mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-01 21:50:13 +08:00

commit

867e236ef4

@ -0,0 +1,287 @@

|

||||

服务端 I/O 性能:Node、PHP、Java、Go 的对比

|

||||

============

|

||||

|

||||

了解应用程序的输入/输出(I/O)模型意味着理解应用程序处理其数据的载入差异,并揭示其在真实环境中表现。或许你的应用程序很小,在不承受很大的负载时,这并不是个严重的问题;但随着应用程序的流量负载增加,可能因为使用了低效的 I/O 模型导致承受不了而崩溃。

|

||||

|

||||

和大多数情况一样,处理这种问题的方法有多种方式,这不仅仅是一个择优的问题,而是对权衡的理解问题。 接下来我们来看看 I/O 到底是什么。

|

||||

|

||||

|

||||

|

||||

在本文中,我们将对 Node、Java、Go 和 PHP + Apache 进行对比,讨论不同语言如何构造其 I/O ,每个模型的优缺点,并总结一些基本的规律。如果你担心你的下一个 Web 应用程序的 I/O 性能,本文将给你最优的解答。

|

||||

|

||||

### I/O 基础知识: 快速复习

|

||||

|

||||

要了解 I/O 所涉及的因素,我们首先深入到操作系统层面复习这些概念。虽然看起来并不与这些概念直接打交道,但你会一直通过应用程序的运行时环境与它们间接接触。了解细节很重要。

|

||||

|

||||

#### 系统调用

|

||||

|

||||

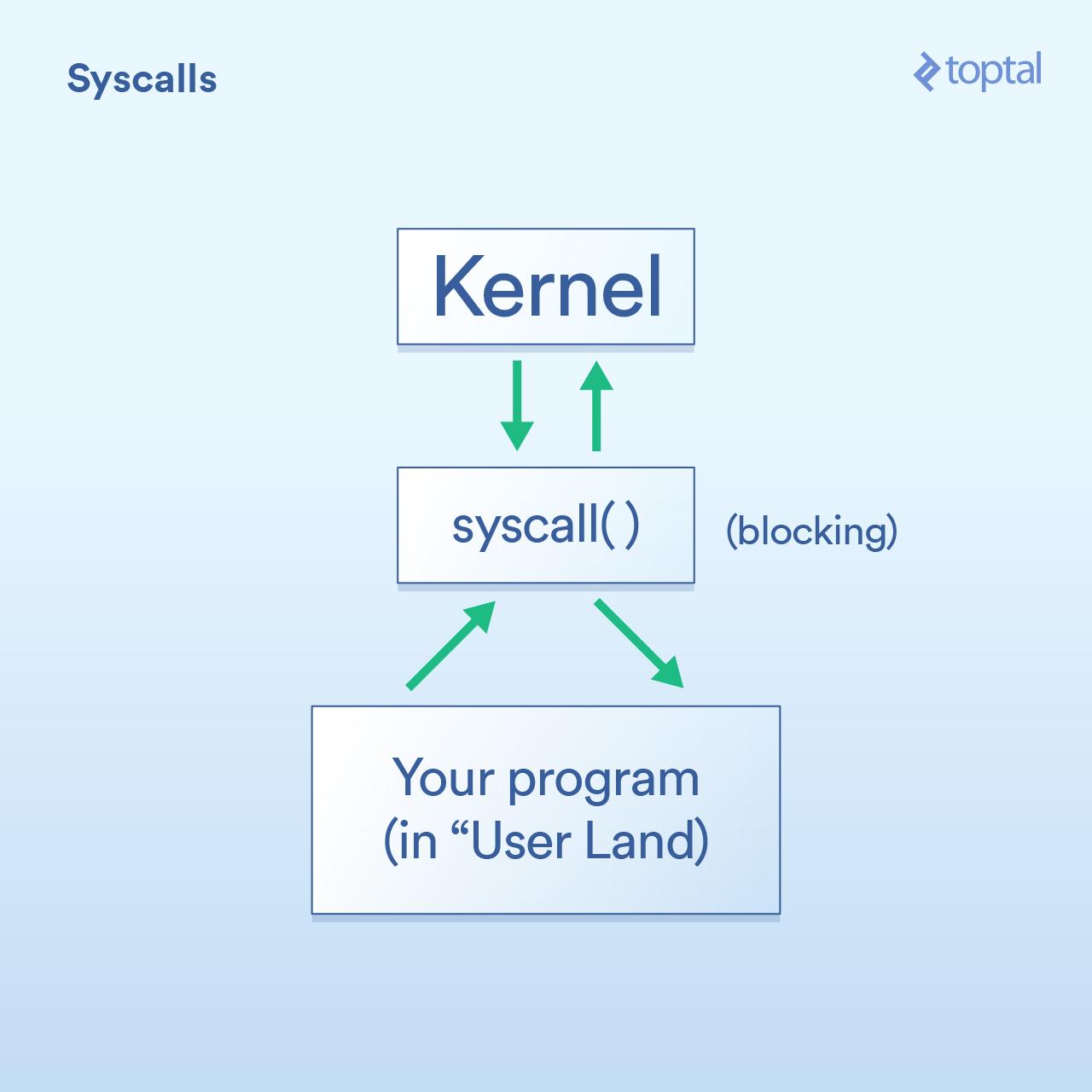

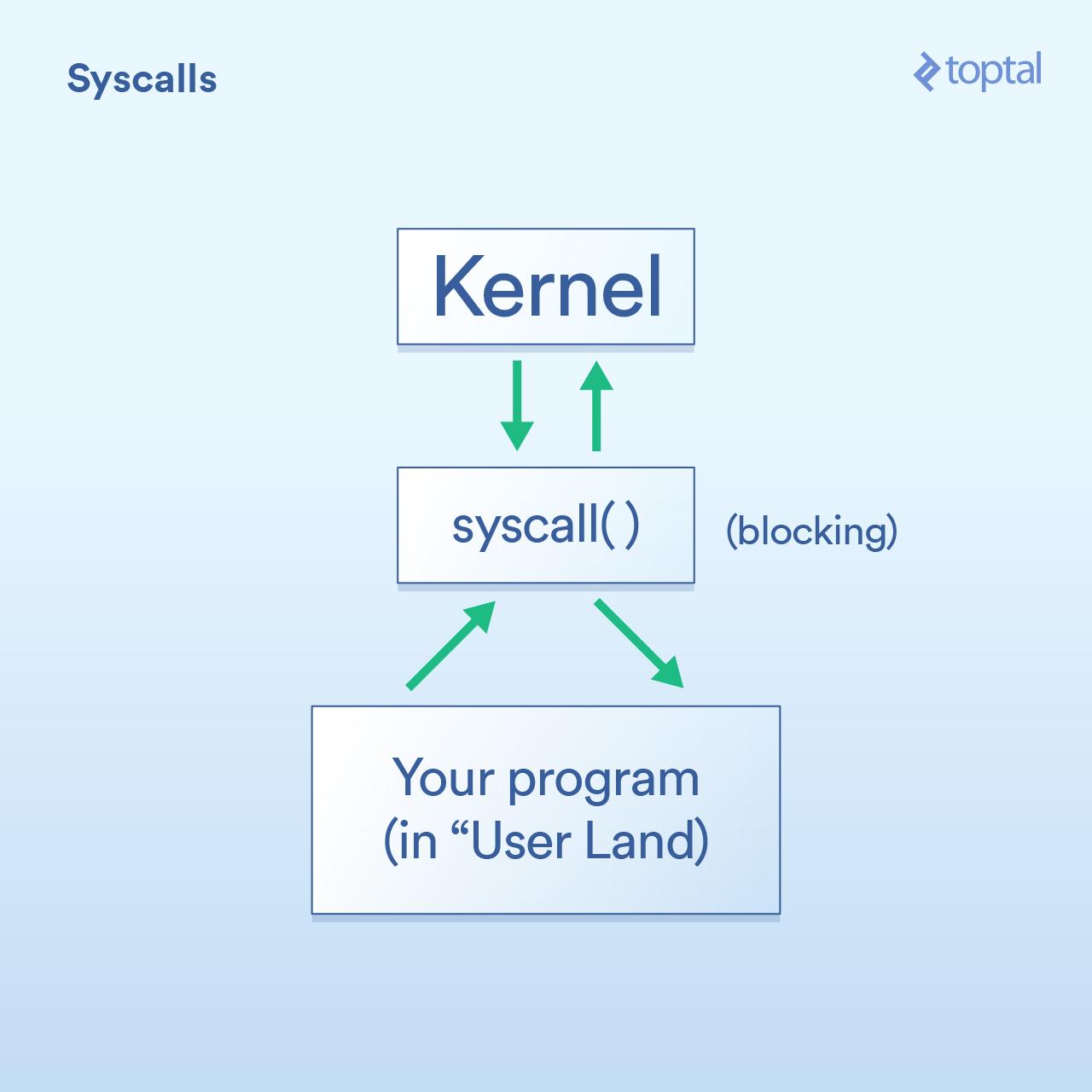

首先是系统调用,其被描述如下:

|

||||

|

||||

* 程序(所谓“<ruby>用户端<rt>user land</rt></ruby>”)必须请求操作系统内核代表它执行 I/O 操作。

|

||||

* “<ruby>系统调用<rt>syscall</rt></ruby>”是你的程序要求内核执行某些操作的方法。这些实现的细节在操作系统之间有所不同,但基本概念是相同的。有一些具体的指令会将控制权从你的程序转移到内核(类似函数调用,但是使用专门用于处理这种情况的专用方式)。一般来说,系统调用会被阻塞,这意味着你的程序会等待内核返回(控制权到)你的代码。

|

||||

* 内核在所需的物理设备( 磁盘、网卡等 )上执行底层 I/O 操作,并回应系统调用。在实际情况中,内核可能需要做许多事情来满足你的要求,包括等待设备准备就绪、更新其内部状态等,但作为应用程序开发人员,你不需要关心这些。这是内核的工作。

|

||||

|

||||

|

||||

|

||||

#### 阻塞与非阻塞

|

||||

|

||||

上面我们提到过,系统调用是阻塞的,一般来说是这样的。然而,一些调用被归类为“非阻塞”,这意味着内核会接收你的请求,将其放在队列或缓冲区之类的地方,然后立即返回而不等待实际的 I/O 发生。所以它只是在很短的时间内“阻塞”,只需要排队你的请求即可。

|

||||

|

||||

举一些 Linux 系统调用的例子可能有助于理解:

|

||||

|

||||

- `read()` 是一个阻塞调用 - 你传递一个句柄,指出哪个文件和缓冲区在哪里传送它所读取的数据,当数据就绪时,该调用返回。这种方式的优点是简单友好。

|

||||

- 分别调用 `epoll_create()`、`epoll_ctl()` 和 `epoll_wait()` ,你可以创建一组句柄来侦听、添加/删除该组中的处理程序、然后阻塞直到有任何事件发生。这允许你通过单个线程有效地控制大量的 I/O 操作,但是现在谈这个还太早。如果你需要这个功能当然好,但须知道它使用起来是比较复杂的。

|

||||

|

||||

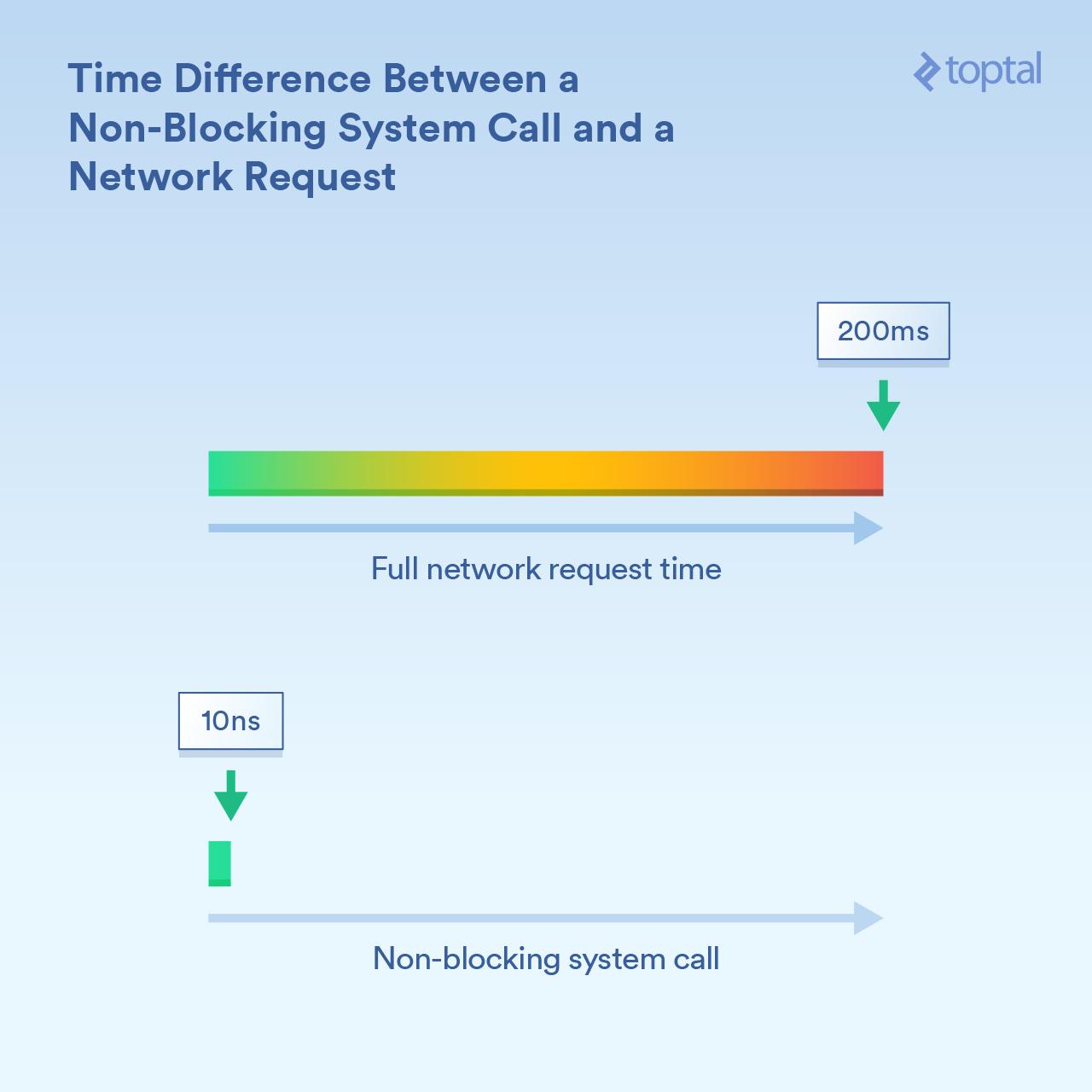

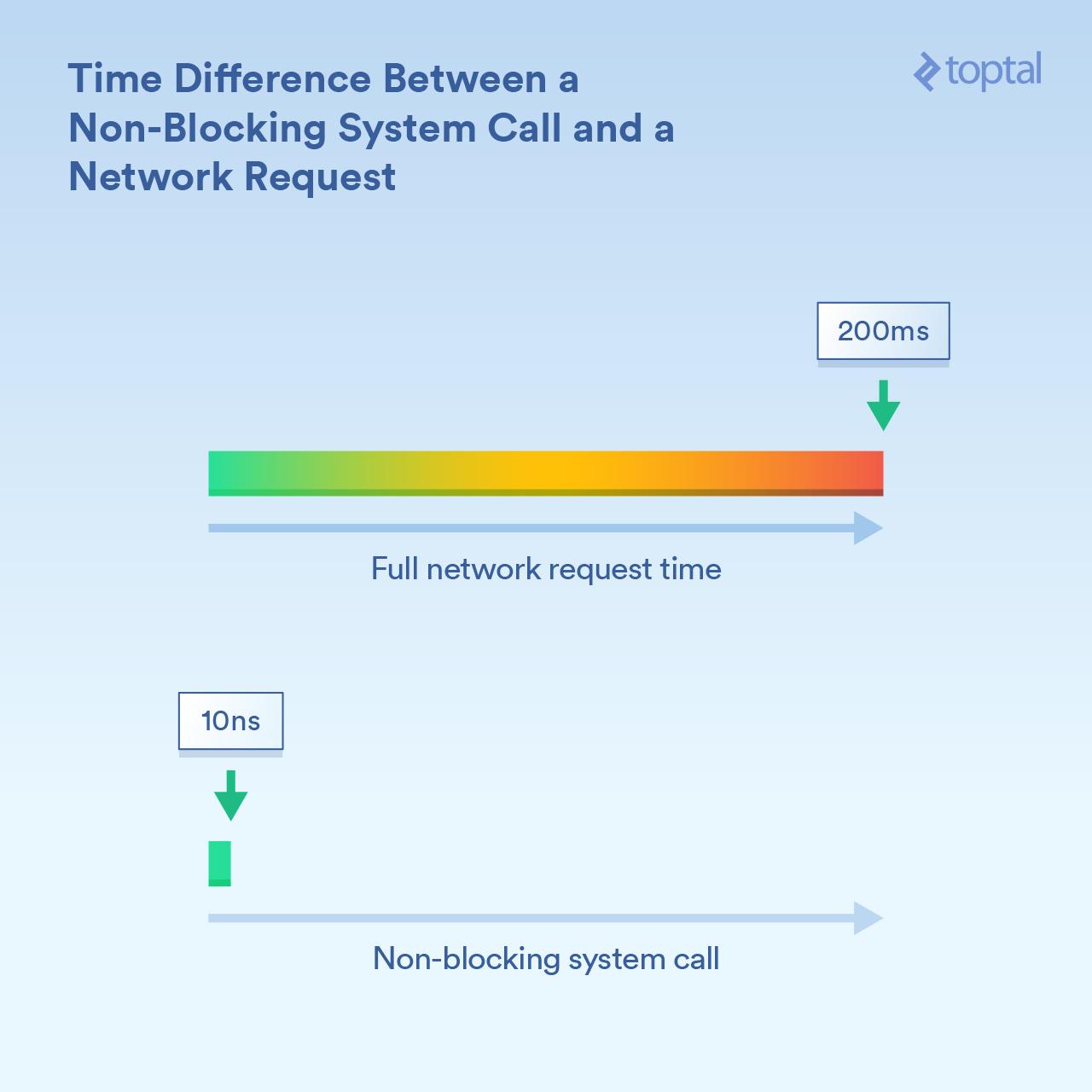

了解这里的时间差异的数量级是很重要的。假设 CPU 内核运行在 3GHz,在没有进行 CPU 优化的情况下,那么它每秒执行 30 亿次<ruby>周期<rt>cycle</rt></ruby>(即每纳秒 3 个周期)。非阻塞系统调用可能需要几十个周期来完成,或者说 “相对少的纳秒” 时间完成。而一个被跨网络接收信息所阻塞的系统调用可能需要更长的时间 - 例如 200 毫秒(1/5 秒)。这就是说,如果非阻塞调用需要 20 纳秒,阻塞调用需要 2 亿纳秒。你的进程因阻塞调用而等待了 1000 万倍的时长!

|

||||

|

||||

|

||||

|

||||

内核既提供了阻塞 I/O (“从网络连接读取并给出数据”),也提供了非阻塞 I/O (“告知我何时这些网络连接具有新数据”)的方法。使用的是哪种机制对调用进程的阻塞时长有截然不同的影响。

|

||||

|

||||

#### 调度

|

||||

|

||||

关键的第三件事是当你有很多线程或进程开始阻塞时会发生什么。

|

||||

|

||||

根据我们的理解,线程和进程之间没有很大的区别。在现实生活中,最显著的性能相关的差异在于,由于线程共享相同的内存,而进程每个都有自己的内存空间,使得单独的进程往往占用更多的内存。但是当我们谈论<ruby>调度<rt>Scheduling</rt></ruby>时,它真正归结为一类事情(线程和进程类同),每个都需要在可用的 CPU 内核上获得一段执行时间。如果你有 300 个线程运行在 8 个内核上,则必须将时间分成几份,以便每个线程和进程都能分享它,每个运行一段时间,然后交给下一个。这是通过 “<ruby>上下文切换<rt>context switch</rt></ruby>” 完成的,可以使 CPU 从运行到一个线程/进程到切换下一个。

|

||||

|

||||

这些上下文切换也有相关的成本 - 它们需要一些时间。在某些快速的情况下,它可能小于 100 纳秒,但根据实际情况、处理器速度/体系结构、CPU 缓存等,偶见花费 1000 纳秒或更长时间。

|

||||

|

||||

而线程(或进程)越多,上下文切换就越多。当我们涉及数以千计的线程时,每个线程花费数百纳秒,就会变得很慢。

|

||||

|

||||

然而,非阻塞调用实质上是告诉内核“仅在这些连接之一有新的数据或事件时再叫我”。这些非阻塞调用旨在有效地处理大量 I/O 负载并减少上下文交换。

|

||||

|

||||

这些你明白了么?现在来到了真正有趣的部分:我们来看看一些流行的语言对那些工具的使用,并得出关于易用性和性能之间权衡的结论,以及一些其他有趣小东西。

|

||||

|

||||

声明,本文中显示的示例是零碎的(片面的,只能体现相关的信息); 数据库访问、外部缓存系统( memcache 等等)以及任何需要 I/O 的东西都将执行某种类型的 I/O 调用,其实质与上面所示的简单示例效果相同。此外,对于将 I/O 描述为“阻塞”( PHP、Java )的情况,HTTP 请求和响应读取和写入本身就是阻塞调用:系统中隐藏着更多 I/O 及其伴生的性能问题需要考虑。

|

||||

|

||||

为一个项目选择编程语言要考虑很多因素。甚至当你只考虑效率时,也有很多因素。但是,如果你担心你的程序将主要受到 I/O 的限制,如果 I/O 性能影响到项目的成败,那么这些是你需要了解的。

|

||||

|

||||

### “保持简单”方法:PHP

|

||||

|

||||

早在 90 年代,很多人都穿着 [Converse][1] 鞋,用 Perl 写着 CGI 脚本。然后 PHP 来了,就像一些人喜欢咒骂的一样,它使得动态网页更容易。

|

||||

|

||||

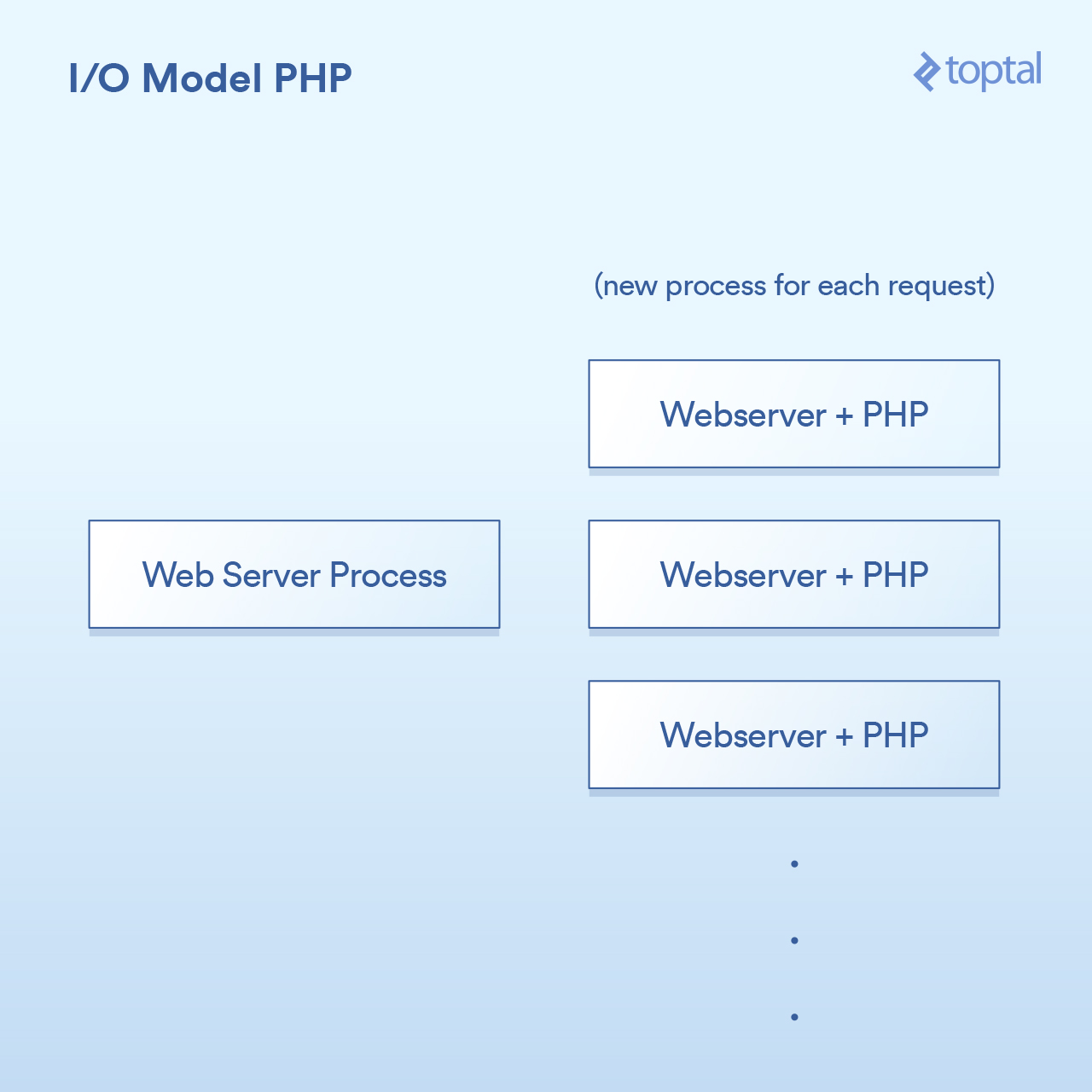

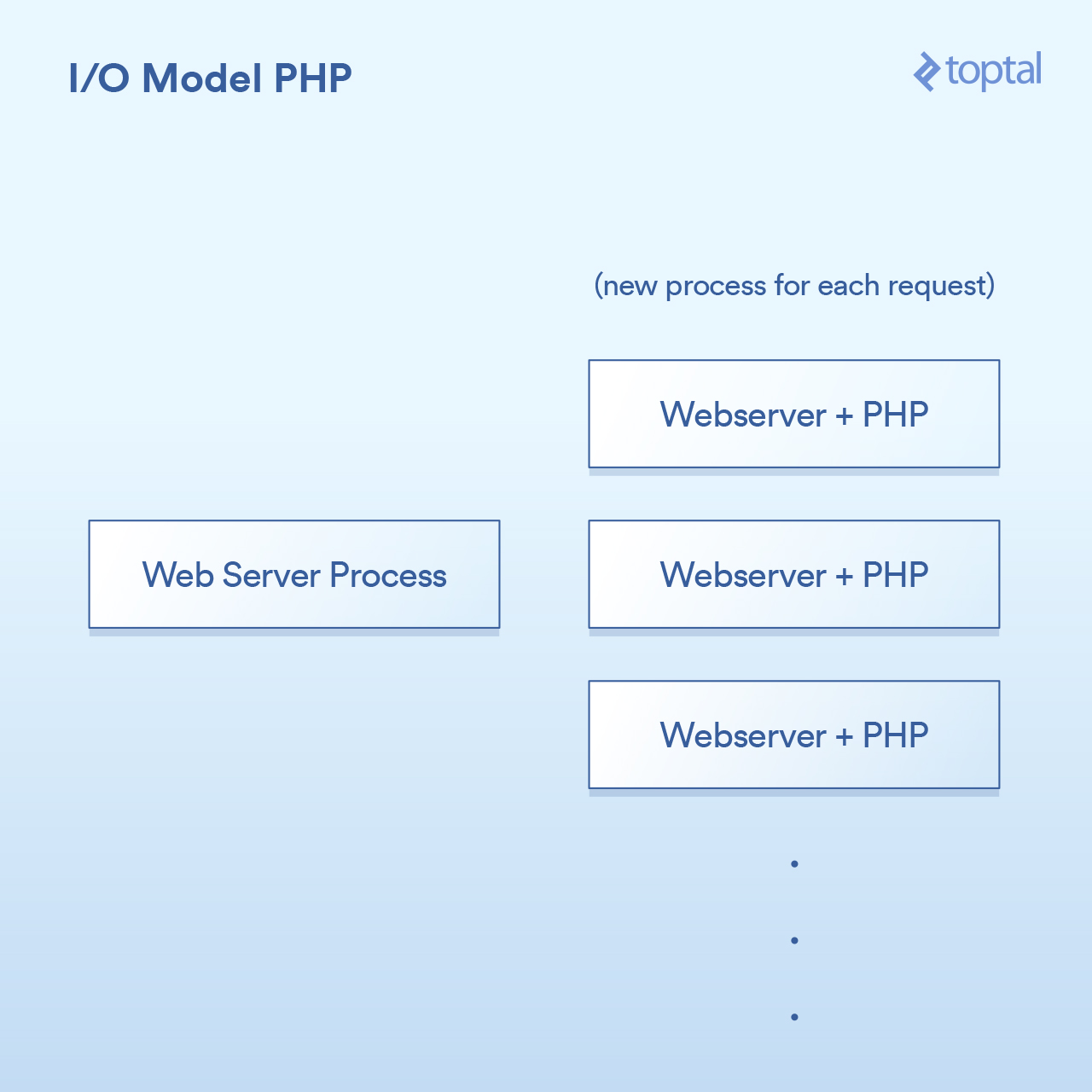

PHP 使用的模型相当简单。虽有一些出入,但你的 PHP 服务器基本上是这样:

|

||||

|

||||

HTTP 请求来自用户的浏览器,并访问你的 Apache Web 服务器。Apache 为每个请求创建一个单独的进程,有一些优化方式可以重新使用它们,以最大限度地减少创建次数( 相对而言,创建进程较慢 )。Apache 调用 PHP 并告诉它运行磁盘上合适的 `.php` 文件。PHP 代码执行并阻塞 I/O 调用。你在 PHP 中调用 `file_get_contents()` ,其底层会调用 `read()` 系统调用并等待结果。

|

||||

|

||||

当然,实际的代码是直接嵌入到你的页面,并且该操作被阻塞:

|

||||

|

||||

```

|

||||

<?php

|

||||

|

||||

// blocking file I/O

|

||||

$file_data = file_get_contents(‘/path/to/file.dat’);

|

||||

|

||||

// blocking network I/O

|

||||

$curl = curl_init('http://example.com/example-microservice');

|

||||

$result = curl_exec($curl);

|

||||

|

||||

// some more blocking network I/O

|

||||

$result = $db->query('SELECT id, data FROM examples ORDER BY id DESC limit 100');

|

||||

|

||||

?>

|

||||

|

||||

```

|

||||

|

||||

关于如何与系统集成,就像这样:

|

||||

|

||||

|

||||

|

||||

很简单:每个请求一个进程。 I/O 调用就阻塞。优点是简单可工作,缺点是,同时与 20,000 个客户端连接,你的服务器将会崩溃。这种方法不能很好地扩展,因为内核提供的用于处理大容量 I/O (epoll 等) 的工具没有被使用。 雪上加霜的是,为每个请求运行一个单独的进程往往会使用大量的系统资源,特别是内存,这通常是你在这样的场景中遇到的第一个问题。

|

||||

|

||||

_注意:Ruby 使用的方法与 PHP 非常相似,在大致的方面上,它们可以被认为是相同的。_

|

||||

|

||||

### 多线程方法: Java

|

||||

|

||||

就在你购买你的第一个域名,在某个句子后很酷地随机说出 “dot com” 的那个时候,Java 来了。而 Java 具有内置于该语言中的多线程功能,它非常棒(特别是在创建时)。

|

||||

|

||||

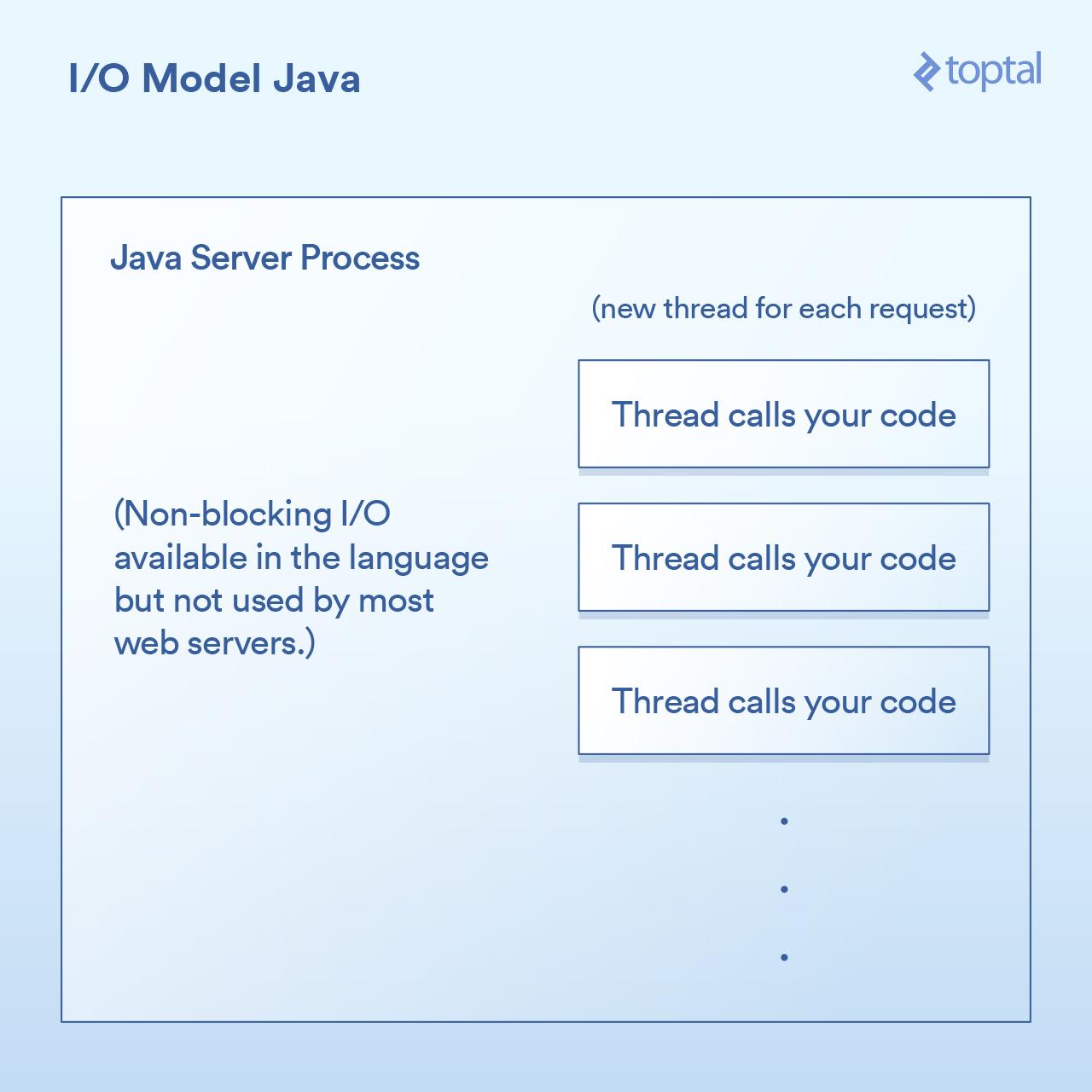

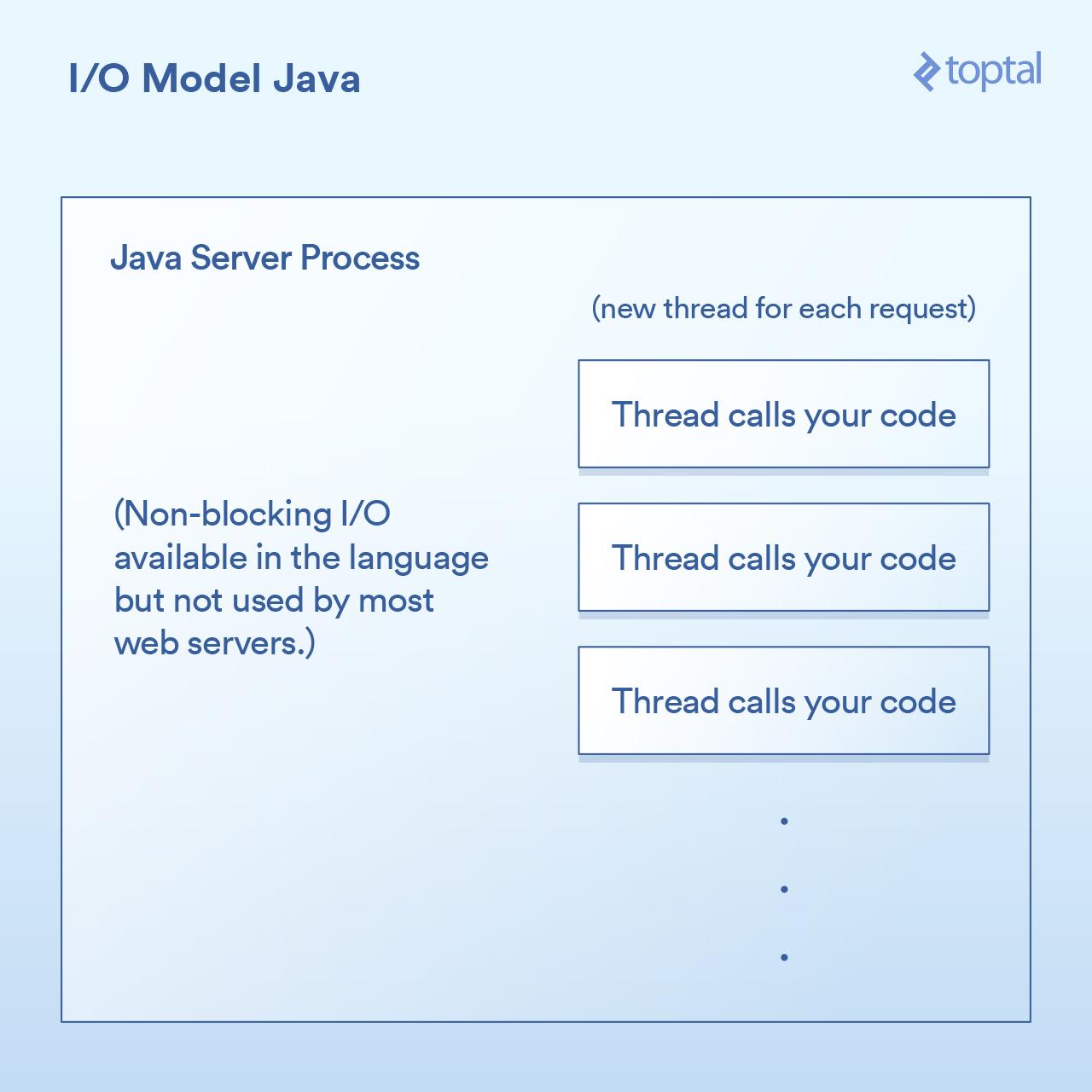

大多数 Java Web 服务器通过为每个请求启动一个新的执行线程,然后在该线程中最终调用你(作为应用程序开发人员)编写的函数。

|

||||

|

||||

在 Java Servlet 中执行 I/O 往往看起来像:

|

||||

|

||||

```

|

||||

public void doGet(HttpServletRequest request,

|

||||

HttpServletResponse response) throws ServletException, IOException

|

||||

{

|

||||

|

||||

// blocking file I/O

|

||||

InputStream fileIs = new FileInputStream("/path/to/file");

|

||||

|

||||

// blocking network I/O

|

||||

URLConnection urlConnection = (new URL("http://example.com/example-microservice")).openConnection();

|

||||

InputStream netIs = urlConnection.getInputStream();

|

||||

|

||||

// some more blocking network I/O

|

||||

out.println("...");

|

||||

}

|

||||

```

|

||||

|

||||

由于我们上面的 `doGet` 方法对应于一个请求,并且在其自己的线程中运行,而不是每个请求一个单独的进程,申请自己的内存。这样有一些好处,比如在线程之间共享状态、缓存数据等,因为它们可以访问彼此的内存,但是它与调度的交互影响与之前的 PHP 的例子几乎相同。每个请求获得一个新线程,该线程内的各种 I/O 操作阻塞在线程内,直到请求被完全处理为止。线程被池化以最小化创建和销毁它们的成本,但是数千个连接仍然意味着数千个线程,这对调度程序是不利的。

|

||||

|

||||

重要的里程碑出现在 Java 1.4 版本(以及 1.7 的重要升级)中,它获得了执行非阻塞 I/O 调用的能力。大多数应用程序、web 应用和其它用途不会使用它,但至少它是可用的。一些 Java Web 服务器尝试以各种方式利用这一点;然而,绝大多数部署的 Java 应用程序仍然如上所述工作。

|

||||

|

||||

|

||||

|

||||

肯定有一些很好的开箱即用的 I/O 功能,Java 让我们更接近,但它仍然没有真正解决当你有一个大量的 I/O 绑定的应用程序被数千个阻塞线程所压垮的问题。

|

||||

|

||||

### 无阻塞 I/O 作为一等公民: Node

|

||||

|

||||

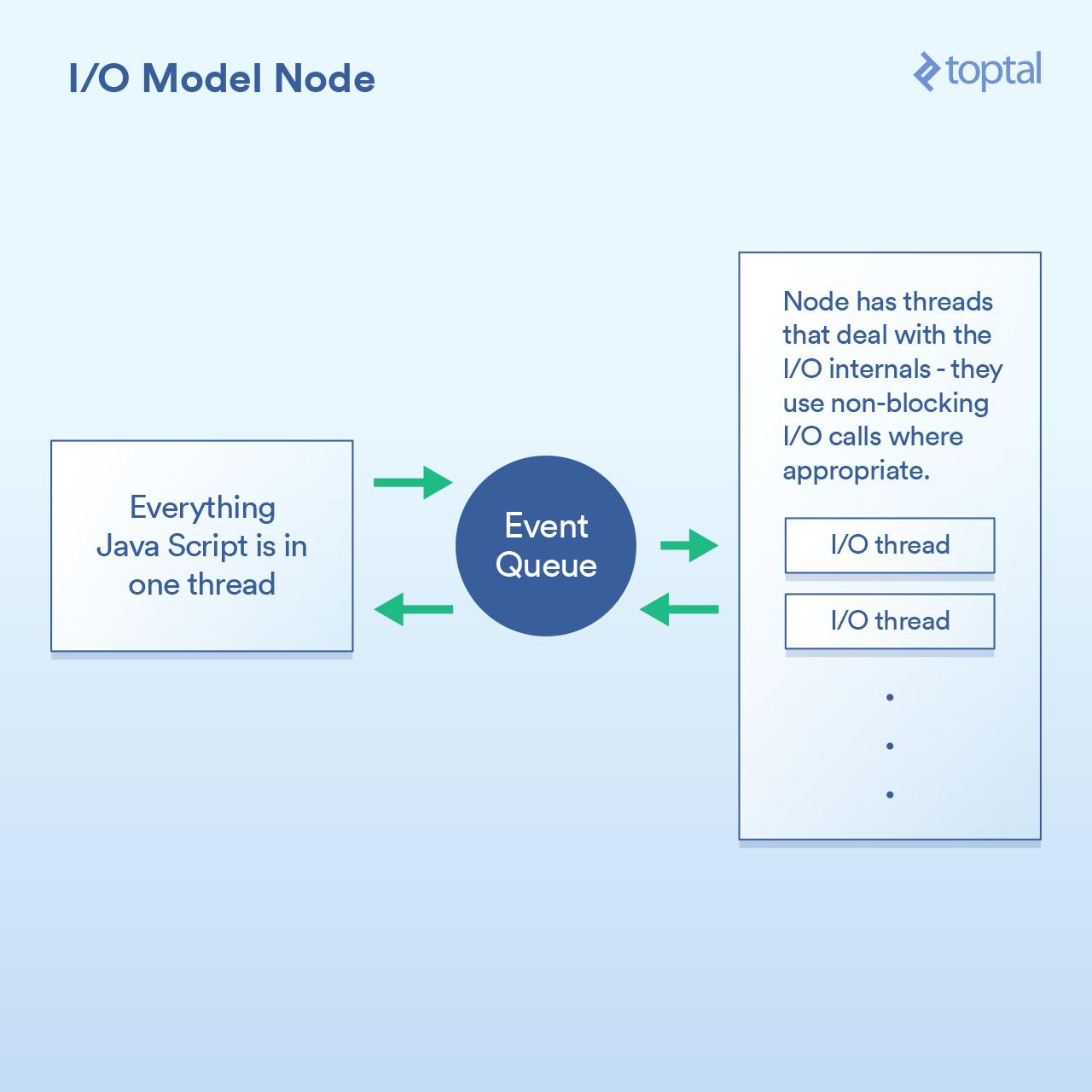

当更好的 I/O 模式来到 Node.js,阻塞才真正被解决。任何一个曾听过 Node 简单介绍的人都被告知这是“非阻塞”,可以有效地处理 I/O。这在一般意义上是正确的。但在细节中则不尽然,而且当在进行性能工程时,这种巫术遇到了问题。

|

||||

|

||||

Node 实现的范例基本上不是说 “在这里写代码来处理请求”,而是说 “在这里写代码来**开始**处理请求”。每次你需要做一些涉及到 I/O 的操作,你会创建一个请求并给出一个回调函数,Node 将在完成之后调用该函数。

|

||||

|

||||

在请求中执行 I/O 操作的典型 Node 代码如下所示:

|

||||

|

||||

```

|

||||

http.createServer(function(request, response) {

|

||||

fs.readFile('/path/to/file', 'utf8', function(err, data) {

|

||||

response.end(data);

|

||||

});

|

||||

});

|

||||

|

||||

```

|

||||

|

||||

你可以看到,这里有两个回调函数。当请求开始时,第一个被调用,当文件数据可用时,第二个被调用。

|

||||

|

||||

这样做的基本原理是让 Node 有机会有效地处理这些回调之间的 I/O 。一个更加密切相关的场景是在 Node 中进行数据库调用,但是我不会在这个例子中啰嗦,因为它遵循完全相同的原则:启动数据库调用,并给 Node 一个回调函数,它使用非阻塞调用单独执行 I/O 操作,然后在你要求的数据可用时调用回调函数。排队 I/O 调用和让 Node 处理它然后获取回调的机制称为“事件循环”。它工作的很好。

|

||||

|

||||

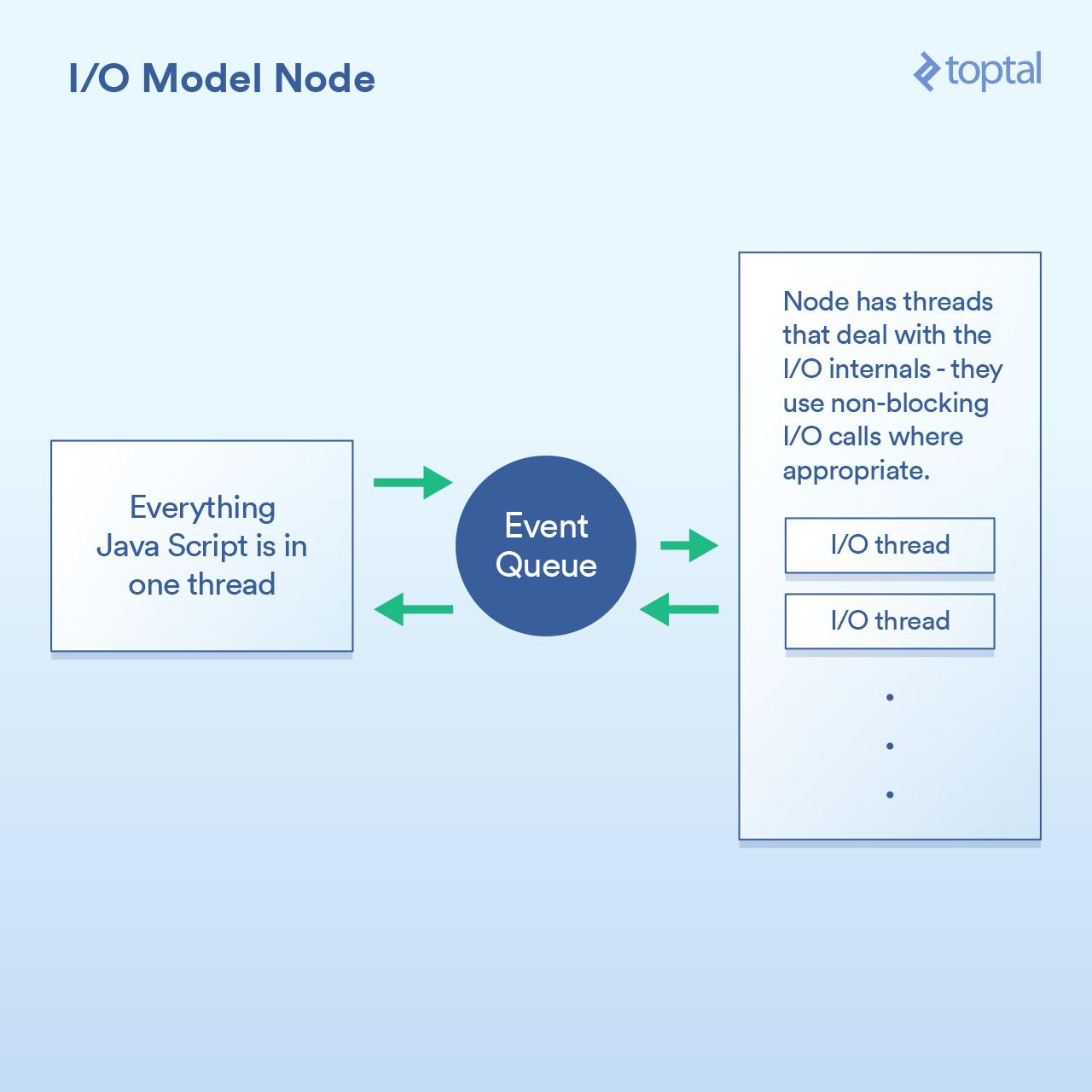

|

||||

|

||||

然而,这个模型有一个陷阱,究其原因,很多是与 V8 JavaScript 引擎(Node 用的是 Chrome 浏览器的 JS 引擎)如何实现的有关^注1 。你编写的所有 JS 代码都运行在单个线程中。你可以想想,这意味着当使用高效的非阻塞技术执行 I/O 时,你的 JS 可以在单个线程中运行计算密集型的操作,每个代码块都会阻塞下一个。可能出现这种情况的一个常见例子是以某种方式遍历数据库记录,然后再将其输出到客户端。这是一个示例,展示了其是如何工作:

|

||||

|

||||

```

|

||||

var handler = function(request, response) {

|

||||

|

||||

connection.query('SELECT ...', function (err, rows) {

|

||||

|

||||

if (err) { throw err };

|

||||

|

||||

for (var i = 0; i < rows.length; i++) {

|

||||

// do processing on each row

|

||||

}

|

||||

|

||||

response.end(...); // write out the results

|

||||

|

||||

})

|

||||

|

||||

};

|

||||

```

|

||||

|

||||

虽然 Node 确实有效地处理了 I/O ,但是上面的例子中 `for` 循环是在你的唯一的一个主线程中占用 CPU 周期。这意味着如果你有 10,000 个连接,则该循环可能会使你的整个应用程序像爬行般缓慢,具体取决于其会持续多久。每个请求必须在主线程中分享一段时间,一次一段。

|

||||

|

||||

这整个概念的前提是 I/O 操作是最慢的部分,因此最重要的是要有效地处理这些操作,即使这意味着要连续进行其他处理。这在某些情况下是正确的,但不是全部。

|

||||

|

||||

另一点是,虽然这只是一个观点,但是写一堆嵌套的回调可能是相当令人讨厌的,有些则认为它使代码更难以追踪。在 Node 代码中看到回调嵌套 4 层、5 层甚至更多层并不罕见。

|

||||

|

||||

我们再次来权衡一下。如果你的主要性能问题是 I/O,则 Node 模型工作正常。然而,它的关键是,你可以在一个处理 HTTP 请求的函数里面放置 CPU 密集型的代码,而且不小心的话会导致每个连接都很慢。

|

||||

|

||||

### 最自然的非阻塞:Go

|

||||

|

||||

在我进入 Go 部分之前,我应该披露我是一个 Go 的粉丝。我已经在许多项目中使用过它,我是一个其生产力优势的公开支持者,我在我的工作中使用它。

|

||||

|

||||

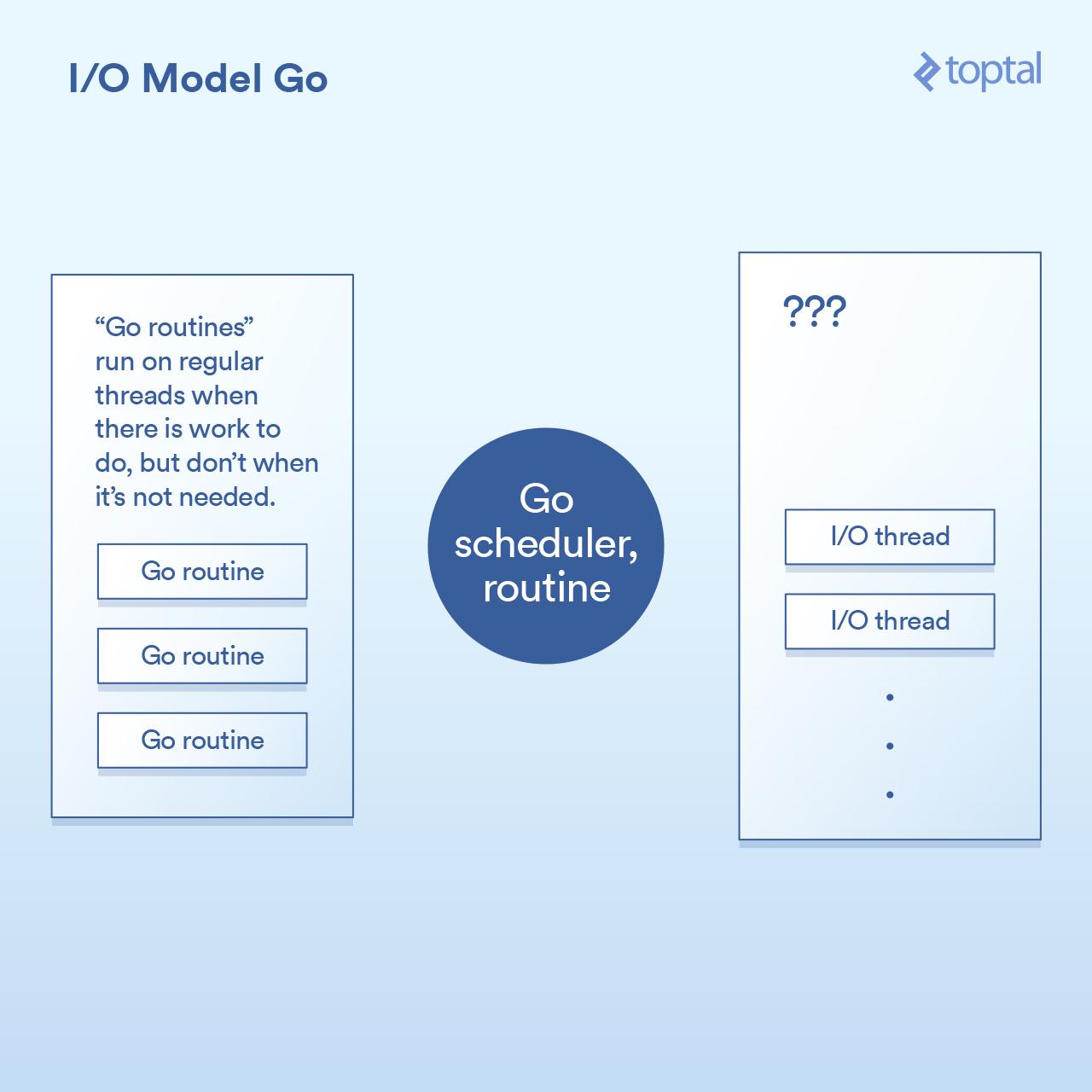

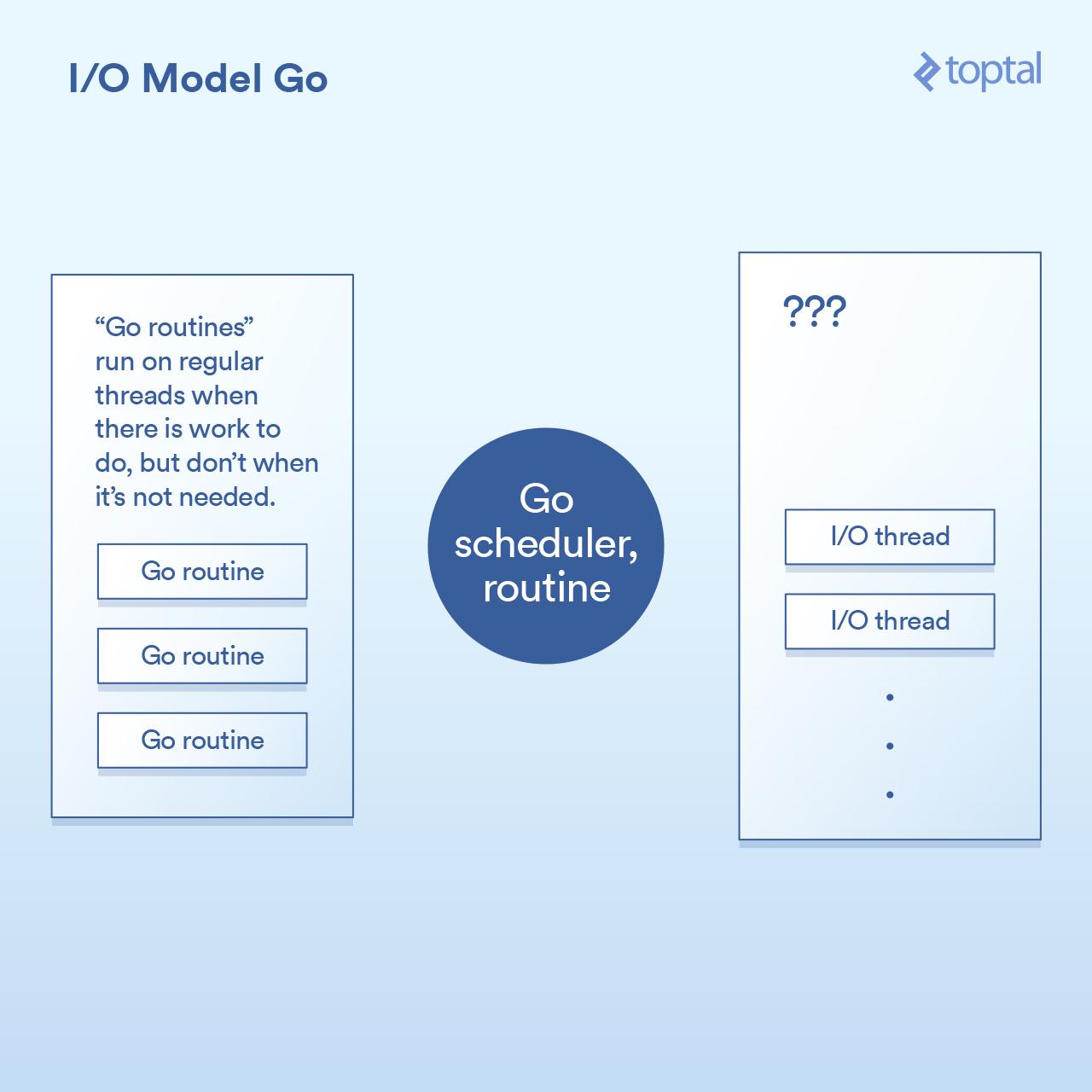

那么,让我们来看看它是如何处理 I/O 的。Go 语言的一个关键特征是它包含自己的调度程序。在 Go 中,不是每个执行线程对应于一个单一的 OS 线程,其通过一种叫做 “<ruby>协程<rt>goroutine</rt></ruby>” 的概念来工作。而 Go 的运行时可以将一个协程分配给一个 OS 线程,使其执行或暂停它,并且它不与一个 OS 线程相关联——这要基于那个协程正在做什么。来自 Go 的 HTTP 服务器的每个请求都在单独的协程中处理。

|

||||

|

||||

调度程序的工作原理如图所示:

|

||||

|

||||

|

||||

|

||||

在底层,这是通过 Go 运行时中的各个部分实现的,它通过对请求的写入/读取/连接等操作来实现 I/O 调用,将当前协程休眠,并当采取进一步动作时唤醒该协程。

|

||||

|

||||

从效果上看,Go 运行时做的一些事情与 Node 做的没有太大不同,除了回调机制是内置到 I/O 调用的实现中,并自动与调度程序交互。它也不会受到必须让所有处理程序代码在同一个线程中运行的限制,Go 将根据其调度程序中的逻辑自动将协程映射到其认为适当的 OS 线程。结果是这样的代码:

|

||||

|

||||

```

|

||||

func ServeHTTP(w http.ResponseWriter, r *http.Request) {

|

||||

|

||||

// the underlying network call here is non-blocking

|

||||

rows, err := db.Query("SELECT ...")

|

||||

|

||||

for _, row := range rows {

|

||||

// do something with the rows,

|

||||

// each request in its own goroutine

|

||||

}

|

||||

|

||||

w.Write(...) // write the response, also non-blocking

|

||||

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

如上所述,我们重构基本的代码结构为更简化的方式,并在底层仍然实现了非阻塞 I/O。

|

||||

|

||||

在大多数情况下,最终是“两全其美”的。非阻塞 I/O 用于所有重要的事情,但是你的代码看起来像是阻塞,因此更容易理解和维护。Go 调度程序和 OS 调度程序之间的交互处理其余部分。这不是完整的魔法,如果你建立一个大型系统,那么值得我们来看看有关它的工作原理的更多细节;但与此同时,你获得的“开箱即用”的环境可以很好地工作和扩展。

|

||||

|

||||

Go 可能有其缺点,但一般来说,它处理 I/O 的方式不在其中。

|

||||

|

||||

### 谎言,可恶的谎言和基准

|

||||

|

||||

对这些各种模式的上下文切换进行准确的定时是很困难的。我也可以认为这对你来说不太有用。相反,我会给出一些比较这些服务器环境的整个 HTTP 服务器性能的基本基准。请记住,影响整个端到端 HTTP 请求/响应路径的性能有很多因素,这里提供的数字只是我将一些样本放在一起进行基本比较的结果。

|

||||

|

||||

对于这些环境中的每一个,我写了适当的代码在一个 64k 文件中读取随机字节,在其上运行了一个 SHA-256 哈希 N 次( N 在 URL 的查询字符串中指定,例如 .../test.php?n=100),并打印出结果十六进制散列。我选择这样做,是因为使用一些一致的 I/O 和受控的方式来运行相同的基准测试是一个增加 CPU 使用率的非常简单的方法。

|

||||

|

||||

有关使用的环境的更多细节,请参阅 [基准说明][3] 。

|

||||

|

||||

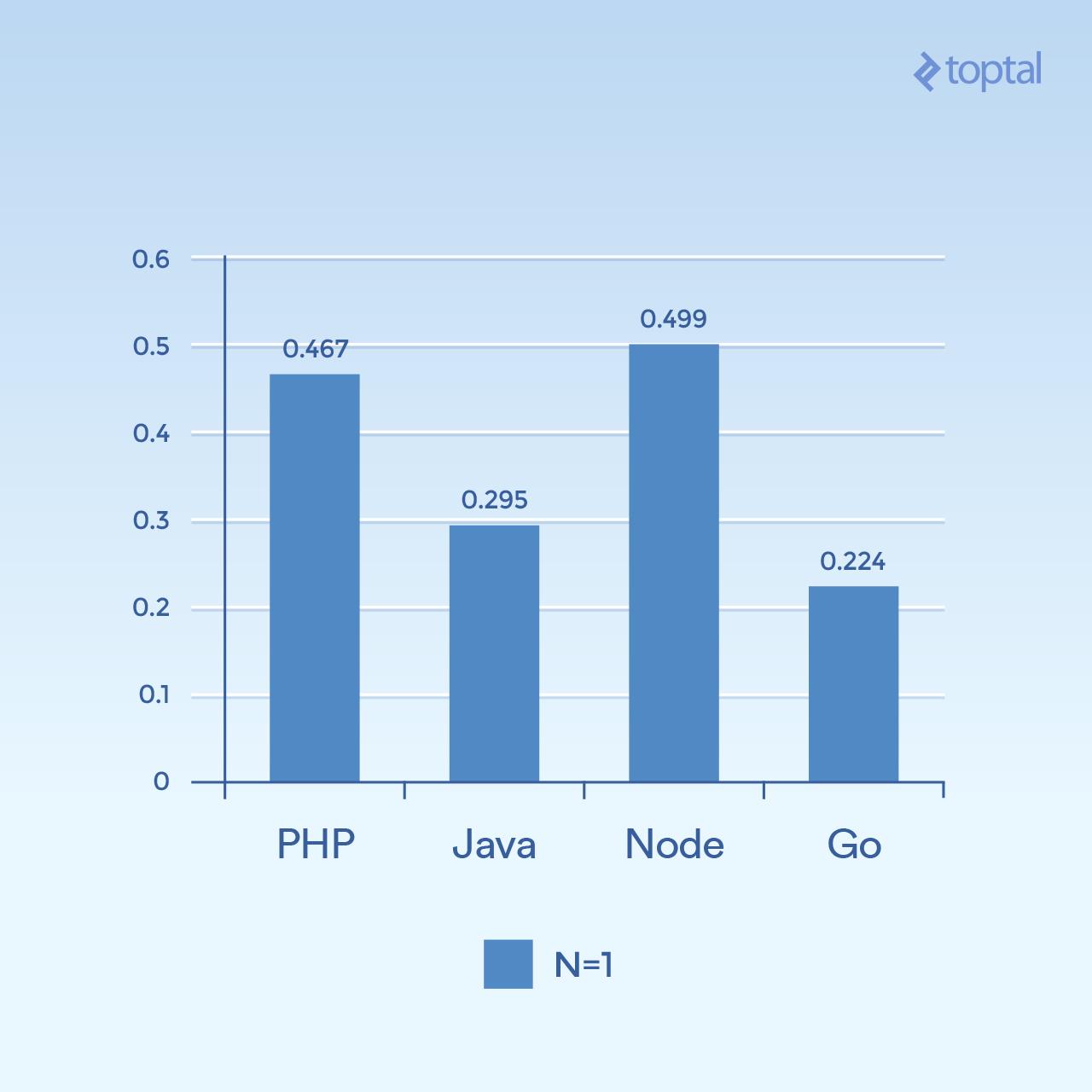

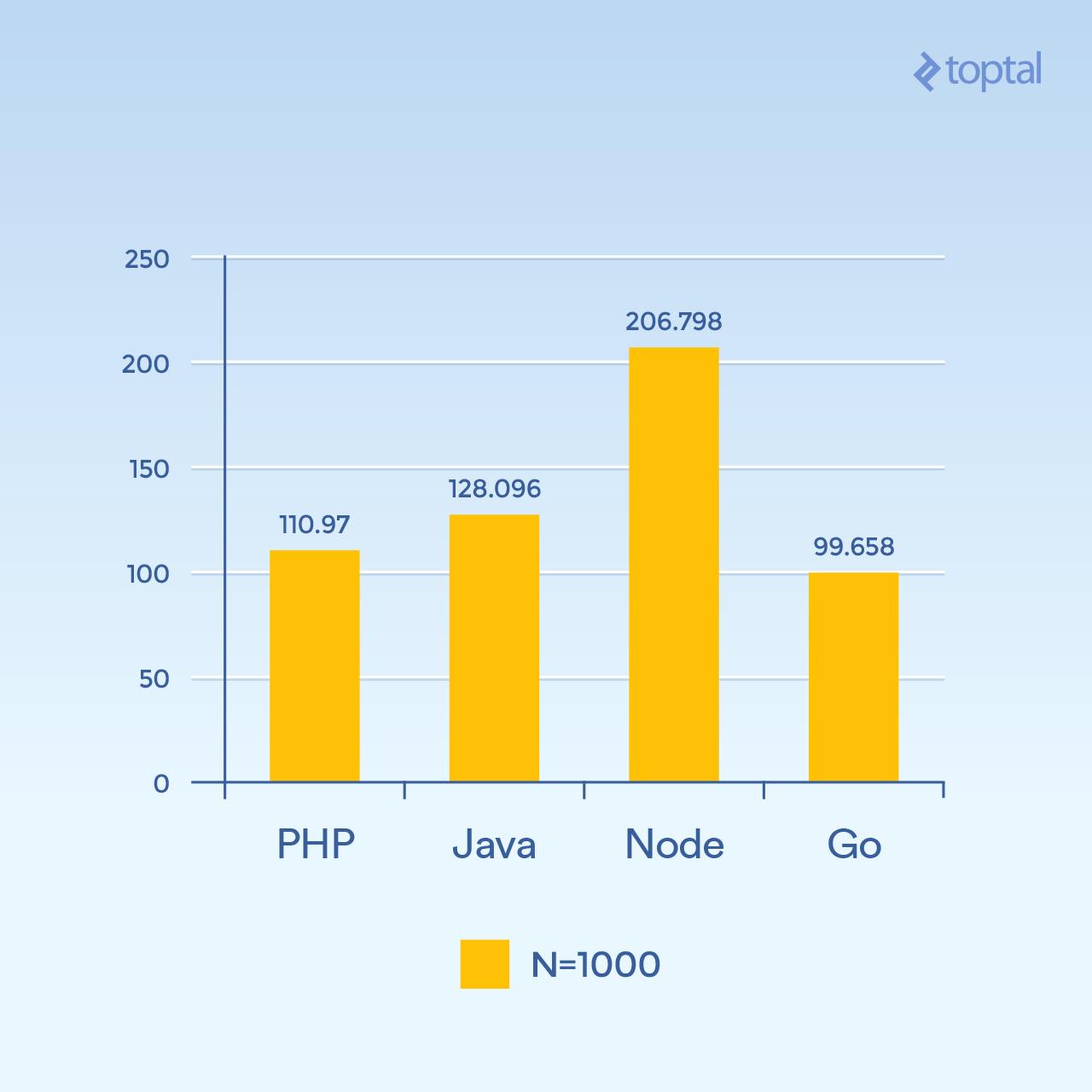

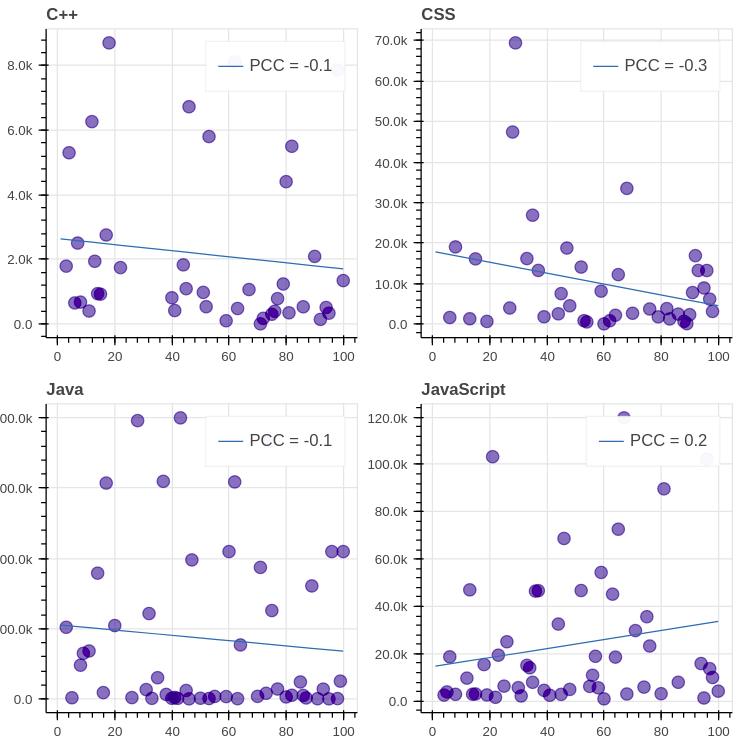

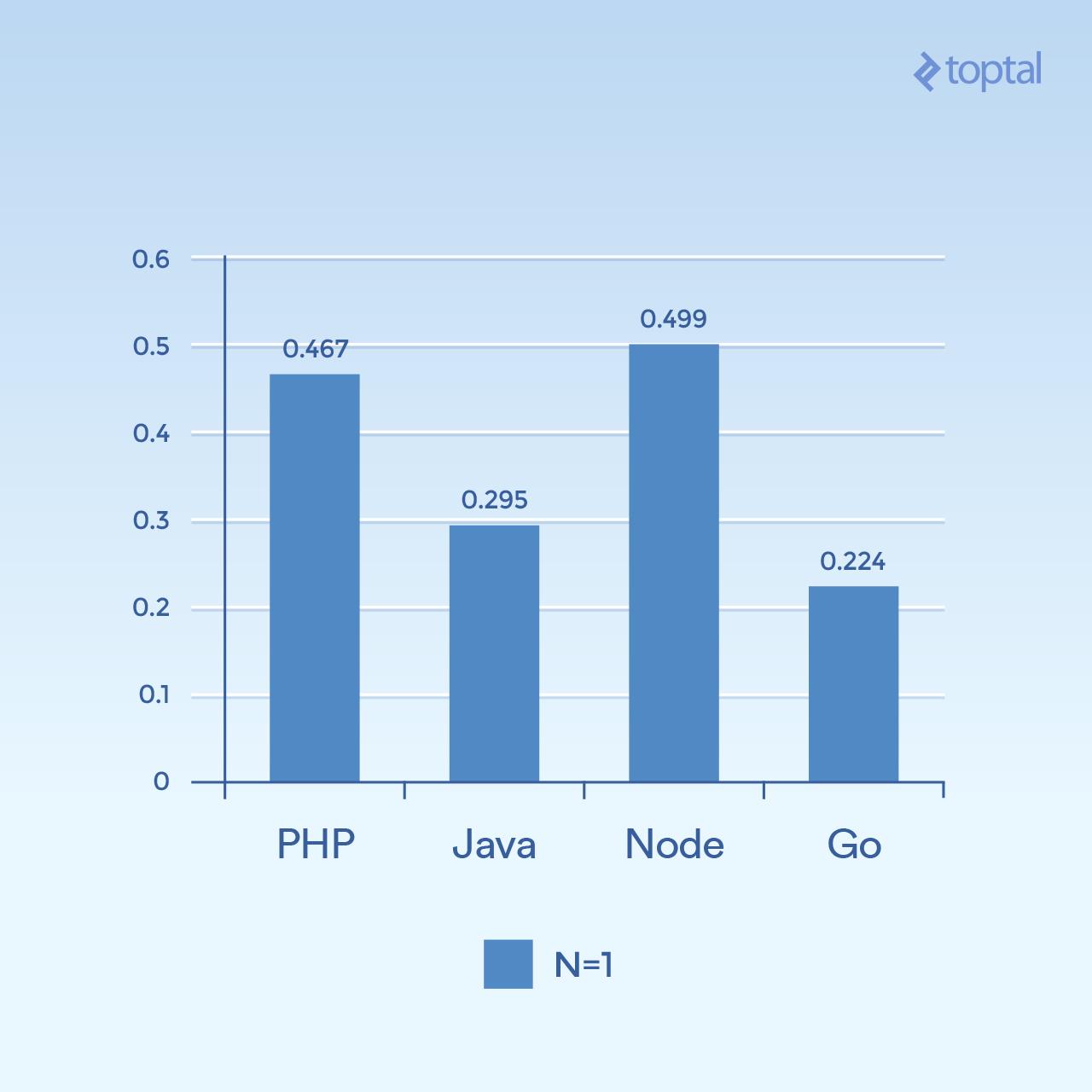

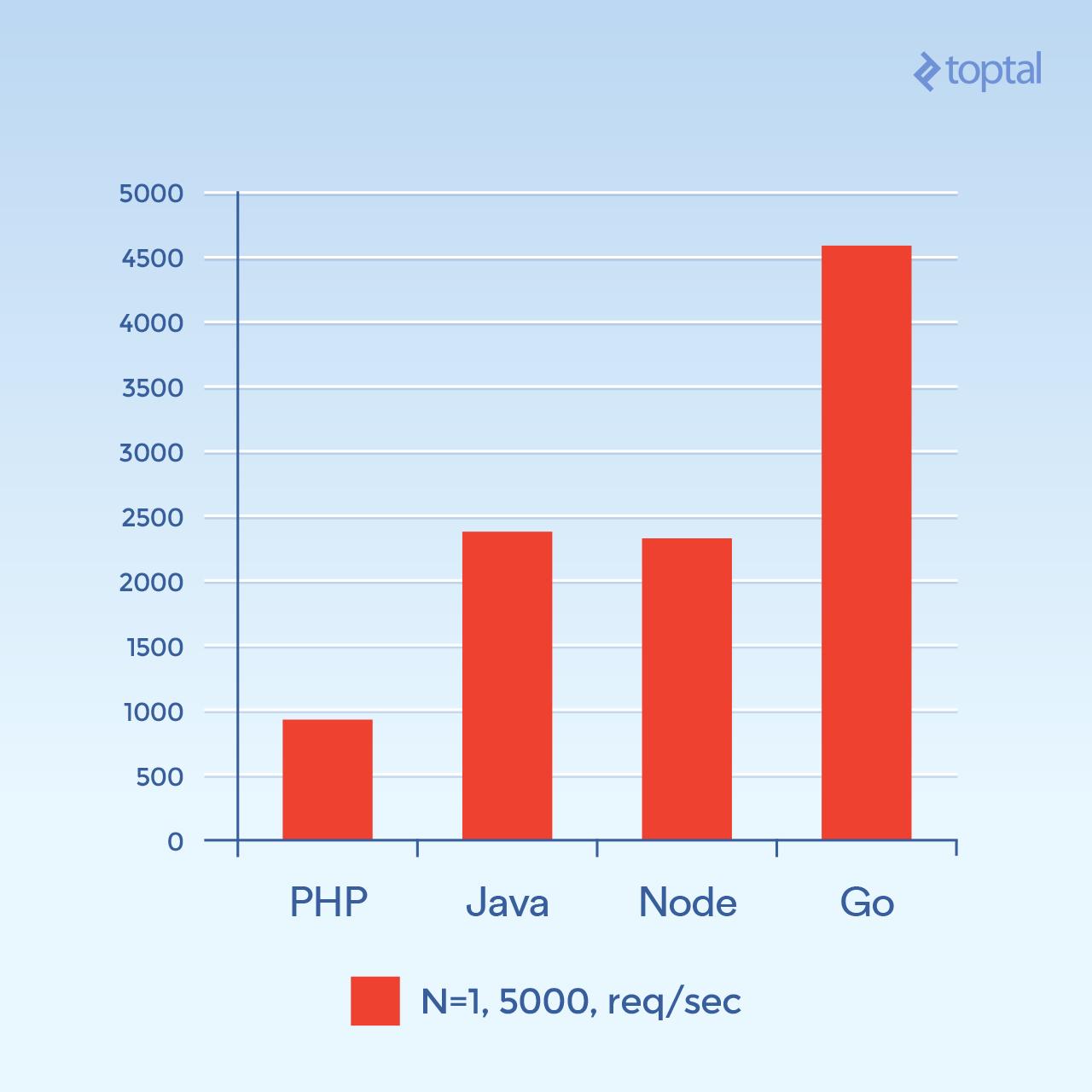

首先,我们来看一些低并发的例子。运行 2000 次迭代,300 个并发请求,每个请求只有一个散列(N = 1),结果如下:

|

||||

|

||||

|

||||

|

||||

*时间是在所有并发请求中完成请求的平均毫秒数。越低越好。*

|

||||

|

||||

仅从一张图很难得出结论,但是对我来说,似乎在大量的连接和计算量上,我们看到时间更多地与语言本身的一般执行有关,对于 I/O 更是如此。请注意,那些被视为“脚本语言”的语言(松散类型,动态解释)执行速度最慢。

|

||||

|

||||

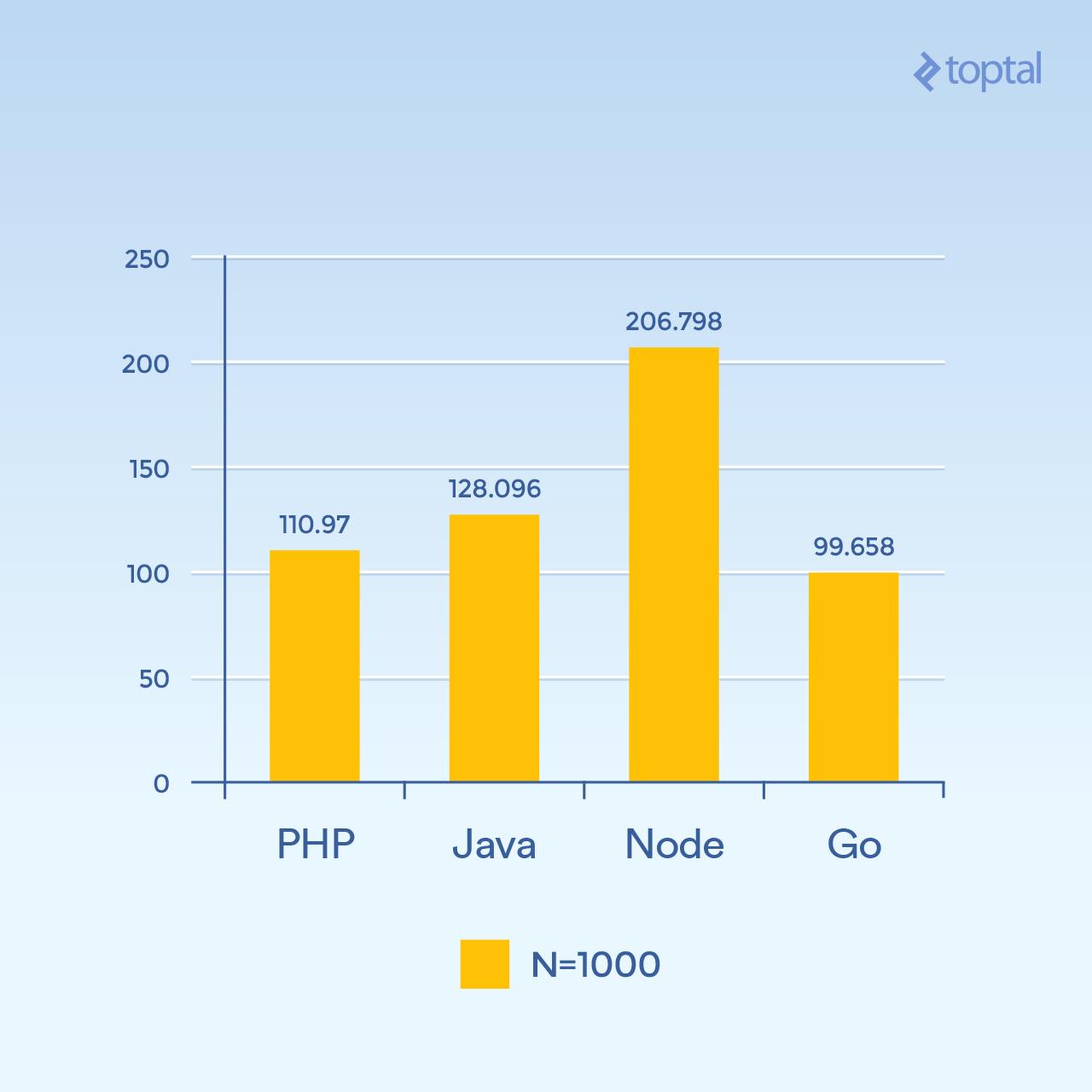

但是,如果我们将 N 增加到 1000,仍然有 300 个并发请求,相同的任务,但是哈希迭代是 1000 倍(显着增加了 CPU 负载):

|

||||

|

||||

|

||||

|

||||

*时间是在所有并发请求中完成请求的平均毫秒数。越低越好。*

|

||||

|

||||

突然间, Node 性能显著下降,因为每个请求中的 CPU 密集型操作都相互阻塞。有趣的是,在这个测试中,PHP 的性能要好得多(相对于其他的),并且打败了 Java。(值得注意的是,在 PHP 中,SHA-256 实现是用 C 编写的,在那个循环中执行路径花费了更多的时间,因为现在我们正在进行 1000 个哈希迭代)。

|

||||

|

||||

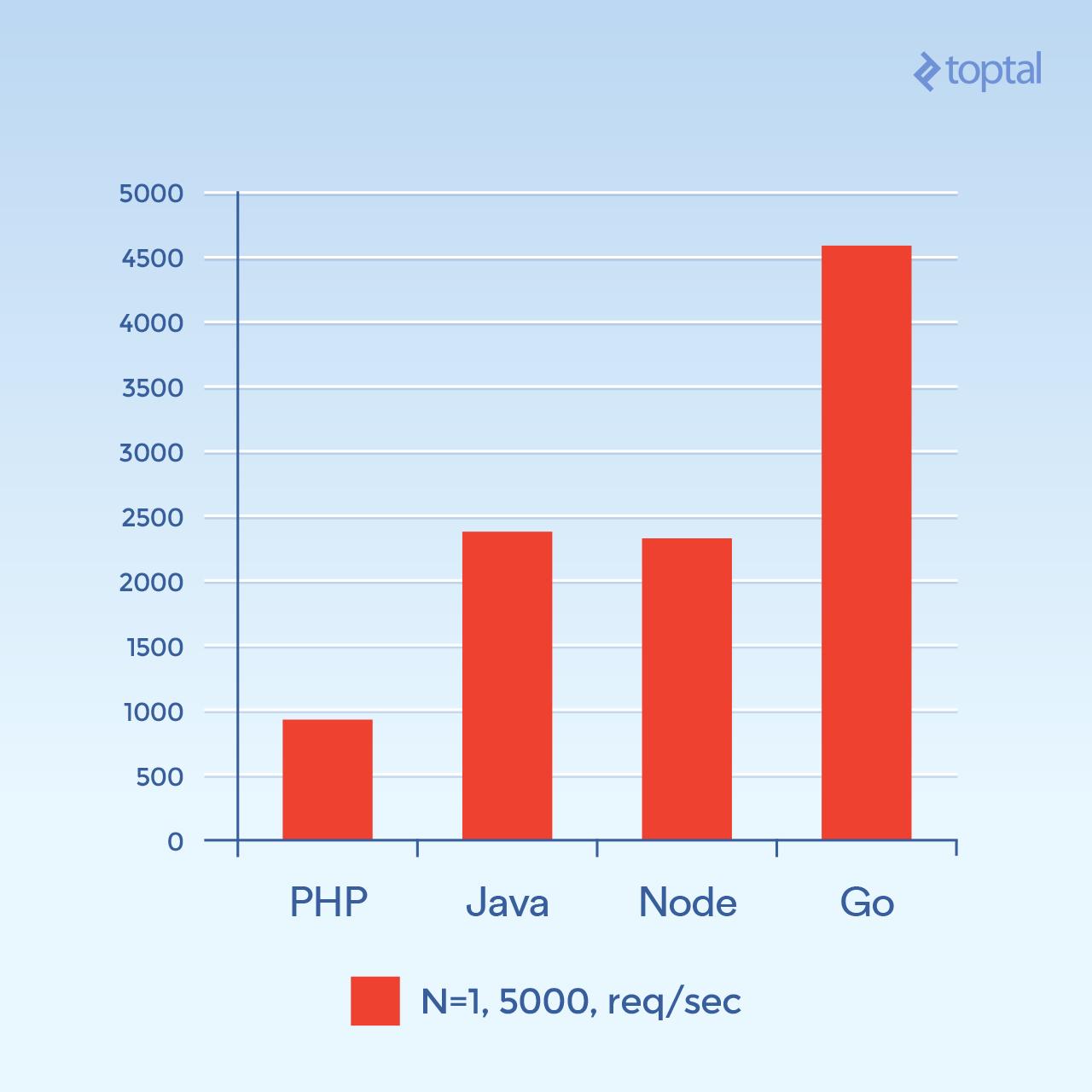

现在让我们尝试 5000 个并发连接(N = 1) - 或者是我可以发起的最大连接。不幸的是,对于大多数这些环境,故障率并不显着。对于这个图表,我们来看每秒的请求总数。 _越高越好_ :

|

||||

|

||||

|

||||

|

||||

*每秒请求数。越高越好。*

|

||||

|

||||

这个图看起来有很大的不同。我猜测,但是看起来像在高连接量时,产生新进程所涉及的每连接开销以及与 PHP + Apache 相关联的附加内存似乎成为主要因素,并阻止了 PHP 的性能。显然,Go 是这里的赢家,其次是 Java,Node,最后是 PHP。

|

||||

|

||||

虽然与你的整体吞吐量相关的因素很多,并且在应用程序之间也有很大的差异,但是你对底层发生什么的事情以及所涉及的权衡了解更多,你将会得到更好的结果。

|

||||

|

||||

### 总结

|

||||

|

||||

以上所有这一切,很显然,随着语言的发展,处理大量 I/O 的大型应用程序的解决方案也随之发展。

|

||||

|

||||

为了公平起见,PHP 和 Java,尽管这篇文章中的描述,确实 [实现了][4] 在 [ web 应用程序][7] 中 [可使用的][6] [ 非阻塞 I/O][5] 。但是这些方法并不像上述方法那么常见,并且需要考虑使用这种方法来维护服务器的随之而来的操作开销。更不用说你的代码必须以与这些环境相适应的方式进行结构化;你的 “正常” PHP 或 Java Web 应用程序通常不会在这样的环境中进行重大修改。

|

||||

|

||||

作为比较,如果我们考虑影响性能和易用性的几个重要因素,我们得出以下结论:

|

||||

|

||||

| 语言 | 线程与进程 | 非阻塞 I/O | 使用便捷性 |

|

||||

| --- | --- | --- | --- |

|

||||

| PHP | 进程 | 否 | |

|

||||

| Java | 线程 | 可用 | 需要回调 |

|

||||

| Node.js | 线程 | 是 | 需要回调 |

|

||||

| Go | 线程 (协程) | 是 | 不需要回调 |

|

||||

|

||||

线程通常要比进程有更高的内存效率,因为它们共享相同的内存空间,而进程则没有。结合与非阻塞 I/O 相关的因素,我们可以看到,至少考虑到上述因素,当我们从列表往下看时,与 I/O 相关的一般设置得到改善。所以如果我不得不在上面的比赛中选择一个赢家,那肯定会是 Go。

|

||||

|

||||

即使如此,在实践中,选择构建应用程序的环境与你的团队对所述环境的熟悉程度以及你可以实现的总体生产力密切相关。因此,每个团队都深入并开始在 Node 或 Go 中开发 Web 应用程序和服务可能就没有意义。事实上,寻找开发人员或你内部团队的熟悉度通常被认为是不使用不同语言和/或环境的主要原因。也就是说,过去十五年来,时代已经发生了变化。

|

||||

|

||||

希望以上内容可以帮助你更清楚地了解底层发生的情况,并为你提供如何处理应用程序的现实可扩展性的一些想法。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.toptal.com/back-end/server-side-io-performance-node-php-java-go

|

||||

|

||||

作者:[BRAD PEABODY][a]

|

||||

译者:[MonkeyDEcho](https://github.com/MonkeyDEcho)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.toptal.com/resume/brad-peabody

|

||||

[1]:https://www.pinterest.com/pin/414401603185852181/

|

||||

[2]:http://www.journaldev.com/7462/node-js-architecture-single-threaded-event-loop

|

||||

[3]:https://peabody.io/post/server-env-benchmarks/

|

||||

[4]:http://reactphp.org/

|

||||

[5]:http://amphp.org/

|

||||

[6]:http://undertow.io/

|

||||

[7]:https://netty.io/

|

||||

@ -1,88 +1,82 @@

|

||||

使用 Docker 构建你的 Serverless 树莓派集群

|

||||

============================================================

|

||||

|

||||

这篇博文将向你展示如何使用 Docker 和 [OpenFaaS][33] 框架构建你自己的 Serverless 树莓派集群。大家常常问我能用他们的集群来做些什么?而这个应用完美匹配卡片尺寸的设备——只需添加更多的树莓派就能获取更强的计算能力。

|

||||

|

||||

这篇博文将向你展示如何使用 Docker 和 [OpenFaaS][33] 框架构建你自己的 Serverless 树莓派集群。大家常常问我他们能用他们的集群来做些什么?这个应用完美匹配卡片尺寸的设备——只需添加更多的树莓派就能获取更强的计算能力。

|

||||

> “Serverless” (无服务器)是事件驱动架构的一种设计模式,与“桥接模式”、“外观模式”、“工厂模式”和“云”这些名词一样,都是一种抽象概念。

|

||||

|

||||

> “Serverless” 是事件驱动架构的一种设计模式,与“桥接模式”、“外观模式”、“工厂模式”和“云”这些名词一样,都是一种抽象概念。

|

||||

|

||||

|

||||

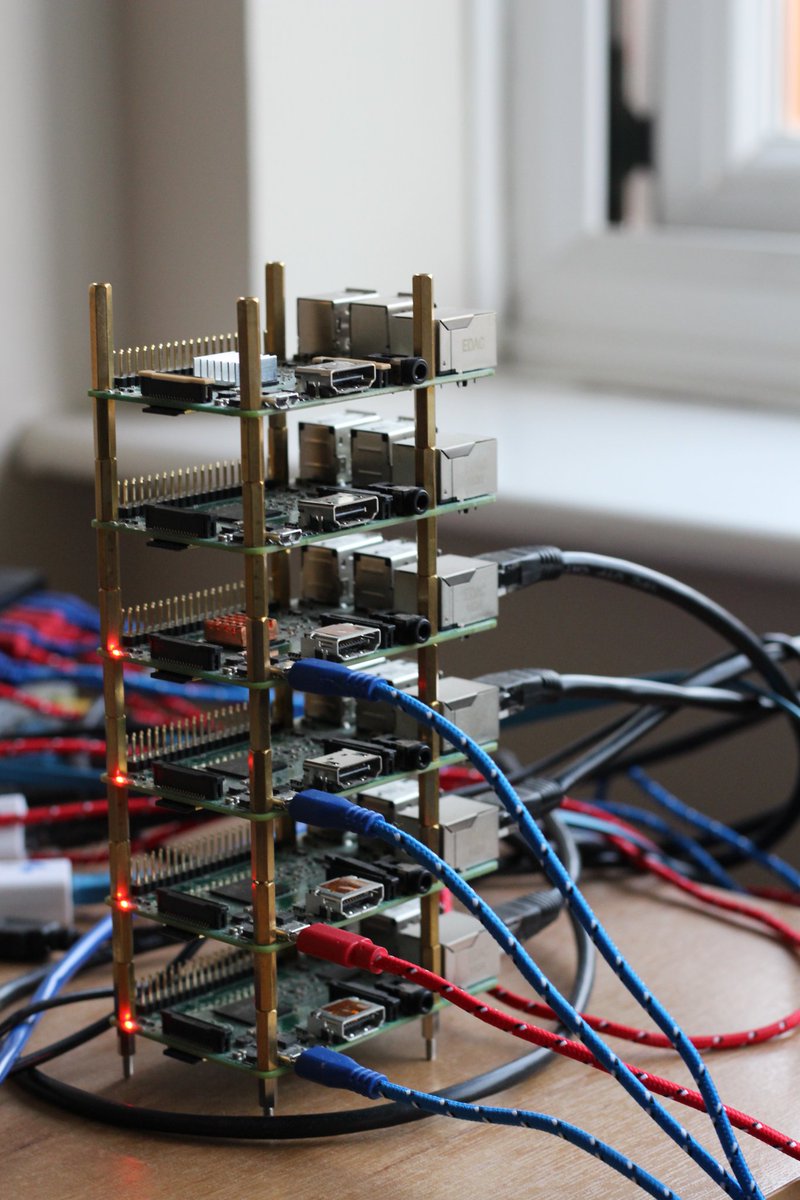

_图片:3 个 Raspberry Pi Zero_

|

||||

|

||||

这是我在本文中描述的集群,用黄铜支架分隔每个设备。

|

||||

|

||||

|

||||

|

||||

### Serverless 是什么?它为何重要?

|

||||

|

||||

行业对于“serverless”这个术语的含义有几种解释。在这篇博文中,我们就把它理解为一种事件驱动的架构模式,它能让你用自己喜欢的任何语言编写轻量可复用的功能。[更多关于 Serverless 的资料][22]。

|

||||

行业对于 “serverless” 这个术语的含义有几种解释。在这篇博文中,我们就把它理解为一种事件驱动的架构模式,它能让你用自己喜欢的任何语言编写轻量可复用的功能。[更多关于 Serverless 的资料][22]。

|

||||

|

||||

|

||||

_Serverless 架构也引出了“功能即服务服务”模式,简称 FaaS_

|

||||

|

||||

_Serverless 架构也引出了“功能即服务服务”模式,简称 FaaS_

|

||||

|

||||

Serverless 的“功能”可以做任何事,但通常用于处理给定的输入——例如来自 GitHub、Twitter、PayPal、Slack、Jenkins CI pipeline 的事件;或者以树莓派为例,处理像红外运动传感器、激光绊网、温度计等真实世界的传感器的输入。

|

||||

|

||||

|

||||

|

||||

|

||||

Serverless 功能能够更好地结合第三方的后端服务,使系统整体的能力大于各部分之和。

|

||||

|

||||

|

||||

了解更多背景信息,可以阅读我最近一偏博文:[Introducing Functions as a Service (FaaS)][34]。

|

||||

了解更多背景信息,可以阅读我最近一偏博文:[功能即服务(FaaS)简介][34]。

|

||||

|

||||

### 概述

|

||||

|

||||

我们将使用 [OpenFaaS][35], 它能够让主机或者集群作为支撑 Serverless 功能运行的后端。任何能够使用 Docker 部署的可执行二进制文件、脚本或者编程语言都能在 [OpenFaaS][36] 上运作,你可以根据速度和伸缩性选择部署的规模。另一个优点是,它还内建了用户界面和监控系统。

|

||||

我们将使用 [OpenFaaS][35],它能够让主机或者集群作为支撑 Serverless 功能运行的后端。任何能够使用 Docker 部署的可执行二进制文件、脚本或者编程语言都能在 [OpenFaaS][36] 上运作,你可以根据速度和伸缩性选择部署的规模。另一个优点是,它还内建了用户界面和监控系统。

|

||||

|

||||

这是我们要执行的步骤:

|

||||

|

||||

* 在一个或多个主机上配置 Docker (树莓派 2 或者 3);

|

||||

|

||||

* 利用 Docker Swarm 将它们连接;

|

||||

|

||||

* 部署 [OpenFaaS][23];

|

||||

|

||||

* 使用 Python 编写我们的第一个功能。

|

||||

|

||||

### Docker Swarm

|

||||

|

||||

Docker 是一项打包和部署应用的技术,支持集群上运行,有着安全的默认设置,而且在搭建集群时只需要一条命令。OpenFaaS 使用 Docker 和 Swarm 在你的可用树莓派上传递你的 Serverless 功能。

|

||||

|

||||

|

||||

_图片:3 个 Raspberry Pi Zero_

|

||||

|

||||

我推荐你在这个项目中使用带树莓派 2 或者 3,以太网交换机和[强大的 USB 多端口电源适配器][37]。

|

||||

|

||||

### 准备 Raspbian

|

||||

|

||||

把 [Raspbian Jessie Lite][38] 写入 SD 卡(8GB 容量就正常工作了,但还是推荐使用 16GB 的 SD 卡)。

|

||||

|

||||

_注意:不要下载成 Raspbian Stretch 了_

|

||||

_注意:不要下载成 Raspbian Stretch 了_

|

||||

|

||||

> 社区在努力让 Docker 支持 Raspbian Stretch,但是还未能做到完美运行。请从[树莓派基金会网站][24]下载 Jessie Lite 镜像。

|

||||

|

||||

我推荐使用 [Etcher.io][39] 烧写镜像。

|

||||

|

||||

> 在引导树莓派之前,你需要在引导分区创建名为“ssh”的空白文件。这样才能允许远程登录。

|

||||

> 在引导树莓派之前,你需要在引导分区创建名为 `ssh` 的空白文件。这样才能允许远程登录。

|

||||

|

||||

* 接通电源,然后修改主机名

|

||||

#### 接通电源,然后修改主机名

|

||||

|

||||

现在启动树莓派的电源并且使用`ssh`连接:

|

||||

现在启动树莓派的电源并且使用 `ssh` 连接:

|

||||

|

||||

```

|

||||

$ ssh pi@raspberrypi.local

|

||||

|

||||

```

|

||||

|

||||

> 默认密码是`raspberry`

|

||||

> 默认密码是 `raspberry`

|

||||

|

||||

使用 `raspi-config` 工具把主机名改为 `swarm-1` 或者类似的名字,然后重启。

|

||||

|

||||

当你到了这一步,你还可以把划分给 GPU (显卡)的内存设置为 16MB。

|

||||

|

||||

* 现在安装 Docker

|

||||

#### 现在安装 Docker

|

||||

|

||||

我们可以使用通用脚本来安装:

|

||||

|

||||

```

|

||||

$ curl -sSL https://get.docker.com | sh

|

||||

|

||||

```

|

||||

|

||||

> 这个安装方式在将来可能会发生变化。如上文所说,你的系统需要是 Jessie,这样才能得到一个确定的配置。

|

||||

@ -92,23 +86,21 @@ $ curl -sSL https://get.docker.com | sh

|

||||

```

|

||||

WARNING: raspbian is no longer updated @ https://get.docker.com/

|

||||

Installing the legacy docker-engine package...

|

||||

|

||||

```

|

||||

|

||||

之后,用下面这个命令确保你的用户帐号可以访问 Docker 客户端:

|

||||

|

||||

```

|

||||

$ usermod pi -aG docker

|

||||

|

||||

```

|

||||

|

||||

> 如果你的用户名不是 `pi`,那就把它替换成你的用户名。

|

||||

|

||||

* 修改默认密码

|

||||

#### 修改默认密码

|

||||

|

||||

输入 `$sudo passwd pi`,然后设置一个新密码,请不要跳过这一步!

|

||||

|

||||

* 重复以上步骤

|

||||

#### 重复以上步骤

|

||||

|

||||

现在为其它的树莓派重复上述步骤。

|

||||

|

||||

@ -128,9 +120,9 @@ To add a worker to this swarm, run the following command:

|

||||

|

||||

```

|

||||

|

||||

你会看到它显示了一个口令,以及其它结点加入集群的命令。接下来使用 `ssh` 登录每个树莓派,运行这个加入集群的命令。

|

||||

你会看到它显示了一个口令,以及其它节点加入集群的命令。接下来使用 `ssh` 登录每个树莓派,运行这个加入集群的命令。

|

||||

|

||||

等待连接完成后,在第一个树莓派上查看集群的结点:

|

||||

等待连接完成后,在第一个树莓派上查看集群的节点:

|

||||

|

||||

```

|

||||

$ docker node ls

|

||||

@ -143,15 +135,15 @@ y2p089bs174vmrlx30gc77h4o swarm4 Ready Active

|

||||

|

||||

恭喜你!你现在拥有一个树莓派集群了!

|

||||

|

||||

* _更多关于集群的内容_

|

||||

#### 更多关于集群的内容

|

||||

|

||||

你可以看到三个结点启动运行。这时只有一个结点是集群管理者。如果我们的管理结点_死机_了,集群就进入了不可修复的状态。我们可以通过添加冗余的管理结点解决这个问题。而且它们依然会运行工作负载,除非你明确设置了让你的服务只运作在工作结点上。

|

||||

你可以看到三个节点启动运行。这时只有一个节点是集群管理者。如果我们的管理节点_死机_了,集群就进入了不可修复的状态。我们可以通过添加冗余的管理节点解决这个问题。而且它们依然会运行工作负载,除非你明确设置了让你的服务只运作在工作节点上。

|

||||

|

||||

要把一个工作结点升级为管理结点,只需要在其中一个管理结点上运行 `docker node promote <node_name>` 命令。

|

||||

要把一个工作节点升级为管理节点,只需要在其中一个管理节点上运行 `docker node promote <node_name>` 命令。

|

||||

|

||||

> 注意: Swarm 命令,例如 `docker service ls` 或者 `docker node ls` 只能在管理结点上运行。

|

||||

> 注意: Swarm 命令,例如 `docker service ls` 或者 `docker node ls` 只能在管理节点上运行。

|

||||

|

||||

想深入了解管理结点与工作结点如何保持一致性,可以查阅 [Docker Swarm 管理指南][40]。

|

||||

想深入了解管理节点与工作节点如何保持一致性,可以查阅 [Docker Swarm 管理指南][40]。

|

||||

|

||||

### OpenFaaS

|

||||

|

||||

@ -159,9 +151,9 @@ y2p089bs174vmrlx30gc77h4o swarm4 Ready Active

|

||||

|

||||

|

||||

|

||||

> 如果你支持 [OpenFaaS][41],希望你能 **start** [OpenFaaS][25] 的 GitHub 仓库。

|

||||

> 如果你支持 [OpenFaaS][41],希望你能 **星标** [OpenFaaS][25] 的 GitHub 仓库。

|

||||

|

||||

登录你的第一个树莓派(你运行 `docker swarm init` 的结点),然后部署这个项目:

|

||||

登录你的第一个树莓派(你运行 `docker swarm init` 的节点),然后部署这个项目:

|

||||

|

||||

```

|

||||

$ git clone https://github.com/alexellis/faas/

|

||||

@ -178,7 +170,7 @@ Creating service func_echoit

|

||||

|

||||

```

|

||||

|

||||

你的其它树莓派会收到 Docer Swarm 的指令,开始从网上拉取这个 Docker 镜像,并且解压到 SD 卡上。这些工作会分布到各个结点上,所以没有哪个结点产生过高的负载。

|

||||

你的其它树莓派会收到 Docer Swarm 的指令,开始从网上拉取这个 Docker 镜像,并且解压到 SD 卡上。这些工作会分布到各个节点上,所以没有哪个节点产生过高的负载。

|

||||

|

||||

这个过程会持续几分钟,你可以用下面指令查看它的完成状况:

|

||||

|

||||

@ -195,7 +187,7 @@ v9vsvx73pszz func_nodeinfo replicated 1/1

|

||||

|

||||

```

|

||||

|

||||

我们希望看到每个服务都显示“1/1”。

|

||||

我们希望看到每个服务都显示 “1/1”。

|

||||

|

||||

你可以根据服务名查看该服务被调度到哪个树莓派上:

|

||||

|

||||

@ -203,7 +195,6 @@ v9vsvx73pszz func_nodeinfo replicated 1/1

|

||||

$ docker service ps func_markdown

|

||||

ID IMAGE NODE STATE

|

||||

func_markdown.1 functions/markdownrender:latest-armhf swarm4 Running

|

||||

|

||||

```

|

||||

|

||||

状态一项应该显示 `Running`,如果它是 `Pending`,那么镜像可能还在下载中。

|

||||

@ -212,16 +203,15 @@ func_markdown.1 functions/markdownrender:latest-armhf swarm4 Running

|

||||

|

||||

```

|

||||

$ ifconfig

|

||||

|

||||

```

|

||||

|

||||

例如,如果你的 IP 地址是 192.168.0.100,那就访问 [http://192.168.0.100:8080][42]。

|

||||

例如,如果你的 IP 地址是 192.168.0.100,那就访问 http://192.168.0.100:8080 。

|

||||

|

||||

这是你会看到 FaaS UI(也叫 API 网关)。这是你定义、测试、调用功能的地方。

|

||||

|

||||

点击名称为 func_markdown 的 Markdown 转换功能,输入一些 Markdown(这是 Wikipedia 用来组织内容的语言)文本。

|

||||

点击名称为 “func_markdown” 的 Markdown 转换功能,输入一些 Markdown(这是 Wikipedia 用来组织内容的语言)文本。

|

||||

|

||||

然后点击 `invoke`。你会看到调用计数增加,屏幕下方显示功能调用的结果。

|

||||

然后点击 “invoke”。你会看到调用计数增加,屏幕下方显示功能调用的结果。

|

||||

|

||||

|

||||

|

||||

@ -229,53 +219,48 @@ $ ifconfig

|

||||

|

||||

这一节的内容已经有相关的教程,但是我们需要几个步骤来配置树莓派。

|

||||

|

||||

* 获取 FaaS-CLI:

|

||||

#### 获取 FaaS-CLI

|

||||

|

||||

```

|

||||

$ curl -sSL cli.openfaas.com | sudo sh

|

||||

armv7l

|

||||

Getting package https://github.com/alexellis/faas-cli/releases/download/0.4.5-b/faas-cli-armhf

|

||||

|

||||

```

|

||||

|

||||

* 下载样例:

|

||||

#### 下载样例

|

||||

|

||||

```

|

||||

$ git clone https://github.com/alexellis/faas-cli

|

||||

$ cd faas-cli

|

||||

|

||||

```

|

||||

|

||||

* 为树莓派修补样例:

|

||||

#### 为树莓派修补样例模版

|

||||

|

||||

我们临时修改我们的模版,让它们能在树莓派上工作:

|

||||

|

||||

```

|

||||

$ cp template/node-armhf/Dockerfile template/node/

|

||||

$ cp template/python-armhf/Dockerfile template/python/

|

||||

|

||||

```

|

||||

|

||||

这么做是因为树莓派和我们平时关注的大多数计算机使用不一样的处理器架构。

|

||||

|

||||

> 了解 Docker 在树莓派上的最新状况,请查阅: [5 Things you need to know][26]

|

||||

> 了解 Docker 在树莓派上的最新状况,请查阅: [你需要了解的五件事][26]。

|

||||

|

||||

现在你可以跟着下面为 PC,笔记本和云端所写的教程操作,但我们在树莓派上要先运行一些命令。

|

||||

现在你可以跟着下面为 PC、笔记本和云端所写的教程操作,但我们在树莓派上要先运行一些命令。

|

||||

|

||||

* [使用 OpenFaaS 运行你的第一个 Serverless Python 功能][27]

|

||||

|

||||

注意第 3 步:

|

||||

|

||||

* 把你的功能放到先前从 GitHub 下载的 `faas-cli` 文件夹中,而不是 `~/functinos/hello-python` 里。

|

||||

|

||||

* 同时,在 `stack.yml` 文件中把 `localhost` 替换成第一个树莓派的 IP 地址。

|

||||

|

||||

集群可能会花费几分钟把 Serverless 功能下载到相关的树莓派上。你可以用下面的命令查看你的服务,确保副本一项显示“1/1”:

|

||||

集群可能会花费几分钟把 Serverless 功能下载到相关的树莓派上。你可以用下面的命令查看你的服务,确保副本一项显示 “1/1”:

|

||||

|

||||

```

|

||||

$ watch 'docker service ls'

|

||||

pv27thj5lftz hello-python replicated 1/1 alexellis2/faas-hello-python-armhf:latest

|

||||

|

||||

```

|

||||

|

||||

**继续阅读教程:** [使用 OpenFaaS 运行你的第一个 Serverless Python 功能][43]

|

||||

@ -286,7 +271,7 @@ pv27thj5lftz hello-python replicated 1/1

|

||||

|

||||

既然使用 Serverless,你也不想花时间监控你的功能。幸运的是,OpenFaaS 内建了 [Prometheus][45] 指标检测,这意味着你可以追踪每个功能的运行时长和调用频率。

|

||||

|

||||

_指标驱动自动伸缩_

|

||||

#### 指标驱动自动伸缩

|

||||

|

||||

如果你给一个功能生成足够的负载,OpenFaaS 将自动扩展你的功能;当需求消失时,你又会回到单一副本的状态。

|

||||

|

||||

@ -298,31 +283,27 @@ pv27thj5lftz hello-python replicated 1/1

|

||||

|

||||

```

|

||||

http://192.168.0.25:9090/graph?g0.range_input=15m&g0.stacked=1&g0.expr=rate(gateway_function_invocation_total%5B20s%5D)&g0.tab=0&g1.range_input=1h&g1.expr=gateway_service_count&g1.tab=0

|

||||

|

||||

```

|

||||

|

||||

这些请求使用 PromQL(Prometheus 请求语言)编写。第一个请求返回功能调用的频率:

|

||||

|

||||

```

|

||||

rate(gateway_function_invocation_total[20s])

|

||||

|

||||

```

|

||||

|

||||

第二个请求显示每个功能的副本数量,最开始应该是每个功能只有一个副本。

|

||||

|

||||

```

|

||||

gateway_service_count

|

||||

|

||||

```

|

||||

|

||||

如果你想触发自动扩展,你可以在树莓派上尝试下面指令:

|

||||

|

||||

```

|

||||

$ while [ true ]; do curl -4 localhost:8080/function/func_echoit --data "hello world" ; done

|

||||

|

||||

```

|

||||

|

||||

查看 Prometheus 的“alerts”页面,可以知道你是否产生足够的负载来触发自动扩展。如果没有,你可以尝试在多个终端同时运行上面的指令。

|

||||

查看 Prometheus 的 “alerts” 页面,可以知道你是否产生足够的负载来触发自动扩展。如果没有,你可以尝试在多个终端同时运行上面的指令。

|

||||

|

||||

|

||||

|

||||

@ -332,41 +313,33 @@ $ while [ true ]; do curl -4 localhost:8080/function/func_echoit --data "hello w

|

||||

|

||||

我们现在配置好了 Docker、Swarm, 并且让 OpenFaaS 运行代码,把树莓派像大型计算机一样使用。

|

||||

|

||||

> 希望大家支持这个项目,**Star** [FaaS 的 GitHub 仓库][28]。

|

||||

> 希望大家支持这个项目,**星标** [FaaS 的 GitHub 仓库][28]。

|

||||

|

||||

你是如何搭建好了自己的 Docker Swarm 集群并且运行 OpenFaaS 的呢?在 Twitter [@alexellisuk][46] 上分享你的照片或推文吧。

|

||||

|

||||

**观看我在 Dockercon 上关于 OpenFaaS 的视频**

|

||||

|

||||

我在 [Austin 的 Dockercon][47] 上展示了 OpenFaaS。——观看介绍和互动例子的视频:

|

||||

|

||||

** 此处有iframe,请手动处理 **

|

||||

我在 [Austin 的 Dockercon][47] 上展示了 OpenFaaS。——观看介绍和互动例子的视频: https://www.youtube.com/embed/-h2VTE9WnZs

|

||||

|

||||

有问题?在下面的评论中提出,或者给我发邮件,邀请我进入你和志同道合者讨论树莓派、Docker、Serverless 的 Slack channel。

|

||||

|

||||

**想要学习更多关于树莓派上运行 Docker 的内容?**

|

||||

|

||||

我建议从 [5 Things you need to know][48] 开始,它包含了安全性、树莓派和普通 PC 间微妙差别等话题。

|

||||

我建议从 [你需要了解的五件事][48] 开始,它包含了安全性、树莓派和普通 PC 间微妙差别等话题。

|

||||

|

||||

* [Dockercon tips: Docker & Raspberry Pi][18]

|

||||

|

||||

* [Control GPIO with Docker Swarm][19]

|

||||

|

||||

* [Is that a Docker Engine in your pocket??][20]

|

||||

|

||||

_在 Twitter 上分享_

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://blog.alexellis.io/your-serverless-raspberry-pi-cluster/

|

||||

|

||||

作者:[Alex Ellis ][a]

|

||||

作者:[Alex Ellis][a]

|

||||

译者:[haoqixu](https://github.com/haoqixu)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,204 @@

|

||||

每个安卓开发初学者应该了解的 12 个技巧

|

||||

====

|

||||

|

||||

> 一次掌握一个技巧,更好地学习安卓

|

||||

|

||||

|

||||

|

||||

距离安迪·鲁宾和他的团队着手开发一个希望颠覆传统手机操作模式的操作系统已经过去 12 年了,这套系统有可能让手机或者智能机给消费者以及软件开发人员带来全新的体验。之前的智能机仅限于收发短信和查看电子邮件(当然还可以打电话),给用户和开发者带来很大的限制。

|

||||

|

||||

安卓,作为打破这个枷锁的系统,拥有非常优秀的框架设计,给大家提供的不仅仅是一组有限的功能,更多的是自由的探索。有人会说 iPhone 才是手机产业的颠覆产品,不过我们说的不是 iPhone 有多么酷(或者多么贵,是吧?),它还是有限制的,而这是我们从来都不希望有的。

|

||||

|

||||

不过,就像本大叔说的,能力越大责任越大,我们也需要更加认真对待安卓应用的设计方式。我看到很多教程都忽略了向初学者传递这个理念,在动手之前请先充分理解系统架构。他们只是把一堆的概念和代码丢给读者,却没有解释清楚相关的优缺点,它们对系统的影响,以及该用什么不该用什么等等。

|

||||

|

||||

在这篇文章里,我们将介绍一些初学者以及中级开发人员都应该掌握的技巧,以帮助更好地理解安卓框架。后续我们还会在这个系列里写更多这样的关于实用技巧的文章。我们开始吧。

|

||||

|

||||

### 1、 `@+id` 和 `@id` 的区别

|

||||

|

||||

要在 Java 代码里访问一个图形控件(或组件),或者是要让它成为其他控件的依赖,我们需要一个唯一的值来引用它。这个唯一值用 `android:id` 属性来定义,本质上就是把用户提供的 id 附加到 `@+id/` 后面,写入到 _id 资源文件_,供其他控件使用。一个 Toolbar 的 id 可以这样定义,

|

||||

|

||||

```

|

||||

android:id="@+id/toolbar"

|

||||

```

|

||||

|

||||

然后这个 id 值就能被 `findViewById(…)` 识别,这个函数会在资源文件里查找 id,或者直接从 R.id 路径引用,然后返回所查找的 View 的类型。

|

||||

|

||||

而另一种,`@id`,和 `findViewById(…)` 行为一样 - 也会根据提供的 id 查找组件,不过仅限于布局时使用。一般用来布置相关控件。

|

||||

|

||||

```

|

||||

android:layout_below="@id/toolbar"

|

||||

```

|

||||

|

||||

### 2、 使用 `@string` 资源为 XML 提供字符串

|

||||

|

||||

简单来说,就是不要在 XML 里直接用字符串。原因很简单。当我们在 XML 里直接使用了字符串,我们一般会在其它地方再次用到同样的字符串。想像一下当我们需要在不同的地方调整同一个字符串的噩梦,而如果使用字符串资源就只改一个地方就够了。另一个好处是,使用资源文件可以提供多国语言支持,因为可以为不同的语言创建相应的字符串资源文件。

|

||||

|

||||

```

|

||||

android:text="My Awesome Application"

|

||||

```

|

||||

|

||||

当你直接使用字符串时,你会在 Android Studio 里收到警告,提示说应该把写死的字符串改成字符串资源。可以点击这个提示,然后按下 `ALT + ENTER` 打开字符串编辑。你也可以直接打开 `res` 目录下的 `values` 目录里的 `strings.xml` 文件,然后像下面这样声明一个字符串资源。

|

||||

|

||||

```

|

||||

<string name="app_name">My Awesome Application</string>

|

||||

```

|

||||

|

||||

然后用它来替换写死的字符串,

|

||||

|

||||

```

|

||||

android:text="@string/app_name"

|

||||

```

|

||||

|

||||

### 3、 使用 `@android` 和 `?attr` 常量

|

||||

|

||||

尽量使用系统预先定义的常量而不是重新声明。举个例子,在布局中有几个地方要用白色或者 #ffffff 颜色值。不要每次都直接用 #ffffff 数值,也不要自己为白色重新声明资源,我们可以直接用这个,

|

||||

|

||||

```

|

||||

@android:color/white

|

||||

```

|

||||

|

||||

安卓预先定义了很多常用的颜色常量,比如白色,黑色或粉色。最经典的应用场景是透明色:

|

||||

|

||||

```

|

||||

@android:color/transparent

|

||||

```

|

||||

|

||||

另一个引用常量的方式是 `?attr`,用来将预先定义的属性值赋值给不同的属性。举个自定义 Toolbar 的例子。这个 Toolbar 需要定义宽度和高度。宽度通常可以设置为 `MATCH_PARENT`,但高度呢?我们大多数人都没有注意设计指导,只是简单地随便设置一个看上去差不多的值。这样做不对。不应该随便自定义高度,而应该这样做,

|

||||

|

||||

```

|

||||

android:layout_height="?attr/actionBarSize"

|

||||

```

|

||||

|

||||

`?attr` 的另一个应用是点击视图时画水波纹效果。`SelectableItemBackground` 是一个预定义的 drawable,任何视图需要增加波纹效果时可以将它设为背景:

|

||||

|

||||

```

|

||||

android:background="?attr/selectableItemBackground"

|

||||

```

|

||||

|

||||

也可以用这个:

|

||||

|

||||

```

|

||||

android:background="?attr/selectableItemBackgroundBorderless"

|

||||

```

|

||||

|

||||

来显示无边框波纹。

|

||||

|

||||

### 4、 SP 和 DP 的区别

|

||||

|

||||

虽然这两个没有本质上的区别,但知道它们是什么以及在什么地方适合用哪个很重要。

|

||||

|

||||

SP 的意思是缩放无关像素,一般建议用于 TextView,首先文字不会因为显示密度不同而显示效果不一样,另外 TextView 的内容还需要根据用户设定做拉伸,或者只调整字体大小。

|

||||

|

||||

其他需要定义尺寸和位置的地方,可以使用 DP,也就是密度无关像素。之前说过,DP 和 SP 的性质是一样的,只是 DP 会根据显示密度自动拉伸,因为安卓系统会动态计算实际显示的像素,这样就可以让使用 DP 的组件在不同显示密度的设备上都可以拥有相同的显示效果。

|

||||

|

||||

### 5、 Drawable 和 Mipmap 的应用

|

||||

|

||||

这两个最让人困惑的是 - drawable 和 mipmap 有多少差异?

|

||||

|

||||

虽然这两个好像有同样的用途,但它们设计目的不一样。mipmap 是用来储存图标的,而 drawable 用于任何其他格式。我们可以看一下系统内部是如何使用它们的,就知道为什么不能混用了。

|

||||

|

||||

你可以看到你的应用里有几个 mipmap 和 drawable 目录,每一个分别代表不同的显示分辨率。当系统从 drawable 目录读取资源时,只会根据当前设备的显示密度选择确定的目录。然而,在读取 mipmap 时,系统会根据需要选择合适的目录,而不仅限于当前显示密度,主要是因为有些启动器会故意显示较大的图标,所以系统会使用较大分辨率的资源。

|

||||

|

||||

总之,用 mipmap 来存放图标或标记图片,可以在不同显示密度的设备上看到分辨率变化,而其它根据需要显示的图片资源都用 drawable。

|

||||

|

||||

比如说,Nexus 5 的显示分辨率是 xxhdpi。当我们把图标放到 `mipmap` 目录里时,所有 `mipmap` 目录都将读入内存。而如果放到 drawable 里,只有 `drawable-xxhdpi` 目录会被读取,其他目录都会被忽略。

|

||||

|

||||

### 6、 使用矢量图形

|

||||

|

||||

为了支持不同显示密度的屏幕,将同一个资源的多个版本(大小)添加到项目里是一个很常见的技巧。这种方式确实有用,不过它也会带来一定的性能开支,比如更大的 apk 文件以及额外的开发工作。为了消除这种影响,谷歌的安卓团队发布了新增的矢量图形。

|

||||

|

||||

矢量图形是用 XML 描述的 SVG(可拉伸矢量图形),是用点、直线和曲线组合以及填充颜色绘制出的图形。正因为矢量图形是由点和线动态画出来的,在不同显示密度下拉伸也不会损失分辨率。而矢量图形带来的另一个好处是更容易做动画。往一个 AnimatedVectorDrawable 文件里添加多个矢量图形就可以做出动画,而不用添加多张图片然后再分别处理。

|

||||

|

||||

```

|

||||

<vector xmlns:android="http://schemas.android.com/apk/res/android"

|

||||

android:width="24dp"

|

||||

android:height="24dp"

|

||||

android:viewportWidth="24.0"

|

||||

android:viewportHeight="24.0">

|

||||

|

||||

<path android:fillColor="#69cdff" android:pathData="M3,18h18v-2L3,16v2zM3,13h18v-2L3,11v2zM3,6v2h18L21,6L3,6z"/>

|

||||

|

||||

</vector>

|

||||

```

|

||||

|

||||

上面的向量定义可以画出下面的图形,

|

||||

|

||||

|

||||

|

||||

要在你的安卓项目里添加矢量图形,可以右键点击你项目里的应用模块,然后选择 New >> Vector Assets。然后会打开 Assets Studio,你可以有两种方式添加矢量图形。第一种是从 Material 图标里选择,另一种是选择本地的 SVG 或 PSD 文件。

|

||||

|

||||

谷歌建议与应用相关都使用 Material 图标,来保持安卓的连贯性和统一体验。[这里][1]有全部图标,记得看一下。

|

||||

|

||||

### 7、 设定边界的开始和结束

|

||||

|

||||

这是人们最容易忽略的地方之一。边界!增加边界当然很简单,但是如果要考虑支持很旧的平台呢?

|

||||

|

||||

边界的“开始”和“结束”分别是“左”和“右”的超集,所以如果应用的 `minSdkVersion` 是 17 或更低,边界和填充的“开始”和“结束”定义是旧的“左”/“右”所需要的。在那些没有定义“开始”和“结束”的系统上,这两个定义可以被安全地忽略。可以像下面这样声明:

|

||||

|

||||

```

|

||||

android:layout_marginEnd="20dp"

|

||||

android:paddingStart="20dp"

|

||||

```

|

||||

|

||||

### 8、 使用 Getter/Setter 生成工具

|

||||

|

||||

在创建一个容器类(只是用来简单的存放一些变量数据)时很烦的一件事情是写多个 getter 和 setter,复制/粘贴该方法的主体再为每个变量重命名。

|

||||

|

||||

幸运的是,Android Studio 有一个解决方法。可以这样做,在类里声明你需要的所有变量,然后打开 Toolbar >> Code。快捷方式是 `ALT + Insert`。点击 Code 会显示 Generate,点击它会出来很多选项,里面有 Getter 和 Setter 选项。在保持焦点在你的类页面然后点击,就会为当前类添加所有的 getter 和 setter(有需要的话可以再去之前的窗口操作)。很爽吧。

|

||||

|

||||

### 9、 使用 Override/Implement 生成工具

|

||||

|

||||

这是另一个很好用的生成工具。自定义一个类然后再扩展很容易,但是如果要扩展你不熟悉的类呢。比如说 PagerAdapter,你希望用 ViewPager 来展示一些页面,那就需要定制一个 PagerAdapter 并实现它的重载方法。但是具体有哪些方法呢?Android Studio 非常贴心地为自定义类强行添加了一个构造函数,或者可以用快捷键(`ALT + Enter`),但是父类 PagerAdapter 里的其他(虚拟)方法需要自己手动添加,我估计大多数人都觉得烦。

|

||||

|

||||

要列出所有可以重载的方法,可以点击 Code >> Generate and Override methods 或者 Implement methods,根据你的需要。你还可以为你的类选择多个方法,只要按住 Ctrl 再选择方法,然后点击 OK。

|

||||

|

||||

### 10、 正确理解 Context

|

||||

|

||||

Context 有点恐怖,我估计许多初学者从没有认真理解过 Context 类的结构 - 它是什么,为什么到处都要用到它。

|

||||

|

||||

简单地说,它将你能从屏幕上看到的所有内容都整合在一起。所有的视图(或者它们的扩展)都通过 Context 绑定到当前的环境。Context 用来管理应用层次的资源,比如说显示密度,或者当前的关联活动。活动、服务和应用都实现了 Context 类的接口来为其他关联组件提供内部资源。举个添加到 MainActivity 的 TextView 的例子。你应该注意到了,在创建一个对象的时候,TextView 的构造函数需要 Context 参数。这是为了获取 TextView 里定义到的资源。比如说,TextView 需要在内部用到 Roboto 字体。这样的话,TextView 需要 Context。而且在我们将 Context(或者 `this`)传递给 TextView 的时候,也就是告诉它绑定当前活动的生命周期。

|

||||

|

||||

另一个 Context 的关键应用是初始化应用层次的操作,比如初始化一个库。库的生命周期和应用是不相关的,所以它需要用 `getApplicationContext()` 来初始化,而不是用 `getContext` 或 `this` 或 `getActivity()`。掌握正确使用不同 Context 类型非常重要,可以避免内存泄漏。另外,要用到 Context 来启动一个活动或服务。还记得 `startActivity(…)` 吗?当你需要在一个非活动类里切换活动时,你需要一个 Context 对象来调用 `startActivity` 方法,因为它是 Context 类的方法,而不是 Activity 类。

|

||||

|

||||

```

|

||||

getContext().startActivity(getContext(), SecondActivity.class);

|

||||

```

|

||||

|

||||

如果你想了解更多 Context 的行为,可以看看[这里][2]或[这里][3]。第一个是一篇关于 Context 的很好的文章,介绍了在哪些地方要用到它。而另一个是安卓关于 Context 的文档,全面介绍了所有的功能 - 方法,静态标识以及更多。

|

||||

|

||||

### 奖励 #1: 格式化代码

|

||||

|

||||

有人会不喜欢整齐,统一格式的代码吗?好吧,几乎我们每一个人,在写一个超过 1000 行的类的时候,都希望我们的代码能有合适的结构。而且,并不仅仅大的类才需要格式化,每一个小模块类也需要让代码保持可读性。

|

||||

|

||||

使用 Android Studio,或者任何 JetBrains IDE,你都不需要自己手动整理你的代码,像增加缩进或者 = 之前的空格。就按自己希望的方式写代码,在想要格式化的时候,如果是 Windows 系统可以按下 `ALT + CTRL + L`,Linux 系统按下 `ALT + CTRL + SHIFT + L`。*代码就自动格式化好了*

|

||||

|

||||

### 奖励 #2: 使用库

|

||||

|

||||

面向对象编程的一个重要原则是增加代码的可重用性,或者说减少重新发明轮子的习惯。很多初学者错误地遵循了这个原则。这条路有两个方向,

|

||||

|

||||

- 不用任何库,自己写所有的代码。

|

||||

- 用库来处理所有事情。

|

||||

|

||||

不管哪个方向走到底都是不对的。如果你彻底选择第一个方向,你将消耗大量的资源,仅仅是为了满足自己拥有一切的骄傲。很可能你的代码没有做过替代库那么多的测试,从而增加模块出问题的可能。如果资源有限,不要重复发明轮子。直接用经过测试的库,在有了明确目标以及充分的资源后,可以用自己的可靠代码来替换这个库。

|

||||

|

||||

而彻底走向另一个方向,问题更严重 - 别人代码的可靠性。不要习惯于所有事情都依赖于别人的代码。在不用太多资源或者自己能掌控的情况下尽量自己写代码。你不需要用库来自定义一个 TypeFaces(字体),你可以自己写一个。

|

||||

|

||||

所以要记住,在这两个极端中间平衡一下 - 不要重新创造所有事情,也不要过分依赖外部代码。保持中立,根据自己的能力写代码。

|

||||

|

||||

这篇文章最早发布在 [What’s That Lambda][4] 上。请访问网站阅读更多关于 Android、Node.js、Angular.js 等等类似文章。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://android.jlelse.eu/12-practices-every-android-beginner-should-know-cd43c3710027

|

||||

|

||||

作者:[Nilesh Singh][a]

|

||||

译者:[zpl1025](https://github.com/zpl1025)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://android.jlelse.eu/@nileshsingh?source=post_header_lockup

|

||||

[1]:https://material.io/icons/

|

||||

[2]:https://blog.mindorks.com/understanding-context-in-android-application-330913e32514

|

||||

[3]:https://developer.android.com/reference/android/content/Context.html

|

||||

[4]:https://www.whatsthatlambda.com/android/android-dev-101-things-every-beginner-must-know

|

||||

@ -1,26 +1,27 @@

|

||||

# IoT 网络安全:后备计划是什么?

|

||||

IoT 网络安全:后备计划是什么?

|

||||

=======

|

||||

|

||||

八月份,四名美国参议员提出了一项旨在改善物联网 (IoT) 安全性的法案。2017 年的“ 物联网网络安全改进法” 是一项小幅的立法。它没有规范物联网市场。它没有任何特别关注的行业,或强制任何公司做任何事情。甚至不修改嵌入式软件的法律责任。无论安全多么糟糕,公司可以继续销售物联网设备。

|

||||

八月份,四名美国参议员提出了一项旨在改善物联网(IoT)安全性的法案。2017 年的 “物联网网络安全改进法” 是一项小幅的立法。它没有规范物联网市场。它没有任何特别关注的行业,或强制任何公司做任何事情。甚至没有修改嵌入式软件的法律责任。无论安全多么糟糕,公司可以继续销售物联网设备。

|

||||

|

||||

法案的做法是利用政府的购买力推动市场:政府购买的任何物联网产品都必须符合最低安全标准。它要求供应商确保设备不仅可以打补丁,而且可以通过认证和及时的方式进行修补,没有不可更改的默认密码,并且没有已知的漏洞。这是一个你可以设置的低安全值,并且将大大提高安全性可以说明关于物联网安全性的当前状态。(全面披露:我帮助起草了一些法案的安全性要求。)

|

||||

法案的做法是利用政府的购买力推动市场:政府购买的任何物联网产品都必须符合最低安全标准。它要求供应商确保设备不仅可以打补丁,而且是以认证和及时的方式进行修补,没有不可更改的默认密码,并且没有已知的漏洞。这是一个你可以达到的低安全值,并且将大大提高安全性,可以说明关于物联网安全性的当前状态。(全面披露:我帮助起草了一些法案的安全性要求。)

|

||||

|

||||

该法案还将修改“计算机欺诈和滥用”和“数字千年版权”法案,以便安全研究人员研究政府购买的物联网设备的安全性。这比我们的行业需求要窄得多。但这是一个很好的第一步,这可能是对这个立法最好的事。

|

||||

|

||||

不过,这一步甚至不可能采取。我在八月份写这个专栏,毫无疑问,这个法案你在十月份或以后读的时候会没有了。如果听证会举行,它们无关紧要。该法案不会被任何委员会投票,不会在任何立法日程上。这个法案成为法律的可能性是零。这不仅仅是因为目前的政治 - 我在奥巴马政府下同样悲观。

|

||||

不过,这一步甚至不可能施行。我在八月份写这个专栏,毫无疑问,这个法案你在十月份或以后读的时候会没有了。如果听证会举行,它们无关紧要。该法案不会被任何委员会投票,不会在任何立法日程上。这个法案成为法律的可能性是零。这不仅仅是因为目前的政治 - 我在奥巴马政府下同样悲观。

|

||||

|

||||

但情况很严重。互联网是危险的 - 物联网不仅给了眼睛和耳朵,而且还给手脚。一旦有影响到位和字节的安全漏洞、利用和攻击现在会影响血肉和血肉。

|

||||

但情况很严重。互联网是危险的 - 物联网不仅给了眼睛和耳朵,而且还给手脚。一旦有影响到位和字节的安全漏洞、利用和攻击现在将会影响到其血肉。

|

||||

|

||||

正如我们在过去一个世纪一再学到的那样,市场是改善产品和服务安全的可怕机制。汽车、食品、餐厅、飞机、火灾和金融仪器安全都是如此。原因很复杂,但基本上卖家不会在安全方面进行竞争,因为买方无法根据安全考虑有效区分产品。市场使用的竞相降低门槛的机制价格降到最低的同时也将质量降至最低。没有政府干预,物联网仍然会很不安全。

|

||||

|

||||

美国政府对干预没有兴趣,所以我们不会看到严肃的安全和保障法规、新的联邦机构或更好的责任法。我们可能在欧盟有更好的机会。根据“通用数据保护条例”在数据隐私的规定,欧盟可能会在 5 年内通过类似的安全法。没有其他国家有足够的市场份额来做改变。

|

||||

|

||||

有时我们可以选择不使用物联网,但是这个选择变得越来越少见了。去年,我试着不连接网络购买新车但是失败了。再过几年, 就几乎不可能不连接到物联网。我们最大的安全风险将不会来自我们与之有市场关系的设备,而是来自其他人的汽车、照相机、路由器、无人机等等。

|

||||

有时我们可以选择不使用物联网,但是这个选择变得越来越少见了。去年,我试着不连接网络来购买新车但是失败了。再过几年, 就几乎不可能不连接到物联网。我们最大的安全风险将不会来自我们与之有市场关系的设备,而是来自其他人的汽车、照相机、路由器、无人机等等。

|

||||

|

||||

我们可以尝试为理想买单,并要求更多的安全性,但企业不会在物联网安全方面进行竞争 - 而且我们的安全专家不是一个足够大的市场力量来产生影响。

|

||||

我们可以尝试为理想买单,并要求更多的安全性,但企业不会在物联网安全方面进行竞争 - 而且我们的安全专家不是一个可以产生影响的足够大的市场力量。

|

||||

|

||||

我们需要一个后备计划,虽然我不知道是什么。如果你有任何想法请评论。

|

||||

|

||||

这篇文章以前出现在_ 9/10 月的 IEEE安全与隐私_上。

|

||||

这篇文章以前出现在 9/10 月的 《IEEE 安全与隐私》上。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -35,7 +36,7 @@ via: https://www.schneier.com/blog/archives/2017/10/iot_cybersecuri.html

|

||||

|

||||

作者:[Bruce Schneier][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

157

published/201710/20171019 How to run DOS programs in Linux.md

Normal file

157

published/201710/20171019 How to run DOS programs in Linux.md

Normal file

@ -0,0 +1,157 @@

|

||||

怎么在 Linux 中运行 DOS 程序

|

||||

============================================================

|

||||

|

||||

> QEMU 和 FreeDOS 使得很容易在 Linux 中运行老的 DOS 程序

|

||||

|

||||

|

||||

|

||||

Image by : opensource.com

|

||||

|

||||

传统的 DOS 操作系统支持的许多非常优秀的应用程序: 文字处理,电子表格,游戏和其它的程序。但是一个应用程序太老了,并不意味着它没用了。

|

||||

|

||||

如今有很多理由去运行一个旧的 DOS 应用程序。或许是从一个遗留的业务应用程序中提取一个报告,或者是想玩一个经典的 DOS 游戏,或者只是因为你对“传统计算机”很好奇。你不需要去双引导你的系统去运行 DOS 程序。取而代之的是,你可以在 Linux 中在一个 PC 仿真程序和 [FreeDOS][18] 的帮助下去正确地运行它们。

|

||||

|

||||

FreeDOS 是一个完整的、免费的、DOS 兼容的操作系统,你可以用它来玩经典的游戏、运行旧式业务软件,或者开发嵌入式系统。任何工作在 MS-DOS 中的程序也可以运行在 FreeDOS 中。

|

||||

|

||||

在那些“过去的时光”里,你安装的 DOS 是作为一台计算机上的独占操作系统。 而现今,它可以很容易地安装到 Linux 上运行的一台虚拟机中。 [QEMU][19] (<ruby>快速仿真程序<rt>Quick EMUlator</rt></ruby>的缩写) 是一个开源的虚拟机软件,它可以在 Linux 中以一个“<ruby>访客<rt>guest</rt></ruby>”操作系统来运行 DOS。许多流行的 Linux 系统都默认包含了 QEMU 。

|

||||

|

||||

通过以下四步,很容易地在 Linux 下通过使用 QEMU 和 FreeDOS 去运行一个老的 DOS 程序。

|

||||

|

||||

### 第 1 步:设置一个虚拟磁盘

|

||||

|

||||

你需要一个地方来在 QEMU 中安装 FreeDOS,为此你需要一个虚拟的 C: 驱动器。在 DOS 中,字母`A:` 和 `B:` 是分配给第一和第二个软盘驱动器的,而 `C:` 是第一个硬盘驱动器。其它介质,包括其它硬盘驱动器和 CD-ROM 驱动器,依次分配 `D:`、`E:` 等等。

|

||||

|

||||

在 QEMU 中,虚拟磁盘是一个镜像文件。要初始化一个用做虚拟 `C: ` 驱动器的文件,使用 `qemu-img` 命令。要创建一个大约 200 MB 的镜像文件,可以这样输入:

|

||||

|

||||

```

|

||||

qemu-img create dos.img 200M

|

||||

```

|

||||

|

||||

与现代计算机相比, 200MB 看起来非常小,但是早在 1990 年代, 200MB 是非常大的。它足够安装和运行 DOS。

|

||||

|

||||

### 第 2 步: QEMU 选项

|

||||

|

||||

与 PC 仿真系统 VMware 或 VirtualBox 不同,你需要通过 QEMU 命令去增加每个虚拟机的组件来 “构建” 你的虚拟系统 。虽然,这可能看起来很费力,但它实际并不困难。这些是我们在 QEMU 中用于去引导 FreeDOS 的参数:

|

||||

|

||||

| | |

|

||||

|:-- |:--|

|

||||

| `qemu-system-i386` | QEMU 可以仿真几种不同的系统,但是要引导到 DOS,我们需要有一个 Intel 兼容的 CPU。 为此,使用 i386 命令启动 QEMU。 |

|

||||

| `-m 16` | 我喜欢定义一个使用 16MB 内存的虚拟机。它看起来很小,但是 DOS 工作不需要很多的内存。在 DOS 时代,计算机使用 16MB 或者 8MB 内存是非常普遍的。 |

|

||||

| `-k en-us` | 从技术上说,这个 `-k` 选项是不需要的,因为 QEMU 会设置虚拟键盘去匹配你的真实键盘(在我的例子中, 它是标准的 US 布局的英语键盘)。但是我还是喜欢去指定它。 |

|

||||

| `-rtc base=localtime` | 每个传统的 PC 设备有一个实时时钟 (RTC) 以便于系统可以保持跟踪时间。我发现它是设置虚拟 RTC 匹配你的本地时间的最简单的方法。 |

|

||||

| `-soundhw sb16,adlib,pcspk` | 如果你需要声音,尤其是为了玩游戏时,我更喜欢定义 QEMU 支持 SoundBlaster 16 声音硬件和 AdLib 音乐。SoundBlaster 16 和 AdLib 是在 DOS 时代非常常见的声音硬件。一些老的程序也许使用 PC 喇叭发声; QEMU 也可以仿真这个。 |

|

||||

| `-device cirrus-vga` | 要使用图像,我喜欢去仿真一个简单的 VGA 视频卡。Cirrus VGA 卡是那时比较常见的图形卡, QEMU 可以仿真它。 |

|

||||

| `-display gtk` | 对于虚拟显示,我设置 QEMU 去使用 GTK toolkit,它可以将虚拟系统放到它自己的窗口内,并且提供一个简单的菜单去控制虚拟机。 |

|

||||

| `-boot order=` | 你可以告诉 QEMU 从多个引导源来引导虚拟机。从软盘驱动器引导(在 DOS 机器中一般情况下是 `A:` )指定 `order=a`。 从第一个硬盘驱动器引导(一般称为 `C:`) 使用 `order=c`。 或者去从一个 CD-ROM 驱动器(在 DOS 中经常分配为 `D:` ) 使用 `order=d`。 你可以使用组合字母去指定一个特定的引导顺序, 比如 `order=dc` 去第一个使用 CD-ROM 驱动器,如果 CD-ROM 驱动器中没有引导介质,然后使用硬盘驱动器。 |

|

||||

|

||||

### 第 3 步: 引导和安装 FreeDOS

|

||||

|

||||

现在 QEMU 已经设置好运行虚拟机,我们需要一个 DOS 系统来在那台虚拟机中安装和引导。 FreeDOS 做这个很容易。它的最新版本是 FreeDOS 1.2, 发行于 2016 年 12 月。

|

||||

|

||||

从 [FreeDOS 网站][20]上下载 FreeDOS 1.2 的发行版。 FreeDOS 1.2 CD-ROM “standard” 安装器 (`FD12CD.iso`) 可以很好地在 QEMU 上运行,因此,我推荐使用这个版本。

|

||||

|

||||

安装 FreeDOS 很简单。首先,告诉 QEMU 使用 CD-ROM 镜像并从其引导。 记住,第一个硬盘驱动器是 `C:` 驱动器,因此, CD-ROM 将以 `D:` 驱动器出现。

|

||||

|

||||

```

|

||||

qemu-system-i386 -m 16 -k en-us -rtc base=localtime -soundhw sb16,adlib -device cirrus-vga -display gtk -hda dos.img -cdrom FD12CD.iso -boot order=d

|

||||

```

|

||||

|

||||

正如下面的提示,你将在几分钟内安装完成 FreeDOS 。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

在你安装完成之后,关闭窗口退出 QEMU。

|

||||

|

||||

### 第 4 步:安装并运行你的 DOS 应用程序

|

||||

|

||||

一旦安装完 FreeDOS,你可以在 QEMU 中运行各种 DOS 应用程序。你可以在线上通过各种档案文件或其它[网站][21]找到老的 DOS 程序。

|

||||

|

||||

QEMU 提供了一个在 Linux 上访问本地文件的简单方法。比如说,想去用 QEMU 共享 `dosfiles/` 文件夹。 通过使用 `-drive` 选项,简单地告诉 QEMU 去使用这个文件夹作为虚拟的 FAT 驱动器。 QEMU 将像一个硬盘驱动器一样访问这个文件夹。

|

||||

|

||||

```

|

||||

-drive file=fat:rw:dosfiles/

|

||||

```

|

||||

|

||||

现在,你可以使用合适的选项去启动 QEMU,加上一个外部的虚拟 FAT 驱动器:

|

||||

|

||||

```

|

||||

qemu-system-i386 -m 16 -k en-us -rtc base=localtime -soundhw sb16,adlib -device cirrus-vga -display gtk -hda dos.img -drive file=fat:rw:dosfiles/ -boot order=c

|

||||

```

|

||||

|

||||

一旦你引导进入 FreeDOS,你保存在 `D:` 驱动器中的任何文件将被保存到 Linux 上的 `dosfiles/` 文件夹中。可以从 Linux 上很容易地直接去读取该文件;然而,必须注意的是,启动 QEMU 后,不能从 Linux 中去改变 `dosfiles/` 这个文件夹。 当你启动 QEMU 时,QEMU 一次性构建一个虚拟的 FAT 表,如果你在启动 QEMU 之后,在 `dosfiles/` 文件夹中增加或删除文件,仿真程序可能会很困惑。

|

||||

|

||||

我使用 QEMU 像这样运行一些我收藏的 DOS 程序, 比如 As-Easy-As 电子表格程序。这是一个在上世纪八九十年代非常流行的电子表格程序,它和现在的 Microsoft Excel 和 LibreOffice Calc 或和以前更昂贵的 Lotus 1-2-3 电子表格程序完成的工作是一样的。 As-Easy-As 和 Lotus 1-2-3 都保存数据为 WKS 文件,最新版本的 Microsoft Excel 不能读取它,但是,根据兼容性, LibreOffice Calc 可以支持它。

|

||||

|

||||

|

||||

|

||||

*As-Easy-As 电子表格程序*

|

||||

|

||||

我也喜欢在 QEMU中引导 FreeDOS 去玩一些收藏的 DOS 游戏,比如原版的 Doom。这些老的 DOS 游戏玩起来仍然非常有趣, 并且它们现在在 QEMU 上运行的非常好。

|

||||

|

||||

|

||||

|

||||

*Doom*

|

||||

|

||||

|

||||

|

||||

*Heretic*

|

||||

|

||||

|

||||

|

||||

*Jill of the Jungle*

|

||||

|

||||

|

||||

|

||||

*Commander Keen*

|

||||

|

||||

QEMU 和 FreeDOS 使得在 Linux 上运行老的 DOS 程序变得很容易。你一旦设置好了 QEMU 作为虚拟机仿真程序并安装了 FreeDOS,你将可以在 Linux 上运行你收藏的经典的 DOS 程序。

|

||||

|

||||

_所有图片要致谢 [FreeDOS.org][16]。_

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Jim Hall 是一位开源软件的开发者和支持者,可能最广为人知的是他是 FreeDOS 的创始人和项目协调者。 Jim 也非常活跃于开源软件适用性领域,作为 GNOME Outreachy 适用性测试的导师,同时也作为一名兼职教授,教授一些开源软件适用性的课程,从 2016 到 2017, Jim 在 GNOME 基金会的董事会担任董事,在工作中, Jim 是本地政府部门的 CIO。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/10/run-dos-applications-linux

|

||||

|

||||

作者:[Jim Hall][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/jim-hall

|

||||

[1]:https://opensource.com/resources/what-is-linux?intcmp=70160000000h1jYAAQ&utm_source=intcallout&utm_campaign=linuxcontent

|

||||

[2]:https://opensource.com/resources/what-are-linux-containers?intcmp=70160000000h1jYAAQ&utm_source=intcallout&utm_campaign=linuxcontent

|

||||

[3]:https://developers.redhat.com/promotions/linux-cheatsheet/?intcmp=70160000000h1jYAAQ&utm_source=intcallout&utm_campaign=linuxcontent

|

||||

[4]:https://developers.redhat.com/cheat-sheet/advanced-linux-commands-cheatsheet?intcmp=70160000000h1jYAAQ&utm_source=intcallout&utm_campaign=linuxcontent

|

||||

[5]:https://opensource.com/tags/linux?intcmp=70160000000h1jYAAQ&utm_source=intcallout&utm_campaign=linuxcontent

|

||||

[6]:https://opensource.com/file/374821

|

||||

[7]:https://opensource.com/file/374771

|

||||

[8]:https://opensource.com/file/374776

|

||||

[9]:https://opensource.com/file/374781

|

||||

[10]:https://opensource.com/file/374761

|

||||

[11]:https://opensource.com/file/374786

|

||||

[12]:https://opensource.com/file/374791

|

||||

[13]:https://opensource.com/file/374796

|

||||

[14]:https://opensource.com/file/374801

|

||||

[15]:https://opensource.com/article/17/10/run-dos-applications-linux?rate=STdDX4LLLyyllTxAOD-CdfSwrZQ9D3FNqJTpMGE7v_8

|

||||

[16]:http://www.freedos.org/

|

||||

[17]:https://opensource.com/user/126046/feed

|

||||

[18]:http://www.freedos.org/

|

||||

[19]:https://www.qemu.org/

|

||||

[20]:http://www.freedos.org/

|

||||

[21]:http://www.freedos.org/links/

|

||||

[22]:https://opensource.com/users/jim-hall

|

||||

[23]:https://opensource.com/users/jim-hall

|

||||

[24]:https://opensource.com/article/17/10/run-dos-applications-linux#comments

|

||||

83

published/20171013 Best of PostgreSQL 10 for the DBA.md

Normal file

83

published/20171013 Best of PostgreSQL 10 for the DBA.md

Normal file

@ -0,0 +1,83 @@

|

||||

对 DBA 最重要的 PostgreSQL 10 新亮点

|

||||

============================================================

|

||||

|

||||

前段时间新的重大版本的 PostgreSQL 10 发布了! 强烈建议阅读[公告][3]、[发布说明][4]和“[新功能][5]”概述可以在[这里][3]、[这里][4]和[这里][5]。像往常一样,已经有相当多的博客覆盖了所有新的东西,但我猜每个人都有自己认为重要的角度,所以与 9.6 版一样我再次在这里列出我印象中最有趣/相关的功能。

|

||||

|

||||

与往常一样,升级或初始化一个新集群的用户将获得更好的性能(例如,更好的并行索引扫描、合并 join 和不相关的子查询,更快的聚合、远程服务器上更加智能的 join 和聚合),这些都开箱即用,但本文中我想讲一些不能开箱即用,实际上你需要采取一些步骤才能从中获益的内容。下面重点展示的功能是从 DBA 的角度来汇编的,很快也有一篇文章从开发者的角度讲述更改。

|

||||

|

||||

### 升级注意事项

|

||||

|

||||

首先有些从现有设置升级的提示 - 有一些小的事情会导致从 9.6 或更旧的版本迁移时引起问题,所以在真正的升级之前,一定要在单独的副本上测试升级,并遍历发行说明中所有可能的问题。最值得注意的缺陷是:

|

||||

|

||||

* 所有包含 “xlog” 的函数都被重命名为使用 “wal” 而不是 “xlog”。

|

||||

|

||||

后一个命名可能与正常的服务器日志混淆,因此这是一个“以防万一”的更改。如果使用任何第三方备份/复制/HA 工具,请检查它们是否为最新版本。

|

||||

* 存放服务器日志(错误消息/警告等)的 pg_log 文件夹已重命名为 “log”。

|

||||

|

||||

确保验证你的日志解析或 grep 脚本(如果有)可以工作。

|

||||

* 默认情况下,查询将最多使用 2 个后台进程。

|

||||

|

||||

如果在 CPU 数量较少的机器上在 `postgresql.conf` 设置中使用默认值 `10`,则可能会看到资源使用率峰值,因为默认情况下并行处理已启用 - 这是一件好事,因为它应该意味着更快的查询。如果需要旧的行为,请将 `max_parallel_workers_per_gather` 设置为 `0`。

|

||||

* 默认情况下,本地主机的复制连接已启用。

|

||||

|

||||

为了简化测试等工作,本地主机和本地 Unix 套接字复制连接现在在 `pg_hba.conf` 中以“<ruby>信任<rt>trust</rt></ruby>”模式启用(无密码)!因此,如果其他非 DBA 用户也可以访问真实的生产计算机,请确保更改配置。

|

||||

|

||||

### 从 DBA 的角度来看我的最爱

|

||||

|

||||

* 逻辑复制

|

||||

|

||||

这个期待已久的功能在你只想要复制一张单独的表、部分表或者所有表时只需要简单的设置而性能损失最小,这也意味着之后主要版本可以零停机升级!历史上(需要 Postgres 9.4+),这可以通过使用第三方扩展或缓慢的基于触发器的解决方案来实现。对我而言这是 10 最好的功能。

|

||||

* 声明分区

|

||||

|

||||

以前管理分区的方法通过继承并创建触发器来把插入操作重新路由到正确的表中,这一点很烦人,更不用说性能的影响了。目前支持的是 “range” 和 “list” 分区方案。如果有人在某些数据库引擎中缺少 “哈希” 分区,则可以使用带表达式的 “list” 分区来实现相同的功能。

|

||||

* 可用的哈希索引

|

||||

|

||||

哈希索引现在是 WAL 记录的,因此是崩溃安全的,并获得了一些性能改进,对于简单的搜索,它们比在更大的数据上的标准 B 树索引快。也支持更大的索引大小。

|

||||

|

||||

* 跨列优化器统计

|

||||

|

||||

这样的统计数据需要在一组表的列上手动创建,以指出这些值实际上是以某种方式相互依赖的。这将能够应对计划器认为返回的数据很少(概率的乘积通常会产生非常小的数字)从而导致在大量数据下性能不好的的慢查询问题(例如选择“嵌套循环” join)。

|

||||

* 副本上的并行快照

|

||||

|

||||

现在可以在 pg_dump 中使用多个进程(`-jobs` 标志)来极大地加快备用服务器上的备份。

|

||||

* 更好地调整并行处理 worker 的行为

|

||||

|

||||

参考 `max_parallel_workers` 和 `min_parallel_table_scan_size`/`min_parallel_index_scan_size` 参数。我建议增加一点后两者的默认值(8MB、512KB)。

|

||||

* 新的内置监控角色,便于工具使用

|

||||

|

||||

新的角色 `pg_monitor`、`pg_read_all_settings`、`pg_read_all_stats` 和 `pg_stat_scan_tables` 能更容易进行各种监控任务 - 以前必须使用超级用户帐户或一些 SECURITY DEFINER 包装函数。

|

||||

* 用于更安全的副本生成的临时 (每个会话) 复制槽

|

||||

* 用于检查 B 树索引的有效性的一个新的 Contrib 扩展

|

||||

|

||||

这两个智能检查发现结构不一致和页面级校验未覆盖的内容。希望不久的将来能更加深入。

|

||||

* Psql 查询工具现在支持基本分支(`if`/`elif`/`else`)

|

||||

|

||||

例如下面的将启用具有特定版本分支(对 pg_stat* 视图等有不同列名)的单个维护/监视脚本,而不是许多版本特定的脚本。

|

||||

|

||||

```

|

||||

SELECT :VERSION_NAME = '10.0' AS is_v10 \gset

|

||||

\if :is_v10

|

||||

SELECT 'yippee' AS msg;

|

||||

\else

|

||||

SELECT 'time to upgrade!' AS msg;

|

||||

\endif

|

||||

```

|

||||

|

||||

这次就这样了!当然有很多其他的东西没有列出,所以对于专职 DBA,我一定会建议你更全面地看发布记录。非常感谢那 300 多为这个版本做出贡献的人!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.cybertec.at/best-of-postgresql-10-for-the-dba/

|

||||

|

||||

作者:[Kaarel Moppel][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.cybertec.at/author/kaarel-moppel/

|

||||

[1]:http://www.cybertec.at/author/kaarel-moppel/

|

||||

[2]:http://www.cybertec.at/best-of-postgresql-10-for-the-dba/

|

||||

[3]:https://www.postgresql.org/about/news/1786/

|

||||

[4]:https://www.postgresql.org/docs/current/static/release-10.html

|

||||

[5]:https://wiki.postgresql.org/wiki/New_in_postgres_10

|

||||

242

published/20171015 Monitoring Slow SQL Queries via Slack.md

Normal file

242

published/20171015 Monitoring Slow SQL Queries via Slack.md

Normal file

@ -0,0 +1,242 @@

|

||||

通过 Slack 监视慢 SQL 查询

|

||||

==============

|

||||

|

||||

> 一个获得关于慢查询、意外错误和其它重要日志通知的简单 Go 秘诀。

|

||||

|

||||

|

||||

|

||||

我的 Slack bot 提示我一个运行了很长时间 SQL 查询。我应该尽快解决它。

|

||||

|

||||

**我们不能管理我们无法去测量的东西。**每个后台应用程序都需要我们去监视它在数据库上的性能。如果一个特定的查询随着数据量增长变慢,你必须在它变得太慢之前去优化它。

|

||||

|

||||

由于 Slack 已经成为我们工作的中心,它也在改变我们监视系统的方式。 虽然我们已经有非常不错的监视工具,如果在系统中任何东西有正在恶化的趋势,让 Slack 机器人告诉我们,也是非常棒的主意。比如,一个太长时间才完成的 SQL 查询,或者,在一个特定的 Go 包中发生一个致命的错误。

|

||||

|

||||

在这篇博客文章中,我们将告诉你,通过使用已经支持这些特性的[一个简单的日志系统][8] 和 [一个已存在的数据库库(database library)][9] 怎么去设置来达到这个目的。

|

||||

|

||||

### 使用记录器

|

||||

|

||||

[logger][10] 是一个为 Go 库和应用程序使用设计的小型库。在这个例子中我们使用了它的三个重要的特性:

|

||||

|

||||

* 它为测量性能提供了一个简单的定时器。

|

||||

* 支持复杂的输出过滤器,因此,你可以从指定的包中选择日志。例如,你可以告诉记录器仅从数据库包中输出,并且仅输出超过 500 ms 的定时器日志。

|

||||

* 它有一个 Slack 钩子,因此,你可以过滤并将日志输入到 Slack。

|

||||

|

||||

让我们看一下在这个例子中,怎么去使用定时器,稍后我们也将去使用过滤器:

|

||||

|

||||

```

|

||||

package main

|

||||

|

||||

import (

|

||||

"github.com/azer/logger"

|

||||

"time"

|

||||

)

|

||||

|

||||

var (

|

||||

users = logger.New("users")

|

||||

database = logger.New("database")

|

||||

)

|

||||

|

||||

func main () {

|

||||

users.Info("Hi!")

|

||||

|

||||

timer := database.Timer()

|

||||

time.Sleep(time.Millisecond * 250) // sleep 250ms

|

||||

timer.End("Connected to database")

|

||||

|

||||

users.Error("Failed to create a new user.", logger.Attrs{

|

||||

"e-mail": "foo@bar.com",

|

||||

})

|

||||

|

||||

database.Info("Just a random log.")

|

||||

|

||||

fmt.Println("Bye.")

|

||||

}

|

||||

```

|

||||

|

||||

运行这个程序没有输出:

|

||||

|

||||

```

|

||||

$ go run example-01.go

|

||||

Bye

|

||||

```

|

||||

|

||||

记录器是[缺省静默的][11],因此,它可以在库的内部使用。我们简单地通过一个环境变量去查看日志:

|

||||

|

||||

例如:

|

||||

|

||||

```

|

||||

$ LOG=database@timer go run example-01.go

|

||||

01:08:54.997 database(250.095587ms): Connected to database.

|

||||

Bye

|

||||

```

|

||||

|

||||

上面的示例我们使用了 `database@timer` 过滤器去查看 `database` 包中输出的定时器日志。你也可以试一下其它的过滤器,比如:

|

||||

|

||||

* `LOG=*`: 所有日志

|

||||

* `LOG=users@error,database`: 所有来自 `users` 的错误日志,所有来自 `database` 的所有日志

|

||||

* `LOG=*@timer,database@info`: 来自所有包的定时器日志和错误日志,以及来自 `database` 的所有日志

|

||||

* `LOG=*,users@mute`: 除了 `users` 之外的所有日志

|

||||

|

||||

### 发送日志到 Slack

|

||||

|

||||

控制台日志是用于开发环境的,但是我们需要产品提供一个友好的界面。感谢 [slack-hook][12], 我们可以很容易地在上面的示例中,使用 Slack 去整合它:

|

||||

|

||||

```

|

||||

import (

|

||||

"github.com/azer/logger"

|

||||

"github.com/azer/logger-slack-hook"

|

||||

)

|

||||

|

||||

func init () {

|

||||

logger.Hook(&slackhook.Writer{

|

||||

WebHookURL: "https://hooks.slack.com/services/...",

|

||||

Channel: "slow-queries",

|

||||

Username: "Query Person",

|

||||

Filter: func (log *logger.Log) bool {

|

||||

return log.Package == "database" && log.Level == "TIMER" && log.Elapsed >= 200

|

||||

}

|

||||

})

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

我们来解释一下,在上面的示例中我们做了什么:

|

||||

|

||||

* 行 #5: 设置入站 webhook url。这个 URL [链接在这里][1]。

|

||||

* 行 #6: 选择流日志的入口通道。

|

||||

* 行 #7: 显示的发送者的用户名。

|

||||

* 行 #11: 使用流过滤器,仅输出时间超过 200 ms 的定时器日志。

|

||||

|

||||

希望这个示例能给你提供一个大概的思路。如果你有更多的问题,去看这个 [记录器][13]的文档。

|

||||

|

||||

### 一个真实的示例: CRUD

|

||||

|

||||

[crud][14] 是一个用于 Go 的数据库的 ORM 式的类库,它有一个隐藏特性是内部日志系统使用 [logger][15] 。这可以让我们很容易地去监视正在运行的 SQL 查询。

|

||||

|

||||

#### 查询

|

||||

|

||||

这有一个通过给定的 e-mail 去返回用户名的简单查询:

|

||||

|

||||

```

|

||||

func GetUserNameByEmail (email string) (string, error) {

|

||||

var name string

|

||||

if err := DB.Read(&name, "SELECT name FROM user WHERE email=?", email); err != nil {

|

||||

return "", err

|

||||

}

|

||||

|

||||

return name, nil

|

||||

}

|

||||

```

|

||||

|

||||

好吧,这个太短了, 感觉好像缺少了什么,让我们增加全部的上下文:

|

||||

|

||||

```

|

||||

import (

|

||||

"github.com/azer/crud"

|

||||

_ "github.com/go-sql-driver/mysql"

|

||||

"os"

|

||||

)

|

||||

|

||||

var db *crud.DB

|

||||

|

||||

func main () {

|

||||

var err error

|

||||

|

||||

DB, err = crud.Connect("mysql", os.Getenv("DATABASE_URL"))

|

||||

if err != nil {

|

||||

panic(err)

|

||||

}

|

||||

|

||||

username, err := GetUserNameByEmail("foo@bar.com")

|

||||

if err != nil {

|

||||

panic(err)

|

||||

}

|

||||

|

||||

fmt.Println("Your username is: ", username)

|

||||

}

|

||||

```

|

||||

|

||||

因此,我们有一个通过环境变量 `DATABASE_URL` 连接到 MySQL 数据库的 [crud][16] 实例。如果我们运行这个程序,将看到有一行输出:

|

||||

|

||||

```

|

||||

$ DATABASE_URL=root:123456@/testdb go run example.go

|

||||

Your username is: azer

|

||||

```

|

||||

|

||||

正如我前面提到的,日志是 [缺省静默的][17]。让我们看一下 crud 的内部日志:

|

||||

|

||||

```

|

||||

$ LOG=crud go run example.go

|

||||

22:56:29.691 crud(0): SQL Query Executed: SELECT username FROM user WHERE email='foo@bar.com'

|

||||

Your username is: azer

|

||||

```

|

||||

|

||||

这很简单,并且足够我们去查看在我们的开发环境中查询是怎么执行的。

|

||||

|

||||

#### CRUD 和 Slack 整合

|

||||

|

||||

记录器是为配置管理应用程序级的“内部日志系统”而设计的。这意味着,你可以通过在你的应用程序级配置记录器,让 crud 的日志流入 Slack :

|

||||

|

||||

```

|

||||

import (

|

||||

"github.com/azer/logger"

|

||||

"github.com/azer/logger-slack-hook"

|

||||

)

|

||||

|

||||

func init () {

|

||||

logger.Hook(&slackhook.Writer{

|

||||

WebHookURL: "https://hooks.slack.com/services/...",

|

||||

Channel: "slow-queries",

|

||||

Username: "Query Person",

|

||||

Filter: func (log *logger.Log) bool {

|

||||

return log.Package == "mysql" && log.Level == "TIMER" && log.Elapsed >= 250

|

||||

}

|

||||

})

|

||||

}

|

||||

```

|

||||

|

||||

在上面的代码中:

|

||||

|

||||

* 我们导入了 [logger][2] 和 [logger-slack-hook][3] 库。

|

||||

* 我们配置记录器日志流入 Slack。这个配置覆盖了代码库中 [记录器][4] 所有的用法, 包括第三方依赖。

|

||||

* 我们使用了流过滤器,仅输出 MySQL 包中超过 250 ms 的定时器日志。

|

||||

|

||||

这种使用方法可以被扩展,而不仅是慢查询报告。我个人使用它去跟踪指定包中的重要错误, 也用于统计一些类似新用户登入或生成支付的日志。

|

||||

|

||||

### 在这篇文章中提到的包

|

||||

|

||||

* [crud][5]

|

||||

* [logger][6]

|

||||

* [logger-slack-hook][7]

|

||||

|

||||

[告诉我们][18] 如果你有任何的问题或建议。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://azer.bike/journal/monitoring-slow-sql-queries-via-slack/

|

||||

|

||||

作者:[Azer Koçulu][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://azer.bike/

|

||||

[1]:https://my.slack.com/services/new/incoming-webhook/

|

||||

[2]:https://github.com/azer/logger

|

||||

[3]:https://github.com/azer/logger-slack-hook

|

||||

[4]:https://github.com/azer/logger

|

||||

[5]:https://github.com/azer/crud

|

||||

[6]:https://github.com/azer/logger

|

||||

[7]:https://github.com/azer/logger

|

||||

[8]:http://azer.bike/journal/monitoring-slow-sql-queries-via-slack/?utm_source=dbweekly&utm_medium=email#logger

|

||||

[9]:http://azer.bike/journal/monitoring-slow-sql-queries-via-slack/?utm_source=dbweekly&utm_medium=email#crud

|

||||

[10]:https://github.com/azer/logger

|

||||

[11]:http://www.linfo.org/rule_of_silence.html

|

||||

[12]:https://github.com/azer/logger-slack-hook

|

||||

[13]:https://github.com/azer/logger

|

||||

[14]:https://github.com/azer/crud

|

||||

[15]:https://github.com/azer/logger

|

||||

[16]:https://github.com/azer/crud

|

||||

[17]:http://www.linfo.org/rule_of_silence.html

|

||||

[18]:https://twitter.com/afrikaradyo

|

||||

@ -1,26 +1,27 @@

|

||||

为什么要在 Docker 中使用 R? 一位 DevOps 的视角

|

||||

为什么要在 Docker 中使用 R? 一位 DevOps 的看法

|

||||

============================================================

|

||||

|

||||

[][11]

|

||||

|

||||

有几篇关于为什么要在 Docker 中使用 R 的文章。在这篇文章中,我将尝试加入一个 DevOps 的观点,并解释在 OpenCPU 系统的上下文中如何使用容器化 R 来构建和部署 R 服务器。

|

||||

> R 语言,一种自由软件编程语言与操作环境,主要用于统计分析、绘图、数据挖掘。R 内置多种统计学及数字分析功能。R 的另一强项是绘图功能,制图具有印刷的素质,也可加入数学符号。——引自维基百科。

|

||||

|

||||

> 有在 [#rstats][2] 世界的人真正地写*为什么*他们使用 Docker,而不是*如何*么?

|

||||

已经有几篇关于为什么要在 Docker 中使用 R 的文章。在这篇文章中,我将尝试加入一个 DevOps 的观点,并解释在 OpenCPU 系统的环境中如何使用容器化 R 来构建和部署 R 服务器。

|

||||

|

||||

> 有在 [#rstats][2] 世界的人真正地写过*为什么*他们使用 Docker,而不是*如何*么?

|

||||

>

|

||||

> — Jenny Bryan (@JennyBryan) [September 29, 2017][3]

|

||||

|

||||

### 1:轻松开发

|

||||

|

||||

OpenCPU 系统的旗舰是[ OpenCPU 服务器][12]:它是一个成熟且强大的 Linux 栈,用于在系统和应用程序中嵌入 R。因为 OpenCPU 是完全开源的,我们可以在 DockerHub 上构建和发布。可以使用以下命令启动(使用端口8004或80)一个可以立即使用的 OpenCPU 和 RStudio 的 Linux 服务器:

|

||||

OpenCPU 系统的旗舰是 [OpenCPU 服务器][12]:它是一个成熟且强大的 Linux 栈,用于在系统和应用程序中嵌入 R。因为 OpenCPU 是完全开源的,我们可以在 DockerHub 上构建和发布。可以使用以下命令启动一个可以立即使用的 OpenCPU 和 RStudio 的 Linux 服务器(使用端口 8004 或 80):

|

||||

|

||||

```

|

||||

docker run -t -p 8004:8004 opencpu/rstudio

|

||||

|

||||

```

|

||||

|

||||

现在只需在你的浏览器打开 [http://localhost:8004/ocpu/][13] 和 [http://localhost:8004/rstudio/][14]!在 rstudio 中用用户 `opencpu`(密码:`opencpu`)登录来构建或安装应用程序。有关详细信息,请参阅[自述文件][15]。

|

||||