mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-28 01:01:09 +08:00

commit

855b8caa23

125

published/20180107 7 leadership rules for the DevOps age.md

Normal file

125

published/20180107 7 leadership rules for the DevOps age.md

Normal file

@ -0,0 +1,125 @@

|

||||

DevOps 时代的 7 个领导力准则

|

||||

======

|

||||

|

||||

> DevOps 是一种持续性的改变和提高:那么也准备改变你所珍视的领导力准则吧。

|

||||

|

||||

|

||||

|

||||

如果 [DevOps] 最终更多的是一种文化而非某种技术或者平台,那么请记住:它没有终点线。而是一种持续性的改变和提高——而且最高管理层并不及格。

|

||||

|

||||

然而,如果期望 DevOps 能够帮助获得更多的成果,领导者需要[修订他们的一些传统的方法][2]。让我们考虑 7 个在 DevOps 时代更有效的 IT 领导的想法。

|

||||

|

||||

### 1、 向失败说“是的”

|

||||

|

||||

“失败”这个词在 IT 领域中一直包含着非常具体的意义,而且通常是糟糕的意思:服务器失败、备份失败、硬盘驱动器失败——你的印象就是如此。

|

||||

|

||||

然而一个健康的 DevOps 文化取决于如何重新定义失败——IT 领导者应该在他们的字典里重新定义这个单词,使这个词的含义和“机会”对等起来。

|

||||

|

||||

“在 DevOps 之前,我们曾有一种惩罚失败者的文化,”[Datical][3] 的首席技术官兼联合创始人罗伯特·里夫斯说,“我们学到的仅仅是去避免错误。在 IT 领域避免错误的首要措施就是不要去改变任何东西:不要加速版本迭代的日程,不要迁移到云中,不要去做任何不同的事”

|

||||

|

||||

那是一个旧时代的剧本,里夫斯坦诚的说,它已经不起作用了,事实上,那种停滞实际上是失败。

|

||||

|

||||

“那些缓慢的发布周期并逃避云的公司被恐惧所麻痹——他们将会走向失败,”里夫斯说道。“IT 领导者必须拥抱失败,并把它当做成一个机遇。人们不仅仅从他们的过错中学习,也会从别人的错误中学习。开放和[安全心理][4]的文化促进学习和提高。”

|

||||

|

||||

**[相关文章:[为什么敏捷领导者谈论“失败”必须超越它本义][5]]**

|

||||

|

||||

### 2、 在管理层渗透开发运营的理念

|

||||

|

||||

尽管 DevOps 文化可以在各个方向有机的发展,那些正在从单体、孤立的 IT 实践中转变出来的公司,以及可能遭遇逆风的公司——需要高管层的全面支持。如果缺少了它,你就会传达模糊的信息,而且可能会鼓励那些宁愿被推着走的人,但这是我们一贯的做事方式。[改变文化是困难的][6];人们需要看到高管层完全投入进去并且知道改变已经实际发生了。

|

||||

|

||||

“高层管理必须全力支持 DevOps,才能成功的实现收益”,来自 [Rainforest QA][7] 的首席信息官德里克·蔡说道。

|

||||

|

||||

成为一个 DevOps 商店。德里克指出,涉及到公司的一切,从技术团队到工具到进程到规则和责任。

|

||||

|

||||

“没有高层管理的统一赞助支持,DevOps 的实施将很难成功,”德里克说道,“因此,在转变到 DevOps 之前在高层中保持一致是很重要的。”

|

||||

|

||||

### 3、 不要只是声明 “DevOps”——要明确它

|

||||

|

||||

即使 IT 公司也已经开始张开双臂拥抱 DevOps,也可能不是每个人都在同一个步调上。

|

||||

|

||||

**[参考我们的相关文章,[3 阐明了DevOps和首席技术官们必须在同一进程上][8]]**

|

||||

|

||||

造成这种脱节的一个根本原因是:人们对这个术语的有着不同的定义理解。

|

||||

|

||||

“DevOps 对不同的人可能意味着不同的含义,”德里克解释道,“对高管层和副总裁层来说,要执行明确的 DevOps 的目标,清楚地声明期望的成果,充分理解带来的成果将如何使公司的商业受益,并且能够衡量和报告成功的过程。”

|

||||

|

||||

事实上,在基线定义和远景之外,DevOps 要求正在进行频繁的交流,不是仅仅在小团队里,而是要贯穿到整个组织。IT 领导者必须将它设置为优先。

|

||||

|

||||

“不可避免的,将会有些阻碍,在商业中将会存在失败和破坏,”德里克说道,“领导者们需要清楚的将这个过程向公司的其他人阐述清楚,告诉他们他们作为这个过程的一份子能够期待的结果。”

|

||||

|

||||

### 4、 DevOps 对于商业和技术同样重要

|

||||

|

||||

IT 领导者们成功的将 DevOps 商店的这种文化和实践当做一项商业策略,以及构建和运营软件的方法。DevOps 是将 IT 从支持部门转向战略部门的推动力。

|

||||

|

||||

IT 领导者们必须转变他们的思想和方法,从成本和服务中心转变到驱动商业成果,而且 DevOps 的文化能够通过自动化和强大的协作加速这些成果,来自 [CYBRIC][9] 的首席技术官和联合创始人迈克·凯尔说道。

|

||||

|

||||

事实上,这是一个强烈的趋势,贯穿这些新“规则”,在 DevOps 时代走在前沿。

|

||||

|

||||

“促进创新并且鼓励团队成员去聪明的冒险是 DevOps 文化的一个关键部分,IT 领导者们需要在一个持续的基础上清楚的和他们交流”,凯尔说道。

|

||||

|

||||

“一个高效的 IT 领导者需要比以往任何时候都要积极的参与到业务中去,”来自 [West Monroe Partners][10] 的性能服务部门的主任埃文说道,“每年或季度回顾的日子一去不复返了——[你需要欢迎每两周一次的挤压整理][11],你需要有在年度水平上的思考战略能力,在冲刺阶段的互动能力,在商业期望满足时将会被给予一定的奖励。”

|

||||

|

||||

### 5、 改变妨碍 DevOps 目标的任何事情

|

||||

|

||||

虽然 DevOps 的老兵们普遍认为 DevOps 更多的是一种文化而不是技术,成功取决于通过正确的过程和工具激活文化。当你声称自己的部门是一个 DevOps 商店却拒绝对进程或技术做必要的改变,这就是你买了辆法拉利却使用了用了 20 年的引擎,每次转动钥匙都会冒烟。

|

||||

|

||||

展览 A: [自动化][12]。这是 DevOps 成功的重要并行策略。

|

||||

|

||||

“IT 领导者需要重点强调自动化,”卡伦德说,“这将是 DevOps 的前期投资,但是如果没有它,DevOps 将会很容易被低效吞噬,而且将会无法完整交付。”

|

||||

|

||||

自动化是基石,但改变不止于此。

|

||||

|

||||

“领导者们需要推动自动化、监控和持续交付过程。这意着对现有的实践、过程、团队架构以及规则的很多改变,” 德里克说。“领导者们需要改变一切会阻碍团队去实现完全自动化的因素。”

|

||||

|

||||

### 6、 重新思考团队架构和能力指标

|

||||

|

||||

当你想改变时……如果你桌面上的组织结构图和你过去大部分时候嵌入的名字都是一样的,那么你是时候该考虑改革了。

|

||||

|

||||

“在这个 DevOps 的新时代文化中,IT 执行者需要采取一个全新的方法来组织架构。”凯尔说,“消除组织的边界限制,它会阻碍团队间的合作,允许团队自我组织、敏捷管理。”

|

||||

|

||||

凯尔告诉我们在 DevOps 时代,这种反思也应该拓展应用到其他领域,包括你怎样衡量个人或者团队的成功,甚至是你和人们的互动。

|

||||

|

||||

“根据业务成果和总体的积极影响来衡量主动性,”凯尔建议。“最后,我认为管理中最重要的一个方面是:有同理心。”

|

||||

|

||||

注意很容易收集的到测量值不是 DevOps 真正的指标,[Red Hat] 的技术专家戈登·哈夫写到,“DevOps 应该把指标以某种形式和商业成果绑定在一起”,他指出,“你可能并不真正在乎开发者些了多少代码,是否有一台服务器在深夜硬件损坏,或者是你的测试是多么的全面。你甚至都不直接关注你的网站的响应情况或者是你更新的速度。但是你要注意的是这些指标可能和顾客放弃购物车去竞争对手那里有关,”参考他的文章,[DevOps 指标:你在测量什么?]

|

||||

|

||||

### 7、 丢弃传统的智慧

|

||||

|

||||

如果 DevOps 时代要求关于 IT 领导能力的新的思考方式,那么也就意味着一些旧的方法要被淘汰。但是是哪些呢?

|

||||

|

||||

“说实话,是全部”,凯尔说道,“要摆脱‘因为我们一直都是以这种方法做事的’的心态。过渡到 DevOps 文化是一种彻底的思维模式的转变,不是对瀑布式的过去和变革委员会的一些细微改变。”

|

||||

|

||||

事实上,IT 领导者们认识到真正的变革要求的不只是对旧方法的小小接触。它更多的是要求对之前的进程或者策略的一个重新启动。

|

||||

|

||||

West Monroe Partners 的卡伦德分享了一个阻碍 DevOps 的领导力的例子:未能拥抱 IT 混合模型和现代的基础架构比如说容器和微服务。

|

||||

|

||||

“我所看到的一个大的规则就是架构整合,或者认为在一个同质的环境下长期的维护会更便宜,”卡伦德说。

|

||||

|

||||

**领导者们,想要更多像这样的智慧吗?[注册我们的每周邮件新闻报道][15]。**

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://enterprisersproject.com/article/2018/1/7-leadership-rules-devops-age

|

||||

|

||||

作者:[Kevin Casey][a]

|

||||

译者:[FelixYFZ](https://github.com/FelixYFZ)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://enterprisersproject.com/user/kevin-casey

|

||||

[1]:https://enterprisersproject.com/tags/devops

|

||||

[2]:https://enterprisersproject.com/article/2017/7/devops-requires-dumping-old-it-leadership-ideas

|

||||

[3]:https://www.datical.com/

|

||||

[4]:https://rework.withgoogle.com/guides/understanding-team-effectiveness/steps/foster-psychological-safety/

|

||||

[5]:https://enterprisersproject.com/article/2017/10/why-agile-leaders-must-move-beyond-talking-about-failure?sc_cid=70160000000h0aXAAQ

|

||||

[6]:https://enterprisersproject.com/article/2017/10/how-beat-fear-and-loathing-it-change

|

||||

[7]:https://www.rainforestqa.com/

|

||||

[8]:https://enterprisersproject.com/article/2018/1/3-areas-where-devops-and-cios-must-get-same-page

|

||||

[9]:https://www.cybric.io/

|

||||

[10]:http://www.westmonroepartners.com/

|

||||

[11]:https://www.scrumalliance.org/community/articles/2017/february/product-backlog-grooming

|

||||

[12]:https://www.redhat.com/en/topics/automation?intcmp=701f2000000tjyaAAA

|

||||

[13]:https://www.redhat.com/en?intcmp=701f2000000tjyaAAA

|

||||

[14]:https://enterprisersproject.com/article/2017/7/devops-metrics-are-you-measuring-what-matters

|

||||

[15]:https://enterprisersproject.com/email-newsletter?intcmp=701f2000000tsjPAAQ

|

||||

@ -0,0 +1,146 @@

|

||||

如何使用命令行检查 Linux 上的磁盘空间

|

||||

========

|

||||

|

||||

> Linux 提供了所有必要的工具来帮助你确切地发现你的驱动器上剩余多少空间。Jack 在这里展示了如何做。

|

||||

|

||||

|

||||

|

||||

快速提问:你的驱动器剩余多少剩余空间?一点点还是很多?接下来的提问是:你知道如何找出这些剩余空间吗?如果你碰巧使用的是 GUI 桌面( 例如 GNOME、KDE、Mate、Pantheon 等 ),则任务可能非常简单。但是,当你要在一个没有 GUI 桌面的服务器上查询剩余空间,你该如何去做呢?你是否要为这个任务安装相应的软件工具?答案是绝对不是。在 Linux 中,具备查找驱动器上的剩余磁盘空间的所有工具。事实上,有两个非常容易使用的工具。

|

||||

|

||||

在本文中,我将演示这些工具。我将使用 [Elementary OS][1](LCTT译注:Elementary OS 是基于 Ubuntu 精心打磨美化的桌面 Linux 发行版 ),它还包括一个 GUI 方式,但我们将限制自己仅使用命令行。好消息是这些命令行工具随时可用于每个 Linux 发行版。在我的测试系统中,连接了许多的驱动器(内部的和外部的)。使用的命令与连接驱动器的位置无关,仅仅与驱动器是否已经挂载好并且对操作系统可见有关。

|

||||

|

||||

言归正传,让我们来试试这些工具。

|

||||

|

||||

### df

|

||||

|

||||

`df` 命令是我第一个用于在 Linux 上查询驱动器空间的工具,时间可以追溯到 20 世纪 90 年代。它的使用和报告结果非常简单。直到今天,`df` 还是我执行此任务的首选命令。此命令有几个选项开关,对于基本的报告,你实际上只需要一个选项。该命令是 `df -H` 。`-H` 选项开关用于将 `df` 的报告结果以人类可读的格式进行显示。`df -H` 的输出包括:已经使用了的空间量、可用空间、空间使用的百分比,以及每个磁盘连接到系统的挂载点(图 1)。

|

||||

|

||||

![df output][3]

|

||||

|

||||

*图 1:Elementary OS 系统上 `df -H` 命令的输出结果*

|

||||

|

||||

如果你的驱动器列表非常长并且你只想查看单个驱动器上使用的空间,该怎么办?对于 `df` 这没问题。我们来看一下位于 `/dev/sda1` 的主驱动器已经使用了多少空间。为此,执行如下命令:

|

||||

|

||||

```

|

||||

df -H /dev/sda1

|

||||

```

|

||||

输出将限于该驱动器(图 2)。

|

||||

|

||||

![disk usage][6]

|

||||

|

||||

*图 2:一个单独驱动器空间情况*

|

||||

|

||||

你还可以限制 `df` 命令结果报告中显示指定的字段。可用的字段包括:

|

||||

|

||||

- `source` — 文件系统的来源(LCTT译注:通常为一个设备,如 `/dev/sda1` )

|

||||

- `size` — 块总数

|

||||

- `used` — 驱动器已使用的空间

|

||||

- `avail` — 可以使用的剩余空间

|

||||

- `pcent` — 驱动器已经使用的空间占驱动器总空间的百分比

|

||||

- `target` —驱动器的挂载点

|

||||

|

||||

让我们显示所有驱动器的输出,仅显示 `size` ,`used` ,`avail` 字段。对此的命令是:

|

||||

|

||||

```

|

||||

df -H --output=size,used,avail

|

||||

```

|

||||

|

||||

该命令的输出非常简单( 图 3 )。

|

||||

|

||||

![output][8]

|

||||

|

||||

*图 3:显示我们驱动器的指定输出*

|

||||

|

||||

这里唯一需要注意的是我们不知道该输出的来源,因此,我们要把 `source` 加入命令中:

|

||||

|

||||

```

|

||||

df -H --output=source,size,used,avail

|

||||

```

|

||||

|

||||

现在输出的信息更加全面有意义(图 4)。

|

||||

|

||||

![source][10]

|

||||

|

||||

*图 4:我们现在知道了磁盘使用情况的来源*

|

||||

|

||||

### du

|

||||

|

||||

我们的下一个命令是 `du` 。 正如您所料,这代表<ruby>磁盘使用情况<rt>disk usage</rt></ruby>。 `du` 命令与 `df` 命令完全不同,因为它报告目录而不是驱动器的空间使用情况。 因此,您需要知道要检查的目录的名称。 假设我的计算机上有一个包含虚拟机文件的目录。 那个目录是 `/media/jack/HALEY/VIRTUALBOX` 。 如果我想知道该特定目录使用了多少空间,我将运行如下命令:

|

||||

|

||||

```

|

||||

du -h /media/jack/HALEY/VIRTUALBOX

|

||||

```

|

||||

|

||||

上面命令的输出将显示目录中每个文件占用的空间(图 5)。

|

||||

|

||||

![du command][12]

|

||||

|

||||

*图 5 在特定目录上运行 `du` 命令的输出*

|

||||

|

||||

到目前为止,这个命令并没有那么有用。如果我们想知道特定目录的总使用量怎么办?幸运的是,`du` 可以处理这项任务。对于同一目录,命令将是:

|

||||

|

||||

```

|

||||

du -sh /media/jack/HALEY/VIRTUALBOX/

|

||||

```

|

||||

|

||||

现在我们知道了上述目录使用存储空间的总和(图 6)。

|

||||

|

||||

![space used][14]

|

||||

|

||||

*图 6:我的虚拟机文件使用存储空间的总和是 559GB*

|

||||

|

||||

您还可以使用此命令查看父项的所有子目录使用了多少空间,如下所示:

|

||||

|

||||

```

|

||||

du -h /media/jack/HALEY

|

||||

```

|

||||

|

||||

此命令的输出见(图 7),是一个用于查看各子目录占用的驱动器空间的好方法。

|

||||

|

||||

![directories][16]

|

||||

|

||||

*图 7:子目录的存储空间使用情况*

|

||||

|

||||

`du` 命令也是一个很好的工具,用于查看使用系统磁盘空间最多的目录列表。执行此任务的方法是将 `du` 命令的输出通过管道传递给另外两个命令:`sort` 和 `head` 。下面的命令用于找出驱动器上占用存储空间最大的前 10 个目录:

|

||||

|

||||

```

|

||||

du -a /media/jack | sort -n -r |head -n 10

|

||||

```

|

||||

|

||||

输出将以从大到小的顺序列出这些目录(图 8)。

|

||||

|

||||

![top users][18]

|

||||

|

||||

*图 8:使用驱动器空间最多的 10 个目录*

|

||||

|

||||

### 没有你想像的那么难

|

||||

|

||||

查看 Linux 系统上挂载的驱动器的空间使用情况非常简单。只要你将你的驱动器挂载在 Linux 系统上,使用 `df` 命令或 `du` 命令在报告必要信息方面都会非常出色。使用 `df` 命令,您可以快速查看磁盘上总的空间使用量,使用 `du` 命令,可以查看特定目录的空间使用情况。对于每一个 Linux 系统的管理员来说,这两个命令的结合使用是必须掌握的。

|

||||

|

||||

而且,如果你没有注意到,我最近介绍了[查看 Linux 上内存使用情况的方法][19]。总之,这些技巧将大力帮助你成功管理 Linux 服务器。

|

||||

|

||||

通过 Linux Foundation 和 edX 免费提供的 “Linux 简介” 课程,了解更多有关 Linux 的信息。

|

||||

|

||||

--------

|

||||

|

||||

via: https://www.linux.com/learn/intro-to-linux/2018/6how-check-disk-space-linux-command-line

|

||||

|

||||

作者:[Jack Wallen][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[SunWave](https://github.com/SunWave)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/jlwallen

|

||||

[1]:https://elementary.io/

|

||||

[3]:https://www.linux.com/sites/lcom/files/styles/rendered_file/public/diskspace_1.jpg?itok=aJa8AZAM

|

||||

[6]:https://www.linux.com/sites/lcom/files/styles/rendered_file/public/diskspace_2.jpg?itok=_PAq3kxC

|

||||

[8]:https://www.linux.com/sites/lcom/files/styles/rendered_file/public/diskspace_3.jpg?itok=51m8I-Vu

|

||||

[10]:https://www.linux.com/sites/lcom/files/styles/rendered_file/public/diskspace_4.jpg?itok=SuwgueN3

|

||||

[12]:https://www.linux.com/sites/lcom/files/styles/rendered_file/public/diskspace_5.jpg?itok=XfS4s7Zq

|

||||

[14]:https://www.linux.com/sites/lcom/files/styles/rendered_file/public/diskspace_6.jpg?itok=r71qICyG

|

||||

[16]:https://www.linux.com/sites/lcom/files/styles/rendered_file/public/diskspace_7.jpg?itok=PtDe4q5y

|

||||

[18]:https://www.linux.com/sites/lcom/files/styles/rendered_file/public/diskspace_8.jpg?itok=v9E1SFcC

|

||||

[19]:https://www.linux.com/learn/5-commands-checking-memory-usage-linux

|

||||

[20]:https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

55

published/20180627 CIP- Keeping the Lights On with Linux.md

Normal file

55

published/20180627 CIP- Keeping the Lights On with Linux.md

Normal file

@ -0,0 +1,55 @@

|

||||

CIP:延续 Linux 之光

|

||||

======

|

||||

|

||||

> CIP 的目标是创建一个基本的系统,使用开源软件来为我们现代社会的基础设施提供动力。

|

||||

|

||||

|

||||

|

||||

|

||||

现如今,现代民用基础设施遍及各处 —— 发电厂、雷达系统、交通信号灯、水坝和天气系统等。这些基础设施项目已然存在数十年,这些设施还将继续提供更长时间的服务,所以安全性和使用寿命是至关重要的。

|

||||

|

||||

并且,其中许多系统都是由 Linux 提供支持,它为技术提供商提供了对这些问题的更多控制。然而,如果每个提供商都在构建自己的解决方案,这可能会导致分散和重复工作。因此,<ruby>[民用基础设施平台][1]<rt>Civil Infrastructure Platform</rt></ruby>(CIP)最首要的目标是创造一个开源基础层,提供给工业设施,例如嵌入式控制器或是网关设备。

|

||||

|

||||

担任 CIP 的技术指导委员会主席的 Yoshitake Kobayashi 说过,“我们在这个领域有一种非常保守的文化,因为一旦我们建立了一个系统,它必须得到长达十多年的支持,在某些情况下超过 60 年。这就是为什么这个项目被创建的原因,因为这个行业的每个使用者都面临同样的问题,即能够长时间地使用 Linux。”

|

||||

|

||||

CIP 的架构是创建一个非常基础的系统,以在控制器上使用开源软件。其中,该基础层包括 Linux 内核和一系列常见的开源软件如 libc、busybox 等。由于软件的使用寿命是一个最主要的问题,CIP 选择使用 Linux 4.4 版本的内核,这是一个由 Greg Kroah-Hartman 维护的长期支持版本。

|

||||

|

||||

### 合作

|

||||

|

||||

由于 CIP 有上游优先政策,因此他们在项目中需要的代码必须位于上游内核中。为了与内核社区建立积极的反馈循环,CIP 聘请 Ben Hutchings 作为 CIP 的官方维护者。Hutchings 以他在 Debian LTS 版本上所做的工作而闻名,这也促成了 CIP 与 Debian 项目之间的官方合作。

|

||||

|

||||

在新的合作下,CIP 将使用 Debian LTS 版本作为构建平台。 CIP 还将支持 Debian 长期支持版本(LTS),延长所有 Debian 稳定版的生命周期。CIP 还将与 Freexian 进行密切合作,后者是一家围绕 Debian LTS 版本提供商业服务的公司。这两个组织将专注于嵌入式系统的开源软件的互操作性、安全性和维护。CIP 还会为一些 Debian LTS 版本提供资金支持。

|

||||

|

||||

Debian 项目负责人 Chris Lamb 表示,“我们对此次合作以及 CIP 对 Debian LTS 项目的支持感到非常兴奋,这样将使支持周期延长至五年以上。我们将一起致力于为用户提供长期支持,并为未来的城市奠定基础。”

|

||||

|

||||

### 安全性

|

||||

|

||||

Kobayashi 说过,其中最需要担心的是安全性。虽然出于明显的安全原因,大部分民用基础设施没有接入互联网(你肯定不想让一座核电站连接到互联网),但也存在其他风险。

|

||||

|

||||

仅仅是系统本身没有连接到互联网,这并不意味着能避开所有危险。其他系统,比如个人移动电脑也能够通过接入互联网而间接入侵到本地系统中。如若有人收到一封带有恶意文件作为电子邮件的附件,这将会“污染”系统内部的基础设备。

|

||||

|

||||

因此,至关重要的是保持运行在这些控制器上的所有软件是最新的并且完全修补的。为了确保安全性,CIP 还向后移植了<ruby>内核自我保护<rt>Kernel Self Protection</rt></ruby>(KSP)项目的许多组件。CIP 还遵循最严格的网络安全标准之一 —— IEC 62443,该标准定义了软件的流程和相应的测试,以确保系统更安全。

|

||||

|

||||

### 展望未来

|

||||

|

||||

随着 CIP 日趋成熟,官方正在加大与各个 Linux 提供商的合作力度。除了与 Debian 和 freexian 的合作外,CIP 最近还邀请了企业 Linux 操作系统供应商 Cybertrust Japan Co., Ltd. 作为新的银牌成员。

|

||||

|

||||

Cybertrust 与其他行业领军者合作,如西门子、东芝、Codethink、日立、Moxa、Plat'Home 和瑞萨,致力于为未来数十年打造一个可靠、安全的基于 Linux 的嵌入式软件平台。

|

||||

|

||||

这些公司在 CIP 的保护下所进行的工作,将确保管理我们现代社会中的民用基础设施的完整性。

|

||||

|

||||

想要了解更多信息,请访问 [民用基础设施官网][1]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/2018/6/cip-keeping-lights-linux

|

||||

|

||||

作者:[Swapnil Bhartiya][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[wyxplus](https://github.com/wyxplus)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/arnieswap

|

||||

[1]:https://www.cip-project.org/

|

||||

@ -0,0 +1,130 @@

|

||||

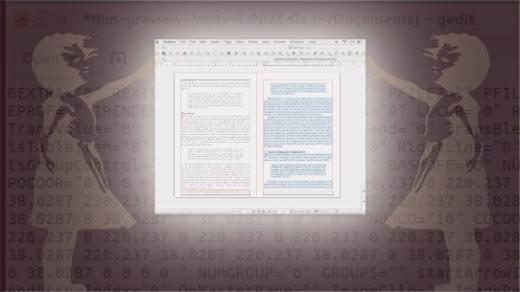

如何用 Scribus 和 Gedit 编辑 Adobe InDesign 文件

|

||||

======

|

||||

|

||||

> 学习一下这些用开源工具编辑 InDesign 文件的方案。

|

||||

|

||||

|

||||

|

||||

要想成为一名优秀的平面设计师,您必须善于使用各种各样专业的工具。现在,对大多数设计师来说,最常用的工具是 <ruby>Adobe 全家桶<rt>Adobe Creative Suite</rt></ruby>。

|

||||

|

||||

但是,有时候使用开源工具能够帮您摆脱困境。比如,您正在使用一台公共打印机打印一份用 Adobe InDesign 创建的文件。这时,您需要对文件做一些简单的改动(比如,改正一个错别字),但您无法立刻使用 Adobe 套件。虽然这种情况很少见,但电子杂志制作软件 [Scribus][1] 和文本编辑器 [Gedit][2] 等开源工具可以节约您的时间。

|

||||

|

||||

在本文中,我将向您展示如何使用 Scribus 和 Gedit 编辑 Adobe InDesign 文件。请注意,还有许多其他开源平面设计软件可以用来代替 Adobe InDesign 或者结合使用。详情请查看我的文章:[昂贵的工具(从来!)不是平面设计的唯一选择][3] 以及 [两个开源 Adobe InDesign 脚本][4].

|

||||

|

||||

在编写本文的时候,我阅读了一些关于如何使用开源软件编辑 InDesign 文件的博客,但没有找到有用的文章。我尝试了两个解决方案。一个是:在 InDesign 创建一个 EPS 并在文本编辑器 Scribus 中将其以可编辑文件打开,但这不起作用。另一个是:从 InDesign 中创建一个 IDML(一种旧的 InDesign 文件格式)文件,并在 Scribus 中打开它。第二种方法效果更好,也是我在下文中使用的解决方法。

|

||||

|

||||

### 编辑名片

|

||||

|

||||

我尝试在 Scribus 中打开和编辑 InDesign 名片文件的效果很好。唯一的问题是字母间的间距有些偏移,以及我用倒过来的 ‘J’ 来创建 “Jeff” 中的 ‘f’ 被翻转。其他部分,像样式和颜色等都完好无损。

|

||||

|

||||

![Business card in Adobe InDesign][6]

|

||||

|

||||

*图:在 Adobe InDesign 中编辑名片。*

|

||||

|

||||

![InDesign IDML file opened in Scribus][8]

|

||||

|

||||

*图:在 Scribus 中打开 InDesign IDML 文件。*

|

||||

|

||||

### 删除带页码的书籍中的副本

|

||||

|

||||

书籍的转换并不顺利。书籍的正文还 OK,但当我用 Scribus 打开 InDesign 文件,目录、页脚和一些首字下沉的段落都出现问题。不过至少,它是一个可编辑的文档。其中一个问题是一些块引用中的文字变成了默认的 Arial 字体,这是因为字体样式(似乎来自其原始的 Word 文档)的优先级比段落样式高。这个问题容易解决。

|

||||

|

||||

![Book layout in InDesign][10]

|

||||

|

||||

*图:InDesign 中的书籍布局。*

|

||||

|

||||

![InDesign IDML file of book layout opened in Scribus][12]

|

||||

|

||||

*图:用 Scribus 打开 InDesign IDML 文件的书籍布局。*

|

||||

|

||||

当我试图选择并删除一页文本的时候,发生了奇异事件。我把光标放在文本中,按下 `Command + A`(“全选”的快捷键)。表面看起来高亮显示了一页文本,但事实并非如此!

|

||||

|

||||

![Selecting text in Scribus][14]

|

||||

|

||||

*图:Scribus 中被选中的文本。*

|

||||

|

||||

当我按下“删除”键,整个文本(不只是高亮的部分)都消失了。

|

||||

|

||||

![Both pages of text deleted in Scribus][16]

|

||||

|

||||

*图:两页文本都被删除了。*

|

||||

|

||||

然后,更奇异的事情发生了……我按下 `Command + Z` 键来撤回删除操作,文本恢复,但文本格式全乱套了。

|

||||

|

||||

![Undo delete restored the text, but with bad formatting.][18]

|

||||

|

||||

*图:Command+Z (撤回删除操作) 恢复了文本,但格式乱套了。*

|

||||

|

||||

### 用文本编辑器打开 InDesign 文件

|

||||

|

||||

当您用普通的记事本(比如,Mac 中的 TextEdit)分别打开 Scribus 文件和 InDesign 文件,会发现 Scribus 文件是可读的,而 InDesign 文件全是乱码。

|

||||

|

||||

您可以用 TextEdit 对两者进行更改并成功保存,但得到的文件是损坏的。下图是当我用 InDesign 打开编辑后的文件时的报错。

|

||||

|

||||

![InDesign error message][20]

|

||||

|

||||

*图:InDesign 的报错。*

|

||||

|

||||

我在 Ubuntu 系统上用文本编辑器 Gedit 编辑 Scribus 时得到了更好的结果。我从命令行启动了 Gedit,然后打开并编辑 Scribus 文件,保存后,再次使用 Scribus 打开文件时,我在 Gedit 中所做的更改都成功显示在 Scribus 中。

|

||||

|

||||

![Editing Scribus file in Gedit][22]

|

||||

|

||||

*图:用 Gedit 编辑 Scribus 文件。*

|

||||

|

||||

![Result of the Gedit edit in Scribus][24]

|

||||

|

||||

*图:用 Scribus 打开 Gedit 编辑过的文件。*

|

||||

|

||||

当您正准备打印的时候,客户打来电话说有一个错别字需要更改,此时您不需要苦等客户爸爸发来新的文件,只需要用 Gedit 打开 Scribus 文件,改正错别字,继续打印。

|

||||

|

||||

### 把图像拖拽到 ID 文件中

|

||||

|

||||

我将 InDesign 文档另存为 IDML 文件,这样我就可以用 Scribus 往其中拖进一些 PDF 文档。似乎 Scribus 并不能像 InDesign 一样把 PDF 文档拖拽进去。于是,我把 PDF 文档转换成 JPG 格式的图片然后导入到 Scribus 中,成功了。但这么做的结果是,将 IDML 文档转换成 PDF 格式后,文件大小非常大。

|

||||

|

||||

![Huge PDF file][26]

|

||||

|

||||

*图:把 Scribus 转换成 PDF 时得到一个非常大的文件*。

|

||||

|

||||

我不确定为什么会这样——这个坑留着以后再填吧。

|

||||

|

||||

您是否有使用开源软件编辑平面图形文件的技巧?如果有,请在评论中分享哦。

|

||||

|

||||

------

|

||||

|

||||

via: https://opensource.com/article/18/7/adobe-indesign-open-source-tools

|

||||

|

||||

作者:[Jeff Macharyas][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[XiatianSummer](https://github.com/XiatianSummer)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/rikki-endsley

|

||||

[1]: https://www.scribus.net/

|

||||

[2]: https://wiki.gnome.org/Apps/Gedit

|

||||

[3]: https://opensource.com/life/16/8/open-source-alternatives-graphic-design

|

||||

[4]: https://opensource.com/article/17/3/scripts-adobe-indesign

|

||||

[5]: /file/402516

|

||||

[6]: https://opensource.com/sites/default/files/uploads/1-business_card_designed_in_adobe_indesign_cc.png "Business card in Adobe InDesign"

|

||||

[7]: /file/402521

|

||||

[8]: https://opensource.com/sites/default/files/uploads/2-indesign_.idml_file_opened_in_scribus.png "InDesign IDML file opened in Scribus"

|

||||

[9]: /file/402531

|

||||

[10]: https://opensource.com/sites/default/files/uploads/3-book_layout_in_indesign.png "Book layout in InDesign"

|

||||

[11]: /file/402536

|

||||

[12]: https://opensource.com/sites/default/files/uploads/4-indesign_.idml_file_of_book_opened_in_scribus.png "InDesign IDML file of book layout opened in Scribus"

|

||||

[13]: /file/402541

|

||||

[14]: https://opensource.com/sites/default/files/uploads/5-command-a_in_the_scribus_file.png "Selecting text in Scribus"

|

||||

[15]: /file/402546

|

||||

[16]: https://opensource.com/sites/default/files/uploads/6-deleted_text_in_scribus.png "Both pages of text deleted in Scribus"

|

||||

[17]: /file/402551

|

||||

[18]: https://opensource.com/sites/default/files/uploads/7-command-z_in_scribus.png "Undo delete restored the text, but with bad formatting."

|

||||

[19]: /file/402556

|

||||

[20]: https://opensource.com/sites/default/files/uploads/8-indesign_error_message.png "InDesign error message"

|

||||

[21]: /file/402561

|

||||

[22]: https://opensource.com/sites/default/files/uploads/9-scribus_edited_in_gedit_on_linux.png "Editing Scribus file in Gedit"

|

||||

[23]: /file/402566

|

||||

[24]: https://opensource.com/sites/default/files/uploads/10-scribus_opens_after_gedit_changes.png "Result of the Gedit edit in Scribus"

|

||||

[25]: /file/402571

|

||||

[26]: https://opensource.com/sites/default/files/uploads/11-large_pdf_size.png "Huge PDF file"

|

||||

|

||||

@ -0,0 +1,74 @@

|

||||

为什么 Arch Linux 如此“难弄”又有何优劣?

|

||||

======

|

||||

|

||||

|

||||

|

||||

[Arch Linux][1] 于 **2002** 年发布,由 **Aaron Grifin** 领头,是当下最热门的 Linux 发行版之一。从设计上说,Arch Linux 试图给用户提供简单、最小化且优雅的体验,但它的目标用户群可不是怕事儿多的用户。Arch 鼓励参与社区建设,并且从设计上期待用户自己有学习操作系统的能力。

|

||||

|

||||

很多 Linux 老鸟对于 **Arch Linux** 会更了解,但电脑前的你可能只是刚开始打算把 Arch 当作日常操作系统来使用。虽然我也不是权威人士,但下面几点优劣是我认为你总会在使用中慢慢发现的。

|

||||

|

||||

### 1、优点: 定制属于你自己的 Linux 操作系统

|

||||

|

||||

大多数热门的 Linux 发行版(比如 **Ubuntu** 和 **Fedora**)很像一般我们会看到的预装系统,和 **Windows** 或者 **MacOS** 一样。但 Arch 则会更鼓励你去把操作系统配置的符合你的品味。如果你能顺利做到这点的话,你会得到一个每一个细节都如你所想的操作系统。

|

||||

|

||||

#### 缺点: 安装过程让人头疼

|

||||

|

||||

[Arch Linux 的安装 ][2] 别辟蹊径——因为你要花些时间来微调你的操作系统。你会在过程中了解到不少终端命令和组成你系统的各种软件模块——毕竟你要自己挑选安装什么。当然,你也知道这个过程少不了阅读一些文档/教程。

|

||||

|

||||

### 2、优点: 没有预装垃圾

|

||||

|

||||

鉴于 **Arch** 允许你在安装时选择你想要的系统部件,你再也不用烦恼怎么处理你不想要的一堆预装软件。作为对比,**Ubuntu** 会预装大量的软件和桌面应用——很多你不需要、甚至卸载之前都不知道它们存在的东西。

|

||||

|

||||

总而言之,**Arch Linux* 能省去大量的系统安装后时间。**Pacman**,是 Arch Linux 默认使用的优秀包管理组件。或者你也可以选择 [Pamac][3] 作为替代。

|

||||

|

||||

### 3、优点: 无需繁琐系统升级

|

||||

|

||||

**Arch Linux** 采用滚动升级模型,简直妙极了。这意味着你不需要操心升级了。一旦你用上了 Arch,持续的更新体验会让你和一会儿一个版本的升级说再见。只要你记得‘滚’更新(Arch 用语),你就一直会使用最新的软件包们。

|

||||

|

||||

#### 缺点: 一些升级可能会滚坏你的系统

|

||||

|

||||

虽然升级过程是完全连续的,你有时得留意一下你在更新什么。没人能知道所有软件的细节配置,也没人能替你来测试你的情况。所以如果你盲目更新,有时候你会滚坏你的系统。(LCTT 译注:别担心,你可以‘滚’回来 ;D )

|

||||

|

||||

### 4、优点: Arch 有一个社区基因

|

||||

|

||||

所有 Linux 用户通常有一个共同点:对独立自由的追求。虽然大多数 Linux 发行版和公司企业等挂钩极少,但也并非没有。比如 基于 **Ubuntu** 的衍生版本们不得不受到 Canonical 公司决策的影响。

|

||||

|

||||

如果你想让你的电脑更独立,那么 Arch Linux 是你的伙伴。不像大多数操作系统,Arch 完全没有商业集团的影响,完全由社区驱动。

|

||||

|

||||

### 5、优点: Arch Wiki 无敌

|

||||

|

||||

[Arch Wiki][4] 是一个无敌文档库,几乎涵盖了所有关于安装和维护 Arch 以及关于操作系统本身的知识。Arch Wiki 最厉害的一点可能是,不管你在用什么发行版,你多多少少可能都在 Arch Wiki 的页面里找到有用信息。这是因为 Arch 用户也会用别的发行版用户会用的东西,所以一些技巧和知识得以泛化。

|

||||

|

||||

### 6、优点: 别忘了 Arch 用户软件库 (AUR)

|

||||

|

||||

<ruby>[Arch 用户软件库][5]<rt>Arch User Repository</rt></ruby> (AUR)是一个来自社区的超大软件仓库。如果你找了一个还没有 Arch 的官方仓库里出现的软件,那你肯定能在 AUR 里找到社区为你准备好的包。

|

||||

|

||||

AUR 是由用户自发编译和维护的。Arch 用户也可以给每个包投票,这样后来者就能找到最有用的那些软件包了。

|

||||

|

||||

#### 最后: Arch Linux 适合你吗?

|

||||

|

||||

**Arch Linux** 优点多于缺点,也有很多优缺点我无法在此一一叙述。安装过程很长,对非 Linux 用户来说也可能偏有些技术,但只要你投入一些时间和善用 Wiki,你肯定能迈过这道坎。

|

||||

|

||||

**Arch Linux** 是一个非常优秀的发行版——尽管它有一些复杂性。同时它也很受那些知道自己想要什么的用户的欢迎——只要你肯做点功课,有些耐心。

|

||||

|

||||

当你从零开始搭建完 Arch 的时候,你会掌握很多 GNU/Linux 的内部细节,也再也不会对你的电脑内部运作方式一无所知了。

|

||||

|

||||

欢迎读者们在评论区讨论你使用 Arch Linux 的优缺点?以及你曾经遇到过的一些挑战。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.fossmint.com/why-is-arch-linux-so-challenging-what-are-pros-cons/

|

||||

|

||||

作者:[Martins D. Okoi][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[Moelf](https://github.com/Moelf)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.fossmint.com/author/dillivine/

|

||||

[1]:https://www.archlinux.org/

|

||||

[2]:https://www.tecmint.com/arch-linux-installation-and-configuration-guide/

|

||||

[3]:https://www.fossmint.com/pamac-arch-linux-gui-package-manager/

|

||||

[4]:https://wiki.archlinux.org/

|

||||

[5]:https://wiki.archlinux.org/index.php/Arch_User_Repository

|

||||

322

published/20180705 Testing Node.js in 2018.md

Normal file

322

published/20180705 Testing Node.js in 2018.md

Normal file

@ -0,0 +1,322 @@

|

||||

测试 Node.js,2018

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

超过 3 亿用户正在使用 [Stream][4]。这些用户全都依赖我们的框架,而我们十分擅长测试要放到生产环境中的任何东西。我们大部分的代码库是用 Go 语言编写的,剩下的部分则是用 Python 编写。

|

||||

|

||||

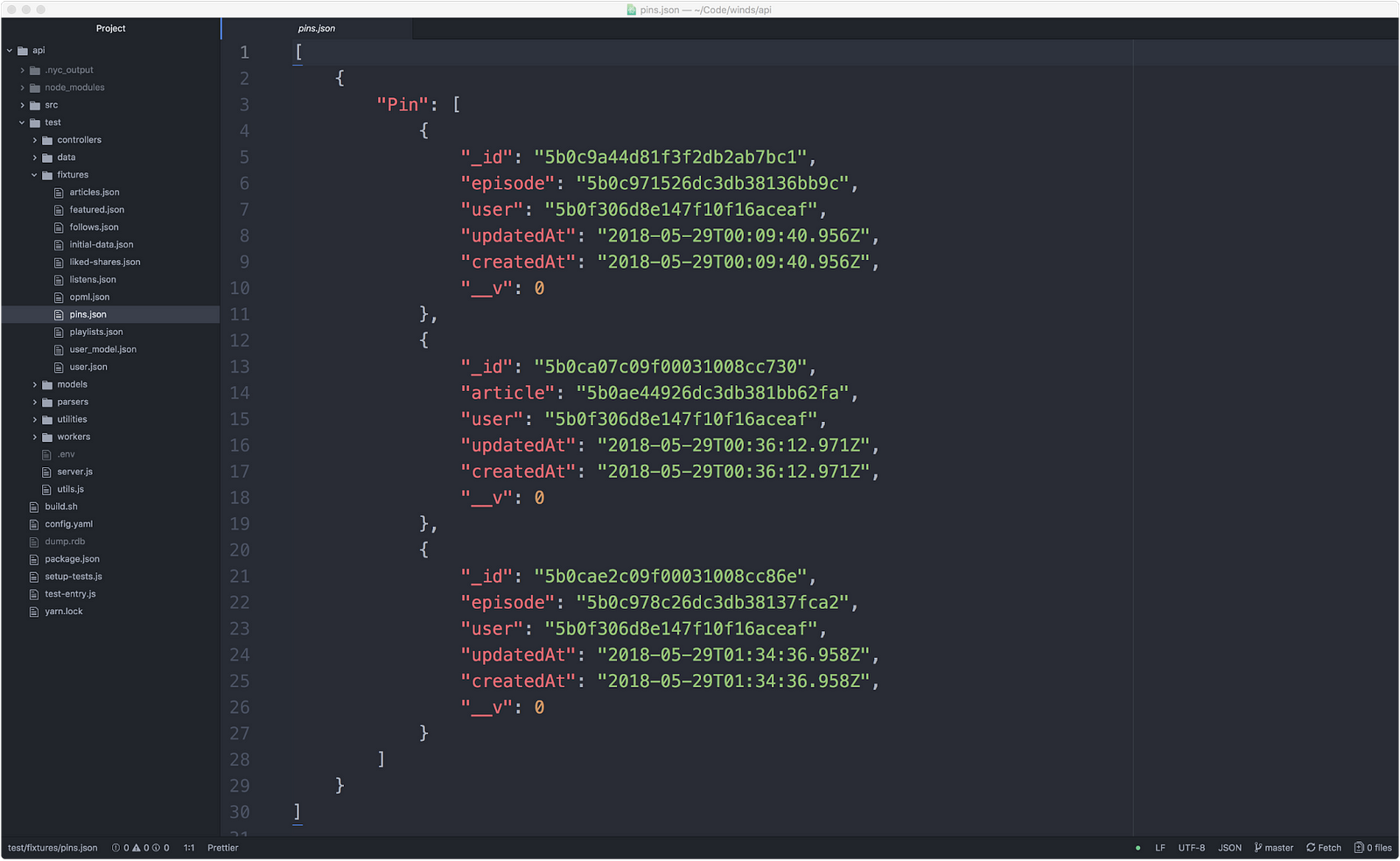

我们最新的展示应用,[Winds 2.0][5],是用 Node.js 构建的,很快我们就了解到测试 Go 和 Python 的常规方法并不适合它。而且,创造一个好的测试套件需要用 Node.js 做很多额外的工作,因为我们正在使用的框架没有提供任何内建的测试功能。

|

||||

|

||||

不论你用什么语言,要构建完好的测试框架可能都非常复杂。本文我们会展示 Node.js 测试过程中的困难部分,以及我们在 Winds 2.0 中用到的各种工具,并且在你要编写下一个测试集合时为你指明正确的方向。

|

||||

|

||||

### 为什么测试如此重要

|

||||

|

||||

我们都向生产环境中推送过糟糕的提交,并且遭受了其后果。碰到这样的情况不是好事。编写一个稳固的测试套件不仅仅是一个明智的检测,而且它还让你能够完全地重构代码,并自信重构之后的代码仍然可以正常运行。这在你刚刚开始编写代码的时候尤为重要。

|

||||

|

||||

如果你是与团队共事,达到测试覆盖率极其重要。没有它,团队中的其他开发者几乎不可能知道他们所做的工作是否导致重大变动(或破坏)。

|

||||

|

||||

编写测试同时会促进你和你的队友把代码分割成更小的片段。这让别人去理解你的代码和修改 bug 变得容易多了。产品收益变得更大,因为你能更早的发现 bug。

|

||||

|

||||

最后,没有测试,你的基本代码还不如一堆纸片。基本不能保证你的代码是稳定的。

|

||||

|

||||

### 困难的部分

|

||||

|

||||

在我看来,我们在 Winds 中遇到的大多数测试问题是 Node.js 中特有的。它的生态系统一直在变大。例如,如果你用的是 macOS,运行 `brew upgrade`(安装了 homebrew),你看到你一个新版本的 Node.js 的概率非常高。由于 Node.js 迭代频繁,相应的库也紧随其后,想要与最新的库保持同步非常困难。

|

||||

|

||||

以下是一些马上映入脑海的痛点:

|

||||

|

||||

1. 在 Node.js 中进行测试是非常主观而又不主观的。人们对于如何构建一个测试架构以及如何检验成功有不同的看法。沮丧的是还没有一个黄金准则规定你应该如何进行测试。

|

||||

2. 有一堆框架能够用在你的应用里。但是它们一般都很精简,没有完好的配置或者启动过程。这会导致非常常见的副作用,而且还很难检测到;所以你最终会想要从零开始编写自己的<ruby>测试执行平台<rt>test runner</rt></ruby>测试执行平台。

|

||||

3. 几乎可以保证你 _需要_ 编写自己的测试执行平台(马上就会讲到这一节)。

|

||||

|

||||

以上列出的情况不是理想的,而且这是 Node.js 社区应该尽管处理的事情。如果其他语言解决了这些问题,我认为也是作为广泛使用的语言, Node.js 解决这些问题的时候。

|

||||

|

||||

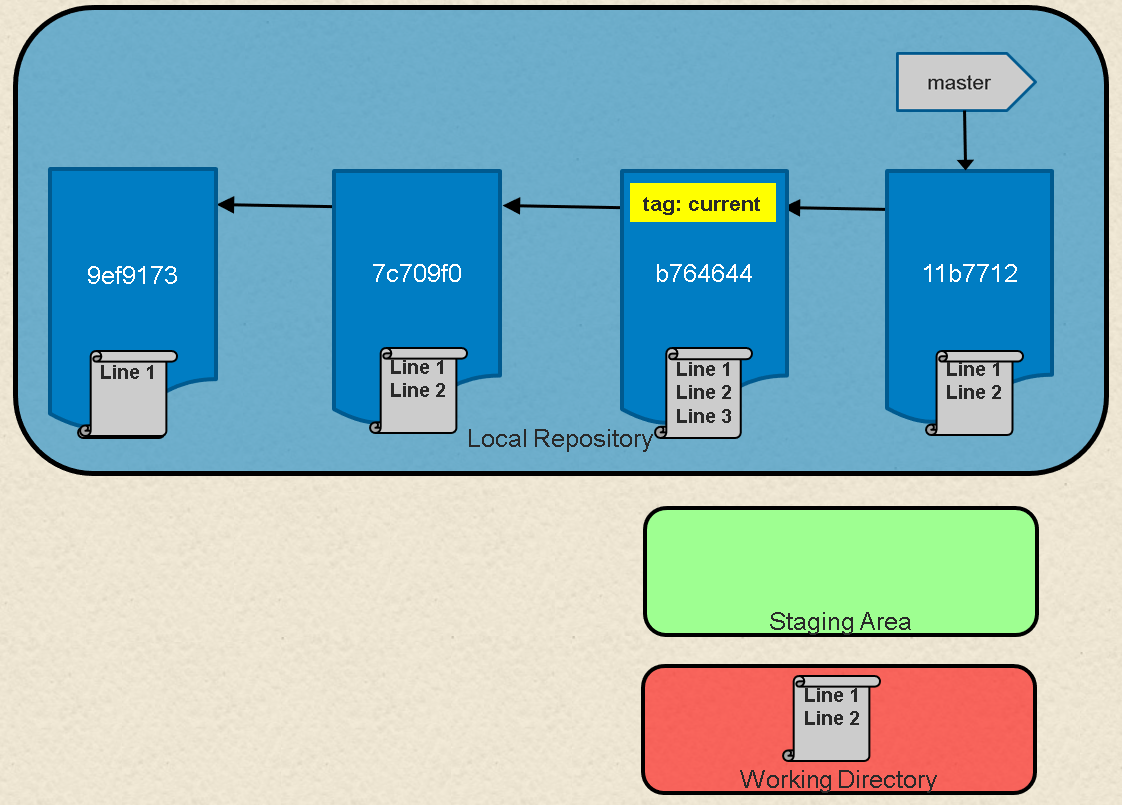

### 编写你自己的测试执行平台

|

||||

|

||||

所以……你可能会好奇<rt>test runner</rt></ruby>测试执行平台 _是_ 什么,说实话,它并不复杂。测试执行平台是测试套件中最高层的容器。它允许你指定全局配置和环境,还可以导入配置。可能有人觉得做这个很简单,对吧?别那么快下结论。

|

||||

|

||||

我们所了解到的是,尽管现在就有足够多的测试框架了,但没有一个测试框架为 Node.js 提供了构建你的测试执行平台的标准方式。不幸的是,这需要开发者来完成。这里有个关于测试执行平台的需求的简单总结:

|

||||

|

||||

* 能够加载不同的配置(比如,本地的、测试的、开发的),并确保你 _永远不会_ 加载一个生产环境的配置 —— 你能想象出那样会出什么问题。

|

||||

* 播种数据库——产生用于测试的数据。必须要支持多种数据库,不论是 MySQL、PostgreSQL、MongoDB 或者其它任何一个数据库。

|

||||

* 能够加载配置(带有用于开发环境测试的播种数据的文件)。

|

||||

|

||||

开发 Winds 的时候,我们选择 Mocha 作为测试执行平台。Mocha 提供了简单并且可编程的方式,通过命令行工具(整合了 Babel)来运行 ES6 代码的测试。

|

||||

|

||||

为了进行测试,我们注册了自己的 Babel 模块引导器。这为我们提供了更细的粒度,更强大的控制,在 Babel 覆盖掉 Node.js 模块加载过程前,对导入的模块进行控制,让我们有机会在所有测试运行前对模块进行模拟。

|

||||

|

||||

此外,我们还使用了 Mocha 的测试执行平台特性,预先把特定的请求赋给 HTTP 管理器。我们这么做是因为常规的初始化代码在测试中不会运行(服务器交互是用 Chai HTTP 插件模拟的),还要做一些安全性检查来确保我们不会连接到生产环境数据库。

|

||||

|

||||

尽管这不是测试执行平台的一部分,有一个<ruby>配置<rt>fixture</rt></ruby>加载器也是我们测试套件中的重要的一部分。我们试验过已有的解决方案;然而,我们最终决定编写自己的助手程序,这样它就能贴合我们的需求。根据我们的解决方案,在生成或手动编写配置时,通过遵循简单专有的协议,我们就能加载数据依赖很复杂的配置。

|

||||

|

||||

### Winds 中用到的工具

|

||||

|

||||

尽管过程很冗长,我们还是能够合理使用框架和工具,使得针对后台 API 进行的适当测试变成现实。这里是我们选择使用的工具:

|

||||

|

||||

#### Mocha

|

||||

|

||||

[Mocha][6],被称为 “运行在 Node.js 上的特性丰富的测试框架”,是我们用于该任务的首选工具。拥有超过 15K 的星标,很多支持者和贡献者,我们知道对于这种任务,这是正确的框架。

|

||||

|

||||

#### Chai

|

||||

|

||||

然后是我们的断言库。我们选择使用传统方法,也就是最适合配合 Mocha 使用的 —— [Chai][7]。Chai 是一个用于 Node.js,适合 BDD 和 TDD 模式的断言库。拥有简单的 API,Chai 很容易整合进我们的应用,让我们能够轻松地断言出我们 _期望_ 从 Winds API 中返回的应该是什么。最棒的地方在于,用 Chai 编写测试让人觉得很自然。这是一个简短的例子:

|

||||

|

||||

```

|

||||

describe('retrieve user', () => {

|

||||

let user;

|

||||

|

||||

before(async () => {

|

||||

await loadFixture('user');

|

||||

user = await User.findOne({email: authUser.email});

|

||||

expect(user).to.not.be.null;

|

||||

});

|

||||

|

||||

after(async () => {

|

||||

await User.remove().exec();

|

||||

});

|

||||

|

||||

describe('valid request', () => {

|

||||

it('should return 200 and the user resource, including the email field, when retrieving the authenticated user', async () => {

|

||||

const response = await withLogin(request(api).get(`/users/${user._id}`), authUser);

|

||||

|

||||

expect(response).to.have.status(200);

|

||||

expect(response.body._id).to.equal(user._id.toString());

|

||||

});

|

||||

|

||||

it('should return 200 and the user resource, excluding the email field, when retrieving another user', async () => {

|

||||

const anotherUser = await User.findOne({email: 'another_user@email.com'});

|

||||

|

||||

const response = await withLogin(request(api).get(`/users/${anotherUser.id}`), authUser);

|

||||

|

||||

expect(response).to.have.status(200);

|

||||

expect(response.body._id).to.equal(anotherUser._id.toString());

|

||||

expect(response.body).to.not.have.an('email');

|

||||

});

|

||||

|

||||

});

|

||||

|

||||

describe('invalid requests', () => {

|

||||

|

||||

it('should return 404 if requested user does not exist', async () => {

|

||||

const nonExistingId = '5b10e1c601e9b8702ccfb974';

|

||||

expect(await User.findOne({_id: nonExistingId})).to.be.null;

|

||||

|

||||

const response = await withLogin(request(api).get(`/users/${nonExistingId}`), authUser);

|

||||

expect(response).to.have.status(404);

|

||||

});

|

||||

});

|

||||

|

||||

});

|

||||

```

|

||||

|

||||

#### Sinon

|

||||

|

||||

拥有与任何单元测试框架相适应的能力,[Sinon][8] 是模拟库的首选。而且,精简安装带来的超级整洁的整合,让 Sinon 把模拟请求变成了简单而轻松的过程。它的网站有极其良好的用户体验,并且提供简单的步骤,供你将 Sinon 整合进自己的测试框架中。

|

||||

|

||||

#### Nock

|

||||

|

||||

对于所有外部的 HTTP 请求,我们使用健壮的 HTTP 模拟库 [nock][9],在你要和第三方 API 交互时非常易用(比如说 [Stream 的 REST API][10])。它做的事情非常酷炫,这就是我们喜欢它的原因,除此之外关于这个精妙的库没有什么要多说的了。这是我们的速成示例,调用我们在 Stream 引擎中提供的 [personalization][11]:

|

||||

|

||||

```

|

||||

nock(config.stream.baseUrl)

|

||||

.get(/winds_article_recommendations/)

|

||||

.reply(200, { results: [{foreign_id:`article:${article.id}`}] });

|

||||

```

|

||||

|

||||

#### Mock-require

|

||||

|

||||

[mock-require][12] 库允许依赖外部代码。用一行代码,你就可以替换一个模块,并且当代码尝试导入这个库时,将会产生模拟请求。这是一个小巧但稳定的库,我们是它的超级粉丝。

|

||||

|

||||

#### Istanbul

|

||||

|

||||

[Istanbul][13] 是 JavaScript 代码覆盖工具,在运行测试的时候,通过模块钩子自动添加覆盖率,可以计算语句,行数,函数和分支覆盖率。尽管我们有相似功能的 CodeCov(见下一节),进行本地测试时,这仍然是一个很棒的工具。

|

||||

|

||||

### 最终结果 — 运行测试

|

||||

|

||||

_有了这些库,还有之前提过的测试执行平台,现在让我们看看什么是完整的测试(你可以在 [_这里_][14] 看看我们完整的测试套件):_

|

||||

|

||||

```

|

||||

import nock from 'nock';

|

||||

import { expect, request } from 'chai';

|

||||

|

||||

import api from '../../src/server';

|

||||

import Article from '../../src/models/article';

|

||||

import config from '../../src/config';

|

||||

import { dropDBs, loadFixture, withLogin } from '../utils.js';

|

||||

|

||||

describe('Article controller', () => {

|

||||

let article;

|

||||

|

||||

before(async () => {

|

||||

await dropDBs();

|

||||

await loadFixture('initial-data', 'articles');

|

||||

article = await Article.findOne({});

|

||||

expect(article).to.not.be.null;

|

||||

expect(article.rss).to.not.be.null;

|

||||

});

|

||||

|

||||

describe('get', () => {

|

||||

it('should return the right article via /articles/:articleId', async () => {

|

||||

let response = await withLogin(request(api).get(`/articles/${article.id}`));

|

||||

expect(response).to.have.status(200);

|

||||

});

|

||||

});

|

||||

|

||||

describe('get parsed article', () => {

|

||||

it('should return the parsed version of the article', async () => {

|

||||

const response = await withLogin(

|

||||

request(api).get(`/articles/${article.id}`).query({ type: 'parsed' })

|

||||

);

|

||||

expect(response).to.have.status(200);

|

||||

});

|

||||

});

|

||||

|

||||

describe('list', () => {

|

||||

it('should return the list of articles', async () => {

|

||||

let response = await withLogin(request(api).get('/articles'));

|

||||

expect(response).to.have.status(200);

|

||||

});

|

||||

});

|

||||

|

||||

describe('list from personalization', () => {

|

||||

after(function () {

|

||||

nock.cleanAll();

|

||||

});

|

||||

|

||||

it('should return the list of articles', async () => {

|

||||

nock(config.stream.baseUrl)

|

||||

.get(/winds_article_recommendations/)

|

||||

.reply(200, { results: [{foreign_id:`article:${article.id}`}] });

|

||||

|

||||

const response = await withLogin(

|

||||

request(api).get('/articles').query({

|

||||

type: 'recommended',

|

||||

})

|

||||

);

|

||||

expect(response).to.have.status(200);

|

||||

expect(response.body.length).to.be.at.least(1);

|

||||

expect(response.body[0].url).to.eq(article.url);

|

||||

});

|

||||

});

|

||||

});

|

||||

```

|

||||

|

||||

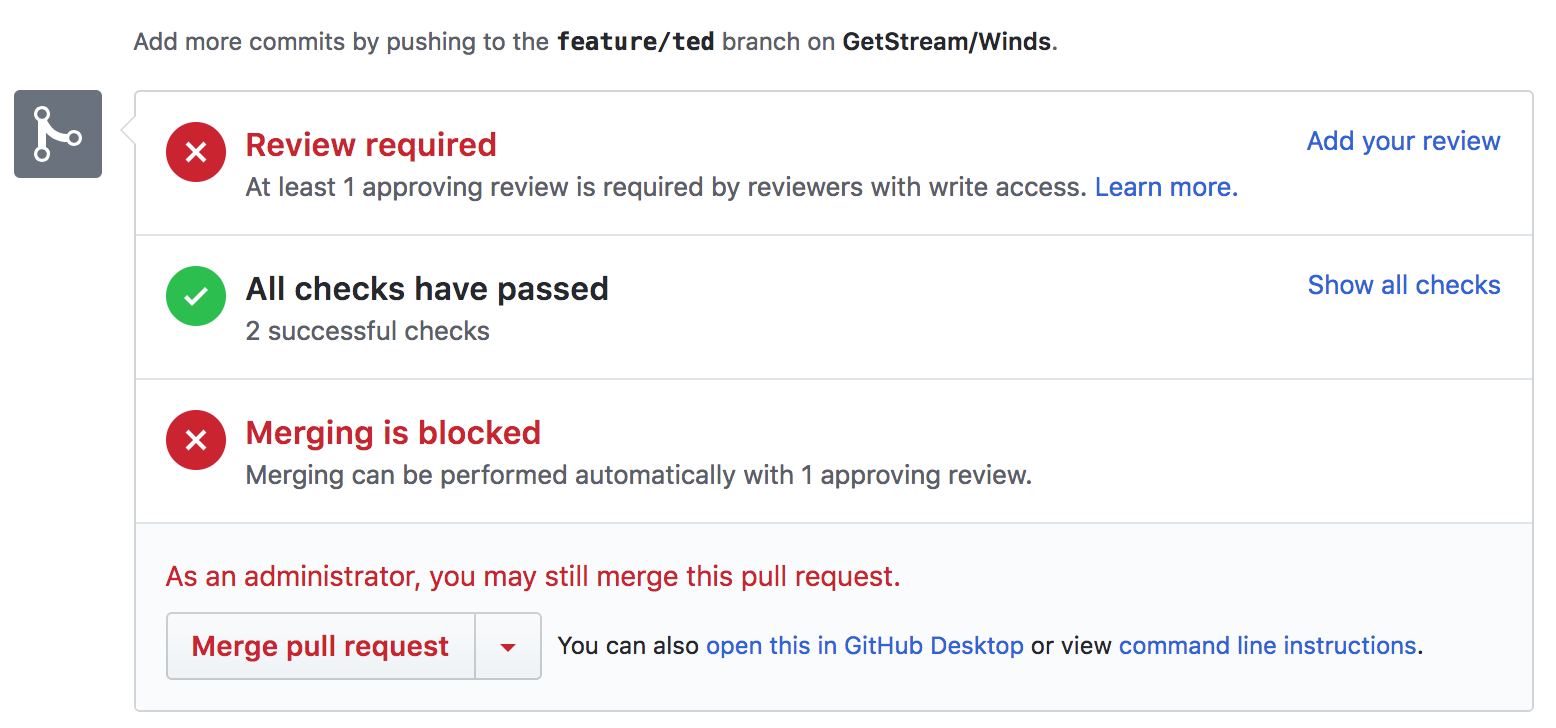

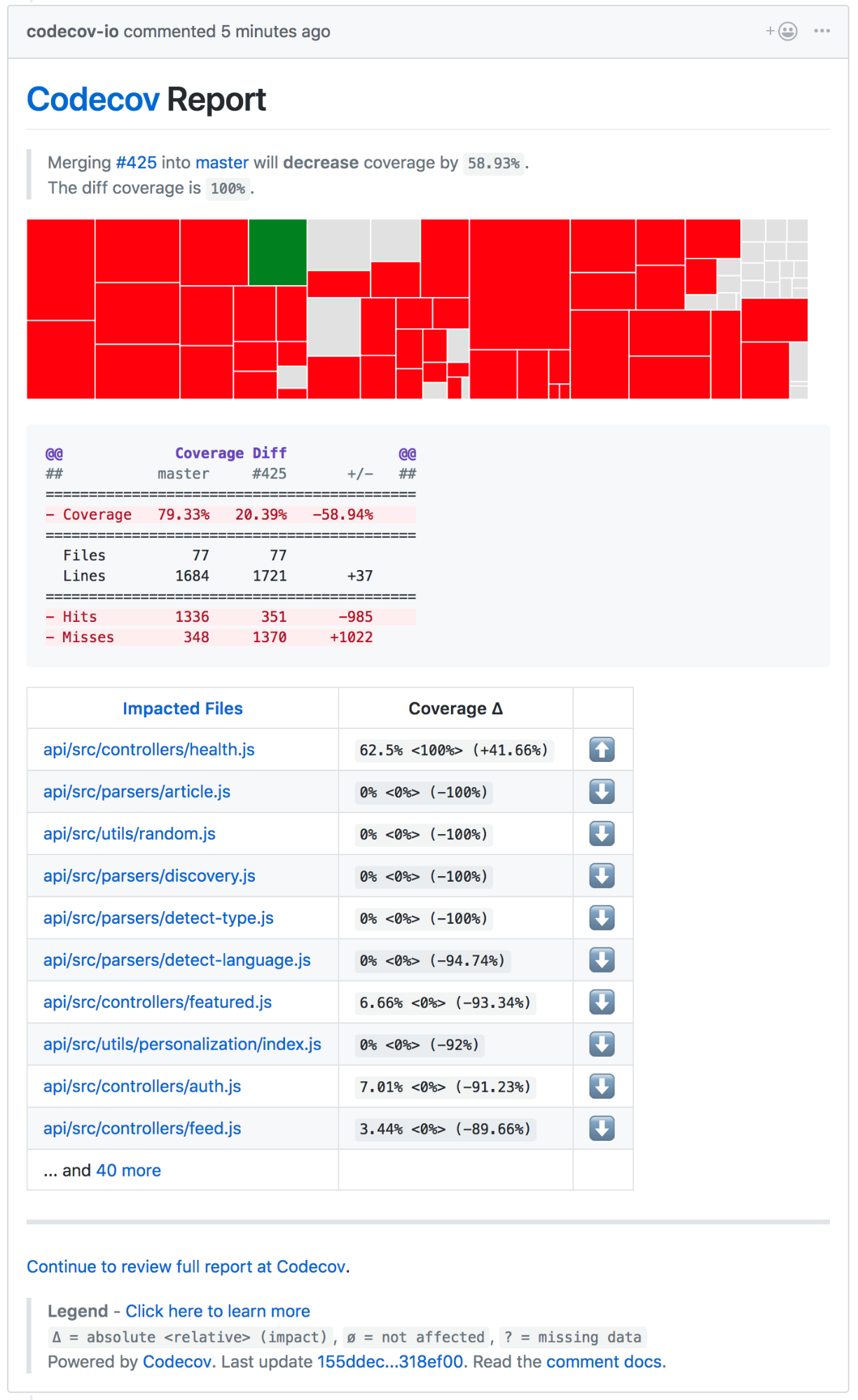

### 持续集成

|

||||

|

||||

有很多可用的持续集成服务,但我们钟爱 [Travis CI][15],因为他们和我们一样喜爱开源环境。考虑到 Winds 是开源的,它再合适不过了。

|

||||

|

||||

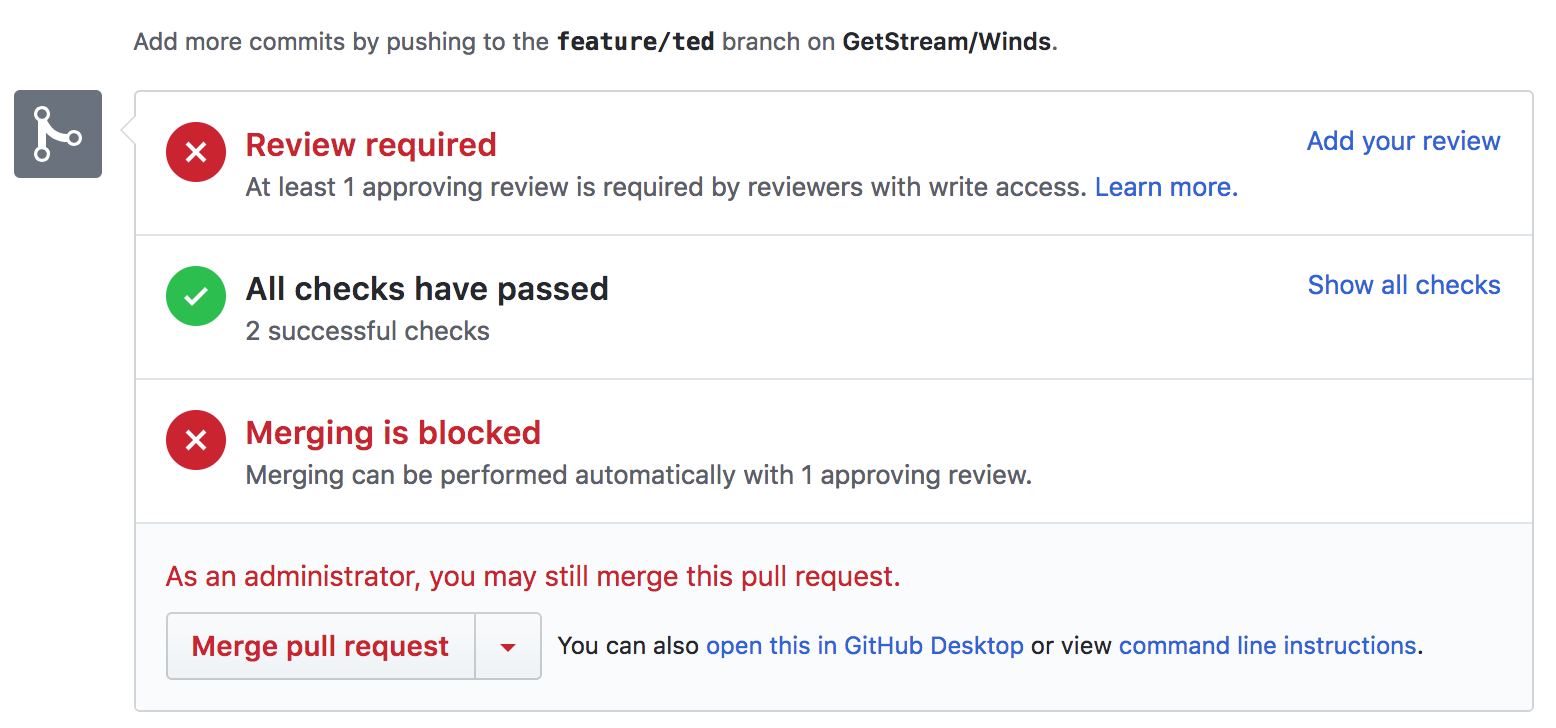

我们的集成非常简单 —— 我们用 [.travis.yml] 文件设置环境,通过简单的 [npm][17] 命令进行测试。测试覆盖率反馈给 GitHub,在 GitHub 上我们将清楚地看出我们最新的代码或者 PR 是不是通过了测试。GitHub 集成很棒,因为它可以自动查询 Travis CI 获取结果。以下是一个在 GitHub 上看到 (经过了测试的) PR 的简单截图:

|

||||

|

||||

|

||||

|

||||

除了 Travis CI,我们还用到了叫做 [CodeCov][18] 的工具。CodeCov 和 [Istanbul] 很像,但它是个可视化的工具,方便我们查看代码覆盖率、文件变动、行数变化,还有其他各种小玩意儿。尽管不用 CodeCov 也可以可视化数据,但把所有东西囊括在一个地方也很不错。

|

||||

|

||||

|

||||

|

||||

### 我们学到了什么

|

||||

|

||||

在开发我们的测试套件的整个过程中,我们学到了很多东西。开发时没有所谓“正确”的方法,我们决定开始创造自己的测试流程,通过理清楚可用的库,找到那些足够有用的东西添加到我们的工具箱中。

|

||||

|

||||

最终我们学到的是,在 Node.js 中进行测试不是听上去那么简单。还好,随着 Node.js 持续完善,社区将会聚集力量,构建一个坚固稳健的库,可以用“正确”的方式处理所有和测试相关的东西。

|

||||

|

||||

但在那时到来之前,我们还会接着用自己的测试套件,它开源在 [Winds 的 GitHub 仓库][20]。

|

||||

|

||||

### 局限

|

||||

|

||||

#### 创建配置没有简单的方法

|

||||

|

||||

有的框架和语言,就如 Python 中的 Django,有简单的方式来创建配置。比如,你可以使用下面这些 Django 命令,把数据导出到文件中来自动化配置的创建过程:

|

||||

|

||||

以下命令会把整个数据库导出到 `db.json` 文件中:

|

||||

|

||||

```

|

||||

./manage.py dumpdata > db.json

|

||||

```

|

||||

|

||||

以下命令仅导出 django 中 `admin.logentry` 表里的内容:

|

||||

|

||||

```

|

||||

./manage.py dumpdata admin.logentry > logentry.json

|

||||

```

|

||||

|

||||

以下命令会导出 `auth.user` 表中的内容:

|

||||

|

||||

```

|

||||

./manage.py dumpdata auth.user > user.json

|

||||

```

|

||||

|

||||

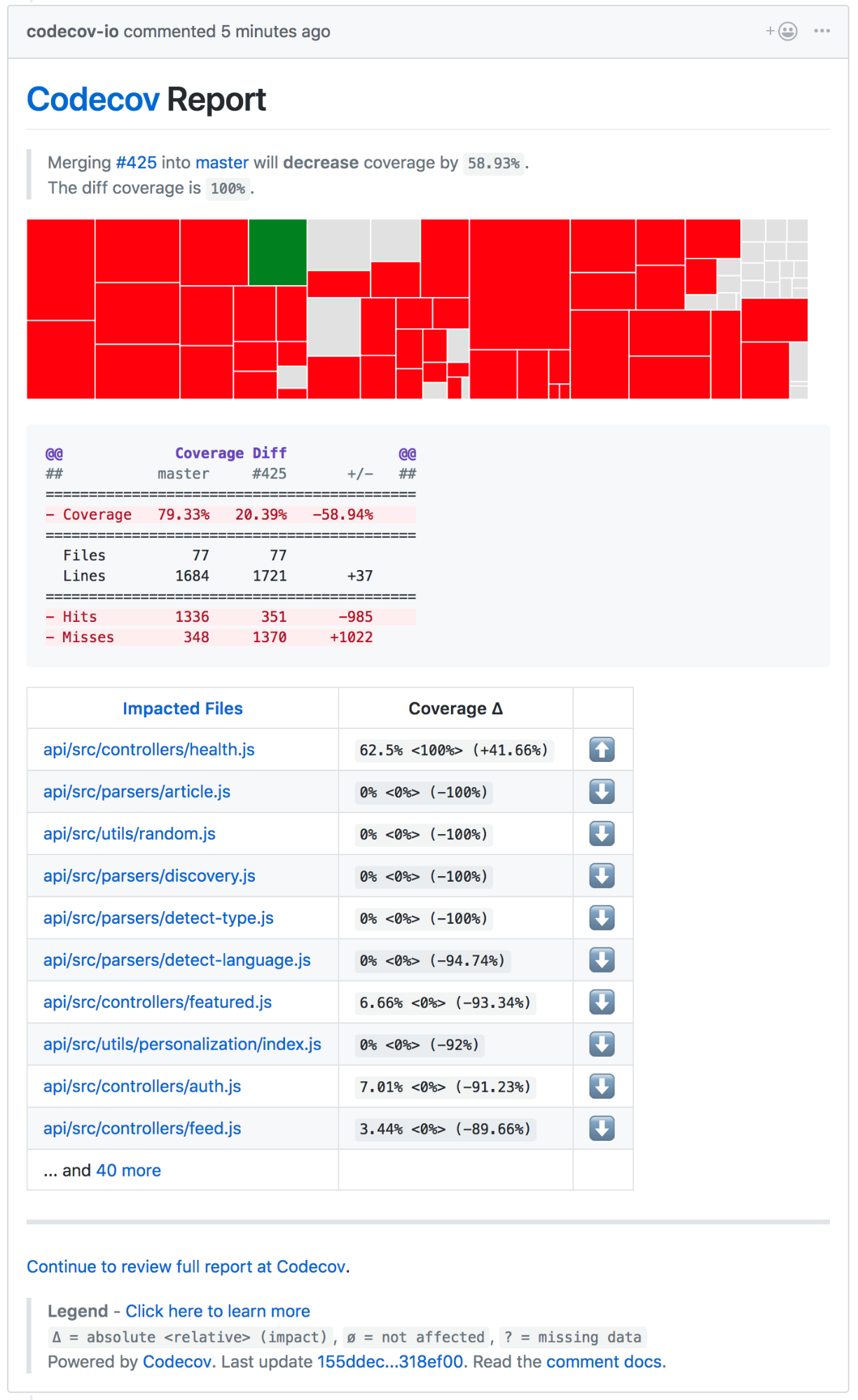

Node.js 里面没有创建配置的简单方式。我们最后做的事情是用 MongoDB Compass 工具导出数据到 JSON 中。这生成了不错的配置,如下图(但是,这是个乏味的过程,肯定会出错):

|

||||

|

||||

|

||||

|

||||

#### 使用 Babel,模拟模块和 Mocha 测试执行平台时,模块加载不直观

|

||||

|

||||

为了支持多种 node 版本,和获取 JavaScript 标准的最新附件,我们使用 Babel 把 ES6 代码转换成 ES5。Node.js 模块系统基于 CommonJS 标准,而 ES6 模块系统中有不同的语义。

|

||||

|

||||

Babel 在 Node.js 模块系统的顶层模拟 ES6 模块语义,但由于我们要使用 mock-require 来介入模块的加载,所以我们经历了罕见的怪异的模块加载过程,这看上去很不直观,而且能导致在整个代码中,导入的、初始化的和使用的模块有不同的版本。这使测试时的模拟过程和全局状态管理复杂化了。

|

||||

|

||||

#### 在使用 ES6 模块时声明的函数,模块内部的函数,都无法模拟

|

||||

|

||||

当一个模块导出多个函数,其中一个函数调用了其他的函数,就不可能模拟使用在模块内部的函数。原因在于当你引用一个 ES6 模块时,你得到的引用集合和模块内部的是不同的。任何重新绑定引用,将其指向新值的尝试都无法真正影响模块内部的函数,内部函数仍然使用的是原始的函数。

|

||||

|

||||

### 最后的思考

|

||||

|

||||

测试 Node.js 应用是复杂的过程,因为它的生态系统总在发展。掌握最新和最好的工具很重要,这样你就不会掉队了。

|

||||

|

||||

如今有很多方式获取 JavaScript 相关的新闻,导致与时俱进很难。关注邮件新闻刊物如 [JavaScript Weekly][21] 和 [Node Weekly][22] 是良好的开始。还有,关注一些 reddit 子模块如 [/r/node][23] 也不错。如果你喜欢了解最新的趋势,[State of JS][24] 在测试领域帮助开发者可视化趋势方面就做的很好。

|

||||

|

||||

最后,这里是一些我喜欢的博客,我经常在这上面发文章:

|

||||

|

||||

* [Hacker Noon][1]

|

||||

* [Free Code Camp][2]

|

||||

* [Bits and Pieces][3]

|

||||

|

||||

觉得我遗漏了某些重要的东西?在评论区或者 Twitter [@NickParsons][25] 让我知道。

|

||||

|

||||

还有,如果你想要了解 Stream,我们的网站上有很棒的 5 分钟教程。点 [这里][26] 进行查看。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Nick Parsons

|

||||

|

||||

Dreamer. Doer. Engineer. Developer Evangelist https://getstream.io.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://hackernoon.com/testing-node-js-in-2018-10a04dd77391

|

||||

|

||||

作者:[Nick Parsons][a]

|

||||

译者:[BriFuture](https://github.com/BriFuture)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://hackernoon.com/@nparsons08?source=post_header_lockup

|

||||

[1]:https://hackernoon.com/

|

||||

[2]:https://medium.freecodecamp.org/

|

||||

[3]:https://blog.bitsrc.io/

|

||||

[4]:https://getstream.io/

|

||||

[5]:https://getstream.io/winds

|

||||

[6]:https://github.com/mochajs/mocha

|

||||

[7]:http://www.chaijs.com/

|

||||

[8]:http://sinonjs.org/

|

||||

[9]:https://github.com/node-nock/nock

|

||||

[10]:https://getstream.io/docs_rest/

|

||||

[11]:https://getstream.io/personalization

|

||||

[12]:https://github.com/boblauer/mock-require

|

||||

[13]:https://github.com/gotwarlost/istanbul

|

||||

[14]:https://github.com/GetStream/Winds/tree/master/api/test

|

||||

[15]:https://travis-ci.org/

|

||||

[16]:https://github.com/GetStream/Winds/blob/master/.travis.yml

|

||||

[17]:https://www.npmjs.com/

|

||||

[18]:https://codecov.io/#features

|

||||

[19]:https://github.com/gotwarlost/istanbul

|

||||

[20]:https://github.com/GetStream/Winds/tree/master/api/test

|

||||

[21]:https://javascriptweekly.com/

|

||||

[22]:https://nodeweekly.com/

|

||||

[23]:https://www.reddit.com/r/node/

|

||||

[24]:https://stateofjs.com/2017/testing/results/

|

||||

[25]:https://twitter.com/@nickparsons

|

||||

[26]:https://getstream.io/try-the-api

|

||||

@ -1,6 +1,8 @@

|

||||

系统管理员的一个网络管理指南

|

||||

面向系统管理员的网络管理指南

|

||||

======

|

||||

|

||||

> 一个使管理服务器和网络更轻松的 Linux 工具和命令的参考列表。

|

||||

|

||||

|

||||

|

||||

如果你是一位系统管理员,那么你的日常工作应该包括管理服务器和数据中心的网络。以下的 Linux 实用工具和命令 —— 从基础的到高级的 —— 将帮你更轻松地管理你的网络。

|

||||

@ -16,8 +18,6 @@

|

||||

* IPv4: `ping <ip address>/<fqdn>`

|

||||

* IPv6: `ping6 <ip address>/<fqdn>`

|

||||

|

||||

|

||||

|

||||

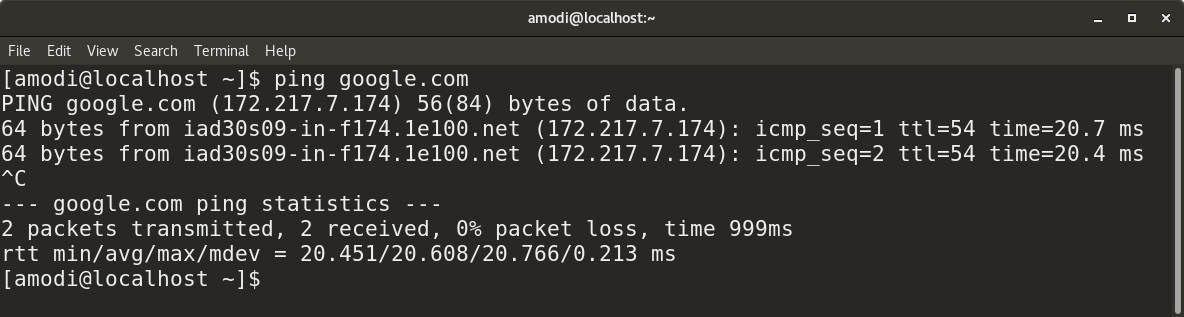

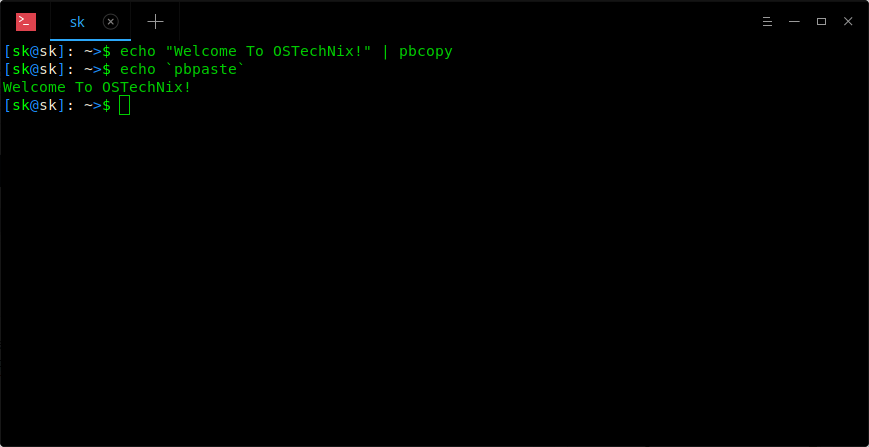

你也可以使用 `ping` 去解析出网站所对应的 IP 地址,如下图所示:

|

||||

|

||||

|

||||

@ -32,16 +32,12 @@

|

||||

|

||||

* `traceroute <ip address>/<fqdn>`

|

||||

|

||||

|

||||

|

||||

### Telnet

|

||||

|

||||

**语法:**

|

||||

|

||||

* `telnet <ip address>/<fqdn>` 是用于 [telnet][3] 进入任何支持该协议的服务器。

|

||||

|

||||

|

||||

|

||||

### Netstat

|

||||

|

||||

这个网络统计(`netstat`)实用工具是用于去分析解决网络连接问题和检查接口/端口统计数据、路由表、协议状态等等的。它是任何管理员都应该必须掌握的工具。

|

||||

@ -69,20 +65,14 @@

|

||||

**语法:**

|

||||

|

||||

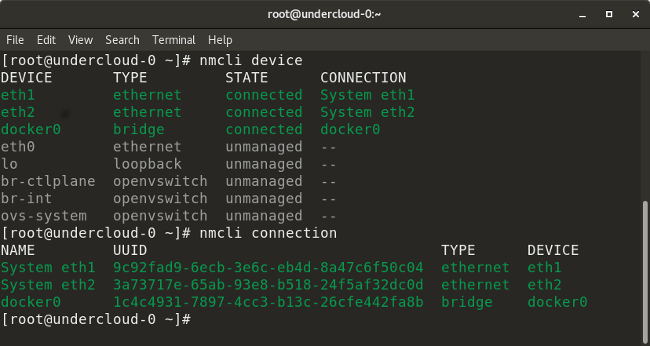

* `nmcli device` 列出网络上的所有设备。

|

||||

|

||||

* `nmcli device show <interface>` 显示指定接口的网络相关的详细情况。

|

||||

|

||||

* `nmcli connection` 检查设备的连接情况。

|

||||

|

||||

* `nmcli connection down <interface>` 关闭指定接口。

|

||||

|

||||

* `nmcli connection up <interface>` 打开指定接口。

|

||||

|

||||

* `nmcli con add type vlan con-name <connection-name> dev <interface> id <vlan-number> ipv4 <ip/cidr> gw4 <gateway-ip>` 在特定的接口上使用指定的 VLAN 号添加一个虚拟局域网(VLAN)接口、IP 地址、和网关。

|

||||

|

||||

|

||||

|

||||

|

||||

### 路由

|

||||

|

||||

检查和配置路由的命令很多。下面是其中一些比较有用的:

|

||||

@ -101,13 +91,13 @@

|

||||

|

||||

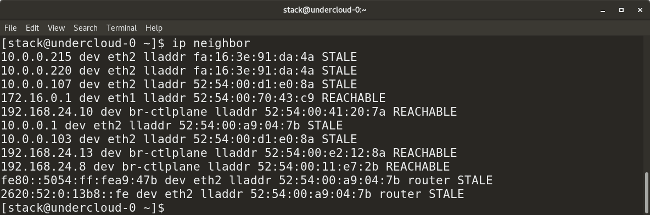

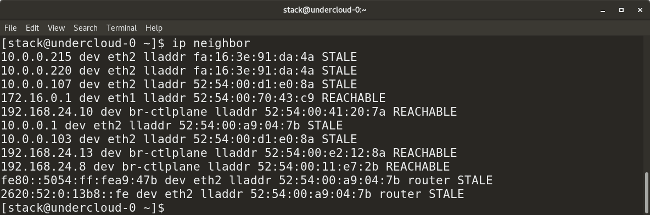

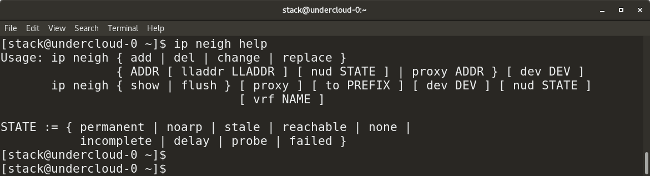

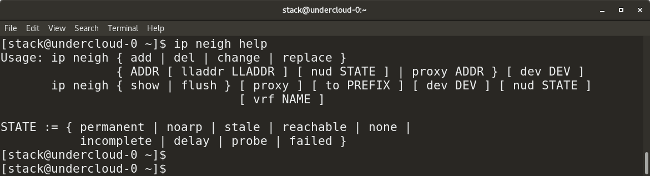

* `ip neighbor` 显示当前的邻接表和用于去添加、改变、或删除新的邻居。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

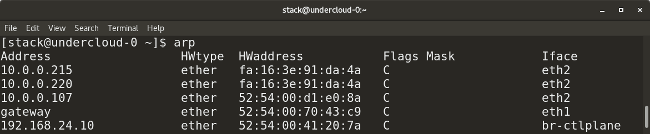

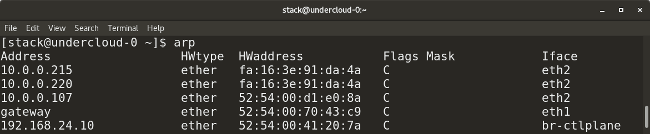

* `arp` (它的全称是 “地址解析协议”)类似于 `ip neighbor`。`arp` 映射一个系统的 IP 地址到它相应的 MAC(介质访问控制)地址。

|

||||

|

||||

|

||||

|

||||

|

||||

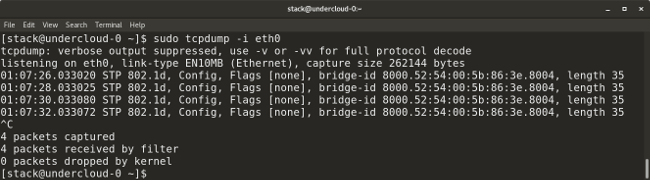

### Tcpdump 和 Wireshark

|

||||

|

||||

@ -117,7 +107,7 @@ Linux 提供了许多包捕获工具,比如 `tcpdump`、`wireshark`、`tshark`

|

||||

|

||||

* `tcpdump -i <interface-name>` 显示指定接口上实时通过的数据包。通过在命令中添加一个 `-w` 标志和输出文件的名字,可以将数据包保存到一个文件中。例如:`tcpdump -w <output-file.> -i <interface-name>`。

|

||||

|

||||

|

||||

|

||||

|

||||

* `tcpdump -i <interface> src <source-ip>` 从指定的源 IP 地址上捕获数据包。

|

||||

* `tcpdump -i <interface> dst <destination-ip>` 从指定的目标 IP 地址上捕获数据包。

|

||||

@ -135,22 +125,16 @@ Linux 提供了许多包捕获工具,比如 `tcpdump`、`wireshark`、`tshark`

|

||||

* `iptables -L` 列出所有已存在的 `iptables` 规则。

|

||||

* `iptables -F` 删除所有已存在的规则。

|

||||

|

||||

|

||||

|

||||

下列命令允许流量从指定端口到指定接口:

|

||||

|

||||

* `iptables -A INPUT -i <interface> -p tcp –dport <port-number> -m state –state NEW,ESTABLISHED -j ACCEPT`

|

||||

* `iptables -A OUTPUT -o <interface> -p tcp -sport <port-number> -m state – state ESTABLISHED -j ACCEPT`

|

||||

|

||||

|

||||

|

||||

下列命令允许<ruby>环回<rt>loopback</rt></ruby>接口访问系统:

|

||||

|

||||

* `iptables -A INPUT -i lo -j ACCEPT`

|

||||

* `iptables -A OUTPUT -o lo -j ACCEPT`

|

||||

|

||||

|

||||

|

||||

### Nslookup

|

||||

|

||||

`nslookup` 工具是用于去获得一个网站或域名所映射的 IP 地址。它也能用于去获得你的 DNS 服务器的信息,比如,一个网站的所有 DNS 记录(具体看下面的示例)。与 `nslookup` 类似的一个工具是 `dig`(Domain Information Groper)实用工具。

|

||||

@ -161,7 +145,6 @@ Linux 提供了许多包捕获工具,比如 `tcpdump`、`wireshark`、`tshark`

|

||||

* `nslookup -type=any <website-name.com>` 显示指定网站/域中所有可用记录。

|

||||

|

||||

|

||||

|

||||

### 网络/接口调试

|

||||

|

||||

下面是用于接口连通性或相关网络问题调试所需的命令和文件的汇总。

|

||||

@ -182,7 +165,6 @@ Linux 提供了许多包捕获工具,比如 `tcpdump`、`wireshark`、`tshark`

|

||||

* `/etc/ntp.conf` 指定 NTP 服务器域名。

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/7/sysadmin-guide-networking-commands

|

||||

@ -190,7 +172,7 @@ via: https://opensource.com/article/18/7/sysadmin-guide-networking-commands

|

||||

作者:[Archit Modi][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

Linux 下 cut 命令的 4 个本质且实用的示例

|

||||

Linux 下 cut 命令的 4 个基础实用的示例

|

||||

============================================================

|

||||

|

||||

`cut` 命令是用来从文本文件中移除“某些列”的经典工具。在本文中的“一列”可以被定义为按照一行中位置区分的一系列字符串或者字节, 或者是以某个分隔符为间隔的某些域。

|

||||

`cut` 命令是用来从文本文件中移除“某些列”的经典工具。在本文中的“一列”可以被定义为按照一行中位置区分的一系列字符串或者字节,或者是以某个分隔符为间隔的某些域。

|

||||

|

||||

先前我已经介绍了[如何使用 AWK 命令][13]。在本文中,我将解释 linux 下 `cut` 命令的 4 个本质且实用的例子,有时这些例子将帮你节省很多时间。

|

||||

|

||||

@ -11,26 +11,13 @@ Linux 下 cut 命令的 4 个本质且实用的示例

|

||||

|

||||

假如你想,你可以观看下面的视频,视频中解释了本文中我列举的 cut 命令的使用例子。

|

||||

|

||||

目录:

|

||||

- https://www.youtube.com/PhE_cFLzVFw

|

||||

|

||||

* [作用在一系列字符上][8]

|

||||

* [范围如何定义?][1]

|

||||

### 1、 作用在一系列字符上

|

||||

|

||||

* [作用在一系列字节上][9]

|

||||

* [作用在多字节编码的字符上][2]

|

||||

当启用 `-c` 命令行选项时,`cut` 命令将移除一系列字符。

|

||||

|

||||

* [作用在域上][10]

|

||||

* [处理不包含分隔符的行][3]

|

||||

|

||||

* [改变输出的分隔符][4]

|

||||

|

||||

* [非 POSIX GNU 扩展][11]

|

||||

|

||||

### 1\. 作用在一系列字符上

|

||||

|

||||

当启用 `-c` 命令行选项时,cut 命令将移除一系列字符。

|

||||

|

||||

和其他的过滤器类似, cut 命令不会就地改变输入的文件,它将复制已修改的数据到它的标准输出里去。你可以通过重定向命令的结果到一个文件中来保存修改后的结果,或者使用管道将结果送到另一个命令的输入中,这些都由你来负责。

|

||||

和其他的过滤器类似, `cut` 命令不会直接改变输入的文件,它将复制已修改的数据到它的标准输出里去。你可以通过重定向命令的结果到一个文件中来保存修改后的结果,或者使用管道将结果送到另一个命令的输入中,这些都由你来负责。

|

||||

|

||||

假如你已经下载了上面视频中的[示例测试文件][26],你将看到一个名为 `BALANCE.txt` 的数据文件,这些数据是直接从我妻子在她工作中使用的某款会计软件中导出的:

|

||||

|

||||

@ -50,7 +37,7 @@ ACCDOC ACCDOCDATE ACCOUNTNUM ACCOUNTLIB ACCDOCLIB

|

||||

|

||||

上述文件是一个固定宽度的文本文件,因为对于每一项数据,都使用了不定长的空格做填充,使得它看起来是一个对齐的列表。

|

||||

|

||||

这样一来,每一列数据开始和结束的位置都是一致的。从 cut 命令的字面意思去理解会给我们带来一个小陷阱:`cut` 命令实际上需要你指出你想_保留_的数据范围,而不是你想_移除_的范围。所以,假如我_只_需要上面文件中的 `ACCOUNTNUM` 和 `ACCOUNTLIB` 列,我需要这么做:

|

||||

这样一来,每一列数据开始和结束的位置都是一致的。从 `cut` 命令的字面意思去理解会给我们带来一个小陷阱:`cut` 命令实际上需要你指出你想_保留_的数据范围,而不是你想_移除_的范围。所以,假如我_只_需要上面文件中的 `ACCOUNTNUM` 和 `ACCOUNTLIB` 列,我需要这么做:

|

||||

|

||||

```

|

||||

sh$ cut -c 25-59 BALANCE.txt | head

|

||||

@ -68,17 +55,17 @@ ACCOUNTNUM ACCOUNTLIB

|

||||

|

||||

#### 范围如何定义?

|

||||

|

||||

正如我们上面看到的那样, cut 命令需要我们特别指定需要保留的数据的_范围_。所以,下面我将更正式地介绍如何定义范围:对于 `cut` 命令来说,范围是由连字符(`-`)分隔的起始和结束位置组成,范围是基于 1 计数的,即每行的第一项是从 1 开始计数的,而不是从 0 开始。范围是一个闭区间,开始和结束位置都将包含在结果之中,正如它们之间的所有字符那样。如果范围中的结束位置比起始位置小,则这种表达式是错误的。作为快捷方式,你可以省略起始_或_结束值,正如下面的表格所示:

|

||||

正如我们上面看到的那样, `cut` 命令需要我们特别指定需要保留的数据的_范围_。所以,下面我将更正式地介绍如何定义范围:对于 `cut` 命令来说,范围是由连字符(`-`)分隔的起始和结束位置组成,范围是基于 1 计数的,即每行的第一项是从 1 开始计数的,而不是从 0 开始。范围是一个闭区间,开始和结束位置都将包含在结果之中,正如它们之间的所有字符那样。如果范围中的结束位置比起始位置小,则这种表达式是错误的。作为快捷方式,你可以省略起始_或_结束值,正如下面的表格所示:

|

||||

|

||||

|

||||

|||

|

||||

|--|--|

|

||||

| 范围 | 含义 |

|

||||

|---|---|

|

||||

| `a-b` | a 和 b 之间的范围(闭区间) |

|

||||

|`a` | 与范围 `a-a` 等价 |

|

||||

| `-b` | 与范围 `1-a` 等价 |

|

||||

| `b-` | 与范围 `b-∞` 等价 |

|

||||

|

||||

cut 命令允许你通过逗号分隔多个范围,下面是一些示例:

|

||||

`cut` 命令允许你通过逗号分隔多个范围,下面是一些示例:

|

||||

|

||||

```

|

||||

# 保留 1 到 24 之间(闭区间)的字符

|

||||

@ -108,8 +95,7 @@ Files /dev/fd/63 and /dev/fd/62 are identical

|

||||

类似的,`cut` 命令 _不会重复数据_:

|

||||

|

||||

```

|

||||

# One might expect that could be a way to repeat

|

||||

# the first column three times, but no...

|

||||

# 某人或许期待这可以第一列三次,但并不会……

|

||||

cut -c -10,-10,-10 BALANCE.txt | head -5

|

||||

ACCDOC

|

||||

4

|

||||

@ -118,13 +104,13 @@ ACCDOC

|

||||

5

|

||||

```

|

||||

|

||||

值得提及的是,曾经有一个提议,建议使用 `-o` 选项来实现上面提到的两个限制,使得 `cut` 工具可以重排或者重复数据。但这个提议被 [POSIX 委员会拒绝了][14],_“因为这类增强不属于 IEEE P1003.2b 草案标准的范围”_。

|

||||

值得提及的是,曾经有一个提议,建议使用 `-o` 选项来去除上面提到的两个限制,使得 `cut` 工具可以重排或者重复数据。但这个提议被 [POSIX 委员会拒绝了][14],_“因为这类增强不属于 IEEE P1003.2b 草案标准的范围”_。

|

||||

|

||||

据我所知,我还没有见过哪个版本的 cut 程序实现了上面的提议,以此来作为扩展,假如你知道某些例外,请使用下面的评论框分享给大家!

|

||||

据我所知,我还没有见过哪个版本的 `cut` 程序实现了上面的提议,以此来作为扩展,假如你知道某些例外,请使用下面的评论框分享给大家!

|

||||

|

||||

### 2\. 作用在一系列字节上

|

||||

### 2、 作用在一系列字节上

|

||||

|

||||

当使用 `-b` 命令行选项时,cut 命令将移除字节范围。

|

||||

当使用 `-b` 命令行选项时,`cut` 命令将移除字节范围。

|

||||

|

||||

咋一看,使用_字符_范围和使用_字节_没有什么明显的不同:

|

||||

|

||||

@ -197,11 +183,11 @@ ACCDOC ACCDOCDATE ACCOUNTLIB DEBIT CREDIT

|

||||

36 1012017 VAT BS/ENC 00000000013,83

|

||||

```

|

||||

|

||||

我已经_毫无删减地_复制了上面命令的输出。所以可以很明显地看出列对齐那里有些问题。

|

||||

我_毫无删减地_复制了上面命令的输出。所以可以很明显地看出列对齐那里有些问题。

|

||||

|

||||

对此我的解释是原来的数据文件只包含 US-ASCII 编码的字符(符号、标点符号、数字和没有发音符号的拉丁字母)。

|

||||

|

||||

但假如你仔细地查看经软件升级后产生的文件,你可以看到新导出的数据文件保留了带发音符号的字母。例如名为“ALNÉENRE”的公司现在被合理地记录了,而不是先前的 “ALNEENRE”(没有发音符号)。

|

||||

但假如你仔细地查看经软件升级后产生的文件,你可以看到新导出的数据文件保留了带发音符号的字母。例如现在合理地记录了名为 “ALNÉENRE” 的公司,而不是先前的 “ALNEENRE”(没有发音符号)。

|

||||

|

||||

`file -i` 正确地识别出了改变,因为它报告道现在这个文件是 [UTF-8 编码][15] 的。

|

||||

|

||||

@ -231,28 +217,26 @@ sh$ sed '2!d' BALANCE-V2.txt | hexdump -C

|

||||

在 `hexdump` 输出的 00000030 那行,在一系列的空格(字节 `20`)之后,你可以看到:

|

||||

|

||||

* 字母 `A` 被编码为 `41`,

|

||||

|

||||

* 字母 `L` 被编码为 `4c`,

|

||||

|

||||

* 字母 `N` 被编码为 `4e`。

|

||||

|

||||

但对于大写的[带有注音的拉丁大写字母 E][16] (这是它在 Unicode 标准中字母 _É_ 的官方名称),则是使用 _2_ 个字节 `c3 89` 来编码的。

|

||||

|

||||

这样便出现问题了:对于使用固定宽度编码的文件, 使用字节位置来表示范围的 `cut` 命令工作良好,但这并不适用于使用变长编码的 UTF-8 或者 [Shift JIS][17] 编码。这种情况在下面的 [POSIX标准的非规范性摘录][18] 中被明确地解释过:

|

||||

这样便出现问题了:对于使用固定宽度编码的文件, 使用字节位置来表示范围的 `cut` 命令工作良好,但这并不适用于使用变长编码的 UTF-8 或者 [Shift JIS][17] 编码。这种情况在下面的 [POSIX 标准的非规范性摘录][18] 中被明确地解释过:

|

||||

|

||||

> 先前版本的 cut 程序将字节和字符视作等同的环境下运作(正如在某些实现下对 退格键<backspace> 和制表键<tab> 的处理)。在针对多字节字符的情况下,特别增加了 `-b` 选项。

|

||||

> 先前版本的 `cut` 程序将字节和字符视作等同的环境下运作(正如在某些实现下对退格键 `<backspace>` 和制表键 `<tab>` 的处理)。在针对多字节字符的情况下,特别增加了 `-b` 选项。

|

||||

|

||||

嘿,等一下!我并没有在上面“有错误”的例子中使用 '-b' 选项,而是 `-c` 选项呀!所以,难道_不应该_能够成功处理了吗!?

|

||||

|

||||

是的,确实_应该_:但是很不幸,即便我们现在已身处 2018 年,GNU Coreutils 的版本为 8.30 了,cut 程序的 GNU 版本实现仍然不能很好地处理多字节字符。引用 [GNU 文档][19] 的话说,_`-c` 选项“现在和 `-b` 选项是相同的,但对于国际化的情形将有所不同[...]”_。需要提及的是,这个问题距今已有 10 年之久了!

|

||||

是的,确实_应该_:但是很不幸,即便我们现在已身处 2018 年,GNU Coreutils 的版本为 8.30 了,`cut` 程序的 GNU 版本实现仍然不能很好地处理多字节字符。引用 [GNU 文档][19] 的话说,_`-c` 选项“现在和 `-b` 选项是相同的,但对于国际化的情形将有所不同[...]”_。需要提及的是,这个问题距今已有 10 年之久了!

|

||||

|

||||

另一方面,[OpenBSD][20] 的实现版本和 POSIX 相吻合,这将归功于当前的本地化(locale) 设定来合理地处理多字节字符:

|

||||

另一方面,[OpenBSD][20] 的实现版本和 POSIX 相吻合,这将归功于当前的本地化(`locale`)设定来合理地处理多字节字符:

|

||||

|

||||

```

|

||||

# 确保随后的命令知晓我们现在处理的是 UTF-8 编码的文本文件

|

||||

openbsd-6.3$ export LC_CTYPE=en_US.UTF-8

|

||||

|

||||

# 使用 `-c` 选项, cut 能够合理地处理多字节字符

|

||||

# 使用 `-c` 选项, `cut` 能够合理地处理多字节字符

|

||||

openbsd-6.3$ cut -c -24,36-59,93- BALANCE-V2.txt

|

||||

ACCDOC ACCDOCDATE ACCOUNTLIB DEBIT CREDIT

|

||||

4 1012017 TIDE SCHEDULE 00000001615,00

|

||||

@ -286,7 +270,7 @@ ACCDOC ACCDOCDATE ACCOUNTLIB DEBIT CREDIT

|

||||

36 1012017 VAT BS/ENC 00000000013,83

|

||||

```

|

||||

|

||||

正如期望的那样,当使用 `-b` 选项而不是 `-c` 选项后, OpenBSD 版本的 cut 实现和传统的 `cut` 表现是类似的:

|

||||

正如期望的那样,当使用 `-b` 选项而不是 `-c` 选项后, OpenBSD 版本的 `cut` 实现和传统的 `cut` 表现是类似的:

|

||||

|

||||

```

|

||||

openbsd-6.3$ cut -b -24,36-59,93- BALANCE-V2.txt

|

||||

@ -322,7 +306,7 @@ ACCDOC ACCDOCDATE ACCOUNTLIB DEBIT CREDIT

|

||||

36 1012017 VAT BS/ENC 00000000013,83

|

||||

```

|

||||

|

||||

### 3\. 作用在域上

|

||||

### 3、 作用在域上

|

||||

|

||||

从某种意义上说,使用 `cut` 来处理用特定分隔符隔开的文本文件要更加容易一些,因为只需要确定好每行中域之间的分隔符,然后复制域的内容到输出就可以了,而不需要烦恼任何与编码相关的问题。

|

||||

|

||||

@ -342,9 +326,9 @@ ACCDOC;ACCDOCDATE;ACCOUNTNUM;ACCOUNTLIB;ACCDOCLIB;DEBIT;CREDIT

|

||||

6;1012017;623795;TOURIST GUIDE BOOK;FACT FA00006253 - BIT QUIROBEN;00000001531,00;

|

||||

```

|

||||

|

||||

你可能知道上面文件是一个 [CSV][29] 格式的文件(它以逗号来分隔),即便有时候域分隔符不是逗号。例如分号(`;`)也常被用来作为分隔符,并且对于那些总使用逗号作为 [十进制分隔符][30]的国家(例如法国,所以上面我的示例文件中选用了他们国家的字符),当导出数据为 "CSV" 格式时,默认将使用分号来分隔数据。另一种常见的情况是使用 [tab 键][32] 来作为分隔符,从而生成叫做 [tab 分隔数值][32] 的文件。最后,在 Unix 和 Linux 领域,冒号 (`:`) 是另一种你能找到的常见分隔符号,例如在标准的 `/etc/passwd` 和 `/etc/group` 这两个文件里。

|

||||

你可能知道上面文件是一个 [CSV][29] 格式的文件(它以逗号来分隔),即便有时候域分隔符不是逗号。例如分号(`;`)也常被用来作为分隔符,并且对于那些总使用逗号作为 [十进制分隔符][30]的国家(例如法国,所以上面我的示例文件中选用了他们国家的字符),当导出数据为 “CSV” 格式时,默认将使用分号来分隔数据。另一种常见的情况是使用 [tab 键][32] 来作为分隔符,从而生成叫做 [tab 分隔的值][32] 的文件。最后,在 Unix 和 Linux 领域,冒号 (`:`) 是另一种你能找到的常见分隔符号,例如在标准的 `/etc/passwd` 和 `/etc/group` 这两个文件里。

|

||||

|

||||

当处理使用分隔符隔开的文本文件格式时,你可以向带有 `-f` 选项的 cut 命令提供需要保留的域的范围,并且你也可以使用 `-d` 选项来制定分隔符(当没有使用 `-d` 选项时,默认以 tab 字符来作为分隔符):

|

||||

当处理使用分隔符隔开的文本文件格式时,你可以向带有 `-f` 选项的 `cut` 命令提供需要保留的域的范围,并且你也可以使用 `-d` 选项来指定分隔符(当没有使用 `-d` 选项时,默认以 tab 字符来作为分隔符):

|

||||

|

||||

```

|

||||

sh$ cut -f 5- -d';' BALANCE.csv | head

|

||||

@ -362,9 +346,9 @@ FACT FA00006253 - BIT QUIROBEN;00000001531,00;

|

||||

|

||||

#### 处理不包含分隔符的行

|

||||

|

||||

但要是输入文件中的某些行没有分隔符又该怎么办呢?很容易地认为可以将这样的行视为只包含第一个域。但 cut 程序并 _不是_ 这样做的。

|

||||

但要是输入文件中的某些行没有分隔符又该怎么办呢?很容易地认为可以将这样的行视为只包含第一个域。但 `cut` 程序并 _不是_ 这样做的。

|

||||

|

||||

默认情况下,当使用 `-f` 选项时, cut 将总是原样输出不包含分隔符的那一行(可能假设它是非数据行,就像表头或注释等):

|

||||

默认情况下,当使用 `-f` 选项时,`cut` 将总是原样输出不包含分隔符的那一行(可能假设它是非数据行,就像表头或注释等):

|

||||

|

||||

```

|

||||

sh$ (echo "# 2018-03 BALANCE"; cat BALANCE.csv) > BALANCE-WITH-HEADER.csv

|

||||

@ -388,8 +372,7 @@ DEBIT;CREDIT

|

||||

00000001333,00;

|

||||

```

|

||||

|

||||

假如你好奇心强,你还可以探索这种特性,来作为一种相对

|

||||

隐晦的方式去保留那些只包含给定字符的行:

|

||||

假如你好奇心强,你还可以探索这种特性,来作为一种相对隐晦的方式去保留那些只包含给定字符的行:

|

||||

|

||||

```

|

||||

# 保留含有一个 `e` 的行

|

||||

@ -398,7 +381,7 @@ sh$ printf "%s\n" {mighty,bold,great}-{condor,monkey,bear} | cut -s -f 1- -d'e'

|

||||

|

||||

#### 改变输出的分隔符

|

||||

|

||||

作为一种扩展, GNU 版本实现的 cut 允许通过使用 `--output-delimiter` 选项来为结果指定一个不同的域分隔符:

|

||||

作为一种扩展, GNU 版本实现的 `cut` 允许通过使用 `--output-delimiter` 选项来为结果指定一个不同的域分隔符:

|

||||

|

||||

```

|

||||

sh$ cut -f 5,6- -d';' --output-delimiter="*" BALANCE.csv | head

|

||||

@ -416,10 +399,12 @@ FACT FA00006253 - BIT QUIROBEN*00000001531,00*

|

||||

|

||||

需要注意的是,在上面这个例子中,所有出现域分隔符的地方都被替换掉了,而不仅仅是那些在命令行中指定的作为域范围边界的分隔符。

|

||||

|

||||

### 4\. 非 POSIX GNU 扩展

|

||||

### 4、 非 POSIX GNU 扩展

|

||||

|

||||

说到非 POSIX GNU 扩展,它们中的某些特别有用。特别需要提及的是下面的扩展也同样对字节、字符或者域范围工作良好(相对于当前的 GNU 实现来说)。

|

||||

|

||||

`--complement`:

|

||||

|

||||

想想在 sed 地址中的感叹符号(`!`),使用它,`cut` 将只保存**没有**被匹配到的范围:

|

||||

|

||||

```

|

||||

@ -436,7 +421,9 @@ ACCDOC;ACCDOCDATE;ACCOUNTNUM;ACCOUNTLIB;DEBIT;CREDIT

|

||||

4;1012017;445452;VAT BS/ENC;00000000323,00;

|

||||

```

|

||||

|

||||

使用 [NUL 字符][6] 来作为行终止符,而不是 [新行(newline)字符][7]。当你的数据包含 新行 字符时, `-z` 选项就特别有用了,例如当处理文件名的时候(因为在文件名中 新行 字符是可以使用的,而 NUL 则不可以)。

|

||||

`--zero-terminated (-z)`:

|

||||

|

||||

使用 [NUL 字符][6] 来作为行终止符,而不是 [<ruby>新行<rt>newline</rt></ruby>字符][7]。当你的数据包含 新行字符时, `-z` 选项就特别有用了,例如当处理文件名的时候(因为在文件名中新行字符是可以使用的,而 NUL 则不可以)。

|

||||

|

||||

为了展示 `-z` 选项,让我们先做一点实验。首先,我们将创建一个文件名中包含换行符的文件:

|

||||

|

||||

@ -448,7 +435,7 @@ BALANCE-V2.txt

|

||||

EMPTY?FILE?WITH FUNKY?NAME.txt

|

||||

```

|

||||

|

||||

现在假设我想展示每个 `*.txt` 文件的前 5 个字符。一个想当然的解法将会失败:

|

||||

现在假设我想展示每个 `*.txt` 文件的前 5 个字符。一个想当然的解决方法将会失败:

|

||||

|

||||

```

|

||||

sh$ ls -1 *.txt | cut -c 1-5

|

||||

@ -460,7 +447,7 @@ WITH

|

||||

NAME.

|

||||

```

|

||||

|

||||

你可以已经知道 `[ls][21]` 是为了[方便人类使用][33]而特别设计的,并且在一个命令管道中使用它是一个反模式(确实是这样的)。所以让我们用 `[find][22]` 来替换它:

|

||||

你可以已经知道 [ls][21] 是为了[方便人类使用][33]而特别设计的,并且在一个命令管道中使用它是一个反模式(确实是这样的)。所以让我们用 [find][22] 来替换它:

|

||||

|

||||

```

|

||||

sh$ find . -name '*.txt' -printf "%f\n" | cut -c 1-5

|

||||

@ -484,11 +471,11 @@ EMPTY

|

||||

BALAN

|

||||

```

|

||||

|

||||

通过上面最后的例子,我们就达到了本文的最后部分了,所以我将让你自己试试 `-printf` 后面那个有趣的 `"%f\0"` 参数或者理解为什么我在管道的最后使用了 `[tr][23]` 命令。

|

||||

通过上面最后的例子,我们就达到了本文的最后部分了,所以我将让你自己试试 `-printf` 后面那个有趣的 `"%f\0"` 参数或者理解为什么我在管道的最后使用了 [tr][23] 命令。

|

||||

|

||||

### 使用 cut 命令可以实现更多功能

|

||||

|

||||

我只是列举了 cut 命令的最常见且在我眼中最实质的使用方式。你甚至可以将它以更加实用的方式加以运用,这取决于你的逻辑和想象。

|

||||

我只是列举了 `cut` 命令的最常见且在我眼中最基础的使用方式。你甚至可以将它以更加实用的方式加以运用,这取决于你的逻辑和想象。

|

||||

|

||||

不要再犹豫了,请使用下面的评论框贴出你的发现。最后一如既往的,假如你喜欢这篇文章,请不要忘记将它分享到你最喜爱网站和社交媒体中!

|

||||

|

||||

@ -496,9 +483,9 @@ BALAN

|

||||

|

||||

via: https://linuxhandbook.com/cut-command/

|

||||

|

||||

作者:[Sylvain Leroux ][a]

|

||||

作者:[Sylvain Leroux][a]

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,23 +1,21 @@

|

||||

针对 Bash 的不完整路径展开(补全)

|

||||

针对 Bash 的不完整路径展开(补全)功能

|

||||

======

|

||||

|

||||

|

||||

|

||||

|

||||

[bash-complete-partial-path][1] 通过添加不完整的路径展开(类似于 Zsh)来增强 Bash(它在 Linux 上,macOS 使用 gnu-sed,Windows 使用 MSYS)中的路径补全。如果你想在 Bash 中使用这个省时特性,而不必切换到 Zsh,它将非常有用。

|

||||

|

||||

这是它如何工作的。当按下 `Tab` 键时,bash-complete-partial-path 假定每个部分都不完整并尝试展开它。假设你要进入 `/usr/share/applications` 。你可以输入 `cd /u/s/app`,按下 `Tab`,bash-complete-partial-path 应该把它展开成 `cd /usr/share/applications` 。如果存在冲突,那么按 `Tab` 仅补全没有冲突的路径。例如,Ubuntu 用户在 `/usr/share` 中应该有很多以 “app” 开头的文件夹,在这种情况下,输入 `cd /u/s/app` 只会展开 `/usr/share/` 部分。

|

||||

|

||||

这是更深层不完整文件路径展开的另一个例子。在Ubuntu系统上输入 `cd /u/s/f/t/u`,按下 `Tab`,它应该自动展开为 `cd /usr/share/fonts/truetype/ubuntu`。

|

||||

另一个更深层不完整文件路径展开的例子。在Ubuntu系统上输入 `cd /u/s/f/t/u`,按下 `Tab`,它应该自动展开为 `cd /usr/share/fonts/truetype/ubuntu`。

|

||||

|

||||

功能包括:

|

||||

|

||||

* 转义特殊字符

|

||||

|

||||

* 如果用户路径开头使用引号,则不转义字符转义,而是在展开路径后使用匹配字符结束引号

|

||||

|

||||

* 正确展开 ~ 表达式

|

||||

|

||||

* 如果 bash-completion 包正在使用,则此代码将安全地覆盖其 _filedir 函数。无需额外配置,只需确保在主 bash-completion 后 source 此项目。

|

||||

* 正确展开 `~` 表达式

|

||||

* 如果正在使用 bash-completion 包,则此代码将安全地覆盖其 `_filedir` 函数。无需额外配置,只需确保在主 bash-completion 后引入此项目。

|

||||

|

||||

查看[项目页面][2]以获取更多信息和演示截图。

|

||||

|

||||

@ -25,7 +23,7 @@

|

||||

|

||||

bash-complete-partial-path 安装说明指定直接下载 bash_completion 脚本。我更喜欢从 Git 仓库获取,这样我可以用一个简单的 `git pull` 来更新它,因此下面的说明将使用这种安装 bash-complete-partial-path。如果你喜欢,可以使用[官方][3]说明。

|

||||

|

||||

1. 安装 Git(需要克隆 bash-complete-partial-path 的 Git 仓库)。

|

||||

1、 安装 Git(需要克隆 bash-complete-partial-path 的 Git 仓库)。

|

||||

|

||||

在 Debian、Ubuntu、Linux Mint 等中,使用此命令安装 Git:

|

||||

|

||||

@ -33,13 +31,13 @@ bash-complete-partial-path 安装说明指定直接下载 bash_completion 脚本

|

||||

sudo apt install git

|

||||

```

|

||||

|

||||

2. 在 `~/.config/` 中克隆 bash-complete-partial-path 的 Git 仓库:

|

||||

2、 在 `~/.config/` 中克隆 bash-complete-partial-path 的 Git 仓库:

|

||||

|

||||

```

|

||||

cd ~/.config && git clone https://github.com/sio/bash-complete-partial-path

|

||||

```

|

||||

|

||||

3. 在 `~/.bashrc` 文件中 source `~/.config/bash-complete-partial-path/bash_completion`,

|

||||

3、 在 `~/.bashrc` 文件中 source `~/.config/bash-complete-partial-path/bash_completion`,

|

||||

|

||||

用文本编辑器打开 ~/.bashrc。例如你可以使用 Gedit:

|

||||

|

||||

@ -55,7 +53,7 @@ gedit ~/.bashrc

|

||||

|

||||

我提到在文件的末尾添加它,因为这需要包含在你的 `~/.bashrc` 文件的主 bash-completion 下面(之后)。因此,请确保不要将其添加到原始 bash-completion 之上,因为它会导致问题。

|

||||

|

||||

4\. Source `~/.bashrc`:

|

||||

4、 引入 `~/.bashrc`:

|

||||

|

||||

```

|

||||

source ~/.bashrc

|

||||

@ -63,8 +61,6 @@ source ~/.bashrc

|

||||

|

||||

这样就好了,现在应该安装完 bash-complete-partial-path 并可以使用了。

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linuxuprising.com/2018/07/incomplete-path-expansion-completion.html

|

||||

@ -72,7 +68,7 @@ via: https://www.linuxuprising.com/2018/07/incomplete-path-expansion-completion.

|

||||

作者:[Logix][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,93 @@

|

||||

2018 年 7 月 COPR 中 4 个值得尝试很酷的新项目

|

||||

======

|

||||

|

||||

|

||||

|

||||

COPR 是个人软件仓库[集合][1],它不在 Fedora 中。这是因为某些软件不符合轻松打包的标准。或者它可能不符合其他 Fedora 标准,尽管它是自由而开源的。COPR 可以在 Fedora 套件之外提供这些项目。COPR 中的软件不被 Fedora 基础设施不支持或没有被该项目所签名。但是,这是一种尝试新的或实验性的软件的一种巧妙的方式。

|

||||

|

||||

这是 COPR 中一组新的有趣项目。

|

||||

|

||||

### Hledger

|

||||

|

||||

[Hledger][2] 是用于跟踪货币或其他商品的命令行程序。它使用简单的纯文本格式日志来存储数据和复式记帐。除了命令行界面,hledger 还提供终端界面和 Web 客户端,可以显示帐户余额图。

|

||||

|

||||

![][3]

|

||||

|

||||

#### 安装说明

|

||||

|

||||

该仓库目前为 Fedora 27、28 和 Rawhide 提供了 hledger。要安装 hledger,请使用以下命令:

|

||||

|

||||

```

|

||||

sudo dnf copr enable kefah/HLedger

|

||||

sudo dnf install hledger

|

||||

```

|

||||

|

||||

### Neofetch

|

||||

|

||||

[Neofetch][4] 是一个命令行工具,可显示有关操作系统、软件和硬件的信息。其主要目的是以紧凑的方式显示数据来截图。你可以使用命令行标志和配置文件将 Neofetch 配置为完全按照你希望的方式显示。

|

||||

|

||||

![][5]

|

||||

|

||||

#### 安装说明

|

||||

|

||||

仓库目前为 Fedora 28 提供 Neofetch。要安装 Neofetch,请使用以下命令:

|

||||

|

||||

```

|

||||

sudo dnf copr enable sysek/neofetch

|

||||

sudo dnf install neofetch

|

||||

|

||||

```

|

||||

|

||||

### Remarkable

|

||||

|

||||