mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-27 02:30:10 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

8527596cc2

published

20171101 -dev-urandom- entropy explained.md20171121 How To Kill The Largest Process In An Unresponsive Linux System.md20180507 4 Firefox extensions to install now.md

sources

talk

20180418 Is DevOps compatible with part-time community teams.md20180425 How will the GDPR impact open source communities20180528 What is a Linux server and why does your business need one.md

tech

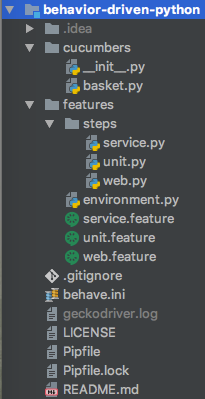

20141217 The Best Linux Tools for Teachers and Students.md20180305 Getting started with Python for data science.md20180404 Bring some JavaScript to your Java enterprise with Vert.x.md20180525 A Bittorrent Filesystem Based On FUSE.md20180525 How to Set Different Wallpaper for Each Monitor in Linux.md20180525 How to clean up your data in the command line.md20180528 What is behavior-driven Python.md20180529 5 trending open source machine learning JavaScript frameworks.md20180529 Copying and renaming files on Linux.md20180529 Manage your workstation with Ansible- Configure desktop settings.md20180529 The Source Code Line Counter And Analyzer.md20180530 3 Python command-line tools.md20180530 Introduction to the Pony programming language.md

translated

104

published/20171101 -dev-urandom- entropy explained.md

Normal file

104

published/20171101 -dev-urandom- entropy explained.md

Normal file

@ -0,0 +1,104 @@

|

||||

/dev/[u]random:对熵的解释

|

||||

======

|

||||

|

||||

### 熵

|

||||

|

||||

当谈到 `/dev/random` 和 `/dev/urandom` 的主题时,你总是会听到这个词:“<ruby>熵<rt>Entropy</rt></ruby>”。每个人对此似乎都有自己的比喻。那为我呢?我喜欢将熵视为“随机果汁”。它是果汁,随机数需要它变得更随机。

|

||||

|

||||

如果你曾经生成过 SSL 证书或 GPG 密钥,那么可能已经看到过像下面这样的内容:

|

||||

|

||||

```

|

||||

We need to generate a lot of random bytes. It is a good idea to perform

|

||||

some other action (type on the keyboard, move the mouse, utilize the

|

||||

disks) during the prime generation; this gives the random number

|

||||

generator a better chance to gain enough entropy.

|

||||

++++++++++..+++++.+++++++++++++++.++++++++++...+++++++++++++++...++++++

|

||||

+++++++++++++++++++++++++++++.+++++..+++++.+++++.+++++++++++++++++++++++++>.

|

||||

++++++++++>+++++...........................................................+++++

|

||||

Not enough random bytes available. Please do some other work to give

|

||||

the OS a chance to collect more entropy! (Need 290 more bytes)

|

||||

```

|

||||

|

||||

通过在键盘上打字并移动鼠标,你可以帮助生成熵或随机果汁。

|

||||

|

||||

你可能会问自己……为什么我需要熵?以及为什么它对于随机数真的变得随机如此重要?那么,假设我们的熵的来源仅限于键盘、鼠标和磁盘 IO 的数据。但是我们的系统是一个服务器,所以我知道没有鼠标和键盘输入。这意味着唯一的因素是你的 IO。如果它是一个单独的、几乎不使用的磁盘,你将拥有较低的熵。这意味着你的系统随机的能力很弱。换句话说,我可以玩概率游戏,并大幅减少破解 ssh 密钥或者解密你认为是加密会话的时间。

|

||||

|

||||

好的,但这是很难实现的对吧?不,实际上并非如此。看看这个 [Debian OpenSSH 漏洞][1]。这个特定的问题是由于某人删除了一些负责添加熵的代码引起的。有传言说,他们因为它导致 valgrind 发出警告而删除了它。然而,在这样做的时候,随机数现在少了很多随机性。事实上,熵少了很多,因此暴力破解变成了一个可行的攻击向量。

|

||||

|

||||

希望到现在为止,我们理解了熵对安全性的重要性。无论你是否意识到你正在使用它。

|

||||

|

||||

### /dev/random 和 /dev/urandom

|

||||

|

||||

`/dev/urandom` 是一个伪随机数生成器,缺乏熵它也**不会**停止。

|

||||

|

||||

`/dev/random` 是一个真随机数生成器,它会在缺乏熵的时候停止。

|

||||

|

||||

大多数情况下,如果我们正在处理实际的事情,并且它不包含你的核心信息,那么 `/dev/urandom` 是正确的选择。否则,如果就使用 `/dev/random`,那么当系统的熵耗尽时,你的程序就会变得有趣。无论它直接失败,或只是挂起——直到它获得足够的熵,这取决于你编写的程序。

|

||||

|

||||

### 检查熵

|

||||

|

||||

那么,你有多少熵?

|

||||

|

||||

```

|

||||

[root@testbox test]# cat /proc/sys/kernel/random/poolsize

|

||||

4096

|

||||

[root@testbox test]# cat /proc/sys/kernel/random/entropy_avail

|

||||

2975

|

||||

```

|

||||

|

||||

`/proc/sys/kernel/random/poolsize`,说明熵池的大小(以位为单位)。例如:在停止抽水之前我们应该储存多少随机果汁。`/proc/sys/kernel/random/entropy_avail` 是当前池中随机果汁的数量(以位为单位)。

|

||||

|

||||

### 我们如何影响这个数字?

|

||||

|

||||

这个数字可以像我们使用它一样耗尽。我可以想出的最简单的例子是将 `/dev/random` 定向到 `/dev/null` 中:

|

||||

|

||||

```

|

||||

[root@testbox test]# cat /dev/random > /dev/null &

|

||||

[1] 19058

|

||||

[root@testbox test]# cat /proc/sys/kernel/random/entropy_avail

|

||||

0

|

||||

[root@testbox test]# cat /proc/sys/kernel/random/entropy_avail

|

||||

1

|

||||

```

|

||||

|

||||

影响这个最简单的方法是运行 [Haveged][2]。Haveged 是一个守护进程,它使用处理器的“抖动”将熵添加到系统熵池中。安装和基本设置非常简单。

|

||||

|

||||

```

|

||||

[root@b08s02ur ~]# systemctl enable haveged

|

||||

Created symlink from /etc/systemd/system/multi-user.target.wants/haveged.service to /usr/lib/systemd/system/haveged.service.

|

||||

[root@b08s02ur ~]# systemctl start haveged

|

||||

```

|

||||

|

||||

在流量相对中等的机器上:

|

||||

|

||||

```

|

||||

[root@testbox ~]# pv /dev/random > /dev/null

|

||||

40 B 0:00:15 [ 0 B/s] [ <=> ]

|

||||

52 B 0:00:23 [ 0 B/s] [ <=> ]

|

||||

58 B 0:00:25 [5.92 B/s] [ <=> ]

|

||||

64 B 0:00:30 [6.03 B/s] [ <=> ]

|

||||

^C

|

||||

[root@testbox ~]# systemctl start haveged

|

||||

[root@testbox ~]# pv /dev/random > /dev/null

|

||||

7.12MiB 0:00:05 [1.43MiB/s] [ <=> ]

|

||||

15.7MiB 0:00:11 [1.44MiB/s] [ <=> ]

|

||||

27.2MiB 0:00:19 [1.46MiB/s] [ <=> ]

|

||||

43MiB 0:00:30 [1.47MiB/s] [ <=> ]

|

||||

^C

|

||||

```

|

||||

|

||||

使用 `pv` 我们可以看到我们通过管道传递了多少数据。正如你所看到的,在运行 `haveged` 之前,我们是 2.1 位/秒(B/s)。而在开始运行 `haveged` 之后,加入处理器的抖动到我们的熵池中,我们得到大约 1.5MiB/秒。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://jhurani.com/linux/2017/11/01/entropy-explained.html

|

||||

|

||||

作者:[James J][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://jblevins.org/log/ssh-vulnkey

|

||||

[1]:http://jhurani.com/linux/2017/11/01/%22https://jblevins.org/log/ssh-vulnkey%22

|

||||

[2]:http://www.issihosts.com/haveged/

|

||||

@ -1,51 +1,56 @@

|

||||

如何在无响应的 Linux 系统中杀掉最大的进程

|

||||

如何在无响应的 Linux 系统中杀掉内存消耗最大的进程

|

||||

======

|

||||

|

||||

|

||||

|

||||

作为一名博客作者,我收藏了很多博客、网站和论坛用来标记 Linux 和 Unix 相关的内容。有时候,我在浏览器中开启了非常多的标签页,导致操作系统会无响应好几分钟。我不能移动我的鼠标去杀掉一个进程或关闭任何开启的标签页。在这种情况下,我别无选择,只能强制重启系统。当然我也用了 **OneTab** (译者注:OneTab 是一个 Chrome 的 Extension, 可以将标签页转化成一个列表保存。)和 **Greate Suspender** (译者注:Great Suspender 是一个 Chrome 的 Extension, 可以自动冻结标签页)这样浏览器拓展,但它们在这里也起不到太大的作用。 我经常耗尽我的内存。而这就是 **Early OOM** 起作用的时候了。在情况严重,它会杀掉一个未响应系统中的最大的进程。Early OOM 每秒会检测可用内存和空余交换区 10 次,一旦两者都低于 10%,它就会把最大的进程杀死。

|

||||

作为一名博客作者,我收藏了很多博客、网站和论坛用来寻找 Linux 和 Unix 相关的内容。有时候,我在浏览器中开启了非常多的标签页,导致操作系统会无响应好几分钟。我不能移动我的鼠标,也不能杀掉一个进程或关闭任何开启的标签页。在这种情况下,我别无选择,只能强制重启系统。当然我也用了 **OneTab** (LCTT 译注:OneTab 是一个 Chrome 的 Extension,可以将标签页转化成一个列表保存。)和 **Greate Suspender** (LCTT 译注:Great Suspender 是一个 Chrome 的 Extension, 可以自动冻结标签页)这样浏览器拓展,但它们在这里也起不到太大的作用。 我经常耗尽我的内存。而这就是 **Early OOM** 起作用的时候了。在情况严重时,它会杀掉一个未响应系统中的内存消耗最大的进程。Early OOM 每秒会检测可用内存和空余交换区 10 次,一旦两者都低于 10%,它就会把最大的进程杀死。

|

||||

|

||||

### 为什么用 Early OOM?为什么不用系统内置的 OOM killer?

|

||||

|

||||

在继续讨论下去之前,我想先简短的介绍下 OOM killer,也就是 **O** ut **O** f **M** emory killer。OOM killer 是一个由内核在可用内存非常低的时候使用的进程。它的主要任务是不断的杀死进程,直到释放出足够的内存,是内核正在运行的进程的其余部分能顺利运行。OOM killer 会找到系统中最不重要并且能释放出最多内存的进程,然后杀掉他们。在 **/proc** 目录下的 **pid** 目录中,我们可以看到每个进程的 oom_score。

|

||||

在继续讨论下去之前,我想先简短的介绍下 OOM killer,也就是 **O**ut **O**f **M**emory killer。OOM killer 是一个由内核在可用内存非常低的时候使用的进程。它的主要任务是不断的杀死进程,直到释放出足够的内存,使内核正在运行的其它进程能顺利运行。OOM killer 会找到系统中最不重要并且能释放出最多内存的进程,然后杀掉他们。在 `/proc` 目录下的 `pid` 目录中,我们可以看到每个进程的 `oom_score`。

|

||||

|

||||

示例:

|

||||

|

||||

```

|

||||

$ cat /proc/10299/oom_score

|

||||

1

|

||||

```

|

||||

|

||||

一个进程的 oom_score 的值越高,这个进程越有可能在系统内存耗尽的时候被 OOM killer 杀死。

|

||||

一个进程的 `oom_score` 的值越高,这个进程越有可能在系统内存耗尽的时候被 OOM killer 杀死。

|

||||

|

||||

Early OOM 的开发者表示,相对于内置的 OOM killer,Early OOM 有一个很大的优点。就像我之前说的那样,OOM killer 会杀掉 oom_score 最高的进程,而这也导致 Chrome 浏览器总是会成为第一个被杀死的进程。为了避免这种情况发生,Early OOM 使用 **/proc/*/status** 而不是 **echo f > /proc/sysrq-trigger**(译者注:这条命令会调用 OOM killer 杀死进程)。开发者还表示,手动触发 OOM killer 在最新版本的 Linux 内核中很可能不会起作用。

|

||||

Early OOM 的开发者表示,相对于内置的 OOM killer,Early OOM 有一个很大的优点。就像我之前说的那样,OOM killer 会杀掉 `oom_score` 最高的进程,而这也导致 Chrome 浏览器总是会成为第一个被杀死的进程。为了避免这种情况发生,Early OOM 使用 `/proc/*/status` 而不是 `echo f > /proc/sysrq-trigger`(LCTT 译注:这条命令会调用 OOM killer 杀死进程)。开发者还表示,手动触发 OOM killer 在最新版本的 Linux 内核中很可能不会起作用。

|

||||

|

||||

### 安装 Early OOM

|

||||

|

||||

Early OOM 在AUR(Arch User Repository)中可以被找到,所以你可以在 Arch 和它的衍生版本中使用任何 AUR 工具安装它。

|

||||

Early OOM 在 AUR(Arch User Repository)中可以找到,所以你可以在 Arch 和它的衍生版本中使用任何 AUR 工具安装它。

|

||||

|

||||

使用 [Pacaur][1]:

|

||||

|

||||

使用 [**Pacaur**][1]:

|

||||

```

|

||||

pacaur -S earlyoom

|

||||

```

|

||||

|

||||

使用 [**Packer**][2]:

|

||||

使用 [Packer][2]:

|

||||

|

||||

```

|

||||

packer -S earlyoom

|

||||

```

|

||||

|

||||

使用 [**Yaourt**][3]:

|

||||

使用 [Yaourt][3]:

|

||||

|

||||

```

|

||||

yaourt -S earlyoom

|

||||

```

|

||||

|

||||

启用并启动 Early OOM daemon:

|

||||

启用并启动 Early OOM 守护进程:

|

||||

|

||||

```

|

||||

sudo systemctl enable earlyoom

|

||||

```

|

||||

```

|

||||

sudo systemctl start earlyoom

|

||||

```

|

||||

|

||||

在其它的 Linux 发行版中,可以按如下方法编译安装它

|

||||

在其它的 Linux 发行版中,可以按如下方法编译安装它:

|

||||

|

||||

```

|

||||

git clone https://github.com/rfjakob/earlyoom.git

|

||||

cd earlyoom

|

||||

@ -53,19 +58,22 @@ make

|

||||

sudo make install

|

||||

```

|

||||

|

||||

### Early OOM - Kill The Largest Process In An Unresponsive Linux System杀掉无响应 Linux 系统中的最大的进程

|

||||

### Early OOM - 杀掉无响应 Linux 系统中的最大的进程

|

||||

|

||||

运行如下命令启动 Early OOM:

|

||||

|

||||

```

|

||||

earlyoom

|

||||

```

|

||||

|

||||

如果是通过编译源代码安装的, 运行如下命令启动 Early OOM:

|

||||

|

||||

```

|

||||

./earlyoom

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

```

|

||||

earlyoom 0.12

|

||||

mem total: 3863 MiB, min: 386 MiB (10 %)

|

||||

@ -82,21 +90,24 @@ mem avail: 1784 MiB (46 %), swap free: 2047 MiB (99 %)

|

||||

[...]

|

||||

```

|

||||

|

||||

就像你在上面的输出中可以看到的,Early OOM 将会显示你有多少内存和交换区,以及有多少可用的内存和交换区。记住它会一直保持运行,直到你按下 CTRL+C。

|

||||

就像你在上面的输出中可以看到的,Early OOM 将会显示你有多少内存和交换区,以及有多少可用的内存和交换区。记住它会一直保持运行,直到你按下 `CTRL+C`。

|

||||

|

||||

如果可用的内存和交换区大小都低于 10%,Early OOM 将会自动杀死最大的进程,直到系统有足够的内存可以流畅的运行。你也可以根据你的需求配置最小百分比值。

|

||||

|

||||

设置最小的可用内存百分比,运行:

|

||||

|

||||

```

|

||||

earlyoom -m <PERCENT_HERE>

|

||||

```

|

||||

|

||||

设置最小可用交换区百分比, 运行:

|

||||

设置最小可用交换区百分比, 运行:

|

||||

|

||||

```

|

||||

earlyoom -s <PERCENT_HERE>

|

||||

```

|

||||

|

||||

在帮助部分,可以看到更多详细信息:

|

||||

|

||||

```

|

||||

$ earlyoom -h

|

||||

earlyoom 0.12

|

||||

@ -120,15 +131,13 @@ Usage: earlyoom [OPTION]...

|

||||

|

||||

谢谢!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/kill-largest-process-unresponsive-linux-system/

|

||||

|

||||

作者:[Aditya Goturu][a]

|

||||

译者:[cizezsy](https://github.com/cizezsy)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

81

published/20180507 4 Firefox extensions to install now.md

Normal file

81

published/20180507 4 Firefox extensions to install now.md

Normal file

@ -0,0 +1,81 @@

|

||||

4 个现在就该去装的 Firefox 扩展

|

||||

=====

|

||||

|

||||

> 合适的扩展能极大地增强你的浏览器功能,但是仔细的选择也是很重要的。

|

||||

|

||||

|

||||

|

||||

正如我在关于 Firefox 扩展的[原创文章][1]中提到的,web 浏览器已成为许多用户计算机体验的关键组件。现代浏览器已经发展成为功能强大且可扩展的平台,扩展可以添加或修改其功能。Firefox 的扩展是使用 WebExtensions API(一种跨浏览器开发系统)构建的。

|

||||

|

||||

在第一篇文章中,我问读者:“你应该安装哪些扩展?” 重申一下,这一决定主要取决于你如何使用浏览器,你对隐私的看法,你对扩展程序开发人员的信任程度以及其他个人偏好。自文章发表以来,我推荐的一个扩展(Xmarks)已经停止维护。另外,该文章收到了大量的反馈,在这篇更新中,这些反馈已经被考虑到。

|

||||

|

||||

我想再次指出,浏览器扩展通常需要能够阅读和(或)更改你访问的网页上的所有内容。你应该仔细考虑这一点。如果扩展程序修改了你访问的所有网页的访问权限,那么它可能成为键盘记录程序、拦截信用卡信息、在线跟踪、插入广告以及执行各种其他恶意活动。这并不意味着每个扩展程序都会暗中执行这些操作,但在安装任何扩展程序之前,你应该仔细考虑安装源,涉及的权限,风险配置文件以及其他因素。请记住,你可以使用配置文件来管理扩展如何影响你的攻击面 —— 例如,使用没有扩展的专用配置文件来执行网上银行等任务。

|

||||

|

||||

考虑到这一点,这里有你可能想要考虑的四个开源 Firefox 扩展。

|

||||

|

||||

### uBlock Origin

|

||||

|

||||

![ublock origin ad blocker screenshot][2]

|

||||

|

||||

我的第一个建议保持不变。[uBlock Origin][3] 是一款快速、低内存消耗、广泛的拦截器,它不仅可以拦截广告,而且还可以执行你自己的内容过滤。 uBlock Origin 的默认行为是使用多个预定义的过滤器列表来拦截广告、跟踪器和恶意软件站点。它允许你任意添加列表和规则,甚至可以锁定到默认拒绝模式。尽管它很强大,但它已被证明是高效和高性能的。它将继续定期更新,并且是该功能的最佳选择之一。

|

||||

|

||||

### Privacy Badger

|

||||

|

||||

![privacy badger ad blocker][4]

|

||||

|

||||

我的第二个建议也保持不变。如果说有什么区别的话,那就是自从我上一篇文章发表以来,隐私问题更被关注了,这使得这个扩展成为一个简单的建议。顾名思义,[Privacy Badger][5] 是一个专注于隐私的扩展,可以拦截广告和其他第三方跟踪器。这是电子前哨基金会基金会(EFF)的一个项目,他们说:

|

||||

|

||||

> Privacy Badger 的诞生是我们希望能够推荐一个单独的扩展,它可以自动分析和拦截任何违反用户同意原则的追踪器或广告;在用户没有任何设置、有关知识或配置的情况下,它可以很好地运行;它是由一个明确为其用户而不是为广告商工作的组织所产生的;它使用了算法的方法来决定什么被跟踪,什么没有被跟踪。”

|

||||

|

||||

为什么 Privacy Badger 会出现在这个列表上,它的功能与上一个扩展看起来很类似?因为一些原因:首先,它从根本上工作原理与 uBlock Origin 不同。其次,深度防御的实践是一项合理的策略。说到深度防御,EFF 还维护着 [HTTPS Everywhere][6] 扩展,它自动确保 https 用于许多主流网站。当你安装 Privacy Badger 时,你也可以考虑使用 HTTPS Everywhere。

|

||||

|

||||

如果你开始认为这篇文章只是对上一篇文章的重新讨论,那么以下是我的建议分歧。

|

||||

|

||||

### Bitwarden

|

||||

|

||||

![Bitwarden][7]

|

||||

|

||||

在上一篇文章中推荐 LastPass 时,我提到这可能是一个有争议的选择。这无疑属实。无论你是否应该使用密码管理器 —— 如果你使用,那么是否应该选择带有浏览器插件的密码管理器 —— 这是一个备受争议的话题,答案很大程度上取决于你的个人风险状况。我认为大多数普通的计算机用户应该使用一个,因为它比最常见的选择要好得多:在任何地方都使用相同的弱密码!我仍然相信这一点。

|

||||

|

||||

[Bitwarden][8] 自从我上次点评以后确实更成熟了。像 LastPass 一样,它对用户友好,支持双因素身份验证,并且相当安全。与 LastPass 不同的是,它是[开源的][9]。它可以使用或不使用浏览器插件,并支持从其他解决方案(包括 LastPass)导入。它的核心功能完全免费,它还有一个 10 美元/年的高级版本。

|

||||

|

||||

### Vimium-FF

|

||||

|

||||

![Vimium][10]

|

||||

|

||||

[Vimium][11] 是另一个开源的扩展,它为 Firefox 键盘快捷键提供了类似 Vim 一样的导航和控制,其称之为“黑客的浏览器”。对于 `Ctrl+x`、 `Meta+x` 和 `Alt+x`,分别对应 `<c-x>`、`<m-x>` 和 `<a-x>`,默认值可以轻松定制。一旦你安装了 Vimium,你可以随时键入 `?` 来查看键盘绑定列表。请注意,如果你更喜欢 Emacs,那么也有一些针对这些键绑定的扩展。无论哪种方式,我认为键盘快捷键是未充分利用的生产力推动力。

|

||||

|

||||

### 额外福利: Grammarly

|

||||

|

||||

不是每个人都有幸在 Opensource.com 上撰写专栏 —— 尽管你应该认真考虑为这个网站撰写文章;如果你有问题,有兴趣,或者想要一个导师,伸出手,让我们聊聊吧。但是,即使没有专栏撰写,正确的语法在各种各样的情况下都是有益的。试一下 [Grammarly][12]。不幸的是,这个扩展不是开源的,但它确实可以确保你输入的所有东西都是清晰的

|

||||

、有效的并且没有错误。它通过扫描你文本中的常见的和复杂的语法错误来实现这一点,涵盖了从主谓一致到文章使用,到修饰词的放置这些所有内容。它的基本功能是免费的,它有一个高级版本,每月收取额外的费用。我在这篇文章中使用了它,它发现了许多我的校对没有发现的错误。

|

||||

|

||||

再次说明,Grammarly 是这个列表中包含的唯一不是开源的扩展,因此如果你知道类似的高质量开源替代品,请在评论中告诉我们。

|

||||

|

||||

这些扩展是我发现有用并推荐给其他人的扩展。请在评论中告诉我你对更新建议的看法。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/5/firefox-extensions

|

||||

|

||||

作者:[Jeremy Garcia][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/jeremy-garcia

|

||||

[1]:https://opensource.com/article/18/1/top-5-firefox-extensions

|

||||

[2]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/ublock.png?itok=_QFEbDmq (ublock origin ad blocker screenshot)

|

||||

[3]:https://addons.mozilla.org/en-US/firefox/addon/ublock-origin/

|

||||

[4]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/images/life-uploads/privacy_badger_1.0.1.png?itok=qZXQeKtc (privacy badger ad blocker screenshot)

|

||||

[5]:https://www.eff.org/privacybadger

|

||||

[6]:https://www.eff.org/https-everywhere

|

||||

[7]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/u128651/bitwarden.png?itok=gZPrCYoi (Bitwarden)

|

||||

[8]:https://bitwarden.com/

|

||||

[9]:https://github.com/bitwarden

|

||||

[10]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/u128651/vimium.png?itok=QRESXjWG (Vimium)

|

||||

[11]:https://addons.mozilla.org/en-US/firefox/addon/vimium-ff/

|

||||

[12]:https://www.grammarly.com/

|

||||

@ -1,40 +1,22 @@

|

||||

# Is DevOps compatible with part-time community teams?

|

||||

|

||||

### DevOps can greatly benefit projects of all sizes. Here's how to build a DevOps adoption plan for smaller projects.

|

||||

|

||||

|

||||

|

||||

Image by :

|

||||

|

||||

[WOCinTech Chat][1]. Modified by Opensource.com. [CC BY-SA 4.0][2]

|

||||

|

||||

### Get the newsletter

|

||||

|

||||

Join the 85,000 open source advocates who receive our giveaway alerts and article roundups.

|

||||

Is DevOps compatible with part-time community teams?

|

||||

======

|

||||

|

||||

|

||||

DevOps seems to be the talk of the IT world of late—and for good reason. DevOps has streamlined the process and production of IT development and operations. However, there is also an upfront cost to embracing a DevOps ideology, in terms of time, effort, knowledge, and financial investment. Larger companies may have the bandwidth, budget, and time to make the necessary changes, but is it feasible for part-time, resource-strapped communities?

|

||||

|

||||

Part-time communities are teams of like-minded people who take on projects outside of their normal work schedules. The members of these communities are driven by passion and a shared purpose. For instance, one such community is the [ALM | DevOps Rangers][3]. With 100 rangers engaged across the globe, a DevOps solution may seem daunting; nonetheless, they took on the challenge and embraced the ideology. Through their example, we've learned that DevOps is not only feasible but desirable in smaller teams. To read about their transformation, check out [How DevOps eliminates development bottlenecks][4].

|

||||

Part-time communities are teams of like-minded people who take on projects outside of their normal work schedules. The members of these communities are driven by passion and a shared purpose. For instance, one such community is the [ALM | DevOps Rangers][1]. With 100 rangers engaged across the globe, a DevOps solution may seem daunting; nonetheless, they took on the challenge and embraced the ideology. Through their example, we've learned that DevOps is not only feasible but desirable in smaller teams. To read about their transformation, check out [How DevOps eliminates development bottlenecks][2].

|

||||

|

||||

> “DevOps is the union of people, process, and products to enable continuous delivery of value to our end customers.” - Donovan Brown

|

||||

|

||||

### The cost of DevOps

|

||||

|

||||

As stated above, there is an _upfront_ "cost" to DevOps. The cost manifests itself in many forms, such as the time and collaboration between development, operations, and other stakeholders, planning a smooth-flowing process that delivers continuous value, finding the best DevOps products, and training the team in new technologies, to name a few. This aligns directly with Donovan's definition of DevOps, in fact—a **process** for delivering **continuous value** and the **people** who make that happen.

|

||||

|

||||

More DevOps resources

|

||||

|

||||

* [What is DevOps?][5]

|

||||

* [Free eBook: DevOps with OpenShift][6]

|

||||

* [10 bad DevOps habits to break][7]

|

||||

* [10 must-read DevOps resources][8]

|

||||

* [The latest on DevOps][9]

|

||||

As stated above, there is an upfront "cost" to DevOps. The cost manifests itself in many forms, such as the time and collaboration between development, operations, and other stakeholders, planning a smooth-flowing process that delivers continuous value, finding the best DevOps products, and training the team in new technologies, to name a few. This aligns directly with Donovan's definition of DevOps, in fact—a **process** for delivering **continuous value** and the **people** who make that happen.

|

||||

|

||||

Streamlined DevOps takes a lot of planning and training just to create the process, and that doesn't even consider the testing phase. We also can't forget the existing in-flight projects that need to be converted into the new system. While the cost increases the more pervasive the transformation—for instance, if an organization aims to unify its entire development organization under a single process, then that would cost more versus transforming a single pilot or subset of the entire portfolio—these upfront costs must be addressed regardless of their scale. There are a lot of resources and products already out there that can be implemented for a smoother transition—but again, we face the time and effort that will be necessary just to research which ones might work best.

|

||||

|

||||

In the case of the ALM | DevOps Rangers, they had to halt all projects for a couple of sprints to set up the initial process. Many organizations would not be able to do that. Even part-time groups might have very good reasons to keep things moving, which only adds to the complexity. In such scenarios, additional cutover planning (and therefore additional cost) is needed, and the overall state of the community is one of flux and change, which adds risk, which—you guessed it—requires more cost to mitigate.

|

||||

|

||||

There is also an _ongoing_ "cost" that teams will face with a DevOps mindset: Simple maintenance of the system, training and transitioning new team members, and keeping up with new, improved technologies are all a part of the process.

|

||||

There is also an ongoing "cost" that teams will face with a DevOps mindset: Simple maintenance of the system, training and transitioning new team members, and keeping up with new, improved technologies are all a part of the process.

|

||||

|

||||

### DevOps for a part-time community

|

||||

|

||||

@ -46,7 +28,7 @@ The answer to that is dependent on a few variables, such as the ability of the t

|

||||

|

||||

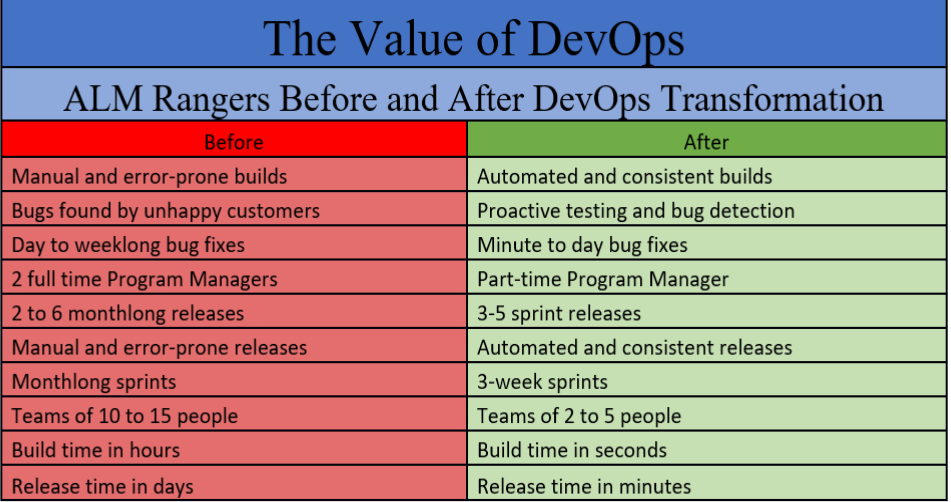

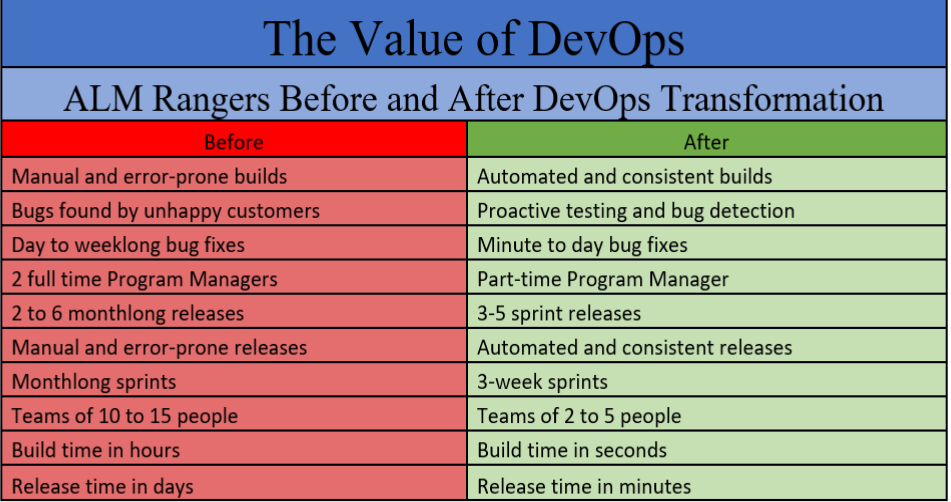

Luckily, we aren't without examples to demonstrate just how DevOps can benefit a smaller group. Let's take a quick look at the ALM Rangers again. The results from their transformation help us understand how DevOps changed their community:

|

||||

|

||||

|

||||

|

||||

|

||||

As illustrated, there are some huge benefits for part-time community teams. Planning goes from long, arduous design sessions to a quick prototyping and storyboarding process. Builds become automated, reliable, and resilient. Testing and bug detection are proactive instead of reactive, which turns into a happier clientele. Multiple full-time program managers are replaced with self-managing teams with a single part-time manager to oversee projects. Teams become smaller and more efficient, which equates to higher production rates and higher-quality project delivery. With results like these, it's hard to argue against DevOps.

|

||||

|

||||

@ -56,62 +38,36 @@ Still, the upfront and ongoing costs aren't right for every community. The numbe

|

||||

|

||||

Another important question to ask: How can a low-bandwidth group make such a massive transition? The good news is that a DevOps transformation doesn’t need to happen all at once. Taken in smaller, more manageable steps, organizations of any size can embrace DevOps.

|

||||

|

||||

1. Determine why DevOps may be the solution you need. Are your projects bottlenecking? Are they running over budget and over time? Of course, these concerns are common for any community, big or small. Answering these questions leads us to step two:

|

||||

2. Develop the right framework to improve the engineering process. DevOps is all about automation, collaboration, and streamlining. Rather than trying to fit everyone into the same process box, the framework should support the work habits, preferences, and delivery needs of the community. Some broad standards should be established (for example, that all teams use a particular version control system). Beyond that, however, let the teams decide their own best process.

|

||||

3. Use the current products that are already available if they meet your needs. Why reinvent the wheel?

|

||||

4. Finally, implement and test the actual DevOps solution. This is, of course, where the actual value of DevOps is realized. There will likely be a few issues and some heartburn, but it will all be worth it in the end because, once established, the products of the community’s work will be nimbler and faster for the users.

|

||||

1. Determine why DevOps may be the solution you need. Are your projects bottlenecking? Are they running over budget and over time? Of course, these concerns are common for any community, big or small. Answering these questions leads us to step two:

|

||||

2. Develop the right framework to improve the engineering process. DevOps is all about automation, collaboration, and streamlining. Rather than trying to fit everyone into the same process box, the framework should support the work habits, preferences, and delivery needs of the community. Some broad standards should be established (for example, that all teams use a particular version control system). Beyond that, however, let the teams decide their own best process.

|

||||

3. Use the current products that are already available if they meet your needs. Why reinvent the wheel?

|

||||

4. Finally, implement and test the actual DevOps solution. This is, of course, where the actual value of DevOps is realized. There will likely be a few issues and some heartburn, but it will all be worth it in the end because, once established, the products of the community’s work will be nimbler and faster for the users.

|

||||

|

||||

|

||||

|

||||

### Reuse DevOps solutions

|

||||

|

||||

One benefit to creating effective CI/CD pipelines is the reusability of those pipelines. Although there is no one-size fits all solution, anyone can adopt a process. There are several pre-made templates available for you to examine, such as build templates on VSTS, ARM templates to deploy Azure resources, and "cookbook"-style textbooks from technical publishers. Once it identifies a process that works well, a community can also create its own template by defining and establishing standards and making that template easily discoverable by the entire community. For more information on DevOps journeys and tools, check out [this site][10].

|

||||

One benefit to creating effective CI/CD pipelines is the reusability of those pipelines. Although there is no one-size fits all solution, anyone can adopt a process. There are several pre-made templates available for you to examine, such as build templates on VSTS, ARM templates to deploy Azure resources, and "cookbook"-style textbooks from technical publishers. Once it identifies a process that works well, a community can also create its own template by defining and establishing standards and making that template easily discoverable by the entire community. For more information on DevOps journeys and tools, check out [this site][3].

|

||||

|

||||

### Summary

|

||||

|

||||

Overall, the success or failure of DevOps relies on the culture of a community. It doesn't matter if the community is a large, resource-rich enterprise or a small, resource-sparse, part-time group. DevOps will still bring solid benefits. The difference is in the approach for adoption and the scale of that adoption. There are both upfront and ongoing costs, but the value greatly outweighs those costs. Communities can use any of the powerful tools available today for their pipelines, and they can also leverage reusability, such as templates, to reduce upfront implementation costs. DevOps is most certainly feasible—and even critical—for the success of part-time community teams.

|

||||

|

||||

---

|

||||

**[See our related story,[How DevOps eliminates development bottlenecks][4].]**

|

||||

|

||||

**\[See our related story, [How DevOps eliminates development bottlenecks][11].\]**

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/4/devops-compatible-part-time-community-teams

|

||||

|

||||

### About the author

|

||||

作者:[Edward Fry][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

[][13]

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

Edward Fry \- Edward a business technophile who revels in blending people, process, and technology to solve real problems with grace and efficiency. As a member of the ALM Rangers, he loves to share his passion for the process of software development and ways to get computers to do the drudge work so that people can dream the big dreams. He also enjoys the art of great software design and architecture and also just likes being immersed in code. When he isn't helping people with technology, he enjoys... [more about Edward Fry][14]

|

||||

|

||||

[More about me][15]

|

||||

|

||||

* [Learn how you can contribute][16]

|

||||

|

||||

---

|

||||

|

||||

via: [https://opensource.com/article/18/4/devops-compatible-part-time-community-teams][17]

|

||||

|

||||

作者: [Edward Fry][18] 选题者: [@lujun9972][19] 译者: [译者ID][20] 校对: [校对者ID][21]

|

||||

|

||||

本文由 [LCTT][22] 原创编译,[Linux中国][23] 荣誉推出

|

||||

|

||||

[1]: https://www.flickr.com/photos/wocintechchat/25392377053/

|

||||

[2]: https://creativecommons.org/licenses/by/4.0/

|

||||

[3]: https://github.com/ALM-Rangers

|

||||

[4]: https://opensource.com/article/17/11/devops-rangers-transformation

|

||||

[5]: https://opensource.com/resources/devops?src=devops_resource_menu1

|

||||

[6]: https://www.openshift.com/promotions/devops-with-openshift.html?intcmp=7016000000127cYAAQ&src=devops_resource_menu2

|

||||

[7]: https://enterprisersproject.com/article/2018/1/10-bad-devops-habits-break?intcmp=7016000000127cYAAQ&src=devops_resource_menu3

|

||||

[8]: https://opensource.com/article/17/12/10-must-read-devops-books?src=devops_resource_menu4

|

||||

[9]: https://opensource.com/tags/devops?src=devops_resource_menu5

|

||||

[10]: https://www.visualstudio.com/devops/

|

||||

[11]: https://opensource.com/article/17/11/devops-rangers-transformation

|

||||

[12]: https://opensource.com/tags/devops

|

||||

[13]: https://opensource.com/users/edwardf

|

||||

[14]: https://opensource.com/users/edwardf

|

||||

[15]: https://opensource.com/users/edwardf

|

||||

[16]: https://opensource.com/participate

|

||||

[17]: https://opensource.com/article/18/4/devops-compatible-part-time-community-teams

|

||||

[18]: https://opensource.com/users/edwardf

|

||||

[19]: https://github.com/lujun9972

|

||||

[20]: https://github.com/译者ID

|

||||

[21]: https://github.com/校对者ID

|

||||

[22]: https://github.com/LCTT/TranslateProject

|

||||

[23]: https://linux.cn/

|

||||

[a]:https://opensource.com/users/edwardf

|

||||

[1]:https://github.com/ALM-Rangers

|

||||

[2]:https://opensource.com/article/17/11/devops-rangers-transformation

|

||||

[3]:https://www.visualstudio.com/devops/

|

||||

[4]:https://opensource.com/article/17/11/devops-rangers-transformation

|

||||

@ -1,111 +0,0 @@

|

||||

# How will the GDPR impact open source communities?

|

||||

|

||||

|

||||

|

||||

Image by :

|

||||

|

||||

opensource.com

|

||||

|

||||

|

||||

|

||||

On May 25, 2018 the [General Data Protection Regulation][1] will go into effect. This new regulation by the European Union will impact how organizations need to protect personal data on a global scale. This could include open source projects, including communities.

|

||||

|

||||

### GDPR details

|

||||

|

||||

The General Data Protection Regulation (GDPR) was approved by the EU Parliament on April 14, 2016, and will be enforced beginning May 25, 2018. The GDPR replaces the Data Protection Directive 95/46/EC that was designed "to harmonize data privacy laws across Europe, to protect and empower all EU citizens data privacy and to reshape the way organizations across the region approach data privacy."

|

||||

|

||||

The aim of the GDPR is to protect the personal data of individuals in the EU in an increasingly data-driven world.

|

||||

|

||||

### To whom does it apply

|

||||

|

||||

One of the biggest changes that comes with the GDPR is an increased territorial scope. The GDPR applies to all organizations processing the personal data of data subjects residing in the European Union, irrelevant to its location.

|

||||

|

||||

While most of the online articles covering the GDPR mention companies selling goods or services, we can also look at this territorial scope with open source projects in mind. There are a few variations, such as a software company (profit) running a community, and a non-profit organization, i.e. an open source software project and its community. Once these communities are run on a global scale, it is most likely that EU-based persons are taking part in this community.

|

||||

|

||||

When such a global community has an online presence, using platforms such as a website, forum, issue tracker etcetera, it is very likely that they are processing personal data of these EU persons, such as their names, e-mail addresses and possibly even more. These activities will trigger a need to comply with the GDPR.

|

||||

|

||||

### GDPR changes and its impact

|

||||

|

||||

The GDPR brings [many changes][2], strengthening data protection and privacy of EU persons, compared to the previous Directive. Some of these changes have a direct impact on a community as described earlier. Let's look at some of these changes.

|

||||

|

||||

#### Consent

|

||||

|

||||

Let's assume that the community in question uses a forum for its members, and also has one or more forms on their website for registration purposes. With the GDPR you will no longer be able to use one lengthy and illegible privacy policy and terms of conditions. For each of those specific purposes, registering on the forum, and on one of those forms, you will need to obtain explicit consent. This consent must be “freely given, specific, informed, and unambiguous.”

|

||||

|

||||

In case of such a form, you could have a checkbox, which should not be pre-checked, with clear text indicating for which purposes the personal data is used, preferably linking to an ‘addendum’ of your existing privacy policy and terms of use.

|

||||

|

||||

#### Right to access

|

||||

|

||||

EU persons get expanded rights by the GDPR. One of them is the right to ask an organization if, where and which personal data is processed. Upon request, they should also be provided with a copy of this data, free of charge, and in an electronic format if this data subject (e.g. EU citizen) asks for it.

|

||||

|

||||

#### Right to be forgotten

|

||||

|

||||

Another right EU citizens get through the GDPR is the "right to be forgotten," also known as data erasure. This means that subject to certain limitation, the organization will have to erase his/her data, and possibly even stop any further processing, including by the organization’s third parties.

|

||||

|

||||

The above three changes imply that your platform(s) software will need to comply with certain aspects of the GDPR as well. It will need to have specific features such as obtaining and storing consent, extracting data and providing a copy in electronic format to a data subject, and finally the means to erase specific data about a data subject.

|

||||

|

||||

#### Breach notification

|

||||

|

||||

Under the GDPR, a data breach occurs whenever personal data is taken or stolen without the authorization of the data subject. Once discovered, you should notify your affected community members within 72 hours unless the personal data breach is unlikely to result in a risk to the rights and freedoms of natural persons. This breach notification is mandatory under the GDPR.

|

||||

|

||||

#### Register

|

||||

|

||||

As an organization, you will become responsible for keeping a register which will include detailed descriptions of all procedures, purposes etc for which you process personal data. This register will act as proof of the organization's compliance with the GDPR’s requirement to maintain a record of personal data processing activities, and will be used for audit purposes.

|

||||

|

||||

#### Fines

|

||||

|

||||

Organizations that do not comply with the GDPR risk fines up to 4% of annual global turnover or €20 million (whichever is greater). According to the GDPR, "this is the maximum fine that can be imposed for the most serious infringements e.g.not having sufficient customer consent to process data or violating the core of Privacy by Design concepts."

|

||||

|

||||

### Final words

|

||||

|

||||

My article should not be used as legal advice or a definite guide to GDPR compliance. I have covered some of the parts of the regulation that could be of impact to an open source community, raising awareness about the GDPR and its impact. Obviously, the regulation contains much more which you will need to know about and possibly comply with.

|

||||

|

||||

As you can probably conclude yourself, you will have to take steps when you are running a global community, to comply with the GDPR. If you already apply robust security standards in your community, such as ISO 27001, NIST or PCI DSS, you should have a head start.

|

||||

|

||||

You can find more information about the GDPR at the following sites/resources:

|

||||

|

||||

* [GDPR Portal][3] (by the EU)

|

||||

|

||||

* [Official Regulation (EU) 2016/679][4] (GDPR, including translations)

|

||||

|

||||

* [What is GDPR? 8 things leaders should know][5] (The Enterprisers Project)

|

||||

|

||||

* [How to avoid a GDPR compliance audit: Best practices][6] (The Enterprisers Project)

|

||||

|

||||

|

||||

### About the author

|

||||

|

||||

[][7]

|

||||

|

||||

Robin Muilwijk \- Robin Muilwijk is Advisor Internet and e-Government. He also serves as a community moderator for Opensource.com, an online publication by Red Hat, and as ambassador for The Open Organization. Robin is also Chair of the eZ Community Board, and Community Manager at [eZ Systems][8]. Robin writes and is active on social media to promote and advocate for open source in our businesses and lives.Follow him on Twitter... [more about Robin Muilwijk][9]

|

||||

|

||||

[More about me][10]

|

||||

|

||||

* [Learn how you can contribute][11]

|

||||

|

||||

---

|

||||

|

||||

via: [https://opensource.com/article/18/4/gdpr-impact][12]

|

||||

|

||||

作者: [Robin Muilwijk][13] 选题者: [@lujun9972][14] 译者: [译者ID][15] 校对: [校对者ID][16]

|

||||

|

||||

本文由 [LCTT][17] 原创编译,[Linux中国][18] 荣誉推出

|

||||

|

||||

[1]: https://www.eugdpr.org/eugdpr.org.html

|

||||

[2]: https://www.eugdpr.org/key-changes.html

|

||||

[3]: https://www.eugdpr.org/eugdpr.org.html

|

||||

[4]: http://eur-lex.europa.eu/legal-content/EN/TXT/?qid=1520531479111&uri=CELEX:32016R0679

|

||||

[5]: https://enterprisersproject.com/article/2018/4/what-gdpr-8-things-leaders-should-know

|

||||

[6]: https://enterprisersproject.com/article/2017/9/avoiding-gdpr-compliance-audit-best-practices

|

||||

[7]: https://opensource.com/users/robinmuilwijk

|

||||

[8]: http://ez.no

|

||||

[9]: https://opensource.com/users/robinmuilwijk

|

||||

[10]: https://opensource.com/users/robinmuilwijk

|

||||

[11]: https://opensource.com/participate

|

||||

[12]: https://opensource.com/article/18/4/gdpr-impact

|

||||

[13]: https://opensource.com/users/robinmuilwijk

|

||||

[14]: https://github.com/lujun9972

|

||||

[15]: https://github.com/译者ID

|

||||

[16]: https://github.com/校对者ID

|

||||

[17]: https://github.com/LCTT/TranslateProject

|

||||

[18]: https://linux.cn/

|

||||

@ -0,0 +1,63 @@

|

||||

What is a Linux server and why does your business need one?

|

||||

======

|

||||

|

||||

|

||||

IT organizations strive to deliver business value by increasing productivity and delivering services faster while remaining flexible enough to incorporate innovations like cloud, containers, and configuration automation. Modern workloads, whether they run on bare metal, virtual machines, containers, or private or public clouds, are expected to be portable and scalable. Supporting all this requires a modern, secure platform.

|

||||

|

||||

The most direct route to innovation is not always a straight line. With the growing adoption of private and public clouds, multiple architectures, and virtualization, today’s data center is like a globe, with varying infrastructure choices bringing it dimension and depth. And just as a pilot depends on air traffic controllers to provide continuous updates, your digital transformation journey should be guided by a trusted operating system like Linux to provide continuously updated technology and the most efficient and secure access to innovations like cloud, containers, and configuration automation.

|

||||

|

||||

Linux is a family of free, open source software operating systems built around the Linux kernel. Originally developed for personal computers based on the Intel x86 architecture, Linux has since been ported to more platforms than any other operating system. Thanks to the dominance of the Linux kernel-based Android OS on smartphones, Linux has the largest installed base of all general-purpose operating systems. Linux is also the leading operating system on servers and "big iron" systems such as mainframe computers, and it is the only OS used on [TOP500][1] supercomputers.

|

||||

|

||||

|

||||

To tap this functionality, many enterprise companies have adopted servers with a high-powered variant of the Linux open source operating system. These are designed to handle the most demanding business application requirements, such as network and system administration, database management, and web services. Linux servers are often chosen over other server operating systems for their stability, security, and flexibility. Leading Linux server operating systems include [Debian][2], [Ubuntu Server][3], [CentOS][4] [Slackware][5] , and [Gentoo][6]

|

||||

|

||||

|

||||

What features and benefits on an enterprise-grade Linux server should you consider for an enterprise workload? First, built-in security controls and scale-out manageability through interfaces that are familiar to both Linux and Windows administrators will enable you to focus on business growth instead of reacting to security vulnerabilities and costly management configuration mistakes. The Linux server you choose should provide security technologies and certifications and maintain enhancements to combat intrusions, protect your data, and meet regulatory compliance for an open source project or a specific OS vendor. It should:

|

||||

|

||||

* **Deliver resources with security** using integrated control features such as centralized identity management and [Security-Enhanced Linux][7] (SELinux), mandatory access controls (MAC) on a foundation that is [Common Criteria-][8] and [FIPS 140-2-certified][9], as well as the first Linux container framework support to be Common Criteria-certified.

|

||||

* **Automate regulatory compliance and security configuration remediation** across your system and within containers with image scanning like [OpenSCAP][10] that checks, remediates against vulnerabilities and configuration security baselines, including against [National Checklist Program][11] content for [PCI-DSS][12], [DISA STIG][13], and more. Additionally, it should centralize and scale out configuration remediation across your entire hybrid environment.

|

||||

* **Receive continuous vulnerability security updates** from the upstream community itself or a specific OS vendor, which remedies and delivers all critical issues by next business day, if possible, to minimize business impact.

|

||||

|

||||

|

||||

|

||||

As the foundation of your hybrid data center, the Linux server should provide platform manageability and flexible integration with legacy management and automation infrastructure. This will save IT staff time and reduce unplanned downtime compared to a non-paid Linux infrastructure. It should:

|

||||

|

||||

* **Speed image building, deployment, and patch management** across the data center with built-in capabilities and enrich system life-cycle management, provisioning, and enhanced patching, and more.

|

||||

* **Manage individual systems from an easy-to-use web interface** that includes storage, networking, containers, services, and more.

|

||||

* **Automate consistency and compliance** across heterogeneous multiple environments and reduce scripting rework with system roles using native configuration management tools like [Ansible][14], [Chef][15], [Salt][16], [Puppet][17], and more.

|

||||

* **Simplify platform updates** with in-place upgrades that eliminate the hassle of machine migrations and application rebuilds.

|

||||

* **Resolve technical issues** before they impact business operations by using predictive analytics tools to automate identification and remediation of anomalies and their root causes.

|

||||

|

||||

|

||||

|

||||

Linux servers are powering innovation around the globe. As the platform for enterprise workloads, a Linux server should provide a stable, secure, and performance-driven foundation for the applications that run the business of today and tomorrow.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/5/what-linux-server

|

||||

|

||||

作者:[Daniel Oh][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/daniel-oh

|

||||

[1]:https://en.wikipedia.org/wiki/TOP500

|

||||

[2]:https://www.debian.org/

|

||||

[3]:https://www.ubuntu.com/download/server

|

||||

[4]:https://www.centos.org/

|

||||

[5]:http://www.slackware.com/

|

||||

[6]:https://www.gentoo.org/

|

||||

[7]:https://en.wikipedia.org/wiki/Security-Enhanced_Linux

|

||||

[8]:https://en.wikipedia.org/wiki/Common_Criteria

|

||||

[9]:https://en.wikipedia.org/wiki/FIPS_140-2

|

||||

[10]:https://www.open-scap.org/

|

||||

[11]:https://www.nist.gov/programs-projects/national-checklist-program

|

||||

[12]:https://www.pcisecuritystandards.org/pci_security/

|

||||

[13]:https://iase.disa.mil/stigs/Pages/index.aspx

|

||||

[14]:https://www.ansible.com/

|

||||

[15]:https://www.chef.io/chef/

|

||||

[16]:https://saltstack.com/salt-open-source/

|

||||

[17]:https://puppet.com/

|

||||

@ -1,3 +1,5 @@

|

||||

Translating by MjSeven

|

||||

|

||||

The Best Linux Tools for Teachers and Students

|

||||

======

|

||||

Linux is a platform ready for everyone. If you have a niche, Linux is ready to meet or exceed the needs of said niche. One such niche is education. If you are a teacher or a student, Linux is ready to help you navigate the waters of nearly any level of the educational system. From study aids, to writing papers, to managing classes, to running an entire institution, Linux has you covered.

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

Translating by zhouzhuowei

|

||||

Getting started with Python for data science

|

||||

======

|

||||

|

||||

|

||||

102

sources/tech/20180525 A Bittorrent Filesystem Based On FUSE.md

Normal file

102

sources/tech/20180525 A Bittorrent Filesystem Based On FUSE.md

Normal file

@ -0,0 +1,102 @@

|

||||

A Bittorrent Filesystem Based On FUSE

|

||||

======

|

||||

|

||||

|

||||

The torrents have been around for a long time to share and download data from the Internet. There are plethora of GUI and CLI torrent clients available on the market. Sometimes, you just can not sit and wait for your download to complete. You might want to watch the content immediately. This is where **BTFS** , the bittorent filesystem, comes in handy. Using BTFS, you can mount the torrent file or magnet link as a directory and then use it as any read-only directory in your file tree. The contents of the files will be downloaded on-demand as they are read by applications. Since BTFS runs on top of FUSE, it does not require intervention into the Linux Kernel.

|

||||

|

||||

## BTFS – A Bittorrent Filesystem Based On FUSE

|

||||

|

||||

### Installing BTFS

|

||||

|

||||

BTFS is available in the default repositories of most Linux distributions.

|

||||

|

||||

On Arch Linux and its variants, run the following command to install BTFS.

|

||||

```

|

||||

$ sudo pacman -S btfs

|

||||

|

||||

```

|

||||

|

||||

On Debian, Ubuntu, Linux Mint:

|

||||

```

|

||||

$ sudo apt-get install btfs

|

||||

|

||||

```

|

||||

|

||||

On Gentoo:

|

||||

```

|

||||

# emerge -av btfs

|

||||

|

||||

```

|

||||

|

||||

BTFS can also be installed using [**Linuxbrew**][1] package manager.

|

||||

```

|

||||

$ brew install btfs

|

||||

|

||||

```

|

||||

|

||||

### Usage

|

||||

|

||||

BTFS usage is fairly simple. All you have to find the .torrent file or magnet link and mount it in a directory. The contents of the torrent file or magnet link will be mounted inside the directory of your choice. When a program tries to access the file for reading, the actual data will be downloaded on demand. Furthermore, tools like **ls** , **cat** and **cp** works as expected for manipulating the torrents. Applications like **vlc** and **mplayer** can also work without changes. The thing is the players don’t even know that the actual content is not physically present in the local disk and the content is collected in parts from peers on demand.

|

||||

|

||||

Create a directory to mount the torrent/magnet link:

|

||||

```

|

||||

$ mkdir mnt

|

||||

|

||||

```

|

||||

|

||||

Mount the torrent/magnet link:

|

||||

```

|

||||

$ btfs video.torrent mnt

|

||||

|

||||

```

|

||||

|

||||

[![][2]][3]

|

||||

|

||||

Cd to the directory:

|

||||

```

|

||||

$ cd mnt

|

||||

|

||||

```

|

||||

|

||||

And, start watching!

|

||||

```

|

||||

$ vlc <path-to-video.mp4>

|

||||

|

||||

```

|

||||

|

||||

Give BTFS a few moments to find and get the website tracker. Once the real data is loaded, BTFS won’t require the tracker any more.

|

||||

|

||||

![][4]

|

||||

|

||||

To unmount the BTFS filesystem, simply run the following command:

|

||||

```

|

||||

$ fusermount -u mnt

|

||||

|

||||

```

|

||||

|

||||

Now, the contents in the mounted directory will be gone. To access the contents again, you need to mount the torrent as described above.

|

||||

|

||||

The BTFS application will turn your VLC or Mplayer into Popcorn Time. Mount your favorite TV show or movie torrent file or magnet link and start watching without having to download the entire contents of the torrent or wait for your download to complete. The contents of the torrent or magnet link will be downloaded on demand when accessed by the applications.

|

||||

|

||||

And, that’s all for now. Hope this was useful. More good stuffs to come. Stay tuned!

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/btfs-a-bittorrent-filesystem-based-on-fuse/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:https://www.ostechnix.com/linuxbrew-common-package-manager-linux-mac-os-x/

|

||||

[2]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[3]:http://www.ostechnix.com/wp-content/uploads/2018/05/btfs.png

|

||||

[4]:http://www.ostechnix.com/wp-content/uploads/2018/05/btfs-1.png

|

||||

@ -0,0 +1,89 @@

|

||||

How to Set Different Wallpaper for Each Monitor in Linux

|

||||

======

|

||||

**Brief: If you want to display different wallpapers on multiple monitors on Ubuntu 18.04 or any other Linux distribution with GNOME, MATE or Budgie desktop environment, this nifty tool will help you achieve this.**

|

||||

|

||||

Multi-monitor setup often leads to multiple issues on Linux but I am not going to discuss those issues in this article. I have rather a positive article on multiple monitor support on Linux.

|

||||

|

||||

If you are using multiple monitor, perhaps you would like to setup a different wallpaper for each monitor. I am not sure about other Linux distributions and desktop environments, but Ubuntu with [GNOME desktop][1] doesn’t provide this functionality on its own.

|

||||

|

||||

Fret not! In this quick tutorial, I’ll show you how to set a different wallpaper for each monitor on Linux distributions with GNOME desktop environment.

|

||||

|

||||

### Setting up different wallpaper for each monitor on Ubuntu 18.04 and other Linux distributions

|

||||

|

||||

![Different wallaper on each monitor in Ubuntu][2]

|

||||

|

||||

I am going to use a nifty tool called [HydraPaper][3] for setting different backgrounds on different monitors. HydraPaper is a [GTK][4] based application to set different backgrounds for each monitor in [GNOME desktop environment][5].

|

||||

|

||||

It also supports on [MATE][6] and [Budgie][7] desktop environments. Which means Ubuntu MATE and [Ubuntu Budgie][8] users can also benefit from this application.

|

||||

|

||||

#### Install HydraPaper on Linux using FlatPak

|

||||

|

||||

HydraPaper can be installed easily using [FlatPak][9]. Ubuntu 18.04 already provides support for FlatPaks so all you need to do is to download the application file and double click on it to open it with the GNOME Software Center.

|

||||

|

||||

You can refer to this article to learn [how to enable FlatPak support][10] on your distribution. Once you have the FlatPak support enabled, just download it from [FlatHub][11] and install it.

|

||||

|

||||

[Download HydraPaper][12]

|

||||

|

||||

#### Using HydraPaper for setting different background on different monitors

|

||||

|

||||

Once installed, just look for HydraPaper in application menu and start the application. You’ll see images from your Pictures folder here because by default the application takes images from the Pictures folder of the user.

|

||||

|

||||

You can add your own folder(s) where you keep your wallpapers. Do note that it doesn’t find images recursively. If you have nested folders, it will only show images from the top folder.

|

||||

|

||||

![Setting up different wallpaper for each monitor on Linux][13]

|

||||

|

||||

Using HydraPaper is absolutely simple. Just select the wallpapers for each monitor and click on the apply button at the top. You can easily identify external monitor(s) termed with HDMI.

|

||||

|

||||

![Setting up different wallpaper for each monitor on Linux][14]

|

||||

|

||||

You can also add selected wallpapers to ‘Favorites’ for quick access. Doing this will move the ‘favorite wallpapers’ from Wallpapers tab to Favorites tab.

|

||||

|

||||

![Setting up different wallpaper for each monitor on Linux][15]

|

||||

|

||||

You don’t need to start HydraPaper at each boot. Once you set different wallpaper for different monitor, the settings are saved and you’ll see your chosen wallpapers even after restart. This would be expected behavior of course but I thought I would mention the obvious.

|

||||

|

||||

One big downside of HydraPaper is in the way it is designed to work. You see, HydraPaper combines your selected wallpapers into one single image and stretches it across the screens giving an impression of having different background on each display. And this becomes an issue when you remove the external display.

|

||||

|

||||

For example, when I tried using my laptop without the external display, it showed me an background image like this.

|

||||

|

||||

![Dual Monitor wallpaper HydraPaper][16]

|

||||

|

||||

Quite obviously, this is not what I would expect.

|

||||

|

||||

#### Did you like it?

|

||||

|

||||

HydraPaper makes setting up different backgrounds on different monitors a painless task. It supports more than two monitors and monitors with different orientation. Simple interface with only the required features makes it an ideal application for those who always use dual monitors.

|

||||

|

||||

How do you set different wallpaper for different monitor on Linux? Do you think HydraPaper is an application worth installing?

|

||||

|

||||

Do share your views and if you find this article, please share it on various social media channels such as Twitter and [Reddit][17].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/wallpaper-multi-monitor/

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[1]:https://www.gnome.org/

|

||||

[2]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/05/multi-monitor-wallpaper-setup-800x450.jpeg

|

||||

[3]:https://github.com/GabMus/HydraPaper

|

||||

[4]:https://www.gtk.org/

|

||||

[5]:https://itsfoss.com/gnome-tricks-ubuntu/

|

||||

[6]:https://mate-desktop.org/

|

||||

[7]:https://budgie-desktop.org/home/

|

||||

[8]:https://itsfoss.com/ubuntu-budgie-18-review/

|

||||

[9]:https://flatpak.org

|

||||

[10]:https://flatpak.org/setup/

|

||||

[11]:https://flathub.org

|

||||

[12]:https://flathub.org/apps/details/org.gabmus.hydrapaper

|

||||

[13]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/05/different-wallpaper-each-monitor-hydrapaper-2-800x631.jpeg

|

||||

[14]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/05/different-wallpaper-each-monitor-hydrapaper-1.jpeg

|

||||

[15]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/05/different-wallpaper-each-monitor-hydrapaper-3.jpeg

|

||||

[16]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/05/hydra-paper-dual-monitor-800x450.jpeg

|

||||

[17]:https://www.reddit.com/r/LinuxUsersGroup/

|

||||

@ -0,0 +1,114 @@

|

||||

How to clean up your data in the command line

|

||||

======

|

||||

|

||||

|

||||

I work part-time as a data auditor. Think of me as a proofreader who works with tables of data rather than pages of prose. The tables are exported from relational databases and are usually fairly modest in size: 100,000 to 1,000,000 records and 50 to 200 fields.

|

||||

|

||||

I haven't seen an error-free data table, ever. The messiness isn't limited, as you might think, to duplicate records, spelling and formatting errors, and data items placed in the wrong field. I also find:

|

||||

|

||||

* broken records spread over several lines because data items had embedded line breaks

|

||||

* data items in one field disagreeing with data items in another field, in the same record

|

||||

* records with truncated data items, often because very long strings were shoehorned into fields with 50- or 100-character limits

|

||||

* character encoding failures producing the gibberish known as [mojibake][1]

|

||||

* invisible [control characters][2], some of which can cause data processing errors

|

||||

* [replacement characters][3] and mysterious question marks inserted by the last program that failed to understand the data's character encoding

|

||||

|

||||

|

||||

|

||||

Cleaning up these problems isn't hard, but there are non-technical obstacles to finding them. The first is everyone's natural reluctance to deal with data errors. Before I see a table, the data owners or managers may well have gone through all five stages of Data Grief:

|

||||

|

||||

1. There are no errors in our data.

|

||||

2. Well, maybe there are a few errors, but they're not that important.

|

||||

3. OK, there are a lot of errors; we'll get our in-house people to deal with them.

|

||||

4. We've started fixing a few of the errors, but it's time-consuming; we'll do it when we migrate to the new database software.

|

||||

5. We didn't have time to clean the data when moving to the new database; we could use some help.

|

||||

|

||||

|

||||

|

||||

The second progress-blocking attitude is the belief that data cleaning requires dedicated applications—either expensive proprietary programs or the excellent open source program [OpenRefine][4]. To deal with problems that dedicated applications can't solve, data managers might ask a programmer for help—someone good with [Python][5] or [R][6].

|

||||

|

||||

But data auditing and cleaning generally don't require dedicated applications. Plain-text data tables have been around for many decades, and so have text-processing tools. Open up a Bash shell and you have a toolbox loaded with powerful text processors like `grep`, `cut`, `paste`, `sort`, `uniq`, `tr`, and `awk`. They're fast, reliable, and easy to use.

|

||||

|

||||

I do all my data auditing on the command line, and I've put many of my data-auditing tricks on a ["cookbook" website][7]. Operations I do regularly get stored as functions and shell scripts (see the example below).

|

||||

|

||||

Yes, a command-line approach requires that the data to be audited have been exported from the database. And yes, the audit results need to be edited later within the database, or (database permitting) the cleaned data items need to be imported as replacements for the messy ones.

|

||||

|

||||

But the advantages are remarkable. `awk` will process a few million records in seconds on a consumer-grade desktop or laptop. Uncomplicated regular expressions will find all the data errors you can imagine. And all of this will happen safely outside the database structure: Command-line auditing cannot affect the database, because it works with data liberated from its database prison.

|

||||

|

||||

Readers who trained on Unix will be smiling smugly at this point. They remember manipulating data on the command line many years ago in just these ways. What's happened since then is that processing power and RAM have increased spectacularly, and the standard command-line tools have been made substantially more efficient. Data auditing has never been faster or easier. And now that Microsoft Windows 10 can run Bash and GNU/Linux programs, Windows users can appreciate the Unix and Linux motto for dealing with messy data: Keep calm and open a terminal.

|

||||

|

||||

|

||||

![Tshirt, Keep Calm and Open A Terminal][9]

|

||||

|

||||

Photo by Robert Mesibov, CC BY

|

||||

|

||||

### An example

|

||||

|

||||

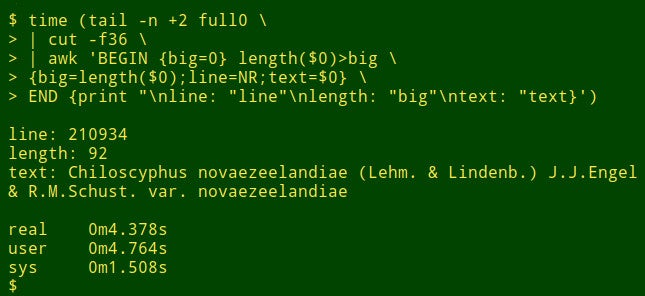

Suppose I want to find the longest data item in a particular field of a big table. That's not really a data auditing task, but it will show how shell tools work. For demonstration purposes, I'll use the tab-separated table `full0`, which has 1,122,023 records (plus a header line) and 49 fields, and I'll look in field number 36. (I get field numbers with a function explained [on my cookbook site][10].)

|

||||

|

||||

The command begins by using `tail` to remove the header line from `full0`. The result is piped to `cut`, which extracts the decapitated field 36. Next in the pipeline is `awk`. Here the variable `big` is initialized to a value of 0; then `awk` tests the length of the data item in the first record. If the length is bigger than 0, `awk` resets `big` to the new length and stores the line number (NR) in the variable `line` and the whole data item in the variable `text`. `awk` then processes each of the remaining 1,122,022 records in turn, resetting the three variables when it finds a longer data item. Finally, it prints out a neatly separated list of line numbers, length of data item, and full text of the longest data item. (In the following code, the commands have been broken up for clarity onto several lines.)

|

||||

```

|

||||

<code>tail -n +2 full0 \

|

||||

|

||||

> | cut -f36 \

|

||||

|

||||

> | awk 'BEGIN {big=0} length($0)>big \

|

||||

|

||||

> {big=length($0);line=NR;text=$0} \

|

||||

|

||||

> END {print "\nline: "line"\nlength: "big"\ntext: "text}' </code>

|

||||

|

||||

```

|

||||

|

||||

How long does this take? About 4 seconds on my desktop (core i5, 8GB RAM):

|

||||

|

||||

|

||||

|

||||

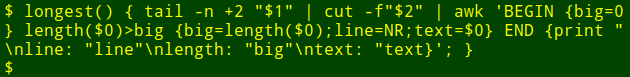

Now for the neat part: I can pop that long command into a shell function, `longest`, which takes as its arguments the filename `($1)` and the field number `($2)`:

|

||||

|

||||

|

||||

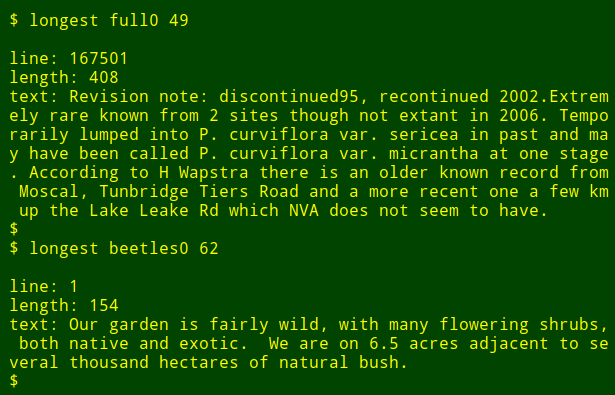

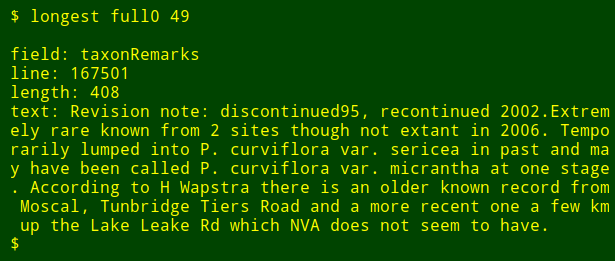

I can then re-run the command as a function, finding longest data items in other fields and in other files without needing to remember how the command is written:

|

||||

|

||||

|

||||