mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject

This commit is contained in:

commit

8496e0a104

@ -0,0 +1,136 @@

|

||||

又一波你可能不知道的 Linux 命令行网络监控工具

|

||||

===============================================================================

|

||||

|

||||

对任何规模的业务来说,网络监控工具都是一个重要的功能。网络监控的目标可能千差万别。比如,监控活动的目标可以是保证长期的网络服务、安全保护、对性能进行排查、网络使用统计等。由于它的目标不同,网络监控器使用很多不同的方式来完成任务。比如对包层面的嗅探,对数据流层面的统计数据,向网络中注入探测的流量,分析服务器日志等。

|

||||

|

||||

尽管有许多专用的网络监控系统可以365天24小时监控,但您依旧可以在特定的情况下使用命令行式的网络监控器,某些命令行式的网络监控器在某方面很有用。如果您是系统管理员,那您就应该有亲身使用一些知名的命令行式网络监控器的经历。这里有一份**Linux上流行且实用的网络监控器**列表。

|

||||

|

||||

### 包层面的嗅探器 ###

|

||||

|

||||

在这个类别下,监控工具在链路上捕捉独立的包,分析它们的内容,展示解码后的内容或者包层面的统计数据。这些工具在最底层对网络进行监控、管理,同样的也能进行最细粒度的监控,其代价是影响网络I/O和分析的过程。

|

||||

|

||||

1. **dhcpdump**:一个命令行式的DHCP流量嗅探工具,捕捉DHCP的请求/回复流量,并以用户友好的方式显示解码的DHCP协议消息。这是一款排查DHCP相关故障的实用工具。

|

||||

|

||||

2. **[dsniff][1]**:一个基于命令行的嗅探、伪造和劫持的工具合集,被设计用于网络审查和渗透测试。它可以嗅探多种信息,比如密码、NSF流量(LCTT 译注:此处疑为 NFS 流量)、email消息、网络地址等。

|

||||

|

||||

3. **[httpry][2]**:一个HTTP报文嗅探器,用于捕获、解码HTTP请求和回复报文,并以用户友好的方式显示这些信息。(LCTT 译注:[延伸阅读](https://linux.cn/article-4148-1.html)。 )

|

||||

|

||||

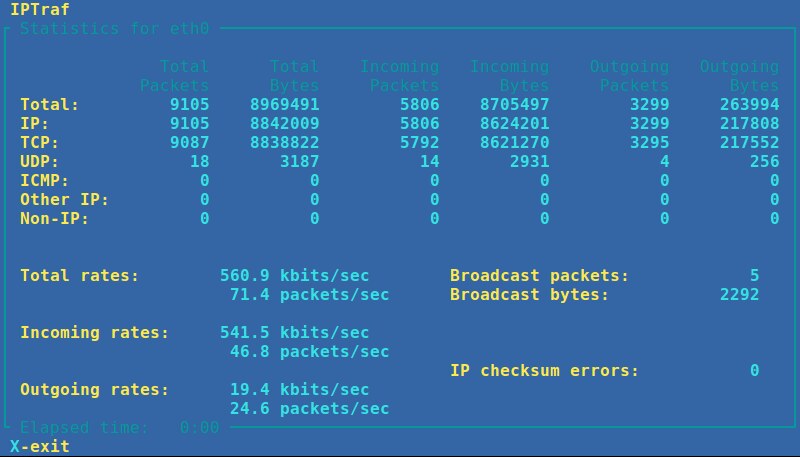

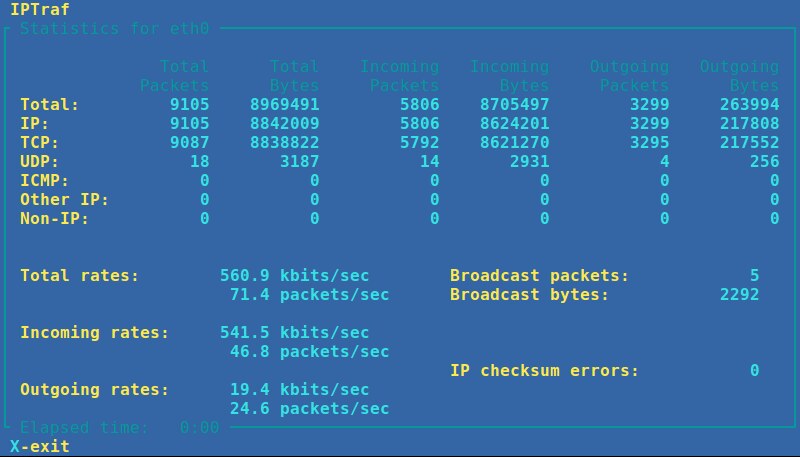

4. **IPTraf**:基于命令行的网络统计数据查看器。它实时显示包层面、连接层面、接口层面、协议层面的报文/字节数。抓包过程由协议过滤器控制,且操作过程全部是菜单驱动的。(LCTT 译注:[延伸阅读](https://linux.cn/article-5430-1.html)。)

|

||||

|

||||

|

||||

|

||||

5. **[mysql-sniffer][3]**:一个用于抓取、解码MySQL请求相关的数据包的工具。它以可读的方式显示最频繁或全部的请求。

|

||||

|

||||

6. **[ngrep][4]**:在网络报文中执行grep。它能实时抓取报文,并用正则表达式或十六进制表达式的方式匹配(过滤)报文。它是一个可以对异常流量进行检测、存储或者对实时流中特定模式报文进行抓取的实用工具。

|

||||

|

||||

7. **[p0f][5]**:一个被动的基于包嗅探的指纹采集工具,可以可靠地识别操作系统、NAT或者代理设置、网络链路类型以及许多其它与活动的TCP连接相关的属性。

|

||||

|

||||

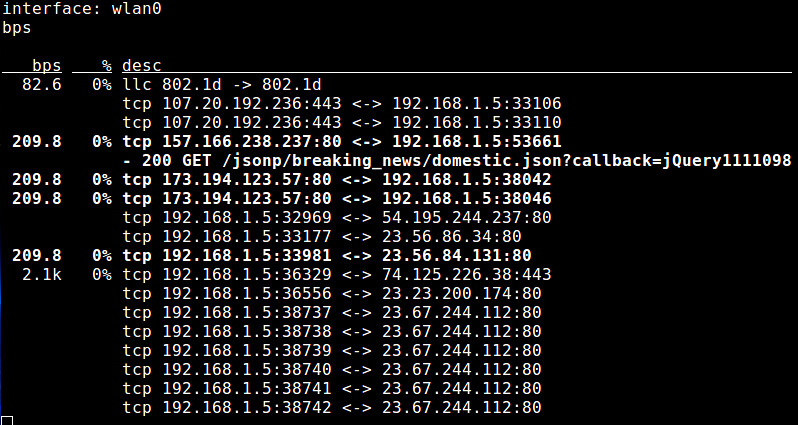

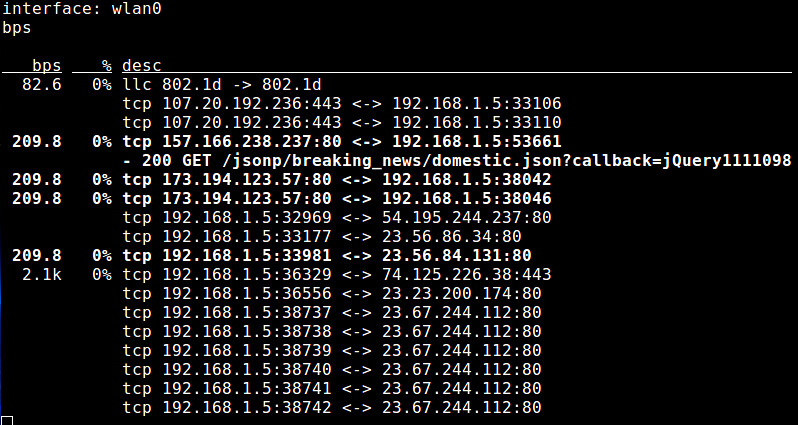

8. **pktstat**:一个命令行式的工具,通过实时分析报文,显示连接带宽使用情况以及相关的协议(例如,HTTP GET/POST、FTP、X11)等描述信息。

|

||||

|

||||

|

||||

|

||||

9. **Snort**:一个入侵检测和预防工具,通过规则驱动的协议分析和内容匹配,来检测/预防活跃流量中各种各样的后门、僵尸网络、网络钓鱼、间谍软件攻击。

|

||||

|

||||

10. **tcpdump**:一个命令行的嗅探工具,可以基于过滤表达式抓取网络中的报文,分析报文,并且在包层面输出报文内容以便于包层面的分析。他在许多网络相关的错误排查、网络程序debug、或[安全][6]监测方面应用广泛。

|

||||

|

||||

11. **tshark**:一个与Wireshark窗口程序一起使用的命令行式的嗅探工具。它能捕捉、解码网络上的实时报文,并能以用户友好的方式显示其内容。

|

||||

|

||||

### 流/进程/接口层面的监控 ###

|

||||

|

||||

在这个分类中,网络监控器通过把流量按照流、相关进程或接口分类,收集每个流、每个进程、每个接口的统计数据。其信息的来源可以是libpcap抓包库或者sysfs内核虚拟文件系统。这些工具的监控成本很低,但是缺乏包层面的检视能力。

|

||||

|

||||

12. **bmon**:一个基于命令行的带宽监测工具,可以显示各种接口相关的信息,不但包括接收/发送的总量/平均值统计数据,而且拥有历史带宽使用视图。

|

||||

|

||||

|

||||

|

||||

13. **[iftop][7]**:一个带宽使用监测工具,可以实时显示某个网络连接的带宽使用情况。它对所有带宽使用情况排序并通过ncurses的接口来进行可视化。他可以方便的监控哪个连接消耗了最多的带宽。(LCTT 译注:[延伸阅读](https://linux.cn/article-1843-1.html)。)

|

||||

|

||||

14. **nethogs**:一个基于ncurses显示的进程监控工具,提供进程相关的实时的上行/下行带宽使用信息。它对检测占用大量带宽的进程很有用。(LCTT 译注:[延伸阅读](https://linux.cn/article-2808-1.html)。)

|

||||

|

||||

15. **netstat**:一个显示许多TCP/UDP的网络堆栈的统计信息的工具。诸如打开的TCP/UDP连接书、网络接口发送/接收、路由表、协议/套接字的统计信息和属性。当您诊断与网络堆栈相关的性能、资源使用时它很有用。

|

||||

|

||||

16. **[speedometer][8]**:一个可视化某个接口发送/接收的带宽使用的历史趋势,并且基于ncurses的条状图进行显示的终端工具。

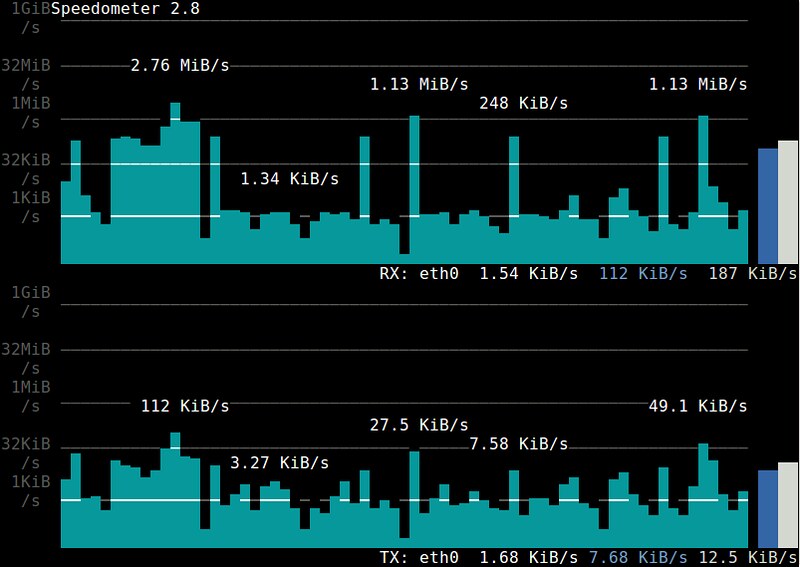

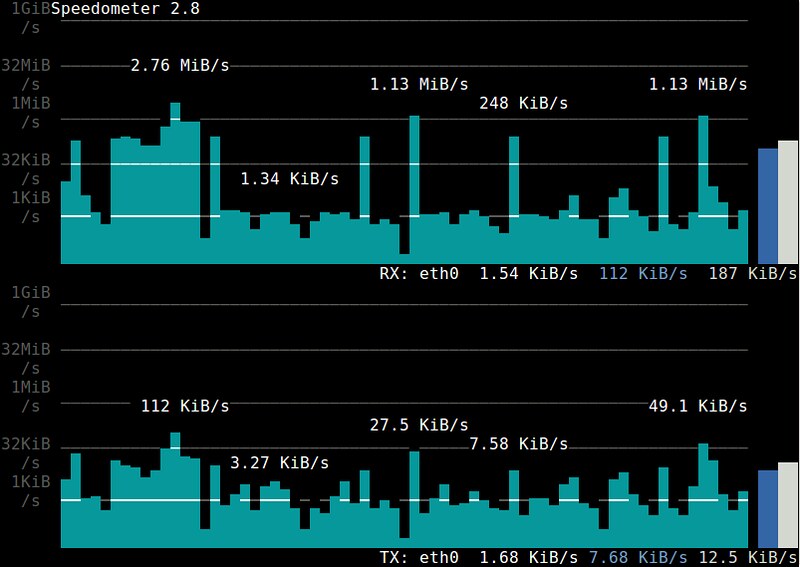

|

||||

|

||||

|

||||

|

||||

17. **[sysdig][9]**:一个可以通过统一的界面对各个Linux子系统进行系统级综合性调试的工具。它的网络监控模块可以监控在线或离线、许多进程/主机相关的网络统计数据,例如带宽、连接/请求数等。(LCTT 译注:[延伸阅读](https://linux.cn/article-4341-1.html)。)

|

||||

|

||||

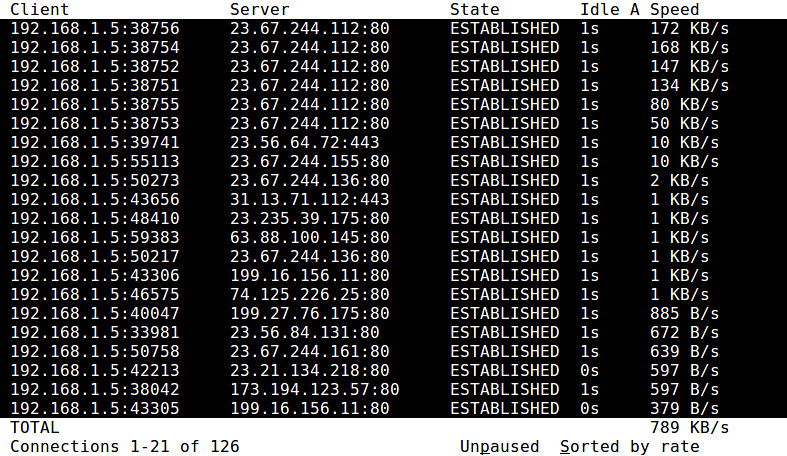

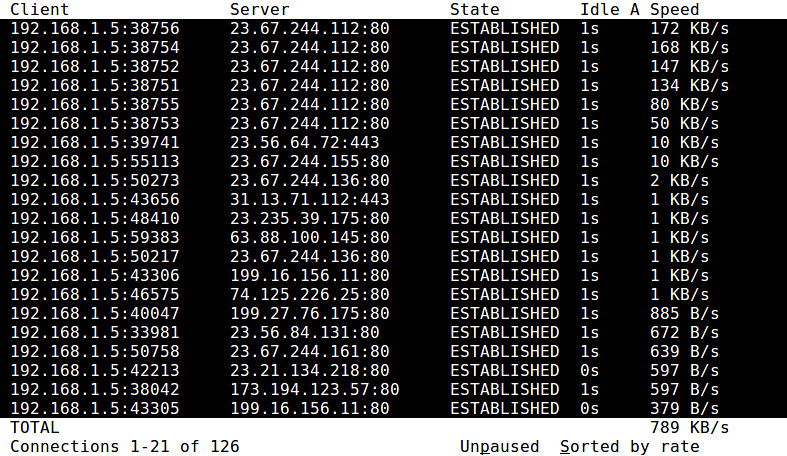

18. **tcptrack**:一个TCP连接监控工具,可以显示活动的TCP连接,包括源/目的IP地址/端口、TCP状态、带宽使用等。

|

||||

|

||||

|

||||

|

||||

19. **vnStat**:一个存储并显示每个接口的历史接收/发送带宽视图(例如,当前、每日、每月)的流量监控器。作为一个后台守护进程,它收集并存储统计数据,包括接口带宽使用率和传输字节总数。(LCTT 译注:[延伸阅读](https://linux.cn/article-5256-1.html)。)

|

||||

|

||||

### 主动网络监控器 ###

|

||||

|

||||

不同于前面提到的被动的监听工具,这个类别的工具们在监听时会主动的“注入”探测内容到网络中,并且会收集相应的反应。监听目标包括路由路径、可供使用的带宽、丢包率、延时、抖动(jitter)、系统设置或者缺陷等。

|

||||

|

||||

20. **[dnsyo][10]**:一个DNS检测工具,能够管理跨越多达1500个不同网络的开放解析器的DNS查询。它在您检查DNS传播或排查DNS设置的时候很有用。

|

||||

|

||||

21. **[iperf][11]**:一个TCP/UDP带宽测量工具,能够测量两个端点间最大可用带宽。它通过在两个主机间单向或双向的输出TCP/UDP探测流量来测量可用的带宽。它在监测网络容量、调谐网络协议栈参数时很有用。一个叫做[netperf][12]的变种拥有更多的功能及更好的统计数据。

|

||||

|

||||

22. **[netcat][13]/socat**:通用的网络调试工具,可以对TCP/UDP套接字进行读、写或监听。它通常和其他的程序或脚本结合起来在后端对网络传输或端口进行监听。(LCTT 译注:[延伸阅读](https://linux.cn/article-1171-1.html)。)

|

||||

|

||||

23. **nmap**:一个命令行的端口扫描和网络发现工具。它依赖于若干基于TCP/UDP的扫描技术来查找开放的端口、活动的主机或者在本地网络存在的操作系统。它在你审查本地主机漏洞或者建立维护所用的主机映射时很有用。[zmap][14]是一个类似的替代品,是一个用于互联网范围的扫描工具。(LCTT 译注:[延伸阅读](https://linux.cn/article-2561-1.html)。)

|

||||

|

||||

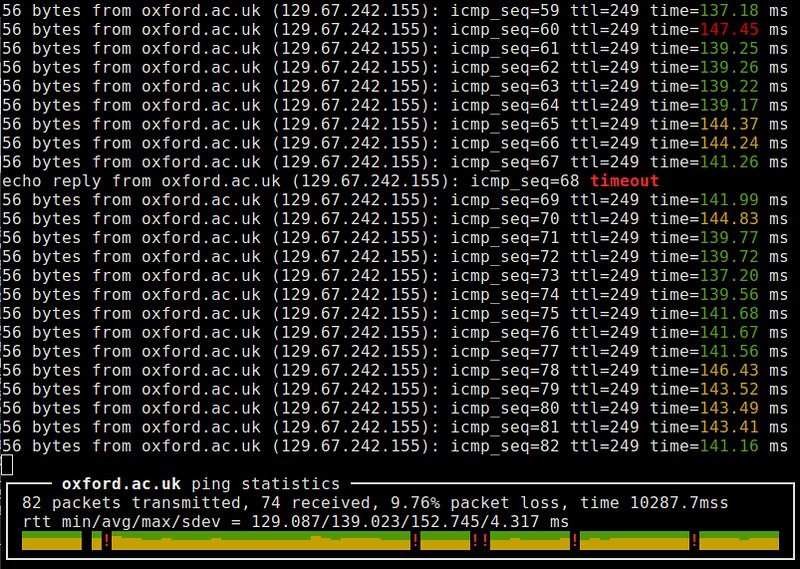

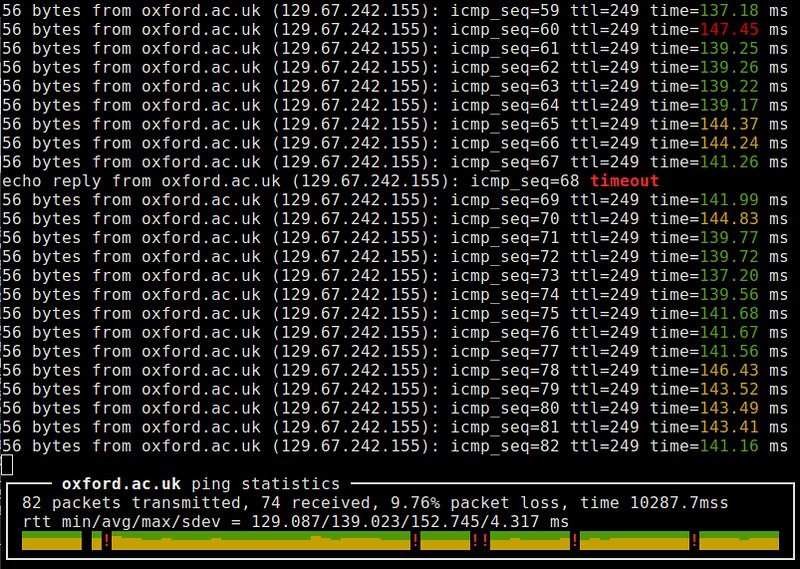

24. ping:一个常用的网络测试工具。通过交换ICMP的echo和reply报文来实现其功能。它在测量路由的RTT、丢包率以及检测远端系统防火墙规则时很有用。ping的变种有更漂亮的界面(例如,[noping][15])、多协议支持(例如,[hping][16])或者并行探测能力(例如,[fping][17])。(LCTT 译注:[延伸阅读](https://linux.cn/article-2303-1.html)。)

|

||||

|

||||

|

||||

|

||||

25. **[sprobe][18]**:一个启发式推断本地主机和任意远端IP地址之间的网络带宽瓶颈的命令行工具。它使用TCP三次握手机制来评估带宽的瓶颈。它在检测大范围网络性能和路由相关的问题时很有用。

|

||||

|

||||

26. **traceroute**:一个能发现从本地到远端主机的第三层路由/转发路径的网络发现工具。它发送限制了TTL的探测报文,收集中间路由的ICMP反馈信息。它在排查低速网络连接或者路由相关的问题时很有用。traceroute的变种有更好的RTT统计功能(例如,[mtr][19])。

|

||||

|

||||

### 应用日志解析器 ###

|

||||

|

||||

在这个类别下的网络监测器把特定的服务器应用程序作为目标(例如,web服务器或者数据库服务器)。由服务器程序产生或消耗的网络流量通过它的日志被分析和监测。不像前面提到的网络层的监控器,这个类别的工具能够在应用层面分析和监控网络流量。

|

||||

|

||||

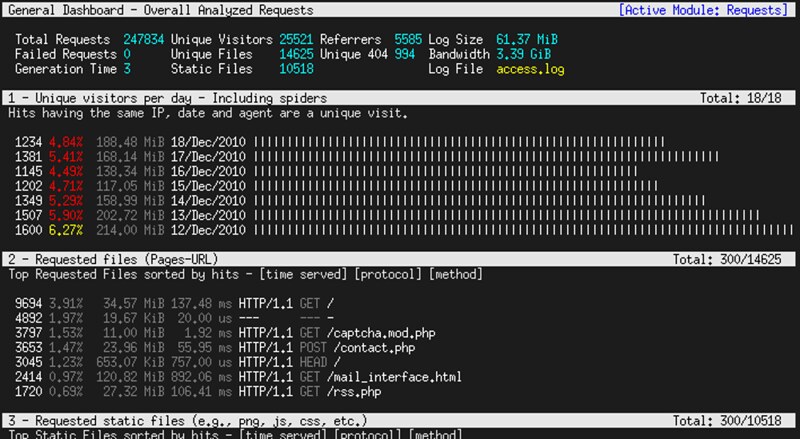

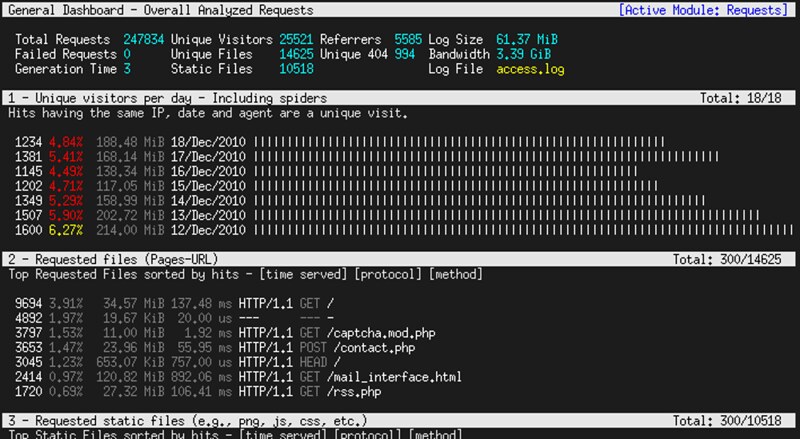

27. **[GoAccess][20]**:一个针对Apache和Nginx服务器流量的交互式查看器。基于对获取到的日志的分析,它能展示包括日访问量、最多请求、客户端操作系统、客户端位置、客户端浏览器等在内的多个实时的统计信息,并以滚动方式显示。

|

||||

|

||||

|

||||

|

||||

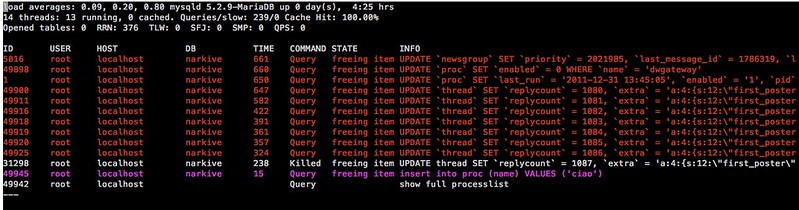

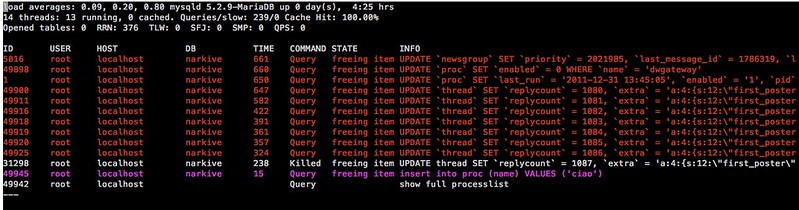

28. **[mtop][21]**:一个面向MySQL/MariaDB服务器的命令行监控器,它可以将成本最大的查询和当前数据库服务器负载以可视化的方式显示出来。它在您优化MySQL服务器性能、调谐服务器参数时很有用。

|

||||

|

||||

|

||||

|

||||

29. **[ngxtop][22]**:一个面向Nginx和Apache服务器的流量监测工具,能够以类似top指令的方式可视化的显示Web服务器的流量。它解析web服务器的查询日志文件并收集某个目的地或请求的流量统计信息。

|

||||

|

||||

### 总结 ###

|

||||

|

||||

在这篇文章中,我展示了许多命令行式监测工具,从最底层的包层面的监控器到最高层应用程序层面的网络监控器。了解那个工具的作用是一回事,选择哪个工具使用又是另外一回事。单一的一个工具不能作为您每天使用的通用的解决方案。一个好的系统管理员应该能决定哪个工具更适合当前的环境。希望这个列表对此有所帮助。

|

||||

|

||||

欢迎您通过回复来改进这个列表的内容!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/useful-command-line-network-monitors-linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[wwy-hust](https://github.com/wwy-hust)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/nanni

|

||||

[1]:http://www.monkey.org/~dugsong/dsniff/

|

||||

[2]:http://xmodulo.com/monitor-http-traffic-command-line-linux.html

|

||||

[3]:https://github.com/zorkian/mysql-sniffer

|

||||

[4]:http://ngrep.sourceforge.net/

|

||||

[5]:http://lcamtuf.coredump.cx/p0f3/

|

||||

[6]:http://xmodulo.com/recommend/firewallbook

|

||||

[7]:http://xmodulo.com/how-to-install-iftop-on-linux.html

|

||||

[8]:https://excess.org/speedometer/

|

||||

[9]:http://xmodulo.com/monitor-troubleshoot-linux-server-sysdig.html

|

||||

[10]:http://xmodulo.com/check-dns-propagation-linux.html

|

||||

[11]:https://iperf.fr/

|

||||

[12]:http://www.netperf.org/netperf/

|

||||

[13]:http://xmodulo.com/useful-netcat-examples-linux.html

|

||||

[14]:https://zmap.io/

|

||||

[15]:http://noping.cc/

|

||||

[16]:http://www.hping.org/

|

||||

[17]:http://fping.org/

|

||||

[18]:http://sprobe.cs.washington.edu/

|

||||

[19]:http://xmodulo.com/better-alternatives-basic-command-line-utilities.html#mtr_link

|

||||

[20]:http://goaccess.io/

|

||||

[21]:http://mtop.sourceforge.net/

|

||||

[22]:http://xmodulo.com/monitor-nginx-web-server-command-line-real-time.html

|

||||

@ -1,7 +1,7 @@

|

||||

既然float不能表示所有的int,那为什么在类型转换时C++将int转换成float?

|

||||

---------

|

||||

=============

|

||||

|

||||

#问题:

|

||||

###问题:

|

||||

|

||||

代码如下:

|

||||

|

||||

@ -13,7 +13,7 @@ if (i == f) // 执行某段代码

|

||||

|

||||

编译器会将i转换成float类型,然后比较这两个float的大小,但是float能够表示所有的int吗?为什么没有将int和float转换成double类型进行比较呢?

|

||||

|

||||

#回答:

|

||||

###回答:

|

||||

|

||||

在整型数的演变中,当`int`变成`unsigned`时,会丢掉负数部分(有趣的是,这样的话,`0u < -1`就是对的了)。

|

||||

|

||||

@ -32,11 +32,11 @@ if((double) i < (double) f)

|

||||

顺便提一下,在这个问题中有趣的是,`unsigned`的优先级高于`int`,所以把`int`和`unsigned`进行比较时,最终进行的是unsigned类型的比较(开头提到的`0u < -1`就是这个道理)。我猜测这可能是在早些时候(计算机发展初期),当时的人们认为`unsigned`比`int`在所表示的数值范围上受到的限制更小:现在还不需要符号位,所以可以使用额外的位来表示更大的数值范围。如果你觉得`int`可能会溢出,那么就使用unsigned好了——在使用16位表示的ints时这个担心会更明显。

|

||||

|

||||

----

|

||||

via:[stackoverflow](http://stackoverflow.com/questions/28010565/why-does-c-promote-an-int-to-a-float-when-a-float-cannot-represent-all-int-val/28011249#28011249)

|

||||

via: [stackoverflow](http://stackoverflow.com/questions/28010565/why-does-c-promote-an-int-to-a-float-when-a-float-cannot-represent-all-int-val/28011249#28011249)

|

||||

|

||||

作者:[wintermute][a]

|

||||

译者:[KayGuoWhu](https://github.com/KayGuoWhu)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,65 @@

|

||||

iptraf:一个实用的TCP/UDP网络监控工具

|

||||

================================================================================

|

||||

|

||||

[iptraf][1]是一个基于ncurses的IP局域网监控器,用来生成包括TCP信息、UDP计数、ICMP和OSPF信息、以太网负载信息、节点状态信息、IP校验和错误等等统计数据。

|

||||

|

||||

它基于ncurses的用户界面可以使用户免于记忆繁琐的命令行开关。

|

||||

|

||||

### 特征 ###

|

||||

|

||||

- IP流量监控器,用来显示你的网络中的IP流量变化信息。包括TCP标识信息、包以及字节计数,ICMP细节,OSPF包类型。

|

||||

- 简单的和详细的接口统计数据,包括IP、TCP、UDP、ICMP、非IP以及其他的IP包计数、IP校验和错误,接口活动、包大小计数。

|

||||

- TCP和UDP服务监控器,能够显示常见的TCP和UDP应用端口上发送的和接收的包的数量。

|

||||

- 局域网数据统计模块,能够发现在线的主机,并显示其上的数据活动统计信息。

|

||||

- TCP、UDP、及其他协议的显示过滤器,允许你只查看感兴趣的流量。

|

||||

- 日志功能。

|

||||

- 支持以太网、FDDI、ISDN、SLIP、PPP以及本地回环接口类型。

|

||||

- 利用Linux内核内置的原始套接字接口,允许它(指iptraf)能够用于各种支持的网卡上

|

||||

- 全屏,菜单式驱动的操作。

|

||||

|

||||

###安装方法###

|

||||

|

||||

**Ubuntu以及其衍生版本**

|

||||

|

||||

sudo apt-get install iptraf

|

||||

|

||||

**Arch Linux以及其衍生版本**

|

||||

|

||||

sudo pacman -S iptra

|

||||

|

||||

**Fedora以及其衍生版本**

|

||||

|

||||

sudo yum install iptraf

|

||||

|

||||

### 用法 ###

|

||||

|

||||

如果不加任何命令行选项地运行**iptraf**命令,程序将进入一种交互模式,通过主菜单可以访问多种功能。

|

||||

|

||||

|

||||

|

||||

简易的上手导航菜单。

|

||||

|

||||

|

||||

|

||||

选择要监控的接口。

|

||||

|

||||

|

||||

|

||||

接口**ppp0**处的流量。

|

||||

|

||||

|

||||

|

||||

试试吧!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.unixmen.com/iptraf-tcpudp-network-monitoring-utility/

|

||||

|

||||

作者:[Enock Seth Nyamador][a]

|

||||

译者:[DongShuaike](https://github.com/DongShuaike)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.unixmen.com/author/seth/

|

||||

[1]:http://iptraf.seul.org/about.html

|

||||

@ -1,12 +1,14 @@

|

||||

|

||||

自动化部署基于Docker的Rails应用

|

||||

================================================================================

|

||||

|

||||

|

||||

[TL;DR] 这是系列文章的第三篇,讲述了我的公司是如何将基础设施从PaaS移植到Docker上的。

|

||||

|

||||

- [第一部分][1]:谈论了我接触Docker之前的经历;

|

||||

- [第二部分][2]:一步步搭建一个安全而又私有的registry。

|

||||

|

||||

----------

|

||||

|

||||

在系列文章的最后一篇里,我们将用一个实例来学习如何自动化整个部署过程。

|

||||

|

||||

### 基本的Rails应用程序###

|

||||

@ -18,99 +20,97 @@

|

||||

$ rvm use 2.2.0

|

||||

$ rails new && cd docker-test

|

||||

|

||||

创建一个基础控制器:

|

||||

创建一个基本的控制器:

|

||||

|

||||

$ rails g controller welcome index

|

||||

|

||||

……然后编辑 `routes.rb` ,以便让工程的根指向我们新创建的welcome#index方法:(这句话理解不太理解)

|

||||

……,然后编辑 `routes.rb` ,以便让该项目的根指向我们新创建的welcome#index方法:

|

||||

|

||||

root 'welcome#index'

|

||||

|

||||

在终端运行 `rails s` ,然后打开浏览器,登录[http://localhost:3000][3],你会进入到索引界面当中。我们不准备给应用加上多么神奇的东西,这只是一个基础实例,用来验证当我们将要创建并部署容器的时候,一切运行正常。

|

||||

在终端运行 `rails s` ,然后打开浏览器,登录[http://localhost:3000][3],你会进入到索引界面当中。我们不准备给应用加上多么神奇的东西,这只是一个基础的实例,当我们将要创建并部署容器的时候,用它来验证一切是否运行正常。

|

||||

|

||||

### 安装webserver ###

|

||||

|

||||

我们打算使用Unicorn当做我们的webserver。在Gemfile中添加 `gem 'unicorn'`和 `gem 'foreman'`然后将它bundle起来(运行 `bundle install`命令)。

|

||||

|

||||

在Rails应用启动的伺候,需要配置Unicorn,所以我们将一个**unicorn.rb**文件放在**config**目录下。[这里有一个Unicorn配置文件的例子][4]你可以直接复制粘贴Gist的内容。

|

||||

启动Rails应用时,需要先配置好Unicorn,所以我们将一个**unicorn.rb**文件放在**config**目录下。[这里有一个Unicorn配置文件的例子][4],你可以直接复制粘贴Gist的内容。

|

||||

|

||||

Let's also add a Procfile with the following content inside the root of the project so that we will be able to start the app with foreman:

|

||||

接下来,在工程的根目录下添加一个Procfile,以便可以使用foreman启动应用,内容为下:

|

||||

接下来,在项目的根目录下添加一个Procfile,以便可以使用foreman启动应用,内容为下:

|

||||

|

||||

web: bundle exec unicorn -p $PORT -c ./config/unicorn.rb

|

||||

|

||||

现在运行**foreman start**命令启动应用,一切都将正常运行,并且你将能够在[http://localhost:5000][5]上看到一个正在运行的应用。

|

||||

|

||||

### 创建一个Docker映像 ###

|

||||

### 构建一个Docker镜像 ###

|

||||

|

||||

现在我们创建一个映像来运行我们的应用。在Rails工程的跟目录下,创建一个名为**Dockerfile**的文件,然后粘贴进以下内容:

|

||||

现在我们构建一个镜像来运行我们的应用。在这个Rails项目的根目录下,创建一个名为**Dockerfile**的文件,然后粘贴进以下内容:

|

||||

|

||||

# Base image with ruby 2.2.0

|

||||

# 基于镜像 ruby 2.2.0

|

||||

FROM ruby:2.2.0

|

||||

|

||||

# Install required libraries and dependencies

|

||||

# 安装所需的库和依赖

|

||||

RUN apt-get update && apt-get install -qy nodejs postgresql-client sqlite3 --no-install-recommends && rm -rf /var/lib/apt/lists/*

|

||||

|

||||

# Set Rails version

|

||||

# 设置 Rails 版本

|

||||

ENV RAILS_VERSION 4.1.1

|

||||

|

||||

# Install Rails

|

||||

# 安装 Rails

|

||||

RUN gem install rails --version "$RAILS_VERSION"

|

||||

|

||||

# Create directory from where the code will run

|

||||

# 创建代码所运行的目录

|

||||

RUN mkdir -p /usr/src/app

|

||||

WORKDIR /usr/src/app

|

||||

|

||||

# Make webserver reachable to the outside world

|

||||

# 使 webserver 可以在容器外面访问

|

||||

EXPOSE 3000

|

||||

|

||||

# Set ENV variables

|

||||

# 设置环境变量

|

||||

ENV PORT=3000

|

||||

|

||||

# Start the web app

|

||||

# 启动 web 应用

|

||||

CMD ["foreman","start"]

|

||||

|

||||

# Install the necessary gems

|

||||

# 安装所需的 gems

|

||||

ADD Gemfile /usr/src/app/Gemfile

|

||||

ADD Gemfile.lock /usr/src/app/Gemfile.lock

|

||||

RUN bundle install --without development test

|

||||

|

||||

# Add rails project (from same dir as Dockerfile) to project directory

|

||||

# 将 rails 项目(和 Dockerfile 同一个目录)添加到项目目录

|

||||

ADD ./ /usr/src/app

|

||||

|

||||

# Run rake tasks

|

||||

# 运行 rake 任务

|

||||

RUN RAILS_ENV=production rake db:create db:migrate

|

||||

|

||||

使用提供的Dockerfile,执行下列命令创建一个映像[1][7]:

|

||||

使用上述Dockerfile,执行下列命令创建一个镜像(确保**boot2docker**已经启动并在运行当中):

|

||||

|

||||

$ docker build -t localhost:5000/your_username/docker-test .

|

||||

|

||||

然后,如果一切正常,长日志输出的最后一行应该类似于:

|

||||

然后,如果一切正常,长长的日志输出的最后一行应该类似于:

|

||||

|

||||

Successfully built 82e48769506c

|

||||

$ docker images

|

||||

REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

|

||||

localhost:5000/your_username/docker-test latest 82e48769506c About a minute ago 884.2 MB

|

||||

|

||||

来运行容器吧!

|

||||

让我们运行一下容器试试!

|

||||

|

||||

$ docker run -d -p 3000:3000 --name docker-test localhost:5000/your_username/docker-test

|

||||

|

||||

You should be able to reach your Rails app running inside the Docker container at port 3000 of your boot2docker VM[2][8] (in my case [http://192.168.59.103:3000][6]).

|

||||

通过你的boot2docker虚拟机[2][8]的3000号端口(我的是[http://192.168.59.103:3000][6]),你可以观察你的Rails应用。

|

||||

通过你的boot2docker虚拟机的3000号端口(我的是[http://192.168.59.103:3000][6]),你可以观察你的Rails应用。(如果不清楚你的boot2docker虚拟地址,输入` $ boot2docker ip`命令查看。)

|

||||

|

||||

### 使用shell脚本进行自动化部署 ###

|

||||

|

||||

前面的文章(指文章1和文章2)已经告诉了你如何将新创建的映像推送到私有registry中,并将其部署在服务器上,所以我们跳过这一部分直接开始自动化进程。

|

||||

前面的文章(指文章1和文章2)已经告诉了你如何将新创建的镜像推送到私有registry中,并将其部署在服务器上,所以我们跳过这一部分直接开始自动化进程。

|

||||

|

||||

我们将要定义3个shell脚本,然后最后使用rake将它们捆绑在一起。

|

||||

|

||||

### 清除 ###

|

||||

|

||||

每当我们创建映像的时候,

|

||||

每当我们创建镜像的时候,

|

||||

|

||||

- 停止并重启boot2docker;

|

||||

- 去除Docker孤儿映像(那些没有标签,并且不再被容器所使用的映像们)。

|

||||

- 去除Docker孤儿镜像(那些没有标签,并且不再被容器所使用的镜像们)。

|

||||

|

||||

在你的工程根目录下的**clean.sh**文件中输入下列命令。

|

||||

|

||||

@ -132,22 +132,22 @@ You should be able to reach your Rails app running inside the Docker container a

|

||||

|

||||

$ chmod +x clean.sh

|

||||

|

||||

### 创建 ###

|

||||

### 构建 ###

|

||||

|

||||

创建的过程基本上和之前我们所做的(docker build)内容相似。在工程的根目录下创建一个**build.sh**脚本,填写如下内容:

|

||||

构建的过程基本上和之前我们所做的(docker build)内容相似。在工程的根目录下创建一个**build.sh**脚本,填写如下内容:

|

||||

|

||||

docker build -t localhost:5000/your_username/docker-test .

|

||||

|

||||

给脚本执行权限。

|

||||

记得给脚本执行权限。

|

||||

|

||||

### 部署 ###

|

||||

|

||||

最后,创建一个**deploy.sh**脚本,在里面填进如下内容:

|

||||

|

||||

# Open SSH connection from boot2docker to private registry

|

||||

# 打开 boot2docker 到私有注册库的 SSH 连接

|

||||

boot2docker ssh "ssh -o 'StrictHostKeyChecking no' -i /Users/username/.ssh/id_boot2docker -N -L 5000:localhost:5000 root@your-registry.com &" &

|

||||

|

||||

# Wait to make sure the SSH tunnel is open before pushing...

|

||||

# 在推送前先确认该 SSH 通道是开放的。

|

||||

echo Waiting 5 seconds before pushing image.

|

||||

|

||||

echo 5...

|

||||

@ -165,7 +165,7 @@ You should be able to reach your Rails app running inside the Docker container a

|

||||

echo Starting push!

|

||||

docker push localhost:5000/username/docker-test

|

||||

|

||||

如果你不理解这其中的含义,请先仔细阅读这部分[part 2][9]。

|

||||

如果你不理解这其中的含义,请先仔细阅读这部分[第二部分][2]。

|

||||

|

||||

给脚本加上执行权限。

|

||||

|

||||

@ -179,10 +179,9 @@ You should be able to reach your Rails app running inside the Docker container a

|

||||

|

||||

这一点都不费工夫,可是事实上开发者比你想象的要懒得多!那么咱们就索性再懒一点!

|

||||

|

||||

我们最后再把工作好好整理一番,我们现在要将三个脚本捆绑在一起,通过rake。

|

||||

|

||||

为了更简单一点,你可以在工程根目录下已经存在的Rakefile中添加几行代码,打开Rakefile文件——pun intended——把下列内容粘贴进去。

|

||||

我们最后再把工作好好整理一番,我们现在要将三个脚本通过rake捆绑在一起。

|

||||

|

||||

为了更简单一点,你可以在工程根目录下已经存在的Rakefile中添加几行代码,打开Rakefile文件,把下列内容粘贴进去。

|

||||

|

||||

namespace :docker do

|

||||

desc "Remove docker container"

|

||||

@ -221,34 +220,27 @@ Deploy独立于build,build独立于clean。所以每次我们输入命令运

|

||||

|

||||

$ rake docker:deploy

|

||||

|

||||

接下来就是见证奇迹的时刻了。一旦映像文件被上传(第一次可能花费较长的时间),你就可以ssh登录产品服务器,并且(通过SSH管道)把docker映像拉取到服务器并运行了。多么简单!

|

||||

接下来就是见证奇迹的时刻了。一旦镜像文件被上传(第一次可能花费较长的时间),你就可以ssh登录产品服务器,并且(通过SSH管道)把docker镜像拉取到服务器并运行了。多么简单!

|

||||

|

||||

也许你需要一段时间来习惯,但是一旦成功,它几乎与用Heroku部署一样简单。

|

||||

|

||||

备注:像往常一样,请让我了解到你的意见。我不敢保证这种方法是最好,最快,或者最安全的Docker开发的方法,但是这东西对我们确实奏效。

|

||||

|

||||

- 确保**boot2docker**已经启动并在运行当中。

|

||||

- 如果你不了解你的boot2docker虚拟地址,输入` $ boot2docker ip`命令查看。

|

||||

- 点击[here][10],教你怎样搭建私有的registry。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://cocoahunter.com/2015/01/23/docker-3/

|

||||

|

||||

作者:[Michelangelo Chasseur][a]

|

||||

译者:[DongShuaike](https://github.com/DongShuaike)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://cocoahunter.com/author/michelangelo/

|

||||

[1]:http://cocoahunter.com/docker-1

|

||||

[2]:http://cocoahunter.com/2015/01/23/docker-2/

|

||||

[1]:https://linux.cn/article-5339-1.html

|

||||

[2]:https://linux.cn/article-5379-1.html

|

||||

[3]:http://localhost:3000/

|

||||

[4]:https://gist.github.com/chasseurmic/0dad4d692ff499761b20

|

||||

[5]:http://localhost:5000/

|

||||

[6]:http://192.168.59.103:3000/

|

||||

[7]:http://cocoahunter.com/2015/01/23/docker-3/#fn:1

|

||||

[8]:http://cocoahunter.com/2015/01/23/docker-3/#fn:2

|

||||

[9]:http://cocoahunter.com/2015/01/23/docker-2/

|

||||

[10]:http://cocoahunter.com/2015/01/23/docker-2/

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

25个给git熟手的技巧

|

||||

25个 Git 进阶技巧

|

||||

================================================================================

|

||||

我已经使用git差不多18个月了,觉得自己对它应该已经非常了解。然后来自GitHub的[Scott Chacon][1]过来给LVS做培训,[LVS是一个赌博软件供应商和开发商][2](从2013年开始的合同),而我在第一天里就学到了很多。

|

||||

我已经使用git差不多18个月了,觉得自己对它应该已经非常了解。然后来自GitHub的[Scott Chacon][1]过来给LVS做培训([LVS是一个赌博软件供应商和开发商][2],从2013年开始的合同),而我在第一天里就学到了很多。

|

||||

|

||||

作为一个对git感觉良好的人,我觉得分享从社区里掌握的一些有价值的信息,也许能帮某人解决问题而不用做太深入研究。

|

||||

|

||||

@ -15,21 +15,21 @@

|

||||

|

||||

#### 2. Git是基于指针的 ####

|

||||

|

||||

保存在git里的一切都是文件。当你创建一个提交的时候,会建立一个包含你的提交信息和相关数据(名字,邮件地址,日期/时间,前一个提交,等等)的文件,并把它链接到一个文件树中。文件树中包含了对象或其他树的列表。对象或容器是和本次提交相关的实际内容(也是一个文件,你想了解的话,尽管文件名并没有包含在对象里,而是在树中)。所有这些文件都使用对象的SHA-1哈希值作为文件名。

|

||||

保存在git里的一切都是文件。当你创建一个提交的时候,会建立一个包含你的提交信息和相关数据(名字,邮件地址,日期/时间,前一个提交,等等)的文件,并把它链接到一个树文件中。这个树文件中包含了对象或其他树的列表。这里的提到的对象(或二进制大对象)是和本次提交相关的实际内容(它也是一个文件,另外,尽管文件名并没有包含在对象里,但是存储在树中)。所有这些文件都使用对象的SHA-1哈希值作为文件名。

|

||||

|

||||

用这种方式,分支和标签就是简单的文件(基本上是这样),包含指向实际提交的SHA-1哈希值。使用这些索引会带来优秀的灵活性和速度,比如创建一个新分支就只要简单地创建一个包含分支名字和所分出的那个提交的SHA-1索引的文件。当然,你不需要自己做这些,而只要使用Git命令行工具(或者GUI),但是实际上就是这么简单。

|

||||

用这种方式,分支和标签就是简单的文件(基本上是这样),包含指向该提交的SHA-1哈希值。使用这些索引会带来优秀的灵活性和速度,比如创建一个新分支就是简单地用分支名字和所分出的那个提交的SHA-1索引来创建一个文件。当然,你不需要自己做这些,而只要使用Git命令行工具(或者GUI),但是实际上就是这么简单。

|

||||

|

||||

你也许听说过叫HEAD的索引。这只是简单的一个文件,包含了你当前指向的那个提交的SHA-1索引值。如果你正在解决一次合并冲突然后看到了HEAD,这并不是一个特别的分支或分值上一个必须的特殊点,只是标明你当前所在位置。

|

||||

你也许听说过叫HEAD的索引。这只是简单的一个文件,包含了你当前指向的那个提交的SHA-1索引值。如果你正在解决一次合并冲突然后看到了HEAD,这并不是一个特别的分支或分支上的一个必需的特殊位置,只是标明你当前所在位置。

|

||||

|

||||

所有的分支指针都保存在.git/refs/heads里,HEAD在.git/HEAD里,而标签保存在.git/refs/tags里 - 自己可以放心地进去看看。

|

||||

所有的分支指针都保存在.git/refs/heads里,HEAD在.git/HEAD里,而标签保存在.git/refs/tags里 - 自己可以随便进去看看。

|

||||

|

||||

#### 3. 两个父节点 - 当然! ####

|

||||

#### 3. 两个爸爸(父节点) - 你没看错! ####

|

||||

|

||||

在历史中查看一个合并提交的信息时,你将看到有两个父节点(相对于一般工作上的常规提交的情况)。第一个父节点是你所在的分支,第二个是你合并过来的分支。

|

||||

在历史中查看一个合并提交的信息时,你将看到有两个父节点(不同于工作副本上的常规提交的情况)。第一个父节点是你所在的分支,第二个是你合并过来的分支。

|

||||

|

||||

#### 4. 合并冲突 ####

|

||||

|

||||

目前我相信你碰到过合并冲突并且解决过。通常是编辑一下文件,去掉<<<<,====,>>>>标志,保留需要留下的代码。有时能够看到这两个修改之前的代码会很不错,比如,在这两个分支上有冲突的改动之前。下面是一种方式:

|

||||

目前我相信你碰到过合并冲突并且解决过。通常是编辑一下文件,去掉<<<<,====,>>>>标志,保留需要留下的代码。有时能够看到这两个修改之前的代码会很不错,比如,在这两个现在冲突的分支之前的改动。下面是一种方式:

|

||||

|

||||

$ git diff --merge

|

||||

diff --cc dummy.rb

|

||||

@ -45,14 +45,14 @@

|

||||

end

|

||||

end

|

||||

|

||||

如果是二进制文件,比较差异就没那么简单了...通常你要做的就是测试这个二进制文件的两个版本来决定保留哪个(或者在二进制文件编辑器里手工复制冲突部分)。从一个特定分支获取文件拷贝(比如说你在合并master和feature123):

|

||||

如果是二进制文件,比较差异就没那么简单了...通常你要做的就是测试这个二进制文件的两个版本来决定保留哪个(或者在二进制文件编辑器里手工复制冲突部分)。从一个特定分支获取文件拷贝(比如说你在合并master和feature123两个分支):

|

||||

|

||||

$ git checkout master flash/foo.fla # 或者...

|

||||

$ git checkout feature132 flash/foo.fla

|

||||

$ # 然后...

|

||||

$ git add flash/foo.fla

|

||||

|

||||

另一种方式是通过git输出文件 - 你可以输出到另外的文件名,然后再重命名正确的文件(当你决定了要用哪个)为正常的文件名:

|

||||

另一种方式是通过git输出文件 - 你可以输出到另外的文件名,然后当你决定了要用哪个后,再将选定的正确文件复制为正常的文件名:

|

||||

|

||||

$ git show master:flash/foo.fla > master-foo.fla

|

||||

$ git show feature132:flash/foo.fla > feature132-foo.fla

|

||||

@ -71,7 +71,7 @@

|

||||

|

||||

#### 5. 远端服务器 ####

|

||||

|

||||

git的一个超强大的功能就是可以有不止一个远端服务器(实际上你一直都在一个本地仓库上工作)。你并不是一定都要有写权限,你可以有多个可以读取的服务器(用来合并他们的工作)然后写入其他仓库。添加一个新的远端服务器很简单:

|

||||

git的一个超强大的功能就是可以有不止一个远端服务器(实际上你一直都在一个本地仓库上工作)。你并不是一定都要有这些服务器的写权限,你可以有多个可以读取的服务器(用来合并他们的工作)然后写入到另外一个仓库。添加一个新的远端服务器很简单:

|

||||

|

||||

$ git remote add john git@github.com:johnsomeone/someproject.git

|

||||

|

||||

@ -87,10 +87,10 @@ git的一个超强大的功能就是可以有不止一个远端服务器(实

|

||||

|

||||

$ git diff master..john/master

|

||||

|

||||

你也可以查看不在远端分支的HEAD的改动:

|

||||

你也可以查看没有在远端分支上的HEAD的改动:

|

||||

|

||||

$ git log remote/branch..

|

||||

# 注意:..后面没有结束的refspec

|

||||

# 注意:..后面没有结束的特定引用

|

||||

|

||||

#### 6. 标签 ####

|

||||

|

||||

@ -99,7 +99,7 @@ git的一个超强大的功能就是可以有不止一个远端服务器(实

|

||||

建立这两种类型的标签都很简单(只有一个命令行开关的差异)

|

||||

|

||||

$ git tag to-be-tested

|

||||

$ git tag -a v1.1.0 # 会提示输入标签信息

|

||||

$ git tag -a v1.1.0 # 会提示输入标签的信息

|

||||

|

||||

#### 7. 建立分支 ####

|

||||

|

||||

@ -108,7 +108,7 @@ git的一个超强大的功能就是可以有不止一个远端服务器(实

|

||||

$ git branch feature132

|

||||

$ git checkout feature132

|

||||

|

||||

当然,如果你确定自己要新建分支并直接切换过去,可以用一个命令实现:

|

||||

当然,如果你确定自己直接切换到新建的分支,可以用一个命令实现:

|

||||

|

||||

$ git checkout -b feature132

|

||||

|

||||

@ -117,20 +117,20 @@ git的一个超强大的功能就是可以有不止一个远端服务器(实

|

||||

$ git checkout -b twitter-experiment feature132

|

||||

$ git branch -d feature132

|

||||

|

||||

更新:你也可以(像Brian Palmer在原博客文章的评论里提出的)只用“git branch”的-m开关在一个命令里实现(像Mike提出的,如果你只有一个分支参数,就会重命名当前分支):

|

||||

更新:你也可以(像Brian Palmer在原博客文章的评论里提出的)只用“git branch”的-m开关在一个命令里实现(像Mike提出的,如果你只指定了一个分支参数,就会重命名当前分支):

|

||||

|

||||

$ git branch -m twitter-experiment

|

||||

$ git branch -m feature132 twitter-experiment

|

||||

|

||||

#### 8. 合并分支 ####

|

||||

|

||||

在将来什么时候,你希望合并改动。有两种方式:

|

||||

也许在将来的某个时候,你希望将改动合并。有两种方式:

|

||||

|

||||

$ git checkout master

|

||||

$ git merge feature83 # 或者...

|

||||

$ git rebase feature83

|

||||

|

||||

merge和rebase之间的差别是merge会尝试处理改动并建立一个新的混合了两者的提交。rebase会尝试把你从一个分支最后一次分离后的所有改动,一个个加到该分支的HEAD上。不过,在已经将分支推到远端服务器后不要再rebase了 - 这回引起冲突/问题。

|

||||

merge和rebase之间的差别是merge会尝试处理改动并建立一个新的混合了两者的提交。rebase会尝试把你从一个分支最后一次分离后的所有改动,一个个加到该分支的HEAD上。不过,在已经将分支推到远端服务器后不要再rebase了 - 这会引起冲突/问题。

|

||||

|

||||

如果你不确定在哪些分支上还有独有的工作 - 所以你也不知道哪些分支需要合并而哪些可以删除,git branch有两个开关可以帮你:

|

||||

|

||||

@ -147,7 +147,7 @@ merge和rebase之间的差别是merge会尝试处理改动并建立一个新的

|

||||

$ git push origin twitter-experiment:refs/heads/twitter-experiment

|

||||

# origin是我们服务器的名字,而twitter-experiment是分支名字

|

||||

|

||||

更新:感谢Erlend在原博客文章上的评论 - 这个实际上和`git push origin twitter-experiment`效果一样,不过使用完整的语法,你可以在两者之间使用不同的分知名(这样本地分支可以是`add-ssl-support`而远端是`issue-1723`)。

|

||||

更新:感谢Erlend在原博客文章上的评论 - 这个实际上和`git push origin twitter-experiment`效果一样,不过使用完整的语法,你可以在两者之间使用不同的分支名(这样本地分支可以是`add-ssl-support`而远端是`issue-1723`)。

|

||||

|

||||

如果你想在远端服务器上删除一个分支(注意分支名前面的冒号):

|

||||

|

||||

@ -210,7 +210,7 @@ git会基于当前的提交信息自动创建评论。如果你更希望有自

|

||||

|

||||

这会让你进入一个基于菜单的交互式提示。你可以使用命令中的数字或高亮的字母(如果你在终端里打开了高亮的话)来进入相应的模式。然后就只是输入你希望操作的文件的数字了(你可以使用这样的格式,1或者1-4或2,4,7)。

|

||||

|

||||

如果你想进入补丁模式(交互式模式下的‘p’或‘5’),你也可以直接进入:

|

||||

如果你想进入补丁模式(交互式模式下按‘p’或‘5’),你也可以直接进入:

|

||||

|

||||

$ git add -p

|

||||

diff --git a/dummy.rb b/dummy.rb

|

||||

@ -226,11 +226,11 @@ git会基于当前的提交信息自动创建评论。如果你更希望有自

|

||||

end

|

||||

Stage this hunk [y,n,q,a,d,/,e,?]?

|

||||

|

||||

你可以看到下方会有一些选项供选择用来添加该文件的这个改动,该文件的所有改动,等等。使用‘?’命令可以详细解释这些选项。

|

||||

你可以看到下方会有一些选项供选择用来添加该文件的这个改动、该文件的所有改动,等等。使用‘?’命令可以详细解释这些选项。

|

||||

|

||||

#### 12. 从文件系统里保存/取回改动 ####

|

||||

|

||||

有些项目(比如git项目本身)在git文件系统中直接保存额外文件而并没有将它们加入到版本控制中。

|

||||

有些项目(比如Git项目本身)在git文件系统中直接保存额外文件而并没有将它们加入到版本控制中。

|

||||

|

||||

让我们从在git中存储一个随机文件开始:

|

||||

|

||||

@ -251,7 +251,7 @@ git会基于当前的提交信息自动创建评论。如果你更希望有自

|

||||

|

||||

#### 13. 查看日志 ####

|

||||

|

||||

如果不用‘git log’来查看最近的提交你git用不了多久。不过,有一些技巧来更好地应用。比如,你可以使用下面的命令来查看每次提交的具体改动:

|

||||

长时间使用 Git 的话,不会没用过‘git log’来查看最近的提交。不过,有一些技巧来更好地应用。比如,你可以使用下面的命令来查看每次提交的具体改动:

|

||||

|

||||

$ git log -p

|

||||

|

||||

@ -268,7 +268,7 @@ git会基于当前的提交信息自动创建评论。如果你更希望有自

|

||||

|

||||

#### 14. 搜索日志 ####

|

||||

|

||||

如果你想找特定作者可以这样做:

|

||||

如果你想找特定提交者可以这样做:

|

||||

|

||||

$ git log --author=Andy

|

||||

|

||||

@ -278,7 +278,7 @@ git会基于当前的提交信息自动创建评论。如果你更希望有自

|

||||

|

||||

$ git log --grep="Something in the message"

|

||||

|

||||

也有一个更强大的叫做pickaxe的命令用来查找删除或添加某个特定内容的提交(比如,该文件第一次出现或被删除)。这可以告诉你什么时候增加了一行(但这一行里的某个字符后面被改动过就不行了):

|

||||

也有一个更强大的叫做pickaxe的命令用来查找包含了删除或添加的某个特定内容的提交(比如,该内容第一次出现或被删除)。这可以告诉你什么时候增加了一行(但这一行里的某个字符后面被改动过就不行了):

|

||||

|

||||

$ git log -S "TODO: Check for admin status"

|

||||

|

||||

@ -294,7 +294,7 @@ git会基于当前的提交信息自动创建评论。如果你更希望有自

|

||||

|

||||

$ git log --since=2.months.ago --until=1.day.ago

|

||||

|

||||

默认情况下会用OR来组合查询,但你可以轻易地改为AND(如果你有超过一条的标准)

|

||||

默认情况下会用OR来组合查询,但你可以轻易地改为AND(如果你有超过一条的查询标准)

|

||||

|

||||

$ git log --since=2.months.ago --until=1.day.ago --author=andy -S "something" --all-match

|

||||

|

||||

@ -310,7 +310,7 @@ git会基于当前的提交信息自动创建评论。如果你更希望有自

|

||||

$ git show feature132@{yesterday} # 时间相关

|

||||

$ git show feature132@{2.hours.ago} # 时间相关

|

||||

|

||||

注意和之前部分有些不同,末尾的插入符号意思是该提交的父节点 - 开始位置的插入符号意思是不在这个分支。

|

||||

注意和之前部分有些不同,末尾的^的意思是该提交的父节点 - 开始位置的^的意思是不在这个分支。

|

||||

|

||||

#### 16. 选择范围 ####

|

||||

|

||||

@ -321,7 +321,7 @@ git会基于当前的提交信息自动创建评论。如果你更希望有自

|

||||

|

||||

你也可以省略[new],将使用当前的HEAD。

|

||||

|

||||

### Rewinding Time & Fixing Mistakes ###

|

||||

### 时光回溯和后悔药 ###

|

||||

|

||||

#### 17. 重置改动 ####

|

||||

|

||||

@ -329,7 +329,7 @@ git会基于当前的提交信息自动创建评论。如果你更希望有自

|

||||

|

||||

$ git reset HEAD lib/foo.rb

|

||||

|

||||

通常会使用‘unstage’的别名,因为看上去有些不直观。

|

||||

通常会使用‘unstage’的别名,因为上面的看上去有些不直观。

|

||||

|

||||

$ git config --global alias.unstage "reset HEAD"

|

||||

$ git unstage lib/foo.rb

|

||||

@ -369,11 +369,11 @@ git会基于当前的提交信息自动创建评论。如果你更希望有自

|

||||

|

||||

#### 19. 交互式切换基础 ####

|

||||

|

||||

这是一个我之前看过展示却没真正理解过的很赞的功能,现在很简单。假如说你提交了3次但是你希望更改顺序或编辑(或者合并):

|

||||

这是一个我之前看过展示却没真正理解过的很赞的功能,现在觉得它就很简单了。假如说你提交了3次但是你希望更改顺序或编辑(或者合并):

|

||||

|

||||

$ git rebase -i master~3

|

||||

|

||||

然后会启动你的编辑器并带有一些指令。你所要做的就是修改这些指令来选择/插入/编辑(或者删除)提交和保存/退出。然后在编辑完后你可以用`git rebase --continue`命令来让每一条指令生效。

|

||||

然后这会启动你的编辑器并带有一些指令。你所要做的就是修改这些指令来选择/插入/编辑(或者删除)提交和保存/退出。然后在编辑完后你可以用`git rebase --continue`命令来让每一条指令生效。

|

||||

|

||||

如果你有修改,将会切换到你提交时所处的状态,之后你需要使用命令git commit --amend来编辑。

|

||||

|

||||

@ -446,7 +446,7 @@ git会基于当前的提交信息自动创建评论。如果你更希望有自

|

||||

|

||||

$ git branch experimental SHA1_OF_HASH

|

||||

|

||||

如果你访问过的话,你通常可以用git reflog来找到SHA1哈希值。

|

||||

如果你最近访问过的话,你通常可以用git reflog来找到SHA1哈希值。

|

||||

|

||||

另一种方式是使用`git fsck —lost-found`。其中一个dangling的提交就是丢失的HEAD(它只是已删除分支的HEAD,而HEAD^被引用为当前的HEAD所以它并不处于dangling状态)

|

||||

|

||||

@ -460,7 +460,7 @@ via: https://www.andyjeffries.co.uk/25-tips-for-intermediate-git-users/

|

||||

|

||||

作者:[Andy Jeffries][a]

|

||||

译者:[zpl1025](https://github.com/zpl1025)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,67 @@

|

||||

在Ubuntu 14.10上安装基于Web的监控工具:Linux-Dash

|

||||

================================================================================

|

||||

|

||||

Linux-Dash是一个用于GNU/Linux机器的,低开销的监控仪表盘。您可以安装试试!Linux Dash的界面提供了您的服务器的所有关键信息的详细视图,可监测的信息包括RAM、磁盘使用率、网络、安装的软件、用户、运行的进程等。所有的信息都被分成几类,您可以通过主页工具栏中的按钮跳到任何一类中。Linux Dash并不是最先进的监测工具,但它十分适合寻找灵活、轻量级、容易部署的应用的用户。

|

||||

|

||||

### Linux-Dash的功能 ###

|

||||

|

||||

- 使用一个基于Web的漂亮的仪表盘界面来监控服务器信息

|

||||

- 实时的按照你的要求监控RAM、负载、运行时间、磁盘配置、用户和许多其他系统状态

|

||||

- 支持基于Apache2/niginx + PHP的服务器

|

||||

- 通过点击和拖动来重排列控件

|

||||

- 支持多种类型的linux服务器

|

||||

|

||||

### 当前控件列表 ###

|

||||

|

||||

- 通用信息

|

||||

- 平均负载

|

||||

- RAM

|

||||

- 磁盘使用量

|

||||

- 用户

|

||||

- 软件

|

||||

- IP

|

||||

- 网络速率

|

||||

- 在线状态

|

||||

- 处理器

|

||||

- 日志

|

||||

|

||||

### 在Ubuntu server 14.10上安装Linux-Dash ###

|

||||

|

||||

首先您需要确认您安装了[Ubuntu LAMP server 14.10][1],接下来您需要安装下面的包:

|

||||

|

||||

sudo apt-get install php5-json unzip

|

||||

|

||||

安装这个模块后,需要在apache2中启用该模块,所以您需要使用下面的命令重启apache2服务器:

|

||||

|

||||

sudo service apache2 restart

|

||||

|

||||

现在您需要下载linux-dash的安装包并安装它:

|

||||

|

||||

wget https://github.com/afaqurk/linux-dash/archive/master.zip

|

||||

|

||||

unzip master.zip

|

||||

|

||||

sudo mv linux-dash-master/ /var/www/html/linux-dash-master/

|

||||

|

||||

接下来您需要使用下面的命令来改变权限:

|

||||

|

||||

sudo chmod 755 /var/www/html/linux-dash-master/

|

||||

|

||||

现在您便可以访问http://serverip/linux-dash-master/了。您应该会看到类似下面的输出:

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.ubuntugeek.com/install-linux-dash-web-based-monitoring-tool-on-ubntu-14-10.html

|

||||

|

||||

作者:[ruchi][a]

|

||||

译者:[wwy-hust](https://github.com/wwy-hust)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.ubuntugeek.com/author/ubuntufix

|

||||

[1]:http://www.ubuntugeek.com/step-by-step-ubuntu-14-10-utopic-unicorn-lamp-server-setup.html

|

||||

@ -1,14 +1,14 @@

|

||||

Linux有问必答时间--如何在Linux下禁用IPv6

|

||||

Linux有问必答:如何在Linux下禁用IPv6

|

||||

================================================================================

|

||||

> **问题**:我发现我的一个应用程序在尝试通过IPv6建立连接,但是由于我们本地网络不允许分配IPv6的流量,IPv6连接会超时,应用程序的连接会退回到IPv4,这样就会造成不必要的延迟。由于我目前对IPv6没有任何需求,所以我想在我的Linux主机上禁用IPv6。有什么比较合适的方法呢?

|

||||

> **问题**:我发现我的一个应用程序在尝试通过IPv6建立连接,但是由于我们本地网络不允许分配IPv6的流量,IPv6连接会超时,应用程序的连接会回退到IPv4,这样就会造成不必要的延迟。由于我目前对IPv6没有任何需求,所以我想在我的Linux主机上禁用IPv6。有什么比较合适的方法呢?

|

||||

|

||||

IPv6被认为是IPv4——互联网上的传统32位地址空间的替代产品,它为了解决现有IPv4地址空间即将耗尽的问题。然而,由于IPv4已经被每台主机或设备连接到了互联网上,所以想在一夜之间将它们全部切换到IPv6几乎是不可能的。许多IPv4到IPv6的转换机制(例如:双协议栈、网络隧道、代理) 已经被提出来用来促进IPv6能被采用,并且很多应用也正在进行重写,就像我们所说的,来增加对IPv6的支持。有一件事情能确定,就是在可预见的未来里IPv4和IPv6势必将共存。

|

||||

IPv6被认为是IPv4——互联网上的传统32位地址空间——的替代产品,它用来解决现有IPv4地址空间即将耗尽的问题。然而,由于已经有大量主机、设备用IPv4连接到了互联网上,所以想在一夜之间将它们全部切换到IPv6几乎是不可能的。许多IPv4到IPv6的转换机制(例如:双协议栈、网络隧道、代理) 已经被提出来用来促进IPv6能被采用,并且很多应用也正在进行重写,如我们所提倡的,来增加对IPv6的支持。有一件事情可以确定,就是在可预见的未来里IPv4和IPv6势必将共存。

|

||||

|

||||

理想情况下,[向IPv6过渡的进程][1]不应该被最终的用户所看见,但是IPv4/IPv6混合环境有时会让你碰到各种源于IPv4和IPv6之间不经意间的相互作用的问题。举个例子,你会碰到应用程序超时的问题比如apt-get或ssh尝试通过IPv6连接失败、DNS服务器意外清空了IPv6的AAAA记录、或者你支持IPv6的设备不兼容你的互联网服务提供商遗留下的IPv4网络等等等等。

|

||||

理想情况下,[向IPv6过渡的进程][1]不应该被最终的用户所看见,但是IPv4/IPv6混合环境有时会让你碰到各种源于IPv4和IPv6之间不经意间的相互碰撞的问题。举个例子,你会碰到应用程序超时的问题,比如apt-get或ssh尝试通过IPv6连接失败、DNS服务器意外清空了IPv6的AAAA记录、或者你支持IPv6的设备不兼容你的互联网服务提供商遗留下的IPv4网络,等等等等。

|

||||

|

||||

当然这不意味着你应该盲目地在你的Linux机器上禁用IPv6。鉴于IPv6许诺的种种好处,作为社会的一份子我们最终还是要充分拥抱它的,但是作为给最终用户进行故障排除过程的一部分,如果IPv6确实是罪魁祸首那你可以尝试去关闭它。

|

||||

当然这不意味着你应该盲目地在你的Linux机器上禁用IPv6。鉴于IPv6许诺的种种好处,作为社会的一份子我们最终还是要充分拥抱它的,但是作为给最终用户进行故障排除过程的一部分,如果IPv6确实是罪魁祸首,那你可以尝试去关闭它。

|

||||

|

||||

这里有一些让你在Linux中部分或全部禁用IPv6的小技巧(例如:为一个已经确定的网络接口)。这些小贴士应该适用于所有主流的Linux发行版包括Ubuntu、Debian、Linux Mint、CentOS、Fedora、RHEL以及Arch Linux。

|

||||

这里有一些让你在Linux中部分(例如:对于某个特定的网络接口)或全部禁用IPv6的小技巧。这些小贴士应该适用于所有主流的Linux发行版包括Ubuntu、Debian、Linux Mint、CentOS、Fedora、RHEL以及Arch Linux。

|

||||

|

||||

### 查看IPv6在Linux中是否被启用 ###

|

||||

|

||||

@ -24,7 +24,7 @@ IPv6被认为是IPv4——互联网上的传统32位地址空间的替代产品

|

||||

|

||||

### 临时禁用IPv6 ###

|

||||

|

||||

如果你想要在你的Linux系统上临时关闭IPv6,你可以用 /proc 文件系统。"临时",意思是我们所做的禁用IPv6的更改在系统重启后将不被保存。IPv6会在你的Linux机器重启后再次被启用。

|

||||

如果你想要在你的Linux系统上临时关闭IPv6,你可以用 /proc 文件系统。"临时"的意思是我们所做的禁用IPv6的更改在系统重启后将不被保存。IPv6会在你的Linux机器重启后再次被启用。

|

||||

|

||||

要将一个特定的网络接口禁用IPv6,使用以下命令:

|

||||

|

||||

@ -50,7 +50,7 @@ IPv6被认为是IPv4——互联网上的传统32位地址空间的替代产品

|

||||

|

||||

#### 方法一 ####

|

||||

|

||||

第一种方法是请求以上提到的 /proc 对 /etc/sysctl.conf 文件进行修改。

|

||||

第一种方法是通过 /etc/sysctl.conf 文件对 /proc 进行永久修改。

|

||||

|

||||

换句话说,就是用文本编辑器打开 /etc/sysctl.conf 然后添加以下内容:

|

||||

|

||||

@ -69,7 +69,7 @@ IPv6被认为是IPv4——互联网上的传统32位地址空间的替代产品

|

||||

|

||||

#### 方法二 ####

|

||||

|

||||

另一个永久禁用IPv6的方法是在开机的时候执行一个必要的内核参数。

|

||||

另一个永久禁用IPv6的方法是在开机的时候传递一个必要的内核参数。

|

||||

|

||||

用文本编辑器打开 /etc/default/grub 并给GRUB_CMDLINE_LINUX变量添加"ipv6.disable=1"。

|

||||

|

||||

@ -79,7 +79,7 @@ IPv6被认为是IPv4——互联网上的传统32位地址空间的替代产品

|

||||

|

||||

GRUB_CMDLINE_LINUX="xxxxx ipv6.disable=1"

|

||||

|

||||

上面的"xxxxx"代表任意存在着的内核参数,在它后面添加"ipv6.disable=1"。

|

||||

上面的"xxxxx"代表任何已有的内核参数,在它后面添加"ipv6.disable=1"。

|

||||

|

||||

|

||||

|

||||

@ -97,7 +97,7 @@ Fedora、CentOS/RHEL系统:

|

||||

|

||||

### 禁用IPv6之后的其它可选步骤 ###

|

||||

|

||||

这里有一些可选步骤在你禁用IPv6后需要考虑,这是因为当你在内核里禁用IPv6后,其它程序仍然会尝试使用IPv6。在大多数情况下,例如应用程序的运转状态不太会遭到破坏,但是出于效率或安全方面的原因,你要为他们禁用IPv6。

|

||||

这里有一些在你禁用IPv6后需要考虑的可选步骤,这是因为当你在内核里禁用IPv6后,其它程序也许仍然会尝试使用IPv6。在大多数情况下,应用程序的这种行为不太会影响到什么,但是出于效率或安全方面的原因,你可以为他们禁用IPv6。

|

||||

|

||||

#### /etc/hosts ####

|

||||

|

||||

@ -124,7 +124,7 @@ Fedora、CentOS/RHEL系统:

|

||||

|

||||

默认情况下,OpenSSH服务(sshd)会去尝试捆绑IPv4和IPv6的地址。

|

||||

|

||||

要强制sshd只捆绑IPv4地址,用文本编辑器打开 /etc/ssh/sshd_config 并添加以下脚本行。inet只适用于IPv4,而inet6是适用于IPv6的。

|

||||

要强制sshd只捆绑IPv4地址,用文本编辑器打开 /etc/ssh/sshd_config 并添加以下行。inet只适用于IPv4,而inet6是适用于IPv6的。

|

||||

|

||||

$ sudo vi /etc/ssh/sshd_config

|

||||

|

||||

@ -140,7 +140,7 @@ via: http://ask.xmodulo.com/disable-ipv6-linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[ZTinoZ](https://github.com/ZTinoZ)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,18 +1,18 @@

|

||||

领略一些最著名的 Linux 网络工具

|

||||

一大波你可能不知道的 Linux 网络工具

|

||||

================================================================================

|

||||

在你的系统上使用命令行工具来监控你的网络是非常实用的,并且对于 Linux 用户来说,有着许许多多现成的工具可以使用,如 nethogs, ntopng, nload, iftop, iptraf, bmon, slurm, tcptrack, cbm, netwatch, collectl, trafshow, cacti, etherape, ipband, jnettop, netspeed 以及 speedometer。

|

||||

如果要在你的系统上监控网络,那么使用命令行工具是非常实用的,并且对于 Linux 用户来说,有着许许多多现成的工具可以使用,如: nethogs, ntopng, nload, iftop, iptraf, bmon, slurm, tcptrack, cbm, netwatch, collectl, trafshow, cacti, etherape, ipband, jnettop, netspeed 以及 speedometer。

|

||||

|

||||

鉴于世上有着许多的 Linux 专家和开发者,显然还存在其他的网络监控工具,但在这篇教程中,我不打算将它们所有包括在内。

|

||||

|

||||

上面列出的工具都有着自己的独特之处,但归根结底,它们都做着监控网络流量的工作,且并不是只有一种方法来完成这件事。例如 nethogs 可以被用来展示每个进程的带宽使用情况,以防你想知道究竟是哪个应用在消耗了你的整个网络资源; iftop 可以被用来展示每个套接字连接的带宽使用情况,而 像 nload 这类的工具可以帮助你得到有关整个带宽的信息。

|

||||

上面列出的工具都有着自己的独特之处,但归根结底,它们都做着监控网络流量的工作,只是通过各种不同的方法。例如 nethogs 可以被用来展示每个进程的带宽使用情况,以防你想知道究竟是哪个应用在消耗了你的整个网络资源; iftop 可以被用来展示每个套接字连接的带宽使用情况,而像 nload 这类的工具可以帮助你得到有关整个带宽的信息。

|

||||

|

||||

### 1) nethogs ###

|

||||

|

||||

nethogs 是一个免费的工具,当要查找哪个 PID (注:即 process identifier,进程 ID) 给你的网络流量带来了麻烦时,它是非常方便的。它按每个进程来组织带宽,而不是像大多数的工具那样按照每个协议或每个子网来划分流量。它功能丰富,同时支持 IPv4 和 IPv6,并且我认为,若你想在你的 Linux 主机上确定哪个程序正消耗着你的全部带宽,它是来做这件事的最佳的程序。

|

||||

nethogs 是一个免费的工具,当要查找哪个 PID (注:即 process identifier,进程 ID) 给你的网络流量带来了麻烦时,它是非常方便的。它按每个进程来分组带宽,而不是像大多数的工具那样按照每个协议或每个子网来划分流量。它功能丰富,同时支持 IPv4 和 IPv6,并且我认为,若你想在你的 Linux 主机上确定哪个程序正消耗着你的全部带宽,它是来做这件事的最佳的程序。

|

||||

|

||||

一个 Linux 用户可以使用 **nethogs** 来显示每个进程的 TCP 下载和上传速率,使用命令 **nethogs eth0** 来监控一个特定的设备,上面的 eth0 是那个你想获取信息的设备的名称,你还可以得到有关正在被传输的数据的传输速率的信息。

|

||||

一个 Linux 用户可以使用 **nethogs** 来显示每个进程的 TCP 下载和上传速率,可以使用命令 **nethogs eth0** 来监控一个指定的设备,上面的 eth0 是那个你想获取信息的设备的名称,你还可以得到有关正在传输的数据的传输速率信息。

|

||||

|

||||

对我而言, nethogs 是非常容易使用的,或许是因为我非常喜欢它以至于我总是在我的 Ubuntu 12.04 LTS 机器中使用它来监控我的网络带宽。

|

||||

对我而言, nethogs 是非常容易使用的,或许是因为我非常喜欢它,以至于我总是在我的 Ubuntu 12.04 LTS 机器中使用它来监控我的网络带宽。

|

||||

|

||||

例如要想使用混杂模式来嗅探,可以像下面展示的命令那样使用选项 -p:

|

||||

|

||||

@ -20,6 +20,8 @@ nethogs 是一个免费的工具,当要查找哪个 PID (注:即 process ide

|

||||

|

||||

假如你想更多地了解 nethogs 并深入探索它,那么请毫不犹豫地阅读我们做的关于这个网络带宽监控工具的整个教程。

|

||||

|

||||

(LCTT 译注:关于 nethogs 的更多信息可以参考:https://linux.cn/article-2808-1.html )

|

||||

|

||||

### 2) nload ###

|

||||

|

||||

nload 是一个控制台应用,可以被用来实时地监控网络流量和带宽使用情况,它还通过提供两个简单易懂的图表来对流量进行可视化。这个绝妙的网络监控工具还可以在监控过程中切换被监控的设备,而这可以通过按左右箭头来完成。

|

||||

@ -28,19 +30,21 @@ nload 是一个控制台应用,可以被用来实时地监控网络流量和

|

||||

|

||||

正如你在上面的截图中所看到的那样,由 nload 提供的图表是非常容易理解的。nload 提供了有用的信息,也展示了诸如被传输数据的总量和最小/最大网络速率等信息。

|

||||

|

||||

而更酷的是你可以在下面的命令的帮助下运行 nload 这个工具,这个命令是非常的短小且易记的:

|

||||

而更酷的是你只需要直接运行 nload 这个工具就行,这个命令是非常的短小且易记的:

|

||||

|

||||

nload

|

||||

|

||||

我很确信的是:我们关于如何使用 nload 的详细教程将帮助到新的 Linux 用户,甚至可以帮助那些正寻找关于 nload 信息的老手。

|

||||

|

||||

(LCTT 译注:关于 nload 的更新信息可以参考:https://linux.cn/article-5114-1.html )

|

||||

|

||||

### 3) slurm ###

|

||||

|

||||

slurm 是另一个 Linux 网络负载监控工具,它以一个不错的 ASCII 图来显示结果,它还支持许多键值用以交互,例如 **c** 用来切换到经典模式, **s** 切换到分图模式, **r** 用来重绘屏幕, **L** 用来启用 TX/RX(注:TX,发送流量;RX,接收流量) LED,**m** 用来在经典分图模式和大图模式之间进行切换, **q** 退出 slurm。

|

||||

slurm 是另一个 Linux 网络负载监控工具,它以一个不错的 ASCII 图来显示结果,它还支持许多按键用以交互,例如 **c** 用来切换到经典模式, **s** 切换到分图模式, **r** 用来重绘屏幕, **L** 用来启用 TX/RX 灯(注:TX,发送流量;RX,接收流量) ,**m** 用来在经典分图模式和大图模式之间进行切换, **q** 退出 slurm。

|

||||

|

||||

|

||||

|

||||

在网络负载监控工具 slurm 中,还有许多其它的键值可用,你可以很容易地使用下面的命令在 man 手册中学习它们。

|

||||

在网络负载监控工具 slurm 中,还有许多其它的按键可用,你可以很容易地使用下面的命令在 man 手册中学习它们。

|

||||

|

||||

man slurm

|

||||

|

||||

@ -48,11 +52,11 @@ slurm 在 Ubuntu 和 Debian 的官方软件仓库中可以找到,所以使用

|

||||

|

||||

sudo apt-get install slurm

|

||||

|

||||

我们已经在一个教程中对 slurm 的使用做了介绍,所以请访问相关网页( 注:应该指的是[这篇文章](http://linoxide.com/ubuntu-how-to/monitor-network-load-slurm-tool/) ),并不要忘记和其它使用 Linux 的朋友分享这些知识。

|

||||

我们已经在一个[教程](http://linoxide.com/ubuntu-how-to/monitor-network-load-slurm-tool/)中对 slurm 的使用做了介绍,不要忘记和其它使用 Linux 的朋友分享这些知识。

|

||||

|

||||

### 4) iftop ###

|

||||

|

||||

当你想在一个接口上按照主机来展示带宽使用情况时,iftop 是一个非常有用的工具。根据 man 手册,**iftop** 在一个已命名的接口或在它可以找到的第一个接口(假如没有任何特殊情况,它就像一个外部的接口)上监听网络流量,并且展示出一个表格来显示当前一对主机间的带宽使用情况。

|

||||

当你想显示连接到网卡上的各个主机的带宽使用情况时,iftop 是一个非常有用的工具。根据 man 手册,**iftop** 在一个指定的接口或在它可以找到的第一个接口(假如没有任何特殊情况,它应该是一个对外的接口)上监听网络流量,并且展示出一个表格来显示当前的一对主机间的带宽使用情况。

|

||||

|

||||

通过在虚拟终端中使用下面的命令,Ubuntu 和 Debian 用户可以在他们的机器中轻易地安装 iftop:

|

||||

|

||||

@ -61,6 +65,8 @@ slurm 在 Ubuntu 和 Debian 的官方软件仓库中可以找到,所以使用

|

||||

在你的机器上,可以使用下面的命令通过 yum 来安装 iftop:

|

||||

|

||||

yum -y install iftop

|

||||

|

||||

(LCTT 译注:关于 nload 的更多信息请参考:https://linux.cn/article-1843-1.html )

|

||||

|

||||

### 5) collectl ###

|

||||

|

||||

@ -69,7 +75,7 @@ collectl 可以被用来收集描述当前系统状态的数据,并且它支

|

||||

- 记录模式

|

||||

- 回放模式

|

||||

|

||||

**记录模式** 允许从一个正在运行的系统中读取数据,然后将这些数据要么显示在终端中,要么写入一个或多个文件或套接字中。

|

||||

**记录模式** 允许从一个正在运行的系统中读取数据,然后将这些数据要么显示在终端中,要么写入一个或多个文件或一个套接字中。

|

||||

|

||||

**回放模式**

|

||||

|

||||

@ -79,13 +85,15 @@ Ubuntu 和 Debian 用户可以在他们的机器上使用他们默认的包管

|

||||

|

||||

sudo apt-get install collectl

|

||||

|

||||

还可以使用下面的命令来安装 collectl, 因为对于这些发行版本(注:这里指的是用 yum 作为包管理器的发行版本),在它们官方的软件仓库中也含有 collectl:

|

||||

还可以使用下面的命令来安装 collectl, 因为对于这些发行版本(注:这里指的是用 yum 作为包管理器的发行版本),在它们官方的软件仓库中也含有 collectl:

|

||||

|

||||

yum install collectl

|

||||

|

||||

(LCTT 译注:关于 collectl 的更多信息请参考: https://linux.cn/article-3154-1.html )

|

||||

|

||||

### 6) Netstat ###

|

||||

|

||||

Netstat 是一个用来监控**传入和传出的网络数据包统计数据**和接口统计数据的命令行工具。它为传输控制协议 TCP (包括上传和下行),路由表,及一系列的网络接口(网络接口控制器或者软件定义的网络接口) 和网络协议统计数据展示网络连接情况。

|

||||

Netstat 是一个用来监控**传入和传出的网络数据包统计数据**的接口统计数据命令行工具。它会显示 TCP 连接 (包括上传和下行),路由表,及一系列的网络接口(网卡或者SDN接口)和网络协议统计数据。

|

||||

|

||||

Ubuntu 和 Debian 用户可以在他们的机器上使用默认的包管理器来安装 netstat。Netsta 软件被包括在 net-tools 软件包中,并可以在 shell 或虚拟终端中运行下面的命令来安装它:

|

||||

|

||||

@ -107,6 +115,8 @@ CentOS, Fedora, RHEL 用户可以在他们的机器上使用默认的包管理

|

||||

|

||||

|

||||

|

||||

(LCTT 译注:关于 netstat 的更多信息请参考:https://linux.cn/article-2434-1.html )

|

||||

|

||||

### 7) Netload ###

|

||||

|

||||

netload 命令只展示一个关于当前网络荷载和自从程序运行之后传输数据总的字节数目的简要报告,它没有更多的功能。它是 netdiag 软件的一部分。

|

||||

@ -115,9 +125,9 @@ netload 命令只展示一个关于当前网络荷载和自从程序运行之后

|

||||

|

||||

# yum install netdiag

|

||||

|

||||

Netload 在默认仓库中作为 netdiag 的一部分可以被找到,我们可以轻易地使用下面的命令来利用 **apt** 包管理器安装 **netdiag**:

|

||||

Netload 是默认仓库中 netdiag 的一部分,我们可以轻易地使用下面的命令来利用 **apt** 包管理器安装 **netdiag**:

|

||||

|

||||

$ sudo apt-get install netdiag (注:这里原文为 sudo install netdiag,应该加上 apt-get)

|

||||

$ sudo apt-get install netdiag

|

||||

|

||||

为了运行 netload,我们需要确保选择了一个正在工作的网络接口的名称,如 eth0, eh1, wlan0, mon0等,然后在 shell 或虚拟终端中运行下面的命令:

|

||||

|

||||

@ -127,21 +137,23 @@ Netload 在默认仓库中作为 netdiag 的一部分可以被找到,我们可

|

||||

|

||||

### 8) Nagios ###

|

||||

|

||||

Nagios 是一个领先且功能强大的开源监控系统,它使得网络或系统管理员在服务器相关的问题影响到服务器的主要事务之前,鉴定并解决这些问题。 有了 Nagios 系统,管理员便可以在一个单一的窗口中监控远程的 Linux 、Windows 系统、交换机、路由器和打印机等。它显示出重要的警告并指示出在你的网络或服务器中是否出现某些故障,这间接地帮助你在问题发生之前,着手执行补救行动。

|

||||

Nagios 是一个领先且功能强大的开源监控系统,它使得网络或系统管理员可以在服务器的各种问题影响到服务器的主要事务之前,发现并解决这些问题。 有了 Nagios 系统,管理员便可以在一个单一的窗口中监控远程的 Linux 、Windows 系统、交换机、路由器和打印机等。它会显示出重要的警告并指出在你的网络或服务器中是否出现某些故障,这可以间接地帮助你在问题发生前就着手执行补救行动。

|

||||

|

||||

Nagios 有一个 web 界面,其中有一个图形化的活动监视器。通过浏览网页 http://localhost/nagios/ 或 http://localhost/nagios3/ 便可以登录到这个 web 界面。假如你在远程的机器上进行操作,请使用你的 IP 地址来替换 localhost,然后键入用户名和密码,我们便会看到如下图所展示的信息:

|

||||

|

||||

|

||||

|

||||

(LCTT 译注:关于 Nagios 的更多信息请参考:https://linux.cn/article-2436-1.html )

|

||||

|

||||

### 9) EtherApe ###

|

||||

|

||||

EtherApe 是一个针对 Unix 的图形化网络监控工具,它仿照了 etherman 软件。它具有链路层,IP 和 TCP 模式并支持 Ethernet, FDDI, Token Ring, ISDN, PPP, SLIP 及 WLAN 设备等接口,再加上支持一些封装的格式。主机和链接随着流量大小和被着色的协议名称展示而变化。它可以过滤要展示的流量,并可从一个文件或运行的网络中读取数据报。

|

||||

EtherApe 是一个针对 Unix 的图形化网络监控工具,它仿照了 etherman 软件。它支持链路层、IP 和 TCP 等模式,并支持以太网, FDDI, 令牌环, ISDN, PPP, SLIP 及 WLAN 设备等接口,以及一些封装格式。主机和连接随着流量和协议而改变其尺寸和颜色。它可以过滤要展示的流量,并可从一个文件或运行的网络中读取数据包。

|

||||

|

||||

在 CentOS、Fedora、RHEL 等 Linux 发行版本中安装 etherape 是一件容易的事,因为在它们的官方软件仓库中就可以找到 etherape。我们可以像下面展示的命令那样使用 yum 包管理器来安装它:

|

||||

|

||||

yum install etherape

|

||||

|

||||

我们可以使用下面的命令在 Ubuntu、Debian 及它们的衍生发行版本中使用 **apt** 包管理器来安装 EtherApe :

|

||||

我们也可以使用下面的命令在 Ubuntu、Debian 及它们的衍生发行版本中使用 **apt** 包管理器来安装 EtherApe :

|

||||

|

||||

sudo apt-get install etherape

|

||||

|

||||

@ -149,13 +161,13 @@ EtherApe 是一个针对 Unix 的图形化网络监控工具,它仿照了 ethe

|

||||

|

||||

sudo etherape

|

||||

|

||||

然后, **etherape** 的 **图形用户界面** 便会被执行。接着,在菜单上面的 **捕捉** 选项下,我们可以选择 **模式**(IP,链路层,TCP) 和 **接口**。一切设定完毕后,我们需要点击 **开始** 按钮。接着我们便会看到类似下面截图的东西:

|

||||

然后, **etherape** 的 **图形用户界面** 便会被执行。接着,在菜单上面的 **捕捉** 选项下,我们可以选择 **模式**(IP,链路层,TCP) 和 **接口**。一切设定完毕后,我们需要点击 **开始** 按钮。接着我们便会看到类似下面截图的东西:

|

||||

|

||||

|

||||

|

||||

### 10) tcpflow ###

|

||||

|

||||

tcpflow 是一个命令行工具,它可以捕捉作为 TCP 连接(流)的一部分的传输数据,并以一种方便协议分析或除错的方式来存储数据。它重建了实际的数据流并将每个流存储在不同的文件中,以备日后的分析。它理解 TCP 序列号并可以正确地重建数据流,不管是在重发或乱序发送状态下。

|

||||

tcpflow 是一个命令行工具,它可以捕捉 TCP 连接(流)的部分传输数据,并以一种方便协议分析或除错的方式来存储数据。它重构了实际的数据流并将每个流存储在不同的文件中,以备日后的分析。它能识别 TCP 序列号并可以正确地重构数据流,不管是在重发还是乱序发送状态下。

|

||||

|

||||

通过 **apt** 包管理器在 Ubuntu 、Debian 系统中安装 tcpflow 是很容易的,因为默认情况下在官方软件仓库中可以找到它。

|

||||

|

||||

@ -175,7 +187,7 @@ tcpflow 是一个命令行工具,它可以捕捉作为 TCP 连接(流)的一

|

||||

|

||||

# yum install --nogpgcheck http://pkgs.repoforge.org/tcpflow/tcpflow-0.21-1.2.el6.rf.i686.rpm

|

||||

|

||||

我们可以使用 tcpflow 来捕捉全部或部分 tcp 流量并以一种简单的方式把它们写到一个可读文件中。下面的命令执行着我们想要做的事情,但我们需要在一个空目录中运行下面的命令,因为它将创建诸如 x.x.x.x.y-a.a.a.a.z 格式的文件,做完这些之后,只需按 Ctrl-C 便可停止这个命令。

|

||||

我们可以使用 tcpflow 来捕捉全部或部分 tcp 流量,并以一种简单的方式把它们写到一个可读的文件中。下面的命令就可以完成这个事情,但我们需要在一个空目录中运行下面的命令,因为它将创建诸如 x.x.x.x.y-a.a.a.a.z 格式的文件,运行之后,只需按 Ctrl-C 便可停止这个命令。

|

||||

|

||||

$ sudo tcpflow -i eth0 port 8000

|

||||

|

||||

@ -183,49 +195,51 @@ tcpflow 是一个命令行工具,它可以捕捉作为 TCP 连接(流)的一

|

||||

|

||||

### 11) IPTraf ###

|

||||

|

||||

[IPTraf][2] 是一个针对 Linux 平台的基于控制台的网络统计应用。它生成一系列的图形,如 TCP 连接包和字节的数目、接口信息和活动指示器、 TCP/UDP 流量故障以及 LAN 状态包和字节的数目。

|

||||

[IPTraf][2] 是一个针对 Linux 平台的基于控制台的网络统计应用。它生成一系列的图形,如 TCP 连接的包/字节计数、接口信息和活动指示器、 TCP/UDP 流量故障以及局域网内设备的包/字节计数。

|

||||

|

||||

在默认的软件仓库中可以找到 IPTraf,所以我们可以使用下面的命令通过 **apt** 包管理器轻松地安装 IPTraf:

|

||||

|

||||

$ sudo apt-get install iptraf

|

||||

|

||||

在默认的软件仓库中可以找到 IPTraf,所以我们可以使用下面的命令通过 **yum** 包管理器轻松地安装 IPTraf:

|

||||

我们可以使用下面的命令通过 **yum** 包管理器轻松地安装 IPTraf:

|

||||

|

||||

# yum install iptraf

|

||||

|

||||

我们需要以管理员权限来运行 IPTraf(注:这里原文写错为 TPTraf),并带有一个可用的网络接口名。这里,我们的网络接口名为 wlan2,所以我们使用 wlan2 来作为接口的名称:

|

||||

我们需要以管理员权限来运行 IPTraf,并带有一个有效的网络接口名。这里,我们的网络接口名为 wlan2,所以我们使用 wlan2 来作为参数:

|

||||

|

||||

$ sudo iptraf wlan2 (注:这里原文为 sudo iptraf,应该加上 wlan2)

|

||||

$ sudo iptraf wlan2

|

||||

|

||||

|

||||

|

||||

开始一般的网络接口统计,键入:

|

||||

开始通常的网络接口统计,键入:

|

||||

|

||||

# iptraf -g

|

||||

|

||||

为了在一个名为 eth0 的接口设备上看详细的统计信息,使用:

|

||||

查看接口 eth0 的详细统计信息,使用:

|

||||

|

||||

# iptraf -d wlan2 (注:这里的 wlan2 和 上面的 eth0 不一致,下面的几句也是这种情况,请相应地改正)

|

||||

# iptraf -d eth0

|

||||

|

||||

为了看一个名为 eth0 的接口的 TCP 和 UDP 监控,使用:

|

||||

查看接口 eth0 的 TCP 和 UDP 监控信息,使用:

|

||||

|

||||

# iptraf -z wlan2

|

||||

# iptraf -z eth0

|

||||

|

||||

为了展示在一个名为 eth0 的接口上的包的大小和数目,使用:

|

||||

查看接口 eth0 的包的大小和数目,使用:

|

||||

|

||||

# iptraf -z wlan2

|

||||

# iptraf -z eth0

|

||||

|

||||

注意:请将上面的 wlan2 替换为你的接口名称。你可以通过运行`ip link show`命令来检查你的接口。

|

||||

注意:请将上面的 eth0 替换为你的接口名称。你可以通过运行`ip link show`命令来检查你的接口。

|

||||

|

||||

(LCTT 译注:关于 iptraf 的更多详细信息请参考:https://linux.cn/article-5430-1.html )

|

||||

|

||||

### 12) Speedometer ###

|

||||

|

||||

Speedometer 是一个小巧且简单的工具,它只绘出一幅包含有通过某个给定端口的上行、下行流量的好看的图。

|

||||

Speedometer 是一个小巧且简单的工具,它只用来绘出一幅包含有通过某个给定端口的上行、下行流量的好看的图。

|

||||

|

||||

在默认的软件仓库中可以找到 Speedometer ,所以我们可以使用下面的命令通过 **yum** 包管理器轻松地安装 Speedometer:

|

||||

|

||||

# yum install speedometer

|

||||

|

||||

在默认的软件仓库中可以找到 Speedometer ,所以我们可以使用下面的命令通过 **apt** 包管理器轻松地安装 Speedometer:

|

||||

我们可以使用下面的命令通过 **apt** 包管理器轻松地安装 Speedometer:

|

||||

|

||||

$ sudo apt-get install speedometer

|

||||

|

||||

@ -239,15 +253,15 @@ Speedometer 可以简单地通过在 shell 或虚拟终端中执行下面的命

|

||||

|

||||

### 13) Netwatch ###

|

||||

|

||||

Netwatch 是 netdiag 工具集里的一部分,并且它也显示出当前主机和其他远程主机的连接情况,以及在每个连接中数据传输的速率。

|

||||

Netwatch 是 netdiag 工具集里的一部分,它也显示当前主机和其他远程主机的连接情况,以及在每个连接中数据传输的速率。

|

||||

|

||||

我们可以使用 yum 在 fedora 中安装 Netwatch,因为它在 fedora 的默认软件仓库中。但若你运行着 CentOS 或 RHEL , 我们需要安装 [rpmforge 软件仓库][3]。

|

||||

|

||||

# yum install netwatch

|

||||

|

||||

Netwatch 作为 netdiag 的一部分可以在默认的软件仓库中找到,所以我们可以轻松地使用下面的命令来利用 **apt** 包管理器安装 **netdiag**:

|

||||

Netwatch 是 netdiag 的一部分,可以在默认的软件仓库中找到,所以我们可以轻松地使用下面的命令来利用 **apt** 包管理器安装 **netdiag**:

|

||||

|

||||

$ sudo apt-get install netdiag(注:这里应该加上 apt-get

|

||||

$ sudo apt-get install netdiag

|

||||

|

||||

为了运行 netwatch, 我们需要在虚拟终端或 shell 中执行下面的命令:

|

||||

|

||||

@ -259,15 +273,15 @@ Netwatch 作为 netdiag 的一部分可以在默认的软件仓库中找到,

|

||||

|

||||

### 14) Trafshow ###

|

||||

|

||||

Trafshow 同 netwatch 和 pktstat(注:这里原文中多了一个 trafshow)一样,可以报告当前激活的连接里使用的协议和每个连接中数据传输的速率。它可以使用 pcap 类型的滤波器来筛选出特定的连接。

|

||||

Trafshow 同 netwatch 和 pktstat 一样,可以报告当前活动的连接里使用的协议和每个连接中数据传输的速率。它可以使用 pcap 类型的过滤器来筛选出特定的连接。

|

||||

|

||||

我们可以使用 yum 在 fedora 中安装 trafshow(注:这里原文为 Netwatch,应该为 trafshow),因为它在 fedora 的默认软件仓库中。但若你正运行着 CentOS 或 RHEL , 我们需要安装 [rpmforge 软件仓库][4]。

|

||||

我们可以使用 yum 在 fedora 中安装 trafshow ,因为它在 fedora 的默认软件仓库中。但若你正运行着 CentOS 或 RHEL , 我们需要安装 [rpmforge 软件仓库][4]。

|

||||

|

||||

# yum install trafshow

|

||||

|

||||

Trafshow 在默认仓库中可以找到,所以我们可以轻松地使用下面的命令来利用 **apt** 包管理器安装它:

|

||||

|

||||

$ sudo apt-get install trafshow(注:原文少了 apt-get)

|

||||

$ sudo apt-get install trafshow

|

||||

|

||||

为了使用 trafshow 来执行监控任务,我们需要在虚拟终端或 shell 中执行下面的命令:

|

||||

|

||||

@ -275,7 +289,7 @@ Trafshow 在默认仓库中可以找到,所以我们可以轻松地使用下

|

||||

|

||||

|

||||

|

||||

为了特别地监控 tcp 连接,如下面一样添加上 tcp 参数:

|

||||

为了专门监控 tcp 连接,如下面一样添加上 tcp 参数:

|

||||

|

||||

$ sudo trafshow -i wlan2 tcp

|

||||

|

||||

@ -285,7 +299,7 @@ Trafshow 在默认仓库中可以找到,所以我们可以轻松地使用下

|

||||

|

||||

### 15) Vnstat ###

|

||||

|

||||

与大多数的其他工具相比,Vnstat 有一点不同。实际上它运行一个后台服务或守护进程,并时刻记录着传输数据的大小。另外,它可以被用来生成一个带有网络使用历史记录的报告。

|

||||

与大多数的其他工具相比,Vnstat 有一点不同。实际上它运行着一个后台服务或守护进程,并时刻记录着传输数据的大小。另外,它可以被用来生成一个网络使用历史记录的报告。

|

||||

|

||||

我们需要开启 EPEL 软件仓库,然后运行 **yum** 包管理器来安装 vnstat。

|

||||

|

||||

@ -301,7 +315,7 @@ Vnstat 在默认软件仓库中可以找到,所以我们可以使用下面的

|

||||

|

||||

|

||||

|

||||

为了实时地监控带宽使用情况,使用 ‘-l’ 选项(实时模式)。然后它将以一种非常精确的方式来展示被上行和下行数据所使用的带宽总量,但不会显示任何有关主机连接或进程的内部细节。

|

||||

为了实时地监控带宽使用情况,使用 ‘-l’ 选项(live 模式)。然后它将以一种非常精确的方式来展示上行和下行数据所使用的带宽总量,但不会显示任何有关主机连接或进程的内部细节。

|

||||

|

||||

$ vnstat -l

|

||||

|

||||

@ -313,7 +327,7 @@ Vnstat 在默认软件仓库中可以找到,所以我们可以使用下面的

|

||||

|

||||

### 16) tcptrack ###

|

||||

|

||||

[tcptrack][5] 可以展示 TCP 连接的状态,它在一个给定的网络端口上进行监听。tcptrack 监控它们的状态并展示出一个经过排列且不断更新的有关来源/目标地址、带宽使用情况等信息的列表,这与 **top** 命令的输出非常类似 。

|

||||

[tcptrack][5] 可以展示 TCP 连接的状态,它在一个给定的网络端口上进行监听。tcptrack 监控它们的状态并展示出排序且不断更新的列表,包括来源/目标地址、带宽使用情况等信息,这与 **top** 命令的输出非常类似 。

|

||||

|

||||

鉴于 tcptrack 在软件仓库中,我们可以轻松地在 Debian、Ubuntu 系统中从软件仓库使用 **apt** 包管理器来安装 tcptrack。为此,我们需要在 shell 或虚拟终端中执行下面的命令:

|

||||

|

||||

@ -329,7 +343,7 @@ Vnstat 在默认软件仓库中可以找到,所以我们可以使用下面的

|

||||

|

||||

注:这里我们下载了 rpmforge-release 的当前最新版本,即 0.5.3-1,你总是可以从 rpmforge 软件仓库中下载其最新版本,并请在上面的命令中替换为你下载的版本。

|

||||

|

||||

**tcptrack** 需要以 root 权限或超级用户身份来运行。执行 tcptrack 时,我们需要带上那个我们想监视的网络接口 TCP 连接状况的接口名称。这里我们的接口名称为 wlan2,所以如下面这样使用:

|

||||

**tcptrack** 需要以 root 权限或超级用户身份来运行。执行 tcptrack 时,我们需要带上要监视的网络接口 TCP 连接状况的接口名称。这里我们的接口名称为 wlan2,所以如下面这样使用:

|

||||

|

||||

sudo tcptrack -i wlan2

|

||||

|

||||

@ -345,7 +359,7 @@ Vnstat 在默认软件仓库中可以找到,所以我们可以使用下面的

|

||||

|

||||

### 17) CBM ###

|

||||

|

||||

CBM 或 Color Bandwidth Meter 可以展示出当前所有网络设备的流量使用情况。这个程序是如此的简单,以至于应该可以从它的名称中看出其功能。CBM 的源代码和新版本可以在 [http://www.isotton.com/utils/cbm/][7] 上找到。

|

||||

CBM ( Color Bandwidth Meter) 可以展示出当前所有网络设备的流量使用情况。这个程序是如此的简单,以至于都可以从它的名称中看出其功能。CBM 的源代码和新版本可以在 [http://www.isotton.com/utils/cbm/][7] 上找到。

|

||||

|

||||

鉴于 CBM 已经包含在软件仓库中,我们可以简单地使用 **apt** 包管理器从 Debian、Ubuntu 的软件仓库中安装 CBM。为此,我们需要在一个 shell 窗口或虚拟终端中运行下面的命令:

|

||||

|

||||

@ -359,7 +373,7 @@ CBM 或 Color Bandwidth Meter 可以展示出当前所有网络设备的流量

|

||||

|

||||

### 18) bmon ###

|

||||

|

||||

[Bmon][8] 或 Bandwidth Monitoring ,是一个用于调试和实时监控带宽的工具。这个工具能够检索各种输入模块的统计数据。它提供了多种输出方式,包括一个基于 curses 库的界面,轻量级的HTML输出,以及 ASCII 输出格式。

|

||||

[Bmon][8] ( Bandwidth Monitoring) ,是一个用于调试和实时监控带宽的工具。这个工具能够检索各种输入模块的统计数据。它提供了多种输出方式,包括一个基于 curses 库的界面,轻量级的HTML输出,以及 ASCII 输出格式。

|

||||

|

||||

bmon 可以在软件仓库中找到,所以我们可以通过使用 apt 包管理器来在 Debian、Ubuntu 中安装它。为此,我们需要在一个 shell 窗口或虚拟终端中运行下面的命令:

|

||||

|

||||

@ -373,7 +387,7 @@ bmon 可以在软件仓库中找到,所以我们可以通过使用 apt 包管

|

||||

|

||||

### 19) tcpdump ###

|

||||

|

||||

[TCPDump][9] 是一个用于网络监控和数据获取的工具。它可以为我们节省很多的时间,并可用来调试网络或服务器 的相关问题。它打印出在某个网络接口上与布尔表达式匹配的数据包所包含的内容的一个描述。

|

||||

[TCPDump][9] 是一个用于网络监控和数据获取的工具。它可以为我们节省很多的时间,并可用来调试网络或服务器的相关问题。它可以打印出在某个网络接口上与布尔表达式相匹配的数据包所包含的内容的一个描述。

|

||||

|

||||

tcpdump 可以在 Debian、Ubuntu 的默认软件仓库中找到,我们可以简单地以 sudo 权限使用 apt 包管理器来安装它。为此,我们需要在一个 shell 窗口或虚拟终端中运行下面的命令:

|

||||

|

||||

@ -389,7 +403,6 @@ tcpdump 需要以 root 权限或超级用户来运行,我们需要带上我们

|

||||

|

||||

|

||||

|

||||

|

||||

假如你只想监视一个特定的端口,则可以运行下面的命令。下面是一个针对 80 端口(网络服务器)的例子:

|

||||

|

||||

$ sudo tcpdump -i wlan2 'port 80'

|

||||

@ -419,14 +432,15 @@ tcpdump 需要以 root 权限或超级用户来运行,我们需要带上我们

|

||||

|

||||

### 结论 ###

|

||||

|

||||

在第一部分中(注:我认为原文多了 first 这个单词,总之是前后文的内容有些不连贯),我们介绍了一些在 Linux 下的网络负载监控工具,这对于系统管理员甚至是新手来说,都是很有帮助的。在这篇文章中介绍的每一个工具都有其特点,不同的选项等,但最终它们都可以帮助你来监控你的网络流量。

|

||||

在这篇文章中,我们介绍了一些在 Linux 下的网络负载监控工具,这对于系统管理员甚至是新手来说,都是很有帮助的。在这篇文章中介绍的每一个工具都具有其特点,不同的选项等,但最终它们都可以帮助你来监控你的网络流量。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/monitoring-2/network-monitoring-tools-linux/

|

||||

|

||||

作者:[Bobbin Zachariah][a]

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

如何修复:apt-get update无法添加新的CD-ROM

|

||||

如何修复 apt-get update 无法添加新的 CD-ROM 的错误

|

||||

================================================================================

|

||||

|

||||

|

||||

@ -63,8 +63,8 @@

|

||||

via: http://itsfoss.com/fix-failed-fetch-cdrom-aptget-update-add-cdroms/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -4,7 +4,7 @@

|

||||

|

||||

|

||||

|

||||

Linux系统的KVM管理

|

||||

*Linux系统的KVM管理*

|

||||

|

||||

在这篇文章里没有什么新的概念,我们只是用命令行工具重复之前所做过的事情,也没有什么前提条件,都是相同的过程,之前的文章我们都讨论过。

|

||||

|

||||

@ -31,35 +31,40 @@ Virsh命令行工具是一款管理virsh客户域的用户界面。virsh程序

|

||||

# virsh pool-define-as Spool1 dir - - - - "/mnt/personal-data/SPool1/"

|

||||

|

||||

|

||||

创建新存储池

|

||||

|

||||

*创建新存储池*

|

||||

|

||||

**2. 查看环境中我们所有的存储池,用以下命令。**

|

||||

|

||||

# virsh pool-list --all

|

||||

|

||||

|

||||

列出所有存储池

|

||||

|

||||

*列出所有存储池*

|

||||

|

||||

**3. 现在我们来构造存储池了,用以下命令来构造我们刚才定义的存储池。**

|

||||

|

||||

# virsh pool-build Spool1

|

||||

|

||||

|

||||

构造存储池

|

||||

|

||||

**4. 用virsh带pool-start的命令来激活并启动我们刚才创建并构造完成的存储池。**

|

||||

*构造存储池*

|

||||

|

||||

**4. 用带pool-start参数的virsh命令来激活并启动我们刚才创建并构造完成的存储池。**

|

||||

|

||||

# virsh pool-start Spool1

|

||||

|

||||

|

||||

激活存储池

|

||||

|

||||

*激活存储池*

|

||||

|

||||

**5. 查看环境中存储池的状态,用以下命令。**

|

||||

|

||||

# virsh pool-list --all

|

||||

|

||||

|

||||

查看存储池状态

|

||||

|

||||

*查看存储池状态*

|

||||

|

||||

你会发现Spool1的状态变成了已激活。

|

||||

|

||||

@ -68,14 +73,16 @@ Virsh命令行工具是一款管理virsh客户域的用户界面。virsh程序

|

||||

# virsh pool-autostart Spool1

|

||||

|

||||

|

||||

配置KVM存储池

|

||||

|

||||

*配置KVM存储池*

|

||||

|

||||

**7. 最后来看看我们新的存储池的信息吧。**

|

||||

|

||||

# virsh pool-info Spool1

|

||||

|

||||

|

||||

查看KVM存储池信息

|

||||

|

||||

*查看KVM存储池信息*

|

||||

|

||||

恭喜你,Spool1已经准备好待命,接下来我们试着创建存储卷来使用它。

|

||||

|

||||

@ -90,12 +97,14 @@ Virsh命令行工具是一款管理virsh客户域的用户界面。virsh程序

|

||||

# qemu-img create -f raw /mnt/personal-data/SPool1/SVol1.img 10G

|

||||

|

||||

|

||||

创建存储卷

|

||||

|

||||

*创建存储卷*

|

||||

|

||||

**9. 通过使用带info的qemu-img命令,你可以获取到你的新磁盘映像的一些信息。**

|

||||

|

||||

|

||||

查看存储卷信息

|

||||

|

||||

*查看存储卷信息*

|

||||

|

||||

**警告**: 不要用qemu-img命令来修改被运行中的虚拟机或任何其它进程所正在使用的映像,那样映像会被破坏。

|

||||

|

||||

@ -120,15 +129,18 @@ Virsh命令行工具是一款管理virsh客户域的用户界面。virsh程序

|

||||

# virt-install --name=rhel7 --disk path=/mnt/personal-data/SPool1/SVol1.img --graphics spice --vcpu=1 --ram=1024 --location=/run/media/dos/9e6f605a-f502-4e98-826e-e6376caea288/rhel-server-7.0-x86_64-dvd.iso --network bridge=virbr0

|

||||

|

||||

|

||||

创建新的虚拟机

|

||||

|

||||

*创建新的虚拟机*

|

||||

|

||||

**11. 你会看到弹出一个virt-vierwer窗口,像是在通过它在与虚拟机通信。**

|

||||

|

||||

|

||||

虚拟机启动程式

|

||||

|

||||

*虚拟机启动程式*

|

||||

|

||||

|

||||

虚拟机安装过程

|

||||

|

||||

*虚拟机安装过程*

|

||||

|

||||

### 结论 ###

|

||||

|

||||

@ -143,7 +155,7 @@ via: http://www.tecmint.com/kvm-management-tools-to-manage-virtual-machines/

|

||||

|

||||

作者:[Mohammad Dosoukey][a]

|

||||

译者:[ZTinoZ](https://github.com/ZTinoZ)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

Linux 基础:如何修复Ubuntu上“E: /var/cache/apt/archives/ subprocess new pre-removal script returned error exit status 1 ”的错误

|

||||