diff --git a/sources/tech/20180125 BUILDING A FULL-TEXT SEARCH APP USING DOCKER AND ELASTICSEARCH.md b/sources/tech/20180125 BUILDING A FULL-TEXT SEARCH APP USING DOCKER AND ELASTICSEARCH.md

deleted file mode 100644

index f69feb8c2a..0000000000

--- a/sources/tech/20180125 BUILDING A FULL-TEXT SEARCH APP USING DOCKER AND ELASTICSEARCH.md

+++ /dev/null

@@ -1,1383 +0,0 @@

-Translating by qhwdw

-BUILDING A FULL-TEXT SEARCH APP USING DOCKER AND ELASTICSEARCH

-============================================================

-

- _How does Wikipedia sort though 5+ million articles to find the most relevant one for your research?_

-

- _How does Facebook find the friend who you're looking for (and whose name you've misspelled), across a userbase of 2+ billion people?_

-

- _How does Google search the entire internet for webpages relevant to your vague, typo-filled search query?_

-

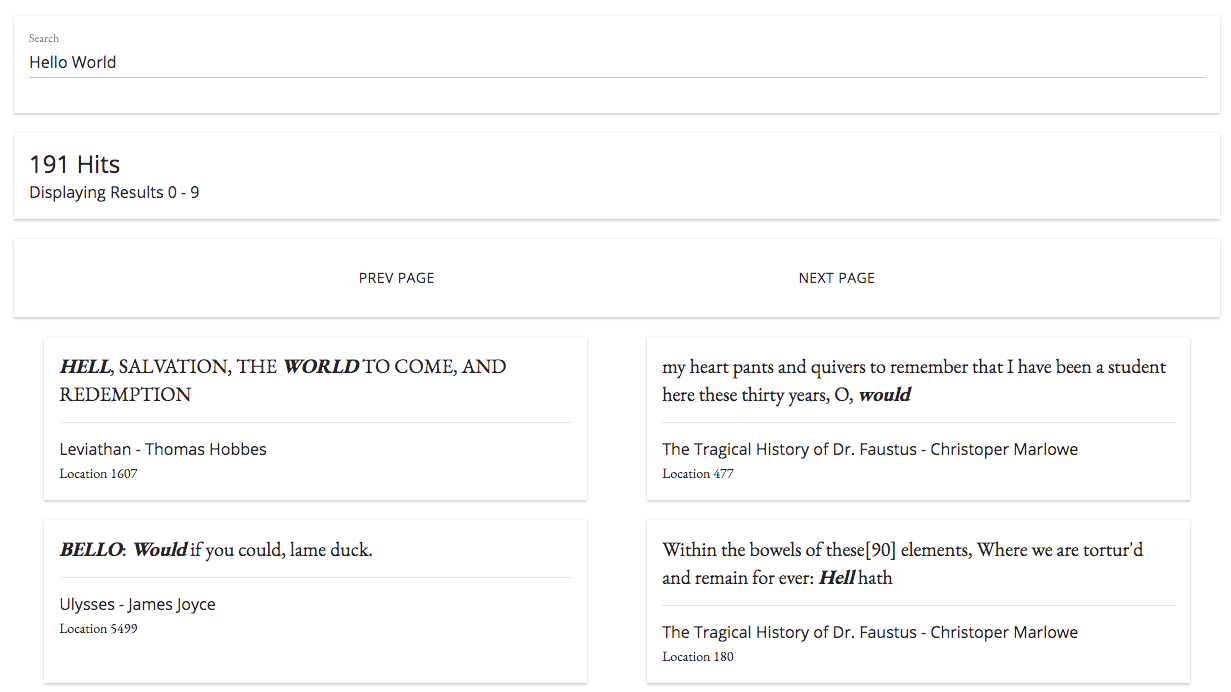

-In this tutorial, we'll walk through setting up our own full-text search application (of an admittedly lesser complexity than the systems in the questions above). Our example app will provide a UI and API to search the complete texts of 100 literary classics such as _Peter Pan_ , _Frankenstein_ , and _Treasure Island_ .

-

-You can preview a completed version of the tutorial app here - [https://search.patricktriest.com][6]

-

-

-

-The source code for the application is 100% open-source and can be found at the GitHub repository here - [https://github.com/triestpa/guttenberg-search][7]

-

-Adding fast, flexible full-text search to apps can be a challenge. Most mainstream databases, such as [PostgreSQL][8] and [MongoDB][9], offer very basic text searching capabilities due to limitations on their existing query and index structures. In order to implement high quality full-text search, a separate datastore is often the best option. [Elasticsearch][10] is a leading open-source datastore that is optimized to perform incredibly flexible and fast full-text search.

-

-We'll be using [Docker][11] to setup our project environment and dependencies. Docker is a containerization engine used by the likes of [Uber][12], [Spotify][13], [ADP][14], and [Paypal][15]. A major advantage of building a containerized app is that the project setup is virtually the same on Windows, macOS, and Linux - which makes writing this tutorial quite a bit simpler for me. Don't worry if you've never used Docker, we'll go through the full project configuration further down.

-

-We'll also be using [Node.js][16] (with the [Koa][17] framework), and [Vue.js][18] to build our search API and frontend web app respectively.

-

-### 1 - WHAT IS ELASTICSEARCH?

-

-Full-text search is a heavily requested feature in modern applications. Search can also be one of the most difficult features to implement competently - many popular websites have subpar search functionality that returns results slowly and has trouble finding non-exact matches. Often, this is due to limitations in the underlying database: most standard relational databases are limited to basic `CONTAINS` or `LIKE`SQL queries, which provide only the most basic string matching functionality.

-

-We'd like our search app to be :

-

-1. **Fast** - Search results should be returned almost instantly, in order to provide a responsive user experience.

-

-2. **Flexible** - We'll want to be able to modify how the search is performed, in order to optimize for different datasets and use cases.

-

-3. **Forgiving** - If a search contains a typo, we'd still like to return relevant results for what the user might have been trying to search for.

-

-4. **Full-Text** - We don't want to limit our search to specific matching keywords or tags - we want to search _everything_ in our datastore (including large text fields) for a match.

-

-

-

-In order to build a super-powered search feature, it’s often most ideal to use a datastore that is optimized for the task of full-text search. This is where [Elasticsearch][19]comes into play; Elasticsearch is an open-source in-memory datastore written in Java and originally built on the [Apache Lucene][20] library.

-

-Here are some examples of real-world Elasticsearch use cases from the official [Elastic website][21].

-

-* Wikipedia uses Elasticsearch to provide full-text search with highlighted search snippets, and search-as-you-type and did-you-mean suggestions.

-

-* The Guardian uses Elasticsearch to combine visitor logs with social -network data to provide real-time feedback to its editors about the public’s response to new articles.

-

-* Stack Overflow combines full-text search with geolocation queries and uses more-like-this to find related questions and answers.

-

-* GitHub uses Elasticsearch to query 130 billion lines of code.

-

-### What makes Elasticsearch different from a "normal" database?

-

-At its core, Elasticsearch is able to provide fast and flexible full-text search through the use of _inverted indices_ .

-

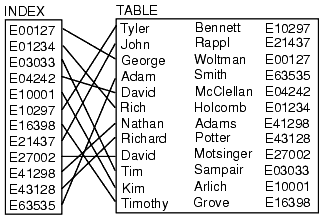

-An "index" is a data structure to allow for ultra-fast data query and retrieval operations in databases. Databases generally index entries by storing an association of fields with the matching table rows. By storing the index in a searchable data structure (often a [B-Tree][22]), databases can achieve sub-linear time on optimized queries (such as “Find the row with ID = 5”).

-

-

-

-We can think of a database index like an old-school library card catalog - it tells you precisely where the entry that you're searching for is located, as long as you already know the title and author of the book. Database tables generally have multiple indices in order to speed up queries on specific fields (i.e. an index on the `name`column would greatly speed up queries for rows with a specific name).

-

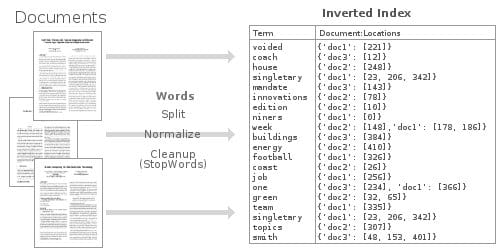

-Inverted indexes work in a substantially different manner. The content of each row (or document) is split up, and each individual entry (in this case each word) points back to any documents that it was found within.

-

-

-

-This inverted-index data structure allows us to very quickly find, say, all of the documents where “football” was mentioned. Through the use of a heavily optimized in-memory inverted index, Elasticsearch enables us to perform some very powerful and customizable full-text searches on our stored data.

-

-### 2 - PROJECT SETUP

-

-### 2.0 - Docker

-

-We'll be using [Docker][23] to manage the environments and dependencies for this project. Docker is a containerization engine that allows applications to be run in isolated environments, unaffected by the host operating system and local development environment. Many web-scale companies run a majority of their server infrastructure in containers now, due to the increased flexibility and composability of containerized application components.

-

-

-

-The advantage of using Docker for me, as the friendly author of this tutorial, is that the local environment setup is minimal and consistent across Windows, macOS, and Linux systems. Instead of going through divergent installation instructions for Node.js, Elasticsearch, and Nginx, we can instead just define these dependencies in Docker configuration files, and then run our app anywhere using this configuration. Furthermore, since each application component will run in it's own isolated container, there is much less potential for existing junk on our local machines to interfere, so "But it works on my machine!" types of scenarios will be much more rare when debugging issues.

-

-### 2.1 - Install Docker & Docker-Compose

-

-The only dependencies for this project are [Docker][24] and [docker-compose][25], the later of which is an officially supported tool for defining multiple container configurations to _compose_ into a single application stack.

-

-Install Docker - [https://docs.docker.com/engine/installation/][26]

-Install Docker Compose - [https://docs.docker.com/compose/install/][27]

-

-### 2.2 - Setup Project Directories

-

-Create a base directory (say `guttenberg_search`) for the project. To organize our project we'll work within two main subdirectories.

-

-* `/public` - Store files for the frontend Vue.js webapp.

-

-* `/server` - Server-side Node.js source code

-

-### 2.3 - Add Docker-Compose Config

-

-Next, we'll create a `docker-compose.yml` file to define each container in our application stack.

-

-1. `gs-api` - The Node.js container for the backend application logic.

-

-2. `gs-frontend` - An Ngnix container for serving the frontend webapp files.

-

-3. `gs-search` - An Elasticsearch container for storing and searching data.

-

-```

-version: '3'

-

-services:

- api: # Node.js App

- container_name: gs-api

- build: .

- ports:

- - "3000:3000" # Expose API port

- - "9229:9229" # Expose Node process debug port (disable in production)

- environment: # Set ENV vars

- - NODE_ENV=local

- - ES_HOST=elasticsearch

- - PORT=3000

- volumes: # Attach local book data directory

- - ./books:/usr/src/app/books

-

- frontend: # Nginx Server For Frontend App

- container_name: gs-frontend

- image: nginx

- volumes: # Serve local "public" dir

- - ./public:/usr/share/nginx/html

- ports:

- - "8080:80" # Forward site to localhost:8080

-

- elasticsearch: # Elasticsearch Instance

- container_name: gs-search

- image: docker.elastic.co/elasticsearch/elasticsearch:6.1.1

- volumes: # Persist ES data in seperate "esdata" volume

- - esdata:/usr/share/elasticsearch/data

- environment:

- - bootstrap.memory_lock=true

- - "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- - discovery.type=single-node

- ports: # Expose Elasticsearch ports

- - "9300:9300"

- - "9200:9200"

-

-volumes: # Define seperate volume for Elasticsearch data

- esdata:

-

-```

-

-This file defines our entire application stack - no need to install Elasticsearch, Node, or Nginx on your local system. Each container is forwarding ports to the host system (`localhost`), in order for us to access and debug the Node API, Elasticsearch instance, and fronted web app from our host machine.

-

-### 2.4 - Add Dockerfile

-

-We are using official prebuilt images for Nginx and Elasticsearch, but we'll need to build our own image for the Node.js app.

-

-Define a simple `Dockerfile` configuration in the application root directory.

-

-```

-# Use Node v8.9.0 LTS

-FROM node:carbon

-

-# Setup app working directory

-WORKDIR /usr/src/app

-

-# Copy package.json and package-lock.json

-COPY package*.json ./

-

-# Install app dependencies

-RUN npm install

-

-# Copy sourcecode

-COPY . .

-

-# Start app

-CMD [ "npm", "start" ]

-

-```

-

-This Docker configuration extends the official Node.js image, copies our application source code, and installs the NPM dependencies within the container.

-

-We'll also add a `.dockerignore` file to avoid copying unneeded files into the container.

-

-```

-node_modules/

-npm-debug.log

-books/

-public/

-

-```

-

-> Note that we're not copying the `node_modules` directory into our container - this is because we'll be running `npm install` from within the container build process. Attempting to copy the `node_modules` from the host system into a container can cause errors since some packages need to be specifically built for certain operating systems. For instance, installing the `bcrypt` package on macOS and attempting to copy that module directly to an Ubuntu container will not work because `bcyrpt`relies on a binary that needs to be built specifically for each operating system.

-

-### 2.5 - Add Base Files

-

-In order to test out the configuration, we'll need to add some placeholder files to the app directories.

-

-Add this base HTML file at `public/index.html`

-

-```

-Hello World From The Frontend Container

-

-```

-

-Next, add the placeholder Node.js app file at `server/app.js`.

-

-```

-const Koa = require('koa')

-const app = new Koa()

-

-app.use(async (ctx, next) => {

- ctx.body = 'Hello World From the Backend Container'

-})

-

-const port = process.env.PORT || 3000

-

-app.listen(port, err => {

- if (err) console.error(err)

- console.log(`App Listening on Port ${port}`)

-})

-

-```

-

-Finally, add our `package.json` Node app configuration.

-

-```

-{

- "name": "guttenberg-search",

- "version": "0.0.1",

- "description": "Source code for Elasticsearch tutorial using 100 classic open source books.",

- "scripts": {

- "start": "node --inspect=0.0.0.0:9229 server/app.js"

- },

- "repository": {

- "type": "git",

- "url": "git+https://github.com/triestpa/guttenberg-search.git"

- },

- "author": "patrick.triest@gmail.com",

- "license": "MIT",

- "bugs": {

- "url": "https://github.com/triestpa/guttenberg-search/issues"

- },

- "homepage": "https://github.com/triestpa/guttenberg-search#readme",

- "dependencies": {

- "elasticsearch": "13.3.1",

- "joi": "13.0.1",

- "koa": "2.4.1",

- "koa-joi-validate": "0.5.1",

- "koa-router": "7.2.1"

- }

-}

-

-```

-

-This file defines the application start command and the Node.js package dependencies.

-

-> Note - You don't have to run `npm install` - the dependencies will be installed inside the container when it is built.

-

-### 2.6 - Try it Out

-

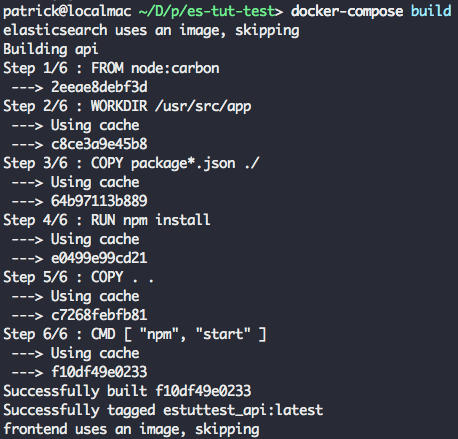

-Everything is in place now to test out each component of the app. From the base directory, run `docker-compose build`, which will build our Node.js application container.

-

-

-

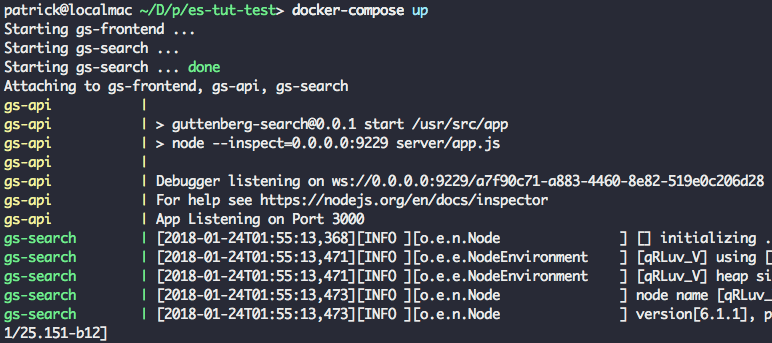

-Next, run `docker-compose up` to launch our entire application stack.

-

-

-

-> This step might take a few minutes since Docker has to download the base images for each container. In subsequent runs, starting the app should be nearly instantaneous, since the required images will have already been downloaded.

-

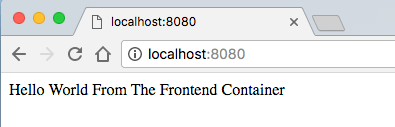

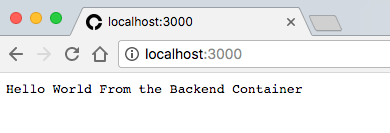

-Try visiting `localhost:8080` in your browser - you should see a simple "Hello World" webpage.

-

-

-

-Visit `localhost:3000` to verify that our Node server returns it's own "Hello World" message.

-

-

-

-Finally, visit `localhost:9200` to check that Elasticsearch is running. It should return information similar to this.

-

-```

-{

- "name" : "SLTcfpI",

- "cluster_name" : "docker-cluster",

- "cluster_uuid" : "iId8e0ZeS_mgh9ALlWQ7-w",

- "version" : {

- "number" : "6.1.1",

- "build_hash" : "bd92e7f",

- "build_date" : "2017-12-17T20:23:25.338Z",

- "build_snapshot" : false,

- "lucene_version" : "7.1.0",

- "minimum_wire_compatibility_version" : "5.6.0",

- "minimum_index_compatibility_version" : "5.0.0"

- },

- "tagline" : "You Know, for Search"

-}

-

-```

-

-If all three URLs display data successfully, congrats! The entire containerized stack is running, so now we can move on to the fun part.

-

-### 3 - CONNECT TO ELASTICSEARCH

-

-The first thing that we'll need to do in our app is connect to our local Elasticsearch instance.

-

-### 3.0 - Add ES Connection Module

-

-Add the following Elasticsearch initialization code to a new file `server/connection.js`.

-

-```

-const elasticsearch = require('elasticsearch')

-

-// Core ES variables for this project

-const index = 'library'

-const type = 'novel'

-const port = 9200

-const host = process.env.ES_HOST || 'localhost'

-const client = new elasticsearch.Client({ host: { host, port } })

-

-/** Check the ES connection status */

-async function checkConnection () {

- let isConnected = false

- while (!isConnected) {

- console.log('Connecting to ES')

- try {

- const health = await client.cluster.health({})

- console.log(health)

- isConnected = true

- } catch (err) {

- console.log('Connection Failed, Retrying...', err)

- }

- }

-}

-

-checkConnection()

-

-```

-

-Let's rebuild our Node app now that we've made changes, using `docker-compose build`. Next, run `docker-compose up -d` to start the application stack as a background daemon process.

-

-With the app started, run `docker exec gs-api "node" "server/connection.js"` on the command line in order to run our script within the container. You should see some system output similar to the following.

-

-```

-{ cluster_name: 'docker-cluster',

- status: 'yellow',

- timed_out: false,

- number_of_nodes: 1,

- number_of_data_nodes: 1,

- active_primary_shards: 1,

- active_shards: 1,

- relocating_shards: 0,

- initializing_shards: 0,

- unassigned_shards: 1,

- delayed_unassigned_shards: 0,

- number_of_pending_tasks: 0,

- number_of_in_flight_fetch: 0,

- task_max_waiting_in_queue_millis: 0,

- active_shards_percent_as_number: 50 }

-

-```

-

-Go ahead and remove the `checkConnection()` call at the bottom before moving on, since in our final app we'll be making that call from outside the connection module.

-

-### 3.1 - Add Helper Function To Reset Index

-

-In `server/connection.js` add the following function below `checkConnection`, in order to provide an easy way to reset our Elasticsearch index.

-

-```

-/** Clear the index, recreate it, and add mappings */

-async function resetIndex (index) {

- if (await client.indices.exists({ index })) {

- await client.indices.delete({ index })

- }

-

- await client.indices.create({ index })

- await putBookMapping()

-}

-

-```

-

-### 3.2 - Add Book Schema

-

-Next, we'll want to add a "mapping" for the book data schema. Add the following function below `resetIndex` in `server/connection.js`.

-

-```

-/** Add book section schema mapping to ES */

-async function putBookMapping () {

- const schema = {

- title: { type: 'keyword' },

- author: { type: 'keyword' },

- location: { type: 'integer' },

- text: { type: 'text' }

- }

-

- return client.indices.putMapping({ index, type, body: { properties: schema } })

-}

-

-```

-

-Here we are defining a mapping for the `book` index. An Elasticsearch `index` is roughly analogous to a SQL `table` or a MongoDB `collection`. Adding a mapping allows us to specify each field and datatype for the stored documents. Elasticsearch is schema-less, so we don't technically need to add a mapping, but doing so will give us more control over how the data is handled.

-

-For instance - we're assigning the `keyword` type to the "title" and "author" fields, and the `text` type to the "text" field. Doing so will cause the search engine to treat these string fields differently - During a search, the engine will search _within_ the `text` field for potential matches, whereas `keyword` fields will be matched based on their full content. This might seem like a minor distinction, but it can have a huge impact on the behavior and speed of different searches.

-

-Export the exposed properties and functions at the bottom of the file, so that they can be accessed by other modules in our app.

-

-```

-module.exports = {

- client, index, type, checkConnection, resetIndex

-}

-

-```

-

-### 4 - LOAD THE RAW DATA

-

-We'll be using data from [Project Gutenberg][28] - an online effort dedicated to providing free, digital copies of books within the public domain. For this project, we'll be populating our library with 100 classic books, including texts such as _The Adventures of Sherlock Holmes_ , _Treasure Island_ , _The Count of Monte Cristo_ , _Around the World in 80 Days_ , _Romeo and Juliet_ , and _The Odyssey_ .

-

-

-

-### 4.1 - Download Book Files

-

-I've zipped the 100 books into a file that you can download here -

-[https://cdn.patricktriest.com/data/books.zip][29]

-

-Extract this file into a `books/` directory in your project.

-

-If you want, you can do this by using the following commands (requires [wget][30] and ["The Unarchiver" CLI][31]).

-

-```

-wget https://cdn.patricktriest.com/data/books.zip

-unar books.zip

-

-```

-

-### 4.2 - Preview A Book

-

-Try opening one of the book files, say `219-0.txt`. You'll notice that it starts with an open access license, followed by some lines identifying the book title, author, release dates, language and character encoding.

-

-```

-Title: Heart of Darkness

-

-Author: Joseph Conrad

-

-Release Date: February 1995 [EBook #219]

-Last Updated: September 7, 2016

-

-Language: English

-

-Character set encoding: UTF-8

-

-```

-

-After these lines comes `*** START OF THIS PROJECT GUTENBERG EBOOK HEART OF DARKNESS ***`, after which the book content actually starts.

-

-If you scroll to the end of the book you'll see the matching message `*** END OF THIS PROJECT GUTENBERG EBOOK HEART OF DARKNESS ***`, which is followed by a much more detailed version of the book's license.

-

-In the next steps, we'll programmatically parse the book metadata from this header and extract the book content from between the `*** START OF` and `***END OF` place markers.

-

-### 4.3 - Read Data Dir

-

-Let's write a script to read the content of each book and to add that data to Elasticsearch. We'll define a new Javascript file `server/load_data.js` in order to perform these operations.

-

-First, we'll obtain a list of every file within the `books/` data directory.

-

-Add the following content to `server/load_data.js`.

-

-```

-const fs = require('fs')

-const path = require('path')

-const esConnection = require('./connection')

-

-/** Clear ES index, parse and index all files from the books directory */

-async function readAndInsertBooks () {

- try {

- // Clear previous ES index

- await esConnection.resetIndex()

-

- // Read books directory

- let files = fs.readdirSync('./books').filter(file => file.slice(-4) === '.txt')

- console.log(`Found ${files.length} Files`)

-

- // Read each book file, and index each paragraph in elasticsearch

- for (let file of files) {

- console.log(`Reading File - ${file}`)

- const filePath = path.join('./books', file)

- const { title, author, paragraphs } = parseBookFile(filePath)

- await insertBookData(title, author, paragraphs)

- }

- } catch (err) {

- console.error(err)

- }

-}

-

-readAndInsertBooks()

-

-```

-

-We'll use a shortcut command to rebuild our Node.js app and update the running container.

-

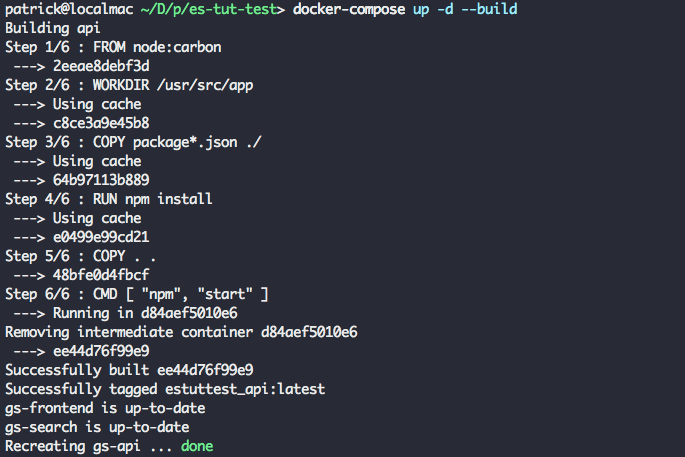

-Run `docker-compose up -d --build` to update the application. This is a shortcut for running `docker-compose build` and `docker-compose up -d`.

-

-

-

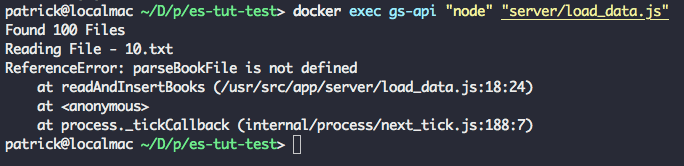

-Run`docker exec gs-api "node" "server/load_data.js"` in order to run our `load_data` script within the container. You should see the Elasticsearch status output, followed by `Found 100 Books`.

-

-After this, the script will exit due to an error because we're calling a helper function (`parseBookFile`) that we have not yet defined.

-

-

-

-### 4.4 - Read Data File

-

-Next, we'll read the metadata and content for each book.

-

-Define a new function in `server/load_data.js`.

-

-```

-/** Read an individual book text file, and extract the title, author, and paragraphs */

-function parseBookFile (filePath) {

- // Read text file

- const book = fs.readFileSync(filePath, 'utf8')

-

- // Find book title and author

- const title = book.match(/^Title:\s(.+)$/m)[1]

- const authorMatch = book.match(/^Author:\s(.+)$/m)

- const author = (!authorMatch || authorMatch[1].trim() === '') ? 'Unknown Author' : authorMatch[1]

-

- console.log(`Reading Book - ${title} By ${author}`)

-

- // Find Guttenberg metadata header and footer

- const startOfBookMatch = book.match(/^\*{3}\s*START OF (THIS|THE) PROJECT GUTENBERG EBOOK.+\*{3}$/m)

- const startOfBookIndex = startOfBookMatch.index + startOfBookMatch[0].length

- const endOfBookIndex = book.match(/^\*{3}\s*END OF (THIS|THE) PROJECT GUTENBERG EBOOK.+\*{3}$/m).index

-

- // Clean book text and split into array of paragraphs

- const paragraphs = book

- .slice(startOfBookIndex, endOfBookIndex) // Remove Guttenberg header and footer

- .split(/\n\s+\n/g) // Split each paragraph into it's own array entry

- .map(line => line.replace(/\r\n/g, ' ').trim()) // Remove paragraph line breaks and whitespace

- .map(line => line.replace(/_/g, '')) // Guttenberg uses "_" to signify italics. We'll remove it, since it makes the raw text look messy.

- .filter((line) => (line && line.length !== '')) // Remove empty lines

-

- console.log(`Parsed ${paragraphs.length} Paragraphs\n`)

- return { title, author, paragraphs }

-}

-

-```

-

-This function performs a few important tasks.

-

-1. Read book text from the file system.

-

-2. Use regular expressions (check out [this post][1] for a primer on using regex) to parse the book title and author.

-

-3. Identify the start and end of the book content, by matching on the all-caps "Project Guttenberg" header and footer.

-

-4. Extract the book text content.

-

-5. Split each paragraph into its own array.

-

-6. Clean up the text and remove blank lines.

-

-As a return value, we'll form an object containing the book's title, author, and an array of paragraphs within the book.

-

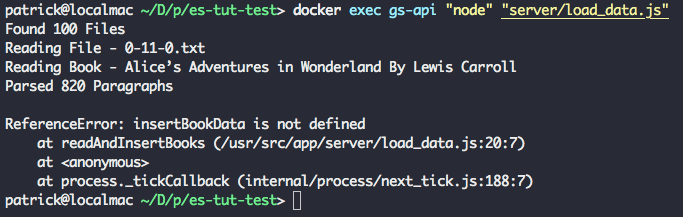

-Run `docker-compose up -d --build` and `docker exec gs-api "node" "server/load_data.js"` again, and you should see the same output as before, this time with three extra lines at the end of the output.

-

-

-

-Success! Our script successfully parsed the title and author from the text file. The script will again end with an error since we still have to define one more helper function.

-

-### 4.5 - Index Datafile in ES

-

-As a final step, we'll bulk-upload each array of paragraphs into the Elasticsearch index.

-

-Add a new `insertBookData` function to `load_data.js`.

-

-```

-/** Bulk index the book data in Elasticsearch */

-async function insertBookData (title, author, paragraphs) {

- let bulkOps = [] // Array to store bulk operations

-

- // Add an index operation for each section in the book

- for (let i = 0; i < paragraphs.length; i++) {

- // Describe action

- bulkOps.push({ index: { _index: esConnection.index, _type: esConnection.type } })

-

- // Add document

- bulkOps.push({

- author,

- title,

- location: i,

- text: paragraphs[i]

- })

-

- if (i > 0 && i % 500 === 0) { // Do bulk insert in 500 paragraph batches

- await esConnection.client.bulk({ body: bulkOps })

- bulkOps = []

- console.log(`Indexed Paragraphs ${i - 499} - ${i}`)

- }

- }

-

- // Insert remainder of bulk ops array

- await esConnection.client.bulk({ body: bulkOps })

- console.log(`Indexed Paragraphs ${paragraphs.length - (bulkOps.length / 2)} - ${paragraphs.length}\n\n\n`)

-}

-

-```

-

-This function will index each paragraph of the book, with author, title, and paragraph location metadata attached. We are inserting the paragraphs using a bulk operation, which is much faster than indexing each paragraph individually.

-

-> We're bulk indexing the paragraphs in batches, instead of inserting all of them at once. This was a last minute optimization which I added in order for the app to run on the low-ish memory (1.7 GB) host machine that serves `search.patricktriest.com`. If you have a reasonable amount of RAM (4+ GB), you probably don't need to worry about batching each bulk upload,

-

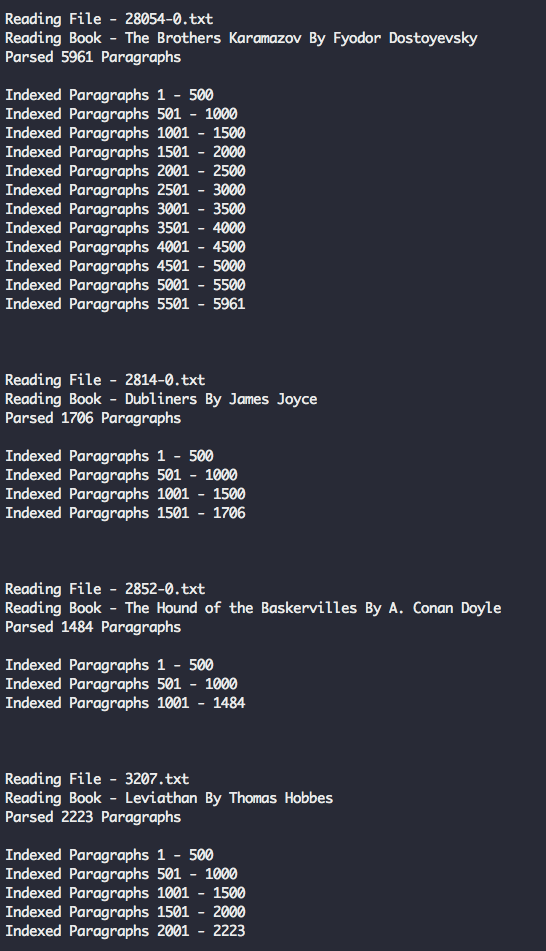

-Run `docker-compose up -d --build` and `docker exec gs-api "node" "server/load_data.js"` one more time - you should now see a full output of 100 books being parsed and inserted in Elasticsearch. This might take a minute or so.

-

-

-

-### 5 - SEARCH

-

-Now that Elasticsearch has been populated with one hundred books (amounting to roughly 230,000 paragraphs), let's try out some search queries.

-

-### 5.0 - Simple HTTP Query

-

-First, let's just query Elasticsearch directly using it's HTTP API.

-

-Visit this URL in your browser - `http://localhost:9200/library/_search?q=text:Java&pretty`

-

-Here, we are performing a bare-bones full-text search to find the word "Java" within our library of books.

-

-You should see a JSON response similar to the following.

-

-```

-{

- "took" : 11,

- "timed_out" : false,

- "_shards" : {

- "total" : 5,

- "successful" : 5,

- "skipped" : 0,

- "failed" : 0

- },

- "hits" : {

- "total" : 13,

- "max_score" : 14.259304,

- "hits" : [

- {

- "_index" : "library",

- "_type" : "novel",

- "_id" : "p_GwFWEBaZvLlaAUdQgV",

- "_score" : 14.259304,

- "_source" : {

- "author" : "Charles Darwin",

- "title" : "On the Origin of Species",

- "location" : 1080,

- "text" : "Java, plants of, 375."

- }

- },

- {

- "_index" : "library",

- "_type" : "novel",

- "_id" : "wfKwFWEBaZvLlaAUkjfk",

- "_score" : 10.186235,

- "_source" : {

- "author" : "Edgar Allan Poe",

- "title" : "The Works of Edgar Allan Poe",

- "location" : 827,

- "text" : "After many years spent in foreign travel, I sailed in the year 18-- , from the port of Batavia, in the rich and populous island of Java, on a voyage to the Archipelago of the Sunda islands. I went as passenger--having no other inducement than a kind of nervous restlessness which haunted me as a fiend."

- }

- },

- ...

- ]

- }

-}

-

-```

-

-The Elasticseach HTTP interface is useful for testing that our data is inserted successfully, but exposing this API directly to the web app would be a huge security risk. The API exposes administrative functionality (such as directly adding and deleting documents), and should ideally not ever be exposed publicly. Instead, we'll write a simple Node.js API to receive requests from the client, and make the appropriate query (within our private local network) to Elasticsearch.

-

-### 5.1 - Query Script

-

-Let's now try querying Elasticsearch from our Node.js application.

-

-Create a new file, `server/search.js`.

-

-```

-const { client, index, type } = require('./connection')

-

-module.exports = {

- /** Query ES index for the provided term */

- queryTerm (term, offset = 0) {

- const body = {

- from: offset,

- query: { match: {

- text: {

- query: term,

- operator: 'and',

- fuzziness: 'auto'

- } } },

- highlight: { fields: { text: {} } }

- }

-

- return client.search({ index, type, body })

- }

-}

-

-```

-

-Our search module defines a simple `search` function, which will perform a `match`query using the input term.

-

-Here are query fields broken down -

-

-* `from` - Allows us to paginate the results. Each query returns 10 results by default, so specifying `from: 10` would allow us to retrieve results 10-20.

-

-* `query` - Where we specify the actual term that we are searching for.

-

-* `operator` - We can modify the search behavior; in this case, we're using the "and" operator to prioritize results that contain all of the tokens (words) in the query.

-

-* `fuzziness` - Adjusts tolerance for spelling mistakes, `auto` defaults to `fuzziness: 2`. A higher fuzziness will allow for more corrections in result hits. For instance, `fuzziness: 1` would allow `Patricc` to return `Patrick` as a match.

-

-* `highlights` - Returns an extra field with the result, containing HTML to display the exact text subset and terms that were matched with the query.

-

-Feel free to play around with these parameters, and to customize the search query further by exploring the [Elastic Full-Text Query DSL][32].

-

-### 6 - API

-

-Let's write a quick HTTP API in order to access our search functionality from a frontend app.

-

-### 6.0 - API Server

-

-Replace our existing `server/app.js` file with the following contents.

-

-```

-const Koa = require('koa')

-const Router = require('koa-router')

-const joi = require('joi')

-const validate = require('koa-joi-validate')

-const search = require('./search')

-

-const app = new Koa()

-const router = new Router()

-

-// Log each request to the console

-app.use(async (ctx, next) => {

- const start = Date.now()

- await next()

- const ms = Date.now() - start

- console.log(`${ctx.method} ${ctx.url} - ${ms}`)

-})

-

-// Log percolated errors to the console

-app.on('error', err => {

- console.error('Server Error', err)

-})

-

-// Set permissive CORS header

-app.use(async (ctx, next) => {

- ctx.set('Access-Control-Allow-Origin', '*')

- return next()

-})

-

-// ADD ENDPOINTS HERE

-

-const port = process.env.PORT || 3000

-

-app

- .use(router.routes())

- .use(router.allowedMethods())

- .listen(port, err => {

- if (err) throw err

- console.log(`App Listening on Port ${port}`)

- })

-

-```

-

-This code will import our server dependencies and set up simple logging and error handling for a [Koa.js][33] Node API server.

-

-### 6.1 - Link endpoint with queries

-

-Next, we'll add an endpoint to our server in order to expose our Elasticsearch query function.

-

-Insert the following code below the `// ADD ENDPOINTS HERE` comment in `server/app.js`.

-

-```

-/**

- * GET /search

- * Search for a term in the library

- */

-router.get('/search', async (ctx, next) => {

- const { term, offset } = ctx.request.query

- ctx.body = await search.queryTerm(term, offset)

- }

-)

-

-```

-

-Restart the app using `docker-compose up -d --build`. In your browser, try calling the search endpoint. For example, this request would search the entire library for passages mentioning "Java" - `http://localhost:3000/search?term=java`

-

-The result will look quite similar to the response from earlier when we called the Elasticsearch HTTP interface directly.

-

-```

-{

- "took": 242,

- "timed_out": false,

- "_shards": {

- "total": 5,

- "successful": 5,

- "skipped": 0,

- "failed": 0

- },

- "hits": {

- "total": 93,

- "max_score": 13.356944,

- "hits": [{

- "_index": "library",

- "_type": "novel",

- "_id": "eHYHJmEBpQg9B4622421",

- "_score": 13.356944,

- "_source": {

- "author": "Charles Darwin",

- "title": "On the Origin of Species",

- "location": 1080,

- "text": "Java, plants of, 375."

- },

- "highlight": {

- "text": ["Java, plants of, 375."]

- }

- }, {

- "_index": "library",

- "_type": "novel",

- "_id": "2HUHJmEBpQg9B462xdNg",

- "_score": 9.030668,

- "_source": {

- "author": "Unknown Author",

- "title": "The King James Bible",

- "location": 186,

- "text": "10:4 And the sons of Javan; Elishah, and Tarshish, Kittim, and Dodanim."

- },

- "highlight": {

- "text": ["10:4 And the sons of Javan; Elishah, and Tarshish, Kittim, and Dodanim."]

- }

- }

- ...

- ]

- }

-}

-

-```

-

-### 6.2 - Input validation

-

-This endpoint is still brittle - we are not doing any checks on the request parameters, so invalid or missing values would result in a server error.

-

-We'll add some middleware to the endpoint in order to validate input parameters using [Joi][34] and the [Koa-Joi-Validate][35] library.

-

-```

-/**

- * GET /search

- * Search for a term in the library

- * Query Params -

- * term: string under 60 characters

- * offset: positive integer

- */

-router.get('/search',

- validate({

- query: {

- term: joi.string().max(60).required(),

- offset: joi.number().integer().min(0).default(0)

- }

- }),

- async (ctx, next) => {

- const { term, offset } = ctx.request.query

- ctx.body = await search.queryTerm(term, offset)

- }

-)

-

-```

-

-Now, if you restart the server and make a request with a missing term(`http://localhost:3000/search`), you will get back an HTTP 400 error with a relevant message, such as `Invalid URL Query - child "term" fails because ["term" is required]`.

-

-To view live logs from the Node app, you can run `docker-compose logs -f api`.

-

-### 7 - FRONT-END APPLICATION

-

-Now that our `/search` endpoint is in place, let's wire up a simple web app to test out the API.

-

-### 7.0 - Vue.js App

-

-We'll be using Vue.js to coordinate our frontend.

-

-Add a new file, `/public/app.js`, to hold our Vue.js application code.

-

-```

-const vm = new Vue ({

- el: '#vue-instance',

- data () {

- return {

- baseUrl: 'http://localhost:3000', // API url

- searchTerm: 'Hello World', // Default search term

- searchDebounce: null, // Timeout for search bar debounce

- searchResults: [], // Displayed search results

- numHits: null, // Total search results found

- searchOffset: 0, // Search result pagination offset

-

- selectedParagraph: null, // Selected paragraph object

- bookOffset: 0, // Offset for book paragraphs being displayed

- paragraphs: [] // Paragraphs being displayed in book preview window

- }

- },

- async created () {

- this.searchResults = await this.search() // Search for default term

- },

- methods: {

- /** Debounce search input by 100 ms */

- onSearchInput () {

- clearTimeout(this.searchDebounce)

- this.searchDebounce = setTimeout(async () => {

- this.searchOffset = 0

- this.searchResults = await this.search()

- }, 100)

- },

- /** Call API to search for inputted term */

- async search () {

- const response = await axios.get(`${this.baseUrl}/search`, { params: { term: this.searchTerm, offset: this.searchOffset } })

- this.numHits = response.data.hits.total

- return response.data.hits.hits

- },

- /** Get next page of search results */

- async nextResultsPage () {

- if (this.numHits > 10) {

- this.searchOffset += 10

- if (this.searchOffset + 10 > this.numHits) { this.searchOffset = this.numHits - 10}

- this.searchResults = await this.search()

- document.documentElement.scrollTop = 0

- }

- },

- /** Get previous page of search results */

- async prevResultsPage () {

- this.searchOffset -= 10

- if (this.searchOffset < 0) { this.searchOffset = 0 }

- this.searchResults = await this.search()

- document.documentElement.scrollTop = 0

- }

- }

-})

-

-```

-

-The app is pretty simple - we're just defining some shared data properties, and adding methods to retrieve and paginate through search results. The search input is debounced by 100ms, to prevent the API from being called with every keystroke.

-

-Explaining how Vue.js works is outside the scope of this tutorial, but this probably won't look too crazy if you've used Angular or React. If you're completely unfamiliar with Vue, and if you want something quick to get started with, I would recommend the official quick-start guide - [https://vuejs.org/v2/guide/][36]

-

-### 7.1 - HTML

-

-Replace our placeholder `/public/index.html` file with the following contents, in order to load our Vue.js app and to layout a basic search interface.

-

-```

-

-

-

-

- Elastic Library

-

-

-

-

-

-

-

-

-