mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-28 23:20:10 +08:00

Merge pull request #15 from LCTT/master

Merge pull request #13 from LCTT/master

This commit is contained in:

commit

83176973d3

@ -2,13 +2,13 @@ Ubuntu中跟踪多个时区的简捷方法

|

||||

================================================================================

|

||||

|

||||

|

||||

**我是否要确保在我醒来时或者安排与*山姆陈*,Ohso的半个开发商,进行Skype通话时,澳大利亚一个关于Chromebook销售的推特已经售罄,我大脑同时在多个时区下工作。**

|

||||

**无论我是要在醒来时发个关于澳大利亚的 Chromebook 销售已经售罄的推特,还是要记着和Ohso的半个开发商山姆陈进行Skype通话,我大脑都需要同时工作在多个时区下。**

|

||||

|

||||

那里头有个问题,如果你认识我,你会知道我的脑容量也就那么丁点,跟金鱼差不多,里头却塞着像Windows Vista这样一个臃肿货(也就是,不是很好)。我几乎记不得昨天之前的事情,更记不得我的门和金门大桥脚之间的时间差!

|

||||

|

||||

作为臂助,我使用一些小部件和菜单项来让我保持同步。在我常规工作日的空间里,我在多个操作系统间游弋,涵盖移动系统和桌面系统,但只有一个让我最快速便捷地设置“世界时钟”。

|

||||

作为臂助,我使用一些小部件和菜单项来让我保持同步。在我常规工作日的空间里,我在多个操作系统间游弋,涵盖移动系统和桌面系统,但只有一个可以让我最快速便捷地设置“世界时钟”。

|

||||

|

||||

**而它刚好是那个名字放在门上方的东西。**

|

||||

**它的名字就是我们标题上提到的那个。**

|

||||

|

||||

|

||||

|

||||

@ -16,10 +16,10 @@ Ubuntu中跟踪多个时区的简捷方法

|

||||

|

||||

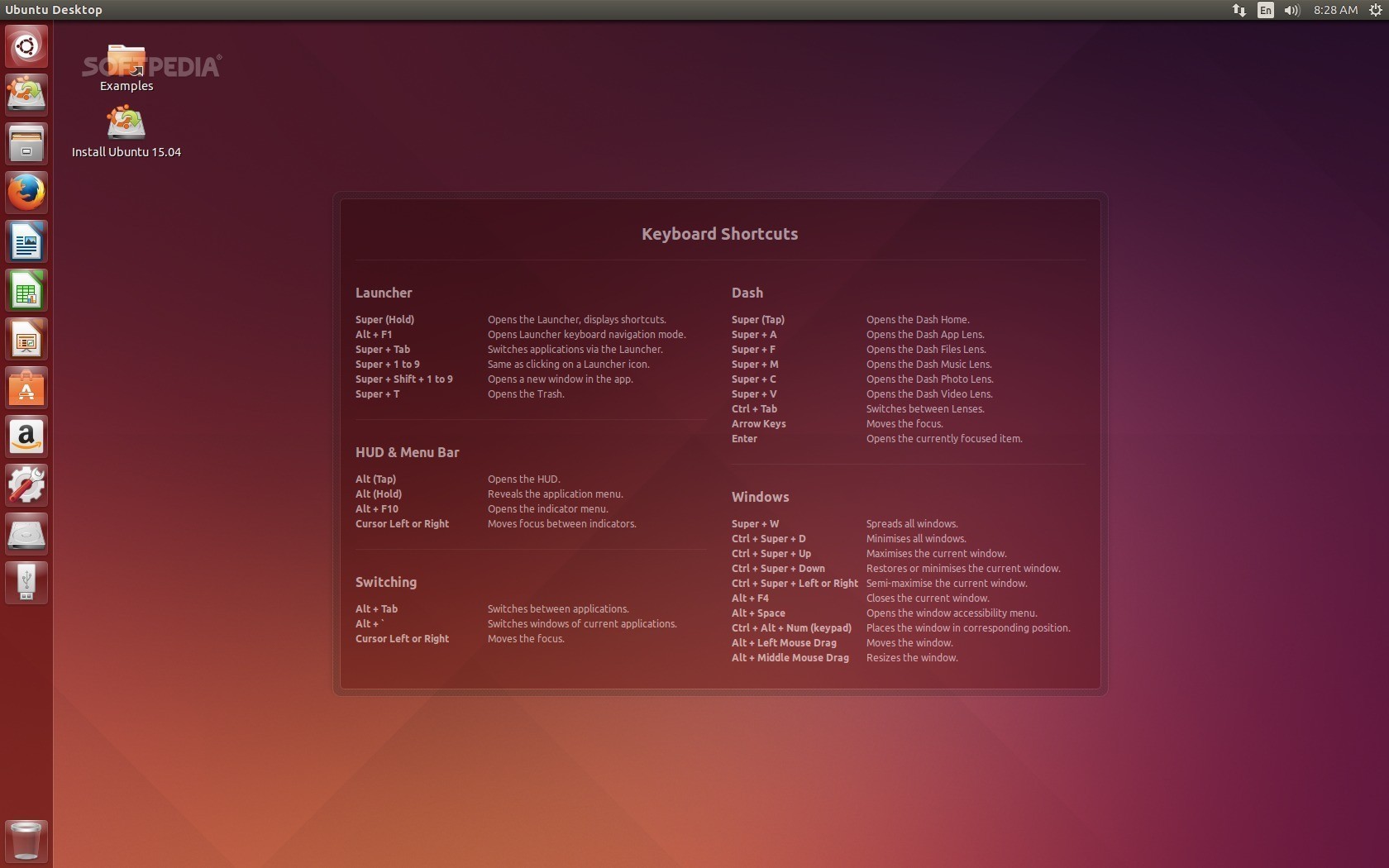

Unity中默认的日期-时间指示器提供了添加并查看多个时区的支持,不需要附加组件,不需要额外的包。

|

||||

|

||||

1. 点击时钟小应用,然后uxuanze‘**时间和日期设置**’条目

|

||||

1. 点击时钟小应用,然后选择‘**时间和日期设置**’条目

|

||||

1. 在‘**时钟**’标签中,选中‘**其它位置的时间**’选框

|

||||

1. 点击‘**选择位置**’按钮

|

||||

1. 点击‘**+**’,然后输入位置名称那个

|

||||

1. 点击‘**+**’,然后输入位置名称

|

||||

|

||||

#### 其它桌面环境 ####

|

||||

|

||||

@ -34,13 +34,13 @@ Unity中默认的日期-时间指示器提供了添加并查看多个时区的

|

||||

|

||||

|

||||

|

||||

Cinnamon 2.4中的世界时钟日历

|

||||

*Cinnamon 2.4中的世界时钟日历*

|

||||

|

||||

**XFCE**和**LXDE**就不那么慷慨了,除了自带的“工作区”作为**多个时钟**添加到面板外,每个都需要手动配置以指定位置。两个都支持‘指示器小部件’,所以,如果你没有依赖于Unity,你可以安装/添加单独的日期/时间指示器。

|

||||

**XFCE**和**LXDE**就不那么慷慨了,除了自带的“工作区”作为**多个时钟**添加到面板外,每个都需要手动配置以指定位置。两个都支持‘指示器小部件’,所以,如果你不用Unity的话,你可以安装/添加单独的日期/时间指示器。

|

||||

|

||||

**Budgie**还刚初出茅庐,不足以胜任角落里的需求,因为Pantheon我还没试过——希望你们通过评论来让我知道得更多。

|

||||

**Budgie**还刚初出茅庐,不足以胜任这种角落里的需求,因为Pantheon我还没试过——希望你们通过评论来让我知道得更多。

|

||||

|

||||

#### Desktop Apps, Widgets & Conky Themes桌面应用、不见和Conky主题 ####

|

||||

#### 桌面应用、部件和Conky主题 ####

|

||||

|

||||

当然,面板小部件只是收纳其它国家多个时区的一种方式。如果你不满意通过面板去访问,那里还有各种各样的**桌面应用**可供使用,其中许多都可以跨版本,甚至跨平台使用。

|

||||

|

||||

@ -54,7 +54,7 @@ via: http://www.omgubuntu.co.uk/2014/12/add-time-zones-world-clock-ubuntu

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,143 @@

|

||||

20条Linux命令面试问答

|

||||

================================================================================

|

||||

**问:1 如何查看当前的Linux服务器的运行级别?**

|

||||

|

||||

答: ‘who -r’ 和 ‘runlevel’ 命令可以用来查看当前的Linux服务器的运行级别。

|

||||

|

||||

**问:2 如何查看Linux的默认网关?**

|

||||

|

||||

答: 用 “route -n” 和 “netstat -nr” 命令,我们可以查看默认网关。除了默认的网关信息,这两个命令还可以显示当前的路由表。

|

||||

|

||||

**问:3 如何在Linux上重建初始化内存盘镜像文件?**

|

||||

|

||||

答: 在CentOS 5.X / RHEL 5.X中,可以用mkinitrd命令来创建初始化内存盘文件,举例如下:

|

||||

|

||||

# mkinitrd -f -v /boot/initrd-$(uname -r).img $(uname -r)

|

||||

|

||||

如果你想要给特定的内核版本创建初始化内存盘,你就用所需的内核名替换掉 ‘uname -r’ 。

|

||||

|

||||

在CentOS 6.X / RHEL 6.X中,则用dracut命令来创建初始化内存盘文件,举例如下:

|

||||

|

||||

# dracut -f

|

||||

|

||||

以上命令能给当前的系统版本创建初始化内存盘,给特定的内核版本重建初始化内存盘文件则使用以下命令:

|

||||

|

||||

# dracut -f initramfs-2.x.xx-xx.el6.x86_64.img 2.x.xx-xx.el6.x86_64

|

||||

|

||||

**问:4 cpio命令是什么?**

|

||||

|

||||

答: cpio就是复制入和复制出的意思。cpio可以向一个归档文件(或单个文件)复制文件、列表,还可以从中提取文件。

|

||||

|

||||

**问:5 patch命令是什么?如何使用?**

|

||||

|

||||

答: 顾名思义,patch命令就是用来将修改(或补丁)写进文本文件里。patch命令通常是接收diff的输出并把文件的旧版本转换为新版本。举个例子,Linux内核源代码由百万行代码文件构成,所以无论何时,任何代码贡献者贡献出代码,只需发送改动的部分而不是整个源代码,然后接收者用patch命令将改动写进原始的源代码里。

|

||||

|

||||

创建一个diff文件给patch使用,

|

||||

|

||||

# diff -Naur old_file new_file > diff_file

|

||||

|

||||

旧文件和新文件要么都是单个的文件要么都是包含文件的目录,-r参数支持目录树递归。

|

||||

|

||||

一旦diff文件创建好,我们就能在旧的文件上打上补丁,把它变成新文件:

|

||||

|

||||

# patch < diff_file

|

||||

|

||||

**问:6 aspell有什么用 ?**

|

||||

|

||||

答: 顾名思义,aspell就是Linux操作系统上的一款交互式拼写检查器。aspell命令继任了更早的一个名为ispell的程序,并且作为一款免费替代品 ,最重要的是它非常好用。当aspell程序主要被其它一些需要拼写检查能力的程序所使用的时候,在命令行中作为一个独立运行的工具的它也能十分有效。

|

||||

|

||||

**问:7 如何从命令行查看域SPF记录?**

|

||||

|

||||

答: 我们可以用dig命令来查看域SPF记录。举例如下:

|

||||

|

||||

linuxtechi@localhost:~$ dig -t TXT google.com

|

||||

|

||||

**问:8 如何识别Linux系统中指定文件(/etc/fstab)的关联包?**

|

||||

|

||||

答: # rpm -qf /etc/fstab

|

||||

|

||||

以上命令能列出提供“/etc/fstab”这个文件的包。

|

||||

|

||||

**问:9 哪条命令用来查看bond0的状态?**

|

||||

|

||||

答: cat /proc/net/bonding/bond0

|

||||

|

||||

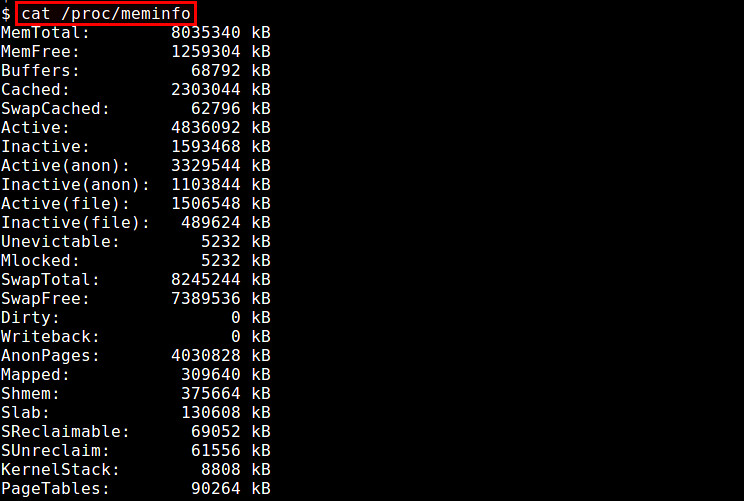

**问:10 Linux系统中的/proc文件系统有什么用?**

|

||||

|

||||

答: /proc文件系统是一个基于内存的文件系统,其维护着关于当前正在运行的内核状态信息,其中包括CPU、内存、分区划分、I/O地址、直接内存访问通道和正在运行的进程。这个文件系统所代表的并不是各种实际存储信息的文件,它们指向的是内存里的信息。/proc文件系统是由系统自动维护的。

|

||||

|

||||

**问:11 如何在/usr目录下找出大小超过10MB的文件?**

|

||||

|

||||

答: # find /usr -size +10M

|

||||

|

||||

**问:12 如何在/home目录下找出120天之前被修改过的文件?**

|

||||

|

||||

答: # find /home -mtime +120

|

||||

|

||||

**问:13 如何在/var目录下找出90天之内未被访问过的文件?**

|

||||

|

||||

答: # find /var \\! -atime -90

|

||||

|

||||

**问:14 在整个目录树下查找文件“core”,如发现则无需提示直接删除它们。**

|

||||

|

||||

答: # find / -name core -exec rm {} \;

|

||||

|

||||

**问:15 strings命令有什么作用?**

|

||||

|

||||

答: strings命令用来提取和显示非文本文件中的文本字符串。(LCTT 译注:当用来分析你系统上莫名其妙出现的二进制程序时,可以从中找到可疑的文件访问,对于追查入侵有用处)

|

||||

|

||||

**问:16 tee 过滤器有什么作用 ?**

|

||||

|

||||

答: tee 过滤器用来向多个目标发送输出内容。如果用于管道的话,它可以将输出复制一份到一个文件,并复制另外一份到屏幕上(或一些其它程序)。

|

||||

|

||||

linuxtechi@localhost:~$ ll /etc | nl | tee /tmp/ll.out

|

||||

|

||||

在以上例子中,从ll输出可以捕获到 /tmp/ll.out 文件中,并且同样在屏幕上显示了出来。

|

||||

|

||||

**问:17 export PS1 = ”$LOGNAME@`hostname`:\$PWD: 这条命令是在做什么?**

|

||||

|

||||

答: 这条export命令会更改登录提示符来显示用户名、本机名和当前工作目录。

|

||||

|

||||

**问:18 ll | awk ‘{print $3,”owns”,$9}’ 这条命令是在做什么?**

|

||||

|

||||

答: 这条ll命令会显示这些文件的文件名和它们的拥有者。

|

||||

|

||||

**问:19 :Linux中的at命令有什么用?**

|

||||

|

||||

答: at命令用来安排一个程序在未来的做一次一次性执行。所有提交的任务都被放在 /var/spool/at 目录下并且到了执行时间的时候通过atd守护进程来执行。

|

||||

|

||||

**问:20 linux中lspci命令的作用是什么?**

|

||||

|

||||

答: lspci命令用来显示你的系统上PCI总线和附加设备的信息。指定-v,-vv或-vvv来获取越来越详细的输出,加上-r参数的话,命令的输出则会更具有易读性。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxtechi.com/20-linux-commands-interview-questions-answers/

|

||||

|

||||

作者:[Pradeep Kumar][a]

|

||||

译者:[ZTinoZ](https://github.com/ZTinoZ)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linuxtechi.com/author/pradeep/

|

||||

[1]:

|

||||

[2]:

|

||||

[3]:

|

||||

[4]:

|

||||

[5]:

|

||||

[6]:

|

||||

[7]:

|

||||

[8]:

|

||||

[9]:

|

||||

[10]:

|

||||

[11]:

|

||||

[12]:

|

||||

[13]:

|

||||

[14]:

|

||||

[15]:

|

||||

[16]:

|

||||

[17]:

|

||||

[18]:

|

||||

[19]:

|

||||

[20]:

|

||||

@ -1,13 +1,11 @@

|

||||

Linux有问必答 - linux如何安装WPS

|

||||

Linux有问必答 - 如何在linux上安装WPS

|

||||

================================================================================

|

||||

> **问题**: 我听说一个好东西Kingsoft Office(译注:就是WPS),所以我想在我的Linux上试试。我怎样才能安装Kingsoft Office呢?

|

||||

|

||||

Kingsoft Office 一套办公套件,支持多个平台,包括Windows, Linux, iOS 和 Android。它包含三个组件:Writer(WPS文字)用来文字处理,Presentation(WPS演示)支持幻灯片,Spereadsheets(WPS表格)为电子表格。使用免费增值模式,其中基础版本是免费使用。比较其他的linux办公套件,如LibreOffice、 OpenOffice,最大优势在于,Kingsoft Office能最好的兼容微软的Office(译注:版权问题?了解下wps和Office的历史问题,可以得到一些结论)。因此如果你需要在windowns和linux平台间交互,Kingsoft office是一个很好的选择。

|

||||

|

||||

Kingsoft Office 是一套办公套件,支持多个平台,包括Windows, Linux, iOS 和 Android。它包含三个组件:Writer(WPS文字)用来文字处理,Presentation(WPS演示)支持幻灯片,Spereadsheets(WPS表格)是电子表格。其使用免费增值模式,其中基础版本是免费使用。比较其他的linux办公套件,如LibreOffice、 OpenOffice,其最大优势在于,Kingsoft Office能最好的兼容微软的Office(译注:版权问题?了解下wps和Office的历史问题,可以得到一些结论)。因此如果你需要在windows和linux平台间交互,Kingsoft office是一个很好的选择。

|

||||

|

||||

### CentOS, Fedora 或 RHEL中安装Kingsoft Office ###

|

||||

|

||||

|

||||

在[官方页面][1]下载RPM文件.官方RPM包只支持32位版本linux,但是你可以在64位中安装。

|

||||

|

||||

需要使用yum命令并用"localinstall"选项来本地安装这个RPM包

|

||||

@ -39,7 +37,7 @@ DEB包同样遇到一堆依赖。因此使用[gdebi][3]命令来代替dpkg来自

|

||||

|

||||

### 启动 Kingsoft Office ###

|

||||

|

||||

安装完成后,你就可以在桌面管理器轻松启动Witer(WPS文字), Presentation(WPS演示), and Spreadsheets(WPS表格),如下图

|

||||

安装完成后,你就可以在桌面管理器轻松启动Witer(WPS文字), Presentation(WPS演示), and Spreadsheets(WPS表格),如下图。

|

||||

|

||||

Ubuntu Unity中:

|

||||

|

||||

@ -49,7 +47,7 @@ GNOME桌面中:

|

||||

|

||||

|

||||

|

||||

不但如此,你也可以在命令行中启动Kingsoft Office

|

||||

不但如此,你也可以在命令行中启动Kingsoft Office。

|

||||

|

||||

启动Wirter(WPS文字),使用这个命令:

|

||||

|

||||

@ -74,7 +72,7 @@ GNOME桌面中:

|

||||

via: http://ask.xmodulo.com/install-kingsoft-office-linux.html

|

||||

|

||||

译者:[Vic020/VicYu](http://www.vicyu.net)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -2,9 +2,9 @@

|

||||

================================================================================

|

||||

|

||||

|

||||

当你想重装Ubuntu或者仅仅是想安装它的一个新版本的时候,寻到一个便捷的方法去重新安装之前的应用并且重置其设置是很有用的。此时 *Aptik* 粉墨登场,它可以帮助你轻松实现。

|

||||

当你想重装Ubuntu或者仅仅是想安装它的一个新版本的时候,如果有个便捷的方法来重新安装之前的应用并且重置其设置会很方便的。此时 *Aptik* 粉墨登场,它可以帮助你轻松实现。

|

||||

|

||||

Aptik(自动包备份和回复)是一个可以用在Ubuntu,Linux Mint, 和其他基于Debian以及Ubuntu的Linux发行版上的应用,它允许你将已经安装过的包括软件库、下载包、安装的应用及其主题和设置在内的PPAs(个人软件包存档)备份到外部的U盘、网络存储或者类似于Dropbox的云服务上。

|

||||

Aptik(自动包备份和恢复)是一个可以用在Ubuntu,Linux Mint 和其他基于Debian以及Ubuntu的Linux发行版上的应用,它允许你将已经安装过的包括软件库、下载包、安装的应用和主题、用户设置在内的PPAs(个人软件包存档)备份到外部的U盘、网络存储或者类似于Dropbox的云服务上。

|

||||

|

||||

注意:当我们在此文章中说到输入某些东西的时候,如果被输入的内容被引号包裹,请不要将引号一起输入进去,除非我们有特殊说明。

|

||||

|

||||

@ -16,7 +16,7 @@ Aptik(自动包备份和回复)是一个可以用在Ubuntu,Linux Mint, 和

|

||||

|

||||

|

||||

|

||||

输入下边的命令到提示符旁边,来确保资源库已经是最新版本。

|

||||

在命令行提示符输入下边的命令,来确保资源库已经是最新版本。

|

||||

|

||||

sudo apt-get update

|

||||

|

||||

@ -86,11 +86,11 @@ Aptik的主窗口显示出来了。从“Backup Directory”下拉列表中选

|

||||

|

||||

接下来,“Downloaded Packages (APT Cache)”的项目只对重装同样版本的Ubuntu有用处。它会备份下你系统缓存(/var/cache/apt/archives)中的包。如果你是升级系统的话,可以跳过这个条目,因为针对新系统的包会比现有系统缓存中的包更加新一些。

|

||||

|

||||

备份和回复下载过的包,这可以在重装Ubuntu,并且重装包的时候节省时间和网络带宽。因为一旦你把这些包恢复到系统缓存中之后,他们可以重新被利用起来,这样下载过程就免了,包的安装会更加快捷。

|

||||

备份和恢复下载过的包,这可以在重装Ubuntu,并且重装包的时候节省时间和网络带宽。因为一旦你把这些包恢复到系统缓存中之后,他们可以重新被利用起来,这样下载过程就免了,包的安装会更加快捷。

|

||||

|

||||

如果你是重装相同版本的Ubuntu系统的话,点击 “Downloaded Packages (APT Cache)” 右侧的 “Backup” 按钮来备份系统缓存中的包。

|

||||

|

||||

注意:当你备份下载过的包的时候是没有二级对话框出现。你系统缓存 (/var/cache/apt/archives) 中的包会被拷贝到备份目录下一个名叫 “archives” 的文件夹中,当整个过程完成后会出现一个对话框来告诉你备份已经完成。

|

||||

注意:当你备份下载过的包的时候是没有二级对话框出现的。你系统缓存 (/var/cache/apt/archives) 中的包会被拷贝到备份目录下一个名叫 “archives” 的文件夹中,当整个过程完成后会出现一个对话框来告诉你备份已经完成。

|

||||

|

||||

|

||||

|

||||

@ -104,7 +104,7 @@ Aptik的主窗口显示出来了。从“Backup Directory”下拉列表中选

|

||||

|

||||

|

||||

|

||||

名为 “packages.list” and “packages-installed.list” 的两个文件出现在了备份目录中,并且一个用来通知你备份完成的对话框出现。点击 ”OK“关闭它。

|

||||

备份目录中出现了两个名为 “packages.list” 和“packages-installed.list” 的文件,并且会弹出一个通知你备份完成的对话框。点击 ”OK“关闭它。

|

||||

|

||||

注意:“packages-installed.list”文件包含了所有的包,而 “packages.list” 在包含了所有包的前提下还指出了那些包被选择上了。

|

||||

|

||||

@ -120,27 +120,27 @@ Aptik的主窗口显示出来了。从“Backup Directory”下拉列表中选

|

||||

|

||||

|

||||

|

||||

当打包完成后,打包后的文件被拷贝到备份目录下,另外一个备份成功的对话框出现。点击”OK“,关掉。

|

||||

当打包完成后,打包后的文件被拷贝到备份目录下,另外一个备份成功的对话框出现。点击“OK”关掉。

|

||||

|

||||

|

||||

|

||||

来自 “/usr/share/themes” 目录的主题和来自 “/usr/share/icons” 目录的图标也可以备份。点击 “Themes and Icons” 右侧的 “Backup” 来进行此操作。“Backup Themes” 对话框默认选择了所有的主题和图标。你可以安装需要取消到一些然后点击 “Backup” 进行备份。

|

||||

放在 “/usr/share/themes” 目录的主题和放在 “/usr/share/icons” 目录的图标也可以备份。点击 “Themes and Icons” 右侧的 “Backup” 来进行此操作。“Backup Themes” 对话框默认选择了所有的主题和图标。你可以安装需要的、取消一些不要的,然后点击 “Backup” 进行备份。

|

||||

|

||||

|

||||

|

||||

主题被打包拷贝到备份目录下的 “themes” 文件夹中,图标被打包拷贝到备份目录下的 “icons” 文件夹中。然后成功提示对话框出现,点击”OK“关闭它。

|

||||

主题被打包拷贝到备份目录下的 “themes” 文件夹中,图标被打包拷贝到备份目录下的 “icons” 文件夹中。然后成功提示对话框出现,点击“OK”关闭它。

|

||||

|

||||

|

||||

|

||||

一旦你完成了需要的备份,点击主界面左上角的”X“关闭 Aptik 。

|

||||

一旦你完成了需要的备份,点击主界面左上角的“X”关闭 Aptik 。

|

||||

|

||||

|

||||

|

||||

备份过的文件已存在于你选择的备份目录中,可以随时取阅。

|

||||

备份过的文件已存在于你选择的备份目录中,可以随时查看。

|

||||

|

||||

|

||||

|

||||

当你重装Ubuntu或者安装新版本的Ubuntu后,在新的系统中安装 Aptik 并且将备份好的文件置于新系统中让其可被使用。运行 Aptik,并使用每个条目的 “Restore” 按钮来恢复你的软件源、应用、包、设置、主题以及图标。

|

||||

当你重装Ubuntu或者安装新版本的Ubuntu后,在新的系统中安装 Aptik 并且将备份好的文件置于新系统中使用。运行 Aptik,并使用每个条目的 “Restore” 按钮来恢复你的软件源、应用、包、设置、主题以及图标。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -148,7 +148,7 @@ via: http://www.howtogeek.com/206454/how-to-backup-and-restore-your-apps-and-ppa

|

||||

|

||||

作者:Lori Kaufman

|

||||

译者:[Ping](https://github.com/mr-ping)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,18 +1,17 @@

|

||||

如何在 Linux 上使用 HAProxy 配置 HTTP 负载均衡器

|

||||

使用 HAProxy 配置 HTTP 负载均衡器

|

||||

================================================================================

|

||||

随着基于 Web 的应用和服务的增多,IT 系统管理员肩上的责任也越来越重。当遇到不可预期的事件如流量达到高峰,流量增大或者内部的挑战比如硬件的损坏或紧急维修,无论如何,你的 Web 应用都必须要保持可用性。甚至现在流行的 devops 和持续交付也可能威胁到你的 Web 服务的可靠性和性能的一致性。

|

||||

随着基于 Web 的应用和服务的增多,IT 系统管理员肩上的责任也越来越重。当遇到不可预期的事件如流量达到高峰,流量增大或者内部的挑战比如硬件的损坏或紧急维修,无论如何,你的 Web 应用都必须要保持可用性。甚至现在流行的 devops 和持续交付(CD)也可能威胁到你的 Web 服务的可靠性和性能的一致性。

|

||||

|

||||

不可预测,不一直的性能表现是你无法接受的。但是我们怎样消除这些缺点呢?大多数情况下一个合适的负载均衡解决方案可以解决这个问题。今天我会给你们介绍如何使用 [HAProxy][1] 配置 HTTP 负载均衡器。

|

||||

不可预测,不一致的性能表现是你无法接受的。但是我们怎样消除这些缺点呢?大多数情况下一个合适的负载均衡解决方案可以解决这个问题。今天我会给你们介绍如何使用 [HAProxy][1] 配置 HTTP 负载均衡器。

|

||||

|

||||

###什么是 HTTP 负载均衡? ###

|

||||

|

||||

HTTP 负载均衡是一个网络解决方案,它将发入的 HTTP 或 HTTPs 请求分配至一组提供相同的 Web 应用内容的服务器用于响应。通过将请求在这样的多个服务器间进行均衡,负载均衡器可以防止服务器出现单点故障,可以提升整体的可用性和响应速度。它还可以让你能够简单的通过添加或者移除服务器来进行横向扩展或收缩,对工作负载进行调整。

|

||||

HTTP 负载均衡是一个网络解决方案,它将进入的 HTTP 或 HTTPs 请求分配至一组提供相同的 Web 应用内容的服务器用于响应。通过将请求在这样的多个服务器间进行均衡,负载均衡器可以防止服务器出现单点故障,可以提升整体的可用性和响应速度。它还可以让你能够简单的通过添加或者移除服务器来进行横向扩展或收缩,对工作负载进行调整。

|

||||

|

||||

### 什么时候,什么情况下需要使用负载均衡? ###

|

||||

|

||||

负载均衡可以提升服务器的使用性能和最大可用性,当你的服务器开始出现高负载时就可以使用负载均衡。或者你在为一个大型项目设计架构时,在前端使用负载均衡是一个很好的习惯。当你的环境需要扩展的时候它会很有用。

|

||||

|

||||

|

||||

### 什么是 HAProxy? ###

|

||||

|

||||

HAProxy 是一个流行的开源的 GNU/Linux 平台下的 TCP/HTTP 服务器的负载均衡和代理软件。HAProxy 是单线程,事件驱动架构,可以轻松的处理 [10 Gbps 速率][2] 的流量,在生产环境中被广泛的使用。它的功能包括自动健康状态检查,自定义负载均衡算法,HTTPS/SSL 支持,会话速率限制等等。

|

||||

@ -24,13 +23,13 @@ HAProxy 是一个流行的开源的 GNU/Linux 平台下的 TCP/HTTP 服务器的

|

||||

### 准备条件 ###

|

||||

|

||||

你至少要有一台,或者最好是两台 Web 服务器来验证你的负载均衡的功能。我们假设后端的 HTTP Web 服务器已经配置好并[可以运行][3]。

|

||||

You will need at least one, or preferably two web servers to verify functionality of your load balancer. We assume that backend HTTP web servers are already [up and running][3].

|

||||

|

||||

|

||||

### 在 Linux 中安装 HAProxy ###

|

||||

## 在 Linux 中安装 HAProxy ##

|

||||

|

||||

对于大多数的发行版,我们可以使用发行版的包管理器来安装 HAProxy。

|

||||

|

||||

#### 在 Debian 中安装 HAProxy ####

|

||||

### 在 Debian 中安装 HAProxy ###

|

||||

|

||||

在 Debian Wheezy 中我们需要添加源,在 /etc/apt/sources.list.d 下创建一个文件 "backports.list" ,写入下面的内容

|

||||

|

||||

@ -41,25 +40,25 @@ You will need at least one, or preferably two web servers to verify functionalit

|

||||

# apt get update

|

||||

# apt get install haproxy

|

||||

|

||||

#### 在 Ubuntu 中安装 HAProxy ####

|

||||

### 在 Ubuntu 中安装 HAProxy ###

|

||||

|

||||

# apt get install haproxy

|

||||

|

||||

#### 在 CentOS 和 RHEL 中安装 HAProxy ####

|

||||

### 在 CentOS 和 RHEL 中安装 HAProxy ###

|

||||

|

||||

# yum install haproxy

|

||||

|

||||

### 配置 HAProxy ###

|

||||

## 配置 HAProxy ##

|

||||

|

||||

本教程假设有两台运行的 HTTP Web 服务器,它们的 IP 地址是 192.168.100.2 和 192.168.100.3。我们将负载均衡配置在 192.168.100.4 的这台服务器上。

|

||||

|

||||

为了让 HAProxy 工作正常,你需要修改 /etc/haproxy/haproxy.cfg 中的一些选项。我们会在这一节中解释这些修改。一些配置可能因 GNU/Linux 发行版的不同而变化,这些会被标注出来。

|

||||

|

||||

#### 1. 配置日志功能 ####

|

||||

### 1. 配置日志功能 ###

|

||||

|

||||

你要做的第一件事是为 HAProxy 配置日志功能,在排错时日志将很有用。日志配置可以在 /etc/haproxy/haproxy.cfg 的 global 段中找到他们。下面是针对不同的 Linux 发型版的 HAProxy 日志配置。

|

||||

|

||||

**CentOS 或 RHEL:**

|

||||

#### CentOS 或 RHEL:####

|

||||

|

||||

在 CentOS/RHEL中启用日志,将下面的:

|

||||

|

||||

@ -82,7 +81,7 @@ You will need at least one, or preferably two web servers to verify functionalit

|

||||

|

||||

# service rsyslog restart

|

||||

|

||||

**Debian 或 Ubuntu:**

|

||||

####Debian 或 Ubuntu:####

|

||||

|

||||

在 Debian 或 Ubuntu 中启用日志,将下面的内容

|

||||

|

||||

@ -106,7 +105,7 @@ You will need at least one, or preferably two web servers to verify functionalit

|

||||

|

||||

# service rsyslog restart

|

||||

|

||||

#### 2. 设置默认选项 ####

|

||||

### 2. 设置默认选项 ###

|

||||

|

||||

下一步是设置 HAProxy 的默认选项。在 /etc/haproxy/haproxy.cfg 的 default 段中,替换为下面的配置:

|

||||

|

||||

@ -124,7 +123,7 @@ You will need at least one, or preferably two web servers to verify functionalit

|

||||

|

||||

上面的配置是当 HAProxy 为 HTTP 负载均衡时建议使用的,但是并不一定是你的环境的最优方案。你可以自己研究 HAProxy 的手册并配置它。

|

||||

|

||||

#### 3. Web 集群配置 ####

|

||||

### 3. Web 集群配置 ###

|

||||

|

||||

Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡中的大多数设置都在这里。现在我们会创建一些基本配置,定义我们的节点。将配置文件中从 frontend 段开始的内容全部替换为下面的:

|

||||

|

||||

@ -141,14 +140,14 @@ Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡

|

||||

server web01 192.168.100.2:80 cookie node1 check

|

||||

server web02 192.168.100.3:80 cookie node2 check

|

||||

|

||||

"listen webfarm *:80" 定义了负载均衡器监听的地址和端口。为了教程的需要,我设置为 "\*" 表示监听在所有接口上。在真实的场景汇总,这样设置可能不太合适,应该替换为可以从 internet 访问的那个网卡接口。

|

||||

"listen webfarm \*:80" 定义了负载均衡器监听的地址和端口。为了教程的需要,我设置为 "*" 表示监听在所有接口上。在真实的场景汇总,这样设置可能不太合适,应该替换为可以从 internet 访问的那个网卡接口。

|

||||

|

||||

stats enable

|

||||

stats uri /haproxy?stats

|

||||

stats realm Haproxy\ Statistics

|

||||

stats auth haproxy:stats

|

||||

|

||||

上面的设置定义了,负载均衡器的状态统计信息可以通过 http://<load-balancer-IP>/haproxy?stats 访问。访问需要简单的 HTTP 认证,用户名为 "haproxy" 密码为 "stats"。这些设置可以替换为你自己的认证方式。如果你不需要状态统计信息,可以完全禁用掉。

|

||||

上面的设置定义了,负载均衡器的状态统计信息可以通过 http://\<load-balancer-IP>/haproxy?stats 访问。访问需要简单的 HTTP 认证,用户名为 "haproxy" 密码为 "stats"。这些设置可以替换为你自己的认证方式。如果你不需要状态统计信息,可以完全禁用掉。

|

||||

|

||||

下面是一个 HAProxy 统计信息的例子

|

||||

|

||||

@ -160,7 +159,7 @@ Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡

|

||||

- **source**:对请求的客户端 IP 地址进行哈希计算,根据哈希值和服务器的权重将请求调度至后端服务器。

|

||||

- **uri**:对 URI 的左半部分(问号之前的部分)进行哈希,根据哈希结果和服务器的权重对请求进行调度

|

||||

- **url_param**:根据每个 HTTP GET 请求的 URL 查询参数进行调度,使用固定的请求参数将会被调度至指定的服务器上

|

||||

- **hdr(name**):根据 HTTP 首部中的 <name> 字段来进行调度

|

||||

- **hdr(name**):根据 HTTP 首部中的 \<name> 字段来进行调度

|

||||

|

||||

"cookie LBN insert indirect nocache" 这一行表示我们的负载均衡器会存储 cookie 信息,可以将后端服务器池中的节点与某个特定会话绑定。节点的 cookie 存储为一个自定义的名字。这里,我们使用的是 "LBN",你可以指定其他的名称。后端节点会保存这个 cookie 的会话。

|

||||

|

||||

@ -169,25 +168,25 @@ Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡

|

||||

|

||||

上面是我们的 Web 服务器节点的定义。服务器有由内部名称(如web01,web02),IP 地址和唯一的 cookie 字符串表示。cookie 字符串可以自定义,我这里使用的是简单的 node1,node2 ... node(n)

|

||||

|

||||

### 启动 HAProxy ###

|

||||

## 启动 HAProxy ##

|

||||

|

||||

如果你完成了配置,现在启动 HAProxy 并验证是否运行正常。

|

||||

|

||||

#### 在 Centos/RHEL 中启动 HAProxy ####

|

||||

### 在 Centos/RHEL 中启动 HAProxy ###

|

||||

|

||||

让 HAProxy 开机自启,使用下面的命令

|

||||

|

||||

# chkconfig haproxy on

|

||||

# service haproxy start

|

||||

|

||||

当然,防火墙需要开放 80 端口,想下面这样

|

||||

当然,防火墙需要开放 80 端口,像下面这样

|

||||

|

||||

**CentOS/RHEL 7 的防火墙**

|

||||

####CentOS/RHEL 7 的防火墙####

|

||||

|

||||

# firewallcmd permanent zone=public addport=80/tcp

|

||||

# firewallcmd reload

|

||||

|

||||

**CentOS/RHEL 6 的防火墙**

|

||||

####CentOS/RHEL 6 的防火墙####

|

||||

|

||||

把下面内容加至 /etc/sysconfig/iptables 中的 ":OUTPUT ACCEPT" 段中

|

||||

|

||||

@ -197,9 +196,9 @@ Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡

|

||||

|

||||

# service iptables restart

|

||||

|

||||

#### 在 Debian 中启动 HAProxy ####

|

||||

### 在 Debian 中启动 HAProxy ###

|

||||

|

||||

#### 启动 HAProxy ####

|

||||

启动 HAProxy

|

||||

|

||||

# service haproxy start

|

||||

|

||||

@ -207,7 +206,7 @@ Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡

|

||||

|

||||

A INPUT p tcp dport 80 j ACCEPT

|

||||

|

||||

#### 在 Ubuntu 中启动HAProxy ####

|

||||

### 在 Ubuntu 中启动HAProxy ###

|

||||

|

||||

让 HAProxy 开机自动启动在 /etc/default/haproxy 中配置

|

||||

|

||||

@ -221,7 +220,7 @@ Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡

|

||||

|

||||

# ufw allow 80

|

||||

|

||||

### 测试 HAProxy ###

|

||||

## 测试 HAProxy ##

|

||||

|

||||

检查 HAProxy 是否工作正常,我们可以这样做

|

||||

|

||||

@ -239,7 +238,7 @@ Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡

|

||||

|

||||

$ curl http://192.168.100.4/test.php

|

||||

|

||||

我们多次使用这个命令此时,会发现交替的输出下面的内容(因为使用了轮询算法):

|

||||

我们多次运行这个命令此时,会发现交替的输出下面的内容(因为使用了轮询算法):

|

||||

|

||||

Server IP: 192.168.100.2

|

||||

X-Forwarded-for: 192.168.100.4

|

||||

@ -251,13 +250,13 @@ Web 集群配置定义了一组可用的 HTTP 服务器。我们的负载均衡

|

||||

|

||||

如果我们停掉一台后端 Web 服务,curl 命令仍然正常工作,请求被分发至另一台可用的 Web 服务器。

|

||||

|

||||

### 总结 ###

|

||||

## 总结 ##

|

||||

|

||||

现在你有了一个完全可用的负载均衡器,以轮询的模式对你的 Web 节点进行负载均衡。还可以去实验其他的配置选项以适应你的环境。希望这个教程可以帮会组你们的 Web 项目有更好的可用性。

|

||||

现在你有了一个完全可用的负载均衡器,以轮询的模式对你的 Web 节点进行负载均衡。还可以去实验其他的配置选项以适应你的环境。希望这个教程可以帮助你们的 Web 项目有更好的可用性。

|

||||

|

||||

你可能已经发现了,这个教程只包含单台负载均衡的设置。这意味着我们仍然有单点故障的问题。在真实场景中,你应该至少部署 2 台或者 3 台负载均衡以防止意外发生,但这不是本教程的范围。

|

||||

|

||||

如果 你有任何问题或建议,请在评论中提出,我会尽我的努力回答。

|

||||

如果你有任何问题或建议,请在评论中提出,我会尽我的努力回答。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -265,11 +264,11 @@ via: http://xmodulo.com/haproxy-http-load-balancer-linux.html

|

||||

|

||||

作者:[Jaroslav Štěpánek][a]

|

||||

译者:[Liao](https://github.com/liaoishere)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/jaroslav

|

||||

[1]:http://www.haproxy.org/

|

||||

[2]:http://www.haproxy.org/10g.html

|

||||

[3]:http://xmodulo.com/how-to-install-lamp-server-on-ubuntu.html

|

||||

[3]:http://linux.cn/article-1567-1.html

|

||||

158

published/201501/20141203 Docker--Present and Future.md

Normal file

158

published/201501/20141203 Docker--Present and Future.md

Normal file

@ -0,0 +1,158 @@

|

||||

Docker 的现状与未来

|

||||

================================================================================

|

||||

|

||||

### Docker - 迄今为止发生的那些事情 ###

|

||||

|

||||

Docker 是一个专为 Linux 容器而设计的工具集,用于‘构建、交付和运行’分布式应用。它最初是 DotCloud 的一个开源项目,于2013年3月发布。这个项目越来越受欢迎,以至于 DotCloud 公司都更名为 Docker 公司(并最终[出售了原有的 PaaS 业务][1])。[Docker 1.0][2]是在2014年6月发布的,而且延续了之前每月更新一个版本的传统。

|

||||

|

||||

Docker 1.0版本的发布标志着 Docker 公司认为该平台已经充分成熟,足以用于生产环境中(由该公司与合作伙伴提供付费支持选择)。每个月发布的更新表明该项目正在迅速发展,比如增添一些新特性、解决一些他们发现的问题。该项目已经成功地分离了‘运行’和‘交付’两件事,所以来自任何版本的 Docker 镜像源都可以与其它版本共同使用(具备向前和向后兼容的特性),这为 Docker 应对快速变化提供了稳定的保障。

|

||||

|

||||

Docker 之所以能够成为最受欢迎的开源项目之一可能会被很多人看做是炒作,但是也是由其坚实的基础所决定的。Docker 的影响力已经得到整个行业许多大企业的支持,包括亚马逊, Canonical 公司, CenturyLink, 谷歌, IBM, 微软, New Relic, Pivotal, 红帽和 VMware。这使得只要有 Linux 的地方,Docker 就可以无处不在。除了这些鼎鼎有名的大公司以外,许多初创公司也在围绕着 Docker 发展,或者改变他们的发展方向来与 Docker 更好地结合起来。这些合作伙伴们(无论大或小)都将帮助推动 Docker 核心项目及其周边生态环境的快速发展。

|

||||

|

||||

### Docker 技术简要综述 ###

|

||||

|

||||

Docker 利用 Linux 的一些内核机制例如 [cGroups][3]、命名空间和 [SElinux][4] 来实现容器之间的隔离。起初 Docker 只是 [LXC][5] 容器管理器子系统的前端,但是在 0.9 版本中引入了 [libcontainer][6],这是一个原生的 go 语言库,提供了用户空间和内核之间的接口。

|

||||

|

||||

容器是基于 [AUFS][7] 这样的联合文件系统的,它允许跨多个容器共享组件,如操作系统镜像和已安装的相关库。这种文件系统的分层方法也被 [Dockerfile][8] 的 DevOps 工具所利用,这些工具能够缓存成功完成的操作。这就省下了安装操作系统和相关应用程序依赖包的时间,极大地加速测试周期。另外,在容器之间的共享库也能够减少内存的占用。

|

||||

|

||||

一个容器是从一个镜像开始运行的,它可以来自本地创建,本地缓存,或者从一个注册库(registry)下载。Docker 公司运营的 [Docker Hub 公有注册库][9],为各种操作系统、中间件和数据库提供了官方仓库存储。各个组织和个人都可以在 docker Hub 上发布的镜像的公有库,也可以注册成私有仓库。由于上传的镜像可以包含几乎任何内容,所以 Docker 提供了一种自动构建工具(以往称为“可信构建”),镜像可以从一种称之为 Dockerfile 的镜像内容清单构建而成。

|

||||

|

||||

### 容器 vs. 虚拟机 ###

|

||||

|

||||

容器会比虚拟机更高效,因为它们能够分享一个内核和分享应用程序库。相比虚拟机系统,这也将使得 Docker 使用的内存更小,即便虚拟机利用了内存超量使用的技术。部署容器时共享底层的镜像层也可以减少存储占用。IBM 的 Boden Russel 已经做了一些[基准测试][10]来说明两者之间的不同。

|

||||

|

||||

相比虚拟机系统,容器具有较低系统开销的优势,所以在容器中,应用程序的运行效率将会等效于在同样的应用程序在虚拟机中运行,甚至效果更佳。IBM 的一个研究团队已经发表了一本名为[虚拟机与 Linux 容器的性能比较]的文章[11]。

|

||||

|

||||

容器只是在隔离特性上要比虚拟机逊色。虚拟机可以利用如 Intel 的 VT-d 和 VT-x 技术的 ring-1 [硬件隔离][12]技术。这种隔离可以防止虚拟机突破和彼此交互。而容器至今还没有任何形式的硬件隔离,这使它容易受到攻击。一个称为 [Shocker][13] 的概念攻击验证表明,在 Docker 1.0 之前的版本是存在这种脆弱性的。尽管 Docker 1.0 修复了许多由 Shocker 漏洞带来的较为严重的问题,Docker 的 CTO Solomon Hykes 仍然[说][14],“当我们可以放心宣称 Docker 的开箱即用是安全的,即便是不可信的 uid0 程序(超级用户权限程序),我们将会很明确地告诉大家。”Hykes 的声明承认,其漏洞及相关的风险依旧存在,所以在容器成为受信任的工具之前将有更多的工作要做。

|

||||

|

||||

对于许多用户案例而言,在容器和虚拟机之间二者选择其一是种错误的二分法。Docker 同样可以在虚拟机中工作的很好,这让它可以用在现有的虚拟基础措施、私有云或者公有云中。同样也可以在容器里跑虚拟机,这也类似于谷歌在其云平台的使用方式。像 IaaS 服务这样普遍可用的基础设施,能够即时提供所需的虚拟机,可以预期容器与虚拟机一起使用的情景将会在数年后出现。容器管理和虚拟机技术也有可能被集成到一起提供一个两全其美的方案;这样,一个硬件信任锚微虚拟化所支撑的 libcontainer 容器,可与前端 Docker 工具链和生态系统整合,而使用提供更好隔离性的不同后端。微虚拟化(例如 Bromium 的 [vSentry][15] 和 VMware 的 [Project Fargo][16])已经用于在桌面环境中以提供基于硬件的应用程序隔离,所以类似的方法也可以用于 libcontainer,作为 Linux内核中的容器机制的替代技术。

|

||||

|

||||

### ‘容器化’ 的应用程序 ###

|

||||

|

||||

几乎所有 Linux 应用程序都可以在 Docker 容器中运行,并没有编程语言或框架的限制。唯一的实际限制是以操作系统的角度来允许容器做什么。即使如此,也可以在特权模式下运行容器,从而大大减少了限制(与之对应的是容器中的应用程序的风险增加,可能导致损坏主机操作系统)。

|

||||

|

||||

容器都是从镜像开始运行的,而镜像也可以从运行中的容器获取。本质上说,有两种方法可以将应用程序放到容器中,分别是手动构建和 Dockerfile。

|

||||

|

||||

#### 手动构建 ####

|

||||

|

||||

手动构建从启动一个基础的操作系统镜像开始,然后在交互式终端中用你所选的 Linux 提供的包管理器安装应用程序及其依赖项。Zef Hemel 在‘[使用 Linux 容器来支持便携式应用程序部署][17]’的文章中讲述了他部署的过程。一旦应用程序被安装之后,容器就可以被推送至注册库(例如Docker Hub)或者导出为一个tar文件。

|

||||

|

||||

#### Dockerfile ####

|

||||

|

||||

Dockerfile 是一个用于构建 Docker 容器的脚本化系统。每一个 Dockerfile 定义了开始的基础镜像,以及一系列在容器中运行的命令或者一些被添加到容器中的文件。Dockerfile 也可以指定对外的端口和当前工作目录,以及容器启动时默认执行的命令。用 Dockerfile 构建的容器可以像手工构建的镜像一样推送或导出。Dockerfile 也可以用于 Docker Hub 的自动构建系统,即在 Docker 公司的控制下从头构建,并且该镜像的源代码是任何需要使用它的人可见的。

|

||||

|

||||

#### 单进程? ####

|

||||

|

||||

无论镜像是手动构建还是通过 Dockerfile 构建,有一个要考虑的关键因素是当容器启动时仅启动一个进程。对于一个单一用途的容器,例如运行一个应用服务器,运行一个单一的进程不是一个问题(有些关于容器应该只有一个单独的进程的争议)。对于一些容器需要启动多个进程的情况,必须先启动 [supervisor][18] 进程,才能生成其它内部所需的进程。由于容器内没有初始化系统,所以任何依赖于 systemd、upstart 或类似初始化系统的东西不修改是无法工作的。

|

||||

|

||||

### 容器和微服务 ###

|

||||

|

||||

全面介绍使用微服务结构体系的原理和好处已经超出了这篇文章的范畴(在 [InfoQ eMag: Microservices][19] 有全面阐述)。然而容器是绑定和部署微服务实例的捷径。

|

||||

|

||||

大规模微服务部署的多数案例都是部署在虚拟机上,容器只是用于较小规模的部署上。容器具有共享操作系统和公用库的的内存和硬盘存储的能力,这也意味着它可以非常有效的并行部署多个版本的服务。

|

||||

|

||||

### 连接容器 ###

|

||||

|

||||

一些小的应用程序适合放在单独的容器中,但在许多案例中应用程序需要分布在多个容器中。Docker 的成功包括催生了一连串新的应用程序组合工具、编制工具及平台作为服务(PaaS)的实现。在这些努力的背后,是希望简化从一组相互连接的容器来创建应用的过程。很多工具也在扩展、容错、性能管理以及对已部署资产进行版本控制方面提供了帮助。

|

||||

|

||||

#### 连通性 ####

|

||||

|

||||

Docker 的网络功能是相当原始的。在同一主机,容器内的服务可以互相访问,而且 Docker 也可以通过端口映射到主机操作系统,使服务可以通过网络访问。官方支持的提供连接能力的库叫做 [libchan][20],这是一个提供给 Go 语言的网络服务库,类似于[channels][21]。在 libchan 找到进入应用的方法之前,第三方应用仍然有很大空间可提供配套的网络服务。例如,[Flocker][22] 已经采取了基于代理的方法使服务实现跨主机(以及底层存储)的移植。

|

||||

|

||||

#### 合成 ####

|

||||

|

||||

Docker 本身拥有把容器连接在一起的机制,与元数据相关的依赖项可以被传递到相依赖的容器中,并用于环境变量和主机入口。如 [Fig][23] 和 [geard][24] 这样的应用合成工具可以在单一文件中展示出这种依赖关系图,这样多个容器就可以汇聚成一个连贯的系统。CenturyLink 公司的 [Panamax][25] 合成工具类似 Fig 和 geard 的底层实现方法,但新增了一些基于 web 的用户接口,并直接与 GitHub 相结合,以便于应用程序分享。

|

||||

|

||||

#### 编制 ####

|

||||

|

||||

像 [Decking][26]、New Relic 公司的 [Centurion][27] 和谷歌公司的 [Kubernetes][28] 这样的编制系统都是旨在协助容器的部署和管理其生命周期系统。也有许多 [Apache Mesos][30] (特别是 [Marathon(马拉松式)持续运行很久的框架])的案例(例如[Mesosphere][29])已经被用于配合 Docker 一起使用。通过为应用程序与底层基础架构之间(例如传递 CPU 核数和内存的需求)提供一个抽象的模型,编制工具提供了两者的解耦,简化了应用程序开发和数据中心操作。有很多各种各样的编制系统,因为许多来自内部系统的以前开发的用于大规模容器部署的工具浮现出来了;如 Kubernetes 是基于谷歌的 [Omega][32] 系统的,[Omega][32] 是用于管理遍布谷歌云环境中容器的系统。

|

||||

|

||||

虽然从某种程度上来说合成工具和编制工具的功能存在重叠,但这也是它们之间互补的一种方式。例如 Fig 可以被用于描述容器间如何实现功能交互,而 Kubernetes pods(容器组)可用于提供监控和扩展。

|

||||

|

||||

#### 平台(即服务)####

|

||||

|

||||

有一些 Docker 原生的 PaaS 服务实现,例如 [Deis][33] 和 [Flynn][34] 已经显现出 Linux 容器在开发上的的灵活性(而不是那些“自以为是”的给出一套语言和框架)。其它平台,例如 CloudFoundry、OpenShift 和 Apcera Continuum 都已经采取将 Docker 基础功能融入其现有的系统的技术路线,这样基于 Docker 镜像(或者基于 Dockerfile)的应用程序也可以与之前用支持的语言和框架的开发的应用一同部署和管理。

|

||||

|

||||

### 所有的云 ###

|

||||

|

||||

由于 Docker 能够运行在任何正常更新内核的 Linux 虚拟机中,它几乎可以用在所有提供 IaaS 服务的云上。大多数的主流云厂商已经宣布提供对 Docker 及其生态系统的支持。

|

||||

|

||||

亚马逊已经把 Docker 引入它们的 Elastic Beanstalk 系统(这是在底层 IaaS 上的一个编制系统)。谷歌使 Docker 成为了“可管理的 VM”,它提供了GAE PaaS 和GCE IaaS 之间的中转站。微软和 IBM 也都已经宣布了基于 Kubernetes 的服务,这样可以在它们的云上部署和管理多容器应用程序。

|

||||

|

||||

为了给现有种类繁多的后端提供可用的一致接口,Docker 团队已经引进 [libswarm][35], 它可以集成于众多的云和资源管理系统。Libswarm 所阐明的目标之一是“通过切换服务来源避免被特定供应商套牢”。这是通过呈现一组一致的服务(与API相关联的)来完成的,该服务会通过特定的后端服务所实现。例如 Docker 服务器将支持本地 Docker 命令行工具的 Docker 远程 API 调用,这样就可以管理一组服务供应商的容器了。

|

||||

|

||||

基于 Docker 的新服务类型仍在起步阶段。总部位于伦敦的 Orchard 实验室提供了 Docker 的托管服务,但是 Docker 公司表示,收购 Orchard 后,其相关服务不会置于优先位置。Docker 公司也出售了之前 DotCloud 的PaaS 业务给 cloudControl。基于更早的容器管理系统的服务例如 [OpenVZ][36] 已经司空见惯了,所以在一定程度上 Docker 需要向主机托管商们证明其价值。

|

||||

|

||||

### Docker 及其发行版 ###

|

||||

|

||||

Docker 已经成为大多数 Linux 发行版例如 Ubuntu、Red Hat 企业版(RHEL)和 CentOS 的一个标准功能。遗憾的是这些发行版的步调和 Docker 项目并不一致,所以在发布版中找到的版本总是远远落后于最新版本。例如 Ubuntu 14.04 版本中的版本是 Docker 0.9.1,而当 Ubuntu 升级至 14.04.1 时 Docker 版本并没有随之升级(此时 Docker 已经升至 1.1.2 版本)。在发行版的软件仓库中还有一个名字空间的冲突,因为 “Docker” 也是 KDE 系统托盘的名字;所以在 Ubuntu 14.04 版本中相关安装包的名字和命令行工具都是使用“Docker.io”的名字。

|

||||

|

||||

在企业级 Linux 的世界中,情况也并没有因此而不同。CentOS 7 中的 Docker 版本是 0.11.1,这是 Docker 公司宣布准备发行 Docker 1.0 产品版本之前的开发版。Linux 发行版用户如果希望使用最新版本以保障其稳定、性能和安全,那么最好地按照 Docker 的[安装说明][37]进行,使用 Docker 公司的所提供的软件库而不是采用发行版的。

|

||||

|

||||

Docker 的到来也催生了新的 Linux 发行版,如 [CoreOS][38] 和红帽的 [Project Atomic][39],它们被设计为能运行容器的最小环境。这些发布版相比传统的发行版,带着更新的内核及 Docker 版本,对内存的使用和硬盘占用率也更低。新发行版也配备了用于大型部署的新工具,例如 [fleet][40](一个分布式初始化系统)和[etcd][41](用于元数据管理)。这些发行版也有新的自我更新机制,以便可以使用最新的内核和 Docker。这也意味着使用 Docker 的影响之一是它抛开了对发行版和相关的包管理解决方案的关注,而对 Linux 内核(及使用它的 Docker 子系统)更加关注。

|

||||

|

||||

这些新发行版也许是运行 Docker 的最好方式,但是传统的发行版和它们的包管理器对容器来说仍然是非常重要的。Docker Hub 托管的官方镜像有 Debian、Ubuntu 和 CentOS,以及一个‘半官方’的 Fedora 镜像库。RHEL 镜像在Docker Hub 中不可用,因为它是 Red Hat 直接发布的。这意味着在 Docker Hub 的自动构建机制仅仅用于那些纯开源发行版下(并愿意信任那些源于 Docker 公司团队提供的基础镜像)。

|

||||

|

||||

Docker Hub 集成了如 Git Hub 和 Bitbucket 这样源代码控制系统来自动构建包管理器,用于管理构建过程中创建的构建规范(在Dockerfile中)和生成的镜像之间的复杂关系。构建过程的不确定结果并非是 Docker 的特定问题——而与软件包管理器如何工作有关。今天构建完成的是一个版本,明天构建的可能就是更新的版本,这就是为什么软件包管理器需要升级的原因。容器抽象(较少关注容器中的内容)以及容器扩展(因为轻量级资源利用率)有可能让这种不确定性成为 Docker 的痛点。

|

||||

|

||||

### Docker 的未来 ###

|

||||

|

||||

Docker 公司对核心功能(libcontainer),跨服务管理(libswarm) 和容器间的信息传递(libchan)的发展上提出了明确的路线。与此同时,该公司已经表明愿意收购 Orchard 实验室,将其纳入自身生态系统。然而 Docker 不仅仅是 Docker 公司的,这个项目的贡献者也来自许多大牌贡献者,其中不乏像谷歌、IBM 和 Red Hat 这样的大公司。在仁慈独裁者、CTO Solomon Hykes 掌舵的形势下,为公司和项目明确了技术领导关系。在前18个月的项目中通过成果输出展现了其快速行动的能力,而且这种趋势并没有减弱的迹象。

|

||||

|

||||

许多投资者正在寻找10年前 VMware 公司的 ESX/vSphere 平台的特征矩阵,并试图找出虚拟机的普及而带动的企业预期和当前 Docker 生态系统两者的距离(和机会)。目前 Docker 生态系统正缺乏类似网络、存储和(对于容器的内容的)细粒度版本管理,这些都为初创企业和创业者提供了机会。

|

||||

|

||||

随着时间的推移,在虚拟机和容器(Docker 的“运行”部分)之间的区别将变得没那么重要了,而关注点将会转移到“构建”和“交付”方面。这些变化将会使“Docker发生什么?”变得不如“Docker将会给IT产业带来什么?”那么重要了。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.infoq.com/articles/docker-future

|

||||

|

||||

作者:[Chris Swan][a]

|

||||

译者:[disylee](https://github.com/disylee)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.infoq.com/author/Chris-Swan

|

||||

[1]:http://blog.dotcloud.com/dotcloud-paas-joins-cloudcontrol

|

||||

[2]:http://www.infoq.com/news/2014/06/docker_1.0

|

||||

[3]:https://www.kernel.org/doc/Documentation/cgroups/cgroups.txt

|

||||

[4]:http://selinuxproject.org/page/Main_Page

|

||||

[5]:https://linuxcontainers.org/

|

||||

[6]:http://blog.docker.com/2014/03/docker-0-9-introducing-execution-drivers-and-libcontainer/

|

||||

[7]:http://aufs.sourceforge.net/aufs.html

|

||||

[8]:https://docs.docker.com/reference/builder/

|

||||

[9]:https://registry.hub.docker.com/

|

||||

[10]:http://bodenr.blogspot.co.uk/2014/05/kvm-and-docker-lxc-benchmarking-with.html?m=1

|

||||

[11]:http://domino.research.ibm.com/library/cyberdig.nsf/papers/0929052195DD819C85257D2300681E7B/$File/rc25482.pdf

|

||||

[12]:https://en.wikipedia.org/wiki/X86_virtualization#Hardware-assisted_virtualization

|

||||

[13]:http://stealth.openwall.net/xSports/shocker.c

|

||||

[14]:https://news.ycombinator.com/item?id=7910117

|

||||

[15]:http://www.bromium.com/products/vsentry.html

|

||||

[16]:http://cto.vmware.com/vmware-docker-better-together/

|

||||

[17]:http://www.infoq.com/articles/docker-containers

|

||||

[18]:http://docs.docker.com/articles/using_supervisord/

|

||||

[19]:http://www.infoq.com/minibooks/emag-microservices

|

||||

[20]:https://github.com/docker/libchan

|

||||

[21]:https://gobyexample.com/channels

|

||||

[22]:http://www.infoq.com/news/2014/08/clusterhq-launch-flocker

|

||||

[23]:http://www.fig.sh/

|

||||

[24]:http://openshift.github.io/geard/

|

||||

[25]:http://panamax.io/

|

||||

[26]:http://decking.io/

|

||||

[27]:https://github.com/newrelic/centurion

|

||||

[28]:https://github.com/GoogleCloudPlatform/kubernetes

|

||||

[29]:https://mesosphere.io/2013/09/26/docker-on-mesos/

|

||||

[30]:http://mesos.apache.org/

|

||||

[31]:https://github.com/mesosphere/marathon

|

||||

[32]:http://static.googleusercontent.com/media/research.google.com/en/us/pubs/archive/41684.pdf

|

||||

[33]:http://deis.io/

|

||||

[34]:https://flynn.io/

|

||||

[35]:https://github.com/docker/libswarm

|

||||

[36]:http://openvz.org/Main_Page

|

||||

[37]:https://docs.docker.com/installation/#installation

|

||||

[38]:https://coreos.com/

|

||||

[39]:http://www.projectatomic.io/

|

||||

[40]:https://github.com/coreos/fleet

|

||||

[41]:https://github.com/coreos/etcd

|

||||

@ -1,6 +1,6 @@

|

||||

CentOS 7.x中正确设置时间与时钟服务器同步

|

||||

================================================================================

|

||||

**Chrony**是一个开源而自由的应用,它能帮助你保持系统时钟与时钟服务器同步,因此让你的时间保持精确。它由两个程序组成,分别是chronyd和chronyc。chronyd是一个后台运行的守护进程,用于调整内核中运行的系统时钟和时钟服务器同步。它确定计算机获取或丢失时间的比率,并对此进行补偿。chronyc提供了一个用户界面,用于监控性能并进行多样化的配置。它可以在chronyd实例控制的计算机上干这些事,也可以在一台不同的远程计算机上干这些事。

|

||||

**Chrony**是一个开源的自由软件,它能帮助你保持系统时钟与时钟服务器(NTP)同步,因此让你的时间保持精确。它由两个程序组成,分别是chronyd和chronyc。chronyd是一个后台运行的守护进程,用于调整内核中运行的系统时钟和时钟服务器同步。它确定计算机增减时间的比率,并对此进行补偿。chronyc提供了一个用户界面,用于监控性能并进行多样化的配置。它可以在chronyd实例控制的计算机上工作,也可以在一台不同的远程计算机上工作。

|

||||

|

||||

在像CentOS 7之类基于RHEL的操作系统上,已经默认安装有Chrony。

|

||||

|

||||

@ -10,19 +10,17 @@ CentOS 7.x中正确设置时间与时钟服务器同步

|

||||

|

||||

**server** - 该参数可以多次用于添加时钟服务器,必须以"server "格式使用。一般而言,你想添加多少服务器,就可以添加多少服务器。

|

||||

|

||||

Example:

|

||||

server 0.centos.pool.ntp.org

|

||||

server 3.europe.pool.ntp.org

|

||||

|

||||

**stratumweight** - stratumweight指令设置当chronyd从可用源中选择同步源时,每个层应该添加多少距离到同步距离。默认情况下,CentOS中设置为0,让chronyd在选择源时忽略层。

|

||||

**stratumweight** - stratumweight指令设置当chronyd从可用源中选择同步源时,每个层应该添加多少距离到同步距离。默认情况下,CentOS中设置为0,让chronyd在选择源时忽略源的层级。

|

||||

|

||||

**driftfile** - chronyd程序的主要行为之一,就是根据实际时间计算出计算机获取或丢失时间的比率,将它记录到一个文件中是最合理的,它会在重启后为系统时钟作出补偿,甚至它可能有机会从时钟服务器获得好的估值。

|

||||

**driftfile** - chronyd程序的主要行为之一,就是根据实际时间计算出计算机增减时间的比率,将它记录到一个文件中是最合理的,它会在重启后为系统时钟作出补偿,甚至可能的话,会从时钟服务器获得较好的估值。

|

||||

|

||||

**rtcsync** - rtcsync指令将启用一个内核模式,在该模式中,系统时间每11分钟会拷贝到实时时钟(RTC)。

|

||||

|

||||

**allow / deny** - 这里你可以指定一台主机、子网,或者网络以允许或拒绝NTP连接到扮演时钟服务器的机器。

|

||||

|

||||

Examples:

|

||||

allow 192.168.4.5

|

||||

deny 192.168/16

|

||||

|

||||

@ -30,11 +28,10 @@ CentOS 7.x中正确设置时间与时钟服务器同步

|

||||

|

||||

**bindcmdaddress** - 该指令允许你限制chronyd监听哪个网络接口的命令包(由chronyc执行)。该指令通过cmddeny机制提供了一个除上述限制以外可用的额外的访问控制等级。

|

||||

|

||||

Example:

|

||||

bindcmdaddress 127.0.0.1

|

||||

bindcmdaddress ::1

|

||||

|

||||

**makestep** - 通常,chronyd将根据需求通过减慢或加速时钟,使得系统逐步纠正所有时间偏差。在某些特定情况下,系统时钟可能会漂移过快,导致该回转过程消耗很长的时间来纠正系统时钟。该指令强制chronyd在调整期大于某个阀值时调停系统时钟,但只有在因为chronyd启动时间超过指定限制(可使用负值来禁用限制),没有更多时钟更新时才生效。

|

||||

**makestep** - 通常,chronyd将根据需求通过减慢或加速时钟,使得系统逐步纠正所有时间偏差。在某些特定情况下,系统时钟可能会漂移过快,导致该调整过程消耗很长的时间来纠正系统时钟。该指令强制chronyd在调整期大于某个阀值时步进调整系统时钟,但只有在因为chronyd启动时间超过指定限制(可使用负值来禁用限制),没有更多时钟更新时才生效。

|

||||

|

||||

### 使用chronyc ###

|

||||

|

||||

@ -66,7 +63,7 @@ via: http://linoxide.com/linux-command/chrony-time-sync/

|

||||

|

||||

作者:[Adrian Dinu][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,30 +1,30 @@

|

||||

Linux 有问必答:如何在Ubuntu或者Debian中启动进入命令行

|

||||

Linux 有问必答:如何在Ubuntu或者Debian中启动后进入命令行

|

||||

================================================================================

|

||||

> **提问**:我运行的是Ubuntu桌面,但是我希望启动后临时进入命令行。有什么简便的方法可以启动进入终端?

|

||||

|

||||

Linux桌面自带了一个显示管理器(比如:GDM、KDM、LightDM),它们可以让计算机启动自动进入一个基于GUI的登录环境。然而,如果你要直接启动进入终端怎么办? 比如,你在排查桌面相关的问题或者想要运行一个不需要GUI的发行程序。

|

||||

Linux桌面自带了一个显示管理器(比如:GDM、KDM、LightDM),它们可以让计算机启动自动进入一个基于GUI的登录环境。然而,如果你要直接启动进入终端怎么办? 比如,你在排查桌面相关的问题或者想要运行一个不需要GUI的应用程序。

|

||||

|

||||

注意你可以通过按下Ctrl+Alt+F1到F6临时从桌面GUI切换到虚拟终端。然而,在本例中你的桌面GUI仍在后台运行,这不同于纯文本模式启动。

|

||||

注意虽然你可以通过按下Ctrl+Alt+F1到F6临时从桌面GUI切换到虚拟终端。然而,在这种情况下你的桌面GUI仍在后台运行,这不同于纯文本模式启动。

|

||||

|

||||

在Ubuntu或者Debian桌面中,你可以通过传递合适的内核参数在启动时启动文本模式。

|

||||

|

||||

### 启动临时进入命令行 ###

|

||||

|

||||

如果你想要禁止桌面GUI并只有一次进入文本模式,你可以使用GRUB菜单。

|

||||

如果你想要禁止桌面GUI并临时进入一次文本模式,你可以使用GRUB菜单。

|

||||

|

||||

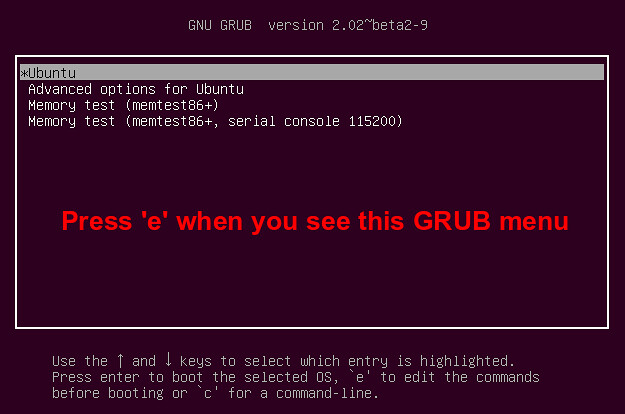

首先,打开你的电脑。当你看到初始的GRUB菜单时,按下‘e’。

|

||||

|

||||

|

||||

|

||||

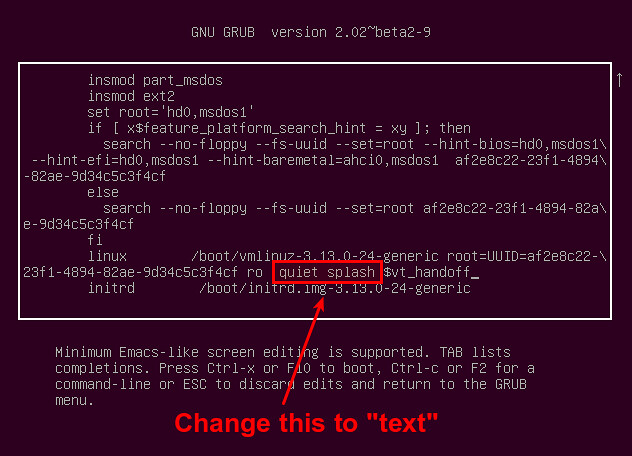

接着会进入下一屏,这里你可以修改内核启动选项。向下滚动到以“linux”开始的行,这里就是内核参数的列表。删除列表中的“quiet”和“splash”。在列表中添加“text”。

|

||||

接着会进入下一屏,这里你可以修改内核启动选项。向下滚动到以“linux”开始的行,这里就是内核参数的列表。删除参数列表中的“quiet”和“splash”。在参数列表中添加“text”。

|

||||

|

||||

|

||||

|

||||

升级的内核选项列表看上去像这样。按下Ctrl+x继续启动。这会一次性以详细模式启动控制台。

|

||||

升级的内核选项列表看上去像这样。按下Ctrl+x继续启动。这会以详细模式启动控制台一次(LCTT译注:由于没有保存修改,所以下次重启还会进入 GUI)。

|

||||

|

||||

|

||||

|

||||

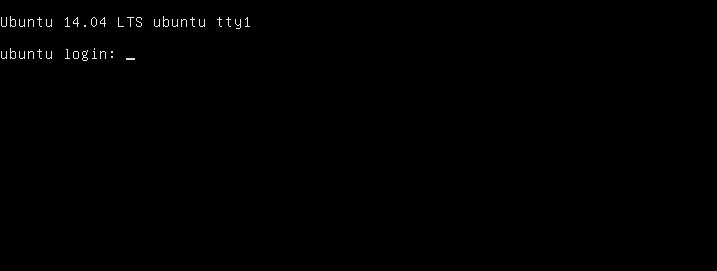

永久启动进入命令行。

|

||||

### 永久启动进入命令行 ###

|

||||

|

||||

如果你想要永久启动进入命令行,你需要[更新定义了内核启动参数GRUB设置][1]。

|

||||

|

||||

@ -32,7 +32,7 @@ Linux桌面自带了一个显示管理器(比如:GDM、KDM、LightDM),

|

||||

|

||||

$ sudo vi /etc/default/grub

|

||||

|

||||

查找以GRUB_CMDLINE_LINUX_DEFAULT开头的行,并用“#”注释这行。这会禁止初始屏幕,而启动详细模式(也就是说显示详细的的启动过程)。

|

||||

查找以GRUB\_CMDLINE\_LINUX\_DEFAULT开头的行,并用“#”注释这行。这会禁止初始屏幕,而启动详细模式(也就是说显示详细的的启动过程)。

|

||||

|

||||

更改GRUB_CMDLINE_LINUX="" 成:

|

||||

|

||||

@ -48,7 +48,7 @@ Linux桌面自带了一个显示管理器(比如:GDM、KDM、LightDM),

|

||||

|

||||

$ sudo update-grub

|

||||

|

||||

这时,你的桌面应该从GUI启动切换到控制台启动了。可以通过重启验证。

|

||||

这时,你的桌面应该可以从GUI启动切换到控制台启动了。可以通过重启验证。

|

||||

|

||||

|

||||

|

||||

@ -57,7 +57,7 @@ Linux桌面自带了一个显示管理器(比如:GDM、KDM、LightDM),

|

||||

via: http://ask.xmodulo.com/boot-into-command-line-ubuntu-debian.html

|

||||

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,50 @@

|

||||

交友网站的2000万用户数据遭泄露

|

||||

----------

|

||||

*泄露数据包括Gmail、Hotmail以及Yahoo邮箱*

|

||||

|

||||

|

||||

|

||||

#一名黑客非法窃取了在线交友网站Topface一个包含2000万用户资料的数据库。

|

||||

|

||||

目前并不清楚这些数据是否已经公开,但是根据某些未公开页面的消息说,某个网名为“Mastermind”的人声称掌握着这些数据。

|

||||

|

||||

#泄露数据列表涵盖了全世界数百个域名

|

||||

|

||||

此人号称泄露数据的内容100%真实有效,而Easy Solutions的CTO,Daniel Ingevaldson 周日在一篇博客中说道,泄露数据包括Hotmail、Yahoo和Gmail等邮箱地址。

|

||||

|

||||

Easy Solutions是一家位于美国的公司,提供多个不同平台的网络检测与安全防护产品。

|

||||

|

||||

据Ingevaldson所说,泄露的数据中,700万来自于Hotmail,250万来自于Yahoo,220万来自于Gmail.com。

|

||||

|

||||

我们并不清楚这些数据是可以直接登录邮箱账户的用户名和密码,还是登录交友网站的账户。另外,也不清楚这些数据在数据库中是加密状态还是明文存在的。

|

||||

|

||||

邮箱地址常常被用于在线网站的登录用户名,用户可以凭借唯一密码进行登录。然而重复使用同一个密码是许多用户的常用作法,同一个密码可以登录许多在线账户。

|

||||

|

||||

[Ingevaldson 还说](1):“看起来,这些数据事实上涵盖了全世界数百个域名。除了原始被黑的网页,黑客和不法分子很可能利用窃取的帐密进行暴库、自动扫描、危害包括银行业、旅游业以及email提供商在内的多个网站。”

|

||||

|

||||

#预计将披露更多信息

|

||||

|

||||

据我们的多个消息源爆料,数据的泄露源就是Topface,一个包含9000万用户的在线交友网站。其总部位于俄罗斯圣彼得堡,超过50%的用户来自于俄罗斯以外的国家。

|

||||

|

||||

我们联系了Topface,向他们求证最近是否遭受了可能导致如此大量数据泄露的网络攻击;但目前我们仍未收到该公司的回复。

|

||||

|

||||

攻击者可能无需获得非法访问权限就窃取了这些数据,Easy Solutions 推测攻击者很可能针对网站客户端使用钓鱼邮件直接获取到了用户数据。

|

||||

|

||||

我们无法通过Easy Solutions的在线网站联系到他们,但我们已经尝试了其他交互通讯方式,目前正在等待更多信息的披露。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via:http://news.softpedia.com/news/Data-of-20-Million-Users-Stolen-from-Dating-Website-471179.shtml

|

||||

|

||||

本文发布时间:26 Jan 2015, 10:20 GMT

|

||||

|

||||

作者:[Ionut Ilascu][a]

|

||||

|

||||

译者:[Mr小眼儿](https://github.com/tinyeyeser)

|

||||

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/ionut-ilascu

|

||||

[1]:http://newblog.easysol.net/dating-site-breached/

|

||||

@ -1,7 +1,7 @@

|

||||

Linux下如何过滤、分割以及合并 pcap 文件

|

||||

=============

|

||||

|

||||

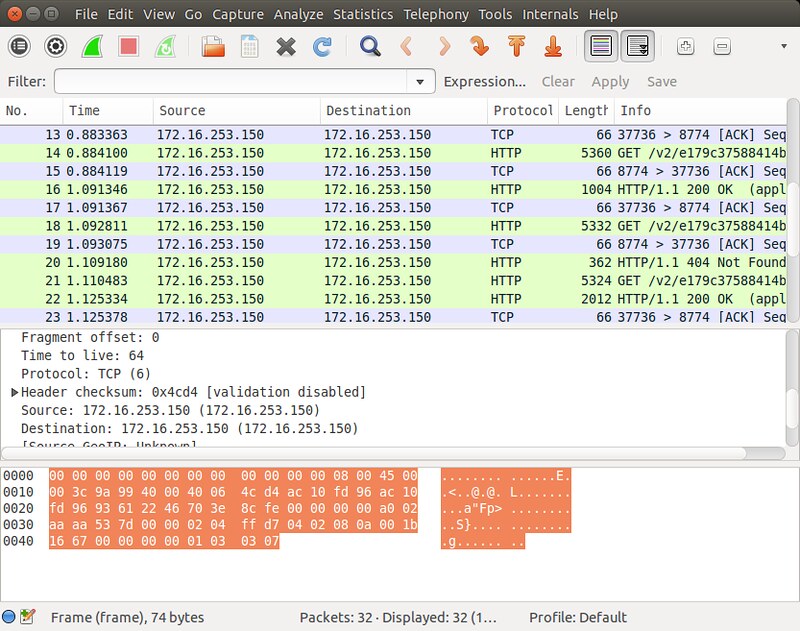

如果你是个网络管理员,并且你的工作包括测试一个[入侵侦测系统][1]或一些网络访问控制策略,那么你通常需要抓取数据包并且在离线状态下分析这些文件。当需要保存捕获的数据包时,我们会想到 libpcap 的数据包格式被广泛使用于许多开源的嗅探工具以及捕包程序。如果 pcap 文件被用于入侵测试或离线分析的话,那么在将他们[注入][2]网络之前通常要先对 pcap 文件进行一些操作。

|

||||

如果你是一个测试[入侵侦测系统][1]或一些网络访问控制策略的网络管理员,那么你经常需要抓取数据包并在离线状态下分析这些文件。当需要保存捕获的数据包时,我们一般会存储为 libpcap 的数据包格式 pcap,这是一种被许多开源的嗅探工具以及捕包程序广泛使用的格式。如果 pcap 文件被用于入侵测试或离线分析的话,那么在将他们[注入][2]网络之前通常要先对 pcap 文件进行一些操作。

|

||||

|

||||

|

||||

|

||||

@ -9,9 +9,9 @@ Linux下如何过滤、分割以及合并 pcap 文件

|

||||

|

||||

### Editcap 与 Mergecap###

|

||||

|

||||

Wireshark,是最受欢迎的 GUI 嗅探工具,实际上它来源于一套非常有用的命令行工具集。其中包括 editcap 与 mergecap。editcap 是一个万能的 pcap 编辑器,它可以过滤并且能以多种方式来分割 pcap 文件。mergecap 可以将多个 pcap 文件合并为一个。 这篇文章就是基于这些 Wireshark 命令行工具。

|

||||

Wireshark,是最受欢迎的 GUI 嗅探工具,实际上它带了一套非常有用的命令行工具集。其中包括 editcap 与 mergecap。editcap 是一个万能的 pcap 编辑器,它可以过滤并且能以多种方式来分割 pcap 文件。mergecap 可以将多个 pcap 文件合并为一个。 这篇文章就是基于这些 Wireshark 命令行工具的。

|

||||

|

||||

如果你已经安装过Wireshark了,那么这些工具已经在你的系统中了。如果还没装的话,那么我们接下来就安装 Wireshark 命令行工具。 需要注意的是,在基于 Debian 的发行版上我们可以不用安装 Wireshark GUI 而仅安装 命令行工具,但是在 Red Hat 及 基于它的发行版中则需要安装整个 Wireshark 包。

|

||||

如果你已经安装过 Wireshark 了,那么这些工具已经在你的系统中了。如果还没装的话,那么我们接下来就安装 Wireshark 命令行工具。 需要注意的是,在基于 Debian 的发行版上我们可以不用安装 Wireshark GUI 而仅安装命令行工具,但是在 Red Hat 及 基于它的发行版中则需要安装整个 Wireshark 包。

|

||||

|

||||

**Debian, Ubuntu 或 Linux Mint**

|

||||

|

||||

@ -27,15 +27,15 @@ Wireshark,是最受欢迎的 GUI 嗅探工具,实际上它来源于一套非

|

||||

|

||||

通过 editcap, 我们能以很多不同的规则来过滤 pcap 文件中的内容,并且将过滤结果保存到新文件中。

|

||||

|

||||

首先,以“起止时间”来过滤 pcap 文件。 " - A < start-time > and " - B < end-time > 选项可以过滤出处在这个时间段到达的数据包(如,从 2:30 ~ 2:35)。时间的格式为 “ YYYY-MM-DD HH:MM:SS"。

|

||||

首先,以“起止时间”来过滤 pcap 文件。 " - A < start-time > 和 " - B < end-time > 选项可以过滤出在这个时间段到达的数据包(如,从 2:30 ~ 2:35)。时间的格式为 “ YYYY-MM-DD HH:MM:SS"。

|

||||

|

||||

$ editcap -A '2014-12-10 10:11:01' -B '2014-12-10 10:21:01' input.pcap output.pcap

|

||||

$ editcap -A '2014-12-10 10:11:01' -B '2014-12-10 10:21:01' input.pcap output.pcap

|

||||

|

||||

也可以从某个文件中提取指定的 N 个包。下面的命令行从 input.pcap 文件中提取100个包(从 401 到 500)并将它们保存到 output.pcap 中:

|

||||

|

||||

$ editcap input.pcap output.pcap 401-500

|

||||

|

||||

使用 "-D< dup-window >" (dup-window可以看成是对比的窗口大小,仅与此范围内的包进行对比)选项可以提取出重复包。每个包都依次与它之前的 < dup-window > -1 个包对比长度与MD5值,如果有匹配的则丢弃。

|

||||

使用 "-D < dup-window >" (dup-window可以看成是对比的窗口大小,仅与此范围内的包进行对比)选项可以提取出重复包。每个包都依次与它之前的 < dup-window > -1 个包对比长度与MD5值,如果有匹配的则丢弃。

|

||||

|

||||

$ editcap -D 10 input.pcap output.pcap

|

||||

|

||||

@ -71,13 +71,13 @@ Wireshark,是最受欢迎的 GUI 嗅探工具,实际上它来源于一套非

|

||||

|

||||

如果要忽略时间戳,仅仅想以命令行中的顺序来合并文件,那么使用 -a 选项即可。

|

||||

|

||||

例如,下列命令会将 input.pcap文件的内容写入到 output.pcap, 并且将 input2.pcap 的内容追加在后面。

|

||||

例如,下列命令会将 input.pcap 文件的内容写入到 output.pcap, 并且将 input2.pcap 的内容追加在后面。

|

||||

|

||||

$ mergecap -a -w output.pcap input.pcap input2.pcap

|

||||

|

||||

###总结###

|

||||

|

||||

在这篇指导中,我演示了多个 editcap、 mergecap 操作 pcap 文件的案例。除此之外,还有其它的相关工具,如 [reordercap][3]用于将数据包重新排序,[text2pcap][4] 用于将pcap 文件转换为 文本格式, [pcap-diff][5]用于比较 pcap 文件的异同,等等。当进行网络入侵测试及解决网络问题时,这些工具与[包注入工具][6]非常实用,所以最好了解他们。

|

||||

在这篇指导中,我演示了多个 editcap、 mergecap 操作 pcap 文件的例子。除此之外,还有其它的相关工具,如 [reordercap][3]用于将数据包重新排序,[text2pcap][4] 用于将 pcap 文件转换为文本格式, [pcap-diff][5]用于比较 pcap 文件的异同,等等。当进行网络入侵测试及解决网络问题时,这些工具与[包注入工具][6]非常实用,所以最好了解他们。

|

||||

|

||||

你是否使用过 pcap 工具? 如果用过的话,你用它来做过什么呢?

|

||||

|

||||

@ -86,8 +86,8 @@ Wireshark,是最受欢迎的 GUI 嗅探工具,实际上它来源于一套非

|

||||

via: http://xmodulo.com/filter-split-merge-pcap-linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[SPccman](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[SPccman](https://github.com/SPccman)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,9 @@

|

||||

如何在Linux/类Unix系统中解压tar文件到不同的目录中

|

||||

如何解压 tar 文件到不同的目录中

|

||||

================================================================================

|

||||

我想要解压一个tar文件到一个指定的目录叫/tmp/data。我该如何在Linux或者类Unix的系统中使用tar命令解压一个tar文件到不同的目录中?

|

||||

|

||||

你不必使用cd名切换到其他的目录并解压。可以使用下面的语法解压一个文件:

|

||||

我想要解压一个tar文件到一个叫/tmp/data的指定目录。我该如何在Linux或者类Unix的系统中使用tar命令解压一个tar文件到不同的目录中?

|

||||

|

||||

你不必使用cd命令切换到其他的目录并解压。可以使用下面的语法解压一个文件:

|

||||

|

||||

### 语法 ###

|

||||

|

||||

@ -16,9 +17,9 @@ GNU/tar 语法:

|

||||

|

||||

tar xf file.tar --directory /path/to/directory

|

||||

|

||||

### 示例:解压文件到另一个文件夹中 ###

|

||||

### 示例:解压文件到另一个目录中 ###

|

||||

|

||||

在本例中。我解压$HOME/etc.backup.tar到文件夹/tmp/data中。首先,你需要手动创建这个目录,输入:

|

||||

在本例中。我解压$HOME/etc.backup.tar到/tmp/data目录中。首先,需要手动创建这个目录,输入:

|

||||

|

||||

mkdir /tmp/data

|

||||

|

||||

@ -34,7 +35,7 @@ GNU/tar 语法:

|

||||

|

||||

|

||||

|

||||

Gif 01: tar命令解压文件到不同的目录

|

||||

*Gif 01: tar命令解压文件到不同的目录*

|

||||

|

||||

你也可以指定解压的文件:

|

||||

|

||||

@ -56,8 +57,8 @@ via: http://www.cyberciti.biz/faq/howto-extract-tar-file-to-specific-directory-o

|

||||

|

||||

作者:[nixCraft][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.cyberciti.biz/tips/about-us

|

||||

[a]:http://www.cyberciti.biz/tips/about-us

|

||||

@ -0,0 +1,58 @@

|

||||

在 Ubuntu 14.04 中Apache从2.2迁移到2.4的问题

|

||||

================================================================================

|

||||

如果你将**Ubuntu**从12.04升级跨越到了14.04的,那么这其中包括了一个重大的升级--**Apache**从2.2版本升级到2.4版本。**Apache**的这次升级带来了许多性能提升,**但是如果继续使用2.2的配置文件会导致很多错误**。

|

||||

|

||||

### 访问控制的改变 ###

|

||||

|

||||

从**Apache 2.4**起所启用授权机制比起2.2的只是针对单一数据存储的单一检查更加灵活。过去很难确定哪个 order 授权怎样被使用的,但是授权容器指令的引入解决了这些问题,现在,配置可以控制什么时候授权方法被调用,什么条件决定何时授权访问。

|

||||

|

||||

这就是为什么大多数的升级失败是由于配置错误的原因。2.2的访问控制是基于IP地址、主机名和其他角色,通过使用指令Order,来设置Allow, Deny或 Satisfy;但是2.4,这些一切都通过新的授权方式进行检查。

|

||||

|

||||

为了弄清楚这些,可以来看一些虚拟主机的例子,这些可以在/etc/apache2/sites-enabled/default 或者 /etc/apache2/sites-enabled/*你的网站名称* 中找到:

|

||||

|

||||

旧的2.2虚拟主机配置:

|

||||

|

||||

Order allow,deny

|

||||

Allow from all

|

||||

|

||||

新的2.4虚拟主机配置:

|

||||

|

||||

Require all granted

|

||||

|

||||

|

||||

|

||||

(LCTT 译注:Order、Allow和deny 这些将在之后的版本废弃,请尽量避免使用,Require 指令已可以提供比其更强大和灵活的功能。)

|

||||

|

||||

### .htaccess 问题 ###

|

||||

|

||||

升级后如果一些设置不工作,或者你得到重定向错误,请检查是否这些设置是放在.htaccess文件中。如果Apache 2.4没有使用 .htaccess 文件中的设置,那是因为在2.4中AllowOverride指令的默认是 none,因此忽略了.htaccess文件。你只需要做的就是修改或者添加AllowOverride All命令到你的网站配置文件中。

|

||||

|

||||

上面截图中,可以看见AllowOverride All指令。

|

||||

|

||||

### 丢失配置文件或者模块 ###

|

||||

|

||||

根据我的经验,这次升级带来的另一个问题就是在2.4中,一些旧模块和配置文件不再需要或者不被支持了。你将会收到一条“Apache不能包含相应的文件”的明确警告,你需要做的是在配置文件中移除这些导致问题的配置行。之后你可以搜索和安装相似的模块来替代。

|

||||

|

||||

### 其他需要了解的小改变 ###

|

||||

|

||||

这里还有一些其他的改变需要考虑,虽然这些通常只会发生警告,而不是错误。

|

||||

|

||||

- MaxClients重命名为MaxRequestWorkers,使之有更准确的描述。而异步MPM,如event,客户端最大连接数不等于工作线程数。旧的配置名依然支持。

|

||||

- DefaultType命令无效,使用它已经没有任何效果了。如果使用除了 none 之外的其它配置值,你会得到一个警告。需要使用其他配置设定来替代它。

|

||||

- EnableSendfile默认关闭

|

||||

- FileETag 现在默认为"MTime Size"(没有INode)

|

||||

- KeepAlive 只接受“On”或“Off”值。之前的任何不是“Off”或者“0”的值都被认为是“On”

|

||||

- 单一的 Mutex 已经替代了 Directives AcceptMutex, LockFile, RewriteLock, SSLMutex, SSLStaplingMutex 和 WatchdogMutexPath 等指令。你需要做的是估计一下这些被替代的指令在2.2中的使用情况,来决定是否删除或者使用Mutex来替代。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/linux-how-to/apache-migration-2-2-to-2-4-ubuntu-14-04/

|

||||

|

||||

作者:[Adrian Dinu][a]

|

||||

译者:[Vic020/VicYu](http://vicyu.net)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/adriand/

|

||||

[1]:http://httpd.apache.org/docs/2.4/

|

||||

@ -1,17 +1,16 @@

|

||||

translating by mtunique

|

||||

Linux FAQs with Answers--How to check disk space on Linux with df command

|

||||

在 Linux 下你所不知道的 df 命令的那些功能

|

||||

================================================================================

|

||||

> **Question**: I know I can use df command to check a file system's disk space usage on Linux. Can you show me practical examples of the df command so that I can make the most out of it?

|

||||

> **问题**: 我知道在Linux上我可以用df命令来查看磁盘使用空间。你能告诉我df命令的实际例子使我可以最大限度得利用它吗?

|

||||

|

||||

As far as disk storage is concerned, there are many command-line or GUI-based tools that can tell you about current disk space usage. These tools report on detailed disk utilization in various human-readable formats, such as easy-to-understand summary, detailed statistics, or [intuitive visualization][1]. If you simply want to know how much free disk space is available for different file systems, then df command is probably all you need.

|

||||

对于磁盘存储方面,有很多命令行或基于GUI的工具,它可以告诉你关于当前磁盘空间的使用情况。这些工具用各种人们可读的格式展示磁盘利用率的详细信息,比如易于理解的总结,详细的统计信息或直观的[可视化报告][1]。如果你只想知道不同文件系统有多少空闲的磁盘空间,那么df命令可能是你所需要的。

|

||||

|

||||

|

||||

|

||||

The df command can report on disk utilization of any "mounted" file system. There are different ways this command can be invoked. Here are some **useful** df **command examples**.

|

||||

df命令可以展示任何“mounted”文件系统的磁盘利用率。该命令可以用不同的方式调用。这里有一些**有用的** df **命令例子**.

|

||||

|

||||

### Display in Human-Readable Format ###

|

||||

### 用人们可读的方式展示 ###

|

||||

|

||||

By default, the df command reports disk space in 1K blocks, which is not easily interpretable. The "-h" parameter will make df print disk space in a more human-readable format (e.g., 100K, 200M, 3G).

|

||||

默认情况下,df命令用1K为块来展示磁盘空间,这看起来不是很直观。“-h”参数使df用更可读的方式打印磁盘空间(例如 100K,200M,3G)。

|

||||

|

||||

$ df -h

|

||||

|

||||

@ -27,9 +26,9 @@ By default, the df command reports disk space in 1K blocks, which is not easily

|

||||

none 100M 48K 100M 1% /run/user

|

||||

/dev/sda1 228M 98M 118M 46% /boot

|

||||

|

||||

### Display Inode Usage ###

|

||||

### 展示Inode使用情况 ###

|

||||

|

||||

When you monitor disk usage, you must watch out for not only disk space, but also "inode" usage. In Linux, inode is a data structure used to store metadata of a particular file, and when a file system is created, a pre-defined number of inodes are allocated. This means that a file system can run out of space not only because big files use up all available space, but also because many small files use up all available inodes. To display inode usage, use "-i" option.

|

||||

当你监视磁盘使用情况时,你必须注意的不仅仅是磁盘空间还有“inode”的使用情况。在Linux中,inode是用来存储特定文件的元数据的一种数据结构,在创建一个文件系统时,inode的预先定义数量将被分配。这意味着,**一个文件系统可能耗尽空间不只是因为大文件用完了所有可用空间,也可能是因为很多小文件用完了所有可能的inode**。用“-i”选项展示inode使用情况。

|

||||

|

||||

$ df -i

|

||||

|

||||

@ -45,9 +44,9 @@ When you monitor disk usage, you must watch out for not only disk space, but als

|

||||

none 1004417 28 1004389 1% /run/user

|

||||

/dev/sda1 124496 346 124150 1% /boot

|

||||

|

||||

### Display Disk Usage Grant Total ###

|

||||

### 展示磁盘总利用率 ###

|

||||

|

||||

By default, the df command shows disk utilization of individual file systems. If you want to know the total disk usage over all existing file systems, add "--total" option.

|

||||

默认情况下, df命令显示磁盘的单个文件系统的利用率。如果你想知道的所有文件系统的总磁盘使用量,增加“ --total ”选项(见最下面的汇总行)。

|

||||

|

||||

$ df -h --total

|

||||

|

||||

@ -64,9 +63,9 @@ By default, the df command shows disk utilization of individual file systems. If

|

||||

/dev/sda1 228M 98M 118M 46% /boot

|

||||

total 918G 565G 307G 65% -

|

||||

|

||||

### Display File System Types ###

|

||||

### 展示文件系统类型 ###

|

||||

|

||||

By default, the df command does not show file system type information. Use "-T" option to add file system types to the output.

|

||||

默认情况下,df命令不显示文件系统类型信息。用“-T”选项来添加文件系统信息到输出中。

|

||||

|

||||

$ df -T

|

||||

|

||||

@ -82,9 +81,9 @@ By default, the df command does not show file system type information. Use "-T"

|

||||

none tmpfs 102400 48 102352 1% /run/user

|

||||

/dev/sda1 ext2 233191 100025 120725 46% /boot

|

||||

|

||||

### Include or Exclude a Specific File System Type ###

|

||||

### 包含或排除特定的文件系统类型 ###

|

||||

|

||||

If you want to know free space of a specific file system type, use "-t <type>" option. You can use this option multiple times to include more than one file system types.

|

||||

如果你想知道特定文件系统类型的剩余空间,用“-t <type>”选项。你可以多次使用这个选项来包含更多的文件系统类型。

|

||||

|

||||

$ df -t ext2 -t ext4

|

||||

|

||||

@ -94,13 +93,13 @@ If you want to know free space of a specific file system type, use "-t <type>" o

|

||||

/dev/mapper/ubuntu-root 952893348 591583380 312882756 66% /

|

||||

/dev/sda1 233191 100025 120725 46% /boot

|

||||

|

||||

To exclude a specific file system type, use "-x <type>" option. You can use this option multiple times as well.

|

||||

排除特定的文件系统类型,用“-x <type>”选项。同样,你可以用这个选项多次来排除多种文件系统类型。

|

||||

|

||||

$ df -x tmpfs

|

||||

|

||||

### Display Disk Usage of a Specific Mount Point ###

|

||||

### 显示一个具体的挂载点磁盘使用情况 ###

|

||||

|

||||

If you specify a mount point with df, it will report disk usage of the file system mounted at that location. If you specify a regular file (or a directory) instead of a mount point, df will display disk utilization of the file system which contains the file (or the directory).

|

||||

如果你用df指定一个挂载点,它将报告挂载在那个地方的文件系统的磁盘使用情况。如果你指定一个普通文件(或一个目录)而不是一个挂载点,df将显示包含这个文件(或目录)的文件系统的磁盘利用率。

|

||||

|

||||

$ df /

|

||||

|

||||

@ -118,9 +117,9 @@ If you specify a mount point with df, it will report disk usage of the file syst

|

||||

Filesystem 1K-blocks Used Available Use% Mounted on

|

||||

/dev/mapper/ubuntu-root 952893348 591583528 312882608 66% /

|

||||

|

||||

### Display Information about Dummy File Systems ###

|

||||

### 显示虚拟文件系统的信息 ###

|

||||

|

||||

If you want to display disk space information for all existing file systems including dummy file systems, use "-a" option. Here, dummy file systems refer to pseudo file systems which do not have corresponding physical devices, e.g., tmpfs, cgroup virtual file system or FUSE file systems. These dummy filesystems have size of 0, and are not reported by df without "-a" option.

|

||||

如果你想显示所有已经存在的文件系统(包括虚拟文件系统)的磁盘空间信息,用“-a”选项。这里,虚拟文件系统是指没有相对应的物理设备的假文件系统,例如,tmpfs,cgroup虚拟文件系统或FUSE文件安系统。这些虚拟文件系统大小为0,不用“-a”选项将不会被报告出来。

|

||||

|

||||

$ df -a

|

||||

|

||||

@ -150,8 +149,8 @@ If you want to display disk space information for all existing file systems incl

|

||||

|

||||

via: http://ask.xmodulo.com/check-disk-space-linux-df-command.html

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[mtunique](https://github.com/mtunique)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

78

published/20150127 Install Jetty Web Server On CentOS 7.md

Normal file

78

published/20150127 Install Jetty Web Server On CentOS 7.md

Normal file

@ -0,0 +1,78 @@

|

||||

在CentOS 7中安装Jetty服务器

|

||||

================================================================================

|

||||

[Jetty][1] 是一款纯Java的HTTP **(Web) 服务器**和Java Servlet容器。 通常在更大的网络框架中,Jetty经常用于设备间的通信,而其他Web服务器通常给“人类”传递文件 :D。Jetty是一个Eclipse基金会的免费开源项目。这个Web服务器用于如Apache ActiveMQ、 Alfresco、 Apache Geronimo、 Apache Maven、 Apache Spark、Google App Engine、 Eclipse、 FUSE、 Twitter的 Streaming API 和 Zimbra中。

|

||||

|

||||

这篇文章会介绍‘如何在CentOS服务器中安装Jetty服务器’。

|

||||

|

||||

**首先我们要用下面的命令安装JDK:**

|

||||

|

||||

yum -y install java-1.7.0-openjdk wget

|

||||

|

||||

**JDK安装之后,我们就可以下载最新版本的Jetty了:**

|

||||

|

||||

wget http://download.eclipse.org/jetty/stable-9/dist/jetty-distribution-9.2.5.v20141112.tar.gz

|

||||

|

||||

**解压并移动下载的包到/opt:**

|

||||

|

||||

tar zxvf jetty-distribution-9.2.5.v20141112.tar.gz -C /opt/

|

||||

|

||||

**重命名文件夹名为jetty:**

|

||||

|

||||

mv /opt/jetty-distribution-9.2.5.v20141112/ /opt/jetty

|

||||

|

||||

**创建一个jetty用户:**

|

||||

|

||||

useradd -m jetty

|

||||

|

||||

**改变jetty文件夹的所属用户:**

|

||||

|

||||

chown -R jetty:jetty /opt/jetty/

|

||||

|

||||

**为jetty.sh创建一个软链接到 /etc/init.d directory 来创建一个启动脚本文件:**

|

||||

|

||||

ln -s /opt/jetty/bin/jetty.sh /etc/init.d/jetty

|

||||

|

||||

**添加脚本:**

|

||||

|

||||

chkconfig --add jetty

|

||||

|

||||

**是jetty在系统启动时启动:**

|

||||

|

||||

chkconfig --level 345 jetty on

|

||||

|

||||

**使用你最喜欢的文本编辑器打开 /etc/default/jetty 并修改端口和监听地址:**

|

||||

|

||||

vi /etc/default/jetty

|

||||

|

||||

----------

|

||||

|

||||

JETTY_HOME=/opt/jetty

|

||||

JETTY_USER=jetty

|

||||

JETTY_PORT=8080

|

||||

JETTY_HOST=50.116.24.78

|

||||

JETTY_LOGS=/opt/jetty/logs/

|

||||

|

||||

**我们完成了安装,现在可以启动jetty服务了 **

|

||||

|

||||

service jetty start

|

||||

|

||||

完成了!

|

||||

|

||||

现在你可以在 **http://\<你的 IP 地址>:8080** 中访问了

|

||||

|

||||

就是这样。

|

||||

|

||||

干杯!!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.unixmen.com/install-jetty-web-server-centos-7/

|

||||

|

||||

作者:[Jijo][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.unixmen.com/author/jijo/

|

||||

[1]:http://eclipse.org/jetty/

|

||||

@ -1,50 +0,0 @@

|

||||

Data of 20 Million Users Stolen from Dating Website

|

||||

----------

|

||||

*Info includes Gmail, Hotmail and Yahoo emails*

|

||||

|

||||

|

||||

|

||||

#A database containing details of more than 20 million users of an online dating website has been allegedly stolen by a hacker.

|

||||

|

||||

It is unclear at the moment if the information has been dumped into the public domain, but someone using the online alias “Mastermind” claims to have it, according to a post on an undisclosed paste site.

|

||||

|

||||

#List contains hundreds of domains from all over the world

|

||||

|

||||

The individual claims that the details are 100% valid and Daniel Ingevaldson, Chief Technology Officer at Easy Solutions, said in a blog post on Sunday that the list included email addresses from Hotmail, Yahoo and Gmail.

|

||||

|

||||

Easy Solutions is a US-based company that provides security products for detecting and preventing cyber fraud across different computer platforms.

|

||||

|

||||

According to Ingevaldson, the list contains over 7 million credentials from Hotmail, 2.5 million from Yahoo, and 2.2 million from Gmail.com.

|

||||

|

||||

It is unclear if “credentials” refers to usernames and passwords that can be used to access the email accounts or the account of the dating website. Also, it is unknown whether the database stored the passwords in a secure manner or if they were available in plain text.

|

||||

|

||||