mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-03 23:40:14 +08:00

commit

827ad24a78

@ -1,142 +0,0 @@

|

||||

translating---geekpi

|

||||

|

||||

Running MongoDB as a Microservice with Docker and Kubernetes

|

||||

===================

|

||||

|

||||

### Introduction

|

||||

|

||||

Want to try out MongoDB on your laptop? Execute a single command and you have a lightweight, self-contained sandbox; another command removes all traces when you're done.

|

||||

|

||||

Need an identical copy of your application stack in multiple environments? Build your own container image and let your development, test, operations, and support teams launch an identical clone of your environment.

|

||||

|

||||

Containers are revolutionizing the entire software lifecycle: from the earliest technical experiments and proofs of concept through development, test, deployment, and support.

|

||||

|

||||

#### [Read the Enabling Microservices: Containers & Orchestration Explained white paper][6].

|

||||

|

||||

Orchestration tools manage how multiple containers are created, upgraded and made highly available. Orchestration also controls how containers are connected to build sophisticated applications from multiple, microservice containers.

|

||||

|

||||

The rich functionality, simple tools, and powerful APIs make container and orchestration functionality a favorite for DevOps teams who integrate them into Continuous Integration (CI) and Continuous Delivery (CD) workflows.

|

||||

|

||||

This post delves into the extra challenges you face when attempting to run and orchestrate MongoDB in containers and illustrates how these challenges can be overcome.

|

||||

|

||||

### Considerations for MongoDB

|

||||

|

||||

Running MongoDB with containers and orchestration introduces some additional considerations:

|

||||

|

||||

* MongoDB database nodes are stateful. In the event that a container fails, and is rescheduled, it's undesirable for the data to be lost (it could be recovered from other nodes in the replica set, but that takes time). To solve this, features such as the _Volume_ abstraction in Kubernetes can be used to map what would otherwise be an ephemeral MongoDB data directory in the container to a persistent location where the data survives container failure and rescheduling.

|

||||

|

||||

* MongoDB database nodes within a replica set must communicate with each other – including after rescheduling. All of the nodes within a replica set must know the addresses of all of their peers, but when a container is rescheduled, it is likely to be restarted with a different IP address. For example, all containers within a Kubernetes Pod share a single IP address, which changes when the pod is rescheduled. With Kubernetes, this can be handled by associating a Kubernetes Service with each MongoDB node, which uses the Kubernetes DNS service to provide a `hostname` for the service that remains constant through rescheduling.

|

||||

|

||||

* Once each of the individual MongoDB nodes is running (each within its own container), the replica set must be initialized and each node added. This is likely to require some additional logic beyond that offered by off the shelf orchestration tools. Specifically, one MongoDB node within the intended replica set must be used to execute the `rs.initiate` and `rs.add` commands.

|

||||

|

||||

* If the orchestration framework provides automated rescheduling of containers (as Kubernetes does) then this can increase MongoDB's resiliency since a failed replica set member can be automatically recreated, thus restoring full redundancy levels without human intervention.

|

||||

|

||||

* It should be noted that while the orchestration framework might monitor the state of the containers, it is unlikely to monitor the applications running within the containers, or backup their data. That means it's important to use a strong monitoring and backup solution such as [MongoDB Cloud Manager][1], included with [MongoDB Enterprise Advanced][2] and [MongoDB Professional][3]. Consider creating your own image that contains both your preferred version of MongoDB and the [MongoDB Automation Agent][4].

|

||||

|

||||

### Implementing a MongoDB Replica Set using Docker and Kubernetes

|

||||

|

||||

As described in the previous section, distributed databases such as MongoDB require a little extra attention when being deployed with orchestration frameworks such as Kubernetes. This section goes to the next level of detail, showing how this can actually be implemented.

|

||||

|

||||

We start by creating the entire MongoDB replica set in a single Kubernetes cluster (which would normally be within a single data center – that clearly doesn't provide geographic redundancy). In reality, little has to be changed to run across multiple clusters and those steps are described later.

|

||||

|

||||

Each member of the replica set will be run as its own pod with a service exposing an external IP address and port. This 'fixed' IP address is important as both external applications and other replica set members can rely on it remaining constant in the event that a pod is rescheduled.

|

||||

|

||||

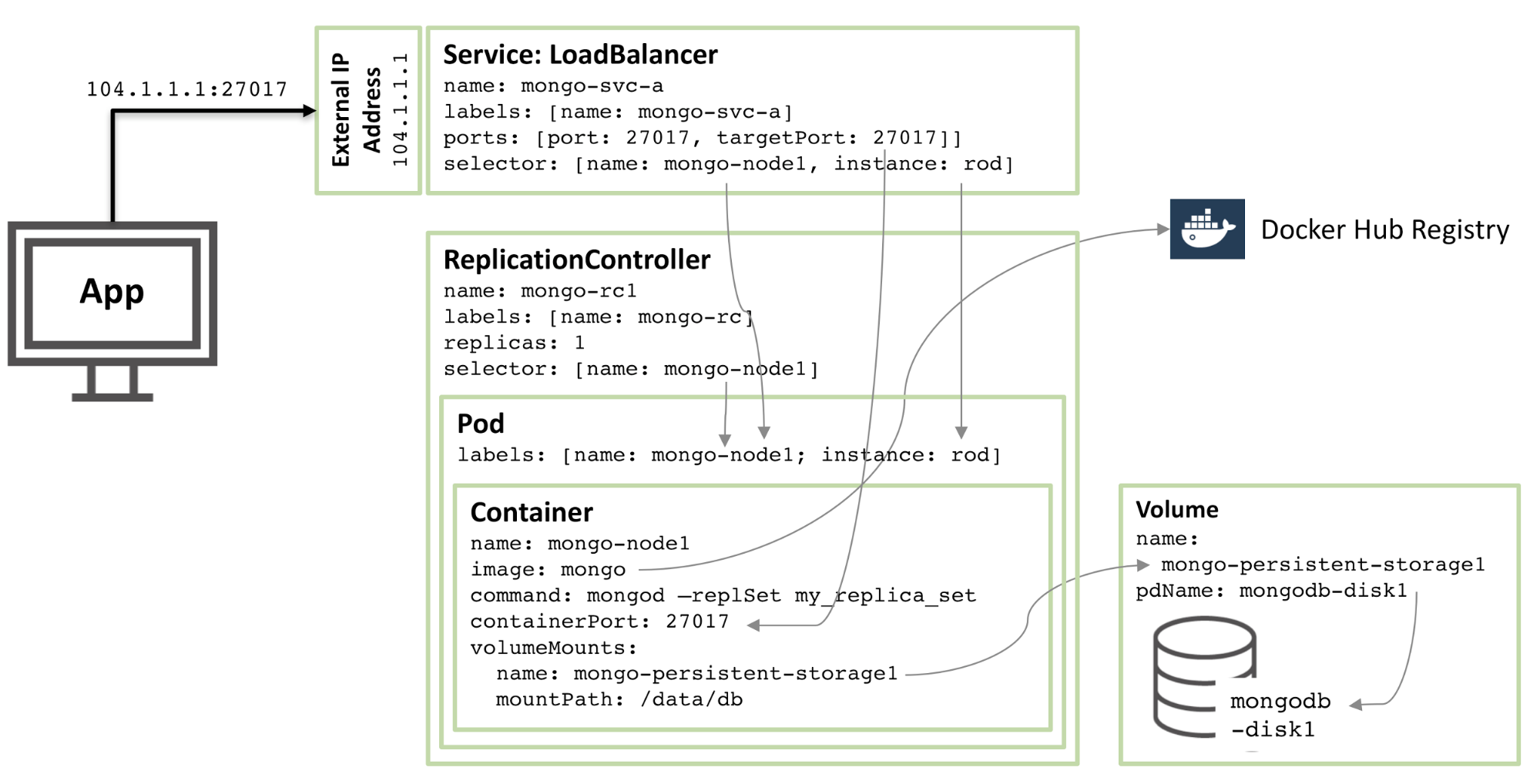

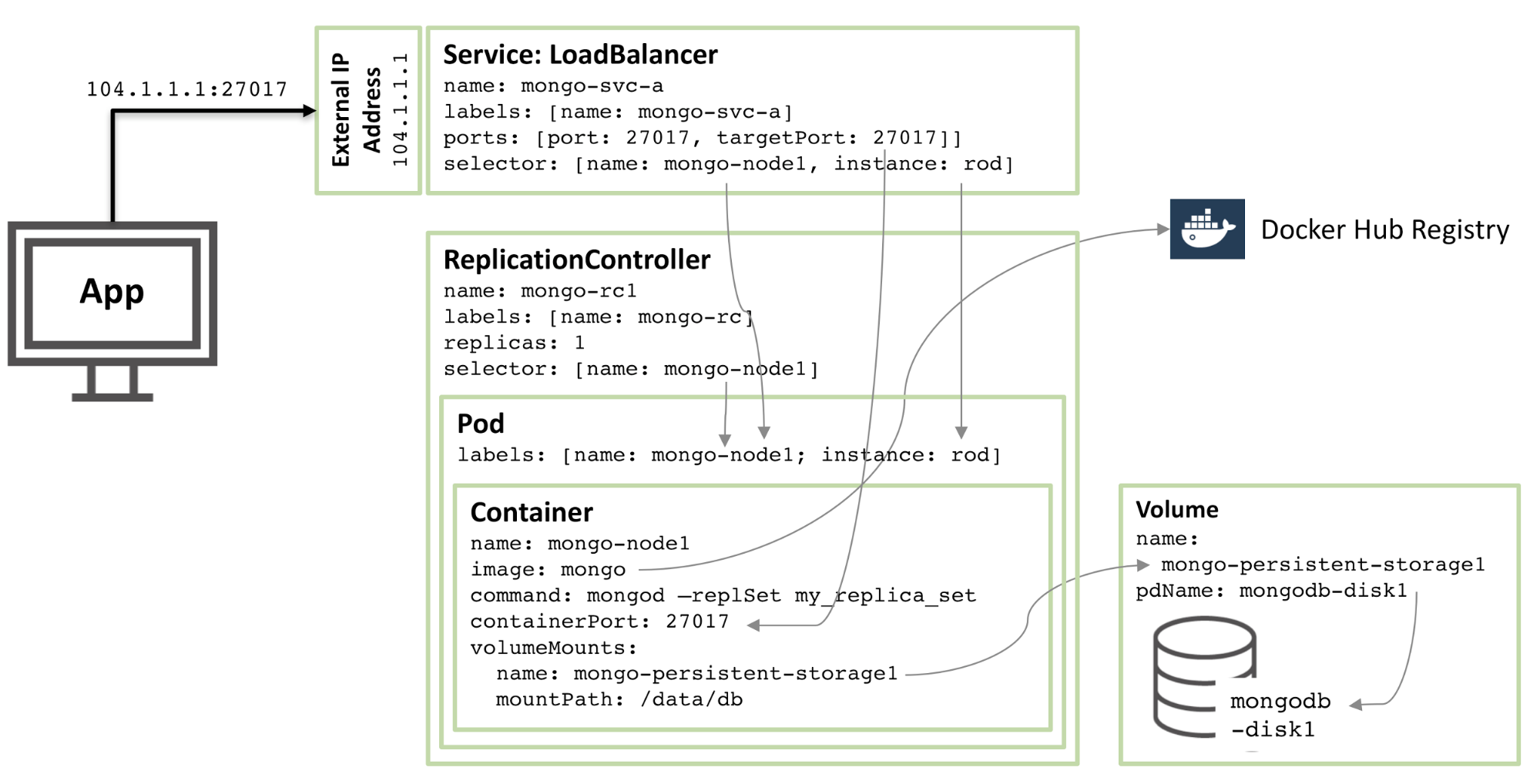

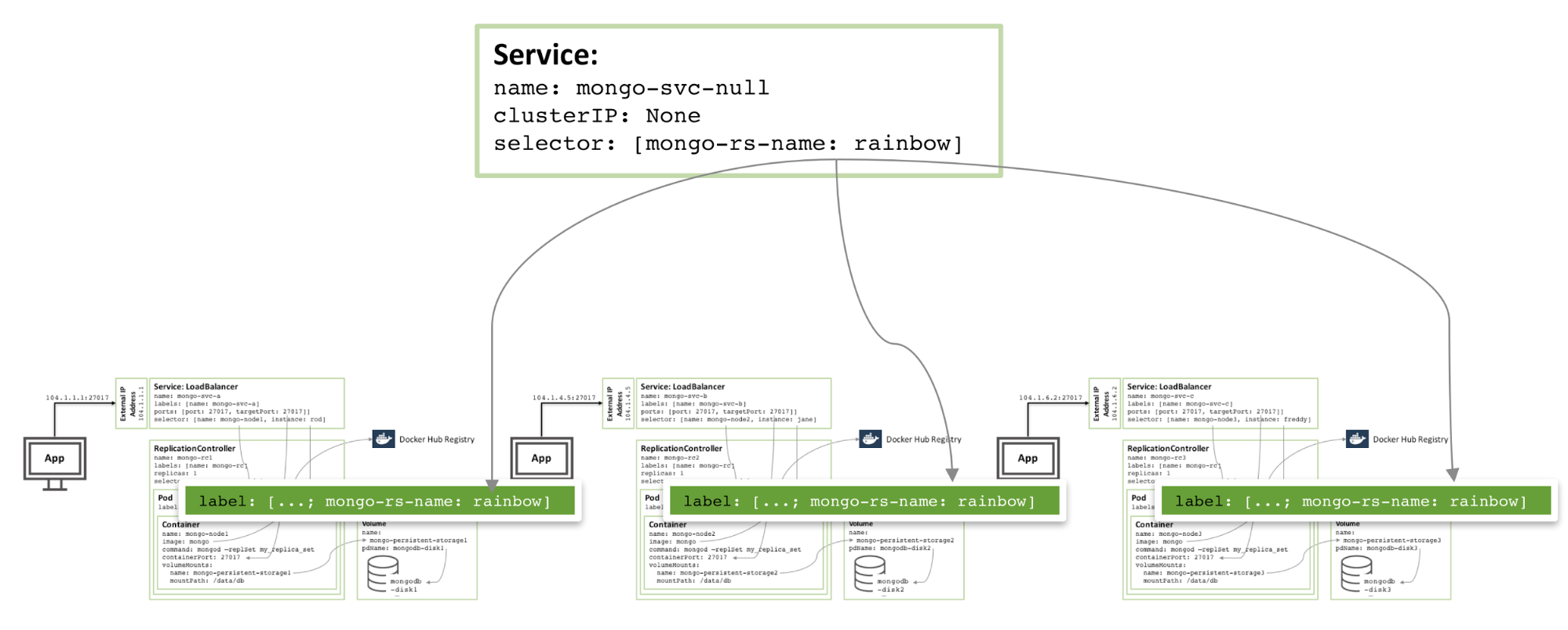

The following diagram illustrates one of these pods and the associated Replication Controller and service.

|

||||

|

||||

<center style="-webkit-font-smoothing: subpixel-antialiased; color: rgb(66, 66, 66); font-family: "Akzidenz Grotesk BQ Light", Helvetica; font-size: 16px; position: relative;">

|

||||

|

||||

</center>

|

||||

|

||||

Figure 1: MongoDB Replica Set member configured as a Kubernetes Pod and exposed as a service

|

||||

|

||||

Stepping through the resources described in that configuration we have:

|

||||

|

||||

* Starting at the core there is a single container named `mongo-node1`. `mongo-node1`includes an image called `mongo` which is a publicly available MongoDB container image hosted on [Docker Hub][5]. The container exposes port `27107` within the cluster.

|

||||

|

||||

* The Kubernetes _volumes_ feature is used to map the `/data/db` directory within the connector to the persistent storage element named `mongo-persistent-storage1`; which in turn is mapped to a disk named `mongodb-disk1` created in the Google Cloud. This is where MongoDB would store its data so that it is persisted over container rescheduling.

|

||||

|

||||

* The container is held within a pod which has the labels to name the pod `mongo-node`and provide an (arbitrary) instance name of `rod`.

|

||||

|

||||

* A Replication Controller named `mongo-rc1` is configured to ensure that a single instance of the `mongo-node1` pod is always running.

|

||||

|

||||

* The `LoadBalancer` service named `mongo-svc-a` exposes an IP address to the outside world together with the port of `27017` which is mapped to the same port number in the container. The service identifies the correct pod using a selector that matches the pod's labels. That external IP address and port will be used by both an application and for communication between the replica set members. There are also local IP addresses for each container, but those change when containers are moved or restarted, and so aren't of use for the replica set.

|

||||

|

||||

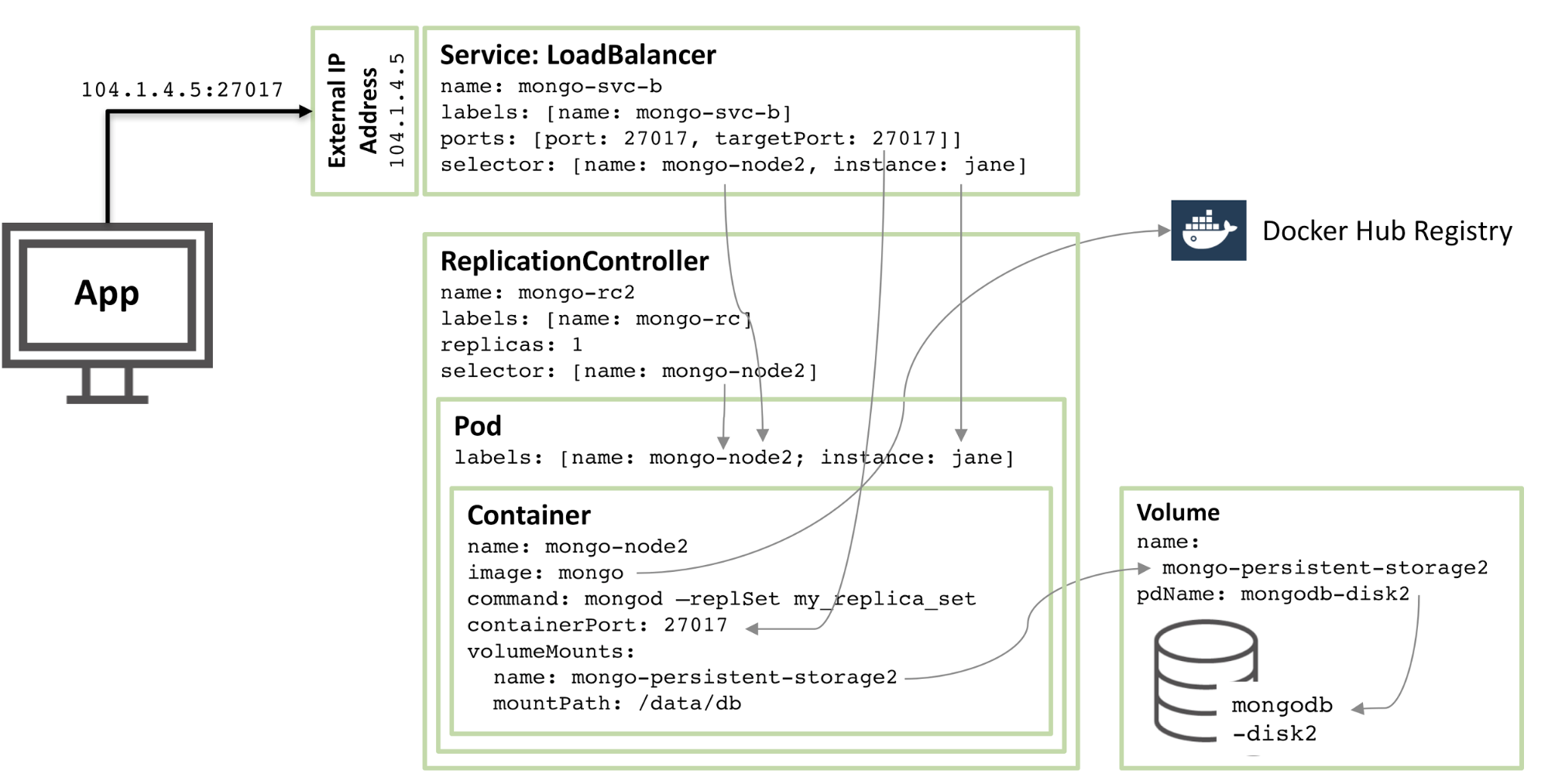

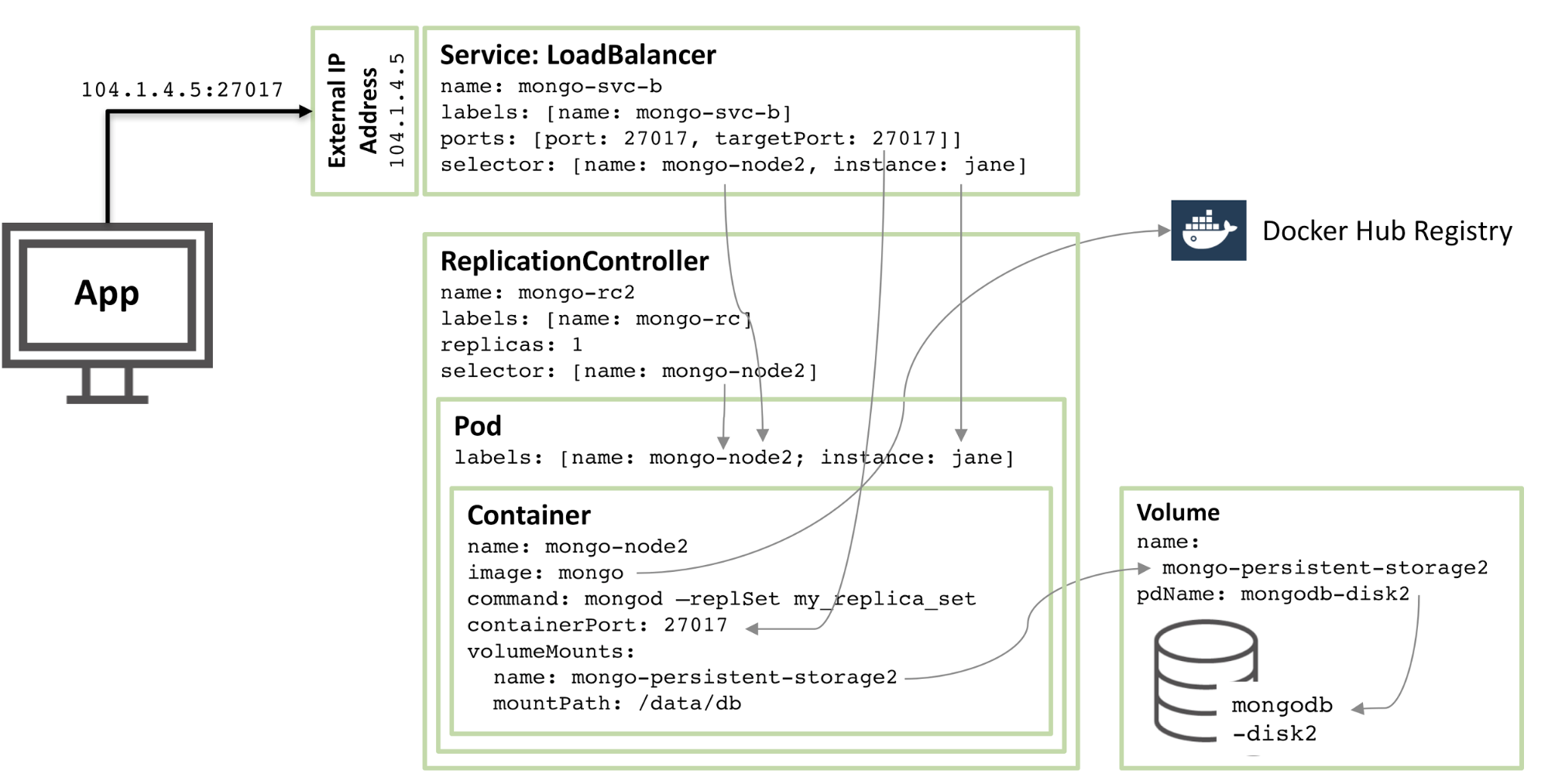

The next diagram shows the configuration for a second member of the replica set.

|

||||

|

||||

<center style="-webkit-font-smoothing: subpixel-antialiased; color: rgb(66, 66, 66); font-family: "Akzidenz Grotesk BQ Light", Helvetica; font-size: 16px; position: relative;">

|

||||

|

||||

</center>

|

||||

|

||||

Figure 2: Second MongoDB Replica Set member configured as a Kubernetes Pod

|

||||

|

||||

90% of the configuration is the same, with just these changes:

|

||||

|

||||

* The disk and volume names must be unique and so `mongodb-disk2` and `mongo-persistent-storage2` are used

|

||||

|

||||

* The Pod is assigned a label of `instance: jane` and `name: mongo-node2` so that the new service can distinguish it (using a selector) from the `rod` Pod used in Figure 1.

|

||||

|

||||

* The Replication Controller is named `mongo-rc2`

|

||||

|

||||

* The Service is named `mongo-svc-b` and gets a unique, external IP address (in this instance, Kubernetes has assigned `104.1.4.5`)

|

||||

|

||||

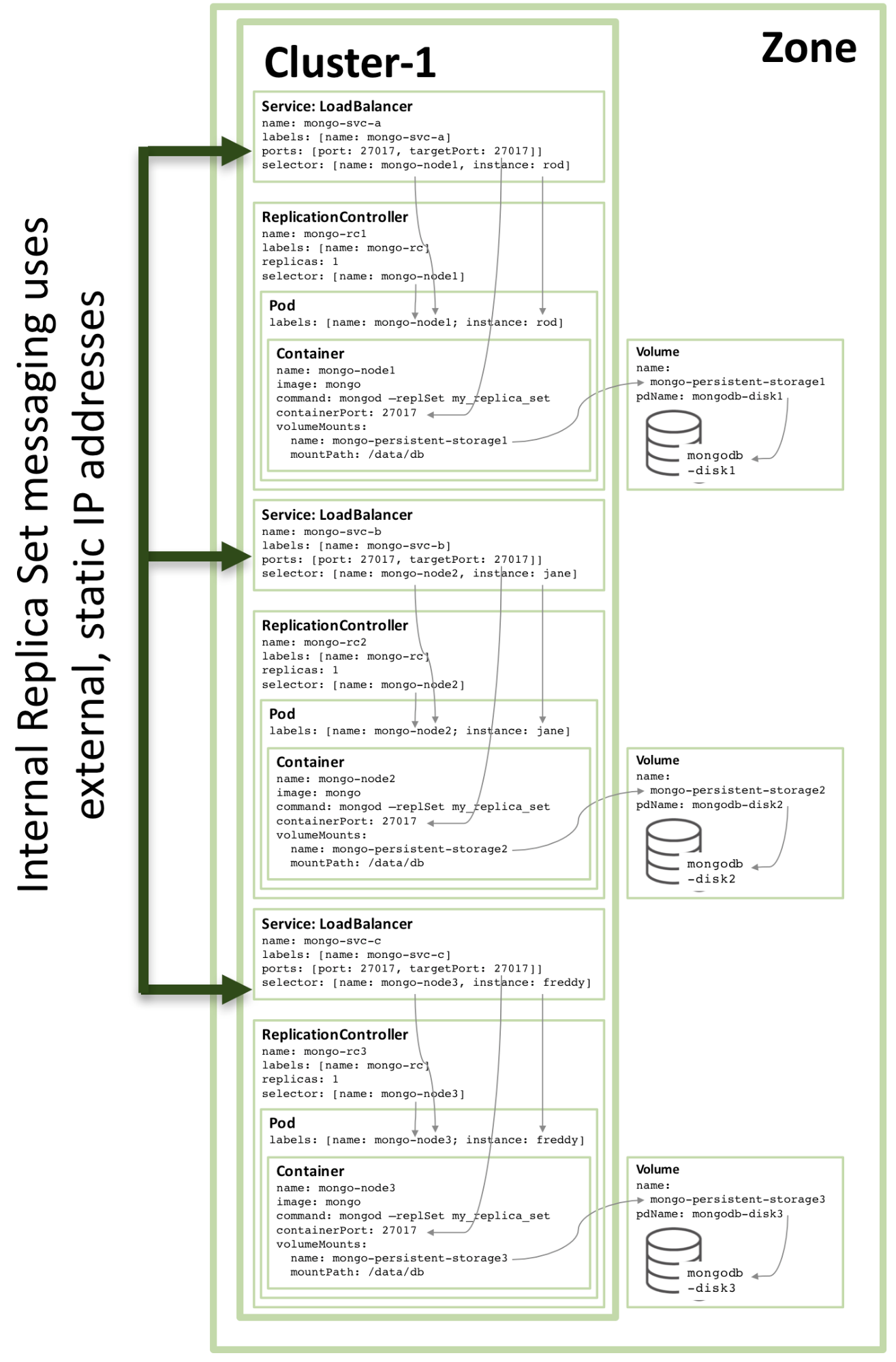

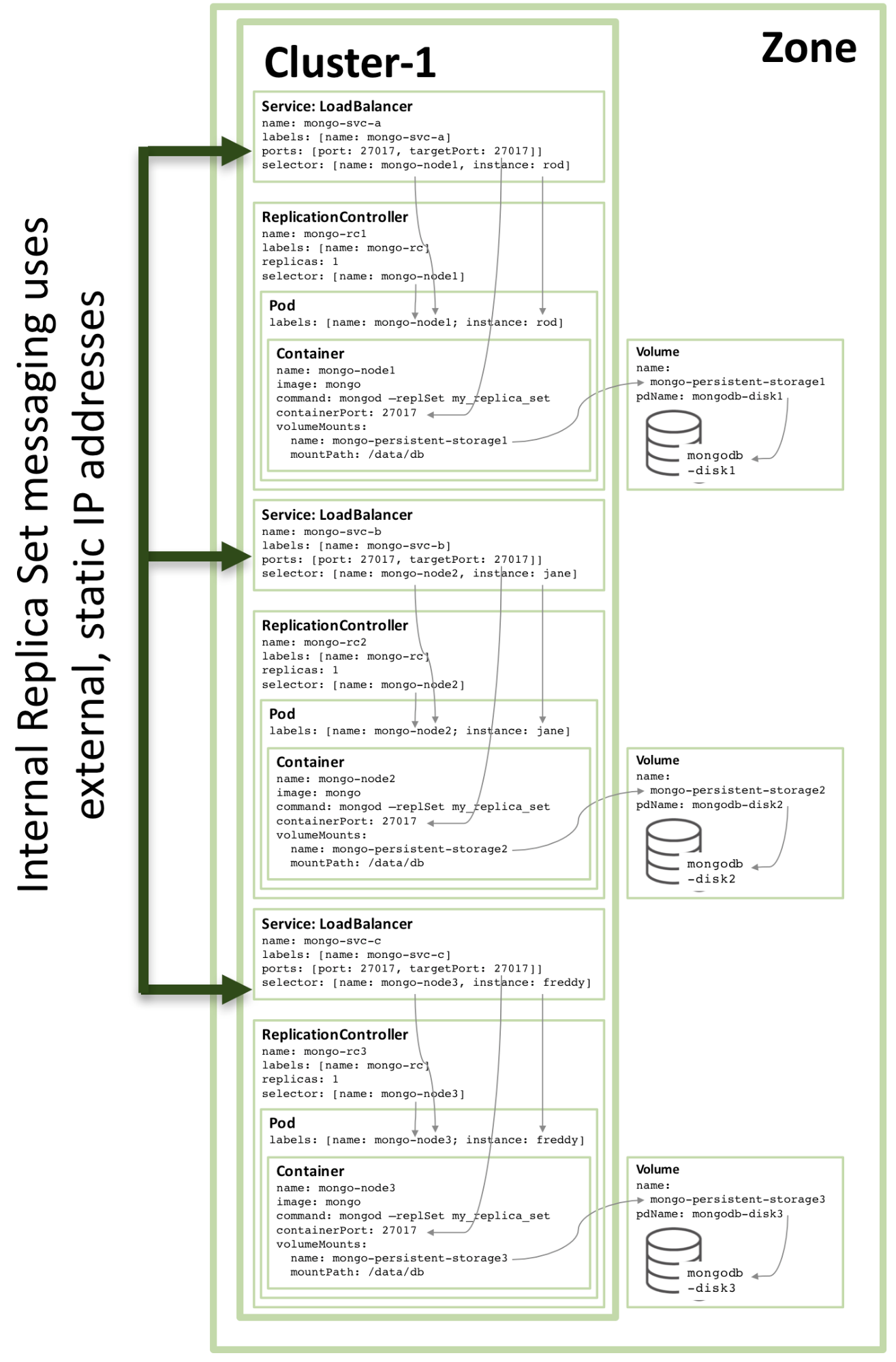

The configuration of the third replica set member follows the same pattern and the following figure shows the complete replica set:

|

||||

|

||||

<center style="-webkit-font-smoothing: subpixel-antialiased; color: rgb(66, 66, 66); font-family: "Akzidenz Grotesk BQ Light", Helvetica; font-size: 16px; position: relative;">

|

||||

|

||||

</center>

|

||||

|

||||

Figure 3: Full Replica Set member configured as a Kubernetes Service

|

||||

|

||||

Note that even if running the configuration shown in Figure 3 on a Kubernetes cluster of three or more nodes, Kubernetes may (and often will) schedule two or more MongoDB replica set members on the same host. This is because Kubernetes views the three pods as belonging to three independent services.

|

||||

|

||||

To increase redundancy (within the zone), an additional _headless_ service can be created. The new service provides no capabilities to the outside world (and will not even have an IP address) but it serves to inform Kubernetes that the three MongoDB pods form a service and so Kubernetes will attempt to schedule them on different nodes.

|

||||

|

||||

<center style="-webkit-font-smoothing: subpixel-antialiased; color: rgb(66, 66, 66); font-family: "Akzidenz Grotesk BQ Light", Helvetica; font-size: 16px; position: relative;">

|

||||

|

||||

</center>

|

||||

|

||||

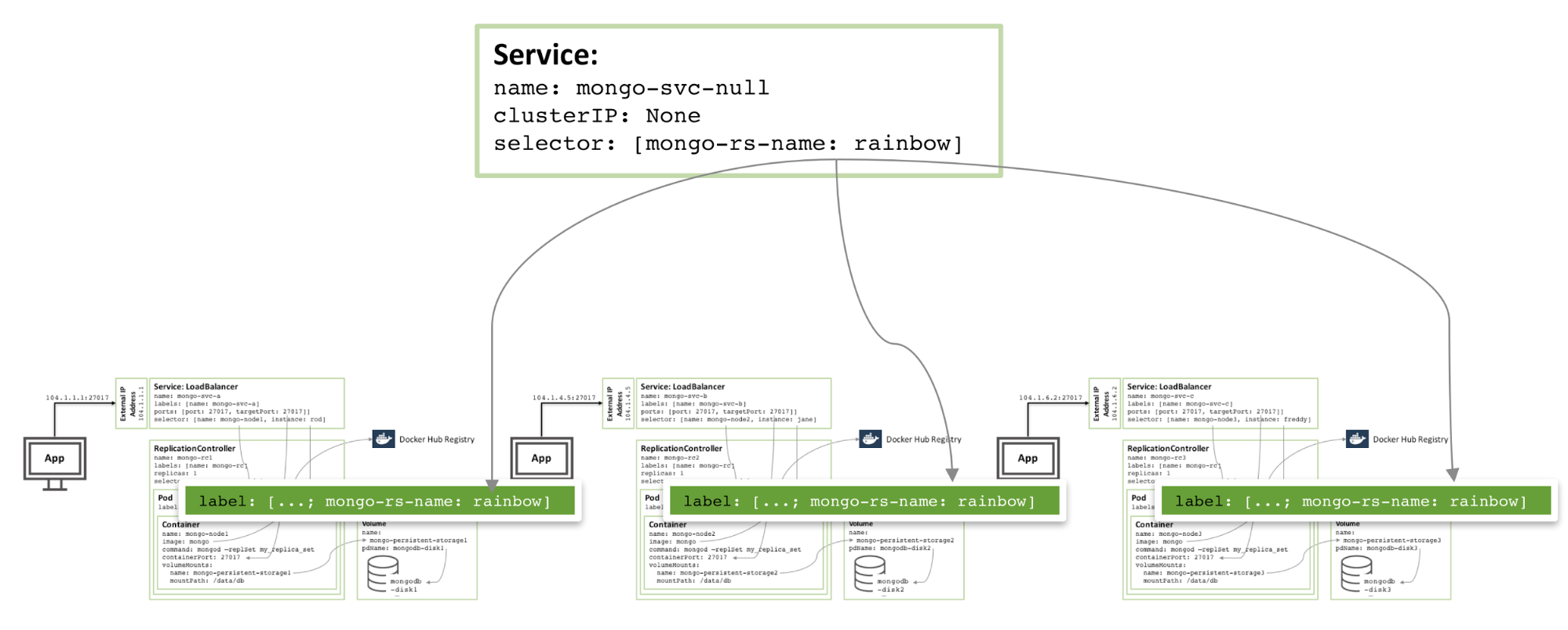

Figure 4: Headless service to avoid co-locating of MongoDB replica set members

|

||||

|

||||

The actual configuration files and the commands needed to orchestrate and start the MongoDB replica set can be found in the [Enabling Microservices: Containers & Orchestration Explained white paper][7]. In particular, there are some special steps required to combine the three MongoDB instances into a functioning, robust replica set which are described in the paper.

|

||||

|

||||

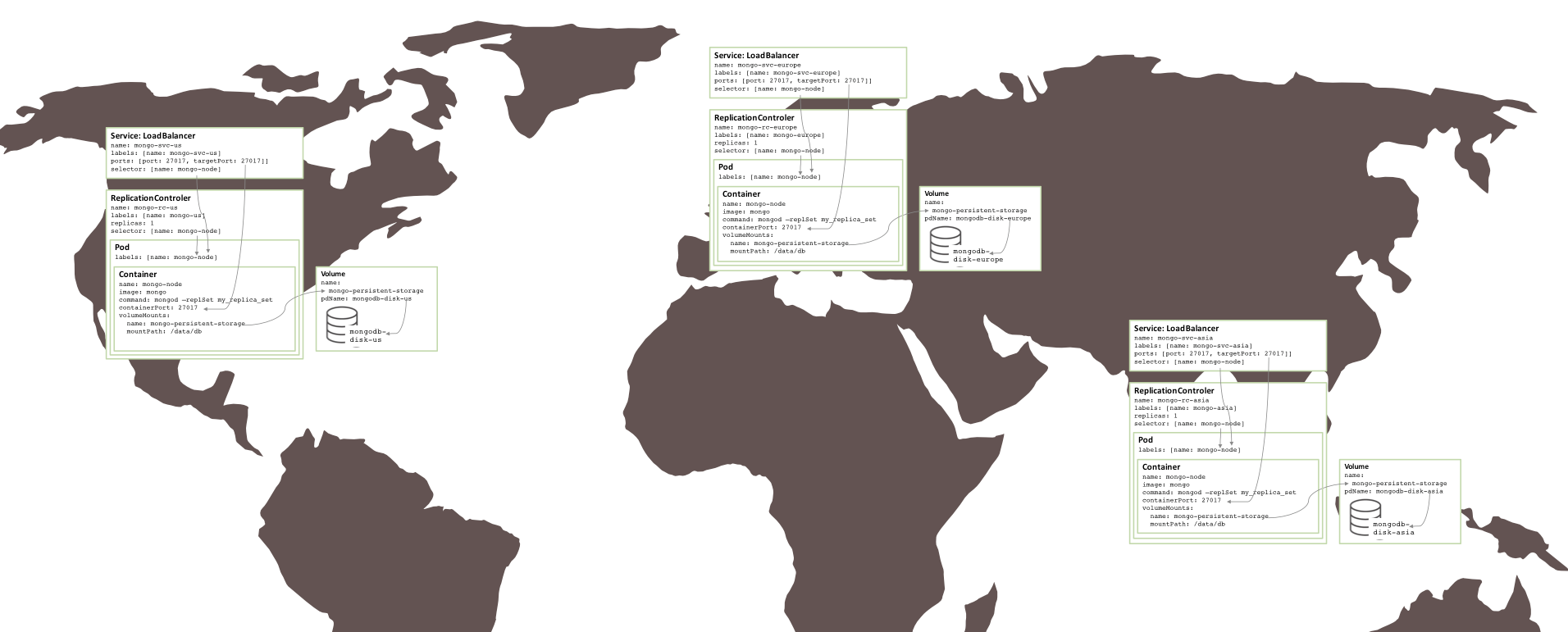

#### Multiple Availability Zone MongoDB Replica Set

|

||||

|

||||

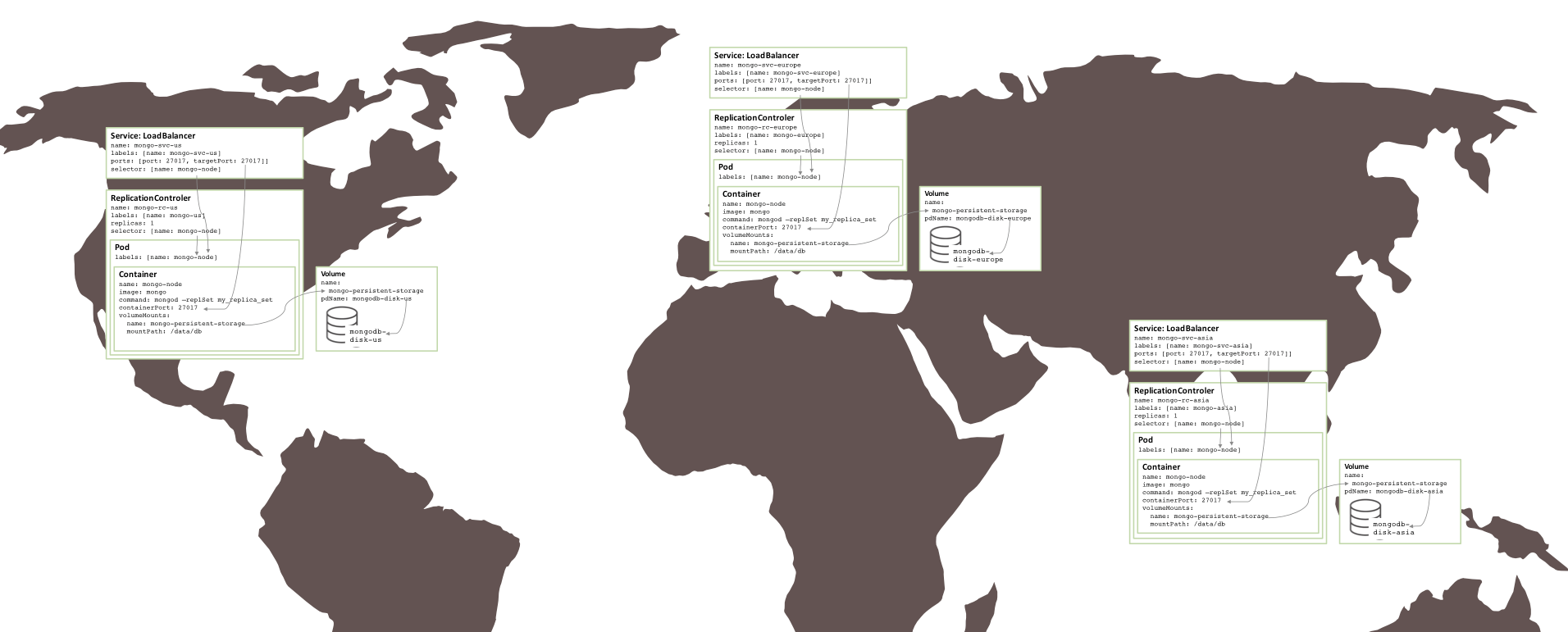

There is risk associated with the replica set created above in that everything is running in the same GCE cluster, and hence in the same availability zone. If there were a major incident that took the availability zone offline, then the MongoDB replica set would be unavailable. If geographic redundancy is required, then the three pods should be run in three different availability zones or regions.

|

||||

|

||||

Surprisingly little needs to change in order to create a similar replica set that is split between three zones – which requires three clusters. Each cluster requires its own Kubernetes YAML file that defines just the pod, Replication Controller and service for one member of the replica set. It is then a simple matter to create a cluster, persistent storage, and MongoDB node for each zone.

|

||||

|

||||

<center style="-webkit-font-smoothing: subpixel-antialiased; color: rgb(66, 66, 66); font-family: "Akzidenz Grotesk BQ Light", Helvetica; font-size: 16px; position: relative;">

|

||||

|

||||

</center>

|

||||

|

||||

Figure 5: Replica set running over multiple availability zones

|

||||

|

||||

### Next Steps

|

||||

|

||||

To learn more about containers and orchestration – both the technologies involved and the business benefits they deliver – read the [Enabling Microservices: Containers & Orchestration Explained white paper][8]. The same paper provides the complete instructions to get the replica set described in this post up and running on Docker and Kubernetes in the Google Container Engine.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Andrew is a Principal Product Marketing Manager working for MongoDB. He joined at the start last summer from Oracle where he spent 6+ years in product management, focused on High Availability. He can be contacted @andrewmorgan or through comments on his blog (clusterdb.com).

|

||||

|

||||

-------

|

||||

|

||||

via: https://www.mongodb.com/blog/post/running-mongodb-as-a-microservice-with-docker-and-kubernetes

|

||||

|

||||

作者:[Andrew Morgan ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.clusterdb.com/

|

||||

[1]:https://www.mongodb.com/cloud/

|

||||

[2]:https://www.mongodb.com/products/mongodb-enterprise-advanced

|

||||

[3]:https://www.mongodb.com/products/mongodb-professional

|

||||

[4]:https://docs.cloud.mongodb.com/tutorial/nav/install-automation-agent/

|

||||

[5]:https://hub.docker.com/_/mongo/

|

||||

[6]:https://www.mongodb.com/collateral/microservices-containers-and-orchestration-explained?jmp=inline

|

||||

[7]:https://www.mongodb.com/collateral/microservices-containers-and-orchestration-explained

|

||||

[8]:https://www.mongodb.com/collateral/microservices-containers-and-orchestration-explained

|

||||

@ -0,0 +1,140 @@

|

||||

使用 Docker 和 Kubernetes 将 MongoDB 作为微服务运行

|

||||

===================

|

||||

|

||||

### 介绍

|

||||

|

||||

想在笔记本电脑上尝试 MongoDB?只需执行一个命令,你就会有一个轻量级,独立的沙箱。完成后可以删除你所做的所有痕迹。

|

||||

|

||||

需要在多个环境中有相同的程序栈副本?构建你自己的容器镜像,让你的开发、测试、操作和支持团队有相同的环境克隆。

|

||||

|

||||

容器正在彻底改变整个软件生命周期:从最早的技术实验和概念证明到开发、测试、部署和支持。

|

||||

|

||||

#### [阅读启用微服务:容器和编排解释白皮书][6]。

|

||||

|

||||

编排工具管理如何创建、升级并高可用多个容器。编排还控制容器如何连接,以从多个微服务容器构建复杂的应用程序。

|

||||

|

||||

丰富的功能,简单的工具和强大的 API 使容器和编排功能成为 DevOps 团队的首选,将其集成到连续集成 (CI) 和连续交付 (CD) 的工作流程中。

|

||||

|

||||

这篇文章探讨了在容器中运行和编排 MongoDB 时遇到的额外挑战,并说明了如何克服这些挑战。

|

||||

|

||||

### MongoDB 的注意事项

|

||||

|

||||

使用容器和编排运行 MongoDB 引入了一些额外的注意事项:

|

||||

|

||||

* MongoDB 数据库节点是有状态的。如果容器发生故障并被重新安排,数据则会丢失(可能会从副本集的其他节点恢复,但需要时间),这是不合需要的。为了解决这个问题,可以使用诸如 Kubernetes 中的 _Volume_ 抽象等功能来将容器中临时的 MongoDB 数据目录映射到在容器故障和重新规划过程中存在的持久位置。

|

||||

|

||||

* 一个副本集中的 MongoDB 数据库节点必须相互通信 - 包括重新计划后。副本集中的所有节点必须知道其所有对等体的地址,但是当重新编排容器时,可能会使用不同的 IP 地址重新启动。例如,Kubernetes Pod 中的所有容器共享一个 IP 地址,当重新编排 pod 时,IP 地址会发生变化。使用 Kubernetes,可以通过将 Kubernetes 服务与每个 MongoDB 节点相关联来处理,该节点使用 Kubernetes DNS 服务为通过重新编排保持不变的服务提供“主机名”。

|

||||

|

||||

* 一旦每个单独的 MongoDB 节点正在运行(每个都在自己的容器中),则必须初始化副本集,并添加每个节点。这可能需要一些除了现成的编排工具提供的额外逻辑。具体来说,必须使用目标副本集中的一个 MongoDB 节点来执行 `rs.initiate` 和 `rs.add` 命令。

|

||||

|

||||

* 如果编排框架提供了自动重新编排容器(如 Kubernetes),那么可以增加 MongoDB 的弹性,因为这可以自动重新创建错误的副本组成员,从而在没有人为干预的情况下恢复完全冗余级别。

|

||||

|

||||

* 应该注意的是,虽然编排框架可能监控容器的状态,但是不太可能监视容器内运行的应用程序或备份数据。这意味着使用 [MongoDB Enterprise Advanced][2] 和 [MongoDB Professional][3] 中包含的 [MongoDB Cloud Manager][1] 等强大的监控和备份解决方案非常重要。考虑创建自己的镜像,其中包含你首选的 MongoDB 版本和 [MongoDB Automation Agent][4]。

|

||||

|

||||

### 使用 Docker 和 Kubernetes 实现 MongoDB 副本集

|

||||

|

||||

如上节所述,分布式数据库(如MongoDB)在使用编排框架(如Kubernetes)进行部署时,需要稍加注意。本节将介绍详细介绍如何实现。

|

||||

|

||||

我们首先在单个 Kubernetes 集群中创建整个 MongoDB 副本集(通常在一个数据中心内,这显然不能提供地理冗余)。实际上,很少有必要改变以跨多个集群运行,这些步骤将在后面描述。

|

||||

|

||||

副本集的每个成员将作为自己的 pod 运行,并提供一个公开 IP 地址和端口的服务。这个“固定”的 IP 地址非常重要,因为外部应用程序和其他副本集成员都可以依赖于它在重新编排 pod 的情况下保持不变。

|

||||

|

||||

下图说明了其中一个 pod 以及相关的复制控制器和服务。

|

||||

|

||||

<center style="-webkit-font-smoothing: subpixel-antialiased; color: rgb(66, 66, 66); font-family: "Akzidenz Grotesk BQ Light", Helvetica; font-size: 16px; position: relative;">

|

||||

|

||||

</center>

|

||||

|

||||

图 1:MongoDB 副本集成员被配置为 Kubernetes Pod 并作为服务公开

|

||||

|

||||

逐步介绍该配置中描述的资源:

|

||||

|

||||

* 从核心开始,有一个名为 `mongo-node1` 的容器。`mongo-node1` 包含一个名为 `mongo` 的镜像,这是一个在 [Docker Hub][5] 上托管的一个公开可用的 MongoDB 容器镜像。容器在集群中暴露端口 `27107`。

|

||||

|

||||

* Kubernetes _volumes_ 功能用于将连接器中的 `/data/db` 目录映射到名为 `mongo-persistent-storage1` 的永久存储上,这又被映射到到 Google Cloud 中创建的名为 `mongodb-disk1` 的磁盘中。这是 MongoDB 存储其数据的地方,这样它可以在容器重新编排后保留。

|

||||

|

||||

* 容器保存在一个 pod 中,该 pod 中有标签命名为 `mongo-node`,并提供一个(任意)名为 `rod` 的示例名。

|

||||

|

||||

* 名为 `mongo-node1` 的复制控制台被配置为确保 `mongo-node1` pod 的单个实例始终运行。

|

||||

|

||||

* 名为 `mongo-svc-a` 的 `负载均衡` 服务给外部开放了一个 IP 地址还有一个 `27017` 端口,它被映射到容器相同的端口号上。该服务使用选择器来匹配 pod 标签来确定正确的 pod。外部 IP 地址和端口将用于应用程序以及副本集成员之间的通信。每个容器也有本地 IP 地址,但是当容器移动或重新启动时,这些 IP 地址会变化,因此不会用于副本集。

|

||||

|

||||

下一个图显示了副本集的第二个成员的配置。

|

||||

|

||||

<center style="-webkit-font-smoothing: subpixel-antialiased; color: rgb(66, 66, 66); font-family: "Akzidenz Grotesk BQ Light", Helvetica; font-size: 16px; position: relative;">

|

||||

|

||||

</center>

|

||||

|

||||

图 2:第二个 MongoDB 副本集成员配置为 Kubernetes Pod

|

||||

|

||||

90% 的配置是一样的,只有这些变化:

|

||||

|

||||

* 磁盘和卷名必须是唯一的,因此使用的是 `mongodb-disk2` 和 `mongo-persistent-storage2`

|

||||

|

||||

* Pod 被分配了一个 `instance: jane` 和 `name: mongo-node2` 的标签,以便新的服务可以使用图 1 所示的 `rod` Pod 来区分它(使用选择器)。

|

||||

|

||||

* 复制控制器命名为 `mongo-rc2`

|

||||

|

||||

* 该服务名为` mongo-svc-b`,并获得了一个唯一的外部 IP 地址(在这种情况下,Kubernetes 分配了 `104.1.4.5`)

|

||||

|

||||

第三个副本成员的配置遵循相同的模式,下图展示了完整的副本集:

|

||||

|

||||

<center style="-webkit-font-smoothing: subpixel-antialiased; color: rgb(66, 66, 66); font-family: "Akzidenz Grotesk BQ Light", Helvetica; font-size: 16px; position: relative;">

|

||||

|

||||

</center>

|

||||

|

||||

图 3:配置为 Kubernetes 服务的完整副本集成员

|

||||

|

||||

请注意,即使在三个或更多节点的 Kubernetes 群集上运行图 3 所示的配置,Kubernetes 可能(并且经常会)在同一主机上安排两个或多个 MongoDB 副本集成员。这是因为 Kubernetes 将三个 pod 视为属于三个独立的服务。

|

||||

|

||||

为了增加冗余(在区域内),可以创建一个附加的 _headless_ 服务。新服务不向外界提供任何功能(甚至不会有 IP 地址),但是它可以让 Kubernetes 通知三个 MongoDB pod 形成一个服务,所以 Kubernetes 会尝试在不同的节点上编排它们。

|

||||

|

||||

<center style="-webkit-font-smoothing: subpixel-antialiased; color: rgb(66, 66, 66); font-family: "Akzidenz Grotesk BQ Light", Helvetica; font-size: 16px; position: relative;">

|

||||

|

||||

</center>

|

||||

|

||||

图 4:避免同一 MongoDB 副本集成员的 Headless 服务

|

||||

|

||||

配置和启动 MongoDB 副本集所需的实际配置文件和命令可以在[启用微服务:容器和编排解释白皮书][7]中找到。特别的是,需要一些本文中描述的特殊步骤来将三个 MongoDB 实例组合成功能强大的副本集。

|

||||

|

||||

#### 多个可用区域 MongoDB 副本集

|

||||

|

||||

上面创建的副本集有风险,因为所有内容都在相同的 GCE 集群中运行,因此都在相同的可用性区域中。如果有一个重大事件使可用性区域离线,那么 MongoDB 副本集将不可用。如果需要地理冗余,则三个 pod 应该在三个不同的可用区域或地区中运行。

|

||||

|

||||

令人惊奇的是,为了创建在三个区域之间分割的类似的副本集(需要三个集群),几乎不需要改变。每个集群都需要自己的 Kubernetes YAML 文件,该文件仅为该副本集中的一个成员定义了 pod、复制控制器和服务。那么为每个区域创建一个集群,永久存储和 MongoDB 节点是一件很简单的事情。

|

||||

|

||||

<center style="-webkit-font-smoothing: subpixel-antialiased; color: rgb(66, 66, 66); font-family: "Akzidenz Grotesk BQ Light", Helvetica; font-size: 16px; position: relative;">

|

||||

|

||||

</center>

|

||||

|

||||

图 5:在多个可用区域上运行的副本集

|

||||

|

||||

### 下一步

|

||||

|

||||

要了解有关容器和编排的更多信息 - 所涉及的技术和所提供的业务优势 - 请阅读[启用微服务:容器和编排解释白皮书][8]。同样的文件提供了完整的说明,以获取本文中描述的副本集,并在 Google Container Engine 中的 Docker 和 Kubernetes 上运行。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Andrew 是 MongoDB 的产品营销总经理。他在去年夏天离开 Oracle 加入 MongoDB,在 Oracle 他花了 6 年多的时间在产品管理上,专注于高可用性。他可以通过 @andrewmorgan 或者在他的博客(clusterdb.com)评论联系他。

|

||||

|

||||

-------

|

||||

|

||||

via: https://www.mongodb.com/blog/post/running-mongodb-as-a-microservice-with-docker-and-kubernetes

|

||||

|

||||

作者:[Andrew Morgan ][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.clusterdb.com/

|

||||

[1]:https://www.mongodb.com/cloud/

|

||||

[2]:https://www.mongodb.com/products/mongodb-enterprise-advanced

|

||||

[3]:https://www.mongodb.com/products/mongodb-professional

|

||||

[4]:https://docs.cloud.mongodb.com/tutorial/nav/install-automation-agent/

|

||||

[5]:https://hub.docker.com/_/mongo/

|

||||

[6]:https://www.mongodb.com/collateral/microservices-containers-and-orchestration-explained?jmp=inline

|

||||

[7]:https://www.mongodb.com/collateral/microservices-containers-and-orchestration-explained

|

||||

[8]:https://www.mongodb.com/collateral/microservices-containers-and-orchestration-explained

|

||||

Loading…

Reference in New Issue

Block a user