mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-25 23:11:02 +08:00

清除部分选题

@lujun9972 部分选题已经过时

This commit is contained in:

parent

c9756c90e4

commit

825f475b5a

@ -1,116 +0,0 @@

|

||||

18 Cyber-Security Trends Organizations Need to Brace for in 2018

|

||||

======

|

||||

|

||||

### 18 Cyber-Security Trends Organizations Need to Brace for in 2018

|

||||

|

||||

Enterprises, end users and governments faced no shortage of security challenges in 2017. Some of those same challenges will continue into 2018, and there will be new problems to solve as well. Ransomware has been a concern for several years and will likely continue to be a big issue in 2018. The new year is also going to bring the formal introduction of the European Union's General Data Protection Regulation (GDPR), which will impact how organizations manage private information. A key trend that emerged in 2017 was an increasing use of artificial intelligence (AI) to help solve cyber-security challenges, and that's a trend that will continue to accelerate in 2018. What else will the new year bring? In this slide show, eWEEK presents 18 security predictions for the year ahead from 18 security experts.

|

||||

|

||||

|

||||

### Africa Emerges as New Area for Threat Actors and Targets

|

||||

|

||||

"In 2018, Africa will emerge as a new focus area for cyber-threats--both targeting organizations based there and attacks originating from the continent. With its growth in technology adoption and operations and rising economy, and its increasing number of local resident threat actors, Africa has the largest potential for net-new impactful cyber events." -Steve Stone, IBM X-Force IRIS

|

||||

|

||||

|

||||

### AI vs. AI

|

||||

|

||||

"2018 will see a rise in AI-based attacks as cyber-criminals begin using machine learning to spoof human behaviors. The cyber-security industry will need to tune their own AI tools to better combat the new threats. The cat and mouse game of cybercrime and security innovation will rapidly escalate to include AI-enabled tools on both sides." --Caleb Barlow, vice president of Threat Intelligence, IBM Security

|

||||

|

||||

|

||||

### Cyber-Security as a Growth Driver

|

||||

|

||||

"CEOs view cyber-security as one of their top risks, but many also see it as an opportunity to innovate and find new ways to generate revenue. In 2018 and beyond, effective cyber-security measures will support companies that are transforming their security, privacy and continuity controls in an effort to grow their businesses." -Greg Bell, KMPG's Global Cyber Security Practice co-leader

|

||||

|

||||

|

||||

### GDPR Means Good Enough Isn't Good Enough

|

||||

|

||||

"Too many professionals share a 'good enough' philosophy that they've adopted from their consumer mindset that they can simply upgrade and patch to comply with the latest security and compliance best practices or regulations. In 2018, with the upcoming enforcement of the EU GDPR 'respond fast' rules, organizations will quickly come to terms, and face fines, with why 'good enough' is not 'good' anymore." -Kris Lovejoy, CEO of BluVector

|

||||

|

||||

|

||||

### Consumerization of Cyber-Security

|

||||

|

||||

"2018 will mark the debut of the 'consumerization of cyber-security.' This means consumers will be offered a unified, comprehensive suite of security offerings, including, in addition to antivirus and spyware protection, credit and identify abuse monitoring and identity restoration. This is a big step forward compared to what is available in one package today. McAfee Total Protection, which safeguards consumer identities in addition to providing virus and malware protection, is an early, simplified example of this. Consumers want to feel more secure." -Don Dixon, co-founder and managing director, Trident Capital Cybersecurity

|

||||

|

||||

|

||||

### Ransomware Will Continue

|

||||

|

||||

"Ransomware will continue to plague organizations with 'old' attacks 'refreshed' and reused. The threat of ransomware will continue into 2018. This year we've seen ransomware wreak havoc across the globe with both WannaCry and NotPetya hitting the headlines. Threats of this type and on this scale will be a common feature of the next 12 months." -Andrew Avanessian, chief operating officer at Avecto

|

||||

|

||||

|

||||

### More Encryption Will Be Needed

|

||||

|

||||

"It will become increasingly clear in the industry that HTTPS does not offer the robust security and end-to-end encryption as is commonly believed, and there will be a push to encrypt data before it is sent over HTTPS." -Darren Guccione, CEO and co-founder, Keeper Security

|

||||

|

||||

|

||||

### Denial of Service Will Become Financially Lucrative

|

||||

|

||||

"Denial of service will become as financially lucrative as identity theft. Using stolen identities for new account fraud has been the major revenue driver behind breaches. However, in recent years ransomware attacks have caused as much if not more damage, as increased reliance on distributed applications and cloud services results in massive business damage when information, applications or systems are held hostage by attackers." -John Pescatore. SANS' director of emerging security trends

|

||||

|

||||

|

||||

### Goodbye Social Security Number

|

||||

|

||||

"2018 is the turning point for the retirement of the Social Security number. At this point, the vast majority of SSNs are compromised, and we can no longer rely on them--nor should we have previously." -Michael Sutton, CISO, Zscaler

|

||||

|

||||

|

||||

### Post-Quantum Cyber-Security Discussion Warms Up the Boardroom

|

||||

|

||||

"The uncertainty of cyber-security in a post-quantum world is percolating some circles, but 2018 is the year the discussions gain momentum in the top levels of business. As security experts grapple with preparing for a post-quantum world, top executives will begin to ask what can be done to ensure all of our connected 'things' remain secure." -Malte Pollmann, CEO of Utimaco

|

||||

|

||||

|

||||

### Market Consolidation Is Coming

|

||||

|

||||

"There will be accelerated consolidation of cyber niche markets flooded with too many 'me-too' companies offering extremely similar products and services. As an example, authentication, end-point security and threat intelligence now boast a total of more than 25 competitors. Ultimately, only three to six companies in each niche can survive." -Mike Janke, co-founder of DataTribe

|

||||

|

||||

|

||||

### Health Care Will Be a Lucrative Target

|

||||

|

||||

"Health records are highly valued on the black market because they are saturated with Personally Identifiable Information (PII). Health care institutions will continue to be a target as they have tighter allocations for security in their IT budgets. Also, medical devices are hard to update and often run on older operating system versions." -Larry Cashdollar, senior engineer, Security Intelligence Response Team, Akamai

|

||||

|

||||

|

||||

### 2018: The Year of Simple Multifactor Authentication for SMBs

|

||||

|

||||

"Unfortunately, effective multifactor authentication (MFA) solutions have remained largely out of reach for the average small- and medium-sized business. Though enterprise multifactor technology is quite mature, it often required complex on-premises solutions and expensive hardware tokens that most small businesses couldn't afford or manage. However, the growth of SaaS and smartphones has introduced new multifactor solutions that are inexpensive and easy for small businesses to use. Next year, many SMBs will adopt these new MFA solutions to secure their more privileged accounts and users. 2018 will be the year of MFA for SMBs." -Corey Nachreiner, CTO at WatchGuard Technologies

|

||||

|

||||

|

||||

### Automation Will Improve the IT Skills Gap

|

||||

|

||||

"The security skills gap is widening every year, with no signs of slowing down. To combat the skills gap and assist in the growing adoption of advanced analytics, automation will become an even higher priority for CISOs." -Haiyan Song, senior vice president of Security Markets at Splunk

|

||||

|

||||

|

||||

### Industrial Security Gets Overdue Attention

|

||||

|

||||

"The high-profile attacks of 2017 acted as a wake-up call, and many plant managers now worry that they could be next. Plant manufacturers themselves will offer enhanced security. Third-party companies going on their own will stay in a niche market. The industrial security manufacturers themselves will drive a cooperation with the security industry to provide security themselves. This is because there is an awareness thing going on and impending government scrutiny. This is different from what happened in the rest of IT/IoT where security vendors just go to market by themselves as a layer on top of IT (i.e.: an antivirus on top of Windows)." -Renaud Deraison, co-founder and CTO, Tenable

|

||||

|

||||

|

||||

### Cryptocurrencies Become the New Playground for Identity Thieves

|

||||

|

||||

"The rising value of cryptocurrencies will lead to greater attention from hackers and bad actors. Next year we'll see more fraud, hacks and money laundering take place across the top cryptocurrency marketplaces. This will lead to a greater focus on identity verification and, ultimately, will result in legislation focused on trader identity." -Stephen Maloney, executive vice president of Business Development & Strategy, Acuant

|

||||

|

||||

|

||||

### GDPR Compliance Will Be a Challenge

|

||||

|

||||

"In 2018, three quarters of companies or apps will be ruled out of compliance with GDPR and at least one major corporation will be fined to the highest extent in 2018 to set an example for others. Most companies are preparing internally by performing more security assessments and recruiting a mix of security professionals with privacy expertise and lawyers, but with the deadline quickly approaching, it's clear the bulk of businesses are woefully behind and may not be able to avoid these consequences." -Sanjay Beri, founder and CEO, Netskope

|

||||

|

||||

|

||||

### Data Security Solidifies Its Spot in the IT Security Stack

|

||||

|

||||

"Many businesses are stuck in the mindset that security of networks, servers and applications is sufficient to protect their data. However, the barrage of breaches in 2017 highlights a clear disconnect between what organizations think is working and what actually works. In 2018, we expect more businesses to implement data security solutions that complement their existing network security deployments." -Jim Varner, CEO of SecurityFirst

|

||||

|

||||

|

||||

### [Eight Cyber-Security Vendors Raise New Funding in November 2017][1]

|

||||

|

||||

Though the pace of funding slowed in November, multiple firms raised new venture capital to develop and improve their cyber-security products.

|

||||

|

||||

Though the pace of funding slowed in November, multiple firms raised new venture capital to develop and improve their cyber-security products.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://voip.eweek.com/security/18-cyber-security-trends-organizations-need-to-brace-for-in-2018

|

||||

|

||||

作者:[Sean Michael Kerner][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://voip.eweek.com/Authors/sean-michael-kerner

|

||||

[1]:http://voip.eweek.com/security/eight-cyber-security-vendors-raise-new-funding-in-november-2017

|

||||

@ -1,255 +0,0 @@

|

||||

A review of Virtual Labs virtualization solutions for MOOCs – WebLog Pro Olivier Berger

|

||||

======

|

||||

### 1 Introduction

|

||||

|

||||

This is a memo that tries to capture some of the experience gained in the [FLIRT project][3] on the topic of Virtual Labs for MOOCs (Massive Open Online Courses).

|

||||

|

||||

In this memo, we try to draw an overview of some benefits and concerns with existing approaches at using virtualization techniques for running Virtual Labs, as distributions of tools made available for distant learners.

|

||||

|

||||

We describe 3 main technical architectures: (1) running Virtual Machine images locally on a virtual machine manager, or (2) displaying the remote execution of similar virtual machines on a IaaS cloud, and (3) the potential of connecting to the remote execution of minimized containers on a remote PaaS cloud.

|

||||

|

||||

We then elaborate on some perspectives for locally running ports of applications to the WebAssembly virtual machine of the modern Web browsers.

|

||||

|

||||

Disclaimer: This memo doesn’t intend to point to extensive literature on the subject, so part of our analysis may be biased by our particular context.

|

||||

|

||||

### 2 Context : MOOCs

|

||||

|

||||

Many MOOCs (Massive Open Online Courses) include a kind of “virtual laboratory” for learners to experiment with tools, as a way to apply the knowledge, practice, and be more active in the learning process. In quite a few (technical) disciplines, this can consist in using a set of standard applications in a professional domain, which represent typical tools that would be used in real life scenarii.

|

||||

|

||||

Our main perspective will be that of a MOOC editor and of MOOC production teams which want to make “virtual labs” available for MOOC participants.

|

||||

|

||||

Such a “virtual lab” would typically contain installations of existing applications, pre-installed and configured, and loaded with scenario data in order to perform a lab.

|

||||

|

||||

The main constraint here is that such labs would typically be fabricated with limited software development expertise and funds[1][4]. Thus we consider here only the assembly of existing “normal” applications and discard the option of developping novel “serious games” and simulator applications for such MOOCs.

|

||||

|

||||

#### 2.1 The FLIRT project

|

||||

|

||||

The [FLIRT project][5] groups a consortium of 19 partners in Industry, SMEs and Academia to work on a collection of MOOCs and SPOCs for professional development in Networks and Telecommunications. Lead by Institut Mines Telecom, it benefits from the funding support of the French “Investissements d’avenir” programme.

|

||||

|

||||

As part of the FLIRT roadmap, we’re leading an “innovation task” focused on Virtual Labs in the context of the Cloud. This memo was produced as part of this task.

|

||||

|

||||

#### 2.2 Some challenges in virtual labs design for distant learning

|

||||

|

||||

Virtual Labs used in distance learning contexts require the use of software applications in autonomy, either running on a personal, or professional computer. In general, the technical skills of participants may be diverse. So much for the quality (bandwith, QoS, filtering, limitations: firewaling) of the hardware and networks they use at home or at work. It’s thus very optimistic to seek for one solution fits all strategy.

|

||||

|

||||

Most of the time there’s a learning curve on getting familiar with the tools which students will have to use, which constitutes as many challenges to overcome for beginners. These tools may not be suited for beginners, but they will still be selected by the trainers as they’re representative of the professional context being taught.

|

||||

|

||||

In theory, this usability challenge should be addressed by devising an adapted pedagogical approach, especially in a context of distance learning, so that learners can practice the labs on their own, without the presence of a tutor or professor. Or some particular prerequisite skills could be required (“please follow System Administration 101 before applying to this course”).

|

||||

|

||||

Unfortunately there are many cases where instructors basically just translate to a distant learning scenario, previous lab resources that had previously been devised for in presence learning. This lets learner faced with many challenges to overcome. The only support resource is often a regular forum on the MOOC’s LMS (Learning Management System).

|

||||

|

||||

My intuition[2][6] is that developing ad-hoc simulators for distant education would probably be more efficient and easy to use for learners. But that would require a too high investment for the designers of the courses.

|

||||

|

||||

In the context of MOOCs which are mainly free to participate to, not much investment is possible in devising ad-hoc lab applications, and instructors have to rely on existing applications, tools and scenarii to deliver a cheap enough environment. Furthermore, technical or licensing constraints[3][7] may lead to selecting lab tools which may not be easy to learn, but have the great advantage or being freely redistributable[4][8].

|

||||

|

||||

### 3 Virtual Machines for Virtual Labs

|

||||

|

||||

The learners who will try unattended learning in such typical virtual labs will face difficulties in making specialized applications run. They must overcome the technical details of downloading, installing and configuring programs, before even trying to perform a particular pedagogical scenario linked to the matter studied.

|

||||

|

||||

To diminish these difficulties, one traditional approach for implementing labs in MOOCs has been to assemble in advance a Virtual Machine image. This already made image can then be downloaded and run with a virtual machine simulator (like [VirtualBox][9][5][10]).

|

||||

|

||||

The pre-loaded VM will already have everything ready for use, so that the learners don’t have to install anything on their machines.

|

||||

|

||||

An alternative is to let learners download and install the needed software tools themselves, but this leads to so many compatibility issues or technical skill prerequisites, that this is often not advised, and mentioned only as a fallback option.

|

||||

|

||||

#### 3.1 Downloading and installation issues

|

||||

|

||||

Experience shows[2][11] that such virtual machines also bring some issues. Even if installation of every piece of software is no longer required, learners still need to be able to run the VM simulator on a wide range of diverse hardware, OSes and configurations. Even managing to download the VMs, still causes many issues (lack admin privileges, weight vs download speed, memory or CPU load, disk space, screen configurations, firewall filtering, keayboard layout, etc.).

|

||||

|

||||

These problems aren’t generally faced by the majority of learners, but the impacted minority is not marginal either, and they generally will produce a lot of support requests for the MOOC team (usually in the forums), which needs to be anticipated by the community managers.

|

||||

|

||||

The use of VMs is no show stopper for most, but can be a serious problem for a minority of learners, and is then no silver bullet.

|

||||

|

||||

Some general usability issues may also emerge if users aren’t used to the look and feel of the enclosed desktop. For instance, the VM may consist of a GNU/Linux desktop, whereas users would use a Windows or Mac OS system.

|

||||

|

||||

#### 3.2 Fabrication issues for the VM images

|

||||

|

||||

On the MOOC team’s side, the fabrication of a lightweight, fast, tested, license-free and easy to use VM image isn’t necessarily easy.

|

||||

|

||||

Software configurations tend to rot as time passes, and maintenance may not be easy when the later MOOC editions evolutions lead to the need to maintain the virtual lab scenarii years later.

|

||||

|

||||

Ideally, this would require adopting an “industrial” process in building (and testing) the lab VMs, but this requires quite an expertise (system administration, packaging, etc.) that may or not have been anticipated at the time of building the MOOC (unlike video editing competence, for instance).

|

||||

|

||||

Our experiment with the [Vagrant][12] technology [[0][13]] and Debian packaging was interesting in this respect, as it allowed us to use a well managed “script” to precisely control the build of a minimal VM image.

|

||||

|

||||

### 4 Virtual Labs as a Service

|

||||

|

||||

To overcome the difficulties in downloading and running Virtual Machines on one’s local computer, we have started exploring the possibility to run these applications in a kind of Software as a Service (SaaS) context, “on the cloud”.

|

||||

|

||||

But not all applications typically used in MOOC labs are already available for remote execution on the cloud (unless the course deals precisely with managing email in GMail).

|

||||

|

||||

We have then studied the option to use such an approach not for a single application, but for a whole virtual “desktop” which would be available on the cloud.

|

||||

|

||||

#### 4.1 IaaS deployments

|

||||

|

||||

A way to achieve this goal is to deploy Virtual Machine images quite similar to the ones described above, on the cloud, in an Infrastructure as a Service (IaaS) context[6][14], to offer access to remote desktops for every learners.

|

||||

|

||||

There are different technical options to achieve this goal, but a simplified description of the architecture can be seen as just running Virtual Machines on a single IaaS platform instead of on each learner’s computer. Access to the desktop and application interfaces is made possible with the use of Web pages (or other dedicated lightweight clients) which will display a “full screen” display of the remote desktop running for the user on the cloud VM. Under the hood, the remote display of a Linux desktop session is made with technologies like [VNC][15] and [RDP][16] connecting to a [Guacamole][17] server on the remote VM.

|

||||

|

||||

In the context of the FLIRT project, we have made early experiments with such an architecture. We used the CloVER solution by our partner [ProCAN][18] which provides a virtual desktops broker between [OpenEdX][19] and an [OpenStack][20] IaaS public platform.

|

||||

|

||||

The expected benefit is that users don’t have to install anything locally, as the only tool needed locally is a Web browser (displaying a full-screen [HTML5 canvas][21] displaying the remote desktop run by the Guacamole server running on the cloud VM.

|

||||

|

||||

But there are still some issues with such an approach. First, the cost of operating such an infrastructure : Virtual Machines need to be hosted on a IaaS platform, and that cost of operation isn’t null[7][22] for the MOOC editor, compared to the cost of VirtualBox and a VM running on the learner’s side (basically zero for the MOOC editor).

|

||||

|

||||

Another issue, which could be more problematic lies in the need for a reliable connection to the Internet during the whole sequences of lab execution by the learners[8][23]. Even if Guacamole is quite efficient at compressing rendering traffic, some basic connectivity is needed during the whole Lab work sessions, preventing some mobile uses for instance.

|

||||

|

||||

One other potential annoyance is the potential delays for making a VM available to a learner (provisioning a VM), when huge VMs images need to be copied inside the IaaS platform when a learner connects to the Virtual Lab activity for the first time (several minutes delays). This may be worse if the VM image is too big (hence the need for optimization of the content[9][24]).

|

||||

|

||||

However, the fact that all VMs are running on a platform under the control of the MOOC editor allows new kind of features for the MOOC. For instance, learners can submit results of their labs directly to the LMS without the need to upload or copy-paste results manually. This can help monitor progress or perform evaluation or grading.

|

||||

|

||||

The fact that their VMs run on the same platform also allows new kinds of pedagogical scenarii, as VMs of multiple learners can be interconnected, allowing cooperative activities between learners. The VM images may then need to be instrumented and deployed in particular configurations, which may require the use of a dedicated broker like CloVER to manage such scenarii.

|

||||

|

||||

For the records, we have yet to perform a rigorous benchmarking of such a solution in order to evaluate its benefits, or constraints given particular contexts. In FLIRT, our main focus will be in the context of SPOCs for professional training (a bit different a context than public MOOCs).

|

||||

|

||||

Still this approach doesn’t solve the VMs fabrication issues for the MOOC staff. Installing software inside a VM, be it local inside a VirtualBox simulator of over the cloud through a remote desktop display, makes not much difference. This relies mainly on manual operations and may not be well managed in terms of quality of the process (reproducibility, optimization).

|

||||

|

||||

#### 4.2 PaaS deployments using containers

|

||||

|

||||

Some key issues in the IaaS context described above, are the cost of operation of running full VMs, and long provisioning delays.

|

||||

|

||||

We’re experimenting with new options to address these issues, through the use of [Linux containers][25] running on a PaaS (Platform as a Service) platform, instead of full-fleshed Virtual Machines[10][26].

|

||||

|

||||

The main difference, with containers instead of Virtual Machines, lies in the reduced size of images, and much lower CPU load requirements, as the container remove the need for one layer of virtualization. Also, the deduplication techniques at the heart of some virtual file-systems used by container platforms lead to really fast provisioning, avoiding the need to wait for the labs to start.

|

||||

|

||||

The traditional making of VMs, done by installing packages and taking a snapshot, was affordable for the regular teacher, but involved manual operations. In this respect, one other major benefit of containers is the potential for better industrialization of the virtual lab fabrication, as they are generally not assembled manually. Instead, one uses a “scripting” approach in describing which applications and their dependencies need to be put inside a container image. But this requires new competence from the Lab creators, like learning the [Docker][27] technology (and the [OpenShift][28] PaaS, for instance), which may be quite specialized. Whereas Docker containers tend to become quite popular in Software Development faculty (through the “[devops][29]” hype), they may be a bit new to other field instructors.

|

||||

|

||||

The learning curve to mastering the automation of the whole container-based labs installation needs to be evaluated. There’s a trade-off to consider in adopting technology like Vagrant or Docker: acquiring container/PaaS expertise vs quality of industrialization and optimization. The production of a MOOC should then require careful planning if one has to hire or contract with a PaaS expert for setting up the Virtual Labs.

|

||||

|

||||

We may also expect interesting pedagogical benefits. As containers are lightweight, and platforms allow to “easily” deploy multiple interlinked containers (over dedicated virtual networks), this enables the setup of more realistic scenarii, where each learner may be provided with multiple “nodes” over virtual networks (all running their individual containers). This would be particularly interesting for Computer Networks or Security teaching for instance, where each learner may have access both to client and server nodes, to study client-server protocols, for instance. This is particularly interesting for us in the context of our FLIRT project, where we produce a collection of Computer Networks courses.

|

||||

|

||||

Still, this mode of operation relies on a good connectivity of the learners to the Cloud. In contexts of distance learning in poorly connected contexts, the PaaS architecture doesn’t solve that particular issue compared to the previous IaaS architecture.

|

||||

|

||||

### 5 Future server-less Virtual Labs with WebAssembly

|

||||

|

||||

As we have seen, the IaaS or PaaS based Virtual Labs running on the Cloud offer alternatives to installing local virtual machines on the learner’s computer. But they both require to be connected for the whole duration of the Lab, as the applications would be executed on the remote servers, on the Cloud (either inside VMs or containers).

|

||||

|

||||

We have been thinking of another alternative which could allow the deployment of some Virtual Labs on the local computers of the learners without the hassles of downloading and installing a Virtual Machine manager and VM image. We envision the possibility to use the infrastructure provided by modern Web browsers to allow running the lab’s applications.

|

||||

|

||||

At the time of writing, this architecture is still highly experimental. The main idea is to rebuild the applications needed for the Lab so that they can be run in the “generic” virtual machine present in the modern browsers, the [WebAssembly][30] and Javascript execution engine.

|

||||

|

||||

WebAssembly is a modern language which seeks for maximum portability, and as its name hints, is a kind of assembly language for the Web platform. What is of interest for us is that WebAssembly is portable on most modern Web browsers, making it a very interesting platform for portability.

|

||||

|

||||

Emerging toolchains allow recompiling applications written in languages like C or C++ so that they can be run on the WebAssembly virtual machine in the browser. This is interesting as it doesn’t require modifying the source code of these programs. Of course, there are limitations, in the kind of underlying APIs and libraries compatible with that platform, and on the sandboxing of the WebAssembly execution engine enforced by the Web browser.

|

||||

|

||||

Historically, WebAssembly has been developped so as to allow running games written in C++ for a framework like Unity, in the Web browser.

|

||||

|

||||

In some contexts, for instance for tools with an interactive GUI, and processing data retrieved from files, and which don’t need very specific interaction with the underlying operating system, it seems possible to port these programs to WebAssembly for running them inside the Web browser.

|

||||

|

||||

We have to experiment deeper with this technology to validate its potential for running Virtual Labs in the context of a Web browser.

|

||||

|

||||

We used a similar approach in the past in porting a Relational Database course lab to the Web browser, for standalone execution. A real database would run in the minimal SQLite RDBMS, recompiled to JavaScript[11][31]. Instead of having to download, install and run a VM with a RDBMS, the students would only connect to a Web page, which would load the DBMS in memory, and allow performing the lab SQL queries locally, disconnected from any third party server.

|

||||

|

||||

In a similar manner, we can think for instance, of a Lab scenario where the Internet packet inspector features of the Wireshark tool would run inside the WebAssembly virtual machine, to allow dissecting provided capture files, without having to install Wireshard, directly into the Web browser.

|

||||

|

||||

We expect to publish a report on that last experiment in the future with more details and results.

|

||||

|

||||

### 6 Conclusion

|

||||

|

||||

The most promising architecture for Virtual Lab deployments seems to be the use of containers on a PaaS platform for deploying virtual desktops or virtual application GUIs available in the Web browser.

|

||||

|

||||

This would allow the controlled fabrication of Virtual Labs containing the exact bits needed for learners to practice while minimizing the delays.

|

||||

|

||||

Still the need for always-on connectivity can be a problem.

|

||||

|

||||

Also, the potential for inter-networked containers allowing the kind of multiple nodes and collaborative scenarii we described, would require a lot of expertise to develop, and management platforms for the MOOC operators, which aren’t yet mature.

|

||||

|

||||

We hope to be able to report on our progress in the coming months and years on those aspects.

|

||||

|

||||

### 7 References

|

||||

|

||||

|

||||

|

||||

[0]

|

||||

Olivier Berger, J Paul Gibson, Claire Lecocq and Christian Bac “Designing a virtual laboratory for a relational database MOOC”. International Conference on Computer Supported Education, SCITEPRESS, 23-25 may 2015, Lisbonne, Portugal, 2015, vol. 7, pp. 260-268, ISBN 978-989-758-107-6 – [DOI: 10.5220/0005439702600268][1] ([preprint (HTML)][2])

|

||||

|

||||

### 8 Copyright

|

||||

|

||||

[][45]

|

||||

|

||||

This work is licensed under a [Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License][46]

|

||||

|

||||

.

|

||||

|

||||

### Footnotes:

|

||||

|

||||

[1][32] – The FLIRT project also works on business model aspects of MOOC or SPOC production in the context of professional development, but the present memo starts from a minimalitic hypothesis where funding for course production is quite limited.

|

||||

|

||||

[2][33] – research-based evidence needed

|

||||

|

||||

[3][34] – In typical MOOCs which are free to participate, the VM should include only gratis tools, which typically means a GNU/Linux distribution loaded with applications available under free and open source licenses.

|

||||

|

||||

[4][35] – Typically, Free and Open Source software, aka Libre Software

|

||||

|

||||

[5][36] – VirtualBox is portable on many operating systems, making it a very popular solution for such a need

|

||||

|

||||

[6][37] – the IaaS platform could typically be an open cloud for MOOCs or a private cloud for SPOCs (for closer monitoring of student activity or security control reasons).

|

||||

|

||||

[7][38] – Depending of the expected use of the lab by learners, this cost may vary a lot. The size and configuration required for the included software may have an impact (hence the need to minimize the footprint of the VM images). With diminishing costs in general this may not be a show stopper. Refer to marketing figures of commercial IaaS offerings for accurate figures. Attention to additional licensing costs if the OS of the VM isn’t free software, or if other licenses must be provided for every learners.

|

||||

|

||||

[8][39] – The needs for always-on connectivity may not be a problem for professional development SPOCs where learners connect from enterprise networks for instance. It may be detrimental when MOOCs are very popular in southern countries where high bandwidth is both unreliable and expensive.

|

||||

|

||||

[9][40] – In this respect, providing a full Linux desktop inside the VM doesn’t necessarily make sense. Instead, running applications full-screen may be better, avoiding installation of whole desktop environments like Gnome or XFCE… but which has usability consequences. Careful tuning and testing is needed in any case.

|

||||

|

||||

[10][41] – The availability of container based architectures is quite popular in the industry, but has not yet been deployed to a large scale in the context of large public MOOC hosting platforms, to our knowledge, at the time of writing. There are interesting technical challenges which the FLIRT project tries to tackle together with its partner ProCAN.

|

||||

|

||||

[11][42] – See the corresponding paragraph [http://www-inf.it-sudparis.eu/PROSE/csedu2015/#standalone-sql-env][43] in [0][44]

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/

|

||||

|

||||

作者:[Author;Olivier Berger;Télécom Sudparis][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www-public.tem-tsp.eu

|

||||

[1]:http://dx.doi.org/10.5220/0005439702600268

|

||||

[2]:http://www-inf.it-sudparis.eu/PROSE/csedu2015/

|

||||

[3]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#org50fdc1a

|

||||

[4]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fn.1

|

||||

[5]:http://flirtmooc.wixsite.com/flirt-mooc-telecom

|

||||

[6]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fn.2

|

||||

[7]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fn.3

|

||||

[8]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fn.4

|

||||

[9]:http://virtualbox.org

|

||||

[10]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fn.5

|

||||

[11]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fn.2

|

||||

[12]:https://www.vagrantup.com/

|

||||

[13]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#orgde5af50

|

||||

[14]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fn.6

|

||||

[15]:https://en.wikipedia.org/wiki/Virtual_Network_Computing

|

||||

[16]:https://en.wikipedia.org/wiki/Remote_Desktop_Protocol

|

||||

[17]:http://guacamole.apache.org/

|

||||

[18]:https://www.procan-group.com/

|

||||

[19]:https://open.edx.org/

|

||||

[20]:http://openstack.org/

|

||||

[21]:https://en.wikipedia.org/wiki/Canvas_element

|

||||

[22]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fn.7

|

||||

[23]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fn.8

|

||||

[24]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fn.9

|

||||

[25]:https://www.redhat.com/en/topics/containers

|

||||

[26]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fn.10

|

||||

[27]:https://en.wikipedia.org/wiki/Docker_(software)

|

||||

[28]:https://www.openshift.com/

|

||||

[29]:https://en.wikipedia.org/wiki/DevOps

|

||||

[30]:http://webassembly.org/

|

||||

[31]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fn.11

|

||||

[32]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fnr.1

|

||||

[33]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fnr.2

|

||||

[34]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fnr.3

|

||||

[35]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fnr.4

|

||||

[36]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fnr.5

|

||||

[37]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fnr.6

|

||||

[38]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fnr.7

|

||||

[39]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fnr.8

|

||||

[40]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fnr.9

|

||||

[41]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fnr.10

|

||||

[42]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#fnr.11

|

||||

[43]:http://www-inf.it-sudparis.eu/PROSE/csedu2015/#standalone-sql-env

|

||||

[44]:https://www-public.tem-tsp.eu/~berger_o/weblog/a-review-of-virtual-labs-virtualization-solutions-for-moocs/#orgde5af50

|

||||

[45]:http://creativecommons.org/licenses/by-nc-sa/4.0/

|

||||

[46]:http://creativecommons.org/licenses/by-nc-sa/4.0/

|

||||

@ -1,45 +0,0 @@

|

||||

New Training Options Address Demand for Blockchain Skills

|

||||

======

|

||||

|

||||

|

||||

|

||||

Blockchain technology is transforming industries and bringing new levels of trust to contracts, payment processing, asset protection, and supply chain management. Blockchain-related jobs are the second-fastest growing in today’s labor market, [according to TechCrunch][1]. But, as in the rapidly expanding field of artificial intelligence, there is a pronounced blockchain skills gap and a need for expert training resources.

|

||||

|

||||

### Blockchain for Business

|

||||

|

||||

A new training option was recently announced from The Linux Foundation. Enrollment is now open for a free training course called[Blockchain: Understanding Its Uses and Implications][2], as well as a [Blockchain for Business][2] professional certificate program. Delivered through the edX training platform, the new course and program provide a way to learn about the impact of blockchain technologies and a means to demonstrate that knowledge. Certification, in particular, can make a difference for anyone looking to work in the blockchain arena.

|

||||

|

||||

“In the span of only a year or two, blockchain has gone from something seen only as related to cryptocurrencies to a necessity for businesses across a wide variety of industries,” [said][3] Linux Foundation General Manager, Training & Certification Clyde Seepersad. “Providing a free introductory course designed not only for technical staff but business professionals will help improve understanding of this important technology, while offering a certificate program through edX will enable professionals from all over the world to clearly demonstrate their expertise.”

|

||||

|

||||

TechCrunch [also reports][4] that venture capital is rapidly flowing toward blockchain-focused startups. And, this new program is designed for business professionals who need to understand the potential – or threat – of blockchain to their company and industry.

|

||||

|

||||

“Professional Certificate programs on edX deliver career-relevant education in a flexible, affordable way, by focusing on the critical skills industry leaders and successful professionals are seeking today,” said Anant Agarwal, edX CEO and MIT Professor.

|

||||

|

||||

### Hyperledger Fabric

|

||||

|

||||

The Linux Foundation is steward to many valuable blockchain resources and includes some notable community members. In fact, a recent New York Times article — “[The People Leading the Blockchain Revolution][5]” — named Brian Behlendorf, Executive Director of The Linux Foundation’s [Hyperledger Project][6], one of the [top influential voices][7] in the blockchain world.

|

||||

|

||||

Hyperledger offers proven paths for gaining credibility and skills in the blockchain space. For example, the project offers a free course titled Introduction to Hyperledger Fabric for Developers. Fabric has emerged as a key open source toolset in the blockchain world. Through the Hyperledger project, you can also take the B9-lab Certified Hyperledger Fabric Developer course. More information on both courses is available [here][8].

|

||||

|

||||

“As you can imagine, someone needs to do the actual coding when companies move to experiment and replace their legacy systems with blockchain implementations,” states the Hyperledger website. “With training, you could gain serious first-mover advantage.”

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/2018/7/new-training-options-address-demand-blockchain-skills

|

||||

|

||||

作者:[SAM DEAN][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/sam-dean

|

||||

[1]:https://techcrunch.com/2018/02/14/blockchain-engineers-are-in-demand/

|

||||

[2]:https://www.edx.org/course/understanding-blockchain-and-its-implications

|

||||

[3]:https://www.linuxfoundation.org/press-release/as-demand-skyrockets-for-blockchain-expertise-the-linux-foundation-and-edx-offer-new-introductory-blockchain-course-and-blockchain-for-business-professional-certificate-program/

|

||||

[4]:https://techcrunch.com/2018/05/20/with-at-least-1-3-billion-invested-globally-in-2018-vc-funding-for-blockchain-blows-past-2017-totals/

|

||||

[5]:https://www.nytimes.com/2018/06/27/business/dealbook/blockchain-stars.html

|

||||

[6]:https://www.hyperledger.org/

|

||||

[7]:https://www.linuxfoundation.org/blog/hyperledgers-brian-behlendorf-named-as-top-blockchain-influencer-by-new-york-times/

|

||||

[8]:https://www.hyperledger.org/resources/training

|

||||

@ -1,185 +0,0 @@

|

||||

How blockchain will influence open source

|

||||

======

|

||||

|

||||

|

||||

|

||||

What [Satoshi Nakamoto][1] started as Bitcoin a decade ago has found a lot of followers and turned into a movement for decentralization. For some, blockchain technology is a religion that will have the same impact on humanity as the Internet has had. For others, it is hype and technology suitable only for Ponzi schemes. While blockchain is still evolving and trying to find its place, one thing is for sure: It is a disruptive technology that will fundamentally transform certain industries. And I'm betting open source will be one of them.

|

||||

|

||||

### The open source model

|

||||

|

||||

Open source is a collaborative software development and distribution model that allows people with common interests to gather and produce something that no individual can create on their own. It allows the creation of value that is bigger than the sum of its parts. Open source is enabled by distributed collaboration tools (IRC, email, git, wiki, issue trackers, etc.), distributed and protected by an open source licensing model and often governed by software foundations such as the [Apache Software Foundation][2] (ASF), [Cloud Native Computing Foundation][3] (CNCF), etc.

|

||||

|

||||

One interesting aspect of the open source model is the lack of financial incentives in its core. Some people believe open source work should remain detached from money and remain a free and voluntary activity driven only by intrinsic motivators (such as "common purpose" and "for the greater good”). Others believe open source work should be rewarded directly or indirectly through extrinsic motivators (such as financial incentive). While the idea of open source projects prospering only through voluntary contributions is romantic, in reality, the majority of open source contributions are done through paid development. Yes, we have a lot of voluntary contributions, but these are on a temporary basis from contributors who come and go, or for exceptionally popular projects while they are at their peak. Creating and sustaining open source projects that are useful for enterprises requires developing, documenting, testing, and bug-fixing for prolonged periods, even when the software is no longer shiny and exciting. It is a boring activity that is best motivated through financial incentives.

|

||||

|

||||

### Commercial open source

|

||||

|

||||

Software foundations such as ASF survive on donations and other income streams such as sponsorships, conference fees, etc. But those funds are primarily used to run the foundations, to provide legal protection for the projects, and to ensure there are enough servers to run builds, issue trackers, mailing lists, etc.

|

||||

|

||||

Similarly, CNCF has member fees and other income streams, which are used to run the foundation and provide resources for the projects. These days, most software is not built on laptops; it is run and tested on hundreds of machines on the cloud, and that requires money. Creating marketing campaigns, brand designs, distributing stickers, etc. takes money, and some foundations can assist with that as well. At its core, foundations implement the right processes to interact with users, developers, and control mechanisms and ensure distribution of available financial resources to open source projects for the common good.

|

||||

|

||||

If users of open source projects can donate money and the foundations can distribute it in a fair way, what is missing?

|

||||

|

||||

What is missing is a direct, transparent, trusted, decentralized, automated bidirectional link for transfer of value between the open source producers and the open source consumer. Currently, the link is either unidirectional or indirect:

|

||||

|

||||

* **Unidirectional** : A developer (think of a "developer" as any role that is involved in the production, maintenance, and distribution of software) can use their brain juice and devote time to do a contribution and share that value with all open source users. But there is no reverse link.

|

||||

|

||||

* **Indirect** : If there is a bug that affects a specific user/company, the options are:

|

||||

|

||||

* To have in-house developers to fix the bug and do a pull request. That is ideal, but it not always possible to hire in-house developers who are knowledgeable about hundreds of open source projects used daily.

|

||||

|

||||

* To hire a freelancer specializing in that specific open source project and pay for the services. Ideally, the freelancer is also a committer for the open source project and can directly change the project code quickly. Otherwise, the fix might not ever make it to the project.

|

||||

|

||||

* To approach a company providing services around the open source project. Such companies typically employ open source committers to influence and gain credibility in the community and offer products, expertise, and professional services.

|

||||

|

||||

|

||||

|

||||

|

||||

The third option has been a successful [model][4] for sustaining many open source projects. Whether they provide services (training, consulting, workshops), support, packaging, open core, or SaaS, there are companies that employ hundreds of staff members who work on open source full time. There is a long [list of companies][5] that have managed to build a successful open source business model over the years, and that list is growing steadily.

|

||||

|

||||

The companies that back open source projects play an important role in the ecosystem: They are the catalyst between the open source projects and the users. The ones that add real value do more than just package software nicely; they can identify user needs and technology trends, and they create a full stack and even an ecosystem of open source projects to address these needs. They can take a boring project and support it for years. If there is a missing piece in the stack, they can start an open source project from scratch and build a community around it. They can acquire a closed source software company and open source the projects (here I got a little carried away, but yes, I'm talking about my employer, [Red Hat][6]).

|

||||

|

||||

To summarize, with the commercial open source model, projects are officially or unofficially managed and controlled by a very few individuals or companies that monetize them and give back to the ecosystem by ensuring the project is successful. It is a win-win-win for open source developers, managing companies, and end users. The alternative is inactive projects and expensive closed source software.

|

||||

|

||||

### Self-sustaining, decentralized open source

|

||||

|

||||

For a project to become part of a reputable foundation, it must conform to certain criteria. For example, ASF and CNCF require incubation and graduation processes, respectively, where apart from all the technical and formal requirements, a project must have a healthy number of active committer and users. And that is the essence of forming a sustainable open source project. Having source code on GitHub is not the same thing as having an active open source project. The latter requires committers who write the code and users who use the code, with both groups enforcing each other continuously by exchanging value and forming an ecosystem where everybody benefits. Some project ecosystems might be tiny and short-lived, and some may consist of multiple projects and competing service providers, with very complex interactions lasting for many years. But as long as there is an exchange of value and everybody benefits from it, the project is developed, maintained, and sustained.

|

||||

|

||||

If you look at ASF [Attic][7], you will find projects that have reached their end of life. When a project is no longer technologically fit for its purpose, it is usually its natural end. Similarly, in the ASF [Incubator][8], you will find tons of projects that never graduated but were instead retired. Typically, these projects were not able to build a large enough community because they are too specialized or there are better alternatives available.

|

||||

|

||||

But there are also cases where projects with high potential and superior technology cannot sustain themselves because they cannot form or maintain a functioning ecosystem for the exchange of value. The open source model and the foundations do not provide a framework and mechanisms for developers to get paid for their work or for users to get their requests heard. There isn’t a common value commitment framework for either party. As a result, some projects can sustain themselves only in the context of commercial open source, where a company acts as an intermediary and value adder between developers and users. That adds another constraint and requires a service provider company to sustain some open source projects. Ideally, users should be able to express their interest in a project and developers should be able to show their commitment to the project in a transparent and measurable way, which forms a community with common interest and intent for the exchange of value.

|

||||

|

||||

Imagine there is a model with mechanisms and tools that enable direct interaction between open source users and developers. This includes not only code contributions through pull requests, questions over the mailing lists, GitHub stars, and stickers on laptops, but also other ways that allow users to influence projects' destinies in a richer, more self-controlled and transparent manner.

|

||||

|

||||

This model could include incentives for actions such as:

|

||||

|

||||

* Funding open source projects directly rather than through software foundations

|

||||

|

||||

* Influencing the direction of projects through voting (by token holders)

|

||||

|

||||

* Feature requests driven by user needs

|

||||

|

||||

* On-time pull request merges

|

||||

|

||||

* Bounties for bug hunts

|

||||

|

||||

* Better test coverage incentives

|

||||

|

||||

* Up-to-date documentation rewards

|

||||

|

||||

* Long-term support guarantees

|

||||

|

||||

* Timely security fixes

|

||||

|

||||

* Expert assistance, support, and services

|

||||

|

||||

* Budget for evangelism and promotion of the projects

|

||||

|

||||

* Budget for regular boring activities

|

||||

|

||||

* Fast email and chat assistance

|

||||

|

||||

* Full visibility of the overall project findings, etc.

|

||||

|

||||

|

||||

|

||||

|

||||

If you haven't guessed, I'm talking about using blockchain and [smart contracts][9] to allow such interactions between users and developers—smart contracts that will give power to the hand of token holders to influence projects.

|

||||

|

||||

![blockchain_in_open_source_ecosystem.png][11]

|

||||

|

||||

The usage of blockchain in the open source ecosystem

|

||||

|

||||

Existing channels in the open source ecosystem provide ways for users to influence projects through financial commitments to service providers or other limited means through the foundations. But the addition of blockchain-based technology to the open source ecosystem could open new channels for interaction between users and developers. I'm not saying this will replace the commercial open source model; most companies working with open source do many things that cannot be replaced by smart contracts. But smart contracts can spark a new way of bootstrapping new open source projects, giving a second life to commodity projects that are a burden to maintain. They can motivate developers to apply boring pull requests, write documentation, get tests to pass, etc., providing a direct value exchange channel between users and open source developers. Blockchain can add new channels to help open source projects grow and become self-sustaining in the long term, even when company backing is not feasible. It can create a new complementary model for self-sustaining open source projects—a win-win.

|

||||

|

||||

### Tokenizing open source

|

||||

|

||||

There are already a number of initiatives aiming to tokenize open source. Some focus only on an open source model, and some are more generic but apply to open source development as well:

|

||||

|

||||

* [Gitcoin][12] \- grow open source, one of the most promising ones in this area.

|

||||

|

||||

* [Oscoin][13] \- cryptocurrency for open source

|

||||

|

||||

* [Open collective][14] \- a platform for supporting open source projects.

|

||||

|

||||

* [FundYourselfNow][15] \- Kickstarter and ICOs for projects.

|

||||

|

||||

* [Kauri][16] \- support for open source project documentation.

|

||||

|

||||

* [Liberapay][17] \- a recurrent donations platform.

|

||||

|

||||

* [FundRequest][18] \- a decentralized marketplace for open source collaboration.

|

||||

|

||||

* [CanYa][19] \- recently acquired [Bountysource][20], now the world’s largest open source P2P bounty platform.

|

||||

|

||||

* [OpenGift][21] \- a new model for open source monetization.

|

||||

|

||||

* [Hacken][22] \- a white hat token for hackers.

|

||||

|

||||

* [Coinlancer][23] \- a decentralized job market.

|

||||

|

||||

* [CodeFund][24] \- an open source ad platform.

|

||||

|

||||

* [IssueHunt][25] \- a funding platform for open source maintainers and contributors.

|

||||

|

||||

* [District0x 1Hive][26] \- a crowdfunding and curation platform.

|

||||

|

||||

* [District0x Fixit][27] \- github bug bounties.

|

||||

|

||||

|

||||

|

||||

|

||||

This list is varied and growing rapidly. Some of these projects will disappear, others will pivot, but a few will emerge as the [SourceForge][28], the ASF, the GitHub of the future. That doesn't necessarily mean they'll replace these platforms, but they'll complement them with token models and create a richer open source ecosystem. Every project can pick its distribution model (license), governing model (foundation), and incentive model (token). In all cases, this will pump fresh blood to the open source world.

|

||||

|

||||

### The future is open and decentralized

|

||||

|

||||

* Software is eating the world.

|

||||

|

||||

* Every company is a software company.

|

||||

|

||||

* Open source is where innovation happens.

|

||||

|

||||

|

||||

|

||||

|

||||

Given that, it is clear that open source is too big to fail and too important to be controlled by a few or left to its own destiny. Open source is a shared-resource system that has value to all, and more importantly, it must be managed as such. It is only a matter of time until every company on earth will want to have a stake and a say in the open source world. Unfortunately, we don't have the tools and the habits to do it yet. Such tools would allow anybody to show their appreciation or ignorance of software projects. It would create a direct and faster feedback loop between producers and consumers, between developers and users. It will foster innovation—innovation driven by user needs and expressed through token metrics.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/8/open-source-tokenomics

|

||||

|

||||

作者:[Bilgin lbryam][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/bibryam

|

||||

[1]:https://en.wikipedia.org/wiki/Satoshi_Nakamoto

|

||||

[2]:https://www.apache.org/

|

||||

[3]:https://www.cncf.io/

|

||||

[4]:https://medium.com/open-consensus/3-oss-business-model-progressions-dafd5837f2d

|

||||

[5]:https://docs.google.com/spreadsheets/d/17nKMpi_Dh5slCqzLSFBoWMxNvWiwt2R-t4e_l7LPLhU/edit#gid=0

|

||||

[6]:http://jobs.redhat.com/

|

||||

[7]:https://attic.apache.org/

|

||||

[8]:http://incubator.apache.org/

|

||||

[9]:https://en.wikipedia.org/wiki/Smart_contract

|

||||

[10]:/file/404421

|

||||

[11]:https://opensource.com/sites/default/files/uploads/blockchain_in_open_source_ecosystem.png (blockchain_in_open_source_ecosystem.png)

|

||||

[12]:https://gitcoin.co/

|

||||

[13]:http://oscoin.io/

|

||||

[14]:https://opencollective.com/opensource

|

||||

[15]:https://www.fundyourselfnow.com/page/about

|

||||

[16]:https://kauri.io/

|

||||

[17]:https://liberapay.com/

|

||||

[18]:https://fundrequest.io/

|

||||

[19]:https://canya.io/

|

||||

[20]:https://www.bountysource.com/

|

||||

[21]:https://opengift.io/pub/

|

||||

[22]:https://hacken.io/

|

||||

[23]:https://www.coinlancer.com/home

|

||||

[24]:https://codefund.io/

|

||||

[25]:https://issuehunt.io/

|

||||

[26]:https://blog.district0x.io/district-proposal-spotlight-1hive-283957f57967

|

||||

[27]:https://github.com/district0x/district-proposals/issues/177

|

||||

[28]:https://sourceforge.net/

|

||||

@ -1,116 +0,0 @@

|

||||

Debian Turns 25! Here are Some Interesting Facts About Debian Linux

|

||||

======

|

||||

One of the oldest Linux distribution still in development, Debian has just turned 25. Let’s have a look at some interesting facts about this awesome FOSS project.

|

||||

|

||||

### 10 Interesting facts about Debian Linux

|

||||

|

||||

![Interesting facts about Debian Linux][1]

|

||||

|

||||

The facts presented here have been collected from various sources available from the internet. They are true to my knowledge, but in case of any error, please remind me to update the article.

|

||||

|

||||

#### 1\. One of the oldest Linux distributions still under active development

|

||||

|

||||

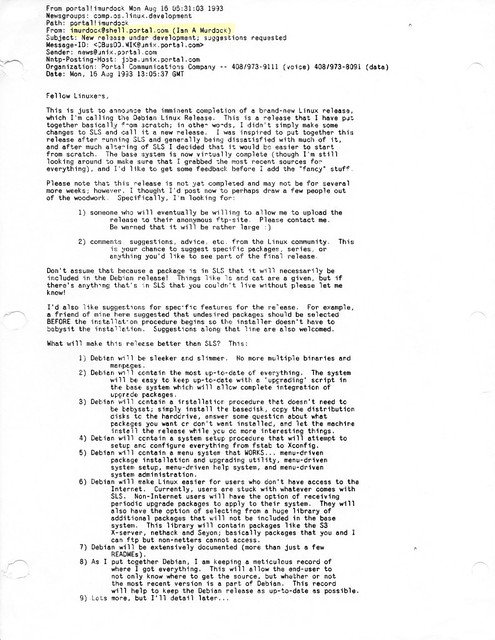

[Debian project][2] was announced on 16th August 1993 by Ian Murdock, Debian Founder. Like Linux creator [Linus Torvalds][3], Ian was a college student when he announced Debian project.

|

||||

|

||||

|

||||

|

||||

#### 2\. Some people get tattoo while some name their project after their girlfriend’s name

|

||||

|

||||

The project was named by combining the name of Ian and his then-girlfriend Debra Lynn. Ian and Debra got married and had three children. Debra and Ian got divorced in 2008.

|

||||

|

||||

#### 3\. Ian Murdock: The Maverick behind the creation of Debian project

|

||||

|

||||

![Debian Founder Ian Murdock][4]

|

||||

Ian Murdock

|

||||

|

||||

[Ian Murdock][5] led the Debian project from August 1993 until March 1996. He shaped Debian into a community project based on the principals of Free Software. The [Debian Manifesto][6] and the [Debian Social Contract][7] are still governing the project.

|

||||

|

||||

He founded a commercial Linux company called [Progeny Linux Systems][8] and worked for a number of Linux related companies such as Sun Microsystems, Linux Foundation and Docker.

|

||||

|

||||

Sadly, [Ian committed suicide in December 2015][9]. His contribution to Debian is certainly invaluable.

|

||||

|

||||

#### 4\. Debian is a community project in the true sense

|

||||

|

||||

Debian is a community based project in true sense. No one ‘owns’ Debian. Debian is being developed by volunteers from all over the world. It is not a commercial project, backed by corporates like many other Linux distributions.

|

||||

|

||||

Debian Linux distribution is composed of Free Software only. It’s one of the few Linux distributions that is true to the spirit of [Free Software][10] and takes proud in being called a GNU/Linux distribution.

|

||||

|

||||

Debian has its non-profit organization called [Software in Public Interest][11] (SPI). Along with Debian, SPI supports many other open source projects financially.

|

||||

|

||||

#### 5\. Debian and its 3 branches

|

||||

|

||||

Debian has three branches or versions: Debian Stable, Debian Unstable (Sid) and Debian Testing.

|

||||

|

||||

Debian Stable, as the name suggests, is the stable branch that has all the software and packages well tested to give you a rock solid stable system. Since it takes time before a well-tested software lands in the stable branch, Debian Stable often contains older versions of programs and hence people joke that Debian Stable means stale.

|

||||

|

||||

[Debian Unstable][12] codenamed Sid is the version where all the development of Debian takes place. This is where the new packages first land or developed. After that, these changes are propagated to the testing version.

|

||||

|

||||

[Debian Testing][13] is the next release after the current stable release. If the current stable release is N, Debian testing would be the N+1 release. The packages from Debian Unstable are tested in this version. After all the new changes are well tested, Debian Testing is then ‘promoted’ as the new Stable version.

|

||||

|

||||

There is no strict release schedule for Debian.

|

||||

|

||||

#### 7\. There was no Debian 1.0 release

|

||||

|

||||

Debian 1.0 was never released. The CD vendor, InfoMagic, accidentally shipped a development release of Debian and entitled it 1.0 in 1996. To prevent confusion between the CD version and the actual Debian release, the Debian Project renamed its next release to “Debian 1.1”.

|

||||

|

||||

#### 8\. Debian releases are codenamed after Toy Story characters

|

||||

|

||||

![Toy Story Characters][14]

|

||||

|

||||

Debian releases are codenamed after the characters from Pixar’s hit animation movie series [Toy Story][15].

|

||||

|

||||

Debian 1.1 was the first release with a codename. It was named Buzz after the Toy Story character Buzz Lightyear.

|

||||

|

||||

It was in 1996 and [Bruce Perens][16] had taken over leadership of the Project from Ian Murdock. Bruce was working at Pixar at the time.

|

||||

|

||||

This trend continued and all the subsequent releases had codenamed after Toy Story characters. For example, the current stable release is Stretch while the upcoming release has been codenamed Buster.

|

||||

|

||||

The unstable Debian version is codenamed Sid. This character in Toy Story is a kid with emotional problems and he enjoys breaking toys. This is symbolic in the sense that Debian Unstable might break your system with untested packages.

|

||||

|

||||

#### 9\. Debian also has a BSD ditribution

|

||||

|

||||

Debian is not limited to Linux. Debian also has a distribution based on FreeBSD kernel. It is called [Debian GNU/kFreeBSD][17].

|

||||

|

||||

#### 10\. Google uses Debian

|

||||

|

||||

[Google uses Debian][18] as its in-house development platform. Earlier, Google used a customized version of Ubuntu as its development platform. Recently they opted for Debian based gLinux.

|

||||

|

||||

#### Happy 25th birthday Debian

|

||||

|

||||

![Happy 25th birthday Debian][19]

|

||||

|

||||

I hope you liked these little facts about Debian. Stuff like these are reasons why people love Debian.

|

||||

|

||||

I wish a very happy 25th birthday to Debian. Please continue to be awesome. Cheers :)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/debian-facts/

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[1]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/08/Interesting-facts-about-debian.jpeg

|

||||

[2]:https://www.debian.org

|

||||

[3]:https://itsfoss.com/linus-torvalds-facts/

|

||||

[4]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/08/ian-murdock.jpg

|

||||