mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-28 01:01:09 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

817719d77e

@ -1,9 +1,9 @@

|

||||

Cloud Commander – 一个有控制台和编辑器在 Web 上的文件管家

|

||||

Cloud Commander:一个有控制台和编辑器的 Web 文件管理器

|

||||

======

|

||||

|

||||

|

||||

|

||||

**Cloud Commander** 是一个基于 web 的文件管理程序,它允许你通过任何计算机、移动端或平板电脑的浏览器查看、访问或管理系统文件或文件夹。他有两个简单而又经典的面板,并且会像你设备的显示尺寸一样自动转换大小。它也拥有两款内置的叫做 **Dword** 和 **Edward** 的文本编辑器,它们支持语法高亮和带有一个支持系统命令行的控制台。因此,您可以随时随地编辑文件。Cloud Commander 服务器是一款在 Linux,Windows,Mac OS X 运行的跨平台应用,而且该应用客户端将在任何一款浏览器上运行。它是用 **JavaScript/Node.Js** 写的,并使用 **MIT** 许可。

|

||||

**Cloud Commander** 是一个基于 web 的文件管理程序,它允许你通过任何计算机、移动端或平板电脑的浏览器查看、访问或管理系统文件或文件夹。它有两个简单而又经典的面板,并且会像你设备的显示尺寸一样自动转换大小。它也拥有两款内置的叫做 **Dword** 和 **Edward** 的文本编辑器,它们支持语法高亮,并带有一个支持系统命令行的控制台。因此,您可以随时随地编辑文件。Cloud Commander 服务器是一款在 Linux、Windows、Mac OS X 运行的跨平台应用,而且该应用客户端可以在任何一款浏览器上运行。它是用 **JavaScript/Node.Js** 写的,并使用 **MIT** 许可证。

|

||||

|

||||

在这个简易教程中,让我们看一看如何在 Ubuntu 18.04 LTS 服务器上安装 Cloud Commander。

|

||||

|

||||

@ -11,53 +11,52 @@ Cloud Commander – 一个有控制台和编辑器在 Web 上的文件管家

|

||||

|

||||

像我之前提到的,是用 Node.js 写的。所以为了安装 Cloud Commander,我们需要首先安装 Node.js。要执行安装,参考下面的指南。

|

||||

|

||||

- [如何在 Linux 上安装 Node.js](https://www.ostechnix.com/install-node-js-linux/)

|

||||

|

||||

### 安装 Cloud Commander

|

||||

|

||||

在安装 Node.js 之后,运行下列命令安装 Cloud Commander:

|

||||

|

||||

```

|

||||

$ npm i cloudcmd -g

|

||||

|

||||

```

|

||||

|

||||

祝贺!Cloud Commander 已经被安装了。让我们往下继续看看 Cloud Commander 的基本使用。

|

||||

祝贺!Cloud Commander 已经安装好了。让我们往下继续看看 Cloud Commander 的基本使用。

|

||||

|

||||

### 开始使用 Cloud Commander

|

||||

|

||||

运行以下命令启动 Cloud Commander:

|

||||

运行以下命令启动 Cloud Commander:

|

||||

|

||||

```

|

||||

$ cloudcmd

|

||||

|

||||

```

|

||||

|

||||

**输出示例:**

|

||||

|

||||

```

|

||||

url: http://localhost:8000

|

||||

|

||||

```

|

||||

|

||||

现在,打开你的浏览器并转到链接:**http://localhost:8000** or **<http://IP-address:8000>**.

|

||||

现在,打开你的浏览器并转到链接:`http://localhost:8000` 或 `http://IP-address:8000`。

|

||||

|

||||

从现在开始,您可以直接在本地系统或远程系统或移动设备,平板电脑等Web浏览器中创建,删除,查看,管理文件或文件夹。

|

||||

|

||||

![][2]

|

||||

|

||||

当你看见上面的截图时,Clouder Commander 有两个面板,十个热键 (F1 to F10),还有控制台。

|

||||

如你所见上面的截图,Clouder Commander 有两个面板,十个热键 (`F1` 到 `F10`),还有控制台。

|

||||

|

||||

每个热键执行的都是一个任务。

|

||||

|

||||

* F1 – 帮助

|

||||

* F2 – 重命名文件/文件夹

|

||||

* F3 – 查看文件/文件夹

|

||||

* F4 – 编辑文件

|

||||

* F5 – 复制文件/文件夹

|

||||

* F6 – 移动文件/文件夹

|

||||

* F7 – 创建新目录

|

||||

* F8 – 删除文件/文件夹

|

||||

* F9 – 打开菜单

|

||||

* F10 – 打开设置

|

||||

|

||||

|

||||

* `F1` – 帮助

|

||||

* `F2` – 重命名文件/文件夹

|

||||

* `F3` – 查看文件/文件夹

|

||||

* `F4` – 编辑文件

|

||||

* `F5` – 复制文件/文件夹

|

||||

* `F6` – 移动文件/文件夹

|

||||

* `F7` – 创建新目录

|

||||

* `F8` – 删除文件/文件夹

|

||||

* `F9` – 打开菜单

|

||||

* `F10` – 打开设置

|

||||

|

||||

#### Cloud Commmander 控制台

|

||||

|

||||

@ -65,11 +64,11 @@ url: http://localhost:8000

|

||||

|

||||

![][3]

|

||||

|

||||

在此控制台中,您可以执行各种管理任务,例如安装软件包,删除软件包,更新系统等。您甚至可以关闭或重新引导系统。 因此,Cloud Commander 不仅仅是一个文件管理器,还具有远程管理工具的功能。

|

||||

在此控制台中,您可以执行各种管理任务,例如安装软件包、删除软件包、更新系统等。您甚至可以关闭或重新引导系统。 因此,Cloud Commander 不仅仅是一个文件管理器,还具有远程管理工具的功能。

|

||||

|

||||

#### 创建文件/文件夹

|

||||

|

||||

要创建新的文件或文件夹就右键单击任意空位置并找到 **New - >File or Directory**。

|

||||

要创建新的文件或文件夹就右键单击任意空位置并找到 “New - >File or Directory”。

|

||||

|

||||

![][4]

|

||||

|

||||

@ -83,39 +82,39 @@ url: http://localhost:8000

|

||||

|

||||

另一个很酷的特性是我们可以从任何系统或设备简单地上传一个文件到 Cloud Commander 系统。

|

||||

|

||||

要上传文件,右键单击 Cloud Commander 面板的任意空白处,并且单击**上传**选项。

|

||||

要上传文件,右键单击 Cloud Commander 面板的任意空白处,并且单击“Upload”选项。

|

||||

|

||||

![][6]

|

||||

|

||||

选择你想要上传的文件。

|

||||

|

||||

另外,你也可以上传来自像 Google 云盘, Dropbox, Amazon 云盘, Facebook, Twitter, Gmail, GtiHub, Picasa, Instagram 还有很多的云服务上的文件。

|

||||

另外,你也可以上传来自像 Google 云盘、Dropbox、Amazon 云盘、Facebook、Twitter、Gmail、GitHub、Picasa、Instagram 还有很多的云服务上的文件。

|

||||

|

||||

要从云端上传文件, 右键单击面板的任意空白处,并且右键单击面板任意空白处并选择**从云端上传**。

|

||||

要从云端上传文件, 右键单击面板的任意空白处,并且右键单击面板任意空白处并选择“Upload from Cloud”。

|

||||

|

||||

![][7]

|

||||

|

||||

选择任意一个你选择的网络服务,例如谷歌云盘。点击**连接到谷歌云盘**按钮。

|

||||

选择任意一个你选择的网络服务,例如谷歌云盘。点击“Connect to Google drive”按钮。

|

||||

|

||||

![][8]

|

||||

|

||||

下一步,用 Cloud Commander 验证你的谷歌云端硬盘,从谷歌云端硬盘选择文件并点击**上传**。

|

||||

下一步,用 Cloud Commander 验证你的谷歌云端硬盘,从谷歌云端硬盘选择文件并点击“Upload”。

|

||||

|

||||

![][9]

|

||||

|

||||

#### 更新 Cloud Commander

|

||||

|

||||

要更新到最新的可用版本,执行下面的命令:

|

||||

|

||||

```

|

||||

$ npm update cloudcmd -g

|

||||

|

||||

```

|

||||

|

||||

#### 总结

|

||||

|

||||

据我测试,它运行地像魔幻一般。在我的Ubuntu服务器测试期间,我没有遇到任何问题。此外,Cloud Commander不仅是基于 Web 的文件管理器,还充当执行大多数Linux管理任务的远程管理工具。 您可以创建文件/文件夹,重命名,删除,编辑和查看它们。此外,您可以像在终端中在本地系统中那样安装,更新,升级和删除任何软件包。当然,您甚至可以从 Cloud Commander 控制台本身关闭或重启系统。 还有什么需要的吗? 尝试一下,你会发现它很有用。

|

||||

据我测试,它运行很好。在我的 Ubuntu 服务器测试期间,我没有遇到任何问题。此外,Cloud Commander 不仅是基于 Web 的文件管理器,还可以充当执行大多数 Linux 管理任务的远程管理工具。 您可以创建文件/文件夹、重命名、删除、编辑和查看它们。此外,您可以像在终端中在本地系统中那样安装、更新、升级和删除任何软件包。当然,您甚至可以从 Cloud Commander 控制台本身关闭或重启系统。 还有什么需要的吗? 尝试一下,你会发现它很有用。

|

||||

|

||||

目前为止就这样吧。 我将很快在这里发表另一篇有趣的文章。 在此之前,请继续关注 OSTechNix。

|

||||

目前为止就这样吧。 我将很快在这里发表另一篇有趣的文章。 在此之前,请继续关注我们。

|

||||

|

||||

祝贺!

|

||||

|

||||

@ -128,7 +127,7 @@ via: https://www.ostechnix.com/cloud-commander-a-web-file-manager-with-console-a

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[fuzheng1998](https://github.com/fuzheng1998)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

Trash-Cli : Linux 上的命令行回收站工具

|

||||

Trash-Cli:Linux 上的命令行回收站工具

|

||||

======

|

||||

|

||||

相信每个人都对<ruby>回收站<rt>trashcan</rt></ruby>很熟悉,因为无论是对 Linux 用户,还是 Windows 用户,或者 Mac 用户来说,它都很常见。当你删除一个文件或目录的时候,该文件或目录会被移动到回收站中。

|

||||

@ -33,31 +33,27 @@ $ sudo apt install trash-cli

|

||||

|

||||

```

|

||||

$ sudo yum install trash-cli

|

||||

|

||||

```

|

||||

|

||||

对于 Fedora 用户,使用 [dnf][6] 命令来安装 Trash-Cli:

|

||||

|

||||

```

|

||||

$ sudo dnf install trash-cli

|

||||

|

||||

```

|

||||

|

||||

对于 Arch Linux 用户,使用 [pacman][7] 命令来安装 Trash-Cli:

|

||||

|

||||

```

|

||||

$ sudo pacman -S trash-cli

|

||||

|

||||

```

|

||||

|

||||

对于 openSUSE 用户,使用 [zypper][8] 命令来安装 Trash-Cli:

|

||||

|

||||

```

|

||||

$ sudo zypper in trash-cli

|

||||

|

||||

```

|

||||

|

||||

如果你的发行版中没有提供 Trash-Cli 的安装包,那么你也可以使用 pip 来安装。为了能够安装 python 包,你的系统中应该会有 pip 包管理器。

|

||||

如果你的发行版中没有提供 Trash-Cli 的安装包,那么你也可以使用 `pip` 来安装。为了能够安装 python 包,你的系统中应该会有 `pip` 包管理器。

|

||||

|

||||

```

|

||||

$ sudo pip install trash-cli

|

||||

@ -66,7 +62,6 @@ Collecting trash-cli

|

||||

Installing collected packages: trash-cli

|

||||

Running setup.py bdist_wheel for trash-cli ... done

|

||||

Successfully installed trash-cli-0.17.1.14

|

||||

|

||||

```

|

||||

|

||||

### 如何使用 Trash-Cli

|

||||

@ -81,7 +76,7 @@ Trash-Cli 的使用不难,因为它提供了一个很简单的语法。Trash-C

|

||||

|

||||

下面,让我们通过一些例子来试验一下。

|

||||

|

||||

1)删除文件和目录:在这个例子中,我们通过运行下面这个命令,将 2g.txt 这一文件和 magi 这一文件夹移动到回收站中。

|

||||

1) 删除文件和目录:在这个例子中,我们通过运行下面这个命令,将 `2g.txt` 这一文件和 `magi` 这一文件夹移动到回收站中。

|

||||

|

||||

```

|

||||

$ trash-put 2g.txt magi

|

||||

@ -89,7 +84,7 @@ $ trash-put 2g.txt magi

|

||||

|

||||

和你在文件管理器中看到的一样。

|

||||

|

||||

2)列出被删除了的文件和目录:为了查看被删除了的文件和目录,你需要运行下面这个命令。之后,你可以在输出中看到被删除文件和目录的详细信息,比如名字、删除日期和时间,以及文件路径。

|

||||

2) 列出被删除了的文件和目录:为了查看被删除了的文件和目录,你需要运行下面这个命令。之后,你可以在输出中看到被删除文件和目录的详细信息,比如名字、删除日期和时间,以及文件路径。

|

||||

|

||||

```

|

||||

$ trash-list

|

||||

@ -97,7 +92,7 @@ $ trash-list

|

||||

2017-10-01 01:40:50 /home/magi/magi/magi

|

||||

```

|

||||

|

||||

3)从回收站中恢复文件或目录:任何时候,你都可以通过运行下面这个命令来恢复文件和目录。它将会询问你来选择你想要恢复的文件或目录。在这个例子中,我打算恢复 2g.txt 文件,所以我的选择是 0 。

|

||||

3) 从回收站中恢复文件或目录:任何时候,你都可以通过运行下面这个命令来恢复文件和目录。它将会询问你来选择你想要恢复的文件或目录。在这个例子中,我打算恢复 `2g.txt` 文件,所以我的选择是 `0` 。

|

||||

|

||||

```

|

||||

$ trash-restore

|

||||

@ -106,7 +101,7 @@ $ trash-restore

|

||||

What file to restore [0..1]: 0

|

||||

```

|

||||

|

||||

4)从回收站中删除文件:如果你想删除回收站中的特定文件,那么可以运行下面这个命令。在这个例子中,我将删除 magi 目录。

|

||||

4) 从回收站中删除文件:如果你想删除回收站中的特定文件,那么可以运行下面这个命令。在这个例子中,我将删除 `magi` 目录。

|

||||

|

||||

```

|

||||

$ trash-rm magi

|

||||

@ -118,11 +113,10 @@ $ trash-rm magi

|

||||

$ trash-empty

|

||||

```

|

||||

|

||||

6)删除超过 X 天的垃圾文件:或者,你可以通过运行下面这个命令来删除回收站中超过 X 天的文件。在这个例子中,我将删除回收站中超过 10 天的项目。

|

||||

6)删除超过 X 天的垃圾文件:或者,你可以通过运行下面这个命令来删除回收站中超过 X 天的文件。在这个例子中,我将删除回收站中超过 `10` 天的项目。

|

||||

|

||||

```

|

||||

$ trash-empty 10

|

||||

|

||||

```

|

||||

|

||||

Trash-Cli 可以工作的很好,但是如果你想尝试它的一些替代品,那么你也可以试一试 [gvfs-trash][9] 和 [autotrash][10] 。

|

||||

@ -133,7 +127,7 @@ via: https://www.2daygeek.com/trash-cli-command-line-trashcan-linux-system/

|

||||

|

||||

作者:[2daygeek][a]

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -5,13 +5,13 @@

|

||||

|

||||

今时今日,无论在家里的沙发上,还是在外面的咖啡厅,只要打开笔记本电脑,连上 Wi-Fi,就能通过网络与外界保持联系。但现在的 Wi-Fi 热点们大都能够通过[每张网卡对应的唯一 MAC 地址][1]来追踪你的设备。下面就来看一下如何避免被追踪。

|

||||

|

||||

现在很多人已经开始注重个人隐私这个问题。个人隐私问题并不仅仅指防止他人能够访问到你电脑上的私有内容(这又是另一个问题了),而更多的是指可追踪性,也就是是否能够被轻易地统计和追踪到。大家都应该[对此更加重视][2]。同时,这方面的底线是,服务提供者在得到了用户的授权后才能对用户进行追踪,例如机场的计时 Wi-Fi 只有在用户授权后才能够使用。

|

||||

现在很多人已经开始注重个人隐私这个问题。个人隐私问题并不仅仅指防止他人能够访问到你电脑上的私有内容(这又是另一个问题了),而更多的是指<ruby>可追踪性<rt>legibility</rt></ruby>,也就是是否能够被轻易地统计和追踪到。大家都应该[对此更加重视][2]。同时,这方面的底线是,服务提供者在得到了用户的授权后才能对用户进行追踪,例如机场的计时 Wi-Fi 只有在用户授权后才能够使用。

|

||||

|

||||

因为固定的 MAC 地址能被轻易地追踪到,所以应该定时进行更换,随机的 MAC 地址是一个好的选择。由于 MAC 地址一般只在局域网内使用,因此随机的 MAC 地址也不太容易产生[冲突][3]。

|

||||

因为固定的 MAC 地址能被轻易地追踪到,所以应该定时进行更换,随机的 MAC 地址是一个好的选择。由于 MAC 地址一般只在局域网内使用,因此随机的 MAC 地址也不大会产生[冲突][3]。

|

||||

|

||||

### 配置 NetworkManager

|

||||

|

||||

要将随机的 MAC 地址默认应用与所有的 Wi-Fi 连接,需要创建 /etc/NetworkManager/conf.d/00-macrandomize.conf 这个文件:

|

||||

要将随机的 MAC 地址默认地用于所有的 Wi-Fi 连接,需要创建 `/etc/NetworkManager/conf.d/00-macrandomize.conf` 这个文件:

|

||||

|

||||

```

|

||||

[device]

|

||||

@ -21,51 +21,47 @@ wifi.scan-rand-mac-address=yes

|

||||

wifi.cloned-mac-address=stable

|

||||

ethernet.cloned-mac-address=stable

|

||||

connection.stable-id=${CONNECTION}/${BOOT}

|

||||

|

||||

```

|

||||

|

||||

然后重启 NetworkManager :

|

||||

|

||||

```

|

||||

systemctl restart NetworkManager

|

||||

|

||||

```

|

||||

|

||||

以上配置文件中,将 cloned-mac-address 的值设置为 stable 就可以在每次 NetworkManager 激活连接的时候都生成相同的 MAC 地址,但连接时使用不同的 MAC 地址。如果要在每次激活连接时获得随机的 MAC 地址,需要将 cloned-mac-address 的值设置为 random。

|

||||

以上配置文件中,将 `cloned-mac-address` 的值设置为 `stable` 就可以在每次 NetworkManager 激活连接的时候都生成相同的 MAC 地址,但连接时使用不同的 MAC 地址。如果要在每次激活连接时也获得随机的 MAC 地址,需要将 `cloned-mac-address` 的值设置为 `random`。

|

||||

|

||||

设置为 stable 可以从 DHCP 获取相同的 IP 地址,也可以让 Wi-Fi 的强制主页根据 MAC 地址记住你的登录状态。如果设置为 random ,在每次连接的时候都需要重新认证(或者点击“我同意”),在使用机场 Wi-Fi 的时候会需要到这种 random 模式。可以在 NetworkManager 的[博客文章][4]中参阅到有关使用 nmcli 从终端配置特定连接的详细说明。

|

||||

设置为 `stable` 可以从 DHCP 获取相同的 IP 地址,也可以让 Wi-Fi 的<ruby>[强制主页](https://en.wikipedia.org/wiki/Captive_portal)<rt>captive portal</rt></ruby>根据 MAC 地址记住你的登录状态。如果设置为 `random` ,在每次连接的时候都需要重新认证(或者点击“我同意”),在使用机场 Wi-Fi 的时候会需要到这种 `random` 模式。可以在这篇 NetworkManager 的[博客文章][4]中参阅到有关使用 `nmcli` 从终端配置特定连接的详细说明。

|

||||

|

||||

使用 ip link 命令可以查看当前的 MAC 地址,MAC 地址将会显示在 ether 一词的后面。

|

||||

使用 `ip link` 命令可以查看当前的 MAC 地址,MAC 地址将会显示在 `ether` 一词的后面。

|

||||

|

||||

```

|

||||

$ ip link

|

||||

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

|

||||

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

|

||||

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

|

||||

2: enp2s0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc fq_codel state DOWN mode DEFAULT group default qlen 1000

|

||||

link/ether 52:54:00:5f:d5:4e brd ff:ff:ff:ff:ff:ff

|

||||

link/ether 52:54:00:5f:d5:4e brd ff:ff:ff:ff:ff:ff

|

||||

3: wlp1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DORMANT group default qlen 1000

|

||||

link/ether 52:54:00:03:23:59 brd ff:ff:ff:ff:ff:ff

|

||||

|

||||

link/ether 52:54:00:03:23:59 brd ff:ff:ff:ff:ff:ff

|

||||

```

|

||||

|

||||

### 什么时候不能随机化 MAC 地址

|

||||

|

||||

当然,在某些情况下确实需要能被追踪到。例如在家用网络中,可能需要将路由器配置为对电脑分配一致的 IP 地址以进行端口转发;再例如公司的雇主可能需要根据 MAC 地址来提供 Wi-Fi 服务,这时候就需要进行追踪。要更改特定的 Wi-Fi 连接,请使用 nmcli 查看 NetworkManager 连接并显示当前设置:

|

||||

当然,在某些情况下确实需要能被追踪到。例如在家用网络中,可能需要将路由器配置为对电脑分配一致的 IP 地址以进行端口转发;再例如公司的雇主可能需要根据 MAC 地址来提供 Wi-Fi 服务,这时候就需要进行追踪。要更改特定的 Wi-Fi 连接,请使用 `nmcli` 查看 NetworkManager 连接并显示当前设置:

|

||||

|

||||

```

|

||||

$ nmcli c | grep wifi

|

||||

Amtrak_WiFi 5f4b9f75-9e41-47f8-8bac-25dae779cd87 wifi --

|

||||

StaplesHotspot de57940c-32c2-468b-8f96-0a3b9a9b0a5e wifi --

|

||||

MyHome e8c79829-1848-4563-8e44-466e14a3223d wifi wlp1s0

|

||||

Amtrak_WiFi 5f4b9f75-9e41-47f8-8bac-25dae779cd87 wifi --

|

||||

StaplesHotspot de57940c-32c2-468b-8f96-0a3b9a9b0a5e wifi --

|

||||

MyHome e8c79829-1848-4563-8e44-466e14a3223d wifi wlp1s0

|

||||

...

|

||||

$ nmcli c show 5f4b9f75-9e41-47f8-8bac-25dae779cd87 | grep cloned

|

||||

802-11-wireless.cloned-mac-address: --

|

||||

802-11-wireless.cloned-mac-address: --

|

||||

$ nmcli c show e8c79829-1848-4563-8e44-466e14a3223d | grep cloned

|

||||

802-11-wireless.cloned-mac-address: stable

|

||||

|

||||

802-11-wireless.cloned-mac-address: stable

|

||||

```

|

||||

|

||||

以下这个例子使用 Amtrak 的完全随机 MAC 地址(使用默认配置)和 MyHome 的永久 MAC 地址(使用 stable 配置)。永久 MAC 地址是在硬件生产的时候分配到网络接口上的,网络管理员能够根据永久 MAC 地址来查看[设备的制造商 ID][5]。

|

||||

这个例子在 Amtrak 使用完全随机 MAC 地址(使用默认配置)和 MyHome 的永久 MAC 地址(使用 `stable` 配置)。永久 MAC 地址是在硬件生产的时候分配到网络接口上的,网络管理员能够根据永久 MAC 地址来查看[设备的制造商 ID][5]。

|

||||

|

||||

更改配置并重新连接活动的接口:

|

||||

|

||||

@ -76,7 +72,6 @@ $ nmcli c down e8c79829-1848-4563-8e44-466e14a3223d

|

||||

$ nmcli c up e8c79829-1848-4563-8e44-466e14a3223d

|

||||

$ ip link

|

||||

...

|

||||

|

||||

```

|

||||

|

||||

你还可以安装 NetworkManager-tui ,就可以通过可视化界面菜单来编辑连接。

|

||||

@ -92,7 +87,7 @@ via: https://fedoramagazine.org/randomize-mac-address-nm/

|

||||

作者:[sheogorath][a],[Stuart D Gathman][b]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[HankChow](https://github.com/HankChow)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,3 +1,6 @@

|

||||

heguangzhi translating

|

||||

|

||||

|

||||

Linus, His Apology, And Why We Should Support Him

|

||||

======

|

||||

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

### fuzheng1998 translating

|

||||

10 Games You Can Play on Linux with Wine

|

||||

======

|

||||

|

||||

|

||||

@ -1,143 +0,0 @@

|

||||

Translating by DavidChen

|

||||

|

||||

How do groups work on Linux?

|

||||

============================================================

|

||||

|

||||

Hello! Last week, I thought I knew how users and groups worked on Linux. Here is what I thought:

|

||||

|

||||

1. Every process belongs to a user (like `julia`)

|

||||

|

||||

2. When a process tries to read a file owned by a group, Linux a) checks if the user `julia` can access the file, and b) checks which groups `julia` belongs to, and whether any of those groups owns & can access that file

|

||||

|

||||

3. If either of those is true (or if the ‘any’ bits are set right) then the process can access the file

|

||||

|

||||

So, for example, if a process is owned by the `julia` user and `julia` is in the `awesome` group, then the process would be allowed to read this file.

|

||||

|

||||

```

|

||||

r--r--r-- 1 root awesome 6872 Sep 24 11:09 file.txt

|

||||

|

||||

```

|

||||

|

||||

I had not thought carefully about this, but if pressed I would have said that it probably checks the `/etc/group` file at runtime to see what groups you’re in.

|

||||

|

||||

### that is not how groups work

|

||||

|

||||

I found out at work last week that, no, what I describe above is not how groups work. In particular Linux does **not** check which groups a process’s user belongs to every time that process tries to access a file.

|

||||

|

||||

Here is how groups actually work! I learned this by reading Chapter 9 (“Process Credentials”) of [The Linux Programming Interface][1] which is an incredible book. As soon as I realized that I did not understand how users and groups worked, I opened up the table of contents with absolute confidence that it would tell me what’s up, and I was right.

|

||||

|

||||

### how users and groups checks are done

|

||||

|

||||

They key new insight for me was pretty simple! The chapter starts out by saying that user and group IDs are **attributes of the process**:

|

||||

|

||||

* real user ID and group ID;

|

||||

|

||||

* effective user ID and group ID;

|

||||

|

||||

* saved set-user-ID and saved set-group-ID;

|

||||

|

||||

* file-system user ID and group ID (Linux-specific); and

|

||||

|

||||

* supplementary group IDs.

|

||||

|

||||

This means that the way Linux **actually** does group checks to see a process can read a file is:

|

||||

|

||||

* look at the process’s group IDs & supplementary group IDs (from the attributes on the process, **not** by looking them up in `/etc/group`)

|

||||

|

||||

* look at the group on the file

|

||||

|

||||

* see if they match

|

||||

|

||||

Generally when doing access control checks it uses the **effective** user/group ID, not the real user/group ID. Technically when accessing a file it actually uses the **file-system** ids but those are usually the same as the effective uid/gid.

|

||||

|

||||

### Adding a user to a group doesn’t put existing processes in that group

|

||||

|

||||

Here’s another fun example that follows from this: if I create a new `panda` group and add myself (bork) to it, then run `groups` to check my group memberships – I’m not in the panda group!

|

||||

|

||||

```

|

||||

bork@kiwi~> sudo addgroup panda

|

||||

Adding group `panda' (GID 1001) ...

|

||||

Done.

|

||||

bork@kiwi~> sudo adduser bork panda

|

||||

Adding user `bork' to group `panda' ...

|

||||

Adding user bork to group panda

|

||||

Done.

|

||||

bork@kiwi~> groups

|

||||

bork adm cdrom sudo dip plugdev lpadmin sambashare docker lxd

|

||||

|

||||

```

|

||||

|

||||

no `panda` in that list! To double check, let’s try making a file owned by the `panda`group and see if I can access it:

|

||||

|

||||

```

|

||||

$ touch panda-file.txt

|

||||

$ sudo chown root:panda panda-file.txt

|

||||

$ sudo chmod 660 panda-file.txt

|

||||

$ cat panda-file.txt

|

||||

cat: panda-file.txt: Permission denied

|

||||

|

||||

```

|

||||

|

||||

Sure enough, I can’t access `panda-file.txt`. No big surprise there. My shell didn’t have the `panda` group as a supplementary GID before, and running `adduser bork panda` didn’t do anything to change that.

|

||||

|

||||

### how do you get your groups in the first place?

|

||||

|

||||

So this raises kind of a confusing question, right – if processes have groups baked into them, how do you get assigned your groups in the first place? Obviously you can’t assign yourself more groups (that would defeat the purpose of access control).

|

||||

|

||||

It’s relatively clear how processes I **execute** from my shell (bash/fish) get their groups – my shell runs as me, and it has a bunch of group IDs on it. Processes I execute from my shell are forked from the shell so they get the same groups as the shell had.

|

||||

|

||||

So there needs to be some “first” process that has your groups set on it, and all the other processes you set inherit their groups from that. That process is called your **login shell** and it’s run by the `login` program (`/bin/login`) on my laptop. `login` runs as root and calls a C function called `initgroups` to set up your groups (by reading `/etc/group`). It’s allowed to set up your groups because it runs as root.

|

||||

|

||||

### let’s try logging in again!

|

||||

|

||||

So! Let’s say I am running in a shell, and I want to refresh my groups! From what we’ve learned about how groups are initialized, I should be able to run `login` to refresh my groups and start a new login shell!

|

||||

|

||||

Let’s try it:

|

||||

|

||||

```

|

||||

$ sudo login bork

|

||||

$ groups

|

||||

bork adm cdrom sudo dip plugdev lpadmin sambashare docker lxd panda

|

||||

$ cat panda-file.txt # it works! I can access the file owned by `panda` now!

|

||||

|

||||

```

|

||||

|

||||

Sure enough, it works! Now the new shell that `login` spawned is part of the `panda` group! Awesome! This won’t affect any other shells I already have running. If I really want the new `panda` group everywhere, I need to restart my login session completely, which means quitting my window manager and logging in again.

|

||||

|

||||

### newgrp

|

||||

|

||||

Somebody on Twitter told me that if you want to start a new shell with a new group that you’ve been added to, you can use `newgrp`. Like this:

|

||||

|

||||

```

|

||||

sudo addgroup panda

|

||||

sudo adduser bork panda

|

||||

newgrp panda # starts a new shell, and you don't have to be root to run it!

|

||||

|

||||

```

|

||||

|

||||

You can accomplish the same(ish) thing with `sg panda bash` which will start a `bash` shell that runs with the `panda` group.

|

||||

|

||||

### setuid sets the effective user ID

|

||||

|

||||

I’ve also always been a little vague about what it means for a process to run as “setuid root”. It turns out that setuid sets the effective user ID! So if I (`julia`) run a setuid root process (like `passwd`), then the **real** user ID will be set to `julia`, and the **effective** user ID will be set to `root`.

|

||||

|

||||

`passwd` needs to run as root, but it can look at its real user ID to see that `julia`started the process, and prevent `julia` from editing any passwords except for `julia`’s password.

|

||||

|

||||

### that’s all!

|

||||

|

||||

There are a bunch more details about all the edge cases and exactly how everything works in The Linux Programming Interface so I will not get into all the details here. That book is amazing. Everything I talked about in this post is from Chapter 9, which is a 17-page chapter inside a 1300-page book.

|

||||

|

||||

The thing I love most about that book is that reading 17 pages about how users and groups work is really approachable, self-contained, super useful, and I don’t have to tackle all 1300 pages of it at once to learn helpful things :)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://jvns.ca/blog/2017/11/20/groups/

|

||||

|

||||

作者:[Julia Evans ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://jvns.ca/

|

||||

[1]:http://man7.org/tlpi/

|

||||

@ -1,3 +1,5 @@

|

||||

translating---geekpi

|

||||

|

||||

How to Play Windows-only Games on Linux with Steam Play

|

||||

======

|

||||

The new experimental feature of Steam allows you to play Windows-only games on Linux. Here’s how to use this feature in Steam right now.

|

||||

|

||||

@ -1,112 +0,0 @@

|

||||

heguangzhi Translating

|

||||

|

||||

3 open source log aggregation tools

|

||||

======

|

||||

Log aggregation systems can help with troubleshooting and other tasks. Here are three top options.

|

||||

|

||||

|

||||

|

||||

How is metrics aggregation different from log aggregation? Can’t logs include metrics? Can’t log aggregation systems do the same things as metrics aggregation systems?

|

||||

|

||||

These are questions I hear often. I’ve also seen vendors pitching their log aggregation system as the solution to all observability problems. Log aggregation is a valuable tool, but it isn’t normally a good tool for time-series data.

|

||||

|

||||

A couple of valuable features in a time-series metrics aggregation system are the regular interval and the storage system customized specifically for time-series data. The regular interval allows a user to derive real mathematical results consistently. If a log aggregation system is collecting metrics in a regular interval, it can potentially work the same way. However, the storage system isn’t optimized for the types of queries that are typical in a metrics aggregation system. These queries will take more resources and time to process using storage systems found in log aggregation tools.

|

||||

|

||||

So, we know a log aggregation system is likely not suitable for time-series data, but what is it good for? A log aggregation system is a great place for collecting event data. These are irregular activities that are significant. An example might be access logs for a web service. These are significant because we want to know what is accessing our systems and when. Another example would be an application error condition—because it is not a normal operating condition, it might be valuable during troubleshooting.

|

||||

|

||||

A handful of rules for logging:

|

||||

|

||||

* DO include a timestamp

|

||||

* DO format in JSON

|

||||

* DON’T log insignificant events

|

||||

* DO log all application errors

|

||||

* MAYBE log warnings

|

||||

* DO turn on logging

|

||||

* DO write messages in a human-readable form

|

||||

* DON’T log informational data in production

|

||||

* DON’T log anything a human can’t read or react to

|

||||

|

||||

|

||||

|

||||

### Cloud costs

|

||||

|

||||

When investigating log aggregation tools, the cloud might seem like an attractive option. However, it can come with significant costs. Logs represent a lot of data when aggregated across hundreds or thousands of hosts and applications. The ingestion, storage, and retrieval of that data are expensive in cloud-based systems.

|

||||

|

||||

As a point of reference from a real system, a collection of around 500 nodes with a few hundred apps results in 200GB of log data per day. There’s probably room for improvement in that system, but even reducing it by half will cost nearly $10,000 per month in many SaaS offerings. This often includes retention of only 30 days, which isn’t very long if you want to look at trending data year-over-year.

|

||||

|

||||

This isn’t to discourage the use of these systems, as they can be very valuable—especially for smaller organizations. The purpose is to point out that there could be significant costs, and it can be discouraging when they are realized. The rest of this article will focus on open source and commercial solutions that are self-hosted.

|

||||

|

||||

### Tool options

|

||||

|

||||

#### ELK

|

||||

|

||||

[ELK][1], short for Elasticsearch, Logstash, and Kibana, is the most popular open source log aggregation tool on the market. It’s used by Netflix, Facebook, Microsoft, LinkedIn, and Cisco. The three components are all developed and maintained by [Elastic][2]. [Elasticsearch][3] is essentially a NoSQL, Lucene search engine implementation. [Logstash][4] is a log pipeline system that can ingest data, transform it, and load it into a store like Elasticsearch. [Kibana][5] is a visualization layer on top of Elasticsearch.

|

||||

|

||||

A few years ago, Beats were introduced. Beats are data collectors. They simplify the process of shipping data to Logstash. Instead of needing to understand the proper syntax of each type of log, a user can install a Beat that will export NGINX logs or Envoy proxy logs properly so they can be used effectively within Elasticsearch.

|

||||

|

||||

When installing a production-level ELK stack, a few other pieces might be included, like [Kafka][6], [Redis][7], and [NGINX][8]. Also, it is common to replace Logstash with Fluentd, which we’ll discuss later. This system can be complex to operate, which in its early days led to a lot of problems and complaints. These have largely been fixed, but it’s still a complex system, so you might not want to try it if you’re a smaller operation.

|

||||

|

||||

That said, there are services available so you don’t have to worry about that. [Logz.io][9] will run it for you, but its list pricing is a little steep if you have a lot of data. Of course, you’re probably smaller and may not have a lot of data. If you can’t afford Logz.io, you could look at something like [AWS Elasticsearch Service][10] (ES). ES is a service Amazon Web Services (AWS) offers that makes it very easy to get Elasticsearch working quickly. It also has tooling to get all AWS logs into ES using Lambda and S3. This is a much cheaper option, but there is some management required and there are a few limitations.

|

||||

|

||||

Elastic, the parent company of the stack, [offers][11] a more robust product that uses the open core model, which provides additional options around analytics tools, and reporting. It can also be hosted on Google Cloud Platform or AWS. This might be the best option, as this combination of tools and hosting platforms offers a cheaper solution than most SaaS options and still provides a lot of value. This system could effectively replace or give you the capability of a [security information and event management][12] (SIEM) system.

|

||||

|

||||

The ELK stack also offers great visualization tools through Kibana, but it lacks an alerting function. Elastic provides alerting functionality within the paid X-Pack add-on, but there is nothing built in for the open source system. Yelp has created a solution to this problem, called [ElastAlert][13], and there are probably others. This additional piece of software is fairly robust, but it increases the complexity of an already complex system.

|

||||

|

||||

#### Graylog

|

||||

|

||||

[Graylog][14] has recently risen in popularity, but it got its start when Lennart Koopmann created it back in 2010. A company was born with the same name two years later. Despite its increasing use, it still lags far behind the ELK stack. This also means it has fewer community-developed features, but it can use the same Beats that the ELK stack uses. Graylog has gained praise in the Go community with the introduction of the Graylog Collector Sidecar written in [Go][15].

|

||||

|

||||

Graylog uses Elasticsearch, [MongoDB][16], and the Graylog Server under the hood. This makes it as complex to run as the ELK stack and maybe a little more. However, Graylog comes with alerting built into the open source version, as well as several other notable features like streaming, message rewriting, and geolocation.

|

||||

|

||||

The streaming feature allows for data to be routed to specific Streams in real time while they are being processed. With this feature, a user can see all database errors in a single Stream and web server errors in a different Stream. Alerts can even be based on these Streams as new items are added or when a threshold is exceeded. Latency is probably one of the biggest issues with log aggregation systems, and Streams eliminate that issue in Graylog. As soon as the log comes in, it can be routed to other systems through a Stream without being processed fully.

|

||||

|

||||

The message rewriting feature uses the open source rules engine [Drools][17]. This allows all incoming messages to be evaluated against a user-defined rules file enabling a message to be dropped (called Blacklisting), a field to be added or removed, or the message to be modified.

|

||||

|

||||

The coolest feature might be Graylog’s geolocation capability, which supports plotting IP addresses on a map. This is a fairly common feature and is available in Kibana as well, but it adds a lot of value—especially if you want to use this as your SIEM system. The geolocation functionality is provided in the open source version of the system.

|

||||

|

||||

Graylog, the company, charges for support on the open source version if you want it. It also offers an open core model for its Enterprise version that offers archiving, audit logging, and additional support. There aren’t many other options for support or hosting, so you’ll likely be on your own if you don’t use Graylog (the company).

|

||||

|

||||

#### Fluentd

|

||||

|

||||

[Fluentd][18] was developed at [Treasure Data][19], and the [CNCF][20] has adopted it as an Incubating project. It was written in C and Ruby and is recommended by [AWS][21] and [Google Cloud][22]. Fluentd has become a common replacement for Logstash in many installations. It acts as a local aggregator to collect all node logs and send them off to central storage systems. It is not a log aggregation system.

|

||||

|

||||

It uses a robust plugin system to provide quick and easy integrations with different data sources and data outputs. Since there are over 500 plugins available, most of your use cases should be covered. If they aren’t, this sounds like an opportunity to contribute back to the open source community.

|

||||

|

||||

Fluentd is a common choice in Kubernetes environments due to its low memory requirements (just tens of megabytes) and its high throughput. In an environment like [Kubernetes][23], where each pod has a Fluentd sidecar, memory consumption will increase linearly with each new pod created. Using Fluentd will drastically reduce your system utilization. This is becoming a common problem with tools developed in Java that are intended to run one per node where the memory overhead hasn’t been a major issue.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/9/open-source-log-aggregation-tools

|

||||

|

||||

作者:[Dan Barker][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/barkerd427

|

||||

[1]: https://www.elastic.co/webinars/introduction-elk-stack

|

||||

[2]: https://www.elastic.co/

|

||||

[3]: https://www.elastic.co/products/elasticsearch

|

||||

[4]: https://www.elastic.co/products/logstash

|

||||

[5]: https://www.elastic.co/products/kibana

|

||||

[6]: http://kafka.apache.org/

|

||||

[7]: https://redis.io/

|

||||

[8]: https://www.nginx.com/

|

||||

[9]: https://logz.io/

|

||||

[10]: https://aws.amazon.com/elasticsearch-service/

|

||||

[11]: https://www.elastic.co/cloud

|

||||

[12]: https://en.wikipedia.org/wiki/Security_information_and_event_management

|

||||

[13]: https://github.com/Yelp/elastalert

|

||||

[14]: https://www.graylog.org/

|

||||

[15]: https://opensource.com/tags/go

|

||||

[16]: https://www.mongodb.com/

|

||||

[17]: https://www.drools.org/

|

||||

[18]: https://www.fluentd.org/

|

||||

[19]: https://www.treasuredata.com/

|

||||

[20]: https://www.cncf.io/

|

||||

[21]: https://aws.amazon.com/blogs/aws/all-your-data-fluentd/

|

||||

[22]: https://cloud.google.com/logging/docs/agent/

|

||||

[23]: https://opensource.com/resources/what-is-kubernetes

|

||||

@ -1,126 +0,0 @@

|

||||

translating---geekpi

|

||||

|

||||

How To Configure Mouse Support For Linux Virtual Consoles

|

||||

======

|

||||

|

||||

|

||||

|

||||

I use Oracle VirtualBox to test various Unix-like operating systems. Most of my VMs are headless servers that does not have graphical desktop environment. For a long time, I have been wondering how can we use the mouse in the text-based terminals in headless Linux servers. Thanks to **GPM** , today I learned that we can use Mouse in virtual consoles for copy and paste operations. **GPM** , acronym for **G** eneral **P** urpose **M** ouse, is a daemon that helps you to configure mouse support for Linux virtual consoles. Please do not confuse GPM with **GDM** (GNOME Display manager). Both serves entirely different purpose.

|

||||

|

||||

GPM is especially useful in the following scenarios:

|

||||

|

||||

* New Linux server installations or for systems that cannot or do not use an X windows system by default, like Arch Linux and Gentoo.

|

||||

* Use copy/paste operations around in the virtual terminals/consoles.

|

||||

* Use copy/paste in text-based editors and browsers (Eg. emacs, lynx).

|

||||

* Use copy/paste in text file managers (Eg. Ranger, Midnight commander).

|

||||

|

||||

|

||||

|

||||

In this brief tutorial, we are going to see how to use Mouse in Text-based terminals in Unix-like operating systems.

|

||||

|

||||

### Installing GPM

|

||||

|

||||

To enable mouse support in Text-only Linux systems, install GPM package. It is available in the default repositories of most Linux distributions.

|

||||

|

||||

On Arch Linux and its variants like Antergos, Manjaro Linux, run the following command to install GPM:

|

||||

|

||||

```

|

||||

$ sudo pacman -S gpm

|

||||

```

|

||||

|

||||

On Debian, Ubuntu, Linux Mint:

|

||||

|

||||

```

|

||||

$ sudo apt install gpm

|

||||

```

|

||||

|

||||

On Fedora:

|

||||

|

||||

```

|

||||

$ sudo dnf install gpm

|

||||

```

|

||||

|

||||

On openSUSE:

|

||||

|

||||

```

|

||||

$ sudo zypper install gpm

|

||||

```

|

||||

|

||||

Once installed, enable and start GPM service using the following commands:

|

||||

|

||||

```

|

||||

$ sudo systemctl enable gpm

|

||||

$ sudo systemctl start gpm

|

||||

```

|

||||

|

||||

In Debian-based systems, gpm service will be automatically started after you installed it, so you need not to manually start the service as shown above.

|

||||

|

||||

### Configure Mouse Support For Linux Virtual Consoles

|

||||

|

||||

There is no special configuration required. GPM will start working as soon as you installed it and started gpm service.

|

||||

|

||||

Have a look at the following screenshot of my Ubuntu 18.04 LTS server before installing GPM:

|

||||

|

||||

|

||||

|

||||

As you see in the above screenshot, there is no visible Mouse pointer in my Ubuntu 18.04 LTS headless server. Only a blinking cursor and it won’t let me to select a text, copy/paste text using mouse. In CLI-only Linux servers, the mouse is literally not useful at all.

|

||||

|

||||

Now check the following screenshot of Ubuntu 18.04 LTS server after installing GPM:

|

||||

|

||||

|

||||

|

||||

See? I can now be able to select the text.

|

||||

|

||||

To select, copy and paste text, do the following:

|

||||

|

||||

* To select text, press the left mouse button and drag the mouse.

|

||||

* Once you selected the text, release the left mouse button and paste text in the same or another console by pressing the middle mouse button.

|

||||

* The right button is used to extend the selection, like in `xterm’.

|

||||

* If you’re using two-button mouse, use the right button to paste text.

|

||||

|

||||

|

||||

|

||||

It’s that simple!

|

||||

|

||||

Like I already said, GPM works just fine and there is no extra configuration needed. Here is the sample contents of GPM configuration file **/etc/gpm.conf** (or `/etc/conf.d/gpm` in some distributions):

|

||||

|

||||

```

|

||||

# protected from evaluation (i.e. by quoting them).

|

||||

#

|

||||

# This file is used by /etc/init.d/gpm and can be modified by

|

||||

# "dpkg-reconfigure gpm" or by hand at your option.

|

||||

#

|

||||

device=/dev/input/mice

|

||||

responsiveness=

|

||||

repeat_type=none

|

||||

type=exps2

|

||||

append=''

|

||||

sample_rate=

|

||||

```

|

||||

|

||||

In my example, I use USB mouse. If you’re using different mouse, you might have to change the values of **device=/dev/input/mice** and **type=exps2** parameters.

|

||||

|

||||

For more details, refer man pages.

|

||||

|

||||

```

|

||||

$ man gpm

|

||||

```

|

||||

|

||||

And, that’s all for now. Hope this was useful. More good stuffs to come. Stay tuned!

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/how-to-configure-mouse-support-for-linux-virtual-consoles/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.ostechnix.com/author/sk/

|

||||

@ -0,0 +1,72 @@

|

||||

4 scanning tools for the Linux desktop

|

||||

======

|

||||

Go paperless by driving your scanner with one of these open source applications.

|

||||

|

||||

|

||||

|

||||

While the paperless world isn't here quite yet, more and more people are getting rid of paper by scanning documents and photos. Having a scanner isn't enough to do the deed, though. You need software to drive that scanner.

|

||||

|

||||

But the catch is many scanner makers don't have Linux versions of the software they bundle with their devices. For the most part, that doesn't matter. Why? Because there are good scanning applications available for the Linux desktop. They work with a variety of scanners and do a good job.

|

||||

|

||||

Let's take a look at four simple but flexible open source Linux scanning tools. I've used each of these tools (and even wrote about three of them [back in 2014][1]) and found them very useful. You might, too.

|

||||

|

||||

### Simple Scan

|

||||

|

||||

One of my longtime favorites, [Simple Scan][2] is small, quick, efficient, and easy to use. If you've seen it before, that's because Simple Scan is the default scanner application on the GNOME desktop, as well as for a number of Linux distributions.

|

||||

|

||||

Scanning a document or photo takes one click. After scanning something, you can rotate or crop it and save it as an image (JPEG or PNG only) or as a PDF. That said, Simple Scan can be slow, even if you scan documents at lower resolutions. On top of that, Simple Scan uses a set of global defaults for scanning, like 150dpi for text and 300dpi for photos. You need to go into Simple Scan's preferences to change those settings.

|

||||

|

||||

If you've scanned something with more than a couple of pages, you can reorder the pages before you save. And if necessary—say you're submitting a signed form—you can email from within Simple Scan.

|

||||

|

||||

### Skanlite

|

||||

|

||||

In many ways, [Skanlite][3] is Simple Scan's cousin in the KDE world. Skanlite has few features, but it gets the job done nicely.

|

||||

|

||||

The software has options that you can configure, including automatically saving scanned files, setting the quality of the scan, and identifying where to save your scans. Skanlite can save to these image formats: JPEG, PNG, BMP, PPM, XBM, and XPM.

|

||||

|

||||

One nifty feature is the software's ability to save portions of what you've scanned to separate files. That comes in handy when, say, you want to excise someone or something from a photo.

|

||||

|

||||

### Gscan2pdf

|

||||

|

||||

Another old favorite, [gscan2pdf][4] might be showing its age, but it still packs a few more features than some of the other applications mentioned here. Even so, gscan2pdf is still comparatively light.

|

||||

|

||||

In addition to saving scans in various image formats (JPEG, PNG, and TIFF), gscan2pdf also saves them as PDF or [DjVu][5] files. You can set the scan's resolution, whether it's black and white or color, and paper size before you click the Scan button. That beats going into gscan2pdf's preferences every time you want to change any of those settings. You can also rotate, crop, and delete pages.

|

||||

|

||||

While none of those features are truly killer, they give you a bit more flexibility.

|

||||

|

||||

### GIMP

|

||||

|

||||

You probably know [GIMP][6] as an image-editing tool. But did you know you can use it to drive your scanner?

|

||||

|

||||

You'll need to install the [XSane][7] scanner software and the GIMP XSane plugin. Both of those should be available from your Linux distro's package manager. From there, select File > Create > Scanner/Camera. From there, click on your scanner and then the Scan button.

|

||||

|

||||

If that's not your cup of tea, or if it doesn't work, you can combine GIMP with a plugin called [QuiteInsane][8]. With either plugin, GIMP becomes a powerful scanning application that lets you set a number of options like whether to scan in color or black and white, the resolution of the scan, and whether or not to compress results. You can also use GIMP's tools to touch up or apply effects to your scans. This makes it great for scanning photos and art.

|

||||

|

||||

### Do they really just work?

|

||||

|

||||

All of this software works well for the most part and with a variety of hardware. I've used them with several multifunction printers that I've owned over the years—whether connecting using a USB cable or over wireless.

|

||||

|

||||

You might have noticed that I wrote "works well for the most part" in the previous paragraph. I did run into one exception: an inexpensive Canon multifunction printer. None of the software I used could detect it. I had to download and install Canon's Linux scanner software, which did work.

|

||||

|

||||

What's your favorite open source scanning tool for Linux? Share your pick by leaving a comment.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/9/linux-scanner-tools

|

||||

|

||||

作者:[Scott Nesbitt][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/scottnesbitt

|

||||

[1]: https://opensource.com/life/14/8/3-tools-scanners-linux-desktop

|

||||

[2]: https://gitlab.gnome.org/GNOME/simple-scan

|

||||

[3]: https://www.kde.org/applications/graphics/skanlite/

|

||||

[4]: http://gscan2pdf.sourceforge.net/

|

||||

[5]: http://en.wikipedia.org/wiki/DjVu

|

||||

[6]: http://www.gimp.org/

|

||||

[7]: https://en.wikipedia.org/wiki/Scanner_Access_Now_Easy#XSane

|

||||

[8]: http://sourceforge.net/projects/quiteinsane/

|

||||

@ -0,0 +1,169 @@

|

||||

Linux tricks that can save you time and trouble

|

||||

======

|

||||

Some command line tricks can make you even more productive on the Linux command line.

|

||||

|

||||

|

||||

|

||||

Good Linux command line tricks don’t only save you time and trouble. They also help you remember and reuse complex commands, making it easier for you to focus on what you need to do, not how you should go about doing it. In this post, we’ll look at some handy command line tricks that you might come to appreciate.

|

||||

|

||||

### Editing your commands

|

||||

|

||||

When making changes to a command that you're about to run on the command line, you can move your cursor to the beginning or the end of the command line to facilitate your changes using the ^a (control key plus “a”) and ^e (control key plus “e”) sequences.

|

||||

|

||||

You can also fix and rerun a previously entered command with an easy text substitution by putting your before and after strings between **^** characters -- as in ^before^after^.

|

||||

|

||||

```

|

||||

$ eho hello world <== oops!

|

||||

|

||||

Command 'eho' not found, did you mean:

|

||||

|

||||

command 'echo' from deb coreutils

|

||||

command 'who' from deb coreutils

|

||||

|

||||

Try: sudo apt install <deb name>

|

||||

|

||||

$ ^e^ec^ <== replace text

|

||||

echo hello world

|

||||

hello world

|

||||

|

||||

```

|

||||

|

||||

### Logging into a remote system with just its name

|

||||

|

||||

If you log into other systems from the command line (I do this all the time), you might consider adding some aliases to your system to supply the details. Your alias can provide the username you want to use (which may or may not be the same as your username on your local system) and the identity of the remote server. Use an alias server_name=’ssh -v -l username IP-address' type of command like this:

|

||||

|

||||

```

|

||||

$ alias butterfly=”ssh -v -l jdoe 192.168.0.11”

|

||||

```

|

||||

|

||||

You can use the system name in place of the IP address if it’s listed in your /etc/hosts file or available through your DNS server.

|

||||

|

||||

And remember you can list your aliases with the **alias** command.

|

||||

|

||||

```

|

||||

$ alias

|

||||

alias butterfly='ssh -v -l jdoe 192.168.0.11'

|

||||

alias c='clear'

|

||||

alias egrep='egrep --color=auto'

|

||||

alias fgrep='fgrep --color=auto'

|

||||

alias grep='grep --color=auto'

|

||||

alias l='ls -CF'

|

||||

alias la='ls -A'

|

||||

alias list_repos='grep ^[^#] /etc/apt/sources.list /etc/apt/sources.list.d/*'

|

||||

alias ll='ls -alF'

|

||||

alias ls='ls --color=auto'

|

||||

alias show_dimensions='xdpyinfo | grep '\''dimensions:'\'''

|

||||

```

|

||||

|

||||

It's good practice to test new aliases and then add them to your ~/.bashrc or similar file to be sure they will be available any time you log in.

|

||||

|

||||

### Freezing and thawing out your terminal window

|

||||

|

||||

The ^s (control key plus “s”) sequence will stop a terminal from providing output by running an XOFF (transmit off) flow control. This affects PuTTY sessions, as well as terminal windows on your desktop. Sometimes typed by mistake, however, the way to make the terminal window responsive again is to enter ^q (control key plus “q”). The only real trick here is remembering ^q since you aren't very likely run into this situation very often.

|

||||

|

||||

### Repeating commands

|

||||

|

||||

Linux provides many ways to reuse commands. The key to command reuse is your history buffer and the commands it collects for you. The easiest way to repeat a command is to type an ! followed by the beginning letters of a recently used command. Another is to press the up-arrow on your keyboard until you see the command you want to reuse and then press enter. You can also display previously entered commands and then type ! followed by the number shown next to the command you want to reuse in the displayed command history entries.

|

||||

|

||||

```

|

||||

!! <== repeat previous command

|

||||

!ec <== repeat last command that started with "ec"

|

||||

!76 <== repeat command #76 from command history

|

||||

```

|

||||

|

||||

### Watching a log file for updates

|

||||

|

||||

Commands such as tail -f /var/log/syslog will show you lines as they are being added to the specified log file — very useful if you are waiting for some particular activity or want to track what’s happening right now. The command will show the end of the file and then additional lines as they are added.

|

||||

|

||||

```

|

||||

$ tail -f /var/log/auth.log

|

||||

Sep 17 09:41:01 fly CRON[8071]: pam_unix(cron:session): session closed for user smmsp

|

||||

Sep 17 09:45:01 fly CRON[8115]: pam_unix(cron:session): session opened for user root

|

||||

Sep 17 09:45:01 fly CRON[8115]: pam_unix(cron:session): session closed for user root

|

||||

Sep 17 09:47:00 fly sshd[8124]: Accepted password for shs from 192.168.0.22 port 47792

|

||||

Sep 17 09:47:00 fly sshd[8124]: pam_unix(sshd:session): session opened for user shs by

|

||||

Sep 17 09:47:00 fly systemd-logind[776]: New session 215 of user shs.

|

||||

Sep 17 09:55:01 fly CRON[8208]: pam_unix(cron:session): session opened for user root

|

||||

Sep 17 09:55:01 fly CRON[8208]: pam_unix(cron:session): session closed for user root

|

||||

<== waits for additional lines to be added

|

||||

```

|

||||

|

||||

### Asking for help

|

||||

|

||||

For most Linux commands, you can enter the name of the command followed by the option **\--help** to get some fairly succinct information on what the command does and how to use it. Less extensive than the man command, the --help option often tells you just what you need to know without expanding on all of the options available.

|

||||

|

||||

```

|

||||

$ mkdir --help

|

||||

Usage: mkdir [OPTION]... DIRECTORY...

|

||||

Create the DIRECTORY(ies), if they do not already exist.

|

||||

|

||||

Mandatory arguments to long options are mandatory for short options too.

|

||||

-m, --mode=MODE set file mode (as in chmod), not a=rwx - umask

|

||||

-p, --parents no error if existing, make parent directories as needed

|

||||

-v, --verbose print a message for each created directory

|

||||

-Z set SELinux security context of each created directory

|

||||

to the default type

|

||||

--context[=CTX] like -Z, or if CTX is specified then set the SELinux

|

||||

or SMACK security context to CTX

|

||||

--help display this help and exit

|

||||

--version output version information and exit

|

||||

|

||||

GNU coreutils online help: <http://www.gnu.org/software/coreutils/>

|

||||

Full documentation at: <http://www.gnu.org/software/coreutils/mkdir>

|

||||

or available locally via: info '(coreutils) mkdir invocation'

|

||||

```

|

||||

|

||||

### Removing files with care

|

||||

|

||||

To add a little caution to your use of the rm command, you can set it up with an alias that asks you to confirm your request to delete files before it goes ahead and deletes them. Some sysadmins make this the default. In that case, you might like the next option even more.

|

||||

|

||||

```

|

||||

$ rm -i <== prompt for confirmation

|

||||

```

|

||||

|

||||

### Turning off aliases

|

||||

|

||||

You can always disable an alias interactively by using the unalias command. It doesn’t change the configuration of the alias in question; it just disables it until the next time you log in or source the file in which the alias is set up.

|

||||

|

||||

```

|

||||

$ unalias rm

|

||||

```

|

||||

|

||||

If the **rm -i** alias is set up as the default and you prefer to never have to provide confirmation before deleting files, you can put your **unalias** command in one of your startup files (e.g., ~/.bashrc).

|

||||

|

||||

### Remembering to use sudo

|

||||

|

||||

If you often forget to precede commands that only root can run with “sudo”, there are two things you can do. You can take advantage of your command history by using the “sudo !!” (use sudo to run your most recent command with sudo prepended to it), or you can turn some of these commands into aliases with the required "sudo" attached.

|

||||

|

||||

```

|

||||

$ alias update=’sudo apt update’

|

||||

```

|

||||

|

||||

### More complex tricks

|

||||

|

||||

Some useful command line tricks require a little more than a clever alias. An alias, after all, replaces a command, often inserting options so you don't have to enter them and allowing you to tack on additional information. If you want something more complex than an alias can manage, you can write a simple script or add a function to your .bashrc or other start-up file. The function below, for example, creates a directory and moves you into it. Once it's been set up, source your .bashrc or other file and you can use commands such as "md temp" to set up a directory and cd into it.

|

||||

|

||||

```

|

||||

md () { mkdir -p "$@" && cd "$1"; }

|

||||

```

|

||||

|

||||

### Wrap-up

|

||||

|

||||

Working on the Linux command line remains one of the most productive and enjoyable ways to get work done on my Linux systems, but a group of command line tricks and clever aliases can make that experience even better.

|

||||

|

||||

Join the Network World communities on [Facebook][1] and [LinkedIn][2] to comment on topics that are top of mind.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3305811/linux/linux-tricks-that-even-you-can-love.html

|

||||

|

||||

作者:[Sandra Henry-Stocker][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Sandra-Henry_Stocker/

|

||||

[1]: https://www.facebook.com/NetworkWorld/

|

||||

[2]: https://www.linkedin.com/company/network-world

|

||||

@ -0,0 +1,72 @@

|

||||

Cozy Is A Nice Linux Audiobook Player For DRM-Free Audio Files

|

||||

======

|

||||

[Cozy][1] **is a free and open source audiobook player for the Linux desktop. The application lets you listen to DRM-free audiobooks (mp3, m4a, flac, ogg and wav) using a modern Gtk3 interface.**

|

||||

|

||||

|

||||

|

||||

You could use any audio player to listen to audiobooks, but a specialized audiobook player like Cozy makes everything easier, by **remembering your playback position and continuing from where you left off for each audiobook** , or by letting you **set the playback speed of each book individually** , among others.

|

||||

|

||||

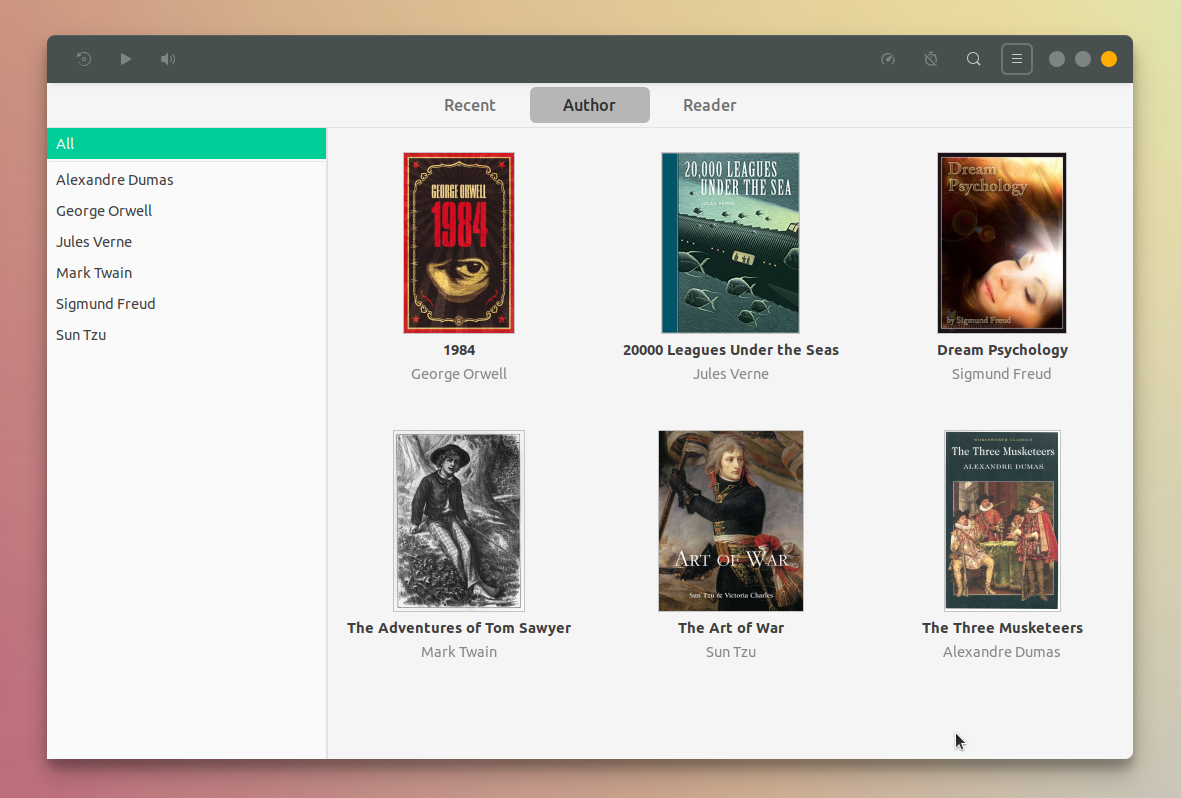

The Cozy interface lets you browse books by author, reader or recency, while also providing search functionality. **Books front covers are supported by Cozy** \- either by using embedded images, or by adding a cover.jpg or cover.png image in the book folder, which is automatically picked up and displayed by Cozy.

|

||||

|

||||

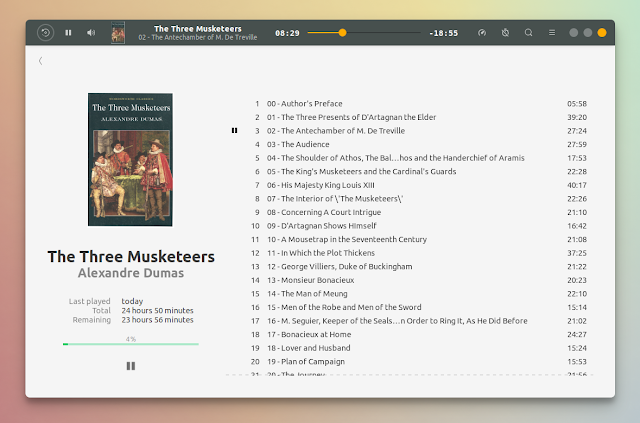

When you click on an audiobook, Cozy lists the book chapters on the right, while displaying the book cover (if available) on the left, along with the book name, author and the last played time, along with total and remaining time:

|

||||

|

||||

|

||||

|

||||

From the application toolbar you can easily **go back 30 seconds** by clicking the rewind icon from its top left-hand side corner. Besides regular controls, cover and title, you'll also find a playback speed button on the toolbar, which lets you increase the playback speed up to 2X.

|

||||

|

||||

**A sleep timer is also available**. It can be set to stop after the current chapter or after a given number of minutes.

|

||||

|

||||

Other Cozy features worth mentioning:

|

||||

|

||||

* **Mpris integration** (Media keys & playback info)

|

||||

* **Supports multiple storage locations**

|

||||

* **Drag'n'drop support for importing new audiobooks**

|

||||

* **Offline Mode**. If your audiobooks are on an external or network drive, you can switch the download button to keep a local cached copy of the book to listen to on the go. To enable this feature you have to set your storage location to external in the settings

|

||||

* **Prevents your system from suspend during playback**

|

||||

* **Dark mode**

|

||||

|

||||

|

||||

|

||||

What I'd like to see in Cozy is a way to get audiobooks metadata, including the book cover, automatically. A feature to retrieve metadata from Audible.com was proposed on the Cozy GitHub project page and the developer seems interested in this, but it's not clear when or if this will be implemented.

|

||||

|

||||

Like I was mentioning in the beginning of the article, Cozy only supports DRM-free audio files. Currently it supports mp3, m4a, flac, ogg and wav. Support for more formats will probably come in the future, with m4b being listed on the Cozy 0.7.0 todo list.

|

||||

|

||||

Cozy cannot play Audible audiobooks due to DRM. But you'll find some solutions out there for converting Audible (.aa/.aax) audiobooks to mp3, like

|

||||

|

||||

### Install Cozy

|

||||

|

||||

**Any Linux distribution / Flatpak** : Cozy is available as a Flatpak on FlatHub. To install it, follow the quick Flatpak [setup][4], then go to the Cozy FlaHub [page][5] and click the install button, or use the install command at the bottom if its page.

|

||||

|

||||

**elementary OS** : Cozy is available in the [AppCenter][6].

|

||||

|

||||

**Ubuntu 18.04 / Linux Mint 19** : you can install Cozy from its repository:

|

||||

|

||||

```

|

||||

wget -nv https://download.opensuse.org/repositories/home:geigi/Ubuntu_18.04/Release.key -O Release.key

|

||||

sudo apt-key add - < Release.key

|

||||

sudo sh -c "echo 'deb http://download.opensuse.org/repositories/home:/geigi/Ubuntu_18.04/ /' > /etc/apt/sources.list.d/home:geigi.list"

|

||||

sudo apt update

|

||||

sudo apt install com.github.geigi.cozy

|

||||

```

|

||||

|

||||

**For other ways of installing Cozy check out its[website][2].**

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linuxuprising.com/2018/09/cozy-is-nice-linux-audiobook-player-for.html

|

||||

|

||||

作者:[Logix][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://plus.google.com/118280394805678839070

|

||||

[1]: https://cozy.geigi.de/

|

||||

[2]: https://cozy.geigi.de/#how-can-i-get-it

|

||||

[3]: https://gitlab.com/ReverendJ1/audiblefreedom/blob/master/audiblefreedom

|

||||

[4]: https://flatpak.org/setup/

|

||||

[5]: https://flathub.org/apps/details/com.github.geigi.cozy

|

||||

[6]: https://appcenter.elementary.io/com.github.geigi.cozy/

|

||||

@ -0,0 +1,47 @@

|

||||

How To Force APT Package Manager To Use IPv4 In Ubuntu 16.04

|

||||

======

|

||||

|

||||

|

||||

|

||||