mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

Merge branch 'master' into 20200427

This commit is contained in:

commit

814f08265a

@ -0,0 +1,127 @@

|

||||

GNU 核心实用程序简介

|

||||

======

|

||||

|

||||

> 大多数 Linux 系统管理员需要做的事情都可以在 GNU coreutils 或 util-linux 中找到。

|

||||

|

||||

|

||||

|

||||

许多 Linux 系统管理员最基本和常用的工具主要包括在两套实用程序中:[GNU 核心实用程序(coreutils)][3]和 util-linux。它们的基本功能允许系统管理员执行许多管理 Linux 系统的任务,包括管理和操作文本文件、目录、数据流、存储介质、进程控制、文件系统等等。

|

||||

|

||||

这些工具是不可缺少的,因为没有它们,就不可能在 Unix 或 Linux 计算机上完成任何有用的工作。鉴于它们的重要性,让我们来研究一下它们。

|

||||

|

||||

### GNU coreutils

|

||||

|

||||

要了解 GNU 核心实用程序的起源,我们需要乘坐时光机进行一次短暂的旅行,回到贝尔实验室的 Unix 早期。[编写 Unix][8] 是为了让 Ken Thompson、Dennis Ritchie、Doug McIlroy 和 Joe Ossanna 可以继续他们在大型多任务和多用户计算机项目 [Multics][9] 上的工作:开发一个叫做《太空旅行》游戏的小东西。正如今天一样,推动计算技术发展的似乎总是游戏玩家。这个新的操作系统比 Multics(LCTT 译注:multi- 字头的意思是多数的)的局限性更大,因为一次只能有两个用户登录,所以被称为 Unics(LCTT 译注:uni- 字头的意思是单独的)。后来这个名字被改成了 Unix。

|

||||

|

||||

随着时间的推移,Unix 取得了如此巨大的成功,开始贝尔实验室基本上是将其赠送给大学,后来送给公司也只是收取介质和运输的费用。在那个年代,系统级的软件是在组织和程序员之间共享的,因为在系统管理这个层面,他们努力实现的是共同的目标。

|

||||

|

||||

最终,AT&T 公司的[老板们][10]决定,他们应该在 Unix 上赚钱,并开始使用限制更多的、昂贵的许可证。这发生在软件变得更加专有、受限和封闭的时期,从那时起,与其他用户和组织共享软件变得不可能。

|

||||

|

||||

有些人不喜欢这种情况,于是用自由软件来对抗。Richard M. Stallman(RMS),他带领着一群“反叛者”试图编写一个开放的、自由的可用操作系统,他们称之为 GNU 操作系统。这群人创建了 GNU 实用程序,但并没有产生一个可行的内核。

|

||||

|

||||

当 Linus Torvalds 开始编写和编译 Linux 内核时,他需要一套非常基本的系统实用程序来开始执行一些稍微有用的工作。内核并不提供命令或任何类型的命令 shell,比如 Bash,它本身是没有任何用处的,因此,Linus 使用了免费提供的 GNU 核心实用程序,并为 Linux 重新编译了它们。这让他拥有了一个完整的、即便是相当基本的操作系统。

|

||||

|

||||

你可以通过在终端命令行中输入命令 `info coreutils` 来了解 GNU 核心实用程序的全部内容。下面的核心实用程序列表就是这个信息页面的一部分。这些实用程序按功能进行了分组,以方便查找;在终端中,选择你想了解更多信息的组,然后按回车键。

|

||||

|

||||

```

|

||||

* Output of entire files:: cat tac nl od base32 base64

|

||||

* Formatting file contents:: fmt pr fold

|

||||

* Output of parts of files:: head tail split csplit

|

||||

* Summarizing files:: wc sum cksum b2sum md5sum sha1sum sha2

|

||||

* Operating on sorted files:: sort shuf uniq comm ptx tsort

|

||||

* Operating on fields:: cut paste join

|

||||

* Operating on characters:: tr expand unexpand

|

||||

* Directory listing:: ls dir vdir dircolors

|

||||

* Basic operations:: cp dd install mv rm shred

|

||||

* Special file types:: mkdir rmdir unlink mkfifo mknod ln link readlink

|

||||

* Changing file attributes:: chgrp chmod chown touch

|

||||

* Disk usage:: df du stat sync truncate

|

||||

* Printing text:: echo printf yes

|

||||

* Conditions:: false true test expr

|

||||

* Redirection:: tee

|

||||

* File name manipulation:: dirname basename pathchk mktemp realpath

|

||||

* Working context:: pwd stty printenv tty

|

||||

* User information:: id logname whoami groups users who

|

||||

* System context:: date arch nproc uname hostname hostid uptime

|

||||

* SELinux context:: chcon runcon

|

||||

* Modified command invocation:: chroot env nice nohup stdbuf timeout

|

||||

* Process control:: kill

|

||||

* Delaying:: sleep

|

||||

* Numeric operations:: factor numfmt seq

|

||||

```

|

||||

|

||||

这个列表里有 102 个实用程序。它涵盖了在 Unix 或 Linux 主机上执行基本任务所需的许多功能。但是,很多基本的实用程序都缺失了,例如,`mount` 和 `umount` 命令不在这个列表中。这些命令和其他许多不在 GNU 核心实用程序中的命令可以在 util-linux 中找到。

|

||||

|

||||

### util-linux

|

||||

|

||||

util-linix 实用程序包中包含了许多系统管理员常用的其它命令。这些实用程序是由 Linux 内核组织发布的,这 107 条命令中几乎每一个都来自原本是三个单独的集合 —— fileutils、shellutils 和 textutils,2003 年它们被[合并成一个包][11]:util-linux。

|

||||

|

||||

```

|

||||

agetty fsck.minix mkfs.bfs setpriv

|

||||

blkdiscard fsfreeze mkfs.cramfs setsid

|

||||

blkid fstab mkfs.minix setterm

|

||||

blockdev fstrim mkswap sfdisk

|

||||

cal getopt more su

|

||||

cfdisk hexdump mount sulogin

|

||||

chcpu hwclock mountpoint swaplabel

|

||||

chfn ionice namei swapoff

|

||||

chrt ipcmk newgrp swapon

|

||||

chsh ipcrm nologin switch_root

|

||||

colcrt ipcs nsenter tailf

|

||||

col isosize partx taskset

|

||||

colrm kill pg tunelp

|

||||

column last pivot_root ul

|

||||

ctrlaltdel ldattach prlimit umount

|

||||

ddpart line raw unshare

|

||||

delpart logger readprofile utmpdump

|

||||

dmesg login rename uuidd

|

||||

eject look renice uuidgen

|

||||

fallocate losetup reset vipw

|

||||

fdformat lsblk resizepart wall

|

||||

fdisk lscpu rev wdctl

|

||||

findfs lslocks RTC Alarm whereis

|

||||

findmnt lslogins runuser wipefs

|

||||

flock mcookie script write

|

||||

fsck mesg scriptreplay zramctl

|

||||

fsck.cramfs mkfs setarch

|

||||

```

|

||||

|

||||

这些实用程序中的一些已经被淘汰了,很可能在未来的某个时候会从集合中被踢出去。你应该看看[维基百科的 util-linux 页面][12]来了解其中许多实用程序的信息,而 man 页面也提供了关于这些命令的详细信息。

|

||||

|

||||

### 总结

|

||||

|

||||

这两个 Linux 实用程序的集合,GNU 核心实用程序和 util-linux,共同提供了管理 Linux 系统所需的基本实用程序。在研究这篇文章的过程中,我发现了几个有趣的实用程序,这些实用程序是我从不知道的。这些命令中的很多都是很少需要的,但当你需要的时候,它们是不可缺少的。

|

||||

|

||||

在这两个集合里,有 200 多个 Linux 实用工具。虽然 Linux 的命令还有很多,但这些都是管理一个典型的 Linux 主机的基本功能所需要的。

|

||||

|

||||

---

|

||||

|

||||

via: [https://opensource.com/article/18/4/gnu-core-utilities][17]

|

||||

|

||||

作者: [David Both][18] 选题者: [lujun9972][19] 译者: [wxy][20] 校对: [wxy][21]

|

||||

|

||||

本文由 [LCTT][22] 原创编译,[Linux中国][23] 荣誉推出

|

||||

|

||||

[1]: https://pixabay.com/en/tiny-people-core-apple-apple-half-700921/

|

||||

[2]: https://creativecommons.org/publicdomain/zero/1.0/

|

||||

[3]: https://www.gnu.org/software/coreutils/coreutils.html

|

||||

[4]: https://opensource.com/life/17/10/top-terminal-emulators?intcmp=7016000000127cYAAQ

|

||||

[5]: https://opensource.com/article/17/2/command-line-tools-data-analysis-linux?intcmp=7016000000127cYAAQ

|

||||

[6]: https://opensource.com/downloads/advanced-ssh-cheat-sheet?intcmp=7016000000127cYAAQ

|

||||

[7]: https://developers.redhat.com/cheat-sheet/advanced-linux-commands-cheatsheet?intcmp=7016000000127cYAAQ

|

||||

[8]: https://en.wikipedia.org/wiki/History_of_Unix

|

||||

[9]: https://en.wikipedia.org/wiki/Multics

|

||||

[10]: https://en.wikipedia.org/wiki/Pointy-haired_Boss

|

||||

[11]: https://en.wikipedia.org/wiki/GNU_Core_Utilities

|

||||

[12]: https://en.wikipedia.org/wiki/Util-linux

|

||||

[13]: https://opensource.com/users/dboth

|

||||

[14]: https://opensource.com/users/dboth

|

||||

[15]: https://opensource.com/users/dboth

|

||||

[16]: https://opensource.com/participate

|

||||

[17]: https://opensource.com/article/18/4/gnu-core-utilities

|

||||

[18]: https://opensource.com/users/dboth

|

||||

[19]: https://github.com/lujun9972

|

||||

[20]: https://github.com/译者ID

|

||||

[21]: https://github.com/校对者ID

|

||||

[22]: https://github.com/LCTT/TranslateProject

|

||||

[23]: https://linux.cn/

|

||||

266

published/20180612 Systemd Services- Reacting to Change.md

Normal file

266

published/20180612 Systemd Services- Reacting to Change.md

Normal file

@ -0,0 +1,266 @@

|

||||

Systemd 服务:响应变化

|

||||

======

|

||||

|

||||

|

||||

|

||||

[我有一个这样的电脑棒][1](图1),我把它用作通用服务器。它很小且安静,由于它是基于 x86 架构的,因此我为我的打印机安装驱动没有任何问题,而且这就是它大多数时候干的事:与客厅的共享打印机和扫描仪通信。

|

||||

|

||||

![ComputeStick][3]

|

||||

|

||||

*一个英特尔电脑棒。欧元硬币大小。*

|

||||

|

||||

大多数时候它都是闲置的,尤其是当我们外出时,因此我认为用它作监视系统是个好主意。该设备没有自带的摄像头,也不需要一直监视。我也不想手动启动图像捕获,因为这样就意味着在出门前必须通过 SSH 登录,并在 shell 中编写命令来启动该进程。

|

||||

|

||||

因此,我以为应该这么做:拿一个 USB 摄像头,然后只需插入它即可自动启动监视系统。如果这个电脑棒重启后发现连接了摄像头也启动监视系统就更加分了。

|

||||

|

||||

在先前的文章中,我们看到 systemd 服务既可以[手动启动或停止][5],也可以[在满足某些条件时启动或停止][6]。这些条件不限于操作系统在启动或关机时序中达到某种状态,还可以在你插入新硬件或文件系统发生变化时进行。你可以通过将 Udev 规则与 systemd 服务结合起来实现。

|

||||

|

||||

### 有 Udev 支持的热插拔

|

||||

|

||||

Udev 规则位于 `/etc/udev/rules` 目录中,通常是由导致一个<ruby>动作<rt>action</rt></ruby>的<ruby>条件<rt>conditions</rt></ruby>和<ruby>赋值<rt>assignments</rt></ruby>的单行语句来描述。

|

||||

|

||||

有点神秘。让我们再解释一次:

|

||||

|

||||

通常,在 Udev 规则中,你会告诉 systemd 当设备连接时需要查看什么信息。例如,你可能想检查刚插入的设备的品牌和型号是否与你让 Udev 等待的设备的品牌和型号相对应。这些就是前面提到的“条件”。

|

||||

|

||||

然后,你可能想要更改一些内容,以便以后可以方便使用该设备。例如,更改设备的读写权限:如果插入 USB 打印机,你会希望用户能够从打印机读取信息(用户的打印应用程序需要知道其模型、制造商,以及是否准备好接受打印作业)并向其写入内容,即发送要打印的内容。更改设备的读写权限是通过你之前阅读的“赋值” 之一完成的。

|

||||

|

||||

最后,你可能希望系统在满足上述条件时执行某些动作,例如在插入某个外部硬盘时启动备份程序以复制重要文件。这就是上面提到的“动作”的例子。

|

||||

|

||||

了解这些之后, 来看看以下几点:

|

||||

|

||||

```

|

||||

ACTION=="add", SUBSYSTEM=="video4linux", ATTRS{idVendor}=="03f0", ATTRS{idProduct}=="e207",

|

||||

SYMLINK+="mywebcam", TAG+="systemd", MODE="0666", ENV{SYSTEMD_WANTS}="webcam.service"

|

||||

```

|

||||

|

||||

规则的第一部分,

|

||||

|

||||

```

|

||||

ACTION=="add", SUBSYSTEM=="video4linux", ATTRS{idVendor}=="03f0",

|

||||

ATTRS{idProduct}=="e207" [etc... ]

|

||||

```

|

||||

|

||||

表明了执行你想让系统执行的其他动作之前设备必须满足的条件。设备必须被添加到(`ACTION=="add"`)机器上,并且必须添加到 `video4linux` 子系统中。为了确保仅在插入正确的设备时才应用该规则,你必须确保 Udev 正确识别设备的制造商(`ATTRS{idVendor}=="03f0"`)和型号(`ATTRS{idProduct}=="e207"`)。

|

||||

|

||||

在本例中,我们讨论的是这个设备(图2):

|

||||

|

||||

![webcam][8]

|

||||

|

||||

*这个试验使用的是 HP 的摄像头。*

|

||||

|

||||

注意怎样用 `==` 来表示这是一个逻辑操作。你应该像这样阅读上面的简要规则:

|

||||

|

||||

> 如果添加了一个设备并且该设备由 video4linux 子系统控制,而且该设备的制造商编码是 03f0,型号是 e207,那么...

|

||||

|

||||

但是,你从哪里获取的这些信息?你在哪里找到触发事件的动作、制造商、型号等?你可要使用多个来源。你可以通过将摄像头插入机器并运行 `lsusb` 来获得 `IdVendor` 和 `idProduct` :

|

||||

|

||||

```

|

||||

lsusb

|

||||

Bus 002 Device 002: ID 8087:0024 Intel Corp. Integrated Rate Matching Hub

|

||||

Bus 002 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

|

||||

Bus 004 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub

|

||||

Bus 003 Device 003: ID 03f0:e207 Hewlett-Packard

|

||||

Bus 003 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

|

||||

Bus 001 Device 003: ID 04f2:b1bb Chicony Electronics Co., Ltd

|

||||

Bus 001 Device 002: ID 8087:0024 Intel Corp. Integrated Rate Matching Hub

|

||||

Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

|

||||

```

|

||||

|

||||

我用的摄像头是 HP 的,你在上面的列表中只能看到一个 HP 设备。`ID` 提供了制造商和型号,它们以冒号(`:`)分隔。如果你有同一制造商的多个设备,不确定哪个是哪个设备,请拔下摄像头,再次运行 `lsusb` , 看看少了什么。

|

||||

|

||||

或者...

|

||||

|

||||

拔下摄像头,等待几秒钟,运行命令 `udevadmin monitor --environment` ,然后重新插入摄像头。当你使用的是HP摄像头时,你将看到:

|

||||

|

||||

```

|

||||

udevadmin monitor --environment

|

||||

UDEV [35776.495221] add /devices/pci0000:00/0000:00:1c.3/0000:04:00.0

|

||||

/usb3/3-1/3-1:1.0/input/input21/event11 (input)

|

||||

.MM_USBIFNUM=00

|

||||

ACTION=add

|

||||

BACKSPACE=guess

|

||||

DEVLINKS=/dev/input/by-path/pci-0000:04:00.0-usb-0:1:1.0-event

|

||||

/dev/input/by-id/usb-Hewlett_Packard_HP_Webcam_HD_2300-event-if00

|

||||

DEVNAME=/dev/input/event11

|

||||

DEVPATH=/devices/pci0000:00/0000:00:1c.3/0000:04:00.0/

|

||||

usb3/3-1/3-1:1.0/input/input21/event11

|

||||

ID_BUS=usb

|

||||

ID_INPUT=1

|

||||

ID_INPUT_KEY=1

|

||||

ID_MODEL=HP_Webcam_HD_2300

|

||||

ID_MODEL_ENC=HPx20Webcamx20HDx202300

|

||||

ID_MODEL_ID=e207

|

||||

ID_PATH=pci-0000:04:00.0-usb-0:1:1.0

|

||||

ID_PATH_TAG=pci-0000_04_00_0-usb-0_1_1_0

|

||||

ID_REVISION=1020

|

||||

ID_SERIAL=Hewlett_Packard_HP_Webcam_HD_2300

|

||||

ID_TYPE=video

|

||||

ID_USB_DRIVER=uvcvideo

|

||||

ID_USB_INTERFACES=:0e0100:0e0200:010100:010200:030000:

|

||||

ID_USB_INTERFACE_NUM=00

|

||||

ID_VENDOR=Hewlett_Packard

|

||||

ID_VENDOR_ENC=Hewlettx20Packard

|

||||

ID_VENDOR_ID=03f0

|

||||

LIBINPUT_DEVICE_GROUP=3/3f0/e207:usb-0000:04:00.0-1/button

|

||||

MAJOR=13

|

||||

MINOR=75

|

||||

SEQNUM=3162

|

||||

SUBSYSTEM=input

|

||||

USEC_INITIALIZED=35776495065

|

||||

XKBLAYOUT=es

|

||||

XKBMODEL=pc105

|

||||

XKBOPTIONS=

|

||||

XKBVARIANT=

|

||||

```

|

||||

|

||||

可能看起来有很多信息要处理,但是,看一下这个:列表前面的 `ACTION` 字段, 它告诉你刚刚发生了什么事件,即一个设备被添加到系统中。你还可以在其中几行中看到设备名称的拼写,因此可以非常确定它就是你要找的设备。输出里还显示了制造商的ID(`ID_VENDOR_ID = 03f0`)和型号(`ID_VENDOR_ID = 03f0`)。

|

||||

|

||||

这为你提供了规则条件部分需要的四个值中的三个。你可能也会想到它还给了你第四个,因为还有一行这样写道:

|

||||

|

||||

```

|

||||

SUBSYSTEM=input

|

||||

```

|

||||

|

||||

小心!尽管 USB 摄像头确实是提供输入的设备(键盘和鼠标也是),但它也属于 usb 子系统和其他几个子系统。这意味着你的摄像头被添加到了多个子系统,并且看起来像多个设备。如果你选择了错误的子系统,那么你的规则可能无法按你期望的那样工作,或者根本无法工作。

|

||||

|

||||

因此,第三件事就是检查网络摄像头被添加到的所有子系统,并选择正确的那个。为此,请再次拔下摄像头,然后运行:

|

||||

|

||||

```

|

||||

ls /dev/video*

|

||||

```

|

||||

|

||||

这将向你显示连接到本机的所有视频设备。如果你使用的是笔记本,大多数笔记本都带有内置摄像头,它可能会显示为 `/dev/video0` 。重新插入摄像头,然后再次运行 `ls /dev/video*`。

|

||||

|

||||

现在,你应该看到多一个视频设备(可能是`/dev/video1`)。

|

||||

|

||||

现在,你可以通过运行 `udevadm info -a /dev/video1` 找出它所属的所有子系统:

|

||||

|

||||

```

|

||||

udevadm info -a /dev/video1

|

||||

|

||||

Udevadm info starts with the device specified by the devpath and then

|

||||

walks up the chain of parent devices. It prints for every device

|

||||

found, all possible attributes in the udev rules key format.

|

||||

A rule to match, can be composed by the attributes of the device

|

||||

and the attributes from one single parent device.

|

||||

|

||||

looking at device '/devices/pci0000:00/0000:00:1c.3/0000:04:00.0

|

||||

/usb3/3-1/3-1:1.0/video4linux/video1':

|

||||

KERNEL=="video1"

|

||||

SUBSYSTEM=="video4linux"

|

||||

DRIVER==""

|

||||

ATTR{dev_debug}=="0"

|

||||

ATTR{index}=="0"

|

||||

ATTR{name}=="HP Webcam HD 2300: HP Webcam HD"

|

||||

|

||||

[etc...]

|

||||

```

|

||||

|

||||

输出持续了相当长的时间,但是你感兴趣的只是开头的部分:`SUBSYSTEM =="video4linux"`。你可以将这行文本直接复制粘贴到你的规则中。输出的其余部分(为简洁未显示)为你提供了更多的信息,例如制造商和型号 ID,同样是以你可以复制粘贴到你的规则中的格式。

|

||||

|

||||

现在,你有了识别设备的方式吗,并明确了什么事件应该触发该动作,该对设备进行修改了。

|

||||

|

||||

规则的下一部分,`SYMLINK+="mywebcam", TAG+="systemd", MODE="0666"` 告诉 Udev 做三件事:首先,你要创建设备的符号链接(例如 `/dev/video1` 到 `/dev/mywebcam`。这是因为你无法预测系统默认情况下会把那个设备叫什么。当你拥有内置摄像头并热插拔一个新的时,内置摄像头通常为 `/dev/video0`,而外部摄像头通常为 `/dev/video1`。但是,如果你在插入外部 USB 摄像头的情况下重启计算机,则可能会相反,内部摄像头可能会变成 `/dev/video1` ,而外部摄像头会变成 `/dev/video0`。这想告诉你的是,尽管你的图像捕获脚本(稍后将看到)总是需要指向外部摄像头设备,但是你不能依赖它是 `/dev/video0` 或 `/dev/video1`。为了解决这个问题,你告诉 Udev 创建一个符号链接,该链接在设备被添加到 `video4linux` 子系统的那一刻起就不会再变,你将使你的脚本指向该链接。

|

||||

|

||||

第二件事就是将 `systemd` 添加到与此规则关联的 Udev 标记列表中。这告诉 Udev,该规则触发的动作将由 systemd 管理,即它将是某种 systemd 服务。

|

||||

|

||||

注意在这个两种情况下是如何使用 `+=` 运算符的。这会将值添加到列表中,这意味着你可以向 `SYMLINK` 和 `TAG` 添加多个值。

|

||||

|

||||

另一方面,`MODE` 值只能包含一个值(因此,你可以使用简单的 `=` 赋值运算符)。`MODE` 的作用是告诉 Udev 谁可以读或写该设备。如果你熟悉 `chmod`(你读到此文, 应该会熟悉),你就也会熟悉[如何用数字表示权限][9]。这就是它的含义:`0666` 的含义是 “向所有人授予对设备的读写权限”。

|

||||

|

||||

最后, `ENV{SYSTEMD_WANTS}="webcam.service"` 告诉 Udev 要运行什么 systemd 服务。

|

||||

|

||||

将此规则保存到 `/etc/udev/rules.d` 目录名为 `90-webcam.rules`(或类似的名称)的文件中,你可以通过重启机器或运行以下命令来加载它:

|

||||

|

||||

```

|

||||

sudo udevadm control --reload-rules && udevadm trigger

|

||||

```

|

||||

|

||||

### 最后是服务

|

||||

|

||||

Udev 规则触发的服务非常简单:

|

||||

|

||||

```

|

||||

# webcam.service

|

||||

|

||||

[Service]

|

||||

Type=simple

|

||||

ExecStart=/home/[user name]/bin/checkimage.sh

|

||||

```

|

||||

|

||||

基本上,它只是运行存储在你个人 `bin/` 中的 `checkimage.sh` 脚本并将其放到后台。[这是你在先前的文章中看过的内容][5]。它看起来似乎很小,但那只是因为它是被 Udev 规则调用的,你刚刚创建了一种特殊的 systemd 单元,称为 `device` 单元。 恭喜。

|

||||

|

||||

至于 `webcam.service` 调用的 `checkimage.sh` 脚本,有几种方法从摄像头抓取图像并将其与前一个图像进行比较以检查变化(这是 `checkimage.sh` 所做的事),但这是我的方法:

|

||||

|

||||

```

|

||||

#!/bin/bash

|

||||

# This is the checkimage.sh script

|

||||

|

||||

mplayer -vo png -frames 1 tv:// -tv driver=v4l2:width=640:height=480:device=

|

||||

/dev/mywebcam &>/dev/null

|

||||

mv 00000001.png /home/[user name]/monitor/monitor.png

|

||||

|

||||

while true

|

||||

do

|

||||

mplayer -vo png -frames 1 tv:// -tv driver=v4l2:width=640:height=480:device=/dev/mywebcam &>/dev/null

|

||||

mv 00000001.png /home/[user name]/monitor/temp.png

|

||||

|

||||

imagediff=`compare -metric mae /home/[user name]/monitor/monitor.png /home/[user name]

|

||||

/monitor/temp.png /home/[user name]/monitor/diff.png 2>&1 > /dev/null | cut -f 1 -d " "`

|

||||

if [ `echo "$imagediff > 700.0" | bc` -eq 1 ]

|

||||

then

|

||||

mv /home/[user name]/monitor/temp.png /home/[user name]/monitor/monitor.png

|

||||

fi

|

||||

|

||||

sleep 0.5

|

||||

done

|

||||

```

|

||||

|

||||

首先使用[MPlayer][10]从摄像头抓取一帧(`00000001.png`)。注意,我们怎样将 `mplayer` 指向 Udev 规则中创建的 `mywebcam` 符号链接,而不是指向 `video0` 或 `video1`。然后,将图像传输到主目录中的 `monitor/` 目录。然后执行一个无限循环,一次又一次地执行相同的操作,但还使用了[Image Magick 的 compare 工具][11]来查看最后捕获的图像与 `monitor/` 目录中已有的图像之间是否存在差异。

|

||||

|

||||

如果图像不同,则表示摄像头的镜框里某些东西动了。该脚本将新图像覆盖原始图像,并继续比较以等待更多变动。

|

||||

|

||||

### 插线

|

||||

|

||||

所有东西准备好后,当你插入摄像头后,你的 Udev 规则将被触发并启动 `webcam.service`。 `webcam.service` 将在后台执行 `checkimage.sh` ,而 `checkimage.sh` 将开始每半秒拍一次照。你会感觉到,因为摄像头的 LED 在每次拍照时将开始闪。

|

||||

|

||||

与往常一样,如果出现问题,请运行:

|

||||

|

||||

```

|

||||

systemctl status webcam.service

|

||||

```

|

||||

|

||||

检查你的服务和脚本正在做什么。

|

||||

|

||||

### 接下来

|

||||

|

||||

你可能想知道:为什么要覆盖原始图像?当然,系统检测到任何动静,你都想知道发生了什么,对吗?你是对的,但是如你在下一部分中将看到的那样,将它们保持原样,并使用另一种类型的 systemd 单元处理图像将更好,更清晰和更简单。

|

||||

|

||||

请期待下一篇。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/intro-to-linux/2018/6/systemd-services-reacting-change

|

||||

|

||||

作者:[Paul Brown][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[messon007](https://github.com/messon007)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/users/bro66

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.intel.com/content/www/us/en/products/boards-kits/compute-stick/stk1a32sc.html

|

||||

[2]: https://www.linux.com/files/images/fig01png

|

||||

[3]: https://lcom.static.linuxfound.org/sites/lcom/files/fig01.png

|

||||

[4]: https://www.linux.com/licenses/category/used-permission

|

||||

[5]: https://linux.cn/article-9700-1.html

|

||||

[6]: https://linux.cn/article-9703-1.html

|

||||

[7]: https://www.linux.com/files/images/fig02png

|

||||

[8]: https://www.linux.com/sites/lcom/files/styles/floated_images/public/fig02.png?itok=esFv4BdM (webcam)

|

||||

[9]: https://chmod-calculator.com/

|

||||

[10]: https://mplayerhq.hu/design7/news.html

|

||||

[11]: https://www.imagemagick.org/script/compare.php

|

||||

[12]: https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

@ -0,0 +1,199 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (robsean)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12186-1.html)

|

||||

[#]: subject: (How to Configure SFTP Server with Chroot in Debian 10)

|

||||

[#]: via: (https://www.linuxtechi.com/configure-sftp-chroot-debian10/)

|

||||

[#]: author: (Pradeep Kumar https://www.linuxtechi.com/author/pradeep/)

|

||||

|

||||

如何在 Debian 10 中配置 Chroot 环境的 SFTP 服务

|

||||

======

|

||||

|

||||

SFTP 意思是“<ruby>安全文件传输协议<rt>Secure File Transfer Protocol</rt></ruby>” 或 “<ruby>SSH 文件传输协议<rt>SSH File Transfer Protocol</rt></ruby>”,它是最常用的用于通过 `ssh` 将文件从本地系统安全地传输到远程服务器的方法,反之亦然。`sftp` 的主要优点是,除 `openssh-server` 之外,我们不需要安装任何额外的软件包,在大多数的 Linux 发行版中,`openssh-server` 软件包是默认安装的一部分。`sftp` 的另外一个好处是,我们可以允许用户使用 `sftp` ,而不允许使用 `ssh` 。

|

||||

|

||||

|

||||

|

||||

当前发布的 Debian 10 代号为 ‘Buster’,在这篇文章中,我们将演示如何在 Debian 10 系统中在 “监狱式的” Chroot 环境中配置 `sftp`。在这里,Chroot 监狱式环境意味着,用户不能超出各自的家目录,或者用户不能从各自的家目录更改目录。下面实验的详细情况:

|

||||

|

||||

* OS = Debian 10

|

||||

* IP 地址 = 192.168.56.151

|

||||

|

||||

让我们跳转到 SFTP 配置步骤,

|

||||

|

||||

### 步骤 1、使用 groupadd 命令给 sftp 创建一个组

|

||||

|

||||

打开终端,使用下面的 `groupadd` 命令创建一个名为的 `sftp_users` 组:

|

||||

|

||||

```

|

||||

root@linuxtechi:~# groupadd sftp_users

|

||||

```

|

||||

|

||||

### 步骤 2、添加用户到组 sftp_users 并设置权限

|

||||

|

||||

假设你想创建新的用户,并且想添加该用户到 `sftp_users` 组中,那么运行下面的命令,

|

||||

|

||||

**语法:**

|

||||

|

||||

```

|

||||

# useradd -m -G sftp_users <用户名>

|

||||

```

|

||||

|

||||

让我们假设用户名是 `jonathan`:

|

||||

|

||||

```

|

||||

root@linuxtechi:~# useradd -m -G sftp_users jonathan

|

||||

```

|

||||

|

||||

使用下面的 `chpasswd` 命令设置密码:

|

||||

|

||||

```

|

||||

root@linuxtechi:~# echo "jonathan:<输入密码>" | chpasswd

|

||||

```

|

||||

|

||||

假设你想添加现有的用户到 `sftp_users` 组中,那么运行下面的 `usermod` 命令,让我们假设已经存在的用户名称是 `chris`:

|

||||

|

||||

```

|

||||

root@linuxtechi:~# usermod -G sftp_users chris

|

||||

```

|

||||

|

||||

现在设置用户所需的权限:

|

||||

|

||||

```

|

||||

root@linuxtechi:~# chown root /home/jonathan /home/chris/

|

||||

```

|

||||

|

||||

在各用户的家目录中都创建一个上传目录,并设置正确地所有权:

|

||||

|

||||

```

|

||||

root@linuxtechi:~# mkdir /home/jonathan/upload

|

||||

root@linuxtechi:~# mkdir /home/chris/upload

|

||||

root@linuxtechi:~# chown jonathan /home/jonathan/upload

|

||||

root@linuxtechi:~# chown chris /home/chris/upload

|

||||

```

|

||||

|

||||

**注意:** 像 Jonathan 和 Chris 之类的用户可以从他们的本地系统上传文件和目录。

|

||||

|

||||

### 步骤 3、编辑 sftp 配置文件 /etc/ssh/sshd_config

|

||||

|

||||

正如我们已经陈述的,`sftp` 操作是通过 `ssh` 完成的,所以它的配置文件是 `/etc/ssh/sshd_config`,在做任何更改前,我建议首先备份文件,然后再编辑该文件,接下来添加下面的内容:

|

||||

|

||||

```

|

||||

root@linuxtechi:~# cp /etc/ssh/sshd_config /etc/ssh/sshd_config-org

|

||||

root@linuxtechi:~# vim /etc/ssh/sshd_config

|

||||

......

|

||||

#Subsystem sftp /usr/lib/openssh/sftp-server

|

||||

Subsystem sftp internal-sftp

|

||||

|

||||

Match Group sftp_users

|

||||

X11Forwarding no

|

||||

AllowTcpForwarding no

|

||||

ChrootDirectory %h

|

||||

ForceCommand internal-sftp

|

||||

......

|

||||

```

|

||||

|

||||

保存并退出文件。

|

||||

|

||||

为使上述更改生效,使用下面的 `systemctl` 命令来重新启动 `ssh` 服务:

|

||||

|

||||

```

|

||||

root@linuxtechi:~# systemctl restart sshd

|

||||

```

|

||||

|

||||

在上面的 `sshd_config` 文件中,我们已经注释掉了以 `Subsystem` 开头的行,并添加了新的条目 `Subsystem sftp internal-sftp` 和新的行。而

|

||||

|

||||

`Match Group sftp_users` –> 它意味着如果用户是 `sftp_users` 组中的一员,那么将应用下面提到的规则到这个条目。

|

||||

|

||||

`ChrootDierctory %h` –> 它意味着用户只能在他们自己各自的家目录中更改目录,而不能超出他们各自的家目录。或者换句话说,我们可以说用户是不允许更改目录的。他们将在他们的目录中获得监狱一样的环境,并且不能访问其他用户的目录和系统的目录。

|

||||

|

||||

`ForceCommand internal-sftp` –> 它意味着用户仅被限制到只能使用 `sftp` 命令。

|

||||

|

||||

### 步骤 4、测试和验证 sftp

|

||||

|

||||

登录到你的 `sftp` 服务器的同一个网络上的任何其它的 Linux 系统,然后通过我们放入 `sftp_users` 组中的用户来尝试 ssh 和 sftp 服务。

|

||||

|

||||

```

|

||||

[root@linuxtechi ~]# ssh root@linuxtechi

|

||||

root@linuxtechi's password:

|

||||

Write failed: Broken pipe

|

||||

[root@linuxtechi ~]# ssh root@linuxtechi

|

||||

root@linuxtechi's password:

|

||||

Write failed: Broken pipe

|

||||

[root@linuxtechi ~]#

|

||||

```

|

||||

|

||||

以上操作证实用户不允许 `ssh` ,现在使用下面的命令尝试 `sftp`:

|

||||

|

||||

```

|

||||

[root@linuxtechi ~]# sftp root@linuxtechi

|

||||

root@linuxtechi's password:

|

||||

Connected to 192.168.56.151.

|

||||

sftp> ls -l

|

||||

drwxr-xr-x 2 root 1001 4096 Sep 14 07:52 debian10-pkgs

|

||||

-rw-r--r-- 1 root 1001 155 Sep 14 07:52 devops-actions.txt

|

||||

drwxr-xr-x 2 1001 1002 4096 Sep 14 08:29 upload

|

||||

```

|

||||

|

||||

让我们使用 sftp 的 `get` 命令来尝试下载一个文件:

|

||||

|

||||

```

|

||||

sftp> get devops-actions.txt

|

||||

Fetching /devops-actions.txt to devops-actions.txt

|

||||

/devops-actions.txt 100% 155 0.2KB/s 00:00

|

||||

sftp>

|

||||

sftp> cd /etc

|

||||

Couldn't stat remote file: No such file or directory

|

||||

sftp> cd /root

|

||||

Couldn't stat remote file: No such file or directory

|

||||

sftp>

|

||||

```

|

||||

|

||||

上面的输出证实我们能从我们的 sftp 服务器下载文件到本地机器,除此之外,我们也必须测试用户不能更改目录。

|

||||

|

||||

让我们在 `upload` 目录下尝试上传一个文件:

|

||||

|

||||

```

|

||||

sftp> cd upload/

|

||||

sftp> put metricbeat-7.3.1-amd64.deb

|

||||

Uploading metricbeat-7.3.1-amd64.deb to /upload/metricbeat-7.3.1-amd64.deb

|

||||

metricbeat-7.3.1-amd64.deb 100% 38MB 38.4MB/s 00:01

|

||||

sftp> ls -l

|

||||

-rw-r--r-- 1 1001 1002 40275654 Sep 14 09:18 metricbeat-7.3.1-amd64.deb

|

||||

sftp>

|

||||

```

|

||||

|

||||

这证实我们已经成功地从我们的本地系统上传一个文件到 sftp 服务中。

|

||||

|

||||

现在使用 winscp 工具来测试 sftp 服务,输入 sftp 服务器 IP 地址和用户的凭证:

|

||||

|

||||

![][3]

|

||||

|

||||

在 “Login” 上单击,然后尝试下载和上传文件:

|

||||

|

||||

![][4]

|

||||

|

||||

现在,在 `upload` 文件夹中尝试上传文件:

|

||||

|

||||

![][5]

|

||||

|

||||

上面的窗口证实上传是完好地工作的,这就是这篇文章的全部。如果这些步骤能帮助你在 Debian 10 中使用 chroot 环境配置 SFTP 服务器s,那么请分享你的反馈和评论。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linuxtechi.com/configure-sftp-chroot-debian10/

|

||||

|

||||

作者:[Pradeep Kumar][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[robsean](https://github.com/robsean)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linuxtechi.com/author/pradeep/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[2]: https://www.linuxtechi.com/wp-content/uploads/2019/09/Configure-sftp-debian10.jpg

|

||||

[3]: https://www.linuxtechi.com/wp-content/uploads/2019/09/Winscp-sftp-debian10.jpg

|

||||

[4]: https://www.linuxtechi.com/wp-content/uploads/2019/09/Download-file-winscp-debian10-sftp.jpg

|

||||

[5]: https://www.linuxtechi.com/wp-content/uploads/2019/09/Upload-File-using-winscp-Debian10-sftp.jpg

|

||||

@ -0,0 +1,96 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (messon007)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12202-1.html)

|

||||

[#]: subject: (The ins and outs of high-performance computing as a service)

|

||||

[#]: via: (https://www.networkworld.com/article/3534725/the-ins-and-outs-of-high-performance-computing-as-a-service.html)

|

||||

[#]: author: (Josh Fruhlinger https://www.networkworld.com/author/Josh-Fruhlinger/)

|

||||

|

||||

超算即服务:超级计算机如何上云

|

||||

======

|

||||

|

||||

> 高性能计算(HPC)服务可能是一种满足不断增长的超级计算需求的方式,但依赖于使用场景,它们不一定比使用本地超级计算机好。

|

||||

|

||||

|

||||

|

||||

导弹和军用直升机上的电子设备需要工作在极端条件下。美国国防承包商<ruby>麦考密克·史蒂文森公司<rt>McCormick Stevenson Corp.</rt></ruby>在部署任何物理设备之前都会事先模拟它所能承受的真实条件。模拟依赖于像 Ansys 这样的有限元素分析软件,该软件需要强大的算力。

|

||||

|

||||

几年前的一天,它出乎意料地超出了计算极限。

|

||||

|

||||

麦考密克·史蒂文森公司的首席工程师 Mike Krawczyk 说:“我们的一些工作会使办公室的计算机不堪重负。购买机器并安装软件在经济上或计划上都不划算。”相反,他们与 Rescale 签约,该公司销售其超级计算机系统上的处理能力,而这只花费了他们购买新硬件上所需的一小部分。

|

||||

|

||||

麦考密克·史蒂文森公司已成为被称为超级计算即服务或高性能计算即服务(两个紧密相关的术语)市场的早期采用者之一。根据国家计算科学研究所的定义,HPC 是超级计算机在计算复杂问题上的应用,而超级计算机是处理能力最先进的那些计算机。

|

||||

|

||||

无论叫它什么,这些服务都在颠覆传统的超级计算市场,并将 HPC 能力带给以前负担不起的客户。但这不是万能的,而且绝对不是即插即用的,至少现在还不是。

|

||||

|

||||

### HPC 服务实践

|

||||

|

||||

从最终用户的角度来看,HPC 即服务类似于早期大型机时代的批处理模型。 “我们创建一个 Ansys 批处理文件并将其发送过去,运行它,然后将结果文件取下来,然后导入到本地,” Krawczyk 说。

|

||||

|

||||

在 HPC 服务背后,云提供商在其自己的数据中心中运行超级计算基础设施,尽管这不一定意味着当你听到“超级计算机”时你就会看到最先进的硬件。正如 IBM OpenPOWER 计算技术副总裁 Dave Turek 解释的那样,HPC 服务的核心是“相互互连的服务器集合。你可以调用该虚拟计算基础设施,它能够在你提出问题时,使得许多不同的服务器并行工作来解决问题。”

|

||||

|

||||

理论听起来很简单。但都柏林城市大学数字商业教授 Theo Lynn 表示,要使其在实践中可行,需要解决一些技术问题。普通计算与 HPC 的区别在于那些互联互通 —— 高速的、低延时的而且昂贵的 —— 因此需要将这些互连引入云基础设施领域。在 HPC 服务可行之前,至少需要将存储性能和数据传输也提升到与本地 HPC 相同的水平。

|

||||

|

||||

但是 Lynn 说,一些制度创新相比技术更好的帮助了 HPC 服务的起飞。特别是,“我们现在看到越来越多的传统 HPC 应用采用云友好的许可模式 —— 这在过去是阻碍采用的障碍。”

|

||||

|

||||

他说,经济也改变了潜在的客户群。“云服务提供商通过向那些负担不起传统 HPC 所需的投资成本的低端 HPC 买家开放,进一步开放了市场。随着市场的开放,超大规模经济模型变得越来越多,更可行,成本开始下降。”

|

||||

|

||||

### 避免本地资本支出

|

||||

|

||||

HPC 服务对传统超级计算长期以来一直占据主导地位的私营部门客户具有吸引力。这些客户包括严重依赖复杂数学模型的行业,包括麦考密克·史蒂文森公司等国防承包商,以及石油和天然气公司、金融服务公司和生物技术公司。都柏林城市大学的 Lynn 补充说,松耦合的工作负载是一个特别好的用例,这意味着许多早期采用者将其用于 3D 图像渲染和相关应用。

|

||||

|

||||

但是,何时考虑 HPC 服务而不是本地 HPC 才有意义?对于德国的模拟烟雾在建筑物中的蔓延和火灾对建筑物结构部件的破坏的 hhpberlin 公司来说,答案是在它超出了其现有资源时。

|

||||

|

||||

Hpberlin 公司数值模拟的科学负责人 Susanne Kilian 说:“几年来,我们一直在运行自己的小型集群,该集群具有多达 80 个处理器核。……但是,随着应用复杂性的提高,这种架构已经越来越不足以支撑;可用容量并不总是够快速地处理项目。”

|

||||

|

||||

她说:“但是,仅仅花钱买一个新的集群并不是一个理想的解决方案:鉴于我们公司的规模和管理环境,不断地维护这个集群(定期进行软件和硬件升级)是不现实的。另外,需要模拟的项目数量会出现很大的波动,因此集群的利用率并不是真正可预测的。通常,使用率很高的阶段与很少使用或不使用的阶段交替出现。”通过转换为 HPC 服务模式,hhpberlin 释放了过剩的产能,并无需支付升级费用。

|

||||

|

||||

IBM 的 Turek 解释了不同公司在评估其需求时所经历的计算过程。对于拥有 30 名员工的生物科学初创公司来说,“你需要计算,但你真的不可能让 15% 的员工专门负责计算。这就像你可能也会说你不希望有专职的法律代表,所以你也会把它作为一项服务来做。”不过,对于一家较大的公司而言,最终归结为权衡 HPC 服务的运营费用与购买内部超级计算机或 HPC 集群的费用。

|

||||

|

||||

到目前为止,这些都是你采用任何云服务时都会遇到的类似的争论。但是,可以 HPC 市场的某些特殊性将使得衡量运营支出(OPEX)与资本支出(CAPEX)时选择前者。超级计算机不是诸如存储或 x86 服务器之类的商用硬件;它们非常昂贵,技术进步很快会使其过时。正如麦考密克·史蒂文森公司的 Krawczyk 所说,“这就像买车:只要车一开走,它就会开始贬值。”对于许多公司,尤其是规模较大,灵活性较差的公司,购买超级计算机的过程可能会陷入无望的泥潭。IBM 的 Turek 说:“你会被规划问题、建筑问题、施工问题、培训问题所困扰,然后必须执行 RFP。你必须得到 CIO 的支持。你必须与内部客户合作以确保服务的连续性。这是一个非常、非常复杂的过程,并没有很多机构有非常出色的执行力。”

|

||||

|

||||

一旦你选择走 HPC 服务的路线,你会发现你会得到你期望从云服务中得到的许多好处,特别是仅在业务需要时才需付费的能力,从而可以带来资源的高效利用。Gartner 高级总监兼分析师 Chirag Dekate 表示,当你对高性能计算有短期需求时,突发性负载是推动选择 HPC 服务的关键用例。

|

||||

|

||||

他说:“在制造业中,在产品设计阶段前后,HPC 活动往往会达到很高的峰值。但是,一旦产品设计完成,在其余产品开发周期中,HPC 资源的利用率就会降低。” 相比之下,他说:“当你拥有大型的、长期运行的工作时,云计算的经济性才会逐渐减弱。”

|

||||

|

||||

通过巧妙的系统设计,你可以将这些 HPC 服务突发活动与你自己的内部常规计算集成在一起。<ruby>埃森哲<rt>Accenture</rt></ruby>实验室常务董事 Teresa Tung 举了一个例子:“通过 API 访问 HPC 可以与传统计算无缝融合。在模型构建阶段,传统的 AI 流水线可能会在高端超级计算机上进行训练,但是最终经过反复按预期运行的训练好的模型将部署在云端的其他服务上,甚至部署在边缘设备上。”

|

||||

|

||||

### 它并不适合所有的应用场景

|

||||

|

||||

HPC 服务适合批处理和松耦合的场景。这与一个常见的 HPC 缺点有关:数据传输问题。高性能计算本身通常涉及庞大的数据集,而将所有这些信息通过互联网发送到云服务提供商并不容易。IBM 的 Turek 说:“我们与生物技术行业的客户交流,他们每月仅在数据费用上就花费 1000 万美元。”

|

||||

|

||||

而钱并不是唯一的潜在问题。构建一个利用数据的工作流程,可能会对你的工作流程提出挑战,让你绕过数据传输所需的漫长时间。hhpberlin 的 Kilian 说:“当我们拥有自己的 HPC 集群时,当然可以随时访问已经产生的仿真结果,从而进行交互式的临时评估。我们目前正努力达到在仿真的任意时刻都可以更高效地、交互地访问和评估云端生成的数据,而无需下载大量的模拟数据。”

|

||||

|

||||

Mike Krawczyk 提到了另一个绊脚石:合规性问题。国防承包商使用的任何服务都需要遵从《国际武器交易条例》(ITAR),麦考密克·史蒂文森公司之所以选择 Rescale,部分原因是因为这是他们发现的唯一符合的供应商。如今,尽管有更多的公司使用云服务,但任何希望使用云服务的公司都应该意识到使用其他人的基础设施时所涉及的法律和数据保护问题,而且许多 HPC 场景的敏感性使得 HPC 即服务的这个问题更加突出。

|

||||

|

||||

此外,HPC 服务所需的 IT 治理超出了目前的监管范围。例如,你需要跟踪你的软件许可证是否允许云使用 —— 尤其是专门为本地 HPC 群集上运行而编写的软件包。通常,你需要跟踪 HPC 服务的使用方式,它可能是一个诱人的资源,尤其是当你从员工习惯的内部系统过渡到有可用的空闲的 HPC 能力时。例如,Avanade 全球平台高级主管兼 Azure 平台服务全球负责人 Ron Gilpin 建议,将你使用的处理核心的数量回拨给那些对时间不敏感的任务。他说:“如果一项工作只需要用一小时来完成而不需要在十分钟内就完成,那么它可以使用 165 个处理器而不是 1,000 个,从而节省了数千美元。”

|

||||

|

||||

### 对 HPC 技能的要求很高

|

||||

|

||||

一直以来,采用 HPC 的最大障碍之一就是其所需的独特的内部技能,而 HPC 服务并不能神奇使这种障碍消失。Gartner 的 Dekate 表示:“许多 CIO 将许多工作负载迁移到了云上,他们看到了成本的节约、敏捷性和效率的提升,因此相信在 HPC 生态中也可以达成类似的效果。一个普遍的误解是,他们可以通过彻底地免去系统管理员,并聘用能解决其 HPC 工作负载的新的云专家,从而以某种方式优化人力成本。”对于 HPC 即服务来说更是如此。

|

||||

|

||||

“但是 HPC 并不是一个主流的企业环境。” 他说。“你正在处理通过高带宽、低延迟的网络互联的高端计算节点,以及相当复杂的应用和中间件技术栈。许多情况下,甚至连文件系统层也是 HPC 环境所独有的。没有对应的技能可能会破坏稳定性。”

|

||||

|

||||

但是超级计算技能的供给却在减少,Dekate 将其称为劳动力“老龄化”,这是因为这一代开发人员将目光投向了新兴的初创公司,而不是学术界或使用 HPC 的更老套的公司。因此,HPC 服务供应商正在尽其所能地弥补差距。IBM 的 Turek 表示,许多 HPC 老手将总是想运行他们自己精心调整过的代码,并需要专门的调试器和其他工具来帮助他们在云端实现这一目标。但是,即使是 HPC 新手也可以调用供应商构建的代码库,以利用超级计算的并行处理能力。第三方软件提供商出售的交钥匙软件包可以减少 HPC 的许多复杂性。

|

||||

|

||||

埃森哲的 Tung 表示,该行业需要进一步加大投入才能真正繁荣。她说:“HPCaaS 已经创建了具有重大影响力的新功能,但还需要做的是使它易于被数据科学家、企业架构师或软件开发人员使用。这包括易用的 API、文档和示例代码。它包括解答问题的用户支持。仅仅提供 API 是不够的,API 需要适合特定的用途。对于数据科学家而言,这可能是以 Python 形式提供,并容易更换她已经在使用的框架。价值来自于使这些用户能够通过新的效率和性能最终使他们的工作得到改善,只要他们能够访问新的功能就可以了。” 如果供应商能够做到这一点,那么 HPC 服务才能真正将超级计算带给大众。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3534725/the-ins-and-outs-of-high-performance-computing-as-a-service.html

|

||||

|

||||

作者:[Josh Fruhlinger][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[messon007](https://github.com/messon007)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Josh-Fruhlinger/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.networkworld.com/article/3236875/embargo-10-of-the-worlds-fastest-supercomputers.html

|

||||

[2]: https://www.networkworld.com/blog/itaas-and-the-corporate-storage-technology/?utm_source=IDG&utm_medium=promotions&utm_campaign=HPE22140&utm_content=sidebar (ITAAS and Corporate Storage Strategy)

|

||||

[3]: https://www.facebook.com/NetworkWorld/

|

||||

[4]: https://www.linkedin.com/company/network-world

|

||||

@ -0,0 +1,80 @@

|

||||

[#]: collector: "lujun9972"

|

||||

[#]: translator: "tinyeyeser"

|

||||

[#]: reviewer: "wxy"

|

||||

[#]: publisher: "wxy"

|

||||

[#]: url: "https://linux.cn/article-12191-1.html"

|

||||

[#]: subject: "How to avoid man-in-the-middle cyber attacks"

|

||||

[#]: via: "https://opensource.com/article/20/4/mitm-attacks"

|

||||

[#]: author: "Jackie Lam https://opensource.com/users/beenverified"

|

||||

|

||||

如何避免中间人攻击(MITM)

|

||||

======

|

||||

|

||||

> 首先搞明白到底什么是中间人攻击(MITM),才能避免成为此类高科技窃听的受害者。

|

||||

|

||||

|

||||

|

||||

当你使用电脑发送数据或与某人在线通话的时候,你一定采取了某种程度的安全隐私手段。

|

||||

|

||||

但如果有第三方在你不知情的情况下窃听,甚至冒充某个你信任的商业伙伴窃取破坏性的信息呢?你的私人数据就这样被放在了危险分子的手中。

|

||||

|

||||

这就是臭名昭著的<ruby>中间人攻击<rt>man-in-the-middle</rt></ruby>(MITM)。

|

||||

|

||||

### 到底什么是中间人攻击?

|

||||

|

||||

黑客潜入到你与受害者或是某个设备间的通信过程中,窃取敏感信息(多数是身份信息)进而从事各种违法行为的过程,就是一次中间人攻击。Scamicide 公司创始人 Steve J. J. Weisman 介绍说:

|

||||

|

||||

> “中间人攻击也可以发生在受害者与某个合法 app 或网页中间。当受害者以为自己面对的是正常 app 或网页时,其实 Ta 正在与一个仿冒的 app 或网页互动,将自己的敏感信息透露给了不法分子。”

|

||||

|

||||

中间人攻击诞生于 1980 年代,是最古老的网络攻击形式之一。但它却更为常见。Weisman 解释道,发生中间人攻击的场景有很多种:

|

||||

|

||||

* **攻陷一个未有效加密的 WiFi 路由器**:该场景多见于人们使用公共 WiFi 的时候。“虽然家用路由器也很脆弱,但黑客攻击公共 WiFi 网络的情况更为常见。”Weisman 说,“黑客的目标就是从毫无戒心的人们那里窃取在线银行账户这样的敏感信息。”

|

||||

* **攻陷银行、金融顾问等机构的电子邮件账户**:“一旦黑客攻陷了这些电子邮件系统,他们就会冒充银行或此类公司给受害者发邮件”,Weisman 说,”他们以紧急情况的名义索要个人信息,诸如用户名和密码。受害者很容易被诱骗交出这些信息。”

|

||||

* **发送钓鱼邮件**:窃贼们还可能冒充成与受害者有合作关系的公司,向其索要个人信息。“在多个案例中,钓鱼邮件会引导受害者访问一个伪造的网页,这个伪造的网页看起来就和受害者常常访问的合法公司网页一模一样。”Weisman 说道。

|

||||

* **在合法网页中嵌入恶意代码**:攻击者还会把恶意代码(通常是 JavaScript)嵌入到一个合法的网页中。“当受害者加载这个合法网页时,恶意代码首先按兵不动,直到用户输入账户登录或是信用卡信息时,恶意代码就会复制这些信息并将其发送至攻击者的服务器。”网络安全专家 Nicholas McBride 介绍说。

|

||||

|

||||

### 有哪些中间人攻击的著名案例?

|

||||

|

||||

联想作为主流的计算机制造厂商,在 2014 到 2015 年售卖的消费级笔记本电脑中预装了一款叫做 VisualDiscovery 的软件,拦截用户的网页浏览行为。当用户的鼠标在某个产品页面经过时,这款软件就会弹出一个来自合作伙伴的类似产品的广告。

|

||||

|

||||

这起中间人攻击事件的关键在于:VisualDiscovery 拥有访问用户所有私人数据的权限,包括身份证号、金融交易信息、医疗信息、登录名和密码等等。所有这些访问行为都是在用户不知情和未获得授权的情况下进行的。联邦交易委员会(FTC)认定此次事件为欺诈与不公平竞争。2019 年,联想同意为此支付 8300 万美元的集体诉讼罚款。

|

||||

|

||||

### 我如何才能避免遭受中间人攻击?

|

||||

|

||||

* **避免使用公共 WiFi**:Weisman 建议,从来都不要使用公开的 WiFi 进行金融交易,除非你安装了可靠的 VPN 客户端并连接至可信任的 VPN 服务器。通过 VPN 连接,你的通信是加密的,信息也就不会失窃。

|

||||

* **时刻注意:**对要求你更新密码或是提供用户名等私人信息的邮件或文本消息要时刻保持警惕。这些手段很可能被用来窃取你的身份信息。

|

||||

|

||||

如果不确定收到的邮件来自于确切哪一方,你可以使用诸如电话反查或是邮件反查等工具。通过电话反查,你可以找出未知发件人的更多身份信息。通过邮件反查,你可以尝试确定谁给你发来了这条消息。

|

||||

|

||||

通常来讲,如果发现某些方面确实有问题,你可以听从公司中某个你认识或是信任的人的意见。或者,你也可以去你的银行、学校或其他某个组织,当面寻求他们的帮助。总之,重要的账户信息绝对不要透露给不认识的“技术人员”。

|

||||

|

||||

* **不要点击邮件中的链接**:如果有人给你发了一封邮件,说你需要登录某个账户,不要点击邮件中的链接。相反,要通过平常习惯的方式自行去访问,并留意是否有告警信息。如果在账户设置中没有看到告警信息,给客服打电话的时候也*不要*联系邮件中留的电话,而是联系站点页面中的联系人信息。

|

||||

* **安装可靠的安全软件**:如果你使用的是 Windows 操作系统,安装开源的杀毒软件,如 [ClamAV][2]。如果使用的是其他平台,要保持你的软件安装有最新的安全补丁。

|

||||

* **认真对待告警信息**:如果你正在访问的页面以 HTTPS 开头,浏览器可能会出现一则告警信息。例如,站点证书的域名与你尝试访问的站点域名不相匹配。千万不要忽视此类告警信息。听从告警建议,迅速关掉页面。确认域名没有输入错误的情况下,如果情况依旧,要立刻联系站点所有者。

|

||||

* **使用广告屏蔽软件**:弹窗广告(也叫广告软件攻击)可被用于窃取个人信息,因此你还可以使用广告屏蔽类软件。对个人用户来说,中间人攻击其实是很难防范的,因为它被设计出来的时候,就是为了让受害者始终蒙在鼓里,意识不到任何异常。有一款不错的开源广告屏蔽软件叫 [uBlock origin][4]。可以同时支持 Firefox 和 Chromium(以及所有基于 Chromium 的浏览器,例如 Chrome、Brave、Vivaldi、Edge 等),甚至还支持 Safari。

|

||||

|

||||

### 保持警惕

|

||||

|

||||

要时刻记住,你并不需要立刻就点击某些链接,你也并不需要听从某个陌生人的建议,无论这些信息看起来有多么紧急。互联网始终都在。你大可以先离开电脑,去证实一下这些人的真实身份,看看这些“无比紧急”的页面到底是真是假。

|

||||

|

||||

尽管任何人都可能遭遇中间人攻击,只要弄明白何为中间人攻击,理解中间人攻击如何发生,并采取有效的防范措施,就可以保护自己避免成为其受害者。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/20/4/mitm-attacks

|

||||

|

||||

作者:[Jackie Lam][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[tinyeyeser](https://github.com/tinyeyeser)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/beenverified

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/security_password_chaos_engineer_monster.png?itok=J31aRccu "Security monster"

|

||||

[2]: https://www.clamav.net

|

||||

[3]: https://opensource.com/article/20/1/stop-typosquatting-attacks

|

||||

[4]: https://github.com/gorhill/uBlock

|

||||

[5]: https://www.beenverified.com/crime/what-is-a-man-in-the-middle-attack/

|

||||

[6]: https://creativecommons.org/licenses/by-sa/2.0/

|

||||

185

published/20200417 Create a SDN on Linux with open source.md

Normal file

185

published/20200417 Create a SDN on Linux with open source.md

Normal file

@ -0,0 +1,185 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (messon007)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12199-1.html)

|

||||

[#]: subject: (Create a SDN on Linux with open source)

|

||||

[#]: via: (https://opensource.com/article/20/4/quagga-linux)

|

||||

[#]: author: (M Umer https://opensource.com/users/noisybotnet)

|

||||

|

||||

在 Linux 上使用开源软件创建 SDN

|

||||

======

|

||||

|

||||

> 使用开源路由协议栈 Quagga,使你的 Linux 系统成为一台路由器。

|

||||

|

||||

|

||||

|

||||

网络路由协议分为两大类:内部网关协议和外部网关协议。路由器使用内部网关协议在单个自治系统内共享信息。如果你用的是 Linux,则可以通过开源(GPLv2)路由协议栈 [Quagga][2] 使其表现得像一台路由器。

|

||||

|

||||

### Quagga 是什么?

|

||||

|

||||

Quagga 是一个[路由软件包][3],并且是 [GNU Zebra][4] 的一个分支。它为类 Unix 平台提供了所有主流路由协议的实现,例如开放最短路径优先(OSPF),路由信息协议(RIP),边界网关协议(BGP)和中间系统到中间系统协议(IS-IS)。

|

||||

|

||||

尽管 Quagga 实现了 IPv4 和 IPv6 的路由协议,但它并不是一个完整的路由器。一个真正的路由器不仅实现了所有路由协议,而且还有转发网络流量的能力。 Quagga 仅仅实现了路由协议栈,而转发网络流量的工作由 Linux 内核处理。

|

||||

|

||||

### 架构

|

||||

|

||||

Quagga 通过特定协议的守护程序实现不同的路由协议。守护程序名称与路由协议相同,加了字母“d”作为后缀。Zebra 是核心,也是与协议无关的守护进程,它为内核提供了一个[抽象层][5],并通过 TCP 套接字向 Quagga 客户端提供 Zserv API。每个特定协议的守护程序负责运行相关的协议,并基于交换的信息来建立路由表。

|

||||

|

||||

![Quagga architecture][6]

|

||||

|

||||

### 环境

|

||||

|

||||

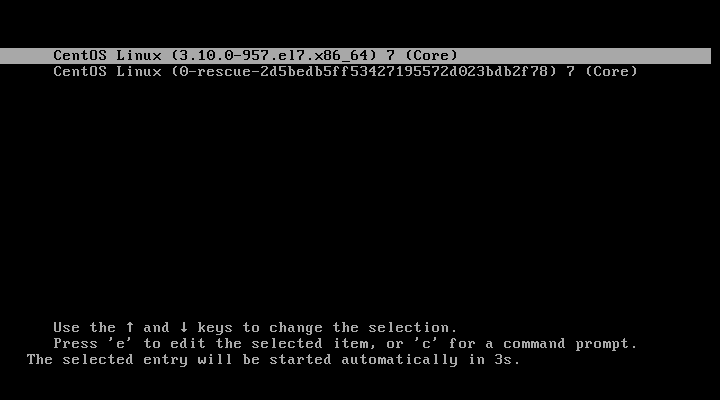

本教程通过 Quagga 实现的 OSPF 协议来配置动态路由。该环境包括两个名为 Alpha 和 Beta 的 CentOS 7.7 主机。两台主机共享访问 **192.168.122.0/24** 网络。

|

||||

|

||||

**主机 Alpha:**

|

||||

|

||||

IP:192.168.122.100/24

|

||||

网关:192.168.122.1

|

||||

|

||||

**主机 Beta:**

|

||||

|

||||

IP:192.168.122.50/24

|

||||

网关:192.168.122.1

|

||||

|

||||

### 安装软件包

|

||||

|

||||

首先,在两台主机上安装 Quagga 软件包。它存在于 CentOS 基础仓库中:

|

||||

|

||||

```

|

||||

yum install quagga -y

|

||||

```

|

||||

|

||||

### 启用 IP 转发

|

||||

|

||||

接下来,在两台主机上启用 IP 转发,因为它将由 Linux 内核来执行:

|

||||

|

||||

```

|

||||

sysctl -w net.ipv4.ip_forward = 1

|

||||

sysctl -p

|

||||

```

|

||||

|

||||

### 配置

|

||||

|

||||

现在,进入 `/etc/quagga` 目录并为你的设置创建配置文件。你需要三个文件:

|

||||

|

||||

* `zebra.conf`:Quagga 守护程序的配置文件,你可以在其中定义接口及其 IP 地址和 IP 转发

|

||||

* `ospfd.conf`:协议配置文件,你可以在其中定义将通过 OSPF 协议提供的网络

|

||||

* `daemons`:你将在其中指定需要运行的相关的协议守护程序

|

||||

|

||||

在主机 Alpha 上,

|

||||

|

||||

```

|

||||

[root@alpha]# cat /etc/quagga/zebra.conf

|

||||

interface eth0

|

||||

ip address 192.168.122.100/24

|

||||

ipv6 nd suppress-ra

|

||||

interface eth1

|

||||

ip address 10.12.13.1/24

|

||||

ipv6 nd suppress-ra

|

||||

interface lo

|

||||

ip forwarding

|

||||

line vty

|

||||

|

||||

[root@alpha]# cat /etc/quagga/ospfd.conf

|

||||

interface eth0

|

||||

interface eth1

|

||||

interface lo

|

||||

router ospf

|

||||

network 192.168.122.0/24 area 0.0.0.0

|

||||

network 10.12.13.0/24 area 0.0.0.0

|

||||

line vty

|

||||

|

||||

[root@alphaa ~]# cat /etc/quagga/daemons

|

||||

zebra=yes

|

||||

ospfd=yes

|

||||

```

|

||||

|

||||

在主机 Beta 上,

|

||||

|

||||

```

|

||||

[root@beta quagga]# cat zebra.conf

|

||||

interface eth0

|

||||

ip address 192.168.122.50/24

|

||||

ipv6 nd suppress-ra

|

||||

interface eth1

|

||||

ip address 10.10.10.1/24

|

||||

ipv6 nd suppress-ra

|

||||

interface lo

|

||||

ip forwarding

|

||||

line vty

|

||||

|

||||

[root@beta quagga]# cat ospfd.conf

|

||||

interface eth0

|

||||

interface eth1

|

||||

interface lo

|

||||

router ospf

|

||||

network 192.168.122.0/24 area 0.0.0.0

|

||||

network 10.10.10.0/24 area 0.0.0.0

|

||||

line vty

|

||||

|

||||

[root@beta ~]# cat /etc/quagga/daemons

|

||||

zebra=yes

|

||||

ospfd=yes

|

||||

```

|

||||

|

||||

### 配置防火墙

|

||||

|

||||

要使用 OSPF 协议,必须允许它通过防火墙:

|

||||

|

||||

```

|

||||

firewall-cmd --add-protocol=ospf –permanent

|

||||

|

||||

firewall-cmd –reload

|

||||

```

|

||||

|

||||

现在,启动 `zebra` 和 `ospfd` 守护程序。

|

||||

|

||||

```

|

||||

# systemctl start zebra

|

||||

# systemctl start ospfd

|

||||

```

|

||||

|

||||

用下面命令在两个主机上查看路由表:

|

||||

|

||||

```

|

||||

[root@alpha ~]# ip route show

|

||||

default via 192.168.122.1 dev eth0 proto static metric 100

|

||||

10.10.10.0/24 via 192.168.122.50 dev eth0 proto zebra metric 20

|

||||

10.12.13.0/24 dev eth1 proto kernel scope link src 10.12.13.1

|

||||

192.168.122.0/24 dev eth0 proto kernel scope link src 192.168.122.100 metric 100

|

||||

```

|

||||

|

||||

你可以看到 Alpha 上的路由表包含通过 **192.168.122.50** 到达 **10.10.10.0/24** 的路由项,它是通过协议 zebra 获取的。同样,在主机 Beta 上,该表包含通过 **192.168.122.100** 到达网络 **10.12.13.0/24** 的路由项。

|

||||

|

||||

```

|

||||

[root@beta ~]# ip route show

|

||||

default via 192.168.122.1 dev eth0 proto static metric 100

|

||||

10.10.10.0/24 dev eth1 proto kernel scope link src 10.10.10.1

|

||||

10.12.13.0/24 via 192.168.122.100 dev eth0 proto zebra metric 20

|

||||

192.168.122.0/24 dev eth0 proto kernel scope link src 192.168.122.50 metric 100

|

||||

```

|

||||

|

||||

### 结论

|

||||

|

||||

如你所见,环境和配置相对简单。要增加复杂性,你可以向路由器添加更多网络接口,以为更多网络提供路由。你也可以使用相同的方法来实现 BGP 和 RIP 协议。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/20/4/quagga-linux

|

||||

|

||||

作者:[M Umer][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[messon007](https://github.com/messon007)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/noisybotnet

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/code_computer_laptop_hack_work.png?itok=aSpcWkcl (Coding on a computer)

|

||||

[2]: https://www.quagga.net/

|

||||

[3]: https://en.wikipedia.org/wiki/Quagga_(software)

|

||||

[4]: https://www.gnu.org/software/zebra/

|

||||

[5]: https://en.wikipedia.org/wiki/Abstraction_layer

|

||||

[6]: https://opensource.com/sites/default/files/uploads/quagga_arch.png (Quagga architecture)

|

||||

203

published/20200417 How to compress files on Linux 5 ways.md

Normal file

203

published/20200417 How to compress files on Linux 5 ways.md

Normal file

@ -0,0 +1,203 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (robsean)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12190-1.html)

|

||||

[#]: subject: (How to compress files on Linux 5 ways)

|

||||

[#]: via: (https://www.networkworld.com/article/3538471/how-to-compress-files-on-linux-5-ways.html)

|

||||

[#]: author: (Sandra Henry-Stocker https://www.networkworld.com/author/Sandra-Henry_Stocker/)

|

||||

|

||||

在 Linux 上压缩文件的 5 种方法

|

||||

======

|

||||

|

||||

> 在 Linux 系统上有很多可以用于压缩文件的工具,但它们的表现并不都是一样的,也不是所有的压缩效果都是一样的。在这篇文章中,我们比较其中的五个工具。

|

||||

|

||||

|

||||

|

||||

在 Linux 上有不少用于压缩文件的命令。最新最有效的一个方法是 `xz`,但是所有的方法都有节省磁盘空间和维护备份文件供以后使用的优点。在这篇文章中,我们将比较这些压缩命令并指出显著的不同。

|

||||

|

||||

### tar

|

||||

|

||||

`tar` 命令不是专门的压缩命令。它通常用于将多个文件拉入一个单个的文件中,以便容易地传输到另一个系统,或者将文件作为一个相关的组进行备份。它也提供压缩的功能,这就很有意义了,附加一个 `z` 压缩选项能够实现压缩文件。

|

||||

|

||||

当使用 `z` 选项为 `tar` 命令附加压缩过程时,`tar` 使用 `gzip` 来进行压缩。

|

||||

|

||||

就像压缩一组文件一样,你可以使用 `tar` 来压缩单个文件,尽管这种操作与直接使用 `gzip` 相比没有特别的优势。要使用 `tar` 这样做,只需要使用 `tar cfz newtarfile filename` 命令来标识要压缩的文件,就像标识一组文件一样,像这样:

|

||||

|

||||

```

|

||||

$ tar cfz bigfile.tgz bigfile

|

||||

^ ^

|

||||

| |

|

||||

+- 新的文件 +- 将被压缩的文件

|

||||

|

||||

$ ls -l bigfile*

|

||||

-rw-rw-r-- 1 shs shs 103270400 Apr 16 16:09 bigfile

|

||||

-rw-rw-r-- 1 shs shs 21608325 Apr 16 16:08 bigfile.tgz

|

||||

```

|

||||

|

||||

注意,文件的大小显著减少了。

|

||||

|

||||

如果你愿意,你可以使用 `tar.gz` 扩展名,这可能会使文件的特征更加明显,但是大多数的 Linux 用户将很可能会意识到与 `tgz` 的意思是一样的 – `tar` 和 `gz` 的组合来显示文件是一个压缩的 tar 文件。在压缩完成后,你将同时得到原始文件和压缩文件。

|

||||

|

||||

要将很多文件收集在一起并在一个命令中压缩出 “tar ball”,使用相同的语法,但要指定要包含的文件为一组,而不是单个文件。这里有一个示例:

|

||||

|

||||

```

|

||||

$ tar cfz bin.tgz bin/*

|

||||

^ ^

|

||||

| +-- 将被包含的文件

|

||||

+ 新的文件

|

||||

```

|

||||

|

||||

### zip

|

||||

|

||||

`zip` 命令创建一个压缩文件,与此同时保留原始文件的完整性。语法像使用 `tar` 一样简单,只是你必需记住,你的原始文件名称应该是命令行上的最后一个参数。

|

||||

|

||||

```

|

||||

$ zip ./bigfile.zip bigfile

|

||||

updating: bigfile (deflated 79%)

|

||||

$ ls -l bigfile bigfile.zip

|

||||

-rw-rw-r-- 1 shs shs 103270400 Apr 16 11:18 bigfile

|

||||

-rw-rw-r-- 1 shs shs 21606889 Apr 16 11:19 bigfile.zip

|

||||

```

|

||||

|

||||

### gzip

|

||||

|

||||

`gzip` 命令非常容易使用。你只需要键入 `gzip`,紧随其后的是你想要压缩的文件名称。不像上述描述的命令,`gzip` 将“就地”加密文件。换句话说,原始文件将被加密文件替换。

|

||||

|

||||

```

|

||||

$ gzip bigfile

|

||||

$ ls -l bigfile*

|

||||

-rw-rw-r-- 1 shs shs 21606751 Apr 15 17:57 bigfile.gz

|

||||

```

|

||||

|

||||

### bzip2

|

||||

|

||||

像使用 `gzip` 命令一样,`bzip2` 将在你选择的文件“就地”压缩,不留下原始文件。

|

||||

|

||||

```

|

||||

$ bzip bigfile

|

||||

$ ls -l bigfile*

|

||||

-rw-rw-r-- 1 shs shs 18115234 Apr 15 17:57 bigfile.bz2

|

||||

```

|

||||

|

||||

### xz

|

||||

|

||||

`xz` 是压缩命令团队中的一个相对较新的成员,在压缩文件的能力方面,它是一个领跑者。像先前的两个命令一样,你只需要将文件名称提供给命令。再强调一次,原始文件被就地压缩。

|

||||

|

||||

```

|

||||

$ xz bigfile

|

||||

$ ls -l bigfile*

|

||||

-rw-rw-r-- 1 shs shs 13427236 Apr 15 17:30 bigfile.xz

|

||||

```

|

||||

|

||||

对于大文件来说,你可能会注意到 `xz` 将比其它的压缩命令花费更多的运行时间,但是压缩的结果却是非常令人赞叹的。

|

||||

|

||||

### 对比

|

||||

|

||||

大多数人都听说过“大小不是一切”。所以,让我们比较一下文件大小以及一些当你计划如何压缩文件时的问题。

|

||||

|

||||

下面显示的统计数据都与压缩单个文件相关,在上面显示的示例中使用 `bigfile`。这个文件是一个大的且相当随机的文本文件。压缩率在一定程度上取决于文件的内容。

|

||||

|

||||

#### 大小减缩率

|

||||

|

||||

当比较时,上面显示的各种压缩命产生下面的结果。百分比表示压缩文件与原始文件的比较效果。

|

||||

|

||||

```

|

||||

-rw-rw-r-- 1 shs shs 103270400 Apr 16 14:01 bigfile

|

||||

------------------------------------------------------

|

||||

-rw-rw-r-- 1 shs shs 18115234 Apr 16 13:59 bigfile.bz2 ~17%

|

||||

-rw-rw-r-- 1 shs shs 21606751 Apr 16 14:00 bigfile.gz ~21%

|

||||

-rw-rw-r-- 1 shs shs 21608322 Apr 16 13:59 bigfile.tgz ~21%

|

||||

-rw-rw-r-- 1 shs shs 13427236 Apr 16 14:00 bigfile.xz ~13%

|

||||

-rw-rw-r-- 1 shs shs 21606889 Apr 16 13:59 bigfile.zip ~21%

|

||||

```

|

||||

|

||||

`xz` 命令获胜,最终只有压缩文件 13% 的大小,但是所有这些压缩命令都相当显著地减少原始文件的大小。

|

||||

|

||||

#### 是否替换原始文件

|

||||

|

||||

`bzip2`、`gzip` 和 `xz` 命令都用压缩文件替换原始文件。`tar` 和 `zip` 命令不替换。

|

||||

|

||||

#### 运行时间

|

||||

|

||||

`xz` 命令似乎比其它命令需要花费更多的时间来加密文件。对于 `bigfile` 来说,大概的时间是:

|

||||

|

||||

```

|

||||

命令 运行时间

|

||||

tar 4.9 秒

|

||||

zip 5.2 秒

|

||||

bzip2 22.8 秒

|

||||

gzip 4.8 秒

|

||||

xz 50.4 秒

|

||||

```

|

||||

|

||||

解压缩文件很可能比压缩时间要短得多。

|

||||

|

||||

#### 文件权限

|

||||

|

||||

不管你对压缩文件设置什么权限,压缩文件的权限将基于你的 `umask` 设置,但 `bzip2` 除外,它保留了原始文件的权限。

|

||||

|

||||

#### 与 Windows 的兼容性

|

||||

|

||||

`zip` 命令创建的文件可以在 Windows 系统以及 Linux 和其他 Unix 系统上使用(即解压),而无需安装其他工具,无论这些工具可能是可用还是不可用的。

|

||||

|

||||

### 解压缩文件

|

||||

|

||||

解压文件的命令与压缩文件的命令类似。在我们运行上述压缩命令后,这些命令用于解压缩 `bigfile`:

|

||||

|

||||

* tar: `tar xf bigfile.tgz`

|

||||

* zip: `unzip bigfile.zip`

|

||||

* gzip: `gunzip bigfile.gz`

|

||||

* bzip2: `bunzip2 bigfile.gz2`

|

||||

* xz: `xz -d bigfile.xz` 或 `unxz bigfile.xz`

|

||||

|

||||

### 自己运行压缩对比

|

||||

|

||||

如果你想自己运行一些测试,抓取一个大的且可以替换的文件,并使用上面显示的每个命令来压缩它 —— 最好使用一个新的子目录。你可能需要先安装 `xz`,如果你想在测试中包含它的话。这个脚本可能更容易地进行压缩,但是可能需要花费几分钟完成。

|

||||

|

||||

```

|

||||

#!/bin/bash

|

||||

|

||||

# 询问用户文件名称

|

||||

echo -n "filename> "

|

||||

read filename

|

||||

|

||||

# 你需要这个,因为一些命令将替换原始文件

|

||||

cp $filename $filename-2

|

||||

|

||||

# 先清理(以免先前的结果仍然可用)

|

||||

rm $filename.*

|

||||

|

||||

tar cvfz ./$filename.tgz $filename > /dev/null

|

||||

zip $filename.zip $filename > /dev/null

|

||||

bzip2 $filename

|

||||

# 恢复原始文件

|

||||

cp $filename-2 $filename

|

||||

gzip $filename

|

||||

# 恢复原始文件

|

||||

cp $filename-2 $filename

|

||||

xz $filename

|

||||

|

||||

# 显示结果

|

||||

ls -l $filename.*

|

||||

|

||||

# 替换原始文件

|

||||

mv $filename-2 $filename

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3538471/how-to-compress-files-on-linux-5-ways.html

|

||||

|

||||

作者:[Sandra Henry-Stocker][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[robsean](https://github.com/robsean)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Sandra-Henry_Stocker/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.networkworld.com/blog/itaas-and-the-corporate-storage-technology/?utm_source=IDG&utm_medium=promotions&utm_campaign=HPE22140&utm_content=sidebar (ITAAS and Corporate Storage Strategy)

|

||||

[2]: https://www.facebook.com/NetworkWorld/

|

||||

[3]: https://www.linkedin.com/company/network-world

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12180-1.html)

|

||||

[#]: subject: (4 Git scripts I can't live without)

|

||||

[#]: via: (https://opensource.com/article/20/4/git-extras)

|

||||

[#]: author: (Vince Power https://opensource.com/users/vincepower)

|

||||

@ -12,11 +12,11 @@

|

||||

|

||||

> Git Extras 版本库包含了 60 多个脚本,它们是 Git 基本功能的补充。以下是如何安装、使用和贡献的方法。

|

||||

|

||||

![Person using a laptop][1]

|

||||

|

||||

|

||||

2005 年,[Linus Torvalds][2] 创建了 [Git][3],以取代他之前用于维护 Linux 内核的专有的分布式源码控制管理解决方案。从那时起,Git 已经成为开源和云原生开发团队的主流版本控制解决方案。

|

||||

2005 年,[Linus Torvalds][2] 创建了 [Git][3],以取代他之前用于维护 Linux 内核的分布式源码控制管理的专有解决方案。从那时起,Git 已经成为开源和云原生开发团队的主流版本控制解决方案。

|

||||

|

||||

但即使是像 Git 这样功能丰富的应用程序,也没有人们想要或需要的每个功能,所以人们会花大力气去创建这些功能。就 Git 而言,这个人就是 [TJ Holowaychuk][4]。他的 [Git Extras][5] 项目承载了 60 多个“附加功能”,这些功能扩展了 Git 的基本功能。

|

||||

但即使是像 Git 这样功能丰富的应用程序,也没有人们想要或需要的每个功能,所以会有人花大力气去创建这些缺少的功能。就 Git 而言,这个人就是 [TJ Holowaychuk][4]。他的 [Git Extras][5] 项目承载了 60 多个“附加功能”,这些功能扩展了 Git 的基本功能。

|

||||

|

||||

### 使用 Git 附加功能

|

||||

|

||||

@ -24,9 +24,9 @@

|

||||

|

||||

#### git-ignore

|

||||

|

||||

`git ignore` 是一个方便的附加功能,它可以让你手动添加文件类型和注释到 `.git-ignore` 文件中,而不需要打开文本编辑器。它可以操作你的个人用户帐户的全局忽略文件和单独用于你正在工作的版本库的忽略文件。

|

||||

`git ignore` 是一个方便的附加功能,它可以让你手动添加文件类型和注释到 `.git-ignore` 文件中,而不需要打开文本编辑器。它可以操作你的个人用户帐户的全局忽略文件和单独用于你正在工作的版本库中的忽略文件。

|

||||

|

||||

在没有参数的情况下执行 `git ignore` 会先列出全局忽略文件,然后是本地的忽略文件。

|

||||

在不提供参数的情况下执行 `git ignore` 会先列出全局忽略文件,然后是本地的忽略文件。

|

||||

|

||||

```

|

||||

$ git ignore

|

||||

@ -105,7 +105,7 @@ branch.master.merge=refs/heads/master

|

||||

* `git mr` 检出来自 GitLab 的合并请求。

|

||||

* `git pr` 检出来自 GitHub 的拉取请求。

|

||||

|

||||

无论是哪种情况,你只需要合并请求号、拉取请求号或完整的 URL,它就会抓取远程引用,检出分支,并调整配置,这样 Git 就知道要替换哪个分支了。

|

||||

无论是哪种情况,你只需要合并请求号/拉取请求号或完整的 URL,它就会抓取远程引用,检出分支,并调整配置,这样 Git 就知道要替换哪个分支了。

|

||||

|

||||

```

|

||||

$ git mr 51

|

||||

@ -142,7 +142,7 @@ $ git extras --help

|

||||

$ brew install git-extras

|

||||

```

|

||||

|

||||

在 Linux 上,每个平台的原生包管理器中都有 Git Extras。有时,你需要启用一个额外的仓库,比如在 CentOS 上的 [EPEL][10],然后运行一条命令。

|

||||

在 Linux 上,每个平台原生的包管理器中都包含有 Git Extras。有时,你需要启用额外的仓库,比如在 CentOS 上的 [EPEL][10],然后运行一条命令。

|

||||

|

||||

```

|

||||

$ sudo yum install git-extras

|

||||

@ -152,9 +152,9 @@ $ sudo yum install git-extras

|

||||

|

||||

### 贡献

|

||||

|

||||

你是否你认为 Git 中有缺少的功能,并且已经构建了一个脚本来处理它?为什么不把它作为 Git Extras 发布版的一部分,与全世界分享呢?

|

||||

你是否认为 Git 中有缺少的功能,并且已经构建了一个脚本来处理它?为什么不把它作为 Git Extras 发布版的一部分,与全世界分享呢?

|

||||

|

||||

要做到这一点,请将该功能贡献到 Git Extras 仓库中。更多具体细节请参见仓库中的 [CONTRIBUTING.md][12] 文件,但基本的操作方法很简单。

|

||||

要做到这一点,请将该功能贡献到 Git Extras 仓库中。更多具体细节请参见仓库中的 [CONTRIBUTING.md][12] 文件,但基本的操作方法很简单:

|

||||

|

||||

1. 创建一个处理该功能的 Bash 脚本。

|

||||

2. 创建一个基本的 man 文件,让大家知道如何使用它。

|

||||

@ -171,7 +171,7 @@ via: https://opensource.com/article/20/4/git-extras

|

||||

作者:[Vince Power][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,283 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (qfzy1233)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12183-1.html)

|

||||

[#]: subject: (16 Things to do After Installing Ubuntu 20.04)

|

||||

[#]: via: (https://itsfoss.com/things-to-do-after-installing-ubuntu-20-04/)

|

||||

[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

|

||||

|

||||

安装完 Ubuntu 20.04 后要做的 16 件事

|

||||

======

|

||||

|

||||

> 以下是安装 Ubuntu 20.04 之后需要做的一些调整和事项,它将使你获得更流畅、更好的桌面 Linux 体验。

|

||||

|

||||

[Ubuntu 20.04 LTS(长期支持版)带来了许多新的特性][1]和观感上的变化。如果你要安装 Ubuntu 20.04,让我向你展示一些推荐步骤便于你的使用。

|

||||

|

||||

### 安装完 Ubuntu 20.04 LTS “Focal Fossa” 后要做的 16 件事

|

||||

|

||||

![][2]

|

||||

|

||||

我在这里提到的步骤仅是我的建议。如果一些定制或调整不适合你的需要和兴趣,你可以忽略它们。

|

||||

|

||||

同样的,有些步骤看起来很简单,但是对于一个 Ubuntu 新手来说是必要的。

|

||||

|

||||

这里的一些建议适用于启用 GNOME 作为默认桌面 Ubuntu 20.04,所以请检查 [Ubuntu 版本][3]和[桌面环境][4]。

|

||||

|

||||

以下列表便是安装了代号为 Focal Fossa 的 Ubuntu 20.04 LTS 之后要做的事。

|

||||

|

||||

#### 1、通过更新和启用额外的软件仓库来准备你的系统

|

||||

|

||||

安装 Ubuntu 或任何其他 Linux 发行版之后,你应该做的第一件事就是更新它。Linux 的运作是建立在本地的可用软件包数据库上,而这个缓存需要同步以便你能够安装软件。

|

||||

|

||||

升级 Ubuntu 非常简单。你可以运行软件更新从菜单(按 `Super` 键并搜索 “software updater”):

|

||||

|

||||

![Ubuntu 20.04 的软件升级器][5]

|

||||

|

||||

你也可以在终端使用以下命令更新你的系统:

|

||||

|

||||

```

|

||||

sudo apt update && sudo apt upgrade

|

||||

```

|

||||

|

||||

接下来,你应该确保启用了 [universe(宇宙)和 multiverse(多元宇宙)软件仓库][6]。使用这些软件仓库,你可以访问更多的软件。我还推荐阅读关于 [Ubuntu 软件仓库][6]的文章,以了解它背后的基本概念。

|

||||

|

||||

在菜单中搜索 “Software & Updates”:

|

||||

|

||||

![软件及更新设置项][7]

|

||||

|

||||

请务必选中软件仓库前面的勾选框:

|

||||

|

||||

![启用额外的软件仓库][8]

|

||||

|

||||

#### 2、安装媒体解码器来播放 MP3、MPEG4 和其他格式媒体文件

|

||||

|

||||

如果你想播放媒体文件,如 MP3、MPEG4、AVI 等,你需要安装媒体解码器。由于各个国家的版权问题, Ubuntu 在默认情况下不会安装它。

|

||||

|

||||

作为个人,你可以[使用 Ubuntu Restricted Extra 安装包][9]很轻松地安装这些媒体编解码器。这将[在你的 Ubuntu 系统安装][10]媒体编解码器、Adobe Flash 播放器和微软 True Type 字体等。

|

||||

|

||||

你可以通过[点击这个链接][11]来安装它(它会要求在软件中心打开它),或者使用以下命令:

|

||||

|

||||

```

|

||||

sudo apt install ubuntu-restricted-extras

|

||||

```

|

||||

|

||||

如果遇到 EULA 或许可证界面,请记住使用 `tab` 键在选项之间进行选择,然后按回车键确认你的选择。

|

||||

|

||||

![按 tab 键选择 OK 并按回车键][12]

|

||||

|

||||

#### 3、从软件中心或网络上安装软件

|

||||

|

||||

现在已经设置好了软件仓库并更新了软件包缓存,应该开始安装所需的软件了。

|

||||

|

||||

在 Ubuntu 中安装应用程序有几种方法,最简单和正式的方法是使用软件中心。

|

||||

|

||||

![Ubuntu 软件中心][14]

|

||||

|

||||

如果你想要一些关于软件的建议,请参考这个[丰富的各种用途的 Ubuntu 应用程序列表][15]。

|

||||

|

||||

一些软件供应商提供了 .deb 文件来方便地安装他们的应用程序。你可以从他们的网站获得 .deb 文件。例如,要[在 Ubuntu 上安装谷歌 Chrome][16],你可以从它的网站上获得 .deb 文件,双击它开始安装。

|

||||

|

||||

#### 4、享受 Steam Proton 和 GameModeEnjoy 上的游戏

|

||||

|

||||

[在 Linux 上进行游戏][17]已经有了长足的发展。你不再受限于自带的少数游戏。你可以[在 Ubuntu 上安装 Steam][18]并享受许多游戏。

|

||||

|

||||

[Steam 新的 Proton 项目][19]可以让你在 Linux 上玩许多只适用于 Windows 的游戏。除此之外,Ubuntu 20.04 还默认安装了 [Feral Interactive 的 GameMode][20]。

|

||||

|

||||

GameMode 会自动调整 Linux 系统的性能,使游戏具有比其他后台进程更高的优先级。

|

||||

|

||||

这意味着一些支持 GameMode 的游戏(如[古墓丽影·崛起][21])在 Ubuntu 上的性能应该有所提高。

|

||||

|

||||

#### 5、管理自动更新(适用于进阶用户和专家)

|

||||

|

||||

最近,Ubuntu 已经开始自动下载并安装对你的系统至关重要的安全更新。这是一个安全功能,作为一个普通用户,你应该让它保持默认开启。

|

||||

|

||||

但是,如果你喜欢自己进行配置更新,而这个自动更新经常导致你[“无法锁定管理目录”错误][22],也许你可以改变自动更新行为。

|

||||

|

||||

你可以选择“立即显示”,这样一有安全更新就会立即通知你,而不是自动安装。

|

||||

|

||||

![管理自动更新设置][23]

|

||||

|

||||

#### 6、控制电脑的自动挂起和屏幕锁定

|

||||

|

||||

如果你在笔记本电脑上使用 Ubuntu 20.04,那么你可能需要注意一些电源和屏幕锁定设置。

|

||||

|

||||

如果你的笔记本电脑处于电池模式,Ubuntu 会在 20 分钟不活动后休眠系统。这样做是为了节省电池电量。就我个人而言,我不喜欢它,因此我禁用了它。

|

||||

|

||||

类似地,如果你离开系统几分钟,它会自动锁定屏幕。我也不喜欢这种行为,所以我宁愿禁用它。

|

||||

|

||||

![Ubuntu 20.04 的电源设置][24]

|

||||

|

||||

#### 7、享受夜间模式

|

||||

|

||||

[Ubuntu 20.04 中最受关注的特性][25]之一是夜间模式。你可以通过进入设置并在外观部分中选择它来启用夜间模式。

|

||||

|

||||

![开启夜间主题 Ubuntu][26]

|

||||

|

||||

你可能需要做一些[额外的调整来获得完整的 Ubuntu 20.04 夜间模式][27]。

|

||||

|

||||

#### 8、控制桌面图标和启动程序

|

||||

|

||||

如果你想要一个最简的桌面,你可以禁用桌面上的图标。你还可以从左侧禁用启动程序,并在顶部面板中禁用软件状态栏。

|

||||

|

||||

所有这些都可以通过默认的新 GNOME 扩展来控制,该程序默认情况下已经可用。

|

||||

|

||||

![禁用 Ubuntu 20 04 的 Dock][28]

|

||||

|

||||

顺便说一下,你也可以通过“设置”->“外观”来将启动栏的位置改变到底部或者右边。

|

||||

|

||||

#### 9、使用表情符和特殊字符,或从搜索中禁用它

|

||||

|

||||

Ubuntu 提供了一个使用表情符号的简单方法。在默认情况下,有一个专用的应用程序叫做“字符”。它基本上可以为你提供表情符号的 [Unicode][29]。

|

||||

|

||||

不仅是表情符号,你还可以使用它来获得法语、德语、俄语和拉丁语字符的 unicode。单击符号你可以复制 unicode,当你粘贴该代码时,你所选择的符号便被插入。

|

||||

|

||||

![Ubuntu 表情符][30]

|

||||

|

||||

你也能在桌面搜索中找到这些特殊的字符和表情符号。也可以从搜索结果中复制它们。

|

||||

|

||||

![表情符出现在桌面搜索中][31]

|

||||

|

||||

如果你不想在搜索结果中看到它们,你应该禁用搜索功能对它们的访问。下一节将讨论如何做到这一点。