mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-15 01:50:08 +08:00

commit

80687b3576

@ -5,7 +5,7 @@ LCTT 是“Linux中国”([https://linux.cn/](https://linux.cn/))的翻译

|

||||

|

||||

LCTT 已经拥有几百名活跃成员,并欢迎更多的Linux志愿者加入我们的团队。

|

||||

|

||||

|

||||

|

||||

|

||||

LCTT 的组成

|

||||

-------------------------------

|

||||

|

||||

@ -0,0 +1,66 @@

|

||||

一个老奶奶的唠叨:当年我玩 Linux 时……

|

||||

=====================

|

||||

|

||||

|

||||

|

||||

在很久以前,那时还没有 Linux 系统。真的没有!之前也从未存在过。不像现在,Linux 系统随处可见。那时有各种流派的 Unix 系统、有苹果的操作系统、有微软的 Windows 操作系统。

|

||||

|

||||

比如说 Windows,它的很多东西都改变了,但是依然保持不变的东西的更多。尽管它已经增加了 20GB 以上的鬼知道是什么的东西,但是 Windows 还是大体保持不变(除了不能在 DOS 的提示下实际做些什么了)。嘿,谁还记得 Gorilla.bas 那个出现在 DOS 系统里的炸香蕉的游戏吗?多么美好的时光啊!不过互联网却不会忘记,你可以在 Kongregate.com 这个网站玩这个游戏的 Flash版本。

|

||||

|

||||

苹果系统也改变了,从一个鼓励你 hack 的友善系统变成了一个漂亮而密实的、根本不让你打开的小盒子,而且还限制了你使用的硬件接口。1998 年:软盘没了。2012 年:光驱没了。而 12 英寸的 MacBook 只有一个单一的 USB Type-C 接口,提供了电源、蓝牙、无线网卡、外部存储、视频输出和其它的一些配件的接口。而你要是想一次连接多个外设就不得不背着一堆转接器,这真是太令人抓狂了!然后就轮到耳机插孔了,没错,这唯一一个苹果世界里留着的非专有的标准硬件接口了,也注定要消失了。(LCTT 译注:还好,虽然最新的 IPhone 7 上没有耳机插孔了,但是新发布的 Macbook 上还有)

|

||||

|

||||

还有一大堆其它的操作系统,比如:Amiga、BeOS、OS/2 ,如果你愿意的话,你能找到几十个操作系统。我建议你去找找看,太容易找到了。Amiga、 BeOS 和 OS/2 这些系统都值得关注,因为它们有强大的功能,比如 32 位的多任务和高级图形处理能力。但是市场的影响击败了强大的系统性能,因此技术上并不出众的 Apple 和 Windows 操作系统统治了市场的主导地位,而那些曾经的系统也逐渐销声匿迹了。

|

||||

|

||||

然后 Linux 系统出现了,世界也因此改变了。

|

||||

|

||||

### 第一款电脑

|

||||

|

||||

我曾经使用过的第一款电脑是 Apple IIc ,大概在 1994年左右,而那个时候 Linux 系统刚出来 3 年。这是我从一个朋友那里借来的,用起来各方面都还不错,但是很不方便。所以我自己花了将近 500 美元买了一台二手的 Tandy 牌的电脑。这对于卖电脑的人来说是一件很伤心的事,因为新电脑要花费双倍的价钱。那个时候,电脑贬值的速度非常快。这个电脑当时看起来强劲得像是个怪物:一个英特尔 386SX 的 CPU,4MB的内存,一个 107MB 的硬盘,14 英寸的彩色 CRT 显示器,运行 MS-DOS 5 和 Windows 3.1 系统。

|

||||

|

||||

我曾无数次的拆开那个可怜的怪物,并且多次重新安装 Windows 和 DOS 系统。因为 Windows 桌面用的比较少,所以我的大部分工作都是在 DOS 下完成的。我喜欢玩血腥暴力的视频游戏,包括 Doom、Duke Nukem、Quake 和 Heretic。啊!那些美好的,让人心动的 8 位图像!

|

||||

|

||||

那个时候硬件的发展一直落后于软件,因此我经常升级硬件。现在我们能买到满足我们需求的任何配置的电脑。我已经好多年都没有再更新我的任何电脑硬件了。

|

||||

|

||||

### 《比特杂志(Computer bits)》

|

||||

|

||||

回到那些曾经辉煌的年代,电脑商店布满大街小巷,找到本地的一家网络服务提供商(ISP)也不用走遍整个街区。ISP 那个时候真的非比寻常,它们不是那种冷冰冰的令人讨论的超级大公司,而是像美国电信运营商和有线电视公司这样的好朋友。他们都非常友好,并且提供各种各样的像 BBS、文件下载、MUD (多玩家在线游戏)等的额外服务。

|

||||

|

||||

我花了很多的时间在电脑商店购买配件,但是很多时候我一个女人家去那里会让店里的员工感到吃惊,我真的很无语了,这怎么就会让一些人看不惯了。我现成已经是一位 58 岁的老家伙了,但是他们还是一样的看不惯我。我希望我这个女电脑迷在我死之前能被他们所接受。

|

||||

|

||||

那些商店的书架上摆满了《比特杂志(Computer bits)》。有关《比特杂志》的[历史刊物](https://web.archive.org/web/20020122193349/http://computerbits.com/)可以在互联网档案库(Internet Archive)中查到。《比特杂志》是当地一家免费报纸,有很多关于计算机方面的优秀的文章和大量的广告。可惜当时的广告没有网络版,因此大家不能再看到那些很有价值的关于计算机方面的详细信息了。你知道现在的广告商们有多么的抓狂吗?他们埋怨那些安装广告过滤器的人,致使科技新闻变成了伪装的广告。他们应该学习一下过去的广告模式,那时候的广告里有很多有价值的信息,大家都喜欢阅读。我从《比特杂志》和其它的电脑杂志的广告中学到了所有关于计算机硬件的知识。《电脑购买者杂志(Computer Shopper)》更是非常好的学习资料,其中有上百页的广告和很多高质量的文章。

|

||||

|

||||

|

||||

|

||||

《比特杂志》的出版人 Paul Harwood 开启了我的写作生涯。我的第一篇计算机专业性质的文章就是在《比特杂志》发表的。 Paul,仿佛你一直都在我的身旁,谢谢你。

|

||||

|

||||

在互联网档案库中,关于《比特杂志》的分类广告信息已经几乎查询不到了。分类广告模式曾经给出版商带来巨大的收入。免费的分类广告网站 Craigslist 在这个领域独占鳌头,同时也扼杀了像《比特杂志》这种以传统的报纸和出版为主的杂志行业。

|

||||

|

||||

其中有一些让我最难以忘怀的记忆就是一个 12 岁左右的吊儿郎当的小屁孩,他脸上挂满了对我这种光鲜亮丽的女人所做工作的不屑和不理解的表情,他在我钟爱的电脑店里走动,递给我一本《比特杂志》,当成给初学者的一本好书。我翻开杂志,指着其中一篇我写的关于 Linux 系统的文章给他看,他说“哦,我明白了”。他尴尬的脸变成了那种正常生理上都不可能呈现的颜色,然后很仓促地夹着尾巴溜走了。(不是的,我亲爱的、诚实的读者们,他不是真的只有 12 岁,而应该是 20 来岁。他现在应该比以前更成熟懂事一些了吧!)

|

||||

|

||||

### 发现 Linux

|

||||

|

||||

我第一次了解到 Linux 系统是在《比特杂志》上,大概在 1997 年左右。我一开始用的一些 Linux操作系统版本是 Red Hat 5 和 Mandrake Linux(曼德拉草)。 Mandrake 真是太棒了,它是第一款易安装型的 Linux 系统,并且还附带图形界面和声卡驱动,因此我马上就可以玩 Tux Racer 游戏了。不像那时候大多数的 Linux 迷们,因为我之前没接触过 Unix系统,所以我学习起来比较难。但是一切都还顺利吧,因为我学到的东西都很有用。相对于我在 Windows 中的体验,我在 Windows 中学习的大部分东西都是徒劳,最终只能放弃返回到 DOS 下。

|

||||

|

||||

玩转电脑真是充满了太多的乐趣,后来我转行成为计算机自由顾问,去帮助一些小型公司的 IT 部门把数据迁移到 Linux 服务器上,这让 Windows 系统或多或少的失去一些用武之地。通常情况下我们都是在背地里偷偷地做这些工作的,因为那段时期微软把 Linux 称为毒瘤,诬蔑 Linux 系统是一种共产主义,用来削弱和吞噬我们身体里的珍贵血液的阴谋。

|

||||

|

||||

### Linux 赢了

|

||||

|

||||

我持续做了很多年的顾问工作,也做其它一些相关的工作,比如:电脑硬件修理和升级、布线、系统和网络管理,还有运行包括 Apple、Windows、Linux 系统在内的混合网络。Apple 和 Windows 系统故意不兼容对方,因此这两个系统真的是最头疼,也最难整合到同一网络中。但是在 Linux 系统和其它开源软件中最有趣的一件事是总有一些人能够处理这些厂商之间的兼容性问题。

|

||||

|

||||

现在已经大不同了。在系统间的互操作方面一直存在一些兼容性问题,而且也没有桌面 Linux 系统的 1 级 OEM 厂商。我觉得这是因为微软和苹果公司在零售行业在大力垄断造成的。也许他们这样做反而帮了我们帮大忙,因为我们可以从 ZaReason 和 System76 这样独立的 Linux 系统开发商得到更好的服务和更高性能的系统。他们对 Linux 系统都很专业,也特别欢迎我们这样的用户。

|

||||

|

||||

Linux 除了在零售行业的桌面版系统占有一席之地以外,从嵌入式系统到超级计算机以及分布式计算系统等各个方面都占据着主要地位。开源技术掌握着软件行业的发展方向,所有的软件行业的前沿技术,比如容器、集群以及人工智能的发展都离不开开源技术的支持。从我的第一台老式的 386SX 电脑到现在,Linux 和开源技术都取得了巨大的进步。

|

||||

|

||||

如果没有 Linux 系统和开源,这一切都不可能发生。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/life/16/7/my-linux-story-carla-schroder

|

||||

|

||||

作者:[Carla Schroder][a]

|

||||

译者:[rusking](https://github.com/rusking)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/carlaschroder

|

||||

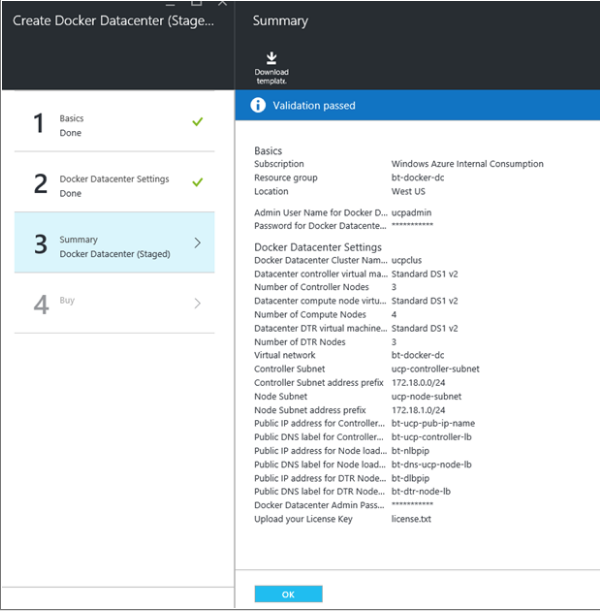

@ -0,0 +1,217 @@

|

||||

如何在 Ubuntu 上使用 Grafana 监控 Docker

|

||||

=========

|

||||

|

||||

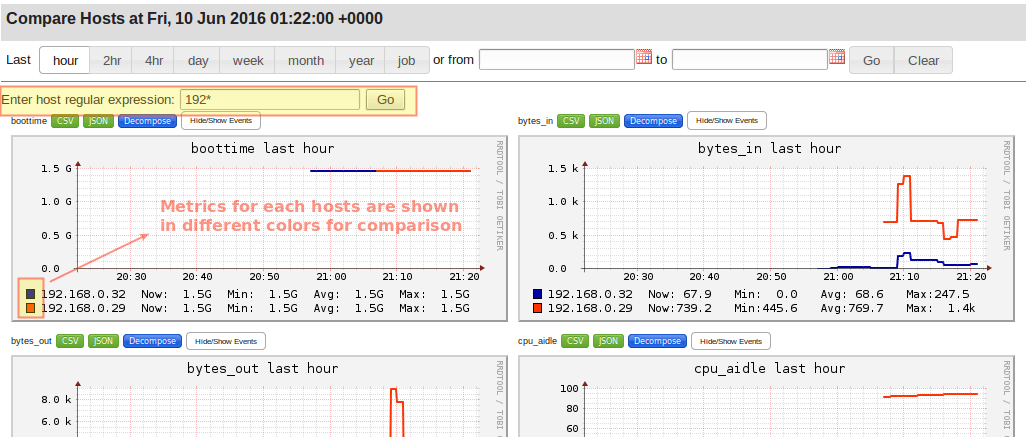

Grafana 是一个有着丰富指标的开源控制面板。在可视化大规模测量数据的时候是非常有用的。根据不同的指标数据,它提供了一个强大、优雅的来创建、分享和浏览数据的方式。

|

||||

|

||||

它提供了丰富多样、灵活的图形选项。此外,针对数据源(Data Source),它支持许多不同的存储后端。每个数据源都有针对特定数据源的特性和功能所定制的查询编辑器。Grafana 提供了对下述数据源的正式支持:Graphite、InfluxDB、OpenTSDB、 Prometheus、Elasticsearch 和 Cloudwatch。

|

||||

|

||||

每个数据源的查询语言和能力显然是不同的,你可以将来自多个数据源的数据混合到一个单一的仪表盘上,但每个面板(Panel)被绑定到属于一个特定组织(Organization)的特定数据源上。它支持验证登录和基于角色的访问控制方案。它是作为一个独立软件部署,使用 Go 和 JavaScript 编写的。

|

||||

|

||||

在这篇文章,我将讲解如何在 Ubuntu 16.04 上安装 Grafana 并使用这个软件配置 Docker 监控。

|

||||

|

||||

### 先决条件

|

||||

|

||||

- 安装好 Docker 的服务器

|

||||

|

||||

### 安装 Grafana

|

||||

|

||||

我们可以在 Docker 中构建我们的 Grafana。 有一个官方提供的 Grafana Docker 镜像。请运行下述命令来构建Grafana 容器。

|

||||

|

||||

```

|

||||

root@ubuntu:~# docker run -i -p 3000:3000 grafana/grafana

|

||||

|

||||

Unable to find image 'grafana/grafana:latest' locally

|

||||

latest: Pulling from grafana/grafana

|

||||

5c90d4a2d1a8: Pull complete

|

||||

b1a9a0b6158e: Pull complete

|

||||

acb23b0d58de: Pull complete

|

||||

Digest: sha256:34ca2f9c7986cb2d115eea373083f7150a2b9b753210546d14477e2276074ae1

|

||||

Status: Downloaded newer image for grafana/grafana:latest

|

||||

t=2016-07-27T15:20:19+0000 lvl=info msg="Starting Grafana" logger=main version=3.1.0 commit=v3.1.0 compiled=2016-07-12T06:42:28+0000

|

||||

t=2016-07-27T15:20:19+0000 lvl=info msg="Config loaded from" logger=settings file=/usr/share/grafana/conf/defaults.ini

|

||||

t=2016-07-27T15:20:19+0000 lvl=info msg="Config loaded from" logger=settings file=/etc/grafana/grafana.ini

|

||||

t=2016-07-27T15:20:19+0000 lvl=info msg="Config overriden from command line" logger=settings arg="default.paths.data=/var/lib/grafana"

|

||||

t=2016-07-27T15:20:19+0000 lvl=info msg="Config overriden from command line" logger=settings arg="default.paths.logs=/var/log/grafana"

|

||||

t=2016-07-27T15:20:19+0000 lvl=info msg="Config overriden from command line" logger=settings arg="default.paths.plugins=/var/lib/grafana/plugins"

|

||||

t=2016-07-27T15:20:19+0000 lvl=info msg="Path Home" logger=settings path=/usr/share/grafana

|

||||

t=2016-07-27T15:20:19+0000 lvl=info msg="Path Data" logger=settings path=/var/lib/grafana

|

||||

t=2016-07-27T15:20:19+0000 lvl=info msg="Path Logs" logger=settings path=/var/log/grafana

|

||||

t=2016-07-27T15:20:19+0000 lvl=info msg="Path Plugins" logger=settings path=/var/lib/grafana/plugins

|

||||

t=2016-07-27T15:20:19+0000 lvl=info msg="Initializing DB" logger=sqlstore dbtype=sqlite3

|

||||

|

||||

t=2016-07-27T15:20:20+0000 lvl=info msg="Executing migration" logger=migrator id="create playlist table v2"

|

||||

t=2016-07-27T15:20:20+0000 lvl=info msg="Executing migration" logger=migrator id="create playlist item table v2"

|

||||

t=2016-07-27T15:20:20+0000 lvl=info msg="Executing migration" logger=migrator id="drop preferences table v2"

|

||||

t=2016-07-27T15:20:20+0000 lvl=info msg="Executing migration" logger=migrator id="drop preferences table v3"

|

||||

t=2016-07-27T15:20:20+0000 lvl=info msg="Executing migration" logger=migrator id="create preferences table v3"

|

||||

t=2016-07-27T15:20:20+0000 lvl=info msg="Created default admin user: [admin]"

|

||||

t=2016-07-27T15:20:20+0000 lvl=info msg="Starting plugin search" logger=plugins

|

||||

t=2016-07-27T15:20:20+0000 lvl=info msg="Server Listening" logger=server address=0.0.0.0:3000 protocol=http subUrl=

|

||||

```

|

||||

|

||||

我们可以通过运行此命令确认 Grafana 容器的工作状态 `docker ps -a` 或通过这个URL访问 `http://Docker IP:3000`。

|

||||

|

||||

所有的 Grafana 配置设置都使用环境变量定义,在使用容器技术时这个是非常有用的。Grafana 配置文件路径为 `/etc/grafana/grafana.ini`。

|

||||

|

||||

### 理解配置项

|

||||

|

||||

Grafana 可以在它的 ini 配置文件中指定几个配置选项,或可以使用前面提到的环境变量来指定。

|

||||

|

||||

#### 配置文件位置

|

||||

|

||||

通常配置文件路径:

|

||||

|

||||

- 默认配置文件路径 : `$WORKING_DIR/conf/defaults.ini`

|

||||

- 自定义配置文件路径 : `$WORKING_DIR/conf/custom.ini`

|

||||

|

||||

PS:当你使用 deb、rpm 或 docker 镜像安装 Grafana 时,你的配置文件在 `/etc/grafana/grafana.ini`。

|

||||

|

||||

#### 理解配置变量

|

||||

|

||||

现在我们看一些配置文件中的变量:

|

||||

|

||||

- `instance_name`:这是 Grafana 服务器实例的名字。默认值从 `${HOSTNAME}` 获取,其值是环境变量` HOSTNAME`,如果该变量为空或不存在,Grafana 将会尝试使用系统调用来获取机器名。

|

||||

- `[paths]`:这些路径通常都是在 init.d 脚本或 systemd service 文件中通过命令行指定。

|

||||

- `data`:这个是 Grafana 存储 sqlite3 数据库(如果使用)、基于文件的会话(如果使用),和其他数据的路径。

|

||||

- `logs`:这个是 Grafana 存储日志的路径。

|

||||

- `[server]`

|

||||

- `http_addr`:应用监听的 IP 地址,如果为空,则监听所有的接口。

|

||||

- `http_port`:应用监听的端口,默认是 3000,你可以使用下面的命令将你的 80 端口重定向到 3000 端口:`$iptables -t nat -A PREROUTING -p tcp --dport 80 -j REDIRECT --to-port 3000`

|

||||

- `root_url` : 这个 URL 用于从浏览器访问 Grafana 。

|

||||

- `cert_file` : 证书文件的路径(如果协议是 HTTPS)。

|

||||

- `cert_key` : 证书密钥文件的路径(如果协议是 HTTPS)。

|

||||

- `[database]`:Grafana 使用数据库来存储用户和仪表盘以及其他信息,默认配置为使用内嵌在 Grafana 主二进制文件中的 SQLite3。

|

||||

- `type`:你可以根据你的需求选择 MySQL、Postgres、SQLite3。

|

||||

- `path`:仅用于选择 SQLite3 数据库时,这个是数据库所存储的路径。

|

||||

- `host`:仅适用 MySQL 或者 Postgres。它包括 IP 地址或主机名以及端口。例如,Grafana 和 MySQL 运行在同一台主机上设置如: `host = 127.0.0.1:3306`。

|

||||

- `name`:Grafana 数据库的名称,把它设置为 Grafana 或其它名称。

|

||||

- `user`:数据库用户(不适用于 SQLite3)。

|

||||

- `password`:数据库用户密码(不适用于 SQLite3)。

|

||||

- `ssl_mode`:对于 Postgres,使用 `disable`,`require`,或 `verify-full` 等值。对于 MySQL,使用 `true`,`false`,或 `skip-verify`。

|

||||

- `ca_cert_path`:(只适用于 MySQL)CA 证书文件路径,在多数 Linux 系统中,证书可以在 `/etc/ssl/certs` 找到。

|

||||

- `client_key_path`:(只适用于 MySQL)客户端密钥的路径,只在服务端需要用户端验证时使用。

|

||||

- `client_cert_path`:(只适用于 MySQL)客户端证书的路径,只在服务端需要用户端验证时使用。

|

||||

- `server_cert_name`:(只适用于 MySQL)MySQL 服务端使用的证书的通用名称字段。如果 `ssl_mode` 设置为 `skip-verify` 时可以不设置。

|

||||

- `[security]`

|

||||

- `admin_user`:这个是 Grafana 默认的管理员用户的用户名,默认设置为 admin。

|

||||

- `admin_password`:这个是 Grafana 默认的管理员用户的密码,在第一次运行时设置,默认为 admin。

|

||||

- `login_remember_days`:保持登录/记住我的持续天数。

|

||||

- `secret_key`:用于保持登录/记住我的 cookies 的签名。

|

||||

|

||||

### 设置监控的重要组件

|

||||

|

||||

我们可以使用下面的组件来创建我们的 Docker 监控系统。

|

||||

|

||||

- `cAdvisor`:它被称为 Container Advisor。它给用户提供了一个资源利用和性能特征的解读。它会收集、聚合、处理、导出运行中的容器的信息。你可以通过[这个文档](https://github.com/google/cadvisor)了解更多。

|

||||

- `InfluxDB`:这是一个包含了时间序列、度量和分析数据库。我们使用这个数据源来设置我们的监控。cAdvisor 只展示实时信息,并不保存这些度量信息。Influx Db 帮助保存 cAdvisor 提供的监控数据,以展示非某一时段的数据。

|

||||

- `Grafana Dashboard`:它可以帮助我们在视觉上整合所有的信息。这个强大的仪表盘使我们能够针对 InfluxDB 数据存储进行查询并将他们放在一个布局合理好看的图表中。

|

||||

|

||||

### Docker 监控的安装

|

||||

|

||||

我们需要一步一步的在我们的 Docker 系统中安装以下每一个组件:

|

||||

|

||||

#### 安装 InfluxDB

|

||||

|

||||

我们可以使用这个命令来拉取 InfluxDB 镜像,并部署了 influxDB 容器。

|

||||

|

||||

```

|

||||

root@ubuntu:~# docker run -d -p 8083:8083 -p 8086:8086 --expose 8090 --expose 8099 -e PRE_CREATE_DB=cadvisor --name influxsrv tutum/influxdb:0.8.8

|

||||

Unable to find image 'tutum/influxdb:0.8.8' locally

|

||||

0.8.8: Pulling from tutum/influxdb

|

||||

a3ed95caeb02: Already exists

|

||||

23efb549476f: Already exists

|

||||

aa2f8df21433: Already exists

|

||||

ef072d3c9b41: Already exists

|

||||

c9f371853f28: Already exists

|

||||

a248b0871c3c: Already exists

|

||||

749db6d368d0: Already exists

|

||||

7d7c7d923e63: Pull complete

|

||||

e47cc7808961: Pull complete

|

||||

1743b6eeb23f: Pull complete

|

||||

Digest: sha256:8494b31289b4dbc1d5b444e344ab1dda3e18b07f80517c3f9aae7d18133c0c42

|

||||

Status: Downloaded newer image for tutum/influxdb:0.8.8

|

||||

d3b6f7789e0d1d01fa4e0aacdb636c221421107d1df96808ecbe8e241ceb1823

|

||||

|

||||

-p 8083:8083 : user interface, log in with username-admin, pass-admin

|

||||

-p 8086:8086 : interaction with other application

|

||||

--name influxsrv : container have name influxsrv, use to cAdvisor link it.

|

||||

```

|

||||

|

||||

你可以测试 InfluxDB 是否安装好,通过访问这个 URL `http://你的 IP 地址:8083`,用户名和密码都是 ”root“。

|

||||

|

||||

|

||||

|

||||

我们可以在这个界面上创建我们所需的数据库。

|

||||

|

||||

|

||||

|

||||

#### 安装 cAdvisor

|

||||

|

||||

我们的下一个步骤是安装 cAdvisor 容器,并将其链接到 InfluxDB 容器。你可以使用此命令来创建它。

|

||||

|

||||

```

|

||||

root@ubuntu:~# docker run --volume=/:/rootfs:ro --volume=/var/run:/var/run:rw --volume=/sys:/sys:ro --volume=/var/lib/docker/:/var/lib/docker:ro --publish=8080:8080 --detach=true --link influxsrv:influxsrv --name=cadvisor google/cadvisor:latest -storage_driver_db=cadvisor -storage_driver_host=influxsrv:8086

|

||||

Unable to find image 'google/cadvisor:latest' locally

|

||||

latest: Pulling from google/cadvisor

|

||||

09d0220f4043: Pull complete

|

||||

151807d34af9: Pull complete

|

||||

14cd28dce332: Pull complete

|

||||

Digest: sha256:8364c7ab7f56a087b757a304f9376c3527c8c60c848f82b66dd728980222bd2f

|

||||

Status: Downloaded newer image for google/cadvisor:latest

|

||||

3bfdf7fdc83872485acb06666a686719983a1172ac49895cd2a260deb1cdde29

|

||||

root@ubuntu:~#

|

||||

|

||||

--publish=8080:8080 : user interface

|

||||

--link=influxsrv:influxsrv: link to container influxsrv

|

||||

-storage_driver=influxdb: set the storage driver as InfluxDB

|

||||

Specify what InfluxDB instance to push data to:

|

||||

-storage_driver_host=influxsrv:8086: The ip:port of the database. Default is ‘localhost:8086’

|

||||

-storage_driver_db=cadvisor: database name. Uses db ‘cadvisor’ by default

|

||||

```

|

||||

|

||||

你可以通过访问这个地址来测试安装 cAdvisor 是否正常 `http://你的 IP 地址:8080`。 这将为你的 Docker 主机和容器提供统计信息。

|

||||

|

||||

|

||||

|

||||

#### 安装 Grafana 控制面板

|

||||

|

||||

最后,我们需要安装 Grafana 仪表板并连接到 InfluxDB,你可以执行下面的命令来设置它。

|

||||

|

||||

```

|

||||

root@ubuntu:~# docker run -d -p 3000:3000 -e INFLUXDB_HOST=localhost -e INFLUXDB_PORT=8086 -e INFLUXDB_NAME=cadvisor -e INFLUXDB_USER=root -e INFLUXDB_PASS=root --link influxsrv:influxsrv --name grafana grafana/grafana

|

||||

f3b7598529202b110e4e6b998dca6b6e60e8608d75dcfe0d2b09ae408f43684a

|

||||

```

|

||||

|

||||

现在我们可以登录 Grafana 来配置数据源. 访问 `http://你的 IP 地址:3000` 或 `http://你的 IP 地址`(如果你在前面做了端口映射的话):

|

||||

|

||||

- 用户名 - admin

|

||||

- 密码 - admin

|

||||

|

||||

一旦我们安装好了 Grafana,我们可以连接 InfluxDB。登录到仪表盘并且点击面板左上方角落的 Grafana 图标(那个火球)。点击数据源(Data Sources)来配置。

|

||||

|

||||

|

||||

|

||||

现在你可以添加新的图形(Graph)到我们默认的数据源 InfluxDB。

|

||||

|

||||

|

||||

|

||||

我们可以通过在测量(Metric)页面编辑和调整我们的查询以调整我们的图形。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

关于 Docker 监控,你可用[从此了解][1]更多信息。 感谢你的阅读。我希望你可以留下有价值的建议和评论。希望你有个美好的一天。

|

||||

|

||||

------

|

||||

|

||||

via: http://linoxide.com/linux-how-to/monitor-docker-containers-grafana-ubuntu/

|

||||

|

||||

作者:[Saheetha Shameer][a]

|

||||

译者:[Bestony](https://github.com/bestony)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://linoxide.com/author/saheethas/

|

||||

[1]: https://github.com/vegasbrianc/docker-monitoring

|

||||

410

published/20160820 Protocol Buffer Basics C++.md

Normal file

410

published/20160820 Protocol Buffer Basics C++.md

Normal file

@ -0,0 +1,410 @@

|

||||

C++ 程序员 Protocol Buffers 基础指南

|

||||

============================

|

||||

|

||||

这篇教程提供了一个面向 C++ 程序员关于 protocol buffers 的基础介绍。通过创建一个简单的示例应用程序,它将向我们展示:

|

||||

|

||||

* 在 `.proto` 文件中定义消息格式

|

||||

* 使用 protocol buffer 编译器

|

||||

* 使用 C++ protocol buffer API 读写消息

|

||||

|

||||

这不是一个关于在 C++ 中使用 protocol buffers 的全面指南。要获取更详细的信息,请参考 [Protocol Buffer Language Guide][1] 和 [Encoding Reference][2]。

|

||||

|

||||

### 为什么使用 Protocol Buffers

|

||||

|

||||

我们接下来要使用的例子是一个非常简单的"地址簿"应用程序,它能从文件中读取联系人详细信息。地址簿中的每一个人都有一个名字、ID、邮件地址和联系电话。

|

||||

|

||||

如何序列化和获取结构化的数据?这里有几种解决方案:

|

||||

|

||||

* 以二进制形式发送/接收原生的内存数据结构。通常,这是一种脆弱的方法,因为接收/读取代码必须基于完全相同的内存布局、大小端等环境进行编译。同时,当文件增加时,原始格式数据会随着与该格式相关的软件而迅速扩散,这将导致很难扩展文件格式。

|

||||

* 你可以创造一种 ad-hoc 方法,将数据项编码为一个字符串——比如将 4 个整数编码为 `12:3:-23:67`。虽然它需要编写一次性的编码和解码代码且解码需要耗费一点运行时成本,但这是一种简单灵活的方法。这最适合编码非常简单的数据。

|

||||

* 序列化数据为 XML。这种方法是非常吸引人的,因为 XML 是一种适合人阅读的格式,并且有为许多语言开发的库。如果你想与其他程序和项目共享数据,这可能是一种不错的选择。然而,众所周知,XML 是空间密集型的,且在编码和解码时,它对程序会造成巨大的性能损失。同时,使用 XML DOM 树被认为比操作一个类的简单字段更加复杂。

|

||||

|

||||

Protocol buffers 是针对这个问题的一种灵活、高效、自动化的解决方案。使用 Protocol buffers,你需要写一个 `.proto` 说明,用于描述你所希望存储的数据结构。利用 `.proto` 文件,protocol buffer 编译器可以创建一个类,用于实现对高效的二进制格式的 protocol buffer 数据的自动化编码和解码。产生的类提供了构造 protocol buffer 的字段的 getters 和 setters,并且作为一个单元来处理读写 protocol buffer 的细节。重要的是,protocol buffer 格式支持格式的扩展,代码仍然可以读取以旧格式编码的数据。

|

||||

|

||||

### 在哪可以找到示例代码

|

||||

|

||||

示例代码被包含于源代码包,位于“examples”文件夹。可在[这里][4]下载代码。

|

||||

|

||||

### 定义你的协议格式

|

||||

|

||||

为了创建自己的地址簿应用程序,你需要从 `.proto` 开始。`.proto` 文件中的定义很简单:为你所需要序列化的每个数据结构添加一个消息(message),然后为消息中的每一个字段指定一个名字和类型。这里是定义你消息的 `.proto` 文件 `addressbook.proto`。

|

||||

|

||||

```

|

||||

package tutorial;

|

||||

|

||||

message Person {

|

||||

required string name = 1;

|

||||

required int32 id = 2;

|

||||

optional string email = 3;

|

||||

|

||||

enum PhoneType {

|

||||

MOBILE = 0;

|

||||

HOME = 1;

|

||||

WORK = 2;

|

||||

}

|

||||

|

||||

message PhoneNumber {

|

||||

required string number = 1;

|

||||

optional PhoneType type = 2 [default = HOME];

|

||||

}

|

||||

|

||||

repeated PhoneNumber phone = 4;

|

||||

}

|

||||

|

||||

message AddressBook {

|

||||

repeated Person person = 1;

|

||||

}

|

||||

```

|

||||

|

||||

如你所见,其语法类似于 C++ 或 Java。我们开始看看文件的每一部分内容做了什么。

|

||||

|

||||

`.proto` 文件以一个 package 声明开始,这可以避免不同项目的命名冲突。在 C++,你生成的类会被置于与 package 名字一样的命名空间。

|

||||

|

||||

下一步,你需要定义消息(message)。消息只是一个包含一系列类型字段的集合。大多标准的简单数据类型是可以作为字段类型的,包括 `bool`、`int32`、`float`、`double` 和 `string`。你也可以通过使用其他消息类型作为字段类型,将更多的数据结构添加到你的消息中——在以上的示例,`Person` 消息包含了 `PhoneNumber` 消息,同时 `AddressBook` 消息包含 `Person` 消息。你甚至可以定义嵌套在其他消息内的消息类型——如你所见,`PhoneNumber` 类型定义于 `Person` 内部。如果你想要其中某一个字段的值是预定义值列表中的某个值,你也可以定义 `enum` 类型——这儿你可以指定一个电话号码是 `MOBILE`、`HOME` 或 `WORK` 中的某一个。

|

||||

|

||||

每一个元素上的 `= 1`、`= 2` 标记确定了用于二进制编码的唯一“标签”(tag)。标签数字 1-15 的编码比更大的数字少需要一个字节,因此作为一种优化,你可以将这些标签用于经常使用的元素或 repeated 元素,剩下 16 以及更高的标签用于非经常使用的元素或 `optional` 元素。每一个 `repeated` 字段的元素需要重新编码标签数字,因此 `repeated` 字段适合于使用这种优化手段。

|

||||

|

||||

每一个字段必须使用下面的修饰符加以标注:

|

||||

|

||||

* `required`:必须提供该字段的值,否则消息会被认为是 “未初始化的”(uninitialized)。如果 `libprotobuf` 以调试模式编译,序列化未初始化的消息将引起一个断言失败。以优化形式构建,将会跳过检查,并且无论如何都会写入该消息。然而,解析未初始化的消息总是会失败(通过 parse 方法返回 `false`)。除此之外,一个 `required` 字段的表现与 `optional` 字段完全一样。

|

||||

* `optional`:字段可能会被设置,也可能不会。如果一个 `optional` 字段没被设置,它将使用默认值。对于简单类型,你可以指定你自己的默认值,正如例子中我们对电话号码的 `type` 一样,否则使用系统默认值:数字类型为 0、字符串为空字符串、布尔值为 false。对于嵌套消息,默认值总为消息的“默认实例”或“原型”,它的所有字段都没被设置。调用 accessor 来获取一个没有显式设置的 `optional`(或 `required`) 字段的值总是返回字段的默认值。

|

||||

* `repeated`:字段可以重复任意次数(包括 0 次)。`repeated` 值的顺序会被保存于 protocol buffer。可以将 repeated 字段想象为动态大小的数组。

|

||||

|

||||

你可以查找关于编写 `.proto` 文件的完整指导——包括所有可能的字段类型——在 [Protocol Buffer Language Guide][6] 里面。不要在这里面查找与类继承相似的特性,因为 protocol buffers 不会做这些。

|

||||

|

||||

> **`required` 是永久性的**

|

||||

|

||||

>在把一个字段标识为 `required` 的时候,你应该特别小心。如果在某些情况下你不想写入或者发送一个 `required` 的字段,那么将该字段更改为 `optional` 可能会遇到问题——旧版本的读者(LCTT 译注:即读取、解析旧版本 Protocol Buffer 消息的一方)会认为不含该字段的消息是不完整的,从而有可能会拒绝解析。在这种情况下,你应该考虑编写特别针对于应用程序的、自定义的消息校验函数。Google 的一些工程师得出了一个结论:使用 `required` 弊多于利;他们更愿意使用 `optional` 和 `repeated` 而不是 `required`。当然,这个观点并不具有普遍性。

|

||||

|

||||

### 编译你的 Protocol Buffers

|

||||

|

||||

既然你有了一个 `.proto`,那你需要做的下一件事就是生成一个将用于读写 `AddressBook` 消息的类(从而包括 `Person` 和 `PhoneNumber`)。为了做到这样,你需要在你的 `.proto` 上运行 protocol buffer 编译器 `protoc`:

|

||||

|

||||

1. 如果你没有安装编译器,请[下载这个包][4],并按照 README 中的指令进行安装。

|

||||

2. 现在运行编译器,指定源目录(你的应用程序源代码位于哪里——如果你没有提供任何值,将使用当前目录)、目标目录(你想要生成的代码放在哪里;常与 `$SRC_DIR` 相同),以及你的 `.proto` 路径。在此示例中:

|

||||

|

||||

```

|

||||

protoc -I=$SRC_DIR --cpp_out=$DST_DIR $SRC_DIR/addressbook.proto

|

||||

```

|

||||

|

||||

因为你想要 C++ 的类,所以你使用了 `--cpp_out` 选项——也为其他支持的语言提供了类似选项。

|

||||

|

||||

在你指定的目标文件夹,将生成以下的文件:

|

||||

|

||||

* `addressbook.pb.h`,声明你生成类的头文件。

|

||||

* `addressbook.pb.cc`,包含你的类的实现。

|

||||

|

||||

### Protocol Buffer API

|

||||

|

||||

让我们看看生成的一些代码,了解一下编译器为你创建了什么类和函数。如果你查看 `addressbook.pb.h`,你可以看到有一个在 `addressbook.proto` 中指定所有消息的类。关注 `Person` 类,可以看到编译器为每个字段生成了读写函数(accessors)。例如,对于 `name`、`id`、`email` 和 `phone` 字段,有下面这些方法:(LCTT 译注:此处原文所指文件名有误,径该之。)

|

||||

|

||||

```c++

|

||||

// name

|

||||

inline bool has_name() const;

|

||||

inline void clear_name();

|

||||

inline const ::std::string& name() const;

|

||||

inline void set_name(const ::std::string& value);

|

||||

inline void set_name(const char* value);

|

||||

inline ::std::string* mutable_name();

|

||||

|

||||

// id

|

||||

inline bool has_id() const;

|

||||

inline void clear_id();

|

||||

inline int32_t id() const;

|

||||

inline void set_id(int32_t value);

|

||||

|

||||

// email

|

||||

inline bool has_email() const;

|

||||

inline void clear_email();

|

||||

inline const ::std::string& email() const;

|

||||

inline void set_email(const ::std::string& value);

|

||||

inline void set_email(const char* value);

|

||||

inline ::std::string* mutable_email();

|

||||

|

||||

// phone

|

||||

inline int phone_size() const;

|

||||

inline void clear_phone();

|

||||

inline const ::google::protobuf::RepeatedPtrField< ::tutorial::Person_PhoneNumber >& phone() const;

|

||||

inline ::google::protobuf::RepeatedPtrField< ::tutorial::Person_PhoneNumber >* mutable_phone();

|

||||

inline const ::tutorial::Person_PhoneNumber& phone(int index) const;

|

||||

inline ::tutorial::Person_PhoneNumber* mutable_phone(int index);

|

||||

inline ::tutorial::Person_PhoneNumber* add_phone();

|

||||

```

|

||||

|

||||

正如你所见到,getters 的名字与字段的小写名字完全一样,并且 setter 方法以 set_ 开头。同时每个单一(singular)(`required` 或 `optional`)字段都有 `has_` 方法,该方法在字段被设置了值的情况下返回 true。最后,所有字段都有一个 `clear_` 方法,用以清除字段到空(empty)状态。

|

||||

|

||||

数字型的 `id` 字段仅有上述的基本读写函数集合(accessors),而 `name` 和 `email` 字段有两个额外的方法,因为它们是字符串——一个是可以获得字符串直接指针的`mutable_` 的 getter ,另一个为额外的 setter。注意,尽管 `email` 还没被设置(set),你也可以调用 `mutable_email`;因为 `email` 会被自动地初始化为空字符串。在本例中,如果你有一个单一的(`required` 或 `optional`)消息字段,它会有一个 `mutable_` 方法,而没有 `set_` 方法。

|

||||

|

||||

`repeated` 字段也有一些特殊的方法——如果你看看 `repeated` 的 `phone` 字段的方法,你可以看到:

|

||||

|

||||

* 检查 `repeated` 字段的 `_size`(也就是说,与 `Person` 相关的电话号码的个数)

|

||||

* 使用下标取得特定的电话号码

|

||||

* 更新特定下标的电话号码

|

||||

* 添加新的电话号码到消息中,之后你便可以编辑。(`repeated` 标量类型有一个 `add_` 方法,用于传入新的值)

|

||||

|

||||

为了获取 protocol 编译器为所有字段定义生成的方法的信息,可以查看 [C++ generated code reference][5]。

|

||||

|

||||

#### 枚举和嵌套类

|

||||

|

||||

与 `.proto` 的枚举相对应,生成的代码包含了一个 `PhoneType` 枚举。你可以通过 `Person::PhoneType` 引用这个类型,通过 `Person::MOBILE`、`Person::HOME` 和 `Person::WORK` 引用它的值。(实现细节有点复杂,但是你无须了解它们而可以直接使用)

|

||||

|

||||

编译器也生成了一个 `Person::PhoneNumber` 的嵌套类。如果你查看代码,你可以发现真正的类型为 `Person_PhoneNumber`,但它通过在 `Person` 内部使用 `typedef` 定义,使你可以把 `Person_PhoneNumber` 当成嵌套类。唯一产生影响的一个例子是,如果你想要在其他文件前置声明该类——在 C++ 中你不能前置声明嵌套类,但是你可以前置声明 `Person_PhoneNumber`。

|

||||

|

||||

#### 标准的消息方法

|

||||

|

||||

所有的消息方法都包含了许多别的方法,用于检查和操作整个消息,包括:

|

||||

|

||||

* `bool IsInitialized() const;` :检查是否所有 `required` 字段已经被设置。

|

||||

* `string DebugString() const;` :返回人类可读的消息表示,对调试特别有用。

|

||||

* `void CopyFrom(const Person& from);`:使用给定的值重写消息。

|

||||

* `void Clear();`:清除所有元素为空(empty)的状态。

|

||||

|

||||

上面这些方法以及下一节要讲的 I/O 方法实现了被所有 C++ protocol buffer 类共享的消息(Message)接口。为了获取更多信息,请查看 [complete API documentation for Message][7]。

|

||||

|

||||

#### 解析和序列化

|

||||

|

||||

最后,所有 protocol buffer 类都有读写你选定类型消息的方法,这些方法使用了特定的 protocol buffer [二进制格式][8]。这些方法包括:

|

||||

|

||||

* `bool SerializeToString(string* output) const;`:序列化消息并将消息字节数据存储在给定的字符串中。注意,字节数据是二进制格式的,而不是文本格式;我们只使用 `string` 类作为合适的容器。

|

||||

* `bool ParseFromString(const string& data);`:从给定的字符创解析消息。

|

||||

* `bool SerializeToOstream(ostream* output) const;`:将消息写到给定的 C++ `ostream`。

|

||||

* `bool ParseFromIstream(istream* input);`:从给定的 C++ `istream` 解析消息。

|

||||

|

||||

这些只是两个用于解析和序列化的选择。再次说明,可以查看 `Message API reference` 完整的列表。

|

||||

|

||||

> **Protocol Buffers 和面向对象设计**

|

||||

|

||||

> Protocol buffer 类通常只是纯粹的数据存储器(像 C++ 中的结构体);它们在对象模型中并不是一等公民。如果你想向生成的 protocol buffer 类中添加更丰富的行为,最好的方法就是在应用程序中对它进行封装。如果你无权控制 `.proto` 文件的设计的话,封装 protocol buffers 也是一个好主意(例如,你从另一个项目中重用一个 `.proto` 文件)。在那种情况下,你可以用封装类来设计接口,以更好地适应你的应用程序的特定环境:隐藏一些数据和方法,暴露一些便于使用的函数,等等。**但是你绝对不要通过继承生成的类来添加行为。**这样做的话,会破坏其内部机制,并且不是一个好的面向对象的实践。

|

||||

|

||||

### 写消息

|

||||

|

||||

现在我们尝试使用 protocol buffer 类。你的地址簿程序想要做的第一件事是将个人详细信息写入到地址簿文件。为了做到这一点,你需要创建、填充 protocol buffer 类实例,并且将它们写入到一个输出流(output stream)。

|

||||

|

||||

这里的程序可以从文件读取 `AddressBook`,根据用户输入,将新 `Person` 添加到 `AddressBook`,并且再次将新的 `AddressBook` 写回文件。这部分直接调用或引用 protocol buffer 类的代码会以“// pb”标出。

|

||||

|

||||

```c++

|

||||

#include <iostream>

|

||||

#include <fstream>

|

||||

#include <string>

|

||||

#include "addressbook.pb.h" // pb

|

||||

using namespace std;

|

||||

|

||||

// This function fills in a Person message based on user input.

|

||||

void PromptForAddress(tutorial::Person* person) {

|

||||

cout << "Enter person ID number: ";

|

||||

int id;

|

||||

cin >> id;

|

||||

person->set_id(id); // pb

|

||||

cin.ignore(256, '\n');

|

||||

|

||||

cout << "Enter name: ";

|

||||

getline(cin, *person->mutable_name()); // pb

|

||||

|

||||

cout << "Enter email address (blank for none): ";

|

||||

string email;

|

||||

getline(cin, email);

|

||||

if (!email.empty()) { // pb

|

||||

person->set_email(email); // pb

|

||||

}

|

||||

|

||||

while (true) {

|

||||

cout << "Enter a phone number (or leave blank to finish): ";

|

||||

string number;

|

||||

getline(cin, number);

|

||||

if (number.empty()) {

|

||||

break;

|

||||

}

|

||||

|

||||

tutorial::Person::PhoneNumber* phone_number = person->add_phone(); //pb

|

||||

phone_number->set_number(number); // pb

|

||||

|

||||

cout << "Is this a mobile, home, or work phone? ";

|

||||

string type;

|

||||

getline(cin, type);

|

||||

if (type == "mobile") {

|

||||

phone_number->set_type(tutorial::Person::MOBILE); // pb

|

||||

} else if (type == "home") {

|

||||

phone_number->set_type(tutorial::Person::HOME); // pb

|

||||

} else if (type == "work") {

|

||||

phone_number->set_type(tutorial::Person::WORK); // pb

|

||||

} else {

|

||||

cout << "Unknown phone type. Using default." << endl;

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

// Main function: Reads the entire address book from a file,

|

||||

// adds one person based on user input, then writes it back out to the same

|

||||

// file.

|

||||

int main(int argc, char* argv[]) {

|

||||

// Verify that the version of the library that we linked against is

|

||||

// compatible with the version of the headers we compiled against.

|

||||

GOOGLE_PROTOBUF_VERIFY_VERSION; // pb

|

||||

|

||||

if (argc != 2) {

|

||||

cerr << "Usage: " << argv[0] << " ADDRESS_BOOK_FILE" << endl;

|

||||

return -1;

|

||||

}

|

||||

|

||||

tutorial::AddressBook address_book; // pb

|

||||

|

||||

{

|

||||

// Read the existing address book.

|

||||

fstream input(argv[1], ios::in | ios::binary);

|

||||

if (!input) {

|

||||

cout << argv[1] << ": File not found. Creating a new file." << endl;

|

||||

} else if (!address_book.ParseFromIstream(&input)) { // pb

|

||||

cerr << "Failed to parse address book." << endl;

|

||||

return -1;

|

||||

}

|

||||

}

|

||||

|

||||

// Add an address.

|

||||

PromptForAddress(address_book.add_person()); // pb

|

||||

|

||||

{

|

||||

// Write the new address book back to disk.

|

||||

fstream output(argv[1], ios::out | ios::trunc | ios::binary);

|

||||

if (!address_book.SerializeToOstream(&output)) { // pb

|

||||

cerr << "Failed to write address book." << endl;

|

||||

return -1;

|

||||

}

|

||||

}

|

||||

|

||||

// Optional: Delete all global objects allocated by libprotobuf.

|

||||

google::protobuf::ShutdownProtobufLibrary(); // pb

|

||||

|

||||

return 0;

|

||||

}

|

||||

```

|

||||

|

||||

注意 `GOOGLE_PROTOBUF_VERIFY_VERSION` 宏。它是一种好的实践——虽然不是严格必须的——在使用 C++ Protocol Buffer 库之前执行该宏。它可以保证避免不小心链接到一个与编译的头文件版本不兼容的库版本。如果被检查出来版本不匹配,程序将会终止。注意,每个 `.pb.cc` 文件在初始化时会自动调用这个宏。

|

||||

|

||||

同时注意在程序最后调用 `ShutdownProtobufLibrary()`。它用于释放 Protocol Buffer 库申请的所有全局对象。对大部分程序,这不是必须的,因为虽然程序只是简单退出,但是 OS 会处理释放程序的所有内存。然而,如果你使用了内存泄漏检测工具,工具要求全部对象都要释放,或者你正在写一个 Protocol Buffer 库,该库可能会被一个进程多次加载和卸载,那么你可能需要强制 Protocol Buffer 清除所有东西。

|

||||

|

||||

### 读取消息

|

||||

|

||||

当然,如果你无法从它获取任何信息,那么这个地址簿没多大用处!这个示例读取上面例子创建的文件,并打印文件里的所有内容。

|

||||

|

||||

```c++

|

||||

#include <iostream>

|

||||

#include <fstream>

|

||||

#include <string>

|

||||

#include "addressbook.pb.h" // pb

|

||||

using namespace std;

|

||||

|

||||

// Iterates though all people in the AddressBook and prints info about them.

|

||||

void ListPeople(const tutorial::AddressBook& address_book) { // pb

|

||||

for (int i = 0; i < address_book.person_size(); i++) { // pb

|

||||

const tutorial::Person& person = address_book.person(i); // pb

|

||||

|

||||

cout << "Person ID: " << person.id() << endl; // pb

|

||||

cout << " Name: " << person.name() << endl; // pb

|

||||

if (person.has_email()) { // pb

|

||||

cout << " E-mail address: " << person.email() << endl; // pb

|

||||

}

|

||||

|

||||

for (int j = 0; j < person.phone_size(); j++) { // pb

|

||||

const tutorial::Person::PhoneNumber& phone_number = person.phone(j); // pb

|

||||

|

||||

switch (phone_number.type()) { // pb

|

||||

case tutorial::Person::MOBILE: // pb

|

||||

cout << " Mobile phone #: ";

|

||||

break;

|

||||

case tutorial::Person::HOME: // pb

|

||||

cout << " Home phone #: ";

|

||||

break;

|

||||

case tutorial::Person::WORK: // pb

|

||||

cout << " Work phone #: ";

|

||||

break;

|

||||

}

|

||||

cout << phone_number.number() << endl; // ob

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

// Main function: Reads the entire address book from a file and prints all

|

||||

// the information inside.

|

||||

int main(int argc, char* argv[]) {

|

||||

// Verify that the version of the library that we linked against is

|

||||

// compatible with the version of the headers we compiled against.

|

||||

GOOGLE_PROTOBUF_VERIFY_VERSION; // pb

|

||||

|

||||

if (argc != 2) {

|

||||

cerr << "Usage: " << argv[0] << " ADDRESS_BOOK_FILE" << endl;

|

||||

return -1;

|

||||

}

|

||||

|

||||

tutorial::AddressBook address_book; // pb

|

||||

|

||||

{

|

||||

// Read the existing address book.

|

||||

fstream input(argv[1], ios::in | ios::binary);

|

||||

if (!address_book.ParseFromIstream(&input)) { // pb

|

||||

cerr << "Failed to parse address book." << endl;

|

||||

return -1;

|

||||

}

|

||||

}

|

||||

|

||||

ListPeople(address_book);

|

||||

|

||||

// Optional: Delete all global objects allocated by libprotobuf.

|

||||

google::protobuf::ShutdownProtobufLibrary(); // pb

|

||||

|

||||

return 0;

|

||||

}

|

||||

```

|

||||

|

||||

### 扩展 Protocol Buffer

|

||||

|

||||

或早或晚在你发布了使用 protocol buffer 的代码之后,毫无疑问,你会想要 "改善"

|

||||

protocol buffer 的定义。如果你想要新的 buffers 向后兼容,并且老的 buffers 向前兼容——几乎可以肯定你很渴望这个——这里有一些规则,你需要遵守。在新的 protocol buffer 版本:

|

||||

|

||||

* 你绝不可以修改任何已存在字段的标签数字

|

||||

* 你绝不可以添加或删除任何 `required` 字段

|

||||

* 你可以删除 `optional` 或 `repeated` 字段

|

||||

* 你可以添加新的 `optional` 或 `repeated` 字段,但是你必须使用新的标签数字(也就是说,标签数字在 protocol buffer 中从未使用过,甚至不能是已删除字段的标签数字)。

|

||||

|

||||

(对于上面规则有一些[例外情况][9],但它们很少用到。)

|

||||

|

||||

如果你能遵守这些规则,旧代码则可以欢快地读取新的消息,并且简单地忽略所有新的字段。对于旧代码来说,被删除的 `optional` 字段将会简单地赋予默认值,被删除的 `repeated` 字段会为空。新代码显然可以读取旧消息。然而,请记住新的 `optional` 字段不会呈现在旧消息中,因此你需要显式地使用 `has_` 检查它们是否被设置或者在 `.proto` 文件在标签数字后使用 `[default = value]` 提供一个合理的默认值。如果一个 `optional` 元素没有指定默认值,它将会使用类型特定的默认值:对于字符串,默认值为空字符串;对于布尔值,默认值为 false;对于数字类型,默认类型为 0。注意,如果你添加一个新的 `repeated` 字段,新代码将无法辨别它被留空(left empty)(被新代码)或者从没被设置(被旧代码),因为 `repeated` 字段没有 `has_` 标志。

|

||||

|

||||

### 优化技巧

|

||||

|

||||

C++ Protocol Buffer 库已极度优化过了。但是,恰当的用法能够更多地提高性能。这里是一些技巧,可以帮你从库中挤压出最后一点速度:

|

||||

|

||||

* 尽可能复用消息对象。即使它们被清除掉,消息也会尽量保存所有被分配来重用的内存。因此,如果我们正在处理许多相同类型或一系列相似结构的消息,一个好的办法是重用相同的消息对象,从而减少内存分配的负担。但是,随着时间的流逝,对象可能会膨胀变大,尤其是当你的消息尺寸(LCTT 译注:各消息内容不同,有些消息内容多一些,有些消息内容少一些)不同的时候,或者你偶尔创建了一个比平常大很多的消息的时候。你应该自己通过调用 [SpaceUsed][10] 方法监测消息对象的大小,并在它太大的时候删除它。

|

||||

* 对于在多线程中分配大量小对象的情况,你的操作系统内存分配器可能优化得不够好。你可以尝试使用 google 的 [tcmalloc][11]。

|

||||

|

||||

### 高级用法

|

||||

|

||||

Protocol Buffers 绝不仅用于简单的数据存取以及序列化。请阅读 [C++ API reference][12] 来看看你还能用它来做什么。

|

||||

|

||||

protocol 消息类所提供的一个关键特性就是反射(reflection)。你不需要针对一个特殊的消息类型编写代码,就可以遍历一个消息的字段并操作它们的值。一个使用反射的有用方法是 protocol 消息与其他编码互相转换,比如 XML 或 JSON。反射的一个更高级的用法可能就是可以找出两个相同类型的消息之间的区别,或者开发某种“协议消息的正则表达式”,利用正则表达式,你可以对某种消息内容进行匹配。只要你发挥你的想像力,就有可能将 Protocol Buffers 应用到一个更广泛的、你可能一开始就期望解决的问题范围上。

|

||||

|

||||

反射是由 [Message::Reflection interface][13] 提供的。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://developers.google.com/protocol-buffers/docs/cpptutorial

|

||||

|

||||

作者:[Google][a]

|

||||

译者:[cposture](https://github.com/cposture)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://developers.google.com/protocol-buffers/docs/cpptutorial

|

||||

[1]: https://developers.google.com/protocol-buffers/docs/proto

|

||||

[2]: https://developers.google.com/protocol-buffers/docs/encoding

|

||||

[3]: https://developers.google.com/protocol-buffers/docs/downloads

|

||||

[4]: https://developers.google.com/protocol-buffers/docs/downloads.html

|

||||

[5]: https://developers.google.com/protocol-buffers/docs/reference/cpp-generated

|

||||

[6]: https://developers.google.com/protocol-buffers/docs/proto

|

||||

[7]: https://developers.google.com/protocol-buffers/docs/reference/cpp/google.protobuf.message.html#Message

|

||||

[8]: https://developers.google.com/protocol-buffers/docs/encoding

|

||||

[9]: https://developers.google.com/protocol-buffers/docs/proto#updating

|

||||

[10]: https://developers.google.com/protocol-buffers/docs/reference/cpp/google.protobuf.message.html#Message.SpaceUsed.details

|

||||

[11]: http://code.google.com/p/google-perftools/

|

||||

[12]: https://developers.google.com/protocol-buffers/docs/reference/cpp/index.html

|

||||

[13]: https://developers.google.com/protocol-buffers/docs/reference/cpp/google.protobuf.message.html#Message.Reflection

|

||||

@ -0,0 +1,447 @@

|

||||

新手指南 - 通过 Docker 在 Linux 上托管 .NET Core

|

||||

=====

|

||||

|

||||

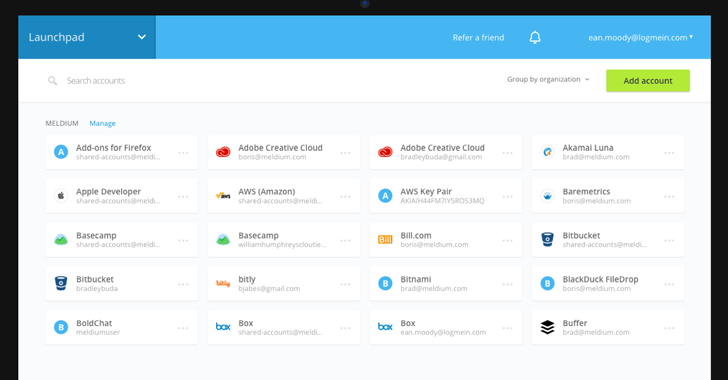

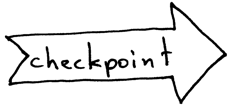

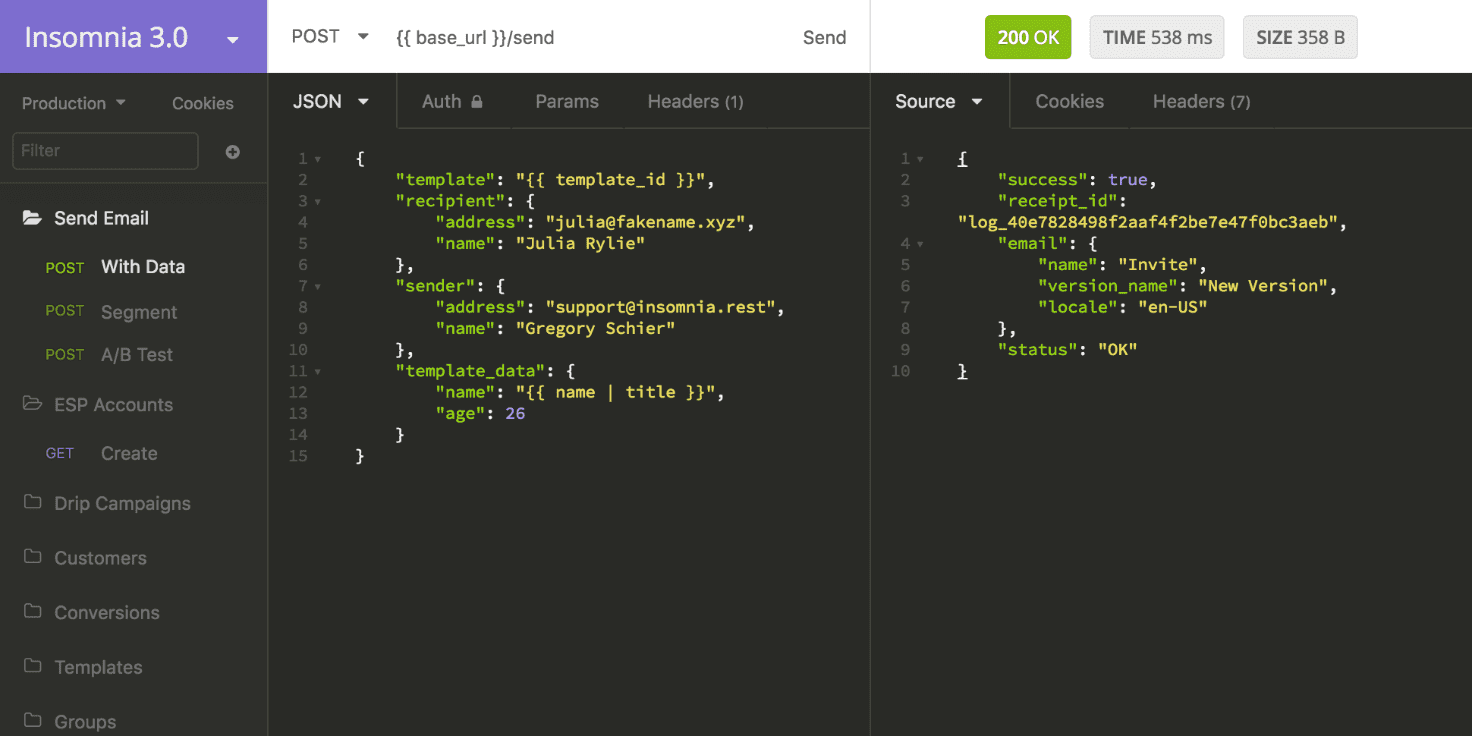

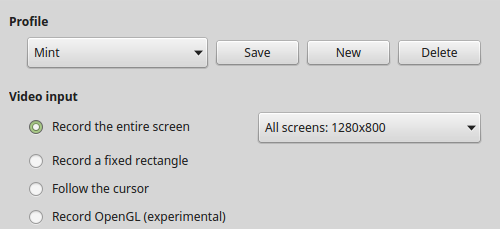

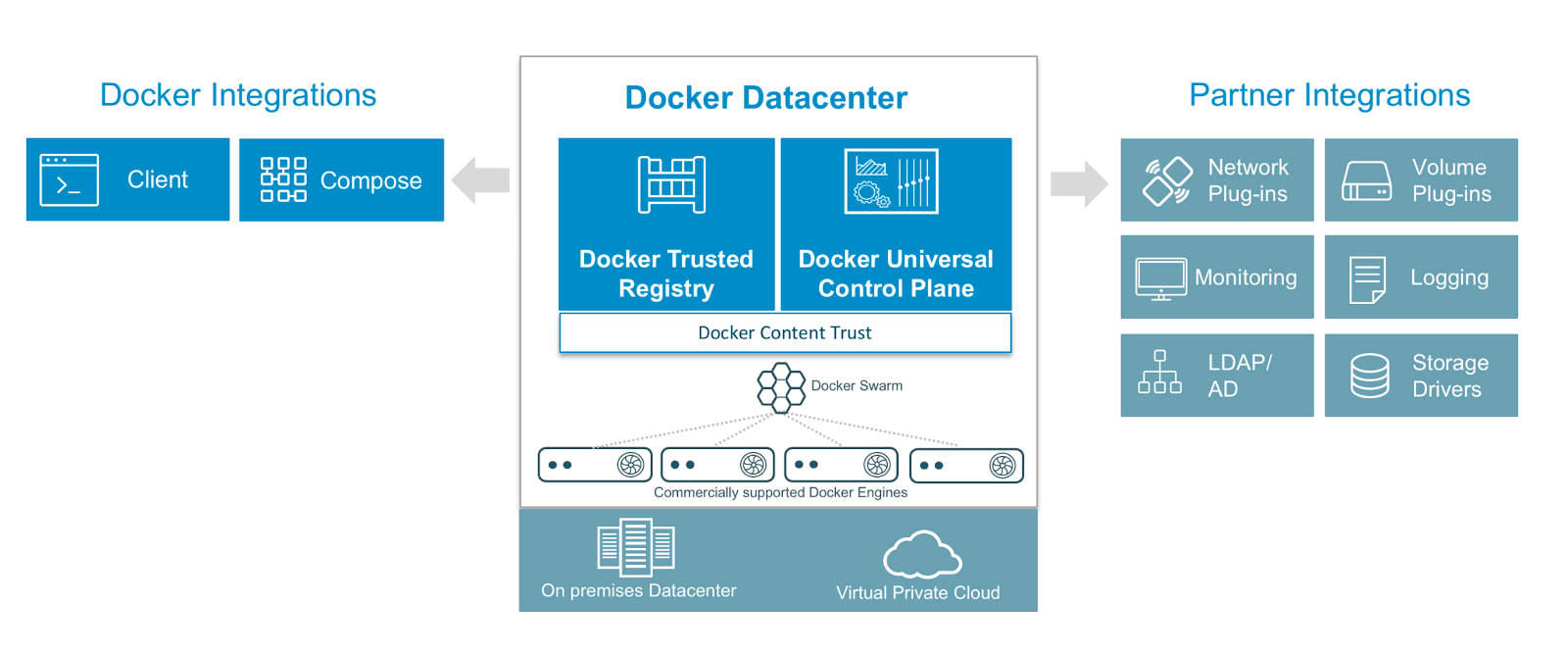

这篇文章基于我之前的文章 [.NET Core 入门][1]。首先,我把 RESTful API 从 .NET Core RC1 升级到了 .NET Core 1.0,然后,我增加了对 Docker 的支持并描述了如何在 Linux 生产环境里托管它。

|

||||

|

||||

|

||||

|

||||

我是首次接触 Docker 并且距离成为一名 Linux 高手还有很远的一段路程。因此,这里的很多想法是来自一个新手。

|

||||

|

||||

### 安装

|

||||

|

||||

按照 https://www.microsoft.com/net/core 上的介绍在你的电脑上安装 .NET Core 。这将会同时在 Windows 上安装 dotnet 命令行工具以及最新的 Visual Studio 工具。

|

||||

|

||||

### 源代码

|

||||

|

||||

你可以直接到 [GitHub](https://github.com/niksoper/aspnet5-books/tree/blog-docker) 上找最到最新完整的源代码。

|

||||

|

||||

### 转换到 .NET CORE 1.0

|

||||

|

||||

自然地,当我考虑如何把 API 从 .NET Core RC1 升级到 .NET Core 1.0 时想到的第一个求助的地方就是谷歌搜索。我是按照下面这两条非常全面的指导来进行升级的:

|

||||

|

||||

- [从 DNX 迁移到 .NET Core CLI][2]

|

||||

- [从 ASP.NET 5 RC1 迁移到 ASP.NET Core 1.0][3]

|

||||

|

||||

当你迁移代码的时候,我建议仔细阅读这两篇指导,因为我在没有阅读第一篇指导的情况下又尝试浏览第二篇,结果感到非常迷惑和沮丧。

|

||||

|

||||

我不想描述细节上的改变因为你可以看 GitHub 上的[提交](https://github.com/niksoper/aspnet5-books/commit/b41ad38794c69a70a572be3ffad051fd2d7c53c0)。这儿是我所作改变的总结:

|

||||

|

||||

- 更新 `global.json` 和 `project.json` 上的版本号

|

||||

- 删除 `project.json` 上的废弃章节

|

||||

- 使用轻型 `ControllerBase` 而不是 `Controller`, 因为我不需要与 MVC 视图相关的方法(这是一个可选的改变)。

|

||||

- 从辅助方法中去掉 `Http` 前缀,比如:`HttpNotFound` -> `NotFound`

|

||||

- `LogVerbose` -> `LogTrace`

|

||||

- 名字空间改变: `Microsoft.AspNetCore.*`

|

||||

- 在 `Startup` 中使用 `SetBasePath`(没有它 `appsettings.json` 将不会被发现)

|

||||

- 通过 `WebHostBuilder` 来运行而不是通过 `WebApplication.Run` 来运行

|

||||

- 删除 Serilog(在写文章的时候,它不支持 .NET Core 1.0)

|

||||

|

||||

唯一令我真正头疼的事是需要移动 Serilog。我本可以实现自己的文件记录器,但是我删除了文件记录功能,因为我不想为了这次操作在这件事情上花费精力。

|

||||

|

||||

不幸的是,将有大量的第三方开发者扮演追赶 .NET Core 1.0 的角色,我非常同情他们,因为他们通常在休息时间还坚持工作但却依旧根本无法接近靠拢微软的可用资源。我建议阅读 Travis Illig 的文章 [.NET Core 1.0 发布了,但 Autofac 在哪儿][4]?这是一篇关于第三方开发者观点的文章。

|

||||

|

||||

做了这些改变以后,我可以从 `project.json` 目录恢复、构建并运行 dotnet,可以看到 API 又像以前一样工作了。

|

||||

|

||||

### 通过 Docker 运行

|

||||

|

||||

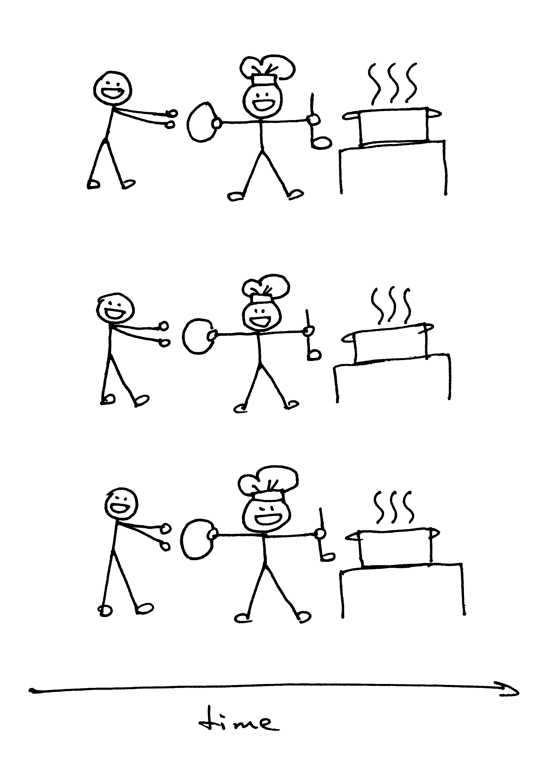

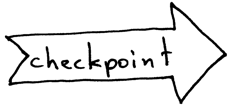

在我写这篇文章的时候, Docker 只能够在 Linux 系统上工作。在 [Windows](https://docs.docker.com/engine/installation/windows/#/docker-for-windows) 系统和 [OS X](https://docs.docker.com/engine/installation/mac/) 上有 beta 支持 Docker,但是它们都必须依赖于虚拟化技术,因此,我选择把 Ubuntu 14.04 当作虚拟机来运行。如果你还没有安装过 Docker,请按照[指导](https://docs.docker.com/engine/installation/linux/ubuntulinux/)来安装。

|

||||

|

||||

我最近阅读了一些关于 Docker 的东西,但我直到现在还没有真正用它来干任何事。我假设读者还没有关于 Docker 的知识,因此我会解释我所使用的所有命令。

|

||||

|

||||

#### HELLO DOCKER

|

||||

|

||||

在 Ubuntu 上安装好 Docker 之后,我所进行的下一步就是按照 https://www.microsoft.com/net/core#docker 上的介绍来开始运行 .NET Core 和 Docker。

|

||||

|

||||

首先启动一个已安装有 .NET Core 的容器。

|

||||

|

||||

```

|

||||

docker run -it microsoft/dotnet:latest

|

||||

```

|

||||

|

||||

`-it` 选项表示交互,所以你执行这条命令之后,你就处于容器之内了,可以如你所希望的那样执行任何 bash 命令。

|

||||

|

||||

然后我们可以执行下面这五条命令来在 Docker 内部运行起来微软 .NET Core 控制台应用程序示例。

|

||||

|

||||

```

|

||||

mkdir hwapp

|

||||

cd hwapp

|

||||

dotnet new

|

||||

dotnet restore

|

||||

dotnet run

|

||||

```

|

||||

|

||||

你可以通过运行 `exit` 来离开容器,然后运行 `Docker ps -a` 命令,这会显示你创建的那个已经退出的容器。你可以通过上运行命令 `Docker rm <container_name>` 来清除容器。

|

||||

|

||||

#### 挂载源代码

|

||||

|

||||

我的下一步骤是使用和上面相同的 microsoft/dotnet 镜像,但是将为我们的应用程序以[数据卷](https://docs.docker.com/engine/tutorials/dockervolumes/1)的方式挂载上源代码。

|

||||

|

||||

首先签出有相关提交的仓库:

|

||||

|

||||

```

|

||||

git clone https://github.com/niksoper/aspnet5-books.git

|

||||

cd aspnet5-books/src/MvcLibrary

|

||||

git checkout dotnet-core-1.0

|

||||

```

|

||||

|

||||

现在启动一个容器来运行 .NET Core 1.0,并将源代码放在 `/book` 下。注意更改 `/path/to/repo` 这部分文件来匹配你的电脑:

|

||||

|

||||

```

|

||||

docker run -it \

|

||||

-v /path/to/repo/aspnet5-books/src/MvcLibrary:/books \

|

||||

microsoft/dotnet:latest

|

||||

```

|

||||

|

||||

现在你可以在容器中运行应用程序了!

|

||||

|

||||

```

|

||||

cd /books

|

||||

dotnet restore

|

||||

dotnet run

|

||||

```

|

||||

|

||||

作为一个概念性展示这的确很棒,但是我们可不想每次运行一个程序都要考虑如何把源代码安装到容器里。

|

||||

|

||||

#### 增加一个 DOCKERFILE

|

||||

|

||||

我的下一步骤是引入一个 Dockerfile,这可以让应用程序很容易在自己的容器内启动。

|

||||

|

||||

我的 Dockerfile 和 `project.json` 一样位于 `src/MvcLibrary` 目录下,看起来像下面这样:

|

||||

|

||||

|

||||

```

|

||||

FROM microsoft/dotnet:latest

|

||||

|

||||

# 为应用程序源代码创建目录

|

||||

RUN mkdir -p /usr/src/books

|

||||

WORKDIR /usr/src/books

|

||||

|

||||

# 复制源代码并恢复依赖关系

|

||||

|

||||

COPY . /usr/src/books

|

||||

RUN dotnet restore

|

||||

|

||||

# 暴露端口并运行应用程序

|

||||

EXPOSE 5000

|

||||

CMD [ "dotnet", "run" ]

|

||||

```

|

||||

|

||||

严格来说,`RUN mkdir -p /usr/src/books` 命令是不需要的,因为 `COPY` 会自动创建丢失的目录。

|

||||

|

||||

Docker 镜像是按层建立的,我们从包含 .NET Core 的镜像开始,添加另一个从源代码生成应用程序,然后运行这个应用程序的层。

|

||||

|

||||

添加了 Dockerfile 以后,我通过运行下面的命令来生成一个镜像,并使用生成的镜像启动一个容器(确保在和 Dockerfile 相同的目录下进行操作,并且你应该使用自己的用户名)。

|

||||

|

||||

```

|

||||

docker build -t niksoper/netcore-books .

|

||||

docker run -it niksoper/netcore-books

|

||||

```

|

||||

|

||||

你应该看到程序能够和之前一样的运行,不过这一次我们不需要像之前那样安装源代码,因为源代码已经包含在 docker 镜像里面了。

|

||||

|

||||

#### 暴露并发布端口

|

||||

|

||||

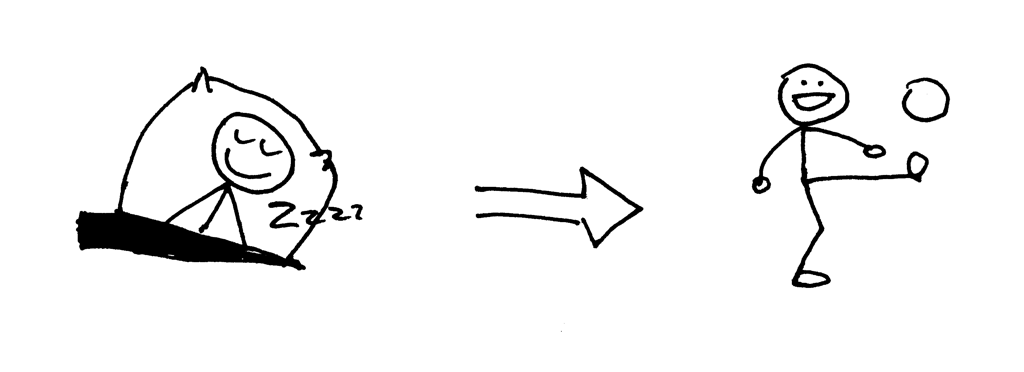

这个 API 并不是特别有用,除非我们需要从容器外面和它进行通信。 Docker 已经有了暴露和发布端口的概念,但这是两件完全不同的事。

|

||||

|

||||

据 Docker [官方文档](https://docs.docker.com/engine/reference/builder/#/expose):

|

||||

|

||||

> `EXPOSE` 指令通知 Docker 容器在运行时监听特定的网络端口。`EXPOSE` 指令不能够让容器的端口可被主机访问。要使可被访问,你必须通过 `-p` 标志来发布一个端口范围或者使用 `-P` 标志来发布所有暴露的端口

|

||||

|

||||

`EXPOSE` 指令只是将元数据添加到镜像上,所以你可以如文档中说的认为它是镜像消费者。从技术上讲,我本应该忽略 `EXPOSE 5000` 这行指令,因为我知道 API 正在监听的端口,但把它们留下很有用的,并且值得推荐。

|

||||

|

||||

在这个阶段,我想直接从主机访问这个 API ,因此我需要通过 `-p` 指令来发布这个端口,这将允许请求从主机上的端口 5000 转发到容器上的端口 5000,无论这个端口是不是之前通过 Dockerfile 暴露的。

|

||||

|

||||

```

|

||||

docker run -d -p 5000:5000 niksoper/netcore-books

|

||||

```

|

||||

|

||||

通过 `-d` 指令告诉 docker 在分离模式下运行容器,因此我们不能看到它的输出,但是它依旧会运行并监听端口 5000。你可以通过 `docker ps` 来证实这件事。

|

||||

|

||||

因此,接下来我准备从主机向容器发起一个请求来庆祝一下:

|

||||

|

||||

```

|

||||

curl http://localhost:5000/api/books

|

||||

```

|

||||

|

||||

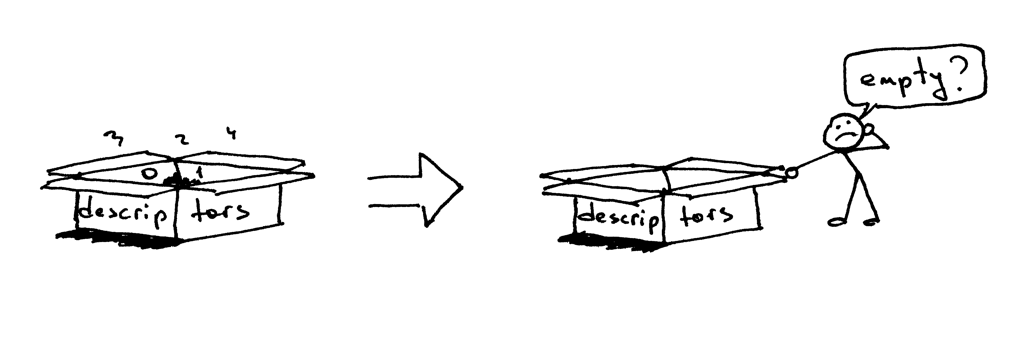

它不工作。

|

||||

|

||||

重复进行相同 `curl` 请求,我看到了两个错误:要么是 `curl: (56) Recv failure: Connection reset by peer`,要么是 `curl: (52) Empty reply from server`。

|

||||

|

||||

我返回去看 docker run 的[文档](https://docs.docker.com/engine/reference/run/#/expose-incoming-ports),然后再次检查我所使用的 `-p` 选项以及 Dockerfile 中的 `EXPOSE` 指令是否正确。我没有发现任何问题,这让我开始有些沮丧。

|

||||

|

||||

重新振作起来以后,我决定去咨询当地的一个 Scott Logic DevOps 大师 - Dave Wybourn(也在[这篇 Docker Swarm 的文章](http://blog.scottlogic.com/2016/08/30/docker-1-12-swarm-mode-round-robin.html)里提到过),他的团队也曾遇到这个实际问题。这个问题是我没有配置过 [Kestral](https://docs.asp.net/en/latest/fundamentals/servers.html#kestrel),这是一个全新的轻量级、跨平台 web 服务器,用于 .NET Core 。

|

||||

|

||||

默认情况下, Kestrel 会监听 http://localhost:5000。但问题是,这儿的 `localhost` 是一个回路接口。

|

||||

|

||||

据[维基百科](https://en.wikipedia.org/wiki/Localhost):

|

||||

|

||||

> 在计算机网络中,localhost 是一个代表本机的主机名。本地主机可以通过网络回路接口访问在主机上运行的网络服务。通过使用回路接口可以绕过任何硬件网络接口。

|

||||

|

||||

当运行在容器内时这是一个问题,因为 `localhost` 只能够在容器内访问。解决方法是更新 `Startup.cs` 里的 `Main` 方法来配置 Kestral 监听的 URL:

|

||||

|

||||

```

|

||||

public static void Main(string[] args)

|

||||

{

|

||||

var host = new WebHostBuilder()

|

||||

.UseKestrel()

|

||||

.UseContentRoot(Directory.GetCurrentDirectory())

|

||||

.UseUrls("http://*:5000") // 在所有网络接口上监听端口 5000

|

||||

.UseIISIntegration()

|

||||

.UseStartup<Startup>()

|

||||

.Build();

|

||||

|

||||

host.Run();

|

||||

}

|

||||

```

|

||||

|

||||

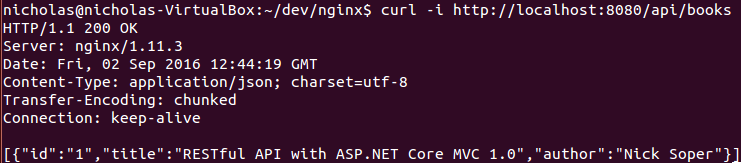

通过这些额外的配置,我可以重建镜像,并在容器中运行应用程序,它将能够接收来自主机的请求:

|

||||

|

||||

```

|

||||

docker build -t niksoper/netcore-books .

|

||||

docker run -d -p 5000:5000 niksoper/netcore-books

|

||||

curl -i http://localhost:5000/api/books

|

||||

```

|

||||

|

||||

我现在得到下面这些相应:

|

||||

|

||||

```

|

||||

HTTP/1.1 200 OK

|

||||

Date: Tue, 30 Aug 2016 15:25:43 GMT

|

||||

Transfer-Encoding: chunked

|

||||

Content-Type: application/json; charset=utf-8

|

||||

Server: Kestrel

|

||||

|

||||

[{"id":"1","title":"RESTful API with ASP.NET Core MVC 1.0","author":"Nick Soper"}]

|

||||

```

|

||||

|

||||

### 在产品环境中运行 KESTREL

|

||||

|

||||

[微软的介绍](https://docs.asp.net/en/latest/publishing/linuxproduction.html#why-use-a-reverse-proxy-server):

|

||||

|

||||

> Kestrel 可以很好的处理来自 ASP.NET 的动态内容,然而,网络服务部分的特性没有如 IIS,Apache 或者 Nginx 那样的全特性服务器那么好。反向代理服务器可以让你不用去做像处理静态内容、缓存请求、压缩请求、SSL 端点这样的来自 HTTP 服务器的工作。

|

||||

|

||||

因此我需要在我的 Linux 机器上把 Nginx 设置成一个反向代理服务器。微软介绍了如何[发布到 Linux 生产环境下](https://docs.asp.net/en/latest/publishing/linuxproduction.html)的指导教程。我把说明总结在这儿:

|

||||

|

||||

1. 通过 `dotnet publish` 来给应用程序产生一个自包含包。

|

||||

2. 把已发布的应用程序复制到服务器上

|

||||

3. 安装并配置 Nginx(作为反向代理服务器)

|

||||

4. 安装并配置 [supervisor](http://supervisord.org/)(用于确保 Nginx 服务器处于运行状态中)

|

||||

5. 安装并配置 [AppArmor](https://wiki.ubuntu.com/AppArmor)(用于限制应用的资源使用)

|

||||

6. 配置服务器防火墙

|

||||

7. 安全加固 Nginx(从源代码构建和配置 SSL)

|

||||

|

||||

这些内容已经超出了本文的范围,因此我将侧重于如何把 Nginx 配置成一个反向代理服务器。自然地,我通过 Docker 来完成这件事。

|

||||

|

||||

### 在另一个容器中运行 NGINX

|

||||

|

||||

我的目标是在第二个 Docker 容器中运行 Nginx 并把它配置成我们的应用程序容器的反向代理服务器。

|

||||

|

||||

我使用的是[来自 Docker Hub 的官方 Nginx 镜像](https://hub.docker.com/_/nginx/)。首先我尝试这样做:

|

||||

|

||||

```

|

||||

docker run -d -p 8080:80 --name web nginx

|

||||

```

|

||||

|

||||

这启动了一个运行 Nginx 的容器并把主机上的 8080 端口映射到了容器的 80 端口上。现在在浏览器中打开网址 `http://localhost:8080` 会显示出 Nginx 的默认登录页面。

|

||||

|

||||

现在我们证实了运行 Nginx 是多么的简单,我们可以关闭这个容器。

|

||||

|

||||

```

|

||||

docker rm -f web

|

||||

```

|

||||

|

||||

### 把 NGINX 配置成一个反向代理服务器

|

||||

|

||||

可以通过像下面这样编辑位于 `/etc/nginx/conf.d/default.conf` 的配置文件,把 Nginx 配置成一个反向代理服务器:

|

||||

|

||||

```

|

||||

server {

|

||||

listen 80;

|

||||

|

||||

location / {

|

||||

proxy_pass http://localhost:6666;

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

通过上面的配置可以让 Nginx 将所有对根目录的访问请求代理到 `http://localhost:6666`。记住这里的 `localhost` 指的是运行 Nginx 的容器。我们可以在 Nginx容器内部利用卷来使用我们自己的配置文件:

|

||||

|

||||

```

|

||||

docker run -d -p 8080:80 \

|

||||

-v /path/to/my.conf:/etc/nginx/conf.d/default.conf \

|

||||

nginx

|

||||

```

|

||||

|

||||

注意:这把一个单一文件从主机映射到容器中,而不是一个完整目录。

|

||||

|

||||

### 在容器间进行通信

|

||||

|

||||

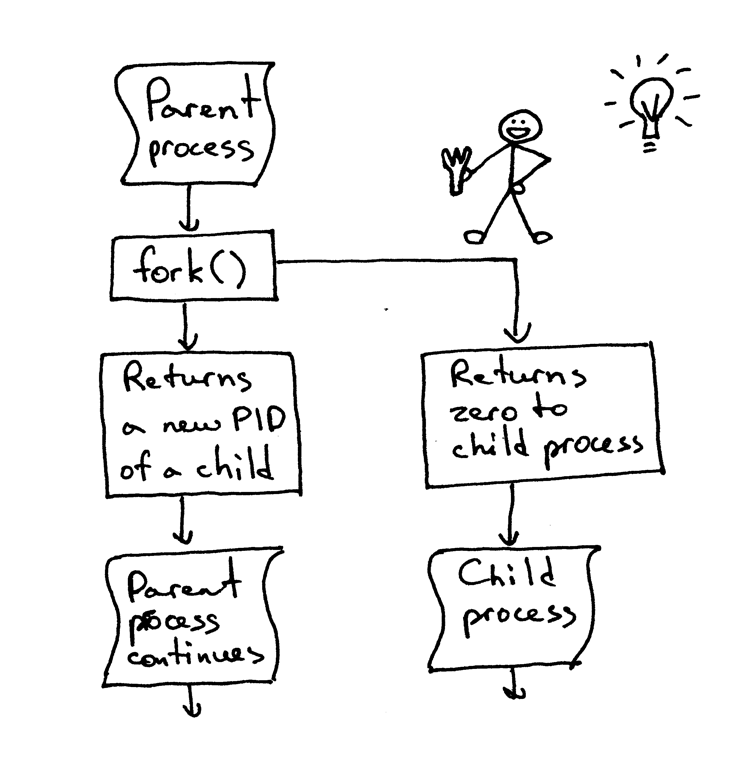

Docker 允许内部容器通过共享虚拟网络进行通信。默认情况下,所有通过 Docker 守护进程启动的容器都可以访问一种叫做“桥”的虚拟网络。这使得一个容器可以被另一个容器在相同的网络上通过 IP 地址和端口来引用。

|

||||

|

||||

你可以通过监测(inspect)容器来找到它的 IP 地址。我将从之前创建的 `niksoper/netcore-books` 镜像中启动一个容器并监测(inspect)它:

|

||||

|

||||

```

|

||||

docker run -d -p 5000:5000 --name books niksoper/netcore-books

|

||||

docker inspect books

|

||||

```

|

||||

|

||||

|

||||

|

||||

我们可以看到这个容器的 IP 地址是 `"IPAddress": "172.17.0.3"`。

|

||||

|

||||

所以现在如果我创建下面的 Nginx 配置文件,并使用这个文件启动一个 Nginx容器, 它将代理请求到我的 API :

|

||||

|

||||

```

|

||||

server {

|

||||

listen 80;

|

||||

|

||||

location / {

|

||||

proxy_pass http://172.17.0.3:5000;

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

现在我可以使用这个配置文件启动一个 Nginx 容器(注意我把主机上的 8080 端口映射到了 Nginx 容器上的 80 端口):

|

||||

|

||||

```

|

||||

docker run -d -p 8080:80 \

|

||||

-v ~/dev/nginx/my.nginx.conf:/etc/nginx/conf.d/default.conf \

|

||||

nginx

|

||||

```

|

||||

|

||||

一个到 `http://localhost:8080` 的请求将被代理到应用上。注意下面 `curl` 响应的 `Server` 响应头:

|

||||

|

||||

|

||||

|

||||

### DOCKER COMPOSE

|

||||

|

||||

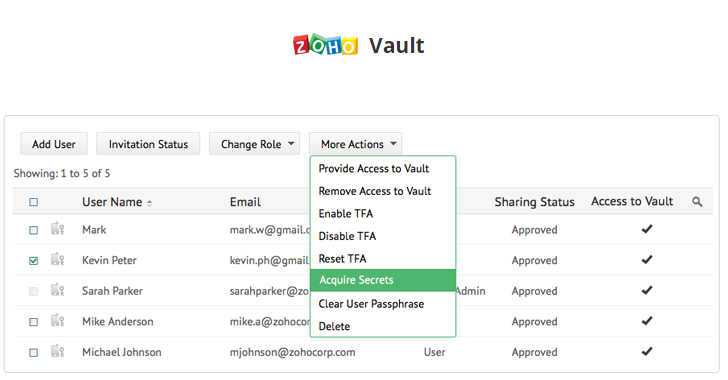

在这个地方,我为自己的进步而感到高兴,但我认为一定还有更好的方法来配置 Nginx,可以不需要知道应用程序容器的确切 IP 地址。另一个当地的 Scott Logic DevOps 大师 Jason Ebbin 在这个地方进行了改进,并建议使用 [Docker Compose](https://docs.docker.com/compose/)。

|

||||

|

||||

概况描述一下,Docker Compose 使得一组通过声明式语法互相连接的容器很容易启动。我不想再细说 Docker Compose 是如何工作的,因为你可以在[之前的文章](http://blog.scottlogic.com/2016/01/25/playing-with-docker-compose-and-erlang.html)中找到。

|

||||

|

||||

我将通过一个我所使用的 `docker-compose.yml` 文件来启动:

|

||||

|

||||

```

|

||||

version: '2'

|

||||

services:

|

||||

books-service:

|

||||

container_name: books-api

|

||||

build: .

|

||||

|

||||

reverse-proxy:

|

||||

container_name: reverse-proxy

|

||||

image: nginx

|

||||

ports:

|

||||

- "9090:8080"

|

||||

volumes:

|

||||

- ./proxy.conf:/etc/nginx/conf.d/default.conf

|

||||

```

|

||||

|

||||

*这是版本 2 语法,所以为了能够正常工作,你至少需要 1.6 版本的 Docker Compose。*

|

||||

|

||||

这个文件告诉 Docker 创建两个服务:一个是给应用的,另一个是给 Nginx 反向代理服务器的。

|

||||

|

||||

### BOOKS-SERVICE

|

||||

|

||||

这个与 docker-compose.yml 相同目录下的 Dockerfile 构建的容器叫做 `books-api`。注意这个容器不需要发布任何端口,因为只要能够从反向代理服务器访问它就可以,而不需要从主机操作系统访问它。

|

||||

|

||||

### REVERSE-PROXY

|

||||

|

||||

这将基于 nginx 镜像启动一个叫做 `reverse-proxy` 的容器,并将位于当前目录下的 `proxy.conf` 文件挂载为配置。它把主机上的 9090 端口映射到容器中的 8080 端口,这将允许我们在 `http://localhost:9090` 上通过主机访问容器。

|

||||

|

||||

`proxy.conf` 文件看起来像下面这样:

|

||||

|

||||

```

|

||||

server {

|

||||

listen 8080;

|

||||

|

||||

location / {

|

||||

proxy_pass http://books-service:5000;

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

这儿的关键点是我们现在可以通过名字引用 `books-service`,因此我们不需要知道 `books-api` 这个容器的 IP 地址!

|

||||

|

||||

现在我们可以通过一个运行着的反向代理启动两个容器(`-d` 意味着这是独立的,因此我们不能看到来自容器的输出):

|

||||

|

||||

```

|

||||

docker compose up -d

|

||||

```

|

||||

|

||||

验证我们所创建的容器:

|

||||

|

||||

```

|

||||

docker ps

|

||||

```

|

||||

|

||||

最后来验证我们可以通过反向代理来控制该 API :

|

||||

|

||||

```

|

||||

curl -i http://localhost:9090/api/books

|

||||

```

|

||||

|

||||

### 怎么做到的?

|

||||

|

||||

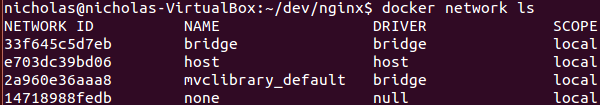

Docker Compose 通过创建一个新的叫做 `mvclibrary_default` 的虚拟网络来实现这件事,这个虚拟网络同时用于 `books-api` 和 `reverse-proxy` 容器(名字是基于 `docker-compose.yml` 文件的父目录)。

|

||||

|

||||

通过 `docker network ls` 来验证网络已经存在:

|

||||

|

||||

|

||||

|

||||

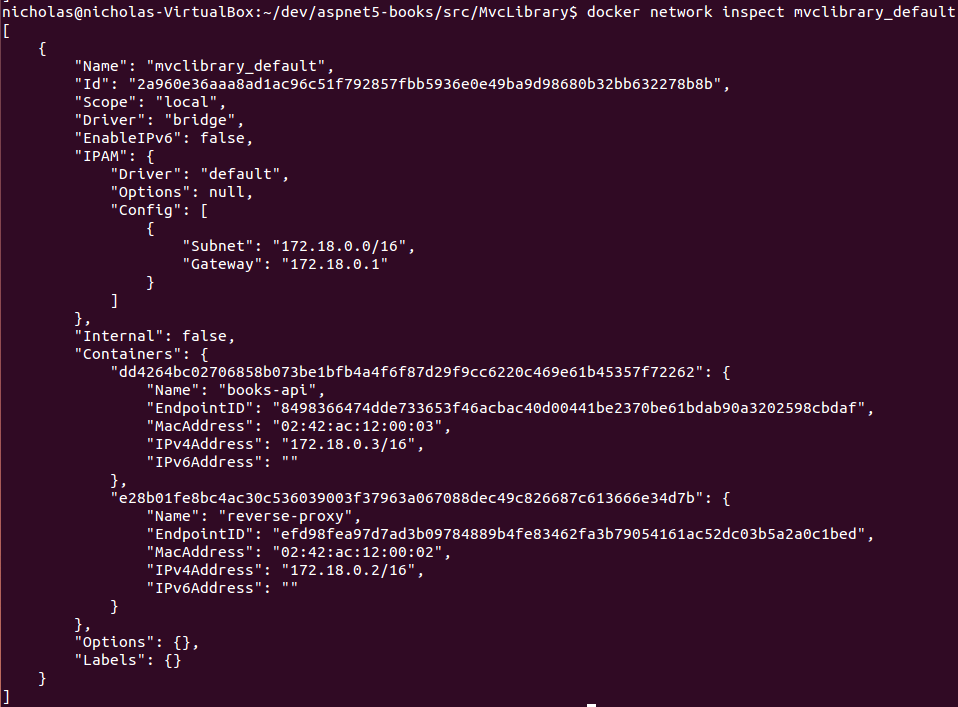

你可以使用 `docker network inspect mvclibrary_default` 来看到新的网络的细节:

|

||||

|

||||

|

||||

|

||||

注意 Docker 已经给网络分配了子网:`"Subnet": "172.18.0.0/16"`。/16 部分是无类域内路由选择(CIDR),完整的解释已经超出了本文的范围,但 CIDR 只是表示 IP 地址范围。运行 `docker network inspect bridge` 显示子网:`"Subnet": "172.17.0.0/16"`,因此这两个网络是不重叠的。

|

||||

|

||||

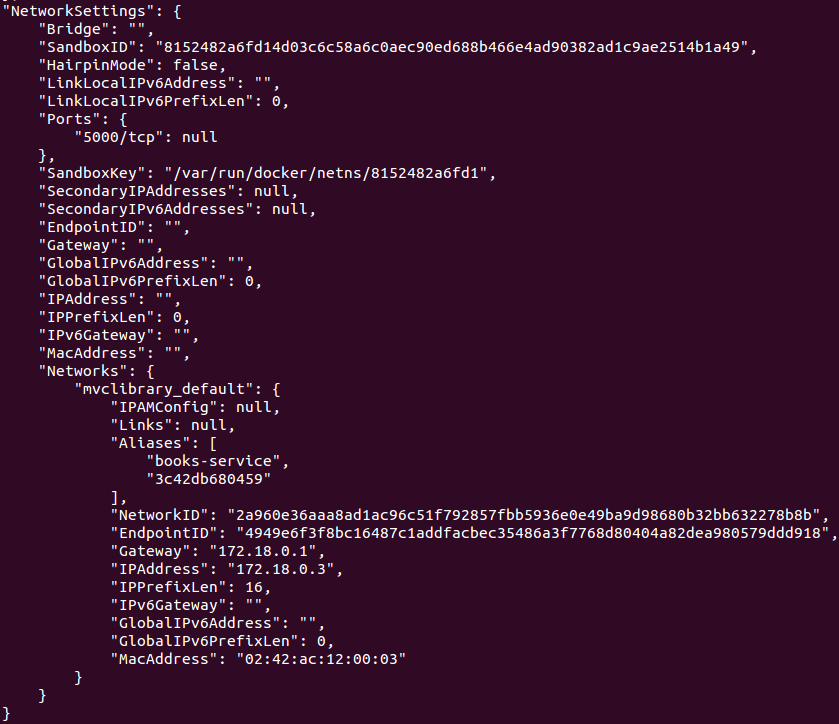

现在用 `docker inspect books-api` 来确认应用程序的容器正在使用该网络:

|

||||

|

||||

|

||||

|

||||

注意容器的两个别名(`"Aliases"`)是容器标识符(`3c42db680459`)和由 `docker-compose.yml` 给出的服务名(`books-service`)。我们通过 `books-service` 别名在自定义 Nginx 配置文件中来引用应用程序的容器。这本可以通过 `docker network create` 手动创建,但是我喜欢用 Docker Compose,因为它可以干净简洁地将容器创建和依存捆绑在一起。

|

||||

|

||||

### 结论

|

||||

|

||||

所以现在我可以通过几个简单的步骤在 Linux 系统上用 Nginx 运行应用程序,不需要对主机操作系统做任何长期的改变:

|

||||

|

||||

```

|

||||

git clone https://github.com/niksoper/aspnet5-books.git

|

||||

cd aspnet5-books/src/MvcLibrary

|

||||

git checkout blog-docker

|

||||

docker-compose up -d

|

||||

curl -i http://localhost:9090/api/books

|

||||

```

|

||||

|

||||

我知道我在这篇文章中所写的内容不是一个真正的生产环境就绪的设备,因为我没有写任何有关下面这些的内容,绝大多数下面的这些主题都需要用单独一篇完整的文章来叙述。

|

||||

|

||||

- 安全考虑比如防火墙和 SSL 配置

|

||||

- 如何确保应用程序保持运行状态

|

||||

- 如何选择需要包含的 Docker 镜像(我把所有的都放入了 Dockerfile 中)

|

||||

- 数据库 - 如何在容器中管理它们

|

||||

|

||||

对我来说这是一个非常有趣的学习经历,因为有一段时间我对探索 ASP.NET Core 的跨平台支持非常好奇,使用 “Configuratin as Code” 的 Docker Compose 方法来探索一下 DevOps 的世界也是非常愉快并且很有教育意义的。

|

||||

|

||||

如果你对 Docker 很好奇,那么我鼓励你来尝试学习它 或许这会让你离开舒适区,不过,有可能你会喜欢它?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://blog.scottlogic.com/2016/09/05/hosting-netcore-on-linux-with-docker.html

|

||||

|

||||

作者:[Nick Soper][a]

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://blog.scottlogic.com/nsoper

|

||||

[1]: http://blog.scottlogic.com/2016/01/20/restful-api-with-aspnet50.html

|

||||

[2]: https://docs.microsoft.com/en-us/dotnet/articles/core/migrating-from-dnx

|

||||

[3]: https://docs.asp.net/en/latest/migration/rc1-to-rtm.html

|

||||

[4]: http://www.paraesthesia.com/archive/2016/06/29/netcore-rtm-where-is-autofac/

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

92

published/20160914 Down and dirty with Windows Nano Server 2016.md

Executable file

92

published/20160914 Down and dirty with Windows Nano Server 2016.md

Executable file

@ -0,0 +1,92 @@

|

||||

一起来看看 Windows Nano Server 2016

|

||||

====

|

||||

|

||||

|

||||

|

||||

> 对于远程 Windows 服务器管理,Nano Server 是快速的功能强大的工具,但是你需要知道你在做的是什么。

|

||||

|

||||

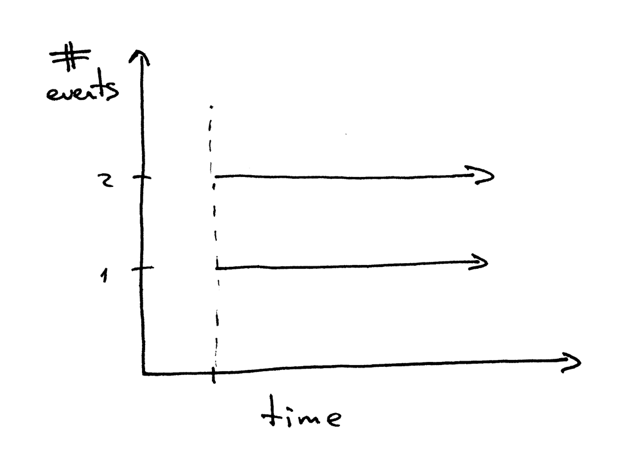

下面谈论[即将到来的 Windows Server 2016 的 Nano 版本][1],带远程管理和命令行设计,考虑到了私有云和数据中心。但是,谈论它和动手用它区别还是很大的,让我们深入看下去。

|

||||

|

||||

没有本地登录,且所有的程序、工具和代理都是 64 位,安装升级快捷,只需要非常少的时间就能重启。它非常适合做计算节点(无论在不在集群)、存储主机、DNS 服务器、IIS web 服务器,以及在容器中提供主机服务或者虚拟化的客户操作系统。

|

||||

|

||||

Nano 服务器也并不是太好玩的,你必须知道你要完成什么。你会看着远程的 PowerShell 的连接而不知所措,但如果你知道你要做什么的话,那它就非常方便和强大。

|

||||

|

||||

微软为设置 Nano 服务器提供了一个[快速入门手册][2] ,在这我给大家具体展示一下。

|

||||

|

||||

首先,你必须创建一个 .vhd 格式的虚拟磁盘文件。在步骤一中,我有几个文件不在正确的位置的小问题,Powershell 总是提示不匹配,所以我不得不反复查找文件的位置方便我可以用 ISO 信息(需要把它拷贝粘贴到你要创建 .vhd 文件的服务器上)。当你所有的东西都位置正确了,你可以开始创建 .vhd 文件的步骤了。

|

||||

|

||||

|

||||

|

||||

*步骤一:尝试运行 New-NanoServerImage 脚本时,很多文件路径错误,我把文件位置的问题搞定后,就能进行下去创建 .vhd 文件了(如图所示)*

|

||||

|

||||

接下来,你可以用创建 VM 向导在 Hyper-V 里面创建 VM 虚拟机,你需要指定一个存在的虚拟磁盘同时指向你新创建的 .vhd 文件。(步骤二)

|

||||

|

||||

|

||||

|

||||

*步骤二:连接虚拟磁盘(一开始创建的)*

|

||||

|

||||

当你启动 Nano 服务器的时候,你或许会发现有内存错误,提示你已经分配了多少内存,如果你还有其他 VM 虚拟机的话, Hyper-V 服务器剩余了多少内存。我已经关闭了一些虚机以增加可用内存,因为微软说 Nano 服务器最少需要 512M 内存,但是它又推荐你至少 800M,最后我分配了 8G 内存因为我给它 1G 的时候根本不能用,为了方便,我也没有尝试其他的内存配置。

|

||||

|

||||

最后我终于到达登录界面,到达 Nano Server Recovery Console 界面(步骤三),Nano 服务器的基本命令界面。

|

||||

|

||||

|

||||

|

||||

*步骤三:Nano 服务器的恢复窗口*

|

||||

|

||||

本来我以为进到这里会很美好,但是当我弄明白几个细节(如何加入域,弹出个磁盘,添加用户),我明白一些配置的细节,用几个参数运行 New-NanoServerImage cmdlet 会变得很简单。

|

||||

|

||||

然而,当你的服务器运行时,也有办法确认它的状态,就像步骤四所示,这一切都始于一个远程 PowerShell 连接。

|

||||

|

||||

|

||||

|

||||

*步骤四:从 Nano 服务器恢复窗口得到的信息,你可以从远程运行一个 Powershell 连接。*

|

||||

|

||||

微软展示了如何创建连接,但是尝试了四个不同的方法,我发现 [MSDN][4] 提供的是最好的方式,步骤五展示。

|

||||

|

||||

|

||||

|

||||

*步骤五:创建到 Nano 服务器的 PowerShell 远程连接*

|

||||

|

||||

提示:创建远程连接,你可以用下面这条命令:

|

||||

|

||||

```

|

||||

Enter-PSSession –ComputerName "192.168.0.100"-Credential ~\Administrator.

|

||||

```

|

||||

|

||||

如果你提前知道这台服务器将要做 DNS 服务器或者集群中的一个计算节点,可以事先在 .vhd 文件中加入一些角色和特定的软件包。如果不太清楚,你可以先建立 PowerShell 连接,然后安装 NanoServerPackage 并导入它,你就可以用 Find-NanoServerPackage 命令来查找你要部署的软件包了(步骤六)。

|

||||

|

||||

|

||||

|

||||

*步骤六:你安装完并导入 NanoServerPackage 后,你可以找到你需要启动服务器的工具以及设置的用户和你需要的一些其他功能包。*

|

||||

|

||||

我测试了安装 DNS 安装包,用 `Install-NanoServerPackage –Name Microsoft-NanoServer-DNS-Package` 这个命令。安装好后,我用 `Enable-WindowsOptionalFeature –Online –FeatureName DNS-Server-Full-Role` 命令启用它。

|

||||

|

||||

之前我并不知道这些命令,之前也从来没运行过,也没有弄过 DNS,但是现在稍微研究一下我就能用 Nano 服务器建立一个 DNS 服务并且运行它。

|

||||

|

||||

接下来是用 PowerShell 来配置 DNS 服务器。这个复杂一些,需要网上研究一下。但是它也不是那么复杂,当你学习了使用 cmdlet ,就可以用 `Add-DNSServerPrimaryZone` 添加 zone,用 `Add-DNSServerResourceRecordA` 命令在 zone 中添加记录。

|

||||

|

||||

做完这些命令行的工作,你可能需要验证是否起效。你可以快速浏览一下 PowerShell 命令,没有太多的 DNS 命令(使用 `Get-Command` 命令)。

|

||||

|

||||

如果你需要一个图形化的配置,你可以从一个图形化的主机上用图形管理器打开 Nano 服务器的 IP 地址。右击需要管理的服务器,提供你的验证信息,你连接好后,在图形管理器中右击然后选择 Add Roles and Features,它会显示你安装好的 DNS 服务,如步骤七所示。

|

||||

|

||||

|

||||

|

||||

*步骤七:通过图形化界面验证 DNS 已经安装*

|

||||

|

||||

不用麻烦登录服务器的远程桌面,你可以用服务器管理工具来进行操作。当然你能验证 DNS 角色不代表你能够通过 GUI 添加新的角色和特性,它有命令行就足够了。你现在可以用 Nano 服务器做一些需要的调整了。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.infoworld.com/article/3119770/windows-server/down-and-dirty-with-windows-nano-server-2016.html

|

||||

|

||||

作者:[J. Peter Bruzzese][a]

|

||||

译者:[jiajia9linuxer](https://github.com/jiajia9linuxer)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://www.infoworld.com/author/J.-Peter-Bruzzese/

|

||||

[1]: http://www.infoworld.com/article/3049191/windows-server/nano-server-a-slimmer-slicker-windows-server-core.html

|

||||

[2]: https://technet.microsoft.com/en-us/windows-server-docs/compute/nano-server/getting-started-with-nano-server

|

||||

[3]: https://technet.microsoft.com/en-us/windows-server-docs/get-started/system-requirements--and-installation

|

||||

[4]: https://msdn.microsoft.com/en-us/library/mt708805(v=vs.85).aspx

|

||||

@ -3,31 +3,30 @@

|

||||

|

||||

|

||||

|

||||

|

||||

我自认为自己是个奥迪亚的维基人。我通过写文章和纠正错误的文章贡献[奥迪亚][1]知识(在印度的[奥里萨邦][2]的主要的语言 )给很多维基项目,像维基百科和维基文库,我也为用印地语和英语写的维基文章做贡献。

|

||||

我自认为自己是个奥迪亚的维基人。我通过写文章和纠正文章错误给很多维基项目贡献了[奥迪亚(Odia)][1]知识(这是在印度的[奥里萨邦][2]的主要语言),比如维基百科和维基文库,我也为用印地语和英语写的维基文章做贡献。

|

||||

|

||||

|

||||

|

||||

我对维基的爱从我第 10 次考试(像在美国的 10 年级学生的年级考试)之后看到的英文维基文章[孟加拉解放战争][3]开始。一不小心我打开了印度维基文章的链接,,并且开始阅读它. 在文章左边有用奥迪亚语写的东西, 所以我点击了一下, 打开了一篇在奥迪亚维基上的 [????/Bhārat][4] 文章. 发现了用母语写的维基让我很激动!

|

||||

我对维基的爱从我第 10 次考试(像在美国的 10 年级学生的年级考试)之后看到的英文维基文章[孟加拉解放战争][3]开始。一不小心我打开了印度维基文章的链接,并且开始阅读它。在文章左边有用奥迪亚语写的东西,所以我点击了一下, 打开了一篇在奥迪亚维基上的 [ଭାରତ/Bhārat][4] 文章。发现了用母语写的维基让我很激动!

|

||||

|

||||

|

||||

|

||||

一个邀请读者参加 2014 年 4 月 1 日第二次布巴内斯瓦尔的研讨会的标语引起了我的好奇。我过去从来没有为维基做过贡献, 只用它搜索过, 我并不熟悉开源和社区贡献流程。加上,我只有 15 岁。我注册了。在研讨会上有很多语言爱好者,我是中间最年轻的一个。尽管我害怕我父亲还是鼓励我去参与。他起了非常重要的作用—他不是一个维基媒体人,和我不一样,但是他的鼓励给了我改变奥迪亚维基的动力和参加社区活动的勇气。

|

||||

一个邀请读者参加 2014 年 4 月 1 日召开的第二届布巴内斯瓦尔研讨会的旗帜广告引起了我的好奇。我过去从来没有为维基做过贡献,只用它做过研究,我并不熟悉开源和社区贡献流程。再加上,当时我只有 15 岁。我注册了,在研讨会上有很多语言爱好者,他们全比我大。尽管我害怕,我父亲还是鼓励我去参与。他起了非常重要的作用—他不是一个维基媒体人,和我不一样,但是他的鼓励给了我改变奥迪亚维基的动力和参加社区活动的勇气。

|

||||

|

||||

我相信奥迪亚语言和文学需要改进很多错误的想法和知识缺口所以,我帮助组织关于奥迪亚维基的活动和和研讨会,我完成了如下列表:

|

||||

我觉得关于奥迪亚语言和文学的知识很多需要改进,有很多错误的观念和知识缺口,所以,我帮助组织关于奥迪亚维基的活动和和研讨会,我完成了如下列表:

|

||||

|

||||

* 发起3次主要的 edit-a-thons 在奥迪亚维基:2015 年妇女节,2016年妇女节, abd [Nabakalebara edit-a-thon 2015][5]

|

||||

* 在奥迪亚维基发起 3 次主要的 edit-a-thons :2015 年妇女节、2016年妇女节、abd [Nabakalebara edit-a-thon 2015][5]

|

||||

* 在全印度发起了征集[檀车节][6]图片的比赛

|

||||

* 在谷歌的两大事件([谷歌I/O大会扩展][7]和谷歌开发节)中代表奥迪亚维基

|

||||

* 在2015[Perception][8]和第一次[Open Access India][9]会议

|

||||

* 在2015 [Perception][8] 和第一次 [Open Access India][9] 会议

|

||||

|

||||

|

||||

|

||||

我只编辑维基项目到了去年,在 2015 年一月,当我出席[孟加拉语维基百科的十周年会议][10]和[毗瑟挐][11]活动时,[互联网和社会中心][12]主任,邀请我参加[培训培训师][13] 计划。我的灵感始于扩展奥迪亚维基,为[华丽][14]的活动举办的聚会和培训新的维基人。这些经验告诉我作为一个贡献者该如何为社区工作。

|

||||

我在维基项目当编辑直到去年( 2015 年 1 月)为止,当我出席[孟加拉语维基百科的十周年会议][10]和[毗瑟挐][11]活动时,[互联网和社会中心][12]主任,邀请我参加[培训师培训计划][13]。我的开始超越奥迪亚维基,为[GLAM][14]的活动举办聚会和培训新的维基人。这些经验告诉我作为一个贡献者该如何为社区工作。

|

||||

|

||||

[Ravi][15],在当时维基的主任,在我的旅程也发挥了重要作用。他非常相信我让我参与到了[Wiki Loves Food][16],维基共享中的公共摄影比赛,组织方是[2016 印度维基会议][17]。在2015的 Loves Food 活动期间,我的团队在维基共享中加入了 10,000+ 有 CC BY-SA 协议的图片。Ravi 进一步巩固了我的承诺,和我分享很多关于维基媒体运动的信息,和他自己在 [奥迪亚维基百科13周年][18]的经历。

|

||||

[Ravi][15],在当时印度维基的总监,在我的旅程也发挥了重要作用。他非常相信我,让我参与到了 [Wiki Loves Food][16],维基共享中的公共摄影比赛,组织方是 [2016 印度维基会议][17]。在 2015 的 Loves Food 活动期间,我的团队在维基共享中加入了 10000+ 采用 CC BY-SA 协议的图片。Ravi 进一步坚定了我的信心,和我分享了很多关于维基媒体运动的信息,以及他自己在 [奥迪亚维基百科 13 周年][18]的经历。

|

||||

|

||||

不到一年后,在 2015 年十二月,我成为了网络与社会中心的[获取知识的程序][19]的项目助理( CIS-A2K 运动)。我自豪的时刻之一是在普里的研讨会,我们从印度带了 20 个新的维基人来编辑奥迪亚维基媒体社区。现在,我的指导者在一个普里非正式聚会被叫作[WikiTungi][20]。我和这个小组一起工作,把 wikiquotes 变成一个真实的计划项目。在奥迪亚维基我也致力于缩小性别差距。[八个女编辑][21]也正帮助组织聚会和研讨会,参加 [Women's History month edit-a-thon][22]。

|

||||

不到一年后,在 2015 年十二月,我成为了网络与社会中心的[获取知识计划][19]的项目助理( CIS-A2K 运动)。我自豪的时刻之一是在印度普里的研讨会,我们给奥迪亚维基媒体社区带来了 20 个新的维基人。现在,我在一个名为 [WikiTungi][20] 普里的非正式聚会上指导着维基人。我和这个小组一起工作,把奥迪亚 Wikiquotes 变成一个真实的计划项目。在奥迪亚维基我也致力于缩小性别差距。[八个女编辑][21]也正帮助组织聚会和研讨会,参加 [Women's History month edit-a-thon][22]。

|

||||

|

||||

在我四年短暂而令人激动的旅行之中,我也参与到 [维基百科的教育项目][23],[通讯团队][24],两个全球的 edit-a-thons: [Art and Feminsim][25] 和 [Menu Challenge][26]。我期待着更多的到来!

|

||||

|

||||

@ -38,8 +37,8 @@

|

||||

via: https://opensource.com/life/16/4/my-open-source-story-sailesh-patnaik

|

||||

|

||||

作者:[Sailesh Patnaik][a]

|

||||

译者:[译者ID](https://github.com/hkurj)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[hkurj](https://github.com/hkurj)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,38 @@

|