mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-12 01:40:10 +08:00

commit

7fec10650c

@ -1,5 +1,6 @@

|

||||

如何设置在Quagga BGP路由器中设置IPv6的BGP对等体和过滤

|

||||

如何设置在 Quagga BGP 路由器中设置 IPv6 的 BGP 对等体和过滤

|

||||

================================================================================

|

||||

|

||||

在之前的教程中,我们演示了如何使用Quagga建立一个[完备的BGP路由器][1]和配置[前缀过滤][2]。在本教程中,我们会向你演示如何创建IPv6 BGP对等体并通过BGP通告IPv6前缀。同时我们也将演示如何使用前缀列表和路由映射特性来过滤通告的或者获取到的IPv6前缀。

|

||||

|

||||

### 拓扑 ###

|

||||

@ -47,7 +48,7 @@ Quagga内部提供一个叫作vtysh的shell,其界面与那些主流路由厂

|

||||

|

||||

# vtysh

|

||||

|

||||

提示将改为:

|

||||

提示符将改为:

|

||||

|

||||

router-a#

|

||||

|

||||

@ -65,7 +66,7 @@ Quagga内部提供一个叫作vtysh的shell,其界面与那些主流路由厂

|

||||

|

||||

router-a# configure terminal

|

||||

|

||||

提示将变更成:

|

||||

提示符将变更成:

|

||||

|

||||

router-a(config)#

|

||||

|

||||

@ -246,13 +247,13 @@ Quagga内部提供一个叫作vtysh的shell,其界面与那些主流路由厂

|

||||

via: http://xmodulo.com/ipv6-bgp-peering-filtering-quagga-bgp-router.html

|

||||

|

||||

作者:[Sarmed Rahman][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[martin2011qi](https://github.com/martin2011qi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/sarmed

|

||||

[1]:http://xmodulo.com/centos-bgp-router-quagga.html

|

||||

[1]:https://linux.cn/article-4232-1.html

|

||||

[2]:http://xmodulo.com/filter-bgp-routes-quagga-bgp-router.html

|

||||

[3]:http://ask.xmodulo.com/open-port-firewall-centos-rhel.html

|

||||

[4]:http://xmodulo.com/filter-bgp-routes-quagga-bgp-router.html

|

||||

@ -1,8 +1,9 @@

|

||||

如何在 Docker 中通过 Kitematic 交互式执行任务

|

||||

如何在 Windows 上通过 Kitematic 使用 Docker

|

||||

================================================================================

|

||||

在本篇文章中,我们会学习如何在 Windows 操作系统上安装 Kitematic 以及部署一个 Hello World Nginx Web 服务器。Kitematic 是一个自由开源软件,它有现代化的界面设计使得允许我们在 Docker 中交互式执行任务。Kitematic 设计非常漂亮、界面也很不错。我们可以简单快速地开箱搭建我们的容器而不需要输入命令,我们可以在图形用户界面中通过简单的点击从而在容器上部署我们的应用。Kitematic 集成了 Docker Hub,允许我们搜索、拉取任何需要的镜像,并在上面部署应用。它同时也能很好地切换到命令行用户接口模式。目前,它包括了自动映射端口、可视化更改环境变量、配置卷、精简日志以及其它功能。

|

||||

|

||||

下面是在 Windows 上安装 Kitematic 并部署 Hello World Nginx Web 服务器的 3 个简单步骤。

|

||||

在本篇文章中,我们会学习如何在 Windows 操作系统上安装 Kitematic 以及部署一个测试性的 Nginx Web 服务器。Kitematic 是一个具有现代化的界面设计的自由开源软件,它可以让我们在 Docker 中交互式执行任务。Kitematic 设计的非常漂亮、界面美观。使用它,我们可以简单快速地开箱搭建我们的容器而不需要输入命令,可以在图形用户界面中通过简单的点击从而在容器上部署我们的应用。Kitematic 集成了 Docker Hub,允许我们搜索、拉取任何需要的镜像,并在上面部署应用。它同时也能很好地切换到命令行用户接口模式。目前,它包括了自动映射端口、可视化更改环境变量、配置卷、流式日志以及其它功能。

|

||||

|

||||

下面是在 Windows 上安装 Kitematic 并部署测试性 Nginx Web 服务器的 3 个简单步骤。

|

||||

|

||||

### 1. 下载 Kitematic ###

|

||||

|

||||

@ -16,15 +17,15 @@

|

||||

|

||||

### 2. 安装 Kitematic ###

|

||||

|

||||

下载好可执行安装程序之后,我们现在打算在我们的 Windows 操作系统上安装 Kitematic。安装程序现在会开始下载并安装运行 Kitematic 需要的依赖,包括 Virtual Box 和 Docker。如果已经在系统上安装了 Virtual Box,它会把它升级到最新版本。安装程序会在几分钟内完成,但取决于你网络和系统的速度。如果你还没有安装 Virtual Box,它会问你是否安装 Virtual Box 网络驱动。建议安装它,因为它有助于 Virtual Box 的网络。

|

||||

下载好可执行安装程序之后,我们现在就可以在我们的 Windows 操作系统上安装 Kitematic了。安装程序现在会开始下载并安装运行 Kitematic 需要的依赖软件,包括 Virtual Box 和 Docker。如果已经在系统上安装了 Virtual Box,它会把它升级到最新版本。安装程序会在几分钟内完成,但取决于你网络和系统的速度。如果你还没有安装 Virtual Box,它会问你是否安装 Virtual Box 网络驱动。建议安装它,因为它用于 Virtual Box 的网络功能。

|

||||

|

||||

|

||||

|

||||

需要的依赖 Docker 和 Virtual Box 安装完成并运行后,会让我们登录到 Docker Hub。如果我们还没有账户或者还不想登录,可以点击 **SKIP FOR NOW** 继续后面的步骤。

|

||||

所需的依赖 Docker 和 Virtual Box 安装完成并运行后,会让我们登录到 Docker Hub。如果我们还没有账户或者还不想登录,可以点击 **SKIP FOR NOW** 继续后面的步骤。

|

||||

|

||||

|

||||

|

||||

如果你还没有账户,你可以在应用程序上点击注册链接并在 Docker Hub 上创建账户。

|

||||

如果你还没有账户,你可以在应用程序上点击注册(Sign Up)链接并在 Docker Hub 上创建账户。

|

||||

|

||||

完成之后,就会出现 Kitematic 应用程序的第一个界面。正如下面看到的这样。我们可以搜索可用的 docker 镜像。

|

||||

|

||||

@ -50,7 +51,11 @@

|

||||

|

||||

### 总结 ###

|

||||

|

||||

我们终于成功在 Windows 操作系统上安装了 Kitematic 并部署了一个 Hello World Ngnix 服务器。总是推荐下载安装 Kitematic 最新的发行版,因为会增加很多新的高级功能。由于 Docker 运行在 64 位平台,当前 Kitematic 也是为 64 位操作系统构建。它只能在 Windows 7 以及更高版本上运行。在这篇教程中,我们部署了一个 Nginx Web 服务器,类似地我们可以在 Kitematic 中简单的点击就能通过镜像部署任何 docker 容器。Kitematic 已经有可用的 Mac OS X 和 Windows 版本,Linux 版本也在开发中很快就会发布。如果你有任何疑问、建议或者反馈,请在下面的评论框中写下来以便我们更改地改进或更新我们的内容。非常感谢!Enjoy :-)

|

||||

我们终于成功在 Windows 操作系统上安装了 Kitematic 并部署了一个 Hello World Ngnix 服务器。推荐下载安装 Kitematic 最新的发行版,因为会增加很多新的高级功能。由于 Docker 运行在 64 位平台,当前 Kitematic 也是为 64 位操作系统构建。它只能在 Windows 7 以及更高版本上运行。

|

||||

|

||||

在这篇教程中,我们部署了一个 Nginx Web 服务器,类似地我们可以在 Kitematic 中简单的点击就能通过镜像部署任何 docker 容器。Kitematic 已经有可用的 Mac OS X 和 Windows 版本,Linux 版本也在开发中很快就会发布。

|

||||

|

||||

如果你有任何疑问、建议或者反馈,请在下面的评论框中写下来以便我们更改地改进或更新我们的内容。非常感谢!Enjoy :-)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -58,7 +63,7 @@ via: http://linoxide.com/linux-how-to/interactively-docker-kitematic/

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[ictlyh](https://github.com/ictlyh)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,129 @@

|

||||

如何使用 Weave 以及 Docker 搭建 Nginx 反向代理/负载均衡服务器

|

||||

================================================================================

|

||||

|

||||

Hi, 今天我们将会学习如何使用 Weave 和 Docker 搭建 Nginx 的反向代理/负载均衡服务器。Weave 可以创建一个虚拟网络将 Docker 容器彼此连接在一起,支持跨主机部署及自动发现。它可以让我们更加专注于应用的开发,而不是基础架构。Weave 提供了一个如此棒的环境,仿佛它的所有容器都属于同个网络,不需要端口/映射/连接等的配置。容器中的应用提供的服务在 weave 网络中可以轻易地被外部世界访问,不论你的容器运行在哪里。在这个教程里我们将会使用 weave 快速并且简单地将 nginx web 服务器部署为一个负载均衡器,反向代理一个运行在 Amazon Web Services 里面多个节点上的 docker 容器中的简单 php 应用。这里我们将会介绍 WeaveDNS,它提供一个不需要改变代码就可以让容器利用主机名找到的简单方式,并且能够让其他容器通过主机名连接彼此。

|

||||

|

||||

在这篇教程里,我们将使用 nginx 来将负载均衡分配到一个运行 Apache 的容器集合。最简单轻松的方法就是使用 Weave 来把运行在 ubuntu 上的 docker 容器中的 nginx 配置成负载均衡服务器。

|

||||

|

||||

### 1. 搭建 AWS 实例 ###

|

||||

|

||||

首先,我们需要搭建 Amzaon Web Service 实例,这样才能在 ubuntu 下用 weave 跑 docker 容器。我们将会使用[AWS 命令行][1] 来搭建和配置两个 AWS EC2 实例。在这里,我们使用最小的可用实例,t1.micro。我们需要一个有效的**Amazon Web Services 账户**使用 AWS 命令行界面来搭建和配置。我们先在 AWS 命令行界面下使用下面的命令将 github 上的 weave 仓库克隆下来。

|

||||

|

||||

$ git clone http://github.com/fintanr/weave-gs

|

||||

$ cd weave-gs/aws-nginx-ubuntu-simple

|

||||

|

||||

在克隆完仓库之后,我们执行下面的脚本,这个脚本将会部署两个 t1.micro 实例,每个实例中都是 ubuntu 作为操作系统并用 weave 跑着 docker 容器。

|

||||

|

||||

$ sudo ./demo-aws-setup.sh

|

||||

|

||||

在这里,我们将会在以后用到这些实例的 IP 地址。这些地址储存在一个 weavedemo.env 文件中,这个文件创建于执行 demo-aws-setup.sh 脚本期间。为了获取这些 IP 地址,我们需要执行下面的命令,命令输出类似下面的信息。

|

||||

|

||||

$ cat weavedemo.env

|

||||

|

||||

export WEAVE_AWS_DEMO_HOST1=52.26.175.175

|

||||

export WEAVE_AWS_DEMO_HOST2=52.26.83.141

|

||||

export WEAVE_AWS_DEMO_HOSTCOUNT=2

|

||||

export WEAVE_AWS_DEMO_HOSTS=(52.26.175.175 52.26.83.141)

|

||||

|

||||

请注意这些不是固定的 IP 地址,AWS 会为我们的实例动态地分配 IP 地址。

|

||||

|

||||

我们在 bash 下执行下面的命令使环境变量生效。

|

||||

|

||||

. ./weavedemo.env

|

||||

|

||||

### 2. 启动 Weave 和 WeaveDNS ###

|

||||

|

||||

在安装完实例之后,我们将会在每台主机上启动 weave 以及 weavedns。Weave 以及 weavedns 使得我们能够轻易地将容器部署到一个全新的基础架构以及配置中, 不需要改变代码,也不需要去理解像 Ambassador 容器以及 Link 机制之类的概念。下面是在第一台主机上启动 weave 以及 weavedns 的命令。

|

||||

|

||||

ssh -i weavedemo-key.pem ubuntu@$WEAVE_AWS_DEMO_HOST1

|

||||

$ sudo weave launch

|

||||

$ sudo weave launch-dns 10.2.1.1/24

|

||||

|

||||

下一步,我也准备在第二台主机上启动 weave 以及 weavedns。

|

||||

|

||||

ssh -i weavedemo-key.pem ubuntu@$WEAVE_AWS_DEMO_HOST2

|

||||

$ sudo weave launch $WEAVE_AWS_DEMO_HOST1

|

||||

$ sudo weave launch-dns 10.2.1.2/24

|

||||

|

||||

### 3. 启动应用容器 ###

|

||||

|

||||

现在,我们准备跨两台主机启动六个容器,这两台主机都用 Apache2 Web 服务实例跑着简单的 php 网站。为了在第一个 Apache2 Web 服务器实例跑三个容器, 我们将会使用下面的命令。

|

||||

|

||||

ssh -i weavedemo-key.pem ubuntu@$WEAVE_AWS_DEMO_HOST1

|

||||

$ sudo weave run --with-dns 10.3.1.1/24 -h ws1.weave.local fintanr/weave-gs-nginx-apache

|

||||

$ sudo weave run --with-dns 10.3.1.2/24 -h ws2.weave.local fintanr/weave-gs-nginx-apache

|

||||

$ sudo weave run --with-dns 10.3.1.3/24 -h ws3.weave.local fintanr/weave-gs-nginx-apache

|

||||

|

||||

在那之后,我们将会在第二个实例上启动另外三个容器,请使用下面的命令。

|

||||

|

||||

ssh -i weavedemo-key.pem ubuntu@$WEAVE_AWS_DEMO_HOST2

|

||||

$ sudo weave run --with-dns 10.3.1.4/24 -h ws4.weave.local fintanr/weave-gs-nginx-apache

|

||||

$ sudo weave run --with-dns 10.3.1.5/24 -h ws5.weave.local fintanr/weave-gs-nginx-apache

|

||||

$ sudo weave run --with-dns 10.3.1.6/24 -h ws6.weave.local fintanr/weave-gs-nginx-apache

|

||||

|

||||

注意: 在这里,--with-dns 选项告诉容器使用 weavedns 来解析主机名,-h x.weave.local 则使得 weavedns 能够解析该主机。

|

||||

|

||||

### 4. 启动 Nginx 容器 ###

|

||||

|

||||

在应用容器如预期的运行后,我们将会启动 nginx 容器,它将会在六个应用容器服务之间轮询并提供反向代理或者负载均衡。 为了启动 nginx 容器,请使用下面的命令。

|

||||

|

||||

ssh -i weavedemo-key.pem ubuntu@$WEAVE_AWS_DEMO_HOST1

|

||||

$ sudo weave run --with-dns 10.3.1.7/24 -ti -h nginx.weave.local -d -p 80:80 fintanr/weave-gs-nginx-simple

|

||||

|

||||

因此,我们的 nginx 容器在 $WEAVE_AWS_DEMO_HOST1 上公开地暴露成为一个 http 服务器。

|

||||

|

||||

### 5. 测试负载均衡服务器 ###

|

||||

|

||||

为了测试我们的负载均衡服务器是否可以工作,我们执行一段可以发送 http 请求给 nginx 容器的脚本。我们将会发送6个请求,这样我们就能看到 nginx 在一次的轮询中服务于每台 web 服务器之间。

|

||||

|

||||

$ ./access-aws-hosts.sh

|

||||

|

||||

{

|

||||

"message" : "Hello Weave - nginx example",

|

||||

"hostname" : "ws1.weave.local",

|

||||

"date" : "2015-06-26 12:24:23"

|

||||

}

|

||||

{

|

||||

"message" : "Hello Weave - nginx example",

|

||||

"hostname" : "ws2.weave.local",

|

||||

"date" : "2015-06-26 12:24:23"

|

||||

}

|

||||

{

|

||||

"message" : "Hello Weave - nginx example",

|

||||

"hostname" : "ws3.weave.local",

|

||||

"date" : "2015-06-26 12:24:23"

|

||||

}

|

||||

{

|

||||

"message" : "Hello Weave - nginx example",

|

||||

"hostname" : "ws4.weave.local",

|

||||

"date" : "2015-06-26 12:24:23"

|

||||

}

|

||||

{

|

||||

"message" : "Hello Weave - nginx example",

|

||||

"hostname" : "ws5.weave.local",

|

||||

"date" : "2015-06-26 12:24:23"

|

||||

}

|

||||

{

|

||||

"message" : "Hello Weave - nginx example",

|

||||

"hostname" : "ws6.weave.local",

|

||||

"date" : "2015-06-26 12:24:23"

|

||||

}

|

||||

|

||||

### 结束语 ###

|

||||

|

||||

我们最终成功地将 nginx 配置成一个反向代理/负载均衡服务器,通过使用 weave 以及运行在 AWS(Amazon Web Service)EC2 里面的 ubuntu 服务器中的 docker。从上面的步骤输出可以清楚的看到我们已经成功地配置了 nginx。我们可以看到请求在一次轮询中被发送到6个应用容器,这些容器在 Apache2 Web 服务器中跑着 PHP 应用。在这里,我们部署了一个容器化的 PHP 应用,使用 nginx 横跨多台在 AWS EC2 上的主机而不需要改变代码,利用 weavedns 使得每个容器连接在一起,只需要主机名就够了,眼前的这些便捷, 都要归功于 weave 以及 weavedns。

|

||||

|

||||

如果你有任何的问题、建议、反馈,请在评论中注明,这样我们才能够做得更好,谢谢:-)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/linux-how-to/nginx-load-balancer-weave-docker/

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[dingdongnigetou](https://github.com/dingdongnigetou)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/arunp/

|

||||

[1]:http://console.aws.amazon.com/

|

||||

418

published/20150803 Managing Linux Logs.md

Normal file

418

published/20150803 Managing Linux Logs.md

Normal file

@ -0,0 +1,418 @@

|

||||

Linux 日志管理指南

|

||||

================================================================================

|

||||

|

||||

管理日志的一个最好做法是将你的日志集中或整合到一个地方,特别是在你有许多服务器或多层级架构时。我们将告诉你为什么这是一个好主意,然后给出如何更容易的做这件事的一些小技巧。

|

||||

|

||||

### 集中管理日志的好处 ###

|

||||

|

||||

如果你有很多服务器,查看某个日志文件可能会很麻烦。现代的网站和服务经常包括许多服务器层级、分布式的负载均衡器,等等。找到正确的日志将花费很长时间,甚至要花更长时间在登录服务器的相关问题上。没什么比发现你找的信息没有被保存下来更沮丧的了,或者本该保留的日志文件正好在重启后丢失了。

|

||||

|

||||

集中你的日志使它们查找更快速,可以帮助你更快速的解决产品问题。你不用猜测那个服务器存在问题,因为所有的日志在同一个地方。此外,你可以使用更强大的工具去分析它们,包括日志管理解决方案。一些解决方案能[转换纯文本日志][1]为一些字段,更容易查找和分析。

|

||||

|

||||

集中你的日志也可以使它们更易于管理:

|

||||

|

||||

- 它们更安全,当它们备份归档到一个单独区域时会有意无意地丢失。如果你的服务器宕机或者无响应,你可以使用集中的日志去调试问题。

|

||||

- 你不用担心ssh或者低效的grep命令在陷入困境的系统上需要更多的资源。

|

||||

- 你不用担心磁盘占满,这个能让你的服务器死机。

|

||||

- 你能保持你的产品服务器的安全性,只是为了查看日志无需给你所有团队登录权限。给你的团队从日志集中区域访问日志权限更安全。

|

||||

|

||||

随着集中日志管理,你仍需处理由于网络联通性不好或者耗尽大量网络带宽从而导致不能传输日志到中心区域的风险。在下面的章节我们将要讨论如何聪明的解决这些问题。

|

||||

|

||||

### 流行的日志归集工具 ###

|

||||

|

||||

在 Linux 上最常见的日志归集是通过使用 syslog 守护进程或者日志代理。syslog 守护进程支持本地日志的采集,然后通过syslog 协议传输日志到中心服务器。你可以使用很多流行的守护进程来归集你的日志文件:

|

||||

|

||||

- [rsyslog][2] 是一个轻量后台程序,在大多数 Linux 分支上已经安装。

|

||||

- [syslog-ng][3] 是第二流行的 Linux 系统日志后台程序。

|

||||

- [logstash][4] 是一个重量级的代理,它可以做更多高级加工和分析。

|

||||

- [fluentd][5] 是另一个具有高级处理能力的代理。

|

||||

|

||||

Rsyslog 是集中日志数据最流行的后台程序,因为它在大多数 Linux 分支上是被默认安装的。你不用下载或安装它,并且它是轻量的,所以不需要占用你太多的系统资源。

|

||||

|

||||

如果你需要更多先进的过滤或者自定义分析功能,如果你不在乎额外的系统负载,Logstash 是另一个最流行的选择。

|

||||

|

||||

### 配置 rsyslog.conf ###

|

||||

|

||||

既然 rsyslog 是最广泛使用的系统日志程序,我们将展示如何配置它为日志中心。它的全局配置文件位于 /etc/rsyslog.conf。它加载模块,设置全局指令,和包含位于目录 /etc/rsyslog.d 中的应用的特有的配置。目录中包含的 /etc/rsyslog.d/50-default.conf 指示 rsyslog 将系统日志写到文件。在 [rsyslog 文档][6]中你可以阅读更多相关配置。

|

||||

|

||||

rsyslog 配置语言是是[RainerScript][7]。你可以给日志指定输入,就像将它们输出到另外一个位置一样。rsyslog 已经配置标准输入默认是 syslog ,所以你通常只需增加一个输出到你的日志服务器。这里有一个 rsyslog 输出到一个外部服务器的配置例子。在本例中,**BEBOP** 是一个服务器的主机名,所以你应该替换为你的自己的服务器名。

|

||||

|

||||

action(type="omfwd" protocol="tcp" target="BEBOP" port="514")

|

||||

|

||||

你可以发送你的日志到一个有足够的存储容量的日志服务器来存储,提供查询,备份和分析。如果你存储日志到文件系统,那么你应该建立[日志轮转][8]来防止你的磁盘爆满。

|

||||

|

||||

作为一种选择,你可以发送这些日志到一个日志管理方案。如果你的解决方案是安装在本地你可以发送到系统文档中指定的本地主机和端口。如果你使用基于云提供商,你将发送它们到你的提供商特定的主机名和端口。

|

||||

|

||||

### 日志目录 ###

|

||||

|

||||

你可以归集一个目录或者匹配一个通配符模式的所有文件。nxlog 和 syslog-ng 程序支持目录和通配符(*)。

|

||||

|

||||

常见的 rsyslog 不能直接监控目录。作为一种解决办法,你可以设置一个定时任务去监控这个目录的新文件,然后配置 rsyslog 来发送这些文件到目的地,比如你的日志管理系统。举个例子,日志管理提供商 Loggly 有一个开源版本的[目录监控脚本][9]。

|

||||

|

||||

### 哪个协议: UDP、TCP 或 RELP? ###

|

||||

|

||||

当你使用网络传输数据时,有三个主流协议可以选择。UDP 在你自己的局域网是最常用的,TCP 用在互联网。如果你不能失去(任何)日志,就要使用更高级的 RELP 协议。

|

||||

|

||||

[UDP][10] 发送一个数据包,那只是一个单一的信息包。它是一个只外传的协议,所以它不会发送给你回执(ACK)。它只尝试发送包。当网络拥堵时,UDP 通常会巧妙的降级或者丢弃日志。它通常使用在类似局域网的可靠网络。

|

||||

|

||||

[TCP][11] 通过多个包和返回确认发送流式信息。TCP 会多次尝试发送数据包,但是受限于 [TCP 缓存][12]的大小。这是在互联网上发送送日志最常用的协议。

|

||||

|

||||

[RELP][13] 是这三个协议中最可靠的,但是它是为 rsyslog 创建的,而且很少有行业采用。它在应用层接收数据,如果有错误就会重发。请确认你的日志接受位置也支持这个协议。

|

||||

|

||||

### 用磁盘辅助队列可靠的传送 ###

|

||||

|

||||

如果 rsyslog 在存储日志时遭遇错误,例如一个不可用网络连接,它能将日志排队直到连接还原。队列日志默认被存储在内存里。无论如何,内存是有限的并且如果问题仍然存在,日志会超出内存容量。

|

||||

|

||||

**警告:如果你只存储日志到内存,你可能会失去数据。**

|

||||

|

||||

rsyslog 能在内存被占满时将日志队列放到磁盘。[磁盘辅助队列][14]使日志的传输更可靠。这里有一个例子如何配置rsyslog 的磁盘辅助队列:

|

||||

|

||||

$WorkDirectory /var/spool/rsyslog # 暂存文件(spool)放置位置

|

||||

$ActionQueueFileName fwdRule1 # 暂存文件的唯一名字前缀

|

||||

$ActionQueueMaxDiskSpace 1g # 1gb 空间限制(尽可能大)

|

||||

$ActionQueueSaveOnShutdown on # 关机时保存日志到磁盘

|

||||

$ActionQueueType LinkedList # 异步运行

|

||||

$ActionResumeRetryCount -1 # 如果主机宕机,不断重试

|

||||

|

||||

### 使用 TLS 加密日志 ###

|

||||

|

||||

如果你担心你的数据的安全性和隐私性,你应该考虑加密你的日志。如果你使用纯文本在互联网传输日志,嗅探器和中间人可以读到你的日志。如果日志包含私人信息、敏感的身份数据或者政府管制数据,你应该加密你的日志。rsyslog 程序能使用 TLS 协议加密你的日志保证你的数据更安全。

|

||||

|

||||

建立 TLS 加密,你应该做如下任务:

|

||||

|

||||

1. 生成一个[证书授权(CA)][15]。在 /contrib/gnutls 有一些证书例子,可以用来测试,但是你需要为产品环境创建自己的证书。如果你正在使用一个日志管理服务,它会给你一个证书。

|

||||

1. 为你的服务器生成一个[数字证书][16]使它能启用 SSL 操作,或者使用你自己的日志管理服务提供商的一个数字证书。

|

||||

1. 配置你的 rsyslog 程序来发送 TLS 加密数据到你的日志管理系统。

|

||||

|

||||

这有一个 rsyslog 配置 TLS 加密的例子。替换 CERT 和 DOMAIN_NAME 为你自己的服务器配置。

|

||||

|

||||

$DefaultNetstreamDriverCAFile /etc/rsyslog.d/keys/ca.d/CERT.crt

|

||||

$ActionSendStreamDriver gtls

|

||||

$ActionSendStreamDriverMode 1

|

||||

$ActionSendStreamDriverAuthMode x509/name

|

||||

$ActionSendStreamDriverPermittedPeer *.DOMAIN_NAME.com

|

||||

|

||||

### 应用日志的最佳管理方法 ###

|

||||

|

||||

除 Linux 默认创建的日志之外,归集重要的应用日志也是一个好主意。几乎所有基于 Linux 的服务器应用都把它们的状态信息写入到独立、专门的日志文件中。这包括数据库产品,像 PostgreSQL 或者 MySQL,网站服务器,像 Nginx 或者 Apache,防火墙,打印和文件共享服务,目录和 DNS 服务等等。

|

||||

|

||||

管理员安装一个应用后要做的第一件事是配置它。Linux 应用程序典型的有一个放在 /etc 目录里 .conf 文件。它也可能在其它地方,但是那是大家找配置文件首先会看的地方。

|

||||

|

||||

根据应用程序有多复杂多庞大,可配置参数的数量可能会很少或者上百行。如前所述,大多数应用程序可能会在某种日志文件写它们的状态:配置文件是定义日志设置和其它东西的地方。

|

||||

|

||||

如果你不确定它在哪,你可以使用locate命令去找到它:

|

||||

|

||||

[root@localhost ~]# locate postgresql.conf

|

||||

/usr/pgsql-9.4/share/postgresql.conf.sample

|

||||

/var/lib/pgsql/9.4/data/postgresql.conf

|

||||

|

||||

#### 设置一个日志文件的标准位置 ####

|

||||

|

||||

Linux 系统一般保存它们的日志文件在 /var/log 目录下。一般是这样,但是需要检查一下应用是否保存它们在 /var/log 下的特定目录。如果是,很好,如果不是,你也许想在 /var/log 下创建一个专用目录?为什么?因为其它程序也在 /var/log 下保存它们的日志文件,如果你的应用保存超过一个日志文件 - 也许每天一个或者每次重启一个 - 在这么大的目录也许有点难于搜索找到你想要的文件。

|

||||

|

||||

如果在你网络里你有运行多于一个的应用实例,这个方法依然便利。想想这样的情景,你也许有一打 web 服务器在你的网络运行。当排查任何一个机器的问题时,你就很容易知道确切的位置。

|

||||

|

||||

#### 使用一个标准的文件名 ####

|

||||

|

||||

给你的应用最新的日志使用一个标准的文件名。这使一些事变得容易,因为你可以监控和追踪一个单独的文件。很多应用程序在它们的日志文件上追加一种时间戳。它让 rsyslog 更难于找到最新的文件和设置文件监控。一个更好的方法是使用日志轮转给老的日志文件增加时间。这样更易去归档和历史查询。

|

||||

|

||||

#### 追加日志文件 ####

|

||||

|

||||

日志文件会在每个应用程序重启后被覆盖吗?如果这样,我们建议关掉它。每次重启 app 后应该去追加日志文件。这样,你就可以追溯重启前最后的日志。

|

||||

|

||||

#### 日志文件追加 vs. 轮转 ####

|

||||

|

||||

要是应用程序每次重启后写一个新日志文件,如何保存当前日志?追加到一个单独的、巨大的文件?Linux 系统并不以频繁重启或者崩溃而出名:应用程序可以运行很长时间甚至不间歇,但是也会使日志文件非常大。如果你查询分析上周发生连接错误的原因,你可能无疑的要在成千上万行里搜索。

|

||||

|

||||

我们建议你配置应用每天半晚轮转(rotate)它的日志文件。

|

||||

|

||||

为什么?首先它将变得可管理。找一个带有特定日期的文件名比遍历一个文件中指定日期的条目更容易。文件也小的多:你不用考虑当你打开一个日志文件时 vi 僵住。第二,如果你正发送日志到另一个位置 - 也许每晚备份任务拷贝到归集日志服务器 - 这样不会消耗你的网络带宽。最后第三点,这样帮助你做日志保留。如果你想剔除旧的日志记录,这样删除超过指定日期的文件比用一个应用解析一个大文件更容易。

|

||||

|

||||

#### 日志文件的保留 ####

|

||||

|

||||

你保留你的日志文件多长时间?这绝对可以归结为业务需求。你可能被要求保持一个星期的日志信息,或者管理要求保持一年的数据。无论如何,日志需要在一个时刻或其它情况下从服务器删除。

|

||||

|

||||

在我们看来,除非必要,只在线保持最近一个月的日志文件,并拷贝它们到第二个地方如日志服务器。任何比这更旧的日志可以被转到一个单独的介质上。例如,如果你在 AWS 上,你的旧日志可以被拷贝到 Glacier。

|

||||

|

||||

#### 给日志单独的磁盘分区 ####

|

||||

|

||||

更好的,Linux 通常建议挂载到 /var 目录到一个单独的文件系统。这是因为这个目录的高 I/O。我们推荐挂载 /var/log 目录到一个单独的磁盘系统下。这样可以节省与主要的应用数据的 I/O 竞争。另外,如果一些日志文件变的太多,或者一个文件变的太大,不会占满整个磁盘。

|

||||

|

||||

#### 日志条目 ####

|

||||

|

||||

每个日志条目中应该捕获什么信息?

|

||||

|

||||

这依赖于你想用日志来做什么。你只想用它来排除故障,或者你想捕获所有发生的事?这是一个捕获每个用户在运行什么或查看什么的规则条件吗?

|

||||

|

||||

如果你正用日志做错误排查的目的,那么只保存错误,报警或者致命信息。没有理由去捕获调试信息,例如,应用也许默认记录了调试信息或者另一个管理员也许为了故障排查而打开了调试信息,但是你应该关闭它,因为它肯定会很快的填满空间。在最低限度上,捕获日期、时间、客户端应用名、来源 ip 或者客户端主机名、执行的动作和信息本身。

|

||||

|

||||

#### 一个 PostgreSQL 的实例 ####

|

||||

|

||||

作为一个例子,让我们看看 vanilla PostgreSQL 9.4 安装的主配置文件。它叫做 postgresql.conf,与其它Linux 系统中的配置文件不同,它不保存在 /etc 目录下。下列的代码段,我们可以在我们的 Centos 7 服务器的 /var/lib/pgsql 目录下找到它:

|

||||

|

||||

root@localhost ~]# vi /var/lib/pgsql/9.4/data/postgresql.conf

|

||||

...

|

||||

#------------------------------------------------------------------------------

|

||||

# ERROR REPORTING AND LOGGING

|

||||

#------------------------------------------------------------------------------

|

||||

# - Where to Log -

|

||||

log_destination = 'stderr'

|

||||

# Valid values are combinations of

|

||||

# stderr, csvlog, syslog, and eventlog,

|

||||

# depending on platform. csvlog

|

||||

# requires logging_collector to be on.

|

||||

# This is used when logging to stderr:

|

||||

logging_collector = on

|

||||

# Enable capturing of stderr and csvlog

|

||||

# into log files. Required to be on for

|

||||

# csvlogs.

|

||||

# (change requires restart)

|

||||

# These are only used if logging_collector is on:

|

||||

log_directory = 'pg_log'

|

||||

# directory where log files are written,

|

||||

# can be absolute or relative to PGDATA

|

||||

log_filename = 'postgresql-%a.log' # log file name pattern,

|

||||

# can include strftime() escapes

|

||||

# log_file_mode = 0600 .

|

||||

# creation mode for log files,

|

||||

# begin with 0 to use octal notation

|

||||

log_truncate_on_rotation = on # If on, an existing log file with the

|

||||

# same name as the new log file will be

|

||||

# truncated rather than appended to.

|

||||

# But such truncation only occurs on

|

||||

# time-driven rotation, not on restarts

|

||||

# or size-driven rotation. Default is

|

||||

# off, meaning append to existing files

|

||||

# in all cases.

|

||||

log_rotation_age = 1d

|

||||

# Automatic rotation of logfiles will happen after that time. 0 disables.

|

||||

log_rotation_size = 0 # Automatic rotation of logfiles will happen after that much log output. 0 disables.

|

||||

# These are relevant when logging to syslog:

|

||||

#syslog_facility = 'LOCAL0'

|

||||

#syslog_ident = 'postgres'

|

||||

# This is only relevant when logging to eventlog (win32):

|

||||

#event_source = 'PostgreSQL'

|

||||

# - When to Log -

|

||||

#client_min_messages = notice # values in order of decreasing detail:

|

||||

# debug5

|

||||

# debug4

|

||||

# debug3

|

||||

# debug2

|

||||

# debug1

|

||||

# log

|

||||

# notice

|

||||

# warning

|

||||

# error

|

||||

#log_min_messages = warning # values in order of decreasing detail:

|

||||

# debug5

|

||||

# debug4

|

||||

# debug3

|

||||

# debug2

|

||||

# debug1

|

||||

# info

|

||||

# notice

|

||||

# warning

|

||||

# error

|

||||

# log

|

||||

# fatal

|

||||

# panic

|

||||

#log_min_error_statement = error # values in order of decreasing detail:

|

||||

# debug5

|

||||

# debug4

|

||||

# debug3

|

||||

# debug2

|

||||

# debug1

|

||||

# info

|

||||

# notice

|

||||

# warning

|

||||

# error

|

||||

# log

|

||||

# fatal

|

||||

# panic (effectively off)

|

||||

#log_min_duration_statement = -1 # -1 is disabled, 0 logs all statements

|

||||

# and their durations, > 0 logs only

|

||||

# statements running at least this number

|

||||

# of milliseconds

|

||||

# - What to Log

|

||||

#debug_print_parse = off

|

||||

#debug_print_rewritten = off

|

||||

#debug_print_plan = off

|

||||

#debug_pretty_print = on

|

||||

#log_checkpoints = off

|

||||

#log_connections = off

|

||||

#log_disconnections = off

|

||||

#log_duration = off

|

||||

#log_error_verbosity = default

|

||||

# terse, default, or verbose messages

|

||||

#log_hostname = off

|

||||

log_line_prefix = '< %m >' # special values:

|

||||

# %a = application name

|

||||

# %u = user name

|

||||

# %d = database name

|

||||

# %r = remote host and port

|

||||

# %h = remote host

|

||||

# %p = process ID

|

||||

# %t = timestamp without milliseconds

|

||||

# %m = timestamp with milliseconds

|

||||

# %i = command tag

|

||||

# %e = SQL state

|

||||

# %c = session ID

|

||||

# %l = session line number

|

||||

# %s = session start timestamp

|

||||

# %v = virtual transaction ID

|

||||

# %x = transaction ID (0 if none)

|

||||

# %q = stop here in non-session

|

||||

# processes

|

||||

# %% = '%'

|

||||

# e.g. '<%u%%%d> '

|

||||

#log_lock_waits = off # log lock waits >= deadlock_timeout

|

||||

#log_statement = 'none' # none, ddl, mod, all

|

||||

#log_temp_files = -1 # log temporary files equal or larger

|

||||

# than the specified size in kilobytes;5# -1 disables, 0 logs all temp files5

|

||||

log_timezone = 'Australia/ACT'

|

||||

|

||||

虽然大多数参数被加上了注释,它们使用了默认值。我们可以看见日志文件目录是 pg_log(log_directory 参数,在 /var/lib/pgsql/9.4/data/ 下的子目录),文件名应该以 postgresql 开头(log_filename参数),文件每天轮转一次(log_rotation_age 参数)然后每行日志记录以时间戳开头(log_line_prefix参数)。特别值得说明的是 log_line_prefix 参数:全部的信息你都可以包含在这。

|

||||

|

||||

看 /var/lib/pgsql/9.4/data/pg_log 目录下展现给我们这些文件:

|

||||

|

||||

[root@localhost ~]# ls -l /var/lib/pgsql/9.4/data/pg_log

|

||||

total 20

|

||||

-rw-------. 1 postgres postgres 1212 May 1 20:11 postgresql-Fri.log

|

||||

-rw-------. 1 postgres postgres 243 Feb 9 21:49 postgresql-Mon.log

|

||||

-rw-------. 1 postgres postgres 1138 Feb 7 11:08 postgresql-Sat.log

|

||||

-rw-------. 1 postgres postgres 1203 Feb 26 21:32 postgresql-Thu.log

|

||||

-rw-------. 1 postgres postgres 326 Feb 10 01:20 postgresql-Tue.log

|

||||

|

||||

所以日志文件名只有星期命名的标签。我们可以改变它。如何做?在 postgresql.conf 配置 log_filename 参数。

|

||||

|

||||

查看一个日志内容,它的条目仅以日期时间开头:

|

||||

|

||||

[root@localhost ~]# cat /var/lib/pgsql/9.4/data/pg_log/postgresql-Fri.log

|

||||

...

|

||||

< 2015-02-27 01:21:27.020 EST >LOG: received fast shutdown request

|

||||

< 2015-02-27 01:21:27.025 EST >LOG: aborting any active transactions

|

||||

< 2015-02-27 01:21:27.026 EST >LOG: autovacuum launcher shutting down

|

||||

< 2015-02-27 01:21:27.036 EST >LOG: shutting down

|

||||

< 2015-02-27 01:21:27.211 EST >LOG: database system is shut down

|

||||

|

||||

### 归集应用的日志 ###

|

||||

|

||||

#### 使用 imfile 监控日志 ####

|

||||

|

||||

习惯上,应用通常记录它们数据在文件里。文件容易在一个机器上寻找,但是多台服务器上就不是很恰当了。你可以设置日志文件监控,然后当新的日志被添加到文件尾部后就发送事件到一个集中服务器。在 /etc/rsyslog.d/ 里创建一个新的配置文件然后增加一个配置文件,然后输入如下:

|

||||

|

||||

$ModLoad imfile

|

||||

$InputFilePollInterval 10

|

||||

$PrivDropToGroup adm

|

||||

|

||||

-----

|

||||

# Input for FILE1

|

||||

$InputFileName /FILE1

|

||||

$InputFileTag APPNAME1

|

||||

$InputFileStateFile stat-APPNAME1 #this must be unique for each file being polled

|

||||

$InputFileSeverity info

|

||||

$InputFilePersistStateInterval 20000

|

||||

$InputRunFileMonitor

|

||||

|

||||

替换 FILE1 和 APPNAME1 为你自己的文件名和应用名称。rsyslog 将发送它到你配置的输出目标中。

|

||||

|

||||

#### 本地套接字日志与 imuxsock ####

|

||||

|

||||

套接字类似 UNIX 文件句柄,所不同的是套接字内容是由 syslog 守护进程读取到内存中,然后发送到目的地。不需要写入文件。作为一个例子,logger 命令发送它的日志到这个 UNIX 套接字。

|

||||

|

||||

如果你的服务器 I/O 有限或者你不需要本地文件日志,这个方法可以使系统资源有效利用。这个方法缺点是套接字有队列大小的限制。如果你的 syslog 守护进程宕掉或者不能保持运行,然后你可能会丢失日志数据。

|

||||

|

||||

rsyslog 程序将默认从 /dev/log 套接字中读取,但是你需要使用如下命令来让 [imuxsock 输入模块][17] 启用它:

|

||||

|

||||

$ModLoad imuxsock

|

||||

|

||||

#### UDP 日志与 imupd ####

|

||||

|

||||

一些应用程序使用 UDP 格式输出日志数据,这是在网络上或者本地传输日志文件的标准 syslog 协议。你的 syslog 守护进程接受这些日志,然后处理它们或者用不同的格式传输它们。备选的,你可以发送日志到你的日志服务器或者到一个日志管理方案中。

|

||||

|

||||

使用如下命令配置 rsyslog 通过 UDP 来接收标准端口 514 的 syslog 数据:

|

||||

|

||||

$ModLoad imudp

|

||||

|

||||

----------

|

||||

|

||||

$UDPServerRun 514

|

||||

|

||||

### 用 logrotate 管理日志 ###

|

||||

|

||||

日志轮转是当日志到达指定的时期时自动归档日志文件的方法。如果不介入,日志文件一直增长,会用尽磁盘空间。最后它们将破坏你的机器。

|

||||

|

||||

logrotate 工具能随着日志的日期截取你的日志,腾出空间。你的新日志文件保持该文件名。你的旧日志文件被重命名加上后缀数字。每次 logrotate 工具运行,就会创建一个新文件,然后现存的文件被逐一重命名。你来决定何时旧文件被删除或归档的阈值。

|

||||

|

||||

当 logrotate 拷贝一个文件,新的文件会有一个新的 inode,这会妨碍 rsyslog 监控新文件。你可以通过增加copytruncate 参数到你的 logrotate 定时任务来缓解这个问题。这个参数会拷贝现有的日志文件内容到新文件然后从现有文件截短这些内容。因为日志文件还是同一个,所以 inode 不会改变;但它的内容是一个新文件。

|

||||

|

||||

logrotate 工具使用的主配置文件是 /etc/logrotate.conf,应用特有设置在 /etc/logrotate.d/ 目录下。DigitalOcean 有一个详细的 [logrotate 教程][18]

|

||||

|

||||

### 管理很多服务器的配置 ###

|

||||

|

||||

当你只有很少的服务器,你可以登录上去手动配置。一旦你有几打或者更多服务器,你可以利用工具的优势使这变得更容易和更可扩展。基本上,所有的事情就是拷贝你的 rsyslog 配置到每个服务器,然后重启 rsyslog 使更改生效。

|

||||

|

||||

#### pssh ####

|

||||

|

||||

这个工具可以让你在很多服务器上并行的运行一个 ssh 命令。使用 pssh 部署仅用于少量服务器。如果你其中一个服务器失败,然后你必须 ssh 到失败的服务器,然后手动部署。如果你有很多服务器失败,那么手动部署它们会话费很长时间。

|

||||

|

||||

#### Puppet/Chef ####

|

||||

|

||||

Puppet 和 Chef 是两个不同的工具,它们能在你的网络按你规定的标准自动的配置所有服务器。它们的报表工具可以使你了解错误情况,然后定期重新同步。Puppet 和 Chef 都有一些狂热的支持者。如果你不确定那个更适合你的部署配置管理,你可以拜读一下 [InfoWorld 上这两个工具的对比][19]

|

||||

|

||||

一些厂商也提供一些配置 rsyslog 的模块或者方法。这有一个 Loggly 上 Puppet 模块的例子。它提供给 rsyslog 一个类,你可以添加一个标识令牌:

|

||||

|

||||

node 'my_server_node.example.net' {

|

||||

# Send syslog events to Loggly

|

||||

class { 'loggly::rsyslog':

|

||||

customer_token => 'de7b5ccd-04de-4dc4-fbc9-501393600000',

|

||||

}

|

||||

}

|

||||

|

||||

#### Docker ####

|

||||

|

||||

Docker 使用容器去运行应用,不依赖于底层服务。所有东西都运行在内部的容器,你可以把它想象为一个功能单元。ZDNet 有一篇关于在你的数据中心[使用 Docker][20] 的深入文章。

|

||||

|

||||

这里有很多方式从 Docker 容器记录日志,包括链接到一个日志容器,记录到一个共享卷,或者直接在容器里添加一个 sysllog 代理。其中最流行的日志容器叫做 [logspout][21]。

|

||||

|

||||

#### 供应商的脚本或代理 ####

|

||||

|

||||

大多数日志管理方案提供一些脚本或者代理,可以从一个或更多服务器相对容易地发送数据。重量级代理会耗尽额外的系统资源。一些供应商像 Loggly 提供配置脚本,来使用现存的 syslog 守护进程更轻松。这有一个 Loggly 上的例子[脚本][22],它能运行在任意数量的服务器上。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.loggly.com/ultimate-guide/logging/managing-linux-logs/

|

||||

|

||||

作者:[Jason Skowronski][a1]

|

||||

作者:[Amy Echeverri][a2]

|

||||

作者:[Sadequl Hussain][a3]

|

||||

译者:[wyangsun](https://github.com/wyangsun)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a1]:https://www.linkedin.com/in/jasonskowronski

|

||||

[a2]:https://www.linkedin.com/in/amyecheverri

|

||||

[a3]:https://www.linkedin.com/pub/sadequl-hussain/14/711/1a7

|

||||

[1]:https://docs.google.com/document/d/11LXZxWlkNSHkcrCWTUdnLRf_CiZz9kK0cr3yGM_BU_0/edit#heading=h.esrreycnpnbl

|

||||

[2]:http://www.rsyslog.com/

|

||||

[3]:http://www.balabit.com/network-security/syslog-ng/opensource-logging-system

|

||||

[4]:http://logstash.net/

|

||||

[5]:http://www.fluentd.org/

|

||||

[6]:http://www.rsyslog.com/doc/rsyslog_conf.html

|

||||

[7]:http://www.rsyslog.com/doc/master/rainerscript/index.html

|

||||

[8]:https://docs.google.com/document/d/11LXZxWlkNSHkcrCWTUdnLRf_CiZz9kK0cr3yGM_BU_0/edit#heading=h.eck7acdxin87

|

||||

[9]:https://www.loggly.com/docs/file-monitoring/

|

||||

[10]:http://www.networksorcery.com/enp/protocol/udp.htm

|

||||

[11]:http://www.networksorcery.com/enp/protocol/tcp.htm

|

||||

[12]:http://blog.gerhards.net/2008/04/on-unreliability-of-plain-tcp-syslog.html

|

||||

[13]:http://www.rsyslog.com/doc/relp.html

|

||||

[14]:http://www.rsyslog.com/doc/queues.html

|

||||

[15]:http://www.rsyslog.com/doc/tls_cert_ca.html

|

||||

[16]:http://www.rsyslog.com/doc/tls_cert_machine.html

|

||||

[17]:http://www.rsyslog.com/doc/v8-stable/configuration/modules/imuxsock.html

|

||||

[18]:https://www.digitalocean.com/community/tutorials/how-to-manage-log-files-with-logrotate-on-ubuntu-12-10

|

||||

[19]:http://www.infoworld.com/article/2614204/data-center/puppet-or-chef--the-configuration-management-dilemma.html

|

||||

[20]:http://www.zdnet.com/article/what-is-docker-and-why-is-it-so-darn-popular/

|

||||

[21]:https://github.com/progrium/logspout

|

||||

[22]:https://www.loggly.com/docs/sending-logs-unixlinux-system-setup/

|

||||

@ -1,10 +1,10 @@

|

||||

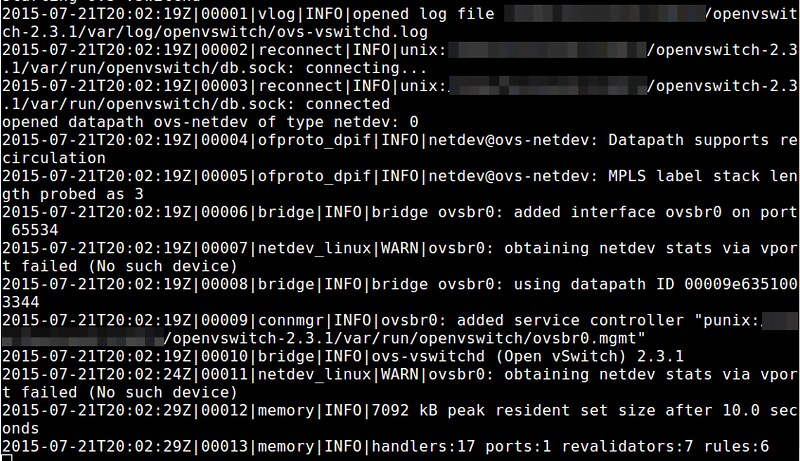

Linux有问必答——如何启用Open vSwitch的日志功能以便调试和排障

|

||||

Linux有问必答:如何启用Open vSwitch的日志功能以便调试和排障

|

||||

================================================================================

|

||||

> **问题** 我试着为我的Open vSwitch部署排障,鉴于此,我想要检查它的由内建日志机制生成的调试信息。我怎样才能启用Open vSwitch的日志功能,并且修改它的日志等级(如,修改成INFO/DEBUG级别)以便于检查更多详细的调试信息呢?

|

||||

|

||||

Open vSwitch(OVS)是Linux平台上用于虚拟切换的最流行的开源部署。由于当今的数据中心日益依赖于软件定义的网络(SDN)架构,OVS被作为数据中心的SDN部署中实际上的标准网络元素而快速采用。

|

||||

Open vSwitch(OVS)是Linux平台上最流行的开源的虚拟交换机。由于当今的数据中心日益依赖于软件定义网络(SDN)架构,OVS被作为数据中心的SDN部署中的事实标准上的网络元素而得到飞速应用。

|

||||

|

||||

Open vSwitch具有一个内建的日志机制,它称之为VLOG。VLOG工具允许你在各种切换组件中启用并自定义日志,由VLOG生成的日志信息可以被发送到一个控制台,syslog以及一个独立日志文件组合,以供检查。你可以通过一个名为`ovs-appctl`的命令行工具在运行时动态配置OVS日志。

|

||||

Open vSwitch具有一个内建的日志机制,它称之为VLOG。VLOG工具允许你在各种网络交换组件中启用并自定义日志,由VLOG生成的日志信息可以被发送到一个控制台、syslog以及一个便于查看的单独日志文件。你可以通过一个名为`ovs-appctl`的命令行工具在运行时动态配置OVS日志。

|

||||

|

||||

|

||||

|

||||

@ -14,7 +14,7 @@ Open vSwitch具有一个内建的日志机制,它称之为VLOG。VLOG工具允

|

||||

|

||||

$ sudo ovs-appctl vlog/set module[:facility[:level]]

|

||||

|

||||

- **Module**:OVS中的任何合法组件的名称(如netdev,ofproto,dpif,vswitchd,以及其它大量组件)

|

||||

- **Module**:OVS中的任何合法组件的名称(如netdev,ofproto,dpif,vswitchd等等)

|

||||

- **Facility**:日志信息的目的地(必须是:console,syslog,或者file)

|

||||

- **Level**:日志的详细程度(必须是:emer,err,warn,info,或者dbg)

|

||||

|

||||

@ -36,13 +36,13 @@ Open vSwitch具有一个内建的日志机制,它称之为VLOG。VLOG工具允

|

||||

|

||||

|

||||

|

||||

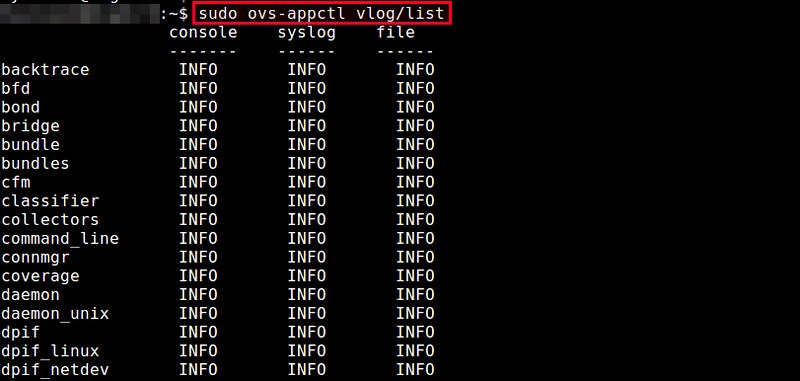

输出结果显示了用于三个工具(console,syslog,file)的各个模块的调试级别。默认情况下,所有模块的日志等级都被设置为INFO。

|

||||

输出结果显示了用于三个场合(facility:console,syslog,file)的各个模块的调试级别。默认情况下,所有模块的日志等级都被设置为INFO。

|

||||

|

||||

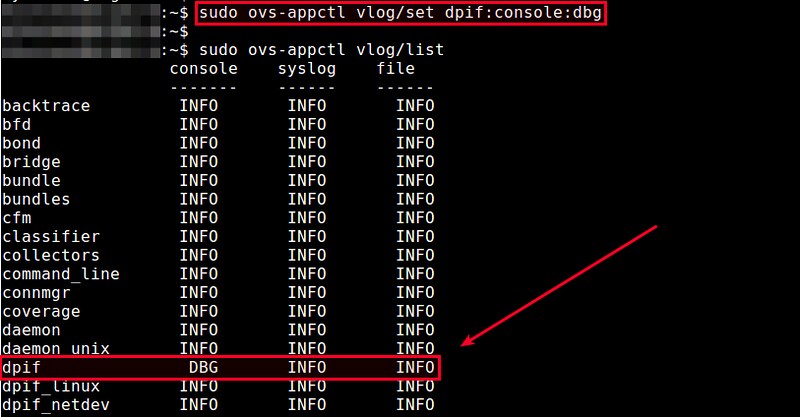

指定任何一个OVS模块,你可以选择性地修改任何特定工具的调试级别。例如,如果你想要在控制台屏幕中查看dpif更为详细的调试信息,可以运行以下命令。

|

||||

指定任何一个OVS模块,你可以选择性地修改任何特定场合的调试级别。例如,如果你想要在控制台屏幕中查看dpif更为详细的调试信息,可以运行以下命令。

|

||||

|

||||

$ sudo ovs-appctl vlog/set dpif:console:dbg

|

||||

|

||||

你将看到dpif模块的console工具已经将其日志等级修改为DBG,而其它两个工具syslog和file的日志级别仍然没有改变。

|

||||

你将看到dpif模块的console工具已经将其日志等级修改为DBG,而其它两个场合syslog和file的日志级别仍然没有改变。

|

||||

|

||||

|

||||

|

||||

@ -52,7 +52,7 @@ Open vSwitch具有一个内建的日志机制,它称之为VLOG。VLOG工具允

|

||||

|

||||

|

||||

|

||||

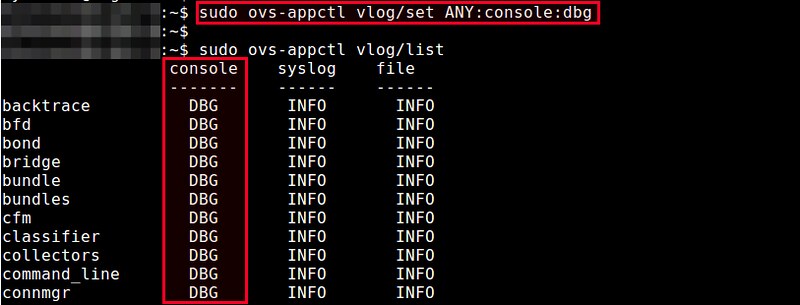

同时,如果你想要一次性修改所有三个工具的日志级别,你可以指定“ANY”作为工具名。例如,下面的命令将修改每个模块的所有工具的日志级别为DBG。

|

||||

同时,如果你想要一次性修改所有三个场合的日志级别,你可以指定“ANY”作为场合名。例如,下面的命令将修改每个模块的所有场合的日志级别为DBG。

|

||||

|

||||

$ sudo ovs-appctl vlog/set ANY:ANY:dbg

|

||||

|

||||

@ -62,7 +62,7 @@ via: http://ask.xmodulo.com/enable-logging-open-vswitch.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,12 +1,13 @@

|

||||

在Ubuntu 15.04中如何安装和使用Snort

|

||||

在 Ubuntu 15.04 中如何安装和使用 Snort

|

||||

================================================================================

|

||||

对于IT安全而言入侵检测是一件非常重要的事。入侵检测系统用于检测网络中非法与恶意的请求。Snort是一款知名的开源入侵检测系统。Web界面(Snorby)可以用于更好地分析警告。Snort使用iptables/pf防火墙来作为入侵检测系统。本篇中,我们会安装并配置一个开源的IDS系统snort。

|

||||

|

||||

对于网络安全而言入侵检测是一件非常重要的事。入侵检测系统(IDS)用于检测网络中非法与恶意的请求。Snort是一款知名的开源的入侵检测系统。其 Web界面(Snorby)可以用于更好地分析警告。Snort使用iptables/pf防火墙来作为入侵检测系统。本篇中,我们会安装并配置一个开源的入侵检测系统snort。

|

||||

|

||||

### Snort 安装 ###

|

||||

|

||||

#### 要求 ####

|

||||

|

||||

snort所使用的数据采集库(DAQ)用于抽象地调用采集库。这个在snort上就有。下载过程如下截图所示。

|

||||

snort所使用的数据采集库(DAQ)用于一个调用包捕获库的抽象层。这个在snort上就有。下载过程如下截图所示。

|

||||

|

||||

|

||||

|

||||

@ -48,7 +49,7 @@ make和make install 命令的结果如下所示。

|

||||

|

||||

|

||||

|

||||

创建安装目录并在脚本中设置prefix参数。同样也建议启用包性能监控(PPM)标志。

|

||||

创建安装目录并在脚本中设置prefix参数。同样也建议启用包性能监控(PPM)的sourcefire标志。

|

||||

|

||||

#mkdir /usr/local/snort

|

||||

|

||||

@ -56,7 +57,7 @@ make和make install 命令的结果如下所示。

|

||||

|

||||

|

||||

|

||||

配置脚本由于缺少libpcre-dev、libdumbnet-dev 和zlib开发库而报错。

|

||||

配置脚本会由于缺少libpcre-dev、libdumbnet-dev 和zlib开发库而报错。

|

||||

|

||||

配置脚本由于缺少libpcre库报错。

|

||||

|

||||

@ -96,7 +97,7 @@ make和make install 命令的结果如下所示。

|

||||

|

||||

|

||||

|

||||

最终snort在/usr/local/snort/bin中运行。现在它对eth0的所有流量都处在promisc模式(包转储模式)。

|

||||

最后,从/usr/local/snort/bin中运行snort。现在它对eth0的所有流量都处在promisc模式(包转储模式)。

|

||||

|

||||

|

||||

|

||||

@ -106,14 +107,17 @@ make和make install 命令的结果如下所示。

|

||||

|

||||

#### Snort的规则和配置 ####

|

||||

|

||||

从源码安装的snort需要规则和安装配置,因此我们会从/etc/snort下面复制规则和配置。我们已经创建了单独的bash脚本来用于规则和配置。它会设置下面这些snort设置。

|

||||

从源码安装的snort还需要设置规则和配置,因此我们需要复制规则和配置到/etc/snort下面。我们已经创建了单独的bash脚本来用于设置规则和配置。它会设置下面这些snort设置。

|

||||

|

||||

- 在linux中创建snort用户用于snort IDS服务。

|

||||

- 在linux中创建用于snort IDS服务的snort用户。

|

||||

- 在/etc下面创建snort的配置文件和文件夹。

|

||||

- 权限设置并从etc中复制snortsnort源代码

|

||||

- 权限设置并从源代码的etc目录中复制数据。

|

||||

- 从snort文件中移除规则中的#(注释符号)。

|

||||

|

||||

#!/bin/bash##PATH of source code of snort

|

||||

-

|

||||

|

||||

#!/bin/bash#

|

||||

# snort源代码的路径

|

||||

snort_src="/home/test/Downloads/snort-2.9.7.3"

|

||||

echo "adding group and user for snort..."

|

||||

groupadd snort &> /dev/null

|

||||

@ -141,15 +145,15 @@ make和make install 命令的结果如下所示。

|

||||

sed -i 's/include \$RULE\_PATH/#include \$RULE\_PATH/' /etc/snort/snort.conf

|

||||

echo "---DONE---"

|

||||

|

||||

改变脚本中的snort源目录并运行。下面是成功的输出。

|

||||

改变脚本中的snort源目录路径并运行。下面是成功的输出。

|

||||

|

||||

|

||||

|

||||

上面的脚本从snort源中复制下面的文件/文件夹到/etc/snort配置文件中

|

||||

上面的脚本从snort源中复制下面的文件和文件夹到/etc/snort配置文件中

|

||||

|

||||

|

||||

|

||||

、snort的配置非常复杂,然而为了IDS能正常工作需要进行下面必要的修改。

|

||||

snort的配置非常复杂,要让IDS能正常工作需要进行下面必要的修改。

|

||||

|

||||

ipvar HOME_NET 192.168.1.0/24 # LAN side

|

||||

|

||||

@ -173,7 +177,7 @@ make和make install 命令的结果如下所示。

|

||||

|

||||

|

||||

|

||||

下载[下载社区][1]规则并解压到/etc/snort/rules。启用snort.conf中的社区及紧急威胁规则。

|

||||

现在[下载社区规则][1]并解压到/etc/snort/rules。启用snort.conf中的社区及紧急威胁规则。

|

||||

|

||||

|

||||

|

||||

@ -187,7 +191,7 @@ make和make install 命令的结果如下所示。

|

||||

|

||||

### 总结 ###

|

||||

|

||||

本篇中,我们致力于开源IDPS系统snort在Ubuntu上的安装和配置。默认它用于监控时间,然而它可以被配置成用于网络保护的内联模式。snort规则可以在离线模式中可以使用pcap文件测试和分析

|

||||

本篇中,我们关注了开源IDPS系统snort在Ubuntu上的安装和配置。通常它用于监控事件,然而它可以被配置成用于网络保护的在线模式。snort规则可以在离线模式中可以使用pcap捕获文件进行测试和分析

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -195,7 +199,7 @@ via: http://linoxide.com/security/install-snort-usage-ubuntu-15-04/

|

||||

|

||||

作者:[nido][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,11 +1,11 @@

|

||||

Linux小技巧:Chrome小游戏,文字说话,计划作业,重复执行命令

|

||||

Linux 小技巧:Chrome 小游戏,让文字说话,计划作业,重复执行命令

|

||||

================================================================================

|

||||

|

||||

重要的事情说两遍,我完成了一个[Linux提示与彩蛋][1]系列,让你的Linux获得更多创造和娱乐。

|

||||

|

||||

|

||||

|

||||

Linux提示与彩蛋系列

|

||||

*Linux提示与彩蛋系列*

|

||||

|

||||

本文,我将会讲解Google-chrome内建小游戏,在终端中如何让文字说话,使用‘at’命令设置作业和使用watch命令重复执行命令。

|

||||

|

||||

@ -17,7 +17,7 @@ Linux提示与彩蛋系列

|

||||

|

||||

|

||||

|

||||

不能连接到互联网

|

||||

*不能连接到互联网*

|

||||

|

||||

按下空格键来激活Google-chrome彩蛋游戏。游戏没有时间限制。并且还不需要浪费时间安装使用。

|

||||

|

||||

@ -27,27 +27,25 @@ Linux提示与彩蛋系列

|

||||

|

||||

|

||||

|

||||

Google Chrome中玩游戏

|

||||

*Google Chrome中玩游戏*

|

||||

|

||||

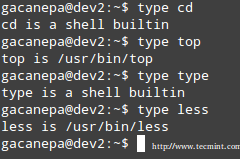

### 2. Linux 终端中朗读文字 ###

|

||||

|

||||

对于那些不能文字朗读的设备,有个小工具可以实现文字说话的转换器。

|

||||

espeak支持多种语言,可以及时朗读输入文字。

|

||||

对于那些不能文字朗读的设备,有个小工具可以实现文字说话的转换器。用各种语言写一些东西,espeak就可以朗读给你。

|

||||

|

||||

系统应该默认安装了Espeak,如果你的系统没有安装,你可以使用下列命令来安装:

|

||||

|

||||

# apt-get install espeak (Debian)

|

||||

# yum install espeak (CentOS)

|

||||

# dnf install espeak (Fedora 22 onwards)

|

||||

# dnf install espeak (Fedora 22 及其以后)

|

||||

|

||||

You may ask espeak to accept Input Interactively from standard Input device and convert it to speech for you. You may do:

|

||||

你可以设置接受从标准输入的交互地输入并及时转换成语音朗读出来。这样设置:

|

||||

你可以让espeak接受标准输入的交互输入并及时转换成语音朗读出来。如下:

|

||||

|

||||

$ espeak [按回车键]

|

||||

|

||||

更详细的输出你可以这样做:

|

||||

|

||||

$ espeak --stdout | aplay [按回车键][这里需要双击]

|

||||

$ espeak --stdout | aplay [按回车键][再次回车]

|

||||

|

||||

espeak设置灵活,也可以朗读文本文件。你可以这样设置:

|

||||

|

||||

@ -55,29 +53,29 @@ espeak设置灵活,也可以朗读文本文件。你可以这样设置:

|

||||

|

||||

espeak可以设置朗读速度。默认速度是160词每分钟。使用-s参数来设置。

|

||||

|

||||

设置30词每分钟:

|

||||

设置每分钟30词的语速:

|

||||

|

||||

$ espeak -s 30 -f /path/to/text/file/file_name.txt | aplay

|

||||

|

||||

设置200词每分钟:

|

||||

设置每分钟200词的语速:

|

||||

|

||||

$ espeak -s 200 -f /path/to/text/file/file_name.txt | aplay

|

||||

|

||||

让其他语言说北印度语(作者母语),这样设置:

|

||||

说其他语言,比如北印度语(作者母语),这样设置:

|

||||

|

||||

$ espeak -v hindi --stdout 'टेकमिंट विश्व की एक बेहतरीन लाइंक्स आधारित वेबसाइट है|' | aplay

|

||||

|

||||

espeak支持多种语言,支持自定义设置。使用下列命令来获得语言表:

|

||||

你可以使用各种语言,让espeak如上面说的以你选择的语言朗读。使用下列命令来获得语言列表:

|

||||

|

||||

$ espeak --voices

|

||||

|

||||

### 3. 快速计划作业 ###

|

||||

### 3. 快速调度任务 ###

|

||||

|

||||

我们已经非常熟悉使用[cron][2]后台执行一个计划命令。

|

||||

我们已经非常熟悉使用[cron][2]守护进程执行一个计划命令。

|

||||

|

||||

Cron是一个Linux系统管理的高级命令,用于计划定时任务如备份或者指定时间或间隔的任何事情。

|

||||

|

||||

但是,你是否知道at命令可以让你计划一个作业或者命令在指定时间?at命令可以指定时间和指定内容执行作业。

|

||||

但是,你是否知道at命令可以让你在指定时间调度一个任务或者命令?at命令可以指定时间执行指定内容。

|

||||

|

||||

例如,你打算在早上11点2分执行uptime命令,你只需要这样做:

|

||||

|

||||

@ -85,17 +83,17 @@ Cron是一个Linux系统管理的高级命令,用于计划定时任务如备

|

||||

uptime >> /home/$USER/uptime.txt

|

||||

Ctrl+D

|

||||

|

||||

|

||||

|

||||

|

||||

Linux中计划作业

|

||||

*Linux中计划任务*

|

||||

|

||||

检查at命令是否成功设置,使用:

|

||||

|

||||

$ at -l

|

||||

|

||||

|

||||

|

||||

|

||||

浏览计划作业

|

||||

*浏览计划任务*

|

||||

|

||||

at支持计划多个命令,例如:

|

||||

|

||||

@ -117,17 +115,17 @@ at支持计划多个命令,例如:

|

||||

|

||||

|

||||

|

||||

Linux中查看日期和时间

|

||||

*Linux中查看日期和时间*

|

||||

|

||||

为了查看这个命令每三秒的输出,我需要运行下列命令:

|

||||

为了每三秒查看一下这个命令的输出,我需要运行下列命令:

|

||||

|

||||

$ watch -n 3 'date +"%H:%M:%S"'

|

||||

|

||||

|

||||

|

||||

Linux中watch命令

|

||||

*Linux中watch命令*

|

||||

|

||||

watch命令的‘-n’开关设定时间间隔。在上诉命令中,我们定义了时间间隔为3秒。你可以按你的需求定义。同样watch

|

||||

watch命令的‘-n’开关设定时间间隔。在上述命令中,我们定义了时间间隔为3秒。你可以按你的需求定义。同样watch

|

||||

也支持其他命令或者脚本。

|

||||

|

||||

至此。希望你喜欢这个系列的文章,让你的linux更有创造性,获得更多快乐。所有的建议欢迎评论。欢迎你也看看其他文章,谢谢。

|

||||

@ -138,7 +136,7 @@ via: http://www.tecmint.com/text-to-speech-in-terminal-schedule-a-job-and-watch-

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[VicYu/Vic020](http://vicyu.net)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

440

published/20150813 Linux file system hierarchy v2.0.md

Normal file

440

published/20150813 Linux file system hierarchy v2.0.md

Normal file

@ -0,0 +1,440 @@

|

||||

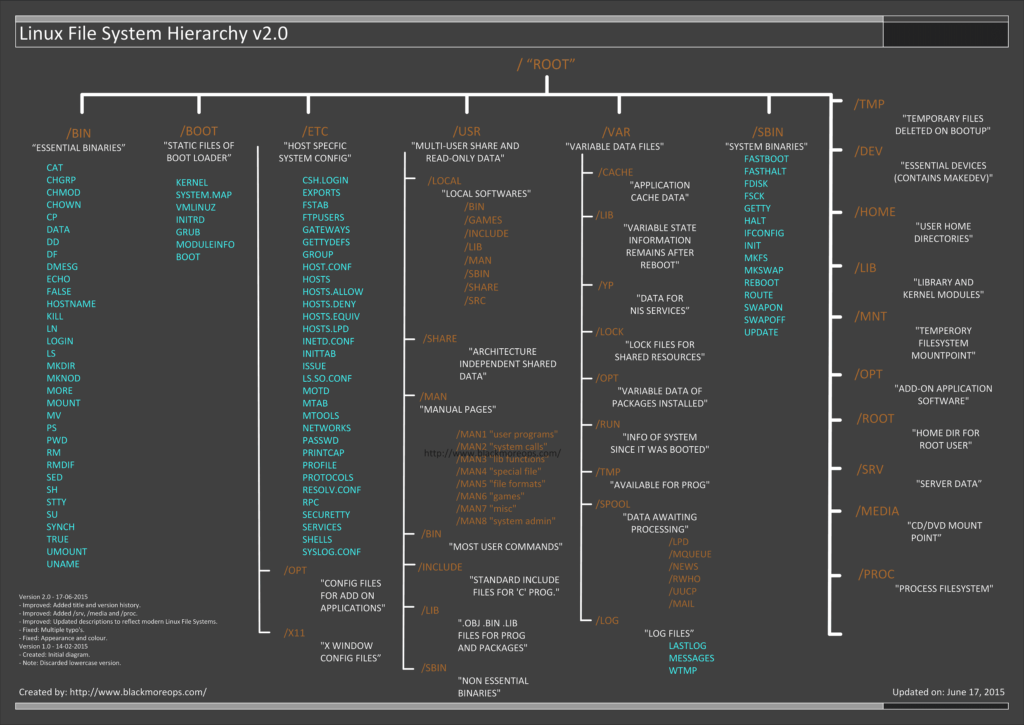

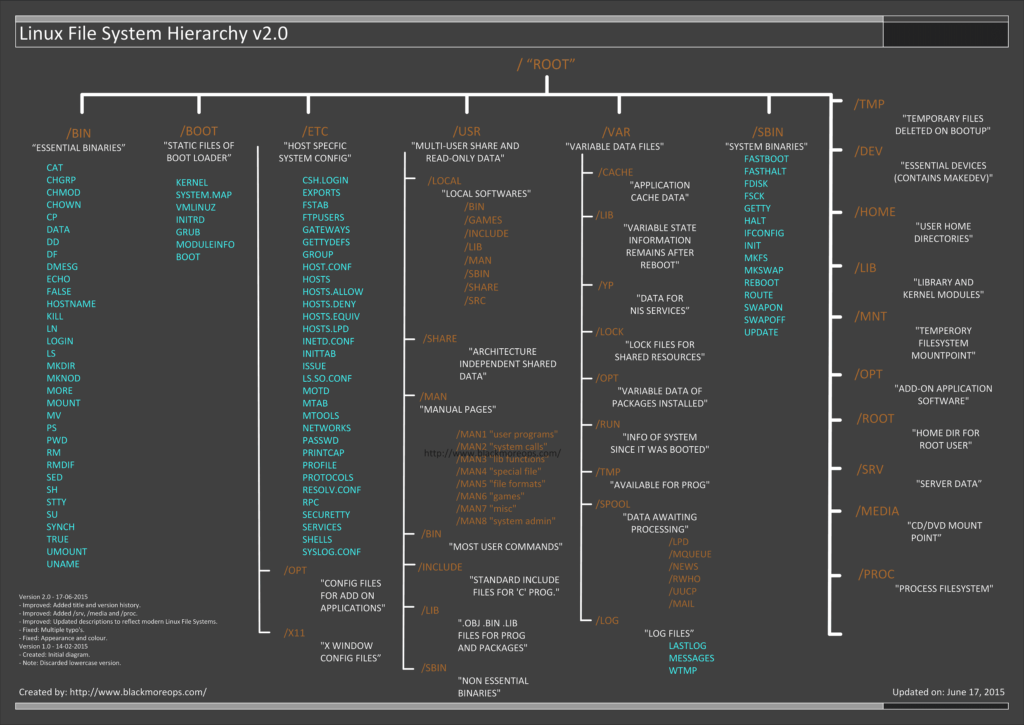

Linux 文件系统结构介绍

|

||||

================================================================================

|

||||

|

||||

|

||||

|

||||

Linux中的文件是什么?它的文件系统又是什么?那些配置文件又在哪里?我下载好的程序保存在哪里了?在 Linux 中文件系统是标准结构的吗?好了,上图简明地阐释了Linux的文件系统的层次关系。当你苦于寻找配置文件或者二进制文件的时候,这便显得十分有用了。我在下方添加了一些解释以及例子,不过“篇幅较长,可以有空再看”。

|

||||

|

||||

另外一种情况便是当你在系统中获取配置以及二进制文件时,出现了不一致性问题,如果你是在一个大型组织中,或者只是一个终端用户,这也有可能会破坏你的系统(比如,二进制文件运行在旧的库文件上了)。若然你在[你的Linux系统上做安全审计][1]的话,你将会发现它很容易遭到各种攻击。所以,保持一个清洁的操作系统(无论是Windows还是Linux)都显得十分重要。

|

||||

|

||||

### Linux的文件是什么? ###

|

||||

|

||||

对于UNIX系统来说(同样适用于Linux),以下便是对文件简单的描述:

|

||||

|

||||

> 在UNIX系统中,一切皆为文件;若非文件,则为进程

|

||||

|

||||

这种定义是比较正确的,因为有些特殊的文件不仅仅是普通文件(比如命名管道和套接字),不过为了让事情变的简单,“一切皆为文件”也是一个可以让人接受的说法。Linux系统也像UNIX系统一样,将文件和目录视如同物,因为目录只是一个包含了其他文件名的文件而已。程序、服务、文本、图片等等,都是文件。对于系统来说,输入和输出设备,基本上所有的设备,都被当做是文件。

|

||||

|

||||

题图版本历史:

|

||||

|

||||

- Version 2.0 – 17-06-2015

|

||||

- – Improved: 添加标题以及版本历史

|

||||

- – Improved: 添加/srv,/meida和/proc

|

||||

- – Improved: 更新了反映当前的Linux文件系统的描述

|

||||

- – Fixed: 多处的打印错误

|

||||

- – Fixed: 外观和颜色

|

||||

- Version 1.0 – 14-02-2015

|

||||

- – Created: 基本的图表

|

||||

- – Note: 摒弃更低的版本

|

||||

|

||||

### 下载链接 ###

|

||||

|

||||

以下是大图的下载地址。如果你需要其他格式,请跟原作者联系,他会尝试制作并且上传到某个地方以供下载

|

||||

|

||||

- [大图 (PNG 格式) – 2480×1755 px – 184KB][2]

|

||||

- [最大图 (PDF 格式) – 9919x7019 px – 1686KB][3]

|

||||

|

||||

**注意**: PDF格式文件是打印的最好选择,因为它画质很高。

|

||||

|

||||

### Linux 文件系统描述 ###

|

||||

|

||||

为了有序地管理那些文件,人们习惯把这些文件当做是硬盘上的有序的树状结构,正如我们熟悉的'MS-DOS'(磁盘操作系统)就是一个例子。大的分枝包括更多的分枝,分枝的末梢是树的叶子或者普通的文件。现在我们将会以这树形图为例,但晚点我们会发现为什么这不是一个完全准确的一幅图。

|

||||

|

||||

<table cellspacing="2" border="4" style="border-collapse: collapse; width: 731px; height: 2617px;">

|

||||

<thead>

|

||||

<tr>

|

||||

<th scope="col">目录</th>

|

||||

<th scope="col">描述</th>

|

||||

</tr>

|

||||

</thead>

|

||||

<tbody>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/</code>

|

||||

</td>

|

||||

<td><i>主层次</i> 的根,也是整个文件系统层次结构的根目录</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/bin</code>

|

||||

</td>

|

||||

<td>存放在单用户模式可用的必要命令二进制文件,所有用户都可用,如 cat、ls、cp等等</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/boot</code>

|

||||

</td>

|

||||

<td>存放引导加载程序文件,例如kernels、initrd等</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/dev</code>

|

||||

</td>

|

||||

<td>存放必要的设备文件,例如<code>/dev/null</code> </td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/etc</code>

|

||||

</td>

|

||||

<td>存放主机特定的系统级配置文件。其实这里有个关于它名字本身意义上的的争议。在贝尔实验室的UNIX实施文档的早期版本中,/etc表示是“其他(etcetera)目录”,因为从历史上看,这个目录是存放各种不属于其他目录的文件(然而,文件系统目录标准 FSH 限定 /etc 用于存放静态配置文件,这里不该存有二进制文件)。早期文档出版后,这个目录名又重新定义成不同的形式。近期的解释中包含着诸如“可编辑文本配置”或者“额外的工具箱”这样的重定义</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/etc/opt</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>存储着新增包的配置文件 <code>/opt/</code>.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/etc/sgml</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>存放配置文件,比如 catalogs,用于那些处理SGML(译者注:标准通用标记语言)的软件的配置文件</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/etc/X11</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>X Window 系统11版本的的配置文件</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/etc/xml</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>配置文件,比如catalogs,用于那些处理XML(译者注:可扩展标记语言)的软件的配置文件</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/home</code>

|

||||

</td>

|

||||

<td>用户的主目录,包括保存的文件,个人配置,等等</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/lib</code>

|

||||

</td>

|

||||

<td><code>/bin/</code> 和 <code>/sbin/</code>中的二进制文件的必需的库文件</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/lib<架构位数></code>

|

||||

</td>

|

||||

<td>备用格式的必要的库文件。 这样的目录是可选的,但如果他们存在的话肯定是有需要用到它们的程序</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/media</code>

|

||||

</td>

|

||||

<td>可移动的多媒体(如CD-ROMs)的挂载点。(出现于 FHS-2.3)</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/mnt</code>

|

||||

</td>

|

||||

<td>临时挂载的文件系统</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/opt</code>

|

||||

</td>

|

||||

<td>可选的应用程序软件包</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/proc</code>

|

||||

</td>

|

||||

<td>以文件形式提供进程以及内核信息的虚拟文件系统,在Linux中,对应进程文件系统(procfs )的挂载点</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/root</code>

|

||||

</td>

|

||||

<td>根用户的主目录</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/sbin</code>

|

||||

</td>

|

||||

<td>必要的系统级二进制文件,比如, init, ip, mount</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/srv</code>

|

||||

</td>

|

||||

<td>系统提供的站点特定数据</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/tmp</code>

|

||||

</td>

|

||||

<td>临时文件 (另见 <code>/var/tmp</code>). 通常在系统重启后删除</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/usr</code>

|

||||

</td>

|

||||

<td><i>二级层级</i>存储用户的只读数据; 包含(多)用户主要的公共文件以及应用程序</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/usr/bin</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>非必要的命令二进制文件 (在单用户模式中不需要用到的);用于所有用户</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/usr/include</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>标准的包含文件</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/usr/lib</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>库文件,用于<code>/usr/bin/</code> 和 <code>/usr/sbin/</code>中的二进制文件</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/usr/lib<架构位数></code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>备用格式库(可选的)</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/usr/local</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td><i>三级层次</i> 用于本地数据,具体到该主机上的。通常会有下一个子目录, <i>比如</i>, <code>bin/</code>, <code>lib/</code>, <code>share/</code>.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/usr/local/sbin</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>非必要系统的二进制文件,比如用于不同网络服务的守护进程</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/usr/share</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>架构无关的 (共享) 数据.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/usr/src</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>源代码,比如内核源文件以及与它相关的头文件</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/usr/X11R6</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>X Window系统,版本号:11,发行版本:6</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

<code>/var</code>

|

||||

</td>

|

||||

<td>各式各样的(Variable)文件,一些随着系统常规操作而持续改变的文件就放在这里,比如日志文件,脱机文件,还有临时的电子邮件文件</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/var/cache</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>应用程序缓存数据. 这些数据是由耗时的I/O(输入/输出)的或者是运算本地生成的结果。这些应用程序是可以重新生成或者恢复数据的。当没有数据丢失的时候,可以删除缓存文件</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/var/lib</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>状态信息。这些信息随着程序的运行而不停地改变,比如,数据库,软件包系统的元数据等等</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/var/lock</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>锁文件。这些文件用于跟踪正在使用的资源</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/var/log</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>日志文件。包含各种日志。</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/var/mail</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>内含用户邮箱的相关文件</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/var/opt</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>来自附加包的各种数据都会存储在 <code>/var/opt/</code>.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/var/run</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>存放当前系统上次启动以来的相关信息,例如当前登入的用户以及当前运行的<a href="http://en.wikipedia.org/wiki/Daemon_%28computing%29">daemons(守护进程)</a>.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/var/spool</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>该spool主要用于存放将要被处理的任务,比如打印队列以及邮件外发队列</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

|

||||

|

||||

<code>/var/mail</code>

|

||||

|

||||

|

||||

|

||||

|

||||

</td>

|

||||

<td>过时的位置,用于放置用户邮箱文件</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>

|

||||

|

||||

|

||||

<code>/var/tmp</code>

|

||||

|

||||

|

||||

</td>

|

||||

<td>存放重启后保留的临时文件</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

</table>

|

||||

|

||||

### Linux的文件类型 ###

|

||||

|

||||

大多数文件仅仅是普通文件,他们被称为`regular`文件;他们包含普通数据,比如,文本、可执行文件、或者程序、程序的输入或输出等等

|

||||

|

||||

虽然你可以认为“在Linux中,一切你看到的皆为文件”这个观点相当保险,但这里仍有着一些例外。

|

||||

|

||||

- `目录`:由其他文件组成的文件

|

||||

- `特殊文件`:用于输入和输出的途径。大多数特殊文件都储存在`/dev`中,我们将会在后面讨论这个问题。

|

||||

- `链接文件`:让文件或者目录出现在系统文件树结构上多个地方的机制。我们将详细地讨论这个链接文件。

|

||||

- `(域)套接字`:特殊的文件类型,和TCP/IP协议中的套接字有点像,提供进程间网络通讯,并受文件系统的访问控制机制保护。

|

||||

- `命名管道` : 或多或少有点像sockets(套接字),提供一个进程间的通信机制,而不用网络套接字协议。

|

||||

|

||||

### 现实中的文件系统 ###

|

||||

|

||||

对于大多数用户和常规系统管理任务而言,“文件和目录是一个有序的类树结构”是可以接受的。然而,对于电脑而言,它是不会理解什么是树,或者什么是树结构。

|

||||

|

||||

每个分区都有它自己的文件系统。想象一下,如果把那些文件系统想成一个整体,我们可以构思一个关于整个系统的树结构,不过这并没有这么简单。在文件系统中,一个文件代表着一个`inode`(索引节点),这是一种包含着构建文件的实际数据信息的序列号:这些数据表示文件是属于谁的,还有它在硬盘中的位置。

|

||||

|

||||

每个分区都有一套属于他们自己的inode,在一个系统的不同分区中,可以存在有相同inode的文件。

|

||||

|

||||

每个inode都表示着一种在硬盘上的数据结构,保存着文件的属性,包括文件数据的物理地址。当硬盘被格式化并用来存储数据时(通常发生在初始系统安装过程,或者是在一个已经存在的系统中添加额外的硬盘),每个分区都会创建固定数量的inode。这个值表示这个分区能够同时存储各类文件的最大数量。我们通常用一个inode去映射2-8k的数据块。当一个新的文件生成后,它就会获得一个空闲的inode。在这个inode里面存储着以下信息:

|

||||

|

||||

- 文件属主和组属主

|

||||

- 文件类型(常规文件,目录文件......)

|

||||

- 文件权限

|

||||

- 创建、最近一次读文件和修改文件的时间

|

||||

- inode里该信息被修改的时间

|

||||

- 文件的链接数(详见下一章)

|

||||

- 文件大小

|

||||

- 文件数据的实际地址

|

||||

|

||||

唯一不在inode的信息是文件名和目录。它们存储在特殊的目录文件。通过比较文件名和inode的数目,系统能够构造出一个便于用户理解的树结构。用户可以通过ls -i查看inode的数目。在硬盘上,inodes有他们独立的空间。

|

||||

|

||||

------------------------

|

||||

|

||||

via: http://www.blackmoreops.com/2015/06/18/linux-file-system-hierarchy-v2-0/

|

||||

|

||||

译者:[tnuoccalanosrep](https://github.com/tnuoccalanosrep)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.blackmoreops.com/2015/02/15/in-light-of-recent-linux-exploits-linux-security-audit-is-a-must/

|

||||

[2]:http://www.blackmoreops.com/wp-content/uploads/2015/06/Linux-file-system-hierarchy-v2.0-2480px-blackMORE-Ops.png

|

||||

[3]:http://www.blackmoreops.com/wp-content/uploads/2015/06/Linux-File-System-Hierarchy-blackMORE-Ops.pdf

|

||||

@ -1,13 +1,10 @@

|

||||

看这些孩子在Ubuntu的Linux终端下玩耍

|

||||

看这些孩子在 Ubuntu 的 Linux 终端下玩耍

|

||||

================================================================================

|

||||

我发现了一个孩子们在他们的计算机教室里玩得很开心的视频。我不知道他们在哪里,但我猜测是在印度尼西亚或者马来西亚。

|

||||

|

||||

注:youtube 视频

|

||||

<iframe width="640" height="390" frameborder="0" allowfullscreen="true" src="http://www.youtube.com/embed/z8taQPomp0Y?version=3&rel=1&fs=1&showsearch=0&showinfo=1&iv_load_policy=1&wmode=transparent" type="text/html" class="youtube-player"></iframe>

|

||||

我发现了一个孩子们在他们的计算机教室里玩得很开心的视频。我不知道他们在哪里,但我猜测是在印度尼西亚或者马来西亚。视频请自行搭梯子: http://www.youtube.com/z8taQPomp0Y

|

||||

|

||||

### 在Linux终端下面跑火车 ###

|

||||

|

||||

这里没有魔术。只是一个叫做“sl”的命令行工具。我假定它是在把ls打错的情况下为了好玩而开发的。如果你曾经在Linux的命令行下工作,你会知道ls是一个最常使用的一个命令,也许也是一个最经常打错的命令。

|

||||

这里没有魔术。只是一个叫做“sl”的命令行工具。我想它是在把ls打错的情况下为了好玩而开发的。如果你曾经在Linux的命令行下工作,你会知道ls是一个最常使用的一个命令,也许也是一个最经常打错的命令。

|

||||

|

||||

如果你想从这个终端下的火车获得一些乐趣,你可以使用下面的命令安装它。

|

||||

|

||||

@ -30,7 +27,7 @@ via: http://itsfoss.com/ubuntu-terminal-train/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,295 @@

|

||||

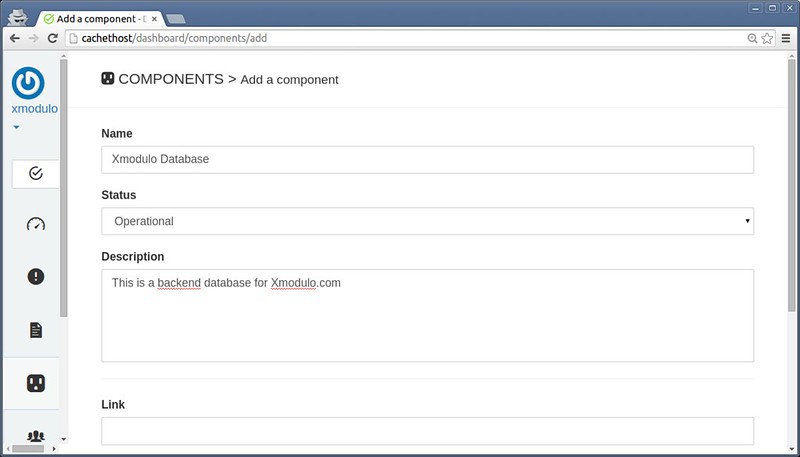

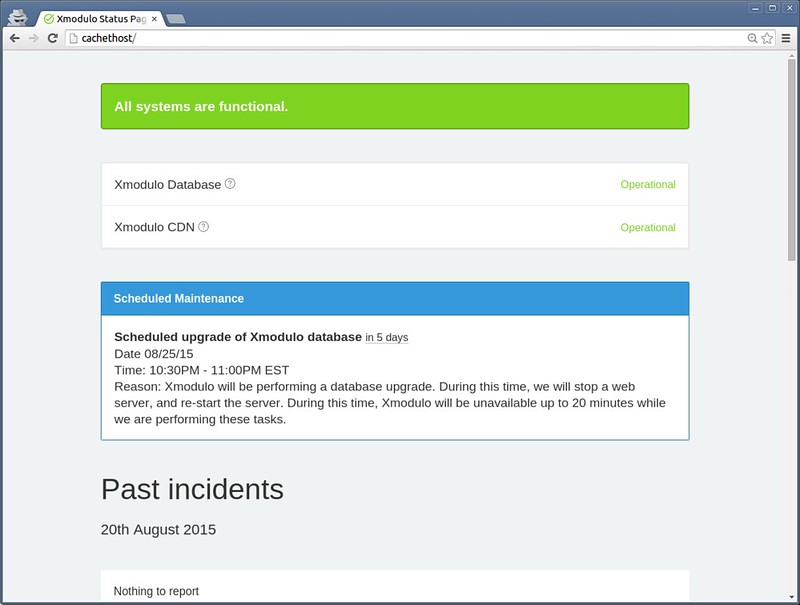

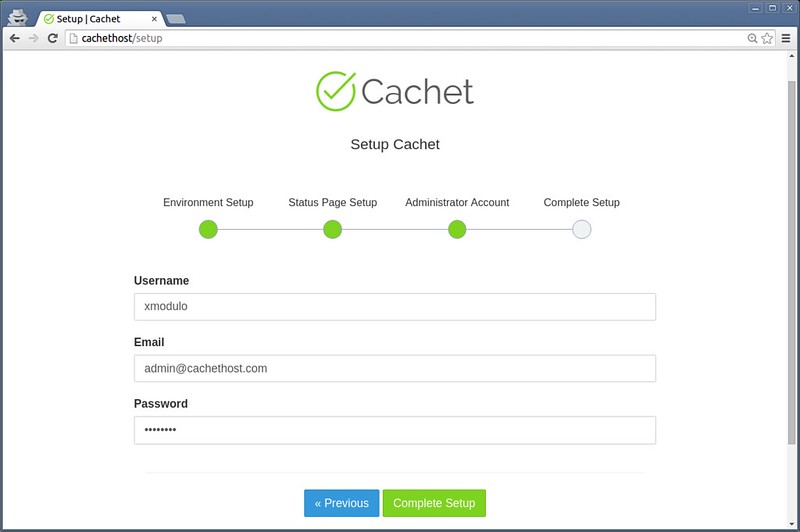

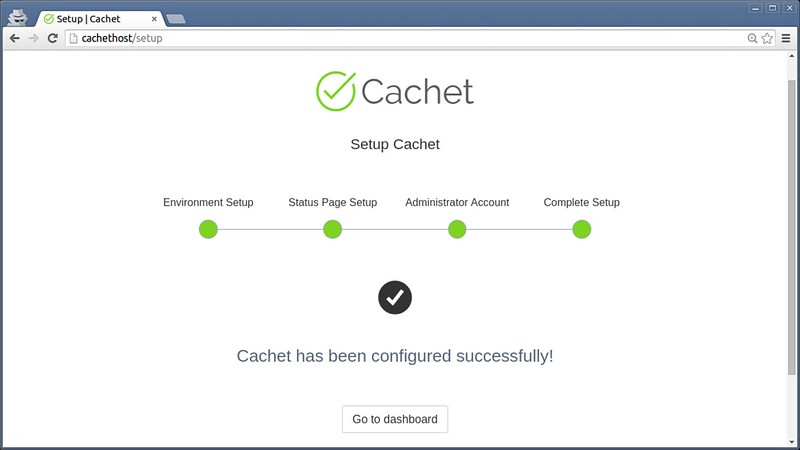

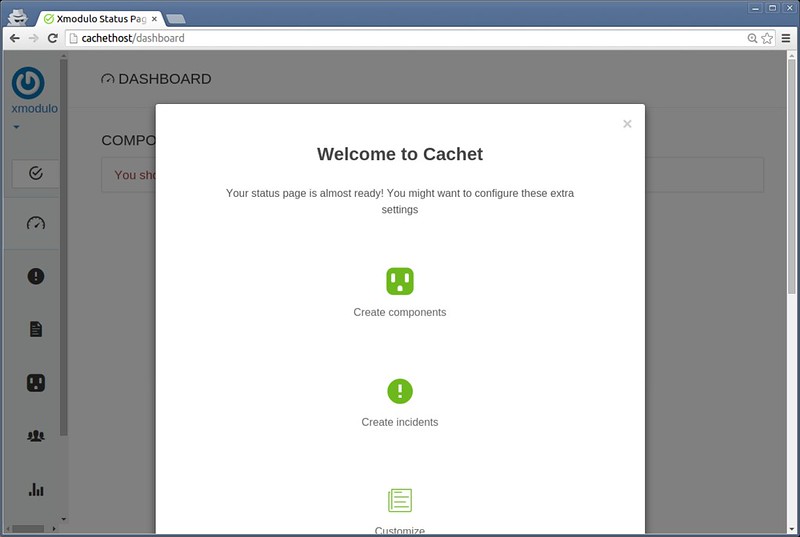

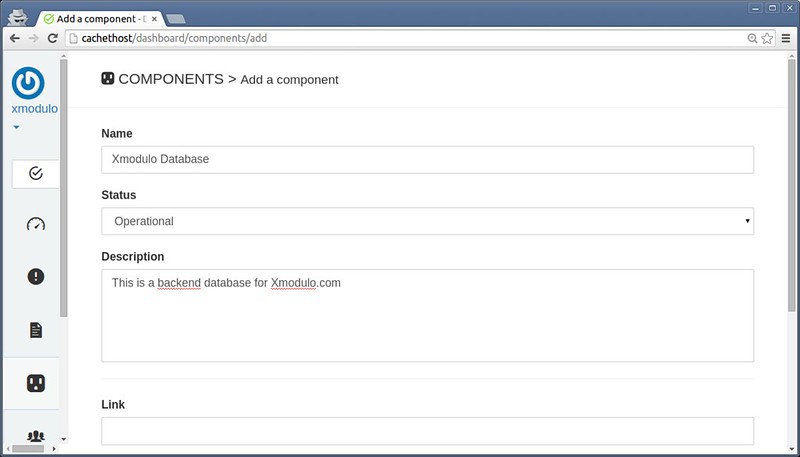

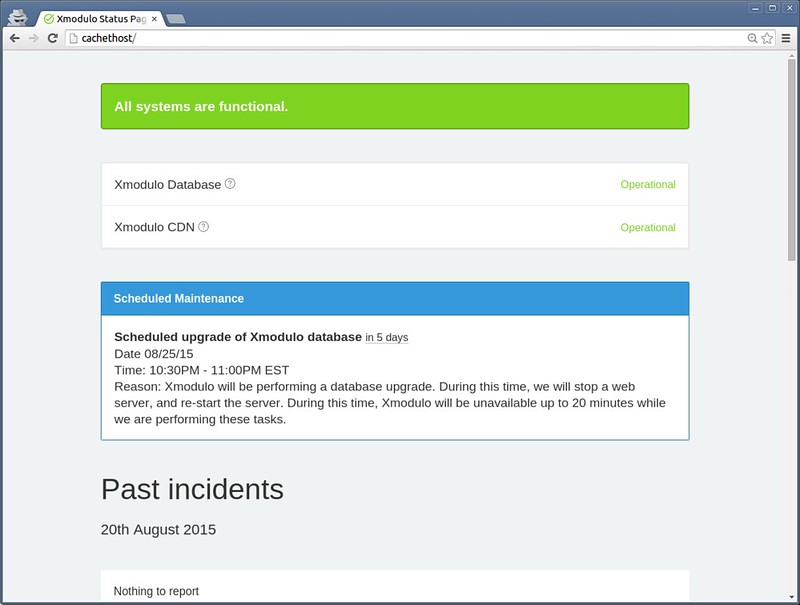

如何为你的平台部署一个公开的系统状态页

|

||||

================================================================================

|

||||

|

||||

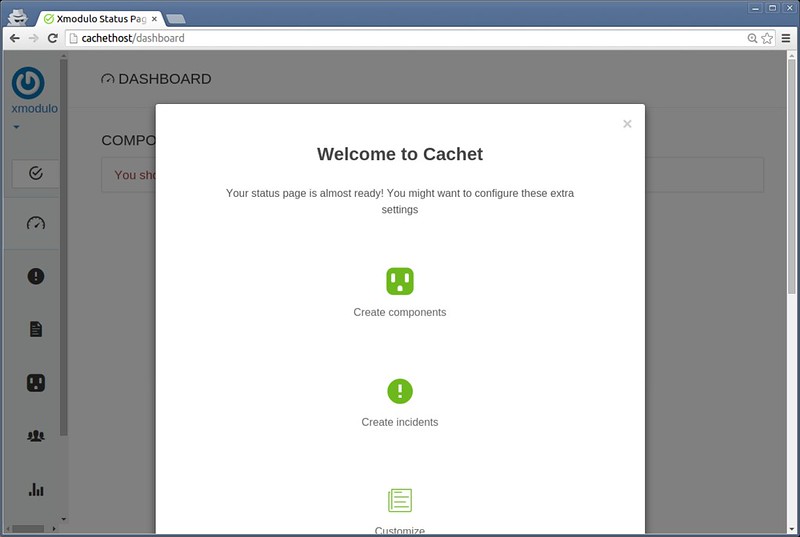

如果你是一个系统管理员,负责关键的 IT 基础设置或公司的服务,你将明白有效的沟通在日常任务中的重要性。假设你的线上存储服务器故障了。你希望团队所有人达成共识你好尽快的解决问题。当你忙来忙去时,你不会想一半的人问你为什么他们不能访问他们的文档。当一个维护计划快到时间了你想在计划前提醒相关人员,这样避免了不必要的开销。

|

||||

|

||||

这一切的要求或多或少改进了你、你的团队、和你服务的用户之间沟通渠道。一个实现它的方法是维护一个集中的系统状态页面,报告和记录故障停机详情、进度更新和维护计划等。这样,在故障期间你避免了不必要的打扰,也可以提醒一些相关方,以及加入一些可选的状态更新。

|

||||

|

||||

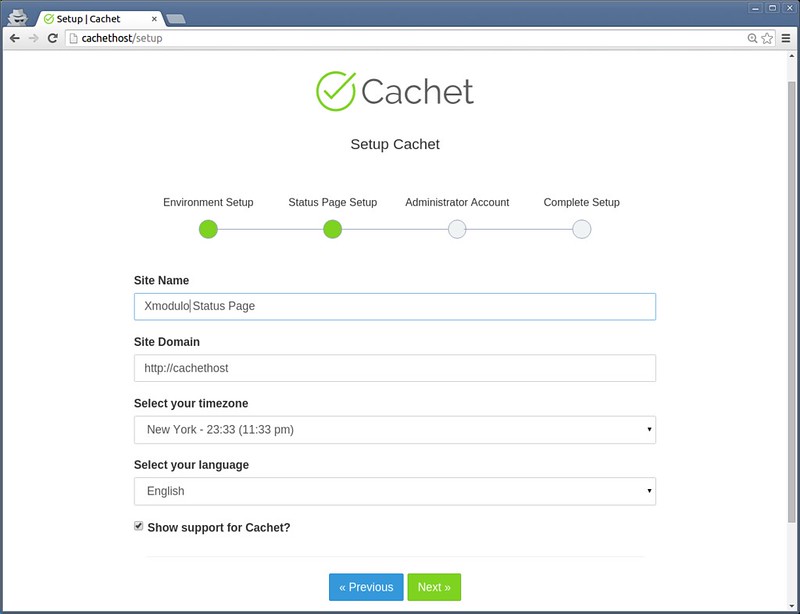

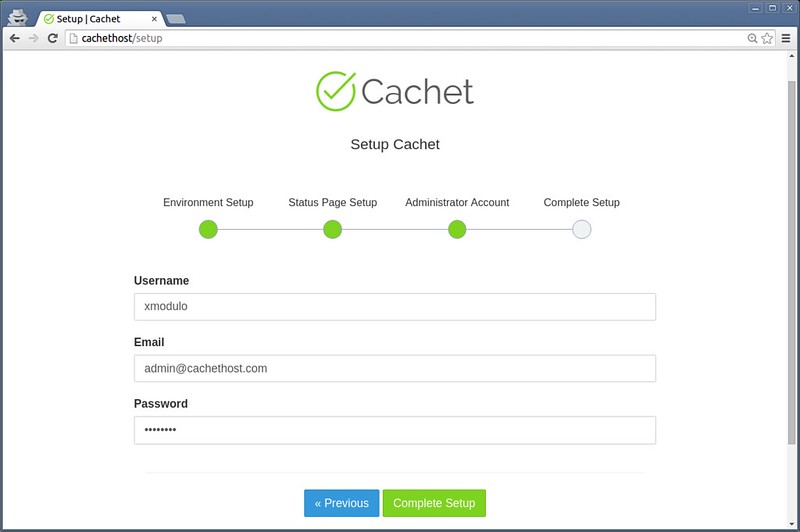

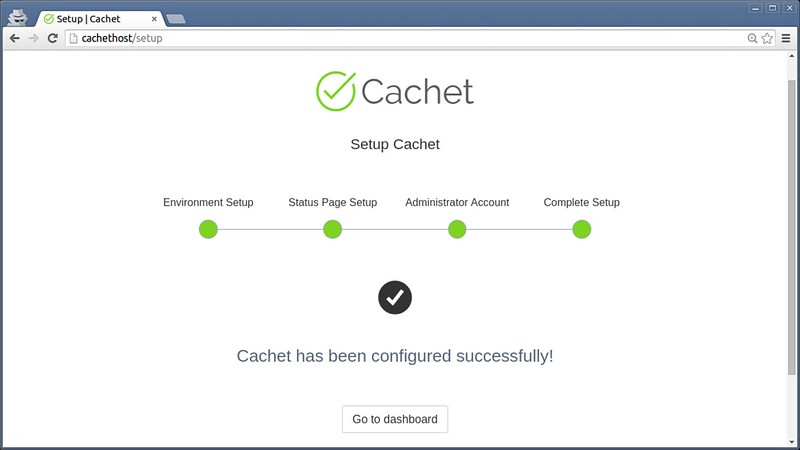

有一个不错的**开源, 自承载系统状态页解决方案**叫做 [Cachet][1]。在这个教程,我将要描述如何用 Cachet 部署一个自承载系统状态页面。

|

||||

|

||||

### Cachet 特性 ###

|

||||

|

||||

在详细的配置 Cachet 之前,让我简单的介绍一下它的主要特性。

|

||||

|

||||

- **全 JSON API**:Cachet API 可以让你使用任意的外部程序或脚本(例如,uptime 脚本)连接到 Cachet 来自动报告突发事件或更新状态。

|

||||

- **认证**:Cachet 支持基础认证和 JSON API 的 API 令牌,所以只有认证用户可以更新状态页面。

|

||||

- **衡量系统**:这通常用来展现随着时间推移的自定义数据(例如,服务器负载或者响应时间)。

|

||||

- **通知**:可选地,你可以给任一注册了状态页面的人发送突发事件的提示邮件。

|

||||

- **多语言**:状态页被翻译为11种不同的语言。

|

||||

- **双因子认证**:这允许你使用 Google 的双因子认证来提升 Cachet 管理账户的安全性。

|

||||

- **跨数据库支持**:你可以选择 MySQL,SQLite,Redis,APC 和 PostgreSQL 作为后端存储。

|

||||

|

||||

剩下的教程,我会说明如何在 Linux 上安装配置 Cachet。

|

||||

|

||||

### 第一步:下载和安装 Cachet ###

|

||||

|

||||

Cachet 需要一个 web 服务器和一个后端数据库来运转。在这个教程中,我将使用 LAMP 架构。以下是一些特定发行版上安装 Cachet 和 LAMP 架构的指令。

|

||||

|

||||

#### Debian,Ubuntu 或者 Linux Mint ####

|

||||

|

||||

$ sudo apt-get install curl git apache2 mysql-server mysql-client php5 php5-mysql

|

||||

$ sudo git clone https://github.com/cachethq/Cachet.git /var/www/cachet

|

||||

$ cd /var/www/cachet

|

||||

$ sudo git checkout v1.1.1

|

||||

$ sudo chown -R www-data:www-data .

|

||||

|

||||

在基于 Debian 的系统上设置 LAMP 架构的更多细节,参考这个[教程][2]。

|

||||

|

||||

#### Fedora, CentOS 或 RHEL ####

|

||||

|

||||

在基于 Red Hat 系统上,你首先需要[设置 REMI 软件库][3](以满足 PHP 的版本需求)。然后执行下面命令。

|

||||

|

||||

$ sudo yum install curl git httpd mariadb-server

|

||||

$ sudo yum --enablerepo=remi-php56 install php php-mysql php-mbstring

|

||||

$ sudo git clone https://github.com/cachethq/Cachet.git /var/www/cachet

|

||||

$ cd /var/www/cachet

|

||||

$ sudo git checkout v1.1.1

|

||||

$ sudo chown -R apache:apache .

|

||||

$ sudo firewall-cmd --permanent --zone=public --add-service=http

|

||||

$ sudo firewall-cmd --reload

|

||||

$ sudo systemctl enable httpd.service; sudo systemctl start httpd.service

|

||||

$ sudo systemctl enable mariadb.service; sudo systemctl start mariadb.service

|

||||

|

||||

在基于 Red Hat 系统上设置 LAMP 的更多细节,参考这个[教程][4]。

|

||||

|

||||

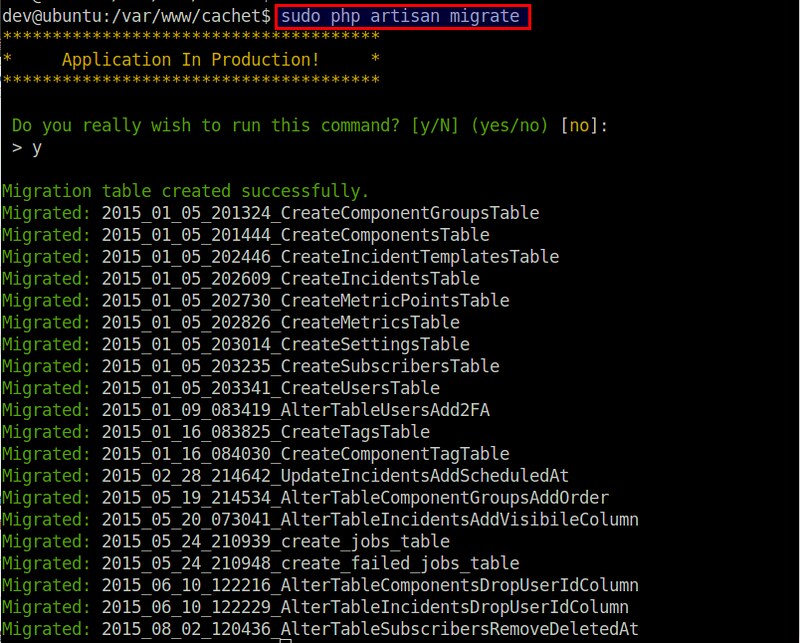

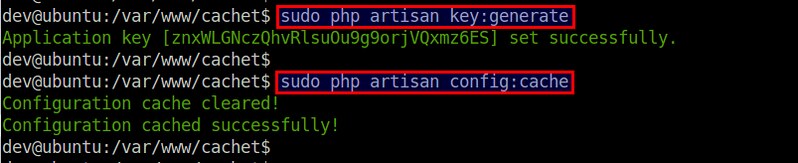

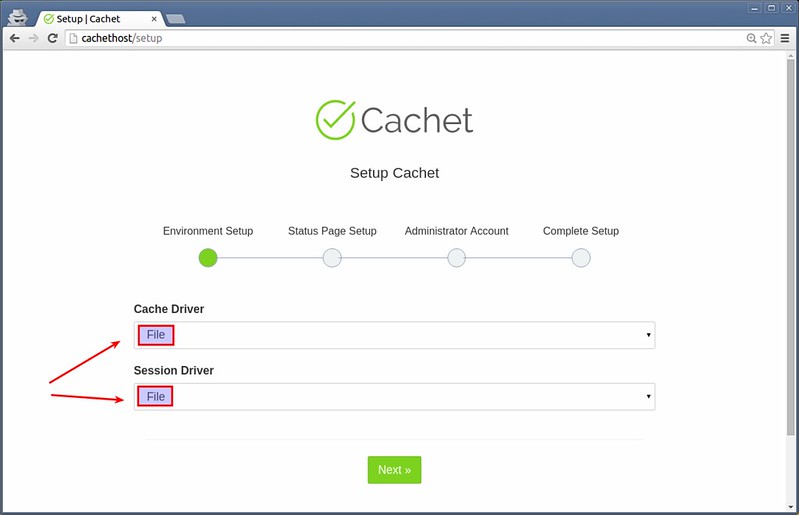

### 配置 Cachet 的后端数据库###

|

||||

|

||||

下一步是配置后端数据库。

|

||||

|