mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-07 22:11:09 +08:00

commit

7f8d210395

@ -0,0 +1,53 @@

|

||||

如果总统候选人们要使用 Linux 发行版,他们会选择哪个?

|

||||

================================================================================

|

||||

|

||||

|

||||

*共和党总统候选人 Donald Trump【译者注:唐纳德·特朗普,美国地产大亨、作家、主持人】*

|

||||

|

||||

如果要竞选总统的人们使用 Linux 或其他的开源操作系统,那么会使用哪个发行版呢?问题的关键是存在许多其它的因素,比如,一些“政治立场”问题,或者是给一个发行版的名字添加上感叹号是否合适——而这问题一直被忽视。先不管这些忽视:接下来是时事新闻工作者关于总统大选和 Linux 发行版的报道。

|

||||

|

||||

对于那些已经看了很多年我的文字的人来说(除了我亲爱的的编辑之外,他们一直听我的瞎扯是不是倒霉到家了?),这篇文章听起来很熟悉,这是因为我在去年的总统选举期间写了一篇[类似的文章][1]。一些读者把这篇文章的内容看的比我想象的还要严肃,所以我会花点时间阐述我的观点:事实上,我不认为开源软件和政治运动彼此之间有多大的关系。我写那样的文章仅仅是新的一周的自我消遣罢了。

|

||||

|

||||

当然,你也可以认为它们彼此相关,毕竟你才是读者。

|

||||

|

||||

### Linux 发行版之选:共和党人们 ###

|

||||

|

||||

今天,我只是谈及一些有关共和党人们的话题,我甚至只会谈论他们的其中一部分。因为共和党的提名人太多了,以至于我写满了整篇文章。由此开始:

|

||||

|

||||

如果 **Jeb (Jeb!?) Bush** 使用 Linux,它一定是 [Debian][2]。Debian 属于一个相当无趣的分支,它是为真正意义上的、成熟的黑客设计的,这些人将清理那些由经验不甚丰富的开源爱好者所造成的混乱视为一大使命。当然,这也使得 Debian 显得很枯燥,所以它已有的用户基数一直在缩减。

|

||||

|

||||

**Scott Walker** ,对于他来说,应该是一个 [Damn Small Linux][3] (DSL) 用户。这个系统仅仅需要 50MB 的硬盘空间和 16MB 的 RAM 便可运行。DSL 可以使一台 20 年前的 486 计算机焕发新春,而这恰好符合了 **Scott Walker** 所主张的消减成本计划。当然,你在 DSL 上的用户体验也十分原始,这个系统平台只能够运行一个浏览器。但是至少你你不用浪费钱财购买新的电脑硬件,你那台 1993 年购买的机器仍然可以为你好好的工作。

|

||||

|

||||

**Chris Christie** 会使用哪种系统呢?他肯定会使用 [Relax-and-Recover Linux][4],它号称“一次搞定(Setup-and-forget)的裸机 Linux 灾难恢复方案” 。从那次不幸的华盛顿大桥事故后,“一次搞定(Setup-and-forget)”基本上便成了 Christie 的政治主张。不管灾难恢复是否能够让 Christie 最终挽回一切,但是当他的电脑死机的时候,至少可以找到一两封意外丢失的机密邮件。

|

||||

|

||||

至于 **Carly Fiorina**,她无疑将要使用 [惠普][6] (HPQ)为“[The Machine][5]”开发的操作系统,她在 1999 年到 2005 年这 6 年期间管理的这个公司。事实上,The Machine 可以运行几种不同的操作系统,也许是基于 Linux 的,也许不是,我们并不太清楚,它的开发始于 **Carly Fiorina** 在惠普公司的任期结束后。不管怎么说,作为 IT 圈里一个成功的管理者,这是她履历里面重要的组成部分,同时这也意味着她很难与惠普彻底断绝关系。

|

||||

|

||||

最后,但并不是不重要,你也猜到了——**Donald Trump**。他显然会动用数百万美元去雇佣一个精英黑客团队去定制属于自己的操作系统——尽管他原本是想要免费获得一个完美的、现成的操作系统——然后还能向别人炫耀自己的财力。他可能会吹嘘自己的操作系统是目前最好的系统,虽然它可能没有兼容 POSIX 或者一些其它的标准,因为那样的话就需要花掉更多的钱。同时这个系统也将根本不会提供任何文档,因为如果 **Donald Trump** 向人们解释他的系统的实际运行方式,他会冒着所有机密被泄露至伊斯兰国家的风险,绝对是这样的。

|

||||

|

||||

另外,如果 **Donald Trump** 非要选择一种已有的 Linux 平台的话, [Ubuntu][7] 应该是明智的选择。就像 **Donald Trump** 一样, Ubuntu 的开发者秉承“我们做自己想要做的”原则,通过他们自己的实现来构建开源软件。自由软件纯化论者却很反感 Ubuntu 这一点,但是很多普通用户却更喜欢一些。当然,无论你是不是一个纯粹论者,无论是在软件领域还是政治领域,还需要时间才能知道分晓。

|

||||

|

||||

### 敬请期待 ###

|

||||

|

||||

如果你想知道为什么我还没有提到民主党候选人,别想多了。我没有在这篇文章中提及他们,是因为我对民主党并不比共和党喜欢更多或更少一点(我个人认为,这种只有两个政党的美国特色是不荒谬的,根本不能体现民主,我也不相信这些党派候选人)。

|

||||

|

||||

另一方面,也可能会有很多人关心民主党候选人使用的 Linux 发行版。后续的帖子中我会提及的,请拭目以待。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://thevarguy.com/open-source-application-software-companies/081715/which-open-source-linux-distributions-would-presidential-

|

||||

|

||||

作者:[Christopher Tozzi][a]

|

||||

译者:[vim-kakali](https://github.com/vim-kakali)

|

||||

校对:[PurlingNayuki](https://github.com/PurlingNayuki), [wxy](https://github.com/wxy/)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://thevarguy.com/author/christopher-tozzi

|

||||

[1]:http://thevarguy.com/open-source-application-software-companies/aligning-linux-distributions-presidential-hopefuls

|

||||

[2]:http://debian.org/

|

||||

[3]:http://www.damnsmalllinux.org/

|

||||

[4]:http://relax-and-recover.org/

|

||||

[5]:http://thevarguy.com/open-source-application-software-companies/061614/hps-machine-open-source-os-truly-revolutionary

|

||||

[6]:http://hp.com/

|

||||

[7]:http://ubuntu.com/

|

||||

@ -4,10 +4,9 @@ Linux 内核里的数据结构——双向链表

|

||||

双向链表

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

Linux 内核中自己实现了双向链表,可以在 [include/linux/list.h](https://github.com/torvalds/linux/blob/master/include/linux/list.h) 找到定义。我们将会首先从双向链表数据结构开始介绍**内核里的数据结构**。为什么?因为它在内核里使用的很广泛,你只需要在 [free-electrons.com](http://lxr.free-electrons.com/ident?i=list_head) 检索一下就知道了。

|

||||

|

||||

Linux 内核自己实现了双向链表,可以在[include/linux/list.h](https://github.com/torvalds/linux/blob/master/include/linux/list.h)找到定义。我们将会从双向链表数据结构开始介绍`内核里的数据结构`。为什么?因为它在内核里使用的很广泛,你只需要在[free-electrons.com](http://lxr.free-electrons.com/ident?i=list_head) 检索一下就知道了。

|

||||

|

||||

首先让我们看一下在[include/linux/types.h](https://github.com/torvalds/linux/blob/master/include/linux/types.h) 里的主结构体:

|

||||

首先让我们看一下在 [include/linux/types.h](https://github.com/torvalds/linux/blob/master/include/linux/types.h) 里的主结构体:

|

||||

|

||||

```C

|

||||

struct list_head {

|

||||

@ -15,7 +14,7 @@ struct list_head {

|

||||

};

|

||||

```

|

||||

|

||||

你可能注意到这和你以前见过的双向链表的实现方法是不同的。举个例子来说,在[glib](http://www.gnu.org/software/libc/) 库里是这样实现的:

|

||||

你可能注意到这和你以前见过的双向链表的实现方法是不同的。举个例子来说,在 [glib](http://www.gnu.org/software/libc/) 库里是这样实现的:

|

||||

|

||||

```C

|

||||

struct GList {

|

||||

@ -25,7 +24,7 @@ struct GList {

|

||||

};

|

||||

```

|

||||

|

||||

通常来说一个链表结构会包含一个指向某个项目的指针。但是Linux内核中的链表实现并没有这样做。所以问题来了:`链表在哪里保存数据呢?`。实际上,内核里实现的链表是`侵入式链表`。侵入式链表并不在节点内保存数据-它的节点仅仅包含指向前后节点的指针,以及指向链表节点数据部分的指针——数据就是这样附加在链表上的。这就使得这个数据结构是通用的,使用起来就不需要考虑节点数据的类型了。

|

||||

通常来说一个链表结构会包含一个指向某个项目的指针。但是 Linux 内核中的链表实现并没有这样做。所以问题来了:**链表在哪里保存数据呢?**。实际上,内核里实现的链表是**侵入式链表(Intrusive list)**。侵入式链表并不在节点内保存数据-它的节点仅仅包含指向前后节点的指针,以及指向链表节点数据部分的指针——数据就是这样附加在链表上的。这就使得这个数据结构是通用的,使用起来就不需要考虑节点数据的类型了。

|

||||

|

||||

比如:

|

||||

|

||||

@ -36,7 +35,7 @@ struct nmi_desc {

|

||||

};

|

||||

```

|

||||

|

||||

让我们看几个例子来理解一下在内核里是如何使用`list_head` 的。如上所述,在内核里有很多很多不同的地方都用到了链表。我们来看一个在杂项字符驱动里面的使用的例子。在 [drivers/char/misc.c](https://github.com/torvalds/linux/blob/master/drivers/char/misc.c) 的杂项字符驱动API 被用来编写处理小型硬件或虚拟设备的小驱动。这些驱动共享相同的主设备号:

|

||||

让我们看几个例子来理解一下在内核里是如何使用 `list_head` 的。如上所述,在内核里有很多很多不同的地方都用到了链表。我们来看一个在杂项字符驱动里面的使用的例子。在 [drivers/char/misc.c](https://github.com/torvalds/linux/blob/master/drivers/char/misc.c) 的杂项字符驱动 API 被用来编写处理小型硬件或虚拟设备的小驱动。这些驱动共享相同的主设备号:

|

||||

|

||||

```C

|

||||

#define MISC_MAJOR 10

|

||||

@ -68,7 +67,7 @@ crw------- 1 root root 10, 63 Mar 21 12:01 vga_arbiter

|

||||

crw------- 1 root root 10, 137 Mar 21 12:01 vhci

|

||||

```

|

||||

|

||||

现在让我们看看它是如何使用链表的。首先看一下结构体`miscdevice`:

|

||||

现在让我们看看它是如何使用链表的。首先看一下结构体 `miscdevice`:

|

||||

|

||||

```C

|

||||

struct miscdevice

|

||||

@ -97,13 +96,13 @@ static LIST_HEAD(misc_list);

|

||||

struct list_head name = LIST_HEAD_INIT(name)

|

||||

```

|

||||

|

||||

然后使用宏`LIST_HEAD_INIT` 进行初始化,这会使用变量`name` 的地址来填充`prev`和`next` 结构体的两个变量。

|

||||

然后使用宏 `LIST_HEAD_INIT` 进行初始化,这会使用变量`name` 的地址来填充`prev`和`next` 结构体的两个变量。

|

||||

|

||||

```C

|

||||

#define LIST_HEAD_INIT(name) { &(name), &(name) }

|

||||

```

|

||||

|

||||

现在来看看注册杂项设备的函数`misc_register`。它在开始就用函数 `INIT_LIST_HEAD` 初始化了`miscdevice->list`。

|

||||

现在来看看注册杂项设备的函数`misc_register`。它在一开始就用函数 `INIT_LIST_HEAD` 初始化了`miscdevice->list`。

|

||||

|

||||

```C

|

||||

INIT_LIST_HEAD(&misc->list);

|

||||

@ -125,7 +124,7 @@ static inline void INIT_LIST_HEAD(struct list_head *list)

|

||||

list_add(&misc->list, &misc_list);

|

||||

```

|

||||

|

||||

内核文件`list.h` 提供了向链表添加新项的API 接口。我们来看看它的实现:

|

||||

内核文件`list.h` 提供了向链表添加新项的 API 接口。我们来看看它的实现:

|

||||

|

||||

|

||||

```C

|

||||

@ -205,9 +204,9 @@ int main() {

|

||||

}

|

||||

```

|

||||

|

||||

最终会打印`2`

|

||||

最终会打印出`2`

|

||||

|

||||

下一点就是`typeof`,它也很简单。就如你从名字所理解的,它仅仅返回了给定变量的类型。当我第一次看到宏`container_of`的实现时,让我觉得最奇怪的就是表达式`((type *)0)`中的0.实际上这个指针巧妙的计算了从结构体特定变量的偏移,这里的`0`刚好就是位宽里的零偏移。让我们看一个简单的例子:

|

||||

下一点就是`typeof`,它也很简单。就如你从名字所理解的,它仅仅返回了给定变量的类型。当我第一次看到宏`container_of`的实现时,让我觉得最奇怪的就是表达式`((type *)0)`中的0。实际上这个指针巧妙的计算了从结构体特定变量的偏移,这里的`0`刚好就是位宽里的零偏移。让我们看一个简单的例子:

|

||||

|

||||

```C

|

||||

#include <stdio.h>

|

||||

@ -236,21 +235,23 @@ int main() {

|

||||

|

||||

当然了`list_add` 和 `list_entry`不是`<linux/list.h>`提供的唯一功能。双向链表的实现还提供了如下API:

|

||||

|

||||

* list_add

|

||||

* list_add_tail

|

||||

* list_del

|

||||

* list_replace

|

||||

* list_move

|

||||

* list_is_last

|

||||

* list_empty

|

||||

* list_cut_position

|

||||

* list_splice

|

||||

* list_for_each

|

||||

* list_for_each_entry

|

||||

* list\_add

|

||||

* list\_add\_tail

|

||||

* list\_del

|

||||

* list\_replace

|

||||

* list\_move

|

||||

* list\_is\_last

|

||||

* list\_empty

|

||||

* list\_cut\_position

|

||||

* list\_splice

|

||||

* list\_for\_each

|

||||

* list\_for\_each\_entry

|

||||

|

||||

等等很多其它API。

|

||||

|

||||

via: https://github.com/0xAX/linux-insides/edit/master/DataStructures/dlist.md

|

||||

----

|

||||

|

||||

via: https://github.com/0xAX/linux-insides/blob/master/DataStructures/dlist.md

|

||||

|

||||

译者:[Ezio](https://github.com/oska874)

|

||||

校对:[Mr小眼儿](https://github.com/tinyeyeser)

|

||||

@ -13,7 +13,7 @@

|

||||

- 意外的文件删除

|

||||

- 文件或文件系统损坏

|

||||

- 服务器完全毁坏,包括由于火灾或其他问题导致的同盘备份毁坏

|

||||

- 硬盘或SSD崩溃

|

||||

- 硬盘或 SSD 崩溃

|

||||

- 病毒或勒索软件破坏或删除文件

|

||||

|

||||

你可以使用磁带归档备份整个服务器并将其离线存储。

|

||||

@ -22,7 +22,7 @@

|

||||

|

||||

|

||||

|

||||

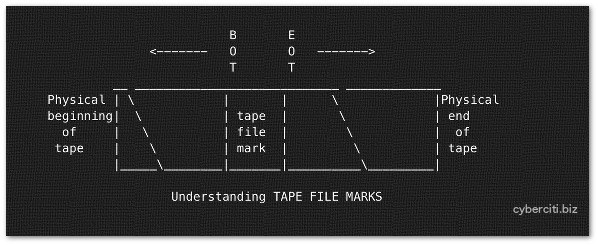

图01:磁带文件标记

|

||||

*图01:磁带文件标记*

|

||||

|

||||

每个磁带设备能存储多个备份文件。磁带备份文件通过 cpio,tar,dd 等命令创建。同时,磁带设备可以由多种程序打开、写入数据、及关闭。你可以存储若干备份(磁带文件)到一个物理磁带上。在每个磁带文件之间有个“磁带文件标记”。这用来指示一个物理磁带上磁带文件的结尾以及另一个文件的开始。你需要使用 mt 命令来定位磁带(快进,倒带和标记)。

|

||||

|

||||

@ -30,7 +30,7 @@

|

||||

|

||||

|

||||

|

||||

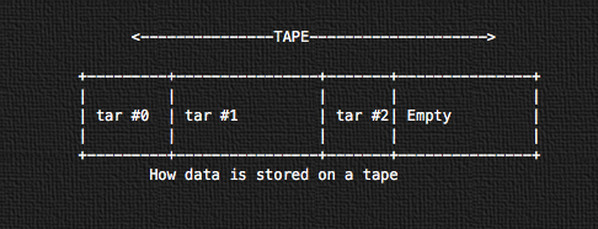

图02:磁带上的数据是如何存储的

|

||||

*图02:磁带上的数据是如何存储的*

|

||||

|

||||

所有的数据使用 tar 以连续磁带存储格式连续地存储。第一个磁带归档会从磁带的物理开始端开始存储(tar #0)。接下来的就是 tar #1,以此类推。

|

||||

|

||||

@ -60,22 +60,22 @@

|

||||

|

||||

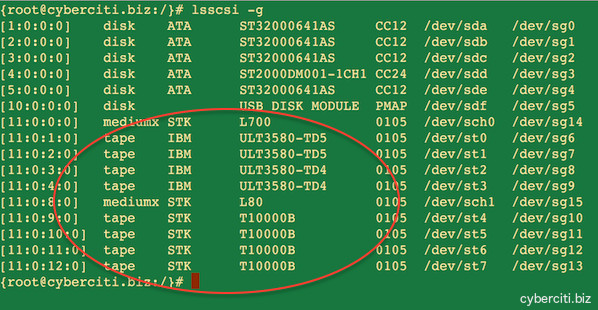

输入下列命令:

|

||||

|

||||

## Linux(更多信息参阅 man) ##

|

||||

### Linux(更多信息参阅 man) ###

|

||||

lsscsi

|

||||

lsscsi -g

|

||||

|

||||

## IBM AIX ##

|

||||

### IBM AIX ###

|

||||

lsdev -Cc tape

|

||||

lsdev -Cc adsm

|

||||

lscfg -vl rmt*

|

||||

|

||||

## Solaris Unix ##

|

||||

### Solaris Unix ###

|

||||

cfgadm –a

|

||||

cfgadm -al

|

||||

luxadm probe

|

||||

iostat -En

|

||||

|

||||

## HP-UX Unix ##

|

||||

### HP-UX Unix ###

|

||||

ioscan Cf

|

||||

ioscan -funC tape

|

||||

ioscan -fnC tape

|

||||

@ -86,11 +86,11 @@

|

||||

|

||||

|

||||

|

||||

图03:Linux 服务器上已安装的磁带设备

|

||||

*图03:Linux 服务器上已安装的磁带设备*

|

||||

|

||||

### mt 命令示例 ###

|

||||

|

||||

在 Linux 和类Unix系统上,mt 命令用来控制磁带驱动器的操作,比如查看状态或查找磁带上的文件或写入磁带控制标记。下列大多数命令需要作为 root 用户执行。语法如下:

|

||||

在 Linux 和类 Unix 系统上,mt 命令用来控制磁带驱动器的操作,比如查看状态或查找磁带上的文件或写入磁带控制标记。下列大多数命令需要作为 root 用户执行。语法如下:

|

||||

|

||||

mt -f /tape/device/name operation

|

||||

|

||||

@ -98,7 +98,7 @@

|

||||

|

||||

你可以设置 TAPE shell 变量。这是磁带驱动器的路径名。在 FreeBSD 上默认的(如果变量没有设置,而不是 null)是 /dev/nsa0。可以通过 mt 命令的 -f 参数传递变量覆盖它,就像下面解释的那样。

|

||||

|

||||

## 添加到你的 shell 配置文件 ##

|

||||

### 添加到你的 shell 配置文件 ###

|

||||

TAPE=/dev/st1 #Linux

|

||||

TAPE=/dev/rmt/2 #Unix

|

||||

TAPE=/dev/nsa3 #FreeBSD

|

||||

@ -106,11 +106,11 @@

|

||||

|

||||

### 1:显示磁带/驱动器状态 ###

|

||||

|

||||

mt status #Use default

|

||||

mt -f /dev/rmt/0 status #Unix

|

||||

mt -f /dev/st0 status #Linux

|

||||

mt -f /dev/nsa0 status #FreeBSD

|

||||

mt -f /dev/rmt/1 status #Unix unity 1 也就是 tape device no. 1

|

||||

mt status ### Use default

|

||||

mt -f /dev/rmt/0 status ### Unix

|

||||

mt -f /dev/st0 status ### Linux

|

||||

mt -f /dev/nsa0 status ### FreeBSD

|

||||

mt -f /dev/rmt/1 status ### Unix unity 1 也就是 tape device no. 1

|

||||

|

||||

你可以像下面一样使用 shell 循环语句遍历一个系统并定位其所有的磁带驱动器:

|

||||

|

||||

@ -208,7 +208,7 @@

|

||||

|

||||

mt -f /dev/st0 rewind; dd if=/dev/st0 of=-

|

||||

|

||||

## tar 格式 ##

|

||||

### tar 格式 ###

|

||||

tar tvf {DEVICE} {Directory-FileName}

|

||||

tar tvf /dev/st0

|

||||

tar tvf /dev/st0 desktop

|

||||

@ -216,40 +216,40 @@

|

||||

|

||||

### 12:使用 dump 或 ufsdump 备份分区 ###

|

||||

|

||||

## Unix 备份 c0t0d0s2 分区 ##

|

||||

### Unix 备份 c0t0d0s2 分区 ###

|

||||

ufsdump 0uf /dev/rmt/0 /dev/rdsk/c0t0d0s2

|

||||

|

||||

## Linux 备份 /home 分区 ##

|

||||

### Linux 备份 /home 分区 ###

|

||||

dump 0uf /dev/nst0 /dev/sda5

|

||||

dump 0uf /dev/nst0 /home

|

||||

|

||||

## FreeBSD 备份 /usr 分区 ##

|

||||

### FreeBSD 备份 /usr 分区 ###

|

||||

dump -0aL -b64 -f /dev/nsa0 /usr

|

||||

|

||||

### 12:使用 ufsrestore 或 restore 恢复分区 ###

|

||||

|

||||

## Unix ##

|

||||

### Unix ###

|

||||

ufsrestore xf /dev/rmt/0

|

||||

## Unix 交互式恢复 ##

|

||||

### Unix 交互式恢复 ###

|

||||

ufsrestore if /dev/rmt/0

|

||||

|

||||

## Linux ##

|

||||

### Linux ###

|

||||

restore rf /dev/nst0

|

||||

## 从磁带媒介上的第6个备份交互式恢复 ##

|

||||

### 从磁带媒介上的第6个备份交互式恢复 ###

|

||||

restore isf 6 /dev/nst0

|

||||

|

||||

## FreeBSD 恢复 ufsdump 格式 ##

|

||||

### FreeBSD 恢复 ufsdump 格式 ###

|

||||

restore -i -f /dev/nsa0

|

||||

|

||||

### 13:从磁带开头开始写入(见图02) ###

|

||||

|

||||

## 这会覆盖磁带上的所有数据 ##

|

||||

### 这会覆盖磁带上的所有数据 ###

|

||||

mt -f /dev/st1 rewind

|

||||

|

||||

### 备份 home ##

|

||||

### 备份 home ###

|

||||

tar cvf /dev/st1 /home

|

||||

|

||||

## 离线并卸载磁带 ##

|

||||

### 离线并卸载磁带 ###

|

||||

mt -f /dev/st0 offline

|

||||

|

||||

从磁带开头开始恢复:

|

||||

@ -260,22 +260,22 @@

|

||||

|

||||

### 14:从最后一个 tar 后开始写入(见图02) ###

|

||||

|

||||

## 这会保留之前写入的数据 ##

|

||||

### 这会保留之前写入的数据 ###

|

||||

mt -f /dev/st1 eom

|

||||

|

||||

### 备份 home ##

|

||||

### 备份 home ###

|

||||

tar cvf /dev/st1 /home

|

||||

|

||||

## 卸载 ##

|

||||

### 卸载 ###

|

||||

mt -f /dev/st0 offline

|

||||

|

||||

### 15:从 tar number 2 后开始写入(见图02) ###

|

||||

|

||||

## 在 tar number 2 之后写入(应该是 2+1)

|

||||

### 在 tar number 2 之后写入(应该是 2+1)###

|

||||

mt -f /dev/st0 asf 3

|

||||

tar cvf /dev/st0 /usr

|

||||

|

||||

## asf 等效于 fsf ##

|

||||

### asf 等效于 fsf ###

|

||||

mt -f /dev/sf0 rewind

|

||||

mt -f /dev/st0 fsf 2

|

||||

|

||||

@ -1,20 +1,22 @@

|

||||

安装 openSUSE Leap 42.1 之后要做的 8 件事

|

||||

================================================================================

|

||||

|

||||

致谢:[Metropolitan Transportation/Flicrk][1]

|

||||

|

||||

> 你已经在你的电脑上安装了 openSUSE,这是你接下来要做的。

|

||||

*致谢:[Metropolitan Transportation/Flicrk][1]*

|

||||

|

||||

[openSUSE Leap 确实是个巨大的飞跃][2],它允许用户运行一个和 SUSE Linux 企业版拥有一样基因的发行版。和其它系统一样,为了实现最佳的使用效果,在使用它之前需要做些优化设置。

|

||||

> 如果你已经在你的电脑上安装了 openSUSE,这就是你接下来要做的。

|

||||

|

||||

下面是一些我在我的电脑上安装 openSUSE Leap 之后做的一些事情(不适用于服务器)。这里面没有强制性要求的设置,基本安装对你来说也可能足够了。但如果你想获得更好的 openSUSE Leap 体验,那就跟着我往下看吧。

|

||||

[openSUSE Leap 确实是个巨大的飞跃][2],它允许用户运行一个和 SUSE Linux 企业版拥有同样基因的发行版。和其它系统一样,为了实现最佳的使用效果,在使用它之前需要做些优化设置。

|

||||

|

||||

下面是一些我在我的电脑上安装 openSUSE Leap 之后做的一些事情(不适用于服务器)。这里面没有强制性的设置,基本安装对你来说也可能足够了。但如果你想获得更好的 openSUSE Leap 体验,那就跟着我往下看吧。

|

||||

|

||||

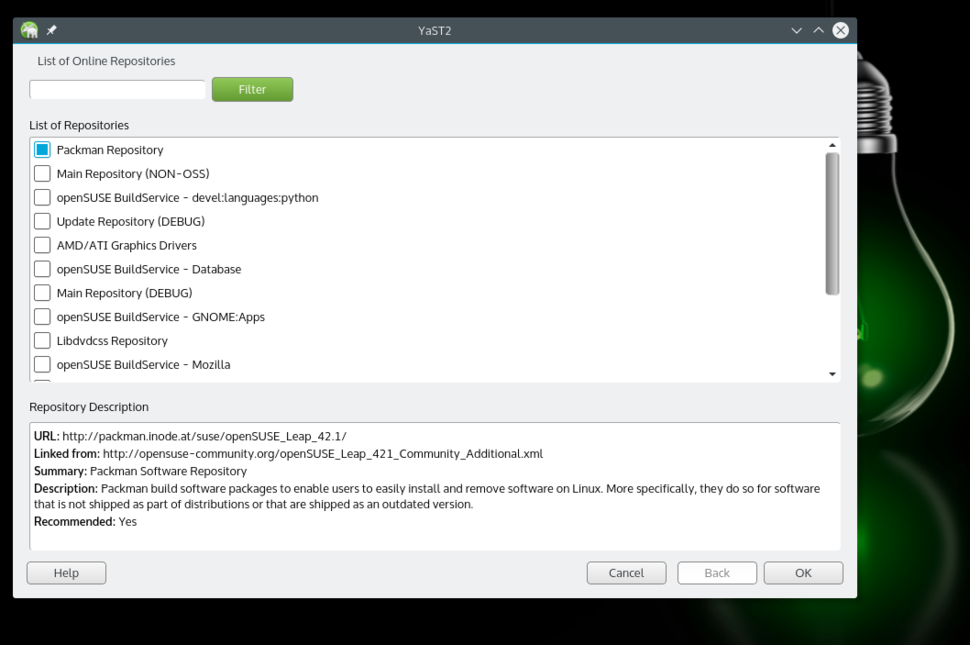

### 1. 添加 Packman 仓库 ###

|

||||

|

||||

由于专利和授权等原因,openSUSE 和许多 Linux 发行版一样,不通过官方仓库(repos)提供一些软件、解码器,以及驱动等。取而代之的是通过第三方或社区仓库来提供。第一个也是最重要的仓库是“Packman”。因为这些仓库不是默认启用的,我们需要添加它们。你可以通过 YaST(openSUSE 的特色之一)或者命令行完成(如下方介绍)。

|

||||

|

||||

|

||||

添加 Packman 仓库。

|

||||

|

||||

*添加 Packman 仓库。*

|

||||

|

||||

使用 YaST,打开软件源部分。点击“添加”按钮并选择“社区仓库(Community Repositories)”。点击“下一步”。一旦仓库列表加载出来了,选择 Packman 仓库。点击“确认”,然后点击“信任”导入信任的 GnuPG 密钥。

|

||||

|

||||

@ -58,7 +60,7 @@ openSUSE 的默认浏览器是 Firefox。但是因为 Firefox 不能够播放专

|

||||

|

||||

### 5. 安装 Nvidia 驱动 ###

|

||||

|

||||

即便你有 Nvidia 或 ATI 显卡,openSUSE Leap 也能够开箱即用。但是,如果你需要专有驱动来游戏或其它目的,你可以安装这些驱动,但需要一点额外的工作。

|

||||

即便你使用 Nvidia 或 ATI 显卡,openSUSE Leap 也能够开箱即用。但是,如果你需要专有驱动来游戏或其它目的,你可以安装这些驱动,但需要一点额外的工作。

|

||||

|

||||

首先你需要添加 Nvidia 源;它的步骤和使用 YaST 添加 Packman 仓库是一样的。唯一的不同是你需要在社区仓库部分选择 Nvidia。添加好了之后,到 **软件管理 > 附加** 去并选择“附加/安装所有匹配的推荐包”。

|

||||

|

||||

@ -76,14 +78,15 @@ openSUSE 的默认浏览器是 Firefox。但是因为 Firefox 不能够播放专

|

||||

|

||||

### 7. 安装你喜欢的电子邮件客户端 ###

|

||||

|

||||

openSUSE 自带 Kmail 或 Evolution,这取决于你安装的桌面环境。我用的是 Plasma,自带 Kmail,这个邮件客户端还有许多地方有待改进。我建议可以试试 Thunderbird 或 Evolution。所有主要的邮件客户端都能在官方仓库找到。你还可以看看我[精心挑选的 Linux 最佳邮件客户端][7]。

|

||||

openSUSE 自带 Kmail 或 Evolution,这取决于你安装的桌面环境。我用的是 KDE Plasma 自带的 Kmail,这个邮件客户端还有许多地方有待改进。我建议可以试试 Thunderbird 或 Evolution。所有主要的邮件客户端都能在官方仓库找到。你还可以看看我[精心挑选的 Linux 最佳邮件客户端][7]。

|

||||

|

||||

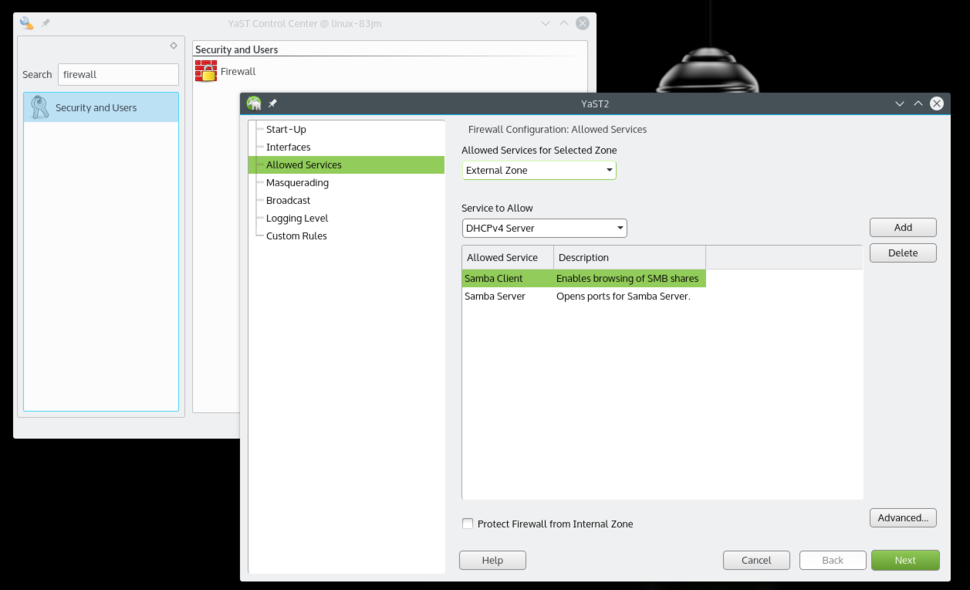

### 8. 在防火墙允许 Samba 服务 ###

|

||||

|

||||

相比于其它发行版,openSUSE 默认提供了更加安全的系统。但对新用户来说它也需要一点设置。如果你正在使用 Samba 协议分享文件到本地网络的话,你需要在防火墙允许该服务。

|

||||

|

||||

|

||||

在防火墙设置里允许 Samba 客户端和服务端

|

||||

|

||||

*在防火墙设置里允许 Samba 客户端和服务端*

|

||||

|

||||

打开 YaST 并搜索 Firewall。在防火墙设置里,进入到“允许的服务(Allowed Services)”,你会在“要允许的服务(Service to allow)”下面看到一个下拉列表。选择“Samba 客户端”,然后点击“添加”。同样方法添加“Samba 服务器”。都添加了之后,点击“下一步”,然后点击“完成”,现在你就可以通过本地网络从你的 openSUSE 分享文件以及访问其它机器了。

|

||||

|

||||

@ -1,55 +0,0 @@

|

||||

vim-kakali translating

|

||||

|

||||

Which Open Source Linux Distributions Would Presidential Hopefuls Run?

|

||||

================================================================================

|

||||

|

||||

|

||||

Republican presidential candidate Donald Trump

|

||||

|

||||

If people running for president used Linux or another open source operating system, which distribution would it be? That's a key question that the rest of the press—distracted by issues of questionable relevance such as "policy platforms" and whether it's appropriate to add an exclamation point to one's Christian name—has been ignoring. But the ignorance ends here: Read on for this sometime-journalist's take on presidential elections and Linux distributions.

|

||||

|

||||

If this sounds like a familiar topic to those of you who have been reading my drivel for years (is anyone, other than my dear editor, unfortunate enough to have actually done that?), it's because I wrote a [similar post][1] during the last presidential election cycle. Some kind readers took that article more seriously than I intended, so I'll take a moment to point out that I don't actually believe that open source software and political campaigns have anything meaningful to do with one another. I am just trying to amuse myself at the start of a new week.

|

||||

|

||||

But you can make of this what you will. You're the reader, after all.

|

||||

|

||||

### Linux Distributions of Choice: Republicans ###

|

||||

|

||||

Today, I'll cover just the Republicans. And I won't even discuss all of them, since the candidates hoping for the Republican party's nomination are too numerous to cover fully here in one post. But for starters:

|

||||

|

||||

If **Jeb (Jeb!?) Bush** ran Linux, it would be [Debian][2]. It's a relatively boring distribution designed for serious, grown-up hackers—the kind who see it as their mission to be the adults in the pack and clean up the messes that less-experienced open source fans create. Of course, this also makes Debian relatively unexciting, and its user base remains perennially small as a result.

|

||||

|

||||

**Scott Walker**, for his part, would be a [Damn Small Linux][3] (DSL) user. Requiring merely 50MB of disk space and 16MB of RAM to run, DSL can breathe new life into 20-year-old 486 computers—which is exactly what a cost-cutting guru like Walker would want. Of course, the user experience you get from DSL is damn primitive; the platform barely runs a browser. But at least you won't be wasting money on new computer hardware when the stuff you bought in 1993 can still serve you perfectly well.

|

||||

|

||||

How about **Chris Christie**? He'd obviously be clinging to [Relax-and-Recover Linux][4], which bills itself as a "setup-and-forget Linux bare metal disaster recovery solution." "Setup-and-forget" has basically been Christie's political strategy ever since that unfortunate incident on the George Washington Bridge stymied his political momentum. Disaster recovery may or may not bring back everything for Christie in the end, but at least he might succeed in recovering a confidential email or two that accidentally disappeared when his computer crashed.

|

||||

|

||||

As for **Carly Fiorina**, she'd no doubt be using software developed for "[The Machine][5]" operating system from [Hewlett-Packard][6] (HPQ), the company she led from 1999 to 2005. The Machine actually may run several different operating systems, which may or may not be based on Linux—details remain unclear—and its development began well after Fiorina's tenure at HP came to a conclusion. Still, her roots as a successful executive in the IT world form an important part of her profile today, meaning that her ties to HP have hardly been severed fully.

|

||||

|

||||

Last but not least—and you knew this was coming—there's **Donald Trump**. He'd most likely pay a team of elite hackers millions of dollars to custom-build an operating system just for him—even though he could obtain a perfectly good, ready-made operating system for free—to show off how much money he has to waste. He'd then brag about it being the best operating system ever made, though it would of course not be compliant with POSIX or anything else, because that would mean catering to the establishment. The platform would also be totally undocumented, since, if Trump explained how his operating system actually worked, he'd risk giving away all his secrets to the Islamic State—obviously.

|

||||

|

||||

Alternatively, if Trump had to go with a Linux platform already out there, [Ubuntu][7] seems like the most obvious choice. Like Trump, the Ubuntu developers have taken a we-do-what-we-want approach to building open source software by implementing their own, sometimes proprietary applications and interfaces. Free-software purists hate Ubuntu for that, but plenty of ordinary people like it a lot. Of course, whether playing purely by your own rules—in the realms of either software or politics—is sustainable in the long run remains to be seen.

|

||||

|

||||

### Stay Tuned ###

|

||||

|

||||

If you're wondering why I haven't yet mentioned the Democratic candidates, worry not. I am not leaving them out of today's writing because I like them any more or less than the Republicans. (Personally, I think the peculiar American practice of having only two viable political parties—which virtually no other functioning democracy does—is ridiculous, and I am suspicious of all of these candidates as a result.)

|

||||

|

||||

On the contrary, there's plenty to say about the Linux distributions the Democrats might use, too. And I will, in a future post. Stay tuned.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://thevarguy.com/open-source-application-software-companies/081715/which-open-source-linux-distributions-would-presidential-

|

||||

|

||||

作者:[Christopher Tozzi][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://thevarguy.com/author/christopher-tozzi

|

||||

[1]:http://thevarguy.com/open-source-application-software-companies/aligning-linux-distributions-presidential-hopefuls

|

||||

[2]:http://debian.org/

|

||||

[3]:http://www.damnsmalllinux.org/

|

||||

[4]:http://relax-and-recover.org/

|

||||

[5]:http://thevarguy.com/open-source-application-software-companies/061614/hps-machine-open-source-os-truly-revolutionary

|

||||

[6]:http://hp.com/

|

||||

[7]:http://ubuntu.com/

|

||||

@ -1,3 +1,7 @@

|

||||

vim-kakali translating

|

||||

|

||||

|

||||

|

||||

Confessions of a cross-platform developer

|

||||

=============================================

|

||||

|

||||

|

||||

@ -0,0 +1,46 @@

|

||||

Linus Torvalds Talks IoT, Smart Devices, Security Concerns, and More[video]

|

||||

===========================================================================

|

||||

|

||||

|

||||

>Dirk Hohndel interviews Linus Torvalds at ELC.

|

||||

|

||||

For the first time in the 11-year history of the [Embedded Linux Conference (ELC)][0], held in San Diego, April 4-6, the keynotes included a discussion with Linus Torvalds. The creator and lead overseer of the Linux kernel, and “the reason we are all here,” in the words of his interviewer, Intel Chief Linux and Open Source Technologist Dirk Hohndel, seemed upbeat about the state of Linux in embedded and Internet of Things applications. Torvalds very presence signaled that embedded Linux, which has often been overshadowed by Linux desktop, server, and cloud technologies, had come of age.

|

||||

|

||||

|

||||

>Linus Torvalds speaking at Embedded Linux Conference.

|

||||

|

||||

IoT was the main topic at ELC, which included an OpenIoT Summit track, and the chief topic in the Torvalds interview.

|

||||

|

||||

“Maybe you won’t see Linux at the IoT leaf nodes, but anytime you have a hub, you will need it,” Torvalds told Hohndel. “You need smart devices especially if you have 23 [IoT standards]. If you have all these stupid devices that don’t necessarily run Linux, and they all talk with slightly different standards, you will need a lot of smart devices. We will never have one completely open standard, one ring to rule them all, but you will have three of four major protocols, and then all these smart hubs that translate.”

|

||||

|

||||

Torvalds remained customarily philosophical when Hohndel asked about the gaping security holes in IoT. “I don’t worry about security because there’s not a lot we can do,” he said. “IoT is unpatchable -- it’s a fact of life.”

|

||||

|

||||

The Linux creator seemed more concerned about the lack of timely upstream contributions from one-off embedded projects, although he noted there have been significant improvements in recent years, partially due to consolidation on hardware.

|

||||

|

||||

“The embedded world has traditionally been hard to interact with as an open source developer, but I think that’s improving,” Torvalds said. “The ARM community has become so much better. Kernel people can now actually keep up with some of the hardware improvements. It’s improving, but we’re not nearly there yet.”

|

||||

|

||||

Torvalds admitted to being more at home on the desktop than in embedded and to having “two left hands” when it comes to hardware.

|

||||

|

||||

“I’ve destroyed things with a soldering iron many times,” he said. “I’m not really set up to do hardware.” On the other hand, Torvalds guessed that if he were a teenager today, he would be fiddling around with a Raspberry Pi or BeagleBone. “The great part is if you’re not great at soldering, you can just buy a new one.”

|

||||

|

||||

Meanwhile, Torvalds vowed to continue fighting for desktop Linux for another 25 years. “I’ll wear them down,” he said with a smile.

|

||||

|

||||

Watch the full video, below.

|

||||

|

||||

Get the Latest on Embedded Linux and IoT. Access 150+ recorded sessions from Embedded Linux Conference 2016. [Watch Now][1].

|

||||

|

||||

[video](https://youtu.be/tQKUWkR-wtM)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/news/linus-torvalds-talks-iot-smart-devices-security-concerns-and-more-video

|

||||

|

||||

作者:[ERIC BROWN][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/users/ericstephenbrown

|

||||

[0]: http://events.linuxfoundation.org/events/embedded-linux-conference

|

||||

[1]: http://go.linuxfoundation.org/elc-openiot-summit-2016-videos?utm_source=lf&utm_medium=blog&utm_campaign=linuxcom

|

||||

@ -0,0 +1,63 @@

|

||||

65% of companies are contributing to open source projects

|

||||

==========================================================

|

||||

|

||||

|

||||

|

||||

This year marks the 10th annual Future of Open Source Survey to examine trends in open source, hosted by Black Duck and North Bridge. The big takeaway from the survey this year centers around the mainstream acceptance of open source today and how much has changed over the last decade.

|

||||

|

||||

The [2016 Future of Open Source Survey][1] analyzed responses from nearly 3,400 professionals. Developers made their voices heard in the survey this year, comprising roughly 70% of the participants. The group that showed exponential growth were security professionals, whose participation increased by over 450%. Their participation shows the increasing interest in ensuring that the open source community pays attention to security issues in open source software and securing new technologies as they emerge.

|

||||

|

||||

Black Duck's [Open Source Rookies][2] of the Year awards identify some of these emerging technologies, like Docker and Kontena in containers. Containers themselves have seen huge growth this year–76% of respondents say their company has some plans to use containers. And an amazing 59% of respondents are already using containers in a variety of deployments, from development and testing to internal and external production environment. The developer community has embraced containers as a way to get their code out quickly and easily.

|

||||

|

||||

It's not surprising that the survey shows a miniscule number of organizations having no developers contributing to open source software. When large corporations like Microsoft and Apple open source some of their solutions, developers gain new opportunities to participate in open source. I certainly hope this trend will continue, with more software developers contributing to open source projects at work and outside of work.

|

||||

|

||||

### Highlights from the 2016 survey

|

||||

|

||||

#### Business value

|

||||

|

||||

* Open source is an essential element in development strategy with more than 65% of respondents relying on open source to speed development.

|

||||

* More than 55% leverage open source within their production environments.

|

||||

|

||||

#### Engine for innovation

|

||||

|

||||

* Respondents reported use of open source to drive innovation through faster, more agile development; accelerated time to market and vastly superior interoperability.

|

||||

* Additional innovation is afforded by open source's quality of solutions; competitive features and technical capabilities; and ability to customize.

|

||||

|

||||

#### Proliferation of open source business models and investment

|

||||

|

||||

* More diverse business models are emerging that promise to deliver more value to open source companies than ever before. They are not as dependent on SaaS and services/support.

|

||||

* Open source private financing has increased almost 4x in five years.

|

||||

|

||||

#### Security and management

|

||||

|

||||

The development of best-in-class open source security and management practices has not kept pace with growth in adoption. Despite a proliferation of expensive, high-profile open source breaches in recent years, the survey revealed that:

|

||||

|

||||

* 50% of companies have no formal policy for selecting and approving open source code.

|

||||

* 47% of companies don’t have formal processes in place to track open source code, limiting their visibility into their open source and therefore their ability to control it.

|

||||

* More than one-third of companies have no process for identifying, tracking or remediating known open source vulnerabilities.

|

||||

|

||||

#### Open source participation on the rise

|

||||

|

||||

The survey revealed an active corporate open source community that spurs innovation, delivers exponential value and shares camaraderie:

|

||||

|

||||

* 67% of respondents report actively encouraging developers to engage in and contribute to open source projects.

|

||||

* 65% of companies are contributing to open source projects.

|

||||

* One in three companies have a fulltime resource dedicated to open source projects.

|

||||

* 59% of respondents participate in open source projects to gain competitive edge.

|

||||

|

||||

Black Duck and North Bridge learned a great deal this year about security, policy, business models and more from the survey, and we’re excited to share these findings. Thank you to our many collaborators and all the respondents for taking the time to take the survey. It’s been a great ten years, and I am happy that we can safely say that the future of open source is full of possibilities.

|

||||

|

||||

Learn more, see the [full results][3].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/business/16/5/2016-future-open-source-survey

|

||||

|

||||

作者:[Haidee LeClair][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

[a]: https://opensource.com/users/blackduck2016

|

||||

[1]: http://www.slideshare.net/blackducksoftware/2016-future-of-open-source-survey-results

|

||||

[2]: https://info.blackducksoftware.com/OpenSourceRookies2015.html

|

||||

[3]: http://www.slideshare.net/blackducksoftware/2016-future-of-open-source-survey-results%C2%A0

|

||||

@ -0,0 +1,56 @@

|

||||

Linux gives me all the tools I need

|

||||

==========================================

|

||||

|

||||

|

||||

|

||||

[Linux][0] is all around us. It's [on our phones][1] in the form of Android. It's [used on the International Space Station][2]. It [provides much of the backbone of the Internet][3]. And yet many people never notice it. Discovering Linux is a rewarding endeavor. Lots of other people have [shared their Linux stories][4] on Opensource.com, and now it's my turn.

|

||||

|

||||

I still remember when I first discovered Linux in 2008. The person who helped me discover Linux was my father, Socrates Ballais. He was an economics professor here in Tacloban City, Philippines. He was also a technology enthusiast. He taught me a lot about computers and technology, but only advocated using Linux as a fallback operating system in case Windows fails.

|

||||

|

||||

### My earliest days

|

||||

|

||||

Before we had a computer in the home, I was a Windows user. I played games, created documents, and did all the other things kids do with computers. I didn't know what Linux was or what it was used for. The Windows logo was my symbol for a computer.

|

||||

|

||||

When we got our first computer, my father installed Linux ([Ubuntu][5] 8.04) on it. Being the curious kid I was, I booted into the operating system. I was astonished with the interface. It was beautiful. I found it to be very user friendly. For some time, all I did in Ubuntu was play the bundled games. I would do my school work in Windows.

|

||||

|

||||

### The first install

|

||||

|

||||

Four years later, I decided that I would reinstall Windows on our family computer. Without hesitation, I also decided to install Ubuntu. With that, I had fallen in love with Linux (again). Over time, I became more adept with Ubuntu and would casually advocate its use to my friends. When I got my first laptop, I installed it right away.

|

||||

|

||||

### Today

|

||||

|

||||

Today, Linux is my go-to operating system. When I need to do something on a computer, I do it in Linux. For documents and presentations, I use Microsoft Office via [Wine][6]. For my web needs, there's [Chrome and Firefox][7]. For email, there's [Geary][8]. You can do pretty much everything with Linux.

|

||||

|

||||

Most, if not all, of my programming work is done in Linux. The lack of a standard Integrated Development Environment (IDE) like [Visual Studio][9] or [XCode][10] taught me to be flexible and learn more things as a programmer. Now a text editor and a compiler/interpreter are all I need to start coding. I only use an IDE in cases when it's the best tool for accomplishing a task at hand. I find Linux to be more developer-friendly than Windows. To generalize, Linux gives me all the tools I need to develop software.

|

||||

|

||||

Today, I am the co-founder and CTO of a startup called [Creatomiv Studios][11]. I use Linux to develop code for the backend server of our latest project, Basyang. I'm also an amateur photographer, and use [GIMP][12] and [Darktable][13] to edit and manage photos. For communication with my team, I use [Telegram][14].

|

||||

|

||||

### The beauty of Linux

|

||||

|

||||

Many may see Linux as an operating system only for those who love solving complicated problems and working on the command line. Others may see it as a rubbish operating system lacking the support of many companies. However, I see Linux as a thing of beauty and a tool for creation. I love Linux the way it is and hope to see it continue to grow.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/life/16/3/my-linux-story-sean-ballais

|

||||

|

||||

作者:[Sean Francis N. Ballais][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

[a]: https://opensource.com/users/seanballais

|

||||

[0]: https://opensource.com/resources/what-is-linux

|

||||

[1]: http://www.howtogeek.com/189036/android-is-based-on-linux-but-what-does-that-mean/

|

||||

[2]: http://www.extremetech.com/extreme/155392-international-space-station-switches-from-windows-to-linux-for-improved-reliability

|

||||

[3]: https://www.youtube.com/watch?v=JzsLkbwi1LA

|

||||

[4]: https://opensource.com/tags/my-linux-story

|

||||

[5]: http://ubuntu.com/

|

||||

[6]: https://www.winehq.org/

|

||||

[7]: https://www.google.com/chrome/browser/desktop/index.html

|

||||

[8]: https://wiki.gnome.org/Apps/Geary

|

||||

[9]: https://www.visualstudio.com/en-us/visual-studio-homepage-vs.aspx

|

||||

[10]: https://developer.apple.com/xcode/

|

||||

[11]: https://www.facebook.com/CreatomivStudios/

|

||||

[12]: https://www.gimp.org/

|

||||

[13]: http://www.darktable.org/

|

||||

[14]: https://telegram.org/

|

||||

@ -0,0 +1,49 @@

|

||||

Growing a career alongside Linux

|

||||

==================================

|

||||

|

||||

|

||||

|

||||

My Linux story started in 1998 and continues today. Back then, I worked for The Gap managing thousands of desktops running [OS/2][1] (and a few years later, [Warp 3.0][2]). As an OS/2 guy, I was really happy then. The desktops hummed along and it was quite easy to support thousands of users with the tools the GAP had built. Changes were coming, though.

|

||||

|

||||

In November of 1998, I received an invitation to join a brand new startup which would focus on Linux in the enterprise. This startup became quite famous as [Linuxcare][2].

|

||||

|

||||

### My time at Linuxcare

|

||||

|

||||

I had played with Linux a bit, but had never considered delivering it to enterprise customers. Mere months later (which is a turn of the corner in startup time and space), I was managing a line of business that let enterprises get their hardware, software, and even books certified on a few flavors of Linux that were popular back then.

|

||||

|

||||

I supported customers like IBM, Dell, and HP in ensuring their hardware ran Linux successfully. You hear a lot now about preloading Linux on hardware today, but way back then I was invited to Dell to discuss getting a laptop certified to run Linux for an upcoming trade show. Very exciting times! We also supported IBM and HP on a number of certification efforts that spanned a few years.

|

||||

|

||||

Linux was changing fast, much like it always has. It gained hardware support for more key devices like sound, network, graphics. At around that time, I shifted from RPM-based systems to [Debian][3] for my personal use.

|

||||

|

||||

### Using Linux through the years

|

||||

|

||||

Fast forward some years and I worked at a number of companies that did Linux as hardened appliances, Linux as custom software, and Linux in the data center. By the mid 2000s, I was busy doing consulting for that rather large software company in Redmond around some analysis and verification of Linux compared to their own solutions. My personal use had not changed though—I would still run Debian testing systems on anything I could.

|

||||

|

||||

I really appreciated the flexibility of a distribution that floated and was forever updated. Debian is one of the most fun and well supported distributions and has the best community I've ever been a part of.

|

||||

|

||||

When I look back at my own adoption of Linux, I remember with fondness the numerous Linux Expo trade shows in San Jose, San Francisco, Boston, and New York in the early and mid 2000's. At Linuxcare we always did fun and funky booths, and walking the show floor always resulted in getting re-acquainted with old friends. Rumors of work were always traded, and the entire thing underscored the fun of using Linux in real endeavors.

|

||||

|

||||

The rise of virtualization and cloud has really made the use of Linux even more interesting. When I was with Linuxcare, we partnered with a small 30-person company in Palo Alto. We would drive to their offices and get things ready for a trade show that they would attend with us. Who would have ever known that little startup would become VMware?

|

||||

|

||||

I have so many stories, and there were so many people I was so fortunate to meet and work with. Linux has evolved in so many ways and has become so important. And even with its increasing importance, Linux is still fun to use. I think its openness and the ability to modify it has contributed to a legion of new users, which always astounds me.

|

||||

|

||||

### Today

|

||||

|

||||

I've moved away from doing mainstream Linux things over the past five years. I manage large scale infrastructure projects that include a variety of OSs (both proprietary and open), but my heart has always been with Linux.

|

||||

|

||||

The constant evolution and fun of using Linux has been a driving force for me for over the past 18 years. I started with the 2.0 Linux kernel and have watched it become what it is now. It's a remarkable thing. An organic thing. A cool thing.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/life/16/3/my-linux-story-michael-perry

|

||||

|

||||

作者:[Michael Perry][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

[a]: https://opensource.com/users/mpmilestogo

|

||||

[1]: https://en.wikipedia.org/wiki/OS/2

|

||||

[2]: https://archive.org/details/IBMOS2Warp3Collection

|

||||

[3]: https://en.wikipedia.org/wiki/Linuxcare

|

||||

[4]: https://www.debian.org/

|

||||

[5]:

|

||||

@ -0,0 +1,54 @@

|

||||

After a nasty computer virus, sys admin looks to Linux

|

||||

=======================================================

|

||||

|

||||

|

||||

|

||||

My first brush with open source came while I was working for my university as a part-time system administrator in 2001. I was part of a small group that created business case studies for teaching not just in the university, but elsewhere in academia.

|

||||

|

||||

As the team grew, the need for a robust LAN setup with file serving, intranet applications, domain logons, etc. emerged. Our IT infrastructure consisted mostly of bootstrapped Windows 98 computers that had become too old for the university's IT labs and were reassigned to our department.

|

||||

|

||||

### Discovering Linux

|

||||

|

||||

One day, as part of the university's IT procurement plan, our department received an IBM server. We planned to use it as an Internet gateway, domain controller, file and backup server, and intranet application host.

|

||||

|

||||

Upon unboxing, we noticed that it came with Red Hat Linux CDs. No one on our 22-person team (including me) knew anything about Linux. After a few days of research, I met a friend of a friend who did Linux RTOS programming for a living. I asked him for some help installing it.

|

||||

|

||||

It was heady stuff as I watched the friend load up the CD drive with the first of the installation CDs and boot into the Anaconda install system. In about an hour we had completed the basic installation, but still had no working internet connection.

|

||||

|

||||

Another hour of tinkering got us connected to the Internet, but we still weren't anywhere near domain logons or Internet gateway functionality. After another weekend of tinkering, we were able to instruct our Windows 98 terminals to accept the IP of the the Linux PC as the proxy so that we had a working shared Internet connection. But domain logons were still some time away.

|

||||

|

||||

We downloaded [Samba][1] over our awfully slow phone modem connection and hand configured it to serve as the domain controller. File services were also enabled via NFS Kernel Server and creating user directories and making the necessary adjustments and configurations on Windows 98 in Network Neighborhood.

|

||||

|

||||

This setup ran flawlessly for quite some time, and we eventually decided to get started with Intranet applications for timesheet management and some other things. By this time, I was leaving the organization and had handed over most of the sys admin stuff to someone who replaced me.

|

||||

|

||||

### A second Linux experience

|

||||

|

||||

In 2004, I got into Linux once again. My wife ran an independent staff placement business that used data from services like Monster.com to connect clients with job seekers.

|

||||

|

||||

Being the more computer literate of the two of us, it was my job to set things right with the computer or Internet when things went wrong. We also needed to experiment with a lot of tools for sifting through the mountains of resumes and CVs she had to go through on a daily basis.

|

||||

|

||||

Windows [BSoDs][2] were a routine affair, but that was tolerable as long as the data we paid for was safe. I had to spend a few hours each week creating backups.

|

||||

|

||||

One day, we had a virus that simply would not go away. Little did we know what was happening to the data on the slave disk. When it finally failed, we plugged in the week-old slave backup and it failed a week later. Our second backup simply refused to boot up. It was time for professional help, so we took our PC to a reputable repair shop. After two days, we learned that some malware or virus had wiped certain file types, including our paid data, clean.

|

||||

|

||||

This was a body blow to my wife's business plans and meant lost contracts and delayed invoice payments. I had in the interim travelled abroad on business and purchased my first laptop computer from [Computex 2004][3] in Taiwan. It had Windows XP pre-installed, but I wanted to replace it with Linux. I had read that Linux was ready for the desktop and that [Mandrake Linux][4] was a good choice. My first attempt at installation went without a glitch. Everything worked beautifully. I used [OpenOffice][5] for my writing, presentation, and spreadsheet needs.

|

||||

|

||||

We got new hard drives for our computer and installed Mandrake Linux on them. OpenOffice replaced Microsoft Office. We relied on webmail for mailing needs, and [Mozilla Firefox][6] was a welcome change in November 2004. My wife saw the benefits immediately, as there were no crashes or virus/malware infections. More importantly, we bade goodbye to the frequent crashes that plagued Windows 98 and XP. She continued to use the same distribution.

|

||||

|

||||

I, on the other hand, started playing around with other distributions. I love distro-hopping and trying out new ones every once in a while. I also regularly try and test out web applications like Drupal, Joomla, and WordPress on Apache and NGINX stacks. And now our son, who was born in 2006, grew up on Linux. He's very happy with Tux Paint, Gcompris, and SMPlayer.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/life/16/3/my-linux-story-soumya-sarkar

|

||||

|

||||

作者:[Soumya Sarkar][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

[a]: https://opensource.com/users/ssarkarhyd

|

||||

[1]: https://www.samba.org/

|

||||

[2]: https://en.wikipedia.org/wiki/Blue_Screen_of_Death

|

||||

[3]: https://en.wikipedia.org/wiki/Computex_Taipei

|

||||

[4]: https://en.wikipedia.org/wiki/Mandriva_Linux

|

||||

[5]: http://www.openoffice.org/

|

||||

[6]: https://www.mozilla.org/en-US/firefox/new/

|

||||

@ -1,3 +1,4 @@

|

||||

hkurj translating

|

||||

A four year, action-packed experience with Wikipedia

|

||||

=======================================================

|

||||

|

||||

|

||||

@ -0,0 +1,38 @@

|

||||

Aspiring sys admin works his way up in Linux

|

||||

===============================================

|

||||

|

||||

|

||||

|

||||

I first saw Linux in action around 2001 at my first job. I was as an account manager for an Austrian automotive industry supplier and shared an office with our IT guy. He was creating a CD burning station (one of those huge things that can burn and print several CDs simultaneously) so that we could create and send CDs of our car parts catalogue to customers. While the burning station was originally designed for Windows, he just could not get it to work. He eventually gave up on Windows and turned to Linux, and it worked flawlessly.

|

||||

|

||||

For me, it was all kind of arcane. Most of the work was done on the command line, which looked like DOS but was much more powerful (even back then, I recognized this). I had been a Mac user since 1993, and a CLI (command line interface) seemed a bit old fashioned to me at the time.

|

||||

|

||||

It was not until years later—I believe around 2009—that I really discovered Linux for myself. By then, I had moved to the Netherlands and found a job working for a retail supplier. It was a small company (about 20 people) where, aside from my normal job as a key account manager, I had involuntarily become the first line of IT support. Whenever something didn't work, people first came to me before calling the expensive external IT consultant.

|

||||

|

||||

One of my colleagues had fallen for a phishing attack and clicked on an .exe file in an email that appeared to be from DHL. (Yes, it does happen.) His computer got completely taken over and he could not do anything. Even a complete reformat wouldn't help, as the virus kept rearing it's ugly head. I only later learned that it probably had written itself to the MBR (Master Boot Record). By this time, the contract with the external IT consultant had been terminated due to cost savings.

|

||||

|

||||

I turned to Ubuntu to get my colleague to work again. And work it did—like a charm. The computer was humming along again, and I got all the important applications to work like they should. In some ways it wasn't the most elegant solution, I'll admit, yet he (and I) liked the speed and stability of the system.

|

||||

|

||||

However, my colleague was so entrenched in the Windows world that he just couldn't get used to the fact that some things were done differently. He just kept complaining. (Sound familiar?)

|

||||

|

||||

While my colleague couldn't bear that things were done differently, I noticed that this was much less of an issue for me as a Mac user. There were more similarities. I was intrigued. So, I installed a dual boot with Ubuntu on my work laptop and found that I got much more work done in less time and it was much easier to get the machine to do what I wanted. Ever since then I've been regularly using several Linux distros, with Ubuntu and Elementary being my personal favorites.

|

||||

|

||||

At the moment, I am unemployed and hence have a lot of time to educate myself. Because I've always had an interest in IT, I am working to get into Linux systems administration. But is awfully hard to get a chance to show your knowledge nowadays because 95% of what I have learned over the years can't be shown on a paper with a stamp on it. Interviews are the place for me to have a conversation about what I know. So, I signed up for Linux certifications that I hope give me the boost I need.

|

||||

|

||||

I have also been contributing to open source for some time. I started by doing translations (English to German) for the xTuple ERP and have since moved on to doing Mozilla "customer service" on Twitter, filing bug reports, etc. I evangelize for free and open software (with varying degrees of success) and financially support several FOSS advocate organizations (DuckDuckGo, bof.nl, EFF, GIMP, LibreCAD, Wikipedia, and many others) whenever I can. I am also currently working to set up a regional privacy cafe.

|

||||

|

||||

Aside from that, I have started working on my first book. It's supposed to be a lighthearted field manual for normal people about computer privacy and security, which I hope to self-publish by the end of the year. (The book will be licensed under Creative Commons.) As for content, you can expect that I will explain in detail why privacy is important and what is wrong with the whole "I have nothing to hide" mentality. But the biggest part will be instructions how to get rid of pesky ad-trackers, encrypting your hard disk and mail, chat OTR, how to use TOR, etc. While it's a manual first, I aim for a tone that is casual and easy to understand spiced up with stories of personal experiences.

|

||||

|

||||

I still love my Macs and will use them whenever I can afford it (mainly because of the great construction), but Linux is always there in a VM and is used for most of my daily work. Nothing fancy here, though: word processing (LibreOffice and Scribus), working on my website and blog (Wordpress and Jekyll), editing some pictures (Shotwell and Gimp), listening to music (Rhythmbox), and pretty much every other task that comes along.

|

||||

|

||||

Whichever way my job hunt turns out, I know that Linux will always be my go-to system.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/life/16/5/my-linux-story-rene-raggl

|

||||

|

||||

作者:[Rene Raggl][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

[a]: https://opensource.com/users/rraggl

|

||||

@ -1,179 +0,0 @@

|

||||

How to Install OsTicket Ticketing System in Fedora 22 / Centos 7

|

||||

================================================================================

|

||||

In this article, we'll learn how to setup help desk ticketing system with osTicket in our machine or server running Fedora 22 or CentOS 7 as operating system. osTicket is a free and open source popular customer support ticketing system developed and maintained by [Enhancesoft][1] and its contributors. osTicket is the best solution for help and support ticketing system and management for better communication and support assistance with clients and customers. It has the ability to easily integrate with inquiries created via email, phone and web based forms into a beautiful multi-user web interface. osTicket makes us easy to manage, organize and log all our support requests and responses in one single place. It is a simple, lightweight, reliable, open source, web-based and easy to setup and use help desk ticketing system.

|

||||

|

||||

Here are some easy steps on how we can setup Help Desk ticketing system with osTicket in Fedora 22 or CentOS 7 operating system.

|

||||

|

||||

### 1. Installing LAMP stack ###

|

||||

|

||||

First of all, we'll need to install LAMP Stack to make osTicket working. LAMP stack is the combination of Apache web server, MySQL or MariaDB database system and PHP. To install a complete suit of LAMP stack that we need for the installation of osTicket, we'll need to run the following commands in a shell or a terminal.

|

||||

|

||||

**On Fedora 22**

|

||||

|

||||

LAMP stack is available on the official repository of Fedora 22. As the default package manager of Fedora 22 is the latest DNF package manager, we'll need to run the following command.

|

||||

|

||||

$ sudo dnf install httpd mariadb mariadb-server php php-mysql php-fpm php-cli php-xml php-common php-gd php-imap php-mbstring wget

|

||||

|

||||

**On CentOS 7**

|

||||

|

||||

As there is LAMP stack available on the official repository of CentOS 7, we'll gonna install it using yum package manager.

|

||||

|

||||

$ sudo yum install httpd mariadb mariadb-server php php-mysql php-fpm php-cli php-xml php-common php-gd php-imap php-mbstring wget

|

||||

|

||||

### 2. Starting Apache Web Server and MariaDB ###

|

||||

|

||||

Next, we'll gonna start MariaDB server and Apache Web Server to get started.

|

||||

|

||||

$ sudo systemctl start mariadb httpd

|

||||

|

||||

Then, we'll gonna enable them to start on every boot of the system.

|

||||

|

||||

$ sudo systemctl enable mariadb httpd

|

||||

|

||||

Created symlink from /etc/systemd/system/multi-user.target.wants/mariadb.service to /usr/lib/systemd/system/mariadb.service.

|

||||

Created symlink from /etc/systemd/system/multi-user.target.wants/httpd.service to /usr/lib/systemd/system/httpd.service.

|

||||

|

||||

### 3. Downloading osTicket package ###

|

||||

|

||||

Next, we'll gonna download the latest release of osTicket ie version 1.9.9 . We can download it from the official download page [http://osticket.com/download][2] or from the official github repository. [https://github.com/osTicket/osTicket-1.8/releases][3] . Here, in this tutorial we'll download the tarball of the latest release of osTicket from the github release page using wget command.

|

||||

|

||||

$ cd /tmp/

|

||||

$ wget https://github.com/osTicket/osTicket-1.8/releases/download/v1.9.9/osTicket-v1.9.9-1-gbe2f138.zip

|

||||

|

||||

--2015-07-16 09:14:23-- https://github.com/osTicket/osTicket-1.8/releases/download/v1.9.9/osTicket-v1.9.9-1-gbe2f138.zip

|

||||

Resolving github.com (github.com)... 192.30.252.131

|

||||

...

|

||||

Connecting to s3.amazonaws.com (s3.amazonaws.com)|54.231.244.4|:443... connected.

|

||||

HTTP request sent, awaiting response... 200 OK

|

||||

Length: 7150871 (6.8M) [application/octet-stream]

|

||||

Saving to: ‘osTicket-v1.9.9-1-gbe2f138.zip’

|

||||

osTicket-v1.9.9-1-gb 100%[========================>] 6.82M 1.25MB/s in 12s

|

||||

2015-07-16 09:14:37 (604 KB/s) - ‘osTicket-v1.9.9-1-gbe2f138.zip’ saved [7150871/7150871]

|

||||

|

||||

### 4. Extracting the osTicket ###

|

||||

|

||||

After we have successfully downloaded the osTicket zipped package, we'll now gonna extract the zip. As the default root directory of Apache web server is /var/www/html/ , we'll gonna create a directory called "**support**" where we'll extract the whole directory and files of the compressed zip file. To do so, we'll need to run the following commands in a terminal or a shell.

|

||||

|

||||

$ unzip osTicket-v1.9.9-1-gbe2f138.zip

|

||||

|

||||

Then, we'll move the whole extracted files to it.

|

||||

|

||||

$ sudo mv /tmp/upload /var/www/html/support

|

||||

|

||||

### 5. Fixing Ownership and Permission ###

|

||||

|

||||

Now, we'll gonna assign the ownership of the directories and files under /var/ww/html/support to apache to enable writable access to the apache process owner. To do so, we'll need to run the following command.

|

||||

|

||||

$ sudo chown apache: -R /var/www/html/support

|

||||

|

||||

Then, we'll also need to copy a sample configuration file to its default configuration file. To do so, we'll need to run the below command.

|

||||

|

||||

$ cd /var/www/html/support/

|

||||

$ sudo cp include/ost-sampleconfig.php include/ost-config.php

|

||||

$ sudo chmod 0666 include/ost-config.php

|

||||

|

||||

If you have SELinux enabled on the system, run the following command.

|

||||

|

||||

$ sudo chcon -R -t httpd_sys_content_t /var/www/html/vtigercrm

|

||||

$ sudo chcon -R -t httpd_sys_rw_content_t /var/www/html/vtigercrm

|

||||

|

||||

### 6. Configuring MariaDB ###

|

||||

|

||||

As this is the first time we're going to configure MariaDB, we'll need to create a password for the root user of mariadb so that we can use it to login and create the database for our osTicket installation. To do so, we'll need to run the following command in a terminal or a shell.

|

||||

|

||||

$ sudo mysql_secure_installation

|

||||

|

||||

...

|

||||

Enter current password for root (enter for none):

|

||||

OK, successfully used password, moving on...

|

||||

|

||||

Setting the root password ensures that nobody can log into the MariaDB

|

||||

root user without the proper authorisation.

|

||||

|

||||

Set root password? [Y/n] y

|

||||

New password:

|

||||

Re-enter new password:

|

||||

Password updated successfully!

|

||||

Reloading privilege tables..

|

||||

Success!

|

||||

...

|

||||

All done! If you've completed all of the above steps, your MariaDB

|

||||

installation should now be secure.

|

||||

|

||||

Thanks for using MariaDB!

|

||||

|

||||

Note: Above, we are asked to enter the root password of the mariadb server but as we are setting for the first time and no password has been set yet, we'll simply hit enter while asking the current mariadb root password. Then, we'll need to enter twice the new password we wanna set. Then, we can simply hit enter in every argument in order to set default configurations.

|

||||

|

||||

### 7. Creating osTicket Database ###

|

||||

|

||||

As osTicket needs a database system to store its data and information, we'll be configuring MariaDB for osTicket. So, we'll need to first login into the mariadb command environment. To do so, we'll need to run the following command.

|

||||

|

||||

$ sudo mysql -u root -p

|

||||

|

||||

Now, we'll gonna create a new database "**osticket_db**" with user "**osticket_user**" and password "osticket_password" which will be granted access to the database. To do so, we'll need to run the following commands inside the MariaDB command environment.

|

||||

|

||||

> CREATE DATABASE osticket_db;

|

||||

> CREATE USER 'osticket_user'@'localhost' IDENTIFIED BY 'osticket_password';

|

||||

> GRANT ALL PRIVILEGES on osticket_db.* TO 'osticket_user'@'localhost' ;

|

||||

> FLUSH PRIVILEGES;

|

||||

> EXIT;

|

||||

|

||||

**Note**: It is strictly recommended to replace the database name, user and password as your desire for security issue.

|

||||

|

||||

### 8. Allowing Firewall ###

|

||||

|

||||

If we are running a firewall program, we'll need to configure our firewall to allow port 80 so that the Apache web server's default port will be accessible externally. This will allow us to navigate our web browser to osTicket's web interface with the default http port 80. To do so, we'll need to run the following command.

|

||||

|

||||

$ sudo firewall-cmd --zone=public --add-port=80/tcp --permanent

|

||||

|

||||

After done, we'll need to reload our firewall service.

|

||||

|

||||

$ sudo firewall-cmd --reload

|

||||

|

||||

### 9. Web based Installation ###

|

||||

|

||||

Finally, is everything is done as described above, we'll now should be able to navigate osTicket's Installer by pointing our web browser to http://domain.com/support or http://ip-address/support . Now, we'll be shown if the dependencies required by osTicket are installed or not. As we've already installed all the necessary packages, we'll be welcomed with **green colored tick** to proceed forward.

|

||||

|

||||

|

||||

|

||||

After that, we'll be required to enter the details for our osTicket instance as shown below. We'll need to enter the database name, username, password and hostname and other important account information that we'll require while logging into the admin panel.

|

||||

|

||||

|

||||

|

||||

After the installation has been completed successfully, we'll be welcomed by a Congratulations screen. There we can see two links, one for our Admin Panel and the other for the support center as the homepage of the osTicket Support Help Desk.

|

||||

|

||||

|

||||

|

||||

If we click on http://ip-address/support or http://domain.com/support, we'll be redirected to the osTicket support page which is as shown below.

|

||||

|

||||

|

||||

|

||||

Next, to login into the admin panel, we'll need to navigate our web browser to http://ip-address/support/scp or http://domain.com/support/scp . Then, we'll need to enter the login details we had just created above while configuring the database and other information in the web installer. After successful login, we'll be able to access our dashboard and other admin sections.

|

||||

|

||||

|

||||

|

||||

### 10. Post Installation ###

|

||||

|

||||

After we have finished the web installation of osTicket, we'll now need to secure some of our configuration files. To do so, we'll need to run the following command.

|

||||

|

||||

$ sudo rm -rf /var/www/html/support/setup/

|

||||