mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-25 00:50:15 +08:00

Merge pull request #1 from LCTT/master

This commit is contained in:

commit

7ec3675ddf

@ -3,29 +3,29 @@

|

||||

|

||||

|

||||

|

||||

在 2014 年,为了对网上一些关于在科技行业女性稀缺的评论作出回应,我的同事 [Crystal Beasley][1] 倡议在科技/信息安全方面工作的女性在网络上分享自己的“成才之路”。这篇文章就是我的故事。我把我的故事与你们分享是因为我相信榜样的力量,也相信一个人有多种途径,选择一个让自己满意的有挑战性的工作,以及实现目标的人生。

|

||||

在 2014 年,为了对网上一些关于在科技行业女性稀缺的评论作出回应,我的同事 [Crystal Beasley][1] 倡议在科技/信息安全方面工作的女性在网络上分享自己的“成才之路”。这篇文章就是我的故事。我把我的故事与你们分享是因为我相信榜样的力量,也相信一个人有多种途径,选择一个让自己满意的有挑战性的工作以及可以实现目标的人生。

|

||||

|

||||

### 和电脑相伴的童年

|

||||

|

||||

我可以说是硅谷的女儿。我的故事不是一个从科技业余爱好转向专业的故事,也不是从小就专注于这份事业的故事。这个故事更多的是关于环境如何塑造你 — 通过它的那种已然存在的文化来改变你,如果你想要被改变的话。这不是从小就开始努力并为一个明确的目标而奋斗的故事,我意识到,这其实是享受了一些特权的成长故事。

|

||||

|

||||

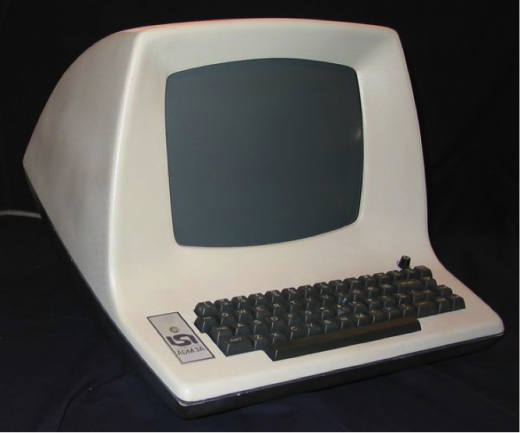

我出生在曼哈顿,但是我在新泽西州长大,因为我的爸爸退伍后,在那里的罗格斯大学攻读计算机科学的博士学位。当我四岁时,学校里有人问我爸爸干什么谋生时,我说,“他就是看电视和捕捉小虫子,但是我从没有见过那些小虫子”(译者注:小虫子,bug)。他在家里有一台哑终端,这大概与他在 Bolt Beranek Newman 公司的工作有关,做关于早期互联网人工智能方面的工作。我就在旁边看着。

|

||||

我出生在曼哈顿,但是我在新泽西州长大,因为我的爸爸退伍后,在那里的罗格斯大学攻读计算机科学的博士学位。当我四岁时,学校里有人问我爸爸干什么谋生时,我说,“他就是看电视和捕捉小虫子,但是我从没有见过那些小虫子”(LCTT 译注:小虫子,bug)。他在家里有一台哑终端(LCTT 译注:就是那台“电视”),这大概与他在 Bolt Beranek Newman 公司的工作有关,做关于早期互联网人工智能方面的工作。我就在旁边看着。

|

||||

|

||||

我没能玩上父亲的会抓小虫子的电视,但是我很早就接触到了技术领域,我很珍惜这个礼物。提早的熏陶对于一个未来的高手是十分必要的 — 所以,请花时间和你的小孩谈谈你所知道的你做的事情!

|

||||

我没能玩上父亲的会抓小虫子的电视,但是我很早就接触到了技术领域,我很珍惜这个礼物。提早的熏陶对于一个未来的高手是十分必要的 — 所以,请花时间和你的小孩谈谈你在做的事情!

|

||||

|

||||

|

||||

|

||||

*我父亲的终端和这个很类似 —— 如果不是这个的话 CC BY-SA 4.0*

|

||||

|

||||

当我六岁时,我们搬到了加州。父亲在施乐的研究中心找到了一个工作。我记得那时我认为这个城市一定有很多熊,因为在它的旗帜上有一个熊。在1979年,Palo Alto 还是一个大学城,还有果园和开阔地带。

|

||||

当我六岁时,我们搬到了加州。父亲在施乐的帕克研究中心(Xerox PARC)找到了一个工作。我记得那时我认为这个城市一定有很多熊,因为在它的旗帜上有一个熊。在1979年,帕洛阿图市还是一个大学城,还有果园和开阔地带。

|

||||

|

||||

在 Palo Alto 的公立学校待了一年之后,我的姐姐和我被送到了“半岛学校”,这个“民主模范”学校对我造成了深刻的影响。在那里,好奇心和创新意识是被高度推崇的,教育也是由学生自己分组讨论决定的。在学校,我们很少能看到叫做电脑的东西,但是在家就不同了。

|

||||

在 Palo Alto 的公立学校待了一年之后,我的姐姐和我被送到了“半岛学校”,这个“民主典范”学校对我造成了深刻的影响。在那里,好奇心和创新意识是被高度推崇的,教育也是由学生自己分组讨论决定的。在学校,我们很少能看到叫做电脑的东西,但是在家就不同了。

|

||||

|

||||

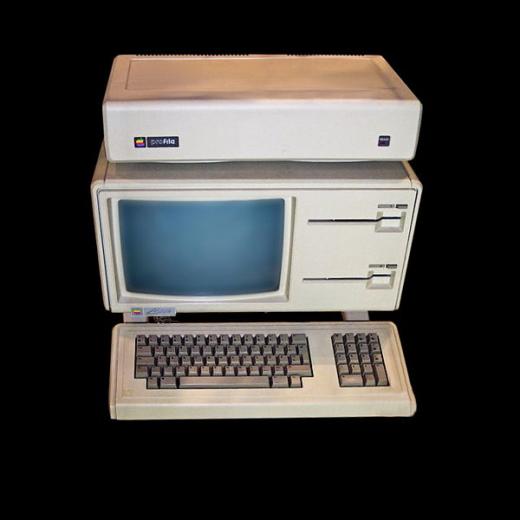

在父亲从施乐辞职之后,他就去了 Apple 公司,在那里他工作使用并带回家让我玩的第一批电脑就是:Apple II 和 LISA。我的父亲在最初的 LISA 的研发团队。我直到现在还深刻的记得他让我们一次又一次的“玩鼠标”的场景,因为他想让我的 3 岁大的妹妹对这个东西感到舒服 —— 她也确实那样。

|

||||

在父亲从施乐辞职之后,他就去了苹果公司,在那里他工作使用并带回家让我玩的第一批电脑就是:Apple II 和 LISA。我的父亲在最初的 LISA 的研发团队。我直到现在还深刻的记得他让我们一次又一次的“玩”鼠标训练的场景,因为他想让我的 3 岁大的妹妹也能对这个东西觉得好用 —— 她也确实那样。

|

||||

|

||||

|

||||

|

||||

*我们的 LISA 看起来就像这样,看到鼠标了吗?CC BY-SA 4.0*

|

||||

*我们的 LISA 看起来就像这样。谁看到鼠标哪儿去了?CC BY-SA 4.0*

|

||||

|

||||

在学校,我的数学的概念学得不错,但是基本计算却惨不忍睹。我的第一个学校的老师告诉我的家长和我,说我的数学很差,还说我很“笨”。虽然我在“常规的”数学项目中表现出色,能理解一个超出 7 岁孩子理解能力的逻辑谜题,但是我不能完成我们每天早上都要做的“练习”。她说我傻,这事我不会忘记。在那之后的十年我都没能相信自己的逻辑能力和算法的水平。**不要低估你对孩子说的话的影响**。

|

||||

|

||||

@ -33,7 +33,7 @@

|

||||

|

||||

### 本科时光

|

||||

|

||||

我想我要成为一个小学教师,我就读米尔斯学院就是想要做这个。但是后来我开始研究女性,后来又研究神学,我这样做仅仅是由于我自己的一个渴求:我希望能理解人类的意志以及为更好的世界而努力。

|

||||

我想我要成为一个小学教师,我就读米尔斯学院就是想要做这个。但是后来我开始研究女性学,后来又研究神学,我这样做仅仅是由于我自己的一个渴求:我希望能理解人类的意志以及为更好的世界而努力。

|

||||

|

||||

同时,我也感受到了互联网的巨大力量。在 1991 年,拥有你自己的 UNIX 的账户,能够和全世界的人谈话,是很令人兴奋的事。我仅仅从在互联网中“玩”就学到了不少,从那些愿意回答我提出的问题的人那里学到的就更多了。这些学习对我的职业生涯的影响不亚于我在正规学校教育之中学到的知识。所有的信息都是有用的。我在一个女子学院度过了学习的关键时期,那时是一个杰出的女性在掌管计算机院。在那个宽松氛围的学院,我们不仅被允许,还被鼓励去尝试很多的道路(我们能接触到很多很多的科技,还有聪明人愿意帮助我们),我也确实那样做了。我十分感激当年的教育。在那个学院,我也了解了什么是极客文化。

|

||||

|

||||

@ -41,31 +41,31 @@

|

||||

|

||||

### 新的开端

|

||||

|

||||

在 1995 年,我被万维网连接人们以及分享想法和信息的能力所震惊(直到现在仍是如此)。我想要进入这个行业。看起来我好像要“女承父业”,但是我不知道如何开始。我开始在硅谷做临时工,从 Sun Microsystems 公司得到我的第一个“真正”技术职位前尝试做了一些事情(为半导体数据写最基础的数据库,技术手册印发前的事务,备份工资单的存跟)。这些事很让人激动。(毕竟,我们是“.com”中的那个”点“)。

|

||||

在 1995 年,我被互联网连接人们以及分享想法和信息的能力所震惊(直到现在仍是如此)。我想要进入这个行业。看起来我好像要“女承父业”,但是我不知道如何开始。我开始在硅谷做临时工,从 Sun 微系统公司得到我的第一个“真正”技术职位前尝试做了一些事情(为半导体数据公司写最基础的数据库,技术手册印发前的事务,备份工资单的存跟)。这些事很让人激动。(毕竟,我们是“.com”中的那个”点“)。

|

||||

|

||||

在 Sun ,我努力学习,尽可能多的尝试新事物。我的第一个工作是<ruby>网页化<rt> HTMLing</rt></ruby>(啥?这是一个词!)白皮书,以及为 Beta 程序修改一些基础的服务工具(大多数是Perl写的)。后来我成为 Solaris beta 项目组中的项目经理,并在 Open Solaris 的 Beta 版运行中感受到了开源的力量。

|

||||

在 Sun 公司,我努力学习,尽可能多的尝试新事物。我的第一个工作是<ruby>网页化<rt> HTMLing</rt></ruby>(啥?这居然是一个词!)白皮书,以及为 Beta 程序修改一些基础的服务工具(大多数是 Perl 写的)。后来我成为 Solaris beta 项目组中的项目经理,并在 Open Solaris 的 Beta 版运行中感受到了开源的力量。

|

||||

|

||||

在那里我做的最重要的事情就是学校。我发现在同样重视工程和教育的地方有一种气氛,在那里我的问题不再显得“傻”。我很庆幸我选对了导师和朋友。在决定休第二个孩子的产假之前,我上每一堂我能上的课程,读每一本我能读的书,尝试自学我在学校没有学习过的技术,商业以及项目管理方面的技能。

|

||||

在那里我做的最重要的事情就是学习。我发现在同样重视工程和教育的地方有一种气氛,在那里我的问题不再显得“傻”。我很庆幸我选对了导师和朋友。在决定休第二个孩子的产假之前,我上每一堂我能上的课程,读每一本我能读的书,尝试自学我在学校没有学习过的技术,商业以及项目管理方面的技能。

|

||||

|

||||

### 重回工作

|

||||

|

||||

当我准备重新工作时,Sun 已经不是可行的地方。所以,我收集了很多人的信息(网络是你的朋友),利用我的沟通技能,最终获得了一个管理互联网门户的长期合同(2005 年时,一切皆门户),并且开始了解 CRM,发布产品的方式,本地化,网络等知识。我讲这么多背景,主要是我尝试以及失败的经历,和我成功的经历同等重要,从中学到很多。我也认为我们需要这个方面的榜样。

|

||||

当我准备重新工作时,Sun 公司已经不再是合适的地方了。所以,我整理了我的联系信息(网络帮到了我),利用我的沟通技能,最终获得了一个管理互联网门户的长期合同(2005 年时,一切皆门户),并且开始了解 CRM、发布产品的方式、本地化、网络等知识。我讲这么多背景,主要是我的尝试以及失败的经历,和我成功的经历同等重要,从中学到很多。我也认为我们需要这个方面的榜样。

|

||||

|

||||

从很多方面来看,我的职业生涯的第一部分是我的技术教育。这事发生的时间和地点都和现在不一样了 —— 我在帮助组织中的女性和其他弱势群体,但是我之后成为一个技术行业的女性。当时无疑我没有看到这个行业的缺陷,但是现在这个行业更加的厌恶女性,一点没有减少。

|

||||

从很多方面来看,我的职业生涯的第一部分是我的技术教育。时变势移 —— 我在帮助组织中的女性和其他弱势群体,但是并没有看出为一个技术行业的女性有多难。当时无疑我没有看到这个行业的缺陷,但是现在这个行业更加的厌恶女性,一点没有减少。

|

||||

|

||||

在这些事情之后,我还没有把自己当作一个标杆,或者一个高级技术人员。当我在父母圈子里认识的一位极客朋友鼓励我申请一个看起来定位十分模糊且技术性很强的开源的非盈利基础设施商店(互联网系统协会,BIND --一个广泛部署的开源 DNS 名称服务器--的缔造者,13 台根域名服务器之一的运营商)的产品经理时,我很震惊。有很长一段时间,我都不知道他们为什么要雇佣我!我对 DNS ,基础设备,以及协议的开发知之甚少,但是我再次遇到了老师,并再度开始飞速发展。我花时间旅行,在关键流程攻关,搞清楚如何与高度国际化的团队合作,解决麻烦的问题,最重要的是,拥抱支持我们的开源和充满活力的社区。我几乎重新学了一切,通过试错的方式。我学习如何构思一个产品。如何通过建设开源社区,领导那些有这特定才能,技能和耐心的人,是他们给了产品价值。

|

||||

在这些事情之后,我还没有把自己当作一个标杆,或者一个高级技术人员。当我在父母圈子里认识的一位极客朋友鼓励我申请一个看起来定位十分模糊且技术性很强的开源的非盈利基础设施机构(互联网系统协会 ISC,它是广泛部署的开源 DNS 名称服务器 BIND 的缔造者,也是 13 台根域名服务器之一的运营商)的产品经理时,我很震惊。有很长一段时间,我都不知道他们为什么要雇佣我!我对 DNS、基础设备,以及协议的开发知之甚少,但是我再次遇到了老师,并再度开始飞速发展。我花时间出差,在关键流程攻关,搞清楚如何与高度国际化的团队合作,解决麻烦的问题,最重要的是,拥抱支持我们的开源和充满活力的社区。我几乎重新学了一切,通过试错的方式。我学习如何构思一个产品。如何通过建设开源社区,领导那些有这特定才能,技能和耐心的人,是他们给了产品价值。

|

||||

|

||||

### 成为别人的导师

|

||||

|

||||

当我在 ISC 工作时,我通过 [TechWomen 项目][2] (一个让来自中东和北非的技术行业的女性到硅谷来接受教育的计划),我开始喜欢教学生以及支持那些技术女性,特别是在开源行业中奋斗的。也正是从这时起我开始相信自己的能力。我还需要学很多。

|

||||

|

||||

当我第一次读 TechWomen 关于导师的广告时,我认为那些导师甚至都不会想要和我说话!我有冒名顶替综合征。当他们邀请我成为第一批导师(以及以后 6 年的导师)时,我很震惊,但是现在我学会了相信这些都是我努力得到的待遇。冒名顶替综合征是真实的,但是它能被时间冲淡。

|

||||

当我第一次读 TechWomen 关于导师的广告时,我根本不认为他们会约我面试!我有冒名顶替综合症。当他们邀请我成为第一批导师(以及以后六年每年的导师)时,我很震惊,但是现在我学会了相信这些都是我努力得到的待遇。冒名顶替综合症是真实的,但是随着时间过去我就慢慢名副其实了。

|

||||

|

||||

### 现在

|

||||

|

||||

最后,我不得不离开我在 ISC 的工作。幸运的是,我的工作以及我的价值让我进入了 Mozilla ,在这里我的努力和我的幸运让我在这里承担着重要的角色。现在,我是一名支持多样性的高级项目经理。我致力于构建一个更多样化,更有包容性的 Mozilla ,站在之前的做同样事情的巨人的肩膀上,与最聪明友善的人们一起工作。我用我的激情来让人们找到贡献一个世界需要的互联网的有意义的方式:这让我兴奋了很久。我能看见,我做到了!

|

||||

最后,我不得不离开我在 ISC 的工作。幸运的是,我的工作以及我的价值让我进入了 Mozilla ,在这里我的努力和我的幸运让我在这里承担着重要的角色。现在,我是一名支持多样性与包容的高级项目经理。我致力于构建一个更多样化,更有包容性的 Mozilla ,站在之前的做同样事情的巨人的肩膀上,与最聪明友善的人们一起工作。我用我的激情来让人们找到贡献一个世界需要的互联网的有意义的方式:这让我兴奋了很久。当我爬上山峰,我能极目四望!

|

||||

|

||||

通过对组织和个人行为的干预来获取一种新的方式,以改变文化,这和我的人生轨迹有着不可思议的联系 —— 从我的早期的学术生涯,到职业生涯再到现在。每天都是一个新的挑战,我想这是我喜欢在科技行业工作,尤其是在开放互联网工作的地方。互联网天然的多元性是它最开始吸引我的原因,也是我还在寻求的 —— 所有人都有机会和获取资源的可能性,无论背景如何。榜样,导师,资源,以及最重要的,尊重,是不断发展技术和开源文化的必要组成部分,实现我相信它能实现的所有事 —— 包括给所有人平等的接触机会。

|

||||

通过对组织和个人行为的干预来获取一种改变文化的新方式,这和我的人生轨迹有着不可思议的联系 —— 从我的早期的学术生涯,到职业生涯再到现在。每天都是一个新的挑战,我想这是我喜欢在科技行业工作,尤其是在开放互联网工作的理由。互联网天然的多元性是它最开始吸引我的原因,也是我还在寻求的 —— 所有人都有机会和获取资源的可能性,无论背景如何。榜样、导师、资源,以及最重要的,尊重,是不断发展技术和开源文化的必要组成部分,实现我相信它能实现的所有事 —— 包括给所有人平等的接触机会。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -0,0 +1,391 @@

|

||||

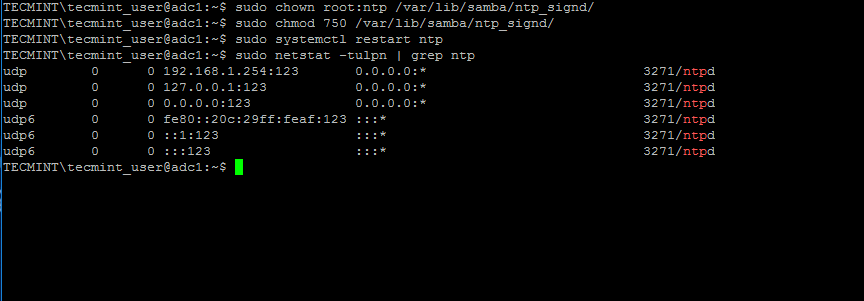

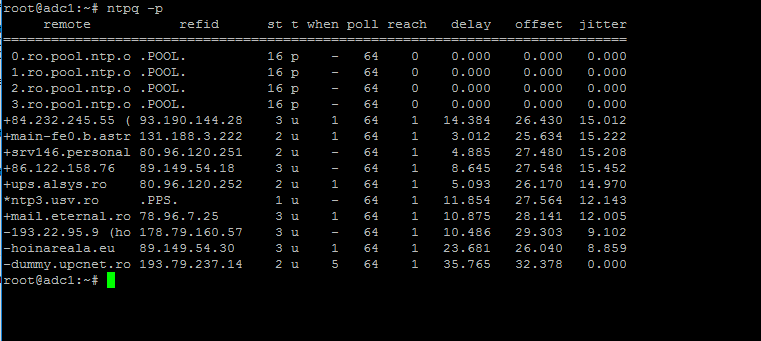

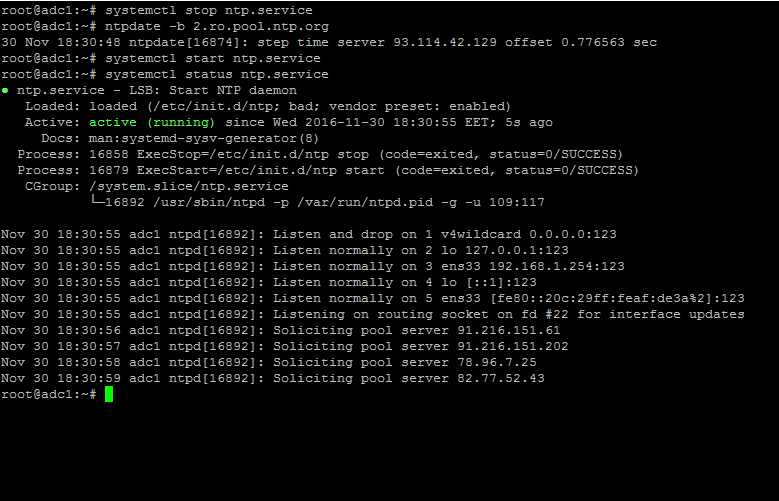

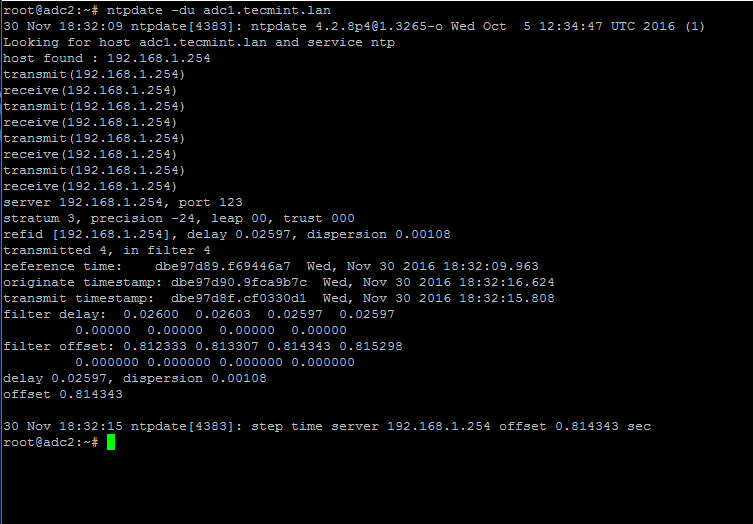

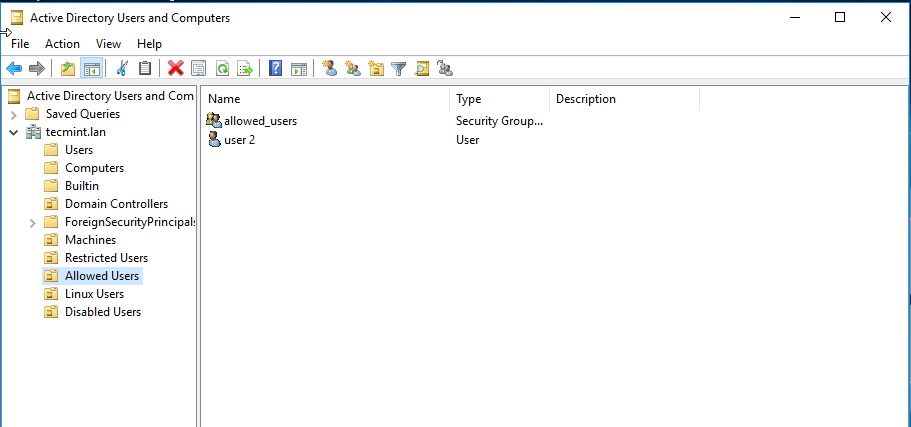

在 Linux 命令行下管理 Samba4 AD 架构(二)

|

||||

============================================================

|

||||

|

||||

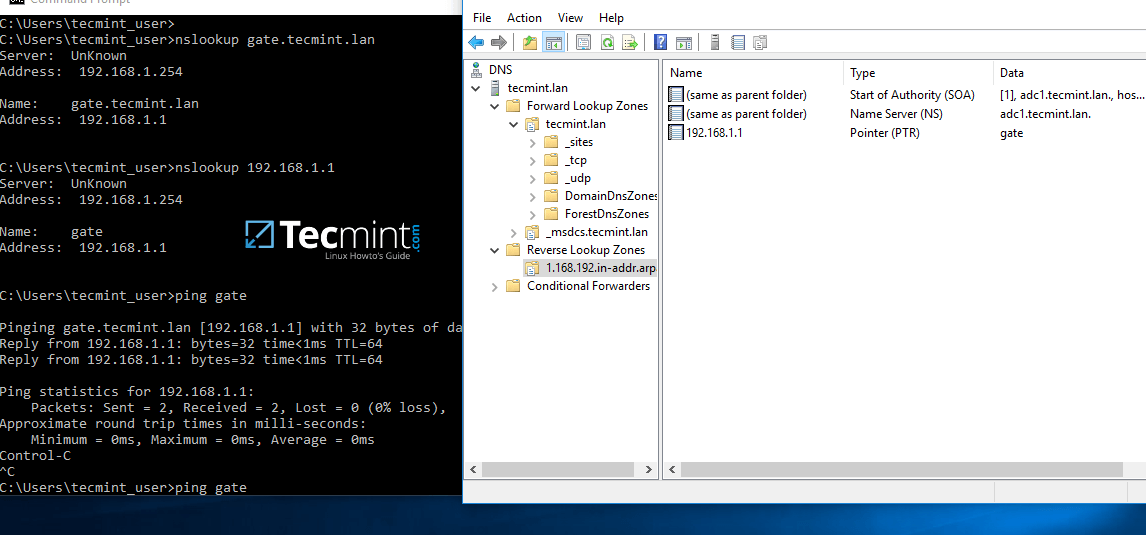

这篇文章包括了管理 Samba4 域控制器架构过程中的一些常用命令,比如添加、移除、禁用或者列出用户及用户组等。

|

||||

|

||||

我们也会关注一下如何配置域安全策略以及如何把 AD 用户绑定到本地的 PAM 认证中,以实现 AD 用户能够在 Linux 域控制器上进行本地登录。

|

||||

|

||||

#### 要求

|

||||

|

||||

- [在 Ubuntu 系统上使用 Samba4 来创建活动目录架构][1]

|

||||

|

||||

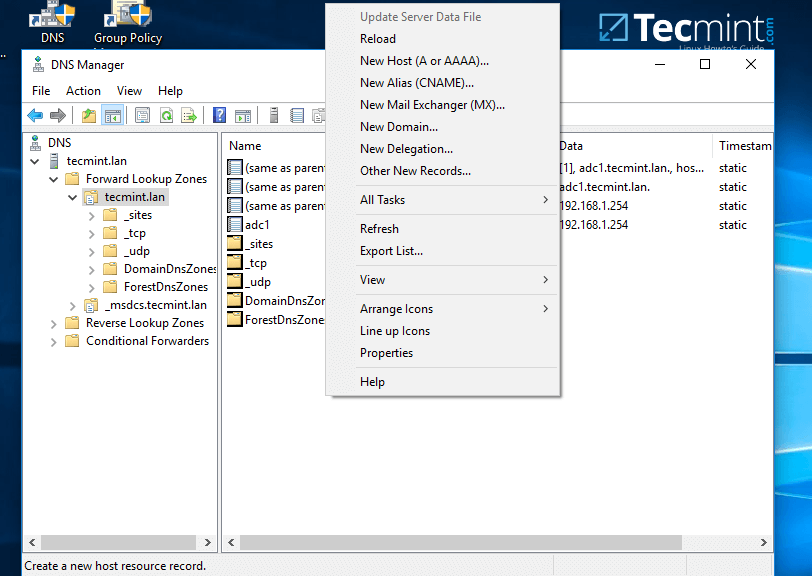

### 第一步:在命令行下管理

|

||||

|

||||

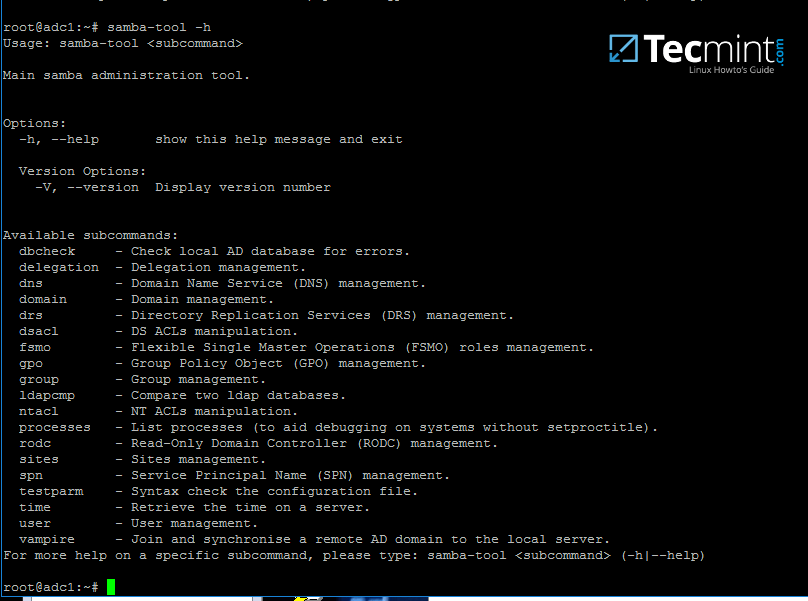

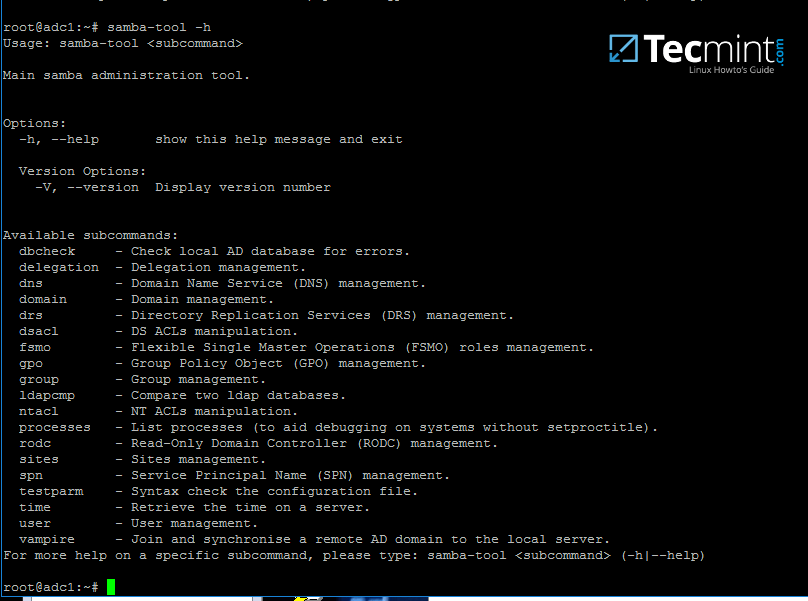

1、 可以通过 `samba-tool` 命令行工具来进行管理,这个工具为域管理工作提供了一个功能强大的管理接口。

|

||||

|

||||

通过 `samba-tool` 命令行接口,你可以直接管理域用户及用户组、域组策略、域站点,DNS 服务、域复制关系和其它重要的域功能。

|

||||

|

||||

使用 root 权限的账号,直接输入 `samba-tool` 命令,不要加任何参数选项来查看该工具能实现的所有功能。

|

||||

|

||||

```

|

||||

# samba-tool -h

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][3]

|

||||

|

||||

*samba-tool —— Samba 管理工具*

|

||||

|

||||

2、 现在,让我们开始使用 `samba-tool` 工具来管理 Samba4 活动目录中的用户。

|

||||

|

||||

使用如下命令来创建 AD 用户:

|

||||

|

||||

```

|

||||

# samba-tool user add your_domain_user

|

||||

```

|

||||

|

||||

添加一个用户,包括 AD 可选的一些重要属性,如下所示:

|

||||

|

||||

```

|

||||

--------- review all options ---------

|

||||

# samba-tool user add -h

|

||||

# samba-tool user add your_domain_user --given-name=your_name --surname=your_username --mail-address=your_domain_user@tecmint.lan --login-shell=/bin/bash

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][4]

|

||||

|

||||

*在 Samba AD 上创建用户*

|

||||

|

||||

3、 可以通过下面的命令来列出所有 Samba AD 域用户:

|

||||

|

||||

```

|

||||

# samba-tool user list

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][5]

|

||||

|

||||

*列出 Samba AD 用户信息*

|

||||

|

||||

4、 使用下面的命令来删除 Samba AD 域用户:

|

||||

|

||||

```

|

||||

# samba-tool user delete your_domain_user

|

||||

```

|

||||

|

||||

5、 重置 Samba 域用户的密码:

|

||||

|

||||

```

|

||||

# samba-tool user setpassword your_domain_user

|

||||

```

|

||||

|

||||

6、 启用或禁用 Samba 域用户账号:

|

||||

|

||||

```

|

||||

# samba-tool user disable your_domain_user

|

||||

# samba-tool user enable your_domain_user

|

||||

```

|

||||

|

||||

7、 同样地,可以使用下面的方法来管理 Samba 用户组:

|

||||

|

||||

```

|

||||

--------- review all options ---------

|

||||

# samba-tool group add –h

|

||||

# samba-tool group add your_domain_group

|

||||

```

|

||||

|

||||

8、 删除 samba 域用户组:

|

||||

|

||||

```

|

||||

# samba-tool group delete your_domain_group

|

||||

```

|

||||

|

||||

9、 显示所有的 Samba 域用户组信息:

|

||||

|

||||

```

|

||||

# samba-tool group list

|

||||

```

|

||||

|

||||

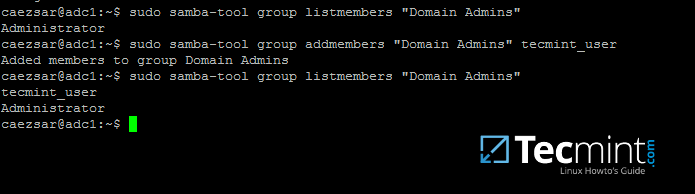

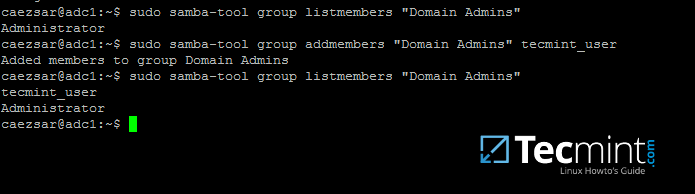

10、 列出指定组下的 Samba 域用户:

|

||||

|

||||

```

|

||||

# samba-tool group listmembers "your_domain group"

|

||||

```

|

||||

[

|

||||

|

||||

][6]

|

||||

|

||||

*列出 Samba 域用户组*

|

||||

|

||||

11、 从 Samba 域组中添加或删除某一用户:

|

||||

|

||||

```

|

||||

# samba-tool group addmembers your_domain_group your_domain_user

|

||||

# samba-tool group remove members your_domain_group your_domain_user

|

||||

```

|

||||

|

||||

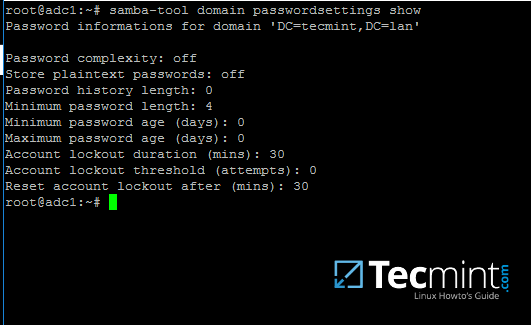

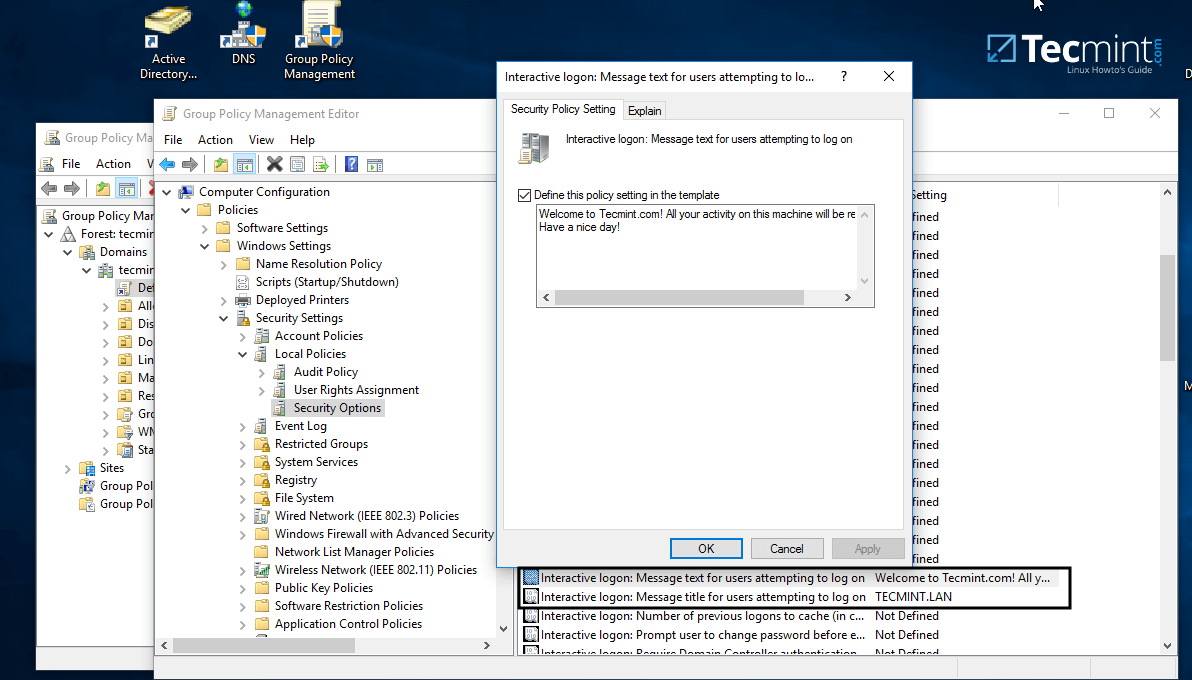

12、 如上面所提到的, `samba-tool` 命令行工具也可以用于管理 Samba 域策略及安全。

|

||||

|

||||

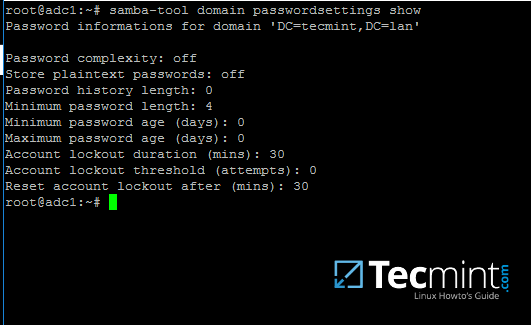

查看 samba 域密码设置:

|

||||

|

||||

```

|

||||

# samba-tool domain passwordsettings show

|

||||

```

|

||||

[

|

||||

|

||||

][7]

|

||||

|

||||

*检查 Samba 域密码*

|

||||

|

||||

13、 为了修改 samba 域密码策略,比如密码复杂度,密码失效时长,密码长度,密码重复次数以及其它域控制器要求的安全策略等,可参照如下命令来完成:

|

||||

|

||||

```

|

||||

---------- List all command options ----------

|

||||

# samba-tool domain passwordsettings -h

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][8]

|

||||

|

||||

*管理 Samba 域密码策略*

|

||||

|

||||

不要把上图中的密码策略规则用于生产环境中。上面的策略仅仅是用于演示目的。

|

||||

|

||||

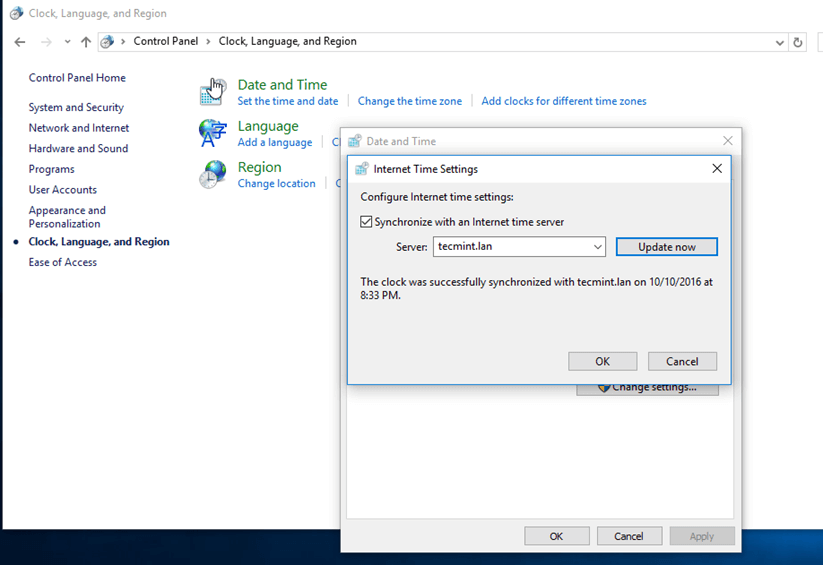

### 第二步:使用活动目录账号来完成 Samba 本地认证

|

||||

|

||||

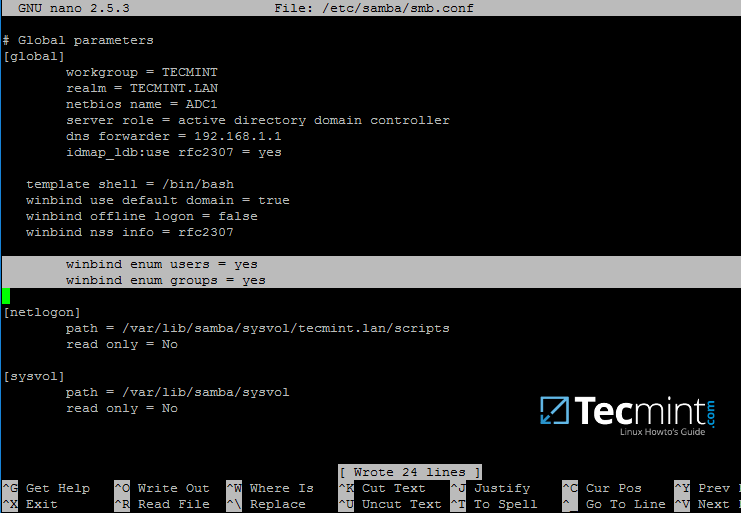

14、 默认情况下,离开 Samba AD DC 环境,AD 用户不能从本地登录到 Linux 系统。

|

||||

|

||||

为了让活动目录账号也能登录到系统,你必须在 Linux 系统环境中做如下设置,并且要修改 Samba4 AD DC 配置。

|

||||

|

||||

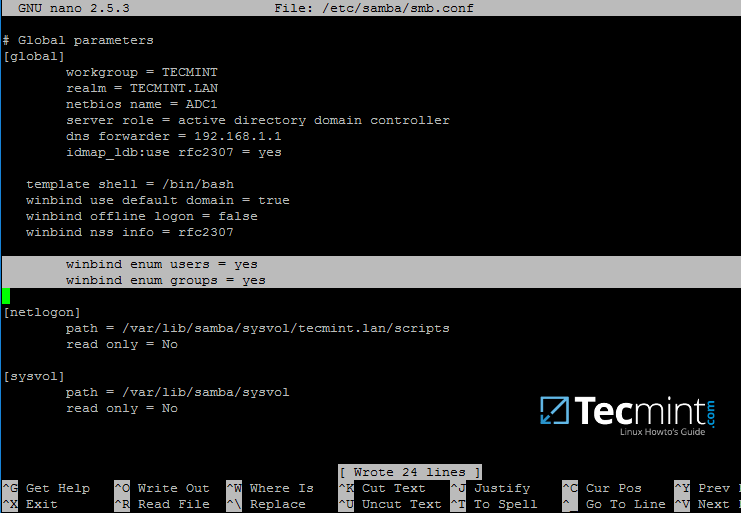

首先,打开 Samba 主配置文件,如果以下内容不存在,则添加:

|

||||

|

||||

```

|

||||

$ sudo nano /etc/samba/smb.conf

|

||||

```

|

||||

|

||||

确保以下参数出现在配置文件中:

|

||||

|

||||

```

|

||||

winbind enum users = yes

|

||||

winbind enum groups = yes

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][9]

|

||||

|

||||

*Samba 通过 AD 用户账号来进行认证*

|

||||

|

||||

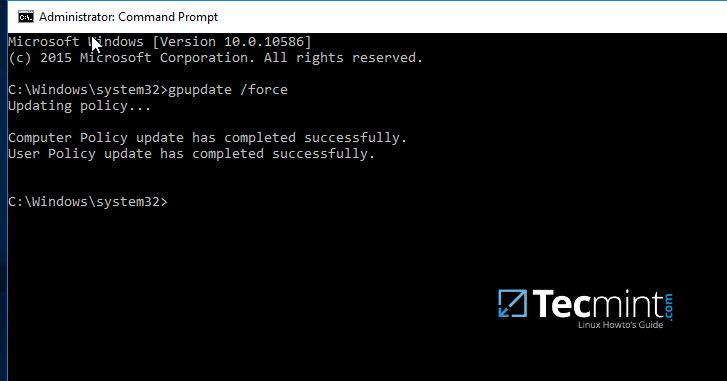

15、 修改之后,使用 `testparm` 工具来验证配置文件没有错误,然后通过如下命令来重启 Samba 服务:

|

||||

|

||||

```

|

||||

$ testparm

|

||||

$ sudo systemctl restart samba-ad-dc.service

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][10]

|

||||

|

||||

*检查 Samba 配置文件是否报错*

|

||||

|

||||

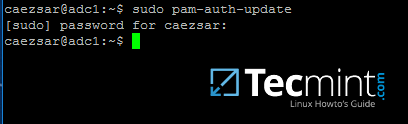

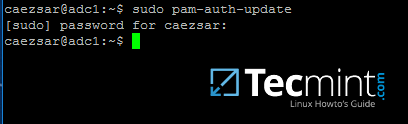

16、 下一步,我们需要修改本地 PAM 配置文件,以让 Samba4 活动目录账号能够完成本地认证、开启会话,并且在第一次登录系统时创建一个用户目录。

|

||||

|

||||

使用 `pam-auth-update` 命令来打开 PAM 配置提示界面,确保所有的 PAM 选项都已经使用 `[空格]` 键来启用,如下图所示:

|

||||

|

||||

完成之后,按 `[Tab]` 键跳转到 OK ,以启用修改。

|

||||

|

||||

```

|

||||

$ sudo pam-auth-update

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][11]

|

||||

|

||||

*为 Samba4 AD 配置 PAM 认证*

|

||||

|

||||

[

|

||||

|

||||

][12]

|

||||

|

||||

为 Samba4 AD 用户启用 PAM认证模块

|

||||

|

||||

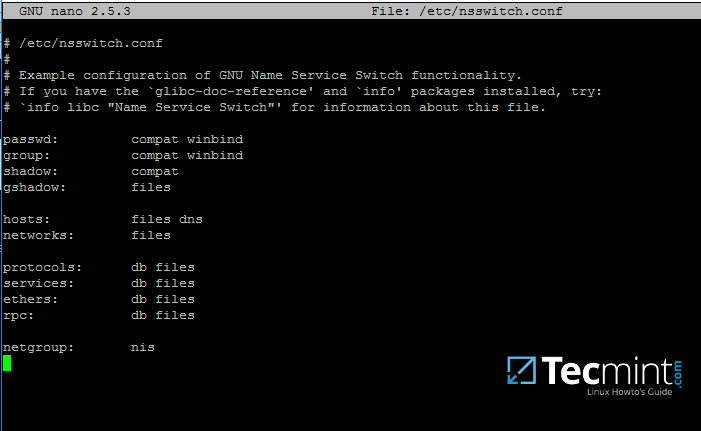

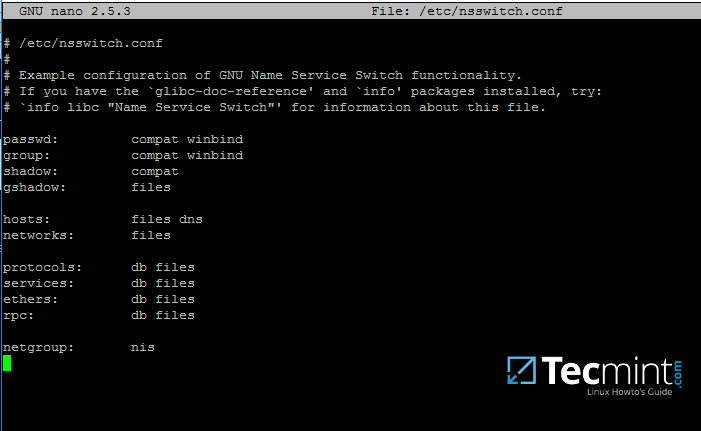

17、 现在,使用文本编辑器打开 `/etc/nsswitch.conf` 配置文件,在 `passwd` 和 `group` 参数的最后面添加 `winbind` 参数,如下图所示:

|

||||

|

||||

```

|

||||

$ sudo vi /etc/nsswitch.conf

|

||||

```

|

||||

[

|

||||

|

||||

][13]

|

||||

|

||||

*为 Samba 服务添加 Winbind Service Switch 设置*

|

||||

|

||||

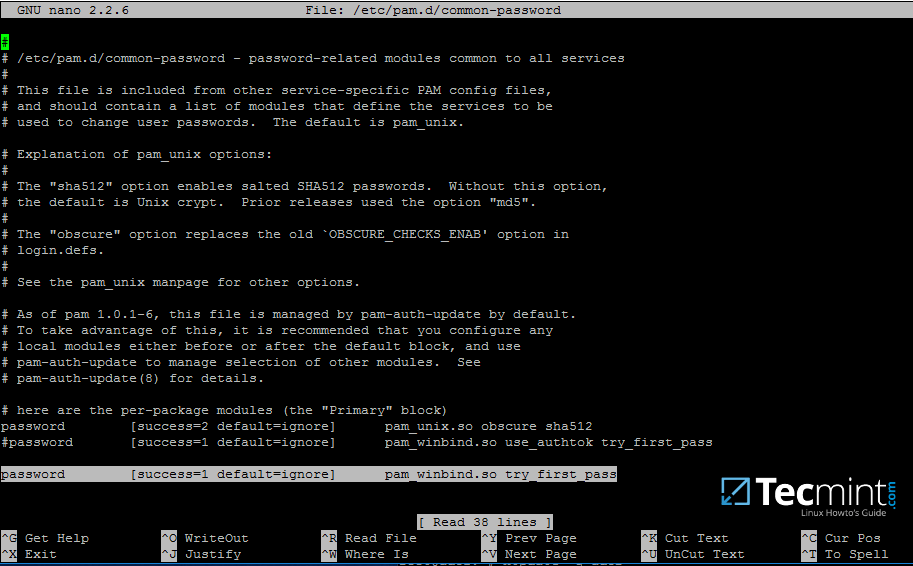

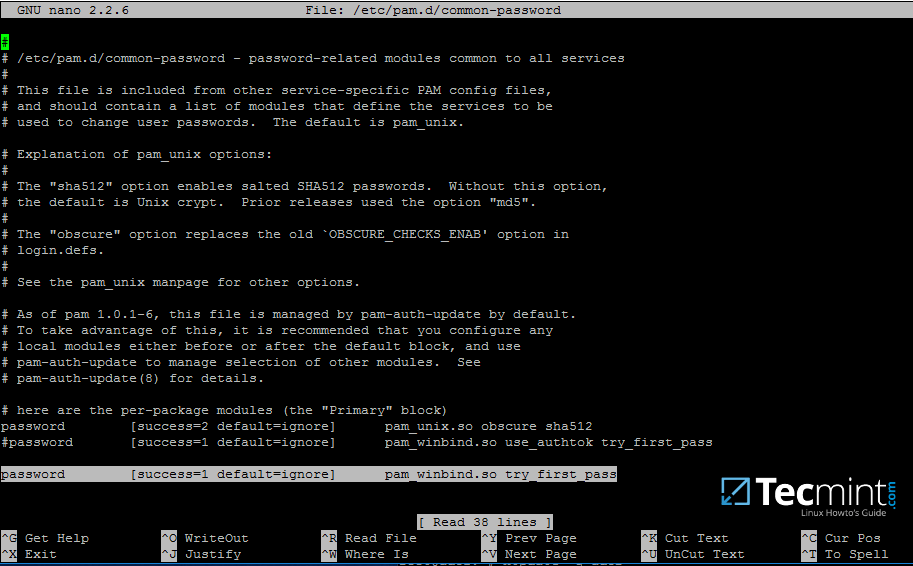

18、 最后,编辑 `/etc/pam.d/common-password` 文件,查找下图所示行并删除 `user_authtok` 参数。

|

||||

|

||||

该设置确保 AD 用户在通过 Linux 系统本地认证后,可以在命令行下修改他们的密码。有这个参数时,本地认证的 AD 用户不能在控制台下修改他们的密码。

|

||||

|

||||

```

|

||||

password [success=1 default=ignore] pam_winbind.so try_first_pass

|

||||

```

|

||||

[

|

||||

|

||||

][14]

|

||||

|

||||

*允许 Samba AD 用户修改密码*

|

||||

|

||||

在每次 PAM 更新安装完成并应用到 PAM 模块,或者你每次执行 `pam-auth-update` 命令后,你都需要删除 `use_authtok` 参数。

|

||||

|

||||

19、 Samba4 的二进制文件会生成一个内建的 windindd 进程,并且默认是启用的。

|

||||

|

||||

因此,你没必要再次去启用并运行 Ubuntu 系统官方自带的 winbind 服务。

|

||||

|

||||

为了防止系统里原来已废弃的 winbind 服务被启动,确保执行以下命令来禁用并停止原来的 winbind 服务。

|

||||

|

||||

```

|

||||

$ sudo systemctl disable winbind.service

|

||||

$ sudo systemctl stop winbind.service

|

||||

```

|

||||

|

||||

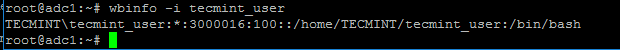

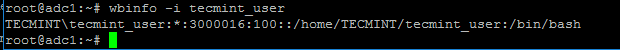

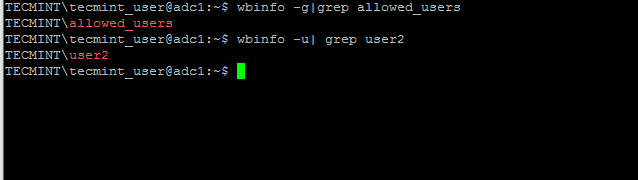

虽然我们不再需要运行原有的 winbind 进程,但是为了安装并使用 wbinfo 工具,我们还得从系统软件库中安装 Winbind 包。

|

||||

|

||||

wbinfo 工具可以用来从 winbindd 进程侧来查询活动目录用户和组。

|

||||

|

||||

以下命令显示了使用 `wbinfo` 命令如何查询 AD 用户及组信息。

|

||||

|

||||

```

|

||||

$ wbinfo -g

|

||||

$ wbinfo -u

|

||||

$ wbinfo -i your_domain_user

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][15]

|

||||

|

||||

*检查 Samba4 AD 信息*

|

||||

|

||||

[

|

||||

|

||||

][16]

|

||||

|

||||

*检查 Samba4 AD 用户信息*

|

||||

|

||||

20、 除了 `wbinfo` 工具外,你也可以使用 `getent` 命令行工具从 Name Service Switch 库中查询活动目录信息库,在 `/etc/nsswitch.conf` 配置文件中有相关描述内容。

|

||||

|

||||

通过 grep 命令用管道符从 `getent` 命令过滤结果集,以获取信息库中 AD 域用户及组信息。

|

||||

|

||||

```

|

||||

# getent passwd | grep TECMINT

|

||||

# getent group | grep TECMINT

|

||||

```

|

||||

[

|

||||

|

||||

][17]

|

||||

|

||||

*查看 Samba4 AD 详细信息*

|

||||

|

||||

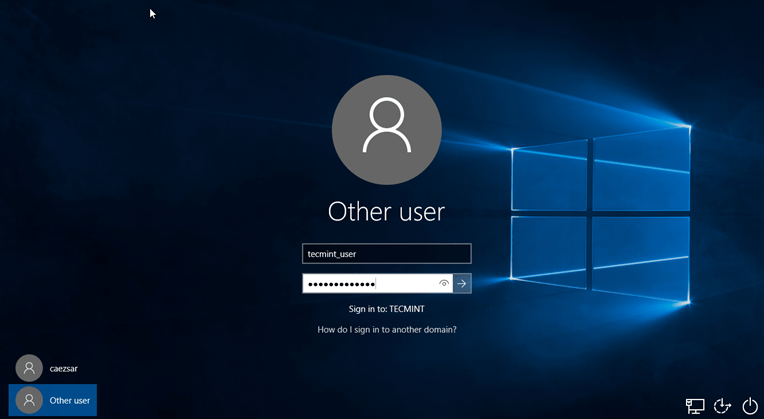

### 第三步:使用活动目录账号登录 Linux 系统

|

||||

|

||||

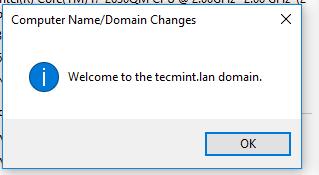

21、 为了使用 Samba4 AD 用户登录系统,使用 `su -` 命令切换到 AD 用户账号即可。

|

||||

|

||||

第一次登录系统后,控制台会有信息提示用户的 home 目录已创建完成,系统路径为 `/home/$DOMAIN/` 之下,名字为用户的 AD 账号名。

|

||||

|

||||

使用 `id` 命令来查询其它已登录的用户信息。

|

||||

|

||||

```

|

||||

# su - your_ad_user

|

||||

$ id

|

||||

$ exit

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][18]

|

||||

|

||||

*检查 Linux 下 Samba4 AD 用户认证结果*

|

||||

|

||||

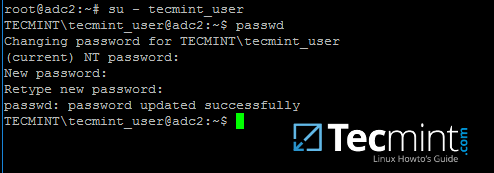

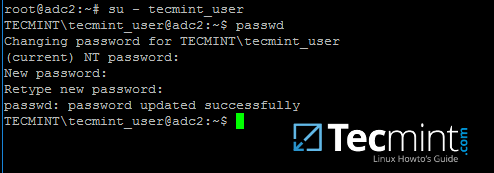

22、 当你成功登入系统后,在控制台下输入 `passwd` 命令来修改已登录的 AD 用户密码。

|

||||

|

||||

```

|

||||

$ su - your_ad_user

|

||||

$ passwd

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][19]

|

||||

|

||||

*修改 Samba4 AD 用户密码*

|

||||

|

||||

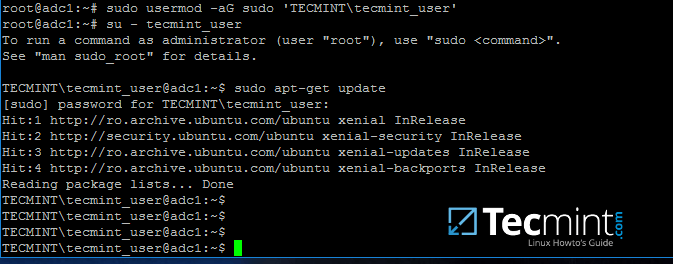

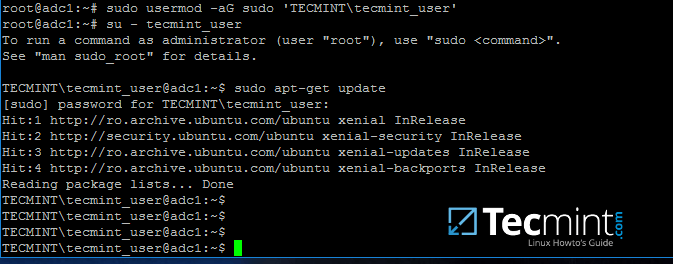

23、 默认情况下,活动目录用户没有可以完成系统管理工作的 root 权限。

|

||||

|

||||

要授予 AD 用户 root 权限,你必须把用户名添加到本地 sudo 组中,可使用如下命令完成。

|

||||

|

||||

确保你已输入域 、斜杠和 AD 用户名,并且使用英文单引号括起来,如下所示:

|

||||

|

||||

```

|

||||

# usermod -aG sudo 'DOMAIN\your_domain_user'

|

||||

```

|

||||

|

||||

要检查 AD 用户在本地系统上是否有 root 权限,登录后执行一个命令,比如,使用 sudo 权限执行 `apt-get update` 命令。

|

||||

|

||||

```

|

||||

# su - tecmint_user

|

||||

$ sudo apt-get update

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][20]

|

||||

|

||||

*授予 Samba4 AD 用户 sudo 权限*

|

||||

|

||||

24、 如果你想把活动目录组中的所有账号都授予 root 权限,使用 `visudo` 命令来编辑 `/etc/sudoers` 配置文件,在 root 权限那一行添加如下内容:

|

||||

|

||||

```

|

||||

%DOMAIN\\your_domain\ group ALL=(ALL:ALL) ALL

|

||||

```

|

||||

|

||||

注意 `/etc/sudoers` 的格式,不要弄乱。

|

||||

|

||||

`/etc/sudoers` 配置文件对于 ASCII 引号字符处理的不是很好,因此务必使用 '%' 来标识用户组,使用反斜杠来转义域名后的第一个斜杠,如果你的组名中包含空格(大多数 AD 内建组默认情况下都包含空格)使用另外一个反斜杠来转义空格。并且域的名称要大写。

|

||||

|

||||

[

|

||||

|

||||

][21]

|

||||

|

||||

*授予所有 Samba4 用户 sudo 权限*

|

||||

|

||||

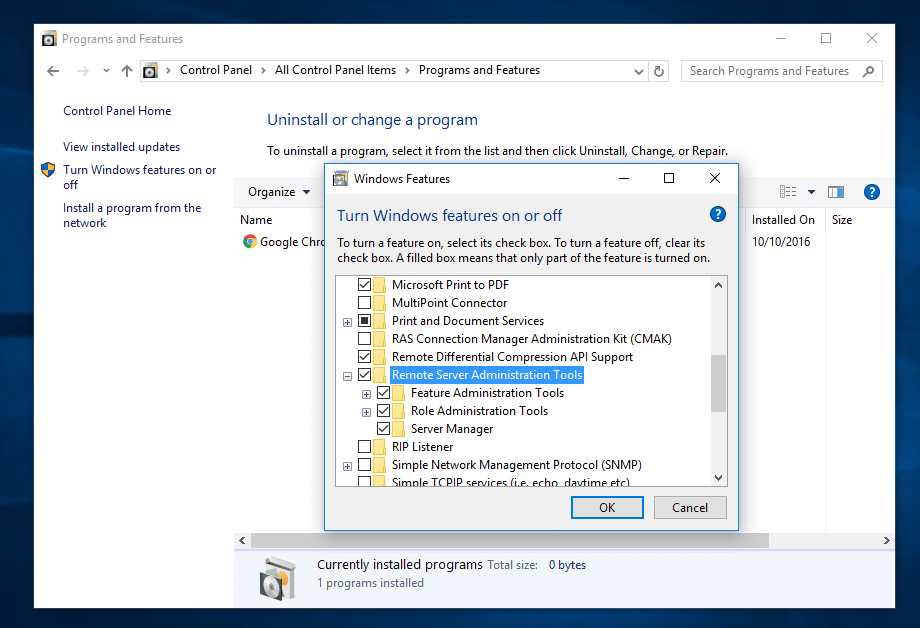

好了,差不多就这些了!管理 Samba4 AD 架构也可以使用 Windows 环境中的其它几个工具,比如 ADUC、DNS 管理器、 GPM 等等,这些工具可以通过安装从 Microsoft 官网下载的 RSAT 软件包来获得。

|

||||

|

||||

要通过 RSAT 工具来管理 Samba4 AD DC ,你必须要把 Windows 系统加入到 Samba4 活动目录。这将是我们下一篇文章的重点,在这之前,请继续关注。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/manage-samba4-active-directory-linux-command-line

|

||||

|

||||

作者:[Matei Cezar][a]

|

||||

译者:[rusking](https://github.com/rusking)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/cezarmatei/

|

||||

[1]:https://linux.cn/article-8065-1.html

|

||||

[2]:http://www.tecmint.com/60-commands-of-linux-a-guide-from-newbies-to-system-administrator/

|

||||

[3]:http://www.tecmint.com/wp-content/uploads/2016/11/Samba-Administration-Tool.png

|

||||

[4]:http://www.tecmint.com/wp-content/uploads/2016/11/Create-User-on-Samba-AD.png

|

||||

[5]:http://www.tecmint.com/wp-content/uploads/2016/11/List-Samba-AD-Users.png

|

||||

[6]:http://www.tecmint.com/wp-content/uploads/2016/11/List-Samba-Domain-Members-of-Group.png

|

||||

[7]:http://www.tecmint.com/wp-content/uploads/2016/11/Check-Samba-Domain-Password.png

|

||||

[8]:http://www.tecmint.com/wp-content/uploads/2016/11/Manage-Samba-Domain-Password-Settings.png

|

||||

[9]:http://www.tecmint.com/wp-content/uploads/2016/11/Samba-Authentication-Using-Active-Directory-Accounts.png

|

||||

[10]:http://www.tecmint.com/wp-content/uploads/2016/11/Check-Samba-Configuration-for-Errors.png

|

||||

[11]:http://www.tecmint.com/wp-content/uploads/2016/11/PAM-Configuration-for-Samba4-AD.png

|

||||

[12]:http://www.tecmint.com/wp-content/uploads/2016/11/Enable-PAM-Authentication-Module-for-Samba4-AD.png

|

||||

[13]:http://www.tecmint.com/wp-content/uploads/2016/11/Add-Windbind-Service-Switch-for-Samba.png

|

||||

[14]:http://www.tecmint.com/wp-content/uploads/2016/11/Allow-Samba-AD-Users-to-Change-Password.png

|

||||

[15]:http://www.tecmint.com/wp-content/uploads/2016/11/Check-Information-of-Samba4-AD.png

|

||||

[16]:http://www.tecmint.com/wp-content/uploads/2016/11/Check-Samba4-AD-User-Info.png

|

||||

[17]:http://www.tecmint.com/wp-content/uploads/2016/11/Get-Samba4-AD-Details.png

|

||||

[18]:http://www.tecmint.com/wp-content/uploads/2016/11/Check-Samba4-AD-User-Authentication-on-Linux.png

|

||||

[19]:http://www.tecmint.com/wp-content/uploads/2016/11/Change-Samba4-AD-User-Password.png

|

||||

[20]:http://www.tecmint.com/wp-content/uploads/2016/11/Grant-sudo-Permission-to-Samba4-AD-User.png

|

||||

[21]:http://www.tecmint.com/wp-content/uploads/2016/11/Give-Sudo-Access-to-All-Samba4-AD-Users.png

|

||||

@ -0,0 +1,65 @@

|

||||

把 SQL Server 迁移到 Linux?不如换成 MySQL

|

||||

============================================================

|

||||

|

||||

最近几年,数量庞大的个人和组织放弃 Windows 平台选择 Linux 平台,而且随着人们体验到更多 Linux 的发展,这个数字将会继续增长。在很长的一段时间内, Linux 是网络服务器的领导者,因为大部分的网络服务器都运行在 Linux 之上,这或许是为什么那么多的个人和组织选择迁移的一个原因。

|

||||

|

||||

迁移的原因有很多,更强的平台稳定性、可靠性、成本、所有权和安全性等等。随着更多的个人和组织迁移到 Linux 平台,MS SQL 服务器数据库管理系统的迁移也有着同样的趋势,首选的是 MySQL ,这是因为 MySQL 的互用性、平台无关性和购置成本低。

|

||||

|

||||

有如此多的个人和组织完成了迁移,这是应业务需求而产生的迁移,而不是为了迁移的乐趣。因此,有必要做一个综合可行性和成本效益分析,以了解迁移对于你的业务上的正面和负面影响。

|

||||

|

||||

迁移需要基于以下重要因素:

|

||||

|

||||

### 对平台的掌控

|

||||

|

||||

不像 Windows 那样,你不能完全控制版本发布和修复,而 Linux 可以让你需要需要修复的时候真正给了你获取修复的灵活性。这一点受到了开发者和安全人员的喜爱,因为他们能在一个安全威胁被确定时立即自行打补丁,不像 Windows ,你只能期望官方尽快发布补丁。

|

||||

|

||||

### 跟随大众

|

||||

|

||||

目前, 运行在 Linux 平台上的服务器在数量上远超 Windows,几乎是全世界服务器数量的四分之三,而且这种趋势在最近一段时间内不会改变。因此,许多组织正在将他们的服务完全迁移到 Linux 上,而不是同时使用两种平台,同时使用将会增加他们的运营成本。

|

||||

|

||||

### 微软没有开放 SQL Server 的源代码

|

||||

|

||||

微软宣称他们下一个名为 Denali 的新版 MS SQL Server 将会是一个 Linux 版本,并且不会开放其源代码,这意味着他们仍然使用的是软件授权模式,只是新版本将能在 Linux 上运行而已。这一点将许多乐于接受开源新版本的人拒之门外。

|

||||

|

||||

这也没有给那些使用闭源的 Oracle 用户另一个选择,对于使用完全开源的 [MySQL 用户][7]也是如此。

|

||||

|

||||

### 节约授权许可证的花费

|

||||

|

||||

授权许可证的潜在成本让许多用户很失望。在 Windows 平台上运行 MS SQL 服务器有太多的授权许可证牵涉其中。你需要这些授权许可证:

|

||||

|

||||

* Windows 操作系统

|

||||

* MS SQL 服务器

|

||||

* 特定的数据库工具,例如 SQL 分析工具等

|

||||

|

||||

不像 Windows 平台,Linux 完全没有高昂的授权花费,因此更能吸引用户。 MySQL 数据库也能免费获取,甚而它提供了像 MS SQL 服务器一样的灵活性,那就更值得选择了。不像那些给 MS SQL 设计的收费工具,大部分的 MySQL 数据库实用程序是免费的。

|

||||

|

||||

### 有时候用的是特殊的硬件

|

||||

|

||||

因为 Linux 是不同的开发者所开发,并在不断改进中,所以它独立于所运行的硬件之上,并能被广泛使用在不同的硬件平台。然而尽管微软正在努力让 Windows 和 MSSQL 服务器做到硬件无关,但在平台无关上依旧有些限制。

|

||||

|

||||

### 支持

|

||||

|

||||

有了 Linux、MySQL 和其它的开源软件,获取满足自己特定需求的帮助变得更加简单,因为有不同开发者参与到这些软件的开发过程中。这些开发者或许就在你附近,这样更容易获取帮助。在线论坛也能帮上不少,你能发帖并讨论你所面对的问题。

|

||||

|

||||

至于那些商业软件,你只能根据他们的软件协议和时间来获得帮助,有时候他们不能在你的时间范围内给出一个解决方案。

|

||||

|

||||

在不同的情况中,迁移到 Linux 都是你最好的选择,加入一个彻底的、稳定可靠的平台来获取优异表现,众所周知,它比 Windows 更健壮。这值得一试。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.howtoforge.com/tutorial/moving-with-sql-server-to-linux-move-from-sql-server-to-mysql-as-well/

|

||||

|

||||

作者:[Tony Branson][a]

|

||||

译者:[ypingcn](https://github.com/ypingcn)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 组织编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://twitter.com/howtoforgecom

|

||||

[1]:https://www.howtoforge.com/tutorial/moving-with-sql-server-to-linux-move-from-sql-server-to-mysql-as-well/#to-have-control-over-the-platform

|

||||

[2]:https://www.howtoforge.com/tutorial/moving-with-sql-server-to-linux-move-from-sql-server-to-mysql-as-well/#joining-the-crowd

|

||||

[3]:https://www.howtoforge.com/tutorial/moving-with-sql-server-to-linux-move-from-sql-server-to-mysql-as-well/#microsoft-isnrsquot-open-sourcing-sql-serverrsquos-code

|

||||

[4]:https://www.howtoforge.com/tutorial/moving-with-sql-server-to-linux-move-from-sql-server-to-mysql-as-well/#saving-on-license-costs

|

||||

[5]:https://www.howtoforge.com/tutorial/moving-with-sql-server-to-linux-move-from-sql-server-to-mysql-as-well/#sometimes-the-specific-hardware-being-used

|

||||

[6]:https://www.howtoforge.com/tutorial/moving-with-sql-server-to-linux-move-from-sql-server-to-mysql-as-well/#support

|

||||

[7]:http://www.scalearc.com/how-it-works/products/scalearc-for-mysql

|

||||

@ -0,0 +1,124 @@

|

||||

如何在 Ubuntu 环境下搭建邮件服务器(一)

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

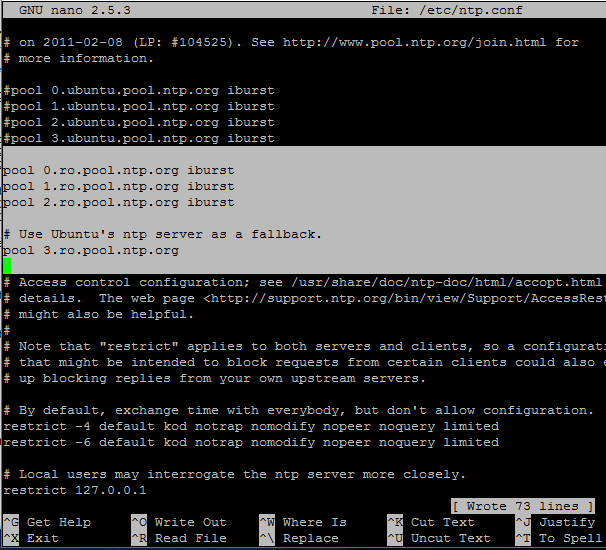

在这个系列的文章中,我们将通过使用 Postfix、Dovecot 和 openssl 这三款工具来为你展示如何在 ubuntu 系统上搭建一个既可靠又易于配置的邮件服务器。

|

||||

|

||||

在这个容器和微服务技术日新月异的时代,值得庆幸的是有些事情并没有改变,例如搭建一个 Linux 下的邮件服务器,仍然需要许多步骤才能间隔各种服务器耦合在一起,而当你将这些配置好,放在一起,却又非常可靠稳定,不会像微服务那样一睁眼有了,一闭眼又没了。 在这个系列教程中我们将通过使用 Postfix、Dovecot 和 openssl 这三款工具在 ubuntu 系统上搭建一个既可靠又易于配置的邮件服务器。

|

||||

|

||||

Postfix 是一个古老又可靠的软件,它比原始的 Unix 系统的 MTA 软件 sendmail 更加容易配置和使用(还有人仍然在用sendmail 吗?)。 Exim 是 Debain 系统上的默认 MTA 软件,它比 Postfix 更加轻量而且超级容易配置,因此我们在将来的教程中会推出 Exim 的教程。

|

||||

|

||||

Dovecot(LCTT 译注:详情请阅读[维基百科](https://en.wikipedia.org/wiki/Dovecot_(software))和 Courier 是两个非常受欢迎的优秀的 IMAP/POP3 协议的服务器软件,Dovecot 更加的轻量并且易于配置。

|

||||

|

||||

你必须要保证你的邮件通讯是安全的,因此我们就需要使用到 OpenSSL 这个软件,OpenSSL 也提供了一些很好用的工具来测试你的邮件服务器。

|

||||

|

||||

为了简单起见,在这一系列的教程中,我们将指导大家安装一个在局域网上的邮件服务器,你应该拥有一个局域网内的域名服务,并确保它是启用且正常工作的,查看这篇“[使用 dnsmasq 为局域网轻松提供 DNS 服务][5]”会有些帮助,然后,你就可以通过注册域名并相应地配置防火墙,来将这台局域网服务器变成互联网可访问邮件服务器。这个过程网上已经有很多很详细的教程了,这里不再赘述,请大家继续跟着教程进行即可。

|

||||

|

||||

### 一些术语

|

||||

|

||||

让我们先来快速了解一些术语,因为当我们了解了这些术语的时候就能知道这些见鬼的东西到底是什么。 :D

|

||||

|

||||

* **MTA**:邮件传输代理(Mail Transfer Agent),基于 SMTP 协议(简单邮件传输协议)的服务端,比如 Postfix、Exim、Sendmail 等。SMTP 服务端彼此之间进行相互通信(LCTT 译注 : 详情请阅读[维基百科](https://en.wikipedia.org/wiki/Message_transfer_agent))。

|

||||

* **MUA**: 邮件用户代理(Mail User Agent),你本地的邮件客户端,例如 : Evolution、KMail、Claws Mail 或者 Thunderbird(LCTT 译注 : 例如国内的 Foxmail)。

|

||||

* **POP3**:邮局协议(Post-Office Protocol)版本 3,将邮件从 SMTP 服务器传输到你的邮件客户端的的最简单的协议。POP 服务端是非常简单小巧的,单一的一台机器可以为数以千计的用户提供服务。

|

||||

* **IMAP**: 交互式消息访问协议(Interactive Message Access Protocol),许多企业使用这个协议因为邮件可以被保存在服务器上,而用户不必担心会丢失消息。IMAP 服务器需要大量的内存和存储空间。

|

||||

* **TLS**:传输套接层(Transport socket layer)是 SSL(Secure Sockets Layer,安全套接层)的改良版,为 SASL 身份认证提供了加密的传输服务层。

|

||||

* **SASL**:简单身份认证与安全层(Simple Authentication and Security Layer),用于认证用户。SASL进行身份认证,而上面说的 TLS 提供认证数据的加密传输。

|

||||

* **StartTLS**: 也被称为伺机 TLS 。如果服务器双方都支持 SSL/TLS,StartTLS 就会将纯文本连接升级为加密连接(TLS 或 SSL)。如果有一方不支持加密,则使用明文传输。StartTLS 会使用标准的未加密端口 25 (SMTP)、 110(POP3)和 143 (IMAP)而不是对应的加密端口 465(SMTP)、995(POP3) 和 993 (IMAP)。

|

||||

|

||||

### 啊,我们仍然有 sendmail

|

||||

|

||||

绝大多数的 Linux 版本仍然还保留着 `/usr/sbin/sendmail` 。 这是在那个 MTA 只有一个 sendmail 的古代遗留下来的痕迹。在大多数 Linux 发行版中,`/usr/sbin/sendmail` 会符号链接到你安装的 MTA 软件上。如果你的 Linux 中有它,不用管它,你的发行版会自己处理好的。

|

||||

|

||||

### 安装 Postfix

|

||||

|

||||

使用 `apt-get install postfix` 来做基本安装时要注意(图 1),安装程序会打开一个向导,询问你想要搭建的服务器类型,你要选择“Internet Server”,虽然这里是局域网服务器。它会让你输入完全限定的服务器域名(例如: myserver.mydomain.net)。对于局域网服务器,假设你的域名服务已经正确配置,(我多次提到这个是因为经常有人在这里出现错误),你也可以只使用主机名。

|

||||

|

||||

|

||||

|

||||

*图 1:Postfix 的配置。*

|

||||

|

||||

Ubuntu 系统会为 Postfix 创建一个配置文件,并启动三个守护进程 : `master`、`qmgr` 和 `pickup`,这里没用一个叫 Postfix 的命令或守护进程。(LCTT 译注:名为 `postfix` 的命令是管理命令。)

|

||||

|

||||

```

|

||||

$ ps ax

|

||||

6494 ? Ss 0:00 /usr/lib/postfix/master

|

||||

6497 ? S 0:00 pickup -l -t unix -u -c

|

||||

6498 ? S 0:00 qmgr -l -t unix -u

|

||||

```

|

||||

|

||||

你可以使用 Postfix 内置的配置语法检查来测试你的配置文件,如果没用发现语法错误,不会输出任何内容。

|

||||

|

||||

```

|

||||

$ sudo postfix check

|

||||

[sudo] password for carla:

|

||||

```

|

||||

|

||||

使用 `netstat` 来验证 `postfix` 是否正在监听 25 端口。

|

||||

|

||||

```

|

||||

$ netstat -ant

|

||||

tcp 0 0 0.0.0.0:25 0.0.0.0:* LISTEN

|

||||

tcp6 0 0 :::25 :::* LISTEN

|

||||

```

|

||||

|

||||

现在让我们再操起古老的 `telnet` 来进行测试 :

|

||||

|

||||

```

|

||||

$ telnet myserver 25

|

||||

Trying 127.0.1.1...

|

||||

Connected to myserver.

|

||||

Escape character is '^]'.

|

||||

220 myserver ESMTP Postfix (Ubuntu)

|

||||

EHLO myserver

|

||||

250-myserver

|

||||

250-PIPELINING

|

||||

250-SIZE 10240000

|

||||

250-VRFY

|

||||

250-ETRN

|

||||

250-STARTTLS

|

||||

250-ENHANCEDSTATUSCODES

|

||||

250-8BITMIME

|

||||

250 DSN

|

||||

^]

|

||||

|

||||

telnet>

|

||||

```

|

||||

|

||||

嘿,我们已经验证了我们的服务器名,而且 Postfix 正在监听 SMTP 的 25 端口而且响应了我们键入的命令。

|

||||

|

||||

按下 `^]` 终止连接,返回 telnet。输入 `quit` 来退出 telnet。输出的 ESMTP(扩展的 SMTP ) 250 状态码如下。

|

||||

(LCTT 译注: ESMTP (Extended SMTP),即扩展 SMTP,就是对标准 SMTP 协议进行的扩展。详情请阅读[维基百科](https://en.wikipedia.org/wiki/Extended_SMTP))

|

||||

|

||||

* **PIPELINING** 允许多个命令流式发出,而不必对每个命令作出响应。

|

||||

* **SIZE** 表示服务器可接收的最大消息大小。

|

||||

* **VRFY** 可以告诉客户端某一个特定的邮箱地址是否存在,这通常应该被取消,因为这是一个安全漏洞。

|

||||

* **ETRN** 适用于非持久互联网连接的服务器。这样的站点可以使用 ETRN 从上游服务器请求邮件投递,Postfix 可以配置成延迟投递邮件到 ETRN 客户端。

|

||||

* **STARTTLS** (详情见上述说明)。

|

||||

* **ENHANCEDSTATUSCODES**,服务器支撑增强型的状态码和错误码。

|

||||

* **8BITMIME**,支持 8 位 MIME,这意味着完整的 ASCII 字符集。最初,原始的 ASCII 是 7 位。

|

||||

* **DSN**,投递状态通知,用于通知你投递时的错误。

|

||||

|

||||

Postfix 的主配置文件是: `/etc/postfix/main.cf`,这个文件是安装程序创建的,可以参考[这个资料][6]来查看完整的 `main.cf` 参数列表, `/etc/postfix/postfix-files` 这个文件描述了 Postfix 完整的安装文件。

|

||||

|

||||

下一篇教程我们会讲解 Dovecot 的安装和测试,然后会给我们自己发送一些邮件。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/learn/how-build-email-server-ubuntu-linux

|

||||

|

||||

作者:[CARLA SCHRODER][a]

|

||||

译者:[WangYihang](https://github.com/WangYihang)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 组织编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/cschroder

|

||||

[1]:https://www.linux.com/licenses/category/creative-commons-zero

|

||||

[2]:https://www.linux.com/licenses/category/creative-commons-zero

|

||||

[3]:https://www.linux.com/files/images/postfix-1png

|

||||

[4]:https://www.linux.com/files/images/mail-stackjpg

|

||||

[5]:https://www.linux.com/learn/dnsmasq-easy-lan-name-services

|

||||

[6]:http://www.postfix.org/postconf.5.html

|

||||

|

||||

@ -0,0 +1,215 @@

|

||||

RHEL (Red Hat Enterprise Linux,红帽企业级 Linux) 7.3 安装指南

|

||||

=====

|

||||

|

||||

RHEL 是由红帽公司开发维护的开源 Linux 发行版,可以运行在所有的主流 CPU 架构中。一般来说,多数的 Linux 发行版都可以免费下载、安装和使用,但对于 RHEL,只有在购买了订阅之后,你才能下载和使用,否则只能获取到试用期为 30 天的评估版。

|

||||

|

||||

本文会告诉你如何在你的机器上安装最新的 RHEL 7.3,当然了,使用的是期限 30 天的评估版 ISO 镜像,请自行到 [https://access.redhat.com/downloads][1] 下载。

|

||||

|

||||

如果你更喜欢使用 CentOS,请移步 [CentOS 7.3 安装指南][2]。

|

||||

|

||||

欲了解 RHEL 7.3 的新特性,请参考 [版本更新日志][3]。

|

||||

|

||||

#### 先决条件

|

||||

|

||||

本次安装是在支持 UEFI 的虚拟机固件上进行的。为了完成安装,你首先需要进入主板的 EFI 固件更改启动顺序为已刻录好 ISO 镜像的对应设备(DVD 或者 U 盘)。

|

||||

|

||||

如果是通过 USB 介质来安装,你需要确保这个可以启动的 USB 设备是用支持 UEFI 兼容的工具来创建的,比如 [Rufus][4],它能将你的 USB 设备设置为 UEFI 固件所需要的 GPT 分区方案。

|

||||

|

||||

为了进入主板的 UEFI 固件设置面板,你需要在电脑初始化 POST (Power on Self Test,通电自检) 的时候按下一个特殊键。

|

||||

|

||||

关于该设置需要用到特殊键,你可以向主板厂商进行咨询获取。通常来说,在笔记本上,可能是这些键:F2、F9、F10、F11 或者 F12,也可能是 Fn 与这些键的组合。

|

||||

|

||||

此外,更改 UEFI 启动顺序前,你要确保快速启动选项 (QuickBoot/FastBoot) 和 安全启动选项 (Secure Boot) 处于关闭状态,这样才能在 EFI 固件中运行 RHEL。

|

||||

|

||||

有一些 UEFI 固件主板模型有这样一个选项,它让你能够以传统的 BIOS 或者 EFI CSM (Compatibility Support Module,兼容支持模块) 两种模式来安装操作系统,其中 CSM 是主板固件中一个用来模拟 BIOS 环境的模块。这种类型的安装需要 U 盘以 MBR 而非 GPT 来进行分区。

|

||||

|

||||

此外,一旦在你的 UEFI 机器中以这两种模式之一成功安装好 RHEL 或者类似的 OS,那么安装好的系统就必须以你安装时使用的模式来运行。而且,你也不能够从 UEFI 模式变更到传统的 BIOS 模式,反之亦然。强行变更这两种模式会让你的系统变得不稳定、无法启动,同时还需要重新安装系统。

|

||||

|

||||

### RHEL 7.3 安装指南

|

||||

|

||||

1、 首先,下载并使用合适的工具刻录 RHEL 7.3 ISO 镜像到 DVD 或者创建一个可启动的 U 盘。

|

||||

|

||||

给机器加电启动,把 DVD/U 盘放入合适驱动器中,并根据你的 UEFI/BIOS 类型,按下特定的启动键变更启动顺序来启动安装介质。

|

||||

|

||||

当安装介质被检测到之后,它会启动到 RHEL 的 grub 菜单。选择“Install red hat Enterprise Linux 7.3” 并按回车继续。

|

||||

|

||||

[][5]

|

||||

|

||||

*RHEL 7.3 启动菜单*

|

||||

|

||||

2、 之后屏幕就会显示 RHEL 7.3 欢迎界面。该界面选择安装过程中使用的语言 (LCTT 译注:这里选的只是安装过程中使用的语言,之后的安装中才会进行最终使用的系统语言环境) ,然后按回车到下一界面。

|

||||

|

||||

[][6]

|

||||

|

||||

*选择 RHEL 7.3 安装过程使用的语言*

|

||||

|

||||

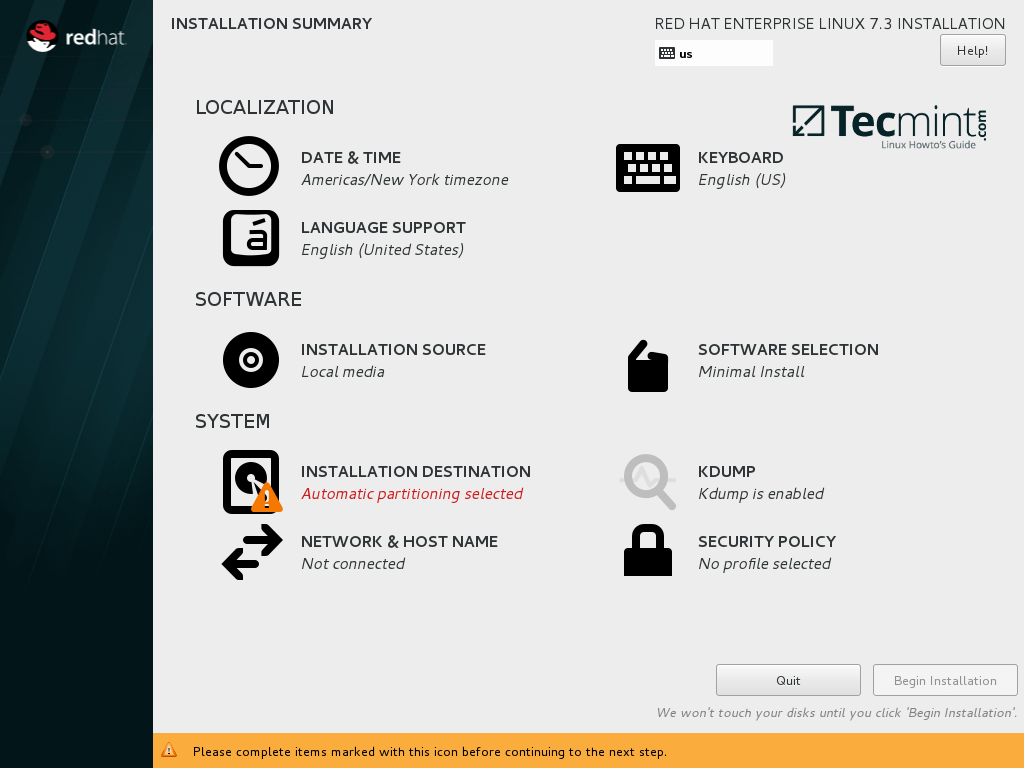

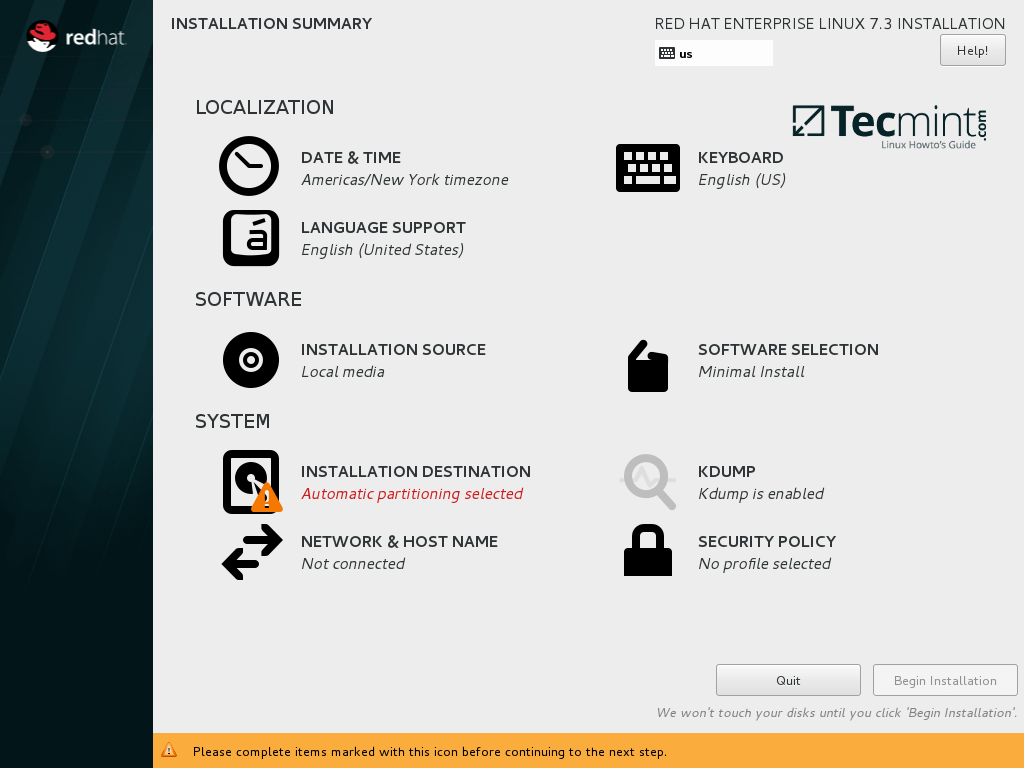

3、 下一界面中显示的是安装 RHEL 时你需要设置的所有事项的总体概览。首先点击日期和时间 (DATE & TIME) 并在地图中选择你的设备所在地区。

|

||||

|

||||

点击最上面的完成 (Done) 按钮来保持你的设置,并进行下一步系统设置。

|

||||

|

||||

[][7]

|

||||

|

||||

*RHEL 7.3 安装概览*

|

||||

|

||||

[][8]

|

||||

|

||||

*选择 RHEL 7.3 日期和时间*

|

||||

|

||||

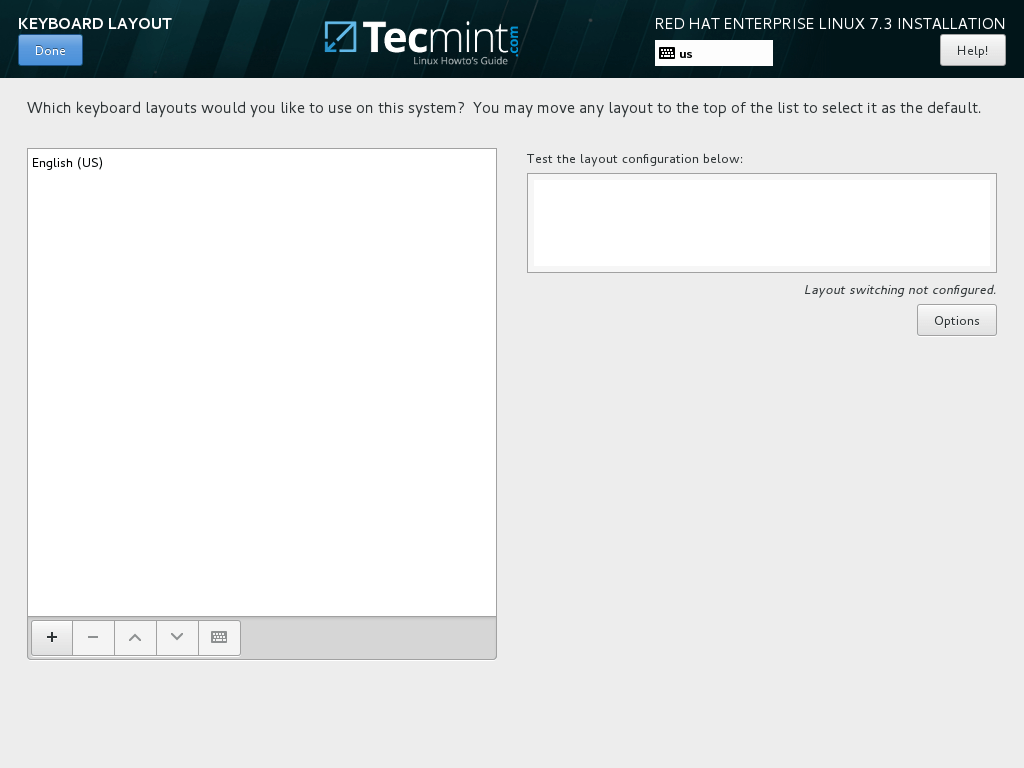

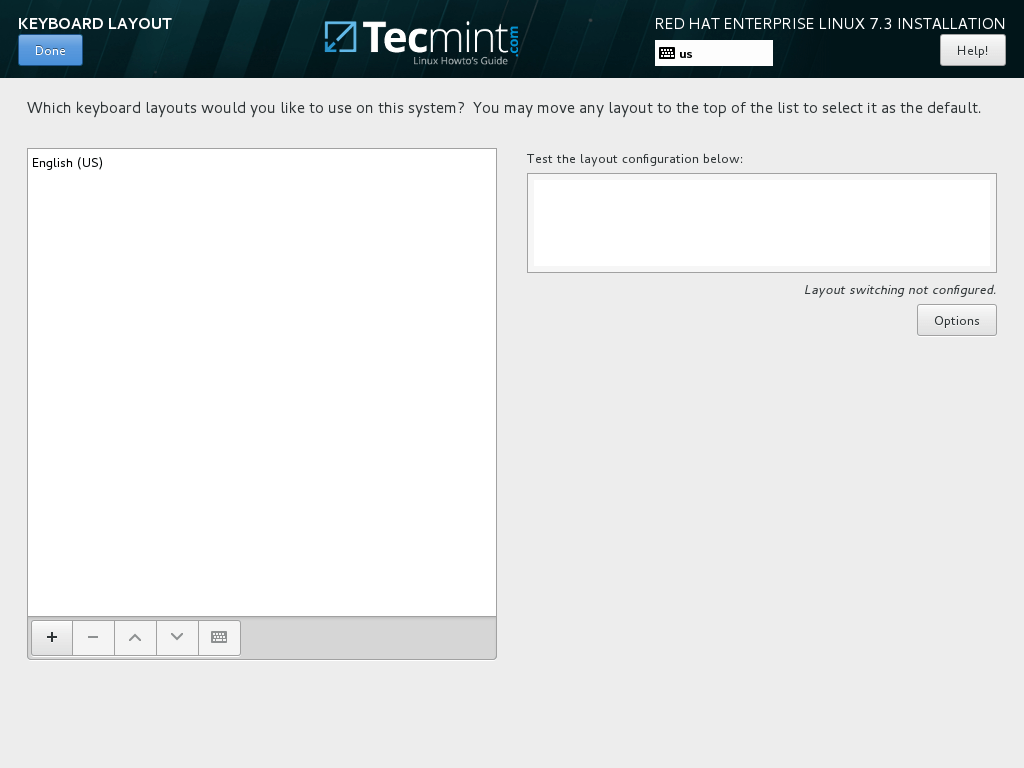

4、 接下来,就是配置你的键盘(keyboard)布局并再次点击完成 (Done) 按钮返回安装主菜单。

|

||||

|

||||

[][9]

|

||||

|

||||

*配置键盘布局*

|

||||

|

||||

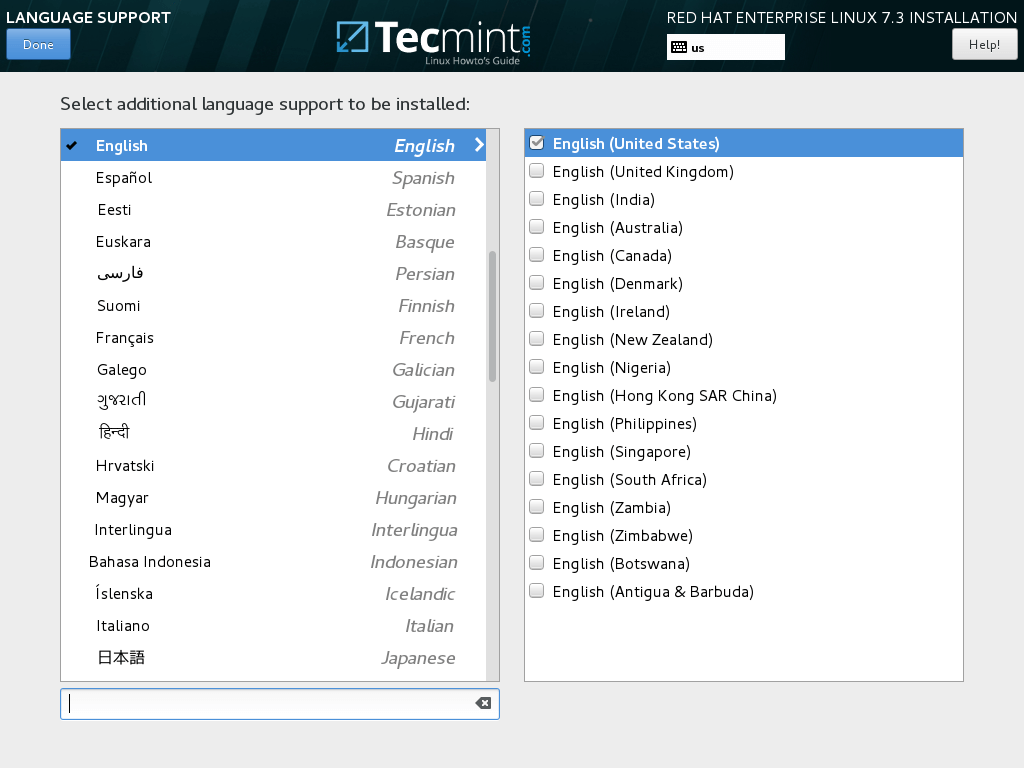

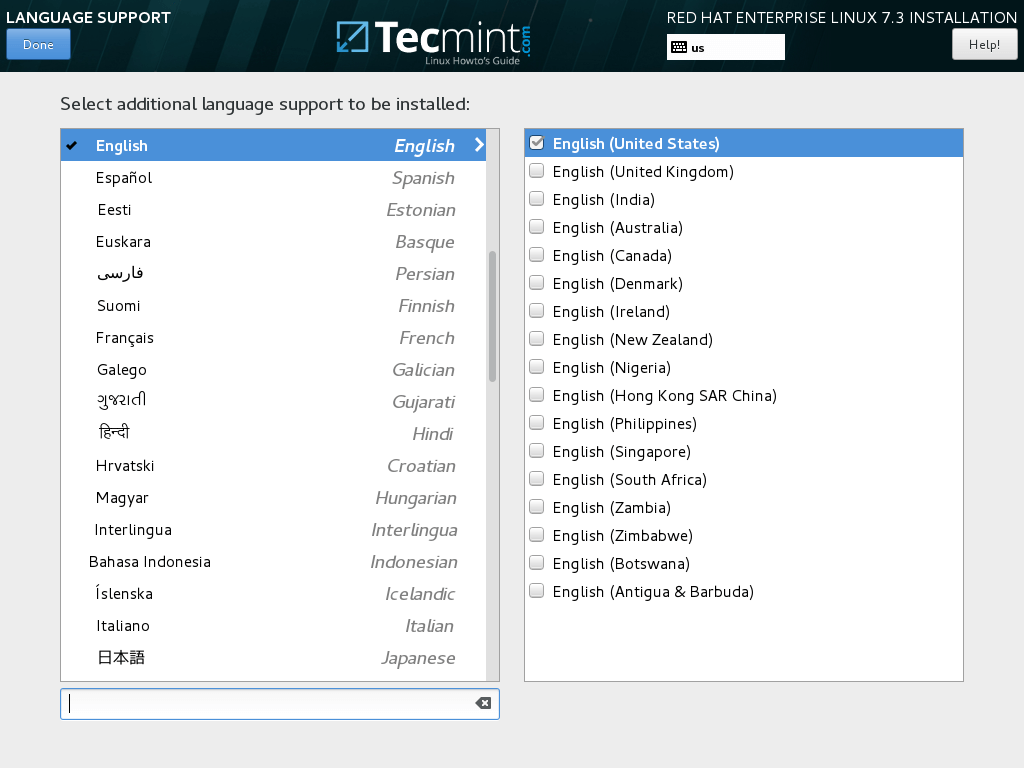

5、 紧接着,选择你使用的语言支持(language support),并点击完成 (Done),然后进行下一步。

|

||||

|

||||

[][10]

|

||||

|

||||

*选择语言支持*

|

||||

|

||||

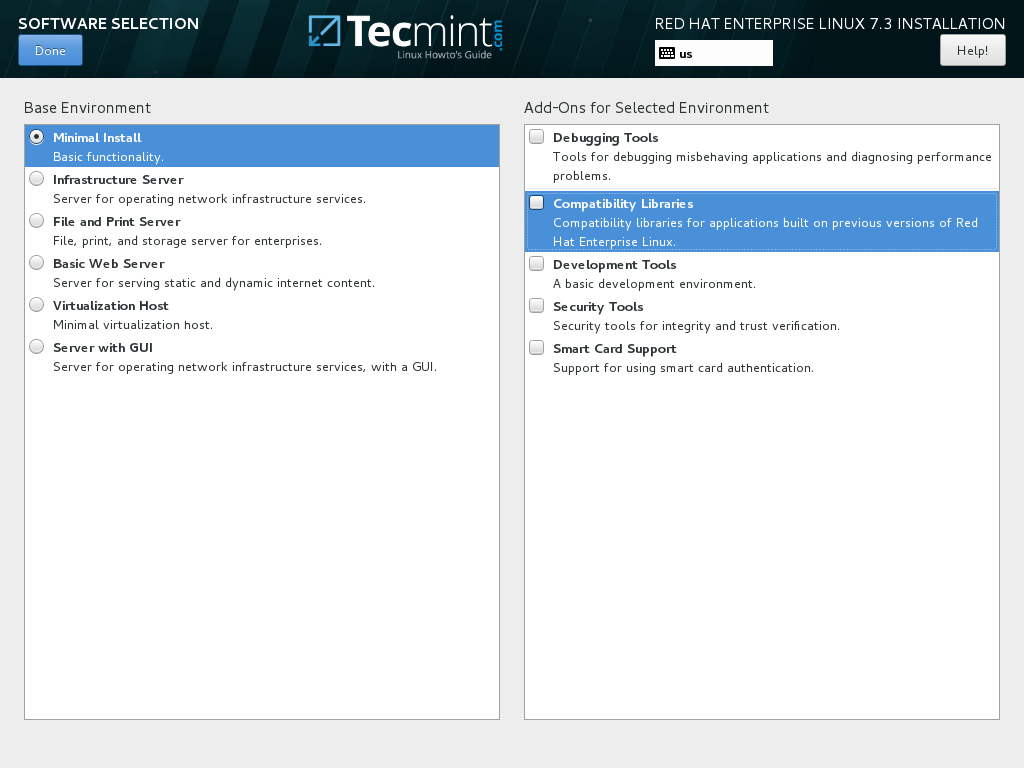

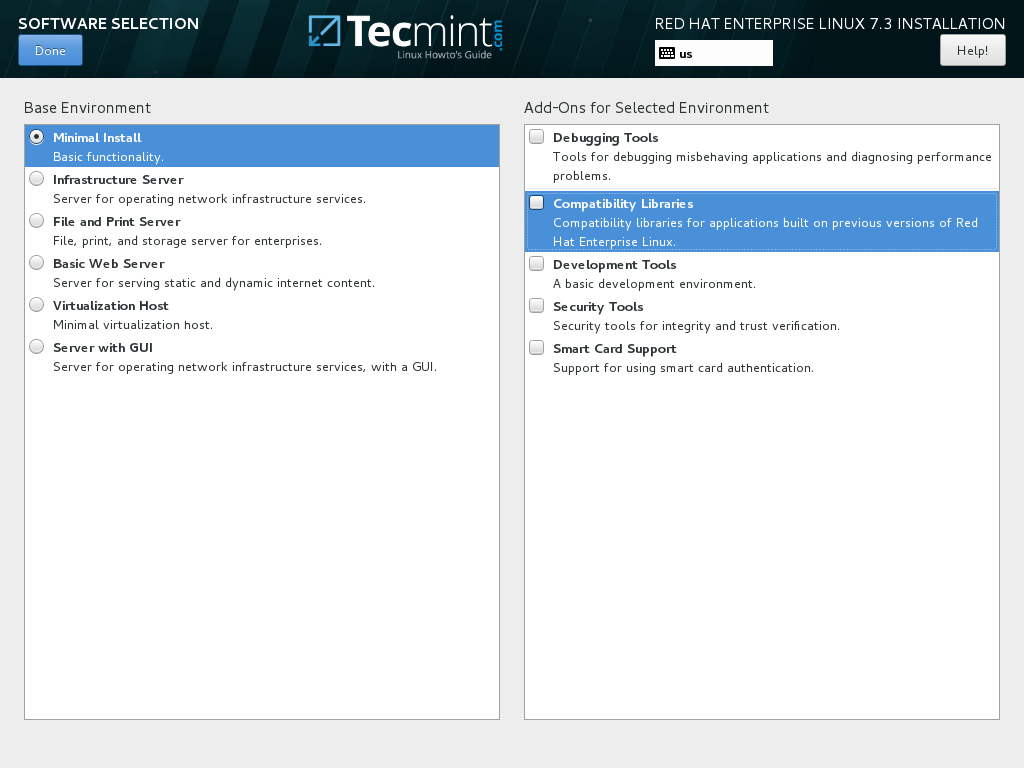

6、 安装源(Installation Source)保持默认就好,因为本例中我们使用本地安装 (DVD/USB 镜像),然后选择要安装的软件集(Software Selection)。

|

||||

|

||||

此处你可以选择基本环境 (base environment) 和附件 (Add-ons) 。由于 RHEL 常用作 Linux 服务器,最小化安装(Minimal Installation)对于系统管理员来说则是最佳选择。

|

||||

|

||||

对于生产环境来说,这也是官方极力推荐的安装方式,因为我们只需要在 OS 中安装极少量软件就好了。

|

||||

|

||||

这也意味着高安全性、可伸缩性以及占用极少的磁盘空间。同时,通过购买订阅 (subscription) 或使用 DVD 镜像源,这里列出的的其它环境和附件都是可以在命令行中很容易地安装。

|

||||

|

||||

[][11]

|

||||

|

||||

*RHEL 7.3 软件集选择*

|

||||

|

||||

7、 万一你想要安装预定义的基本环境之一,比方说 Web 服务器、文件 & 打印服务器、架构服务器、虚拟化主机、带 GUI 的服务器等,直接点击选择它们,然后在右边的框选择附件,最后点击完成 (Done) 结束这一步操作即可。

|

||||

|

||||

[][12]

|

||||

|

||||

*选择带 GUI 的服务器*

|

||||

|

||||

8、 在接下来点击安装目标 (Installation Destination),这个步骤要求你为将要安装的系统进行分区、格式化文件系统并设置挂载点。

|

||||

|

||||

最安全的做法就是让安装器自动配置硬盘分区,这样会创建 Linux 系统所有需要用到的基本分区 (在 LVM 中创建 `/boot`、`/boot/efi`、`/(root)` 以及 `swap` 等分区),并格式化为 RHEL 7.3 默认的 XFS 文件系统。

|

||||

|

||||

请记住:如果安装过程是从 UEFI 固件中启动的,那么硬盘的分区表则是 GPT 分区方案。否则,如果你以 CSM 或传统 BIOS 来启动,硬盘的分区表则使用老旧的 MBR 分区方案。

|

||||

|

||||

假如不喜欢自动分区,你也可以选择配置你的硬盘分区表,手动创建自己需要的分区。

|

||||

|

||||

不论如何,本文推荐你选择自动配置分区。最后点击完成 (Done) 继续下一步。

|

||||

|

||||

[][13]

|

||||

|

||||

*选择 RHEL 7.3 的安装硬盘*

|

||||

|

||||

9、 下一步是禁用 Kdump 服务,然后配置网络。

|

||||

|

||||

[][14]

|

||||

|

||||

*禁用 Kdump 特性*

|

||||

|

||||

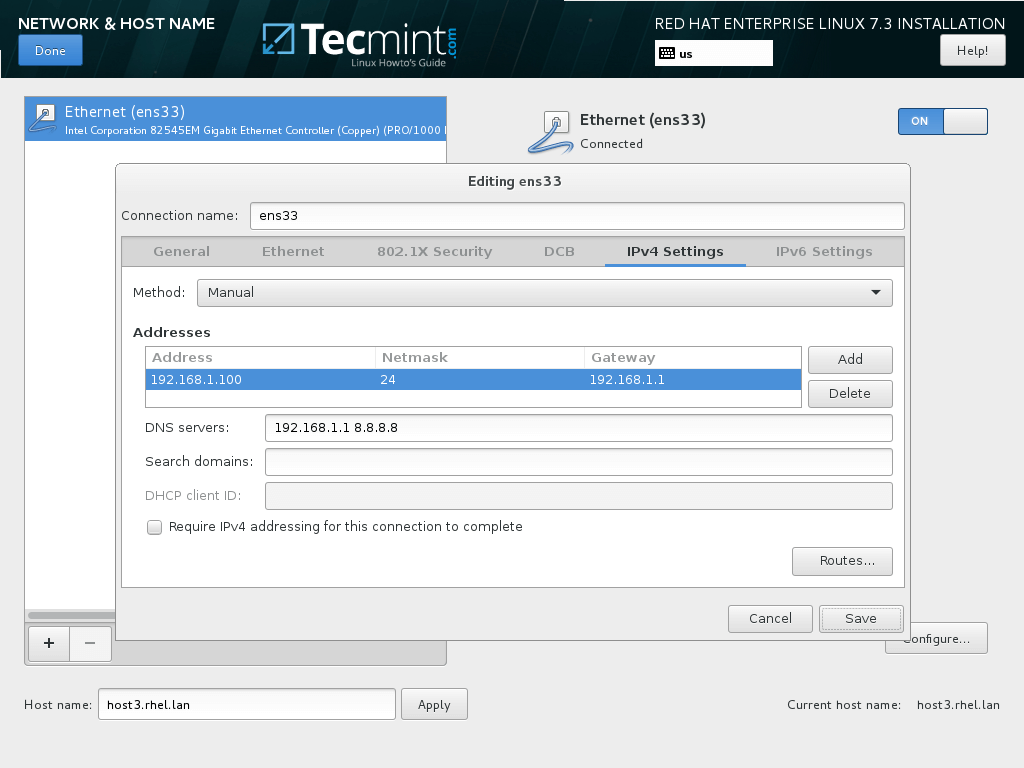

10、 在网络和主机名(Network and Hostname)中,设置你机器使用的主机名和一个描述性名称,同时拖动 Ethernet 开关按钮到 `ON` 来启用网络功能。

|

||||

|

||||

如果你在自己的网络中有一个 DHCP 服务器,那么网络 IP 设置会自动获取和使用。

|

||||

|

||||

[][15]

|

||||

|

||||

*配置网络主机名称*

|

||||

|

||||

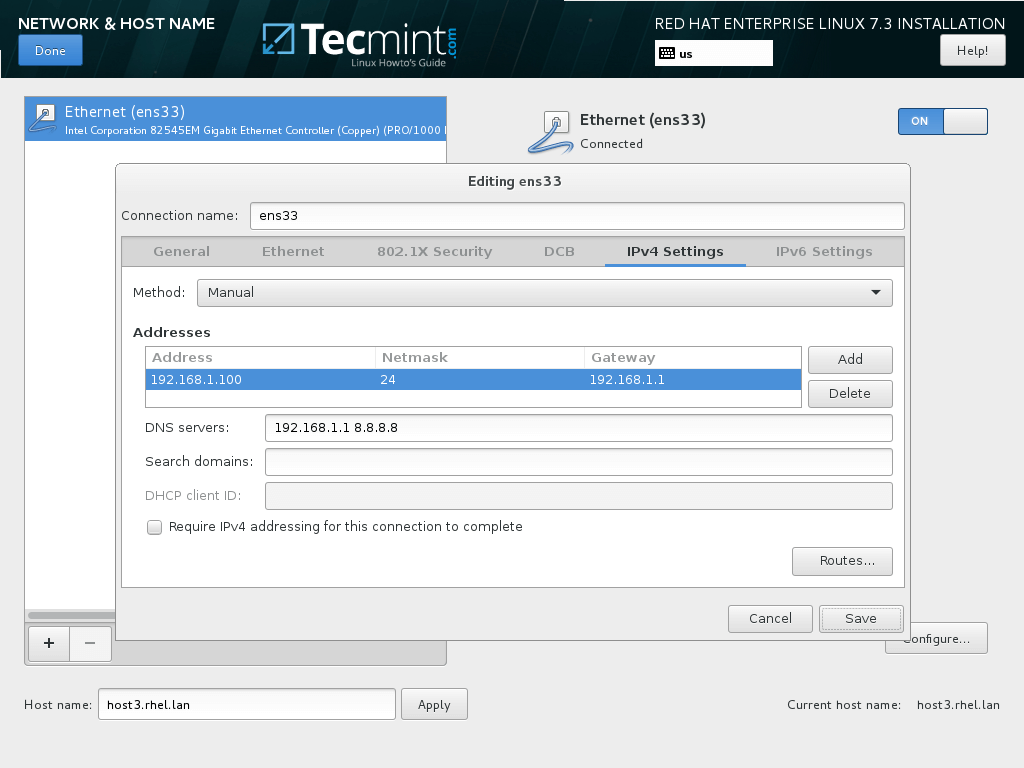

11、 如果要为网络接口设置静态 IP,点击配置 (Configure) 按钮,然后手动设置 IP,如下方截图所示。

|

||||

|

||||

设置好网络接口的 IP 地址之后,点击保存 (Save) 按钮,最后切换一下网络接口的 `OFF` 和 `ON` 状态已应用刚刚设置的静态 IP。

|

||||

|

||||

最后,点击完成 (Done) 按钮返回到安装设置主界面。

|

||||

|

||||

[][16]

|

||||

|

||||

*配置网络 IP 地址*

|

||||

|

||||

12、 最后,在安装配置主界面需要你配置的最后一项就是安全策略配置(Security Policy)文件了。选择并应用默认的(Default)安全策略,然后点击完成 (Done) 返回主界面。

|

||||

|

||||

回顾所有的安装设置项并点击开始安装 (Begin Installation) 按钮来启动安装过程,这个过程启动之后,你就没有办法停止它了。

|

||||

|

||||

[][17]

|

||||

|

||||

*为 RHEL 7.3 启用安全策略*

|

||||

|

||||

[][18]

|

||||

|

||||

*开始安装 RHEL 7.3*

|

||||

|

||||

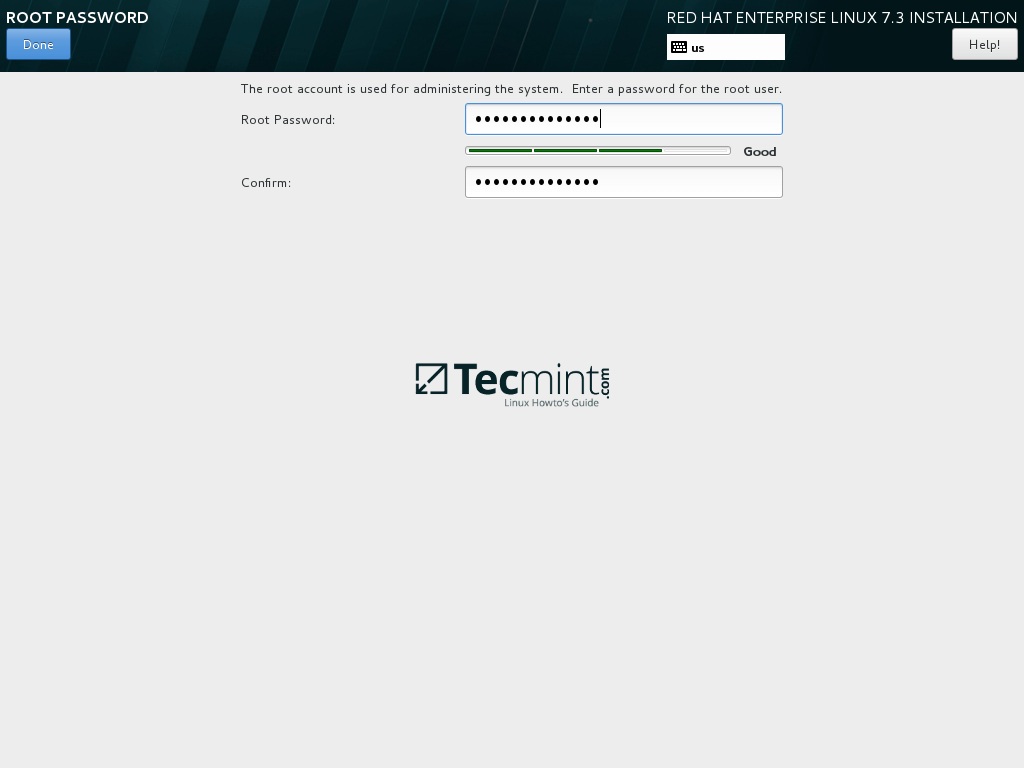

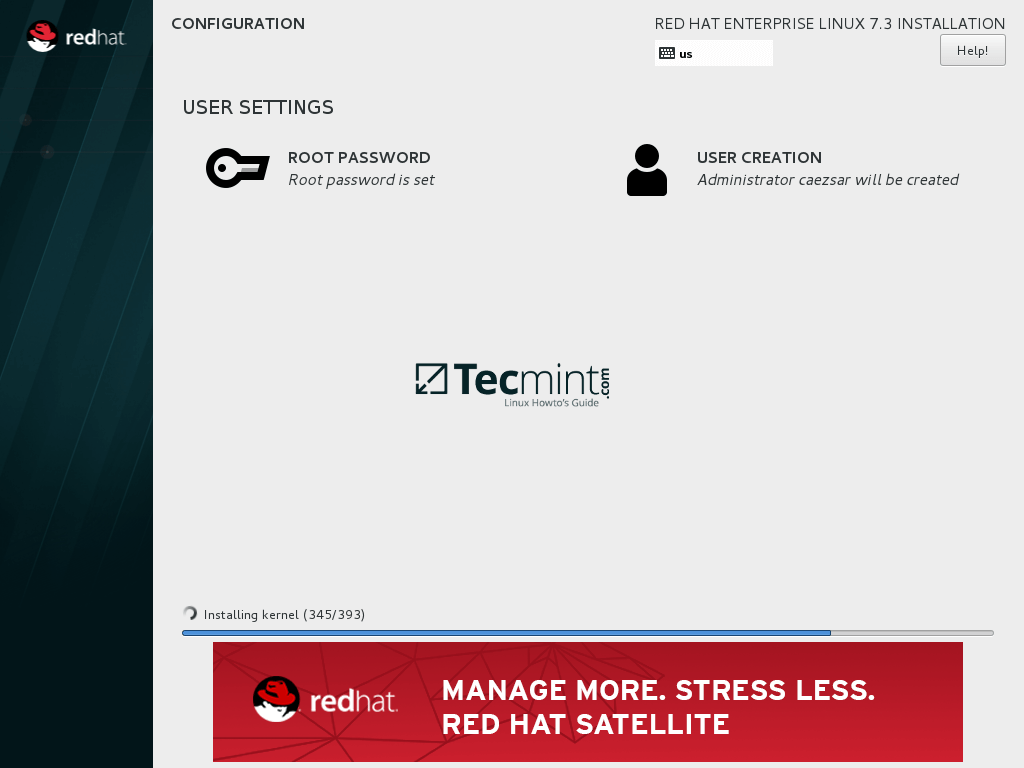

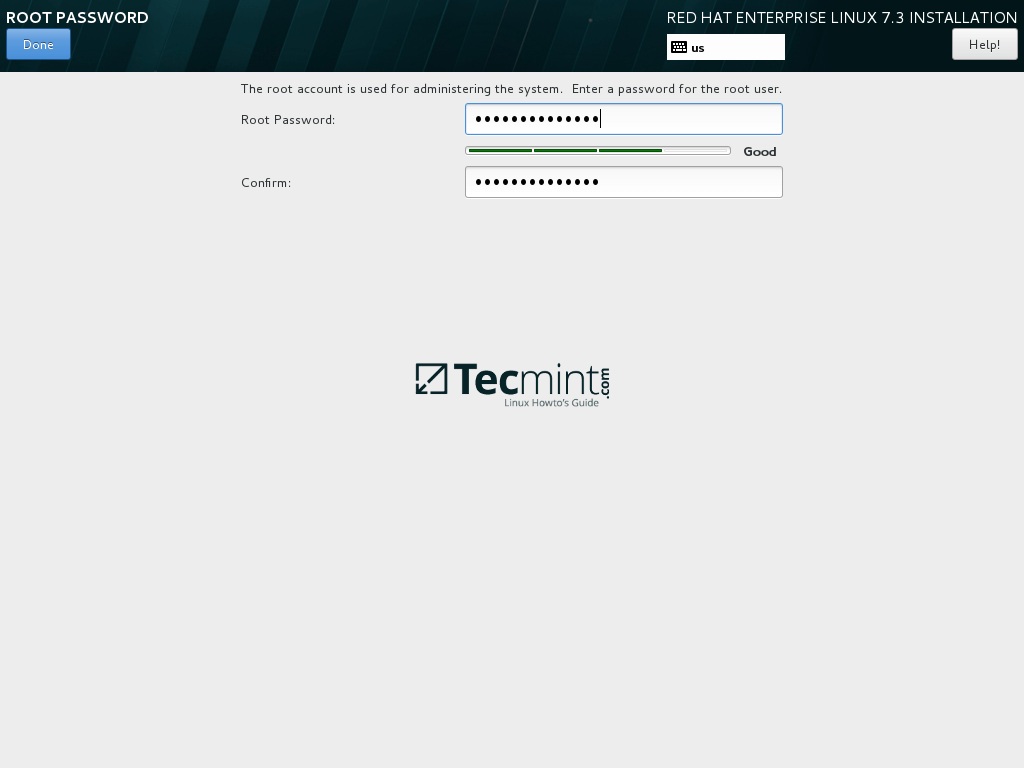

13、 在安装过程中,你的显示器会出现用户设置 (User Settings)。首先点击 Root 密码 (Root Password) 为 root 账户设置一个高强度密码。

|

||||

|

||||

[][19]

|

||||

|

||||

*配置用户选项*

|

||||

|

||||

[][20]

|

||||

|

||||

*设置 Root 账户密码*

|

||||

|

||||

14、 最后,创建一个新用户,通过选中使该用户成为管理员 (Make this user administrator) 为新建的用户授权 root 权限。同时还要为这个账户设置一个高强度密码,点击完成 (Done) 返回用户设置菜单,就可以等待安装过程完成了。

|

||||

|

||||

[][21]

|

||||

|

||||

*创建新用户账户*

|

||||

|

||||

[][22]

|

||||

|

||||

*RHEL 7.3 安装过程*

|

||||

|

||||

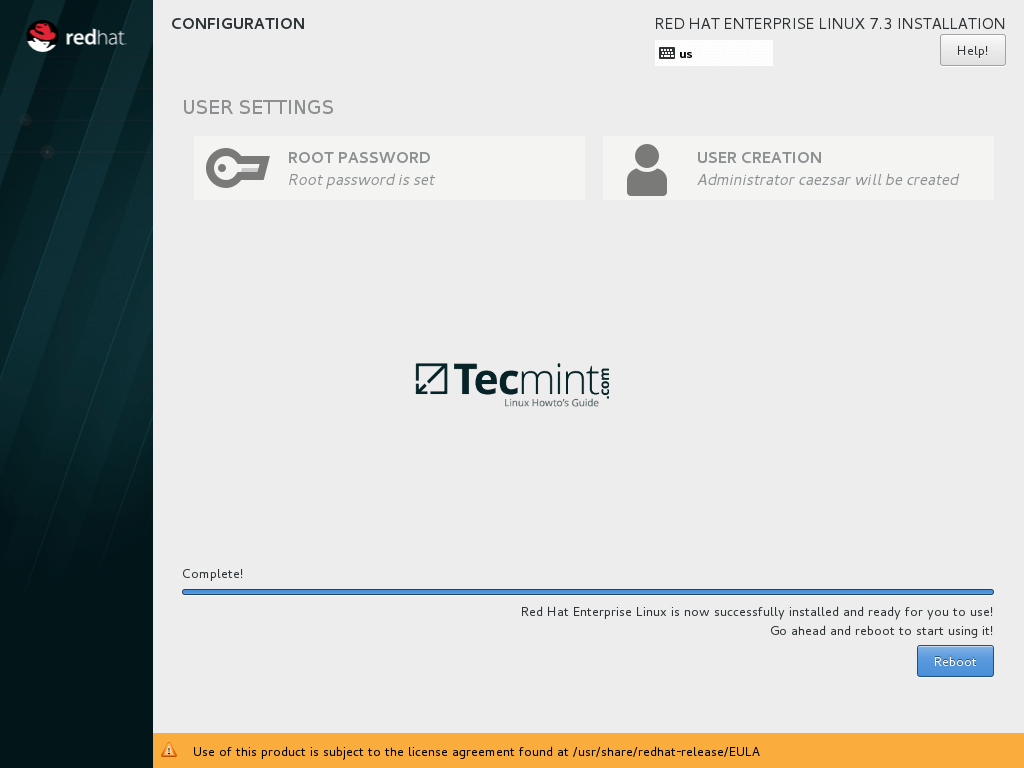

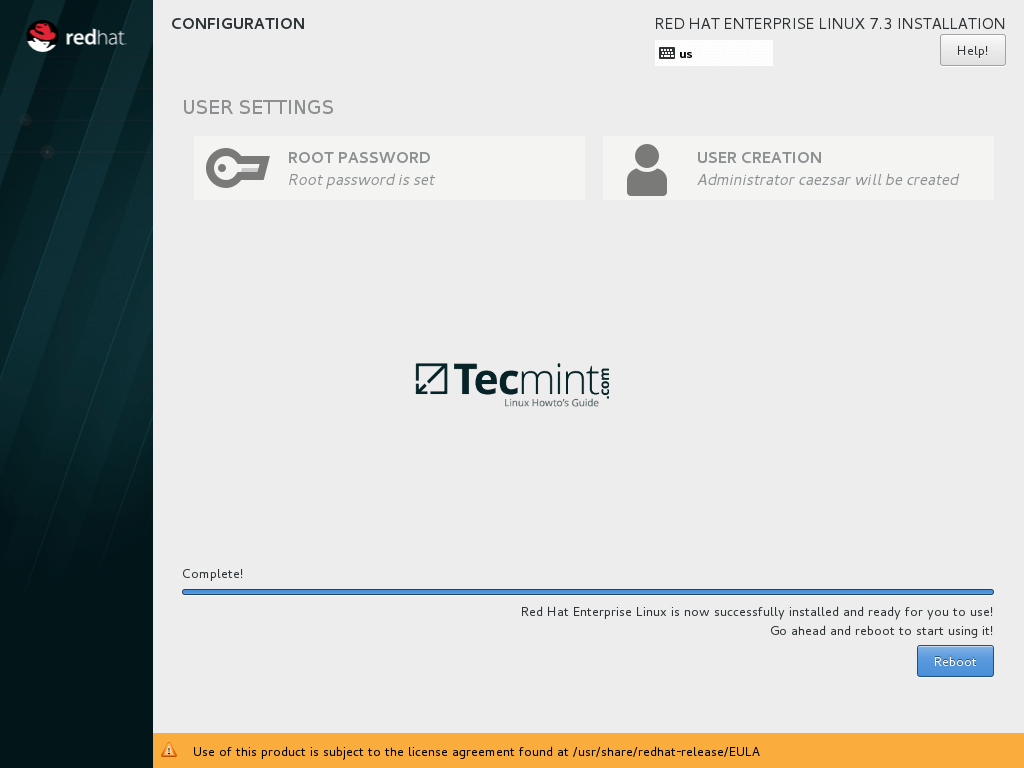

15、 安装过程结束并成功安装后,弹出或拔掉 DVD/USB 设备,重启机器。

|

||||

|

||||

[][23]

|

||||

|

||||

*RHEL 7.3 安装完成*

|

||||

|

||||

[][24]

|

||||

|

||||

*启动 RHEL 7.3*

|

||||

|

||||

至此,安装完成。为了后期一直使用 RHEL,你需要从 Red Hat 消费者门户购买一个订阅,然后在命令行 [使用订阅管理器来注册你的 RHEL 系统][25]。

|

||||

|

||||

------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Matei Cezar

|

||||

|

||||

|

||||

|

||||

我是一个终日沉溺于电脑的家伙,对开源的 Linux 软件非常着迷,有着 4 年 Linux 桌面发行版、服务器和 bash 编程经验。

|

||||

|

||||

---------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/red-hat-enterprise-linux-7-3-installation-guide/

|

||||

|

||||

作者:[Matei Cezar][a]

|

||||

译者:[GHLandy](https://github.com/GHLandy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/cezarmatei/

|

||||

[1]:https://access.redhat.com/downloads

|

||||

[2]:https://linux.cn/article-8048-1.html

|

||||

[3]:https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7-Beta/html/7.3_Release_Notes/chap-Red_Hat_Enterprise_Linux-7.3_Release_Notes-Overview.html

|

||||

[4]:https://rufus.akeo.ie/

|

||||

[5]:http://www.tecmint.com/wp-content/uploads/2016/12/RHEL-7.3-Boot-Menu.jpg

|

||||

[6]:http://www.tecmint.com/wp-content/uploads/2016/12/Select-RHEL-7.3-Language.png

|

||||

[7]:http://www.tecmint.com/wp-content/uploads/2016/12/RHEL-7.3-Installation-Summary.png

|

||||

[8]:http://www.tecmint.com/wp-content/uploads/2016/12/Select-RHEL-7.3-Date-and-Time.png

|

||||

[9]:http://www.tecmint.com/wp-content/uploads/2016/12/Configure-Keyboard-Layout.png

|

||||

[10]:http://www.tecmint.com/wp-content/uploads/2016/12/Choose-Language-Support.png

|

||||

[11]:http://www.tecmint.com/wp-content/uploads/2016/12/RHEL-7.3-Software-Selection.png

|

||||

[12]:http://www.tecmint.com/wp-content/uploads/2016/12/Select-Server-with-GUI-on-RHEL-7.3.png

|

||||

[13]:http://www.tecmint.com/wp-content/uploads/2016/12/Choose-RHEL-7.3-Installation-Drive.png

|

||||

[14]:http://www.tecmint.com/wp-content/uploads/2016/12/Disable-Kdump-Feature.png

|

||||

[15]:http://www.tecmint.com/wp-content/uploads/2016/12/Configure-Network-Hostname.png

|

||||

[16]:http://www.tecmint.com/wp-content/uploads/2016/12/Configure-Network-IP-Address.png

|

||||

[17]:http://www.tecmint.com/wp-content/uploads/2016/12/Apply-Security-Policy-on-RHEL-7.3.png

|

||||

[18]:http://www.tecmint.com/wp-content/uploads/2016/12/Begin-RHEL-7.3-Installation.png

|

||||

[19]:http://www.tecmint.com/wp-content/uploads/2016/12/Configure-User-Settings.png

|

||||

[20]:http://www.tecmint.com/wp-content/uploads/2016/12/Set-Root-Account-Password.png

|

||||

[21]:http://www.tecmint.com/wp-content/uploads/2016/12/Create-New-User-Account.png

|

||||

[22]:http://www.tecmint.com/wp-content/uploads/2016/12/RHEL-7.3-Installation-Process.png

|

||||

[23]:http://www.tecmint.com/wp-content/uploads/2016/12/RHEL-7.3-Installation-Complete.png

|

||||

[24]:http://www.tecmint.com/wp-content/uploads/2016/12/RHEL-7.3-Booting.png

|

||||

[25]:http://www.tecmint.com/enable-redhat-subscription-reposiories-and-updates-for-rhel-7/

|

||||

412

published/LXD/Part 4 - LXD 2.0--Resource control.md

Normal file

412

published/LXD/Part 4 - LXD 2.0--Resource control.md

Normal file

@ -0,0 +1,412 @@

|

||||

LXD 2.0 系列(四):资源控制

|

||||

======================================

|

||||

|

||||

这是 [LXD 2.0 系列介绍文章][0]的第四篇。

|

||||

|

||||

因为 LXD 容器管理有很多命令,因此这篇文章会很长。 如果你想要快速地浏览这些相同的命令,你可以[尝试下我们的在线演示][1]!

|

||||

|

||||

|

||||

|

||||

### 可用资源限制

|

||||

|

||||

LXD 提供了各种资源限制。其中一些与容器本身相关,如内存配额、CPU 限制和 I/O 优先级。而另外一些则与特定设备相关,如 I/O 带宽或磁盘用量限制。

|

||||

|

||||

与所有 LXD 配置一样,资源限制可以在容器运行时动态更改。某些可能无法启用,例如,如果设置的内存值小于当前内存用量,但 LXD 将会试着设置并且报告失败。

|

||||

|

||||

所有的限制也可以通过配置文件继承,在这种情况下每个受影响的容器将受到该限制的约束。也就是说,如果在默认配置文件中设置 `limits.memory=256MB`,则使用默认配置文件(通常是全都使用)的每个容器的内存限制为 256MB。

|

||||

|

||||

我们不支持资源限制池,将其中的限制由一组容器共享,因为我们没有什么好的方法通过现有的内核 API 实现这些功能。

|

||||

|

||||

#### 磁盘

|

||||

|

||||

这或许是最需要和最明显的需求。只需设置容器文件系统的大小限制,并对容器强制执行。

|

||||

|

||||

LXD 确实可以让你这样做!

|

||||

|

||||

不幸的是,这比它听起来复杂得多。 Linux 没有基于路径的配额,而大多数文件系统只有基于用户和组的配额,这对容器没有什么用处。

|

||||

|

||||

如果你正在使用 ZFS 或 btrfs 存储后端,这意味着现在 LXD 只能支持磁盘限制。也有可能为 LVM 实现此功能,但这取决于与它一起使用的文件系统,并且如果结合实时更新那会变得棘手起来,因为并不是所有的文件系统都允许在线增长,而几乎没有一个允许在线收缩。

|

||||

|

||||

#### CPU

|

||||

|

||||

当涉及到 CPU 的限制,我们支持 4 种不同的东西:

|

||||

|

||||

* 只给我 X 个 CPU 核心

|

||||

|

||||

在这种模式下,你让 LXD 为你选择一组核心,然后为更多的容器和 CPU 的上线/下线提供负载均衡。

|

||||

|

||||

容器只看到这个数量的 CPU 核心。

|

||||

|

||||

* 给我一组特定的 CPU 核心(例如,核心1、3 和 5)

|

||||

|

||||

类似于第一种模式,但是不会做负载均衡,你会被限制在那些核心上,无论它们有多忙。

|

||||

|

||||

* 给我你拥有的 20% 处理能力

|

||||

|

||||

在这种模式下,你可以看到所有的 CPU,但调度程序将限制你使用 20% 的 CPU 时间,但这只有在负载状态才会这样!所以如果系统不忙,你的容器可以跑得很欢。而当其他的容器也开始使用 CPU 时,它会被限制用量。

|

||||

|

||||

* 每测量 200ms,给我 50ms(并且不超过)

|

||||

|

||||

此模式与上一个模式类似,你可以看到所有的 CPU,但这一次,无论系统可能是多么空闲,你只能使用你设置的极限时间下的尽可能多的 CPU 时间。在没有过量使用的系统上,这可使你可以非常整齐地分割 CPU,并确保这些容器的持续性能。

|

||||

|

||||

另外还可以将前两个中的一个与最后两个之一相结合,即请求一组 CPU,然后进一步限制这些 CPU 的 CPU 时间。

|

||||

|

||||

除此之外,我们还有一个通用的优先级调节方式,可以告诉调度器当你处于负载状态时,两个争夺资源的容器谁会取得胜利。

|

||||

|

||||

#### 内存

|

||||

|

||||

内存听起来很简单,就是给我多少 MB 的内存!

|

||||

|

||||

它绝对可以那么简单。 我们支持这种限制以及基于百分比的请求,比如给我 10% 的主机内存!

|

||||

|

||||

另外我们在上层支持一些额外的东西。 例如,你可以选择在每个容器上打开或者关闭 swap,如果打开,还可以设置优先级,以便你可以选择哪些容器先将内存交换到磁盘!

|

||||

|

||||

内存限制默认是“hard”。 也就是说,当内存耗尽时,内核将会开始杀掉你的那些进程。

|

||||

|

||||

或者,你可以将强制策略设置为“soft”,在这种情况下,只要没有别的进程的情况下,你将被允许使用尽可能多的内存。一旦别的进程想要这块内存,你将无法分配任何内存,直到你低于你的限制或者主机内存再次有空余。

|

||||

|

||||

#### 网络 I/O

|

||||

|

||||

网络 I/O 可能是我们看起来最简单的限制,但是相信我,实现真的不简单!

|

||||

|

||||

我们支持两种限制。 第一个是对网络接口的速率限制。你可以设置入口和出口的限制,或者只是设置“最大”限制然后应用到出口和入口。这个只支持“桥接”和“p2p”类型接口。

|

||||

|

||||

第二种是全局网络 I/O 优先级,仅当你的网络接口趋于饱和的时候再使用。

|

||||

|

||||

#### 块 I/O

|

||||

|

||||

我把最古怪的放在最后。对于用户看起来它可能简单,但有一些情况下,它的结果并不会和你的预期一样。

|

||||

|

||||

我们在这里支持的基本上与我在网络 I/O 中描述的相同。

|

||||

|

||||

你可以直接设置磁盘的读写 IO 的频率和速率,并且有一个全局的块 I/O 优先级,它会通知 I/O 调度程序更倾向哪个。

|

||||

|

||||

古怪的是如何设置以及在哪里应用这些限制。不幸的是,我们用于实现这些功能的底层使用的是完整的块设备。这意味着我们不能为每个路径设置每个分区的 I/O 限制。

|

||||

|

||||

这也意味着当使用可以支持多个块设备映射到指定的路径(带或者不带 RAID)的 ZFS 或 btrfs 时,我们并不知道这个路径是哪个块设备提供的。

|

||||

|

||||

这意味着,完全有可能,实际上确实有可能,容器使用的多个磁盘挂载点(绑定挂载或直接挂载)可能来自于同一个物理磁盘。

|

||||

|

||||

这就使限制变得很奇怪。为了使限制生效,LXD 具有猜测给定路径所对应块设备的逻辑,这其中包括询问 ZFS 和 btrfs 工具,甚至可以在发现一个文件系统中循环挂载的文件时递归地找出它们。

|

||||

|

||||

这个逻辑虽然不完美,但通常会找到一组应该应用限制的块设备。LXD 接着记录并移动到下一个路径。当遍历完所有的路径,然后到了非常奇怪的部分。它会平均你为相应块设备设置的限制,然后应用这些。

|

||||

|

||||

这意味着你将在容器中“平均”地获得正确的速度,但这也意味着你不能对来自同一个物理磁盘的“/fast”和一个“/slow”目录应用不同的速度限制。 LXD 允许你设置它,但最后,它会给你这两个值的平均值。

|

||||

|

||||

### 它怎么工作?

|

||||

|

||||

除了网络限制是通过较旧但是良好的“tc”实现的,上述大多数限制是通过 Linux 内核的 cgroup API 来实现的。

|

||||

|

||||

LXD 在启动时会检测你在内核中启用了哪些 cgroup,并且将只应用你的内核支持的限制。如果你缺少一些 cgroup,守护进程会输出警告,接着你的 init 系统将会记录这些。

|

||||

|

||||

在 Ubuntu 16.04 上,默认情况下除了内存交换审计外将会启用所有限制,内存交换审计需要你通过`swapaccount = 1`这个内核引导参数来启用。

|

||||

|

||||

### 应用这些限制

|

||||

|

||||

上述所有限制都能够直接或者用某个配置文件应用于容器。容器范围的限制可以使用:

|

||||

|

||||

```

|

||||

lxc config set CONTAINER KEY VALUE

|

||||

```

|

||||

|

||||

或对于配置文件设置:

|

||||

|

||||

```

|

||||

lxc profile set PROFILE KEY VALUE

|

||||

```

|

||||

|

||||

当指定特定设备时:

|

||||

|

||||

```

|

||||

lxc config device set CONTAINER DEVICE KEY VALUE

|

||||

```

|

||||

|

||||

或对于配置文件设置:

|

||||

|

||||

```

|

||||

lxc profile device set PROFILE DEVICE KEY VALUE

|

||||

```

|

||||

|

||||

有效配置键、设备类型和设备键的完整列表可以[看这里][1]。

|

||||

|

||||

#### CPU

|

||||

|

||||

要限制使用任意两个 CPU 核心可以这么做:

|

||||

|

||||

```

|

||||

lxc config set my-container limits.cpu 2

|

||||

```

|

||||

|

||||

要指定特定的 CPU 核心,比如说第二和第四个:

|

||||

|

||||

```

|

||||

lxc config set my-container limits.cpu 1,3

|

||||

```

|

||||

|

||||

更加复杂的情况还可以设置范围:

|

||||

|

||||

```

|

||||

lxc config set my-container limits.cpu 0-3,7-11

|

||||

```

|

||||

|

||||

限制实时生效,你可以看下面的例子:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc exec zerotier -- cat /proc/cpuinfo | grep ^proces

|

||||

processor : 0

|

||||

processor : 1

|

||||

processor : 2

|

||||

processor : 3

|

||||

stgraber@dakara:~$ lxc config set zerotier limits.cpu 2

|

||||

stgraber@dakara:~$ lxc exec zerotier -- cat /proc/cpuinfo | grep ^proces

|

||||

processor : 0

|

||||

processor : 1

|

||||

```

|

||||

|

||||

注意,为了避免完全混淆用户空间,lxcfs 会重排 `/proc/cpuinfo` 中的条目,以便没有错误。

|

||||

|

||||

就像 LXD 中的一切,这些设置也可以应用在配置文件中:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc exec snappy -- cat /proc/cpuinfo | grep ^proces

|

||||

processor : 0

|

||||

processor : 1

|

||||

processor : 2

|

||||

processor : 3

|

||||

stgraber@dakara:~$ lxc profile set default limits.cpu 3

|

||||

stgraber@dakara:~$ lxc exec snappy -- cat /proc/cpuinfo | grep ^proces

|

||||

processor : 0

|

||||

processor : 1

|

||||

processor : 2

|

||||

```

|

||||

|

||||

要限制容器使用 10% 的 CPU 时间,要设置下 CPU allowance:

|

||||

|

||||

```

|

||||

lxc config set my-container limits.cpu.allowance 10%

|

||||

```

|

||||

|

||||

或者给它一个固定的 CPU 时间切片:

|

||||

|

||||

```

|

||||

lxc config set my-container limits.cpu.allowance 25ms/200ms

|

||||

```

|

||||

|

||||

最后,要将容器的 CPU 优先级调到最低:

|

||||

|

||||

```

|

||||

lxc config set my-container limits.cpu.priority 0

|

||||

```

|

||||

|

||||

#### 内存

|

||||

|

||||

要直接应用内存限制运行下面的命令:

|

||||

|

||||

```

|

||||

lxc config set my-container limits.memory 256MB

|

||||

```

|

||||

|

||||

(支持的后缀是 KB、MB、GB、TB、PB、EB)

|

||||

|

||||

要关闭容器的内存交换(默认启用):

|

||||

|

||||

```

|

||||

lxc config set my-container limits.memory.swap false

|

||||

```

|

||||

|

||||

告诉内核首先交换指定容器的内存:

|

||||

|

||||

```

|

||||

lxc config set my-container limits.memory.swap.priority 0

|

||||

```

|

||||

|

||||

如果你不想要强制的内存限制:

|

||||

|

||||

```

|

||||

lxc config set my-container limits.memory.enforce soft

|

||||

```

|

||||

|

||||

#### 磁盘和块 I/O

|

||||

|

||||

不像 CPU 和内存,磁盘和 I/O 限制是直接作用在实际的设备上的,因此你需要编辑原始设备或者屏蔽某个具体的设备。

|

||||

|

||||

要设置磁盘限制(需要 btrfs 或者 ZFS):

|

||||

|

||||

```

|

||||

lxc config device set my-container root size 20GB

|

||||

```

|

||||

|

||||

比如:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc exec zerotier -- df -h /

|

||||

Filesystem Size Used Avail Use% Mounted on

|

||||

encrypted/lxd/containers/zerotier 179G 542M 178G 1% /

|

||||

stgraber@dakara:~$ lxc config device set zerotier root size 20GB

|

||||

stgraber@dakara:~$ lxc exec zerotier -- df -h /

|

||||

Filesystem Size Used Avail Use% Mounted on

|

||||

encrypted/lxd/containers/zerotier 20G 542M 20G 3% /

|

||||

```

|

||||

|

||||

要限制速度,你可以:

|

||||

|

||||

```

|

||||

lxc config device set my-container root limits.read 30MB

|

||||

lxc config device set my-container root.limits.write 10MB

|

||||

```

|

||||

|

||||

或者限制 IO 频率:

|

||||

|

||||

```

|

||||

lxc config device set my-container root limits.read 20Iops

|

||||

lxc config device set my-container root limits.write 10Iops

|

||||

```

|

||||

|

||||

最后你在一个过量使用的繁忙系统上,你或许想要:

|

||||

|

||||

```

|

||||

lxc config set my-container limits.disk.priority 10

|

||||

```

|

||||

|

||||

将那个容器的 I/O 优先级调到最高。

|

||||

|

||||

#### 网络 I/O

|

||||

|

||||

只要机制可用,网络 I/O 基本等同于块 I/O。

|

||||

|

||||

比如:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc exec zerotier -- wget http://speedtest.newark.linode.com/100MB-newark.bin -O /dev/null

|

||||

--2016-03-26 22:17:34-- http://speedtest.newark.linode.com/100MB-newark.bin

|

||||

Resolving speedtest.newark.linode.com (speedtest.newark.linode.com)... 50.116.57.237, 2600:3c03::4b

|

||||

Connecting to speedtest.newark.linode.com (speedtest.newark.linode.com)|50.116.57.237|:80... connected.

|

||||

HTTP request sent, awaiting response... 200 OK

|

||||

Length: 104857600 (100M) [application/octet-stream]

|

||||

Saving to: '/dev/null'

|

||||

|

||||

/dev/null 100%[===================>] 100.00M 58.7MB/s in 1.7s

|

||||

|

||||

2016-03-26 22:17:36 (58.7 MB/s) - '/dev/null' saved [104857600/104857600]

|

||||

|

||||

stgraber@dakara:~$ lxc profile device set default eth0 limits.ingress 100Mbit

|

||||

stgraber@dakara:~$ lxc profile device set default eth0 limits.egress 100Mbit

|

||||

stgraber@dakara:~$ lxc exec zerotier -- wget http://speedtest.newark.linode.com/100MB-newark.bin -O /dev/null

|

||||

--2016-03-26 22:17:47-- http://speedtest.newark.linode.com/100MB-newark.bin

|

||||

Resolving speedtest.newark.linode.com (speedtest.newark.linode.com)... 50.116.57.237, 2600:3c03::4b

|

||||

Connecting to speedtest.newark.linode.com (speedtest.newark.linode.com)|50.116.57.237|:80... connected.

|

||||

HTTP request sent, awaiting response... 200 OK

|

||||

Length: 104857600 (100M) [application/octet-stream]

|

||||

Saving to: '/dev/null'

|

||||

|

||||

/dev/null 100%[===================>] 100.00M 11.4MB/s in 8.8s

|

||||

|

||||

2016-03-26 22:17:56 (11.4 MB/s) - '/dev/null' saved [104857600/104857600]

|

||||

```

|

||||

|

||||

这就是如何将一个千兆网的连接速度限制到仅仅 100Mbit/s 的!

|

||||

|

||||

和块 I/O 一样,你可以设置一个总体的网络优先级:

|

||||

|

||||

```

|

||||

lxc config set my-container limits.network.priority 5

|

||||

```

|

||||

|

||||

### 获取当前资源使用率

|

||||

|

||||

[LXD API][2] 可以导出目前容器资源使用情况的一点信息,你可以得到:

|

||||

|

||||

* 内存:当前、峰值、目前内存交换和峰值内存交换

|

||||

* 磁盘:当前磁盘使用率

|

||||

* 网络:每个接口传输的字节和包数。

|

||||

|

||||

另外如果你使用的是非常新的 LXD(在写这篇文章时的 git 版本),你还可以在`lxc info`中得到这些信息:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc info zerotier

|

||||

Name: zerotier

|

||||

Architecture: x86_64

|

||||

Created: 2016/02/20 20:01 UTC

|

||||

Status: Running

|

||||

Type: persistent

|

||||

Profiles: default

|

||||

Pid: 29258

|

||||

Ips:

|

||||

eth0: inet 172.17.0.101

|

||||

eth0: inet6 2607:f2c0:f00f:2700:216:3eff:feec:65a8

|

||||

eth0: inet6 fe80::216:3eff:feec:65a8

|

||||

lo: inet 127.0.0.1

|

||||

lo: inet6 ::1

|

||||

lxcbr0: inet 10.0.3.1

|

||||

lxcbr0: inet6 fe80::f0bd:55ff:feee:97a2

|

||||

zt0: inet 29.17.181.59

|

||||

zt0: inet6 fd80:56c2:e21c:0:199:9379:e711:b3e1

|

||||

zt0: inet6 fe80::79:e7ff:fe0d:5123

|

||||

Resources:

|

||||

Processes: 33

|

||||

Disk usage:

|

||||

root: 808.07MB

|

||||

Memory usage:

|

||||

Memory (current): 106.79MB

|

||||

Memory (peak): 195.51MB

|

||||

Swap (current): 124.00kB

|

||||

Swap (peak): 124.00kB

|

||||

Network usage:

|

||||

lxcbr0:

|

||||

Bytes received: 0 bytes

|

||||

Bytes sent: 570 bytes

|

||||

Packets received: 0

|

||||

Packets sent: 0

|

||||

zt0:

|

||||

Bytes received: 1.10MB

|

||||

Bytes sent: 806 bytes

|

||||

Packets received: 10957

|

||||

Packets sent: 10957

|

||||

eth0:

|

||||

Bytes received: 99.35MB

|

||||

Bytes sent: 5.88MB

|

||||

Packets received: 64481

|

||||

Packets sent: 64481

|

||||

lo:

|

||||

Bytes received: 9.57kB

|

||||

Bytes sent: 9.57kB

|

||||

Packets received: 81

|

||||

Packets sent: 81

|

||||

Snapshots:

|

||||

zerotier/blah (taken at 2016/03/08 23:55 UTC) (stateless)

|

||||

```

|

||||

|

||||

### 总结

|

||||

|

||||

LXD 团队花费了几个月的时间来迭代我们使用的这些限制的语言。 它是为了在保持强大和功能明确的基础上同时保持简单。

|

||||

|

||||

实时地应用这些限制和通过配置文件继承,使其成为一种非常强大的工具,可以在不影响正在运行的服务的情况下实时管理服务器上的负载。

|

||||

|

||||

### 更多信息

|

||||

|

||||

LXD 的主站在: <https://linuxcontainers.org/lxd>

|

||||

|

||||

LXD 的 GitHub 仓库: <https://github.com/lxc/lxd>

|

||||

|

||||

LXD 的邮件列表: <https://lists.linuxcontainers.org>

|

||||

|

||||

LXD 的 IRC 频道: #lxcontainers on irc.freenode.net

|

||||

|

||||

如果你不想在你的机器上安装LXD,你可以[在线尝试下][3]。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.stgraber.org/2016/03/26/lxd-2-0-resource-control-412/

|

||||

|

||||

作者:[Stéphane Graber][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 组织翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.stgraber.org/author/stgraber/

|

||||

[0]: https://www.stgraber.org/2016/03/11/lxd-2-0-blog-post-series-012/

|

||||

[1]: https://github.com/lxc/lxd/blob/master/doc/configuration.md

|

||||

[2]: https://github.com/lxc/lxd/blob/master/doc/rest-api.md

|

||||

[3]: https://linuxcontainers.org/lxd/try-it

|

||||

@ -1,6 +1,3 @@

|

||||

|

||||

@poodarchu 翻译中

|

||||

|

||||

Building a data science portfolio: Storytelling with data

|

||||

========

|

||||

|

||||

|

||||

@ -1,149 +0,0 @@

|

||||

ucasFL translating

|

||||

PyCharm - The Best Linux Python IDE

|

||||

=========

|

||||

/about/pycharm2-57e2d5ee5f9b586c352c7493.png)

|

||||

|

||||

### Introduction

|

||||

|

||||

In this guide I will introduce you to the PyCharm integrated development environment which can be used to develop professional applications using the Python programming language.

|

||||

|

||||

Python is a great programming language because it is truly cross platform and can be used to develop a single application which will run on Windows, Linux and Mac computers without having to recompile any code.

|

||||

|

||||

PyCharm is an editor and debugger developed by [Jetbrains][1] who are the same people who developed Resharper which is a great tool used by Windows developers for refactoring code and to make their lives easier when writing .NET code. Many of the principles of [Resharper][2] have been added to the professional version of [PyCharm][3].

|

||||

|

||||

### How To Install PyCharm

|

||||

|

||||

I have written a guide showing how to get PyCharm, download it, extract the files and run it.

|

||||

|

||||

[Simply click this link][4].

|

||||

|

||||

### The Welcome Screen

|

||||

|

||||

When you first run PyCharm or when you close a project you will be presented with a screen showing a list of recent projects.

|

||||

|

||||

You will also see the following menu options:

|

||||

|

||||

* Create New Project

|

||||

* Open A Project

|

||||

* Checkout From Version Control

|

||||

|

||||

There is also a configure settings option which lets you set up the default Python version and other such settings.

|

||||

|

||||

### Creating A New Project

|

||||

|

||||

When you choose to create a new project you are provided with a list of possible project types as follows:

|

||||

|

||||

* Pure Python

|

||||

* Django

|

||||

* Flask

|

||||

* Google App Engine

|

||||

* Pyramid

|

||||

* Web2Py

|

||||

* Angular CLI

|

||||

* AngularJS

|

||||

* Foundation

|

||||

* HTML5 Bolierplate

|

||||

* React Starter Kit

|

||||

* Twitter Bootstrap

|

||||

* Web Starter Kit

|

||||

|

||||

This isn't a programming tutorial so I won't be listing what all of those project types are. If you want to create a base desktop application which will run on Windows, Linux and Mac then you can choose a Pure Python project and use QT libraries to develop graphical applications which look native to the operating system they are running on regardless as to where they were developed.

|

||||

|

||||

As well as choosing the project type you can also enter the name for your project and also choose the version of Python to develop against.

|

||||

|

||||

### Open A Project

|

||||

|

||||

You can open a project by clicking on the name within the recently opened projects list or you can click the open button and navigate to the folder where the project you wish to open is located.

|

||||

|

||||

### Checking Out From Source Control

|

||||

|

||||

PyCharm provides the option to check out project code from various online resources including [GitHub][5], [CVS][6], Git, [Mercurial][7] and [Subversion][8].

|

||||

|

||||

### The PyCharm IDE

|

||||

|

||||

The PyCharm IDE starts with a menu at the top and underneath this you have tabs for each open project.

|

||||

|

||||

On the right side of the screen are debugging options for stepping through code.

|

||||

|

||||

The left pane has a list of project files and external libraries.

|

||||

|

||||

To add a file you right-click on the project name and choose "new". You then get the option to add one of the following file types:

|

||||

|

||||

* File

|

||||

* Directory

|

||||

* Python Package

|

||||

* Python File

|

||||

* Jupyter Notebook

|

||||

* HTML File

|

||||

* Stylesheet

|

||||

* JavaScript

|

||||

* TypeScript

|

||||

* CoffeeScript

|

||||

* Gherkin

|

||||

* Data Source

|

||||

|

||||

When you add a file, such as a python file you can start typing into the editor in the right panel.

|

||||

|

||||

The text is all colour coded and has bold text . A vertical line shows the indentation so you can be sure that you are tabbing correctly.

|

||||

|

||||

The editor also includes full intellisense which means as you start typing the names of libraries or recognised commands you can complete the commands by pressing tab.

|

||||

|

||||

### Debugging The Application

|

||||

|

||||

You can debug your application at any point by using the debugging options in the top right corner.

|

||||

|

||||

If you are developing a graphical application then you can simply press the green button to run the application. You can also press shift and F10.

|

||||

|

||||

To debug the application you can either click the button next to the green arrow or press shift and F9.You can place breakpoints in the code so that the program stops on a given line by clicking in the grey margin on the line you wish to break at.

|

||||

|

||||

To make a single step forward you can press F8 which steps over the code. This means it will run the code but it won't step into a function. To step into the function you would press F7\. If you are in a function and want to step out to the calling function press shift and F8.

|

||||

|

||||

At the bottom of the screen whilst you are debugging you will see various windows such as a list of processes and threads, and variables that you are watching the values for.

|

||||

|

||||

As you are stepping through code you can add a watch on a variable so that you can see when the value changes.

|

||||

|

||||

Another great option is to run the code with coverage checker. The programming world has changed a lot during the years and now it is common for developers to perform test-driven development so that every change they make they can check to make sure they haven't broken another part of the system.

|

||||

|

||||

The coverage checker actually helps you to run the program, perform some tests and then when you have finished it will tell you how much of the code was covered as a percentage during your test run.

|

||||

|

||||

There is also a tool for showing the name of a method or class, how many times the items were called, and how long was spent in that particular piece of code.

|

||||

|

||||

### Code Refactoring

|

||||

|

||||

A really powerful feature of PyCharm is the code refactoring option.

|

||||

|

||||

When you start to develop code little marks will appear in the right margin. If you type something which is likely to cause an error or just isn't written well then PyCharm will place a coloured marker.

|

||||

|

||||

Clicking on the coloured marker will tell you the issue and will offer a solution.

|

||||

|

||||

For example, if you have an import statement which imports a library and then don't use anything from that library not only will the code turn grey the marker will state that the library is unused.

|

||||

|

||||

Other errors that will appear are for good coding such as only having one blank line between an import statement and the start of a function. You will also be told when you have created a function that isn't in lowercase.

|

||||

|

||||

You don't have to abide by all of the PyCharm rules. Many of them are just good coding guidelines and are nothing to do with whether the code will run or not.

|

||||

|

||||

The code menu has other refactoring options. For example, you can perform code cleanup and you can inspect a file or project for issues.