mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-15 01:50:08 +08:00

Merge branch 'master' of https://github.com/ZTinoZ/TranslateProject.git

This commit is contained in:

commit

7dc7384542

@ -0,0 +1,86 @@

|

||||

7 个驱动开源发展的社区

|

||||

================================================================================

|

||||

不久前,开源模式还被成熟的工业级厂商以怀疑的态度认作是叛逆小孩的玩物。如今,开源的促进会和基金会在一长列的供应商提供者的支持下正蓬勃发展,而他们将开源模式视作创新的关键。

|

||||

|

||||

|

||||

|

||||

### 技术的开放发展驱动着创新 ###

|

||||

|

||||

在过去的 20 几年间,技术的开源推进已被视作驱动创新的关键因素。即使那些以前将开源视作威胁的公司也开始接受这个观点 — 例如微软,如今它在一系列的开源的促进会中表现活跃。到目前为止,大多数的开源推进都集中在软件方面,但甚至这个也正在改变,因为社区已经开始向开源硬件倡议方面聚拢。这里介绍 7 个成功地在硬件和软件方面同时促进和发展开源技术的组织。

|

||||

|

||||

### OpenPOWER 基金会 ###

|

||||

|

||||

|

||||

|

||||

[OpenPOWER 基金会][2] 由 IBM, Google, Mellanox, Tyan 和 NVIDIA 于 2013 年共同创建,在与开源软件发展相同的精神下,旨在驱动开放协作硬件的发展,在过去的 20 几年间,开源软件发展已经找到了肥沃的土壤。

|

||||

|

||||

IBM 通过开放其基于 Power 架构的硬件和软件技术,向使用 Power IP 的独立硬件产品提供许可证等方式为基金会的建立播下种子。如今超过 70 个成员共同协作来为基于 Linux 的数据中心提供自定义的开放服务器,组件和硬件。

|

||||

|

||||

去年四月,在比最新基于 x86 系统快 50 倍的数据分析能力的新的 POWER8 处理器的服务器的基础上, OpenPOWER 推出了一个技术路线图。七月, IBM 和 Google 发布了一个固件堆栈。去年十月见证了 NVIDIA GPU 带来加速 POWER8 系统的能力和来自 Tyan 的第一个 OpenPOWER 参考服务器。

|

||||

|

||||

### Linux 基金会 ###

|

||||

|

||||

|

||||

|

||||

于 2000 年建立的 [Linux 基金会][2] 如今成为掌控着历史上最大的开源协同开发成果,它有着超过 180 个合作成员和许多独立成员及学生成员。它赞助 Linux 核心开发者的工作并促进、保护和推进 Linux 操作系统,并协调软件的协作开发。

|

||||

|

||||

它最为成功的协作项目包括 Code Aurora Forum (一个拥有为移动无线产业服务的企业财团),MeeGo (一个为移动设备和 IVI [注:指的是车载消息娱乐设备,为 In-Vehicle Infotainment 的简称] 构建一个基于 Linux 内核的操作系统的项目) 和 Open Virtualization Alliance (开放虚拟化联盟,它促进自由和开源软件虚拟化解决方案的采用)。

|

||||

|

||||

### 开放虚拟化联盟 ###

|

||||

|

||||

|

||||

|

||||

[开放虚拟化联盟(OVA)][3] 的存在目的为:通过提供使用案例和对具有互操作性的通用接口和 API 的发展提供支持,来促进自由、开源软件的虚拟化解决方案,例如 KVM 的采用。KVM 将 Linux 内核转变为一个虚拟机管理程序。

|

||||

|

||||

如今, KVM 已成为和 OpenStack 共同使用的最为常见的虚拟机管理程序。

|

||||

|

||||

### OpenStack 基金会 ###

|

||||

|

||||

|

||||

|

||||

原本作为一个 IaaS(基础设施即服务) 产品由 NASA 和 Rackspace 于 2010 年启动,[OpenStack 基金会][4] 已成为最大的开源项目聚居地之一。它拥有超过 200 家公司成员,其中包括 AT&T, AMD, Avaya, Canonical, Cisco, Dell 和 HP。

|

||||

|

||||

大约以 6 个月为一个发行周期,基金会的 OpenStack 项目开发用于通过一个基于 Web 的仪表盘,命令行工具或一个 RESTful 风格的 API 来控制或调配流经一个数据中心的处理存储池和网络资源。至今为止,基金会支持的协同开发已经孕育出了一系列 OpenStack 组件,其中包括 OpenStack Compute(一个云计算网络控制器,它是一个 IaaS 系统的主要部分),OpenStack Networking(一个用以管理网络和 IP 地址的系统) 和 OpenStack Object Storage(一个可扩展的冗余存储系统)。

|

||||

|

||||

### OpenDaylight ###

|

||||

|

||||

|

||||

|

||||

作为来自 Linux 基金会的另一个协作项目, [OpenDaylight][5] 是一个由诸如 Dell, HP, Oracle 和 Avaya 等行业厂商于 2013 年 4 月建立的联合倡议。它的任务是建立一个由社区主导、开源、有工业支持的针对软件定义网络( SDN: Software-Defined Networking)的包含代码和蓝图的框架。其思路是提供一个可直接部署的全功能 SDN 平台,而不需要其他组件,供应商可提供附件组件和增强组件。

|

||||

|

||||

### Apache 软件基金会 ###

|

||||

|

||||

|

||||

|

||||

[Apache 软件基金会 (ASF)][7] 是将近 150 个顶级项目的聚居地,这些项目涵盖从开源的企业级自动化软件到与 Apache Hadoop 相关的分布式计算的整个生态系统。这些项目分发企业级、可免费获取的软件产品,而 Apache 协议则是为了让无论是商业用户还是个人用户更方便地部署 Apache 的产品。

|

||||

|

||||

ASF 是 1999 年成立的一个会员制,非盈利公司,以精英为其核心 — 要成为它的成员,你必须首先在基金会的一个或多个协作项目中做出积极贡献。

|

||||

|

||||

### 开放计算项目 ###

|

||||

|

||||

|

||||

|

||||

作为 Facebook 重新设计其 Oregon 数据中心的副产物, [开放计算项目][7] 旨在发展针对数据中心的开源硬件解决方案。 OCP 是一个由廉价无浪费的服务器、针对 Open Rack(为数据中心设计的机架标准,来让机架集成到数据中心的基础设施中) 的模块化 I/O 存储和一个相对 "绿色" 的数据中心设计方案等构成。

|

||||

|

||||

OCP 董事会成员包括来自 Facebook,Intel,Goldman Sachs,Rackspace 和 Microsoft 的代表。

|

||||

|

||||

OCP 最近宣布了有两种可选的许可证: 一个类似 Apache 2.0 的允许衍生工作的许可证,和一个更规范的鼓励将更改回馈到原有软件的许可证。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.networkworld.com/article/2866074/opensource-subnet/7-communities-driving-open-source-development.html

|

||||

|

||||

作者:[Thor Olavsrud][a]

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.networkworld.com/author/Thor-Olavsrud/

|

||||

[1]:http://openpowerfoundation.org/

|

||||

[2]:http://www.linuxfoundation.org/

|

||||

[3]:https://openvirtualizationalliance.org/

|

||||

[4]:http://www.openstack.org/foundation/

|

||||

[5]:http://www.opendaylight.org/

|

||||

[6]:http://www.apache.org/

|

||||

[7]:http://www.opencompute.org/

|

||||

@ -1,20 +1,20 @@

|

||||

如何在 Ubuntu 中管理和使用 LVM(Logical Volume Management,逻辑卷管理)

|

||||

如何在 Ubuntu 中管理和使用 逻辑卷管理 LVM

|

||||

================================================================================

|

||||

|

||||

|

||||

在我们之前的文章中,我们介绍了[什么是 LVM 以及能用 LVM 做什么][1],今天我们会给你介绍一些 LVM 的主要管理工具,使得你在设置和扩展安装时更游刃有余。

|

||||

|

||||

正如之前所述,LVM 是介于你的操作系统和物理硬盘驱动器之间的抽象层。这意味着你的物理硬盘驱动器和分区不再依赖于他们所在的硬盘驱动和分区。而是,你的操作系统所见的硬盘驱动和分区可以是由任意数目的独立硬盘驱动汇集而成或是一个软件磁盘阵列。

|

||||

正如之前所述,LVM 是介于你的操作系统和物理硬盘驱动器之间的抽象层。这意味着你的物理硬盘驱动器和分区不再依赖于他们所在的硬盘驱动和分区。而是你的操作系统所见的硬盘驱动和分区可以是由任意数目的独立硬盘汇集而成的或是一个软件磁盘阵列。

|

||||

|

||||

要管理 LVM,这里有很多可用的 GUI 工具,但要真正理解 LVM 配置发生的事情,最好要知道一些命令行工具。这当你在一个服务器或不提供 GUI 工具的发行版上管理 LVM 时尤为有用。

|

||||

|

||||

LVM 的大部分命令和彼此都非常相似。每个可用的命令都由以下其中之一开头:

|

||||

|

||||

- Physical Volume = pv

|

||||

- Volume Group = vg

|

||||

- Logical Volume = lv

|

||||

- Physical Volume (物理卷) = pv

|

||||

- Volume Group (卷组)= vg

|

||||

- Logical Volume (逻辑卷)= lv

|

||||

|

||||

物理卷命令用于在卷组中添加或删除硬盘驱动。卷组命令用于为你的逻辑卷操作更改显示的物理分区抽象集。逻辑卷命令会以分区形式显示卷组使得你的操作系统能使用指定的空间。

|

||||

物理卷命令用于在卷组中添加或删除硬盘驱动。卷组命令用于为你的逻辑卷操作更改显示的物理分区抽象集。逻辑卷命令会以分区形式显示卷组,使得你的操作系统能使用指定的空间。

|

||||

|

||||

### 可下载的 LVM 备忘单 ###

|

||||

|

||||

@ -26,7 +26,7 @@ LVM 的大部分命令和彼此都非常相似。每个可用的命令都由以

|

||||

|

||||

### 如何查看当前 LVM 信息 ###

|

||||

|

||||

你首先需要做的事情是检查你的 LVM 设置。s 和 display 命令和物理卷(pv)、卷组(vg)以及逻辑卷(lv)一起使用,是一个找出当前设置好的开始点。

|

||||

你首先需要做的事情是检查你的 LVM 设置。s 和 display 命令可以和物理卷(pv)、卷组(vg)以及逻辑卷(lv)一起使用,是一个找出当前设置的好起点。

|

||||

|

||||

display 命令会格式化输出信息,因此比 s 命令更易于理解。对每个命令你会看到名称和 pv/vg 的路径,它还会给出空闲和已使用空间的信息。

|

||||

|

||||

@ -40,17 +40,17 @@ display 命令会格式化输出信息,因此比 s 命令更易于理解。对

|

||||

|

||||

#### 创建物理卷 ####

|

||||

|

||||

我们会从一个完全新的没有任何分区和信息的硬盘驱动开始。首先找出你将要使用的磁盘。(/dev/sda, sdb, 等)

|

||||

我们会从一个全新的没有任何分区和信息的硬盘开始。首先找出你将要使用的磁盘。(/dev/sda, sdb, 等)

|

||||

|

||||

> 注意:记住所有的命令都要以 root 身份运行或者在命令前面添加 'sudo' 。

|

||||

|

||||

fdisk -l

|

||||

|

||||

如果之前你的硬盘驱动从没有格式化或分区,在 fdisk 的输出中你很可能看到类似下面的信息。这完全正常,因为我们会在下面的步骤中创建需要的分区。

|

||||

如果之前你的硬盘从未格式化或分区过,在 fdisk 的输出中你很可能看到类似下面的信息。这完全正常,因为我们会在下面的步骤中创建需要的分区。

|

||||

|

||||

|

||||

|

||||

我们的新磁盘位置是 /dev/sdb,让我们用 fdisk 命令在驱动上创建一个新的分区。

|

||||

我们的新磁盘位置是 /dev/sdb,让我们用 fdisk 命令在磁盘上创建一个新的分区。

|

||||

|

||||

这里有大量能创建新分区的 GUI 工具,包括 [Gparted][2],但由于我们已经打开了终端,我们将使用 fdisk 命令创建需要的分区。

|

||||

|

||||

@ -62,9 +62,9 @@ display 命令会格式化输出信息,因此比 s 命令更易于理解。对

|

||||

|

||||

|

||||

|

||||

以指定的顺序输入命令创建一个使用新硬盘驱动 100% 空间的主分区并为 LVM 做好了准备。如果你需要更改分区的大小或相应多个分区,我建议使用 GParted 或自己了解关于 fdisk 命令的使用。

|

||||

以指定的顺序输入命令创建一个使用新硬盘 100% 空间的主分区并为 LVM 做好了准备。如果你需要更改分区的大小或想要多个分区,我建议使用 GParted 或自己了解一下关于 fdisk 命令的使用。

|

||||

|

||||

**警告:下面的步骤会格式化你的硬盘驱动。确保在进行下面步骤之前你的硬盘驱动中没有任何信息。**

|

||||

**警告:下面的步骤会格式化你的硬盘驱动。确保在进行下面步骤之前你的硬盘驱动中没有任何有用的信息。**

|

||||

|

||||

- n = 创建新分区

|

||||

- p = 创建主分区

|

||||

@ -79,9 +79,9 @@ display 命令会格式化输出信息,因此比 s 命令更易于理解。对

|

||||

- t = 更改分区类型

|

||||

- 8e = 更改为 LVM 分区类型

|

||||

|

||||

核实并将信息写入硬盘驱动器。

|

||||

核实并将信息写入硬盘。

|

||||

|

||||

- p = 查看分区设置使得写入更改到磁盘之前可以回看

|

||||

- p = 查看分区设置使得在写入更改到磁盘之前可以回看

|

||||

- w = 写入更改到磁盘

|

||||

|

||||

|

||||

@ -102,7 +102,7 @@ display 命令会格式化输出信息,因此比 s 命令更易于理解。对

|

||||

|

||||

|

||||

|

||||

Vgpool 是新创建的卷组的名称。你可以使用任何你喜欢的名称,但建议标签以 vg 开头,以便后面你使用它时能意识到这是一个卷组。

|

||||

vgpool 是新创建的卷组的名称。你可以使用任何你喜欢的名称,但建议标签以 vg 开头,以便后面你使用它时能意识到这是一个卷组。

|

||||

|

||||

#### 创建逻辑卷 ####

|

||||

|

||||

@ -112,7 +112,7 @@ Vgpool 是新创建的卷组的名称。你可以使用任何你喜欢的名称

|

||||

|

||||

|

||||

|

||||

-L 命令指定逻辑卷的大小,在该情况中是 3 GB,-n 命令指定卷的名称。 指定 vgpool 所以 lvcreate 命令知道从什么卷获取空间。

|

||||

-L 命令指定逻辑卷的大小,在该情况中是 3 GB,-n 命令指定卷的名称。 指定 vgpool 以便 lvcreate 命令知道从什么卷获取空间。

|

||||

|

||||

#### 格式化并挂载逻辑卷 ####

|

||||

|

||||

@ -131,7 +131,7 @@ Vgpool 是新创建的卷组的名称。你可以使用任何你喜欢的名称

|

||||

|

||||

#### 重新设置逻辑卷大小 ####

|

||||

|

||||

逻辑卷的一个好处是你能使你的共享物理变大或变小而不需要移动所有东西到一个更大的硬盘驱动。另外,你可以添加新的硬盘驱动并同时扩展你的卷组。或者如果你有一个不使用的硬盘驱动,你可以从卷组中移除它使得逻辑卷变小。

|

||||

逻辑卷的一个好处是你能使你的存储物理地变大或变小,而不需要移动所有东西到一个更大的硬盘。另外,你可以添加新的硬盘并同时扩展你的卷组。或者如果你有一个不使用的硬盘,你可以从卷组中移除它使得逻辑卷变小。

|

||||

|

||||

这里有三个用于使物理卷、卷组和逻辑卷变大或变小的基础工具。

|

||||

|

||||

@ -147,9 +147,9 @@ Vgpool 是新创建的卷组的名称。你可以使用任何你喜欢的名称

|

||||

|

||||

按照上面创建新分区并更改分区类型为 LVM(8e) 的步骤安装一个新硬盘驱动。然后用 pvcreate 命令创建一个 LVM 能识别的物理卷。

|

||||

|

||||

#### 添加新硬盘驱动到卷组 ####

|

||||

#### 添加新硬盘到卷组 ####

|

||||

|

||||

要添加新的硬盘驱动到一个卷组,你只需要知道你的新分区,在我们的例子中是 /dev/sdc1,以及想要添加到的卷组的名称。

|

||||

要添加新的硬盘到一个卷组,你只需要知道你的新分区,在我们的例子中是 /dev/sdc1,以及想要添加到的卷组的名称。

|

||||

|

||||

这会添加新物理卷到已存在的卷组中。

|

||||

|

||||

@ -189,7 +189,7 @@ Vgpool 是新创建的卷组的名称。你可以使用任何你喜欢的名称

|

||||

|

||||

1. 调整文件系统大小 (调整之前确保已经移动文件到硬盘驱动安全的地方)

|

||||

1. 减小逻辑卷 (除了 + 可以扩展大小,你也可以用 - 压缩大小)

|

||||

1. 用 vgreduce 从卷组中移除硬盘驱动

|

||||

1. 用 vgreduce 从卷组中移除硬盘

|

||||

|

||||

#### 备份逻辑卷 ####

|

||||

|

||||

@ -197,7 +197,7 @@ Vgpool 是新创建的卷组的名称。你可以使用任何你喜欢的名称

|

||||

|

||||

|

||||

|

||||

LVM 获取快照的时候,会有一张和逻辑卷完全相同的照片,该照片可以用于在不同的硬盘驱动上进行备份。生成一个备份的时候,任何需要添加到逻辑卷的新信息会如往常一样写入磁盘,但会跟踪更改使得原始快照永远不会损毁。

|

||||

LVM 获取快照的时候,会有一张和逻辑卷完全相同的“照片”,该“照片”可以用于在不同的硬盘上进行备份。生成一个备份的时候,任何需要添加到逻辑卷的新信息会如往常一样写入磁盘,但会跟踪更改使得原始快照永远不会损毁。

|

||||

|

||||

要创建一个快照,我们需要创建拥有足够空闲空间的逻辑卷,用于保存我们备份的时候会写入该逻辑卷的任何新信息。如果驱动并不是经常写入,你可以使用很小的一个存储空间。备份完成的时候我们只需要移除临时逻辑卷,原始逻辑卷会和往常一样。

|

||||

|

||||

@ -209,7 +209,7 @@ LVM 获取快照的时候,会有一张和逻辑卷完全相同的照片,该

|

||||

|

||||

|

||||

|

||||

这里我们创建了一个只有 512MB 的逻辑卷,因为驱动实际上并不会使用。512MB 的空间会保存备份时产生的任何新数据。

|

||||

这里我们创建了一个只有 512MB 的逻辑卷,因为该硬盘实际上并不会使用。512MB 的空间会保存备份时产生的任何新数据。

|

||||

|

||||

#### 挂载新快照 ####

|

||||

|

||||

@ -222,7 +222,7 @@ LVM 获取快照的时候,会有一张和逻辑卷完全相同的照片,该

|

||||

|

||||

#### 复制快照和删除逻辑卷 ####

|

||||

|

||||

你剩下需要做的是从 /mnt/lvstuffbackup/ 中复制所有文件到一个外部的硬盘驱动或者打包所有文件到一个文件。

|

||||

你剩下需要做的是从 /mnt/lvstuffbackup/ 中复制所有文件到一个外部的硬盘或者打包所有文件到一个文件。

|

||||

|

||||

**注意:tar -c 会创建一个归档文件,-f 要指出归档文件的名称和路径。要获取 tar 命令的帮助信息,可以在终端中输入 man tar。**

|

||||

|

||||

@ -230,7 +230,7 @@ LVM 获取快照的时候,会有一张和逻辑卷完全相同的照片,该

|

||||

|

||||

|

||||

|

||||

记住备份发生的时候写到 lvstuff 的所有文件都会在我们之前创建的临时逻辑卷中被跟踪。确保备份的时候你有足够的空闲空间。

|

||||

记住备份时候写到 lvstuff 的所有文件都会在我们之前创建的临时逻辑卷中被跟踪。确保备份的时候你有足够的空闲空间。

|

||||

|

||||

备份完成后,卸载卷并移除临时快照。

|

||||

|

||||

@ -259,10 +259,10 @@ LVM 获取快照的时候,会有一张和逻辑卷完全相同的照片,该

|

||||

via: http://www.howtogeek.com/howto/40702/how-to-manage-and-use-lvm-logical-volume-management-in-ubuntu/

|

||||

|

||||

译者:[ictlyh](https://github.com/ictlyh)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.howtogeek.com/howto/36568/what-is-logical-volume-management-and-how-do-you-enable-it-in-ubuntu/

|

||||

[1]:https://linux.cn/article-5953-1.html

|

||||

[2]:http://www.howtogeek.com/howto/17001/how-to-format-a-usb-drive-in-ubuntu-using-gparted/

|

||||

[3]:http://www.howtogeek.com/howto/33552/htg-explains-which-linux-file-system-should-you-choose/

|

||||

183

published/20150522 Analyzing Linux Logs.md

Normal file

183

published/20150522 Analyzing Linux Logs.md

Normal file

@ -0,0 +1,183 @@

|

||||

如何分析 Linux 日志

|

||||

==============================================================================

|

||||

|

||||

|

||||

日志中有大量的信息需要你处理,尽管有时候想要提取并非想象中的容易。在这篇文章中我们会介绍一些你现在就能做的基本日志分析例子(只需要搜索即可)。我们还将涉及一些更高级的分析,但这些需要你前期努力做出适当的设置,后期就能节省很多时间。对数据进行高级分析的例子包括生成汇总计数、对有效值进行过滤,等等。

|

||||

|

||||

我们首先会向你展示如何在命令行中使用多个不同的工具,然后展示了一个日志管理工具如何能自动完成大部分繁重工作从而使得日志分析变得简单。

|

||||

|

||||

### 用 Grep 搜索 ###

|

||||

|

||||

搜索文本是查找信息最基本的方式。搜索文本最常用的工具是 [grep][1]。这个命令行工具在大部分 Linux 发行版中都有,它允许你用正则表达式搜索日志。正则表达式是一种用特殊的语言写的、能识别匹配文本的模式。最简单的模式就是用引号把你想要查找的字符串括起来。

|

||||

|

||||

#### 正则表达式 ####

|

||||

|

||||

这是一个在 Ubuntu 系统的认证日志中查找 “user hoover” 的例子:

|

||||

|

||||

$ grep "user hoover" /var/log/auth.log

|

||||

Accepted password for hoover from 10.0.2.2 port 4792 ssh2

|

||||

pam_unix(sshd:session): session opened for user hoover by (uid=0)

|

||||

pam_unix(sshd:session): session closed for user hoover

|

||||

|

||||

构建精确的正则表达式可能很难。例如,如果我们想要搜索一个类似端口 “4792” 的数字,它可能也会匹配时间戳、URL 以及其它不需要的数据。Ubuntu 中下面的例子,它匹配了一个我们不想要的 Apache 日志。

|

||||

|

||||

$ grep "4792" /var/log/auth.log

|

||||

Accepted password for hoover from 10.0.2.2 port 4792 ssh2

|

||||

74.91.21.46 - - [31/Mar/2015:19:44:32 +0000] "GET /scripts/samples/search?q=4972 HTTP/1.0" 404 545 "-" "-”

|

||||

|

||||

#### 环绕搜索 ####

|

||||

|

||||

另一个有用的小技巧是你可以用 grep 做环绕搜索。这会向你展示一个匹配前面或后面几行是什么。它能帮助你调试导致错误或问题的东西。`B` 选项展示前面几行,`A` 选项展示后面几行。举个例子,我们知道当一个人以管理员员身份登录失败时,同时他们的 IP 也没有反向解析,也就意味着他们可能没有有效的域名。这非常可疑!

|

||||

|

||||

$ grep -B 3 -A 2 'Invalid user' /var/log/auth.log

|

||||

Apr 28 17:06:20 ip-172-31-11-241 sshd[12545]: reverse mapping checking getaddrinfo for 216-19-2-8.commspeed.net [216.19.2.8] failed - POSSIBLE BREAK-IN ATTEMPT!

|

||||

Apr 28 17:06:20 ip-172-31-11-241 sshd[12545]: Received disconnect from 216.19.2.8: 11: Bye Bye [preauth]

|

||||

Apr 28 17:06:20 ip-172-31-11-241 sshd[12547]: Invalid user admin from 216.19.2.8

|

||||

Apr 28 17:06:20 ip-172-31-11-241 sshd[12547]: input_userauth_request: invalid user admin [preauth]

|

||||

Apr 28 17:06:20 ip-172-31-11-241 sshd[12547]: Received disconnect from 216.19.2.8: 11: Bye Bye [preauth]

|

||||

|

||||

#### Tail ####

|

||||

|

||||

你也可以把 grep 和 [tail][2] 结合使用来获取一个文件的最后几行,或者跟踪日志并实时打印。这在你做交互式更改的时候非常有用,例如启动服务器或者测试代码更改。

|

||||

|

||||

$ tail -f /var/log/auth.log | grep 'Invalid user'

|

||||

Apr 30 19:49:48 ip-172-31-11-241 sshd[6512]: Invalid user ubnt from 219.140.64.136

|

||||

Apr 30 19:49:49 ip-172-31-11-241 sshd[6514]: Invalid user admin from 219.140.64.136

|

||||

|

||||

关于 grep 和正则表达式的详细介绍并不在本指南的范围,但 [Ryan’s Tutorials][3] 有更深入的介绍。

|

||||

|

||||

日志管理系统有更高的性能和更强大的搜索能力。它们通常会索引数据并进行并行查询,因此你可以很快的在几秒内就能搜索 GB 或 TB 的日志。相比之下,grep 就需要几分钟,在极端情况下可能甚至几小时。日志管理系统也使用类似 [Lucene][4] 的查询语言,它提供更简单的语法来检索数字、域以及其它。

|

||||

|

||||

### 用 Cut、 AWK、 和 Grok 解析 ###

|

||||

|

||||

#### 命令行工具 ####

|

||||

|

||||

Linux 提供了多个命令行工具用于文本解析和分析。当你想要快速解析少量数据时非常有用,但处理大量数据时可能需要很长时间。

|

||||

|

||||

#### Cut ####

|

||||

|

||||

[cut][5] 命令允许你从有分隔符的日志解析字段。分隔符是指能分开字段或键值对的等号或逗号等。

|

||||

|

||||

假设我们想从下面的日志中解析出用户:

|

||||

|

||||

pam_unix(su:auth): authentication failure; logname=hoover uid=1000 euid=0 tty=/dev/pts/0 ruser=hoover rhost= user=root

|

||||

|

||||

我们可以像下面这样用 cut 命令获取用等号分割后的第八个字段的文本。这是一个 Ubuntu 系统上的例子:

|

||||

|

||||

$ grep "authentication failure" /var/log/auth.log | cut -d '=' -f 8

|

||||

root

|

||||

hoover

|

||||

root

|

||||

nagios

|

||||

nagios

|

||||

|

||||

#### AWK ####

|

||||

|

||||

另外,你也可以使用 [awk][6],它能提供更强大的解析字段功能。它提供了一个脚本语言,你可以过滤出几乎任何不相干的东西。

|

||||

|

||||

例如,假设在 Ubuntu 系统中我们有下面的一行日志,我们想要提取登录失败的用户名称:

|

||||

|

||||

Mar 24 08:28:18 ip-172-31-11-241 sshd[32701]: input_userauth_request: invalid user guest [preauth]

|

||||

|

||||

你可以像下面这样使用 awk 命令。首先,用一个正则表达式 /sshd.*invalid user/ 来匹配 sshd invalid user 行。然后用 { print $9 } 根据默认的分隔符空格打印第九个字段。这样就输出了用户名。

|

||||

|

||||

$ awk '/sshd.*invalid user/ { print $9 }' /var/log/auth.log

|

||||

guest

|

||||

admin

|

||||

info

|

||||

test

|

||||

ubnt

|

||||

|

||||

你可以在 [Awk 用户指南][7] 中阅读更多关于如何使用正则表达式和输出字段的信息。

|

||||

|

||||

#### 日志管理系统 ####

|

||||

|

||||

日志管理系统使得解析变得更加简单,使用户能快速的分析很多的日志文件。他们能自动解析标准的日志格式,比如常见的 Linux 日志和 Web 服务器日志。这能节省很多时间,因为当处理系统问题的时候你不需要考虑自己写解析逻辑。

|

||||

|

||||

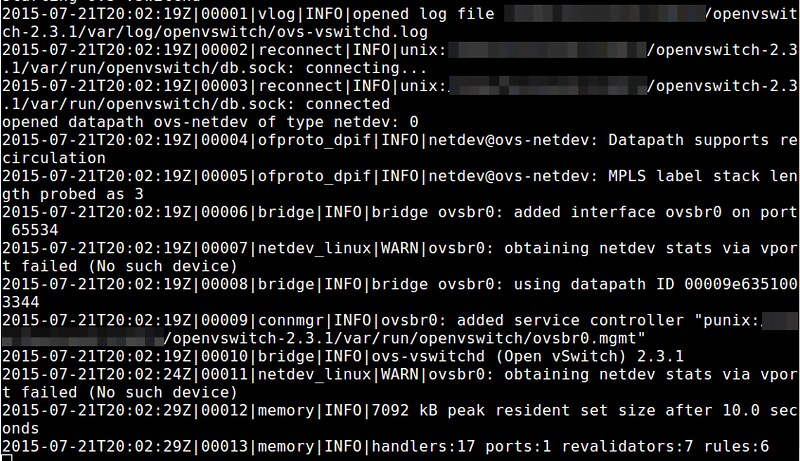

下面是一个 sshd 日志消息的例子,解析出了每个 remoteHost 和 user。这是 Loggly 中的一张截图,它是一个基于云的日志管理服务。

|

||||

|

||||

|

||||

|

||||

你也可以对非标准格式自定义解析。一个常用的工具是 [Grok][8],它用一个常见正则表达式库,可以解析原始文本为结构化 JSON。下面是一个 Grok 在 Logstash 中解析内核日志文件的事例配置:

|

||||

|

||||

filter{

|

||||

grok {

|

||||

match => {"message" => "%{CISCOTIMESTAMP:timestamp} %{HOST:host} %{WORD:program}%{NOTSPACE} %{NOTSPACE}%{NUMBER:duration}%{NOTSPACE} %{GREEDYDATA:kernel_logs}"

|

||||

}

|

||||

}

|

||||

|

||||

下图是 Grok 解析后输出的结果:

|

||||

|

||||

|

||||

|

||||

### 用 Rsyslog 和 AWK 过滤 ###

|

||||

|

||||

过滤使得你能检索一个特定的字段值而不是进行全文检索。这使你的日志分析更加准确,因为它会忽略来自其它部分日志信息不需要的匹配。为了对一个字段值进行搜索,你首先需要解析日志或者至少有对事件结构进行检索的方式。

|

||||

|

||||

#### 如何对应用进行过滤 ####

|

||||

|

||||

通常,你可能只想看一个应用的日志。如果你的应用把记录都保存到一个文件中就会很容易。如果你需要在一个聚集或集中式日志中过滤一个应用就会比较复杂。下面有几种方法来实现:

|

||||

|

||||

1. 用 rsyslog 守护进程解析和过滤日志。下面的例子将 sshd 应用的日志写入一个名为 sshd-message 的文件,然后丢弃事件以便它不会在其它地方重复出现。你可以将它添加到你的 rsyslog.conf 文件中测试这个例子。

|

||||

|

||||

:programname, isequal, “sshd” /var/log/sshd-messages

|

||||

&~

|

||||

|

||||

2. 用类似 awk 的命令行工具提取特定字段的值,例如 sshd 用户名。下面是 Ubuntu 系统中的一个例子。

|

||||

|

||||

$ awk '/sshd.*invalid user/ { print $9 }' /var/log/auth.log

|

||||

guest

|

||||

admin

|

||||

info

|

||||

test

|

||||

ubnt

|

||||

|

||||

3. 用日志管理系统自动解析日志,然后在需要的应用名称上点击过滤。下面是在 Loggly 日志管理服务中提取 syslog 域的截图。我们对应用名称 “sshd” 进行过滤,如维恩图图标所示。

|

||||

|

||||

|

||||

|

||||

#### 如何过滤错误 ####

|

||||

|

||||

一个人最希望看到日志中的错误。不幸的是,默认的 syslog 配置不直接输出错误的严重性,也就使得难以过滤它们。

|

||||

|

||||

这里有两个解决该问题的方法。首先,你可以修改你的 rsyslog 配置,在日志文件中输出错误的严重性,使得便于查看和检索。在你的 rsyslog 配置中你可以用 pri-text 添加一个 [模板][9],像下面这样:

|

||||

|

||||

"<%pri-text%> : %timegenerated%,%HOSTNAME%,%syslogtag%,%msg%n"

|

||||

|

||||

这个例子会按照下面的格式输出。你可以看到该信息中指示错误的 err。

|

||||

|

||||

<authpriv.err> : Mar 11 18:18:00,hoover-VirtualBox,su[5026]:, pam_authenticate: Authentication failure

|

||||

|

||||

你可以用 awk 或者 grep 检索错误信息。在 Ubuntu 中,对这个例子,我们可以用一些语法特征,例如 . 和 >,它们只会匹配这个域。

|

||||

|

||||

$ grep '.err>' /var/log/auth.log

|

||||

<authpriv.err> : Mar 11 18:18:00,hoover-VirtualBox,su[5026]:, pam_authenticate: Authentication failure

|

||||

|

||||

你的第二个选择是使用日志管理系统。好的日志管理系统能自动解析 syslog 消息并抽取错误域。它们也允许你用简单的点击过滤日志消息中的特定错误。

|

||||

|

||||

下面是 Loggly 中一个截图,显示了高亮错误严重性的 syslog 域,表示我们正在过滤错误:

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.loggly.com/ultimate-guide/logging/analyzing-linux-logs/

|

||||

|

||||

作者:[Jason Skowronski][a],[Amy Echeverri][b],[ Sadequl Hussain][c]

|

||||

译者:[ictlyh](https://github.com/ictlyh)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linkedin.com/in/jasonskowronski

|

||||

[b]:https://www.linkedin.com/in/amyecheverri

|

||||

[c]:https://www.linkedin.com/pub/sadequl-hussain/14/711/1a7

|

||||

[1]:http://linux.die.net/man/1/grep

|

||||

[2]:http://linux.die.net/man/1/tail

|

||||

[3]:http://ryanstutorials.net/linuxtutorial/grep.php

|

||||

[4]:https://lucene.apache.org/core/2_9_4/queryparsersyntax.html

|

||||

[5]:http://linux.die.net/man/1/cut

|

||||

[6]:http://linux.die.net/man/1/awk

|

||||

[7]:http://www.delorie.com/gnu/docs/gawk/gawk_26.html#IDX155

|

||||

[8]:http://logstash.net/docs/1.4.2/filters/grok

|

||||

[9]:http://www.rsyslog.com/doc/v8-stable/configuration/templates.html

|

||||

@ -1,18 +1,18 @@

|

||||

Ubuntu 15.04上配置OpenVPN服务器-客户端

|

||||

在 Ubuntu 15.04 上配置 OpenVPN 服务器和客户端

|

||||

================================================================================

|

||||

虚拟专用网(VPN)是几种用于建立与其它网络连接的网络技术中常见的一个名称。它被称为虚拟网,因为各个节点的连接不是通过物理线路实现的。而由于没有网络所有者的正确授权是不能通过公共线路访问到网络,所以它是专用的。

|

||||

虚拟专用网(VPN)常指几种通过其它网络建立连接技术。它之所以被称为“虚拟”,是因为各个节点间的连接不是通过物理线路实现的,而“专用”是指如果没有网络所有者的正确授权是不能被公开访问到。

|

||||

|

||||

|

||||

|

||||

[OpenVPN][1]软件通过TUN/TAP驱动的帮助,使用TCP和UDP协议来传输数据。UDP协议和TUN驱动允许NAT后的用户建立到OpenVPN服务器的连接。此外,OpenVPN允许指定自定义端口。它提额外提供了灵活的配置,可以帮助你避免防火墙限制。

|

||||

[OpenVPN][1]软件借助TUN/TAP驱动使用TCP和UDP协议来传输数据。UDP协议和TUN驱动允许NAT后的用户建立到OpenVPN服务器的连接。此外,OpenVPN允许指定自定义端口。它提供了更多的灵活配置,可以帮助你避免防火墙限制。

|

||||

|

||||

OpenVPN中,由OpenSSL库和传输层安全协议(TLS)提供了安全和加密。TLS是SSL协议的一个改进版本。

|

||||

|

||||

OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示了如何配置OpenVPN的服务器端,以及如何预备使用带有公共密钥非对称加密和TLS协议基础结构(PKI)。

|

||||

OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示了如何配置OpenVPN的服务器端,以及如何配置使用带有公共密钥基础结构(PKI)的非对称加密和TLS协议。

|

||||

|

||||

### 服务器端配置 ###

|

||||

|

||||

首先,我们必须安装OpenVPN。在Ubuntu 15.04和其它带有‘apt’报管理器的Unix系统中,可以通过如下命令安装:

|

||||

首先,我们必须安装OpenVPN软件。在Ubuntu 15.04和其它带有‘apt’包管理器的Unix系统中,可以通过如下命令安装:

|

||||

|

||||

sudo apt-get install openvpn

|

||||

|

||||

@ -20,7 +20,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

sudo apt-get unstall easy-rsa

|

||||

|

||||

**注意**: 所有接下来的命令要以超级用户权限执行,如在“sudo -i”命令后;此外,你可以使用“sudo -E”作为接下来所有命令的前缀。

|

||||

**注意**: 所有接下来的命令要以超级用户权限执行,如在使用`sudo -i`命令后执行,或者你可以使用`sudo -E`作为接下来所有命令的前缀。

|

||||

|

||||

开始之前,我们需要拷贝“easy-rsa”到openvpn文件夹。

|

||||

|

||||

@ -32,15 +32,15 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

cd /etc/openvpn/easy-rsa/2.0

|

||||

|

||||

这里,我们开启了一个密钥生成进程。

|

||||

这里,我们开始密钥生成进程。

|

||||

|

||||

首先,我们编辑一个“var”文件。为了简化生成过程,我们需要在里面指定数据。这里是“var”文件的一个样例:

|

||||

首先,我们编辑一个“vars”文件。为了简化生成过程,我们需要在里面指定数据。这里是“vars”文件的一个样例:

|

||||

|

||||

export KEY_COUNTRY="US"

|

||||

export KEY_PROVINCE="CA"

|

||||

export KEY_CITY="SanFrancisco"

|

||||

export KEY_ORG="Fort-Funston"

|

||||

export KEY_EMAIL="my@myhost.mydomain"

|

||||

export KEY_COUNTRY="CN"

|

||||

export KEY_PROVINCE="BJ"

|

||||

export KEY_CITY="Beijing"

|

||||

export KEY_ORG="Linux.CN"

|

||||

export KEY_EMAIL="open@vpn.linux.cn"

|

||||

export KEY_OU=server

|

||||

|

||||

希望这些字段名称对你而言已经很清楚,不需要进一步说明了。

|

||||

@ -61,7 +61,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

./build-ca

|

||||

|

||||

在对话中,我们可以看到默认的变量,这些变量是我们先前在“vars”中指定的。我们可以检查以下,如有必要进行编辑,然后按回车几次。对话如下

|

||||

在对话中,我们可以看到默认的变量,这些变量是我们先前在“vars”中指定的。我们可以检查一下,如有必要进行编辑,然后按回车几次。对话如下

|

||||

|

||||

Generating a 2048 bit RSA private key

|

||||

.............................................+++

|

||||

@ -75,14 +75,14 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

For some fields there will be a default value,

|

||||

If you enter '.', the field will be left blank.

|

||||

-----

|

||||

Country Name (2 letter code) [US]:

|

||||

State or Province Name (full name) [CA]:

|

||||

Locality Name (eg, city) [SanFrancisco]:

|

||||

Organization Name (eg, company) [Fort-Funston]:

|

||||

Organizational Unit Name (eg, section) [MyOrganizationalUnit]:

|

||||

Common Name (eg, your name or your server's hostname) [Fort-Funston CA]:

|

||||

Country Name (2 letter code) [CN]:

|

||||

State or Province Name (full name) [BJ]:

|

||||

Locality Name (eg, city) [Beijing]:

|

||||

Organization Name (eg, company) [Linux.CN]:

|

||||

Organizational Unit Name (eg, section) [Tech]:

|

||||

Common Name (eg, your name or your server's hostname) [Linux.CN CA]:

|

||||

Name [EasyRSA]:

|

||||

Email Address [me@myhost.mydomain]:

|

||||

Email Address [open@vpn.linux.cn]:

|

||||

|

||||

接下来,我们需要生成一个服务器密钥

|

||||

|

||||

@ -102,14 +102,14 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

For some fields there will be a default value,

|

||||

If you enter '.', the field will be left blank.

|

||||

-----

|

||||

Country Name (2 letter code) [US]:

|

||||

State or Province Name (full name) [CA]:

|

||||

Locality Name (eg, city) [SanFrancisco]:

|

||||

Organization Name (eg, company) [Fort-Funston]:

|

||||

Organizational Unit Name (eg, section) [MyOrganizationalUnit]:

|

||||

Common Name (eg, your name or your server's hostname) [server]:

|

||||

Country Name (2 letter code) [CN]:

|

||||

State or Province Name (full name) [BJ]:

|

||||

Locality Name (eg, city) [Beijing]:

|

||||

Organization Name (eg, company) [Linux.CN]:

|

||||

Organizational Unit Name (eg, section) [Tech]:

|

||||

Common Name (eg, your name or your server's hostname) [Linux.CN server]:

|

||||

Name [EasyRSA]:

|

||||

Email Address [me@myhost.mydomain]:

|

||||

Email Address [open@vpn.linux.cn]:

|

||||

|

||||

Please enter the following 'extra' attributes

|

||||

to be sent with your certificate request

|

||||

@ -119,14 +119,14 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

Check that the request matches the signature

|

||||

Signature ok

|

||||

The Subject's Distinguished Name is as follows

|

||||

countryName :PRINTABLE:'US'

|

||||

stateOrProvinceName :PRINTABLE:'CA'

|

||||

localityName :PRINTABLE:'SanFrancisco'

|

||||

organizationName :PRINTABLE:'Fort-Funston'

|

||||

organizationalUnitName:PRINTABLE:'MyOrganizationalUnit'

|

||||

commonName :PRINTABLE:'server'

|

||||

countryName :PRINTABLE:'CN'

|

||||

stateOrProvinceName :PRINTABLE:'BJ'

|

||||

localityName :PRINTABLE:'Beijing'

|

||||

organizationName :PRINTABLE:'Linux.CN'

|

||||

organizationalUnitName:PRINTABLE:'Tech'

|

||||

commonName :PRINTABLE:'Linux.CN server'

|

||||

name :PRINTABLE:'EasyRSA'

|

||||

emailAddress :IA5STRING:'me@myhost.mydomain'

|

||||

emailAddress :IA5STRING:'open@vpn.linux.cn'

|

||||

Certificate is to be certified until May 22 19:00:25 2025 GMT (3650 days)

|

||||

Sign the certificate? [y/n]:y

|

||||

1 out of 1 certificate requests certified, commit? [y/n]y

|

||||

@ -143,7 +143,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

Generating DH parameters, 2048 bit long safe prime, generator 2

|

||||

This is going to take a long time

|

||||

................................+................<and many many dots>

|

||||

................................+................<许多的点>

|

||||

|

||||

在漫长的等待之后,我们可以继续生成最后的密钥了,该密钥用于TLS验证。命令如下:

|

||||

|

||||

@ -176,7 +176,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

### Unix的客户端配置 ###

|

||||

|

||||

假定我们有一台装有类Unix操作系统的设备,比如Ubuntu 15.04,并安装有OpenVPN。我们想要从先前的部分连接到OpenVPN服务器。首先,我们需要为客户端生成密钥。为了生成该密钥,请转到服务器上的目录中:

|

||||

假定我们有一台装有类Unix操作系统的设备,比如Ubuntu 15.04,并安装有OpenVPN。我们想要连接到前面建立的OpenVPN服务器。首先,我们需要为客户端生成密钥。为了生成该密钥,请转到服务器上的对应目录中:

|

||||

|

||||

cd /etc/openvpn/easy-rsa/2.0

|

||||

|

||||

@ -211,7 +211,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

dev tun

|

||||

proto udp

|

||||

|

||||

# IP and Port of remote host with OpenVPN server

|

||||

# 远程 OpenVPN 服务器的 IP 和 端口号

|

||||

remote 111.222.333.444 1194

|

||||

|

||||

resolv-retry infinite

|

||||

@ -243,7 +243,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

安卓设备上的OpenVPN配置和Unix系统上的十分类似,我们需要一个含有配置文件、密钥和证书的包。文件列表如下:

|

||||

|

||||

- configuration file (.ovpn),

|

||||

- 配置文件 (扩展名 .ovpn),

|

||||

- ca.crt,

|

||||

- dh2048.pem,

|

||||

- client.crt,

|

||||

@ -257,7 +257,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

dev tun

|

||||

proto udp

|

||||

|

||||

# IP and Port of remote host with OpenVPN server

|

||||

# 远程 OpenVPN 服务器的 IP 和 端口号

|

||||

remote 111.222.333.444 1194

|

||||

|

||||

resolv-retry infinite

|

||||

@ -274,21 +274,21 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

所有这些文件我们必须移动我们设备的SD卡上。

|

||||

|

||||

然后,我们需要安装[OpenVPN连接][2]。

|

||||

然后,我们需要安装一个[OpenVPN Connect][2] 应用。

|

||||

|

||||

接下来,配置过程很是简单:

|

||||

|

||||

open setting of OpenVPN and select Import options

|

||||

select Import Profile from SD card option

|

||||

in opened window go to folder with prepared files and select .ovpn file

|

||||

application offered us to create a new profile

|

||||

tap on the Connect button and wait a second

|

||||

- 打开 OpenVPN 并选择“Import”选项

|

||||

- 选择“Import Profile from SD card”

|

||||

- 在打开的窗口中导航到我们放置好文件的目录,并选择那个 .ovpn 文件

|

||||

- 应用会要求我们创建一个新的配置文件

|

||||

- 点击“Connect”按钮并稍等一下

|

||||

|

||||

搞定。现在,我们的安卓设备已经通过安全的VPN连接连接到我们的专用网。

|

||||

|

||||

### 尾声 ###

|

||||

|

||||

虽然OpenVPN初始配置花费不少时间,但是简易客户端配置为我们弥补了时间上的损失,也提供了从任何设备连接的能力。此外,OpenVPN提供了一个很高的安全等级,以及从不同地方连接的能力,包括位于NAT后面的客户端。因此,OpenVPN可以同时在家和在企业中使用。

|

||||

虽然OpenVPN初始配置花费不少时间,但是简易的客户端配置为我们弥补了时间上的损失,也提供了从任何设备连接的能力。此外,OpenVPN提供了一个很高的安全等级,以及从不同地方连接的能力,包括位于NAT后面的客户端。因此,OpenVPN可以同时在家和企业中使用。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -296,7 +296,7 @@ via: http://linoxide.com/ubuntu-how-to/configure-openvpn-server-client-ubuntu-15

|

||||

|

||||

作者:[Ivan Zabrovskiy][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,205 @@

|

||||

关于Linux防火墙'iptables'的面试问答

|

||||

================================================================================

|

||||

Nishita Agarwal是Tecmint的用户,她将分享关于她刚刚经历的一家公司(印度的一家私人公司Pune)的面试经验。在面试中她被问及许多不同的问题,但她是iptables方面的专家,因此她想分享这些关于iptables的问题和相应的答案给那些以后可能会进行相关面试的人。

|

||||

|

||||

|

||||

|

||||

所有的问题和相应的答案都基于Nishita Agarwal的记忆并经过了重写。

|

||||

|

||||

> “嗨,朋友!我叫**Nishita Agarwal**。我已经取得了理学学士学位,我的专业集中在UNIX和它的变种(BSD,Linux)。它们一直深深的吸引着我。我在存储方面有1年多的经验。我正在寻求职业上的变化,并将供职于印度的Pune公司。”

|

||||

|

||||

下面是我在面试中被问到的问题的集合。我已经把我记忆中有关iptables的问题和它们的答案记录了下来。希望这会对您未来的面试有所帮助。

|

||||

|

||||

### 1. 你听说过Linux下面的iptables和Firewalld么?知不知道它们是什么,是用来干什么的? ###

|

||||

|

||||

**答案** : iptables和Firewalld我都知道,并且我已经使用iptables好一段时间了。iptables主要由C语言写成,并且以GNU GPL许可证发布。它是从系统管理员的角度写的,最新的稳定版是iptables 1.4.21。iptables通常被用作类UNIX系统中的防火墙,更准确的说,可以称为iptables/netfilter。管理员通过终端/GUI工具与iptables打交道,来添加和定义防火墙规则到预定义的表中。Netfilter是内核中的一个模块,它执行包过滤的任务。

|

||||

|

||||

Firewalld是RHEL/CentOS 7(也许还有其他发行版,但我不太清楚)中最新的过滤规则的实现。它已经取代了iptables接口,并与netfilter相连接。

|

||||

|

||||

### 2. 你用过一些iptables的GUI或命令行工具么? ###

|

||||

|

||||

**答案** : 虽然我既用过GUI工具,比如与[Webmin][1]结合的Shorewall;以及直接通过终端访问iptables,但我必须承认通过Linux终端直接访问iptables能给予用户更高级的灵活性、以及对其背后工作更好的理解的能力。GUI适合初级管理员,而终端适合有经验的管理员。

|

||||

|

||||

### 3. 那么iptables和firewalld的基本区别是什么呢? ###

|

||||

|

||||

**答案** : iptables和firewalld都有着同样的目的(包过滤),但它们使用不同的方式。iptables与firewalld不同,在每次发生更改时都刷新整个规则集。通常iptables配置文件位于‘/etc/sysconfig/iptables‘,而firewalld的配置文件位于‘/etc/firewalld/‘。firewalld的配置文件是一组XML文件。以XML为基础进行配置的firewalld比iptables的配置更加容易,但是两者都可以完成同样的任务。例如,firewalld可以在自己的命令行界面以及基于XML的配置文件下使用iptables。

|

||||

|

||||

### 4. 如果有机会的话,你会在你所有的服务器上用firewalld替换iptables么? ###

|

||||

|

||||

**答案** : 我对iptables很熟悉,它也工作的很好。如果没有任何需求需要firewalld的动态特性,那么没有理由把所有的配置都从iptables移动到firewalld。通常情况下,目前为止,我还没有看到iptables造成什么麻烦。IT技术的通用准则也说道“为什么要修一件没有坏的东西呢?”。上面是我自己的想法,但如果组织愿意用firewalld替换iptables的话,我不介意。

|

||||

|

||||

### 5. 你看上去对iptables很有信心,巧的是,我们的服务器也在使用iptables。 ###

|

||||

|

||||

iptables使用的表有哪些?请简要的描述iptables使用的表以及它们所支持的链。

|

||||

|

||||

**答案** : 谢谢您的赞赏。至于您问的问题,iptables使用的表有四个,它们是:

|

||||

|

||||

- Nat 表

|

||||

- Mangle 表

|

||||

- Filter 表

|

||||

- Raw 表

|

||||

|

||||

Nat表 : Nat表主要用于网络地址转换。根据表中的每一条规则修改网络包的IP地址。流中的包仅遍历一遍Nat表。例如,如果一个通过某个接口的包被修饰(修改了IP地址),该流中其余的包将不再遍历这个表。通常不建议在这个表中进行过滤,由NAT表支持的链称为PREROUTING 链,POSTROUTING 链和OUTPUT 链。

|

||||

|

||||

Mangle表 : 正如它的名字一样,这个表用于校正网络包。它用来对特殊的包进行修改。它能够修改不同包的头部和内容。Mangle表不能用于地址伪装。支持的链包括PREROUTING 链,OUTPUT 链,Forward 链,Input 链和POSTROUTING 链。

|

||||

|

||||

Filter表 : Filter表是iptables中使用的默认表,它用来过滤网络包。如果没有定义任何规则,Filter表则被当作默认的表,并且基于它来过滤。支持的链有INPUT 链,OUTPUT 链,FORWARD 链。

|

||||

|

||||

Raw表 : Raw表在我们想要配置之前被豁免的包时被使用。它支持PREROUTING 链和OUTPUT 链。

|

||||

|

||||

### 6. 简要谈谈什么是iptables中的目标值(能被指定为目标),他们有什么用 ###

|

||||

|

||||

**答案** : 下面是在iptables中可以指定为目标的值:

|

||||

|

||||

- ACCEPT : 接受包

|

||||

- QUEUE : 将包传递到用户空间 (应用程序和驱动所在的地方)

|

||||

- DROP : 丢弃包

|

||||

- RETURN : 将控制权交回调用的链并且为当前链中的包停止执行下一调用规则

|

||||

|

||||

### 7. 让我们来谈谈iptables技术方面的东西,我的意思是说实际使用方面 ###

|

||||

|

||||

你怎么检测在CentOS中安装iptables时需要的iptables的rpm?

|

||||

|

||||

**答案** : iptables已经被默认安装在CentOS中,我们不需要单独安装它。但可以这样检测rpm:

|

||||

|

||||

# rpm -qa iptables

|

||||

|

||||

iptables-1.4.21-13.el7.x86_64

|

||||

|

||||

如果您需要安装它,您可以用yum来安装。

|

||||

|

||||

# yum install iptables-services

|

||||

|

||||

### 8. 怎样检测并且确保iptables服务正在运行? ###

|

||||

|

||||

**答案** : 您可以在终端中运行下面的命令来检测iptables的状态。

|

||||

|

||||

# service status iptables [On CentOS 6/5]

|

||||

# systemctl status iptables [On CentOS 7]

|

||||

|

||||

如果iptables没有在运行,可以使用下面的语句

|

||||

|

||||

---------------- 在CentOS 6/5下 ----------------

|

||||

# chkconfig --level 35 iptables on

|

||||

# service iptables start

|

||||

|

||||

---------------- 在CentOS 7下 ----------------

|

||||

# systemctl enable iptables

|

||||

# systemctl start iptables

|

||||

|

||||

我们还可以检测iptables的模块是否被加载:

|

||||

|

||||

# lsmod | grep ip_tables

|

||||

|

||||

### 9. 你怎么检查iptables中当前定义的规则呢? ###

|

||||

|

||||

**答案** : 当前的规则可以简单的用下面的命令查看:

|

||||

|

||||

# iptables -L

|

||||

|

||||

示例输出

|

||||

|

||||

Chain INPUT (policy ACCEPT)

|

||||

target prot opt source destination

|

||||

ACCEPT all -- anywhere anywhere state RELATED,ESTABLISHED

|

||||

ACCEPT icmp -- anywhere anywhere

|

||||

ACCEPT all -- anywhere anywhere

|

||||

ACCEPT tcp -- anywhere anywhere state NEW tcp dpt:ssh

|

||||

REJECT all -- anywhere anywhere reject-with icmp-host-prohibited

|

||||

|

||||

Chain FORWARD (policy ACCEPT)

|

||||

target prot opt source destination

|

||||

REJECT all -- anywhere anywhere reject-with icmp-host-prohibited

|

||||

|

||||

Chain OUTPUT (policy ACCEPT)

|

||||

target prot opt source destination

|

||||

|

||||

### 10. 你怎样刷新所有的iptables规则或者特定的链呢? ###

|

||||

|

||||

**答案** : 您可以使用下面的命令来刷新一个特定的链。

|

||||

|

||||

# iptables --flush OUTPUT

|

||||

|

||||

要刷新所有的规则,可以用:

|

||||

|

||||

# iptables --flush

|

||||

|

||||

### 11. 请在iptables中添加一条规则,接受所有从一个信任的IP地址(例如,192.168.0.7)过来的包。 ###

|

||||

|

||||

**答案** : 上面的场景可以通过运行下面的命令来完成。

|

||||

|

||||

# iptables -A INPUT -s 192.168.0.7 -j ACCEPT

|

||||

|

||||

我们还可以在源IP中使用标准的斜线和子网掩码:

|

||||

|

||||

# iptables -A INPUT -s 192.168.0.7/24 -j ACCEPT

|

||||

# iptables -A INPUT -s 192.168.0.7/255.255.255.0 -j ACCEPT

|

||||

|

||||

### 12. 怎样在iptables中添加规则以ACCEPT,REJECT,DENY和DROP ssh的服务? ###

|

||||

|

||||

**答案** : 但愿ssh运行在22端口,那也是ssh的默认端口,我们可以在iptables中添加规则来ACCEPT ssh的tcp包(在22号端口上)。

|

||||

|

||||

# iptables -A INPUT -s -p tcp --dport 22 -j ACCEPT

|

||||

|

||||

REJECT ssh服务(22号端口)的tcp包。

|

||||

|

||||

# iptables -A INPUT -s -p tcp --dport 22 -j REJECT

|

||||

|

||||

DENY ssh服务(22号端口)的tcp包。

|

||||

|

||||

|

||||

# iptables -A INPUT -s -p tcp --dport 22 -j DENY

|

||||

|

||||

DROP ssh服务(22号端口)的tcp包。

|

||||

|

||||

|

||||

# iptables -A INPUT -s -p tcp --dport 22 -j DROP

|

||||

|

||||

### 13. 让我给你另一个场景,假如有一台电脑的本地IP地址是192.168.0.6。你需要封锁在21、22、23和80号端口上的连接,你会怎么做? ###

|

||||

|

||||

**答案** : 这时,我所需要的就是在iptables中使用‘multiport‘选项,并将要封锁的端口号跟在它后面。上面的场景可以用下面的一条语句搞定:

|

||||

|

||||

# iptables -A INPUT -s 192.168.0.6 -p tcp -m multiport --dport 22,23,80,8080 -j DROP

|

||||

|

||||

可以用下面的语句查看写入的规则。

|

||||

|

||||

# iptables -L

|

||||

|

||||

Chain INPUT (policy ACCEPT)

|

||||

target prot opt source destination

|

||||

ACCEPT all -- anywhere anywhere state RELATED,ESTABLISHED

|

||||

ACCEPT icmp -- anywhere anywhere

|

||||

ACCEPT all -- anywhere anywhere

|

||||

ACCEPT tcp -- anywhere anywhere state NEW tcp dpt:ssh

|

||||

REJECT all -- anywhere anywhere reject-with icmp-host-prohibited

|

||||

DROP tcp -- 192.168.0.6 anywhere multiport dports ssh,telnet,http,webcache

|

||||

|

||||

Chain FORWARD (policy ACCEPT)

|

||||

target prot opt source destination

|

||||

REJECT all -- anywhere anywhere reject-with icmp-host-prohibited

|

||||

|

||||

Chain OUTPUT (policy ACCEPT)

|

||||

target prot opt source destination

|

||||

|

||||

**面试官** : 好了,我问的就是这些。你是一个很有价值的雇员,我们不会错过你的。我将会向HR推荐你的名字。如果你有什么问题,请问我。

|

||||

|

||||

作为一个候选人我不愿不断的问将来要做的项目的事以及公司里其他的事,这样会打断愉快的对话。更不用说HR轮会不会比较难,总之,我获得了机会。

|

||||

|

||||

同时我要感谢Avishek和Ravi(我的朋友)花时间帮我整理我的面试。

|

||||

|

||||

朋友!如果您有过类似的面试,并且愿意与数百万Tecmint读者一起分享您的面试经历,请将您的问题和答案发送到admin@tecmint.com。

|

||||

|

||||

谢谢!保持联系。如果我能更好的回答我上面的问题的话,请记得告诉我。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/linux-firewall-iptables-interview-questions-and-answers/

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[wwy-hust](https://github.com/wwy-hust)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/avishek/

|

||||

[1]:http://www.tecmint.com/install-webmin-web-based-system-administration-tool-for-rhel-centos-fedora/

|

||||

@ -1,11 +1,14 @@

|

||||

如何使用Docker Machine部署Swarm集群

|

||||

================================================================================

|

||||

大家好,今天我们来研究一下如何使用Docker Machine部署Swarm集群。Docker Machine提供了独立的Docker API,所以任何与Docker守护进程进行交互的工具都可以使用Swarm来(透明地)扩增到多台主机上。Docker Machine可以用来在个人电脑、云端以及的数据中心里创建Docker主机。它为创建服务器,安装Docker以及根据用户设定配置Docker客户端提供了便捷化的解决方案。我们可以使用任何驱动来部署swarm集群,并且swarm集群将由于使用了TLS加密具有极好的安全性。

|

||||

|

||||

大家好,今天我们来研究一下如何使用Docker Machine部署Swarm集群。Docker Machine提供了标准的Docker API 支持,所以任何可以与Docker守护进程进行交互的工具都可以使用Swarm来(透明地)扩增到多台主机上。Docker Machine可以用来在个人电脑、云端以及的数据中心里创建Docker主机。它为创建服务器,安装Docker以及根据用户设定来配置Docker客户端提供了便捷化的解决方案。我们可以使用任何驱动来部署swarm集群,并且swarm集群将由于使用了TLS加密具有极好的安全性。

|

||||

|

||||

下面是我提供的简便方法。

|

||||

|

||||

### 1. 安装Docker Machine ###

|

||||

|

||||

Docker Machine 在任何Linux系统上都被支持。首先,我们需要从Github上下载最新版本的Docker Machine。我们使用curl命令来下载最先版本Docker Machine ie 0.2.0。

|

||||

Docker Machine 在各种Linux系统上都支持的很好。首先,我们需要从Github上下载最新版本的Docker Machine。我们使用curl命令来下载最先版本Docker Machine ie 0.2.0。

|

||||

|

||||

64位操作系统:

|

||||

|

||||

# curl -L https://github.com/docker/machine/releases/download/v0.2.0/docker-machine_linux-amd64 > /usr/local/bin/docker-machine

|

||||

@ -18,7 +21,7 @@ Docker Machine 在任何Linux系统上都被支持。首先,我们需要从Git

|

||||

|

||||

# chmod +x /usr/local/bin/docker-machine

|

||||

|

||||

在做完上面的事情以后,我们必须确保docker-machine已经安装好。怎么检查呢?运行docker-machine -v指令,指令将会给出我们系统上所安装的docker-machine版本。

|

||||

在做完上面的事情以后,我们要确保docker-machine已经安装正确。怎么检查呢?运行`docker-machine -v`指令,该指令将会给出我们系统上所安装的docker-machine版本。

|

||||

|

||||

# docker-machine -v

|

||||

|

||||

@ -31,14 +34,15 @@ Docker Machine 在任何Linux系统上都被支持。首先,我们需要从Git

|

||||

|

||||

### 2. 创建Machine ###

|

||||

|

||||

在将Docker Machine安装到我们的设备上之后,我们需要使用Docker Machine创建一个machine。在这片文章中,我们会将其部署在Digital Ocean Platform上。所以我们将使用“digitalocean”作为它的Driver API,然后将docker swarm运行在其中。这个Droplet会被设置为Swarm主节点,我们还要创建另外一个Droplet,并将其设定为Swarm节点代理。

|

||||

在将Docker Machine安装到我们的设备上之后,我们需要使用Docker Machine创建一个machine。在这篇文章中,我们会将其部署在Digital Ocean Platform上。所以我们将使用“digitalocean”作为它的Driver API,然后将docker swarm运行在其中。这个Droplet会被设置为Swarm主控节点,我们还要创建另外一个Droplet,并将其设定为Swarm节点代理。

|

||||

|

||||

创建machine的命令如下:

|

||||

|

||||

# docker-machine create --driver digitalocean --digitalocean-access-token <API-Token> linux-dev

|

||||

|

||||

**Note**: 假设我们要创建一个名为“linux-dev”的machine。<API-Token>是用户在Digital Ocean Cloud Platform的Digital Ocean控制面板中生成的密钥。为了获取这个密钥,我们需要登录我们的Digital Ocean控制面板,然后点击API选项,之后点击Generate New Token,起个名字,然后在Read和Write两个选项上打钩。之后我们将得到一个很长的十六进制密钥,这个就是<API-Token>了。用其替换上面那条命令中的API-Token字段。

|

||||

**备注**: 假设我们要创建一个名为“linux-dev”的machine。<API-Token>是用户在Digital Ocean Cloud Platform的Digital Ocean控制面板中生成的密钥。为了获取这个密钥,我们需要登录我们的Digital Ocean控制面板,然后点击API选项,之后点击Generate New Token,起个名字,然后在Read和Write两个选项上打钩。之后我们将得到一个很长的十六进制密钥,这个就是<API-Token>了。用其替换上面那条命令中的API-Token字段。

|

||||

|

||||

现在,运行下面的指令,将Machine configuration装载进shell。

|

||||

现在,运行下面的指令,将Machine 的配置变量加载进shell里。

|

||||

|

||||

# eval "$(docker-machine env linux-dev)"

|

||||

|

||||

@ -48,7 +52,7 @@ Docker Machine 在任何Linux系统上都被支持。首先,我们需要从Git

|

||||

|

||||

# docker-machine active linux-dev

|

||||

|

||||

现在,我们检查是否它(指machine)被标记为了 ACTIVE "*"。

|

||||

现在,我们检查它(指machine)是否被标记为了 ACTIVE "*"。

|

||||

|

||||

# docker-machine ls

|

||||

|

||||

@ -56,22 +60,21 @@ Docker Machine 在任何Linux系统上都被支持。首先,我们需要从Git

|

||||

|

||||

### 3. 运行Swarm Docker镜像 ###

|

||||

|

||||

现在,在我们创建完成了machine之后。我们需要将swarm docker镜像部署上去。这个machine将会运行这个docker镜像并且控制Swarm主节点和从节点。使用下面的指令运行镜像:

|

||||

现在,在我们创建完成了machine之后。我们需要将swarm docker镜像部署上去。这个machine将会运行这个docker镜像,并且控制Swarm主控节点和从节点。使用下面的指令运行镜像:

|

||||

|

||||

# docker run swarm create

|

||||

|

||||

|

||||

|

||||

If you are trying to run swarm docker image using **32 bit Operating System** in the computer where Docker Machine is running, we'll need to SSH into the Droplet.

|

||||

如果你想要在**32位操作系统**上运行swarm docker镜像。你需要SSH登录到Droplet当中。

|

||||

|

||||

# docker-machine ssh

|

||||

#docker run swarm create

|

||||

#exit

|

||||

|

||||

### 4. 创建Swarm主节点 ###

|

||||

### 4. 创建Swarm主控节点 ###

|

||||

|

||||

在我们的swarm image已经运行在machine当中之后,我们将要创建一个Swarm主节点。使用下面的语句,添加一个主节点。(这里的感觉怪怪的,好像少翻译了很多东西,是我把Master翻译为主节点的原因吗?)

|

||||

在我们的swarm image已经运行在machine当中之后,我们将要创建一个Swarm主控节点。使用下面的语句,添加一个主控节点。

|

||||

|

||||

# docker-machine create \

|

||||

-d digitalocean \

|

||||

@ -83,9 +86,9 @@ If you are trying to run swarm docker image using **32 bit Operating System** in

|

||||

|

||||

|

||||

|

||||

### 5. 创建Swarm结点群 ###

|

||||

### 5. 创建Swarm从节点 ###

|

||||

|

||||

现在,我们将要创建一个swarm结点,此结点将与Swarm主节点相连接。下面的指令将创建一个新的名为swarm-node的droplet,其与Swarm主节点相连。到此,我们就拥有了一个两节点的swarm集群了。

|

||||

现在,我们将要创建一个swarm从节点,此节点将与Swarm主控节点相连接。下面的指令将创建一个新的名为swarm-node的droplet,其与Swarm主控节点相连。到此,我们就拥有了一个两节点的swarm集群了。

|

||||

|

||||

# docker-machine create \

|

||||

-d digitalocean \

|

||||

@ -96,21 +99,19 @@ If you are trying to run swarm docker image using **32 bit Operating System** in

|

||||

|

||||

|

||||

|

||||

### 6. Connecting to the Swarm Master ###

|

||||

### 6. 与Swarm主节点连接 ###

|

||||

### 6. 与Swarm主控节点连接 ###

|

||||

|

||||

现在,我们连接Swarm主节点以便我们可以依照需求和配置文件在节点间部署Docker容器。运行下列命令将Swarm主节点的Machine配置文件加载到环境当中。

|

||||

现在,我们连接Swarm主控节点以便我们可以依照需求和配置文件在节点间部署Docker容器。运行下列命令将Swarm主控节点的Machine配置文件加载到环境当中。

|

||||

|

||||

# eval "$(docker-machine env --swarm swarm-master)"

|

||||

|

||||

然后,我们就可以跨结点地运行我们所需的容器了。在这里,我们还要检查一下是否一切正常。所以,运行**docker info**命令来检查Swarm集群的信息。

|

||||

然后,我们就可以跨节点地运行我们所需的容器了。在这里,我们还要检查一下是否一切正常。所以,运行**docker info**命令来检查Swarm集群的信息。

|

||||

|

||||

# docker info

|

||||

|

||||

### Conclusion ###

|

||||

### 总结 ###

|

||||

|

||||

我们可以用Docker Machine轻而易举地创建Swarm集群。这种方法有非常高的效率,因为它极大地减少了系统管理员和用户的时间消耗。在这篇文章中,我们以Digital Ocean作为驱动,通过创建一个主节点和一个从节点成功地部署了集群。其他类似的应用还有VirtualBox,Google Cloud Computing,Amazon Web Service,Microsoft Azure等等。这些连接都是通过TLS进行加密的,具有很高的安全性。如果你有任何的疑问,建议,反馈,欢迎在下面的评论框中注明以便我们可以更好地提高文章的质量!

|

||||

我们可以用Docker Machine轻而易举地创建Swarm集群。这种方法有非常高的效率,因为它极大地减少了系统管理员和用户的时间消耗。在这篇文章中,我们以Digital Ocean作为驱动,通过创建一个主控节点和一个从节点成功地部署了集群。其他类似的驱动还有VirtualBox,Google Cloud Computing,Amazon Web Service,Microsoft Azure等等。这些连接都是通过TLS进行加密的,具有很高的安全性。如果你有任何的疑问,建议,反馈,欢迎在下面的评论框中注明以便我们可以更好地提高文章的质量!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -118,7 +119,7 @@ via: http://linoxide.com/linux-how-to/provision-swarm-clusters-using-docker-mach

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[DongShuaike](https://github.com/DongShuaike)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,101 @@

|

||||

FreeBSD 和 Linux 有什么不同?

|

||||

================================================================================

|

||||

|

||||

|

||||

|

||||

### 简介 ###

|

||||

|

||||

BSD最初从UNIX继承而来,目前,有许多的类Unix操作系统是基于BSD的。FreeBSD是使用最广泛的开源的伯克利软件发行版(即 BSD 发行版)。就像它隐含的意思一样,它是一个自由开源的类Unix操作系统,并且是公共服务器平台。FreeBSD源代码通常以宽松的BSD许可证发布。它与Linux有很多相似的地方,但我们得承认它们在很多方面仍有不同。

|

||||

|

||||

本文的其余部分组织如下:FreeBSD的描述在第一部分,FreeBSD和Linux的相似点在第二部分,它们的区别将在第三部分讨论,对他们功能的讨论和总结在最后一节。

|

||||

|

||||

### FreeBSD描述 ###

|

||||

|

||||

#### 历史 ####

|

||||

|

||||

- FreeBSD的第一个版本发布于1993年,它的第一张CD-ROM是FreeBSD1.0,发行于1993年12月。接下来,FreeBSD 2.1.0在1995年发布,并且获得了所有用户的青睐。实际上许多IT公司都使用FreeBSD并且很满意,我们可以列出其中的一些:IBM、Nokia、NetApp和Juniper Network。

|

||||

|

||||

#### 许可证 ####

|

||||

|

||||

- 关于它的许可证,FreeBSD以多种开源许可证进行发布,它的名为Kernel的最新代码以两句版BSD许可证进行了发布,给予使用和重新发布FreeBSD的绝对自由。其它的代码则以三句版或四句版BSD许可证进行发布,有些是以GPL和CDDL的许可证发布的。

|

||||

|

||||

(LCTT 译注:BSD 许可证与 GPL 许可证相比,相当简短,最初只有四句规则;1999年应 RMS 请求,删除了第三句,新的许可证称作“新 BSD”或三句版BSD;原来的 BSD 许可证称作“旧 BSD”、“修订的 BSD”或四句版BSD;也有一种删除了第三、第四两句的版本,称之为两句版 BSD,等价于 MIT 许可证。)

|

||||

|

||||

#### 用户 ####

|

||||

|

||||

- FreeBSD的重要特点之一就是它的用户多样性。实际上,FreeBSD可以作为邮件服务器、Web 服务器、FTP 服务器以及路由器等,您只需要在它上运行服务相关的软件即可。而且FreeBSD还支持ARM、PowerPC、MIPS、x86、x86-64架构。

|

||||

|

||||

### FreeBSD和Linux的相似处 ###

|

||||

|

||||

FreeBSD和Linux是两个自由开源的软件。实际上,它们的用户可以很容易的检查并修改源代码,用户拥有绝对的自由。而且,FreeBSD和Linux都是类Unix系统,它们的内核、内部组件、库程序都使用从历史上的AT&T Unix继承来的算法。FreeBSD从根基上更像Unix系统,而Linux是作为自由的类Unix系统发布的。许多工具应用都可以在FreeBSD和Linux中找到,实际上,他们几乎有同样的功能。

|

||||

|

||||

此外,FreeBSD能够运行大量的Linux应用。它可以安装一个Linux的兼容层,这个兼容层可以在编译FreeBSD时加入AAC Compact Linux得到,或通过下载已编译了Linux兼容层的FreeBSD系统,其中会包括兼容程序:aac_linux.ko。不同于FreeBSD的是,Linux无法运行FreeBSD的软件。

|

||||

|

||||

最后,我们注意到虽然二者有同样的目标,但二者还是有一些不同之处,我们在下一节中列出。

|

||||

|

||||

### FreeBSD和Linux的区别 ###

|

||||

|

||||

目前对于大多数用户来说并没有一个选择FreeBSD还是Linux的明确的准则。因为他们有着很多同样的应用程序,因为他们都被称作类Unix系统。

|

||||

|

||||

在这一章,我们将列出这两种系统的一些重要的不同之处。

|

||||

|

||||

#### 许可证 ####

|

||||

|

||||

- 两个系统的区别首先在于它们的许可证。Linux以GPL许可证发行,它为用户提供阅读、发行和修改源代码的自由,GPL许可证帮助用户避免仅仅发行二进制。而FreeBSD以BSD许可证发布,BSD许可证比GPL更宽容,因为其衍生著作不需要仍以该许可证发布。这意味着任何用户能够使用、发布、修改代码,并且不需要维持之前的许可证。

|

||||

- 您可以依据您的需求,在两种许可证中选择一种。首先是BSD许可证,由于其特殊的条款,它更受用户青睐。实际上,这个许可证使用户在保证源代码的封闭性的同时,可以售卖以该许可证发布的软件。再说说GPL,它需要每个使用以该许可证发布的软件的用户多加注意。

|

||||

- 如果想在以不同许可证发布的两种软件中做出选择,您需要了解他们各自的许可证,以及他们开发中的方法论,从而能了解他们特性的区别,来选择更适合自己需求的。

|

||||

|

||||

#### 控制 ####

|

||||

|

||||

- 由于FreeBSD和Linux是以不同的许可证发布的,Linus Torvalds控制着Linux的内核,而FreeBSD却与Linux不同,它并未被控制。我个人更倾向于使用FreeBSD而不是Linux,这是因为FreeBSD才是绝对自由的软件,没有任何控制许可证的存在。Linux和FreeBSD还有其他的不同之处,我建议您先不急着做出选择,等读完本文后再做出您的选择。

|

||||

|

||||

#### 操作系统 ####

|

||||

|

||||

- Linux主要指内核系统,这与FreeBSD不同,FreeBSD的整个系统都被维护着。FreeBSD的内核和一组由FreeBSD团队开发的软件被作为一个整体进行维护。实际上,FreeBSD开发人员能够远程且高效的管理核心操作系统。

|

||||

- 而Linux方面,在管理系统方面有一些困难。由于不同的组件由不同的源维护,Linux开发者需要将它们汇集起来,才能达到同样的功能。

|

||||

- FreeBSD和Linux都给了用户大量的可选软件和发行版,但他们管理的方式不同。FreeBSD是统一的管理方式,而Linux需要被分别维护。

|

||||

|

||||

#### 硬件支持 ####

|

||||

|

||||

- 说到硬件支持,Linux比FreeBSD做的更好。但这不意味着FreeBSD没有像Linux那样支持硬件的能力。他们只是在管理的方式不同,这通常还依赖于您的需求。因此,如果您在寻找最新的解决方案,FreeBSD更适应您;但如果您在寻找更多的普适性,那最好使用Linux。

|

||||

|

||||

#### 原生FreeBSD Vs 原生Linux ####

|

||||

|

||||

- 两者的原生系统的区别又有不同。就像我之前说的,Linux是一个Unix的替代系统,由Linux Torvalds编写,并由网络上的许多极客一起协助实现的。Linux有一个现代系统所需要的全部功能,诸如虚拟内存、共享库、动态加载、优秀的内存管理等。它以GPL许可证发布。

|

||||

- FreeBSD也继承了Unix的许多重要的特性。FreeBSD作为在加州大学开发的BSD的一种发行版。开发BSD的最重要的原因是用一个开源的系统来替代AT&T操作系统,从而给用户无需AT&T许可证便可使用的能力。

|

||||

- 许可证的问题是开发者们最关心的问题。他们试图提供一个最大化克隆Unix的开源系统。这影响了用户的选择,由于FreeBSD使用BSD许可证进行发布,因而相比Linux更加自由。

|

||||

|

||||

#### 支持的软件包 ####

|

||||

|

||||

- 从用户的角度来看,另一个二者不同的地方便是软件包以及从源码安装的软件的可用性和支持。Linux只提供了预编译的二进制包,这与FreeBSD不同,它不但提供预编译的包,而且还提供从源码编译和安装的构建系统。使用它的 ports 工具,FreeBSD给了您选择使用预编译的软件包(默认)和在编译时定制您软件的能力。(LCTT 译注:此处说明有误。Linux 也提供了源代码方式的包,并支持自己构建。)

|

||||

- 这些 ports 允许您构建所有支持FreeBSD的软件。而且,它们的管理还是层次化的,您可以在/usr/ports下找到源文件的地址以及一些正确使用FreeBSD的文档。

|

||||

- 这些提到的 ports给予你产生不同软件包版本的可能性。FreeBSD给了您通过源代码构建以及预编译的两种软件,而不是像Linux一样只有预编译的软件包。您可以使用两种安装方式管理您的系统。

|

||||

|

||||

#### FreeBSD 和 Linux 常用工具比较 ####

|

||||

|

||||

- 有大量的常用工具在FreeBSD上可用,并且有趣的是他们由FreeBSD的团队所拥有。相反的,Linux工具来自GNU,这就是为什么在使用中有一些限制。(LCTT 译注:这也是 Linux 正式的名称被称作“GNU/Linux”的原因,因为本质上 Linux 其实只是指内核。)

|

||||

- 实际上FreeBSD采用的BSD许可证非常有益且有用。因此,您有能力维护核心操作系统,控制这些应用程序的开发。有一些工具类似于它们的祖先 - BSD和Unix的工具,但不同于GNU的套件,GNU套件只想做到最小的向后兼容。

|

||||

|

||||

#### 标准 Shell ####

|

||||

|

||||

- FreeBSD默认使用tcsh。它是csh的评估版,由于FreeBSD以BSD许可证发行,因此不建议您在其中使用GNU的组件 bash shell。bash和tcsh的区别仅仅在于tcsh的脚本功能。实际上,我们更推荐在FreeBSD中使用sh shell,因为它更加可靠,可以避免一些使用tcsh和csh时出现的脚本问题。

|

||||

|

||||

#### 一个更加层次化的文件系统 ####

|

||||

|

||||

- 像之前提到的一样,使用FreeBSD时,基础操作系统以及可选组件可以被很容易的区别开来。这导致了一些管理它们的标准。在Linux下,/bin,/sbin,/usr/bin或者/usr/sbin都是存放可执行文件的目录。FreeBSD不同,它有一些附加的对其进行组织的规范。基础操作系统被放在/usr/local/bin或者/usr/local/sbin目录下。这种方法可以帮助管理和区分基础操作系统和可选组件。

|

||||

|

||||

### 结论 ###

|

||||

|

||||

FreeBSD和Linux都是自由且开源的系统,他们有相似点也有不同点。上面列出的内容并不能说哪个系统比另一个更好。实际上,FreeBSD和Linux都有自己的特点和技术规格,这使它们与别的系统区别开来。那么,您有什么看法呢?您已经有在使用它们中的某个系统了么?如果答案为是的话,请给我们您的反馈;如果答案是否的话,在读完我们的描述后,您怎么看?请在留言处发表您的观点。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.unixmen.com/comparative-introduction-freebsd-linux-users/

|

||||

|

||||

作者:[anismaj][a]

|

||||

译者:[wwy-hust](https://github.com/wwy-hust)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.unixmen.com/author/anis/

|

||||

@ -1,13 +1,14 @@

|

||||

在Linux中使用‘Systemctl’管理‘Systemd’服务和单元

|

||||

systemctl 完全指南

|

||||

================================================================================

|

||||

Systemctl是一个systemd工具,主要负责控制systemd系统和服务管理器。

|

||||

|

||||

Systemd是一个系统管理守护进程、工具和库的集合,用于取代System V初始进程。Systemd的功能是用于集中管理和配置类UNIX系统。

|

||||

|

||||

在Linux生态系统中,Systemd被部署到了大多数的标准Linux发行版中,只有位数不多的几个尚未部署。Systemd通常是所有其它守护进程的父进程,但并非总是如此。

|

||||

在Linux生态系统中,Systemd被部署到了大多数的标准Linux发行版中,只有为数不多的几个发行版尚未部署。Systemd通常是所有其它守护进程的父进程,但并非总是如此。

|

||||

|

||||

|

||||

使用Systemctl管理Linux服务

|

||||

|

||||

*使用Systemctl管理Linux服务*

|

||||

|

||||

本文旨在阐明在运行systemd的系统上“如何控制系统和服务”。

|

||||

|

||||

@ -41,11 +42,9 @@ Systemd是一个系统管理守护进程、工具和库的集合,用于取代S

|

||||

root 555 1 0 16:27 ? 00:00:00 /usr/lib/systemd/systemd-logind

|

||||

dbus 556 1 0 16:27 ? 00:00:00 /bin/dbus-daemon --system --address=systemd: --nofork --nopidfile --systemd-activation

|

||||

|

||||

**注意**:systemd是作为父进程(PID=1)运行的。在上面带(-e)参数的ps命令输出中,选择所有进程,(-

|

||||

**注意**:systemd是作为父进程(PID=1)运行的。在上面带(-e)参数的ps命令输出中,选择所有进程,(-a)选择除会话前导外的所有进程,并使用(-f)参数输出完整格式列表(即 -eaf)。

|

||||

|

||||

a)选择除会话前导外的所有进程,并使用(-f)参数输出完整格式列表(如 -eaf)。

|

||||

|

||||

也请注意上例中后随的方括号和样例剩余部分。方括号表达式是grep的字符类表达式的一部分。

|

||||

也请注意上例中后随的方括号和例子中剩余部分。方括号表达式是grep的字符类表达式的一部分。

|

||||

|

||||

#### 4. 分析systemd启动进程 ####

|

||||

|

||||

@ -147,7 +146,7 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

1 loaded units listed. Pass --all to see loaded but inactive units, too.

|

||||

To show all installed unit files use 'systemctl list-unit-files'.

|

||||

|

||||

#### 10. 检查某个单元(cron.service)是否启用 ####

|

||||

#### 10. 检查某个单元(如 cron.service)是否启用 ####

|

||||

|

||||

# systemctl is-enabled crond.service

|

||||

|

||||

@ -187,7 +186,7 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

dbus-org.fedoraproject.FirewallD1.service enabled

|

||||

....

|

||||

|

||||

#### 13. Linux中如何启动、重启、停止、重载服务以及检查服务(httpd.service)状态 ####

|

||||

#### 13. Linux中如何启动、重启、停止、重载服务以及检查服务(如 httpd.service)状态 ####

|

||||

|

||||

# systemctl start httpd.service

|

||||

# systemctl restart httpd.service

|

||||

@ -214,15 +213,15 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

Apr 28 17:21:30 tecmint systemd[1]: Started The Apache HTTP Server.

|

||||

Hint: Some lines were ellipsized, use -l to show in full.

|

||||

|

||||

**注意**:当我们使用systemctl的start,restart,stop和reload命令时,我们不会不会从终端获取到任何输出内容,只有status命令可以打印输出。

|

||||

**注意**:当我们使用systemctl的start,restart,stop和reload命令时,我们不会从终端获取到任何输出内容,只有status命令可以打印输出。

|

||||

|

||||

#### 14. 如何激活服务并在启动时启用或禁用服务(系统启动时自动启动服务) ####

|

||||

#### 14. 如何激活服务并在启动时启用或禁用服务(即系统启动时自动启动服务) ####

|

||||

|

||||

# systemctl is-active httpd.service

|

||||

# systemctl enable httpd.service

|

||||

# systemctl disable httpd.service

|

||||

|

||||

#### 15. 如何屏蔽(让它不能启动)或显示服务(httpd.service) ####

|

||||

#### 15. 如何屏蔽(让它不能启动)或显示服务(如 httpd.service) ####

|

||||

|

||||

# systemctl mask httpd.service

|

||||

ln -s '/dev/null' '/etc/systemd/system/httpd.service'

|

||||

@ -297,7 +296,7 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

# systemctl enable tmp.mount

|

||||

# systemctl disable tmp.mount

|

||||

|

||||

#### 20. 在Linux中屏蔽(让它不能启动)或显示挂载点 ####

|

||||

#### 20. 在Linux中屏蔽(让它不能启用)或可见挂载点 ####

|

||||

|

||||

# systemctl mask tmp.mount

|

||||

|

||||

@ -375,7 +374,7 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

|

||||

CPUShares=2000

|

||||

|

||||

**注意**:当你为某个服务设置CPUShares,会自动创建一个以服务名命名的目录(httpd.service),里面包含了一个名为90-CPUShares.conf的文件,该文件含有CPUShare限制信息,你可以通过以下方式查看该文件:

|

||||

**注意**:当你为某个服务设置CPUShares,会自动创建一个以服务名命名的目录(如 httpd.service),里面包含了一个名为90-CPUShares.conf的文件,该文件含有CPUShare限制信息,你可以通过以下方式查看该文件:

|

||||

|

||||

# vi /etc/systemd/system/httpd.service.d/90-CPUShares.conf

|

||||

|

||||

@ -528,13 +527,13 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

#### 35. 启动运行等级5,即图形模式 ####

|

||||

|

||||

# systemctl isolate runlevel5.target

|

||||

OR

|

||||

或

|

||||

# systemctl isolate graphical.target

|

||||

|

||||

#### 36. 启动运行等级3,即多用户模式(命令行) ####

|

||||

|

||||

# systemctl isolate runlevel3.target

|

||||

OR

|

||||

或

|

||||

# systemctl isolate multiuser.target

|

||||

|

||||

#### 36. 设置多用户模式或图形模式为默认运行等级 ####

|

||||

@ -572,7 +571,7 @@ via: http://www.tecmint.com/manage-services-using-systemd-and-systemctl-in-linux

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,20 +1,18 @@

|

||||

|

||||

如何在 Ubuntu/CentOS7.1/Fedora22 上安装 Plex Media Server ?

|

||||

如何在 Ubuntu/CentOS7.1/Fedora22 上安装 Plex Media Server

|

||||

================================================================================

|

||||

在本文中我们将会向你展示如何容易地在主流的最新发布的Linux发行版上安装Plex Home Media Server。在Plex安装成功后你将可以使用你的集中式家庭媒体播放系统,该系统能让多个Plex播放器App共享它的媒体资源,并且该系统允许你设置你的环境,通过增加你的设备以及设置一个可以一起使用Plex的用户组。让我们首先在Ubuntu15.04上开始Plex的安装。

|

||||

在本文中我们将会向你展示如何容易地在主流的最新Linux发行版上安装Plex Media Server。在Plex安装成功后你将可以使用你的中央式家庭媒体播放系统,该系统能让多个Plex播放器App共享它的媒体资源,并且该系统允许你设置你的环境,增加你的设备以及设置一个可以一起使用Plex的用户组。让我们首先在Ubuntu15.04上开始Plex的安装。

|

||||

|

||||

### 基本的系统资源 ###

|

||||

|

||||

系统资源主要取决于你打算用来连接服务的设备类型和数量, 所以根据我们的需求我们将会在一个单独的服务器上使用以下系统资源。

|

||||

|

||||

注:表格

|

||||

<table width="666" style="height: 181px;">

|

||||

<tbody>

|

||||

<tr>

|

||||

<td width="670" colspan="2"><b>Plex Home Media Server</b></td>

|

||||

<td width="670" colspan="2"><b>Plex Media Server</b></td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="236"><b>Base Operating System</b></td>

|

||||

<td width="236"><b>基础操作系统</b></td>

|

||||

<td width="425">Ubuntu 15.04 / CentOS 7.1 / Fedora 22 Work Station</td>

|

||||

</tr>

|

||||

<tr>

|

||||

@ -22,11 +20,11 @@

|

||||

<td width="425">Version 0.9.12.3.1173-937aac3</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="236"><b>RAM and CPU</b></td>

|

||||

<td width="236"><b>RAM 和 CPU</b></td>

|

||||

<td width="425">1 GB , 2.0 GHZ</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="236"><b>Hard Disk</b></td>

|

||||

<td width="236"><b>硬盘</b></td>

|

||||

<td width="425">30 GB</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

@ -38,13 +36,13 @@

|

||||

|

||||

#### 步骤 1: 系统更新 ####

|

||||

|

||||

用root权限登陆你的服务器。确保你的系统是最新的,如果不是就使用下面的命令。

|

||||

用root权限登录你的服务器。确保你的系统是最新的,如果不是就使用下面的命令。

|

||||

|

||||

root@ubuntu-15:~#apt-get update

|

||||

|

||||

#### 步骤 2: 下载最新的Plex Media Server包 ####

|

||||

|

||||

创建一个新目录,用wget命令从Plex官网下载为Ubuntu提供的.deb包并放入该目录中。

|

||||

创建一个新目录,用wget命令从[Plex官网](https://plex.tv/)下载为Ubuntu提供的.deb包并放入该目录中。

|

||||

|

||||

root@ubuntu-15:~# cd /plex/

|

||||

root@ubuntu-15:/plex#

|

||||

@ -52,7 +50,7 @@

|

||||

|

||||

#### 步骤 3: 安装Plex Media Server的Debian包 ####

|

||||

|

||||

现在在相同的目录下执行下面的命令来开始debian包的安装, 然后检查plexmediaserver(译者注: 原文plekmediaserver, 明显笔误)的状态。

|

||||

现在在相同的目录下执行下面的命令来开始debian包的安装, 然后检查plexmediaserver服务的状态。

|

||||

|

||||

root@ubuntu-15:/plex# dpkg -i plexmediaserver_0.9.12.3.1173-937aac3_amd64.deb

|

||||

|

||||

@ -62,41 +60,41 @@

|

||||

|

||||

|

||||

|

||||

### 在Ubuntu 15.04上设置Plex Home Media Web应用 ###

|

||||

### 在Ubuntu 15.04上设置Plex Media Web应用 ###

|

||||

|

||||

让我们在你的本地网络主机中打开web浏览器, 并用你的本地主机IP以及端口32400来打开Web界面并完成以下步骤来配置Plex。

|

||||

让我们在你的本地网络主机中打开web浏览器, 并用你的本地主机IP以及端口32400来打开Web界面,并完成以下步骤来配置Plex。

|

||||

|

||||

http://172.25.10.179:32400/web

|

||||

http://localhost:32400/web

|

||||

|

||||

#### 步骤 1: 登陆前先注册 ####

|

||||

#### 步骤 1: 登录前先注册 ####

|

||||

|

||||

在你访问到Plex Media Server的Web界面之后(译者注: 原文是Plesk, 应该是笔误), 确保注册并填上你的用户名(译者注: 原文username email ID感觉怪怪:))和密码来登陆。

|

||||

在你访问到Plex Media Server的Web界面之后, 确保注册并填上你的用户名和密码来登录。

|

||||

|

||||

|

||||

|

||||

#### 输入你的PIN码来保护你的Plex Home Media用户(译者注: 原文Plex Media Home, 个人觉得专业称谓应该保持一致) ####

|

||||

#### 输入你的PIN码来保护你的Plex Media用户####

|

||||

|

||||

|

||||

|

||||

现在你已经成功的在Plex Home Media下配置你的用户。

|

||||

现在你已经成功的在Plex Media下配置你的用户。

|

||||

|

||||

|

||||

|

||||

### 在设备上而不是本地服务器上打开Plex Web应用 ###

|

||||

|

||||

正如我们在Plex Media主页看到的表明"你没有权限访问这个服务"。 这是因为我们跟服务器计算机不在同个网络。

|

||||

如我们在Plex Media主页看到的提示“你没有权限访问这个服务”。 这说明我们跟服务器计算机不在同个网络。

|

||||

|

||||

|

||||

|

||||

现在我们需要解决这个权限问题以便我们通过设备访问服务器而不是通过托管服务器(Plex服务器), 通过完成下面的步骤。

|

||||

现在我们需要解决这个权限问题,以便我们通过设备访问服务器而不是只能在服务器上访问。通过完成下面的步骤完成。

|

||||

|

||||

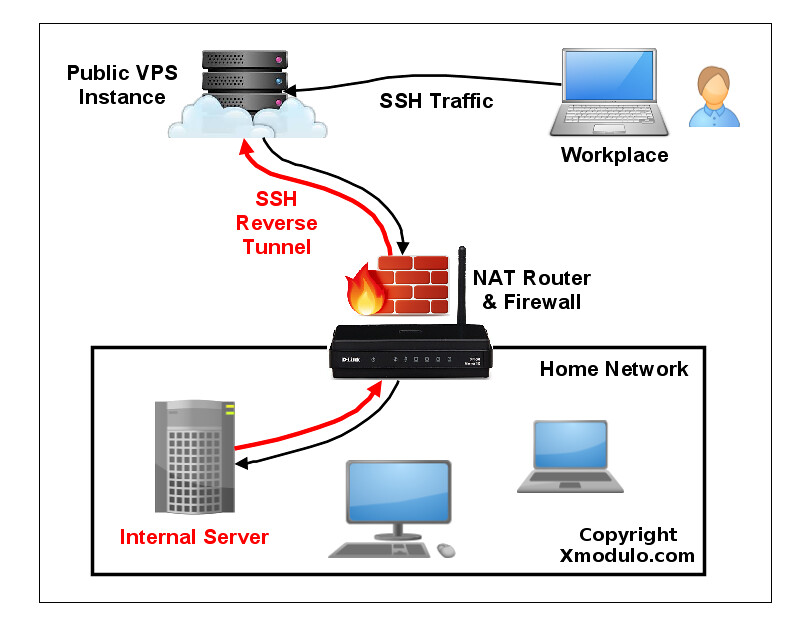

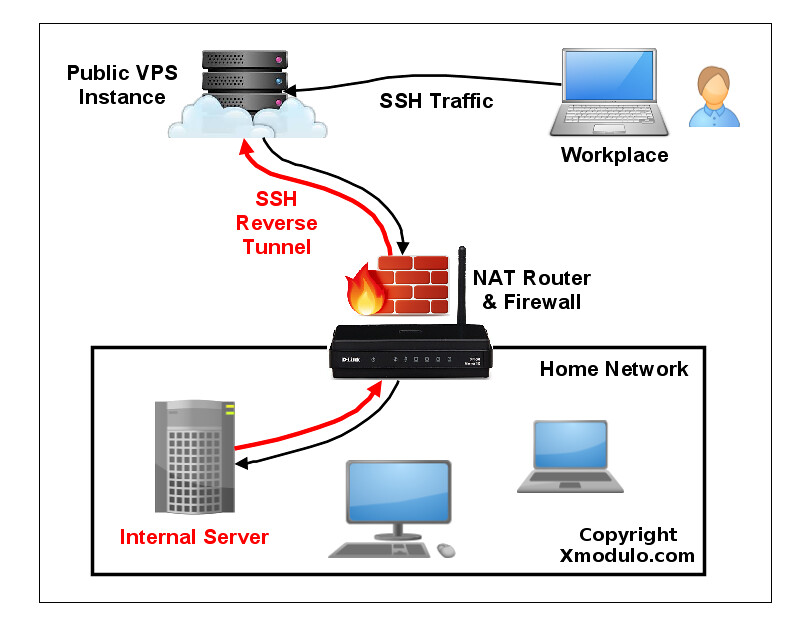

### 设置SSH隧道使Windows系统访问到Linux服务器 ###

|

||||

### 设置SSH隧道使Windows系统可以访问到Linux服务器 ###

|

||||

|

||||

首先我们需要建立一条SSH隧道以便我们访问远程服务器资源,就好像资源在本地一样。 这仅仅是必要的初始设置。

|

||||

|

||||

如果你正在使用Windows作为你的本地系统,Linux作为服务器,那么我们可以参照下图通过Putty来设置SSH隧道。

|

||||

(译者注: 首先要在Putty的Session中用Plex服务器IP配置一个SSH的会话,才能进行下面的隧道转发规则配置。

|

||||

(LCTT译注: 首先要在Putty的Session中用Plex服务器IP配置一个SSH的会话,才能进行下面的隧道转发规则配置。

|

||||

然后点击“Open”,输入远端服务器用户名密码, 来保持SSH会话连接。)

|

||||

|

||||

|

||||

@ -111,13 +109,13 @@

|

||||

|

||||

|

||||

|

||||

现在一个功能齐全的Plex Home Media Server已经准备好添加新的媒体库、频道、播放列表等资源。

|

||||

现在一个功能齐全的Plex Media Server已经准备好添加新的媒体库、频道、播放列表等资源。

|

||||

|

||||

|

||||

|

||||

### 在CentOS 7.1上安装Plex Media Server 0.9.12.3 ###

|

||||

|

||||

我们将会按照上述在Ubuntu15.04上安装Plex Home Media Server的步骤来将Plex安装到CentOS 7.1上。

|

||||

我们将会按照上述在Ubuntu15.04上安装Plex Media Server的步骤来将Plex安装到CentOS 7.1上。

|

||||

|

||||

让我们从安装Plex Media Server开始。

|

||||

|

||||

@ -144,9 +142,9 @@

|

||||

[root@linux-tutorials plex]# systemctl enable plexmediaserver.service

|

||||

[root@linux-tutorials plex]# systemctl status plexmediaserver.service

|

||||

|

||||

### 在CentOS-7.1上设置Plex Home Media Web应用 ###

|

||||

### 在CentOS-7.1上设置Plex Media Web应用 ###

|

||||

|

||||

现在我们只需要重复在Ubuntu上设置Plex Web应用的所有步骤就可以了。 让我们在Web浏览器上打开一个新窗口并用localhost或者Plex服务器的IP(译者注: 原文为or your Plex server, 明显的笔误)来访问Plex Home Media Web应用(译者注:称谓一致)。

|

||||

现在我们只需要重复在Ubuntu上设置Plex Web应用的所有步骤就可以了。 让我们在Web浏览器上打开一个新窗口并用localhost或者Plex服务器的IP来访问Plex Media Web应用。

|

||||

|

||||

http://172.20.3.174:32400/web

|

||||

http://localhost:32400/web

|

||||

@ -157,25 +155,25 @@