mirror of

https://github.com/LCTT/TranslateProject.git

synced 2024-12-26 21:30:55 +08:00

Merge branch 'master' of github.com:LCTT/TranslateProject

This commit is contained in:

commit

7ad96ab03c

@ -60,6 +60,7 @@ LCTT 的组成

|

||||

* 2017/03/13 制作了 LCTT 主页、成员列表和成员主页,LCTT 主页将移动至 https://linux.cn/lctt 。

|

||||

* 2017/03/16 提升 GHLandy、bestony、rusking 为新的 Core 成员。创建 Comic 小组。

|

||||

* 2017/04/11 启用头衔制,为各位重要成员颁发头衔。

|

||||

* 2017/11/21 鉴于 qhwdw 快速而上佳的翻译质量,提升 qhwdw 为新的 Core 成员。

|

||||

|

||||

核心成员

|

||||

-------------------------------

|

||||

@ -86,6 +87,7 @@ LCTT 的组成

|

||||

- 核心成员 @Locez,

|

||||

- 核心成员 @ucasFL,

|

||||

- 核心成员 @rusking,

|

||||

- 核心成员 @qhwdw,

|

||||

- 前任选题 @DeadFire,

|

||||

- 前任校对 @reinoir222,

|

||||

- 前任校对 @PurlingNayuki,

|

||||

|

||||

@ -0,0 +1,347 @@

|

||||

理解多区域配置中的 firewalld

|

||||

============================================================

|

||||

|

||||

现在的新闻里充斥着服务器被攻击和数据失窃事件。对于一个阅读过安全公告博客的人来说,通过访问错误配置的服务器,利用最新暴露的安全漏洞或通过窃取的密码来获得系统控制权,并不是件多困难的事情。在一个典型的 Linux 服务器上的任何互联网服务都可能存在漏洞,允许未经授权的系统访问。

|

||||

|

||||

因为在应用程序层面上强化系统以防范任何可能的威胁是不可能做到的事情,而防火墙可以通过限制对系统的访问提供了安全保证。防火墙基于源 IP、目标端口和协议来过滤入站包。因为这种方式中,仅有几个 IP/端口/协议的组合与系统交互,而其它的方式做不到过滤。

|

||||

|

||||

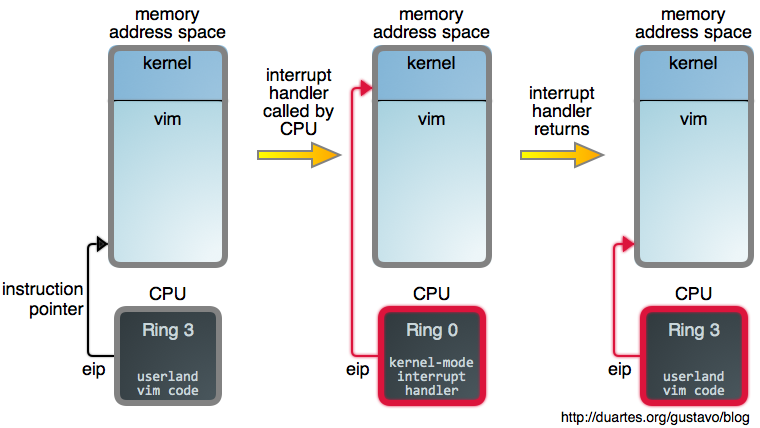

Linux 防火墙是通过 netfilter 来处理的,它是内核级别的框架。这十几年来,iptables 被作为 netfilter 的用户态抽象层(LCTT 译注: userland,一个基本的 UNIX 系统是由 kernel 和 userland 两部分构成,除 kernel 以外的称为 userland)。iptables 将包通过一系列的规则进行检查,如果包与特定的 IP/端口/协议的组合匹配,规则就会被应用到这个包上,以决定包是被通过、拒绝或丢弃。

|

||||

|

||||

Firewalld 是最新的 netfilter 用户态抽象层。遗憾的是,由于缺乏描述多区域配置的文档,它强大而灵活的功能被低估了。这篇文章提供了一个示例去改变这种情况。

|

||||

|

||||

### Firewalld 的设计目标

|

||||

|

||||

firewalld 的设计者认识到大多数的 iptables 使用案例仅涉及到几个单播源 IP,仅让每个符合白名单的服务通过,而其它的会被拒绝。这种模式的好处是,firewalld 可以通过定义的源 IP 和/或网络接口将入站流量分类到不同<ruby>区域<rt>zone</rt></ruby>。每个区域基于指定的准则按自己配置去通过或拒绝包。

|

||||

|

||||

另外的改进是基于 iptables 进行语法简化。firewalld 通过使用服务名而不是它的端口和协议去指定服务,使它更易于使用,例如,是使用 samba 而不是使用 UDP 端口 137 和 138 和 TCP 端口 139 和 445。它进一步简化语法,消除了 iptables 中对语句顺序的依赖。

|

||||

|

||||

最后,firewalld 允许交互式修改 netfilter,允许防火墙独立于存储在 XML 中的永久配置而进行改变。因此,下面的的临时修改将在下次重新加载时被覆盖:

|

||||

|

||||

```

|

||||

# firewall-cmd <some modification>

|

||||

```

|

||||

|

||||

而,以下的改变在重加载后会永久保存:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent <some modification>

|

||||

# firewall-cmd --reload

|

||||

```

|

||||

|

||||

### 区域

|

||||

|

||||

在 firewalld 中最上层的组织是区域。如果一个包匹配区域相关联的网络接口或源 IP/掩码 ,它就是区域的一部分。可用的几个预定义区域:

|

||||

|

||||

```

|

||||

# firewall-cmd --get-zones

|

||||

block dmz drop external home internal public trusted work

|

||||

```

|

||||

|

||||

任何配置了一个**网络接口**和/或一个**源**的区域就是一个<ruby>活动区域<rt>active zone</rt></ruby>。列出活动的区域:

|

||||

|

||||

```

|

||||

# firewall-cmd --get-active-zones

|

||||

public

|

||||

interfaces: eno1 eno2

|

||||

```

|

||||

|

||||

**Interfaces** (接口)是系统中的硬件和虚拟的网络适配器的名字,正如你在上面的示例中所看到的那样。所有的活动的接口都将被分配到区域,要么是默认的区域,要么是用户指定的一个区域。但是,一个接口不能被分配给多于一个的区域。

|

||||

|

||||

在缺省配置中,firewalld 设置所有接口为 public 区域,并且不对任何区域设置源。其结果是,`public` 区域是唯一的活动区域。

|

||||

|

||||

**Sources** (源)是入站 IP 地址的范围,它也可以被分配到区域。一个源(或重叠的源)不能被分配到多个区域。这样做的结果是产生一个未定义的行为,因为不清楚应该将哪些规则应用于该源。

|

||||

|

||||

因为指定一个源不是必需的,任何包都可以通过接口匹配而归属于一个区域,而不需要通过源匹配来归属一个区域。这表示通过使用优先级方式,优先到达多个指定的源区域,稍后将详细说明这种情况。首先,我们来检查 `public` 区域的配置:

|

||||

|

||||

```

|

||||

# firewall-cmd --zone=public --list-all

|

||||

public (default, active)

|

||||

interfaces: eno1 eno2

|

||||

sources:

|

||||

services: dhcpv6-client ssh

|

||||

ports:

|

||||

masquerade: no

|

||||

forward-ports:

|

||||

icmp-blocks:

|

||||

rich rules:

|

||||

# firewall-cmd --permanent --zone=public --get-target

|

||||

default

|

||||

```

|

||||

|

||||

逐行说明如下:

|

||||

|

||||

* `public (default, active)` 表示 `public` 区域是默认区域(当接口启动时会自动默认),并且它是活动的,因为,它至少有一个接口或源分配给它。

|

||||

* `interfaces: eno1 eno2` 列出了这个区域上关联的接口。

|

||||

* `sources:` 列出了这个区域的源。现在这里什么都没有,但是,如果这里有内容,它们应该是这样的格式 xxx.xxx.xxx.xxx/xx。

|

||||

* `services: dhcpv6-client ssh` 列出了允许通过这个防火墙的服务。你可以通过运行 `firewall-cmd --get-services` 得到一个防火墙预定义服务的详细列表。

|

||||

* `ports:` 列出了一个允许通过这个防火墙的目标端口。它是用于你需要去允许一个没有在 firewalld 中定义的服务的情况下。

|

||||

* `masquerade: no` 表示这个区域是否允许 IP 伪装。如果允许,它将允许 IP 转发,它可以让你的计算机作为一个路由器。

|

||||

* `forward-ports:` 列出转发的端口。

|

||||

* `icmp-blocks:` 阻塞的 icmp 流量的黑名单。

|

||||

* `rich rules:` 在一个区域中优先处理的高级配置。

|

||||

* `default` 是目标区域,它决定了与该区域匹配而没有由上面设置中显式处理的包的动作。

|

||||

|

||||

### 一个简单的单区域配置示例

|

||||

|

||||

如果只是简单地锁定你的防火墙。简单地在删除公共区域上当前允许的服务,并重新加载:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent --zone=public --remove-service=dhcpv6-client

|

||||

# firewall-cmd --permanent --zone=public --remove-service=ssh

|

||||

# firewall-cmd --reload

|

||||

```

|

||||

|

||||

在下面的防火墙上这些命令的结果是:

|

||||

|

||||

```

|

||||

# firewall-cmd --zone=public --list-all

|

||||

public (default, active)

|

||||

interfaces: eno1 eno2

|

||||

sources:

|

||||

services:

|

||||

ports:

|

||||

masquerade: no

|

||||

forward-ports:

|

||||

icmp-blocks:

|

||||

rich rules:

|

||||

# firewall-cmd --permanent --zone=public --get-target

|

||||

default

|

||||

```

|

||||

|

||||

本着尽可能严格地保证安全的精神,如果发生需要在你的防火墙上临时开放一个服务的情况(假设是 ssh),你可以增加这个服务到当前会话中(省略 `--permanent`),并且指示防火墙在一个指定的时间之后恢复修改:

|

||||

|

||||

```

|

||||

# firewall-cmd --zone=public --add-service=ssh --timeout=5m

|

||||

```

|

||||

|

||||

这个 `timeout` 选项是一个以秒(`s`)、分(`m`)或小时(`h`)为单位的时间值。

|

||||

|

||||

### 目标

|

||||

|

||||

当一个区域处理它的源或接口上的一个包时,但是,没有处理该包的显式规则时,这时区域的<ruby>目标<rt>target</rt></ruby>决定了该行为:

|

||||

|

||||

* `ACCEPT`:通过这个包。

|

||||

* `%%REJECT%%`:拒绝这个包,并返回一个拒绝的回复。

|

||||

* `DROP`:丢弃这个包,不回复任何信息。

|

||||

* `default`:不做任何事情。该区域不再管它,把它踢到“楼上”。

|

||||

|

||||

在 firewalld 0.3.9 中有一个 bug (已经在 0.3.10 中修复),对于一个目标是除了“default”以外的源区域,不管允许的服务是什么,这的目标都会被应用。例如,一个使用目标 `DROP` 的源区域,将丢弃所有的包,甚至是白名单中的包。遗憾的是,这个版本的 firewalld 被打包到 RHEL7 和它的衍生版中,使它成为一个相当常见的 bug。本文中的示例避免了可能出现这种行为的情况。

|

||||

|

||||

### 优先权

|

||||

|

||||

活动区域中扮演两个不同的角色。关联接口行为的区域作为接口区域,并且,关联源行为的区域作为源区域(一个区域能够扮演两个角色)。firewalld 按下列顺序处理一个包:

|

||||

|

||||

1. 相应的源区域。可以存在零个或一个这样的区域。如果这个包满足一个<ruby>富规则<rt>rich rule</rt></ruby>、服务是白名单中的、或者目标没有定义,那么源区域处理这个包,并且在这里结束。否则,向上传递这个包。

|

||||

2. 相应的接口区域。肯定有一个这样的区域。如果接口处理这个包,那么到这里结束。否则,向上传递这个包。

|

||||

3. firewalld 默认动作。接受 icmp 包并拒绝其它的一切。

|

||||

|

||||

这里的关键信息是,源区域优先于接口区域。因此,对于多区域的 firewalld 配置的一般设计模式是,创建一个优先源区域来允许指定的 IP 对系统服务的提升访问,并在一个限制性接口区域限制其它访问。

|

||||

|

||||

### 一个简单的多区域示例

|

||||

|

||||

为演示优先权,让我们在 `public` 区域中将 `http` 替换成 `ssh`,并且为我们喜欢的 IP 地址,如 1.1.1.1,设置一个默认的 `internal` 区域。以下的命令完成这个任务:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent --zone=public --remove-service=ssh

|

||||

# firewall-cmd --permanent --zone=public --add-service=http

|

||||

# firewall-cmd --permanent --zone=internal --add-source=1.1.1.1

|

||||

# firewall-cmd --reload

|

||||

```

|

||||

|

||||

这些命令的结果是生成如下的配置:

|

||||

|

||||

```

|

||||

# firewall-cmd --zone=public --list-all

|

||||

public (default, active)

|

||||

interfaces: eno1 eno2

|

||||

sources:

|

||||

services: dhcpv6-client http

|

||||

ports:

|

||||

masquerade: no

|

||||

forward-ports:

|

||||

icmp-blocks:

|

||||

rich rules:

|

||||

# firewall-cmd --permanent --zone=public --get-target

|

||||

default

|

||||

# firewall-cmd --zone=internal --list-all

|

||||

internal (active)

|

||||

interfaces:

|

||||

sources: 1.1.1.1

|

||||

services: dhcpv6-client mdns samba-client ssh

|

||||

ports:

|

||||

masquerade: no

|

||||

forward-ports:

|

||||

icmp-blocks:

|

||||

rich rules:

|

||||

# firewall-cmd --permanent --zone=internal --get-target

|

||||

default

|

||||

```

|

||||

|

||||

在上面的配置中,如果有人尝试从 1.1.1.1 去 `ssh`,这个请求将会成功,因为这个源区域(`internal`)被首先应用,并且它允许 `ssh` 访问。

|

||||

|

||||

如果有人尝试从其它的地址,如 2.2.2.2,去访问 `ssh`,它不是这个源区域的,因为和这个源区域不匹配。因此,这个请求被直接转到接口区域(`public`),它没有显式处理 `ssh`,因为,public 的目标是 `default`,这个请求被传递到默认动作,它将被拒绝。

|

||||

|

||||

如果 1.1.1.1 尝试进行 `http` 访问会怎样?源区域(`internal`)不允许它,但是,目标是 `default`,因此,请求将传递到接口区域(`public`),它被允许访问。

|

||||

|

||||

现在,让我们假设有人从 3.3.3.3 拖你的网站。要限制从那个 IP 的访问,简单地增加它到预定义的 `drop` 区域,正如其名,它将丢弃所有的连接:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent --zone=drop --add-source=3.3.3.3

|

||||

# firewall-cmd --reload

|

||||

```

|

||||

|

||||

下一次 3.3.3.3 尝试去访问你的网站,firewalld 将转发请求到源区域(`drop`)。因为目标是 `DROP`,请求将被拒绝,并且它不会被转发到接口区域(`public`)。

|

||||

|

||||

### 一个实用的多区域示例

|

||||

|

||||

假设你为你的组织的一台服务器配置防火墙。你希望允许全世界使用 `http` 和 `https` 的访问,你的组织(1.1.0.0/16)和工作组(1.1.1.0/8)使用 `ssh` 访问,并且你的工作组可以访问 `samba` 服务。使用 firewalld 中的区域,你可以用一个很直观的方式去实现这个配置。

|

||||

|

||||

`public` 这个命名,它的逻辑似乎是把全世界访问指定为公共区域,而 `internal` 区域用于为本地使用。从在 `public` 区域内设置使用 `http` 和 `https` 替换 `dhcpv6-client` 和 `ssh` 服务来开始:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent --zone=public --remove-service=dhcpv6-client

|

||||

# firewall-cmd --permanent --zone=public --remove-service=ssh

|

||||

# firewall-cmd --permanent --zone=public --add-service=http

|

||||

# firewall-cmd --permanent --zone=public --add-service=https

|

||||

```

|

||||

|

||||

然后,取消 `internal` 区域的 `mdns`、`samba-client` 和 `dhcpv6-client` 服务(仅保留 `ssh`),并增加你的组织为源:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent --zone=internal --remove-service=mdns

|

||||

# firewall-cmd --permanent --zone=internal --remove-service=samba-client

|

||||

# firewall-cmd --permanent --zone=internal --remove-service=dhcpv6-client

|

||||

# firewall-cmd --permanent --zone=internal --add-source=1.1.0.0/16

|

||||

```

|

||||

|

||||

为容纳你提升的 `samba` 的权限,增加一个富规则:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent --zone=internal --add-rich-rule='rule family=ipv4 source address="1.1.1.0/8" service name="samba" accept'

|

||||

```

|

||||

|

||||

最后,重新加载,把这些变化拉取到会话中:

|

||||

|

||||

```

|

||||

# firewall-cmd --reload

|

||||

```

|

||||

|

||||

仅剩下少数的细节了。从一个 `internal` 区域以外的 IP 去尝试通过 `ssh` 到你的服务器,结果是回复一个拒绝的消息。它是 firewalld 默认的。更为安全的作法是去显示不活跃的 IP 行为并丢弃该连接。改变 `public` 区域的目标为 `DROP`,而不是 `default` 来实现它:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent --zone=public --set-target=DROP

|

||||

# firewall-cmd --reload

|

||||

```

|

||||

|

||||

但是,等等,你不再可以 ping 了,甚至是从内部区域!并且 icmp (ping 使用的协议)并不在 firewalld 可以列入白名单的服务列表中。那是因为,icmp 是第 3 层的 IP 协议,它没有端口的概念,不像那些捆绑了端口的服务。在设置公共区域为 `DROP` 之前,ping 能够通过防火墙是因为你的 `default` 目标通过它到达防火墙的默认动作(default),即允许它通过。但现在它已经被删除了。

|

||||

|

||||

为恢复内部网络的 ping,使用一个富规则:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent --zone=internal --add-rich-rule='rule protocol value="icmp" accept'

|

||||

# firewall-cmd --reload

|

||||

```

|

||||

|

||||

结果如下,这里是两个活动区域的配置:

|

||||

|

||||

```

|

||||

# firewall-cmd --zone=public --list-all

|

||||

public (default, active)

|

||||

interfaces: eno1 eno2

|

||||

sources:

|

||||

services: http https

|

||||

ports:

|

||||

masquerade: no

|

||||

forward-ports:

|

||||

icmp-blocks:

|

||||

rich rules:

|

||||

# firewall-cmd --permanent --zone=public --get-target

|

||||

DROP

|

||||

# firewall-cmd --zone=internal --list-all

|

||||

internal (active)

|

||||

interfaces:

|

||||

sources: 1.1.0.0/16

|

||||

services: ssh

|

||||

ports:

|

||||

masquerade: no

|

||||

forward-ports:

|

||||

icmp-blocks:

|

||||

rich rules:

|

||||

rule family=ipv4 source address="1.1.1.0/8" service name="samba" accept

|

||||

rule protocol value="icmp" accept

|

||||

# firewall-cmd --permanent --zone=internal --get-target

|

||||

default

|

||||

```

|

||||

|

||||

这个设置演示了一个三层嵌套的防火墙。最外层,`public`,是一个接口区域,包含全世界的访问。紧接着的一层,`internal`,是一个源区域,包含你的组织,它是 `public` 的一个子集。最后,一个富规则增加到最内层,包含了你的工作组,它是 `internal` 的一个子集。

|

||||

|

||||

这里的关键信息是,当在一个场景中可以突破到嵌套层,最外层将使用接口区域,接下来的将使用一个源区域,并且在源区域中额外使用富规则。

|

||||

|

||||

### 调试

|

||||

|

||||

firewalld 采用直观范式来设计防火墙,但比它的前任 iptables 更容易产生歧义。如果产生无法预料的行为,或者为了更好地理解 firewalld 是怎么工作的,则可以使用 iptables 描述 netfilter 是如何配置操作的。前一个示例的输出如下,为了简单起见,将输出和日志进行了修剪:

|

||||

|

||||

```

|

||||

# iptables -S

|

||||

-P INPUT ACCEPT

|

||||

... (forward and output lines) ...

|

||||

-N INPUT_ZONES

|

||||

-N INPUT_ZONES_SOURCE

|

||||

-N INPUT_direct

|

||||

-N IN_internal

|

||||

-N IN_internal_allow

|

||||

-N IN_internal_deny

|

||||

-N IN_public

|

||||

-N IN_public_allow

|

||||

-N IN_public_deny

|

||||

-A INPUT -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

|

||||

-A INPUT -i lo -j ACCEPT

|

||||

-A INPUT -j INPUT_ZONES_SOURCE

|

||||

-A INPUT -j INPUT_ZONES

|

||||

-A INPUT -p icmp -j ACCEPT

|

||||

-A INPUT -m conntrack --ctstate INVALID -j DROP

|

||||

-A INPUT -j REJECT --reject-with icmp-host-prohibited

|

||||

... (forward and output lines) ...

|

||||

-A INPUT_ZONES -i eno1 -j IN_public

|

||||

-A INPUT_ZONES -i eno2 -j IN_public

|

||||

-A INPUT_ZONES -j IN_public

|

||||

-A INPUT_ZONES_SOURCE -s 1.1.0.0/16 -g IN_internal

|

||||

-A IN_internal -j IN_internal_deny

|

||||

-A IN_internal -j IN_internal_allow

|

||||

-A IN_internal_allow -p tcp -m tcp --dport 22 -m conntrack --ctstate NEW -j ACCEPT

|

||||

-A IN_internal_allow -s 1.1.1.0/8 -p udp -m udp --dport 137 -m conntrack --ctstate NEW -j ACCEPT

|

||||

-A IN_internal_allow -s 1.1.1.0/8 -p udp -m udp --dport 138 -m conntrack --ctstate NEW -j ACCEPT

|

||||

-A IN_internal_allow -s 1.1.1.0/8 -p tcp -m tcp --dport 139 -m conntrack --ctstate NEW -j ACCEPT

|

||||

-A IN_internal_allow -s 1.1.1.0/8 -p tcp -m tcp --dport 445 -m conntrack --ctstate NEW -j ACCEPT

|

||||

-A IN_internal_allow -p icmp -m conntrack --ctstate NEW -j ACCEPT

|

||||

-A IN_public -j IN_public_deny

|

||||

-A IN_public -j IN_public_allow

|

||||

-A IN_public -j DROP

|

||||

-A IN_public_allow -p tcp -m tcp --dport 80 -m conntrack --ctstate NEW -j ACCEPT

|

||||

-A IN_public_allow -p tcp -m tcp --dport 443 -m conntrack --ctstate NEW -j ACCEPT

|

||||

```

|

||||

|

||||

在上面的 iptables 输出中,新的链(以 `-N` 开始的行)是被首先声明的。剩下的规则是附加到(以 `-A` 开始的行) iptables 中的。已建立的连接和本地流量是允许通过的,并且入站包被转到 `INPUT_ZONES_SOURCE` 链,在那里如果存在相应的区域,IP 将被发送到那个区域。从那之后,流量被转到 `INPUT_ZONES` 链,从那里它被路由到一个接口区域。如果在那里它没有被处理,icmp 是允许通过的,无效的被丢弃,并且其余的都被拒绝。

|

||||

|

||||

### 结论

|

||||

|

||||

firewalld 是一个文档不足的防火墙配置工具,它的功能远比大多数人认识到的更为强大。以创新的区域范式,firewalld 允许系统管理员去分解流量到每个唯一处理它的分类中,简化了配置过程。因为它直观的设计和语法,它在实践中不但被用于简单的单一区域中也被用于复杂的多区域配置中。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linuxjournal.com/content/understanding-firewalld-multi-zone-configurations?page=0,0

|

||||

|

||||

作者:[Nathan Vance][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linuxjournal.com/users/nathan-vance

|

||||

[1]:https://www.linuxjournal.com/tag/firewalls

|

||||

[2]:https://www.linuxjournal.com/tag/howtos

|

||||

[3]:https://www.linuxjournal.com/tag/networking

|

||||

[4]:https://www.linuxjournal.com/tag/security

|

||||

[5]:https://www.linuxjournal.com/tag/sysadmin

|

||||

[6]:https://www.linuxjournal.com/users/william-f-polik

|

||||

[7]:https://www.linuxjournal.com/users/nathan-vance

|

||||

@ -1,36 +1,28 @@

|

||||

Translating By LHRchina

|

||||

|

||||

|

||||

Ubuntu Core in LXD containers

|

||||

使用 LXD 容器运行 Ubuntu Core

|

||||

============================================================

|

||||

|

||||

|

||||

### Share or save

|

||||

|

||||

|

||||

|

||||

### What’s Ubuntu Core?

|

||||

### Ubuntu Core 是什么?

|

||||

|

||||

Ubuntu Core is a version of Ubuntu that’s fully transactional and entirely based on snap packages.

|

||||

Ubuntu Core 是完全基于 snap 包构建,并且完全事务化的 Ubuntu 版本。

|

||||

|

||||

Most of the system is read-only. All installed applications come from snap packages and all updates are done using transactions. Meaning that should anything go wrong at any point during a package or system update, the system will be able to revert to the previous state and report the failure.

|

||||

该系统大部分是只读的,所有已安装的应用全部来自 snap 包,完全使用事务化更新。这意味着不管在系统更新还是安装软件的时候遇到问题,整个系统都可以回退到之前的状态并且记录这个错误。

|

||||

|

||||

The current release of Ubuntu Core is called series 16 and was released in November 2016.

|

||||

最新版是在 2016 年 11 月发布的 Ubuntu Core 16。

|

||||

|

||||

Note that on Ubuntu Core systems, only snap packages using confinement can be installed (no “classic” snaps) and that a good number of snaps will not fully work in this environment or will require some manual intervention (creating user and groups, …). Ubuntu Core gets improved on a weekly basis as new releases of snapd and the “core” snap are put out.

|

||||

注意,Ubuntu Core 限制只能够安装 snap 包(而非 “传统” 软件包),并且有相当数量的 snap 包在当前环境下不能正常运行,或者需要人工干预(创建用户和用户组等)才能正常运行。随着新版的 snapd 和 “core” snap 包发布,Ubuntu Core 每周都会得到改进。

|

||||

|

||||

### Requirements

|

||||

### 环境需求

|

||||

|

||||

As far as LXD is concerned, Ubuntu Core is just another Linux distribution. That being said, snapd does require unprivileged FUSE mounts and AppArmor namespacing and stacking, so you will need the following:

|

||||

就 LXD 而言,Ubuntu Core 仅仅相当于另一个 Linux 发行版。也就是说,snapd 需要挂载无特权的 FUSE 和 AppArmor 命名空间以及软件栈,像下面这样:

|

||||

|

||||

* An up to date Ubuntu system using the official Ubuntu kernel

|

||||

* 一个新版的使用 Ubuntu 官方内核的系统

|

||||

* 一个新版的 LXD

|

||||

|

||||

* An up to date version of LXD

|

||||

### 创建一个 Ubuntu Core 容器

|

||||

|

||||

### Creating an Ubuntu Core container

|

||||

|

||||

The Ubuntu Core images are currently published on the community image server.

|

||||

You can launch a new container with:

|

||||

当前 Ubuntu Core 镜像发布在社区的镜像服务器。你可以像这样启动一个新的容器:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc launch images:ubuntu-core/16 ubuntu-core

|

||||

@ -38,9 +30,9 @@ Creating ubuntu-core

|

||||

Starting ubuntu-core

|

||||

```

|

||||

|

||||

The container will take a few seconds to start, first executing a first stage loader that determines what read-only image to use and setup the writable layers. You don’t want to interrupt the container in that stage and “lxc exec” will likely just fail as pretty much nothing is available at that point.

|

||||

这个容器启动需要一点点时间,它会先执行第一阶段的加载程序,加载程序会确定使用哪一个镜像(镜像是只读的),并且在系统上设置一个可读层,你不要在这一阶段中断容器执行,这个时候什么都没有,所以执行 `lxc exec` 将会出错。

|

||||

|

||||

Seconds later, “lxc list” will show the container IP address, indicating that it’s booted into Ubuntu Core:

|

||||

几秒钟之后,执行 `lxc list` 将会展示容器的 IP 地址,这表明已经启动了 Ubuntu Core:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc list

|

||||

@ -51,7 +43,7 @@ stgraber@dakara:~$ lxc list

|

||||

+-------------+---------+----------------------+----------------------------------------------+------------+-----------+

|

||||

```

|

||||

|

||||

You can then interact with that container the same way you would any other:

|

||||

之后你就可以像使用其他的交互一样和这个容器进行交互:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc exec ubuntu-core bash

|

||||

@ -63,11 +55,11 @@ pc-kernel 4.4.0-45-4 37 canonical -

|

||||

root@ubuntu-core:~#

|

||||

```

|

||||

|

||||

### Updating the container

|

||||

### 更新容器

|

||||

|

||||

If you’ve been tracking the development of Ubuntu Core, you’ll know that those versions above are pretty old. That’s because the disk images that are used as the source for the Ubuntu Core LXD images are only refreshed every few months. Ubuntu Core systems will automatically update once a day and then automatically reboot to boot onto the new version (and revert if this fails).

|

||||

如果你一直关注着 Ubuntu Core 的开发,你应该知道上面的版本已经很老了。这是因为被用作 Ubuntu LXD 镜像的代码每隔几个月才会更新。Ubuntu Core 系统在重启时会检查更新并进行自动更新(更新失败会回退)。

|

||||

|

||||

If you want to immediately force an update, you can do it with:

|

||||

如果你想现在强制更新,你可以这样做:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc exec ubuntu-core bash

|

||||

@ -81,7 +73,7 @@ series 16

|

||||

root@ubuntu-core:~#

|

||||

```

|

||||

|

||||

And then reboot the system and check the snapd version again:

|

||||

然后重启一下 Ubuntu Core 系统,然后看看 snapd 的版本。

|

||||

|

||||

```

|

||||

root@ubuntu-core:~# reboot

|

||||

@ -95,7 +87,7 @@ series 16

|

||||

root@ubuntu-core:~#

|

||||

```

|

||||

|

||||

You can get an history of all snapd interactions with

|

||||

你也可以像下面这样查看所有 snapd 的历史记录:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc exec ubuntu-core snap changes

|

||||

@ -105,9 +97,9 @@ ID Status Spawn Ready Summary

|

||||

3 Done 2017-01-31T05:21:30Z 2017-01-31T05:22:45Z Refresh all snaps in the system

|

||||

```

|

||||

|

||||

### Installing some snaps

|

||||

### 安装 Snap 软件包

|

||||

|

||||

Let’s start with the simplest snaps of all, the good old Hello World:

|

||||

以一个最简单的例子开始,经典的 Hello World:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc exec ubuntu-core bash

|

||||

@ -117,7 +109,7 @@ root@ubuntu-core:~# hello-world

|

||||

Hello World!

|

||||

```

|

||||

|

||||

And then move on to something a bit more useful:

|

||||

接下来让我们看一些更有用的:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc exec ubuntu-core bash

|

||||

@ -125,9 +117,9 @@ root@ubuntu-core:~# snap install nextcloud

|

||||

nextcloud 11.0.1snap2 from 'nextcloud' installed

|

||||

```

|

||||

|

||||

Then hit your container over HTTP and you’ll get to your newly deployed Nextcloud instance.

|

||||

之后通过 HTTP 访问你的容器就可以看到刚才部署的 Nextcloud 实例。

|

||||

|

||||

If you feel like testing the latest LXD straight from git, you can do so with:

|

||||

如果你想直接通过 git 测试最新版 LXD,你可以这样做:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc config set ubuntu-core security.nesting true

|

||||

@ -156,7 +148,7 @@ What IPv6 address should be used (CIDR subnet notation, “auto” or “none”

|

||||

LXD has been successfully configured.

|

||||

```

|

||||

|

||||

And because container inception never gets old, lets run Ubuntu Core 16 inside Ubuntu Core 16:

|

||||

已经设置过的容器不能回退版本,但是可以在 Ubuntu Core 16 中运行另一个 Ubuntu Core 16 容器:

|

||||

|

||||

```

|

||||

root@ubuntu-core:~# lxc launch images:ubuntu-core/16 nested-core

|

||||

@ -170,28 +162,29 @@ root@ubuntu-core:~# lxc list

|

||||

+-------------+---------+---------------------+-----------------------------------------------+------------+-----------+

|

||||

```

|

||||

|

||||

### Conclusion

|

||||

### 写在最后

|

||||

|

||||

If you ever wanted to try Ubuntu Core, this is a great way to do it. It’s also a great tool for snap authors to make sure their snap is fully self-contained and will work in all environments.

|

||||

如果你只是想试用一下 Ubuntu Core,这是一个不错的方法。对于 snap 包开发者来说,这也是一个不错的工具来测试你的 snap 包能否在不同的环境下正常运行。

|

||||

|

||||

Ubuntu Core is a great fit for environments where you want to ensure that your system is always up to date and is entirely reproducible. This does come with a number of constraints that may or may not work for you.

|

||||

如果你希望你的系统总是最新的,并且整体可复制,Ubuntu Core 是一个很不错的方案,不过这也会带来一些相应的限制,所以可能不太适合你。

|

||||

|

||||

And lastly, a word of warning. Those images are considered as good enough for testing, but aren’t officially supported at this point. We are working towards getting fully supported Ubuntu Core LXD images on the official Ubuntu image server in the near future.

|

||||

最后是一个警告,对于测试来说,这些镜像是足够的,但是当前并没有被正式的支持。在不久的将来,官方的 Ubuntu server 可以完整的支持 Ubuntu Core LXD 镜像。

|

||||

|

||||

### Extra information

|

||||

### 附录

|

||||

|

||||

The main LXD website is at: [https://linuxcontainers.org/lxd][2] Development happens on Github at: [https://github.com/lxc/lxd][3]

|

||||

Mailing-list support happens on: [https://lists.linuxcontainers.org][4]

|

||||

IRC support happens in: #lxcontainers on irc.freenode.net

|

||||

Try LXD online: [https://linuxcontainers.org/lxd/try-it][5]

|

||||

- LXD 主站:[https://linuxcontainers.org/lxd][2]

|

||||

- Github:[https://github.com/lxc/lxd][3]

|

||||

- 邮件列表:[https://lists.linuxcontainers.org][4]

|

||||

- IRC:#lxcontainers on irc.freenode.net

|

||||

- 在线试用:[https://linuxcontainers.org/lxd/try-it][5]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://insights.ubuntu.com/2017/02/27/ubuntu-core-in-lxd-containers/

|

||||

|

||||

作者:[Stéphane Graber ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

作者:[Stéphane Graber][a]

|

||||

译者:[aiwhj](https://github.com/aiwhj)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,67 @@

|

||||

了解用于 Linux 和 Windows 容器的 Docker “容器主机”与“容器操作系统”

|

||||

=================

|

||||

|

||||

让我们来探讨一下“容器主机”和“容器操作系统”之间的关系,以及它们在 Linux 和 Windows 容器之间的区别。

|

||||

|

||||

### 一些定义

|

||||

|

||||

* <ruby>容器主机<rt>Container Host</rt></ruby>:也称为<ruby>主机操作系统<rt>Host OS</rt></ruby>。主机操作系统是 Docker 客户端和 Docker 守护程序在其上运行的操作系统。在 Linux 和非 Hyper-V 容器的情况下,主机操作系统与运行中的 Docker 容器共享内核。对于 Hyper-V,每个容器都有自己的 Hyper-V 内核。

|

||||

* <ruby>容器操作系统<rt>Container OS</rt></ruby>:也被称为<ruby>基础操作系统<rt>Base OS</rt></ruby>。基础操作系统是指包含操作系统如 Ubuntu、CentOS 或 windowsservercore 的镜像。通常情况下,你将在基础操作系统镜像之上构建自己的镜像,以便可以利用该操作系统的部分功能。请注意,Windows 容器需要一个基础操作系统,而 Linux 容器不需要。

|

||||

* <ruby>操作系统内核<rt>Operating System Kernel</rt></ruby>:内核管理诸如内存、文件系统、网络和进程调度等底层功能。

|

||||

|

||||

### 如下的一些图

|

||||

|

||||

|

||||

|

||||

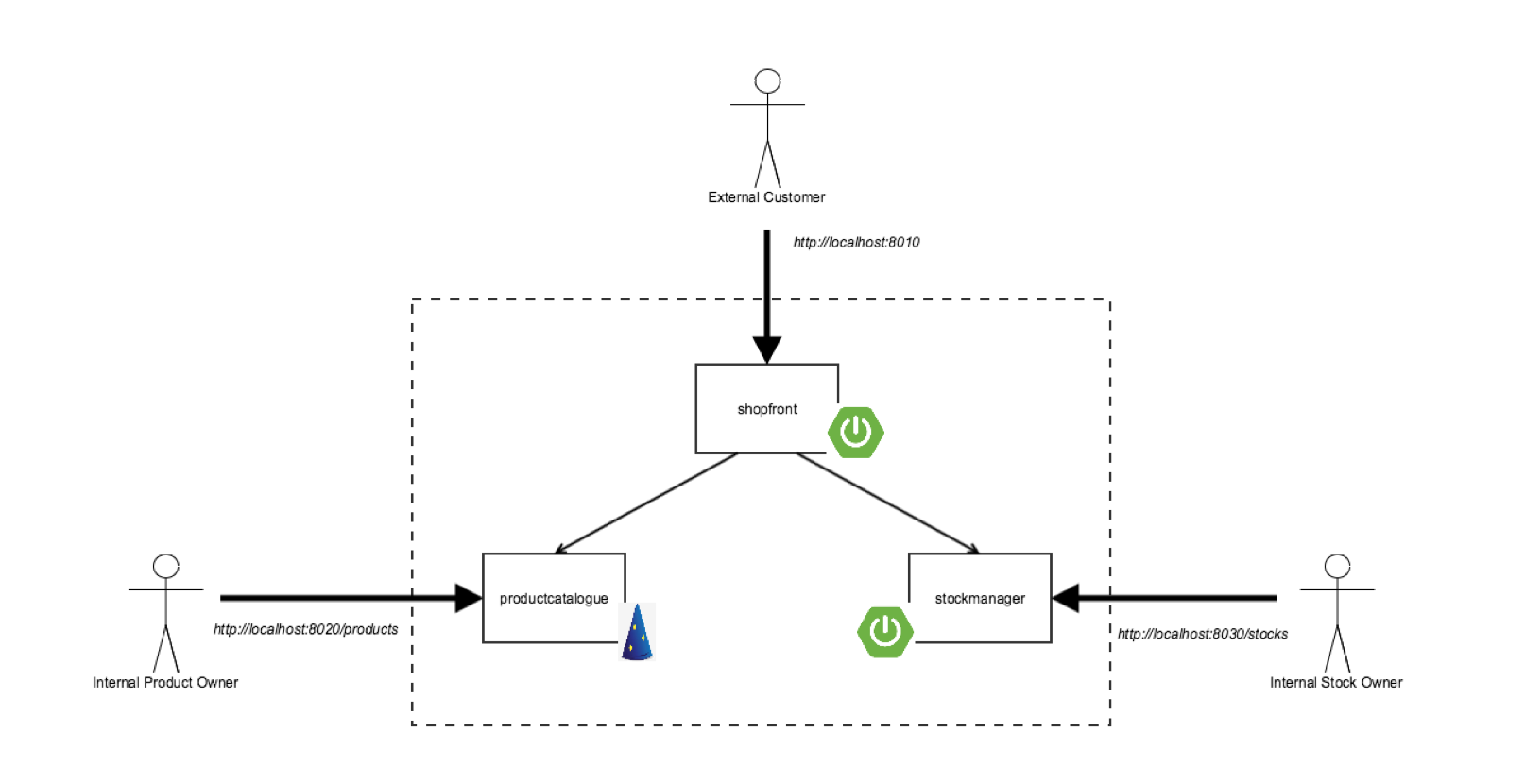

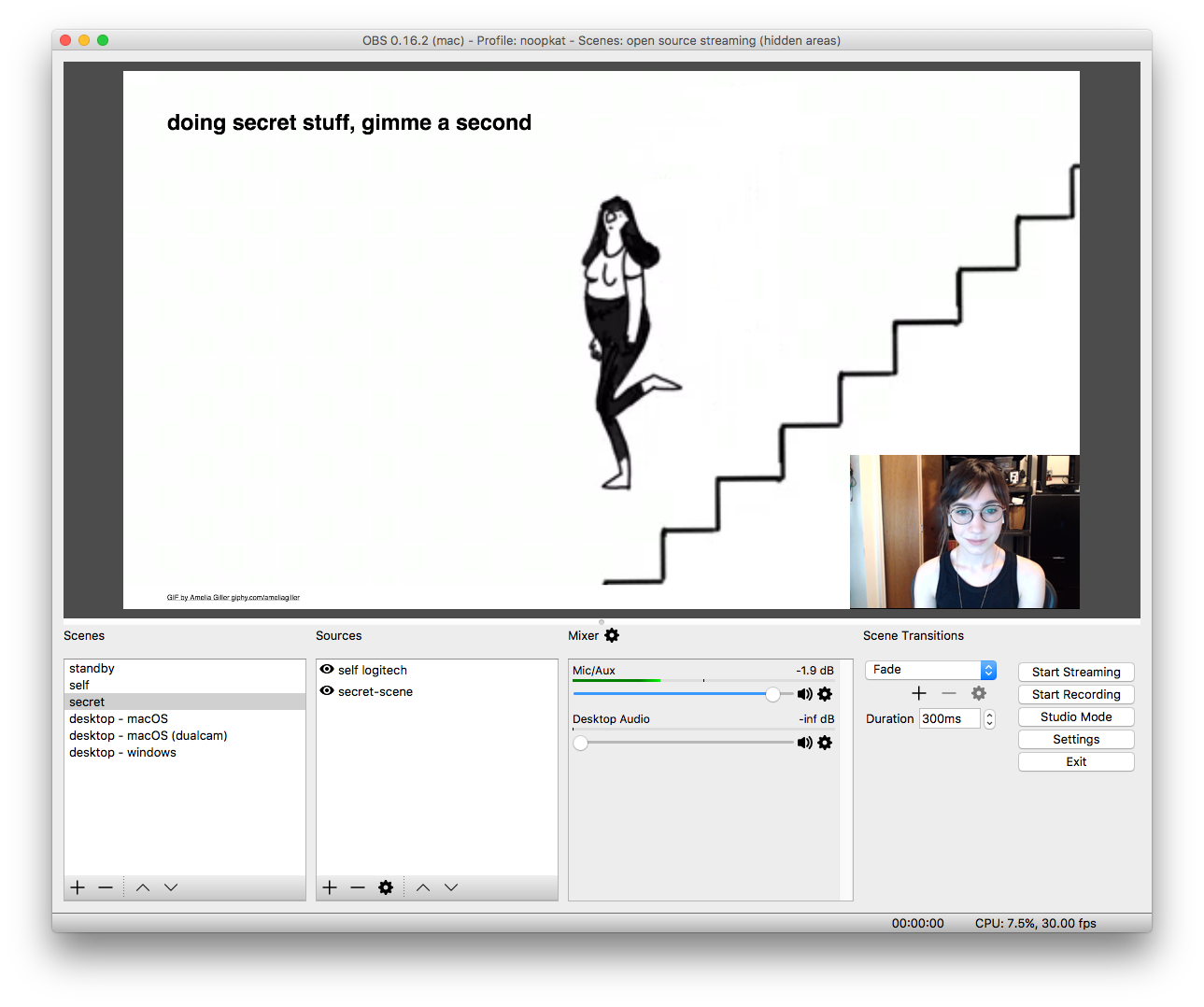

在上面的例子中:

|

||||

|

||||

* 主机操作系统是 Ubuntu。

|

||||

* Docker 客户端和 Docker 守护进程(一起被称为 Docker 引擎)正在主机操作系统上运行。

|

||||

* 每个容器共享主机操作系统内核。

|

||||

* CentOS 和 BusyBox 是 Linux 基础操作系统镜像。

|

||||

* “No OS” 容器表明你不需要基础操作系统以在 Linux 中运行一个容器。你可以创建一个含有 [scratch][1] 基础镜像的 Docker 文件,然后运行直接使用内核的二进制文件。

|

||||

* 查看[这篇][2]文章来比较基础 OS 的大小。

|

||||

|

||||

|

||||

|

||||

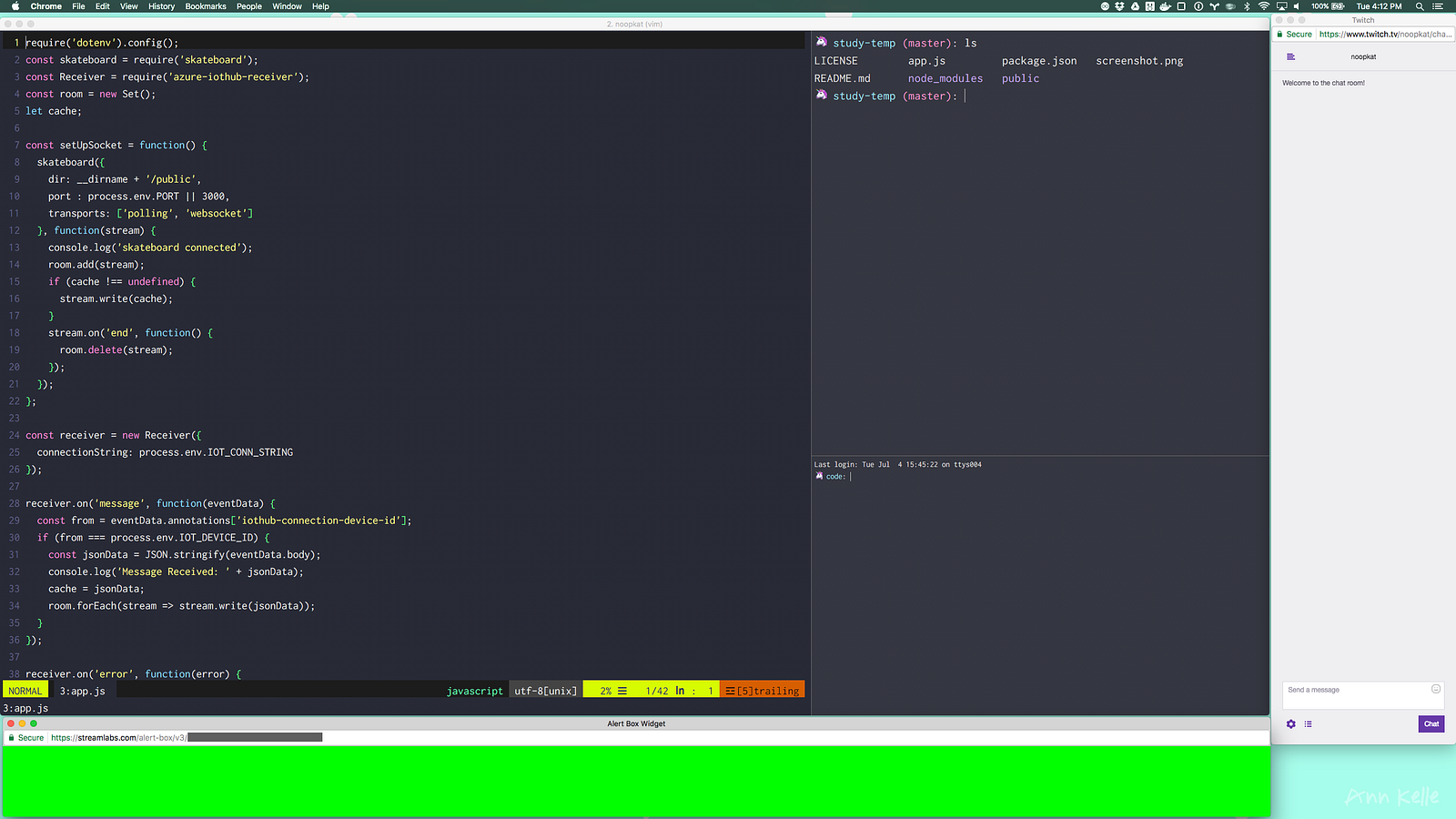

在上面的例子中:

|

||||

|

||||

* 主机操作系统是 Windows 10 或 Windows Server。

|

||||

* 每个容器共享主机操作系统内核。

|

||||

* 所有 Windows 容器都需要 [nanoserver][3] 或 [windowsservercore][4] 的基础操作系统。

|

||||

|

||||

|

||||

|

||||

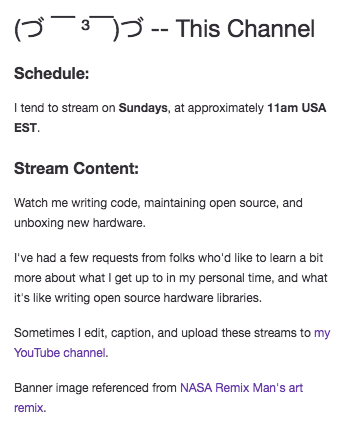

在上面的例子中:

|

||||

|

||||

* 主机操作系统是 Windows 10 或 Windows Server。

|

||||

* 每个容器都托管在自己的轻量级 Hyper-V 虚拟机中。

|

||||

* 每个容器使用 Hyper-V 虚拟机内的内核,它在容器之间提供额外的分离层。

|

||||

* 所有 Windows 容器都需要 [nanoserver][5] 或 [windowsservercore][6] 的基础操作系统。

|

||||

|

||||

### 几个好的链接

|

||||

|

||||

* [关于 Windows 容器][7]

|

||||

* [深入实现 Windows 容器,包括多用户模式和“写时复制”来节省资源][8]

|

||||

* [Linux 容器如何通过使用“写时复制”来节省资源][9]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://floydhilton.com/docker/2017/03/31/Docker-ContainerHost-vs-ContainerOS-Linux-Windows.html

|

||||

|

||||

作者:[Floyd Hilton][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://floydhilton.com/about/

|

||||

[1]:https://hub.docker.com/_/scratch/

|

||||

[2]:https://www.brianchristner.io/docker-image-base-os-size-comparison/

|

||||

[3]:https://hub.docker.com/r/microsoft/nanoserver/

|

||||

[4]:https://hub.docker.com/r/microsoft/windowsservercore/

|

||||

[5]:https://hub.docker.com/r/microsoft/nanoserver/

|

||||

[6]:https://hub.docker.com/r/microsoft/windowsservercore/

|

||||

[7]:https://docs.microsoft.com/en-us/virtualization/windowscontainers/about/

|

||||

[8]:http://blog.xebia.com/deep-dive-into-windows-server-containers-and-docker-part-2-underlying-implementation-of-windows-server-containers/

|

||||

[9]:https://docs.docker.com/engine/userguide/storagedriver/imagesandcontainers/#the-copy-on-write-strategy

|

||||

@ -0,0 +1,48 @@

|

||||

肯特·贝克:改变人生的代码整理魔法

|

||||

==========

|

||||

|

||||

> 本文作者<ruby>肯特·贝克<rt>Kent Beck</rt></ruby>,是最早研究软件开发的模式和重构的人之一,是敏捷开发的开创者之一,更是极限编程和测试驱动开发的创始人,同时还是 Smalltalk 和 JUnit 的作者,对当今世界的软件开发影响深远。现在 Facebook 工作。

|

||||

|

||||

本周我一直在整理 Facebook 代码,而且我喜欢这个工作。我的职业生涯中已经整理了数千小时的代码,我有一套使这种整理更加安全、有趣和高效的规则。

|

||||

|

||||

整理工作是通过一系列短小而安全的步骤进行的。事实上,规则一就是**如果这很难,那就不要去做**。我以前在晚上做填字游戏。如果我卡住那就去睡觉,第二天晚上那些没有发现的线索往往很容易发现。与其想要一心搞个大的,不如在遇到阻力的时候停下来。

|

||||

|

||||

整理会陷入这样一种感觉:你错失的要比你从一个个成功中获得的更多(稍后会细说)。第二条规则是**当你充满活力时开始,当你累了时停下来**。起来走走。如果还没有恢复精神,那这一天的工作就算做完了。

|

||||

|

||||

只有在仔细追踪其它变化的时候(我把它和最新的差异搞混了),整理工作才可以与开发同步进行。第三条规则是**立即完成每个环节的工作**。与功能开发所不同的是,功能开发只有在完成一大块工作时才有意义,而整理是基于时间一点点完成的。

|

||||

|

||||

整理在任何步骤中都只需要付出不多的努力,所以我会在任何步骤遇到麻烦的时候放弃。所以,规则四是**两次失败后恢复**。如果我整理代码,运行测试,并遇到测试失败,那么我会立即修复它。如果我修复失败,我会立即恢复到上次已知最好的状态。

|

||||

|

||||

即便没有闪亮的新设计的愿景,整理也是有用的。不过,有时候我想看看事情会如何发展,所以第五条就是**实践**。执行一系列的整理和还原。第二次将更快,你会更加熟悉避免哪些坑。

|

||||

|

||||

只有在附带损害的风险较低,审查整理变化的成本也较低的时候整理才有用。规则六是**隔离整理**。如果你错过了在编写代码中途整理的机会,那么接下来可能很困难。要么完成并接着整理,要么还原、整理并进行修改。

|

||||

|

||||

试试这些。将临时申明的变量移动到它第一次使用的位置,简化布尔表达式(`return expression == True`?),提取一个 helper,将逻辑或状态的范围缩小到实际使用的位置。

|

||||

|

||||

### 规则

|

||||

|

||||

- 规则一、 如果这很难,那就不要去做

|

||||

- 规则二、 当你充满活力时开始,当你累了时停下来

|

||||

- 规则三、 立即完成每个环节工作

|

||||

- 规则四、 两次失败后恢复

|

||||

- 规则五、 实践

|

||||

- 规则六、 隔离整理

|

||||

|

||||

### 尾声

|

||||

|

||||

我通过严格地整理改变了架构、提取了框架。这种方式可以安全地做出重大改变。我认为这是因为,虽然每次整理的成本是不变的,但回报是指数级的,但我需要数据和模型来解释这个假说。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.facebook.com/notes/kent-beck/the-life-changing-magic-of-tidying-up-code/1544047022294823/

|

||||

|

||||

作者:[KENT BECK][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.facebook.com/kentlbeck

|

||||

[1]:https://www.facebook.com/notes/kent-beck/the-life-changing-magic-of-tidying-up-code/1544047022294823/?utm_source=wanqu.co&utm_campaign=Wanqu+Daily&utm_medium=website#

|

||||

[2]:https://www.facebook.com/kentlbeck

|

||||

[3]:https://www.facebook.com/notes/kent-beck/the-life-changing-magic-of-tidying-up-code/1544047022294823/

|

||||

@ -0,0 +1,33 @@

|

||||

Let’s Encrypt :2018 年 1 月发布通配证书

|

||||

============================================================

|

||||

|

||||

Let’s Encrypt 将于 2018 年 1 月开始发放通配证书。通配证书是一个经常需要的功能,并且我们知道在一些情况下它可以使 HTTPS 部署更简单。我们希望提供通配证书有助于加速网络向 100% HTTPS 进展。

|

||||

|

||||

Let’s Encrypt 目前通过我们的全自动 DV 证书颁发和管理 API 保护了 4700 万个域名。自从 Let's Encrypt 的服务于 2015 年 12 月发布以来,它已经将加密网页的数量从 40% 大大地提高到了 58%。如果你对通配证书的可用性以及我们达成 100% 的加密网页的使命感兴趣,我们请求你为我们的[夏季筹款活动][1](LCTT 译注:之前的夏季活动,原文发布于今年夏季)做出贡献。

|

||||

|

||||

通配符证书可以保护基本域的任何数量的子域名(例如 *.example.com)。这使得管理员可以为一个域及其所有子域使用单个证书和密钥对,这可以使 HTTPS 部署更加容易。

|

||||

|

||||

通配符证书将通过我们[即将发布的 ACME v2 API 终端][2]免费提供。我们最初只支持通过 DNS 进行通配符证书的基础域验证,但是随着时间的推移可能会探索更多的验证方式。我们鼓励人们在我们的[社区论坛][3]上提出任何关于通配证书支持的问题。

|

||||

|

||||

我们决定在夏季筹款活动中宣布这一令人兴奋的进展,因为我们是一个非营利组织,这要感谢使用我们服务的社区的慷慨支持。如果你想支持一个更安全和保密的网络,[现在捐赠吧][4]!

|

||||

|

||||

我们要感谢我们的[社区][5]和我们的[赞助者][6],使我们所做的一切成为可能。如果你的公司或组织能够赞助 Let's Encrypt,请发送电子邮件至 [sponsor@letsencrypt.org][7]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://letsencrypt.org/2017/07/06/wildcard-certificates-coming-jan-2018.html

|

||||

|

||||

作者:[Josh Aas][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://letsencrypt.org/2017/07/06/wildcard-certificates-coming-jan-2018.html

|

||||

[1]:https://letsencrypt.org/donate/

|

||||

[2]:https://letsencrypt.org/2017/06/14/acme-v2-api.html

|

||||

[3]:https://community.letsencrypt.org/

|

||||

[4]:https://letsencrypt.org/donate/

|

||||

[5]:https://letsencrypt.org/getinvolved/

|

||||

[6]:https://letsencrypt.org/sponsors/

|

||||

[7]:mailto:sponsor@letsencrypt.org

|

||||

@ -0,0 +1,149 @@

|

||||

Linux 用户的手边工具:Guide to Linux

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

> “Guide to Linux” 这个应用并不完美,但它是一个非常好的工具,可以帮助你学习 Linux 命令。

|

||||

|

||||

还记得你初次使用 Linux 时的情景吗?对于有些人来说,他的学习曲线可能有些挑战性。比如,在 `/usr/bin` 中能找到许多命令。在我目前使用的 Elementary OS 系统中,命令的数量是 1944 个。当然,这并不全是真实的命令(或者,我会使用到的命令数量),但这个数目是很多的。

|

||||

|

||||

正因为如此(并且不同平台不一样),现在,新用户(和一些已经熟悉的用户)需要一些帮助。

|

||||

|

||||

对于每个管理员来说,这些技能是必须具备的:

|

||||

|

||||

* 熟悉平台

|

||||

* 理解命令

|

||||

* 编写 Shell 脚本

|

||||

|

||||

当你寻求帮助时,有时,你需要去“阅读那些该死的手册”(Read the Fine/Freaking/Funky Manual,LCTT 译注:一个网络用语,简写为 RTFM),但是当你自己都不知道要找什么的时候,它就没办法帮到你了。在那个时候,你就会为你拥有像 [Guide to Linux][15] 这样的手机应用而感到高兴。

|

||||

|

||||

不像你在 Linux.com 上看到的那些大多数的内容,这篇文章只是介绍一个 Android 应用的。为什么呢?因为这个特殊的 应用是用来帮助用户学习 Linux 的。

|

||||

|

||||

而且,它做的很好。

|

||||

|

||||

关于这个应用我清楚地告诉你 —— 它并不完美。Guide to Linux 里面充斥着很烂的英文,糟糕的标点符号,并且(如果你是一个纯粹主义者),它从来没有提到过 GNU。在这之上,它有一个特别的功能(通常它对用户非常有用)功能不是很有用(LCTT 译注:是指终端模拟器,后面会详细解释)。除此之外,我敢说 Guide to Linux 可能是 Linux 平台上最好的一个移动端的 “口袋指南”。

|

||||

|

||||

对于这个应用,你可能会喜欢它的如下特性:

|

||||

|

||||

* 离线使用

|

||||

* Linux 教程

|

||||

* 基础的和高级的 Linux 命令的详细介绍

|

||||

* 包含了命令示例和语法

|

||||

* 专用的 Shell 脚本模块

|

||||

|

||||

除此以外,Guide to Linux 是免费提供的(尽管里面有一些广告)。如果你想去除广告,它有一个应用内的购买,($2.99 USD/年)可以去消除广告。

|

||||

|

||||

让我们来安装这个应用,来看一看它的构成。

|

||||

|

||||

### 安装

|

||||

|

||||

像所有的 Android 应用一样,安装 Guide to Linux 是非常简单的。按照以下简单的几步就可以安装它了:

|

||||

|

||||

1. 打开你的 Android 设备上的 Google Play 商店

|

||||

2. 搜索 Guide to Linux

|

||||

3. 找到 Essence Infotech 的那个,并轻触进入

|

||||

4. 轻触 Install

|

||||

5. 允许安装

|

||||

|

||||

安装完成后,你可以在你的<ruby>应用抽屉<rt>App Drawer</rt></ruby>或主屏幕上(或者两者都有)上找到它去启动 Guide to Linux 。轻触图标去启动这个应用。

|

||||

|

||||

### 使用

|

||||

|

||||

让我们看一下 Guide to Linux 的每个功能。我发现某些功能比其它的更有帮助,或许你的体验会不一样。在我们分别讲解之前,我将重点提到其界面。开发者在为这个应用创建一个易于使用的界面方面做的很好。

|

||||

|

||||

从主窗口中(图 1),你可以获取四个易于访问的功能。

|

||||

|

||||

|

||||

|

||||

*图 1: The Guide to Linux 主窗口。[已获授权][1]*

|

||||

|

||||

轻触四个图标中的任何一个去启动一个功能,然后,准备去学习。

|

||||

|

||||

### 教程

|

||||

|

||||

让我们从这个应用教程的最 “新手友好” 的功能开始。打开“Tutorial”功能,然后,将看到该教程的欢迎部分,“Linux 操作系统介绍”(图 2)。

|

||||

|

||||

|

||||

|

||||

*图 2:教程开始。[已获授权][2]*

|

||||

|

||||

如果你轻触 “汉堡包菜单” (左上角的三个横线),显示了内容列表(图 3),因此,你可以在教程中选择任何一个可用部分。

|

||||

|

||||

|

||||

|

||||

*图 3:教程的内容列表。[已获授权][3]*

|

||||

|

||||

如果你现在还没有注意到,Guide to Linux 教程部分是每个主题的一系列短文的集合。短文包含图片和链接(有时候),链接将带你到指定的 web 网站(根据主题的需要)。这里没有交互,仅仅只能阅读。但是,这是一个很好的起点,由于开发者在描述各个部分方面做的很好(虽然有语法问题)。

|

||||

|

||||

尽管你可以在窗口的顶部看到一个搜索选项,但是,我还是没有发现这一功能的任何效果 —— 但是,你可以试一下。

|

||||

|

||||

对于 Linux 新手来说,如果希望获得 Linux 管理的技能,你需要去阅读整个教程。完成之后,转到下一个主题。

|

||||

|

||||

### 命令

|

||||

|

||||

命令功能类似于手机上的 man 页面一样,是大量的频繁使用的 Linux 命令。当你首次打开它,欢迎页面将详细解释使用命令的益处。

|

||||

|

||||

读完之后,你可以轻触向右的箭头(在屏幕底部)或轻触 “汉堡包菜单” ,然后从侧边栏中选择你想去学习的其它命令。(图 4)

|

||||

|

||||

|

||||

|

||||

*图 4:命令侧边栏允许你去查看列出的命令。[已获授权][4]*

|

||||

|

||||

轻触任意一个命令,你可以阅读这个命令的解释。每个命令解释页面和它的选项都提供了怎么去使用的示例。

|

||||

|

||||

### Shell 脚本

|

||||

|

||||

在这个时候,你开始熟悉 Linux 了,并对命令已经有一定程序的掌握。现在,是时候去熟悉 shell 脚本了。这个部分的设置方式与教程部分和命令部分相同。

|

||||

|

||||

你可以打开内容列表的侧边栏,然后打开包含 shell 脚本教程的任意部分(图 5)。

|

||||

|

||||

|

||||

|

||||

*图 5:Shell 脚本节看上去很熟悉。[已获授权][5]*

|

||||

|

||||

开发者在解释如何最大限度地利用 shell 脚本方面做的很好。对于任何有兴趣学习 shell 脚本细节的人来说,这是个很好的起点。

|

||||

|

||||

### 终端

|

||||

|

||||

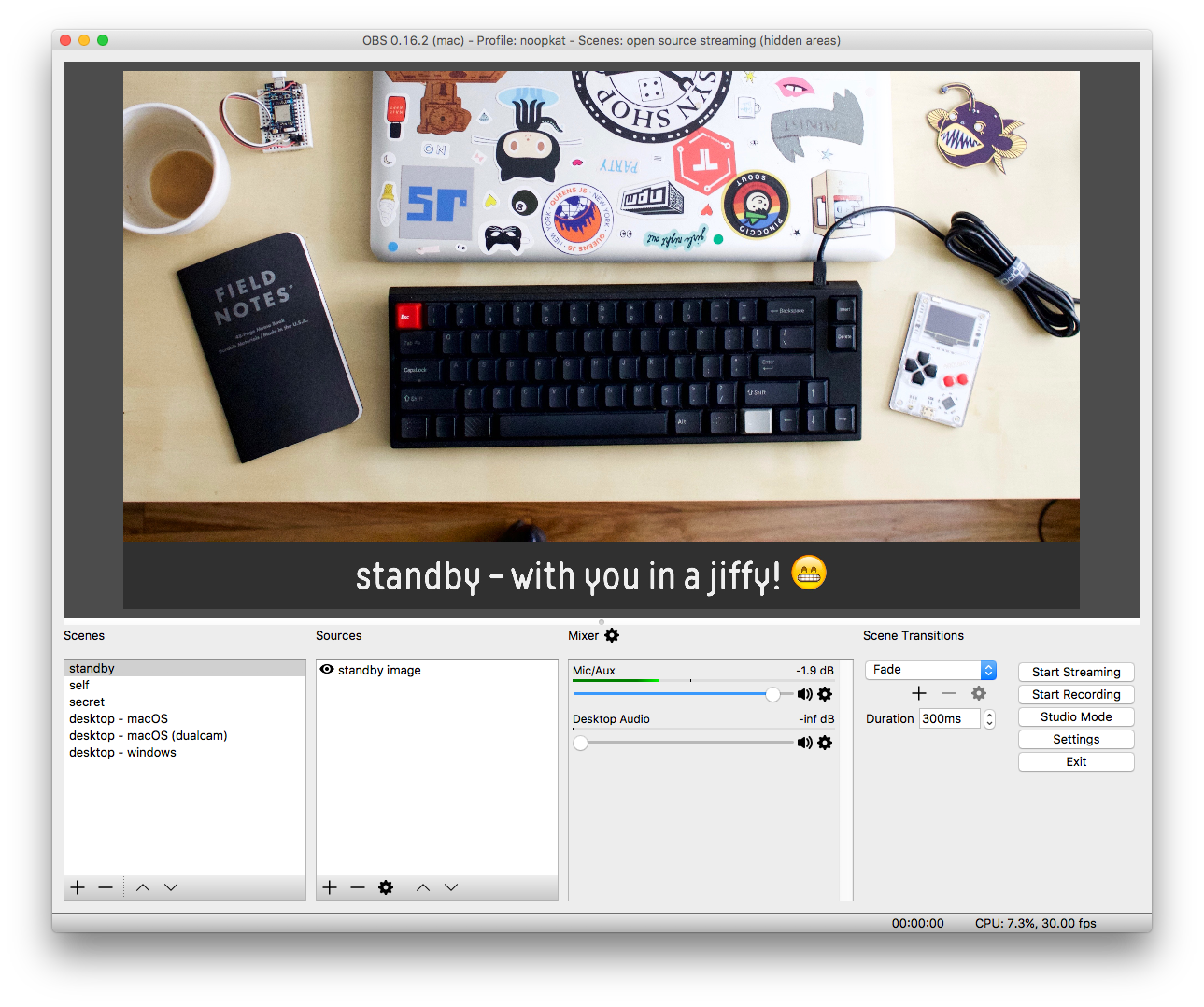

现在我们到了一个新的地方,开发者在这个应用中包含了一个终端模拟器。遗憾的是,当你在一个没有 “root” 权限的 Android 设备上安装这个应用时,你会发现你被限制在一个只读文件系统中,在那里,大部分命令根本无法工作。但是,我在一台 Pixel 2 (通过 Android 应用商店)安装的 Guide to Linux 中,可以使用更多的这个功能(还只是较少的一部分)。在一台 OnePlus 3 (非 root 过的)上,不管我改变到哪个目录,我都是得到相同的错误信息 “permission denied”,甚至是一个简单的命令也如此。

|

||||

|

||||

在 Chromebook 上,不管怎么操作,它都是正常的(图 6)。可以说,它可以一直很好地工作在一个只读操作系统中(因此,你不能用它进行真正的工作或创建新文件)。

|

||||

|

||||

|

||||

|

||||

*图 6: 可以完美地(可以这么说)用一个终端模拟器去工作。[已获授权][6]*

|

||||

|

||||

记住,这并不是真实的成熟终端,但却是一个新用户去熟悉终端是怎么工作的一种方法。遗憾的是,大多数用户只会发现自己对这个工具的终端功能感到沮丧,仅仅是因为,它们不能使用他们在其它部分学到的东西。开发者可能将这个终端功能打造成了一个 Linux 文件系统沙箱,因此,用户可以真实地使用它去学习。每次用户打开那个工具,它将恢复到原始状态。这只是我一个想法。

|

||||

|

||||

### 写在最后…

|

||||

|

||||

尽管终端功能被一个只读文件系统所限制(几乎到了没法使用的程序),Guide to Linux 仍然是一个新手学习 Linux 的好工具。在 guide to Linux 中,你将学习关于 Linux、命令、和 shell 脚本的很多知识,以便在你安装你的第一个发行版之前,让你学习 Linux 有一个好的起点。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/learn/intro-to-linux/2017/8/guide-linux-app-handy-tool-every-level-linux-user

|

||||

|

||||

作者:[JACK WALLEN][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/jlwallen

|

||||

[1]:https://www.linux.com/licenses/category/used-permission

|

||||

[2]:https://www.linux.com/licenses/category/used-permission

|

||||

[3]:https://www.linux.com/licenses/category/used-permission

|

||||

[4]:https://www.linux.com/licenses/category/used-permission

|

||||

[5]:https://www.linux.com/licenses/category/used-permission

|

||||

[6]:https://www.linux.com/licenses/category/used-permission

|

||||

[7]:https://www.linux.com/licenses/category/used-permission

|

||||

[8]:https://www.linux.com/files/images/guidetolinux1jpg

|

||||

[9]:https://www.linux.com/files/images/guidetolinux2jpg

|

||||

[10]:https://www.linux.com/files/images/guidetolinux3jpg-0

|

||||

[11]:https://www.linux.com/files/images/guidetolinux4jpg

|

||||

[12]:https://www.linux.com/files/images/guidetolinux-5-newjpg

|

||||

[13]:https://www.linux.com/files/images/guidetolinux6jpg-0

|

||||

[14]:https://www.linux.com/files/images/guide-linuxpng

|

||||

[15]:https://play.google.com/store/apps/details?id=com.essence.linuxcommands

|

||||

[16]:https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

[17]:https://www.addtoany.com/share#url=https%3A%2F%2Fwww.linux.com%2Flearn%2Fintro-to-linux%2F2017%2F8%2Fguide-linux-app-handy-tool-every-level-linux-user&title=Guide%20to%20Linux%20App%20Is%20a%20Handy%20Tool%20for%20Every%20Level%20of%20Linux%20User

|

||||

@ -0,0 +1,85 @@

|

||||

放弃你的代码,而不是你的时间

|

||||

============================================================

|

||||

|

||||

作为软件开发人员,我认为我们可以认同开源代码^注1 已经[改变了世界][9]。它的公共性质去除了壁垒,可以让软件可以变的最好。但问题是,太多有价值的项目由于领导者的精力耗尽而停滞不前:

|

||||

|

||||

>“我没有时间和精力去投入开源了。我在开源上没有得到任何收入,所以我在那上面花的时间,我可以用在‘生活上的事’,或者写作上……正因为如此,我决定现在结束我所有的开源工作。”

|

||||

>

|

||||

>—[Ryan Bigg,几个 Ruby 和 Elixir 项目的前任维护者][1]

|

||||

>

|

||||

>“这也是一个巨大的机会成本,由于我无法同时学习或者完成很多事情,FubuMVC 占用了我很多的时间,这是它现在必须停下来的主要原因。”

|

||||

>

|

||||

>—[前 FubuMVC 项目负责人 Jeremy Miller][2]

|

||||

>

|

||||

>“当我们决定要孩子的时候,我可能会放弃开源,我预计最终解决我问题的方案将是:核武器。”

|

||||

>

|

||||

>—[Nolan Lawson,PouchDB 的维护者之一][3]

|

||||

|

||||

我们需要的是一种新的行业规范,即项目领导者将_总是_能获得(其付出的)时间上的补偿。我们还需要抛弃的想法是, 任何提交问题或合并请求的开发人员都自动会得到维护者的注意。

|

||||

|

||||

我们先来回顾一下开源代码在市场上的作用。它是一个积木。它是[实用软件][10],是企业为了在别处获利而必须承担的成本。如果用户能够理解该代码的用途并且发现它比替代方案(闭源专用、定制的内部解决方案等)更有价值,那么围绕该软件的社区就会不断增长。它可以更好,更便宜,或两者兼而有之。

|

||||

|

||||

如果一个组织需要改进该代码,他们可以自由地聘请任何他们想要的开发人员。通常情况下[为了他们的利益][11]会将改进贡献给社区,因为由于代码合并的复杂性,这是他们能够轻松地从其他用户获得未来改进的唯一方式。这种“引力”倾向于把社区聚集在一起。

|

||||

|

||||

但是它也会加重项目维护者的负担,因为他们必须对这些改进做出反应。他们得到了什么回报?最好的情况是,这些社区贡献可能是他们将来可以使用的东西,但现在不是。最坏的情况下,这只不过是一个带着利他主义面具的自私请求罢了。

|

||||

|

||||

有一类开源项目避免了这个陷阱。Linux、MySQL、Android、Chromium 和 .NET Core 除了有名,有什么共同点么?他们都对一个或多个大型企业具有_战略性重要意义_,因为它们满足了这些利益。[聪明的公司商品化他们的商品][12],没有什么比开源软件便宜的商品了。红帽需要那些使用 Linux 的公司来销售企业级 Linux,Oracle 使用 MySQL 作为销售 MySQL Enterprise 的引子,谷歌希望世界上每个人都拥有电话和浏览器,而微软则试图将开发者锁定在平台上然后将它们拉入 Azure 云。这些项目全部由各自公司直接资助。

|

||||

|

||||

但是那些其他的项目呢,那些不是大玩家核心战略的项目呢?

|

||||

|

||||

如果你是其中一个项目的负责人,请向社区成员收取年费。_开放的源码,封闭的社区_。给用户的信息应该是“尽你所愿地使用代码,但如果你想影响项目的未来,请_为我们的时间支付_。”将非付费用户锁定在论坛和问题跟踪之外,并忽略他们的电子邮件。不支付的人应该觉得他们错过了派对。

|

||||

|

||||

还要向贡献者收取合并非普通的合并请求的时间花费。如果一个特定的提交不会立即给你带来好处,请为你的时间收取全价。要有原则并[记住 YAGNI][13]。

|

||||

|

||||

这会导致一个极小的社区和更多的分支么?绝对。但是,如果你坚持不懈地构建自己的愿景,并为其他人创造价值,他们会尽快为要做的贡献而支付。_合并贡献的意愿是[稀缺资源][4]_。如果没有它,用户必须反复地将它们的变化与你发布的每个新版本进行协调。

|

||||

|

||||

如果你想在代码库中保持高水平的[概念完整性][14],那么限制社区是特别重要的。有[自由贡献政策][15]的无领导者项目没有必要收费。

|

||||

|

||||

为了实现更大的愿景,而不是单独为自己的业务支付成本,而是可能使其他人受益,去[众筹][16]吧。有许多成功的故事:

|

||||

|

||||

- [Font Awesome 5][5]

|

||||

- [Ruby enVironment Management (RVM)][6]

|

||||

- [Django REST framework 3][7]

|

||||

|

||||

[众筹有局限性][17]。它[不适合][18][大型项目][19]。但是,开源代码也是实用软件,它不需要雄心勃勃、冒险的破局者。它已经一点点地[渗透到每个行业][20]。

|

||||

|

||||

这些观点代表着一条可持续发展的道路,也可以解决[开源的多样性问题][21],这可能源于其历史上无偿的性质。但最重要的是,我们要记住,[我们一生中只留下那么多的按键次数][22],而且我们总有一天会后悔那些我们浪费的东西。

|

||||

|

||||

- 注 1 :当我说“开源”时,我的意思是代码[许可][8]以某种方式来构建专有的东西。这通常意味着一个宽松许可证(MIT 或 Apache 或 BSD),但并非总是如此。Linux 是当今科技行业的核心,但是是以 GPL 授权的。_

|

||||

|

||||

感谢 Jason Haley、Don McNamara、Bryan Hogan 和 Nadia Eghbal 阅读了这篇文章的草稿。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://wgross.net/essays/give-away-your-code-but-never-your-time

|

||||

|

||||

作者:[William Gross][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://wgross.net/#about-section

|

||||

[1]:http://ryanbigg.com/2015/11/open-source-work

|

||||

[2]:https://jeremydmiller.com/2014/04/03/im-throwing-in-the-towel-in-fubumvc/

|

||||

[3]:https://nolanlawson.com/2017/03/05/what-it-feels-like-to-be-an-open-source-maintainer/

|

||||

[4]:https://hbr.org/2010/11/column-to-win-create-whats-scarce

|

||||

[5]:https://www.kickstarter.com/projects/232193852/font-awesome-5

|

||||

[6]:https://www.bountysource.com/teams/rvm/fundraiser

|

||||

[7]:https://www.kickstarter.com/projects/tomchristie/django-rest-framework-3

|

||||

[8]:https://choosealicense.com/

|

||||

[9]:https://www.wired.com/insights/2013/07/in-a-world-without-open-source/

|

||||

[10]:https://martinfowler.com/bliki/UtilityVsStrategicDichotomy.html

|

||||

[11]:https://tessel.io/blog/67472869771/monetizing-open-source

|

||||

[12]:https://www.joelonsoftware.com/2002/06/12/strategy-letter-v/

|

||||

[13]:https://martinfowler.com/bliki/Yagni.html

|

||||

[14]:http://wiki.c2.com/?ConceptualIntegrity

|

||||

[15]:https://opensource.com/life/16/5/growing-contributor-base-modern-open-source

|

||||

[16]:https://poststatus.com/kickstarter-open-source-project/

|

||||

[17]:http://blog.felixbreuer.net/2013/04/24/crowdfunding-for-open-source.html

|

||||

[18]:https://www.indiegogo.com/projects/geary-a-beautiful-modern-open-source-email-client#/

|

||||

[19]:http://www.itworld.com/article/2708360/open-source-tools/canonical-misses-smartphone-crowdfunding-goal-by--19-million.html

|

||||

[20]:http://www.infoworld.com/article/2914643/open-source-software/rise-and-rise-of-open-source.html

|

||||

[21]:http://readwrite.com/2013/12/11/open-source-diversity/

|

||||

[22]:http://keysleft.com/

|

||||

[23]:http://wgross.net/essays/give-away-your-code-but-never-your-time

|

||||

250

published/20171002 Scaling the GitLab database.md

Normal file

250

published/20171002 Scaling the GitLab database.md

Normal file

@ -0,0 +1,250 @@

|

||||

在 GitLab 我们是如何扩展数据库的

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

> 在扩展 GitLab 数据库和我们应用的解决方案,去帮助解决我们的数据库设置中的问题时,我们深入分析了所面临的挑战。

|

||||

|

||||

很长时间以来 GitLab.com 使用了一个单个的 PostgreSQL 数据库服务器和一个用于灾难恢复的单个复制。在 GitLab.com 最初的几年,它工作的还是很好的,但是,随着时间的推移,我们看到这种设置的很多问题,在这篇文章中,我们将带你了解我们在帮助解决 GitLab.com 和 GitLab 实例所在的主机时都做了些什么。

|

||||

|

||||

例如,数据库长久处于重压之下, CPU 使用率几乎所有时间都处于 70% 左右。并不是因为我们以最好的方式使用了全部的可用资源,而是因为我们使用了太多的(未经优化的)查询去“冲击”服务器。我们意识到需要去优化设置,这样我们就可以平衡负载,使 GitLab.com 能够更灵活地应对可能出现在主数据库服务器上的任何问题。

|

||||

|

||||

在我们使用 PostgreSQL 去跟踪这些问题时,使用了以下的四种技术:

|

||||

|

||||

1. 优化你的应用程序代码,以使查询更加高效(并且理论上使用了很少的资源)。

|

||||

2. 使用一个连接池去减少必需的数据库连接数量(及相关的资源)。

|

||||

3. 跨多个数据库服务器去平衡负载。

|

||||

4. 分片你的数据库

|

||||

|

||||

在过去的两年里,我们一直在积极地优化应用程序代码,但它不是一个完美的解决方案,甚至,如果你改善了性能,当流量也增加时,你还需要去应用其它的几种技术。出于本文的目的,我们将跳过优化应用代码这个特定主题,而专注于其它技术。

|

||||

|

||||

### 连接池

|

||||

|

||||

在 PostgreSQL 中,一个连接是通过启动一个操作系统进程来处理的,这反过来又需要大量的资源,更多的连接(及这些进程)将使用你的数据库上的更多的资源。 PostgreSQL 也在 [max_connections][5] 设置中定义了一个强制的最大连接数量。一旦达到这个限制,PostgreSQL 将拒绝新的连接, 比如,下面的图表示的设置:

|

||||

|

||||

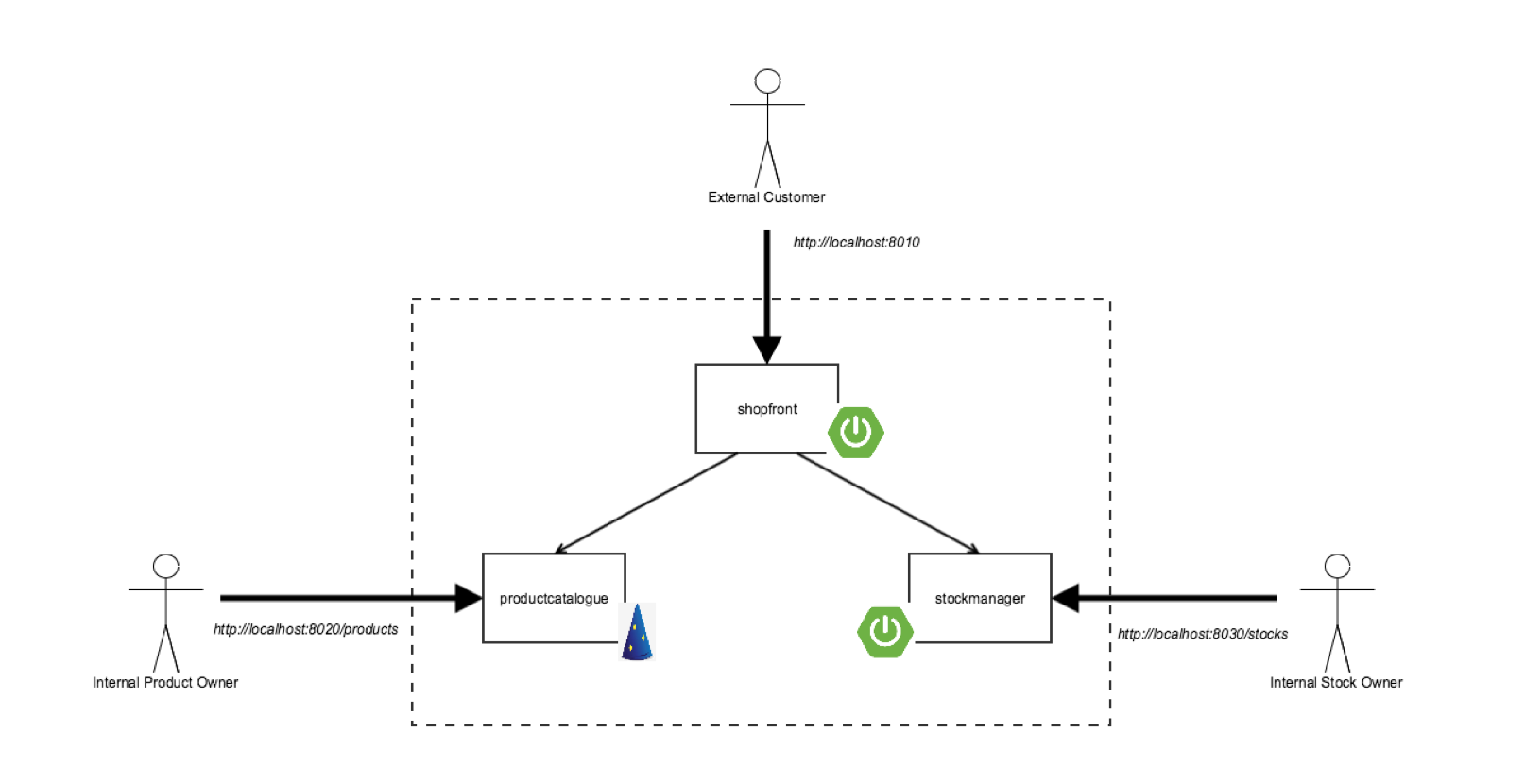

|

||||

|

||||

这里我们的客户端直接连接到 PostgreSQL,这样每个客户端请求一个连接。

|

||||

|

||||

通过连接池,我们可以有多个客户端侧的连接重复使用一个 PostgreSQL 连接。例如,没有连接池时,我们需要 100 个 PostgreSQL 连接去处理 100 个客户端连接;使用连接池后,我们仅需要 10 个,或者依据我们配置的 PostgreSQL 连接。这意味着我们的连接图表将变成下面看到的那样:

|

||||

|

||||

|

||||

|

||||

这里我们展示了一个示例,四个客户端连接到 pgbouncer,但不是使用了四个 PostgreSQL 连接,而是仅需要两个。

|

||||

|

||||

对于 PostgreSQL 有两个最常用的连接池:

|

||||

|

||||

* [pgbouncer][1]

|

||||

* [pgpool-II][2]

|

||||

|

||||

pgpool 有一点特殊,因为它不仅仅是连接池:它有一个内置的查询缓存机制,可以跨多个数据库负载均衡、管理复制等等。

|

||||

|

||||

另一个 pgbouncer 是很简单的:它就是一个连接池。

|

||||

|

||||

### 数据库负载均衡

|

||||

|

||||

数据库级的负载均衡一般是使用 PostgreSQL 的 “<ruby>[热备机][6]<rt>hot-standby</rt></ruby>” 特性来实现的。 热备机是允许你去运行只读 SQL 查询的 PostgreSQL 副本,与不允许运行任何 SQL 查询的普通<ruby>备用机<rt>standby</rt></ruby>相反。要使用负载均衡,你需要设置一个或多个热备服务器,并且以某些方式去平衡这些跨主机的只读查询,同时将其它操作发送到主服务器上。扩展这样的一个设置是很容易的:(如果需要的话)简单地增加多个热备机以增加只读流量。

|

||||

|

||||

这种方法的另一个好处是拥有一个更具弹性的数据库集群。即使主服务器出现问题,仅使用次级服务器也可以继续处理 Web 请求;当然,如果这些请求最终使用主服务器,你可能仍然会遇到错误。

|

||||

|

||||

然而,这种方法很难实现。例如,一旦它们包含写操作,事务显然需要在主服务器上运行。此外,在写操作完成之后,我们希望继续使用主服务器一会儿,因为在使用异步复制的时候,热备机服务器上可能还没有这些更改。

|

||||

|

||||

### 分片

|

||||

|

||||

分片是水平分割你的数据的行为。这意味着数据保存在特定的服务器上并且使用一个分片键检索。例如,你可以按项目分片数据并且使用项目 ID 做为分片键。当你的写负载很高时,分片数据库是很有用的(除了一个多主设置外,均衡写操作没有其它的简单方法),或者当你有_大量_的数据并且你不再使用传统方式保存它也是有用的(比如,你不能把它简单地全部放进一个单个磁盘中)。

|

||||

|

||||

不幸的是,设置分片数据库是一个任务量很大的过程,甚至,在我们使用诸如 [Citus][7] 的软件时也是这样。你不仅需要设置基础设施 (不同的复杂程序取决于是你运行在你自己的数据中心还是托管主机的解决方案),你还得需要调整你的应用程序中很大的一部分去支持分片。

|

||||

|

||||

#### 反对分片的案例

|

||||

|

||||

在 GitLab.com 上一般情况下写负载是非常低的,同时大多数的查询都是只读查询。在极端情况下,尖峰值达到每秒 1500 元组写入,但是,在大多数情况下不超过每秒 200 元组写入。另一方面,我们可以在任何给定的次级服务器上轻松达到每秒 1000 万元组读取。

|

||||

|

||||

存储方面,我们也不使用太多的数据:大约 800 GB。这些数据中的很大一部分是在后台迁移的,这些数据一经迁移后,我们的数据库收缩的相当多。

|

||||

|

||||

接下来的工作量就是调整应用程序,以便于所有查询都可以正确地使用分片键。 我们的一些查询包含了一个项目 ID,它是我们使用的分片键,也有许多查询没有包含这个分片键。分片也会影响提交到 GitLab 的改变内容的过程,每个提交者现在必须确保在他们的查询中包含分片键。

|

||||

|

||||

最后,是完成这些工作所需要的基础设施。服务器已经完成设置,监视也添加了、工程师们必须培训,以便于他们熟悉上面列出的这些新的设置。虽然托管解决方案可能不需要你自己管理服务器,但它不能解决所有问题。工程师们仍然需要去培训(很可能非常昂贵)并需要为此支付账单。在 GitLab 上,我们也非常乐意提供我们用过的这些工具,这样社区就可以使用它们。这意味着如果我们去分片数据库, 我们将在我们的 Omnibus 包中提供它(或至少是其中的一部分)。确保你提供的服务的唯一方法就是你自己去管理它,这意味着我们不能使用主机托管的解决方案。

|

||||

|

||||

最终,我们决定不使用数据库分片,因为它是昂贵的、费时的、复杂的解决方案。

|

||||

|

||||

### GitLab 的连接池

|

||||

|

||||

对于连接池我们有两个主要的诉求:

|

||||

|

||||

1. 它必须工作的很好(很显然这是必需的)。

|

||||

2. 它必须易于在我们的 Omnibus 包中运用,以便于我们的用户也可以从连接池中得到好处。

|

||||

|

||||

用下面两步去评估这两个解决方案(pgpool 和 pgbouncer):

|

||||

|

||||

1. 执行各种技术测试(是否有效,配置是否容易,等等)。

|

||||

2. 找出使用这个解决方案的其它用户的经验,他们遇到了什么问题?怎么去解决的?等等。

|

||||

|

||||

pgpool 是我们考察的第一个解决方案,主要是因为它提供的很多特性看起来很有吸引力。我们其中的一些测试数据可以在 [这里][8] 找到。

|

||||

|

||||

最终,基于多个因素,我们决定不使用 pgpool 。例如, pgpool 不支持<ruby>粘连接<rt>sticky connection</rt></ruby>。 当执行一个写入并(尝试)立即显示结果时,它会出现问题。想像一下,创建一个<ruby>工单<rt>issue</rt></ruby>并立即重定向到这个页面, 没有想到会出现 HTTP 404,这是因为任何用于只读查询的服务器还没有收到数据。针对这种情况的一种解决办法是使用同步复制,但这会给表带来更多的其它问题,而我们希望避免这些问题。

|

||||

|

||||

另一个问题是, pgpool 的负载均衡逻辑与你的应用程序是不相干的,是通过解析 SQL 查询并将它们发送到正确的服务器。因为这发生在你的应用程序之外,你几乎无法控制查询运行在哪里。这实际上对某些人也可能是有好处的, 因为你不需要额外的应用程序逻辑。但是,它也妨碍了你在需要的情况下调整路由逻辑。

|

||||

|

||||

由于配置选项非常多,配置 pgpool 也是很困难的。或许促使我们最终决定不使用它的原因是我们从过去使用过它的那些人中得到的反馈。即使是在大多数的案例都不是很详细的情况下,我们收到的反馈对 pgpool 通常都持有负面的观点。虽然出现的报怨大多数都与早期版本的 pgpool 有关,但仍然让我们怀疑使用它是否是个正确的选择。

|

||||

|

||||

结合上面描述的问题和反馈,最终我们决定不使用 pgpool 而是使用 pgbouncer 。我们用 pgbouncer 执行了一套类似的测试,并且对它的结果是非常满意的。它非常容易配置(而且一开始不需要很多的配置),运用相对容易,仅专注于连接池(而且它真的很好),而且没有明显的负载开销(如果有的话)。也许我唯一的报怨是,pgbouncer 的网站有点难以导航。

|

||||

|

||||

使用 pgbouncer 后,通过使用<ruby>事务池<rt>transaction pooling</rt></ruby>我们可以将活动的 PostgreSQL 连接数从几百个降到仅 10 - 20 个。我们选择事务池是因为 Rails 数据库连接是持久的。这个设置中,使用<ruby>会话池<rt>session pooling</rt></ruby>不能让我们降低 PostgreSQL 连接数,从而受益(如果有的话)。通过使用事务池,我们可以调低 PostgreSQL 的 `max_connections` 的设置值,从 3000 (这个特定值的原因我们也不清楚) 到 300 。这样配置的 pgbouncer ,即使在尖峰时,我们也仅需要 200 个连接,这为我们提供了一些额外连接的空间,如 `psql` 控制台和维护任务。

|

||||

|

||||

对于使用事务池的负面影响方面,你不能使用预处理语句,因为 `PREPARE` 和 `EXECUTE` 命令也许最终在不同的连接中运行,从而产生错误的结果。 幸运的是,当我们禁用了预处理语句时,并没有测量到任何响应时间的增加,但是我们 _确定_ 测量到在我们的数据库服务器上内存使用减少了大约 20 GB。

|

||||

|

||||

为确保我们的 web 请求和后台作业都有可用连接,我们设置了两个独立的池: 一个有 150 个连接的后台进程连接池,和一个有 50 个连接的 web 请求连接池。对于 web 连接需要的请求,我们很少超过 20 个,但是,对于后台进程,由于在 GitLab.com 上后台运行着大量的进程,我们的尖峰值可以很容易达到 100 个连接。

|

||||

|

||||

今天,我们提供 pgbouncer 作为 GitLab EE 高可用包的一部分。对于更多的信息,你可以参考 “[Omnibus GitLab PostgreSQL High Availability][9]”。

|

||||

|

||||

### GitLab 上的数据库负载均衡

|

||||

|

||||

使用 pgpool 和它的负载均衡特性,我们需要一些其它的东西去分发负载到多个热备服务器上。

|

||||

|

||||

对于(但不限于) Rails 应用程序,它有一个叫 [Makara][10] 的库,它实现了负载均衡的逻辑并包含了一个 ActiveRecord 的缺省实现。然而,Makara 也有一些我们认为是有些遗憾的问题。例如,它支持的粘连接是非常有限的:当你使用一个 cookie 和一个固定的 TTL 去执行一个写操作时,连接将粘到主服务器。这意味着,如果复制极大地滞后于 TTL,最终你可能会发现,你的查询运行在一个没有你需要的数据的主机上。

|

||||

|

||||

Makara 也需要你做很多配置,如所有的数据库主机和它们的角色,没有服务发现机制(我们当前的解决方案也不支持它们,即使它是将来计划的)。 Makara 也 [似乎不是线程安全的][11],这是有问题的,因为 Sidekiq (我们使用的后台进程)是多线程的。 最终,我们希望尽可能地去控制负载均衡的逻辑。

|

||||

|

||||

除了 Makara 之外 ,还有一个 [Octopus][12] ,它也是内置的负载均衡机制。但是 Octopus 是面向分片数据库的,而不仅是均衡只读查询的。因此,最终我们不考虑使用 Octopus。

|

||||

|

||||

最终,我们直接在 GitLab EE构建了自己的解决方案。 添加初始实现的<ruby>合并请求<rt>merge request</rt></ruby>可以在 [这里][13]找到,尽管一些更改、提升和修复是以后增加的。

|

||||

|

||||

我们的解决方案本质上是通过用一个处理查询的路由的代理对象替换 `ActiveRecord::Base.connection` 。这可以让我们均衡负载尽可能多的查询,甚至,包括不是直接来自我们的代码中的查询。这个代理对象基于调用方式去决定将查询转发到哪个主机, 消除了解析 SQL 查询的需要。

|

||||

|

||||

#### 粘连接

|

||||

|

||||

粘连接是通过在执行写入时,将当前 PostgreSQL WAL 位置存储到一个指针中实现支持的。在请求即将结束时,指针短期保存在 Redis 中。每个用户提供他自己的 key,因此,一个用户的动作不会导致其他的用户受到影响。下次请求时,我们取得指针,并且与所有的次级服务器进行比较。如果所有的次级服务器都有一个超过我们的指针的 WAL 指针,那么我们知道它们是同步的,我们可以为我们的只读查询安全地使用次级服务器。如果一个或多个次级服务器没有同步,我们将继续使用主服务器直到它们同步。如果 30 秒内没有写入操作,并且所有的次级服务器还没有同步,我们将恢复使用次级服务器,这是为了防止有些人的查询永远运行在主服务器上。

|

||||

|

||||

检查一个次级服务器是否就绪十分简单,它在如下的 `Gitlab::Database::LoadBalancing::Host#caught_up?` 中实现:

|

||||

|

||||

```

|

||||

def caught_up?(location)

|

||||

string = connection.quote(location)

|

||||

|

||||

query = "SELECT NOT pg_is_in_recovery() OR " \

|

||||

"pg_xlog_location_diff(pg_last_xlog_replay_location(), #{string}) >= 0 AS result"

|

||||

|

||||

row = connection.select_all(query).first

|

||||

|

||||

row && row['result'] == 't'

|

||||

ensure

|

||||

release_connection

|

||||

end

|

||||

|

||||

```

|

||||

这里的大部分代码是运行原生查询(raw queries)和获取结果的标准的 Rails 代码,查询的最有趣的部分如下:

|

||||

|

||||

```

|

||||

SELECT NOT pg_is_in_recovery()

|

||||

OR pg_xlog_location_diff(pg_last_xlog_replay_location(), WAL-POINTER) >= 0 AS result"

|

||||

|

||||

```

|

||||

|

||||

这里 `WAL-POINTER` 是 WAL 指针,通过 PostgreSQL 函数 `pg_current_xlog_insert_location()` 返回的,它是在主服务器上执行的。在上面的代码片断中,该指针作为一个参数传递,然后它被引用或转义,并传递给查询。

|

||||

|

||||

使用函数 `pg_last_xlog_replay_location()` 我们可以取得次级服务器的 WAL 指针,然后,我们可以通过函数 `pg_xlog_location_diff()` 与我们的主服务器上的指针进行比较。如果结果大于 0 ,我们就可以知道次级服务器是同步的。

|

||||

|

||||

当一个次级服务器被提升为主服务器,并且我们的 GitLab 进程还不知道这一点的时候,添加检查 `NOT pg_is_in_recovery()` 以确保查询不会失败。在这个案例中,主服务器总是与它自己是同步的,所以它简单返回一个 `true`。

|

||||

|

||||

#### 后台进程

|

||||

|

||||

我们的后台进程代码 _总是_ 使用主服务器,因为在后台执行的大部分工作都是写入。此外,我们不能可靠地使用一个热备机,因为我们无法知道作业是否在主服务器执行,也因为许多作业并没有直接绑定到用户上。

|

||||

|

||||

#### 连接错误

|

||||

|

||||

要处理连接错误,比如负载均衡器不会使用一个视作离线的次级服务器,会增加主机上(包括主服务器)的连接错误,将会导致负载均衡器多次重试。这是确保,在遇到偶发的小问题或数据库失败事件时,不会立即显示一个错误页面。当我们在负载均衡器级别上处理 [热备机冲突][14] 的问题时,我们最终在次级服务器上启用了 `hot_standby_feedback` ,这样就解决了热备机冲突的所有问题,而不会对表膨胀造成任何负面影响。

|

||||

|

||||

我们使用的过程很简单:对于次级服务器,我们在它们之间用无延迟试了几次。对于主服务器,我们通过使用越来越快的回退尝试几次。

|

||||

|

||||

更多信息你可以查看 GitLab EE 上的源代码:

|

||||

|

||||

* [https://gitlab.com/gitlab-org/gitlab-ee/tree/master/lib/gitlab/database/load_balancing.rb][3]

|

||||

* [https://gitlab.com/gitlab-org/gitlab-ee/tree/master/lib/gitlab/database/load_balancing][4]

|

||||

|

||||

数据库负载均衡首次引入是在 GitLab 9.0 中,并且 _仅_ 支持 PostgreSQL。更多信息可以在 [9.0 release post][15] 和 [documentation][16] 中找到。

|

||||

|

||||

### Crunchy Data

|

||||

|

||||

我们与 [Crunchy Data][17] 一起协同工作来部署连接池和负载均衡。不久之前我还是唯一的 [数据库专家][18],它意味着我有很多工作要做。此外,我对 PostgreSQL 的内部细节的和它大量的设置所知有限 (或者至少现在是),这意味着我能做的也有限。因为这些原因,我们雇用了 Crunchy 去帮我们找出问题、研究慢查询、建议模式优化、优化 PostgreSQL 设置等等。

|

||||

|

||||

在合作期间,大部分工作都是在相互信任的基础上完成的,因此,我们共享私人数据,比如日志。在合作结束时,我们从一些资料和公开的内容中删除了敏感数据,主要的资料在 [gitlab-com/infrastructure#1448][19],这又反过来导致产生和解决了许多分立的问题。

|

||||

|

||||

这次合作的好处是巨大的,它帮助我们发现并解决了许多的问题,如果必须我们自己来做的话,我们可能需要花上几个月的时间来识别和解决它。

|

||||

|

||||

幸运的是,最近我们成功地雇佣了我们的 [第二个数据库专家][20] 并且我们希望以后我们的团队能够发展壮大。

|

||||

|

||||

### 整合连接池和数据库负载均衡

|

||||

|

||||

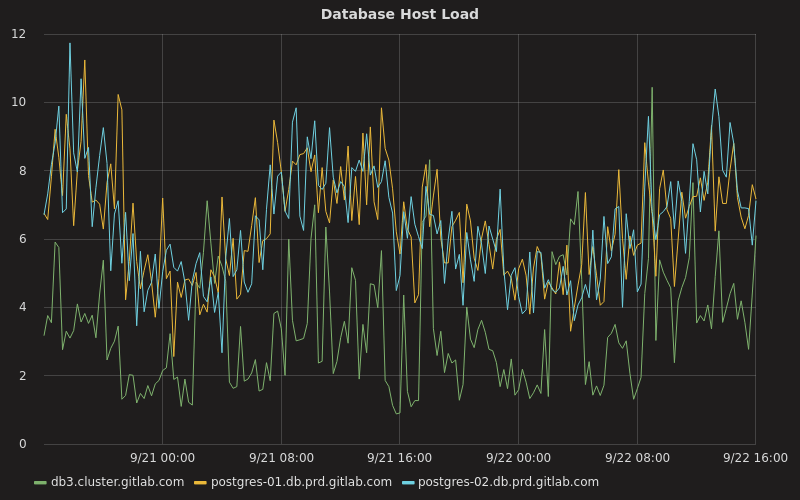

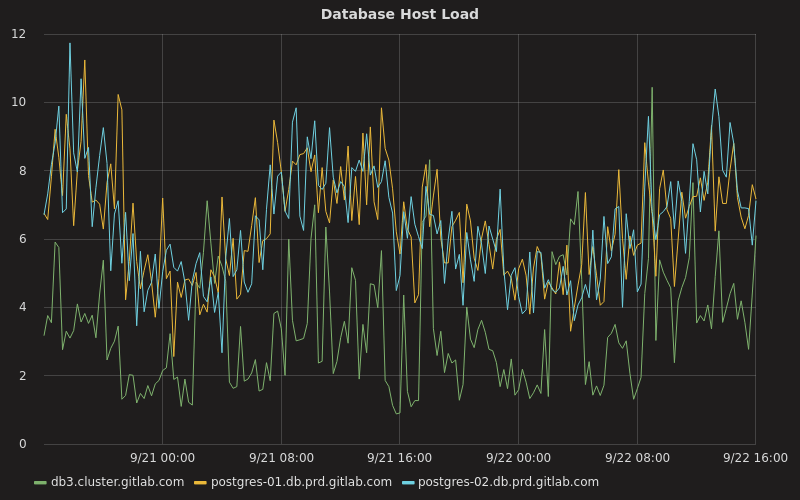

整合连接池和数据库负载均衡可以让我们去大幅减少运行数据库集群所需要的资源和在分发到热备机上的负载。例如,以前我们的主服务器 CPU 使用率一直徘徊在 70%,现在它一般在 10% 到 20% 之间,而我们的两台热备机服务器则大部分时间在 20% 左右:

|

||||

|

||||

|

||||

|

||||

在这里, `db3.cluster.gitlab.com` 是我们的主服务器,而其它的两台是我们的次级服务器。

|

||||

|

||||

其它的负载相关的因素,如平均负载、磁盘使用、内存使用也大为改善。例如,主服务器现在的平均负载几乎不会超过 10,而不像以前它一直徘徊在 20 左右:

|

||||

|

||||

|

||||

|

||||

在业务繁忙期间,我们的次级服务器每秒事务数在 12000 左右(大约为每分钟 740000),而主服务器每秒事务数在 6000 左右(大约每分钟 340000):

|

||||

|

||||

|

||||

|

||||

可惜的是,在部署 pgbouncer 和我们的数据库负载均衡器之前,我们没有关于事务速率的任何数据。

|

||||

|

||||

我们的 PostgreSQL 的最新统计数据的摘要可以在我们的 [public Grafana dashboard][21] 上找到。

|

||||

|

||||

我们的其中一些 pgbouncer 的设置如下:

|

||||

|

||||

| 设置 | 值 |

|

||||

| --- | --- |

|

||||

| `default_pool_size` | 100 |

|

||||

| `reserve_pool_size` | 5 |

|

||||

| `reserve_pool_timeout` | 3 |

|

||||

| `max_client_conn` | 2048 |

|

||||

| `pool_mode` | transaction |

|

||||

| `server_idle_timeout` | 30 |

|

||||

|

||||

除了前面所说的这些外,还有一些工作要作,比如: 部署服务发现([#2042][22]), 持续改善如何检查次级服务器是否可用([#2866][23]),和忽略落后于主服务器太多的次级服务器 ([#2197][24])。

|

||||

|

||||

值得一提的是,到目前为止,我们还没有任何计划将我们的负载均衡解决方案,独立打包成一个你可以在 GitLab 之外使用的库,相反,我们的重点是为 GitLab EE 提供一个可靠的负载均衡解决方案。

|

||||

|

||||

如果你对它感兴趣,并喜欢使用数据库、改善应用程序性能、给 GitLab上增加数据库相关的特性(比如: [服务发现][25]),你一定要去查看一下我们的 [招聘职位][26] 和 [数据库专家手册][27] 去获取更多信息。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://about.gitlab.com/2017/10/02/scaling-the-gitlab-database/

|

||||

|

||||

作者:[Yorick Peterse][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://about.gitlab.com/team/#yorickpeterse

|

||||

[1]:https://pgbouncer.github.io/

|

||||

[2]:http://pgpool.net/mediawiki/index.php/Main_Page

|

||||

[3]:https://gitlab.com/gitlab-org/gitlab-ee/tree/master/lib/gitlab/database/load_balancing.rb

|

||||

[4]:https://gitlab.com/gitlab-org/gitlab-ee/tree/master/lib/gitlab/database/load_balancing

|

||||

[5]:https://www.postgresql.org/docs/9.6/static/runtime-config-connection.html#GUC-MAX-CONNECTIONS

|

||||

[6]:https://www.postgresql.org/docs/9.6/static/hot-standby.html

|

||||

[7]:https://www.citusdata.com/

|

||||

[8]:https://gitlab.com/gitlab-com/infrastructure/issues/259#note_23464570

|

||||

[9]:https://docs.gitlab.com/ee/administration/high_availability/alpha_database.html

|

||||

[10]:https://github.com/taskrabbit/makara

|

||||

[11]:https://github.com/taskrabbit/makara/issues/151

|

||||

[12]:https://github.com/thiagopradi/octopus

|

||||

[13]:https://gitlab.com/gitlab-org/gitlab-ee/merge_requests/1283

|

||||

[14]:https://www.postgresql.org/docs/current/static/hot-standby.html#HOT-STANDBY-CONFLICT

|

||||

[15]:https://about.gitlab.com/2017/03/22/gitlab-9-0-released/

|

||||

[16]:https://docs.gitlab.com/ee/administration/database_load_balancing.html

|

||||

[17]:https://www.crunchydata.com/

|

||||

[18]:https://about.gitlab.com/handbook/infrastructure/database/

|

||||

[19]:https://gitlab.com/gitlab-com/infrastructure/issues/1448

|

||||

[20]:https://gitlab.com/_stark

|

||||

[21]:http://monitor.gitlab.net/dashboard/db/postgres-stats?refresh=5m&orgId=1

|

||||

[22]:https://gitlab.com/gitlab-org/gitlab-ee/issues/2042

|

||||

[23]:https://gitlab.com/gitlab-org/gitlab-ee/issues/2866

|

||||

[24]:https://gitlab.com/gitlab-org/gitlab-ee/issues/2197

|

||||

[25]:https://gitlab.com/gitlab-org/gitlab-ee/issues/2042

|

||||

[26]:https://about.gitlab.com/jobs/specialist/database/

|

||||

[27]:https://about.gitlab.com/handbook/infrastructure/database/

|

||||

@ -1,29 +1,31 @@

|

||||

PostgreSQL 的哈希索引现在很酷

|

||||

======

|

||||

由于我刚刚提交了最后一个改进 PostgreSQL 11 哈希索引的补丁,并且大部分哈希索引的改进都致力于预计下周发布的 PostgreSQL 10,因此现在似乎是对过去 18 个月左右所做的工作进行简要回顾的好时机。在版本 10 之前,哈希索引在并发性能方面表现不佳,缺少预写日志记录,因此在宕机或复制时都是不安全的,并且还有其他二等公民。在 PostgreSQL 10 中,这在很大程度上被修复了。

|

||||

|

||||

虽然我参与了一些设计,但改进哈希索引的首要功劳来自我的同事 Amit Kapila,[他在这个话题下的博客值得一读][1]。哈希索引的问题不仅在于没有人打算写预写日志记录的代码,还在于代码没有以某种方式进行结构化,使其可以添加实际上正常工作的预写日志记录。要拆分一个桶,系统将锁定已有的桶(使用一种十分低效的锁定机制),将半个元组移动到新的桶中,压缩已有的桶,然后松开锁。即使记录了个别更改,在错误的时刻发生崩溃也会使索引处于损坏状态。因此,Aimt 首先做的是重新设计锁定机制。[新的机制][2]在某种程度上允许扫描和拆分并行进行,并且允许稍后完成那些因报错或崩溃而被中断的拆分。一完成一系列漏洞的修复和一些重构工作,Aimt 就打了另一个补丁,[添加了支持哈希索引的预写日志记录][3]。

|

||||

由于我刚刚提交了最后一个改进 PostgreSQL 11 哈希索引的补丁,并且大部分哈希索引的改进都致力于预计下周发布的 PostgreSQL 10(LCTT 译注:已发布),因此现在似乎是对过去 18 个月左右所做的工作进行简要回顾的好时机。在版本 10 之前,哈希索引在并发性能方面表现不佳,缺少预写日志记录,因此在宕机或复制时都是不安全的,并且还有其他二等公民。在 PostgreSQL 10 中,这在很大程度上被修复了。

|

||||

|

||||

与此同时,我们发现哈希索引已经错过了许多已应用于 B 树多年的相当明显的性能改进。因为哈希索引不支持预写日志记录,以及旧的锁定机制十分笨重,所以没有太多的动机去提升其他的性能。而这意味着如果哈希索引会成为一个非常有用的技术,那么需要做的事只是添加预写日志记录而已。PostgreSQL 索引存取方法的抽象层允许索引保留有关其信息的后端专用缓存,避免了重复查询索引本身来获取相关的元数据。B 树和 SQLite 的索引正在使用这种机制,但哈希索引没有,所以我的同事 Mithun Cy 写了一个补丁来[使用此机制缓存哈希索引的元页][4]。同样,B 树索引有一个称为“单页回收”的优化,它巧妙地从索引页移除没用的索引指针,从而防止了大量索引膨胀,否则这将发生。我的同事 Ashutosh Sharma 打了一个补丁将[这个逻辑移植到哈希索引上][5],也大大减少了索引的膨胀。最后,B 树索引[自 2006 年以来][6]就有了一个功能,可以避免重复锁定和解锁同一个索引页——所有元组都在页中一次性删除,然后一次返回一个。Ashutosh Sharma 也[将此逻辑移植到了哈希索引中][7],但是由于缺少时间,这个优化没有在版本 10 中完成。在这个博客提到的所有内容中,这是唯一一个直到版本 11 才会出现的改进。

|

||||

虽然我参与了一些设计,但改进哈希索引的首要功劳来自我的同事 Amit Kapila,[他在这个话题下的博客值得一读][1]。哈希索引的问题不仅在于没有人打算写预写日志记录的代码,还在于代码没有以某种方式进行结构化,使其可以添加实际上正常工作的预写日志记录。要拆分一个桶,系统将锁定已有的桶(使用一种十分低效的锁定机制),将半个元组移动到新的桶中,压缩已有的桶,然后松开锁。即使记录了个别更改,在错误的时刻发生崩溃也会使索引处于损坏状态。因此,Aimt 首先做的是重新设计锁定机制。[新的机制][2]在某种程度上允许扫描和拆分并行进行,并且允许稍后完成那些因报错或崩溃而被中断的拆分。完成了一系列漏洞的修复和一些重构工作,Aimt 就打了另一个补丁,[添加了支持哈希索引的预写日志记录][3]。

|

||||

|

||||

关于哈希索引的工作有一个更有趣的地方是,很难确定行为是否真的正确。锁定行为的更改只可能在繁重的并发状态下失败,而预写日志记录中的错误可能仅在崩溃恢复的情况下显示出来。除此之外,在每种情况下,问题可能是微妙的。没有东西崩溃还不够;它们还必须在所有情况下产生正确的答案,并且这似乎很难去验证。为了协助这项工作,我的同事 Kuntal Ghosh 先后跟进了最初由 Heikki Linnakangas 和 Michael Paquier 开始的工作,并且制作了一个 WAL 一致性检查器,它不仅可以作为开发人员测试的专用补丁,还能真正[致力于 PostgreSQL][8]。在提交之前,我们对哈希索引的预写日志代码使用此工具进行了广泛的测试,并十分成功地查找到了一些漏洞。这个工具并不仅限于哈希索引,相反:它也可用于其他模块的预写日志记录代码,包括堆,当今的 AMS 索引,以及一些以后开发的其他东西。事实上,它已经成功地[在 BRIN 中找到了一个漏洞][9]。

|

||||

与此同时,我们发现哈希索引已经错过了许多已应用于 B 树索引多年的相当明显的性能改进。因为哈希索引不支持预写日志记录,以及旧的锁定机制十分笨重,所以没有太多的动机去提升其他的性能。而这意味着如果哈希索引会成为一个非常有用的技术,那么需要做的事只是添加预写日志记录而已。PostgreSQL 索引存取方法的抽象层允许索引保留有关其信息的后端专用缓存,避免了重复查询索引本身来获取相关的元数据。B 树和 SQLite 的索引正在使用这种机制,但哈希索引没有,所以我的同事 Mithun Cy 写了一个补丁来[使用此机制缓存哈希索引的元页][4]。同样,B 树索引有一个称为“单页回收”的优化,它巧妙地从索引页移除没用的索引指针,从而防止了大量索引膨胀。我的同事 Ashutosh Sharma 打了一个补丁将[这个逻辑移植到哈希索引上][5],也大大减少了索引的膨胀。最后,B 树索引[自 2006 年以来][6]就有了一个功能,可以避免重复锁定和解锁同一个索引页——所有元组都在页中一次性删除,然后一次返回一个。Ashutosh Sharma 也[将此逻辑移植到了哈希索引中][7],但是由于缺少时间,这个优化没有在版本 10 中完成。在这个博客提到的所有内容中,这是唯一一个直到版本 11 才会出现的改进。

|

||||

|

||||

关于哈希索引的工作有一个更有趣的地方是,很难确定行为是否真的正确。锁定行为的更改只可能在繁重的并发状态下失败,而预写日志记录中的错误可能仅在崩溃恢复的情况下显示出来。除此之外,在每种情况下,问题可能是微妙的。没有东西崩溃还不够;它们还必须在所有情况下产生正确的答案,并且这似乎很难去验证。为了协助这项工作,我的同事 Kuntal Ghosh 先后跟进了最初由 Heikki Linnakangas 和 Michael Paquier 开始的工作,并且制作了一个 WAL 一致性检查器,它不仅可以作为开发人员测试的专用补丁,还能真正[提交到 PostgreSQL][8]。在提交之前,我们对哈希索引的预写日志代码使用此工具进行了广泛的测试,并十分成功地查找到了一些漏洞。这个工具并不仅限于哈希索引,相反:它也可用于其他模块的预写日志记录代码,包括堆,当今的所有 AM 索引,以及一些以后开发的其他东西。事实上,它已经成功地[在 BRIN 中找到了一个漏洞][9]。

|

||||

|

||||

虽然 WAL 一致性检查是主要的开发者工具——尽管它也适合用户使用,如果怀疑有错误——也可以升级到专为数据库管理人员提供的几种工具。Jesper Pedersen 写了一个补丁来[升级 pageinspect contrib 模块来支持哈希索引][10],Ashutosh Sharma 做了进一步的工作,Peter Eisentraut 提供了测试用例(这是一个很好的办法,因为这些测试用例迅速失败,引发了几轮漏洞修复)。多亏了 Ashutosh Sharma 的工作,pgstattuple contrib 模块[也支持哈希索引了][11]。

|

||||

|

||||

一路走来,也有一些其他性能的改进。我一开始没有意识到的是,当一个哈希索引开始新一轮的桶拆分时,磁盘上的大小会突然加倍,这对于 1MB 的索引来说并不是一个问题,但是如果你碰巧有一个 64GB 的索引,那就有些不幸了。Mithun Cy 通过编写一个补丁,把加倍[分为四个阶段][12]在某个程度上解决了这一问题,这意味着我们将从 64GB 到 80GB 到 96GB 到 112GB 到 128GB,而不是一次性从 64GB 到 128GB。这个问题可以进一步改进,但需要对磁盘格式进行更深入的重构,并且需要仔细考虑对查找性能的影响。

|

||||

一路走来,也有一些其他性能的改进。我一开始没有意识到的是,当一个哈希索引开始新一轮的桶拆分时,磁盘上的大小会突然加倍,这对于 1MB 的索引来说并不是一个问题,但是如果你碰巧有一个 64GB 的索引,那就有些不幸了。Mithun Cy 通过编写一个补丁,把加倍过程[分为四个阶段][12]在某个程度上解决了这一问题,这意味着我们将从 64GB 到 80GB 到 96GB 到 112GB 到 128GB,而不是一次性从 64GB 到 128GB。这个问题可以进一步改进,但需要对磁盘格式进行更深入的重构,并且需要仔细考虑对查找性能的影响。

|

||||

|

||||

七月时,一份[来自于“AP”测试人员][13]的报告使我们感到需要做进一步的调整。AP 发现,若试图将 20 亿行数据插入到新创建的哈希索引中会导致错误。为了解决这个问题,Amit 修改了拆分桶的代码,[使得在每次拆分之后清理旧的桶][14],大大减少了溢出页的累积。为了得以确保,Aimt 和我也[增加了四倍的位图页的最大数量][15],用于跟踪溢出页分配。

|

||||

|

||||