mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-15 01:50:08 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject

merge from lctt.

This commit is contained in:

commit

79b9756115

179

published/19951001 Writing man Pages Using groff.md

Normal file

179

published/19951001 Writing man Pages Using groff.md

Normal file

@ -0,0 +1,179 @@

|

||||

使用 groff 编写 man 手册页

|

||||

===================

|

||||

|

||||

`groff` 是大多数 Unix 系统上所提供的流行的文本格式化工具 nroff/troff 的 GNU 版本。它一般用于编写手册页,即命令、编程接口等的在线文档。在本文中,我们将给你展示如何使用 `groff` 编写你自己的 man 手册页。

|

||||

|

||||

在 Unix 系统上最初有两个文本处理系统:troff 和 nroff,它们是由贝尔实验室为初始的 Unix 所开发的(事实上,开发 Unix 系统的部分原因就是为了支持这样的一个文本处理系统)。这个文本处理器的第一个版本被称作 roff(意为 “runoff”——径流);稍后出现了 troff,在那时用于为特定的<ruby>排字机<rt>Typesetter</rt></ruby>生成输出。nroff 是更晚一些的版本,它成为了各种 Unix 系统的标准文本处理器。groff 是 nroff 和 troff 的 GNU 实现,用在 Linux 系统上。它包括了几个扩展功能和一些打印设备的驱动程序。

|

||||

|

||||

`groff` 能够生成文档、文章和书籍,很多时候它就像是其它的文本格式化系统(如 TeX)的血管一样。然而,`groff`(以及原来的 nroff)有一个固有的功能是 TeX 及其变体所缺乏的:生成普通 ASCII 输出。其它的系统在生成打印的文档方面做得很好,而 `groff` 却能够生成可以在线浏览的普通 ASCII(甚至可以在最简单的打印机上直接以普通文本打印)。如果要生成在线浏览的文档以及打印的表单,`groff` 也许是你所需要的(虽然也有替代品,如 Texinfo、Lametex 等等)。

|

||||

|

||||

`groff` 还有一个好处是它比 TeX 小很多;它所需要的支持文件和可执行程序甚至比最小化的 TeX 版本都少。

|

||||

|

||||

`groff` 一个特定的用途是用于格式化 Unix 的 man 手册页。如果你是一个 Unix 程序员,你肯定需要编写和生成各种 man 手册页。在本文中,我们将通过编写一个简短的 man 手册页来介绍 `groff` 的使用。

|

||||

|

||||

像 TeX 一样,`groff` 使用特定的文本格式化语言来描述如何处理文本。这种语言比 TeX 之类的系统更加神秘一些,但是更加简洁。此外,`groff` 在基本的格式化器之上提供了几个宏软件包;这些宏软件包是为一些特定类型的文档所定制的。举个例子, mgs 宏对于写作文章或论文很适合,而 man 宏可用于 man 手册页。

|

||||

|

||||

### 编写 man 手册页

|

||||

|

||||

用 `groff` 编写 man 手册页十分简单。要让你的 man 手册页看起来和其它的一样,你需要从源头上遵循几个惯例,如下所示。在这个例子中,我们将为一个虚构的命令 `coffee` 编写 man 手册页,它用于以各种方式控制你的联网咖啡机。

|

||||

|

||||

使用任意文本编辑器,输入如下代码,并保存为 `coffee.man`。不要输入每行的行号,它们仅用于本文中的说明。

|

||||

|

||||

```

|

||||

.TH COFFEE 1 "23 March 94"

|

||||

.SH NAME

|

||||

coffee \- Control remote coffee machine

|

||||

.SH SYNOPSIS

|

||||

\fBcoffee\fP [ -h | -b ] [ -t \fItype\fP ]

|

||||

\fIamount\fP

|

||||

.SH DESCRIPTION

|

||||

\fBcoffee\fP queues a request to the remote

|

||||

coffee machine at the device \fB/dev/cf0\fR.

|

||||

The required \fIamount\fP argument specifies

|

||||

the number of cups, generally between 0 and

|

||||

12 on ISO standard coffee machines.

|

||||

.SS Options

|

||||

.TP

|

||||

\fB-h\fP

|

||||

Brew hot coffee. Cold is the default.

|

||||

.TP

|

||||

\fB-b\fP

|

||||

Burn coffee. Especially useful when executing

|

||||

\fBcoffee\fP on behalf of your boss.

|

||||

.TP

|

||||

\fB-t \fItype\fR

|

||||

Specify the type of coffee to brew, where

|

||||

\fItype\fP is one of \fBcolumbian\fP,

|

||||

\fBregular\fP, or \fBdecaf\fP.

|

||||

.SH FILES

|

||||

.TP

|

||||

\fC/dev/cf0\fR

|

||||

The remote coffee machine device

|

||||

.SH "SEE ALSO"

|

||||

milk(5), sugar(5)

|

||||

.SH BUGS

|

||||

May require human intervention if coffee

|

||||

supply is exhausted.

|

||||

```

|

||||

|

||||

*清单 1:示例 man 手册页源文件*

|

||||

|

||||

不要让这些晦涩的代码吓坏了你。字符串序列 `\fB`、`\fI` 和 `\fR` 分别用来改变字体为粗体、斜体和正体(罗马字体)。`\fP` 设置字体为前一个选择的字体。

|

||||

|

||||

其它的 `groff` <ruby>请求<rt>request</rt></ruby>以点(`.`)开头出现在行首。第 1 行中,我们看到的 `.TH` 请求用于设置该 man 手册页的标题为 `COFFEE`、man 的部分为 `1`、以及该 man 手册页的最新版本的日期。(说明,man 手册的第 1 部分用于用户命令、第 2 部分用于系统调用等等。使用 `man man` 命令了解各个部分)。

|

||||

|

||||

在第 2 行,`.SH` 请求用于标记一个<ruby>节<rt>section</rt></ruby>的开始,并给该节名称为 `NAME`。注意,大部分的 Unix man 手册页依次使用 `NAME`、 `SYNOPSIS`、`DESCRIPTION`、`FILES`、`SEE ALSO`、`NOTES`、`AUTHOR` 和 `BUGS` 等节,个别情况下也需要一些额外的可选节。这只是编写 man 手册页的惯例,并不强制所有软件都如此。

|

||||

|

||||

第 3 行给出命令的名称,并在一个横线(`-`)后给出简短描述。在 `NAME` 节使用这个格式以便你的 man 手册页可以加到 whatis 数据库中——它可以用于 `man -k` 或 `apropos` 命令。

|

||||

|

||||

第 4-6 行我们给出了 `coffee` 命令格式的大纲。注意,斜体 `\fI...\fP` 用于表示命令行的参数,可选参数用方括号扩起来。

|

||||

|

||||

第 7-12 行给出了该命令的摘要介绍。粗体通常用于表示程序或文件的名称。

|

||||

|

||||

在 13 行,使用 `.SS` 开始了一个名为 `Options` 的子节。

|

||||

|

||||

接着第 14-25 行是选项列表,会使用参数列表样式表示。参数列表中的每一项以 `.TP` 请求来标记;`.TP` 后的行是参数,再之后是该项的文本。例如,第 14-16 行:

|

||||

|

||||

```

|

||||

.TP

|

||||

\fB-h\P

|

||||

Brew hot coffee. Cold is the default.

|

||||

```

|

||||

|

||||

将会显示如下:

|

||||

|

||||

```

|

||||

-h Brew hot coffee. Cold is the default.

|

||||

```

|

||||

|

||||

第 26-29 行创建该 man 手册页的 `FILES` 节,它用于描述该命令可能使用的文件。可以使用 `.TP` 请求来表示文件列表。

|

||||

|

||||

第 30-31 行,给出了 `SEE ALSO` 节,它提供了其它可以参考的 man 手册页。注意,第 30 行的 `.SH` 请求中 `"SEE ALSO"` 使用括号扩起来,这是因为 `.SH` 使用第一个空格来分隔该节的标题。任何超过一个单词的标题都需要使用引号扩起来成为一个单一参数。

|

||||

|

||||

最后,第 32-34 行,是 `BUGS` 节。

|

||||

|

||||

### 格式化和安装 man 手册页

|

||||

|

||||

为了在你的屏幕上查看这个手册页格式化的样式,你可以使用如下命令:

|

||||

|

||||

|

||||

```

|

||||

$ groff -Tascii -man coffee.man | more

|

||||

```

|

||||

|

||||

`-Tascii` 选项告诉 `groff` 生成普通 ASCII 输出;`-man` 告诉 `groff` 使用 man 手册页宏集合。如果一切正常,这个 man 手册页显示应该如下。

|

||||

|

||||

```

|

||||

COFFEE(1) COFFEE(1)

|

||||

NAME

|

||||

coffee - Control remote coffee machine

|

||||

SYNOPSIS

|

||||

coffee [ -h | -b ] [ -t type ] amount

|

||||

DESCRIPTION

|

||||

coffee queues a request to the remote coffee machine at

|

||||

the device /dev/cf0\. The required amount argument speci-

|

||||

fies the number of cups, generally between 0 and 12 on ISO

|

||||

standard coffee machines.

|

||||

Options

|

||||

-h Brew hot coffee. Cold is the default.

|

||||

-b Burn coffee. Especially useful when executing cof-

|

||||

fee on behalf of your boss.

|

||||

-t type

|

||||

Specify the type of coffee to brew, where type is

|

||||

one of columbian, regular, or decaf.

|

||||

FILES

|

||||

/dev/cf0

|

||||

The remote coffee machine device

|

||||

SEE ALSO

|

||||

milk(5), sugar(5)

|

||||

BUGS

|

||||

May require human intervention if coffee supply is

|

||||

exhausted.

|

||||

```

|

||||

|

||||

*格式化的 man 手册页*

|

||||

|

||||

如之前提到过的,`groff` 能够生成其它类型的输出。使用 `-Tps` 选项替代 `-Tascii` 将会生成 PostScript 输出,你可以将其保存为文件,用 GhostView 查看,或用一个 PostScript 打印机打印出来。`-Tdvi` 会生成设备无关的 .dvi 输出,类似于 TeX 的输出。

|

||||

|

||||

如果你希望让别人在你的系统上也可以查看这个 man 手册页,你需要安装这个 groff 源文件到其它用户的 `%MANPATH` 目录里面。标准的 man 手册页放在 `/usr/man`。第一部分的 man 手册页应该放在 `/usr/man/man1` 下,因此,使用命令:

|

||||

|

||||

```

|

||||

$ cp coffee.man /usr/man/man1/coffee.1

|

||||

```

|

||||

|

||||

这将安装该 man 手册页到 `/usr/man` 中供所有人使用(注意使用 `.1` 扩展名而不是 `.man`)。当接下来执行 `man coffee` 命令时,该 man 手册页会被自动重新格式化,并且可查看的文本会被保存到 `/usr/man/cat1/coffee.1.Z` 中。

|

||||

|

||||

如果你不能直接复制 man 手册页的源文件到 `/usr/man`(比如说你不是系统管理员),你可创建你自己的 man 手册页目录树,并将其加入到你的 `%MANPATH`。`%MANPATH` 环境变量的格式同 `%PATH` 一样,举个例子,要添加目录 `/home/mdw/man` 到 `%MANPATH` ,只需要:

|

||||

|

||||

```

|

||||

$ export MANPATH=/home/mdw/man:$MANPATH

|

||||

```

|

||||

|

||||

`groff` 和 man 手册页宏还有许多其它的选项和格式化命令。找到它们的最好办法是查看 `/usr/lib/groff` 中的文件; `tmac` 目录包含了宏文件,自身通常会包含其所提供的命令的文档。要让 `groff` 使用特定的宏集合,只需要使用 `-m macro` (或 `-macro`) 选项。例如,要使用 mgs 宏,使用命令:

|

||||

|

||||

```

|

||||

groff -Tascii -mgs files...

|

||||

```

|

||||

|

||||

`groff` 的 man 手册页对这个选项描述了更多细节。

|

||||

|

||||

不幸的是,随同 `groff` 提供的宏集合没有完善的文档。第 7 部分的 man 手册页提供了一些,例如,`man 7 groff_mm` 会给你 mm 宏集合的信息。然而,该文档通常只覆盖了在 `groff` 实现中不同和新功能,而假设你已经了解过原来的 nroff/troff 宏集合(称作 DWB:the Documentor's Work Bench)。最佳的信息来源或许是一本覆盖了那些经典宏集合细节的书。要了解更多的编写 man 手册页的信息,你可以看看 man 手册页源文件(`/usr/man` 中),并通过它们来比较源文件的输出。

|

||||

|

||||

这篇文章是《Running Linux》 中的一章,由 Matt Welsh 和 Lar Kaufman 著,奥莱理出版(ISBN 1-56592-100-3)。在本书中,还包括了 Linux 下使用的各种文本格式化系统的教程。这期的《Linux Journal》中的内容及《Running Linux》应该可以给你提供在 Linux 上使用各种文本工具的良好开端。

|

||||

|

||||

### 祝好,撰写快乐!

|

||||

|

||||

Matt Welsh ([mdw@cs.cornell.edu][1])是康奈尔大学的一名学生和系统程序员,在机器人和视觉实验室从事于时时机器视觉研究。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxjournal.com/article/1158

|

||||

|

||||

作者:[Matt Welsh][a]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linuxjournal.com/user/800006

|

||||

[1]:mailto:mdw@cs.cornell.edu

|

||||

@ -1,41 +1,27 @@

|

||||

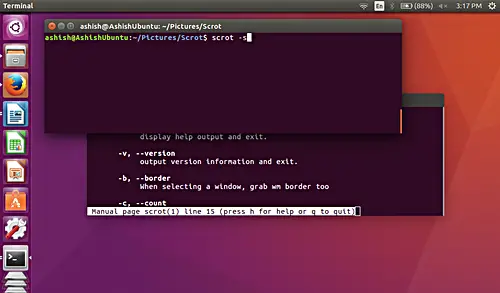

如何在 Linux 系统里用 Scrot 截屏

|

||||

============================================================

|

||||

|

||||

### 文章主要内容

|

||||

|

||||

1. [关于 Scrot][12]

|

||||

2. [安装 Scrot][13]

|

||||

3. [Scrot 的使用和特点][14]

|

||||

1. [获取程序版本][1]

|

||||

2. [抓取当前窗口][2]

|

||||

3. [抓取选定窗口][3]

|

||||

4. [在截屏时包含窗口边框][4]

|

||||

5. [延时截屏][5]

|

||||

6. [截屏前倒数][6]

|

||||

7. [图片质量][7]

|

||||

8. [生成缩略图][8]

|

||||

9. [拼接多显示器截屏][9]

|

||||

10. [在保存截图后执行操作][10]

|

||||

11. [特殊字符串][11]

|

||||

4. [结论][15]

|

||||

|

||||

最近,我们介绍过 [gnome-screenshot][17] 工具,这是一个很优秀的屏幕抓取工具。但如果你想找一个在命令行运行的更好用的截屏工具,你一定要试试 Scrot。这个工具有一些 gnome-screenshot 没有的独特功能。在这片文章里,我们会通过简单易懂的例子来详细介绍 Scrot。

|

||||

最近,我们介绍过 [gnome-screenshot][17] 工具,这是一个很优秀的屏幕抓取工具。但如果你想找一个在命令行运行的更好用的截屏工具,你一定要试试 Scrot。这个工具有一些 gnome-screenshot 没有的独特功能。在这篇文章里,我们会通过简单易懂的例子来详细介绍 Scrot。

|

||||

|

||||

请注意一下,这篇文章里的所有例子都在 Ubuntu 16.04 LTS 上测试过,我们用的 scrot 版本是 0.8。

|

||||

|

||||

### 关于 Scrot

|

||||

|

||||

[Scrot][18] (**SCR**eensh**OT**) 是一个屏幕抓取工具,使用 imlib2 库来获取和保存图片。由 Tom Gilbert 用 C 语言开发完成,通过 BSD 协议授权。

|

||||

[Scrot][18] (**SCR**eensh**OT**) 是一个屏幕抓取工具,使用 imlib2 库来获取和保存图片。由 Tom Gilbert 用 C 语言开发完成,通过 BSD 协议授权。

|

||||

|

||||

### 安装 Scrot

|

||||

|

||||

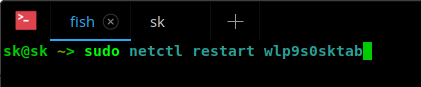

scort 工具可能在你的 Ubuntu 系统里预装了,不过如果没有的话,你可以用下面的命令安装:

|

||||

|

||||

```

|

||||

sudo apt-get install scrot

|

||||

```

|

||||

|

||||

安装完成后,你可以通过下面的命令来使用:

|

||||

|

||||

```

|

||||

scrot [options] [filename]

|

||||

```

|

||||

|

||||

**注意**:方括号里的参数是可选的。

|

||||

|

||||

@ -51,13 +37,17 @@ scrot [options] [filename]

|

||||

|

||||

默认情况下,抓取的截图会用带时间戳的文件名保存到当前目录下,不过你也可以在运行命令时指定截图文件名。比如:

|

||||

|

||||

```

|

||||

scrot [image-name].png

|

||||

```

|

||||

|

||||

### 获取程序版本

|

||||

|

||||

你想的话,可以用 -v 选项来查看 scrot 的版本。

|

||||

你想的话,可以用 `-v` 选项来查看 scrot 的版本。

|

||||

|

||||

```

|

||||

scrot -v

|

||||

```

|

||||

|

||||

这是例子:

|

||||

|

||||

@ -67,10 +57,11 @@ scrot -v

|

||||

|

||||

### 抓取当前窗口

|

||||

|

||||

这个工具可以限制抓取当前的焦点窗口。这个功能可以通过 -u 选项打开。

|

||||

这个工具可以限制抓取当前的焦点窗口。这个功能可以通过 `-u` 选项打开。

|

||||

|

||||

```

|

||||

scrot -u

|

||||

|

||||

```

|

||||

例如,这是我在命令行执行上边命令时的桌面:

|

||||

|

||||

[

|

||||

@ -85,9 +76,11 @@ scrot -u

|

||||

|

||||

### 抓取选定窗口

|

||||

|

||||

这个工具还可以让你抓取任意用鼠标点击的窗口。这个功能可以用 -s 选项打开。

|

||||

这个工具还可以让你抓取任意用鼠标点击的窗口。这个功能可以用 `-s` 选项打开。

|

||||

|

||||

```

|

||||

scrot -s

|

||||

```

|

||||

|

||||

例如,在下面的截图里你可以看到,我有两个互相重叠的终端窗口。我在上层的窗口里执行上面的命令。

|

||||

|

||||

@ -95,7 +88,7 @@ scrot -s

|

||||

|

||||

][23]

|

||||

|

||||

现在假如我想抓取下层的终端窗口。这样我只要在执行命令后点击窗口就可以了 - 在你用鼠标点击之前,命令的执行不会结束。

|

||||

现在假如我想抓取下层的终端窗口。这样我只要在执行命令后点击窗口就可以了 —— 在你用鼠标点击之前,命令的执行不会结束。

|

||||

|

||||

这是我点击了下层终端窗口后的截图:

|

||||

|

||||

@ -107,9 +100,11 @@ scrot -s

|

||||

|

||||

### 在截屏时包含窗口边框

|

||||

|

||||

我们之前介绍的 -u 选项在截屏时不会包含窗口边框。不过,需要的话你也可以在截屏时包含窗口边框。这个功能可以通过 -b 选项打开(当然要和 -u 选项一起)。

|

||||

我们之前介绍的 `-u` 选项在截屏时不会包含窗口边框。不过,需要的话你也可以在截屏时包含窗口边框。这个功能可以通过 `-b` 选项打开(当然要和 `-u` 选项一起)。

|

||||

|

||||

```

|

||||

scrot -ub

|

||||

```

|

||||

|

||||

下面是示例截图:

|

||||

|

||||

@ -121,11 +116,13 @@ scrot -ub

|

||||

|

||||

### 延时截屏

|

||||

|

||||

你可以在开始截屏时增加一点延时。需要在 --delay 或 -d 选项后设定一个时间值参数。

|

||||

你可以在开始截屏时增加一点延时。需要在 `--delay` 或 `-d` 选项后设定一个时间值参数。

|

||||

|

||||

```

|

||||

scrot --delay [NUM]

|

||||

|

||||

scrot --delay 5

|

||||

```

|

||||

|

||||

例如:

|

||||

|

||||

@ -137,11 +134,13 @@ scrot --delay 5

|

||||

|

||||

### 截屏前倒数

|

||||

|

||||

这个工具也可以在你使用延时功能后显示一个倒计时。这个功能可以通过 -c 选项打开。

|

||||

这个工具也可以在你使用延时功能后显示一个倒计时。这个功能可以通过 `-c` 选项打开。

|

||||

|

||||

```

|

||||

scrot –delay [NUM] -c

|

||||

|

||||

scrot -d 5 -c

|

||||

```

|

||||

|

||||

下面是示例截图:

|

||||

|

||||

@ -153,11 +152,13 @@ scrot -d 5 -c

|

||||

|

||||

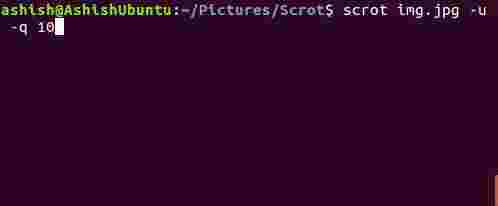

你可以使用这个工具来调整截图的图片质量,范围是 1-100 之间。较大的值意味着更大的文件大小以及更低的压缩率。默认值是 75,不过最终效果根据选择的文件类型也会有一些差异。

|

||||

|

||||

这个功能可以通过 --quality 或 -q 选项打开,但是你必须提供一个 1-100 之间的数值作为参数。

|

||||

这个功能可以通过 `--quality` 或 `-q` 选项打开,但是你必须提供一个 1 - 100 之间的数值作为参数。

|

||||

|

||||

```

|

||||

scrot –quality [NUM]

|

||||

|

||||

scrot –quality 10

|

||||

```

|

||||

|

||||

下面是示例截图:

|

||||

|

||||

@ -165,17 +166,19 @@ scrot –quality 10

|

||||

|

||||

][28]

|

||||

|

||||

你可以看到,-q 选项的参数更靠近 1 让图片质量下降了很多。

|

||||

你可以看到,`-q` 选项的参数更靠近 1 让图片质量下降了很多。

|

||||

|

||||

### 生成缩略图

|

||||

|

||||

scort 工具还可以生成截屏的缩略图。这个功能可以通过 --thumb 选项打开。这个选项也需要一个 NUM 数值作为参数,基本上是指定原图大小的百分比。

|

||||

scort 工具还可以生成截屏的缩略图。这个功能可以通过 `--thumb` 选项打开。这个选项也需要一个 NUM 数值作为参数,基本上是指定原图大小的百分比。

|

||||

|

||||

```

|

||||

scrot --thumb NUM

|

||||

|

||||

scrot --thumb 50

|

||||

```

|

||||

|

||||

**注意**:加上 --thumb 选项也会同时保存原始截图文件。

|

||||

**注意**:加上 `--thumb` 选项也会同时保存原始截图文件。

|

||||

|

||||

例如,下面是我测试的原始截图:

|

||||

|

||||

@ -191,9 +194,11 @@ scrot --thumb 50

|

||||

|

||||

### 拼接多显示器截屏

|

||||

|

||||

如果你的电脑接了多个显示设备,你可以用 scort 抓取并拼接这些显示设备的截图。这个功能可以通过 -m 选项打开。

|

||||

如果你的电脑接了多个显示设备,你可以用 scort 抓取并拼接这些显示设备的截图。这个功能可以通过 `-m` 选项打开。

|

||||

|

||||

```

|

||||

scrot -m

|

||||

```

|

||||

|

||||

下面是示例截图:

|

||||

|

||||

@ -203,9 +208,11 @@ scrot -m

|

||||

|

||||

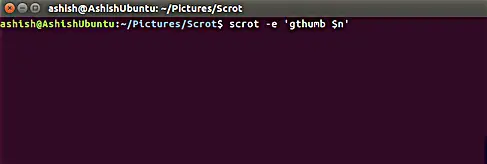

### 在保存截图后执行操作

|

||||

|

||||

使用这个工具,你可以在保存截图后执行各种操作 - 例如,用像 gThumb 这样的图片编辑器打开截图。这个功能可以通过 -e 选项打开。下面是例子:

|

||||

使用这个工具,你可以在保存截图后执行各种操作 —— 例如,用像 gThumb 这样的图片编辑器打开截图。这个功能可以通过 `-e` 选项打开。下面是例子:

|

||||

|

||||

scrot abc.png -e ‘gthumb abc.png’

|

||||

```

|

||||

scrot abc.png -e 'gthumb abc.png'

|

||||

```

|

||||

|

||||

这个命令里的 gthumb 是一个图片编辑器,上面的命令在执行后会自动打开。

|

||||

|

||||

@ -223,29 +230,33 @@ scrot abc.png -e ‘gthumb abc.png’

|

||||

|

||||

你可以看到 scrot 抓取了屏幕截图,然后再启动了 gThumb 图片编辑器打开刚才保存的截图图片。

|

||||

|

||||

如果你截图时没有指定文件名,截图将会用带有时间戳的文件名保存到当前目录 - 这是 scrot 的默认设定,我们前面已经说过。

|

||||

如果你截图时没有指定文件名,截图将会用带有时间戳的文件名保存到当前目录 —— 这是 scrot 的默认设定,我们前面已经说过。

|

||||

|

||||

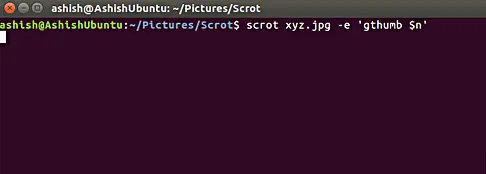

下面是一个使用默认名字并且加上 -e 选项来截图的例子:

|

||||

下面是一个使用默认名字并且加上 `-e` 选项来截图的例子:

|

||||

|

||||

scrot -e ‘gthumb $n’

|

||||

```

|

||||

scrot -e 'gthumb $n'

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][34]

|

||||

|

||||

有个地方要注意的是 $n 是一个特殊字符串,用来获取当前截图的文件名。关于特殊字符串的更多细节,请继续看下个小节。

|

||||

有个地方要注意的是 `$n` 是一个特殊字符串,用来获取当前截图的文件名。关于特殊字符串的更多细节,请继续看下个小节。

|

||||

|

||||

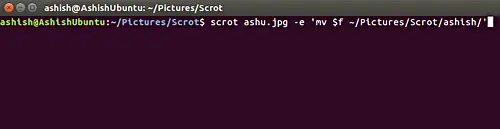

### 特殊字符串

|

||||

|

||||

scrot 的 -e(或 --exec)选项和文件名参数可以使用格式说明符。有两种类型格式。第一种是以 '%' 加字母组成,用来表示日期和时间,第二种以 '$' 开头,scrot 内部使用。

|

||||

scrot 的 `-e`(或 `--exec`)选项和文件名参数可以使用格式说明符。有两种类型格式。第一种是以 `%` 加字母组成,用来表示日期和时间,第二种以 `$` 开头,scrot 内部使用。

|

||||

|

||||

下面介绍几个 --exec 和文件名参数接受的说明符。

|

||||

下面介绍几个 `--exec` 和文件名参数接受的说明符。

|

||||

|

||||

**$f** – 让你可以使用截图的全路径(包括文件名)。

|

||||

`$f` – 让你可以使用截图的全路径(包括文件名)。

|

||||

|

||||

例如

|

||||

例如:

|

||||

|

||||

```

|

||||

scrot ashu.jpg -e ‘mv $f ~/Pictures/Scrot/ashish/’

|

||||

```

|

||||

|

||||

下面是示例截图:

|

||||

|

||||

@ -253,17 +264,19 @@ scrot ashu.jpg -e ‘mv $f ~/Pictures/Scrot/ashish/’

|

||||

|

||||

][35]

|

||||

|

||||

如果你没有指定文件名,scrot 默认会用日期格式的文件名保存截图。这个是 scrot 的默认文件名格式:%yy-%mm-%dd-%hhmmss_$wx$h_scrot.png。

|

||||

如果你没有指定文件名,scrot 默认会用日期格式的文件名保存截图。这个是 scrot 的默认文件名格式:`%yy-%mm-%dd-%hhmmss_$wx$h_scrot.png`。

|

||||

|

||||

**$n** – 提供截图文件名。下面是示例截图:

|

||||

`$n` – 提供截图文件名。下面是示例截图:

|

||||

|

||||

[

|

||||

|

||||

][36]

|

||||

|

||||

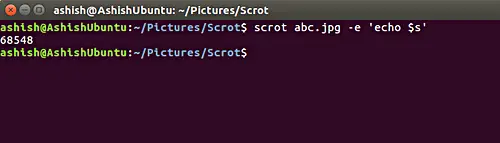

**$s** – 获取截图的文件大小。这个功能可以像下面这样使用。

|

||||

`$s` – 获取截图的文件大小。这个功能可以像下面这样使用。

|

||||

|

||||

```

|

||||

scrot abc.jpg -e ‘echo $s’

|

||||

```

|

||||

|

||||

下面是示例截图:

|

||||

|

||||

@ -271,22 +284,19 @@ scrot abc.jpg -e ‘echo $s’

|

||||

|

||||

][37]

|

||||

|

||||

类似的,你也可以使用其他格式字符串 **$p**, **$w**, **$h**, **$t**, **$$** 以及 **\n** 来分别获取图片像素大小,图像宽度,图像高度,图像格式,输入 $ 字符,以及换行。你可以像上面介绍的 **$s** 格式那样使用这些字符串。

|

||||

类似的,你也可以使用其他格式字符串 `$p`、`$w`、 `$h`、`$t`、`$$` 以及 `\n` 来分别获取图片像素大小、图像宽度、图像高度、图像格式、输入 `$` 字符、以及换行。你可以像上面介绍的 `$s` 格式那样使用这些字符串。

|

||||

|

||||

### 结论

|

||||

|

||||

这个应用能轻松地安装在 Ubuntu 系统上,对初学者比较友好。scrot 也提供了一些高级功能,比如支持格式化字符串,方便专业用户用脚本处理。当然,如果你想用起来的话有一点轻微的学习曲线。

|

||||

|

||||

|

||||

[vie][16]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.howtoforge.com/tutorial/how-to-take-screenshots-in-linux-with-scrot/

|

||||

|

||||

作者:[Himanshu Arora][a]

|

||||

译者:[zpl1025](https://github.com/zpl1025)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,5 +1,5 @@

|

||||

vim 的酷功能:会话!

|

||||

============================================================•

|

||||

============================================================

|

||||

|

||||

昨天我在编写我的[vimrc][5]的时候了解到一个很酷的 vim 功能!(主要为了添加 fzf 和 ripgrep 插件)。这是一个内置功能,不需要特别的插件。

|

||||

|

||||

@ -17,9 +17,7 @@ vim 的酷功能:会话!

|

||||

一些 vim 插件给 vim 会话添加了额外的功能:

|

||||

|

||||

* [https://github.com/tpope/vim-obsession][1]

|

||||

|

||||

* [https://github.com/mhinz/vim-startify][2]

|

||||

|

||||

* [https://github.com/xolox/vim-session][3]

|

||||

|

||||

这是漫画:

|

||||

@ -30,9 +28,9 @@ vim 的酷功能:会话!

|

||||

|

||||

via: https://jvns.ca/blog/2017/09/10/vim-sessions/

|

||||

|

||||

作者:[Julia Evans ][a]

|

||||

作者:[Julia Evans][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,9 +1,9 @@

|

||||

并发服务器(3) —— 事件驱动

|

||||

并发服务器(三):事件驱动

|

||||

============================================================

|

||||

|

||||

这是《并发服务器》系列的第三节。[第一节][26] 介绍了阻塞式编程,[第二节 —— 线程][27] 探讨了多线程,将其作为一种可行的方法来实现服务器并发编程。

|

||||

这是并发服务器系列的第三节。[第一节][26] 介绍了阻塞式编程,[第二节:线程][27] 探讨了多线程,将其作为一种可行的方法来实现服务器并发编程。

|

||||

|

||||

另一种常见的实现并发的方法叫做 _事件驱动编程_,也可以叫做 _异步_ 编程 [^注1][28]。这种方法变化万千,因此我们会从最基本的开始,使用一些基本的 APIs 而非从封装好的高级方法开始。本系列以后的文章会讲高层次抽象,还有各种混合的方法。

|

||||

另一种常见的实现并发的方法叫做 _事件驱动编程_,也可以叫做 _异步_ 编程 ^注1 。这种方法变化万千,因此我们会从最基本的开始,使用一些基本的 API 而非从封装好的高级方法开始。本系列以后的文章会讲高层次抽象,还有各种混合的方法。

|

||||

|

||||

本系列的所有文章:

|

||||

|

||||

@ -13,13 +13,13 @@

|

||||

|

||||

### 阻塞式 vs. 非阻塞式 I/O

|

||||

|

||||

要介绍这个标题,我们先讲讲阻塞和非阻塞 I/O 的区别。阻塞式 I/O 更好理解,因为这是我们使用 I/O 相关 API 时的“标准”方式。从套接字接收数据的时候,调用 `recv` 函数会发生 _阻塞_,直到它从端口上接收到了来自另一端套接字的数据。这恰恰是第一部分讲到的顺序服务器的问题。

|

||||

作为本篇的介绍,我们先讲讲阻塞和非阻塞 I/O 的区别。阻塞式 I/O 更好理解,因为这是我们使用 I/O 相关 API 时的“标准”方式。从套接字接收数据的时候,调用 `recv` 函数会发生 _阻塞_,直到它从端口上接收到了来自另一端套接字的数据。这恰恰是第一部分讲到的顺序服务器的问题。

|

||||

|

||||

因此阻塞式 I/O 存在着固有的性能问题。第二节里我们讲过一种解决方法,就是用多线程。哪怕一个线程的 I/O 阻塞了,别的线程仍然可以使用 CPU 资源。实际上,阻塞 I/O 通常在利用资源方面非常高效,因为线程就等待着 —— 操作系统将线程变成休眠状态,只有满足了线程需要的条件才会被唤醒。

|

||||

|

||||

_非阻塞式_ I/O 是另一种思路。把套接字设成非阻塞模式时,调用 `recv` 时(还有 `send`,但是我们现在只考虑接收),函数返回地会很快,哪怕没有数据要接收。这时,就会返回一个特殊的错误状态 ^[注2][15] 来通知调用者,此时没有数据传进来。调用者可以去做其他的事情,或者尝试再次调用 `recv` 函数。

|

||||

_非阻塞式_ I/O 是另一种思路。把套接字设成非阻塞模式时,调用 `recv` 时(还有 `send`,但是我们现在只考虑接收),函数返回的会很快,哪怕没有接收到数据。这时,就会返回一个特殊的错误状态 ^注2 来通知调用者,此时没有数据传进来。调用者可以去做其他的事情,或者尝试再次调用 `recv` 函数。

|

||||

|

||||

证明阻塞式和非阻塞式的 `recv` 区别的最好方式就是贴一段示例代码。这里有个监听套接字的小程序,一直在 `recv` 这里阻塞着;当 `recv` 返回了数据,程序就报告接收到了多少个字节 ^[注3][16]:

|

||||

示范阻塞式和非阻塞式的 `recv` 区别的最好方式就是贴一段示例代码。这里有个监听套接字的小程序,一直在 `recv` 这里阻塞着;当 `recv` 返回了数据,程序就报告接收到了多少个字节 ^注3 :

|

||||

|

||||

```

|

||||

int main(int argc, const char** argv) {

|

||||

@ -69,8 +69,7 @@ hello # wait for 2 seconds after typing this

|

||||

socket world

|

||||

^D # to end the connection>

|

||||

```

|

||||

|

||||

The listening program will print the following:

|

||||

|

||||

监听程序会输出以下内容:

|

||||

|

||||

```

|

||||

@ -144,7 +143,6 @@ int main(int argc, const char** argv) {

|

||||

这里与阻塞版本有些差异,值得注意:

|

||||

|

||||

1. `accept` 函数返回的 `newsocktfd` 套接字因调用了 `fcntl`, 被设置成非阻塞的模式。

|

||||

|

||||

2. 检查 `recv` 的返回状态时,我们对 `errno` 进行了检查,判断它是否被设置成表示没有可供接收的数据的状态。这时,我们仅仅是休眠了 200 毫秒然后进入到下一轮循环。

|

||||

|

||||

同样用 `nc` 进行测试,以下是非阻塞监听器的输出:

|

||||

@ -183,19 +181,19 @@ Peer disconnected; I'm done.

|

||||

|

||||

作为练习,给输出添加一个时间戳,确认调用 `recv` 得到结果之间花费的时间是比输入到 `nc` 中所用的多还是少(每一轮是 200 ms)。

|

||||

|

||||

这里就实现了使用非阻塞的 `recv` 让监听者检查套接字变为可能,并且在没有数据的时候重新获得控制权。换句话说,这就是 _polling(轮询)_ —— 主程序周期性的查询套接字以便读取数据。

|

||||

这里就实现了使用非阻塞的 `recv` 让监听者检查套接字变为可能,并且在没有数据的时候重新获得控制权。换句话说,用编程的语言说这就是 <ruby>轮询<rt>polling</rt></ruby> —— 主程序周期性的查询套接字以便读取数据。

|

||||

|

||||

对于顺序响应的问题,这似乎是个可行的方法。非阻塞的 `recv` 让同时与多个套接字通信变成可能,轮询这些套接字,仅当有新数据到来时才处理。就是这样,这种方式 _可以_ 用来写并发服务器;但实际上一般不这么做,因为轮询的方式很难扩展。

|

||||

|

||||

首先,我在代码中引入的 200 ms 延迟对于记录非常好(监听器在我输入 `nc` 之间只打印几行 “Calling recv...”,但实际上应该有上千行)。但它也增加了多达 200 ms 的服务器响应时间,这几乎是意料不到的。实际的程序中,延迟会低得多,休眠时间越短,进程占用的 CPU 资源就越多。有些时钟周期只是浪费在等待,这并不好,尤其是在移动设备上,这些设备的电量往往有限。

|

||||

首先,我在代码中引入的 200ms 延迟对于演示非常好(监听器在我输入 `nc` 之间只打印几行 “Calling recv...”,但实际上应该有上千行)。但它也增加了多达 200ms 的服务器响应时间,这无意是不必要的。实际的程序中,延迟会低得多,休眠时间越短,进程占用的 CPU 资源就越多。有些时钟周期只是浪费在等待,这并不好,尤其是在移动设备上,这些设备的电量往往有限。

|

||||

|

||||

但是当我们实际这样来使用多个套接字的时候,更严重的问题出现了。想像下监听器正在同时处理 1000 个 客户端。这意味着每一个循环迭代里面,它都得为 _这 1000 个套接字中的每一个_ 执行一遍非阻塞的 `recv`,找到其中准备好了数据的那一个。这非常低效,并且极大的限制了服务器能够并发处理的客户端数。这里有个准则:每次轮询之间等待的间隔越久,服务器响应性越差;而等待的时间越少,CPU 在无用的轮询上耗费的资源越多。

|

||||

但是当我们实际这样来使用多个套接字的时候,更严重的问题出现了。想像下监听器正在同时处理 1000 个客户端。这意味着每一个循环迭代里面,它都得为 _这 1000 个套接字中的每一个_ 执行一遍非阻塞的 `recv`,找到其中准备好了数据的那一个。这非常低效,并且极大的限制了服务器能够并发处理的客户端数。这里有个准则:每次轮询之间等待的间隔越久,服务器响应性越差;而等待的时间越少,CPU 在无用的轮询上耗费的资源越多。

|

||||

|

||||

讲真,所有的轮询都像是无用功。当然操作系统应该是知道哪个套接字是准备好了数据的,因此没必要逐个扫描。事实上,就是这样,接下来就会讲一些API,让我们可以更优雅地处理多个客户端。

|

||||

讲真,所有的轮询都像是无用功。当然操作系统应该是知道哪个套接字是准备好了数据的,因此没必要逐个扫描。事实上,就是这样,接下来就会讲一些 API,让我们可以更优雅地处理多个客户端。

|

||||

|

||||

### select

|

||||

|

||||

`select` 的系统调用是轻便的(POSIX),标准 Unix API 中常有的部分。它是为上一节最后一部分描述的问题而设计的 —— 允许一个线程可以监视许多文件描述符 ^[注4][17] 的变化,不用在轮询中执行不必要的代码。我并不打算在这里引入一个关于 `select` 的理解性的教程,有很多网站和书籍讲这个,但是在涉及到问题的相关内容时,我会介绍一下它的 API,然后再展示一个非常复杂的例子。

|

||||

`select` 的系统调用是可移植的(POSIX),是标准 Unix API 中常有的部分。它是为上一节最后一部分描述的问题而设计的 —— 允许一个线程可以监视许多文件描述符 ^注4 的变化,而不用在轮询中执行不必要的代码。我并不打算在这里引入一个关于 `select` 的全面教程,有很多网站和书籍讲这个,但是在涉及到问题的相关内容时,我会介绍一下它的 API,然后再展示一个非常复杂的例子。

|

||||

|

||||

`select` 允许 _多路 I/O_,监视多个文件描述符,查看其中任何一个的 I/O 是否可用。

|

||||

|

||||

@ -209,30 +207,25 @@ int select(int nfds, fd_set *readfds, fd_set *writefds,

|

||||

`select` 的调用过程如下:

|

||||

|

||||

1. 在调用之前,用户先要为所有不同种类的要监视的文件描述符创建 `fd_set` 实例。如果想要同时监视读取和写入事件,`readfds` 和 `writefds` 都要被创建并且引用。

|

||||

|

||||

2. 用户可以使用 `FD_SET` 来设置集合中想要监视的特殊描述符。例如,如果想要监视描述符 2、7 和 10 的读取事件,在 `readfds` 这里调用三次 `FD_SET`,分别设置 2、7 和 10。

|

||||

|

||||

3. `select` 被调用。

|

||||

|

||||

4. 当 `select` 返回时(现在先不管超时),就是说集合中有多少个文件描述符已经就绪了。它也修改 `readfds` 和 `writefds` 集合,来标记这些准备好的描述符。其它所有的描述符都会被清空。

|

||||

|

||||

5. 这时用户需要遍历 `readfds` 和 `writefds`,找到哪个描述符就绪了(使用 `FD_ISSET`)。

|

||||

|

||||

作为完整的例子,我在并发的服务器程序上使用 `select`,重新实现了我们之前的协议。[完整的代码在这里][18];接下来的是代码中的高亮,还有注释。警告:示例代码非常复杂,因此第一次看的时候,如果没有足够的时间,快速浏览也没有关系。

|

||||

作为完整的例子,我在并发的服务器程序上使用 `select`,重新实现了我们之前的协议。[完整的代码在这里][18];接下来的是代码中的重点部分及注释。警告:示例代码非常复杂,因此第一次看的时候,如果没有足够的时间,快速浏览也没有关系。

|

||||

|

||||

### 使用 select 的并发服务器

|

||||

|

||||

使用 I/O 的多发 API 诸如 `select` 会给我们服务器的设计带来一些限制;这不会马上显现出来,但这值得探讨,因为它们是理解事件驱动编程到底是什么的关键。

|

||||

|

||||

最重要的是,要记住这种方法本质上是单线程的 ^[注5][19]。服务器实际上在 _同一时刻只能做一件事_。因为我们想要同时处理多个客户端请求,我们需要换一种方式重构代码。

|

||||

最重要的是,要记住这种方法本质上是单线程的 ^注5 。服务器实际上在 _同一时刻只能做一件事_。因为我们想要同时处理多个客户端请求,我们需要换一种方式重构代码。

|

||||

|

||||

首先,让我们谈谈主循环。它看起来是什么样的呢?先让我们想象一下服务器有一堆任务,它应该监视哪些东西呢?两种类型的套接字活动:

|

||||

|

||||

1. 新客户端尝试连接。这些客户端应该被 `accept`。

|

||||

|

||||

2. 已连接的客户端发送数据。这个数据要用 [第一节][11] 中所讲到的协议进行传输,有可能会有一些数据要被回送给客户端。

|

||||

|

||||

尽管这两种活动在本质上有所区别,我们还是要把他们放在一个循环里,因为只能有一个主循环。循环会包含 `select` 的调用。这个 `select` 的调用会监视上述的两种活动。

|

||||

尽管这两种活动在本质上有所区别,我们还是要把它们放在一个循环里,因为只能有一个主循环。循环会包含 `select` 的调用。这个 `select` 的调用会监视上述的两种活动。

|

||||

|

||||

这里是部分代码,设置了文件描述符集合,并在主循环里转到被调用的 `select` 部分。

|

||||

|

||||

@ -264,9 +257,7 @@ while (1) {

|

||||

这里的一些要点:

|

||||

|

||||

1. 由于每次调用 `select` 都会重写传递给函数的集合,调用器就得维护一个 “master” 集合,在循环迭代中,保持对所监视的所有活跃的套接字的追踪。

|

||||

|

||||

2. 注意我们所关心的,最开始的唯一那个套接字是怎么变成 `listener_sockfd` 的,这就是最开始的套接字,服务器借此来接收新客户端的连接。

|

||||

|

||||

3. `select` 的返回值,是在作为参数传递的集合中,那些已经就绪的描述符的个数。`select` 修改这个集合,用来标记就绪的描述符。下一步是在这些描述符中进行迭代。

|

||||

|

||||

```

|

||||

@ -298,7 +289,7 @@ for (int fd = 0; fd <= fdset_max && nready > 0; fd++) {

|

||||

}

|

||||

```

|

||||

|

||||

这部分循环检查 _可读的_ 描述符。让我们跳过监听器套接字(要浏览所有内容,[看这个代码][20]) 然后看看当其中一个客户端准备好了之后会发生什么。出现了这种情况后,我们调用一个叫做 `on_peer_ready_recv` 的 _回调_ 函数,传入相应的文件描述符。这个调用意味着客户端连接到套接字上,发送某些数据,并且对套接字上 `recv` 的调用不会被阻塞 ^[注6][21]。这个回调函数返回结构体 `fd_status_t`。

|

||||

这部分循环检查 _可读的_ 描述符。让我们跳过监听器套接字(要浏览所有内容,[看这个代码][20]) 然后看看当其中一个客户端准备好了之后会发生什么。出现了这种情况后,我们调用一个叫做 `on_peer_ready_recv` 的 _回调_ 函数,传入相应的文件描述符。这个调用意味着客户端连接到套接字上,发送某些数据,并且对套接字上 `recv` 的调用不会被阻塞 ^注6 。这个回调函数返回结构体 `fd_status_t`。

|

||||

|

||||

```

|

||||

typedef struct {

|

||||

@ -307,7 +298,7 @@ typedef struct {

|

||||

} fd_status_t;

|

||||

```

|

||||

|

||||

这个结构体告诉主循环,是否应该监视套接字的读取事件,写入事件,或者两者都监视。上述代码展示了 `FD_SET` 和 `FD_CLR` 是怎么在合适的描述符集合中被调用的。对于主循环中某个准备好了写入数据的描述符,代码是类似的,除了它所调用的回调函数,这个回调函数叫做 `on_peer_ready_send`。

|

||||

这个结构体告诉主循环,是否应该监视套接字的读取事件、写入事件,或者两者都监视。上述代码展示了 `FD_SET` 和 `FD_CLR` 是怎么在合适的描述符集合中被调用的。对于主循环中某个准备好了写入数据的描述符,代码是类似的,除了它所调用的回调函数,这个回调函数叫做 `on_peer_ready_send`。

|

||||

|

||||

现在来花点时间看看这个回调:

|

||||

|

||||

@ -464,37 +455,36 @@ INFO:2017-09-26 05:29:18,070:conn0 disconnecting

|

||||

INFO:2017-09-26 05:29:18,070:conn2 disconnecting

|

||||

```

|

||||

|

||||

和线程的情况相似,客户端之间没有延迟,他们被同时处理。而且在 `select-server` 也没有用线程!主循环 _多路_ 处理所有的客户端,通过高效使用 `select` 轮询多个套接字。回想下 [第二节中][22] 顺序的 vs 多线程的客户端处理过程的图片。对于我们的 `select-server`,三个客户端的处理流程像这样:

|

||||

和线程的情况相似,客户端之间没有延迟,它们被同时处理。而且在 `select-server` 也没有用线程!主循环 _多路_ 处理所有的客户端,通过高效使用 `select` 轮询多个套接字。回想下 [第二节中][22] 顺序的 vs 多线程的客户端处理过程的图片。对于我们的 `select-server`,三个客户端的处理流程像这样:

|

||||

|

||||

|

||||

|

||||

所有的客户端在同一个线程中同时被处理,通过乘积,做一点这个客户端的任务,然后切换到另一个,再切换到下一个,最后切换回到最开始的那个客户端。注意,这里没有什么循环调度,客户端在它们发送数据的时候被客户端处理,这实际上是受客户端左右的。

|

||||

|

||||

### 同步,异步,事件驱动,回调

|

||||

### 同步、异步、事件驱动、回调

|

||||

|

||||

`select-server` 示例代码为讨论什么是异步编程,它和事件驱动及基于回调的编程有何联系,提供了一个良好的背景。因为这些词汇在并发服务器的(非常矛盾的)讨论中很常见。

|

||||

`select-server` 示例代码为讨论什么是异步编程、它和事件驱动及基于回调的编程有何联系,提供了一个良好的背景。因为这些词汇在并发服务器的(非常矛盾的)讨论中很常见。

|

||||

|

||||

让我们从一段 `select` 的手册页面中引用的一句好开始:

|

||||

让我们从一段 `select` 的手册页面中引用的一句话开始:

|

||||

|

||||

> select,pselect,FD_CLR,FD_ISSET,FD_SET,FD_ZERO - 同步 I/O 处理

|

||||

> select,pselect,FD\_CLR,FD\_ISSET,FD\_SET,FD\_ZERO - 同步 I/O 处理

|

||||

|

||||

因此 `select` 是 _同步_ 处理。但我刚刚演示了大量代码的例子,使用 `select` 作为 _异步_ 处理服务器的例子。有哪些东西?

|

||||

|

||||

答案是:这取决于你的观查角度。同步常用作阻塞处理,并且对 `select` 的调用实际上是阻塞的。和第 1、2 节中讲到的顺序的、多线程的服务器中对 `send` 和 `recv` 是一样的。因此说 `select` 是 _同步的_ API 是有道理的。可是,服务器的设计却可以是 _异步的_,或是 _基于回调的_,或是 _事件驱动的_,尽管其中有对 `select` 的使用。注意这里的 `on_peer_*` 函数是回调函数;它们永远不会阻塞,并且只有网络事件触发的时候才会被调用。它们可以获得部分数据,并能够在调用过程中保持稳定的状态。

|

||||

答案是:这取决于你的观察角度。同步常用作阻塞处理,并且对 `select` 的调用实际上是阻塞的。和第 1、2 节中讲到的顺序的、多线程的服务器中对 `send` 和 `recv` 是一样的。因此说 `select` 是 _同步的_ API 是有道理的。可是,服务器的设计却可以是 _异步的_,或是 _基于回调的_,或是 _事件驱动的_,尽管其中有对 `select` 的使用。注意这里的 `on_peer_*` 函数是回调函数;它们永远不会阻塞,并且只有网络事件触发的时候才会被调用。它们可以获得部分数据,并能够在调用过程中保持稳定的状态。

|

||||

|

||||

如果你曾经做过一些 GUI 编程,这些东西对你来说应该很亲切。有个 “事件循环”,常常完全隐藏在框架里,应用的 “业务逻辑” 建立在回调上,这些回调会在各种事件触发后被调用,用户点击鼠标,选择菜单,定时器到时间,数据到达套接字,等等。曾经最常见的编程模型是客户端的 JavaScript,这里面有一堆回调函数,它们在浏览网页时用户的行为被触发。

|

||||

如果你曾经做过一些 GUI 编程,这些东西对你来说应该很亲切。有个 “事件循环”,常常完全隐藏在框架里,应用的 “业务逻辑” 建立在回调上,这些回调会在各种事件触发后被调用,用户点击鼠标、选择菜单、定时器触发、数据到达套接字等等。曾经最常见的编程模型是客户端的 JavaScript,这里面有一堆回调函数,它们在浏览网页时用户的行为被触发。

|

||||

|

||||

### select 的局限

|

||||

|

||||

使用 `select` 作为第一个异步服务器的例子对于说明这个概念很有用,而且由于 `select` 是很常见,可移植的 API。但是它也有一些严重的缺陷,在监视的文件描述符非常大的时候就会出现。

|

||||

使用 `select` 作为第一个异步服务器的例子对于说明这个概念很有用,而且由于 `select` 是很常见、可移植的 API。但是它也有一些严重的缺陷,在监视的文件描述符非常大的时候就会出现。

|

||||

|

||||

1. 有限的文件描述符的集合大小。

|

||||

|

||||

2. 糟糕的性能。

|

||||

|

||||

从文件描述符的大小开始。`FD_SETSIZE` 是一个编译期常数,在如今的操作系统中,它的值通常是 1024。它被硬编码在 `glibc` 的头文件里,并且不容易修改。它把 `select` 能够监视的文件描述符的数量限制在 1024 以内。曾有些分支想要写出能够处理上万个并发访问的客户端请求的服务器,这个问题很有现实意义。有一些方法,但是不可移植,也很难用。

|

||||

从文件描述符的大小开始。`FD_SETSIZE` 是一个编译期常数,在如今的操作系统中,它的值通常是 1024。它被硬编码在 `glibc` 的头文件里,并且不容易修改。它把 `select` 能够监视的文件描述符的数量限制在 1024 以内。曾有些人想要写出能够处理上万个并发访问的客户端请求的服务器,所以这个问题很有现实意义。有一些方法,但是不可移植,也很难用。

|

||||

|

||||

糟糕的性能问题就好解决的多,但是依然非常严重。注意当 `select` 返回的时候,它向调用者提供的信息是 “就绪的” 描述符的个数,还有被修改过的描述符集合。描述符集映射着描述符 就绪/未就绪”,但是并没有提供什么有效的方法去遍历所有就绪的描述符。如果只有一个描述符是就绪的,最坏的情况是调用者需要遍历 _整个集合_ 来找到那个描述符。这在监视的描述符数量比较少的时候还行,但是如果数量变的很大的时候,这种方法弊端就凸显出了 ^[注7][23]。

|

||||

糟糕的性能问题就好解决的多,但是依然非常严重。注意当 `select` 返回的时候,它向调用者提供的信息是 “就绪的” 描述符的个数,还有被修改过的描述符集合。描述符集映射着描述符“就绪/未就绪”,但是并没有提供什么有效的方法去遍历所有就绪的描述符。如果只有一个描述符是就绪的,最坏的情况是调用者需要遍历 _整个集合_ 来找到那个描述符。这在监视的描述符数量比较少的时候还行,但是如果数量变的很大的时候,这种方法弊端就凸显出了 ^注7 。

|

||||

|

||||

由于这些原因,为了写出高性能的并发服务器, `select` 已经不怎么用了。每一个流行的操作系统有独特的不可移植的 API,允许用户写出非常高效的事件循环;像框架这样的高级结构还有高级语言通常在一个可移植的接口中包含这些 API。

|

||||

|

||||

@ -541,30 +531,23 @@ while (1) {

|

||||

}

|

||||

```

|

||||

|

||||

通过调用 `epoll_ctl` 来配置 `epoll`。这时,配置监听的套接字数量,也就是 `epoll` 监听的描述符的数量。然后分配一个缓冲区,把就绪的事件传给 `epoll` 以供修改。在主循环里对 `epoll_wait` 的调用是魅力所在。它阻塞着,直到某个描述符就绪了(或者超时),返回就绪的描述符数量。但这时,不少盲目地迭代所有监视的集合,我们知道 `epoll_write` 会修改传给它的 `events` 缓冲区,缓冲区中有就绪的事件,从 0 到 `nready-1`,因此我们只需迭代必要的次数。

|

||||

通过调用 `epoll_ctl` 来配置 `epoll`。这时,配置监听的套接字数量,也就是 `epoll` 监听的描述符的数量。然后分配一个缓冲区,把就绪的事件传给 `epoll` 以供修改。在主循环里对 `epoll_wait` 的调用是魅力所在。它阻塞着,直到某个描述符就绪了(或者超时),返回就绪的描述符数量。但这时,不要盲目地迭代所有监视的集合,我们知道 `epoll_write` 会修改传给它的 `events` 缓冲区,缓冲区中有就绪的事件,从 0 到 `nready-1`,因此我们只需迭代必要的次数。

|

||||

|

||||

要在 `select` 里面重新遍历,有明显的差异:如果在监视着 1000 个描述符,只有两个就绪, `epoll_waits` 返回的是 `nready=2`,然后修改 `events` 缓冲区最前面的两个元素,因此我们只需要“遍历”两个描述符。用 `select` 我们就需要遍历 1000 个描述符,找出哪个是就绪的。因此,在繁忙的服务器上,有许多活跃的套接字时 `epoll` 比 `select` 更加容易扩展。

|

||||

|

||||

剩下的代码很直观,因为我们已经很熟悉 `select 服务器` 了。实际上,`epoll 服务器` 中的所有“业务逻辑”和 `select 服务器` 是一样的,回调构成相同的代码。

|

||||

剩下的代码很直观,因为我们已经很熟悉 “select 服务器” 了。实际上,“epoll 服务器” 中的所有“业务逻辑”和 “select 服务器” 是一样的,回调构成相同的代码。

|

||||

|

||||

这种相似是通过将事件循环抽象分离到一个库/框架中。我将会详述这些内容,因为很多优秀的程序员曾经也是这样做的。相反,下一篇文章里我们会了解 `libuv`,一个最近出现的更加受欢迎的时间循环抽象层。像 `libuv` 这样的库让我们能够写出并发的异步服务器,并且不用考虑系统调用下繁琐的细节。

|

||||

这种相似是通过将事件循环抽象分离到一个库/框架中。我将会详述这些内容,因为很多优秀的程序员曾经也是这样做的。相反,下一篇文章里我们会了解 libuv,一个最近出现的更加受欢迎的时间循环抽象层。像 libuv 这样的库让我们能够写出并发的异步服务器,并且不用考虑系统调用下繁琐的细节。

|

||||

|

||||

* * *

|

||||

|

||||

|

||||

[注1][1] 我试着在两件事的实际差别中突显自己,一件是做一些网络浏览和阅读,但经常做得头疼。有很多不同的选项,从“他们是一样的东西”到“一个是另一个的子集”,再到“他们是完全不同的东西”。在面临这样主观的观点时,最好是完全放弃这个问题,专注特殊的例子和用例。

|

||||

|

||||

[注2][2] POSIX 表示这可以是 `EAGAIN`,也可以是 `EWOULDBLOCK`,可移植应用应该对这两个都进行检查。

|

||||

|

||||

[注3][3] 和这个系列所有的 C 示例类似,代码中用到了某些助手工具来设置监听套接字。这些工具的完整代码在这个 [仓库][4] 的 `utils` 模块里。

|

||||

|

||||

[注4][5] `select` 不是网络/套接字专用的函数,它可以监视任意的文件描述符,有可能是硬盘文件,管道,终端,套接字或者 Unix 系统中用到的任何文件描述符。这篇文章里,我们主要关注它在套接字方面的应用。

|

||||

|

||||

[注5][6] 有多种方式用多线程来实现事件驱动,我会把它放在稍后的文章中进行讨论。

|

||||

|

||||

[注6][7] 由于各种非实验因素,它 _仍然_ 可以阻塞,即使是在 `select` 说它就绪了之后。因此服务器上打开的所有套接字都被设置成非阻塞模式,如果对 `recv` 或 `send` 的调用返回了 `EAGAIN` 或者 `EWOULDBLOCK`,回调函数就装作没有事件发生。阅读示例代码的注释可以了解更多细节。

|

||||

|

||||

[注7][8] 注意这比该文章前面所讲的异步 polling 例子要稍好一点。polling 需要 _一直_ 发生,而 `select` 实际上会阻塞到有一个或多个套接字准备好读取/写入;`select` 会比一直询问浪费少得多的 CPU 时间。

|

||||

- 注1:我试着在做网络浏览和阅读这两件事的实际差别中突显自己,但经常做得头疼。有很多不同的选项,从“它们是一样的东西”到“一个是另一个的子集”,再到“它们是完全不同的东西”。在面临这样主观的观点时,最好是完全放弃这个问题,专注特殊的例子和用例。

|

||||

- 注2:POSIX 表示这可以是 `EAGAIN`,也可以是 `EWOULDBLOCK`,可移植应用应该对这两个都进行检查。

|

||||

- 注3:和这个系列所有的 C 示例类似,代码中用到了某些助手工具来设置监听套接字。这些工具的完整代码在这个 [仓库][4] 的 `utils` 模块里。

|

||||

- 注4:`select` 不是网络/套接字专用的函数,它可以监视任意的文件描述符,有可能是硬盘文件、管道、终端、套接字或者 Unix 系统中用到的任何文件描述符。这篇文章里,我们主要关注它在套接字方面的应用。

|

||||

- 注5:有多种方式用多线程来实现事件驱动,我会把它放在稍后的文章中进行讨论。

|

||||

- 注6:由于各种非实验因素,它 _仍然_ 可以阻塞,即使是在 `select` 说它就绪了之后。因此服务器上打开的所有套接字都被设置成非阻塞模式,如果对 `recv` 或 `send` 的调用返回了 `EAGAIN` 或者 `EWOULDBLOCK`,回调函数就装作没有事件发生。阅读示例代码的注释可以了解更多细节。

|

||||

- 注7:注意这比该文章前面所讲的异步轮询的例子要稍好一点。轮询需要 _一直_ 发生,而 `select` 实际上会阻塞到有一个或多个套接字准备好读取/写入;`select` 会比一直询问浪费少得多的 CPU 时间。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -572,7 +555,7 @@ via: https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/

|

||||

|

||||

作者:[Eli Bendersky][a]

|

||||

译者:[GitFuture](https://github.com/GitFuture)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -587,9 +570,9 @@ via: https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/

|

||||

[8]:https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/#id9

|

||||

[9]:https://eli.thegreenplace.net/tag/concurrency

|

||||

[10]:https://eli.thegreenplace.net/tag/c-c

|

||||

[11]:http://eli.thegreenplace.net/2017/concurrent-servers-part-1-introduction/

|

||||

[12]:http://eli.thegreenplace.net/2017/concurrent-servers-part-1-introduction/

|

||||

[13]:http://eli.thegreenplace.net/2017/concurrent-servers-part-2-threads/

|

||||

[11]:https://linux.cn/article-8993-1.html

|

||||

[12]:https://linux.cn/article-8993-1.html

|

||||

[13]:https://linux.cn/article-9002-1.html

|

||||

[14]:http://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/

|

||||

[15]:https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/#id11

|

||||

[16]:https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/#id12

|

||||

@ -598,10 +581,10 @@ via: https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/

|

||||

[19]:https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/#id14

|

||||

[20]:https://github.com/eliben/code-for-blog/blob/master/2017/async-socket-server/select-server.c

|

||||

[21]:https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/#id15

|

||||

[22]:http://eli.thegreenplace.net/2017/concurrent-servers-part-2-threads/

|

||||

[22]:https://linux.cn/article-9002-1.html

|

||||

[23]:https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/#id16

|

||||

[24]:https://github.com/eliben/code-for-blog/blob/master/2017/async-socket-server/epoll-server.c

|

||||

[25]:https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/

|

||||

[26]:http://eli.thegreenplace.net/2017/concurrent-servers-part-1-introduction/

|

||||

[27]:http://eli.thegreenplace.net/2017/concurrent-servers-part-2-threads/

|

||||

[26]:https://linux.cn/article-8993-1.html

|

||||

[27]:https://linux.cn/article-9002-1.html

|

||||

[28]:https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/#id10

|

||||

@ -0,0 +1,80 @@

|

||||

面向初学者的 Linux 网络硬件:软件思维

|

||||

===========================================================

|

||||

|

||||

|

||||

|

||||

> 没有路由和桥接,我们将会成为孤独的小岛,你将会在这个网络教程中学到更多知识。

|

||||

|

||||

[Commons Zero][3]Pixabay

|

||||

|

||||

上周,我们学习了本地网络硬件知识,本周,我们将学习网络互联技术和在移动网络中的一些很酷的黑客技术。

|

||||

|

||||

### 路由器

|

||||

|

||||

网络路由器就是计算机网络中的一切,因为路由器连接着网络,没有路由器,我们就会成为孤岛。图一展示了一个简单的有线本地网络和一个无线接入点,所有设备都接入到互联网上,本地局域网的计算机连接到一个连接着防火墙或者路由器的以太网交换机上,防火墙或者路由器连接到网络服务供应商(ISP)提供的电缆箱、调制调节器、卫星上行系统……好像一切都在计算中,就像是一个带着不停闪烁的的小灯的盒子。当你的网络数据包离开你的局域网,进入广阔的互联网,它们穿过一个又一个路由器直到到达自己的目的地。

|

||||

|

||||

|

||||

|

||||

*图一:一个简单的有线局域网和一个无线接入点。*

|

||||

|

||||

路由器可以是各种样式:一个只专注于路由的小巧特殊的小盒子,一个将会提供路由、防火墙、域名服务,以及 VPN 网关功能的大点的盒子,一台重新设计的台式电脑或者笔记本,一个树莓派计算机或者一个 Arduino,体积臃肿矮小的像 PC Engines 这样的单板计算机,除了苛刻的用途以外,普通的商品硬件都能良好的工作运行。高端的路由器使用特殊设计的硬件每秒能够传输最大量的数据包。它们有多路数据总线,多个中央处理器和极快的存储。(可以通过了解 Juniper 和思科的路由器来感受一下高端路由器书什么样子的,而且能看看里面是什么样的构造。)

|

||||

|

||||

接入你的局域网的无线接入点要么作为一个以太网网桥,要么作为一个路由器。桥接器扩展了这个网络,所以在这个桥接器上的任意一端口上的主机都连接在同一个网络中。一台路由器连接的是两个不同的网络。

|

||||

|

||||

### 网络拓扑

|

||||

|

||||

有多种设置你的局域网的方式,你可以把所有主机接入到一个单独的<ruby>平面网络<rt>flat network</rt></ruby>,也可以把它们划分为不同的子网。如果你的交换机支持 VLAN 的话,你也可以把它们分配到不同的 VLAN 中。

|

||||

|

||||

平面网络是最简单的网络,只需把每一台设备接入到同一个交换机上即可,如果一台交换上的端口不够使用,你可以将更多的交换机连接在一起。有些交换机有特殊的上行端口,有些是没有这种特殊限制的上行端口,你可以连接其中的任意端口,你可能需要使用交叉类型的以太网线,所以你要查阅你的交换机的说明文档来设置。

|

||||

|

||||

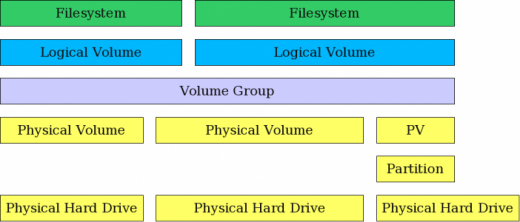

平面网络是最容易管理的,你不需要路由器也不需要计算子网,但它也有一些缺点。它们的伸缩性不好,所以当网络规模变得越来越大的时候就会被广播网络所阻塞。将你的局域网进行分段将会提升安全保障, 把局域网分成可管理的不同网段将有助于管理更大的网络。图二展示了一个分成两个子网的局域网络:内部的有线和无线主机,和一个托管公开服务的主机。包含面向公共的服务器的子网称作非军事区域 DMZ,(你有没有注意到那些都是主要在电脑上打字的男人们的术语?)因为它被阻挡了所有的内部网络的访问。

|

||||

|

||||

|

||||

|

||||

*图二:一个分成两个子网的简单局域网。*

|

||||

|

||||

即使像图二那样的小型网络也可以有不同的配置方法。你可以将防火墙和路由器放置在一台单独的设备上。你可以为你的非军事区域设置一个专用的网络连接,把它完全从你的内部网络隔离,这将引导我们进入下一个主题:一切基于软件。

|

||||

|

||||

### 软件思维

|

||||

|

||||

你可能已经注意到在这个简短的系列中我们所讨论的硬件,只有网络接口、交换机,和线缆是特殊用途的硬件。

|

||||

其它的都是通用的商用硬件,而且都是软件来定义它的用途。Linux 是一个真实的网络操作系统,它支持大量的网络操作:网关、虚拟专用网关、以太网桥、网页、邮箱以及文件等等服务器、负载均衡、代理、服务质量、多种认证、中继、故障转移……你可以在运行着 Linux 系统的标准硬件上运行你的整个网络。你甚至可以使用 Linux 交换应用(LISA)和VDE2 协议来模拟以太网交换机。

|

||||

|

||||

有一些用于小型硬件的特殊发行版,如 DD-WRT、OpenWRT,以及树莓派发行版,也不要忘记 BSD 们和它们的特殊衍生用途如 pfSense 防火墙/路由器,和 FreeNAS 网络存储服务器。

|

||||

|

||||

你知道有些人坚持认为硬件防火墙和软件防火墙有区别?其实是没有区别的,就像说硬件计算机和软件计算机一样。

|

||||

|

||||

### 端口聚合和以太网绑定

|

||||

|

||||

聚合和绑定,也称链路聚合,是把两条以太网通道绑定在一起成为一条通道。一些交换机支持端口聚合,就是把两个交换机端口绑定在一起,成为一个是它们原来带宽之和的一条新的连接。对于一台承载很多业务的服务器来说这是一个增加通道带宽的有效的方式。

|

||||

|

||||

你也可以在以太网口进行同样的配置,而且绑定汇聚的驱动是内置在 Linux 内核中的,所以不需要任何其他的专门的硬件。

|

||||

|

||||

### 随心所欲选择你的移动宽带

|

||||

|

||||

我期望移动宽带能够迅速增长来替代 DSL 和有线网络。我居住在一个有 25 万人口的靠近一个城市的地方,但是在城市以外,要想接入互联网就要靠运气了,即使那里有很大的用户上网需求。我居住的小角落离城镇有 20 分钟的距离,但对于网络服务供应商来说他们几乎不会考虑到为这个地方提供网络。 我唯一的选择就是移动宽带;这里没有拨号网络、卫星网络(即使它很糟糕)或者是 DSL、电缆、光纤,但却没有阻止网络供应商把那些我在这个区域从没看到过的 Xfinity 和其它高速网络服务的传单塞进我的邮箱。

|

||||

|

||||

我试用了 AT&T、Version 和 T-Mobile。Version 的信号覆盖范围最广,但是 Version 和 AT&T 是最昂贵的。

|

||||

我居住的地方在 T-Mobile 信号覆盖的边缘,但迄今为止他们给了最大的优惠,为了能够能够有效的使用,我必须购买一个 WeBoost 信号放大器和一台中兴的移动热点设备。当然你也可以使用一部手机作为热点,但是专用的热点设备有着最强的信号。如果你正在考虑购买一台信号放大器,最好的选择就是 WeBoost,因为他们的服务支持最棒,而且他们会尽最大努力去帮助你。在一个小小的 APP [SignalCheck Pro][8] 的协助下设置将会精准的增强你的网络信号,他们有一个功能较少的免费的版本,但你将一点都不会后悔去花两美元使用专业版。

|

||||

|

||||

那个小巧的中兴热点设备能够支持 15 台主机,而且还有拥有基本的防火墙功能。 但你如果你使用像 Linksys WRT54GL这样的设备,可以使用 Tomato、OpenWRT,或者 DD-WRT 来替代普通的固件,这样你就能完全控制你的防护墙规则、路由配置,以及任何其它你想要设置的服务。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/learn/intro-to-linux/2017/10/linux-networking-hardware-beginners-think-software

|

||||

|

||||

作者:[CARLA SCHRODER][a]

|

||||

译者:[FelixYFZ](https://github.com/FelixYFZ)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/cschroder

|

||||

[1]:https://www.linux.com/licenses/category/used-permission

|

||||

[2]:https://www.linux.com/licenses/category/used-permission

|

||||

[3]:https://www.linux.com/licenses/category/creative-commons-zero

|

||||

[4]:https://www.linux.com/files/images/fig-1png-7

|

||||

[5]:https://www.linux.com/files/images/fig-2png-4

|

||||

[6]:https://www.linux.com/files/images/soderskar-islandjpg

|

||||

[7]:https://www.linux.com/learn/intro-to-linux/2017/10/linux-networking-hardware-beginners-lan-hardware

|

||||

[8]:http://www.bluelinepc.com/signalcheck/

|

||||

@ -0,0 +1,135 @@

|

||||

如何使用 GPG 加解密文件

|

||||

=================

|

||||

|

||||

目标:使用 GPG 加密文件

|

||||

|

||||

发行版:适用于任何发行版

|

||||

|

||||

要求:安装了 GPG 的 Linux 或者拥有 root 权限来安装它。

|

||||

|

||||

难度:简单

|

||||

|

||||

约定:

|

||||

|

||||

* `#` - 需要使用 root 权限来执行指定命令,可以直接使用 root 用户来执行,也可以使用 `sudo` 命令

|

||||

* `$` - 可以使用普通用户来执行指定命令

|

||||

|

||||

### 介绍

|

||||

|

||||

加密非常重要。它对于保护敏感信息来说是必不可少的。你的私人文件应该要被加密,而 GPG 提供了很好的解决方案。

|

||||

|

||||

### 安装 GPG

|

||||

|

||||

GPG 的使用非常广泛。你在几乎每个发行版的仓库中都能找到它。如果你还没有安装它,那现在就来安装一下吧。

|

||||

|

||||

**Debian/Ubuntu**

|

||||

|

||||

```

|

||||

$ sudo apt install gnupg

|

||||

```

|

||||

|

||||

**Fedora**

|

||||

|

||||

```

|

||||

# dnf install gnupg2

|

||||

```

|

||||

|

||||

**Arch**

|

||||

|

||||

```

|

||||

# pacman -S gnupg

|

||||

```

|

||||

|

||||

**Gentoo**

|

||||

|

||||

```

|

||||

# emerge --ask app-crypt/gnupg

|

||||

```

|

||||

|

||||

### 创建密钥

|

||||

|

||||

你需要一个密钥对来加解密文件。如果你为 SSH 已经生成过了密钥对,那么你可以直接使用它。如果没有,GPG 包含工具来生成密钥对。

|

||||

|

||||

```

|

||||

$ gpg --full-generate-key

|

||||

```

|

||||

|

||||

GPG 有一个命令行程序可以帮你一步一步的生成密钥。它还有一个简单得多的工具,但是这个工具不能让你设置密钥类型,密钥的长度以及过期时间,因此不推荐使用这个工具。

|

||||

|

||||

GPG 首先会询问你密钥的类型。没什么特别的话选择默认值就好。

|

||||

|

||||

下一步需要设置密钥长度。`4096` 是一个不错的选择。

|

||||

|

||||

之后,可以设置过期的日期。 如果希望密钥永不过期则设置为 `0`。

|

||||

|

||||

然后,输入你的名称。

|

||||

|

||||

最后,输入电子邮件地址。

|

||||

|

||||

如果你需要的话,还能添加一个注释。

|

||||

|

||||

所有这些都完成后,GPG 会让你校验一下这些信息。

|

||||

|

||||

GPG 还会问你是否需要为密钥设置密码。这一步是可选的, 但是会增加保护的程度。若需要设置密码,则 GPG 会收集你的操作信息来增加密钥的健壮性。 所有这些都完成后, GPG 会显示密钥相关的信息。

|

||||

|

||||

### 加密的基本方法

|

||||

|

||||

现在你拥有了自己的密钥,加密文件非常简单。 使用下面的命令在 `/tmp` 目录中创建一个空白文本文件。

|

||||

|

||||

```

|

||||

$ touch /tmp/test.txt

|

||||

```

|

||||

|

||||

然后用 GPG 来加密它。这里 `-e` 标志告诉 GPG 你想要加密文件, `-r` 标志指定接收者。

|

||||

|

||||

```

|

||||

$ gpg -e -r "Your Name" /tmp/test.txt

|

||||

```

|

||||

|

||||

GPG 需要知道这个文件的接收者和发送者。由于这个文件给是你的,因此无需指定发送者,而接收者就是你自己。

|

||||

|

||||

### 解密的基本方法

|

||||

|

||||

你收到加密文件后,就需要对它进行解密。 你无需指定解密用的密钥。 这个信息被编码在文件中。 GPG 会尝试用其中的密钥进行解密。

|

||||

|

||||

```

|

||||

$ gpg -d /tmp/test.txt.gpg

|

||||

```

|

||||

|

||||

### 发送文件

|

||||

|

||||

假设你需要发送文件给别人。你需要有接收者的公钥。 具体怎么获得密钥由你自己决定。 你可以让他们直接把公钥发送给你, 也可以通过密钥服务器来获取。

|

||||

|

||||

收到对方公钥后,导入公钥到 GPG 中。

|

||||

|

||||

```

|

||||

$ gpg --import yourfriends.key

|

||||

```

|

||||

|

||||

这些公钥与你自己创建的密钥一样,自带了名称和电子邮件地址的信息。 记住,为了让别人能解密你的文件,别人也需要你的公钥。 因此导出公钥并将之发送出去。

|

||||

|

||||

```

|

||||

gpg --export -a "Your Name" > your.key

|

||||

```

|

||||

|

||||

现在可以开始加密要发送的文件了。它跟之前的步骤差不多, 只是需要指定你自己为发送人。

|

||||

|

||||

```

|

||||

$ gpg -e -u "Your Name" -r "Their Name" /tmp/test.txt

|

||||

```

|

||||

|

||||

### 结语

|

||||

|

||||

就这样了。GPG 还有一些高级选项, 不过你在 99% 的时间内都不会用到这些高级选项。 GPG 就是这么易于使用。你也可以使用创建的密钥对来发送和接受加密邮件,其步骤跟上面演示的差不多, 不过大多数的电子邮件客户端在拥有密钥的情况下会自动帮你做这个动作。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://linuxconfig.org/how-to-encrypt-and-decrypt-individual-files-with-gpg

|

||||

|

||||

作者:[Nick Congleton][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux 中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://linuxconfig.org

|

||||

74

published/20171120 Mark McIntyre How Do You Fedora.md

Normal file

74

published/20171120 Mark McIntyre How Do You Fedora.md

Normal file

@ -0,0 +1,74 @@

|

||||

Mark McIntyre:与 Fedora 的那些事

|

||||

===========================

|

||||

|

||||

|

||||

|

||||

最近我们采访了 Mark McIntyre,谈了他是如何使用 Fedora 系统的。这也是 Fedora 杂志上[系列文章的一部分][2]。该系列简要介绍了 Fedora 用户,以及他们是如何用 Fedora 把事情做好的。如果你想成为采访对象,请通过[反馈表][3]与我们联系。

|

||||

|

||||

### Mark McIntyre 是谁?

|

||||

|

||||

Mark McIntyre 为极客而生,以 Linux 为乐趣。他说:“我在 13 岁开始编程,当时自学 BASIC 语言,我体会到其中的乐趣,并在乐趣的引导下,一步步成为专业的码农。” Mark 和他的侄女都是披萨饼的死忠粉。“去年秋天,我和我的侄女开始了一个任务,去尝试诺克斯维尔的许多披萨饼连锁店。点击[这里][4]可以了解我们的进展情况。”Mark 也是一名业余的摄影爱好者,并且在 Flickr 上 [发布自己的作品][5]。

|

||||

|

||||

|

||||

|

||||

作为一名开发者,Mark 有着丰富的工作背景。他用过 Visual Basic 编写应用程序,用过 LotusScript、 PL/SQL(Oracle)、 Tcl/TK 编写代码,也用过基于 Python 的 Django 框架。他的强项是 Python。这也是目前他作为系统工程师的工作语言。“我经常使用 Python。由于我的工作变得更像是自动化工程师, Python 用得就更频繁了。”

|

||||

|

||||

McIntyre 自称是个书呆子,喜欢科幻电影,但他最喜欢的一部电影却不是科幻片。“尽管我是个书呆子,喜欢看《<ruby>星际迷航<rt>Star Trek</rt></ruby>》、《<ruby>星球大战<rt>Star Wars</rt></ruby>》之类的影片,但《<ruby>光荣战役<rt>Glory</rt></ruby>》或许才是我最喜欢的电影。”他还提到,电影《<ruby>冲出宁静号<rt>Serenity</rt></ruby>》是一个著名电视剧的精彩后续(指《萤火虫》)。

|

||||

|

||||

Mark 比较看重他人的谦逊、知识与和气。他欣赏能够设身处地为他人着想的人。“如果你决定为另一个人服务,那么你会选择自己愿意亲近的人,而不是让自己备受折磨的人。”

|

||||

|

||||

McIntyre 目前在 [Scripps Networks Interactive][6] 工作,这家公司是 HGTV、Food Network、Travel Channel、DIY、GAC 以及其他几个有线电视频道的母公司。“我现在是一名系统工程师,负责非线性视频内容,这是所有媒体要开展线上消费所需要的。”他为一些开发团队提供支持,他们编写应用程序,将线性视频从有线电视发布到线上平台,比如亚马逊、葫芦。这些系统既包含预置系统,也包含云系统。Mark 还开发了一些自动化工具,将这些应用程序主要部署到云基础结构中。

|

||||

|

||||

### Fedora 社区

|

||||

|

||||

Mark 形容 Fedora 社区是一个富有活力的社区,充满着像 Fedora 用户一样热爱生活的人。“从设计师到封包人,这个团体依然非常活跃,生机勃勃。” 他继续说道:“这使我对该操作系统抱有一种信心。”

|

||||

|

||||

2002 年左右,Mark 开始经常使用 IRC 上的 #fedora 频道:“那时候,Wi-Fi 在启用适配器和配置模块功能时,有许多还是靠手工实现的。”为了让他的 Wi-Fi 能够工作,他不得不重新去编译 Fedora 内核。

|

||||

|

||||

McIntyre 鼓励他人参与 Fedora 社区。“这里有许多来自不同领域的机会。前端设计、测试部署、开发、应用程序打包以及新技术实现。”他建议选择一个感兴趣的领域,然后向那个团体提出疑问。“这里有许多机会去奉献自己。”

|

||||

|

||||

对于帮助他起步的社区成员,Mark 赞道:“Ben Williams 非常乐于助人。在我第一次接触 Fedora 时,他帮我搞定了一些 #fedora 支持频道中的安装补丁。” Ben 也鼓励 Mark 去做 Fedora [大使][7]。

|

||||

|

||||

### 什么样的硬件和软件?

|

||||

|

||||

McIntyre 将 Fedora Linux 系统用在他的笔记本和台式机上。在服务器上他选择了 CentOS,因为它有更长的生命周期支持。他现在的台式机是自己组装的,配有 Intel 酷睿 i5 处理器,32GB 的内存和2TB 的硬盘。“我装了个 4K 的显示屏,有足够大的地方来同时查看所有的应用。”他目前工作用的笔记本是戴尔灵越二合一,配备 13 英寸的屏,16 GB 的内存和 525 GB 的 m.2 固态硬盘。

|

||||

|

||||

|

||||

|

||||

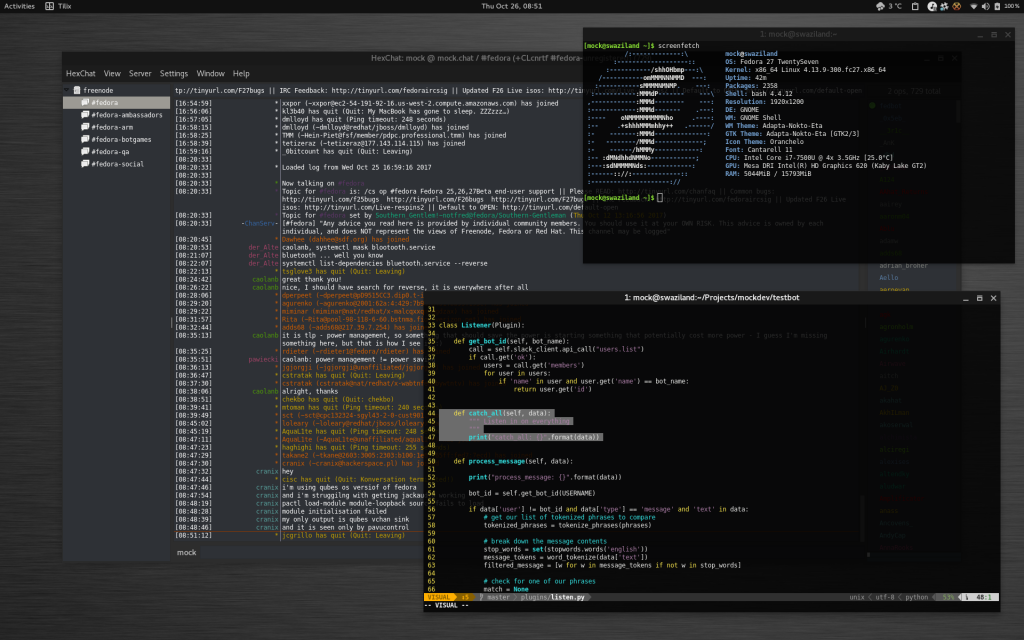

Mark 现在将 Fedora 26 运行在他过去几个月装配的所有机器中。当一个新版本正式发布的时候,他倾向于避开这个高峰期。“除非在它即将发行的时候,我的工作站中有个正在运行下一代测试版本,通常情况下,一旦它发展成熟,我都会试着去获取最新的版本。”他经常采取就地更新:“这种就地更新方法利用 dnf 系统升级插件,目前表现得非常好。”

|

||||

|

||||

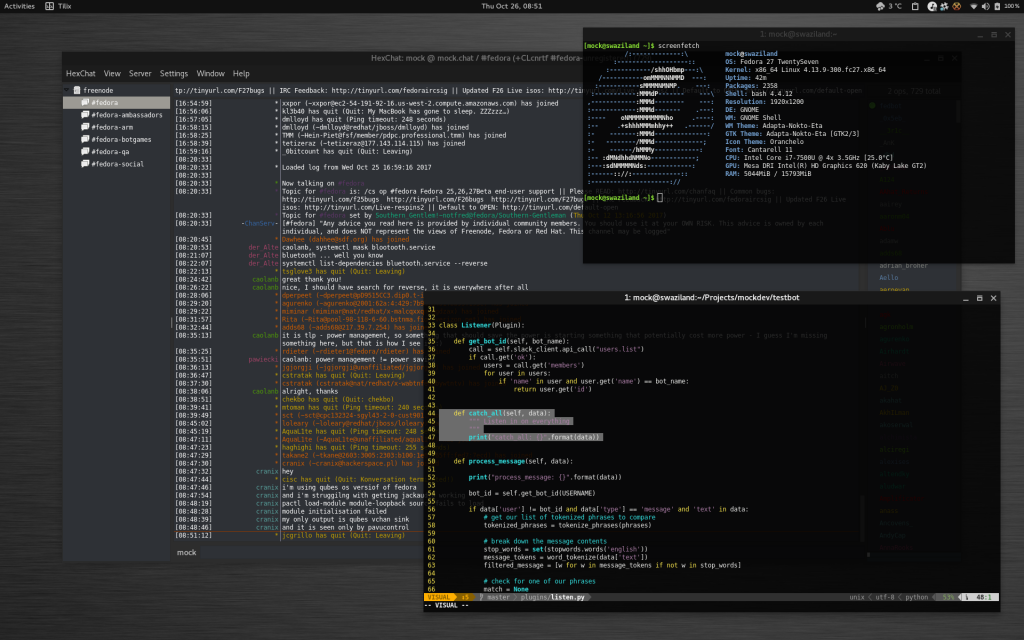

为了搞摄影,McIntyre 用上了 [GIMP][8]、[Darktable][9],以及其他一些照片查看包和快速编辑包。当不用 Web 电子邮件时,Mark 会使用 [Geary][10],还有[GNOME Calendar][11]。Mark 选用 HexChat 作为 IRC 客户端,[HexChat][12] 与在 Fedora 服务器实例上运行的 [ZNC bouncer][13] 联机。他的部门通过 Slave 进行沟通交流。

|

||||

|

||||

“我从来都不是 IDE 粉,所以大多数的编辑任务都是在 [vim][14] 上完成的。”Mark 偶尔也会打开一个简单的文本编辑器,如 [gedit][15],或者 [xed][16]。他用 [GPaste][17] 做复制和粘贴工作。“对于终端的选择,我已经变成 [Tilix][18] 的忠粉。”McIntyre 通过 [Rhythmbox][19] 来管理他喜欢的播客,并用 [Epiphany][20] 实现快速网络查询。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://fedoramagazine.org/mark-mcintyre-fedora/

|

||||

|

||||

作者:[Charles Profitt][a]

|

||||

译者:[zrszrszrs](https://github.com/zrszrszrs)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://fedoramagazine.org/author/cprofitt/

|

||||

[1]:https://fedoramagazine.org/mark-mcintyre-fedora/

|

||||

[2]:https://fedoramagazine.org/tag/how-do-you-fedora/

|

||||

[3]:https://fedoramagazine.org/submit-an-idea-or-tip/

|

||||

[4]:https://knox-pizza-quest.blogspot.com/

|

||||

[5]:https://www.flickr.com/photos/mockgeek/

|

||||

[6]:http://www.scrippsnetworksinteractive.com/

|

||||

[7]:https://fedoraproject.org/wiki/Ambassadors

|

||||

[8]:https://www.gimp.org/

|

||||

[9]:http://www.darktable.org/

|

||||

[10]:https://wiki.gnome.org/Apps/Geary

|

||||

[11]:https://wiki.gnome.org/Apps/Calendar

|

||||

[12]:https://hexchat.github.io/

|

||||

[13]:https://wiki.znc.in/ZNC

|

||||

[14]:http://www.vim.org/

|

||||

[15]:https://wiki.gnome.org/Apps/Gedit

|

||||

[16]:https://github.com/linuxmint/xed

|

||||

[17]:https://github.com/Keruspe/GPaste

|

||||

[18]:https://fedoramagazine.org/try-tilix-new-terminal-emulator-fedora/

|

||||

[19]:https://wiki.gnome.org/Apps/Rhythmbox

|

||||

[20]:https://wiki.gnome.org/Apps/Web

|

||||

@ -0,0 +1,149 @@

|

||||

如何判断 Linux 服务器是否被入侵?

|

||||

=========================

|

||||

|

||||

本指南中所谓的服务器被入侵或者说被黑了的意思,是指未经授权的人或程序为了自己的目的登录到服务器上去并使用其计算资源,通常会产生不好的影响。

|

||||

|

||||

免责声明:若你的服务器被类似 NSA 这样的国家机关或者某个犯罪集团入侵,那么你并不会注意到有任何问题,这些技术也无法发觉他们的存在。

|

||||

|

||||

然而,大多数被攻破的服务器都是被类似自动攻击程序这样的程序或者类似“脚本小子”这样的廉价攻击者,以及蠢蛋罪犯所入侵的。

|

||||

|

||||

这类攻击者会在访问服务器的同时滥用服务器资源,并且不怎么会采取措施来隐藏他们正在做的事情。

|

||||

|

||||

### 被入侵服务器的症状

|

||||

|

||||

当服务器被没有经验攻击者或者自动攻击程序入侵了的话,他们往往会消耗 100% 的资源。他们可能消耗 CPU 资源来进行数字货币的采矿或者发送垃圾邮件,也可能消耗带宽来发动 DoS 攻击。

|

||||

|

||||

因此出现问题的第一个表现就是服务器 “变慢了”。这可能表现在网站的页面打开的很慢,或者电子邮件要花很长时间才能发送出去。

|

||||

|

||||

那么你应该查看那些东西呢?

|

||||

|

||||

#### 检查 1 - 当前都有谁在登录?

|

||||

|

||||

你首先要查看当前都有谁登录在服务器上。发现攻击者登录到服务器上进行操作并不复杂。

|

||||

|

||||

其对应的命令是 `w`。运行 `w` 会输出如下结果:

|

||||

|

||||

```

|

||||

08:32:55 up 98 days, 5:43, 2 users, load average: 0.05, 0.03, 0.00

|

||||

USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT

|

||||

root pts/0 113.174.161.1 08:26 0.00s 0.03s 0.02s ssh root@coopeaa12

|

||||

root pts/1 78.31.109.1 08:26 0.00s 0.01s 0.00s w

|

||||

```

|

||||

|

||||

第一个 IP 是英国 IP,而第二个 IP 是越南 IP。这个不是个好兆头。

|

||||

|

||||

停下来做个深呼吸, 不要恐慌之下只是干掉他们的 SSH 连接。除非你能够防止他们再次进入服务器,否则他们会很快进来并踢掉你,以防你再次回去。

|

||||

|

||||

请参阅本文最后的“被入侵之后怎么办”这一章节来看找到了被入侵的证据后应该怎么办。

|

||||

|

||||

`whois` 命令可以接一个 IP 地址然后告诉你该 IP 所注册的组织的所有信息,当然就包括所在国家的信息。

|

||||

|

||||

#### 检查 2 - 谁曾经登录过?

|

||||

|

||||

Linux 服务器会记录下哪些用户,从哪个 IP,在什么时候登录的以及登录了多长时间这些信息。使用 `last` 命令可以查看这些信息。

|

||||

|

||||

输出类似这样:

|

||||

|

||||

```

|

||||

root pts/1 78.31.109.1 Thu Nov 30 08:26 still logged in

|

||||

root pts/0 113.174.161.1 Thu Nov 30 08:26 still logged in

|

||||

root pts/1 78.31.109.1 Thu Nov 30 08:24 - 08:26 (00:01)

|

||||

root pts/0 113.174.161.1 Wed Nov 29 12:34 - 12:52 (00:18)

|

||||

root pts/0 14.176.196.1 Mon Nov 27 13:32 - 13:53 (00:21)

|

||||

```

|

||||

|

||||

这里可以看到英国 IP 和越南 IP 交替出现,而且最上面两个 IP 现在还处于登录状态。如果你看到任何未经授权的 IP,那么请参阅最后章节。

|

||||

|

||||

登录后的历史记录会记录到二进制的 `/var/log/wtmp` 文件中(LCTT 译注:这里作者应该写错了,根据实际情况修改),因此很容易被删除。通常攻击者会直接把这个文件删掉,以掩盖他们的攻击行为。 因此, 若你运行了 `last` 命令却只看得见你的当前登录,那么这就是个不妙的信号。

|

||||

|

||||

如果没有登录历史的话,请一定小心,继续留意入侵的其他线索。

|

||||

|

||||

#### 检查 3 - 回顾命令历史

|

||||

|

||||

这个层次的攻击者通常不会注意掩盖命令的历史记录,因此运行 `history` 命令会显示出他们曾经做过的所有事情。

|

||||

一定留意有没有用 `wget` 或 `curl` 命令来下载类似垃圾邮件机器人或者挖矿程序之类的非常规软件。

|

||||

|

||||

命令历史存储在 `~/.bash_history` 文件中,因此有些攻击者会删除该文件以掩盖他们的所作所为。跟登录历史一样,若你运行 `history` 命令却没有输出任何东西那就表示历史文件被删掉了。这也是个不妙的信号,你需要很小心地检查一下服务器了。(LCTT 译注,如果没有命令历史,也有可能是你的配置错误。)

|

||||

|

||||

#### 检查 4 - 哪些进程在消耗 CPU?

|

||||

|

||||

你常遇到的这类攻击者通常不怎么会去掩盖他们做的事情。他们会运行一些特别消耗 CPU 的进程。这就很容易发现这些进程了。只需要运行 `top` 然后看最前的那几个进程就行了。

|

||||

|

||||

这也能显示出那些未登录进来的攻击者。比如,可能有人在用未受保护的邮件脚本来发送垃圾邮件。

|

||||

|

||||

如果你最上面的进程对不了解,那么你可以 Google 一下进程名称,或者通过 `losf` 和 `strace` 来看看它做的事情是什么。

|

||||

|

||||

使用这些工具,第一步从 `top` 中拷贝出进程的 PID,然后运行:

|

||||

|

||||

```

|

||||

strace -p PID

|

||||

```

|

||||

|

||||

这会显示出该进程调用的所有系统调用。它产生的内容会很多,但这些信息能告诉你这个进程在做什么。

|

||||

|

||||

```

|

||||

lsof -p PID

|

||||

```

|

||||

|

||||

这个程序会列出该进程打开的文件。通过查看它访问的文件可以很好的理解它在做的事情。

|

||||

|

||||

#### 检查 5 - 检查所有的系统进程

|

||||

|

||||

消耗 CPU 不严重的未授权进程可能不会在 `top` 中显露出来,不过它依然可以通过 `ps` 列出来。命令 `ps auxf` 就能显示足够清晰的信息了。

|

||||

|

||||

你需要检查一下每个不认识的进程。经常运行 `ps` (这是个好习惯)能帮助你发现奇怪的进程。

|

||||

|

||||

#### 检查 6 - 检查进程的网络使用情况

|

||||

|

||||

`iftop` 的功能类似 `top`,它会排列显示收发网络数据的进程以及它们的源地址和目的地址。类似 DoS 攻击或垃圾机器人这样的进程很容易显示在列表的最顶端。

|

||||

|

||||

#### 检查 7 - 哪些进程在监听网络连接?

|

||||

|

||||

通常攻击者会安装一个后门程序专门监听网络端口接受指令。该进程等待期间是不会消耗 CPU 和带宽的,因此也就不容易通过 `top` 之类的命令发现。

|

||||

|

||||

`lsof` 和 `netstat` 命令都会列出所有的联网进程。我通常会让它们带上下面这些参数:

|

||||

|

||||

```

|

||||

lsof -i

|

||||

```

|

||||

|

||||

```

|

||||

netstat -plunt

|

||||

```

|

||||

|

||||

你需要留意那些处于 `LISTEN` 和 `ESTABLISHED` 状态的进程,这些进程要么正在等待连接(LISTEN),要么已经连接(ESTABLISHED)。如果遇到不认识的进程,使用 `strace` 和 `lsof` 来看看它们在做什么东西。

|

||||

|

||||

### 被入侵之后该怎么办呢?

|

||||

|

||||

首先,不要紧张,尤其当攻击者正处于登录状态时更不能紧张。**你需要在攻击者警觉到你已经发现他之前夺回机器的控制权。**如果他发现你已经发觉到他了,那么他可能会锁死你不让你登陆服务器,然后开始毁尸灭迹。

|

||||

|

||||

如果你技术不太好那么就直接关机吧。你可以在服务器上运行 `shutdown -h now` 或者 `systemctl poweroff` 这两条命令之一。也可以登录主机提供商的控制面板中关闭服务器。关机后,你就可以开始配置防火墙或者咨询一下供应商的意见。

|

||||

|

||||

如果你对自己颇有自信,而你的主机提供商也有提供上游防火墙,那么你只需要以此创建并启用下面两条规则就行了:

|

||||

|

||||

1. 只允许从你的 IP 地址登录 SSH。

|

||||

2. 封禁除此之外的任何东西,不仅仅是 SSH,还包括任何端口上的任何协议。

|

||||

|

||||

这样会立即关闭攻击者的 SSH 会话,而只留下你可以访问服务器。

|

||||

|

||||

如果你无法访问上游防火墙,那么你就需要在服务器本身创建并启用这些防火墙策略,然后在防火墙规则起效后使用 `kill` 命令关闭攻击者的 SSH 会话。(LCTT 译注:本地防火墙规则 有可能不会阻止已经建立的 SSH 会话,所以保险起见,你需要手工杀死该会话。)

|

||||

|

||||

最后还有一种方法,如果支持的话,就是通过诸如串行控制台之类的带外连接登录服务器,然后通过 `systemctl stop network.service` 停止网络功能。这会关闭所有服务器上的网络连接,这样你就可以慢慢的配置那些防火墙规则了。

|

||||

|

||||

重夺服务器的控制权后,也不要以为就万事大吉了。

|

||||

|

||||

不要试着修复这台服务器,然后接着用。你永远不知道攻击者做过什么,因此你也永远无法保证这台服务器还是安全的。

|

||||

|

||||

最好的方法就是拷贝出所有的数据,然后重装系统。(LCTT 译注:你的程序这时已经不可信了,但是数据一般来说没问题。)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://bash-prompt.net/guides/server-hacked/

|

||||

|

||||

作者:[Elliot Cooper][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://bash-prompt.net

|

||||

132

published/20171130 Wake up and Shut Down Linux Automatically.md

Normal file

132

published/20171130 Wake up and Shut Down Linux Automatically.md

Normal file

@ -0,0 +1,132 @@

|

||||

如何自动唤醒和关闭 Linux

|

||||

=====================

|

||||

|

||||

|

||||

|

||||

> 了解如何通过配置 Linux 计算机来根据时间自动唤醒和关闭。

|

||||

|

||||

|

||||

不要成为一个电能浪费者。如果你的电脑不需要开机就请把它们关机。出于方便和计算机宅的考虑,你可以通过配置你的 Linux 计算机实现自动唤醒和关闭。

|

||||

|

||||

### 宝贵的系统运行时间

|

||||

|

||||

有时候有些电脑需要一直处在开机状态,在不超过电脑运行时间的限制下这种情况是被允许的。有些人为他们的计算机可以长时间的正常运行而感到自豪,且现在我们有内核热补丁能够实现只有在硬件发生故障时才需要机器关机。我认为比较实际可行的是,像减少移动部件磨损一样节省电能,且在不需要机器运行的情况下将其关机。比如,你可以在规定的时间内唤醒备份服务器,执行备份,然后关闭它直到它要进行下一次备份。或者,你可以设置你的互联网网关只在特定的时间运行。任何不需要一直运行的东西都可以将其配置成在其需要工作的时候打开,待其完成工作后将其关闭。

|

||||

|

||||

### 系统休眠

|

||||

|

||||

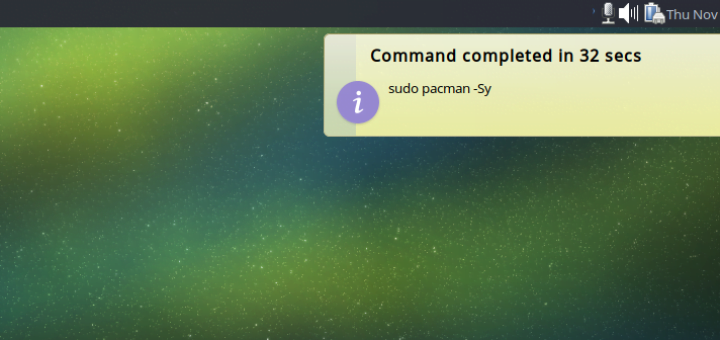

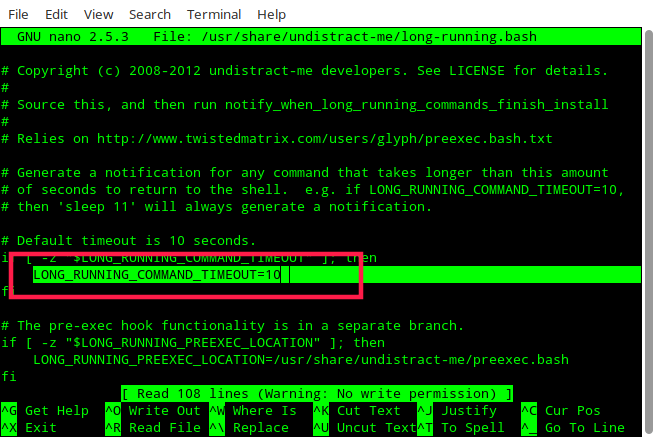

对于不需要一直运行的电脑,使用 root 的 cron 定时任务(即 `/etc/crontab`)可以可靠地关闭电脑。这个例子创建一个 root 定时任务实现每天晚上 11 点 15 分定时关机。

|

||||

|

||||

```

|

||||

# crontab -e -u root

|

||||

# m h dom mon dow command

|

||||

15 23 * * * /sbin/shutdown -h now

|

||||

```

|

||||

|

||||

以下示例仅在周一至周五运行:

|

||||

|

||||

```

|

||||

15 23 * * 1-5 /sbin/shutdown -h now

|

||||

```

|

||||

|

||||

您可以为不同的日期和时间创建多个 cron 作业。 通过命令 `man 5 crontab` 可以了解所有时间和日期的字段。

|

||||

|

||||

一个快速、容易的方式是,使用 `/etc/crontab` 文件。但这样你必须指定用户:

|

||||

|

||||

```

|

||||

15 23 * * 1-5 root shutdown -h now

|

||||

```

|

||||

|

||||

### 自动唤醒

|

||||

|

||||

实现自动唤醒是一件很酷的事情;我大多数 SUSE (SUSE Linux)的同事都在纽伦堡,因此,因此为了跟同事能有几小时一起工作的时间,我不得不需要在凌晨五点起床。我的计算机早上 5 点半自动开始工作,而我只需要将自己和咖啡拖到我的桌子上就可以开始工作了。按下电源按钮看起来好像并不是什么大事,但是在每天的那个时候每件小事都会变得很大。

|

||||

|

||||

唤醒 Linux 计算机可能不如关闭它可靠,因此你可能需要尝试不同的办法。你可以使用远程唤醒(Wake-On-LAN)、RTC 唤醒或者个人电脑的 BIOS 设置预定的唤醒这些方式。这些方式可行的原因是,当你关闭电脑时,这并不是真正关闭了计算机;此时计算机处在极低功耗状态且还可以接受和响应信号。只有在你拔掉电源开关时其才彻底关闭。

|

||||

|

||||

### BIOS 唤醒

|

||||

|

||||

BIOS 唤醒是最可靠的。我的系统主板 BIOS 有一个易于使用的唤醒调度程序 (图 1)。对你来说也是一样的容易。

|

||||

|

||||

|

||||

|

||||

*图 1:我的系统 BIOS 有个易用的唤醒定时器。*

|

||||

|

||||

### 主机远程唤醒(Wake-On-LAN)

|

||||

|

||||

远程唤醒是仅次于 BIOS 唤醒的又一种可靠的唤醒方法。这需要你从第二台计算机发送信号到所要打开的计算机。可以使用 Arduino 或<ruby>树莓派<rt>Raspberry Pi</rt></ruby>发送给基于 Linux 的路由器或者任何 Linux 计算机的唤醒信号。首先,查看系统主板 BIOS 是否支持 Wake-On-LAN ,要是支持的话,必须先启动它,因为它被默认为禁用。

|

||||

|

||||

然后,需要一个支持 Wake-On-LAN 的网卡;无线网卡并不支持。你需要运行 `ethtool` 命令查看网卡是否支持 Wake-On-LAN :

|

||||

|

||||

```

|

||||

# ethtool eth0 | grep -i wake-on

|

||||

Supports Wake-on: pumbg

|

||||

Wake-on: g

|

||||

```

|

||||

|

||||

这条命令输出的 “Supports Wake-on” 字段会告诉你你的网卡现在开启了哪些功能:

|

||||

|

||||

* d -- 禁用

|

||||

* p -- 物理活动唤醒

|

||||

* u -- 单播消息唤醒

|

||||

* m -- 多播(组播)消息唤醒

|

||||

* b -- 广播消息唤醒

|

||||

* a -- ARP 唤醒

|

||||

* g -- <ruby>特定数据包<rt>magic packet</rt></ruby>唤醒

|

||||

* s -- 设有密码的<ruby>特定数据包<rt>magic packet</rt></ruby>唤醒

|

||||

|

||||

`ethtool` 命令的 man 手册并没说清楚 `p` 选项的作用;这表明任何信号都会导致唤醒。然而,在我的测试中它并没有这么做。想要实现远程唤醒主机,必须支持的功能是 `g` —— <ruby>特定数据包<rt>magic packet</rt></ruby>唤醒,而且下面的“Wake-on” 行显示这个功能已经在启用了。如果它没有被启用,你可以通过 `ethtool` 命令来启用它。

|

||||

|

||||

```

|

||||

# ethtool -s eth0 wol g

|

||||

```

|

||||

|

||||

这条命令可能会在重启后失效,所以为了确保万无一失,你可以创建个 root 用户的定时任务(cron)在每次重启的时候来执行这条命令。

|

||||

|

||||

```

|

||||

@reboot /usr/bin/ethtool -s eth0 wol g

|

||||

```

|

||||

|

||||

另一个选择是最近的<ruby>网络管理器<rt>Network Manager</rt></ruby>版本有一个很好的小复选框来启用 Wake-On-LAN(图 2)。

|

||||

|

||||

|

||||

|

||||

*图 2:启用 Wake on LAN*

|

||||

|

||||

这里有一个可以用于设置密码的地方,但是如果你的网络接口不支持<ruby>安全开机<rt>Secure On</rt></ruby>密码,它就不起作用。

|

||||

|

||||

现在你需要配置第二台计算机来发送唤醒信号。你并不需要 root 权限,所以你可以为你的普通用户创建 cron 任务。你需要用到的是想要唤醒的机器的网络接口和MAC地址信息。

|

||||

|

||||

```

|

||||

30 08 * * * /usr/bin/wakeonlan D0:50:99:82:E7:2B

|

||||

```

|

||||

|

||||

### RTC 唤醒

|

||||

|

||||

通过使用实时闹钟来唤醒计算机是最不可靠的方法。对于这个方法,可以参看 [Wake Up Linux With an RTC Alarm Clock][4] ;对于现在的大多数发行版来说这种方法已经有点过时了。

|

||||

|

||||

下周继续了解更多关于使用 RTC 唤醒的方法。

|

||||

|

||||

通过 Linux 基金会和 edX 可以学习更多关于 Linux 的免费 [Linux 入门][5]教程。

|

||||

|

||||

(题图:[The Observatory at Delhi][7])

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via:https://www.linux.com/learn/intro-to-linux/2017/11/wake-and-shut-down-linux-automatically

|

||||

|

||||

作者:[Carla Schroder][a]

|

||||

译者:[HardworkFish](https://github.com/HardworkFish)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/cschroder

|

||||

[1]:https://www.linux.com/files/images/bannerjpg

|

||||

[2]:https://www.linux.com/files/images/fig-1png-11

|

||||

[3]:https://www.linux.com/files/images/fig-2png-7

|

||||

[4]:https://www.linux.com/learn/wake-linux-rtc-alarm-clock

|

||||

[5]:https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

[6]:https://www.linux.com/licenses/category/creative-commons-attribution

|

||||

[7]:http://www.columbia.edu/itc/mealac/pritchett/00routesdata/1700_1799/jaipur/delhijantarearly/delhijantarearly.html

|

||||

[8]:https://www.linux.com/licenses/category/used-permission

|

||||

[9]:https://www.linux.com/licenses/category/used-permission

|

||||

|

||||

@ -1,13 +1,9 @@

|

||||

如何在 Linux 系统中用用户组来管理用户

|

||||

如何在 Linux 系统中通过用户组来管理用户

|

||||

============================================================

|

||||

|

||||

### [group-of-people-1645356_1920.jpg][1]

|

||||

|

||||

|

||||

|

||||

本教程可以了解如何通过用户组和访问控制表(ACL)来管理用户。

|

||||

|

||||

[创意共享协议][4]

|

||||

> 本教程可以了解如何通过用户组和访问控制表(ACL)来管理用户。

|

||||

|

||||

当你需要管理一台容纳多个用户的 Linux 机器时,比起一些基本的用户管理工具所提供的方法,有时候你需要对这些用户采取更多的用户权限管理方式。特别是当你要管理某些用户的权限时,这个想法尤为重要。比如说,你有一个目录,某个用户组中的用户可以通过读和写的权限访问这个目录,而其他用户组中的用户对这个目录只有读的权限。在 Linux 中,这是完全可以实现的。但前提是你必须先了解如何通过用户组和访问控制表(ACL)来管理用户。

|

||||

|

||||

@ -18,36 +14,32 @@

|

||||

你需要用下面两个用户名新建两个用户:

|

||||

|

||||

* olivia

|

||||

|

||||

* nathan

|

||||

|

||||

你需要新建以下两个用户组:

|

||||

|