mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

7826602c01

@ -1,21 +1,24 @@

|

||||

如何在 Git 中重置、恢复、和返回到以前的状态

|

||||

如何在 Git 中重置、恢复,返回到以前的状态

|

||||

======

|

||||

|

||||

> 用简洁而优雅的 Git 命令撤销仓库中的改变。

|

||||

|

||||

|

||||

|

||||

使用 Git 工作时其中一个鲜为人知(和没有意识到)的方面就是,如何很容易地返回到你以前的位置 —— 也就是说,在仓库中如何很容易地去撤销那怕是重大的变更。在本文中,我们将带你了解如何去重置、恢复、和完全回到以前的状态,做到这些只需要几个简单而优雅的 Git 命令。

|

||||

使用 Git 工作时其中一个鲜为人知(和没有意识到)的方面就是,如何轻松地返回到你以前的位置 —— 也就是说,在仓库中如何很容易地去撤销那怕是重大的变更。在本文中,我们将带你了解如何去重置、恢复和完全回到以前的状态,做到这些只需要几个简单而优雅的 Git 命令。

|

||||

|

||||

### reset

|

||||

### 重置

|

||||

|

||||

我们从 Git 的 `reset` 命令开始。确实,你应该能够想到它就是一个 "回滚" — 它将你本地环境返回到前面的提交。这里的 "本地环境" 一词,我们指的是你的本地仓库、暂存区、以及工作目录。

|

||||

我们从 Git 的 `reset` 命令开始。确实,你应该能够认为它就是一个 “回滚” —— 它将你本地环境返回到之前的提交。这里的 “本地环境” 一词,我们指的是你的本地仓库、暂存区以及工作目录。

|

||||

|

||||

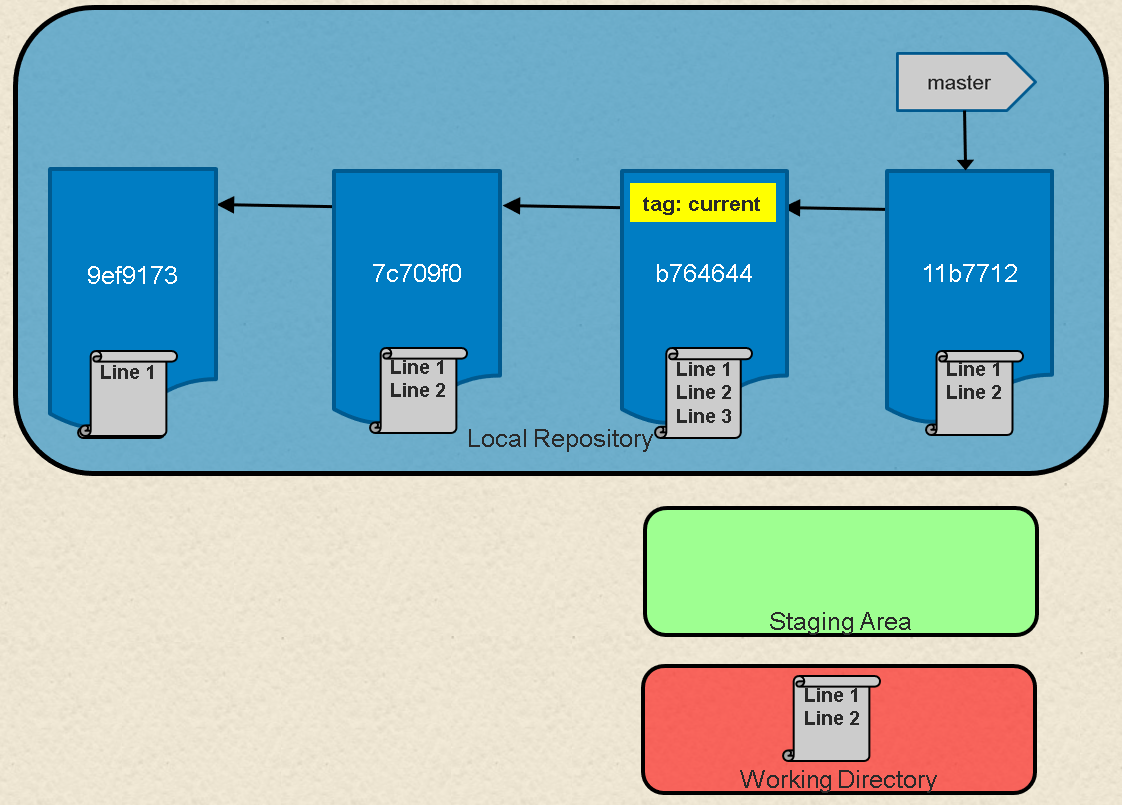

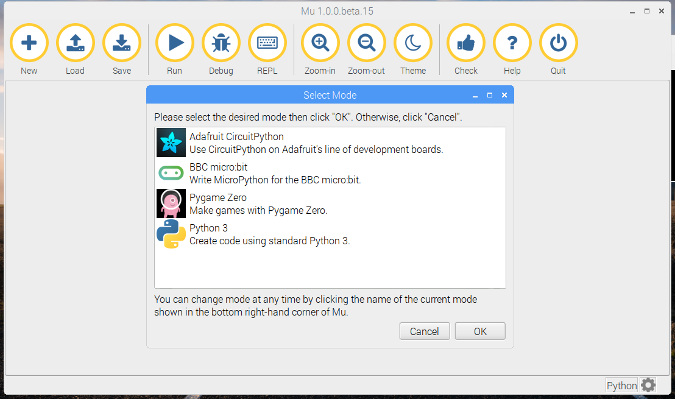

先看一下图 1。在这里我们有一个在 Git 中表示一系列状态的提交。在 Git 中一个分支就是简单的一个命名的、可移动指针到一个特定的提交。在这种情况下,我们的 master 分支是链中指向最新提交的一个指针。

|

||||

先看一下图 1。在这里我们有一个在 Git 中表示一系列提交的示意图。在 Git 中一个分支简单来说就是一个命名的、指向一个特定的提交的可移动指针。在这里,我们的 master 分支是指向链中最新提交的一个指针。

|

||||

|

||||

![Local Git environment with repository, staging area, and working directory][2]

|

||||

|

||||

图 1:有仓库、暂存区、和工作目录的本地环境

|

||||

*图 1:有仓库、暂存区、和工作目录的本地环境*

|

||||

|

||||

如果看一下我们的 master 分支是什么,可以看一下到目前为止我们产生的提交链。

|

||||

|

||||

```

|

||||

$ git log --oneline

|

||||

b764644 File with three lines

|

||||

@ -23,41 +26,49 @@ b764644 File with three lines

|

||||

9ef9173 File with one line

|

||||

```

|

||||

|

||||

如果我们想回滚到前一个提交会发生什么呢?很简单 —— 我们只需要移动分支指针即可。Git 提供了为我们做这个动作的命令。例如,如果我们重置 master 为当前提交回退两个提交的位置,我们可以使用如下之一的方法:

|

||||

如果我们想回滚到前一个提交会发生什么呢?很简单 —— 我们只需要移动分支指针即可。Git 提供了为我们做这个动作的 `reset` 命令。例如,如果我们重置 master 为当前提交回退两个提交的位置,我们可以使用如下之一的方法:

|

||||

|

||||

`$ git reset 9ef9173`(使用一个绝对的提交 SHA1 值 9ef9173)

|

||||

```

|

||||

$ git reset 9ef9173

|

||||

```

|

||||

|

||||

或

|

||||

(使用一个绝对的提交 SHA1 值 `9ef9173`)

|

||||

|

||||

`$ git reset current~2`(在 “current” 标签之前,使用一个相对值 -2)

|

||||

或:

|

||||

|

||||

```

|

||||

$ git reset current~2

|

||||

```

|

||||

(在 “current” 标签之前,使用一个相对值 -2)

|

||||

|

||||

图 2 展示了操作的结果。在这之后,如果我们在当前分支(master)上运行一个 `git log` 命令,我们将看到只有一个提交。

|

||||

|

||||

```

|

||||

$ git log --oneline

|

||||

|

||||

9ef9173 File with one line

|

||||

|

||||

```

|

||||

|

||||

![After reset][4]

|

||||

|

||||

图 2:在 `reset` 之后

|

||||

*图 2:在 `reset` 之后*

|

||||

|

||||

`git reset` 命令也包含使用一个你最终满意的提交内容去更新本地环境的其它部分的选项。这些选项包括:`hard` 在仓库中去重置指向的提交,用提交的内容去填充工作目录,并重置暂存区;`soft` 仅重置仓库中的指针;而 `mixed`(默认值)将重置指针和暂存区。

|

||||

`git reset` 命令也包含使用一些选项,可以让你最终满意的提交内容去更新本地环境的其它部分。这些选项包括:`hard` 在仓库中去重置指向的提交,用提交的内容去填充工作目录,并重置暂存区;`soft` 仅重置仓库中的指针;而 `mixed`(默认值)将重置指针和暂存区。

|

||||

|

||||

这些选项在特定情况下非常有用,比如,`git reset --hard <commit sha1 | reference>` 这个命令将覆盖本地任何未提交的更改。实际上,它重置了(清除掉)暂存区,并用你重置的提交内容去覆盖了工作区中的内容。在你使用 `hard` 选项之前,一定要确保这是你真正地想要做的操作,因为这个命令会覆盖掉任何未提交的更改。

|

||||

|

||||

### revert

|

||||

### 恢复

|

||||

|

||||

`git revert` 命令的实际结果类似于 `reset`,但它的方法不同。`reset` 命令是在(默认)链中向后移动分支的指针去“撤销”更改,`revert` 命令是在链中添加一个新的提交去“取消”更改。再次查看图 1 可以非常轻松地看到这种影响。如果我们在链中的每个提交中向文件添加一行,一种方法是使用 `reset` 使那个提交返回到仅有两行的那个版本,如:`git reset HEAD~1`。

|

||||

`git revert` 命令的实际结果类似于 `reset`,但它的方法不同。`reset` 命令(默认)是在链中向后移动分支的指针去“撤销”更改,`revert` 命令是在链中添加一个新的提交去“取消”更改。再次查看图 1 可以非常轻松地看到这种影响。如果我们在链中的每个提交中向文件添加一行,一种方法是使用 `reset` 使那个提交返回到仅有两行的那个版本,如:`git reset HEAD~1`。

|

||||

|

||||

另一个方法是添加一个新的提交去删除第三行,以使最终结束变成两行的版本 —— 实际效果也是取消了那个更改。使用一个 `git revert` 命令可以实现上述目的,比如:

|

||||

|

||||

另一个方法是添加一个新的提交去删除第三行,以使最终结束变成两行的版本 — 实际效果也是取消了那个更改。使用一个 `git revert` 命令可以实现上述目的,比如:

|

||||

```

|

||||

$ git revert HEAD

|

||||

|

||||

```

|

||||

|

||||

因为它添加了一个新的提交,Git 将提示如下的提交信息:

|

||||

|

||||

```

|

||||

Revert "File with three lines"

|

||||

|

||||

@ -74,6 +85,7 @@ This reverts commit b764644bad524b804577684bf74e7bca3117f554.

|

||||

图 3(在下面)展示了 `revert` 操作完成后的结果。

|

||||

|

||||

如果我们现在运行一个 `git log` 命令,我们将看到前面的提交之前的一个新提交。

|

||||

|

||||

```

|

||||

$ git log --oneline

|

||||

11b7712 Revert "File with three lines"

|

||||

@ -83,6 +95,7 @@ b764644 File with three lines

|

||||

```

|

||||

|

||||

这里是工作目录中这个文件当前的内容:

|

||||

|

||||

```

|

||||

$ cat <filename>

|

||||

Line 1

|

||||

@ -91,31 +104,34 @@ Line 2

|

||||

|

||||

|

||||

|

||||

#### Revert 或 reset 如何选择?

|

||||

*图 3 `revert` 操作之后*

|

||||

|

||||

#### 恢复或重置如何选择?

|

||||

|

||||

为什么要优先选择 `revert` 而不是 `reset` 操作?如果你已经将你的提交链推送到远程仓库(其它人可以已经拉取了你的代码并开始工作),一个 `revert` 操作是让他们去获得更改的非常友好的方式。这是因为 Git 工作流可以非常好地在分支的末端添加提交,但是当有人 `reset` 分支指针之后,一组提交将再也看不见了,这可能会是一个挑战。

|

||||

|

||||

当我们以这种方式使用 Git 工作时,我们的基本规则之一是:在你的本地仓库中使用这种方式去更改还没有推送的代码是可以的。如果提交已经推送到了远程仓库,并且可能其它人已经使用它来工作了,那么应该避免这些重写提交历史的更改。

|

||||

|

||||

总之,如果你想回滚、撤销、或者重写其它人已经在使用的一个提交链的历史,当你的同事试图将他们的更改合并到他们拉取的原始链上时,他们可能需要做更多的工作。如果你必须对已经推送并被其他人正在使用的代码做更改,在你做更改之前必须要与他们沟通,让他们先合并他们的更改。然后在没有需要去合并的侵入操作之后,他们再拉取最新的副本。

|

||||

总之,如果你想回滚、撤销或者重写其它人已经在使用的一个提交链的历史,当你的同事试图将他们的更改合并到他们拉取的原始链上时,他们可能需要做更多的工作。如果你必须对已经推送并被其他人正在使用的代码做更改,在你做更改之前必须要与他们沟通,让他们先合并他们的更改。然后在这个侵入操作没有需要合并的内容之后,他们再拉取最新的副本。

|

||||

|

||||

你可能注意到了,在我们做了 `reset` 操作之后,原始的提交链仍然在那个位置。我们移动了指针,然后 `reset` 代码回到前一个提交,但它并没有删除任何提交。换句话说就是,只要我们知道我们所指向的原始提交,我们能够通过简单的返回到分支的原始链的头部来“恢复”指针到前面的位置:

|

||||

|

||||

你可能注意到了,在我们做了 `reset` 操作之后,原始的链仍然在那个位置。我们移动了指针,然后 `reset` 代码回到前一个提交,但它并没有删除任何提交。换句话说就是,只要我们知道我们所指向的原始提交,我们能够通过简单的返回到分支的原始头部来“恢复”指针到前面的位置:

|

||||

```

|

||||

git reset <sha1 of commit>

|

||||

|

||||

```

|

||||

|

||||

当提交被替换之后,我们在 Git 中做的大量其它操作也会发生类似的事情。新提交被创建,有关的指针被移动到一个新的链,但是老的提交链仍然存在。

|

||||

|

||||

### Rebase

|

||||

### 变基

|

||||

|

||||

现在我们来看一个分支变基。假设我们有两个分支 — master 和 feature — 提交链如下图 4 所示。Master 的提交链是 `C4->C2->C1->C0` 和 feature 的提交链是 `C5->C3->C2->C1->C0`.

|

||||

现在我们来看一个分支变基。假设我们有两个分支:master 和 feature,提交链如下图 4 所示。master 的提交链是 `C4->C2->C1->C0` 和 feature 的提交链是 `C5->C3->C2->C1->C0`。

|

||||

|

||||

![Chain of commits for branches master and feature][6]

|

||||

|

||||

图 4:master 和 feature 分支的提交链

|

||||

*图 4:master 和 feature 分支的提交链*

|

||||

|

||||

如果我们在分支中看它的提交记录,它们看起来应该像下面的这样。(为了易于理解,`C` 表示提交信息)

|

||||

|

||||

```

|

||||

$ git log --oneline master

|

||||

6a92e7a C4

|

||||

@ -131,9 +147,10 @@ f33ae68 C1

|

||||

5043e79 C0

|

||||

```

|

||||

|

||||

我给人讲,在 Git 中,可以将 `rebase` 认为是 “将历史合并”。从本质上来说,Git 将一个分支中的每个不同提交尝试“重放”到另一个分支中。

|

||||

我告诉人们在 Git 中,可以将 `rebase` 认为是 “将历史合并”。从本质上来说,Git 将一个分支中的每个不同提交尝试“重放”到另一个分支中。

|

||||

|

||||

因此,我们使用基本的 Git 命令,可以变基一个 feature 分支进入到 master 中,并将它拼入到 `C4` 中(比如,将它插入到 feature 的链中)。操作命令如下:

|

||||

|

||||

因此,我们使用基本的 Git 命令,可以 rebase 一个 feature 分支进入到 master 中,并将它拼入到 `C4` 中(比如,将它插入到 feature 的链中)。操作命令如下:

|

||||

```

|

||||

$ git checkout feature

|

||||

$ git rebase master

|

||||

@ -147,9 +164,10 @@ Applying: C5

|

||||

|

||||

![Chain of commits after the rebase command][8]

|

||||

|

||||

图 5:`rebase` 命令完成后的提交链

|

||||

*图 5:`rebase` 命令完成后的提交链*

|

||||

|

||||

接着,我们看一下提交历史,它应该变成如下的样子。

|

||||

|

||||

```

|

||||

$ git log --oneline master

|

||||

6a92e7a C4

|

||||

@ -168,25 +186,27 @@ f33ae68 C1

|

||||

|

||||

注意那个 `C3'` 和 `C5'`— 在 master 分支上已处于提交链的“顶部”,由于产生了更改而创建了新提交。但是也要注意的是,rebase 后“原始的” `C3` 和 `C5` 仍然在那里 — 只是再没有一个分支指向它们而已。

|

||||

|

||||

如果我们做了这个 rebase,然后确定这不是我们想要的结果,希望去撤销它,我们可以做下面示例所做的操作:

|

||||

如果我们做了这个变基,然后确定这不是我们想要的结果,希望去撤销它,我们可以做下面示例所做的操作:

|

||||

|

||||

```

|

||||

$ git reset 79768b8

|

||||

|

||||

```

|

||||

|

||||

由于这个简单的变更,现在我们的分支将重新指向到做 `rebase` 操作之前一模一样的位置 —— 完全等效于撤销操作(图 6)。

|

||||

|

||||

![After undoing rebase][10]

|

||||

|

||||

图 6:撤销 `rebase` 操作之后

|

||||

*图 6:撤销 `rebase` 操作之后*

|

||||

|

||||

如果你想不起来之前一个操作指向的一个分支上提交了什么内容怎么办?幸运的是,Git 命令依然可以帮助你。用这种方式可以修改大多数操作的指针,Git 会记住你的原始提交。事实上,它是在 `.git` 仓库目录下,将它保存为一个特定的名为 `ORIG_HEAD ` 的文件中。在它被修改之前,那个路径是一个包含了大多数最新引用的文件。如果我们 `cat` 这个文件,我们可以看到它的内容。

|

||||

|

||||

```

|

||||

$ cat .git/ORIG_HEAD

|

||||

79768b891f47ce06f13456a7e222536ee47ad2fe

|

||||

```

|

||||

|

||||

我们可以使用 `reset` 命令,正如前面所述,它返回指向到原始的链。然后它的历史将是如下的这样:

|

||||

|

||||

```

|

||||

$ git log --oneline feature

|

||||

79768b8 C5

|

||||

@ -196,7 +216,8 @@ f33ae68 C1

|

||||

5043e79 C0

|

||||

```

|

||||

|

||||

在 reflog 中是获取这些信息的另外一个地方。这个 reflog 是你本地仓库中相关切换或更改的详细描述清单。你可以使用 `git reflog` 命令去查看它的内容:

|

||||

在 reflog 中是获取这些信息的另外一个地方。reflog 是你本地仓库中相关切换或更改的详细描述清单。你可以使用 `git reflog` 命令去查看它的内容:

|

||||

|

||||

```

|

||||

$ git reflog

|

||||

79768b8 HEAD@{0}: reset: moving to 79768b

|

||||

@ -216,10 +237,10 @@ f33ae68 HEAD@{13}: commit: C1

|

||||

5043e79 HEAD@{14}: commit (initial): C0

|

||||

```

|

||||

|

||||

你可以使用日志中列出的、你看到的相关命名格式,去 reset 任何一个东西:

|

||||

你可以使用日志中列出的、你看到的相关命名格式,去重置任何一个东西:

|

||||

|

||||

```

|

||||

$ git reset HEAD@{1}

|

||||

|

||||

```

|

||||

|

||||

一旦你理解了当“修改”链的操作发生后,Git 是如何跟踪原始提交链的基本原理,那么在 Git 中做一些更改将不再是那么可怕的事。这就是强大的 Git 的核心能力之一:能够很快速、很容易地尝试任何事情,并且如果不成功就撤销它们。

|

||||

@ -233,7 +254,7 @@ via: https://opensource.com/article/18/6/git-reset-revert-rebase-commands

|

||||

作者:[Brent Laster][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,29 +1,28 @@

|

||||

跨站请求伪造

|

||||

CSRF(跨站请求伪造)简介

|

||||

======

|

||||

|

||||

设计 Web 程序时,安全性是一个主要问题。我不是在谈论 DDOS 保护,使用强密码或两步验证。我在谈论对网络程序的最大威胁。它被称为**CSRF**, 是 **Cross Site Resource Forgery** (跨站请求伪造)的缩写。

|

||||

|

||||

设计 Web 程序时,安全性是一个主要问题。我不是在谈论 DDoS 保护、使用强密码或两步验证。我说的是对网络程序的最大威胁。它被称为**CSRF**, 是 **Cross Site Resource Forgery** (跨站请求伪造)的缩写。

|

||||

|

||||

### 什么是 CSRF?

|

||||

|

||||

[][1]

|

||||

|

||||

首先,**CSRF** 是 Cross Site Resource Forgery 的缩写。它通常发音为 “sea-surf”,也经常被称为XSRF。CSRF 是一种攻击类型,在受害者不知情的情况下,在受害者登录的 Web 程序上执行各种操作。这些行为可以是任何事情,从简单地喜欢或评论社交媒体帖子到向人们发送垃圾消息,甚至从受害者的银行账户转移资金。

|

||||

|

||||

首先,**CSRF** 是 Cross Site Resource Forgery 的缩写。它通常发音为 “sea-surf”,也经常被称为 XSRF。CSRF 是一种攻击类型,在受害者不知情的情况下,在受害者登录的 Web 程序上执行各种操作。这些行为可以是任何事情,从简单地点赞或评论社交媒体帖子到向人们发送垃圾消息,甚至从受害者的银行账户转移资金。

|

||||

|

||||

### CSRF 如何工作?

|

||||

|

||||

**CSRF** 攻击尝试利用在所有浏览器一个简单的常见漏洞。每次我们对网站进行身份验证或登录时,会话 cookie 都会存储在浏览器中。因此,每当我们向网站提出请求时,这些 cookie 就会自动发送到服务器,服务器通过匹配与服务器记录一起发送的 cookie 来识别我们。这样就知道是我们了。

|

||||

**CSRF** 攻击尝试利用所有浏览器上的一个简单的常见漏洞。每次我们对网站进行身份验证或登录时,会话 cookie 都会存储在浏览器中。因此,每当我们向网站提出请求时,这些 cookie 就会自动发送到服务器,服务器通过匹配与服务器记录一起发送的 cookie 来识别我们。这样就知道是我们了。

|

||||

|

||||

[][2]

|

||||

|

||||

这意味着我将在知情或不知情的情况下发出关请求。由于 cookie 被发送并且它们将匹配服务器上的记录,服务器认为我在发出该请求。

|

||||

这意味着我将在知情或不知情的情况下发出请求。由于 cookie 也被发送并且它们将匹配服务器上的记录,服务器认为我在发出该请求。

|

||||

|

||||

|

||||

CSRF 攻击通常以链接的形式出现。我们可以在其他网站上点击它们或通过电子邮件接收它们。单击这些链接时,会向服务器发出不需要的请求。正如我之前所说,服务器认为我们发出了请求并对其进行了身份验证。

|

||||

|

||||

#### 一个真实世界的例子

|

||||

|

||||

为了把事情看得更深入,想象一下你已登录银行的网站。并在 **yourbank.com/transfer** 上填写表格。你将接收者的帐号填写为 1234,填入金额 5,000 并单击提交按钮。现在,我们将有一个 **yourbank.com/transfer/send?to=1234&amount=5000** 的请求。因此服务器将根据请求进行操作并转账。现在想象一下你在另一个网站上,然后点击一个链接,用黑客的帐号打开上面的 URL。这笔钱现在会转账给黑客,服务器认为你做了交易。即使你没有。

|

||||

为了把事情看得更深入,想象一下你已登录银行的网站。并在 **yourbank.com/transfer** 上填写表格。你将接收者的帐号填写为 1234,填入金额 5,000 并单击提交按钮。现在,我们将有一个 **yourbank.com/transfer/send?to=1234&amount=5000** 的请求。因此服务器将根据请求进行操作并转账。现在想象一下你在另一个网站上,然后点击一个链接,用黑客的帐号作为参数打开上面的 URL。这笔钱现在会转账给黑客,服务器认为你做了交易。即使你没有。

|

||||

|

||||

[][3]

|

||||

|

||||

@ -43,7 +42,7 @@ via: http://www.linuxandubuntu.com/home/understanding-csrf-cross-site-request-fo

|

||||

作者:[linuxandubuntu][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,603 +0,0 @@

|

||||

Translating by MjSeven

|

||||

|

||||

A Collection Of Useful BASH Scripts For Heavy Commandline Users

|

||||

======

|

||||

|

||||

|

||||

|

||||

Today, I have stumbled upon a collection of useful BASH scripts for heavy commandline users. These scripts, known as **Bash-Snippets** , might be quite helpful for those who live in Terminal all day. Want to check the weather of a place where you live? This script will do that for you. Wondering what is the Stock price? You can run the script that displays the current details of a stock. Feel bored? You can watch some youtube videos. All from commandline. You don’t need to install any heavy memory consumable GUI applications.

|

||||

|

||||

As of writing this, Bash-Snippets provides the following 19 useful tools:

|

||||

|

||||

1. **Cheat** – Linux Commands cheat sheet.

|

||||

2. **Cloudup** – A tool to backup your GitHub repositories to bitbucket.

|

||||

3. **Crypt** – Encrypt and decrypt files.

|

||||

4. **Cryptocurrency** – Converts Cryptocurrency based on realtime exchange rates of the top 10 cryptos.

|

||||

5. **Currency** – Currency converter.

|

||||

6. **Geo** – Provides the details of wan, lan, router, dns, mac, and ip.

|

||||

7. **Lyrics** – Grab lyrics for a given song quickly from the command line.

|

||||

8. **Meme** – Command line meme creator.

|

||||

9. **Movies** – Search and display a movie details.

|

||||

10. **Newton** – Performs numerical calculations all the way up to symbolic math parsing.

|

||||

11. **Qrify** – Turns the given string into a qr code.

|

||||

12. **Short** – URL Shortner

|

||||

13. **Siteciphers** – Check which ciphers are enabled / disabled for a given https site.

|

||||

14. **Stocks** – Provides certain Stock details.

|

||||

15. **Taste** – Recommendation engine that provides three similar items like the supplied item (The items can be books, music, artists, movies, and games etc).

|

||||

16. **Todo** – Command line todo manager.

|

||||

17. **Transfer** – Quickly transfer files from the command line.

|

||||

18. **Weather** – Displays weather details of your place.

|

||||

19. **Youtube-Viewer** – Watch YouTube from Terminal.

|

||||

|

||||

|

||||

|

||||

The author might add more utilities and/or features in future, so I recommend you to keep an eye on the project’s website or GitHub page for future updates.

|

||||

|

||||

### Bash-Snippets – A Collection Of Useful BASH Scripts For Heavy Commandline Users

|

||||

|

||||

#### Installation

|

||||

|

||||

You can install these scripts on any OS that supports BASH.

|

||||

|

||||

First, clone the GIT repository using command:

|

||||

```

|

||||

$ git clone https://github.com/alexanderepstein/Bash-Snippets

|

||||

|

||||

```

|

||||

|

||||

Go to the cloned directory:

|

||||

```

|

||||

$ cd Bash-Snippets/

|

||||

|

||||

```

|

||||

|

||||

Git checkout to the latest stable release:

|

||||

```

|

||||

$ git checkout v1.22.0

|

||||

|

||||

```

|

||||

|

||||

Finally, install the Bash-Snippets using command:

|

||||

```

|

||||

$ sudo ./install.sh

|

||||

|

||||

```

|

||||

|

||||

This will ask you which scripts to install. Just type **Y** and press ENTER key to install the respective script. If you don’t want to install a particular script, type **N** and hit ENTER.

|

||||

```

|

||||

Do you wish to install currency [Y/n]: y

|

||||

|

||||

```

|

||||

|

||||

To install all scripts, run:

|

||||

```

|

||||

$ sudo ./install.sh all

|

||||

|

||||

```

|

||||

|

||||

To install a specific script, say currency, run:

|

||||

```

|

||||

$ sudo ./install.sh currency

|

||||

|

||||

```

|

||||

|

||||

You can also install it using [**Linuxbrew**][1] package manager.

|

||||

|

||||

To installs all tools, run:

|

||||

```

|

||||

$ brew install bash-snippets

|

||||

|

||||

```

|

||||

|

||||

To install specific tools:

|

||||

```

|

||||

$ brew install bash-snippets --without-all-tools --with-newton --with-weather

|

||||

|

||||

```

|

||||

|

||||

Also, there is a PPA for Debian-based systems such as Ubuntu, Linux Mint.

|

||||

```

|

||||

$ sudo add-apt-repository ppa:navanchauhan/bash-snippets

|

||||

$ sudo apt update

|

||||

$ sudo apt install bash-snippets

|

||||

|

||||

```

|

||||

|

||||

#### Usage

|

||||

|

||||

**An active Internet connection is required** to use these tools. The usage is fairly simple. Let us see how to use some of these scripts. I assume you have installed all scripts.

|

||||

|

||||

**1\. Currency – Currency Converter**

|

||||

|

||||

This script converts the currency based on realtime exchange rates. Enter the base currency code and the currency to exchange to, and the amount being exchanged one by one as shown below.

|

||||

```

|

||||

$ currency

|

||||

What is the base currency: INR

|

||||

What currency to exchange to: USD

|

||||

What is the amount being exchanged: 10

|

||||

|

||||

=========================

|

||||

| INR to USD

|

||||

| Rate: 0.015495

|

||||

| INR: 10

|

||||

| USD: .154950

|

||||

=========================

|

||||

|

||||

```

|

||||

|

||||

You can also pass all arguments in a single command as shown below.

|

||||

```

|

||||

$ currency INR USD 10

|

||||

|

||||

```

|

||||

|

||||

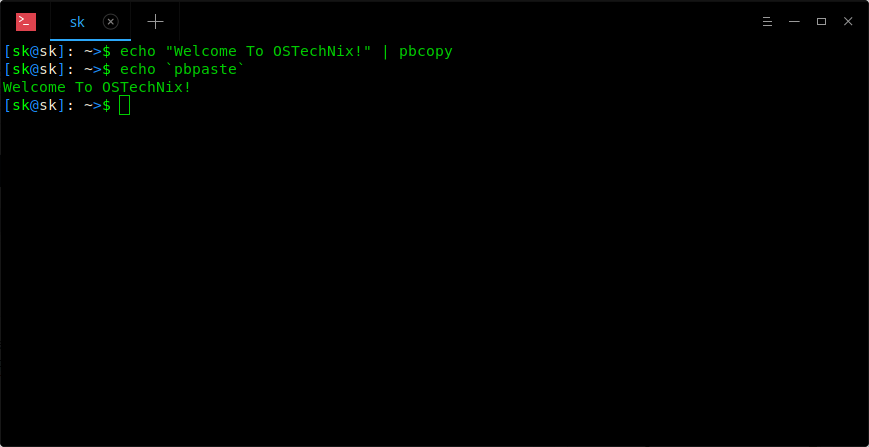

Refer the following screenshot.

|

||||

|

||||

[![Bash-Snippets][2]][3]

|

||||

|

||||

**2\. Stocks – Display stock price details**

|

||||

|

||||

If you want to check a stock price details, mention the stock item as shown below.

|

||||

```

|

||||

$ stocks Intel

|

||||

|

||||

INTC stock info

|

||||

=============================================

|

||||

| Exchange Name: NASDAQ

|

||||

| Latest Price: 34.2500

|

||||

| Close (Previous Trading Day): 34.2500

|

||||

| Price Change: 0.0000

|

||||

| Price Change Percentage: 0.00%

|

||||

| Last Updated: Jul 12, 4:00PM EDT

|

||||

=============================================

|

||||

|

||||

```

|

||||

|

||||

The above output the **Intel stock** details.

|

||||

|

||||

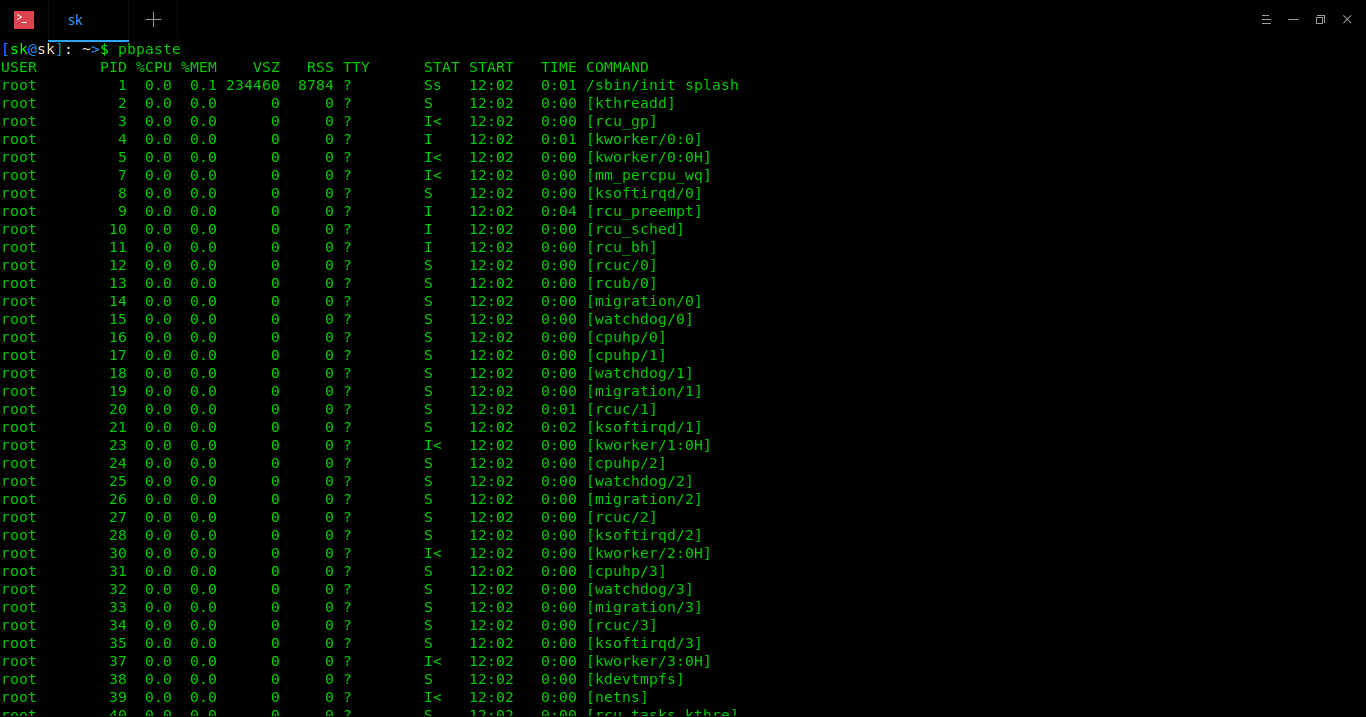

**3\. Weather – Display Weather details**

|

||||

|

||||

Let us check the Weather details by running the following command:

|

||||

```

|

||||

$ weather

|

||||

|

||||

```

|

||||

|

||||

**Sample output:**

|

||||

|

||||

![][4]

|

||||

|

||||

As you see in the above screenshot, it provides the 3 day weather forecast. Without any arguments, it will display the weather details based on your IP address. You can also bring the weather details of a particular city or country like below.

|

||||

```

|

||||

$ weather Chennai

|

||||

|

||||

```

|

||||

|

||||

Also, you can view the moon phase by entering the following command:

|

||||

```

|

||||

$ weather moon

|

||||

|

||||

```

|

||||

|

||||

Sample output would be:

|

||||

|

||||

![][5]

|

||||

|

||||

**4\. Crypt – Encrypt and Decrypt files**

|

||||

|

||||

This script is a wrapper for openssl that allows you to encrypt and decrypt files quickly and easily.

|

||||

|

||||

To encrypt a file, use the following command:

|

||||

```

|

||||

$ crypt -e [original file] [encrypted file]

|

||||

|

||||

```

|

||||

|

||||

For example, the following command will encrypt a file called **ostechnix.txt** , and save it as **encrypt_ostechnix.txt **in the current working directory.

|

||||

```

|

||||

$ crypt -e ostechnix.txt encrypt_ostechnix.txt

|

||||

|

||||

```

|

||||

|

||||

Enter the password for the file twice.

|

||||

```

|

||||

Encrypting ostechnix.txt...

|

||||

enter aes-256-cbc encryption password:

|

||||

Verifying - enter aes-256-cbc encryption password:

|

||||

Successfully encrypted

|

||||

|

||||

```

|

||||

|

||||

The above command will encrypt the given file using **AES 256 level encryption**. The password will not be saved in plain text. You can encrypt .pdf, .txt, .docx, .doc, .png, .jpeg type files.

|

||||

|

||||

To decrypt the file, use the following command:

|

||||

```

|

||||

$ crypt -d [encrypted file] [output file]

|

||||

|

||||

```

|

||||

|

||||

Example:

|

||||

```

|

||||

$ crypt -d encrypt_ostechnix.txt ostechnix.txt

|

||||

|

||||

```

|

||||

|

||||

Enter the password to decrypt.

|

||||

```

|

||||

Decrypting encrypt_ostechnix.txt...

|

||||

enter aes-256-cbc decryption password:

|

||||

Successfully decrypted

|

||||

|

||||

```

|

||||

|

||||

**5\. Movies – Find Movie details**

|

||||

|

||||

Using this script, you can find a movie details.

|

||||

|

||||

The following command displays the details of a movie called “mother”.

|

||||

```

|

||||

$ movies mother

|

||||

|

||||

==================================================

|

||||

| Title: Mother

|

||||

| Year: 2009

|

||||

| Tomato: 95%

|

||||

| Rated: R

|

||||

| Genre: Crime, Drama, Mystery

|

||||

| Director: Bong Joon Ho

|

||||

| Actors: Hye-ja Kim, Bin Won, Goo Jin, Je-mun Yun

|

||||

| Plot: A mother desperately searches for the killer who framed her son for a girl's horrific murder.

|

||||

==================================================

|

||||

|

||||

```

|

||||

|

||||

**6\. Display similar items like the supplied item**

|

||||

|

||||

To use this script, you need to get the API key **[here][6]**. No worries, it is completely FREE! Once the you got the API, add the following line to your **~/.bash_profile** : **export TASTE_API_KEY=”yourAPIKeyGoesHere”**``

|

||||

|

||||

Now, you can view the similar item like the supplied item as shown below:

|

||||

```

|

||||

$ taste -i Red Hot Chilli Peppers

|

||||

|

||||

```

|

||||

|

||||

**7\. Short – Shorten URLs**

|

||||

|

||||

This script shortens the given URL.

|

||||

```

|

||||

$ short <URL>

|

||||

|

||||

```

|

||||

|

||||

**8\. Geo – Display the details of your network**

|

||||

|

||||

This script helps you to find out the details of your network, such as wan, lan, router, dns, mac, and ip geolocation.

|

||||

|

||||

For example, to find out your LAN ip, run:

|

||||

```

|

||||

$ geo -l

|

||||

|

||||

```

|

||||

|

||||

Sample output from my system:

|

||||

```

|

||||

192.168.43.192

|

||||

|

||||

```

|

||||

|

||||

To find your Wan IP:

|

||||

```

|

||||

$ geo -w

|

||||

|

||||

```

|

||||

|

||||

For more details, just type ‘geo’ in the Terminal.

|

||||

```

|

||||

$ geo

|

||||

Geo

|

||||

Description: Provides quick access for wan, lan, router, dns, mac, and ip geolocation data

|

||||

Usage: geo [flag]

|

||||

-w Returns WAN IP

|

||||

-l Returns LAN IP(s)

|

||||

-r Returns Router IP

|

||||

-d Returns DNS Nameserver

|

||||

-m Returns MAC address for interface. Ex. eth0

|

||||

-g Returns Current IP Geodata

|

||||

Examples:

|

||||

geo -g

|

||||

geo -wlrdgm eth0

|

||||

Custom Geo Output =>

|

||||

[all] [query] [city] [region] [country] [zip] [isp]

|

||||

Example: geo -a 8.8.8.8 -o city,zip,isp

|

||||

-o [options] Returns Specific Geodata

|

||||

-a [address] For specific ip in -s

|

||||

-v Returns Version

|

||||

-h Returns Help Screen

|

||||

-u Updates Bash-Snippets

|

||||

|

||||

```

|

||||

|

||||

**9\. Cheat – Display cheatsheets of Linux commands**

|

||||

|

||||

Want to refer the cheatsheet of Linux command? Well, it is also possible. The following command will display the cheatsheet of **curl** command:

|

||||

```

|

||||

$ cheat curl

|

||||

|

||||

```

|

||||

|

||||

Just replace **curl** with the command of your choice to display its cheatsheet. This can be very useful for the quick reference to any command you want to use.

|

||||

|

||||

**10\. Youtube-Viewer – Watch YouTube videos**

|

||||

|

||||

Using this script, you can search or watch youtube videos right from the Terminal.

|

||||

|

||||

Let us watch some **Ed Sheeran** videos.

|

||||

```

|

||||

$ ytview Ed Sheeran

|

||||

|

||||

```

|

||||

|

||||

Choose the video you want to play from the list. The selected will play in your default media player.

|

||||

|

||||

![][7]

|

||||

|

||||

To view recent videos by an artist, you can use:

|

||||

```

|

||||

$ ytview -c [channel name]

|

||||

|

||||

```

|

||||

|

||||

To search for videos, just enter:

|

||||

```

|

||||

$ ytview -s [videoToSearch]

|

||||

|

||||

```

|

||||

|

||||

or just,

|

||||

```

|

||||

$ ytview [videoToSearch]

|

||||

|

||||

```

|

||||

|

||||

**11\. cloudup – Backup GitHub repositories to bitbucket**

|

||||

|

||||

Have you hosted any project on GitHub? Great! You can backup the GitHub repositories to **bitbucket** , a web-based hosting service used for source code and development projects, at any time.

|

||||

|

||||

You can either backup all github repositories of the designated user at once with the **-a** option. Or run it with no flags and backup individual repositories.

|

||||

|

||||

To backup GitHub repository, run:

|

||||

```

|

||||

$ cloudup

|

||||

|

||||

```

|

||||

|

||||

You will be asked to enter your GitHub username, name of the repository to backup, and bitbucket username and password etc.

|

||||

|

||||

**12\. Qrify – Convert Strings into QR code**

|

||||

|

||||

This script converts any given string of text into a QR code. This is useful for sending links or saving a string of commands to your phone

|

||||

```

|

||||

$ qrify convert this text into qr code

|

||||

|

||||

```

|

||||

|

||||

Sample output would be:

|

||||

|

||||

![][8]

|

||||

|

||||

Cool, isn’t it?

|

||||

|

||||

**13\. Cryptocurrency**

|

||||

|

||||

It displays the top ten cryptocurrencies realtime exchange rates.

|

||||

|

||||

Type the following command and hit ENTER to run it:

|

||||

```

|

||||

$ cryptocurrency

|

||||

|

||||

```

|

||||

|

||||

![][9]

|

||||

|

||||

**14\. Lyrics**

|

||||

|

||||

This script grabs the lyrics for a given song quickly from the command line.

|

||||

|

||||

Say for example, I am going to fetch the lyrics of **“who is it”** song, a popular song sung by **Michael Jackson**.

|

||||

```

|

||||

$ lyrics -a michael jackson -s who is it

|

||||

|

||||

```

|

||||

|

||||

![][10]

|

||||

|

||||

**15\. Meme**

|

||||

|

||||

This script allows you to create simple memes from command line. It is quite faster than GUI-based meme generators.

|

||||

|

||||

To create a meme, just type:

|

||||

```

|

||||

$ meme -f mymeme

|

||||

Enter the name for the meme's background (Ex. buzz, doge, blb ): buzz

|

||||

Enter the text for the first line: THIS IS A

|

||||

Enter the text for the second line: MEME

|

||||

|

||||

```

|

||||

|

||||

This will create jpg file in your current working directory.

|

||||

|

||||

**16\. Newton**

|

||||

|

||||

Tired of solving complex Maths problems? Here you go. The Newton script will perform numerical calculations all the way up to symbolic math parsing.

|

||||

|

||||

![][11]

|

||||

|

||||

**17\. Siteciphers**

|

||||

|

||||

This script helps you to check which ciphers are enabled / disabled for a given https site.

|

||||

```

|

||||

$ siteciphers google.com

|

||||

|

||||

```

|

||||

|

||||

![][12]

|

||||

|

||||

**18\. Todo**

|

||||

|

||||

It allows you to create everyday tasks directly from the Terminal.

|

||||

|

||||

Let us create some tasks.

|

||||

```

|

||||

$ todo -a The first task

|

||||

01). The first task Tue Jun 26 14:51:30 IST 2018

|

||||

|

||||

```

|

||||

|

||||

To add another task, simply re-run the above command with the task name.

|

||||

```

|

||||

$ todo -a The second task

|

||||

01). The first task Tue Jun 26 14:51:30 IST 2018

|

||||

02). The second task Tue Jun 26 14:52:29 IST 2018

|

||||

|

||||

```

|

||||

|

||||

To view the list of tasks, run:

|

||||

```

|

||||

$ todo -g

|

||||

01). The first task Tue Jun 26 14:51:30 IST 2018

|

||||

02). A The second task Tue Jun 26 14:51:46 IST 2018

|

||||

|

||||

```

|

||||

|

||||

Once you completed a task, remove it from the list as shown below.

|

||||

```

|

||||

$ todo -r 2

|

||||

Sucessfully removed task number 2

|

||||

01). The first task Tue Jun 26 14:51:30 IST 2018

|

||||

|

||||

```

|

||||

|

||||

To clear all tasks, run:

|

||||

```

|

||||

$ todo -c

|

||||

Tasks cleared.

|

||||

|

||||

```

|

||||

|

||||

**19\. Transfer**

|

||||

|

||||

The transfer script allows you to quickly and easily transfer files and directories over Internet.

|

||||

|

||||

Let us upload a file.

|

||||

```

|

||||

$ transfer test.txt

|

||||

Uploading test.txt

|

||||

################################################################################################################################################ 100.0%

|

||||

Success!

|

||||

Transfer Download Command: transfer -d desiredOutputDirectory ivmfj test.txt

|

||||

Transfer File URL: https://transfer.sh/ivmfj/test.txt

|

||||

|

||||

```

|

||||

|

||||

The file will be uploaded to transfer.sh site. Transfer.sh allows you to upload files up to **10 GB** in one go. All shared files automatically expire after **14 days**. As you can see, anyone can download the file either by visiting the second URL via a web browser or using the transfer command (it is installed in his/her system, of course).

|

||||

|

||||

Now remove the file from your system.

|

||||

```

|

||||

$ rm -fr test.txt

|

||||

|

||||

```

|

||||

|

||||

Now, you can download the file from transfer.sh site at any time (within 14 days) like below.

|

||||

```

|

||||

$ transfer -d Downloads ivmfj test.txt

|

||||

|

||||

```

|

||||

|

||||

For more details about this utility, refer our following guide.

|

||||

|

||||

##### Getting help

|

||||

|

||||

If you don’t know how to use a particular script, just type that script’s name and press ENTER. You will see the usage details. The following example displays the help section of **Qrify** script.

|

||||

```

|

||||

$ qrify

|

||||

Qrify

|

||||

Usage: qrify [stringtoturnintoqrcode]

|

||||

Description: Converts strings or urls into a qr code.

|

||||

-u Update Bash-Snippet Tools

|

||||

-m Enable multiline support (feature not working yet)

|

||||

-h Show the help

|

||||

-v Get the tool version

|

||||

Examples:

|

||||

qrify this is a test string

|

||||

qrify -m two\\nlines

|

||||

qrify github.com # notice no http:// or https:// this will fail

|

||||

|

||||

```

|

||||

|

||||

#### Updating scripts

|

||||

|

||||

You can update the installed tools at any time suing -u option. The following command updates “weather” tool.

|

||||

```

|

||||

$ weather -u

|

||||

|

||||

```

|

||||

|

||||

#### Uninstall

|

||||

|

||||

You can uninstall these tools as shown below.

|

||||

|

||||

Git clone the repository:

|

||||

```

|

||||

$ git clone https://github.com/alexanderepstein/Bash-Snippets

|

||||

|

||||

```

|

||||

|

||||

Go to the Bash-Snippets directory:

|

||||

```

|

||||

$ cd Bash-Snippets

|

||||

|

||||

```

|

||||

|

||||

And uninstall the scripts by running the following command:

|

||||

```

|

||||

$ sudo ./uninstall.sh

|

||||

|

||||

```

|

||||

|

||||

Type **y** and hit ENTER to remove each script.

|

||||

```

|

||||

Do you wish to uninstall currency [Y/n]: y

|

||||

|

||||

```

|

||||

|

||||

**Also read: **

|

||||

|

||||

And, that’s all for now folks. I must admit that I’m very impressed when testing this scripts. I really liked the idea of combing all useful scripts into a single package. Kudos to the developer. Give it a try, you won’t be disappointed.

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/collection-useful-bash-scripts-heavy-commandline-users/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:https://www.ostechnix.com/linuxbrew-common-package-manager-linux-mac-os-x/

|

||||

[3]:http://www.ostechnix.com/wp-content/uploads/2017/07/sk@sk_001.png

|

||||

[4]:http://www.ostechnix.com/wp-content/uploads/2017/07/sk@sk_002-1.png

|

||||

[5]:http://www.ostechnix.com/wp-content/uploads/2017/07/sk@sk_003.png

|

||||

[6]:https://tastedive.com/account/api_access

|

||||

[7]:http://www.ostechnix.com/wp-content/uploads/2017/07/ytview-1.png

|

||||

[8]:http://www.ostechnix.com/wp-content/uploads/2017/07/sk@sk_005.png

|

||||

[9]:http://www.ostechnix.com/wp-content/uploads/2017/07/cryptocurrency.png

|

||||

[10]:http://www.ostechnix.com/wp-content/uploads/2017/07/lyrics.png

|

||||

[11]:http://www.ostechnix.com/wp-content/uploads/2017/07/newton.png

|

||||

[12]:http://www.ostechnix.com/wp-content/uploads/2017/07/siteciphers.png

|

||||

@ -1,151 +0,0 @@

|

||||

fuowang 翻译中

|

||||

|

||||

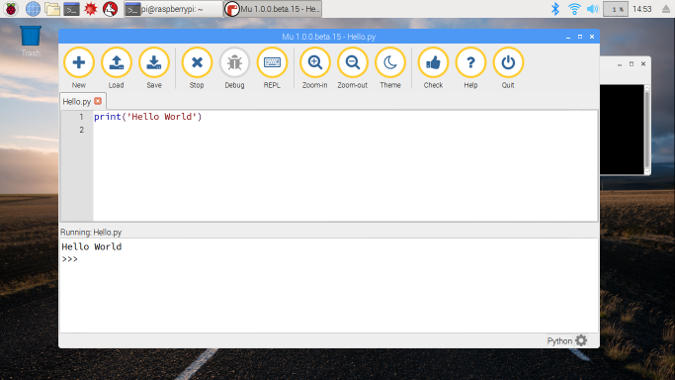

Arch Linux Applications Automatic Installation Script

|

||||

======

|

||||

|

||||

|

||||

|

||||

Howdy Archers! Today, I have stumbled upon an useful utility called **“ArchI0”** , a CLI menu-based Arch Linux applications automatic installation script. This script provides an easiest way to install all essential applications for your Arch-based distribution. Please note that **this script is meant for noobs only**. Intermediate and advanced users can easily figure out [**how to use pacman**][1] to get things done. If you want to learn how Arch Linux works, I suggest you to manually install all software one by one. For those who are still noobs and wanted an easy and quick way to install all essential applications for their Arch-based systems, make use of this script.

|

||||

|

||||

### ArchI0 – Arch Linux Applications Automatic Installation Script

|

||||

|

||||

The developer of this script has created two scripts namely **ArchI0live** and **ArchI0**. You can use ArchI0live script to test without installing it. This might be helpful to know what actually is in this script before installing it on your system.

|

||||

|

||||

### Install ArchI0

|

||||

|

||||

To install this script, Git cone the ArchI0 script repository using command:

|

||||

```

|

||||

$ git clone https://github.com/SifoHamlaoui/ArchI0.git

|

||||

|

||||

```

|

||||

|

||||

The above command will clone the ArchI0 GtiHub repository contents in a folder called ArchI0 in your current directory. Go to the directory using command:

|

||||

```

|

||||

$ cd ArchI0/

|

||||

|

||||

```

|

||||

|

||||

Make the script executable using command:

|

||||

```

|

||||

$ chmod +x ArchI0live.sh

|

||||

|

||||

```

|

||||

|

||||

Run the script with command:

|

||||

```

|

||||

$ sudo ./ArchI0live.sh

|

||||

|

||||

```

|

||||

|

||||

We need to run this script as root or sudo user, because installing applications requires root privileges.

|

||||

|

||||

> **Note:** For those wondering what all are those commands for at the beginning of the script, the first command downloads **figlet** , because the script logo is shown using figlet. The 2nd command install **Leafpad** which is used to open and read the license file. The 3rd command install **wget** to download files from sourceforge. The 4th and 5th commands are to download and open the License File on leafpad. And, the final and 6th command is used to close the license file after reading it.

|

||||

|

||||

Type your Arch Linux system’s architecture and hit ENTER key. When it asks to install the script, type y and hit ENTER.

|

||||

|

||||

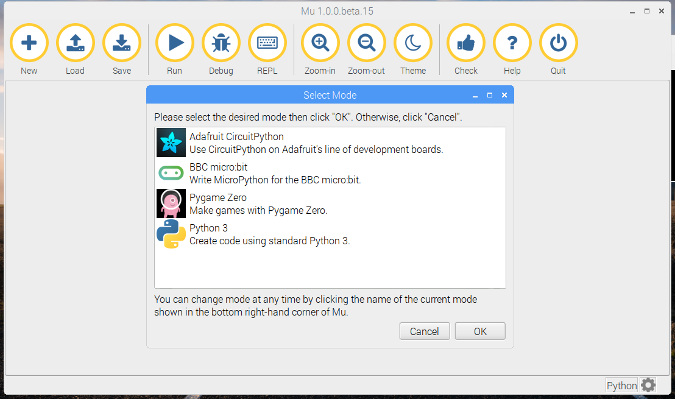

![][3]

|

||||

|

||||

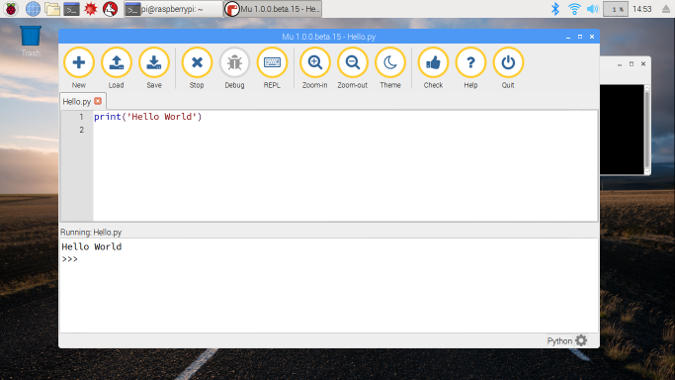

Once it is installed, you will be redirected to the main menu.

|

||||

|

||||

![][4]

|

||||

|

||||

As you see in the above screenshot, ArchI0 has 13 categories and contains 90 easy-to-install programs under those categories. These 90 programs are just enough to setup a full-fledged Arch Linux desktop to perform day-to-day activities. To know about this script, type **a** and to exit this script type **q**.

|

||||

|

||||

After installing it, you don’t need to run the ArchI0live script. You can directly launch it using the following command:

|

||||

```

|

||||

$ sudo ArchI0

|

||||

|

||||

```

|

||||

|

||||

It will ask you each time to choose your Arch Linux distribution architecture.

|

||||

```

|

||||

This script Is under GPLv3 License

|

||||

|

||||

Preparing To Run Script

|

||||

Checking For ROOT: PASSED

|

||||

What Is Your OS Architecture? {32/64} 64

|

||||

|

||||

```

|

||||

|

||||

From now on, you can install the program of your choice from the categories listed in the main menu. To view the list of available programs under a specific category, enter the category number. Say for example, to view the list of available programs under **Text Editors** category, type **1** and hit ENTER.

|

||||

```

|

||||

This script Is under GPLv3 License

|

||||

|

||||

[ R00T MENU ]

|

||||

Make A Choice

|

||||

1) Text Editors

|

||||

2) FTP/Torrent Applications

|

||||

3) Download Managers

|

||||

4) Network managers

|

||||

5) VPN clients

|

||||

6) Chat Applications

|

||||

7) Image Editors

|

||||

8) Video editors/Record

|

||||

9) Archive Handlers

|

||||

10) Audio Applications

|

||||

11) Other Applications

|

||||

12) Development Environments

|

||||

13) Browser/Web Plugins

|

||||

14) Dotfiles

|

||||

15) Usefull Links

|

||||

------------------------

|

||||

a) About ArchI0 Script

|

||||

q) Leave ArchI0 Script

|

||||

|

||||

Choose An Option: 1

|

||||

|

||||

```

|

||||

|

||||

Next, choose the application you want to install. To return to main menu, type **q** and hit ENTER.

|

||||

|

||||

I want to install Emacs, so I type **3**.

|

||||

```

|

||||

This script Is under GPLv3 License

|

||||

|

||||

[ TEXT EDITORS ]

|

||||

[ Option ] [ Description ]

|

||||

1) GEdit

|

||||

2) Geany

|

||||

3) Emacs

|

||||

4) VIM

|

||||

5) Kate

|

||||

---------------------------

|

||||

q) Return To Main Menu

|

||||

|

||||

Choose An Option: 3

|

||||

|

||||

```

|

||||

|

||||

Now, Emacs will be installed on your Arch Linux system.

|

||||

|

||||

![][5]

|

||||

|

||||

Press ENTER key to return to main menu after installing the applications of your choice.

|

||||

|

||||

### Conclusion

|

||||

|

||||

Undoubtedly, this script makes the Arch Linux user’s life easier, particularly the beginner’s. If you are looking for a fast and easy way to install applications without using pacman, then this script might be a good choice. Give it a try and let us know what you think about this script in the comment section below.

|

||||

|

||||

And, that’s all. Hope this tool helps. We will be posting useful guides every day. If you find our guides useful, please share them on your social, professional networks and support OSTechNix.

|

||||

|

||||

Cheers!!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/archi0-arch-linux-applications-automatic-installation-script/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:http://www.ostechnix.com/getting-started-pacman/

|

||||

[2]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[3]:http://www.ostechnix.com/wp-content/uploads/2017/07/sk@sk-ArchI0_003.png

|

||||

[4]:http://www.ostechnix.com/wp-content/uploads/2017/07/sk@sk-ArchI0_004-1.png

|

||||

[5]:http://www.ostechnix.com/wp-content/uploads/2017/07/pacman-as-superuser_005.png

|

||||

@ -1,141 +0,0 @@

|

||||

# A gentle introduction to FreeDOS

|

||||

|

||||

|

||||

|

||||

Image credits :

|

||||

|

||||

Jim Hall, CC BY

|

||||

|

||||

## Get the newsletter

|

||||

|

||||

Join the 85,000 open source advocates who receive our giveaway alerts and article roundups.

|

||||

|

||||

FreeDOS is an old operating system, but it is new to many people. In 1994, several developers and I came together to [create FreeDOS][1]—a complete, free, DOS-compatible operating system you can use to play classic DOS games, run legacy business software, or develop embedded systems. Any program that works on MS-DOS should also run on FreeDOS.

|

||||

|

||||

In 1994, FreeDOS was immediately familiar to anyone who had used Microsoft's proprietary MS-DOS. And that was by design; FreeDOS intended to mimic MS-DOS as much as possible. As a result, DOS users in the 1990s were able to jump right into FreeDOS. But times have changed. Today, open source developers are more familiar with the Linux command line or they may prefer a graphical desktop like [GNOME][2], making the FreeDOS command line seem alien at first.

|

||||

|

||||

New users often ask, "I [installed FreeDOS][3], but how do I use it?" If you haven't used DOS before, the blinking C:\> DOS prompt can seem a little unfriendly. And maybe scary. This gentle introduction to FreeDOS should get you started. It offers just the basics: how to get around and how to look at files. If you want to learn more than what's offered here, visit the [FreeDOS wiki][4].

|

||||

|

||||

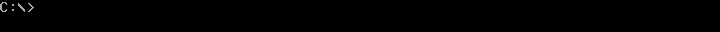

## The DOS prompt

|

||||

|

||||

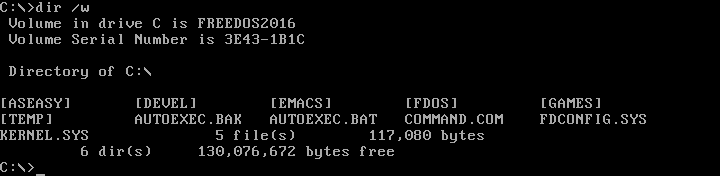

First, let's look at the empty prompt and what it means.

|

||||

|

||||

|

||||

|

||||

DOS is a "disk operating system" created when personal computers ran from floppy disks. Even when computers supported hard drives, it was common in the 1980s and 1990s to switch frequently between the different drives. For example, you might make a backup copy of your most important files to a floppy disk.

|

||||

|

||||

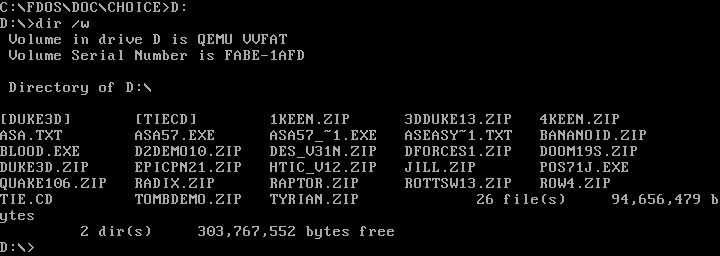

DOS referenced each drive by a letter. Early PCs could have only two floppy drives, which were assigned as the A: and B: drives. The first partition on the first hard drive was the C: drive, and so on for other drives. The C: in the prompt means you are using the first partition on the first hard drive.

|

||||

|

||||

Starting with PC-DOS 2.0 in 1983, DOS also supported directories and subdirectories, much like the directories and subdirectories on Linux filesystems. But unlike Linux, DOS directory names are delimited by \ instead of /. Putting that together with the drive letter, the C:\ in the prompt means you are in the top, or "root," directory of the C: drive.

|

||||

|

||||

The > is the literal prompt where you type your DOS commands, like the $ prompt on many Linux shells. The part before the > tells you the current working directory, and you type commands at the > prompt.

|

||||

|

||||

## Finding your way around in DOS

|

||||

|

||||

The basics of navigating through directories in DOS are very similar to the steps you'd use on the Linux command line. You need to remember only a few commands.

|

||||

|

||||

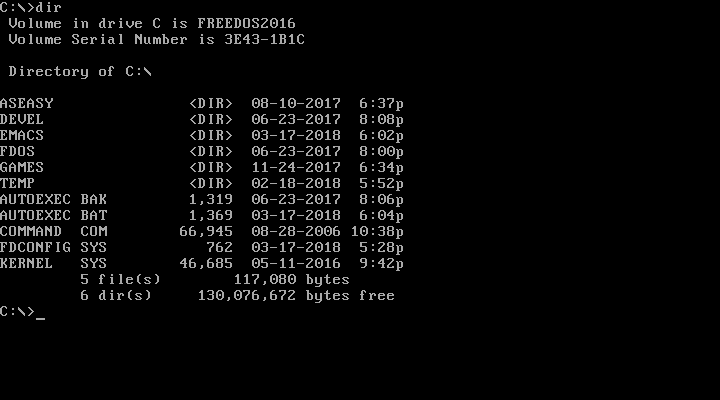

### Displaying a directory

|

||||

|

||||

When you want to see the contents of the current directory, use the DIR command. Since DOS commands are not case-sensitive, you could also type dir. By default, DOS displays the details of every file and subdirectory, including the name, extension, size, and last modified date and time.

|

||||

|

||||

|

||||

|

||||

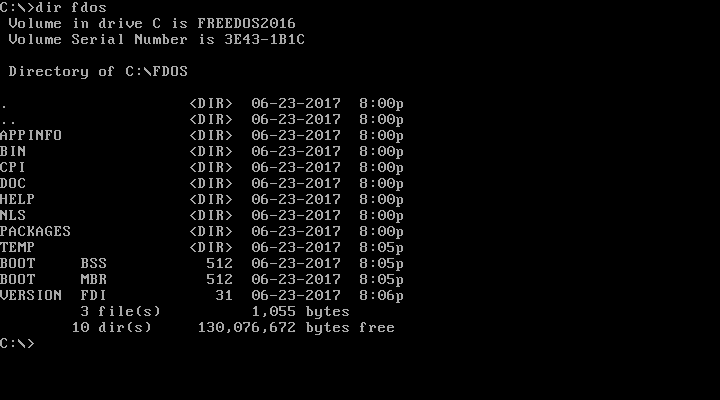

If you don't want the extra details about individual file sizes, you can display a "wide" directory by using the /w option with the DIR command. Note that Linux uses the hyphen (-) or double-hyphen (--) to start command-line options, but DOS uses the slash character (/).

|

||||

|

||||

|

||||

|

||||

You can look inside a specific subdirectory by passing the pathname as a parameter to DIR. Again, another difference from Linux is that Linux files and directories are case-sensitive, but DOS names are case-insensitive. DOS will usually display files and directories in all uppercase, but you can equally reference them in lowercase.

|

||||

|

||||

|

||||

|

||||

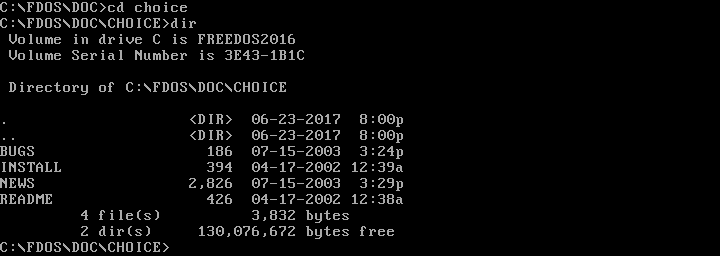

### Changing the working directory

|

||||

|

||||

Once you can see the contents of a directory, you can "move into" any other directory. On DOS, you change your working directory with the CHDIR command, also abbreviated as CD. You can change into a subdirectory with a command like CD CHOICE or into a new path with CD \FDOS\DOC\CHOICE.

|

||||

|

||||

|

||||

|

||||

Just like on the Linux command line, DOS uses . to represent the current directory, and .. for the parent directory (one level "up" from the current directory). You can combine these. For example, CD .. changes to the parent directory, and CD ..\.. moves you two levels "up" from the current directory.

|

||||

|

||||

FreeDOS also borrows a feature from Linux: You can use CD - to jump back to your previous working directory. That is handy after you change into a new path to do one thing and want to go back to your previous work.

|

||||

|

||||

|

||||

|

||||

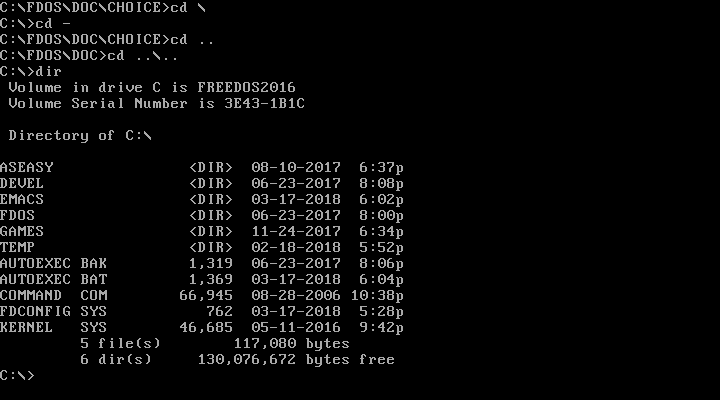

### Changing the working drive

|

||||

|

||||

Under Linux, the concept of a "drive" is hidden. In Linux and other Unix systems, you "mount" a drive to a directory path, such as /backup, or the system does it for you automatically, such as /var/run/media/user/flashdrive. But DOS is a much simpler system. With DOS, you must change the working drive by yourself.

|

||||

|

||||

Remember that DOS assigns the first partition on the first hard drive as the C: drive, and so on for other drive letters. On modern systems, people rarely divide a hard drive with multiple DOS partitions; they simply use the whole disk—or as much of it as they can assign to DOS. Today, C: is usually the first hard drive, and D: is usually another hard drive or the CD-ROM drive. Other network drives can be mapped to other letters, such as E: or Z: or however you want to organize them.

|

||||

|

||||

Changing drives is easy under DOS. Just type the drive letter followed by a colon (:) on the command line, and DOS will change to that working drive. For example, on my [QEMU][5] system, I set my D: drive to a shared directory in my Linux home directory, where I keep installers for various DOS applications and games I want to test.

|

||||

|

||||

|

||||

|

||||

Be careful that you don't try to change to a drive that doesn't exist. DOS may set the working drive, but if you try to do anything there you'll get the somewhat infamous "Abort, Retry, Fail" DOS error message.

|

||||

|

||||

|

||||

|

||||

## Other things to try

|

||||

|

||||

With the CD and DIR commands, you have the basics of DOS navigation. These commands allow you to find your way around DOS directories and see what other subdirectories and files exist. Once you are comfortable with basic navigation, you might also try these other basic DOS commands:

|

||||

|

||||

* MKDIR or MD to create new directories

|

||||

* RMDIR or RD to remove directories

|

||||

* TREE to view a list of directories and subdirectories in a tree-like format

|

||||

* TYPE and MORE to display file contents

|

||||

* RENAME or REN to rename files

|

||||

* DEL or ERASE to delete files

|

||||

* EDIT to edit files

|

||||

* CLS to clear the screen

|

||||

|

||||

If those aren't enough, you can find a list of [all DOS commands][6] on the FreeDOS wiki.

|

||||

|

||||

In FreeDOS, you can use the /? parameter to get brief instructions to use each command. For example, EDIT /? will show you the usage and options for the editor. Or you can type HELP to use an interactive help system.

|

||||

|

||||

Like any DOS, FreeDOS is meant to be a simple operating system. The DOS filesystem is pretty simple to navigate with only a few basic commands. So fire up a QEMU session, install FreeDOS, and experiment with the DOS command line. Maybe now it won't seem so scary.

|

||||

|

||||

## Related stories:

|

||||

|

||||

* [How to install FreeDOS in QEMU][7]

|

||||

* [How to install FreeDOS on Raspberry Pi][8]

|

||||

* [The origin and evolution of FreeDOS][9]

|

||||

* [Four cool facts about FreeDOS][10]

|

||||

|

||||

## About the author

|

||||

|

||||

[][11]

|

||||

|

||||

Jim Hall \- Jim Hall is an open source software developer and advocate, probably best known as the founder and project coordinator for FreeDOS. Jim is also very active in the usability of open source software, as a mentor for usability testing in GNOME Outreachy, and as an occasional adjunct professor teaching a course on the Usability of Open Source Software. From 2016 to 2017, Jim served as a director on the GNOME Foundation Board of Directors. At work, Jim is Chief Information Officer in local... [more about Jim Hall][12]

|

||||

|

||||

[More about me][13]

|

||||

|

||||

* [Learn how you can contribute][14]

|

||||

|

||||

---

|

||||

|

||||

via: [https://opensource.com/article/18/4/gentle-introduction-freedos][15]

|

||||

|

||||

作者: [undefined][16] 选题者: [@lujun9972][17] 译者: [译者ID][18] 校对: [校对者ID][19]

|

||||

|

||||

本文由 [LCTT][20] 原创编译,[Linux中国][21] 荣誉推出

|

||||

|

||||

[1]: https://opensource.com/article/17/10/freedos

|

||||

[2]: https://opensource.com/article/17/8/gnome-20-anniversary

|

||||

[3]: http://www.freedos.org/

|

||||

[4]: http://wiki.freedos.org/

|

||||

[5]: https://www.qemu.org/

|

||||

[6]: http://wiki.freedos.org/wiki/index.php/Dos_commands

|

||||

[7]: https://opensource.com/article/17/10/run-dos-applications-linux

|

||||

[8]: https://opensource.com/article/18/3/can-you-run-dos-raspberry-pi

|

||||

[9]: https://opensource.com/article/17/10/freedos

|

||||

[10]: https://opensource.com/article/17/6/freedos-still-cool-today

|

||||

[11]: https://opensource.com/users/jim-hall

|

||||

[12]: https://opensource.com/users/jim-hall

|

||||

[13]: https://opensource.com/users/jim-hall

|

||||

[14]: https://opensource.com/participate

|

||||

[15]: https://opensource.com/article/18/4/gentle-introduction-freedos

|

||||

[16]: undefined

|

||||

[17]: https://github.com/lujun9972

|

||||

[18]: https://github.com/译者ID

|

||||

[19]: https://github.com/校对者ID

|

||||

[20]: https://github.com/LCTT/TranslateProject

|

||||

[21]: https://linux.cn/

|

||||

@ -1,489 +0,0 @@

|

||||

Translating by qhwdw

|

||||

# Understanding metrics and monitoring with Python

|

||||

|

||||

|

||||

|

||||

Image by :

|

||||

|

||||

opensource.com

|

||||

|

||||

## Get the newsletter

|

||||

|

||||

Join the 85,000 open source advocates who receive our giveaway alerts and article roundups.

|

||||

|

||||

My reaction when I first came across the terms counter and gauge and the graphs with colors and numbers labeled "mean" and "upper 90" was one of avoidance. It's like I saw them, but I didn't care because I didn't understand them or how they might be useful. Since my job didn't require me to pay attention to them, they remained ignored.

|

||||

|

||||

That was about two years ago. As I progressed in my career, I wanted to understand more about our network applications, and that is when I started learning about metrics.

|

||||

|

||||

The three stages of my journey to understanding monitoring (so far) are:

|

||||

|

||||

* Stage 1: What? (Looks elsewhere)

|

||||

* Stage 2: Without metrics, we are really flying blind.

|

||||

* Stage 3: How do we keep from doing metrics wrong?

|

||||

|

||||

I am currently in Stage 2 and will share what I have learned so far. I'm moving gradually toward Stage 3, and I will offer some of my resources on that part of the journey at the end of this article.

|

||||

|

||||

Let's get started!

|

||||

|

||||

## Software prerequisites

|

||||

|

||||

More Python Resources

|

||||

|

||||

* [What is Python?][1]

|

||||

* [Top Python IDEs][2]

|

||||

* [Top Python GUI frameworks][3]

|

||||

* [Latest Python content][4]

|

||||

* [More developer resources][5]

|

||||

|

||||

All the demos discussed in this article are available on [my GitHub repo][6]. You will need to have docker and docker-compose installed to play with them.

|

||||

|

||||

## Why should I monitor?

|

||||

|

||||

The top reasons for monitoring are:

|

||||

|

||||

* Understanding _normal_ and _abnormal_ system and service behavior

|

||||

* Doing capacity planning, scaling up or down

|

||||

* Assisting in performance troubleshooting

|

||||

* Understanding the effect of software/hardware changes

|

||||

* Changing system behavior in response to a measurement

|

||||

* Alerting when a system exhibits unexpected behavior

|

||||

|

||||

## Metrics and metric types

|

||||

|

||||

For our purposes, a **metric** is an _observed_ value of a certain quantity at a given point in _time_. The total of number hits on a blog post, the total number of people attending a talk, the number of times the data was not found in the caching system, the number of logged-in users on your website—all are examples of metrics.

|

||||

|

||||

They broadly fall into three categories:

|

||||

|

||||

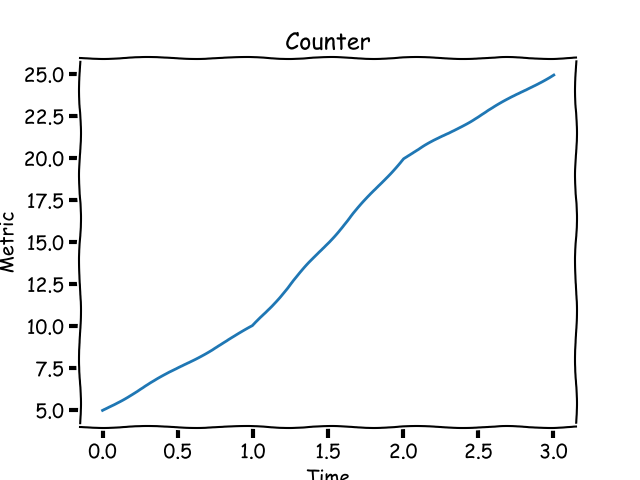

### Counters

|

||||

|

||||

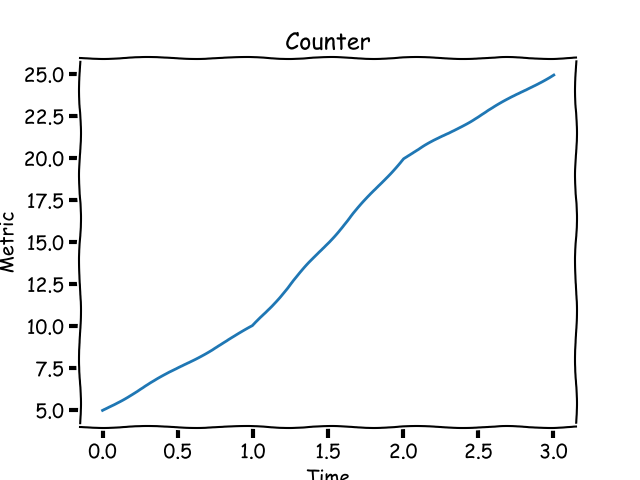

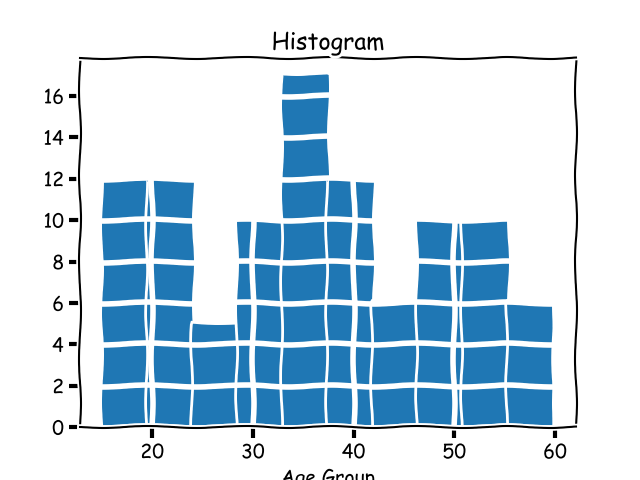

Consider your personal blog. You just published a post and want to keep an eye on how many hits it gets over time, a number that can only increase. This is an example of a **counter** metric. Its value starts at 0 and increases during the lifetime of your blog post. Graphically, a counter looks like this:

|

||||

|

||||

|

||||

|

||||

A counter metric always increases.

|

||||

|

||||

### Gauges

|

||||

|

||||

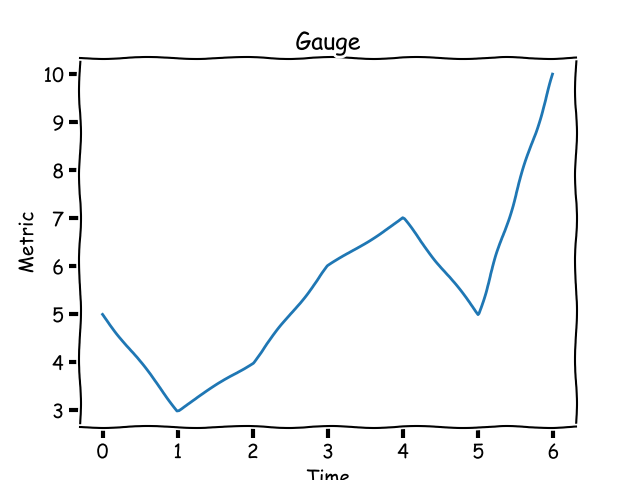

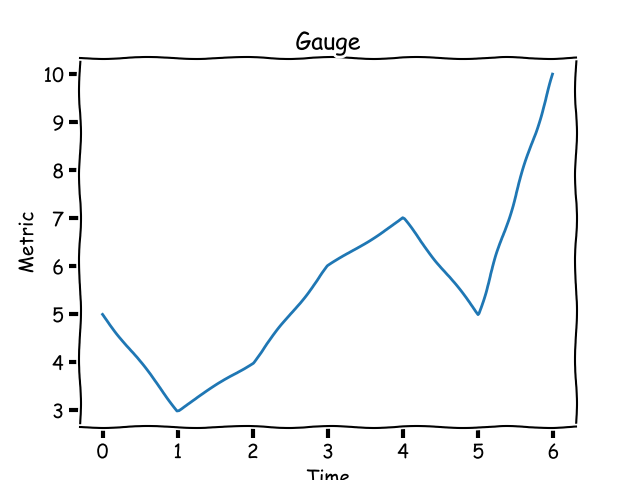

Instead of the total number of hits on your blog post over time, let's say you want to track the number of hits per day or per week. This metric is called a **gauge** and its value can go up or down. Graphically, a gauge looks like this:

|

||||

|

||||

|

||||

|

||||

A gauge metric can increase or decrease.

|

||||

|

||||

A gauge's value usually has a _ceiling_ and a _floor_ in a certain time window.

|

||||

|

||||

### Histograms and timers

|

||||

|

||||

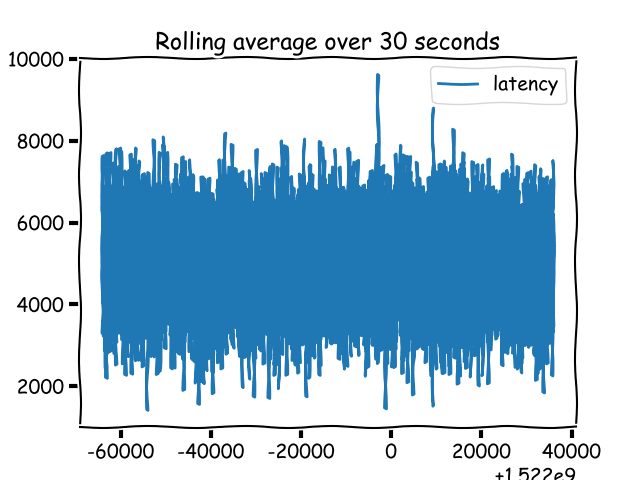

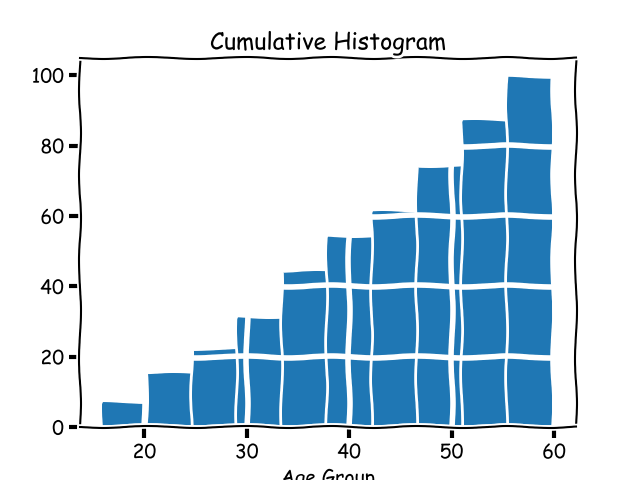

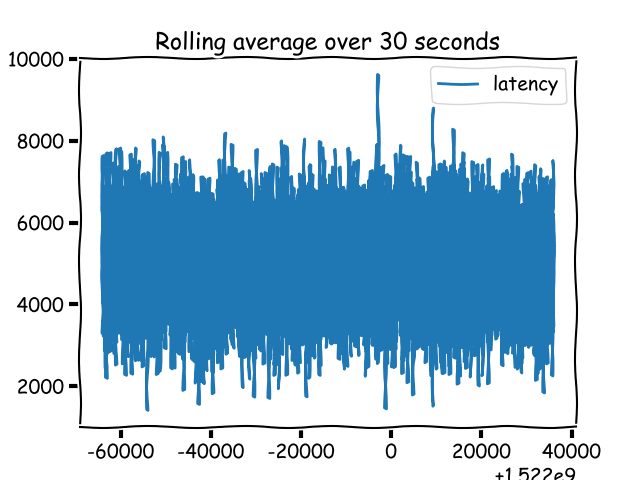

A **histogram** (as Prometheus calls it) or a **timer** (as StatsD calls it) is a metric to track _sampled observations_. Unlike a counter or a gauge, the value of a histogram metric doesn't necessarily show an up or down pattern. I know that doesn't make a lot of sense and may not seem different from a gauge. What's different is what you expect to _do_ with histogram data compared to a gauge. Therefore, the monitoring system needs to know that a metric is a histogram type to allow you to do those things.

|

||||

|

||||

|

||||

|

||||

A histogram metric can increase or decrease.

|

||||

|

||||

## Demo 1: Calculating and reporting metrics

|

||||

|

||||

[Demo 1][7] is a basic web application written using the [Flask][8] framework. It demonstrates how we can _calculate_ and _report_ metrics.

|

||||

|

||||

The src directory has the application in app.py with the src/helpers/middleware.py containing the following:

|

||||

|

||||

```

|

||||

from flask import request

|

||||

import csv

|

||||

import time

|

||||

|

||||

|

||||

def start_timer():

|

||||

request.start_time = time.time()

|

||||

|

||||

|

||||

def stop_timer(response):

|

||||

# convert this into milliseconds for statsd

|

||||

resp_time = (time.time() - request.start_time)*1000

|

||||

with open('metrics.csv', 'a', newline='') as f:

|

||||

csvwriter = csv.writer(f)

|

||||

csvwriter.writerow([str(int(time.time())), str(resp_time)])

|

||||

|

||||

return response

|

||||

|

||||

|

||||

def setup_metrics(app):

|

||||

app.before_request(start_timer)

|

||||

app.after_request(stop_timer)

|

||||

```

|

||||

|

||||

When setup_metrics() is called from the application, it configures the start_timer() function to be called before a request is processed and the stop_timer() function to be called after a request is processed but before the response has been sent. In the above function, we write the timestamp and the time it took (in milliseconds) for the request to be processed.

|

||||

|

||||

When we run docker-compose up in the demo1 directory, it starts the web application, then a client container that makes a number of requests to the web application. You will see a src/metrics.csv file that has been created with two columns: timestamp and request_latency.

|

||||

|

||||

Looking at this file, we can infer two things:

|

||||

|

||||

* There is a lot of data that has been generated

|

||||

* No observation of the metric has any characteristic associated with it

|

||||

|

||||

Without a characteristic associated with a metric observation, we cannot say which HTTP endpoint this metric was associated with or which node of the application this metric was generated from. Hence, we need to qualify each metric observation with the appropriate metadata.

|

||||

|

||||

## Statistics 101

|

||||

|

||||

If we think back to high school mathematics, there are a few statistics terms we should all recall, even if vaguely, including mean, median, percentile, and histogram. Let's briefly recap them without judging their usefulness, just like in high school.

|

||||

|

||||

### Mean

|

||||

|

||||

The **mean**, or the average of a list of numbers, is the sum of the numbers divided by the cardinality of the list. The mean of 3, 2, and 10 is (3+2+10)/3 = 5.

|

||||

|

||||

### Median

|

||||

|

||||

The **median** is another type of average, but it is calculated differently; it is the center numeral in a list of numbers ordered from smallest to largest (or vice versa). In our list above (2, 3, 10), the median is 3. The calculation is not very straightforward; it depends on the number of items in the list.

|

||||

|

||||

### Percentile

|

||||

|

||||

The **percentile** is a measure that gives us a measure below which a certain (k) percentage of the numbers lie. In some sense, it gives us an _idea_ of how this measure is doing relative to the k percentage of our data. For example, the 95th percentile score of the above list is 9.29999. The percentile measure varies from 0 to 100 (non-inclusive). The _zeroth_ percentile is the minimum score in a set of numbers. Some of you may recall that the median is the 50th percentile, which turns out to be 3.

|

||||

|

||||

Some monitoring systems refer to the percentile measure as upper_X where _X_ is the percentile; _upper 90_ refers to the value at the 90th percentile.

|

||||

|

||||

### Quantile

|

||||

|

||||

The **q-Quantile** is a measure that ranks q_N_ in a set of _N_ numbers. The value of **q** ranges between 0 and 1 (both inclusive). When **q** is 0.5, the value is the median. The relationship between the quantile and percentile is that the measure at **q** quantile is equivalent to the measure at **100_q_** percentile.

|

||||

|

||||

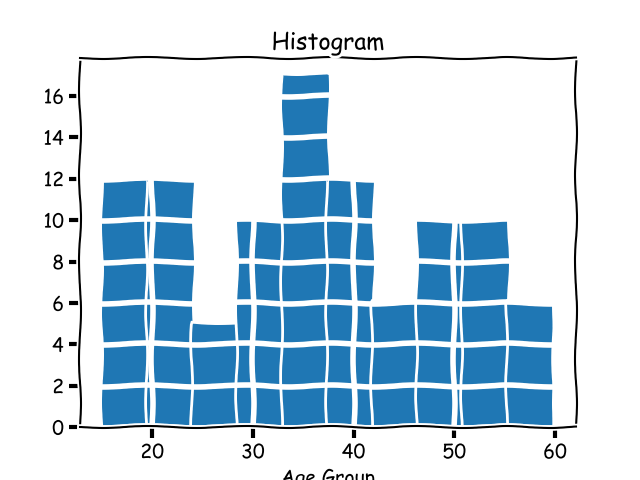

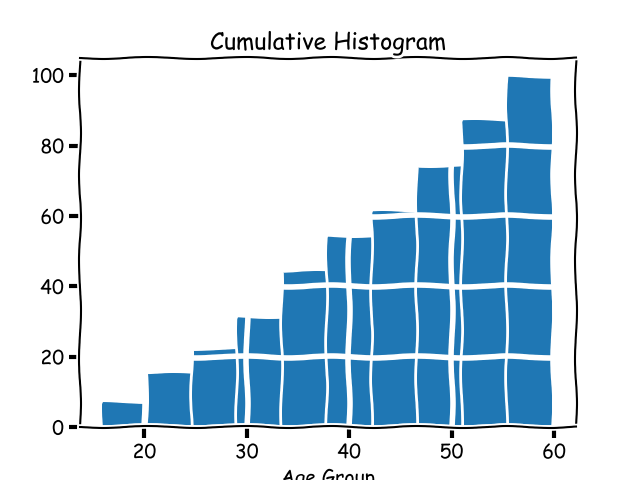

### Histogram

|

||||

|

||||