mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-16 22:42:21 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

781f74b9e1

@ -1,3 +1,4 @@

|

||||

translated by lixinyuxx

|

||||

6 common questions about agile development practices for teams

|

||||

======

|

||||

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

translated by lixinyuxx

|

||||

5 guiding principles you should know before you design a microservice

|

||||

======

|

||||

|

||||

|

||||

@ -0,0 +1,64 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (5 resolutions for open source project maintainers)

|

||||

[#]: via: (https://opensource.com/article/18/12/resolutions-open-source-project-maintainers)

|

||||

[#]: author: (Ben Cotton https://opensource.com/users/bcotton)

|

||||

|

||||

5 resolutions for open source project maintainers

|

||||

======

|

||||

No matter how you say it, good communication is essential to strong open source communities.

|

||||

|

||||

|

||||

I'm generally not big on New Year's resolutions. I have no problem with self-improvement, of course, but I tend to anchor around other parts of the calendar. Even so, there's something about taking down this year's free calendar and replacing it with next year's that inspires some introspection.

|

||||

|

||||

In 2017, I resolved to not share articles on social media until I'd read them. I've kept to that pretty well, and I'd like to think it has made me a better citizen of the internet. For 2019, I'm thinking about resolutions to make me a better open source software maintainer.

|

||||

|

||||

Here are some resolutions I'll try to stick to on the projects where I'm a maintainer or co-maintainer.

|

||||

|

||||

### 1\. Include a code of conduct

|

||||

|

||||

Jono Bacon included "not enforcing the code of conduct" in his article "[7 mistakes you're probably making][1]." Of course, to enforce a code of conduct, you must first have a code of conduct. I plan on defaulting to the [Contributor Covenant][2], but you can use whatever you like. As with licenses, it's probably best to use one that's already written instead of writing your own. But the important thing is to find something that defines how you want your community to behave, whatever that looks like. Once it's written down and enforced, people can decide for themselves if it looks like the kind of community they want to be a part of.

|

||||

|

||||

### 2\. Make the license clear and specific

|

||||

|

||||

You know what really stinks? Unclear licenses. "This software is licensed under the GPL" with no further text doesn't tell me much. Which version of the [GPL][3]? Do I get to pick? For non-code portions of a project, "licensed under a Creative Commons license" is even worse. I love the [Creative Commons licenses][4], but there are several different licenses with significantly different rights and obligations. So, I will make it very clear which variant and version of a license applies to my projects. I will include the full text of the license in the repo and a concise note in the other files.

|

||||

|

||||

Sort of related to this is using an [OSI][5]-approved license. It's tempting to come up with a new license that says exactly what you want it to say, but good luck if you ever need to enforce it. Will it hold up? Will the people using your project understand it?

|

||||

|

||||

### 3\. Triage bug reports and questions quickly

|

||||

|

||||

Few things in technology scale as poorly as open source maintainers. Even on small projects, it can be hard to find the time to answer every question and fix every bug. But that doesn't mean I can't at least acknowledge the person. It doesn't have to be a multi-paragraph reply. Even just labeling the GitHub issue shows that I saw it. Maybe I'll get to it right away. Maybe I'll get to it a year later. But it's important for the community to see that, yes, there is still someone here.

|

||||

|

||||

### 4\. Don't push features or bug fixes without accompanying documentation

|

||||

|

||||

For as much as my open source contributions over the years have revolved around documentation, my projects don't reflect the importance I put on it. There aren't many commits I can push that don't require some form of documentation. New features should obviously be documented at (or before!) the time they're committed. But even bug fixes should get an entry in the release notes. If nothing else, a push is a good opportunity to also make a commit to improving the docs.

|

||||

|

||||

### 5\. Make it clear when I'm abandoning a project

|

||||

|

||||

I'm really bad at saying "no" to things. I told the editors I'd write one or two articles for [Opensource.com][6] and here I am almost 60 articles later. Oops. But at some point, the things that once held my interests no longer do. Maybe the project is unnecessary because its functionality got absorbed into a larger project. Maybe I'm just tired of it. But it's unfair to the community (and potentially dangerous, as the recent [event-stream malware injection][7] showed) to leave a project in limbo. Maintainers have the right to walk away whenever and for whatever reason, but it should be clear that they have.

|

||||

|

||||

Whether you're an open source maintainer or contributor, if you know other resolutions project maintainers should make, please share them in the comments.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/12/resolutions-open-source-project-maintainers

|

||||

|

||||

作者:[Ben Cotton][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/bcotton

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/article/17/8/mistakes-open-source-avoid

|

||||

[2]: https://www.contributor-covenant.org/

|

||||

[3]: https://opensource.org/licenses/gpl-license

|

||||

[4]: https://creativecommons.org/share-your-work/licensing-types-examples/

|

||||

[5]: https://opensource.org/

|

||||

[6]: http://Opensource.com

|

||||

[7]: https://arstechnica.com/information-technology/2018/11/hacker-backdoors-widely-used-open-source-software-to-steal-bitcoin/

|

||||

@ -0,0 +1,111 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (8 tips to help non-techies move to Linux)

|

||||

[#]: via: (https://opensource.com/article/18/12/help-non-techies)

|

||||

[#]: author: (Scott Nesbitt https://opensource.com/users/scottnesbitt)

|

||||

|

||||

8 tips to help non-techies move to Linux

|

||||

======

|

||||

Help your friends dump their proprietary operating systems and make the move to open source.

|

||||

|

||||

|

||||

Back in 2016, I took down the shingle for my technology coaching business. Permanently. Or so I thought.

|

||||

|

||||

Over the last 10 months, a handful of friends and acquaintances have pulled me back into that realm. How? With their desire to dump That Other Operating System™ and move to Linux.

|

||||

|

||||

This has been an interesting experience, in no small part because most of the people aren't at all technical. They know how to use a computer to do what they need to do. Beyond that, they're not interested in delving deeper. That said, they were (and are) attracted to Linux for a number of reasons—probably because I constantly prattle on about it.

|

||||

|

||||

While bringing them to the Linux side of the computing world, I learned a few things about helping non-techies move to Linux. If someone asks you to help them make the jump to Linux, these eight tips can help you.

|

||||

|

||||

### 1\. Be honest about Linux.

|

||||

|

||||

Linux is great. It's not perfect, though. It can be perplexing and sometimes frustrating for new users. It's best to prepare the person you're helping with a short pep talk.

|

||||

|

||||

What should you talk about? Briefly explain what Linux is and how it differs from other operating systems. Explain what you can and _can't_ do with it. Let them know some of the pain points they might encounter when using Linux daily.

|

||||

|

||||

If you take a bit of time to [ease them into][1] Linux and open source, the switch won't be as jarring.

|

||||

|

||||

### 2\. It's not about you.

|

||||

|

||||

It's easy to fall into what I call the _power user fallacy_ : the idea that everyone uses technology the same way you do. That's rarely, if ever, the case.

|

||||

|

||||

This isn't about you. It's not about your needs or how you use a computer. It's about the person you're helping's needs and intentions. Their needs, especially if they're not particularly technical, will be different from yours.

|

||||

|

||||

It doesn't matter if Ubuntu or Elementary or Manjaro aren't your distros of choice. It doesn't matter if you turn your nose up at window managers like GNOME, KDE, or Pantheon in favor of i3 or Ratpoison. The person you're helping might think otherwise.

|

||||

|

||||

Put your needs and prejudices aside and help them find the right Linux distribution for them. Find out what they use their computer for and tailor your recommendations for a distribution or three based on that.

|

||||

|

||||

### 3\. Not everyone's a techie.

|

||||

|

||||

And not everyone wants to be. Everyone I've helped move to Linux in the last 10 months has no interest in compiling kernels or code nor in editing and tweaking configuration files. Most of them will never crack open a terminal window. I don't expect them to be interested in doing any of that in the future, either.

|

||||

|

||||

Guess what? There's nothing wrong with that. Maybe they won't _get the most out of_ Linux (whatever that means) by not embracing their inner geeks. Not everyone will want to take on challenges of, say, installing and configuring Slackware or Arch. They need something that will work out of the box.

|

||||

|

||||

### 4\. Take stock of their hardware.

|

||||

|

||||

In an ideal world, we'd all have tricked-out, high-powered laptops or desktops with everything maxed out. Sadly, that world doesn't exist.

|

||||

|

||||

That probably includes the person you're helping move to Linux. They may have slightly (maybe more than slightly) older hardware that they're comfortable with and that works for them. Hardware that they might not be able to afford to upgrade or replace.

|

||||

|

||||

Also, remember that not everyone needs a system for heavy-duty development or gaming or audio and video production. They just need a computer for browsing the web, editing photos, running personal productivity software, and the like.

|

||||

|

||||

One person I recently helped adopt Linux had an Acer Aspire 1 laptop with 4GB of RAM and a 64GB SSD. That helped inform my recommendations, which revolved around a few lightweight Linux distributions.

|

||||

|

||||

### 5\. Help them test-drive some distros.

|

||||

|

||||

The [DistroWatch][2] database contains close to 900 Linux distributions. You should be able to find three to five Linux distributions to recommend. Make a short list of the distributions you think would be a good fit for them. Also, point them to reviews so they can get other perspectives on those distributions.

|

||||

|

||||

When it comes time to take those Linux distributions for a spin, don't just hand someone a bunch of flash drives and walk away. You might be surprised to learn that most people have never run a live Linux distribution or installed an operating system. Any operating system. Beyond plugging the flash drives in, they probably won't know what to do.

|

||||

|

||||

Instead, show them how to [create bootable flash drives][3] and set up their computer's BIOS to start from those drives. Then, let them spend some time running the distros off the flash drives. That will give them a rudimentary feel for the distros and their window managers' quirks.

|

||||

|

||||

### 6\. Walk them through an installation.

|

||||

|

||||

Running a live session with a flash drive tells someone only so much. They need to work with a Linux distribution for a couple or three weeks to really form an opinion of it and to understand its quirks and strengths.

|

||||

|

||||

There's a myth that Linux is difficult to install. That might have been true back in the mid-1990s, but today most Linux distributions are easy to install. You follow a few graphical prompts and let the software do the rest.

|

||||

|

||||

For someone who's never installed any operating system, installing Linux can be a bit daunting. They might not know what to choose when, say, they're asked which filesystem to use or whether or not to encrypt their hard disk.

|

||||

|

||||

Guide them through at least one installation. While you should let them do most of the work, be there to answer questions.

|

||||

|

||||

### 7\. Be prepared to do a couple of installs.

|

||||

|

||||

As I mentioned a paragraph or two ago, using a Linux distribution for two weeks gives someone ample time to regularly interact with it and see if it can be their daily driver. It often works out. Sometimes, though, it doesn't.

|

||||

|

||||

Remember the person with the Acer Aspire 1 laptop? She thought Xubuntu was the right distribution for her. After a few weeks of working with it, that wasn't the case. There wasn't a technical reason—Xubuntu ran smoothly on her laptop. It was just a matter of feel. Instead, she switched back to the first distro she test drove: [MX Linux][4]. She's been happily using MX ever since.

|

||||

|

||||

### 8\. Teach them to fish.

|

||||

|

||||

You can't always be there to be the guiding hand. Or to be the mechanic or plumber who can fix any problems the person encounters. You have a life, too.

|

||||

|

||||

Once they've settled on a Linux distribution, explain that you'll offer a helping hand for two or three weeks. After that, they're on their own. Don't completely abandon them. Be around to help with big problems, but let them know they'll have to learn to do things for themselves.

|

||||

|

||||

Introduce them to websites that can help them solve their problems. Point them to useful articles and books. Doing that will help make them more confident and competent users of Linux—and of computers and technology in general.

|

||||

|

||||

### Final thoughts

|

||||

|

||||

Helping someone move to Linux from another, more familiar operating system can be a challenge—a challenge for them and for you. If you take it slowly and follow the advice in this article, you can make the process smoother.

|

||||

|

||||

Do you have other tips for helping a non-techie switch to Linux? Feel free to share them by leaving a comment.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/12/help-non-techies

|

||||

|

||||

作者:[Scott Nesbitt][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/scottnesbitt

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/business/15/2/ato2014-lightning-talks-scott-nesbitt

|

||||

[2]: https://distrowatch.com

|

||||

[3]: https://opensource.com/article/18/7/getting-started-etcherio

|

||||

[4]: https://opensource.com/article/18/2/mx-linux-17-distro-beginners

|

||||

146

sources/talk/20181218 The Rise and Demise of RSS.md

Normal file

146

sources/talk/20181218 The Rise and Demise of RSS.md

Normal file

@ -0,0 +1,146 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (The Rise and Demise of RSS)

|

||||

[#]: via: (https://twobithistory.org/2018/12/18/rss.html)

|

||||

[#]: author: (Two-Bit History https://twobithistory.org)

|

||||

|

||||

The Rise and Demise of RSS

|

||||

======

|

||||

|

||||

This post was originally published on [September 16th, 2018][1]. What follows is a revision that includes additional information gleaned from interviews with Ramanathan Guha, Ian Davis, Dan Libby, and Kevin Werbach.

|

||||

|

||||

About a decade ago, the average internet user might well have heard of RSS. Really Simple Syndication, or Rich Site Summary—what the acronym stands for depends on who you ask—is a standard that websites and podcasts can use to offer a feed of content to their users, one easily understood by lots of different computer programs. Today, though RSS continues to power many applications on the web, it has become, for most people, an obscure technology.

|

||||

|

||||

The story of how this happened is really two stories. The first is a story about a broad vision for the web’s future that never quite came to fruition. The second is a story about how a collaborative effort to improve a popular standard devolved into one of the most contentious forks in the history of open-source software development.

|

||||

|

||||

In the late 1990s, in the go-go years between Netscape’s IPO and the Dot-com crash, everyone could see that the web was going to be an even bigger deal than it already was, even if they didn’t know exactly how it was going to get there. One theory was that the web was about to be revolutionized by syndication. The web, originally built to enable a simple transaction between two parties—a client fetching a document from a single host server—would be broken open by new standards that could be used to repackage and redistribute entire websites through a variety of channels. Kevin Werbach, writing for Release 1.0, a newsletter influential among investors in the 1990s, predicted that syndication “would evolve into the core model for the Internet economy, allowing businesses and individuals to retain control over their online personae while enjoying the benefits of massive scale and scope.”

|

||||

|

||||

He invited his readers to imagine a future in which fencing aficionados, rather than going directly to an “online sporting goods site” or “fencing equipment retailer,” could buy a new épée directly through e-commerce widgets embedded into their favorite website about fencing. Just like in the television world, where big networks syndicate their shows to smaller local stations, syndication on the web would allow businesses and publications to reach consumers through a multitude of intermediary sites. This would mean, as a corollary, that consumers would gain significant control over where and how they interacted with any given business or publication on the web.

|

||||

|

||||

RSS was one of the standards that promised to deliver this syndicated future. To Werbach, RSS was “the leading example of a lightweight syndication protocol.” Another contemporaneous article called RSS the first protocol to realize the potential of XML. It was going to be a way for both users and content aggregators to create their own customized channels out of everything the web had to offer. And yet, two decades later, after the rise of social media and Google’s decision to shut down Google Reader, RSS appears to be [a slowly dying technology][2], now used chiefly by podcasters, programmers with tech blogs, and the occasional journalist. Though of course some people really do still rely on RSS readers, stubbornly adding an RSS feed to your blog, even in 2018, is a political statement. That little tangerine bubble has become a wistful symbol of defiance against a centralized web increasingly controlled by a handful of corporations, a web that hardly resembles the syndicated web of Werbach’s imagining.

|

||||

|

||||

The future once looked so bright for RSS. What happened? Was its downfall inevitable, or was it precipitated by the bitter infighting that thwarted the development of a single RSS standard?

|

||||

|

||||

### Muddied Water

|

||||

|

||||

RSS was invented twice. This meant it never had an obvious owner, a state of affairs that spawned endless debate and acrimony. But it also suggests that RSS was an important idea whose time had come.

|

||||

|

||||

In 1998, Netscape was struggling to envision a future for itself. Its flagship product, the Netscape Navigator web browser—once preferred by over 80 percent of web users—was quickly losing ground to Microsoft’s Internet Explorer. So Netscape decided to compete in a new arena. In May, a team was brought together to start work on what was known internally as “Project 60.” Two months later, Netscape announced “My Netscape,” a web portal that would fight it out with other portals like Yahoo, MSN, and Excite.

|

||||

|

||||

The following year, in March, Netscape announced an addition to the My Netscape portal called the “My Netscape Network.” My Netscape users could now customize their My Netscape page so that it contained “channels” featuring the most recent headlines from sites around the web. As long as your favorite website published a special file in a format dictated by Netscape, you could add that website to your My Netscape page, typically by clicking an “Add Channel” button that participating websites were supposed to add to their interfaces. A little box containing a list of linked headlines would then appear.

|

||||

|

||||

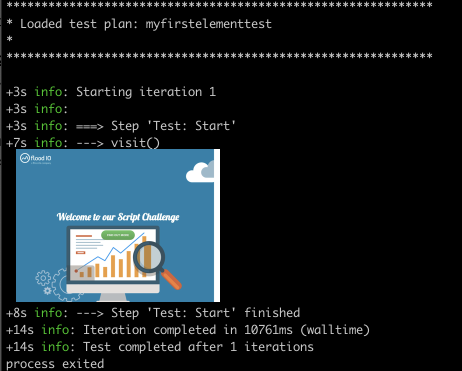

![A My Netscape Network Channel][3] A My Netscape Network channel for Mozilla.org, as it might look to users

|

||||

about to add it to their My Netscape page.

|

||||

|

||||

The special file that participating websites had to publish was an RSS file. In the My Netscape Network announcement, Netscape explained that RSS stood for “RDF Site Summary.” This was somewhat of a misnomer. RDF, or the Resource Description Framework, is basically a grammar for describing certain properties of arbitrary resources. (See [my article about the Semantic Web][4] if that sounds really exciting to you.) In 1999, a draft specification for RDF was being considered by the World Wide Web Consortium (W3C), the web’s main standards body. Though RSS was supposed to be based on RDF, the example RSS document Netscape actually released didn’t use any RDF tags at all. In a document that accompanied the Netscape RSS specification, Dan Libby, one of the specification’s authors, explained that “in this release of MNN, Netscape has intentionally limited the complexity of the RSS format.” The specification was given the 0.90 version number, the idea being that subsequent versions would bring RSS more in line with the W3C’s XML specification and the evolving draft of the RDF specification.

|

||||

|

||||

RSS had been created by Libby and two other Netscape employees, Eckart Walther and Ramanathan Guha. According to an email to me from Guha, he and Walther cooked up RSS in the beginning with some input from Libby; after AOL bought Netscape in 1998, he and Walther left and it became Libby’s responsibility. Before Netscape, Guha had worked for Apple, where he came up with something called the Meta Content Framework. MCF was a format for representing metadata about anything from web pages to local files. Guha demonstrated its power by developing an application called [HotSauce][5] that visualized relationships between files as a network of nodes suspended in 3D space. Immediately after leaving Apple for Netscape, Guha worked with a Netscape consultant named Tim Bray, who in a post on his blog said that he and Guha eventually produced an XML-based version of MCF that in turn became the foundation for the W3C’s RDF draft. It’s no surprise, then, that Guha, Walther, and Libby were keen to build on Guha’s prior work and incorporate RDF into RSS. But Libby later wrote that the original vision for an RDF-based RSS was pared back because of time constraints and the perception that RDF was “‘too complex’ for the ‘average user.’”

|

||||

|

||||

While Netscape was trying to win eyeballs in what became known as the “portal wars,” elsewhere on the web a new phenomenon known as “weblogging” was being pioneered. One of these pioneers was Dave Winer, CEO of a company called UserLand Software, which developed early content management systems that made blogging accessible to people without deep technical fluency. Winer ran his own blog, [Scripting News][6], which today is one of the oldest blogs on the internet. More than a year before Netscape announced My Netscape Network, on December 15, 1997, Winer published a post announcing that the blog would now be available in XML as well as HTML.

|

||||

|

||||

Dave Winer’s XML format became known as the Scripting News format. It was supposedly similar to Microsoft’s Channel Definition Format (a “push technology” standard submitted to the W3C in March, 1997), but I haven’t been able to find a file in the original format to verify that claim. Like Netscape’s RSS, it structured the content of Winer’s blog so that it could be understood by other software applications. When Netscape released RSS 0.90, Winer and UserLand Software began to support both formats. But Winer believed that Netscape’s format was “woefully inadequate” and “missing the key thing web writers and readers need.” It could only represent a list of links, whereas the Scripting News format could represent a series of paragraphs, each containing one or more links.

|

||||

|

||||

In June 1999, two months after Netscape’s My Netscape Network announcement, Winer introduced a new version of the Scripting News format, called ScriptingNews 2.0b1. Winer claimed that he decided to move ahead with his own format only after trying but failing to get anyone at Netscape to care about RSS 0.90’s deficiencies. The new version of the Scripting News format added several items to the `<header>` element that brought the Scripting News format to parity with RSS. But the two formats continued to differ in that the Scripting News format, which Winer nicknamed the “fat” syndication format, could include entire paragraphs and not just links.

|

||||

|

||||

Netscape got around to releasing RSS 0.91 the very next month. The updated specification was a major about-face. RSS no longer stood for “RDF Site Summary”; it now stood for “Rich Site Summary.” All the RDF—and there was almost none anyway—was stripped out. Many of the Scripting News tags were incorporated. In the text of the new specification, Libby explained:

|

||||

|

||||

> RDF references removed. RSS was originally conceived as a metadata format providing a summary of a website. Two things have become clear: the first is that providers want more of a syndication format than a metadata format. The structure of an RDF file is very precise and must conform to the RDF data model in order to be valid. This is not easily human-understandable and can make it difficult to create useful RDF files. The second is that few tools are available for RDF generation, validation and processing. For these reasons, we have decided to go with a standard XML approach.

|

||||

|

||||

Winer was enormously pleased with RSS 0.91, calling it “even better than I thought it would be.” UserLand Software adopted it as a replacement for the existing ScriptingNews 2.0b1 format. For a while, it seemed that RSS finally had a single authoritative specification.

|

||||

|

||||

### The Great Fork

|

||||

|

||||

A year later, the RSS 0.91 specification had become woefully inadequate. There were all sorts of things people were trying to do with RSS that the specification did not address. There were other parts of the specification that seemed unnecessarily constraining—each RSS channel could only contain a maximum of 15 items, for example.

|

||||

|

||||

By that point, RSS had been adopted by several more organizations. Other than Netscape, which seems to have lost interest after RSS 0.91, the big players were Dave Winer’s UserLand Software; O’Reilly Net, which ran an RSS aggregator called Meerkat; and Moreover.com, which also ran an RSS aggregator focused on news. Via mailing list, representatives from these organizations and others regularly discussed how to improve on RSS 0.91. But there were deep disagreements about what those improvements should look like.

|

||||

|

||||

The mailing list in which most of the discussion occurred was called the Syndication mailing list. [An archive of the Syndication mailing list][7] is still available. It is an amazing historical resource. It provides a moment-by-moment account of how those deep disagreements eventually led to a political rupture of the RSS community.

|

||||

|

||||

On one side of the coming rupture was Winer. Winer was impatient to evolve RSS, but he wanted to change it only in relatively conservative ways. In June, 2000, he published his own RSS 0.91 specification on the UserLand website, meant to be a starting point for further development of RSS. It made no significant changes to the 0.91 specification published by Netscape. Winer claimed in a blog post that accompanied his specification that it was only a “cleanup” documenting how RSS was actually being used in the wild, which was needed because the Netscape specification was no longer being maintained. In the same post, he argued that RSS had succeeded so far because it was simple, and that by adding namespaces (a way to explicitly distinguish between different RSS vocabularies) or RDF back to the format—some had suggested this be done in the Syndication mailing list—it “would become vastly more complex, and IMHO, at the content provider level, would buy us almost nothing for the added complexity.” In a message to the Syndication mailing list sent around the same time, Winer suggested that these issues were important enough that they might lead him to create a fork:

|

||||

|

||||

> I’m still pondering how to move RSS forward. I definitely want ICE-like stuff in RSS2, publish and subscribe is at the top of my list, but I am going to fight tooth and nail for simplicity. I love optional elements. I don’t want to go down the namespaces and schema road, or try to make it a dialect of RDF. I understand other people want to do this, and therefore I guess we’re going to get a fork. I have my own opinion about where the other fork will lead, but I’ll keep those to myself for the moment at least.

|

||||

|

||||

Arrayed against Winer were several other people, including Rael Dornfest of O’Reilly, Ian Davis (responsible for a search startup called Calaba), and a precocious, 14-year-old Aaron Swartz. This is the same Aaron Swartz that would later co-found Reddit and become famous for his hacktivism. (In 2000, according to an email to me from Davis, his dad often accompanied him to technology meetups.) Dornfest, Davis, and Swartz all thought that RSS needed namespaces in order to accommodate the many different things everyone wanted to do with it. On another mailing list hosted by O’Reilly, Davis proposed a namespace-based module system, writing that such a system would “make RSS as extensible as we like rather than packing in new features that over-complicate the spec.” The “namespace camp” believed that RSS would soon be used for much more than the syndication of blog posts, so namespaces, rather than being a complication, were the only way to keep RSS from becoming unmanageable as it supported more and more use cases.

|

||||

|

||||

At the root of this disagreement about namespaces was a deeper disagreement about what RSS was even for. Winer had invented his Scripting News format to syndicate the posts he wrote for his blog. Netscape had released RSS as “RDF Site Summary” because it was a way of recreating a site in miniature within the My Netscape online portal. Some people felt that Netscape’s original vision should be honored. Writing to the Syndication mailing list, Davis explained his view that RSS was “originally conceived as a way of building mini sitemaps,” and that now he and others wanted to expand RSS “to encompass more types of information than simple news headlines and to cater for the new uses of RSS that have emerged over the last 12 months.” This was a sensible point to make because the goal of the Netscape RSS project in the beginning was even loftier than Davis suggests: Guha told me that he wanted to create a technology that could support not just website channels but feeds about arbitrary entities such as, for example, Madonna. Further developing RSS so that it could do this would indeed be in keeping with that original motivation. But Davis’ argument also overstates the degree to which there was a unified vision at Netscape by the time the RSS specification was published. According to Libby, who I talked to via email, there was eventually contention between a “Let’s Build the Semantic Web” group and “Let’s Make This Simple for People to Author” group even within Netscape.

|

||||

|

||||

For his part, Winer argued that Netscape’s original goals were irrelevant because his Scripting News format was in fact the first RSS and it had been meant for a very different purpose. Given that the people most involved in the development of RSS disagreed about who had created RSS and why, a fork seems to have been inevitable.

|

||||

|

||||

The fork happened after Dornfest announced a proposed RSS 1.0 specification and formed the RSS-DEV Working Group—which would include Davis, Swartz, and several others but not Winer—to get it ready for publication. In the proposed specification, RSS once again stood for “RDF Site Summary,” because RDF had been added back in to represent metadata properties of certain RSS elements. The specification acknowledged Winer by name, giving him credit for popularizing RSS through his “evangelism.” But it also argued that RSS could not be improved in the way that Winer was advocating. Just adding more elements to RSS without providing for extensibility with a module system would “sacrifice scalability.” The specification went on to define a module system for RSS based on XML namespaces.

|

||||

|

||||

Winer felt that it was “unfair” that the RSS-DEV Working Group had arrogated the “RSS 1.0” name for themselves. In another mailing list about decentralization, he wrote that he had “recently had a standard stolen by a big name,” presumably meaning O’Reilly, which had convened the RSS-DEV Working Group. Other members of the Syndication mailing list also felt that the RSS-DEV Working Group should not have used the name “RSS” without unanimous agreement from the community on how to move RSS forward. But the Working Group stuck with the name. Dan Brickley, another member of the RSS-DEV Working Group, defended this decision by arguing that “RSS 1.0 as proposed is solidly grounded in the original RSS vision, which itself had a long heritage going back to MCF (an RDF precursor) and related specs (CDF etc).” He essentially felt that the RSS 1.0 effort had a better claim to the RSS name than Winer did, since RDF had originally been a part of RSS. The RSS-DEV Working Group published a final version of their specification in December. That same month, Winer published his own improvement to RSS 0.91, which he called RSS 0.92, on UserLand’s website. RSS 0.92 made several small optional improvements to RSS, among which was the addition of the `<enclosure>` tag soon used by podcasters everywhere. RSS had officially forked.

|

||||

|

||||

The fork might have been avoided if a better effort had been made to include Winer in the RSS-DEV Working Group. He obviously belonged there. He was a prominent contributor to the Syndication mailing list and responsible for much of RSS’ popularity, as the members of the Working Group themselves acknowledged. But, as Davis wrote in an email to me, Winer “wanted control and wanted RSS to be his legacy so was reluctant to work with us.” Tim O’Reilly, founder and CEO of O’Reilly, explained in a UserLand discussion group in September, 2000 that Winer basically refused to participate:

|

||||

|

||||

> A group of people involved in RSS got together to start thinking about its future evolution. Dave was part of the group. When the consensus of the group turned in a direction he didn’t like, Dave stopped participating, and characterized it as a plot by O’Reilly to take over RSS from him, despite the fact that Rael Dornfest of O’Reilly was only one of about a dozen authors of the proposed RSS 1.0 spec, and that many of those who were part of its development had at least as long a history with RSS as Dave had.

|

||||

|

||||

To this, Winer said:

|

||||

|

||||

> I met with Dale [Dougherty] two weeks before the announcement, and he didn’t say anything about it being called RSS 1.0. I spoke on the phone with Rael the Friday before it was announced, again he didn’t say that they were calling it RSS 1.0. The first I found out about it was when it was publicly announced.

|

||||

>

|

||||

> Let me ask you a straight question. If it turns out that the plan to call the new spec “RSS 1.0” was done in private, without any heads-up or consultation, or for a chance for the Syndication list members to agree or disagree, not just me, what are you going to do?

|

||||

>

|

||||

> UserLand did a lot of work to create and popularize and support RSS. We walked away from that, and let your guys have the name. That’s the top level. If I want to do any further work in Web syndication, I have to use a different name. Why and how did that happen Tim?

|

||||

|

||||

I have not been able to find a discussion in the Syndication mailing list about using the RSS 1.0 name prior to the announcement of the RSS 1.0 proposal. Winer, in a message to me, said that he was not trying to control RSS and just wanted to use it in his products.

|

||||

|

||||

RSS would fork again in 2003, when several developers frustrated with the bickering in the RSS community sought to create an entirely new format. These developers created Atom, a format that did away with RDF but embraced XML namespaces. Atom would eventually be specified by [a proposed IETF standard][8]. After the introduction of Atom, there were three competing versions of RSS: Winer’s RSS 0.92 (updated to RSS 2.0 in 2002 and renamed “Really Simple Syndication”), the RSS-DEV Working Group’s RSS 1.0, and Atom.

|

||||

|

||||

### Decline

|

||||

|

||||

The proliferation of competing RSS specifications may have hampered RSS in other ways that I’ll discuss shortly. But it did not stop RSS from becoming enormously popular during the 2000s. By 2004, the New York Times had started offering its headlines in RSS and had written an article explaining to the layperson what RSS was and how to use it. Google Reader, the RSS aggregator ultimately used by millions, was launched in 2005. By 2013, RSS seemed popular enough that the New York Times, in its obituary for Aaron Swartz, called the technology “ubiquitous.” For a while, before a third of the planet had signed up for Facebook, RSS was simply how many people stayed abreast of news on the internet.

|

||||

|

||||

The New York Times published Swartz’ obituary in January 2013. By that point, though, RSS had actually turned a corner and was well on its way to becoming an obscure technology. Google Reader was shut down in July 2013, ostensibly because user numbers had been falling “over the years.” This prompted several articles from various outlets declaring that RSS was dead. But people had been declaring that RSS was dead for years, even before Google Reader’s shuttering. Steve Gillmor, writing for TechCrunch in May 2009, advised that “it’s time to get completely off RSS and switch to Twitter” because “RSS just doesn’t cut it anymore.” He pointed out that Twitter was basically a better RSS feed, since it could show you what people thought about an article in addition to the article itself. It allowed you to follow people and not just channels. Gillmor told his readers that it was time to let RSS recede into the background. He ended his article with a verse from Bob Dylan’s “Forever Young.”

|

||||

|

||||

Today, RSS is not dead. But neither is it anywhere near as popular as it once was. Lots of people have offered explanations for why RSS lost its broad appeal. Perhaps the most persuasive explanation is exactly the one offered by Gillmor in 2009. Social networks, just like RSS, provide a feed featuring all the latest news on the internet. Social networks took over from RSS because they were simply better feeds. They also provide more benefits to the companies that own them. Some people have accused Google, for example, of shutting down Google Reader in order to encourage people to use Google+. Google might have been able to monetize Google+ in a way that it could never have monetized Google Reader. Marco Arment, the creator of Instapaper, wrote on his blog in 2013:

|

||||

|

||||

> Google Reader is just the latest casualty of the war that Facebook started, seemingly accidentally: the battle to own everything. While Google did technically “own” Reader and could make some use of the huge amount of news and attention data flowing through it, it conflicted with their far more important Google+ strategy: they need everyone reading and sharing everything through Google+ so they can compete with Facebook for ad-targeting data, ad dollars, growth, and relevance.

|

||||

|

||||

So both users and technology companies realized that they got more out of using social networks than they did out of RSS.

|

||||

|

||||

Another theory is that RSS was always too geeky for regular people. Even the New York Times, which seems to have been eager to adopt RSS and promote it to its audience, complained in 2006 that RSS is a “not particularly user friendly” acronym coined by “computer geeks.” Before the RSS icon was designed in 2004, websites like the New York Times linked to their RSS feeds using little orange boxes labeled “XML,” which can only have been intimidating. The label was perfectly accurate though, because back then clicking the link would take a hapless user to a page full of XML. [This great tweet][9] captures the essence of this explanation for RSS’ demise. Regular people never felt comfortable using RSS; it hadn’t really been designed as a consumer-facing technology and involved too many hurdles; people jumped ship as soon as something better came along.

|

||||

|

||||

RSS might have been able to overcome some of these limitations if it had been further developed. Maybe RSS could have been extended somehow so that friends subscribed to the same channel could syndicate their thoughts about an article to each other. Maybe browser support could have been improved. But whereas a company like Facebook was able to “move fast and break things,” the RSS developer community was stuck trying to achieve consensus. When they failed to agree on a single standard, effort that could have gone into improving RSS was instead squandered on duplicating work that had already been done. Davis told me, for example, that Atom would not have been necessary if the members of the Syndication mailing list had been able to compromise and collaborate, and “all that cleanup work could have been put into RSS to strengthen it.” So if we are asking ourselves why RSS is no longer popular, a good first-order explanation is that social networks supplanted it. If we ask ourselves why social networks were able to supplant it, then the answer may be that the people trying to make RSS succeed faced a problem much harder than, say, building Facebook. As Dornfest wrote to the Syndication mailing list at one point, “currently it’s the politics far more than the serialization that’s far from simple.”

|

||||

|

||||

So today we are left with centralized silos of information. Even so, the syndicated web that Werbach foresaw in 1999 has been realized, just not in the way he thought it would be. After all, The Onion is a publication that relies on syndication through Facebook and Twitter the same way that Seinfeld relied on syndication to rake in millions after the end of its original run. I asked Werbach what he thinks about this and he more or less agrees. He told me that RSS, on one level, was clearly a failure, because it isn’t now “a technology that is really the core of the whole blogging world or content world or world of assembling different elements of things into sites.” But, on another level, “the whole social media revolution is partly about the ability to aggregate different content and resources” in a manner reminiscent of RSS and his original vision for a syndicated web. To Werbach, “it’s the legacy of RSS, even if it’s not built on RSS.”

|

||||

|

||||

Unfortunately, syndication on the modern web still only happens through one of a very small number of channels, meaning that none of us “retain control over our online personae” the way that Werbach imagined we would. One reason this happened is garden-variety corporate rapaciousness—RSS, an open format, didn’t give technology companies the control over data and eyeballs that they needed to sell ads, so they did not support it. But the more mundane reason is that centralized silos are just easier to design than common standards. Consensus is difficult to achieve and it takes time, but without consensus spurned developers will go off and create competing standards. The lesson here may be that if we want to see a better, more open web, we have to get better at not screwing each other over.

|

||||

|

||||

If you enjoyed this post, more like it come out every four weeks! Follow [@TwoBitHistory][10] on Twitter or subscribe to the [RSS feed][11] to make sure you know when a new post is out.

|

||||

|

||||

Previously on TwoBitHistory…

|

||||

|

||||

> I've long wondered if the Unix commands on my Macbook are built from the same code that they were built from 20 or 30 years ago. The answer, it turns, out, is "kinda"!

|

||||

>

|

||||

> My latest post, on how the implementation of cat has changed over the years:<https://t.co/dHizjK50ES>

|

||||

>

|

||||

> — TwoBitHistory (@TwoBitHistory) [November 12, 2018][12]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://twobithistory.org/2018/12/18/rss.html

|

||||

|

||||

作者:[Two-Bit History][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://twobithistory.org

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://twobithistory.org/2018/09/16/the-rise-and-demise-of-rss.html

|

||||

[2]: https://trends.google.com/trends/explore?date=all&geo=US&q=rss

|

||||

[3]: https://twobithistory.org/images/mnn-channel.gif

|

||||

[4]: https://twobithistory.org/2018/05/27/semantic-web.html

|

||||

[5]: http://web.archive.org/web/19970703020212/http://mcf.research.apple.com:80/hs/screen_shot.html

|

||||

[6]: http://scripting.com

|

||||

[7]: https://groups.yahoo.com/neo/groups/syndication/info

|

||||

[8]: https://tools.ietf.org/html/rfc4287

|

||||

[9]: https://twitter.com/mgsiegler/status/311992206716203008

|

||||

[10]: https://twitter.com/TwoBitHistory

|

||||

[11]: https://twobithistory.org/feed.xml

|

||||

[12]: https://twitter.com/TwoBitHistory/status/1062114484209311746?ref_src=twsrc%5Etfw

|

||||

@ -1,3 +1,4 @@

|

||||

Translating by qhwdw

|

||||

Protecting Code Integrity with PGP — Part 4: Moving Your Master Key to Offline Storage

|

||||

======

|

||||

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

translated by lixinyuxx

|

||||

4 Firefox extensions worth checking out

|

||||

======

|

||||

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

translated by lixinyuxx

|

||||

The life cycle of a software bug

|

||||

======

|

||||

|

||||

|

||||

@ -1,142 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (11 Uses for a Raspberry Pi Around the Office)

|

||||

[#]: via: (https://blog.dxmtechsupport.com.au/11-uses-for-a-raspberry-pi-around-the-office/)

|

||||

[#]: author: (James Mawson https://blog.dxmtechsupport.com.au/author/james-mawson/)

|

||||

|

||||

11 Uses for a Raspberry Pi Around the Office

|

||||

======

|

||||

|

||||

Look, I know what you’re thinking: a Raspberry Pi is really just for tinkering, prototyping and hobby use. It’s not actually meant for running a business on.

|

||||

|

||||

And it’s definitely true that this computer’s relatively low processing power, corruptible SD card, lack of battery backup and the DIY nature of the support means it’s not going to be a viable replacement for a [professionally installed and configured business server][1] for your most mission-critical operations any time soon.

|

||||

|

||||

But the board is affordable, incredibly frugal with power, small enough to fit just about anywhere and endlessly flexible – it’s actually a pretty great way to handle some basic tasks around the office.

|

||||

|

||||

And, even better, there’s a whole world of people out there who have done these projects before and are happy to share how they did it.

|

||||

|

||||

### DNS Server

|

||||

|

||||

Every time you type a website address or click a link in your browser, it needs to convert the domain name into a numeric IP address before it can show you anything.

|

||||

|

||||

Normally this means a request to a DNS server somewhere on the internet – but you can speed up your browsing by handling this locally.

|

||||

|

||||

You can also assign your own subdomains for local access to machines around the office.

|

||||

|

||||

[Here’s how to get this working.][2]

|

||||

|

||||

### Toilet Occupied Sign

|

||||

|

||||

Ever get queues at the loos?

|

||||

|

||||

That’s annoying for those left waiting and the time spent dealing with it is a drain on your whole office’s productivity.

|

||||

|

||||

I guess you could always hang those signs they have on airplanes all through your office.

|

||||

|

||||

[Occu-pi][3] is a much simpler solution, using a magnetic switch and a Raspberry Pi to tell when the bolt is closed and update a Slack channel as to when it’s in use – meaning that the whole office can tell at a glance of their computer or mobile device whether there’s a cubicle free.

|

||||

|

||||

### Honeypot Trap for Hackers

|

||||

|

||||

It should scare most business owners just a little bit that their first clue that a hacker’s breached the network is when something goes badly wrong.

|

||||

|

||||

That’s where it can help to have a honeypot: a computer that serves no purpose except to sit on your network with certain ports open to masquerade as a juicy target to hackers.

|

||||

|

||||

Security researchers often deploy honeypots on the exterior of a network, to collect data on what attackers are doing.

|

||||

|

||||

But for the average small business, these are more usefully deployed in the interior, to serve as kind of a tripwire. Because no ordinary user has any real reason to want to connect to the honeypot, any login attempts that occur are a very good indicator that mischief is afoot.

|

||||

|

||||

This can provide early warning of outsider intrusion, and of trusted insiders up to no good.

|

||||

|

||||

In larger, client/server networks, it might be more practical to run something like this as a virtual machine. But in small-office/home-office situations with a peer-to-peer network running on a wireless router, something like [HoneyPi][4] is a great little burglar alarm.

|

||||

|

||||

### Print Server

|

||||

|

||||

Network-attached printers are so much more convenient.

|

||||

|

||||

But it can be expensive to replace all your printers- especially if you’re otherwise happy with them.

|

||||

|

||||

It might make a lot more sense to [set up a Raspberry Pi as a print server][5].

|

||||

|

||||

### Network Attached Storage

|

||||

|

||||

Turning simple hard drives into network attached storage was one of the earliest practical uses for a Raspberry Pi, and it’s still one of the best.

|

||||

|

||||

[Here’s how to create a NAS with your Raspberry Pi.][6]

|

||||

|

||||

### Ticketing Server

|

||||

|

||||

Looking for a way to manage the support tickets for your help desk on a shoestring budget?

|

||||

|

||||

There’s a totally open source ticketing program called osTicket that you can install on your Pi, and it’s even available as [a ready-to-go SD card image][7].

|

||||

|

||||

### Digital Signage

|

||||

|

||||

Whether it’s for events, advertising, a menu, or something else entirely, a lot of businesses need a way to display digital signage – and the Pi’s affordability and frugal electricity needs make it a very attractive choice.

|

||||

|

||||

[There are a wealth of options to choose from here.][8]

|

||||

|

||||

### Directories and Kiosks

|

||||

|

||||

[FullPageOS][9] is a Linux distribution based on Raspbian that boots straight into a full screen version of Chromium – ideal for shopping directoriers, library catalogues and so on.

|

||||

|

||||

### Basic Intranet Web Server

|

||||

|

||||

For hosting a public-facing website, you’re really much better off just getting a hosting account. A Raspberry Pi is not really built to serve any real volume of web traffic.

|

||||

|

||||

But for small offices, it can host an internal business wiki or basic company intranet. It can also work as a sandbox environment for experimenting with code and server configurations.

|

||||

|

||||

[Here’s how to get Apache, MySQL and PHP running on a Pi.][10]

|

||||

|

||||

### Penetration Tester

|

||||

|

||||

Kali Linux is an operating system built specifically to probe networks for security vulnerabilities. By installing it on a Pi, you’ve got a super portable penetration tester with more than 600 tools included.

|

||||

|

||||

[You can find a torrent link for the Raspberry Pi image here.][11]

|

||||

|

||||

Be absolutely scrupulous to only use this on your own network or networks you’ve got permission to perform a security audit on – using this to hack other networks is a serious crime.

|

||||

|

||||

### VPN Server

|

||||

|

||||

When you’re out on the road, relying on public wireless internet, you’ve not really any say in who else might be on the network, snooping on all your traffic. That’s why it can be reassuring to encrypt everything with a VPN connection.

|

||||

|

||||

There are any number of commercial VPN services you can subscribe to – and you can install your own in the cloud – but by running one from your office, you can also access the local network from anywhere.

|

||||

|

||||

For light use – say, the occasional bit of business travel – a Raspberry Pi is a great, power-efficient way to set up a VPN server. (It’s also worth checking first that your router doesn’t offer this functionality already – very many do.)

|

||||

|

||||

[Here’s how to install OpenVPN on a Raspberry Pi.][12]

|

||||

|

||||

### Wireless Coffee Machine

|

||||

|

||||

Ahh, ambrosia: sweet nectar of the gods and the backbone of all productive enterprise.

|

||||

|

||||

So why not [hack the office coffee machine into a smart coffee machine][13] for precision temperature control and wireless network connectivity?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://blog.dxmtechsupport.com.au/11-uses-for-a-raspberry-pi-around-the-office/

|

||||

|

||||

作者:[James Mawson][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://blog.dxmtechsupport.com.au/author/james-mawson/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://dxmtechsupport.com.au/server-configuration

|

||||

[2]: https://www.1and1.com/digitalguide/server/configuration/how-to-make-your-raspberry-pi-into-a-dns-server/

|

||||

[3]: https://blog.usejournal.com/occu-pi-the-bathroom-of-the-future-ed69b84e21d5

|

||||

[4]: https://trustfoundry.net/honeypi-easy-honeypot-raspberry-pi/

|

||||

[5]: https://opensource.com/article/18/3/print-server-raspberry-pi

|

||||

[6]: https://howtoraspberrypi.com/create-a-nas-with-your-raspberry-pi-and-samba/

|

||||

[7]: https://everyday-tech.com/a-raspberry-pi-ticketing-system-image-with-osticket/

|

||||

[8]: https://blog.capterra.com/7-free-and-open-source-digital-signage-software-options-for-your-next-event/

|

||||

[9]: https://github.com/guysoft/FullPageOS

|

||||

[10]: https://maker.pro/raspberry-pi/projects/raspberry-pi-web-server

|

||||

[11]: https://www.offensive-security.com/kali-linux-arm-images/

|

||||

[12]: https://medium.freecodecamp.org/running-your-own-openvpn-server-on-a-raspberry-pi-8b78043ccdea

|

||||

[13]: https://www.techradar.com/au/how-to/how-to-build-your-own-smart-coffee-machine

|

||||

@ -1,5 +1,5 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: translator: ( Auk7F7)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: subject: (Arch-Audit : A Tool To Check Vulnerable Packages In Arch Linux)

|

||||

@ -7,6 +7,7 @@

|

||||

[#]: author: (Prakash Subramanian https://www.2daygeek.com/author/prakash/)

|

||||

[#]: url: ( )

|

||||

|

||||

|

||||

Arch-Audit : A Tool To Check Vulnerable Packages In Arch Linux

|

||||

======

|

||||

|

||||

|

||||

169

sources/tech/20181204 4 Unique Terminal Emulators for Linux.md

Normal file

169

sources/tech/20181204 4 Unique Terminal Emulators for Linux.md

Normal file

@ -0,0 +1,169 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (4 Unique Terminal Emulators for Linux)

|

||||

[#]: via: (https://www.linux.com/blog/learn/2018/12/4-unique-terminals-linux)

|

||||

[#]: author: (Jack Wallen https://www.linux.com/users/jlwallen)

|

||||

|

||||

4 Unique Terminal Emulators for Linux

|

||||

======

|

||||

|

||||

Let’s face it, if you’re a Linux administrator, you’re going to work with the command line. To do that, you’ll be using a terminal emulator. Most likely, your distribution of choice came pre-installed with a default terminal emulator that gets the job done. But this is Linux, so you have a wealth of choices to pick from, and that ideology holds true for terminal emulators as well. In fact, if you open up your distribution’s GUI package manager (or search from the command line), you’ll find a trove of possible options. Of those, many are pretty straightforward tools; however, some are truly unique.

|

||||

|

||||

In this article, I’ll highlight four such terminal emulators, that will not only get the job done, but do so while making the job a bit more interesting or fun. So, let’s take a look at these terminals.

|

||||

|

||||

### Tilda

|

||||

|

||||

[Tilda][1] is designed for Gtk and is a member of the cool drop-down family of terminals. That means the terminal is always running in the background, ready to drop down from the top of your monitor (such as Guake and Yakuake). What makes Tilda rise above many of the others is the number of configuration options available for the terminal (Figure 1).

|

||||

|

||||

|

||||

Tilda can be installed from the standard repositories. On a Ubuntu- (or Debian-) based distribution, the installation is as simple as:

|

||||

|

||||

```

|

||||

sudo apt-get install tilda -y

|

||||

```

|

||||

|

||||

Once installed, open Tilda from your desktop menu, which will also open the configuration window. Configure the app to suit your taste and then close the configuration window. You can then open and close Tilda by hitting the F1 hotkey. One caveat to using Tilda is that, after the first run, you won’t find any indication as to how to reach the configuration wizard. No worries. If you run the command tilda -C it will open the configuration window, while still retaining the options you’ve previously set.

|

||||

|

||||

Available options include:

|

||||

|

||||

* Terminal size and location

|

||||

|

||||

* Font and color configurations

|

||||

|

||||

* Auto Hide

|

||||

|

||||

* Title

|

||||

|

||||

* Custom commands

|

||||

|

||||

* URL Handling

|

||||

|

||||

* Transparency

|

||||

|

||||

* Animation

|

||||

|

||||

* Scrolling

|

||||

|

||||

* And more

|

||||

|

||||

|

||||

|

||||

|

||||

What I like about these types of terminals is that they easily get out of the way when you don’t need them and are just a button click away when you do. For those that hop in and out of the terminal, a tool like Tilda is ideal.

|

||||

|

||||

### Aterm

|

||||

|

||||

Aterm holds a special place in my heart, as it was one of the first terminals I used that made me realize how flexible Linux was. This was back when AfterStep was my window manager of choice (which dates me a bit) and I was new to the command line. What Aterm offered was a terminal emulator that was highly customizable, while helping me learn the ins and outs of using the terminal (how to add options and switches to a command). “How?” you ask. Because Aterm never had a GUI for customization. To run Aterm with any special options, it had to run as a command. For example, say you want to open Aterm with transparency enabled, green text, white highlights, and no scroll bar. To do this, issue the command:

|

||||

|

||||

```

|

||||

aterm -tr -fg green -bg white +xb

|

||||

```

|

||||

|

||||

The end result (with the top command running for illustration) would look like that shown in Figure 2.

|

||||

|

||||

![Aterm][3]

|

||||

|

||||

Figure 2: Aterm with a few custom options.

|

||||

|

||||

[Used with permission][4]

|

||||

|

||||

Of course, you must first install Aterm. Fortunately, the application is still found in the standard repositories, so installing on the likes of Ubuntu is as simple as:

|

||||

|

||||

```

|

||||

sudo apt-get install aterm -y

|

||||

```

|

||||

|

||||

If you want to always open Aterm with those options, your best bet is to create an alias in your ~/.bashrc file like so:

|

||||

|

||||

```

|

||||

alias=”aterm -tr -fg green -bg white +sb”

|

||||

```

|

||||

|

||||

Save that file and, when you issue the command aterm, it will always open with those options. For more about creating aliases, check out [this tutorial][5].

|

||||

|

||||

### Eterm

|

||||

|

||||

Eterm is the second terminal that really showed me how much fun the Linux command line could be. Eterm is the default terminal emulator for the Enlightenment desktop. When I eventually migrated from AfterStep to Enlightenment (back in the early 2000s), I was afraid I’d lose out on all those cool aesthetic options. That turned out to not be the case. In fact, Eterm offered plenty of unique options, while making the task easier with a terminal toolbar. With Eterm, you can easily select from a large number of background images (should you want one - Figure 3) by selecting from the Background > Pixmap menu entry.

|

||||

|

||||

![Eterm][7]

|

||||

|

||||

Figure 3: Selecting from one of the many background images for Eterm.

|

||||

|

||||

[Used with permission][4]

|

||||

|

||||

There are a number of other options to configure (such as font size, map alerts, toggle scrollbar, brightness, contrast, and gamma of background images, and more). The one thing you want to make sure is, after you’ve configured Eterm to suit your tastes, to click Eterm > Save User Settings (otherwise, all settings will be lost when you close the app).

|

||||

|

||||

Eterm can be installed from the standard repositories, with a command such as:

|

||||

|

||||

```

|

||||

sudo apt-get install eterm

|

||||

```

|

||||

|

||||

### Extraterm

|

||||

|

||||

[Extraterm][8] should probably win a few awards for coolest feature set of any terminal window project available today. The most unique feature of Extraterm is the ability to wrap commands in color-coded frames (blue for successful commands and red for failed commands - Figure 4).

|

||||

|

||||

![Extraterm][10]

|

||||

|

||||

Figure 4: Extraterm showing two failed command frames.

|

||||

|

||||

[Used with permission][4]

|

||||

|

||||

When you run a command, Extraterm will wrap the command in an isolated frame. If the command succeeds, the frame will be outlined in blue. Should the command fail, the frame will be outlined in red.

|

||||

|

||||

Extraterm cannot be installed via the standard repositories. In fact, the only way to run Extraterm on Linux (at the moment) is to [download the precompiled binary][11] from the project’s GitHub page, extract the file, change into the newly created directory, and issue the command ./extraterm.

|

||||

|

||||

Once the app is running, to enable frames you must first enable bash integration. To do that, open Extraterm and then right-click anywhere in the window to reveal the popup menu. Scroll until you see the entry for Inject Bash shell Integration (Figure 5). Select that entry and you can then begin using the frames option.

|

||||

|

||||

![Extraterm][13]

|

||||

|

||||

Figure 5: Injecting Bash integration for Extraterm.

|

||||

|

||||

[Used with permission][4]

|

||||

|

||||

If you run a command, and don’t see a frame appear, you probably have to create a new frame for the command (as Extraterm only ships with a few default frames). To do that, click on the Extraterm menu button (three horizontal lines in the top right corner of the window), select Settings, and then click the Frames tab. In this window, scroll down and click the New Rule button. You can then add a command you want to work with the frames option (Figure 6).

|

||||

|

||||

![frames][15]

|

||||

|

||||

Figure 6: Adding a new rule for frames.

|

||||

|

||||

[Used with permission][4]

|

||||

|

||||

If, after this, you still don’t see frames appearing, download the extraterm-commands file from the [Download page][11], extract the file, change into the newly created directory, and issue the command sh setup_extraterm_bash.sh. That should enable frames for Extraterm.

|

||||

There’s plenty more options available for Extraterm. I’m convinced, once you start playing around with this new take on the terminal window, you won’t want to go back to the standard terminal. Hopefully the developer will make this app available to the standard repositories soon (as it could easily become one of the most popular terminal windows in use).

|

||||

|

||||

### And Many More

|

||||

|

||||

As you probably expected, there are quite a lot of terminals available for Linux. These four represent (at least for me) four unique takes on the task, each of which do a great job of helping you run the commands every Linux admin needs to run. If you aren’t satisfied with one of these, give your package manager a look to see what’s available. You are sure to find something that works perfectly for you.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/learn/2018/12/4-unique-terminals-linux

|

||||

|

||||

作者:[Jack Wallen][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/users/jlwallen

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: http://tilda.sourceforge.net/tildadoc.php

|

||||

[2]: https://www.linux.com/files/images/terminals2jpg

|

||||

[3]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/terminals_2.jpg?itok=gBkRLwDI (Aterm)

|

||||

[4]: https://www.linux.com/licenses/category/used-permission

|

||||

[5]: https://www.linux.com/blog/learn/2018/12/aliases-diy-shell-commands

|

||||

[6]: https://www.linux.com/files/images/terminals3jpg

|

||||

[7]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/terminals_3.jpg?itok=RVPTJAtK (Eterm)

|

||||

[8]: http://extraterm.org

|

||||

[9]: https://www.linux.com/files/images/terminals4jpg

|

||||

[10]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/terminals_4.jpg?itok=2n01qdwO (Extraterm)

|

||||

[11]: https://github.com/sedwards2009/extraterm/releases

|

||||

[12]: https://www.linux.com/files/images/terminals5jpg

|

||||

[13]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/terminals_5.jpg?itok=FdaE1Mpf (Extraterm)

|

||||

[14]: https://www.linux.com/files/images/terminals6jpg

|

||||

[15]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/terminals_6.jpg?itok=lQ1Zv5wq (frames)

|

||||

@ -1,5 +1,5 @@

|

||||