mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-28 23:20:10 +08:00

commit

7819d066d3

@ -1,11 +1,9 @@

|

||||

已校对。

|

||||

|

||||

为满足当今和未来 IT 需求,培训员工还是雇佣新人?

|

||||

================================================================

|

||||

|

||||

|

||||

|

||||

在数字化时代,由于IT工具不断更新,技术公司紧随其后,对 IT 技能的需求也不断变化。对于企业来说,寻找和雇佣那些拥有令人垂涎能力的创新人才,是非常不容易的。同时,培训内部员工来使他们接受新的技能和挑战,需要一定的时间,而时间要求常常是紧迫的。

|

||||

在数字化时代,由于 IT 工具不断更新,技术公司紧随其后,对 IT 技能的需求也不断变化。对于企业来说,寻找和雇佣那些拥有令人垂涎能力的创新人才,是非常不容易的。同时,培训内部员工来使他们接受新的技能和挑战,需要一定的时间,而时间要求常常是紧迫的。

|

||||

|

||||

[Sandy Hill][1] 对 IT 涉及到的多项技术都很熟悉。她作为 [Pegasystems][2] 项目的 IT 总监,负责多个 IT 团队,从应用的部署到数据中心的运营都要涉及。更重要的是,Pegasystems 开发帮助销售、市场、服务以及运营团队流水化操作,以及客户联络的应用。这意味着她需要掌握使用 IT 内部资源的最佳方法,面对公司客户遇到的 IT 挑战。

|

||||

|

||||

@ -19,7 +17,7 @@

|

||||

|

||||

**TEP:说说培训方法吧,怎样帮助你的员工发展他们的技能?**

|

||||

|

||||

**Hill**:我要求每一位员工制定一个技术性的和非技术性的训练目标。这作为他们绩效评估的一部分。他们的技术性目标需要与他们的工作职能相符,非技术行目标则随意,比如着重发展一项软技能,或是学一些专业领域之外的东西。我每年对职员进行一次评估,看看差距和不足之处,以使团队保持全面发展。

|

||||

**Hill**:我要求每一位员工制定一个技术性的和非技术性的训练目标。这作为他们绩效评估的一部分。他们的技术性目标需要与他们的工作职能相符,非技术岗目标则随意,比如着重发展一项软技能,或是学一些专业领域之外的东西。我每年对职员进行一次评估,看看差距和不足之处,以使团队保持全面发展。

|

||||

|

||||

**TEP:你的训练计划能够在多大程度上减轻招聘工作量, 保持职员的稳定性?**

|

||||

|

||||

@ -1,9 +1,11 @@

|

||||

小模块的开销

|

||||

JavaScript 小模块的开销

|

||||

====

|

||||

|

||||

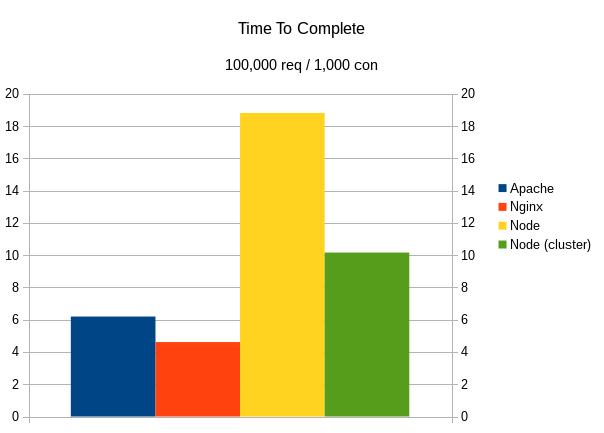

大约一年之前,我在将一个大型 JavaScript 代码库重构为小模块时发现了 Browserify 和 Webpack 中一个令人沮丧的事实:

|

||||

更新(2016/10/30):我写完这篇文章之后,我在[这个基准测试中发了一个错误](https://github.com/nolanlawson/cost-of-small-modules/pull/8),会导致 Rollup 比它预期的看起来要好一些。不过,整体结果并没有明显的不同(Rollup 仍然击败了 Browserify 和 Webpack,虽然它并没有像 Closure 十分好),所以我只是更新了图表。该基准测试包括了 [RequireJS 和 RequireJS Almond 打包器](https://github.com/nolanlawson/cost-of-small-modules/pull/5),所以文章中现在也包括了它们。要看原始帖子,可以查看[历史版本](https://web.archive.org/web/20160822181421/https://nolanlawson.com/2016/08/15/the-cost-of-small-modules/)。

|

||||

|

||||

> “代码越模块化,代码体积就越大。”- Nolan Lawson

|

||||

大约一年之前,我在将一个大型 JavaScript 代码库重构为更小的模块时发现了 Browserify 和 Webpack 中一个令人沮丧的事实:

|

||||

|

||||

> “代码越模块化,代码体积就越大。:< ”- Nolan Lawson

|

||||

|

||||

过了一段时间,Sam Saccone 发布了一些关于 [Tumblr][1] 和 [Imgur][2] 页面加载性能的出色的研究。其中指出:

|

||||

|

||||

@ -15,9 +17,9 @@

|

||||

|

||||

一个页面中包含的 JavaScript 脚本越多,页面加载也将越慢。庞大的 JavaScript 包会导致浏览器花费更多的时间去下载、解析和执行,这些都将加长载入时间。

|

||||

|

||||

即使当你使用如 Webpack [code splitting][3]、Browserify [factor bundles][4] 等工具将代码分解为多个包,时间的花费也仅仅是被延迟到页面生命周期的晚些时候。JavaScript 迟早都将有一笔开销。

|

||||

即使当你使用如 Webpack [code splitting][3]、Browserify [factor bundles][4] 等工具将代码分解为多个包,该开销也仅仅是被延迟到页面生命周期的晚些时候。JavaScript 迟早都将有一笔开销。

|

||||

|

||||

此外,由于 JavaScript 是一门动态语言,同时流行的 [CommonJS][5] 模块也是动态的,所以这就使得在最终分发给用户的代码中剔除无用的代码变得异常困难。譬如你可能只使用到 jQuery 中的 $.ajax,但是通过载入 jQuery 包,你将以整个包为代价。

|

||||

此外,由于 JavaScript 是一门动态语言,同时流行的 [CommonJS][5] 模块也是动态的,所以这就使得在最终分发给用户的代码中剔除无用的代码变得异常困难。譬如你可能只使用到 jQuery 中的 $.ajax,但是通过载入 jQuery 包,你将付出整个包的代价。

|

||||

|

||||

JavaScript 社区对这个问题提出的解决办法是提倡 [小模块][6] 的使用。小模块不仅有许多 [美好且实用的好处][7] 如易于维护,易于理解,易于集成等,而且还可以通过鼓励包含小巧的功能而不是庞大的库来解决之前提到的 jQuery 的问题。

|

||||

|

||||

@ -66,7 +68,7 @@ $ browserify node_modules/qs | browserify-count-modules

|

||||

|

||||

顺带一提,我写过的最大的开源站点 [Pokedex.org][21] 包含了 4 个包,共 311 个模块。

|

||||

|

||||

让我们先暂时忽略这些 JavaScript 包的实际大小,我认为去探索一下一定数量的模块本身开销会事一件有意思的事。虽然 Sam Saccone 的文章 [“2016 年 ES2015 转译的开销”][22] 已经广为流传,但是我认为他的结论还未到达足够深度,所以让我们挖掘的稍微再深一点吧。

|

||||

让我们先暂时忽略这些 JavaScript 包的实际大小,我认为去探索一下一定数量的模块本身开销会是一件有意思的事。虽然 Sam Saccone 的文章 [“2016 年 ES2015 转译的开销”][22] 已经广为流传,但是我认为他的结论还未到达足够深度,所以让我们挖掘的稍微再深一点吧。

|

||||

|

||||

### 测试环节!

|

||||

|

||||

@ -86,13 +88,13 @@ console.log(total)

|

||||

module.exports = 1

|

||||

```

|

||||

|

||||

我测试了五种打包方法:Browserify, 带 [bundle-collapser][24] 插件的 Browserify, Webpack, Rollup 和 Closure Compiler。对于 Rollup 和 Closure Compiler 我使用了 ES6 模块,而对于 Browserify 和 Webpack 则用的 CommonJS,目的是为了不涉及其各自缺点而导致测试的不公平(由于它们可能需要做一些转译工作,如 Babel 一样,而这些工作将会增加其自身的运行时间)。

|

||||

我测试了五种打包方法:Browserify、带 [bundle-collapser][24] 插件的 Browserify、Webpack、Rollup 和 Closure Compiler。对于 Rollup 和 Closure Compiler 我使用了 ES6 模块,而对于 Browserify 和 Webpack 则用的是 CommonJS,目的是为了不涉及其各自缺点而导致测试的不公平(由于它们可能需要做一些转译工作,如 Babel 一样,而这些工作将会增加其自身的运行时间)。

|

||||

|

||||

为了更好地模拟一个生产环境,我将带 -mangle 和 -compress 参数的 Uglify 用于所有的包,并且使用 gzip 压缩后通过 GitHub Pages 用 HTTPS 协议进行传输。对于每个包,我一共下载并执行 15 次,然后取其平均值,并使用 performance.now() 函数来记录载入时间(未使用缓存)与执行时间。

|

||||

为了更好地模拟一个生产环境,我对所有的包采用带 `-mangle` 和 `-compress` 参数的 `Uglify` ,并且使用 gzip 压缩后通过 GitHub Pages 用 HTTPS 协议进行传输。对于每个包,我一共下载并执行 15 次,然后取其平均值,并使用 `performance.now()` 函数来记录载入时间(未使用缓存)与执行时间。

|

||||

|

||||

### 包大小

|

||||

|

||||

在我们查看测试结果之前,我们有必要先来看一眼我们要测试的包文件。一下是每个包最小处理后但并未使用 gzip 压缩时的体积大小(单位:Byte):

|

||||

在我们查看测试结果之前,我们有必要先来看一眼我们要测试的包文件。以下是每个包最小处理后但并未使用 gzip 压缩时的体积大小(单位:Byte):

|

||||

|

||||

| | 100 个模块 | 1000 个模块 | 5000 个模块 |

|

||||

| --- | --- | --- | --- |

|

||||

@ -110,7 +112,7 @@ module.exports = 1

|

||||

| rollup | 300 | 2145 | 11510 |

|

||||

| closure | 302 | 2140 | 11789 |

|

||||

|

||||

Browserify 和 Webpack 的工作方式是隔离各个模块到各自的函数空间,然后声明一个全局载入器,并在每次 require() 函数调用时定位到正确的模块处。下面是我们的 Browserify 包的样子:

|

||||

Browserify 和 Webpack 的工作方式是隔离各个模块到各自的函数空间,然后声明一个全局载入器,并在每次 `require()` 函数调用时定位到正确的模块处。下面是我们的 Browserify 包的样子:

|

||||

|

||||

```

|

||||

(function e(t,n,r){function s(o,u){if(!n[o]){if(!t[o]){var a=typeof require=="function"&&require;if(!u&&a)return a(o,!0);if(i)return i(o,!0);var f=new Error("Cannot find module '"+o+"'");throw f.code="MODULE_NOT_FOUND",f}var l=n[o]={exports:{}};t[o][0].call(l.exports,function(e){var n=t[o][1][e];return s(n?n:e)},l,l.exports,e,t,n,r)}return n[o].exports}var i=typeof require=="function"&&require;for(var o=0;o

|

||||

@ -144,7 +146,7 @@ Browserify 和 Webpack 的工作方式是隔离各个模块到各自的函数空

|

||||

|

||||

在 100 个模块时,各包的差异是微不足道的,但是一旦模块数量达到 1000 个甚至 5000 个时,差异将会变得非常巨大。iPod Touch 在不同包上的差异并不明显,而对于具有一定年代的 Nexus 5 来说,Browserify 和 Webpack 明显耗时更多。

|

||||

|

||||

与此同时,我发现有意思的是 Rollup 和 Closure 的运行开销对于 iPod 而言几乎可以忽略,并且与模块的数量关系也不大。而对于 Nexus 5 来说,运行的开销并非完全可以忽略,但它们仍比 Browserify 或 Webpack 低很多。后者若未在几百毫秒内完成加载则将会占用主线程的好几帧的时间,这就意味着用户界面将冻结并且等待直到模块载入完成。

|

||||

与此同时,我发现有意思的是 Rollup 和 Closure 的运行开销对于 iPod 而言几乎可以忽略,并且与模块的数量关系也不大。而对于 Nexus 5 来说,运行的开销并非完全可以忽略,但 Rollup/Closure 仍比 Browserify/Webpack 低很多。后者若未在几百毫秒内完成加载则将会占用主线程的好几帧的时间,这就意味着用户界面将冻结并且等待直到模块载入完成。

|

||||

|

||||

值得注意的是前面这些测试都是在千兆网速下进行的,所以在网络情况来看,这只是一个最理想的状况。借助 Chrome 开发者工具,我们可以认为地将 Nexus 5 的网速限制到 3G 水平,然后来看一眼这对测试产生的影响([查看表格][30]):

|

||||

|

||||

@ -152,13 +154,13 @@ Browserify 和 Webpack 的工作方式是隔离各个模块到各自的函数空

|

||||

|

||||

一旦我们将网速考虑进来,Browserify/Webpack 和 Rollup/Closure 的差异将变得更为显著。在 1000 个模块规模(接近于 Reddit 1050 个模块的规模)时,Browserify 花费的时间比 Rollup 长大约 400 毫秒。然而 400 毫秒已经不是一个小数目了,正如 Google 和 Bing 指出的,亚秒级的延迟都会 [对用户的参与产生明显的影响][32] 。

|

||||

|

||||

还有一件事需要指出,那就是这个测试并非测量 100 个、1000 个或者 5000 个模块的每个模块的精确运行时间。因为这还与你对 require() 函数的使用有关。在这些包中,我采用的是对每个模块调用一次 require() 函数。但如果你每个模块调用了多次 require() 函数(这在代码库中非常常见)或者你多次动态调用 require() 函数(例如在子函数中调用 require() 函数),那么你将发现明显的性能退化。

|

||||

还有一件事需要指出,那就是这个测试并非测量 100 个、1000 个或者 5000 个模块的每个模块的精确运行时间。因为这还与你对 `require()` 函数的使用有关。在这些包中,我采用的是对每个模块调用一次 `require()` 函数。但如果你每个模块调用了多次 `require()` 函数(这在代码库中非常常见)或者你多次动态调用 `require()` 函数(例如在子函数中调用 `require()` 函数),那么你将发现明显的性能退化。

|

||||

|

||||

Reddit 的移动站点就是一个很好的例子。虽然该站点有 1050 个模块,但是我测量了它们使用 Browserify 的实际执行时间后发现比“1000 个模块”的测试结果差好多。当使用那台运行 Chrome 的 Nexus 5 时,我测出 Reddit 的 Browserify require() 函数耗时 2.14 秒。而那个“1000 个模块”脚本中的等效函数只需要 197 毫秒(在搭载 i7 处理器的 Surface Book 上的桌面版 Chrome,我测出的结果分别为 559 毫秒与 37 毫秒,虽然给出桌面平台的结果有些令人惊讶)。

|

||||

|

||||

这结果提示我们有必要对每个模块使用多个 require() 函数的情况再进行一次测试。不过,我并不认为这对 Browserify 和 Webpack 会是一个公平的测试,因为 Rollup 和 Closure 都会将重复的 ES6 库导入处理为一个的顶级变量声明,同时也阻止了顶层空间以外的其他区域的导入。所以根本上来说,Rollup 和 Closure 中一个导入和多个导入的开销是相同的,而对于 Browserify 和 Webpack,运行开销随 require() 函数的数量线性增长。

|

||||

这结果提示我们有必要对每个模块使用多个 `require()` 函数的情况再进行一次测试。不过,我并不认为这对 Browserify 和 Webpack 会是一个公平的测试,因为 Rollup 和 Closure 都会将重复的 ES6 库导入处理为一个的顶级变量声明,同时也阻止了顶层空间以外的其他区域的导入。所以根本上来说,Rollup 和 Closure 中一个导入和多个导入的开销是相同的,而对于 Browserify 和 Webpack,运行开销随 `require()` 函数的数量线性增长。

|

||||

|

||||

为了我们这个分析的目的,我认为最好假设模块的数量是性能的短板。而事实上,“5000 个模块”也是一个比“5000 个 require() 函数调用”更好的度量标准。

|

||||

为了我们这个分析的目的,我认为最好假设模块的数量是性能的短板。而事实上,“5000 个模块”也是一个比“5000 个 `require()` 函数调用”更好的度量标准。

|

||||

|

||||

### 结论

|

||||

|

||||

@ -168,11 +170,11 @@ Reddit 的移动站点就是一个很好的例子。虽然该站点有 1050 个

|

||||

|

||||

给出这些结果之后,我对 Closure Compiler 和 Rollup 在 JavaScript 社区并没有得到过多关注而感到惊讶。我猜测或许是因为(前者)需要依赖 Java,而(后者)仍然相当不成熟并且未能做到开箱即用(详见 [Calvin’s Metcalf 的评论][37] 中作的不错的总结)。

|

||||

|

||||

即使没有足够数量的 JavaScript 开发者加入到 Rollup 或 Closure 的队伍中,我认为 npm 包作者们也已准备好了去帮助解决这些问题。如果你使用 npm 安装 lodash,你将会发其现主要的导入是一个巨大的 JavaScript 模块,而不是你期望的 Lodash 的超模块(hyper-modular)特性(require('lodash/uniq'),require('lodash.uniq') 等等)。对于 PouchDB,我们做了一个类似的声明以 [使用 Rollup 作为预发布步骤][38],这将产生对于用户而言尽可能小的包。

|

||||

即使没有足够数量的 JavaScript 开发者加入到 Rollup 或 Closure 的队伍中,我认为 npm 包作者们也已准备好了去帮助解决这些问题。如果你使用 npm 安装 lodash,你将会发其现主要的导入是一个巨大的 JavaScript 模块,而不是你期望的 Lodash 的超模块(hyper-modular)特性(`require('lodash/uniq')`,`require('lodash.uniq')` 等等)。对于 PouchDB,我们做了一个类似的声明以 [使用 Rollup 作为预发布步骤][38],这将产生对于用户而言尽可能小的包。

|

||||

|

||||

同时,我创建了 [rollupify][39] 来尝试将这过程变得更为简单一些,只需拖动到已存在的 Browserify 工程中即可。其基本思想是在你自己的项目中使用导入(import)和导出(export)(可以使用 [cjs-to-es6][40] 来帮助迁移),然后使用 require() 函数来载入第三方包。这样一来,你依旧可以在你自己的代码库中享受所有模块化的优点,同时能导出一个适当大小的大模块来发布给你的用户。不幸的是,你依旧得为第三方库付出一些代价,但是我发现这是对于当前 npm 生态系统的一个很好的折中方案。

|

||||

|

||||

所以结论如下:一个大的 JavaScript 包比一百个小 JavaScript 模块要快。尽管这是事实,我依旧希望我们社区能最终发现我们所处的困境————提倡小模块的原则对开发者有利,但是对用户不利。同时希望能优化我们的工具,使得我们可以对两方面都有利。

|

||||

所以结论如下:**一个大的 JavaScript 包比一百个小 JavaScript 模块要快**。尽管这是事实,我依旧希望我们社区能最终发现我们所处的困境————提倡小模块的原则对开发者有利,但是对用户不利。同时希望能优化我们的工具,使得我们可以对两方面都有利。

|

||||

|

||||

### 福利时间!三款桌面浏览器

|

||||

|

||||

@ -205,15 +207,15 @@ Firefox 48 ([查看表格][45])

|

||||

|

||||

[![Nexus 5 (3G) RequireJS 结果][53]](https://nolanwlawson.files.wordpress.com/2016/08/2016-08-20-14_45_29-small_modules3-xlsx-excel.png)

|

||||

|

||||

|

||||

更新 3: 我写了一个 [optimize-js](http://github.com/nolanlawson/optimize-js) ,它会减少一些函数内的函数的解析成本。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://nolanlawson.com/2016/08/15/the-cost-of-small-modules/?utm_source=javascriptweekly&utm_medium=email

|

||||

via: https://nolanlawson.com/2016/08/15/the-cost-of-small-modules/

|

||||

|

||||

作者:[Nolan][a]

|

||||

译者:[Yinr](https://github.com/Yinr)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,57 @@

|

||||

宽松开源许可证的崛起意味着什么

|

||||

====

|

||||

|

||||

为什么像 GNU GPL 这样的限制性许可证越来越不受青睐。

|

||||

|

||||

“如果你用了任何开源软件, 那么你软件的其他部分也必须开源。” 这是微软前 CEO 巴尔默 2001 年说的, 尽管他说的不对, 还是引发了人们对自由软件的 FUD (恐惧, 不确定和怀疑(fear, uncertainty and doubt))。 大概这才是他的意图。

|

||||

|

||||

对开源软件的这些 FUD 主要与开源许可有关。 现在有许多不同的许可证, 当中有些限制比其他的更严格(也有人称“更具保护性”)。 诸如 GNU 通用公共许可证 (GPL) 这样的限制性许可证使用了 copyleft 的概念。 copyleft 赋予人们自由发布软件副本和修改版的权力, 只要衍生工作保留同样的权力。 bash 和 GIMP 等开源项目就是使用了 GPL(v3)。 还有一个 AGPL( Affero GPL) 许可证, 它为网络上的软件(如 web service)提供了 copyleft 许可。

|

||||

|

||||

这意味着, 如果你使用了这种许可的代码, 然后加入了你自己的专有代码, 那么在一些情况下, 整个代码, 包括你的代码也就遵从这种限制性开源许可证。 Ballmer 说的大概就是这类的许可证。

|

||||

|

||||

但宽松许可证不同。 比如, 只要保留版权声明和许可声明且不要求开发者承担责任, MIT 许可证允许任何人任意使用开源代码, 包括修改和出售。 另一个比较流行的宽松开源许可证是 Apache 许可证 2.0,它还包含了贡献者向用户提供专利授权相关的条款。 使用 MIT 许可证的有 JQuery、.NET Core 和 Rails , 使用 Apache 许可证 2.0 的软件包括安卓, Apache 和 Swift。

|

||||

|

||||

两种许可证类型最终都是为了让软件更有用。 限制性许可证促进了参与和分享的开源理念, 使每一个人都能从软件中得到最大化的利益。 而宽松许可证通过允许人们任意使用软件来确保人们能从软件中得到最多的利益, 即使这意味着他们可以使用代码, 修改它, 据为己有,甚至以专有软件出售,而不做任何回报。

|

||||

|

||||

开源许可证管理公司黑鸭子软件的数据显示, 去年使用最多的开源许可证是限制性许可证 GPL 2.0,份额大约 25%。 宽松许可证 MIT 和 Apache 2.0 次之, 份额分别为 18% 和 16%, 再后面是 GPL 3.0, 份额大约 10%。 这样来看, 限制性许可证占 35%, 宽松许可证占 34%, 几乎是平手。

|

||||

|

||||

但这份当下的数据没有揭示发展趋势。黑鸭子软件的数据显示, 从 2009 年到 2015 年的六年间, MIT 许可证的份额上升了 15.7%, Apache 的份额上升了 12.4%。 在这段时期, GPL v2 和 v3 的份额惊人地下降了 21.4%。 换言之, 在这段时期里, 大量软件从限制性许可证转到宽松许可证。

|

||||

|

||||

这个趋势还在继续。 黑鸭子软件的[最新数据][1]显示, MIT 现在的份额为 26%, GPL v2 为 21%, Apache 2 为 16%, GPL v3 为 9%。 即 30% 的限制性许可证和 42% 的宽松许可证--与前一年的 35% 的限制许可证和 34% 的宽松许可证相比, 发生了重大的转变。 对 GitHub 上使用许可证的[调查研究][2]证实了这种转变。 它显示 MIT 以压倒性的 45% 占有率成为最流行的许可证, 与之相比, GPL v2 只有 13%, Apache 11%。

|

||||

|

||||

|

||||

|

||||

### 引领趋势

|

||||

|

||||

从限制性许可证到宽松许可证,这么大的转变背后是什么呢? 是公司害怕如果使用了限制性许可证的软件,他们就会像巴尔默说的那样,失去自己私有软件的控制权了吗? 事实上, 可能就是如此。 比如, Google 就[禁用了 Affero GPL 软件][3]。

|

||||

|

||||

[Instructional Media + Magic][4] 的主席 Jim Farmer, 是一个教育开源技术的开发者。 他认为很多公司为避免法律问题而不使用限制性许可证。 “问题就在于复杂性。 许可证的复杂性越高, 被他人因为某行为而告上法庭的可能性越高。 高复杂性更可能带来诉讼”, 他说。

|

||||

|

||||

他补充说, 这种对限制性许可证的恐惧正被律师们驱动着, 许多律师建议自己的客户使用 MIT 或 Apache 2.0 许可证的软件, 并明确反对使用 Affero 许可证的软件。

|

||||

|

||||

他说, 这会对软件开发者产生影响, 因为如果公司都避开限制性许可证软件的使用,开发者想要自己的软件被使用, 就更会把新的软件使用宽松许可证。

|

||||

|

||||

但 SalesAgility(开源 SuiteCRM 背后的公司)的 CEO Greg Soper 认为这种到宽松许可证的转变也是由一些开发者驱动的。 “看看像 Rocket.Chat 这样的应用。 开发者本可以选择 GPL 2.0 或 Affero 许可证, 但他们选择了宽松许可证,” 他说。 “这样可以给这个应用最大的机会, 因为专有软件厂商可以使用它, 不会伤害到他们的产品, 且不需要把他们的产品也使用开源许可证。 这样如果开发者想要让第三方应用使用他的应用的话, 他有理由选择宽松许可证。”

|

||||

|

||||

Soper 指出, 限制性许可证致力于帮助开源项目获得成功,方式是阻止开发者拿了别人的代码、做了修改,但不把结果回报给社区。 “Affero 许可证对我们的产品健康发展很重要, 因为如果有人利用了我们的代码开发,做得比我们好, 却又不把代码回报回来, 就会扼杀掉我们的产品,” 他说。 “ 对 Rocket.Chat 则不同, 因为如果它使用 Affero, 那么它会污染公司的知识产权, 所以公司不会使用它。 不同的许可证有不同的使用案例。”

|

||||

|

||||

曾在 Gnome、OpenOffice 工作过,现在是 LibreOffice 的开源开发者的 Michael Meeks 同意 Jim Farmer 的观点,认为许多公司确实出于对法律的担心,而选择使用宽松许可证的软件。 “copyleft 许可证有风险, 但同样也有巨大的益处。 遗憾的是人们都听从律师的, 而律师只是讲风险, 却从不告诉你有些事是安全的。”

|

||||

|

||||

巴尔默发表他的错误言论已经过去 15 年了, 但它产生的 FUD 还是有影响--即使从限制性许可证到宽松许可证的转变并不是他的目的。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.cio.com/article/3120235/open-source-tools/what-the-rise-of-permissive-open-source-licenses-means.html

|

||||

|

||||

作者:[Paul Rubens][a]

|

||||

译者:[willcoderwang](https://github.com/willcoderwang)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://www.cio.com/author/Paul-Rubens/

|

||||

[1]: https://www.blackducksoftware.com/top-open-source-licenses

|

||||

[2]: https://github.com/blog/1964-open-source-license-usage-on-github-com

|

||||

[3]: http://www.theregister.co.uk/2011/03/31/google_on_open_source_licenses/

|

||||

[4]: http://immagic.com/

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

在 Linux 上检测硬盘坏道和坏块

|

||||

在 Linux 上检测硬盘上的坏道和坏块

|

||||

===

|

||||

|

||||

让我们从定义坏道和坏块开始说起,它们是一块磁盘或闪存上不再能够被读写的部分,一般是由于磁盘表面特定的[物理损坏][7]或闪存晶体管失效导致的。

|

||||

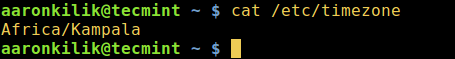

让我们从坏道和坏块的定义开始说起,它们是一块磁盘或闪存上不再能够被读写的部分,一般是由于磁盘表面特定的[物理损坏][7]或闪存晶体管失效导致的。

|

||||

|

||||

随着坏道的继续积累,它们会对你的磁盘或闪存容量产生令人不快或破坏性的影响,甚至可能会导致硬件失效。

|

||||

|

||||

@ -13,7 +13,7 @@

|

||||

|

||||

### 在 Linux 上使用坏块工具检查坏道

|

||||

|

||||

坏块工具可以让用户扫描设备检查坏道或坏块。设备可以是一个磁盘或外置磁盘,由一个如 /dev/sdc 这样的文件代表。

|

||||

坏块工具可以让用户扫描设备检查坏道或坏块。设备可以是一个磁盘或外置磁盘,由一个如 `/dev/sdc` 这样的文件代表。

|

||||

|

||||

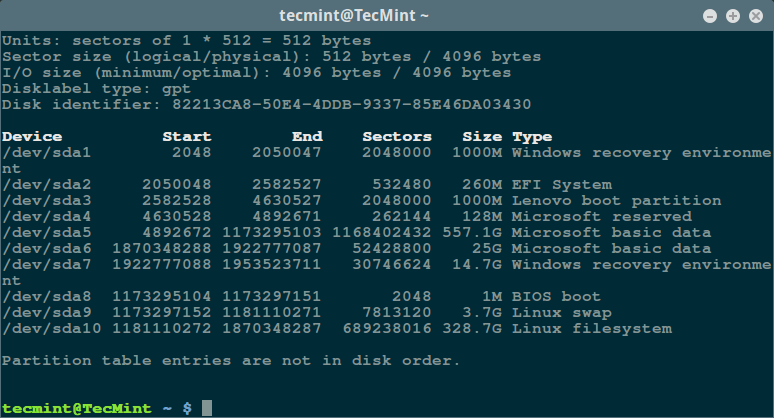

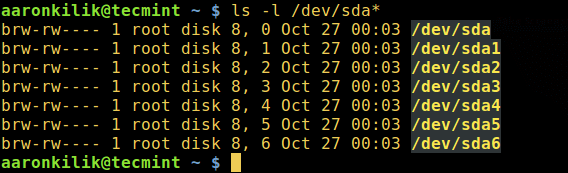

首先,通过超级用户权限执行 [fdisk 命令][5]来显示你的所有磁盘或闪存的信息以及它们的分区信息:

|

||||

|

||||

@ -24,9 +24,9 @@ $ sudo fdisk -l

|

||||

|

||||

[][4]

|

||||

|

||||

列出 Linux 文件系统分区

|

||||

*列出 Linux 文件系统分区*

|

||||

|

||||

然后用这个命令检查你的 Linux 硬盘上的坏道/坏块:

|

||||

然后用如下命令检查你的 Linux 硬盘上的坏道/坏块:

|

||||

|

||||

```

|

||||

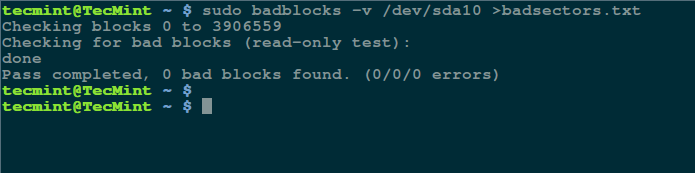

$ sudo badblocks -v /dev/sda10 > badsectors.txt

|

||||

@ -35,15 +35,15 @@ $ sudo badblocks -v /dev/sda10 > badsectors.txt

|

||||

|

||||

[][3]

|

||||

|

||||

在 Linux 上扫描硬盘坏道

|

||||

*在 Linux 上扫描硬盘坏道*

|

||||

|

||||

上面的命令中,badblocks 扫描设备 /dev/sda10(记得指定你的实际设备),-v 选项让它显示操作的详情。另外,这里使用了输出重定向将操作结果重定向到了文件 badsectors.txt。

|

||||

上面的命令中,badblocks 扫描设备 `/dev/sda10`(记得指定你的实际设备),`-v` 选项让它显示操作的详情。另外,这里使用了输出重定向将操作结果重定向到了文件 `badsectors.txt`。

|

||||

|

||||

如果你在你的磁盘上发现任何坏道,卸载磁盘并像下面这样让系统不要将数据写入回报的扇区中。

|

||||

|

||||

你需要执行 e2fsck(针对 ext2/ext3/ext4 文件系统)或 fsck 命令,命令中还需要用到 badsectors.txt 文件和设备文件。

|

||||

你需要执行 `e2fsck`(针对 ext2/ext3/ext4 文件系统)或 `fsck` 命令,命令中还需要用到 `badsectors.txt` 文件和设备文件。

|

||||

|

||||

`-l` 选项告诉命令将指定文件名文件(badsectors.txt)中列出的扇区号码加入坏块列表。

|

||||

`-l` 选项告诉命令将在指定的文件 `badsectors.txt` 中列出的扇区号码加入坏块列表。

|

||||

|

||||

```

|

||||

------------ 针对 for ext2/ext3/ext4 文件系统 ------------

|

||||

@ -60,7 +60,7 @@ $ sudo fsck -l badsectors.txt /dev/sda10

|

||||

|

||||

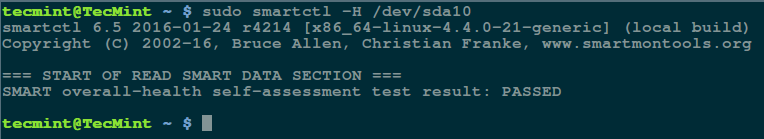

这个方法对带有 S.M.A.R.T(Self-Monitoring, Analysis and Reporting Technology,自我监控分析报告技术)系统的现代磁盘(ATA/SATA 和 SCSI/SAS 硬盘以及固态硬盘)更加的可靠和高效。S.M.A.R.T 系统能够帮助检测,报告,以及可能记录它们的健康状况,这样你就可以找出任何可能出现的硬件失效。

|

||||

|

||||

你可以使用以下命令安装 smartmontools:

|

||||

你可以使用以下命令安装 `smartmontools`:

|

||||

|

||||

```

|

||||

------------ 在基于 Debian/Ubuntu 的系统上 ------------

|

||||

@ -71,7 +71,7 @@ $ sudo yum install smartmontools

|

||||

|

||||

```

|

||||

|

||||

安装完成之后,使用 smartctl 控制磁盘集成的 S.M.A.R.T 系统。你可以这样查看它的手册或帮助:

|

||||

安装完成之后,使用 `smartctl` 控制磁盘集成的 S.M.A.R.T 系统。你可以这样查看它的手册或帮助:

|

||||

|

||||

```

|

||||

$ man smartctl

|

||||

@ -79,7 +79,7 @@ $ smartctl -h

|

||||

|

||||

```

|

||||

|

||||

然后执行 smartctrl 命令并在命令中指定你的设备作为参数,以下命令包含了参数 `-H` 或 `--health` 以显示 SMART 整体健康自我评估测试结果。

|

||||

然后执行 `smartctrl` 命令并在命令中指定你的设备作为参数,以下命令包含了参数 `-H` 或 `--health` 以显示 SMART 整体健康自我评估测试结果。

|

||||

|

||||

```

|

||||

$ sudo smartctl -H /dev/sda10

|

||||

@ -88,7 +88,7 @@ $ sudo smartctl -H /dev/sda10

|

||||

|

||||

[][2]

|

||||

|

||||

检查 Linux 硬盘健康

|

||||

*检查 Linux 硬盘健康*

|

||||

|

||||

上面的结果指出你的硬盘很健康,近期内不大可能发生硬件失效。

|

||||

|

||||

@ -102,10 +102,8 @@ via: http://www.tecmint.com/check-linux-hard-disk-bad-sectors-bad-blocks/

|

||||

|

||||

|

||||

作者:[Aaron Kili][a]

|

||||

|

||||

译者:[alim0x](https://github.com/alim0x)

|

||||

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,12 +1,13 @@

|

||||

# 如何在 Linux 中将文件编码转换为 UTF-8

|

||||

如何在 Linux 中将文件编码转换为 UTF-8

|

||||

===============

|

||||

|

||||

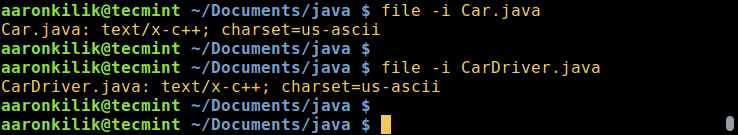

在这篇教程中,我们将解释字符编码的含义,然后给出一些使用命令行工具将使用某种字符编码的文件转化为另一种编码的例子。最后,我们将一起看一看如何在 Linux 下将使用各种字符编码的文件转化为 UTF-8 编码。

|

||||

|

||||

你可能已经知道,计算机是不会理解和存储字符、数字或者任何人类能够理解的东西的,除了二进制数据。一个二进制位只有两种可能的值,也就是 `0` 或 `1`,`真`或`假`,`对`或`错`。其它的任何事物,比如字符、数据和图片,必须要以二进制的形式来表现,以供计算机处理。

|

||||

你可能已经知道,计算机除了二进制数据,是不会理解和存储字符、数字或者任何人类能够理解的东西的。一个二进制位只有两种可能的值,也就是 `0` 或 `1`,`真`或`假`,`是`或`否`。其它的任何事物,比如字符、数据和图片,必须要以二进制的形式来表现,以供计算机处理。

|

||||

|

||||

简单来说,字符编码是一种可以指示电脑来将原始的 0 和 1 解释成实际字符的方式,在这些字符编码中,字符都可以用数字串来表示。

|

||||

简单来说,字符编码是一种可以指示电脑来将原始的 0 和 1 解释成实际字符的方式,在这些字符编码中,字符都以一串数字来表示。

|

||||

|

||||

字符编码方案有很多种,比如 ASCII, ANCI, Unicode 等等。下面是 ASCII 编码的一个例子。

|

||||

字符编码方案有很多种,比如 ASCII、ANCI、Unicode 等等。下面是 ASCII 编码的一个例子。

|

||||

|

||||

```

|

||||

字符 二进制

|

||||

@ -22,11 +23,9 @@ B 01000010

|

||||

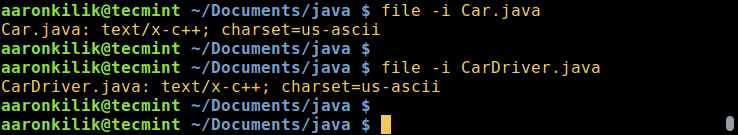

$ file -i Car.java

|

||||

$ file -i CarDriver.java

|

||||

```

|

||||

[

|

||||

|

||||

][3]

|

||||

|

||||

|

||||

在 Linux 中查看文件的编码

|

||||

*在 Linux 中查看文件的编码*

|

||||

|

||||

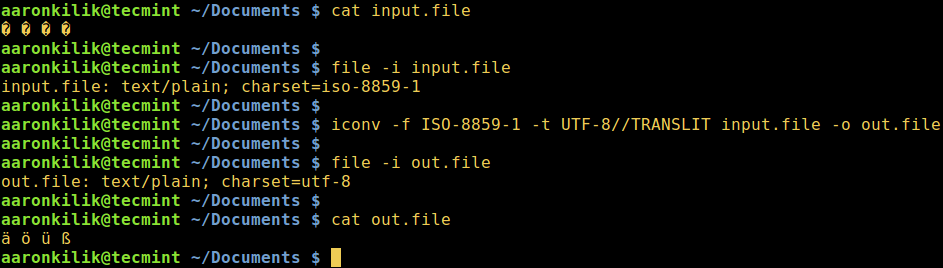

iconv 工具的使用方法如下:

|

||||

|

||||

@ -34,25 +33,21 @@ iconv 工具的使用方法如下:

|

||||

$ iconv option

|

||||

$ iconv options -f from-encoding -t to-encoding inputfile(s) -o outputfile

|

||||

```

|

||||

|

||||

在这里,`-f` 或 `--from-code` 标明了输入编码,而 `-t` 或 `--to-encoding` 指定了输出编码。

|

||||

在这里,`-f` 或 `--from-code` 表明了输入编码,而 `-t` 或 `--to-encoding` 指定了输出编码。

|

||||

|

||||

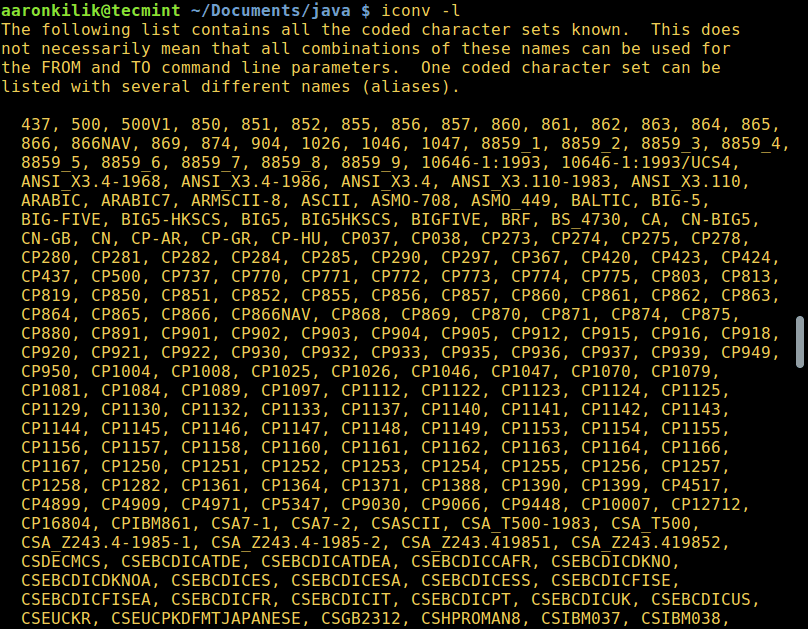

为了列出所有已有编码的字符集,你可以使用以下命令:

|

||||

|

||||

```

|

||||

$ iconv -l

|

||||

```

|

||||

[

|

||||

|

||||

][2]

|

||||

|

||||

|

||||

列出所有已有编码字符集

|

||||

*列出所有已有编码字符集*

|

||||

|

||||

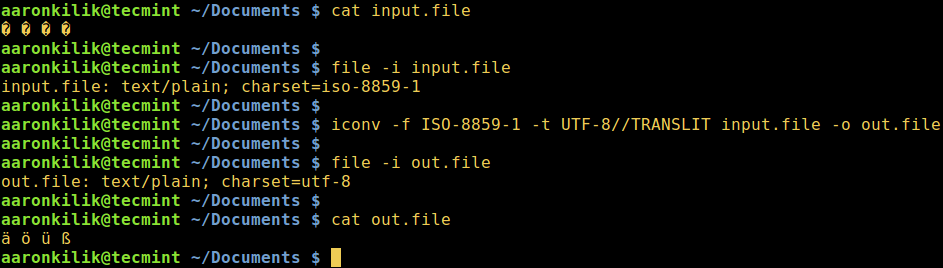

### 将文件从 ISO-8859-1 编码转换为 UTF-8 编码

|

||||

|

||||

下面,我们将学习如何将一种编码方案转换为另一种编码方案。下面的命令将会将 ISO-8859-1 编码转换为 UTF-8 编码。

|

||||

|

||||

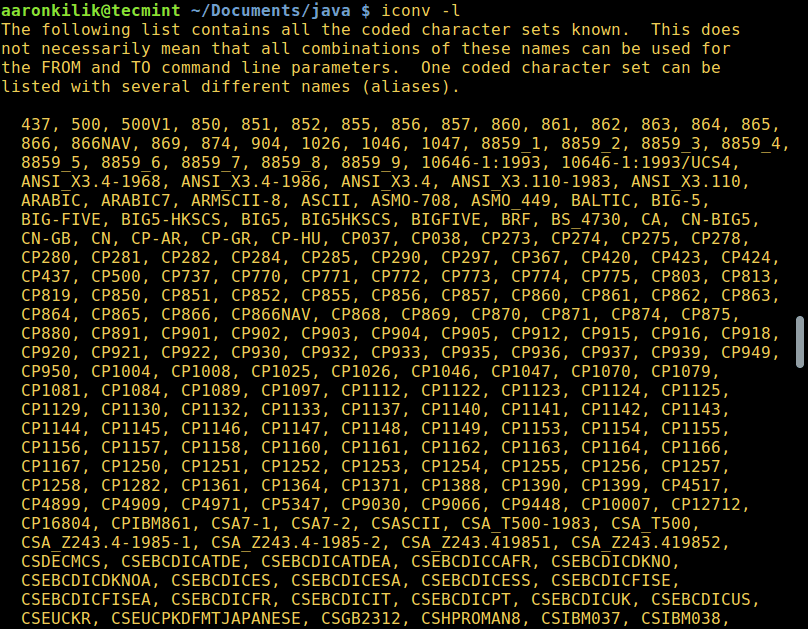

Consider a file named `input.file` which contains the characters:

|

||||

考虑如下文件 `input.file`,其中包含这几个字符:

|

||||

|

||||

```

|

||||

@ -70,17 +65,15 @@ $ iconv -f ISO-8859-1 -t UTF-8//TRANSLIT input.file -o out.file

|

||||

$ cat out.file

|

||||

$ file -i out.file

|

||||

```

|

||||

[

|

||||

|

||||

][1]

|

||||

|

||||

|

||||

在 Linux 中将 ISO-8859-1 转化为 UTF-8

|

||||

*在 Linux 中将 ISO-8859-1 转化为 UTF-8*

|

||||

|

||||

注意:如果输出编码后面添加了 `//IGNORE` 字符串,那些不能被转换的字符将不会被转换,并且在转换后,程序会显示一条错误信息。

|

||||

|

||||

好,如果字符串 `//TRANSLIT` 被添加到了上面例子中的输出编码之后 (UTF-8//TRANSLIT),待转换的字符会尽量采用形译原则。也就是说,如果某个字符在输出编码方案中不能被表示的话,它将会被替换为一个形状比较相似的字符。

|

||||

好,如果字符串 `//TRANSLIT` 被添加到了上面例子中的输出编码之后 (`UTF-8//TRANSLIT`),待转换的字符会尽量采用形译原则。也就是说,如果某个字符在输出编码方案中不能被表示的话,它将会被替换为一个形状比较相似的字符。

|

||||

|

||||

而且,如果一个字符不在输出编码中,而且不能被形译,它将会在输出文件中被一个问号标记 `(?)` 代替。

|

||||

而且,如果一个字符不在输出编码中,而且不能被形译,它将会在输出文件中被一个问号标记 `?` 代替。

|

||||

|

||||

### 将多个文件转换为 UTF-8 编码

|

||||

|

||||

@ -88,13 +81,13 @@ $ file -i out.file

|

||||

|

||||

```

|

||||

#!/bin/bash

|

||||

# 将 values_here 替换为输入编码

|

||||

### 将 values_here 替换为输入编码

|

||||

FROM_ENCODING="value_here"

|

||||

# 输出编码 (UTF-8)

|

||||

### 输出编码 (UTF-8)

|

||||

TO_ENCODING="UTF-8"

|

||||

# 转换命令

|

||||

### 转换命令

|

||||

CONVERT=" iconv -f $FROM_ENCODING -t $TO_ENCODING"

|

||||

# 使用循环转换多个文件

|

||||

### 使用循环转换多个文件

|

||||

for file in *.txt; do

|

||||

$CONVERT "$file" -o "${file%.txt}.utf8.converted"

|

||||

done

|

||||

@ -122,13 +115,11 @@ $ man iconv

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/convert-files-to-utf-8-encoding-in-linux/#

|

||||

via: http://www.tecmint.com/convert-files-to-utf-8-encoding-in-linux/

|

||||

|

||||

作者:[Aaron Kili][a]

|

||||

|

||||

译者:[StdioA](https://github.com/StdioA)

|

||||

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,116 @@

|

||||

如何在 Linux 中压缩及解压缩 .bz2 文件

|

||||

============================================================

|

||||

|

||||

对文件进行压缩,可以通过使用较少的字节对文件中的数据进行编码来显著地减小文件的大小,并且在跨网络的[文件的备份和传送][1]时很有用。 另一方面,解压文件意味着将文件中的数据恢复到初始状态。

|

||||

|

||||

Linux 中有几个[文件压缩和解压缩工具][2],比如gzip、7-zip、Lrzip、[PeaZip][3] 等等。

|

||||

|

||||

本篇教程中,我们将介绍如何在 Linux 中使用 bzip2 工具压缩及解压缩`.bz2`文件。

|

||||

|

||||

bzip2 是一个非常有名的压缩工具,并且在大多数主流 Linux 发行版上都有,你可以在你的发行版上用合适的命令来安装它。

|

||||

|

||||

```

|

||||

$ sudo apt install bzip2 [On Debian/Ubuntu]

|

||||

$ sudo yum install bzip2 [On CentOS/RHEL]

|

||||

$ sudo dnf install bzip2 [On Fedora 22+]

|

||||

```

|

||||

|

||||

使用 bzip2 的常规语法是:

|

||||

|

||||

```

|

||||

$ bzip2 option(s) filenames

|

||||

```

|

||||

|

||||

### 如何在 Linux 中使用“bzip2”压缩文件

|

||||

|

||||

你可以如下压缩一个文件,使用`-z`标志启用压缩:

|

||||

|

||||

```

|

||||

$ bzip2 filename

|

||||

或者

|

||||

$ bzip2 -z filename

|

||||

```

|

||||

|

||||

要压缩一个`.tar`文件,使用的命令为:

|

||||

|

||||

```

|

||||

$ bzip2 -z backup.tar

|

||||

```

|

||||

|

||||

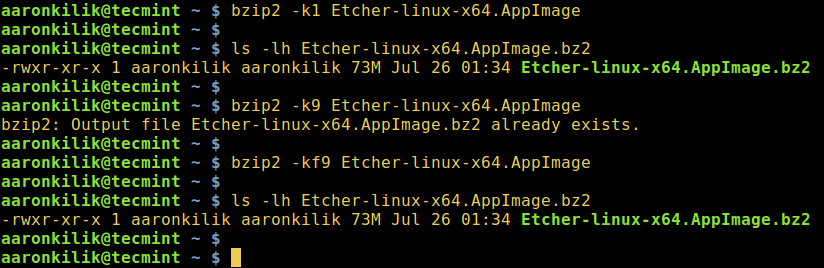

重要:bzip2 默认会在压缩及解压缩文件时删除输入文件(原文件),要保留输入文件,使用`-k`或者`--keep`选项。

|

||||

|

||||

此外,`-f`或者`--force`标志会强制让 bzip2 覆盖已有的输出文件。

|

||||

|

||||

```

|

||||

------ 要保留输入文件 ------

|

||||

$ bzip2 -zk filename

|

||||

$ bzip2 -zk backup.tar

|

||||

```

|

||||

|

||||

你也可以设置块的大小,从 100k 到 900k,分别使用`-1`或者`--fast`到`-9`或者`--best`:

|

||||

|

||||

```

|

||||

$ bzip2 -k1 Etcher-linux-x64.AppImage

|

||||

$ ls -lh Etcher-linux-x64.AppImage.bz2

|

||||

$ bzip2 -k9 Etcher-linux-x64.AppImage

|

||||

$ bzip2 -kf9 Etcher-linux-x64.AppImage

|

||||

$ ls -lh Etcher-linux-x64.AppImage.bz2

|

||||

```

|

||||

|

||||

下面的截屏展示了如何使用选项来保留输入文件,强制 bzip2 覆盖输出文件,并且在压缩中设置块的大小。

|

||||

|

||||

|

||||

|

||||

*在 Linux 中使用 bzip2 压缩文件*

|

||||

|

||||

### 如何在 Linux 中使用“bzip2”解压缩文件

|

||||

|

||||

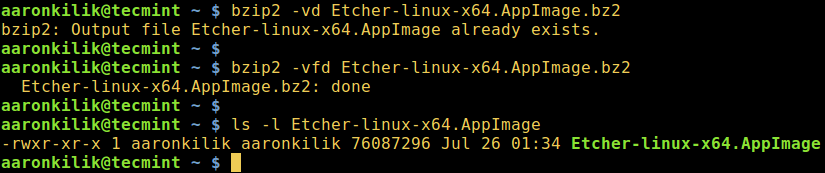

要解压缩`.bz2`文件,确保使用`-d`或者`--decompress`选项:

|

||||

|

||||

```

|

||||

$ bzip2 -d filename.bz2

|

||||

```

|

||||

|

||||

注意:这个文件必须是`.bz2`的扩展名,上面的命令才能使用。

|

||||

|

||||

```

|

||||

$ bzip2 -vd Etcher-linux-x64.AppImage.bz2

|

||||

$ bzip2 -vfd Etcher-linux-x64.AppImage.bz2

|

||||

$ ls -l Etcher-linux-x64.AppImage

|

||||

```

|

||||

|

||||

|

||||

|

||||

*在 Linux 中解压 bzip2 文件*

|

||||

|

||||

要浏览 bzip2 的帮助及 man 页面,输入下面的命令:

|

||||

|

||||

```

|

||||

$ bzip2 -h

|

||||

$ man bzip2

|

||||

```

|

||||

|

||||

最后,通过上面简单的阐述,我相信你现在已经可以在 Linux 中压缩及解压缩`bz2`文件了。然而,有任何的问题和反馈,可以在评论区中留言。

|

||||

|

||||

重要的是,你可能想在 Linux 中查看一些重要的 [tar 命令示例][6],以便学习使用 tar 命令来[创建压缩归档文件][7]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/linux-compress-decompress-bz2-files-using-bzip2

|

||||

|

||||

作者:[Aaron Kili][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/aaronkili/

|

||||

[1]:http://www.tecmint.com/rsync-local-remote-file-synchronization-commands/

|

||||

[2]:http://www.tecmint.com/command-line-archive-tools-for-linux/

|

||||

[3]:http://www.tecmint.com/peazip-linux-file-manager-and-file-archive-tool/

|

||||

[4]:http://www.tecmint.com/wp-content/uploads/2016/11/Compress-Files-Using-bzip2-in-Linux.png

|

||||

[5]:http://www.tecmint.com/wp-content/uploads/2016/11/Decompression-bzip2-File-in-Linux.png

|

||||

[6]:http://www.tecmint.com/18-tar-command-examples-in-linux/

|

||||

[7]:http://www.tecmint.com/compress-files-and-finding-files-in-linux/

|

||||

|

||||

|

||||

@ -0,0 +1,241 @@

|

||||

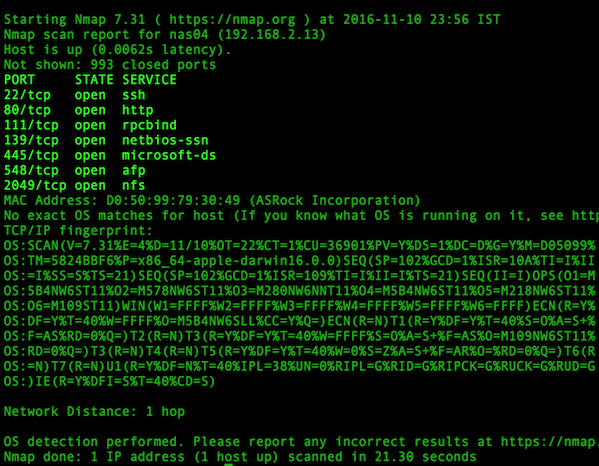

在 Kali Linux 下实战 Nmap(网络安全扫描器)

|

||||

========

|

||||

|

||||

在这第二篇 Kali Linux 文章中, 将讨论称为 ‘[nmap][30]‘ 的网络工具。虽然 nmap 不是 Kali 下唯一的一个工具,但它是最[有用的网络映射工具][29]之一。

|

||||

|

||||

- [第一部分-为初学者准备的Kali Linux安装指南][4]

|

||||

|

||||

Nmap, 是 Network Mapper 的缩写,由 Gordon Lyon 维护(更多关于 Mr. Lyon 的信息在这里: [http://insecure.org/fyodor/][28]) ,并被世界各地许多的安全专业人员使用。

|

||||

|

||||

这个工具在 Linux 和 Windows 下都能使用,并且是用命令行驱动的。相对于那些令人害怕的命令行,对于 nmap,在这里有一个美妙的图形化前端叫做 zenmap。

|

||||

|

||||

强烈建议个人去学习 nmap 的命令行版本,因为与图形化版本 zenmap 相比,它提供了更多的灵活性。

|

||||

|

||||

对服务器进行 nmap 扫描的目的是什么?很好的问题。Nmap 允许管理员快速彻底地了解网络上的系统,因此,它的名字叫 Network MAPper 或者 nmap。

|

||||

|

||||

Nmap 能够快速找到活动的主机和与该主机相关联的服务。Nmap 的功能还可以通过结合 Nmap 脚本引擎(通常缩写为 NSE)进一步被扩展。

|

||||

|

||||

这个脚本引擎允许管理员快速创建可用于确定其网络上是否存在新发现的漏洞的脚本。已经有许多脚本被开发出来并且包含在大多数的 nmap 安装中。

|

||||

|

||||

提醒一句 - 使用 nmap 的人既可能是善意的,也可能是恶意的。应该非常小心,确保你不要使用 nmap 对没有明确得到书面许可的系统进行扫描。请在使用 nmap 工具的时候注意!

|

||||

|

||||

#### 系统要求

|

||||

|

||||

1. [Kali Linux][3] (nmap 可以用于其他操作系统,并且功能也和这个指南里面讲的类似)。

|

||||

2. 另一台计算机,并且装有 nmap 的计算机有权限扫描它 - 这通常很容易通过软件来实现,例如通过 [VirtualBox][2] 创建虚拟机。

|

||||

1. 想要有一个好的机器来练习一下,可以了解一下 Metasploitable 2。

|

||||

2. 下载 MS2 :[Metasploitable2][1]。

|

||||

3. 一个可以工作的网络连接,或者是使用虚拟机就可以为这两台计算机建立有效的内部网络连接。

|

||||

|

||||

### Kali Linux – 使用 Nmap

|

||||

|

||||

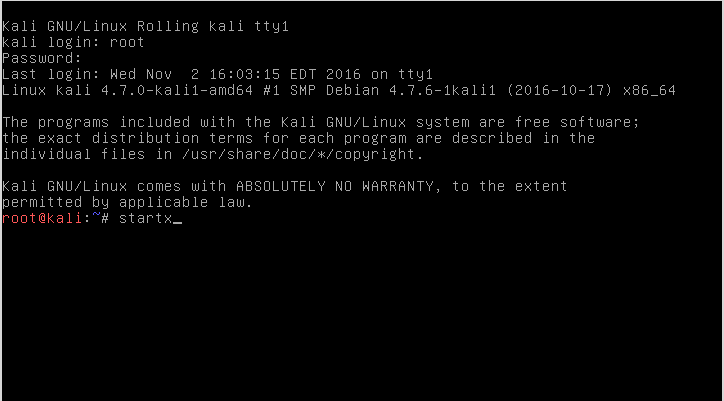

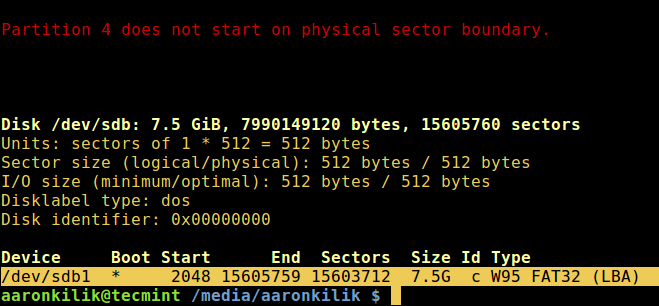

使用 nmap 的第一步是登录 Kali Linux,如果需要,就启动一个图形会话(本系列的第一篇文章安装了 [Kali Linux 的 Enlightenment 桌面环境] [27])。

|

||||

|

||||

在安装过程中,安装程序将提示用户输入用来登录的“root”用户和密码。 一旦登录到 Kali Linux 机器,使用命令`startx`就可以启动 Enlightenment 桌面环境 - 值得注意的是 nmap 不需要运行桌面环境。

|

||||

|

||||

```

|

||||

# startx

|

||||

|

||||

```

|

||||

|

||||

|

||||

*在 Kali Linux 中启动桌面环境*

|

||||

|

||||

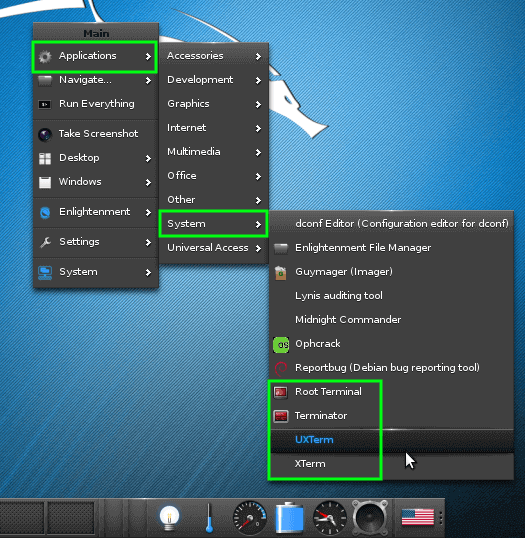

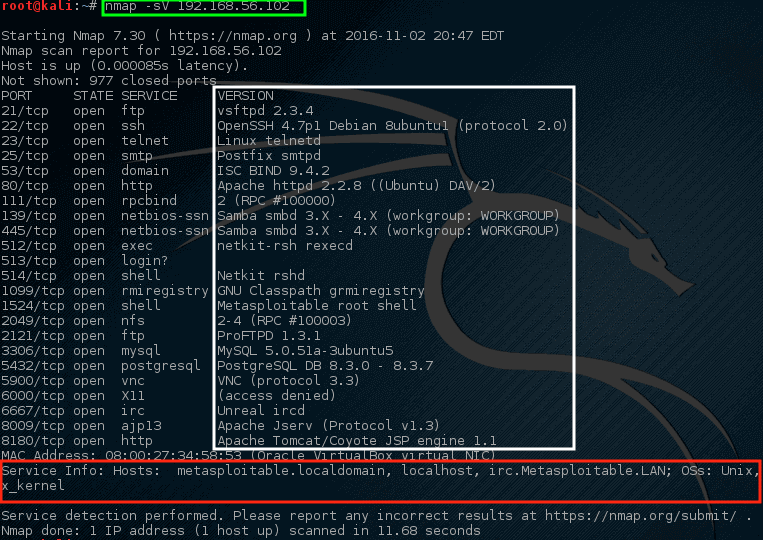

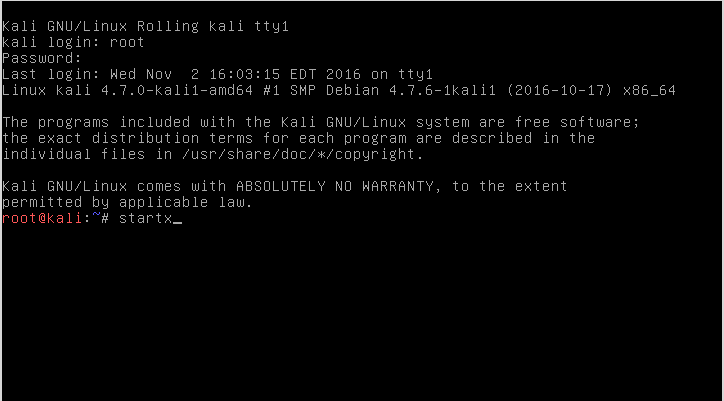

一旦登录到 Enlightenment,将需要打开终端窗口。通过点击桌面背景,将会出现一个菜单。导航到终端可以进行如下操作:应用程序 -> 系统 -> 'Xterm' 或 'UXterm' 或 '根终端'。

|

||||

|

||||

作者是名为 '[Terminator] [25]' 的 shell 程序的粉丝,但是这可能不会显示在 Kali Linux 的默认安装中。这里列出的所有 shell 程序都可用于使用 nmap 。

|

||||

|

||||

|

||||

|

||||

*在 Kali Linux 下启动终端*

|

||||

|

||||

一旦终端启动,nmap 的乐趣就开始了。 对于这个特定的教程,将会创建一个 Kali 机器和 Metasploitable机器之间的私有网络。

|

||||

|

||||

这会使事情变得更容易和更安全,因为私有的网络范围将确保扫描保持在安全的机器上,防止易受攻击的 Metasploitable 机器被其他人攻击。

|

||||

|

||||

### 怎样在我的网络上找到活动主机

|

||||

|

||||

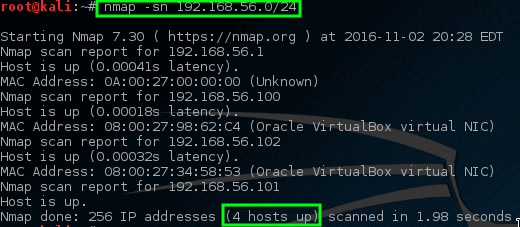

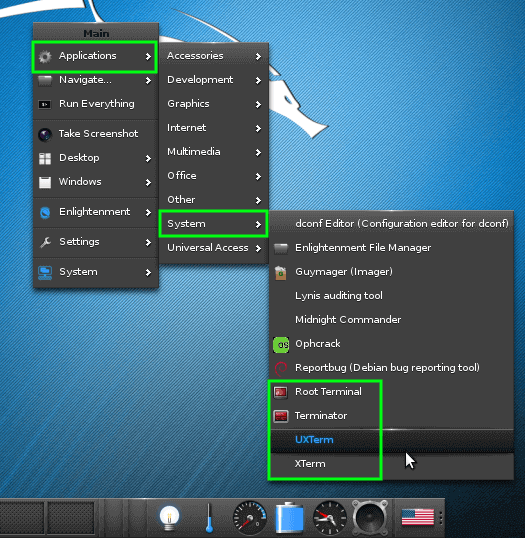

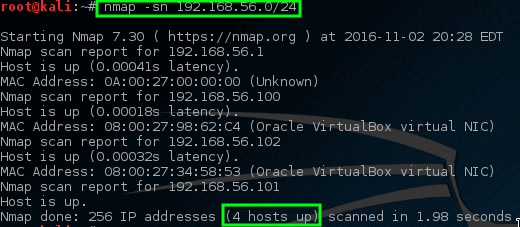

在此示例中,这两台计算机都位于专用的 192.168.56.0/24 网络上。 Kali 机器的 IP 地址为 192.168.56.101,要扫描的 Metasploitable 机器的 IP 地址为 192.168.56.102。

|

||||

|

||||

假如我们不知道 IP 地址信息,但是可以通过快速 nmap 扫描来帮助确定在特定网络上哪些是活动主机。这种扫描称为 “简单列表” 扫描,将 `-sL`参数传递给 nmap 命令。

|

||||

|

||||

```

|

||||

# nmap -sL 192.168.56.0/24

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

*Nmap – 扫描网络上的活动主机*

|

||||

|

||||

悲伤的是,这个初始扫描没有返回任何活动主机。 有时,这是某些操作系统处理[端口扫描网络流量][22]的一个方法。

|

||||

|

||||

###在我的网络中找到并 ping 所有活动主机

|

||||

|

||||

不用担心,在这里有一些技巧可以使 nmap 尝试找到这些机器。 下一个技巧会告诉 nmap 尝试去 ping 192.168.56.0/24 网络中的所有地址。

|

||||

|

||||

```

|

||||

# nmap -sn 192.168.56.0/24

|

||||

|

||||

```

|

||||

|

||||

|

||||

*Nmap – Ping 所有已连接的活动网络主机*

|

||||

|

||||

这次 nmap 会返回一些潜在的主机来进行扫描! 在此命令中,`-sn` 禁用 nmap 的尝试对主机端口扫描的默认行为,只是让 nmap 尝试 ping 主机。

|

||||

|

||||

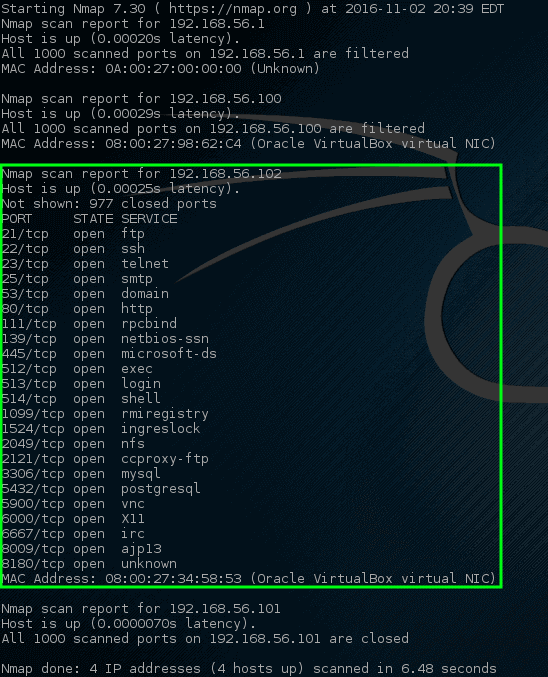

### 找到主机上的开放端口

|

||||

|

||||

让我们尝试让 nmap 端口扫描这些特定的主机,看看会出现什么。

|

||||

|

||||

```

|

||||

# nmap 192.168.56.1,100-102

|

||||

|

||||

```

|

||||

|

||||

|

||||

*Nmap – 在主机上扫描网络端口*

|

||||

|

||||

哇! 这一次 nmap 挖到了一个金矿。 这个特定的主机有相当多的[开放网络端口][19]。

|

||||

|

||||

这些端口全都代表着在此特定机器上的某种监听服务。 我们前面说过,192.168.56.102 的 IP 地址会分配给一台易受攻击的机器,这就是为什么在这个主机上会有这么多[开放端口][18]。

|

||||

|

||||

在大多数机器上打开这么多端口是非常不正常的,所以赶快调查这台机器是个明智的想法。管理员可以检查下网络上的物理机器,并在本地查看这些机器,但这不会很有趣,特别是当 nmap 可以为我们更快地做到时!

|

||||

|

||||

### 找到主机上监听端口的服务

|

||||

|

||||

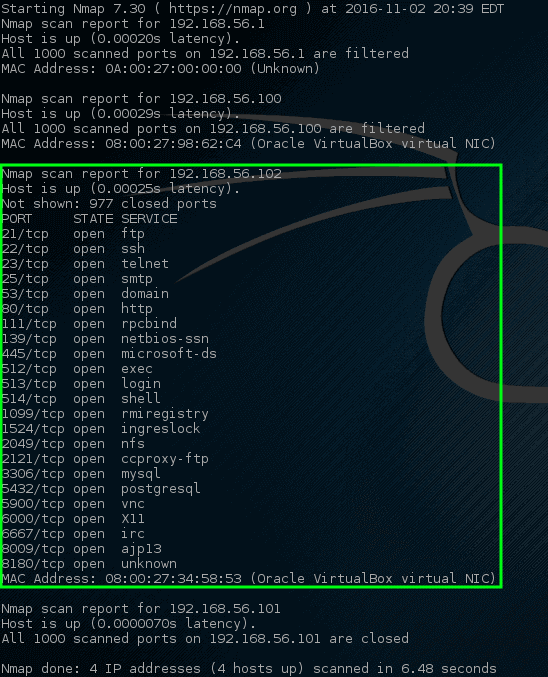

下一个扫描是服务扫描,通常用于尝试确定机器上什么[服务监听在特定的端口][17]。

|

||||

|

||||

Nmap 将探测所有打开的端口,并尝试从每个端口上运行的服务中获取信息。

|

||||

|

||||

```

|

||||

# nmap -sV 192.168.56.102

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

*Nmap – 扫描网络服务监听端口*

|

||||

|

||||

请注意这次 nmap 提供了一些关于 nmap 在特定端口运行的建议(在白框中突出显示),而且 nmap 也试图确认运行在这台机器上的[这个操作系统的信息][15]和它的主机名(也非常成功!)。

|

||||

|

||||

查看这个输出,应该引起网络管理员相当多的关注。 第一行声称 VSftpd 版本 2.3.4 正在这台机器上运行! 这是一个真正的旧版本的 VSftpd。

|

||||

|

||||

通过查找 ExploitDB,对于这个版本早在 2001 年就发现了一个非常严重的漏洞(ExploitDB ID – 17491)。

|

||||

|

||||

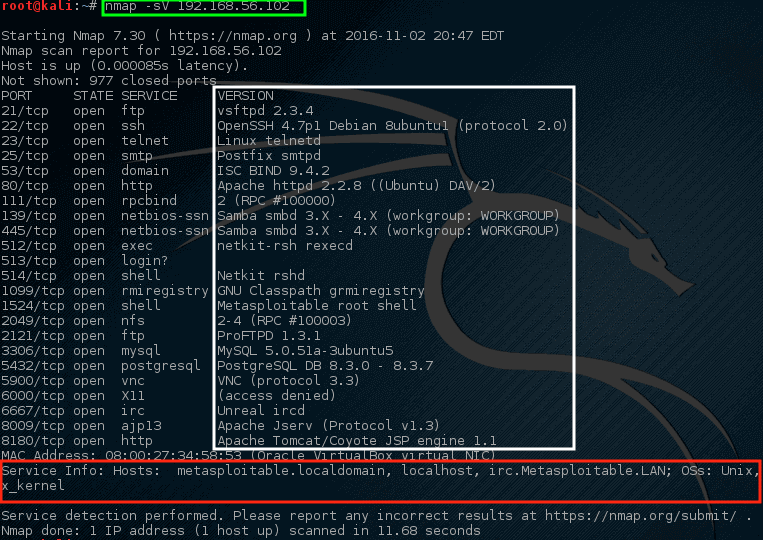

### 发现主机上上匿名 ftp 登录

|

||||

|

||||

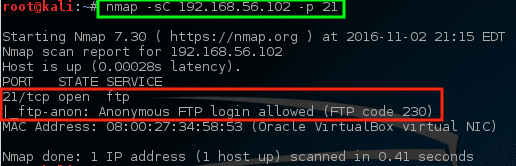

让我们使用 nmap 更加清楚的查看这个端口,并且看看可以确认什么。

|

||||

|

||||

```

|

||||

# nmap -sC 192.168.56.102 -p 21

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

*Nmap – 扫描机器上的特定端口*

|

||||

|

||||

使用此命令,让 nmap 在主机上的 FTP 端口(`-p 21`)上运行其默认脚本(`-sC`)。 虽然它可能是、也可能不是一个问题,但是 nmap 确实发现在这个特定的服务器[是允许匿名 FTP 登录的][13]。

|

||||

|

||||

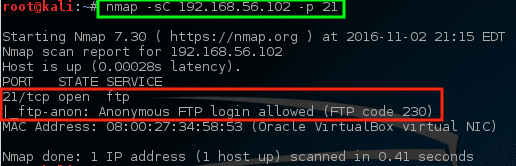

### 检查主机上的漏洞

|

||||

|

||||

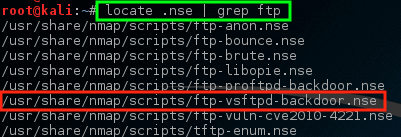

这与我们早先知道 VSftd 有旧漏洞的知识相匹配,应该引起一些关注。 让我们看看 nmap有没有脚本来尝试检查 VSftpd 漏洞。

|

||||

|

||||

```

|

||||

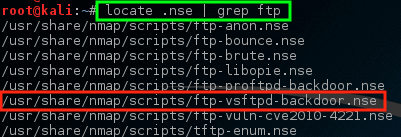

# locate .nse | grep ftp

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

*Nmap – 扫描 VSftpd 漏洞*

|

||||

|

||||

注意 nmap 已有一个 NSE 脚本已经用来处理 VSftpd 后门问题!让我们尝试对这个主机运行这个脚本,看看会发生什么,但首先知道如何使用脚本可能是很重要的。

|

||||

|

||||

```

|

||||

# nmap --script-help=ftp-vsftd-backdoor.nse

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

*了解 Nmap NSE 脚本使用*

|

||||

|

||||

通过这个描述,很明显,这个脚本可以用来试图查看这个特定的机器是否容易受到先前识别的 ExploitDB 问题的影响。

|

||||

|

||||

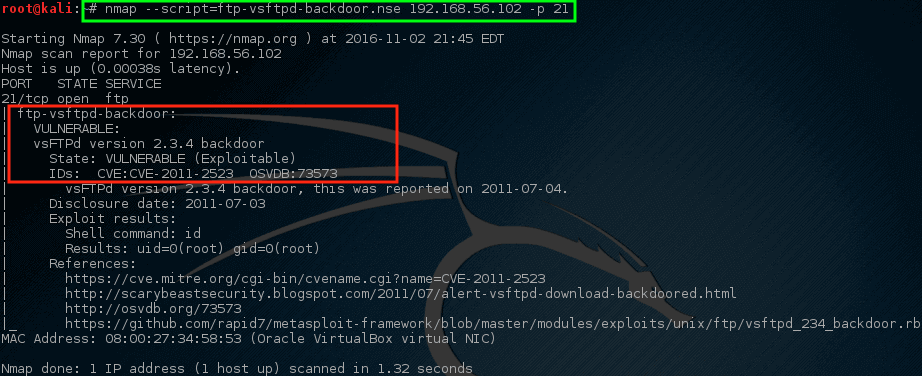

让我们运行这个脚本,看看会发生什么。

|

||||

|

||||

```

|

||||

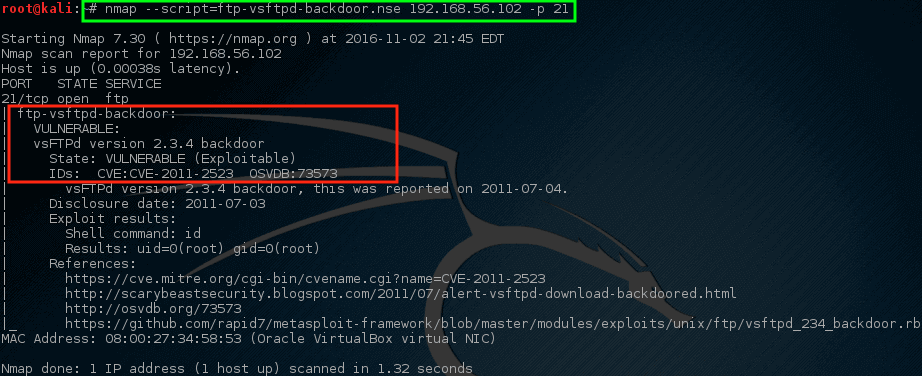

# nmap --script=ftp-vsftpd-backdoor.nse 192.168.56.102 -p 21

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

*Nmap – 扫描易受攻击的主机*

|

||||

|

||||

耶!Nmap 的脚本返回了一些危险的消息。 这台机器可能面临风险,之后可以进行更加详细的调查。虽然这并不意味着机器缺乏对风险的抵抗力和可以被用于做一些可怕/糟糕的事情,但它应该给网络/安全团队带来一些关注。

|

||||

|

||||

Nmap 具有极高的选择性,非常平稳。 到目前为止已经做的大多数扫描, nmap 的网络流量都保持适度平稳,然而以这种方式扫描对个人拥有的网络可能是非常耗时的。

|

||||

|

||||

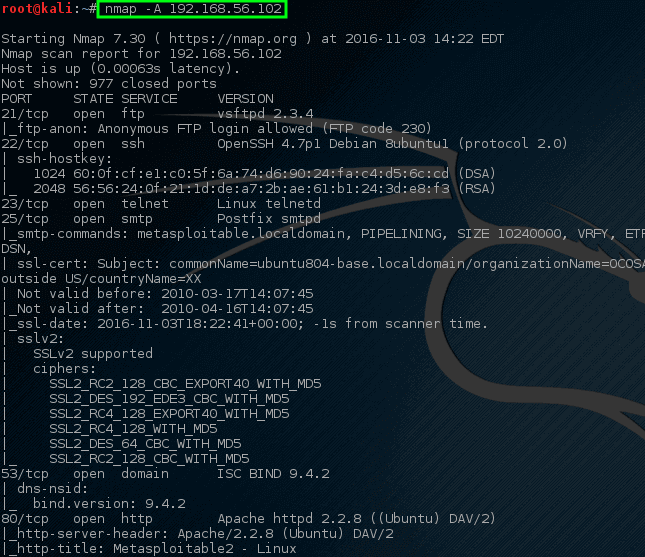

Nmap 有能力做一个更积极的扫描,往往一个命令就会产生之前几个命令一样的信息。 让我们来看看积极的扫描的输出(注意 - 积极的扫描会触发[入侵检测/预防系统][9]!)。

|

||||

|

||||

```

|

||||

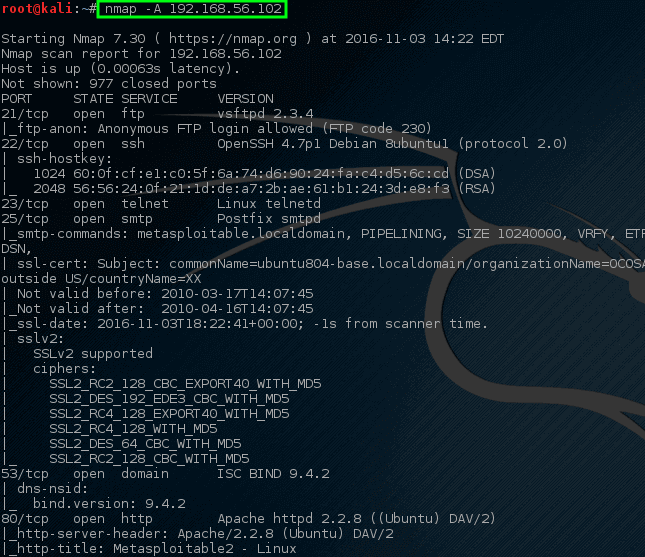

# nmap -A 192.168.56.102

|

||||

|

||||

```

|

||||

|

||||

|

||||

*Nmap – 在主机上完成网络扫描*

|

||||

|

||||

注意这一次,使用一个命令,nmap 返回了很多关于在这台特定机器上运行的开放端口、服务和配置的信息。 这些信息中的大部分可用于帮助确定[如何保护本机][7]以及评估网络上可能运行的软件。

|

||||

|

||||

这只是 nmap 可用于在主机或网段上找到的许多有用信息的很短的一个列表。强烈敦促个人在个人拥有的网络上继续[以nmap][6] 进行实验。(不要通过扫描其他主机来练习!)。

|

||||

|

||||

有一个关于 Nmap 网络扫描的官方指南,作者 Gordon Lyon,可从[亚马逊](http://amzn.to/2eFNYrD)上获得。

|

||||

|

||||

方便的话可以留下你的评论和问题(或者使用 nmap 扫描器的技巧)。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/nmap-network-security-scanner-in-kali-linux/

|

||||

|

||||

作者:[Rob Turner][a]

|

||||

译者:[DockerChen](https://github.com/DockerChen)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/robturner/

|

||||

[1]:https://sourceforge.net/projects/metasploitable/files/Metasploitable2/

|

||||

[2]:http://www.tecmint.com/install-virtualbox-on-redhat-centos-fedora/

|

||||

[3]:http://www.tecmint.com/kali-linux-installation-guide

|

||||

[4]:http://www.tecmint.com/kali-linux-installation-guide

|

||||

[5]:http://amzn.to/2eFNYrD

|

||||

[6]:http://www.tecmint.com/nmap-command-examples/

|

||||

[7]:http://www.tecmint.com/security-and-hardening-centos-7-guide/

|

||||

[8]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Scan-Network-Host.png

|

||||

[9]:http://www.tecmint.com/protect-apache-using-mod_security-and-mod_evasive-on-rhel-centos-fedora/

|

||||

[10]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Scan-Host-for-Vulnerable.png

|

||||

[11]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Learn-NSE-Script.png

|

||||

[12]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Scan-Service-Vulnerability.png

|

||||

[13]:http://www.tecmint.com/setup-ftp-anonymous-logins-in-linux/

|

||||

[14]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Scan-Particular-Port-on-Host.png

|

||||

[15]:http://www.tecmint.com/commands-to-collect-system-and-hardware-information-in-linux/

|

||||

[16]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Scan-Network-Services-Ports.png

|

||||

[17]:http://www.tecmint.com/find-linux-processes-memory-ram-cpu-usage/

|

||||

[18]:http://www.tecmint.com/find-open-ports-in-linux/

|

||||

[19]:http://www.tecmint.com/find-open-ports-in-linux/

|

||||

[20]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Scan-for-Ports-on-Hosts.png

|

||||

[21]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Ping-All-Network-Live-Hosts.png

|

||||

[22]:http://www.tecmint.com/audit-network-performance-security-and-troubleshooting-in-linux/

|

||||

[23]:http://www.tecmint.com/wp-content/uploads/2016/11/Nmap-Scan-Network.png

|

||||

[24]:http://www.tecmint.com/wp-content/uploads/2016/11/Launch-Terminal-in-Kali-Linux.png

|

||||

[25]:http://www.tecmint.com/terminator-a-linux-terminal-emulator-to-manage-multiple-terminal-windows/

|

||||

[26]:http://www.tecmint.com/wp-content/uploads/2016/11/Start-Desktop-Environment-in-Kali-Linux.png

|

||||

[27]:http://www.tecmint.com/kali-linux-installation-guide

|

||||

[28]:http://insecure.org/fyodor/

|

||||

[29]:http://www.tecmint.com/bcc-best-linux-performance-monitoring-tools/

|

||||

[30]:http://www.tecmint.com/nmap-command-examples/

|

||||

|

||||

@ -0,0 +1,87 @@

|

||||

LINUX NOW RUNS ON 99.6% OF TOP 500 SUPERCOMPUTERS

|

||||

============================================================

|

||||

|

||||

[

|

||||

|

||||

][12]

|

||||

|

||||

_Brief: Linux may have just 2% in the desktop market share, but when it comes to supercomputers, Linux is simply ruling it with over 99% of the share._

|

||||

|

||||

Linux running on more than 99% of the top 500 fastest supercomputers in the world is no surprise. If you followed our previous reports, in the year 2015, [Linux was running on more than 97% of the top 500 supercomputers][13]. This year, it just got better.

|

||||

|

||||

[#Linux now runs on more than 99% of top 500 #supercomputers in the world][4]

|

||||

|

||||

[CLICK TO TWEET][5]

|

||||

|

||||

This information is collected by an independent organization [Top500][14] that publishes the details about the top 500 fastest supercomputers known to them, twice a year. You can [go the website and filter out the list][15] based on country, OS type used, vendors etc. Don’t worry, I’ll do it for you to present some of the most interesting facts from this year’s list.

|

||||

|

||||

### LINUX GOT 498 OUT OF 500

|

||||

|

||||

If I have to break it down in numbers, 498 out of the top 500 supercomputers run Linux. Rest of the two supercomputers run Unix-based OS. Windows, which was running on 1 supercomputer until last year, is nowhere in the list this year. Perhaps, none of the supercomputers can run Windows 10 (pun intended).

|

||||

|

||||

To summarize the list of top 500 supercomputers based on OS this year:

|

||||

|

||||

* Linux: 498

|

||||

* Unix: 2

|

||||

* Windows: 0

|

||||

|

||||

To give you a year wise summary of Linux shares on the top 500 supercomputers:

|

||||

|

||||

* In 2012: 94%

|

||||

* In [2013][6]: 95%

|

||||

* In [2014][7]: 97%

|

||||

* In [2015][8]: 97.2%

|

||||

* In 2016: 99.6%

|

||||

* In 2017: ???

|

||||

|

||||

In addition to that, first 380 fastest supercomputers run Linux, including of course the fastest supercomputer based in China. Unix is used by the 386th and 387th ranked supercomputers also based in China.

|

||||

|

||||

### SOME OTHER INTERESTING STATS ABOUT FASTEST SUPERCOMPUTERS

|

||||

|

||||

[

|

||||

|

||||

][16]

|

||||

|

||||

Moving Linux aside, I was looking at the list and thought of sharing some other interesting stats with you.

|

||||

|

||||

* World’s fastest supercomputer is [Sunway TaihuLight][9]. It based in [National Supercomputing Center in Wuxi][10], China. It has a speed of 93PFLOPS.

|

||||

* World’s second fastest supercomputer is also based in China ([Tianhe-2][11]) while the third spot is taken by US based Titan.

|

||||

* Out of the top 10 fastest supercomputers, USA has 5, Japan and China have 2 each while Switzerland has 1.

|

||||

* US and China both have 171 supercomputers each in the list of the top 500 supercomputers.

|

||||

* Japan has 27, France has 20, while India, Russia and Saudi Arabia has 5 supercomputers in the list.

|

||||

|

||||

[Suggested ReaddigiKam 5.0 Released! Install It In Ubuntu Linux][17]

|

||||

|

||||

Some interesting facts, isn’t it? You can filter out your own list [here][18] to further details. For the moment I am happy to brag about Linux running on 99% of the top 500 supercomputers and look forward to a perfect score of 100% next year.

|

||||

|

||||

While you are reading it, do share this article on social media. It’s an achievement for Linux and we got to show off :P

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/linux-99-percent-top-500-supercomputers

|

||||

|

||||

作者:[Abhishek Prakash ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://itsfoss.com/author/abhishek/

|

||||

[1]:https://twitter.com/share?original_referer=https%3A%2F%2Fitsfoss.com%2F&source=tweetbutton&text=Linux+Now+Runs+On+99.6%25+Of+Top+500+Supercomputers&url=https%3A%2F%2Fitsfoss.com%2Flinux-99-percent-top-500-supercomputers%2F&via=%40itsfoss

|

||||

[2]:https://www.linkedin.com/cws/share?url=https://itsfoss.com/linux-99-percent-top-500-supercomputers/

|

||||

[3]:http://pinterest.com/pin/create/button/?url=https://itsfoss.com/linux-99-percent-top-500-supercomputers/&description=Linux+Now+Runs+On+99.6%25+Of+Top+500+Supercomputers&media=https://itsfoss.com/wp-content/uploads/2016/11/Linux-King-Supercomputer-world-min.jpg

|

||||

[4]:https://twitter.com/share?text=%23Linux+now+runs+on+more+than+99%25+of+top+500+%23supercomputers+in+the+world&via=itsfoss&related=itsfoss&url=https://itsfoss.com/linux-99-percent-top-500-supercomputers/

|

||||

[5]:https://twitter.com/share?text=%23Linux+now+runs+on+more+than+99%25+of+top+500+%23supercomputers+in+the+world&via=itsfoss&related=itsfoss&url=https://itsfoss.com/linux-99-percent-top-500-supercomputers/

|

||||

[6]:https://itsfoss.com/95-percent-worlds-top-500-supercomputers-run-linux/

|

||||

[7]:https://itsfoss.com/97-percent-worlds-top-500-supercomputers-run-linux/

|

||||

[8]:https://itsfoss.com/linux-runs-97-percent-worlds-top-500-supercomputers/

|

||||

[9]:https://en.wikipedia.org/wiki/Sunway_TaihuLight

|

||||

[10]:https://www.top500.org/site/50623

|

||||

[11]:https://en.wikipedia.org/wiki/Tianhe-2

|

||||

[12]:https://itsfoss.com/wp-content/uploads/2016/11/Linux-King-Supercomputer-world-min.jpg

|

||||

[13]:https://itsfoss.com/linux-runs-97-percent-worlds-top-500-supercomputers/

|

||||

[14]:https://www.top500.org/

|

||||

[15]:https://www.top500.org/statistics/sublist/

|

||||

[16]:https://itsfoss.com/wp-content/uploads/2016/11/fastest-supercomputers.png

|

||||

[17]:https://itsfoss.com/digikam-5-0-released-install-it-in-ubuntu-linux/

|

||||

[18]:https://www.top500.org/statistics/sublist/

|

||||

@ -0,0 +1,84 @@

|

||||

How To Manually Backup Your SMS / MMS Messages On Android?

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

If you’re switching a device or upgrading your system, making a backup of your data might be of crucial importance.

|

||||

|

||||

|

||||

One of the places where our important data may lie, is in our SMS / MMS messages, be it of sentimental or utilizable value, backing it up might prove quite useful.

|

||||

|

||||

However, unlike our photos, videos or song files which can be transferred and backed up with relative ease, backing our SMS / MMS usually proves to be a bit more complicated task that commonly require involving a third party-app or service.

|

||||

|

||||

### Why Do It Manually?

|

||||

|

||||

Although there currently exist quite a bit of different apps that might take care of backing SMS and MMS for you, you may want to consider doing it manually for the following reasons:

|

||||

|

||||

1. Apps **may not work** on different devices or different Android versions.

|

||||

2. Apps may backup your data by uploading it to the Internet cloud therefore requiring you to **jeopardize the safety** of your content.

|

||||

3. By backing up manually, you have complete control over where your data goes and what it goes through, thus **limiting the risk of spyware** in the process.

|

||||

4. Doing it manually can be overall **less time consuming, easier and more straightforward**than any other way.

|

||||

|

||||

### How To Backup SMS / MMS Manually?

|

||||

|

||||

To backup your SMS / MMS messages manually you’ll need to have an Android tool called [adb][1]installed on your computer.

|

||||

|

||||

Now, the important thing to know regarding SMS / MMS is that Android stores them in a database commonly named **mmssms.db.**

|

||||

|

||||

Since the location of that database may differ between one device to another and also because other SMS apps can create databases of their own, such as, gommssms.db created by GO SMS app, the first thing you’d want to do is to search for these databases.

|

||||

|

||||

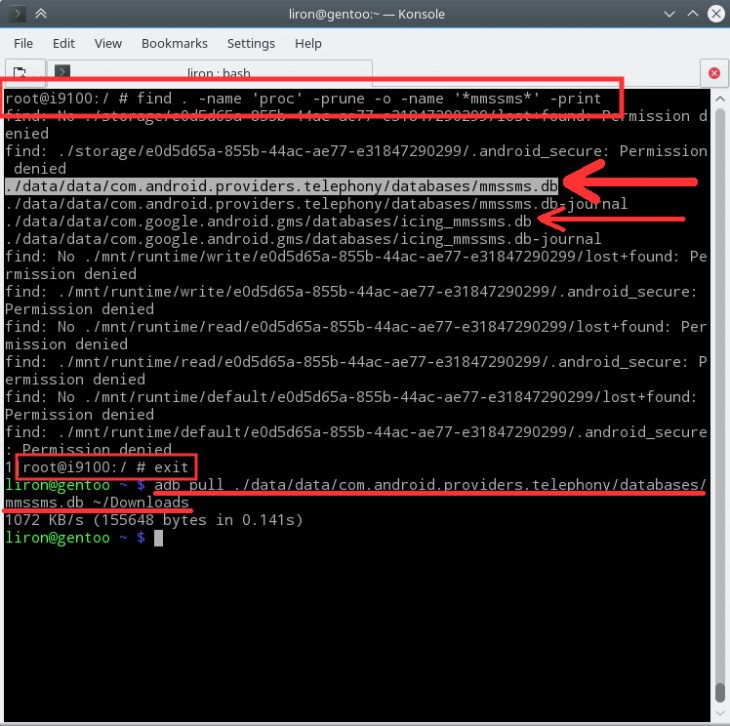

So, open up your CLI tool (I use Linux Terminal, you may use Windows CMD or PowerShell) and issue the following commands:

|

||||

|

||||

Note: below is a series of commands needed for the task and later is the explanation of what each command does.

|

||||

|

||||

`

|

||||

adb root

|

||||

|

||||

adb shell

|

||||

|

||||

find / -name "*mmssms*"

|

||||

|

||||

exit

|

||||

|

||||

adb pull /PATH/TO/mmssms.db /PATH/TO/DESTINATION/FOLDER

|

||||

|

||||

`

|

||||

|

||||

#### Explanation:

|

||||

|

||||

We start with adb root command in order to start adb in root mode – so that we’ll have permissions to reach system protected files as well.

|

||||

|

||||

“adb shell” is used to get inside the device shell.

|

||||

|

||||

Next, the “find” command is used to search for the databases. (in my case it’s found in: /data/data/com.android.providers.telephony/databases/mmssms.db)

|

||||

|

||||

* Tip: if your Terminal prints too many irrelevant results, try refining your “find” parameters (google it).

|

||||

|

||||

[

|

||||

|

||||

][2]

|

||||

|

||||

Android SMS&MMS databases

|

||||

|

||||

Then we use exit command in order to exit back to our local system directory.

|

||||

|

||||

Lastly, adb pull is used to copy the database files into a folder on our computer.

|

||||

|

||||

Now, once you’re ready to restore your SMS / MMS messages, whether it’s on a new device or a new system version, simply search again for the location of mmssms on the new system and replace it with the one you’ve backed.

|

||||

|

||||

Use adb push to replace it, e.g: adb push ~/Downloads/mmssms.db /data/data/com.android.providers.telephony/databases/mmssms.db

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://iwf1.com/how-to-manually-backup-your-sms-mms-messages-on-android/

|

||||

|

||||

作者:[Liron ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://iwf1.com/tag/android

|

||||

[1]:http://developer.android.com/tools/help/adb.html

|

||||

[2]:http://iwf1.com/wordpress/wp-content/uploads/2016/10/Android-SMSMMS-databases.jpg

|

||||

@ -0,0 +1,100 @@

|

||||

Livepatch – Apply Critical Security Patches to Ubuntu Linux Kernel Without Rebooting

|

||||

============================================================

|

||||

|

||||

If you are a system administrator in charge of maintaining critical systems in enterprise environments, we are sure you know two important things:

|

||||

|

||||

1) Finding a downtime window to install security patches in order to handle kernel or operating system vulnerabilities can be difficult. If the company or business you work for does not have security policies in place, operations management may end up favoring uptime over the need to solve vulnerabilities. Additionally, internal bureaucracy can cause delays in granting approvals for a downtime. Been there myself.

|

||||

|

||||

2) Sometimes you can’t really afford a downtime, and should be prepared to mitigate any potential exposures to malicious attacks some other way.

|

||||

|

||||

The good news is that Canonical has recently released (actually, a couple of days ago) its Livepatchservice to apply critical kernel patches to Ubuntu 16.04 (64-bit edition / 4.4.x kernel) without the need for a later reboot. Yes, you read that right: with Livepatch, you don’t need to restart your Ubuntu 16.04 server in order for the security patches to take effect.

|

||||

|

||||

### Signing up for Ubuntu Livepatch

|

||||

|

||||

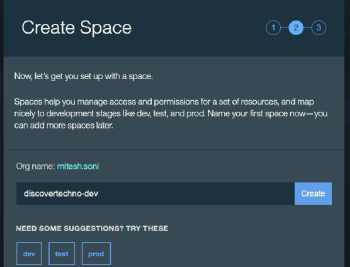

In order to use Canonical Livepatch Service, you need to sign up at [https://auth.livepatch.canonical.com/][1] and indicate if you are a regular Ubuntu user or an Advantage subscriber (paid option). All Ubuntu users can link up to 3 different machines to Livepatch through the use of a token:

|

||||

|

||||

[

|

||||

|

||||

][2]

|

||||

|

||||

Canonical Livepatch Service

|

||||

|

||||

In the next step you will be prompted to enter your Ubuntu One credentials or sign up for a new account. If you choose the latter, you will need to confirm your email address in order to finish your registration:

|

||||

|

||||

[

|

||||

|

||||

][3]

|

||||

|

||||

Ubuntu One Confirmation Mail

|

||||

|

||||

Once you click on the link above to confirm your email address, you’ll be ready to go back to [https://auth.livepatch.canonical.com/][4] and get your Livepatch token.

|

||||

|

||||

### Getting and Using your Livepatch Token

|

||||

|

||||

To begin, copy the unique token assigned to your Ubuntu One account:

|

||||

|

||||

[

|

||||

|

||||

][5]

|

||||

|

||||

Canonical Livepatch Token

|

||||

|

||||

Then go to a terminal and type:

|

||||

|

||||

```

|

||||

$ sudo snap install canonical-livepatch

|

||||

```

|

||||

|

||||

The above command will install the livepatch, whereas

|

||||

|

||||

```

|

||||

$ sudo canonical-livepatch enable [YOUR TOKEN HERE]

|

||||

```

|

||||

|

||||

will enable it for your system. If this last command indicates it can’t find canonical-livepatch, make sure `/snap/bin` has been added to your path. A workaround consists of changing your working directory to `/snap/bin` and do.

|

||||

|

||||

```

|

||||

$ sudo ./canonical-livepatch enable [YOUR TOKEN HERE]

|

||||

```

|

||||

[

|

||||

|

||||

][6]

|

||||

|

||||

Install Livepatch in Ubuntu

|

||||

|

||||

Overtime, you’ll want to check the description and the status of patches applied to your kernel. Fortunately, this is as easy as doing.

|

||||

|

||||

```

|

||||

$ sudo ./canonical-livepatch status --verbose

|

||||

```

|

||||

|

||||

as you can see in the following image:

|

||||

|

||||

[

|

||||

|

||||

][7]

|

||||

|

||||

Check Livepatch Status in Ubuntu

|

||||

|

||||

Having enabled Livepatch on your Ubuntu server, you will be able to reduce planned and unplanned downtimes at a minimum while keeping your system secure. Hopefully Canonical’s initiative will award you a pat on the back by management – or better yet, a raise.

|

||||

|

||||

Feel free to let us know if you have any questions about this article. Just drop us a note using the comment form below and we will get back to you as soon as possible.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/livepatch-install-critical-security-patches-to-ubuntu-kernel

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/gacanepa/

|

||||

[1]:https://auth.livepatch.canonical.com/

|

||||

[2]:http://www.tecmint.com/wp-content/uploads/2016/10/Canonical-Livepatch-Service.png

|

||||

[3]:http://www.tecmint.com/wp-content/uploads/2016/10/Ubuntu-One-Confirmation-Mail.png

|

||||

[4]:https://auth.livepatch.canonical.com/

|

||||

[5]:http://www.tecmint.com/wp-content/uploads/2016/10/Livepatch-Token.png

|

||||

[6]:http://www.tecmint.com/wp-content/uploads/2016/10/Install-Livepatch-in-Ubuntu.png

|

||||

[7]:http://www.tecmint.com/wp-content/uploads/2016/10/Check-Livepatch-Status.png

|

||||

@ -1,3 +1,5 @@

|

||||

GitFuture get translating

|

||||

|

||||

DTrace for Linux 2016

|

||||

===========

|

||||

|

||||

|

||||

@ -0,0 +1,113 @@

|

||||

翻译中 by zky001

|

||||

How to Sort Output of ‘ls’ Command By Last Modified Date and Time

|

||||

============================================================

|

||||

|

||||

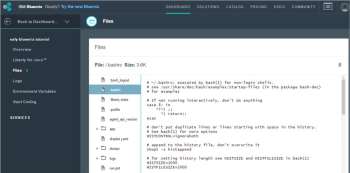

One of the commonest things a Linux user will always do on the command line is [listing the contents of a directory][1]. As we may already know, [ls][2] and [dir][3] are the two commands available on Linux for listing directory content, with the former being more popular and in most cases, preferred by users.

|

||||

|

||||

When listing directory contents, the results can be sorted based on several criteria such as alphabetical order of filenames, modification time, access time, version and file size. Sorting using each of these file properties can be enabled by using a specific flag.

|

||||

|

||||

In this brief [ls command guide][4], we will look at how to [sort the output of ls command][5] by last modification time (date and time).

|

||||

|

||||

Let us start by executing some [basic ls commands][6].

|

||||

|

||||

### Linux Basic ls Commands

|

||||

|

||||

1. Running ls command without appending any argument will list current working directory contents.

|

||||

|

||||

```

|

||||

$ ls

|

||||

```

|

||||

[

|

||||

|

||||

][7]

|

||||

|

||||

List Content of Working Directory

|

||||

|

||||

2. To list contents of any directory, for example /etc directory use:

|

||||

|

||||

```

|

||||

$ ls /etc

|

||||

```

|

||||

[

|

||||

|

||||

][8]

|

||||

|

||||

List Contents of Directory

|

||||

|

||||

3. A directory always contains a few hidden files (at least two), therefore, to show all files in a directory, use the `-a` or `--all` flag:

|

||||

|

||||

```

|

||||

$ ls -a

|

||||

```

|

||||

[

|

||||

|

||||

][9]

|

||||

|

||||

List Hidden Files in Directory

|

||||

|

||||

4. You can as well print detailed information about each file in the ls output, such as the file permissions, number of links, owner’s name and group owner, file size, time of last modification and the file/directory name.

|

||||

|

||||

This is activated by the `-l` option, which means a long listing format as in the next screenshot:

|

||||

|

||||

```

|

||||

$ ls -l

|

||||

```

|

||||

[

|

||||

|

||||

][10]

|

||||

|

||||

Long List Directory Contents

|

||||

|

||||

### Sort Files Based on Time and Date

|

||||

|

||||

5. To list files in a directory and [sort them last modified date and time][11], make use of the `-t` option as in the command below:

|

||||

|

||||

```

|

||||

$ ls -lt

|

||||

```

|

||||

[

|

||||

|

||||

][12]

|

||||

|

||||

Sort ls Output by Date and Time

|

||||

|

||||

6. If you want a reverse sorting files based on date and time, you can use the `-r` option to work like so:

|

||||

|

||||

```

|

||||

$ ls -ltr

|

||||

```

|

||||

[

|

||||

|

||||

][13]

|

||||

|

||||

Sort ls Output Reverse by Date and Time

|

||||

|

||||

We will end here for now, however, there is more usage information and options in the [ls command][14], so make it a point to look through it or any other guides offering [ls command tricks every Linux user should know][15] or [use sort command][16]. Last but not least, you can reach us via the feedback section below.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/sort-ls-output-by-last-modified-date-and-time

|

||||

|

||||

作者:[Aaron Kili][a]

|

||||

译者:[zky001](https://github.com/zky001)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/aaronkili/

|

||||

[1]:http://www.tecmint.com/file-and-directory-management-in-linux/

|

||||

[2]:http://www.tecmint.com/15-basic-ls-command-examples-in-linux/

|

||||

[3]:http://www.tecmint.com/linux-dir-command-usage-with-examples/

|

||||

[4]:http://www.tecmint.com/tag/linux-ls-command/

|

||||

[5]:http://www.tecmint.com/sort-command-linux/

|

||||

[6]:http://www.tecmint.com/15-basic-ls-command-examples-in-linux/

|

||||

[7]:http://www.tecmint.com/wp-content/uploads/2016/10/List-Content-of-Working-Directory.png

|

||||

[8]:http://www.tecmint.com/wp-content/uploads/2016/10/List-Contents-of-Directory.png

|

||||

[9]:http://www.tecmint.com/wp-content/uploads/2016/10/List-Hidden-Files-in-Directory.png

|

||||

[10]:http://www.tecmint.com/wp-content/uploads/2016/10/ls-Long-List-Format.png

|

||||

[11]:http://www.tecmint.com/find-and-sort-files-modification-date-and-time-in-linux/

|

||||

[12]:http://www.tecmint.com/wp-content/uploads/2016/10/Sort-ls-Output-by-Date-and-Time.png

|

||||

[13]:http://www.tecmint.com/wp-content/uploads/2016/10/Sort-ls-Output-Reverse-by-Date-and-Time.png

|

||||

[14]:http://www.tecmint.com/tag/linux-ls-command/

|

||||

[15]:http://www.tecmint.com/linux-ls-command-tricks/

|

||||

[16]:http://www.tecmint.com/linux-sort-command-examples/

|

||||

@ -0,0 +1,115 @@

|

||||

3 Ways to Extract and Copy Files from ISO Image in Linux

|

||||

============================================================

|

||||

|

||||

Let’s say you have a large ISO file on your Linux server and you wanted to access, extract or copy one single file from it. How do you do it? Well in Linux there are couple ways do it.

|

||||

|

||||

For example, you can use standard mount command to mount an ISO image in read-only mode using the loop device and then copy the files to another directory.

|

||||

|

||||

### Mount or Extract ISO File in Linux

|

||||

|

||||

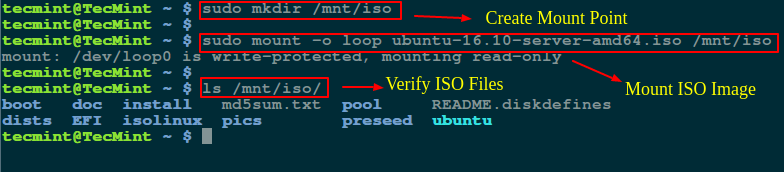

To do so, you must have an ISO file (I used ubuntu-16.10-server-amd64.iso ISO image) and mount point directory to mount or extract ISO files.

|

||||

|

||||

First create an mount point directory, where you will going to mount the image as shown:

|

||||

|

||||

```

|

||||

$ sudo mkdir /mnt/iso

|

||||

```

|

||||

|

||||

Once directory has been created, you can easily mount ubuntu-16.10-server-amd64.iso file and verify its content by running following command.

|

||||

|

||||

```

|

||||

$ sudo mount -o loop ubuntu-16.10-server-amd64.iso /mnt/iso

|

||||

$ ls /mnt/iso/

|

||||

```

|

||||

[

|

||||

|

||||

][1]

|

||||

|

||||

Mount ISO File in Linux

|

||||

|

||||

Now you can go inside the mounted directory (/mnt/iso) and access the files or copy the files to `/tmp`directory using [cp command][2].

|

||||

|

||||

```

|

||||

$ cd /mnt/iso

|

||||

$ sudo cp md5sum.txt /tmp/

|

||||

$ sudo cp -r ubuntu /tmp/

|

||||

```

|

||||

[

|

||||

|

||||

][3]

|

||||

|

||||

Copy Files From ISO File in Linux

|

||||

|

||||

Note: The `-r` option used to copy directories recursively, if you want you can also [monitor progress of copy command][4].

|

||||

|

||||

### Extract ISO Content Using 7zip Command

|

||||

|

||||

If you don’t want to mount ISO file, you can simply install 7zip, is an open source archive program used to pack or unpack different number of formats including TAR, XZ, GZIP, ZIP, BZIP2, etc..

|

||||

|

||||

```

|

||||

$ sudo apt-get install p7zip-full p7zip-rar [On Debian/Ubuntu systems]

|

||||

$ sudo yum install p7zip p7zip-plugins [On CentOS/RHEL systems]

|

||||

```

|

||||

|

||||

Once 7zip program has been installed, you can use 7z command to extract ISO file contents.

|

||||

|

||||

```

|

||||

$ 7z x ubuntu-16.10-server-amd64.iso

|

||||

```

|

||||

[

|

||||

|

||||

][5]

|

||||

|

||||

7zip – Extract ISO File Content in Linux

|

||||

|

||||

Note: As compared to Linux mount command, 7zip seems much faster and smart enough to pack or unpack any archive formats.

|

||||

|

||||

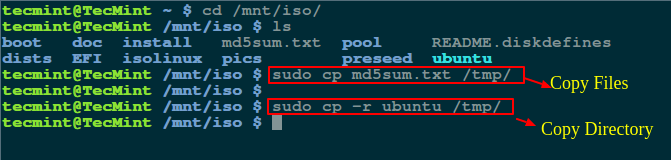

### Extract ISO Content Using isoinfo Command

|

||||

|

||||

The isoinfo command is used for directory listings of iso9660 images, but you can also use this program to extract files.

|

||||

|

||||

As I said isoinfo program perform directory listing, so first list the content of ISO file.

|

||||

|

||||

```

|

||||

$ isoinfo -i ubuntu-16.10-server-amd64.iso -l

|

||||

```

|

||||

[

|

||||

|

||||

][6]

|

||||

|

||||

List ISO Content in Linux

|

||||

|

||||

Now you can extract a single file from an ISO image like so:

|

||||

|

||||

```

|

||||

$ isoinfo -i ubuntu-16.10-server-amd64.iso -x MD5SUM.TXT > MD5SUM.TXT

|

||||

```

|

||||

|

||||

Note: The redirection is needed as `-x` option extracts to stdout.

|

||||

|

||||

[

|

||||

|

||||

][7]

|

||||

|

||||

Extract Single File from ISO in Linux

|

||||

|

||||

Well, there are many ways to do, if you know any useful command or program to extract or copy files from ISO file do share us via comment section.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/extract-files-from-iso-files-linux

|

||||

|

||||

作者:[Ravi Saive][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/admin/

|

||||

[1]:http://www.tecmint.com/wp-content/uploads/2016/10/Mount-ISO-File-in-Linux.png

|

||||

[2]:http://www.tecmint.com/advanced-copy-command-shows-progress-bar-while-copying-files/

|

||||

[3]:http://www.tecmint.com/wp-content/uploads/2016/10/Copy-Files-From-ISO-File-in-Linux.png

|

||||