mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-28 23:20:10 +08:00

Merge branch 'LCTT/master'

This commit is contained in:

commit

77875d24bf

@ -0,0 +1,42 @@

|

||||

Debian 8 "Jessie" 将把GNOME作为默认桌面环境

|

||||

================================================================================

|

||||

> Debian的GNOME团队已经取得了实质进展

|

||||

|

||||

<center></center>

|

||||

|

||||

<center>*GNOME 3.14桌面*</center>

|

||||

|

||||

**Debian项目开发者花了很长一段时间来决定将Xfce,GNOME或一些其他桌面环境中的哪个作为默认环境,不过目前看起来像是GNOME赢了。**

|

||||

|

||||

[我们两天前提到了][1],GNOME 3.14的软件包被上传到 Debian Testing(Debian 8 “Jessie”)的软件仓库中,这是一个令人惊喜的事情。通常情况下,GNOME的维护者对任何类型的软件包都不会这么快地决定添加,更别说桌面环境。

|

||||

|

||||

事实证明,关于即将到来的Debian 8的发行版中所用的默认桌面的争论已经尘埃落定,尽管这个词可能有点过于武断。无论什么情况下,总是有些开发者想要Xfce,另外一些则是喜欢 GNOME,看起来 MATE 也是不少人的备选。

|

||||

|

||||

### 最有可能的是,GNOME将Debian 8“Jessie” 的默认桌面环境###

|

||||

|

||||

我们之所以说“最有可能”是因为协议尚未达成一致,但它看起来GNOME已经遥遥领先了。Debian的维护者和开发者乔伊·赫斯解释了为什么会这样。

|

||||

|

||||

“根据从 https://wiki.debian.org/DebianDesktop/Requalification/Jessie 初步结果看,一些所需数据尚不可用,但在这一点上,我百分之八十地确定GNOME已经领先了。特别是,由于“辅助功能”和某些“systemd”整合的进度。在辅助功能方面:Gnome和Mate都领先了一大截。其他一些桌面的辅助功能改善了在Debian上的支持,部分原因是这一过程推动的,但仍需要上游大力支持。“

|

||||

|

||||

“Systemd /etc 整合方面:Xfce,Mate等尽力追赶在这一领域正在发生的变化,当技术团队停止了修改之后,希望有时间能在冻结期间解决这些问题。所以这并不是完全否决这些桌面,但要从目前的状态看,GNOME是未来的选择,“乔伊·赫斯[补充说][2]。

|

||||

|

||||

开发者在邮件中表示,在Debian的GNOME团队对他们所维护的项目[充满了激情][3],而Debian的Xfce的团队是决定默认桌面的实际阻碍。

|

||||

|

||||

无论如何,Debian 8“Jessie”没有一个具体发布时间,并没有迹象显示何时可能会被发布。在另一方面,GNOME 3.14已经发布了(也许你已经看到新闻了),它将很快应对好进行Debian的测试。

|

||||

|

||||

我们也应该感谢Jordi Mallach,在Debian中的GNOME包的维护者之一,他为我们指引了正确的讯息。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Debian-8-quot-Jessie-quot-to-Have-GNOME-as-the-Default-Desktop-459665.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[fbigun](https://github.com/fbigun)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://news.softpedia.com/news/Debian-8-quot-Jessie-quot-to-Get-GNOME-3-14-459470.shtml

|

||||

[2]:http://anonscm.debian.org/cgit/tasksel/tasksel.git/commit/?id=dce99f5f8d84e4c885e6beb4cc1bb5bb1d9ee6d7

|

||||

[3]:http://news.softpedia.com/news/Debian-Maintainer-Says-that-Xfce-on-Debian-Will-Not-Meet-Quality-Standards-GNOME-Is-Needed-454962.shtml

|

||||

@ -0,0 +1,29 @@

|

||||

Red Hat Enterprise Linux 5产品线终结

|

||||

================================================================================

|

||||

2007年3月,红帽公司首次宣布它的[Red Hat Enterprise Linux 5][1](RHEL)平台。虽然如今看来很普通,RHEL 5特别显著的一点是它是红帽公司第一个强调虚拟化的主要发行版本,而这点是如今现代发行版所广泛接受的特性。

|

||||

|

||||

最初的计划是为RHEL 5提供七年的寿命,但在2012年该计划改变了,红帽为RHEL 5[扩展][2]至10年的标准支持。

|

||||

|

||||

刚刚过去的这个星期,Red Hat发布的RHEL 5.11是RHEL 5.X系列的最后的、次要里程碑版本。红帽现在进入了将持续三年的名为“production 3”的支持周期。在这阶段将没有新的功能被添加到平台中,并且红帽公司将只提供有重大影响的安全修复程序和紧急优先级的bug修复。

|

||||

|

||||

平台事业部副总裁兼总经理Jim Totton在红帽公司在一份声明中说:“红帽公司致力于建立一个长期,稳定的产品生命周期,这将给那些依赖Red Hat Enterprise Linux为他们的关键应用服务的企业客户提供关键的益处。虽然RHEL 5.11是RHEL 5平台的最终次要版本,但它提供了安全性和可靠性方面的增强功能,以保持该平台接下来几年的活力。”

|

||||

|

||||

新的增强功能包括安全性和稳定性更新,包括改进了红帽帮助用户调试系统的方式。

|

||||

|

||||

还有一些新的存储的驱动程序,以支持新的存储适配器和改进在VMware ESXi上运行RHEL的支持。

|

||||

|

||||

在安全方面的巨大改进是OpenSCAP更新到版本1.0.8。红帽在2011年五月的[RHEL5.7的里程碑更新][3]中第一次支持了OpenSCAP。 OpenSCAP是安全内容自动化协议(SCAP)框架的开源实现,用于创建一个标准化方法来维护安全系统。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxplanet.com/news/end-of-the-line-for-red-hat-enterprise-linux-5.html

|

||||

|

||||

作者:Sean Michael Kerner

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.internetnews.com/ent-news/article.php/3665641

|

||||

[2]:http://www.serverwatch.com/server-news/red-hat-extends-linux-support.html

|

||||

[3]:http://www.internetnews.com/skerner/2011/05/red-hat-enterprise-linux-57-ad.html

|

||||

@ -0,0 +1,39 @@

|

||||

KDE Plasma 5的第二个bug修复版本发布,带来了很多的改变

|

||||

================================================================================

|

||||

> 新的Plasma 5发布了,带来了新的外观

|

||||

|

||||

<center></center>

|

||||

|

||||

<center>*KDE Plasma 5*</center>

|

||||

|

||||

### Plasma 5的第二个bug修复版本发布,已可下载###

|

||||

|

||||

KDE Plasma 5的bug修复版本不断来到,它新的桌面体验将会是KDE的生态系统的一个组成部分。

|

||||

|

||||

[公告][1]称:“plasma-5.0.2这个版本,新增了一个月以来来自KDE的贡献者新的翻译和修订。Bug修复通常是很小但是很重要,如修正未翻译的文字,使用正确的图标和修正KDELibs 4软件的文件重复现象。它还增加了一个月以来辛勤的翻译成果,使其支持其他更多的语言”

|

||||

|

||||

这个桌面还没有在任何Linux发行版中默认安装,这将持续一段时间,直到我们测试完成。

|

||||

|

||||

开发者还解释说,更新的软件包可以在Kubuntu Plasma 5的开发版本中进行审查。

|

||||

|

||||

如果你个人需要它们,你也可以下载源码包。

|

||||

|

||||

- [KDE Plasma Packages][2]

|

||||

- [KDE Plasma Sources][3]

|

||||

|

||||

如果你决定去编译它,你必须需要知道 KDE Plasma 5.0.2是一组复杂的软件,可能你需要解决不少问题。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Second-Bugfix-Release-for-KDE-Plasma-5-Arrives-with-Lots-of-Changes-459688.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://kde.org/announcements/plasma-5.0.2.php

|

||||

[2]:https://community.kde.org/Plasma/Packages

|

||||

[3]:http://kde.org/info/plasma-5.0.2.php

|

||||

@ -1,36 +0,0 @@

|

||||

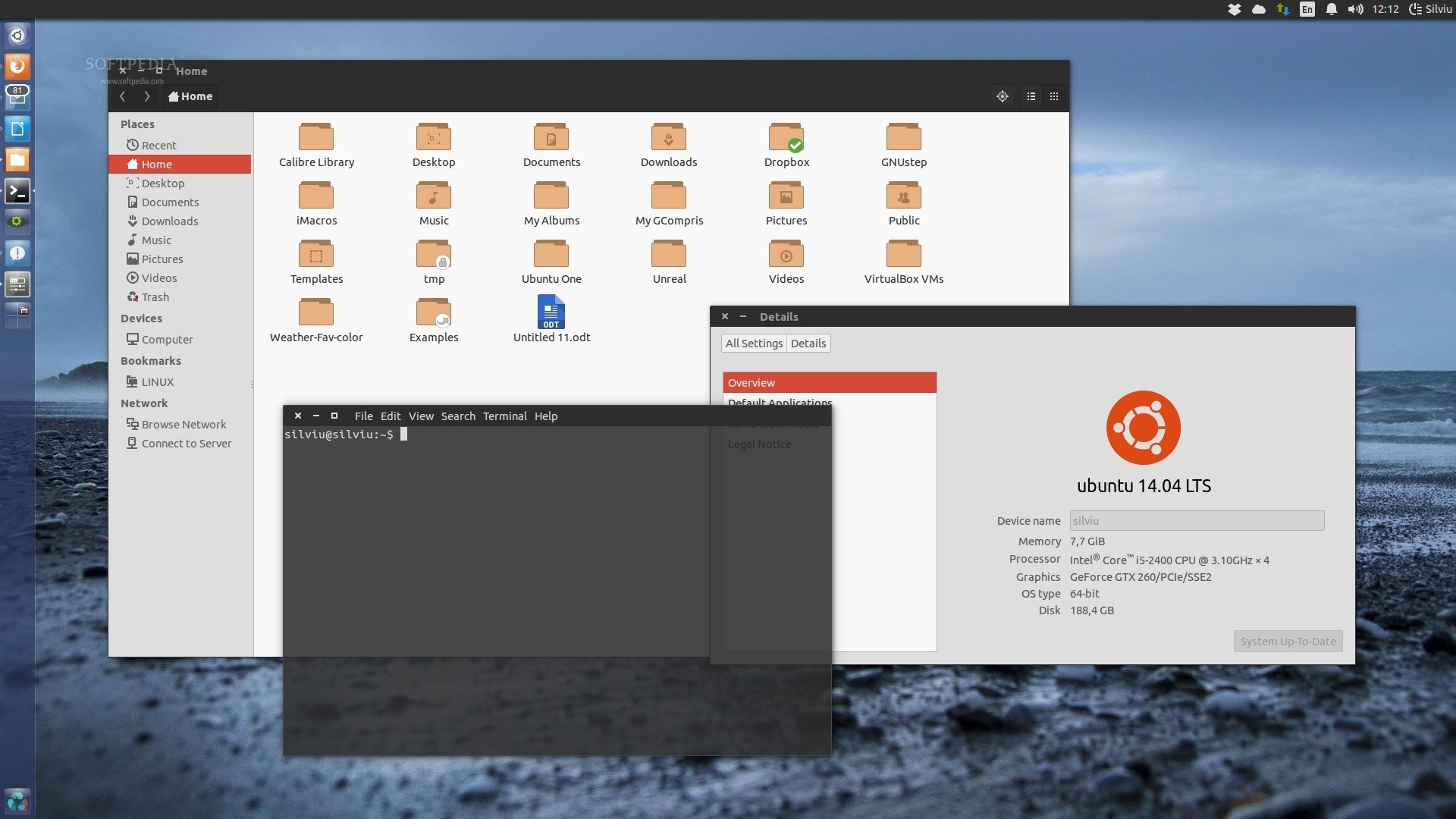

Canonical Closes nginx Exploit in Ubuntu 14.04 LTS

|

||||

================================================================================

|

||||

> Users have to upgrade their systems to fix the issue

|

||||

|

||||

|

||||

|

||||

Ubuntu 14.04 LTS

|

||||

|

||||

**Canonical has published details in a security notice about an nginx vulnerability that affected Ubuntu 14.04 LTS (Trusty Tahr). The problem has been identified and fixed.**

|

||||

|

||||

The Ubuntu developers have fixed a small nginx exploit. They explain that nginx could have been made to expose sensitive information over the network.

|

||||

|

||||

According to the security notice, “Antoine Delignat-Lavaud and Karthikeyan Bhargavan discovered that nginx incorrectly reused cached SSL sessions. An attacker could possibly use this issue in certain configurations to obtain access to information from a different virtual host.”

|

||||

|

||||

For a more detailed description of the problems, you can see Canonical's security [notification][1]. Users should upgrade their Linux distribution in order to correct this issue.

|

||||

|

||||

The problem can be repaired by upgrading the system to the latest nginx package (and dependencies). To apply the patch, you can simply run the Update Manager application.

|

||||

|

||||

If you don't want to use the Software Updater, you can open a terminal and enter the following commands (you will need to be root):

|

||||

|

||||

sudo apt-get update

|

||||

sudo apt-get dist-upgrade

|

||||

|

||||

In general, a standard system update will make all the necessary changes. You don't have to restart the PC in order to implement this fix.

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Canonical-Closes-Nginx-Exploit-in-Ubuntu-14-04-LTS-459677.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://www.ubuntu.com/usn/usn-2351-1/

|

||||

@ -1,29 +0,0 @@

|

||||

End of the Line for Red Hat Enterprise Linux 5

|

||||

================================================================================

|

||||

In March of 2007, Red Hat first announced its [Red Hat Enterprise Linux 5][1]( RHEL) platform. Though it might seem quant today, RHEL 5 was particularly notable in that it was the first major release for Red Hat to emphasize virtualization, which is a feature all modern distros now take for granted.

|

||||

|

||||

Originally the plan was for RHEL 5 to have seven years of life, but that plan changed in 2012 when when Red Hat [extended][2] its standard support for RHEL 5 to 10 years.

|

||||

|

||||

This past week, Red Hat released RHEL 5.11 which is the final minor milestone release for RHEL 5.X. RHEL now enters what Red Hat calls it production 3 support which will last for another three years. During the production three phase no new functionality is added to the platform and Red Hat will only provide critical impact security fixes and urgent priority bug fixes.

|

||||

|

||||

"Red Hat’s commitment to a long, stable product lifecycle is a key benefit for enterprise customers who rely on Red Hat Enterprise Linux for their critical applications," Jim Totton, vice president and general manager, Platform Business Unit, Red Hat said in a statement. " While Red Hat Enterprise Linux 5.11 is the final minor release of the Red Hat Enterprise Linux 5 platform, the enhancements it offers in terms of security and reliability are designed to maintain the platform’s viability for years to come."

|

||||

|

||||

The new enhancements include security and stability updates including improvements to the way that Red Hat can help users to debug a system.

|

||||

|

||||

There are also new storage drivers to support newer storage adapters and improved support for RHEL running on VMware ESXi.

|

||||

|

||||

On the security front the big improvement is an update to OpenSCAP version 1.0.8. Red Hat first provided support for OpenSCAP in May of 2011 with the [RHEL 5.7 milestone update][3]. OpenSCAP is an open source implementation of the Security Content Automation Protocol (SCAP) framework for creating a standardized approach for maintaining secure systems.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxplanet.com/news/end-of-the-line-for-red-hat-enterprise-linux-5.html

|

||||

|

||||

作者:Sean Michael Kerner

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.internetnews.com/ent-news/article.php/3665641

|

||||

[2]:http://www.serverwatch.com/server-news/red-hat-extends-linux-support.html

|

||||

[3]:http://www.internetnews.com/skerner/2011/05/red-hat-enterprise-linux-57-ad.html

|

||||

@ -1,38 +0,0 @@

|

||||

Second Bugfix Release for KDE Plasma 5 Arrives with Lots of Changes

|

||||

================================================================================

|

||||

> The new Plasma 5 desktop is out with a new version

|

||||

|

||||

|

||||

|

||||

KDE Plasma 5

|

||||

|

||||

### The KDE Community has announced that the second bugfix release for Plasma 5 is now out and available for download. ###

|

||||

|

||||

Bugfix releases for the KDE Plasma 5, the new desktop experience that will be an integral part of the KDE ecosystem, have started to arrive very often.

|

||||

|

||||

"This release, versioned plasma-5.0.2, adds a month's worth of new translations and fixes from KDE's contributors. The bugfixes are typically small but important such as fixing text which couldn't be translated, using the correct icons and fixing overlapping files with KDELibs 4 software. It also adds a month's hard work of translations to make support in other languages even more complete," reads the [announcement][1].

|

||||

|

||||

This particular desktop is not yet implemented by default in any Linux distro and it will be a while until we are able to test it properly.

|

||||

|

||||

The developers also explain that the updated packages can be reviewed in the development versions of Kubuntu Plasma 5.

|

||||

|

||||

You can also download the source packages, if you need them individually.

|

||||

|

||||

- [KDE Plasma Packages][2]

|

||||

- [KDE Plasma Sources][3]

|

||||

|

||||

You also have to keep in mind that KDE Plasma 5.0.2 is a sophisticated piece of software and you really need to know what you are doing if you decide to compile it.

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Second-Bugfix-Release-for-KDE-Plasma-5-Arrives-with-Lots-of-Changes-459688.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://kde.org/announcements/plasma-5.0.2.php

|

||||

[2]:https://community.kde.org/Plasma/Packages

|

||||

[3]:http://kde.org/info/plasma-5.0.2.php

|

||||

@ -0,0 +1,36 @@

|

||||

Wal Commander GitHub Edition 0.17 released

|

||||

================================================================================

|

||||

|

||||

|

||||

> ### Description ###

|

||||

>

|

||||

> Wal Commander GitHub Edition is a multi-platform open source file manager for Windows, Linux, FreeBSD and OS X.

|

||||

>

|

||||

> The purpose of this project is to create a portable file manager mimicking the look-n-feel of Far Manager.

|

||||

|

||||

The next stable version of our Wal Commander GitHub Edition 0.17 is out. Major features include command line autocomplete using the commands history; file associations to bind custom commands to different actions on files; and experimental support of OS X using XQuartz. A lot of new hotkeys were added in this release. Precompiled binaries are available for Windows x64. Linux, FreeBSD and OS X versions can be built directly from the [GitHub source code][1].

|

||||

|

||||

### Major features ###

|

||||

|

||||

- command line autocomplete (use Del key to erase a command)

|

||||

- file associations (Main menu -> Commands -> File associations)

|

||||

- experimental OS X support on top of XQuartz ([https://github.com/corporateshark/WalCommander/issues/5][2])

|

||||

|

||||

### [Downloads][3] ###.

|

||||

|

||||

Source code: [https://github.com/corporateshark/WalCommander][4]

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://wcm.linderdaum.com/release-0-17-0/

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:https://github.com/corporateshark/WalCommander/releases

|

||||

[2]:https://github.com/corporateshark/WalCommander/issues/5

|

||||

[3]:http://wcm.linderdaum.com/downloads/

|

||||

[4]:https://github.com/corporateshark/WalCommander

|

||||

@ -1,92 +0,0 @@

|

||||

Making MySQL Better at GitHub

|

||||

================================================================================

|

||||

> At GitHub we say, "it's not fully shipped until it's fast." We've talked before about some of the ways we keep our [frontend experience speedy][1], but that's only part of the story. Our MySQL database infrastructure dramatically affects the performance of GitHub.com. Here's a look at how our infrastructure team seamlessly conducted a major MySQL improvement last August and made GitHub even faster.

|

||||

|

||||

### The mission ###

|

||||

|

||||

Last year we moved the bulk of GitHub.com's infrastructure into a new datacenter with world-class hardware and networking. Since MySQL forms the foundation of our backend systems, we expected database performance to benefit tremendously from an improved setup. But creating a brand-new cluster with brand-new hardware in a new datacenter is no small task, so we had to plan and test carefully to ensure a smooth transition.

|

||||

|

||||

### Preparation ###

|

||||

|

||||

A major infrastructure change like this requires measurement and metrics gathering every step of the way. After installing base operating systems on our new machines, it was time to test out our new setup with various configurations. To get a realistic test workload, we used tcpdump to extract SELECT queries from the old cluster that was serving production and replayed them onto the new cluster.

|

||||

|

||||

MySQL tuning is very workload specific, and well-known configuration settings like innodb_buffer_pool_size often make the most difference in MySQL's performance. But on a major change like this, we wanted to make sure we covered everything, so we took a look at settings like innodb_thread_concurrency, innodb_io_capacity, and innodb_buffer_pool_instances, among others.

|

||||

|

||||

We were careful to only make one test configuration change at a time, and to run tests for at least 12 hours. We looked for query response time changes, stalls in queries per second, and signs of reduced concurrency. We observed the output of SHOW ENGINE INNODB STATUS, particularly the SEMAPHORES section, which provides information on work load contention.

|

||||

|

||||

Once we were relatively comfortable with configuration settings, we started migrating one of our largest tables onto an isolated cluster. This served as an early test of the process, gave us more space in the buffer pools of our core cluster and provided greater flexibility for failover and storage. This initial migration introduced an interesting application challenge, as we had to make sure we could maintain multiple connections and direct queries to the correct cluster.

|

||||

|

||||

In addition to all our raw hardware improvements, we also made process and topology improvements: we added delayed replicas, faster and more frequent backups, and more read replica capacity. These were all built out and ready for go-live day.

|

||||

|

||||

### Making a list; checking it twice ###

|

||||

|

||||

With millions of people using GitHub.com on a daily basis, we did not want to take any chances with the actual switchover. We came up with a thorough [checklist][2] before the transition:

|

||||

|

||||

|

||||

|

||||

We also planned a maintenance window and [announced it on our blog][3] to give our users plenty of notice.

|

||||

|

||||

### Migration day ###

|

||||

|

||||

At 5am Pacific Time on a Saturday, the migration team assembled online in chat and the process began:

|

||||

|

||||

|

||||

|

||||

We put the site in maintenance mode, made an announcement on Twitter, and set out to work through the list above:

|

||||

|

||||

|

||||

|

||||

**13 minutes** later, we were able to confirm operations of the new cluster:

|

||||

|

||||

|

||||

|

||||

Then we flipped GitHub.com out of maintenance mode, and let the world know that we were in the clear.

|

||||

|

||||

|

||||

|

||||

Lots of up front testing and preparation meant that we kept the work we needed on go-live day to a minimum.

|

||||

|

||||

### Measuring the final results ###

|

||||

|

||||

In the weeks following the migration, we closely monitored performance and response times on GitHub.com. We found that our cluster migration cut the average GitHub.com page load time by half and the 99th percentile by *two-thirds*:

|

||||

|

||||

|

||||

|

||||

### What we learned ###

|

||||

|

||||

#### Functional partitioning ####

|

||||

|

||||

During this process we decided that moving larger tables that mostly store historic data to separate cluster was a good way to free up disk and buffer pool space. This allowed us to leave more resources for our "hot" data, splitting some connection logic to enable the application to query multiple clusters. This proved to be a big win for us and we are working to reuse this pattern.

|

||||

|

||||

#### Always be testing ####

|

||||

|

||||

You can never do too much acceptance and regression testing for your application. Replicating data from the old cluster to the new cluster while running acceptance tests and replaying queries were invaluable for tracing out issues and preventing surprises during the migration.

|

||||

|

||||

#### The power of collaboration ####

|

||||

|

||||

Large changes to infrastructure like this mean a lot of people need to be involved, so pull requests functioned as our primary point of coordination as a team. We had people all over the world jumping in to help.

|

||||

|

||||

Deploy day team map:

|

||||

|

||||

<iframe width="620" height="420" frameborder="0" src="https://render.githubusercontent.com/view/geojson?url=https://gist.githubusercontent.com/anonymous/5fa29a7ccbd0101630da/raw/map.geojson"></iframe>

|

||||

|

||||

This created a workflow where we could open a pull request to try out changes, get real-time feedback, and see commits that fixed regressions or errors -- all without phone calls or face-to-face meetings. When everything has a URL that can provide context, it's easy to involve a diverse range of people and make it simple for them give feedback.

|

||||

|

||||

### One year later.. ###

|

||||

|

||||

A full year later, we are happy to call this migration a success — MySQL performance and reliability continue to meet our expectations. And as an added bonus, the new cluster enabled us to make further improvements towards greater availability and query response times. I'll be writing more about those improvements here soon.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://github.com/blog/1880-making-mysql-better-at-github

|

||||

|

||||

作者:[samlambert][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://github.com/samlambert

|

||||

[1]:https://github.com/blog/1756-optimizing-large-selector-sets

|

||||

[2]:https://help.github.com/articles/writing-on-github#task-lists

|

||||

[3]:https://github.com/blog/1603-site-maintenance-august-31st-2013

|

||||

@ -0,0 +1,82 @@

|

||||

ChromeOS vs Linux: The Good, the Bad and the Ugly

|

||||

================================================================================

|

||||

> In the battle between ChromeOS and Linux, both desktop environments have strengths and weaknesses.

|

||||

|

||||

Anyone who believes Google isn't "making a play" for desktop users isn't paying attention. In recent years, I've seen [ChromeOS][1] making quite a splash on the [Google Chromebook][2]. Exploding with popularity on sites such as Amazon.com, it looks as if ChromeOS could be unstoppable.

|

||||

|

||||

In this article, I'm going to look at ChromeOS as a concept to market, how it's affecting Linux adoption and whether or not it's a good/bad thing for the Linux community as a whole. Plus, I'll talk about the biggest issue of all and how no one is doing anything about it.

|

||||

|

||||

### ChromeOS isn't really Linux ###

|

||||

|

||||

When folks ask me if ChromeOS is a Linux distribution, I usually reply that ChromeOS is to Linux what OS X is to BSD. In other words, I consider ChromeOS to be a forked operating system that uses the Linux kernel under the hood. Much of the operating system is made up of Google's own proprietary blend of code and software.

|

||||

|

||||

So while the ChromeOS is using the Linux kernel under its hood, it's still very different from what we might find with today's modern Linux distributions.

|

||||

|

||||

Where ChromeOS's difference becomes most apparent, however, is in the apps it offers the end user: Web applications. With everything being launched from a browser window, Linux users might find using ChromeOS to be a bit vanilla. But for non-Linux users, the experience is not all that different than what they may have used on their old PCs.

|

||||

|

||||

For example: Anyone who is living a Google-centric lifestyle on Windows will feel right at home on ChromeOS. Odds are this individual is already relying on the Chrome browser, Google Drive and Gmail. By extension, moving over to ChromeOS feels fairly natural for these folks, as they're simply using the browser they're already used to.

|

||||

|

||||

Linux enthusiasts, however, tend to feel constrained almost immediately. Software choices feel limited and boxed in, plus games and VoIP are totally out of the question. Sorry, but [GooglePlus Hangouts][3] isn't a replacement for [VoIP][4] software. Not even by a long shot.

|

||||

|

||||

### ChromeOS or Linux on the desktop ###

|

||||

|

||||

Anyone making the claim that ChromeOS hurts Linux adoption on the desktop needs to come up for air and meet non-technical users sometime.

|

||||

|

||||

Yes, desktop Linux is absolutely fine for most casual computer users. However it helps to have someone to install the OS and offer "maintenance" services like we see in the Windows and OS X camps. Sadly Linux lacks this here in the States, which is where I see ChromeOS coming into play.

|

||||

|

||||

I've found the Linux desktop is best suited for environments where on-site tech support can manage things on the down-low. Examples include: Homes where advanced users can drop by and handle updates, governments and schools with IT departments. These are environments where Linux on the desktop is set up to be used by users of any skill level or background.

|

||||

|

||||

By contrast, ChromeOS is built to be completely maintenance free, thus not requiring any third part assistance short of turning it on and allowing updates to do the magic behind the scenes. This is partly made possible due to the ChromeOS being designed for specific hardware builds, in a similar spirit to how Apple develops their own computers. Because Google has a pulse on the hardware ChromeOS is bundled with, it allows for a generally error free experience. And for some individuals, this is fantastic!

|

||||

|

||||

Comically, the folks who exclaim that there's a problem here are not even remotely the target market for ChromeOS. In short, these are passionate Linux enthusiasts looking for something to gripe about. My advice? Stop inventing problems where none exist.

|

||||

|

||||

The point is: the market share for ChromeOS and Linux on the desktop are not even remotely the same. This could change in the future, but at this time, these two groups are largely separate.

|

||||

|

||||

### ChromeOS use is growing ###

|

||||

|

||||

No matter what your view of ChromeOS happens to be, the fact remains that its adoption is growing. New computers built for ChromeOS are being released all the time. One of the most recent ChromeOS computer releases is from Dell. Appropriately named the [Dell Chromebox][5], this desktop ChromeOS appliance is yet another shot at traditional computing. It has zero software DVDs, no anti-malware software, and offfers completely seamless updates behind the scenes. For casual users, Chromeboxes and Chromebooks are becoming a viable option for those who do most of their work from within a web browser.

|

||||

|

||||

Despite this growth, ChromeOS appliances face one huge downside – storage. Bound by limited hard drive size and a heavy reliance on cloud storage, ChromeOS isn't going to cut it for anyone who uses their computers outside of basic web browser functionality.

|

||||

|

||||

### ChromeOS and Linux crossing streams ###

|

||||

|

||||

Previously, I mentioned that ChromeOS and Linux on the desktop are in two completely separate markets. The reason why this is the case stems from the fact that the Linux community has done a horrid job at promoting Linux on the desktop offline.

|

||||

|

||||

Yes, there are occasional events where casual folks might discover this "Linux thing" for the first time. But there isn't a single entity to then follow up with these folks, making sure they’re getting their questions answered and that they're getting the most out of Linux.

|

||||

|

||||

In reality, the likely offline discovery breakdown goes something like this:

|

||||

|

||||

- Casual user finds out Linux from their local Linux event.

|

||||

- They bring the DVD/USB device home and attempt to install the OS.

|

||||

- While some folks very well may have success with the install process, I've been contacted by a number of folks with the opposite experience.

|

||||

- Frustrated, these folks are then expected to "search" online forums for help. Difficult to do on a primary computer experiencing network or video issues.

|

||||

- Completely fed up, some of the above frustrated bring their computers back into a Windows shop for "repair." In addition to Windows being re-installed, they also receive an earful about how "Linux isn't for them" and should be avoided.

|

||||

|

||||

Some of you might charge that the above example is exaggerated. I would respond with this: It's happened to people I know personally and it happens often. Wake up Linux community, our adoption model is broken and tired.

|

||||

|

||||

### Great platforms, horrible marketing and closing thoughts ###

|

||||

|

||||

If there is one thing that I feel ChromeOS and Linux on the desktop have in common...besides the Linux kernel, it's that they both happen to be great products with rotten marketing. The advantage however, goes to Google with this one, due to their ability to spend big money online and reserve shelf space at big box stores.

|

||||

|

||||

Google believes that because they have the "online advantage" that offline efforts aren't really that important. This is incredibly short-sighted and reflects one of Google's biggest missteps. The belief that if you're not exposed to their online efforts, you're not worth bothering with, is only countered by local shelf-space at select big box stores.

|

||||

|

||||

My suggestion is this – offer Linux on the desktop to the ChromeOS market through offline efforts. This means Linux User Groups need to start raising funds to be present at county fairs, mall kiosks during the holiday season and teaching free classes at community centers. This will immediately put Linux on the desktop in front of the same audience that might otherwise end up with a ChromeOS powered appliance.

|

||||

|

||||

If local offline efforts like this don't happen, not to worry. Linux on the desktop will continue to grow as will the ChromeOS market. Sadly though, it will absolutely keep the two markets separate as they are now.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.datamation.com/open-source/chromeos-vs-linux-the-good-the-bad-and-the-ugly-1.html

|

||||

|

||||

作者:[Matt Hartley][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.datamation.com/author/Matt-Hartley-3080.html

|

||||

[1]:http://en.wikipedia.org/wiki/Chrome_OS

|

||||

[2]:http://www.google.com/chrome/devices/features/

|

||||

[3]:https://plus.google.com/hangouts

|

||||

[4]:http://en.wikipedia.org/wiki/Voice_over_IP

|

||||

[5]:http://www.pcworld.com/article/2602845/dell-brings-googles-chrome-os-to-desktops.html

|

||||

@ -1,74 +0,0 @@

|

||||

Translating by GOLinux!

|

||||

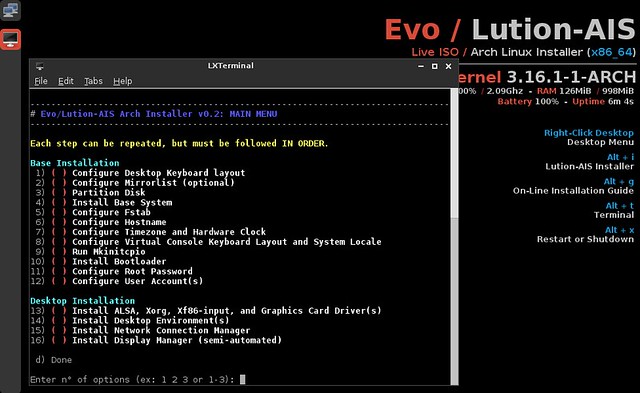

How to install Arch Linux the easy way with Evo/Lution

|

||||

================================================================================

|

||||

The one who ventures into an install of Arch Linux and has only experienced installing Linux with Ubuntu or Mint is in for a steep learning curve. The number of people giving up halfway is probably higher than the ones that pull it through. Arch Linux is somewhat cult in the way that you may call yourself a weathered Linux user if you succeed in setting it up and configuring it in a useful way.

|

||||

|

||||

Even though there is a [helpful wiki][1] to guide newcomers, the requirements are still too high for some who set out to conquer Arch. You need to be at least familiar with commands like fdisk or mkfs in a terminal and have heard of mc, nano or chroot to make it through this endeavour. It reminds me of a Debian install 10 years ago.

|

||||

|

||||

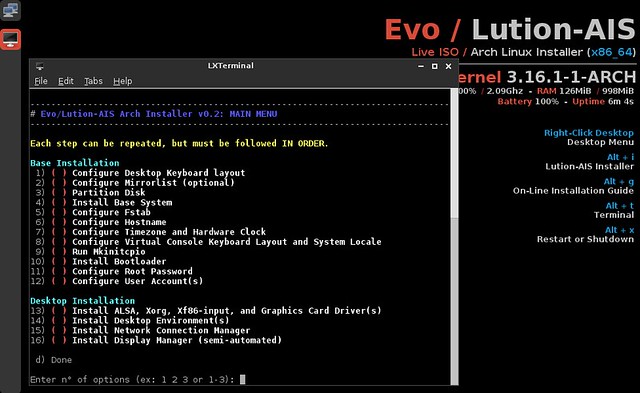

For those ambitious souls that still lack some knowledge, there is an installer in the form of an ISO image called [Evo/Lution Live ISO][2] to the rescue. Even though it is booted like a distribution of its own, it does nothing but assist with installing a barebone Arch Linux. Evo/Lution is a project that aims to diversify the user base of Arch by providing a simple way of installing Arch as well as a community that provides comprehensive help and documentation to that group of users. In this mix, Evo is the (non-installable) live CD and Lution is the installer itself. The project's founders see a widening gap between Arch developers and users of Arch and its derivative distributions, and want to build a community with equal roles between all participants.

|

||||

|

||||

|

||||

|

||||

The software part of the project is the CLI installer Lution-AIS which explains every step of what happens during the installation of a pure vanilla Arch. The resulting installation will have all the latest software that Arch has to offer without adding anything from AUR or any other custom packages.

|

||||

|

||||

After booting up the ISO image, which weighs in at 422 MB, we are presented with a workspace consisting of a Conky display on the right with shortcuts to the options and a LX-Terminal on the left waiting to run the installer.

|

||||

|

||||

|

||||

|

||||

After setting off the actual installer by either right-clicking on the desktop or using ALT-i, you are presented with a list of 16 jobs to be run. It makes sense to run them all unless you know better. You can either run them one by one or make a selection like 1 3 6 or 1-4 or do them all at once by entering 1-16. Most steps need to be confirmed with a 'y' for yes, and the next task waits for you to hit Enter. This will allow time to read the installation guide which is hidden behind ALT-g or even walking away from it.

|

||||

|

||||

|

||||

|

||||

The 16 steps are divided in "Base Install" and "Desktop Install". The first group takes care of localization, partitioning, and installing a bootloader.

|

||||

|

||||

The installer leads you through partitioning with gparted, gdisk, and cfdisk as options.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

After you have created partitions (e.g., /dev/sda1 for root and /dev/sda2 for swap using gparted as shown in the screenshot), you can choose 1 out of 10 file systems. In the next step, you can choose your kernel (latest or LTS) and base system.

|

||||

|

||||

|

||||

|

||||

After installing the bootloader of your choice, the first part of the install is done, which takes approximately 12 minutes. This is the point where in plain Arch Linux you reboot into your system for the first time.

|

||||

|

||||

With Lution you just move on to the second part which installs Xorg, sound and graphics drivers, and then moves on to desktop environments.

|

||||

|

||||

|

||||

|

||||

The installer detects if an install is done in VirtualBox, and will automatically install and load the right generic drivers for the VM and sets up **systemd** accordingly.

|

||||

|

||||

In the next step, you can choose between the desktop environments KDE, Gnome, Cinnamon, LXDE, Enlightenment, Mate or XFCE. Should you not be friends with the big ships, you can also go with a Window manager like Awesome, Fluxbox, i3, IceWM, Openbox or PekWM.

|

||||

|

||||

|

||||

|

||||

Part two of the installer will take under 10 minutes with Cinnamon as the desktop environment; however, KDE will take longer due to a much larger download.

|

||||

|

||||

Lution-AIS worked like a charm on two tries with Cinnamon and Awesome. After the installer was done and prompted me to reboot, it took me to the desired environments.

|

||||

|

||||

|

||||

|

||||

I have only two points to criticize: when the installer offered me to choose a mirror list and when it created the fstab file. In both cases it opened a second terminal, prompting me with an informational text. It took me a while to figure out I had to close the terminals before the installer would move on. When it prompts you after creating fstab, you need to close the terminal, and answer 'yes' when asked if you want to save the file.

|

||||

|

||||

|

||||

|

||||

The second of my issues probably has to do with VirtualBox. When starting up, you may see a message that no network has been detected. Clicking on the top icon on the left will open wicd, the network manager that is used here. Clicking on "Disconnect" and then "Connect" and restarting the installer will get it automatically detected.

|

||||

|

||||

Evo/Lution seems a worthwhile project, where Lution works fine. Not much can be said on the community part yet. They started a brand new website, forum, and wiki that need to be filled with content first. So if you like the idea, join [their forum][3] and let them know. The ISO image can be downloaded from [the website][4].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/2014/09/install-arch-linux-easy-way-evolution.html

|

||||

|

||||

作者:[Ferdinand Thommes][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/ferdinand

|

||||

[1]:https://wiki.archlinux.org/

|

||||

[2]:http://www.evolutionlinux.com/

|

||||

[3]:http://www.evolutionlinux.com/forums/

|

||||

[4]:http://www.evolutionlinux.com/downloads.html

|

||||

@ -1,72 +0,0 @@

|

||||

How to use CloudFlare as a ddclient provider under Ubuntu

|

||||

================================================================================

|

||||

DDclient is a Perl client used to update dynamic DNS entries for accounts on Dynamic DNS Network Service Provider. It was originally written by Paul Burry and is now mostly by wimpunk. It has the capability to update more than just dyndns and it can fetch your WAN-ipaddress in a few different ways.

|

||||

|

||||

CloudFlare, however, has a little known feature that will allow you to update your DNS records via API or a command line script called ddclient. This will give you the same result, and it's also free.

|

||||

|

||||

Unfortunately, ddclient does not work with CloudFlare out of the box. There is a patch available and here is how to hack it up on Debian or Ubuntu, also works in Raspbian with Raspberry Pi.

|

||||

|

||||

### Requirements ###

|

||||

|

||||

Make sure you have a domain name that you own and Sign up to CloudFlare ,add your domain name. Follow the instructions, the default values it gives should be fine.You'll be letting CloudFlare host your domain so you need to adjust the settings at your registrar.If you'd like to use a subdomain, add an ‘A' record for it. Any IP address will do for now.

|

||||

|

||||

### Install ddclient on ubuntu ###

|

||||

|

||||

Open the terminal and run the following command

|

||||

|

||||

sudo apt-get install ddclient

|

||||

|

||||

Now you need to install the patch using the following commands

|

||||

|

||||

sudo apt-get install curl sendmail libjson-any-perl libio-socket-ssl-perl

|

||||

|

||||

curl -O http://blog.peter-r.co.uk/uploads/ddclient-3.8.0-cloudflare-22-6-2014.patch

|

||||

|

||||

sudo patch /usr/sbin/ddclient < ddclient-3.8.0-cloudflare-22-6-2014.patch

|

||||

|

||||

The above commands completes the ddclient and patch

|

||||

|

||||

### Configuring ddclient ###

|

||||

|

||||

You need to edit the ddclient.conf file using the following command

|

||||

|

||||

sudo vi /etc/ddclient.conf

|

||||

|

||||

Add the following information

|

||||

|

||||

##

|

||||

### CloudFlare (cloudflare.com)

|

||||

###

|

||||

ssl=yes

|

||||

use=web, web=dyndns

|

||||

protocol=cloudflare, \

|

||||

server=www.cloudflare.com, \

|

||||

zone=domain.com, \

|

||||

login=you@email.com, \

|

||||

password=api-key \

|

||||

host.domain.com

|

||||

|

||||

Comment out:

|

||||

|

||||

#daemon=300

|

||||

|

||||

Your api-key comes from the cloudflare account page

|

||||

|

||||

ssl=yes might already be in that file

|

||||

|

||||

use=web, web=dyndns will use dyndns to check IP (useful for NAT)

|

||||

|

||||

You're done. Log in to https://www.cloudflare.com and check that the IP listed for your domain matches http://checkip.dyndns.com

|

||||

|

||||

To verify your settings using the following command

|

||||

|

||||

sudo ddclient -daemon=0 -debug -verbose -noquiet

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.ubuntugeek.com/how-to-use-cloudflare-as-a-ddclient-provider-under-ubuntu.html

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

@ -0,0 +1,84 @@

|

||||

Linux FAQs with Answers--How to build a RPM or DEB package from the source with CheckInstall

|

||||

================================================================================

|

||||

> **Question**: I would like to install a software program by building it from the source. Is there a way to build and install a package from the source, instead of running "make install"? That way, I could uninstall the program easily later if I want to.

|

||||

|

||||

If you have installed a Linux program from its source by running "make install", it becomes really tricky to remove it completely, unless the author of the program provides an uninstall target in the Makefile. You will have to compare the complete list of files in your system before and after installing the program from source, and manually remove all the files that were added during the installation.

|

||||

|

||||

That is when CheckInstall can come in handy. CheckInstall keeps track of all the files created or modified by an install command line (e.g., "make install" "make install_modules", etc.), and builds a standard binary package, giving you the ability to install or uninstall it with your distribution's standard package management system (e.g., yum for Red Hat or apt-get for Debian). It has been also known to work with Slackware, SuSe, Mandrake and Gentoo as well, as per the [official documentation][1].

|

||||

|

||||

In this post, we will only focus on Red Hat and Debian based distributions, and show how to build a RPM or DEB package from the source using CheckInstall.

|

||||

|

||||

### Installing CheckInstall on Linux ###

|

||||

|

||||

To install CheckInstall on Debian derivatives:

|

||||

|

||||

# aptitude install checkinstall

|

||||

|

||||

To install CheckInstall on Red Hat-based distributions, you will need to download a pre-built .rpm of CheckInstall (e.g., searchable from [http://rpm.pbone.net][2]), as it has been removed from the Repoforge repository. The .rpm package for CentOS 6 works in CentOS 7 as well.

|

||||

|

||||

# wget ftp://ftp.pbone.net/mirror/ftp5.gwdg.de/pub/opensuse/repositories/home:/ikoinoba/CentOS_CentOS-6/x86_64/checkinstall-1.6.2-3.el6.1.x86_64.rpm

|

||||

# yum install checkinstall-1.6.2-3.el6.1.x86_64.rpm

|

||||

|

||||

Once checkinstall is installed, you can use the following format to build a package for particular software.

|

||||

|

||||

# checkinstall <install-command>

|

||||

|

||||

Without <install-command> argument, the default install command "make install" will be used.

|

||||

|

||||

### Build a RPM or DEB Pacakge with CheckInstall ###

|

||||

|

||||

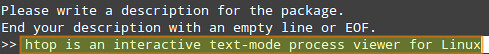

In this example, we will build a package for [htop][3], an interactive text-mode process viewer for Linux (like top on steroids).

|

||||

|

||||

First, let's download the source code from the official website of the project. As a best practice, we will store the tarball in /usr/local/src, and untar it.

|

||||

|

||||

# cd /usr/local/src

|

||||

# wget http://hisham.hm/htop/releases/1.0.3/htop-1.0.3.tar.gz

|

||||

# tar xzf htop-1.0.3.tar.gz

|

||||

# cd htop-1.0.3

|

||||

|

||||

Let's find out the install command for htop, so that we can invoke checkinstall with the command. As shown below, htop is installed with 'make install' command.

|

||||

|

||||

# ./configure

|

||||

# make install

|

||||

|

||||

Therefore, to build a htop package, we can invoke checkinstall without any argument, which will then use 'make install' command to build a package. Along the process, the checkinstall command will ask you a series of questions.

|

||||

|

||||

In short, here are the commands to build a package for **htop**:

|

||||

|

||||

# ./configure

|

||||

# checkinstall

|

||||

|

||||

Answer 'y' to "Should I create a default set of package docs?":

|

||||

|

||||

|

||||

|

||||

You can enter a brief description of the package, then press Enter twice:

|

||||

|

||||

|

||||

|

||||

Enter a number to modify any of the following values or Enter to proceed:

|

||||

|

||||

|

||||

|

||||

Then checkinstall will create a .rpm or a .deb package automatically, depending on what your Linux system is:

|

||||

|

||||

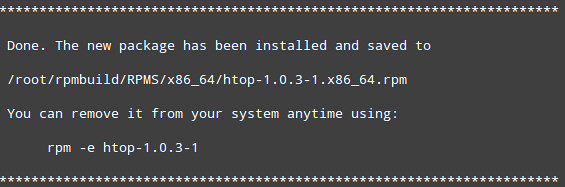

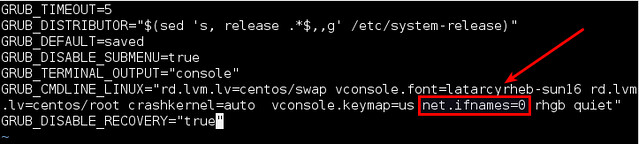

On CentOS 7:

|

||||

|

||||

|

||||

|

||||

On Debian 7:

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ask.xmodulo.com/build-rpm-deb-package-source-checkinstall.html

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://checkinstall.izto.org/docs/README

|

||||

[2]:http://rpm.pbone.net/

|

||||

[3]:http://ask.xmodulo.com/install-htop-centos-rhel.html

|

||||

@ -0,0 +1,30 @@

|

||||

Linux FAQs with Answers--How to catch and handle a signal in Perl

|

||||

================================================================================

|

||||

> **Question**: I need to handle an interrupt signal by using a custom signal handler in Perl. In general, how can I catch and handle various signals (e.g., INT, TERM) in a Perl program?

|

||||

|

||||

As an asynchronous notification mechanism in the POSIX standard, a signal is sent by an operating system to a process to notify it of a certain event. When a signal is generated, the target process's execution is interrupted by an operating system, and the signal is delivered to the process's signal handler routine. One can define and register a custom signal handler or rely on the default signal handler.

|

||||

|

||||

In Perl, signals can be caught and handled by using a global %SIG hash variable. This %SIG hash variable is keyed by signal numbers, and contains references to corresponding signal handlers. Therefore, if you want to define a custom signal handler for a particular signal, you can simply update the hash value of %SIG for the signal.

|

||||

|

||||

Here is a code snippet to handle interrupt (INT) and termination (TERM) signals using a custom signal handler.

|

||||

|

||||

$SIG{INT} = \&signal_handler;

|

||||

$SIG{TERM} = \&signal_handler;

|

||||

|

||||

sub signal_handler {

|

||||

print "This is a custom signal handler\n";

|

||||

die "Caught a signal $!";

|

||||

}

|

||||

|

||||

|

||||

|

||||

Other valid hash values for %SIG are 'IGNORE' and 'DEFAULT'. When an assigned hash value is 'IGNORE' (e.g., $SIG{CHLD}='IGNORE'), the corresponding signal will be ignored. Assigning 'DEFAULT' hash value (e.g., $SIG{HUP}='DEFAULT') means that we will be using a default signal handler.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ask.xmodulo.com/catch-handle-interrupt-signal-perl.html

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

@ -0,0 +1,69 @@

|

||||

Linux FAQs with Answers--How to change a network interface name on CentOS 7

|

||||

================================================================================

|

||||

> **Question**: On CentOS 7, I would like to change the assigned name of a network interface to something else. What is a proper way to rename a network interface on CentOS or RHEL 7?

|

||||

|

||||

Traditionally, network interfaces in Linux are enumerated as eth[0123...], but these names do not necessarily correspond to actual hardware slots, PCI geography, USB port number, etc. This introduces a unpredictable naming problem (e.g., due to undeterministic device probing behavior) which can cause various network misconfigurations (e.g., disabled interface or firewall bypass resulting from unintentional interface renaming). MAC address based udev rules are not so much helpful in a virtualized environment where MAC addresses are as euphemeral as port numbers.

|

||||

|

||||

CentOS/RHEL 6 has introduced a method for [consistent and predictable network device naming][1] for network interfaces. These features uniquely determine the name of network interfaces in order to make locating and differentiating the interfaces easier and in such a way that it is persistent across later boots, time, and hardware changes. However, this naming rule is not turned on by default on CentOS/RHEL 6.

|

||||

|

||||

Starting with CentOS/RHEL 7, the predictable naming rule is adopted by default. Under this rule, interface names are automatically determined based on firmware, topology, and location information. Now interface names stay fixed even if NIC hardware is added or removed without re-enumeration, and broken hardware can be replaced seamlessly.

|

||||

|

||||

* Two character prefixes based on the type of interface:

|

||||

* en -- ethernet

|

||||

* sl -- serial line IP (slip)

|

||||

* wl -- wlan

|

||||

* ww -- wwan

|

||||

*

|

||||

* Type of names:

|

||||

* b<number> -- BCMA bus core number

|

||||

* ccw<name> -- CCW bus group name

|

||||

* o<index> -- on-board device index number

|

||||

* s<slot>[f<function>][d<dev_port>] -- hotplug slot index number

|

||||

* x<MAC> -- MAC address

|

||||

* [P<domain>]p<bus>s<slot>[f<function>][d<dev_port>]

|

||||

* -- PCI geographical location

|

||||

* [P<domain>]p<bus>s<slot>[f<function>][u<port>][..]1[i<interface>]

|

||||

* -- USB port number chain

|

||||

|

||||

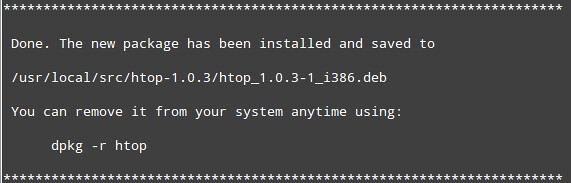

A minor disadvantage of this new naming scheme is that the interface names are somewhat harder to read than the traditional names. For example, you may find names like enp0s3. Besides, you no longer have any control over such interface names.

|

||||

|

||||

|

||||

|

||||

If, for some reason, you prefer the old way, and want to be able to assign any arbitrary name of your choice to an interface on CentOS/RHEL 7, you need to override the default predictable naming rule, and define a MAC address based udev rule.

|

||||

|

||||

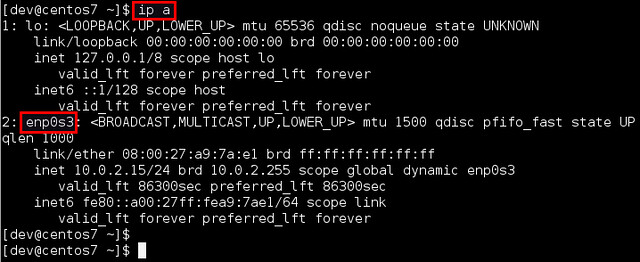

**Here is how to rename a network interface on CentOS or RHEL 7.**

|

||||

|

||||

First, let's disable the predictable naming rule. For that, you can pass "net.ifnames=0" kernel parameter during boot. This is achieved by editing /etc/default/grub and adding "net.ifnames=0" to GRUB_CMDLINE_LINUX variable.

|

||||

|

||||

|

||||

|

||||

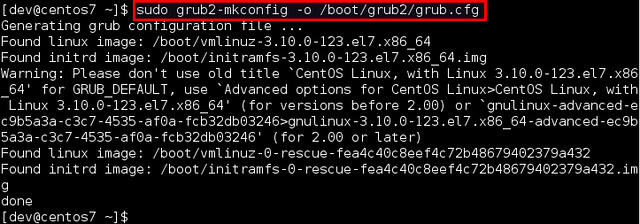

Then run this command to regenerate GRUB configuration with updated kernel parameters.

|

||||

|

||||

$ sudo grub2-mkconfig -o /boot/grub2/grub.cfg

|

||||

|

||||

|

||||

|

||||

Next, edit (or create) a udev network naming rule file (/etc/udev/rules.d/70-persistent-net.rules), and add the following line. Replace MAC address and interface with your own.

|

||||

|

||||

$ sudo vi /etc/udev/rules.d/70-persistent-net.rules

|

||||

|

||||

----------

|

||||

|

||||

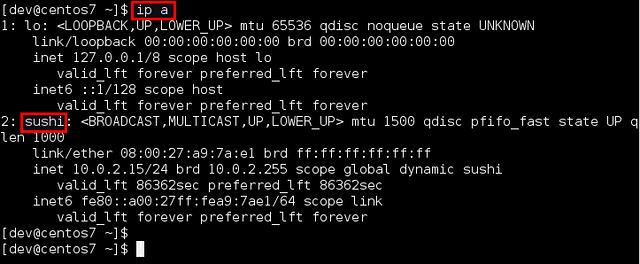

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="08:00:27:a9:7a:e1", ATTR{type}=="1", KERNEL=="eth*", NAME="sushi"

|

||||

|

||||

Finally, reboot the machine, and verify the new interface name.

|

||||

|

||||

|

||||

|

||||

Note that it is still your responsibility to configure the renamed interface. If the network configuration (e.g., IPv4 settings, firewall rules) is based on the old name (before change), you need to update network configuration to reflect the name change.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ask.xmodulo.com/change-network-interface-name-centos7.html

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/6/html/Deployment_Guide/appe-Consistent_Network_Device_Naming.html

|

||||

@ -0,0 +1,78 @@

|

||||

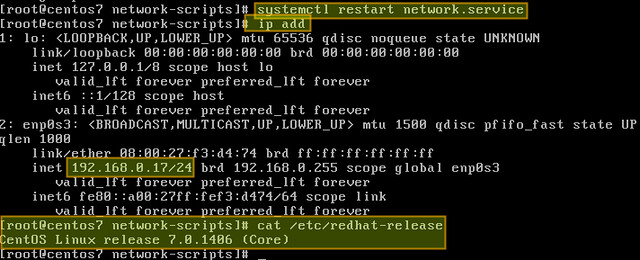

Linux FAQs with Answers--How to configure a static IP address on CentOS 7

|

||||

================================================================================

|

||||

> **Question**: On CentOS 7, I want to switch from DHCP to static IP address configuration with one of my network interfaces. What is a proper way to assign a static IP address to a network interface permanently on CentOS or RHEL 7?

|

||||

|

||||

If you want to set up a static IP address on a network interface in CentOS 7, there are several different ways to do it, varying depending on whether or not you want to use Network Manager for that.

|

||||

|

||||

Network Manager is a dynamic network control and configuration system that attempts to keep network devices and connections up and active when they are available). CentOS/RHEL 7 comes with Network Manager service installed and enabled by default.

|

||||

|

||||

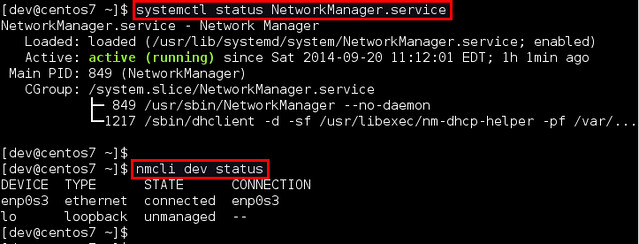

To verify the status of Network Manager service:

|

||||

|

||||

$ systemctl status NetworkManager.service

|

||||

|

||||

To check which network interface is managed by Network Manager, run:

|

||||

|

||||

$ nmcli dev status

|

||||

|

||||

|

||||

|

||||

If the output of nmcli shows "connected" for a particular interface (e.g., enp0s3 in the example), it means that the interface is managed by Network Manager. You can easily disable Network Manager for a particular interface, so that you can configure it on your own for a static IP address.

|

||||

|

||||

Here are **two different ways to assign a static IP address to a network interface on CentOS 7**. We will be configuring a network interface named enp0s3.

|

||||

|

||||

### Configure a Static IP Address without Network Manager ###

|

||||

|

||||

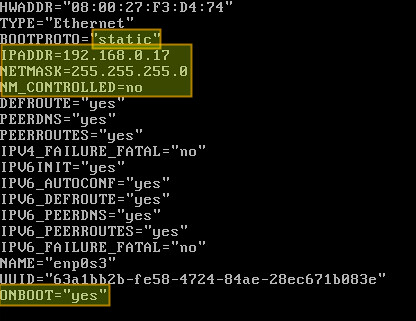

Go to the /etc/sysconfig/network-scripts directory, and locate its configuration file (ifcfg-enp0s3). Create it if not found.

|

||||

|

||||

|

||||

|

||||

Open the configuration file and edit the following variables:

|

||||

|

||||

|

||||

|

||||

In the above, "NM_CONTROLLED=no" indicates that this interface will be set up using this configuration file, instead of being managed by Network Manager service. "ONBOOT=yes" tells the system to bring up the interface during boot.

|

||||

|

||||

Save changes and restart the network service using the following command:

|

||||

|

||||

# systemctl restart network.service

|

||||

|

||||

Now verify that the interface has been properly configured:

|

||||

|

||||

# ip add

|

||||

|

||||

|

||||

|

||||

### Configure a Static IP Address with Network Manager ###

|

||||

|

||||

If you want to use Network Manager to manage the interface, you can use nmtui (Network Manager Text User Interface) which provides a way to configure Network Manager in a terminal environment.

|

||||

|

||||

Before using nmtui, first set "NM_CONTROLLED=yes" in /etc/sysconfig/network-scripts/ifcfg-enp0s3.

|

||||

|

||||

Now let's install nmtui as follows.

|

||||

|

||||

# yum install NetworkManager-tui

|

||||

|

||||

Then go ahead and edit the Network Manager configuration of enp0s3 interface:

|

||||

|

||||

# nmtui edit enp0s3

|

||||

|

||||

The following screen will allow us to manually enter the same information that is contained in /etc/sysconfig/network-scripts/ifcfg-enp0s3.

|

||||

|

||||

Use the arrow keys to navigate this screen, press Enter to select from a list of values (or fill in the desired values), and finally click OK at the bottom right:

|

||||

|

||||

|

||||

|

||||

Finally, restart the network service.

|

||||

|

||||

# systemctl restart network.service

|

||||

|

||||

and you're ready to go.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ask.xmodulo.com/configure-static-ip-address-centos7.html

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

@ -0,0 +1,53 @@

|

||||

Linux FAQs with Answers--How to detect a Linux distribution in Perl

|

||||

================================================================================

|

||||

> **Question**: I need to write a Perl program which contains Linux distro-dependent code. For that, the Perl program needs to be able to automatically detect what Linux distribution (e.g., Ubuntu, CentOS, Debian, Fedora, etc) it is running on, and what version number it is. How can I identify Linux distribution in Perl?

|

||||

|

||||

If you want to detect Linux distribution within a Perl script, you can use a Perl module named [Linux::Distribution][1]. This module guesses the underlying Linux operating system by examining /etc/lsb-release, and other distro-specific files under /etc directory. It supports detecting all major Linux distributions, including Fedora, CentOS, Arch Linux, Debian, Ubuntu, SuSe, Red Hat, Gentoo, Slackware, Knoppix, and Mandrake.

|

||||

|

||||

To use this module in a Perl program, you need to install it first.

|

||||

|

||||

### Install Linux::Distribution on Debian or Ubuntu ###

|

||||

|

||||

Installation on Debian-based system is straightforward with apt-get:

|

||||

|

||||

$ sudo apt-get install liblinux-distribution-packages-perl

|

||||

|

||||

### Install Linux::Distribution on Fedora, CentOS or RHEL ###

|

||||

|

||||

If Linux::Distribution module is not available as a package in your Linux (such as on Red Hat based systems), you can use CPAN to build it.

|

||||

|

||||

First, make sure that you have CPAN installed on your Linux system:

|

||||

|

||||

$ sudo yum -y install perl-CPAN

|

||||

|

||||

Then use this command to build and install the module:

|

||||

|

||||

$ sudo perl -MCPAN -e 'install Linux::Distribution'

|

||||

|

||||

### Identify a Linux Distribution in Perl ###

|

||||

|

||||

Once Linux::Distribution module is installed, you can use the following code snippet to identify on which Linux distribution you are running.

|

||||

|

||||

use Linux::Distribution qw(distribution_name distribution_version);

|

||||

|

||||

my $linux = Linux::Distribution->new;

|

||||

|

||||

if ($linux) {

|

||||

my $distro = $linux->distribution_name();

|

||||

my $version = $linux->distribution_version();

|

||||

print "Distro: $distro $version\n";

|

||||

}

|

||||

else {

|

||||

print "Distro: unknown\n";

|

||||

}

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ask.xmodulo.com/detect-linux-distribution-in-perl.html

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:https://metacpan.org/pod/Linux::Distribution

|

||||

@ -0,0 +1,39 @@

|

||||

Linux FAQs with Answers--How to embed all fonts in a PDF document generated with LaTex

|

||||

================================================================================

|

||||

> **Question**: I generated a PDF document by compiling LaTex source files. However, I noticed that not all fonts used are embedded in the PDF document. How can I make sure that all fonts are embedded in a PDF document generated from LaTex?

|

||||

|

||||

When you create a PDF file, it is a good idea to embed fonts in the PDF file. If you don't embed fonts, a PDF viewer can replace a font with something else if the font is not available on the computer. This will cause the document to be rendered differently across different PDF viewers or OS platforms. Missing fonts can also be an issue when you print out the document.

|

||||

|

||||

When you generate a PDF document from LaTex (for example with pdflatex or dvipdfm), it's possible that not all fonts are embedded in the PDF document. For example, the following output of [pdffonts][1] says that there are missing fonts (e.g., Helvetica) in a PDF document.

|

||||

|

||||

|

||||

|

||||

To avoid this kind of problems, here is how to embed all fonts at LaTex compile time.

|

||||

|

||||

$ latex document.tex

|

||||

$ dvips -Ppdf -G0 -t letter -o document.ps document.dvi

|

||||

$ ps2pdf -dPDFSETTINGS=/prepress \

|

||||

-dCompatibilityLevel=1.4 \

|

||||

-dAutoFilterColorImages=false \

|

||||

-dAutoFilterGrayImages=false \

|

||||

-dColorImageFilter=/FlateEncode \

|

||||

-dGrayImageFilter=/FlateEncode \

|

||||

-dMonoImageFilter=/FlateEncode \

|

||||

-dDownsampleColorImages=false \

|

||||

-dDownsampleGrayImages=false \

|

||||

document.ps document.pdf

|

||||

|

||||

Now you will see that all fonts are properly embedded in the PDF file.

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ask.xmodulo.com/embed-all-fonts-pdf-document-latex.html

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://ask.xmodulo.com/check-which-fonts-are-used-pdf-document.html

|

||||

@ -0,0 +1,51 @@

|

||||

How To Reset Root Password On CentOS 7

|

||||

================================================================================

|

||||

The way to reset the root password on centos7 is totally different to Centos 6. Let me show you how to reset root password in CentOS 7.

|

||||

|

||||

1 – In the boot grub menu select option to edit.

|

||||

|

||||

|

||||

|

||||

2 – Select Option to edit (e).

|

||||

|

||||

|

||||

|

||||

3 – Go to the line of Linux 16 and change ro with rw init=/sysroot/bin/sh.

|

||||

|

||||

|

||||

|

||||

4 – Now press Control+x to start on single user mode.

|

||||

|

||||

|

||||

|

||||

5 – Now access the system with this command.

|

||||

|

||||

chroot /sysroot

|

||||

|

||||

6 – Reset the password.

|

||||

|

||||

passwd root

|

||||

|

||||

7 – Update selinux information

|

||||

|

||||

touch /.autorelabel

|

||||

|

||||

8 – Exit chroot

|

||||

|

||||

exit

|

||||

|

||||

9 – Reboot your system

|

||||

|

||||

reboot

|

||||

|

||||

That’s it. Enjoy.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.unixmen.com/reset-root-password-centos-7/

|

||||

|

||||

作者:M.el Khamlichi

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

@ -0,0 +1,151 @@

|

||||

How to manage configurations in Linux with Puppet and Augeas

|

||||

================================================================================

|

||||