mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-25 23:11:02 +08:00

commit

7720875b8d

@ -73,21 +73,21 @@ Nagios安装过程中可以设置邮件服务器,安装后也可以进行自

|

||||

root@mrtg:/etc/nagios3/conf.d/# vim linux-server.cfg

|

||||

-

|

||||

define host{

|

||||

name linux-server ; 名称,需修改

|

||||

name linux-server ; 名称,需修改

|

||||

notifications_enabled 1

|

||||

event_handler_enabled 1

|

||||

flap_detection_enabled 1

|

||||

failure_prediction_enabled 1

|

||||

process_perf_data 1

|

||||

process_perf_data 1

|

||||

retain_status_information 1

|

||||

retain_nonstatus_information 1

|

||||

check_command example-host-check ; 检查所用脚本,需修改

|

||||

check_interval 3 ; 连续检查的间隔,需修改

|

||||

max_check_attempts 3 ; 产生邮件告警前的自检次数,需修改

|

||||

notification_interval 0

|

||||

check_command example-host-check ; 检查所用脚本,需修改

|

||||

check_interval 3 ; 连续检查的间隔,需修改

|

||||

max_check_attempts 3 ; 产生邮件告警前的自检次数,需修改

|

||||

notification_interval 0

|

||||

notification_period 24x7

|

||||

notification_options d,u,r

|

||||

contact_groups admins ; 邮件将要发送至的组,需修改

|

||||

contact_groups admins ; 邮件将要发送至的组,需修改

|

||||

register0

|

||||

}

|

||||

|

||||

@ -100,22 +100,22 @@ Nagios安装过程中可以设置邮件服务器,安装后也可以进行自

|

||||

root@mrtg:/etc/nagios3/conf.d/# vim cisco-device.cfg

|

||||

-

|

||||

define host{

|

||||

name cisco-device ;名称,需修改

|

||||

name cisco-device ;名称,需修改

|

||||

notifications_enabled 1

|

||||

event_handler_enabled 1

|

||||

flap_detection_enabled 1

|

||||

failure_prediction_enabled 1

|

||||

process_perf_data 1

|

||||

process_perf_data 1

|

||||

retain_status_information 1

|

||||

retain_nonstatus_information 1

|

||||

check_command example-host-check ; 检查时使用的脚本,需修改

|

||||

check_interval 3 ; 连续检查间隔,需修改

|

||||

max_check_attempts 3 ; 产生邮件告警前的自检次数,需修改

|

||||

notification_interval 0

|

||||

notification_period 24x7

|

||||

check_command example-host-check ; 检查时使用的脚本,需修改

|

||||

check_interval 3 ; 连续检查间隔,需修改

|

||||

max_check_attempts 3 ; 产生邮件告警前的自检次数,需修改

|

||||

notification_interval 0

|

||||

notification_period 24x7

|

||||

notification_options d,u,r

|

||||

contact_groups admins ; 邮件将要发至的组,需修改

|

||||

register 0

|

||||

contact_groups admins ; 邮件将要发至的组,需修改

|

||||

register 0

|

||||

}

|

||||

|

||||

### 添加主机 ###

|

||||

@ -148,13 +148,13 @@ Nagios安装过程中可以设置邮件服务器,安装后也可以进行自

|

||||

root@mrtg:/etc/nagios3/conf.d/# vim hostgroups_nagios2.cfg

|

||||

-

|

||||

define hostgroup {

|

||||

hostgroup_name linux-server ; 主机组名

|

||||

hostgroup_name linux-server ; 主机组名

|

||||

alias Linux Servers

|

||||

members our-server ; 组员列表

|

||||

}

|

||||

|

||||

define hostgroup {

|

||||

hostgroup_name cisco-device ; 主机组名

|

||||

hostgroup_name cisco-device ; 主机组名

|

||||

alias Cisco Devices

|

||||

members our-server ; comma separated list of members

|

||||

}

|

||||

@ -176,18 +176,18 @@ Nagios安装过程中可以设置邮件服务器,安装后也可以进行自

|

||||

-

|

||||

define service {

|

||||

hostgroup_name linux-server

|

||||

service_description Linux Servers

|

||||

check_command example-host-check

|

||||

use generic-service

|

||||

notification_interval 0 ; 初始化设置为0

|

||||

service_description Linux Servers

|

||||

check_command example-host-check

|

||||

use generic-service

|

||||

notification_interval 0 ; 初始化设置为0

|

||||

}

|

||||

|

||||

define service {

|

||||

hostgroup_name cisco-device

|

||||

service_description Cisco Devices

|

||||

check_command example-host-check

|

||||

use generic-service

|

||||

notification_interval 0 ; 初始化设置为0

|

||||

check_command example-host-check

|

||||

use generic-service

|

||||

notification_interval 0 ; 初始化设置为0

|

||||

}

|

||||

|

||||

### 联系人定义 ###

|

||||

@ -205,12 +205,12 @@ Nagios安装过程中可以设置邮件服务器,安装后也可以进行自

|

||||

host_notification_options d,r

|

||||

service_notification_commands notify-service-by-email

|

||||

host_notification_commands notify-host-by-email

|

||||

email root@localhost, sentinel@example.tst

|

||||

email root@localhost, sentinel@example.tst

|

||||

}

|

||||

|

||||

最后,试运行初始化检测是否有配置错误。如果没有错误,Nagios开始安全运行。

|

||||

|

||||

root@mrtg:~#nagios –v /etc/nagios3/nagios.cfg

|

||||

root@mrtg:~# nagios -v /etc/nagios3/nagios.cfg

|

||||

root@mrtg:~# service nagios3 restart

|

||||

|

||||

## CentOS/RHEL上的Nagios配置 ##

|

||||

@ -229,33 +229,33 @@ Redhat系统中Nagios的配置文件地址如下所示。

|

||||

[root@mrtg objects]# vim templates.cfg

|

||||

-

|

||||

define host{

|

||||

name linux-server

|

||||

use generic-host

|

||||

name linux-server

|

||||

use generic-host

|

||||

check_period 24x7

|

||||

check_interval 3

|

||||

retry_interval 1

|

||||

check_interval 3

|

||||

retry_interval 1

|

||||

max_check_attempts 3

|

||||

check_command example-host-check

|

||||

notification_period 24x7

|

||||

check_command example-host-check

|

||||

notification_period 24x7

|

||||

notification_interval 0

|

||||

notification_options d,u,r

|

||||

contact_groups admins

|

||||

register 0

|

||||

notification_options d,u,r

|

||||

contact_groups admins

|

||||

register 0

|

||||

}

|

||||

|

||||

define host{

|

||||

name cisco-router

|

||||

use generic-host

|

||||

|

||||

define host{

|

||||

name cisco-router

|

||||

use generic-host

|

||||

check_period 24x7

|

||||

check_interval 3

|

||||

retry_interval 1

|

||||

check_interval 3

|

||||

retry_interval 1

|

||||

max_check_attempts 3

|

||||

check_command example-host-check

|

||||

notification_period 24x7

|

||||

check_command example-host-check

|

||||

notification_period 24x7

|

||||

notification_interval 0

|

||||

notification_options d,u,r

|

||||

contact_groups admins

|

||||

register 0

|

||||

notification_options d,u,r

|

||||

contact_groups admins

|

||||

register 0

|

||||

}

|

||||

|

||||

### 添加主机和主机组 ###

|

||||

@ -267,7 +267,7 @@ Redhat系统中Nagios的配置文件地址如下所示。

|

||||

-

|

||||

#Adding Linux server

|

||||

define host{

|

||||

use linux-server

|

||||

use linux-server

|

||||

host_name our-server

|

||||

alias our-server

|

||||

address 172.17.1.23

|

||||

@ -275,7 +275,7 @@ Redhat系统中Nagios的配置文件地址如下所示。

|

||||

|

||||

#Adding Cisco Router

|

||||

define host{

|

||||

use cisco-router

|

||||

use cisco-router

|

||||

host_name our-router

|

||||

alias our-router

|

||||

address 172.17.1.1

|

||||

@ -310,10 +310,10 @@ Redhat系统中Nagios的配置文件地址如下所示。

|

||||

告警要发送的邮件地址添加至Nagios中。

|

||||

|

||||

[root@objects objects]# vim contacts.cfg

|

||||

-

|

||||

-

|

||||

define contact{

|

||||

contact_name nagiosadmin

|

||||

use generic-contact

|

||||

use generic-contact

|

||||

alias Nagios Admin

|

||||

email nagios@localhost, sentinel@example.tst

|

||||

}

|

||||

@ -326,7 +326,7 @@ Redhat系统中Nagios的配置文件地址如下所示。

|

||||

|

||||

### 配置后访问Nagios ###

|

||||

|

||||

现在一切就绪,可以开始Nagios之旅了。Ubuntu/Debian用户可以通过打开http://IP地址/nagios3网页访问Nagios,CentOS/RHEL用户可以打开http://IP地址/nagios,如http://172.17.1.23/nagios3来访问Nagios。“nagiosadmin”用户则需要认证来访问页面。

|

||||

现在一切就绪,可以开始Nagios之旅了。Ubuntu/Debian用户可以通过打开 http://IP地址/nagios3 网页访问Nagios,CentOS/RHEL用户可以打开 http://IP地址/nagios ,如 http://172.17.1.23/nagios3 来访问Nagios。“nagiosadmin”用户则需要认证来访问页面。

|

||||

|

||||

[][9]

|

||||

|

||||

|

||||

@ -1,18 +1,21 @@

|

||||

为什么你的公司需要参与更多开源软件的编写

|

||||

为什么公司需要参与更多开源软件的编写?

|

||||

================================================================================

|

||||

>闭关锁国是产生不了创新的。

|

||||

|

||||

> 闭门造车是产生不了创新的。

|

||||

|

||||

|

||||

|

||||

|

||||

**华尔街日报 [称][1],有消息表明,Zulily正在开发** 更多的内部软件,但实际上根本不是。多年前[Eric Raymond写道][2],全世界95%的软件写来用的,而不是售卖。原因很多,但是其中有一个比较突出:正如Zulily的CIO Luke Friang所说,几乎没有一个[非定制]软件解决方案能跟上我们的步伐。

|

||||

[据华尔街日报称][1],有消息表明,Zulily正在开发更多的内部软件,但实际上根本不是。多年前[Eric Raymond写道][2],全世界95%的软件写来用的,而不是售卖。原因很多,但是其中有一个比较突出:正如Zulily的CIO Luke Friang所说,几乎没有一个[非定制]软件解决方案能跟上我们的步伐。

|

||||

|

||||

20年前是这样,现在也是这样。

|

||||

|

||||

但是有一点是不同的,这也正是华尔街日报完全忽略的地方。而这也正是历史上开发的内部软件始终保持着专有的原因了,因为她是一个公司的 核心竞争力。然而今天,越来越多的公司意识到另一面:开源内部软件将会比保持专有获益更多。

|

||||

但是有一点是不同的,这也正是华尔街日报完全忽略的地方。而这也正是历史上开发的内部软件始终保持着专有的原因了,因为它是一个公司的核心竞争力。然而今天,越来越多的公司意识到另一面:开源内部软件将会比保持专有获益更多。

|

||||

|

||||

这也就是为什么你的公司需要为开源项目做出更多的贡献。记住是更多。

|

||||

|

||||

### 不寻常的那些年

|

||||

|

||||

我们刚刚经历了一个很不一样的20年,那时很多软件的开发都是为了内部的使用,大多数人的精力都放在由SAP和微软这样的厂商建立的应用广泛的企业级解决方案。

|

||||

|

||||

不管怎么说,这都是一个理论。

|

||||

@ -27,32 +30,37 @@

|

||||

|

||||

然而,开源的道路上,一些公司也发现,有些销售商不能很好地描述他们所想要的,即便是很好理解的产品类别,如像内容管理系统,他们需要 知道的是产品亮点,而不希望是一个模子刻出来的。

|

||||

|

||||

所以顾客没了,他们中有一部分上转变变成了供应商。

|

||||

所以顾客没了,他们中有一部分转变成了供应商。

|

||||

|

||||

这也是常有的事,[O'Grady指出了][4]这一点。2010年,O'Grady发现了一个有趣的现象:“软件提供商正面对着一个强有力的市场竞争者:他们 的顾客。”

|

||||

### 自己动手,丰衣足食

|

||||

|

||||

这也是常有的事,[O'Grady指出了][4]这一点。2010年,O'Grady发现了一个有趣的现象:“软件提供商正面对着一个强有力的市场竞争者:他们的顾客。”

|

||||

|

||||

回想一下今天的高科技,大多数都是开源的,几乎所有的项目一开始都是某些公司的内部项目,或者仅仅是有些开发者的爱好,Linux,Git,Hadoop,Cassandra,MongDB,Android,等等。没有一个项目起初是为了售卖而产生的。

|

||||

|

||||

相反,这些项目通常是由一些公司维护,他们使用开源的资源来构建软件并[完善软件][5],这主要是一些Web公司。不像以前银行,医院和一些组织开发的软件只供内部使用,他们开源源码。

|

||||

|

||||

虽然,[有些公司避免定制软件][6],因为他们不想自己维护它,开源(稍微)减轻了这些发展中公司来维护一个项目的压力。从而为项目发起人均摊项目的开发成本,Yahoo,开始于Hadoop,但是现在最大的贡献者是Cloudera和Hortonworks。Facebook开始于Cassandra,但是现在主要是靠DataStax在维护。等等。

|

||||

虽然,[有些公司避免定制软件][6],因为他们不想自己维护它,开源(稍微)减轻了这些发展中公司来维护一个项目的压力。从而为项目发起人均摊项目的开发成本,Yahoo,建立了 Hadoop,但是现在最大的贡献者是Cloudera和Hortonworks。Facebook 建立了 Cassandra,但是现在主要是靠DataStax在维护。等等。

|

||||

|

||||

### 现在就走出来吧!

|

||||

|

||||

今天,真正的软件创新并不是闭门造车能造出来的,即便是可以,它也不会在那儿,开源项目颠覆了几十年的软件开发传统。

|

||||

|

||||

这不仅仅是一个人的一点点力量。

|

||||

|

||||

最好的开源项目都[发展得很快][7],但是这并不意味着别人在乎你的开源代码。[开放你的源码有显著的优缺点][8],其中一个很重要的优点是 很多伟大的开发者都希望为开源做出贡献:如果你也想找一个伟大的开发者跟你一起,你需要给他们一个开放的源代码来让他们工作。([Netflix][9]说)

|

||||

最好的开源项目都[发展得很快][7],但是这并不意味着别人在乎你的开源代码。[开放你的源码有显著的优缺点][8],其中一个很重要的优点是很多伟大的开发者都希望为开源做出贡献:如果你也想找一个伟大的开发者跟你一起,你需要给他们一个开放的源代码来让他们工作。([Netflix][9]说)

|

||||

|

||||

但是,我们没有理由站在一边看,现在正是时候参与开源社区了,而不是一些不清楚的社区。是的,开源最大的参与者正是你们和你们的公司。 赶紧开始吧。

|

||||

但是,我们没有理由站在一边看,现在正是时候参与开源社区了,而不是把“社区”妖魔化。是的,开源最大的参与者正是你们和你们的公司。 赶紧开始吧。

|

||||

|

||||

主要图片来自于Shutterstock. (注:Shutterstock是美国的一家摄影图片网站。)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://readwrite.com/2014/08/16/open-source-software-business-zulily-erp-wall-street-journal

|

||||

|

||||

作者:[Matt Asay][a]

|

||||

译者:[barney-ro](https://github.com/barney-ro)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,16 +1,16 @@

|

||||

为什么一些古老的编程语言不会消亡?

|

||||

================================================================================

|

||||

> 我们中意于我们所知道的。

|

||||

> 我们钟爱我们已知的。

|

||||

|

||||

|

||||

|

||||

当今许多知名的编程语言已经都非常古老了。PHP 语言20年、Python 语言23年、HTML 语言21年、Ruby 语言和 JavaScript 语言已经19年,C 语言更是高达42年之久。

|

||||

|

||||

这是没人能预料得到的,即使是计算机科学家 [Brian Kernighan][1] 也一样。他是写著第一本关于 C 语言的作者之一,只到今天这本书还在印刷着。(C 语言本身的发明者 [Dennis Ritchie][2] 是 Kernighan 的合著者,他于 2011 年已辞世。)

|

||||

这是没人能预料得到的,即使是计算机科学家 [Brian Kernighan][1] 也一样。他是写著第一本关于 C 语言的作者之一,直到今天这本书还在印刷着。(C 语言本身的发明者 [Dennis Ritchie][2] 是 Kernighan 的合著者,他于 2011 年已辞世。)

|

||||

|

||||

“我依稀记得早期跟编辑们的谈话,告诉他们我们已经卖出了5000册左右的量,”最近采访 Kernighan 时他告诉我说。“我们设法做的更好。我没有想到的是在2014年的教科书里学生仍然在使用第一个版本的书。”

|

||||

|

||||

关于 C 语言的持久性特别显著的就是 Google 开发出了新的语言 Go,解决同一问题比用 C 语言更有效率。

|

||||

关于 C 语言的持久性特别显著的就是 Google 开发出了新的语言 Go,解决同一问题比用 C 语言更有效率。不过,我仍然很难想象 Go 能彻底杀死 C,无论它有多么好。

|

||||

|

||||

“大多数语言并不会消失或者至少很大一部分用户承认它们不会消失,”他说。“C 语言仍然在一定的领域独领风骚,所以它很接地气。”

|

||||

|

||||

@ -20,13 +20,13 @@

|

||||

|

||||

分别来自普林斯顿大学和加州大学伯克利分校的研究者 Ari Rabkin 和 Leo Meyerovich 花费了两年时间来研究解决上面的问题。他们的研究报告,[《编程语言使用情况实例分析》][3],记录了对超过 200,000 个 Sourceforge 项目和超过 13,000 个程序员投票结果的分析。

|

||||

|

||||

他们主要的发现呢?大多数时候程序员选择的编程语言都是他们所熟悉的。

|

||||

他们主要的发现是什么呢?大多数时候程序员选择的编程语言都是他们所熟悉的。

|

||||

|

||||

“存在着我们使用的语言是因为我们经常使用他们,” Rabkin 告诉我。“例如:天文学家就经常使用 IDL [交互式数据语言]来开发他们的计算机程序,并不是因为它具有什么特殊的星级功能或其它特点,而是因为用它形成习惯了。他们已经用些语言构建出很优秀的程序了,并且想保持原状。”

|

||||

“这些我们使用的语言还继续存在是因为我们经常使用他们,” Rabkin 告诉我。“例如:天文学家就经常使用 IDL [交互式数据语言]来开发他们的计算机程序,并不是因为它具有什么特殊的亮点功能或其它特点,而是因为用它形成习惯了。他们已经用些语言构建出很优秀的程序了,并且想保持原状。”

|

||||

|

||||

换句话说,它部分要归功于创建其的语言的的知名度仍保留较大劲头。当然,这并不意味着流行的语言不会变化。Rabkin 指出我们今天在使用的 C 语言就跟 Kernighan 第一次创建时的一点都不同,那时的 C 编译器跟现代的也不是完全兼容。

|

||||

换句话说,它部分要归功于这些语言所创立的知名度仍保持较高。当然,这并不意味着流行的语言不会变化。Rabkin 指出我们今天在使用的 C 语言就跟 Kernighan 第一次创建时的一点都不同,那时的 C 编译器跟现代的也不是完全兼容。

|

||||

|

||||

“有一个古老的,关于工程师的笑话。工程师被问到哪一种编程语言人们会使用30年,他说,‘我不知道,但它总会被叫做 Fortran’,” Rabkin 说到。“长期存活的语言跟他们在70年代和80年代刚设计出来的时候不一样了。人们通常都是在上面增加功能,而不会删除功能,因为要保持向后兼容,但有些功能会被修正。”

|

||||

“有一个古老的,关于工程师的笑话。工程师被问到哪一种编程语言人们会使用30年,他说,‘我不知道,但它总会被叫做 Fortran’,” Rabkin 说到。“长期存活的语言跟他们在70年代和80年代刚设计出来的时候不太一样了。人们通常都是在上面增加功能,而不会删除功能,因为要保持向后兼容,但有些功能会被修正。”

|

||||

|

||||

向后兼容意思就是当语言升级后,程序员不仅可以使用升级语言的新特性,也不用回去重写已经实现的老代码块。老的“遗留代码”的语法规则已经不用了,但舍弃是要花成本的。只要它们存在,我们就有理由相信相关的语言也会存在。

|

||||

|

||||

@ -34,17 +34,17 @@

|

||||

|

||||

遗留代码指的是用过时的源代码编写的程序或部分程序。想想看,一个企业或工程项目的关键程序功能部分是用没人维护的编程语言写出来的。因为它们仍起着作用,用现代的源代码重写非常困难或着代价太高,所以它们不得不保留下来,即使其它部分的代码都变动了,程序员也必须不断折腾以保证它们能正常工作。

|

||||

|

||||

任何的编程语言,存在了超过几十年时间都具有某种形式的遗留代码问题, PHP 也不加例外。PHP 是一个很有趣的例子,因为它的遗留代码跟现在的代码明显不同,支持者或评论家都承认这是一个巨大的进步。

|

||||

任何编程语言,存在了超过几十年时间都具有某种形式的遗留代码问题, PHP 也不例外。PHP 是一个很有趣的例子,因为它的遗留代码跟现在的代码明显不同,支持者或评论家都承认这是一个巨大的进步。

|

||||

|

||||

Andi Gutmans 是 已经成为 PHP4 的标准编译器的 Zend Engine 的发明者之一。Gutmans 说他和搭档本来是想改进完善 PHP3 的,他们的工作如此成功,以至于 PHP 的原发明者 Rasmus Lerdorf 也加入他们的项目。结果就成为了 PHP4 和他的后续者 PHP5 的编译器。

|

||||

Andi Gutmans 是已经成为 PHP4 的标准编译器的 Zend Engine 的发明者之一。Gutmans 说他和搭档本来是想改进完善 PHP3 的,他们的工作如此成功,以至于 PHP 的原发明者 Rasmus Lerdorf 也加入他们的项目。结果就成为了 PHP4 和他的后续者 PHP5 的编译器。

|

||||

|

||||

因此,当今的 PHP 与它的祖先即最开始的 PHP 是完全不同的。然而,在 Gutmans 看来,在用古老的 PHP 语言版本写的遗留代码的地方一直存在着偏见以至于上升到整个语言的高度。比如 PHP 充满着安全漏洞或没有“集群”功能来支持大规模的计算任务等概念。

|

||||

因此,当今的 PHP 与它的祖先——即最开始的 PHP 是完全不同的。然而,在 Gutmans 看来,在用古老的 PHP 语言版本写的遗留代码的地方一直存在着偏见以至于上升到整个语言的高度。比如 PHP 充满着安全漏洞或没有“集群”功能来支持大规模的计算任务等概念。

|

||||

|

||||

“批评 PHP 的人们通常批评的是在 1998 年时候的 PHP 版本,”他说。“这些人都没有与时俱进。当今的 PHP 已经有了很成熟的生态系统了。”

|

||||

|

||||

如今,Gutmans 说,他作为一个管理者最重要的事情就是鼓励人们升级到最新版本。“PHP有个很大的社区,足以支持您的遗留代码的问题,”他说。“但总的来说,我们的社区大部分都在 PHP5.3 及以上的。”

|

||||

|

||||

问题是,任何语言用户都不会全部升级到最新版本。这就是为什么 Python 用户仍在使用 2000 年发布的 Python 2,而不是使用 2008 年发布的 Python 3 的原因。甚至是已经六年了喜欢 Google 的大多数用户仍没有升级。这种情况是多种原因造成的,但它使得很多开发者在承担风险。

|

||||

问题是,任何语言用户都不会全部升级到最新版本。这就是为什么 Python 用户仍在使用 2000 年发布的 Python 2,而不是使用 2008 年发布的 Python 3 的原因。甚至在六年后,大多数像 Google 这样的用户仍没有升级。这种情况是多种原因造成的,但它使得很多开发者在承担风险。

|

||||

|

||||

“任何东西都不会消亡的,”Rabkin 说。“任何语言的遗留代码都会一直存在。重写的代价是非常高昂的,如果它们不出问题就不要去改动。”

|

||||

|

||||

@ -54,15 +54,15 @@ Andi Gutmans 是 已经成为 PHP4 的标准编译器的 Zend Engine 的发明

|

||||

|

||||

> 有一件事使我们被深深震撼到了。这事最重要的就是我们给人们按年龄分组,然后询问他们知道多少编程语言。我们主观的认为随着年龄的增长知道的会越来越多,但实际上却不是,25岁年龄组和45岁年龄组知道的语言数目是一样的。几个反复询问的问题这里持续不变的。您知道一种语言的几率并不与您的年龄挂钩。

|

||||

|

||||

换句话说,不仅仅里年长的开发者坚持传统,年轻的程序员会承认并采用古老的编程语言作为他们的第一们语言。这可能是因为这些语言具有很有趣的开发库及功能特点,也可能是因为在社区里开发者都是一个组的都喜爱这种开发语言。

|

||||

换句话说,不仅仅年长的开发者坚持传统,年轻的程序员也会认可并采用古老的编程语言作为他们的第一们语言。这可能是因为这些语言具有很有趣的开发库及功能特点,也可能是因为在社区里开发者都是喜爱这种开发语言的一伙人。

|

||||

|

||||

“在全球程序员关注的语言的数量是有定数的,” Rabkin 说。“如果一们语言表现出足够独特的价值,人们将会学习和使用它。如果是和您交流代码和知识的的某个人分享一门编程语言,您将会学习它。因此,例如,只要那些开发库是 Python 库和社区特长是 Python 语言的经验,那么 Python 将会大行其道。”

|

||||

“在全球程序员关注的语言的数量是有定数的,” Rabkin 说。“如果一们语言表现出足够独特的价值,人们将会学习和使用它。如果是和您交流代码和知识的的某个人分享一门编程语言,您将会学习它。因此,例如,只要那些 Python 库存在、 社区也对 Python 语言很有经验的话,那么 Python 仍将会大行其道。”

|

||||

|

||||

研究人员发现关于语言实现的功能,社区是一个巨大的因素。虽然像 Python 和 Ruby 这样的高级语言并没有太大的差别,但,例如程序员就更容易觉得一种比另一种优越。

|

||||

研究人员发现关于语言实现的功能,社区是一个巨大的因素。虽然像 Python 和 Ruby 这样的高级语言并没有太大的差别,但,程序员总是容易觉得一种比另一种优越。

|

||||

|

||||

“Rails 不一定要用 Ruby 语言编写,但它用了,这就是社会因素在起作用,” Rabkin 说。“例如,复活 Objective-C 语言这件事就是苹果的工程师团队说‘让我们使用它吧,’ 他们就没得选择了。”

|

||||

“Rails 不一定要用 Ruby 语言编写,但它用了,这就是社区因素在起作用,” Rabkin 说。“例如,复活 Objective-C 语言这件事就是苹果的工程师团队说‘让我们使用它吧,’ 他们就没得选择了。”

|

||||

|

||||

通观社会的影响及老旧代码这些问题,我们发现最古老的和最新的计算机语言都有巨大的惰性。Go 语言怎么样能超越 C 语言呢?如果有合适的人或公司说它超越它就超越。

|

||||

通观社会的影响及老旧代码这些问题,我们发现最古老的和最新的计算机语言都有巨大的惰性。Go 语言怎么样才能超越 C 语言呢?如果有合适的人或公司说它超越它就超越。

|

||||

|

||||

“它归结为谁传播的更好谁就好,” Rabkin 说。

|

||||

|

||||

@ -74,7 +74,7 @@ via: http://readwrite.com/2014/09/02/programming-language-coding-lifetime

|

||||

|

||||

作者:[Lauren Orsini][a]

|

||||

译者:[runningwater](https://github.com/runningwater)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,24 +1,26 @@

|

||||

使用条块化I/O管理多个逻辑卷管理磁盘

|

||||

使用条块化I/O管理多个LVM磁盘(第五部分)

|

||||

================================================================================

|

||||

在本文中,我们将了解逻辑卷是如何通过条块化I/O来写入数据到磁盘的。逻辑卷管理的酷炫特性之一,就是它能通过条块化I/O跨多个磁盘写入数据。

|

||||

|

||||

|

||||

使用条块化I/O管理LVM磁盘

|

||||

|

||||

|

||||

### LVM条块化是什么? ###

|

||||

|

||||

**LVM条块化**是LVM功能之一,该技术会跨多个磁盘写入数据,而不是对单一物理卷持续写入。

|

||||

|

||||

|

||||

|

||||

*使用条块化I/O管理LVM磁盘*

|

||||

|

||||

|

||||

#### 条块化特性 ####

|

||||

|

||||

- 它会改善磁盘性能。

|

||||

- 挽救对单一磁盘的重复硬写入。

|

||||

- 避免对单一磁盘的不断的大量写入。

|

||||

- 使用对多个磁盘的条块化写入,可以减少磁盘填满的几率。

|

||||

|

||||

在逻辑卷管理中,如果我们需要创建一个逻辑卷,扩展的卷会完全映射到卷组和物理卷。在此种情形中,如果其中一个**PV**(物理卷)被填满,我们需要从其它物理卷中添加更多扩展。这样,添加更多扩展到PV中后,我们可以指定逻辑卷使用特定的物理卷写入I/O。

|

||||

|

||||

假设我们又**四个磁盘**驱动器,分别指向了四个物理卷,如果各个物理卷总计可以达到**100 I/O**,我们卷组就可以获得**400 I/O**。

|

||||

假设我们有**四个磁盘**驱动器,分别指向了四个物理卷,如果各个物理卷总计可以达到**100 I/O**,我们卷组就可以获得**400 I/O**。

|

||||

|

||||

如果我们不使用**条块化方法**,文件系统将横跨基础物理卷写入。例如,写入一些数据到物理卷达到100 I/O,这些数据只会写入到第一个PV(**sdb1**)。如果我们在写入时使用条块化选项创建逻辑卷,它会分割100 I/O分别写入到四个驱动器中,这就是说每个驱动器中都会接收到25 I/O。

|

||||

|

||||

@ -41,27 +43,31 @@

|

||||

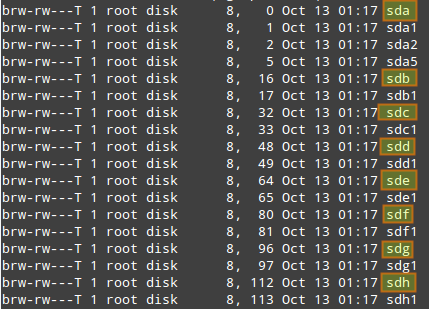

# fdisk -l | grep sd

|

||||

|

||||

|

||||

列出硬盘驱动器

|

||||

|

||||

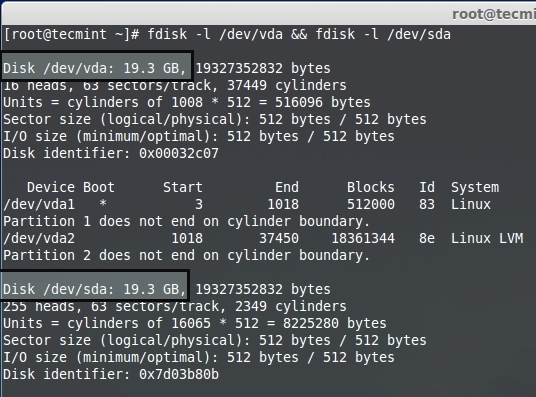

现在,我们必须为这4个硬盘驱动器**sdb**,**sdc**,**sdd**和**sde**创建分区,我们将用‘**fdisk**’命令来完成该工作。要创建分区,请遵从本文**第一部分**中**步骤#4**的说明,并在创建分区时确保你已将类型修改为**LVM(8e)**。

|

||||

*列出硬盘驱动器*

|

||||

|

||||

现在,我们必须为这4个硬盘驱动器**sdb**,**sdc**,**sdd**和**sde**创建分区,我们将用‘**fdisk**’命令来完成该工作。要创建分区,请遵从本文**[第一部分][1]**中**步骤#4**的说明,并在创建分区时确保你已将类型修改为**LVM(8e)**。

|

||||

|

||||

# pvcreate /dev/sd[b-e]1 -v

|

||||

|

||||

|

||||

在LVM中创建物理卷

|

||||

|

||||

*在LVM中创建物理卷*

|

||||

|

||||

PV创建完成后,你可以使用‘**pvs**’命令将它们列出来。

|

||||

|

||||

# pvs

|

||||

|

||||

|

||||

验证物理卷

|

||||

|

||||

*验证物理卷*

|

||||

|

||||

现在,我们需要使用这4个物理卷来定义卷组。这里,我定义了一个物理扩展大小(PE)为**16MB**,名为**vg_strip**的卷组。

|

||||

|

||||

# vgcreate -s 16M vg_strip /dev/sd[b-e]1 -v

|

||||

|

||||

上面命令中选项的说明。

|

||||

上面命令中选项的说明:

|

||||

|

||||

- **[b-e]1** – 定义硬盘驱动器名称,如sdb1,sdc1,sdd1,sde1。

|

||||

- **-s** – 定义物理扩展大小。

|

||||

- **-v** – 详情。

|

||||

@ -71,14 +77,16 @@ PV创建完成后,你可以使用‘**pvs**’命令将它们列出来。

|

||||

# vgs vg_strip

|

||||

|

||||

|

||||

验证卷组

|

||||

|

||||

*验证卷组*

|

||||

|

||||

要获取VG更详细的信息,可以在**vgdisplay**命令中使用‘-v’选项,它将给出**vg_strip**卷组中所使用的全部物理卷的详细情况。

|

||||

|

||||

# vgdisplay vg_strip -v

|

||||

|

||||

|

||||

卷组信息

|

||||

|

||||

*卷组信息*

|

||||

|

||||

回到我们的话题,现在在创建逻辑卷时,我们需要定义条块化值,就是数据需要如何使用条块化方法来写入到我们的逻辑卷中。

|

||||

|

||||

@ -91,46 +99,54 @@ PV创建完成后,你可以使用‘**pvs**’命令将它们列出来。

|

||||

- **-i** –条块化

|

||||

|

||||

|

||||

创建逻辑卷

|

||||

|

||||

*创建逻辑卷*

|

||||

|

||||

在上面的图片中,我们可以看到条块尺寸的默认大小为**64 KB**,如果我们需要自定义条块值,我们可以使用**-I**(大写I)。要确认逻辑卷已经是否已经创建,请使用以下命令。

|

||||

|

||||

# lvdisplay vg_strip/lv_tecmint_strp1

|

||||

|

||||

|

||||

确认逻辑卷

|

||||

|

||||

*确认逻辑卷*

|

||||

|

||||

现在,接下来的问题是,我们怎样才能知道条块被写入到了4个驱动器。这里,我们可以使用‘**lvdisplay**’和**-m**(显示逻辑卷映射)命令来验证。

|

||||

|

||||

# lvdisplay vg_strip/lv_tecmint_strp1 -m

|

||||

|

||||

|

||||

检查逻辑卷

|

||||

|

||||

*检查逻辑卷*

|

||||

|

||||

要创建自定义的条块尺寸,我们需要用我们自定义的条块大小**256KB**来创建一个**1GB**大小的逻辑卷。现在,我打算将条块分布到3个PV上。这里,我们可以定义我们想要哪些pv条块化。

|

||||

|

||||

# lvcreate -L 1G -i3 -I 256 -n lv_tecmint_strp2 vg_strip /dev/sdb1 /dev/sdc1 /dev/sdd1

|

||||

|

||||

|

||||

定义条块大小

|

||||

|

||||

*定义条块大小*

|

||||

|

||||

接下来,检查条块大小和条块化的卷。

|

||||

|

||||

# lvdisplay vg_strip/lv_tecmint_strp2 -m

|

||||

|

||||

|

||||

检查条块大小

|

||||

|

||||

*检查条块大小*

|

||||

|

||||

是时候使用设备映射了,我们使用‘**dmsetup**’命令来完成这项工作。它是一个低级别的逻辑卷管理工具,它用于管理使用了设备映射驱动的逻辑设备。

|

||||

|

||||

# dmsetup deps /dev/vg_strip/lv_tecmint_strp[1-2]

|

||||

|

||||

|

||||

设备映射

|

||||

|

||||

*设备映射*

|

||||

|

||||

这里,我们可以看到strp1依赖于4个驱动器,strp2依赖于3个设备。

|

||||

|

||||

希望你已经明白,我们怎样能让逻辑卷条块化来写入数据。对于此项设置,必须掌握逻辑卷管理基础知识。在我的下一篇文章中,我将给大家展示怎样在逻辑卷管理中迁移数据。到那时,请静候更新。同时,别忘了对本文提出有价值的建议。

|

||||

希望你已经明白,我们怎样能让逻辑卷条块化来写入数据。对于此项设置,必须掌握逻辑卷管理基础知识。

|

||||

|

||||

在我的下一篇文章中,我将给大家展示怎样在逻辑卷管理中迁移数据。到那时,请静候更新。同时,别忘了对本文提出有价值的建议。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -138,8 +154,9 @@ via: http://www.tecmint.com/manage-multiple-lvm-disks-using-striping-io/

|

||||

|

||||

作者:[Babin Lonston][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/babinlonston/

|

||||

[1]:http://linux.cn/article-3965-1.html

|

||||

@ -1,10 +1,11 @@

|

||||

迁移LVM分区到新的逻辑卷(驱动器)——第六部分

|

||||

迁移LVM分区到新的逻辑卷/驱动器(第六部分)

|

||||

================================================================================

|

||||

|

||||

这是我们正在开展的逻辑卷管理系列的第六部分。在本文中,我们将为大家展示怎样来在线将现存的逻辑卷迁移到其它新的驱动器。在开始之前,我想要先来介绍一下LVM迁移及其特性。

|

||||

这是我们正在进行的LVM系列的第六部分。在本文中,我们将为大家展示怎样在线将现存的逻辑卷迁移到其它新的驱动器。在开始之前,我想要先来介绍一下LVM迁移及其特性。

|

||||

|

||||

|

||||

LVM存储迁移

|

||||

|

||||

*LVM存储迁移*

|

||||

|

||||

|

||||

### 什么是LVM迁移? ###

|

||||

@ -17,7 +18,7 @@ LVM存储迁移

|

||||

- 我们可以使用任何类型的磁盘,如SATA、SSD、SAS、SAN storage iSCSI或者FC。

|

||||

- 在线迁移磁盘,而且数据不会丢失。

|

||||

|

||||

在LVM迁移中,我们将交换各个卷、文件系统以及位于现存存储中的数据。例如,如果我们有一个单一逻辑卷,它已经映射到了物理卷,而该物理卷是一个物理硬盘驱动器。

|

||||

在LVM迁移中,我们将交换各个卷、文件系统以及位于已有的存储中的数据。例如,如果我们有一个单一逻辑卷,它已经映射到了物理卷,而该物理卷是一个物理硬盘驱动器。

|

||||

|

||||

现在,如果我们需要升级服务器存储为SSD硬盘驱动器,我们首先需要考虑什么?重新格式化磁盘?不!我们不必重新格式化服务器,LVM可以选择将这些旧的SATA驱动器上的数据迁移到新的SSD驱动器上。在线迁移将会支持任何类型的磁盘,不管是本地驱动器,还是SAN或者光纤通道都可以。

|

||||

|

||||

@ -35,7 +36,8 @@ LVM存储迁移

|

||||

# lvs

|

||||

|

||||

|

||||

检查逻辑卷磁盘

|

||||

|

||||

*检查逻辑卷磁盘*

|

||||

|

||||

### 步骤2: 检查新添加的驱动器 ###

|

||||

|

||||

@ -44,7 +46,8 @@ LVM存储迁移

|

||||

# fdisk -l | grep dev

|

||||

|

||||

|

||||

检查新添加的驱动器

|

||||

|

||||

*检查新添加的驱动器*

|

||||

|

||||

**注意**:你看到上面屏幕中的内容了吗?新的驱动器已经被成功添加了,其名称为“**/dev/sda**”。

|

||||

|

||||

@ -57,7 +60,8 @@ LVM存储迁移

|

||||

# cat tecmint.txt

|

||||

|

||||

|

||||

检查逻辑卷数据

|

||||

|

||||

*检查逻辑卷数据*

|

||||

|

||||

**注意**:出于演示的目的,我们已经在**/mnt/lvm**挂载点下创建了两个文件,我们将在线将这些数据迁移到新的驱动器中。

|

||||

|

||||

@ -67,7 +71,8 @@ LVM存储迁移

|

||||

# vgs -o+devices | grep tecmint_vg

|

||||

|

||||

|

||||

确认逻辑卷名称

|

||||

|

||||

*确认逻辑卷名称*

|

||||

|

||||

**注意**:看到上面屏幕中的内容了吗?“**vdb**”容纳了卷组**tecmint_vg**。

|

||||

|

||||

@ -79,7 +84,8 @@ LVM存储迁移

|

||||

# pvs

|

||||

|

||||

|

||||

创建物理卷

|

||||

|

||||

*创建物理卷*

|

||||

|

||||

**6.**接下来,使用‘vgextend命令’来添加新创建的物理卷到现存卷组tecmint_vg。

|

||||

|

||||

@ -87,14 +93,16 @@ LVM存储迁移

|

||||

# vgs

|

||||

|

||||

|

||||

添加物理卷

|

||||

|

||||

*添加物理卷*

|

||||

|

||||

**7.**要获得卷组的完整信息列表,请使用‘vgdisplay’命令。

|

||||

|

||||

# vgdisplay tecmint_vg -v

|

||||

|

||||

|

||||

列出卷组信息

|

||||

|

||||

*列出卷组信息*

|

||||

|

||||

**注意**:在上面屏幕中,我们可以看到在输出结果的结束处,我们的PV已经添加到了卷组中。

|

||||

|

||||

@ -108,7 +116,8 @@ LVM存储迁移

|

||||

# ls -l /dev | grep vd

|

||||

|

||||

|

||||

列出设备信息

|

||||

|

||||

*列出设备信息*

|

||||

|

||||

**注意**:在上面的命令中,我们可以看到主设备号是**252**,次设备号是**17**,它连接到了**vdb1**。希望你理解了上面命令的输出。

|

||||

|

||||

@ -122,7 +131,8 @@ LVM存储迁移

|

||||

- **1** = 添加单个镜像

|

||||

|

||||

|

||||

镜像法迁移

|

||||

|

||||

*镜像法迁移*

|

||||

|

||||

**注意**:上面的迁移过程根据卷的大小会花费一段时间。

|

||||

|

||||

@ -131,14 +141,16 @@ LVM存储迁移

|

||||

# lvs -o+devices

|

||||

|

||||

|

||||

验证转换的镜像

|

||||

|

||||

*验证转换的镜像*

|

||||

|

||||

**11.**当你确认转换的镜像没有任何问题后,你可以移除旧的虚拟磁盘**vdb1**。**-m**选项将移除镜像,先前我们使用**l**来添加镜像。

|

||||

|

||||

# lvconvert -m 0 /dev/tecmint_vg/tecmint_lv /dev/vdb1

|

||||

|

||||

|

||||

移除虚拟磁盘

|

||||

|

||||

*移除虚拟磁盘*

|

||||

|

||||

**12.**在旧虚拟磁盘移除后,你可以使用以下命令来再次检查逻辑卷设备。

|

||||

|

||||

@ -147,7 +159,8 @@ LVM存储迁移

|

||||

# ls -l /dev | grep sd

|

||||

|

||||

|

||||

检查新镜像的设备

|

||||

|

||||

*检查新镜像的设备*

|

||||

|

||||

在上面的图片中,你看到了吗?我们的逻辑卷现在依赖于**8,1**,名称为**sda1**。这说明我们的迁移过程已经完成了。

|

||||

|

||||

@ -157,7 +170,8 @@ LVM存储迁移

|

||||

# cat tecmin.txt

|

||||

|

||||

|

||||

检查镜像的数据

|

||||

|

||||

*检查镜像的数据*

|

||||

|

||||

|

||||

# vgreduce /dev/tecmint_vg /dev/vdb1

|

||||

@ -170,7 +184,8 @@ LVM存储迁移

|

||||

# lvs

|

||||

|

||||

|

||||

删除虚拟磁盘

|

||||

|

||||

*删除虚拟磁盘*

|

||||

|

||||

### 步骤6: LVM pvmove镜像法 ###

|

||||

|

||||

@ -190,7 +205,7 @@ via: http://www.tecmint.com/lvm-storage-migration/#comment-331336

|

||||

|

||||

作者:[Babin Lonston][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

在Linux上使用smartmontools查看硬盘的健康状态

|

||||

使用 smartmontools 查看硬盘的健康状态

|

||||

================================================================================

|

||||

要说Linux用户最不愿意看到的事情,莫过于在毫无警告的情况下发现硬盘崩溃了。诸如[RAID][2]的[备份][1]和存储技术可以在任何时候帮用户恢复数据,但为预防硬件突然崩溃造成数据丢失所花费的代价却是相当可观的,特别是在用户从来没有提前考虑过在这些情况下的应对措施时。

|

||||

|

||||

@ -28,7 +28,7 @@

|

||||

|

||||

|

||||

|

||||

其中sdx代表分配给机器上对应硬盘上的设备名。

|

||||

其中sdX代表分配给机器上对应硬盘上的设备名。

|

||||

|

||||

如果想要显示出某个指定硬盘的信息(比如设备模式、S/N、固件版本、大小、ATA版本/修订号、SMART功能的可用性和状态),在运行smartctl命令时添加"--info"选项,并按如下所示指定硬盘的设备名。

|

||||

|

||||

@ -67,8 +67,8 @@

|

||||

- **THRESH**:在报告硬盘FAILED状态前,WORST可以允许的最小值。

|

||||

- **TYPE**:属性的类型(Pre-fail或Old_age)。Pre-fail类型的属性可被看成一个关键属性,表示参与磁盘的整体SMART健康评估(PASSED/FAILED)。如果任何Pre-fail类型的属性故障,那么可视为磁盘将要发生故障。另一方面,Old_age类型的属性可被看成一个非关键的属性(如正常的磁盘磨损),表示不会使磁盘本身发生故障。

|

||||

- **UPDATED**:表示属性的更新频率。Offline代表磁盘上执行离线测试的时间。

|

||||

- **WHEN_FAILED**:如果VALUE小于等于THRESH,会被设置成“FAILING_NOW”;如果WORST小于等于THRESH会被设置成“In_the_past”;如果都不是,会被设置成“-”。在“FAILING_NOW”情况下,需要备份重要文件ASAP,特别是属性是Pre-fail类型时。“In_the_past”代表属性已经故障了,但在运行测试的时候没问题。“-”代表这个属性从没故障过。

|

||||

- **RAW_VALUE**:制造商定义的原始值,从VALUE派生。

|

||||

- **WHEN\_FAILED**:如果VALUE小于等于THRESH,会被设置成“FAILING\_NOW”;如果WORST小于等于THRESH会被设置成“In\_the\_past”;如果都不是,会被设置成“-”。在“FAILING\_NOW”情况下,需要尽快备份重要文件,特别是属性是Pre-fail类型时。“In\_the\_past”代表属性已经故障了,但在运行测试的时候没问题。“-”代表这个属性从没故障过。

|

||||

- **RAW\_VALUE**:制造商定义的原始值,从VALUE派生。

|

||||

|

||||

这时候你可能会想,“是的,smartctl看起来是个不错的工具,但我更想知道如何避免手动运行的麻烦。”如果能够以指定的间隔运行,同时又能通知我测试结果,那不是更好吗?”

|

||||

|

||||

@ -134,7 +134,7 @@ via: http://xmodulo.com/check-hard-disk-health-linux-smartmontools.html

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[KayGuoWhu](https://github.com/KayGuoWhu)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -76,7 +76,7 @@ via: http://www.ubuntugeek.com/configuring-layer-two-peer-to-peer-vpn-using-n2n.

|

||||

|

||||

作者:[ruchi][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

Linux 系统中使用 logwatch 监控日志文件

|

||||

================================================================================

|

||||

Linux 操作系统和许多应用程序会创建特殊的文件来记录它们的运行事件,这些文件通常被称作“日志”。当要了解操作系统或第三方应用程序的行为或进行故障排队的话,这些系统日志或特写的应用程序日志文件是必不可少的的工具。但是,日志文件并没有您们所谓的“清晰”或“容易”这种程度的可读性。手工分析原始的日志文件简直是浪费时间,并且单调乏味。出于这个原因,对于系统管理员来说,发现任何一款能把原始的日志文件转换成更人性化的记录摘要的工具,将会受益无穷。

|

||||

Linux 操作系统和许多应用程序会创建特殊的文件来记录它们的运行事件,这些文件通常被称作“日志”。当要了解操作系统或第三方应用程序的行为或进行故障排查时,这些系统日志或特定的应用程序日志文件是必不可少的的工具。但是,日志文件并没有您们所谓的“清晰”或“容易”这种程度的可读性。手工分析原始的日志文件简直是浪费时间,并且单调乏味。出于这个原因,对于系统管理员来说,发现任何一款能把原始的日志文件转换成更人性化的记录摘要的工具,将会受益无穷。

|

||||

|

||||

[logwatch][1] 是一款用 Perl 语言编写的开源日志解析分析器。它能对原始的日志文件进行解析并转换成结构化格式的文档,也能根据您的使用情况和需求来定制报告。logwatch 的主要目的是生成更易于使用的日志摘要,并不是用来对日志进行实时的处理和监控的。正因为如此,logwatch 通常被设定好时间和频率的自动定时任务来调度运行或者是有需要日志处理的时候从命令行里手动运行。一旦日志报告生成,logwatch 会通过电子邮件把这报告发送给您,您可以把它保存成文件或者在屏幕上直接显示。

|

||||

[logwatch][1] 是一款用 Perl 语言编写的开源日志解析分析器。它能对原始的日志文件进行解析并转换成结构化格式的文档,也能根据您的使用情况和需求来定制报告。logwatch 的主要目的是生成更易于使用的日志摘要,并不是用来对日志进行实时的处理和监控的。正因为如此,logwatch 通常被设定好时间和频率的自动定时任务来调度运行或者是有需要日志处理的时候从命令行里手动运行。一旦日志报告生成,logwatch 可以通过电子邮件把这报告发送给您,您可以把它保存成文件或者直接显示在屏幕上。

|

||||

|

||||

Logwatch 报告的详细程度和报告覆盖范围是完全可定制化的。Logwatch 的日志处理引擎也是可扩展的,从某种意义上来说,如果您想在一个新的应用程序中使用 logwatch 功能的话,只需要为这个应用程序的日志文件编写一个日志处理脚本(使用 Perl 语言),然后挂接到 logwatch 上就行。

|

||||

|

||||

@ -20,13 +20,13 @@ logwatch 有一点不好的就是,在它生成的报告中没有详细的时

|

||||

|

||||

### 配置 Logwatch ###

|

||||

|

||||

安装时,主要的配置文件(logwatch.conf)被放到 **/etc/logwatch/conf** 目录中。此文件定义的设置选项会覆盖掉定义在 /usr/share/logwatch/default.conf/logwatch.conf 文件中的系统级设置。

|

||||

安装时,主要的配置文件(logwatch.conf)被放到 **/etc/logwatch/conf** 目录中。此文件(默认是空的)定义的设置选项会覆盖掉定义在 /usr/share/logwatch/default.conf/logwatch.conf 文件中的系统级设置。

|

||||

|

||||

在命令行中,启动 logwatch, 如果不带参数的话,将会使用 /etc/logwatch/conf/logwatch.conf 文件中定义的自定义选项。但,只要一指定参数,它们就会覆盖 /etc/logwatch/conf/logwatch.conf 文件中的任意默认/自定义设置。

|

||||

在命令行中,启动 logwatch, 如果不带参数的话,将会使用 /etc/logwatch/conf/logwatch.conf 文件中定义的选项。但,只要一指定参数,它们就会覆盖 /etc/logwatch/conf/logwatch.conf 文件中的任意默认/自定义设置。

|

||||

|

||||

这篇文章里,我们会编辑 /etc/logwatch/conf/logwatch.conf 文件来对一些默认的设置项做些个性化设置。

|

||||

|

||||

Detail = <Low, Med, High, or a number>

|

||||

Detail = <Low, Med, High, 或数字>

|

||||

|

||||

“Detail” 配置指令控制着 logwatch 报告的详细程度。它可以是个正整数,也可以是分别代表着10、5和0数字的 High、Med、Low 几个选项。

|

||||

|

||||

@ -53,7 +53,7 @@ logwatch 有一点不好的就是,在它生成的报告中没有详细的时

|

||||

Service = <service-name-2>

|

||||

. . .

|

||||

|

||||

“Service” 选项指定想要监控的一个或多个服务。在 /usr/share/logwatch/scripts/services 目录下列出的服务都能被监控,它们已经涵盖了重要的系统服务(例如,pam,secure,iptables,syslogd 等),也涵盖了一些像 sudo、sshd、http、fail2ban、samba等主流的应用服务。如果您想添加新的服务到列表中,得编写一个相应的日志处理 Perl 脚本,并把它放在这个目录中。

|

||||

“Service” 选项指定想要监控的一个或多个服务。在 /usr/share/logwatch/scripts/services 目录下列出的服务都能被监控,它们已经涵盖了重要的系统服务(例如:pam,secure,iptables,syslogd 等),也涵盖了一些像 sudo、sshd、http、fail2ban、samba等主流的应用服务。如果您想添加新的服务到列表中,得编写一个相应的日志处理 Perl 脚本,并把它放在这个目录中。

|

||||

|

||||

如果这个选项要用来选择特定的服务话,您需要把 /usr/share/logwatch/default.conf/logwatch.conf 文件中的 "Service = All " 这一行注释掉。

|

||||

|

||||

@ -123,7 +123,7 @@ via: http://xmodulo.com/monitor-log-file-linux-logwatch.html

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[runningwater](https://github.com/runningwater)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,295 @@

|

||||

你值得拥有 —— 25 个 Linux 性能监控工具

|

||||

================================================================================

|

||||

一段时间以来,我们在网上向读者介绍了如何为Linux以及类Linux操作系统配置多种不同的性能监控工具。在这篇文章中我们将罗列一系列使用最频繁的性能监控工具,并对介绍到的每一个工具提供了相应的简介链接,大致将其划分为两类,基于命令行的和提供图形化接口的。

|

||||

|

||||

### 基于命令行的性能监控工具 ###

|

||||

|

||||

#### 1. dstat - 多类型资源统计工具 ####

|

||||

|

||||

该命令整合了**vmstat**,**iostat**和**ifstat**三种命令。同时增加了新的特性和功能可以让你能及时看到各种的资源使用情况,从而能够使你对比和整合不同的资源使用情况。通过不同颜色和区块布局的界面帮助你能够更加清晰容易的获取信息。它也支持将信息数据导出到**cvs**格式文件中,从而用其他应用程序打开,或者导入到数据库中。你可以用该命令来[监控cpu,内存和网络状态随着时间的变化][1]。

|

||||

|

||||

|

||||

|

||||

#### 2. atop - 相比top更好的ASCII码体验 ####

|

||||

|

||||

这个使用**ASCII**码显示方式的命令行工具是一个显示所有进程活动的性能监控工具。它可以展示每日的系统日志以进行长期的进程活动分析,并高亮显示过载的系统使用资源。它包含了CPU,内存,交换空间,磁盘和网络层的度量指标。所有这些功能只需在终端运行**atop**即可。

|

||||

|

||||

# atop

|

||||

|

||||

当然你也可以使用[交互界面来显示][2]数据并进行排序。

|

||||

|

||||

|

||||

|

||||

#### 3. Nmon - 类Unix系统的性能监控 ####

|

||||

|

||||

Nmon是**Nigel's Monitor**缩写,它最早开发用来作为**AIX**的系统监控工具。如果使用**在线模式**,可以使用光标键在屏幕上操作实时显示在终端上的监控信息。使用**捕捉模式**能够将数据保存为**CSV**格式,方便进一步的处理和图形化展示。

|

||||

|

||||

|

||||

|

||||

更多的信息参考我们的[nmon性能监控文章][3]。

|

||||

|

||||

#### 4. slabtop - 显示内核slab缓存信息 ####

|

||||

|

||||

这个应用能够显示**缓存分配器**是如何管理Linux内核中缓存的不同类型的对象。这个命令类似于top命令,区别是它的重点是实时显示内核slab缓存信息。它能够显示按照不同排序条件来排序显示缓存列表。它同时也能够显示一个slab层信息的统计信息的题头。举例如下:

|

||||

|

||||

# slabtop --sort=a

|

||||

# slabtop -s b

|

||||

# slabtop -s c

|

||||

# slabtop -s l

|

||||

# slabtop -s v

|

||||

# slabtop -s n

|

||||

# slabtop -s o

|

||||

|

||||

**更多信息参阅**[内核slab缓存文章][4]。

|

||||

|

||||

#### 5. sar - 性能监控和瓶颈检查 ####

|

||||

|

||||

**sar** 命令可以将操作系统上所选的累积活动计数器内容信息输出到标准输出上。其基于计数值和时间间隔参数的**审计系统**,会按照指定的时间间隔输出指定次数的监控信息。如果时间间隔参数为设置为0,那么[sar命令将会显示系统从开机到当时时刻的平均统计信息][5]。有用的命令如下:

|

||||

|

||||

# sar -u 2 3

|

||||

# sar -u -f /var/log/sa/sa05

|

||||

# sar -P ALL 1 1

|

||||

# sar -r 1 3

|

||||

# sar -W 1 3

|

||||

|

||||

#### 6. Saidar - 简单的统计监控工具 ####

|

||||

|

||||

Saidar是一个**简单**且**轻量**的系统信息监控工具。虽然它无法提供大多性能报表,但是它能够通过一个简单明了的方式显示最有用的系统运行状况数据。你可以很容易地看到[运行时间、平均负载、CPU、内存、进程、磁盘和网络接口][6]统计信息。

|

||||

|

||||

Usage: saidar [-d delay] [-c] [-v] [-h]

|

||||

|

||||

-d 设置更新时间(秒)

|

||||

-c 彩色显示

|

||||

-v 显示版本号

|

||||

-h 显示本帮助

|

||||

|

||||

|

||||

|

||||

#### 7. top - 经典的Linux任务管理工具 ####

|

||||

|

||||

作为一个广为人知的**Linux**工具,**top**是大多数的类Unix操作系统任务管理器。它可以显示当前正在运行的进程的列表,用户可以按照不同的条件对该列表进行排序。它主要显示了系统进程对**CPU**和内存的使用状况。top可以快速检查是哪个或哪几个进程挂起了你的系统。你可以在[这里][7]看到top使用的例子。 你可以在终端输入top来运行它并进入到交互模式:

|

||||

|

||||

交互模式的一些快捷操作:

|

||||

|

||||

全局命令: <回车/空格> ?, =, A, B, d, G, h, I, k, q, r, s, W, Z

|

||||

统计区的命令: l, m, t, 1

|

||||

任务区的命令:

|

||||

外观: b, x, y, z 内容: c, f, H, o, S, u 大小: #, i, n 排序: <, >, F, O, R

|

||||

色彩方案: <Ret>, a, B, b, H, M, q, S, T, w, z, 0 - 7

|

||||

窗口命令: -, _, =, +, A, a, G, g, w

|

||||

|

||||

|

||||

|

||||

#### 8. Sysdig - 系统进程的高级视图 ####

|

||||

|

||||

**Sysdig**是一个能够让系统管理员和开发人员以前所未有方式洞察其系统行为的监控工具。其开发团队希望改善系统级的监控方式,通过提供关于**存储,进程,网络和内存**子系统的**统一有序**以及**粒度可见**的方式来进行错误排查,并可以创建系统活动记录文件以便你可以在任何时间轻松分析。

|

||||

|

||||

简单例子:

|

||||

|

||||

# sysdig proc.name=vim

|

||||

# sysdig -p"%proc.name %fd.name" "evt.type=accept and proc.name!=httpd"

|

||||

# sysdig evt.type=chdir and user.name=root

|

||||

# sysdig -l

|

||||

# sysdig -L

|

||||

# sysdig -c topprocs_net

|

||||

# sysdig -c fdcount_by fd.sport "evt.type=accept"

|

||||

# sysdig -p"%proc.name %fd.name" "evt.type=accept and proc.name!=httpd"

|

||||

# sysdig -c topprocs_file

|

||||

# sysdig -c fdcount_by proc.name "fd.type=file"

|

||||

# sysdig -p "%12user.name %6proc.pid %12proc.name %3fd.num %fd.typechar %fd.name" evt.type=open

|

||||

# sysdig -c topprocs_cpu

|

||||

# sysdig -c topprocs_cpu evt.cpu=0

|

||||

# sysdig -p"%evt.arg.path" "evt.type=chdir and user.name=root"

|

||||

# sysdig evt.type=open and fd.name contains /etc

|

||||

|

||||

|

||||

|

||||

**更多信息** 可以在 [如何利用sysdig改善系统层次的监控和错误排查][8]

|

||||

|

||||

#### 9. netstat - 显示开放的端口和连接 ####

|

||||

|

||||

它是**Linux管理员**使用来显示各种网络信息的工具,如查看什么端口开放和什么网络连接已经建立以及何种进程运行在该连接之上。同时它也显示了不同程序间打开的**Unix套接字**的信息。作为大多数Linux发行版本的一部分,netstat的许多命令在 [netstat和它的不同输出][9]中有详细的描述。最为常用的如下:

|

||||

|

||||

$ netstat | head -20

|

||||

$ netstat -r

|

||||

$ netstat -rC

|

||||

$ netstat -i

|

||||

$ netstat -ie

|

||||

$ netstat -s

|

||||

$ netstat -g

|

||||

$ netstat -tapn

|

||||

|

||||

### 10. tcpdump - 洞察网络封包 ###

|

||||

|

||||

**tcpdump**可以用来查看**网络连接**的**封包**内容。它显示了传输过程中封包内容的各种信息。为了使得输出信息更为有用,它允许使用者通过不同的过滤器获取自己想要的信息。可以参照的例子如下:

|

||||

|

||||

# tcpdump -i eth0 not port 22

|

||||

# tcpdump -c 10 -i eth0

|

||||

# tcpdump -ni eth0 -c 10 not port 22

|

||||

# tcpdump -w aloft.cap -s 0

|

||||

# tcpdump -r aloft.cap

|

||||

# tcpdump -i eth0 dst port 80

|

||||

|

||||

你可以文章“[在topdump和捕捉包][10]”中找到详细描述。

|

||||

|

||||

#### 11. vmstat - 虚拟内存统计信息 ####

|

||||

|

||||

**vmstat**是虚拟内存(**virtual memory** statistics)的缩写,作为一个**内存监控**工具,它收集和显示关于**内存**,**进程**,**终端**和**分页**和**I/O阻塞**的概括信息。作为一个开源程序,它可以在大部分Linux发行版本中找到,包括Solaris和FreeBSD。它用来诊断大部分的内存性能问题和其他相关问题。

|

||||

|

||||

|

||||

|

||||

**M更多信息** 参考 [vmstat命令文章][11]。

|

||||

|

||||

#### 12. free - 内存统计信息 ####

|

||||

|

||||

free是另一个能够在终端中显示内存和交换空间使用的命令行工具。由于它的简易,它经常用于快速查看内存使用或者是应用于不同的脚本和应用程序中。在这里你可以看到[这个小程序的许多应用][12]。几乎所有的系统管理员日常都会用这个工具。:-)

|

||||

|

||||

|

||||

|

||||

#### 13. Htop - 更加友好的top ####

|

||||

|

||||

**Htop**基本上是一个top改善版本,它能够以更加多彩的方式显示更多的统计信息,同时允许你采用不同的方式进行排序,它提供了一个**用户友好**的接口。

|

||||

|

||||

|

||||

|

||||

你可以在文章“[关于htop和top的比较][13]”中找到**更多的信息** 。

|

||||

|

||||

#### 14. ss - 网络管理的现代替代品 ####

|

||||

|

||||

**ss**是**iproute2**包的一部分。iproute2是用来替代一整套标准的**Unix网络**工具组件,它曾经用来完成[网络接口配置,路由表和管理ARP表][14]任务。ss工具用来记录套接字统计信息,它可以显示类似netstat一样的信息,同时也能显示更多TCP和状态信息。一些例子如下:

|

||||

|

||||

# ss -tnap

|

||||

# ss -tnap6

|

||||

# ss -tnap

|

||||

# ss -s

|

||||

# ss -tn -o state established -p

|

||||

|

||||

#### 15. lsof - 列表显示打开的文件 ####

|

||||

|

||||

**lsof**命令,意为“**list open files**”, 用于在许多类Unix系统中显示所有打开的文件及打开它们的进程。在大部分Linux发行版和其他类Linux操作系统中系统管理员用它来检查不同的进程打开了哪些文件。

|

||||

|

||||

# lsof +p process_id

|

||||

# lsof | less

|

||||

# lsof –u username

|

||||

# lsof /etc/passwd

|

||||

# lsof –i TCP:ftp

|

||||

# lsof –i TCP:80

|

||||

|

||||

你可以找到 **更多例子** 在[lsof 文章][15]

|

||||

|

||||

#### 16. iftop - 类似top的了网络连接工具 ####

|

||||

|

||||

**iftop**是另一个基于网络信息的类似top的程序。它能够显示当前时刻按照**带宽使用**量或者上传或者下载量排序的**网络连接**状况。它同时提供了下载文件的预估完成时间。

|

||||

|

||||

|

||||

|

||||

**更多信息**可以参考[网络流量iftop文章][16]

|

||||

|

||||

#### 17. iperf - 网络性能工具 ####

|

||||

|

||||

**iperf**是一个**网络测试**工具,能够创建**TCP**和**UDP**数据连接并在网络上测量它们的**传输性能**。它支持调节关于时间,协议和缓冲等不同的参数。对于每一个测试,它会报告带宽,丢包和其他的一些参数。

|

||||

|

||||

|

||||

|

||||

如果你想用使用这个工具,可以参考这篇文章: [如何安装和使用iperf][17]

|

||||

|

||||

#### 18. Smem - 高级内存报表工具 ####

|

||||

|

||||

**Smem**是最先进的**Linux**命令行工具之一,它提供关于系统中已经使用的和共享的实际内存大小,试图提供一个更为可靠的当前**内存**使用数据。

|

||||

|

||||

$ smem -m

|

||||

$ smem -m -p | grep firefox

|

||||

$ smem -u -p

|

||||

$ smem -w -p

|

||||

|

||||

参考我们的文章:[Smem更多的例子][18]

|

||||

|

||||

### 图形化或基于Web的性能工具 ###

|

||||

|

||||

#### 19. Icinga - Nagios的社区分支版本 ####

|

||||

|

||||

**Icinga**是一个**开源免费**的网络监控程序,作为Nagios的分支,它继承了前者现有的大部分功能,同时基于这些功能又增加了社区用户要求已久的功能和补丁。

|

||||

|

||||

|

||||

|

||||

**更多信息**请参考[安装和配置lcinga文章][19].

|

||||

|

||||

#### 20. Nagios - 最为流行的监控工具. ####

|

||||

|

||||

作为在Linux上使用最为广泛和最为流行的**监控方案**,它有一个守护程序用来收集不同进程和远程主机的信息,这些收集到的信息都通过功能强大**的web界面**进行呈现。

|

||||

|

||||

|

||||

|

||||

你可以在文章“[如何安装nagios][20]”里面**找到更多的信息**

|

||||

|

||||

#### 21. Linux process explorer - Linux下的procexp ####

|

||||

|

||||

**Linux process explorer**是一个Linux下的图形化进程浏览工具。它能够显示不同的进程信息,如进程数,TCP/IP连接和每一个进程的性能指标。作为**Windows**下**procexp**在Linux的替代品,是由**Sysinternals**开发的,其目标是比**top**和**ps**提供更好用户体验。

|

||||

|

||||

|

||||

|

||||

查看 [linux process explorer 文章][21]获取更多信息。

|

||||

|

||||

#### 22. Collectl - 性能监控工具 ####

|

||||

|

||||

你可以既可以通过交互的方式使用这个**性能监控**工具,也可以用它把**报表**写到磁盘上,并通过web服务器来访问。它以一种**易读易管理**的格式,显示了**CPU,磁盘,内存,网络,网络文件系统,进程,slabs**等统计信息。

|

||||

|

||||

|

||||

|

||||

**更多** 关于[Collectl的文章][22]。

|

||||

|

||||

#### 23. MRTG - 经典网络流量监控图形工具 ####

|

||||

|

||||

这是一个采用**rrdtool**的生成图形的流量监控工具。作为**最早**的提供**图形化界面**的流量监控工具,它被广泛应用在类Unix的操作系统中。查看我们关于[如何使用MRTG][23]的文章获取更多关于安装和配置的信息。

|

||||

|

||||

|

||||

|

||||

#### 24. Monit - 简单易用的监控工具 ####

|

||||

|

||||

**Monit**是一个用来**监控进程**,**系统加载**,**文件系统**和**目录文件**等的开源的Linux工具。你能够让它自动化维护和修复,也能够在运行错误的情景下执行特定动作或者发邮件报告提醒系统管理员。如果你想要用这个工具,你可以查看[如何使用Monit的文章][24]。

|

||||

|

||||

|

||||

|

||||

#### 25. Munin - 为服务器提供监控和提醒服务 ####

|

||||

|

||||

作为一个网络资源监控工具,*Munin**能够帮助分析**资源趋势**和**查看薄弱环节**以及导致产生**性能问题**的原因。开发此软件的团队希望它能够易用和用户体验友好。该软件是用Perl开发的,并采用**rrdtool**来绘制图形,使用了**web界面**进行呈现。开发人员推广此应用时声称当前已有500多个监控插件可以“**即插即用**”。

|

||||

|

||||

|

||||

**更多信息**可以在[关于Munin的文章][25]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/monitoring-2/linux-performance-monitoring-tools/

|

||||

|

||||

作者:[Adrian Dinu][a]

|

||||

译者:[andyxue](https://github.com/andyxue)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/adriand/

|

||||

[1]:http://linux.cn/article-3215-1.html

|

||||

[2]:http://linoxide.com/monitoring-2/guide-using-linux-atop/

|

||||

[3]:http://linoxide.com/monitoring-2/install-nmon-monitor-linux-performance/

|

||||

[4]:http://linux.cn/article-3702-1.html

|

||||

[5]:http://linoxide.com/linux-command/linux-system-performance-monitoring-using-sar-command/

|

||||

[6]:http://linoxide.com/monitoring-2/monitor-linux-saidar-tool/

|

||||

[7]:http://linux.cn/article-2352-1.html

|

||||

[8]:http://linux.cn/article-4341-1.html

|

||||

[9]:http://linux.cn/article-2434-1.html

|

||||

[10]:http://linoxide.com/linux-how-to/network-traffic-capture-tcp-dump-command/

|

||||

[11]:http://linux.cn/article-2472-1.html

|

||||

[12]:http://linux.cn/article-2443-1.html

|

||||

[13]:http://linux.cn/article-3141-1.html

|

||||

[14]:http://linux.cn/article-4372-1.html

|

||||

[15]:http://linux.cn/article-4099-1.html

|

||||

[16]:http://linux.cn/article-1843-1.html

|

||||

[17]:http://linoxide.com/monitoring-2/install-iperf-test-network-speed-bandwidth/

|

||||

[18]:http://linoxide.com/tools/memory-usage-reporting-smem/

|

||||

[19]:http://linoxide.com/monitoring-2/install-configure-icinga-linux/

|

||||

[20]:http://linux.cn/article-2436-1.html

|

||||

[21]:http://sourceforge.net/projects/procexp/

|

||||

[22]:http://linux.cn/article-3154-1.html

|

||||

[23]:http://linoxide.com/tools/multi-router-traffic-grapher/

|

||||

[24]:http://linoxide.com/monitoring-2/monit-linux/

|

||||

[25]:http://linoxide.com/ubuntu-how-to/install-munin/

|

||||

@ -27,7 +27,7 @@

|

||||

|

||||

### eCryptFS基础 ###

|

||||

|

||||

eCrypFS是一个基于FUSE的用户空间加密文件系统,在Linux内核2.6.19及更高版本中可用(作为encryptfs模块)。eCryptFS加密的伪文件系统挂载到当前文件系统的顶部。它可以很好地工作在EXT文件系统家族和其它文件系统如JFS、XFS、ReiserFS、Btrfs,甚至是NFS/CIFS共享文件系统上。Ubuntu使用eCryptFS作为加密其家目录的默认方法,ChromeOS也是。在eCryptFS底层,默认使用的是AES算法,但是它也支持其它算法,如blowfish、des3、cast5、cast6。如果你是通过手工创建eCryptFS设置,你可以选择其中一种算法。

|

||||

eCrypFS是一个基于FUSE的用户空间加密文件系统,在Linux内核2.6.19及更高版本中可用(作为encryptfs模块)。eCryptFS加密的伪文件系统是挂载到当前文件系统顶部的。它可以很好地工作在EXT文件系统家族和其它文件系统如JFS、XFS、ReiserFS、Btrfs,甚至是NFS/CIFS共享文件系统上。Ubuntu使用eCryptFS作为加密其家目录的默认方法,ChromeOS也是。在eCryptFS底层,默认使用的是AES算法,但是它也支持其它算法,如blowfish、des3、cast5、cast6。如果你是通过手工创建eCryptFS设置,你可以选择其中一种算法。

|

||||

|

||||

就像我所的,Ubuntu让我们在安装过程中选择是否加密/home目录。好吧,这是使用eCryptFS的最简单的一种方法。

|

||||

|

||||

@ -63,13 +63,13 @@ Arch Linux:

|

||||

|

||||

|

||||

|

||||

它会要求你输入登录密码和挂载密码。登录密码和你常规登录的密码一样,而挂载密码用于派生一个文件加密主密钥。留空来生成一个,这样会更安全。登出然后重新登录。

|

||||

它会要求你输入登录密码和挂载密码。登录密码和你常规登录的密码一样,而挂载密码用于派生一个文件加密主密钥。这里留空可以生成一个(复杂的),这样会更安全。登出然后重新登录。

|

||||

|

||||

你会注意到,eCryptFS默认在你的家目录中创建了两个目录:Private和.Private。~/.Private目录包含有加密的数据,而你可以在~/Private目录中访问到相应的解密后的数据。在你登录时,~/.Private目录会自动解密并映射到~/Private目录,因此你可以访问它。当你登出时,~/Private目录会自动卸载,而~/Private目录中的内容会加密回到~/.Private目录。

|

||||

|

||||

eCryptFS怎么会知道你拥有~/.Private目录,并自动将其解密到~/Private目录而不需要我们输入密码呢?这就是eCryptFS的PAM模块捣的鬼,它为我们提供了这项便利服务。

|

||||

|

||||

如果你不想要~/Private目录在登录时自动挂载,只需要在运行ecryptfs-setup-private工具时添加“--noautomount”选项。同样,如果你不想要~/Private目录在登出后自动卸载,也可以自动“--noautoumount”选项。但是,那样后,你需要自己手工挂载或卸载~/Private目录:

|

||||

如果你不想让~/Private目录在登录时自动挂载,只需要在运行ecryptfs-setup-private工具时添加“--noautomount”选项。同样,如果你不想要~/Private目录在登出后自动卸载,也可以自动“--noautoumount”选项。但是,那样后,你需要自己手工挂载或卸载~/Private目录:

|

||||

|

||||

$ ecryptfs-mount-private ~/.Private ~/Private

|

||||

$ ecryptfs-umount-private ~/Private

|

||||

@ -94,7 +94,7 @@ via: http://xmodulo.com/encrypt-files-directories-ecryptfs-linux.html

|

||||

|

||||

作者:[Christopher Valerio][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -51,7 +51,7 @@ Shell 脚本 - 使用 if 语句进行条件检测

|

||||

echo "Number is smaller"

|

||||

fi

|

||||

|

||||

### If..elif..else..fi 语句 (Short for else if) ###

|

||||

### If..elif..else..fi 语句 (简写的 else if) ###

|

||||

|

||||

Bourne Shell 的 if 语句语法中,else 语句里的代码块会在 if 条件为假时执行。我们还可以将 if 语句嵌套到一起,来实现多重条件的检测。我们可以使用 elif 语句(else if 的缩写)来构建多重条件的检测。

|

||||

|

||||

@ -94,7 +94,7 @@ Bourne Shell 的 if 语句语法中,else 语句里的代码块会在 if 条件

|

||||

|

||||

If 和 else 语句可以在一个 bash 脚本里相互嵌套。关键词 “fi” 表示里层 if 语句的结束,所有 if 语句必须使用 关键词 “fi” 来结束。

|

||||

|

||||

基本 if 语句的 **嵌套语法**:

|

||||

基本 if 语句的**嵌套语法**:

|

||||

|

||||

if [ 判断条件1 ]

|

||||

then

|

||||

@ -139,7 +139,7 @@ via: http://www.linuxtechi.com/shell-scripting-checking-conditions-with-if/

|

||||

|

||||

作者:[Pradeep Kumar][a]

|

||||

译者:[ThomazL](https://github.com/ThomazL)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,10 +1,10 @@

|

||||

Linux中使用rsync——文件和目录排除列表

|

||||

================================================================================

|

||||

**rsync**是一个十分有用,而且十分流行的linux工具。它用于备份和恢复文件,也用于对比和同步文件。我们已经在前面的文章讲述了[Linux中rsync命令的使用实例][1],而今天我们将增加一些更为有用的rsync使用技巧。

|

||||

**rsync**是一个十分有用,而且十分流行的linux工具。它用于备份和恢复文件,也用于对比和同步文件。我们已经在前面的文章讲述了[如何在Linux下使用rsync][1],而今天我们将增加一些更为有用的rsync使用技巧。

|

||||

|

||||

### 排除文件和目录列表 ###

|

||||

|

||||

有时候,当我们做大量同步的时候,我们可能想要从同步的文件和目录中排除一个文件和目录的列表。一般来说,像不能被同步的设备文件和某些系统文件,或者像临时文件或者缓存文件这类占据不必要磁盘空间的文件,这类文件时我们需要排除的。

|

||||

有时候,当我们做大量同步的时候,我们可能想要从同步的文件和目录中排除一个文件和目录的列表。一般来说,像设备文件和某些系统文件,或者像临时文件或者缓存文件这类占据不必要磁盘空间的文件是不合适同步的,这类文件是我们需要排除的。

|

||||

|

||||

首先,让我们创建一个名为“excluded”的文件(当然,你想取什么名都可以),然后将我们想要排除的文件夹或文件写入该文件,一行一个。在我们的例子中,如果你想要对根分区进行完整的备份,你应该排除一些在启动时创建的设备目录和放置临时文件的目录,列表看起来像下面这样:

|

||||

|

||||

@ -19,7 +19,8 @@ Linux中使用rsync——文件和目录排除列表

|

||||

### 从命令行排除文件 ###

|

||||

|

||||

你也可以从命令行直接排除文件,该方法在你要排除的文件数量较少,并且在你想要将它写成脚本或加到crontab中又不想脚本或cron依赖于另外一个文件运行时十分有用。

|

||||

For example if you wish to sync /var to a backup directory but you don't wish to include cache and tmp folder that usualy don't hold important content between restarts you can use the following command:

|

||||

|

||||

|

||||

例如,如果你想要同步/var到一个备份目录,但是你不想要包含cache和tmp这些通常不会有重要内容的文件夹,你可以使用以下命令:

|

||||

|

||||

$ sudo rsync -aAXhv --exclude={"/var/cache","/var/tmp"} /var /home/adrian/var

|

||||

@ -34,9 +35,9 @@ via: http://linoxide.com/linux-command/exclude-files-rsync-examples/

|

||||

|

||||

作者:[Adrian Dinu][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/adriand/

|

||||

[1]:http://linoxide.com/how-tos/rsync-copy/

|

||||

[1]:http://linux.cn/article-4503-1.html

|

||||

@ -1,25 +1,25 @@

|

||||

Pitivi 发布 0.94 版本,使用 GTK HeaderBar,修复无数 Bugs

|

||||

Pitivi 0.94 切换到 GTK HeaderBar,修复无数 Bugs

|

||||

=====================================

|

||||

|

||||

** 我是 [Pitivi 视频编辑器][1] 的狂热爱好者。Pitivi 可能不是(至少现在不是)Linux 上可用的最拉风的,功能完善的非线性视频编辑器,但是它绝对是最可靠的一个。 **

|

||||

** 我是 [Pitivi 视频编辑器][1] 的狂热爱好者。Pitivi 可能不是(至少现在不是)Linux 上可用的、最拉风的、功能完善的、非线性视频编辑器,但是它绝对是最可靠的一个。 **

|

||||

|

||||

|

||||

|

||||

自然而然地,我一直在期待这个开源视频编辑器在 [这周末][2] 发布的新的 beta 测试版。

|

||||

自然而然地,我一直在期待这个开源视频编辑器[这次][2]发布的新的 beta 测试版。

|

||||

|

||||

Pitivi 0.94 是基于新的 “GStreamer Editing Service”(GES)的第四个发行版本。

|

||||

|

||||

开发组成员 Jean-François Fortin Tam,称号 “Nekohayo” 将本次升级描述为 “** ...主要作为一个维护版本发布,但是除了对 Bug 的修复之外,还是增加了几个有意思的改进和功能。 **”

|

||||

开发组成员 Jean-François Fortin Tam(“Nekohayo”)将本次升级描述为 “**...主要作为一个维护版本发布,但是除了对 Bug 的修复之外,还是增加了几个有意思的改进和功能。**”

|

||||

|

||||

## 有什么新改进? ##

|

||||

### 有什么新改进? ###

|

||||

|

||||

有不少有意思的改进!作为 Pitivi 0.94 版本中最明显的变化,Pitivi 添加了如同 GNOME 客户端一般的 GTK HeaderBar 装饰。HeaderBar 整合了桌面窗口栏,标题栏以及工具栏,节省了大块浪费的垂直以及水平的占用空间。

|

||||

|

||||

“*当你用过一次后,你就在也回不来了,*” Fortin Tam 介绍说。欣赏一下下面这张截图,你肯定会同意的。

|

||||

“*当你用过一次后,你就再也不会走了*” Fortin Tam 介绍说。欣赏一下下面这张截图,你肯定会同意的。

|

||||

|

||||

|

||||

|

||||

Pitivi 现在使用了 GTK HeaderBar 以及菜单键。(image: Nekohayo)

|

||||

*Pitivi 现在使用了 GTK HeaderBar 以及菜单键。(image: Nekohayo)*

|

||||

|

||||

那么应用菜单又怎么样呢?别担心,应用菜单遵循了 GNOME 交互界面的标准,看一下自己机器上的应用菜单确认一下吧。

|

||||

|

||||

@ -49,13 +49,11 @@ Pitivi 现在使用了 GTK HeaderBar 以及菜单键。(image: Nekohayo)

|

||||

|

||||

上面这些信息听起来都很不错吧?下一次更新会更好!这不只是一个通常的来自开发者的夸张,如同 Jean François 解释的一般:

|

||||

|

||||

> “下一次更新(0.95)会运行在难以置信的强大的后端上。感谢 Mathieu [Duponchelle] 和 Thibault [Saunier] 在用 NLE(新的为了 GES 的非线性引擎)替代 GNonLin 并修复问题等工作中做出的努力。”

|

||||

> “下一次更新(0.95)会运行在令人难以置信的强大的后端上。感谢 Mathieu [Duponchelle] 和 Thibault [Saunier] 在用 NLE(新的为了 GES 的非线性引擎)替代 GNonLin 并修复问题等工作中做出的努力。”

|

||||

|

||||

Ubuntu 14.10 带有老的(更容易崩溃)的软件中心,进入 Pitivi 官网¹下载 [安装包][5] 来体验最新杰作。

|

||||

Ubuntu 14.10 带有老的(更容易崩溃)的软件中心,进入 Pitivi 官网下载 [安装包][5] 来体验最新杰作。

|

||||

|

||||

** Pitivi 基金会酬了将近 €20,000,使我们能够向着约定的 1.0 版本迈出一大步。如果你也想早点看到 1.0 版本的到来的话,省下你在星巴克买的格郎德香草奶油咖啡,捐赠我们! **

|

||||

|

||||

*¹目前 0.94 安装包还没发布,你可以下载 nightly tar*

|

||||

**Pitivi 基金会筹了将近 €20,000,使我们能够向着约定的 1.0 版本迈出一大步。如果你也想早点看到 1.0 版本的到来的话,省下你在星巴克买的格郎德香草奶油咖啡,捐赠我们!**

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -64,7 +62,7 @@ via: http://www.omgubuntu.co.uk/2014/11/pitivi-0-94-header-bar-more-features

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[ThomazL](https://github.com/ThomazL)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,45 +1,36 @@

|

||||

|

||||

如何从Ubuntu的声音菜单中移除音乐播放器

|

||||

================================================================================

|

||||

|

||||

|

||||

**自从2010年首次出现,Ubuntu 的声音菜单已经被证明是Unity 桌面上的最流行的独有特性之一。**

|

||||

|

||||

**自从2010年的介绍一来,Ubuntu声音菜单已经被证明是最流行和个性的统一桌面之一.**

|

||||

把音乐播放器与音量控制程序集成到一个标准的界面里是一种看起来很聪明的做法,这样就不用到处找声音相关的各种程序。人们不禁要问,为什么其它操作系统没有效仿这种做法!

|

||||

|

||||

随着音乐播放器与音量程序合成小体积的应用程序-即集成,其中一个希望找到与声音相关的蠢事-通过标准接口的灵感。人们不禁要问,为什么其它操作系统没有效仿这种做法!

|

||||

|

||||

#### 冗长的 ####

|

||||

|

||||

|

||||

尽管它看起来很方便,但是这个小应用当前存在一个问题:相当多的东西集在一起看起来想一个MP3,是否真正的把想要的东西都放在里面了。虽然有用,但是一个无所不再的应用程序清单已经安装了,这让一些不经常适用的人看着很累赘和反感。

|

||||

|

||||

|

||||

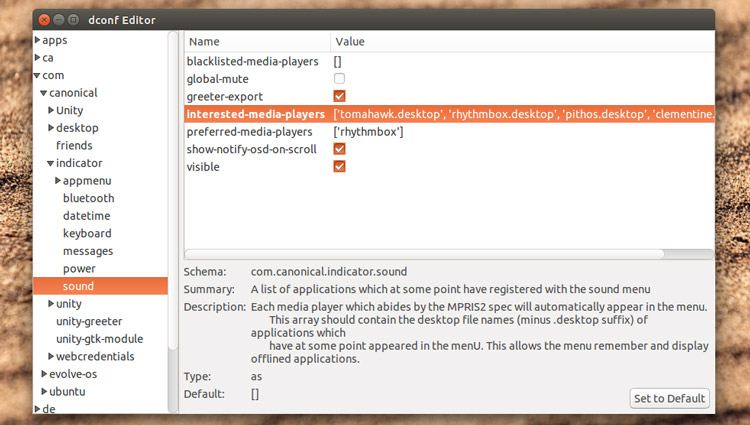

我将要打赌上面的截图看起来一定很熟悉,你们中的很多人一定阅读过吧!不要害怕,**dconf-editor **就在这里。

|

||||

#### 臃肿 ####

|

||||

|

||||

尽管它看起来很方便,但是这个小应用当前存在一个问题:很多播放器都堆在一起,像一个组合音响一样。也许你用得着,但是你安装的所有的媒体播放器都挤在这里,这会让人看着很累赘和反感。

|

||||

|

||||

我将要打赌,当你读到这里时,一定发现上面的截图看起来很熟悉!不要担心,**dconf-editor**可以解决它。

|

||||

|

||||

### 从Ubuntu 声音菜单中移除播放器 ###

|

||||

|

||||

|

||||

#### 第一部分: 基础知识 ####

|

||||

|

||||

最快速和最简单地从声音菜单中移除播放器的方法就是卸载相关的应用程序。但这是极端的方式,我的意思是指你也许想要保留应用程序,但是不需要它集成。

|

||||

最快速和最简单地从声音菜单中移除播放器的方法就是卸载相关的应用程序。但这是极端的方式,我的意思是指你也许想要保留应用程序,但是不需要它集成到菜单里面。

|

||||

|

||||

只删除播放器但是保留我们需要的应用程序,我们用到一个看起来令人惊讶的工具叫“dconf-editor”.

|

||||

只删除播放器但是保留我们需要的应用程序,我们用到一个看起来令人惊讶的工具叫“dconf-editor”。

|

||||

|

||||

你可能已经安装了,如果没有安装的话,那么你从Ubuntu软件中心找出。

|

||||

|

||||

|

||||

- [在Ubuntu中点击安装Dconf-Editor][1]

|

||||

|

||||

一旦安装完毕,找到Unity Dash并打开。打开的时候不要惊慌;你不会再回到2002年了,它确实是这样子的。

|

||||

|

||||

一旦安装完毕,找到Unity Dash并打开。打开的时候不要惊慌;你没有到2002年,它确实是这种古老的样子。

|

||||

|

||||

使用右侧菜单栏,你需要从导航到 com > canonical > indicator > sound.下面的面板将会出现。

|

||||

|

||||

|

||||

|

||||

双击靠近interested-media-players的比括号并删除你希望从声音菜单里移除掉的播放器,但需要保留在方括号中,且不要删除任何你想保留逗号或者撇号。

|

||||

|

||||

双击“interested-media-players”旁的闭括号,并删除你希望从声音菜单里移除掉的播放器,但需要保留方括号中,且不要删除任何需要保留的逗号或者单引号。

|

||||

|

||||

举个例子,我移除掉这些

|

||||

|

||||

@ -55,9 +46,9 @@

|

||||

|

||||

#### 第二部分:黑名单 ####

|

||||

|

||||

等等!还不能关闭dconf-editor。尽管上面的步骤看起来把事情处理得干净利落,但是一些播放器在打开时会立即重新加载到声音菜单。为了避免重复这个过程,将它们添加到**媒体播放器黑名单**中。

|

||||

等等!还不能关闭dconf-editor。尽管上面的步骤看起来把事情处理得干净利落,但是一些播放器在打开时会立即重新加载到声音菜单。为了避免重复这个过程,将它们添加到**blacklisted-media-player**中。

|

||||

|

||||

记得每个在撇括号里的播放器都用逗号分隔多个条目。他们也必须在方括号内,所以在退出之前请务必仔细检查。

|

||||

记得每个在括号里的播放器都用逗号分隔多个条目。他们也必须在方括号内,所以在退出之前请务必仔细检查。

|

||||

|

||||

最终结果如下:

|

||||

|

||||

@ -69,7 +60,7 @@ via: http://www.omgubuntu.co.uk/2014/11/remove-players-ubuntu-sound-menu

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[disylee](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

运行级别与服务管理命令systemd简介

|

||||

systemd的运行级别与服务管理命令简介

|

||||

================================================================================

|

||||

|

||||

|

||||

@ -6,20 +6,21 @@

|

||||

|

||||

在开始介绍systemd命令前,让我们先简单的回顾一下历史。在Linux世界里,有一个很奇怪的现象,一方面Linux和自由软件(FOSS)在不断的向前推进,另一方面人们对这些变化却不断的抱怨。这就是为什么我要在此稍稍提及那些反对systemd所引起的争论的原因,因为我依然记得历史上有不少类似的争论:

|

||||

|

||||

- 软件包(Pacakge)是邪恶的,因为正真的Linux用户会从源码构建他所想要的的一切,并严格的管理系统中安装的软件。

|

||||

- 解析依赖关系的包管理器是邪恶的,正真的Linux用户会手动解决这些该死的依赖关系。

|

||||

- 软件包(Pacakge)是邪恶的,因为真正的Linux用户会从源码构建他所想要的的一切,并严格的管理系统中安装的软件。

|

||||

- 解析依赖关系的包管理器是邪恶的,真正的Linux用户会手动解决这些该死的依赖关系。

|

||||

- apt-get总能把事情干好,所以只有Yum是邪恶的。

|

||||

- Red Hat简直就是Linux中的微软。

|

||||

- 好样的,Ubuntu!

|

||||

- 滚蛋吧,Ubuntu!

|

||||

|

||||

诸如此类...就像我之前常常说的一样,变化总是让人沮丧。这些该死的变化搅乱了我的工作流程,这可不是一件小事情,任何业务流程的中断,都会直接影响到生产力。但是,我们现在还处于计算机发展的婴儿期,在未来的很长的一段时间内将会持续有快速的变化和发展。想必大家应该都认识一些因循守旧的人,在他们的心里,商品一旦买回家以后就是恒久不变的,就像是买了一把扳手、一套家具或是一个粉红色的火烈鸟草坪装饰品。就是这些人,仍然在坚持使用Windows Vista,甚至还有人在使用运行Windows95的老破烂机器和CRT显示器。他们不能理解为什么要去换一台新机器。老的还能用啊,不是么?

|

||||

诸如此类...就像我之前常常说的一样,变化总是让人沮丧。这些该死的变化搅乱了我的工作流程,这可不是一件小事情,任何业务流程的中断,都会直接影响到生产力。但是,我们现在还处于计算机发展的婴儿期,在未来的很长的一段时间内将会持续有快速的变化和发展。想必大家应该都认识一些因循守旧的人,在他们的心里,商品一旦买回家以后就是恒久不变的,就像是买了一把扳手、一套家具或是一个粉红色的火烈鸟草坪装饰品。就是这些人,仍然在坚持使用Windows Vista,甚至还有人在使用运行Windows 95的老破烂机器和CRT显示器。他们不能理解为什么要去换一台新机器。老的还能用啊,不是么?

|

||||

|

||||

这让我回忆起了我在维护老电脑上的一项伟大的成就,那台破电脑真的早就该淘汰掉。从前我有个朋友有一台286的老机器,安装了一个极其老的MS-DOS版本。她使用这台电脑来处理一些简单的任务,比如说约会、日记、记账等,我还用BASIC给她写了一个简单的记账软件。她不用关注任何安全更新,是这样么?因为它压根都没有联网。所以我会时不时给她维修一下电脑,更换电阻、电容、电源或者是CMOS电池什么的。它竟然还一直能用。它那袖珍的琥珀CRT显示器变得越来越暗,在使用了20多年后,终于退出了历史舞台。现在我的这位朋友,换了一台运行Linux的老Thinkpad,来干同样的活。

|

||||

|

||||

前面的话题有点偏题了,下面抓紧时间开始介绍systemd。

|

||||

|

||||

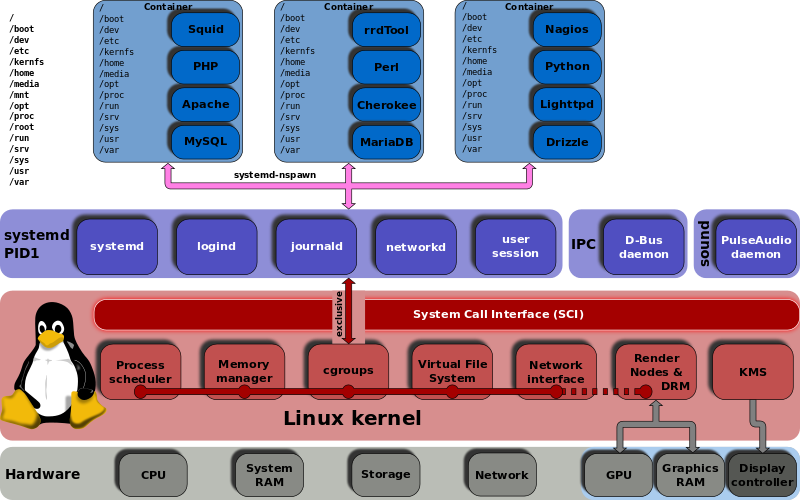

###运行级别 vs. 状态###

|

||||

|

||||

SysVInit使用静态的运行级别来构建不同的启动状态,大部分发布版本中提供了以下5个运行级别:

|

||||

|

||||

- 单用户模式(Single-user mode)

|

||||

@ -28,7 +29,7 @@ SysVInit使用静态的运行级别来构建不同的启动状态,大部分发

|

||||

- 系统关机(System shutdown)

|

||||

- 系统重启(System reboot)

|

||||

|

||||

对于我来说,使用多个运行级别并没有太大的好处,但它们却一直在系统中存在着。 不同于运行级别,systemd可以创建不同的状态,状态提供了灵活的机制来设置启动时的配置项。这些状态是由多个unit文件组成的,状态又叫做启动目标(target)。启动目标有一个漂亮的描述性命名,而不是像运行级别那样使用数字。unit文件可以控制服务、设备、套接字和挂载点。参考/usr/lib/systemd/system/graphical.target,这是CentOS 7默认的启动目标:

|

||||

对于我来说,使用多个运行级别并没有太大的好处,但它们却一直在系统中存在着。 不同于运行级别,systemd可以创建不同的状态,状态提供了灵活的机制来设置启动时的配置项。这些状态是由多个unit文件组成的,状态又叫做启动目标(target)。启动目标有一个清晰的描述性命名,而不是像运行级别那样使用数字。unit文件可以控制服务、设备、套接字和挂载点。参考下/usr/lib/systemd/system/graphical.target,这是CentOS 7默认的启动目标:

|

||||

|

||||

[Unit]

|

||||

Description=Graphical Interface

|

||||

@ -71,15 +72,16 @@ SysVInit使用静态的运行级别来构建不同的启动状态,大部分发

|

||||

DIR_SUFFIX="${APACHE_CONFDIR##/etc/apache2-}"

|

||||

else

|

||||

DIR_SUFFIX=

|

||||

|

||||

整个文件一共有410行。

|

||||

|

||||

你可以检查unit件的依赖关系,我常常被这些复杂的依赖关系给吓到:

|

||||

你可以检查unit文件的依赖关系,我常常被这些复杂的依赖关系给吓到:

|

||||

|

||||

$ systemctl list-dependencies httpd.service

|

||||

|

||||

### cgroups ###

|

||||

|

||||

cgroups,或者叫控制组,在Linux内核里已经出现好几年了,但直到systemd的出现才被真正使用起来。[The kernel documentation][1]中是这样描述cgroups的:“控制组提供层次化的机制来管理任务组,使用它可以聚合和拆分任务组,并管理任务组后续产生的子任务。”换句话说,它提供了多种有效的方式来控制、限制和分配资源。systemd使用了cgroups,你可以便捷得查看它,使用下面的命令可以展示你系统中的整个cgroup树:

|

||||

cgroups,或者叫控制组,在Linux内核里已经出现好几年了,但直到systemd的出现才被真正使用起来。[The kernel documentation][1]中是这样描述cgroups的:“控制组提供层次化的机制来管理任务组,使用它可以聚合和拆分任务组,并管理任务组后续产生的子任务。”换句话说,它提供了多种有效的方式来控制、限制和分配资源。systemd使用了cgroups,你可以便捷的查看它,使用下面的命令可以展示你系统中的整个cgroup树:

|

||||

|

||||

$ systemd-cgls

|

||||

|

||||

@ -115,7 +117,7 @@ via: http://www.linux.com/learn/tutorials/794615-systemd-runlevels-and-service-m

|

||||

|

||||

作者:[Carla Schroder][a]

|

||||

译者:[coloka](https://github.com/coloka)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,10 +1,10 @@

|

||||

5大最佳开源的浏览器安全应用

|

||||

5个最佳开源的浏览器安全应用

|

||||

================================================================================

|

||||

浏览器是现在各种在线服务的入口。电脑安全问题迄今仍未得到解决,技术进步为恶意软件提供了新的途径,感染我们的设备,入侵商业网络。例如,智能手机与平板为恶意软件--及其同伙“[恶意广告][1]”--带来一片全新天地,它们在其中腾挪作乱。

|

||||

浏览器是现在各种在线服务的入口。电脑安全问题迄今仍未得到解决,技术进步为恶意软件提供了新的途径,感染我们的设备、入侵商业网络。例如,智能手机与平板为恶意软件--及其同伙“[恶意广告][1]”--带来一片全新天地,它们在其中腾挪作乱。

|

||||

|

||||

恶意广告在合法广告与合法网络中注入恶意软件。当然你可能会认为“合法”广告与网络与非法广告与网络之间仅有一线之隔。但是请不要偏题哦。隐私与安全天生就是一对兄弟,保护隐私也就是保护你的安全。

|

||||

|

||||

Firefox, Chrome, 以及 Opera当仁不让属最棒的浏览器:性能最佳、兼容性最好、以及安全性最优。以下五个开源安全应用安装于浏览器后会助你抵御种种威胁。

|

||||

Firefox, Chrome, 以及 Opera 当仁不让属最棒的浏览器:性能最佳、兼容性最好、以及安全性最优。以下五个开源安全应用安装于浏览器后会助你抵御种种威胁。

|

||||

|

||||

### 保护隐私: 开源浏览器安全应用 ###

|

||||

|

||||

@ -12,11 +12,11 @@ Firefox, Chrome, 以及 Opera当仁不让属最棒的浏览器:性能最佳、

|

||||

|

||||

广告网络为恶意软件提供了肥沃的土壤。一个广告网络可以覆盖数千站点,因此攻陷一个广告网络就相当于攻陷数千台机器。AdBlock及其衍生品—[AdBlock Plus][2], [AdBlock Pro][3], 与 [AdBlock Edge][4]--都是屏蔽广告的优秀工具,可以让那些充斥烦人广告的网站重新还你一片清静。

|

||||

|

||||