mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-25 23:11:02 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject into new

This commit is contained in:

commit

76fd134c4f

@ -1,43 +1,41 @@

|

||||

关于安全,开发人员需要知道的

|

||||

======

|

||||

(to 校正:有些长句子理解得不好,望见谅)

|

||||

> 开发人员不需要成为安全专家, 但他们确实需要摆脱将安全视为一些不幸障碍的心态。

|

||||

|

||||

|

||||

|

||||

DevOps 并不意味着每个人都需要成为开发和运维方面的专家。尤其在大型组织中,其中角色往往更加专业化。相反,DevOps 思想在某种程度上更多地是关注问题的分离。在某种程度上,运维团队可以为开发人员(无论是在本地云还是在公共云中)部署平台,并且不受影响,这对两个团队来说都是好消息。开发人员可以获得高效的开发环境和自助服务,运维人员可以专注于保持基础管道运行和维护平台。

|

||||

|

||||

这是一种约定。开发者期望从运维人员那里得到一个稳定和实用的平台,运维人员希望开发者能够自己处理与开发应用相关的大部分任务。

|

||||

|

||||

也就是说,DevOps 还涉及更好的沟通、合作和透明度。如果它不仅仅是一种介于开发和运维之间的新型壁垒,它的效果会更好。运维人员需要对开发者想要和需要的工具类型以及他们通过监视和日志记录来编写更好应用程序所需的可见性保持敏感。相反,开发人员需要了解如何才能使底层基础设施更有效地使用,以及什么能够在夜间(字面上)保持操作。(to 校正:这里意思是不是在无人时候操作)

|

||||

也就是说,DevOps 还涉及更好的沟通、合作和透明度。如果它不仅仅是一种介于开发和运维之间的新型壁垒,它的效果会更好。运维人员需要对开发者想要和需要的工具类型以及他们通过监视和日志记录来编写更好应用程序所需的可见性保持敏感。另一方面,开发人员需要了解如何才能更有效地使用底层基础设施,以及什么能够使运维在夜间(字面上)保持运行。

|

||||

|

||||

同样的原则也适用于更广泛的 DevSecOps,这个术语明确地提醒我们,安全需要嵌入到整个 DevOps 管道中,从获取内容到编写应用程序、构建应用程序、测试应用程序以及在生产环境中运行它们。开发人员(和运维人员)不需要突然成为安全专家,除了他们的其它角色。但是,他们通常可以从对安全最佳实践(这可能不同于他们已经习惯的)的更高认识中获益,并从将安全视为一些不幸障碍的心态中转变出来。

|

||||

同样的原则也适用于更广泛的 DevSecOps,这个术语明确地提醒我们,安全需要嵌入到整个 DevOps 管道中,从获取内容到编写应用程序、构建应用程序、测试应用程序以及在生产环境中运行它们。开发人员(和运维人员)除了他们已有的角色不需要突然成为安全专家。但是,他们通常可以从对安全最佳实践(这可能不同于他们已经习惯的)的更高认识中获益,并从将安全视为一些不幸障碍的心态中转变出来。

|

||||

|

||||

以下是一些观察结果。

|

||||

|

||||

开放式 Web 应用程序安全项目(Open Web Application Security Project)([OWASP][1])[Top 10 列表]提供了一个窗口,可以了解 Web 应用程序中的主要漏洞。列表中的许多条目对 Web 程序员来说都很熟悉。跨站脚本(XSS)和注入漏洞是最常见的。但令人震惊的是,2007 年列表中的许多漏洞仍在 2017 年的列表中([PDF][3])。无论是培训还是工具,都有问题,许多相同的编码漏洞在不断出现。(to 校正:这句话不清楚)

|

||||

<ruby>开放式 Web 应用程序安全项目<rt>Open Web Application Security Project</rt></ruby>([OWASP][1])[Top 10 列表]提供了一个窗口,可以了解 Web 应用程序中的主要漏洞。列表中的许多条目对 Web 程序员来说都很熟悉。跨站脚本(XSS)和注入漏洞是最常见的。但令人震惊的是,2007 年列表中的许多漏洞仍在 2017 年的列表中([PDF][3])。无论是培训还是工具,都有问题,许多同样的编码漏洞一再出现。

|

||||

|

||||

新平台技术加剧了这种情况。例如,虽然容器不一定要求应用程序以不同的方式编写,但是它们与新模式(例如[微服务][4])相吻合,并且可以放大某些对于安全实践的影响。例如,我的同事 [Dan Walsh][5]([@rhatdan][6])写道:“计算机领域最大的误解是需要 root 权限来运行应用程序,问题是并不是所有开发者都认为他们需要 root,而是他们将这种假设构建到他们建设的服务中,即服务无法在非 root 情况下运行,而这降低了安全性。”

|

||||

新的平台技术加剧了这种情况。例如,虽然容器不一定要求应用程序以不同的方式编写,但是它们与新模式(例如[微服务][4])相吻合,并且可以放大某些对于安全实践的影响。例如,我的同事 [Dan Walsh][5]([@rhatdan][6])写道:“计算机领域最大的误解是需要 root 权限来运行应用程序,问题是并不是所有开发者都认为他们需要 root,而是他们将这种假设构建到他们建设的服务中,即服务无法在非 root 情况下运行,而这降低了安全性。”

|

||||

|

||||

默认使用 root 访问是一个好的实践吗?并不是。但它可能(也许)是一个可以防御的应用程序和系统,否则就会被其它方法完全隔离。但是,由于所有东西都连接在一起,没有真正的边界,多用户工作负载,拥有许多不同级别访问权限的用户,更不用说更加危险的环境了,那么快捷方式的回旋余地就更小了。

|

||||

|

||||

[自动化][7]应该是 DevOps 不可分割的一部分。自动化需要覆盖整个过程中,包括安全和合规性测试。代码是从哪里来的?是否涉及第三方技术、产品或容器映像?是否有已知的安全勘误表?是否有已知的常见代码缺陷?秘密和个人身份信息是否被隔离?如何进行身份认证?谁被授权部署服务和应用程序?

|

||||

[自动化][7]应该是 DevOps 不可分割的一部分。自动化需要覆盖整个过程中,包括安全和合规性测试。代码是从哪里来的?是否涉及第三方技术、产品或容器镜像?是否有已知的安全勘误表?是否有已知的常见代码缺陷?机密信息和个人身份信息是否被隔离?如何进行身份认证?谁被授权部署服务和应用程序?

|

||||

|

||||

你不是在写你自己的加密代码吧?

|

||||

你不是自己在写你的加密代码吧?

|

||||

|

||||

尽可能地自动化渗透测试。我提到过自动化没?它是使安全性持续的一个重要部分,而不是偶尔做一次的检查清单。

|

||||

|

||||

这听起来很难吗?可能有点。至少它是不同的。但是,作一名 [DevOpsDays OpenSpaces][8] 伦敦论坛的一名参与者对我说:“这只是技术测试。它既不神奇也不神秘。”他接着说,将安全作为一种更广泛地了解整个软件生命周期(这是一种不错的技能)的方法来参与进来并不难。他还建议参加事件响应练习或[捕获国旗练习][9]。你会发现它们很有趣。

|

||||

|

||||

本文基于作者将于 5 月 8 日至 10 日在旧金山举行的 [Red Hat Summit 2018][11] 上发表的演讲。_[5 月 7 日前注册][11]以节省 500 美元的注册。使用折扣代码**OPEN18**在支付页面应用折扣_

|

||||

|

||||

这听起来很难吗?可能有点。至少它是不同的。但是,一名 [DevOpsDays OpenSpaces][8] 伦敦论坛的参与者对我说:“这只是技术测试。它既不神奇也不神秘。”他接着说,将安全作为一种更广泛地了解整个软件生命周期的方法(这是一种不错的技能)来参与进来并不难。他还建议参加事件响应练习或[夺旗练习][9]。你会发现它们很有趣。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/4/what-developers-need-know-about-security

|

||||

|

||||

作者:[Gordon Haff][a]

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,67 @@

|

||||

一个用于家庭项目的单用户、轻量级操作系统

|

||||

======

|

||||

> 业余爱好者应该了解一下 RISC OS 的五个原因。

|

||||

|

||||

|

||||

|

||||

究竟什么是 RISC OS?嗯,它不是一种新的 Linux。它也不是有些人认为的 Windows。事实上,它发布于 1987 年,它比它们任何一个都要古老。但你看到它时不一定会意识到这一点。

|

||||

|

||||

其点击式图形用户界面在底部为活动的程序提供一个固定面板和一个图标栏。因此,它看起来像 Windows 95,并且比它早了 8 年。

|

||||

|

||||

这个操作系统最初是为 [Acorn Archimedes][1] 编写的。这台机器中的 Acorn RISC Machines CPU 是全新的硬件,因此需要在其上运行全新的软件。这是最早的 ARM 芯片上的系统,早于任何人想到的 Android 或 [Armbian][2] 之前。

|

||||

|

||||

虽然 Acorn 桌面最终消失了,但 ARM 芯片继续征服世界。在这里,RISC OS 一直有一个优点 —— 通常在嵌入式设备中,你从来没有真正地意识到它。RISC OS 过去长期以来一直是一个完全专有的操作系统。但近年来,该抄系统的所有者已经开始将源代码发布到一个名为 [RISC OS Open][3] 的项目中。

|

||||

|

||||

### 1、你可以将它安装在树莓派上

|

||||

|

||||

树莓派的官方操作系统 [Raspbian][4] 实际上非常棒(如果你对摆弄不同技术上新奇的东西不感兴趣,那么你可能最初也不会选择树莓派)。由于 RISC OS 是专门为 ARM 编写的,因此它可以在各种小型计算机上运行,包括树莓派的各个型号。

|

||||

|

||||

### 2、它超轻量级

|

||||

|

||||

我的树莓派上安装的 RISC 系统占用了几百兆 —— 这是在我加载了数十个程序和游戏之后。它们大多数时候不超过 1 兆。

|

||||

|

||||

如果你真的节俭,RISC OS Pico 可用在 16MB SD 卡上。如果你要在嵌入式系统或物联网项目中鼓捣某些东西,这是很完美的。当然,16MB 实际上比压缩到 512KB 的老 Archimedes 的 ROM 要多得多。但我想 30 年间内存技术的发展,我们可以稍微放宽一下了。

|

||||

|

||||

### 3、它非常适合复古游戏

|

||||

|

||||

当 Archimedes 处于鼎盛时期时,ARM CPU 的速度比 Apple Macintosh 和 Commodore Amiga 中的 Motorola 68000 要快几倍,它也完全吸了新的 386 技术。这使得它成为对游戏开发者有吸引力的一个平台,他们希望用这个星球上最强大的桌面计算机来支撑他们的东西。

|

||||

|

||||

那些游戏的许多拥有者都非常慷慨,允许业余爱好者免费下载他们的老作品。虽然 RISC OS 和硬件已经发展了,但只需要进行少量的调整就可以让它们运行起来。

|

||||

|

||||

如果你有兴趣探索这个,[这里有一个指南][5]让这些游戏在你的树莓派上运行。

|

||||

|

||||

### 4、它有 BBC BASIC

|

||||

|

||||

就像过去一样,按下 `F12` 进入命令行,输入 `*BASIC`,就可以看到一个完整的 BBC BASIC 解释器。

|

||||

|

||||

对于那些在 80 年代没有接触过它的人,请让我解释一下:BBC BASIC 是当时我们很多人的第一个编程语言,因为它专门教孩子如何编码。当时有大量的书籍和杂志文章教我们编写自己的简单但高度可玩的游戏。

|

||||

|

||||

几十年后,对于一个想要在学校假期做点什么的有技术头脑的孩子而言,在 BBC BASIC 上编写自己的游戏仍然是一个很棒的项目。但很少有孩子在家里有 BBC micro。那么他们应该怎么做呢?

|

||||

|

||||

当然,你可以在每台家用电脑上运行解释器,但是当别人需要使用它时就不能用了。那么为什么不使用装有 RISC OS 的树莓派呢?

|

||||

|

||||

### 5、它是一个简单的单用户操作系统

|

||||

|

||||

RISC OS 不像 Linux 一样有自己的用户和超级用户访问权限。它有一个用户并可以完全访问整个机器。因此,它可能不是跨企业部署的最佳日常驱动,甚至不适合给老人家做银行业务。但是,如果你正在寻找可以用来修改和鼓捣的东西,那绝对是太棒了。你和机器之间没有那么多障碍,所以你可以直接闯进去。

|

||||

|

||||

### 扩展阅读

|

||||

|

||||

如果你想了解有关此操作系统的更多信息,请查看 [RISC OS Open][3],或者将镜像烧到闪存到卡上并开始使用它。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/7/gentle-intro-risc-os

|

||||

|

||||

作者:[James Mawson][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/dxmjames

|

||||

[1]:https://en.wikipedia.org/wiki/Acorn_Archimedes

|

||||

[2]:https://www.armbian.com/

|

||||

[3]:https://www.riscosopen.org/content/

|

||||

[4]:https://www.raspbian.org/

|

||||

[5]:https://www.riscosopen.org/wiki/documentation/show/Introduction%20to%20RISC%20OS

|

||||

@ -1,67 +1,64 @@

|

||||

**全文共三处“译注”,麻烦校对大大**

|

||||

|

||||

2018 年 5 款最好的 Linux 游戏

|

||||

======

|

||||

|

||||

|

||||

|

||||

Linux 可能不会很快成为游戏玩家选择的平台——Valve Steam Machines 的失败似乎是对这一点的深刻提醒——但这并不意味着该平台没有稳定增长,并且拥有相当多的优秀游戏。

|

||||

Linux 可能不会很快成为游戏玩家选择的平台 —— Valve Steam Machines 的失败似乎是对这一点的深刻提醒 —— 但这并不意味着该平台没有稳定增长,并且拥有相当多的优秀游戏。

|

||||

|

||||

从独立打击到辉煌的 RPG(角色扮演),2018 年已经可以称得上是 Linux 游戏的丰收年,在这里,我们将列出迄今为止最喜欢的五款。

|

||||

从独立单机到辉煌的 RPG(角色扮演),2018 年已经可以称得上是 Linux 游戏的丰收年,在这里,我们将列出迄今为止最喜欢的五款。

|

||||

|

||||

你是否在寻找优秀的 Linux 游戏却又不想挥霍金钱?来看看我们的最佳 [免费 Linux 游戏][1] 名单吧!

|

||||

|

||||

### 1. 永恒之柱2:死亡之火(Pillars of Eternity II: Deadfire)

|

||||

### 1、<ruby>永恒之柱 2:死亡之火<rt>Pillars of Eternity II: Deadfire</rt></ruby>

|

||||

|

||||

![best-linux-games-2018-pillars-of-eternity-2-deadfire][2]

|

||||

|

||||

其中一款最能代表近年来 cRPG 的复兴,它让传统的 Bethesda RPG 看起来更像是轻松的动作冒险游戏。在《永恒之柱》系列的最新作品中,当你和船员在充满冒险和危机的岛屿周围航行时,你会发现自己更像是一个海盗。

|

||||

其中一款最能代表近年来 cRPG 的复兴,它让传统的 Bethesda RPG 看起来更像是轻松的动作冒险游戏。在磅礴的《<ruby>永恒之柱<rt>Pillars of Eternity</rt></ruby>》系列的最新作品中,当你和船员在充满冒险和危机的岛屿周围航行时,你会发现自己更像是一个海盗。

|

||||

|

||||

在混合了海战元素的基础上,《死亡之火》延续了前作丰富的游戏剧情和出色的写作,同时在美丽的画面和手绘背景的基础上更进一步。

|

||||

在混合了海战元素的基础上,《死亡之火》延续了前作丰富的游戏剧情和出色的文笔,同时在美丽的画面和手绘背景的基础上更进一步。

|

||||

|

||||

这是一款毫无疑问的深度的硬核 RPG ,可能会让一些人对它产生抵触情绪,不过那些接受它的人会投入几个月的时间沉迷其中。

|

||||

这是一款毫无疑问的令人印象深刻的硬核 RPG ,可能会让一些人对它产生抵触情绪,不过那些接受它的人会投入几个月的时间沉迷其中。

|

||||

|

||||

|

||||

### 2. 杀戮尖塔(Slay the Spire)

|

||||

### 2、<ruby>杀戮尖塔<rt>Slay the Spire</rt></ruby>

|

||||

|

||||

![best-linux-games-2018-slay-the-spire][3]

|

||||

|

||||

《杀戮尖塔》仍处于早期阶段,却已经成为年度最佳游戏之一,它是一款采用 deck-building 玩法的卡牌游戏,由充满活力的视觉风格和流氓般的机制加以点缀,在每次令人愤怒的(但可能是应受的)死亡之后,你还会回来尝试更多次。(译注:翻译出来有点生硬)

|

||||

《杀戮尖塔》仍处于早期阶段,却已经成为年度最佳游戏之一,它是一款采用 deck-building 玩法的卡牌游戏,由充满活力的视觉风格和 rogue-like 机制加以点缀,即便在一次次令人愤怒的(但可能是应受的)死亡之后,你还会再次投入其中。

|

||||

|

||||

每次游戏都有无尽的卡牌组合和不同的布局,《杀戮尖塔》就像是近年来所有震撼独立场景的最佳实现——卡牌游戏和永久死亡冒险合二为一。

|

||||

每次游戏都有无尽的卡牌组合和不同的布局,《杀戮尖塔》就像是近年来所有令人震撼的独立游戏的最佳具现 —— 卡牌游戏和永久死亡冒险模式合二为一。

|

||||

|

||||

再强调一次,它仍处于早期阶段,所以它只会变得越来越好!

|

||||

|

||||

### 3. 战斗机甲(Battletech)

|

||||

### 3、<ruby>战斗机甲<rt>Battletech</rt></ruby>

|

||||

|

||||

![best-linux-games-2018-battletech][4]

|

||||

|

||||

正如我们在这个名单上看到的“重磅”游戏一样(译注:这句翻译出来前后逻辑感觉有问题),《战斗机甲》是一款星际战争游戏(基于桌面游戏),你将装载一个机甲战队并引导它们进行丰富的回合制战斗。

|

||||

这是我们榜单上像“大片”一样的游戏,《战斗机甲》是一款星际战争游戏(基于桌面游戏),你将装载一个机甲战队并引导它们进行丰富的回合制战斗。

|

||||

|

||||

战斗发生在一系列的地形上——从寒冷的荒地到阳光普照的地带——你将用巨大的热武器装备你的四人小队,与对手小队作战。如果你觉得这听起来有点“机械战士”的味道,那么你正是在正确的思考路线上,只不过这次更注重战术安排而不是直接行动。

|

||||

战斗发生在一系列的地形上,从寒冷的荒地到阳光普照的地带,你将用巨大的热武器装备你的四人小队,与对手小队作战。如果你觉得这听起来有点“机械战士”的味道,那么你想的没错,只不过这次更注重战术安排而不是直接行动。

|

||||

|

||||

除了让你在宇宙冲突中指挥的战役外,多人模式也可能会耗费你数不清的时间。

|

||||

|

||||

### 4. 死亡细胞(Dead Cells)

|

||||

### 4、<ruby>死亡细胞<rt>Dead Cells</rt></ruby>

|

||||

|

||||

![best-linux-games-2018-dead-cells][5]

|

||||

|

||||

这款游戏称得上是年度最佳平台动作游戏。"Roguelite" 类游戏《死亡细胞》将你带入一个黑暗(却色彩绚丽)的世界,在那里进行攻击和躲避以通过程序生成的关卡。它有点像 2D 的《黑暗之魂(Dark Souls)》,如果黑暗之魂被五彩缤纷的颜色浸透的话。

|

||||

这款游戏称得上是年度最佳平台动作游戏。Roguelike 游戏《死亡细胞》将你带入一个黑暗(却色彩绚丽)的世界,在那里进行攻击和躲避以通过程序生成的关卡。它有点像 2D 的《<ruby>黑暗之魂<rt>Dark Souls</rt></ruby>》,如果《黑暗之魂》也充满五彩缤纷的颜色的话。

|

||||

|

||||

死亡细胞很残忍,不过精确而灵敏的控制系统一定会让你为死亡付出代价,而在两次运行期间的升级系统又会确保你总是有一些进步的成就感。

|

||||

《死亡细胞》是无情的,只有精确而灵敏的控制才会让你避开死亡,而在两次运行期间的升级系统又会确保你总是有一些进步的成就感。

|

||||

|

||||

《死亡细胞》的像素风、动画效果和游戏机制都达到了巅峰,它及时地提醒我们,在没有 3D 图形的过度使用下游戏可以制作成什么样子。

|

||||

|

||||

|

||||

### 5. 叛逆机械师(Iconoclasts)

|

||||

### 5、<ruby>叛逆机械师<rt>Iconoclasts</rt></ruby>

|

||||

|

||||

![best-linux-games-2018-iconoclasts][6]

|

||||

|

||||

这款游戏不像上面提到的几款那样为人所知,它是一款可爱风格的游戏,可以看作是《死亡细胞》不那么惊悚、更可爱的替代品(译注:形容词生硬)。玩家将扮演成罗宾,发现自己处于政治扭曲的外星世界后开始了逃亡。

|

||||

这款游戏不像上面提到的几款那样为人所知,它是一款可爱风格的游戏,可以看作是《死亡细胞》不那么惊悚、更可爱的替代品。玩家将扮演成罗宾,一个发现自己处于政治扭曲的外星世界后开始了逃亡的女孩。

|

||||

|

||||

尽管你的角色将在非线性的关卡中行动,游戏却有着扣人心弦的游戏剧情,罗宾会获得各种各样充满想象力的提升,其中最重要的是她的扳手,从发射炮弹到解决巧妙的环境问题,你几乎可以用它来做任何事。

|

||||

|

||||

《叛逆机械师》是一个充满快乐与活力的平台游戏,融合了《洛克人(Megaman)》的战斗和《银河战士(Metroid)》的探索。如果你借鉴了那两部伟大的作品,可能不会比它做得更好。

|

||||

《叛逆机械师》是一个充满快乐与活力的平台游戏,融合了《<ruby>洛克人<rt>Megaman</rt></ruby>》的战斗和《<ruby>银河战士<rt>Metroid</rt></ruby>》的探索。如果你借鉴了那两部伟大的作品,可能不会比它做得更好。

|

||||

|

||||

### 总结

|

||||

|

||||

@ -74,7 +71,7 @@ via: https://www.maketecheasier.com/best-linux-games/

|

||||

作者:[Robert Zak][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[seriouszyx](https://github.com/seriouszyx)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,247 @@

|

||||

如何使用 chkconfig 和 systemctl 命令启用或禁用 Linux 服务

|

||||

======

|

||||

|

||||

对于 Linux 管理员来说这是一个重要(美妙)的话题,所以每个人都必须知道,并练习怎样才能更高效的使用它们。

|

||||

|

||||

在 Linux 中,无论何时当你安装任何带有服务和守护进程的包,系统默认会把这些服务的初始化及 systemd 脚本添加进去,不过此时它们并没有被启用。

|

||||

|

||||

我们需要手动的开启或者关闭那些服务。Linux 中有三个著名的且一直在被使用的初始化系统。

|

||||

|

||||

### 什么是初始化系统?

|

||||

|

||||

在以 Linux/Unix 为基础的操作系统上,`init` (初始化的简称) 是内核引导系统启动过程中第一个启动的进程。

|

||||

|

||||

`init` 的进程 id (pid)是 1,除非系统关机否则它将会一直在后台运行。

|

||||

|

||||

`init` 首先根据 `/etc/inittab` 文件决定 Linux 运行的级别,然后根据运行级别在后台启动所有其他进程和应用程序。

|

||||

|

||||

BIOS、MBR、GRUB 和内核程序在启动 `init` 之前就作为 Linux 的引导程序的一部分开始工作了。

|

||||

|

||||

下面是 Linux 中可以使用的运行级别(从 0~6 总共七个运行级别):

|

||||

|

||||

* `0`:关机

|

||||

* `1`:单用户模式

|

||||

* `2`:多用户模式(没有NFS)

|

||||

* `3`:完全的多用户模式

|

||||

* `4`:系统未使用

|

||||

* `5`:图形界面模式

|

||||

* `6`:重启

|

||||

|

||||

下面是 Linux 系统中最常用的三个初始化系统:

|

||||

|

||||

* System V(Sys V)

|

||||

* Upstart

|

||||

* systemd

|

||||

|

||||

### 什么是 System V(Sys V)?

|

||||

|

||||

System V(Sys V)是类 Unix 系统第一个也是传统的初始化系统。`init` 是内核引导系统启动过程中第一支启动的程序,它是所有程序的父进程。

|

||||

|

||||

大部分 Linux 发行版最开始使用的是叫作 System V(Sys V)的传统的初始化系统。在过去的几年中,已经发布了好几个初始化系统以解决标准版本中的设计限制,例如:launchd、Service Management Facility、systemd 和 Upstart。

|

||||

|

||||

但是 systemd 已经被几个主要的 Linux 发行版所采用,以取代传统的 SysV 初始化系统。

|

||||

|

||||

### 什么是 Upstart?

|

||||

|

||||

Upstart 是一个基于事件的 `/sbin/init` 守护进程的替代品,它在系统启动过程中处理任务和服务的启动,在系统运行期间监视它们,在系统关机的时候关闭它们。

|

||||

|

||||

它最初是为 Ubuntu 而设计,但是它也能够完美的部署在其他所有 Linux系统中,用来代替古老的 System-V。

|

||||

|

||||

Upstart 被用于 Ubuntu 从 9.10 到 Ubuntu 14.10 和基于 RHEL 6 的系统,之后它被 systemd 取代。

|

||||

|

||||

### 什么是 systemd?

|

||||

|

||||

systemd 是一个新的初始化系统和系统管理器,它被用于所有主要的 Linux 发行版,以取代传统的 SysV 初始化系统。

|

||||

|

||||

systemd 兼容 SysV 和 LSB 初始化脚本。它可以直接替代 SysV 初始化系统。systemd 是被内核启动的第一个程序,它的 PID 是 1。

|

||||

|

||||

systemd 是所有程序的父进程,Fedora 15 是第一个用 systemd 取代 upstart 的发行版。`systemctl` 用于命令行,它是管理 systemd 的守护进程/服务的主要工具,例如:(开启、重启、关闭、启用、禁用、重载和状态)

|

||||

|

||||

systemd 使用 .service 文件而不是 bash 脚本(SysVinit 使用的)。systemd 将所有守护进程添加到 cgroups 中排序,你可以通过浏览 `/cgroup/systemd` 文件查看系统等级。

|

||||

|

||||

### 如何使用 chkconfig 命令启用或禁用引导服务?

|

||||

|

||||

`chkconfig` 实用程序是一个命令行工具,允许你在指定运行级别下启动所选服务,以及列出所有可用服务及其当前设置。

|

||||

|

||||

此外,它还允许我们从启动中启用或禁用服务。前提是你有超级管理员权限(root 或者 `sudo`)运行这个命令。

|

||||

|

||||

所有的服务脚本位于 `/etc/rd.d/init.d`文件中

|

||||

|

||||

### 如何列出运行级别中所有的服务

|

||||

|

||||

`--list` 参数会展示所有的服务及其当前状态(启用或禁用服务的运行级别):

|

||||

|

||||

```

|

||||

# chkconfig --list

|

||||

NetworkManager 0:off 1:off 2:on 3:on 4:on 5:on 6:off

|

||||

abrt-ccpp 0:off 1:off 2:off 3:on 4:off 5:on 6:off

|

||||

abrtd 0:off 1:off 2:off 3:on 4:off 5:on 6:off

|

||||

acpid 0:off 1:off 2:on 3:on 4:on 5:on 6:off

|

||||

atd 0:off 1:off 2:off 3:on 4:on 5:on 6:off

|

||||

auditd 0:off 1:off 2:on 3:on 4:on 5:on 6:off

|

||||

.

|

||||

.

|

||||

```

|

||||

|

||||

### 如何查看指定服务的状态

|

||||

|

||||

如果你想查看运行级别下某个服务的状态,你可以使用下面的格式匹配出需要的服务。

|

||||

|

||||

比如说我想查看运行级别中 `auditd` 服务的状态

|

||||

|

||||

```

|

||||

# chkconfig --list| grep auditd

|

||||

auditd 0:off 1:off 2:on 3:on 4:on 5:on 6:off

|

||||

```

|

||||

|

||||

### 如何在指定运行级别中启用服务

|

||||

|

||||

使用 `--level` 参数启用指定运行级别下的某个服务,下面展示如何在运行级别 3 和运行级别 5 下启用 `httpd` 服务。

|

||||

|

||||

|

||||

```

|

||||

# chkconfig --level 35 httpd on

|

||||

```

|

||||

|

||||

### 如何在指定运行级别下禁用服务

|

||||

|

||||

同样使用 `--level` 参数禁用指定运行级别下的服务,下面展示的是在运行级别 3 和运行级别 5 中禁用 `httpd` 服务。

|

||||

|

||||

```

|

||||

# chkconfig --level 35 httpd off

|

||||

```

|

||||

|

||||

### 如何将一个新服务添加到启动列表中

|

||||

|

||||

`-–add` 参数允许我们添加任何新的服务到启动列表中,默认情况下,新添加的服务会在运行级别 2、3、4、5 下自动开启。

|

||||

|

||||

```

|

||||

# chkconfig --add nagios

|

||||

```

|

||||

|

||||

### 如何从启动列表中删除服务

|

||||

|

||||

可以使用 `--del` 参数从启动列表中删除服务,下面展示的是如何从启动列表中删除 Nagios 服务。

|

||||

|

||||

```

|

||||

# chkconfig --del nagios

|

||||

```

|

||||

|

||||

### 如何使用 systemctl 命令启用或禁用开机自启服务?

|

||||

|

||||

`systemctl` 用于命令行,它是一个用来管理 systemd 的守护进程/服务的基础工具,例如:(开启、重启、关闭、启用、禁用、重载和状态)。

|

||||

|

||||

所有服务创建的 unit 文件位与 `/etc/systemd/system/`。

|

||||

|

||||

### 如何列出全部的服务

|

||||

|

||||

使用下面的命令列出全部的服务(包括启用的和禁用的)。

|

||||

|

||||

```

|

||||

# systemctl list-unit-files --type=service

|

||||

UNIT FILE STATE

|

||||

arp-ethers.service disabled

|

||||

auditd.service enabled

|

||||

autovt@.service enabled

|

||||

blk-availability.service disabled

|

||||

brandbot.service static

|

||||

chrony-dnssrv@.service static

|

||||

chrony-wait.service disabled

|

||||

chronyd.service enabled

|

||||

cloud-config.service enabled

|

||||

cloud-final.service enabled

|

||||

cloud-init-local.service enabled

|

||||

cloud-init.service enabled

|

||||

console-getty.service disabled

|

||||

console-shell.service disabled

|

||||

container-getty@.service static

|

||||

cpupower.service disabled

|

||||

crond.service enabled

|

||||

.

|

||||

.

|

||||

150 unit files listed.

|

||||

```

|

||||

|

||||

使用下面的格式通过正则表达式匹配出你想要查看的服务的当前状态。下面是使用 `systemctl` 命令查看 `httpd` 服务的状态。

|

||||

|

||||

```

|

||||

# systemctl list-unit-files --type=service | grep httpd

|

||||

httpd.service disabled

|

||||

```

|

||||

|

||||

### 如何让指定的服务开机自启

|

||||

|

||||

使用下面格式的 `systemctl` 命令启用一个指定的服务。启用服务将会创建一个符号链接,如下可见:

|

||||

|

||||

```

|

||||

# systemctl enable httpd

|

||||

Created symlink from /etc/systemd/system/multi-user.target.wants/httpd.service to /usr/lib/systemd/system/httpd.service.

|

||||

```

|

||||

|

||||

运行下列命令再次确认服务是否被启用。

|

||||

|

||||

```

|

||||

# systemctl is-enabled httpd

|

||||

enabled

|

||||

```

|

||||

|

||||

### 如何禁用指定的服务

|

||||

|

||||

运行下面的命令禁用服务将会移除你启用服务时所创建的符号链接。

|

||||

|

||||

```

|

||||

# systemctl disable httpd

|

||||

Removed symlink /etc/systemd/system/multi-user.target.wants/httpd.service.

|

||||

```

|

||||

|

||||

运行下面的命令再次确认服务是否被禁用。

|

||||

|

||||

```

|

||||

# systemctl is-enabled httpd

|

||||

disabled

|

||||

```

|

||||

|

||||

### 如何查看系统当前的运行级别

|

||||

|

||||

使用 `systemctl` 命令确认你系统当前的运行级别,`runlevel` 命令仍然可在 systemd 下工作,不过,运行级别对于 systemd 来说是一个历史遗留的概念。所以我建议你全部使用 `systemctl` 命令。

|

||||

|

||||

我们当前处于运行级别 3, 它等同于下面显示的 `multi-user.target`。

|

||||

|

||||

```

|

||||

# systemctl list-units --type=target

|

||||

UNIT LOAD ACTIVE SUB DESCRIPTION

|

||||

basic.target loaded active active Basic System

|

||||

cloud-config.target loaded active active Cloud-config availability

|

||||

cryptsetup.target loaded active active Local Encrypted Volumes

|

||||

getty.target loaded active active Login Prompts

|

||||

local-fs-pre.target loaded active active Local File Systems (Pre)

|

||||

local-fs.target loaded active active Local File Systems

|

||||

multi-user.target loaded active active Multi-User System

|

||||

network-online.target loaded active active Network is Online

|

||||

network-pre.target loaded active active Network (Pre)

|

||||

network.target loaded active active Network

|

||||

paths.target loaded active active Paths

|

||||

remote-fs.target loaded active active Remote File Systems

|

||||

slices.target loaded active active Slices

|

||||

sockets.target loaded active active Sockets

|

||||

swap.target loaded active active Swap

|

||||

sysinit.target loaded active active System Initialization

|

||||

timers.target loaded active active Timers

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

|

||||

via: https://www.2daygeek.com/how-to-enable-or-disable-services-on-boot-in-linux-using-chkconfig-and-systemctl-command/

|

||||

|

||||

|

||||

作者:[Prakash Subramanian][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[way-ww](https://github.com/way-ww)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

|

||||

[a]: https://www.2daygeek.com/author/prakash/

|

||||

[b]: https://github.com/lujun9972

|

||||

@ -1,11 +1,11 @@

|

||||

使用 Calcurse 在 Linux 命令行中组织任务

|

||||

======

|

||||

|

||||

使用 Calcurse 了解你的日历和待办事项列表。

|

||||

> 使用 Calcurse 了解你的日历和待办事项列表。

|

||||

|

||||

|

||||

|

||||

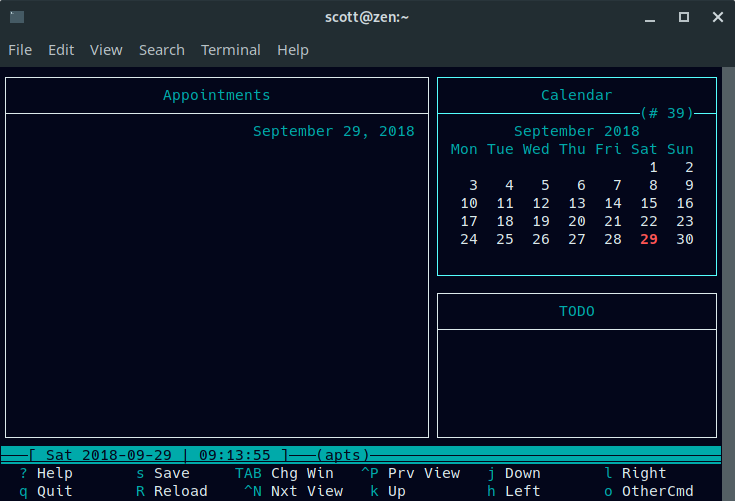

你是否需要复杂,功能丰富的图形或 Web 程序才能保持井井有条?我不这么认为。正确的命令行工具可以完成工作并且做得很好。

|

||||

你是否需要复杂、功能丰富的图形或 Web 程序才能保持井井有条?我不这么认为。合适的命令行工具可以完成工作并且做得很好。

|

||||

|

||||

当然,说出命令行这个词可能会让一些 Linux 用户感到害怕。对他们来说,命令行是未知领域。

|

||||

|

||||

@ -15,54 +15,51 @@

|

||||

|

||||

### 获取软件

|

||||

|

||||

如果你喜欢编译代码(我通常不喜欢),你可以从[Calcurse 网站][1]获取源码。否则,根据你的 Linux 发行版获取[二进制安装程序][2]。你甚至可以从 Linux 发行版的软件包管理器中获取 Calcurse。检查一下不会有错的。

|

||||

如果你喜欢编译代码(我通常不喜欢),你可以从 [Calcurse 网站][1]获取源码。否则,根据你的 Linux 发行版获取[二进制安装程序][2]。你甚至可以从 Linux 发行版的软件包管理器中获取 Calcurse。检查一下不会有错的。

|

||||

|

||||

编译或安装 Calcurse 后(两者都不用太长时间),你就可以开始使用了。

|

||||

|

||||

### 使用 Calcurse

|

||||

|

||||

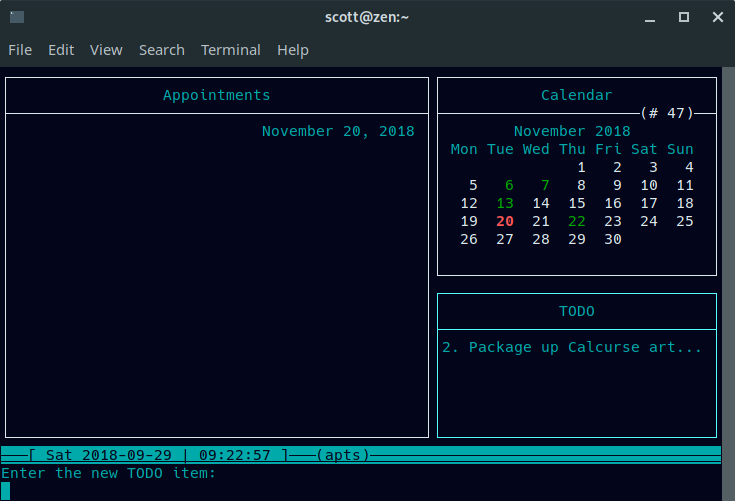

打开终端并输入 **calcurse**。

|

||||

打开终端并输入 `calcurse`。

|

||||

|

||||

|

||||

|

||||

Calcurse 的界面由三个面板组成:

|

||||

|

||||

* 预约(屏幕左侧)

|

||||

* 日历(右上角)

|

||||

* 待办事项清单(右下角)

|

||||

* <ruby>预约<rt>Appointments</rt></ruby>(屏幕左侧)

|

||||

* <ruby>日历<rt>Calendar</rt></ruby>(右上角)

|

||||

* <ruby>待办事项清单<rt>TODO</rt></ruby>(右下角)

|

||||

|

||||

按键盘上的 `Tab` 键在面板之间移动。要在面板添加新项目,请按下 `a`。Calcurse 将指导你完成添加项目所需的操作。

|

||||

|

||||

一个有趣的地方地是预约和日历面板配合工作。你选中日历面板并添加一个预约。在那里,你选择一个预约的日期。完成后,你回到预约面板,你就看到了。

|

||||

|

||||

|

||||

按键盘上的 Tab 键在面板之间移动。要在面板添加新项目,请按下 **a**。Calcurse 将指导你完成添加项目所需的操作。

|

||||

|

||||

一个有趣的地方地预约和日历面板一起生效。你选中日历面板并添加一个预约。在那里,你选择一个预约的日期。完成后,你回到预约面板。我知道。。。

|

||||

|

||||

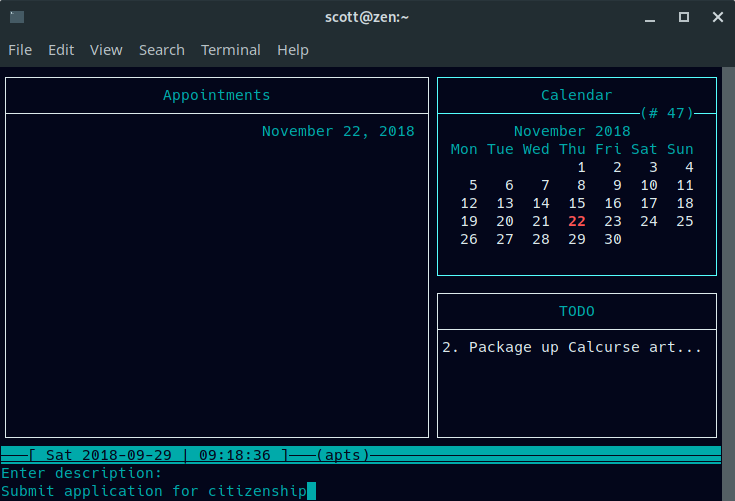

按下 **a** 设置开始时间,持续时间(以分钟为单位)和预约说明。开始时间和持续时间是可选的。Calcurse 在它们到期的那天显示预约。

|

||||

按下 `a` 设置开始时间、持续时间(以分钟为单位)和预约说明。开始时间和持续时间是可选的。Calcurse 在它们到期的那天显示预约。

|

||||

|

||||

|

||||

|

||||

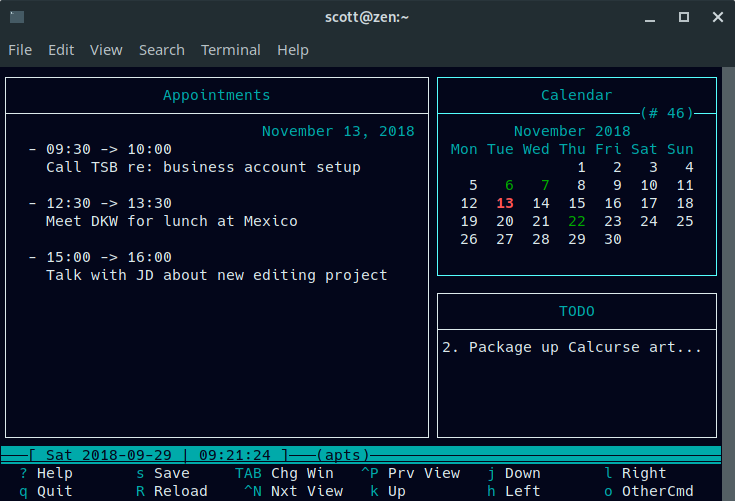

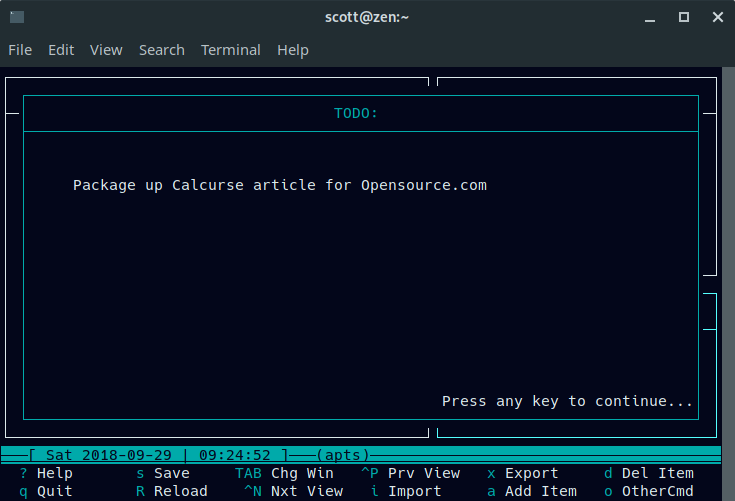

一天的预约看起来像:

|

||||

一天的预约看起来像这样:

|

||||

|

||||

|

||||

|

||||

待办事项列表独立运作。选中待办面板并(再次)按下 **a**。输入任务的描述,然后设置优先级(1 表示最高,9 表示最低)。Calcurse 会在待办事项面板中列出未完成的任务。

|

||||

待办事项列表独立运作。选中待办面板并(再次)按下 `a`。输入任务的描述,然后设置优先级(1 表示最高,9 表示最低)。Calcurse 会在待办事项面板中列出未完成的任务。

|

||||

|

||||

|

||||

|

||||

如果你的任务有很长的描述,那么 Calcurse 会截断它。你可以使用键盘上的向上或向下箭头键浏览任务,然后按下 **v** 查看描述。

|

||||

如果你的任务有很长的描述,那么 Calcurse 会截断它。你可以使用键盘上的向上或向下箭头键浏览任务,然后按下 `v` 查看描述。

|

||||

|

||||

|

||||

|

||||

Calcurse 将其信息以文本形式保存在你的主目录下名为 **.calcurse** 的隐藏文件夹中,例如 **/home/scott/.calcurse**。如果 Calcurse 停止工作,那也很容易找到你的信息。

|

||||

Calcurse 将其信息以文本形式保存在你的主目录下名为 `.calcurse` 的隐藏文件夹中,例如 `/home/scott/.calcurse`。如果 Calcurse 停止工作,那也很容易找到你的信息。

|

||||

|

||||

### 其他有用的功能

|

||||

|

||||

Calcurse 其他的功能包括设置重复预约的功能。要执行此操作,找出要重复的预约,然后在预约面板中按下 **r**。系统会要求你设置频率(例如,每天或每周)以及你希望重复预约的时间。

|

||||

Calcurse 其他的功能包括设置重复预约的功能。要执行此操作,找出要重复的预约,然后在预约面板中按下 `r`。系统会要求你设置频率(例如,每天或每周)以及你希望重复预约的时间。

|

||||

|

||||

你还可以导入 [ICAL][3] 格式的日历或以 ICAL 或 [PCAL][4] 格式导出数据。使用 ICAL,你可以与其他日历程序共享数据。使用 PCAL,你可以生成日历的 Postscript 版本。

|

||||

|

||||

你还可以将许多命令行参数传递给 Calcurse。你可以[在文档中][5]阅读它们。

|

||||

你还可以将许多命令行参数传递给 Calcurse。你可以[在文档中][5]了解它们。

|

||||

|

||||

虽然很简单,但 Calcurse 可以帮助你保持井井有条。你需要更加关注自己的任务和预约,但是你将能够更好地关注你需要做什么以及你需要做的方向。

|

||||

|

||||

@ -73,7 +70,7 @@ via: https://opensource.com/article/18/10/calcurse

|

||||

作者:[Scott Nesbitt][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,6 +1,7 @@

|

||||

pydbgen:一个数据库随机生成器

|

||||

======

|

||||

> 用这个简单的工具生成大型数据库,让你更好地研究数据科学。

|

||||

|

||||

> 用这个简单的工具生成带有多表的大型数据库,让你更好地用 SQL 研究数据科学。

|

||||

|

||||

|

||||

|

||||

@ -38,7 +39,6 @@ from pydbgen import pydbgen

|

||||

myDB=pydbgen.pydb()

|

||||

```

|

||||

|

||||

Then you can access the various internal functions exposed by the **pydb** object. For example, to print random US cities, enter:

|

||||

随后就可以调用 `pydb` 对象公开的各种内部函数了。可以按照下面的例子,输出随机的美国城市和车牌号码:

|

||||

|

||||

```

|

||||

@ -58,7 +58,7 @@ for _ in range(10):

|

||||

SZL-0934

|

||||

```

|

||||

|

||||

另外,如果你输入的是 city 而不是 city_real,返回的将会是虚构的城市名。

|

||||

另外,如果你输入的是 `city()` 而不是 `city_real()`,返回的将会是虚构的城市名。

|

||||

|

||||

```

|

||||

print(myDB.gen_data_series(num=8,data_type='city'))

|

||||

@ -97,11 +97,12 @@ fields=['name','city','street_address','email'])

|

||||

```

|

||||

|

||||

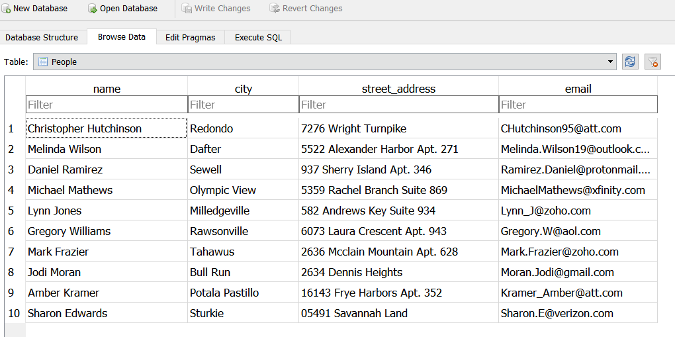

上面的例子种生成了一个能被 MySQL 和 SQLite 支持的 `.db` 文件。下图则显示了这个文件中的数据表在 SQLite 可视化客户端中打开的画面。

|

||||

|

||||

|

||||

|

||||

### 生成 Excel 文件

|

||||

|

||||

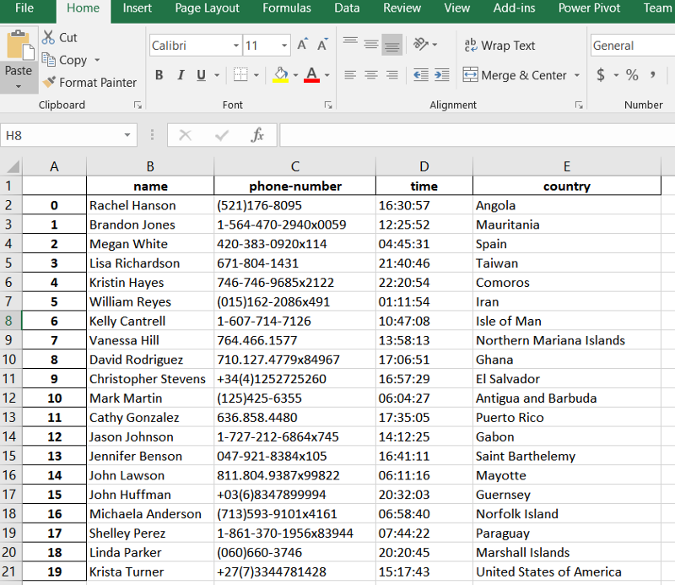

和上面的其它示例类似,下面的代码可以生成一个具有随机数据的 Excel 文件。值得一提的是,通过将`phone_simple` 参数设为 `False` ,可以生成较长较复杂的电话号码。如果你想要提高自己在数据提取方面的能力,不妨尝试一下这个功能。

|

||||

和上面的其它示例类似,下面的代码可以生成一个具有随机数据的 Excel 文件。值得一提的是,通过将 `phone_simple` 参数设为 `False` ,可以生成较长较复杂的电话号码。如果你想要提高自己在数据提取方面的能力,不妨尝试一下这个功能。

|

||||

|

||||

```

|

||||

myDB.gen_excel(num=20,fields=['name','phone','time','country'],

|

||||

@ -109,6 +110,7 @@ phone_simple=False,filename='TestExcel.xlsx')

|

||||

```

|

||||

|

||||

最终的结果类似下图所示:

|

||||

|

||||

|

||||

|

||||

### 生成随机电子邮箱地址

|

||||

@ -133,7 +135,7 @@ Tirtha.S@comcast.net

|

||||

|

||||

### 未来的改进和用户贡献

|

||||

|

||||

目前的版本中并不完美。如果你发现了 pydbgen 的 bug 导致 pydbgen 在运行期间发生崩溃,请向我反馈。如果你打算对这个项目贡献代码,[也随时欢迎你][1]。当然现在也还有很多改进的方向:

|

||||

目前的版本中并不完美。如果你发现了 pydbgen 的 bug 导致它在运行期间发生崩溃,请向我反馈。如果你打算对这个项目贡献代码,[也随时欢迎你][1]。当然现在也还有很多改进的方向:

|

||||

|

||||

* pydbgen 作为随机数据生成器,可以集成一些机器学习或统计建模的功能吗?

|

||||

* pydbgen 是否会添加可视化功能?

|

||||

@ -151,7 +153,7 @@ via: https://opensource.com/article/18/11/pydbgen-random-database-table-generato

|

||||

作者:[Tirthajyoti Sarkar][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[HankChow](https://github.com/HankChow)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -11,9 +11,9 @@

|

||||

|

||||

### tin-summer

|

||||

|

||||

`tin-summer` 是使用 Rust 语言编写的免费开源工具,它可以用于查找占用磁盘空间的文件,它也是 `du` 命令的另一个替代品。由于使用了多线程,因此 `tin-summer` 在计算大目录的大小时会比 `du` 命令快得多。`tin-summer` 与 `du` 命令之间的区别是前者读取文件的大小,而后者则读取磁盘使用情况。

|

||||

tin-summer 是使用 Rust 语言编写的自由开源工具,它可以用于查找占用磁盘空间的文件,它也是 `du` 命令的另一个替代品。由于使用了多线程,因此 tin-summer 在计算大目录的大小时会比 `du` 命令快得多。tin-summer 与 `du` 命令之间的区别是前者读取文件的大小,而后者则读取磁盘使用情况。

|

||||

|

||||

`tin-summer` 的开发者认为它可以替代 `du`,因为它具有以下优势:

|

||||

tin-summer 的开发者认为它可以替代 `du`,因为它具有以下优势:

|

||||

|

||||

* 在大目录的操作速度上比 `du` 更快;

|

||||

* 在显示结果上默认采用易读格式;

|

||||

@ -21,26 +21,26 @@

|

||||

* 可以对输出进行排序和着色处理;

|

||||

* 可扩展,等等。

|

||||

|

||||

|

||||

|

||||

**安装 tin-summer**

|

||||

|

||||

要安装 `tin-summer`,只需要在终端中执行以下命令:

|

||||

要安装 tin-summer,只需要在终端中执行以下命令:

|

||||

|

||||

```

|

||||

$ curl -LSfs https://japaric.github.io/trust/install.sh | sh -s -- --git vmchale/tin-summer

|

||||

```

|

||||

|

||||

你也可以使用 `cargo` 软件包管理器安装 `tin-summer`,但你需要在系统上先安装 Rust。在 Rust 已经安装好的情况下,执行以下命令:

|

||||

你也可以使用 `cargo` 软件包管理器安装 tin-summer,但你需要在系统上先安装 Rust。在 Rust 已经安装好的情况下,执行以下命令:

|

||||

|

||||

```

|

||||

$ cargo install tin-summer

|

||||

```

|

||||

|

||||

如果上面提到的这两种方法都不能成功安装 `tin-summer`,还可以从它的[软件发布页][1]下载最新版本的二进制文件编译,进行手动安装。

|

||||

如果上面提到的这两种方法都不能成功安装 tin-summer,还可以从它的[软件发布页][1]下载最新版本的二进制文件编译,进行手动安装。

|

||||

|

||||

**用法**

|

||||

|

||||

(LCTT 译注:tin-summer 的命令名为 `sn`)

|

||||

|

||||

如果需要查看当前工作目录的文件大小,可以执行以下命令:

|

||||

|

||||

```

|

||||

@ -80,13 +80,13 @@ $ sn sort /home/sk/ -n5

|

||||

$ sn ar

|

||||

```

|

||||

|

||||

`tin-summer` 同样支持查找指定大小的带有构建工程的目录。例如执行以下命令可以查找到大小在 100 MB 以上的带有构建工程的目录:

|

||||

tin-summer 同样支持查找指定大小的带有构建工程的目录。例如执行以下命令可以查找到大小在 100 MB 以上的带有构建工程的目录:

|

||||

|

||||

```

|

||||

$ sn ar -t100M

|

||||

```

|

||||

|

||||

如上文所说,`tin-summer` 在操作大目录的时候速度比较快,因此在操作小目录的时候,速度会相对比较慢一些。不过它的开发者已经表示,将会在以后的版本中优化这个缺陷。

|

||||

如上文所说,tin-summer 在操作大目录的时候速度比较快,因此在操作小目录的时候,速度会相对比较慢一些。不过它的开发者已经表示,将会在以后的版本中优化这个缺陷。

|

||||

|

||||

要获取相关的帮助,可以执行以下命令:

|

||||

|

||||

@ -98,7 +98,7 @@ $ sn --help

|

||||

|

||||

### dust

|

||||

|

||||

`dust` (含义是 `du` + `rust` = `dust`)使用 Rust 编写,是一个免费、开源的更直观的 `du` 工具。它可以在不需要 `head` 或`sort` 命令的情况下即时显示目录占用的磁盘空间。与 `tin-summer` 一样,它会默认情况以易读的格式显示每个目录的大小。

|

||||

`dust` (含义是 `du` + `rust` = `dust`)使用 Rust 编写,是一个免费、开源的更直观的 `du` 工具。它可以在不需要 `head` 或`sort` 命令的情况下即时显示目录占用的磁盘空间。与 tin-summer 一样,它会默认情况以易读的格式显示每个目录的大小。

|

||||

|

||||

**安装 dust**

|

||||

|

||||

@ -114,7 +114,7 @@ $ cargo install du-dust

|

||||

$ wget https://github.com/bootandy/dust/releases/download/v0.3.1/dust-v0.3.1-x86_64-unknown-linux-gnu.tar.gz

|

||||

```

|

||||

|

||||

抽取文件:

|

||||

抽取文件:

|

||||

|

||||

```

|

||||

$ tar -xvf dust-v0.3.1-x86_64-unknown-linux-gnu.tar.gz

|

||||

@ -283,7 +283,7 @@ via: https://www.ostechnix.com/some-good-alternatives-to-du-command/

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[HankChow](https://github.com/HankChow)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,56 +0,0 @@

|

||||

plutoid Translating!

|

||||

|

||||

Write Dumb Code

|

||||

======

|

||||

The best way you can contribute to an open source project is to remove lines of code from it. We should endeavor to write code that a novice programmer can easily understand without explanation or that a maintainer can understand without significant time investment.

|

||||

|

||||

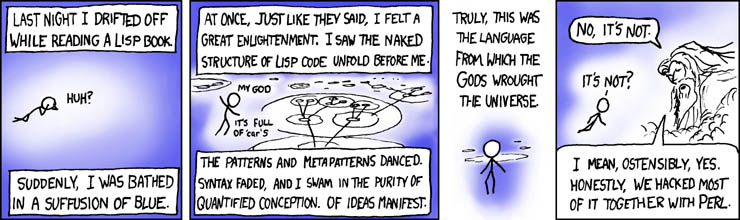

As students we attempt increasingly challenging problems with increasingly sophisticated technologies. We first learn loops, then functions, then classes, etc.. We are praised as we ascend this hierarchy, writing longer programs with more advanced technology. We learn that experienced programmers use monads while new programmers use for loops.

|

||||

|

||||

Then we graduate and find a job or open source project to work on with others. We search for something that we can add, and implement a solution pridefully, using the all the tricks that we learned in school.

|

||||

|

||||

Ah ha! I can extend this project to do X! And I can use inheritance here! Excellent!

|

||||

|

||||

We implement this feature and feel accomplished, and with good reason. Programming in real systems is no small accomplishment. This was certainly my experience. I was excited to write code and proud that I could show off all of the things that I knew how to do to the world. As evidence of my historical love of programming technology, here is a [linear algebra language][1] built with a another meta-programming language. Notice that no one has touched this code in several years.

|

||||

|

||||

However after maintaining code a bit more I now think somewhat differently.

|

||||

|

||||

1. We should not seek to build software. Software is the currency that we pay to solve problems, which is our actual goal. We should endeavor to build as little software as possible to solve our problems.

|

||||

2. We should use technologies that are as simple as possible, so that as many people as possible can use and extend them without needing to understand our advanced techniques. We should use advanced techniques only when we are not smart enough to figure out how to use more common techniques.

|

||||

|

||||

|

||||

|

||||

Neither of these points are novel. Most people I meet agree with them to some extent, but somehow we forget them when we go to contribute to a new project. The instinct to contribute by building and to demonstrate sophistication often take over.

|

||||

|

||||

### Software is a cost

|

||||

|

||||

Every line that you write costs people time. It costs you time to write it of course, but you are willing to make this personal sacrifice. However this code also costs the reviewers their time to understand it. It costs future maintainers and developers their time as they fix and modify your code. They could be spending this time outside in the sunshine or with their family.

|

||||

|

||||

So when you add code to a project you should feel meek. It should feel as though you are eating with your family and there isn't enough food on the table. You should take only what you need and no more. The people with you will respect you for your efforts to restrict yourself. Solving problems with less code is a hard, but it is a burden that you take on yourself to lighten the burdens of others.

|

||||

|

||||

### Complex technologies are harder to maintain

|

||||

|

||||

As students, we demonstrate merit by using increasingly advanced technologies. Our measure of worth depends on our ability to use functions, then classes, then higher order functions, then monads, etc. in public projects. We show off our solutions to our peers and feel pride or shame according to our sophistication.

|

||||

|

||||

However when working with a team to solve problems in the world the situation is reversed. Now we strive to solve problems with code that is as simple as possible. When we solve a problem simply we enable junior programmers to extend our solution to solve other problems. Simple code enables others and boosts our impact. We demonstrate our value by solving hard problems with only basic techniques.

|

||||

|

||||

Look! I replaced this recursive function with a for loop and it still does everything that we need it to. I know it's not as clever, but I noticed that the interns were having trouble with it and I thought that this change might help.

|

||||

|

||||

If you are a good programmer then you don't need to demonstrate that you know cool tricks. Instead, you can demonstrate your value by solving a problem in a simple way that enables everyone on your team to contribute in the future.

|

||||

|

||||

### But moderation, of course

|

||||

|

||||

That being said, over-adherence to the "build things with simple tools" dogma can be counter productive. Often a recursive solution can be much simpler than a for-loop solution and often times using a Class or a Monad is the right approach. But we should be mindful when using these technologies that we are building for ourselves our own system; a system with which others have had no experience.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://matthewrocklin.com/blog/work/2018/01/27/write-dumb-code

|

||||

|

||||

作者:[Matthew Rocklin][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://matthewrocklin.com

|

||||

[1]:https://github.com/mrocklin/matrix-algebra

|

||||

@ -1,3 +1,5 @@

|

||||

HankChow translating

|

||||

|

||||

What you need to know about the GPL Cooperation Commitment

|

||||

======

|

||||

|

||||

|

||||

158

sources/talk/20181114 Analyzing the DNA of DevOps.md

Normal file

158

sources/talk/20181114 Analyzing the DNA of DevOps.md

Normal file

@ -0,0 +1,158 @@

|

||||

Analyzing the DNA of DevOps

|

||||

======

|

||||

How have waterfall, agile, and other development frameworks shaped the evolution of DevOps? Here's what we discovered.

|

||||

|

||||

|

||||

If you were to analyze the DNA of DevOps, what would you find in its ancestry report?

|

||||

|

||||

This article is not a methodology bake-off, so if you are looking for advice or a debate on the best approach to software engineering, you can stop reading here. Rather, we are going to explore the genetic sequences that have brought DevOps to the forefront of today's digital transformations.

|

||||

|

||||

Much of DevOps has evolved through trial and error, as companies have struggled to be responsive to customers’ demands while improving quality and standing out in an increasingly competitive marketplace. Adding to the challenge is the transition from a product-driven to a service-driven global economy that connects people in new ways. The software development lifecycle is becoming an increasingly complex system of services and microservices, both interconnected and instrumented. As DevOps is pushed further and faster than ever, the speed of change is wiping out slower traditional methodologies like waterfall.

|

||||

|

||||

We are not slamming the waterfall approach—many organizations have valid reasons to continue using it. However, mature organizations should aim to move away from wasteful processes, and indeed, many startups have a competitive edge over companies that use more traditional approaches in their day-to-day operations.

|

||||

|

||||

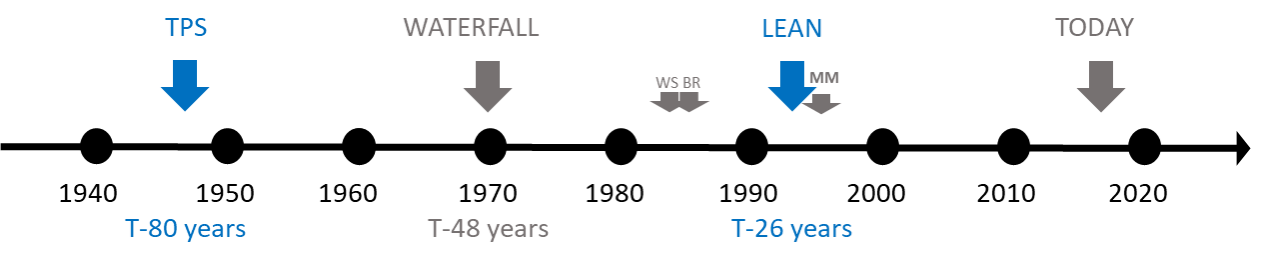

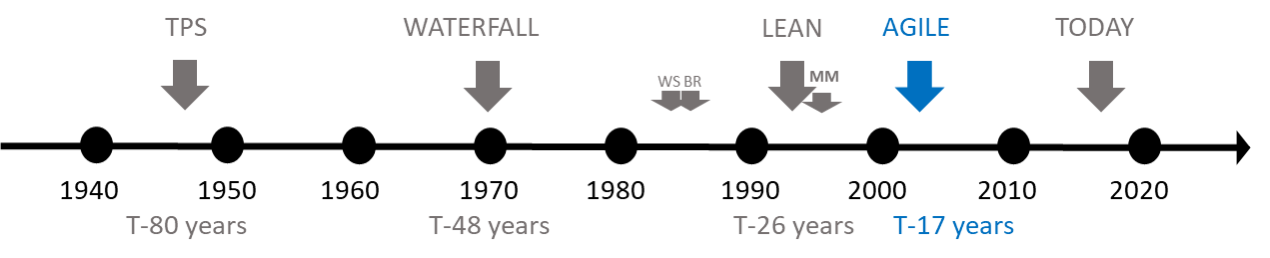

Ironically, lean, [Kanban][1], continuous, and agile principles and processes trace back to the early 1940's, so DevOps cannot claim to be a completely new idea.

|

||||

|

||||

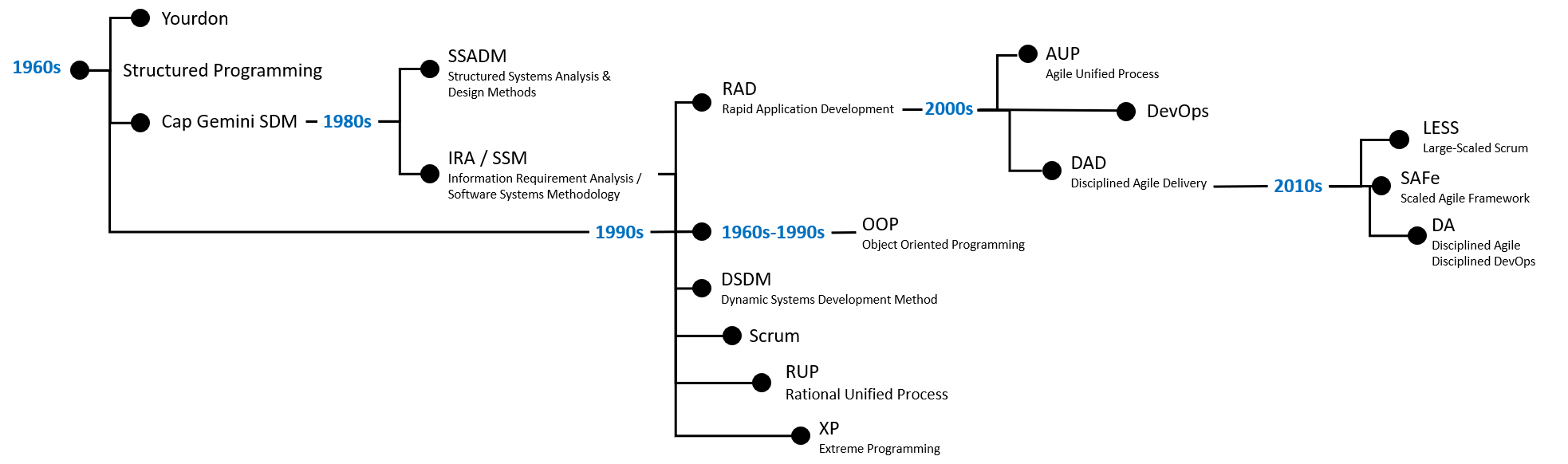

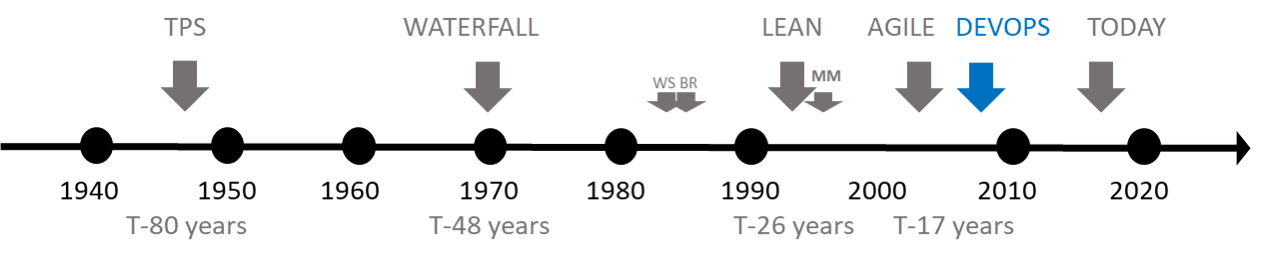

Let's start by stepping back a few years and looking at the waterfall, lean, and agile software development approaches. The figure below shows a “haplogroup” of the software development lifecycle. (Remember, we are not looking for the best approach but trying to understand which approach has positively influenced our combined 67 years of software engineering and the evolution to a DevOps mindset.)

|

||||

|

||||

|

||||

|

||||

> “A fool with a tool is still a fool.” -Mathew Mathai

|

||||

|

||||

### The traditional waterfall method

|

||||

|

||||

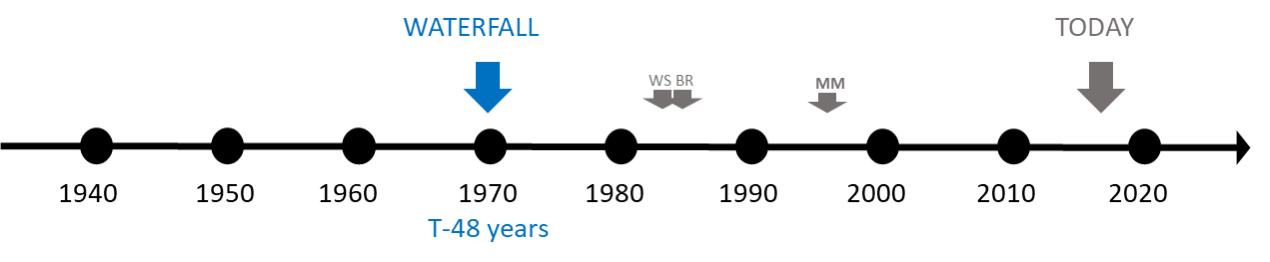

From our perspective, the oldest genetic material comes from the [waterfall][2] model, first introduced by Dr. Winston W. Royce in a paper published in the 1970's.

|

||||

|

||||

|

||||

|

||||

Like a waterfall, this approach emphasizes a logical and sequential progression through requirements, analysis, coding, testing, and operations in a single pass. You must complete each sequence, meet criteria, and obtain a signoff before you can begin the next one. The waterfall approach benefits projects that need stringent sequences and that have a detailed and predictable scope and milestone-based development. Contrary to popular belief, it also allows teams to experiment and make early design changes during the requirements, analysis, and design stages.

|

||||

|

||||

|

||||

|

||||

### Lean thinking

|

||||

|

||||

Although lean thinking dates to the Venetian Arsenal in the 1450s, we start the clock when Toyota created the [Toyota Production System][3], developed by Japanese engineers between 1948 and 1972. Toyota published an official description of the system in 1992.

|

||||

|

||||

|

||||

|

||||

Lean thinking is based on [five principles][4]: value, value stream, flow, pull, and perfection. The core of this approach is to understand and support an effective value stream, eliminate waste, and deliver continuous value to the user. It is about delighting your users without interruption.

|

||||

|

||||

|

||||

|

||||

### Kaizen

|

||||

|

||||

Kaizen is based on incremental improvements; the **Plan- >Do->Check->Act** lifecycle moved companies toward a continuous improvement mindset. Originally developed to improve the flow and processes of the assembly line, the Kaizen concept also adds value across the supply chain. The Toyota Production system was one of the early implementors of Kaizen and continuous improvement. Kaizen and DevOps work well together in environments where workflow goes from design to production. Kaizen focuses on two areas:

|

||||

|

||||

* Flow

|

||||

* Process

|

||||

|

||||

|

||||

|

||||

### Continuous delivery

|

||||

|

||||

Kaizen inspired the development of processes and tools to automate production. Companies were able to speed up production and improve the quality, design, build, test, and delivery phases by removing waste (including culture and mindset) and automating as much as possible using machines, software, and robotics. Much of the Kaizen philosophy also applies to lean business and software practices and continuous delivery deployment for DevOps principles and goals.

|

||||

|

||||

### Agile

|

||||

|

||||

The [Manifesto for Agile Software Development][5] appeared in 2001, authored by Alistair Cockburn, Bob Martin, Jeff Sutherland, Jim Highsmith, Ken Schwaber, Kent Beck, Ward Cunningham, and others.

|

||||

|

||||

|

||||

|

||||

[Agile][6] is not about throwing caution to the wind, ditching design, or building software in the Wild West. It is about being able to create and respond to change. Agile development is [based on twelve principles][7] and a manifesto that values individuals and collaboration, working software, customer collaboration, and responding to change.

|

||||

|

||||

|

||||

|

||||

### Disciplined agile

|

||||

|

||||

Since the Agile Manifesto has remained static for 20 years, many agile practitioners have looked for ways to add choice and subjectivity to the approach. Additionally, the Agile Manifesto focuses heavily on development, so a tweak toward solutions rather than code or software is especially needed in today's fast-paced development environment. Scott Ambler and Mark Lines co-authored [Disciplined Agile Delivery][8] and [The Disciplined Agile Framework][9], based on their experiences at Rational, IBM, and organizations in which teams needed more choice or were not mature enough to implement lean practices, or where context didn't fit the lifecycle.

|

||||

|

||||

The significance of DAD and DA is that it is a [process-decision framework][10] that enables simplified process decisions around incremental and iterative solution delivery. DAD builds on the many practices of agile software development, including scrum, agile modeling, lean software development, and others. The extensive use of agile modeling and refactoring, including encouraging automation through test-driven development (TDD), lean thinking such as Kanban, [XP][11], [scrum][12], and [RUP][13] through a choice of five agile lifecycles, and the introduction of the architect owner, gives agile practitioners added mindsets, processes, and tools to successfully implement DevOps.

|

||||

|

||||

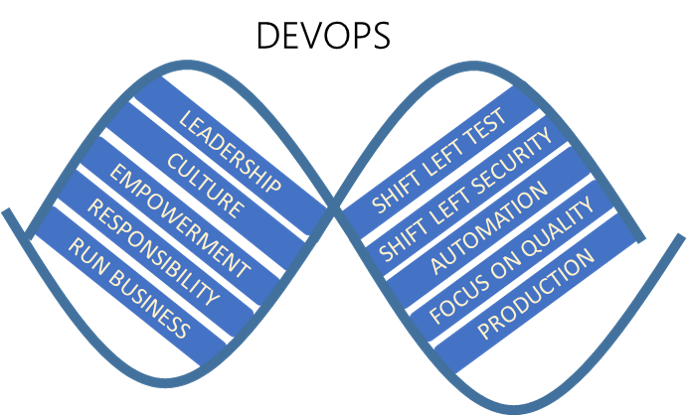

### DevOps

|

||||

|

||||

As far as we can gather, DevOps emerged during a series of DevOpsDays in Belgium in 2009, going on to become the foundation for numerous digital transformations. Microsoft principal DevOps manager [Donovan Brown][14] defines DevOps as “the union of people, process, and products to enable continuous delivery of value to our end users.”

|

||||

|

||||

|

||||

|

||||

Let's go back to our original question: What would you find in the ancestry report of DevOps if you analyzed its DNA?

|

||||

|

||||

|

||||

|

||||

We are looking at history dating back 80, 48, 26, and 17 years—an eternity in today’s fast-paced and often turbulent environment. By nature, we humans continuously experiment, learn, and adapt, inheriting strengths and resolving weaknesses from our genetic strands.

|

||||

|

||||

Under the microscope, we will find traces of waterfall, lean thinking, agile, scrum, Kanban, and other genetic material. For example, there are traces of waterfall for detailed and predictable scope, traces of lean for cutting waste, and traces of agile for promoting increments of shippable code. The genetic strands that define when and how to ship the code are where DevOps lights up in our DNA exploration.

|

||||

|

||||

|

||||

|

||||

You use the telemetry you collect from watching your solution in production to drive experiments, confirm hypotheses, and prioritize your product backlog. In other words, DevOps inherits from a variety of proven and evolving frameworks and enables you to transform your culture, use products as enablers, and most importantly, delight your customers.

|

||||

|

||||

If you are comfortable with lean thinking and agile, you will enjoy the full benefits of DevOps. If you come from a waterfall environment, you will receive help from a DevOps mindset, but your lean and agile counterparts will outperform you.

|

||||

|

||||

### eDevOps

|

||||

|

||||

|

||||

|

||||

In 2016, Brent Reed coined the term eDevOps (no Google or Wikipedia references exist to date), defining it as “a way of working (WoW) that brings continuous improvement across the enterprise seamlessly, through people, processes and tools.”

|

||||

|

||||

Brent found that agile was failing in IT: Businesses that had adopted lean thinking were not achieving the value, focus, and velocity they expected from their trusted IT experts. Frustrated at seeing an "ivory tower" in which siloed IT services were disconnected from architecture, development, operations, and help desk support teams, he applied his practical knowledge of disciplined agile delivery and added some goals and practical applications to the DAD toolset, including:

|

||||

|

||||

* Focus and drive of culture through a continuous improvement (Kaizen) mindset, bringing people together even when they are across the cubicle

|

||||

* Velocity through automation (TDD + refactoring everything possible), removing waste and adopting a [TOGAF][15], JBGE (just barely good enough) approach to documentation

|

||||

* Value through modeling (architecture modeling) and shifting left to enable right through exposing anti-patterns while sharing through collaboration patterns in a more versatile and strategic modern digital repository

|

||||

|

||||

|

||||

|

||||

Using his experience with AI at IBM, Brent designed a maturity model for eDevOps that incrementally automates dashboards for measuring and decision-making purposes so that continuous improvement through a continuous deployment (automating from development to production) is a real possibility for any organization. eDevOps in an effective transformation program based on disciplined DevOps that enables:

|

||||

|

||||

* Business to DevOps (BizDevOps),

|

||||

* Security to DevOps (SecDevOps)

|

||||

* Information to DevOps (DataDevOps)

|

||||

* Loosely coupled technical services while bringing together and delighting all stakeholders

|

||||

* Building potentially consumable solutions every two weeks or faster

|

||||

* Collecting, measuring, analyzing, displaying, and automating actionable insight through the DevOps processes from concept through live production use

|

||||

* Continuous improvement following a Kaizen and disciplined agile approach

|

||||

|

||||

|

||||

|

||||

### The next stage in the development of DevOps

|

||||

|

||||

|

||||

|

||||

Will DevOps ultimately be considered hype—a collection of more tech thrown at corporations and added to the already extensive list of buzzwords? Time, of course, will tell how DevOps will progress. However, DevOps' DNA must continue to mature and be refined, and developers must understand that it is neither a silver bullet nor a remedy to cure all ailments and solve all problems.

|

||||

|

||||

```

|

||||

DevOps != Agile != Lean Thinking != Waterfall

|

||||

|

||||

DevOps != Tools !=Technology

|

||||

|

||||

DevOps Ì Agile Ì Lean Thinking Ì Waterfall

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/11/analyzing-devops

|

||||

|

||||

作者:[Willy-Peter Schaub][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/wpschaub

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://en.wikipedia.org/wiki/Kanban

|

||||

[2]: https://airbrake.io/blog/sdlc/waterfall-model

|

||||

[3]: https://en.wikipedia.org/wiki/Toyota_Production_System

|

||||

[4]: https://www.lean.org/WhatsLean/Principles.cfm

|

||||

[5]: http://agilemanifesto.org/

|

||||

[6]: https://www.agilealliance.org/agile101

|

||||

[7]: http://agilemanifesto.org/principles.html

|

||||

[8]: https://books.google.com/books?id=CwvBEKsCY2gC

|

||||

[9]: http://www.disciplinedagiledelivery.com/books/

|

||||

[10]: https://en.wikipedia.org/wiki/Disciplined_agile_delivery

|

||||

[11]: https://en.wikipedia.org/wiki/Extreme_programming

|

||||

[12]: https://www.scrum.org/resources/what-is-scrum

|

||||

[13]: https://en.wikipedia.org/wiki/Rational_Unified_Process

|

||||

[14]: http://donovanbrown.com/

|

||||

[15]: http://www.opengroup.org/togaf

|

||||

@ -0,0 +1,76 @@

|

||||

Is your startup built on open source? 9 tips for getting started

|

||||

======

|

||||

Are open source businesses all that different from normal businesses?

|

||||

|

||||

|

||||

When I started [Gluu][1] in 2009, I had no idea how difficult it would be to start an open source software company. Using the open source development methodology seemed like a good idea, especially for infrastructure software based on protocols defined by open standards. By nature, entrepreneurs are optimistic—we underestimate the difficulty of starting a business. However, Gluu was my fourth business, so I thought I knew what I was in for. But I was in for a surprise!

|

||||

|

||||

Every business is unique. One of the challenges of serial entrepreneurship is that a truth that was core to the success of a previous business may be incorrect in your next business. Building a business around open source forced me to change my plan. How to find the right team members, how to price our offering, how to market our product—all of these aspects of starting a business (and more) were impacted by the open source mission and required an adjustment from my previous experience.

|

||||

|

||||

A few years ago, we started to question whether Gluu was pursuing the right business model. The business was growing, but not as fast as we would have liked.

|

||||

|

||||

One of the things we did at Gluu was to prepare a "business model canvas," an approach detailed in the book [Business Model Generation: A Handbook for Visionaries, Game Changers, and Challengers][2] by Yves Pigneur and Alexander Osterwalder. This is a thought-provoking exercise for any business at any stage. It helped us consider our business more holistically. A business is more than a stream of revenue. You need to think about how you segment the market, how to interact with customers, what are your sales channels, what are your key activities, what is your value proposition, what are your expenses, partnerships, and key resources. We've done this a few times over the years because a business model naturally evolves over time.

|

||||

|

||||

In 2016, I started to wonder how other open source businesses were structuring their business models. Business Model Generation talks about three types of companies: product innovation, customer relationship, and infrastructure.

|

||||

|

||||

* Product innovation companies are first to market with new products and can get a lot of market share because they are first.

|

||||

* Customer relationship companies have a wider offering and need to get "wallet share" not market share.

|

||||

* Infrastructure companies are very scalable but need established operating procedures and lots of capital.

|

||||

|

||||

|

||||

|

||||

![Open Source Underdogs podcast][4]

|

||||

|

||||

Mike Swartz, CC BY

|

||||

|

||||

It's hard to figure out what models and types of business other open source software companies are pursuing by just looking at their website. And most open source companies are private—so there are no SEC filings to examine.

|

||||

|

||||

To find out more, I went to the web. I found a [great talk][5] from Mike Olson, Founder and Chief Strategy Officer at Cloudera, about open source business models. It was recorded as part of a Stanford business lecture series. I wanted more of these kinds of talks! But I couldn't find any. That's when I got the idea to start a podcast where I interview founders of open source companies and ask them to describe what business model they are pursuing.

|

||||

|

||||

In 2018, this idea became a reality when we started a podcast called [Open Source Underdogs][6]. So far, we have recorded nine episodes. There is a lot of great content in all the episodes, but I thought it would be fun to share one piece of advice from each.

|

||||

|

||||

### Advice from 9 open source businesses

|

||||

|

||||

**Peter Wang, CTO of Anaconda: **"Investors coming in to help put more gas in your gas tank want to understand what road you're on and how far you want to go. If you can't communicate to investors on a basis that they understand about your business model and revenue model, then you have no business asking them for their money. Don't get mad at them!"

|

||||

|

||||

**Jim Thompson, Founder of Netgate: **"Businesses survive at the whim of their customers. Solving customer problems and providing value to the business is literally why you have a business!"

|

||||

|

||||

**Michael Howard, CEO of MariaDB: **"My advice to open source software startups? It depends what part of the stack you're in. If you're infrastructure, you have no choice but to be open source."

|

||||

|

||||

**Ian Tien, CEO of** **Mattermost: ** "You want to build something that people love. So start with roles that open source can play in your vision for the product, the distribution model, the community you want to build, and the business you want to build."

|

||||

|

||||

**Mike Olson, Founder and Chief Strategy Officer at Cloudera: **"A business model is a complex construct. Open source is a really important component of strategic thinking. It's a great distributed development model. It's a genius, low-cost distribution model—and those have a bunch of advantages. But you need to think about how you're going to get paid."

|

||||

|

||||

**Elliot Horowitz, Founder of MongoDB: **"The most important thing, whether it's open source or not open source, is to get incredibly close to your users."

|

||||

|

||||

**Tom Hatch, CEO of SaltStack: **"Being able to build an internal culture and a management mindset that deals with open source, and profits from open source, and functions in a stable and responsible way with regard to open source is one of the big challenges you're going to face. It's one thing to make a piece of open source software and get people to use it. It's another to build a company on top of that open source."

|

||||

|

||||

**Matt Mullenweg, CEO of Automattic: **"Open source businesses aren't that different from normal businesses. A mistake that we made, that others can avoid, is not incorporating the best leaders and team members in functions like marketing and sales."

|

||||

|

||||

**Gabriel Engel, CEO of RocketChat: **"Moving from a five-person company, where you are the center of the company, and it's easy to know what everyone is doing, and everyone relies on you for decisions, to a 40-person company—that transition is harder than expected."

|

||||

|

||||

### What we've learned

|

||||

|

||||

After recording these podcasts, we've tweaked Gluu's business model a little. It's become clearer that we need to embrace open core—we've been over-reliant on support revenue. It's a direction we had been going, but listening to our podcast's guests supported our decision.

|

||||

|

||||

We have many new episodes lined up for 2018 and 2019, including conversations with the founders of Liferay, Couchbase, TimescaleDB, Canonical, Redis, and more, who are sure to offer even more great insights about the open source software business. You can find all the podcast episodes by searching for "Open Source Underdogs" on iTunes and Google podcasts or by visiting our [website][6]. We want to hear your opinions and ideas you have to help us improve the podcast, so after you listen, please leave us a review.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/11/tips-open-source-entrepreneurs

|

||||

|

||||

作者:[Mike Schwartz][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/gluufederation

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.gluu.org/

|

||||

[2]: https://www.wiley.com/en-us/Business+Model+Generation%3A+A+Handbook+for+Visionaries%2C+Game+Changers%2C+and+Challengers-p-9780470876411

|

||||

[3]: /file/414706

|

||||

[4]: https://opensource.com/sites/default/files/uploads/underdogs_logo.jpg (Open Source Underdogs podcast)

|

||||

[5]: https://youtu.be/T_UM5PYk9NA

|

||||

[6]: https://opensourceunderdogs.com/

|

||||

@ -1,3 +1,5 @@

|

||||

translating---geekpi

|

||||

|

||||

Publishing Markdown to HTML with MDwiki

|

||||

======

|

||||

|

||||

|

||||

@ -1,75 +0,0 @@

|

||||

Translating by qhwdw

|

||||

|

||||

|

||||

Greg Kroah-Hartman Explains How the Kernel Community Is Securing Linux

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

Kernel maintainer Greg Kroah-Hartman talks about how the kernel community is hardening Linux against vulnerabilities.[Creative Commons Zero][2]

|

||||

|

||||

As Linux adoption expands, it’s increasingly important for the kernel community to improve the security of the world’s most widely used technology. Security is vital not only for enterprise customers, it’s also important for consumers, as 80 percent of mobile devices are powered by Linux. In this article, Linux kernel maintainer Greg Kroah-Hartman provides a glimpse into how the kernel community deals with vulnerabilities.

|

||||

|

||||

### There will be bugs

|

||||

|

||||

|

||||

|

||||

|

||||

Greg Kroah-Hartman[The Linux Foundation][1]

|

||||

|

||||

As Linus Torvalds once said, most security holes are bugs, and bugs are part of the software development process. As long as the software is being written, there will be bugs.

|

||||

|

||||

“A bug is a bug. We don’t know if a bug is a security bug or not. There is a famous bug that I fixed and then three years later Red Hat realized it was a security hole,” said Kroah-Hartman.

|

||||

|

||||

There is not much the kernel community can do to eliminate bugs, but it can do more testing to find them. The kernel community now has its own security team that’s made up of kernel developers who know the core of the kernel.

|

||||

|

||||

“When we get a report, we involve the domain owner to fix the issue. In some cases it’s the same people, so we made them part of the security team to speed things up,” Kroah Hartman said. But he also stressed that all parts of the kernel have to be aware of these security issues because kernel is a trusted environment and they have to protect it.

|

||||

|

||||

“Once we fix things, we can put them in our stack analysis rules so that they are never reintroduced,” he said.

|

||||

|

||||

Besides fixing bugs, the community also continues to add hardening to the kernel. “We have realized that we need to have mitigations. We need hardening,” said Kroah-Hartman.

|

||||

|

||||

Huge efforts have been made by Kees Cook and others to take the hardening features that have been traditionally outside of the kernel and merge or adapt them for the kernel. With every kernel released, Cook provides a summary of all the new hardening features. But hardening the kernel is not enough, vendors have to enable the new features and take advantage of them. That’s not happening.

|

||||

|

||||

Kroah-Hartman [releases a stable kernel every week][5], and companies pick one to support for a longer period so that device manufacturers can take advantage of it. However, Kroah-Hartman has observed that, aside from the Google Pixel, most Android phones don’t include the additional hardening features, meaning all those phones are vulnerable. “People need to enable this stuff,” he said.

|

||||

|

||||

“I went out and bought all the top of the line phones based on kernel 4.4 to see which one actually updated. I found only one company that updated their kernel,” he said. “I'm working through the whole supply chain trying to solve that problem because it's a tough problem. There are many different groups involved -- the SoC manufacturers, the carriers, and so on. The point is that they have to push the kernel that we create out to people.”

|

||||

|

||||

The good news is that unlike with consumer electronics, the big vendors like Red Hat and SUSE keep the kernel updated even in the enterprise environment. Modern systems with containers, pods, and virtualization make this even easier. It’s effortless to update and reboot with no downtime. It is, in fact, easier to keep things secure than it used to be.

|

||||

|

||||

### Meltdown and Spectre

|

||||

|

||||

No security discussion is complete without the mention of Meltdown and Spectre. The kernel community is still working on fixes as new flaws are discovered. However, Intel has changed its approach in light of these events.

|

||||

|

||||

“They are reworking on how they approach security bugs and how they work with the community because they know they did it wrong,” Kroah-Hartman said. “The kernel has fixes for almost all of the big Spectre issues, but there is going to be a long tail of minor things.”

|

||||

|

||||

The good news is that these Intel vulnerabilities proved that things are getting better for the kernel community. “We are doing more testing. With the latest round of security patches, we worked on our own for four months before releasing them to the world because we were embargoed. But once they hit the real world, it made us realize how much we rely on the infrastructure we have built over the years to do this kind of testing, which ensures that we don’t have bugs before they hit other people,” he said. “So things are certainly getting better.”

|

||||

|

||||

The increasing focus on security is also creating more job opportunities for talented people. Since security is an area that gets eyeballs, those who want to build a career in kernel space, security is a good place to get started with.

|

||||

|

||||

“If there are people who want a job to do this type of work, we have plenty of companies who would love to hire them. I know some people who have started off fixing bugs and then got hired,” Kroah-Hartman said.

|

||||

|

||||

You can hear more in the video below:

|

||||

|

||||

[视频](https://youtu.be/jkGVabyMh1I)

|

||||

|

||||

_Check out the schedule of talks for Open Source Summit Europe and sign up to receive updates:_

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/2018/10/greg-kroah-hartman-explains-how-kernel-community-securing-linux-0

|

||||

|

||||

作者:[SWAPNIL BHARTIYA][a]

|

||||

选题:[oska874][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/arnieswap

|

||||

[b]:https://github.com/oska874

|

||||

[1]:https://www.linux.com/licenses/category/linux-foundation

|

||||