mirror of

https://github.com/LCTT/TranslateProject.git

synced 2024-12-26 21:30:55 +08:00

commit

7680a83b63

@ -1,13 +1,13 @@

|

||||

IT 灾备:系统管理员对抗自然灾害 | HPE

|

||||

IT 灾备:系统管理员对抗自然灾害

|

||||

======

|

||||

|

||||

|

||||

|

||||

面对倾泻的洪水或地震时业务需要继续运转。在飓风卡特里娜、桑迪和其他灾难中幸存下来的系统管理员向在紧急状况下负责 IT 的人们分享真实世界中的建议。

|

||||

> 面对倾泻的洪水或地震时业务需要继续运转。在飓风卡特里娜、桑迪和其他灾难中幸存下来的系统管理员向在紧急状况下负责 IT 的人们分享真实世界中的建议。

|

||||

|

||||

说到自然灾害,2017 年可算是多灾多难。飓风哈维,厄玛和玛莉亚给休斯顿,波多黎各,弗罗里达和加勒比造成了严重破坏。此外,西部的野火将多处住宅和商业建筑付之一炬。

|

||||

说到自然灾害,2017 年可算是多灾多难。(LCTT 译注:本文发表于 2017 年)飓风哈维、厄玛和玛莉亚给休斯顿、波多黎各、弗罗里达和加勒比造成了严重破坏。此外,西部的野火将多处住宅和商业建筑付之一炬。

|

||||

|

||||

再来一篇关于[有备无患][1]的警示文章——当然其中都是好的建议——是很简单的,但这无法帮助网络管理员应对湿漉漉的烂摊子。那些善意的建议中大多数都假定掌权的人乐于投入资金来实施这些建议。

|

||||

再来一篇关于[有备无患][1]的警示文章 —— 当然其中都是好的建议 —— 是很简单的,但这无法帮助网络管理员应对湿漉漉的烂摊子。那些善意的建议中大多数都假定掌权的人乐于投入资金来实施这些建议。

|

||||

|

||||

我们对真实世界更感兴趣。不如让我们来充分利用这些坏消息。

|

||||

|

||||

@ -23,21 +23,21 @@ IT 灾备:系统管理员对抗自然灾害 | HPE

|

||||

|

||||

当灯光忽明忽暗,狂风象火车机车一样怒号时,就该启动你的业务持续计划和灾备计划了。

|

||||

|

||||

有太多的系统管理员报告当暴风雨来临时这两个计划中一个也没有。这并不令人惊讶。2014 年[<ruby>灾备预备状态委员会<rt>Disaster Recovery Preparedness Council</rt></ruby>][6]发现[世界范围内被调查的公司中有 73% 没有足够的灾备计划][7]。

|

||||

有太多的系统管理员报告当暴风雨来临时这两个计划中一个也没有。这并不令人惊讶。2014 年<ruby>[灾备预备状态委员会][6]<rt>Disaster Recovery Preparedness Council</rt></ruby>发现[世界范围内被调查的公司中有 73% 没有足够的灾备计划][7]。

|

||||

|

||||

“足够”是关键词。正如一个系统管理员2016年在 Reddit 上写的那样,“[我们的灾备计划就是一场灾难。][8]我们所有的数据都备份在离这里大约 30 英里的一个<ruby>存储区域网络<rt>SAN</rt></ruby>。我们没有将数据重新上线的硬件,甚至好几天过去了都没能让核心服务器启动运行起来。我们是个年营收 40 亿美元的公司,却不愿为适当的设备投入几十万美元,或是在数据中心添置几台服务器。当添置硬件的提案被提出的时候,我们的管理层说,‘嗐,碰到这种事情的机会能有多大呢’。”

|

||||

“**足够**”是关键词。正如一个系统管理员 2016 年在 Reddit 上写的那样,“[我们的灾备计划就是一场灾难。][8]我们所有的数据都备份在离这里大约 30 英里的一个<ruby>存储区域网络<rt>SAN</rt></ruby>。我们没有将数据重新上线的硬件,甚至好几天过去了都没能让核心服务器启动运行起来。我们是个年营收 40 亿美元的公司,却不愿为适当的设备投入几十万美元,或是在数据中心添置几台服务器。当添置硬件的提案被提出的时候,我们的管理层说,‘嗐,碰到这种事情的机会能有多大呢’。”

|

||||

|

||||

同一个帖子中另一个人说得更简洁:“眼下我的灾备计划只能在黑暗潮湿的角落里哭泣,但愿没人在乎损失的任何东西。”

|

||||

|

||||

如果你在哭泣,但愿你至少不是独自流泪。任何灾备计划,即便是 IT 部门制订的灾备计划,必须确定[你能跟别人通讯][10],如同系统管理员 Jim Thompson 从卡特里娜飓风中得到的教训:“确保你有一个与人们通讯的计划。在一场严重的区域性灾难期间,你将无法给身处灾区的任何人打电话。”

|

||||

|

||||

有一个选择可能会让有技术头脑的人感兴趣:[<ruby>业余电台<rt>ham radio</rt></ruby>][11]。[它在波多黎各发挥了巨大作用][12]。

|

||||

有一个选择可能会让有技术头脑的人感兴趣:<ruby>[业余电台][11]<rt>ham radio</rt></ruby>。[它在波多黎各发挥了巨大作用][12]。

|

||||

|

||||

### 列一个愿望清单

|

||||

|

||||

第一步是承认问题。“许多公司实际上对灾备计划不感兴趣,或是消极对待”,[Micro Focus][14] 的首席架构师 [Joshua Focus][13] 说。“将灾备看作业务持续性的一个方面是种不同的视角。所有公司都要应对业务持续性,所以灾备应被视为业务持续性的一部分。”

|

||||

|

||||

IT 部门需要将其需求书面化以确保适当的灾备和业务持续性计划。即使是你不知道如何着手,或尤其是这种时候,也是如此。正如一个系统管理员所言,“我喜欢有一个‘想法转储’,让所有计划,点子,改进措施毫无保留地提出来。[这][对一类情况尤其有帮助,即当你提议变更][15],并付诸实施,接着 6 个月之后你警告过的状况就要来临。”现在你做好了一切准备并且可以开始讨论:“如同我们之前在 4 月讨论过的那样……”

|

||||

IT 部门需要将其需求书面化以确保适当的灾备和业务持续性计划。即使是你不知道如何着手,或尤其是这种时候,也是如此。正如一个系统管理员所言,“我喜欢有一个‘想法转储’,让所有计划、点子、改进措施毫无保留地提出来。(这)[对一类情况尤其有帮助,即当你提议变更][15],并付诸实施,接着 6 个月之后你警告过的状况就要来临。”现在你做好了一切准备并且可以开始讨论:“如同我们之前在 4 月讨论过的那样……”

|

||||

|

||||

因此,当你的管理层对业务持续性计划回应道“嗐,碰到这种事的机会能有多大呢?”的时候你能做些什么呢?有个系统管理员称这也完全是管理层的正常行为。在这种糟糕的处境下,老练的系统管理员建议用书面形式把这些事情记录下来。记录应清楚表明你告知管理层需要采取的措施,且[他们拒绝采纳建议][16]。“总的来说就是有足够的书面材料能让他们搓成一根绳子上吊,”该系统管理员补充道。

|

||||

|

||||

@ -47,13 +47,13 @@ IT 部门需要将其需求书面化以确保适当的灾备和业务持续性

|

||||

|

||||

“[我们的办公室是幢摇摇欲坠的建筑][18],”飓风哈维重创休斯顿之后有个系统管理员提到。“我们盲目地进入那幢建筑,现场的基础设施糟透了。正是我们给那幢建筑里带去了最不想要的一滴水,现在基础设施整个都沉在水下了。”

|

||||

|

||||

尽管如此,如果你想让数据中心继续运转——或在暴风雨过后恢复运转——你需要确保该场所不仅能经受住你所在地区那些意料中的灾难,而且能经受住那些意料之外的灾难。一个旧金山的系统管理员知道为什么重要的是确保公司的服务器安置在可以承受里氏 7 级地震的建筑内。一家圣路易斯的公司知道如何应对龙卷风。但你应当为所有可能发生的事情做好准备:加州的龙卷风,密苏里州的地震,或[僵尸末日][19](给你在 IT 预算里增加一把链锯提供了充分理由)。

|

||||

尽管如此,如果你想让数据中心继续运转——或在暴风雨过后恢复运转 —— 你需要确保该场所不仅能经受住你所在地区那些意料中的灾难,而且能经受住那些意料之外的灾难。一个旧金山的系统管理员知道为什么重要的是确保公司的服务器安置在可以承受里氏 7 级地震的建筑内。一家圣路易斯的公司知道如何应对龙卷风。但你应当为所有可能发生的事情做好准备:加州的龙卷风、密苏里州的地震,或[僵尸末日][19](给你在 IT 预算里增加一把链锯提供了充分理由)。

|

||||

|

||||

在休斯顿的情况下,[多数数据中心保持运转][20],因为它们是按照抵御暴风雨和洪水的标准建造的。[Data Foundry][21] 的首席技术官 Edward Henigin 说他们公司的数据中心之一,“专门建造的休斯顿 2 号的设计能抵御 5 级飓风的风速。这个场所的公共供电没有中断,我们得以避免切换到后备发电机。”

|

||||

|

||||

那是好消息。坏消息是伴随着超级飓风桑迪于2012年登场,如果[你的数据中心没准备好应对洪水][22],你会陷入一个麻烦不断的世界。一个不能正常运转的数据中心 [Datagram][23] 服务的客户包括 Gawker,Gizmodo 和 Buzzfeed 等知名网站。

|

||||

那是好消息。坏消息是伴随着超级飓风桑迪于 2012 年登场,如果[你的数据中心没准备好应对洪水][22],你会陷入一个麻烦不断的世界。一个不能正常运转的数据中心 [Datagram][23] 服务的客户包括 Gawker、Gizmodo 和 Buzzfeed 等知名网站。

|

||||

|

||||

当然,有时候你什么也做不了。正如某个波多黎各圣胡安的系统管理员在飓风厄玛扫过后悲伤地写到,“发电机没油了。服务器机房靠电池在运转但是没有[空调]。[永别了,服务器][24]。”由于 <ruby>MPLS<rt>Multiprotocol Lable Switching</rt></ruby> 线路亦中断,该系统管理员没法切换到灾备措施:“多么充实的一天。”

|

||||

当然,有时候你什么也做不了。正如某个波多黎各圣胡安的系统管理员在飓风厄玛扫过后悲伤地写到,“发电机没油了。服务器机房靠电池在运转但是没有(空调)。[永别了,服务器][24]。”由于 <ruby>MPLS<rt>Multiprotocol Lable Switching</rt></ruby> 线路亦中断,该系统管理员没法切换到灾备措施:“多么充实的一天。”

|

||||

|

||||

总而言之,IT 专业人士需要了解他们所处的地区,了解他们面临的风险并将他们的服务器安置在能抵御当地自然灾害的数据中心内。

|

||||

|

||||

@ -73,37 +73,37 @@ IT 部门需要将其需求书面化以确保适当的灾备和业务持续性

|

||||

|

||||

某个系统管理员挖苦式的计划是什么呢?“趁 UPS 完蛋之前把你能关的机器关掉,不能关的就让它崩溃咯。然后,[喝个痛快直到供电恢复][28]。”

|

||||

|

||||

在 2016 年德尔塔和西南航空停电事故之后,IT 员工驱动的一个更加严肃的计划是由一个有管理的服务供应商为其客户[部署不间断电源][29]:“对于至关重要的部分,在供电中断时我们结合使用<ruby>简单网络管理协议<rt>SNMP</rt></ruby>信令和 <ruby>PowerChute 网络关机<rt>PowerChute Nrework Shutdown</rt></ruby>客户端来关闭设备。至于重新开机,那取决于客户。有些是自动启动,有些则需要人工干预。”

|

||||

在 2016 年德尔塔和西南航空停电事故之后,IT 员工推动的一个更加严肃的计划是由一个有管理的服务供应商为其客户[部署不间断电源][29]:“对于至关重要的部分,在供电中断时我们结合使用<ruby>简单网络管理协议<rt>SNMP</rt></ruby>信令和 <ruby>PowerChute 网络关机<rt>PowerChute Nrework Shutdown</rt></ruby>客户端来关闭设备。至于重新开机,那取决于客户。有些是自动启动,有些则需要人工干预。”

|

||||

|

||||

另一种做法是用来自两个供电所的供电线路支持数据中心。例如,[西雅图威斯汀大厦数据中心][30]有来自不同供电所的多路 13.4 千伏供电线路,以及多个 480 伏三相变电箱。

|

||||

|

||||

预防严重断电的系统不是“通用的”设备。系统管理员应当[为数据中心请求一台定制的柴油发电机][31]。除了按你特定的需求调整,发电机必须能迅速跳至全速运转并承载全部电力负荷而不致影响系统负载性能。”

|

||||

|

||||

这些发电机也必须加以保护。例如,将你的发电机安置在泛洪区的一楼就不是个聪明的主意。位于纽约<ruby>百老街<rt>Broad street</rt></ruby>的数据中心在超级飓风桑迪期间就是类似情形,备用发电机的燃料油桶在地下室——并且被水淹了。尽管一场[“人力接龙”用容量 5 加仑的水桶将柴油输送到 17 段楼梯之上的发电机][32]使 [Peer 1 Hosting][33] 得以继续运营,这不是一个切实可行的业务持续计划。

|

||||

这些发电机也必须加以保护。例如,将你的发电机安置在泛洪区的一楼就不是个聪明的主意。位于纽约<ruby>百老街<rt>Broad street</rt></ruby>的数据中心在超级飓风桑迪期间就是类似情形,备用发电机的燃料油桶在地下室 —— 并且被水淹了。尽管一场[“人力接龙”用容量 5 加仑的水桶将柴油输送到 17 段楼梯之上的发电机][32]使 [Peer 1 Hosting][33] 得以继续运营,但这不是一个切实可行的业务持续计划。

|

||||

|

||||

正如多数数据中心专家所知那样,如果你有时间——假设一个飓风离你有一天的距离——确保你的发电机正常工作,加满油,准备好当供电线路被刮断时立即开启,不管怎样你之前应当每月测试你的发电机。你之前是那么做的,是吧?是就好!

|

||||

正如多数数据中心专家所知那样,如果你有时间 —— 假设一个飓风离你有一天的距离 —— 确保你的发电机正常工作,加满油,准备好当供电线路被刮断时立即开启,不管怎样你之前应当每月测试你的发电机。你之前是那么做的,是吧?是就好!

|

||||

|

||||

### 测试你对备份的信心

|

||||

|

||||

普通用户几乎从不备份,检查备份是否实际完好的就更少了。系统管理员对此更加了解。

|

||||

|

||||

有些 [IT 部门在寻求将他们的备份迁移到云端][34]。但有些系统管理员仍对此不买账——他们有很好的理由。最近有人报告,“在用了整整 5 天[从亚马逊 Glacier 恢复了 [400 GB] 数据][35]之后,我欠了亚马逊将近 200 美元的传输费,并且[我还是]处于未完全恢复状态,还差大约 100 GB 文件。

|

||||

有些 [IT 部门在寻求将他们的备份迁移到云端][34]。但有些系统管理员仍对此不买账 —— 他们有很好的理由。最近有人报告,“在用了整整 5 天[从亚马逊 Glacier 恢复了(400 GB)数据][35]之后,我欠了亚马逊将近 200 美元的传输费,并且(我还是)处于未完全恢复状态,还差大约 100 GB 文件。”

|

||||

|

||||

结果是有些系统管理员依然喜欢磁带备份。磁带肯定不够时髦,但正如操作系统专家 Andrew S. Tanenbaum 说的那样,“[永远不要低估一辆装满磁带在高速上飞驰的旅行车的带宽][36]。”

|

||||

|

||||

目前每盘磁带可以存储 10 TB 数据;有的进行中的实验可在磁带上存储高达 200 TB 数据。诸如[<ruby>线性磁带文件系统<rt>Linear Tape File System</rt></ruby>][37]之类的技术允许你象访问网络硬盘一样读取磁带数据。

|

||||

目前每盘磁带可以存储 10 TB 数据;有的进行中的实验可在磁带上存储高达 200 TB 数据。诸如<ruby>[线性磁带文件系统][37]<rt>Linear Tape File System</rt></ruby>之类的技术允许你象访问网络硬盘一样读取磁带数据。

|

||||

|

||||

然而对许多人而言,磁带[绝对是最后选择的手段][38]。没关系,因为备份应该有大量的可选方案。在这种情况下,一个系统管理员说到,“故障时我们会用这些方法(恢复备份):[Windows] 服务器层面的 VSS [Volume Shadow Storage] 快照,<ruby>存储区域网络<rt>SAN</rt></ruby>层面的卷快照,以及存储区域网络层面的异地归档快照。但是万一有什么事情发生并摧毁了我们的虚拟机,存储区域网络和备份存储区域网络,我们还是可以取回磁带并恢复数据。”

|

||||

然而对许多人而言,磁带[绝对是最后选择的手段][38]。没关系,因为备份应该有大量的可选方案。在这种情况下,一个系统管理员说到,“故障时我们会用这些方法(恢复备份):(Windows)服务器层面的 VSS (Volume Shadow Storage)快照,<ruby>存储区域网络<rt>SAN</rt></ruby>层面的卷快照,以及存储区域网络层面的异地归档快照。但是万一有什么事情发生并摧毁了我们的虚拟机,存储区域网络和备份存储区域网络,我们还是可以取回磁带并恢复数据。”

|

||||

|

||||

当麻烦即将到来时,可使用副本工具如 [Veeam][39],它会为你的服务器创建一个虚拟机副本。若出现故障,副本会自动启动。没有麻烦,没有手忙脚乱,正如某个系统管理员在这个流行的系统管理员帖子中所说,“[我爱你 Veeam][40]。”

|

||||

|

||||

### 网络?什么网络?

|

||||

|

||||

当然,如果员工们无法触及他们的服务,没有任何云,colo 和远程数据中心能帮到你。你不需要一场自然灾害来证明冗余互联网连接的正确性。只需要一台挖断线路的挖掘机或断掉的光缆就能让你在工作中渡过糟糕的一天。

|

||||

当然,如果员工们无法触及他们的服务,没有任何云、colo 和远程数据中心能帮到你。你不需要一场自然灾害来证明冗余互联网连接的正确性。只需要一台挖断线路的挖掘机或断掉的光缆就能让你在工作中渡过糟糕的一天。

|

||||

|

||||

“理想状态下”,某个系统管理员明智地观察到,“你应该有[两路互联网接入线路连接到有独立基础设施的两个 ISP][41]。例如,你不希望两个 ISP 都依赖于同一根光缆。你也不希望采用两家本地 ISP,并发现他们的上行带宽都依赖于同一家骨干网运营商。”

|

||||

|

||||

聪明的系统管理员知道他们公司的互联网接入线路[必须是商业级别的,带有<ruby>服务等级协议<rt>service-level agreement(SLA)</rt></ruby>][43],其中包含“修复时间”条款。或者更好的是采用<ruby>互联网接入专线<rt></rt>dedicated Internet access</ruby>。技术上这与任何其他互联网接入方式没有区别。区别在于互联网接入专线不是一种“尽力而为”的接入方式,而是你会得到明确规定的专供你使用的带宽并附有服务等级协议。这种专线不便宜,但正如一句格言所说的那样,“速度,可靠性,便宜,只能挑两个。”当你的业务跑在这条线路上并且一场暴风雨即将来袭,“可靠性”必须是你挑的两个之一。

|

||||

聪明的系统管理员知道他们公司的互联网接入线路[必须是商业级别的][43],带有<ruby>服务等级协议<rt>service-level agreement(SLA)</rt></ruby>,其中包含“修复时间”条款。或者更好的是采用<ruby>互联网接入专线<rt></rt>dedicated Internet access</ruby>。技术上这与任何其他互联网接入方式没有区别。区别在于互联网接入专线不是一种“尽力而为”的接入方式,而是你会得到明确规定的专供你使用的带宽并附有服务等级协议。这种专线不便宜,但正如一句格言所说的那样,“速度、可靠性、便宜,只能挑两个。”当你的业务跑在这条线路上并且一场暴风雨即将来袭,“可靠性”必须是你挑的两个之一。

|

||||

|

||||

### 晴空重现之时

|

||||

|

||||

@ -113,7 +113,7 @@ IT 部门需要将其需求书面化以确保适当的灾备和业务持续性

|

||||

|

||||

* 你的 IT 员工得说多少次:不要仅仅备份,还得测试备份?

|

||||

* 没电就没公司。确保你的服务器有足够的应急电源来满足业务需要,并确保它们能正常工作。

|

||||

* 如果你的公司在一场自然灾害中幸存下来——或者避开了灾害——明智的系统管理员知道这就是向管理层申请被他们推迟的灾备预算的时候了。因为下次你就未必有这么幸运了。

|

||||

* 如果你的公司在一场自然灾害中幸存下来,或者避开了灾害,明智的系统管理员知道这就是向管理层申请被他们推迟的灾备预算的时候了。因为下次你就未必有这么幸运了。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -121,8 +121,8 @@ IT 部门需要将其需求书面化以确保适当的灾备和业务持续性

|

||||

via: https://www.hpe.com/us/en/insights/articles/it-disaster-recovery-sysadmins-vs-natural-disasters-1711.html

|

||||

|

||||

作者:[Steven-J-Vaughan-Nichols][a]

|

||||

译者:[译者ID](https://github.com/0x996)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[0x996](https://github.com/0x996)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,137 @@

|

||||

两种 cp 命令的绝佳用法的快捷方式

|

||||

===================

|

||||

|

||||

> 这篇文章是关于如何在使用 cp 命令进行备份以及同步时提高效率。

|

||||

|

||||

|

||||

|

||||

去年七月,我写了一篇[关于 cp 命令的两种绝佳用法][7]的文章:备份一个文件,以及同步一个文件夹的备份。

|

||||

|

||||

虽然这些工具确实很好用,但同时,输入这些命令太过于累赘了。为了解决这个问题,我在我的 Bash 启动文件里创建了一些 Bash 快捷方式。现在,我想把这些捷径分享给你们,以便于你们在需要的时候可以拿来用,或者是给那些还不知道怎么使用 Bash 的别名以及函数的用户提供一些思路。

|

||||

|

||||

### 使用 Bash 别名来更新一个文件夹的副本

|

||||

|

||||

如果要使用 `cp` 来更新一个文件夹的副本,通常会使用到的命令是:

|

||||

|

||||

```

|

||||

cp -r -u -v SOURCE-FOLDER DESTINATION-DIRECTORY

|

||||

```

|

||||

|

||||

其中 `-r` 代表“向下递归访问文件夹中的所有文件”,`-u` 代表“更新目标”,`-v` 代表“详细模式”,`SOURCE-FOLDER` 是包含最新文件的文件夹的名称,`DESTINATION-DIRECTORY` 是包含必须同步的`SOURCE-FOLDER` 副本的目录。

|

||||

|

||||

因为我经常使用 `cp` 命令来复制文件夹,我会很自然地想起使用 `-r` 选项。也许再想地更深入一些,我还可以想起用 `-v` 选项,如果再想得再深一层,我会想起用选项 `-u`(不知道这个选项是代表“更新”还是“同步”还是一些什么其它的)。

|

||||

|

||||

或者,还可以使用[Bash 的别名功能][8]来将 `cp` 命令以及其后的选项转换成一个更容易记忆的单词,就像这样:

|

||||

|

||||

```

|

||||

alias sync='cp -r -u -v'

|

||||

```

|

||||

|

||||

如果我将其保存在我的主目录中的 `.bash_aliases` 文件中,然后启动一个新的终端会话,我可以使用该别名了,例如:

|

||||

|

||||

```

|

||||

sync Pictures /media/me/4388-E5FE

|

||||

```

|

||||

|

||||

可以将我的主目录中的图片文件夹与我的 USB 驱动器中的相同版本同步。

|

||||

|

||||

不清楚 `sync` 是否已经定义了?你可以在终端里输入 `alias` 这个单词来列出所有正在使用的命令别名。

|

||||

|

||||

喜欢吗?想要现在就立即使用吗?那就现在打开终端,输入:

|

||||

|

||||

```

|

||||

echo "alias sync='cp -r -u -v'" >> ~/.bash_aliases

|

||||

```

|

||||

|

||||

然后启动一个新的终端窗口并在命令提示符下键入 `alias`。你应该看到这样的东西:

|

||||

|

||||

```

|

||||

me@mymachine~$ alias

|

||||

|

||||

alias alert='notify-send --urgency=low -i "$([ $? = 0 ] && echo terminal || echo error)" "$(history|tail -n1|sed -e '\''s/^\s*[0-9]\+\s*//;s/[;&|]\s*alert$//'\'')"'

|

||||

alias egrep='egrep --color=auto'

|

||||

alias fgrep='fgrep --color=auto'

|

||||

alias grep='grep --color=auto'

|

||||

alias gvm='sdk'

|

||||

alias l='ls -CF'

|

||||

alias la='ls -A'

|

||||

alias ll='ls -alF'

|

||||

alias ls='ls --color=auto'

|

||||

alias sync='cp -r -u -v'

|

||||

me@mymachine:~$

|

||||

```

|

||||

|

||||

这里你能看到 `sync` 已经定义了。

|

||||

|

||||

### 使用 Bash 函数来为备份编号

|

||||

|

||||

若要使用 `cp` 来备份一个文件,通常使用的命令是:

|

||||

|

||||

```

|

||||

cp --force --backup=numbered WORKING-FILE BACKED-UP-FILE

|

||||

```

|

||||

|

||||

其中 `--force` 代表“强制制作副本”,`--backup= numbered` 代表“使用数字表示备份的生成”,`WORKING-FILE` 是我们希望保留的当前文件,`BACKED-UP-FILE` 与 `WORKING-FILE` 的名称相同,并附加生成信息。

|

||||

|

||||

我们不仅需要记得所有 `cp` 的选项,我们还需要记得去重复输入 `WORKING-FILE` 的名字。但当[Bash 的函数功能][9]已经可以帮我们做这一切,为什么我们还要不断地重复这个过程呢?就像这样:

|

||||

|

||||

再一次提醒,你可将下列内容保存入你在家目录下的 `.bash_aliases` 文件里:

|

||||

|

||||

```

|

||||

function backup {

|

||||

if [ $# -ne 1 ]; then

|

||||

echo "Usage: $0 filename"

|

||||

elif [ -f $1 ] ; then

|

||||

echo "cp --force --backup=numbered $1 $1"

|

||||

cp --force --backup=numbered $1 $1

|

||||

else

|

||||

echo "$0: $1 is not a file"

|

||||

fi

|

||||

}

|

||||

```

|

||||

|

||||

我将此函数称之为 `backup`,因为我的系统上没有任何其他名为 `backup` 的命令,但你可以选择适合的任何名称。

|

||||

|

||||

第一个 `if` 语句是用于检查是否提供有且只有一个参数,否则,它会用 `echo` 命令来打印出正确的用法。

|

||||

|

||||

`elif` 语句是用于检查提供的参数所指向的是一个文件,如果是的话,它会用第二个 `echo` 命令来打印所需的 `cp` 的命令(所有的选项都是用全称来表示)并且执行它。

|

||||

|

||||

如果所提供的参数不是一个文件,文件中的第三个 `echo` 用于打印错误信息。

|

||||

|

||||

在我的家目录下,如果我执行 `backup` 这个命令,我可以发现目录下多了一个文件名为`checkCounts.sql.~1~` 的文件,如果我再执行一次,便又多了另一个名为 `checkCounts.sql.~2~` 的文件。

|

||||

|

||||

成功了!就像所想的一样,我可以继续编辑 `checkCounts.sql`,但如果我可以经常地用这个命令来为文件制作快照的话,我可以在我遇到问题的时候回退到最近的版本。

|

||||

|

||||

也许在未来的某个时间,使用 `git` 作为版本控制系统会是一个好主意。但像上文所介绍的 `backup` 这个简单而又好用的工具,是你在需要使用快照的功能时却还未准备好使用 `git` 的最好工具。

|

||||

|

||||

### 结论

|

||||

|

||||

在我的上一篇文章里,我保证我会通过使用脚本,shell 里的函数以及别名功能来简化一些机械性的动作来提高生产效率。

|

||||

|

||||

在这篇文章里,我已经展示了如何在使用 `cp` 命令同步或者备份文件时运用 shell 函数以及别名功能来简化操作。如果你想要了解更多,可以读一下这两篇文章:[怎样通过使用命令别名功能来减少敲击键盘的次数][10] 以及由我的同事 Greg 和 Seth 写的 [Shell 编程:shift 方法和自定义函数介绍][11]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/1/two-great-uses-cp-command-update

|

||||

|

||||

作者:[Chris Hermansen][a]

|

||||

译者:[zyk2290](https://github.com/zyk2290)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/clhermansen

|

||||

[1]:https://opensource.com/users/clhermansen

|

||||

[2]:https://opensource.com/users/clhermansen

|

||||

[3]:https://opensource.com/user/37806/feed

|

||||

[4]:https://opensource.com/article/18/1/two-great-uses-cp-command-update?rate=J_7R7wSPbukG9y8jrqZt3EqANfYtVAwZzzpopYiH3C8

|

||||

[5]:https://opensource.com/article/18/1/two-great-uses-cp-command-update#comments

|

||||

[6]:https://www.flickr.com/photos/internetarchivebookimages/14803082483/in/photolist-oy6EG4-pZR3NZ-i6r3NW-e1tJSX-boBtf7-oeYc7U-o6jFKK-9jNtc3-idt2G9-i7NG1m-ouKjXe-owqviF-92xFBg-ow9e4s-gVVXJN-i1K8Pw-4jybMo-i1rsBr-ouo58Y-ouPRzz-8cGJHK-85Evdk-cru4Ly-rcDWiP-gnaC5B-pAFsuf-hRFPcZ-odvBMz-hRCE7b-mZN3Kt-odHU5a-73dpPp-hUaaAi-owvUMK-otbp7Q-ouySkB-hYAgmJ-owo4UZ-giHgqu-giHpNc-idd9uQ-osAhcf-7vxk63-7vwN65-fQejmk-pTcLgA-otZcmj-fj1aSX-hRzHQk-oyeZfR

|

||||

[7]:https://opensource.com/article/17/7/two-great-uses-cp-command

|

||||

[8]:https://opensource.com/article/17/5/introduction-alias-command-line-tool

|

||||

[9]:https://opensource.com/article/17/1/shell-scripting-shift-method-custom-functions

|

||||

[10]:https://opensource.com/article/17/5/introduction-alias-command-line-tool

|

||||

[11]:https://opensource.com/article/17/1/shell-scripting-shift-method-custom-functions

|

||||

[12]:https://opensource.com/tags/linux

|

||||

[13]:https://opensource.com/users/clhermansen

|

||||

[14]:https://opensource.com/users/clhermansen

|

||||

@ -0,0 +1,201 @@

|

||||

本地开发如何测试 Webhook

|

||||

===================

|

||||

|

||||

|

||||

|

||||

[Webhook][10] 可用于外部系统通知你的系统发生了某个事件或更新。可能最知名的 [Webhook][10] 类型是支付服务提供商(PSP)通知你的系统支付状态有了更新。

|

||||

|

||||

它们通常以监听的预定义 URL 的形式出现,例如 `http://example.com/webhooks/payment-update`。同时,另一个系统向该 URL 发送具有特定有效载荷的 POST 请求(例如支付 ID)。一旦请求进入,你就会获得支付 ID,可以通过 PSP 的 API 用这个支付 ID 向它们询问最新状态,然后更新你的数据库。

|

||||

|

||||

其他例子可以在这个对 Webhook 的出色的解释中找到:[https://sendgrid.com/blog/whats-webhook/][12]。

|

||||

|

||||

只要系统可通过互联网公开访问(这可能是你的生产环境或可公开访问的临时环境),测试这些 webhook 就相当顺利。而当你在笔记本电脑上或虚拟机内部(例如,Vagrant 虚拟机)进行本地开发时,它就变得困难了。在这些情况下,发送 webhook 的一方无法公开访问你的本地 URL。此外,监视发送的请求也很困难,这可能使开发和调试变得困难。

|

||||

|

||||

因此,这个例子将解决:

|

||||

|

||||

* 测试来自本地开发环境的 webhook,该环境无法通过互联网访问。从服务器向 webhook 发送数据的服务无法访问它。

|

||||

* 监控发送的请求和数据,以及应用程序生成的响应。这样可以更轻松地进行调试,从而缩短开发周期。

|

||||

|

||||

前置需求:

|

||||

|

||||

* *可选*:如果你使用虚拟机(VM)进行开发,请确保它正在运行,并确保在 VM 中完成后续步骤。

|

||||

* 对于本教程,我们假设你定义了一个 vhost:`webhook.example.vagrant`。我在本教程中使用了 Vagrant VM,但你可以自由选择 vhost。

|

||||

* 按照这个[安装说明][3]安装 `ngrok`。在 VM 中,我发现它的 Node 版本也很有用:[https://www.npmjs.com/package/ngrok][4],但你可以随意使用其他方法。

|

||||

|

||||

我假设你没有在你的环境中运行 SSL,但如果你使用了,请将在下面的示例中的端口 80 替换为端口 433,`http://` 替换为 `https://`。

|

||||

|

||||

### 使 webhook 可测试

|

||||

|

||||

我们假设以下示例代码。我将使用 PHP,但请将其视作伪代码,因为我留下了一些关键部分(例如 API 密钥、输入验证等)没有编写。

|

||||

|

||||

第一个文件:`payment.php`。此文件创建一个 `$payment` 对象,将其注册到 PSP。然后它获取客户需要访问的 URL,以便支付并将用户重定向到客户那里。

|

||||

|

||||

请注意,此示例中的 `webhook.example.vagrant` 是我们为开发设置定义的本地虚拟主机。它无法从外部世界进入。

|

||||

|

||||

```

|

||||

<?php

|

||||

/*

|

||||

* This file creates a payment and tells the PSP what webhook URL to use for updates

|

||||

* After creating the payment, we get a URL to send the customer to in order to pay at the PSP

|

||||

*/

|

||||

$payment = [

|

||||

'order_id' => 123,

|

||||

'amount' => 25.00,

|

||||

'description' => 'Test payment',

|

||||

'redirect_url' => 'http://webhook.example.vagrant/redirect.php',

|

||||

'webhook_url' => 'http://webhook.example.vagrant/webhook.php',

|

||||

];

|

||||

|

||||

$payment = $paymentProvider->createPayment($payment);

|

||||

header("Location: " . $payment->getPaymentUrl());

|

||||

```

|

||||

|

||||

第二个文件:`webhook.php`。此文件等待 PSP 调用以获得有关更新的通知。

|

||||

|

||||

```

|

||||

<?php

|

||||

/*

|

||||

* This file gets called by the PSP and in the $_POST they submit an 'id'

|

||||

* We can use this ID to get the latest status from the PSP and update our internal systems afterward

|

||||

*/

|

||||

|

||||

$paymentId = $_POST['id'];

|

||||

$paymentInfo = $paymentProvider->getPayment($paymentId);

|

||||

$status = $paymentInfo->getStatus();

|

||||

|

||||

// Perform actions in here to update your system

|

||||

if ($status === 'paid') {

|

||||

..

|

||||

}

|

||||

elseif ($status === 'cancelled') {

|

||||

..

|

||||

}

|

||||

```

|

||||

|

||||

我们的 webhook URL 无法通过互联网访问(请记住它:`webhook.example.vagrant`)。因此,PSP 永远不可能调用文件 `webhook.php`,你的系统将永远不会知道付款状态,这最终导致订单永远不会被运送给客户。

|

||||

|

||||

幸运的是,`ngrok` 可以解决这个问题。 [ngrok][13] 将自己描述为:

|

||||

|

||||

> ngrok 通过安全隧道将 NAT 和防火墙后面的本地服务器暴露给公共互联网。

|

||||

|

||||

让我们为我们的项目启动一个基本的隧道。在你的环境中(在你的系统上或在 VM 上)运行以下命令:

|

||||

|

||||

```

|

||||

ngrok http -host-header=rewrite webhook.example.vagrant:80

|

||||

```

|

||||

|

||||

> 阅读其文档可以了解更多配置选项:[https://ngrok.com/docs][14]。

|

||||

|

||||

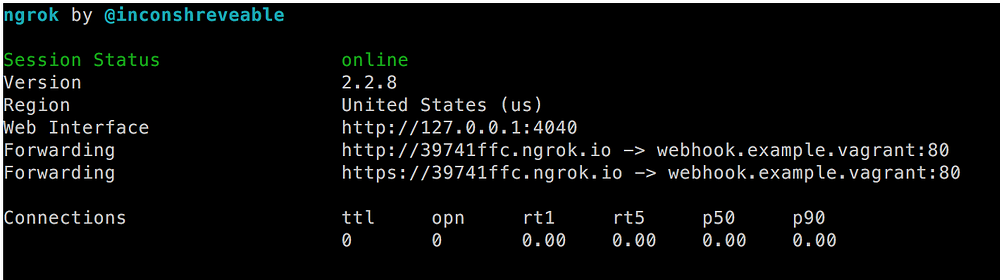

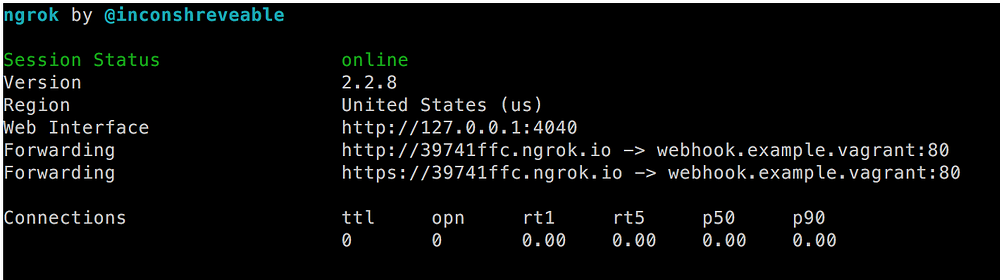

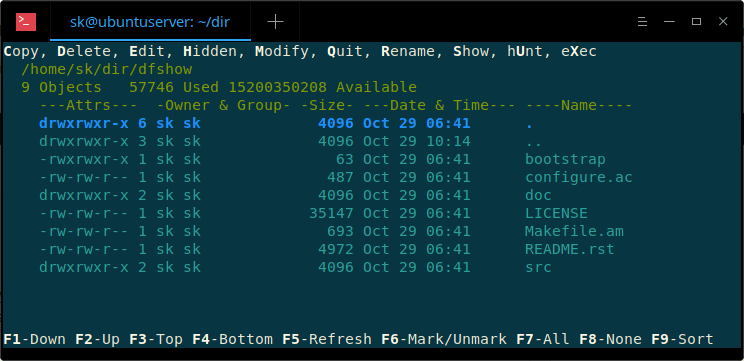

会出现这样的屏幕:

|

||||

|

||||

|

||||

|

||||

*ngrok 输出*

|

||||

|

||||

我们刚刚做了什么?基本上,我们指示 `ngrok` 在端口 80 建立了一个到 `http://webhook.example.vagrant` 的隧道。同一个 URL 也可以通过 `http://39741ffc.ngrok.io` 或 `https://39741ffc.ngrok.io` 访问,它们能被任何知道此 URL 的人通过互联网公开访问。

|

||||

|

||||

请注意,你可以同时获得 HTTP 和 HTTPS 两个服务。这个文档提供了如何将此限制为 HTTPS 的示例:[https://ngrok.com/docs#bind-tls][16]。

|

||||

|

||||

那么,我们如何让我们的 webhook 现在工作起来?将 `payment.php` 更新为以下代码:

|

||||

|

||||

```

|

||||

<?php

|

||||

/*

|

||||

* This file creates a payment and tells the PSP what webhook URL to use for updates

|

||||

* After creating the payment, we get a URL to send the customer to in order to pay at the PSP

|

||||

*/

|

||||

$payment = [

|

||||

'order_id' => 123,

|

||||

'amount' => 25.00,

|

||||

'description' => 'Test payment',

|

||||

'redirect_url' => 'http://webhook.example.vagrant/redirect.php',

|

||||

'webhook_url' => 'https://39741ffc.ngrok.io/webhook.php',

|

||||

];

|

||||

|

||||

$payment = $paymentProvider->createPayment($payment);

|

||||

header("Location: " . $payment->getPaymentUrl());

|

||||

```

|

||||

|

||||

现在,我们告诉 PSP 通过 HTTPS 调用此隧道 URL。只要 PSP 通过隧道调用 webhook,`ngrok` 将确保使用未修改的有效负载调用内部 URL。

|

||||

|

||||

### 如何监控对 webhook 的调用?

|

||||

|

||||

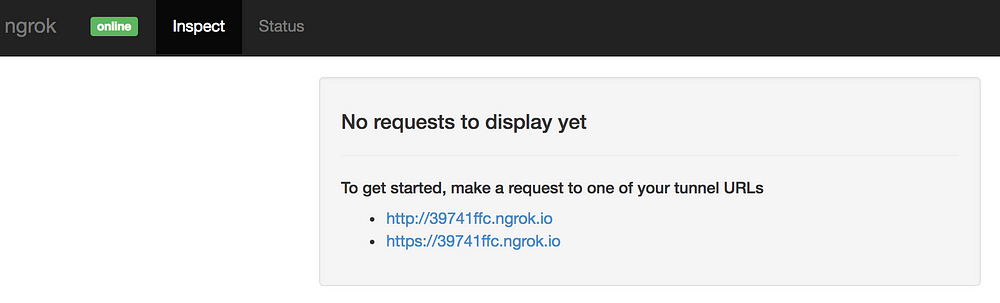

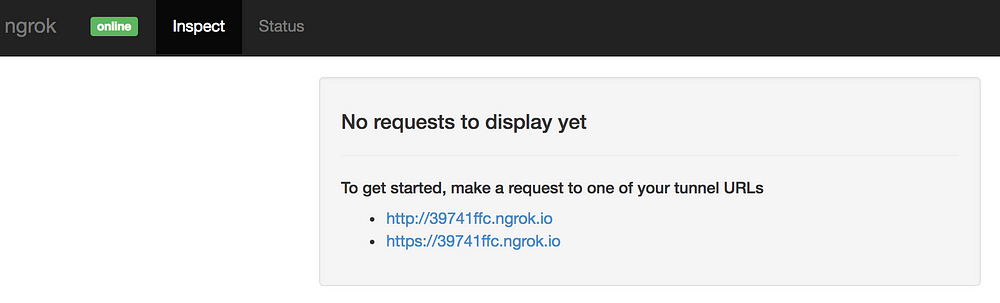

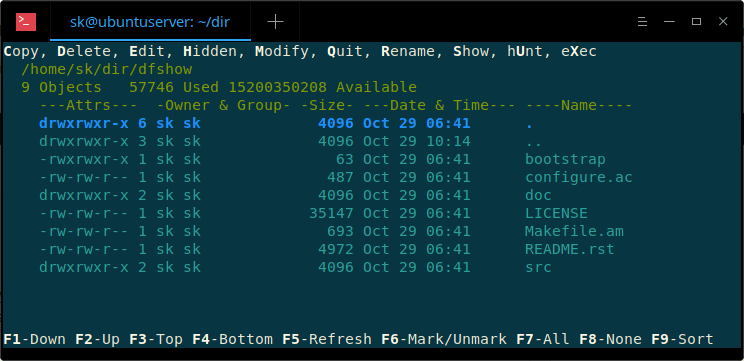

你在上面看到的屏幕截图概述了对隧道主机的调用,这些数据相当有限。幸运的是,`ngrok` 提供了一个非常好的仪表板,允许你检查所有调用:

|

||||

|

||||

|

||||

|

||||

我不会深入研究这个问题,因为它是不言自明的,你只要运行它就行了。因此,我将解释如何在 Vagrant 虚拟机上访问它,因为它不是开箱即用的。

|

||||

|

||||

仪表板将允许你查看所有调用、其状态代码、标头和发送的数据。你将看到应用程序生成的响应。

|

||||

|

||||

仪表板的另一个优点是它允许你重放某个调用。假设你的 webhook 代码遇到了致命的错误,开始新的付款并等待 webhook 被调用将会很繁琐。重放上一个调用可以使你的开发过程更快。

|

||||

|

||||

默认情况下,仪表板可在 `http://localhost:4040` 访问。

|

||||

|

||||

### 虚拟机中的仪表盘

|

||||

|

||||

为了在 VM 中完成此工作,你必须执行一些额外的步骤:

|

||||

|

||||

首先,确保可以在端口 4040 上访问 VM。然后,在 VM 内创建一个文件已存放此配置:

|

||||

|

||||

```

|

||||

web_addr: 0.0.0.0:4040

|

||||

```

|

||||

|

||||

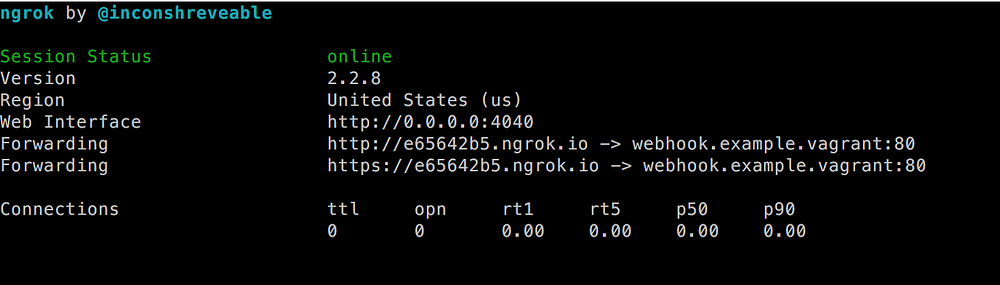

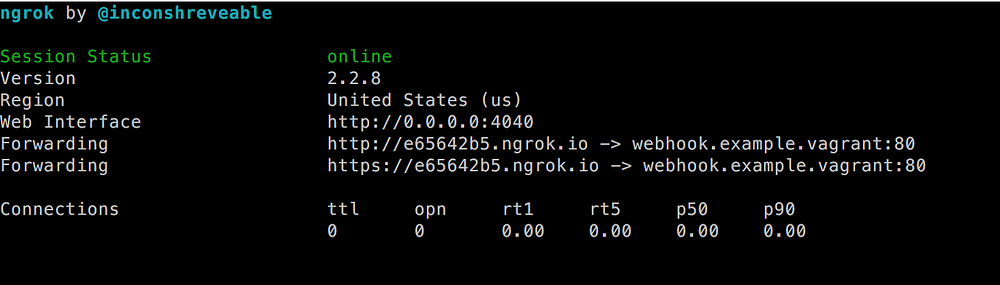

现在,杀死仍在运行的 `ngrok` 进程,并使用稍微调整过的命令启动它:

|

||||

|

||||

```

|

||||

ngrok http -config=/path/to/config/ngrok.conf -host-header=rewrite webhook.example.vagrant:80

|

||||

```

|

||||

|

||||

尽管 ID 已经更改,但你将看到类似于上一屏幕截图的屏幕。之前的网址不再有效,但你有了一个新网址。 此外,`Web Interface` URL 已更改:

|

||||

|

||||

|

||||

|

||||

现在将浏览器指向 `http://webhook.example.vagrant:4040` 以访问仪表板。另外,对 `https://e65642b5.ngrok.io/webhook.php` 做个调用。这可能会导致你的浏览器出错,但仪表板应显示正有一个请求。

|

||||

|

||||

### 最后的备注

|

||||

|

||||

上面的例子是伪代码。原因是每个外部系统都以不同的方式使用 webhook。我试图基于一个虚构的 PSP 实现给出一个例子,因为可能很多开发人员在某个时刻肯定会处理付款。

|

||||

|

||||

请注意,你的 webhook 网址也可能被意图不好的其他人使用。确保验证发送给它的任何输入。

|

||||

|

||||

更好的的,可以向 URL 添加令牌,该令牌对于每个支付是唯一的。只有你的系统和发送 webhook 的系统才能知道此令牌。

|

||||

|

||||

祝你测试和调试你的 webhook 顺利!

|

||||

|

||||

注意:我没有在 Docker 上测试过本教程。但是,这个 Docker 容器看起来是一个很好的起点,并包含了明确的说明:[https://github.com/wernight/docker-ngrok][19] 。

|

||||

|

||||

--------

|

||||

|

||||

via: https://medium.freecodecamp.org/testing-webhooks-while-using-vagrant-for-development-98b5f3bedb1d

|

||||

|

||||

作者:[Stefan Doorn][a]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://medium.freecodecamp.org/@stefandoorn

|

||||

[1]:https://unsplash.com/photos/MYTyXb7fgG0?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText

|

||||

[2]:https://unsplash.com/?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText

|

||||

[3]:https://ngrok.com/download

|

||||

[4]:https://www.npmjs.com/package/ngrok

|

||||

[5]:http://webhook.example.vagrnat/

|

||||

[6]:http://39741ffc.ngrok.io/

|

||||

[7]:http://39741ffc.ngrok.io/

|

||||

[8]:http://webhook.example.vagrant:4040/

|

||||

[9]:https://e65642b5.ngrok.io/webhook.php.

|

||||

[10]:https://sendgrid.com/blog/whats-webhook/

|

||||

[11]:http://example.com/webhooks/payment-update%29

|

||||

[12]:https://sendgrid.com/blog/whats-webhook/

|

||||

[13]:https://ngrok.com/

|

||||

[14]:https://ngrok.com/docs

|

||||

[15]:http://39741ffc.ngrok.io%2C/

|

||||

[16]:https://ngrok.com/docs#bind-tls

|

||||

[17]:http://localhost:4040./

|

||||

[18]:https://e65642b5.ngrok.io/webhook.php.

|

||||

[19]:https://github.com/wernight/docker-ngrok

|

||||

[20]:https://github.com/stefandoorn

|

||||

[21]:https://twitter.com/stefan_doorn

|

||||

[22]:https://www.linkedin.com/in/stefandoorn

|

||||

@ -0,0 +1,130 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11200-1.html)

|

||||

[#]: subject: (How to detect automatically generated emails)

|

||||

[#]: via: (https://arp242.net/weblog/autoreply.html)

|

||||

[#]: author: (Martin Tournoij https://arp242.net/)

|

||||

|

||||

如何检测自动生成的电子邮件

|

||||

======

|

||||

|

||||

|

||||

|

||||

当你用电子邮件系统发送自动回复时,你需要注意不要向自动生成的电子邮件发送回复。最好的情况下,你将获得无用的投递失败消息。更可能的是,你会得到一个无限的电子邮件循环和一个混乱的世界。

|

||||

|

||||

事实证明,可靠地检测自动生成的电子邮件并不总是那么容易。以下是基于为此编写的检测器并使用它扫描大约 100,000 封电子邮件(大量的个人存档和公司存档)的观察结果。

|

||||

|

||||

### Auto-submitted 信头

|

||||

|

||||

由 [RFC 3834][1] 定义。

|

||||

|

||||

这是表示你的邮件是自动回复的“官方”标准。如果存在 `Auto-Submitted` 信头,并且其值不是 `no`,你应该**不**发送回复。

|

||||

|

||||

### X-Auto-Response-Suppress 信头

|

||||

|

||||

[由微软][2]定义。

|

||||

|

||||

此信头由微软 Exchange、Outlook 和其他一些产品使用。许多新闻订阅等都设定了这个。如果 `X-Auto-Response-Suppress` 包含 `DR`(“抑制投递报告”)、`AutoReply`(“禁止 OOF 通知以外的自动回复消息”)或 `All`,你应该**不**发送回复。

|

||||

|

||||

### List-Id 和 List-Unsubscribe 信头

|

||||

|

||||

由 [RFC 2919][3] 定义。

|

||||

|

||||

你通常不希望给邮件列表或新闻订阅发送自动回复。几乎所有的邮件列表和大多数新闻订阅都至少设置了其中一个信头。如果存在这些信头中的任何一个,你应该**不**发送回复。这个信头的值不重要。

|

||||

|

||||

### Feedback-ID 信头

|

||||

|

||||

[由谷歌][4]定义。

|

||||

|

||||

Gmail 使用此信头识别邮件是否是新闻订阅,并使用它为这些新闻订阅的所有者生成统计信息或报告。如果此信头存在,你应该**不**发送回复。这个信头的值不重要。

|

||||

|

||||

### 非标准方式

|

||||

|

||||

上述方法定义明确(即使有些是非标准的)。不幸的是,有些电子邮件系统不使用它们中的任何一个 :-( 这里有一些额外的措施。

|

||||

|

||||

#### Precedence 信头

|

||||

|

||||

在 [RFC 2076][5] 中没有真正定义,不鼓励使用它(但通常会遇到此信头)。

|

||||

|

||||

请注意,不建议检查是否存在此信头,因为某些邮件使用 `normal` 和其他一些(少见的)值(尽管这不常见)。

|

||||

|

||||

我的建议是如果其值不区分大小写地匹配 `bulk`、`auto_reply` 或 `list`,则**不**发送回复。

|

||||

|

||||

#### 其他不常见的信头

|

||||

|

||||

这是我遇到的另外的一些(不常见的)信头。如果设置了其中一个,我建议**不**发送自动回复。大多数邮件也设置了上述信头之一,但有些没有(这并不常见)。

|

||||

|

||||

* `X-MSFBL`:无法真正找到定义(Microsoft 信头?),但我只有自动生成的邮件带有此信头。

|

||||

* `X-Loop`:在任何地方都没有真正定义过,有点罕见,但有时有。它通常设置为不应该收到电子邮件的地址,但也会遇到 `X-Loop: yes`。

|

||||

* `X-Autoreply`:相当罕见,并且似乎总是具有 `yes` 的值。

|

||||

|

||||

#### Email 地址

|

||||

|

||||

检查 `From` 或 `Reply-To` 信头是否包含 `noreply`、`no-reply` 或 `no_reply`(正则表达式:`^no.?reply@`)。

|

||||

|

||||

#### 只有 HTML 部分

|

||||

|

||||

如果电子邮件只有 HTML 部分,而没有文本部分,则表明这是一个自动生成的邮件或新闻订阅。几乎所有邮件客户端都设置了文本部分。

|

||||

|

||||

#### 投递失败消息

|

||||

|

||||

许多传递失败消息并不能真正表明它们是失败的。一些检查方法:

|

||||

|

||||

* `From` 包含 `mailer-daemon` 或 `Mail Delivery Subsystem`

|

||||

|

||||

#### 特定的邮件库特征

|

||||

|

||||

许多邮件类库留下了某种痕迹,大多数常规邮件客户端使用自己的数据覆盖它。检查这个似乎工作得相当可靠。

|

||||

|

||||

* `X-Mailer: Microsoft CDO for Windows 2000`:由某些微软软件设置;我只能在自动生成的邮件中找到它。是的,在 2015 年它仍然在使用。

|

||||

* `Message-ID` 信头包含 `.JavaMail.`:我发现了一些(5 个 50k 大小的)常规消息,但不是很多;绝大多数(数千封)邮件是新闻订阅、订单确认等。

|

||||

* `^X-Mailer` 以 `PHP` 开头。这应该会同时看到 `X-Mailer: PHP/5.5.0` 和 `X-Mailer: PHPmailer XXX XXX`。与 “JavaMail” 相同。

|

||||

* 出现了 `X-Library`;似乎只有 [Indy][6] 设定了这个。

|

||||

* `X-Mailer` 以 `wdcollect` 开头。由一些 Plesk 邮件设置。

|

||||

* `X-Mailer` 以 `MIME-tools` 开头。

|

||||

|

||||

### 最后的预防措施:限制回复的数量

|

||||

|

||||

即使遵循上述所有建议,你仍可能会遇到一个避开所有这些检测的电子邮件程序。这可能非常危险,因为电子邮件系统只是“如果有电子邮件那么发送”,就有可能导致无限的电子邮件循环。

|

||||

|

||||

出于这个原因,我建议你记录你自动发送的电子邮件,并将此速率限制为在几分钟内最多几封电子邮件。这将打破循环链条。

|

||||

|

||||

我们使用每五分钟一封电子邮件的设置,但没这么严格的设置可能也会运作良好。

|

||||

|

||||

### 你需要为自动回复设置什么信头

|

||||

|

||||

具体细节取决于你发送的邮件类型。这是我们用于自动回复邮件的内容:

|

||||

|

||||

```

|

||||

Auto-Submitted: auto-replied

|

||||

X-Auto-Response-Suppress: All

|

||||

Precedence: auto_reply

|

||||

```

|

||||

|

||||

### 反馈

|

||||

|

||||

你可以发送电子邮件至 [martin@arp242.net][7] 或 [创建 GitHub 议题][8]以提交反馈、问题等。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://arp242.net/weblog/autoreply.html

|

||||

|

||||

作者:[Martin Tournoij][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://arp242.net/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: http://tools.ietf.org/html/rfc3834

|

||||

[2]: https://msdn.microsoft.com/en-us/library/ee219609(v=EXCHG.80).aspx

|

||||

[3]: https://tools.ietf.org/html/rfc2919)

|

||||

[4]: https://support.google.com/mail/answer/6254652?hl=en

|

||||

[5]: http://www.faqs.org/rfcs/rfc2076.html

|

||||

[6]: http://www.indyproject.org/index.en.aspx

|

||||

[7]: mailto:martin@arp242.net

|

||||

[8]: https://github.com/Carpetsmoker/arp242.net/issues/new

|

||||

@ -0,0 +1,228 @@

|

||||

[#]: collector: "lujun9972"

|

||||

[#]: translator: "wxy"

|

||||

[#]: reviewer: "wxy"

|

||||

[#]: publisher: "wxy"

|

||||

[#]: url: "https://linux.cn/article-11198-1.html"

|

||||

[#]: subject: "How To Parse And Pretty Print JSON With Linux Commandline Tools"

|

||||

[#]: via: "https://www.ostechnix.com/how-to-parse-and-pretty-print-json-with-linux-commandline-tools/"

|

||||

[#]: author: "EDITOR https://www.ostechnix.com/author/editor/"

|

||||

|

||||

如何用 Linux 命令行工具解析和格式化输出 JSON

|

||||

======

|

||||

|

||||

|

||||

|

||||

JSON 是一种轻量级且与语言无关的数据存储格式,易于与大多数编程语言集成,也易于人类理解 —— 当然,如果格式正确的话。JSON 这个词代表 **J**ava **S**cript **O**bject **N**otation,虽然它以 JavaScript 开头,而且主要用于在服务器和浏览器之间交换数据,但现在正在用于许多领域,包括嵌入式系统。在这里,我们将使用 Linux 上的命令行工具解析并格式化打印 JSON。它对于在 shell 脚本中处理大型 JSON 数据或在 shell 脚本中处理 JSON 数据非常有用。

|

||||

|

||||

### 什么是格式化输出?

|

||||

|

||||

JSON 数据的结构更具人性化。但是在大多数情况下,JSON 数据会存储在一行中,甚至没有行结束字符。

|

||||

|

||||

显然,这对于手动阅读和编辑不太方便。

|

||||

|

||||

这是<ruby>格式化输出<rt>pretty print</rt></ruby>就很有用。这个该名称不言自明:重新格式化 JSON 文本,使人们读起来更清晰。这被称为 **JSON 格式化输出**。

|

||||

|

||||

### 用 Linux 命令行工具解析和格式化输出 JSON

|

||||

|

||||

可以使用命令行文本处理器解析 JSON 数据,例如 `awk`、`sed` 和 `gerp`。实际上 `JSON.awk` 是一个来做这个的 awk 脚本。但是,也有一些专用工具可用于同一目的。

|

||||

|

||||

1. `jq` 或 `jshon`,shell 下的 JSON 解析器,它们都非常有用。

|

||||

2. Shell 脚本,如 `JSON.sh` 或 `jsonv.sh`,用于在 bash、zsh 或 dash shell 中解析JSON。

|

||||

3. `JSON.awk`,JSON 解析器 awk 脚本。

|

||||

4. 像 `json.tool` 这样的 Python 模块。

|

||||

5. `undercore-cli`,基于 Node.js 和 javascript。

|

||||

|

||||

在本教程中,我只关注 `jq`,这是一个 shell 下的非常强大的 JSON 解析器,具有高级过滤和脚本编程功能。

|

||||

|

||||

### JSON 格式化输出

|

||||

|

||||

JSON 数据可能放在一行上使人难以解读,因此为了使其具有一定的可读性,JSON 格式化输出就可用于此目的的。

|

||||

|

||||

**示例:**来自 `jsonip.com` 的数据,使用 `curl` 或 `wget` 工具获得 JSON 格式的外部 IP 地址,如下所示。

|

||||

|

||||

```

|

||||

$ wget -cq http://jsonip.com/ -O -

|

||||

```

|

||||

|

||||

实际数据看起来类似这样:

|

||||

|

||||

```

|

||||

{"ip":"111.222.333.444","about":"/about","Pro!":"http://getjsonip.com"}

|

||||

```

|

||||

|

||||

现在使用 `jq` 格式化输出它:

|

||||

|

||||

```

|

||||

$ wget -cq http://jsonip.com/ -O - | jq '.'

|

||||

```

|

||||

|

||||

通过 `jq` 过滤了该结果之后,它应该看起来类似这样:

|

||||

|

||||

```

|

||||

{

|

||||

"ip": "111.222.333.444",

|

||||

"about": "/about",

|

||||

"Pro!": "http://getjsonip.com"

|

||||

}

|

||||

```

|

||||

|

||||

同样也可以通过 Python `json.tool` 模块做到。示例如下:

|

||||

|

||||

```

|

||||

$ cat anything.json | python -m json.tool

|

||||

```

|

||||

|

||||

这种基于 Python 的解决方案对于大多数用户来说应该没问题,但是如果没有预安装或无法安装 Python 则不行,比如在嵌入式系统上。

|

||||

|

||||

然而,`json.tool` Python 模块具有明显的优势,它是跨平台的。因此,你可以在 Windows、Linux 或 Mac OS 上无缝使用它。

|

||||

|

||||

### 如何用 jq 解析 JSON

|

||||

|

||||

首先,你需要安装 `jq`,它已被大多数 GNU/Linux 发行版选中,并使用各自的软件包安装程序命令进行安装。

|

||||

|

||||

在 Arch Linux 上:

|

||||

|

||||

```

|

||||

$ sudo pacman -S jq

|

||||

```

|

||||

|

||||

在 Debian、Ubuntu、Linux Mint 上:

|

||||

|

||||

```

|

||||

$ sudo apt-get install jq

|

||||

```

|

||||

|

||||

在 Fedora 上:

|

||||

|

||||

```

|

||||

$ sudo dnf install jq

|

||||

```

|

||||

|

||||

在 openSUSE 上:

|

||||

|

||||

```

|

||||

$ sudo zypper install jq

|

||||

```

|

||||

|

||||

对于其它操作系统或平台参见[官方的安装指导][1]。

|

||||

|

||||

#### jq 的基本过滤和标识符功能

|

||||

|

||||

`jq` 可以从 `STDIN` 或文件中读取 JSON 数据。你可以根据情况使用。

|

||||

|

||||

单个符号 `.` 是最基本的过滤器。这些过滤器也称为**对象标识符-索引**。`jq` 使用单个 `.` 过滤器基本上相当将输入的 JSON 文件格式化输出。

|

||||

|

||||

- **单引号**:不必始终使用单引号。但是如果你在一行中组合几个过滤器,那么你必须使用它们。

|

||||

- **双引号**:你必须用两个双引号括起任何特殊字符,如 `@`、`#`、`$`,例如 `jq .foo.”@bar”`。

|

||||

- **原始数据打印**:不管出于任何原因,如果你只需要最终解析的数据(不包含在双引号内),请使用带有 `-r` 标志的 `jq` 命令,如下所示:`jq -r .foo.bar`。

|

||||

|

||||

#### 解析特定数据

|

||||

|

||||

要过滤出 JSON 的特定部分,你需要了解格式化输出的 JSON 文件的数据层次结构。

|

||||

|

||||

来自维基百科的 JSON 数据示例:

|

||||

|

||||

```

|

||||

{

|

||||

"firstName": "John",

|

||||

"lastName": "Smith",

|

||||

"age": 25,

|

||||

"address": {

|

||||

"streetAddress": "21 2nd Street",

|

||||

"city": "New York",

|

||||

"state": "NY",

|

||||

"postalCode": "10021"

|

||||

},

|

||||

"phoneNumber": [

|

||||

{

|

||||

"type": "home",

|

||||

"number": "212 555-1234"

|

||||

},

|

||||

{

|

||||

"type": "fax",

|

||||

"number": "646 555-4567"

|

||||

}

|

||||

],

|

||||

"gender": {

|

||||

"type": "male"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

我将在本教程中将此 JSON 数据用作示例,将其保存为 `sample.json`。

|

||||

|

||||

假设我想从 `sample.json` 文件中过滤出地址。所以命令应该是这样的:

|

||||

|

||||

```

|

||||

$ jq .address sample.json

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

```

|

||||

{

|

||||

"streetAddress": "21 2nd Street",

|

||||

"city": "New York",

|

||||

"state": "NY",

|

||||

"postalCode": "10021"

|

||||

}

|

||||

```

|

||||

|

||||

再次,我想要邮政编码,然后我要添加另一个**对象标识符-索引**,即另一个过滤器。

|

||||

|

||||

```

|

||||

$ cat sample.json | jq .address.postalCode

|

||||

```

|

||||

|

||||

另请注意,**过滤器区分大小写**,并且你必须使用完全相同的字符串来获取有意义的输出,否则就是 null。

|

||||

|

||||

#### 从 JSON 数组中解析元素

|

||||

|

||||

JSON 数组的元素包含在方括号内,这无疑是非常通用的。

|

||||

|

||||

要解析数组中的元素,你必须使用 `[]` 标识符以及其他对象标识符索引。

|

||||

|

||||

在此示例 JSON 数据中,电话号码存储在数组中,要从此数组中获取所有内容,你只需使用括号,像这个示例:

|

||||

|

||||

```

|

||||

$ jq .phoneNumber[] sample.json

|

||||

```

|

||||

|

||||

假设你只想要数组的第一个元素,然后使用从 `0` 开始的数组对象编号,对于第一个项目,使用 `[0]`,对于下一个项目,它应该每步增加 1。

|

||||

|

||||

```

|

||||

$ jq .phoneNumber[0] sample.json

|

||||

```

|

||||

|

||||

#### 脚本编程示例

|

||||

|

||||

假设我只想要家庭电话,而不是整个 JSON 数组数据。这就是用 `jq` 命令脚本编写的方便之处。

|

||||

|

||||

```

|

||||

$ cat sample.json | jq -r '.phoneNumber[] | select(.type == "home") | .number'

|

||||

```

|

||||

|

||||

首先,我将一个过滤器的结果传递给另一个,然后使用 `select` 属性选择特定类型的数据,再次将结果传递给另一个过滤器。

|

||||

|

||||

解释每种类型的 `jq` 过滤器和脚本编程超出了本教程的范围和目的。强烈建议你阅读 `jq` 手册,以便更好地理解下面的内容。

|

||||

|

||||

资源:

|

||||

|

||||

- https://stedolan.github.io/jq/manual/

|

||||

- http://www.compciv.org/recipes/cli/jq-for-parsing-json/

|

||||

- https://lzone.de/cheat-sheet/jq

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/how-to-parse-and-pretty-print-json-with-linux-commandline-tools/

|

||||

|

||||

作者:[ostechnix][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.ostechnix.com/author/editor/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://stedolan.github.io/jq/download/

|

||||

@ -1,74 +1,64 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11195-1.html)

|

||||

[#]: subject: (Check storage performance with dd)

|

||||

[#]: via: (https://fedoramagazine.org/check-storage-performance-with-dd/)

|

||||

[#]: author: (Gregory Bartholomew https://fedoramagazine.org/author/glb/)

|

||||

|

||||

Check storage performance with dd

|

||||

使用 dd 检查存储性能

|

||||

======

|

||||

|

||||

![][1]

|

||||

|

||||

This article includes some example commands to show you how to get a _rough_ estimate of hard drive and RAID array performance using the _dd_ command. Accurate measurements would have to take into account things like [write amplification][2] and [system call overhead][3], which this guide does not. For a tool that might give more accurate results, you might want to consider using [hdparm][4].

|

||||

本文包含一些示例命令,向你展示如何使用 `dd` 命令*粗略*估计硬盘驱动器和 RAID 阵列的性能。准确的测量必须考虑诸如[写入放大][2]和[系统调用开销][3]之类的事情,本指南不会考虑这些。对于可能提供更准确结果的工具,你可能需要考虑使用 [hdparm][4]。

|

||||

|

||||

To factor out performance issues related to the file system, these examples show how to test the performance of your drives and arrays at the block level by reading and writing directly to/from their block devices. **WARNING** : The _write_ tests will destroy any data on the block devices against which they are run. **Do not run them against any device that contains data you want to keep!**

|

||||

为了分解与文件系统相关的性能问题,这些示例显示了如何通过直接读取和写入块设备来在块级测试驱动器和阵列的性能。**警告**:*写入*测试将会销毁用来运行测试的块设备上的所有数据。**不要对包含你想要保留的数据的任何设备运行这些测试!**

|

||||

|

||||

### Four tests

|

||||

### 四个测试

|

||||

|

||||

Below are four example dd commands that can be used to test the performance of a block device:

|

||||

下面是四个示例 `dd` 命令,可用于测试块设备的性能:

|

||||

|

||||

1. One process reading from $MY_DISK:

|

||||

1、 从 `$MY_DISK` 读取的一个进程:

|

||||

|

||||

```

|

||||

# dd if=$MY_DISK of=/dev/null bs=1MiB count=200 iflag=nocache

|

||||

```

|

||||

|

||||

2. One process writing to $MY_DISK:

|

||||

2、写入到 `$MY_DISK` 的一个进程:

|

||||

|

||||

```

|

||||

# dd if=/dev/zero of=$MY_DISK bs=1MiB count=200 oflag=direct

|

||||

```

|

||||

|

||||

3. Two processes reading concurrently from $MY_DISK:

|

||||

3、从 `$MY_DISK` 并发读取的两个进程:

|

||||

|

||||

```

|

||||

# (dd if=$MY_DISK of=/dev/null bs=1MiB count=200 iflag=nocache &); (dd if=$MY_DISK of=/dev/null bs=1MiB count=200 iflag=nocache skip=200 &)

|

||||

```

|

||||

|

||||

4. Two processes writing concurrently to $MY_DISK:

|

||||

4、 并发写入到 `$MY_DISK` 的两个进程:

|

||||

|

||||

```

|

||||

# (dd if=/dev/zero of=$MY_DISK bs=1MiB count=200 oflag=direct &); (dd if=/dev/zero of=$MY_DISK bs=1MiB count=200 oflag=direct skip=200 &)

|

||||

```

|

||||

|

||||

- 执行读写测试时,相应的 `iflag=nocache` 和 `oflag=direct` 参数非常重要,因为没有它们,`dd` 命令有时会显示从[内存][5]中传输数据的结果速度,而不是从硬盘。

|

||||

- `bs` 和 `count` 参数的值有些随意,我选择的值应足够大,以便在大多数情况下为当前硬件提供合适的平均值。

|

||||

- `null` 和 `zero` 设备在读写测试中分别用于目标和源,因为它们足够快,不会成为性能测试中的限制因素。

|

||||

- 并发读写测试中第二个 `dd` 命令的 `skip=200` 参数是为了确保 `dd` 的两个副本在硬盘驱动器的不同区域上运行。

|

||||

|

||||

### 16 个示例

|

||||

|

||||

下面是演示,显示针对以下四个块设备中之一运行上述四个测试中的各个结果:

|

||||

|

||||

– The _iflag=nocache_ and _oflag=direct_ parameters are important when performing the read and write tests (respectively) because without them the dd command will sometimes show the resulting speed of transferring the data to/from [RAM][5] rather than the hard drive.

|

||||

1. `MY_DISK=/dev/sda2`(用在示例 1-X 中)

|

||||

2. `MY_DISK=/dev/sdb2`(用在示例 2-X 中)

|

||||

3. `MY_DISK=/dev/md/stripped`(用在示例 3-X 中)

|

||||

4. `MY_DISK=/dev/md/mirrored`(用在示例 4-X 中)

|

||||

|

||||

– The values for the _bs_ and _count_ parameters are somewhat arbitrary and what I have chosen should be large enough to provide a decent average in most cases for current hardware.

|

||||

|

||||

– The _null_ and _zero_ devices are used for the destination and source (respectively) in the read and write tests because they are fast enough that they will not be the limiting factor in the performance tests.

|

||||

|

||||

– The _skip=200_ parameter on the second dd command in the concurrent read and write tests is to ensure that the two copies of dd are operating on different areas of the hard drive.

|

||||

|

||||

### 16 examples

|

||||

|

||||

Below are demonstrations showing the results of running each of the above four tests against each of the following four block devices:

|

||||

|

||||

1. MY_DISK=/dev/sda2 (used in examples 1-X)

|

||||

2. MY_DISK=/dev/sdb2 (used in examples 2-X)

|

||||

3. MY_DISK=/dev/md/stripped (used in examples 3-X)

|

||||

4. MY_DISK=/dev/md/mirrored (used in examples 4-X)

|

||||

|

||||

|

||||

|

||||

A video demonstration of the these tests being run on a PC is provided at the end of this guide.

|

||||

|

||||

Begin by putting your computer into _rescue_ mode to reduce the chances that disk I/O from background services might randomly affect your test results. **WARNING** : This will shutdown all non-essential programs and services. Be sure to save your work before running these commands. You will need to know your _root_ password to get into rescue mode. The _passwd_ command, when run as the root user, will prompt you to (re)set your root account password.

|

||||

首先将计算机置于*救援*模式,以减少后台服务的磁盘 I/O 随机影响测试结果的可能性。**警告**:这将关闭所有非必要的程序和服务。在运行这些命令之前,请务必保存你的工作。你需要知道 `root` 密码才能进入救援模式。`passwd` 命令以 `root` 用户身份运行时,将提示你(重新)设置 `root` 帐户密码。

|

||||

|

||||

```

|

||||

$ sudo -i

|

||||

@ -77,14 +67,14 @@ $ sudo -i

|

||||

# systemctl rescue

|

||||

```

|

||||

|

||||

You might also want to temporarily disable logging to disk:

|

||||

你可能还想暂时禁止将日志记录到磁盘:

|

||||

|

||||

```

|

||||

# sed -r -i.bak 's/^#?Storage=.*/Storage=none/' /etc/systemd/journald.conf

|

||||

# systemctl restart systemd-journald.service

|

||||

```

|

||||

|

||||

If you have a swap device, it can be temporarily disabled and used to perform the following tests:

|

||||

如果你有交换设备,可以暂时禁用它并用于执行后面的测试:

|

||||

|

||||

```

|

||||

# swapoff -a

|

||||

@ -93,7 +83,7 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

# mdadm --zero-superblock $MY_DEVS

|

||||

```

|

||||

|

||||

#### Example 1-1 (reading from sda)

|

||||

#### 示例 1-1 (从 sda 读取)

|

||||

|

||||

```

|

||||

# MY_DISK=$(echo $MY_DEVS | cut -d ' ' -f 1)

|

||||

@ -106,7 +96,7 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

209715200 bytes (210 MB, 200 MiB) copied, 1.7003 s, 123 MB/s

|

||||

```

|

||||

|

||||

#### Example 1-2 (writing to sda)

|

||||

#### 示例 1-2 (写入到 sda)

|

||||

|

||||

```

|

||||

# MY_DISK=$(echo $MY_DEVS | cut -d ' ' -f 1)

|

||||

@ -119,7 +109,7 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

209715200 bytes (210 MB, 200 MiB) copied, 1.67117 s, 125 MB/s

|

||||

```

|

||||

|

||||

#### Example 1-3 (reading concurrently from sda)

|

||||

#### 示例 1-3 (从 sda 并发读取)

|

||||

|

||||

```

|

||||

# MY_DISK=$(echo $MY_DEVS | cut -d ' ' -f 1)

|

||||

@ -135,7 +125,7 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

209715200 bytes (210 MB, 200 MiB) copied, 3.52614 s, 59.5 MB/s

|

||||

```

|

||||

|

||||

#### Example 1-4 (writing concurrently to sda)

|

||||

#### 示例 1-4 (并发写入到 sda)

|

||||

|

||||

```

|

||||

# MY_DISK=$(echo $MY_DEVS | cut -d ' ' -f 1)

|

||||

@ -150,7 +140,7 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

209715200 bytes (210 MB, 200 MiB) copied, 3.60872 s, 58.1 MB/s

|

||||

```

|

||||

|

||||

#### Example 2-1 (reading from sdb)

|

||||

#### 示例 2-1 (从 sdb 读取)

|

||||

|

||||

```

|

||||

# MY_DISK=$(echo $MY_DEVS | cut -d ' ' -f 2)

|

||||

@ -163,7 +153,7 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

209715200 bytes (210 MB, 200 MiB) copied, 1.67285 s, 125 MB/s

|

||||

```

|

||||

|

||||

#### Example 2-2 (writing to sdb)

|

||||

#### 示例 2-2 (写入到 sdb)

|

||||

|

||||

```

|

||||

# MY_DISK=$(echo $MY_DEVS | cut -d ' ' -f 2)

|

||||

@ -176,7 +166,7 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

209715200 bytes (210 MB, 200 MiB) copied, 1.67198 s, 125 MB/s

|

||||

```

|

||||

|

||||

#### Example 2-3 (reading concurrently from sdb)

|

||||

#### 示例 2-3 (从 sdb 并发读取)

|

||||

|

||||

```

|

||||

# MY_DISK=$(echo $MY_DEVS | cut -d ' ' -f 2)

|

||||

@ -192,7 +182,7 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

209715200 bytes (210 MB, 200 MiB) copied, 3.57736 s, 58.6 MB/s

|

||||

```

|

||||

|

||||

#### Example 2-4 (writing concurrently to sdb)

|

||||

#### 示例 2-4 (并发写入到 sdb)

|

||||

|

||||

```

|

||||

# MY_DISK=$(echo $MY_DEVS | cut -d ' ' -f 2)

|

||||

@ -208,7 +198,7 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

209715200 bytes (210 MB, 200 MiB) copied, 3.81475 s, 55.0 MB/s

|

||||

```

|

||||

|

||||

#### Example 3-1 (reading from RAID0)

|

||||

#### 示例 3-1 (从 RAID0 读取)

|

||||

|

||||

```

|

||||

# mdadm --create /dev/md/stripped --homehost=any --metadata=1.0 --level=0 --raid-devices=2 $MY_DEVS

|

||||

@ -222,7 +212,7 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

209715200 bytes (210 MB, 200 MiB) copied, 0.837419 s, 250 MB/s

|

||||

```

|

||||

|

||||

#### Example 3-2 (writing to RAID0)

|

||||

#### 示例 3-2 (写入到 RAID0)

|

||||

|

||||

```

|

||||

# MY_DISK=/dev/md/stripped

|

||||

@ -235,7 +225,7 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

209715200 bytes (210 MB, 200 MiB) copied, 0.823648 s, 255 MB/s

|

||||

```

|

||||

|

||||

#### Example 3-3 (reading concurrently from RAID0)

|

||||

#### 示例 3-3 (从 RAID0 并发读取)

|

||||

|

||||

```

|

||||

# MY_DISK=/dev/md/stripped

|

||||

@ -251,7 +241,7 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

209715200 bytes (210 MB, 200 MiB) copied, 1.80016 s, 116 MB/s

|

||||

```

|

||||

|

||||

#### Example 3-4 (writing concurrently to RAID0)

|

||||

#### 示例 3-4 (并发写入到 RAID0)

|

||||

|

||||

```

|

||||

# MY_DISK=/dev/md/stripped

|

||||

@ -267,7 +257,7 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

209715200 bytes (210 MB, 200 MiB) copied, 1.81323 s, 116 MB/s

|

||||

```

|

||||

|

||||

#### Example 4-1 (reading from RAID1)

|

||||

#### 示例 4-1 (从 RAID1 读取)

|

||||

|

||||

```

|

||||

# mdadm --stop /dev/md/stripped

|

||||

@ -282,7 +272,7 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

209715200 bytes (210 MB, 200 MiB) copied, 1.74963 s, 120 MB/s

|

||||

```

|

||||

|

||||

#### Example 4-2 (writing to RAID1)

|

||||

#### 示例 4-2 (写入到 RAID1)

|

||||

|

||||

```

|

||||

# MY_DISK=/dev/md/mirrored

|

||||

@ -295,7 +285,7 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

209715200 bytes (210 MB, 200 MiB) copied, 1.74625 s, 120 MB/s

|

||||

```

|

||||

|

||||

#### Example 4-3 (reading concurrently from RAID1)

|

||||

#### 示例 4-3 (从 RAID1 并发读取)

|

||||

|

||||

```

|

||||

# MY_DISK=/dev/md/mirrored

|

||||

@ -311,7 +301,7 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

209715200 bytes (210 MB, 200 MiB) copied, 1.67685 s, 125 MB/s

|

||||

```

|

||||

|

||||

#### Example 4-4 (writing concurrently to RAID1)

|

||||

#### 示例 4-4 (并发写入到 RAID1)

|

||||

|

||||

```

|

||||

# MY_DISK=/dev/md/mirrored

|

||||

@ -327,7 +317,7 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

209715200 bytes (210 MB, 200 MiB) copied, 4.1067 s, 51.1 MB/s

|

||||

```

|

||||

|

||||

#### Restore your swap device and journald configuration

|

||||

#### 恢复交换设备和日志配置

|

||||

|

||||

```

|

||||

# mdadm --stop /dev/md/stripped /dev/md/mirrored

|

||||

@ -339,23 +329,19 @@ If you have a swap device, it can be temporarily disabled and used to perform th

|

||||

# reboot

|

||||

```

|

||||

|

||||

### Interpreting the results

|

||||

### 结果解读

|

||||

|

||||

Examples 1-1, 1-2, 2-1, and 2-2 show that each of my drives read and write at about 125 MB/s.

|

||||

示例 1-1、1-2、2-1 和 2-2 表明我的每个驱动器以大约 125 MB/s 的速度读写。

|

||||

|

||||

Examples 1-3, 1-4, 2-3, and 2-4 show that when two reads or two writes are done in parallel on the same drive, each process gets at about half the drive’s bandwidth (60 MB/s).

|

||||

示例 1-3、1-4、2-3 和 2-4 表明,当在同一驱动器上并行完成两次读取或写入时,每个进程的驱动器带宽大约为一半(60 MB/s)。

|

||||

|

||||

The 3-x examples show the performance benefit of putting the two drives together in a RAID0 (data stripping) array. The numbers, in all cases, show that the RAID0 array performs about twice as fast as either drive is able to perform on its own. The trade-off is that you are twice as likely to lose everything because each drive only contains half the data. A three-drive array would perform three times as fast as a single drive (all drives being equal) but it would be thrice as likely to suffer a [catastrophic failure][6].

|

||||

3-X 示例显示了将两个驱动器放在 RAID0(数据条带化)阵列中的性能优势。在所有情况下,这些数字表明 RAID0 阵列的执行速度是任何一个驱动器能够独立提供的速度的两倍。相应的是,丢失所有内容的可能性也是两倍,因为每个驱动器只包含一半的数据。一个三个驱动器阵列的执行速度是单个驱动器的三倍(所有驱动器规格都相同),但遭受[灾难性故障][6]的可能也是三倍。

|

||||

|

||||

The 4-x examples show that the performance of the RAID1 (data mirroring) array is similar to that of a single disk except for the case where multiple processes are concurrently reading (example 4-3). In the case of multiple processes reading, the performance of the RAID1 array is similar to that of the RAID0 array. This means that you will see a performance benefit with RAID1, but only when processes are reading concurrently. For example, if a process tries to access a large number of files in the background while you are trying to use a web browser or email client in the foreground. The main benefit of RAID1 is that your data is unlikely to be lost [if a drive fails][7].

|

||||

4-X 示例显示 RAID1(数据镜像)阵列的性能类似于单个磁盘的性能,除了多个进程同时读取的情况(示例4-3)。在多个进程读取的情况下,RAID1 阵列的性能类似于 RAID0 阵列的性能。这意味着你将看到 RAID1 的性能优势,但仅限于进程同时读取时。例如,当你在前台使用 Web 浏览器或电子邮件客户端时,进程会尝试访问后台中的大量文件。RAID1 的主要好处是,[如果驱动器出现故障][7],你的数据不太可能丢失。

|

||||

|

||||

### Video demo

|

||||

### 故障排除

|

||||

|

||||

Testing storage throughput using dd

|

||||

|

||||

### Troubleshooting

|

||||

|

||||

If the above tests aren’t performing as you expect, you might have a bad or failing drive. Most modern hard drives have built-in Self-Monitoring, Analysis and Reporting Technology ([SMART][8]). If your drive supports it, the _smartctl_ command can be used to query your hard drive for its internal statistics:

|

||||

如果上述测试未按预期执行,则可能是驱动器坏了或出现故障。大多数现代硬盘都内置了自我监控、分析和报告技术([SMART][8])。如果你的驱动器支持它,`smartctl` 命令可用于查询你的硬盘驱动器的内部统计信息:

|

||||

|

||||

```

|

||||

# smartctl --health /dev/sda

|

||||

@ -363,21 +349,21 @@ If the above tests aren’t performing as you expect, you might have a bad or fa

|

||||

# smartctl -x /dev/sda

|

||||

```

|

||||

|

||||

Another way that you might be able to tune your PC for better performance is by changing your [I/O scheduler][9]. Linux systems support several I/O schedulers and the current default for Fedora systems is the [multiqueue][10] variant of the [deadline][11] scheduler. The default performs very well overall and scales extremely well for large servers with many processors and large disk arrays. There are, however, a few more specialized schedulers that might perform better under certain conditions.

|

||||

另一种可以调整 PC 以获得更好性能的方法是更改 [I/O 调度程序][9]。Linux 系统支持多个 I/O 调度程序,Fedora 系统的当前默认值是 [deadline][11] 调度程序的 [multiqueue][10] 变体。默认情况下它的整体性能非常好,并且对于具有许多处理器和大型磁盘阵列的大型服务器,其扩展性极为出色。但是,有一些更专业的调度程序在某些条件下可能表现更好。

|

||||

|

||||

To view which I/O scheduler your drives are using, issue the following command:

|

||||

要查看驱动器正在使用的 I/O 调度程序,请运行以下命令:

|

||||

|

||||

```

|

||||

$ for i in /sys/block/sd?/queue/scheduler; do echo "$i: $(<$i)"; done

|

||||

```

|

||||

|

||||

You can change the scheduler for a drive by writing the name of the desired scheduler to the /sys/block/<device name>/queue/scheduler file:

|

||||

你可以通过将所需调度程序的名称写入 `/sys/block/<device name>/queue/scheduler` 文件来更改驱动器的调度程序:

|

||||

|

||||

```

|

||||

# echo bfq > /sys/block/sda/queue/scheduler

|

||||

```

|

||||

|

||||

You can make your changes permanent by creating a [udev rule][12] for your drive. The following example shows how to create a udev rule that will set all [rotational drives][13] to use the [BFQ][14] I/O scheduler:

|

||||

你可以通过为驱动器创建 [udev 规则][12]来永久更改它。以下示例显示了如何创建将所有的[旋转式驱动器][13]设置为使用 [BFQ][14] I/O 调度程序的 udev 规则:

|

||||

|

||||

```

|

||||

# cat << END > /etc/udev/rules.d/60-ioscheduler-rotational.rules

|

||||

@ -385,7 +371,7 @@ ACTION=="add|change", KERNEL=="sd[a-z]", ATTR{queue/rotational}=="1", ATTR{queue

|

||||

END

|

||||

```

|

||||

|

||||

Here is another example that sets all [solid-state drives][15] to use the [NOOP][16] I/O scheduler:

|

||||

这是另一个设置所有的[固态驱动器][15]使用 [NOOP][16] I/O 调度程序的示例:

|

||||

|

||||

```

|

||||

# cat << END > /etc/udev/rules.d/60-ioscheduler-solid-state.rules

|

||||

@ -393,11 +379,7 @@ ACTION=="add|change", KERNEL=="sd[a-z]", ATTR{queue/rotational}=="0", ATTR{queue

|

||||

END

|

||||

```

|

||||

|

||||

Changing your I/O scheduler won’t affect the raw throughput of your devices, but it might make your PC seem more responsive by prioritizing the bandwidth for the foreground tasks over the background tasks or by eliminating unnecessary block reordering.

|

||||

|

||||

* * *

|

||||

|

||||

_Photo by _[ _James Donovan_][17]_ on _[_Unsplash_][18]_._

|

||||

更改 I/O 调度程序不会影响设备的原始吞吐量,但通过优先考虑后台任务的带宽或消除不必要的块重新排序,可能会使你的 PC 看起来响应更快。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -405,8 +387,8 @@ via: https://fedoramagazine.org/check-storage-performance-with-dd/

|

||||

|

||||

作者:[Gregory Bartholomew][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,183 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11221-1.html)

|

||||

[#]: subject: (How to install Elasticsearch and Kibana on Linux)

|

||||

[#]: via: (https://opensource.com/article/19/7/install-elasticsearch-and-kibana-linux)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||

|

||||

如何在 Linux 上安装 Elasticsearch 和 Kibana

|

||||

======

|

||||

|

||||

> 获取我们关于安装两者的简化说明。

|

||||

|

||||

![5 pengiuns floating on iceburg][1]

|

||||

|

||||

如果你渴望学习基于开源 Lucene 库的著名开源搜索引擎 Elasticsearch,那么没有比在本地安装它更好的方法了。这个过程在 [Elasticsearch 网站][2]中有详细介绍,但如果你是初学者,官方说明就比必要的信息多得多。本文采用一种简化的方法。

|

||||

|

||||

### 添加 Elasticsearch 仓库

|

||||

|

||||

首先,将 Elasticsearch 仓库添加到你的系统,以便你可以根据需要安装它并接收更新。如何做取决于你的发行版。在基于 RPM 的系统上,例如 [Fedora][3]、[CentOS] [4]、[Red Hat Enterprise Linux(RHEL)][5] 或 [openSUSE][6],(本文任何地方引用 Fedora 或 RHEL 的也适用于 CentOS 和 openSUSE)在 `/etc/yum.repos.d/` 中创建一个名为 `elasticsearch.repo` 的仓库描述文件:

|

||||

|

||||

```

|

||||

$ cat << EOF | sudo tee /etc/yum.repos.d/elasticsearch.repo

|

||||

[elasticsearch-7.x]

|

||||

name=Elasticsearch repository for 7.x packages

|

||||

baseurl=https://artifacts.elastic.co/packages/oss-7.x/yum

|

||||

gpgcheck=1

|

||||

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

|

||||

enabled=1

|

||||

autorefresh=1

|

||||

type=rpm-md

|

||||

EOF

|

||||

```

|

||||

|

||||

在 Ubuntu 或 Debian 上,不要使用 `add-apt-repository` 工具。由于它自身默认的和 Elasticsearch 仓库提供的不匹配而导致错误。相反,设置这个:

|

||||

|

||||

```

|

||||

$ echo "deb https://artifacts.elastic.co/packages/oss-7.x/apt stable main" | sudo tee -a /etc/apt/sources.list.d/elastic-7.x.list

|

||||

```

|

||||

|

||||

在你从该仓库安装之前,导入 GPG 公钥,然后更新:

|

||||

|

||||

```

|

||||

$ sudo apt-key adv --keyserver \

|

||||

hkp://keyserver.ubuntu.com:80 \

|

||||

--recv D27D666CD88E42B4

|

||||

$ sudo apt update

|

||||

```

|

||||

|

||||

此存储库仅包含 Elasticsearch 的开源功能,在 [Apache 许可证][7]下发布,没有提供订阅版本的额外功能。如果你需要仅限订阅的功能(这些功能是**并不**开源),那么 `baseurl` 必须设置为:

|

||||

|

||||

```

|

||||

baseurl=https://artifacts.elastic.co/packages/7.x/yum

|

||||

```

|

||||

|

||||

### 安装 Elasticsearch

|

||||

|

||||

你需要安装的软件包的名称取决于你使用的是开源版本还是订阅版本。本文使用开源版本,包名最后有 `-oss` 后缀。如果包名后没有 `-oss`,那么表示你请求的是仅限订阅版本。

|

||||

|

||||

如果你创建了订阅版本的仓库却尝试安装开源版本,那么就会收到“非指定”的错误。如果你创建了一个开源版本仓库却没有将 `-oss` 添加到包名后,那么你也会收到错误。

|

||||

|

||||

使用包管理器安装 Elasticsearch。例如,在 Fedora、CentOS 或 RHEL 上运行以下命令:

|

||||

|

||||

```

|

||||

$ sudo dnf install elasticsearch-oss

|

||||

```

|

||||

|

||||

在 Ubuntu 或 Debian 上,运行:

|

||||

|

||||

```

|

||||

$ sudo apt install elasticsearch-oss

|

||||

```

|

||||

|

||||

如果你在安装 Elasticsearch 时遇到错误,那么你可能安装的是错误的软件包。如果你想如本文这样使用开源包,那么请确保使用正确的 apt 仓库或在 Yum 配置正确的 `baseurl`。

|

||||

|

||||

### 启动并启用 Elasticsearch

|

||||

|

||||

安装 Elasticsearch 后,你必须启动并启用它:

|

||||

|

||||

```

|

||||

$ sudo systemctl daemon-reload

|

||||

$ sudo systemctl enable --now elasticsearch.service

|

||||

```

|

||||

|

||||

要确认 Elasticsearch 在其默认端口 9200 上运行,请在 Web 浏览器中打开 `localhost:9200`。你可以使用 GUI 浏览器,也可以在终端中执行此操作:

|

||||

|

||||

|

||||

```

|

||||

$ curl localhost:9200

|

||||

{

|

||||

|

||||

"name" : "fedora30",

|

||||

"cluster_name" : "elasticsearch",

|

||||

"cluster_uuid" : "OqSbb16NQB2M0ysynnX1hA",

|

||||

"version" : {

|

||||

"number" : "7.2.0",

|

||||

"build_flavor" : "oss",

|

||||

"build_type" : "rpm",

|

||||

"build_hash" : "508c38a",

|

||||

"build_date" : "2019-06-20T15:54:18.811730Z",

|

||||

"build_snapshot" : false,

|

||||

"lucene_version" : "8.0.0",

|

||||

"minimum_wire_compatibility_version" : "6.8.0",

|

||||

"minimum_index_compatibility_version" : "6.0.0-beta1"

|

||||

},

|

||||

"tagline" : "You Know, for Search"

|

||||

}

|

||||

```

|

||||

|

||||

### 安装 Kibana

|

||||

|

||||

Kibana 是 Elasticsearch 数据可视化的图形界面。它包含在 Elasticsearch 仓库,因此你可以使用包管理器进行安装。与 Elasticsearch 本身一样,如果你使用的是 Elasticsearch 的开源版本,那么必须将 `-oss` 放到包名最后,订阅版本则不用(两者安装需要匹配):

|

||||

|

||||

|

||||

```

|

||||

$ sudo dnf install kibana-oss

|

||||

```

|

||||

|

||||

在 Ubuntu 或 Debian 上:

|

||||

|

||||

```

|

||||

$ sudo apt install kibana-oss

|

||||

```

|

||||

|

||||

Kibana 在端口 5601 上运行,因此打开图形化 Web 浏览器并进入 `localhost:5601` 来开始使用 Kibana,如下所示:

|

||||

|

||||

![Kibana running in Firefox.][8]

|

||||

|

||||

### 故障排除

|

||||

|

||||

如果在安装 Elasticsearch 时出现错误,请尝试手动安装 Java 环境。在 Fedora、CentOS 和 RHEL 上:

|

||||

|

||||

```

|

||||

$ sudo dnf install java-openjdk-devel java-openjdk

|

||||

```

|

||||

|

||||

在 Ubuntu 上:

|

||||

|

||||

```

|

||||

$ sudo apt install default-jdk

|

||||

```

|

||||

|

||||

如果所有其他方法都失败,请尝试直接从 Elasticsearch 服务器安装 Elasticsearch RPM:

|

||||

|

||||

```

|

||||