mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-25 23:11:02 +08:00

commit

74cbf1f7cc

@ -1,48 +1,49 @@

|

||||

在 Debian 中安装 OpenQRM 云计算平台

|

||||

================================================================================

|

||||

|

||||

### 简介 ###

|

||||

|

||||

**openQRM**是一个基于 Web 的开源云计算和数据中心管理平台,可灵活地与企业数据中心的现存组件集成。

|

||||

|

||||

它支持下列虚拟技术:

|

||||

|

||||

- KVM,

|

||||

- XEN,

|

||||

- Citrix XenServer,

|

||||

- VMWare ESX,

|

||||

- LXC,

|

||||

- OpenVZ.

|

||||

- KVM

|

||||

- XEN

|

||||

- Citrix XenServer

|

||||

- VMWare ESX

|

||||

- LXC

|

||||

- OpenVZ

|

||||

|

||||

openQRM 中的杂交云连接器通过 **Amazon AWS**, **Eucalyptus** 或 **OpenStack** 来支持一系列的私有或公有云提供商,以此来按需扩展你的基础设施。它也自动地进行资源调配、 虚拟化、 存储和配置管理,且关注高可用性。集成计费系统的自助服务云门户可使终端用户按需请求新的服务器和应用堆栈。

|

||||

openQRM 中的混合云连接器支持 **Amazon AWS**, **Eucalyptus** 或 **OpenStack** 等一系列的私有或公有云提供商,以此来按需扩展你的基础设施。它也可以自动地进行资源调配、 虚拟化、 存储和配置管理,且保证高可用性。集成的计费系统的自服务云门户可使终端用户按需请求新的服务器和应用堆栈。

|

||||

|

||||

openQRM 有两种不同风格的版本可获取:

|

||||

|

||||

- 企业版

|

||||

- 社区版

|

||||

|

||||

你可以在[这里][1] 查看这两个版本间的区别。

|

||||

你可以在[这里][1]查看这两个版本间的区别。

|

||||

|

||||

### 特点 ###

|

||||

|

||||

- 私有/杂交的云计算平台;

|

||||

- 可管理物理或虚拟的服务器系统;

|

||||

- 可与所有主流的开源或商业的存储技术集成;

|

||||

- 跨平台: Linux, Windows, OpenSolaris, and BSD;

|

||||

- 支持 KVM, XEN, Citrix XenServer, VMWare ESX(i), lxc, OpenVZ 和 VirtualBox;

|

||||

- 支持使用额外的 Amazon AWS, Eucalyptus, Ubuntu UEC 等云资源来进行杂交云设置;

|

||||

- 支持 P2V, P2P, V2P, V2V 迁移和高可用性;

|

||||

- 集成最好的开源管理工具 – 如 puppet, nagios/Icinga 或 collectd;

|

||||

- 有超过 50 个插件来支持扩展功能并与你的基础设施集成;

|

||||

- 针对终端用户的自助门户;

|

||||

- 集成计费系统.

|

||||

- 私有/混合的云计算平台

|

||||

- 可管理物理或虚拟的服务器系统

|

||||

- 集成了所有主流的开源或商业的存储技术

|

||||

- 跨平台: Linux, Windows, OpenSolaris 和 BSD

|

||||

- 支持 KVM, XEN, Citrix XenServer, VMWare ESX(i), lxc, OpenVZ 和 VirtualBox

|

||||

- 支持使用额外的 Amazon AWS, Eucalyptus, Ubuntu UEC 等云资源来进行混合云设置

|

||||

- 支持 P2V, P2P, V2P, V2V 迁移和高可用性

|

||||

- 集成最好的开源管理工具 – 如 puppet, nagios/Icinga 或 collectd

|

||||

- 有超过 50 个插件来支持扩展功能并与你的基础设施集成

|

||||

- 针对终端用户的自服务门户

|

||||

- 集成了计费系统

|

||||

|

||||

### 安装 ###

|

||||

|

||||

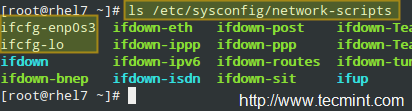

在这里我们将在 in Debian 7.5 上安装 openQRM。你的服务器必须至少满足以下要求:

|

||||

在这里我们将在 Debian 7.5 上安装 openQRM。你的服务器必须至少满足以下要求:

|

||||

|

||||

- 1 GB RAM;

|

||||

- 100 GB Hdd(硬盘驱动器);

|

||||

- 可选: Bios 支持虚拟化(Intel CPUs 的 VT 或 AMD CPUs AMD-V).

|

||||

- 1 GB RAM

|

||||

- 100 GB Hdd(硬盘驱动器)

|

||||

- 可选: Bios 支持虚拟化(Intel CPUs 的 VT 或 AMD CPUs AMD-V)

|

||||

|

||||

首先,安装 `make` 软件包来编译 openQRM 源码包:

|

||||

|

||||

@ -52,7 +53,7 @@ openQRM 有两种不同风格的版本可获取:

|

||||

|

||||

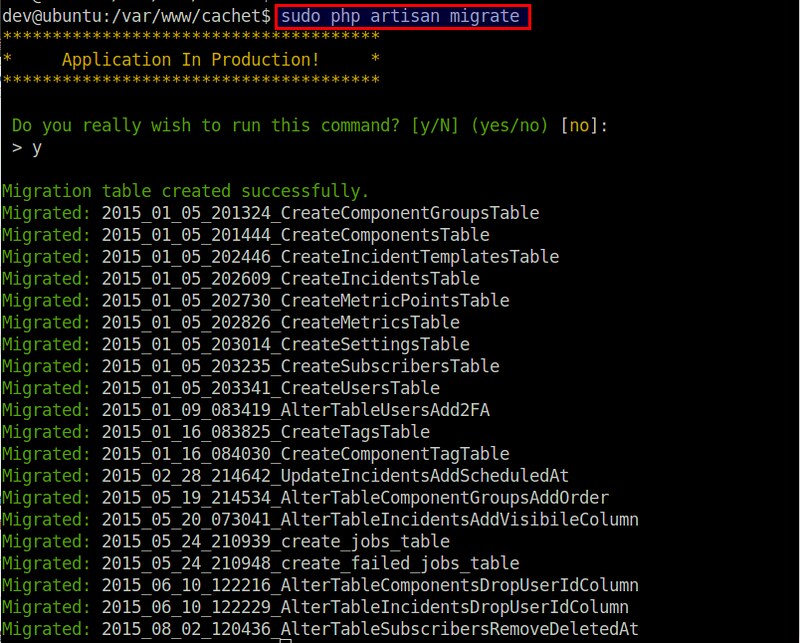

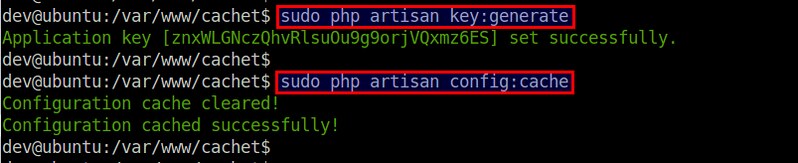

然后,逐次运行下面的命令来安装 openQRM。

|

||||

|

||||

从[这里][2] 下载最新的可用版本:

|

||||

从[这里][2]下载最新的可用版本:

|

||||

|

||||

wget http://sourceforge.net/projects/openqrm/files/openQRM-Community-5.1/openqrm-community-5.1.tgz

|

||||

|

||||

@ -66,35 +67,35 @@ openQRM 有两种不同风格的版本可获取:

|

||||

|

||||

sudo make start

|

||||

|

||||

安装期间,你将被询问去更新文件 `php.ini`

|

||||

安装期间,会要求你更新文件 `php.ini`

|

||||

|

||||

|

||||

|

||||

|

||||

输入 mysql root 用户密码。

|

||||

|

||||

|

||||

|

||||

|

||||

再次输入密码:

|

||||

|

||||

|

||||

|

||||

|

||||

选择邮件服务器配置类型。

|

||||

选择邮件服务器配置类型:

|

||||

|

||||

|

||||

|

||||

|

||||

假如你不确定该如何选择,可选择 `Local only`。在我们的这个示例中,我选择了 **Local only** 选项。

|

||||

|

||||

|

||||

|

||||

|

||||

输入你的系统邮件名称,并最后输入 Nagios 管理员密码。

|

||||

|

||||

|

||||

|

||||

|

||||

根据你的网络连接状态,上面的命令可能将花费很长的时间来下载所有运行 openQRM 所需的软件包,请耐心等待。

|

||||

|

||||

最后你将得到 openQRM 配置 URL 地址以及相关的用户名和密码。

|

||||

|

||||

|

||||

|

||||

|

||||

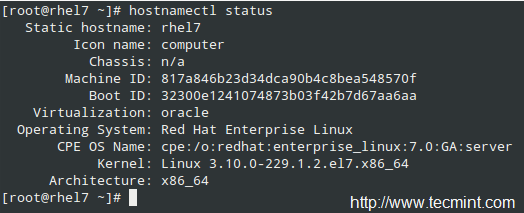

### 配置 ###

|

||||

|

||||

@ -104,23 +105,23 @@ openQRM 有两种不同风格的版本可获取:

|

||||

|

||||

默认的用户名和密码是: **openqrm/openqrm** 。

|

||||

|

||||

|

||||

|

||||

|

||||

选择一个网卡来给 openQRM 管理网络使用。

|

||||

|

||||

|

||||

|

||||

|

||||

选择一个数据库类型,在我们的示例中,我选择了 mysql。

|

||||

|

||||

|

||||

|

||||

|

||||

现在,配置数据库连接并初始化 openQRM, 在这里,我使用 **openQRM** 作为数据库名称, **root** 作为用户的身份,并将 debian 作为数据库的密码。 请小心,你应该输入先前在安装 openQRM 时创建的 mysql root 用户密码。

|

||||

|

||||

|

||||

|

||||

|

||||

祝贺你!! openQRM 已经安装并配置好了。

|

||||

祝贺你! openQRM 已经安装并配置好了。

|

||||

|

||||

|

||||

|

||||

|

||||

### 更新 openQRM ###

|

||||

|

||||

@ -129,16 +130,17 @@ openQRM 有两种不同风格的版本可获取:

|

||||

cd openqrm/src/

|

||||

make update

|

||||

|

||||

到现在为止,我们做的只是在我们的 Ubuntu 服务器中安装和配置 openQRM, 至于 创建、运行虚拟,管理存储,额外的系统集成和运行你自己的私有云等内容,我建议你阅读 [openQRM 管理员指南][3]。

|

||||

到现在为止,我们做的只是在我们的 Debian 服务器中安装和配置 openQRM, 至于 创建、运行虚拟,管理存储,额外的系统集成和运行你自己的私有云等内容,我建议你阅读 [openQRM 管理员指南][3]。

|

||||

|

||||

就是这些了,欢呼吧!周末快乐!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.unixmen.com/install-openqrm-cloud-computing-platform-debian/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,24 +1,24 @@

|

||||

Trickr:一个开源的Linux桌面RSS新闻速递

|

||||

Tickr:一个开源的 Linux 桌面 RSS 新闻速递应用

|

||||

================================================================================

|

||||

|

||||

|

||||

**最新的!最新的!阅读关于它的一切!**

|

||||

|

||||

好了,所以我们今天要强调的应用程序不是相当于旧报纸的二进制版本—而是它会以一个伟大的方式,将最新的新闻推送到你的桌面上。

|

||||

好了,我们今天要推荐的应用程序可不是旧式报纸的二进制版本——它会以一种漂亮的方式将最新的新闻推送到你的桌面上。

|

||||

|

||||

Tick是一个基于GTK的Linux桌面新闻速递,能够在水平带滚动显示最新头条新闻,以及你最爱的RSS资讯文章标题,当然你可以放置在你桌面的任何地方。

|

||||

Tickr 是一个基于 GTK 的 Linux 桌面新闻速递应用,能够以横条方式滚动显示最新头条新闻以及你最爱的RSS资讯文章标题,当然你可以放置在你桌面的任何地方。

|

||||

|

||||

请叫我Joey Calamezzo;我把我的放在底部,有电视新闻台的风格。

|

||||

请叫我 Joey Calamezzo;我把它放在底部,就像电视新闻台的滚动字幕一样。 (LCTT 译注: Joan Callamezzo 是 Pawnee Today 的主持人,一位 Pawnee 的本地新闻/脱口秀主持人。而本文作者是 Joey。)

|

||||

|

||||

“到你了,子标题”

|

||||

“到你了,副标题”。

|

||||

|

||||

### RSS -还记得吗? ###

|

||||

|

||||

“谢谢段落结尾。”

|

||||

“谢谢,这段结束了。”

|

||||

|

||||

在一个推送通知,社交媒体,以及点击诱饵的时代,哄骗我们阅读最新的令人惊奇的,人人都爱读的清单,RSS看起来有一点过时了。

|

||||

在一个充斥着推送通知、社交媒体、标题党,以及哄骗人们点击的清单体的时代,RSS看起来有一点过时了。

|

||||

|

||||

对我来说?恩,RSS是名副其实的真正简单的聚合。这是将消息通知给我的最简单,最易于管理的方式。我可以在我愿意的时候,管理和阅读一些东西;没必要匆忙的去看,以防这条微博消失在信息流中,或者推送通知消失。

|

||||

对我来说呢?恩,RSS是名副其实的真正简单的聚合(RSS : Really Simple Syndication)。这是将消息通知给我的最简单、最易于管理的方式。我可以在我愿意的时候,管理和阅读一些东西;没必要匆忙的去看,以防这条微博消失在信息流中,或者推送通知消失。

|

||||

|

||||

tickr的美在于它的实用性。你可以不断地有新闻滚动在屏幕的底部,然后不时地瞥一眼。

|

||||

|

||||

@ -32,31 +32,30 @@ tickr的美在于它的实用性。你可以不断地有新闻滚动在屏幕的

|

||||

|

||||

尽管虽然tickr可以从Ubuntu软件中心安装,然而它已经很久没有更新了。当你打开笨拙的不直观的控制面板的时候,没有什么能够比这更让人感觉被遗弃的了。

|

||||

|

||||

打开它:

|

||||

要打开它:

|

||||

|

||||

1. 右键单击tickr条

|

||||

1. 转至编辑>首选项

|

||||

1. 调整各种设置

|

||||

|

||||

选项和设置行的后面,有些似乎是容易理解的。但是知己知彼你能够几乎掌控一切,包括:

|

||||

选项和设置行的后面,有些似乎是容易理解的。但是详细了解这些你才能够掌握一切,包括:

|

||||

|

||||

- 设置滚动速度

|

||||

- 选择鼠标经过时的行为

|

||||

- 资讯更新频率

|

||||

- 字体,包括字体大小和颜色

|

||||

- 分隔符(“delineator”)

|

||||

- 消息分隔符(“delineator”)

|

||||

- tickr在屏幕上的位置

|

||||

- tickr条的颜色和不透明度

|

||||

- 选择每种资讯显示多少文章

|

||||

|

||||

有个值得一提的“怪癖”是,当你点击“应用”按钮,只会更新tickr的屏幕预览。当您退出“首选项”窗口时,请单击“确定”。

|

||||

|

||||

想要滚动条在你的显示屏上水平显示,也需要公平一点的调整,特别是统一显示。

|

||||

想要得到完美的显示效果, 你需要一点点调整,特别是在 Unity 上。

|

||||

|

||||

按下“全宽按钮”,能够让应用程序自动检测你的屏幕宽度。默认情况下,当放置在顶部或底部时,会留下25像素的间距(应用程序被创建在过去的GNOME2.x桌面)。只需添加额外的25像素到输入框,来弥补这个问题。

|

||||

按下“全宽按钮”,能够让应用程序自动检测你的屏幕宽度。默认情况下,当放置在顶部或底部时,会留下25像素的间距(应用程序以前是在GNOME2.x桌面上创建的)。只需添加额外的25像素到输入框,来弥补这个问题。

|

||||

|

||||

其他可供选择的选项包括:选择文章在哪个浏览器打开;tickr是否以一个常规的窗口出现;

|

||||

是否显示一个时钟;以及应用程序多久检查一次文章资讯。

|

||||

其他可供选择的选项包括:选择文章在哪个浏览器打开;tickr是否以一个常规的窗口出现;是否显示一个时钟;以及应用程序多久检查一次文章资讯。

|

||||

|

||||

#### 添加资讯 ####

|

||||

|

||||

@ -76,9 +75,9 @@ tickr自带的有超过30种不同的资讯列表,从技术博客到主流新

|

||||

|

||||

### 在Ubuntu 14.04 LTS或更高版本上安装Tickr ###

|

||||

|

||||

在Ubuntu 14.04 LTS或更高版本上安装Tickr

|

||||

这就是 Tickr,它不会改变世界,但是它能让你知道世界上发生了什么。

|

||||

|

||||

在Ubuntu 14.04 LTS或更高版本中安装,转到Ubuntu软件中心,但要点击下面的按钮。

|

||||

在Ubuntu 14.04 LTS或更高版本中安装,点击下面的按钮转到Ubuntu软件中心。

|

||||

|

||||

- [点击此处进入Ubuntu软件中心安装tickr][1]

|

||||

|

||||

@ -88,7 +87,7 @@ via: http://www.omgubuntu.co.uk/2015/06/tickr-open-source-desktop-rss-news-ticke

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[xiaoyu33](https://github.com/xiaoyu33)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

146

published/20150717 How to monitor NGINX with Datadog - Part 3.md

Normal file

146

published/20150717 How to monitor NGINX with Datadog - Part 3.md

Normal file

@ -0,0 +1,146 @@

|

||||

如何使用 Datadog 监控 NGINX(第三篇)

|

||||

================================================================================

|

||||

|

||||

|

||||

如果你已经阅读了前面的[如何监控 NGINX][1],你应该知道从你网络环境的几个指标中可以获取多少信息。而且你也看到了从 NGINX 特定的基础中收集指标是多么容易的。但要实现全面,持续的监控 NGINX,你需要一个强大的监控系统来存储并将指标可视化,当异常发生时能提醒你。在这篇文章中,我们将向你展示如何使用 Datadog 安装 NGINX 监控,以便你可以在定制的仪表盘中查看这些指标:

|

||||

|

||||

|

||||

|

||||

Datadog 允许你以单个主机、服务、流程和度量来构建图形和警告,或者使用它们的几乎任何组合构建。例如,你可以监控你的所有主机,或者某个特定可用区域的所有NGINX主机,或者您可以监视具有特定标签的所有主机的一个关键指标。本文将告诉您如何:

|

||||

|

||||

- 在 Datadog 仪表盘上监控 NGINX 指标,就像监控其他系统一样

|

||||

- 当一个关键指标急剧变化时设置自动警报来通知你

|

||||

|

||||

### 配置 NGINX ###

|

||||

|

||||

为了收集 NGINX 指标,首先需要确保 NGINX 已启用 status 模块和一个 报告 status 指标的 URL。一步步的[配置开源 NGINX][2] 和 [NGINX Plus][3] 请参见之前的相关文章。

|

||||

|

||||

### 整合 Datadog 和 NGINX ###

|

||||

|

||||

#### 安装 Datadog 代理 ####

|

||||

|

||||

Datadog 代理是[一个开源软件][4],它能收集和报告你主机的指标,这样就可以使用 Datadog 查看和监控他们。安装这个代理通常[仅需要一个命令][5]

|

||||

|

||||

只要你的代理启动并运行着,你会看到你主机的指标报告[在你 Datadog 账号下][6]。

|

||||

|

||||

|

||||

|

||||

#### 配置 Agent ####

|

||||

|

||||

接下来,你需要为代理创建一个简单的 NGINX 配置文件。在你系统中代理的配置目录应该[在这儿][7]找到。

|

||||

|

||||

在目录里面的 conf.d/nginx.yaml.example 中,你会发现[一个简单的配置文件][8],你可以编辑并提供 status URL 和可选的标签为每个NGINX 实例:

|

||||

|

||||

init_config:

|

||||

|

||||

instances:

|

||||

|

||||

- nginx_status_url: http://localhost/nginx_status/

|

||||

tags:

|

||||

- instance:foo

|

||||

|

||||

当你提供了 status URL 和任意 tag,将配置文件保存为 conf.d/nginx.yaml。

|

||||

|

||||

#### 重启代理 ####

|

||||

|

||||

你必须重新启动代理程序来加载新的配置文件。重新启动命令[在这里][9],根据平台的不同而不同。

|

||||

|

||||

#### 检查配置文件 ####

|

||||

|

||||

要检查 Datadog 和 NGINX 是否正确整合,运行 Datadog 的 info 命令。每个平台使用的命令[看这儿][10]。

|

||||

|

||||

如果配置是正确的,你会看到这样的输出:

|

||||

|

||||

Checks

|

||||

======

|

||||

|

||||

[...]

|

||||

|

||||

nginx

|

||||

-----

|

||||

- instance #0 [OK]

|

||||

- Collected 8 metrics & 0 events

|

||||

|

||||

#### 安装整合 ####

|

||||

|

||||

最后,在你的 Datadog 帐户打开“Nginx 整合”。这非常简单,你只要在 [NGINX 整合设置][11]中点击“Install Integration”按钮。

|

||||

|

||||

|

||||

|

||||

### 指标! ###

|

||||

|

||||

一旦代理开始报告 NGINX 指标,你会看到[一个 NGINX 仪表盘][12]出现在在你 Datadog 可用仪表盘的列表中。

|

||||

|

||||

基本的 NGINX 仪表盘显示有用的图表,囊括了几个[我们的 NGINX 监控介绍][13]中的关键指标。 (一些指标,特别是请求处理时间要求进行日志分析,Datadog 不支持。)

|

||||

|

||||

你可以通过增加 NGINX 之外的重要指标的图表来轻松创建一个全面的仪表盘,以监控你的整个网站设施。例如,你可能想监视你 NGINX 的主机级的指标,如系统负载。要构建一个自定义的仪表盘,只需点击靠近仪表盘的右上角的选项并选择“Clone Dash”来克隆一个默认的 NGINX 仪表盘。

|

||||

|

||||

|

||||

|

||||

你也可以使用 Datadog 的[主机地图][14]在更高层面监控你的 NGINX 实例,举个例子,用颜色标示你所有的 NGINX 主机的 CPU 使用率来辨别潜在热点。

|

||||

|

||||

|

||||

|

||||

### NGINX 指标警告 ###

|

||||

|

||||

一旦 Datadog 捕获并可视化你的指标,你可能会希望建立一些监控自动地密切关注你的指标,并当有问题提醒你。下面将介绍一个典型的例子:一个提醒你 NGINX 吞吐量突然下降时的指标监控器。

|

||||

|

||||

#### 监控 NGINX 吞吐量 ####

|

||||

|

||||

Datadog 指标警报可以是“基于吞吐量的”(当指标超过设定值会警报)或“基于变化幅度的”(当指标的变化超过一定范围会警报)。在这个例子里,我们会采取后一种方式,当每秒传入的请求急剧下降时会提醒我们。下降往往意味着有问题。

|

||||

|

||||

1. **创建一个新的指标监控**。从 Datadog 的“Monitors”下拉列表中选择“New Monitor”。选择“Metric”作为监视器类型。

|

||||

|

||||

|

||||

|

||||

2. **定义你的指标监视器**。我们想知道 NGINX 每秒总的请求量下降的数量,所以我们在基础设施中定义我们感兴趣的 nginx.net.request_per_s 之和。

|

||||

|

||||

|

||||

|

||||

3. **设置指标警报条件**。我们想要在变化时警报,而不是一个固定的值,所以我们选择“Change Alert”。我们设置监控为无论何时请求量下降了30%以上时警报。在这里,我们使用一个一分钟的数据窗口来表示 “now” 指标的值,对横跨该间隔内的平均变化和之前 10 分钟的指标值作比较。

|

||||

|

||||

|

||||

|

||||

4. **自定义通知**。如果 NGINX 的请求量下降,我们想要通知我们的团队。在这个例子中,我们将给 ops 团队的聊天室发送通知,并给值班工程师发送短信。在“Say what’s happening”中,我们会为监控器命名,并添加一个伴随该通知的短消息,建议首先开始调查的内容。我们会 @ ops 团队使用的 Slack,并 @pagerduty [将警告发给短信][15]。

|

||||

|

||||

|

||||

|

||||

5. **保存集成监控**。点击页面底部的“Save”按钮。你现在在监控一个关键的 NGINX [工作指标][16],而当它快速下跌时会给值班工程师发短信。

|

||||

|

||||

### 结论 ###

|

||||

|

||||

在这篇文章中,我们谈到了通过整合 NGINX 与 Datadog 来可视化你的关键指标,并当你的网络基础架构有问题时会通知你的团队。

|

||||

|

||||

如果你一直使用你自己的 Datadog 账号,你现在应该可以极大的提升你的 web 环境的可视化,也有能力对你的环境、你所使用的模式、和对你的组织最有价值的指标创建自动监控。

|

||||

|

||||

如果你还没有 Datadog 帐户,你可以注册[免费试用][17],并开始监视你的基础架构,应用程序和现在的服务。

|

||||

|

||||

------------------------------------------------------------

|

||||

|

||||

via: https://www.datadoghq.com/blog/how-to-monitor-nginx-with-datadog/

|

||||

|

||||

作者:K Young

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:https://linux.cn/article-5970-1.html

|

||||

[2]:https://linux.cn/article-5985-1.html#open-source

|

||||

[3]:https://linux.cn/article-5985-1.html#plus

|

||||

[4]:https://github.com/DataDog/dd-agent

|

||||

[5]:https://app.datadoghq.com/account/settings#agent

|

||||

[6]:https://app.datadoghq.com/infrastructure

|

||||

[7]:http://docs.datadoghq.com/guides/basic_agent_usage/

|

||||

[8]:https://github.com/DataDog/dd-agent/blob/master/conf.d/nginx.yaml.example

|

||||

[9]:http://docs.datadoghq.com/guides/basic_agent_usage/

|

||||

[10]:http://docs.datadoghq.com/guides/basic_agent_usage/

|

||||

[11]:https://app.datadoghq.com/account/settings#integrations/nginx

|

||||

[12]:https://app.datadoghq.com/dash/integration/nginx

|

||||

[13]:https://linux.cn/article-5970-1.html

|

||||

[14]:https://www.datadoghq.com/blog/introducing-host-maps-know-thy-infrastructure/

|

||||

[15]:https://www.datadoghq.com/blog/pagerduty/

|

||||

[16]:https://www.datadoghq.com/blog/monitoring-101-collecting-data/#metrics

|

||||

[17]:https://www.datadoghq.com/blog/how-to-monitor-nginx-with-datadog/#sign-up

|

||||

[18]:https://github.com/DataDog/the-monitor/blob/master/nginx/how_to_monitor_nginx_with_datadog.md

|

||||

[19]:https://github.com/DataDog/the-monitor/issues

|

||||

@ -0,0 +1,70 @@

|

||||

如何在 Linux 终端中知道你的公有 IP

|

||||

================================================================================

|

||||

|

||||

|

||||

公有地址由 InterNIC 分配并由基于类的网络 ID 或基于 CIDR 的地址块构成(被称为 CIDR 块),并保证了在全球互联网中的唯一性。当公有地址被分配时,其路由将会被记录到互联网中的路由器中,这样访问公有地址的流量就能顺利到达。访问目标公有地址的流量可经由互联网抵达。比如,当一个 CIDR 块被以网络 ID 和子网掩码的形式分配给一个组织时,对应的 [网络 ID,子网掩码] 也会同时作为路由储存在互联网中的路由器中。目标是 CIDR 块中的地址的 IP 封包会被导向对应的位置。

|

||||

|

||||

在本文中我将会介绍在几种在 Linux 终端中查看你的公有 IP 地址的方法。这对普通用户来说并无意义,但 Linux 服务器(无GUI或者作为只能使用基本工具的用户登录时)会很有用。无论如何,从 Linux 终端中获取公有 IP 在各种方面都很意义,说不定某一天就能用得着。

|

||||

|

||||

以下是我们主要使用的两个命令,curl 和 wget。你可以换着用。

|

||||

|

||||

### Curl 纯文本格式输出: ###

|

||||

|

||||

curl icanhazip.com

|

||||

curl ifconfig.me

|

||||

curl curlmyip.com

|

||||

curl ip.appspot.com

|

||||

curl ipinfo.io/ip

|

||||

curl ipecho.net/plain

|

||||

curl www.trackip.net/i

|

||||

|

||||

### curl JSON格式输出: ###

|

||||

|

||||

curl ipinfo.io/json

|

||||

curl ifconfig.me/all.json

|

||||

curl www.trackip.net/ip?json (有点丑陋)

|

||||

|

||||

### curl XML格式输出: ###

|

||||

|

||||

curl ifconfig.me/all.xml

|

||||

|

||||

### curl 得到所有IP细节 (挖掘机)###

|

||||

|

||||

curl ifconfig.me/all

|

||||

|

||||

### 使用 DYDNS (当你使用 DYDNS 服务时有用)###

|

||||

|

||||

curl -s 'http://checkip.dyndns.org' | sed 's/.*Current IP Address: \([0-9\.]*\).*/\1/g'

|

||||

curl -s http://checkip.dyndns.org/ | grep -o "[[:digit:].]\+"

|

||||

|

||||

### 使用 Wget 代替 Curl ###

|

||||

|

||||

wget http://ipecho.net/plain -O - -q ; echo

|

||||

wget http://observebox.com/ip -O - -q ; echo

|

||||

|

||||

### 使用 host 和 dig 命令 ###

|

||||

|

||||

如果有的话,你也可以直接使用 host 和 dig 命令。

|

||||

|

||||

host -t a dartsclink.com | sed 's/.*has address //'

|

||||

dig +short myip.opendns.com @resolver1.opendns.com

|

||||

|

||||

### bash 脚本示例: ###

|

||||

|

||||

#!/bin/bash

|

||||

|

||||

PUBLIC_IP=`wget http://ipecho.net/plain -O - -q ; echo`

|

||||

echo $PUBLIC_IP

|

||||

|

||||

简单易用。

|

||||

|

||||

我实际上是在写一个用于记录每日我的路由器中所有 IP 变化并保存到一个文件的脚本。我在搜索过程中找到了这些很好用的命令。希望某天它能帮到其他人。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.blackmoreops.com/2015/06/14/how-to-get-public-ip-from-linux-terminal/

|

||||

|

||||

译者:[KevinSJ](https://github.com/KevinSJ)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

115

published/20150817 Top 5 Torrent Clients For Ubuntu Linux.md

Normal file

115

published/20150817 Top 5 Torrent Clients For Ubuntu Linux.md

Normal file

@ -0,0 +1,115 @@

|

||||

Ubuntu 下五个最好的 BT 客户端

|

||||

================================================================================

|

||||

|

||||

|

||||

|

||||

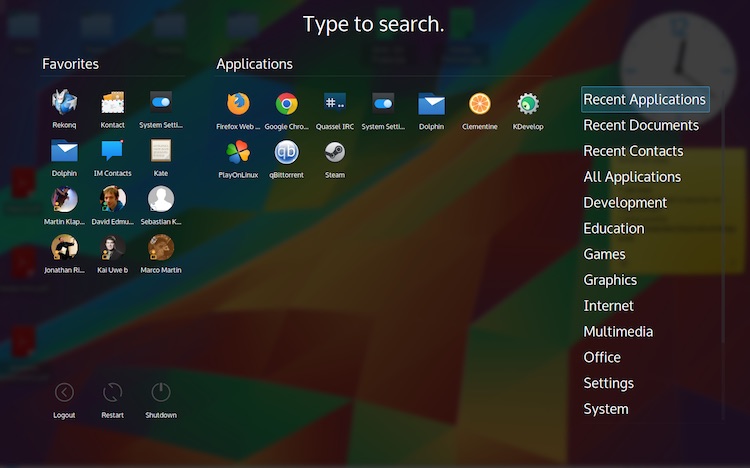

在寻找 **Ubuntu 中最好的 BT 客户端**吗?事实上,Linux 桌面平台中有许多 BT 客户端,但是它们中的哪些才是**最好的 Ubuntu 客户端**呢?

|

||||

|

||||

我将会列出 Linux 上最好的五个 BT 客户端,它们都拥有着体积轻盈,功能强大的特点,而且还有令人印象深刻的用户界面。自然,易于安装和使用也是特性之一。

|

||||

|

||||

### Ubuntu 下最好的 BT 客户端 ###

|

||||

|

||||

考虑到 Ubuntu 默认安装了 Transmission,所以我将会从这个列表中排除了 Transmission。但是这并不意味着 Transmission 没有资格出现在这个列表中,事实上,Transmission 是一个非常好的BT客户端,这也正是它被包括 Ubuntu 在内的多个发行版默认安装的原因。

|

||||

|

||||

### Deluge ###

|

||||

|

||||

|

||||

|

||||

[Deluge][1] 被 Lifehacker 评选为 Linux 下最好的 BT 客户端,这说明了 Deluge 是多么的有用。而且,并不仅仅只有 Lifehacker 是 Deluge 的粉丝,纵观多个论坛,你都会发现不少 Deluge 的忠实拥趸。

|

||||

|

||||

快速,时尚而直观的界面使得 Deluge 成为 Linux 用户的挚爱。

|

||||

|

||||

Deluge 可在 Ubuntu 的仓库中获取,你能够在 Ubuntu 软件中心中安装它,或者使用下面的命令:

|

||||

|

||||

sudo apt-get install deluge

|

||||

|

||||

### qBittorrent ###

|

||||

|

||||

|

||||

|

||||

正如它的名字所暗示的,[qBittorrent][2] 是著名的 [Bittorrent][3] 应用的 Qt 版本。如果曾经使用过它,你将会看到和 Windows 下的 Bittorrent 相似的界面。同样轻巧并且有着 BT 客户端的所有标准功能, qBittorrent 也可以在 Ubuntu 的默认仓库中找到。

|

||||

|

||||

它可以通过 Ubuntu 软件仓库安装,或者使用下面的命令:

|

||||

|

||||

sudo apt-get install qbittorrent

|

||||

|

||||

|

||||

### Tixati ###

|

||||

|

||||

|

||||

|

||||

[Tixati][4] 是另一个不错的 Ubuntu 下的 BT 客户端。它有着一个默认的黑暗主题,尽管很多人喜欢,但是我例外。它拥有着一切你能在 BT 客户端中找到的功能。

|

||||

|

||||

除此之外,它还有着数据分析的额外功能。你可以在美观的图表中分析流量以及其它数据。

|

||||

|

||||

- [下载 Tixati][5]

|

||||

|

||||

|

||||

|

||||

### Vuze ###

|

||||

|

||||

|

||||

|

||||

[Vuze][6] 是许多 Linux 以及 Windows 用户最喜欢的 BT 客户端。除了标准的功能,你可以直接在应用程序中搜索种子,也可以订阅系列片源,这样就无需再去寻找新的片源了,因为你可以在侧边栏中的订阅看到它们。

|

||||

|

||||

它还配备了一个视频播放器,可以播放带有字幕的高清视频等等。但是我不认为你会用它来代替那些更好的视频播放器,比如 VLC。

|

||||

|

||||

Vuze 可以通过 Ubuntu 软件中心安装或者使用下列命令:

|

||||

|

||||

sudo apt-get install vuze

|

||||

|

||||

|

||||

|

||||

### Frostwire ###

|

||||

|

||||

|

||||

|

||||

[Frostwire][7] 是一个你应该试一下的应用。它不仅仅是一个简单的 BT 客户端,它还可以应用于安卓,你可以用它通过 Wifi 来共享文件。

|

||||

|

||||

你可以在应用中搜索种子并且播放他们。除了下载文件,它还可以浏览本地的影音文件,并且将它们有条理的呈现在播放器中。这同样适用于安卓版本。

|

||||

|

||||

还有一个特点是:Frostwire 提供了独立音乐人的[合法音乐下载][13]。你可以下载并且欣赏它们,免费而且合法。

|

||||

|

||||

- [下载 Frostwire][8]

|

||||

|

||||

|

||||

|

||||

### 荣誉奖 ###

|

||||

|

||||

在 Windows 中,uTorrent(发音:mu torrent)是我最喜欢的 BT 应用。尽管 uTorrent 可以在 Linux 下运行,但是我还是特意忽略了它。因为在 Linux 下使用 uTorrent 不仅困难,而且无法获得完整的应用体验(运行在浏览器中)。

|

||||

|

||||

可以[在这里][9]阅读 Ubuntu下uTorrent 的安装教程。

|

||||

|

||||

#### 快速提示: ####

|

||||

|

||||

大多数情况下,BT 应用不会默认自动启动。如果想改变这一行为,请阅读[如何管理 Ubuntu 下的自启动程序][10]来学习。

|

||||

|

||||

### 你最喜欢的是什么? ###

|

||||

|

||||

这些是我对于 Ubuntu 下最好的 BT 客户端的意见。你最喜欢的是什么呢?请发表评论。也可以查看与本主题相关的[Ubuntu 最好的下载管理器][11]。如果使用 Popcorn Time,试试 [Popcorn Time 技巧][12]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://itsfoss.com/best-torrent-ubuntu/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[Xuanwo](https://github.com/Xuanwo)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://itsfoss.com/author/abhishek/

|

||||

[1]:http://deluge-torrent.org/

|

||||

[2]:http://www.qbittorrent.org/

|

||||

[3]:http://www.bittorrent.com/

|

||||

[4]:http://www.tixati.com/

|

||||

[5]:http://www.tixati.com/download/

|

||||

[6]:http://www.vuze.com/

|

||||

[7]:http://www.frostwire.com/

|

||||

[8]:http://www.frostwire.com/downloads

|

||||

[9]:http://sysads.co.uk/2014/05/install-utorrent-3-3-ubuntu-14-04-13-10/

|

||||

[10]:http://itsfoss.com/manage-startup-applications-ubuntu/

|

||||

[11]:http://itsfoss.com/4-best-download-managers-for-linux/

|

||||

[12]:http://itsfoss.com/popcorn-time-tips/

|

||||

[13]:http://www.frostclick.com/wp/

|

||||

|

||||

@ -0,0 +1,100 @@

|

||||

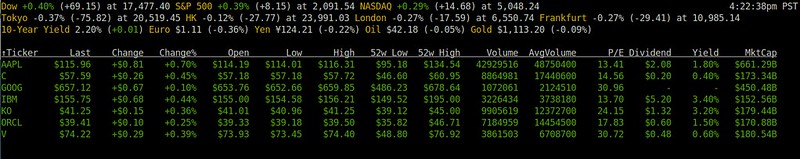

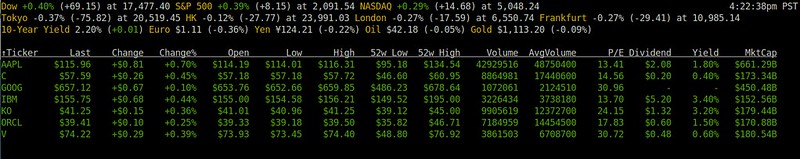

Linux中通过命令行监控股票报价

|

||||

================================================================================

|

||||

|

||||

如果你是那些股票投资者或者交易者中的一员,那么监控证券市场将是你的日常工作之一。最有可能的是你会使用一个在线交易平台,这个平台有着一些漂亮的实时图表和全部种类的高级股票分析和交易工具。虽然这种复杂的市场研究工具是任何严肃的证券投资者了解市场的必备工具,但是监控最新的股票报价来构建有利可图的投资组合仍然有很长一段路要走。

|

||||

|

||||

如果你是一位长久坐在终端前的全职系统管理员,而证券交易又成了你日常生活中的业余兴趣,那么一个简单地显示实时股票报价的命令行工具会是给你的恩赐。

|

||||

|

||||

在本教程中,让我来介绍一个灵巧而简洁的命令行工具,它可以让你在Linux上从命令行监控股票报价。

|

||||

|

||||

这个工具叫做[Mop][1]。它是用GO编写的一个轻量级命令行工具,可以极其方便地跟踪来自美国市场的最新股票报价。你可以很轻松地自定义要监控的证券列表,它会在一个基于ncurses的便于阅读的界面显示最新的股票报价。

|

||||

|

||||

**注意**:Mop是通过雅虎金融API获取最新的股票报价的。你必须意识到,他们的的股票报价已知会有15分钟的延时。所以,如果你正在寻找0延时的“实时”股票报价,那么Mop就不是你的菜了。这种“现场”股票报价订阅通常可以通过向一些不开放的私有接口付费获取。了解这些之后,让我们来看看怎样在Linux环境下使用Mop吧。

|

||||

|

||||

### 安装 Mop 到 Linux ###

|

||||

|

||||

由于Mop是用Go实现的,你首先需要安装Go语言。如果你还没有安装Go,请参照[此指南][2]将Go安装到你的Linux平台中。请确保按指南中所讲的设置GOPATH环境变量。

|

||||

|

||||

安装完Go后,继续像下面这样安装Mop。

|

||||

|

||||

**Debian,Ubuntu 或 Linux Mint**

|

||||

|

||||

$ sudo apt-get install git

|

||||

$ go get github.com/michaeldv/mop

|

||||

$ cd $GOPATH/src/github.com/michaeldv/mop

|

||||

$ make install

|

||||

|

||||

**Fedora,CentOS,RHEL**

|

||||

|

||||

$ sudo yum install git

|

||||

$ go get github.com/michaeldv/mop

|

||||

$ cd $GOPATH/src/github.com/michaeldv/mop

|

||||

$ make install

|

||||

|

||||

上述命令将安装Mop到$GOPATH/bin。

|

||||

|

||||

现在,编辑你的.bashrc,将$GOPATH/bin写到你的PATH变量中。

|

||||

|

||||

export PATH="$PATH:$GOPATH/bin"

|

||||

|

||||

----------

|

||||

|

||||

$ source ~/.bashrc

|

||||

|

||||

### 使用Mop来通过命令行监控股票报价 ###

|

||||

|

||||

要启动Mop,只需运行名为cmd的命令(LCTT 译注:这名字实在是……)。

|

||||

|

||||

$ cmd

|

||||

|

||||

首次启动,你将看到一些Mop预配置的证券行情自动收录器。

|

||||

|

||||

|

||||

|

||||

报价显示了像最新价格、交易百分比、每日低/高、52周低/高、股息以及年收益率等信息。Mop从[CNN][3]获取市场总览信息,从[雅虎金融][4]获得个股报价,股票报价信息它自己会在终端内周期性更新。

|

||||

|

||||

### 自定义Mop中的股票报价 ###

|

||||

|

||||

让我们来试试自定义证券列表吧。对此,Mop提供了易于记忆的快捷键:‘+’用于添加一只新股,而‘-’则用于移除一只股票。

|

||||

|

||||

要添加新股,请按‘+’,然后输入股票代码来添加(如MSFT)。你可以通过输入一个由逗号分隔的交易代码列表来一次添加多个股票(如”MSFT, AMZN, TSLA”)。

|

||||

|

||||

|

||||

|

||||

从列表中移除股票可以类似地按‘-’来完成。

|

||||

|

||||

### 对Mop中的股票报价排序 ###

|

||||

|

||||

你可以基于任何栏目对股票报价列表进行排序。要排序,请按‘o’,然后使用左/右键来选择排序的基准栏目。当选定了一个特定栏目后,你可以按回车来对列表进行升序排序,或者降序排序。

|

||||

|

||||

|

||||

|

||||

通过按‘g’,你可以根据股票当日的涨或跌来分组。涨的情况以绿色表示,跌的情况以白色表示。

|

||||

|

||||

|

||||

|

||||

如果你想要访问帮助页,只需要按‘?’。

|

||||

|

||||

|

||||

|

||||

### 尾声 ###

|

||||

|

||||

正如你所见,Mop是一个轻量级的,然而极其方便的证券监控工具。当然,你可以很轻松地从其它别的什么地方,从在线站点,你的智能手机等等访问到股票报价信息。然而,如果你在整天使用终端环境,Mop可以很容易地适应你的工作环境,希望没有让你过多地从你的工作流程中分心。只要让它在你其中一个终端中运行并保持市场日期持续更新,那就够了。

|

||||

|

||||

交易快乐!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/monitor-stock-quotes-command-line-linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/nanni

|

||||

[1]:https://github.com/michaeldv/mop

|

||||

[2]:http://ask.xmodulo.com/install-go-language-linux.html

|

||||

[3]:http://money.cnn.com/data/markets/

|

||||

[4]:http://finance.yahoo.com/

|

||||

@ -0,0 +1,52 @@

|

||||

Linux无极限:IBM发布LinuxONE大型机

|

||||

================================================================================

|

||||

|

||||

|

||||

LinuxONE Emperor MainframeGood的Ubuntu服务器团队今天发布了一条消息关于[IBM发布了LinuxONE][1],一种只支持Linux的大型机,也可以运行Ubuntu。

|

||||

|

||||

IBM发布的最大的LinuxONE系统称作‘Emperor’,它可以扩展到8000台虚拟机或者上万台容器- 对任何一台Linux系统都可能的记录。

|

||||

|

||||

LinuxONE被IBM称作‘游戏改变者’,它‘释放了Linux的商业潜力’。

|

||||

|

||||

IBM和Canonical正在一起协作为LinuxONE和其他IBM z系统创建Ubuntu发行版。Ubuntu将会在IBM z加入RedHat和SUSE作为首屈一指的Linux发行版。

|

||||

|

||||

随着IBM ‘Emperor’发布的还有LinuxONE Rockhopper,一个为中等规模商业或者组织小一点的大型机。

|

||||

|

||||

IBM是大型机中的领导者,并且占有大型机市场中90%的份额。

|

||||

|

||||

注:youtube 视频

|

||||

<iframe width="750" height="422" frameborder="0" allowfullscreen="" src="https://www.youtube.com/embed/2ABfNrWs-ns?feature=oembed"></iframe>

|

||||

|

||||

### 大型机用于什么? ###

|

||||

|

||||

你阅读这篇文章所使用的电脑在一个‘大铁块’一样的大型机前会显得很矮小。它们是巨大的,笨重的机柜里面充满了高端的组件、自己设计的技术和眼花缭乱的大量存储(就是数据存储,没有空间放钢笔和尺子)。

|

||||

|

||||

大型机被大型机构和商业用来处理和存储大量数据,通过统计来处理数据和处理大规模的事务处理。

|

||||

|

||||

### ‘世界最快的处理器’ ###

|

||||

|

||||

IBM已经与Canonical Ltd组成了团队来在LinuxONE和其他IBM z系统中使用Ubuntu。

|

||||

|

||||

LinuxONE Emperor使用IBM z13处理器。发布于一月的芯片声称是时间上最快的微处理器。它可以在几毫秒内响应事务。

|

||||

|

||||

但是也可以很好地处理高容量的移动事务,z13中的LinuxONE系统也是一个理想的云系统。

|

||||

|

||||

每个核心可以处理超过50个虚拟服务器,总共可以超过8000台虚拟服务器么,这使它以更便宜,更环保、更高效的方式扩展到云。

|

||||

|

||||

**在阅读这篇文章时你不必是一个CIO或者大型机巡查员。LinuxONE提供的可能性足够清晰。**

|

||||

|

||||

来源: [Reuters (h/t @popey)][2]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2015/08/ibm-linuxone-mainframe-ubuntu-partnership

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/117485690627814051450/?rel=author

|

||||

[1]:http://www-03.ibm.com/systems/z/announcement.html

|

||||

[2]:http://www.reuters.com/article/2015/08/17/us-ibm-linuxone-idUSKCN0QM09P20150817

|

||||

@ -0,0 +1,53 @@

|

||||

Ubuntu Linux 来到 IBM 大型机

|

||||

================================================================================

|

||||

最终来到了。在 [LinuxCon][1] 上,IBM 和 [Canonical][2] 宣布 [Ubuntu Linux][3] 不久就会运行在 IBM 大型机 [LinuxONE][1] 上,这是一种只支持 Linux 的大型机,现在也可以运行 Ubuntu 了。

|

||||

|

||||

这个 IBM 发布的最大的 LinuxONE 系统称作‘Emperor’,它可以扩展到 8000 台虚拟机或者上万台容器,这可能是单独一台 Linux 系统的记录。

|

||||

|

||||

LinuxONE 被 IBM 称作‘游戏改变者’,它‘释放了 Linux 的商业潜力’。

|

||||

|

||||

|

||||

|

||||

*很快你就可以在你的 IBM 大型机上安装 Ubuntu Linux orange 啦*

|

||||

|

||||

根据 IBM z 系统的总经理 Ross Mauri 以及 Canonical 和 Ubuntu 的创立者 Mark Shuttleworth 所言,这是因为客户需要。十多年来,IBM 大型机只支持 [红帽企业版 Linux (RHEL)][4] 和 [SUSE Linux 企业版 (SLES)][5] Linux 发行版。

|

||||

|

||||

随着 Ubuntu 越来越成熟,更多的企业把它作为企业级 Linux,也有更多的人希望它能运行在 IBM 大型机上。尤其是银行希望如此。不久,金融 CIO 们就可以满足他们的需求啦。

|

||||

|

||||

在一次采访中 Shuttleworth 说 Ubuntu Linux 在 2016 年 4 月下一次长期支持版 Ubuntu 16.04 中就可以用到大型机上。而在 2014 年底 Canonical 和 IBM 将 [Ubuntu 带到 IBM 的 POWER][6] 架构中就迈出了第一步。

|

||||

|

||||

在那之前,Canonical 和 IBM 差点签署了协议 [在 2011 年实现 Ubuntu 支持 IBM 大型机][7],但最终也没有实现。这次,真的发生了。

|

||||

|

||||

Canonical 的 CEO Jane Silber 解释说 “[把 Ubuntu 平台支持扩大][8]到 [IBM z 系统][9] 是因为认识到需要 z 系统运行其业务的客户数量以及混合云市场的成熟。”

|

||||

|

||||

**Silber 还说:**

|

||||

|

||||

> 由于 z 系统的支持,包括 [LinuxONE][10],Canonical 和 IBM 的关系进一步加深,构建了对 POWER 架构的支持和 OpenPOWER 生态系统。正如 Power 系统的客户受益于 Ubuntu 的可扩展能力,我们的敏捷开发过程也使得类似 POWER8 CAPI (Coherent Accelerator Processor Interface,一致性加速器接口)得到了市场支持,z 系统的客户也可以期望技术进步能快速部署,并从 [Juju][11] 和我们的其它云工具中获益,使得能快速向端用户提供新服务。另外,我们和 IBM 的合作包括实现扩展部署很多 IBM 和 Juju 的软件解决方案。大型机客户对于能通过 Juju 将丰富‘迷人的’ IBM 解决方案、其它软件供应商的产品、开源解决方案部署到大型机上感到高兴。

|

||||

|

||||

Shuttleworth 期望 z 系统上的 Ubuntu 能取得巨大成功。它发展很快,由于对 OpenStack 的支持,希望有卓越云性能的人会感到非常高兴。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.zdnet.com/article/ubuntu-linux-is-coming-to-the-mainframe/

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2015/08/ibm-linuxone-mainframe-ubuntu-partnership

|

||||

|

||||

作者:[Steven J. Vaughan-Nichols][a],[Joey-Elijah Sneddon][a]

|

||||

译者:[ictlyh](https://github.com/ictlyh),[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.zdnet.com/meet-the-team/us/steven-j-vaughan-nichols/

|

||||

[1]:http://events.linuxfoundation.org/events/linuxcon-north-america

|

||||

[2]:http://www.canonical.com/

|

||||

[3]:http://www.ubuntu.comj/

|

||||

[4]:http://www.redhat.com/en/technologies/linux-platforms/enterprise-linux

|

||||

[5]:https://www.suse.com/products/server/

|

||||

[6]:http://www.zdnet.com/article/ibm-doubles-down-on-linux/

|

||||

[7]:http://www.zdnet.com/article/mainframe-ubuntu-linux/

|

||||

[8]:https://insights.ubuntu.com/2015/08/17/ibm-and-canonical-plan-ubuntu-support-on-ibm-z-systems-mainframe/

|

||||

[9]:http://www-03.ibm.com/systems/uk/z/

|

||||

[10]:http://www.zdnet.com/article/linuxone-ibms-new-linux-mainframes/

|

||||

[11]:https://jujucharms.com/

|

||||

@ -0,0 +1,49 @@

|

||||

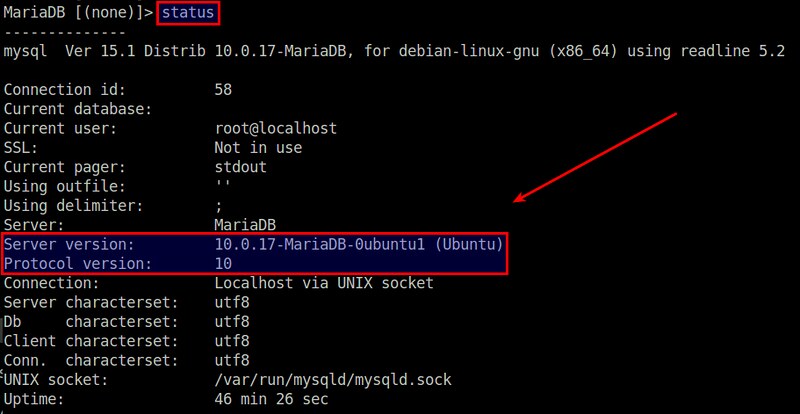

Linux有问必答:如何检查MariaDB服务端版本

|

||||

================================================================================

|

||||

> **提问**: 我使用的是一台运行MariaDB的VPS。我该如何检查MariaDB服务端的版本?

|

||||

|

||||

有时候你需要知道你的数据库版本,比如当你升级你数据库或对已知缺陷打补丁时。这里有几种方法找出MariaDB版本的方法。

|

||||

|

||||

### 方法一 ###

|

||||

|

||||

第一种找出版本的方法是登录MariaDB服务器,登录之后,你会看到一些MariaDB的版本信息。

|

||||

|

||||

|

||||

|

||||

另一种方法是在登录MariaDB后出现的命令行中输入‘status’命令。输出会显示服务器的版本还有协议版本。

|

||||

|

||||

|

||||

|

||||

### 方法二 ###

|

||||

|

||||

如果你不能访问MariaDB服务器,那么你就不能用第一种方法。这种情况下你可以根据MariaDB的安装包的版本来推测。这种方法只有在MariaDB通过包管理器安装的才有用。

|

||||

|

||||

你可以用下面的方法检查MariaDB的安装包。

|

||||

|

||||

#### Debian、Ubuntu或者Linux Mint: ####

|

||||

|

||||

$ dpkg -l | grep mariadb

|

||||

|

||||

下面的输出说明MariaDB的版本是10.0.17。

|

||||

|

||||

|

||||

|

||||

#### Fedora、CentOS或者 RHEL: ####

|

||||

|

||||

$ rpm -qa | grep mariadb

|

||||

|

||||

下面的输出说明安装的版本是5.5.41。

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ask.xmodulo.com/check-mariadb-server-version.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://ask.xmodulo.com/author/nanni

|

||||

@ -0,0 +1,74 @@

|

||||

如何在 Docker 容器中运行 Kali Linux 2.0

|

||||

================================================================================

|

||||

### 介绍 ###

|

||||

|

||||

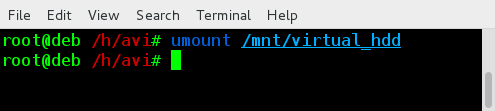

Kali Linux 是一个对于安全测试人员和白帽的一个知名操作系统。它带有大量安全相关的程序,这让它很容易用于渗透测试。最近,[Kali Linux 2.0][1] 发布了,它被认为是这个操作系统最重要的一次发布。另一方面,Docker 技术由于它的可扩展性和易用性让它变得很流行。Dokcer 让你非常容易地将你的程序带给你的用户。好消息是你可以通过 Docker 运行Kali Linux 了,让我们看看该怎么做 :)

|

||||

|

||||

### 在 Docker 中运行 Kali Linux 2.0 ###

|

||||

|

||||

**相关提示**

|

||||

|

||||

> 如果你还没有在系统中安装docker,你可以运行下面的命令:

|

||||

|

||||

> **对于 Ubuntu/Linux Mint/Debian:**

|

||||

|

||||

> sudo apt-get install docker

|

||||

|

||||

> **对于 Fedora/RHEL/CentOS:**

|

||||

|

||||

> sudo yum install docker

|

||||

|

||||

> **对于 Fedora 22:**

|

||||

|

||||

> dnf install docker

|

||||

|

||||

> 你可以运行下面的命令来启动docker:

|

||||

|

||||

> sudo docker start

|

||||

|

||||

首先运行下面的命令确保 Docker 服务运行正常:

|

||||

|

||||

sudo docker status

|

||||

|

||||

Kali Linux 的开发团队已将 Kali Linux 的 docker 镜像上传了,只需要输入下面的命令来下载镜像。

|

||||

|

||||

docker pull kalilinux/kali-linux-docker

|

||||

|

||||

|

||||

|

||||

下载完成后,运行下面的命令来找出你下载的 docker 镜像的 ID。

|

||||

|

||||

docker images

|

||||

|

||||

|

||||

|

||||

现在运行下面的命令来从镜像文件启动 kali linux docker 容器(这里需用正确的镜像ID替换)。

|

||||

|

||||

docker run -i -t 198cd6df71ab3 /bin/bash

|

||||

|

||||

它会立刻启动容器并且让你登录到该操作系统,你现在可以在 Kaili Linux 中工作了。

|

||||

|

||||

|

||||

|

||||

你可以在容器外面通过下面的命令来验证容器已经启动/运行中了:

|

||||

|

||||

docker ps

|

||||

|

||||

|

||||

|

||||

### 总结 ###

|

||||

|

||||

Docker 是一种最聪明的用来部署和分发包的方式。Kali Linux docker 镜像非常容易上手,也不会消耗很大的硬盘空间,这样也可以很容易地在任何安装了 docker 的操作系统上测试这个很棒的发行版了。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linuxpitstop.com/run-kali-linux-2-0-in-docker-container/

|

||||

|

||||

作者:[Aun][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linuxpitstop.com/author/aun/

|

||||

[1]:https://linux.cn/article-6005-1.html

|

||||

@ -1,24 +1,25 @@

|

||||

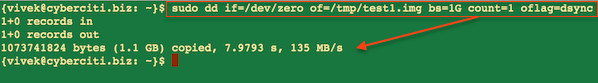

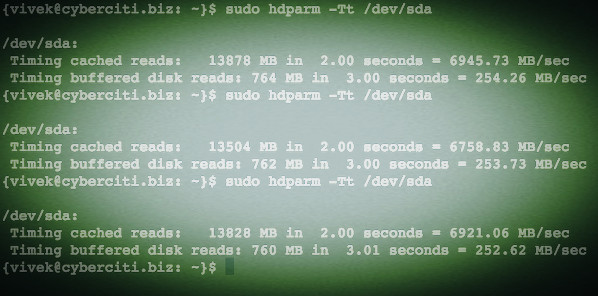

使用dd命令在Linux和Unix环境下进行硬盘I/O性能检测

|

||||

使用 dd 命令进行硬盘 I/O 性能检测

|

||||

================================================================================

|

||||

如何使用dd命令测试硬盘的性能?如何在linux操作系统下检测硬盘的读写能力?

|

||||

|

||||

如何使用dd命令测试我的硬盘性能?如何在linux操作系统下检测硬盘的读写速度?

|

||||

|

||||

你可以使用以下命令在一个Linux或类Unix操作系统上进行简单的I/O性能测试。

|

||||

|

||||

- **dd命令** :它被用来在Linux和类Unix系统下对硬盘设备进行写性能的检测。

|

||||

- **hparm命令**:它被用来获取或设置硬盘参数,包括测试读性能以及缓存性能等。

|

||||

- **dd命令** :它被用来在Linux和类Unix系统下对硬盘设备进行写性能的检测。

|

||||

- **hparm命令**:它用来在基于 Linux 的系统上获取或设置硬盘参数,包括测试读性能以及缓存性能等。

|

||||

|

||||

在这篇指南中,你将会学到如何使用dd命令来测试硬盘性能。

|

||||

|

||||

### 使用dd命令来监控硬盘的读写性能:###

|

||||

|

||||

- 打开shell终端(这里貌似不能翻译为终端提示符)。

|

||||

- 通过ssh登录到远程服务器。

|

||||

- 打开shell终端。

|

||||

- 或者通过ssh登录到远程服务器。

|

||||

- 使用dd命令来测量服务器的吞吐率(写速度) `dd if=/dev/zero of=/tmp/test1.img bs=1G count=1 oflag=dsync`

|

||||

- 使用dd命令测量服务器延迟 `dd if=/dev/zero of=/tmp/test2.img bs=512 count=1000 oflag=dsync`

|

||||

|

||||

####理解dd命令的选项###

|

||||

|

||||

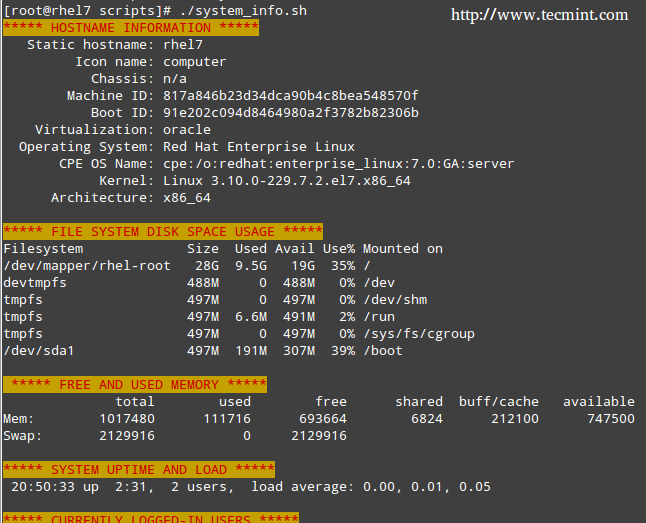

在这个例子当中,我将使用搭载Ubuntu Linux 14.04 LTS系统的RAID-10(配有SAS SSD的Adaptec 5405Z)服务器阵列来运行。基本语法为:

|

||||

在这个例子当中,我将使用搭载Ubuntu Linux 14.04 LTS系统的RAID-10(配有SAS SSD的Adaptec 5405Z)服务器阵列来运行。基本语法为:

|

||||

|

||||

dd if=/dev/input.file of=/path/to/output.file bs=block-size count=number-of-blocks oflag=dsync

|

||||

## GNU dd语法 ##

|

||||

@ -29,18 +30,19 @@

|

||||

输出样例:

|

||||

|

||||

|

||||

Fig.01: 使用dd命令获取的服务器吞吐率

|

||||

|

||||

*图01: 使用dd命令获取的服务器吞吐率*

|

||||

|

||||

请各位注意在这个实验中,我们写入一个G的数据,可以发现,服务器的吞吐率是135 MB/s,这其中

|

||||

|

||||

- `if=/dev/zero (if=/dev/input.file)` :用来设置dd命令读取的输入文件名。

|

||||

- `of=/tmp/test1.img (of=/path/to/output.file)` :dd命令将input.file写入的输出文件的名字。

|

||||

- `bs=1G (bs=block-size)` :设置dd命令读取的块的大小。例子中为1个G。

|

||||

- `count=1 (count=number-of-blocks)`: dd命令读取的块的个数。

|

||||

- `oflag=dsync (oflag=dsync)` :使用同步I/O。不要省略这个选项。这个选项能够帮助你去除caching的影响,以便呈现给你精准的结果。

|

||||

- `if=/dev/zero` (if=/dev/input.file) :用来设置dd命令读取的输入文件名。

|

||||

- `of=/tmp/test1.img` (of=/path/to/output.file):dd命令将input.file写入的输出文件的名字。

|

||||

- `bs=1G` (bs=block-size) :设置dd命令读取的块的大小。例子中为1个G。

|

||||

- `count=1` (count=number-of-blocks):dd命令读取的块的个数。

|

||||

- `oflag=dsync` (oflag=dsync) :使用同步I/O。不要省略这个选项。这个选项能够帮助你去除caching的影响,以便呈现给你精准的结果。

|

||||

- `conv=fdatasyn`: 这个选项和`oflag=dsync`含义一样。

|

||||

|

||||

在这个例子中,一共写了1000次,每次写入512字节来获得RAID10服务器的延迟时间:

|

||||

在下面这个例子中,一共写了1000次,每次写入512字节来获得RAID10服务器的延迟时间:

|

||||

|

||||

dd if=/dev/zero of=/tmp/test2.img bs=512 count=1000 oflag=dsync

|

||||

|

||||

@ -50,11 +52,11 @@ Fig.01: 使用dd命令获取的服务器吞吐率

|

||||

1000+0 records out

|

||||

512000 bytes (512 kB) copied, 0.60362 s, 848 kB/s

|

||||

|

||||

请注意服务器的吞吐率以及延迟时间也取决于服务器/应用的加载。所以我推荐你在一个刚刚重启过并且处于峰值时间的服务器上来运行测试,以便得到更加准确的度量。现在你可以在你的所有设备上互相比较这些测试结果了。

|

||||

请注意服务器的吞吐率以及延迟时间也取决于服务器/应用的负载。所以我推荐你在一个刚刚重启过并且处于峰值时间的服务器上来运行测试,以便得到更加准确的度量。现在你可以在你的所有设备上互相比较这些测试结果了。

|

||||

|

||||

####为什么服务器的吞吐率和延迟时间都这么差?###

|

||||

###为什么服务器的吞吐率和延迟时间都这么差?###

|

||||

|

||||

低的数值并不意味着你在使用差劲的硬件。可能是HARDWARE RAID10的控制器缓存导致的。

|

||||

低的数值并不意味着你在使用差劲的硬件。可能是硬件 RAID10的控制器缓存导致的。

|

||||

|

||||

使用hdparm命令来查看硬盘缓存的读速度。

|

||||

|

||||

@ -79,11 +81,12 @@ Fig.01: 使用dd命令获取的服务器吞吐率

|

||||

输出样例:

|

||||

|

||||

|

||||

Fig.02: 检测硬盘读入以及缓存性能的Linux hdparm命令

|

||||

|

||||

请再一次注意由于文件文件操作的缓存属性,你将总是会看到很高的读速度。

|

||||

*图02: 检测硬盘读入以及缓存性能的Linux hdparm命令*

|

||||

|

||||

**使用dd命令来测试读入速度**

|

||||

请再次注意,由于文件文件操作的缓存属性,你将总是会看到很高的读速度。

|

||||

|

||||

###使用dd命令来测试读取速度###

|

||||

|

||||

为了获得精确的读测试数据,首先在测试前运行下列命令,来将缓存设置为无效:

|

||||

|

||||

@ -91,11 +94,11 @@ Fig.02: 检测硬盘读入以及缓存性能的Linux hdparm命令

|

||||

echo 3 | sudo tee /proc/sys/vm/drop_caches

|

||||

time time dd if=/path/to/bigfile of=/dev/null bs=8k

|

||||

|

||||

**笔记本上的示例**

|

||||

####笔记本上的示例####

|

||||

|

||||

运行下列命令:

|

||||

|

||||

### Cache存在的Debian系统笔记本吞吐率###

|

||||

### 带有Cache的Debian系统笔记本吞吐率###

|

||||

dd if=/dev/zero of=/tmp/laptop.bin bs=1G count=1 oflag=direct

|

||||

|

||||

###使cache失效###

|

||||

@ -104,10 +107,11 @@ Fig.02: 检测硬盘读入以及缓存性能的Linux hdparm命令

|

||||

###没有Cache的Debian系统笔记本吞吐率###

|

||||

dd if=/dev/zero of=/tmp/laptop.bin bs=1G count=1 oflag=direct

|

||||

|

||||

**苹果OS X Unix(Macbook pro)的例子**

|

||||

####苹果OS X Unix(Macbook pro)的例子####

|

||||

|

||||

GNU dd has many more options but OS X/BSD and Unix-like dd command need to run as follows to test real disk I/O and not memory add sync option as follows:

|

||||

GNU dd命令有其他许多选项但是在 OS X/BSD 以及类Unix中, dd命令需要像下面那样执行来检测去除掉内存地址同步的硬盘真实I/O性能:

|

||||

|

||||

GNU dd命令有其他许多选项,但是在 OS X/BSD 以及类Unix中, dd命令需要像下面那样执行来检测去除掉内存地址同步的硬盘真实I/O性能:

|

||||

|

||||

## 运行这个命令2-3次来获得更好地结果 ###

|

||||

time sh -c "dd if=/dev/zero of=/tmp/testfile bs=100k count=1k && sync"

|

||||

@ -124,26 +128,29 @@ GNU dd命令有其他许多选项但是在 OS X/BSD 以及类Unix中, dd命令

|

||||

|

||||

本人Macbook Pro的写速度是635346520字节(635.347MB/s)。

|

||||

|

||||

**不喜欢用命令行?^_^**

|

||||

###不喜欢用命令行?\^_^###

|

||||

|

||||

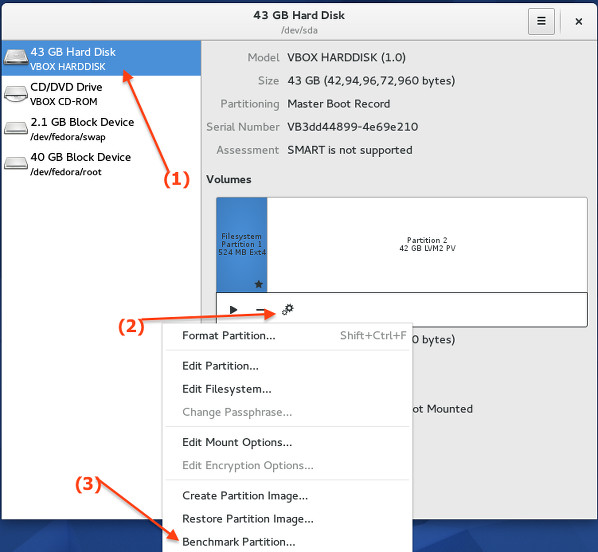

你可以在Linux或基于Unix的系统上使用disk utility(gnome-disk-utility)这款工具来得到同样的信息。下面的那个图就是在我的Fedora Linux v22 VM上截取的。

|

||||

|

||||

**图形化方法**

|

||||

####图形化方法####

|

||||

|

||||

点击“Activites”或者“Super”按键来在桌面和Activites视图间切换。输入“Disks”

|

||||

|

||||

|

||||

Fig.03: 打开Gnome硬盘工具

|

||||

|

||||

*图03: 打开Gnome硬盘工具*

|

||||

|

||||

在左边的面板上选择你的硬盘,点击configure按钮,然后点击“Benchmark partition”:

|

||||

|

||||

|

||||

Fig.04: 评测硬盘/分区

|

||||

|

||||

最后,点击“Start Benchmark...”按钮(你可能被要求输入管理员用户名和密码):

|

||||

*图04: 评测硬盘/分区*

|

||||

|

||||

最后,点击“Start Benchmark...”按钮(你可能需要输入管理员用户名和密码):

|

||||

|

||||

|

||||

Fig.05: 最终的评测结果

|

||||

|

||||

*图05: 最终的评测结果*

|

||||

|

||||

如果你要问,我推荐使用哪种命令和方法?

|

||||

|

||||

@ -158,7 +165,7 @@ via: http://www.cyberciti.biz/faq/howto-linux-unix-test-disk-performance-with-dd

|

||||

|

||||

作者:Vivek Gite

|

||||

译者:[DongShuaike](https://github.com/DongShuaike)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,156 @@

|

||||

在 Linux 下使用 RAID(一):介绍 RAID 的级别和概念

|

||||

================================================================================

|

||||

|

||||

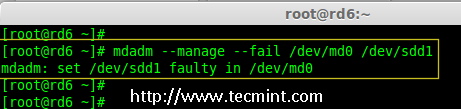

RAID 的意思是廉价磁盘冗余阵列(Redundant Array of Inexpensive Disks),但现在它被称为独立磁盘冗余阵列(Redundant Array of Independent Drives)。早先一个容量很小的磁盘都是非常昂贵的,但是现在我们可以很便宜的买到一个更大的磁盘。Raid 是一系列放在一起,成为一个逻辑卷的磁盘集合。

|

||||

|

||||

|

||||

|

||||

*在 Linux 中理解 RAID 设置*

|

||||

|

||||

RAID 包含一组或者一个集合甚至一个阵列。使用一组磁盘结合驱动器组成 RAID 阵列或 RAID 集。将至少两个磁盘连接到一个 RAID 控制器,而成为一个逻辑卷,也可以将多个驱动器放在一个组中。一组磁盘只能使用一个 RAID 级别。使用 RAID 可以提高服务器的性能。不同 RAID 的级别,性能会有所不同。它通过容错和高可用性来保存我们的数据。

|

||||

|

||||

这个系列被命名为“在 Linux 下使用 RAID”,分为9个部分,包括以下主题:

|

||||

|

||||

- 第1部分:介绍 RAID 的级别和概念

|

||||

- 第2部分:在Linux中如何设置 RAID0(条带化)

|

||||

- 第3部分:在Linux中如何设置 RAID1(镜像化)

|

||||

- 第4部分:在Linux中如何设置 RAID5(条带化与分布式奇偶校验)

|

||||

- 第5部分:在Linux中如何设置 RAID6(条带双分布式奇偶校验)

|

||||

- 第6部分:在Linux中设置 RAID 10 或1 + 0(嵌套)

|

||||

- 第7部分:增加现有的 RAID 阵列并删除损坏的磁盘

|

||||

- 第8部分:在 RAID 中恢复(重建)损坏的驱动器

|

||||

- 第9部分:在 Linux 中管理 RAID

|

||||

|

||||

这是9篇系列教程的第1部分,在这里我们将介绍 RAID 的概念和 RAID 级别,这是在 Linux 中构建 RAID 需要理解的。

|

||||

|

||||

### 软件 RAID 和硬件 RAID ###

|

||||

|

||||

软件 RAID 的性能较低,因为其使用主机的资源。 需要加载 RAID 软件以从软件 RAID 卷中读取数据。在加载 RAID 软件前,操作系统需要引导起来才能加载 RAID 软件。在软件 RAID 中无需物理硬件。零成本投资。

|

||||

|

||||

硬件 RAID 的性能较高。他们采用 PCI Express 卡物理地提供有专用的 RAID 控制器。它不会使用主机资源。他们有 NVRAM 用于缓存的读取和写入。缓存用于 RAID 重建时,即使出现电源故障,它会使用后备的电池电源保持缓存。对于大规模使用是非常昂贵的投资。

|

||||

|

||||

硬件 RAID 卡如下所示:

|

||||

|

||||

|

||||

|

||||

*硬件 RAID*

|

||||

|

||||

#### 重要的 RAID 概念 ####

|

||||

|

||||

- **校验**方式用在 RAID 重建中从校验所保存的信息中重新生成丢失的内容。 RAID 5,RAID 6 基于校验。

|

||||

- **条带化**是将切片数据随机存储到多个磁盘。它不会在单个磁盘中保存完整的数据。如果我们使用2个磁盘,则每个磁盘存储我们的一半数据。

|

||||

- **镜像**被用于 RAID 1 和 RAID 10。镜像会自动备份数据。在 RAID 1 中,它会保存相同的内容到其他盘上。

|

||||

- **热备份**只是我们的服务器上的一个备用驱动器,它可以自动更换发生故障的驱动器。在我们的阵列中,如果任何一个驱动器损坏,热备份驱动器会自动用于重建 RAID。

|

||||

- **块**是 RAID 控制器每次读写数据时的最小单位,最小 4KB。通过定义块大小,我们可以增加 I/O 性能。

|

||||

|

||||

RAID有不同的级别。在这里,我们仅列出在真实环境下的使用最多的 RAID 级别。

|

||||

|

||||

- RAID0 = 条带化

|

||||

- RAID1 = 镜像

|

||||

- RAID5 = 单磁盘分布式奇偶校验

|

||||

- RAID6 = 双磁盘分布式奇偶校验

|

||||

- RAID10 = 镜像 + 条带。(嵌套RAID)

|

||||

|

||||

RAID 在大多数 Linux 发行版上使用名为 mdadm 的软件包进行管理。让我们先对每个 RAID 级别认识一下。

|

||||

|

||||

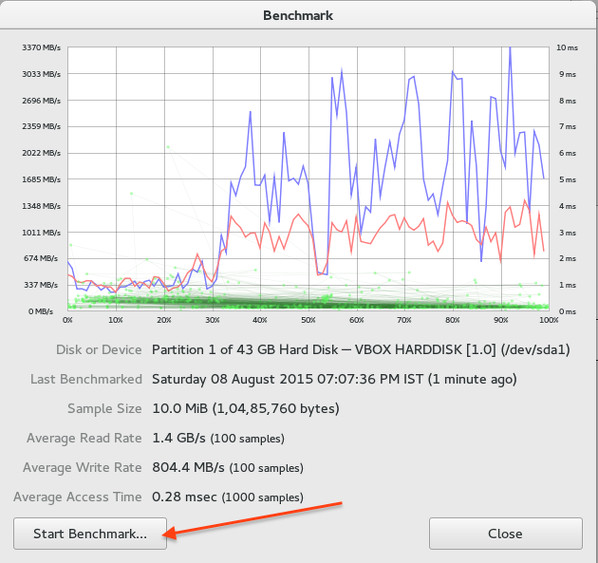

#### RAID 0 / 条带化 ####

|

||||

|

||||

|

||||

|

||||

条带化有很好的性能。在 RAID 0(条带化)中数据将使用切片的方式被写入到磁盘。一半的内容放在一个磁盘上,另一半内容将被写入到另一个磁盘。

|

||||

|

||||

假设我们有2个磁盘驱动器,例如,如果我们将数据“TECMINT”写到逻辑卷中,“T”将被保存在第一盘中,“E”将保存在第二盘,'C'将被保存在第一盘,“M”将保存在第二盘,它会一直继续此循环过程。(LCTT 译注:实际上不可能按字节切片,是按数据块切片的。)

|

||||

|

||||

在这种情况下,如果驱动器中的任何一个发生故障,我们就会丢失数据,因为一个盘中只有一半的数据,不能用于重建 RAID。不过,当比较写入速度和性能时,RAID 0 是非常好的。创建 RAID 0(条带化)至少需要2个磁盘。如果你的数据是非常宝贵的,那么不要使用此 RAID 级别。

|

||||

|

||||

- 高性能。

|

||||

- RAID 0 中容量零损失。

|

||||

- 零容错。

|

||||

- 写和读有很高的性能。

|

||||

|

||||

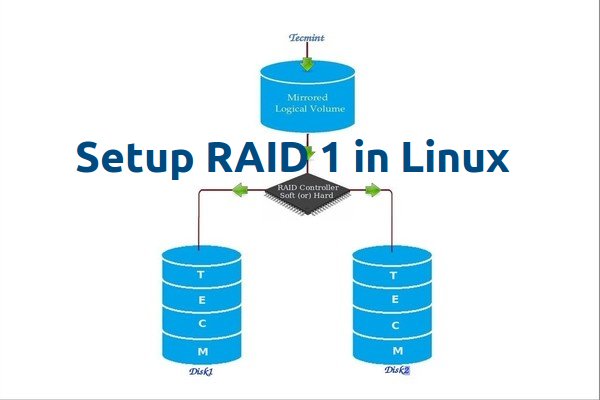

#### RAID 1 / 镜像化 ####

|

||||

|

||||

|

||||

|

||||

镜像也有不错的性能。镜像可以对我们的数据做一份相同的副本。假设我们有两个2TB的硬盘驱动器,我们总共有4TB,但在镜像中,但是放在 RAID 控制器后面的驱动器形成了一个逻辑驱动器,我们只能看到这个逻辑驱动器有2TB。

|

||||

|

||||

当我们保存数据时,它将同时写入这两个2TB驱动器中。创建 RAID 1(镜像化)最少需要两个驱动器。如果发生磁盘故障,我们可以通过更换一个新的磁盘恢复 RAID 。如果在 RAID 1 中任何一个磁盘发生故障,我们可以从另一个磁盘中获取相同的数据,因为另外的磁盘中也有相同的数据。所以是零数据丢失。

|

||||

|

||||

- 良好的性能。

|

||||

- 总容量丢失一半可用空间。

|

||||

- 完全容错。

|

||||

- 重建会更快。

|

||||

- 写性能变慢。

|

||||

- 读性能变好。

|

||||

- 能用于操作系统和小规模的数据库。

|

||||

|

||||

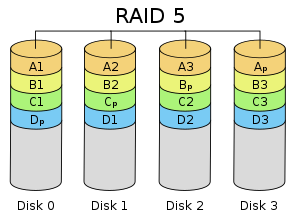

#### RAID 5 / 分布式奇偶校验 ####

|

||||

|

||||

|

||||

|

||||

RAID 5 多用于企业级。 RAID 5 的以分布式奇偶校验的方式工作。奇偶校验信息将被用于重建数据。它从剩下的正常驱动器上的信息来重建。在驱动器发生故障时,这可以保护我们的数据。

|

||||

|

||||

假设我们有4个驱动器,如果一个驱动器发生故障而后我们更换发生故障的驱动器后,我们可以从奇偶校验中重建数据到更换的驱动器上。奇偶校验信息存储在所有的4个驱动器上,如果我们有4个 1TB 的驱动器。奇偶校验信息将被存储在每个驱动器的256G中,而其它768GB是用户自己使用的。单个驱动器故障后,RAID 5 依旧正常工作,如果驱动器损坏个数超过1个会导致数据的丢失。

|

||||

|

||||

- 性能卓越

|

||||

- 读速度将非常好。

|

||||

- 写速度处于平均水准,如果我们不使用硬件 RAID 控制器,写速度缓慢。

|

||||

- 从所有驱动器的奇偶校验信息中重建。

|

||||

- 完全容错。

|

||||

- 1个磁盘空间将用于奇偶校验。

|

||||

- 可以被用在文件服务器,Web服务器,非常重要的备份中。

|

||||

|

||||

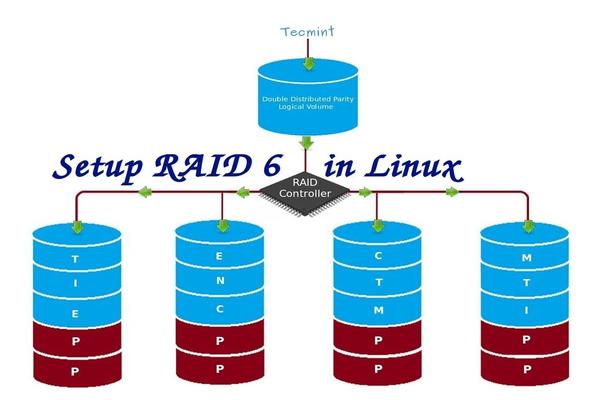

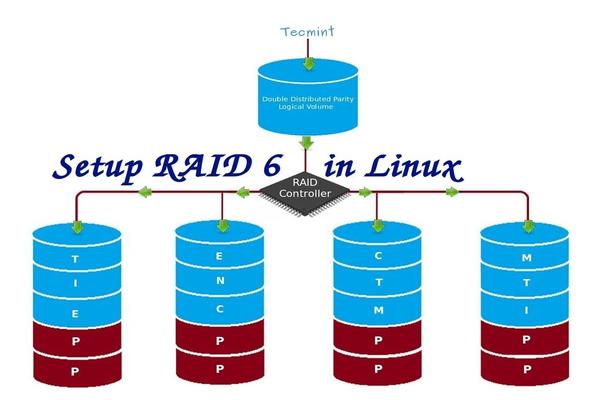

#### RAID 6 双分布式奇偶校验磁盘 ####

|

||||

|

||||

|

||||

|

||||

RAID 6 和 RAID 5 相似但它有两个分布式奇偶校验。大多用在大数量的阵列中。我们最少需要4个驱动器,即使有2个驱动器发生故障,我们依然可以更换新的驱动器后重建数据。

|

||||

|

||||

它比 RAID 5 慢,因为它将数据同时写到4个驱动器上。当我们使用硬件 RAID 控制器时速度就处于平均水准。如果我们有6个的1TB驱动器,4个驱动器将用于数据保存,2个驱动器将用于校验。

|

||||

|

||||

- 性能不佳。

|

||||

- 读的性能很好。

|

||||

- 如果我们不使用硬件 RAID 控制器写的性能会很差。

|

||||

- 从两个奇偶校验驱动器上重建。

|

||||

- 完全容错。

|

||||

- 2个磁盘空间将用于奇偶校验。

|

||||

- 可用于大型阵列。

|

||||

- 用于备份和视频流中,用于大规模。

|

||||

|

||||

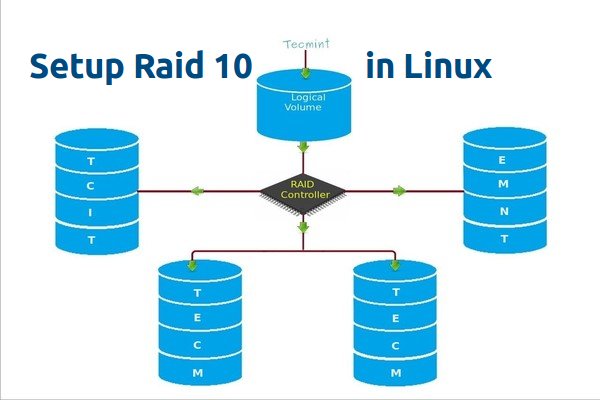

#### RAID 10 / 镜像+条带 ####

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

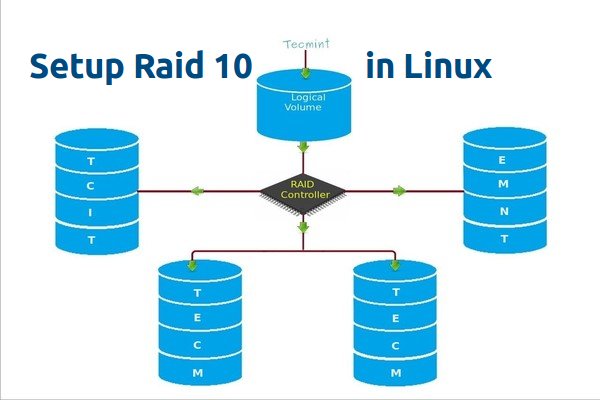

RAID 10 可以被称为1 + 0或0 +1。它将做镜像+条带两个工作。在 RAID 10 中首先做镜像然后做条带。在 RAID 01 上首先做条带,然后做镜像。RAID 10 比 01 好。

|

||||

|

||||

假设,我们有4个驱动器。当我逻辑卷上写数据时,它会使用镜像和条带的方式将数据保存到4个驱动器上。

|

||||

|

||||

如果我在 RAID 10 上写入数据“TECMINT”,数据将使用如下方式保存。首先将“T”同时写入两个磁盘,“E”也将同时写入另外两个磁盘,所有数据都写入两块磁盘。这样可以将每个数据复制到另外的磁盘。

|

||||

|

||||

同时它将使用 RAID 0 方式写入数据,遵循将“T”写入第一组盘,“E”写入第二组盘。再次将“C”写入第一组盘,“M”到第二组盘。

|

||||

|

||||

- 良好的读写性能。

|

||||

- 总容量丢失一半的可用空间。

|

||||

- 容错。

|

||||

- 从副本数据中快速重建。

|

||||

- 由于其高性能和高可用性,常被用于数据库的存储中。

|

||||

|

||||

### 结论 ###

|

||||

|

||||

在这篇文章中,我们已经了解了什么是 RAID 和在实际环境大多采用哪个级别的 RAID。希望你已经学会了上面所写的。对于 RAID 的构建必须了解有关 RAID 的基本知识。以上内容可以基本满足你对 RAID 的了解。

|

||||

|

||||

在接下来的文章中,我将介绍如何设置和使用各种级别创建 RAID,增加 RAID 组(阵列)和驱动器故障排除等。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/understanding-raid-setup-in-linux/

|

||||

|

||||

作者:[Babin Lonston][a]

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/babinlonston/

|

||||

@ -0,0 +1,219 @@

|

||||

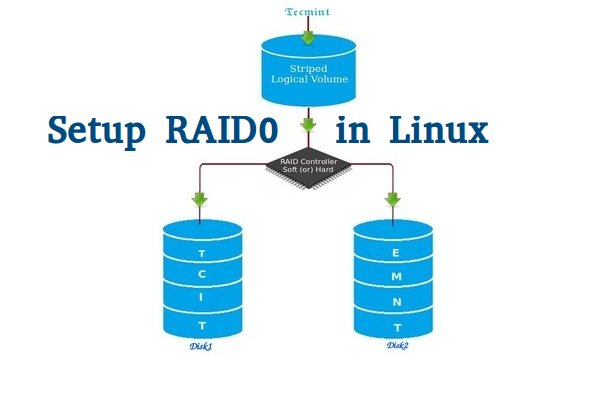

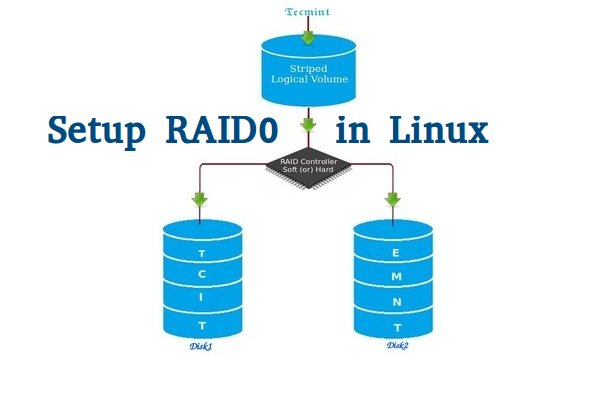

在 Linux 下使用 RAID(一):使用 mdadm 工具创建软件 RAID 0 (条带化)

|

||||

================================================================================

|

||||

|

||||

RAID 即廉价磁盘冗余阵列,其高可用性和可靠性适用于大规模环境中,相比正常使用,数据更需要被保护。RAID 是一些磁盘的集合,是包含一个阵列的逻辑卷。驱动器可以组合起来成为一个阵列或称为(组的)集合。

|

||||

|

||||

创建 RAID 最少应使用2个连接到 RAID 控制器的磁盘组成,来构成逻辑卷,可以根据定义的 RAID 级别将更多的驱动器添加到一个阵列中。不使用物理硬件创建的 RAID 被称为软件 RAID。软件 RAID 也叫做穷人 RAID。

|

||||

|

||||

|

||||

|

||||

*在 Linux 中创建 RAID0*

|

||||

|

||||

使用 RAID 的主要目的是为了在发生单点故障时保存数据,如果我们使用单个磁盘来存储数据,如果它损坏了,那么就没有机会取回我们的数据了,为了防止数据丢失我们需要一个容错的方法。所以,我们可以使用多个磁盘组成 RAID 阵列。

|

||||

|

||||

#### 在 RAID 0 中条带是什么 ####

|

||||

|

||||

条带是通过将数据在同时分割到多个磁盘上。假设我们有两个磁盘,如果我们将数据保存到该逻辑卷上,它会将数据保存在两个磁盘上。使用 RAID 0 是为了获得更好的性能,但是如果驱动器中一个出现故障,我们将不能得到完整的数据。因此,使用 RAID 0 不是一种好的做法。唯一的解决办法就是安装有 RAID 0 逻辑卷的操作系统来提高重要文件的安全性。

|

||||

|

||||

- RAID 0 性能较高。

|

||||

- 在 RAID 0 上,空间零浪费。

|

||||

- 零容错(如果硬盘中的任何一个发生故障,无法取回数据)。

|

||||

- 写和读性能都很好。

|

||||

|

||||

#### 要求 ####

|

||||

|

||||

创建 RAID 0 允许的最小磁盘数目是2个,但你可以添加更多的磁盘,不过数目应该是2,4,6,8等的偶数。如果你有一个物理 RAID 卡并且有足够的端口,你可以添加更多磁盘。

|

||||

|

||||

在这里,我们没有使用硬件 RAID,此设置只需要软件 RAID。如果我们有一个物理硬件 RAID 卡,我们可以从它的功能界面访问它。有些主板默认内建 RAID 功能,还可以使用 Ctrl + I 键访问它的界面。

|

||||

|

||||

如果你是刚开始设置 RAID,请阅读我们前面的文章,我们已经介绍了一些关于 RAID 基本的概念。

|

||||

|

||||

- [介绍 RAID 的级别和概念][1]

|

||||

|

||||

**我的服务器设置**

|

||||

|

||||

操作系统 : CentOS 6.5 Final

|

||||

IP 地址 : 192.168.0.225

|

||||

两块盘 : 20 GB each

|

||||

|

||||

这是9篇系列教程的第2部分,在这部分,我们将看看如何能够在 Linux 上创建和使用 RAID 0(条带化),以名为 sdb 和 sdc 两个 20GB 的硬盘为例。

|

||||

|

||||

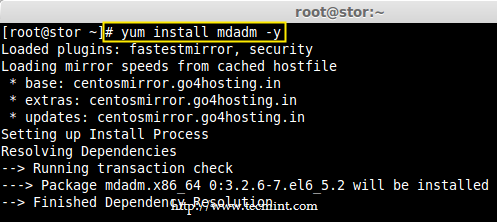

### 第1步:更新系统和安装管理 RAID 的 mdadm 软件 ###

|

||||

|

||||

1、 在 Linux 上设置 RAID 0 前,我们先更新一下系统,然后安装`mdadm` 包。mdadm 是一个小程序,这将使我们能够在Linux下配置和管理 RAID 设备。

|

||||

|

||||

# yum clean all && yum update

|

||||

# yum install mdadm -y

|

||||

|

||||

|

||||

|

||||

*安装 mdadm 工具*

|

||||

|

||||

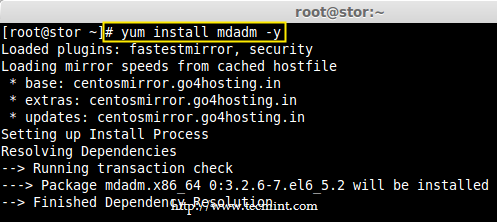

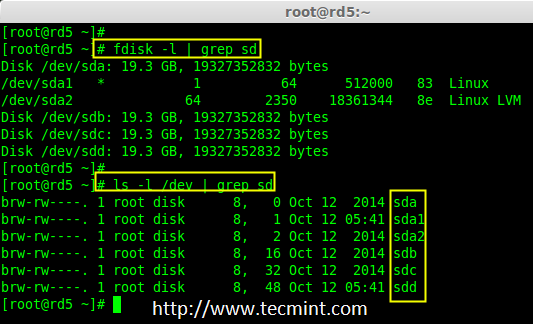

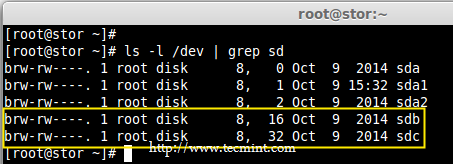

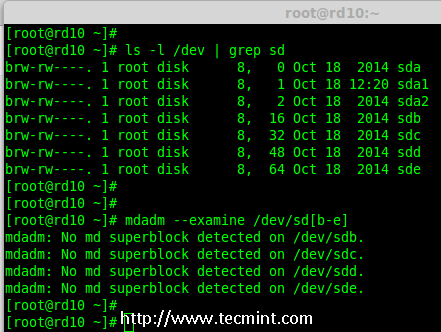

### 第2步:确认连接了两个 20GB 的硬盘 ###

|

||||

|

||||

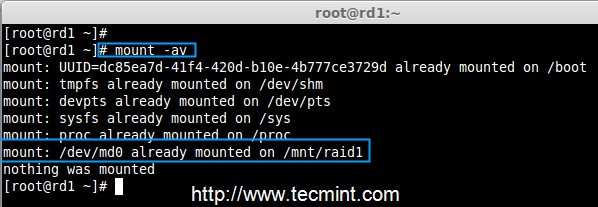

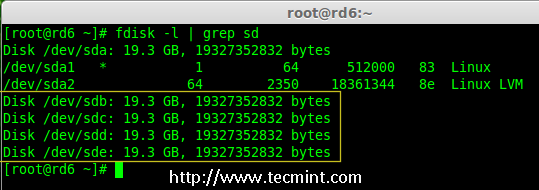

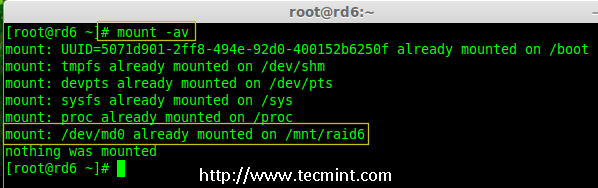

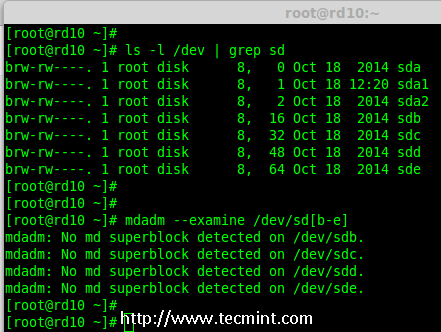

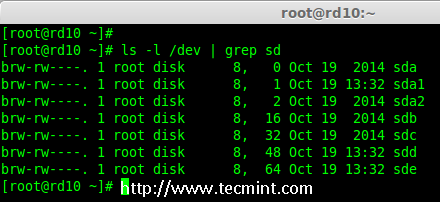

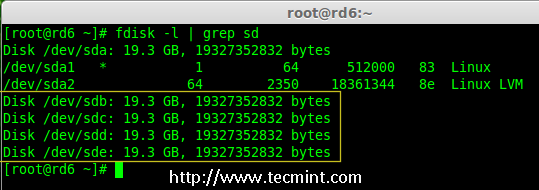

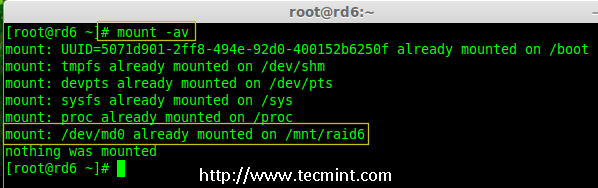

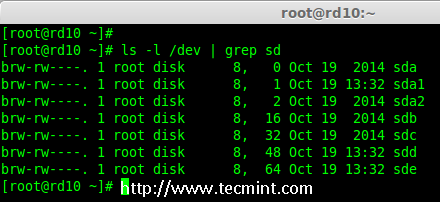

2、 在创建 RAID 0 前,请务必确认两个硬盘能被检测到,使用下面的命令确认。

|

||||

|

||||

# ls -l /dev | grep sd

|

||||

|

||||

|

||||

|

||||

*检查硬盘*

|

||||

|

||||

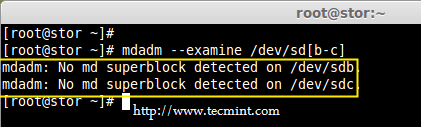

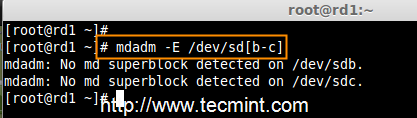

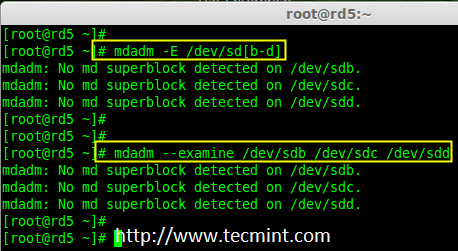

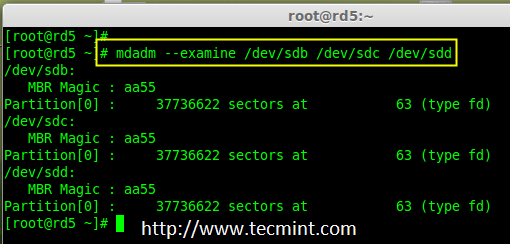

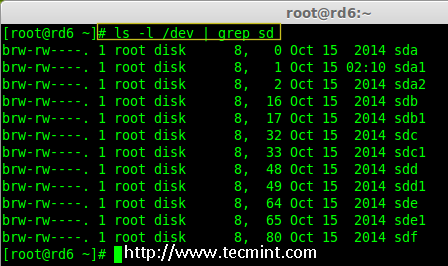

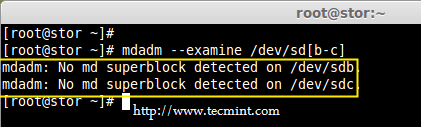

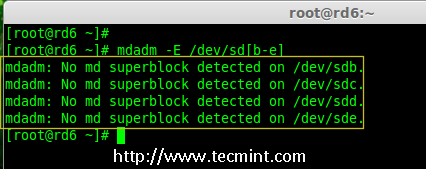

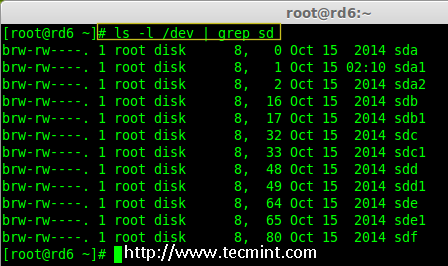

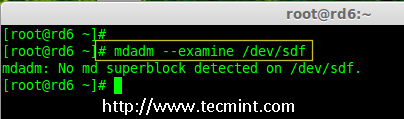

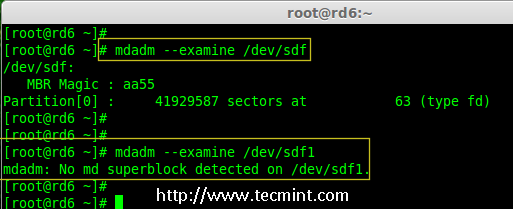

3、 一旦检测到新的硬盘驱动器,同时检查是否连接的驱动器已经被现有的 RAID 使用,使用下面的`mdadm` 命令来查看。

|

||||

|

||||

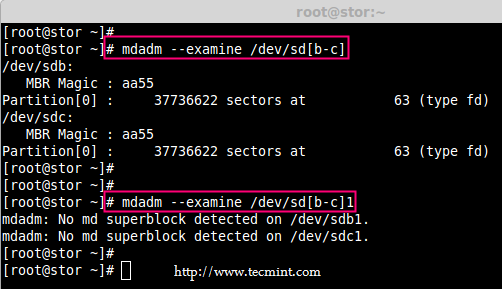

# mdadm --examine /dev/sd[b-c]

|

||||

|

||||

|

||||

|

||||

*检查 RAID 设备*

|

||||

|

||||

从上面的输出我们可以看到,没有任何 RAID 使用 sdb 和 sdc 这两个驱动器。

|

||||

|

||||

### 第3步:创建 RAID 分区 ###

|

||||

|

||||

4、 现在用 sdb 和 sdc 创建 RAID 的分区,使用 fdisk 命令来创建。在这里,我将展示如何创建 sdb 驱动器上的分区。

|

||||

|

||||

# fdisk /dev/sdb

|

||||

|

||||

请按照以下说明创建分区。

|

||||

|

||||

- 按`n` 创建新的分区。

|

||||

- 然后按`P` 选择主分区。

|

||||

- 接下来选择分区号为1。

|

||||

- 只需按两次回车键选择默认值即可。

|

||||

- 然后,按`P` 来显示创建好的分区。

|

||||

|

||||

|

||||

|

||||

*创建分区*

|

||||

|

||||

请按照以下说明将分区创建为 Linux 的 RAID 类型。

|

||||

|

||||

- 按`L`,列出所有可用的类型。

|

||||

- 按`t` 去修改分区。

|

||||

- 键入`fd` 设置为 Linux 的 RAID 类型,然后按回车确认。

|

||||

- 然后再次使用`p`查看我们所做的更改。

|

||||

- 使用`w`保存更改。

|

||||

|

||||

|

||||

|

||||

*在 Linux 上创建 RAID 分区*

|

||||

|

||||

**注**: 请使用上述步骤同样在 sdc 驱动器上创建分区。

|

||||

|

||||

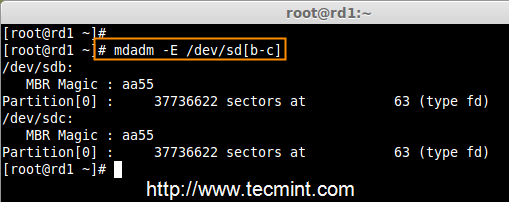

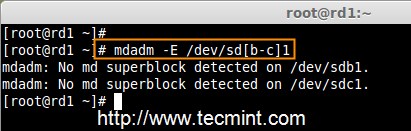

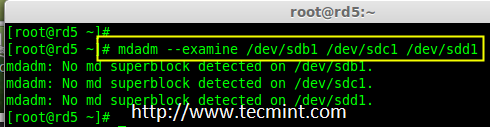

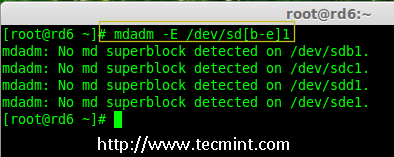

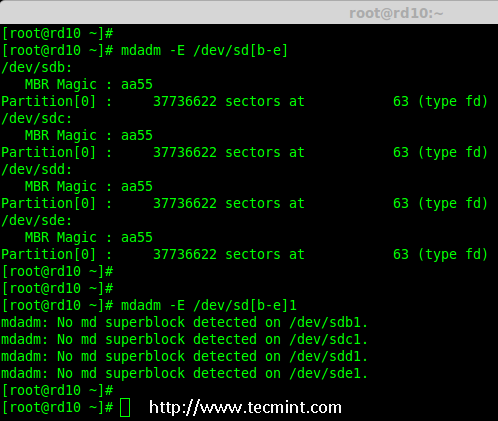

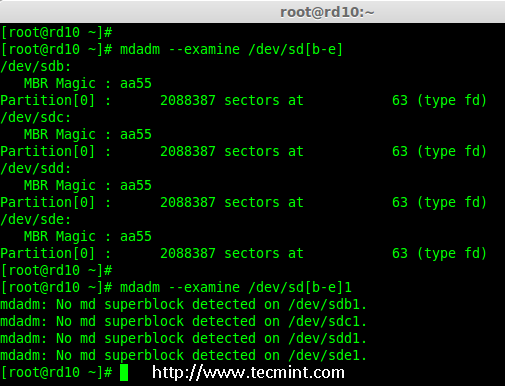

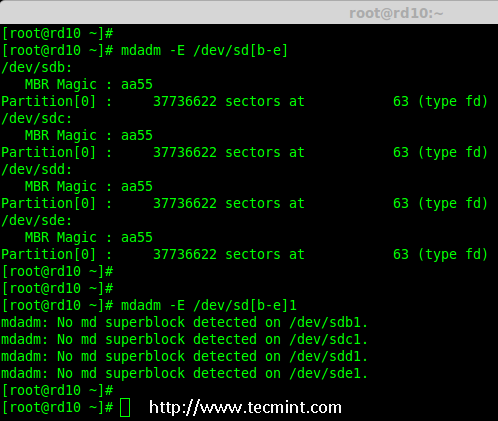

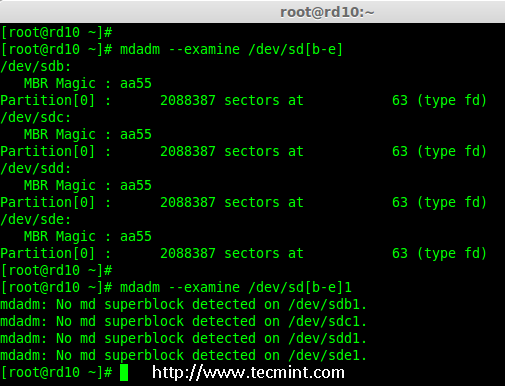

5、 创建分区后,验证这两个驱动器是否正确定义 RAID,使用下面的命令。

|

||||

|

||||

# mdadm --examine /dev/sd[b-c]

|

||||

# mdadm --examine /dev/sd[b-c]1

|

||||

|

||||

|

||||

|

||||

*验证 RAID 分区*

|

||||

|

||||

### 第4步:创建 RAID md 设备 ###

|

||||

|

||||

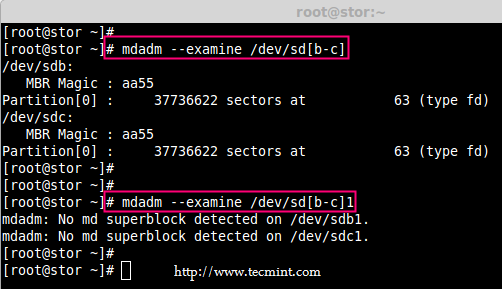

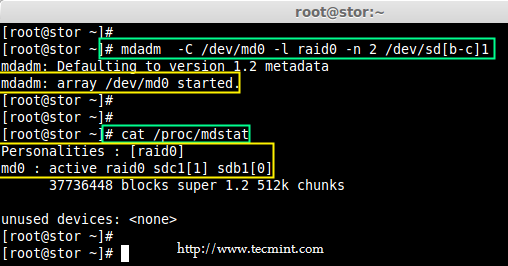

6、 现在使用以下命令创建 md 设备(即 /dev/md0),并选择 RAID 合适的级别。

|

||||

|

||||

# mdadm -C /dev/md0 -l raid0 -n 2 /dev/sd[b-c]1

|

||||

# mdadm --create /dev/md0 --level=stripe --raid-devices=2 /dev/sd[b-c]1

|

||||

|

||||

- -C – 创建

|

||||

- -l – 级别

|

||||

- -n – RAID 设备数

|

||||

|

||||

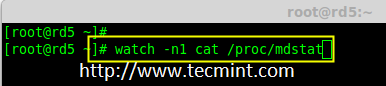

7、 一旦 md 设备已经建立,使用如下命令可以查看 RAID 级别,设备和阵列的使用状态。

|

||||

|

||||

# cat /proc/mdstat

|

||||

|

||||

|

||||

|

||||

*查看 RAID 级别*

|

||||

|

||||

# mdadm -E /dev/sd[b-c]1

|

||||

|

||||

|

||||

|

||||

*查看 RAID 设备*

|

||||

|

||||

# mdadm --detail /dev/md0

|

||||

|

||||

|

||||

|

||||

*查看 RAID 阵列*

|

||||

|

||||

### 第5步:给 RAID 设备创建文件系统 ###

|

||||

|

||||

8、 将 RAID 设备 /dev/md0 创建为 ext4 文件系统,并挂载到 /mnt/raid0 下。

|

||||

|

||||

# mkfs.ext4 /dev/md0

|

||||

|

||||

|

||||

|

||||

*创建 ext4 文件系统*

|

||||

|

||||

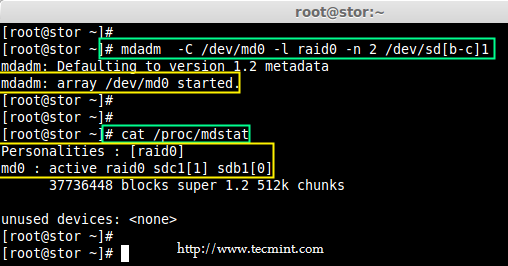

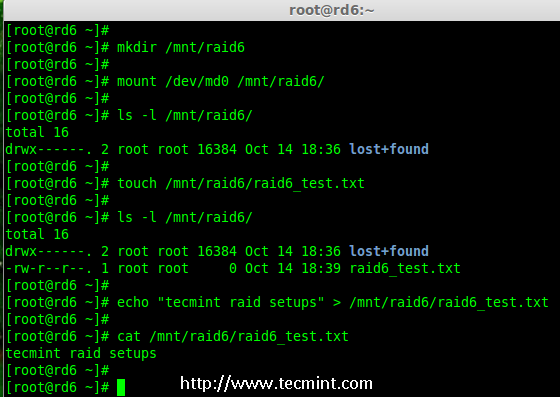

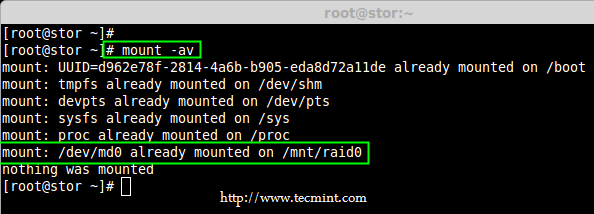

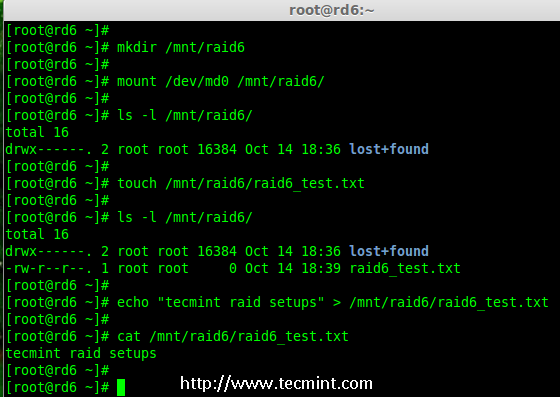

9、 在 RAID 设备上创建好 ext4 文件系统后,现在创建一个挂载点(即 /mnt/raid0),并将设备 /dev/md0 挂载在它下。

|

||||

|

||||

# mkdir /mnt/raid0

|

||||

# mount /dev/md0 /mnt/raid0/

|

||||

|

||||

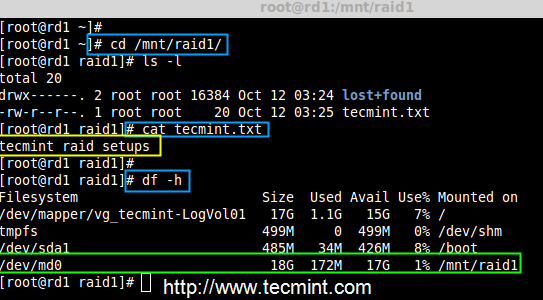

10、下一步,使用 df 命令验证设备 /dev/md0 是否被挂载在 /mnt/raid0 下。

|

||||

|

||||

# df -h

|

||||

|

||||

11、 接下来,在挂载点 /mnt/raid0 下创建一个名为`tecmint.txt` 的文件,为创建的文件添加一些内容,并查看文件和目录的内容。

|

||||

|

||||

# touch /mnt/raid0/tecmint.txt

|

||||

# echo "Hi everyone how you doing ?" > /mnt/raid0/tecmint.txt

|

||||

# cat /mnt/raid0/tecmint.txt

|

||||

# ls -l /mnt/raid0/

|

||||

|

||||

|

||||

|

||||

*验证挂载的设备*

|

||||

|

||||

12、 当你验证挂载点后,就可以将它添加到 /etc/fstab 文件中。

|

||||

|

||||

# vim /etc/fstab

|

||||

|

||||

添加以下条目,根据你的安装位置和使用文件系统的不同,自行做修改。

|

||||

|

||||

/dev/md0 /mnt/raid0 ext4 deaults 0 0

|

||||

|

||||

|

||||

|

||||

*添加设备到 fstab 文件中*

|

||||

|

||||

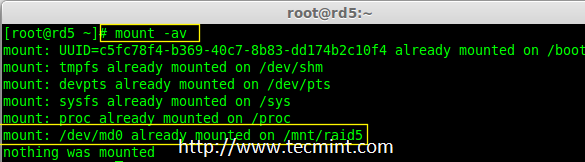

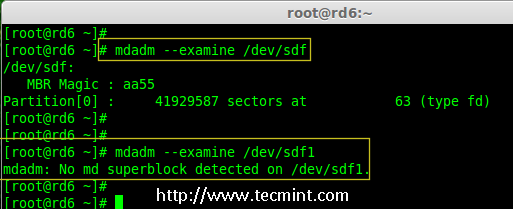

13、 使用 mount 命令的 `-a` 来检查 fstab 的条目是否有误。

|

||||

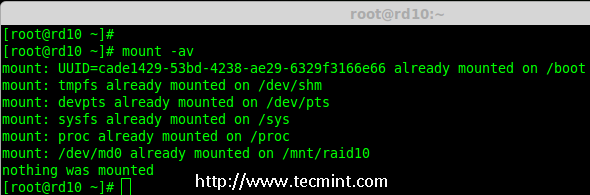

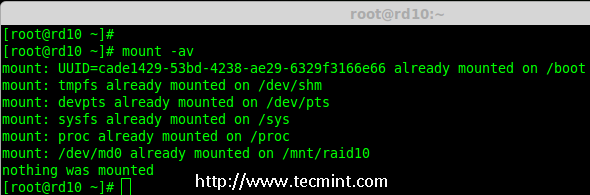

|

||||

# mount -av

|

||||

|

||||

|

||||

|

||||

*检查 fstab 文件是否有误*

|

||||

|

||||

### 第6步:保存 RAID 配置 ###

|

||||

|

||||

14、 最后,保存 RAID 配置到一个文件中,以供将来使用。我们再次使用带有`-s` (scan) 和`-v` (verbose) 选项的 `mdadm` 命令,如图所示。

|

||||

|

||||

# mdadm -E -s -v >> /etc/mdadm.conf

|

||||

# mdadm --detail --scan --verbose >> /etc/mdadm.conf

|

||||

# cat /etc/mdadm.conf

|

||||

|

||||

|

||||

|

||||

*保存 RAID 配置*

|

||||

|

||||

就这样,我们在这里看到,如何通过使用两个硬盘配置具有条带化的 RAID 0 。在接下来的文章中,我们将看到如何设置 RAID 1。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/create-raid0-in-linux/

|

||||

|

||||

作者:[Babin Lonston][a]

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/babinlonston/

|

||||

[1]:https://linux.cn/article-6085-1.html

|

||||

@ -1,83 +1,82 @@

|

||||

在 Linux 中使用"两个磁盘"创建 RAID 1(镜像) - 第3部分

|

||||

在 Linux 下使用 RAID(三):用两块磁盘创建 RAID 1(镜像)

|

||||

================================================================================

|

||||

RAID 镜像意味着相同数据的完整克隆(或镜像)写入到两个磁盘中。创建 RAID1 至少需要两个磁盘,它的读取性能或者可靠性比数据存储容量更好。

|

||||

|

||||

**RAID 镜像**意味着相同数据的完整克隆(或镜像),分别写入到两个磁盘中。创建 RAID 1 至少需要两个磁盘,而且仅用于读取性能或者可靠性要比数据存储容量更重要的场合。

|

||||

|

||||

|

||||

|

||||

|

||||

在 Linux 中设置 RAID1

|

||||

*在 Linux 中设置 RAID 1*

|

||||

|

||||

创建镜像是为了防止因硬盘故障导致数据丢失。镜像中的每个磁盘包含数据的完整副本。当一个磁盘发生故障时,相同的数据可以从其它正常磁盘中读取。而后,可以从正在运行的计算机中直接更换发生故障的磁盘,无需任何中断。

|

||||

|

||||

### RAID 1 的特点 ###

|

||||

|

||||

-镜像具有良好的性能。

|

||||

- 镜像具有良好的性能。

|

||||

|

||||

-磁盘利用率为50%。也就是说,如果我们有两个磁盘每个500GB,总共是1TB,但在镜像中它只会显示500GB。

|

||||

- 磁盘利用率为50%。也就是说,如果我们有两个磁盘每个500GB,总共是1TB,但在镜像中它只会显示500GB。

|

||||

|

||||

-在镜像如果一个磁盘发生故障不会有数据丢失,因为两个磁盘中的内容相同。

|

||||

- 在镜像如果一个磁盘发生故障不会有数据丢失,因为两个磁盘中的内容相同。

|

||||

|

||||

-读取数据会比写入性能更好。

|

||||

- 读取性能会比写入性能更好。

|

||||

|

||||

#### 要求 ####

|

||||

|

||||

创建 RAID 1 至少要有两个磁盘,你也可以添加更多的磁盘,磁盘数需为2,4,6,8等偶数。要添加更多的磁盘,你的系统必须有 RAID 物理适配器(硬件卡)。

|

||||

|

||||

创建 RAID 1 至少要有两个磁盘,你也可以添加更多的磁盘,磁盘数需为2,4,6,8的两倍。为了能够添加更多的磁盘,你的系统必须有 RAID 物理适配器(硬件卡)。

|

||||

这里,我们使用软件 RAID 不是硬件 RAID,如果你的系统有一个内置的物理硬件 RAID 卡,你可以从它的功能界面或使用 Ctrl + I 键来访问它。

|

||||

|

||||

这里,我们使用软件 RAID 不是硬件 RAID,如果你的系统有一个内置的物理硬件 RAID 卡,你可以从它的 UI 组件或使用 Ctrl + I 键来访问它。

|

||||

|

||||

需要阅读: [Basic Concepts of RAID in Linux][1]

|

||||

需要阅读: [介绍 RAID 的级别和概念][1]

|

||||

|

||||

#### 在我的服务器安装 ####

|

||||

|

||||

Operating System : CentOS 6.5 Final

|

||||

IP Address : 192.168.0.226

|

||||

Hostname : rd1.tecmintlocal.com

|

||||

Disk 1 [20GB] : /dev/sdb

|

||||

Disk 2 [20GB] : /dev/sdc

|

||||

操作系统 : CentOS 6.5 Final

|

||||

IP 地址 : 192.168.0.226

|

||||

主机名 : rd1.tecmintlocal.com

|

||||

磁盘 1 [20GB] : /dev/sdb

|

||||

磁盘 2 [20GB] : /dev/sdc

|

||||

|

||||

本文将指导你使用 mdadm (创建和管理 RAID 的)一步一步的建立一个软件 RAID 1 或镜像在 Linux 平台上。但同样的做法也适用于其它 Linux 发行版如 RedHat,CentOS,Fedora 等等。

|

||||

本文将指导你在 Linux 平台上使用 mdadm (用于创建和管理 RAID )一步步的建立一个软件 RAID 1 (镜像)。同样的做法也适用于如 RedHat,CentOS,Fedora 等 Linux 发行版。

|

||||

|

||||

### 第1步:安装所需要的并且检查磁盘 ###

|

||||

### 第1步:安装所需软件并且检查磁盘 ###

|

||||

|

||||

1.正如我前面所说,在 Linux 中我们需要使用 mdadm 软件来创建和管理 RAID。所以,让我们用 yum 或 apt-get 的软件包管理工具在 Linux 上安装 mdadm 软件包。

|

||||

1、 正如我前面所说,在 Linux 中我们需要使用 mdadm 软件来创建和管理 RAID。所以,让我们用 yum 或 apt-get 的软件包管理工具在 Linux 上安装 mdadm 软件包。

|

||||

|

||||

# yum install mdadm [on RedHat systems]

|

||||

# apt-get install mdadm [on Debain systems]

|

||||

# yum install mdadm [在 RedHat 系统]

|

||||

# apt-get install mdadm [在 Debain 系统]

|

||||

|

||||

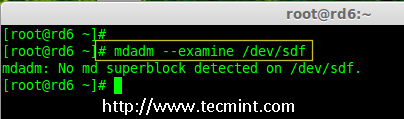

2. 一旦安装好‘mdadm‘包,我们需要使用下面的命令来检查磁盘是否已经配置好。

|

||||

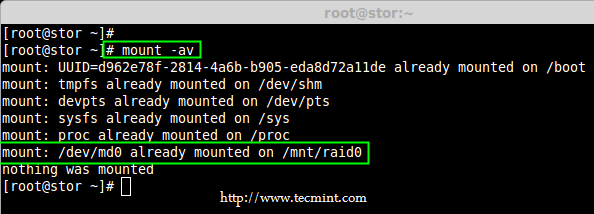

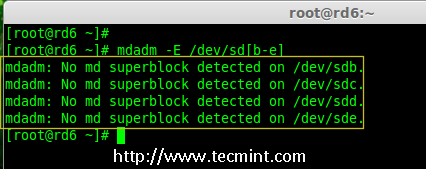

2、 一旦安装好`mdadm`包,我们需要使用下面的命令来检查磁盘是否已经配置好。

|

||||

|

||||

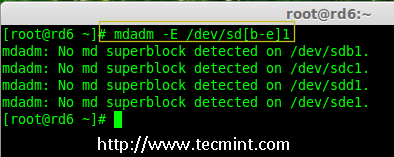

# mdadm -E /dev/sd[b-c]

|

||||

|

||||

|

||||

|

||||

检查 RAID 的磁盘

|

||||

|

||||

*检查 RAID 的磁盘*

|

||||

|

||||

正如你从上面图片看到的,没有检测到任何超级块,这意味着还没有创建RAID。

|

||||

|

||||

### 第2步:为 RAID 创建分区 ###

|

||||

|

||||

3. 正如我提到的,我们最少使用两个分区 /dev/sdb 和 /dev/sdc 来创建 RAID1。我们首先使用‘fdisk‘命令来创建这两个分区并更改其类型为 raid。

|

||||

3、 正如我提到的,我们使用最少的两个分区 /dev/sdb 和 /dev/sdc 来创建 RAID 1。我们首先使用`fdisk`命令来创建这两个分区并更改其类型为 raid。

|

||||

|

||||

# fdisk /dev/sdb

|

||||

|

||||

按照下面的说明

|

||||

|

||||

- 按 ‘n’ 创建新的分区。

|

||||

- 然后按 ‘P’ 选择主分区。

|

||||

- 按 `n` 创建新的分区。

|

||||

- 然后按 `P` 选择主分区。

|

||||

- 接下来选择分区号为1。

|

||||

- 按两次回车键默认将整个容量分配给它。

|

||||

- 然后,按 ‘P’ 来打印创建好的分区。

|

||||

- 按 ‘L’,列出所有可用的类型。

|

||||

- 按 ‘t’ 修改分区类型。

|

||||

- 键入 ‘fd’ 设置为Linux 的 RAID 类型,然后按 Enter 确认。

|

||||

- 然后再次使用‘p’查看我们所做的更改。

|

||||

- 使用‘w’保存更改。

|

||||

- 然后,按 `P` 来打印创建好的分区。

|

||||

- 按 `L`,列出所有可用的类型。

|

||||

- 按 `t` 修改分区类型。

|

||||

- 键入 `fd` 设置为 Linux 的 RAID 类型,然后按 Enter 确认。

|

||||

- 然后再次使用`p`查看我们所做的更改。

|

||||

- 使用`w`保存更改。

|

||||

|

||||

|

||||

|

||||

创建磁盘分区

|

||||

*创建磁盘分区*

|

||||

|

||||

在创建“/dev/sdb”分区后,接下来按照同样的方法创建分区 /dev/sdc 。

|

||||

|

||||

@ -85,59 +84,59 @@ RAID 镜像意味着相同数据的完整克隆(或镜像)写入到两个磁

|

||||

|

||||

|

||||

|

||||

创建第二个分区

|

||||

*创建第二个分区*

|

||||

|

||||

4. 一旦这两个分区创建成功后,使用相同的命令来检查 sdb & sdc 分区并确认 RAID 分区的类型如上图所示。

|

||||

4、 一旦这两个分区创建成功后,使用相同的命令来检查 sdb 和 sdc 分区并确认 RAID 分区的类型如上图所示。

|