mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-12 01:40:10 +08:00

commit

74aab89fe5

@ -1,15 +1,15 @@

|

||||

Streams:一个新的 Redis 通用数据结构

|

||||

======

|

||||

|

||||

直到几个月以前,对于我来说,在消息传递的环境中,<ruby>流<rt>streams</rt></ruby>只是一个有趣且相对简单的概念。这个概念在 Kafka 流行之后,我主要研究它们在 Disque 案例中的应用,Disque 是一个消息队列,它将在 Redis 4.2 中被转换为 Redis 的一个模块。后来我决定让 Disque 都用 AP 消息[1],也就是说,它将在不需要客户端过多参与的情况下实现容错和可用性,这样一来,我更加确定地认为流的概念在那种情况下并不适用。

|

||||

直到几个月以前,对于我来说,在消息传递的环境中,<ruby>流<rt>streams</rt></ruby>只是一个有趣且相对简单的概念。这个概念在 Kafka 流行之后,我主要研究它们在 Disque 案例中的应用,Disque 是一个消息队列,它将在 Redis 4.2 中被转换为 Redis 的一个模块。后来我决定让 Disque 都用 AP 消息(LCTT 译注:参见 [CAP 定理][1]) ,也就是说,它将在不需要客户端过多参与的情况下实现容错和可用性,这样一来,我更加确定地认为流的概念在那种情况下并不适用。

|

||||

|

||||

然而在那时 Redis 有个问题,那就是缺省情况下导出数据结构并不轻松。它在 Redis <ruby>列表<rt>list</rt></ruby>、<ruby>有序集合<rt>sorted list</rt></ruby>、<ruby>发布/订阅<rt>Pub/Sub</rt></ruby>功能之间有某些缺陷。你可以权衡使用这些工具对一个消息或事件建模。

|

||||

然而在那时 Redis 有个问题,那就是缺省情况下导出数据结构并不轻松。它在 Redis <ruby>列表<rt>list</rt></ruby>、<ruby>有序集<rt>sorted list</rt></ruby>、<ruby>发布/订阅<rt>Pub/Sub</rt></ruby>功能之间有某些缺陷。你可以权衡使用这些工具对一系列消息或事件建模。

|

||||

|

||||

排序集合是内存消耗大户,那自然就不能对投递相同消息进行一次又一次的建模,客户端不能阻塞新消息。因为有序集合并不是一个序列化的数据结构,它是一个元素可以根据它们量的变化而移动的集合:所以它不像时序性的数据那样。

|

||||

有序集是内存消耗大户,那自然就不能对投递的相同消息进行一次又一次的建模,客户端不能阻塞新消息。因为有序集并不是一个序列化的数据结构,它是一个元素可以根据它们量的变化而移动的集合:所以它不像时序性的数据那样。

|

||||

|

||||

列表有另外的问题,它在某些特定的用例中产生类似的适用性问题:你无法浏览列表中间的内容,因为在那种情况下,访问时间是线性的。此外,没有任何指定输出的功能,列表上的阻塞操作仅为单个客户端提供单个元素。列表中没有固定的元素标识,也就是说,不能指定从哪个元素开始给我提供内容。

|

||||

列表有另外的问题,它在某些特定的用例中会产生类似的适用性问题:你无法浏览列表中间的内容,因为在那种情况下,访问时间是线性的。此外,没有任何指定输出的功能,列表上的阻塞操作仅为单个客户端提供单个元素。列表中没有固定的元素标识,也就是说,不能指定从哪个元素开始给我提供内容。

|

||||

|

||||

对于一对多的工作任务,有发布/订阅机制,它在大多数情况下是非常好的,但是,对于某些不想<ruby>“即发即弃”<rt>fire-and-forget</rt></ruby>的东西:保留一个历史是很重要的,不只是因为是断开之后重新获得消息,也因为某些如时序性的消息列表,用范围查询浏览是非常重要的:在这 10 秒范围内温度读数是多少?

|

||||

对于一对多的工作任务,有发布/订阅机制,它在大多数情况下是非常好的,但是,对于某些不想<ruby>“即发即弃”<rt>fire-and-forget</rt></ruby>的东西:保留一个历史是很重要的,不只是因为是断开之后会重新获得消息,也因为某些如时序性的消息列表,用范围查询浏览是非常重要的:比如在这 10 秒范围内温度读数是多少?

|

||||

|

||||

我试图解决上述问题,我想规划一个通用的有序集合,并列入一个独特的、更灵活的数据结构,然而,我的设计尝试最终以生成一个比当前的数据结构更加矫揉造作的结果而告终。Redis 有个好处,它的数据结构导出更像自然的计算机科学的数据结构,而不是 “Salvatore 发明的 API”。因此,我最终停止了我的尝试,并且说,“ok,这是我们目前能提供的”,或许我会为发布/订阅增加一些历史信息,或者为列表访问增加一些更灵活的方式。然而,每次在会议上有用户对我说 “你如何在 Redis 中模拟时间系列” 或者类似的问题时,我的脸就绿了。

|

||||

|

||||

@ -22,7 +22,7 @@ Streams:一个新的 Redis 通用数据结构

|

||||

|

||||

他的思路启发了我。我想了几天,并且意识到这可能是我们马上同时解决上面所有问题的契机。我需要去重新构思 “日志” 的概念是什么。日志是个基本的编程元素,每个人都使用过它,因为它只是简单地以追加模式打开一个文件,并以一定的格式写入数据。然而 Redis 数据结构必须是抽象的。它们在内存中,并且我们使用内存并不是因为我们懒,而是因为使用一些指针,我们可以概念化数据结构并把它们抽象,以使它们摆脱明确的限制。例如,一般来说日志有几个问题:偏移不是逻辑化的,而是真实的字节偏移,如果你想要与条目插入的时间相关的逻辑偏移应该怎么办?我们有范围查询可用。同样,日志通常很难进行垃圾回收:在一个只能进行追加操作的数据结构中怎么去删除旧的元素?好吧,在我们理想的日志中,我们只需要说,我想要数字最大的那个条目,而旧的元素一个也不要,等等。

|

||||

|

||||

当我从 Timothy 的想法中受到启发,去尝试着写一个规范的时候,我使用了 Redis 集群中的 radix 树去实现,优化了它内部的某些部分。这为实现一个有效利用空间的日志提供了基础,而且仍然可以用<ruby>对数时间<rt>logarithmic time</rt></ruby>来访问范围。同时,我开始去读关于 Kafka 流以获得另外的灵感,它也非常适合我的设计,最后借鉴了 Kafka <ruby>消费群体<rt>consumer groups</rt></ruby>的概念,并且再次针对 Redis 进行优化,以适用于 Redis 在内存中使用的情况。然而,该规范仅停留在纸面上,在一段时间后我几乎把它从头到尾重写了一遍,以便将我与别人讨论的所得到的许多建议一起增加到 Redis 升级中。我希望 Redis 流能成为对于时间序列有用的特性,而不仅是一个常见的事件和消息类的应用程序。

|

||||

当我从 Timothy 的想法中受到启发,去尝试着写一个规范的时候,我使用了 Redis 集群中的 radix 树去实现,优化了它内部的某些部分。这为实现一个有效利用空间的日志提供了基础,而且仍然有可能在<ruby>对数时间<rt>logarithmic time</rt></ruby>内访问范围。同时,我开始去读关于 Kafka 的流相关的内容以获得另外的灵感,它也非常适合我的设计,最后借鉴了 Kafka <ruby>消费组<rt>consumer groups</rt></ruby>的概念,并且再次针对 Redis 进行优化,以适用于 Redis 在内存中使用的情况。然而,该规范仅停留在纸面上,在一段时间后我几乎把它从头到尾重写了一遍,以便将我与别人讨论的所得到的许多建议一起增加到 Redis 升级中。我希望 Redis 流能成为对于时间序列有用的特性,而不仅是一个常见的事件和消息类的应用程序。

|

||||

|

||||

### 让我们写一些代码吧

|

||||

|

||||

@ -159,7 +159,7 @@ QUEUED

|

||||

|

||||

### 内存使用和节省加载时间

|

||||

|

||||

因为用来建模 Redis 流的设计,内存使用率是非常低的。这取决于它们的字段、值的数量和长度,对于简单的消息,每使用 100MB 内存可以有几百万条消息。此外,格式设想为需要极少的序列化:listpack 块以 radix 树节点方式存储,在磁盘上和内存中都以相同方式表示的,因此它们可以很轻松地存储和读取。例如,Redis 可以在 0.3 秒内从 RDB 文件中读取 500 万个条目。这使流的复制和持久存储非常高效。

|

||||

因为用来建模 Redis 流的设计,内存使用率是非常低的。这取决于它们的字段、值的数量和长度,对于简单的消息,每使用 100MB 内存可以有几百万条消息。此外,该格式设想为需要极少的序列化:listpack 块以 radix 树节点方式存储,在磁盘上和内存中都以相同方式表示的,因此它们可以很轻松地存储和读取。例如,Redis 可以在 0.3 秒内从 RDB 文件中读取 500 万个条目。这使流的复制和持久存储非常高效。

|

||||

|

||||

我还计划允许从条目中间进行部分删除。现在仅实现了一部分,策略是在条目在标记中标识条目为已删除,并且,当已删除条目占全部条目的比例达到指定值时,这个块将被回收重写,如果需要,它将被连到相邻的另一个块上,以避免碎片化。

|

||||

|

||||

@ -175,7 +175,7 @@ via: http://antirez.com/news/114

|

||||

|

||||

作者:[antirez][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)、[pityonline](https://github.com/pityonline)

|

||||

校对:[wxy](https://github.com/wxy), [pityonline](https://github.com/pityonline)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,40 +1,41 @@

|

||||

通过构建一个区块链来学习区块链技术

|

||||

想学习区块链?那就用 Python 构建一个

|

||||

======

|

||||

|

||||

> 了解区块链是如何工作的最快的方法是构建一个。

|

||||

|

||||

|

||||

你看到这篇文章是因为和我一样,对加密货币的大热而感到兴奋。并且想知道区块链是如何工作的 —— 它们背后的技术是什么。

|

||||

|

||||

你看到这篇文章是因为和我一样,对加密货币的大热而感到兴奋。并且想知道区块链是如何工作的 —— 它们背后的技术基础是什么。

|

||||

|

||||

但是理解区块链并不容易 —— 至少对我来说是这样。我徜徉在各种难懂的视频中,并且因为示例太少而陷入深深的挫败感中。

|

||||

|

||||

我喜欢在实践中学习。这迫使我去处理被卡在代码级别上的难题。如果你也是这么做的,在本指南结束的时候,你将拥有一个功能正常的区块链,并且实实在在地理解了它的工作原理。

|

||||

我喜欢在实践中学习。这会使得我在代码层面上处理主要问题,从而可以让我坚持到底。如果你也是这么做的,在本指南结束的时候,你将拥有一个功能正常的区块链,并且实实在在地理解了它的工作原理。

|

||||

|

||||

### 开始之前 …

|

||||

|

||||

记住,区块链是一个 _不可更改的、有序的_ 被称为区块的记录链。它们可以包括事务~~(交易???校对确认一下,下同)~~、文件或者任何你希望的真实数据。最重要的是它们是通过使用_哈希_链接到一起的。

|

||||

记住,区块链是一个 _不可更改的、有序的_ 记录(被称为区块)的链。它们可以包括<ruby>交易<rt>transaction</rt></ruby>、文件或者任何你希望的真实数据。最重要的是它们是通过使用_哈希_链接到一起的。

|

||||

|

||||

如果你不知道哈希是什么,[这里有解释][1]。

|

||||

|

||||

**_本指南的目标读者是谁?_** 你应该能很容易地读和写一些基本的 Python 代码,并能够理解 HTTP 请求是如何工作的,因为我们讨论的区块链将基于 HTTP。

|

||||

**_本指南的目标读者是谁?_** 你应该能轻松地读、写一些基本的 Python 代码,并能够理解 HTTP 请求是如何工作的,因为我们讨论的区块链将基于 HTTP。

|

||||

|

||||

**_我需要做什么?_** 确保安装了 [Python 3.6][2]+(以及 `pip`),还需要去安装 Flask 和非常好用的 Requests 库:

|

||||

|

||||

```

|

||||

pip install Flask==0.12.2 requests==2.18.4

|

||||

pip install Flask==0.12.2 requests==2.18.4

|

||||

```

|

||||

|

||||

当然,你也需要一个 HTTP 客户端,像 [Postman][3] 或者 cURL。哪个都行。

|

||||

|

||||

**_最终的代码在哪里可以找到?_** 源代码在 [这里][4]。

|

||||

|

||||

* * *

|

||||

|

||||

### 第 1 步:构建一个区块链

|

||||

|

||||

打开你喜欢的文本编辑器或者 IDE,我个人 ❤️ [PyCharm][5]。创建一个名为 `blockchain.py` 的新文件。我将使用一个单个的文件,如果你看晕了,可以去参考 [源代码][6]。

|

||||

打开你喜欢的文本编辑器或者 IDE,我个人喜欢 [PyCharm][5]。创建一个名为 `blockchain.py` 的新文件。我将仅使用一个文件,如果你看晕了,可以去参考 [源代码][6]。

|

||||

|

||||

#### 描述一个区块链

|

||||

|

||||

我们将创建一个 `Blockchain` 类,它的构造函数将去初始化一个空列表(去存储我们的区块链),以及另一个列表去保存事务。下面是我们的类规划:

|

||||

我们将创建一个 `Blockchain` 类,它的构造函数将去初始化一个空列表(去存储我们的区块链),以及另一个列表去保存交易。下面是我们的类规划:

|

||||

|

||||

```

|

||||

class Blockchain(object):

|

||||

@ -58,15 +59,16 @@ class Blockchain(object):

|

||||

@property

|

||||

def last_block(self):

|

||||

# Returns the last Block in the chain

|

||||

pass

|

||||

pass

|

||||

```

|

||||

|

||||

*我们的 Blockchain 类的原型*

|

||||

|

||||

我们的区块链类负责管理链。它将存储事务并且有一些为链中增加新区块的助理性质的方法。现在我们开始去充实一些类的方法。

|

||||

我们的 `Blockchain` 类负责管理链。它将存储交易并且有一些为链中增加新区块的辅助性质的方法。现在我们开始去充实一些类的方法。

|

||||

|

||||

#### 一个区块是什么样子的?

|

||||

#### 区块是什么样子的?

|

||||

|

||||

每个区块有一个索引、一个时间戳(Unix 时间)、一个事务的列表、一个证明(后面会详细解释)、以及前一个区块的哈希。

|

||||

每个区块有一个索引、一个时间戳(Unix 时间)、一个交易的列表、一个证明(后面会详细解释)、以及前一个区块的哈希。

|

||||

|

||||

单个区块的示例应该是下面的样子:

|

||||

|

||||

@ -86,13 +88,15 @@ block = {

|

||||

}

|

||||

```

|

||||

|

||||

此刻,链的概念应该非常明显 —— 每个新区块包含它自身的信息和前一个区域的哈希。这一点非常重要,因为这就是区块链不可更改的原因:如果攻击者修改了一个早期的区块,那么所有的后续区块将包含错误的哈希。

|

||||

*我们的区块链中的块示例*

|

||||

|

||||

这样做有意义吗?如果没有,就让时间来埋葬它吧 —— 这就是区块链背后的核心思想。

|

||||

此刻,链的概念应该非常明显 —— 每个新区块包含它自身的信息和前一个区域的哈希。**这一点非常重要,因为这就是区块链不可更改的原因**:如果攻击者修改了一个早期的区块,那么**所有**的后续区块将包含错误的哈希。

|

||||

|

||||

#### 添加事务到一个区块

|

||||

*这样做有意义吗?如果没有,就让时间来埋葬它吧 —— 这就是区块链背后的核心思想。*

|

||||

|

||||

我们将需要一种区块中添加事务的方式。我们的 `new_transaction()` 就是做这个的,它非常简单明了:

|

||||

#### 添加交易到一个区块

|

||||

|

||||

我们将需要一种区块中添加交易的方式。我们的 `new_transaction()` 就是做这个的,它非常简单明了:

|

||||

|

||||

```

|

||||

class Blockchain(object):

|

||||

@ -113,14 +117,14 @@ class Blockchain(object):

|

||||

'amount': amount,

|

||||

})

|

||||

|

||||

return self.last_block['index'] + 1

|

||||

return self.last_block['index'] + 1

|

||||

```

|

||||

|

||||

在 `new_transaction()` 运行后将在列表中添加一个事务,它返回添加事务后的那个区块的索引 —— 那个区块接下来将被挖矿。提交事务的用户后面会用到这些。

|

||||

在 `new_transaction()` 运行后将在列表中添加一个交易,它返回添加交易后的那个区块的索引 —— 那个区块接下来将被挖矿。提交交易的用户后面会用到这些。

|

||||

|

||||

#### 创建新区块

|

||||

|

||||

当我们的区块链被实例化后,我们需要一个创世区块(一个没有祖先的区块)来播种它。我们也需要去添加一些 “证明” 到创世区块,它是挖矿(工作量证明 PoW)的成果。我们在后面将讨论更多挖矿的内容。

|

||||

当我们的 `Blockchain` 被实例化后,我们需要一个创世区块(一个没有祖先的区块)来播种它。我们也需要去添加一些 “证明” 到创世区块,它是挖矿(工作量证明 PoW)的成果。我们在后面将讨论更多挖矿的内容。

|

||||

|

||||

除了在我们的构造函数中创建创世区块之外,我们还需要写一些方法,如 `new_block()`、`new_transaction()` 以及 `hash()`:

|

||||

|

||||

@ -190,18 +194,18 @@ class Blockchain(object):

|

||||

|

||||

# We must make sure that the Dictionary is Ordered, or we'll have inconsistent hashes

|

||||

block_string = json.dumps(block, sort_keys=True).encode()

|

||||

return hashlib.sha256(block_string).hexdigest()

|

||||

return hashlib.sha256(block_string).hexdigest()

|

||||

```

|

||||

|

||||

上面的内容简单明了 —— 我添加了一些注释和文档字符串,以使代码清晰可读。到此为止,表示我们的区块链基本上要完成了。但是,你肯定想知道新区块是如何被创建、打造或者挖矿的。

|

||||

|

||||

#### 理解工作量证明

|

||||

|

||||

一个工作量证明(PoW)算法是在区块链上创建或者挖出新区块的方法。PoW 的目标是去撞出一个能够解决问题的数字。这个数字必须满足“找到它很困难但是验证它很容易”的条件 —— 网络上的任何人都可以计算它。这就是 PoW 背后的核心思想。

|

||||

<ruby>工作量证明<rt>Proof of Work</rt></ruby>(PoW)算法是在区块链上创建或者挖出新区块的方法。PoW 的目标是去撞出一个能够解决问题的数字。这个数字必须满足“找到它很困难但是验证它很容易”的条件 —— 网络上的任何人都可以计算它。这就是 PoW 背后的核心思想。

|

||||

|

||||

我们来看一个非常简单的示例来帮助你了解它。

|

||||

|

||||

我们来解决一个问题,一些整数 x 乘以另外一个整数 y 的结果的哈希值必须以 0 结束。因此,hash(x * y) = ac23dc…0。为简单起见,我们先把 x = 5 固定下来。在 Python 中的实现如下:

|

||||

我们来解决一个问题,一些整数 `x` 乘以另外一个整数 `y` 的结果的哈希值必须以 `0` 结束。因此,`hash(x * y) = ac23dc…0`。为简单起见,我们先把 `x = 5` 固定下来。在 Python 中的实现如下:

|

||||

|

||||

```

|

||||

from hashlib import sha256

|

||||

@ -215,19 +219,21 @@ while sha256(f'{x*y}'.encode()).hexdigest()[-1] != "0":

|

||||

print(f'The solution is y = {y}')

|

||||

```

|

||||

|

||||

在这里的答案是 y = 21。因为它产生的哈希值是以 0 结尾的:

|

||||

在这里的答案是 `y = 21`。因为它产生的哈希值是以 0 结尾的:

|

||||

|

||||

```

|

||||

hash(5 * 21) = 1253e9373e...5e3600155e860

|

||||

```

|

||||

|

||||

在比特币中,工作量证明算法被称之为 [Hashcash][10]。与我们上面的例子没有太大的差别。这就是矿工们进行竞赛以决定谁来创建新块的算法。一般来说,其难度取决于在一个字符串中所查找的字符数量。然后矿工会因其做出的求解而得到奖励的币——在一个交易当中。

|

||||

|

||||

网络上的任何人都可以很容易地去核验它的答案。

|

||||

|

||||

#### 实现基本的 PoW

|

||||

|

||||

为我们的区块链来实现一个简单的算法。我们的规则与上面的示例类似:

|

||||

|

||||

> 找出一个数字 p,它与前一个区块的答案进行哈希运算得到一个哈希值,这个哈希值的前四位必须是由 0 组成。

|

||||

> 找出一个数字 `p`,它与前一个区块的答案进行哈希运算得到一个哈希值,这个哈希值的前四位必须是由 `0` 组成。

|

||||

|

||||

```

|

||||

import hashlib

|

||||

@ -266,25 +272,21 @@ class Blockchain(object):

|

||||

|

||||

guess = f'{last_proof}{proof}'.encode()

|

||||

guess_hash = hashlib.sha256(guess).hexdigest()

|

||||

return guess_hash[:4] == "0000"

|

||||

return guess_hash[:4] == "0000"

|

||||

```

|

||||

|

||||

为了调整算法的难度,我们可以修改前导 0 的数量。但是 4 个零已经足够难了。你会发现,将前导 0 的数量每增加一,那么找到正确答案所需要的时间难度将大幅增加。

|

||||

|

||||

我们的类基本完成了,现在我们开始去使用 HTTP 请求与它交互。

|

||||

|

||||

* * *

|

||||

|

||||

### 第 2 步:以 API 方式去访问我们的区块链

|

||||

|

||||

我们将去使用 Python Flask 框架。它是个微框架,使用它去做端点到 Python 函数的映射很容易。这样我们可以使用 HTTP 请求基于 web 来与我们的区块链对话。

|

||||

我们将使用 Python Flask 框架。它是个微框架,使用它去做端点到 Python 函数的映射很容易。这样我们可以使用 HTTP 请求基于 web 来与我们的区块链对话。

|

||||

|

||||

我们将创建三个方法:

|

||||

|

||||

* `/transactions/new` 在一个区块上创建一个新事务

|

||||

|

||||

* `/transactions/new` 在一个区块上创建一个新交易

|

||||

* `/mine` 告诉我们的服务器去挖矿一个新区块

|

||||

|

||||

* `/chain` 返回完整的区块链

|

||||

|

||||

#### 配置 Flask

|

||||

@ -332,33 +334,33 @@ def full_chain():

|

||||

return jsonify(response), 200

|

||||

|

||||

if __name__ == '__main__':

|

||||

app.run(host='0.0.0.0', port=5000)

|

||||

app.run(host='0.0.0.0', port=5000)

|

||||

```

|

||||

|

||||

对上面的代码,我们做添加一些详细的解释:

|

||||

|

||||

* Line 15:实例化我们的节点。更多关于 Flask 的知识读 [这里][7]。

|

||||

|

||||

* Line 18:为我们的节点创建一个随机的名字。

|

||||

|

||||

* Line 21:实例化我们的区块链类。

|

||||

|

||||

* Line 24–26:创建 /mine 端点,这是一个 GET 请求。

|

||||

|

||||

* Line 28–30:创建 /transactions/new 端点,这是一个 POST 请求,因为我们要发送数据给它。

|

||||

|

||||

* Line 32–38:创建 /chain 端点,它返回全部区块链。

|

||||

|

||||

* Line 24–26:创建 `/mine` 端点,这是一个 GET 请求。

|

||||

* Line 28–30:创建 `/transactions/new` 端点,这是一个 POST 请求,因为我们要发送数据给它。

|

||||

* Line 32–38:创建 `/chain` 端点,它返回全部区块链。

|

||||

* Line 40–41:在 5000 端口上运行服务器。

|

||||

|

||||

#### 事务端点

|

||||

#### 交易端点

|

||||

|

||||

这就是对一个事务的请求,它是用户发送给服务器的:

|

||||

这就是对一个交易的请求,它是用户发送给服务器的:

|

||||

|

||||

```

|

||||

{ "sender": "my address", "recipient": "someone else's address", "amount": 5}

|

||||

{

|

||||

"sender": "my address",

|

||||

"recipient": "someone else's address",

|

||||

"amount": 5

|

||||

}

|

||||

```

|

||||

|

||||

因为我们已经有了添加交易到块中的类方法,剩下的就很容易了。让我们写个函数来添加交易:

|

||||

|

||||

```

|

||||

import hashlib

|

||||

import json

|

||||

@ -383,18 +385,17 @@ def new_transaction():

|

||||

index = blockchain.new_transaction(values['sender'], values['recipient'], values['amount'])

|

||||

|

||||

response = {'message': f'Transaction will be added to Block {index}'}

|

||||

return jsonify(response), 201

|

||||

return jsonify(response), 201

|

||||

```

|

||||

创建事务的方法

|

||||

|

||||

*创建交易的方法*

|

||||

|

||||

#### 挖矿端点

|

||||

|

||||

我们的挖矿端点是见证奇迹的地方,它实现起来很容易。它要做三件事情:

|

||||

|

||||

1. 计算工作量证明

|

||||

|

||||

2. 因为矿工(我们)添加一个事务而获得报酬,奖励矿工(我们) 1 个硬币

|

||||

|

||||

2. 因为矿工(我们)添加一个交易而获得报酬,奖励矿工(我们) 1 个币

|

||||

3. 通过将它添加到链上而打造一个新区块

|

||||

|

||||

```

|

||||

@ -434,10 +435,10 @@ def mine():

|

||||

'proof': block['proof'],

|

||||

'previous_hash': block['previous_hash'],

|

||||

}

|

||||

return jsonify(response), 200

|

||||

return jsonify(response), 200

|

||||

```

|

||||

|

||||

注意,挖掘出的区块的接收方是我们的节点地址。现在,我们所做的大部分工作都只是与我们的区块链类的方法进行交互的。到目前为止,我们已经做到了,现在开始与我们的区块链去交互。

|

||||

注意,挖掘出的区块的接收方是我们的节点地址。现在,我们所做的大部分工作都只是与我们的 `Blockchain` 类的方法进行交互的。到目前为止,我们已经做完了,现在开始与我们的区块链去交互。

|

||||

|

||||

### 第 3 步:与我们的区块链去交互

|

||||

|

||||

@ -447,24 +448,33 @@ return jsonify(response), 200

|

||||

|

||||

```

|

||||

$ python blockchain.py

|

||||

* Running on http://127.0.0.1:5000/ (Press CTRL+C to quit)

|

||||

```

|

||||

|

||||

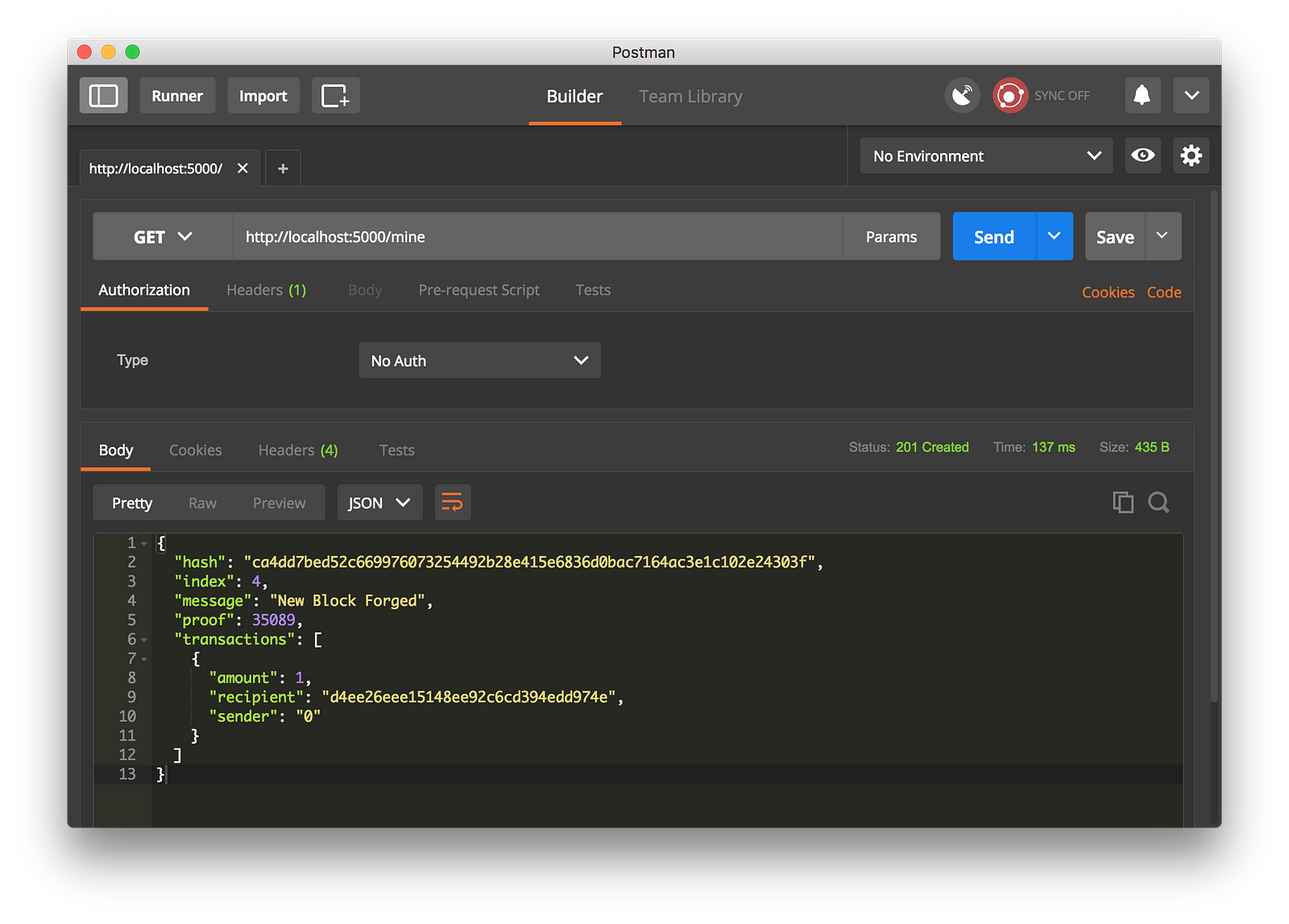

我们通过生成一个 GET 请求到 http://localhost:5000/mine 去尝试挖一个区块:

|

||||

我们通过生成一个 `GET` 请求到 `http://localhost:5000/mine` 去尝试挖一个区块:

|

||||

|

||||

|

||||

使用 Postman 去生成一个 GET 请求

|

||||

|

||||

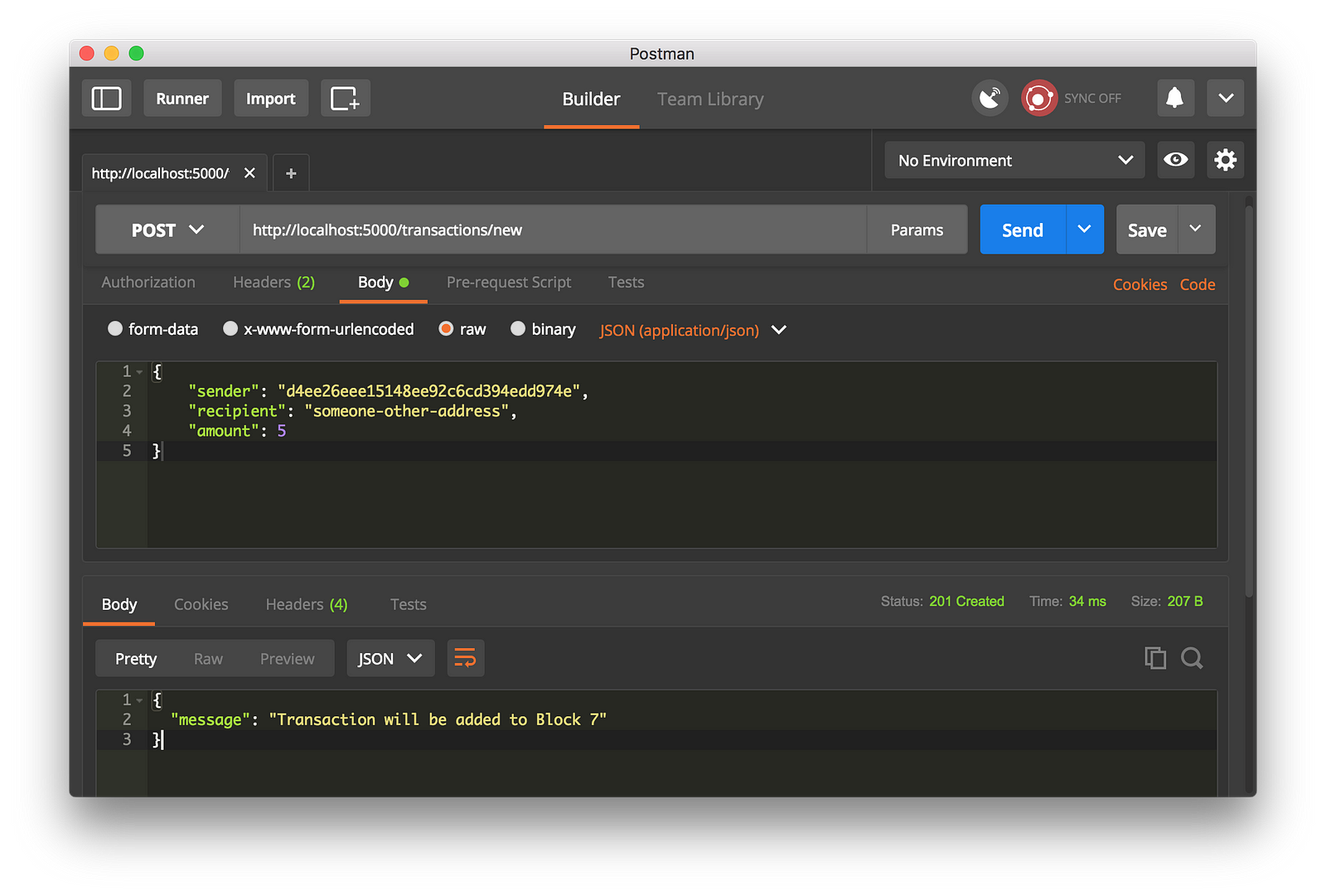

我们通过生成一个 POST 请求到 http://localhost:5000/transactions/new 去创建一个区块,它带有一个包含我们的事务结构的 `Body`:

|

||||

*使用 Postman 去生成一个 GET 请求*

|

||||

|

||||

我们通过生成一个 `POST` 请求到 `http://localhost:5000/transactions/new` 去创建一个区块,请求数据包含我们的交易结构:

|

||||

|

||||

|

||||

使用 Postman 去生成一个 POST 请求

|

||||

|

||||

*使用 Postman 去生成一个 POST 请求*

|

||||

|

||||

如果你不使用 Postman,也可以使用 cURL 去生成一个等价的请求:

|

||||

|

||||

```

|

||||

$ curl -X POST -H "Content-Type: application/json" -d '{ "sender": "d4ee26eee15148ee92c6cd394edd974e", "recipient": "someone-other-address", "amount": 5}' "http://localhost:5000/transactions/new"

|

||||

$ curl -X POST -H "Content-Type: application/json" -d '{

|

||||

"sender": "d4ee26eee15148ee92c6cd394edd974e",

|

||||

"recipient": "someone-other-address",

|

||||

"amount": 5

|

||||

}' "http://localhost:5000/transactions/new"

|

||||

```

|

||||

我重启动我的服务器,然后我挖到了两个区块,这样总共有了3 个区块。我们通过请求 http://localhost:5000/chain 来检查整个区块链:

|

||||

|

||||

我重启动我的服务器,然后我挖到了两个区块,这样总共有了 3 个区块。我们通过请求 `http://localhost:5000/chain` 来检查整个区块链:

|

||||

|

||||

```

|

||||

{

|

||||

"chain": [

|

||||

@ -503,18 +513,18 @@ $ curl -X POST -H "Content-Type: application/json" -d '{ "sender": "d4ee26eee151

|

||||

}

|

||||

],

|

||||

"length": 3

|

||||

}

|

||||

```

|

||||

### 第 4 步:共识

|

||||

|

||||

这是很酷的一个地方。我们已经有了一个基本的区块链,它可以接收事务并允许我们去挖掘出新区块。但是区块链的整个重点在于它是去中心化的。而如果它们是去中心化的,那我们如何才能确保它们表示在同一个区块链上?这就是共识问题,如果我们希望在我们的网络上有多于一个的节点运行,那么我们将必须去实现一个共识算法。

|

||||

这是很酷的一个地方。我们已经有了一个基本的区块链,它可以接收交易并允许我们去挖掘出新区块。但是区块链的整个重点在于它是<ruby>去中心化的<rt>decentralized</rt></ruby>。而如果它们是去中心化的,那我们如何才能确保它们表示在同一个区块链上?这就是<ruby>共识<rt>Consensus</rt></ruby>问题,如果我们希望在我们的网络上有多于一个的节点运行,那么我们将必须去实现一个共识算法。

|

||||

|

||||

#### 注册新节点

|

||||

|

||||

在我们能实现一个共识算法之前,我们需要一个办法去让一个节点知道网络上的邻居节点。我们网络上的每个节点都保留有一个该网络上其它节点的注册信息。因此,我们需要更多的端点:

|

||||

|

||||

1. /nodes/register 以 URLs 的形式去接受一个新节点列表

|

||||

|

||||

2. /nodes/resolve 去实现我们的共识算法,由它来解决任何的冲突 —— 确保节点有一个正确的链。

|

||||

1. `/nodes/register` 以 URL 的形式去接受一个新节点列表

|

||||

2. `/nodes/resolve` 去实现我们的共识算法,由它来解决任何的冲突 —— 确保节点有一个正确的链。

|

||||

|

||||

我们需要去修改我们的区块链的构造函数,来提供一个注册节点的方法:

|

||||

|

||||

@ -538,11 +548,12 @@ class Blockchain(object):

|

||||

"""

|

||||

|

||||

parsed_url = urlparse(address)

|

||||

self.nodes.add(parsed_url.netloc)

|

||||

self.nodes.add(parsed_url.netloc)

|

||||

```

|

||||

一个添加邻居节点到我们的网络的方法

|

||||

|

||||

注意,我们将使用一个 `set()` 去保存节点列表。这是一个非常合算的方式,它将确保添加的内容是幂等的 —— 这意味着不论你将特定的节点添加多少次,它都是精确地只出现一次。

|

||||

*一个添加邻居节点到我们的网络的方法*

|

||||

|

||||

注意,我们将使用一个 `set()` 去保存节点列表。这是一个非常合算的方式,它将确保添加的节点是<ruby>幂等<rt>idempotent</rt></ruby>的 —— 这意味着不论你将特定的节点添加多少次,它都是精确地只出现一次。

|

||||

|

||||

#### 实现共识算法

|

||||

|

||||

@ -615,12 +626,12 @@ class Blockchain(object)

|

||||

self.chain = new_chain

|

||||

return True

|

||||

|

||||

return False

|

||||

return False

|

||||

```

|

||||

|

||||

第一个方法 `valid_chain()` 是负责来检查链是否有效,它通过遍历区块链上的每个区块并验证它们的哈希和工作量证明来检查这个区块链是否有效。

|

||||

|

||||

`resolve_conflicts()` 方法用于遍历所有的邻居节点,下载它们的链并使用上面的方法去验证它们是否有效。如果找到有效的链,确定谁是最长的链,然后我们就用最长的链来替换我们的当前的链。

|

||||

`resolve_conflicts()` 方法用于遍历所有的邻居节点,下载它们的链并使用上面的方法去验证它们是否有效。**如果找到有效的链,确定谁是最长的链,然后我们就用最长的链来替换我们的当前的链。**

|

||||

|

||||

在我们的 API 上来注册两个端点,一个用于添加邻居节点,另一个用于解决冲突:

|

||||

|

||||

@ -658,18 +669,20 @@ def consensus():

|

||||

'chain': blockchain.chain

|

||||

}

|

||||

|

||||

return jsonify(response), 200

|

||||

return jsonify(response), 200

|

||||

```

|

||||

|

||||

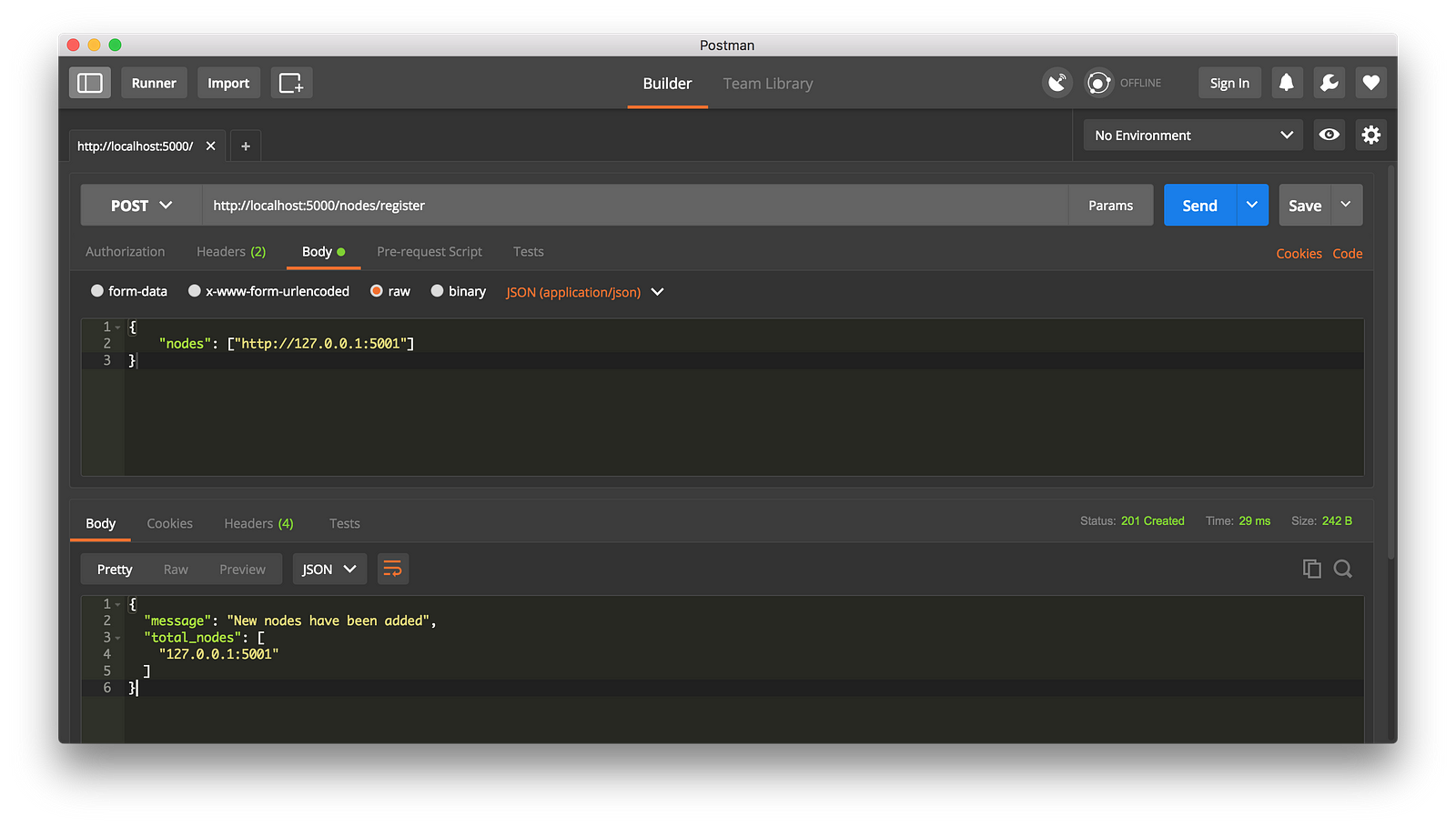

这种情况下,如果你愿意可以使用不同的机器来做,然后在你的网络上启动不同的节点。或者是在同一台机器上使用不同的端口启动另一个进程。我是在我的机器上使用了不同的端口启动了另一个节点,并将它注册到了当前的节点上。因此,我现在有了两个节点:[http://localhost:5000][9] 和 http://localhost:5001。

|

||||

这种情况下,如果你愿意,可以使用不同的机器来做,然后在你的网络上启动不同的节点。或者是在同一台机器上使用不同的端口启动另一个进程。我是在我的机器上使用了不同的端口启动了另一个节点,并将它注册到了当前的节点上。因此,我现在有了两个节点:`http://localhost:5000` 和 `http://localhost:5001`。

|

||||

|

||||

|

||||

注册一个新节点

|

||||

|

||||

*注册一个新节点*

|

||||

|

||||

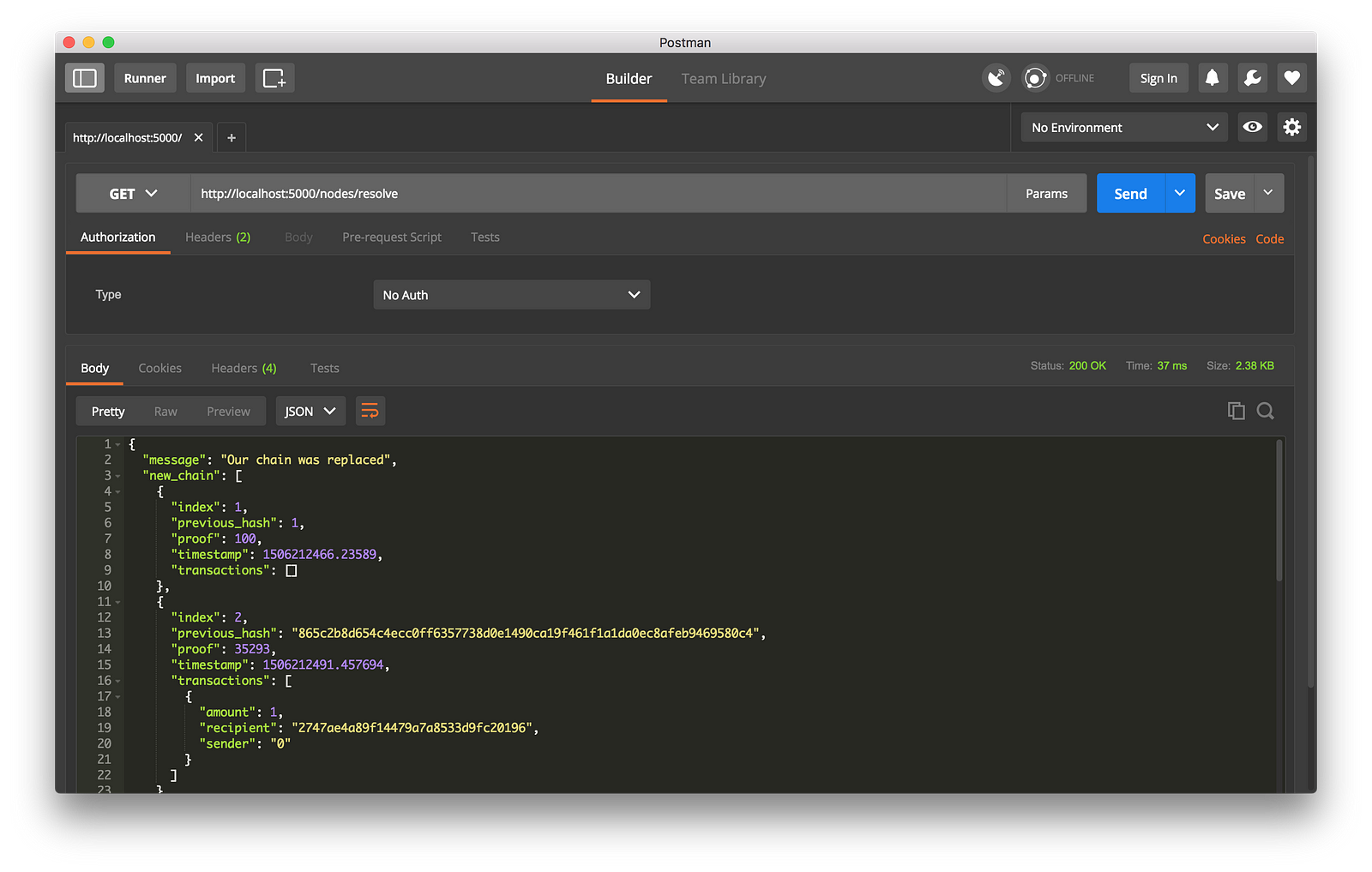

我接着在节点 2 上挖出一些新区块,以确保这个链是最长的。之后我在节点 1 上以 `GET` 方式调用了 `/nodes/resolve`,这时,节点 1 上的链被共识算法替换成节点 2 上的链了:

|

||||

|

||||

|

||||

工作中的共识算法

|

||||

|

||||

*工作中的共识算法*

|

||||

|

||||

然后将它们封装起来 … 找一些朋友来帮你一起测试你的区块链。

|

||||

|

||||

@ -677,7 +690,7 @@ return jsonify(response), 200

|

||||

|

||||

我希望以上内容能够鼓舞你去创建一些新的东西。我是加密货币的狂热拥护者,因此我相信区块链将迅速改变我们对经济、政府和记录保存的看法。

|

||||

|

||||

**更新:** 我正计划继续它的第二部分,其中我将扩展我们的区块链,使它具备事务验证机制,同时讨论一些你可以在其上产生你自己的区块链的方式。

|

||||

**更新:** 我正计划继续它的第二部分,其中我将扩展我们的区块链,使它具备交易验证机制,同时讨论一些你可以在其上产生你自己的区块链的方式。(LCTT 译注:第二篇并没有~!)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -685,7 +698,7 @@ via: https://hackernoon.com/learn-blockchains-by-building-one-117428612f46

|

||||

|

||||

作者:[Daniel van Flymen][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -699,3 +712,4 @@ via: https://hackernoon.com/learn-blockchains-by-building-one-117428612f46

|

||||

[7]:http://flask.pocoo.org/docs/0.12/quickstart/#a-minimal-application

|

||||

[8]:http://localhost:5000/transactions/new

|

||||

[9]:http://localhost:5000

|

||||

[10]:https://en.wikipedia.org/wiki/Hashcash

|

||||

87

published/20180115 Why DevSecOps matters to IT leaders.md

Normal file

87

published/20180115 Why DevSecOps matters to IT leaders.md

Normal file

@ -0,0 +1,87 @@

|

||||

为什么 DevSecOps 对 IT 领导来说如此重要

|

||||

======

|

||||

|

||||

> DevSecOps 也许不是一个优雅的词汇,但是其结果很吸引人:更强的安全、提前出现在开发周期中。来看看一个 IT 领导与 Meltdown 的拼搏。

|

||||

|

||||

|

||||

|

||||

如果 [DevOps][1] 最终是关于创造更好的软件,那也就意味着是更安全的软件。

|

||||

|

||||

而到了术语 “DevSecOps”,就像任何其他 IT 术语一样,DevSecOps —— 一个更成熟的 DevOps 的后代 ——可能容易受到炒作和盗用。但这个术语对那些拥抱了 DevOps 文化的领导者们来说具有重要的意义,并且其实践和工具可以帮助他们实现其承诺。

|

||||

|

||||

说道这里:“DevSecOps”是什么意思?

|

||||

|

||||

“DevSecOps 是开发、安全、运营的混合,”来自 [Datical][2] 的首席技术官和联合创始人 Robert 说。“这提醒我们,对我们的应用程序来说安全和创建并部署应用到生产中一样重要。”

|

||||

|

||||

**[想阅读其他首席技术官的 DevOps 文章吗?查阅我们丰富的资源,[DevOps:IT 领导者指南][3]]**

|

||||

|

||||

向非技术人员解释 DevSecOps 的一个简单的方法是:它是指将安全有意并提前加入到开发过程中。

|

||||

|

||||

“安全团队从历史上一直都被孤立于开发团队——每个团队在 IT 的不同领域都发展了很强的专业能力”,来自红帽安全策的专家 Kirsten 最近告诉我们。“不需要这样,非常关注安全也关注他们通过软件来兑现商业价值的能力的企业正在寻找能够在应用开发生命周期中加入安全的方法。他们通过在整个 CI/CD 管道中集成安全实践、工具和自动化来采用 DevSecOps。”

|

||||

|

||||

“为了能够做的更好,他们正在整合他们的团队——专业的安全人员从开始设计到部署到生产中都融入到了开发团队中了,”她说,“双方都收获了价值——每个团队都拓展了他们的技能和基础知识,使他们自己都成更有价值的技术人员。 DevOps 做的很正确——或者说 DevSecOps——提高了 IT 的安全性。”

|

||||

|

||||

IT 团队比任何以往都要求要快速频繁的交付服务。DevOps 在某种程度上可以成为一个很棒的推动者,因为它能够消除开发和运营之间通常遇到的一些摩擦,运营一直被排挤在整个过程之外直到要部署的时候,开发者把代码随便一放之后就不再去管理,他们承担更少的基础架构的责任。那种孤立的方法引起了很多问题,委婉的说,在数字时代,如果将安全孤立起来同样的情况也会发生。

|

||||

|

||||

“我们已经采用了 DevOps,因为它已经被证明通过移除开发和运营之间的阻碍来提高 IT 的绩效,”Reevess 说,“就像我们不应该在开发周期要结束时才加入运营,我们不应该在快要结束时才加入安全。”

|

||||

|

||||

### 为什么 DevSecOps 必然出现

|

||||

|

||||

或许会把 DevSecOps 看作是另一个时髦词,但对于安全意识很强的IT领导者来说,它是一个实质性的术语:在软件开发管道中安全必须是第一层面的要素,而不是部署前的最后一步的螺栓,或者更糟的是,作为一个团队只有当一个实际的事故发生的时候安全人员才会被重用争抢。

|

||||

|

||||

“DevSecOps 不只是一个时髦的术语——因为多种原因它是现在和未来 IT 将呈现的状态”,来自 [Sumo Logic] 的安全和合规副总裁 George 说道,“最重要的好处是将安全融入到开发和运营当中开提供保护的能力”

|

||||

|

||||

此外,DevSecOps 的出现可能是 DevOps 自身逐渐成熟并扎根于 IT 之中的一个征兆。

|

||||

|

||||

“企业中的 DevOps 文化已成定局,而且那意味着开发者们正以不断增长的速度交付功能和更新,特别是自我管理的组织会对合作和衡量的结果更加满意”,来自 [CYBRIC] 的首席技术官和联合创始人 Mike 说道。

|

||||

|

||||

在实施 DevOps 的同时继续保留原有安全措施的团队和公司,随着他们继续部署的更快更频繁可能正在经历越来越多的安全管理风险上的痛苦。

|

||||

|

||||

“现在的手工的安全测试方法会继续远远被甩在后面。”

|

||||

|

||||

“如今,手动的安全测试方法正被甩得越来越远,利用自动化和协作将安全测试转移到软件开发生命周期中,因此推动 DevSecOps 的文化是 IT 领导者们为增加整体的灵活性提供安全保证的唯一途径”,Kail 说。

|

||||

|

||||

转移安全测试也使开发者受益:他们能够在开放的较早的阶段验证并解决潜在的问题——这样很少需要或者甚至不需要安全人员的介入,而不是在一个新的服务或者更新部署之前在他们的代码中发现一个明显的漏洞。

|

||||

|

||||

“做的正确,DevSecOps 能够将安全融入到开发生命周期中,允许开发者们在没有安全中断的情况下更加快速容易的保证他们应用的安全”,来自 [SAS][8] 的首席信息安全员 Wilson 说道。

|

||||

|

||||

Wilson 指出静态(SAST)和源组合分析(SCA)工具,集成到团队的持续交付管道中,作为有用的技术通过给予开发者关于他们的代码中的潜在问题和第三方依赖中的漏洞的反馈来使之逐渐成为可能。

|

||||

|

||||

“因此,开发者们能够主动和迭代的缓解应用安全的问题,然后在不需要安全人员介入的情况下重新进行安全扫描。” Wilson 说。他同时指出 DevSecOps 能够帮助开发者简化更新和打补丁。

|

||||

|

||||

DevSecOps 并不意味着你不再需要安全组的意见了,就如同 DevOps 并不意味着你不再需要基础架构专家;它只是帮助你减少在生产中发现缺陷的可能性,或者减少导致降低部署速度的阻碍,因为缺陷已经在开发周期中被发现解决了。

|

||||

|

||||

“如果他们有问题或者需要帮助,我们就在这儿,但是因为已经给了开发者他们需要的保护他们应用安全的工具,我们很少在一个深入的测试中发现一个导致中断的问题,”Wilson 说道。

|

||||

|

||||

### DevSecOps 遇到 Meltdown

|

||||

|

||||

Sumo Locic 的 Gerchow 向我们分享了一个在运转中的 DevSecOps 文化的一个及时案例:当最近 [Meltdown 和 Spectre] 的消息传来的时候,团队的 DevSecOps 方法使得有了一个快速的响应来减轻风险,没有任何的通知去打扰内部或者外部的顾客,Gerchow 所说的这点对原生云、高监管的公司来说特别的重要。

|

||||

|

||||

第一步:Gerchow 的小型安全团队都具有一定的开发能力,能够通过 Slack 和它的主要云供应商协同工作来确保它的基础架构能够在 24 小时之内完成修复。

|

||||

|

||||

“接着我的团队立即开始进行系统级的修复,实现终端客户的零停机时间,不需要去开工单给工程师,如果那样那意味着你需要等待很长的变更过程。所有的变更都是通过 Slack 的自动 jira 票据进行,通过我们的日志监控和分析解决方案”,Gerchow 解释道。

|

||||

|

||||

在本质上,它听起来非常像 DevOps 文化,匹配正确的人员、过程和工具,但它明确的将安全作为文化中的一部分进行了混合。

|

||||

|

||||

“在传统的环境中,这将花费数周或数月的停机时间来处理,因为开发、运维和安全三者是相互独立的”,Gerchow 说道,“通过一个 DevSecOps 的过程和习惯,终端用户可以通过简单的沟通和当日修复获得无缝的体验。”

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://enterprisersproject.com/article/2018/1/why-devsecops-matters-it-leaders

|

||||

|

||||

作者:[Kevin Casey][a]

|

||||

译者:[FelixYFZ](https://github.com/FelixYFZ)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://enterprisersproject.com/user/kevin-casey

|

||||

[1]:https://enterprisersproject.com/tags/devops

|

||||

[2]:https://www.datical.com/

|

||||

[3]:https://enterprisersproject.com/devops?sc_cid=70160000000h0aXAAQ

|

||||

[4]:https://www.redhat.com/en?intcmp=701f2000000tjyaAAA

|

||||

[5]:https://enterprisersproject.com/article/2017/10/what-s-next-devops-5-trends-watch

|

||||

[6]:https://www.sumologic.com/

|

||||

[7]:https://www.cybric.io/

|

||||

[8]:https://www.sas.com/en_us/home.html

|

||||

[9]:https://www.redhat.com/en/blog/what-are-meltdown-and-spectre-heres-what-you-need-know?intcmp=701f2000000tjyaAAA

|

||||

@ -1,9 +1,11 @@

|

||||

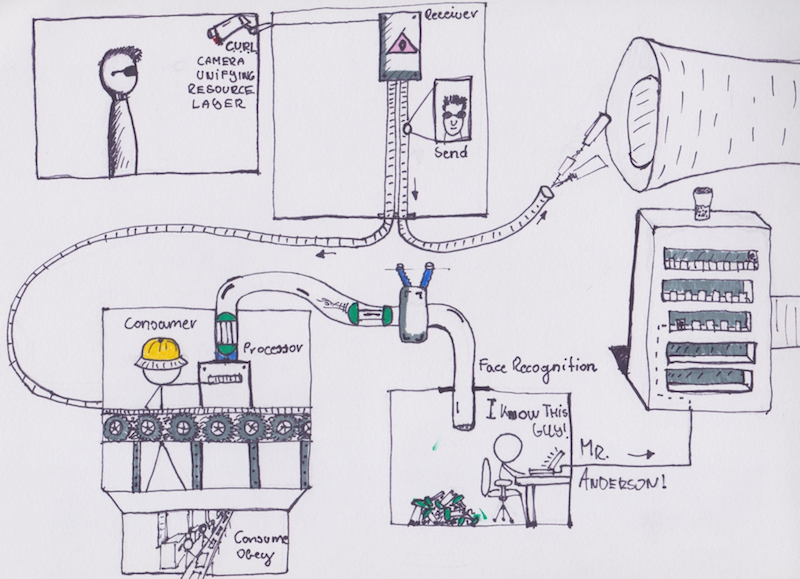

Kubernetes 分布式应用部署实战 -- 以人脸识别应用为例

|

||||

Kubernetes 分布式应用部署实战:以人脸识别应用为例

|

||||

============================================================

|

||||

|

||||

# 简介

|

||||

|

||||

|

||||

伙计们,请做好准备,下面将是一段漫长的旅程,期望你能够乐在其中。

|

||||

## 简介

|

||||

|

||||

伙计们,请搬好小板凳坐好,下面将是一段漫长的旅程,期望你能够乐在其中。

|

||||

|

||||

我将基于 [Kubernetes][5] 部署一个分布式应用。我曾试图编写一个尽可能真实的应用,但由于时间和精力有限,最终砍掉了很多细节。

|

||||

|

||||

@ -11,17 +13,17 @@ Kubernetes 分布式应用部署实战 -- 以人脸识别应用为例

|

||||

|

||||

让我们开始吧。

|

||||

|

||||

# 应用

|

||||

## 应用

|

||||

|

||||

### TL;DR

|

||||

|

||||

|

||||

|

||||

应用本身由 6 个组件构成。代码可以从如下链接中找到:[Kubenetes 集群示例][6]。

|

||||

该应用本身由 6 个组件构成。代码可以从如下链接中找到:[Kubenetes 集群示例][6]。

|

||||

|

||||

这是一个人脸识别服务,通过比较已知个人的图片,识别给定图片对应的个人。前端页面用表格形式简要的展示图片及对应的个人。具体而言,向 [接收器][6] 发送请求,请求包含指向一个图片的链接。图片可以位于任何位置。接受器将图片地址存储到数据库 (MySQL) 中,然后向队列发送处理请求,请求中包含已保存图片的 ID。这里我们使用 [NSQ][8] 建立队列。

|

||||

|

||||

[图片处理][9]服务一直监听处理请求队列,从中获取任务。处理过程包括如下几步:获取图片 ID,读取图片,通过 [gRPC][11] 将图片路径发送至 Python 编写的[人脸识别][10]后端。如果识别成功,后端给出图片对应个人的名字。图片处理器进而根据个人 ID 更新图片记录,将其标记为处理成功。如果识别不成功,图片被标记为待解决。如果图片识别过程中出现错误,图片被标记为失败。

|

||||

[图片处理][9] 服务一直监听处理请求队列,从中获取任务。处理过程包括如下几步:获取图片 ID,读取图片,通过 [gRPC][11] 将图片路径发送至 Python 编写的 [人脸识别][10] 后端。如果识别成功,后端给出图片对应个人的名字。图片处理器进而根据个人 ID 更新图片记录,将其标记为处理成功。如果识别不成功,图片被标记为待解决。如果图片识别过程中出现错误,图片被标记为失败。

|

||||

|

||||

标记为失败的图片可以通过计划任务等方式进行重试。

|

||||

|

||||

@ -33,39 +35,31 @@ Kubernetes 分布式应用部署实战 -- 以人脸识别应用为例

|

||||

|

||||

```

|

||||

curl -d '{"path":"/unknown_images/unknown0001.jpg"}' http://127.0.0.1:8000/image/post

|

||||

|

||||

```

|

||||

|

||||

此时,接收器将<ruby>路径<rt>path</rt></ruby>存储到共享数据库集群中,对应的条目包括数据库服务提供的 ID。本应用采用”持久层提供条目对象唯一标识“的模型。获得条目 ID 后,接收器向 NSQ 发送消息,至此接收器的工作完成。

|

||||

此时,接收器将<ruby>路径<rt>path</rt></ruby>存储到共享数据库集群中,该实体存储后将从数据库服务收到对应的 ID。本应用采用“<ruby>实体对象<rt>Entity Object</rt></ruby>的唯一标识由持久层提供”的模型。获得实体 ID 后,接收器向 NSQ 发送消息,至此接收器的工作完成。

|

||||

|

||||

### 图片处理器

|

||||

|

||||

从这里开始变得有趣起来。图片处理器首次运行时会创建两个 Go routines,具体为:

|

||||

从这里开始变得有趣起来。图片处理器首次运行时会创建两个 Go <ruby>协程<rt>routine</rt></ruby>,具体为:

|

||||

|

||||

### Consume

|

||||

|

||||

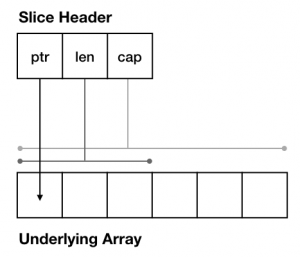

这是一个 NSQ 消费者,需要完成三项任务。首先,监听队列中的消息。其次,当有新消息到达时,将对应的 ID 追加到一个线程安全的 ID 片段中,以供第二个 routine 处理。最后,告知第二个 routine 处理新任务,方法为 [sync.Condition][12]。

|

||||

这是一个 NSQ 消费者,需要完成三项必需的任务。首先,监听队列中的消息。其次,当有新消息到达时,将对应的 ID 追加到一个线程安全的 ID 片段中,以供第二个协程处理。最后,告知第二个协程处理新任务,方法为 [sync.Condition][12]。

|

||||

|

||||

### ProcessImages

|

||||

|

||||

该 routine 会处理指定 ID 片段,直到对应片段全部处理完成。当处理完一个片段后,该 routine 并不是在一个通道上睡眠等待,而是进入悬挂状态。对每个 ID,按如下步骤顺序处理:

|

||||

该协程会处理指定 ID 片段,直到对应片段全部处理完成。当处理完一个片段后,该协程并不是在一个通道上睡眠等待,而是进入悬挂状态。对每个 ID,按如下步骤顺序处理:

|

||||

|

||||

* 与人脸识别服务建立 gRPC 连接,其中人脸识别服务会在人脸识别部分进行介绍

|

||||

|

||||

* 从数据库获取图片对应的条目

|

||||

|

||||

* 从数据库获取图片对应的实体

|

||||

* 为 [断路器][1] 准备两个函数

|

||||

* 函数 1: 用于 RPC 方法调用的主函数

|

||||

|

||||

* 函数 2: 基于 ping 的断路器健康检查

|

||||

|

||||

* 调用函数 1 将图片路径发送至人脸识别服务,其中路径应该是人脸识别服务可以访问的,最好是共享的,例如 NFS

|

||||

|

||||

* 如果调用失败,将图片条目状态更新为 FAILEDPROCESSING

|

||||

|

||||

* 如果调用失败,将图片实体状态更新为 FAILEDPROCESSING

|

||||

* 如果调用成功,返回值是一个图片的名字,对应数据库中的一个个人。通过联合 SQL 查询,获取对应个人的 ID

|

||||

|

||||

* 将数据库中的图片条目状态更新为 PROCESSED,更新图片被识别成的个人的 ID

|

||||

* 将数据库中的图片实体状态更新为 PROCESSED,更新图片被识别成的个人的 ID

|

||||

|

||||

这个服务可以复制多份同时运行。

|

||||

|

||||

@ -89,7 +83,7 @@ curl -d '{"path":"/unknown_images/unknown0001.jpg"}' http://127.0.0.1:8000/image

|

||||

|

||||

注意:我曾经试图使用 [GoCV][14],这是一个极好的 Go 库,但欠缺所需的 C 绑定。推荐马上了解一下这个库,它会让你大吃一惊,例如编写若干行代码即可实现实时摄像处理。

|

||||

|

||||

这个 Python 库的工作方式本质上很简单。准备一些你认识的人的图片,把信息记录下来。对于我而言,我有一个图片文件夹,包含若干图片,名称分别为 `hannibal_1.jpg, hannibal_2.jpg, gergely_1.jpg, john_doe.jpg`。在数据库中,我使用两个表记录信息,分别为 `person, person_images`,具体如下:

|

||||

这个 Python 库的工作方式本质上很简单。准备一些你认识的人的图片,把信息记录下来。对于我而言,我有一个图片文件夹,包含若干图片,名称分别为 `hannibal_1.jpg`、 `hannibal_2.jpg`、 `gergely_1.jpg`、 `john_doe.jpg`。在数据库中,我使用两个表记录信息,分别为 `person`、 `person_images`,具体如下:

|

||||

|

||||

```

|

||||

+----+----------+

|

||||

@ -126,13 +120,13 @@ NSQ 是 Go 编写的小规模队列,可扩展且占用系统内存较少。NSQ

|

||||

|

||||

### 配置

|

||||

|

||||

为了尽可能增加灵活性以及使用 Kubernetes 的 ConfigSet 特性,我在开发过程中使用 .env 文件记录配置信息,例如数据库服务的地址以及 NSQ 的查询地址。在生产环境或 Kubernetes 环境中,我将使用环境变量属性配置。

|

||||

为了尽可能增加灵活性以及使用 Kubernetes 的 ConfigSet 特性,我在开发过程中使用 `.env` 文件记录配置信息,例如数据库服务的地址以及 NSQ 的查询地址。在生产环境或 Kubernetes 环境中,我将使用环境变量属性配置。

|

||||

|

||||

### 应用小结

|

||||

|

||||

这就是待部署应用的全部架构信息。应用的各个组件都是可变更的,他们之间仅通过数据库、消息队列和 gRPC 进行耦合。考虑到更新机制的原理,这是部署分布式应用所必须的;在部署部分我会继续分析。

|

||||

|

||||

# 使用 Kubernetes 部署应用

|

||||

## 使用 Kubernetes 部署应用

|

||||

|

||||

### 基础知识

|

||||

|

||||

@ -144,55 +138,51 @@ Kubernetes 是容器化服务及应用的管理器。它易于扩展,可以管

|

||||

|

||||

在 Kubernetes 中,你给出期望的应用状态,Kubernetes 会尽其所能达到对应的状态。状态可以是已部署、已暂停,有 2 个副本等,以此类推。

|

||||

|

||||

Kubernetes 使用标签和注释标记组件,包括服务,部署,副本组,守护进程组等在内的全部组件都被标记。考虑如下场景,为了识别 pod 与 应用的对应关系,使用 `app: myapp` 标签。假设应用已部署 2 个容器,如果你移除其中一个容器的 `app` 标签,Kubernetes 只能识别到一个容器(隶属于应用),进而启动一个新的具有 `myapp` 标签的实例。

|

||||

Kubernetes 使用标签和注释标记组件,包括服务、部署、副本组、守护进程组等在内的全部组件都被标记。考虑如下场景,为了识别 pod 与应用的对应关系,使用 `app: myapp` 标签。假设应用已部署 2 个容器,如果你移除其中一个容器的 `app` 标签,Kubernetes 只能识别到一个容器(隶属于应用),进而启动一个新的具有 `myapp` 标签的实例。

|

||||

|

||||

### Kubernetes 集群

|

||||

|

||||

要使用 Kubernetes,需要先搭建一个 Kubernetes 集群。搭建 Kubernetes 集群可能是一个痛苦的经历,但所幸有工具可以帮助我们。Minikube 为我们在本地搭建一个单节点集群。AWS 的一个 beta 服务工作方式类似于 Kubernetes 集群,你只需请求 Nodes 并定义你的部署即可。Kubernetes 集群组件的文档如下:[Kubernetes 集群组件][17]。

|

||||

要使用 Kubernetes,需要先搭建一个 Kubernetes 集群。搭建 Kubernetes 集群可能是一个痛苦的经历,但所幸有工具可以帮助我们。Minikube 为我们在本地搭建一个单节点集群。AWS 的一个 beta 服务工作方式类似于 Kubernetes 集群,你只需请求节点并定义你的部署即可。Kubernetes 集群组件的文档如下:[Kubernetes 集群组件][17]。

|

||||

|

||||

### 节点 (Nodes)

|

||||

### 节点

|

||||

|

||||

节点是工作单位,形式可以是虚拟机、物理机,也可以是各种类型的云主机。

|

||||

<ruby>节点<rt>node</rt></ruby>是工作单位,形式可以是虚拟机、物理机,也可以是各种类型的云主机。

|

||||

|

||||

### Pods

|

||||

### Pod

|

||||

|

||||

Pods 是本地容器组成的集合,即一个 Pod 中可能包含若干个容器。Pod 创建后具有自己的 DNS 和 虚拟 IP,这样 Kubernetes 可以对到达流量进行负载均衡。你几乎不需要直接和容器打交道;即使是调试的时候,例如查看日志,你通常调用 `kubectl logs deployment/your-app -f` 查看部署日志,而不是使用 `-c container_name` 查看具体某个容器的日志。`-f` 参数表示从日志尾部进行流式输出。

|

||||

Pod 是本地容器逻辑上组成的集合,即一个 Pod 中可能包含若干个容器。Pod 创建后具有自己的 DNS 和虚拟 IP,这样 Kubernetes 可以对到达流量进行负载均衡。你几乎不需要直接和容器打交道;即使是调试的时候,例如查看日志,你通常调用 `kubectl logs deployment/your-app -f` 查看部署日志,而不是使用 `-c container_name` 查看具体某个容器的日志。`-f` 参数表示从日志尾部进行流式输出。

|

||||

|

||||

### 部署 (Deployments)

|

||||

### 部署

|

||||

|

||||

在 Kubernetes 中创建任何类型的资源时,后台使用一个部署,它指定了资源的期望状态。使用部署对象,你可以将 Pod 或服务变更为另外的状态,也可以更新应用或上线新版本应用。你一般不会直接操作副本组 (后续会描述),而是通过部署对象创建并管理。

|

||||

在 Kubernetes 中创建任何类型的资源时,后台使用一个<ruby>部署<rt>deployment</rt></ruby>组件,它指定了资源的期望状态。使用部署对象,你可以将 Pod 或服务变更为另外的状态,也可以更新应用或上线新版本应用。你一般不会直接操作副本组 (后续会描述),而是通过部署对象创建并管理。

|

||||

|

||||

### 服务 (Services)

|

||||

### 服务

|

||||

|

||||

默认情况下,Pod 会获取一个 IP 地址。但考虑到 Pod 是 Kubernetes 中的易失性组件,我们需要更加持久的组件。不论是队列,mysql,内部 API 或前端,都需要长期运行并使用保持不变的 IP 或 更佳的 DNS 记录。

|

||||

默认情况下,Pod 会获取一个 IP 地址。但考虑到 Pod 是 Kubernetes 中的易失性组件,我们需要更加持久的组件。不论是队列,MySQL、内部 API 或前端,都需要长期运行并使用保持不变的 IP 或更好的 DNS 记录。

|

||||

|

||||

为解决这个问题,Kubernetes 提供了服务组件,可以定义访问模式,支持的模式包括负载均衡,简单 IP 或 内部 DNS。

|

||||

为解决这个问题,Kubernetes 提供了<ruby>服务<rt>service</rt></ruby>组件,可以定义访问模式,支持的模式包括负载均衡、简单 IP 或内部 DNS。

|

||||

|

||||

Kubernetes 如何获知服务运行正常呢?你可以配置健康性检查和可用性检查。健康性检查是指检查容器是否处于运行状态,但容器处于运行状态并不意味着服务运行正常。对此,你应该使用可用性检查,即请求应用的一个特别<ruby>接口<rt>endpoint</rt></ruby>。

|

||||

|

||||

由于服务非常重要,推荐你找时间阅读以下文档:[服务][18]。严肃的说,需要阅读的东西很多,有 24 页 A4 纸的篇幅,涉及网络,服务及自动发现。这也有助于你决定是否真的打算在生产环境中使用 Kubernetes。

|

||||

由于服务非常重要,推荐你找时间阅读以下文档:[服务][18]。严肃的说,需要阅读的东西很多,有 24 页 A4 纸的篇幅,涉及网络、服务及自动发现。这也有助于你决定是否真的打算在生产环境中使用 Kubernetes。

|

||||

|

||||

### DNS / 服务发现

|

||||

|

||||

在 Kubernetes 集群中创建服务后,该服务会从名为 kube-proxy 和 kube-dns 的特殊 Kubernetes 部署中获取一个 DNS 记录。他们两个用于提供集群内的服务发现。如果你有一个正在运行的 mysql 服务并配置 `clusterIP: no`,那么集群内部任何人都可以通过 `mysql.default.svc.cluster.local` 访问该服务,其中:

|

||||

在 Kubernetes 集群中创建服务后,该服务会从名为 `kube-proxy` 和 `kube-dns` 的特殊 Kubernetes 部署中获取一个 DNS 记录。它们两个用于提供集群内的服务发现。如果你有一个正在运行的 MySQL 服务并配置 `clusterIP: no`,那么集群内部任何人都可以通过 `mysql.default.svc.cluster.local` 访问该服务,其中:

|

||||

|

||||

* `mysql` – 服务的名称

|

||||

|

||||

* `default` – 命名空间的名称

|

||||

|

||||

* `svc` – 对应服务分类

|

||||

|

||||

* `cluster.local` – 本地集群的域名

|

||||

|

||||

可以使用自定义设置更改本地集群的域名。如果想让服务可以从集群外访问,需要使用 DNS 提供程序并使用例如 Nginx 将 IP 地址绑定至记录。服务对应的对外 IP 地址可以使用如下命令查询:

|

||||

可以使用自定义设置更改本地集群的域名。如果想让服务可以从集群外访问,需要使用 DNS 服务,并使用例如 Nginx 将 IP 地址绑定至记录。服务对应的对外 IP 地址可以使用如下命令查询:

|

||||

|

||||

* 节点端口方式 – `kubectl get -o jsonpath="{.spec.ports[0].nodePort}" services mysql`

|

||||

|

||||

* 负载均衡方式 – `kubectl get -o jsonpath="{.spec.ports[0].LoadBalancer}" services mysql`

|

||||

|

||||

### 模板文件

|

||||

|

||||

类似 Docker Compose, TerraForm 或其它的服务管理工具,Kubernetes 也提供了基础设施描述模板。这意味着,你几乎不用手动操作。

|

||||

类似 Docker Compose、TerraForm 或其它的服务管理工具,Kubernetes 也提供了基础设施描述模板。这意味着,你几乎不用手动操作。

|

||||

|

||||

以 Nginx 部署为例,查看下面的 yaml 模板:

|

||||

|

||||

@ -218,26 +208,26 @@ spec: #(4)

|

||||

image: nginx:1.7.9

|

||||

ports:

|

||||

- containerPort: 80

|

||||

|

||||

```

|

||||

|

||||

在这个示例部署中,我们做了如下操作:

|

||||

|

||||

* (1) 使用 kind 关键字定义模板类型

|

||||

* (2) 使用 metadata 关键字,增加该部署的识别信息,使用 labels 标记每个需要创建的资源 (3)

|

||||

* (4) 然后使用 spec 关键字描述所需的状态

|

||||

* (5) nginx 应用需要 3 个副本

|

||||

* (6) Pod 中容器的模板定义部分

|

||||

* 容器名称为 nginx

|

||||

* 容器模板为 nginx:1.7.9 (本例使用 Docker 镜像)

|

||||

* (1) 使用 `kind` 关键字定义模板类型

|

||||

* (2) 使用 `metadata` 关键字,增加该部署的识别信息

|

||||

* (3) 使用 `labels` 标记每个需要创建的资源

|

||||

* (4) 然后使用 `spec` 关键字描述所需的状态

|

||||

* (5) nginx 应用需要 3 个副本

|

||||

* (6) Pod 中容器的模板定义部分

|

||||

* 容器名称为 nginx

|

||||

* 容器模板为 nginx:1.7.9 (本例使用 Docker 镜像)

|

||||

|

||||

### 副本组 (ReplicaSet)

|

||||

### 副本组

|

||||

|

||||

副本组是一个底层的副本管理器,用于保证运行正确数目的应用副本。相比而言,部署是更高层级的操作,应该用于管理副本组。除非你遇到特殊的情况,需要控制副本的特性,否则你几乎不需要直接操作副本组。

|

||||

<ruby>副本组<rt>ReplicaSet</rt></ruby>是一个底层的副本管理器,用于保证运行正确数目的应用副本。相比而言,部署是更高层级的操作,应该用于管理副本组。除非你遇到特殊的情况,需要控制副本的特性,否则你几乎不需要直接操作副本组。

|

||||

|

||||

### 守护进程组 (DaemonSet)

|

||||

### 守护进程组

|

||||

|

||||

上面提到 Kubernetes 始终使用标签,还有印象吗?守护进程组是一个控制器,用于确保守护进程化的应用一直运行在具有特定标签的节点中。

|

||||

上面提到 Kubernetes 始终使用标签,还有印象吗?<ruby>守护进程组<rt>DaemonSet</rt></ruby>是一个控制器,用于确保守护进程化的应用一直运行在具有特定标签的节点中。

|

||||

|

||||

例如,你将所有节点增加 `logger` 或 `mission_critical` 的标签,以便运行日志 / 审计服务的守护进程。接着,你创建一个守护进程组并使用 `logger` 或 `mission_critical` 节点选择器。Kubernetes 会查找具有该标签的节点,确保守护进程的实例一直运行在这些节点中。因而,节点中运行的所有进程都可以在节点内访问对应的守护进程。

|

||||

|

||||

@ -253,7 +243,7 @@ spec: #(4)

|

||||

|

||||

### Kubernetes 部分小结

|

||||

|

||||

Kubernetes 是容器编排的便捷工具,工作单元为 Pods,具有分层架构。最顶层是部署,用于操作其它资源,具有高度可配置性。对于你的每个命令调用,Kubernetes 提供了对应的 API,故理论上你可以编写自己的代码,向 Kubernetes API 发送数据,得到与 `kubectl` 命令同样的效果。

|

||||

Kubernetes 是容器编排的便捷工具,工作单元为 Pod,具有分层架构。最顶层是部署,用于操作其它资源,具有高度可配置性。对于你的每个命令调用,Kubernetes 提供了对应的 API,故理论上你可以编写自己的代码,向 Kubernetes API 发送数据,得到与 `kubectl` 命令同样的效果。

|

||||

|

||||

截至目前,Kubernetes 原生支持所有主流云服务供应商,而且完全开源。如果你愿意,可以贡献代码;如果你希望对工作原理有深入了解,可以查阅代码:[GitHub 上的 Kubernetes 项目][22]。

|

||||

|

||||

@ -272,7 +262,7 @@ kubectl get nodes -o yaml

|

||||

|

||||

### 构建容器

|

||||

|

||||

Kubernetes 支持大多数现有的容器技术。我这里使用 Docker。每一个构建的服务容器,对应代码库中的一个 Dockerfile 文件。我推荐你仔细阅读它们,其中大多数都比较简单。对于 Go 服务,我采用了最近引入的多步构建的方式。Go 服务基于 Alpine Linux 镜像创建。人脸识别程序使用 Python,NSQ 和 MySQL 使用对应的容器。

|

||||

Kubernetes 支持大多数现有的容器技术。我这里使用 Docker。每一个构建的服务容器,对应代码库中的一个 Dockerfile 文件。我推荐你仔细阅读它们,其中大多数都比较简单。对于 Go 服务,我采用了最近引入的多步构建的方式。Go 服务基于 Alpine Linux 镜像创建。人脸识别程序使用 Python、NSQ 和 MySQL 使用对应的容器。

|

||||

|

||||

### 上下文

|

||||

|

||||

@ -293,9 +283,9 @@ Switched to context "kube-face-cluster".

|

||||

```

|

||||

此后,所有 `kubectl` 命令都会使用 `face` 命名空间。

|

||||

|

||||

(译注:作者后续并没有使用 face 命名空间,模板文件中的命名空间仍为 default,可能 face 命名空间用于开发环境。如果希望使用 face 命令空间,需要将内部 DNS 地址中的 default 改成 face;如果只是测试,可以不执行这两条命令。)

|

||||

(LCTT 译注:作者后续并没有使用 face 命名空间,模板文件中的命名空间仍为 default,可能 face 命名空间用于开发环境。如果希望使用 face 命令空间,需要将内部 DNS 地址中的 default 改成 face;如果只是测试,可以不执行这两条命令。)

|

||||

|

||||

### 应用部署

|

||||

## 应用部署

|

||||

|

||||

Pods 和 服务概览:

|

||||

|

||||

@ -318,7 +308,6 @@ type: Opaque

|

||||

data:

|

||||

mysql_password: base64codehere

|

||||

mysql_userpassword: base64codehere

|

||||

|

||||

```

|

||||

|

||||

其中 base64 编码通过如下命令生成:

|

||||

@ -326,10 +315,9 @@ data:

|

||||

```

|

||||

echo -n "ubersecurepassword" | base64

|

||||

echo -n "root:ubersecurepassword" | base64

|

||||

|

||||

```

|

||||

|

||||

(LCTT 译注:secret yaml 文件中的 data 应该有两条,一条对应 mysql_password, 仅包含密码;另一条对应 mysql_userpassword,包含用户和密码。后文会用到 mysql_userpassword,但没有提及相应的生成)

|

||||

(LCTT 译注:secret yaml 文件中的 data 应该有两条,一条对应 `mysql_password`,仅包含密码;另一条对应 `mysql_userpassword`,包含用户和密码。后文会用到 `mysql_userpassword`,但没有提及相应的生成)

|

||||

|

||||

我的部署 yaml 对应部分如下:

|

||||

|

||||

@ -362,13 +350,12 @@ echo -n "root:ubersecurepassword" | base64

|

||||

|

||||

其中 `presistentVolumeClain` 是关键,告知 Kubernetes 当前资源需要持久化存储。持久化存储的提供方式对用户透明。类似 Pods,如果想了解更多细节,参考文档:[Kubernetes 持久化存储][27]。

|

||||

|

||||

(LCTT 译注:使用 presistentVolumeClain 之前需要创建 presistentVolume,对于单节点可以使用本地存储,对于多节点需要使用共享存储,因为 Pod 可以能调度到任何一个节点)

|

||||

(LCTT 译注:使用 `presistentVolumeClain` 之前需要创建 `presistentVolume`,对于单节点可以使用本地存储,对于多节点需要使用共享存储,因为 Pod 可以能调度到任何一个节点)

|

||||

|

||||

使用如下命令部署 MySQL 服务:

|

||||

|

||||

```

|

||||

kubectl apply -f mysql.yaml

|

||||

|

||||

```

|

||||

|

||||

这里比较一下 `create` 和 `apply`。`apply` 是一种<ruby>宣告式<rt>declarative</rt></ruby>的对象配置命令,而 `create` 是<ruby>命令式<rt>imperative</rt>的命令。当下我们需要知道的是,`create` 通常对应一项任务,例如运行某个组件或创建一个部署;相比而言,当我们使用 `apply` 的时候,用户并没有指定具体操作,Kubernetes 会根据集群目前的状态定义需要执行的操作。故如果不存在名为 `mysql` 的服务,当我执行 `apply -f mysql.yaml` 时,Kubernetes 会创建该服务。如果再次执行这个命令,Kubernetes 会忽略该命令。但如果我再次运行 `create`,Kubernetes 会报错,告知服务已经创建。

|

||||

@ -460,7 +447,7 @@ volumes:

|

||||

|

||||

```

|

||||

|

||||

(LCTT 译注:数据库初始化脚本需要改成对应的路径,如果是多节点,需要是共享存储中的路径。另外,作者给的 sql 文件似乎有误,person_images 表中的 person_id 列数字都小 1,作者默认 id 从 0 开始,但应该是从 1 开始)

|

||||

(LCTT 译注:数据库初始化脚本需要改成对应的路径,如果是多节点,需要是共享存储中的路径。另外,作者给的 sql 文件似乎有误,`person_images` 表中的 `person_id` 列数字都小 1,作者默认 `id` 从 0 开始,但应该是从 1 开始)

|

||||

|

||||

运行如下命令查看引导脚本是否正确执行:

|

||||

|

||||

@ -489,7 +476,6 @@ mysql>

|

||||

|

||||

```

|

||||

kubectl logs deployment/mysql -f

|

||||

|

||||

```

|

||||

|

||||

### NSQ 查询

|

||||

@ -505,7 +491,7 @@ NSQ 查询将以内部服务的形式运行。由于不需要外部访问,这

|

||||

|

||||

```

|

||||

|

||||

那么,内部 DNS 对应的条目类似于:`nsqlookup.default.svc.cluster.local`。

|

||||

那么,内部 DNS 对应的实体类似于:`nsqlookup.default.svc.cluster.local`。

|

||||

|

||||

无头服务的更多细节,可以参考:[无头服务][32]。

|

||||

|

||||

@ -517,7 +503,7 @@ args: ["--broadcast-address=nsqlookup.default.svc.cluster.local"]

|

||||

|

||||

```

|

||||

|

||||

你可能会疑惑,`--broadcast-address` 参数是做什么用的?默认情况下,nsqlookup 使用 `hostname` (LCTT 译注:这里是指容器的主机名,而不是 hostname 字符串本身)作为广播地址;这意味着,当用户运行回调时,回调试图访问的地址类似于 `http://nsqlookup-234kf-asdf:4161/lookup?topics=image`,但这显然不是我们期望的。将广播地址设置为内部 DNS 后,回调地址将是 `http://nsqlookup.default.svc.cluster.local:4161/lookup?topic=images`,这正是我们期望的。

|

||||

你可能会疑惑,`--broadcast-address` 参数是做什么用的?默认情况下,`nsqlookup` 使用容器的主机名作为广播地址;这意味着,当用户运行回调时,回调试图访问的地址类似于 `http://nsqlookup-234kf-asdf:4161/lookup?topics=image`,但这显然不是我们期望的。将广播地址设置为内部 DNS 后,回调地址将是 `http://nsqlookup.default.svc.cluster.local:4161/lookup?topic=images`,这正是我们期望的。

|

||||

|

||||

NSQ 查询还需要转发两个端口,一个用于广播,另一个用于 nsqd 守护进程的回调。在 Dockerfile 中暴露相应端口,在 Kubernetes 模板中使用它们,类似如下:

|

||||

|

||||

@ -533,6 +519,7 @@ NSQ 查询还需要转发两个端口,一个用于广播,另一个用于 nsq

|

||||

```

|

||||

|

||||

服务模板:

|

||||

|

||||

```

|

||||

spec:

|

||||

ports:

|

||||

@ -592,13 +579,13 @@ NSQ 守护进程也需要一些调整的参数配置:

|

||||

|

||||

```

|

||||

|

||||

其中我们配置了 lookup-tcp-address 和 broadcast-address 参数。前者是 nslookup 服务的 DNS 地址,后者用于回调,就像 nsqlookupd 配置中那样。

|

||||

其中我们配置了 `lookup-tcp-address` 和 `broadcast-address` 参数。前者是 nslookup 服务的 DNS 地址,后者用于回调,就像 nsqlookupd 配置中那样。

|

||||

|

||||

#### 对外公开

|

||||

|

||||

下面即将创建第一个对外公开的服务。有两种方式可供选择。考虑到该 API 负载较高,可以使用负载均衡的方式。另外,如果希望将其部署到生产环境中的任选节点,也应该使用负载均衡方式。

|

||||

|

||||

但由于我使用的本地集群只有一个节点,那么使用 `节点端口` 的方式就足够了。`节点端口` 方式将服务暴露在对应节点的固定端口上。如果未指定端口,将从 30000-32767 数字范围内随机选其一个。也可以指定端口,可以在模板文件中使用 `nodePort` 设置即可。可以通过 `<NodeIP>:<NodePort>` 访问该服务。如果使用多个节点,负载均衡可以将多个 IP 合并为一个 IP。

|

||||

但由于我使用的本地集群只有一个节点,那么使用 `NodePort` 的方式就足够了。`NodePort` 方式将服务暴露在对应节点的固定端口上。如果未指定端口,将从 30000-32767 数字范围内随机选其一个。也可以指定端口,可以在模板文件中使用 `nodePort` 设置即可。可以通过 `<NodeIP>:<NodePort>` 访问该服务。如果使用多个节点,负载均衡可以将多个 IP 合并为一个 IP。

|

||||

|

||||

更多信息,请参考文档:[服务发布][33]。

|

||||

|

||||

@ -643,7 +630,7 @@ spec:

|

||||

|

||||

### 图片处理器

|

||||

|

||||

图片处理器用于将图片传送至识别组件。它需要访问 nslookupd, mysql 以及后续部署的人脸识别服务的 gRPC 接口。事实上,这是一个无聊的服务,甚至其实并不是服务(LCTT 译注:第一个服务是指在整个架构中,图片处理器作为一个服务;第二个服务是指 Kubernetes 服务)。它并需要对外暴露端口,这是第一个只包含部署的组件。长话短说,下面是完整的模板:

|

||||

图片处理器用于将图片传送至识别组件。它需要访问 nslookupd、 mysql 以及后续部署的人脸识别服务的 gRPC 接口。事实上,这是一个无聊的服务,甚至其实并不是服务(LCTT 译注:第一个服务是指在整个架构中,图片处理器作为一个服务;第二个服务是指 Kubernetes 服务)。它并需要对外暴露端口,这是第一个只包含部署的组件。长话短说,下面是完整的模板:

|

||||

|

||||

```

|

||||

---

|

||||

@ -781,7 +768,7 @@ curl -d '{"path":"/unknown_people/unknown220.jpg"}' http://192.168.99.100:30251/

|

||||

|

||||

```

|

||||

|

||||

图像处理器会在 `/unknown_people` 目录搜索名为 unknown220.jpg 的图片,接着在 known_foler 文件中找到 unknown220.jpg 对应个人的图片,最后返回匹配图片的名称。

|

||||

图像处理器会在 `/unknown_people` 目录搜索名为 unknown220.jpg 的图片,接着在 `known_folder` 文件中找到 `unknown220.jpg` 对应个人的图片,最后返回匹配图片的名称。

|

||||

|

||||

查看日志,大致信息如下:

|

||||

|

||||

@ -861,9 +848,9 @@ receiver-deployment-5cb4797598-sf5ds 1/1 Running 0 26s

|

||||

|

||||

```

|

||||

|

||||

### 滚动更新 (Rolling Update)

|

||||

## 滚动更新

|

||||

|

||||

滚动更新过程中会发生什么呢?

|

||||

<ruby>滚动更新<rt>Rolling Update</rt></ruby>过程中会发生什么呢?

|

||||

|

||||

|

||||

|

||||

@ -871,7 +858,7 @@ receiver-deployment-5cb4797598-sf5ds 1/1 Running 0 26s

|

||||

|

||||

目前的 API 一次只能处理一个图片,不能批量处理,对此我并不满意。

|

||||

|

||||

#### 代码

|

||||

### 代码

|

||||

|

||||

目前,我们使用下面的代码段处理单个图片的情形:

|

||||

|

||||

@ -900,7 +887,7 @@ func main() {

|

||||

|

||||

这里,你可能会说你并不需要保留旧代码;某些情况下,确实如此。因此,我们打算直接修改旧代码,让其通过少量参数调用新代码。这样操作操作相当于移除了旧代码。当所有客户端迁移完毕后,这部分代码也可以安全地删除。

|

||||

|

||||

#### 新的 Endpoint

|

||||

### 新的接口

|

||||

|

||||

让我们添加新的路由方法:

|

||||

|

||||

@ -941,7 +928,7 @@ func PostImage(w http.ResponseWriter, r *http.Request) {

|

||||

|

||||

```

|

||||

|

||||

当然,方法名可能容易混淆,但你应该能够理解我想表达的意思。我将请求中的单个路径封装成新方法所需格式,然后将其作为请求发送给新接口处理。仅此而已。在 [滚动更新批量图片 PR][34] 中可以找到更多的修改方式。

|

||||

当然,方法名可能容易混淆,但你应该能够理解我想表达的意思。我将请求中的单个路径封装成新方法所需格式,然后将其作为请求发送给新接口处理。仅此而已。在 [滚动更新批量图片的 PR][34] 中可以找到更多的修改方式。

|

||||

|

||||

至此,我们使用两种方法调用接收器:

|

||||

|

||||

@ -958,7 +945,7 @@ curl -d '{"paths":[{"path":"unknown4456.jpg"}]}' http://127.0.0.1:8000/images/po

|

||||

|

||||

为了简洁,我不打算为 NSQ 和其它组件增加批量图片处理的能力。这些组件仍然是一次处理一个图片。这部分修改将留给你作为扩展内容。 :)

|

||||

|

||||

#### 新镜像

|

||||

### 新镜像

|

||||

|

||||

为实现滚动更新,我首先需要为接收器服务创建一个新的镜像。新镜像使用新标签,告诉大家版本号为 v1.1。

|

||||

|

||||

@ -969,11 +956,11 @@ docker build -t skarlso/kube-receiver-alpine:v1.1 .

|

||||

|

||||

新镜像创建后,我们可以开始滚动更新了。

|

||||

|

||||

#### 滚动更新

|

||||

### 滚动更新

|

||||

|

||||

在 Kubernetes 中,可以使用多种方式完成滚动更新。

|

||||

|

||||

##### 手动更新

|

||||

#### 手动更新

|

||||

|

||||

不妨假设在我配置文件中使用的容器版本为 `v1.0`,那么实现滚动更新只需运行如下命令:

|

||||

|

||||

@ -991,7 +978,7 @@ kubectl rolling-update receiver --rollback

|

||||

|

||||

容器将回滚到使用上一个版本镜像,操作简捷无烦恼。

|

||||

|

||||

##### 应用新的配置文件

|

||||

#### 应用新的配置文件

|

||||

|

||||

手动更新的不足在于无法版本管理。

|

||||

|

||||

@ -1051,7 +1038,7 @@ kubectl delete services -all

|

||||

|

||||

```

|

||||

|

||||

# 写在最后的话

|

||||

## 写在最后的话

|

||||

|

||||

各位看官,本文就写到这里了。我们在 Kubernetes 上编写、部署、更新和扩展(老实说,并没有实现)了一个分布式应用。

|

||||

|

||||

@ -1065,9 +1052,9 @@ Gergely 感谢你阅读本文。

|

||||

|

||||

via: https://skarlso.github.io/2018/03/15/kubernetes-distributed-application/

|

||||

|

||||

作者:[hannibal ][a]

|

||||

作者:[hannibal][a]

|

||||

译者:[pinewall](https://github.com/pinewall)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

215

published/20180708 Getting Started with Debian Packaging.md

Normal file

215

published/20180708 Getting Started with Debian Packaging.md

Normal file

@ -0,0 +1,215 @@

|

||||

Debian 打包入门

|

||||

======

|

||||

|

||||

> 创建 CardBook 软件包、本地 Debian 仓库,并修复错误。

|

||||

|

||||

|

||||

|

||||

我在 GSoC(LCTT 译注:Google Summer Of Code,一项针对学生进行的开源项目训练营,一般在夏季进行。)的任务中有一项是为用户构建 Thunderbird <ruby>扩展<rt>add-ons</rt></ruby>。一些非常流行的扩展,比如 [Lightning][1] (日历行事历)已经拥有了 deb 包。

|

||||

|

||||

另外一个重要的用于管理基于 CardDav 和 vCard 标准的联系人的扩展 [Cardbook][2] ,还没有一个 deb 包。

|

||||

|

||||

我的导师, [Daniel][3] 鼓励我去为它制作一个包,并上传到 [mentors.debian.net][4]。因为这样就可以使用 `apt-get` 来安装,简化了安装流程。这篇博客描述了我是如何从头开始学习为 CardBook 创建一个 Debian 包的。

|

||||

|

||||

首先,我是第一次接触打包,我在从源码构建包的基础上进行了大量研究,并检查它的协议是是否与 [DFSG][5] 兼容。

|

||||

|

||||

我从多个 Debian Wiki 中的指南中进行学习,比如 [打包介绍][6]、 [构建一个包][7],以及一些博客。

|

||||

|

||||

我还研究了包含在 [Lightning 扩展包][8]的 amd64 文件。

|

||||

|

||||

我创建的包可以在[这里][9]找到。

|

||||

|

||||

![Debian Package!][10]

|

||||

|

||||

*Debian 包*

|

||||

|

||||

### 创建一个空的包

|

||||

|

||||

我从使用 `dh_make` 来创建一个 `debian` 目录开始。

|

||||

|

||||

```

|

||||

# Empty project folder

|

||||

$ mkdir -p Debian/cardbook

|

||||

```

|

||||

|

||||

```

|

||||

# create files

|

||||

$ dh_make\

|

||||

> --native \

|

||||

> --single \

|

||||

> --packagename cardbook_1.0.0 \

|

||||

> --email minkush@example.com

|

||||

```

|

||||

|

||||

一些重要的文件,比如 `control`、`rules`、`changelog`、`copyright` 等文件被初始化其中。

|

||||

|

||||

所创建的文件的完整列表如下:

|

||||

|

||||

```

|

||||

$ find /debian

|

||||

debian/

|

||||

debian/rules

|

||||

debian/preinst.ex

|

||||

debian/cardbook-docs.docs

|

||||

debian/manpage.1.ex

|

||||

debian/install

|

||||

debian/source

|

||||

debian/source/format

|

||||

debian/cardbook.debhelper.lo

|

||||

debian/manpage.xml.ex

|

||||

debian/README.Debian

|

||||

debian/postrm.ex

|

||||

debian/prerm.ex

|

||||

debian/copyright

|

||||

debian/changelog

|

||||

debian/manpage.sgml.ex

|

||||

debian/cardbook.default.ex

|

||||

debian/README

|

||||

debian/cardbook.doc-base.EX

|

||||

debian/README.source

|

||||

debian/compat

|

||||

debian/control

|

||||

debian/debhelper-build-stamp

|

||||

debian/menu.ex

|

||||

debian/postinst.ex

|

||||

debian/cardbook.substvars

|

||||

debian/files

|

||||

```

|

||||

|

||||

我了解了 Debian 系统中 [Dpkg][11] 包管理器及如何用它安装、删除和管理包。

|

||||

|

||||

我使用 `dpkg` 命令创建了一个空的包。这个命令创建一个空的包文件以及四个名为 `.changes`、`.deb`、 `.dsc`、 `.tar.gz` 的文件。

|

||||

|

||||

- `.dsc` 文件包含了所发生的修改和签名

|

||||

- `.deb` 文件是用于安装的主要包文件。

|

||||

- `.tar.gz` (tarball)包含了源代码

|

||||

|

||||

这个过程也在 `/usr/share` 目录下创建了 `README` 和 `changelog` 文件。它们包含了关于这个包的基本信息比如描述、作者、版本。

|

||||

|

||||

我安装这个包,并检查这个包安装的内容。我的新包中包含了版本、架构和描述。

|

||||

|

||||

```

|

||||

$ dpkg -L cardbook

|

||||

/usr

|

||||

/usr/share

|

||||

/usr/share/doc

|

||||

/usr/share/doc/cardbook

|

||||

/usr/share/doc/cardbook/README.Debian

|

||||

/usr/share/doc/cardbook/changelog.gz

|

||||

/usr/share/doc/cardbook/copyright

|

||||

```

|

||||

|

||||

### 包含 CardBook 源代码

|

||||

|

||||

在成功的创建了一个空包以后,我在包中添加了实际的 CardBook 扩展文件。 CardBook 的源代码托管在 [Gitlab][12] 上。我将所有的源码文件包含在另外一个目录,并告诉打包命令哪些文件需要包含在这个包中。

|

||||

|

||||

我使用 `vi` 编辑器创建一个 `debian/install` 文件并列举了需要被安装的文件。在这个过程中,我花费了一些时间去学习基于 Linux 终端的文本编辑器,比如 `vi` 。这让我熟悉如何在 `vi` 中编辑、创建文件和快捷方式。

|

||||

|

||||

当这些完成后,我在变更日志中更新了包的版本并记录了我所做的改变。

|

||||

|

||||

```

|

||||

$ dpkg -l | grep cardbook

|

||||

ii cardbook 1.1.0 amd64 Thunderbird add-on for address book

|

||||

```

|

||||

|

||||

![Changelog][13]

|

||||

|

||||

*更新完包的变更日志*

|

||||

|

||||

在重新构建完成后,重要的依赖和描述信息可以被加入到包中。 Debian 的 `control` 文件可以用来添加额外的必须项目和依赖。

|

||||

|

||||

### 本地 Debian 仓库

|

||||

|

||||

在不创建本地存储库的情况下,CardBook 可以使用如下的命令来安装:

|

||||

|

||||

```

|

||||

$ sudo dpkg -i cardbook_1.1.0.deb

|

||||

```

|

||||

|

||||

为了实际测试包的安装,我决定构建一个本地 Debian 存储库。没有它,`apt-get` 命令将无法定位包,因为它没有在 Debian 的包软件列表中。

|

||||

|

||||

为了配置本地 Debian 存储库,我复制我的包 (.deb)为放在 `/tmp` 目录中的 `Packages.gz` 文件。

|

||||

|

||||

![Packages-gz][14]

|

||||

|

||||

*本地 Debian 仓库*

|

||||

|

||||

为了使它工作,我了解了 `apt` 的配置和它查找文件的路径。

|

||||

|

||||

我研究了一种在 `apt-config` 中添加文件位置的方法。最后,我通过在 APT 中添加 `*.list` 文件来添加包的路径,并使用 `apt-cache` 更新APT缓存来完成我的任务。

|

||||

|

||||

因此,最新的 CardBook 版本可以成功的通过 `apt-get install cardbook` 来安装了。

|

||||

|

||||

![Package installation!][15]

|

||||

|

||||

*使用 apt-get 安装 CardBook*

|

||||

|

||||

### 修复打包错误和 Bugs

|

||||

|

||||

我的导师 Daniel 在这个过程中帮了我很多忙,并指导我如何进一步进行打包。他告诉我使用 [Lintian][16] 来修复打包过程中出现的常见错误和最终使用 [dput][17] 来上传 CardBook 包。

|

||||

|

||||

> Lintian 是一个用于发现策略问题和 Bug 的包检查器。它是 Debian 维护者们在上传包之前广泛使用的自动化检查 Debian 策略的工具。

|

||||

|

||||

我上传了该软件包的第二个更新版本到 Debian 目录中的 [Salsa 仓库][18] 的一个独立分支中。

|

||||

|

||||

我从 Debian backports 上安装 Lintian 并学习在一个包上用它来修复错误。我研究了它用在其错误信息中的缩写,和如何查看 Lintian 命令返回的详细内容。

|

||||

|

||||

```

|

||||

$ lintian -i -I --show-overrides cardbook_1.2.0.changes

|

||||

```

|

||||

|

||||

最初,在 `.changes` 文件上运行命令时,我惊讶地看到显示出来了大量错误、警告和注释!

|

||||

|

||||

![Package Error Brief!][19]

|

||||

|

||||

*在包上运行 Lintian 时看到的大量报错*

|

||||

|

||||

![Lintian error1!][20]

|

||||

|

||||

*详细的 Lintian 报错*

|

||||

|

||||

![Lintian error2!][23]

|

||||

|

||||

*详细的 Lintian 报错 (2) 以及更多*

|

||||

|

||||

我花了几天时间修复与 Debian 包策略违例相关的一些错误。为了消除一个简单的错误,我必须仔细研究每一项策略和 Debian 的规则。为此,我参考了 [Debian 策略手册][21] 以及 [Debian 开发者参考][22]。

|

||||

|

||||

我仍然在努力使它变得完美无暇,并希望很快可以将它上传到 mentors.debian.net!

|

||||

|

||||

如果 Debian 社区中使用 Thunderbird 的人可以帮助修复这些报错就太感谢了。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://minkush.me/cardbook-debian-package/

|

||||

|

||||

作者:[Minkush Jain][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[Bestony](https://github.com/bestony)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://minkush.me/cardbook-debian-package/#

|

||||

[1]:https://addons.mozilla.org/en-US/thunderbird/addon/lightning/

|

||||

[2]:https://addons.mozilla.org/nn-NO/thunderbird/addon/cardbook/?src=hp-dl-featured

|

||||

[3]:https://danielpocock.com/

|

||||

[4]:https://mentors.debian.net/

|

||||

[5]:https://wiki.debian.org/DFSGLicenses

|

||||

[6]:https://wiki.debian.org/Packaging/Intro

|

||||

[7]:https://wiki.debian.org/BuildingAPackage

|

||||

[8]:https://packages.debian.org/stretch/amd64/lightning/filelist

|

||||

[9]:https://salsa.debian.org/minkush-guest/CardBook/tree/debian-package/Debian

|

||||

[10]:http://minkush.me/img/posts/13.png

|

||||

[11]:https://packages.debian.org/stretch/dpkg

|

||||

[12]:https://gitlab.com/CardBook/CardBook

|

||||

[13]:http://minkush.me/img/posts/15.png

|

||||

[14]:http://minkush.me/img/posts/14.png

|

||||

[15]:http://minkush.me/img/posts/11.png

|

||||

[16]:https://packages.debian.org/stretch/lintian

|

||||

[17]:https://packages.debian.org/stretch/dput

|

||||

[18]:https://salsa.debian.org/minkush-guest/CardBook/tree/debian-package

|

||||

[19]:http://minkush.me/img/posts/16.png (Running Lintian on package)

|

||||

[20]:http://minkush.me/img/posts/10.png

|

||||

[21]:https://www.debian.org/doc/debian-policy/

|

||||

[22]:https://www.debian.org/doc/manuals/developers-reference/

|

||||

[23]:http://minkush.me/img/posts/17.png

|

||||

@ -1,38 +1,38 @@

|

||||

15 个适用于 MacOS 的开源应用程序

|

||||

======

|

||||

|

||||

> 钟爱开源的用户不会觉得在非 Linux 操作系统上使用他们喜爱的应用有多难。

|

||||

|

||||

|

||||

|

||||

只要有可能的情况下,我都会去选择使用开源工具。不久之前,我回到大学去攻读教育领导学硕士学位。即便是我将喜欢的 Linux 笔记本电脑换成了一台 MacBook Pro(因为我不能确定校园里能够接受 Linux),我还是决定继续使用我喜欢的工具,哪怕是在 MacOS 上也是如此。

|

||||

|

||||

幸运的是,它很容易,并且没有哪个教授质疑过我用的是什么软件。即然如此,我就不能保守秘密。

|

||||

幸运的是,它很容易,并且没有哪个教授质疑过我用的是什么软件。即然如此,我就不能秘而不宣。

|

||||

|

||||

我知道,我的一些同学最终会在学区担任领导职务,因此,我与我的那些使用 MacOS 或 Windows 的同学分享了关于下面描述的这些开源软件。毕竟,开源软件是真正地自由和友好的。我也希望他们去了解它,并且愿意以很少的一些成本去提供给他们的学生去使用这些世界级的应用程序。他们中的大多数人都感到很惊讶,因为,众所周知,开源软件除了有像你和我这样的用户之外,压根就没有销售团队。

|

||||

|

||||

### 我的 MacOS 学习曲线

|

||||

|

||||

虽然大多数的开源工具都能像以前我在 Linux 上使用的那样工作,只是需要不同的安装方法。但是,经过这个过程,我学习了这些工具在 MacOS 上的一些细微差别。像 [yum][1]、[DNF][2]、和 [APT][3] 在 MacOS 的世界中压根不存在 — 我真的很怀念它们。

|

||||

虽然大多数的开源工具都能像以前我在 Linux 上使用的那样工作,只是需要不同的安装方法。但是,经过这个过程,我学习了这些工具在 MacOS 上的一些细微差别。像 [yum][1]、[DNF][2]、和 [APT][3] 在 MacOS 的世界中压根不存在 —— 我真的很怀念它们。

|

||||

|

||||

一些 MacOS 应用程序要求依赖项,并且安装它们要比我在 Linux 上习惯的方法困难很多。尽管如此,我仍然没有放弃。在这个过程中,我学会了如何在我的新平台上保留最好的软件。即便是 MacOS 大部分的核心也是 [开源的][4]。

|

||||

|

||||

此外,我的 Linux 的知识背景让我使用 MacOS 的命令行很轻松很舒适。我仍然使用命令行去创建和拷贝文件、添加用户、以及使用其它的像 cat、tac、more、less、和 tail 这样的 [实用工具][5]。

|

||||

此外,我的 Linux 的知识背景让我使用 MacOS 的命令行很轻松很舒适。我仍然使用命令行去创建和拷贝文件、添加用户、以及使用其它的像 `cat`、`tac`、`more`、`less` 和 `tail` 这样的 [实用工具][5]。

|

||||

|

||||

### 15 个适用于 MacOS 的非常好的开源应用程序

|

||||

|

||||

* 在大学里,要求我使用 DOCX 的电子版格式来提交我的工作,而这其实很容易,最初我使用的是 [OpenOffice][6],而后来我使用的是 [LibreOffice][7] 去完成我的论文。

|

||||

* 当我因为演示需要去做一些图像时,我使用的是我最喜欢的图像应用程序 [GIMP][8] 和 [Inkscape][9]。

|

||||

* 我喜欢的播客创建工具是 [Audacity][10]。它比起 Mac 上搭载的专有的应用程序更加简单。我使用它去录制访谈和为视频演示创建配乐。

|

||||

* 我喜欢的播客创建工具是 [Audacity][10]。它比起 Mac 上搭载的专有应用程序更加简单。我使用它去录制访谈和为视频演示创建配乐。

|

||||

* 在 MacOS 上我最早发现的多媒体播放器是 [VideoLan][11] (VLC)。

|

||||

* MacOS 的内置专有视频创建工具是一个非常好的产品,但是你也可以很轻松地去安装和使用 [OpenShot][12],它是一个非常好的内容创建工具。

|

||||

* MacOS 内置的专有视频创建工具是一个非常好的产品,但是你也可以很轻松地去安装和使用 [OpenShot][12],它是一个非常好的内容创建工具。

|

||||

* 当我需要在我的客户端上分析网络时,我在我的 Mac 上使用了易于安装的 [Nmap][13] (Network Mapper) 和 [Wireshark][14] 工具。

|

||||

* 当我为图书管理员和其它教育工作者提供培训时,我在 MacOS 上使用 [VirtualBox][15] 去做 Raspbian、Fedora、Ubuntu、和其它 Linux 发行版的示范操作。

|

||||

* 当我为图书管理员和其它教育工作者提供培训时,我在 MacOS 上使用 [VirtualBox][15] 去做 Raspbian、Fedora、Ubuntu 和其它 Linux 发行版的示范操作。

|

||||

* 我使用 [Etcher.io][16] 在我的 MacBook 上制作了一个引导盘,下载 ISO 文件,将它刻录到一个 U 盘上。

|

||||

* 我认为 [Firefox][17] 比起 MacBook Pro 自带的专有浏览器更易用更安全,并且它允许我跨操作系统去同步我的书签。

|

||||

* 当我使用电子书阅读器时,[Calibre][18] 是当之无愧的选择。它很容易去下载和安装,你甚至只需要几次点击就能将它配置为一台 [教室中使用的电子书服务器][19]。

|

||||

* 最近我给中学的学习教 Python 课程,我发现它可以很容易地从 [Python.org][20] 上下载和安装 Python 3 及 IDLE3 编辑器。我也喜欢学习数据科学,并与学生分享。不论你是对 Python 还是 R 感兴趣,我都建议你下载和 [安装][21] [Anaconda 发行版][22]。它包含了非常好的 iPython 编辑器、RStudio、Jupyter Notebooks、和 JupyterLab,以及其它一些应用程序。

|

||||

* [HandBrake][23] 是一个将你家里的旧的视频 DVD 转成 MP4 的工具,这样你就可以将它们共享到 YouTube、Vimeo、或者你的 MacOS 上的 [Kodi][24] 服务器上。

|

||||

|

||||

|

||||

* 最近我给中学的学生教 Python 课程,我发现它可以很容易地从 [Python.org][20] 上下载和安装 Python 3 及 IDLE3 编辑器。我也喜欢学习数据科学,并与学生分享。不论你是对 Python 还是 R 感兴趣,我都建议你下载和 [安装][21] [Anaconda 发行版][22]。它包含了非常好的 iPython 编辑器、RStudio、Jupyter Notebooks、和 JupyterLab,以及其它一些应用程序。

|

||||

* [HandBrake][23] 是一个将你家里的旧的视频 DVD 转成 MP4 的工具,这样你就可以将它们共享到 YouTube、Vimeo、或者你的 MacOS 上的 [Kodi][24] 服务器上。

|

||||

|

||||

现在轮到你了:你在 MacOS(或 Windows)上都使用什么样的开源软件?在下面的评论区共享出来吧。

|

||||

|

||||

@ -43,7 +43,7 @@ via: https://opensource.com/article/18/7/open-source-tools-macos

|

||||

作者:[Don Watkins][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,101 +1,93 @@

|

||||

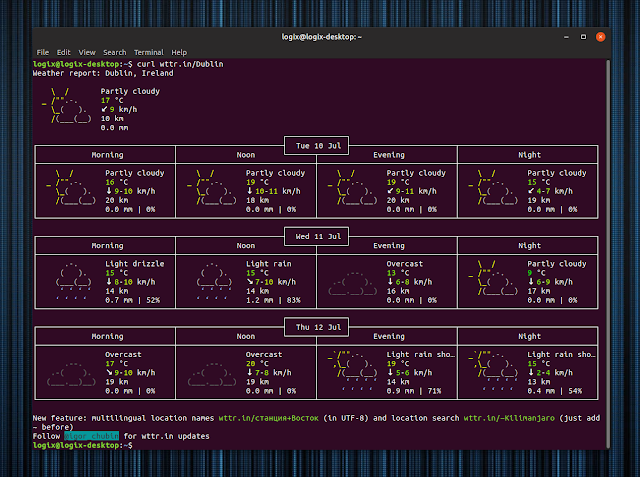

使用 Wttr.in 在你的终端中显示天气预报

|

||||

======

|

||||

**[wttr.in][1] 是一个功能丰富的天气预报服务,它支持在命令行显示天气**。它可以自动检测你的位置(根据你的 IP 地址),支持指定位置或搜索地理位置(如城市、山区等)等。哦,另外**你不需要安装它 - 你只需要使用 cURL 或 Wget**(见下文)。

|

||||

|

||||

|

||||

|

||||

[wttr.in][1] 是一个功能丰富的天气预报服务,它支持在命令行显示天气。它可以(根据你的 IP 地址)自动检测你的位置,也支持指定位置或搜索地理位置(如城市、山区等)等。哦,另外**你不需要安装它 —— 你只需要使用 cURL 或 Wget**(见下文)。

|

||||

|

||||

wttr.in 功能包括:

|

||||

|

||||

* **显示当前天气以及 3 天天气预报,分为早晨、中午、傍晚和夜晚**(包括温度范围、风速和风向、可见度、降水量和概率)

|

||||

|

||||

* **显示当前天气以及 3 天内的天气预报,分为早晨、中午、傍晚和夜晚**(包括温度范围、风速和风向、可见度、降水量和概率)

|

||||

* **可以显示月相**

|

||||

|

||||

* **基于你的 IP 地址自动检测位置**

|

||||

|

||||

* **允许指定城市名称、3 字母的机场代码、区域代码、GPS 坐标、IP 地址或域名**。你还可以指定地理位置,如湖泊、山脉、地标等)

|

||||

|

||||

* **支持多语言位置名称**(查询字符串必须以 Unicode 指定)

|

||||

|

||||

* **支持指定**天气预报显示的语言(它支持超过 50 种语言)

|

||||

|

||||

* **it uses USCS units for queries from the USA and the metric system for the rest of the world** , but you can change this by appending `?u` for USCS, and `?m` for the metric system (SI)

|

||||

* **来自美国的查询使用 USCS 单位用于,世界其他地方使用公制系统**,但你可以通过附加 `?u` 使用 USCS,附加 `?m` 使用公制系统。 )

|

||||

* **3 种输出格式:终端的 ANSI,浏览器的 HTML 和 PNG**

|

||||

|

||||

* **3 种输出格式:终端的 ANSI,浏览器的 HTML 和 PNG**。

|

||||

|

||||

|

||||

|

||||

|

||||

就像我在文章开头提到的那样,使用 wttr.in,你只需要 cURL 或 Wget,但你也可以

|

||||

就像我在文章开头提到的那样,使用 wttr.in,你只需要 cURL 或 Wget,但你也可以在你的服务器上[安装它][3]。 或者你可以安装 [wego][4],这是一个使用 wtter.in 的终端气候应用,虽然 wego 要求注册一个 API 密钥来安装。

|

||||

|

||||

**在使用 wttr.in 之前,请确保已安装 cURL。**在 Debian、Ubuntu 或 Linux Mint(以及其他基于 Debian 或 Ubuntu 的 Linux 发行版)中,使用以下命令安装 cURL:

|

||||

|

||||

```

|

||||

sudo apt install curl

|

||||

|

||||

```

|

||||

|

||||

### wttr.in 命令行示例

|

||||

|

||||

获取你所在位置的天气(wttr.in 会根据你的 IP 地址猜测你的位置):

|

||||

|

||||

```

|

||||

curl wttr.in

|

||||

|

||||

```

|

||||

|

||||

通过在 `curl` 之后添加 `-4`,强制 cURL 将名称解析为 IPv4 地址(如果你遇到 IPv6 和 wttr.in 问题):

|

||||

通过在 `curl` 之后添加 `-4`,强制 cURL 将名称解析为 IPv4 地址(如果你用 IPv6 访问 wttr.in 有问题):

|

||||

|

||||

```

|

||||

curl -4 wttr.in

|

||||

|

||||

```

|

||||

|

||||

如果你想检索天气预报保存为 png,**还可以使用 Wget**(而不是 cURL),或者你想这样使用它:

|

||||

|

||||

```

|

||||

wget -O- -q wttr.in

|

||||

|

||||

```

|

||||

|

||||

如果相对 cURL 你更喜欢 Wget ,可以在下面的所有命令中用 `wget -O- -q` 替换 `curl`。

|

||||

|

||||

指定位置:

|

||||

|

||||

```

|

||||

curl wttr.in/Dublin

|

||||

|

||||

```

|

||||

|

||||

显示地标的天气信息(本例中为艾菲尔铁塔):

|

||||

|

||||

```

|

||||

curl wttr.in/~Eiffel+Tower

|

||||

|

||||

```

|

||||

|

||||

获取 IP 地址位置的天气信息(以下 IP 属于 GitHub):

|

||||

|

||||

```

|

||||

curl wttr.in/@192.30.253.113

|

||||

|

||||

```

|

||||

|

||||

使用 USCS 单位检索天气:

|