mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-25 00:50:15 +08:00

commit

73347fb470

@ -1,8 +1,9 @@

|

||||

如何在 Docker 中通过 Kitematic 交互式执行任务

|

||||

如何在 Windows 上通过 Kitematic 使用 Docker

|

||||

================================================================================

|

||||

在本篇文章中,我们会学习如何在 Windows 操作系统上安装 Kitematic 以及部署一个 Hello World Nginx Web 服务器。Kitematic 是一个自由开源软件,它有现代化的界面设计使得允许我们在 Docker 中交互式执行任务。Kitematic 设计非常漂亮、界面也很不错。我们可以简单快速地开箱搭建我们的容器而不需要输入命令,我们可以在图形用户界面中通过简单的点击从而在容器上部署我们的应用。Kitematic 集成了 Docker Hub,允许我们搜索、拉取任何需要的镜像,并在上面部署应用。它同时也能很好地切换到命令行用户接口模式。目前,它包括了自动映射端口、可视化更改环境变量、配置卷、精简日志以及其它功能。

|

||||

|

||||

下面是在 Windows 上安装 Kitematic 并部署 Hello World Nginx Web 服务器的 3 个简单步骤。

|

||||

在本篇文章中,我们会学习如何在 Windows 操作系统上安装 Kitematic 以及部署一个测试性的 Nginx Web 服务器。Kitematic 是一个具有现代化的界面设计的自由开源软件,它可以让我们在 Docker 中交互式执行任务。Kitematic 设计的非常漂亮、界面美观。使用它,我们可以简单快速地开箱搭建我们的容器而不需要输入命令,可以在图形用户界面中通过简单的点击从而在容器上部署我们的应用。Kitematic 集成了 Docker Hub,允许我们搜索、拉取任何需要的镜像,并在上面部署应用。它同时也能很好地切换到命令行用户接口模式。目前,它包括了自动映射端口、可视化更改环境变量、配置卷、流式日志以及其它功能。

|

||||

|

||||

下面是在 Windows 上安装 Kitematic 并部署测试性 Nginx Web 服务器的 3 个简单步骤。

|

||||

|

||||

### 1. 下载 Kitematic ###

|

||||

|

||||

@ -16,15 +17,15 @@

|

||||

|

||||

### 2. 安装 Kitematic ###

|

||||

|

||||

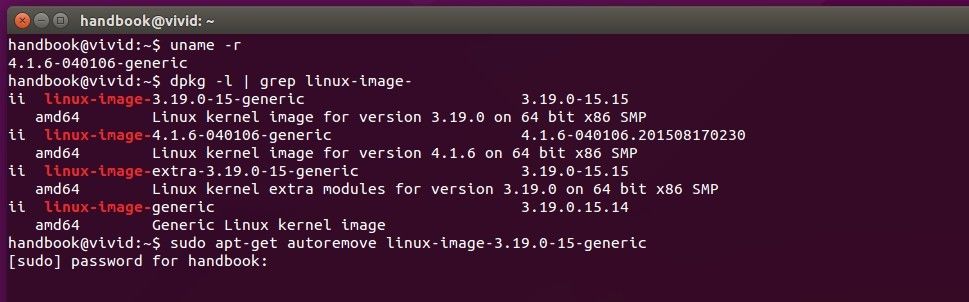

下载好可执行安装程序之后,我们现在打算在我们的 Windows 操作系统上安装 Kitematic。安装程序现在会开始下载并安装运行 Kitematic 需要的依赖,包括 Virtual Box 和 Docker。如果已经在系统上安装了 Virtual Box,它会把它升级到最新版本。安装程序会在几分钟内完成,但取决于你网络和系统的速度。如果你还没有安装 Virtual Box,它会问你是否安装 Virtual Box 网络驱动。建议安装它,因为它有助于 Virtual Box 的网络。

|

||||

下载好可执行安装程序之后,我们现在就可以在我们的 Windows 操作系统上安装 Kitematic了。安装程序现在会开始下载并安装运行 Kitematic 需要的依赖软件,包括 Virtual Box 和 Docker。如果已经在系统上安装了 Virtual Box,它会把它升级到最新版本。安装程序会在几分钟内完成,但取决于你网络和系统的速度。如果你还没有安装 Virtual Box,它会问你是否安装 Virtual Box 网络驱动。建议安装它,因为它用于 Virtual Box 的网络功能。

|

||||

|

||||

|

||||

|

||||

需要的依赖 Docker 和 Virtual Box 安装完成并运行后,会让我们登录到 Docker Hub。如果我们还没有账户或者还不想登录,可以点击 **SKIP FOR NOW** 继续后面的步骤。

|

||||

所需的依赖 Docker 和 Virtual Box 安装完成并运行后,会让我们登录到 Docker Hub。如果我们还没有账户或者还不想登录,可以点击 **SKIP FOR NOW** 继续后面的步骤。

|

||||

|

||||

|

||||

|

||||

如果你还没有账户,你可以在应用程序上点击注册链接并在 Docker Hub 上创建账户。

|

||||

如果你还没有账户,你可以在应用程序上点击注册(Sign Up)链接并在 Docker Hub 上创建账户。

|

||||

|

||||

完成之后,就会出现 Kitematic 应用程序的第一个界面。正如下面看到的这样。我们可以搜索可用的 docker 镜像。

|

||||

|

||||

@ -50,7 +51,11 @@

|

||||

|

||||

### 总结 ###

|

||||

|

||||

我们终于成功在 Windows 操作系统上安装了 Kitematic 并部署了一个 Hello World Ngnix 服务器。总是推荐下载安装 Kitematic 最新的发行版,因为会增加很多新的高级功能。由于 Docker 运行在 64 位平台,当前 Kitematic 也是为 64 位操作系统构建。它只能在 Windows 7 以及更高版本上运行。在这篇教程中,我们部署了一个 Nginx Web 服务器,类似地我们可以在 Kitematic 中简单的点击就能通过镜像部署任何 docker 容器。Kitematic 已经有可用的 Mac OS X 和 Windows 版本,Linux 版本也在开发中很快就会发布。如果你有任何疑问、建议或者反馈,请在下面的评论框中写下来以便我们更改地改进或更新我们的内容。非常感谢!Enjoy :-)

|

||||

我们终于成功在 Windows 操作系统上安装了 Kitematic 并部署了一个 Hello World Ngnix 服务器。推荐下载安装 Kitematic 最新的发行版,因为会增加很多新的高级功能。由于 Docker 运行在 64 位平台,当前 Kitematic 也是为 64 位操作系统构建。它只能在 Windows 7 以及更高版本上运行。

|

||||

|

||||

在这篇教程中,我们部署了一个 Nginx Web 服务器,类似地我们可以在 Kitematic 中简单的点击就能通过镜像部署任何 docker 容器。Kitematic 已经有可用的 Mac OS X 和 Windows 版本,Linux 版本也在开发中很快就会发布。

|

||||

|

||||

如果你有任何疑问、建议或者反馈,请在下面的评论框中写下来以便我们更改地改进或更新我们的内容。非常感谢!Enjoy :-)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -58,7 +63,7 @@ via: http://linoxide.com/linux-how-to/interactively-docker-kitematic/

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[ictlyh](https://github.com/ictlyh)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,5 +1,6 @@

|

||||

安装Strongswan - Linux上一个基于IPsec的vpn工具

|

||||

安装 Strongswan :Linux 上一个基于 IPsec 的 VPN 工具

|

||||

================================================================================

|

||||

|

||||

IPsec是一个提供网络层安全的标准。它包含认证头(AH)和安全负载封装(ESP)组件。AH提供包的完整性,ESP组件提供包的保密性。IPsec确保了在网络层的安全特性。

|

||||

|

||||

- 保密性

|

||||

@ -7,27 +8,27 @@ IPsec是一个提供网络层安全的标准。它包含认证头(AH)和安全

|

||||

- 来源不可抵赖性

|

||||

- 重放攻击防护

|

||||

|

||||

[Strongswan][1]是一个IPsec协议实现的开源代码,Strongswan代表强壮开源广域网(StrongS/WAN)。它支持IPsec的VPN两个版本的密钥自动交换(网络密钥交换(IKE)V1和V2)。

|

||||

[Strongswan][1]是一个IPsec协议的开源代码实现,Strongswan的意思是强安全广域网(StrongS/WAN)。它支持IPsec的VPN中的两个版本的密钥自动交换(网络密钥交换(IKE)V1和V2)。

|

||||

|

||||

Strongswan基本上提供了自动交换密钥共享VPN两个节点或网络,然后它使用Linux内核的IPsec(AH和ESP)实现。密钥共享使用了IKE机制的特性使用ESP编码数据。在IKE阶段,strongswan使用OpenSSL加密算法(AES,SHA等等)和其他加密类库。无论如何,ESP组成IPsec使用的安全算法,它是Linux内核实现的。Strongswan的主要特性是下面这些。

|

||||

Strongswan基本上提供了在VPN的两个节点/网关之间自动交换密钥的共享,然后它使用了Linux内核的IPsec(AH和ESP)实现。密钥共享使用了之后用于ESP数据加密的IKE 机制。在IKE阶段,strongswan使用OpenSSL的加密算法(AES,SHA等等)和其他加密类库。无论如何,IPsec中的ESP组件使用的安全算法是由Linux内核实现的。Strongswan的主要特性如下:

|

||||

|

||||

- x.509证书或基于预共享密钥认证

|

||||

- 支持IKEv1和IKEv2密钥交换协议

|

||||

- 可选内置插件和库的完整性和加密测试

|

||||

- 支持椭圆曲线DH群体和ECDSA证书

|

||||

- 可选的,对于插件和库的内置完整性和加密测试

|

||||

- 支持椭圆曲线DH群和ECDSA证书

|

||||

- 在智能卡上存储RSA私钥和证书

|

||||

|

||||

它能被使用在客户端或服务器(road warrior模式)和网关到网关的情景。

|

||||

它能被使用在客户端/服务器(road warrior模式)和网关到网关的情景。

|

||||

|

||||

### 如何安装 ###

|

||||

|

||||

几乎所有的Linux发行版都支持Strongswan的二进制包。在这个教程,我们将从二进制包安装strongswan也编译strongswan合适的特性的源代码。

|

||||

几乎所有的Linux发行版都支持Strongswan的二进制包。在这个教程,我们会从二进制包安装strongswan,也会从源代码编译带有合适的特性的strongswan。

|

||||

|

||||

### 使用二进制包 ###

|

||||

|

||||

可以使用以下命令安装Strongswan到Ubuntu 14.04 LTS

|

||||

|

||||

$sudo aptitude install strongswan

|

||||

$ sudo aptitude install strongswan

|

||||

|

||||

|

||||

|

||||

@ -35,35 +36,35 @@ strongswan的全局配置(strongswan.conf)文件和ipsec配置(ipsec.conf/

|

||||

|

||||

### strongswan源码编译安装的依赖包 ###

|

||||

|

||||

- GMP(strongswan使用的Mathematical/Precision 库)

|

||||

- OpenSSL(加密算法在这个库里)

|

||||

- PKCS(1,7,8,11,12)(证书编码和智能卡与Strongswan集成)

|

||||

- GMP(strongswan使用的高精度数学库)

|

||||

- OpenSSL(加密算法来自这个库)

|

||||

- PKCS(1,7,8,11,12)(证书编码和智能卡集成)

|

||||

|

||||

#### 步骤 ####

|

||||

|

||||

**1)** 在终端使用下面命令到/usr/src/目录

|

||||

|

||||

$cd /usr/src

|

||||

$ cd /usr/src

|

||||

|

||||

**2)** 用下面命令从strongswan网站下载源代码

|

||||

|

||||

$sudo wget http://download.strongswan.org/strongswan-5.2.1.tar.gz

|

||||

$ sudo wget http://download.strongswan.org/strongswan-5.2.1.tar.gz

|

||||

|

||||

(strongswan-5.2.1.tar.gz 是最新版。)

|

||||

(strongswan-5.2.1.tar.gz 是当前最新版。)

|

||||

|

||||

|

||||

|

||||

**3)** 用下面命令提取下载软件,然后进入目录。

|

||||

**3)** 用下面命令提取下载的软件,然后进入目录。

|

||||

|

||||

$sudo tar –xvzf strongswan-5.2.1.tar.gz; cd strongswan-5.2.1

|

||||

$ sudo tar –xvzf strongswan-5.2.1.tar.gz; cd strongswan-5.2.1

|

||||

|

||||

**4)** 使用configure命令配置strongswan每个想要的选项。

|

||||

|

||||

./configure --prefix=/usr/local -–enable-pkcs11 -–enable-openssl

|

||||

$ ./configure --prefix=/usr/local -–enable-pkcs11 -–enable-openssl

|

||||

|

||||

|

||||

|

||||

如果GMP库没有安装,然后配置脚本将会发生下面的错误。

|

||||

如果GMP库没有安装,配置脚本将会发生下面的错误。

|

||||

|

||||

|

||||

|

||||

@ -71,19 +72,19 @@ strongswan的全局配置(strongswan.conf)文件和ipsec配置(ipsec.conf/

|

||||

|

||||

|

||||

|

||||

无论如何,如果GMP已经安装而且还一致报错,然后在Ubuntu上使用下面命令创建libgmp.so库的软连到/usr/lib,/lib/,/usr/lib/x86_64-linux-gnu/路径下。

|

||||

不过,如果GMP已经安装还报上述错误的话,在Ubuntu上使用如下命令,给在路径 /usr/lib,/lib/,/usr/lib/x86_64-linux-gnu/ 下的libgmp.so库创建软连接。

|

||||

|

||||

$ sudo ln -s /usr/lib/x86_64-linux-gnu/libgmp.so.10.1.3 /usr/lib/x86_64-linux-gnu/libgmp.so

|

||||

|

||||

|

||||

|

||||

创建libgmp.so软连后,再执行./configure脚本也许就找到gmp库了。无论如何,gmp头文件也许发生其他错误,像下面这样。

|

||||

创建libgmp.so软连接后,再执行./configure脚本也许就找到gmp库了。然而,如果gmp头文件发生其他错误,像下面这样。

|

||||

|

||||

|

||||

|

||||

为解决上面的错误,使用下面命令安装libgmp-dev包

|

||||

|

||||

$sudo aptitude install libgmp-dev

|

||||

$ sudo aptitude install libgmp-dev

|

||||

|

||||

|

||||

|

||||

@ -105,7 +106,7 @@ via: http://linoxide.com/security/install-strongswan/

|

||||

|

||||

作者:[nido][a]

|

||||

译者:[wyangsun](https://github.com/wyangsun)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,14 +1,14 @@

|

||||

Ubuntu上使用LVM轻松调整分区并制作快照

|

||||

Ubuntu 上使用 LVM 轻松调整分区并制作快照

|

||||

================================================================================

|

||||

|

||||

|

||||

Ubuntu的安装器提供了一个轻松“使用LVM”的复选框。说明中说,它启用了逻辑卷管理,因此你可以制作快照,并更容易地调整硬盘分区大小——这里将为大家讲述如何完成这些操作。

|

||||

Ubuntu的安装器提供了一个轻松“使用LVM”的复选框。它的描述中说,启用逻辑卷管理可以让你制作快照,并更容易地调整硬盘分区大小——这里将为大家讲述如何完成这些操作。

|

||||

|

||||

LVM是一种技术,某种程度上和[RAID阵列][1]或[Windows上的存储空间][2]类似。虽然该技术在服务器上更为有用,但是它也可以在桌面端PC上使用。

|

||||

LVM是一种技术,某种程度上和[RAID阵列][1]或[Windows上的“存储空间”][2]类似。虽然该技术在服务器上更为有用,但是它也可以在桌面端PC上使用。

|

||||

|

||||

### 你应该在新安装Ubuntu时使用LVM吗? ###

|

||||

|

||||

第一个问题是,你是否想要在安装Ubuntu时使用LVM?如果是,那么Ubuntu让这一切变得很简单,只需要轻点鼠标就可以完成,但是该选项默认是不启用的。正如安装器所说的,它允许你调整分区、创建快照、合并多个磁盘到一个逻辑卷等等——所有这一切都可以在系统运行时完成。不同于传统分区,你不需要关掉你的系统,从Live CD或USB驱动,然后[调整这些不使用的分区][3]。

|

||||

第一个问题是,你是否想要在安装Ubuntu时使用LVM?如果是,那么Ubuntu让这一切变得很简单,只需要轻点鼠标就可以完成,但是该选项默认是不启用的。正如安装器所说的,它允许你调整分区、创建快照、将多个磁盘合并到一个逻辑卷等等——所有这一切都可以在系统运行时完成。不同于传统分区,你不需要关掉你的系统,从Live CD或USB驱动,然后[当这些分区不使用时才能调整][3]。

|

||||

|

||||

完全坦率地说,普通Ubuntu桌面用户可能不会意识到他们是否正在使用LVM。但是,如果你想要在今后做一些更高深的事情,那么LVM就会有所帮助了。LVM可能更复杂,可能会在你今后恢复数据时会导致问题——尤其是在你经验不足时。这里不会有显著的性能损失——LVM是彻底地在Linux内核中实现的。

|

||||

|

||||

@ -18,7 +18,7 @@ LVM是一种技术,某种程度上和[RAID阵列][1]或[Windows上的存储空

|

||||

|

||||

前面,我们已经[说明了何谓LVM][4]。概括来讲,它在你的物理磁盘和呈现在你系统中的分区之间提供了一个抽象层。例如,你的计算机可能装有两个硬盘驱动器,它们的大小都是 1 TB。你必须得在这些磁盘上至少分两个区,每个区大小 1 TB。

|

||||

|

||||

LVM就在这些分区上提供了一个抽象层。用于取代磁盘上的传统分区,LVM将在你对这些磁盘初始化后,将它们当作独立的“物理卷”来对待。然后,你就可以基于这些物理卷创建“逻辑卷”。例如,你可以将这两个 1 TB 的磁盘组合成一个 2 TB 的分区,你的系统将只看到一个 2 TB 的卷,而LVM将会在后台处理这一切。一组物理卷以及一组逻辑卷被称之为“卷组”,一个标准的系统只会有一个卷组。

|

||||

LVM就在这些分区上提供了一个抽象层。用于取代磁盘上的传统分区,LVM将在你对这些磁盘初始化后,将它们当作独立的“物理卷”来对待。然后,你就可以基于这些物理卷创建“逻辑卷”。例如,你可以将这两个 1 TB 的磁盘组合成一个 2 TB 的分区,你的系统将只看到一个 2 TB 的卷,而LVM将会在后台处理这一切。一组物理卷以及一组逻辑卷被称之为“卷组”,一个典型的系统只会有一个卷组。

|

||||

|

||||

该抽象层使得调整分区、将多个磁盘组合成单个卷、甚至为一个运行着的分区的文件系统创建“快照”变得十分简单,而完成所有这一切都无需先卸载分区。

|

||||

|

||||

@ -28,11 +28,11 @@ LVM就在这些分区上提供了一个抽象层。用于取代磁盘上的传

|

||||

|

||||

通常,[LVM通过Linux终端命令来管理][5]。这在Ubuntu上也行得通,但是有个更简单的图形化方法可供大家采用。如果你是一个Linux用户,对GParted或者与其类似的分区管理器熟悉,算了,别瞎掰了——GParted根本不支持LVM磁盘。

|

||||

|

||||

然而,你可以使用Ubuntu附带的磁盘工具。该工具也被称之为GNOME磁盘工具,或者叫Palimpsest。点击停靠盘上的图标来开启它吧,搜索磁盘然后敲击回车。不像GParted,该磁盘工具将会在“其它设备”下显示LVM分区,因此你可以根据需要格式化这些分区,也可以调整其它选项。该工具在Live CD或USB 驱动下也可以使用。

|

||||

然而,你可以使用Ubuntu附带的磁盘工具。该工具也被称之为GNOME磁盘工具,或者叫Palimpsest。点击dash中的图标来开启它吧,搜索“磁盘”然后敲击回车。不像GParted,该磁盘工具将会在“其它设备”下显示LVM分区,因此你可以根据需要格式化这些分区,也可以调整其它选项。该工具在Live CD或USB 驱动下也可以使用。

|

||||

|

||||

|

||||

|

||||

不幸的是,该磁盘工具不支持LVM的大多数强大的特性,没有管理卷组、扩展分区,或者创建快照等选项。对于这些操作,你可以通过终端来实现,但是你没有那个必要。相反,你可以打开Ubuntu软件中心,搜索关键字LVM,然后安装逻辑卷管理工具,你可以在终端窗口中运行**sudo apt-get install system-config-lvm**命令来安装它。安装完之后,你就可以从停靠盘上打开逻辑卷管理工具了。

|

||||

不幸的是,该磁盘工具不支持LVM的大多数强大的特性,没有管理卷组、扩展分区,或者创建快照等选项。对于这些操作,你可以通过终端来实现,但是没有那个必要。相反,你可以打开Ubuntu软件中心,搜索关键字LVM,然后安装逻辑卷管理工具,你可以在终端窗口中运行**sudo apt-get install system-config-lvm**命令来安装它。安装完之后,你就可以从dash上打开逻辑卷管理工具了。

|

||||

|

||||

这个图形化配置工具是由红帽公司开发的,虽然有点陈旧了,但却是唯一的图形化方式,你可以通过它来完成上述操作,将那些终端命令抛诸脑后了。

|

||||

|

||||

@ -40,11 +40,11 @@ LVM就在这些分区上提供了一个抽象层。用于取代磁盘上的传

|

||||

|

||||

|

||||

|

||||

卷组视图会列出你所有物理卷和逻辑卷的总览。这里,我们有两个横跨两个独立硬盘驱动器的物理分区,我们有一个交换分区和一个根分区,就像Ubuntu默认设置的分区图表。由于我们从另一个驱动器添加了第二个物理分区,现在那里有大量未使用空间。

|

||||

卷组视图会列出你所有的物理卷和逻辑卷的总览。这里,我们有两个横跨两个独立硬盘驱动器的物理分区,我们有一个交换分区和一个根分区,这是Ubuntu默认设置的分区图表。由于我们从另一个驱动器添加了第二个物理分区,现在那里有大量未使用空间。

|

||||

|

||||

|

||||

|

||||

要扩展逻辑分区到物理空间,你可以在逻辑视图下选择它,点击编辑属性,然后修改大小来扩大分区。你也可以在这里缩减分区。

|

||||

要扩展逻辑分区到物理空间,你可以在逻辑视图下选择它,点击编辑属性,然后修改大小来扩大分区。你也可以在这里缩小分区。

|

||||

|

||||

|

||||

|

||||

@ -55,7 +55,7 @@ system-config-lvm的其它选项允许你设置快照和镜像。对于传统桌

|

||||

via: http://www.howtogeek.com/211937/how-to-use-lvm-on-ubuntu-for-easy-partition-resizing-and-snapshots/

|

||||

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,89 @@

|

||||

如何在树莓派 2 运行 ubuntu Snappy Core

|

||||

================================================================================

|

||||

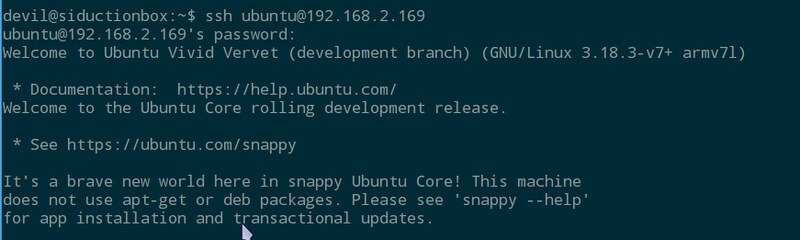

物联网(Internet of Things, IoT) 时代即将来临。很快,过不了几年,我们就会问自己当初是怎么在没有物联网的情况下生存的,就像我们现在怀疑过去没有手机的年代。Canonical 就是一个物联网快速发展却还是开放市场下的竞争者。这家公司宣称自己把赌注压到了IoT 上,就像他们已经在“云”上做过的一样。在今年一月底,Canonical 启动了一个基于Ubuntu Core 的小型操作系统,名字叫做 [Ubuntu Snappy Core][1] 。

|

||||

|

||||

Snappy 代表了两种意思,它是一种用来替代 deb 的新的打包格式;也是一个用来更新系统的前端,从CoreOS、红帽子和其他系统借鉴了**原子更新**这个想法。自从树莓派 2 投入市场,Canonical 很快就发布了用于树莓派的Snappy Core 版本。而第一代树莓派因为是基于ARMv6 ,Ubuntu 的ARM 镜像是基于ARMv7 ,所以不能运行ubuntu 。不过这种状况现在改变了,Canonical 通过发布 Snappy Core 的RPI2 镜像,抓住机会证明了Snappy 就是一个用于云计算,特别是用于物联网的系统。

|

||||

|

||||

Snappy 同样可以运行在其它像Amazon EC2, Microsofts Azure, Google的 Compute Engine 这样的云端上,也可以虚拟化在 KVM、Virtuabox 和vagrant 上。Canonical Ubuntu 已经拥抱了微软、谷歌、Docker、OpenStack 这些重量级选手,同时也与一些小项目达成合作关系。除了一些创业公司,比如 Ninja Sphere、Erle Robotics,还有一些开发板生产商,比如 Odroid、Banana Pro, Udoo, PCDuino 和 Parallella 、全志,Snappy 也提供了支持。Snappy Core 同时也希望尽快运行到路由器上来帮助改进路由器生产商目前很少更新固件的策略。

|

||||

|

||||

接下来,让我们看看怎么样在树莓派 2 上运行 Ubuntu Snappy Core。

|

||||

|

||||

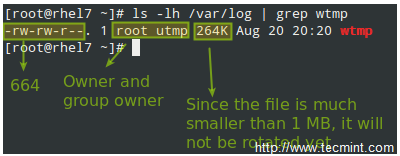

用于树莓派2 的Snappy 镜像可以从 [Raspberry Pi 网站][2] 上下载。解压缩出来的镜像必须[写到一个至少8GB 大小的SD 卡][3]。尽管原始系统很小,但是原子升级和回滚功能会占用不小的空间。使用 Snappy 启动树莓派 2 后你就可以使用默认用户名和密码(都是ubuntu)登录系统。

|

||||

|

||||

|

||||

|

||||

sudo 已经配置好了可以直接用,安全起见,你应该使用以下命令来修改你的用户名

|

||||

|

||||

$ sudo usermod -l <new name> <old name>

|

||||

|

||||

或者也可以使用`adduser` 为你添加一个新用户。

|

||||

|

||||

因为RPI缺少硬件时钟,而 Snappy Core 镜像并不知道这一点,所以系统会有一个小 bug:处理某些命令时会报很多错。不过这个很容易解决:

|

||||

|

||||

使用这个命令来确认这个bug 是否影响:

|

||||

|

||||

$ date

|

||||

|

||||

如果输出类似 "Thu Jan 1 01:56:44 UTC 1970", 你可以这样做来改正:

|

||||

|

||||

$ sudo date --set="Sun Apr 04 17:43:26 UTC 2015"

|

||||

|

||||

改成你的实际时间。

|

||||

|

||||

|

||||

|

||||

现在你可能打算检查一下,看看有没有可用的更新。注意通常使用的命令是不行的:

|

||||

|

||||

$ sudo apt-get update && sudo apt-get distupgrade

|

||||

|

||||

这时系统不会让你通过,因为 Snappy 使用它自己精简过的、基于dpkg 的包管理系统。这么做的原因是 Snappy 会运行很多嵌入式程序,而同时你也会试图所有事情尽可能的简化。

|

||||

|

||||

让我们来看看最关键的部分,理解一下程序是如何与 Snappy 工作的。运行 Snappy 的SD 卡上除了 boot 分区外还有3个分区。其中的两个构成了一个重复的文件系统。这两个平行文件系统被固定挂载为只读模式,并且任何时刻只有一个是激活的。第三个分区是一个部分可写的文件系统,用来让用户存储数据。通过更新系统,标记为'system-a' 的分区会保持一个完整的文件系统,被称作核心,而另一个平行的文件系统仍然会是空的。

|

||||

|

||||

|

||||

|

||||

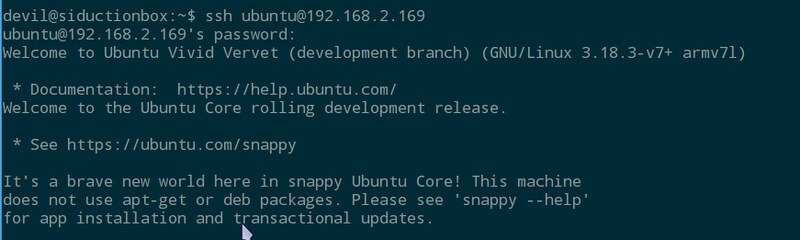

如果我们运行以下命令:

|

||||

|

||||

$ sudo snappy update

|

||||

|

||||

系统将会在'system-b' 上作为一个整体进行更新,这有点像是更新一个镜像文件。接下来你将会被告知要重启系统来激活新核心。

|

||||

|

||||

重启之后,运行下面的命令可以检查你的系统是否已经更新到最新版本,以及当前被激活的是哪个核心

|

||||

|

||||

$ sudo snappy versions -a

|

||||

|

||||

经过更新-重启两步操作,你应该可以看到被激活的核心已经被改变了。

|

||||

|

||||

因为到目前为止我们还没有安装任何软件,所以可以用下面的命令更新:

|

||||

|

||||

$ sudo snappy update ubuntu-core

|

||||

|

||||

如果你打算仅仅更新特定的OS 版本这就够了。如果出了问题,你可以使用下面的命令回滚:

|

||||

|

||||

$ sudo snappy rollback ubuntu-core

|

||||

|

||||

这将会把系统状态回滚到更新之前。

|

||||

|

||||

|

||||

|

||||

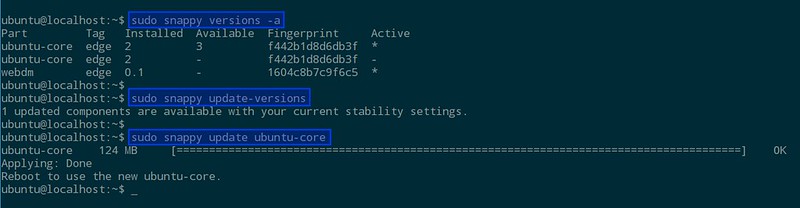

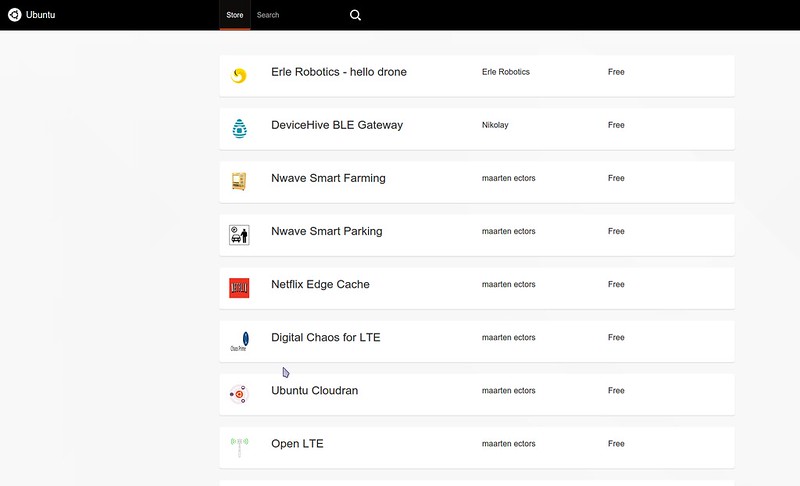

再来说说那些让 Snappy 变得有用的软件。这里不会讲的太多关于如何构建软件、向 Snappy 应用商店添加软件的基础知识,但是你可以通过 Freenode 上的IRC 频道 #snappy 了解更多信息,那个上面有很多人参与。你可以通过浏览器访问http://\<ip-address>:4200 来浏览应用商店,然后从商店安装软件,再在浏览器里访问 http://webdm.local 来启动程序。如何构建用于 Snappy 的软件并不难,而且也有了现成的[参考文档][4] 。你也可以很容易的把 DEB 安装包使用Snappy 格式移植到Snappy 上。

|

||||

|

||||

|

||||

|

||||

尽管 Ubuntu Snappy Core 吸引了我们去研究新型的 Snappy 安装包格式和 Canonical 式的原子更新操作,但是因为有限的可用应用,它现在在生产环境里还不是很有用。但是既然搭建一个 Snappy 环境如此简单,这看起来是一个学点新东西的好机会。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/ubuntu-snappy-core-raspberry-pi-2.html

|

||||

|

||||

作者:[Ferdinand Thommes][a]

|

||||

译者:[Ezio](https://github.com/oska874)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/ferdinand

|

||||

[1]:http://www.ubuntu.com/things

|

||||

[2]:http://www.raspberrypi.org/downloads/

|

||||

[3]:http://xmodulo.com/write-raspberry-pi-image-sd-card.html

|

||||

[4]:https://developer.ubuntu.com/en/snappy/

|

||||

@ -1,6 +1,6 @@

|

||||

在 VirtualBox 中使用 Docker Machine 管理主机

|

||||

================================================================================

|

||||

大家好,今天我们学习在 VirtualBox 中使用 Docker Machine 来创建和管理 Docker 主机。Docker Machine 是一个应用,用于在我们的电脑上、在云端、在数据中心创建 Docker 主机,然后用户可以使用 Docker 客户端来配置一些东西。这个 API 为本地主机、或数据中心的虚拟机、或云端的实例提供 Docker 服务。Docker Machine 支持 Windows、OSX 和 Linux,并且是以一个独立的二进制文件包形式安装的。使用(与现有 Docker 工具)相同的接口,我们就可以充分利用已经提供 Docker 基础框架的生态系统。只要一个命令,用户就能快速部署 Docker 容器。

|

||||

大家好,今天我们学习在 VirtualBox 中使用 Docker Machine 来创建和管理 Docker 主机。Docker Machine 是一个可以帮助我们在电脑上、在云端、在数据中心内创建 Docker 主机的应用。它为根据用户的配置和需求创建服务器并在其上安装 Docker和客户端提供了一个轻松的解决方案。这个 API 可以用于在本地主机、或数据中心的虚拟机、或云端的实例提供 Docker 服务。Docker Machine 支持 Windows、OSX 和 Linux,并且是以一个独立的二进制文件包形式安装的。仍然使用(与现有 Docker 工具)相同的接口,我们就可以充分利用已经提供 Docker 基础框架的生态系统。只要一个命令,用户就能快速部署 Docker 容器。

|

||||

|

||||

本文列出一些简单的步骤用 Docker Machine 来部署 docker 容器。

|

||||

|

||||

@ -8,15 +8,15 @@

|

||||

|

||||

Docker Machine 完美支持所有 Linux 操作系统。首先我们需要从 [github][1] 下载最新版本的 Docker Machine,本文使用 curl 作为下载工具,Docker Machine 版本为 0.2.0。

|

||||

|

||||

** 64 位操作系统 **

|

||||

**64 位操作系统**

|

||||

|

||||

# curl -L https://github.com/docker/machine/releases/download/v0.2.0/docker-machine_linux-amd64 > /usr/local/bin/docker-machine

|

||||

|

||||

** 32 位操作系统 **

|

||||

**32 位操作系统**

|

||||

|

||||

# curl -L https://github.com/docker/machine/releases/download/v0.2.0/docker-machine_linux-i386 > /usr/local/bin/docker-machine

|

||||

|

||||

下载完成后,找到 **/usr/local/bin** 目录下的 **docker-machine** 文件,执行一下:

|

||||

下载完成后,找到 **/usr/local/bin** 目录下的 **docker-machine** 文件,让其可以执行:

|

||||

|

||||

# chmod +x /usr/local/bin/docker-machine

|

||||

|

||||

@ -28,12 +28,12 @@ Docker Machine 完美支持所有 Linux 操作系统。首先我们需要从 [gi

|

||||

|

||||

运行下面的命令,安装 Docker 客户端,以便于在我们自己的电脑止运行 Docker 命令:

|

||||

|

||||

# curl -L https://get.docker.com/builds/linux/x86_64/docker-latest > /usr/local/bin/docker

|

||||

# chmod +x /usr/local/bin/docker

|

||||

# curl -L https://get.docker.com/builds/linux/x86_64/docker-latest > /usr/local/bin/docker

|

||||

# chmod +x /usr/local/bin/docker

|

||||

|

||||

### 2. 创建 VirtualBox 虚拟机 ###

|

||||

|

||||

在 Linux 系统上安装完 Docker Machine 后,接下来我们可以安装 VirtualBox 虚拟机,运行下面的就可以了。--driver virtualbox 选项表示我们要在 VirtualBox 的虚拟机里面部署 docker,最后的参数“linux” 是虚拟机的名称。这个命令会下载 [boot2docker][2] iso,它是个基于 Tiny Core Linux 的轻量级发行版,自带 Docker 程序,然后 docker-machine 命令会创建一个 VirtualBox 虚拟机(LCTT:当然,我们也可以选择其他的虚拟机软件)来运行这个 boot2docker 系统。

|

||||

在 Linux 系统上安装完 Docker Machine 后,接下来我们可以安装 VirtualBox 虚拟机,运行下面的就可以了。`--driver virtualbox` 选项表示我们要在 VirtualBox 的虚拟机里面部署 docker,最后的参数“linux” 是虚拟机的名称。这个命令会下载 [boot2docker][2] iso,它是个基于 Tiny Core Linux 的轻量级发行版,自带 Docker 程序,然后 `docker-machine` 命令会创建一个 VirtualBox 虚拟机(LCTT译注:当然,我们也可以选择其他的虚拟机软件)来运行这个 boot2docker 系统。

|

||||

|

||||

# docker-machine create --driver virtualbox linux

|

||||

|

||||

@ -49,7 +49,7 @@ Docker Machine 完美支持所有 Linux 操作系统。首先我们需要从 [gi

|

||||

|

||||

### 3. 设置环境变量 ###

|

||||

|

||||

现在我们需要让 docker 与虚拟机通信,运行 docker-machine env <虚拟机名称> 来实现这个目的。

|

||||

现在我们需要让 docker 与 docker-machine 通信,运行 `docker-machine env <虚拟机名称>` 来实现这个目的。

|

||||

|

||||

# eval "$(docker-machine env linux)"

|

||||

# docker ps

|

||||

@ -64,7 +64,7 @@ Docker Machine 完美支持所有 Linux 操作系统。首先我们需要从 [gi

|

||||

|

||||

### 4. 运行 Docker 容器 ###

|

||||

|

||||

完成配置后我们就可以在 VirtualBox 上运行 docker 容器了。测试一下,在虚拟机里执行 **docker run busybox echo hello world** 命令,我们可以看到容器的输出信息。

|

||||

完成配置后我们就可以在 VirtualBox 上运行 docker 容器了。测试一下,我们可以运行虚拟机 `docker run busybox` ,并在里面里执行 `echo hello world` 命令,我们可以看到容器的输出信息。

|

||||

|

||||

# docker run busybox echo hello world

|

||||

|

||||

@ -72,7 +72,7 @@ Docker Machine 完美支持所有 Linux 操作系统。首先我们需要从 [gi

|

||||

|

||||

### 5. 拿到 Docker 主机的 IP ###

|

||||

|

||||

我们可以执行下面的命令获取 Docker 主机的 IP 地址。

|

||||

我们可以执行下面的命令获取运行 Docker 的主机的 IP 地址。我们可以看到在 Docker 主机的 IP 地址上的任何暴露出来的端口。

|

||||

|

||||

# docker-machine ip

|

||||

|

||||

@ -94,7 +94,9 @@ Docker Machine 完美支持所有 Linux 操作系统。首先我们需要从 [gi

|

||||

|

||||

### 总结 ###

|

||||

|

||||

最后,我们使用 Docker Machine 成功在 VirtualBox 上创建并管理一台 Docker 主机。Docker Machine 确实能让用户快速地在不同的平台上部署 Docker 主机,就像我们这里部署在 VirtualBox 上一样。这个 --driver virtulbox 驱动可以在本地机器上使用,也可以在数据中心的虚拟机上使用。Docker Machine 驱动除了支持本地的 VirtualBox 之外,还支持远端的 Digital Ocean、AWS、Azure、VMware 以及其他基础设施。如果你有任何疑问,或者建议,请在评论栏中写出来,我们会不断改进我们的内容。谢谢,祝愉快。

|

||||

最后,我们使用 Docker Machine 成功在 VirtualBox 上创建并管理一台 Docker 主机。Docker Machine 确实能让用户快速地在不同的平台上部署 Docker 主机,就像我们这里部署在 VirtualBox 上一样。这个 virtualbox 驱动可以在本地机器上使用,也可以在数据中心的虚拟机上使用。Docker Machine 驱动除了支持本地的 VirtualBox 之外,还支持远端的 Digital Ocean、AWS、Azure、VMware 以及其它基础设施。

|

||||

|

||||

如果你有任何疑问,或者建议,请在评论栏中写出来,我们会不断改进我们的内容。谢谢,祝愉快。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -102,7 +104,7 @@ via: http://linoxide.com/linux-how-to/host-virtualbox-docker-machine/

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[bazz2](https://github.com/bazz2)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -2,7 +2,7 @@ Ubuntu 14.04中修复“update information is outdated”错误

|

||||

================================================================================

|

||||

|

||||

|

||||

看到Ubuntu 14.04的顶部面板上那个显示下面这个错误的红色三角形了吗?

|

||||

看到过Ubuntu 14.04的顶部面板上那个显示下面这个错误的红色三角形了吗?

|

||||

|

||||

> 更新信息过时。该错误可能是由网络问题,或者某个仓库不再可用而造成的。请通过从指示器菜单中选择‘显示更新’来手动更新,然后查看是否存在有失败的仓库。

|

||||

>

|

||||

@ -25,7 +25,7 @@ Ubuntu 14.04中修复“update information is outdated”错误

|

||||

|

||||

### 修复‘update information is outdated’错误 ###

|

||||

|

||||

这里讨论的‘解决方案’可能对Ubuntu的这些版本有用:Ubuntu 14.04,12.04或14.04。你所要做的仅仅是打开终端(Ctrl+Alt+T),然后使用下面的命令:

|

||||

这里讨论的‘解决方案’可能对Ubuntu的这些版本有用:Ubuntu 14.04,12.04。你所要做的仅仅是打开终端(Ctrl+Alt+T),然后使用下面的命令:

|

||||

|

||||

sudo apt-get update

|

||||

|

||||

@ -47,7 +47,7 @@ via: http://itsfoss.com/fix-update-information-outdated-ubuntu/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -56,4 +56,4 @@ via: http://itsfoss.com/fix-update-information-outdated-ubuntu/

|

||||

[2]:http://itsfoss.com/notification-terminal-command-completion-ubuntu/

|

||||

[3]:http://itsfoss.com/solve-gpg-error-signatures-verified-ubuntu/

|

||||

[4]:http://itsfoss.com/install-spotify-ubuntu-1504/

|

||||

[5]:http://itsfoss.com/fix-update-errors-ubuntu-1404/

|

||||

[5]:https://linux.cn/article-5603-1.html

|

||||

@ -1,10 +1,11 @@

|

||||

在 Linux 中使用日志来排错

|

||||

================================================================================

|

||||

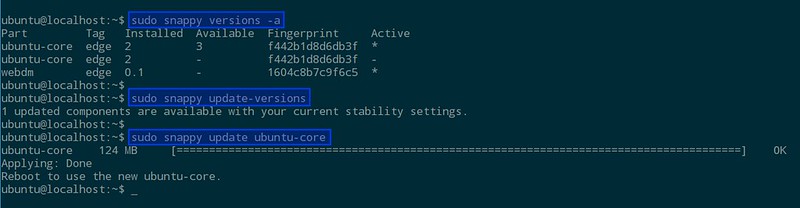

人们创建日志的主要原因是排错。通常你会诊断为什么问题发生在你的 Linux 系统或应用程序中。错误信息或一些列事件可以给你提供造成根本原因的线索,说明问题是如何发生的,并指出如何解决它。这里有几个使用日志来解决的样例。

|

||||

|

||||

人们创建日志的主要原因是排错。通常你会诊断为什么问题发生在你的 Linux 系统或应用程序中。错误信息或一系列的事件可以给你提供找出根本原因的线索,说明问题是如何发生的,并指出如何解决它。这里有几个使用日志来解决的样例。

|

||||

|

||||

### 登录失败原因 ###

|

||||

|

||||

如果你想检查你的系统是否安全,你可以在验证日志中检查登录失败的和登录成功但可疑的用户。当有人通过不正当或无效的凭据来登录时会出现认证失败,经常使用 SSH 进行远程登录或 su 到本地其他用户来进行访问权。这些是由[插入式验证模块][1]来记录,或 PAM 进行短期记录。在你的日志中会看到像 Failed 这样的字符串密码和未知的用户。成功认证记录包括像 Accepted 这样的字符串密码并打开会话。

|

||||

如果你想检查你的系统是否安全,你可以在验证日志中检查登录失败的和登录成功但可疑的用户。当有人通过不正当或无效的凭据来登录时会出现认证失败,这通常发生在使用 SSH 进行远程登录或 su 到本地其他用户来进行访问权时。这些是由[插入式验证模块(PAM)][1]来记录的。在你的日志中会看到像 Failed password 和 user unknown 这样的字符串。而成功认证记录则会包括像 Accepted password 和 session opened 这样的字符串。

|

||||

|

||||

失败的例子:

|

||||

|

||||

@ -30,22 +31,21 @@

|

||||

|

||||

由于没有标准格式,所以你需要为每个应用程序的日志使用不同的命令。日志管理系统,可以自动分析日志,将它们有效的归类,帮助你提取关键字,如用户名。

|

||||

|

||||

日志管理系统可以使用自动解析功能从 Linux 日志中提取用户名。这使你可以看到用户的信息,并能单个的筛选。在这个例子中,我们可以看到,root 用户登录了 2700 次,因为我们筛选的日志显示尝试登录的只有 root 用户。

|

||||

日志管理系统可以使用自动解析功能从 Linux 日志中提取用户名。这使你可以看到用户的信息,并能通过点击过滤。在下面这个例子中,我们可以看到,root 用户登录了 2700 次之多,因为我们筛选的日志仅显示 root 用户的尝试登录记录。

|

||||

|

||||

|

||||

|

||||

日志管理系统也让你以时间为做坐标轴的图标来查看使你更容易发现异常。如果有人在几分钟内登录失败一次或两次,它可能是一个真正的用户而忘记了密码。但是,如果有几百个失败的登录并且使用的都是不同的用户名,它更可能是在试图攻击系统。在这里,你可以看到在3月12日,有人试图登录 Nagios 几百次。这显然不是一个合法的系统用户。

|

||||

日志管理系统也可以让你以时间为做坐标轴的图表来查看,使你更容易发现异常。如果有人在几分钟内登录失败一次或两次,它可能是一个真正的用户而忘记了密码。但是,如果有几百个失败的登录并且使用的都是不同的用户名,它更可能是在试图攻击系统。在这里,你可以看到在3月12日,有人试图登录 Nagios 几百次。这显然不是一个合法的系统用户。

|

||||

|

||||

|

||||

|

||||

### 重启的原因 ###

|

||||

|

||||

|

||||

有时候,一台服务器由于系统崩溃或重启而宕机。你怎么知道它何时发生,是谁做的?

|

||||

|

||||

#### 关机命令 ####

|

||||

|

||||

如果有人手动运行 shutdown 命令,你可以看到它的身份在验证日志文件中。在这里,你可以看到,有人从 IP 50.0.134.125 上作为 ubuntu 的用户远程登录了,然后关闭了系统。

|

||||

如果有人手动运行 shutdown 命令,你可以在验证日志文件中看到它。在这里,你可以看到,有人从 IP 50.0.134.125 上作为 ubuntu 的用户远程登录了,然后关闭了系统。

|

||||

|

||||

Mar 19 18:36:41 ip-172-31-11-231 sshd[23437]: Accepted publickey for ubuntu from 50.0.134.125 port 52538 ssh

|

||||

Mar 19 18:36:41 ip-172-31-11-231 23437]:sshd[ pam_unix(sshd:session): session opened for user ubuntu by (uid=0)

|

||||

@ -53,7 +53,7 @@

|

||||

|

||||

#### 内核初始化 ####

|

||||

|

||||

如果你想看看服务器重新启动的所有原因(包括崩溃),你可以从内核初始化日志中寻找。你需要搜索内核设施和初始化 cpu 的信息。

|

||||

如果你想看看服务器重新启动的所有原因(包括崩溃),你可以从内核初始化日志中寻找。你需要搜索内核类(kernel)和 cpu 初始化(Initializing)的信息。

|

||||

|

||||

Mar 19 18:39:30 ip-172-31-11-231 kernel: [ 0.000000] Initializing cgroup subsys cpuset

|

||||

Mar 19 18:39:30 ip-172-31-11-231 kernel: [ 0.000000] Initializing cgroup subsys cpu

|

||||

@ -61,9 +61,9 @@

|

||||

|

||||

### 检测内存问题 ###

|

||||

|

||||

有很多原因可能导致服务器崩溃,但一个普遍的原因是内存用尽。

|

||||

有很多原因可能导致服务器崩溃,但一个常见的原因是内存用尽。

|

||||

|

||||

当你系统的内存不足时,进程会被杀死,通常会杀死使用最多资源的进程。当系统正在使用的内存发生错误并且有新的或现有的进程试图使用更多的内存。在你的日志文件查找像 Out of Memory 这样的字符串,内核也会发出杀死进程的警告。这些信息表明系统故意杀死进程或应用程序,而不是允许进程崩溃。

|

||||

当你系统的内存不足时,进程会被杀死,通常会杀死使用最多资源的进程。当系统使用了所有内存,而新的或现有的进程试图使用更多的内存时就会出现错误。在你的日志文件查找像 Out of Memory 这样的字符串或类似 kill 这样的内核警告信息。这些信息表明系统故意杀死进程或应用程序,而不是允许进程崩溃。

|

||||

|

||||

例如:

|

||||

|

||||

@ -75,20 +75,20 @@

|

||||

$ grep “Out of memory” /var/log/syslog

|

||||

[33238.178288] Out of memory: Kill process 6230 (firefox) score 53 or sacrifice child

|

||||

|

||||

请记住,grep 也要使用内存,所以导致内存不足的错误可能只是运行的 grep。这是另一个分析日志的独特方法!

|

||||

请记住,grep 也要使用内存,所以只是运行 grep 也可能导致内存不足的错误。这是另一个你应该中央化存储日志的原因!

|

||||

|

||||

### 定时任务错误日志 ###

|

||||

|

||||

cron 守护程序是一个调度器只在指定的日期和时间运行进程。如果进程运行失败或无法完成,那么 cron 的错误出现在你的日志文件中。你可以找到这些文件在 /var/log/cron,/var/log/messages,和 /var/log/syslog 中,具体取决于你的发行版。cron 任务失败原因有很多。通常情况下,问题出在进程中而不是 cron 守护进程本身。

|

||||

cron 守护程序是一个调度器,可以在指定的日期和时间运行进程。如果进程运行失败或无法完成,那么 cron 的错误出现在你的日志文件中。具体取决于你的发行版,你可以在 /var/log/cron,/var/log/messages,和 /var/log/syslog 几个位置找到这个日志。cron 任务失败原因有很多。通常情况下,问题出在进程中而不是 cron 守护进程本身。

|

||||

|

||||

默认情况下,cron 作业会通过电子邮件发送信息。这里是一个日志中记录的发送电子邮件的内容。不幸的是,你不能看到邮件的内容在这里。

|

||||

默认情况下,cron 任务的输出会通过 postfix 发送电子邮件。这是一个显示了该邮件已经发送的日志。不幸的是,你不能在这里看到邮件的内容。

|

||||

|

||||

Mar 13 16:35:01 PSQ110 postfix/pickup[15158]: C3EDC5800B4: uid=1001 from=<hoover>

|

||||

Mar 13 16:35:01 PSQ110 postfix/cleanup[15727]: C3EDC5800B4: message-id=<20150310110501.C3EDC5800B4@PSQ110>

|

||||

Mar 13 16:35:01 PSQ110 postfix/qmgr[15159]: C3EDC5800B4: from=<hoover@loggly.com>, size=607, nrcpt=1 (queue active)

|

||||

Mar 13 16:35:05 PSQ110 postfix/smtp[15729]: C3EDC5800B4: to=<hoover@loggly.com>, relay=gmail-smtp-in.l.google.com[74.125.130.26]:25, delay=4.1, delays=0.26/0/2.2/1.7, dsn=2.0.0, status=sent (250 2.0.0 OK 1425985505 f16si501651pdj.5 - gsmtp)

|

||||

|

||||

你应该想想 cron 在日志中的标准输出以帮助你定位问题。这里展示你可以使用 logger 命令重定向 cron 标准输出到 syslog。用你的脚本来代替 echo 命令,helloCron 可以设置为任何你想要的应用程序的名字。

|

||||

你可以考虑将 cron 的标准输出记录到日志中,以帮助你定位问题。这是一个你怎样使用 logger 命令重定向 cron 标准输出到 syslog的例子。用你的脚本来代替 echo 命令,helloCron 可以设置为任何你想要的应用程序的名字。

|

||||

|

||||

*/5 * * * * echo ‘Hello World’ 2>&1 | /usr/bin/logger -t helloCron

|

||||

|

||||

@ -97,7 +97,9 @@ cron 守护程序是一个调度器只在指定的日期和时间运行进程。

|

||||

Apr 28 22:20:01 ip-172-31-11-231 CRON[15296]: (ubuntu) CMD (echo 'Hello World!' 2>&1 | /usr/bin/logger -t helloCron)

|

||||

Apr 28 22:20:01 ip-172-31-11-231 helloCron: Hello World!

|

||||

|

||||

每个 cron 作业将根据作业的具体类型以及如何输出数据来记录不同的日志。希望在日志中有问题根源的线索,也可以根据需要添加额外的日志记录。

|

||||

每个 cron 任务将根据任务的具体类型以及如何输出数据来记录不同的日志。

|

||||

|

||||

希望在日志中有问题根源的线索,也可以根据需要添加额外的日志记录。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -107,7 +109,7 @@ via: http://www.loggly.com/ultimate-guide/logging/troubleshooting-with-linux-log

|

||||

作者:[Amy Echeverri][a2]

|

||||

作者:[Sadequl Hussain][a3]

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,6 +1,7 @@

|

||||

Ubuntu 15.04 and系统中安装 Logwatch

|

||||

如何在 Ubuntu 15.04 系统中安装 Logwatch

|

||||

================================================================================

|

||||

大家好,今天我们会讲述在 Ubuntu 15.04 操作系统上如何安装 Logwatch 软件,它也可以在任意的 Linux 系统和类 Unix 系统上安装。Logwatch 是一款可定制的日志分析和日志监控报告生成系统,它可以根据一段时间的日志文件生成您所希望关注的详细报告。它具有易安装、易配置、可审查等特性,同时对其提供的数据的安全性上也有一些保障措施。Logwatch 会扫描重要的操作系统组件像 SSH、网站服务等的日志文件,然后生成用户所关心的有价值的条目汇总报告。

|

||||

|

||||

大家好,今天我们会讲述在 Ubuntu 15.04 操作系统上如何安装 Logwatch 软件,它也可以在各种 Linux 系统和类 Unix 系统上安装。Logwatch 是一款可定制的日志分析和日志监控报告生成系统,它可以根据一段时间的日志文件生成您所希望关注的详细报告。它具有易安装、易配置、可审查等特性,同时对其提供的数据的安全性上也有一些保障措施。Logwatch 会扫描重要的操作系统组件像 SSH、网站服务等的日志文件,然后生成用户所关心的有价值的条目汇总报告。

|

||||

|

||||

### 预安装设置 ###

|

||||

|

||||

@ -16,13 +17,13 @@ Ubuntu 15.04 and系统中安装 Logwatch

|

||||

|

||||

root@ubuntu-15:~# apt-get install logwatch

|

||||

|

||||

在安装过程中,一旦您按提示按下“Y”健同意对系统修改的话,Logwatch 将会开始安装一些额外的必须软件包。

|

||||

在安装过程中,一旦您按提示按下“Y”键同意对系统修改的话,Logwatch 将会开始安装一些额外的必须软件包。

|

||||

|

||||

在安装过程中会根据您机器上的邮件服务器设置情况弹出提示对 Postfix 设置的配置界面。在这篇教程中我们使用最容易的 “仅本地” 选项。根据您的基础设施情况也可以选择其它的可选项,然后点击“确定”继续。

|

||||

在安装过程中会根据您机器上的邮件服务器设置情况弹出提示对 Postfix 设置的配置界面。在这篇教程中我们使用最容易的 “仅本地(Local only)” 选项。根据您的基础设施情况也可以选择其它的可选项,然后点击“确定”继续。

|

||||

|

||||

|

||||

|

||||

随后您得选择邮件服务器名,这邮件服务器名也会被其它程序使用,所以它应该是一个完全合格域名/全称域名(FQDN),且只一个。

|

||||

随后您得选择邮件服务器名,这邮件服务器名也会被其它程序使用,所以它应该是一个完全合格域名/全称域名(FQDN)。

|

||||

|

||||

|

||||

|

||||

@ -70,11 +71,11 @@ Ubuntu 15.04 and系统中安装 Logwatch

|

||||

# complete email address.

|

||||

MailFrom = Logwatch

|

||||

|

||||

对这个配置文件保存修改,至于其它的参数就让它是默认的,无需改动。

|

||||

对这个配置文件保存修改,至于其它的参数就让它保持默认,无需改动。

|

||||

|

||||

**调度任务配置**

|

||||

|

||||

现在编辑在日常 crons 目录下的 “00logwatch” 文件来配置从 logwatch 生成的报告需要发送的邮件地址。

|

||||

现在编辑在 “daily crons” 目录下的 “00logwatch” 文件来配置从 logwatch 生成的报告需要发送的邮件地址。

|

||||

|

||||

root@ubuntu-15:~# vim /etc/cron.daily/00logwatch

|

||||

|

||||

@ -88,25 +89,25 @@ Ubuntu 15.04 and系统中安装 Logwatch

|

||||

|

||||

root@ubuntu-15:~#logwatch

|

||||

|

||||

生成的报告开始部分显示的是执行的时间和日期。它包含不同的部分,每个部分以开始标识开始而以结束标识结束,中间显示的标识部分提到的完整日志信息。

|

||||

生成的报告开始部分显示的是执行的时间和日期。它包含不同的部分,每个部分以开始标识开始而以结束标识结束,中间显示的是该部分的完整信息。

|

||||

|

||||

这儿演示的是开始标识头的样子,要显示系统上所有安装包的信息,如下所示:

|

||||

这儿显示的是开始的样子,它以显示系统上所有安装的软件包的部分开始,如下所示:

|

||||

|

||||

|

||||

|

||||

接下来的部分显示的日志信息是关于当前系统登陆会话、rsyslogs 和当前及最后可用的会话 SSH 连接信息。

|

||||

接下来的部分显示的日志信息是关于当前系统登录会话、rsyslogs 和当前及最近的 SSH 会话信息。

|

||||

|

||||

|

||||

|

||||

Logwatch 报告最后显示的是安全 sudo 日志及root目录磁盘使用情况,如下示:

|

||||

Logwatch 报告最后显示的是安全方面的 sudo 日志及根目录磁盘使用情况,如下示:

|

||||

|

||||

|

||||

|

||||

您也可以打开如下的文件来检查生成的 logwatch 报告电子邮件。

|

||||

您也可以打开如下的文件来查看生成的 logwatch 报告电子邮件。

|

||||

|

||||

root@ubuntu-15:~# vim /var/mail/root

|

||||

|

||||

您会看到所有已生成的邮件到其配置用户的信息传送状态。

|

||||

您会看到发送给你配置的用户的所有已生成的邮件及其邮件递交状态。

|

||||

|

||||

### 更多详情 ###

|

||||

|

||||

@ -130,7 +131,7 @@ via: http://linoxide.com/ubuntu-how-to/install-use-logwatch-ubuntu-15-04/

|

||||

|

||||

作者:[Kashif Siddique][a]

|

||||

译者:[runningwater](https://github.com/runningwater)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

如何在 Linux 中安装 Visual Studio Code

|

||||

================================================================================

|

||||

大家好,今天我们一起来学习如何在 Linux 发行版中安装 Visual Studio Code。Visual Studio Code 是基于 Electron 优化代码后的编辑器,后者是基于 Chromium 的一款软件,用于为桌面系统发布 io.js 应用。Visual Studio Code 是微软开发的包括 Linux 在内的全平台代码编辑器和文本编辑器。它是免费软件但不开源,在专有软件许可条款下发布。它是我们日常使用的超级强大和快速的代码编辑器。Visual Studio Code 有很多很酷的功能,例如导航、智能感知支持、语法高亮、括号匹配、自动补全、片段、支持自定义键盘绑定、并且支持多种语言,例如 Python、C++、Jade、PHP、XML、Batch、F#、DockerFile、Coffee Script、Java、HandleBars、 R、 Objective-C、 PowerShell、 Luna、 Visual Basic、 .Net、 Asp.Net、 C#、 JSON、 Node.js、 Javascript、 HTML、 CSS、 Less、 Sass 和 Markdown。Visual Studio Code 集成了包管理器和库,并构建通用任务使得加速每日的工作流。Visual Studio Code 中最受欢迎的是它的调试功能,它包括流式支持 Node.js 的预览调试。

|

||||

大家好,今天我们一起来学习如何在 Linux 发行版中安装 Visual Studio Code。Visual Studio Code 是基于 Electron 优化代码后的编辑器,后者是基于 Chromium 的一款软件,用于为桌面系统发布 io.js 应用。Visual Studio Code 是微软开发的支持包括 Linux 在内的全平台代码编辑器和文本编辑器。它是免费软件但不开源,在专有软件许可条款下发布。它是可以用于我们日常使用的超级强大和快速的代码编辑器。Visual Studio Code 有很多很酷的功能,例如导航、智能感知支持、语法高亮、括号匹配、自动补全、代码片段、支持自定义键盘绑定、并且支持多种语言,例如 Python、C++、Jade、PHP、XML、Batch、F#、DockerFile、Coffee Script、Java、HandleBars、 R、 Objective-C、 PowerShell、 Luna、 Visual Basic、 .Net、 Asp.Net、 C#、 JSON、 Node.js、 Javascript、 HTML、 CSS、 Less、 Sass 和 Markdown。Visual Studio Code 集成了包管理器、库、构建,以及其它通用任务,以加速日常的工作流。Visual Studio Code 中最受欢迎的是它的调试功能,它包括流式支持 Node.js 的预览调试。

|

||||

|

||||

注意:请注意 Visual Studio Code 只支持 64 位 Linux 发行版。

|

||||

注意:请注意 Visual Studio Code 只支持 64 位的 Linux 发行版。

|

||||

|

||||

下面是在所有 Linux 发行版中安装 Visual Studio Code 的几个简单步骤。

|

||||

|

||||

@ -32,12 +32,12 @@

|

||||

|

||||

### 3. 运行 Visual Studio Code ###

|

||||

|

||||

提取软件包之后,我们可以直接运行一个名为 Code 的文件启动 Visual Studio Code。

|

||||

展开软件包之后,我们可以直接运行一个名为 Code 的文件启动 Visual Studio Code。

|

||||

|

||||

# sudo chmod +x /opt/VSCode-linux-x64/Code

|

||||

# sudo /opt/VSCode-linux-x64/Code

|

||||

|

||||

如果我们想启动 Code 并通过终端能在任何地方打开,我们就需要创建 /opt/vscode/Code 的一个链接 /usr/local/bin/code。

|

||||

如果我们想通过终端在任何地方启动 Code,我们就需要创建 /opt/vscode/Code 的一个链接 /usr/local/bin/code。

|

||||

|

||||

# ln -s /opt/VSCode-linux-x64/Code /usr/local/bin/code

|

||||

|

||||

@ -47,11 +47,11 @@

|

||||

|

||||

### 4. 创建桌面启动 ###

|

||||

|

||||

下一步,成功抽取 Visual Studio Code 软件包之后,我们打算创建桌面启动程序,使得根据不同桌面环境能够从启动器、菜单、桌面启动它。首先我们要复制一个图标文件到 /usr/share/icons/ 目录。

|

||||

下一步,成功展开 Visual Studio Code 软件包之后,我们打算创建桌面启动程序,使得根据不同桌面环境能够从启动器、菜单、桌面启动它。首先我们要复制一个图标文件到 /usr/share/icons/ 目录。

|

||||

|

||||

# cp /opt/VSCode-linux-x64/resources/app/vso.png /usr/share/icons/

|

||||

|

||||

然后,我们创建一个桌面启动程序,文件扩展名为 .desktop。这里我们在 /tmp/VSCODE/ 目录中使用喜欢的文本编辑器创建名为 visualstudiocode.desktop 的文件。

|

||||

然后,我们创建一个桌面启动程序,文件扩展名为 .desktop。这里我们使用喜欢的文本编辑器在 /tmp/VSCODE/ 目录中创建名为 visualstudiocode.desktop 的文件。

|

||||

|

||||

# vi /tmp/vscode/visualstudiocode.desktop

|

||||

|

||||

@ -99,17 +99,19 @@

|

||||

# apt-get update

|

||||

# apt-get install ubuntu-make

|

||||

|

||||

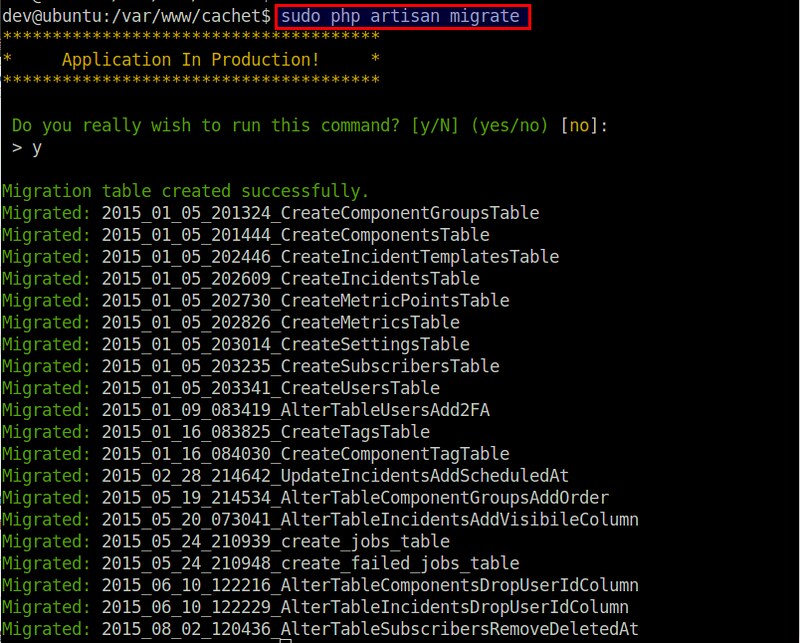

在我们的 ubuntu 操作系统上安装完 Ubuntu Make 之后,我们打算在一个终端中运行以下命令安装 Code。

|

||||

在我们的 ubuntu 操作系统上安装完 Ubuntu Make 之后,我们可以在一个终端中运行以下命令来安装 Code。

|

||||

|

||||

# umake web visual-studio-code

|

||||

|

||||

|

||||

|

||||

运行完上面的命令之后,会要求我们输入想要的安装路径。然后,会请求我们允许在 ubuntu 系统中安装 Visual Studio Code。我们敲击 “a”。点击完后,它会在 ubuntu 机器上下载和安装 Code。最后,我们可以在启动器或者菜单中启动它。

|

||||

运行完上面的命令之后,会要求我们输入想要的安装路径。然后,会请求我们允许在 ubuntu 系统中安装 Visual Studio Code。我们输入“a”(接受)。输入完后,它会在 ubuntu 机器上下载和安装 Code。最后,我们可以在启动器或者菜单中启动它。

|

||||

|

||||

### 总结 ###

|

||||

|

||||

我们已经成功地在 Linux 发行版上安装了 Visual Studio Code。在所有 linux 发行版上安装 Visual Studio Code 都和上面介绍的相似,我们同样可以使用 umake 在 linux 发行版中安装。Umake 是一个安装开发工具,IDEs 和语言流行的工具。我们可以用 Umake 轻松地安装 Android Studios、Eclipse 和很多其它流行 IDE。Visual Studio Code 是基于 Github 上一个叫 [Electron][2] 的项目,它是 [Atom.io][3] 编辑器的一部分。它有很多 Atom.io 编辑器没有的改进功能。当前 Visual Studio Code 只支持 64 位 linux 操作系统平台。如果你有任何疑问、建议或者反馈,请在下面的评论框中留言以便我们改进和更新我们的内容。非常感谢!Enjoy :-)

|

||||

我们已经成功地在 Linux 发行版上安装了 Visual Studio Code。在所有 linux 发行版上安装 Visual Studio Code 都和上面介绍的相似,我们也可以使用 umake 在 Ubuntu 发行版中安装。Umake 是一个安装开发工具,IDEs 和语言的流行工具。我们可以用 Umake 轻松地安装 Android Studios、Eclipse 和很多其它流行 IDE。Visual Studio Code 是基于 Github 上一个叫 [Electron][2] 的项目,它是 [Atom.io][3] 编辑器的一部分。它有很多 Atom.io 编辑器没有的改进功能。当前 Visual Studio Code 只支持 64 位 linux 操作系统平台。

|

||||

|

||||

如果你有任何疑问、建议或者反馈,请在下面的评论框中留言以便我们改进和更新我们的内容。非常感谢!Enjoy :-)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -117,7 +119,7 @@ via: http://linoxide.com/linux-how-to/install-visual-studio-code-linux/

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[ictlyh](https://github.com/ictlyh)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,160 @@

|

||||

Alien 魔法:RPM 和 DEB 互转

|

||||

================================================================================

|

||||

|

||||

正如我确信,你们一定知道Linux下的多种软件安装方式:使用发行版所提供的包管理系统([aptitude,yum,或者zypper][1],还可以举很多例子),从源码编译(尽管现在很少用了,但在Linux发展早期却是唯一可用的方法),或者使用各自的低级工具dpkg用于.deb,以及rpm用于.rpm,预编译包,如此这般。

|

||||

|

||||

|

||||

|

||||

*使用Alien将RPM转换成DEB以及将DEB转换成RPM*

|

||||

|

||||

在本文中,我们将为你介绍alien,一个用于在各种不同的Linux包格式相互转换的工具,其最常见的用法是将.rpm转换成.deb(或者反过来)。

|

||||

|

||||

如果你需要某个特定类型的包,而你只能找到其它格式的包的时候,该工具迟早能派得上用场——即使是其作者不再维护,并且在其网站声明:alien将可能永远维持在实验状态。

|

||||

|

||||

例如,有一次,我正查找一个用于喷墨打印机的.deb驱动,但是却没有找到——生产厂家只提供.rpm包,这时候alien拯救了我。我安装了alien,将包进行转换,不久之后我就可以使用我的打印机了,没有任何问题。

|

||||

|

||||

即便如此,我们也必须澄清一下,这个工具不应当用来转换重要的系统文件和库,因为它们在不同的发行版中有不同的配置。只有在前面说的那种情况下所建议的安装方法根本不适合时,alien才能作为最后手段使用。

|

||||

|

||||

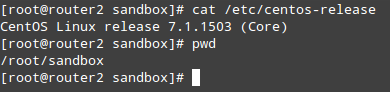

最后一项要点是,我们必须注意,虽然我们在本文中使用CentOS和Debian,除了前两个发行版及其各自的家族体系外,据我们所知,alien可以工作在Slackware中,甚至Solaris中。

|

||||

|

||||

### 步骤1:安装Alien及其依赖包 ###

|

||||

|

||||

要安装alien到CentOS/RHEL 7中,你需要启用EPEL和Nux Dextop(是的,是Dextop——不是Desktop)仓库,顺序如下:

|

||||

|

||||

# yum install epel-release

|

||||

|

||||

启用Nux Dextop仓库的包的当前最新版本是0.5(2015年8月10日发布),在安装之前你可以查看[http://li.nux.ro/download/nux/dextop/el7/x86_64/][2]上是否有更新的版本。

|

||||

|

||||

# rpm --import http://li.nux.ro/download/nux/RPM-GPG-KEY-nux.ro

|

||||

# rpm -Uvh http://li.nux.ro/download/nux/dextop/el7/x86_64/nux-dextop-release-0-5.el7.nux.noarch.rpm

|

||||

|

||||

然后再做,

|

||||

|

||||

# yum update && yum install alien

|

||||

|

||||

在Fedora中,你只需要运行上面的命令即可。

|

||||

|

||||

在Debian及其衍生版中,只需要:

|

||||

|

||||

# aptitude install alien

|

||||

|

||||

### 步骤2:将.deb转换成.rpm包 ###

|

||||

|

||||

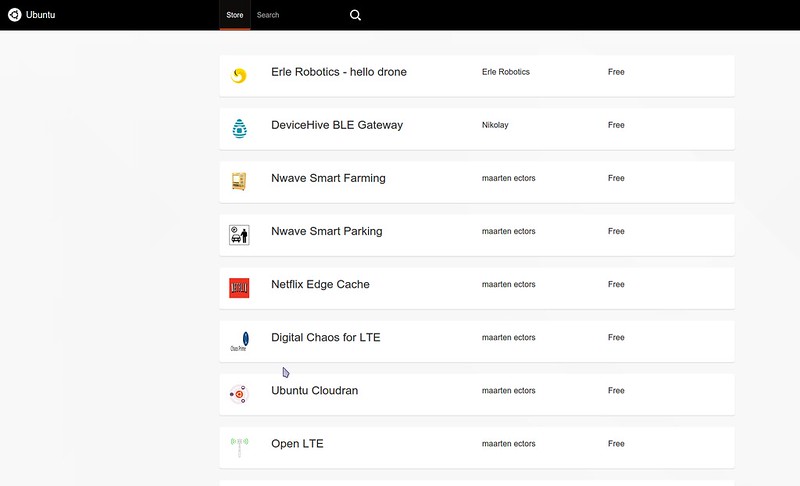

对于本次测试,我们选择了date工具,它提供了一系列日期和时间工具用于处理大量金融数据。我们将下载.deb包到我们的CentOS 7机器中,将它转换成.rpm并安装:

|

||||

|

||||

|

||||

|

||||

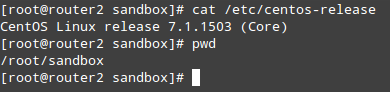

检查CentOS版本

|

||||

|

||||

# cat /etc/centos-release

|

||||

# wget http://ftp.us.debian.org/debian/pool/main/d/dateutils/dateutils_0.3.1-1.1_amd64.deb

|

||||

# alien --to-rpm --scripts dateutils_0.3.1-1.1_amd64.deb

|

||||

|

||||

|

||||

|

||||

*在Linux中将.deb转换成.rpm*

|

||||

|

||||

**重要**:(请注意alien是怎样来增加目标包的次版本号的。如果你想要无视该行为,请添加-keep-version标识)。

|

||||

|

||||

如果我们尝试马上安装该包,我们将碰到些许问题:

|

||||

|

||||

# rpm -Uvh dateutils-0.3.1-2.1.x86_64.rpm

|

||||

|

||||

|

||||

|

||||

*安装RPM包*

|

||||

|

||||

要解决该问题,我们需要启用epel-testing仓库,然后安装rpmbuild工具来编辑该包的配置以重建包:

|

||||

|

||||

# yum --enablerepo=epel-testing install rpmrebuild

|

||||

|

||||

然后运行,

|

||||

|

||||

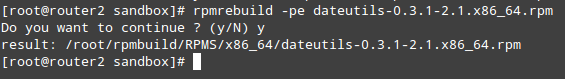

# rpmrebuild -pe dateutils-0.3.1-2.1.x86_64.rpm

|

||||

|

||||

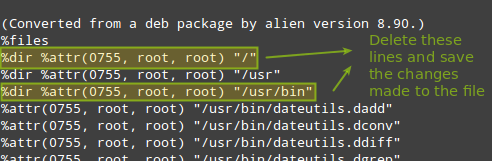

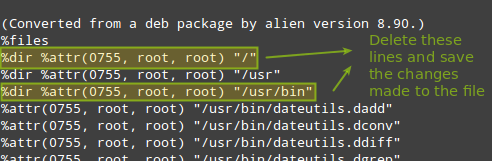

它会打开你的默认文本编辑器。请转到`%files`章节并删除涉及到错误信息中提到的目录的行,然后保存文件并退出:

|

||||

|

||||

|

||||

|

||||

*转换.deb到Alien版*

|

||||

|

||||

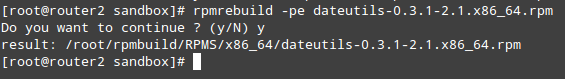

但你退出该文件后,将提示你继续去重构。如果你选择“Y”,该文件会重构到指定的目录(与当前工作目录不同):

|

||||

|

||||

# rpmrebuild –pe dateutils-0.3.1-2.1.x86_64.rpm

|

||||

|

||||

|

||||

|

||||

*构建RPM包*

|

||||

|

||||

现在你可以像以往一样继续来安装包并验证:

|

||||

|

||||

# rpm -Uvh /root/rpmbuild/RPMS/x86_64/dateutils-0.3.1-2.1.x86_64.rpm

|

||||

# rpm -qa | grep dateutils

|

||||

|

||||

|

||||

|

||||

*安装构建RPM包*

|

||||

|

||||

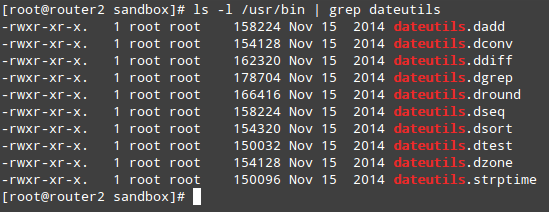

最后,你可以列出date工具包含的各个工具,也可以查看各自的手册页:

|

||||

|

||||

# ls -l /usr/bin | grep dateutils

|

||||

|

||||

|

||||

|

||||

*验证安装的RPM包*

|

||||

|

||||

### 步骤3:将.rpm转换成.deb包 ###

|

||||

|

||||

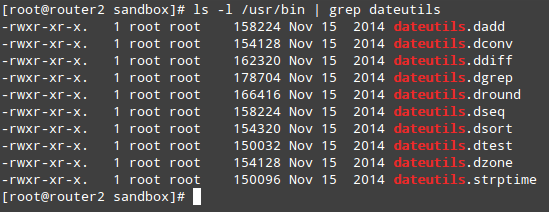

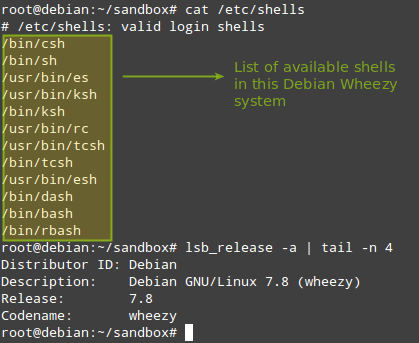

在本节中,我们将演示如何将.rpm转换成.deb。在一台32位的Debian Wheezy机器中,让我们从CentOS 6操作系统仓库中下载用于zsh shell的.rpm包。注意,该shell在Debian及其衍生版的默认安装中是不可用的。

|

||||

|

||||

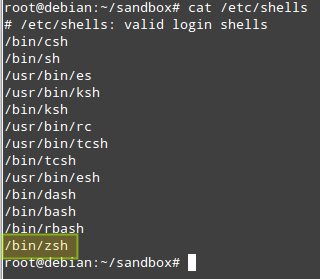

# cat /etc/shells

|

||||

# lsb_release -a | tail -n 4

|

||||

|

||||

|

||||

|

||||

*检查Shell和Debian操作系统版本*

|

||||

|

||||

# wget http://mirror.centos.org/centos/6/os/i386/Packages/zsh-4.3.11-4.el6.centos.i686.rpm

|

||||

# alien --to-deb --scripts zsh-4.3.11-4.el6.centos.i686.rpm

|

||||

|

||||

你可以安全地无视关于签名丢失的信息:

|

||||

|

||||

|

||||

|

||||

*将.rpm转换成.deb包*

|

||||

|

||||

过了一会儿后,.deb包应该已经生成,并可以安装了:

|

||||

|

||||

# dpkg -i zsh_4.3.11-5_i386.deb

|

||||

|

||||

|

||||

|

||||

*安装RPM转换来的Deb包*

|

||||

|

||||

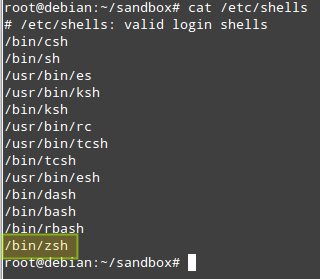

安装完后,你看看可以zsh是否添加到了合法shell列表中:

|

||||

|

||||

# cat /etc/shells

|

||||

|

||||

|

||||

|

||||

*确认安装的Zsh包*

|

||||

|

||||

### 小结 ###

|

||||

|

||||

在本文中,我们已经解释了如何将.rpm转换成.deb及其反向转换,这可以作为这类程序不能从仓库中或者作为可分发源代码获得的最后安装手段。你一定想要将本文添加到书签中,因为我们都需要alien。

|

||||

|

||||

请自由分享你关于本文的想法,写到下面的表单中吧。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/convert-from-rpm-to-deb-and-deb-to-rpm-package-using-alien/

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/gacanepa/

|

||||

[1]:http://www.tecmint.com/linux-package-management/

|

||||

[2]:http://li.nux.ro/download/nux/dextop/el7/x86_64/

|

||||

@ -1,17 +1,21 @@

|

||||

|

||||

Linux/UNIX: Bash 下如何逐行读取一个文件

|

||||

Bash 下如何逐行读取一个文件

|

||||

================================================================================

|

||||

在 Linux 或类 UNIX 系统下如何使用 KSH 或 BASH shell 逐行读取一个文件?

|

||||

|

||||

在 Linux, OSX, * BSD ,或者类 Unix 系统下你可以使用while..do..done bash 的循环来逐行读取一个文件。

|

||||

在 Linux 或类 UNIX 系统下如何使用 KSH 或 BASH shell 逐行读取一个文件?

|

||||

|

||||

**在 Bash Unix 或者 Linux shell 中逐行读取一个文件的语法:**

|

||||

在 Linux、OSX、 *BSD 或者类 Unix 系统下你可以使用 while..do..done 的 bash 循环来逐行读取一个文件。

|

||||

|

||||

1.对于 bash, ksh, zsh,和其他的 shells 语法如下 -

|

||||

1. while read -r line; do COMMAND; done < input.file

|

||||

1.通过 -r 选项传递给红色的命令阻止反斜杠被解释。

|

||||

1.在 read 命令之前添加 IFS= option,来防止 leading/trailing 尾随的空白字符被分割 -

|

||||

1. while IFS= read -r line; do COMMAND_on $line; done < input.file

|

||||

###在 Bash Unix 或者 Linux shell 中逐行读取一个文件的语法

|

||||

|

||||

对于 bash、ksh、 zsh 和其他的 shells 语法如下

|

||||

|

||||

while read -r line; do COMMAND; done < input.file

|

||||

|

||||

通过 -r 选项传递给 read 命令以防止阻止解释其中的反斜杠转义符。

|

||||

|

||||

在 read 命令之前添加 `IFS=` 选项,来防止首尾的空白字符被去掉。

|

||||

|

||||

while IFS= read -r line; do COMMAND_on $line; done < input.file

|

||||

|

||||

这是更适合人类阅读的语法:

|

||||

|

||||

@ -30,7 +34,7 @@ Linux/UNIX: Bash 下如何逐行读取一个文件

|

||||

file="/home/vivek/data.txt"

|

||||

while IFS= read line

|

||||

do

|

||||

# display $line or do somthing with $line

|

||||

# display $line or do somthing with $line

|

||||

echo "$line"

|

||||

done <"$file"

|

||||

|

||||

@ -40,7 +44,7 @@ Linux/UNIX: Bash 下如何逐行读取一个文件

|

||||

file="/home/vivek/data.txt"

|

||||

while IFS= read -r line

|

||||

do

|

||||

# display $line or do somthing with $line

|

||||

# display $line or do somthing with $line

|

||||

printf '%s\n' "$line"

|

||||

done <"$file"

|

||||

|

||||

@ -50,17 +54,17 @@ Linux/UNIX: Bash 下如何逐行读取一个文件

|

||||

file="/etc/passwd"

|

||||

while IFS=: read -r f1 f2 f3 f4 f5 f6 f7

|

||||

do

|

||||

# display fields using f1, f2,..,f7

|

||||

printf 'Username: %s, Shell: %s, Home Dir: %s\n' "$f1" "$f7" "$f6"

|

||||

# display fields using f1, f2,..,f7

|

||||

printf 'Username: %s, Shell: %s, Home Dir: %s\n' "$f1" "$f7" "$f6"

|

||||

done <"$file"

|

||||

|

||||

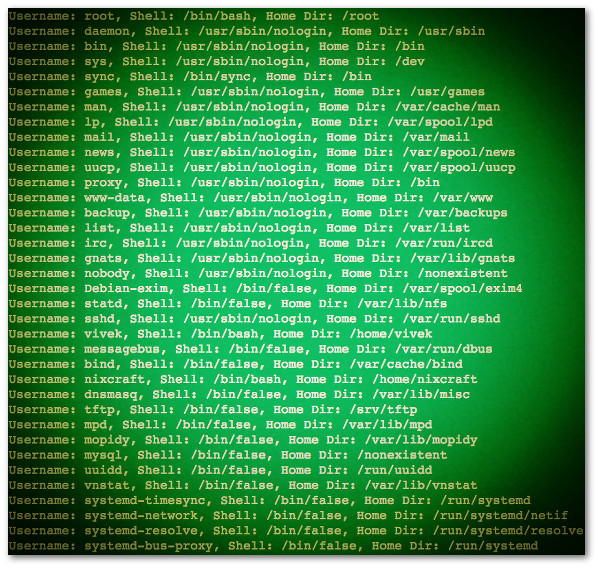

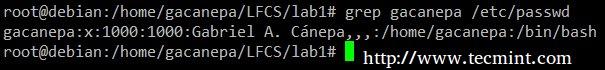

示例输出:

|

||||

|

||||

|

||||

|

||||

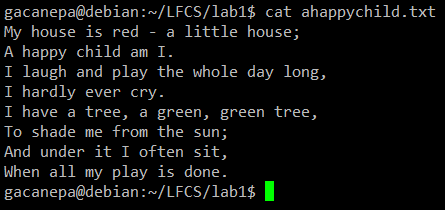

图01:Bash shell scripting- 读取文件并逐行输出文件

|

||||

*图01:Bash 脚本:读取文件并逐行输出文件*

|

||||

|

||||

**Bash Scripting: 逐行读取文本文件并创建为 pdf 文件**

|

||||

###Bash 脚本:逐行读取文本文件并创建为 pdf 文件

|

||||

|

||||

我的输入文件如下(faq.txt):

|

||||

|

||||

@ -75,7 +79,7 @@ Linux/UNIX: Bash 下如何逐行读取一个文件

|

||||

8292|http://www.cyberciti.biz/faq/mounting-harddisks-in-freebsd-with-mount-command/|FreeBSD: Mount Hard Drive / Disk Command

|

||||

8190|http://www.cyberciti.biz/faq/rebooting-solaris-unix-server/|Reboot a Solaris UNIX System

|

||||

|

||||

我的 bash script:

|

||||

我的 bash 脚本:

|

||||

|

||||

#!/bin/bash

|

||||

# Usage: Create pdf files from input (wrapper script)

|

||||

@ -106,11 +110,11 @@ Linux/UNIX: Bash 下如何逐行读取一个文件

|

||||

done <"$_db"

|

||||

fi

|

||||

|

||||

**提示:从 bash 的变量开始读取**

|

||||

###技巧:从 bash 变量中读取

|

||||

|

||||

让我们看看如何在 Debian 或者 Ubuntu Linux 下列出所有安装过的 php 包,请输入:

|

||||

|

||||

# 我将输出内容赋值到一个变量名为$list中 #

|

||||

# 我将输出内容赋值到一个变量名为 $list中 #

|

||||

|

||||

list=$(dpkg --list php\* | awk '/ii/{print $2}')

|

||||

printf '%s\n' "$list"

|

||||

@ -128,7 +132,7 @@ Linux/UNIX: Bash 下如何逐行读取一个文件

|

||||

php5-readline

|

||||

php5-suhosin-extension

|

||||

|

||||

你现在可以从 $list 中看到安装的包:

|

||||

你现在可以从 $list 中看到它们,并安装这些包:

|

||||

|

||||

#!/bin/bash

|

||||

# BASH can iterate over $list variable using a "here string" #

|

||||

@ -152,15 +156,14 @@ Linux/UNIX: Bash 下如何逐行读取一个文件

|

||||

Installing php package php5-readline...

|

||||

Installing php package php5-suhosin-extension...

|

||||

|

||||

|

||||

*** 不要忘了运行php5enmod并重新启动服务(httpd 或 php5-fpm) ***

|

||||

*** Do not forget to run php5enmod and restart the server (httpd or php5-fpm) ***

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.cyberciti.biz/faq/unix-howto-read-line-by-line-from-file/

|

||||

|

||||

作者:[作者名][a]

|

||||

作者: VIVEK GIT

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

@ -1,77 +1,78 @@

|

||||

|

||||

在 Linux 中安装 RAID 6(条带化双分布式奇偶校验) - 第5部分

|

||||

在 Linux 下使用 RAID(五):安装 RAID 6(条带化双分布式奇偶校验)

|

||||

================================================================================

|

||||

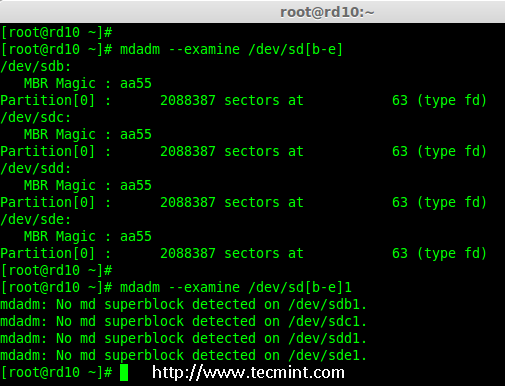

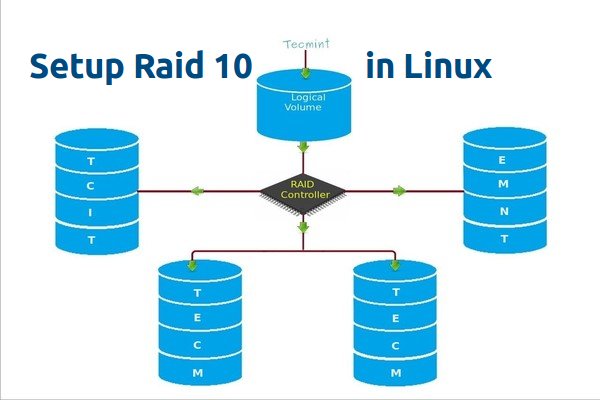

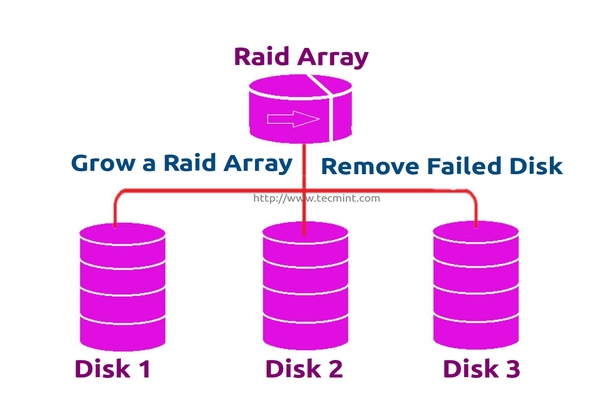

RAID 6 是 RAID 5 的升级版,它有两个分布式奇偶校验,即时两个磁盘发生故障后依然有容错能力。两并列的磁盘发生故障时,系统的关键任务仍然能运行。它与 RAID 5 相似,但性能更健壮,因为它多用了一个磁盘来进行奇偶校验。

|

||||

|

||||

在之前的文章中,我们已经在 RAID 5 看了分布式奇偶校验,但在本文中,我们将看到的是 RAID 6 双分布式奇偶校验。不要期望比其他 RAID 有额外的性能,我们仍然需要安装一个专用的 RAID 控制器。在 RAID 6 中,即使我们失去了2个磁盘,我们仍可以取回数据通过更换磁盘,然后从校验中构建数据。

|

||||

RAID 6 是 RAID 5 的升级版,它有两个分布式奇偶校验,即使两个磁盘发生故障后依然有容错能力。在两个磁盘同时发生故障时,系统的关键任务仍然能运行。它与 RAID 5 相似,但性能更健壮,因为它多用了一个磁盘来进行奇偶校验。

|

||||

|

||||

在之前的文章中,我们已经在 RAID 5 看了分布式奇偶校验,但在本文中,我们将看到的是 RAID 6 双分布式奇偶校验。不要期望比其他 RAID 有更好的性能,除非你也安装了一个专用的 RAID 控制器。在 RAID 6 中,即使我们失去了2个磁盘,我们仍可以通过更换磁盘,从校验中构建数据,然后取回数据。

|

||||

|

||||

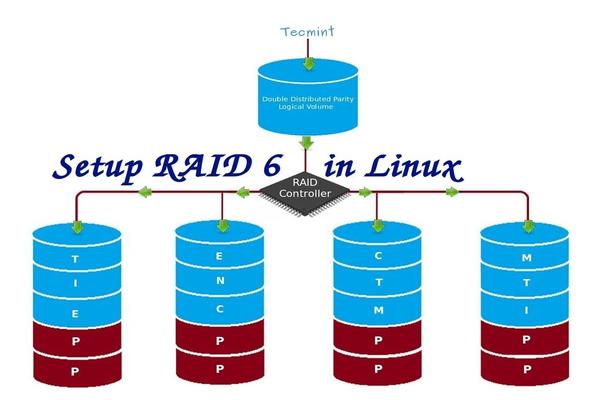

|

||||

|

||||

在 Linux 中安装 RAID 6

|

||||

*在 Linux 中安装 RAID 6*

|

||||

|

||||

要建立一个 RAID 6,一组最少需要4个磁盘。RAID 6 甚至在有些设定中会有多组磁盘,当读取数据时,它会同时从所有磁盘读取,所以读取速度会更快,当写数据时,因为它要将数据写在条带化的多个磁盘上,所以性能会较差。

|

||||

要建立一个 RAID 6,一组最少需要4个磁盘。RAID 6 甚至在有些组中会有更多磁盘,这样将多个硬盘捆在一起,当读取数据时,它会同时从所有磁盘读取,所以读取速度会更快,当写数据时,因为它要将数据写在条带化的多个磁盘上,所以性能会较差。

|

||||

|

||||

现在,很多人都在讨论为什么我们需要使用 RAID 6,它的性能和其他 RAID 相比并不太好。提出这个问题首先需要知道的是,如果需要高容错的必须选择 RAID 6。在每一个对数据库的高可用性要求较高的环境中,他们需要 RAID 6 因为数据库是最重要,无论花费多少都需要保护其安全,它在视频流环境中也是非常有用的。

|

||||

现在,很多人都在讨论为什么我们需要使用 RAID 6,它的性能和其他 RAID 相比并不太好。提出这个问题首先需要知道的是,如果需要高容错性就选择 RAID 6。在每一个用于数据库的高可用性要求较高的环境中,他们需要 RAID 6 因为数据库是最重要,无论花费多少都需要保护其安全,它在视频流环境中也是非常有用的。

|

||||

|

||||

#### RAID 6 的的优点和缺点 ####

|

||||

|

||||

- 性能很不错。

|

||||

- RAID 6 非常昂贵,因为它要求两个独立的磁盘用于奇偶校验功能。

|

||||

- 性能不错。

|

||||

- RAID 6 比较昂贵,因为它要求两个独立的磁盘用于奇偶校验功能。

|

||||

- 将失去两个磁盘的容量来保存奇偶校验信息(双奇偶校验)。

|

||||

- 不存在数据丢失,即时两个磁盘损坏。我们可以在更换损坏的磁盘后从校验中重建数据。

|

||||

- 即使两个磁盘损坏,数据也不会丢失。我们可以在更换损坏的磁盘后从校验中重建数据。

|

||||

- 读性能比 RAID 5 更好,因为它从多个磁盘读取,但对于没有专用的 RAID 控制器的设备写性能将非常差。

|

||||

|

||||

#### 要求 ####

|

||||

|

||||

要创建一个 RAID 6 最少需要4个磁盘.你也可以添加更多的磁盘,但你必须有专用的 RAID 控制器。在软件 RAID 中,我们在 RAID 6 中不会得到更好的性能,所以我们需要一个物理 RAID 控制器。

|

||||

要创建一个 RAID 6 最少需要4个磁盘。你也可以添加更多的磁盘,但你必须有专用的 RAID 控制器。使用软件 RAID 我们在 RAID 6 中不会得到更好的性能,所以我们需要一个物理 RAID 控制器。

|

||||

|

||||

这些是新建一个 RAID 需要的设置,我们建议先看完以下 RAID 文章。

|

||||

如果你新接触 RAID 设置,我们建议先看完以下 RAID 文章。

|

||||

|

||||

- [Linux 中 RAID 的基本概念 – 第一部分][1]

|

||||

- [在 Linux 上创建软件 RAID 0 (条带化) – 第二部分][2]

|

||||

- [在 Linux 上创建软件 RAID 1 (镜像) – 第三部分][3]

|

||||

- [介绍 RAID 的级别和概念][1]

|

||||

- [使用 mdadm 工具创建软件 RAID 0 (条带化)][2]

|

||||

- [用两块磁盘创建 RAID 1(镜像)][3]

|

||||

- [创建 RAID 5(条带化与分布式奇偶校验)](4)

|

||||

|

||||

#### My Server Setup ####

|

||||

#### 我的服务器设置 ####

|

||||

|

||||

Operating System : CentOS 6.5 Final

|

||||

IP Address : 192.168.0.228

|

||||

Hostname : rd6.tecmintlocal.com

|

||||

Disk 1 [20GB] : /dev/sdb

|

||||

Disk 2 [20GB] : /dev/sdc

|

||||

Disk 3 [20GB] : /dev/sdd

|

||||

Disk 4 [20GB] : /dev/sde

|

||||

操作系统 : CentOS 6.5 Final

|

||||

IP 地址 : 192.168.0.228

|

||||

主机名 : rd6.tecmintlocal.com

|

||||

磁盘 1 [20GB] : /dev/sdb

|

||||

磁盘 2 [20GB] : /dev/sdc

|

||||

磁盘 3 [20GB] : /dev/sdd

|

||||

磁盘 4 [20GB] : /dev/sde

|

||||

|

||||

这篇文章是9系列 RAID 教程的第5部分,在这里我们将看到我们如何在 Linux 系统或者服务器上创建和设置软件 RAID 6 或条带化双分布式奇偶校验,使用四个 20GB 的磁盘 /dev/sdb, /dev/sdc, /dev/sdd 和 /dev/sde.

|

||||

这是9篇系列教程的第5部分,在这里我们将看到如何在 Linux 系统或者服务器上使用四个 20GB 的磁盘(名为 /dev/sdb、 /dev/sdc、 /dev/sdd 和 /dev/sde)创建和设置软件 RAID 6 (条带化双分布式奇偶校验)。

|

||||

|

||||

### 第1步:安装 mdadm 工具,并检查磁盘 ###

|

||||

|

||||

1.如果你按照我们最进的两篇 RAID 文章(第2篇和第3篇),我们已经展示了如何安装‘mdadm‘工具。如果你直接看的这篇文章,我们先来解释下在Linux系统中如何使用‘mdadm‘工具来创建和管理 RAID,首先根据你的 Linux 发行版使用以下命令来安装。

|

||||

1、 如果你按照我们最进的两篇 RAID 文章(第2篇和第3篇),我们已经展示了如何安装`mdadm`工具。如果你直接看的这篇文章,我们先来解释下在 Linux 系统中如何使用`mdadm`工具来创建和管理 RAID,首先根据你的 Linux 发行版使用以下命令来安装。

|

||||

|

||||

# yum install mdadm [on RedHat systems]

|

||||

# apt-get install mdadm [on Debain systems]

|

||||

# yum install mdadm [在 RedHat 系统]

|

||||

# apt-get install mdadm [在 Debain 系统]

|

||||

|

||||

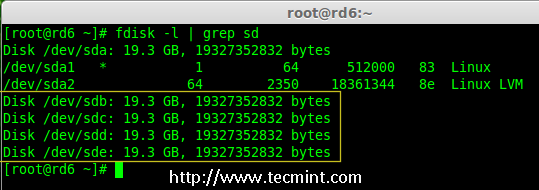

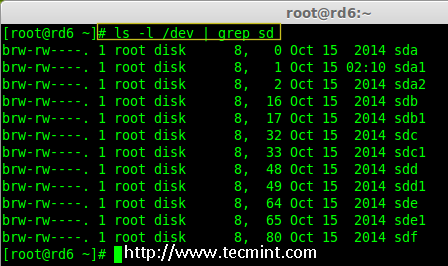

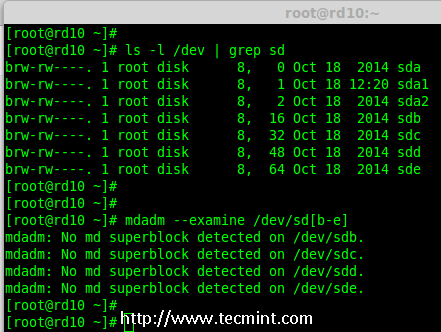

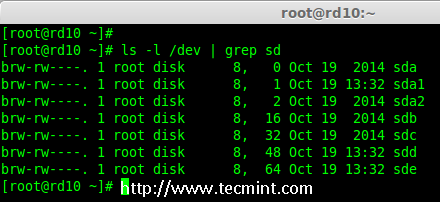

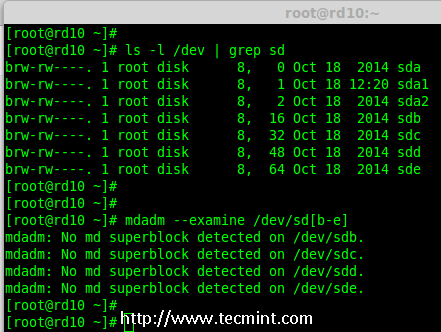

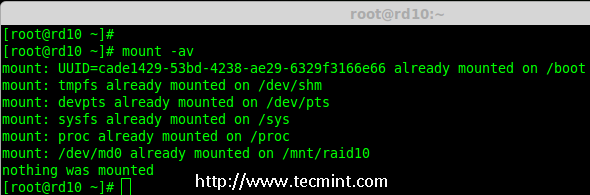

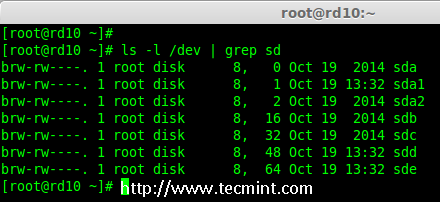

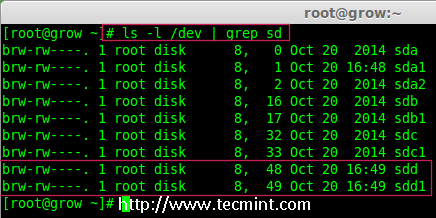

2.安装该工具后,然后来验证需要的四个磁盘,我们将会使用下面的‘fdisk‘命令来检验用于创建 RAID 的磁盘。

|

||||

2、 安装该工具后,然后来验证所需的四个磁盘,我们将会使用下面的`fdisk`命令来检查用于创建 RAID 的磁盘。

|

||||

|

||||

# fdisk -l | grep sd

|

||||

|

||||

|

||||

|

||||

在 Linux 中检查磁盘

|

||||

*在 Linux 中检查磁盘*

|

||||

|

||||

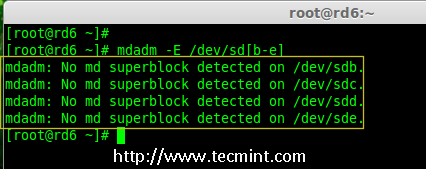

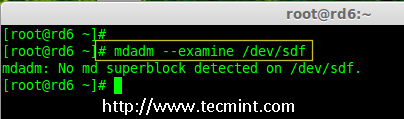

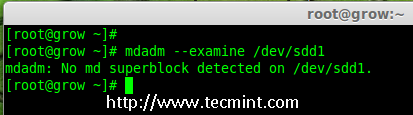

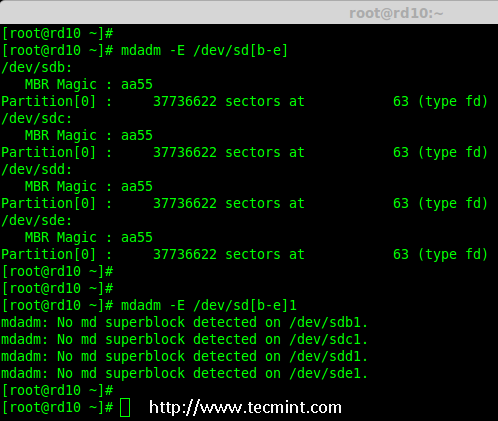

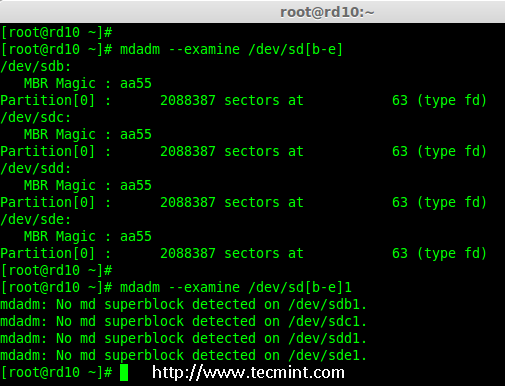

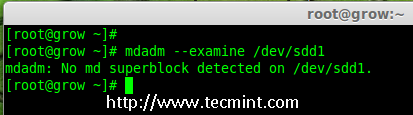

3.在创建 RAID 磁盘前,先检查下我们的磁盘是否创建过 RAID 分区。

|

||||

3、 在创建 RAID 磁盘前,先检查下我们的磁盘是否创建过 RAID 分区。

|

||||

|

||||

# mdadm -E /dev/sd[b-e]

|

||||

# mdadm --examine /dev/sdb /dev/sdc /dev/sdd /dev/sde

|

||||

# mdadm --examine /dev/sdb /dev/sdc /dev/sdd /dev/sde # 或

|

||||

|

||||

|

||||

|

||||

在磁盘上检查 Raid 分区

|

||||

*在磁盘上检查 RAID 分区*

|

||||

|

||||

**注意**: 在上面的图片中,没有检测到任何 super-block 或者说在四个磁盘上没有 RAID 存在。现在我们开始创建 RAID 6。

|

||||

|

||||

### 第2步:为 RAID 6 创建磁盘分区 ###

|

||||

|

||||

4.现在为 raid 创建分区‘/dev/sdb‘, ‘/dev/sdc‘, ‘/dev/sdd‘ 和 ‘/dev/sde‘使用下面 fdisk 命令。在这里,我们将展示如何创建分区在 sdb 磁盘,同样的步骤也适用于其他分区。

|

||||

4、 现在在 `/dev/sdb`, `/dev/sdc`, `/dev/sdd` 和 `/dev/sde`上为 RAID 创建分区,使用下面的 fdisk 命令。在这里,我们将展示如何在 sdb 磁盘创建分区,同样的步骤也适用于其他分区。

|

||||

|

||||

**创建 /dev/sdb 分区**

|

||||

|

||||

@ -79,20 +80,20 @@ RAID 6 是 RAID 5 的升级版,它有两个分布式奇偶校验,即时两

|

||||

|

||||

请按照说明进行操作,如下图所示创建分区。

|

||||

|

||||

- 按 ‘n’ 创建新的分区。

|

||||

- 然后按 ‘P’ 选择主分区。

|

||||

- 按 `n`创建新的分区。

|

||||

- 然后按 `P` 选择主分区。

|

||||

- 接下来选择分区号为1。

|

||||

- 只需按两次回车键选择默认值即可。

|

||||

- 然后,按 ‘P’ 来打印创建好的分区。

|

||||

- 按 ‘L’,列出所有可用的类型。

|

||||

- 按 ‘t’ 去修改分区。

|

||||

- 键入 ‘fd’ 设置为 Linux 的 RAID 类型,然后按 Enter 确认。

|

||||

- 然后再次使用‘p’查看我们所做的更改。

|

||||

- 使用‘w’保存更改。

|

||||

- 然后,按 `P` 来打印创建好的分区。

|

||||

- 按 `L`,列出所有可用的类型。

|

||||

- 按 `t` 去修改分区。

|

||||

- 键入 `fd` 设置为 Linux 的 RAID 类型,然后按回车确认。

|

||||

- 然后再次使用`p`查看我们所做的更改。

|

||||

- 使用`w`保存更改。

|

||||

|

||||

|

||||

|

||||

创建 /dev/sdb 分区

|

||||

*创建 /dev/sdb 分区*

|

||||

|

||||

**创建 /dev/sdc 分区**

|

||||

|

||||

@ -100,7 +101,7 @@ RAID 6 是 RAID 5 的升级版,它有两个分布式奇偶校验,即时两

|

||||

|

||||

|

||||

|

||||

创建 /dev/sdc 分区

|

||||

*创建 /dev/sdc 分区*

|

||||

|

||||

**创建 /dev/sdd 分区**

|

||||

|

||||

@ -108,7 +109,7 @@ RAID 6 是 RAID 5 的升级版,它有两个分布式奇偶校验,即时两

|

||||

|

||||

|

||||

|

||||

创建 /dev/sdd 分区

|

||||

*创建 /dev/sdd 分区*

|

||||

|

||||

**创建 /dev/sde 分区**

|

||||

|

||||

@ -116,71 +117,67 @@ RAID 6 是 RAID 5 的升级版,它有两个分布式奇偶校验,即时两

|

||||

|

||||

|

||||

|

||||

创建 /dev/sde 分区

|

||||

*创建 /dev/sde 分区*

|

||||

|

||||

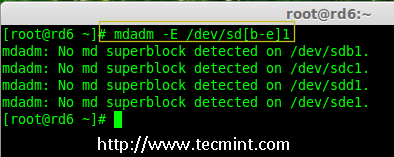

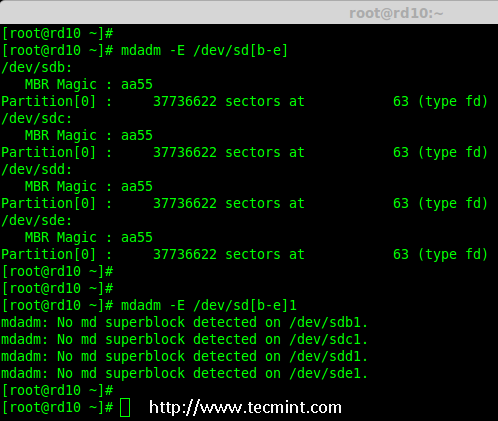

5.创建好分区后,检查磁盘的 super-blocks 是个好的习惯。如果 super-blocks 不存在我们可以按前面的创建一个新的 RAID。

|

||||

5、 创建好分区后,检查磁盘的 super-blocks 是个好的习惯。如果 super-blocks 不存在我们可以按前面的创建一个新的 RAID。

|

||||

|

||||

# mdadm -E /dev/sd[b-e]1

|

||||

|

||||

|

||||

或者

|

||||

|

||||

# mdadm --examine /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sde1

|

||||

# mdadm -E /dev/sd[b-e]1

|

||||

# mdadm --examine /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sde1 # 或

|

||||

|

||||

|

||||

|

||||

在新分区中检查 Raid

|

||||

*在新分区中检查 RAID *

|

||||

|

||||

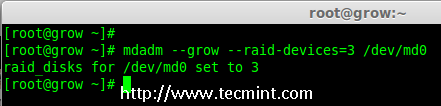

### 步骤3:创建 md 设备(RAID) ###

|

||||

|

||||

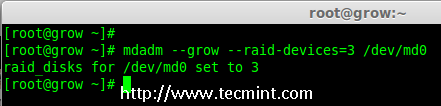

6,现在是时候来创建 RAID 设备‘md0‘ (即 /dev/md0)并应用 RAID 级别在所有新创建的分区中,确认 raid 使用以下命令。

|

||||

6、 现在可以使用以下命令创建 RAID 设备`md0` (即 /dev/md0),并在所有新创建的分区中应用 RAID 级别,然后确认 RAID 设置。

|

||||

|

||||

# mdadm --create /dev/md0 --level=6 --raid-devices=4 /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sde1

|

||||

# cat /proc/mdstat

|

||||

|

||||

|

||||

|

||||

创建 Raid 6 设备

|

||||

*创建 Raid 6 设备*

|

||||

|

||||

7.你还可以使用 watch 命令来查看当前 raid 的进程,如下图所示。

|

||||

7、 你还可以使用 watch 命令来查看当前创建 RAID 的进程,如下图所示。

|

||||

|

||||

# watch -n1 cat /proc/mdstat

|

||||

|

||||

|

||||

|

||||

检查 Raid 6 进程

|

||||

*检查 RAID 6 创建过程*

|

||||

|

||||

8.使用以下命令验证 RAID 设备。

|

||||

8、 使用以下命令验证 RAID 设备。

|

||||

|

||||

# mdadm -E /dev/sd[b-e]1

|

||||

# mdadm -E /dev/sd[b-e]1

|

||||

|

||||

**注意**::上述命令将显示四个磁盘的信息,这是相当长的,所以没有截取其完整的输出。

|

||||

|

||||

9.接下来,验证 RAID 阵列,以确认 re-syncing 被启动。

|

||||

9、 接下来,验证 RAID 阵列,以确认重新同步过程已经开始。

|

||||

|

||||

# mdadm --detail /dev/md0

|

||||

|

||||

|

||||

|

||||

检查 Raid 6 阵列

|

||||

*检查 Raid 6 阵列*

|

||||

|

||||

### 第4步:在 RAID 设备上创建文件系统 ###

|

||||

|

||||

10.使用 ext4 为‘/dev/md0‘创建一个文件系统并将它挂载在 /mnt/raid5 。这里我们使用的是 ext4,但你可以根据你的选择使用任意类型的文件系统。

|

||||

10、 使用 ext4 为`/dev/md0`创建一个文件系统,并将它挂载在 /mnt/raid6 。这里我们使用的是 ext4,但你可以根据你的选择使用任意类型的文件系统。

|

||||

|

||||

# mkfs.ext4 /dev/md0

|

||||

|

||||

|

||||

|

||||

在 Raid 6 上创建文件系统

|

||||

*在 RAID 6 上创建文件系统*

|

||||

|

||||

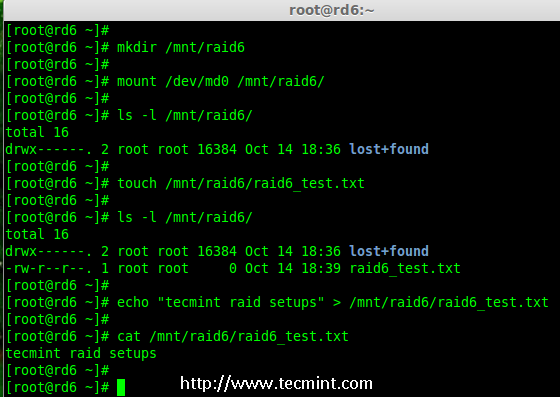

11.挂载创建的文件系统到 /mnt/raid6,并验证挂载点下的文件,我们可以看到 lost+found 目录。

|

||||

11、 将创建的文件系统挂载到 /mnt/raid6,并验证挂载点下的文件,我们可以看到 lost+found 目录。

|

||||

|

||||

# mkdir /mnt/raid6

|

||||

# mount /dev/md0 /mnt/raid6/

|

||||

# ls -l /mnt/raid6/

|

||||

|

||||

12.在挂载点下创建一些文件,在任意文件中添加一些文字并验证其内容。

|

||||

12、 在挂载点下创建一些文件,在任意文件中添加一些文字并验证其内容。

|

||||

|

||||

# touch /mnt/raid6/raid6_test.txt

|

||||

# ls -l /mnt/raid6/

|

||||

@ -189,9 +186,9 @@ RAID 6 是 RAID 5 的升级版,它有两个分布式奇偶校验,即时两

|

||||

|

||||

|

||||

|

||||

验证 Raid 内容

|

||||

*验证 RAID 内容*

|

||||

|

||||

13.在 /etc/fstab 中添加以下条目使系统启动时自动挂载设备,环境不同挂载点可能会有所不同。

|

||||

13、 在 /etc/fstab 中添加以下条目使系统启动时自动挂载设备,操作系统环境不同挂载点可能会有所不同。

|

||||

|

||||

# vim /etc/fstab

|

||||

|

||||

@ -199,36 +196,37 @@ RAID 6 是 RAID 5 的升级版,它有两个分布式奇偶校验,即时两

|

||||

|

||||

|

||||

|

||||

自动挂载 Raid 6 设备

|

||||

*自动挂载 RAID 6 设备*

|

||||

|

||||

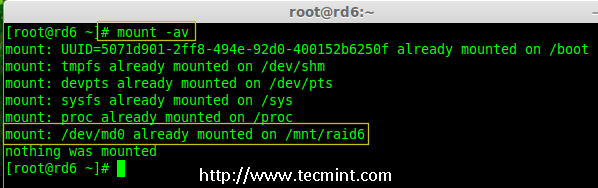

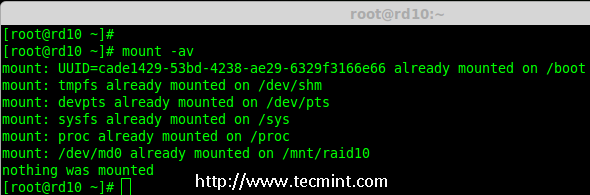

14.接下来,执行‘mount -a‘命令来验证 fstab 中的条目是否有错误。

|

||||

14、 接下来,执行`mount -a`命令来验证 fstab 中的条目是否有错误。

|

||||

|

||||

# mount -av

|

||||

|

||||

|

||||

|

||||

验证 Raid 是否自动挂载

|

||||

*验证 RAID 是否自动挂载*

|

||||

|

||||

### 第5步:保存 RAID 6 的配置 ###

|

||||

|

||||

15.请注意默认 RAID 没有配置文件。我们需要使用以下命令手动保存它,然后检查设备‘/dev/md0‘的状态。

|

||||

15、 请注意,默认情况下 RAID 没有配置文件。我们需要使用以下命令手动保存它,然后检查设备`/dev/md0`的状态。

|

||||

|

||||

# mdadm --detail --scan --verbose >> /etc/mdadm.conf

|

||||

# cat /etc/mdadm.conf

|

||||

# mdadm --detail /dev/md0

|

||||

|

||||

|

||||

|

||||

保存 Raid 6 配置

|

||||

*保存 RAID 6 配置*

|

||||

|

||||

|

||||

|

||||

检查 Raid 6 状态

|

||||

*检查 RAID 6 状态*

|

||||

|

||||

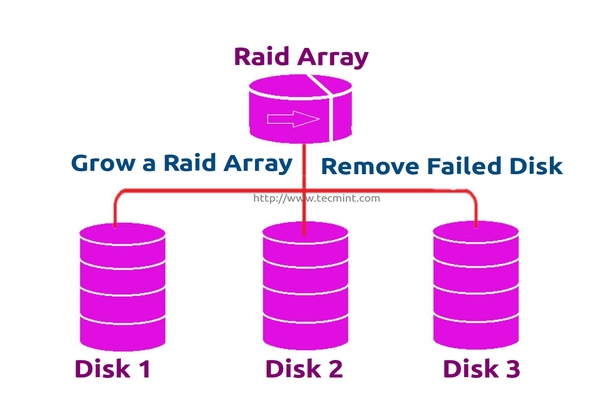

### 第6步:添加备用磁盘 ###

|

||||

|

||||

16.现在,它使用了4个磁盘,并且有两个作为奇偶校验信息来使用。在某些情况下,如果任意一个磁盘出现故障,我们仍可以得到数据,因为在 RAID 6 使用双奇偶校验。

|

||||

16、 现在,已经使用了4个磁盘,并且其中两个作为奇偶校验信息来使用。在某些情况下,如果任意一个磁盘出现故障,我们仍可以得到数据,因为在 RAID 6 使用双奇偶校验。

|

||||

|

||||

如果第二个磁盘也出现故障,在第三块磁盘损坏前我们可以添加一个新的。它可以作为一个备用磁盘并入 RAID 集合,但我在创建 raid 集合前没有定义备用的磁盘。但是,在磁盘损坏后或者创建 RAId 集合时我们可以添加一块磁盘。现在,我们已经创建好了 RAID,下面让我演示如何添加备用磁盘。

|

||||

如果第二个磁盘也出现故障,在第三块磁盘损坏前我们可以添加一个新的。可以在创建 RAID 集时加入一个备用磁盘,但我在创建 RAID 集合前没有定义备用的磁盘。不过,我们可以在磁盘损坏后或者创建 RAID 集合时添加一块备用磁盘。现在,我们已经创建好了 RAID,下面让我演示如何添加备用磁盘。

|

||||

|

||||

为了达到演示的目的,我已经热插入了一个新的 HDD 磁盘(即 /dev/sdf),让我们来验证接入的磁盘。

|

||||

|

||||

@ -236,15 +234,15 @@ RAID 6 是 RAID 5 的升级版,它有两个分布式奇偶校验,即时两

|

||||

|

||||

|

||||

|

||||

检查新 Disk

|

||||

*检查新磁盘*

|

||||

|

||||

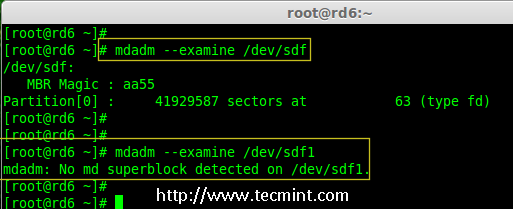

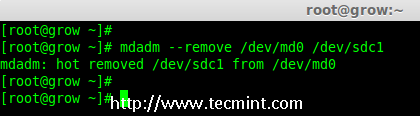

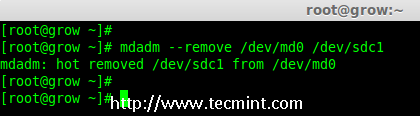

17.现在再次确认新连接的磁盘没有配置过 RAID ,使用 mdadm 来检查。

|

||||

17、 现在再次确认新连接的磁盘没有配置过 RAID ,使用 mdadm 来检查。

|

||||

|

||||

# mdadm --examine /dev/sdf

|

||||

|

||||

|

||||

|

||||

在新磁盘中检查 Raid

|

||||

*在新磁盘中检查 RAID*

|

||||

|

||||

**注意**: 像往常一样,我们早前已经为四个磁盘创建了分区,同样,我们使用 fdisk 命令为新插入的磁盘创建新分区。

|

||||

|

||||

@ -252,9 +250,9 @@ RAID 6 是 RAID 5 的升级版,它有两个分布式奇偶校验,即时两

|

||||

|

||||

|

||||

|

||||

为 /dev/sdf 创建分区

|

||||

*为 /dev/sdf 创建分区*

|

||||

|

||||

18.在 /dev/sdf 创建新的分区后,在新分区上确认 raid,包括/dev/md0 raid 设备的备用磁盘,并验证添加的设备。

|

||||

18、 在 /dev/sdf 创建新的分区后,在新分区上确认没有 RAID,然后将备用磁盘添加到 RAID 设备 /dev/md0 中,并验证添加的设备。

|

||||

|

||||

# mdadm --examine /dev/sdf

|

||||

# mdadm --examine /dev/sdf1

|

||||

@ -263,19 +261,19 @@ RAID 6 是 RAID 5 的升级版,它有两个分布式奇偶校验,即时两

|

||||

|

||||

|

||||

|

||||

在 sdf 分区上验证 Raid

|

||||

*在 sdf 分区上验证 Raid*

|

||||

|

||||

|

||||

|

||||

为 RAID 添加 sdf 分区

|

||||

*添加 sdf 分区到 RAID *

|

||||

|

||||

|

||||

|

||||

验证 sdf 分区信息

|

||||

*验证 sdf 分区信息*

|

||||

|

||||

### 第7步:检查 RAID 6 容错 ###

|

||||

|

||||

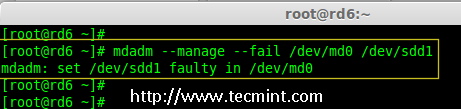

19.现在,让我们检查备用驱动器是否能自动工作,当我们阵列中的任何一个磁盘出现故障时。为了测试,我亲自将一个磁盘模拟为故障设备。

|

||||

19、 现在,让我们检查备用驱动器是否能自动工作,当我们阵列中的任何一个磁盘出现故障时。为了测试,我将一个磁盘手工标记为故障设备。

|

||||

|

||||

在这里,我们标记 /dev/sdd1 为故障磁盘。

|

||||

|

||||

@ -283,15 +281,15 @@ RAID 6 是 RAID 5 的升级版,它有两个分布式奇偶校验,即时两

|

||||

|

||||

|

||||

|

||||

检查 Raid 6 容错

|

||||

*检查 RAID 6 容错*

|

||||

|

||||

20.让我们查看 RAID 的详细信息,并检查备用磁盘是否开始同步。

|

||||

20、 让我们查看 RAID 的详细信息,并检查备用磁盘是否开始同步。

|

||||

|

||||

# mdadm --detail /dev/md0

|

||||

|

||||

|

||||

|

||||

检查 Raid 自动同步

|

||||

*检查 RAID 自动同步*

|

||||

|

||||

**哇塞!** 这里,我们看到备用磁盘激活了,并开始重建进程。在底部,我们可以看到有故障的磁盘 /dev/sdd1 标记为 faulty。可以使用下面的命令查看进程重建。

|

||||

|

||||