mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-18 02:00:18 +08:00

commit

71ed0966d3

@ -1,35 +1,33 @@

|

||||

[调试器的工作原理: 第3篇 - 调试信息][25]

|

||||

调试器工作原理(三):调试信息

|

||||

============================================================

|

||||

|

||||

|

||||

这是调试器的工作原理系列文章的第三篇。阅读这篇文章之前应当先阅读[第一篇][26]与[第二篇][27]。

|

||||

|

||||

### 这篇文章的主要内容

|

||||

|

||||

本文将解释调试器是如何在机器码中,查找它将C语言源代码转换成机器语言代码时所需要的C语言函数、变量、与数据。

|

||||

本文将解释调试器是如何在机器码中查找它将 C 语言源代码转换成机器语言代码时所需要的 C 语言函数、变量、与数据。

|

||||

|

||||

### 调试信息

|

||||

|

||||

|

||||

现代编译器能够将有着各种排版或嵌套的程序流程、各种数据类型的变量的高级语言代码转换为一大堆称之为机器码的 0/1 数据,这么做的唯一目的是尽可能快的在目标 CPU 上运行程序。通常来说一行C语言代码能够转换为若干条机器码。变量被分散在机器码中的各个部分,有的在堆栈中,有的在寄存器中,或者直接被优化掉了。数据结构与对象在机器码中甚至不“存在”,它们只是用于将数据按一定的结构编码存储进缓存。

|

||||

现代编译器能够将有着各种缩进或嵌套的程序流程、各种数据类型的变量的高级语言代码转换为一大堆称之为机器码的 0/1 数据,这么做的唯一目的是尽可能快的在目标 CPU 上运行程序。通常来说一行 C 语言代码能够转换为若干条机器码。变量被分散在机器码中的各个部分,有的在堆栈中,有的在寄存器中,或者直接被优化掉了。数据结构与对象在机器码中甚至不“存在”,它们只是用于将数据按一定的结构编码存储进缓存。

|

||||

|

||||

那么调试器怎么知道,当你需要在某个函数入口处暂停时,程序要在哪停下来呢?它怎么知道当你查看某个变量值时,它怎么找到这个值?答案是,调试信息。

|

||||

|

||||

编译器在生成机器码时同时会生成相应的调试信息。调试信息代表了可执行程序与源代码之间的关系,并以一种提前定义好的格式,同机器码存放在一起。过去的数年里,人们针对不同的平台与可执行文件发明了很多种用于存储这些信息的格式。不过我们这篇文章不会讲这些格式的历史,而是将阐述这些调试信息是如何工作的,所以我们将专注于一些事情,比如 `DWARF`。`DWARF` 如今十分广泛的应用在类 `Unix` 平台上的可执行文件的调试。

|

||||

编译器在生成机器码时同时会生成相应的调试信息。调试信息代表了可执行程序与源代码之间的关系,并以一种提前定义好的格式,同机器码存放在一起。过去的数年里,人们针对不同的平台与可执行文件发明了很多种用于存储这些信息的格式。不过我们这篇文章不会讲这些格式的历史,而是将阐述这些调试信息是如何工作的,所以我们将专注于一些事情,比如 `DWARF`。`DWARF` 如今十分广泛的用作 Linux 和类 `Unix` 平台上的可执行文件的调试格式。

|

||||

|

||||

### ELF 中的 DWARF

|

||||

|

||||

|

||||

|

||||

|

||||

根据[它的维基百科][17] 所描述,虽然 `DWARF` 是同 `ELF` 一同设计的(`DWARF` 是由 `DWARF` 标准委员会推出的开放标准。上文中展示的 图标就来自这个网站。),但 `DWARF` 在理论上来说也可以嵌入到其他的可执行文件格式中。

|

||||

根据[它的维基百科][17] 所描述,虽然 `DWARF` 是同 `ELF` 一同设计的(`DWARF` 是由 `DWARF` 标准委员会推出的开放标准。上文中展示的图标就来自这个网站。),但 `DWARF` 在理论上来说也可以嵌入到其他的可执行文件格式中。

|

||||

|

||||

`DWARF` 是一种复杂的格式,它的构建基于过去多年中许多不同的编译器与操作系统。正是因为它解决了一个为任意语言在任何平台与业务系统中产生调试信息的这样棘手的难题,它也必须很复杂。想要透彻的讲解 `DWARF` 仅仅是通过这单薄的一篇文章是远远不够的,说实话我也并没有充分地了解 `DWARF` 到每一个微小的细节,所以我也不能十分透彻的讲解 (如果你感兴趣的话,文末有一些能够帮助你的资源。建议从 `DWARF` 教程开始上手)。这篇文章中我将以浅显易懂的方式展示 `DWARF` 在实际应用中调试信息是如何工作的。

|

||||

`DWARF` 是一种复杂的格式,它吸收了过去许多年各种不同的架构与操作系统的格式的经验。正是因为它解决了一个在任何平台与 ABI (应用二进制接口)上为任意高级语言产生调试信息这样棘手的难题,它也必须很复杂。想要透彻的讲解 `DWARF` 仅仅是通过这单薄的一篇文章是远远不够的,说实话我也并没有充分地了解 `DWARF` 到每一个微小的细节,所以我也不能十分透彻的讲解 (如果你感兴趣的话,文末有一些能够帮助你的资源。建议从 `DWARF` 教程开始上手)。这篇文章中我将以浅显易懂的方式展示 `DWARF`,以说明调试信息是如何实际工作的。

|

||||

|

||||

### ELF文件中的调试部分

|

||||

### ELF 文件中的调试部分

|

||||

|

||||

首先让我们看看 `DWARF` 处在 ELF 文件中的什么位置。`ELF` 定义了每一个生成的目标文件中的每一部分。 _section header table_ 声明并定义了每一部分。不同的工具以不同的方式处理不同的部分,例如连接器会寻找连接器需要的部分,调试器会查找调试器需要的部分。

|

||||

首先让我们看看 `DWARF` 处在 ELF 文件中的什么位置。`ELF` 定义了每一个生成的目标文件中的每一节。 <ruby>节头表<rt>section header table</rt></ruby> 声明并定义了每一节及其名字。不同的工具以不同的方式处理不同的节,例如连接器会寻找连接器需要的部分,调试器会查找调试器需要的部分。

|

||||

|

||||

我们本文的实验会使用从这个C语言源文件构建的可执行文件,编译成 tracedprog2:

|

||||

我们本文的实验会使用从这个 C 语言源文件构建的可执行文件,编译成 `tracedprog2`:

|

||||

|

||||

|

||||

```

|

||||

@ -52,7 +50,7 @@ int main()

|

||||

```

|

||||

|

||||

|

||||

使用 `objdump -h` 命令检查 `ELF` 可执行文件中的段落头,我们会看到几个以 .debug_ 开头的段落,这些就是 `DWARF` 的调试部分。

|

||||

使用 `objdump -h` 命令检查 `ELF` 可执行文件中的<ruby>节头<rt>section header</rt></ruby>,我们会看到几个以 `.debug_` 开头的节,这些就是 `DWARF` 的调试部分。

|

||||

|

||||

```

|

||||

26 .debug_aranges 00000020 00000000 00000000 00001037

|

||||

@ -73,23 +71,23 @@ int main()

|

||||

CONTENTS, READONLY, DEBUGGING

|

||||

```

|

||||

|

||||

每个段落的第一个数字代表了这个段落的大小,最后一个数字代表了这个段落开始位置距离 `ELF` 的偏移量。调试器利用这些信息从可执行文件中读取段落。

|

||||

每个节的第一个数字代表了该节的大小,最后一个数字代表了这个节开始位置距离 `ELF` 的偏移量。调试器利用这些信息从可执行文件中读取节。

|

||||

|

||||

现在让我们看看一些在 `DWARF` 中查找有用的调试信息的实际例子。

|

||||

|

||||

### 查找函数

|

||||

|

||||

调试器的基础任务之一,就是当我们在某个函数处设置断点时,调试器需要能够在入口处暂停。为此,必须为函数与函数在机器码地址这两者建立起某种映射关系。

|

||||

调试器的最基础的任务之一,就是当我们在某个函数处设置断点时,调试器需要能够在入口处暂停。为此,必须为高级代码中的函数名称与函数在机器码中指令开始的地址这两者之间建立起某种映射关系。

|

||||

|

||||

为了获取这种映射关系,我们可以查找 `DWARF` 中的 .debug_info 段落。在我们深入之前,需要一点基础知识。`DWARF` 中每一个描述类型被称之为调试信息入口(`DIE`)。每个 `DIE` 都有关于它的属性之类的标签。`DIE` 之间通过兄弟节点或子节点连接,属性的值也可以指向其他的 `DIE`.

|

||||

为了获取这种映射关系,我们可以查找 `DWARF` 中的 `.debug_info` 节。在我们深入之前,需要一点基础知识。`DWARF` 中每一个描述类型被称之为调试信息入口(`DIE`)。每个 `DIE` 都有关于它的类型、属性之类的标签。`DIE` 之间通过兄弟节点或子节点相互连接,属性的值也可以指向其它的 `DIE`。

|

||||

|

||||

运行以下命令

|

||||

运行以下命令:

|

||||

|

||||

```

|

||||

objdump --dwarf=info tracedprog2

|

||||

```

|

||||

|

||||

输出文件相当的长,为了方便举例我们只关注这些行(从这里开始,无用的冗长信息我会以 (...)代替,方便排版。):

|

||||

输出文件相当的长,为了方便举例我们只关注这些行(从这里开始,无用的冗长信息我会以 (...)代替,方便排版):

|

||||

|

||||

```

|

||||

<1><71>: Abbrev Number: 5 (DW_TAG_subprogram)

|

||||

@ -114,7 +112,7 @@ objdump --dwarf=info tracedprog2

|

||||

<c7> DW_AT_frame_base : 0x2c (location list)

|

||||

```

|

||||

|

||||

上面的代码中有两个带有 DW_TAG_subprogram 标签的入口,在 `DWARF` 中这是对函数的指代。注意,这是两个段落入口,其中一个是 do_stuff 函数的入口,另一个是主函数的入口。这些信息中有很多值得关注的属性,但其中最值得注意的是 DW_AT_low_pc。它代表了函数开始处程序指针的值(在x86平台上是 `EIP`)。此处 0x8048604 代表了 do_stuff 函数开始处的程序指针。下面我们将利用 `objdump -d` 命令对可执行文件进行反汇编。来看看这块地址中都有什么:

|

||||

上面的代码中有两个带有 `DW_TAG_subprogram` 标签的入口,在 `DWARF` 中这是对函数的指代。注意,这是两个节的入口,其中一个是 `do_stuff` 函数的入口,另一个是主(`main`)函数的入口。这些信息中有很多值得关注的属性,但其中最值得注意的是 `DW_AT_low_pc`。它代表了函数开始处程序指针的值(在 x86 平台上是 `EIP`)。此处 `0x8048604` 代表了 `do_stuff` 函数开始处的程序指针。下面我们将利用 `objdump -d` 命令对可执行文件进行反汇编。来看看这块地址中都有什么:

|

||||

|

||||

```

|

||||

08048604 <do_stuff>:

|

||||

@ -139,13 +137,13 @@ objdump --dwarf=info tracedprog2

|

||||

804863d: c3 ret

|

||||

```

|

||||

|

||||

显然,0x8048604 是 do_stuff 的开始地址,这样一来,调试器就可以建立函数与其在可执行文件中的位置间的映射关系。

|

||||

显然,`0x8048604` 是 `do_stuff` 的开始地址,这样一来,调试器就可以建立函数与其在可执行文件中的位置间的映射关系。

|

||||

|

||||

### 查找变量

|

||||

|

||||

假设我们当前在 do_staff 函数中某个位置上设置断点停了下来。我们想通过调试器取得 my_local 这个变量的值。调试器怎么知道在哪里去找这个值呢?很显然这要比查找函数更为困难。变量可能存储在全局存储区、堆栈、甚至是寄存器中。此外,同名变量在不同的作用域中可能有着不同的值。调试信息必须能够反映所有的这些变化,当然,`DWARF` 就能做到。

|

||||

假设我们当前在 `do_staff` 函数中某个位置上设置断点停了下来。我们想通过调试器取得 `my_local` 这个变量的值。调试器怎么知道在哪里去找这个值呢?很显然这要比查找函数更为困难。变量可能存储在全局存储区、堆栈、甚至是寄存器中。此外,同名变量在不同的作用域中可能有着不同的值。调试信息必须能够反映所有的这些变化,当然,`DWARF` 就能做到。

|

||||

|

||||

我不会逐一去将每一种可能的状况,但我会以调试器在 do_stuff 函数中查找 my_local 变量的过程来举个例子。下面我们再看一遍 .debug_info 中 do_stuff 的每一个入口,这次连它的子入口也要一起看。

|

||||

我不会逐一去将每一种可能的状况,但我会以调试器在 `do_stuff` 函数中查找 `my_local` 变量的过程来举个例子。下面我们再看一遍 `.debug_info` 中 `do_stuff` 的每一个入口,这次连它的子入口也要一起看。

|

||||

|

||||

```

|

||||

<1><71>: Abbrev Number: 5 (DW_TAG_subprogram)

|

||||

@ -178,11 +176,11 @@ objdump --dwarf=info tracedprog2

|

||||

<af> DW_AT_location : (...) (DW_OP_fbreg: -24)

|

||||

```

|

||||

|

||||

看到每个入口处第一对尖括号中的数字了吗?这些是嵌套的等级,在上面的例子中,以 <2> 开头的入口是以 <1> 开头的子入口。因此我们得知 my_local 变量(以 DW_TAG_variable 标签标记)是 do_stuff 函数的局部变量。除此之外,调试器也需要知道变量的数据类型,这样才能正确的使用与显示变量。上面的例子中 my_local 的变量类型指向另一个 `DIE` <0x4b>。如果使用 objdump 命令查看这个 `DIE` 部分的话,我们会发现这部分代表了有符号4字节整型数据。

|

||||

看到每个入口处第一对尖括号中的数字了吗?这些是嵌套的等级,在上面的例子中,以 `<2>` 开头的入口是以 `<1>` 开头的子入口。因此我们得知 `my_local` 变量(以 `DW_TAG_variable` 标签标记)是 `do_stuff` 函数的局部变量。除此之外,调试器也需要知道变量的数据类型,这样才能正确的使用与显示变量。上面的例子中 `my_local` 的变量类型指向另一个 `DIE` `<0x4b>`。如果使用 `objdump` 命令查看这个 `DIE` 的话,我们会发现它是一个有符号 4 字节整型数据。

|

||||

|

||||

而为了在实际运行的程序内存中查找变量的值,调试器需要使用到 DW_AT_location 属性。对于 my_local 而言,是 DW_OP_fbreg: -20。这个代码段的意思是说 my_local 存储在距离它所在函数起始地址偏移量为-20的地方。

|

||||

而为了在实际运行的程序内存中查找变量的值,调试器需要使用到 `DW_AT_location` 属性。对于 `my_local` 而言,是 `DW_OP_fbreg: -20`。这个代码段的意思是说 `my_local` 存储在距离它所在函数起始地址偏移量为 `-20` 的地方。

|

||||

|

||||

do_stuff 函数的 DW_AT_frame_base 属性值为 0x0 (location list)。这意味着这个属性的值需要在 location list 中查找。下面我们来一起看看。

|

||||

`do_stuff` 函数的 `DW_AT_frame_base` 属性值为 `0x0 (location list)`。这意味着这个属性的值需要在 `location list` 中查找。下面我们来一起看看。

|

||||

|

||||

```

|

||||

$ objdump --dwarf=loc tracedprog2

|

||||

@ -202,9 +200,9 @@ Contents of the .debug_loc section:

|

||||

0000002c <End of list>

|

||||

```

|

||||

|

||||

我们需要关注的是第一列(do_stuff 函数的 DW_AT_frame_base 属性包含 location list 中 0x0 的偏移量。而 main 函数的相同属性包含 0x2c 的偏移量,这个偏移量是第二套地址列表的偏移量。)。对于调试器可能定位到的每一个地址,它都会指定当前栈帧到变量间的偏移量,而这个偏移就是通过寄存器来计算的。对于x86平台而言,bpreg4 指向 esp,而 bpreg5 指向 ebp。

|

||||

我们需要关注的是第一列(`do_stuff` 函数的 `DW_AT_frame_base` 属性包含 `location list` 中 `0x0` 的偏移量。而 `main` 函数的相同属性包含 `0x2c` 的偏移量,这个偏移量是第二套地址列表的偏移量)。对于调试器可能定位到的每一个地址,它都会指定当前栈帧到变量间的偏移量,而这个偏移就是通过寄存器来计算的。对于 x86 平台而言,`bpreg4` 指向 `esp`,而 `bpreg5` 指向 `ebp`。

|

||||

|

||||

让我们再看看 do_stuff 函数的头几条指令。

|

||||

让我们再看看 `do_stuff` 函数的头几条指令。

|

||||

|

||||

```

|

||||

08048604 <do_stuff>:

|

||||

@ -216,15 +214,15 @@ Contents of the .debug_loc section:

|

||||

8048610: 89 45 f4 mov DWORD PTR [ebp-0xc],eax

|

||||

```

|

||||

|

||||

只有当第二条指令执行后,ebp 寄存器才真正存储了有用的值。当然,前两条指令的基址是由上面所列出来的地址信息表计算出来的。一但 ebp 确定了,计算偏移量就十分方便了,因为尽管 esp 在操作堆栈的时候需要移动,但 ebp 作为栈底并不需要移动。

|

||||

只有当第二条指令执行后,`ebp` 寄存器才真正存储了有用的值。当然,前两条指令的基址是由上面所列出来的地址信息表计算出来的。一但 `ebp` 确定了,计算偏移量就十分方便了,因为尽管 `esp` 在操作堆栈的时候需要移动,但 `ebp` 作为栈底并不需要移动。

|

||||

|

||||

究竟我们应该去哪里找 my_local 的值呢?在 0x8048610 这块地址后, my_local 的值经过在 eax 中的计算后被存在了内存中,从这里开始我们才需要关注 my_local 的值。调试器会利用 DW_OP_breg5: 8 这个基址来查找。我们回想下,my_local 的 DW_AT_location 属性值为 DW_OP_fbreg: -20。所以应当从基址中 -20 ,同时由于 ebp 寄存器需要 + 8,所以最终结果为 - 12。现在再次查看反汇编代码,来看看数据从 eax 中被移动到哪里了。当然,这里 my_local 应当被存储在了 ebp - 12 的地址中。

|

||||

究竟我们应该去哪里找 `my_local` 的值呢?在 `0x8048610` 这块地址后, `my_local` 的值经过在 `eax` 中的计算后被存在了内存中,从这里开始我们才需要关注 `my_local` 的值。调试器会利用 `DW_OP_breg5: 8` 这个栈帧来查找。我们回想下,`my_local` 的 `DW_AT_location` 属性值为 `DW_OP_fbreg: -20`。所以应当从基址中 `-20` ,同时由于 `ebp` 寄存器需要 `+8`,所以最终结果为 `ebp - 12`。现在再次查看反汇编代码,来看看数据从 `eax` 中被移动到哪里了。当然,这里 `my_local` 应当被存储在了 `ebp - 12` 的地址中。

|

||||

|

||||

### 查看行号

|

||||

|

||||

当我们谈论调试信息的时候,我们利用了些技巧。当调试C语言源代码并在某个函数出放置断点的时候,我们并不关注第一条“机器码”指令(函数的调用准备工作已经完成而局部变量还没有初始化。)。我们真正关注的是函数的第一行“C代码”。

|

||||

当我们谈到在调试信息寻找函数的时候,我们利用了些技巧。当调试 C 语言源代码并在某个函数出放置断点的时候,我们并不关注第一条“机器码”指令(函数的调用准备工作已经完成而局部变量还没有初始化)。我们真正关注的是函数的第一行“C 代码”。

|

||||

|

||||

这就是 `DWARF` 完全覆盖映射C源代码与可执行文件中机器码地址的原因。下面是 .debug_line 段中所包含的内容,我们将其转换为可读的格式展示如下。

|

||||

这就是 `DWARF` 完全覆盖映射 C 源代码中的行与可执行文件中机器码地址的原因。下面是 `.debug_line` 节中所包含的内容,我们将其转换为可读的格式展示如下。

|

||||

|

||||

```

|

||||

$ objdump --dwarf=decodedline tracedprog2

|

||||

@ -247,27 +245,27 @@ tracedprog2.c 17 0x8048653

|

||||

tracedprog2.c 18 0x8048658

|

||||

```

|

||||

|

||||

很容易就可以看出其中C源代码与反汇编代码之间的对应关系。第5行指向 do_stuff 函数的入口,0x8040604。第6行,指向 0x804860a ,正是调试器在调试 do_stuff 函数时需要停下来的地方。这里已经完成了函数调用的准备工作。上面的这些信息形成了行号与地址间的双向映射关系。

|

||||

很容易就可以看出其中 C 源代码与反汇编代码之间的对应关系。第 5 行指向 `do_stuff` 函数的入口,`0x8040604`。第 6 行,指向 `0x804860a` ,正是调试器在调试 `do_stuff` 函数时需要停下来的地方。这里已经完成了函数调用的准备工作。上面的这些信息形成了行号与地址间的双向映射关系。

|

||||

|

||||

* 当在某一行设置断点的时候,调试器会利用这些信息去查找相应的地址来做断点工作(还记得上篇文章中的 int 3 指令吗?)

|

||||

* 当指令造成代码段错误时,调试器会利用这些信息来查看源代码中发生的状况。

|

||||

* 当在某一行设置断点的时候,调试器会利用这些信息去查找相应的地址来做断点工作(还记得上篇文章中的 `int 3` 指令吗?)

|

||||

* 当指令造成段错误时,调试器会利用这些信息来查看源代码中发生问题的行。

|

||||

|

||||

### libdwarf - 用 DWARF 编程

|

||||

|

||||

尽管使用命令行工具来获得 `DWARF` 很有用,但这仍然不够易用。作为程序员,我们应当知道当我们需要这些调试信息时应当怎么编程来获取这些信息。

|

||||

尽管使用命令行工具来获得 `DWARF` 很有用,但这仍然不够易用。作为程序员,我们希望知道当我们需要这些调试信息时应当怎么编程来获取这些信息。

|

||||

|

||||

自然我们想到的第一种方法就是阅读 `DWARF` 规范并按规范操作阅读使用。有句话说的好,分析 HTML 应当使用库函数,永远不要手工分析。对于 `DWARF` 来说这是如此。`DWARF` 比 HTML 要复杂得多。上面所展示出来的只是冰山一角。更糟糕的是,在实际的目标文件中,大部分信息是以压缩格式存储的,分析起来更加复杂(信息中的某些部分,例如位置信息与行号信息,在某些虚拟机下是以指令的方式编码的。)。

|

||||

自然我们想到的第一种方法就是阅读 `DWARF` 规范并按规范操作阅读使用。有句话说的好,分析 HTML 应当使用库函数,永远不要手工分析。对于 `DWARF` 来说正是如此。`DWARF` 比 HTML 要复杂得多。上面所展示出来的只是冰山一角。更糟糕的是,在实际的目标文件中,大部分信息是以非常紧凑的压缩格式存储的,分析起来更加复杂(信息中的某些部分,例如位置信息与行号信息,在某些虚拟机下是以指令的方式编码的)。

|

||||

|

||||

所以我们要使用库函数来处理 `DWARF`。下面是两种我熟悉的主流库(还有些不完整的库这里没有写)

|

||||

所以我们要使用库来处理 `DWARF`。下面是两种我熟悉的主要的库(还有些不完整的库这里没有写)

|

||||

|

||||

1. `BFD` (libbfd),包含了 `objdump` (对,就是这篇文章中我们一直在用的这货),`ld`(`GNU` 连接器)与 `as`(`GNU` 编译器)。`BFD` 主要用于[GNU binutils][11]。

|

||||

1. `BFD` (libbfd),包含了 `objdump` (对,就是这篇文章中我们一直在用的这货),`ld`(`GNU` 连接器)与 `as`(`GNU` 编译器)。`BFD` 主要用于 [GNU binutils][11]。

|

||||

2. `libdwarf` ,同它的哥哥 `libelf` 一同用于 `Solaris` 与 `FreeBSD` 中的调试信息分析。

|

||||

|

||||

相比较而言我更倾向于使用 `libdwarf`,因为我对它了解的更多,并且 `libdwarf` 的开源协议更开放。

|

||||

相比较而言我更倾向于使用 `libdwarf`,因为我对它了解的更多,并且 `libdwarf` 的开源协议更开放(`LGPL` 对比 `GPL`)。

|

||||

|

||||

因为 `libdwarf` 本身相当复杂,操作起来需要相当多的代码,所以我在这不会展示所有代码。你可以在 [这里][24] 下载代码并运行试试。运行这些代码需要提前安装 `libelfand` 与 `libdwarf` ,同时在使用连接器的时候要使用参数 `-lelf` 与 `-ldwarf`。

|

||||

|

||||

这个示例程序可以接受可执行文件并打印其中的函数名称与函数入口地址。下面是我们整篇文章中使用的C程序经过示例程序处理后的输出。

|

||||

这个示例程序可以接受可执行文件并打印其中的函数名称与函数入口地址。下面是我们整篇文章中使用的 C 程序经过示例程序处理后的输出。

|

||||

|

||||

```

|

||||

$ dwarf_get_func_addr tracedprog2

|

||||

@ -285,17 +283,17 @@ high pc : 0x0804865a

|

||||

|

||||

原理上讲,调试信息是个很简单的概念。尽管实现细节可能比较复杂,但经过了上面的学习我想你应该了解了调试器是如何从可执行文件中获取它需要的源代码信息的了。对于程序员而言,程序只是代码段与数据结构;对可执行文件而言,程序只是一系列存储在内存或寄存器中的指令或数据。但利用调试信息,调试器就可以将这两者连接起来,从而完成调试工作。

|

||||

|

||||

此文与这系列的前两篇,一同介绍了调试器的内部工作过程。利用这里所讲到的知识,再敲些代码,应该可以完成一个 `Linux` 中最简单基础但也有一定功能的调试器。

|

||||

此文与这系列的前两篇,一同介绍了调试器的内部工作过程。利用这里所讲到的知识,再敲些代码,应该可以完成一个 Linux 中最简单、基础但也有一定功能的调试器。

|

||||

|

||||

下一步我并不确定要做什么,这个系列文章可能就此结束,也有可能我要讲些堆栈调用的事情,又或者讲 `Windows` 下的调试。你们有什么好的点子或者相关材料,可以直接评论或者发邮件给我。

|

||||

下一步我并不确定要做什么,这个系列文章可能就此结束,也有可能我要讲些堆栈调用的事情,又或者讲 Windows 下的调试。你们有什么好的点子或者相关材料,可以直接评论或者发邮件给我。

|

||||

|

||||

### 参考

|

||||

|

||||

* objdump 参考手册

|

||||

* [ELF][12] 与 [DWARF][13]的维基百科

|

||||

* [Dwarf Debugging Standard home page][14],这里有很棒的 DWARF 教程与 DWARF 标准,作者是 Michael Eager。第二版基于 GCC 也许更能吸引你。

|

||||

* [libdwarf home page][15],这里可以下载到 libwarf 的完整库与参考手册

|

||||

* [BFD documentation][16]

|

||||

* [ELF][12] 与 [DWARF][13] 的维基百科

|

||||

* [Dwarf Debugging Standard 主页][14],这里有很棒的 DWARF 教程与 DWARF 标准,作者是 Michael Eager。第二版基于 GCC 也许更能吸引你。

|

||||

* [libdwarf 主页][15],这里可以下载到 libwarf 的完整库与参考手册

|

||||

* [BFD 文档][16]

|

||||

|

||||

* * *

|

||||

|

||||

@ -305,7 +303,7 @@ via: http://eli.thegreenplace.net/2011/02/07/how-debuggers-work-part-3-debugging

|

||||

|

||||

作者:[Eli Bendersky][a]

|

||||

译者:[YYforymj](https://github.com/YYforymj)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -335,5 +333,5 @@ via: http://eli.thegreenplace.net/2011/02/07/how-debuggers-work-part-3-debugging

|

||||

[23]:http://eli.thegreenplace.net/2011/02/07/how-debuggers-work-part-3-debugging-information#id12

|

||||

[24]:https://github.com/eliben/code-for-blog/blob/master/2011/dwarf_get_func_addr.c

|

||||

[25]:http://eli.thegreenplace.net/2011/02/07/how-debuggers-work-part-3-debugging-information

|

||||

[26]:http://eli.thegreenplace.net/2011/01/23/how-debuggers-work-part-1/

|

||||

[27]:http://eli.thegreenplace.net/2011/01/27/how-debuggers-work-part-2-breakpoints/

|

||||

[26]:https://linux.cn/article-8552-1.html

|

||||

[27]:https://linux.cn/article-8418-1.html

|

||||

@ -0,0 +1,101 @@

|

||||

软件定义存储(SDS)的发展:十个你应当知道的项目

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

*凭借 SDS,组织机构可以更好抽象出底层存储的管理功能,并且通过不同策略实现灵活配置。下面将要向你展示一些你应当知道的此类开源项目。Creative Commons Zero,Pixabay *

|

||||

|

||||

纵观 2016 年,SDS(Software-Defined Storage,软件定义存储)方面取得了很多里程碑式的进步,并且日益紧密的与云部署结合在了一起。凭借 SDS ,组织机构可以更好抽象出底层存储的管理功能,并且通过不同策略实现灵活配置。当然,他们也可以选择自由开源的 SDS 解决方案。人们熟知的 Ceph 正是凭借 OpenStack 部署在不断扩大自己的影响力,但是它离成为唯一的 SDS 开源项目还有很长的路要走。

|

||||

|

||||

Gartner 的一份市场调查报告中预测,截至到 2019 年,70% 已有的存储部署解决方案会支持以纯软件的方式来实施。同时 Gartner 还预测截至到 2020 年,70% 到 80% 的非结构化数据会存储在由 SDS 管理的廉价存储设备中。

|

||||

|

||||

最近,Dell EMC 公司加入到了由 Linux 基金会发起的 [OpenSDS][4] 项目中。 OpenSDS 致力于寻求解决 SDS 集成所面临的挑战,并推动企业对开放标准的采用。它由存储客户与厂商组成,包括 Fujitsu,Hitachi Data Systems,Huawei,Oregon State University 以及 Vodafone。同时 OpenSDS 也寻求与其它的上游开源社区进行合作,比如 Cloud Native Computing Foundation、Docker、OpenStack 以及 Open Container Initiative。

|

||||

|

||||

根据 Open SDS 项目的 [主页][5],2017 年会是 SDS 的一个元年:“社区希望在 2017 第二季度完成原型的发布,并且在第三季度中发布一个测试版本。OpenSDS 的最初组织者期望能通过这个项目来影响到一些开源技术,比如来自 Openstack 社区的 Cinder 和 Manila 项目,并且能够支持更广泛的云存储解决方案。”

|

||||

|

||||

与此同时,SDS 相关项目也呈现了爆发式的增长,其范围横跨 Apache Cassandra 到 Cehp。Linux 基金会最近发布了 2016 年度报告“[开放云指南:当前的趋势及开源项目][7]”,报告从整体上分析了开放云计算的现状,其中有一章涵盖了 SDS。你可以[下载][8]这篇报告,需要注意的是,这是一份综合了容器发展趋势、SDS,以及云计算的重新定义等等很多内容。报告中涵盖了当今对于开源云计算最重要的一些项目,并分类给出了描述和链接。

|

||||

|

||||

在这个系列的文章中,我们从该报告中整理了很多项目,并且针对它们是如何发展的提供了一些额外的视角及信息。在下面的内容当中,你会看到现今对 SDS 来说很重要的项目,并且能了解到它们为什么具有这么大的影响力。同时,根据上面的报告,我们提供了相关项目的 GitHub 仓库链接,方便大家查看。

|

||||

|

||||

### 软件定义存储(SDS)

|

||||

|

||||

- [Apache Cassandra][9]

|

||||

|

||||

Apache Cassandra 是一个可扩展的、高可用的,面向任务优先应用的数据库。它可以运行在商业设备或者云架构上,并且能实现跨数据中心的低延迟数据传输,同时具备良好的容错性。[Cassandra 的 GitHub 仓库][10]。

|

||||

|

||||

- [Ceph][11]

|

||||

|

||||

Ceph 是 Red Hat 构建的一个企业级可扩展的块设备、对象,以及文件存储平台,并且可部署在公有云或者私有云之上。Ceph 目前被广泛应用于 OpenStack。[Ceph 的 GitHub 仓库][12]。

|

||||

|

||||

- [CouchDB][13]

|

||||

|

||||

CouchDB 是一个 Apache 软件基金会项目,是一个单节点或者集群数据库管理系统。CouchDB 提供了 RESTful HTTP 接口来读取和更新数据库文件。[CouchDB 的 GitHub 仓库][14]。

|

||||

|

||||

- [Docker 数据卷插件][15]

|

||||

|

||||

Docker Engine 数据卷插件可以使 Engine 与外部的存储系统一起集成部署,并且数据卷的生命周期与单一 Engine 主机相同。目前存在很多第三方的数据卷管理插件,包括 Azure File Storage、NetApp、VMware vSphere 等等。你可以在 GitHub上查找到更多的插件。

|

||||

|

||||

- [GlusterFS][16]

|

||||

|

||||

Gluster 是 Red Hat 的可扩展网络文件系统,同时也是数据管理平台。Gluster 可以部署在公有云,私有云或者混合云之上,可用于 Linux 容器内的流媒体处理任务、数据分析任务,以及其它数据和带宽敏感型任务的执行。[GlusterFS 的 GitHub 仓库][17]。

|

||||

|

||||

- [MongoDB][18]

|

||||

|

||||

MongoDB 是一个高性能的文件数据库,并且部署和扩展都非常简单。[MongoDB 的 GitHub 仓库][19]。

|

||||

|

||||

- [Nexenta][20]

|

||||

|

||||

NexentaStor 是一个可扩展的、统一的软件定义的文件和块设备管理服务,同时支持数据管理功能。它能够与 VMware 集成,并且支持 Docker 和 OpenStack。[Nexenta 的 GitHub 仓库][21]。

|

||||

|

||||

- [Redis][22]

|

||||

|

||||

Redis 是一个基于内存的数据存储,一般被用作数据库、缓存,以及消息代理。它支持多种数据结构,并且本身支持复制、Lua 脚本、LRU 算法、事务,以及多层级的硬盘持久化。

|

||||

|

||||

- [Riak CS][24]

|

||||

|

||||

Riak CS(Cloud Storage)是基于 Basho 的分布式数据库 Riak KV 构建的对象存储软件。它提供了在不同规模的分布式云存储能力,可以用于公有云和私有云,还能为大压力的应用和服务提供基础的存储服务。其 API 兼容 Amazon S3,并且支持租户级别的费用计算和测量能力。[Riak CS 的 GitHub 仓库][25]。

|

||||

|

||||

- [Swift][26]

|

||||

|

||||

Swift 是 OpenStack 项目中的对象存储系统,设计初衷是通过简单 API 存储和获取非结构化数据。Swift 设计之初就是可扩展的,并且针对持久性、可靠性以及并发数据读取做了优化。[Swift 的 GitHub 仓库][27]。

|

||||

|

||||

_了解更多的开源云计算趋势以及更完整的开源云计算项目列表,请[下载 Linux 基金会的“开放云指南”][3]。_

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/news/open-cloud-report/2016/guide-open-cloud-software-defined-storage-opens

|

||||

|

||||

作者:[SAM DEAN][a]

|

||||

译者:[toutoudnf](https://github.com/toutoudnf)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/sam-dean

|

||||

[1]:https://www.linux.com/licenses/category/creative-commons-zero

|

||||

[2]:https://www.linux.com/files/images/software-definedjpg

|

||||

[3]:http://bit.ly/2eHQOwy

|

||||

[4]:http://ctt.marketwire.com/?release=11G125514-001&id=10559023&type=0&url=https%3A%2F%2Fwww.opensds.io%2F

|

||||

[5]:https://www.opensds.io/

|

||||

[6]:https://www.linux.com/blog/linux-foundation-issues-2016-guide-open-source-cloud-projects

|

||||

[7]:http://ctt.marketwire.com/?release=11G120876-001&id=10172077&type=0&url=http%3A%2F%2Fgo.linuxfoundation.org%2Frd-open-cloud-report-2016-pr

|

||||

[8]:http://go.linuxfoundation.org/l/6342/2016-10-31/3krbjr

|

||||

[9]:http://cassandra.apache.org/

|

||||

[10]:https://github.com/apache/cassandra

|

||||

[11]:http://ceph.com/

|

||||

[12]:https://github.com/ceph/ceph

|

||||

[13]:http://couchdb.apache.org/

|

||||

[14]:https://github.com/apache/couchdb

|

||||

[15]:https://docs.docker.com/engine/extend/plugins_volume/

|

||||

[16]:https://www.gluster.org/

|

||||

[17]:https://github.com/gluster/glusterfs

|

||||

[18]:https://www.mongodb.com/

|

||||

[19]:https://github.com/mongodb/mongo

|

||||

[20]:https://nexenta.com/

|

||||

[21]:https://github.com/Nexenta

|

||||

[22]:http://redis.io/

|

||||

[23]:https://github.com/antirez/redis

|

||||

[24]:http://docs.basho.com/riak/cs/2.1.1/

|

||||

[25]:https://github.com/basho/riak_cs

|

||||

[26]:https://wiki.openstack.org/wiki/Swift

|

||||

[27]:https://github.com/openstack/swift

|

||||

@ -3,7 +3,7 @@

|

||||

|

||||

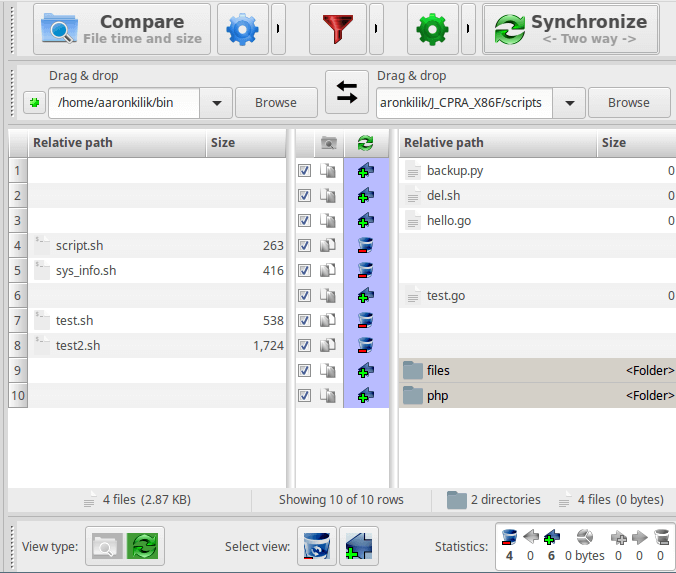

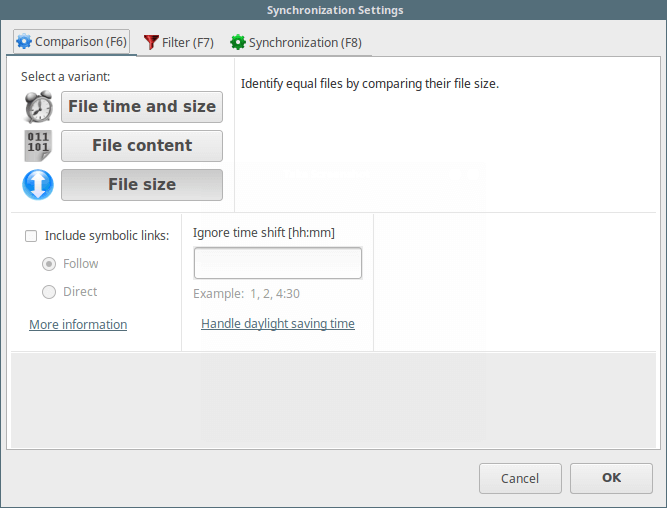

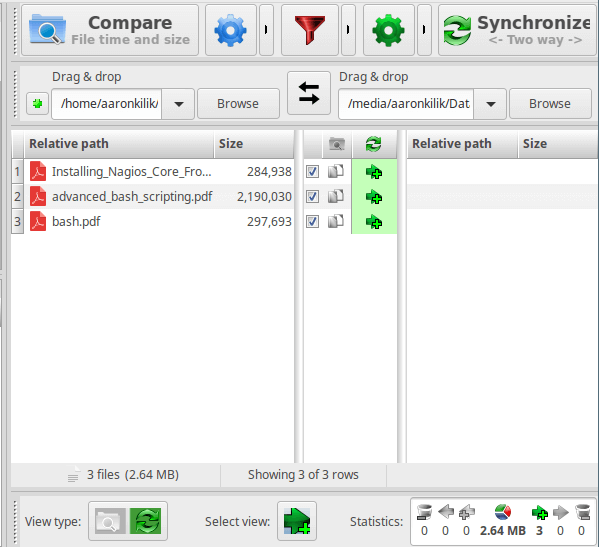

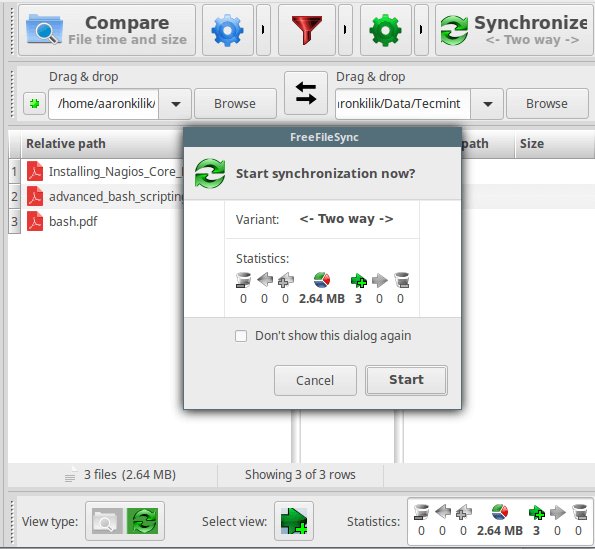

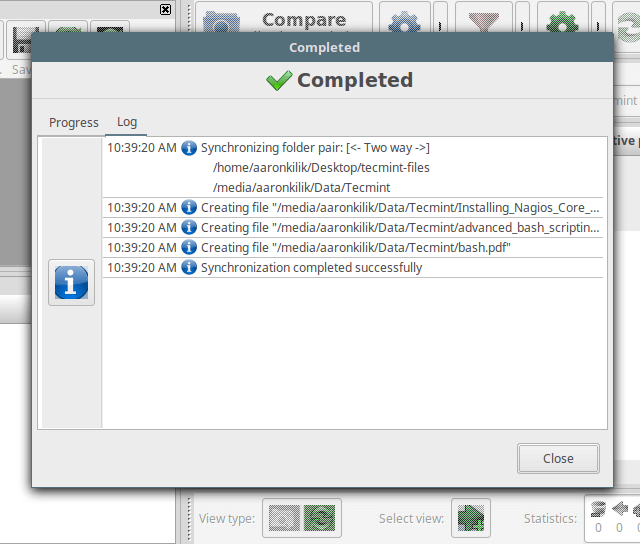

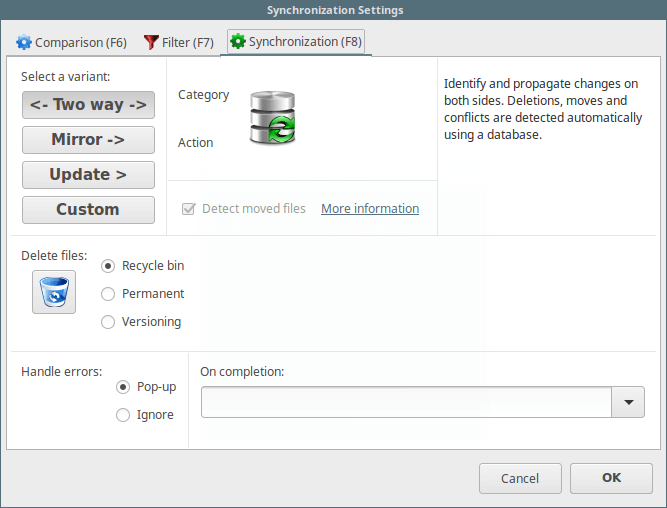

在之前的一篇文章里,我们回顾了[ Linux 下 9 个最好的文件比较工具][1],本篇文章中,我们将会描述在 Linux 下怎样找到两个目录之间的不同。

|

||||

|

||||

一般情况下,要在 Linux 下比较两个文件,我们会使用 **diff** (一个简单的源自 Unix 的命令行工具 )来显示两个计算机文件的不同;它一行一行的去比较文件,而且很方便使用,在几乎全部的 Linux 发行版都预装了。

|

||||

一般情况下,要在 Linux 下比较两个文件,我们会使用 `diff` (一个简单的源自 Unix 的命令行工具)来显示两个计算机文件的不同;它一行一行的去比较文件,而且很方便使用,在几乎全部的 Linux 发行版都预装了。

|

||||

|

||||

问题是在 Linux 下我们怎么才能比较两个目录?现在,我们想知道两个目录中哪些文件/子目录是共有的,哪些只存在一个于目录。

|

||||

|

||||

@ -14,7 +14,7 @@ $ diff [OPTION]… FILES

|

||||

$ diff options dir1 dir2

|

||||

```

|

||||

|

||||

默认情况下,输出是按文件/子文件夹的文件名的字母排序的,如下面截图所示,在命令中, `-q` 开关是告诉 diif 只有在文件有差异时报告。

|

||||

默认情况下,输出是按文件/子文件夹的文件名的字母排序的,如下面截图所示,在命令中, `-q` 开关是告诉 `diif` 只有在文件有差异时报告。

|

||||

|

||||

```

|

||||

$ diff -q directory-1/ directory-2/

|

||||

@ -25,7 +25,7 @@ $ diff -q directory-1/ directory-2/

|

||||

|

||||

*两个文件夹之间的差异*

|

||||

|

||||

再次运行 diff 并不能进入子文件夹,但是我们可以使用 `-r` 开关来读子文件夹,如下所示。

|

||||

再次运行 `diff` 并不能进入子文件夹,但是我们可以使用 `-r` 开关来读子文件夹,如下所示。

|

||||

|

||||

```

|

||||

$ diff -qr directory-1/ directory-2/

|

||||

@ -33,7 +33,7 @@ $ diff -qr directory-1/ directory-2/

|

||||

|

||||

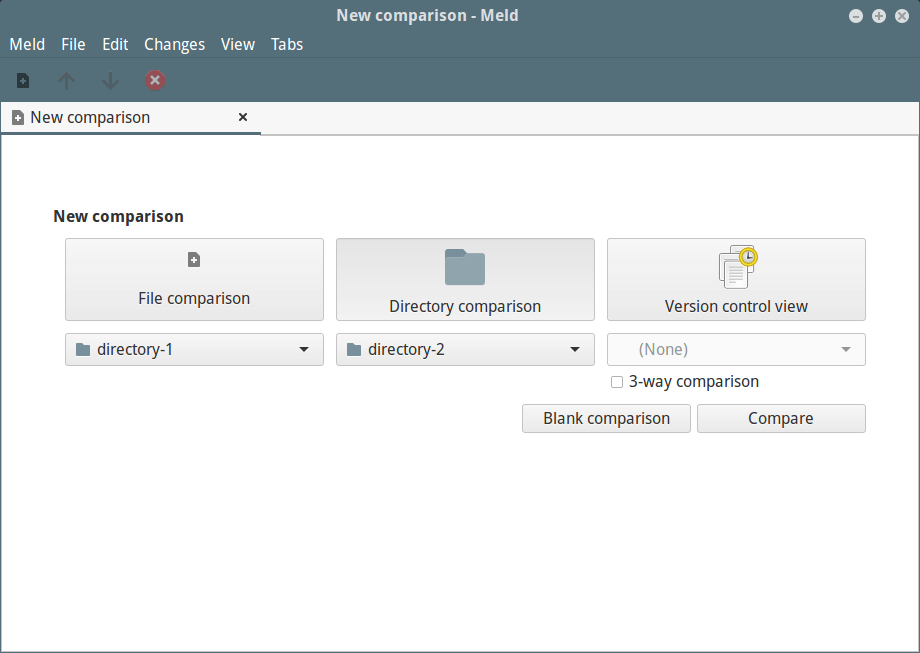

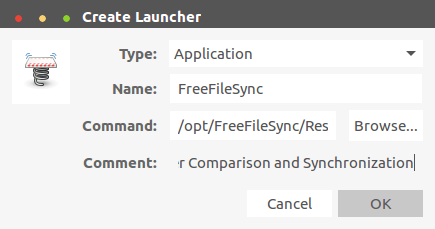

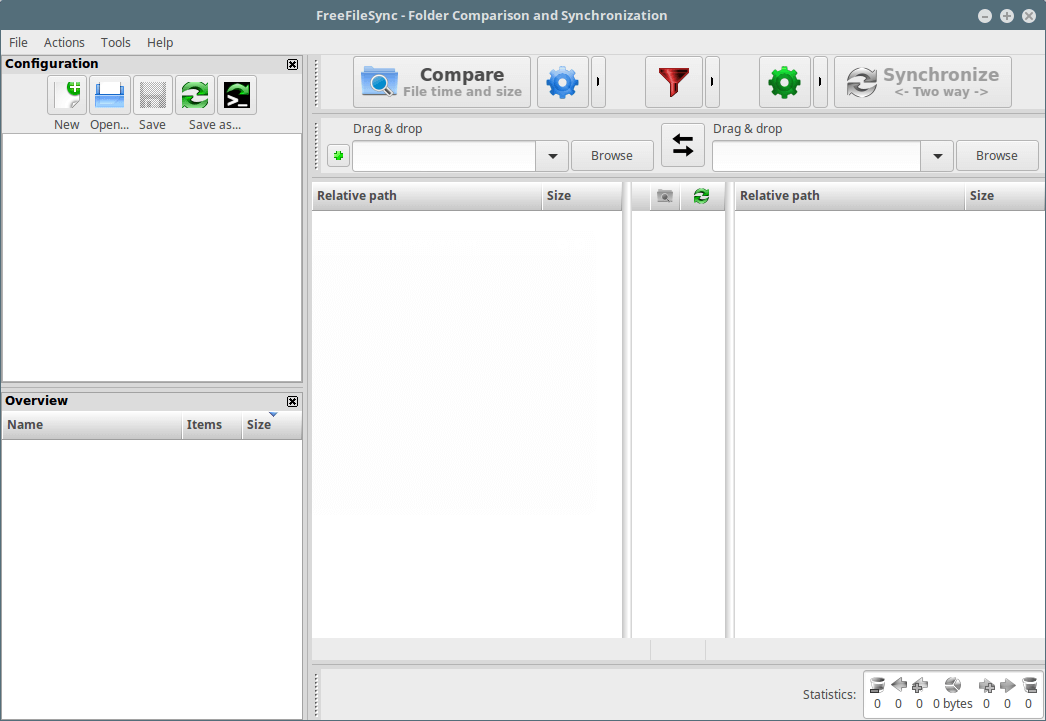

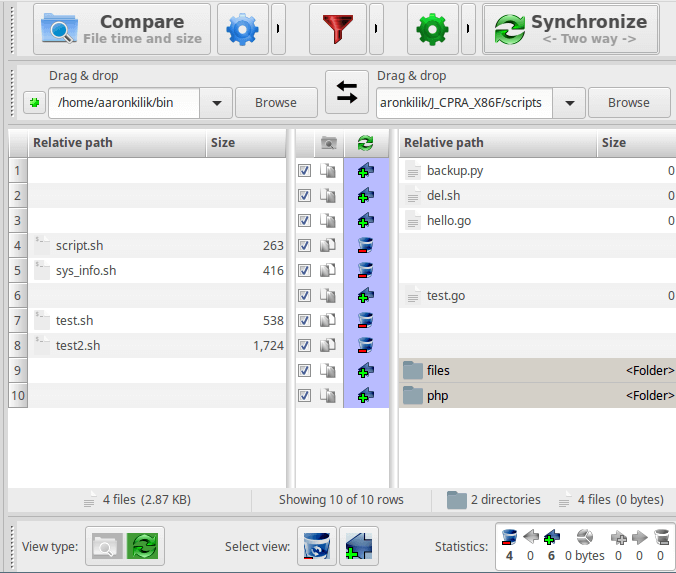

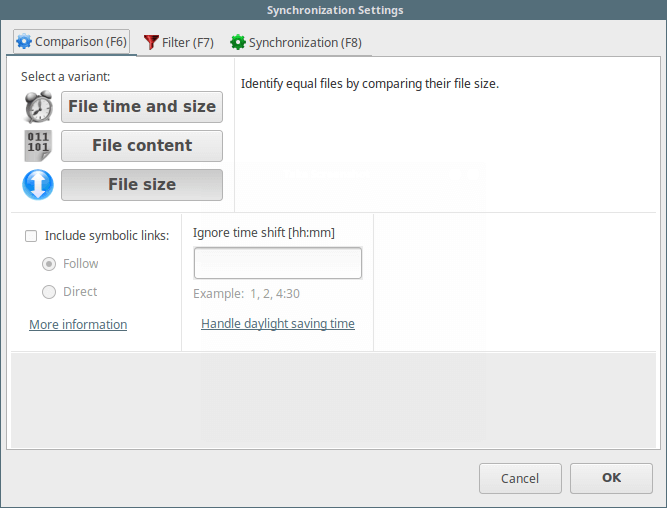

### 使用 Meld 可视化比较和合并工具

|

||||

|

||||

meld 是一个很酷的图形化工具(一个 GNOME 桌面下的可视化的比较和合并工具),可供那些喜欢使用鼠标的人使用,可按如下来安装。

|

||||

`meld` 是一个很酷的图形化工具(一个 GNOME 桌面下的可视化的比较和合并工具),可供那些喜欢使用鼠标的人使用,可按如下来安装。

|

||||

|

||||

```

|

||||

$ sudo apt install meld [Debian/Ubuntu systems]

|

||||

@ -51,6 +51,7 @@ $ sudo dnf install meld [Fedora 22+]

|

||||

*Meld 比较工具*

|

||||

|

||||

选择你想要比较的文件夹,注意你可以勾选 “**3-way Comparison**” 选项,添加第三个文件夹。

|

||||

|

||||

[

|

||||

|

||||

][5]

|

||||

@ -76,7 +77,7 @@ Aaron Kili 是一个 Linux 和 F.O.S.S 爱好者,即将成为 Linux 系统管

|

||||

|

||||

via: http://www.tecmint.com/compare-find-difference-between-two-directories-in-linux/

|

||||

|

||||

作者:[Aaron Kili ][a]

|

||||

作者:[Aaron Kili][a]

|

||||

译者:[hkurj](https://github.com/hkurj)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

@ -1,45 +1,40 @@

|

||||

wcnnbdk1 translating

|

||||

ssh_scan – Verifies Your SSH Server Configuration and Policy in Linux

|

||||

ssh_scan:远程验证你 SSH 服务的配置和策略

|

||||

============================================================

|

||||

|

||||

`ssh_scan` 是一个面向 Linux 和 UNIX 服务器的易用的 SSH 服务参数配置和策略的扫描器程序,其思路来自[Mozilla OpenSSH 安全指南][6],这个指南为 SSH 服务参数配置提供了一个可靠的安全策略基线的建议,如加密算法(Ciphers),报文认证信息码算法(MAC),密钥交换算法(KexAlgos)和其它。

|

||||

|

||||

ssh_scan is an easy-to-use prototype SSH configuration and policy scanner for Linux and UNIX servers, inspired by [Mozilla OpenSSH Security Guide][6], which provides a reasonable baseline policy recommendation for SSH configuration parameters such as Ciphers, MACs, and KexAlgos and much more.

|

||||

`ssh_scan` 有如下好处:

|

||||

|

||||

It has some of the following benefits:

|

||||

* 它的依赖是最小化的,`ssh_scan` 只引入了本地 Ruby 和 BinData 来进行它的工作,没有太多的依赖。

|

||||

* 它是可移植的,你可以在其它的项目中使用 `ssh_scan` 或者将它用在[自动化任务][1]上。

|

||||

* 它是易于使用的,只需要简单的将它指向一个 SSH 服务就可以获得一个该服务所支持的选项和策略状态的 JSON 格式报告。

|

||||

* 它同时也是易于配置的,你可以创建适合你策略需求的策略。

|

||||

|

||||

* It has minimal dependencies, ssh_scan only employs native Ruby and BinData to do its work, no heavy dependencies.

|

||||

**建议阅读:** [如何在 Linux 上安装配置 OpenSSH 服务][7]

|

||||

|

||||

* It’s portable, you can use ssh_scan in another project or for [automation of tasks][1].

|

||||

### 如何在 Linux 上安装 ssh_scan

|

||||

|

||||

* It’s easy to use, simply point it at an SSH service and get a JSON report of what it supports and it’s policy status.

|

||||

有如下三种安装 `ssh_scan` 的方式:

|

||||

|

||||

* It’s also configurable, you can create your own custom policies that fit your specific policy requirements.

|

||||

|

||||

**Suggested Read:** [How to Install and Configure OpenSSH Server in Linux][7]

|

||||

|

||||

### How to Install ssh_scan in Linux

|

||||

|

||||

There are three ways you can install ssh_scan and they are:

|

||||

|

||||

To install and run as a gem, type:

|

||||

使用 Ruby gem 来安装运行,如下:

|

||||

|

||||

```

|

||||

----------- On Debian/Ubuntu -----------

|

||||

----------- 在 Debian/Ubuntu -----------

|

||||

$ sudo apt-get install ruby gem

|

||||

$ sudo gem install ssh_scan

|

||||

----------- On CentOS/RHEL -----------

|

||||

----------- 在 CentOS/RHEL -----------

|

||||

# yum install ruby rubygem

|

||||

# gem install ssh_scan

|

||||

```

|

||||

|

||||

To run from a [docker container][8], type:

|

||||

使用[docker 容器][8]来运行,如下:

|

||||

|

||||

```

|

||||

# docker pull mozilla/ssh_scan

|

||||

# docker run -it mozilla/ssh_scan /app/bin/ssh_scan -t github.com

|

||||

```

|

||||

|

||||

To install and run from source, type:

|

||||

使用源码安装运行,如下:

|

||||

|

||||

```

|

||||

# git clone https://github.com/mozilla/ssh_scan.git

|

||||

@ -53,29 +48,29 @@ To install and run from source, type:

|

||||

# ./bin/ssh_scan

|

||||

```

|

||||

|

||||

### How to Use ssh_scan in Linux

|

||||

### 如何在 Linux 上使用 ssh_scan

|

||||

|

||||

The syntax for using ssh_scan is as follows:

|

||||

使用 `ssh_scan` 的语法如下:

|

||||

|

||||

```

|

||||

$ ssh_scan -t ip-address

|

||||

$ ssh_scan -t server-hostname

|

||||

$ ssh_scan -t ip地址

|

||||

$ ssh_scan -t 主机名

|

||||

```

|

||||

|

||||

For example to scan SSH configs and policy of server 92.168.43.198, enter:

|

||||

举个例子来扫描 192.168.43.198 这台服务器的 SSH 配置和策略,键入:

|

||||

|

||||

```

|

||||

$ ssh_scan -t 192.168.43.198

|

||||

```

|

||||

|

||||

Note you can also pass a [IP/Range/Hostname] to the `-t` option as shown in the options below:

|

||||

注意你同时也可以像下方展示的给 `-t` 选项传入一个[IP地址/地址段/主机名]:

|

||||

|

||||

```

|

||||

$ ssh_scan -t 192.168.43.198,200,205

|

||||

$ ssh_scan -t test.tecmint.lan

|

||||

```

|

||||

|

||||

##### Sample Output

|

||||

输出示例:

|

||||

|

||||

```

|

||||

I, [2017-05-09T10:36:17.913644 #7145] INFO -- : You're using the latest version of ssh_scan 0.0.19

|

||||

@ -192,25 +187,25 @@ I, [2017-05-09T10:36:17.913644 #7145] INFO -- : You're using the latest version

|

||||

]

|

||||

```

|

||||

|

||||

You can use `-p` to specify a different port, `-L` to enable the logger and `-V` to define the verbosity level as shown below:

|

||||

你可以使用 `-p` 选项来指定不同的端口,`-L` 选项来开启日志记录配合 `-V` 选项来指定日志级别:

|

||||

|

||||

```

|

||||

$ ssh_scan -t 192.168.43.198 -p 22222 -L ssh-scan.log -V INFO

|

||||

```

|

||||

|

||||

Additionally, use a custom policy file (default is Mozilla Modern) with the `-P` or `--policy [FILE]` like so:

|

||||

另外,可以使用 `-P` 或 `--policy` 选项来指定一个策略文件(默认是 Mozilla Modern)(LCTT 译注:这里的 Modern 可能指的是 https://wiki.mozilla.org/Security/Server_Side_TLS 中提到的 Modern compatibility ):

|

||||

|

||||

```

|

||||

$ ssh_scan -t 192.168.43.198 -L ssh-scan.log -V INFO -P /path/to/custom/policy/file

|

||||

```

|

||||

|

||||

Type this to view all ssh_scan usage options and more examples:

|

||||

ssh_scan 使用帮助与其它示例:

|

||||

|

||||

```

|

||||

$ ssh_scan -h

|

||||

```

|

||||

|

||||

##### Sample Output

|

||||

输出示例:

|

||||

|

||||

```

|

||||

ssh_scan v0.0.17 (https://github.com/mozilla/ssh_scan)

|

||||

@ -245,34 +240,28 @@ ssh_scan -t 192.168.1.1 -P custom_policy.yml

|

||||

ssh_scan -t 192.168.1.1 --unit-test -P custom_policy.yml

|

||||

```

|

||||

|

||||

Check out some useful artilces on SSH Server:

|

||||

SSH 服务器相关参考阅读:

|

||||

|

||||

1. [SSH Passwordless Login Using SSH Keygen in 5 Easy Steps][2]

|

||||

1. [使用 SSH Keygen(ssh-keygen)五步实现 SSH 免密登录][2]

|

||||

2. [安全 SSH 服务器的 5 个最佳实践][3]

|

||||

3. [使用 Chroot 来限制 SSH 用户进入某些目录][4]

|

||||

4. [如何配置 SSH 连接来简化远程登录][5]

|

||||

|

||||

2. [5 Best Practices to Secure SSH Server][3]

|

||||

如果需要更详细的信息可以访问 `ssh_scan` 的 Github 仓库:[https://github.com/mozilla/ssh_scan][9]

|

||||

|

||||

3. [Restrict SSH User Access to Certain Directory Using Chrooted Jail][4]

|

||||

|

||||

4. [How to Configure Custom SSH Connections to Simplify Remote Access][5]

|

||||

|

||||

For more details visit ssh_scan Github repository: [https://github.com/mozilla/ssh_scan][9]

|

||||

|

||||

In this article, we showed you how to set up and use ssh_scan in Linux. Do you know of any similar tools out there? Let us know via the feedback form below, including any other thoughts concerning this guide.

|

||||

|

||||

SHARE[+][10][0][11][20][12][25][13] [][14]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

作者简介:

|

||||

|

||||

Aaron Kili is a Linux and F.O.S.S enthusiast, an upcoming Linux SysAdmin, web developer, and currently a content creator for TecMint who loves working with computers and strongly believes in sharing knowledge.

|

||||

Aaron Kili 是 Linux 与 F.O.S.S (自由及开源软件)爱好者,一位将来的 Linux 系统管理员,网站开发者,现在是一个热爱与计算机一起工作并且拥有强烈知识分信念的 TecMint 内容贡献者。

|

||||

|

||||

------------------

|

||||

|

||||

via: https://www.tecmint.com/ssh_scan-ssh-configuration-and-policy-scanner-for-linux/

|

||||

|

||||

作者:[Aaron Kili ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

作者:[Aaron Kili][a]

|

||||

译者:[wcnnbdk1](https://github.com/wcnnbdk1)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -286,11 +275,4 @@ via: https://www.tecmint.com/ssh_scan-ssh-configuration-and-policy-scanner-for-l

|

||||

[7]:https://www.tecmint.com/install-openssh-server-in-linux/

|

||||

[8]:https://www.tecmint.com/install-docker-and-learn-containers-in-centos-rhel-7-6/

|

||||

[9]:https://github.com/mozilla/ssh_scan

|

||||

[10]:https://www.tecmint.com/ssh_scan-ssh-configuration-and-policy-scanner-for-linux/#

|

||||

[11]:https://www.tecmint.com/ssh_scan-ssh-configuration-and-policy-scanner-for-linux/#

|

||||

[12]:https://www.tecmint.com/ssh_scan-ssh-configuration-and-policy-scanner-for-linux/#

|

||||

[13]:https://www.tecmint.com/ssh_scan-ssh-configuration-and-policy-scanner-for-linux/#

|

||||

[14]:https://www.tecmint.com/ssh_scan-ssh-configuration-and-policy-scanner-for-linux/#comments

|

||||

[15]:https://www.tecmint.com/author/aaronkili/

|

||||

[16]:https://www.tecmint.com/10-useful-free-linux-ebooks-for-newbies-and-administrators/

|

||||

[17]:https://www.tecmint.com/free-linux-shell-scripting-books/

|

||||

@ -0,0 +1,90 @@

|

||||

理解 Linux 中的 Shutdown、Poweroff、Halt 和 Reboot 命令

|

||||

============================================================

|

||||

|

||||

在本篇中,我们会向你解释 **shutdown、poweroff、halt 以及 reboot** 命令。我们会解释当使用可选项时,它们实际做了什么。

|

||||

|

||||

如果你想深入管理 Linux 服务器,那么为了有效和可靠的服务器管理,这些[重要的 Linux 命令][1]你需要完全理解。

|

||||

|

||||

通常上,当你想要关闭或者重启你的机器时,你会运行下面之一的命令:

|

||||

|

||||

### Shutdown 命令

|

||||

|

||||

**shutdown** 会给系统计划一个时间关机。它可被用于停止、关机、重启机器。

|

||||

|

||||

你也许会指定一个时间字符串(通常是 “now” 或者 “hh:mm” 用于小时/分钟)作为第一个参数。额外地,你也可以设置一个广播信息在系统关闭前发送给所有已登录的用户。

|

||||

|

||||

重要:如果使用了时间参数,系统关机前 5 分钟,/run/nologin 文件会被创建来确保没有人可以再登录。

|

||||

|

||||

shutdown 命令示例:

|

||||

|

||||

```

|

||||

# shutdown

|

||||

# shutdown now

|

||||

# shutdown 13:20

|

||||

# shutdown -p now #关闭机器

|

||||

# shutdown -H now #停止机器

|

||||

# shutdown -r09:35 #在 09:35am 重启机器

|

||||

```

|

||||

|

||||

要取消即将的关机,只要输入下面的命令:

|

||||

|

||||

```

|

||||

# shutdown -c

|

||||

```

|

||||

|

||||

### Halt 命令

|

||||

|

||||

**halt** 通知硬件来停止所有的 CPU 功能,但是仍然保持通电。你可以用它使系统处于低层维护状态。

|

||||

|

||||

注意在有些情况会它会完全关闭系统。下面是 halt 命令示例:

|

||||

|

||||

```

|

||||

# halt #停止机器

|

||||

# halt -p #关闭机器

|

||||

# halt --reboot #重启机器

|

||||

```

|

||||

|

||||

### poweroff 命令

|

||||

|

||||

**poweroff** 会发送一个 ACPI 信号来通知系统关机。

|

||||

|

||||

下面是 poweroff 命令示例:

|

||||

|

||||

```

|

||||

# poweroff #关闭机器

|

||||

# poweroff --halt #停止机器

|

||||

# poweroff --reboot #重启机器

|

||||

```

|

||||

|

||||

### Reboot 命令

|

||||

|

||||

reboot 通知系统重启。

|

||||

|

||||

```

|

||||

# reboot #重启机器

|

||||

# reboot --halt #停止机器

|

||||

# reboot -p #关闭机器

|

||||

```

|

||||

|

||||

就是这样了!如先前提到的,理解这些命令能够有效并可靠地在多用户环境下管理 Linux 服务器。你有一些额外的想法么?在评论区留言与我们分享。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Aaron Kili 是一名 Linux 和 F.O.S.S 的爱好者,未来的 Linux 系统管理员、网站开发人员,目前是 TecMint 的内容创作者,他喜欢用电脑工作,并乐于分享知识。

|

||||

|

||||

--------------------------

|

||||

|

||||

via: https://www.tecmint.com/shutdown-poweroff-halt-and-reboot-commands-in-linux/

|

||||

|

||||

作者:[Aaron Kili ][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.tecmint.com/author/aaronkili/

|

||||

[1]:https://www.tecmint.com/60-commands-of-linux-a-guide-from-newbies-to-system-administrator/

|

||||

[2]:https://www.tecmint.com/author/aaronkili/

|

||||

|

||||

131

published/20170529 Linux comm command tutorial for beginners.md

Normal file

131

published/20170529 Linux comm command tutorial for beginners.md

Normal file

@ -0,0 +1,131 @@

|

||||

使用 comm 比较两个排序好的文件

|

||||

===========================================================

|

||||

|

||||

Linux 中的 `comm` 命令可以让用户按行比较两个**已经排序好**的文件。在本教程中,我们将使用一些浅显易懂的例子来讨论这个命令行工具。在开始之前,请注意,本教程中提到的所有例子都已经在 Ubuntu 16.04LTS 版本中测试过。

|

||||

|

||||

下面的例子将会告诉你 `comm` 命令是如何工作的。

|

||||

|

||||

### 1、 如何使用 `comm` 比较两个排序好的文件

|

||||

|

||||

要使用 `comm` 命令比较两个排序好的文件,只需要把它们的名字作为 `comm` 命令的参数。下面是通常的语法:

|

||||

|

||||

```

|

||||

comm [name-of-first-file] [name-of-second-file]

|

||||

```

|

||||

|

||||

比如,假设 `file1` 和 `file2` 是这种情况下的两个文件。前者包含下面几行内容:

|

||||

|

||||

```

|

||||

001

|

||||

056

|

||||

127

|

||||

258

|

||||

```

|

||||

|

||||

而后者包含下面几行内容:

|

||||

|

||||

```

|

||||

002

|

||||

056

|

||||

167

|

||||

369

|

||||

```

|

||||

|

||||

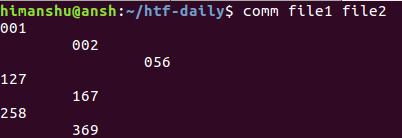

此时,`comm` 命令的输出如下图所示:

|

||||

|

||||

```

|

||||

comm file1 file2

|

||||

```

|

||||

|

||||

[][8]

|

||||

|

||||

你可以看到,输出包含 3 列。第一列是仅包含在 `file1` 中的内容,第二列是仅包含在 `file2` 中的内容,最后,第三列是两个文件中均包含的内容。

|

||||

|

||||

### 2、 如何不输出 `comm` 命令输出中的某些列

|

||||

|

||||

如果你想,你可以不输出 `comm` 命令输出中的某些列。对于该特性,你有三个命令行选项可用:`-1`、`-2` 和 `-3` 。正如你所猜想的,这些数字表示你不想输出的列。

|

||||

|

||||

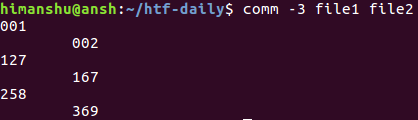

比如,下面这个命令将会不输出上面例子中的第三列:

|

||||

|

||||

```

|

||||

comm -3 file1 file2

|

||||

```

|

||||

[][9]

|

||||

|

||||

|

||||

因此,你可以看到,第三列并没有输出。

|

||||

|

||||

注意,你可以通过一个单一命令同时不输出多列内容。比如:

|

||||

|

||||

```

|

||||

comm -12 file1 file2

|

||||

```

|

||||

|

||||

上面这个命令将会不输出第一、二列。

|

||||

|

||||

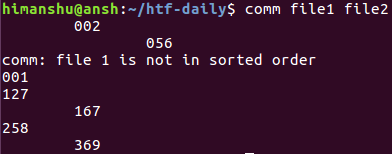

### 3、 如何使用 `comm` 命令比较两个未排序好的文件

|

||||

|

||||

正如我们所知道的,`comm` 只可用于排序好的文件。如果发现其中一个文件未排序好,那么便会在输出中产生一条信息来告诉用户。比如,我们交换 `file1` 的第一行和第二行,然后与 `file2` 进行比较。下面是该命令的输出:

|

||||

|

||||

[][10]

|

||||

|

||||

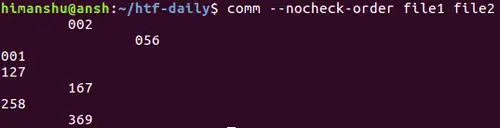

你可以看到,这个命令产生一个输出告诉我们:`file1` 还没有排序好。此时,如果你不想让这个工具检查输入是否已经排序好,那么你可以使用 `--nocheck-order` 选项:

|

||||

|

||||

```

|

||||

comm --nocheck-order file1 file2

|

||||

```

|

||||

|

||||

[][11]

|

||||

|

||||

你可以看到,前面出现的提示信息已经消失了。

|

||||

|

||||

注意,如果你想明确告诉 `comm` 命令来检查输入文件是否排序好,那么你可以使用 `--check-order` 选项。

|

||||

|

||||

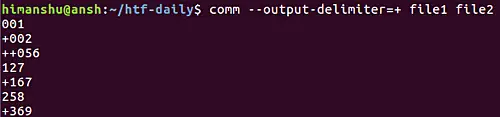

### 4、 如何用自定义字符串分隔 `comm` 命令的输出列

|

||||

|

||||

默认情况下,`comm` 命令的输出列之间是以空格分隔的。然而,如何你想使用一个自定义字符串作为分隔符,那么你可以使用 `--output-delimiter` 选项。使用该选项时需要指定你想用来作为分隔符的字符串。

|

||||

|

||||

```

|

||||

comm --output-delimiter=+ file1 file2

|

||||

```

|

||||

|

||||

比如,我们使用加号来作为分隔符:

|

||||

|

||||

[][12]

|

||||

|

||||

### 5、 如何使 `comm` 的输出行以 `NUL` 字符终止

|

||||

|

||||

默认情况下,`comm` 命令的输出行以新行终止。然而,如果你想,那么你可以改为以 `NUL` 字符终止,只需要使用 `-z` 选项即可:

|

||||

|

||||

```

|

||||

comm -z file1 file2

|

||||

```

|

||||

|

||||

### 结论

|

||||

|

||||

`comm` 命令并没有特别多的特性性,我们在这儿已经讨论了它的绝大多数命令行选项。只需要理解和练习在这篇教程中讨论的内容,那么你便可以在日常工作中知道如何使用这个工具了。如果你有任何问题或者疑问,请前往该命令的 [man 手册][13],或者在下面评论。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.howtoforge.com/linux-comm-command/

|

||||

|

||||

作者:[Himanshu Arora][a]

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.howtoforge.com/linux-comm-command/

|

||||

[1]:https://www.howtoforge.com/linux-comm-command/#linux-comm-command

|

||||

[2]:https://www.howtoforge.com/linux-comm-command/#-how-to-compare-two-sorted-files-using-comm

|

||||

[3]:https://www.howtoforge.com/linux-comm-command/#-how-tonbspsuppress-individual-columns-in-comm-command-output

|

||||

[4]:https://www.howtoforge.com/linux-comm-command/#-how-to-make-comm-compare-files-that-are-not-sorted

|

||||

[5]:https://www.howtoforge.com/linux-comm-command/#-how-to-separate-comm-output-columns-with-custom-string

|

||||

[6]:https://www.howtoforge.com/linux-comm-command/#-how-to-make-comm-output-lines-nul-terminated

|

||||

[7]:https://www.howtoforge.com/linux-comm-command/#conclusion

|

||||

[8]:https://www.howtoforge.com/images/linux_comm_command/big/comm-output.png

|

||||

[9]:https://www.howtoforge.com/images/linux_comm_command/big/comm-supress-column.png

|

||||

[10]:https://www.howtoforge.com/images/linux_comm_command/big/comm-not-sorted-message.png

|

||||

[11]:https://www.howtoforge.com/images/linux_comm_command/big/comm-nocheck-order.png

|

||||

[12]:https://www.howtoforge.com/images/linux_comm_command/big/comm-output-delimiter.png

|

||||

[13]:https://linux.cn/man/1/comm

|

||||

@ -0,0 +1,108 @@

|

||||

使用 Python 开始你的机器学习之旅

|

||||

============================================================

|

||||

|

||||

> 机器学习是你的简历中必需的一门技能。我们简要概括一下使用 Python 来进行机器学习的一些步骤。

|

||||

|

||||

|

||||

|

||||

|

||||

>图片来自: opensource.com

|

||||

|

||||

你想知道如何开始机器学习吗?在这篇文章中,我将简要概括一下使用 [Python][16] 来开始机器学习的一些步骤。Python 是一门流行的开源程序设计语言,也是在人工智能及其它相关科学领域中最常用的语言之一。机器学习简称 ML,是人工智能的一个分支,它是利用算法从数据中进行学习,然后作出预测。机器学习有助于帮助我们预测我们周围的世界。

|

||||

|

||||

从无人驾驶汽车到股市预测,再到在线学习,机器学习通过预测来进行自我提高的方法几乎被用在了每一个领域。由于机器学习的实际运用,目前它已经成为就业市场上最有需求的技能之一。另外,使用 Python 来开始机器学习很简单,因为有大量的在线资源,以及许多可用的 [Python 机器学习库][16]。

|

||||

|

||||

你需要如何开始使用 Python 进行机器学习呢?让我们来总结一下这个过程。

|

||||

|

||||

### 提高你的 Python 技能

|

||||

|

||||

由于 Python 在工业界和科学界都非常受欢迎,因此你不难找到 Python 的学习资源。如果你是一个从未接触过 Python 的新手,你可以利用在线资源,比如课程、书籍和视频来学习 Python。比如下面列举的一些资源:

|

||||

|

||||

* [Python 学习之路][5]

|

||||

* [Google 开发者 Python 课程(视频)][6]

|

||||

* [Google 的 Python 课堂][7]

|

||||

|

||||

### 安装 Anaconda

|

||||

|

||||

下一步是安装 [Anacona][2]。有了 Anaconda ,你将可以开始使用 Python 来探索机器学习的世界了。Anaconda 的默认安装库包含了进行机器学习所需要的工具。

|

||||

|

||||

### 基本的机器学习技能

|

||||

|

||||

有了一些基本的 Python 编程技能,你就可以开始学习一些基本的机器学习技能了。一个实用的学习方法是学到一定技能便开始进行练习。然而,如果你想深入学习这个领域,那么你需要准备投入更多的学习时间。

|

||||

|

||||

一个获取技能的有效方法是在线课程。吴恩达的 Coursera [机器学习课程][20] 是一个不错的选择。其它有用的在线训练包括:

|

||||

|

||||

* [Python 机器学习: Scikit-Learn 教程][8]

|

||||

* [Python 实用机器学习教程][9]

|

||||

|

||||

你也可以在 [LiveEdu.tv][21] 上观看机器学习视频,从而进一步了解这个领域。

|

||||

|

||||

### 学习更多的 Python 库

|

||||

|

||||

当你对 Python 和机器学习有一个好的感觉之后,可以开始学习一些[开源的 Python 库][22]。科学的 Python 库将会使完成一些简单的机器学习任务变得很简单。然而,选择什么库是完全主观的,并且在业界内许多人有很大的争论。

|

||||

|

||||

一些实用的 Python 库包括:

|

||||

|

||||

* [Scikit-learn][10] :一个优雅的机器学习算法库,可用于数据挖掘和数据分析任务。

|

||||

* [Tensorflow][11] :一个易于使用的神经网络库。

|

||||

* [Theano][12] : 一个强大的机器学习库,可以帮助你轻松的评估数学表达式。

|

||||

* [Pattern][13] : 可以帮助你进行自然语言处理、数据挖掘以及更多的工作。

|

||||

* [Nilearn][14] :基于 Scikit-learn,它可以帮助你进行简单快速的统计学习。

|

||||

|

||||

### 探索机器学习

|

||||

|

||||

对基本的 Python、机器学习技能和 Python 库有了一定理解之后,就可以开始探索机器学习了。接下来,尝试探索一下 Scikit-learn 库。一个不错的教程是 Jake VanderPlas 写的 [Scikit-learn 简介][23]。

|

||||

|

||||

然后,进入中级主题,比如 [K-均值聚类算法简介][24]、线性回归、[决策树][25]和逻辑回归。

|

||||

|

||||

最后,深入高级机器学习主题,比如向量机和复杂数据转换。

|

||||

|

||||

就像学习任何新技能一样,练习得越多,就会学得越好。你可以通过练习不同的算法,使用不同的数据集来更好的理解机器学习,并提高解决问题的整体能力。

|

||||

|

||||

使用 Python 进行机器学习是对你的技能的一个很好的补充,并且有大量免费和低成本的在线资源可以帮助你。你已经掌握机器学习技能了吗?可以在下面留下你的评论,或者[提交一篇文章][26]来分享你的故事。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Michael J. Garbade 博士是旧金山 LiveEdu Inc(Livecoding.tv)的创始人兼首席执行官。Livecoding.tv 是世界上观看工程师直播编代码最先进的直播平台。你可以通过观看工程师们写网站、移动应用和游戏,来将你的技能提升到一个新的水平。MichaelJ. Garbade 博士拥有金融学博士学位,并且是一名自学成才的工程师,他喜欢 Python、Django、Sencha Touch 和视频流。

|

||||

|

||||

-----------

|

||||

|

||||

via: https://opensource.com/article/17/5/python-machine-learning-introduction

|

||||

|

||||

作者:[Michael J. Garbade][a]

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/drmjg

|

||||

[1]:https://opensource.com/tags/python?src=programming_resource_menu

|

||||

[2]:https://opensource.com/tags/javascript?src=programming_resource_menu

|

||||

[3]:https://opensource.com/tags/perl?src=programming_resource_menu

|

||||

[4]:https://developers.redhat.com/?intcmp=7016000000127cYAAQ&amp;amp;amp;src=programming_resource_menu

|

||||

[5]:https://learnpythonthehardway.org/book/

|

||||

[6]:https://www.youtube.com/playlist?list=PLfZeRfzhgQzTMgwFVezQbnpc1ck0I6CQl

|

||||

[7]:https://developers.google.com/edu/python/

|

||||

[8]:https://www.datacamp.com/community/tutorials/machine-learning-python#gs.HfAvLRs

|

||||

[9]:https://pythonprogramming.net/machine-learning-tutorial-python-introduction/

|

||||

[10]:http://scikit-learn.org/stable/

|

||||

[11]:https://opensource.com/article/17/2/machine-learning-projects-tensorflow-raspberry-pi

|

||||

[12]:http://deeplearning.net/software/theano/

|

||||

[13]:https://github.com/clips/pattern

|

||||

[14]:https://github.com/nilearn/nilearn

|

||||

[15]:https://opensource.com/article/17/5/python-machine-learning-introduction?rate=jgAmIV_YqoWTbnSgNjZ0EE5lyhJtzf-ukzhiMmXtfMQ

|

||||

[16]:https://opensource.com/article/17/2/3-top-machine-learning-libraries-python

|

||||

[17]:https://www.liveedu.tv/learn/python/

|

||||

[18]:https://opensource.com/article/17/2/3-top-machine-learning-libraries-python

|

||||

[19]:http://docs.continuum.io/anaconda/install

|

||||

[20]:https://www.coursera.org/learn/machine-learning

|

||||

[21]:https://www.liveedu.tv/

|

||||

[22]:https://opensource.com/article/17/5/4-practical-python-libraries

|

||||

[23]:http://nbviewer.jupyter.org/github/donnemartin/data-science-ipython-notebooks/blob/master/scikit-learn/scikit-learn-intro.ipynb

|

||||

[24]:https://www.datascience.com/blog/introduction-to-k-means-clustering-algorithm-learn-data-science-tutorials

|

||||

[25]:http://machinelearningmastery.com/implement-decision-tree-algorithm-scratch-python/

|

||||

[26]:https://opensource.com/story

|

||||

[27]:https://opensource.com/user/78291/feed

|

||||

[28]:https://opensource.com/users/drmjg

|

||||

@ -0,0 +1,156 @@

|

||||

MyCLI :一个支持自动补全和语法高亮的 MySQL/MariaDB 客户端

|

||||

====

|

||||

|

||||

MyCLI 是一个易于使用的命令行客户端,可用于受欢迎的数据库管理系统 MySQL、MariaDB 和 Percona,支持自动补全和语法高亮。它是使用 `prompt_toolkit` 库写的,需要 Python 2.7、3.3、3.4、3.5 和 3.6 的支持。MyCLI 还支持通过 SSL 安全连接到 MySQL 服务器。

|

||||

|

||||

#### MyCLI 的特性

|

||||

|

||||

* 当你第一次使用它的时候,将会自动创建一个文件 `~/.myclirc`。

|

||||

* 当输入 SQL 的关键词和数据库中的表、视图和列时,支持自动补全。

|

||||

* 默认情况下也支持智能补全,能根据上下文的相关性提供补全建议。

|

||||

|

||||

比如:

|

||||

|

||||

```

|

||||

SELECT * FROM <Tab> - 这将显示出数据库中的表名。

|

||||

SELECT * FROM users WHERE <Tab> - 这将简单的显示出列名称。

|

||||

```

|

||||

|

||||

* 通过使用 `Pygents` 支持语法高亮

|

||||

* 支持 SSL 连接

|

||||

* 提供多行查询支持

|

||||

* 它可以将每一个查询和输出记录到一个文件中(默认情况下禁用)。

|

||||

* 允许保存收藏一个查询(使用 `\fs 别名` 保存一个查询,并可使用 `\f 别名` 运行它)。

|

||||

* 支持 SQL 语句执行和表查询计时

|

||||

* 以更吸引人的方式打印表格数据

|

||||

|

||||

### 如何在 Linux 上为 MySQL 和 MariaDB 安装 MyCLI

|

||||

|

||||

在 Debian/Ubuntu 发行版上,你可以很容易的像下面这样使用 [`apt` 命令][6] 来安装 MyCLI 包:

|

||||

|

||||

```

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get install mycli

|

||||

```

|

||||

|

||||

同样,在 Fedora 22+ 上也有 MyCLI 的可用包,你可以像下面这样使用 [`dnf` 命令][7] 来安装它:

|

||||

|

||||

```

|

||||

$ sudo dnf install mycli

|

||||

```

|

||||

|

||||

对于其他 Linux 发行版,比如 RHEL/CentOS,你需要使用 Python 的 `pip` 工具来安装 MyCLI。首先,使用下面的命令来安装 pip:

|

||||

|

||||

```

|

||||

$ sudo yum install pip

|

||||

```

|

||||

|

||||

安装好 `pip` 以后,你可以像下面这样安装 MyCLI:

|

||||

|

||||

```

|

||||

$ sudo pip install mycli

|

||||

```

|

||||

|

||||

### 在 Linux 中如何使用 MyCLI 连接 MySQL 和 MariaDB

|

||||

|

||||

安装好 MyCLI 以后,你可以像下面这样使用它:

|

||||

|

||||

```

|

||||

$ mycli -u root -h localhost

|

||||

```

|

||||

|

||||

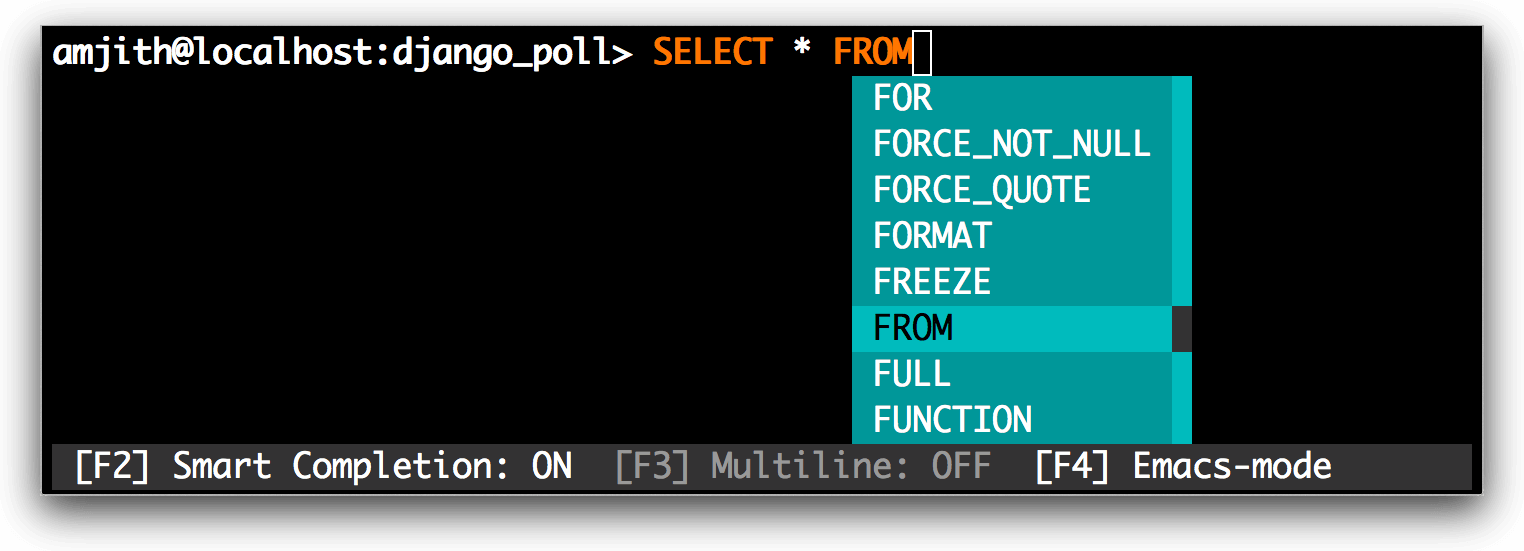

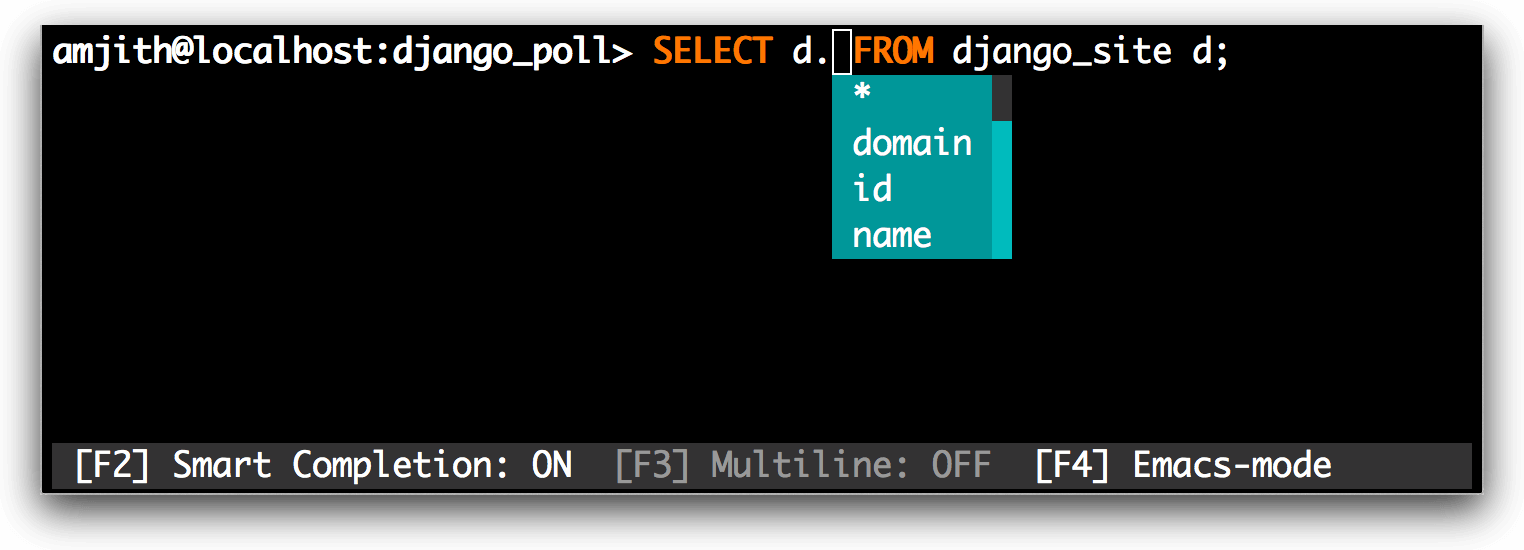

#### 自动补全

|

||||

|

||||

对于关键词和 SQL 函数可以进行简单的自动补全:

|

||||

|

||||

|

||||

|

||||

*MySQL 自动补全*

|

||||

|

||||

#### 智能补全

|

||||

|

||||

当输入 `FROM` 关键词以后会进行表名称的补全:

|

||||

|

||||

|

||||

|

||||

*MySQL 智能补全*

|

||||

|

||||

#### 别名支持

|

||||

|

||||

当表的名称设置别名以后,也支持列名称的补全:

|

||||

|

||||

|

||||

|

||||

*MySQL 别名支持*

|

||||

|

||||

#### 语法高亮

|

||||

|

||||

支持 MySQL 语法高亮:

|

||||

|

||||

|

||||

|

||||

*MySQL 语法高亮*

|

||||

|

||||

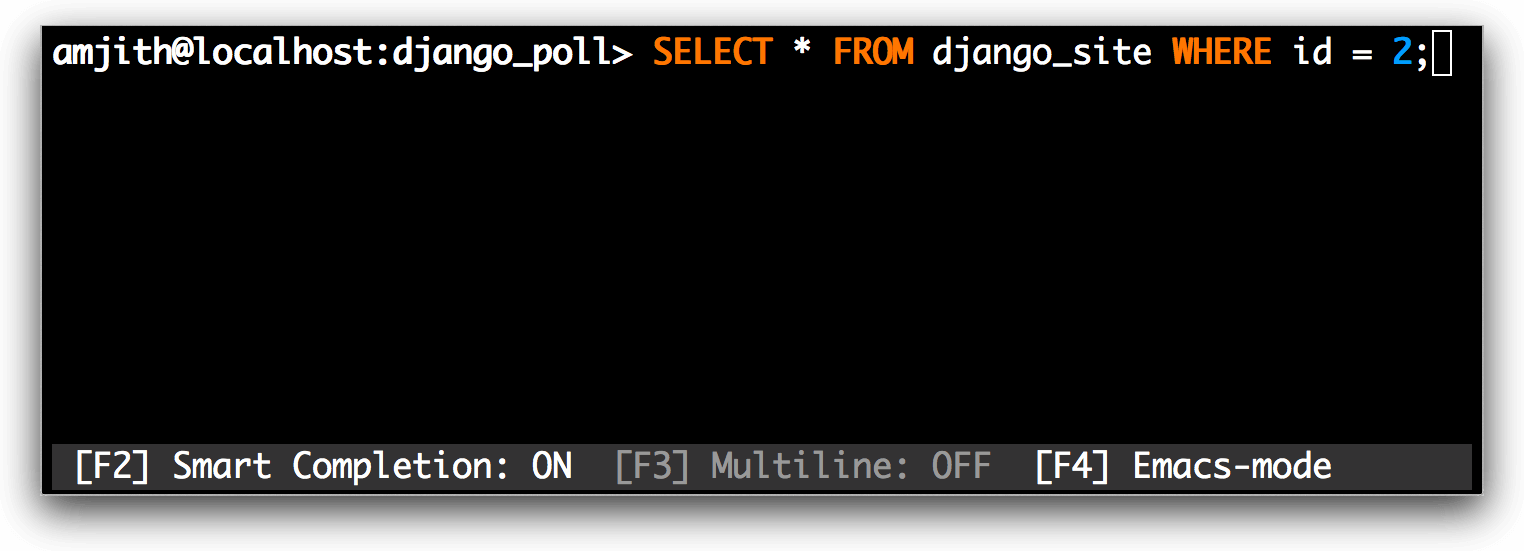

#### 格式化 SQL 的输出

|

||||

|

||||

MySQL 的输出会通过 [`less` 命令][8] 进行格式化输出:

|

||||

|

||||

|

||||

|

||||

*MySQL 格式化输出*

|

||||

|

||||

要登录 MySQL 并同时选择数据库,你可以使用和下面类似的命令:

|

||||

|

||||

```

|

||||

$ mycli local_database

|

||||

$ mycli -h localhost -u root app_db

|

||||

$ mycli mysql://amjith@localhost:3306/django_poll

|

||||

```

|

||||

|

||||

更多使用选项,请输入:

|

||||

|

||||

```

|

||||

$ mycli --help

|

||||

```

|

||||

|

||||

MyCLI 主页: [http://mycli.net/index][9]

|

||||

|

||||

记得阅读一些关于 MySQL 管理的有用文章:

|

||||

|

||||

1. [在 Linux 中用于数据库管理的 20 个 MySQL(Mysqladmin)命令][1]

|

||||

2. [如何在 Linux 中更改默认的 MySQL/MariaDB 数据目录][2]

|

||||

3. [在 Linux 中监测 MySQL 性能的 4 个实用命令行工具][3]

|

||||

4. [如何在 Linux 中更改 MySQL 或 MariaDB 的 Root 密码][4]

|

||||

5. [MySQL 备份和恢复数据库管理命令 ][5]

|

||||

|

||||

这就是本文的全部内容了。在这篇指南中,我们展示了如何通过一些简单的命令在 Linux 中安装和使用 MyCLI。记得通过下面的反馈表向我们分享你关于这篇文章的想法。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Aaron Kili是一名 Linux 和 F.O.S.S 的爱好者,未来的 Linux 系统管理员、网站开发人员,目前是 TecMint 的内容创作者,他喜欢用电脑工作,并乐于分享知识。

|

||||

|

||||

---------

|

||||

|

||||

via: https://www.tecmint.com/mycli-mysql-client-with-auto-completion-syntax-highlighting/

|

||||

|

||||

作者:[Aaron Kili][a]

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.tecmint.com/author/aaronkili/

|

||||

[1]:https://www.tecmint.com/mysqladmin-commands-for-database-administration-in-linux/

|

||||

[2]:https://www.tecmint.com/change-default-mysql-mariadb-data-directory-in-linux/

|

||||

[3]:https://www.tecmint.com/mysql-performance-monitoring/

|

||||

[4]:https://www.tecmint.com/change-mysql-mariadb-root-password/

|

||||

[5]:https://www.tecmint.com/mysql-backup-and-restore-commands-for-database-administration/

|

||||

[6]:https://www.tecmint.com/apt-advanced-package-command-examples-in-ubuntu/

|

||||

[7]:https://www.tecmint.com/dnf-commands-for-fedora-rpm-package-management/

|

||||

[8]:https://www.tecmint.com/linux-more-command-and-less-command-examples/

|

||||

[9]:http://mycli.net/index

|

||||

[10]:https://www.tecmint.com/author/aaronkili/

|

||||

[11]:https://www.tecmint.com/10-useful-free-linux-ebooks-for-newbies-and-administrators/

|

||||

[12]:https://www.tecmint.com/free-linux-shell-scripting-books/

|

||||

@ -0,0 +1,105 @@

|

||||

mimipenguin:从当前 Linux 用户转储登录密码

|

||||

============================================================

|

||||

|

||||

mimipenguin 是一个免费、开源、简单但是强大的 shell/python 脚本,用来从当前 Linux 桌面用户转储登录凭证(用户名和密码),并且已在不同的 Linux 发行版中测试过。

|

||||

|

||||

另外,它还支持如:VSFTPd(活跃的 FTP 客户端连接)、Apache2(活跃的/旧的 HTTP 基础认证会话,但是这需要 Gcore),还有 openssh-server(活跃的 SSH 链接,需用 [sudo 命令][5])。重要的是,它逐渐被移植到其他语言中,以支持所有可想到的以后可以利用的情况。

|

||||

|

||||

### mimipenguin 是如何工作的?

|

||||

|

||||

要理解 mimipenguin 是如何工作的,你需要知道所有或者大多数的 Linux 发行版会在内存中存储大量的重要信息, 如:凭据、加密密钥以及个人数据。

|

||||

|

||||

尤其是用户名和密码是由进程(运行中的程序)保存在内存中,并以明文形式存储较长时间。mimipenguin 在技术上利用这些在内存中的明文凭证 - 它会转储一个进程,并提取可能包含明文凭据的行。

|

||||

|

||||

然后,通过以下内容的哈希值来尝试计算每个单词的出现几率:`/etc/shadow`、内存和 regex 搜索。一旦找到任何内容,它就会在标准输出上打印出来。

|

||||

|

||||

### 在 Linux 中安装 mimipenguin

|

||||

|

||||

我们将使用 git 来克隆 mimipenguin 仓库,因此如果你还没安装,那么首先在系统上安装 git。

|

||||

|

||||

```

|

||||

$ sudo apt install git #Debian/Ubuntu systems

|

||||

$ sudo yum install git #RHEL/CentOS systems

|

||||

$ sudo dnf install git #Fedora 22+

|

||||

```

|

||||

|

||||

接着像这样在你的家目录(或者其他任何地方)克隆 mimipenguin 目录:

|

||||

|

||||

```

|

||||

$ git clone https://github.com/huntergregal/mimipenguin.git

|

||||

```

|

||||

|

||||

下载完成后,进入并如下运行 mimipenguin:

|

||||

|

||||

```

|

||||

$ cd mimipenguin/

|

||||

$ ./mimipenguin.sh

|

||||

```

|

||||

|

||||

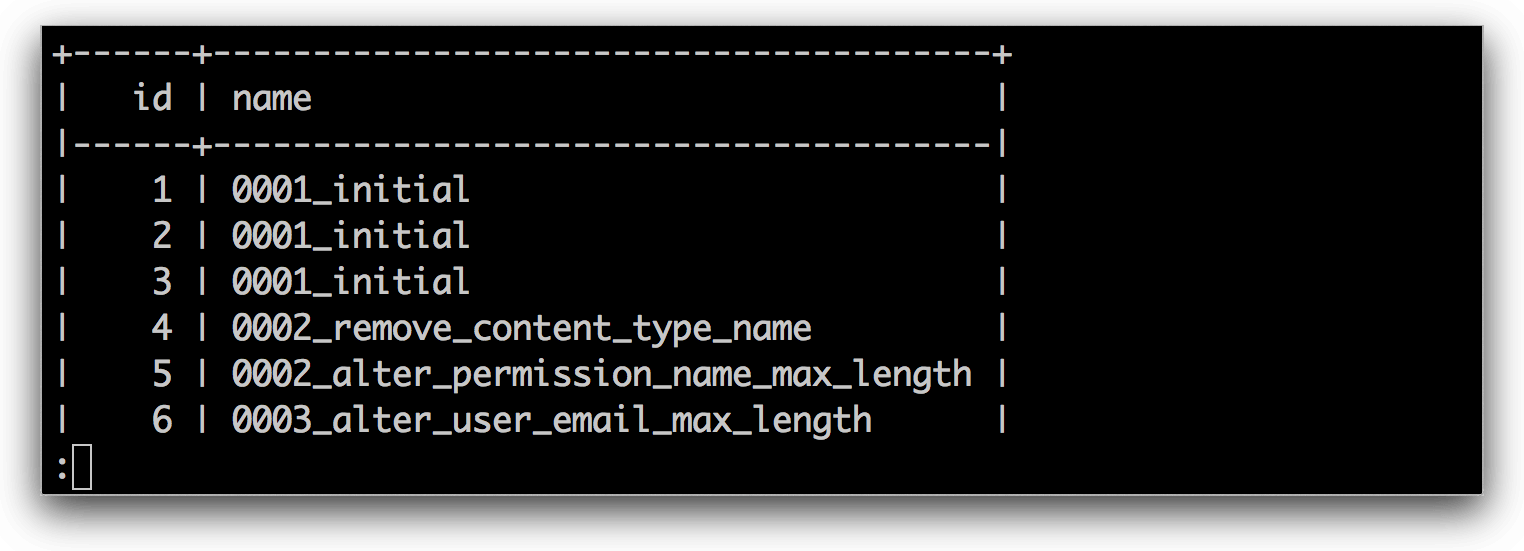

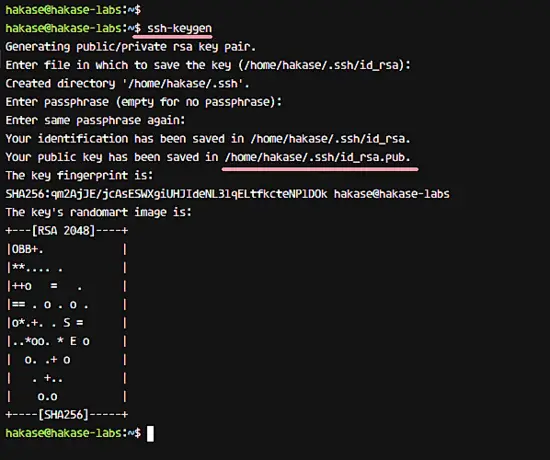

注意:如果你遇到下面的错误,那就使用 sudo 命令:

|

||||

|

||||

```

|

||||

Root required - You are dumping memory...

|

||||

Even mimikatz requires administrator

|

||||

```

|

||||

|

||||

|

||||

|

||||

*在 Linux 中转储登录密码*

|

||||

|

||||

从上面的输出中,mimipenguin 向你提供了桌面环境的用户名和密码。

|

||||

|

||||

另外,还可以如下运行 python 版脚本:

|

||||

|

||||

```

|

||||

$ sudo ./mimipenguin.py

|

||||

```

|

||||

|

||||

注意有时 gcore 可能会阻塞脚本(这是 gcore 中一个已知问题)。

|

||||

|

||||

#### 未来更新

|

||||

|

||||

下面是将会被添加到 mimipenguin 的功能:

|

||||

|

||||

* 提升总体效率

|

||||

* 添加更多支持以及其他的凭据位置

|

||||

* 包括支持非桌面环境

|

||||

* 添加 LDAP 的支持

|

||||

|

||||

mimipenguin 的 Github 仓库:[https://github.com/huntergregal/mimipenguin][6]

|

||||

|

||||

同样,请查阅:

|

||||

|

||||

1. [如何在 Linux 中用密码保护一个 vim 文件][1]

|

||||

2. [如何在 Linux 中生成/加密/解密随机密码][2]

|

||||

3. [如何在 RHEL/CentOS/Fedora 中用密码保护 GRUB][3]

|

||||

4. [在 CentOS 7 中重置/恢复忘记的 root 用户账号密码][4]

|

||||

|

||||

在下面的评论栏中分享你关于这个工具的额外想法或者对 Linux 中内存中明文凭据的问题。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Aaron Kili 是 Linux 和 F.O.S.S 爱好者,即将成为 Linux SysAdmin 和网络开发人员,目前是 TecMint 的内容创作者,他喜欢在电脑上工作,并坚信分享知识。

|

||||

|

||||

-------------

|

||||

|

||||

via: https://www.tecmint.com/mimipenguin-hack-login-passwords-of-linux-users/

|

||||

|

||||

作者:[Aaron Kili][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.tecmint.com/author/aaronkili/

|

||||

[1]:https://linux.cn/article-8547-1.html

|

||||

[2]:https://www.tecmint.com/generate-encrypt-decrypt-random-passwords-in-linux/

|

||||

[3]:https://www.tecmint.com/password-protect-grub-in-linux/

|

||||

[4]:https://linux.cn/article-8212-1.html

|

||||

[5]:https://www.tecmint.com/sudoers-configurations-for-setting-sudo-in-linux/

|

||||

[6]:https://github.com/huntergregal/mimipenguin

|

||||

[7]:https://www.tecmint.com/author/aaronkili/

|

||||

[8]:https://www.tecmint.com/10-useful-free-linux-ebooks-for-newbies-and-administrators/

|

||||

[9]:https://www.tecmint.com/free-linux-shell-scripting-books/

|

||||

101

published/The history of Android/18 - The history of Android.md

Normal file

101

published/The history of Android/18 - The history of Android.md

Normal file

@ -0,0 +1,101 @@

|

||||

安卓编年史(16):安卓 3.0 蜂巢—平板和设计复兴

|

||||

================================================================================

|

||||

|

||||

|

||||

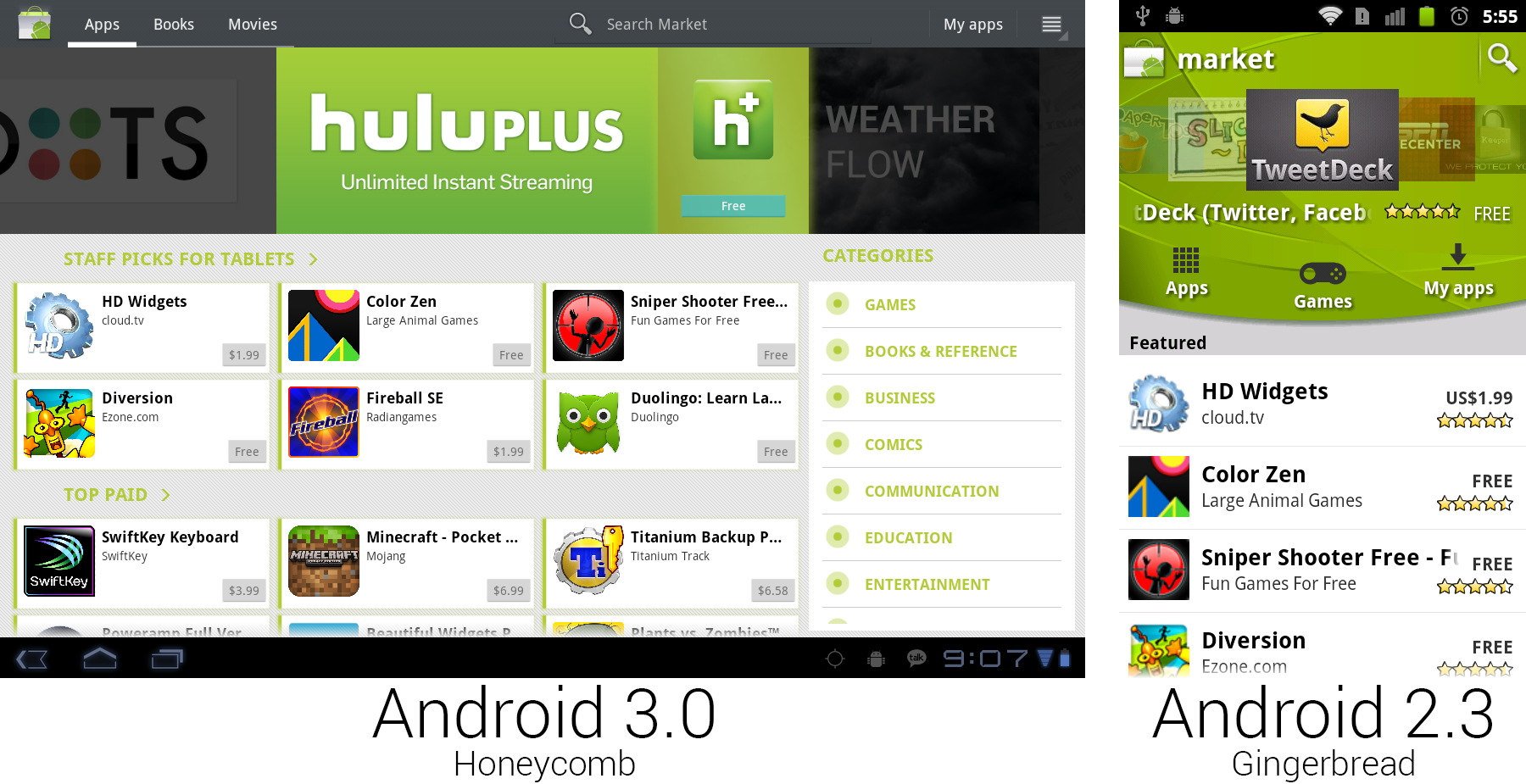

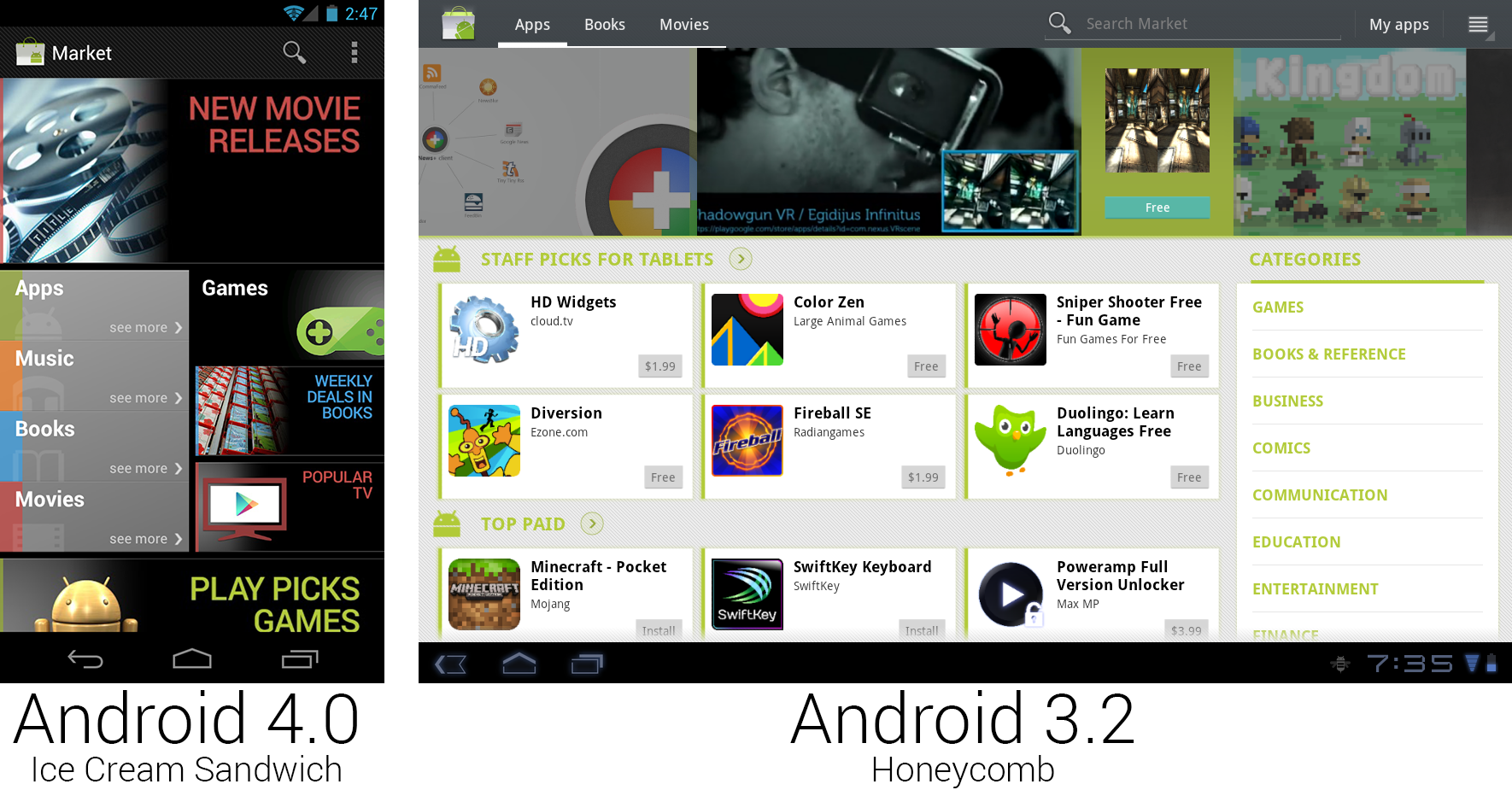

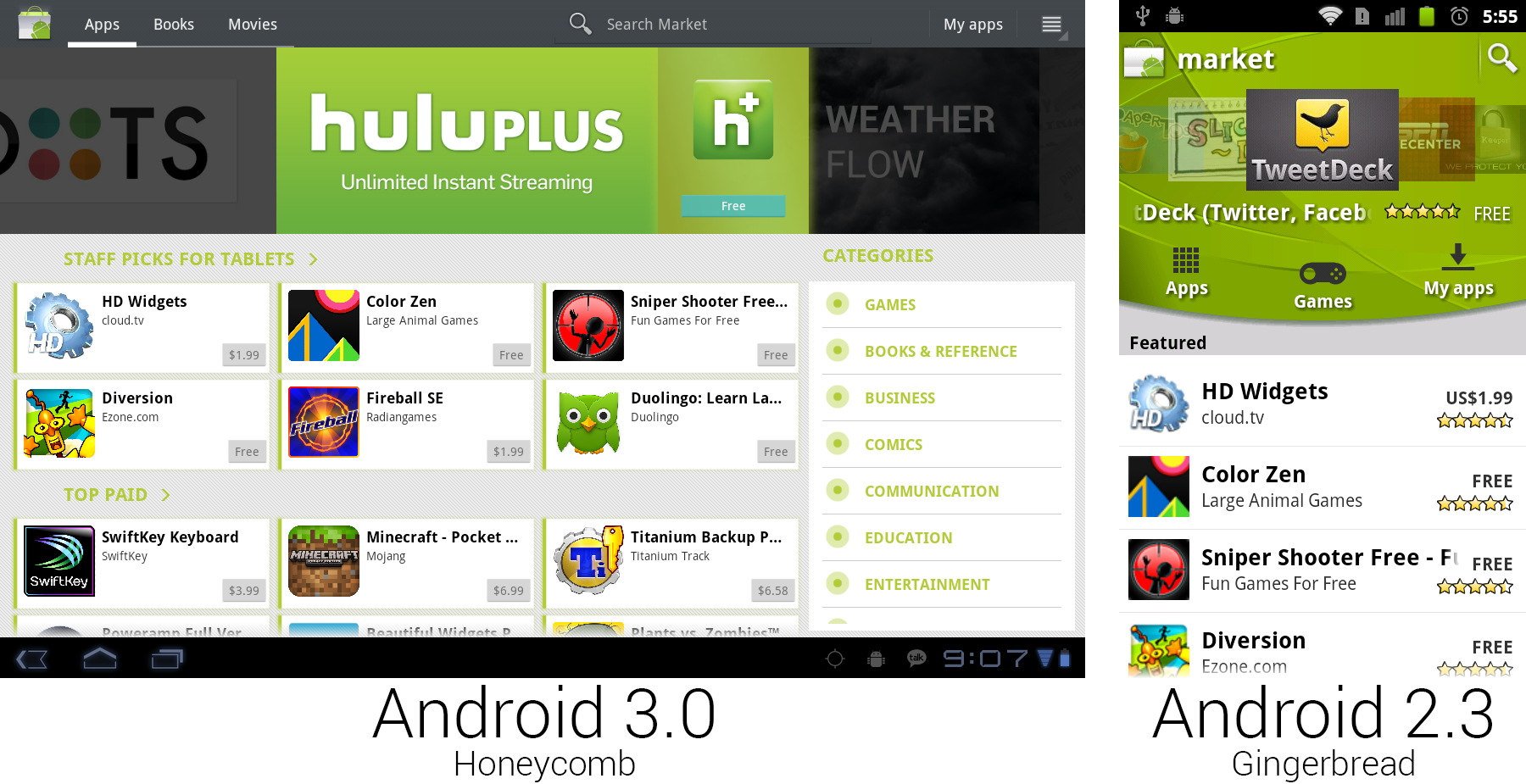

*安卓市场的新设计试水“卡片式”界面,这将成为谷歌的主要风格。

|

||||

[Ron Amadeo 供图]*

|

||||

|

||||

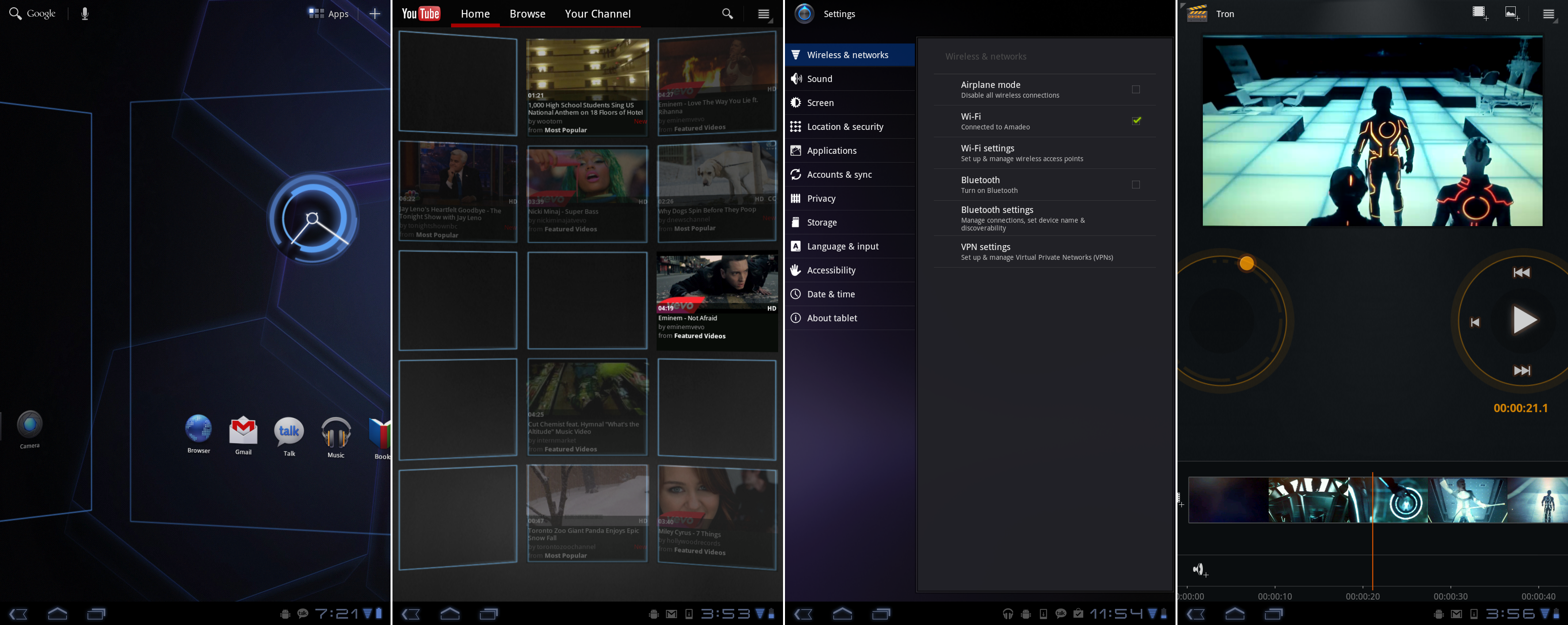

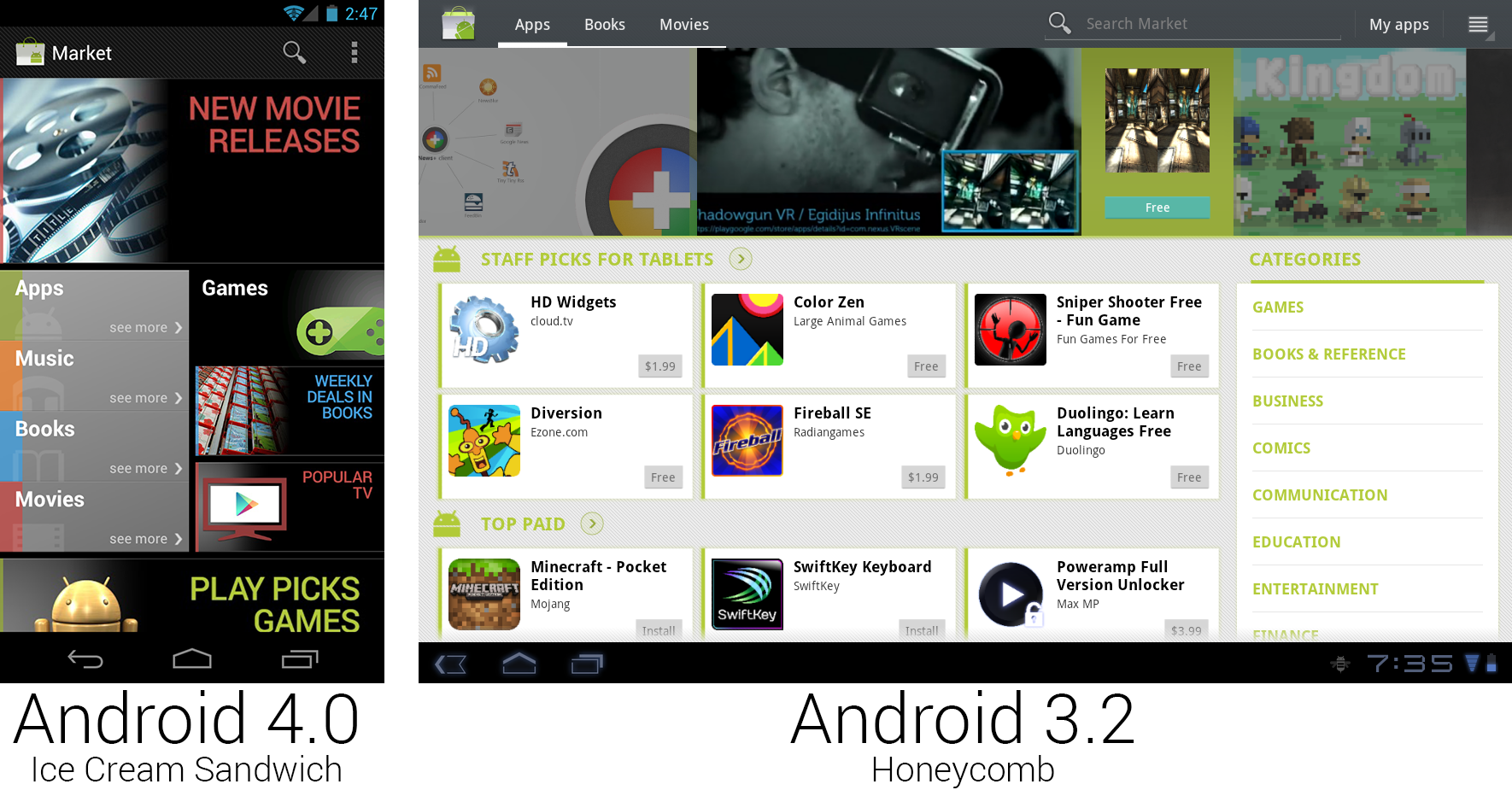

安卓推向市场已经有两年半时间了,安卓市场放出了它的第四版设计。这个新设计十分重要,因为它已经很接近谷歌的“卡片式”界面了。通过在小方块中显示应用或其他内容,谷歌可以使它的设计在不同尺寸屏幕下无缝过渡而不受影响。内容可以像一个相册应用里的照片一样显示——给布局渲染填充一个内容块列表,加上屏幕包装,就完成了。更大的屏幕一次可以看到更多的内容块,小点的屏幕一次看到的内容就少。内容用了不一样的方式显示,谷歌还在右边新增了一个“分类”板块,顶部还有个巨大的热门应用滚动显示。

|

||||

|

||||

虽然设计上为更容易配置界面准备好准备好了,但功能上还没有。最初发布的市场版本锁定为横屏模式,而且还是蜂巢独占的。

|

||||

|

||||

|

||||

|

||||

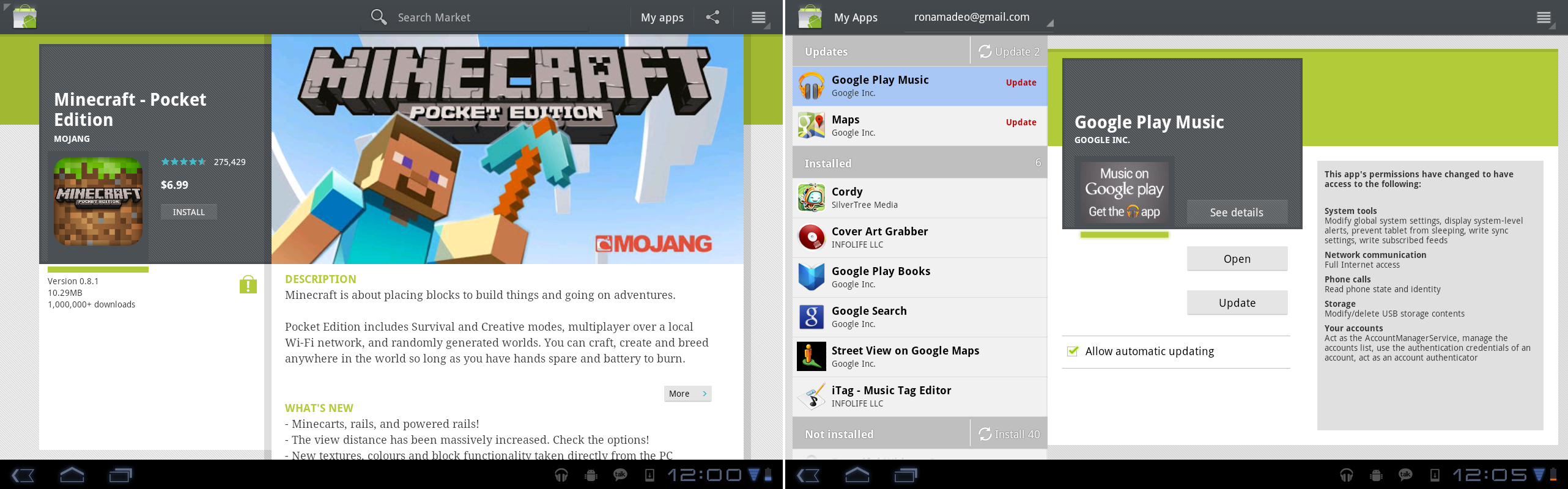

*应用详情页和“我的应用”界面。

|

||||

[Ron Amadeo 供图]*

|

||||

|

||||

新的市场不仅出售应用,还加入了书籍和电影租借。谷歌从 2010 年开始出售图书;之前只通过网站出售。新的市场将谷歌所有的内容销售聚合到了一处,进一步向苹果 iTunes 所的主宰地位展开较量。虽然在“安卓市场”出售这些东西有点品牌混乱,因为大部分内容都不依赖于安卓才能使用。

|

||||

|

||||

|

||||

|

||||

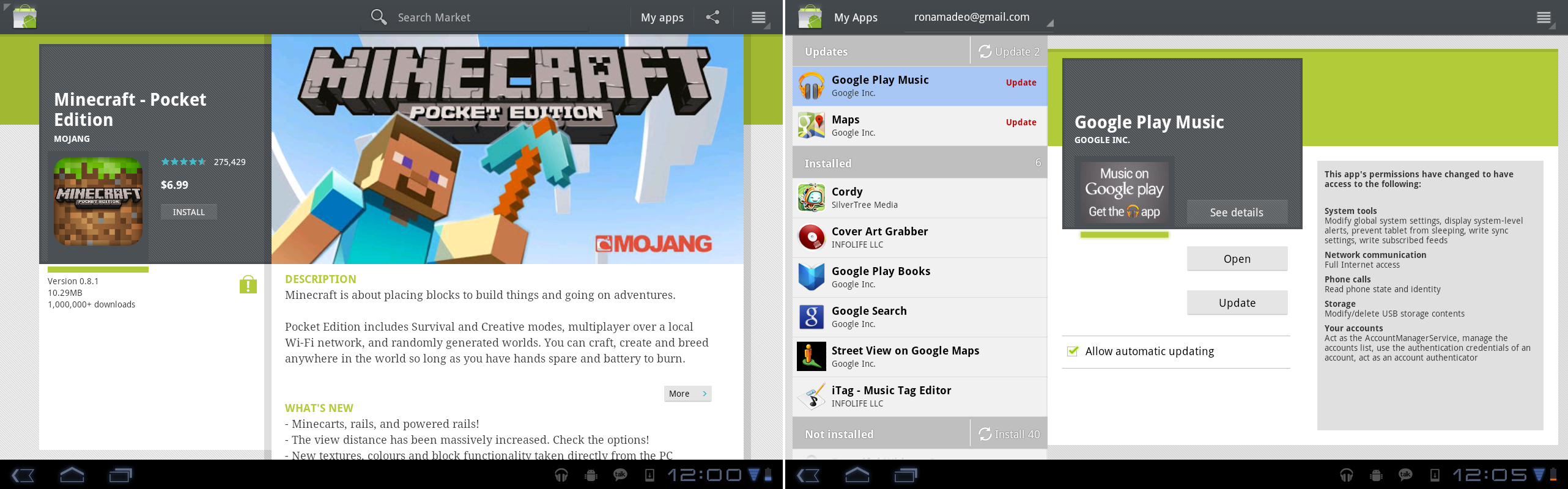

*浏览器看起来非常像 Chrome,联系人使用了双面板界面。

|

||||

[Ron Amadeo 供图]*

|

||||

|

||||

新浏览器界面顶部添加了标签页栏。尽管这个浏览器并不是 Chrome ,但它模仿了许多 Chrome 的设计和特性。除了这个探索性的顶部标签页界面,浏览器还加入了隐身标签,在浏览网页时不保存历史记录和自动补全记录。它还有个选项可以让你拥有一个 Chrome 风格的新标签页,页面上包含你最经常访问的网页略缩图。

|

||||

|

||||

新浏览器甚至还能和 Chrome 同步。在浏览器登录后,它会下载你的 Chrome 书签并且自动登录你的谷歌账户。收藏一个页面只需点击地址栏的星形标志即可,和谷歌地图一样,浏览器抛弃了缩放按钮,完全改用手势控制。

|

||||

|

||||

联系人应用最终从电话应用中移除,并且独立为一个应用。之前的联系人/拨号的混合式设计相对于人们使用现代智能手机的方式来说,过于以电话为中心了。联系人中存有电子邮件、IM、短信、地址、生日以及社交网络等信息,所以将它们捆绑在电话应用里的意义和将它们放进谷歌地图里差不多。抛开了电话通讯功能,联系人能够简化成没有标签页的联系人列表。蜂巢采用了双面板视图,在左侧显示完整的联系人列表,右侧是联系人详情。应用利用了 Fragments API,通过它应用可以在同一屏显示多个面板界面。

|

||||

|

||||

蜂巢版本的联系人应用是第一个拥有快速滚动功能的版本。当按住左侧滚动条的时候,你可以快速上下拖动,应用会显示列表当前位置的首字母预览。

|

||||

|

||||

|

||||

|

||||

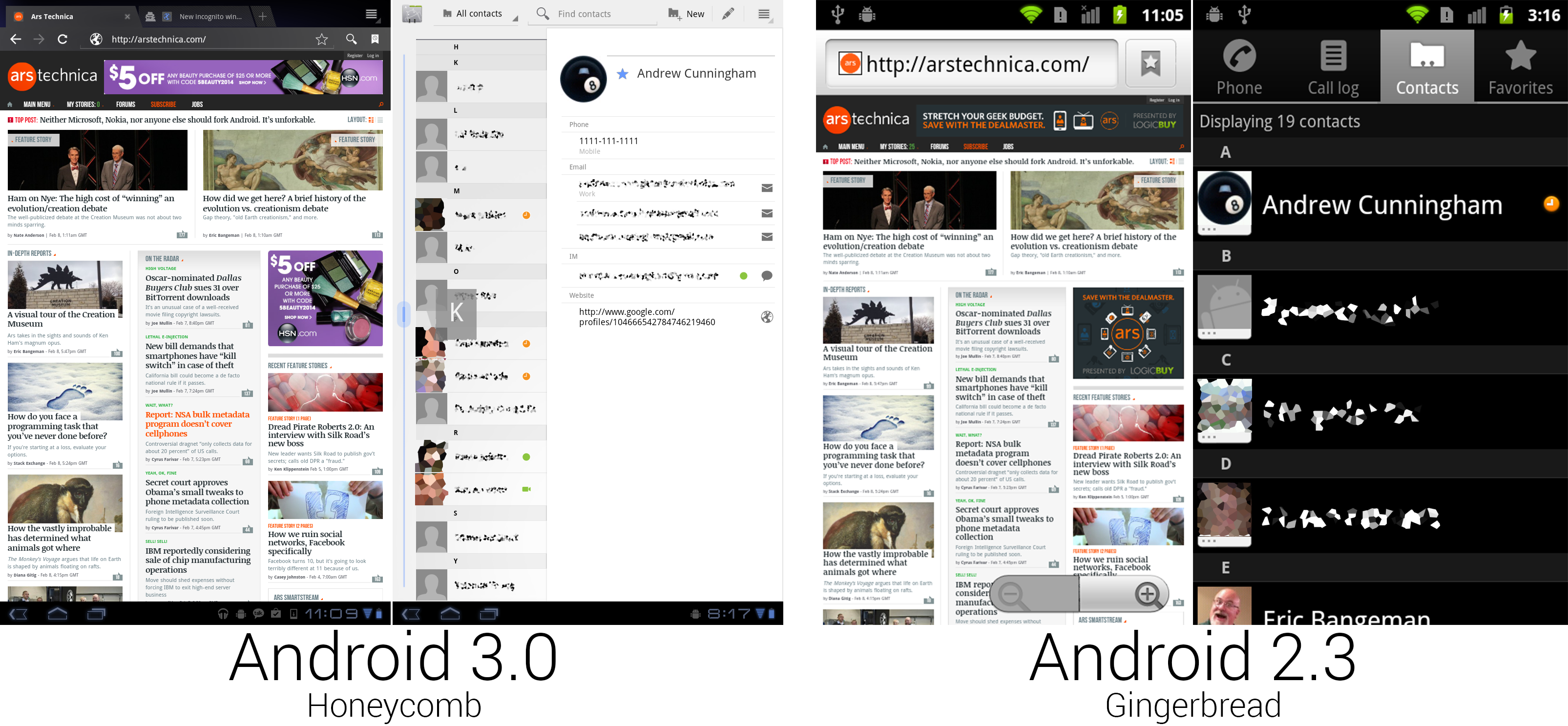

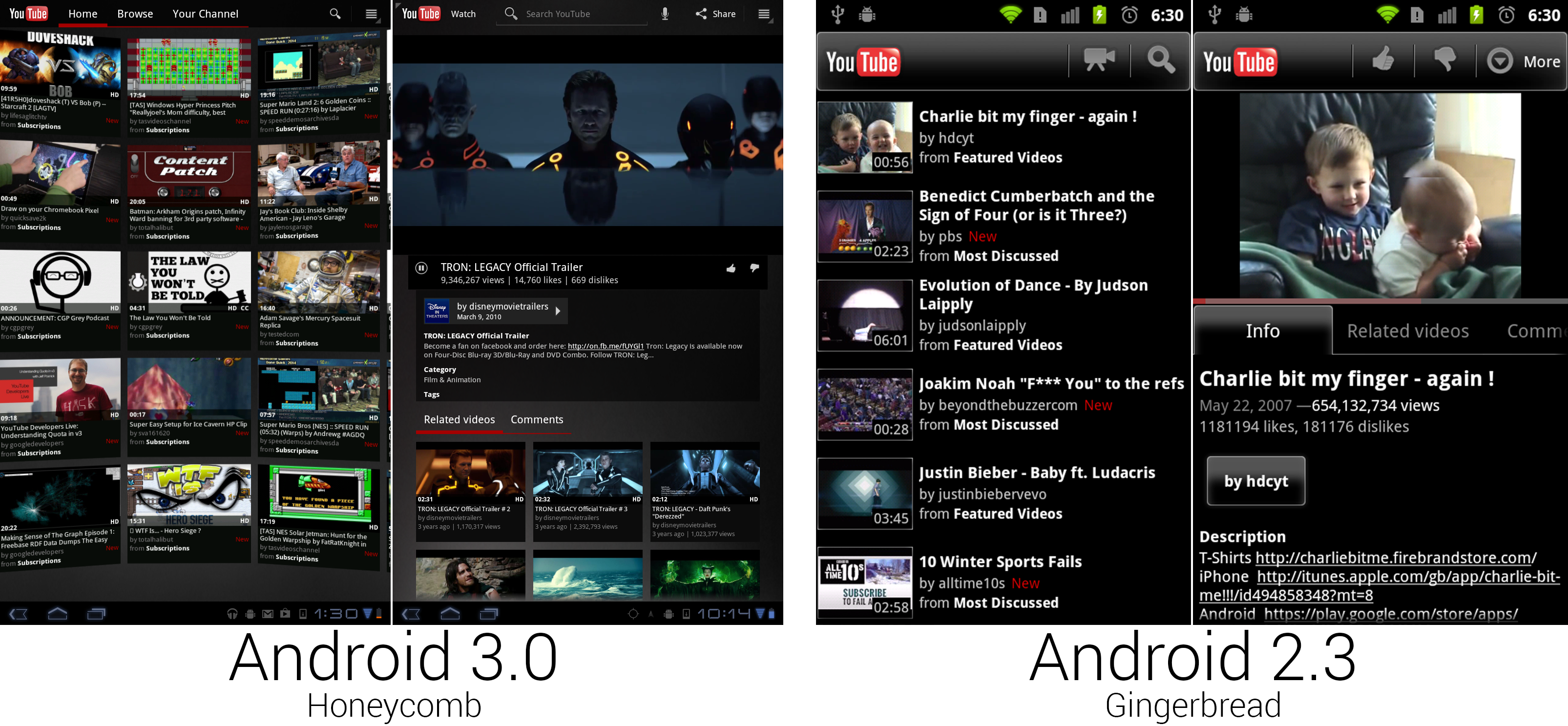

*新 Youtube 应用看起来像是来自黑客帝国。

|

||||

[Ron Amadeo 供图]*

|

||||

|

||||

谢天谢地 Youtube 终于抛弃了自安卓 2.3 以来的谷歌给予这个视频服务的“独特”设计,新界面设计与系统更加一体化。主界面是一个水平滚动的曲面墙,上面显示着最热门或者(登录之后)个人关注的视频。虽然谷歌从来没有将这个设计带到手机上,但它可以被认为是一个易于重新配置的卡片界面。操作栏在这里是个可配置的工具栏。没有登录时,操作栏由一个搜索栏填满。当你登录后,搜索缩小为一个按钮,“首页”,“浏览”和“你的频道”标签将会显示出来。

|

||||

|

||||

|

||||

|

||||

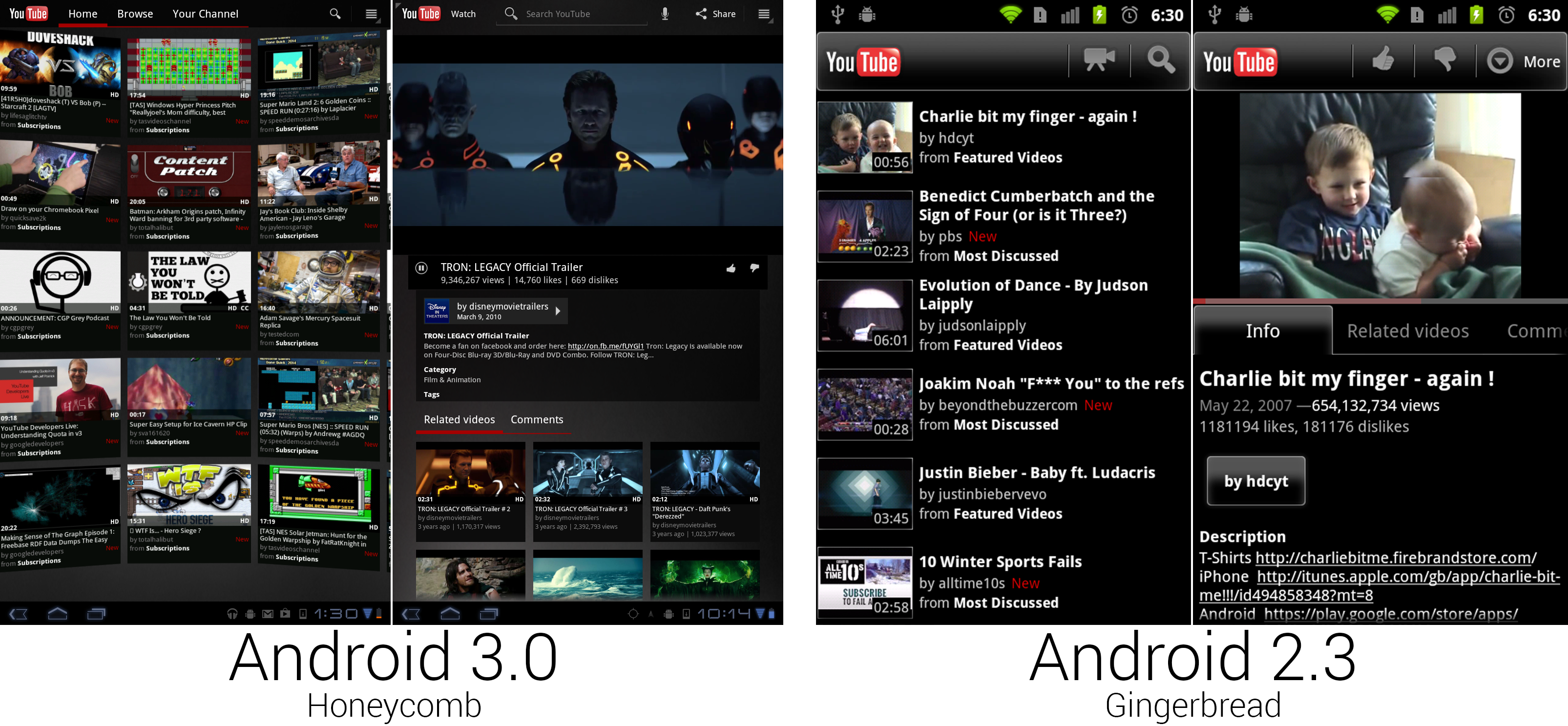

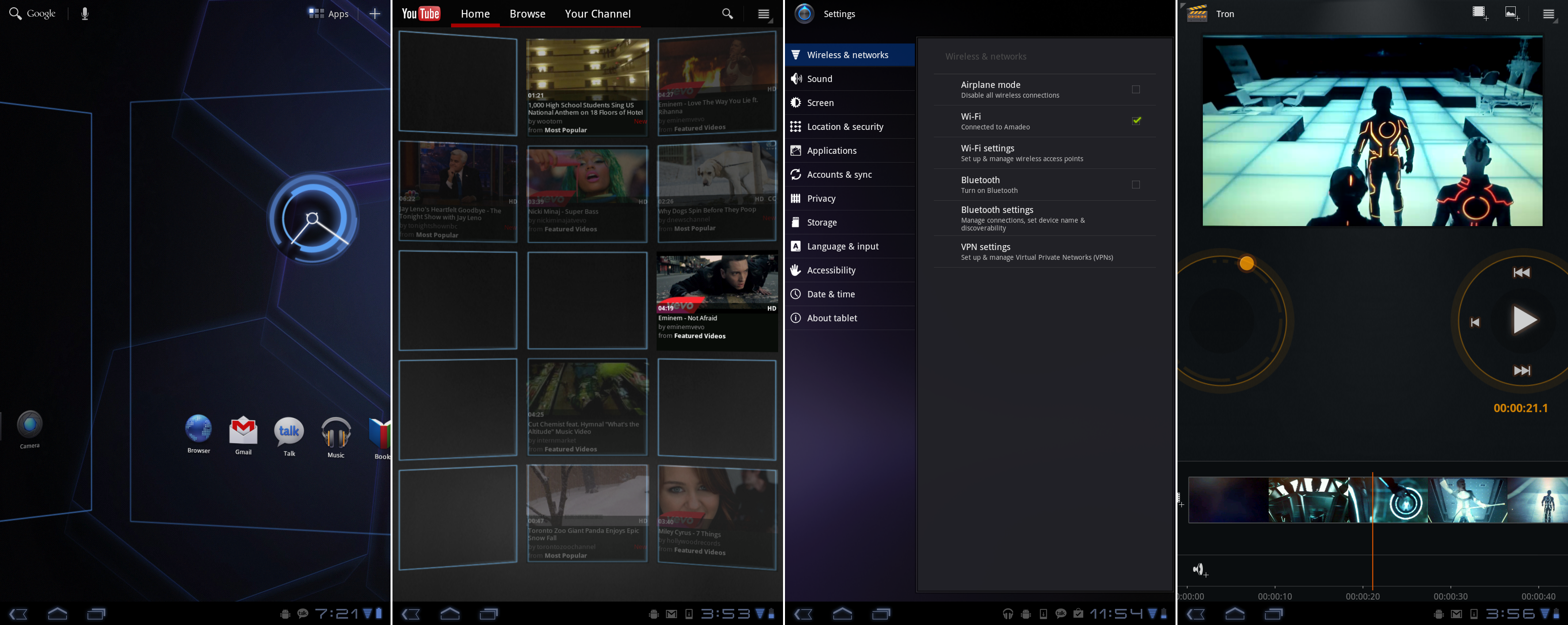

*蜂巢用一个蓝色框架的电脑界面来驱动主屏。电影工作室完全采用橙色电子风格主题。

|

||||

[Ron Amadeo 供图]*

|

||||

|

||||

蜂巢新增的应用“电影工作室”,这不是一个不言自明的应用,而且没有任何的解释或说明。就我们所知,你可以导入视频,剪切它们,添加文本和场景过渡。编辑视频——电脑上你可以做的最耗时、困难,以及处理器密集型任务之一 —— 在平板上完成感觉有点野心过大了,谷歌在之后的版本里将其完全移除了。电影工作室里我们最喜欢的部分是它完全的电子风格主题。虽然系统的其它部分使用蓝色高亮,在这里是橙色的。(电影工作室是个邪恶的程序!)

|

||||

|

||||

|

||||

*小部件!

|

||||

[Ron Amadeo 供图]*

|

||||

|

||||

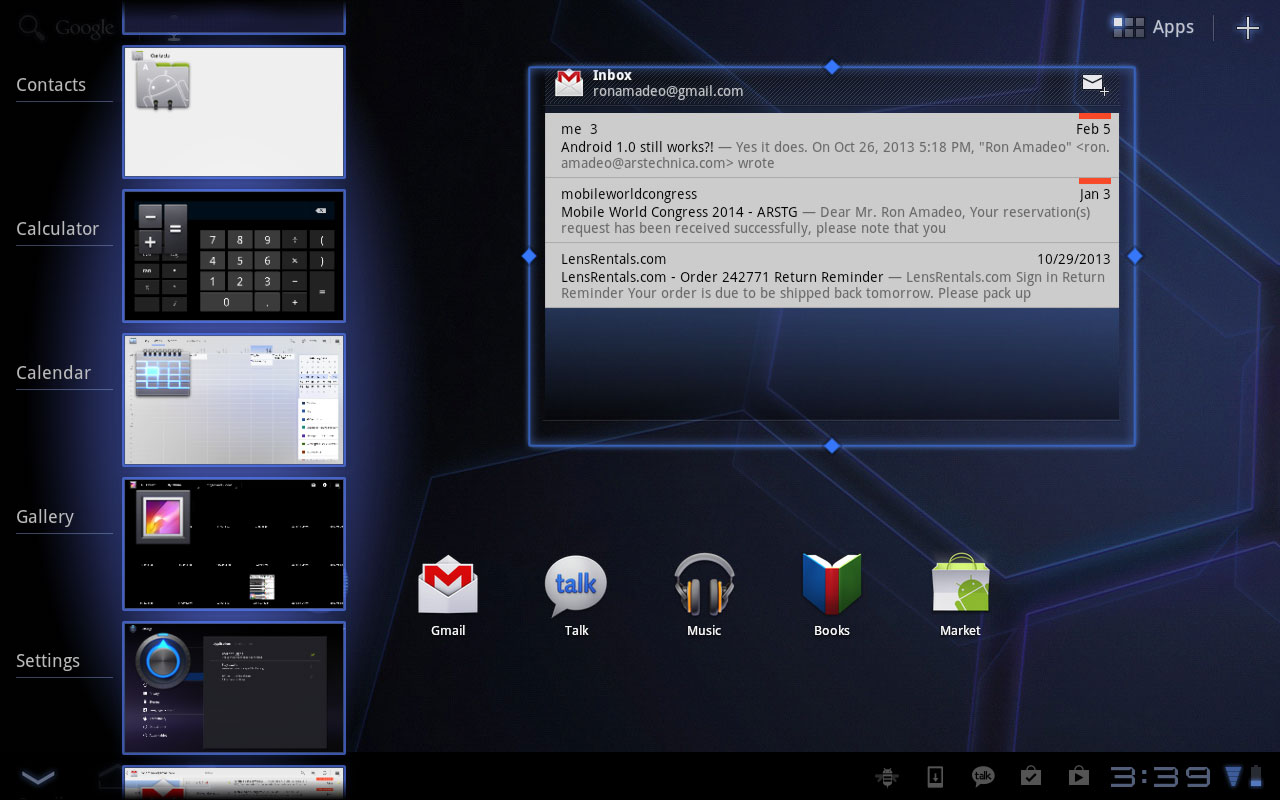

蜂巢带来了新的部件框架,允许部件滚动,Gmail、Email 以及日历部件都升级了以支持改功能。Youtube 和书籍使用了新的部件,内容卡片可以自动滚动切换。在小部件上轻轻向上或向下滑动可以切换卡片。我们不确定你的书籍中哪些书会被显示出来,但如果你想要的话它就在那儿。尽管所有的这些小部件在 10 英寸屏幕上运行良好,谷歌从未为手机重新设计它们,这让它们在安卓最流行的规格上几乎毫无用处。所有的小部件有个大块的标识标题栏,而且通常占据大半屏幕只显示很少的内容。

|

||||

|

||||

|

||||

|

||||

*安卓 3.1 中可滚动的最近应用以及可自定义大小的小部件。

|

||||

[Ron Amadeo 供图]*

|

||||

|

||||

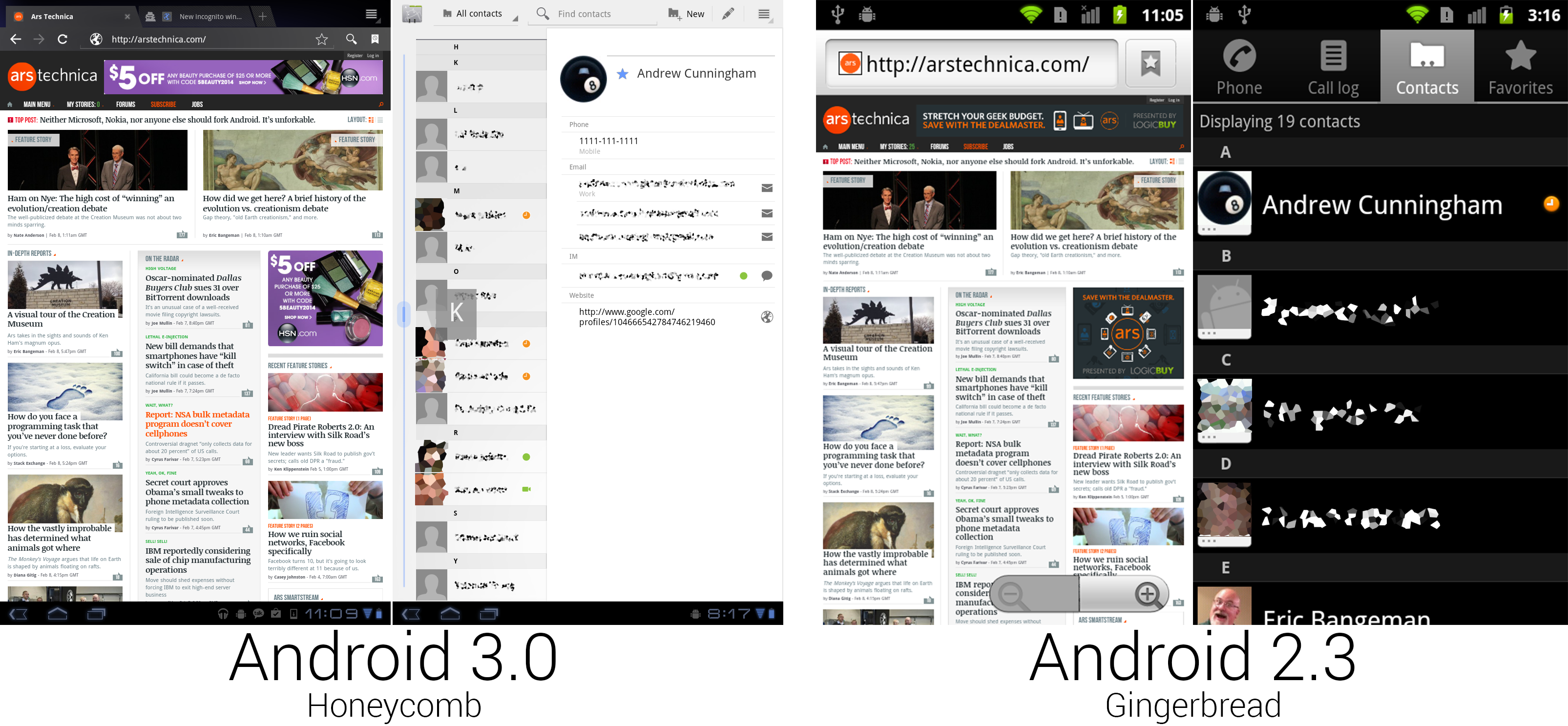

蜂巢后续的版本修复了 3.0 早期的一些问题。安卓 3.1 在蜂巢的第一个版本之后三个月放出,并带来了一些改进。小部件自定义大小是添加的最大特性之一。长按小部件之后,一个带有拖拽按钮的蓝色外框会显示出来,拖动按钮可以改变小部件尺寸。最近应用界面现在可以垂直滚动并且承载更多应用。这个版本唯一缺失的功能是滑动关闭应用。

|

||||

|

||||

在今天,一个 0.1 版本的升级是个主要更新,但是在蜂巢,那只是个小更新。除了一些界面调整,3.1 添加了对游戏手柄,键盘,鼠标以及其它 USB 和蓝牙输入设备的支持。它还提供了更多的开发者 API。

|

||||

|

||||

|

||||

|

||||

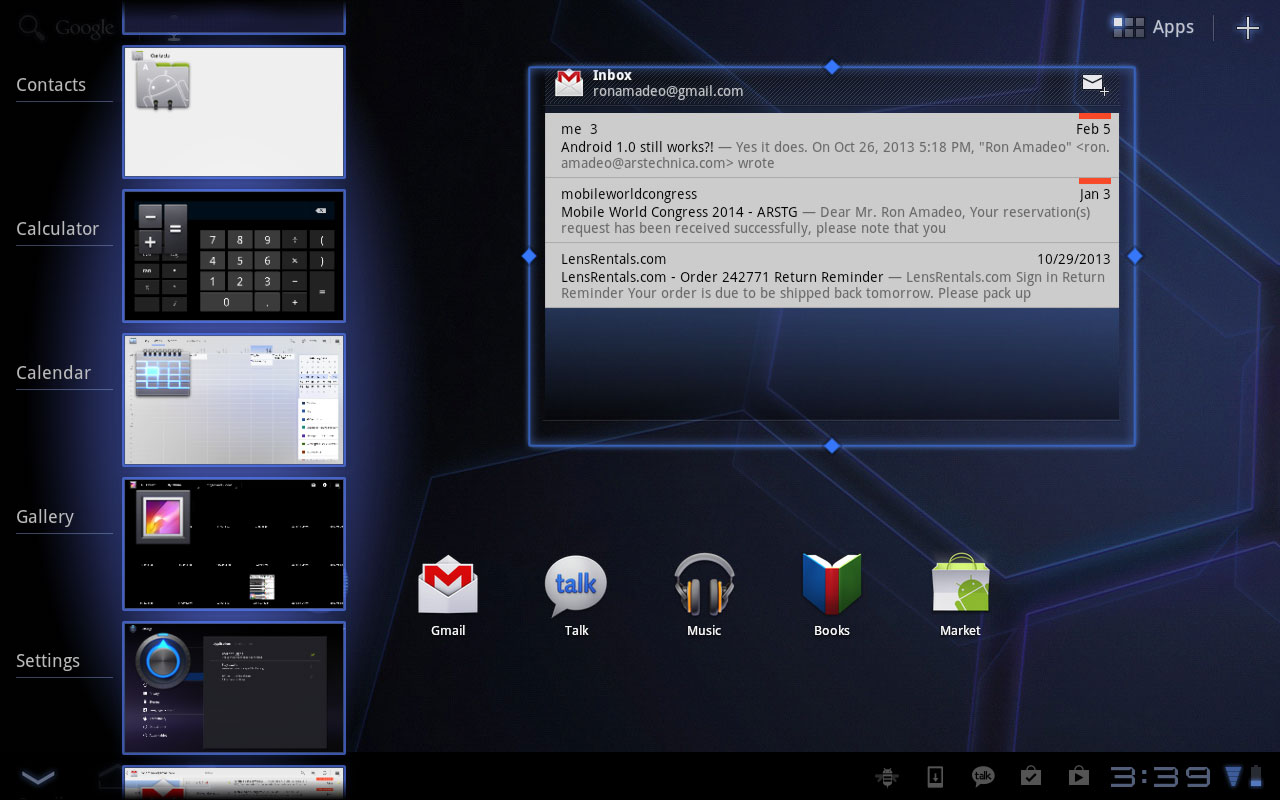

*安卓 3.2 的兼容性缩放和一个安卓平板上典型的展开视图应用。

|

||||

[Ron Amadeo 供图]*

|

||||

|

||||

安卓 3.2 在 3.1 发布后两个月放出,添加了七到八英寸的小尺寸平板支持。3.2 终于启用了 SD 卡支持,Xoom 在生命最初的五个月就像是初生儿的柔弱的肢体一样。

|

||||

|

||||

蜂巢匆匆问世是为了成为一个生态系统建设者。如果应用没有平板版本,没人会想要一个安卓平板的,所以谷歌知道需要尽快将东西送到开发者手中。在这个安卓平板生态的早期阶段,应用还没有到齐。这是拥有 Xoom 的人们所面临的最大的问题。

|

||||

|

||||

3.2 添加了“兼容缩放”,给了用户一个新选项,可以将应用拉伸适应屏幕(如右侧图片显示的那样)或缩放成正常的应用布局来适应屏幕。这些选项都不是很理想,由于没有应用生态来支持平板,蜂巢设备销售状况惨淡。但谷歌的平板决策最终还是会得到回报。今天,安卓平板已经[取代 iOS 占据了最大的市场份额][1]。

|

||||

|

||||

|

||||

|

||||

*姜饼上的 Google Music Beta。

|

||||

[Ron Amadeo 供图]*

|

||||

|

||||

### Google Music Beta —— 取代内容商店的云存储 ###

|

||||

|

||||

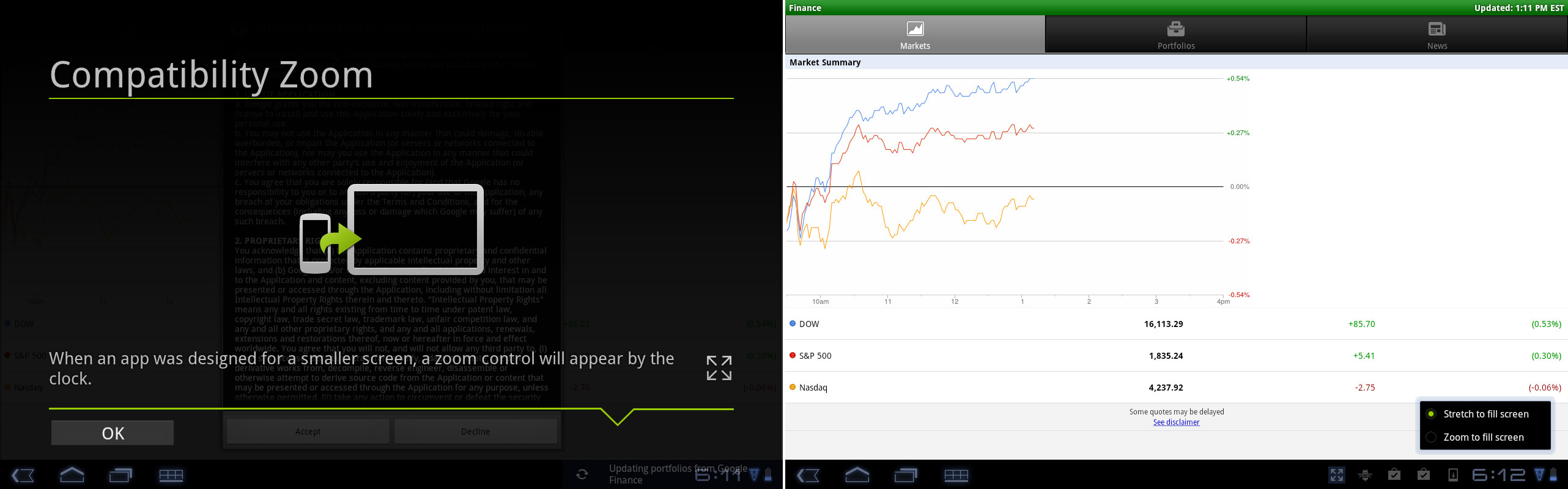

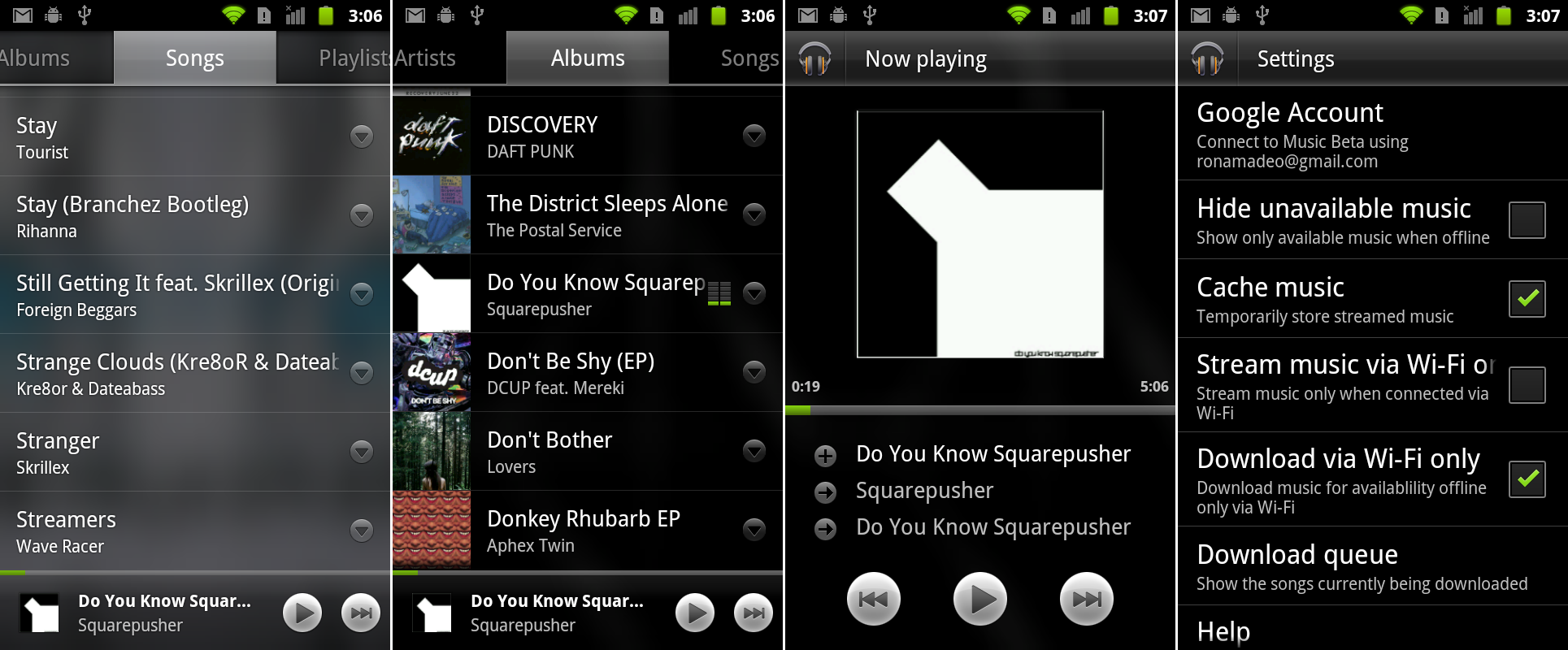

尽管蜂巢改进了 Google Music 的界面,但是音乐应用的设计并没有从蜂巢直接进化到冰淇淋三明治。2011 年 5 月,谷歌发布了“[Google Music Beta][1]”,和新的 Google Music 应用一同到来的在线音乐存储。

|

||||

|

||||

新 Google Music 为安卓 2.2 及以上版本设计,借鉴了 Cooliris 相册的设计语言,但也有改变之处,背景使用了模糊处理的图片。几乎所有东西都是透明的:弹出菜单,顶部标签页,还有底部的正在播放栏。可以下载单独的歌曲或整个播放列表到设备上离线播放,这让 Google Music 成为一个让音乐同步到你所有设备的好途径。除了移动应用外,Google Music 还有一个 Web 应用,让它可以在任何一台桌面电脑上使用。

|

||||

|

||||

谷歌和唱片公司关于内容的合约还没有谈妥,音乐商店还没准备好,所以它的权宜之计是允许用户存储音乐到线上并下载到设备上。如今谷歌除了音乐存储服务外,还有单曲购买和订阅模式。

|

||||

|

||||

----------

|

||||

|

||||

|

||||

|

||||

[Ron Amadeo][a] / Ron 是 Ars Technica 的评论编缉,专注于安卓系统和谷歌产品。他总是在追寻新鲜事物,还喜欢拆解事物看看它们到底是怎么运作的。[@RonAmadeo][t]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://arstechnica.com/gadgets/2016/10/building-android-a-40000-word-history-of-googles-mobile-os/18/

|

||||

|

||||

译者:[alim0x](https://github.com/alim0x) 校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://techcrunch.com/2014/03/03/gartner-195m-tablets-sold-in-2013-android-grabs-top-spot-from-ipad-with-62-share/

|

||||

[a]:http://arstechnica.com/author/ronamadeo

|

||||

[t]:https://twitter.com/RonAmadeo

|

||||

@ -0,0 +1,62 @@

|

||||

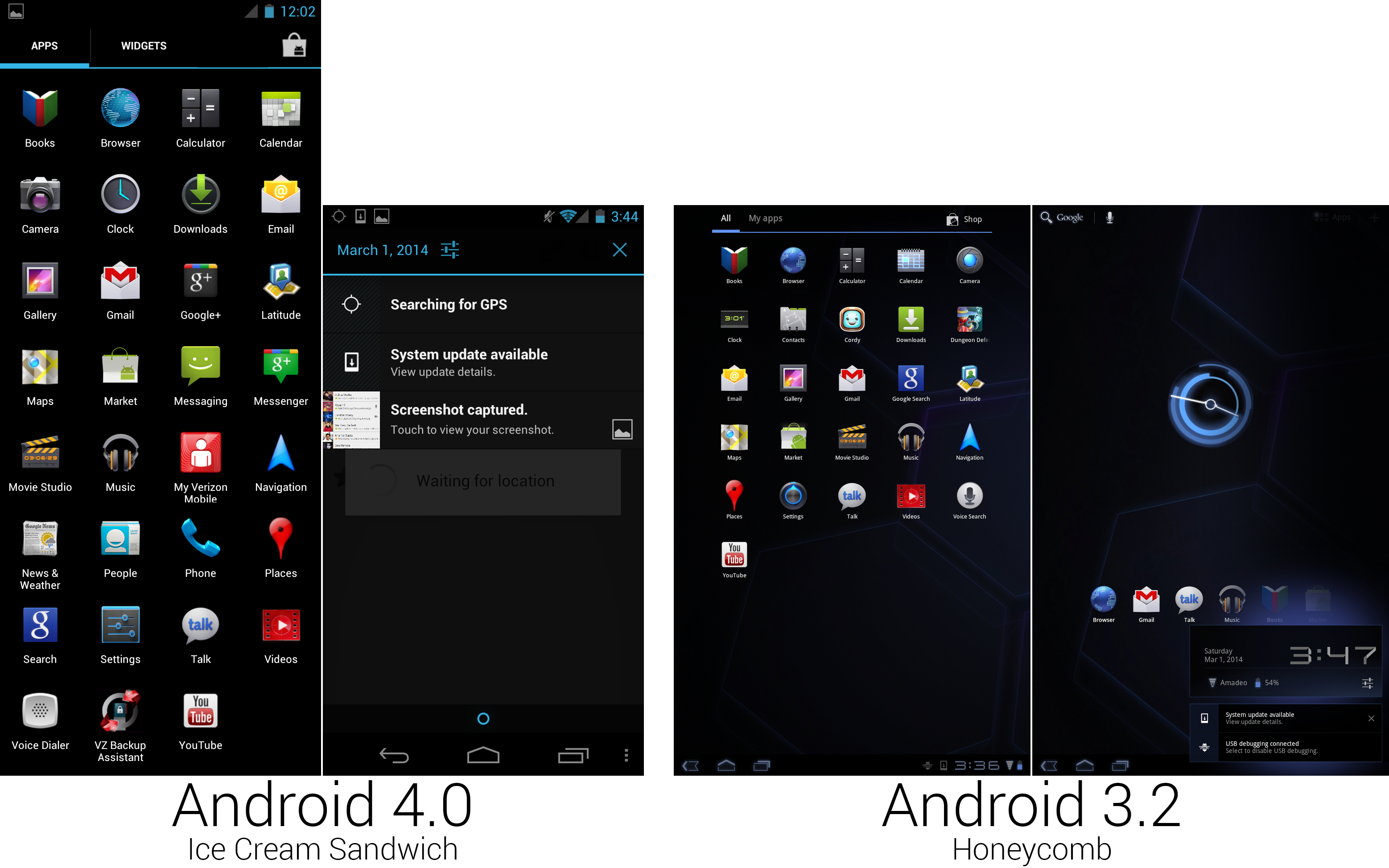

安卓编年史(18):Android 4.0 冰淇淋三明治—摩登时代

|

||||

================================================================================

|

||||

|

||||

### Android 4.0 冰淇淋三明治 —— 摩登时代 ###

|

||||

|

||||

|

||||

|

||||

*三星 Galaxy Nexus,安卓4.0的首发设备。*

|

||||

|

||||

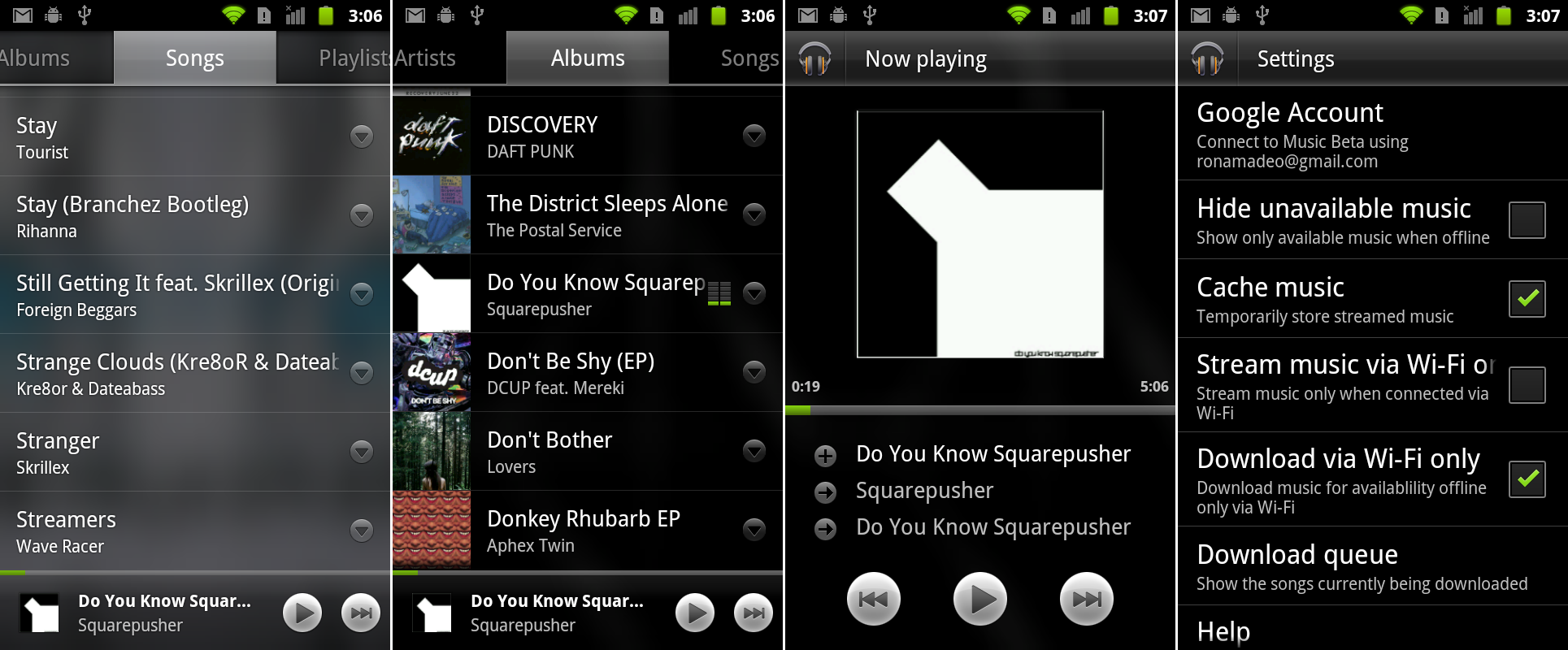

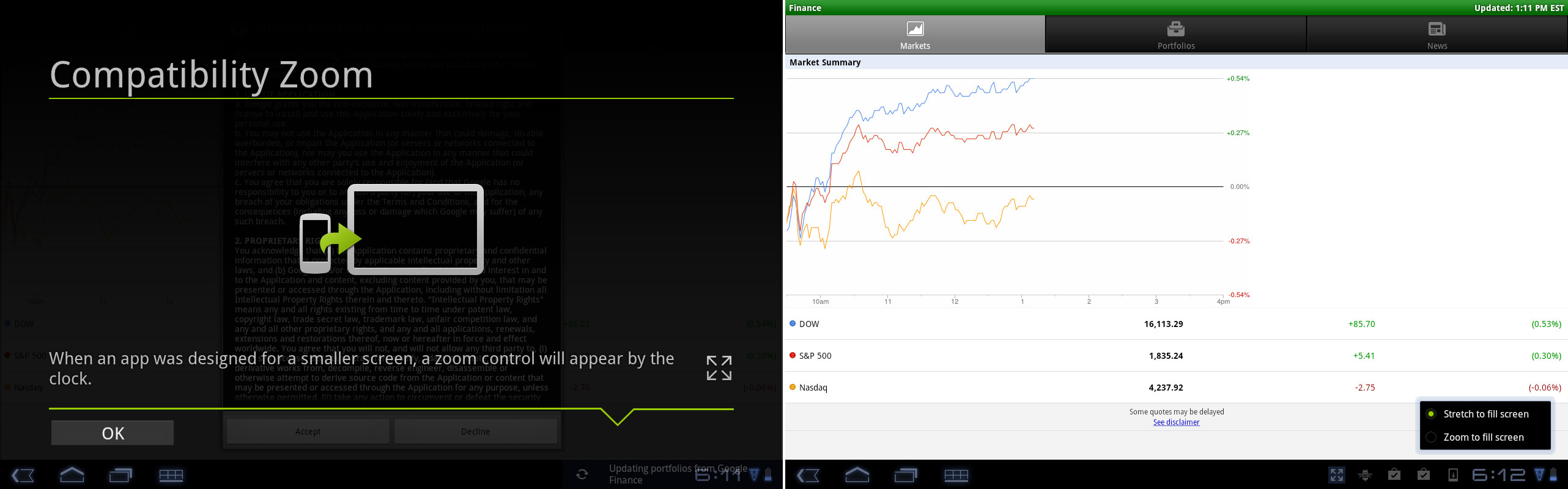

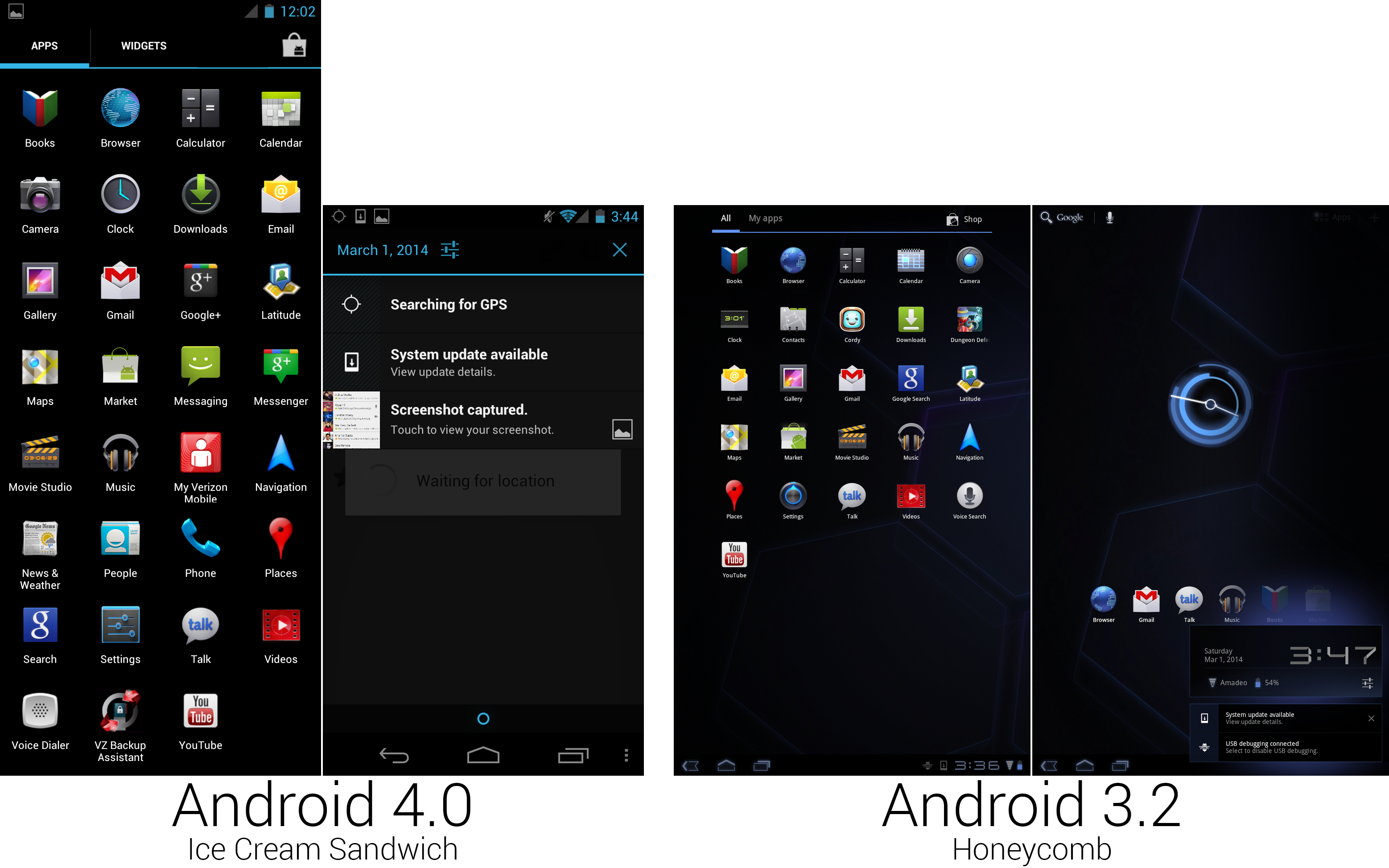

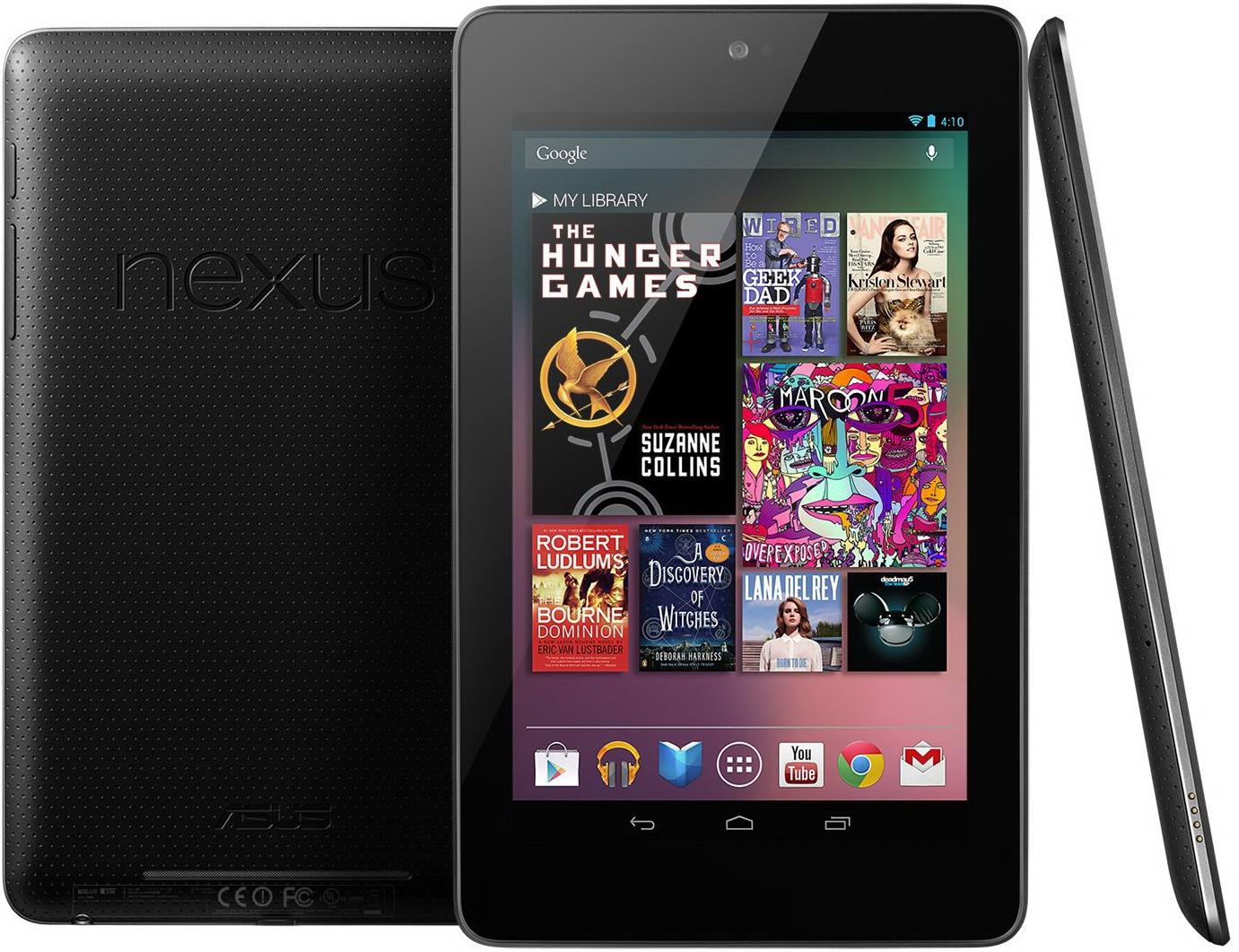

安卓 4.0,冰淇淋三明治,在 2011 年 10 月发布,系统发布回到正轨,带来定期发布的手机和平板,并且安卓再次开源。这是自姜饼以来手机设备的第一个更新,意味着最主要的安卓用户群体近乎一年没有见到更新了。4.0 随处可见缩小版的蜂巢设计,还将虚拟按键,操作栏(Action Bar),全新的设计语言带到了手机上。

|

||||

|

||||

冰淇淋三明治在三星 Galaxy Nexus 上首次亮相,也是最早带有 720p 显示屏的安卓手机之一。随着分辨率的提高,Galaxy Nexus 使用了更大的 4.65 英寸显示屏——几乎比最初的 Nexus One 大了一整英寸。这被许多批评者认为“太大了”,但如今的安卓设备甚至更大。(5 英寸当今是“正常”的。)冰淇淋三明治比姜饼的性能要求更高,Galaxy Nexus 配备了一颗双核,1.2Ghz 德州仪器 OMAP 处理器和 1GB 的内存。

|

||||

|

||||

在美国,Galaxy Nexus 在 Verizon 首发并且支持 LTE。不像之前的 Nexus 设备,最流行的型号——Verizon 版——是在运营商的控制之下,谷歌的软件和更新在手机得到更新之前要经过 Verizon 的核准。这导致了更新的延迟以及 Verizon 不喜欢的应用被移除,即便是 Google Wallet 也不例外。

|

||||

|

||||

多亏了冰淇淋三明治的软件改进,谷歌终于达成了移除手机上按钮的目标。有了虚拟导航键,实体电容按钮就可以移除了,最终 Galaxy Nexus 仅有电源和音量是实体按键。

|

||||

|

||||

|

||||

|

||||

*安卓 4.0 将很多蜂巢的设计缩小了。

|

||||

[Ron Amadeo 供图]*

|

||||

|

||||

电子质感的审美在蜂巢中显得有点多。于是在冰淇淋三明治中,谷歌开始减少科幻风的设计。科幻风的时钟字体从半透明折叠风格转变成纤细,优雅,看起来更加正常的字体。解锁环的水面波纹效果被去除了,蜂巢中的外星风格时钟小部件也被极简设计所取代。系统按钮也经过了重新设计,原先的蓝色轮廓,偶尔的厚边框变成了细的,设置带有白色轮廓。默认壁纸从蜂巢的蓝色太空船内部变成条纹状,破碎的彩虹,给默认布局增添了不少迟来的色彩。

|

||||

|

||||

蜂巢的系统栏在手机上一分为二。在顶上是传统的状态栏,底部是新的系统栏,放着三个系统按钮:后退、主屏幕、最近应用。一个固定的搜索栏放置在了主屏幕顶部。该栏以和底栏一样的方式固定在屏幕上,所以在五个主屏上,它总共占据了 20 个图标大小的位置。在蜂巢的锁屏上,内部的小圆圈可以向大圆圈外的任意位置滑动来解锁设备。在冰淇淋三明治,你得把小圆圈移动到解锁图标上。这个新准确度要求允许谷歌向锁屏添加新的选项:一个相机快捷方式。将小圆圈拖向相机图标会直接启动相机,跳过了主屏幕。

|

||||

|

||||

|

||||

|

||||

*一个手机系统意味着更多的应用,通知面板重新回到了全屏界面。

|

||||

[Ron Amadeo 供图]*

|

||||

|

||||

应用抽屉还是标签页式的,但是蜂巢中的“我的应用”标签被“部件”标签页替代,这是个简单的 2×3 部件略缩图视图。像蜂巢里的那样,这个应用抽屉是分页的,需要水平滑动换页。(如今安卓仍在使用这个应用抽屉设计。)应用抽屉里新增的是 Google+ 应用,后来独立存在。还有一个“Messenger”快捷方式,是 Google+ 的私密信息服务。(不要混淆 “Messenger” 和已有的 “Messaging” 短信应用。)

|

||||

|

||||

因为我们现在回到了手机上,所以短信,新闻和天气,电话,以及语音拨号都回来了,以及 Cordy,一个平板的游戏,被移除了。尽管不是 Nexus 设备,我们的截图还是来自 Verizon 版的设备,可以从图上看到有像 “My Verizon Mobile” 和 “VZ Backup Assistant” 这样没用的应用。为了和冰淇淋三明治的去电子风格主题一致,日历和相机图标现在看起来更像是来自地球的东西而不是来自外星球。时钟,下载,电话,以及安卓市场同样得到了新图标,“联系人”获得了新图标,还有新名字 “People”。

|

||||

|

||||

通知面板进行了大改造,特别是和[之前姜饼中的设计][2]相比而言。面板头部有个日期,一个设置的快捷方式,以及“清除所有”按钮。虽然蜂巢的第一个版本就允许用户通过通知右边的“X”消除单个通知,但是冰淇淋三明治的实现更加优雅:只要从左向右滑动通知即可。蜂巢有着蓝色高亮,但是蓝色色调到处都是。冰淇淋三明治几乎把所有地方的蓝色统一成一个(如果你想知道确定的值,hex 码是 `#33B5E5`)。通知面板的背景是透明的,底部的“把手”变为一个简单的小蓝圈,带着不透明的黑色背景。

|

||||

|

||||

|

||||

|

||||

*安卓市场的主页背景变成了黑色。

|

||||

[Ron Amadeo 供图]*

|

||||

|

||||

市场获得了又一个新设计。它终于再次支持纵向模式,并且添加了音乐到商店中,你可以从中购买音乐。新的市场拓展了从蜂巢中引入的卡片概念,它还是第一个同时使用在手机和平板上的版本。主页上的卡片通常不是链接到应用的,而是指向特别的促销页面,像是“编辑精选”或季度促销。

|

||||

|

||||

----------

|

||||

|

||||

|

||||

|

||||