mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject

This commit is contained in:

commit

716f606025

64

published/20170131 Book review Ours to Hack and to Own.md

Normal file

64

published/20170131 Book review Ours to Hack and to Own.md

Normal file

@ -0,0 +1,64 @@

|

||||

书评:《Ours to Hack and to Own》

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

Image by : opensource.com

|

||||

|

||||

私有制的时代看起来似乎结束了,在这里我将不仅仅讨论那些由我们中的许多人引入到我们的家庭与生活的设备和软件,我也将讨论这些设备与应用依赖的平台与服务。

|

||||

|

||||

尽管我们使用的许多服务是免费的,但我们对它们并没有任何控制权。本质上讲,这些企业确实控制着我们所看到的、听到的以及阅读到的内容。不仅如此,许多企业还在改变工作的本质。他们正使用封闭的平台来助长由全职工作到[零工经济][2]的转变,这种方式提供极少的安全性与确定性。

|

||||

|

||||

这项行动对于网络以及每一个使用与依赖网络的人产生了广泛的影响。仅仅二十多年前的对开放互联网的想象正在逐渐消逝,并迅速地被一块难以穿透的幕帘所取代。

|

||||

|

||||

一种逐渐流行的补救办法就是建立<ruby>[平台合作社][3]<rt>platform cooperatives</rt></ruby>, 即由他们的用户所拥有的电子化平台。正如这本书[《Ours to Hack and to Own》][4]所阐述的,平台合作社背后的观点与开源有许多相同的根源。

|

||||

|

||||

学者 Trebor Scholz 和作家 Nathan Schneider 已经收集了 40 篇论文,探讨平台合作社作为普通人可使用的工具的增长及需求,以提升开放性并对闭源系统的不透明性及各种限制予以还击。

|

||||

|

||||

### 何处适合开源

|

||||

|

||||

任何平台合作社核心及接近核心的部分依赖于开源;不仅开源技术是必要的,构成开源开放性、透明性、协同合作以及共享的准则与理念同样不可或缺。

|

||||

|

||||

在这本书的介绍中,Trebor Scholz 指出:

|

||||

|

||||

> 与斯诺登时代的互联网黑盒子系统相反,这些平台需要使它们的数据流透明来辨别自身。他们需要展示客户与员工的数据在哪里存储,数据出售给了谁以及数据用于何种目的。

|

||||

|

||||

正是对开源如此重要的透明性,促使平台合作社如此吸引人,并在目前大量已有平台之中成为令人耳目一新的变化。

|

||||

|

||||

开源软件在《Ours to Hack and to Own》所分享的平台合作社的构想中必然充当着重要角色。开源软件能够为群体建立助推合作社的技术基础设施提供快速而不算昂贵的途径。

|

||||

|

||||

Mickey Metts 在论文中这样形容, “邂逅你的友邻技术伙伴。" Metts 为一家名为 Agaric 的企业工作,这家企业使用 Drupal 为团体及小型企业建立他们不能自行完成的平台。除此以外, Metts 还鼓励任何想要建立并运营自己的企业的公司或合作社的人接受自由开源软件。为什么呢?因为它是高质量的、并不昂贵的、可定制的,并且你能够与由乐于助人而又热情的人们组成的大型社区产生联系。

|

||||

|

||||

### 不总是开源的,但开源总在

|

||||

|

||||

这本书里不是所有的论文都关注或提及开源的;但是,开源方式的关键元素——合作、社区、开放治理以及电子自由化——总是在其间若隐若现。

|

||||

|

||||

事实上正如《Ours to Hack and to Own》中许多论文所讨论的,建立一个更加开放、基于平常人的经济与社会区块,平台合作社会变得非常重要。用 Douglas Rushkoff 的话讲,那会是类似 Creative Commons 的组织“对共享知识资源的私有化”的补偿。它们也如 Barcelona 的 CTO Francesca Bria 所描述的那样,是“通过确保市民数据安全性、隐私性和权利的系统”来运营他们自己的“分布式通用数据基础架构”的城市。

|

||||

|

||||

### 最后的思考

|

||||

|

||||

如果你在寻找改变互联网以及我们工作的方式的蓝图,《Ours to Hack and to Own》并不是你要寻找的。这本书与其说是用户指南,不如说是一种宣言。如书中所说,《Ours to Hack and to Own》让我们略微了解如果我们将开源方式准则应用于社会及更加广泛的世界我们能够做的事。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Scott Nesbitt ——作家、编辑、雇佣兵、 <ruby>虎猫牛仔<rt>Ocelot wrangle</rt></ruby>、丈夫与父亲、博客写手、陶器收藏家。Scott 正是做这样的一些事情。他还是大量写关于开源软件文章与博客的长期开源用户。你可以在 Twitter、Github 上找到他。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/1/review-book-ours-to-hack-and-own

|

||||

|

||||

作者:[Scott Nesbitt][a]

|

||||

译者:[darsh8](https://github.com/darsh8)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/scottnesbitt

|

||||

[1]:https://opensource.com/article/17/1/review-book-ours-to-hack-and-own?rate=dgkFEuCLLeutLMH2N_4TmUupAJDjgNvFpqWqYCbQb-8

|

||||

[2]:https://en.wikipedia.org/wiki/Access_economy

|

||||

[3]:https://en.wikipedia.org/wiki/Platform_cooperative

|

||||

[4]:http://www.orbooks.com/catalog/ours-to-hack-and-to-own/

|

||||

[5]:https://opensource.com/user/14925/feed

|

||||

[6]:https://opensource.com/users/scottnesbitt

|

||||

@ -1,84 +1,101 @@

|

||||

检查系统和硬件信息的命令

|

||||

======

|

||||

你们好,linux 爱好者们,在这篇文章中,我将讨论一些作为系统管理员重要的事。众所周知,作为一名优秀的系统管理员意味着要了解有关 IT 基础架构的所有信息,并掌握有关服务器的所有信息,无论是硬件还是操作系统。所以下面的命令将帮助你了解所有的硬件和系统信息。

|

||||

|

||||

#### 1- 查看系统信息

|

||||

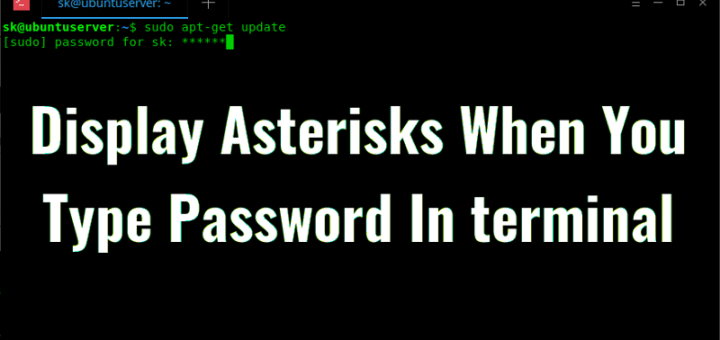

你们好,Linux 爱好者们,在这篇文章中,我将讨论一些作为系统管理员重要的事。众所周知,作为一名优秀的系统管理员意味着要了解有关 IT 基础架构的所有信息,并掌握有关服务器的所有信息,无论是硬件还是操作系统。所以下面的命令将帮助你了解所有的硬件和系统信息。

|

||||

|

||||

### 1 查看系统信息

|

||||

|

||||

```

|

||||

$ uname -a

|

||||

```

|

||||

|

||||

![uname command][2]

|

||||

|

||||

它会为你提供有关系统的所有信息。它会为你提供系统的内核名、主机名、内核版本、内核发布号、硬件名称。

|

||||

|

||||

#### 2- 查看硬件信息

|

||||

### 2 查看硬件信息

|

||||

|

||||

```

|

||||

$ lshw

|

||||

```

|

||||

|

||||

![lshw command][4]

|

||||

|

||||

使用 lshw 将在屏幕上显示所有硬件信息。

|

||||

使用 `lshw` 将在屏幕上显示所有硬件信息。

|

||||

|

||||

#### 3- 查看块设备(硬盘、闪存驱动器)信息

|

||||

### 3 查看块设备(硬盘、闪存驱动器)信息

|

||||

|

||||

```

|

||||

$ lsblk

|

||||

```

|

||||

|

||||

![lsblk command][6]

|

||||

|

||||

lsblk 命令在屏幕上打印关于块设备的所有信息。使用 lsblk -a 显示所有块设备。

|

||||

`lsblk` 命令在屏幕上打印关于块设备的所有信息。使用 `lsblk -a` 可以显示所有块设备。

|

||||

|

||||

#### 4- 查看 CPU 信息

|

||||

### 4 查看 CPU 信息

|

||||

|

||||

```

|

||||

$ lscpu

|

||||

```

|

||||

|

||||

![lscpu command][8]

|

||||

|

||||

lscpu 在屏幕上显示所有 CPU 信息。

|

||||

`lscpu` 在屏幕上显示所有 CPU 信息。

|

||||

|

||||

#### 5- 查看 PCI 信息

|

||||

### 5 查看 PCI 信息

|

||||

|

||||

```

|

||||

$ lspci

|

||||

```

|

||||

|

||||

![lspci command][10]

|

||||

|

||||

所有的网络适配器卡、USB 卡、图形卡都被称为 PCI。要查看他们的信息使用 lspci。

|

||||

所有的网络适配器卡、USB 卡、图形卡都被称为 PCI。要查看他们的信息使用 `lspci`。

|

||||

|

||||

lspci -v 将提供有关 PCI 卡的详细信息。

|

||||

`lspci -v` 将提供有关 PCI 卡的详细信息。

|

||||

|

||||

lspci -t 会以树形格式显示它们。

|

||||

`lspci -t` 会以树形格式显示它们。

|

||||

|

||||

#### 6- 查看 USB 信息

|

||||

### 6 查看 USB 信息

|

||||

|

||||

```

|

||||

$ lsusb

|

||||

```

|

||||

|

||||

![lsusb command][12]

|

||||

|

||||

要查看有关连接到机器的所有 USB 控制器和设备的信息,我们使用 lsusb。

|

||||

要查看有关连接到机器的所有 USB 控制器和设备的信息,我们使用 `lsusb`。

|

||||

|

||||

#### 7- 查看 SCSI 信息

|

||||

### 7 查看 SCSI 信息

|

||||

|

||||

$ lssci

|

||||

```

|

||||

$ lsscsi

|

||||

```

|

||||

|

||||

![lssci][14]

|

||||

![lsscsi][14]

|

||||

|

||||

要查看 SCSI 信息输入 lsscsi。lsscsi -s 会显示分区的大小。

|

||||

要查看 SCSI 信息输入 `lsscsi`。`lsscsi -s` 会显示分区的大小。

|

||||

|

||||

#### 8- 查看文件系统信息

|

||||

### 8 查看文件系统信息

|

||||

|

||||

```

|

||||

$ fdisk -l

|

||||

```

|

||||

|

||||

![fdisk command][16]

|

||||

|

||||

使用 fdisk -l 将显示有关文件系统的信息。虽然 fdisk 的主要功能是修改文件系统,但是也可以创建新分区,删除旧分区(详情在我以后的教程中)。

|

||||

使用 `fdisk -l` 将显示有关文件系统的信息。虽然 `fdisk` 的主要功能是修改文件系统,但是也可以创建新分区,删除旧分区(详情在我以后的教程中)。

|

||||

|

||||

就是这些了,我的 Linux 爱好者们。建议你在**[这里][17]**和**[这里][18]**查看我文章中关于另外的 Linux 命令。

|

||||

就是这些了,我的 Linux 爱好者们。建议你在**[这里][17]**和**[这里][18]**的文章中查看关于另外的 Linux 命令。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linuxtechlab.com/commands-system-hardware-info/

|

||||

|

||||

作者:[Shusain][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,59 @@

|

||||

安全专家的需求正在快速增长

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

> 来自 Dice 和 Linux 基金会的“开源工作报告”发现,未来对具有安全经验的专业人员的需求很高。

|

||||

|

||||

对安全专业人员的需求是真实的。在 [Dice.com][4] 多达 75,000 个职位中,有 15% 是安全职位。[福布斯][6] 称:“根据网络安全数据工具 [CyberSeek][5],在美国每年有 4 万个信息安全分析师的职位空缺,雇主正在努力填补其他 20 万个与网络安全相关的工作。”我们知道,安全专家的需求正在快速增长,但感兴趣的程度还较低。

|

||||

|

||||

### 安全是要关注的领域

|

||||

|

||||

根据我的经验,很少有大学生对安全工作感兴趣,所以很多人把安全视为商机。入门级技术专家对业务分析师或系统分析师感兴趣,因为他们认为,如果想学习和应用核心 IT 概念,就必须坚持分析师工作或者更接近产品开发的工作。事实并非如此。

|

||||

|

||||

事实上,如果你有兴趣成为商业领导者,那么安全是要关注的领域 —— 作为一名安全专业人员,你必须端到端地了解业务,你必须看大局来给你的公司带来优势。

|

||||

|

||||

### 无所畏惧

|

||||

|

||||

分析师和安全工作并不完全相同。公司出于必要继续合并工程和安全工作。企业正在以前所未有的速度进行基础架构和代码的自动化部署,从而提高了安全作为所有技术专业人士日常生活的一部分的重要性。在我们的 [Linux 基金会的开源工作报告][7]中,42% 的招聘经理表示未来对有安全经验的专业人士的需求很大。

|

||||

|

||||

在安全方面从未有过更激动人心的时刻。如果你随时掌握最新的技术新闻,就会发现大量的事情与安全相关 —— 数据泄露、系统故障和欺诈。安全团队正在不断变化,快节奏的环境中工作。真正的挑战在于在保持甚至改进最终用户体验的同时,积极主动地进行安全性,发现和消除漏洞。

|

||||

|

||||

### 增长即将来临

|

||||

|

||||

在技术的任何方面,安全将继续与云一起成长。企业越来越多地转向云计算,这暴露出比组织里比过去更多的安全漏洞。随着云的成熟,安全变得越来越重要。

|

||||

|

||||

条例也在不断完善 —— 个人身份信息(PII)越来越广泛。许多公司都发现他们必须投资安全来保持合规,避免成为头条新闻。由于面临巨额罚款,声誉受损以及行政工作安全,公司开始越来越多地为安全工具和人员安排越来越多的预算。

|

||||

|

||||

### 培训和支持

|

||||

|

||||

即使你不选择一个专门的安全工作,你也一定会发现自己需要写安全的代码,如果你没有这个技能,你将开始一场艰苦的战斗。如果你的公司提供在工作中学习的话也是可以的,但我建议结合培训、指导和不断的实践。如果你不使用安全技能,你将很快在快速进化的恶意攻击的复杂性中失去它们。

|

||||

|

||||

对于那些寻找安全工作的人来说,我的建议是找到组织中那些在工程、开发或者架构领域最为强大的人员 —— 与他们和其他团队进行交流,做好实际工作,并且确保在心里保持大局。成为你的组织中一个脱颖而出的人,一个可以写安全的代码,同时也可以考虑战略和整体基础设施健康状况的人。

|

||||

|

||||

### 游戏最后

|

||||

|

||||

越来越多的公司正在投资安全性,并试图填补他们的技术团队的开放角色。如果你对管理感兴趣,那么安全是值得关注的地方。执行层领导希望知道他们的公司正在按规则行事,他们的数据是安全的,并且免受破坏和损失。

|

||||

|

||||

明智地实施和有战略思想的安全是受到关注的。安全对高管和消费者之类至关重要 —— 我鼓励任何对安全感兴趣的人进行培训和贡献。

|

||||

|

||||

_现在[下载][2]完整的 2017 年开源工作报告_

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/os-jobs-report/2017/11/security-jobs-are-hot-get-trained-and-get-noticed

|

||||

|

||||

作者:[BEN COLLEN][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/bencollen

|

||||

[1]:https://www.linux.com/licenses/category/used-permission

|

||||

[2]:http://bit.ly/2017OSSjobsreport

|

||||

[3]:https://www.linux.com/files/images/security-skillspng

|

||||

[4]:http://www.dice.com/

|

||||

[5]:http://cyberseek.org/index.html#about

|

||||

[6]:https://www.forbes.com/sites/jeffkauflin/2017/03/16/the-fast-growing-job-with-a-huge-skills-gap-cyber-security/#292f0a675163

|

||||

[7]:http://media.dice.com/report/the-2017-open-source-jobs-report-employers-prioritize-hiring-open-source-professionals-with-latest-skills/

|

||||

158

published/20171205 How to Use the Date Command in Linux.md

Normal file

158

published/20171205 How to Use the Date Command in Linux.md

Normal file

@ -0,0 +1,158 @@

|

||||

如何使用 date 命令

|

||||

======

|

||||

|

||||

|

||||

|

||||

在本文中, 我们会通过一些案例来演示如何使用 Linux 中的 `date` 命令。`date` 命令可以用户输出/设置系统日期和时间。 `date` 命令很简单, 请参见下面的例子和语法。

|

||||

|

||||

默认情况下,当不带任何参数运行 `date` 命令时,它会输出当前系统日期和时间:

|

||||

|

||||

```shell

|

||||

$ date

|

||||

Sat 2 Dec 12:34:12 CST 2017

|

||||

```

|

||||

|

||||

### 语法

|

||||

|

||||

```

|

||||

Usage: date [OPTION]... [+FORMAT]

|

||||

or: date [-u|--utc|--universal] [MMDDhhmm[[CC]YY][.ss]]

|

||||

以给定格式显示当前时间,或设置系统时间。

|

||||

```

|

||||

|

||||

### 案例

|

||||

|

||||

下面这些案例会向你演示如何使用 `date` 命令来查看前后一段时间的日期时间。

|

||||

|

||||

#### 1、 查找 5 周后的日期

|

||||

|

||||

```shell

|

||||

date -d "5 weeks"

|

||||

Sun Jan 7 19:53:50 CST 2018

|

||||

```

|

||||

|

||||

#### 2、 查找 5 周后又过 4 天的日期

|

||||

|

||||

```shell

|

||||

date -d "5 weeks 4 days"

|

||||

Thu Jan 11 19:55:35 CST 2018

|

||||

```

|

||||

|

||||

#### 3、 获取下个月的日期

|

||||

|

||||

```shell

|

||||

date -d "next month"

|

||||

Wed Jan 3 19:57:43 CST 2018

|

||||

```

|

||||

|

||||

#### 4、 获取下周日的日期

|

||||

|

||||

```shell

|

||||

date -d last-sunday

|

||||

Sun Nov 26 00:00:00 CST 2017

|

||||

```

|

||||

|

||||

`date` 命令还有很多格式化相关的选项, 下面的例子向你演示如何格式化 `date` 命令的输出.

|

||||

|

||||

#### 5、 以 `yyyy-mm-dd` 的格式显示日期

|

||||

|

||||

```shell

|

||||

date +"%F"

|

||||

2017-12-03

|

||||

```

|

||||

|

||||

#### 6、 以 `mm/dd/yyyy` 的格式显示日期

|

||||

|

||||

```shell

|

||||

date +"%m/%d/%Y"

|

||||

12/03/2017

|

||||

```

|

||||

|

||||

#### 7、 只显示时间

|

||||

|

||||

```shell

|

||||

date +"%T"

|

||||

20:07:04

|

||||

```

|

||||

|

||||

#### 8、 显示今天是一年中的第几天

|

||||

|

||||

```shell

|

||||

date +"%j"

|

||||

337

|

||||

```

|

||||

|

||||

#### 9、 与格式化相关的选项

|

||||

|

||||

| 格式 | 说明 |

|

||||

|---------------|----------------|

|

||||

| `%%` | 显示百分号 (`%`)。 |

|

||||

| `%a` | 星期的缩写形式 (如: `Sun`)。 |

|

||||

| `%A` | 星期的完整形式 (如: `Sunday`)。 |

|

||||

| `%b` | 缩写的月份 (如: `Jan`)。 |

|

||||

| `%B` | 当前区域的月份全称 (如: `January`)。 |

|

||||

| `%c` | 日期以及时间 (如: `Thu Mar 3 23:05:25 2005`)。 |

|

||||

| `%C` | 当前世纪;类似 `%Y`, 但是会省略最后两位 (如: `20`)。 |

|

||||

| `%d` | 月中的第几日 (如: `01`)。 |

|

||||

| `%D` | 日期;效果与 `%m/%d/%y` 一样。 |

|

||||

| `%e` | 月中的第几日, 会填充空格;与 `%_d` 一样。 |

|

||||

| `%F` | 完整的日期;跟 `%Y-%m-%d` 一样。 |

|

||||

| `%g` | 年份的后两位 (参见 `%G`)。 |

|

||||

| `%G` | 年份 (参见 `%V`);通常跟 `%V` 连用。 |

|

||||

| `%h` | 同 `%b`。 |

|

||||

| `%H` | 小时 (`00`..`23`)。 |

|

||||

| `%I` | 小时 (`01`..`12`)。 |

|

||||

| `%j` | 一年中的第几天 (`001`..`366`)。 |

|

||||

| `%k` | 小时, 用空格填充 ( `0`..`23`); 与 `%_H` 一样。 |

|

||||

| `%l` | 小时, 用空格填充 ( `1`..`12`); 与 `%_I` 一样。 |

|

||||

| `%m` | 月份 (`01`..`12`)。 |

|

||||

| `%M` | 分钟 (`00`..`59`)。 |

|

||||

| `%n` | 换行。 |

|

||||

| `%N` | 纳秒 (`000000000`..`999999999`)。 |

|

||||

| `%p` | 当前区域时间是上午 `AM` 还是下午 `PM`;未知则为空。 |

|

||||

| `%P` | 类似 `%p`, 但是用小写字母显示。 |

|

||||

| `%r` | 当前区域的 12 小时制显示时间 (如: `11:11:04 PM`)。 |

|

||||

| `%R` | 24 小时制的小时和分钟;同 `%H:%M`。 |

|

||||

| `%s` | 从 1970-01-01 00:00:00 UTC 到现在经历的秒数。 |

|

||||

| `%S` | 秒数 (`00`..`60`)。 |

|

||||

| `%t` | 制表符。 |

|

||||

| `%T` | 时间;同 `%H:%M:%S`。 |

|

||||

| `%u` | 星期 (`1`..`7`);1 表示 `星期一`。 |

|

||||

| `%U` | 一年中的第几个星期,以周日为一周的开始 (`00`..`53`)。 |

|

||||

| `%V` | 一年中的第几个星期,以周一为一周的开始 (`01`..`53`)。 |

|

||||

| `%w` | 用数字表示周几 (`0`..`6`); 0 表示 `周日`。 |

|

||||

| `%W` | 一年中的第几个星期, 周一为一周的开始 (`00`..`53`)。 |

|

||||

| `%x` | 当前区域的日期表示(如: `12/31/99`)。 |

|

||||

| `%X` | 当前区域的时间表示 (如: `23:13:48`)。 |

|

||||

| `%y` | 年份的后面两位 (`00`..`99`)。 |

|

||||

| `%Y` | 年。 |

|

||||

| `%z` | 以 `+hhmm` 的数字格式表示时区 (如: `-0400`)。 |

|

||||

| `%:z` | 以 `+hh:mm` 的数字格式表示时区 (如: `-04:00`)。 |

|

||||

| `%::z` | 以 `+hh:mm:ss` 的数字格式表示时区 (如: `-04:00:00`)。 |

|

||||

| `%:::z` | 以数字格式表示时区, 其中 `:` 的个数由你需要的精度来决定 (例如, `-04`, `+05:30`)。 |

|

||||

| `%Z` | 时区的字符缩写(例如, `EDT`)。 |

|

||||

|

||||

#### 10、 设置系统时间

|

||||

|

||||

你也可以使用 `date` 来手工设置系统时间,方法是使用 `--set` 选项, 下面的例子会将系统时间设置成 2017 年 8 月 30 日下午 4 点 22 分。

|

||||

|

||||

```shell

|

||||

date --set="20170830 16:22"

|

||||

```

|

||||

|

||||

当然, 如果你使用的是我们的 [VPS 托管服务][1],你总是可以联系并咨询我们的 Linux 专家管理员(通过客服电话或者下工单的方式)关于 `date` 命令的任何东西。他们是 24×7 在线的,会立即向您提供帮助。(LCTT 译注:原文的广告~)

|

||||

|

||||

PS. 如果你喜欢这篇帖子,请点击下面的按钮分享或者留言。谢谢。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.rosehosting.com/blog/use-the-date-command-in-linux/

|

||||

|

||||

作者:[rosehosting][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.rosehosting.com

|

||||

[1]:https://www.rosehosting.com/hosting-services.html

|

||||

@ -0,0 +1,143 @@

|

||||

如何在 Linux 使用文件压缩

|

||||

=======

|

||||

|

||||

|

||||

|

||||

> Linux 系统为文件压缩提供了许多选择,关键是选择一个最适合你的。

|

||||

|

||||

如果你对可用于 Linux 系统的文件压缩命令或选项有任何疑问,你也许应该看一下 `apropos compress` 这个命令的输出。如果你有机会这么做,你会惊异于有如此多的的命令来进行压缩文件和解压缩文件;此外还有许多命令来进行压缩文件的比较、检验,并且能够在压缩文件中的内容中进行搜索,甚至能够把压缩文件从一个格式变成另外一种格式(如,将 `.z` 格式变为 `.gz` 格式 )。

|

||||

|

||||

你可以看到只是适用于 bzip2 压缩的全部条目就有这么多。加上 zip、gzip 和 xz 在内,你会有非常多的选择。

|

||||

|

||||

```

|

||||

$ apropos compress | grep ^bz

|

||||

bzcat (1) - decompresses files to stdout

|

||||

bzcmp (1) - compare bzip2 compressed files

|

||||

bzdiff (1) - compare bzip2 compressed files

|

||||

bzegrep (1) - search possibly bzip2 compressed files for a regular expression

|

||||

bzexe (1) - compress executable files in place

|

||||

bzfgrep (1) - search possibly bzip2 compressed files for a regular expression

|

||||

bzgrep (1) - search possibly bzip2 compressed files for a regular expression

|

||||

bzip2 (1) - a block-sorting file compressor, v1.0.6

|

||||

bzless (1) - file perusal filter for crt viewing of bzip2 compressed text

|

||||

bzmore (1) - file perusal filter for crt viewing of bzip2 compressed text

|

||||

```

|

||||

|

||||

在我的 Ubuntu 系统上 ,`apropos compress` 命令的返回中列出了 60 条以上的命令。

|

||||

|

||||

### 压缩算法

|

||||

|

||||

压缩并没有普适的方案,某些压缩工具是有损压缩,例如一些压缩用于减少 mp3 文件大小,而能够使聆听者有接近原声的音乐感受。但是在 Linux 命令行上压缩或归档用户文件所使用的算法必须能够精确地重新恢复为原始数据。换句话说,它们必须是无损的。

|

||||

|

||||

这是如何做到的?让我们假设在一行上有 300 个相同的字符可以被压缩成像 “300x” 这样的字符串,但是这种算法对大多数文件没有很大的用处,因为文件中不可能包含长的相同字符序列比完全随机的序列更多。 压缩算法要复杂得多,从 Unix 早期压缩首次被引入以来,它就越来越复杂了。

|

||||

|

||||

### 在 Linux 系统上的压缩命令

|

||||

|

||||

在 Linux 系统上最常用的文件压缩命令包括 `zip`、`gzip`、`bzip2`、`xz`。 所有这些压缩命令都以类似的方式工作,但是你需要权衡有多少文件要压缩(节省多少空间)、压缩花费的时间、压缩文件在其他你需要使用的系统上的兼容性。

|

||||

|

||||

有时压缩一个文件并不会花费很多时间和精力。在下面的例子中,被压缩的文件实际上比原始文件要大。这并不是一个常见情况,但是有可能发生——尤其是在文件内容达到一定程度的随机性。

|

||||

|

||||

```

|

||||

$ time zip bigfile.zip bigfile

|

||||

adding: bigfile (default 0% )

|

||||

real 0m0.055s

|

||||

user 0m0.000s

|

||||

sys 0m0.016s

|

||||

$ ls -l bigfile*

|

||||

-rw-r--r-- 1 root root 0 12月 20 22:36 bigfile

|

||||

-rw------- 1 root root 164 12月 20 22:41 bigfile.zip

|

||||

```

|

||||

|

||||

注意该文件压缩后的版本(`bigfile.zip`)比原始文件(`bigfile`)要大。如果压缩增加了文件的大小或者减少很少的比例,也许唯一的好处就是便于在线备份。如果你在压缩文件后看到了下面的信息,你不会从压缩中得到什么受益。

|

||||

|

||||

```

|

||||

( defalted 1% )

|

||||

```

|

||||

|

||||

文件内容在文件压缩的过程中有很重要的作用。在上面文件大小增加的例子中是因为文件内容过于随机。压缩一个文件内容只包含 `0` 的文件,你会有一个相当震惊的压缩比。在如此极端的情况下,三个常用的压缩工具都有非常棒的效果。

|

||||

|

||||

```

|

||||

-rw-rw-r-- 1 shs shs 10485760 Dec 8 12:31 zeroes.txt

|

||||

-rw-rw-r-- 1 shs shs 49 Dec 8 17:28 zeroes.txt.bz2

|

||||

-rw-rw-r-- 1 shs shs 10219 Dec 8 17:28 zeroes.txt.gz

|

||||

-rw-rw-r-- 1 shs shs 1660 Dec 8 12:31 zeroes.txt.xz

|

||||

-rw-rw-r-- 1 shs shs 10360 Dec 8 12:24 zeroes.zip

|

||||

```

|

||||

|

||||

令人印象深刻的是,你不太可能看到超过 1000 万字节而压缩到少于 50 字节的文件, 因为基本上不可能有这样的文件。

|

||||

|

||||

在更真实的情况下 ,大小差异总体上是不同的,但是差别并不显著,比如对于确实不太大的 jpg 图片文件来说。

|

||||

|

||||

```

|

||||

-rw-r--r-- 1 shs shs 13522 Dec 11 18:58 image.jpg

|

||||

-rw-r--r-- 1 shs shs 13875 Dec 11 18:58 image.jpg.bz2

|

||||

-rw-r--r-- 1 shs shs 13441 Dec 11 18:58 image.jpg.gz

|

||||

-rw-r--r-- 1 shs shs 13508 Dec 11 18:58 image.jpg.xz

|

||||

-rw-r--r-- 1 shs shs 13581 Dec 11 18:58 image.jpg.zip

|

||||

```

|

||||

|

||||

在对大的文本文件同样进行压缩时 ,你会看到显著的不同。

|

||||

|

||||

```

|

||||

$ ls -l textfile*

|

||||

-rw-rw-r-- 1 shs shs 8740836 Dec 11 18:41 textfile

|

||||

-rw-rw-r-- 1 shs shs 1519807 Dec 11 18:41 textfile.bz2

|

||||

-rw-rw-r-- 1 shs shs 1977669 Dec 11 18:41 textfile.gz

|

||||

-rw-rw-r-- 1 shs shs 1024700 Dec 11 18:41 textfile.xz

|

||||

-rw-rw-r-- 1 shs shs 1977808 Dec 11 18:41 textfile.zip

|

||||

```

|

||||

|

||||

在这种情况下 ,`xz` 相较于其他压缩命令有效的减小了文件大小,对于第二的 bzip2 命令也是如此。

|

||||

|

||||

### 查看压缩文件

|

||||

|

||||

这些以 `more` 结尾的命令(`bzmore` 等等)能够让你查看压缩文件的内容而不需要解压文件。

|

||||

|

||||

```

|

||||

bzmore (1) - file perusal filter for crt viewing of bzip2 compressed text

|

||||

lzmore (1) - view xz or lzma compressed (text) files

|

||||

xzmore (1) - view xz or lzma compressed (text) files

|

||||

zmore (1) - file perusal filter for crt viewing of compressed text

|

||||

```

|

||||

|

||||

为了解压缩文件内容显示给你,这些命令做了大量的计算。但在另一方面,它们不会把解压缩后的文件留在你系统上,它们只是即时解压需要的部分。

|

||||

|

||||

```

|

||||

$ xzmore textfile.xz | head -1

|

||||

Here is the agenda for tomorrow's staff meeting:

|

||||

```

|

||||

|

||||

### 比较压缩文件

|

||||

|

||||

有几个压缩工具箱包含一个差异命令(例如 :`xzdiff`),那些工具会把这些工作交给 `cmp` 和 `diff` 来进行比较,而不是做特定算法的比较。例如,`xzdiff` 命令比较 bz2 类型的文件和比较 xz 类型的文件一样简单 。

|

||||

|

||||

### 如何选择最好的 Linux 压缩工具

|

||||

|

||||

如何选择压缩工具取决于你工作。在一些情况下,选择取决于你所压缩的数据内容。在更多的情况下,取决你组织内的惯例,除非你对磁盘空间有着很高的敏感度。下面是一般性建议:

|

||||

|

||||

**zip** 对于需要分享给或者在 Windows 系统下使用的文件最适合。

|

||||

|

||||

**gzip** 或许对你要在 Unix/Linux 系统下使用的文件是最好的。虽然 bzip2 已经接近普及,但 gzip 看起来仍将长期存在。

|

||||

|

||||

**bzip2** 使用了和 gzip 不同的算法,并且会产生比 gzip 更小的文件,但是它们需要花费更长的时间进行压缩。

|

||||

|

||||

**xz** 通常可以提供最好的压缩率,但是也会花费相当长的时间。它比其他工具更新一些,可能在你工作的系统上还不存在。

|

||||

|

||||

### 注意

|

||||

|

||||

在压缩文件时,你有很多选择,而在极少的情况下,并不能有效节省磁盘存储空间。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3240938/linux/how-to-squeeze-the-most-out-of-linux-file-compression.html

|

||||

|

||||

作者:[Sandra Henry-Stocker][1]

|

||||

译者:[singledo][2]

|

||||

校对:[wxy][4]

|

||||

|

||||

本文由 [ LCTT ][3]原创编译,Linux中国 荣誉推出

|

||||

|

||||

[1]:https://www.networkworld.com

|

||||

[2]:https://github.com/singledo

|

||||

[3]:https://github.com/LCTT/TranslateProject

|

||||

[4]:https://github.com/wxy

|

||||

@ -0,0 +1,136 @@

|

||||

利用 Resetter 将 Ubuntu 系发行版重置为初始状态

|

||||

======

|

||||

|

||||

|

||||

|

||||

*这个 Resetter 工具可以将 Ubuntu、 Linux Mint (以及其它基于 Ubuntu 的发行版)返回到其初始配置。*

|

||||

|

||||

有多少次你投身于 Ubuntu(或 Ubuntu 衍生版本),配置某项内容和安装软件,却发现你的桌面(或服务器)平台并不是你想要的结果。当在机器上产生了大量的用户文件时,这种情况可能会出现问题。既然这样,你有一个选择,你要么可以备份你所有的数据,重新安装操作系统,然后将您的数据复制回本机,或者也可以利用一种类似于 [Resetter][1] 的工具做同样的事情。

|

||||

|

||||

Resetter 是一个新的工具(由名为“[gaining][2]”的加拿大开发者开发),用 Python 和 PyQt 编写,它将会重置 Ubuntu、Linux Mint(和一些其他的,基于 Ubuntu 的衍生版)回到初始配置。Resetter 提供了两种不同的复位选择:自动和自定义。利用自动方式,工具就会完成以下内容:

|

||||

|

||||

* 删除用户安装的应用软件

|

||||

* 删除用户及家目录

|

||||

* 创建默认备份用户

|

||||

* 自动安装缺失的预装应用软件(MPIA)

|

||||

* 删除非默认用户

|

||||

* 删除 snap 软件包

|

||||

|

||||

自定义方式会:

|

||||

|

||||

* 删除用户安装的应用程序或者允许你选择要删除的应用程序

|

||||

* 删除旧的内核

|

||||

* 允许你选择用户进行删除

|

||||

* 删除用户及家目录

|

||||

* 创建默认备份用户

|

||||

* 允许您创建自定义备份用户

|

||||

* 自动安装缺失的预装应用软件(MPIA)或选择 MPIA 进行安装

|

||||

* 删除非默认用户

|

||||

* 查看所有相关依赖包

|

||||

* 删除 snap 软件包

|

||||

|

||||

我将带领您完成安装和使用 Resetter 的过程。但是,我必须告诉你这个工具非常前期的测试版。即便如此, Resetter 绝对值得一试。实际上,我鼓励您测试该应用程序并提交 bug 报告(您可以通过 [GitHub][3] 提交,或者直接发送给开发人员的电子邮件地址 [gaining7@outlook.com][4])。

|

||||

|

||||

还应注意的是,目前仅支持的衍生版有:

|

||||

|

||||

* Debian 9.2 (稳定)Gnome 版本

|

||||

* Linux Mint 17.3+(对 Mint 18.3 的支持即将推出)

|

||||

* Ubuntu 14.04+ (虽然我发现不支持 17.10)

|

||||

* Elementary OS 0.4+

|

||||

* Linux Deepin 15.4+

|

||||

|

||||

说到这里,让我们安装和使用 Resetter。我将在 [Elementary OS Loki][5] 平台展示。

|

||||

|

||||

### 安装

|

||||

|

||||

有几种方法可以安装 Resetter。我选择的方法是通过 `gdebi` 辅助应用程序,为什么?因为它将获取安装所需的所有依赖项。首先,我们必须安装这个特定的工具。打开终端窗口并发出命令:

|

||||

|

||||

```

|

||||

sudo apt install gdebi

|

||||

```

|

||||

|

||||

一旦安装完毕,请将浏览器指向 [Resetter 下载页面][6],并下载该软件的最新版本。一旦下载完毕,打开文件管理器,导航到下载的文件,然后单击(或双击,这取决于你如何配置你的桌面) `resetter_XXX-stable_all.deb` 文件(XXX 是版本号)。`gdebi` 应用程序将会打开(图 1)。点击安装包按钮,输入你的 `sudo` 密码,接下来 Resetter 将开始安装。

|

||||

|

||||

|

||||

![gdebi][8]

|

||||

|

||||

*图 1:利用 gdebi 安装 Resetter*

|

||||

|

||||

当安装完成,准备接下来的操作。

|

||||

|

||||

### 使用 Resetter

|

||||

|

||||

**记住,在这之前,必须备份数据。别怪我没提醒你。**

|

||||

|

||||

从终端窗口发出命令 `sudo resetter`。您将被提示输入 `sudo`密码。一旦 Resetter 打开,它将自动检测您的发行版(图 2)。

|

||||

|

||||

![Resetter][11]

|

||||

|

||||

*图 2:Resetter 主窗口*

|

||||

|

||||

我们将通过自动重置来测试 Resetter 的流程。从主窗口,点击 Automatic Reset(自动复位)。这款应用将提供一个明确的警告,它将把你的操作系统(我的实例,Elementary OS 0.4.1 Loki)重新设置为出厂默认状态(图 3)。

|

||||

|

||||

![警告][13]

|

||||

|

||||

*图 3:在继续之前,Resetter 会警告您。 *

|

||||

|

||||

|

||||

单击“Yes”,Resetter 会显示它将删除的所有包(图 4)。如果您没有问题,单击 OK,重置将开始。

|

||||

|

||||

![移除软件包][15]

|

||||

|

||||

*图 4:所有要删除的包,以便将 Elementary OS 重置为出厂默认值。*

|

||||

|

||||

在重置过程中,应用程序将显示一个进度窗口(图 5)。根据安装的数量,这个过程不应该花费太长时间。

|

||||

|

||||

![进度][17]

|

||||

|

||||

*图 5:Resetter 进度窗口*

|

||||

|

||||

当过程完成时,Resetter 将显示一个新的用户名和密码,以便重新登录到新重置的发行版(图 6)。

|

||||

|

||||

![新用户][19]

|

||||

|

||||

*图 6:新用户及密码*

|

||||

|

||||

单击 OK,然后当提示时单击“Yes”以重新启动系统。当提示登录时,使用 Resetter 应用程序提供给您的新凭证。成功登录后,您需要重新创建您的原始用户。该用户的主目录仍然是完整的,所以您需要做的就是发出命令 `sudo useradd USERNAME` ( USERNAME 是用户名)。完成之后,发出命令 `sudo passwd USERNAME` (USERNAME 是用户名)。使用设置的用户/密码,您可以注销并以旧用户的身份登录(使用在重新设置操作系统之前相同的家目录)。

|

||||

|

||||

### 我的成果

|

||||

|

||||

我必须承认,在将密码添加到我的老用户(并通过使用 `su` 命令切换到该用户进行测试)之后,我无法使用该用户登录到 Elementary OS 桌面。为了解决这个问题,我登录了 Resetter 所创建的用户,移动了老用户的家目录,删除了老用户(使用命令 `sudo deluser jack`),并重新创建了老用户(使用命令 `sudo useradd -m jack`)。

|

||||

|

||||

这样做之后,我检查了原始的家目录,只发现了用户的所有权从 `jack.jack` 变成了 `1000.1000`。利用命令 `sudo chown -R jack.jack /home/jack`,就可以容易的修正这个问题。教训是什么?如果您使用 Resetter 并发现无法用您的老用户登录(在您重新创建用户并设置一个新密码之后),请确保更改用户的家目录的所有权限。

|

||||

|

||||

在这个问题之外,Resetter 在将 Elementary OS Loki 恢复到默认状态方面做了大量的工作。虽然 Resetter 处在测试中,但它是一个相当令人印象深刻的工具。试一试,看看你是否有和我一样出色的成绩。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/learn/intro-to-linux/2017/12/set-ubuntu-derivatives-back-default-resetter

|

||||

|

||||

作者:[Jack Wallen][a]

|

||||

译者:[stevenzdg988](https://github.com/stevenzdg988)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/jlwallen

|

||||

[1]:https://github.com/gaining/Resetter

|

||||

[2]:https://github.com/gaining

|

||||

[3]:https://github.com

|

||||

[4]:mailto:gaining7@outlook.com

|

||||

[5]:https://elementary.io/

|

||||

[6]:https://github.com/gaining/Resetter/releases/tag/v1.1.3-stable

|

||||

[7]:/files/images/resetter1jpg-0

|

||||

[8]:https://www.linux.com/sites/lcom/files/styles/rendered_file/public/resetter_1_0.jpg?itok=3c_qrApr (gdebi)

|

||||

[9]:/licenses/category/used-permission

|

||||

[10]:/files/images/resetter2jpg

|

||||

[11]:https://www.linux.com/sites/lcom/files/styles/rendered_file/public/resetter_2.jpg?itok=bmawiCYJ (Resetter)

|

||||

[12]:/files/images/resetter3jpg

|

||||

[13]:https://www.linux.com/sites/lcom/files/styles/rendered_file/public/resetter_3.jpg?itok=2wlbC3Ue (warning)

|

||||

[14]:/files/images/resetter4jpg-1

|

||||

[15]:https://www.linux.com/sites/lcom/files/styles/rendered_file/public/resetter_4_1.jpg?itok=f2I3noDM (remove packages)

|

||||

[16]:/files/images/resetter5jpg

|

||||

[17]:https://www.linux.com/sites/lcom/files/styles/rendered_file/public/resetter_5.jpg?itok=3FYs5_2S (progress)

|

||||

[18]:/files/images/resetter6jpg

|

||||

[19]:https://www.linux.com/sites/lcom/files/styles/rendered_file/public/resetter_6.jpg?itok=R9SVZgF1 (new username)

|

||||

[20]:https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

@ -1,32 +1,34 @@

|

||||

yum find out path where is package installed to on CentOS/RHEL

|

||||

在 CentOS/RHEL 上查找 yum 安裝的软件的位置

|

||||

======

|

||||

|

||||

I have [install htop package on a CentOS/RHEL][1] . I wanted find out where and at what path htop package installed all files. Is there an easy way to tell yum where is package installed on a CentOS/RHEL?

|

||||

**我已经在 CentOS/RHEL 上[安装了 htop][1] 。现在想知道软件被安装在哪个位置。有没有简单的方法能找到 yum 软件包安装的目录呢?**

|

||||

|

||||

[yum command][2] is an interactive, open source, rpm based, package manager for a CentOS/RHEL and clones. It can automatically perform the following operations for you:

|

||||

[yum 命令][2] 是可交互的、基于 rpm 的 CentOS/RHEL 的开源软件包管理工具。它会帮助你自动地完成以下操作:

|

||||

|

||||

1. Core system file updates

|

||||

2. Package updates

|

||||

3. Install a new packages

|

||||

4. Delete of old packages

|

||||

5. Perform queries on the installed and/or available packages

|

||||

1. 核心系统文件更新

|

||||

2. 软件包更新

|

||||

3. 安装新的软件包

|

||||

4. 删除旧的软件包

|

||||

5. 查找已安装和可用的软件包

|

||||

|

||||

yum is similar to other high level package managers like [apt-get command][3]/[apt command][4].

|

||||

和 `yum` 相似的软件包管理工具有: [apt-get 命令][3] 和 [apt 命令][4]。

|

||||

|

||||

### yum where is package installed

|

||||

### yum 安装软件包的位置

|

||||

|

||||

The syntax is as follows to install htop package for a demo purpose:

|

||||

处于演示的目的,我们以下列命令安装 `htop`:

|

||||

|

||||

`# yum install htop`

|

||||

```

|

||||

# yum install htop

|

||||

```

|

||||

|

||||

To list the files installed by a yum package called htop, run the following rpm command:

|

||||

要列出名为 htop 的 yum 软件包安装的文件,运行下列 `rpm` 命令:

|

||||

|

||||

```

|

||||

# rpm -q {packageNameHere}

|

||||

# rpm -ql htop

|

||||

```

|

||||

|

||||

Sample outputs:

|

||||

示例输出:

|

||||

|

||||

```

|

||||

/usr/bin/htop

|

||||

@ -37,18 +39,17 @@ Sample outputs:

|

||||

/usr/share/doc/htop-2.0.2/README

|

||||

/usr/share/man/man1/htop.1.gz

|

||||

/usr/share/pixmaps/htop.png

|

||||

|

||||

```

|

||||

|

||||

### How to see the files installed by a yum package using repoquery command

|

||||

### 如何使用 repoquery 命令查看由 yum 软件包安装的文件位置

|

||||

|

||||

First install yum-utils package using [yum command][2]:

|

||||

首先使用 [yum 命令][2] 安装 yum-utils 软件包:

|

||||

|

||||

```

|

||||

# yum install yum-utils

|

||||

```

|

||||

|

||||

Sample outputs:

|

||||

示例输出:

|

||||

|

||||

```

|

||||

Resolving Dependencies

|

||||

@ -60,9 +61,9 @@ Resolving Dependencies

|

||||

---> Package libxml2-python.x86_64 0:2.9.1-6.el7_2.3 will be installed

|

||||

---> Package python-kitchen.noarch 0:1.1.1-5.el7 will be installed

|

||||

--> Finished Dependency Resolution

|

||||

|

||||

|

||||

Dependencies Resolved

|

||||

|

||||

|

||||

=======================================================================================

|

||||

Package Arch Version Repository Size

|

||||

=======================================================================================

|

||||

@ -71,56 +72,61 @@ Installing:

|

||||

Installing for dependencies:

|

||||

libxml2-python x86_64 2.9.1-6.el7_2.3 rhui-rhel-7-server-rhui-rpms 247 k

|

||||

python-kitchen noarch 1.1.1-5.el7 rhui-rhel-7-server-rhui-rpms 266 k

|

||||

|

||||

|

||||

Transaction Summary

|

||||

=======================================================================================

|

||||

Install 1 Package (+2 Dependent packages)

|

||||

|

||||

|

||||

Total download size: 630 k

|

||||

Installed size: 3.1 M

|

||||

Is this ok [y/d/N]: y

|

||||

Downloading packages:

|

||||

(1/3): python-kitchen-1.1.1-5.el7.noarch.rpm | 266 kB 00:00:00

|

||||

(2/3): libxml2-python-2.9.1-6.el7_2.3.x86_64.rpm | 247 kB 00:00:00

|

||||

(3/3): yum-utils-1.1.31-42.el7.noarch.rpm | 117 kB 00:00:00

|

||||

(1/3): python-kitchen-1.1.1-5.el7.noarch.rpm | 266 kB 00:00:00

|

||||

(2/3): libxml2-python-2.9.1-6.el7_2.3.x86_64.rpm | 247 kB 00:00:00

|

||||

(3/3): yum-utils-1.1.31-42.el7.noarch.rpm | 117 kB 00:00:00

|

||||

---------------------------------------------------------------------------------------

|

||||

Total 1.0 MB/s | 630 kB 00:00

|

||||

Total 1.0 MB/s | 630 kB 00:00

|

||||

Running transaction check

|

||||

Running transaction test

|

||||

Transaction test succeeded

|

||||

Running transaction

|

||||

Installing : python-kitchen-1.1.1-5.el7.noarch 1/3

|

||||

Installing : libxml2-python-2.9.1-6.el7_2.3.x86_64 2/3

|

||||

Installing : yum-utils-1.1.31-42.el7.noarch 3/3

|

||||

Verifying : libxml2-python-2.9.1-6.el7_2.3.x86_64 1/3

|

||||

Verifying : yum-utils-1.1.31-42.el7.noarch 2/3

|

||||

Verifying : python-kitchen-1.1.1-5.el7.noarch 3/3

|

||||

|

||||

Installing : python-kitchen-1.1.1-5.el7.noarch 1/3

|

||||

Installing : libxml2-python-2.9.1-6.el7_2.3.x86_64 2/3

|

||||

Installing : yum-utils-1.1.31-42.el7.noarch 3/3

|

||||

Verifying : libxml2-python-2.9.1-6.el7_2.3.x86_64 1/3

|

||||

Verifying : yum-utils-1.1.31-42.el7.noarch 2/3

|

||||

Verifying : python-kitchen-1.1.1-5.el7.noarch 3/3

|

||||

|

||||

Installed:

|

||||

yum-utils.noarch 0:1.1.31-42.el7

|

||||

|

||||

yum-utils.noarch 0:1.1.31-42.el7

|

||||

|

||||

Dependency Installed:

|

||||

libxml2-python.x86_64 0:2.9.1-6.el7_2.3 python-kitchen.noarch 0:1.1.1-5.el7

|

||||

|

||||

libxml2-python.x86_64 0:2.9.1-6.el7_2.3 python-kitchen.noarch 0:1.1.1-5.el7

|

||||

|

||||

Complete!

|

||||

```

|

||||

|

||||

### 如何列出通过 yum 安装的命令?

|

||||

|

||||

### How do I list the contents of a installed package using YUM?

|

||||

现在可以使用 `repoquery` 命令:

|

||||

|

||||

Now run repoquery command as follows:

|

||||

```

|

||||

# repoquery --list htop

|

||||

```

|

||||

|

||||

`# repoquery --list htop`

|

||||

或者:

|

||||

|

||||

OR

|

||||

```

|

||||

# repoquery -l htop

|

||||

```

|

||||

|

||||

`# repoquery -l htop`

|

||||

|

||||

Sample outputs:

|

||||

示例输出:

|

||||

|

||||

[![yum where is package installed][5]][5]

|

||||

|

||||

You can also use the type command or command command to just find location of given binary file such as httpd or htop:

|

||||

*使用 repoquery 命令确定 yum 包安装的路径*

|

||||

|

||||

你也可以使用 `type` 命令或者 `command` 命令查找指定二进制文件的位置,例如 `httpd` 或者 `htop` :

|

||||

|

||||

```

|

||||

$ type -a httpd

|

||||

@ -128,19 +134,19 @@ $ type -a htop

|

||||

$ command -V htop

|

||||

```

|

||||

|

||||

### about the author

|

||||

### 关于作者

|

||||

|

||||

The author is the creator of nixCraft and a seasoned sysadmin and a trainer for the Linux operating system/Unix shell scripting. He has worked with global clients and in various industries, including IT, education, defense and space research, and the nonprofit sector. Follow him on [Twitter][6], [Facebook][7], [Google+][8].

|

||||

作者是 nixCraft 的创始人,是经验丰富的系统管理员并且是 Linux 命令行脚本编程的教练。他拥有全球多行业合作的经验,客户包括 IT,教育,安防和空间研究。他的联系方式:[Twitter][6]、 [Facebook][7]、 [Google+][8]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.cyberciti.biz/faq/yum-determining-finding-path-that-yum-package-installed-to/

|

||||

|

||||

作者:[][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

作者:[cyberciti][a]

|

||||

译者:[cyleung](https://github.com/cyleung)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux 中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.cyberciti.biz

|

||||

[1]:https://www.cyberciti.biz/faq/centos-redhat-linux-install-htop-command-using-yum/

|

||||

@ -151,3 +157,5 @@ via: https://www.cyberciti.biz/faq/yum-determining-finding-path-that-yum-package

|

||||

[6]:https://twitter.com/nixcraft

|

||||

[7]:https://facebook.com/nixcraft

|

||||

[8]:https://plus.google.com/+CybercitiBiz

|

||||

|

||||

|

||||

@ -1,6 +1,3 @@

|

||||

ezio is translating

|

||||

|

||||

|

||||

Anatomy of a Program in Memory

|

||||

============================================================

|

||||

|

||||

|

||||

@ -0,0 +1,116 @@

|

||||

Creating a YUM repository from ISO & Online repo

|

||||

======

|

||||

|

||||

YUM tool is one of the most important tool for Centos/RHEL/Fedora. Though in latest builds of fedora, it has been replaced with DNF but that not at all means that it has ran its course. It is still used widely for installing rpm packages, we have already discussed YUM with examples in our earlier tutorial ([ **READ HERE**][1]).

|

||||

|

||||

In this tutorial, we are going to learn to create a Local YUM repository, first by using ISO image of OS & then by creating a mirror image of an online yum repository.

|

||||

|

||||

### Creating YUM with DVD ISO

|

||||

|

||||

We are using a Centos 7 dvd for this tutorial & same process should work on RHEL 7 as well.

|

||||

|

||||

Firstly create a directory named YUM in root folder

|

||||

|

||||

```

|

||||

$ mkdir /YUM-

|

||||

```

|

||||

|

||||

then mount Centos 7 ISO ,

|

||||

|

||||

```

|

||||

$ mount -t iso9660 -o loop /home/dan/Centos-7-x86_x64-DVD.iso /mnt/iso/

|

||||

```

|

||||

|

||||

Next, copy the packages from mounted ISO to /YUM folder. Once all the packages have been copied to the system, we will install the required packages for creating YUM. Open /YUM & install the following RPM packages,

|

||||

|

||||

```

|

||||

$ rpm -ivh deltarpm

|

||||

$ rpm -ivh python-deltarpm

|

||||

$ rpm -ivh createrepo

|

||||

```

|

||||

|

||||

Once these packages have been installed, we will create a file named " **local.repo "** in **/etc/yum.repos.d** folder with all the yum information

|

||||

|

||||

```

|

||||

$ vi /etc/yum.repos.d/local.repo

|

||||

```

|

||||

|

||||

```

|

||||

LOCAL REPO]

|

||||

Name=Local YUM

|

||||

baseurl=file:///YUM

|

||||

gpgcheck=0

|

||||

enabled=1

|

||||

```

|

||||

|

||||

Save & exit the file. Next we will create repo-data by running the following command

|

||||

|

||||

```

|

||||

$ createrepo -v /YUM

|

||||

```

|

||||

|

||||

It will take some time to create the repo data. Once the process finishes, run

|

||||

|

||||

```

|

||||

$ yum clean all

|

||||

```

|

||||

|

||||

to clean cache & then run

|

||||

|

||||

```

|

||||

$ yum repolist

|

||||

```

|

||||

|

||||

to check the list of all repositories. You should see repo "local.repo" in the list.

|

||||

|

||||

|

||||

### Creating mirror YUM repository with online repository

|

||||

|

||||

Process involved in creating a yum is similar to creating a yum with an ISO image with one exception that we will fetch our rpm packages from an online repository instead of an ISO.

|

||||

|

||||

Firstly, we need to find an online repository to get the latest packages . It is advised to find an online yum that is closest to your location , in order to optimize the download speeds. We will be using below mentioned , you can select one nearest to yours location from [CENTOS MIRROR LIST][2]

|

||||

|

||||

After selecting a mirror, we will sync that mirror with our system using rsync but before you do that, make sure that you plenty of space on your server

|

||||

|

||||

```

|

||||

$ rsync -avz rsync://mirror.fibergrid.in/centos/7.2/os/x86_64/Packages/s/ /YUM

|

||||

```

|

||||

|

||||

Sync will take quite a while (maybe an hour) depending on your internet speed. After the syncing is completed, we will update our repo-data

|

||||

|

||||

```

|

||||

$ createrepo - v /YUM

|

||||

```

|

||||

|

||||

Our Yum is now ready to used . We can create a cron job for our repo to be updated automatically at a determined time daily or weekly as per you needs.

|

||||

|

||||

To create a cron job for syncing the repository, run

|

||||

|

||||

```

|

||||

$ crontab -e

|

||||

```

|

||||

|

||||

& add the following line

|

||||

|

||||

```

|

||||

30 12 * * * rsync -avz http://mirror.centos.org/centos/7/os/x86_64/Packages/ /YUM

|

||||

```

|

||||

|

||||

This will enable the syncing of yum every night at 12:30 AM. Also remember to create repository configuration file in /etc/yum.repos.d , as we did above.

|

||||

|

||||

That's it guys, you now have your own yum repository to use. Please share this article if you like it & leave your comments/queries in the comment box down below.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linuxtechlab.com/creating-yum-repository-iso-online-repo/

|

||||

|

||||

作者:[Shusain][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linuxtechlab.com/author/shsuain/

|

||||

[1]:http://linuxtechlab.com/using-yum-command-examples/

|

||||

[2]:http://mirror.centos.org/centos/

|

||||

@ -1,4 +1,4 @@

|

||||

python-hwinfo : Display Summary Of Hardware Information In Linux

|

||||

Translating by Torival python-hwinfo : Display Summary Of Hardware Information In Linux

|

||||

======

|

||||

Till the date, we have covered most of the utilities which discover Linux system hardware information & configuration but still there are plenty of commands available for the same purpose.

|

||||

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

Translating by FelixYFZ

|

||||

How to test internet speed in Linux terminal

|

||||

======

|

||||

Learn how to use speedtest cli tool to test internet speed in Linux terminal. Also includes one liner python command to get speed details right away.

|

||||

|

||||

@ -1,83 +0,0 @@

|

||||

Translating by zjon

|

||||

|

||||

What is a firewall?

|

||||

======

|

||||

Network-based firewalls have become almost ubiquitous across US enterprises for their proven defense against an ever-increasing array of threats.

|

||||

|

||||

A recent study by network testing firm NSS Labs found that up to 80% of US large businesses run a next-generation firewall. Research firm IDC estimates the firewall and related unified threat management market was a $7.6 billion industry in 2015 and expected to reach $12.7 billion by 2020.

|

||||

|

||||

**[ If you 're upgrading, here's [What to consider when deploying a next generation firewall][1].]**

|

||||

|

||||

### What is a firewall?

|

||||

|

||||

Firewalls act as a perimeter defense tool that monitor traffic and either allow it or block it. Over the years functionality of firewalls has increased, and now most firewalls can not only block a set of known threats and enforce advanced access control list policies, but they can also deeply inspect individual packets of traffic and test packets to determine if they're safe. Most firewalls are deployed as network hardware that processes traffic and software that allow end users to configure and manage the system. Increasingly, software-only versions of firewalls are being deployed in highly virtualized environments to enforce policies on segmented networks or in the IaaS public cloud.

|

||||

|

||||

Advancements in firewall technology have created new options firewall deployments over the past decade, so now there are a handful of options for end users looking to deploy a firewall. These include:

|

||||

|

||||

### Stateful firewalls

|

||||

|

||||

When firewalls were first created they were stateless, meaning that the hardware that the traffic traverse through while being inspected monitored each packet of network traffic individually and either blocking or allowing it in isolation. Beginning in the mid to late 1990s, the first major advancements in firewalls was the introduction of state. Stateful firewalls examine traffic in a more holistic context, taking into account the operating state and characteristics of the network connection to provide a more holistic firewall. Maintaining this state allows the firewall to allow certain traffic to access certain users while blocking at same traffic to other users, for example.

|

||||

|

||||

### Next-generation firewalls

|

||||

|

||||

Over the years firewalls have added myriad new features, including deep packet inspection, intrusion detection and prevention and inspection of encrypted traffic. Next-generation firewalls (NGFWs) refer to firewalls that have integrated many of these advanced features into the firewall.

|

||||

|

||||

### Proxy-based firewalls

|

||||

|

||||

These firewalls act as a gateway between end users who request data and the source of that data. All traffic is filtered through this proxy before being passed on to the end user. This protects the client from exposure to threats by masking the identity of the original requester of the information.

|

||||

|

||||

### Web application firewalls

|

||||

|

||||

These firewalls sit in front of specific applications as opposed to sitting on an entry or exit point of a broader network. Whereas proxy-based firewalls are typically thought of as protecting end-user clients, WAFs are typically thought of as protecting the application servers.

|

||||

|

||||

### Firewall hardware

|

||||

|

||||

Firewall hardware is typically a straightforward server that can act as a router for filtering traffic and running firewall software. These devices are placed at the edge of a corporate network, between a router and the Internet service provider's connection point. A typical enterprise may deploy dozens of physical firewalls throughout a data center. Users need to determine what throughput capacity they need the firewall to support based on the size of the user base and speed of the Internet connection.

|

||||

|

||||

### Firewall software

|

||||

|

||||

Typically end users deploy multiple firewall hardware endpoints and a central firewall software system to manage the deployment. This central system is where policies and features are configured, where analysis can be done and threats can be responded to.

|

||||

|

||||

### Next-generation firewalls

|

||||

|

||||

Over the years firewalls have added myriad new features, including deep packet inspection, intrusion detection and prevention and inspection of encrypted traffic. Next-generation firewalls (NGFWs) refer to firewalls that have integrated many of these advanced features, and here is a description of some of them.

|

||||

|

||||

### Stateful inspection

|

||||

|

||||

This is the basic firewall functionality in which the device blocks known unwanted traffic

|

||||

|

||||

### Anti-virus

|

||||

|

||||

This functionality that searches for known virus and vulnerabilities in network traffic is aided by the firewall receiving updates on the latest threats and being constantly updated to protect against them.

|

||||

|

||||

### Intrusion Prevention Systems (IPS)

|

||||

|

||||

This class of security products can be deployed as a standalone product, but IPS functionality is increasingly being integrated into NGFWs. Whereas basic firewall technologies identify and block certain types of network traffic, IPS uses more granular security measures such as signature tracing and anomaly detection to prevent unwanted threats from entering corporate networks. IPS systems have replaced the previous version of this technology, Intrusion Detection Systems (IDS) which focused more on identifying threats rather than containing them.

|

||||

|

||||

### Deep Packet Inspection (DPI)

|

||||

|

||||

DPI can be part of or used in conjunction with an IPS, but its nonetheless become an important feature of NGFWs because of the ability to provide granular analysis of traffic, most specifically the headers of traffic packets and traffic data. DPI can also be used to monitor outbound traffic to ensure sensitive information is not leaving corporate networks, a technology referred to as Data Loss Prevention (DLP).

|

||||

|

||||

### SSL Inspection

|

||||

|

||||

Secure Sockets Layer (SSL) Inspection is the idea of inspecting encrypted traffic to test for threats. As more and more traffic is encrypted, SSL Inspection is becoming an important component of DPI technology that is being implemented in NGFWs. SSL Inspection acts as a buffer that unencrypts the traffic before it's delivered to the final destination to test it.

|

||||

|

||||

### Sandboxing

|

||||

|

||||

This is one of the newer features being rolled into NGFWs and refers to the ability of a firewall to take certain unknown traffic or code and run it in a test environment to determine if it is nefarious.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3230457/lan-wan/what-is-a-firewall-perimeter-stateful-inspection-next-generation.html

|

||||

|

||||

作者:[Brandon Butler][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.networkworld.com/author/Brandon-Butler/

|

||||

[1]:https://www.networkworld.com/article/3236448/lan-wan/what-to-consider-when-deploying-a-next-generation-firewall.html

|

||||

|

||||

|

||||

@ -1,159 +0,0 @@

|

||||

How To Create Custom Ubuntu Live CD Image

|

||||

======

|

||||

|

||||

|

||||

Today let us discuss about how to create custom Ubuntu live cd image (ISO). We already have done this using [**Pinguy Builder**][1]. But, It seems to be discontinued now. I don't see any updates lately from the Pinguy builder official site. Fortunately, I found an alternative tool to create Ubuntu live cd images. Meet **Cubic** , acronym for **C** ustom **Ub** untu **I** SO **C** reator, a GUI application to create a customized bootable Ubuntu Live CD (ISO) image.

|

||||

|

||||

Cubic is being actively developed and it offers many options to easily create a customized Ubuntu live cd. It has an integrated command-line chroot environment where you can do all customization, such as installing new packages, Kernels, adding more background wallpapers, adding additional files and folders. It has an intuitive GUI interface that allows effortless navigation (back and forth with a mouse click) during the live image creation process. You can create with a new custom image or modify existing projects. Since it is used to make Ubuntu live images, I believe it can be used in other Ubuntu flavours and derivatives such as Linux Mint.

|

||||

|

||||

### Install Cubic

|

||||

|

||||

Cubic developer has made a PPA to ease the installation process. To install Cubic on your Ubuntu system, run the following commands one by one in your Terminal:

|

||||

```

|

||||

sudo apt-add-repository ppa:cubic-wizard/release

|

||||

```

|

||||

```

|

||||

sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys 6494C6D6997C215E

|

||||

```

|

||||

```

|

||||

sudo apt update

|

||||

```

|

||||

```

|

||||

sudo apt install cubic

|

||||

```

|

||||

|

||||

### Create Custom Ubuntu Live Cd Image Using Cubic

|

||||

|

||||

Once installed, launch Cubic from application menu or dock. This is how Cubic looks like in my Ubuntu 16.04 LTS desktop system.

|

||||

|

||||

Choose a directory for your new project. It is the directory where your files will be saved.

|

||||

|

||||

[![][2]][3]

|

||||

|

||||

Please note that Cubic will not create a live cd of your system. Instead it just creates a custom live cd from an Ubuntu installation cd. So, you should have a latest ISO image in hand.

|

||||

|

||||

Choose the path where you have stored your Ubuntu installation ISO image. Cubic will automatically fill out all details of your custom OS. You can change the details if you want. Click Next to continue.

|

||||

|

||||

[![][2]][4]

|

||||

|

||||

Next, the compressed Linux file system from the source installation medium will be extracted to your project's directory (i.e **/home/ostechnix/custom_ubuntu** in our case).

|

||||

|

||||

[![][2]][5]

|

||||

|

||||

Once the file system extracted, you will be landed to chroot environment automatically. If you don't see Terminal prompt, press the ENTER key few times.

|

||||

|

||||

[![][2]][6]

|

||||

|

||||

From here you can install any additional packages, add background images, add software sources repositories list, add latest Linux kernel to your live cd and all other customization.

|

||||

|

||||

For example, I want vim installed in my live cd, so I am going to install it now.

|

||||

|

||||

[![][2]][7]

|

||||

|

||||

We don't need to "sudo", because we are already in root environment.

|

||||

|

||||

Similarly, install any additional Linux Kernel version if you want.

|

||||

```

|

||||

apt install linux-image-extra-4.10.0-24-generic

|

||||

```

|

||||

|

||||

Also, you can update software sources list (Add or remove repositories list):

|

||||

|

||||

[![][2]][8]

|

||||

|

||||

After modifying the sources list, don't forget to run "apt update" command to update the sources list:

|

||||

```

|

||||

apt update

|

||||

```

|

||||

|

||||

Also, you can add files or folders to the live cd. Copy the files/folders (right click on them and choose copy or CTRL+C) and right click in the Terminal (inside Cubic window), choose **Paste file(s)** and finally click Copy in the bottom corner of the Cubic wizard.

|

||||

|

||||

[![][2]][9]

|

||||

|

||||

**Note for Ubuntu 17.10 users: **

|

||||

|

||||

In Ubuntu 17.10 system, the DNS lookup may not work in chroot environment. If you are making a custom Ubuntu 17.10 live image, you need to point the correct file resolve.conf file:

|

||||

```

|

||||

ln -sr /run/systemd/resolve/resolv.conf /run/systemd/resolve/stub-resolv.conf

|

||||

|

||||

```

|

||||

|

||||

To verify DNS resolution works, run:

|

||||

```

|

||||

cat /etc/resolv.conf

|

||||

ping google.com

|

||||

```

|

||||

|

||||

Add your own wallpapers if you want. To do so, go to the **/usr/share/backgrounds/** directory,

|

||||

```

|

||||

cd /usr/share/backgrounds

|

||||

```

|

||||

|

||||

and drag/drop the images into the Cubic window. Or copy the images and right click on Cubic Terminal window and choose **Paste file(s)** option. Also, make sure you have added the new wallpapers in an XML file under **/usr/share/gnome-background-properties** , so you can choose the newly added image **Change Desktop Background** dialog when you right-click on your desktop. When you made all changes, click Next in Cubic wizard.

|

||||

|

||||

In the next, choose Linux Kernel version to use when booting into the new live ISO. If you have installed any additional kernels, they will also listed in this section. Just choose the Kernel you'd like to use in your live cd.

|

||||

|

||||

[![][2]][10]

|

||||

|

||||

In the next section, select the packages that you want to remove from your live image. The selected packages will be automatically removed after the Ubuntu OS has been installed using the custom live image. Please be careful while choosing the packages to remove, you might have unknowingly removed a package that depends on another package.

|

||||

|

||||

[![][2]][11]

|

||||

|

||||

Now, the live image creation process will start. It will take some time depending upon your system's specifications.

|

||||

|

||||

[![][2]][12]

|

||||

|

||||

Once the image creation process completed, click Finish. Cubic will display the newly created custom image details.

|

||||

|

||||

If you want to modify the newly create custom live image in the future, **uncheck** the option that says **" Delete all project files, except the generated disk image and the corresponding MD5 checksum file"**. Cubic will left the custom image in the project's working directory, you can make any changes in future. You don't have start all over again.

|

||||

|

||||

To create a new live image for different Ubuntu versions, use a different project directory.

|

||||

|

||||

### Modify Custom Ubuntu Live Cd Image Using Cubic

|

||||

|

||||

Launch Cubic from menu, and select an existing project directory. Click the Next button, and you will see the following three options:

|

||||

|

||||

1. Create a disk image from the existing project.

|

||||

2. Continue customizing the existing project.

|

||||

3. Delete the existing project.

|

||||

|

||||

|

||||

|

||||

[![][2]][13]

|

||||

|

||||

The first option will allow you to create a new live ISO image from your existing project using the same customization you previously made. If you lost your ISO image, you can use the first option to create a new one.

|

||||

|

||||

The second option allows you to make any additional changes in your existing project. If you choose this option, you will be landed into chroot environment again. You can add new files or folders, install any new softwares, remove any softwares, add other Linux kernels, add desktop backgrounds and so on.

|

||||

|

||||

The third option will delete the existing project, so you can start all over from the beginning. Please that this option will delete all files including the newly generated ISO.

|

||||

|

||||

I made a custom Ubuntu 16.04 LTS desktop live cd using Cubic. It worked just fine as described here. If you want to create an Ubuntu live cd, Cubic might be good choice.

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/create-custom-ubuntu-live-cd-image/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:https://www.ostechnix.com/pinguy-builder-build-custom-ubuntu-os/

|

||||

[2]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[3]:http://www.ostechnix.com/wp-content/uploads/2017/10/Cubic-1.png ()

|

||||

[4]:http://www.ostechnix.com/wp-content/uploads/2017/10/Cubic-2.png ()

|

||||

[5]:http://www.ostechnix.com/wp-content/uploads/2017/10/Cubic-3.png ()

|

||||

[6]:http://www.ostechnix.com/wp-content/uploads/2017/10/Cubic-4.png ()

|

||||

[7]:http://www.ostechnix.com/wp-content/uploads/2017/10/Cubic-6.png ()

|

||||

[8]:http://www.ostechnix.com/wp-content/uploads/2017/10/Cubic-5.png ()

|

||||

[9]:http://www.ostechnix.com/wp-content/uploads/2017/10/Cubic-7.png ()

|

||||

[10]:http://www.ostechnix.com/wp-content/uploads/2017/10/Cubic-8.png ()

|

||||

[11]:http://www.ostechnix.com/wp-content/uploads/2017/10/Cubic-10-1.png ()

|

||||

[12]:http://www.ostechnix.com/wp-content/uploads/2017/10/Cubic-12-1.png ()

|

||||

[13]:http://www.ostechnix.com/wp-content/uploads/2017/10/Cubic-13.png ()

|

||||

115

sources/tech/20171220 Containers without Docker at Red Hat.md

Normal file

115

sources/tech/20171220 Containers without Docker at Red Hat.md

Normal file

@ -0,0 +1,115 @@

|

||||

Containers without Docker at Red Hat

|

||||

======

|

||||

|

||||

The Docker (now [Moby][1]) project has done a lot to popularize containers in recent years. Along the way, though, it has generated concerns about its concentration of functionality into a single, monolithic system under the control of a single daemon running with root privileges: `dockerd`. Those concerns were reflected in a [talk][2] by Dan Walsh, head of the container team at Red Hat, at [KubeCon \+ CloudNativeCon][3]. Walsh spoke about the work the container team is doing to replace Docker with a set of smaller, interoperable components. His rallying cry is "no big fat daemons" as he finds them to be contrary to the venerated Unix philosophy.

|

||||

|

||||

### The quest to modularize Docker

|

||||

|

||||

As we saw in an [earlier article][4], the basic set of container operations is not that complicated: you need to pull a container image, create a container from the image, and start it. On top of that, you need to be able to build images and push them to a registry. Most people still use Docker for all of those steps but, as it turns out, Docker isn't the only name in town anymore: an early alternative was `rkt`, which led to the creation of various standards like CRI (runtime), OCI (image), and CNI (networking) that allow backends like [CRI-O][5] or Docker to interoperate with, for example, [Kubernetes][6].

|

||||

|

||||