mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-30 02:40:11 +08:00

commit

6f1bca3d49

published

20150823 How learning data structures and algorithms make you a better developer.md20150827 The Strangest Most Unique Linux Distros.md20150914 Display Awesome Linux Logo With Basic Hardware Info Using screenfetch and linux_logo Tools.md20150921 Configure PXE Server In Ubuntu 14.04.md

201510

20150202 How to filter BGP routes in Quagga BGP router.md20150716 Interview--Larry Wall.md20150821 Linux 4.3 Kernel To Add The MOST Driver Subsystem.md20150906 Installing NGINX and NGINX Plus With Ansible.md20150908 How to Run ISO Files Directly From the HDD with GRUB2.md20150911 5 Useful Commands to Manage File Types and System Time in Linu--Part 3.md20150914 How to Setup Node JS v4.0.0 on Ubuntu 14.04 or 15.04.md20150914 Linux FAQs with Answers--How to check weather forecasts from the command line on Linux.md20150917 TERMINATOR 0.98 INSTALL IN UBUNTU AND LINUX MINT.md20150918 How To Add And Remove Bookmarks In Ubuntu Beginner Tip.md20150918 Install Justniffer In Ubuntu 15.04.md20150921 How to Setup IonCube Loaders on Ubuntu 14.04 or 15.04.md20150921 Meet The New Ubuntu 15.10 Default Wallpaper.md20150921 Red Hat CEO Optimistic on OpenStack Revenue Opportunity.md20150923 How To Upgrade From Oracle 11g To Oracle 12c.md20150923 Xenlism WildFire--Minimal Icon Theme For Linux Desktop.md20150925 HTTP 2 Now Fully Supported in NGINX Plus.md20150930 Debian dropping the Linux Standard Base.md20150930 Install and use Ansible (Automation Tool) in CentOS 7.md20151005 pyinfo() A good looking phpinfo-like python script.md20151007 How To Download Videos Using youtube-dl In Linux.md20151007 Open Source Media Player MPlayer 1.2 Released.md20151007 Productivity Tools And Tips For Linux.md20151012 10 Useful Utilities For Linux Users.md20151012 Linux FAQs with Answers--How to change USB device permission permanently on Linux.md20151012 Linux FAQs with Answers--How to force password change at the next login on Linux.md20151013 Mytodo--A ToDo List Manager For DIY Lovers.md20151015 New Collaborative Group to Speed Real-Time Linux.md20151019 10 passwd command examples in Linux.md20151019 11 df command examples in Linux.md20151019 How-To--Compile the Latest Wine 32-bit on 64-bit Ubuntu (15.10).md20151020 Five Years of LibreOffice Evolution (2010-2015).md20151020 Linux History--24 Years Step by Step.md

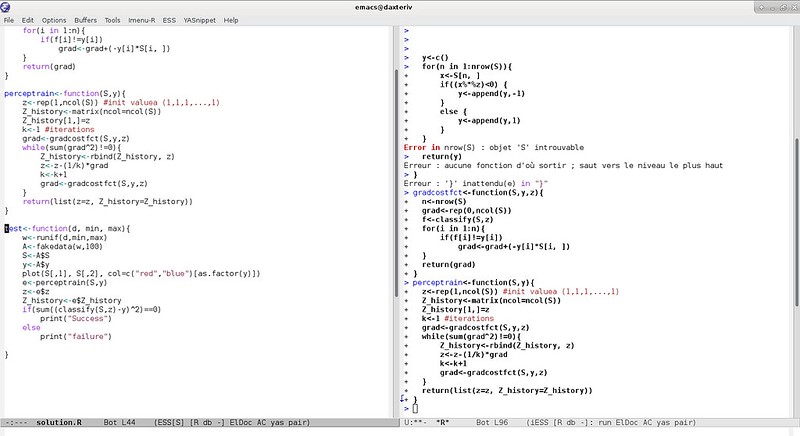

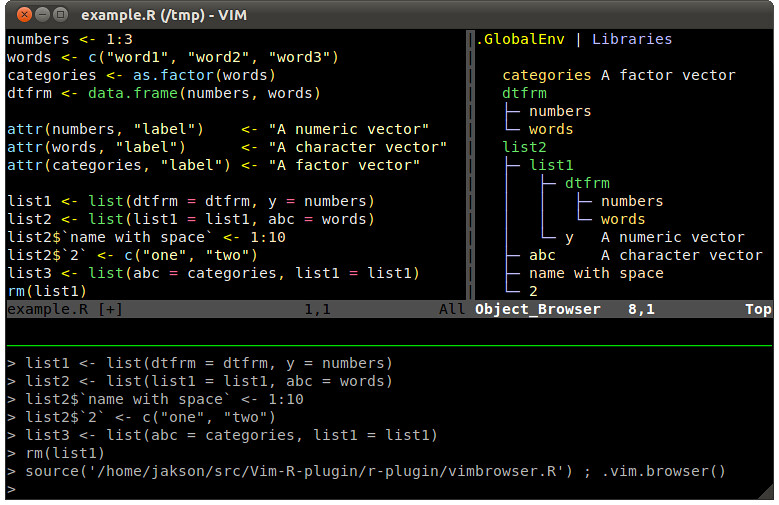

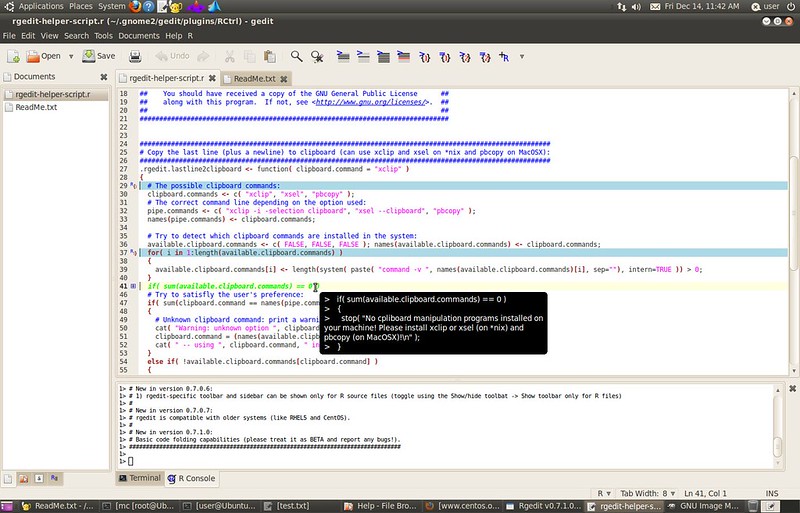

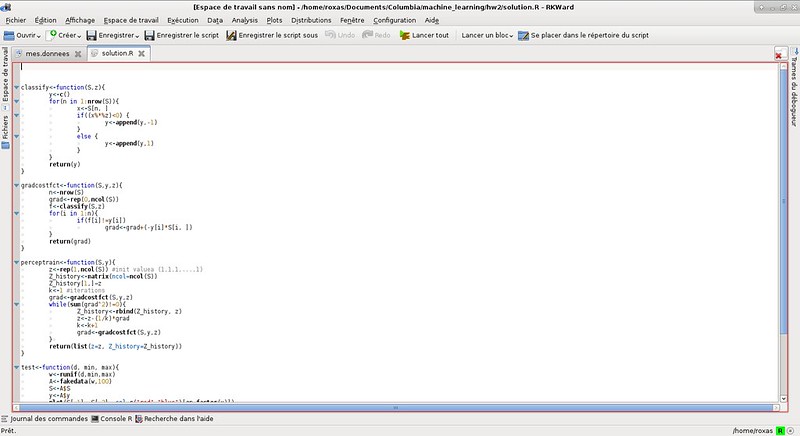

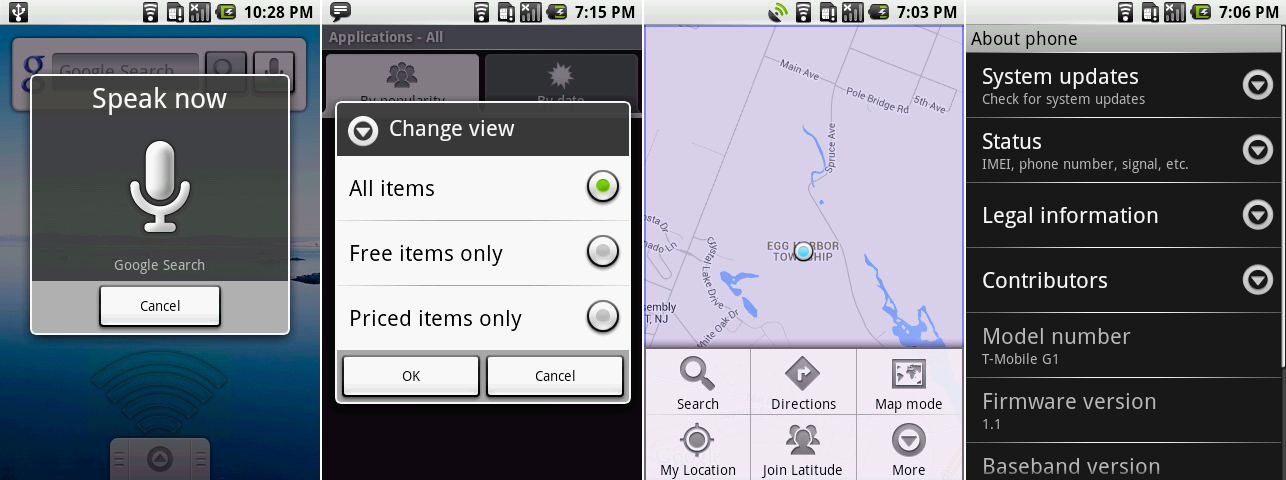

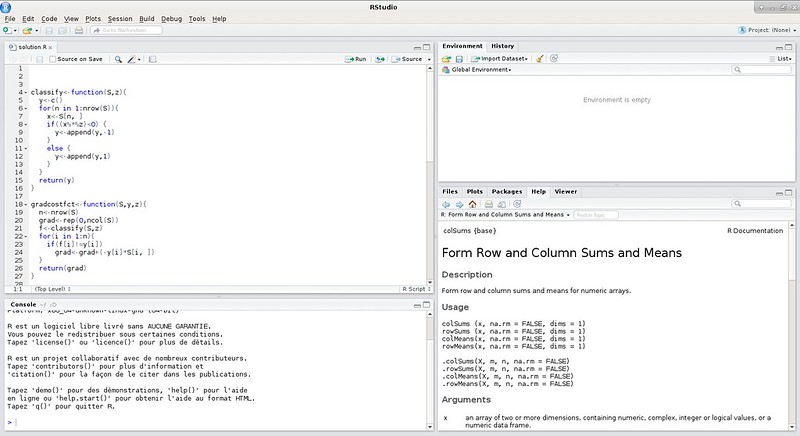

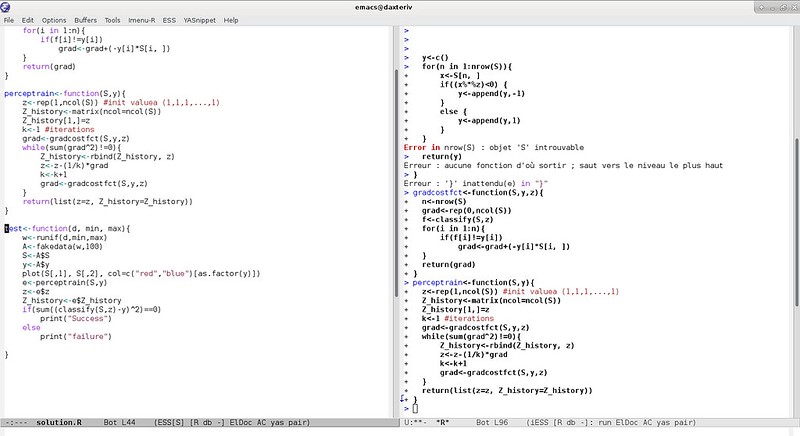

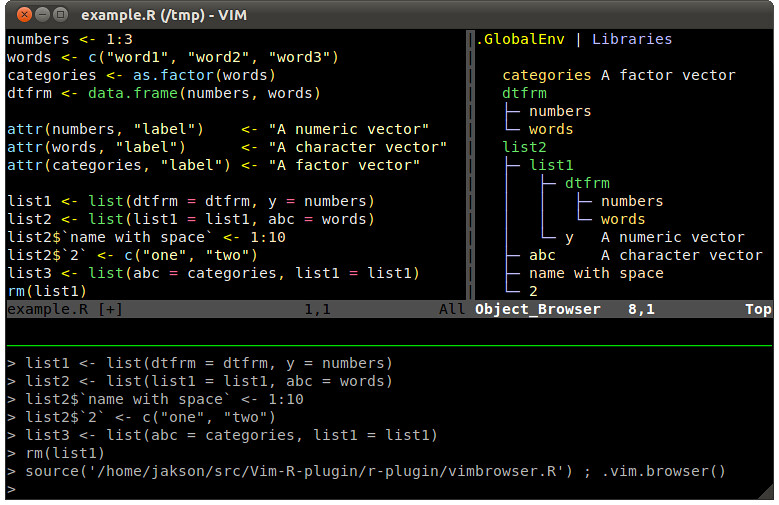

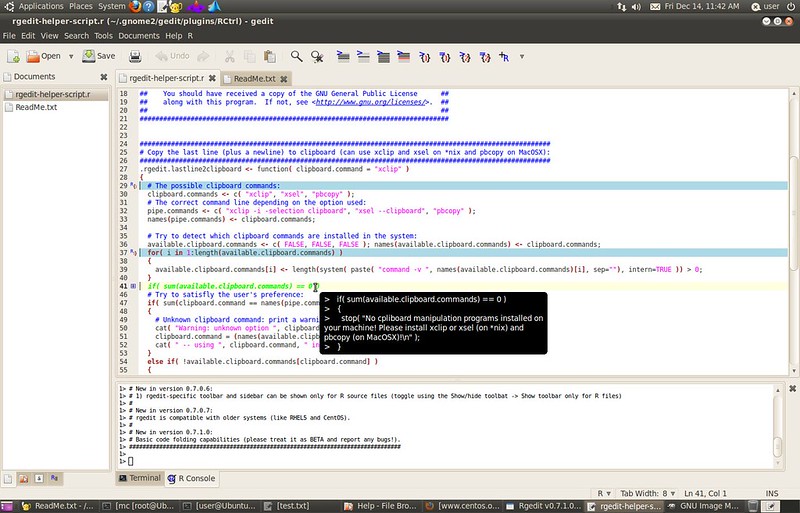

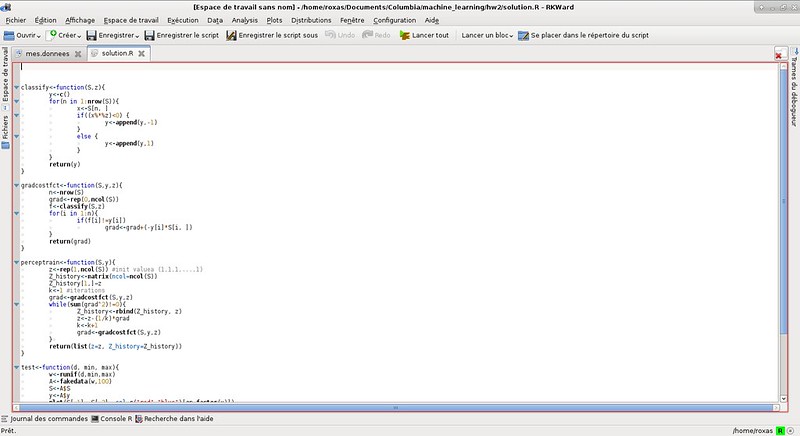

20151012 How To Use iPhone In Antergos Linux.md20151012 Linux FAQs with Answers--How to find information about built-in kernel modules on Linux.md20151012 What is a good IDE for R on Linux.md20151019 Linux FAQs with Answers--How to install Ubuntu desktop behind a proxy.md20151027 How To Show Desktop In GNOME 3.md20151104 Ubuntu Software Centre To Be Replaced in 16.04 LTS.mdLearn with Linux--Master Your Math with These Linux Apps.mdLetsEncrypt.mdRAID

Part 1 - Introduction to RAID, Concepts of RAID and RAID Levels.mdPart 2 - Creating Software RAID0 (Stripe) on ‘Two Devices’ Using ‘mdadm’ Tool in Linux.mdPart 3 - Setting up RAID 1 (Mirroring) using 'Two Disks' in Linux.mdPart 4 - Creating RAID 5 (Striping with Distributed Parity) in Linux.mdPart 5 - Setup RAID Level 6 (Striping with Double Distributed Parity) in Linux.mdPart 6 - Setting Up RAID 10 or 1+0 (Nested) in Linux.mdPart 7 - Growing an Existing RAID Array and Removing Failed Disks in Raid.mdPart 8 - How to Recover Data and Rebuild Failed Software RAID's.mdPart 9 - How to Manage Software RAID's in Linux with 'Mdadm' Tool.md

RHCE

Part 1 - RHCE Series--How to Setup and Test Static Network Routing.mdPart 2 - How to Perform Packet Filtering Network Address Translation and Set Kernel Runtime Parameters.mdPart 3 - How to Produce and Deliver System Activity Reports Using Linux Toolsets.mdPart 4 - Using Shell Scripting to Automate Linux System Maintenance Tasks.mdPart 5 - How to Manage System Logs (Configure, Rotate and Import Into Database) in RHEL 7.mdPart 6 - Setting Up Samba and Configure FirewallD and SELinux to Allow File Sharing on Linux or Windows Clients.md

The history of Android

sources

share

20150824 Great Open Source Collaborative Editing Tools.md20150901 5 best open source board games to play online.md20151012 Curious about Linux Try Linux Desktop on the Cloud.md20151012 What is a good IDE for R on Linux.md20151028 Bossie Awards 2015--The best open source application development tools.md20151028 Bossie Awards 2015--The best open source applications.md20151028 Bossie Awards 2015--The best open source big data tools.md20151028 Bossie Awards 2015--The best open source data center and cloud software.md20151028 Bossie Awards 2015--The best open source desktop and mobile software.md20151028 Bossie Awards 2015--The best open source networking and security software.md20151030 80 Linux Monitoring Tools for SysAdmins.md20151104 Optimize Web Delivery with these Open Source Tools.md

talk

20101020 19 Years of KDE History--Step by Step.md20150820 LinuxCon's surprise keynote speaker Linus Torvalds muses about open-source software.md20150820 Why did you start using Linux.md20150824 LinuxCon exclusive--Mark Shuttleworth says Snappy was born long before CoreOS and the Atomic Project.md20150827 The Strangest Most Unique Linux Distros.md20150909 Superclass--15 of the world's best living programmers.md20150910 The Free Software Foundation--30 years in.md20150916 Italy's Ministry of Defense to Drop Microsoft Office in Favor of LibreOffice.md20150921 14 tips for teaching open source development.md20150921 Red Hat CEO Optimistic on OpenStack Revenue Opportunity.md20150929 A Slick New Set-Up Wizard Is Coming To Ubuntu and Ubuntu Touch.md20151012 The Brief History Of Aix HP-UX Solaris BSD And LINUX.md20151015 New Collaborative Group to Speed Real-Time Linux.md20151019 Gaming On Linux--All You Need To Know.md20151020 18 Years of GNOME Design and Software Evolution--Step by Step.md20151020 30 Years of Free Software Foundation--Best Quotes of Richard Stallman.md20151023 Mark Shuttleworth--The Man Behind Ubuntu Operating System.md20151105 Linus Torvalds Lambasts Open Source Programmers over Insecure Code.md

tech

20150806 Installation Guide for Puppet on Ubuntu 15.04.md20150824 How to Setup Zephyr Test Management Tool on CentOS 7.x.md20151012 How To Use iPhone In Antergos Linux.md20151012 How to Setup DockerUI--a Web Interface for Docker.md20151028 10 Tips for 10x Application Performance.md20151104 How to Install Pure-FTPd with TLS on FreeBSD 10.2.md20151104 How to Install Redis Server on CentOS 7.md20151104 How to Install SQLite 3.9.1 with JSON Support on Ubuntu 15.04.md

@ -1,41 +1,41 @@

|

||||

学习数据结构与算法分析如何帮助您成为更优秀的开发人员?

|

||||

学习数据结构与算法分析如何帮助您成为更优秀的开发人员

|

||||

================================================================================

|

||||

|

||||

> "相较于其它方式,我一直热衷于推崇围绕数据设计代码,我想这也是Git能够如此成功的一大原因[…]在我看来,区别程序员优劣的一大标准就在于他是否认为自己设计的代码或数据结构更为重要。"

|

||||

> "相较于其它方式,我一直热衷于推崇围绕数据设计代码,我想这也是Git能够如此成功的一大原因[…]在我看来,区别程序员优劣的一大标准就在于他是否认为自己设计的代码还是数据结构更为重要。"

|

||||

-- Linus Torvalds

|

||||

|

||||

---

|

||||

|

||||

> "优秀的数据结构与简陋的代码组合远比倒过来的组合方式更好。"

|

||||

> "优秀的数据结构与简陋的代码组合远比反之的组合更好。"

|

||||

-- Eric S. Raymond, The Cathedral and The Bazaar

|

||||

|

||||

学习数据结构与算法分析会让您成为一名出色的程序员。

|

||||

|

||||

**数据结构与算法分析是一种解决问题的思维模式** 在您的个人知识库中,数据结构与算法分析的相关知识储备越多,您将具备应对并解决越多各类繁杂问题的能力。掌握了这种思维模式,您还将有能力针对新问题提出更多以前想不到的漂亮的解决方案。

|

||||

**数据结构与算法分析是一种解决问题的思维模式。** 在您的个人知识库中,数据结构与算法分析的相关知识储备越多,您将越多具备应对并解决各类繁杂问题的能力。掌握了这种思维模式,您还将有能力针对新问题提出更多以前想不到的漂亮的解决方案。

|

||||

|

||||

您将***更深入地***了解,计算机如何完成各项操作。无论您是否是直接使用给定的算法,它都影响着您作出的各种技术决定。从计算机操作系统的内存分配到RDBMS的内在工作机制,以及网络堆栈如何实现将数据从地球的一个角落发送至另一个角落这些大大小小的工作的完成,都离不开基础的数据结构与算法,理解并掌握它将会让您更了解计算机的运作机理。

|

||||

您将*更深入地*了解,计算机如何完成各项操作。无论您是否是直接使用给定的算法,它都影响着您作出的各种技术决定。从计算机操作系统的内存分配到RDBMS的内在工作机制,以及网络协议如何实现将数据从地球的一个角落发送至另一个角落,这些大大小小的工作的完成,都离不开基础的数据结构与算法,理解并掌握它将会让您更了解计算机的运作机理。

|

||||

|

||||

对算法广泛深入的学习能让为您应对大体系的问题储备解决方案。之前建模困难时遇到的问题如今通常都能融合进经典的数据结构中得到很好地解决。即使是最基础的数据结构,只要对它进行足够深入的钻研,您将会发现在每天的编程任务中都能经常用到这些知识。

|

||||

对算法广泛深入的学习能为您储备解决方案来应对大体系的问题。之前建模困难时遇到的问题如今通常都能融合进经典的数据结构中得到很好地解决。即使是最基础的数据结构,只要对它进行足够深入的钻研,您将会发现在每天的编程任务中都能经常用到这些知识。

|

||||

|

||||

有了这种思维模式,在遇到磨棱两可的问题时,您会具备想出新的解决方案的能力。即使最初并没有打算用数据结构与算法解决相应问题的情况,当真正用它们解决这些问题时您会发现它们将非常有用。要意识到这一点,您至少要对数据结构与算法分析的基础知识有深入直观的认识。

|

||||

有了这种思维模式,在遇到磨棱两可的问题时,您将能够想出新奇的解决方案。即使最初并没有打算用数据结构与算法解决相应问题的情况,当真正用它们解决这些问题时您会发现它们将非常有用。要意识到这一点,您至少要对数据结构与算法分析的基础知识有深入直观的认识。

|

||||

|

||||

理论认识就讲到这里,让我们一起看看下面几个例子。

|

||||

|

||||

###最短路径问题###

|

||||

|

||||

我们想要开发一个计算从一个国际机场出发到另一个国际机场的最短距离的软件。假设我们受限于以下路线:

|

||||

我们想要开发一个软件来计算从一个国际机场出发到另一个国际机场的最短距离。假设我们受限于以下路线:

|

||||

|

||||

|

||||

|

||||

从这张画出机场各自之间的距离以及目的地的图中,我们如何才能找到最短距离,比方说从赫尔辛基到伦敦?**Dijkstra算法**是能让我们在最短的时间得到正确答案的适用算法。

|

||||

从这张画出机场各自之间的距离以及目的地的图中,我们如何才能找到最短距离,比方说从赫尔辛基到伦敦?**[Dijkstra算法][3]**是能让我们在最短的时间得到正确答案的适用算法。

|

||||

|

||||

在所有可能的解法中,如果您曾经遇到过这类问题,知道可以用Dijkstra算法求解,您大可不必从零开始实现它,只需***知道***该算法能指向固定的代码库帮助您解决相关的实现问题。

|

||||

在所有可能的解法中,如果您曾经遇到过这类问题,知道可以用Dijkstra算法求解,您大可不必从零开始实现它,只需***知道***该算法的代码库能帮助您解决相关的实现问题。

|

||||

|

||||

实现了该算法,您将深入理解一项著名的重要图论算法。您会发现实际上该算法太集成化,因此名为A*的扩展包经常会代替该算法使用。这个算法应用广泛,从机器人指引的功能实现到TCP数据包路由,以及GPS寻径问题都能应用到这个算法。

|

||||

如果你深入到该算法的实现中,您将深入理解一项著名的重要图论算法。您会发现实际上该算法比较消耗资源,因此名为[A*][4]的扩展经常用于代替该算法。这个算法应用广泛,从机器人寻路的功能实现到TCP数据包路由,以及GPS寻径问题都能应用到这个算法。

|

||||

|

||||

###先后排序问题###

|

||||

|

||||

您想要在开放式在线课程平台上(如Udemy或Khan学院)学习某课程,有些课程之间彼此依赖。例如,用户学习牛顿力学机制课程前必须先修微积分课程,课程之间可以有多种依赖关系。用YAML表述举例如下:

|

||||

您想要在开放式在线课程(MOOC,Massive Open Online Courses)平台上(如Udemy或Khan学院)学习某课程,有些课程之间彼此依赖。例如,用户学习牛顿力学(Newtonian Mechanics)课程前必须先修微积分(Calculus)课程,课程之间可以有多种依赖关系。用YAML表述举例如下:

|

||||

|

||||

# Mapping from course name to requirements

|

||||

#

|

||||

@ -54,16 +54,16 @@

|

||||

astrophysics: [radioactivity, calculus]

|

||||

quantumn_mechanics: [atomic_physics, radioactivity, calculus]

|

||||

|

||||

鉴于以上这些依赖关系,作为一名用户,我希望系统能帮我列出必修课列表,让我在之后可以选择任意一门课程学习。如果我选择了`微积分`课程,我希望系统能返回以下列表:

|

||||

鉴于以上这些依赖关系,作为一名用户,我希望系统能帮我列出必修课列表,让我在之后可以选择任意一门课程学习。如果我选择了微积分(calculus)课程,我希望系统能返回以下列表:

|

||||

|

||||

arithmetic -> algebra -> trigonometry -> calculus

|

||||

|

||||

这里有两个潜在的重要约束条件:

|

||||

|

||||

- 返回的必修课列表中,每门课都与下一门课存在依赖关系

|

||||

- 必修课列表中不能有重复项

|

||||

- 我们不希望列表中有任何重复课程

|

||||

|

||||

这是解决数据间依赖关系的例子,解决该问题的排序算法称作拓扑排序算法(tsort)。它适用于解决上述我们用YAML列出的依赖关系图的情况,以下是在图中显示的相关结果(其中箭头代表`需要先修的课程`):

|

||||

这是解决数据间依赖关系的例子,解决该问题的排序算法称作拓扑排序算法(tsort,topological sort)。它适用于解决上述我们用YAML列出的依赖关系图的情况,以下是在图中显示的相关结果(其中箭头代表`需要先修的课程`):

|

||||

|

||||

|

||||

|

||||

@ -79,16 +79,17 @@

|

||||

|

||||

这符合我们上面描述的需求,用户只需选出`radioactivity`,就能得到在此之前所有必修课程的有序列表。

|

||||

|

||||

在运用该排序算法之前,我们甚至不需要深入了解算法的实现细节。一般来说,选择不同的编程语言在其标准库中都会有相应的算法实现。即使最坏的情况,Unix也会默认安装`tsort`程序,运行`tsort`程序,您就可以实现该算法。

|

||||

在运用该排序算法之前,我们甚至不需要深入了解算法的实现细节。一般来说,你可能选择的各种编程语言在其标准库中都会有相应的算法实现。即使最坏的情况,Unix也会默认安装`tsort`程序,运行`man tsort` 来了解该程序。

|

||||

|

||||

###其它拓扑排序适用场合###

|

||||

|

||||

- **工具** 使用诸如`make`的工具您可以声明任务之间的依赖关系,这里拓扑排序算法将从底层实现具有依赖关系的任务顺序执行的功能。

|

||||

- **有`require`指令的编程语言**,适用于要运行当前文件需先运行另一个文件的情况。这里拓扑排序用于识别文件运行顺序以保证每个文件只加载一次,且满足所有文件间的依赖关系要求。

|

||||

- **包含甘特图的项目管理工具**.甘特图能直观列出给定任务的所有依赖关系,在这些依赖关系之上能提供给用户任务完成的预估时间。我不常用到甘特图,但这些绘制甘特图的工具很可能会用到拓扑排序算法。

|

||||

- **类似`make`的工具** 可以让您声明任务之间的依赖关系,这里拓扑排序算法将从底层实现具有依赖关系的任务顺序执行的功能。

|

||||

- **具有`require`指令的编程语言**适用于要运行当前文件需先运行另一个文件的情况。这里拓扑排序用于识别文件运行顺序以保证每个文件只加载一次,且满足所有文件间的依赖关系要求。

|

||||

- **带有甘特图的项目管理工具**。甘特图能直观列出给定任务的所有依赖关系,在这些依赖关系之上能提供给用户任务完成的预估时间。我不常用到甘特图,但这些绘制甘特图的工具很可能会用到拓扑排序算法。

|

||||

|

||||

###霍夫曼编码实现数据压缩###

|

||||

[霍夫曼编码](http://en.wikipedia.org/wiki/Huffman_coding)是一种用于无损数据压缩的编码算法。它的工作原理是先分析要压缩的数据,再为每个字符创建一个二进制编码。字符出现的越频繁,编码赋值越小。因此在一个数据集中`e`可能会编码为`111`,而`x`会编码为`10010`。创建了这种编码模式,就可以串联无定界符,也能正确地进行解码。

|

||||

|

||||

[霍夫曼编码][5](Huffman coding)是一种用于无损数据压缩的编码算法。它的工作原理是先分析要压缩的数据,再为每个字符创建一个二进制编码。字符出现的越频繁,编码赋值越小。因此在一个数据集中`e`可能会编码为`111`,而`x`会编码为`10010`。创建了这种编码模式,就可以串联无定界符,也能正确地进行解码。

|

||||

|

||||

在gzip中使用的DEFLATE算法就结合了霍夫曼编码与LZ77一同用于实现数据压缩功能。gzip应用领域很广,特别适用于文件压缩(以`.gz`为扩展名的文件)以及用于数据传输中的http请求与应答。

|

||||

|

||||

@ -96,10 +97,11 @@

|

||||

|

||||

- 您会理解为什么较大的压缩文件会获得较好的整体压缩效果(如压缩的越多,压缩率也越高)。这也是SPDY协议得以推崇的原因之一:在复杂的HTTP请求/响应过程数据有更好的压缩效果。

|

||||

- 您会了解数据传输过程中如果想要压缩JavaScript/CSS文件,运行压缩软件是完全没有意义的。PNG文件也是类似,因为它们已经使用DEFLATE算法完成了压缩。

|

||||

- 如果您试图强行破译加密的信息,您可能会发现重复数据压缩质量越好,给定的密文单位bit的数据压缩将帮助您确定相关的[分组密码模式](http://en.wikipedia.org/wiki/Block_cipher_mode_of_operation).

|

||||

- 如果您试图强行破译加密的信息,您可能会发现由于重复数据压缩质量更好,密文给定位的数据压缩率将帮助您确定相关的[分组密码工作模式][6](block cipher mode of operation.)。

|

||||

|

||||

###下一步选择学习什么是困难的###

|

||||

作为一名程序员应当做好持续学习的准备。为成为一名web开发人员,您需要了解标记语言以及Ruby/Python,正则表达式,SQL,JavaScript等高级编程语言,还需要了解HTTP的工作原理,如何运行UNIX终端以及面向对象的编程艺术。您很难有效地预览到未来的职业全景,因此选择下一步要学习哪些知识是困难的。

|

||||

|

||||

作为一名程序员应当做好持续学习的准备。为了成为一名web开发人员,您需要了解标记语言以及Ruby/Python、正则表达式、SQL、JavaScript等高级编程语言,还需要了解HTTP的工作原理,如何运行UNIX终端以及面向对象的编程艺术。您很难有效地预览到未来的职业全景,因此选择下一步要学习哪些知识是困难的。

|

||||

|

||||

我没有快速学习的能力,因此我不得不在时间花费上非常谨慎。我希望尽可能地学习到有持久生命力的技能,即不会在几年内就过时的技术。这意味着我也会犹豫这周是要学习JavaScript框架还是那些新的编程语言。

|

||||

|

||||

@ -111,13 +113,14 @@ via: http://www.happybearsoftware.com/how-learning-data-structures-and-algorithm

|

||||

|

||||

作者:[Happy Bear][a]

|

||||

译者:[icybreaker](https://github.com/icybreaker)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.happybearsoftware.com/

|

||||

[1]:http://en.wikipedia.org/wiki/Huffman_coding

|

||||

[2]:http://en.wikipedia.org/wiki/Block_cipher_mode_of_operation

|

||||

|

||||

|

||||

|

||||

[3]:http://en.wikipedia.org/wiki/Dijkstra's_algorithm

|

||||

[4]:http://en.wikipedia.org/wiki/A*_search_algorithm

|

||||

[5]:http://en.wikipedia.org/wiki/Huffman_coding

|

||||

[6]:http://en.wikipedia.org/wiki/Block_cipher_mode_of_operation

|

||||

@ -0,0 +1,88 @@

|

||||

那些奇特的 Linux 发行版本

|

||||

================================================================================

|

||||

从大多数消费者所关注的诸如 Ubuntu,Fedora,Mint 或 elementary OS 到更加复杂、轻量级和企业级的诸如 Slackware,Arch Linux 或 RHEL,这些发行版本我都已经见识过了。除了这些,难道没有其他别的了吗?其实 Linux 的生态系统是非常多样化的,对每个人来说,总有一款适合你。下面就让我们讨论一些稀奇古怪的小众 Linux 发行版本吧,它们代表着开源平台真正的多样性。

|

||||

|

||||

### Puppy Linux

|

||||

|

||||

|

||||

|

||||

它是一个仅有一个普通 DVD 光盘容量十分之一大小的操作系统,这就是 Puppy Linux。整个操作系统仅有 100MB 大小!并且它还可以从内存中运行,这使得它运行极快,即便是在老式的 PC 机上。 在操作系统启动后,你甚至可以移除启动介质!还有什么比这个更好的吗? 系统所需的资源极小,大多数的硬件都会被自动检测到,并且它预装了能够满足你基本需求的软件。[在这里体验 Puppy Linux 吧][1].

|

||||

|

||||

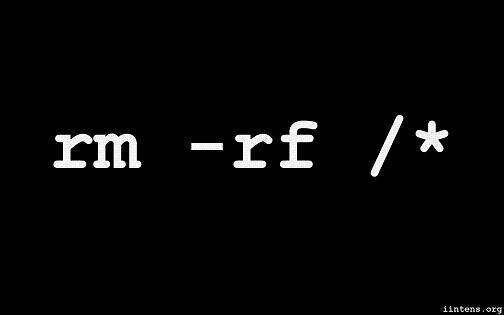

### Suicide Linux(自杀 Linux)

|

||||

|

||||

|

||||

|

||||

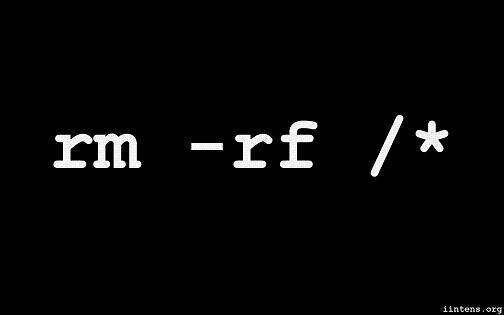

这个名字吓到你了吗?我想应该是。 ‘任何时候 -注意是任何时候-一旦你远程输入不正确的命令,解释器都会创造性地将它重定向为 `rm -rf /` 命令,然后擦除你的硬盘’。它就是这么简单。我真的很想知道谁自信到将[Suicide Linux][2] 安装到生产机上。 **警告:千万不要在生产机上尝试这个!** 假如你感兴趣的话,现在可以通过一个简洁的[DEB 包][3]来获取到它。

|

||||

|

||||

### PapyrOS

|

||||

|

||||

|

||||

|

||||

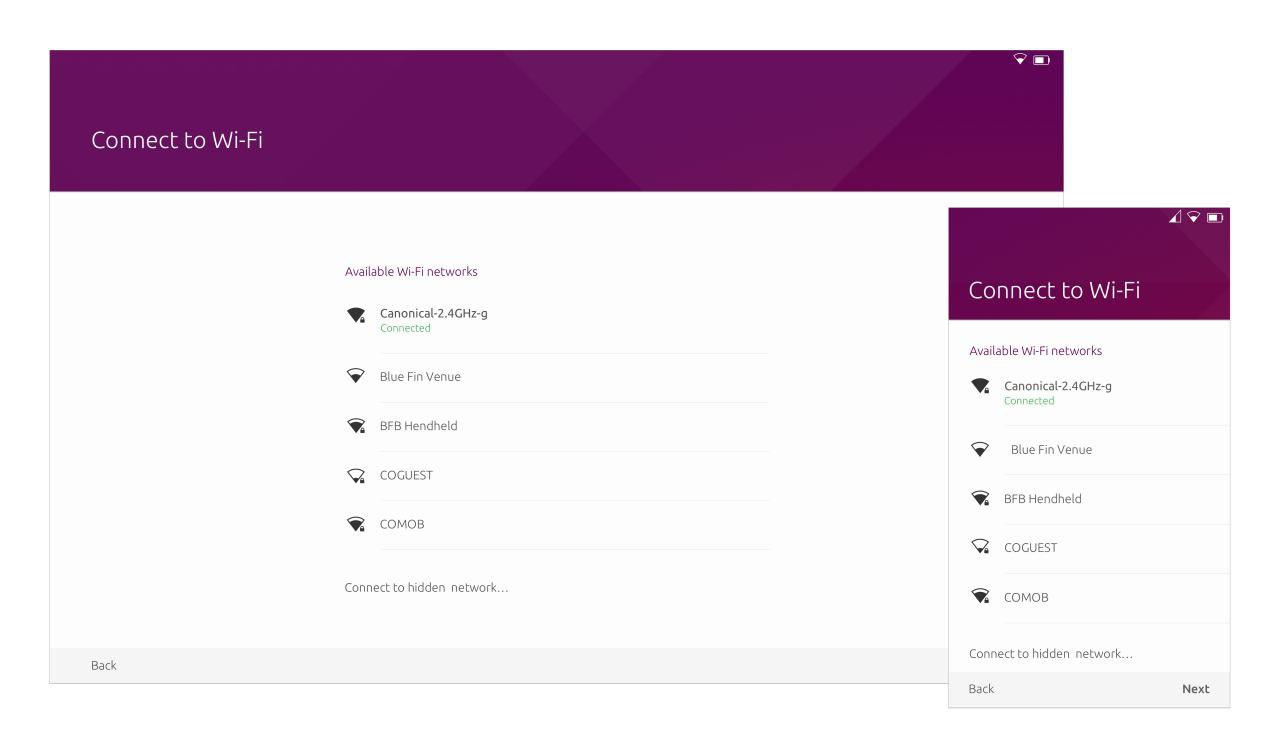

它的 “奇怪”是好的方面。PapyrOS 正尝试着将 Android 的 material design 设计语言引入到新的 Linux 发行版本上。尽管这个项目还处于早期阶段,看起来它已经很有前景。该项目的网页上说该系统已经完成了 80%,随后人们可以期待它的第一个 Alpha 发行版本。在该项目被宣告提出时,我们做了 [PapyrOS][4] 的小幅报道,从它的外观上看,它甚至可能会引领潮流。假如你感兴趣的话,可在 [Google+][5] 上关注该项目并可通过 [BountySource][6] 来贡献出你的力量。

|

||||

|

||||

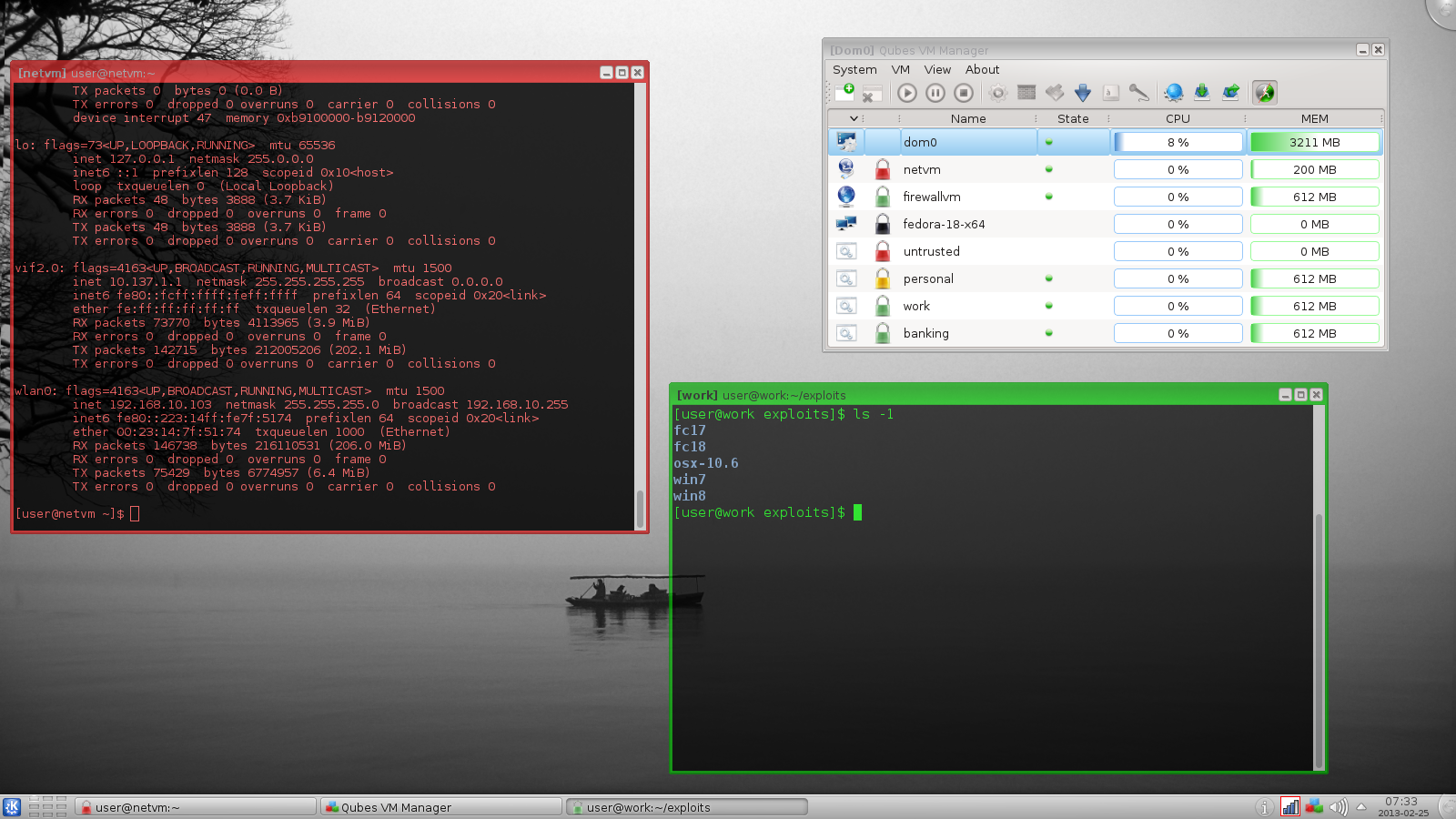

### Qubes OS

|

||||

|

||||

|

||||

|

||||

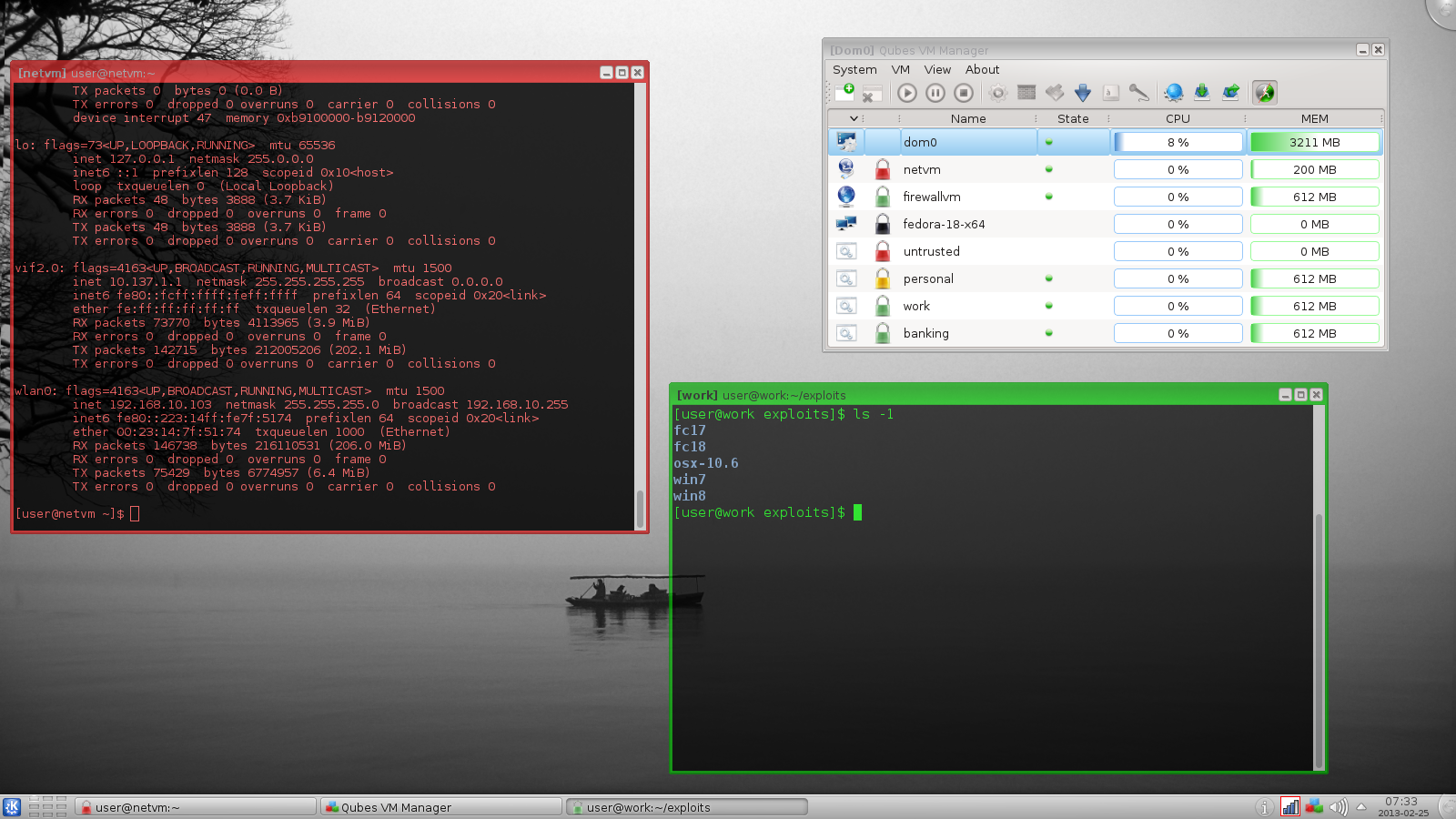

Qubes 是一个开源的操作系统,其设计通过使用[安全分级(Security by Compartmentalization)][14]的方法,来提供强安全性。其前提假设是不存在完美的没有 bug 的桌面环境。并通过实现一个‘安全隔离(Security by Isolation)’ 的方法,[Qubes Linux][7]试图去解决这些问题。Qubes 基于 Xen、X 视窗系统和 Linux,并可运行大多数的 Linux 应用,支持大多数的 Linux 驱动。Qubes 入选了 Access Innovation Prize 2014 for Endpoint Security Solution 决赛名单。

|

||||

|

||||

### Ubuntu Satanic Edition

|

||||

|

||||

|

||||

|

||||

Ubuntu SE 是一个基于 Ubuntu 的发行版本。通过一个含有主题、壁纸甚至来源于某些天才新晋艺术家的重金属音乐的综合软件包,“它同时带来了最好的自由软件和免费的金属音乐” 。尽管这个项目看起来不再积极开发了, Ubuntu Satanic Edition 甚至在其名字上都显得奇异。 [Ubuntu SE (Slightly NSFW)][8]。

|

||||

|

||||

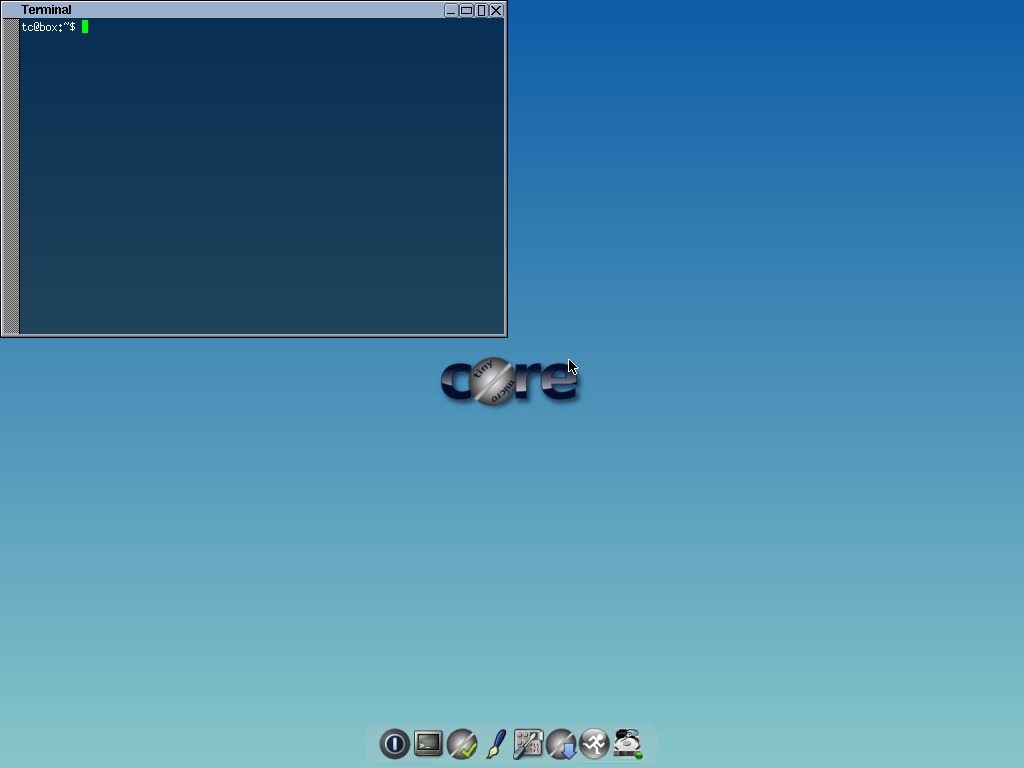

### Tiny Core Linux

|

||||

|

||||

|

||||

|

||||

Puppy Linux 还不够小?试试这个吧。 Tiny Core Linux 是一个 12MB 大小的图形化 Linux 桌面!是的,你没有看错。一个主要的补充说明:它不是一个完整的桌面,也并不完全支持所有的硬件。它只含有能够启动进入一个非常小巧的 X 桌面,支持有线网络连接的核心部件。它甚至还有一个名为 Micro Core Linux 的没有 GUI 的版本,仅有 9MB 大小。[Tiny Core Linux][9]。

|

||||

|

||||

### NixOS

|

||||

|

||||

|

||||

|

||||

它是一个资深用户所关注的 Linux 发行版本,有着独特的打包和配置管理方式。在其他的发行版本中,诸如升级的操作可能是非常危险的。升级一个软件包可能会引起其他包无法使用,而升级整个系统感觉还不如重新安装一个。在那些你不能安全地测试由一个配置的改变所带来的结果的更改之上,它们通常没有“重来”这个选项。在 NixOS 中,整个系统由 Nix 包管理器按照一个纯功能性的构建语言的描述来构建。这意味着构建一个新的配置并不会重写先前的配置。大多数其他的特色功能也遵循着这个模式。Nix 相互隔离地存储所有的软件包。有关 NixOS 的更多内容请看[这里][10]。

|

||||

|

||||

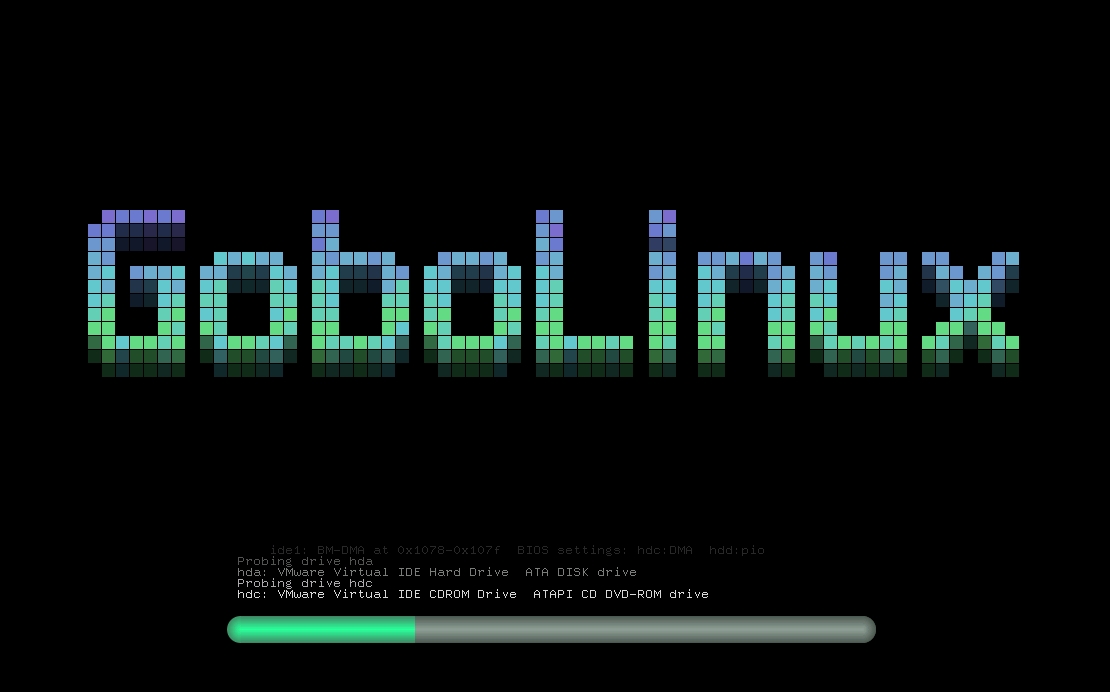

### GoboLinux

|

||||

|

||||

|

||||

|

||||

这是另一个非常奇特的 Linux 发行版本。它与其他系统如此不同的原因是它有着独特的重新整理的文件系统。它有着自己独特的子目录树,其中存储着所有的文件和程序。GoboLinux 没有专门的包数据库,因为其文件系统就是它的数据库。在某些方面,这类重整有些类似于 OS X 上所看到的功能。

|

||||

|

||||

### Hannah Montana Linux

|

||||

|

||||

|

||||

|

||||

它是一个基于 Kubuntu 的 Linux 发行版本,它有着汉娜·蒙塔娜( Hannah Montana) 主题的开机启动界面、KDM(KDE Display Manager)、图标集、ksplash、plasma、颜色主题和壁纸(I'm so sorry)。[这是它的链接][12]。这个项目现在不再活跃了。

|

||||

|

||||

### RLSD Linux

|

||||

|

||||

它是一个极其精简、小巧、轻量和安全可靠的,基于 Linux 文本的操作系统。开发者称 “它是一个独特的发行版本,提供一系列的控制台应用和自带的安全特性,对黑客或许有吸引力。” [RLSD Linux][13].

|

||||

|

||||

我们还错过了某些更加奇特的发行版本吗?请让我们知晓吧。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.techdrivein.com/2015/08/the-strangest-most-unique-linux-distros.html

|

||||

|

||||

作者:Manuel Jose

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://puppylinux.org/main/Overview%20and%20Getting%20Started.htm

|

||||

[2]:http://qntm.org/suicide

|

||||

[3]:http://sourceforge.net/projects/suicide-linux/files/

|

||||

[4]:http://www.techdrivein.com/2015/02/papyros-material-design-linux-coming-soon.html

|

||||

[5]:https://plus.google.com/communities/109966288908859324845/stream/3262a3d3-0797-4344-bbe0-56c3adaacb69

|

||||

[6]:https://www.bountysource.com/teams/papyros

|

||||

[7]:https://www.qubes-os.org/

|

||||

[8]:http://ubuntusatanic.org/

|

||||

[9]:http://tinycorelinux.net/

|

||||

[10]:https://nixos.org/

|

||||

[11]:http://www.gobolinux.org/

|

||||

[12]:http://hannahmontana.sourceforge.net/

|

||||

[13]:http://rlsd2.dimakrasner.com/

|

||||

[14]:https://en.wikipedia.org/wiki/Compartmentalization_(information_security)

|

||||

@ -1,12 +1,12 @@

|

||||

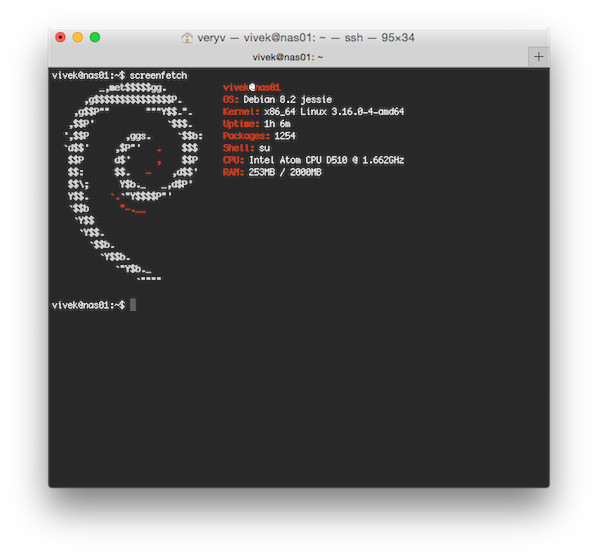

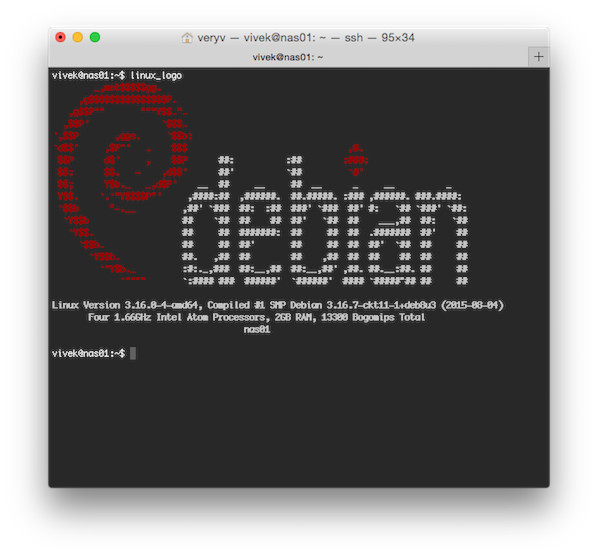

用 screenfetch 和 linux_logo 工具显示带有酷炫 Linux 标志的基本硬件信息

|

||||

用 screenfetch 和 linux_logo 显示带有酷炫 Linux 标志的基本硬件信息

|

||||

================================================================================

|

||||

想在屏幕上显示出你的 Linux 发行版的酷炫标志和基本硬件信息吗?不用找了,来试试超赞的 screenfetch 和 linux_logo 工具。

|

||||

|

||||

### 来见见 screenfetch 吧 ###

|

||||

### 来看看 screenfetch 吧 ###

|

||||

|

||||

screenFetch 是一个能够在截屏中显示系统/主题信息的命令行脚本。它可以在 Linux,OS X,FreeBSD 以及其它的许多类Unix系统上使用。来自 man 手册的说明:

|

||||

|

||||

> 这个方便的 Bash 脚本可以用来生成那些漂亮的终端主题信息和 ASCII 发行版标志,就像如今你在别人的截屏里看到的那样。它会自动检测你的发行版并显示 ASCII 版的发行版标志,并且在右边显示一些有价值的信息。

|

||||

> 这个方便的 Bash 脚本可以用来生成那些漂亮的终端主题信息和用 ASCII 构成的发行版标志,就像如今你在别人的截屏里看到的那样。它会自动检测你的发行版并显示 ASCII 版的发行版标志,并且在右边显示一些有价值的信息。

|

||||

|

||||

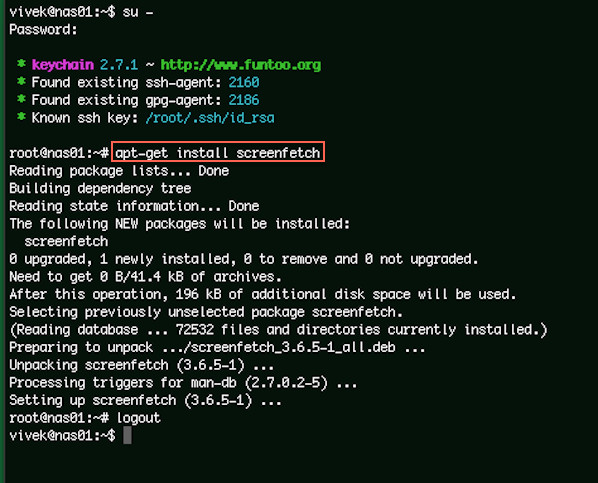

#### 在 Linux 上安装 screenfetch ####

|

||||

|

||||

@ -16,7 +16,7 @@ screenFetch 是一个能够在截屏中显示系统/主题信息的命令行脚

|

||||

|

||||

|

||||

|

||||

图一:用 apt-get 安装 screenfetch

|

||||

*图一:用 apt-get 安装 screenfetch*

|

||||

|

||||

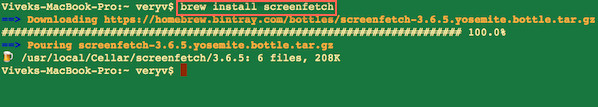

#### 在 Mac OS X 上安装 screenfetch ####

|

||||

|

||||

@ -26,7 +26,7 @@ screenFetch 是一个能够在截屏中显示系统/主题信息的命令行脚

|

||||

|

||||

|

||||

|

||||

图二:用 brew 命令安装 screenfetch

|

||||

*图二:用 brew 命令安装 screenfetch*

|

||||

|

||||

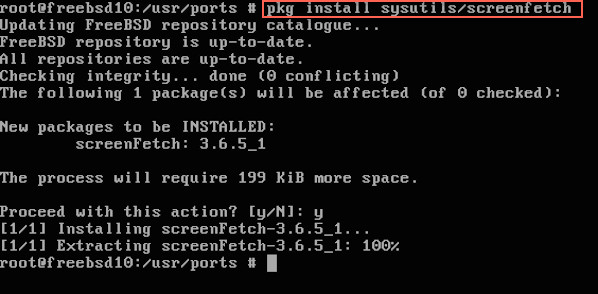

#### 在 FreeBSD 上安装 screenfetch ####

|

||||

|

||||

@ -36,7 +36,7 @@ screenFetch 是一个能够在截屏中显示系统/主题信息的命令行脚

|

||||

|

||||

|

||||

|

||||

图三:在 FreeBSD 用 pkg 安装 screenfetch

|

||||

*图三:在 FreeBSD 用 pkg 安装 screenfetch*

|

||||

|

||||

#### 在 Fedora 上安装 screenfetch ####

|

||||

|

||||

@ -46,7 +46,7 @@ screenFetch 是一个能够在截屏中显示系统/主题信息的命令行脚

|

||||

|

||||

|

||||

|

||||

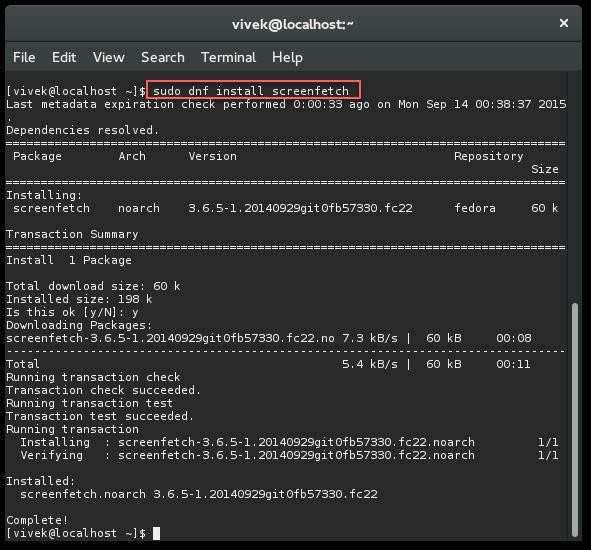

图四:在 Fedora 22 用 dnf 安装 screenfetch

|

||||

*图四:在 Fedora 22 用 dnf 安装 screenfetch*

|

||||

|

||||

#### 我该怎么使用 screefetch 工具? ####

|

||||

|

||||

@ -56,21 +56,21 @@ screenFetch 是一个能够在截屏中显示系统/主题信息的命令行脚

|

||||

|

||||

这是不同系统的输出:

|

||||

|

||||

|

||||

|

||||

|

||||

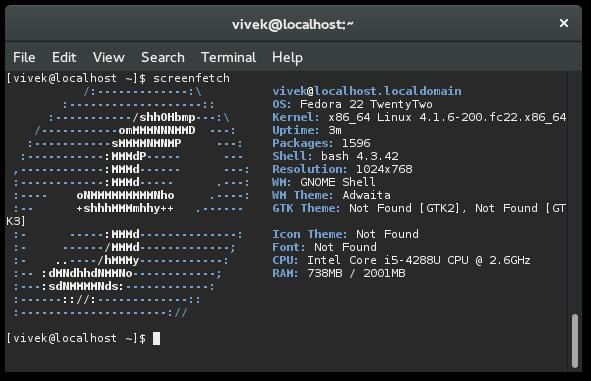

Fedora 上的 Screenfetch

|

||||

*Fedora 上的 Screenfetch*

|

||||

|

||||

|

||||

|

||||

|

||||

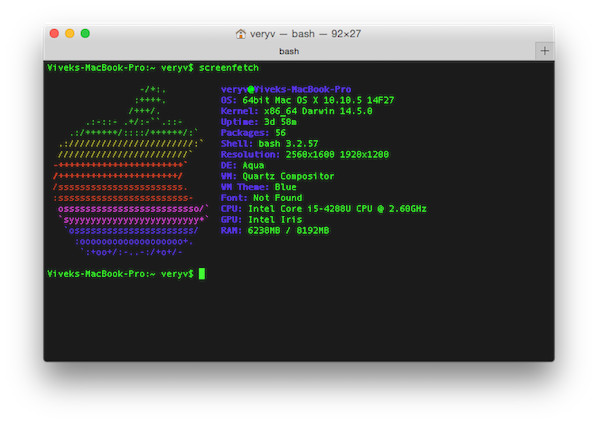

OS X 上的 Screenfetch

|

||||

*OS X 上的 Screenfetch*

|

||||

|

||||

|

||||

|

||||

|

||||

FreeBSD 上的 Screenfetch

|

||||

*FreeBSD 上的 Screenfetch*

|

||||

|

||||

|

||||

|

||||

|

||||

Debian 上的 Screenfetch

|

||||

*Debian 上的 Screenfetch*

|

||||

|

||||

#### 获取截屏 ####

|

||||

|

||||

@ -134,7 +134,7 @@ linux_logo 程序生成一个彩色的 ANSI 版企鹅图片,还包含一些来

|

||||

|

||||

|

||||

|

||||

运行 linux_logo

|

||||

*运行 linux_logo*

|

||||

|

||||

#### 等等,还有更多! ####

|

||||

|

||||

@ -196,7 +196,7 @@ linux_logo 程序生成一个彩色的 ANSI 版企鹅图片,还包含一些来

|

||||

|

||||

|

||||

|

||||

动图1: linux_logo 和 bash 循环,既有趣又能发朋友圈耍酷

|

||||

*动图1: linux_logo 和 bash 循环,既有趣又能发朋友圈耍酷*

|

||||

|

||||

### 获取帮助 ###

|

||||

|

||||

@ -216,7 +216,7 @@ via: http://www.cyberciti.biz/hardware/howto-display-linux-logo-in-bash-terminal

|

||||

|

||||

作者:Vivek Gite

|

||||

译者:[alim0x](https://github.com/alim0x)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,9 +1,9 @@

|

||||

|

||||

在 Ubuntu 14.04 中配置 PXE 服务器

|

||||

在 Ubuntu 14.04 中配置 PXE 服务器

|

||||

================================================================================

|

||||

|

||||

|

||||

|

||||

PXE(Preboot Execution Environment--预启动执行环境)服务器允许用户从网络中启动 Linux 发行版并且可以同时在数百台 PC 中安装而不需要 Linux ISO 镜像。如果你客户端的计算机没有 CD/DVD 或USB 引导盘,或者如果你想在大型企业中同时安装多台计算机,那么 PXE 服务器可以帮你节省时间和金钱。

|

||||

PXE(Preboot Execution Environment--预启动执行环境)服务器允许用户从网络中启动 Linux 发行版并且可以不需要 Linux ISO 镜像就能同时在数百台 PC 中安装。如果你客户端的计算机没有 CD/DVD 或USB 引导盘,或者如果你想在大型企业中同时安装多台计算机,那么 PXE 服务器可以帮你节省时间和金钱。

|

||||

|

||||

在这篇文章中,我们将告诉你如何在 Ubuntu 14.04 配置 PXE 服务器。

|

||||

|

||||

@ -11,11 +11,11 @@ PXE(Preboot Execution Environment--预启动执行环境)服务器允许用

|

||||

|

||||

开始前,你需要先设置 PXE 服务器使用静态 IP。在你的系统中要使用静态 IP 地址,需要编辑 “/etc/network/interfaces” 文件。

|

||||

|

||||

1. 打开 “/etc/network/interfaces” 文件.

|

||||

打开 “/etc/network/interfaces” 文件.

|

||||

|

||||

sudo nano /etc/network/interfaces

|

||||

|

||||

作如下修改:

|

||||

作如下修改:

|

||||

|

||||

# 回环网络接口

|

||||

auto lo

|

||||

@ -43,23 +43,23 @@ DHCP,TFTP 和 NFS 是 PXE 服务器的重要组成部分。首先,需要更

|

||||

|

||||

### 配置 DHCP 服务: ###

|

||||

|

||||

DHCP 代表动态主机配置协议(Dynamic Host Configuration Protocol),并且它主要用于动态分配网络配置参数,如用于接口和服务的 IP 地址。在 PXE 环境中,DHCP 服务器允许客户端请求并自动获得一个 IP 地址来访问网络。

|

||||

DHCP 代表动态主机配置协议(Dynamic Host Configuration Protocol),它主要用于动态分配网络配置参数,如用于接口和服务的 IP 地址。在 PXE 环境中,DHCP 服务器允许客户端请求并自动获得一个 IP 地址来访问网络。

|

||||

|

||||

1. 编辑 “/etc/default/dhcp3-server” 文件.

|

||||

1、编辑 “/etc/default/dhcp3-server” 文件.

|

||||

|

||||

sudo nano /etc/default/dhcp3-server

|

||||

|

||||

作如下修改:

|

||||

作如下修改:

|

||||

|

||||

INTERFACES="eth0"

|

||||

|

||||

保存 (Ctrl + o) 并退出 (Ctrl + x) 文件.

|

||||

|

||||

2. 编辑 “/etc/dhcp3/dhcpd.conf” 文件:

|

||||

2、编辑 “/etc/dhcp3/dhcpd.conf” 文件:

|

||||

|

||||

sudo nano /etc/dhcp/dhcpd.conf

|

||||

|

||||

作如下修改:

|

||||

作如下修改:

|

||||

|

||||

default-lease-time 600;

|

||||

max-lease-time 7200;

|

||||

@ -74,29 +74,29 @@ DHCP 代表动态主机配置协议(Dynamic Host Configuration Protocol),

|

||||

|

||||

保存文件并退出。

|

||||

|

||||

3. 启动 DHCP 服务.

|

||||

3、启动 DHCP 服务.

|

||||

|

||||

sudo /etc/init.d/isc-dhcp-server start

|

||||

|

||||

### 配置 TFTP 服务器: ###

|

||||

|

||||

TFTP 是一种文件传输协议,类似于 FTP。它不用进行用户认证也不能列出目录。TFTP 服务器总是监听网络上的 PXE 客户端。当它检测到网络中有 PXE 客户端请求 PXE 服务器时,它将提供包含引导菜单的网络数据包。

|

||||

TFTP 是一种文件传输协议,类似于 FTP,但它不用进行用户认证也不能列出目录。TFTP 服务器总是监听网络上的 PXE 客户端的请求。当它检测到网络中有 PXE 客户端请求 PXE 服务时,它将提供包含引导菜单的网络数据包。

|

||||

|

||||

1. 配置 TFTP 时,需要编辑 “/etc/inetd.conf” 文件.

|

||||

1、配置 TFTP 时,需要编辑 “/etc/inetd.conf” 文件.

|

||||

|

||||

sudo nano /etc/inetd.conf

|

||||

|

||||

作如下修改:

|

||||

作如下修改:

|

||||

|

||||

tftp dgram udp wait root /usr/sbin/in.tftpd /usr/sbin/in.tftpd -s /var/lib/tftpboot

|

||||

|

||||

保存文件并退出。

|

||||

保存文件并退出。

|

||||

|

||||

2. 编辑 “/etc/default/tftpd-hpa” 文件。

|

||||

2、编辑 “/etc/default/tftpd-hpa” 文件。

|

||||

|

||||

sudo nano /etc/default/tftpd-hpa

|

||||

|

||||

作如下修改:

|

||||

作如下修改:

|

||||

|

||||

TFTP_USERNAME="tftp"

|

||||

TFTP_DIRECTORY="/var/lib/tftpboot"

|

||||

@ -105,14 +105,14 @@ TFTP 是一种文件传输协议,类似于 FTP。它不用进行用户认证

|

||||

RUN_DAEMON="yes"

|

||||

OPTIONS="-l -s /var/lib/tftpboot"

|

||||

|

||||

保存文件并退出。

|

||||

保存文件并退出。

|

||||

|

||||

3. 使用 `xinetd` 让 boot 服务在每次系统开机时自动启动,并启动tftpd服务。

|

||||

3、 使用 `xinetd` 让 boot 服务在每次系统开机时自动启动,并启动tftpd服务。

|

||||

|

||||

sudo update-inetd --enable BOOT

|

||||

sudo service tftpd-hpa start

|

||||

|

||||

4. 检查状态。

|

||||

4、检查状态。

|

||||

|

||||

sudo netstat -lu

|

||||

|

||||

@ -123,7 +123,7 @@ TFTP 是一种文件传输协议,类似于 FTP。它不用进行用户认证

|

||||

|

||||

### 配置 PXE 启动文件 ###

|

||||

|

||||

现在,你需要将 PXE 引导文件 “pxelinux.0” 放在 TFTP 根目录下。为 TFTP 创建一个目录,并复制 syslinux 在 “/usr/lib/syslinux/” 下提供的所有引导程序文件到 “/var/lib/tftpboot/” 下,操作如下:

|

||||

现在,你需要将 PXE 引导文件 “pxelinux.0” 放在 TFTP 根目录下。为 TFTP 创建目录结构,并从 “/usr/lib/syslinux/” 复制 syslinux 提供的所有引导程序文件到 “/var/lib/tftpboot/” 下,操作如下:

|

||||

|

||||

sudo mkdir /var/lib/tftpboot

|

||||

sudo mkdir /var/lib/tftpboot/pxelinux.cfg

|

||||

@ -135,13 +135,13 @@ TFTP 是一种文件传输协议,类似于 FTP。它不用进行用户认证

|

||||

|

||||

PXE 配置文件定义了 PXE 客户端启动时显示的菜单,它能引导并与 TFTP 服务器关联。默认情况下,当一个 PXE 客户端启动时,它会使用自己的 MAC 地址指定要读取的配置文件,所以我们需要创建一个包含可引导内核列表的默认文件。

|

||||

|

||||

编辑 PXE 服务器配置文件使用可用的安装选项。.

|

||||

编辑 PXE 服务器配置文件,使用有效的安装选项。

|

||||

|

||||

编辑 “/var/lib/tftpboot/pxelinux.cfg/default,”

|

||||

编辑 “/var/lib/tftpboot/pxelinux.cfg/default”:

|

||||

|

||||

sudo nano /var/lib/tftpboot/pxelinux.cfg/default

|

||||

|

||||

作如下修改:

|

||||

作如下修改:

|

||||

|

||||

DEFAULT vesamenu.c32

|

||||

TIMEOUT 100

|

||||

@ -183,12 +183,12 @@ PXE 配置文件定义了 PXE 客户端启动时显示的菜单,它能引导

|

||||

|

||||

### 为 PXE 服务器添加 Ubuntu 14.04 桌面启动镜像 ###

|

||||

|

||||

对于这一步,Ubuntu 内核和 initrd 文件是必需的。要获得这些文件,你需要 Ubuntu 14.04 桌面 ISO 镜像。你可以通过以下命令下载 Ubuntu 14.04 ISO 镜像到 /mnt 目录:

|

||||

对于这一步需要 Ubuntu 内核和 initrd 文件。要获得这些文件,你需要 Ubuntu 14.04 桌面 ISO 镜像。你可以通过以下命令下载 Ubuntu 14.04 ISO 镜像到 /mnt 目录:

|

||||

|

||||

sudo cd /mnt

|

||||

sudo wget http://releases.ubuntu.com/14.04/ubuntu-14.04.3-desktop-amd64.iso

|

||||

|

||||

**注意**: 下载用的 URL 可能会改变,因为 ISO 镜像会进行更新。如果上面的网址无法访问,看看这个网站,了解最新的下载链接。

|

||||

**注意**: 下载用的 URL 可能会改变,因为 ISO 镜像会进行更新。如果上面的网址无法访问,看看[这个网站][4],了解最新的下载链接。

|

||||

|

||||

挂载 ISO 文件,使用以下命令将所有文件复制到 TFTP文件夹中:

|

||||

|

||||

@ -199,9 +199,9 @@ PXE 配置文件定义了 PXE 客户端启动时显示的菜单,它能引导

|

||||

|

||||

### 将导出的 ISO 目录配置到 NFS 服务器上 ###

|

||||

|

||||

现在,你需要通过 NFS 协议安装源镜像。你还可以使用 HTTP 和 FTP 来安装源镜像。在这里,我已经使用 NFS 导出 ISO 内容。

|

||||

现在,你需要通过 NFS 协议来设置“安装源镜像( Installation Source Mirrors)”。你还可以使用 HTTP 和 FTP 来安装源镜像。在这里,我已经使用 NFS 输出 ISO 内容。

|

||||

|

||||

要配置 NFS 服务器,你需要编辑 “etc/exports” 文件。

|

||||

要配置 NFS 服务器,你需要编辑 “/etc/exports” 文件。

|

||||

|

||||

sudo nano /etc/exports

|

||||

|

||||

@ -209,7 +209,7 @@ PXE 配置文件定义了 PXE 客户端启动时显示的菜单,它能引导

|

||||

|

||||

/var/lib/tftpboot/Ubuntu/14.04/amd64 *(ro,async,no_root_squash,no_subtree_check)

|

||||

|

||||

保存文件并退出。为使更改生效,启动 NFS 服务。

|

||||

保存文件并退出。为使更改生效,输出并启动 NFS 服务。

|

||||

|

||||

sudo exportfs -a

|

||||

sudo /etc/init.d/nfs-kernel-server start

|

||||

@ -218,9 +218,9 @@ PXE 配置文件定义了 PXE 客户端启动时显示的菜单,它能引导

|

||||

|

||||

### 配置网络引导 PXE 客户端 ###

|

||||

|

||||

PXE 客户端可以被任何具备 PXE 网络引导的系统来启用。现在,你的客户端可以启动并安装 Ubuntu 14.04 桌面,需要在系统的 BIOS 中设置 “Boot From Network” 选项。

|

||||

PXE 客户端可以是任何支持 PXE 网络引导的计算机系统。现在,你的客户端只需要在系统的 BIOS 中设置 “从网络引导(Boot From Network)” 选项就可以启动并安装 Ubuntu 14.04 桌面。

|

||||

|

||||

现在你可以去做 - 用网络引导启动你的 PXE 客户端计算机,你现在应该看到一个子菜单,显示了我们创建的 Ubuntu 14.04 桌面。

|

||||

现在准备出发吧 - 用网络引导启动你的 PXE 客户端计算机,你现在应该看到一个子菜单,显示了我们创建的 Ubuntu 14.04 桌面的菜单项。

|

||||

|

||||

|

||||

|

||||

@ -241,7 +241,7 @@ via: https://www.maketecheasier.com/configure-pxe-server-ubuntu/

|

||||

|

||||

作者:[Hitesh Jethva][a]

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -249,3 +249,4 @@ via: https://www.maketecheasier.com/configure-pxe-server-ubuntu/

|

||||

[1]:https://en.wikipedia.org/wiki/Preboot_Execution_Environment

|

||||

[2]:https://help.ubuntu.com/community/PXEInstallServer

|

||||

[3]:https://www.flickr.com/photos/jhcalderon/3681926417/

|

||||

[4]:http://releases.ubuntu.com/14.04/

|

||||

@ -1,6 +1,6 @@

|

||||

如何使用 Quagga BGP(边界网关协议)路由器来过滤 BGP 路由

|

||||

================================================================================

|

||||

在[之前的文章][1]中,我们介绍了如何使用 Quagga 将 CentOS 服务器变成一个 BGP 路由器,也介绍了 BGP 对等体和前缀交换设置。在本教程中,我们将重点放在如何使用**前缀列表**和**路由映射**来分别控制数据注入和数据输出。

|

||||

在[之前的文章][1]中,我们介绍了如何使用 Quagga 将 CentOS 服务器变成一个 BGP 路由器,也介绍了 BGP 对等体和前缀交换设置。在本教程中,我们将重点放在如何使用**前缀列表(prefix-list)**和**路由映射(route-map)**来分别控制数据注入和数据输出。

|

||||

|

||||

之前的文章已经说过,BGP 的路由判定是基于前缀的收取和前缀的广播。为避免错误的路由,你需要使用一些过滤机制来控制这些前缀的收发。举个例子,如果你的一个 BGP 邻居开始广播一个本不属于它们的前缀,而你也将错就错地接收了这些不正常前缀,并且也将它转发到网络上,这个转发过程会不断进行下去,永不停止(所谓的“黑洞”就这样产生了)。所以确保这样的前缀不会被收到,或者不会转发到任何网络,要达到这个目的,你可以使用前缀列表和路由映射。前者是基于前缀的过滤机制,后者是更为常用的基于前缀的策略,可用于精调过滤机制。

|

||||

|

||||

@ -36,15 +36,15 @@

|

||||

|

||||

上面的命令创建了名为“DEMO-FRFX”的前缀列表,只允许存在 192.168.0.0/23 这个前缀。

|

||||

|

||||

前缀列表的另一个牛X功能是支持子网掩码区间,请看下面的例子:

|

||||

前缀列表的另一个强大功能是支持子网掩码区间,请看下面的例子:

|

||||

|

||||

ip prefix-list DEMO-PRFX permit 192.168.0.0/23 le 24

|

||||

|

||||

这个命令创建的前缀列表包含在 192.168.0.0/23 和 /24 之间的前缀,分别是 192.168.0.0/23, 192.168.0.0/24 and 192.168.1.0/24。运算符“le”表示小于等于,你也可以使用“ge”表示大于等于。

|

||||

这个命令创建的前缀列表包含在 192.168.0.0/23 和 /24 之间的前缀,分别是 192.168.0.0/23, 192.168.0.0/24 和 192.168.1.0/24。运算符“le”表示小于等于,你也可以使用“ge”表示大于等于。

|

||||

|

||||

一个前缀列表语句可以有多个允许或拒绝操作。每个语句都自动或手动地分配有一个序列号。

|

||||

|

||||

如果存在多个前缀列表语句,则这些语句会按序列号顺序被依次执行。在配置前缀列表的时候,我们需要注意在所有前缀列表语句后面的**隐性拒绝**属性,就是说凡是不被明显允许的,都会被拒绝。

|

||||

如果存在多个前缀列表语句,则这些语句会按序列号顺序被依次执行。在配置前缀列表的时候,我们需要注意在所有前缀列表语句之后是**隐性拒绝**语句,就是说凡是不被明显允许的,都会被拒绝。

|

||||

|

||||

如果要设置成允许所有前缀,前缀列表语句设置如下:

|

||||

|

||||

@ -81,7 +81,7 @@

|

||||

probability Match portion of routes defined by percentage value

|

||||

tag Match tag of route

|

||||

|

||||

如你所见,路由映射可以匹配很多属性,本教程需要匹配一个前缀。

|

||||

如你所见,路由映射可以匹配很多属性,在本教程中匹配的是前缀。

|

||||

|

||||

route-map DEMO-RMAP permit 10

|

||||

match ip address prefix-list DEMO-PRFX

|

||||

@ -163,7 +163,7 @@

|

||||

|

||||

可以看到,router-A 有4条路由前缀到达 router-B,而 router-B 只接收3条。查看一下范围,我们就能知道只有被路由映射允许的前缀才能在 router-B 上显示出来,其他的前缀一概丢弃。

|

||||

|

||||

**小提示**:如果接收前缀内容没有刷新,试试重置下 BGP 会话,使用这个命令:clear ip bgp neighbor-IP。本教程中命令如下:

|

||||

**小提示**:如果接收前缀内容没有刷新,试试重置下 BGP 会话,使用这个命令:`clear ip bgp neighbor-IP`。本教程中命令如下:

|

||||

|

||||

clear ip bgp 192.168.1.1

|

||||

|

||||

@ -193,9 +193,9 @@ via: http://xmodulo.com/filter-bgp-routes-quagga-bgp-router.html

|

||||

|

||||

作者:[Sarmed Rahman][a]

|

||||

译者:[bazz2](https://github.com/bazz2)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/sarmed

|

||||

[1]:http://xmodulo.com/centos-bgp-router-quagga.html

|

||||

[1]:https://linux.cn/article-4609-1.html

|

||||

132

published/201510/20150716 Interview--Larry Wall.md

Normal file

132

published/201510/20150716 Interview--Larry Wall.md

Normal file

@ -0,0 +1,132 @@

|

||||

Larry Wall 专访——语言学、Perl 6 的设计和发布

|

||||

================================================================================

|

||||

|

||||

> 经历了15年的打造,Perl 6 终将在年底与大家见面。我们预先采访了它的作者了解一下新特性。

|

||||

|

||||

Larry Wall 是个相当有趣的人。他是编程语言 Perl 的创造者,这种语言被广泛的誉为将互联网粘在一起的胶水,也由于大量地在各种地方使用非字母的符号被嘲笑为‘只写’语言——以难以阅读著称。Larry 本人具有语言学背景,以其介绍 Perl 未来发展的演讲“[洋葱的状态][1](State of the Onion)”而闻名。(LCTT 译注:“洋葱的状态”是 Larry Wall 的年度演讲的主题,洋葱也是 Perl 基金会的标志。)

|

||||

|

||||

在2015年布鲁塞尔的 FOSDEM 上,我们赶上了 Larry,问了问他为什么 Perl 6 花了如此长的时间(Perl 5 的发布时间是1994年),了解当项目中的每个人都各执己见时是多么的难以管理,以及他的语言学背景自始至终究竟给 Perl 带来了怎样的影响。做好准备,让我们来领略其中的奥妙……

|

||||

|

||||

|

||||

|

||||

**Linux Voice:你曾经有过计划去寻找世界上某个地方的某种不见经传的语言,然后为它创造书写的文字,但你从未有机会去实现它。如果你能回到过去,你会去做么?**

|

||||

|

||||

Larry Wall:你首先得是个年轻人才能搞得定!做这些事需要投入很大的努力和人力,以至于已经不适合那些上了年纪的人了。健康、活力是其中的一部分,同样也因为人们在年轻的时候更容易学习一门新的语言,只有在你学会了语言之后你才能写呀。

|

||||

|

||||

我自学了日语十年,由于我的音系学和语音学的训练我能说的比较流利——但要理解别人的意思对我来说还十分困难。所以到了日本我会问路,但我听不懂他们的回答!

|

||||

|

||||

通常需要一门语言学习得足够好才能开发一个文字体系,并可以使用这种语言进行少量的交流。在你能够实际推广它和用本土人自己的文化教育他们前,那还需要一些年。最后才可以教授本土人如何以他们的文明书写。

|

||||

|

||||

当然如果在语言方面你有帮手 —— 经过别人的提醒我们不再使用“语言线人”来称呼他们了,那样显得我们像是在 CIA 工作的一样!—— 你可以通过他们的帮助来学习外语。他们不是老师,但他们会以另一种方式来启发你学习 —— 当然他们也能教你如何说。他们会拿着一根棍子,指着它说“这是一根棍子”,然后丢掉同时说“棒子掉下去了”。然后,你就可以记下一些东西并将其系统化。

|

||||

|

||||

大多数让人们有这样做的动力是翻译圣经。但是这只是其中的一方面;另一方面也是为了文化保护。传教士在这方面臭名昭著,因为人类学家认为人们应该基于自己的文明来做这件事。但有些人注定会改变他们的文化——他们可能是军队、或是商业侵入,如可口可乐或者缝纫机,或传教士。在这三者之间,传教士相对来讲伤害最小的了,如果他们恪守本职的话。

|

||||

|

||||

**LV:许多文字系统有本可依,相较而言你的发明就像是格林兰语…**

|

||||

|

||||

印第安人照搬字母就发明了他们自己的语言,而且没有在这些字母上施加太多我们给这些字母赋予的涵义,这种做法相当随性。它们只要能够表达出人们的所思所想,使交流顺畅就行。经常是有些声调语言(Tonal language)使用的是西方文字拼写,并尽可能的使用拉丁文的字符变化,然后用重音符或数字标注出音调。

|

||||

|

||||

在你开始学习如何使用语音和语调表示之后,你也开始变得迷糊——或者你的书写就不如从前准确。或者你对话的时候像在讲英文,但发音开始无法匹配拼写。

|

||||

|

||||

**LV:当你在开发 Perl 的时候,你的语言学背景会不会使你认为:“这对程序设计语言真的非常重要”?**

|

||||

|

||||

LW:我在人们是如何使用语言上想了很多。在现实的语言中,你有一套名词、动词和形容词的体系,并且你知道这些单词的词性。在现实的自然语言中,你时常将一个单词放到不同的位置。我所学的语言学理论也被称为法位学(phoenetic),它解释了这些在自然语言中工作的原理 —— 也就是有些你当做名词的东西,有时候你可以将它用作动词,并且人们总是这样做。

|

||||

|

||||

你能很好的将任何单词放在任何位置而进行沟通。我比较喜欢的例子是将一个整句用作为一个形容词。这句话会是这样的:“我不喜欢你的[我可以用任何东西来取代这个形容词的]态度”!

|

||||

|

||||

所以自然语言非常灵活,因为聆听者非常聪明 —— 至少,相对于电脑而言 —— 你相信他们会理解你最想表达的意思,即使存在歧义。当然对电脑而言,你必须保证歧义不大。

|

||||

|

||||

> “在 Perl 6 中,我们试图让电脑更准确的了解我们。”

|

||||

|

||||

可以说在 Perl 1到5上,我们针对歧义方面处理做得还不够。有时电脑会在不应该的时候迷惑。在 Perl 6上,我们找了许多方法,使得电脑对你所说的话能更准确的理解,就算用户并不清楚这底是字符串还是数字,电脑也能准确的知道它的类型。我们找到了内部以强类型存储,而仍然可以无视类型的“以此即彼”的方法。

|

||||

|

||||

|

||||

|

||||

**LV:Perl 被视作互联网上的“胶水(glue)”语言已久,能将点点滴滴组合在一起。在你看来 Perl 6 的发布是否符合当前用户的需要,或者旨在招揽更多新用户,能使它重获新生吗?**

|

||||

|

||||

LW:最初的设想是为 Perl 程序员带来更好的 Perl。但在看到了 Perl 5 上的不足后,很明显改掉这些不足会使 Perl 6更易用,就像我在讨论中提到过 —— 类似于 [托尔金(J. R. R. Tolkien) 在《指环王》前言中谈到的适用性一样][2]。

|

||||

|

||||

重点是“简单的东西应该简单,而困难的东西应该可以实现”。让我们回顾一下,在 Perl 2和3之间的那段时间。在 Perl 2上我们不能处理二进制数据或嵌入的 null 值 —— 只支持 C 语言风格的字符串。我曾说过“Perl 只是文本处理语言 —— 在文本处理语言里你并不需要这些功能”。

|

||||

|

||||

但当时发生了一大堆的问题,因为大多数的文本中会包含少量的二进制数据 —— 如网络地址(network addresses)及类似的东西。你使用二进制数据打开套接字,然后处理文本。所以通过支持二进制数据,语言的适用性(applicability)翻了一倍。

|

||||

|

||||

这让我们开始探讨在语言中什么应该简单。现在的 Perl 中有一条原则,是我们偷师了哈夫曼编码(Huffman coding)的做法,它在位编码系统中为字符采取了不同的尺寸,常用的字符占用的位数较少,不常用的字符占用的位数更多。

|

||||

|

||||

我们偷师了这种想法并将它作为 Perl 的一般原则,针对常用的或者说常输入的 —— 这些常用的东西必须简单或简洁。不过,另一方面,也显得更加的不规则(irregular)。在自然语言中也是这样的,最常用的动词实际上往往是最不规则的。

|

||||

|

||||

所以在这样的情况下需要更多的差异存在。我很喜欢一本书是 Umberto Eco 写的的《探寻完美的语言(The Search for the Perfect Language)》,说的并不是计算机语言;而是哲学语言,大体的意思是古代的语言也许是完美的,我们应该将它们带回来。

|

||||

|

||||

所有的这类语言错误的认为类似的事物其编码也应该总是类似的。但这并不是我们沟通的方式。如果你的农场中有许多动物,他们都有相近的名字,当你想杀一只鸡的时候说“走,去把 Blerfoo 宰了”,你的真实想法是宰了 Blerfee,但有可能最后死的是一头牛(LCTT 译注:这是杀鸡用牛刀的意思吗?哈哈)。

|

||||

|

||||

所以在这种时候我们其实更需要好好的将单词区分开,使沟通信道的冗余增加。常用的单词应该有更多的差异。为了达到更有效的通讯,还有一种自足(LCTT 译注:self-clocking ,自同步,[概念][3]来自电信和电子行业,此处译为“自足”更能体现涵义)编码。如果你在一个货物上看到过 UPC 标签(条形码),它就是一个自足编码,每对“条”和“空”总是以七个列宽为单位,据此你就知道“条”的宽度加起来总是这么宽。这就是自足。

|

||||

|

||||

在电子产品中还有另一种自足编码。在老式的串行传输协议中有停止和启动位,来保持同步。自然语言中也会包含这些。比如说,在写日语时,不用使用空格。由于书写方式的原因,他们会在每个词组的开头使用中文中的汉字字符,然后用音节表(syllabary)中的字符来结尾。

|

||||

|

||||

**LV:是平假名,对吗?**

|

||||

|

||||

LW: 是的,平假名。所以在这一系统,每个词组的开头就自然就很重要了。同样的,在古希腊,大多数的动词都是搭配好的(declined 或 conjugated),所以它们的标准结尾是一种自足机制。在他们的书写体系中空格也是可有可无的 —— 引入空格是更近代的发明。

|

||||

|

||||

所以在计算机语言上也要如此,有的值也可以自足编码。在 Perl 上我们重度依赖这种方法,而且在 Perl 6 上相较于前几代这种依赖更重。当你使用表达式时,你要么得到的是一个词,要么得到的是插值(infix)操作符。当你想要得到一个词,你有可能得到的是一个前缀操作符,它也在相同的位置;同样当你想要得到一个插值操作符,你也可能得到的是前一个词的后缀。

|

||||

|

||||

但是反过来。如果编译器准确的知道它想要什么,你可以稍微重载(overload)它们,其它的让 Perl 来完成。所以在斜线“/”后面是单词时它会当成正则表达式,而斜线“/”在字串中时视作除法。而我们并不会重载所有东西,因为那只会使你失去自足冗余。

|

||||

|

||||

多数情况下我们提示的比较好的语法错误消息,是出于发现了一行中出现了两个关键词,然后我们尝试找出为什么一行会出现两个关键字 —— “哦,你一定漏掉了上一行的分号”,所以我们相较于很多其他的按步照班的解析器可以生成更好的错误消息。

|

||||

|

||||

|

||||

|

||||

**LV:为什么 Perl 6 花了15年?当每个人对事物有不同看法时一定十分难于管理,而且正确和错误并不是绝对的。**

|

||||

|

||||

LW:这必须要非常小心地平衡。刚开始会有许多的好的想法 —— 好吧,我并不是说那些全是好的想法。也有很多令人烦恼的地方,就像有361条 RFC [功能建议文件],而我也许只想要20条。我们需要坐下来,将它们全部看完,并忽略其中的解决方案,因为它们通常流于表象、视野狭隘。几乎每一条只针对一样事物,如若我们将它们全部拼凑起来,那简直是一堆垃圾。

|

||||

|

||||

> “掌握平衡时需要格外小心。毕竟在刚开始的时候总会有许多的好主意。”

|

||||

|

||||

所以我们必须基于人们在使用 Perl 5 时的真实感受重新整理,寻找统一、深层的解决方案。这些 RFC 文档许多都提到了一个事实,就是类型系统的不足。通过引入更条理分明的类型系统,我们可以解决很多问题并且即聪明又紧凑。

|

||||

|

||||

同时我们开始关注其他方面:如何统一特征集并开始重用不同领域的想法,这并不需要它们在下层相同。我们有一种标准的书写配对(pair)的方式——好吧,在 Perl 里面有两种!但使用冒号书写配对的方法同样可以用于基数计数法或是任何进制的文本编号。同样也可以用于其他形式的引用(quoting)。在 Perl 里我们称它为“奇妙的一致”。

|

||||

|

||||

> “做了 Perl 6 的早期实现的朋友们,握着我的手说:“我们真的很需要一位语言的设计者。””

|

||||

|

||||

同样的想法涌现出来,你说“我已经熟悉了语法如何运作,但是我看见它也被用在别处”,所以说视角相同才能找出这种一致。那些提出各种想法和做了 Perl 6 的早期实现的人们回来看我,握着我的手说:“我们真的需要一位语言的设计者。您能作为我们的[仁慈独裁者][4](benevolent dictator)吗?”(LCTT 译注:Benevolent Dictator For Life,或 BDFL,指开源领袖,通常指对社区争议拥有最终裁决权的领袖,典故来自 Python 创始人 Guido van Rossum, 具体参考维基条目[解释][4])

|

||||

|

||||

所以我是语言的设计者,但总是听到:“不要管具体实现(implementation)!我们目睹了你对 Perl 5 做的那些,我们不想历史重演!”真是让我忍俊不禁,因为他们作为起步的核心和原先 Perl 5 的内部结构上几乎别无二致,也许这就是为什么一些早期的实现做的并不好的原因。

|

||||

|

||||

因为我们仍然在摸索我们的整个设计,其实现在做了许多 VM (虚拟机)该做什么和不该做什么的假设,所以最终这个东西就像面向对象的汇编语言一样。类似的问题在伊始阶段无处不在。然后 Pugs 这家伙走过来说:“用用看 Haskell 吧,它能让你们清醒的认识自己正在干什么,让我们用它来弄清楚下层的语义模型(semantic model)。”

|

||||

|

||||

因此,我们明确了其中的一些语义模型,但更重要的是,我们开始建立符合那些语义模型的测试套件。在这之后,Parrot VM 继续进行开发,并且出现了另一个实现 Niecza ,它基于 .Net,是由一个年轻的家伙搞出来的。他很聪明,实现了 Perl 6 的一个很大的子集。不过他还是一个人干,并没有找到什么好方法让别人介入他的项目。

|

||||

|

||||

同时 Parrot 项目变得过于庞大,以至于任何人都不能真正的深入掌控它,并且很难重构。同时,开发 Rakudo 的人们觉得我们可能需要在更多平台上运行它,而不只是在 Parrot VM 上。 于是他们发明了所谓的可移植层 NQP ,即 “Not Quite Perl”。他们一开始将它移植到 JVM(Java虚拟机)上运行,与此同时,他们还秘密的开发了一个叫做 MoarVM 的 VM ,它去年才刚刚为人知晓。

|

||||

|

||||

无论 MoarVM 还是 JVM 在回归测试(regression test)中表现得十分接近 —— 在许多方面 Parrot 算是尾随其后。这样不挑剔 VM 真的很棒,我们也能开始考虑将 NQP 发扬光大。谷歌夏季编码大赛(Google Summer of Code project)的目标就是针对 JavaScript 的 NQP,这应该靠谱,因为 MoarVM 也同样使用 Node.js 作为日常处理。

|

||||

|

||||

我们可能要将今年余下的时光投在 MoarVM 上,直到 6.0 发布,方可休息片刻。

|

||||

|

||||

**LV:去年英国,政府开展编程年活动(Year of Code),来激发年轻人对编程的兴趣。针对活动的建议五花八门——类似为了让人们准确的认识到内存的使用你是否应该从低阶语言开始讲授,或是一门高阶语言。你对此作何看法?**

|

||||

|

||||

LW:到现在为止,Python 社区在低阶方面的教学工作做得比我们要好。我们也很想在这一方面做点什么,这也是我们有蝴蝶 logo 的部分原因,以此来吸引七岁大小的女孩子!

|

||||

|

||||

|

||||

|

||||

> “到现在为止,Python 社区在低阶方面的教学工作做得比我们要好。”

|

||||

|

||||

我们认为将 Perl 6 作为第一门语言来学习是可行的。一大堆的将 Perl 5 作为第一门语言学习的人让我们吃惊。你知道,在 Perl 5 中有许多相当大的概念,如闭包,词法范围,和一些你通常在函数式编程中见到的特性。甚至在 Perl 6 中更是如此。

|

||||

|

||||

Perl 6 花了这么长时间的部分原因是我们尝试去坚持将近 50 种互不相同的原则,在设计语言的最后对于“哪点是最重要的规则”这个问题还是悬而未决。有太多的问题需要讨论。有时我们做出了决定,并已经工作了一段时间,才发现这个决定并不很正确。

|

||||

|

||||

之前我们并未针对并发程序设计或指定很多东西,直到 Jonathan Worthington 的出现,他非常巧妙的权衡了各个方面。他结合了一些其他语言诸如 Go 和 C# 的想法,将并发原语写的非常好。可组合性(Composability)是一个语言至关重要的一部分。

|

||||

|

||||

有很多的程序设计系统的并发和并行写的并不好 —— 比如线程和锁,不良的操作方式有很多。所以在我看来,额外花点时间看一下 Go 或者 C# 这种高阶原语的开发是很值得的 —— 那是一种关键字上的矛盾 —— 写的相当棒。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxvoice.com/interview-larry-wall/

|

||||

|

||||

作者:[Mike Saunders][a]

|

||||

译者:[martin2011qi](https://github.com/martin2011qi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linuxvoice.com/author/mike/

|

||||

[1]:https://en.wikipedia.org/wiki/Perl#State_of_the_Onion

|

||||

[2]:http://tinyurl.com/nhpr8g2

|

||||

[3]:http://en.wikipedia.org/wiki/Self-clocking_signal

|

||||

[4]:https://en.wikipedia.org/wiki/Benevolent_dictator_for_life

|

||||

@ -0,0 +1,28 @@

|

||||

Linux 4.3 内核增加了 MOST 驱动子系统

|

||||

================================================================================

|

||||

当 4.2 内核还没有正式发布的时候,Greg Kroah-Hartman 就为他维护的各种子系统模块打开了4.3 的合并窗口。

|

||||

|

||||

之前 Greg KH 发起的拉取请求(pull request)里包含了 linux 4.3 的合并窗口更新,内容涉及驱动核心、TTY/串口、USB 驱动、字符/杂项以及暂存区内容。这些拉取申请没有提供任何震撼性的改变,大部分都是改进/附加/修改bug。暂存区内容又是大量的修正和清理,但是还是有一个新的驱动子系统。

|

||||

|

||||

Greg 提到了[4.3 的暂存区改变][2],“这里的很多东西,几乎全部都是细小的修改和改变。通常的 IIO 更新和新驱动,以及我们已经添加了的 MOST 驱动子系统,已经在源码树里整理了。ozwpan 驱动最终还是被删掉,因为它很明显被废弃了而且也没有人关心它。”

|

||||

|

||||

MOST 驱动子系统是面向媒体的系统传输(Media Oriented Systems Transport)的简称。在 linux 4.3 新增的文档里面解释道,“MOST 驱动支持 LInux 应用程序访问 MOST 网络:汽车信息骨干网(Automotive Information Backbone),高速汽车多媒体网络的事实上的标准。MOST 定义了必要的协议、硬件和软件层,提供高效且低消耗的传输控制,实时的数据包传输,而只需要使用一个媒介(物理层)。目前使用的媒介是光线、非屏蔽双绞线(UTP)和同轴电缆。MOST 也支持多种传输速度,最高支持150Mbps。”如文档解释的,MOST 主要是关于 Linux 在汽车上的应用。

|

||||

|

||||

当 Greg KH 发出了他为 Linux 4.3 多个子系统做出的更新,但是他还没有打算提交 [KDBUS][5] 的内核代码。他之前已经放出了 [linux 4.3 的 KDBUS] 的开发计划,所以我们将需要等待官方的4.3 合并窗口,看看会发生什么。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.phoronix.com/scan.php?page=news_item&px=Linux-4.3-Staging-Pull

|

||||

|

||||

作者:[Michael Larabel][a]

|

||||

译者:[oska874](https://github.com/oska874)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.michaellarabel.com/

|

||||

[1]:http://www.phoronix.com/scan.php?page=search&q=Linux+4.2

|

||||

[2]:http://lkml.iu.edu/hypermail/linux/kernel/1508.2/02604.html

|

||||

[3]:http://www.phoronix.com/scan.php?page=news_item&px=KDBUS-Not-In-Linux-4.2

|

||||

[4]:http://www.phoronix.com/scan.php?page=news_item&px=Linux-4.2-rc7-Released

|

||||

[5]:http://www.phoronix.com/scan.php?page=search&q=KDBUS

|

||||

@ -1,27 +1,28 @@

|

||||

在 Ubuntu 和 Linux Mint 上安装 Terminator 0.98

|

||||

================================================================================

|

||||

[Terminator][1],在一个窗口中有多个终端。该项目的目标之一是为管理终端提供一个有用的工具。它的灵感来自于类似 gnome-multi-term,quankonsole 等程序,这些程序关注于在窗格中管理终端。 Terminator 0.98 带来了更完美的标签功能,更好的布局保存/恢复,改进了偏好用户界面和多出 bug 修复。

|

||||

[Terminator][1],它可以在一个窗口内打开多个终端。该项目的目标之一是为摆放终端提供一个有用的工具。它的灵感来自于类似 gnome-multi-term,quankonsole 等程序,这些程序关注于按网格摆放终端。 Terminator 0.98 带来了更完美的标签功能,更好的布局保存/恢复,改进了偏好用户界面和多处 bug 修复。

|

||||

|

||||

|

||||

|

||||

###TERMINATOR 0.98 的更改和新特性

|

||||

|

||||

- 添加了一个布局启动器,允许在不用布局之间简单切换(用 Alt + L 打开一个新的布局切换器);

|

||||

- 添加了一个新的手册(使用 F1 打开);

|

||||

- 保存的时候,布局现在会记住:

|

||||

- * 最大化和全屏状态

|

||||

- * 窗口标题

|

||||

- * 激活的标签

|

||||

- * 激活的终端

|

||||

- * 每个终端的工作目录

|

||||

- 添加选项用于启用/停用非同质标签和滚动箭头;

|

||||

- 最大化和全屏状态

|

||||

- 窗口标题

|

||||

- 激活的标签

|

||||

- 激活的终端

|

||||

- 每个终端的工作目录

|

||||

- 添加选项用于启用/停用非同类(non-homogenous)标签和滚动箭头;

|

||||

- 添加快捷键用于按行/半页/一页向上/下滚动;

|

||||

- 添加使用 Ctrl+鼠标滚轮放大/缩小,Shift+鼠标滚轮向上/下滚动页面;

|

||||

- 为下一个/上一个 profile 添加快捷键

|

||||

- 添加使用 Ctrl+鼠标滚轮来放大/缩小,Shift+鼠标滚轮向上/下滚动页面;

|

||||

- 为下一个/上一个配置文件(profile)添加快捷键

|

||||

- 改进自定义命令菜单的一致性

|

||||

- 新增快捷方式/代码来切换所有/标签分组;

|

||||

- 改进监视插件

|

||||

- 增加搜索栏切换;

|

||||

- 清理和重新组织窗口偏好,包括一个完整的全局便签更新

|

||||

- 清理和重新组织偏好(preferences)窗口,包括一个完整的全局便签更新

|

||||

- 添加选项用于设置 ActivityWatcher 插件静默时间

|

||||

- 其它一些改进和 bug 修复

|

||||

- [点击此处查看完整更新日志][2]

|

||||

@ -37,10 +38,6 @@ Terminator 0.98 有可用的 PPA,首先我们需要在 Ubuntu/Linux Mint 上

|

||||

如果你想要移除 Terminator,只需要在终端中运行下面的命令(可选)

|

||||

|

||||

$ sudo apt-get remove terminator

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -48,7 +45,7 @@ via: http://www.ewikitech.com/articles/linux/terminator-install-ubuntu-linux-min

|

||||

|

||||

作者:[admin][a]

|

||||

译者:[ictlyh](http://mutouxiaogui.cn/blog)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,10 +1,10 @@

|

||||

如何在Ubuntu 14.04 / 15.04中设置IonCube Loaders

|

||||

================================================================================

|

||||

IonCube Loaders是PHP中用于辅助加速页面的加解密工具。它保护你的PHP代码不会被在未授权的计算机上查看。使用ionCube编码并加密PHP需要一个叫ionCube Loader的文件安装在web服务器上并提供给需要大量访问的PHP用。它在运行时处理并执行编码。PHP只需在‘php.ini’中添加一行就可以使用这个loader。

|

||||

IonCube Loaders是一个PHP中用于加解密的工具,并带有加速页面运行的功能。它也可以保护你的PHP代码不会查看和运行在未授权的计算机上。要使用ionCube编码、加密的PHP文件,需要在web服务器上安装一个叫ionCube Loader的文件,并需要让 PHP 可以访问到,很多 PHP 应用都在用它。它可以在运行时读取并执行编码过后的代码。PHP只需在‘php.ini’中添加一行就可以使用这个loader。

|

||||

|

||||

### 前提条件 ###

|

||||

|

||||

在这篇文章中,我们将在Ubuntu14.04/15.04安装Ioncube Loader ,以便它可以在所有PHP模式中使用。本教程的唯一要求就是你系统安装了LEMP,并有“的php.ini”文件。

|

||||

在这篇文章中,我们将在Ubuntu14.04/15.04安装Ioncube Loader ,以便它可以在所有PHP模式中使用。本教程的唯一要求就是你系统安装了LEMP,并有“php.ini”文件。

|

||||

|

||||

### 下载 IonCube Loader ###

|

||||

|

||||

@ -14,15 +14,15 @@ IonCube Loaders是PHP中用于辅助加速页面的加解密工具。它保护

|

||||

|

||||

|

||||

|

||||

下载完成后用下面的命令解压到"/usr/local/src/"。

|

||||

下载完成后用下面的命令解压到“/usr/local/src/"。

|

||||

|

||||

# tar -zxvf ioncube_loaders_lin_x86-64.tar.gz -C /usr/local/src/

|

||||

|

||||

|

||||

|

||||

解压完成后我们就可以看到所有的存在的模块。但是我们只需要我们安装的PHP版本的相关模块。

|

||||

解压完成后我们就可以看到所有提供的模块。但是我们只需要我们所安装的PHP版本的对应模块。

|

||||

|

||||

要检查PHP版本,你可以运行下面的命令来找出相关的模块。

|

||||

要检查PHP版本,你可以运行下面的命令来找出相应的模块。

|

||||

|

||||

# php -v

|

||||

|

||||

@ -30,14 +30,14 @@ IonCube Loaders是PHP中用于辅助加速页面的加解密工具。它保护

|

||||

|

||||

根据上面的命令我们知道我们安装的是PHP 5.6.4,因此我们需要拷贝合适的模块到PHP模块目录下。

|

||||

|

||||

首先我们在“/usr/local/”创建一个叫“ioncube”的目录并复制需要的ioncube loader到这里。

|

||||

首先我们在“/usr/local/”创建一个叫“ioncube”的目录并复制所需的ioncube loader到这里。

|

||||

|

||||

root@ubuntu-15:/usr/local/src/ioncube# mkdir /usr/local/ioncube

|

||||

root@ubuntu-15:/usr/local/src/ioncube# cp ioncube_loader_lin_5.6.so ioncube_loader_lin_5.6_ts.so /usr/local/ioncube/

|

||||

|

||||

### PHP 配置 ###

|

||||

|

||||

我们要在位于"/etc/php5/cli/"文件夹下的"php.ini"中加入下面的配置行并重启web服务和php模块。

|

||||

我们要在位于"/etc/php5/cli/"文件夹下的"php.ini"中加入如下的配置行并重启web服务和php模块。

|

||||

|

||||

# vim /etc/php5/cli/php.ini

|

||||

|

||||

@ -54,7 +54,6 @@ IonCube Loaders是PHP中用于辅助加速页面的加解密工具。它保护

|

||||

|

||||

要为我们的网站测试ioncube loader。用下面的内容创建一个"info.php"文件并放在网站的web目录下。

|

||||

|

||||

|

||||

# vim /usr/share/nginx/html/info.php

|

||||

|

||||

加入phpinfo的脚本后重启web服务后用域名或者ip地址访问“info.php”。

|

||||

@ -63,7 +62,6 @@ IonCube Loaders是PHP中用于辅助加速页面的加解密工具。它保护

|

||||

|

||||

|

||||

|

||||

From the terminal issue the following command to verify the php version that shows the ionCube PHP Loader is Enabled.

|

||||

在终端中运行下面的命令来验证php版本并显示PHP Loader已经启用了。

|

||||

|

||||

# php -v

|

||||

@ -74,7 +72,7 @@ From the terminal issue the following command to verify the php version that sho

|

||||

|

||||

### 总结 ###

|

||||

|

||||

教程的最后你已经了解了在安装有nginx的Ubuntu中安装和配置ionCube Loader,如果你正在使用其他的web服务,这与其他服务没有明显的差别。因此做完这些安装Loader是很简单的,并且在大多数服务器上的安装都不会有问题。然而并没有一个所谓的“标准PHP安装”,服务可以通过许多方式安装,并启用或者禁用功能。

|

||||

教程的最后你已经了解了如何在安装有nginx的Ubuntu中安装和配置ionCube Loader,如果你正在使用其他的web服务,这与其他服务没有明显的差别。因此安装Loader是很简单的,并且在大多数服务器上的安装都不会有问题。然而并没有一个所谓的“标准PHP安装”,服务可以通过许多方式安装,并启用或者禁用功能。

|

||||

|

||||

如果你是在共享服务器上,那么确保运行了ioncube-loader-helper.php脚本,并点击链接来测试运行时安装。如果安装时你仍然遇到了问题,欢迎联系我们及给我们留下评论。

|

||||

|

||||

@ -84,7 +82,7 @@ via: http://linoxide.com/ubuntu-how-to/setup-ioncube-loaders-ubuntu-14-04-15-04/

|

||||

|

||||

作者:[Kashif Siddique][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,41 @@

|

||||

红帽 CEO 对 OpenStack 收益表示乐观

|

||||

================================================================================

|

||||

得益于围绕 Linux 和云不断发展的平台与基础设施技术,红帽正在持续快速发展。红帽宣布在九月二十一日完成了 2016 财年第二季度的财务业绩,再次超过预期。

|

||||

|

||||

|

||||

|

||||

这一季度,红帽的收入为 5 亿 4 百万美元,和去年同比增长 13%。净收入为 5 千 1 百万美元,超过了 2015 财年第二季度的 4 千 7 百万美元。

|

||||

|

||||

展望未来,红帽为下一季度和全年提供了积极的目标。对于第三季度,红帽希望指导收益能在 5亿1千9百万美元和5亿2千3百万美元之间,和去年同期相比增长 15%。

|

||||

|

||||

对于 2016 财年,红帽的全年指导目标是 20亿4千4百万美元,和去年相比增长 14%。

|

||||

|

||||

红帽 CFO Frank Calderoni 在电话会议上指出,红帽最高的 30 个订单差不多甚至超过了 1 百万美元。其中有 4 个订单超过 5 百万美元,还有一个超过 1 千万美元。

|

||||

|

||||

从近几年的经验来看,红帽产品的交叉销售非常成功,全部订单中有超过 65% 的订单包括了一个或多个红帽应用和新兴技术产品组件。

|

||||

|

||||

Calderoni 说 “我们希望这些技术,例如中间件、RHEL OpenStack 平台、OpenShift、云管理和存储能持续推动收益增长。”

|

||||

|

||||

### OpenStack ###

|

||||

|

||||

在电话会议中,红帽 CEO Jim Whitehurst 多次问到 OpenStack 的预期收入。Whitehurst 说得益于安装程序的改进,最近发布的 Red Hat OpenStack Platform 7.0 向前垮了一大步。

|

||||

|

||||

Whitehurst 提到:“在识别硬件和使用方面它做的很好,当然,这也意味着在硬件识别并正确使用它们方便还有很多工作要做。”

|

||||

|

||||

Whitehurst 说他已经开始注意到很多的生产应用程序开始迁移到 OpenStack 云上来。他也警告说在产业化方面迁移到 OpenStack 大部分只是尝鲜,还并没有成为主流。

|

||||

|

||||

对于竞争对手, Whitehurst 尤其提到了微软、惠普和 Mirantis。在他看来,很多组织仍然会使用多种操作系统,如果他们部分使用了微软产品,会更倾向于开源方案作为替代选项。Whitehurst 说在云方面他还没有看到太多和惠普面对面的竞争,但和 Mirantis 则确实如此。

|

||||

|

||||

Whitehurst 说 “我们也有几次胜利,客户从 Mirantis 转到了 RHEL。”

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.serverwatch.com/server-news/red-hat-ceo-optimistic-on-openstack-revenue-opportunity.html

|

||||

|

||||

作者:[Sean Michael Kerner][a]

|

||||

译者:[ictlyh](http://mutouxiaogui.cn/blog)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.serverwatch.com/author/Sean-Michael-Kerner-101580.htm

|

||||

@ -1,12 +1,8 @@

|

||||

如何将 Oracle 11g 升级到 Orcale 12c

|

||||

================================================================================

|

||||

大家好。

|

||||

大家好。今天我们来学习一下如何将 Oracle 11g 升级到 Oracle 12c。开始吧。

|

||||

|

||||

今天我们来学习一下如何将 Oracle 11g 升级到 Oracle 12c。开始吧。

|

||||

|

||||

在此,我使用的是 CentOS 7 64 位 Linux 发行版。

|

||||

|

||||

我假设你已经在你的系统上安装了 Oracle 11g。这里我会展示一下安装 Oracle 11g 时我的操作步骤。

|

||||

在此,我使用的是 CentOS 7 64 位 Linux 发行版。我假设你已经在你的系统上安装了 Oracle 11g。这里我会展示一下安装 Oracle 11g 时我的操作步骤。

|

||||

|

||||

我在 Oracle 11g 上选择 “Create and configure a database”,如下图所示。

|

||||

|

||||

@ -16,7 +12,7 @@

|

||||

|

||||

|

||||

|

||||

然后你输入安装 Oracle 11g 的所有路径以及密码。下面是我自己的 Oracle 11g 安装配置。确保你正确输入了 Oracle 的密码。

|

||||

然后你输入安装 Oracle 11g 的各种路径以及密码。下面是我自己的 Oracle 11g 安装配置。确保你正确输入了 Oracle 的密码。

|

||||

|

||||

|

||||

|

||||

@ -30,7 +26,7 @@

|

||||

|

||||

你需要从该[链接][1]上下载两个 zip 文件。下载并解压两个文件到相同目录。文件名为 **linuxamd64_12c_database_1of2.zip** & **linuxamd64_12c_database_2of2.zip**。提取或解压完后,它会创建一个名为 database 的文件夹。

|

||||

|

||||

注意:升级到 12c 之前,请确保在你的 CentOS 上已经安装了所有必须的软件包并且 path 环境变量也已经正确配置,还有其它前提条件也已经满足。

|

||||

注意:升级到 12c 之前,请确保在你的 CentOS 上已经安装了所有必须的软件包,并且所有的路径变量也已经正确配置,还有其它前提条件也已经满足。

|

||||

|

||||

下面是必须使用正确版本安装的一些软件包

|

||||

|

||||

@ -47,13 +43,11 @@

|

||||

|

||||

在因特网上搜索正确的 rpm 版本。

|

||||

|

||||

你也可以用一个查询处理多个软件包,然后在输出中查找正确版本。例如:

|

||||

|

||||

在终端中输入下面的命令

|

||||

你也可以用一个查询处理多个软件包,然后在输出中查找正确版本。例如,在终端中输入下面的命令:

|

||||

|

||||

rpm -q binutils compat-libstdc++ gcc glibc libaio libgcc libstdc++ make sysstat unixodbc

|

||||

|

||||

你的系统中必须安装了以下软件包(版本可能较新会旧)

|

||||

你的系统中必须安装了以下软件包(版本可能或新或旧)

|

||||

|

||||

- binutils-2.23.52.0.1-12.el7.x86_64

|

||||

- compat-libcap1-1.10-3.el7.x86_64

|

||||

@ -83,11 +77,7 @@

|

||||

|

||||

你也需要 unixODBC-2.3.1 或更新版本的驱动。

|

||||

|

||||

我希望你安装 Oracle 11g 的时候已经在你的 CentOS 7 上创建了名为 oracle 的用户。

|

||||

|

||||

让我们以用户 oracle 登录 CentOS。

|

||||

|

||||

以用户 oracle 登录到 CentOS 之后,在你的 CentOS上打开一个终端。

|

||||

我希望你安装 Oracle 11g 的时候已经在你的 CentOS 7 上创建了名为 oracle 的用户。让我们以用户 oracle 登录 CentOS。以用户 oracle 登录到 CentOS 之后,在你的 CentOS上打开一个终端。

|

||||

|

||||

使用终端更改工作目录并导航到你解压两个 zip 文件的目录。在终端中输入以下命令开始安装 12c。

|

||||

|

||||

@ -119,15 +109,15 @@

|

||||

|

||||

|

||||

|

||||

第七步,像下面这样使用默认的选择继续下一步。

|

||||

对于第七步,像下面这样使用默认的选择继续下一步。

|

||||

|

||||

|

||||

|

||||

在第九步,你会看到一个类似下面这样的总结报告。

|

||||

在第九步中,你会看到一个类似下面这样的总结报告。

|

||||

|

||||

|

||||

|

||||

如果一切正常,你可以点击步骤九中的 install 开始安装,进入步骤十。

|

||||

如果一切正常,你可以点击第九步中的 install 开始安装,进入第十步。

|

||||

|

||||

|

||||

|

||||

@ -135,7 +125,7 @@

|

||||

|

||||

要有耐心,一步一步走下来最后它会告诉你成功了。否则,在谷歌上搜索做必要的操作解决问题。再一次说明,由于你可能会遇到的错误有很多,我无法在这里提供所有详细介绍。

|

||||

|

||||

现在,只需要按照下面屏幕指令配置监听器

|

||||

现在,只需要按照下面屏幕指令配置监听器。

|

||||

|

||||

配置完监听器之后,它会启动数据库升级助手(Database Upgrade Assistant)。选择 Upgrade Oracle Database。

|

||||

|

||||

@ -157,7 +147,7 @@ via: http://www.unixmen.com/upgrade-from-oracle-11g-to-oracle-12c/

|

||||

|

||||

作者:[Mohammad Forhad Iftekher][a]

|

||||

译者:[ictlyh](http://www.mutouxiaogui.cn/blog/)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -4,11 +4,11 @@

|

||||

|

||||

我知道你已经看过[如何下载 YouTube 视频][1]。但那些工具大部分都采用图形用户界面的方式。我会向你展示如何通过终端使用 youtube-dl 下载 YouTube 视频。

|

||||

|

||||

### [youtube-dl][2] ###

|

||||

### youtube-dl ###

|

||||

|

||||

youtube-dl 是基于 Python 的命令行小工具,允许你从 YouTube.com、Dailymotion、Google Video、Photobucket、Facebook、Yahoo、Metacafe、Depositfiles 以及其它一些类似网站中下载视频。它是用 pygtk 编写的,需要 Python 解析器来运行,对平台要求并不严格。它能够在 Unix、Windows 或者 Mac OS X 系统上运行。

|

||||

[youtube-dl][2] 是基于 Python 的命令行小工具,允许你从 YouTube.com、Dailymotion、Google Video、Photobucket、Facebook、Yahoo、Metacafe、Depositfiles 以及其它一些类似网站中下载视频。它是用 pygtk 编写的,需要 Python 解析器来运行,对平台要求并不严格。它能够在 Unix、Windows 或者 Mac OS X 系统上运行。

|

||||

|

||||

youtube-dl 支持断点续传。如果在下载的过程中 youtube-dl 被杀死了(例如通过 Ctrl-C 或者丢失网络连接),你只需要使用相同的 YouTube 视频 URL 再次运行它。只要当前目录中有下载的部分文件,它就会自动恢复没有完成的下载,也就是说,你不需要[下载][3]管理器来恢复下载。

|

||||

youtube-dl 支持断点续传。如果在下载的过程中 youtube-dl 被杀死了(例如通过 Ctrl-C 或者丢失网络连接),你只需要使用相同的 YouTube 视频 URL 再次运行它。只要当前目录中有下载的部分文件,它就会自动恢复没有完成的下载,也就是说,你不需要[下载管理器][3]来恢复下载。

|

||||

|

||||

#### 安装 youtube-dl ####

|

||||

|

||||

@ -16,7 +16,7 @@ youtube-dl 支持断点续传。如果在下载的过程中 youtube-dl 被杀死

|

||||

|

||||

sudo apt-get install youtube-dl

|

||||

|

||||

对于任何 Linux 发行版,你都可以通过下面的命令行接口在你的系统上快速安装 youtube-dl:

|

||||

对于任何 Linux 发行版,你都可以通过下面的命令行在你的系统上快速安装 youtube-dl:

|

||||

|

||||

sudo wget https://yt-dl.org/downloads/latest/youtube-dl -O/usr/local/bin/youtube-dl

|

||||

|

||||

@ -83,11 +83,11 @@ via: http://itsfoss.com/download-youtube-linux/

|

||||

|

||||

作者:[alimiracle][a]

|

||||

译者:[ictlyh](http://mutouxiaogui.cn/blog/)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://itsfoss.com/author/ali/

|

||||

[1]:http://itsfoss.com/download-youtube-videos-ubuntu/

|

||||

[2]:https://rg3.github.io/youtube-dl/

|

||||

[3]:http://itsfoss.com/xtreme-download-manager-install/

|

||||

[3]:https://linux.cn/article-6209-1.html

|

||||

@ -1,10 +1,10 @@

|

||||

对 Linux 用户10个有用的工具

|

||||

10 个给 Linux 用户的有用工具

|

||||

================================================================================

|

||||

|

||||

|

||||

### 引言 ###

|

||||

|

||||

在本教程中,我已经收集了对 Linux 用户10个有用的工具,其中包括各种网络监控,系统审计和一些其它实用的命令,它可以帮助用户提高工作效率。我希望你会喜欢他们。

|

||||

在本教程中,我已经收集了10个给 Linux 用户的有用工具,其中包括各种网络监控,系统审计和一些其它实用的命令,它可以帮助用户提高工作效率。我希望你会喜欢他们。

|

||||

|

||||

#### 1. w ####

|

||||

|

||||

@ -14,19 +14,18 @@

|

||||

|

||||

|

||||

|

||||

显示帮助信息

|

||||

不显示头部信息(LCTT译注:原文此处有误)

|

||||

|

||||

$w -h

|

||||

|

||||

(LCTT译注:-h为不显示头部信息)

|

||||

|

||||

显示当前用户信息

|

||||

显示指定用户的信息

|

||||

|

||||

$w <username>

|

||||

|

||||

|

||||

|

||||

#### 2. nmon ####

|

||||

|

||||

Nmon(nigel’s monitor 的简写)是一个显示系统性能信息的工具。

|

||||

|

||||

$ sudo apt-get install nmon

|

||||

@ -37,7 +36,7 @@ Nmon(nigel’s monitor 的简写)是一个显示系统性能信息的工具

|

||||

|

||||

|

||||

|

||||

nmon 可以转储与 netwrok,cpu, memory 和磁盘使用情况的信息。

|

||||

nmon 可以显示与 netwrok,cpu, memory 和磁盘使用情况的信息。

|

||||

|

||||

**nmon 显示 cpu 信息 (按 c)**

|

||||

|

||||

@ -53,7 +52,7 @@ nmon 可以转储与 netwrok,cpu, memory 和磁盘使用情况的信息。

|

||||

|

||||

#### 3. ncdu ####

|

||||

|

||||

是一个基于‘du’的光标版本的命令行程序,这个命令是用来分析各种目录占用的磁盘空间。

|

||||

是一个支持光标的`du`程序,这个命令是用来分析各种目录占用的磁盘空间。

|

||||

|

||||

$apt-get install ncdu

|

||||

|

||||

@ -71,7 +70,7 @@ nmon 可以转储与 netwrok,cpu, memory 和磁盘使用情况的信息。

|

||||

|

||||

#### 4. slurm ####

|

||||

|

||||

一个基于网络接口的带宽监控命令行程序,它会基于图形来显示 ascii 文件。

|

||||

一个基于网络接口的带宽监控命令行程序,它会用字符来显示文本图形。

|

||||

|

||||

$ apt-get install slurm

|

||||

|

||||

@ -94,7 +93,7 @@ nmon 可以转储与 netwrok,cpu, memory 和磁盘使用情况的信息。

|

||||

|

||||

#### 5.findmnt ####

|

||||

|

||||

Findmnt 命令用于查找挂载的文件系统。它是用来列出安装设备,当需要时也可以挂载或卸载设备,它也是 util-linux 的一部分。

|

||||

Findmnt 命令用于查找挂载的文件系统。它用来列出安装设备,当需要时也可以挂载或卸载设备,它是 util-linux 软件包的一部分。

|

||||

|

||||

例子:

|

||||

|

||||

@ -122,7 +121,7 @@ Findmnt 命令用于查找挂载的文件系统。它是用来列出安装设备

|

||||

|

||||

#### 6. dstat ####

|

||||

|

||||

一种组合和灵活的工具,它可用于监控内存,进程,网络和磁盘的性能,它可以用来取代 ifstat, iostat, dmstat 等。

|

||||

一种灵活的组合工具,它可用于监控内存,进程,网络和磁盘性能,它可以用来取代 ifstat, iostat, dmstat 等。

|

||||

|

||||

$apt-get install dstat

|

||||

|

||||

@ -134,27 +133,27 @@ Findmnt 命令用于查找挂载的文件系统。它是用来列出安装设备

|

||||

|

||||

|

||||

|

||||

- **-c** cpu

|

||||

**-c** cpu

|

||||

|

||||

$ dstat -c

|

||||

|

||||

|

||||

|

||||

显示 cpu 的详细信息。

|

||||

|

||||

$ dstat -cdl -D sda1

|

||||

|

||||

|

||||

|

||||

- **-d** 磁盘

|

||||

**-d** 磁盘

|

||||

|

||||

$ dstat -d

|

||||

|

||||

|

||||

|

||||

显示 cpu、磁盘等的详细信息。

|

||||

|

||||

$ dstat -cdl -D sda1

|

||||

|

||||

|

||||

|

||||

#### 7. saidar ####

|

||||

|

||||

另一种基于 CLI 的系统统计数据监控工具,提供了有关磁盘使用,网络,内存,交换等信息。

|

||||

另一种基于命令行的系统统计数据监控工具,提供了有关磁盘使用,网络,内存,交换分区等信息。

|

||||

|

||||

$ sudo apt-get install saidar

|

||||

|

||||

@ -172,7 +171,7 @@ Findmnt 命令用于查找挂载的文件系统。它是用来列出安装设备

|

||||

|

||||

#### 8. ss ####

|

||||

|

||||

ss(socket statistics)是一个很好的选择来替代 netstat,它从内核空间收集信息,比 netstat 的性能更好。

|

||||

ss(socket statistics)是一个很好的替代 netstat 的选择,它从内核空间收集信息,比 netstat 的性能更好。

|

||||

|

||||

例如:

|

||||

|

||||

@ -196,7 +195,7 @@ ss(socket statistics)是一个很好的选择来替代 netstat,它从内

|

||||

|

||||

#### 9. ccze ####

|

||||

|

||||

一个自定义日志格式的工具 :).

|

||||

一个美化日志显示的工具 :).

|

||||

|

||||

$ apt-get install ccze

|

||||

|

||||

@ -222,7 +221,7 @@ ss(socket statistics)是一个很好的选择来替代 netstat,它从内

|

||||

|

||||

一种基于 Python 的终端工具,它可以用来以图形方式显示系统活动状态。详细信息以一个丰富多彩的柱状图来展示。

|

||||

|

||||

安装 python:

|

||||

安装 python(LCTT 译注:一般来说,你应该已经有了 python,不需要此步):

|

||||

|

||||

$ sudo apt-add-repository ppa:fkrull/deadsnakes

|

||||

|

||||

@ -234,7 +233,7 @@ ss(socket statistics)是一个很好的选择来替代 netstat,它从内

|

||||

|

||||

$ sudo apt-get install python3.2

|

||||

|

||||

- [下载 ranwhen.py][1]

|

||||

[点此下载 ranwhen.py][1]

|

||||

|

||||

$ unzip ranwhen-master.zip && cd ranwhen-master

|

||||

|

||||

@ -246,7 +245,7 @@ ss(socket statistics)是一个很好的选择来替代 netstat,它从内

|

||||

|

||||

### 结论 ###

|

||||

|

||||

这都是些冷门但重要的 Linux 管理工具。他们可以在日常生活中帮助用户。在我们即将发表的文章中,我们会尽量多带来些管理员/用户工具。

|

||||

这都是些不常见但重要的 Linux 管理工具。他们可以在日常生活中帮助用户。在我们即将发表的文章中,我们会尽量多带来些管理员/用户工具。

|

||||

|

||||

玩得愉快!

|

||||

|

||||

@ -256,7 +255,7 @@ via: http://www.unixmen.com/10-useful-utilities-linux-users/

|

||||

|

||||

作者:[Rajneesh Upadhyay][a]

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

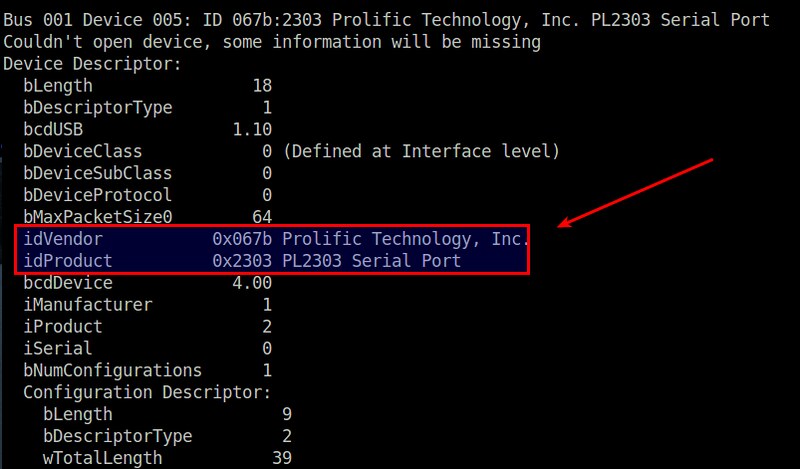

@ -1,26 +1,26 @@

|

||||

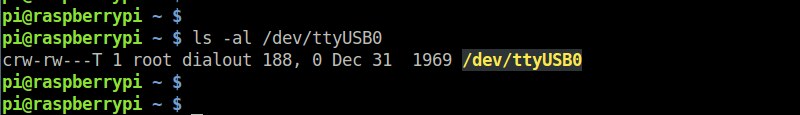

Linux有问必答 -- 如何在LInux中永久修改USB设备权限

|

||||

Linux 有问必答:如何在 Linux 中永久修改 USB 设备权限

|

||||

================================================================================

|

||||

> **提问**:当我尝试在Linux中运行USB GPS接收器时我遇到了下面来自gpsd的错误。

|

||||

> **提问**:当我尝试在 Linux 中运行 USB GPS 接收器时我遇到了下面来自 gpsd 的错误。

|

||||

>

|

||||

> gpsd[377]: gpsd:ERROR: read-only device open failed: Permission denied

|

||||

> gpsd[377]: gpsd:ERROR: /dev/ttyUSB0: device activation failed.

|

||||

> gpsd[377]: gpsd:ERROR: device open failed: Permission denied - retrying read-only

|

||||

>

|

||||

> 看上去gpsd没有权限访问USB设备(/dev/ttyUSB0)。我该如何永久修改它在Linux上的权限?

|

||||

> 看上去 gpsd 没有权限访问 USB 设备(/dev/ttyUSB0)。我该如何永久修改它在Linux上的权限?

|

||||

|

||||

当你在运行一个会读取或者写入USB设备的进程时,进程的用户/组必须有权限这么做。当然你可以手动用chmod命令改变USB设备的权限,但是手动的权限改变只是暂时的。USB设备会在下次重启时恢复它的默认权限。