mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

commit

6c009e04ac

@ -0,0 +1,94 @@

|

||||

GNU/Linux,爱憎由之

|

||||

==================

|

||||

|

||||

首先,我能确定本文提及的内容一定会造成激烈的辩论,从之前那篇 [我讨厌 GNU/Linux 的五个理由 – 你呢,爱还是恨?][1] 的页底评论区就可见一斑。

|

||||

|

||||

也因此,我在此没有使用恨 (hate) 这个词,那会让我感觉很不舒服,所以我觉得用不喜欢 (dislike) 来代替更合适。

|

||||

|

||||

[][2]

|

||||

|

||||

*关于 Linux,我所不喜欢的 5 件事。*

|

||||

|

||||

也就是说,请读者记住,文中的观点完完全全出于我个人和自身的经历,而这些想法和经历可能会和他人的相似,也可能相去甚远。

|

||||

|

||||

此外,我也意识到,这些所谓的不喜欢(dislike)是与经验相关的,Linux 就是这个样子。然而,但正是这些事实阻碍了新用户做出迁移系统的决定。

|

||||

|

||||

像从前一样,随时留下评论并展开讨论,或者提出任何其他符合本文主题的观点。

|

||||

|

||||

### 不喜欢理由之一:从 Windows 迁移到 Linux 对用户来说是个陡峭的学习曲线

|

||||

|

||||

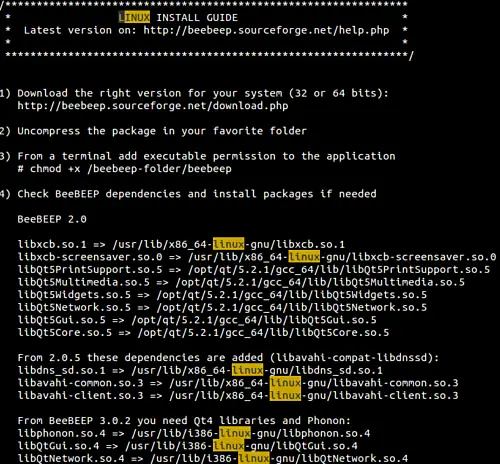

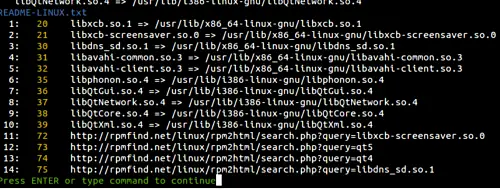

如果说使用 Windows 已经成为了你生活中不可缺少的一个部分,那么你在 Linux 电脑上安装一个新软件之前,还必须要习惯和理解诸如远程仓库(repository)、依赖关系(dependency)、包(package)和包管理器(package manager)等概念。

|

||||

|

||||

不久你也会发现,仅仅使用鼠标点击一个可执行程序是很难完成某个程序的安装的。或者由于一些原因,你没有可用的网络,那么安装一个你想要的软件会是一件非常累人的任务。

|

||||

|

||||

### 不喜欢理由之二:独立学习使用仍存在困难

|

||||

|

||||

类似理由一,事实上,最开始独立学习 Linux 知识的时候,很多人都会觉得那是一个巨大挑战。尽管网上有数以千万计的教程和 [大量的好书][3],但初学者也会因此烦了选择困难症,不知从何开始学习。

|

||||

|

||||

此外,数不清的社区 (比如:[linuxsay.com][4]) 论坛中都有大量的有经验用户为大家无偿提供(通常都是这样的)解答,但不幸的是,这些问题的解答并不完全可信、或者与新用户的经验和知识层面不匹配,导致用户无法理解。

|

||||

|

||||

事实上,因为有太多的发行版系列及其衍生版本可以获取,这使得我们有必要向第三方机构付费,让他们指引我们走向 Linux 世界的第一步、了解这些发行版系列之间的相同点以及区别。

|

||||

|

||||

### 不喜欢理由之三:新老系统/软件迁移问题

|

||||

|

||||

一旦你下定决心开始使用 Linux,那么无论是在家里或是办公室,也无论是个人版或者企业级,你都要完全从旧系统向新系统迁移,然后要考虑这些年来你所使用的软件在 Linux 平台上的替代产品。

|

||||

|

||||

而这确实令人矛盾不已,特别是要面对相同类型(比如文本处理器、关系型数据库系统、图形套件等) 的多个不同程序,而又没有受过专业指导和训练,那么很多人都下定不了决心要使用哪个好。

|

||||

|

||||

除非有可敬的有经验用户或者教学视频进行指导,否则存在太多的软件实例给用户进行选择,真的会让人走进误区。

|

||||

|

||||

### 不喜欢理由之四:缺乏硬件厂商的驱动支持

|

||||

|

||||

恐怕没有人能否认这样的事实,Linux 走过了漫长的历史,它的第一个内核版本公布已经有 20 多年了(LCTT 译注:准确说是将近 26 年了,1991.10.05 - 2017.02,相信现今很多我们这些 Linux 用户在第一个内核版本公布的时候都还没出生,包括译者在内)。随着越来越多的设备驱动编译进每次发布的稳定内核中、越来越多的厂商开始支持研究和开发兼容 Linux 的设备驱动,Linux 用户们不再会经常遇到设备运行不正常的情况了,但还是会偶尔遭遇的。

|

||||

|

||||

并且,如果你的个人计算或者公司业务需要一个特殊设备,但恰巧又没有现成的 Linux 驱动,你还得困在 Windows 或者其他有驱动支持的其他系统。

|

||||

|

||||

尽管你经常这样提醒自己:“闭源软件真他妈邪恶!”,但事实上的确有闭源软件,并且不幸的是,出于商业需求我们多数情况还是被迫使用它。

|

||||

|

||||

### 不喜欢理由之五:Linux 的主要力量仍在于服务器

|

||||

|

||||

这么说吧,我加入 Linux 阵营的主要原因是多年前它将一台老电脑生机焕发并能够正常使用让我看到了它的前景。花费了一段时间来解决不喜欢理由之一、之二中遇到的那些问题,并且成功使用一台 566 MHz 赛扬处理器、10 GB IDE 硬盘以及仅有 256 MB 内存的机器搭载 Debian Squeeze 建立起一个家庭文件/打印/ Web 服务于一体的服务器之后,我非常开心。

|

||||

|

||||

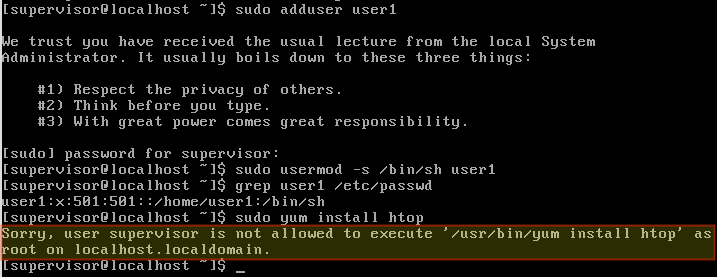

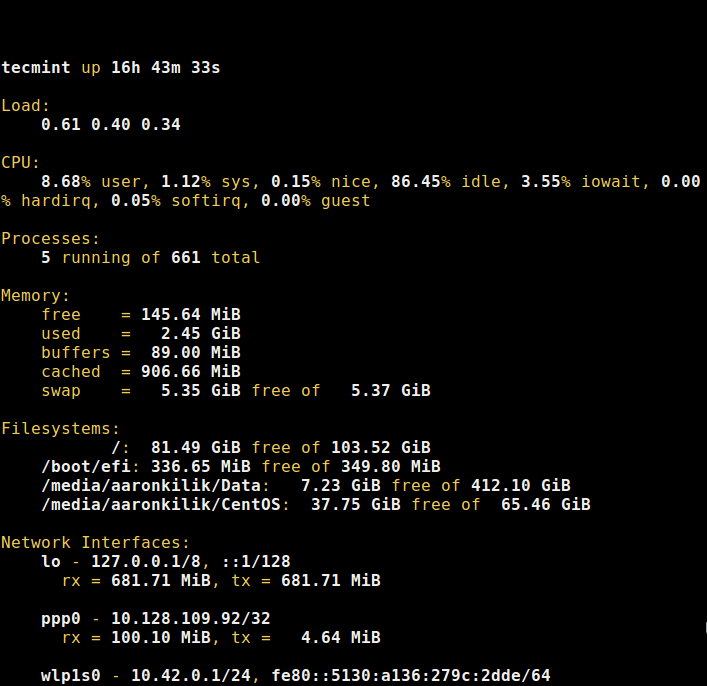

当我发现即便是处于高负载的情况,[htop 显示][5] 系统资源消耗才勉强到达一半,这令非常我惊喜。

|

||||

|

||||

你可能已经不停在再问自己,文中明明说的是不喜欢 Linux,为什么还提这些事呢?答案很简单,我是需要一个比较好的 Linux 桌面发行版来运行在一台相对老旧的电脑上。当然我并不指望能够有那么一个发行版可以运行上述提到那种硬件特征的电脑上,但我的确没有发现有任何一款外观漂亮的可定制桌面系统能运行在 1 GB 内存以下的电脑中,如果可以,其速度大概比鼻涕虫还慢吧。

|

||||

|

||||

我想在此重申一下:我是说“我没发现”,而非“不存在”。可能某天我会发现一个较好的 Linux 桌面发行版能够用在我房间里那台寿终正寝的笔记本上。如果那天真的到来,我将首先删除这篇文章,并向它竖起大拇指。

|

||||

|

||||

### 总而言之

|

||||

|

||||

在本文中,我也尝试了提及 Linux 在某些地方仍需不断改进。我是一名幸福的 Linux 用户,并由衷地感谢那些杰出的社区不断为 Linux 系统、组件和其他功能做出贡献。我想重复一下我在本文开头说的 —— 这些明显的不足点,如果从适当的角度去看也是一种优势,或者也快了吧。

|

||||

|

||||

在那到来之前,让我们相互支持,一起学习并帮助 Linux 成长和传播。随时在下方留下你的评论和问题 —— 我们期待你不同的观点。

|

||||

|

||||

-------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Gabriel Cánepa —— 一位来自阿根廷圣路易斯梅塞德斯镇 (Villa Mercedes, San Luis, Argentina) 的 GNU/Linux 系统管理员,Web 开发者。就职于一家世界领先级的消费品公司,乐于在每天的工作中能使用 FOSS 工具来提高生产力。

|

||||

|

||||

-------------------------------

|

||||

|

||||

译者简介:

|

||||

|

||||

[GHLandy](http://GHLandy.com) —— 生活中所有欢乐与苦闷都应藏在心中,有些事儿注定无人知晓,自己也无从说起。

|

||||

|

||||

-------------------------------

|

||||

|

||||

via: http://www.tecmint.com/things-i-dislike-and-love-about-gnu-linux/

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[GHLandy](https://github.com/GHLandy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/gacanepa/

|

||||

[1]:https://linux.cn/article-3855-1.html

|

||||

[2]:http://www.tecmint.com/wp-content/uploads/2015/11/Things-I-Dislike-About-Linux.png

|

||||

[3]:http://www.tecmint.com/10-useful-free-linux-ebooks-for-newbies-and-administrators/

|

||||

[4]:http://linuxsay.com/

|

||||

[5]:http://www.tecmint.com/install-htop-linux-process-monitoring-for-rhel-centos-fedora/

|

||||

[6]:http://www.tecmint.com/author/gacanepa/

|

||||

[7]:http://www.tecmint.com/10-useful-free-linux-ebooks-for-newbies-and-administrators/

|

||||

[8]:http://www.tecmint.com/free-linux-shell-scripting-books/

|

||||

@ -1,53 +1,57 @@

|

||||

获取、安装和制作 GTK 主题

|

||||

----------------

|

||||

如何获取、安装和制作 GTK 主题

|

||||

=====================

|

||||

|

||||

非常多的桌面版 Linux 都支持主题。GUI(译者注:图形用户界面)独有的外观或者”风格“叫做主题。用户可以改变主题让桌面看起来与众不同。通常,用户也会更改图标。然而,主题和图标包是两个独立的实体。很多人想制作他们自己的主题,因此这是一篇关于GTK 主题的制作以及各种制作时必需的信息的文章。

|

||||

多数桌面版 Linux 都支持主题。GUI(LCTT 译注:图形用户界面)独有的外观或者“风格”叫做主题。用户可以改变主题让桌面看起来与众不同。通常,用户也会更改图标,然而,主题和图标包是两个独立的实体。很多人想制作他们自己的主题,因此这是一篇关于 GTK 主题的制作以及各种制作时所必需的信息的文章。

|

||||

|

||||

**注意:** 这篇文章着重讨论 GTK3,但会稍微谈一下 GTK2、Metacity 等。本文不会讨论光标和图标。

|

||||

|

||||

**基本概念**

|

||||

GIMP 工具包(简称 GTK)是一个用来创造在多种系统上(如此造就了 GTK 的跨平台)图形用户界面的构件工具包。GTK([http://www.gtk.org/][17])通常被错误地认为代表“GNOME 工具包”,但实际上它代表“GIMP 工具包”,因为最初创造它是为了给 GIMP 设计用户界面。GTK 是一个用 C 语言编写的面向对象工具包(GTK 本身不是一种语言)。GTK 遵循 LGPL协议完全开源。GTK 是一个使用广泛的图形用户界面工具包,它含有很多可用工具。

|

||||

###基本概念

|

||||

|

||||

GTK 主题无法在基于 QT 的应用上使用。QT 主题需要在基于 QT 的应用上使用。

|

||||

GIMP 工具包(简称 GTK)是一个用来在多种系统上(因此造就了 GTK 的跨平台)创建图形用户界面的构件工具包。GTK([http://www.gtk.org/][17])通常被误认为代表“GNOME 工具包”,但实际上它代表“GIMP 工具包”,因为最初创造它是为了给 GIMP 设计用户界面。GTK 是一个用 C 语言编写的面向对象工具包(GTK 本身不是一种语言)。GTK 遵循 LGPL 协议完全开源。GTK 是一个广泛使用的图形用户界面工具包,它含有很多用于 GTK 的工具。

|

||||

|

||||

主题使用层叠样式表(CSS)来生成主题样式。这里的 CSS 和网站开发者在网页上使用的相同。然而它引用的 HTML 标签被 GTK 构件的专用标签代替。学习 CSS 对主题开发者来说很重要。

|

||||

为 GTK 制作的主题无法用在基于 Qt 的应用上。QT 应用需要使用 Qt 主题。

|

||||

|

||||

**主题存放位置**

|

||||

主题可能会存储在“~/.themes”或者“/usr/share/themes”文件夹中。存放在“~/.themes”文件夹下的主题只有此 home 文件夹的所有者可以使用。而存放在“/usr/share/themes”文件夹下的全局主题可供所有用户使用。当执行 GTK 程序时,它会按照某种确定的顺序检查可用主题文件的列表。如果没有找到主题文件,它会尝试检查列表中的下一个文件。下述文字是 GTK3 程序检查时的顺序列表。

|

||||

$XDG_CONFIG_HOME/gtk-3.0/gtk.css (另一写法 ~/.config/gtk-3.0/gtk.css)

|

||||

~/.themes/NAME/gtk-3.0/gtk.css

|

||||

$datadir/share/themes/NAME/gtk-3.0/gtk.css (另一写法 /usr/share/themes/name/gtk-3.0/gtk.css)

|

||||

主题使用层叠样式表(CSS)来生成主题样式。这里的 CSS 和网站开发者在网页上使用的相同。然而不是引用 HTML 标签,而是引用 GTK 构件的专用标签。学习 CSS 对主题开发者来说很重要。

|

||||

|

||||

**注意:** ”NAME“是当前主题名称的占位符。

|

||||

### 主题存放位置

|

||||

|

||||

如果有两个主题名字相同,那么存放在用户 home 文件夹(~/.themes)里的主题会被使用。开发者测试存放在本地 home 文件夹的主题时可以好好的利用 GTK 的主题查找算法。

|

||||

主题可能会存储在 `~/.themes` 或者 `/usr/share/themes` 文件夹中。存放在 `~/.themes` 文件夹下的主题只有此 home 文件夹的所有者可以使用。而存放在 `/usr/share/themes` 文件夹下的全局主题可供所有用户使用。当执行 GTK 程序时,它会按照某种确定的顺序检查可用主题文件的列表。如果没有找到主题文件,它会尝试检查列表中的下一个文件。下述文字是 GTK3 程序检查时的顺序列表。

|

||||

|

||||

1. `$XDG_CONFIG_HOME/gtk-3.0/gtk.css` (另一写法 `~/.config/gtk-3.0/gtk.css`)

|

||||

2. `~/.themes/NAME/gtk-3.0/gtk.css`

|

||||

3. `$datadir/share/themes/NAME/gtk-3.0/gtk.css` (另一写法 `/usr/share/themes/name/gtk-3.0/gtk.css`)

|

||||

|

||||

**注意:** “NAME”代表当前主题名称。

|

||||

|

||||

如果有两个主题名字相同,那么存放在用户 home 文件夹(`~/.themes`)里的主题会被优先使用。开发者可以利用这个 GTK 主题查找算法的优势来测试存放在本地 home 文件夹的主题。

|

||||

|

||||

### 主题引擎

|

||||

|

||||

**主题引擎**

|

||||

主题引擎是软件的一部分,用来改变图形用户界面构件的外观。引擎通过解析主题文件来了解应当绘制多少种构件。有些引擎随着主题被开发出来。每种引擎都有优点和缺点,还有些引擎添加了某些特性和特色。

|

||||

|

||||

从默认软件源中可以获取很多主题引擎。Debian 系的 Linux 发行版可以执行“apt-get install gtk2-engines-murrine gtk2-engines-pixbuf gtk3-engines-unico”命令来安装三种不同的引擎。很多引擎同时支持 GTK2 和 GTK3。以下述短列表为例。

|

||||

从默认软件源中可以获取很多主题引擎。Debian 系的 Linux 发行版可以执行 `apt-get install gtk2-engines-murrine gtk2-engines-pixbuf gtk3-engines-unico` 命令来安装三种不同的引擎。很多引擎同时支持 GTK2 和 GTK3。以下述列表为例:

|

||||

|

||||

* gtk2-engines-aurora - Aurora GTK2 engine

|

||||

* gtk2-engines-pixbuf - Pixbuf GTK2 engine

|

||||

* gtk3-engines-oxygen - Engine port of the Oxygen widget style to GTK

|

||||

* gtk3-engines-unico - Unico GTK3 engine

|

||||

* gtk3-engines-xfce - GTK3 engine for Xfce

|

||||

* gtk2-engines-aurora - Aurora GTK2 引擎

|

||||

* gtk2-engines-pixbuf - Pixbuf GTK2 引擎

|

||||

* gtk3-engines-oxygen - 将 Oxygen 组件风格移植 GTK 的引擎

|

||||

* gtk3-engines-unico - Unico GTK3 引擎

|

||||

* gtk3-engines-xfce - 用于 Xfce 的 GTK3 引擎

|

||||

|

||||

### 创作 GTK3 主题

|

||||

|

||||

**创作 GTK3 主题**

|

||||

开发者创作 GTK3 主题时,或者从空文件着手,或者将已有的主题作为模板。从现存主题着手可能会对新手有帮助。比如,开发者可以把主题复制到用户的 home 文件夹,然后编辑这些文件。

|

||||

|

||||

GTK3 主题的通用格式是新建一个以主题名字命名的文件夹。然后新建一个名为“gtk-3.0”的子目录,在子目录里新建一个名为“gtk.css”的文件。在文件“gtk.css“里,使用 CSS 代码写出主题的外观。为了测试将主题移动到 ~/.theme 里。使用新主题并在必要时进行改进。如果有需求,开发者可以添加额外的组件,使主题支持 GTK2,Openbox,Metacity,Unity等桌面环境。

|

||||

GTK3 主题的通用格式是新建一个以主题名字命名的文件夹。然后新建一个名为 `gtk-3.0` 的子目录,在子目录里新建一个名为 `gtk.css` 的文件。在文件 `gtk.css` 里,使用 CSS 代码写出主题的外观。为了测试可以将主题移动到 `~/.theme` 里。使用新主题并在必要时进行改进。如果有需求,开发者可以添加额外的组件,使主题支持 GTK2、Openbox、Metacity、Unity 等桌面环境。

|

||||

|

||||

为了阐明如何创造主题,我们会学习”Ambiance“主题,通常可以在 /usr/share/themes/Ambiance 找到它。此目录包含下面列出的子目录以及一个名为”index.theme“的文件。

|

||||

为了阐明如何创造主题,我们会学习 Ambiance 主题,通常可以在 `/usr/share/themes/Ambiance` 找到它。此目录包含下面列出的子目录以及一个名为 `index.theme` 的文件。

|

||||

|

||||

* gtk-2.0

|

||||

* gtk-3.0

|

||||

* metacity-1

|

||||

* unity

|

||||

* gtk-2.0

|

||||

* gtk-3.0

|

||||

* metacity-1

|

||||

* unity

|

||||

|

||||

“**index.theme**”含有元数据(比如主题的名字)和一些重要的配置(比如按钮的布局)。下面是”Ambiance“主题的”index.theme“文件内容。

|

||||

`index.theme` 含有元数据(比如主题的名字)和一些重要的配置(比如按钮的布局)。下面是 Ambiance 主题的 `index.theme` 文件内容。

|

||||

|

||||

代码:

|

||||

```

|

||||

[Desktop Entry]

|

||||

Type=X-GNOME-Metatheme

|

||||

@ -64,9 +68,8 @@ ButtonLayout=close,minimize,maximize:

|

||||

X-Ubuntu-UseOverlayScrollbars=true

|

||||

```

|

||||

|

||||

”**gtk-2.0**“目录包括支持 GTK2 的文件,比如文件”gtkrc“和文件夹”apps“。文件夹”apps“包括具体程序的 GTK 配置。文件”gtkrc“是 GTK2 部分的主要 CSS 文件。下面是 /usr/share/themes/Ambiance/gtk-2.0/apps/nautilus.rc 文件的内容。

|

||||

`gtk-2.0` 目录包括支持 GTK2 的文件,比如文件 `gtkrc` 和文件夹 `apps`。文件夹 `apps` 包括具体程序的 GTK 配置。文件 `gtkrc` 是 GTK2 部分的主要 CSS 文件。下面是 `/usr/share/themes/Ambiance/gtk-2.0/apps/nautilus.rc` 文件的内容。

|

||||

|

||||

代码:

|

||||

```

|

||||

# ==============================================================================

|

||||

# NAUTILUS SPECIFIC SETTINGS

|

||||

@ -81,9 +84,8 @@ widget_class "*Nautilus*<GtkButton>" style "notebook_button"

|

||||

widget_class "*Nautilus*<GtkButton>*<GtkLabel>" style "notebook_button"

|

||||

```

|

||||

|

||||

”**gtk-3.0**“目录里是 GTK3 的文件。GTK3 使用”gtk.css“取代了"gtkrc",作为主文件。对于 Ambiance 主题,此文件有一行‘@import url("gtk-main.css");’。”settings.ini“是重要的主题范围配置文件。GTK3 主题的”apps“目录和 GTK2 有同样的作用。”assets“目录里有单选按钮、多选框等的图像文件。下面是 /usr/share/themes/Ambiance/gtk-3.0/gtk-main.css 的内容。

|

||||

`gtk-3.0` 目录里是 GTK3 的文件。GTK3 使用 `gtk.css` 取代了 `gtkrc` 作为主文件。对于 Ambiance 主题,此文件有一行 `@import url("gtk-main.css");`。`settings.ini` 包含重要的主题级配置。GTK3 主题的 `apps` 目录和 GTK2 有同样的作用。`assets` 目录里有单选按钮、多选框等的图像文件。下面是 `/usr/share/themes/Ambiance/gtk-3.0/gtk-main.css` 的内容。

|

||||

|

||||

代码:

|

||||

```

|

||||

/*default color scheme */

|

||||

@define-color bg_color #f2f1f0;

|

||||

@ -155,14 +157,14 @@ widget_class "*Nautilus*<GtkButton>*<GtkLabel>" style "notebook_button"

|

||||

@import url("public-colors.css");

|

||||

```

|

||||

|

||||

”**metacity-1**“文件夹含有 Metacity 窗口管理器按钮(比如”关闭窗口“按钮)的图像文件。此目录还有一个名为”metacity-theme-1.xml“的文件,包括了主题的元数据(像开发者的名字)和主题设计。然而,主题的 Metacity 部分使用 XML 文件而不是 CSS 文件。

|

||||

`metacity-1` 文件夹含有 Metacity 窗口管理器按钮(比如“关闭窗口”按钮)的图像文件。此目录还有一个名为 `metacity-theme-1.xml` 的文件,包括了主题的元数据(像开发者的名字)和主题设计。然而,主题的 Metacity 部分使用 XML 文件而不是 CSS 文件。

|

||||

|

||||

”**unity**“文件夹含有 Unity 按钮使用的 SVG 文件。除了 SVG 文件,这里没有其他的文件。

|

||||

`unity` 文件夹含有 Unity 按钮使用的 SVG 文件。除了 SVG 文件,这里没有其他的文件。

|

||||

|

||||

一些主题可能也会包含其他的目录。比如, Clearlooks-Phenix 主题有名为 `openbox-3` 和 `xfwm4` 的文件夹。`openbox-3` 文件夹仅有一个 `themerc` 文件,声明了主题配置和外观(下面有文件示例)。`xfwm4` 目录含有几个 xpm 文件、几个 png 图像文件(在 `png` 文件夹里)、一个 `README` 文件,还有个包含了主题配置的 `themerc` 文件(就像下面看到的那样)。

|

||||

|

||||

一些主题可能也会包含其他的目录。比如,“Clearlooks-Phenix”主题有名为”**openbox-3**”和“**xfwm4**“的文件夹。”openbox-3“文件夹仅有一个”themerc“文件,声明了主题配置和外观(下面有文件示例)。”xfwm4“目录含有几个 xpm 文件,几个 png 图像文件(在”png“文件夹里),一个”README“文件,还有个包含了主题配置的”themerc“文件(就像下面看到的那样)。

|

||||

/usr/share/themes/Clearlooks-Phenix/xfwm4/themerc

|

||||

|

||||

代码:

|

||||

```

|

||||

# Clearlooks XFWM4 by Casey Kirsle

|

||||

|

||||

@ -182,7 +184,6 @@ title_vertical_offset_inactive=1

|

||||

|

||||

/usr/share/themes/Clearlooks-Phenix/openbox-3/themerc

|

||||

|

||||

代码:

|

||||

```

|

||||

!# Clearlooks-Evolving

|

||||

!# Clearlooks as it evolves in gnome-git...

|

||||

@ -349,16 +350,17 @@ osd.unhilight.bg.color: #BABDB6

|

||||

osd.unhilight.bg.colorTo: #efefef

|

||||

```

|

||||

|

||||

**测试主题**

|

||||

在创作主题时,测试主题并且微调代码对得到想要的样子是很有帮助的。有相当的开发者想要用到”主题预览器“这样的工具呢。幸运的是,已经有了。

|

||||

### 测试主题

|

||||

|

||||

* GTK+ Change Theme - 这个程序可以更改 GTK 主题,开发者可以用它预览主题。这个程序由一个含有很多构件的窗口组成,因此可以为主题提供一个完整的预览。要安装它,只需输入命令”apt-get install gtk-chtheme“。

|

||||

* GTK Theme Switch - 用户可以使用它轻松的更换用户主题。测试主题时确保打开了一些应用,方便预览效果。要安装它,只需输入命令”apt-get install gtk-theme-switch“,然后在终端敲出”gtk-theme-switch2“即可运行。

|

||||

* LXappearance - 它可以更换主题,图标以及字体。

|

||||

* PyWF - 这是”The Widget Factory“的一个基于 Python 的可选组件。可以在[http://gtk-apps.org/content/show.php/PyTWF?content=102024][1]获取Pywf。

|

||||

* The Widget Factory - 这是一个古老的 GTK 预览器。要安装它,只需输入命令”apt-get install thewidgetfactory",然后在终端敲出“twf”即可运行。

|

||||

在创作主题时,测试主题并且微调代码对得到想要的样子是很有帮助的。有相当的开发者想要用到“主题预览器”这样的工具。幸运的是,已经有了。

|

||||

|

||||

**主题下载**

|

||||

* GTK+ Change Theme - 这个程序可以更改 GTK 主题,开发者可以用它预览主题。这个程序由一个含有很多构件的窗口组成,因此可以为主题提供一个完整的预览。要安装它,只需输入命令 `apt-get install gtk-chtheme`。

|

||||

* GTK Theme Switch - 用户可以使用它轻松地更换用户主题。测试主题时确保打开了一些应用,方便预览效果。要安装它,只需输入命令 `apt-get install gtk-theme-switch`,然后在终端敲出 `gtk-theme-switch2` 即可运行。

|

||||

* LXappearance - 它可以更换主题,图标以及字体。

|

||||

* PyWF - 这是基于 Python 开发的一个 The Widget Factory 的替代品。可以在 [http://gtk-apps.org/content/show.php/PyTWF?content=102024][1] 获取 PyWF。

|

||||

* The Widget Factory - 这是一个古老的 GTK 预览器。要安装它,只需输入命令 `apt-get install thewidgetfactory`,然后在终端敲出 `twf` 即可运行。

|

||||

|

||||

### 主题下载

|

||||

|

||||

* Cinnamon - [http://gnome-look.org/index.php?xcontentmode=104][2]

|

||||

* Compiz - [http://gnome-look.org/index.php?xcontentmode=102][3]

|

||||

@ -370,7 +372,7 @@ osd.unhilight.bg.colorTo: #efefef

|

||||

* Metacity - [http://gnome-look.org/index.php?xcontentmode=101][9]

|

||||

* Ubuntu Themes - [http://www.ubuntuthemes.org/][10]

|

||||

|

||||

**延伸阅读**

|

||||

### 延伸阅读

|

||||

|

||||

* Graphical User Interface (GUI) Reading Guide - [http://www.linux.org/threads/gui-reading-guide.6471/][11]

|

||||

* GTK - [http://www.linux.org/threads/understanding-gtk.6291/][12]

|

||||

@ -385,7 +387,7 @@ via: http://www.linux.org/threads/installing-obtaining-and-making-gtk-themes.846

|

||||

|

||||

作者:[DevynCJohnson][a]

|

||||

译者:[fuowang](https://github.com/fuowang)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -3,19 +3,19 @@ Docker 是什么?

|

||||

|

||||

|

||||

|

||||

这是一段摘录,取自于 Karl Matthias 和 Sean P. Kane 撰写的 [Docker: Up and Running][3]。其中或许包含一些引用到不可用内容,因为那些是整篇大文章中的一部分。

|

||||

> 这是一篇摘录,取自于 Karl Matthias 和 Sean P. Kane 撰写的 [Docker 即学即用][3]。其中或许包含一些引用到本文中没有的内容,因为那些是整本书中的一部分。

|

||||

|

||||

2013 年 3 月 15 日,在加利福尼亚州圣克拉拉召开的 Python 开发者大会上,dotCloud 的创始人兼首席执行官 Solomon Hvkes 在一场仅五分钟的[微型演讲][4]中,首次提出了 Docker 这一概念。当时,仅约 40 人(除 dotCloud 内部人员)获得了使用 Docker 的机会。

|

||||

|

||||

这在之后的几周内,有关 Docker 的新闻铺天盖地。随后这个项目很快在 [Github][5] 上开源,任何人都可以下载它并为其做出贡献。在之后的几个月中,越来越多的业界人士开始听说 Docker 以及它是如何彻底地改变了软件开发,交付和运行的方式。一年之内,Docker 的名字几乎无人不知无人不晓,但还是有很多人不太明白 Docker 究竟是什么,人们为何如此兴奋。

|

||||

这在之后的几周内,有关 Docker 的新闻铺天盖地。随后这个项目很快在 [Github][5] 上开源,任何人都可以下载它并为其做出贡献。在之后的几个月中,越来越多的业界人士开始听说 Docker 以及它是如何彻底地改变了软件的开发、交付和运行的方式。一年之内,Docker 的名字几乎无人不知无人不晓,但还是有很多人不太明白 Docker 究竟是什么,人们为何如此兴奋。

|

||||

|

||||

Docker 是一个工具,它致力于为任何应用程序创建分发版本而简化封装流程,将其部署到各种规模的环境中,并将敏捷软件组织的工作流程和响应流水化。

|

||||

|

||||

### Docker 带来的希望

|

||||

|

||||

虽然表面上被视为一个虚拟化平台,但 Docker 远远不止如此。Docker 涉及的领域横跨了业界多个方面,包括 KVM, Xen, OpenStack, Mesos, Capistrano, Fabric, Ansible, Chef, Puppet, SaltStack 等技术。或许你已经发现了,在 Docker 的竞争产品列表中有一些很值得关注。例如,大多数工程师不会说,虚拟化产品和配置管理工具是竞争关系,但 Docker 和这两种技术都有点关系。前面列举的一些技术常常因其提高了工作效率而获得称赞,这就导致了大量的探讨。而现在 Docker 正处在这些过去十年间最广泛使用的技术之中。

|

||||

虽然表面上被视为一个虚拟化平台,但 Docker 远远不止如此。Docker 涉及的领域横跨了业界多个方面,包括 KVM、 Xen、 OpenStack、 Mesos、 Capistrano、 Fabric、 Ansible、 Chef、 Puppet、 SaltStack 等技术。或许你已经发现了,在 Docker 的竞争产品列表中有一些很值得关注。例如,大多数工程师都不会认为,虚拟化产品和配置管理工具是竞争关系,但 Docker 和这两种技术都有点关系。前面列举的一些技术常常因其提高了工作效率而获得称赞,这就导致了大量的探讨。而现在 Docker 正是这些过去十年间最广泛使用的技术之一。

|

||||

|

||||

如果你要拿 Docker 分别与这些领域的卫冕冠军按照功能逐项比较,那么 Docker 看上去可能只是个一般的竞争对手。Docker 在某些领域表现的更好,但它带来的是一个跨越广泛的解决工作流程中众多挑战的功能集合。通过将应用程序部署工具(如 Capistrano, Fabric)的易用性和虚拟化系统管理的易于性结合,使工作流程自动化,以及易于实施<ruby>编排<rt>orchestration </rt></ruby>,Docker 提供了一个非常强大的功能集合。

|

||||

如果你要拿 Docker 分别与这些领域的卫冕冠军按照功能逐项比较,那么 Docker 看上去可能只是个一般的竞争对手。Docker 在某些领域表现的更好,但它带来的是一个跨越广泛的解决工作流程中众多挑战的功能集合。通过将应用程序部署工具(如 Capistrano、 Fabric)的易用性和虚拟化系统管理的易于性结合,使工作流程自动化,以及易于实施<ruby>编排<rt>orchestration</rt></ruby>,Docker 提供了一个非常强大的功能集合。

|

||||

|

||||

大量的新技术来来去去,因此对这些新事物保持一定的怀疑总是好的。如果不深入研究,人们很容易误以为 Docker 只是另一种为开发者和运营团队解决一些具体问题的技术。如果把 Docker 单独看作一种虚拟化技术或者部署技术,它看起来并不引人注目。不过 Docker 可比表面上看起来的强大得多。

|

||||

|

||||

@ -23,11 +23,11 @@ Docker 是一个工具,它致力于为任何应用程序创建分发版本而

|

||||

|

||||

那么,最让公司感到头疼的问题是什么呢?现如今,很难按照预期的速度发布软件,而随着公司从只有一两个开发人员成长到拥有若干开发团队的时候,发布新版本时的沟通负担将越来越重,难以管理。开发者不得不去了解软件所处环境的复杂性,生产运营团队也需要不断地理解所发布软件的内部细节。这些通常都是不错的工作技能,因为它们有利于更好地从整体上理解发布环境,从而促进软件的鲁棒性设计。但是随着组织成长的加速,这些技能的拓展很困难。

|

||||

|

||||

充分了解所用的环境细节往往需要团队之间大量的沟通,而这并不能直接为团队创造值。例如,为了发布版本 1.2.1, 开发人员要求运维团队升级特定的库,这个过程就降低了开发效率,也没有为公司创造价值。如果开发人员能够直接升级他们所使的库,然后编写代码,测试新版本,最后发布软件,那么整个交付过程所用的时间将会明显缩短。如果运维人员无需与多个应用开发团队相协调,就能够在宿主系统上升级软件,那么效率将大大提高。Docker 有助于在软件层面建立一层隔离,从而减轻团队的沟通负担。

|

||||

充分了解所用的环境细节往往需要团队之间大量的沟通,而这并不能直接为团队创造值。例如,为了发布版本 1.2.1、开发人员要求运维团队升级特定的库,这个过程就降低了开发效率,也没有为公司创造价值。如果开发人员能够直接升级他们所使的库,然后编写代码,测试新版本,最后发布软件,那么整个交付过程所用的时间将会明显缩短。如果运维人员无需与多个应用开发团队相协调,就能够在宿主系统上升级软件,那么效率将大大提高。Docker 有助于在软件层面建立一层隔离,从而减轻团队的沟通负担。

|

||||

|

||||

除了有助于解决沟通问题,在某种程度上 Docker 的软件架构还鼓励开发出更多健壮的应用程序。这种架构哲学的核心是一次性的小型容器。在新版本部署的时候,会将旧版本应用的整个运行环境全部丢弃。在应用所处的环境中,任何东西的存在时间都不会超过应用程序本身。这是一个简单却影响深远的想法。这就意味着,应用程序不会意外地依赖于之前版本的遗留产物; 对应用的短暂调试和修改也不会存在于未来的版本中; 应用程序具有高度的可移植性,因为应用的所有状态要么直接包含于部署物中,且不可修改,要么存储于数据库、缓存或文件服务器等外部依赖中。

|

||||

除了有助于解决沟通问题,在某种程度上 Docker 的软件架构还鼓励开发出更多健壮的应用程序。这种架构哲学的核心是一次性的小型容器。在新版本部署的时候,会将旧版本应用的整个运行环境全部丢弃。在应用所处的环境中,任何东西的存在时间都不会超过应用程序本身。这是一个简单却影响深远的想法。这就意味着,应用程序不会意外地依赖于之前版本的遗留产物;对应用的短暂调试和修改也不会存在于未来的版本中;应用程序具有高度的可移植性,因为应用的所有状态要么直接包含于部署物中,且不可修改,要么存储于数据库、缓存或文件服务器等外部依赖中。

|

||||

|

||||

因此,应用程序不仅具有更好的可扩展性,而且更加可靠。存储应用的容器实例数量的增减,对于前端网站的影响很小。事实证明,这种架构对于非 Docker 化的应用程序已然成功,但是 Docker 自身包含了这种架构方式,使得 Docker 化的应用程序始终遵循这些最佳实践,这也是一件好事。

|

||||

因此,应用程序不仅具有更好的可扩展性,而且更加可靠。存储应用的容器实例数量的增减,对于前端网站的影响很小。事实证明,这种架构对于非 Docker 化的应用程序已然成功,但是 Docker 自身包含了这种架构方式,使得 Docker 化的应用程序始终遵循这些最佳实践,这也是一件好事。

|

||||

|

||||

### Docker 工作流程的好处

|

||||

|

||||

@ -35,11 +35,11 @@ Docker 是一个工具,它致力于为任何应用程序创建分发版本而

|

||||

|

||||

**使用开发人员已经掌握的技能打包软件**

|

||||

|

||||

> 许多公司为了管理各种工具来为它们支持的平台生成软件包,不得不提供一些软件发布和构建工程师的岗位。像 rpm, mock, dpkg 和 pbuilder 等工具使用起来并不容易,每一种工具都需要单独学习。而 Docker 则把你所有需要的东西全部打包起来,定义为一个文件。

|

||||

> 许多公司为了管理各种工具来为它们支持的平台生成软件包,不得不提供一些软件发布和构建工程师的岗位。像 rpm、mock、 dpkg 和 pbuilder 等工具使用起来并不容易,每一种工具都需要单独学习。而 Docker 则把你所有需要的东西全部打包起来,定义为一个文件。

|

||||

|

||||

**使用标准化的镜像格式打包应用软件及其所需的文件系统**

|

||||

|

||||

> 过去,不仅需要打包应用程序,还需要包含一些依赖库和守护进程等。然而,我们永远不能百分之百地保证,软件运行的环境是完全一致的。这就使得软件的打包很难掌握,许多公司也不能可靠地完成这项工作。常有类似事发生,使用 Scientific Linux 的用户试图部署一个来自社区的、仅在 Red Hat Linux 上经过测试的软件包,希望这个软件包足够接近他们的需求。如果使用 Dokcer, 只需将应用程序和其所依赖的每个文件一起部署即可。Docker 的分层镜像使得这个过程更加高效,确保应用程序运行在预期的环境中。

|

||||

> 过去,不仅需要打包应用程序,还需要包含一些依赖库和守护进程等。然而,我们永远不能百分之百地保证,软件运行的环境是完全一致的。这就使得软件的打包很难掌握,许多公司也不能可靠地完成这项工作。常有类似的事发生,使用 Scientific Linux 的用户试图部署一个来自社区的、仅在 Red Hat Linux 上经过测试的软件包,希望这个软件包足够接近他们的需求。如果使用 Dokcer、只需将应用程序和其所依赖的每个文件一起部署即可。Docker 的分层镜像使得这个过程更加高效,确保应用程序运行在预期的环境中。

|

||||

|

||||

**测试打包好的构建产物并将其部署到运行任意系统的生产环境**

|

||||

|

||||

@ -57,27 +57,27 @@ Docker 发布的第一年,许多刚接触的新人惊讶地发现,尽管 Doc

|

||||

|

||||

Docker 可以解决很多问题,这些问题是其他类型的传统工具专门解决的。那么 Docker 在功能上的广度就意味着它在特定的功能上缺乏深度。例如,一些组织认为,使用 Docker 之后可以完全摈弃配置管理工具,但 Docker 真正强大之处在于,它虽然能够取代某些传统的工具,但通常与它们是兼容的,甚至与它们结合使用还能增强自身的功能。下面将列举一些 Docker 还未能完全取代的工具,如果与它们结合起来使用,往往能取得更好的效果。

|

||||

|

||||

**企业级虚拟化平台(VMware, KVM 等)**

|

||||

**企业级虚拟化平台(VMware、KVM 等)**

|

||||

|

||||

> 容器并不是传统意义上的虚拟机。虚拟机包含完整的操作系统,运行在宿主操作系统之上。虚拟化平台最大的优点是,一台宿主机上可以使用虚拟机运行多个完全不同的操作系统。而容器是和主机共用同一个内核,这就意味着容器使用更少的系统资源,但必须基于同一个底层操作系统(如 Linux)。

|

||||

|

||||

**云平台(Openstack, CloudStack 等)**

|

||||

**云平台(Openstack、CloudStack 等)**

|

||||

|

||||

> 与企业级虚拟化平台一样,容器和云平台的工作流程表面上有大量的相似之处。从传统意义上看,二者都可以按需横向扩展。但是,Docker 并不是云平台,它只能在预先安装 Docker 的宿主机中部署,运行和管理容器,并能创建新的宿主系统(实例),对象存储,数据块存储以及其他与云平台相关的资源。

|

||||

|

||||

**配置管理工具(Puppet,Chef 等)**

|

||||

**配置管理工具(Puppet、Chef 等)**

|

||||

|

||||

> 尽管 Docker 能够显著提高一个组织管理应用程序及其依赖的能力,但不能完全取代传统的配置管理工具。Dockerfile 文件用于定义一个容器构建时内容,但不能持续管理容器运行时的状态和 Docker 的宿主系统。

|

||||

|

||||

**部署框架(Capistrano,Fabric等)**

|

||||

**部署框架(Capistrano、Fabric等)**

|

||||

|

||||

> Docker 通过创建自成一体的容器镜像,简化了应用程序在所有环境上的部署过程。这些用于部署的容器镜像封装了应用程序的全部依赖。然而 Docker 本身不无法执行复杂的自动化部署任务。我们通常使用其他工具一起实现较大的工作流程自动化。

|

||||

> Docker 通过创建自成一体的容器镜像,简化了应用程序在所有环境上的部署过程。这些用于部署的容器镜像封装了应用程序的全部依赖。然而 Docker 本身无法执行复杂的自动化部署任务。我们通常使用其他工具一起实现较大的工作流程自动化。

|

||||

|

||||

**工作负载管理工具(Mesos,Fleet等)**

|

||||

**工作负载管理工具(Mesos、Fleet等)**

|

||||

|

||||

> Docker 服务器没有集群的概念。我们必须使用其他的业务流程工具(如 Docker 自己开发的 Swarm)智能地协调多个 Docker 主机的任务,跟踪所有主机的状态及其资源使用情况,确保运行着足够的容器。

|

||||

|

||||

**虚拟化开发环境(Vagrant等)**

|

||||

**虚拟化开发环境(Vagrant 等)**

|

||||

|

||||

> 对开发者来说,Vagrant 是一个虚拟机管理工具,经常用来模拟与实际生产环境尽量一致的服务器软件栈。此外,Vagrant 可以很容易地让 Mac OS X 和基于 Windows 的工作站运行 Linux 软件。由于 Docker 服务器只能运行在 Linux 上,于是它提供了一个名为 Boot2Docker 的工具允许开发人员在不同的平台上快速运行基于 Linux 的 Docker 容器。Boot2Docker 足以满足很多标准的 Docker 工作流程,但仍然无法支持 Docker Machine 和 Vagrant 的所有功能。

|

||||

|

||||

@ -98,7 +98,7 @@ Sean Kane 目前在 New Relic 公司的共享基础设施团队中担任首席

|

||||

|

||||

via: https://www.oreilly.com/learning/what-is-docker

|

||||

|

||||

作者:[Karl Matthias ][a],[Sean Kane][b]

|

||||

作者:[Karl Matthias][a],[Sean Kane][b]

|

||||

译者:[Cathon](https://github.com/Cathon)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

@ -0,0 +1,130 @@

|

||||

6 个值得好好学习的 JavaScript 框架

|

||||

=====================

|

||||

|

||||

|

||||

|

||||

**常言道,条条大路通罗马,可是那一条适合我呢?** 由于用于构建前端页面等现代技术的出现,JavaScript 在 Web 开发社区早已是如雷贯耳。通过在网页上编写几个函数并提供执行逻辑,可以很好的支持 HTML (主要是用于页面的 _表现_ 或者 _布局_)。如果没有 JavaScript,那页面将没有任何 _交互特性_ 可言。

|

||||

|

||||

现在的框架和库的已经从蛮荒时代崛起了,很多老旧的技术纷纷开始将功能分离成模块。现在不再需要在整个核心语言中支持所有特性了,开发者允许所有用户创建库和框架来增强核心语言的功能。这样,语言的灵活性获得了了显著提高。

|

||||

|

||||

如果在已经在使用 **JavaScript** (以及 **JQuery**) 来支持 HTML,那么你肯定知道开发和维护一个大型应用需要付出多大的努力以及编写多么复杂的代码,而 JavaScript 框架可以帮助你快速的构建交互式 Web 应用 (包含单页面应用或者多页面应用)。

|

||||

|

||||

当一个新手开发者想要学习 JavaScript 时,他常常会被各种 JavaScript 框架所吸引,也幸亏有为数众多的社区,任何开发者都可以轻易地通过在线教程或者其他资源来学习。

|

||||

|

||||

但是,唉!多数的程序员都很难决定学习和使用哪一个框架。因此在本文中,我将为大家推荐 6 个值得好好学习的 JavaScript 框架。让我们开始吧。

|

||||

|

||||

### 1、AngularJS

|

||||

|

||||

|

||||

|

||||

**(注:这是我个人最喜欢的框架)**

|

||||

|

||||

无论你是何时听说的 JavaScript,很可能你早就听过 AngularJS,因为这是在 JavaScript 社区中最为广泛使用的框架了。它发布于 2000 年,由 Google 开发 (这够有说服力让你是用了吧) ,它是一个开源项目,这意味着你可以阅读、编辑和修改其源代码以便更加符合自身的需求,并且不用向其开发者支付一分钱 (这不是很酷吗?)。

|

||||

|

||||

如果说你觉得通过纯粹的 JavaScript 代码编写一个复杂的 Web 应用比较困难的话,那么你肯定会兴奋的跳起来,因为它将显著地减轻你的编码负担。它符合支持双向数据绑定的 MVC (Model–view–controller,模型-视图-控制) 设计典范。假如你不熟悉 MVC,你只需要知道它代表着无论何时探测到某些变化,它将自动更新前端 (比如,用户界面端) 和后端 (代码或者服务器端) 数据。

|

||||

|

||||

MVC 可为大大减少构建复杂应用程序所需的时间和精力,所有你只需要集中精力于一处即可 (DOM 编程接口会自动同步更新视图和模型)。由于 _视图组件_ 与 _模型组件_ 是分离的,你可以很容易的创建一个可复用的组件,使得用户界面的效果非常好看。

|

||||

|

||||

如果因为某些原因,你已经使用了 **TypeScript** (一种与 JavaScript 非常相似的语言),那么你可以很容易就上手 AngularJS,因为这两者的语法高度相似。与 **TypeScript** 相似这一特点在一定程度上提升了 AngularJS 的受欢迎程度。

|

||||

|

||||

目前,Angular 2.0 已经发布,并且提升了移动端的性能,这也足以向一个新的开发者证明,该框架的开发活跃的够高并且定期更新。

|

||||

|

||||

AngularJS 有着大量的用户,包括 (但不限于) Udemy、Forbes、GoDaddy、Ford、NBA 和 Oscars。

|

||||

|

||||

对于那些想要一个高效的 MVC 框架,用来开发面面俱到、包含健壮且现代化的基础架构的单页应用的用户来说,我极力的推荐这个框架。这是第一个为无经验 JavaScript 开发者设计的框架。

|

||||

|

||||

### 2、React

|

||||

|

||||

|

||||

|

||||

与 AngularJS 相似,React 也是一个 MVC (Model–view–controller,模型-视图-控制) 类型的框架,但不同的是,它完全针对于 _视图组件_ (因为它是为 UI 特别定制的) 并且可与任何架构进行无缝衔接。这意味着你可以马上将它运用到你的网站中去。

|

||||

|

||||

它从核心功能中抽象出 DOM 编程接口 (并且因此使用了虚拟 DOM),所以你可以快速的渲染 UI,这使得你能够通过 _node.js_ 将它作为一个客户端框架来使用。它是由 Facebook 开发的开源项目,还有其它的开发者为它贡献代码。

|

||||

|

||||

假如说你见到过并喜欢 Facebook 和 Instagram 的界面,那么你将会爱上 React。通过 React,你可以给你的应用的每个状态设计一个简单的视图,当数据改变的时候,视图也自动随之改变。只要你想的话,可以创建各种的复杂 UI,也可以在任何应用中复用它。在服务器端,React 同样支持通过 _node.js_ 来进行渲染。对于其他的接口,React 也一样表现得足够的灵活。

|

||||

|

||||

除 Facebook 和 Instagram 外,还有好多公司也在使用 React,包括 Whatsapp、BBC、、PayPal、Netflix 和 Dropbox 等。

|

||||

|

||||

如果你只需要一个前端开发框架来构建一个非常复杂且界面极好的强大视图层,那我极力向你推荐这个框架,但你需要有足够的经验来处理各种类型的 JavaScript 代码,而且你再也不需要其他的组件了 (因为你可以自己集成它们)。

|

||||

|

||||

### 3、Ember

|

||||

|

||||

|

||||

|

||||

这个 JavaScript 框架在 2011 年正式发布,是由 _Yehuda Katz_ 开发的开源项目。它有一个庞大且活跃的在线社区,所有在有任何问题时,你都可以在社区中提问。该框架吸收融合了非常多的通用 JavaScript 风格和经验,以便确保开发者能最快的做到开箱即用。

|

||||

|

||||

它使用了 MVVM (Model–view–viewmodel,模型-视图-视图模型) 的设计模式,这使得它与 MVC 有些不一样,因为它由一个 _连接器 (binder)_ 帮助视图和数据连接器进行通信。

|

||||

|

||||

对于 DOM 编程接口的快速服务端渲染,它借助了 _Fastboot.js_,这能够让那些复杂 UI 的性能得到极大提高。

|

||||

|

||||

它的现代化路由模式和模型引擎还支持 _RESTful API_,这可以却确保你可以使用这种最新的技术。它支持句柄集成模板(Handlebars integrated template),用以自动更新数据。

|

||||

|

||||

早在 2015 年间,它的风头曾一度盖过 AngularJS 和 React,被称为最好的 JavaScript 框架,对于它在 JavaScript 社区中的可用性和吸引力,这样的说服力该是足够了的。

|

||||

|

||||

对于不追求高灵活性和大型架构的用户,并且仅仅只是为了赶赴工期、完成任务的话,我个人非常推荐这个 JavaScript 框架,

|

||||

|

||||

### 4、Adonis

|

||||

|

||||

|

||||

|

||||

如果你曾使用过 _Laravel_ 和 _NodeJS_,那么你在使用这一个框架之时会觉得相当顺手,因为它是集合了这两个平台的优点而形成的一个框架,对于任何种类的现代应用来说,它都显得非常专业、圆润和精致。

|

||||

|

||||

它使用了 _NodeJS_,所以是一个很好的后端框架,同时还附带有一些前端特性 (与前面提到那些更多地注重前端的框架不同),所以想要进入后端开发的新手开发者会发觉这个框架相当迷人。

|

||||

|

||||

相比于 _NoSQL_,很多的开发者都比价喜欢使用 _SQL_ 数据库 (因为他们需要增强和数据以及其它特性的交互性),这一现象在这个框架中得到了很好的体现,这时的它更接近标准,开发者也更容易使用。

|

||||

|

||||

如果你混迹于各类 PHP 社区,那你一定很熟悉 **服务提供商 (Service Providers)**,也由于 Adonis 相应的 PHP 风格包含其中,所以在使用它的时候,你会觉得似曾相识。

|

||||

|

||||

在它所有的特性中,最好的便是那个极为强大的路由引擎,支持使用函数来组织和管理应用的所有状态、支持错误处理机制、支持通过 SQL ORM 来进行数据库查询、支持生成器、支持箭头函数 (arrow functions)、支持代理等等。

|

||||

|

||||

如果喜欢使用无状态 REST API 来构建服务器端应用,我比较推荐它,因为你会爱上这个框架的。

|

||||

|

||||

### 5、Vue.js

|

||||

|

||||

|

||||

|

||||

这一个开源的 JavaScript 框架,发布于 2014 年,它有个极为简单的 API,用以为现代 Web 界面(Modern Web Interface)开发交互式组件 (Reactive components)。其设计着重于简单易用。与 Ember 相似,它使用的是 MVVM (Model–view–viewmodel,模型-视图-视图模型) 设计范例,这样简化了设计。

|

||||

|

||||

这个框架最有吸引力的一点是,你可以根据自身需求来选择使用的模块。比如,你需要编写简单的 HTML 代码,抓取 JSON,然后创建一个 Vue 实例来完成可以复用的小特效。

|

||||

|

||||

与之前的那些 JavaScript 框架相似,它使用双路数据绑定来更新模型和视图,同时也使用连接器来完成视图和数据连接器的通信。这是一个还未完全成熟的框架,因为它全部的关注点都在视图层,所以你需要自己处理其它的组件。

|

||||

|

||||

如果你熟悉 _AngularJS_,那你会感觉很顺手,因为它大量嵌入了 _AngularJS_ 的架构,如果你懂得 JavaScript 的基础用法,那你的许多项目都可以轻易地迁移到该框架之下。

|

||||

|

||||

假如你只想把任务完成,或者想提升你自身的 JavaScript 编程经验,又或者你需要学习不同的 JavAScript 框架的本质,我极力推荐这个。

|

||||

|

||||

### 6、Backbone.js

|

||||

|

||||

|

||||

|

||||

这个框架可以很容易的集成到任何第三方的模板引擎,默认使用的是 _Underscore_ 模板引擎,而且该框架仅有一个依赖 (**JQuery**),因此它以轻量而闻名。它支持带有 **RESTful JSON** 接口的 MVC (Model–view–controller,模型-视图-控制) (可以自动更新前端和后端) 设计范例。

|

||||

|

||||

假如你曾经使用过著名的社交新闻网络服务 **reddit**,那么你肯定听说过它在几个单页面应用中使用了 **Backbone.js**。**Backbone.js** 的原作者为之建立了与 _CoffeScript_ 旗鼓相当的 _Underscore_ 模板引擎,所以你可以放心,开发者知道该做什么。

|

||||

|

||||

该框架在一个软件包中提供了键值对 (key-value) 模型、视图以及几个打包的模块,所以你不需要额外下载其他的外部包,这样可以节省不少时间。框架的源码可以在 GitHub 进行查看,这意味着你可以根据需求进行深度定制。

|

||||

|

||||

如果你是寻找一个入门级框架来快速构建一个单页面应用,那么这个框架非常适合你。

|

||||

|

||||

### 总而言之

|

||||

|

||||

至此,我已经在本文着重说明了 6 个值得好好学习的 JavaScript 框架,希望你读完本文后能够决定使用哪个框架来完成自己的任务。

|

||||

|

||||

如果说对于选择框架,你还是不知所措,请记住,这个世界是实践出真知而非教条主义的。最好就是从列表中挑选一个来使用,看看最后是否满足你的需求和兴趣,如果还是不行,接着试试另一个。你也尽管放心好了,列表中的框架肯定是足够了的。

|

||||

|

||||

-------------------------------

|

||||

|

||||

译者简介:

|

||||

|

||||

[GHLandy](http://GHLandy.com) —— 生活中所有欢乐与苦闷都应藏在心中,有些事儿注定无人知晓,自己也无从说起。

|

||||

|

||||

-------------------------------

|

||||

|

||||

via: http://www.discoversdk.com/blog/6-best-javascript-frameworks-to-learn-in-2016

|

||||

|

||||

作者:[Danyal Zia][a]

|

||||

译者:[GHLandy](https://github.com/GHLandy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.discoversdk.com/blog/6-best-javascript-frameworks-to-learn-in-2016

|

||||

@ -0,0 +1,85 @@

|

||||

如何重置 RHEL7/CentOS7 系统的密码

|

||||

=================

|

||||

|

||||

### 介绍

|

||||

|

||||

**目的**

|

||||

|

||||

在 RHEL7/CentOS7/Scientific Linux 7 中重设 root 密码。

|

||||

|

||||

**要求**

|

||||

|

||||

RHEL7 / CentOS7 / Scientific Linux 7

|

||||

|

||||

**困难程度**

|

||||

|

||||

中等

|

||||

|

||||

### 指导

|

||||

|

||||

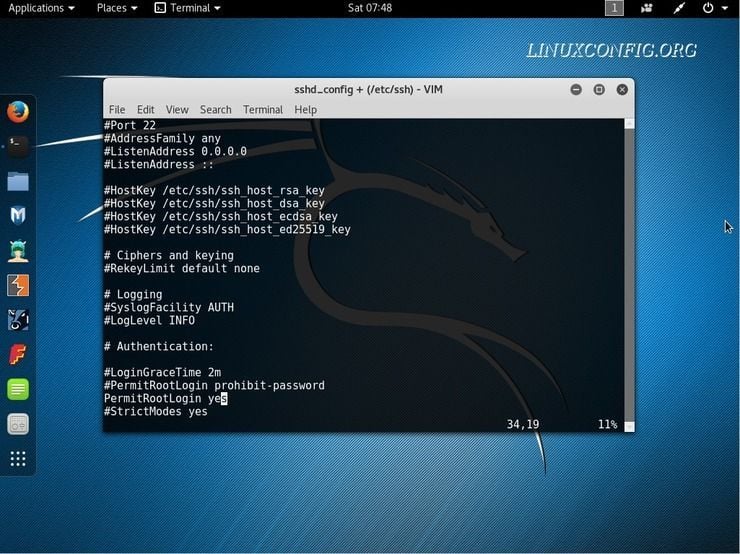

RHEL7 的世界发生了变化,重置 root 密码的方式也一样。虽然中断引导过程的旧方法(init=/bin/bash)仍然有效,但它不再是推荐的。“Systemd” 使用 “rd.break” 来中断引导。让我们快速浏览下整个过程。

|

||||

|

||||

**启动进入最小模式**

|

||||

|

||||

重启系统并在内核列表页面在系统启动之前按下 `e`。你会进入编辑模式。

|

||||

|

||||

**中断启动进程**

|

||||

|

||||

在内核字符串中 - 在以 `linux 16 /vmlinuz- ect` 结尾的行中输入 `rd.break`。接着 `Ctrl+X` 重启。系统启动进入初始化内存磁盘,并挂载在 `/sysroot`。在此模式中你不需要输入密码。

|

||||

|

||||

**重新挂载文件系统以便读写**

|

||||

|

||||

```

|

||||

switch_root:/# mount -o remount,rw /sysroot/

|

||||

```

|

||||

|

||||

**使 /sysroot 成为根目录**

|

||||

|

||||

```

|

||||

switch_root:/# chroot /sysroot

|

||||

```

|

||||

|

||||

命令行提示符会稍微改变。

|

||||

|

||||

**修改 root 密码**

|

||||

|

||||

```

|

||||

sh-4.2# passwd

|

||||

```

|

||||

|

||||

**加载 SELinux 策略**

|

||||

|

||||

```

|

||||

sh-4.2# load_policy -i

|

||||

```

|

||||

|

||||

**在 /etc/shadow 中设置上下文类型**

|

||||

|

||||

```

|

||||

sh-4.2# chcon -t shadow_t /etc/shadow

|

||||

```

|

||||

|

||||

注意:你可以通过如下创建 `autorelabel` 文件的方式来略过最后两步,但自动重建卷标会花费很长时间。

|

||||

|

||||

```

|

||||

sh-4.2# touch /.autorelabel

|

||||

```

|

||||

|

||||

因为这个原因,尽管它更简单,它应该作为“懒人选择”,而不是建议。

|

||||

|

||||

**退出并重启**

|

||||

|

||||

退出并重启并用新的 root 密码登录。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://linuxconfig.org/how-to-reset-the-root-password-in-rhel7-centos7-scientific-linux-7-based-systems

|

||||

|

||||

作者:[Rado Folwarczny][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://linuxconfig.org/how-to-reset-the-root-password-in-rhel7-centos7-scientific-linux-7-based-systems

|

||||

|

||||

90

published/20161103 Perl and the birth of the dynamic web.md

Normal file

90

published/20161103 Perl and the birth of the dynamic web.md

Normal file

@ -0,0 +1,90 @@

|

||||

Perl 与动态网站的诞生

|

||||

==================

|

||||

|

||||

> 在新闻组和邮件列表里、在计算机科学实验室里、在各大陆之间,流传着一个神秘的故事,那是关于 Perl 与动态网站之间的不得不说的往事。

|

||||

|

||||

|

||||

|

||||

>图片来源 : [Internet Archive Book Images][30], 由 Opensource.com 修改. [CC BY-SA 4.0][29].

|

||||

|

||||

早期互联网历史中,有一些脍炙人口的开创性事件:如 蒂姆·伯纳斯·李(Tim Berners-Lee)在邮件组上[宣布][28] WWW-project 的那天,该文档随同 [CERN][27] 发布的项目代码进入到了公共域,以及 1993 年 1 月的[第一版 NCSA Mosaic 浏览器][26]。虽然这些独立的事件是相当重要的,但是当时的技术的开发已经更为丰富,不再是由一组的孤立事件组成,而更像是一系列有内在联系的故事。

|

||||

|

||||

这其中的一个故事描述的是网站是如何变成_动态的_,通俗说来就是我们如何使服务器除了提供静态 HTML 文档之外做更多的事。这是个流传在[新闻组][25]和邮件列表间、计算机科学实验室里、各个大陆之间的故事,重点不是一个人,而是一种编程语言:Perl。

|

||||

|

||||

### CGI 脚本和信息软件

|

||||

|

||||

在上世纪 90 年代中后期,Perl 几乎和动态网站是同义词。Perl 是一种相对来说容易学习的解释型语言,并且有强大的文本处理特性,使得它能够很容易的编写脚本来把一个网站关联到数据库、处理由用户发送的表单数据,当然,还要创造那些上世纪 90 年代的网站的经典形象——计数器和留言簿。

|

||||

|

||||

类似的网站特性渐渐的变成了 CGI 脚本的形式,其全称为通用网关接口(Common Gateway Interface),[首个实现][24]由 Rob McCool 于 1993 年 11 月在 NCSA HTTPD 上完成。CGI 是目的是直面功能,并且在短短几年间,任何人都可以很容易的找到一些由 Perl 写的预制的脚本存档。有一个声名狼籍的案例就是 [Matt's Scripts Archive][23],这是一种流行却包含各种安全缺陷的源代码库,它甚至使得 Perl 社区成员创建了一种被称为 [Not Matt‘s Scripts][22] 的更为专业的替换选择。

|

||||

|

||||

在当时,无论是业余爱好者,还是职业程序员都采用 Perl 来制作动态网站和应用,Tim O’Reilly [创造了词汇“信息软件(infoware)”][21] 来描述网站和 Perl 怎样成为变化中的计算机工业的一部分。考虑到 Yahoo!和 Amazon 带来的创新,O‘Reilly 写道:“传统软件在大量的软件中仅仅包含了少量的信息;而信息软件则在少量的软件中包含了大量的信息。” Perl 是一种像瑞士军刀一样的完美的小而强大的工具,它支撑了信息媒体从巨大的网站目录向早期的用户生成内容(UGC)平台的转变。

|

||||

|

||||

### 题外话

|

||||

|

||||

尽管使用 Perl 来制作 CGI 简直是上佳之选,但是编程语言和不断提升中的动态网站之间的关系变得更加的密切与深入。从[第一个网站][20](在 1990 年的圣诞节前)出现到 1993 年 McCool 实现 CGI 的短暂时期内,Web 上的各种东西,比如表单、图片以及表格,就这么逐渐出现在上世纪 90 年代乃至后来。尽管伯纳斯·李也对这些早期的岁月产生了影响,但是不同的人看到的是 Web 不同的潜在作用,并将它推向各自不同的方向。一方面,这样的结果来自一些著名的辩论,例如 [HTML 应该和 SGML 保持多近的关系][19]、[是否应该实现一个图像标签][18]等等。在另一方面,在没有直接因素影响的情况下改变是极其缓慢的。后者已经很好的描述了动态网站是如何发展的。

|

||||

|

||||

从某种意义上说,第一个“网关”的诞生可以追溯到 1991 至 1992 年之间(LCTT 译注:此处所谓“网关”的意义请参照 CGI 的定义),当时伯纳斯·李和一些计算机科学家与超文本爱好者[编写服务程序][17]使得一些特定的资源能够连接到一起,例如 CERN 的内部应用程序、通用的应用程序如 Oracle 数据库、[广域信息查询系统(WAIS)][16] 等等。(WAIS 是 Web 的前身,上世纪 80 年代后期开发,其中,开发者之一 [Brewster Kahle][15],是一个数字化图书管理员和 [Internet Archive][14] 的创始人。)可以这样理解,“网关”就是一个被设计用来连接其它 Web、数据库或者应用程序的定制的 Web 服务器。任何的动态功能就意味着在不同的端口上运行另外一个守护进程(参考阅读,例如伯纳斯·李对于在网站上[如何添加一个搜索功能][13] 的描述)。伯纳斯·李期望 Web 可以成为不同信息系统之间的通用接口,并且鼓励建立单一用途服务。他也提到 Perl 是一种强大的(甚至是不可思议)、可以将各种东西组合起来的语言。

|

||||

|

||||

然而,另一种对“网关”的理解指出它不一定是一个定制设备,可能只是一个脚本,一个并不需要额外服务器的低吞吐量的附加脚本。这种形式的首次出现是有争议性的 Jim Davis 的 [Gateway to the U Mich Geography server][11],于 1992 年的 11 月发布在了 WWW-talk 邮件列表中。Davis 的脚本是使用 Perl 编写的,是一种 Web API 的原型,基于格式化的用户查询从另外的服务器拉取数据。我们来说明一下这两种对于网关的理解的不同之处,伯纳斯·李[回复了][10] Davis 的邮件,期望他和 Michigan 服务器的作者“能够达成某种共识”,“从网络的角度来看的话”仅使用一台服务器来提供这样的信息可能会更有意义。伯纳斯·李,可能是期待着 Web 的发明者可以提出一种有秩序的信息资源访问方式。这样从不同服务器上拉取数据的网关和脚本意味着一种潜在的 Web 的质的变化,虽然不断增多,但也可能有点偏离了伯纳斯·李的原始观点。

|

||||

|

||||

### 回到 Perl HTTPD

|

||||

|

||||

在 Davis 的地理服务器上的网关向标准化的、低吞吐量的、通过 CGI 方式实现的脚本化网关迈进的一步中,[Perl HTTPD][9] 的出现是很重要的事件,它是 1993 年初由印地安纳大学的研究生 Marc Van Heyningen 在布卢明顿(Bloomington)完全使用 Perl 语言实现的一个 Web 服务器程序。从 Van Heyningen 给出的[设计原则][8]来看,基于使用 Perl 就不需要任何的编译过程这样一种事实,使得它能够成为一种极易扩展的服务器程序,这个服务器包含了“一个向代码中增加新特性时只要简单的重启一下就可以,而不会有任何的宕机时间的特性”,使得这个服务器程序可以频繁的加入新功能。

|

||||

|

||||

Perl HTTPD 代表了那种服务器程序应该是单一、特定目的的观点。相应的,这种模式似乎暗示了在 Web 开发中像这样渐进式的、持续测试的软件产品可能会最终变成一种共识。Van Heyningen 在后来[提到过][7]他从头编写这样一个服务器程序的初衷是当时没有一种简便的方式使用 CERN 服务器程序来生成“虚拟文档”(例如,动态生成的页面),他打趣说使用 Perl 这样的“神之语言”来写可能是最简单的方式了。在他初期编写的众多脚本中有一个 Sun 操作系统的用户手册的 Web 界面,以及 [Finger 网关][6](这是一种早期用来共享计算机系统信息或者是用户信息的协议)。

|

||||

|

||||

虽然 Van Heyningen 将印地安纳大学的服务器主要用来连接现存的信息资源,他和研究生们同时也看见了作为个人发布形式的潜在可能。其中一件广为人知事件是在 1993-1994 年之间围绕着一个著名的加拿大案件而[公布][5]的一系列的文件、照片和新闻故事,与此形成鲜明对比的是,所有的全国性媒体都保持了沉默。

|

||||

|

||||

Perl HTTPD 没有坚持到现在的需要。今天,Van Heyningen 回忆起这个程序的时候认为这个程序只是当时的一个原型产品。它的原始目的只是向那些已经选择了 Gopher 作为大学的网络界面的资深教员们展示了网络的另一种利用方式。Van Heyningen 以[一种基于 Web 的、可搜索的出版物索引][4]的方式,用代码回应了他的导师们的虚荣。就是说,在服务器程序技术方面关键创新是为了赢得争论的胜利而诞生的,在这个角度上来看代码做到了所有要求它所做的事。

|

||||

|

||||

不管该服务器程序的生命是否短暂,伴随者 Perl HTTPD 一起出现的理念已经传播到了各个角落。Van Heyningen 开始收到了获取该代码的请求,而后将它分享到了网上,并提示说,需要了解一些 Perl 就可以将它移植到其它操作系统(或者找到一个这样的人也行)。不久之后,居住在奥斯汀(Austin)的程序员 Tony Sanders 开发了一个被称为 [Plexus][3] 的轻便版本。Sander 的服务器程序是一款全功能的产品,并且同样包含了 Perl HTTPD 所建议的易扩展性,而且添加一些新的特性如图片解码等。Plexus [直接影响了][2] Rob McCool 给 NCSA HTTPD 服务器上的脚本开发的“htbin”,并且同样影响到了不久之后诞生的通用网关接口(CGI)。

|

||||

|

||||

在这些历史遗产之外,感谢妙不可言的 Internet Archive(互联网时光机)使得 Perl HTTPD 在今天依然保留在一种我们依然可以获取的形式,你可以从[这里下载 tarball][1]。

|

||||

|

||||

### 历史展望

|

||||

|

||||

对于技术世界的颠覆来说,技术的改变总是在一个相互对立的过程中。现有的技术是思考新技术的基础与起点。过时的编程形式启迪了今天人们做事的新方式。网络世界的创新可能看起来更像是对于旧技术的扩展,不仅仅是 Perl。

|

||||

|

||||

在萌芽事件的简单的时间轴之外,Web 历史学者也许可以从 Perl 获取更多的线索。其中一部份的挑战在于材料的获取。更多需要做的事情包括从可获取的大量杂乱的数据中梳理出它的结构,将分散在邮件列表、归档网站,书本和杂志中的信息内容组合在一起。还有一部分的挑战是需要认识到 Web 的历史不仅仅是新技术发布的日子,它同时包括了个人记忆、人类情感与社会进程等,并且这不仅仅是单一的历史线而是有许许多多条相似的历史线组合而成的。就如 Perl 的信条一样“殊途同归。(There's More Than One Way To Do It.)”

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/life/16/11/perl-and-birth-dynamic-web

|

||||

|

||||

作者:[Michael Stevenson][a]

|

||||

译者:[wcnnbdk1](https://github.com/wcnnbdk1)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/mstevenson

|

||||

[1]:https://web.archive.org/web/20011126190051/http://www.cs.indiana.edu/perl-server/httpd.pl.tar.Z

|

||||

[2]:http://1997.webhistory.org/www.lists/www-talk.1993q4/0516.html

|

||||

[3]:https://web.archive.org/web/19990421192342/http://www.earth.com/server/doc/plexus.html

|

||||

[4]:https://web.archive.org/web/19990428030253/http://www.cs.indiana.edu:800/cstr/search

|

||||

[5]:https://web.archive.org/web/19970720205155/http://www.cs.indiana.edu/canada/karla.html

|

||||

[6]:https://web.archive.org/web/19990429014629/http://www.cs.indiana.edu:800/finger/gateway

|

||||

[7]:https://web.archive.org/web/19980122184328/http://www.cs.indiana.edu/perl-server/history.html

|

||||

[8]:https://web.archive.org/web/19970720025822/http://www.cs.indiana.edu/perl-server/intro.html

|

||||

[9]:https://web.archive.org/web/19970720025822/http://www.cs.indiana.edu/perl-server/code.html

|

||||

[10]:https://lists.w3.org/Archives/Public/www-talk/1992NovDec/0069.html

|

||||

[11]:https://lists.w3.org/Archives/Public/www-talk/1992NovDec/0060.html

|

||||

[12]:http://info.cern.ch/hypertext/WWW/Provider/ShellScript.html

|

||||

[13]:http://1997.webhistory.org/www.lists/www-talk.1993q1/0109.html

|

||||

[14]:https://archive.org/index.php

|

||||

[15]:http://brewster.kahle.org/about/

|

||||

[16]:https://en.wikipedia.org/wiki/Wide_area_information_server

|

||||

[17]:http://info.cern.ch/hypertext/WWW/Daemon/Overview.html

|

||||

[18]:http://1997.webhistory.org/www.lists/www-talk.1993q1/0182.html

|

||||

[19]:http://1997.webhistory.org/www.lists/www-talk.1993q1/0096.html

|

||||

[20]:http://info.cern.ch/hypertext/WWW/TheProject.html

|

||||

[21]:https://web.archive.org/web/20000815230603/http://www.edventure.com/release1/1198.html

|

||||

[22]:http://nms-cgi.sourceforge.net/

|

||||

[23]:https://web.archive.org/web/19980709151514/http://scriptarchive.com/

|

||||

[24]:http://1997.webhistory.org/www.lists/www-talk.1993q4/0518.html

|

||||

[25]:https://en.wikipedia.org/wiki/Usenet_newsgroup

|

||||

[26]:http://1997.webhistory.org/www.lists/www-talk.1993q1/0099.html

|

||||

[27]:https://tenyears-www.web.cern.ch/tenyears-www/

|

||||

[28]:https://groups.google.com/forum/#!msg/alt.hypertext/eCTkkOoWTAY/bJGhZyooXzkJ

|

||||

[29]:https://creativecommons.org/licenses/by-sa/4.0/

|

||||

[30]:https://www.flickr.com/photos/internetarchivebookimages/14591826409/in/photolist-oeqVBX-xezHCD-otJDtG-whb6Qz-tohe9q-tCxH8y-xq4VfN-otJFfh-xEmn3b-tERUdv-oucUgd-wKDyLy-owgebW-xd6Wew-xGEvuT-toqHkP-oegBCj-xtDdzN-tF19ip-xGFbWP-xcQMJq-wxrrkN-tEYczi-tEYvCn-tohQuy-tEzFwN-xHikPT-oetG8V-toiGvh-wKEgAu-xut1qp-toh7PG-xezovR-oegRMa-wKN2eg-oegSRp-sJ29GF-oeqXLV-oeJTBY-ovLF3X-oeh2iJ-xcQBWs-oepQoy-ow4xoo-xknjyD-ovunVZ-togQaj-tEytff-xEkSLS-xtD8G1

|

||||

@ -0,0 +1,99 @@

|

||||

如何在 XenServer 7 GUI 虚拟机(VM)上提高屏幕分辨率

|

||||

============

|

||||

|

||||

### 介绍

|

||||

|

||||

**目的**

|

||||

|

||||

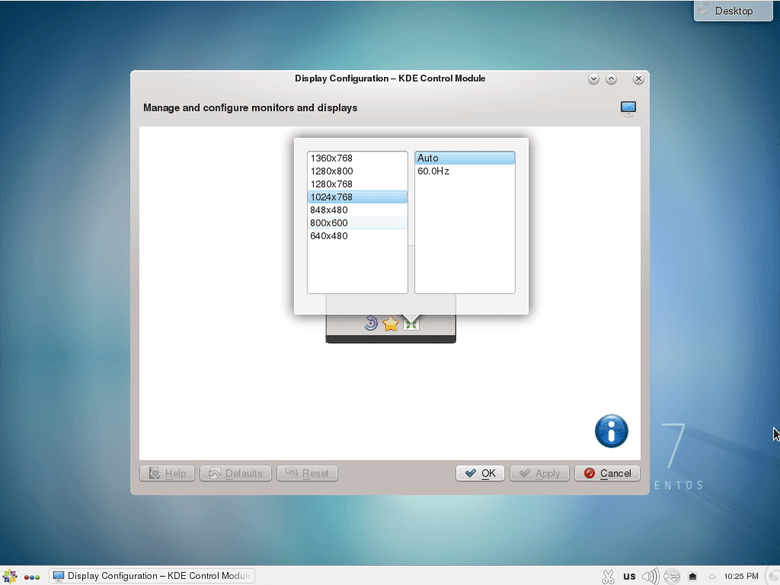

如果你想要将 XenServer 虚拟机作为远程桌面,默认的分辨率可能不能满足你的要求。

|

||||

|

||||

|

||||

|

||||

本篇的目标是提高 XenServer 7 GUI 虚拟机(VM)的屏幕分辨率

|

||||

|

||||

**要求**

|

||||

|

||||

访问 XenServer 7 系统的权限

|

||||

|

||||

**难易性**

|

||||

|

||||

简单

|

||||

|

||||

**惯例**

|

||||

|

||||

* `#` - 给定命令需要作为 root 用户权限运行或者使用 `sudo` 命令

|

||||

* `$` - 给定命令作为常规权限用户运行

|

||||

|

||||

### 指导

|

||||

|

||||

**获得 VM UUID**

|

||||

|

||||

首先,我们需要获得想要提升分辨率的虚拟机的 UUID。

|

||||

|

||||

```

|

||||

# xe vm-list

|

||||

uuid ( RO) : 09a3d0d3-f16c-b215-9460-50dde9123891

|

||||

name-label ( RW): CentOS 7

|

||||

power-state ( RO): running

|

||||

```

|

||||

|

||||

提示:如果你将此 UUID 保存为 shell 变量会节省一些时间:

|

||||

|

||||

```

|

||||

# UUID=09a3d0d3-f16c-b215-9460-50dde9123891

|

||||

```

|

||||

|

||||

**关闭 VM**

|

||||

|

||||

优雅地关闭 VM 或使用 `xe vm-vm-shutdown` 命令:

|

||||

|

||||

```

|

||||

# xe vm-shutdown uuid=$UUID

|

||||

```

|

||||

|

||||

**更新 VGA 的 VIDEORAM 设置**

|

||||

|

||||

检查你目前的 VGA 的 VIDEORAM 参数设置:

|

||||

|

||||

```

|

||||

# xe vm-param-get uuid=$UUID param-name="platform" param-key=vga

|

||||

std

|

||||

# xe vm-param-get uuid=$UUID param-name="platform" param-key=videoram

|

||||

8

|

||||

```

|

||||

|

||||

要提升屏幕的分辨率,将 VGA 更新到 `std` (如果已经设置过,就不需要做什么),并将 `videoram` 调大几兆,如设置成 16:

|

||||

|

||||

```

|

||||

# xe vm-param-set uuid=$UUID platform:vga=std

|

||||

# xe vm-param-set uuid=$UUID platform:videoram=16

|

||||

```

|

||||

|

||||

**启动 VM**

|

||||

|

||||

```

|

||||

# xe vm-start uuid=$UUID

|

||||

```

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://linuxconfig.org/how-to-increase-screen-resolution-on-xenserver-7-gui-virtual-machine-vm

|

||||

|

||||

作者:[Lubos Rendek][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://linuxconfig.org/how-to-increase-screen-resolution-on-xenserver-7-gui-virtual-machine-vm

|

||||

[1]:https://linuxconfig.org/how-to-increase-screen-resolution-on-xenserver-7-gui-virtual-machine-vm#h5-1-obtain-vm-uuid

|

||||

[2]:https://linuxconfig.org/how-to-increase-screen-resolution-on-xenserver-7-gui-virtual-machine-vm#h5-2-shutdown-vm

|

||||

[3]:https://linuxconfig.org/how-to-increase-screen-resolution-on-xenserver-7-gui-virtual-machine-vm#h5-3-update-vga-a-videoram-settings

|

||||

[4]:https://linuxconfig.org/how-to-increase-screen-resolution-on-xenserver-7-gui-virtual-machine-vm#h5-4-start-vm

|

||||

[5]:https://linuxconfig.org/how-to-increase-screen-resolution-on-xenserver-7-gui-virtual-machine-vm#h1-objective

|

||||

[6]:https://linuxconfig.org/how-to-increase-screen-resolution-on-xenserver-7-gui-virtual-machine-vm#h2-requirements

|

||||

[7]:https://linuxconfig.org/how-to-increase-screen-resolution-on-xenserver-7-gui-virtual-machine-vm#h3-difficulty

|

||||

[8]:https://linuxconfig.org/how-to-increase-screen-resolution-on-xenserver-7-gui-virtual-machine-vm#h4-conventions

|

||||

[9]:https://linuxconfig.org/how-to-increase-screen-resolution-on-xenserver-7-gui-virtual-machine-vm#h5-instructions

|

||||

@ -0,0 +1,82 @@

|

||||

如何在 RHEL 上设置 Linux RAID 1

|

||||

============================================================

|

||||

|

||||

### 设置 Linux RAID 1

|

||||

|

||||

配置 LINUX RAID 1 非常重要,因为它提供了冗余性。

|

||||

|

||||

RAID 分区拥有高级功能,如冗余和更好的性能。所以让我们来说下如何实现 RAID,以及让我们来看看不同类型的 RAID:

|

||||

|

||||

- RAID 0(条带):磁盘组合在一起,形成一个更大的驱动器。这以可用性为代价提供了更好的性能。如果 RAID 中的任何一块磁盘出现故障,则整个磁盘集将无法使用。最少需要两块磁盘。

|

||||

- RAID 1(镜像):磁盘从一个复制到另一个,提供了冗余。如果一块磁盘发生故障,则另一块磁盘接管,它有另外一份原始磁盘的数据的完整副本。其缺点是写入时间慢。最少需要两块磁盘。

|

||||

- RAID 5(带奇偶校验的条带):磁盘类似于 RAID 0,并且连接在一起以形成一个大型驱动器。这里的区别是,25% 的磁盘用于奇偶校验位,这允许在单个磁盘发生故障时可以恢复磁盘。最少需要三块盘。

|

||||

|

||||

让我们继续进行 Linux RAID 1 配置。

|

||||

|

||||

安装 Linux RAID 1 的要求:

|

||||

|

||||

1、系统中应该安装了 mdam,请用下面的命令确认。

|

||||

|

||||

```

|

||||

[root@rhel1 ~]# rpm -qa | grep -i mdadm

|

||||

mdadm-3.2.2-9.el6.x86_64

|

||||

[root@rhel1 ~]#

|

||||

```

|

||||

|

||||

2、 系统应该连接了 2 块磁盘。

|

||||

|

||||

创建两个分区,一个磁盘一个分区(sdc、sdd),每个分区占据整块磁盘。

|

||||

|

||||

```

|

||||

Disk /dev/sdc: 1073 MB, 1073741824 bytes

|

||||

255 heads, 63 sectors/track, 130 cylinders

|

||||

Units = cylinders of 16065 * 512 = 8225280 bytes

|

||||

Sector size (logical/physical): 512 bytes / 512 bytes

|

||||

I/O size (minimum/optimal): 512 bytes / 512 bytes

|

||||

Disk identifier: 0x67cc8cfb

|

||||

|

||||

Device Boot Start End Blocks Id System

|

||||

/dev/sdc1 1 130 1044193+ 83 Linux

|

||||

|

||||

Disk /dev/sdd: 1073 MB, 1073741824 bytes

|

||||

255 heads, 63 sectors/track, 130 cylinders

|

||||

Units = cylinders of 16065 * 512 = 8225280 bytes

|

||||

Sector size (logical/physical): 512 bytes / 512 bytes

|

||||

I/O size (minimum/optimal): 512 bytes / 512 bytes

|

||||

Disk identifier: 0x0294382b

|

||||

|

||||

Device Boot Start End Blocks Id System

|

||||

/dev/sdd1 1 130 1044193+ 83 Linux

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

|

||||

作者简介:

|

||||

|

||||

大家好!我是 Manmohan Mirkar。我很高兴见到你们!我在 10 多年前开始使用 Linux,我从来没有想过我会到今天这个地步。我的激情是帮助你们获取 Linux 知识。谢谢阅读!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxroutes.com/linux-raid-1/

|

||||

|

||||

作者:[Manmohan Mirkar][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linuxroutes.com/author/admin/

|

||||

[1]:http://www.linuxroutes.com/linux-raid-1/#

|

||||

[2]:http://www.linuxroutes.com/linux-raid-1/#

|

||||

[3]:http://www.linuxroutes.com/linux-raid-1/#

|

||||

[4]:http://www.linuxroutes.com/linux-raid-1/#

|

||||

[5]:http://www.linuxroutes.com/linux-raid-1/#

|

||||

[6]:http://www.linuxroutes.com/linux-raid-1/#

|

||||

[7]:http://www.linuxroutes.com/linux-raid-1/#

|

||||

[8]:http://www.linuxroutes.com/linux-raid-1/#

|

||||

[9]:http://www.linuxroutes.com/linux-raid-1/#

|

||||

[10]:http://www.linuxroutes.com/linux-raid-1/#

|

||||

[11]:http://www.linuxroutes.com/linux-raid-1/#

|

||||

[12]:http://www.linuxroutes.com/author/admin/

|

||||

[13]:http://www.linuxroutes.com/linux-raid-1/#respond

|

||||

183

published/20161226 Top 10 open source projects of 2016.md

Normal file

183

published/20161226 Top 10 open source projects of 2016.md

Normal file

@ -0,0 +1,183 @@

|

||||

2016 年十大顶级开源项目

|

||||

============================================================

|

||||

|

||||

> 在我们今年的年度顶级开源项目列表中,让我们回顾一下作者们提到的几个 2016 年受欢迎的项目,以及社区管理员选出的钟爱项目。

|

||||

|

||||

|

||||

|

||||

图片来自:[George Eastman House][1] 和 [Internet Archive Book Images][2] 。修改自 Opensource.com. CC BY-SA 4.0

|

||||

|

||||

我们持续关注每年新出现的、成长、改变和发展的优秀开源项目。挑选 10 个开源项目到我们的年度顶级项目列表中并不太容易,而且,也没有哪个如此短的列表能够包含每一个应该包含在内的项目。

|

||||

|

||||

为了挑选 10 个顶级开源项目,我们回顾了作者们 2016 年涉及到的流行的开源项目,同时也从社区管理员收集了一些意见。经过管理员的一番推荐和投票之后,我们的编辑团队选定了最终的列表。

|

||||

|

||||

它们就在这儿, 2016 年 10 个顶级开源项目:

|

||||

|

||||

### Atom

|

||||

|

||||

[Atom][3] 是一个来自 GitHub 的可魔改的(hackable)文本编辑器。Jono Bacon 在今年的早些时候为它的“简单核心”[写了一篇文章][4],对该开源项目所给用户带来的选择而大加赞赏。

|

||||

|

||||

“[Atom][3] 带来了大多数用户想要的主要核心特性和设置,但是缺失了一些用户可能想要的更加高级或独特的特性。……Atom 提供了一个强大的框架,从而允许它的许多部分都可以被改变或扩展。”

|

||||

|

||||

如果打算开始使用 Atom, 请先阅读[这篇指南][5]。如果想加入到用户社区,你可以在 [GitHub][6]、[Discuss][7] 和 [Slack][8] 上找到 Atom 。

|

||||

|

||||

Atom 是 [MIT][9] 许可的,它的[源代码][10]托管在 GitHub 上。

|

||||

|

||||

### Eclipse Che

|

||||

|

||||

[Eclipse Che][11] 是下一代在线集成开发环境(IDE)和开发者工作区。Joshua Allen Holm 在 2016 年 11 月为我们[点评][12]了 Eclipse Che,使我们可以一窥项目背后的开发者社区,Eclipse Che 创新性地使用了容器技术,并且开箱即用就支持多种流行语言。

|

||||

|

||||

“Eclipse Che 集成了就绪即用( ready-to-go)的软件环境(stack)覆盖了绝大多数现代流行语言。这包括 C++、Java、Go、PHP、Python、.NET、Node.js、Ruby on Rails 和 Android 开发的软件环境。软件环境仓库(Stack Library )如果不够的话,甚至还提供了更多的选择,你可以创建一个能够提供特殊环境的定制软件环境。”

|

||||

|

||||

你可以通过网上的[托管账户][13]、[本地安装][14],或者在你常用的[云供应商][15]上测试 Eclipse Che。你也可以在 GitHub 上找到它的[源代码][16],发布于 [Eclipse 公开许可证][17]之下。

|

||||

|

||||

### FreeCAD

|

||||

|

||||

[FreeCAD][18] 是用 Python 写的,是一款电脑辅助设计工具(或叫电脑辅助起草工具),可以用它来为实际物体创建设计模型。 Jason Baker 在 [3 款可供选择的 AutoCAD 的开源替代品][19]一文中写到关于 FreeCAD :

|

||||

|

||||

“FreeCAD 可以从各种常见格式中导入和导出 3D 对象,其模块化结构使得它易于通过各种插件扩展基本功能。该程序有许多内置的界面选项,这包括从草稿到渲染器,甚至还有一个机器人仿真能力。”

|

||||

|

||||

FreeCAD 是 [LGPL][20] 许可的,它的[源代码][21]托管在 GitHub 上。

|

||||

|

||||

### GnuCash

|

||||

|

||||

[GnuCash][22] 是一个跨平台的开源桌面应用,它可以用来管理个人和小型商业账户。 Jason Baker 把 GnuCash 列入了我们针对个人金融的 Mint 和 Quicken 的开源替代品的[综述列表][23]中:

|

||||

|

||||

GnuCash “具有多项记账的特性,能从多种格式导入数据,处理多重汇率,为你创建预算,打印支票,创建定制计划报告,并且能够直接从网上银行导入和拉取股票行情。”

|

||||

|

||||

其发布于 GPL [版本 2 或版本 3 许可证][25]下,你可以在 GitHub 上找到 GnuCash 的[源代码][24]。

|

||||

|

||||

一个值得一提的 GnuCash 可选替代品是 [KMyMoney][26],它也得到了该列表的提名,是另一个在 Linux 上管理财务的好选择。

|

||||

|

||||

### Kodi

|

||||

|

||||

[Kodi][27] 是一个开源媒体中心应用,之前叫做 XBMC,它能够在多种设备上工作,是一个用来 DIY 播放电影、TV、音乐的机顶盒的工具。 Kodi 高度可定制化,它支持多种皮肤、插件和许多遥控设备(包括它自己定制的 Android remote 应用)。

|

||||

|

||||

尽管今年我们没有深入地报道 Kodi, 但依旧出现在许多关于创建一个家用 Linux [音乐服务器][28]、媒体[管理工具][29]的文章中,还出现在之前的一个关于最喜爱的开源[视频播放器][30]的投票中(如果你在家中使用 Kodi,想要写一些自己的体验,[请让我们知道][31])。

|

||||

|

||||

其发布于 [GPLv2][33] 许可证下,你可以在 GitHub 上找到 Kodi 的[源代码][32]。

|

||||

|

||||

### MyCollab

|

||||

|

||||

[MyCollab][34] 是一套针对顾客关系管理(CRM)、文档管理和项目管理的工具。社区管理员 Robin Muilwijk 在他的综述 [2016 年 11 个顶级的项目管理工具][35]一文中详细阐述了 MyCollab-Project 的细节:

|

||||

|

||||

“MyCollab-Project 包含许多特性,比如甘特图、里程碑、时间跟踪和事件管理。它有 Kanban 板功能,因而支持敏捷开发模式。 MyCollab-Project 有三个不同的版本,其中[社区版][36]是自由且开源的。”

|

||||

|

||||

安装 MyCollab 需要 Java 运行环境和 MySQL 环境的支持。请访问 [MyCollab 网站][37]来了解如何对项目做贡献。

|

||||

|

||||

MyCollab 是 AGPLv3 许可的,它的[源代码][38]托管在 GitHub 上。

|

||||

|

||||

### OpenAPS

|

||||

|

||||

[OpenAPS][39] 是社区管理员在 2016 年发现的另一个有趣的项目,我们也深入报道过它。 OpenAPS,即 Open Artificial Pancreas System 项目,是一个致力于提高 1 型糖尿病患者生活质量的开源项目。

|

||||

|

||||

该项目包含“[一个专注安全的典范(reference)设计][40]、一个[工具箱][41]和一个开源的[典范(reference)实现][42],它们是为设备制造商或者任何能够构造人工胰腺设备的个人设计的,从而能够根据胰岛素水平安全地调节血液中葡萄糖水平。尽管潜在用户在尝试亲自构建或使用该系统前应该小心地测试该项目并和他们的健康护理医生讨论,但该项目的创建者希望开放技术能够加速医疗设备行业的研究和开发步伐,从而发现新的治疗方案并更快的投入市场。”

|

||||

|

||||

### OpenHAB

|

||||

|

||||

[OpenHAB][43] 是一个具有可插拔体系结构的家用自动化平台。社区管理员 D Ruth Bavousett 今年购买该平台并尝试使用以后为 OpenHAB [写到][44]:

|

||||

|

||||

“我所发现的其中一个有趣的模块是蓝牙绑定;它能够发现特定的已启用蓝牙的设备(比如你的智能手机、你孩子的那些设备)并且在这些设备到达或离开的时候采取行动-关门或开门、开灯、调节恒温器和关闭安全模式等等”

|

||||

|

||||

查看这个能够与社交网络、即时消息和云 IoT 平台进行集成和通讯的[绑定和捆绑设备的完整列表][45]。

|

||||

|

||||

OpenHAB 是 EPL 许可的,它的[源代码][46]托管在 GitHub 上。

|

||||

|

||||

### OpenToonz

|

||||

|

||||

[OpenToonz][47] 是一个 2D 动画生产软件。社区管理员 Joshua Allen 在 2016 年 3 月[报道][48]了它的开源版本,在 Opensource.com 网站的其他动画相关的文章中它也有被提及,但是我们并没有深入介绍,敬请期待。

|

||||

|

||||

现在,我们可以告诉你的是, OpenToonz 有许多独一无二的特性,包括 GTS,它是吉卜力工作室(Studio Ghibli )开发的一个生成工具,还有一个用于图像处理的[效果插件 SDK][49]。

|

||||

|

||||

如果想讨论开发和视频研究的话题,请查看 GitHub 上的[论坛][50]。 OpenToonz 的[源代码][51]托管在 GitHub 上,该项目是以 BSD 许可证发布。

|

||||

|

||||

### Roundcube

|

||||

|

||||

[Roundcube][52] 是一个现代化、基于浏览器的邮件客户端,它提供了邮箱用户使用桌面客户端时可能用到的许多(如果不是全部)功能。它有许多特性,包括支持超过 70 种语言、集成拼写检查、拖放界面、功能丰富的通讯簿、 HTML 电子邮件撰写、多条件搜索、 PGP 加密支持、会话线索等。 Roundcube 可以作为许多用户的邮件客户端的偶尔的替代品工作。

|

||||

|

||||

在我们的 [Gmail的开源替代品][53] 综述中, Roundcube 和另外四个邮件客户端均被包含在内。

|

||||

|

||||

其以 [GPLv3][55] 许可证发布,你可以在 GitHub 上找到 Roundcube 的[源代码][54]。除了直接[下载][56]、安装该项目,你也可以在许多完整的邮箱服务器软件中找到它,如 [Groupware][57]、[iRedMail][58]、[Mail-in-a-Box][59] 和 [mailcow][60]。

|

||||

|

||||

|

||||

这就是我们的列表了。在 2016 年,你有什么喜爱的开源项目吗?喜爱的原因呢?请在下面的评论框发表。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

|

||||

|

||||

Jen Wike Huger - Jen Wike Huger 是 Opensource.com 网站的内容管理员。她负责日期发布、协调编辑团队并指导新作者和已有作者。请在 Twitter 上关注她 @jenwike, 并在 Jen.io 上查看她的更多个人简介。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/16/12/yearbook-top-10-open-source-projects

|

||||

|

||||

作者:[Jen Wike Huger][a]

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/jen-wike

|

||||

[1]:https://www.flickr.com/photos/george_eastman_house/

|

||||

[2]:https://www.flickr.com/photos/internetarchivebookimages/14784547612/in/photolist-owsEVj-odcHUi-osAjiE-x91Jr9-obHow3-owt68v-owu56t-ouySJt-odaPbp-owajfC-ouBSeL-oeTzy4-ox1okT-odZmpW-ouXBnc-ot2Du4-ocakCh-obZ8Pp-oeTNDK-ouiMZZ-ie12mP-oeVPhH-of2dD4-obXM65-owkSzg-odBEbi-oqYadd-ouiNiK-icoz2G-ie4G4G-ocALsB-ouHTJC-wGocbd-osUxcE-oeYNdc-of1ymF-idPbwn-odoerh-oeSekw-ovaayH-otn9x3-ouoPm7-od8KVS-oduYZL-obYkk3-hXWops-ocUu6k-dTeHx6-ot6Fs5-ouXK46

|

||||

[3]:https://atom.io/

|

||||

[4]:https://opensource.com/life/16/2/culture-pluggable-open-source

|

||||

[5]:https://github.com/atom/atom/blob/master/CONTRIBUTING.md

|

||||

[6]:https://github.com/atom/atom

|

||||

[7]:http://discuss.atom.io/

|

||||

[8]:http://atom-slack.herokuapp.com/

|

||||

[9]:https://raw.githubusercontent.com/atom/atom/master/LICENSE.md

|

||||

[10]:https://github.com/atom/atom

|

||||

[11]:http://www.eclipse.org/che/

|

||||

[12]:https://linux.cn/article-8018-1.html

|

||||

[13]:https://www.eclipse.org/che/getting-started/cloud/

|

||||

[14]:https://www.eclipse.org/che/getting-started/download/

|

||||

[15]:https://bitnami.com/stack/eclipse-che

|

||||

[16]:https://github.com/eclipse/che/

|

||||

[17]:https://github.com/eclipse/che/blob/master/LICENSE

|

||||

[18]:http://www.freecadweb.org/

|

||||

[19]:https://opensource.com/alternatives/autocad

|

||||

[20]:https://github.com/FreeCAD/FreeCAD/blob/master/COPYING

|

||||

[21]:https://github.com/FreeCAD/FreeCAD

|

||||

[22]:https://www.gnucash.org/

|

||||

[23]:https://opensource.com/life/16/1/3-open-source-personal-finance-tools-linux

|

||||

[24]:https://github.com/Gnucash/

|

||||

[25]:https://github.com/Gnucash/gnucash/blob/master/LICENSE

|

||||

[26]:https://kmymoney.org/

|

||||

[27]:https://kodi.tv/

|

||||

[28]:https://opensource.com/life/16/1/how-set-linux-based-music-server-home

|

||||

[29]:https://opensource.com/life/16/6/tinymediamanager-catalogs-your-movie-and-tv-files

|

||||

[30]:https://opensource.com/life/15/11/favorite-open-source-video-player

|

||||

[31]:https://opensource.com/how-submit-article

|

||||

[32]:https://github.com/xbmc/xbmc

|

||||

[33]:https://github.com/xbmc/xbmc/blob/master/LICENSE.GPL

|

||||

[34]:https://community.mycollab.com/

|

||||

[35]:https://opensource.com/business/16/3/top-project-management-tools-2016

|

||||

[36]:https://github.com/MyCollab/mycollab

|

||||

[37]:https://community.mycollab.com/docs/developing-mycollab/how-can-i-contribute-to-mycollab/

|

||||

[38]:https://github.com/MyCollab/mycollab

|

||||

[39]:https://openaps.org/

|

||||

[40]:https://openaps.org/reference-design

|

||||

[41]:https://github.com/openaps/openaps

|

||||

[42]:https://github.com/openaps/oref0/

|

||||

[43]:http://www.openhab.org/

|

||||

[44]:https://opensource.com/life/16/4/automating-your-home-openhab

|

||||

[45]:http://www.openhab.org/features/supported-technologies.html

|

||||

[46]:https://github.com/openhab/openhab

|

||||

[47]:https://opentoonz.github.io/e/index.html

|

||||

[48]:https://opensource.com/life/16/3/weekly-news-march-26

|

||||

[49]:https://github.com/opentoonz/plugin_sdk

|

||||

[50]:https://github.com/opentoonz/opentoonz/issues

|

||||

[51]:https://github.com/opentoonz/opentoonz

|

||||

[52]:https://roundcube.net/

|

||||

[53]:https://opensource.com/alternatives/gmail

|

||||

[54]:https://github.com/roundcube/roundcubemail

|

||||

[55]:https://github.com/roundcube/roundcubemail/blob/master/LICENSE

|

||||

[56]:https://roundcube.net/download/

|

||||

[57]:http://kolab.org/

|

||||

[58]:http://www.iredmail.org/

|

||||

[59]:https://mailinabox.email/

|

||||

[60]:https://mailcow.email/

|

||||

@ -1,14 +1,14 @@

|

||||

编写 android 测试单元该做的和不该做的事

|

||||

============================================================

|

||||

|

||||

在本文中, 我将根据我的实际经验,为大家阐述一个编写测试用例的最佳实践。在本文中我将使用 Espresso 编码, 但是它们可以用到单元和 instrumentation 测试当中。基于以上目的, 我们来研究一个新闻程序.

|

||||

在本文中, 我将根据我的实际经验,为大家阐述一个编写测试用例的最佳实践。在本文中我将使用 Espresso 编码, 但是它们可以用到单元测试和仪器测试(instrumentation test)当中。基于以上目的,我们来研究一个新闻程序。

|

||||

|

||||

> 以下内容纯属虚构,如有雷同纯属巧合:P

|

||||

> 以下内容纯属虚构,如有雷同纯属巧合 :P

|

||||

|

||||

一个新闻 APP 应该会有以下这些 <ruby>活动<rt>activities</rt></ruby>。

|

||||

一个新闻 APP 应该会有以下这些 activity。

|

||||

|

||||

* 语言选择 - 当用户第一次打开软件, 他必须至少选择一种语言。选择后,选项保存在共享偏好中,用户跳转到新闻列表 activity。

|

||||

* 新闻列表 - 当用户来到新闻列表 activity,将发送一个包含语言参数的请求到服务器,并将服务器返回的内容显示在 recycler view 上(包含有新闻列表的 id _news_list_)。 如果共享偏好中未存语言参数,或者服务器没有返回一个成功消息, 就会弹出一个错误对话框并且 recycler view 将不可见。如果用户只选择了一种语言,新闻列表 activity 有个 “Change your Language” 的按钮,或者如果用户选择多种语言,则按钮为 “Change your Languages” 。 ( 我对天发誓这是一个虚构的 APP 软件)

|

||||

* 新闻列表 - 当用户来到新闻列表 activity,将发送一个包含语言参数的请求到服务器,并将服务器返回的内容显示在 recycler view 上(包含有新闻列表的 id, _news_list_)。 如果共享偏好中未存语言参数,或者服务器没有返回一个成功消息, 就会弹出一个错误对话框并且 recycler view 将不可见。如果用户只选择了一种语言,新闻列表 activity 有个 “Change your Language” 的按钮,或者如果用户选择多种语言,则按钮为 “Change your Languages” 。 (我对天发誓这是一个虚构的 APP 软件)

|

||||

* 新闻细节 - 如同名字所述, 当用户点选新闻列表项时将启动这个 activity。

|

||||

|

||||

这个 APP 功能已经足够,,让我们深入研究下为新闻列表 activity 编写的测试用例。 这是我第一次写的代码。

|

||||

@ -40,9 +40,9 @@

|

||||

?}

|

||||

```

|

||||

|

||||

#### 仔细想想测试什么。

|

||||

#### 仔细想想测试什么

|

||||

|

||||

在第一个测试用例 _testClickOnAnyNewsItem()_, 如果服务器没有返回成功信息,测试用例将会返回失败,因为 recycler view 是不可见的。但是这个测试用例的目的并非如此。 **不管该用例为 PASS 还是 FAIL,它的最低要求是 recycler view 总是可见的,** 如果因某种原因,recycler view 不可见,那么测试用例不应视为 FAILED。正确的测试代码应该像下面这个样子。

|

||||

在第一个测试用例 `testClickOnAnyNewsItem()`, 如果服务器没有返回成功信息,测试用例将会返回失败,因为 recycler view 是不可见的。但是这个测试用例的目的并非如此。 **不管该用例为 PASS 还是 FAIL,它的最低要求是 recycler view 总是可见的,** 如果因某种原因,recycler view 不可见,那么测试用例不应视为 FAILED。正确的测试代码应该像下面这个样子。

|

||||

|

||||

```

|

||||

/*

|

||||

@ -74,15 +74,13 @@

|

||||

```

|

||||

#### 一个测试用例本身应该是完整的

|

||||

|

||||

当我开始测试, 我通常按如下顺序测试 activities:

|

||||

|

||||

* 语音选择

|

||||

当我开始测试, 我通常按如下顺序测试 activity:

|

||||

|

||||

* 语言选择

|

||||

* 新闻列表

|

||||

|

||||

* 新闻细节

|

||||

|

||||

因为我首先测试语音选择 activity,在测试 NewsList activity 之前,总有一种语音已经是选择好了的。但是当我先测试新闻列表 activity 时,测试用例开始返回错误信息。原因很简单 - 没有选择语言,recycler view 不会显示。**注意, 测试用例的执行顺序不能影响测试结果。** 因此在运行测试用例之前, 语言选项必须是保存在共享偏好中的。在本例中,测试用例独立于语言选择 activity 的测试。

|

||||

因为我首先测试语言选择 activity,在测试 NewsList activity 之前,总有一种语言已经是选择好了的。但是当我先测试新闻列表 activity 时,测试用例开始返回错误信息。原因很简单 - 没有选择语言,recycler view 不会显示。**注意, 测试用例的执行顺序不能影响测试结果。** 因此在运行测试用例之前, 语言选项必须是保存在共享偏好中的。在本例中,测试用例独立于语言选择 activity 的测试。

|

||||

|

||||

```

|

||||

@Rule

|

||||

@ -111,9 +109,9 @@

|

||||

intended(hasComponent(NewsDetailsActivity.class.getName()));

|

||||

?}

|

||||

```

|

||||

#### 在测试用例中避免使用条件代码。

|

||||

#### 在测试用例中避免使用条件代码

|

||||

|

||||

现在在第二个测试用例 _testChangeLanguageFeature()_中,我们获取到用户选择语言的个数, 基于这个数目,我们写了 if-else 条件来进行测试。 但是 if-else 条件应该写在你的代码当中,而不是测试代码里。每一个条件应该单独测试。 因此, 在本例中, 不是只写一条测试用例,而是要写如下两个测试用例。

|

||||

现在在第二个测试用例 `testChangeLanguageFeature()` 中,我们获取到用户选择语言的个数,基于这个数目,我们写了 if-else 条件来进行测试。 但是 if-else 条件应该写在你的代码当中,而不是测试代码里。每一个条件应该单独测试。 因此,在本例中,不是只写一条测试用例,而是要写如下两个测试用例。

|

||||

|

||||

```

|

||||

/**

|

||||

@ -145,14 +143,13 @@

|

||||

|

||||

在大多数应用中,我们与外部网络或者数据库进行交互。一个测试用例运行时可以向服务器发送一个请求,并获取成功或失败的返回信息。但是不能因从服务器获取到失败信息,就认为测试用例没有通过。这样想这个问题 - 如果测试用例失败,然后我们修改客户端代码,以便测试用例通过。 但是在本例中, 我们要在客户端进行任何更改吗?- **NO**。

|

||||

|

||||

但是你应该也无法完全避免要测试网络请求和响应。由于服务器是一个外部代理,我们可以设想一个场景,发送一些可能导致程序崩溃的错误响应。因此, 你写的测试用例应该覆盖所有可能来自服务器的响应,甚至包括服务器决不会发出的响应。这样可以覆盖所有代码,并能保证应用可以处理所有响应,而不会崩溃。

|

||||

|

||||

但是你应该也无法完全避免要测试网络请求和响应。由于服务器是一个外部代理,我们可以设想一个场景,发送一些可能导致程序崩溃的错误响应。因此,你写的测试用例应该覆盖所有可能来自服务器的响应,甚至包括服务器决不会发出的响应。这样可以覆盖所有代码,并能保证应用可以处理所有响应,而不会崩溃。

|

||||

|

||||

> 正确的编写测试用例与编写这些测试代码同等重要。

|

||||

|

||||

感谢你阅读此文章。希望对测试用例写的更好有所帮助。你可以在 [LinkedIn][1] 上联系我。还可以[在这里][2]阅读我的其他文章。

|

||||

|

||||

获取更多资讯请关注_[Mindorks][3]_, 我们发新文章时您将获得通知。

|

||||

获取更多资讯请关注我们, 我们发新文章时您将获得通知。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -3,7 +3,7 @@

|

||||

|

||||

如果你经常光顾 [Distrowatch][1] 网站,你会发现每一年的 Linux 系统流行度排行榜几乎都没啥变化。

|

||||

|

||||

排在前十名的一直都是那几个发行版,其它一些发行版也许现在还在排行榜中,到下一年年底就有可能不在了。

|

||||

排在前十名的一直都是那几个发行版,而其它一些发行版也许现在还在排行榜中,到下一年年底就有可能不在了。

|

||||

|

||||

关于 Distrowatch 的一个大家很不了解的功能叫做[候选列表][2],它包括以下类型的发行版:

|

||||

|

||||

@ -12,15 +12,15 @@

|

||||

- 相关的英文资料不够丰富

|

||||

- 该项目好像都没人进行维护

|

||||

|

||||

其它一些非常具有潜力,但是还未被评审的 Linux 系统发行版也是值得大家去关注的。注意,由于 Distrowatch 网站暂时没时间或人力去评审这些新的发行版,因此它们可能永远无法进入网站首页排名。

|

||||

一些非常具有潜力,但是还未被评审的 Linux 系统发行版也是值得大家去关注的。但是注意,由于 Distrowatch 网站暂时没时间或人力去评审这些新的发行版,因此它们可能永远无法进入网站首页排名。

|

||||

|

||||

因此,我们将会跟大家分享下 **2017** 年最具潜力的 **5** 个新的 Linux 发行版系统,并且会对它们做一些简单的介绍。

|

||||

|

||||

由于 Linux 系统的生态圈都非常活跃,你可以期待着这篇文章后续的不断更新,或许在下一年中它将完全大变样了。

|

||||

|

||||

尽管如此,咱们还是来看下这些新系统吧!

|

||||

不管怎么说,咱们还是来看下这些新系统吧!

|

||||

|

||||

### 1\. SemicodeOS 操作系统

|

||||

### 1、 SemicodeOS 操作系统

|

||||

|

||||

[SemicodeOS 操作系统][3] 是一个专为程序员和 Web 开发人员设计的 Linux 发行版。它包括所有的开箱即用的代码编译器,[各种文本编辑器][4],[最流行的编程语言的 IDE 环境][5],以及团队协作编程工具。

|

||||

|

||||

@ -34,7 +34,7 @@

|

||||

|

||||

*Semicode Linux 操作系统*

|

||||

|

||||

### 2\. EnchantmentOS 操作系统

|

||||

### 2、 EnchantmentOS 操作系统

|

||||

|

||||

[EnchantmentOS][7] 操作系统是一个基于 Xubuntu 16.04 的发行版,它包括一些经过特别挑选的对内存要求较低的应用程序。这无论对新老设备来说都是一个不错的选择。

|

||||

|

||||

@ -48,9 +48,9 @@

|

||||

|

||||

*EnchantmentOS 操作系统*

|

||||

|

||||

### 3\. Escuelas Linux 操作系统

|

||||

### 3、 Escuelas Linux 操作系统

|

||||

|

||||

[Escuelas Linux 操作系统][9](在西班牙语中是 ”Linux 学校“ 的意思)是一个基于 Bodhi 的 Linux 发行版,它主要是为中小学教育而设计的,它包括各种各样的与教育相关的应用软件。请忽略其西班牙语名字,它也提供全英语支持。

|

||||

[Escuelas Linux 操作系统][9](在西班牙语中是 “Linux 学校” 的意思)是一个基于 Bodhi 的 Linux 发行版,它主要是为中小学教育而设计的,它包括各种各样的与教育相关的应用软件。请忽略其西班牙语名字,它也提供全英语支持。

|

||||

|

||||