mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-12 01:40:10 +08:00

commit

6a38ecd4df

@ -1,39 +1,40 @@

|

||||

|

||||

如何在 Linux 中使用 Fio 来测评硬盘性能

|

||||

======

|

||||

|

||||

|

||||

|

||||

Fio(Flexible I/O Tester) 是一款由 Jens Axboe 开发的用于测评和压力/硬件验证的[免费开源][1]的软件

|

||||

Fio(Flexible I/O Tester) 是一款由 Jens Axboe 开发的用于测评和压力/硬件验证的[自由开源][1]的软件。

|

||||

|

||||

它支持 19 种不同类型的 I/O 引擎 (sync, mmap, libaio, posixaio, SG v3, splice, null, network, syslet, guasi, solarisaio, 以及更多), I/O 优先级(针对较新的 Linux 内核),I/O 速度,复刻或线程任务,和其他更多的东西。它能够在块设备和文件上工作。

|

||||

它支持 19 种不同类型的 I/O 引擎 (sync、mmap、libaio、posixaio、SG v3、splice、null、network、 syslet、guasi、solarisaio,以及更多), I/O 优先级(针对较新的 Linux 内核),I/O 速度,fork 的任务或线程任务等等。它能够在块设备和文件上工作。

|

||||

|

||||

Fio 接受一种非常简单易于理解的文本格式作为任务描述。软件默认包含了许多示例任务文件。 Fio 展示了所有类型的 I/O 性能信息,包括完整的 IO 延迟和百分比。

|

||||

Fio 接受一种非常简单易于理解的文本格式的任务描述。软件默认包含了几个示例任务文件。 Fio 展示了所有类型的 I/O 性能信息,包括完整的 IO 延迟和百分比。

|

||||

|

||||

它被广泛的应用在非常多的地方,包括测评、QA,以及验证用途。它支持 Linux 、 FreeBSD 、 NetBSD、 OpenBSD、 OS X、 OpenSolaris、 AIX、 HP-UX、 Android 以及 Windows。

|

||||

它被广泛的应用在非常多的地方,包括测评、QA,以及验证用途。它支持 Linux 、FreeBSD 、NetBSD、 OpenBSD、 OS X、 OpenSolaris、 AIX、 HP-UX、 Android 以及 Windows。

|

||||

|

||||

在这个教程,我们将使用 Ubuntu 16 ,你需要拥有这台电脑的 sudo 或 root 权限。我们将完整的进行安装和 Fio 的使用。

|

||||

在这个教程,我们将使用 Ubuntu 16 ,你需要拥有这台电脑的 `sudo` 或 root 权限。我们将完整的进行安装和 Fio 的使用。

|

||||

|

||||

### 使用源码安装 Fio

|

||||

|

||||

我们要去克隆 Github 上的仓库。安装所需的依赖,然后我们将会从源码构建应用。首先,确保我们安装了 Git 。

|

||||

我们要去克隆 GitHub 上的仓库。安装所需的依赖,然后我们将会从源码构建应用。首先,确保我们安装了 Git 。

|

||||

|

||||

```

|

||||

sudo apt-get install git

|

||||

```

|

||||

|

||||

CentOS 用户可以执行下述命令:

|

||||

|

||||

```

|

||||

sudo yum install git

|

||||

```

|

||||

|

||||

现在,我们切换到 /opt 目录,并从 Github 上克隆仓库:

|

||||

现在,我们切换到 `/opt` 目录,并从 Github 上克隆仓库:

|

||||

|

||||

```

|

||||

cd /opt

|

||||

git clone https://github.com/axboe/fio

|

||||

```

|

||||

|

||||

你应该会看到下面这样的输出

|

||||

你应该会看到下面这样的输出:

|

||||

|

||||

```

|

||||

Cloning into 'fio'...

|

||||

@ -45,7 +46,7 @@ Resolving deltas: 100% (16251/16251), done.

|

||||

Checking connectivity... done.

|

||||

```

|

||||

|

||||

现在,我们通过在 opt 目录下输入下方的命令切换到 Fio 的代码目录:

|

||||

现在,我们通过在 `/opt` 目录下输入下方的命令切换到 Fio 的代码目录:

|

||||

|

||||

```

|

||||

cd fio

|

||||

@ -61,7 +62,7 @@ cd fio

|

||||

|

||||

### 在 Ubuntu 上安装 Fio

|

||||

|

||||

对于 Ubuntu 和 Debian 来说, Fio 已经在主仓库内。你可以很容易的使用类似 yum 和 apt-get 的标准包管理器来安装 Fio。

|

||||

对于 Ubuntu 和 Debian 来说, Fio 已经在主仓库内。你可以很容易的使用类似 `yum` 和 `apt-get` 的标准包管理器来安装 Fio。

|

||||

|

||||

对于 Ubuntu 和 Debian ,你只需要简单的执行下述命令:

|

||||

|

||||

@ -69,7 +70,8 @@ cd fio

|

||||

sudo apt-get install fio

|

||||

```

|

||||

|

||||

对于 CentOS/Redhat 你只需要简单执行下述命令:

|

||||

对于 CentOS/Redhat 你只需要简单执行下述命令。

|

||||

|

||||

在 CentOS ,你可能在你能安装 Fio 前需要去安装 EPEL 仓库到你的系统中。你可以通过执行下述命令来安装它:

|

||||

|

||||

```

|

||||

@ -124,23 +126,20 @@ Run status group 0 (all jobs):

|

||||

|

||||

Disk stats (read/write):

|

||||

sda: ios=0/0, merge=0/0, ticks=0/0, in_queue=0, util=0.00%

|

||||

|

||||

|

||||

```

|

||||

|

||||

### 执行随机读测试

|

||||

|

||||

我们将要执行一个随机读测试,我们将会尝试读取一个随机的 2GB 文件。

|

||||

|

||||

```

|

||||

|

||||

sudo fio --name=randread --ioengine=libaio --iodepth=16 --rw=randread --bs=4k --direct=0 --size=512M --numjobs=4 --runtime=240 --group_reporting

|

||||

|

||||

|

||||

```

|

||||

|

||||

你应该会看到下面这样的输出

|

||||

```

|

||||

你应该会看到下面这样的输出:

|

||||

|

||||

```

|

||||

...

|

||||

fio-2.2.10

|

||||

Starting 4 processes

|

||||

@ -176,15 +175,13 @@ Run status group 0 (all jobs):

|

||||

|

||||

Disk stats (read/write):

|

||||

sda: ios=521587/871, merge=0/1142, ticks=96664/612, in_queue=97284, util=99.85%

|

||||

|

||||

|

||||

```

|

||||

|

||||

最后,我们想要展示一个简单的随机读-写测试来看一看 Fio 返回的输出类型。

|

||||

|

||||

### 读写性能测试

|

||||

|

||||

下述命令将会测试 USB Pen 驱动器 (/dev/sdc1) 的随机读写性能:

|

||||

下述命令将会测试 USB Pen 驱动器 (`/dev/sdc1`) 的随机读写性能:

|

||||

|

||||

```

|

||||

sudo fio --randrepeat=1 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=test --filename=random_read_write.fio --bs=4k --iodepth=64 --size=4G --readwrite=randrw --rwmixread=75

|

||||

@ -213,8 +210,6 @@ Disk stats (read/write):

|

||||

sda: ios=774141/258944, merge=1463/899, ticks=748800/150316, in_queue=900720, util=99.35%

|

||||

```

|

||||

|

||||

We hope you enjoyed this tutorial and enjoyed following along, Fio is a very useful tool and we hope you can use it in your next debugging activity. If you enjoyed reading this post feel free to leave a comment of questions. Go ahead and clone the repo and play around with the code.

|

||||

|

||||

我们希望你能喜欢这个教程并且享受接下来的内容,Fio 是一个非常有用的工具,并且我们希望你能在你下一次 Debugging 活动中使用到它。如果你喜欢这个文章,欢迎留下评论和问题。

|

||||

|

||||

|

||||

@ -224,7 +219,7 @@ via: https://wpmojo.com/how-to-use-fio-to-measure-disk-performance-in-linux/

|

||||

|

||||

作者:[Alex Pearson][a]

|

||||

译者:[Bestony](https://github.com/bestony)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,58 @@

|

||||

老树发新芽:微服务

|

||||

======

|

||||

|

||||

|

||||

|

||||

如果我告诉你有这样一种软件架构,一个应用程序的组件通过基于网络的通讯协议为其它组件提供服务,我估计你可能会说它是 …

|

||||

|

||||

是的,它和你编程的年限有关。如果你从上世纪九十年代就开始了你的编程生涯,那么你肯定会说它是 <ruby>[面向服务的架构][1]<rt> Service-Oriented Architecture</rt></ruby>(SOA)。但是,如果你是个年青人,并且在云上获得初步的经验,那么,你将会说:“哦,你说的是 <ruby>[微服务][2]<rt>Microservices</rt></ruby>。”

|

||||

|

||||

你们都没错。如果想真正地了解它们的差别,你需要深入地研究这两种架构。

|

||||

|

||||

在 SOA 中,服务是一个功能,它是定义好的、自包含的、并且是不依赖上下文和其它服务的状态的功能。总共有两种服务。一种是消费者服务,它从另外类型的服务 —— 提供者服务 —— 中请求一个服务。一个 SOA 服务可以同时扮演这两种角色。

|

||||

|

||||

SOA 服务可以与其它服务交换数据。两个或多个服务也可以彼此之间相互协调。这些服务执行基本的任务,比如创建一个用户帐户、提供登录功能、或验证支付。

|

||||

|

||||

与其说 SOA 是模块化一个应用程序,还不如说它是把分布式的、独立维护和部署的组件,组合成一个应用程序。然后在服务器上运行这些组件。

|

||||

|

||||

早期版本的 SOA 使用面向对象的协议进行组件间通讯。例如,微软的 <ruby>[分布式组件对象模型][3]<rt> Distributed Component Object Model</rt></ruby>(DCOM) 和使用 <ruby>[通用对象请求代理架构][5]<rt>Common Object Request Broker Architecture</rt></ruby>(CORBA) 规范的 <ruby>[对象请求代理][4]<rt> Object Request Broker</rt></ruby>(ORB)。

|

||||

|

||||

用于消息服务的最新的版本,有 <ruby>[Java 消息服务][6]<rt> Java Message Service</rt></ruby>(JMS)或者 <ruby>[高级消息队列协议][7]<rt>Advanced Message Queuing Protocol</rt></ruby>(AMQP)。这些服务通过<ruby>企业服务总线<rt>Enterprise Service Bus</rt></ruby>(ESB) 进行连接。基于这些总线,来传递和接收可扩展标记语言(XML)格式的数据。

|

||||

|

||||

[微服务][2] 是一个架构样式,其中的应用程序以松散耦合的服务或模块组成。它适用于开发大型的、复杂的应用程序的<ruby>持续集成<rt>Continuous Integration</rt></ruby>/<ruby>持续部署<rt>Continuous Deployment</rt></ruby>(CI/CD)模型。一个应用程序就是一堆模块的汇总。

|

||||

|

||||

每个微服务提供一个应用程序编程接口(API)端点。它们通过轻量级协议连接,比如,<ruby>[表述性状态转移][8]<rt> REpresentational State Transfer</rt></ruby>(REST),或 [gRPC][9]。数据倾向于使用 <ruby>[JavaScript 对象标记][10]<rt> JavaScript Object Notation</rt></ruby>(JSON)或 [Protobuf][11] 来表示。

|

||||

|

||||

这两种架构都可以用于去替代以前老的整体式架构,整体式架构的应用程序被构建为单个的、自治的单元。例如,在一个客户机 —— 服务器模式中,一个典型的 Linux、Apache、MySQL、PHP/Python/Perl (LAMP) 服务器端应用程序将去处理 HTTP 请求、运行子程序、以及从底层的 MySQL 数据库中检索/更新数据。所有这些应用程序“绑”在一起提供服务。当你改变了任何一个东西,你都必须去构建和部署一个新版本。

|

||||

|

||||

使用 SOA,你可以只改变需要的几个组件,而不是整个应用程序。使用微服务,你可以做到一次只改变一个服务。使用微服务,你才能真正做到一个解耦架构。

|

||||

|

||||

微服务也比 SOA 更轻量级。不过 SOA 服务是部署到服务器和虚拟机上,而微服务是部署在容器中。协议也更轻量级。这使得微服务比 SOA 更灵活。因此,它更适合于要求敏捷性的电商网站。

|

||||

|

||||

说了这么多,到底意味着什么呢?微服务就是 SOA 在容器和云计算上的变种。

|

||||

|

||||

老式的 SOA 并没有离我们远去,而因为我们不断地将应用程序搬迁到容器中,所以微服务架构将越来越流行。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://blogs.dxc.technology/2018/05/08/everything-old-is-new-again-microservices/

|

||||

|

||||

作者:[Cloudy Weather][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://blogs.dxc.technology/author/steven-vaughan-nichols/

|

||||

[1]:https://www.service-architecture.com/articles/web-services/service-oriented_architecture_soa_definition.html

|

||||

[2]:http://microservices.io/

|

||||

[3]:https://technet.microsoft.com/en-us/library/cc958799.aspx

|

||||

[4]:https://searchmicroservices.techtarget.com/definition/Object-Request-Broker-ORB

|

||||

[5]:http://www.corba.org/

|

||||

[6]:https://docs.oracle.com/javaee/6/tutorial/doc/bncdq.html

|

||||

[7]:https://www.amqp.org/

|

||||

[8]:https://www.service-architecture.com/articles/web-services/representational_state_transfer_rest.html

|

||||

[9]:https://grpc.io/

|

||||

[10]:https://www.json.org/

|

||||

[11]:https://github.com/google/protobuf/

|

||||

@ -2,18 +2,16 @@

|

||||

============================================================

|

||||

|

||||

|

||||

>欧洲核子研究组织(简称 CERN)依靠开源技术处理大型强子对撞机生成的大量数据。ATLAS(超环面仪器,如图所示)是一种探测基本粒子的通用探测器。(图片来源:CERN)[经许可使用][2]

|

||||

|

||||

>欧洲核子研究组织(简称 CERN)依靠开源技术处理大型强子对撞机生成的大量数据。ATLAS(超环面仪器,如图所示)是一种探测基本粒子的通用探测器。(图片来源:CERN)

|

||||

|

||||

[CERN][3]

|

||||

|

||||

[CERN][6] 无需过多介绍了吧。CERN 创建了万维网和大型强子对撞机(LHC),这是世界上最大的粒子加速器,就是通过它发现了 [希格斯玻色子][7]。负责该组织 IT 操作系统和基础架构的 Tim Bell 表示,他的团队的目标是“为全球 13000 名物理学家提供计算设施,以分析这些碰撞、了解宇宙的构成以及是如何运转的。”

|

||||

[CERN][6] 无需过多介绍了吧。CERN 创建了<ruby>万维网<rt>World Wide Web</rt></ruby>(WWW)和<ruby>大型强子对撞机<rt>Large Hadron Collider</rt></ruby>(LHC),这是世界上最大的<ruby>粒子加速器<rt>particle accelerator</rt></ruby>,就是通过它发现了 <ruby>[希格斯玻色子][7]<rt>Higgs boson</rt></ruby>。负责该组织 IT 操作系统和基础架构的 Tim Bell 表示,他的团队的目标是“为全球 13000 名物理学家提供计算设施,以分析这些碰撞,了解宇宙的构成以及是如何运转的。”

|

||||

|

||||

CERN 正在进行硬核科学研究,尤其是大型强子对撞机,它在运行时 [生成大量数据][8]。“CERN 目前存储大约 200 PB 的数据,当加速器运行时,每月有超过 10 PB 的数据产生。这必然会给计算基础架构带来极大的挑战,包括存储大量数据,以及能够在合理的时间范围内处理数据,对于网络、存储技术和高效计算架构都是很大的压力。“Bell 说到。

|

||||

|

||||

### [tim-bell-cern.png][4]

|

||||

|

||||

|

||||

|

||||

Tim Bell, CERN [经许可使用][1] Swapnil Bhartiya

|

||||

*Tim Bell, CERN*

|

||||

|

||||

大型强子对撞机的运作规模和它产生的数据量带来了严峻的挑战,但 CERN 对这些问题并不陌生。CERN 成立于 1954 年,已经 60 余年了。“我们一直面临着难以解决的计算能力挑战,但我们一直在与开源社区合作解决这些问题。”Bell 说,“即使在 90 年代,当我们发明万维网时,我们也希望与人们共享,使其能够从 CERN 的研究中受益,开源是做这件事的再合适不过的工具了。”

|

||||

|

||||

@ -29,19 +27,20 @@ CERN 帮助 CentOS 提供基础架构,他们还组织了 CentOS DoJo 活动(

|

||||

|

||||

除了 OpenStack 和 CentOS 之外,CERN 还是其他开源项目的深度用户,包括用于配置管理的 Puppet、用于监控的 Grafana 和 InfluxDB,等等。

|

||||

|

||||

“我们与全球约 170 个实验室合作。因此,每当我们发现一个开源项目的可完善之处,其他实验室便可以很容易地采纳使用。“Bell 说,”与此同时,我们也向其他项目学习。当像 eBay 和 Rackspace 这样大规模的安装提高了解决方案的可扩展性时,我们也从中受益,也可以扩大规模。“

|

||||

“我们与全球约 170 个实验室合作。因此,每当我们发现一个开源项目的改进之处,其他实验室便可以很容易地采纳使用。”Bell 说,“与此同时,我们也向其他项目学习。当像 eBay 和 Rackspace 这样大规模的装机量提高了解决方案的可扩展性时,我们也从中受益,也可以扩大规模。“

|

||||

|

||||

### 解决现实问题

|

||||

|

||||

2012 年左右,CERN 正在研究如何为大型强子对撞机扩展计算能力,但难点是人员而不是技术。CERN 雇用的员工人数是固定的。“我们必须找到一种方法来扩展计算能力,而不需要大量额外的人来管理。”Bell 说,“OpenStack 为我们提供了一个自动的 API 驱动和软件定义的基础架构。”OpenStack 还帮助 CERN 检查与服务交付相关的问题,然后使其自动化,而无需增加员工。

|

||||

|

||||

“我们目前在日内瓦和布达佩斯的两个数据中心运行大约 280000 个核心(cores)和 7000 台服务器。我们正在使用软件定义的基础架构使一切自动化,这使我们能够在保持员工数量不变的同时继续添加更多的服务器。“Bell 说。

|

||||

“我们目前在日内瓦和布达佩斯的两个数据中心运行大约 280000 个处理器核心和 7000 台服务器。我们正在使用软件定义的基础架构使一切自动化,这使我们能够在保持员工数量不变的同时继续添加更多的服务器。“Bell 说。

|

||||

|

||||

随着时间的推移,CERN 将面临更大的挑战。大型强子对撞机有一个到 2035 年的蓝图,包括一些重要的升级。“我们的加速器运转三到四年,然后会用 18 个月或两年的时间来升级基础架构。在这维护期间我们会做一些计算能力的规划。“Bell 说。CERN 还计划升级高亮度大型强子对撞机,会允许更高光度的光束。与目前的 CERN 的规模相比,升级意味着计算需求需增加约 60 倍。

|

||||

随着时间的推移,CERN 将面临更大的挑战。大型强子对撞机有一个到 2035 年的蓝图,包括一些重要的升级。“我们的加速器运转三到四年,然后会用 18 个月或两年的时间来升级基础架构。在这维护期间我们会做一些计算能力的规划。

|

||||

”Bell 说。CERN 还计划升级高亮度大型强子对撞机,会允许更高光度的光束。与目前的 CERN 的规模相比,升级意味着计算需求需增加约 60 倍。

|

||||

|

||||

“根据摩尔定律,我们可能只能满足需求的四分之一,因此我们必须找到相应的扩展计算能力和存储基础架构的方法,并找到自动化和解决方案,例如 OpenStack,将有助于此。”Bell 说。

|

||||

|

||||

“当我们开始使用大型强子对撞机并观察我们如何提供计算能力时,很明显我们无法将所有内容都放入 CERN 的数据中心,因此我们设计了一个分布式网格结构:位于中心的 CERN 和围绕着它的级联结构。“Bell 说,“全世界约有 12 个大型一级数据中心,然后是 150 所小型大学和实验室。他们从大型强子对撞机的数据中收集样本,以帮助物理学家理解和分析数据。“

|

||||

“当我们开始使用大型强子对撞机并观察我们如何提供计算能力时,很明显我们无法将所有内容都放入 CERN 的数据中心,因此我们设计了一个分布式网格结构:位于中心的 CERN 和围绕着它的级联结构。”Bell 说,“全世界约有 12 个大型一级数据中心,然后是 150 所小型大学和实验室。他们从大型强子对撞机的数据中收集样本,以帮助物理学家理解和分析数据。”

|

||||

|

||||

这种结构意味着 CERN 正在进行国际合作,数百个国家正致力于分析这些数据。归结为一个基本原则,即开源不仅仅是共享代码,还包括人们之间的协作、知识共享,以实现个人、组织或公司无法单独实现的目标。这就是开源世界的希格斯玻色子。

|

||||

|

||||

@ -49,9 +48,9 @@ CERN 帮助 CentOS 提供基础架构,他们还组织了 CentOS DoJo 活动(

|

||||

|

||||

via: https://www.linux.com/blog/2018/5/how-cern-using-linux-open-source

|

||||

|

||||

作者:[SWAPNIL BHARTIYA ][a]

|

||||

作者:[SWAPNIL BHARTIYA][a]

|

||||

译者:[jessie-pang](https://github.com/jessie-pang)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,21 +1,24 @@

|

||||

如何在 Git 中重置、恢复、和返回到以前的状态

|

||||

如何在 Git 中重置、恢复,返回到以前的状态

|

||||

======

|

||||

|

||||

> 用简洁而优雅的 Git 命令撤销仓库中的改变。

|

||||

|

||||

|

||||

|

||||

使用 Git 工作时其中一个鲜为人知(和没有意识到)的方面就是,如何很容易地返回到你以前的位置 —— 也就是说,在仓库中如何很容易地去撤销那怕是重大的变更。在本文中,我们将带你了解如何去重置、恢复、和完全回到以前的状态,做到这些只需要几个简单而优雅的 Git 命令。

|

||||

使用 Git 工作时其中一个鲜为人知(和没有意识到)的方面就是,如何轻松地返回到你以前的位置 —— 也就是说,在仓库中如何很容易地去撤销那怕是重大的变更。在本文中,我们将带你了解如何去重置、恢复和完全回到以前的状态,做到这些只需要几个简单而优雅的 Git 命令。

|

||||

|

||||

### reset

|

||||

### 重置

|

||||

|

||||

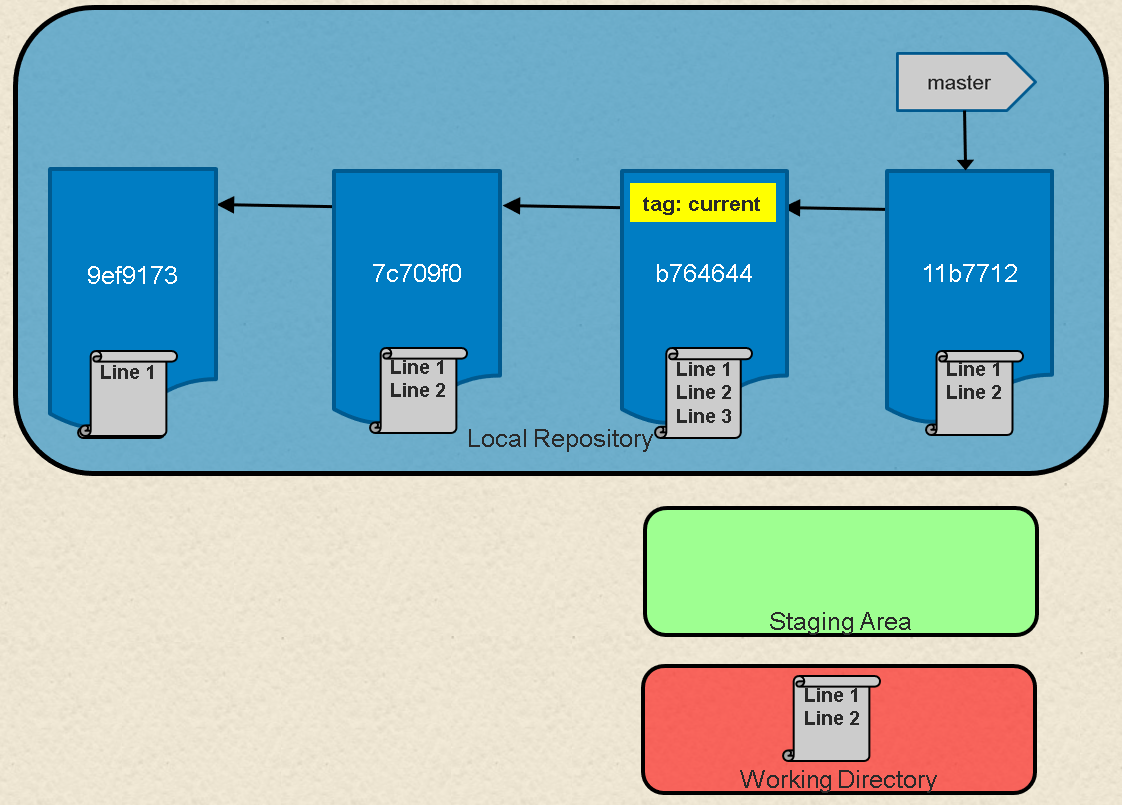

我们从 Git 的 `reset` 命令开始。确实,你应该能够想到它就是一个 "回滚" — 它将你本地环境返回到前面的提交。这里的 "本地环境" 一词,我们指的是你的本地仓库、暂存区、以及工作目录。

|

||||

我们从 Git 的 `reset` 命令开始。确实,你应该能够认为它就是一个 “回滚” —— 它将你本地环境返回到之前的提交。这里的 “本地环境” 一词,我们指的是你的本地仓库、暂存区以及工作目录。

|

||||

|

||||

先看一下图 1。在这里我们有一个在 Git 中表示一系列状态的提交。在 Git 中一个分支就是简单的一个命名的、可移动指针到一个特定的提交。在这种情况下,我们的 master 分支是链中指向最新提交的一个指针。

|

||||

先看一下图 1。在这里我们有一个在 Git 中表示一系列提交的示意图。在 Git 中一个分支简单来说就是一个命名的、指向一个特定的提交的可移动指针。在这里,我们的 master 分支是指向链中最新提交的一个指针。

|

||||

|

||||

![Local Git environment with repository, staging area, and working directory][2]

|

||||

|

||||

图 1:有仓库、暂存区、和工作目录的本地环境

|

||||

*图 1:有仓库、暂存区、和工作目录的本地环境*

|

||||

|

||||

如果看一下我们的 master 分支是什么,可以看一下到目前为止我们产生的提交链。

|

||||

|

||||

```

|

||||

$ git log --oneline

|

||||

b764644 File with three lines

|

||||

@ -23,41 +26,49 @@ b764644 File with three lines

|

||||

9ef9173 File with one line

|

||||

```

|

||||

|

||||

如果我们想回滚到前一个提交会发生什么呢?很简单 —— 我们只需要移动分支指针即可。Git 提供了为我们做这个动作的命令。例如,如果我们重置 master 为当前提交回退两个提交的位置,我们可以使用如下之一的方法:

|

||||

如果我们想回滚到前一个提交会发生什么呢?很简单 —— 我们只需要移动分支指针即可。Git 提供了为我们做这个动作的 `reset` 命令。例如,如果我们重置 master 为当前提交回退两个提交的位置,我们可以使用如下之一的方法:

|

||||

|

||||

`$ git reset 9ef9173`(使用一个绝对的提交 SHA1 值 9ef9173)

|

||||

```

|

||||

$ git reset 9ef9173

|

||||

```

|

||||

|

||||

或

|

||||

(使用一个绝对的提交 SHA1 值 `9ef9173`)

|

||||

|

||||

`$ git reset current~2`(在 “current” 标签之前,使用一个相对值 -2)

|

||||

或:

|

||||

|

||||

```

|

||||

$ git reset current~2

|

||||

```

|

||||

(在 “current” 标签之前,使用一个相对值 -2)

|

||||

|

||||

图 2 展示了操作的结果。在这之后,如果我们在当前分支(master)上运行一个 `git log` 命令,我们将看到只有一个提交。

|

||||

|

||||

```

|

||||

$ git log --oneline

|

||||

|

||||

9ef9173 File with one line

|

||||

|

||||

```

|

||||

|

||||

![After reset][4]

|

||||

|

||||

图 2:在 `reset` 之后

|

||||

*图 2:在 `reset` 之后*

|

||||

|

||||

`git reset` 命令也包含使用一个你最终满意的提交内容去更新本地环境的其它部分的选项。这些选项包括:`hard` 在仓库中去重置指向的提交,用提交的内容去填充工作目录,并重置暂存区;`soft` 仅重置仓库中的指针;而 `mixed`(默认值)将重置指针和暂存区。

|

||||

`git reset` 命令也包含使用一些选项,可以让你最终满意的提交内容去更新本地环境的其它部分。这些选项包括:`hard` 在仓库中去重置指向的提交,用提交的内容去填充工作目录,并重置暂存区;`soft` 仅重置仓库中的指针;而 `mixed`(默认值)将重置指针和暂存区。

|

||||

|

||||

这些选项在特定情况下非常有用,比如,`git reset --hard <commit sha1 | reference>` 这个命令将覆盖本地任何未提交的更改。实际上,它重置了(清除掉)暂存区,并用你重置的提交内容去覆盖了工作区中的内容。在你使用 `hard` 选项之前,一定要确保这是你真正地想要做的操作,因为这个命令会覆盖掉任何未提交的更改。

|

||||

|

||||

### revert

|

||||

### 恢复

|

||||

|

||||

`git revert` 命令的实际结果类似于 `reset`,但它的方法不同。`reset` 命令是在(默认)链中向后移动分支的指针去“撤销”更改,`revert` 命令是在链中添加一个新的提交去“取消”更改。再次查看图 1 可以非常轻松地看到这种影响。如果我们在链中的每个提交中向文件添加一行,一种方法是使用 `reset` 使那个提交返回到仅有两行的那个版本,如:`git reset HEAD~1`。

|

||||

`git revert` 命令的实际结果类似于 `reset`,但它的方法不同。`reset` 命令(默认)是在链中向后移动分支的指针去“撤销”更改,`revert` 命令是在链中添加一个新的提交去“取消”更改。再次查看图 1 可以非常轻松地看到这种影响。如果我们在链中的每个提交中向文件添加一行,一种方法是使用 `reset` 使那个提交返回到仅有两行的那个版本,如:`git reset HEAD~1`。

|

||||

|

||||

另一个方法是添加一个新的提交去删除第三行,以使最终结束变成两行的版本 —— 实际效果也是取消了那个更改。使用一个 `git revert` 命令可以实现上述目的,比如:

|

||||

|

||||

另一个方法是添加一个新的提交去删除第三行,以使最终结束变成两行的版本 — 实际效果也是取消了那个更改。使用一个 `git revert` 命令可以实现上述目的,比如:

|

||||

```

|

||||

$ git revert HEAD

|

||||

|

||||

```

|

||||

|

||||

因为它添加了一个新的提交,Git 将提示如下的提交信息:

|

||||

|

||||

```

|

||||

Revert "File with three lines"

|

||||

|

||||

@ -74,6 +85,7 @@ This reverts commit b764644bad524b804577684bf74e7bca3117f554.

|

||||

图 3(在下面)展示了 `revert` 操作完成后的结果。

|

||||

|

||||

如果我们现在运行一个 `git log` 命令,我们将看到前面的提交之前的一个新提交。

|

||||

|

||||

```

|

||||

$ git log --oneline

|

||||

11b7712 Revert "File with three lines"

|

||||

@ -83,6 +95,7 @@ b764644 File with three lines

|

||||

```

|

||||

|

||||

这里是工作目录中这个文件当前的内容:

|

||||

|

||||

```

|

||||

$ cat <filename>

|

||||

Line 1

|

||||

@ -91,31 +104,34 @@ Line 2

|

||||

|

||||

|

||||

|

||||

#### Revert 或 reset 如何选择?

|

||||

*图 3 `revert` 操作之后*

|

||||

|

||||

#### 恢复或重置如何选择?

|

||||

|

||||

为什么要优先选择 `revert` 而不是 `reset` 操作?如果你已经将你的提交链推送到远程仓库(其它人可以已经拉取了你的代码并开始工作),一个 `revert` 操作是让他们去获得更改的非常友好的方式。这是因为 Git 工作流可以非常好地在分支的末端添加提交,但是当有人 `reset` 分支指针之后,一组提交将再也看不见了,这可能会是一个挑战。

|

||||

|

||||

当我们以这种方式使用 Git 工作时,我们的基本规则之一是:在你的本地仓库中使用这种方式去更改还没有推送的代码是可以的。如果提交已经推送到了远程仓库,并且可能其它人已经使用它来工作了,那么应该避免这些重写提交历史的更改。

|

||||

|

||||

总之,如果你想回滚、撤销、或者重写其它人已经在使用的一个提交链的历史,当你的同事试图将他们的更改合并到他们拉取的原始链上时,他们可能需要做更多的工作。如果你必须对已经推送并被其他人正在使用的代码做更改,在你做更改之前必须要与他们沟通,让他们先合并他们的更改。然后在没有需要去合并的侵入操作之后,他们再拉取最新的副本。

|

||||

总之,如果你想回滚、撤销或者重写其它人已经在使用的一个提交链的历史,当你的同事试图将他们的更改合并到他们拉取的原始链上时,他们可能需要做更多的工作。如果你必须对已经推送并被其他人正在使用的代码做更改,在你做更改之前必须要与他们沟通,让他们先合并他们的更改。然后在这个侵入操作没有需要合并的内容之后,他们再拉取最新的副本。

|

||||

|

||||

你可能注意到了,在我们做了 `reset` 操作之后,原始的提交链仍然在那个位置。我们移动了指针,然后 `reset` 代码回到前一个提交,但它并没有删除任何提交。换句话说就是,只要我们知道我们所指向的原始提交,我们能够通过简单的返回到分支的原始链的头部来“恢复”指针到前面的位置:

|

||||

|

||||

你可能注意到了,在我们做了 `reset` 操作之后,原始的链仍然在那个位置。我们移动了指针,然后 `reset` 代码回到前一个提交,但它并没有删除任何提交。换句话说就是,只要我们知道我们所指向的原始提交,我们能够通过简单的返回到分支的原始头部来“恢复”指针到前面的位置:

|

||||

```

|

||||

git reset <sha1 of commit>

|

||||

|

||||

```

|

||||

|

||||

当提交被替换之后,我们在 Git 中做的大量其它操作也会发生类似的事情。新提交被创建,有关的指针被移动到一个新的链,但是老的提交链仍然存在。

|

||||

|

||||

### Rebase

|

||||

### 变基

|

||||

|

||||

现在我们来看一个分支变基。假设我们有两个分支 — master 和 feature — 提交链如下图 4 所示。Master 的提交链是 `C4->C2->C1->C0` 和 feature 的提交链是 `C5->C3->C2->C1->C0`.

|

||||

现在我们来看一个分支变基。假设我们有两个分支:master 和 feature,提交链如下图 4 所示。master 的提交链是 `C4->C2->C1->C0` 和 feature 的提交链是 `C5->C3->C2->C1->C0`。

|

||||

|

||||

![Chain of commits for branches master and feature][6]

|

||||

|

||||

图 4:master 和 feature 分支的提交链

|

||||

*图 4:master 和 feature 分支的提交链*

|

||||

|

||||

如果我们在分支中看它的提交记录,它们看起来应该像下面的这样。(为了易于理解,`C` 表示提交信息)

|

||||

|

||||

```

|

||||

$ git log --oneline master

|

||||

6a92e7a C4

|

||||

@ -131,9 +147,10 @@ f33ae68 C1

|

||||

5043e79 C0

|

||||

```

|

||||

|

||||

我给人讲,在 Git 中,可以将 `rebase` 认为是 “将历史合并”。从本质上来说,Git 将一个分支中的每个不同提交尝试“重放”到另一个分支中。

|

||||

我告诉人们在 Git 中,可以将 `rebase` 认为是 “将历史合并”。从本质上来说,Git 将一个分支中的每个不同提交尝试“重放”到另一个分支中。

|

||||

|

||||

因此,我们使用基本的 Git 命令,可以变基一个 feature 分支进入到 master 中,并将它拼入到 `C4` 中(比如,将它插入到 feature 的链中)。操作命令如下:

|

||||

|

||||

因此,我们使用基本的 Git 命令,可以 rebase 一个 feature 分支进入到 master 中,并将它拼入到 `C4` 中(比如,将它插入到 feature 的链中)。操作命令如下:

|

||||

```

|

||||

$ git checkout feature

|

||||

$ git rebase master

|

||||

@ -147,9 +164,10 @@ Applying: C5

|

||||

|

||||

![Chain of commits after the rebase command][8]

|

||||

|

||||

图 5:`rebase` 命令完成后的提交链

|

||||

*图 5:`rebase` 命令完成后的提交链*

|

||||

|

||||

接着,我们看一下提交历史,它应该变成如下的样子。

|

||||

|

||||

```

|

||||

$ git log --oneline master

|

||||

6a92e7a C4

|

||||

@ -168,25 +186,27 @@ f33ae68 C1

|

||||

|

||||

注意那个 `C3'` 和 `C5'`— 在 master 分支上已处于提交链的“顶部”,由于产生了更改而创建了新提交。但是也要注意的是,rebase 后“原始的” `C3` 和 `C5` 仍然在那里 — 只是再没有一个分支指向它们而已。

|

||||

|

||||

如果我们做了这个 rebase,然后确定这不是我们想要的结果,希望去撤销它,我们可以做下面示例所做的操作:

|

||||

如果我们做了这个变基,然后确定这不是我们想要的结果,希望去撤销它,我们可以做下面示例所做的操作:

|

||||

|

||||

```

|

||||

$ git reset 79768b8

|

||||

|

||||

```

|

||||

|

||||

由于这个简单的变更,现在我们的分支将重新指向到做 `rebase` 操作之前一模一样的位置 —— 完全等效于撤销操作(图 6)。

|

||||

|

||||

![After undoing rebase][10]

|

||||

|

||||

图 6:撤销 `rebase` 操作之后

|

||||

*图 6:撤销 `rebase` 操作之后*

|

||||

|

||||

如果你想不起来之前一个操作指向的一个分支上提交了什么内容怎么办?幸运的是,Git 命令依然可以帮助你。用这种方式可以修改大多数操作的指针,Git 会记住你的原始提交。事实上,它是在 `.git` 仓库目录下,将它保存为一个特定的名为 `ORIG_HEAD ` 的文件中。在它被修改之前,那个路径是一个包含了大多数最新引用的文件。如果我们 `cat` 这个文件,我们可以看到它的内容。

|

||||

|

||||

```

|

||||

$ cat .git/ORIG_HEAD

|

||||

79768b891f47ce06f13456a7e222536ee47ad2fe

|

||||

```

|

||||

|

||||

我们可以使用 `reset` 命令,正如前面所述,它返回指向到原始的链。然后它的历史将是如下的这样:

|

||||

|

||||

```

|

||||

$ git log --oneline feature

|

||||

79768b8 C5

|

||||

@ -196,7 +216,8 @@ f33ae68 C1

|

||||

5043e79 C0

|

||||

```

|

||||

|

||||

在 reflog 中是获取这些信息的另外一个地方。这个 reflog 是你本地仓库中相关切换或更改的详细描述清单。你可以使用 `git reflog` 命令去查看它的内容:

|

||||

在 reflog 中是获取这些信息的另外一个地方。reflog 是你本地仓库中相关切换或更改的详细描述清单。你可以使用 `git reflog` 命令去查看它的内容:

|

||||

|

||||

```

|

||||

$ git reflog

|

||||

79768b8 HEAD@{0}: reset: moving to 79768b

|

||||

@ -216,10 +237,10 @@ f33ae68 HEAD@{13}: commit: C1

|

||||

5043e79 HEAD@{14}: commit (initial): C0

|

||||

```

|

||||

|

||||

你可以使用日志中列出的、你看到的相关命名格式,去 reset 任何一个东西:

|

||||

你可以使用日志中列出的、你看到的相关命名格式,去重置任何一个东西:

|

||||

|

||||

```

|

||||

$ git reset HEAD@{1}

|

||||

|

||||

```

|

||||

|

||||

一旦你理解了当“修改”链的操作发生后,Git 是如何跟踪原始提交链的基本原理,那么在 Git 中做一些更改将不再是那么可怕的事。这就是强大的 Git 的核心能力之一:能够很快速、很容易地尝试任何事情,并且如果不成功就撤销它们。

|

||||

@ -233,7 +254,7 @@ via: https://opensource.com/article/18/6/git-reset-revert-rebase-commands

|

||||

作者:[Brent Laster][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,34 +1,34 @@

|

||||

比特币是一个邪教 — Adam Caudill

|

||||

比特币是一个邪教

|

||||

======

|

||||

经过这些年,比特币社区已经发生了非常大的变化;社区成员从闭着眼睛都能讲解 [Merkle 树][1] 的技术迷们,变成了被一夜爆富欲望驱使的投机者和由一些连什么是 Merkle 树都不懂的人所领导的企图寻求 10 亿美元估值的区块链初创公司。随着时间的流逝,围绕比特币和其它加密货币形成了一个狂热,他们认为比特币和其它加密货币远比实际的更重要;他们相信常见的货币(法定货币)正在成为过去,而加密货币将从根本上改变世界经济。

|

||||

|

||||

每一年他们的队伍都在壮大,而他们对加密货币的看法也在变得更加宏伟,那怕是因为[使用新技术][2]而使它陷入困境的情况下。虽然我坚信设计优良的加密货币可以使金钱的跨境流动更容易,并且在大规模通胀的领域提供一个更稳定的选择,但现实情况是,我们并没有做到这些。实际上,正是价值的巨大不稳定性才使得投机者赚钱。那些宣扬美元和欧元即将死去的人,已经完全抛弃了对现实世界客观公正的看法。

|

||||

经过这些年,比特币社区已经发生了非常大的变化;社区成员从闭着眼睛都能讲解 [梅克尔树][1] 的技术迷们,变成了被一夜爆富欲望驱使的投机者和由一些连什么是梅克尔树都不懂的人所领导的企图寻求 10 亿美元估值的区块链初创公司。随着时间的流逝,围绕比特币和其它加密货币形成了一股热潮,他们认为比特币和其它加密货币远比实际的更重要;他们相信常见的货币(法定货币)正在成为过去,而加密货币将从根本上改变世界经济。

|

||||

|

||||

每一年他们的队伍都在壮大,而他们对加密货币的看法也在变得更加宏伟,那怕是对该技术的[新奇的用法][2]而使它陷入了困境。虽然我坚信设计优良的加密货币可以使金钱的跨境流动更容易,并且在大规模通胀的领域提供一个更稳定的选择,但现实情况是,我们并没有做到这些。实际上,正是价值的巨大不稳定性才使得投机者赚钱。那些宣扬美元和欧元即将死去的人,已经完全抛弃了对现实世界客观公正的看法。

|

||||

|

||||

### 一点点背景 …

|

||||

|

||||

比特币发行那天,我读了它的白皮书 —— 它使用有趣的 [Merkle 树][1] 去创建一个公共账簿和一个非常合理的共识协议 —— 由于它新颖的特性引起了密码学领域中许多人的注意。在白皮书发布后的几年里,比特币变得非常有价值,并由此吸引了许多人将它视为是一种投资,和那些认为它将改变一切的忠实追随者(和发声者)。这篇文章将讨论的正是后者。

|

||||

比特币发行那天,我读了它的白皮书 —— 它使用有趣的 [梅克尔树][1] 去创建一个公共账簿和一个非常合理的共识协议 —— 由于它新颖的特性引起了密码学领域中许多人的注意。在白皮书发布后的几年里,比特币变得非常有价值,并由此吸引了许多人将它视为是一种投资,和那些认为它将改变一切的忠实追随者(和发声者)。这篇文章将讨论的正是后者。

|

||||

|

||||

昨天,有人在推特上发布了一个最近的比特币区块的哈希,下面成千上万的推文和其它讨论让我相信,比特币已经跨越界线进入了真正的邪教领域。

|

||||

昨天(2018/6/20),有人在推特上发布了一个最近的比特币区块的哈希,下面成千上万的推文和其它讨论让我相信,比特币已经跨越界线进入了真正的邪教领域。

|

||||

|

||||

一切都源于 Mark Wilcox 的这个推文:

|

||||

一切都源于 Mark Wilcox 的[这个推文][9]:

|

||||

|

||||

> #00000000000000000021e800c1e8df51b22c1588e5a624bea17e9faa34b2dc4a

|

||||

> — Mark Wilcox (@mwilcox) June 19, 2018

|

||||

> [#00000000000000000021e800c1e8df51b22c1588e5a624bea17e9faa34b2dc4a][8]

|

||||

|

||||

张贴的这个值是 [比特币 #528249 号区块][3] 的哈希值。前导零是挖矿过程的结果;挖掘一个区块就是把区块内容与一个 nonce(和其它数据)组合起来,然后做哈希运算,并且它至少有一定数量的前导零才能被验证为有效区块。如果它不是正确的数字,你可以更换 nonce 再试。重复这个过程直到哈希值的前导零数量是正确的数字之后,你就有了一个有效的区块。让人们感到很兴奋的部分是接下来的 21e800。

|

||||

> — Mark Wilcox (@mwilcox) [June 19, 2018][9]

|

||||

|

||||

张贴的这个值是 [比特币 #528249 号区块][3] 的哈希值。前导零是挖矿过程的结果;挖掘一个区块就是把区块内容与一个<ruby>现时数<rt>nonce</rt></ruby>(和其它数据)组合起来,然后做哈希运算,并且它至少有一定数量的前导零才能被验证为有效区块。如果它不是正确的数字,你可以更换现时数再试。重复这个过程直到哈希值的前导零数量是正确的数字之后,你就有了一个有效的区块。让人们感到很兴奋的部分是接下来的 `21e800`。

|

||||

|

||||

一些人说这是一个有意义的编号,挖掘出这个区块的人实际上的难度远远超出当前所看到的,不仅要调整前导零的数量,还要匹配接下来的 24 位 —— 它要求非常强大的计算能力。如果有人能够以蛮力去实现它,这将表明有些事情很严重,比如,在计算或密码学方面的重大突破。

|

||||

|

||||

你一定会有疑问,为什么 21e800 如此重要 —— 一个你问了肯定会后悔的问题。有人说它是参考了 [E8 理论][4](一个广受批评的提出标准场理论的论文),或是表示总共存在 2100000000 枚比特币(`21 x 10^8` 就是 2,100,000,000)。还有其它说法,因为太疯狂了而没有办法写出来。另一个重要的事实是,在前导零后面有 21e8 的区块平均每年被挖掘出一次 —— 这些从来没有人认为是很重要的。

|

||||

你一定会有疑问,为什么 `21e800` 如此重要 —— 一个你问了肯定会后悔的问题。有人说它是参考了 [E8 理论][4](一个广受批评的提出标准场理论的论文),或是表示总共存在 2,100,000,000 枚比特币(`21 x 10^8` 就是 2,100,000,000)。还有其它说法,因为太疯狂了而没有办法写出来。另一个重要的事实是,在前导零后面有 21e8 的区块平均每年被挖掘出一次 —— 这些从来没有人认为是很重要的。

|

||||

|

||||

这就引出了有趣的地方:关于这是如何发生的[理论][5]。

|

||||

|

||||

* 一台量子计算机,它能以某种方式用不可思议的速度做哈希运算。尽管在量子计算机的理论中还没有迹象表明它能够做这件事。哈希是量子计算机认为很安全的东西之一。

|

||||

* 一台量子计算机,它能以某种方式用不可思议的速度做哈希运算。尽管在量子计算机的理论中还没有迹象表明它能够做这件事。哈希是量子计算机认为安全的东西之一。

|

||||

* 时间旅行。是的,真的有人这么说,有人从未来穿梭回到现在去挖掘这个区块。我认为这种说法太荒谬了,都懒得去解释它为什么是错误的。

|

||||

* 中本聪回来了。尽管事实上他的私钥没有任何活动,一些人从理论上认为他回来了,他能做一些没人能做的事情。这些理论是无法解释他如何做到的。

|

||||

|

||||

|

||||

|

||||

> 因此,总的来说(按我的理解)中本聪,为了知道和计算他做的事情,根据现代科学,他可能是以下之一:

|

||||

>

|

||||

> A) 使用了一台量子计算机

|

||||

@ -37,39 +37,35 @@

|

||||

>

|

||||

> — Crypto Randy Marsh [REKT] (@nondualrandy) [June 21, 2018][6]

|

||||

|

||||

如果你觉得所有的这一切听起来像 [命理学][7],不止你一个人是这样想的。

|

||||

如果你觉得所有的这一切听起来像 <ruby>[命理学][7]<rt>numerology</rt></ruby>,不止你一个人是这样想的。

|

||||

|

||||

所有围绕有特殊意义的区块哈希的讨论,也引发了对在某种程度上比较有趣的东西的讨论。比特币的创世区块,它是第一个比特币区块,有一个不寻常的属性:早期的比特币要求哈希值的前 32 位是零;而创始区块的前导零有 43 位。因为由代码产生的创世区块从不会发布,它不知道它是如何产生的,也不知道是用什么类型的硬件产生的。中本聪有学术背景,因此可能他有比那个时候大学中常见设备更强大的计算能力。从这一点上说,只是对古怪的创世区块的历史有点好奇,仅此而已。

|

||||

所有围绕有特殊意义的区块哈希的讨论,也引发了对在某种程度上比较有趣的东西的讨论。比特币的创世区块,它是第一个比特币区块,有一个不寻常的属性:早期的比特币要求哈希值的前 32 <ruby>位<rt>bit</rt></ruby>是零;而创始区块的前导零有 43 位。因为产生创世区块的代码从未发布过,不知道它是如何产生的,也不知道是用什么类型的硬件产生的。中本聪有学术背景,因此可能他有比那个时候大学中常见设备更强大的计算能力。从这一点上说,只是对古怪的创世区块的历史有点好奇,仅此而已。

|

||||

|

||||

### 关于哈希运算的简单题外话

|

||||

|

||||

这种喧嚣始于比特币区块的哈希运算;因此理解哈希是什么很重要,并且要理解一个非常重要的属性,一个哈希是单向加密函数,它能够基于给定的数据创建一个伪随机输出。

|

||||

这种喧嚣始于比特币区块的哈希运算;因此理解哈希是什么很重要,并且要理解一个非常重要的属性,哈希是单向加密函数,它能够基于给定的数据创建一个伪随机输出。

|

||||

|

||||

这意味着什么呢?基于本文讨论的目的,对于每个给定的输入你将得到一个随机的输出。随机数有时看起来很有趣,很简单,因为它是随机的结果,并且人类大脑可以很容易从任何东西中找到顺序。当你从随机数据中开始查看顺序时,你就会发现有趣的事情 —— 这些东西毫无意义,因为它们只是简单地随机数。当人们把重要的意义归属到随机数据上时,它将告诉你很多这些参与者观念相关的东西,而不是数据本身。

|

||||

|

||||

### 币的邪教

|

||||

### 币之邪教

|

||||

|

||||

首先,我们来定义一组术语:

|

||||

|

||||

* 邪教:一个宗教崇拜和直接向一个特定的人或物虔诚的体系。

|

||||

* 宗教:有人认为是至高无上的追求或兴趣。

|

||||

* <ruby>邪教<rt>Cult</rt></ruby>:一个宗教崇拜和直接向一个特定的人或物虔诚的体系。

|

||||

* <ruby>宗教<rt>Religion</rt></ruby>:有人认为是至高无上的追求或兴趣。

|

||||

|

||||

<ruby>币之邪教<rt>Cult of the Coin</rt></ruby>有许多圣人,或许没有人比<ruby>中本聪<rt>Satoshi Nakamoto</rt></ruby>更伟大,他是比特币创始者(们)的假名。(对他的)狂热拥戴,要归因于他的能力和理解力远超过一般的研究人员,认为他的远见卓视无人能比,他影响了世界新经济的秩序。当将中本聪的神秘本质和未知的真实身份结合起来时,狂热的追随着们将中本聪视为一个真正值得尊敬的人物。

|

||||

|

||||

当然,除了追随其他圣人的追捧者之外,毫无疑问这些追捧者认为自己是正确的。任何对他们的圣人的批评都被认为也是对他们的批评。例如,那些追捧 EOS 的人,可能会视中本聪为一个开发了失败项目的黑客,而对 EOS 那怕是最轻微的批评,他们也会作出激烈的反应,之所以反应如此强烈,仅仅是因为攻击了他们心目中的神。那些追捧 IOTA 的人的反应也一样;还有更多这样的例子。

|

||||

|

||||

币的狂热追捧者中的许多圣人,或许没有人比中本聪更伟大,他是比特币创始人的假名。强力的护卫、赋予能力和理解力远超过一般的研究人员,认为他的远见卓视无人能比,他影响了世界新经济的秩序。当将中本聪的神秘本质和未知的真实身份结合起来时,狂热的追随着们将中本聪视为一个真正的值的尊敬的人物。

|

||||

|

||||

当然,除了追随其他圣人的追捧者之外,毫无疑问这些追捧者认为自己是正确的。任何对他们的圣人的批评都被认为也是对他们的批评。例如,那些追捧 EOS 的人,可能会认为中本聪是开发了一个失败项目的黑客,而对 EOS 那怕是最轻微的批评,他们也会作出激烈的反应,之所以反应如此强烈,仅仅是因为攻击了他们心目中的神。那些追捧 IOTA 的人的反应也一样;还有更多这样的例子。

|

||||

|

||||

这些追随着在讨论问题时已经失去了理性和客观,他们的狂热遮盖了他们的视野。任何对这些项目和项目背后的人的讨论,如果不是溢美之词,必然以某种程序的刻薄言辞结束,对于一个技术的讨论那种做法是毫无道理的。

|

||||

这些追随者在讨论问题时已经失去了理性和客观,他们的狂热遮盖了他们的视野。任何对这些项目和项目背后的人的讨论,如果不是溢美之词,必然以某种程序的刻薄言辞结束,对于一个技术的讨论那种做法是毫无道理的。

|

||||

|

||||

这很危险,原因很多:

|

||||

|

||||

* 开发者 & 研究者对缺陷视而不见。由于追捧者的大量赞美,这些参与开发的人对自己的能力开始膨胀,并将一些批评看作是无端的攻击 —— 因为他们认为自己是不可能错的。

|

||||

* 开发者 & 研究者对缺陷视而不见。由于追捧者的大量赞美,这些参与开发的人对自己的能力的看法开始膨胀,并将一些批评看作是无端的攻击 —— 因为他们认为自己是不可能错的。

|

||||

* 真正的问题是被攻击。技术问题不再被看作是需要去解决的问题和改进的机会,他们认为是来自那些想去破坏项目的人的攻击。

|

||||

* 用一枚币来控制他们。追随者们通常会结盟,而圣人仅有一个。承认其它项目的优越,意味着认同自己项目的缺陷或不足,而这是他们不愿意做的事情。

|

||||

* 阻止真实的进步。进化是很残酷的,它要求死亡,项目失败,以及承认这些失败的原因。如果忽视失败的教训,如果不允许那些应该去死亡的事情发生,进步就会停止。

|

||||

|

||||

|

||||

* 物以类聚,人以币分。追随者们通常会结盟到一起,而圣人仅有一个。承认其它项目的优越,意味着认同自己项目的缺陷或不足,而这是他们不愿意做的事情。

|

||||

* 阻止真实的进步。进化是很残酷的,死亡是必然会有的,项目可能失败,也要承认这些失败的原因。如果忽视失败的教训,如果不允许那些应该去死亡的事情发生,进步就会停止。

|

||||

|

||||

许多围绕加密货币和相关区块链项目的讨论已经开始变得越来越”有毒“,善意的人想在不受攻击的情况下进行技术性讨论越来越不可能。随着对真正缺陷的讨论,那些在其它环境中注定要失败的缺陷,在没有做任何的事实分析的情况下即刻被判定为异端已经成为了惯例,善意的人参与其中的代价变得极其昂贵。至少有些人已经意识到极其严重的安全漏洞,由于高“毒性”的环境,他们选择保持沉默。

|

||||

|

||||

@ -79,7 +75,10 @@

|

||||

|

||||

[注意:这种行为有许多例子可以引用,但是为了保护那些因批评项目而成为被攻击目标的人,我选择尽可能少的列出这种例子。我看到许多我很尊敬的人、许多我认为是朋友的人成为这种恶毒攻击的受害者 —— 我不想引起人们对这些攻击的注意和重新引起对他们的攻击。]

|

||||

|

||||

---

|

||||

关于作者:

|

||||

|

||||

我是一个资深应用安全顾问、研究员和具有超过 15 年的经验的软件开发者。我主要关注的是应用程序安全、安全通信和加密, 虽然我经常由于无聊而去研究新的领域。我通常会写了一些关于我的研究和安全、开发和软件设计,和我当前吸引了我注意力的爱好的文章。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -88,7 +87,7 @@ via: https://adamcaudill.com/2018/06/21/bitcoin-is-a-cult/

|

||||

作者:[Adam Caudill][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -100,3 +99,5 @@ via: https://adamcaudill.com/2018/06/21/bitcoin-is-a-cult/

|

||||

[5]:https://medium.com/@coop__soup/00000000000000000021e800c1e8df51b22c1588e5a624bea17e9faa34b2dc4a-cd4b67d446be

|

||||

[6]:https://twitter.com/nondualrandy/status/1009609117768605696?ref_src=twsrc%5Etfw

|

||||

[7]:https://en.wikipedia.org/wiki/Numerology

|

||||

[8]:https://twitter.com/hashtag/00000000000000000021e800c1e8df51b22c1588e5a624bea17e9faa34b2dc4a?src=hash&ref_src=twsrc%5Etfw

|

||||

[9]:https://twitter.com/mwilcox/status/1009160832398262273?ref_src=twsrc%5Etfw

|

||||

52

published/20180801 Cross-Site Request Forgery.md

Normal file

52

published/20180801 Cross-Site Request Forgery.md

Normal file

@ -0,0 +1,52 @@

|

||||

CSRF(跨站请求伪造)简介

|

||||

======

|

||||

|

||||

|

||||

设计 Web 程序时,安全性是一个主要问题。我不是在谈论 DDoS 保护、使用强密码或两步验证。我说的是对网络程序的最大威胁。它被称为**CSRF**, 是 **Cross Site Resource Forgery** (跨站请求伪造)的缩写。

|

||||

|

||||

### 什么是 CSRF?

|

||||

|

||||

[][1]

|

||||

|

||||

首先,**CSRF** 是 Cross Site Resource Forgery 的缩写。它通常发音为 “sea-surf”,也经常被称为 XSRF。CSRF 是一种攻击类型,在受害者不知情的情况下,在受害者登录的 Web 程序上执行各种操作。这些行为可以是任何事情,从简单地点赞或评论社交媒体帖子到向人们发送垃圾消息,甚至从受害者的银行账户转移资金。

|

||||

|

||||

### CSRF 如何工作?

|

||||

|

||||

**CSRF** 攻击尝试利用所有浏览器上的一个简单的常见漏洞。每次我们对网站进行身份验证或登录时,会话 cookie 都会存储在浏览器中。因此,每当我们向网站提出请求时,这些 cookie 就会自动发送到服务器,服务器通过匹配与服务器记录一起发送的 cookie 来识别我们。这样就知道是我们了。

|

||||

|

||||

[][2]

|

||||

|

||||

这意味着我将在知情或不知情的情况下发出请求。由于 cookie 也被发送并且它们将匹配服务器上的记录,服务器认为我在发出该请求。

|

||||

|

||||

CSRF 攻击通常以链接的形式出现。我们可以在其他网站上点击它们或通过电子邮件接收它们。单击这些链接时,会向服务器发出不需要的请求。正如我之前所说,服务器认为我们发出了请求并对其进行了身份验证。

|

||||

|

||||

#### 一个真实世界的例子

|

||||

|

||||

为了把事情看得更深入,想象一下你已登录银行的网站。并在 **yourbank.com/transfer** 上填写表格。你将接收者的帐号填写为 1234,填入金额 5,000 并单击提交按钮。现在,我们将有一个 **yourbank.com/transfer/send?to=1234&amount=5000** 的请求。因此服务器将根据请求进行操作并转账。现在想象一下你在另一个网站上,然后点击一个链接,用黑客的帐号作为参数打开上面的 URL。这笔钱现在会转账给黑客,服务器认为你做了交易。即使你没有。

|

||||

|

||||

[][3]

|

||||

|

||||

#### CSRF 防护

|

||||

|

||||

CSRF 防护非常容易实现。它通常将一个称为 CSRF 令牌的令牌发送到网页。每次发出新请求时,都会发送并验证此令牌。因此,向服务器发出的恶意请求将通过 cookie 身份验证,但 CSRF 验证会失败。大多数 Web 框架为防止 CSRF 攻击提供了开箱即用的支持,而 CSRF 攻击现在并不像以前那样常见。

|

||||

|

||||

### 总结

|

||||

|

||||

CSRF 攻击在 10 年前是一件大事,但如今我们看不到太多。过去,Youtube、纽约时报和 Netflix 等知名网站都容易受到 CSRF 的攻击。然而,CSRF 攻击的普遍性和发生率最近有减少。尽管如此,CSRF 攻击仍然是一种威胁,重要的是,你要保护自己的网站或程序免受攻击。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxandubuntu.com/home/understanding-csrf-cross-site-request-forgery

|

||||

|

||||

作者:[linuxandubuntu][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linuxandubuntu.com

|

||||

[1]:http://www.linuxandubuntu.com/uploads/2/1/1/5/21152474/csrf-what-is-cross-site-forgery_orig.jpg

|

||||

[2]:http://www.linuxandubuntu.com/uploads/2/1/1/5/21152474/cookies-set-by-website-chrome_orig.jpg

|

||||

[3]:http://www.linuxandubuntu.com/uploads/2/1/1/5/21152474/csrf-hacking-bank-account_orig.jpg

|

||||

@ -1,4 +1,4 @@

|

||||

20 questions DevOps job candidates should be prepared to answer

|

||||

Translating by FelixYFZ 20 questions DevOps job candidates should be prepared to answer

|

||||

======

|

||||

|

||||

|

||||

|

||||

@ -0,0 +1,68 @@

|

||||

3 pitfalls everyone should avoid with hybrid multi-cloud, part 2

|

||||

======

|

||||

|

||||

|

||||

|

||||

This article was co-written with [Roel Hodzelmans][1].

|

||||

|

||||

Cloud hype is all around you—you're told it's critical to ensuring a digital future for your business. Whether you choose cloud, hybrid cloud, or hybrid multi-cloud, you have numerous decisions to make, even as you continue the daily work of enhancing your customers' experience and agile delivery of your applications (including legacy applications)—likely some of your business' most important resources.

|

||||

|

||||

In this series, we explain three pitfalls everyone should avoid when transitioning to hybrid multi-cloud environments. [In part one][2], we defined the different cloud types and explained the differences between hybrid cloud and multi-cloud. Here, in part two, we will dive into the first pitfall: Why cost is not always the best motivator for moving to the cloud.

|

||||

|

||||

### Why not?

|

||||

|

||||

When looking at hybrid or multi-cloud strategies for your business, don't let cost become the obvious motivator. There are a few other aspects of any migration strategy that you should review when putting your plan together. But often budget rules the conversations.

|

||||

|

||||

When giving this talk three times at conferences, we've asked our audience to answer a live, online questionnaire about their company, customers, and experiences in the field. Over 73% of respondents said cost was the driving factor in their business' decision to move to hybrid or multi-cloud.

|

||||

|

||||

But, if you already have full control of your on-premises data centers, yet perpetually underutilize and overpay for resources, how can you expect to prevent those costs from rolling over into your cloud strategy?

|

||||

|

||||

There are three main (and often forgotten, ignored, and unaccounted for) reasons cost shouldn't be the primary motivating factor for migrating to the cloud: labor costs, overcapacity, and overpaying for resources. They are important points to consider when developing a hybrid or multi-cloud strategy.

|

||||

|

||||

### Labor costs

|

||||

|

||||

Imagine a utility company making the strategic decision to move everything to the cloud within the next three years. The company kicks off enthusiastically, envisioning huge cost savings, but soon runs into labor cost issues that threaten to blow up the budget.

|

||||

|

||||

One of the most overlooked aspects of moving to the cloud is the cost of labor to migrate existing applications and data. A Forrester study reports that labor costs can consume [over 50% of the total cost of a public cloud migration][3]. Forrester says, "customer-facing apps for systems of engagement… typically employ lots of new code rather than migrating existing code to cloud platforms."

|

||||

|

||||

Step back and analyze what's essential to your customer success and move only that to the cloud. Then, evaluate all your non-essential applications and, over time, consider moving them to commercial, off-the-shelf solutions that require little labor cost.

|

||||

|

||||

### Overcapacity

|

||||

|

||||

"More than 80% of in-house data centers have [way more server capacity than is necessary][4]," reports Business Insider. This amazing bit of information should shock you to your core.

|

||||

|

||||

What exactly is "way more" in this context?

|

||||

|

||||

One hint comes from Deutsche Bank CTO Pat Healey, presenting at Red Hat Summit 2017. He talks about ordering hardware for the financial institution's on-premises data center, only to find out later that [usage numbers were in the single digits][5].

|

||||

|

||||

Healey is not alone; many companies have these problems. They don't do routine assessments, such as checking electricity, cooling, licensing, and other factors, to see how much capacity they are using on a consistent basis.

|

||||

|

||||

### Overpaying

|

||||

|

||||

Companies are paying an average of 36% more for cloud services than they need to, according to the Business Insider article mentioned above.

|

||||

|

||||

One reason is that public cloud providers enthusiastically support customers coming agnostically into their cloud. As customers leverage more of the platform's cloud-native features, they reach a monetary threshold, and technical support drops off dramatically.

|

||||

|

||||

It's a classic case of vendor lock-in, where the public cloud provider knows it is cost-prohibitive for the customer to migrate off its cloud, so it doesn't feel compelled to provide better service.

|

||||

|

||||

### Coming up

|

||||

|

||||

In part three of this series, we'll discuss the second of three pitfalls that everyone should avoid with hybrid multi-cloud. Stay tuned to learn why you should take care with moving everything to the cloud.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/6/reasons-move-to-cloud

|

||||

|

||||

作者:[Eric D.Schabell][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/eschabell

|

||||

[1]:https://opensource.com/users/roelh

|

||||

[2]:https://opensource.com/article/18/4/pitfalls-hybrid-multi-cloud

|

||||

[3]:https://www.techrepublic.com/article/labor-costs-can-make-up-50-of-public-cloud-migration-is-it-worth-it/

|

||||

[4]:http://www.businessinsider.com/companies-waste-62-billion-on-the-cloud-by-paying-for-storage-they-dont-need-according-to-a-report-2017-11

|

||||

[5]:https://youtu.be/SPRUJ5Z-Aew

|

||||

@ -1,3 +1,5 @@

|

||||

Translating by jessie-pang

|

||||

|

||||

Why moving all your workloads to the cloud is a bad idea

|

||||

======

|

||||

|

||||

|

||||

@ -0,0 +1,180 @@

|

||||

3 tips for moving your team to a microservices architecture

|

||||

======

|

||||

|

||||

|

||||

Microservices are gaining in popularity and providing new ways for tech companies to improve their services for end users. But what impact does the shift to microservices have on team culture and morale? What issues should CTOs, developers, and project managers consider when the best technological choice is a move to microservices?

|

||||

|

||||

Below you’ll find key advice and insight from CTOs and project leads as they reflect on their experiences with team culture and microservices.

|

||||

|

||||

### You can't build successful microservices without a successful team culture

|

||||

|

||||

When I was working with Java developers, there was tension within the camp about who got to work on the newest and meatiest features. Our engineering leadership had decided that we would exclusively use Java to build all new microservices.

|

||||

|

||||

There were great reasons for this decision, but as I will explain later, such a restrictive decision come with some repercussions. Communicating the “why” of technical decisions can go a long way toward creating a culture where people feel included and informed.

|

||||

|

||||

When you're organizing and managing a team around microservices, it’s always challenging to balance the mood, morale, and overall culture. In most cases, the leadership needs to balance the risk of team members using new technology against the needs of the client and the business itself.

|

||||

|

||||

This dilemma, and many others like it, has led CTOs to ask themselves questions such as: How much freedom should I give my team when it comes to adopting new technologies? And perhaps even more importantly, how can I manage the overarching culture within my camp?

|

||||

|

||||

### Give every team member a chance to thrive

|

||||

|

||||

When the engineering leaders in the example above decided that Java was the best technology to use when building microservices, the decision was best for the company: Java is performant, and many of the senior people on the team were well-versed with it. However, not everyone on the team had experience with Java.

|

||||

|

||||

The problem was, our team was split into two camps: the Java guys and the JavaScript guys. As time went by and exciting new projects came up, we’d always reach for Java to get the job done. Before long, some annoyance within the JavaScript camp crept in: “Why do the Java guys always get to work on the exciting new projects while we’re left to do the mundane front-end tasks like implementing third-party analytics tools? We want a big, exciting project to work on too!”

|

||||

|

||||

Like most rifts, it started out small, but it grew worse over time.

|

||||

|

||||

The lesson I learned from that experience was to take your team’s expertise and favored technologies into account when choosing a de facto tech stack for your microservices and when adjusting your team's level of freedom to pick and choose their tools.

|

||||

|

||||

Sure, you need some structure, but if you’re too restrictive—or worse, blind to the desire of team members to innovate with different technologies—you may have a rift of your own to manage.

|

||||

|

||||

So evaluate your team closely and come up with a plan that empowers everyone. That way, every section of your team can get involved in major projects, and nobody will feel like they’re being left on the bench.

|

||||

|

||||

### Technology choices: stability vs. flexibility

|

||||

|

||||

Let’s say you hire a new junior developer who is excited about some brand new, fresh-off-the-press JavaScript framework.

|

||||

|

||||

That framework, while sporting some technical breakthroughs, may not have proven itself in production environments, and it probably doesn’t have great support available. CTOs have to make a difficult choice: Okaying that move for the morale of the team, or declining it to protect the company and its bottom line and to keep the project stable as the deadline approaches.

|

||||

|

||||

The answer depends on a lot of different factors (which also means there is no single correct answer).

|

||||

|

||||

### Technological freedom

|

||||

|

||||

“We give our team and ourselves 100% freedom in considering technology choices. We eventually identified two or three technologies not to use in the end, primarily due to not wanting to complicate our deployment story,” said [Benjamin Curtis][1], co-founder of [Honeybadger][2].

|

||||

|

||||

“In other words, we considered introducing new languages and new approaches into our tech stack when creating our microservices, and we actually did deploy a production microservice on a different stack at one point. [While we do generally] stick with technologies that we know in order to simplify our ops stack, we periodically revisit that decision to see if potential performance or reliability benefits would be gained by adopting a new technology, but so far we haven't made a change,” Curtis continued.

|

||||

|

||||

When I spoke with [Stephen Blum][3], CTO at [PubNub][4], he expressed a similar view, welcoming pretty much any technology that cuts the mustard: “We're totally open with it. We want to continue to push forward with new open source technologies that are available, and we only have a couple of constraints with the team that are very fair: [It] must run in container environment, and it has to be cost-effective.”

|

||||

|

||||

### High freedom, high responsibility

|

||||

|

||||

[Sumo Logic][5] CTO [Christian Beedgen][6] and chief architect [Stefan Zier][7] expanded on this topic, agreeing that if you’re going to give developers freedom to choose their technology, it must come with a high level of responsibility attached. “It’s really important that [whoever builds] the software takes full ownership for it. In other words, they not only build software, but they also run the software and remain responsible for the whole lifecycle.”

|

||||

|

||||

Beedgen and Zier recommend implementing a system that resembles a federal government system, keeping those freedoms in check by heightening responsibility: “[You need] a federal culture, really. You've got to have a system where multiple, independent teams can come together towards the greater goal. That limits the independence of the units to some degree, as they have to agree that there is potentially a federal government of some sort. But within those smaller groups, they can make as many decisions on their own as they like within guidelines established on a higher level.”

|

||||

|

||||

Decentralized, federal, or however you frame it, this approach to structuring microservice teams gives each team and each team member the freedom they want, without enabling anyone to pull the project apart.

|

||||

|

||||

However, not everyone agrees.

|

||||

|

||||

### Restrict technology to simplify things

|

||||

|

||||

[Darby Frey][8], co-founder of [Lead Honestly][9], takes a more restrictive approach to technology selection.

|

||||

|

||||

“At my last company we had a lot of services and a fairly small team, and one of the main things that made it work, especially for the team size that we had, was that every app was the same. Every backend service was a Ruby app,” he explained.

|

||||

|

||||

Frey explained that this helped simplify the lives of his team members: “[Every service has] the same testing framework, the same database backend, the same background job processing tool, et cetera. Everything was the same.

|

||||

|

||||

“That meant that when an engineer would jump around between apps, they weren’t having to learn a new pattern or learn a different language each time,” Frey continued, “So we're very aware and very strict about keeping that commonality.”

|

||||

|

||||

While Frey is sympathetic to developers wanting to introduce a new language, admitting that he “loves the idea of trying new things,” he feels that the cons still outweigh the pros.

|

||||

|

||||

“Having a polyglot architecture can increase the development and maintenance costs. If it's just all the same, you can focus on business value and business features and not have to be super siloed in how your services operate. I don't think everybody loves that decision, but at the end of the day, when they have to fix something on a weekend or in the middle of the night, they appreciate it,” said Frey.

|

||||

|

||||

### Centralized or decentralized organization

|

||||

|

||||

How your team is structured is also going to impact your microservices engineering culture—for better or worse.

|

||||

|

||||

For example, it’s common for software engineers to write the code before shipping it off to the operations team, who in turn deploy it to the servers. But when things break (and things always break!), an internal conflict occurs.

|

||||

|

||||

Because operation engineers don’t write the code themselves, they rarely understand problems when they first arise. As a result, they need to get in touch with those who did code it: the software engineers. So right from the get-go, you’ve got a middleman relaying messages between the problem and the team that can fix that problem.

|

||||

|

||||

To add an extra layer of complexity, because software engineers aren’t involved with operations, they often can’t fully appreciate how their code affects the overall operation of the platform. They learn of issues only when operations engineers complain about them.

|

||||

|

||||

As you can see, this is a relationship that’s destined for constant conflict.

|

||||

|

||||

### Navigating conflict

|

||||

|

||||

One way to attack this problem is by following the lead of Netflix and Amazon, both of which favor decentralized governance. Software development thought leaders James Lewis and Martin Fowler feel that decentralized governance is the way to go when it comes to microservice team organization, as they explain in a [blog post][10].

|

||||

|

||||

“One of the consequences of centralized governance is the tendency to standardize on single technology platforms. Experience shows that this approach is constricting—not every problem is a nail and not every solution a hammer,” the article reads. “Perhaps the apogee of decentralized governance is the ‘build it, run it’ ethos popularized by Amazon. Teams are responsible for all aspects of the software they build, including operating the software 24/7.”

|

||||

|

||||

Netflix, Lewis and Fowler write, is another company pushing higher levels of responsibility on development teams. They hypothesize that, because they’ll be responsible and called upon should anything go wrong later down the line, more care will be taken during the development and testing stages to ensure each microservice is in ship shape.

|

||||

|

||||

“These ideas are about as far away from the traditional centralized governance model as it is possible to be,” they conclude.

|

||||

|

||||

### Who's on weekend pager duty?

|

||||

|

||||

When considering a centralized or decentralized culture, think about how it impacts your team members when problems inevitably crop up at inopportune times. A decentralized system implies that each decentralized team takes responsibility for one service or one set of services. But that also creates a problem: Silos.

|

||||

|

||||

That’s one reason why Lead Honestly's Frey isn’t a proponent of the concept of decentralized governance.

|

||||

|

||||

“The pattern of ‘a single team is responsible for a particular service’ is something you see a lot in microservice architectures. We don't do that, for a couple of reasons. The primary business reason is that we want teams that are responsible not for specific code but for customer-facing features. A team might be responsible for order processing, so that will touch multiple code bases but the end result for the business is that there is one team that owns the whole thing end to end, so there are fewer cracks for things to fall through,” Frey explained.

|

||||

|

||||

The other main reason, he continued, is that developers can take more ownership of the overall project: “They can actually think about [the project] holistically.”

|

||||

|

||||

Nathan Peck, developer advocate for container services at Amazon Web Services, [explained this problem in more depth][11]. In essence, when you separate the software engineers and the operations engineers, you make life harder for your team whenever an issue arises with the code—which is bad news for end users, too.

|

||||

|

||||

But does decentralization need to lead to separation and siloization?

|

||||

|

||||

Peck explained that his solution lies in [DevOps][12], a model aimed at tightening the feedback loop by bringing these two teams closer together, strengthening team culture and communication in the process. Peck describes this as the “you build it, you run it” approach.

|

||||

|

||||

However, that doesn’t mean teams need to get siloed or distanced away from partaking in certain tasks, as Frey suggests might happen.

|

||||

|

||||

“One of the most powerful approaches to decentralized governance is to build a mindset of ‘DevOps,’” Peck wrote. “[With this approach], engineers are involved in all parts of the software pipeline: writing code, building it, deploying the resulting product, and operating and monitoring it in production. The DevOps way contrasts with the older model of separating development teams from operations teams by having development teams ship code ‘over the wall’ to operations teams who were then responsible to run it and maintain it.”

|

||||

|

||||

DevOps, as [Armory][13] CTO [Isaac Mosquera][14] explained, is an agile software development framework and culture that’s gaining traction thanks to—well, pretty much everything that Peck said.

|

||||

|

||||

Interestingly, Mosquera feels that this approach actually flies in the face of [Conway’s Law][15]:

|

||||

|

||||

_" Organizations which design systems ... are constrained to produce designs which are copies of the communication structures of these organizations." — M. Conway_

|

||||

|

||||

“Instead of communication driving software design, now software architecture drives communication. Not only do teams operate and organize differently, but it requires a new set of tooling and process to support this type of architecture; i.e., DevOps,” Mosquera explained.

|

||||

|

||||

[Chris McFadden][16], VP of engineering at [SparkPost][17], offers an interesting example that might be worth following. At SparkPost, you’ll find decentralized governance—but you won’t find a one-team-per-service culture.

|

||||

|

||||

“The team that is developing these microservices started off as one team, but they’re now split up into three teams under the same larger group. Each team has some level of responsibility around certain domains and certain expertise, but the ownership of these services is not restricted to any one of these teams,” McFadden explained.

|

||||

|

||||

This approach, McFadden continued, allows any team to work on anything from new features to bug fixes to production issues relating to any of those services. There’s total flexibility and not a silo in sight.

|

||||

|

||||

“It allows [the teams to be] a little more flexible both in terms of new product development as well, just because you're not getting too restricted and that's based on our size as a company and as an engineering team. We really need to retain some flexibility,” he said.

|

||||

|

||||

However, size might matter here. McFadden admitted that if SparkPost was a lot larger, “then it would make more sense to have a single, larger team own one of those microservices.”

|

||||

|

||||

“[It's] better, I think, to have a little bit more broad responsibility for these services and it gives you a little more flexibility. At least that works for us at this time, where we are as an organization,” he said.

|

||||

|

||||

### A successful microservices engineering culture is a balancing act

|

||||

|

||||

When it comes to technology, freedom—with responsibility—looks to be the most rewarding path. Team members with differing technological preferences will come and go, while new challenges may require you to ditch technologies that have previously served you well. Software development is constantly in flux, so you’ll need to continually balance the needs of your team are new devices, technologies, and clients emerge.

|

||||

|

||||

As for structuring your teams, a decentralized yet un-siloed approach that leverages DevOps and instills a “you build it, you run it” mentality seems to be popular, although other schools of thought do exist. As usual, you’re going to have to experiment to see what suits your team best.

|

||||

|

||||

Here’s a quick recap on how to ensure your team culture meshes well with a microservices architecture:

|

||||

|

||||

* **Be sustainable, yet flexible** : Balance sustainability without forgetting about flexibility and the need for your team to be innovative when the right opportunity comes along. However, there’s a distinct difference of opinion over how you should achieve that balance.

|

||||

|

||||

* **Give equal opportunities** : Don’t favor one section of your team over another. If you’re going to impose restrictions, make sure it’s not going to fundamentally alienate team members from the get-go. Think about how your product roadmap is shaping up and forecast how it will be built and who’s going to do the work.

|

||||

|

||||

* **Structure your team to be agile, yet responsible** : Decentralized governance and agile development is the flavor of the day for a good reason, but don’t forget to install a sense of responsibility within each team.

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/8/microservices-team-challenges

|

||||

|

||||

作者:[Jake Lumetta][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/jakelumetta

|

||||

[1]:https://twitter.com/stympy?lang=en

|

||||

[2]:https://www.honeybadger.io/

|

||||

[3]:https://twitter.com/stephenlb

|

||||

[4]:https://www.pubnub.com/

|

||||

[5]:http://sumologic.com/

|

||||

[6]:https://twitter.com/raychaser

|

||||

[7]:https://twitter.com/stefanzier

|

||||

[8]:https://twitter.com/darbyfrey

|

||||

[9]:https://leadhonestly.com/

|

||||

[10]:https://martinfowler.com/articles/microservices.html#ProductsNotProjects

|

||||

[11]:https://medium.com/@nathankpeck/microservice-principles-decentralized-governance-4cdbde2ff6ca

|

||||

[12]:https://opensource.com/resources/devops

|

||||

[13]:http://armory.io/

|

||||

[14]:https://twitter.com/imosquera

|

||||

[15]:https://en.wikipedia.org/wiki/Conway%27s_law

|

||||

[16]:https://twitter.com/cristoirmac

|

||||

[17]:https://www.sparkpost.com/

|

||||

56

sources/talk/20180809 How do tools affect culture.md

Normal file

56

sources/talk/20180809 How do tools affect culture.md

Normal file

@ -0,0 +1,56 @@

|

||||

How do tools affect culture?

|

||||

======

|

||||

|

||||

|

||||

|

||||

Most of the DevOps community talks about how tools don’t matter much. The culture has to change first, the argument goes, which might modify how the tools are used.

|

||||

|

||||

I agree and disagree with that concept. I believe the relationship between tools and culture is more symbiotic and bidirectional than unidirectional. I have discovered this through real-world transformations across several companies now. I admit it’s hard to determine whether the tools changed the culture or whether the culture changed how the tools were used.

|

||||

|

||||

### Violating principles

|

||||

|

||||

Some tools violate core principles of modern development and operations. The primary violation I have seen are tools that require GUI interactions. This often separates operators from the value pipeline in a way that is cognitively difficult to overcome. If everything in your infrastructure is supposed to be configured and deployed through a value pipeline, then taking someone out of that flow inherently changes their perspective and engagement. Making manual modifications also injects risk into the system that creates unpredictability and undermines the value of the pipeline.

|

||||

|

||||

I’ve heard it said that these tools are fine and can be made to work within the new culture, and I’ve tried this in the past. Screen scraping and form manipulation tools have been used to attempt automation with some systems I’ve integrated. This is very fragile and doesn’t work on all systems. It ultimately required a lot of manual intervention.

|

||||

|

||||

Another system from a large vendor providing integrated monitoring and ticketing solutions for infrastructure seemed to implement its API as an afterthought, and this resulted in the system being unable to handle the load from the automated system. This required constant manual recoveries and sometimes the tedious task of manually closing errant tickets that shouldn’t have been created or that weren’t closed properly.

|

||||

|

||||

The individuals maintaining these systems experienced great frustration and often expressed a lack of confidence in the overall DevOps transformation. In one of these instances, we introduced a modern tool for monitoring and alerting, and the same individuals suddenly developed a tremendous amount of confidence in the overall DevOps transformation. I believe this is because tools can reinforce culture and improve it when a similar tool that lacks modern capabilities would otherwise stymie motivation and engagement.

|

||||

|

||||

### Choosing tools

|

||||

|

||||

At the NAIC (National Association of Insurance Commissioners), we’ve adopted a practice of evaluating new and existing tools based on features we believe reinforce the core principles of our value pipeline. We currently have seven items on our list:

|

||||

|

||||

* REST API provided and fully functional (possesses all application functionality)

|

||||

* Ability to provision immutably (can be installed, configured, and started without human intervention)

|

||||

* Ability to provide all configuration through static files

|

||||

* Open source code

|

||||

* Uses open standards when available

|

||||

* Offered as Software as a Service (SaaS) or hosted (we don't run anything)

|

||||

* Deployable to public cloud (based on licensing and cost)

|

||||

|

||||

|

||||

|

||||

This is a prioritized list. Each item gets rated green, yellow, or red to indicate how much each statement applies to a particular technology. This creates a visual that makes it quite clear how the different candidates compare to one another. We then use this to make decisions about which tools we should use. We don’t make decisions solely on these criteria, but they do provide a clearer picture and help us know when we’re sacrificing principles. Transparency is a core principle in our culture, and this system helps reinforce that in our decision-making process.

|

||||

|

||||

We use green, yellow, and red because there’s not normally a clear binary representation of these criteria within each tool. For example, some tools have an incomplete API, which would result in yellow being applied. If the tool uses open standards like OpenAPI and there’s no other applicable open standard, then it would receive green for “Uses open standards when available.” However, a tracing system that uses OpenAPI and not OpenTracing would receive a yellow rating.

|

||||

|

||||

This type of system creates a common understanding of what is valued when it comes to tool selection, and it helps avoid unknowingly violating core principles of your value pipeline. We recently used this method to select [GitLab][1] as our version control and continuous integration system, and it has drastically improved our culture for many reasons. I estimated 50 users for the first year, and we’re already over 120 in just the first few months.

|

||||

|

||||

The tools we used previously didn’t allow us to contribute back our own features, collaborate transparently, or automate so completely. We’ve also benefited from GitLab’s culture influencing ours. Its [handbook][2] and open communication have been invaluable to our growth. Tools, and the companies that make them, can and will influence your company’s culture. What are you willing to allow in?

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/8/how-tools-affect-culture

|

||||

|

||||

作者:[Dan Barker][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/barkerd427

|

||||

[1]:https://about.gitlab.com/

|

||||

[2]:https://about.gitlab.com/handbook/

|

||||

@ -1,3 +1,5 @@

|

||||