mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

694ea80870

@ -1,57 +1,58 @@

|

||||

iWant – 一个分散的点对点共享文件的命令行应用程序

|

||||

iWant:一个去中心化的点对点共享文件的命令行工具

|

||||

======

|

||||

|

||||

|

||||

|

||||

不久之前,我们编写了一个指南,内容是一个文件共享实用程序,名为 [**transfer.sh**][1],它是一个免费的 Web 服务,允许你在 Internet 上轻松快速地共享文件,还有 [**PSiTransfer**][2],一个简单的开源自托管文件共享解决方案。今天,我们将看到另一个名为 **"iWant"** 的文件共享实用程序。它是一个免费的,基于 CLI 的开源分散式点对点文件共享应用程序。

|

||||

不久之前,我们编写了一个指南,内容是一个文件共享实用程序,名为 [transfer.sh][1],它是一个免费的 Web 服务,允许你在 Internet 上轻松快速地共享文件,还有 [PSiTransfer][2],一个简单的开源自托管文件共享解决方案。今天,我们将看到另一个名为 “iWant” 的文件共享实用程序。它是一个基于命令行的自由开源的去中心化点对点文件共享应用程序。

|

||||

|

||||

你可能想知道,它与其它文件共享应用程序有什么不同?以下是 iWant 的一些突出特点。

|

||||

|

||||

* 它是一个命令行应用程序。这意味着你不需要消耗内存来加载 GUI 实用程序。你只需要一个终端。

|

||||

* 它是分散的。这意味着你的数据不会在任何中心位置存储。因此,不会因为中心失败而失败。

|

||||

* 它是去中心化的。这意味着你的数据不会在任何中心位置存储。因此,不会因为中心点失败而失败。

|

||||

* iWant 允许中断下载,你可以在以后随时恢复。你不需要从头开始下载,它会从你停止的位置恢复下载。

|

||||

* 共享目录中文件所作的任何更改(如删除、添加、修改)都会立即反映在网络中。

|

||||

* 就像种子一样,iWant 从多个节点下载文件。如果任何节点离开群组或未能响应,它将继续从另一个节点下载。

|

||||

* 它是跨平台的,因此你可以在 GNU/Linux, MS Windows 或者 Mac OS X 中使用它。

|

||||

* 它是跨平台的,因此你可以在 GNU/Linux、MS Windows 或者 Mac OS X 中使用它。

|

||||

|

||||

### iWant – 一个基于 CLI 的分散点对点文件共享解决方案

|

||||

|

||||

#### 安装 iWant

|

||||

### 安装 iWant

|

||||

|

||||

iWant 可以使用 PIP 包管理器轻松安装。确保你在 Linux 发行版中安装了 pip。如果尚未安装,参考以下指南。

|

||||

|

||||

[如何使用 Pip 管理 Python 包](https://www.ostechnix.com/manage-python-packages-using-pip/)

|

||||

|

||||

安装 PIP 后,确保你有以下依赖项:

|

||||

安装 pip 后,确保你有以下依赖项:

|

||||

|

||||

* libffi-dev

|

||||

* libssl-dev

|

||||

|

||||

比如说,在 Ubuntu 上,你可以使用以下命令安装这些依赖项:

|

||||

|

||||

```

|

||||

$ sudo apt-get install libffi-dev libssl-dev

|

||||

|

||||

```

|

||||

|

||||

安装完所有依赖项后,使用以下命令安装 iWant:

|

||||

|

||||

```

|

||||

$ sudo pip install iwant

|

||||

|

||||

```

|

||||

|

||||

现在我们的系统中已经有了 iWant,让我们来看看如何使用它来通过网络传输文件。

|

||||

|

||||

#### 用法

|

||||

### 用法

|

||||

|

||||

首先,使用以下命令启动 iWant 服务器:

|

||||

|

||||

(LCTT 译注:虽然这个软件是叫 iWant,但是其命令名为 `iwanto`,另外这个软件至少一年没有更新了。)

|

||||

|

||||

```

|

||||

$ iwanto start

|

||||

|

||||

```

|

||||

|

||||

第一次启动时,iWant 会询问想要分享和下载文件夹的位置,所以需要输入两个文件夹的位置。然后,选择要使用的网卡。

|

||||

|

||||

示例输出:

|

||||

|

||||

```

|

||||

Shared/Download folder details looks empty..

|

||||

Note: Shared and Download folder cannot be the same

|

||||

@ -66,31 +67,31 @@ now scanning /home/sk/myshare

|

||||

Updating Leader 56f6d5e8-654e-11e7-93c8-08002712f8c1

|

||||

[Adding] /home/sk/myshare 0.0

|

||||

connecting to 192.168.43.2:1235 for hashdump

|

||||

|

||||

```

|

||||

|

||||

如果你看到类似上面的输出,你可以立即开始使用 iWant。

|

||||

如果你看到类似上面的输出,你可以立即开始使用 iWant 了。

|

||||

|

||||

同样,在网络种的所有系统上启动 iWant 服务,指定有效的分享和下载文件夹的位置,并选择合适的网卡。

|

||||

同样,在网络中的所有系统上启动 iWant 服务,指定有效的分享和下载文件夹的位置,并选择合适的网卡。

|

||||

|

||||

iWant 服务将继续在当前终端窗口中运行,直到你按下 **CTRL+C** 退出为止。你需要打开一个新选项卡或新的终端窗口来使用 iWant。

|

||||

iWant 服务将继续在当前终端窗口中运行,直到你按下 `CTRL+C` 退出为止。你需要打开一个新选项卡或新的终端窗口来使用 iWant。

|

||||

|

||||

iWant 的用法非常简单,它的命令很少,如下所示。

|

||||

|

||||

* **iwanto start** – 启动 iWant 服务。

|

||||

* **iwanto search <name>** – 查找文件。

|

||||

* **iwanto download <hash>** – 下载一个文件。

|

||||

* **iwanto share <path>** – 更改共享文件夹的位置。

|

||||

* **iwanto download to <destination>** – 更改下载文件夹位置。

|

||||

* **iwanto view config** – 查看共享和下载文件夹。

|

||||

* **iwanto –version** – 显示 iWant 版本。

|

||||

* **iwanto -h** – 显示帮助信息。

|

||||

* `iwanto start` – 启动 iWant 服务。

|

||||

* `iwanto search <name>` – 查找文件。

|

||||

* `iwanto download <hash>` – 下载一个文件。

|

||||

* `iwanto share <path>` – 更改共享文件夹的位置。

|

||||

* `iwanto download to <destination>` – 更改下载文件夹位置。

|

||||

* `iwanto view config` – 查看共享和下载文件夹。

|

||||

* `iwanto –version` – 显示 iWant 版本。

|

||||

* `iwanto -h` – 显示帮助信息。

|

||||

|

||||

让我向你展示一些例子。

|

||||

|

||||

**查找文件**

|

||||

#### 查找文件

|

||||

|

||||

要查找一个文件,运行:

|

||||

|

||||

```

|

||||

$ iwanto search <filename>

|

||||

|

||||

@ -99,84 +100,86 @@ $ iwanto search <filename>

|

||||

请注意,你无需指定确切的名称。

|

||||

|

||||

示例:

|

||||

|

||||

```

|

||||

$ iwanto search command

|

||||

|

||||

```

|

||||

|

||||

上面的命令将搜索包含 "command" 字符串的所有文件。

|

||||

上面的命令将搜索包含 “command” 字符串的所有文件。

|

||||

|

||||

我的 Ubuntu 系统会输出:

|

||||

|

||||

```

|

||||

Filename Size Checksum

|

||||

------------------------------------------- ------- --------------------------------

|

||||

/home/sk/myshare/THE LINUX COMMAND LINE.pdf 3.85757 efded6cc6f34a3d107c67c2300459911

|

||||

|

||||

```

|

||||

|

||||

**下载文件**

|

||||

#### 下载文件

|

||||

|

||||

你可以在你的网络上的任何系统下载文件。要下载文件,只需提供文件的哈希(校验和),如下所示。你可以使用 `iwanto search` 命令获取共享的哈希值。

|

||||

|

||||

你可以在你的网络上的任何系统下载文件。要下载文件,只需提供文件的哈希(校验和),如下所示。你可以使用 "iwanto search" 命令获取共享的哈希值。

|

||||

```

|

||||

$ iwanto download efded6cc6f34a3d107c67c2300459911

|

||||

|

||||

```

|

||||

|

||||

文件将保存在你的下载位置,在本文中是 `/home/sk/mydownloads/` 位置。

|

||||

|

||||

```

|

||||

Filename: /home/sk/mydownloads/THE LINUX COMMAND LINE.pdf

|

||||

Size: 3.857569 MB

|

||||

|

||||

```

|

||||

|

||||

**查看配置**

|

||||

#### 查看配置

|

||||

|

||||

要查看配置,例如共享和下载文件夹的位置,运行:

|

||||

|

||||

```

|

||||

$ iwanto view config

|

||||

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

```

|

||||

Shared folder:/home/sk/myshare

|

||||

Download folder:/home/sk/mydownloads

|

||||

|

||||

```

|

||||

|

||||

**更改共享和下载文件夹的位置**

|

||||

#### 更改共享和下载文件夹的位置

|

||||

|

||||

你可以更改共享文件夹和下载文件夹。

|

||||

|

||||

```

|

||||

$ iwanto share /home/sk/ostechnix

|

||||

|

||||

```

|

||||

|

||||

现在,共享位置已更改为 `/home/sk/ostechnix`。

|

||||

|

||||

同样,你可以使用以下命令更改下载位置:

|

||||

|

||||

```

|

||||

$ iwanto download to /home/sk/Downloads

|

||||

|

||||

```

|

||||

|

||||

要查看所做的更改,运行 `config` 命令:

|

||||

要查看所做的更改,运行命令:

|

||||

|

||||

```

|

||||

$ iwanto view config

|

||||

|

||||

```

|

||||

|

||||

**停止 iWant**

|

||||

#### 停止 iWant

|

||||

|

||||

一旦你不想用 iWant 了,可以按下 **CTRL+C** 退出。

|

||||

一旦你不想用 iWant 了,可以按下 `CTRL+C` 退出。

|

||||

|

||||

如果它不起作用,那可能是由于防火墙或你的路由器不支持多播。你可以在 ** ~/.iwant/.iwant.log** 文件中查看所有日志。有关更多详细信息,参阅最后提供的项目的 GitHub 页面。

|

||||

如果它不起作用,那可能是由于防火墙或你的路由器不支持多播。你可以在 `~/.iwant/.iwant.log` 文件中查看所有日志。有关更多详细信息,参阅最后提供的项目的 GitHub 页面。

|

||||

|

||||

差不多就是全部了。希望这个工具有所帮助。下次我会带着另一个有趣的指南再次来到这里。在那之前,请继续关注 OSTechNix!

|

||||

差不多就是全部了。希望这个工具有所帮助。下次我会带着另一个有趣的指南再次来到这里。

|

||||

|

||||

干杯!

|

||||

|

||||

### 资源

|

||||

|

||||

-[iWant GitHub](https://github.com/nirvik/iWant)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -185,7 +188,7 @@ via: https://www.ostechnix.com/iwant-decentralized-peer-peer-file-sharing-comman

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,130 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Why DevOps is the most important tech strategy today)

|

||||

[#]: via: (https://opensource.com/article/19/3/devops-most-important-tech-strategy)

|

||||

[#]: author: (Kelly AlbrechtWilly-Peter Schaub https://opensource.com/users/ksalbrecht/users/brentaaronreed/users/wpschaub/users/wpschaub/users/ksalbrecht)

|

||||

|

||||

Why DevOps is the most important tech strategy today

|

||||

======

|

||||

Clearing up some of the confusion about DevOps.

|

||||

![CICD with gears][1]

|

||||

|

||||

Many people first learn about [DevOps][2] when they see one of its outcomes and ask how it happened. It's not necessary to understand why something is part of DevOps to implement it, but knowing that—and why a DevOps strategy is important—can mean the difference between being a leader or a follower in an industry.

|

||||

|

||||

Maybe you've heard some the incredible outcomes attributed to DevOps, such as production environments that are so resilient they can handle thousands of releases per day while a "[Chaos Monkey][3]" is running around randomly unplugging things. This is impressive, but on its own, it's a weak business case, essentially burdened with [proving a negative][4]: The DevOps environment is resilient because a serious failure hasn't been observed… yet.

|

||||

|

||||

There is a lot of confusion about DevOps and many people are still trying to make sense of it. Here's an example from someone in my LinkedIn feed:

|

||||

|

||||

> Recently attended few #DevOps sessions where some speakers seemed to suggest #Agile is a subset of DevOps. Somehow, my understanding was just the opposite.

|

||||

>

|

||||

> Would like to hear your thoughts. What do you think is the relationship between Agile and DevOps?

|

||||

>

|

||||

> 1. DevOps is a subset of Agile

|

||||

> 2. Agile is a subset of DevOps

|

||||

> 3. DevOps is an extension of Agile, starts where Agile ends

|

||||

> 4. DevOps is the new version of Agile

|

||||

>

|

||||

|

||||

|

||||

Tech industry professionals have been weighing in on the LinkedIn post with a wide range of answers. How would you respond?

|

||||

|

||||

### DevOps' roots in lean and agile

|

||||

|

||||

DevOps makes a lot more sense if we start with the strategies of Henry Ford and the Toyota Production System's refinements of Ford's model. Within this history is the birthplace of lean manufacturing, which has been well studied. In [_Lean Thinking_][5], James P. Womack and Daniel T. Jones distill it into five principles:

|

||||

|

||||

1. Specify the value desired by the customer

|

||||

2. Identify the value stream for each product providing that value and challenge all of the wasted steps currently necessary to provide it

|

||||

3. Make the product flow continuously through the remaining value-added steps

|

||||

4. Introduce pull between all steps where continuous flow is possible

|

||||

5. Manage toward perfection so that the number of steps and the amount of time and information needed to serve the customer continually falls

|

||||

|

||||

|

||||

|

||||

Lean seeks to continuously remove waste and increase the flow of value to the customer. This is easily recognizable and understood through a core tenet of lean: single piece flow. We can do a number of activities to learn why moving single pieces at a time is magnitudes faster than batches of many pieces; the [Penny Game][6] and the [Airplane Game][7] are two of them. In the Penny Game, if a batch of 20 pennies takes two minutes to get to the customer, they get the whole batch after waiting two minutes. If you move one penny at a time, the customer gets the first penny in about five seconds and continues getting pennies until the 20th penny arrives approximately 25 seconds later.

|

||||

|

||||

This is a huge difference, but not everything in life is as simple and predictable as the penny in the Penny Game. This is where agile comes in. We certainly see lean principles on high-performing agile teams, but these teams need more than lean to do what they do.

|

||||

|

||||

To be able to handle the unpredictability and variance of typical software development tasks, agile methodology focuses on awareness, deliberation, decision, and action to adjust course in the face of a constantly changing reality. For example, agile frameworks (like scrum) increase awareness with ceremonies like the daily standup and the sprint review. If the scrum team becomes aware of a new reality, the framework allows and encourages them to adjust course if necessary.

|

||||

|

||||

For teams to make these types of decisions, they need to be self-organizing in a high-trust environment. High-performing agile teams working this way achieve a fast flow of value while continuously adjusting course, removing the waste of going in the wrong direction.

|

||||

|

||||

### Optimal batch size

|

||||

|

||||

To understand the power of DevOps in software development, it helps to understand the economics of batch size. Consider the following U-curve optimization illustration from Donald Reinertsen's _[Principles of Product Development Flow][8]:_

|

||||

|

||||

![U-curve optimization illustration of optimal batch size][9]

|

||||

|

||||

This can be explained with an analogy about grocery shopping. Suppose you need to buy some eggs and you live 30 minutes from the store. Buying one egg (far left on the illustration) at a time would mean a 30-minute trip each time. This is your _transaction cost_. The _holding cost_ might represent the eggs spoiling and taking up space in your refrigerator over time. The _total cost_ is the _transaction cost_ plus your _holding cost_. This U-curve explains why, for most people, buying a dozen eggs at a time is their _optimal batch size_. If you lived next door to the store, it'd cost you next to nothing to walk there, and you'd probably buy a smaller carton each time to save room in your refrigerator and enjoy fresher eggs.

|

||||

|

||||

This U-curve optimization illustration can shed some light on why productivity increases significantly in successful agile transformations. Consider the effect of agile transformation on decision making in an organization. In traditional hierarchical organizations, decision-making authority is centralized. This leads to larger decisions made less frequently by fewer people. An agile methodology will effectively reduce an organization's transaction cost for making decisions by decentralizing the decisions to where the awareness and information is the best known: across the high-trust, self-organizing agile teams.

|

||||

|

||||

The following animation shows how reducing transaction cost shifts the optimal batch size to the left. You can't understate the value to an organization in making faster decisions more frequently.

|

||||

|

||||

![U-curve optimization illustration][10]

|

||||

|

||||

### Where does DevOps fit in?

|

||||

|

||||

Automation is one of the things DevOps is most known for. The previous illustration shows the value of automation in great detail. Through automation, we reduce our transaction costs to nearly zero, essentially getting our testing and deployments for free. This lets us take advantage of smaller and smaller batch sizes of work. Smaller batches of work are easier to understand, commit to, test, review, and know when they are done. These smaller batch sizes also contain less variance and risk, making them easier to deploy and, if something goes wrong, to troubleshoot and recover from. With automation combined with a solid agile practice, we can get our feature development very close to single piece flow, providing value to customers quickly and continuously.

|

||||

|

||||

More traditionally, DevOps is understood as a way to knock down the walls of confusion between the dev and ops teams. In this model, development teams develop new features, while operations teams keep the system stable and running smoothly. Friction occurs because new features from development introduce change into the system, increasing the risk of an outage, which the operations team doesn't feel responsible for—but has to deal with anyway. DevOps is not just trying to get people working together, it's more about trying to make more frequent changes safely in a complex environment.

|

||||

|

||||

We can look to [Ron Westrum][11] for research about achieving safety in complex organizations. In researching why some organizations are safer than others, he found that an organization's culture is predictive of its safety. He identified three types of culture: Pathological, Bureaucratic, and Generative. He found that the Pathological culture was predictive of less safety and the Generative culture was predictive of more safety (e.g., far fewer plane crashes or accidental hospital deaths in his main areas of research).

|

||||

|

||||

![Three types of culture identified by Ron Westrum][12]

|

||||

|

||||

Effective DevOps teams achieve a Generative culture with lean and agile practices, showing that speed and safety are complementary, or two sides of the same coin. By reducing the optimal batch sizes of decisions and features to become very small, DevOps achieves a faster flow of information and value while removing waste and reducing risk.

|

||||

|

||||

In line with Westrum's research, change can happen easily with safety and reliability improving at the same time. When an agile DevOps team is trusted to make its own decisions, we get the tools and techniques DevOps is most known for today: automation and continuous delivery. Through this automation, transaction costs are reduced further than ever, and a near single piece lean flow is achieved, creating the potential for thousands of decisions and releases per day, as we've seen happen in high-performing DevOps organizations.

|

||||

|

||||

### Flow, feedback, learning

|

||||

|

||||

DevOps doesn't stop there. We've mainly been talking about DevOps achieving a revolutionary flow, but lean and agile practices are further amplified through similar efforts that achieve faster feedback loops and faster learning. In the [_DevOps Handbook_][13], the authors explain in detail how, beyond its fast flow, DevOps achieves telemetry across its entire value stream for fast and continuous feedback. Further, leveraging the [kaizen][14] bursts of lean and the [retrospectives][15] of scrum, high-performing DevOps teams will continuously drive learning and continuous improvement deep into the foundations of their organizations, achieving a lean manufacturing revolution in the software product development industry.

|

||||

|

||||

### Start with a DevOps assessment

|

||||

|

||||

The first step in leveraging DevOps is, either after much study or with the help of a DevOps consultant and coach, to conduct an assessment across a suite of dimensions consistently found in high-performing DevOps teams. The assessment should identify weak or non-existent team norms that need improvement. Evaluate the assessment's results to find quick wins—focus areas with high chances for success that will produce high-impact improvement. Quick wins are important for gaining the momentum needed to tackle more challenging areas. The teams should generate ideas that can be tried quickly and start to move the needle on the DevOps transformation.

|

||||

|

||||

After some time, the team should reassess on the same dimensions to measure improvements and identify new high-impact focus areas, again with fresh ideas from the team. A good coach will consult, train, mentor, and support as needed until the team owns its own continuous improvement and achieves near consistency on all dimensions by continually reassessing, experimenting, and learning.

|

||||

|

||||

In the [second part][16] of this article, we'll look at results from a DevOps survey in the Drupal community and see where the quick wins are most likely to be found.

|

||||

|

||||

* * *

|

||||

|

||||

_Rob_ _Bayliss and Kelly Albrecht will present[DevOps: Why, How, and What][17] and host a follow-up [Birds of a][18]_ [_Feather_][18] _[discussion][18] at [DrupalCon 2019][19] in Seattle, April 8-12._

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/3/devops-most-important-tech-strategy

|

||||

|

||||

作者:[Kelly AlbrechtWilly-Peter Schaub][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/ksalbrecht/users/brentaaronreed/users/wpschaub/users/wpschaub/users/ksalbrecht

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/cicd_continuous_delivery_deployment_gears.png?itok=kVlhiEkc (CICD with gears)

|

||||

[2]: https://opensource.com/resources/devops

|

||||

[3]: https://github.com/Netflix/chaosmonkey

|

||||

[4]: https://en.wikipedia.org/wiki/Burden_of_proof_(philosophy)#Proving_a_negative

|

||||

[5]: https://www.amazon.com/dp/B0048WQDIO/ref=dp-kindle-redirect?_encoding=UTF8&btkr=1

|

||||

[6]: https://youtu.be/5t6GhcvKB8o?t=54

|

||||

[7]: https://www.shmula.com/paper-airplane-game-pull-systems-push-systems/8280/

|

||||

[8]: https://www.amazon.com/dp/B00K7OWG7O/ref=dp-kindle-redirect?_encoding=UTF8&btkr=1

|

||||

[9]: https://opensource.com/sites/default/files/uploads/batch_size_optimal_650.gif (U-curve optimization illustration of optimal batch size)

|

||||

[10]: https://opensource.com/sites/default/files/uploads/batch_size_650.gif (U-curve optimization illustration)

|

||||

[11]: https://en.wikipedia.org/wiki/Ron_Westrum

|

||||

[12]: https://opensource.com/sites/default/files/uploads/information_flow.png (Three types of culture identified by Ron Westrum)

|

||||

[13]: https://www.amazon.com/DevOps-Handbook-World-Class-Reliability-Organizations/dp/1942788002/ref=sr_1_3?keywords=DevOps+handbook&qid=1553197361&s=books&sr=1-3

|

||||

[14]: https://en.wikipedia.org/wiki/Kaizen

|

||||

[15]: https://www.scrum.org/resources/what-is-a-sprint-retrospective

|

||||

[16]: https://opensource.com/article/19/3/where-drupal-community-stands-devops-adoption

|

||||

[17]: https://events.drupal.org/seattle2019/sessions/devops-why-how-and-what

|

||||

[18]: https://events.drupal.org/seattle2019/bofs/devops-getting-started

|

||||

[19]: https://events.drupal.org/seattle2019

|

||||

@ -0,0 +1,115 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Continuous response: The essential process we're ignoring in DevOps)

|

||||

[#]: via: (https://opensource.com/article/19/3/continuous-response-devops)

|

||||

[#]: author: (Randy Bias https://opensource.com/users/randybias)

|

||||

|

||||

Continuous response: The essential process we're ignoring in DevOps

|

||||

======

|

||||

You probably practice CI and CD, but if you aren't thinking about

|

||||

continuous response, you aren't really doing DevOps.

|

||||

![CICD with gears][1]

|

||||

|

||||

Continuous response (CR) is an overlooked link in the DevOps process chain. The two other major links—[continuous integration (CI) and continuous delivery (CD)][2]—are well understood, but CR is not. Yet, CR is the essential element of follow-through required to make customers happy and fulfill the promise of greater speed and agility. At the heart of the DevOps movement is the need for greater velocity and agility to bring businesses into our new digital age. CR plays a pivotal role in enabling this.

|

||||

|

||||

### Defining CR

|

||||

|

||||

We need a crisp definition of CR to move forward with breaking it down. To put it into context, let's revisit the definitions of continuous integration (CI) and continuous delivery (CD). Here are Gartner's definitions when I wrote this them down in 2017:

|

||||

|

||||

> [Continuous integration][3] is the practice of integrating, building, testing, and delivering functional software on a scheduled, repeatable, and automated basis.

|

||||

>

|

||||

> Continuous delivery is a software engineering approach where teams keep producing valuable software in short cycles while ensuring that the software can be reliably released at any time.

|

||||

|

||||

I propose the following definition for CR:

|

||||

|

||||

> Continuous response is a practice where developers and operators instrument, measure, observe, and manage their deployed software looking for changes in performance, resiliency, end-user behavior, and security posture and take corrective actions as necessary.

|

||||

|

||||

We can argue about whether these definitions are 100% correct. They are good enough for our purposes, which is framing the definition of CR in rough context so we can understand it is really just the last link in the chain of a holistic cycle.

|

||||

|

||||

![The holistic DevOps cycle][4]

|

||||

|

||||

What is this multi-colored ring, you ask? It's the famous [OODA Loop][5]. Before continuing, let's touch on what the OODA Loop is and why it's relevant to DevOps. We'll keep it brief though, as there is already a long history between the OODA Loop and DevOps.

|

||||

|

||||

#### A brief aside: The OODA Loop

|

||||

|

||||

At the heart of core DevOps thinking is using the OODA Loop to create a proactive process for evolving and responding to changing environments. A quick [web search][6] makes it easy to learn the long history between the OODA Loop and DevOps, but if you want the deep dive, I highly recommend [The Tao of Boyd: How to Master the OODA Loop][7].

|

||||

|

||||

Here is the "evolved OODA Loop" presented by John Boyd:

|

||||

|

||||

![OODA Loop][8]

|

||||

|

||||

The most important thing to understand about the OODA Loop is that it's a cognitive process for adapting to and handling changing circumstances.

|

||||

|

||||

The second most important thing to understand about the OODA Loop is, since it is a thought process that is meant to evolve, it depends on driving feedback back into the earlier parts of the cycle as you iterate.

|

||||

|

||||

As you can see in the diagram above, CI, CD, and CR are all their own isolated OODA Loops within the overall DevOps OODA Loop. The key here is that each OODA Loop is an evolving thought process for how test, release, and success are measured. Simply put, those who can execute on the OODA Loop fastest will win.

|

||||

|

||||

Put differently, DevOps wants to drive speed (executing the OODA Loop faster) combined with agility (taking feedback and using it to constantly adjust the OODA Loop). This is why CR is a vital piece of the DevOps process. We must drive production feedback into the DevOps maturation process. The DevOps notion of Culture, Automation, Measurement, and Sharing ([CAMS][9]) partially but inadequately captures this, whereas CR provides a much cleaner continuation of CI/CD in my mind.

|

||||

|

||||

### Breaking CR down

|

||||

|

||||

CR has more depth and breadth than CI or CD. This is natural, given that what we're categorizing is the post-deployment process by which our software is taking a variety of actions from autonomic responses to analytics of customer experience. I think, when it's broken down, there are three key buckets that CR components fall into. Each of these three areas forms a complete OODA Loop; however, the level of automation throughout the OODA Loop varies significantly.

|

||||

|

||||

The following table will help clarify the three areas of CR:

|

||||

|

||||

CR Type | Purpose | Examples

|

||||

---|---|---

|

||||

Real-time | Autonomics for availability and resiliency | Auto-scaling, auto-healing, developer-in-the-loop automated responses to real-time failures, automated root-cause analysis

|

||||

Analytic | Feature/fix pipeline | A/B testing, service response times, customer interaction models

|

||||

Predictive | History-based planning | Capacity planning, hardware failure prediction models, cost-basis analysis

|

||||

|

||||

_Real-time CR_ is probably the best understood of the three. This kind of CR is where our software has been instrumented for known issues and can take an immediate, automated response (autonomics). Examples of known issues include responding to high or low demand (e.g., elastic auto-scaling), responding to expected infrastructure resource failures (e.g., auto-healing), and responding to expected distributed application failures (e.g., circuit breaker pattern). In the future, we will see machine learning (ML) and similar technologies applied to automated root-cause analysis and event correlation, which will then provide a path towards "no ops" or "zero ops" operational models.

|

||||

|

||||

_Analytic CR_ is still the most manual of the CR processes. This kind of CR is focused primarily on observing end-user experience and providing feedback to the product development cycle to add features or fix existing functionality. Examples of this include traditional A/B website testing, measuring page-load times or service-response times, post-mortems of service failures, and so on.

|

||||

|

||||

_Predictive CR_ , due to the resurgence of AI and ML, is one of the innovation areas in CR. It uses historical data to predict future needs. ML techniques are allowing this area to become more fully automated. Examples include automated and predictive capacity planning (primarily for the infrastructure layer), automated cost-basis analysis of service delivery, and real-time reallocation of infrastructure resources to resolve capacity and hardware failure issues before they impact the end-user experience.

|

||||

|

||||

### Diving deeper on CR

|

||||

|

||||

CR, like CI or CD, is a DevOps process supported by a set of underlying tools. CI and CD are not Jenkins, unit tests, or automated deployments alone. They are a process flow. Similarly, CR is a process flow that begins with the delivery of new code via CD, which open source tools like [Spinnaker][10] give us. CR is not monitoring, machine learning, or auto-scaling, but a diverse set of processes that occur after code deployment, supported by a variety of tools. CR is also different in two specific ways.

|

||||

|

||||

First, it is different because, by its nature, it is broader. The general software development lifecycle (SDLC) process means that most [CI/CD processes][11] are similar. However, code running in production differs from app to app or service to service. This means that CR differs as well.

|

||||

|

||||

Second, CR is different because it is nascent. Like CI and CD before it, the process and tools existed before they had a name. Over time, CI/CD became more normalized and easier to scope. CR is new, hence there is lots of room to discuss what's in or out. I welcome your comments in this regard and hope you will run with these ideas.

|

||||

|

||||

### CR: Closing the loop on DevOps

|

||||

|

||||

DevOps arose because of the need for greater service delivery velocity and agility. Essentially, DevOps is an extension of agile software development practices to an operational mindset. It's a direct response to the flexibility and automation possibilities that cloud computing affords. However, much of the thinking on DevOps to date has focused on deploying the code to production and ends there. But our jobs don't end there. As professionals, we must also make certain our code is behaving as expected, we are learning as it runs in production, and we are taking that learning back into the product development process.

|

||||

|

||||

This is where CR lives and breathes. DevOps without CR is the same as saying there is no OODA Loop around the DevOps process itself. It's like saying that operators' and developers' jobs end with the code being deployed. We all know this isn't true. Customer experience is the ultimate measurement of our success. Can people use the software or service without hiccups or undue friction? If not, we need to fix it. CR is the final link in the DevOps chain that enables delivering the truest customer experience.

|

||||

|

||||

If you aren't thinking about continuous response, you aren't doing DevOps. Share your thoughts on CR, and tell me what you think about the concept and the definition.

|

||||

|

||||

* * *

|

||||

|

||||

_This article is based on[The Essential DevOps Process We're Ignoring: Continuous Response][12], which originally appeared on the Cloudscaling blog under a [CC BY 4.0][13] license and is republished with permission._

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/3/continuous-response-devops

|

||||

|

||||

作者:[Randy Bias][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/randybias

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/cicd_continuous_delivery_deployment_gears.png?itok=kVlhiEkc (CICD with gears)

|

||||

[2]: https://opensource.com/article/18/8/what-cicd

|

||||

[3]: https://www.gartner.com/doc/3187420/guidance-framework-continuous-integration-continuous

|

||||

[4]: https://opensource.com/sites/default/files/uploads/holistic-devops-cycle-smaller.jpeg (The holistic DevOps cycle)

|

||||

[5]: https://en.wikipedia.org/wiki/OODA_loop

|

||||

[6]: https://www.google.com/search?q=site%3Ablog.b3k.us+ooda+loop&rlz=1C5CHFA_enUS730US730&oq=site%3Ablog.b3k.us+ooda+loop&aqs=chrome..69i57j69i58.8660j0j4&sourceid=chrome&ie=UTF-8#q=devops+ooda+loop&*

|

||||

[7]: http://www.artofmanliness.com/2014/09/15/ooda-loop/

|

||||

[8]: https://opensource.com/sites/default/files/uploads/ooda-loop-2-1.jpg (OODA Loop)

|

||||

[9]: https://itrevolution.com/devops-culture-part-1/

|

||||

[10]: https://www.spinnaker.io

|

||||

[11]: https://opensource.com/article/18/12/cicd-tools-sysadmins

|

||||

[12]: http://cloudscaling.com/blog/devops/the-essential-devops-process-were-ignoring-continuous-response/

|

||||

[13]: https://creativecommons.org/licenses/by/4.0/

|

||||

@ -0,0 +1,77 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Why do organizations have open secrets?)

|

||||

[#]: via: (https://opensource.com/open-organization/19/3/open-secrets-bystander-effect)

|

||||

[#]: author: (Laura Hilliger https://opensource.com/users/laurahilliger/users/maryjo)

|

||||

|

||||

Why do organizations have open secrets?

|

||||

======

|

||||

Everyone sees something, but no one says anything—that's the bystander

|

||||

effect. And it's damaging your organizational culture.

|

||||

![][1]

|

||||

|

||||

[The five characteristics of an open organization][2] must work together to ensure healthy and happy communities inside our organizations. Even the most transparent teams, departments, and organizations require equal doses of additional open principles—like inclusivity and collaboration—to avoid dysfunction.

|

||||

|

||||

The "open secrets" phenomenon illustrates the limitations of transparency when unaccompanied by additional open values. [A recent article in Harvard Business Review][3] explored the way certain organizational issues—widely apparent but seemingly impossible to solve—lead to discomfort in the workforce. Authors Insiya Hussain and Subra Tangirala performed a number of studies, and found that the more people in an organization who knew about a particular "secret," be it a software bug or a personnel issue, the less likely any one person would be to report the issue or otherwise _do_ something about it.

|

||||

|

||||

Hussain and Tangirala explain that so-called "open secrets" are the result of a [bystander effect][4], which comes into play when people think, "Well, if _everyone_ knows, surely _I_ don't need to be the one to point it out." The authors mention several causes of this behavior, but let's take a closer look at why open secrets might be circulating in your organization—with an eye on what an open leader might do to [create a safe space for whistleblowing][5].

|

||||

|

||||

### 1\. Fear

|

||||

|

||||

People don't want to complain about a known problem only to have their complaint be the one that initiates the quality assurance, integrity, or redress process. What if new information emerges that makes their report irrelevant? What if they are simply _wrong_?

|

||||

|

||||

At the root of all bystander behavior is fear—fear of repercussions, fear of losing reputation or face, or fear that the very thing you've stood up against turns out to be a non-issue for everyone else. Going on record as "the one who reported" carries with it a reputational risk that is very intimidating.

|

||||

|

||||

The first step to ensuring that your colleagues report malicious behavior, code, or _whatever_ needs reporting is to create a fear-free workplace. We're inundated with the idea that making a mistake is bad or wrong. We're taught that we have to "protect" our reputations. However, the qualities of a good and moral character are _always_ subjective.

|

||||

|

||||

_Tip for leaders_ : Reward courage and strength every time you see it, regardless of whether you deem it "necessary." For example, if in a meeting where everyone except one person agrees on something, spend time on that person's concerns. Be patient and kind in helping that person change their mind, and be open minded about that person being able to change yours. Brains work in different ways; never forget that one person might have a perspective that changes the lay of the land.

|

||||

|

||||

### 2\. Policies

|

||||

|

||||

Usually, complaint procedures and policies are designed to ensure fairness towards all parties involved in the complaint. Discouraging false reporting and ensuring such fairness in situations like these is certainly a good idea. But policies might actually deter people from standing up—because a victim might be discouraged from reporting an experience if the formal policy for reporting doesn't make them feel protected. Standing up to someone in a position of power and saying "Your behavior is horrid, and I'm not going to take it" isn't easy for anyone, but it's particularly difficult for marginalized groups.

|

||||

|

||||

The "open secrets" phenomenon illustrates the limitations of transparency when unaccompanied by additional open values.

|

||||

|

||||

To ensure fairness to all parties, we need to adjust for victims. As part of making the decision to file a report, a victim will be dealing with a variety of internal fears. They'll wonder what might happen to their self-worth if they're put in a situation where they have to talk to someone about their experience. They'll wonder if they'll be treated differently if they're the one who stands up, and how that will affect their future working environments and relationships. Especially in a situation involving an open secret, asking a victim to be strong is asking them to have to trust that numerous other people will back them up. This fear shouldn't be part of their workplace experience; it's just not fair.

|

||||

|

||||

Remember that if one feels responsible for a problem (e.g., "Crap, that's _my code_ that's bringing down the whole server!"), then that person might feel fear at pointing out the mistake. _The important thing is dealing with the situation, not finding someone to blame._ Policies that make people feel personally protected—no matter what the situation—are absolutely integral to ensuring the organization deals with open secrets.

|

||||

|

||||

_Tip for leaders_ : Make sure your team's or organization's policy regarding complaints makes anonymous reporting possible. Asking a victim to "go on record" puts them in the position of having to defend their perspective. If they feel they're the victim of harassment, they're feeling as if they are harassed _and_ being asked to defend their experience. This means they're doing double the work of the perpetrator, who only has to defend themselves.

|

||||

|

||||

### 3\. Marginalization

|

||||

|

||||

Women, LGBTQ people, racial minorities, people with physical disabilities, people who are neuro-atypical, and other marginalized groups often find themselves in positions that them feel routinely dismissed, disempowered, disrespected—and generally dissed. These feelings are valid (and shouldn't be too surprising to anyone who has spent some time looking at issues of diversity and inclusion). Our emotional safety matters, and we tend to be quite protective of it—even if it means letting open secrets go unaddressed.

|

||||

|

||||

Marginalized groups have enough worries weighing on them, even when they're _not_ running the risk of damaging their relationships with others at work. Being seen and respected in both an organization and society more broadly is difficult enough _without_ drawing potentially negative attention.

|

||||

|

||||

Policies that make people feel personally protected—no matter what the situation—are absolutely integral to ensuring the organization deals with open secrets.

|

||||

|

||||

Luckily, in recent years attitudes towards marginalized groups have become visible, and we as a society have begun to talk about our experiences as "outliers." We've also come to realize that marginalized groups aren't actually "outliers" at all; we can thank the colorful, beautiful internet for that.

|

||||

|

||||

_Tip for leaders_ : Diversity and inclusion plays a role in dispelling open secrets. Make sure your diversity and inclusion practices and policies truly encourage a diverse workplace.

|

||||

|

||||

### Model the behavior

|

||||

|

||||

The best way to create a safe workplace and give people the ability to call attention to pervasive problems found within it is to _model the behaviors that you want other people to display_. Dysfunction occurs in cultures that don't pay attention to and value the principles upon which they are built. In order to discourage bystander behavior, transparent, inclusive, adaptable and collaborative communities must create policies that support calling attention to open secrets and then empathetically dealing with whatever the issue may be.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/open-organization/19/3/open-secrets-bystander-effect

|

||||

|

||||

作者:[Laura Hilliger][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/laurahilliger/users/maryjo

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/OSDC_secret_ingredient_520x292.png?itok=QbKzJq-N

|

||||

[2]: https://opensource.com/open-organization/resources/open-org-definition

|

||||

[3]: https://hbr.org/2019/01/why-open-secrets-exist-in-organizations

|

||||

[4]: https://www.psychologytoday.com/us/basics/bystander-effect

|

||||

[5]: https://opensource.com/open-organization/19/2/open-leaders-whistleblowers

|

||||

@ -0,0 +1,62 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (How Kubeflow is evolving without ksonnet)

|

||||

[#]: via: (https://opensource.com/article/19/4/kubeflow-evolution)

|

||||

[#]: author: (Jonathan Gershater (Red Hat) https://opensource.com/users/jgershat/users/jgershat)

|

||||

|

||||

How Kubeflow is evolving without ksonnet

|

||||

======

|

||||

There are big differences in how open source communities handle change compared to closed source vendors.

|

||||

![Chat bubbles][1]

|

||||

|

||||

Many software projects depend on modules that are run as separate open source projects. When one of those modules loses support (as is inevitable), the community around the main project must determine how to proceed.

|

||||

|

||||

This situation is happening right now in the [Kubeflow][2] community. Kubeflow is an evolving open source platform for developing, orchestrating, deploying, and running scalable and portable machine learning workloads on [Kubernetes][3]. Recently, the primary supporter of the Kubeflow component [ksonnet][4] announced that it would [no longer support][5] the software.

|

||||

|

||||

When a piece of software loses support, the decision-making process (and the outcome) differs greatly depending on whether the software is open source or closed source.

|

||||

|

||||

### A cellphone analogy

|

||||

|

||||

To illustrate the differences in how an open source community and a closed source/single software vendor proceed when a component loses support, let's use an example from hardware design.

|

||||

|

||||

Suppose you buy cellphone Model A and it stops working. When you try to get it repaired, you discover the manufacturer is out of business and no longer offering support. Since the cellphone's design is proprietary and closed, no other manufacturers can support it.

|

||||

|

||||

Now, suppose you buy cellphone Model B, it stops working, and its manufacturer is also out of business and no longer offering support. However, Model B's design is open, and another company is in business manufacturing, repairing and upgrading Model B cellphones.

|

||||

|

||||

This illustrates one difference between software written using closed and open source principles. If the vendor of a closed source software solution goes out of business, support disappears with the vendor, unless the vendor sells the software's design and intellectual property. But, if the vendor of an open source solution goes out of business, there is no intellectual property to sell. By the principles of open source, the source code is available for anyone to use and modify, under license, so another vendor can continue to maintain the software.

|

||||

|

||||

### How Kubeflow is evolving without ksonnet

|

||||

|

||||

The ramification of ksonnet's backers' decision to cease development illustrates Kubeflow's open and collaborative design process. Kubeflow's designers have several options, such as replacing ksonnet, adopting and developing ksonnet, etc. Because Kubeflow is an open source project, all options are discussed in the open on the Kubeflow mailing list. Some of the community's suggestions include:

|

||||

|

||||

> * Should we look at projects that are CNCF/Apache projects e.g. [helm][6]

|

||||

> * I would opt for back to the basics. KISS. How about plain old jsonnet + kubectl + makefile/scripts ? Thats how e.g. the coreos [prometheus operator][7] does it. It would also lower the entry barrier (no new tooling) and let vendors of k8s (gke, openshift, etc) easily build on top of that.

|

||||

> * I vote for using a simple, _programmatic_ context, be it manual jsonnet + kubectl, or simple Python scripts + Python K8s client, or any tool be can build on top of these.

|

||||

>

|

||||

|

||||

|

||||

The members of the mailing list are discussing and debating alternatives to ksonnet and will arrive at a decision to continue development. What I love about the open source way of adapting is that it's done communally. Unlike closed source software, which is often designed by one vendor, the organizations that are members of an open source project can collaboratively steer the project in the direction they best see fit. As Kubeflow evolves, it will benefit from an open, collaborative decision-making framework.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/4/kubeflow-evolution

|

||||

|

||||

作者:[Jonathan Gershater (Red Hat)][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/jgershat/users/jgershat

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/talk_chat_communication_team.png?itok=CYfZ_gE7 (Chat bubbles)

|

||||

[2]: https://www.kubeflow.org/

|

||||

[3]: https://github.com/kubernetes

|

||||

[4]: https://ksonnet.io/

|

||||

[5]: https://blogs.vmware.com/cloudnative/2019/02/05/welcoming-heptio-open-source-projects-to-vmware/

|

||||

[6]: https://landscape.cncf.io

|

||||

[7]: https://github.com/coreos/prometheus-operator/tree/master/contrib/kube-prometheus

|

||||

@ -0,0 +1,72 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Making computer science curricula as adaptable as our code)

|

||||

[#]: via: (https://opensource.com/open-organization/19/4/adaptable-curricula-computer-science)

|

||||

[#]: author: (Amarachi Achonu https://opensource.com/users/amarach1/users/johnsontanner3)

|

||||

|

||||

Making computer science curricula as adaptable as our code

|

||||

======

|

||||

No two computer science students are alike—so teachers need curricula

|

||||

that are open and adaptable.

|

||||

![][1]

|

||||

|

||||

Educators in elementary computer science face a lack of adaptable curricula. Calls for more modifiable, non-rigid curricula are therefore enticing—assuming that such curricula could benefit teachers by increasing their ability to mold resources for individual classrooms and, ultimately, produce better teaching experiences and learning outcomes.

|

||||

|

||||

Our team at [CSbyUs][2] noticed this scarcity, and we've created an open source web platform to facilitate more flexible, adaptable, and tested curricula for computer science educators. The mission of the CSbyUs team has always been utilizing open source technology to improve pedagogy in computer science, which includes increasing support for teachers. Therefore, this project primarily seeks to use open source principles—and the benefits inherent in them—to expand the possibilities of modern curriculum-making and support teachers by increasing access to more adaptable curricula.

|

||||

|

||||

### Rigid, monotonous, mundane

|

||||

|

||||

Why is the lack of adaptable curricula a problem for computer science education? Rigid curricula dominates most classrooms today, primarily through monotonous and routinely distributed lesson plans. Many of these plans are developed without the capacity for dynamic use and application to different classroom atmospheres. In contrast, an _adaptable_ curriculum is one that would _account_ for dynamic and changing classroom environments.

|

||||

|

||||

An adaptable curriculum means freedom and more options for educators. This is especially important in elementary-level classrooms, where instructors are introducing students to computer science for the first time, and in classrooms with higher populations of groups typically underrepresented in the field of computer science. Here especially, it's advantageous for instructors to have access to curricula that explicitly consider diverse classroom landscapes and grants the freedom necessary to adapt to specific student populations.

|

||||

|

||||

### Making it adaptable

|

||||

|

||||

This kind of adaptability is certainly at work at CSbyUs. Hayley Barton—a member of both the organization's curriculum-making team and its teaching team, and a senior at Duke University majoring in Economics and minoring in Computer Science and Spanish—recently demonstrated the benefits of adaptable curricula during an engagement in the field. Reflecting on her teaching experiences, Barton describes a major reason why curriculum adaptation is necessary in computer science classrooms. "We are seeing the range of students that we work with," she says, "and trying to make the curriculum something that can be tailored to different students."

|

||||

|

||||

An adaptable curriculum means freedom and more options for educators.

|

||||

|

||||

A more adaptable curriculum is necessary for truly challenging students, Barton continues.

|

||||

|

||||

The need for change became most evident to Barton when working students to make their own preliminary apps. Barton collaborated with students who appeared to be at different levels of focus and attention. On the one hand, a group of more advanced students took well to the style of a demonstrative curriculum and remained attentive and engaged to the task. On the other hand, another group of students seemed to have more trouble focusing in the classroom or even being motivated to engage with topics of computer science skills. Witnessing this difference among students, it became important that curriculum would need to be adaptable in multiple ways to be able to engage more students at their level.

|

||||

|

||||

"We want to challenge every student without making it too challenging for any individual student," Barton says. "Thinking about those things definitely feeds into how I'm thinking about the curriculum in terms of making it accessible for all the students."

|

||||

|

||||

As a curriculum-maker, she subsequently uses experiences like this to make changes to the original curriculum.

|

||||

|

||||

"If those other students have one-on-one time themselves, they could be doing even more amazing things with their apps," says Barton.

|

||||

|

||||

Taking this advice, Barton would potentially incorporate into the curriculum more emphasis on cultivating students' sense of ownership in computer science, since this is important to their focus and productivity. For this, students may be afforded that sense of one-on-one time. The result will affect the next round of teachers who use the curriculum.

|

||||

|

||||

For these changes to be effective, the onus is on teachers to notice the dynamics of the classroom. In the future, curriculum adaptation may depend on paying particular attention to and identifying these subtle differences of style of curriculum. Identifying and commenting about these subtleties allows the possibility of applying a different strategy, and these are the changes that are applied to the curriculum.

|

||||

|

||||

Curriculum adaptation should be iterative, as it involves learning from experience, returning to the drawing board, making changes, and finally, utilizing the curriculum again.

|

||||

|

||||

"We've gone through a lot of stages of development," Barton says. "The goal is to have this kind of back and forth, where the curriculum is something that's been tested, where we've used our feedback, and also used other research that we've done, to make it something that's actually impactful."

|

||||

|

||||

Hayley's "back and forth" process is an iterative process of curriculum-making. Between utilizing curricula and modifying curricula, instructors like Hayley can take a once-rigid curriculum and mold it to any degree that the user sees fit—again and again. This iterative process depends on tests performed first in the classroom, and it depends on the teacher's rationale and reflection on how curricula uniquely pans out for them.

|

||||

|

||||

Adaptability of curriculum is the most important principle on which the CSbyUs platform is built. Much like Hayley's process of curriculum-making, curriculum adaptation should be _iterative_ , as it involves learning from experience, returning to the drawing board, making changes, and finally, utilizing the curriculum again. Once launched, the CSbyUS website will document this iterative process.

|

||||

|

||||

The open-focused pedagogy behind the CSByUs platform, then, brings to life the flexibility inherent in the process of curriculum adaptation. First, it invites and collects the valuable first-hand perspectives of real educators working with real curricula to produce real learning. Next, it capitalizes on an iterative processes of development—one familiar to open source programmers—to enable modifications to curriculum (and the documentation of those modifications). Finally, it transforms the way teachers encounter curricula by helping them make selections from different versions of both modified curriculum and "the original." Our platform's open source strategy is crucial to cultivating a hub of flexible curricula for educators.

|

||||

|

||||

Open source practices can be a key difference in making rigid curricula more moldable for educators. Furthermore, since this approach effectively melds open source technologies with open-focused pedagogy, open pedagogy can potentially provide flexibility for educators teaching various curriculum across disciplines.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/open-organization/19/4/adaptable-curricula-computer-science

|

||||

|

||||

作者:[Amarachi Achonu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/amarach1/users/johnsontanner3

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/rh_003588_01_rd3os.combacktoschoolserieshe_rh_051x_0.png?itok=gIzbmxuI

|

||||

[2]: https://csbyus.herokuapp.com/

|

||||

@ -1,3 +1,4 @@

|

||||

Moelf translating

|

||||

Myths about /dev/urandom

|

||||

======

|

||||

|

||||

|

||||

@ -1,101 +0,0 @@

|

||||

tomjlw is translating

|

||||

Rediscovering make: the power behind rules

|

||||

======

|

||||

|

||||

|

||||

|

||||

I used to think makefiles were just a convenient way to list groups of shell commands; over time I've learned how powerful, flexible, and full-featured they are. This post brings to light over some of those features related to rules.

|

||||

|

||||

### Rules

|

||||

|

||||

Rules are instructions that indicate `make` how and when a file called the target should be built. The target can depend on other files called prerequisites.

|

||||

|

||||

You instruct `make` how to build the target in the recipe, which is no more than a set of shell commands to be executed, one at a time, in the order they appear. The syntax looks like this:

|

||||

```

|

||||

target_name : prerequisites

|

||||

recipe

|

||||

```

|

||||

|

||||

Once you have defined a rule, you can build the target from the command line by executing:

|

||||

```

|

||||

$ make target_name

|

||||

```

|

||||

|

||||

Once the target is built, `make` is smart enough to not run the recipe ever again unless at least one of the prerequisites has changed.

|

||||

|

||||

### More on prerequisites

|

||||

|

||||

Prerequisites indicate two things:

|

||||

|

||||

* When the target should be built: if a prerequisite is newer than the target, `make` assumes that the target should be built.

|

||||

* An order of execution: since prerequisites can, in turn, be built by another rule on the makefile, they also implicitly set an order on which rules are executed.

|

||||

|

||||

|

||||

|

||||

If you want to define an order, but you don't want to rebuild the target if the prerequisite changes, you can use a special kind of prerequisite called order only, which can be placed after the normal prerequisites, separated by a pipe (`|`)

|

||||

|

||||

### Patterns

|

||||

|

||||

For convenience, `make` accepts patterns for targets and prerequisites. A pattern is defined by including the `%` character, a wildcard that matches any number of literal characters or an empty string. Here are some examples:

|

||||

|

||||

* `%`: match any file

|

||||

* `%.md`: match all files with the `.md` extension

|

||||

* `prefix%.go`: match all files that start with `prefix` that have the `.go` extension

|

||||

|

||||

|

||||

|

||||

### Special targets

|

||||

|

||||

There's a set of target names that have special meaning for `make` called special targets.

|

||||

|

||||

You can find the full list of special targets in the [documentation][1]. As a rule of thumb, special targets start with a dot followed by uppercase letters.

|

||||

|

||||

Here are a few useful ones:

|

||||

|

||||

**.PHONY** : Indicates `make` that the prerequisites of this target are considered to be phony targets, which means that `make` will always run it's recipe regardless of whether a file with that name exists or what its last-modification time is.

|

||||

|

||||

**.DEFAULT** : Used for any target for which no rules are found.

|

||||

|

||||

**.IGNORE** : If you specify prerequisites for `.IGNORE`, `make` will ignore errors in execution of their recipes.

|

||||

|

||||

### Substitutions

|

||||

|

||||

Substitutions are useful when you need to modify the value of a variable with alterations that you specify.

|

||||

|

||||

A substitution has the form `$(var:a=b)` and its meaning is to take the value of the variable `var`, replace every `a` at the end of a word with `b` in that value, and substitute the resulting string. For example:

|

||||

```

|

||||

foo := a.o

|

||||

bar : = $(foo:.o=.c) # sets bar to a.c

|

||||

```

|

||||

|

||||

note: special thanks to [Luis Lavena][2] for letting me know about the existence of substitutions.

|

||||

|

||||

### Archive Files

|

||||

|

||||

Archive files are used to collect multiple data files together into a single file (same concept as a zip file), they are built with the `ar` Unix utility. `ar` can be used to create archives for any purpose, but has been largely replaced by `tar` for any other purposes than [static libraries][3].

|

||||

|

||||

In `make`, you can use an individual member of an archive file as a target or prerequisite as follows:

|

||||

```

|

||||

archive(member) : prerequisite

|

||||

recipe

|

||||

```

|

||||

|

||||

### Final Thoughts

|

||||

|

||||

There's a lot more to discover about make, but at least this counts as a start, I strongly encourage you to check the [documentation][4], create a dumb makefile, and just play with it.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://monades.roperzh.com/rediscovering-make-power-behind-rules/

|

||||

|

||||

作者:[Roberto Dip][a]

|

||||

译者:[tomjlw](https://github.com/tomjlw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://monades.roperzh.com

|

||||

[1]:https://www.gnu.org/software/make/manual/make.html#Special-Targets

|

||||

[2]:https://twitter.com/luislavena/

|

||||

[3]:http://tldp.org/HOWTO/Program-Library-HOWTO/static-libraries.html

|

||||

[4]:https://www.gnu.org/software/make/manual/make.html

|

||||

@ -1,3 +1,5 @@

|

||||

translating by robsean

|

||||

|

||||

12 Best GTK Themes for Ubuntu and other Linux Distributions

|

||||

======

|

||||

**Brief: Let’s have a look at some of the beautiful GTK themes that you can use not only in Ubuntu but other Linux distributions that use GNOME.**

|

||||

|

||||

@ -1,137 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Oomox – Customize And Create Your Own GTK2, GTK3 Themes)

|

||||

[#]: via: (https://www.ostechnix.com/oomox-customize-and-create-your-own-gtk2-gtk3-themes/)

|

||||

[#]: author: (EDITOR https://www.ostechnix.com/author/editor/)

|

||||

|

||||

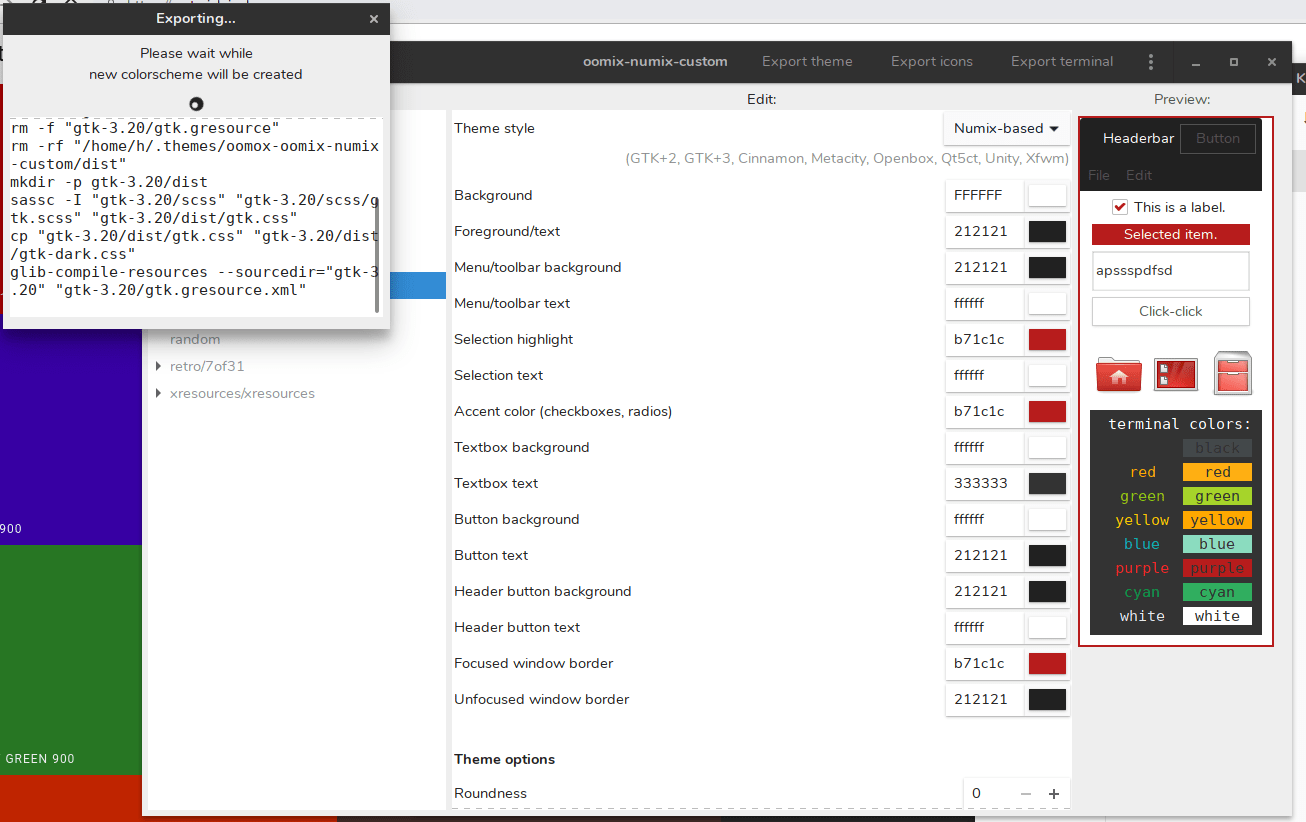

Oomox – Customize And Create Your Own GTK2, GTK3 Themes

|

||||

======

|

||||

|

||||

|

||||

|

||||

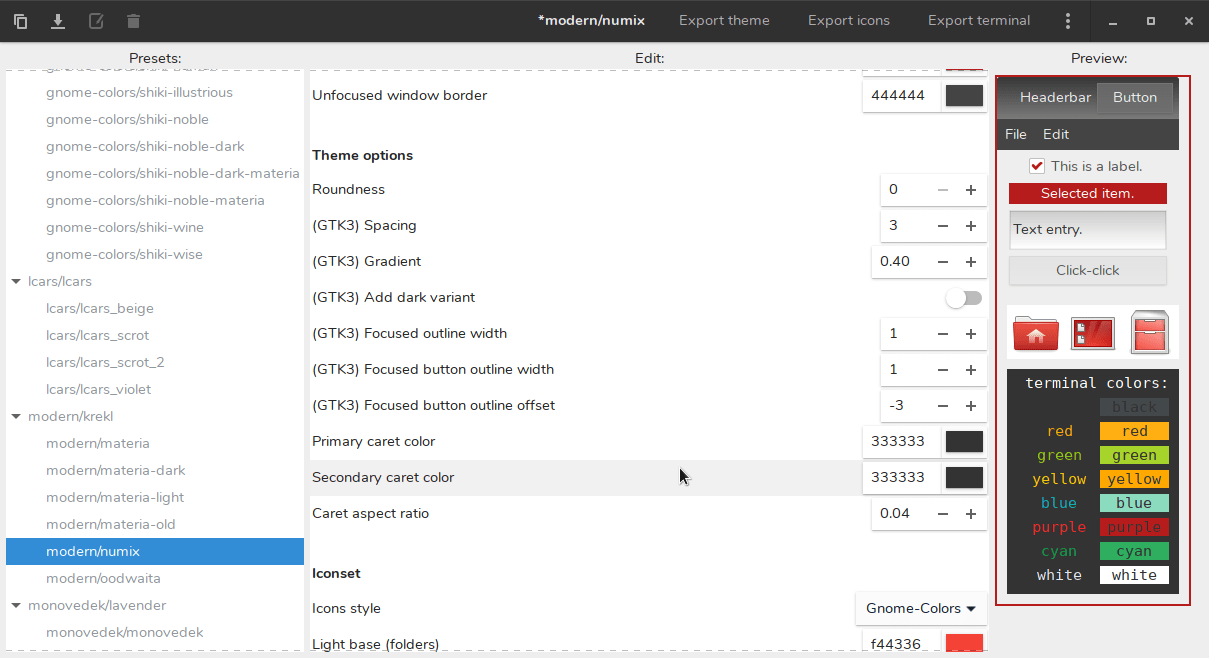

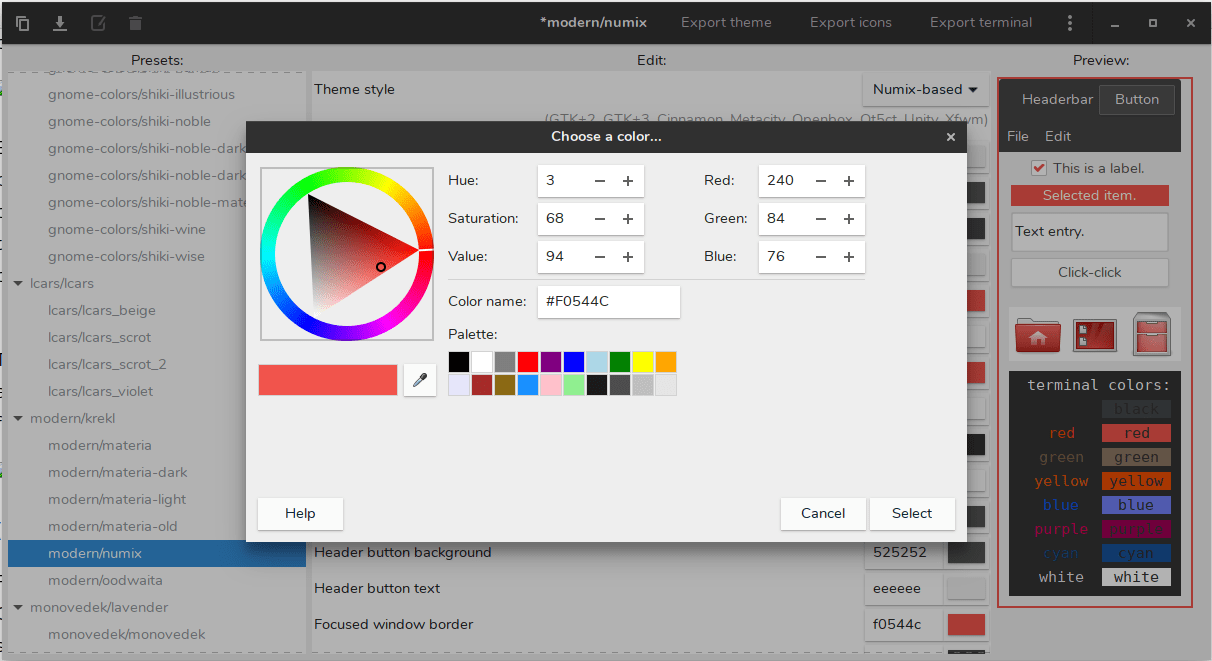

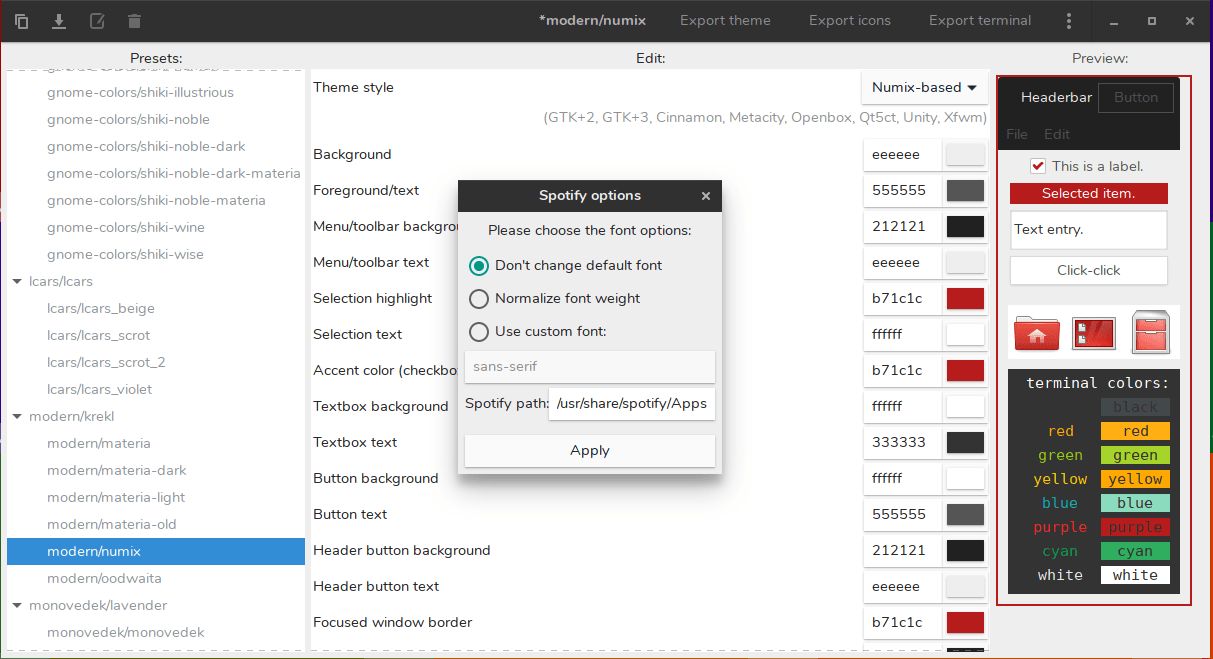

Theming and Visual customization is one of the main advantages of Linux. Since all the code is open, you can change how your Linux system looks and behaves to a greater degree than you ever could with Windows/Mac OS. GTK theming is perhaps the most popular way in which people customize their Linux desktops. The GTK toolkit is used by a wide variety of desktop environments like Gnome, Cinnamon, Unity, XFCE, and budgie. This means that a single theme made for GTK can be applied to any of these Desktop Environments with little changes.

|

||||

|

||||

There are a lot of very high quality popular GTK themes out there, such as **Arc** , **Numix** , and **Adapta**. But if you want to customize these themes and create your own visual design, you can use **Oomox**.

|

||||

|

||||

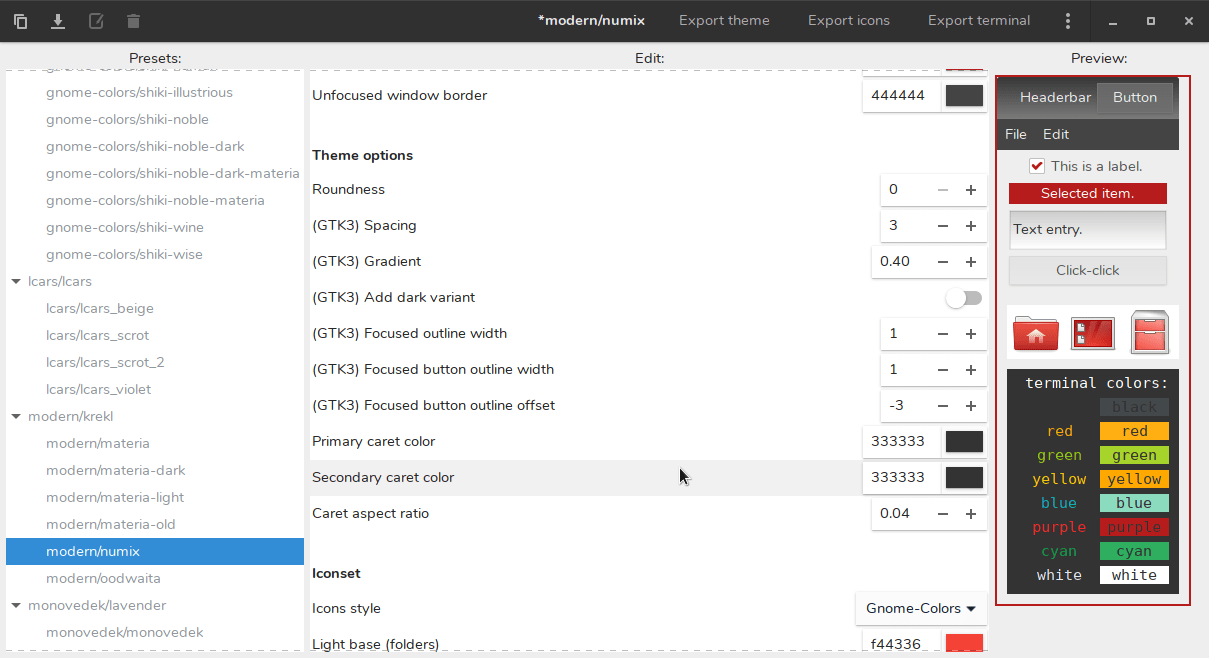

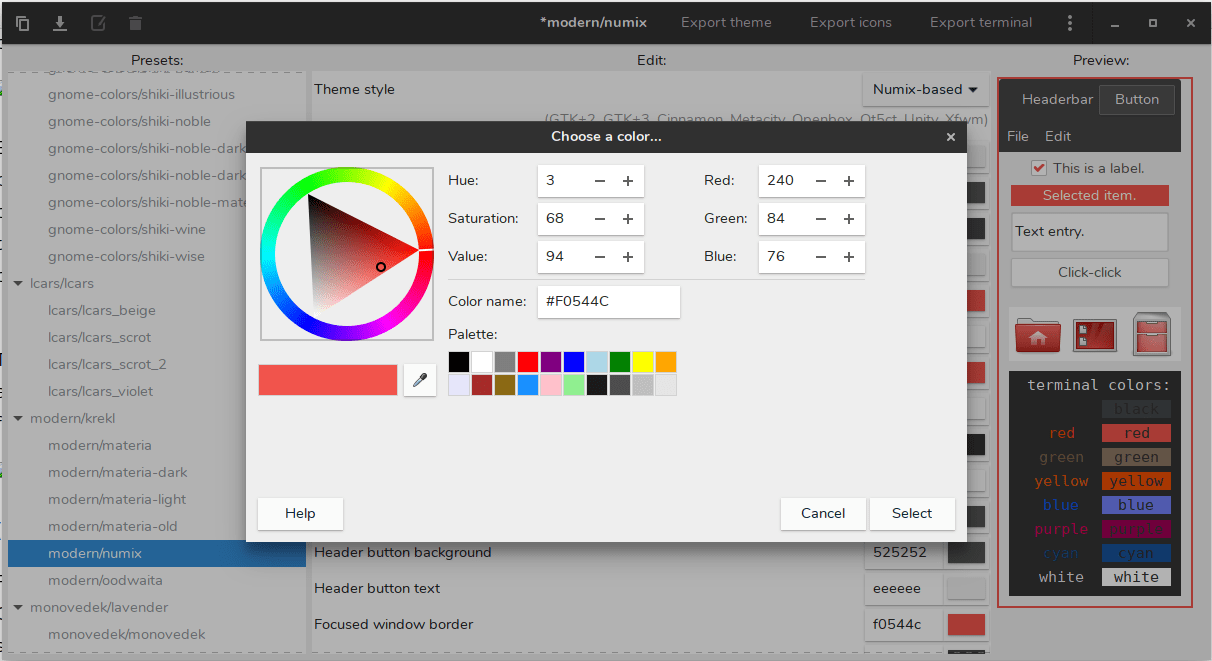

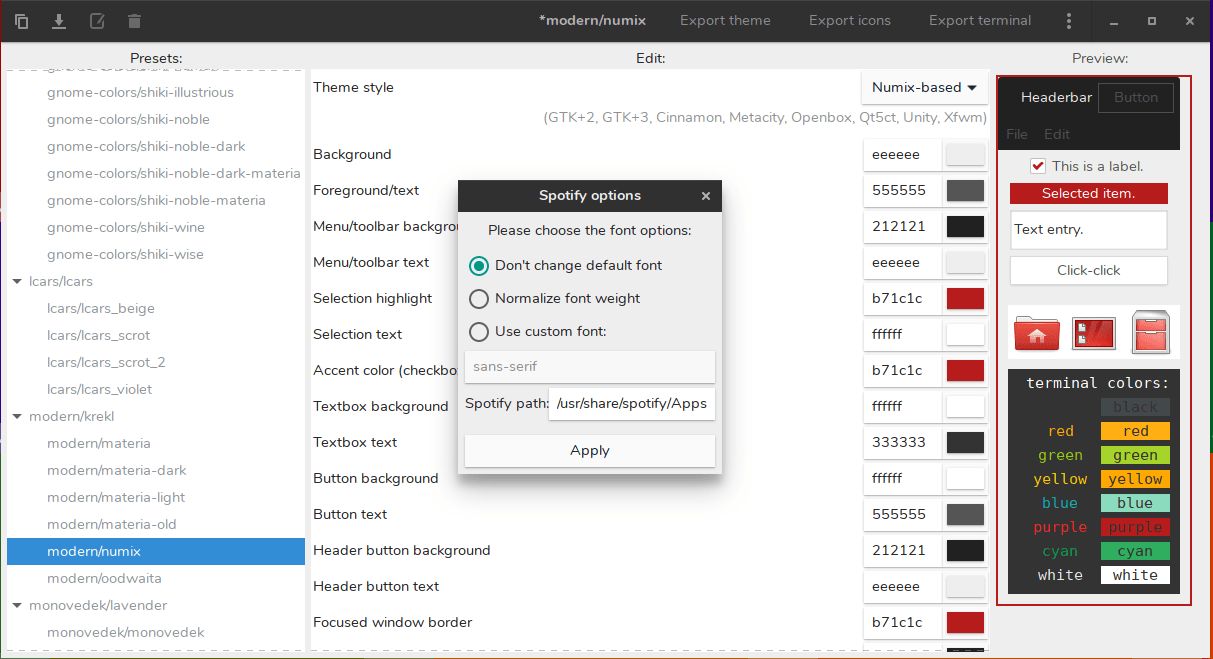

The Oomox is a graphical app for customizing and creating your own GTK theme complete with your own color, icon and terminal style. It comes with several presets, which you can apply on a Numix, Arc, or Materia style theme to create your own GTK theme.

|

||||

|

||||

### Installing Oomox

|

||||

|

||||

On Arch Linux and its variants:

|

||||

|

||||

Oomox is available on [**AUR**][1], so you can install it using any AUR helper programs like [**Yay**][2].

|

||||

|

||||

```

|

||||

$ yay -S oomox

|

||||

|

||||

```

|

||||

|

||||

On Debian/Ubuntu/Linux Mint, download `oomox.deb`package from [**here**][3] and install it as shown below. As of writing this guide, the latest version was **oomox_1.7.0.5.deb**.

|

||||

|

||||

```

|

||||

$ sudo dpkg -i oomox_1.7.0.5.deb

|

||||

$ sudo apt install -f

|

||||

|

||||

```

|

||||

|

||||

On Fedora, Oomox is available in third-party **COPR** repository.

|

||||

|

||||

```

|

||||

$ sudo dnf copr enable tcg/themes

|

||||

$ sudo dnf install oomox

|

||||

|

||||

```

|

||||

|

||||

Oomox is also available as a [**Flatpak app**][4]. Make sure you have installed Flatpak as described in [**this guide**][5]. And then, install and run Oomox using the following commands:

|

||||

|

||||

```

|

||||

$ flatpak install flathub com.github.themix_project.Oomox

|

||||

|

||||

$ flatpak run com.github.themix_project.Oomox

|

||||

|

||||

```

|

||||

|

||||

For other Linux distributions, go to the Oomox project page (Link is given at the end of this guide) on Github and compile and install it manually from source.

|

||||

|

||||

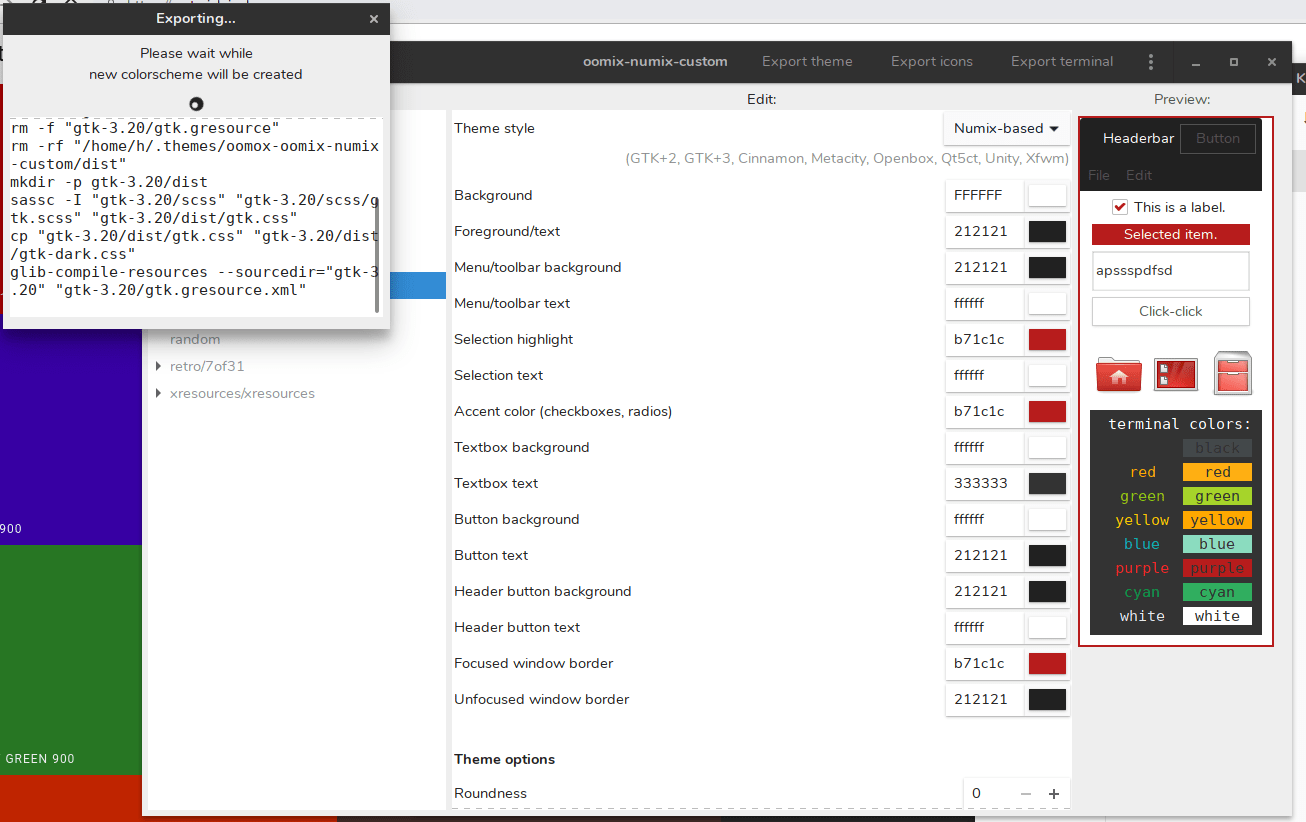

### Customize And Create Your Own GTK2, GTK3 Themes

|