mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

68af30be4e

102

published/20090203 How the Kernel Manages Your Memory.md

Normal file

102

published/20090203 How the Kernel Manages Your Memory.md

Normal file

@ -0,0 +1,102 @@

|

||||

内核如何管理内存

|

||||

============================================================

|

||||

|

||||

在学习了进程的 [虚拟地址布局][1] 之后,让我们回到内核,来学习它管理用户内存的机制。这里再次使用 Gonzo:

|

||||

|

||||

|

||||

|

||||

Linux 进程在内核中是作为进程描述符 [task_struct][2] (LCTT 译注:它是在 Linux 中描述进程完整信息的一种数据结构)的实例来实现的。在 task_struct 中的 [mm][3] 域指向到**内存描述符**,[mm_struct][4] 是一个程序在内存中的执行摘要。如上图所示,它保存了起始和结束内存段,进程使用的物理内存页面的 [数量][5](RSS <ruby>常驻内存大小<rt>Resident Set Size</rt></ruby> )、虚拟地址空间使用的 [总数量][6]、以及其它片断。 在内存描述符中,我们可以获悉它有两种管理内存的方式:**虚拟内存区域**集和**页面表**。Gonzo 的内存区域如下所示:

|

||||

|

||||

|

||||

|

||||

每个虚拟内存区域(VMA)是一个连续的虚拟地址范围;这些区域绝对不会重叠。一个 [vm_area_struct][7] 的实例完整地描述了一个内存区域,包括它的起始和结束地址,[flags][8] 决定了访问权限和行为,并且 [vm_file][9] 域指定了映射到这个区域的文件(如果有的话)。(除了内存映射段的例外情况之外,)一个 VMA 是不能**匿名**映射文件的。上面的每个内存段(比如,堆、栈)都对应一个单个的 VMA。虽然它通常都使用在 x86 的机器上,但它并不是必需的。VMA 也不关心它们在哪个段中。

|

||||

|

||||

一个程序的 VMA 在内存描述符中是作为 [mmap][10] 域的一个链接列表保存的,以起始虚拟地址为序进行排列,并且在 [mm_rb][12] 域中作为一个 [红黑树][11] 的根。红黑树允许内核通过给定的虚拟地址去快速搜索内存区域。在你读取文件 `/proc/pid_of_process/maps` 时,内核只是简单地读取每个进程的 VMA 的链接列表并[显示它们][13]。

|

||||

|

||||

在 Windows 中,[EPROCESS][14] 块大致类似于一个 task_struct 和 mm_struct 的结合。在 Windows 中模拟一个 VMA 的是虚拟地址描述符,或称为 [VAD][15];它保存在一个 [AVL 树][16] 中。你知道关于 Windows 和 Linux 之间最有趣的事情是什么吗?其实它们只有一点小差别。

|

||||

|

||||

4GB 虚拟地址空间被分配到**页面**中。在 32 位模式中的 x86 处理器中支持 4KB、2MB、以及 4MB 大小的页面。Linux 和 Windows 都使用大小为 4KB 的页面去映射用户的一部分虚拟地址空间。字节 0-4095 在页面 0 中,字节 4096-8191 在页面 1 中,依次类推。VMA 的大小 _必须是页面大小的倍数_ 。下图是使用 4KB 大小页面的总数量为 3GB 的用户空间:

|

||||

|

||||

|

||||

|

||||

处理器通过查看**页面表**去转换一个虚拟内存地址到一个真实的物理内存地址。每个进程都有它自己的一组页面表;每当发生进程切换时,用户空间的页面表也同时切换。Linux 在内存描述符的 [pgd][17] 域中保存了一个指向进程的页面表的指针。对于每个虚拟页面,页面表中都有一个相应的**页面表条目**(PTE),在常规的 x86 页面表中,它是一个简单的如下所示的大小为 4 字节的记录:

|

||||

|

||||

|

||||

|

||||

Linux 通过函数去 [读取][18] 和 [设置][19] PTE 条目中的每个标志位。标志位 P 告诉处理器这个虚拟页面是否**在**物理内存中。如果该位被清除(设置为 0),访问这个页面将触发一个页面故障。请记住,当这个标志位为 0 时,内核可以在剩余的域上**做任何想做的事**。R/W 标志位是读/写标志;如果被清除,这个页面将变成只读的。U/S 标志位表示用户/超级用户;如果被清除,这个页面将仅被内核访问。这些标志都是用于实现我们在前面看到的只读内存和内核空间保护。

|

||||

|

||||

标志位 D 和 A 用于标识页面是否是“**脏的**”或者是已**被访问过**。一个脏页面表示已经被写入,而一个被访问过的页面则表示有一个写入或者读取发生过。这两个标志位都是粘滞位:处理器只能设置它们,而清除则是由内核来完成的。最终,PTE 保存了这个页面相应的起始物理地址,它们按 4KB 进行整齐排列。这个看起来不起眼的域是一些痛苦的根源,因为它限制了物理内存最大为 [4 GB][20]。其它的 PTE 域留到下次再讲,因为它是涉及了物理地址扩展的知识。

|

||||

|

||||

由于在一个虚拟页面上的所有字节都共享一个 U/S 和 R/W 标志位,所以内存保护的最小单元是一个虚拟页面。但是,同一个物理内存可能被映射到不同的虚拟页面,这样就有可能会出现相同的物理内存出现不同的保护标志位的情况。请注意,在 PTE 中是看不到运行权限的。这就是为什么经典的 x86 页面上允许代码在栈上被执行的原因,这样会很容易导致挖掘出栈缓冲溢出漏洞(可能会通过使用 [return-to-libc][21] 和其它技术来找出非可执行栈)。由于 PTE 缺少禁止运行标志位说明了一个更广泛的事实:在 VMA 中的权限标志位有可能或可能不完全转换为硬件保护。内核只能做它能做到的,但是,最终的架构限制了它能做的事情。

|

||||

|

||||

虚拟内存不保存任何东西,它只是简单地 _映射_ 一个程序的地址空间到底层的物理内存上。物理内存被当作一个称之为**物理地址空间**的巨大块而由处理器访问。虽然内存的操作[涉及到某些][22]总线,我们在这里先忽略它,并假设物理地址范围从 0 到可用的最大值按字节递增。物理地址空间被内核进一步分解为**页面帧**。处理器并不会关心帧的具体情况,这一点对内核也是至关重要的,因为,**页面帧是物理内存管理的最小单元**。Linux 和 Windows 在 32 位模式下都使用 4KB 大小的页面帧;下图是一个有 2 GB 内存的机器的例子:

|

||||

|

||||

|

||||

|

||||

在 Linux 上每个页面帧是被一个 [描述符][23] 和 [几个标志][24] 来跟踪的。通过这些描述符和标志,实现了对机器上整个物理内存的跟踪;每个页面帧的具体状态是公开的。物理内存是通过使用 [Buddy 内存分配][25] (LCTT 译注:一种内存分配算法)技术来管理的,因此,如果一个页面帧可以通过 Buddy 系统分配,那么它是**未分配的**(free)。一个被分配的页面帧可以是**匿名的**、持有程序数据的、或者它可能处于页面缓存中、持有数据保存在一个文件或者块设备中。还有其它的异形页面帧,但是这些异形页面帧现在已经不怎么使用了。Windows 有一个类似的页面帧号(Page Frame Number (PFN))数据库去跟踪物理内存。

|

||||

|

||||

我们把虚拟内存区域(VMA)、页面表条目(PTE),以及页面帧放在一起来理解它们是如何工作的。下面是一个用户堆的示例:

|

||||

|

||||

|

||||

|

||||

蓝色的矩形框表示在 VMA 范围内的页面,而箭头表示页面表条目映射页面到页面帧。一些缺少箭头的虚拟页面,表示它们对应的 PTE 的当前标志位被清除(置为 0)。这可能是因为这个页面从来没有被使用过,或者是它的内容已经被交换出去了。在这两种情况下,即便这些页面在 VMA 中,访问它们也将导致产生一个页面故障。对于这种 VMA 和页面表的不一致的情况,看上去似乎很奇怪,但是这种情况却经常发生。

|

||||

|

||||

一个 VMA 像一个在你的程序和内核之间的合约。你请求它做一些事情(分配内存、文件映射、等等),内核会回应“收到”,然后去创建或者更新相应的 VMA。 但是,它 _并不立刻_ 去“兑现”对你的承诺,而是它会等待到发生一个页面故障时才去 _真正_ 做这个工作。内核是个“懒惰的家伙”、“不诚实的人渣”;这就是虚拟内存的基本原理。它适用于大多数的情况,有一些类似情况和有一些意外的情况,但是,它是规则是,VMA 记录 _约定的_ 内容,而 PTE 才反映这个“懒惰的内核” _真正做了什么_。通过这两种数据结构共同来管理程序的内存;它们共同来完成解决页面故障、释放内存、从内存中交换出数据、等等。下图是内存分配的一个简单案例:

|

||||

|

||||

|

||||

|

||||

当程序通过 [brk()][26] 系统调用来请求一些内存时,内核只是简单地 [更新][27] 堆的 VMA 并给程序回复“已搞定”。而在这个时候并没有真正地分配页面帧,并且新的页面也没有映射到物理内存上。一旦程序尝试去访问这个页面时,处理器将发生页面故障,然后调用 [do_page_fault()][28]。这个函数将使用 [find_vma()][30] 去 [搜索][29] 发生页面故障的 VMA。如果找到了,然后在 VMA 上进行权限检查以防范恶意访问(读取或者写入)。如果没有合适的 VMA,也没有所尝试访问的内存的“合约”,将会给进程返回段故障。

|

||||

|

||||

当[找到][31]了一个合适的 VMA,内核必须通过查找 PTE 的内容和 VMA 的类型去[处理][32]故障。在我们的案例中,PTE 显示这个页面是 [不存在的][33]。事实上,我们的 PTE 是全部空白的(全部都是 0),在 Linux 中这表示虚拟内存还没有被映射。由于这是匿名 VMA,我们有一个完全的 RAM 事务,它必须被 [do_anonymous_page()][34] 来处理,它分配页面帧,并且用一个 PTE 去映射故障虚拟页面到一个新分配的帧。

|

||||

|

||||

有时候,事情可能会有所不同。例如,对于被交换出内存的页面的 PTE,在当前(Present)标志位上是 0,但它并不是空白的。而是在交换位置仍有页面内容,它必须从磁盘上读取并且通过 [do_swap_page()][35] 来加载到一个被称为 [major fault][36] 的页面帧上。

|

||||

|

||||

这是我们通过探查内核的用户内存管理得出的前半部分的结论。在下一篇文章中,我们通过将文件加载到内存中,来构建一个完整的内存框架图,以及对性能的影响。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://duartes.org/gustavo/blog/post/how-the-kernel-manages-your-memory/

|

||||

|

||||

作者:[Gustavo Duarte][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://duartes.org/gustavo/blog/about/

|

||||

[1]:https://linux.cn/article-9255-1.html

|

||||

[2]:http://lxr.linux.no/linux+v2.6.28.1/include/linux/sched.h#L1075

|

||||

[3]:http://lxr.linux.no/linux+v2.6.28.1/include/linux/sched.h#L1129

|

||||

[4]:http://lxr.linux.no/linux+v2.6.28.1/include/linux/mm_types.h#L173

|

||||

[5]:http://lxr.linux.no/linux+v2.6.28.1/include/linux/mm_types.h#L197

|

||||

[6]:http://lxr.linux.no/linux+v2.6.28.1/include/linux/mm_types.h#L206

|

||||

[7]:http://lxr.linux.no/linux+v2.6.28.1/include/linux/mm_types.h#L99

|

||||

[8]:http://lxr.linux.no/linux+v2.6.28/include/linux/mm.h#L76

|

||||

[9]:http://lxr.linux.no/linux+v2.6.28.1/include/linux/mm_types.h#L150

|

||||

[10]:http://lxr.linux.no/linux+v2.6.28.1/include/linux/mm_types.h#L174

|

||||

[11]:http://en.wikipedia.org/wiki/Red_black_tree

|

||||

[12]:http://lxr.linux.no/linux+v2.6.28.1/include/linux/mm_types.h#L175

|

||||

[13]:http://lxr.linux.no/linux+v2.6.28.1/fs/proc/task_mmu.c#L201

|

||||

[14]:http://www.nirsoft.net/kernel_struct/vista/EPROCESS.html

|

||||

[15]:http://www.nirsoft.net/kernel_struct/vista/MMVAD.html

|

||||

[16]:http://en.wikipedia.org/wiki/AVL_tree

|

||||

[17]:http://lxr.linux.no/linux+v2.6.28.1/include/linux/mm_types.h#L185

|

||||

[18]:http://lxr.linux.no/linux+v2.6.28.1/arch/x86/include/asm/pgtable.h#L173

|

||||

[19]:http://lxr.linux.no/linux+v2.6.28.1/arch/x86/include/asm/pgtable.h#L230

|

||||

[20]:http://www.google.com/search?hl=en&amp;amp;amp;amp;q=2^20+*+2^12+bytes+in+GB

|

||||

[21]:http://en.wikipedia.org/wiki/Return-to-libc_attack

|

||||

[22]:http://duartes.org/gustavo/blog/post/getting-physical-with-memory

|

||||

[23]:http://lxr.linux.no/linux+v2.6.28/include/linux/mm_types.h#L32

|

||||

[24]:http://lxr.linux.no/linux+v2.6.28/include/linux/page-flags.h#L14

|

||||

[25]:http://en.wikipedia.org/wiki/Buddy_memory_allocation

|

||||

[26]:http://www.kernel.org/doc/man-pages/online/pages/man2/brk.2.html

|

||||

[27]:http://lxr.linux.no/linux+v2.6.28.1/mm/mmap.c#L2050

|

||||

[28]:http://lxr.linux.no/linux+v2.6.28/arch/x86/mm/fault.c#L583

|

||||

[29]:http://lxr.linux.no/linux+v2.6.28/arch/x86/mm/fault.c#L692

|

||||

[30]:http://lxr.linux.no/linux+v2.6.28/mm/mmap.c#L1466

|

||||

[31]:http://lxr.linux.no/linux+v2.6.28/arch/x86/mm/fault.c#L711

|

||||

[32]:http://lxr.linux.no/linux+v2.6.28/mm/memory.c#L2653

|

||||

[33]:http://lxr.linux.no/linux+v2.6.28/mm/memory.c#L2674

|

||||

[34]:http://lxr.linux.no/linux+v2.6.28/mm/memory.c#L2681

|

||||

[35]:http://lxr.linux.no/linux+v2.6.28/mm/memory.c#L2280

|

||||

[36]:http://lxr.linux.no/linux+v2.6.28/mm/memory.c#L2316

|

||||

@ -1,15 +1,15 @@

|

||||

学习你的工具:驾驭你的 Git 历史

|

||||

学习用工具来驾驭 Git 历史

|

||||

============================================================

|

||||

|

||||

在你的日常工作中,不可能每天都从头开始去开发一个新的应用程序。而真实的情况是,在日常工作中,我们大多数时候所面对的都是遗留下来的一个代码库,我们能够去修改一些特性的内容或者现存的一些代码行,是我们在日常工作中很重要的一部分。而这也就是分布式版本控制系统 `git` 的价值所在。现在,我们来深入了解怎么去使用 `git` 的历史以及如何很轻松地去浏览它的历史。

|

||||

在你的日常工作中,不可能每天都从头开始去开发一个新的应用程序。而真实的情况是,在日常工作中,我们大多数时候所面对的都是遗留下来的一个代码库,去修改一些特性的内容或者现存的一些代码行,这是我们在日常工作中很重要的一部分。而这也就是分布式版本控制系统 `git` 的价值所在。现在,我们来深入了解怎么去使用 `git` 的历史以及如何很轻松地去浏览它的历史。

|

||||

|

||||

### Git 历史

|

||||

|

||||

首先和最重要的事是,什么是 `git` 历史?正如其名字一样,它是一个 `git` 仓库的提交历史。它包含一堆提交信息,其中有它们的作者的名字、提交的哈希值以及提交日期。查看一个 `git` 仓库历史的方法很简单,就是一个 `git log` 命令。

|

||||

首先和最重要的事是,什么是 `git` 历史?正如其名字一样,它是一个 `git` 仓库的提交历史。它包含一堆提交信息,其中有它们的作者的名字、该提交的哈希值以及提交日期。查看一个 `git` 仓库历史的方法很简单,就是一个 `git log` 命令。

|

||||

|

||||

> _*旁注:**为便于本文的演示,我们使用 Ruby 在 Rails 仓库的 `master` 分支。之所以选择它的理由是因为,Rails 有很好的 `git` 历史,有很好的提交信息、引用以及每个变更的解释。如果考虑到代码库的大小、维护者的年龄和数据,Rails 肯定是我见过的最好的仓库。当然了,我并不是说其它 `git` 仓库做的不好,它只是我见过的比较好的一个仓库。_

|

||||

> _旁注:为便于本文的演示,我们使用 Ruby on Rails 的仓库的 `master` 分支。之所以选择它的理由是因为,Rails 有良好的 `git` 历史,漂亮的提交信息、引用以及对每个变更的解释。如果考虑到代码库的大小、维护者的年龄和数量,Rails 肯定是我见过的最好的仓库。当然了,我并不是说其它的 `git` 仓库做的不好,它只是我见过的比较好的一个仓库。_

|

||||

|

||||

因此,回到 Rails 仓库。如果你在 Ralis 仓库上运行 `git log`。你将看到如下所示的输出:

|

||||

那么,回到 Rails 仓库。如果你在 Ralis 仓库上运行 `git log`。你将看到如下所示的输出:

|

||||

|

||||

```

|

||||

commit 66ebbc4952f6cfb37d719f63036441ef98149418

|

||||

@ -72,7 +72,7 @@ Date: Thu Jun 2 21:26:53 2016 -0500

|

||||

[skip ci] Make header bullets consistent in engines.md

|

||||

```

|

||||

|

||||

正如你所见,`git log` 展示了提交哈希、作者和他的 email 以及提交日期。当然,`git` 输出的可定制性很强大,它允许你去定制 `git log` 命令的输出格式。比如说,我们希望看到提交的信息显示在一行上,我们可以运行 `git log --oneline`,它将输出一个更紧凑的日志:

|

||||

正如你所见,`git log` 展示了提交的哈希、作者及其 email 以及该提交创建的日期。当然,`git` 输出的可定制性很强大,它允许你去定制 `git log` 命令的输出格式。比如说,我们只想看提交信息的第一行,我们可以运行 `git log --oneline`,它将输出一个更紧凑的日志:

|

||||

|

||||

```

|

||||

66ebbc4 Dont re-define class SQLite3Adapter on test

|

||||

@ -89,15 +89,15 @@ e98caf8 [skip ci] Make header bullets consistent in engines.md

|

||||

|

||||

如果你想看 `git log` 的全部选项,我建议你去查阅 `git log` 的 man 页面,你可以在一个终端中输入 `man git-log` 或者 `git help log` 来获得。

|

||||

|

||||

> _**小提示:**如果你觉得 `git log` 看起来太恐怖或者过于复杂,或者你觉得看它太无聊了,我建议你去寻找一些 `git` GUI 命令行工具。在以前的文章中,我使用过 [GitX][1] ,我觉得它很不错,但是,由于我看命令行更“亲切”一些,在我尝试了 [tig][2] 之后,就再也没有去用过它。_

|

||||

> _小提示:如果你觉得 `git log` 看起来太恐怖或者过于复杂,或者你觉得看它太无聊了,我建议你去寻找一些 `git` 的 GUI 或命令行工具。在之前,我使用过 [GitX][1] ,我觉得它很不错,但是,由于我看命令行更“亲切”一些,在我尝试了 [tig][2] 之后,就再也没有去用过它。_

|

||||

|

||||

### 查找尼莫

|

||||

### 寻找尼莫

|

||||

|

||||

现在,我们已经知道了关于 `git log` 命令一些很基础的知识之后,我们来看一下,在我们的日常工作中如何使用它更加高效地浏览历史。

|

||||

现在,我们已经知道了关于 `git log` 命令的一些很基础的知识之后,我们来看一下,在我们的日常工作中如何使用它更加高效地浏览历史。

|

||||

|

||||

假如,我们怀疑在 `String#classify` 方法中有一个预期之外的行为,我们希望能够找出原因,并且定位出实现它的代码行。

|

||||

|

||||

为达到上述目的,你可以使用的第一个命令是 `git grep`,通过它可以找到这个方法定义在什么地方。简单来说,这个命令输出了给定的某些“样品”的匹配行。现在,我们来找出定义它的方法,它非常简单 —— 我们对 `def classify` 运行 grep,然后看到的输出如下:

|

||||

为达到上述目的,你可以使用的第一个命令是 `git grep`,通过它可以找到这个方法定义在什么地方。简单来说,这个命令输出了匹配特定模式的那些行。现在,我们来找出定义它的方法,它非常简单 —— 我们对 `def classify` 运行 grep,然后看到的输出如下:

|

||||

|

||||

```

|

||||

➜ git grep 'def classify'

|

||||

@ -113,7 +113,7 @@ activesupport/lib/active_support/core_ext/string/inflections.rb: def classifyact

|

||||

activesupport/lib/active_support/core_ext/string/inflections.rb:205: def classifyactivesupport/lib/active_support/inflector/methods.rb:186: def classify(table_name)tools/profile:112: def classify

|

||||

```

|

||||

|

||||

更好看了,是吧?考虑到上下文,我们可以很轻松地找到,这个方法在`activesupport/lib/active_support/core_ext/string/inflections.rb` 的第 205 行的 `classify` 方法,它看起来像这样,是不是很容易?

|

||||

更好看了,是吧?考虑到上下文,我们可以很轻松地找到,这个方法在 `activesupport/lib/active_support/core_ext/string/inflections.rb` 的第 205 行的 `classify` 方法,它看起来像这样,是不是很容易?

|

||||

|

||||

```

|

||||

# Creates a class name from a plural table name like Rails does for table names to models.

|

||||

@ -127,7 +127,7 @@ activesupport/lib/active_support/core_ext/string/inflections.rb:205: def classi

|

||||

end

|

||||

```

|

||||

|

||||

尽管这个方法我们找到的是在 `String` 上的一个常见的调用,它涉及到`ActiveSupport::Inflector` 上的另一个方法,使用了相同的名字。获得了 `git grep` 的结果,我们可以很轻松地导航到这里,因此,我们看到了结果的第二行, `activesupport/lib/active_support/inflector/methods.rb` 在 186 行上。我们正在寻找的方法是:

|

||||

尽管我们找到的这个方法是在 `String` 上的一个常见的调用,它调用了 `ActiveSupport::Inflector` 上的另一个同名的方法。根据之前的 `git grep` 的结果,我们可以很轻松地发现结果的第二行, `activesupport/lib/active_support/inflector/methods.rb` 在 186 行上。我们正在寻找的方法是这样的:

|

||||

|

||||

```

|

||||

# Creates a class name from a plural table name like Rails does for table

|

||||

@ -146,17 +146,17 @@ def classify(table_name)

|

||||

end

|

||||

```

|

||||

|

||||

酷!考虑到 Rails 仓库的大小,我们借助 `git grep` 找到它,用时没有超越 30 秒。

|

||||

酷!考虑到 Rails 仓库的大小,我们借助 `git grep` 找到它,用时都没有超越 30 秒。

|

||||

|

||||

### 那么,最后的变更是什么?

|

||||

|

||||

我们已经掌握了有用的方法,现在,我们需要搞清楚这个文件所经历的变更。由于我们已经知道了正确的文件名和行数,我们可以使用 `git blame`。这个命令展示了一个文件中每一行的最后修订者和修订的内容。我们来看一下这个文件最后的修订都做了什么:

|

||||

现在,我们已经找到了所要找的方法,现在,我们需要搞清楚这个文件所经历的变更。由于我们已经知道了正确的文件名和行数,我们可以使用 `git blame`。这个命令展示了一个文件中每一行的最后修订者和修订的内容。我们来看一下这个文件最后的修订都做了什么:

|

||||

|

||||

```

|

||||

git blame activesupport/lib/active_support/inflector/methods.rb

|

||||

```

|

||||

|

||||

虽然我们得到了这个文件每一行的最后的变更,但是,我们更感兴趣的是对指定的方法(176 到 189 行)的最后变更。让我们在 `git blame` 命令上增加一个选项,它将只显示那些行。此外,我们将在命令上增加一个 `-s` (阻止) 选项,去跳过那一行变更时的作者名字和修订(提交)的时间戳:

|

||||

虽然我们得到了这个文件每一行的最后的变更,但是,我们更感兴趣的是对特定方法(176 到 189 行)的最后变更。让我们在 `git blame` 命令上增加一个选项,让它只显示那些行的变化。此外,我们将在命令上增加一个 `-s` (忽略)选项,去跳过那一行变更时的作者名字和修订(提交)的时间戳:

|

||||

|

||||

```

|

||||

git blame -L 176,189 -s activesupport/lib/active_support/inflector/methods.rb

|

||||

@ -183,13 +183,13 @@ git blame -L 176,189 -s activesupport/lib/active_support/inflector/methods.rb

|

||||

git show 5bb1d4d2

|

||||

```

|

||||

|

||||

你亲自做实验了吗?如果没有做,我直接告诉你结果,这个令人惊叹的 [提交][3] 是由 [Schneems][4] 做的,他通过使用 frozen 字符串做了一个非常有趣的性能优化,这在我们当前的上下文中是非常有意义的。但是,由于我们在这个假设的调试会话中,这样做并不能告诉我们当前问题所在。因此,我们怎么样才能够通过研究来发现,我们选定的方法经过了哪些变更?

|

||||

你亲自做实验了吗?如果没有做,我直接告诉你结果,这个令人惊叹的 [提交][3] 是由 [Schneems][4] 完成的,他通过使用 frozen 字符串做了一个非常有趣的性能优化,这在我们当前的场景中是非常有意义的。但是,由于我们在这个假设的调试会话中,这样做并不能告诉我们当前问题所在。因此,我们怎么样才能够通过研究来发现,我们选定的方法经过了哪些变更?

|

||||

|

||||

### 搜索日志

|

||||

|

||||

现在,我们回到 `git` 日志,现在的问题是,怎么能够看到 `classify` 方法经历了哪些修订?

|

||||

|

||||

`git log` 命令非常强大,因此它提供了非常多的列表选项。我们尝试去看一下保存了这个文件的 `git` 日志内容。使用 `-p` 选项,它的意思是在 `git` 日志中显示这个文件的完整补丁:

|

||||

`git log` 命令非常强大,因此它提供了非常多的列表选项。我们尝试使用 `-p` 选项去看一下保存了这个文件的 `git` 日志内容,这个选项的意思是在 `git` 日志中显示这个文件的完整补丁:

|

||||

|

||||

```

|

||||

git log -p activesupport/lib/active_support/inflector/methods.rb

|

||||

@ -201,13 +201,13 @@ git log -p activesupport/lib/active_support/inflector/methods.rb

|

||||

git log -L 176,189:activesupport/lib/active_support/inflector/methods.rb

|

||||

```

|

||||

|

||||

`git log` 命令接受了 `-L` 选项,它有一个行的范围和文件名做为参数。它的格式可能有点奇怪,格式解释如下:

|

||||

`git log` 命令接受 `-L` 选项,它用一个行的范围和文件名做为参数。它的格式可能有点奇怪,格式解释如下:

|

||||

|

||||

```

|

||||

git log -L <start-line>,<end-line>:<path-to-file>

|

||||

```

|

||||

|

||||

当我们去运行这个命令之后,我们可以看到对这些行的一个修订列表,它将带我们找到创建这个方法的第一个修订:

|

||||

当我们运行这个命令之后,我们可以看到对这些行的一个修订列表,它将带我们找到创建这个方法的第一个修订:

|

||||

|

||||

```

|

||||

commit 51xd6bb829c418c5fbf75de1dfbb177233b1b154

|

||||

@ -238,11 +238,11 @@ diff--git a/activesupport/lib/active_support/inflector/methods.rb b/activesuppor

|

||||

|

||||

现在,我们再来看一下 —— 它是在 2011 年提交的。`git` 可以让我们重回到这个时间。这是一个很好的例子,它充分说明了足够的提交信息对于重新了解当时的上下文环境是多么的重要,因为从这个提交信息中,我们并不能获得足够的信息来重新理解当时的创建这个方法的上下文环境,但是,话说回来,你**不应该**对此感到恼怒,因为,你看到的这些项目,它们的作者都是无偿提供他们的工作时间和精力来做开源工作的。(向开源项目贡献者致敬!)

|

||||

|

||||

回到我们的正题,我们并不能确认 `classify` 方法最初实现是怎么回事,考虑到这个第一次的提交只是一个重构。现在,如果你认为,“或许、有可能、这个方法不在 176 行到 189 行的范围之内,那么就你应该在这个文件中扩大搜索范围”,这样想是对的。我们看到在它的修订提交的信息中提到了“重构”这个词,它意味着这个方法可能在那个文件中是真实存在的,只是在重构之后它才存在于那个行的范围内。

|

||||

回到我们的正题,我们并不能确认 `classify` 方法最初实现是怎么回事,考虑到这个第一次的提交只是一个重构。现在,如果你认为,“或许、有可能、这个方法不在 176 行到 189 行的范围之内,那么就你应该在这个文件中扩大搜索范围”,这样想是对的。我们看到在它的修订提交的信息中提到了“重构”这个词,它意味着这个方法可能在那个文件中是真实存在的,而且是在重构之后它才存在于那个行的范围内。

|

||||

|

||||

但是,我们如何去确认这一点呢?不管你信不信,`git` 可以再次帮助你。`git log` 命令有一个 `-S` 选项,它可以传递一个特定的字符串作为参数,然后去查找代码变更(添加或者删除)。也就是说,如果我们执行 `git log -S classify` 这样的命令,我们可以看到所有包含 `classify` 字符串的变更行的提交。

|

||||

|

||||

如果你在 Ralis 仓库上运行上述命令,首先你会发现这个命令运行有点慢。但是,你应该会发现 `git` 真的解析了在那个仓库中的所有修订来匹配这个字符串,因为仓库非常大,实际上它的运行速度是非常快的。在你的指尖下 `git` 再次展示了它的强大之处。因此,如果去找关于 `classify` 方法的第一个修订,我们可以运行如下的命令:

|

||||

如果你在 Ralis 仓库上运行上述命令,首先你会发现这个命令运行有点慢。但是,你应该会发现 `git` 实际上解析了在那个仓库中的所有修订来匹配这个字符串,其实它的运行速度是非常快的。在你的指尖下 `git` 再次展示了它的强大之处。因此,如果去找关于 `classify` 方法的第一个修订,我们可以运行如下的命令:

|

||||

|

||||

```

|

||||

git log -S 'def classify'

|

||||

@ -258,7 +258,7 @@ Date: Wed Nov 24 01:04:44 2004 +0000

|

||||

git-svn-id: http://svn-commit.rubyonrails.org/rails/trunk@4 5ecf4fe2-1ee6-0310-87b1-e25e094e27de

|

||||

```

|

||||

|

||||

很酷!是吧?它初次被提交到 Rails,是由 DHHD 在一个 `svn` 仓库上做的!这意味着 `classify` 提交到 Rails 仓库的大概时间。现在,我们去看一下这个提交的所有变更信息,我们运行如下的命令:

|

||||

很酷!是吧?它初次被提交到 Rails,是由 DHH 在一个 `svn` 仓库上做的!这意味着 `classify` 大概在一开始就被提交到了 Rails 仓库。现在,我们去看一下这个提交的所有变更信息,我们运行如下的命令:

|

||||

|

||||

```

|

||||

git show db045dbbf60b53dbe013ef25554fd013baf88134

|

||||

@ -268,7 +268,7 @@ git show db045dbbf60b53dbe013ef25554fd013baf88134

|

||||

|

||||

### 下次见

|

||||

|

||||

当然,我们并不会真的去修改任何 bug,因为我们只是去尝试使用一些 `git` 命令,来演示如何查看 `classify` 方法的演变历史。但是不管怎样,`git` 是一个非常强大的工具,我们必须学好它、用好它。我希望这篇文章可以帮助你掌握更多的关于如何使用 `git` 的知识。

|

||||

当然,我们并没有真的去修改任何 bug,因为我们只是去尝试使用一些 `git` 命令,来演示如何查看 `classify` 方法的演变历史。但是不管怎样,`git` 是一个非常强大的工具,我们必须学好它、用好它。我希望这篇文章可以帮助你掌握更多的关于如何使用 `git` 的知识。

|

||||

|

||||

你喜欢这些内容吗?

|

||||

|

||||

@ -284,9 +284,9 @@ git show db045dbbf60b53dbe013ef25554fd013baf88134

|

||||

|

||||

via: https://ieftimov.com/learn-your-tools-navigating-git-history

|

||||

|

||||

作者:[Ilija Eftimov ][a]

|

||||

作者:[Ilija Eftimov][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,73 @@

|

||||

如何在 Linux/Unix 中不重启 Vim 而重新加载 .vimrc 文件

|

||||

======

|

||||

|

||||

我是一位新的 Vim 编辑器用户。我通常使用 `:vs ~/.vimrc` 来加载 `~/.vimrc` 配置。而当我编辑 `.vimrc` 时,我需要不重启 Vim 会话而重新加载它。在 Linux 或者类 Unix 系统中,如何在编辑 `.vimrc` 后,重新加载它而不用重启 Vim 呢?

|

||||

|

||||

Vim 是自由开源并且向上兼容 Vi 的编辑器。它可以用来编辑各种文本。它在编辑用 C/Perl/Python 编写的程序时特别有用。可以用它来编辑 Linux/Unix 配置文件。`~/.vimrc` 是你个人的 Vim 初始化和自定义文件。

|

||||

|

||||

### 如何在不重启 Vim 会话的情况下重新加载 .vimrc

|

||||

|

||||

在 Vim 中重新加载 `.vimrc` 而不重新启动的流程:

|

||||

|

||||

1. 输入 `vim filename` 启动 vim

|

||||

2. 按下 `Esc` 接着输入 `:vs ~/.vimrc` 来加载 vim 配置

|

||||

3. 像这样添加自定义配置:

|

||||

|

||||

```

|

||||

filetype indent plugin on

|

||||

set number

|

||||

syntax on

|

||||

```

|

||||

4. 使用 `:wq` 保存文件,并从 `~/.vimrc` 窗口退出

|

||||

5. 输入下面任一命令重载 `~/.vimrc`:`:so $MYVIMRC` 或者 `:source ~/.vimrc`。

|

||||

|

||||

[![How to reload .vimrc file without restarting vim][1]][1]

|

||||

|

||||

*图1:编辑 ~/.vimrc 并在需要时重载它而不用退出 vim,这样你就可以继续编辑程序了*

|

||||

|

||||

`:so[urce]! {file}` 这个 vim 命令会从给定的文件比如 `~/.vimrc` 读取配置。就像你输入的一样,这些命令是在普通模式下执行的。当你在 `:global`、:`argdo`、 `:windo`、`:bufdo` 之后、循环中或者跟着另一个命令时,显示不会再在执行命令时更新。

|

||||

|

||||

### 如何设置按键来编辑并重载 ~/.vimrc

|

||||

|

||||

在你的 `~/.vimrc` 后面跟上这些:

|

||||

|

||||

```

|

||||

" Edit vimr configuration file

|

||||

nnoremap confe :e $MYVIMRC<CR>

|

||||

" Reload vims configuration file

|

||||

nnoremap confr :source $MYVIMRC<CR>

|

||||

```

|

||||

|

||||

现在只要按下 `Esc` 接着输入 `confe` 就可以编辑 `~/.vimrc`。按下 `Esc` ,接着输入 `confr` 以重新加载。一些人喜欢在 `.vimrc` 中使用 `<Leader>` 键。因此上面的映射变成:

|

||||

|

||||

```

|

||||

" Edit vimr configuration file

|

||||

nnoremap <Leader>ve :e $MYVIMRC<CR>

|

||||

" Reload vimr configuration file

|

||||

nnoremap <Leader>vr :source $MYVIMRC<CR>

|

||||

```

|

||||

|

||||

`<Leader>` 键默认映射成 `\` 键。因此只要输入 `\` 接着 `ve` 就能编辑文件。按下 `\` 接着 `vr` 就能重载 `~/vimrc`。

|

||||

|

||||

这就完成了,你可以不用再重启 Vim 就能重新加载 `.vimrc` 了。

|

||||

|

||||

### 关于作者

|

||||

|

||||

作者是 nixCraft 的创建者,经验丰富的系统管理员,也是 Linux / Unix shell 脚本的培训师。他曾与全球客户以及IT、教育、国防和太空研究以及非营利部门等多个行业合作。在 [Twitter][9]、[Facebook][10]、[Google +][11] 上关注他。通过[RSS/XML 订阅][5]获取最新的系统管理、Linux/Unix 以及开源主题教程。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.cyberciti.biz/faq/how-to-reload-vimrc-file-without-restarting-vim-on-linux-unix/

|

||||

|

||||

作者:[Vivek Gite][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.cyberciti.biz/

|

||||

[1]:https://www.cyberciti.biz/media/new/faq/2018/02/How-to-reload-.vimrc-file-without-restarting-vim.jpg

|

||||

[2]:https://twitter.com/nixcraft

|

||||

[3]:https://facebook.com/nixcraft

|

||||

[4]:https://plus.google.com/+CybercitiBiz

|

||||

[5]:https://www.cyberciti.biz/atom/atom.xml

|

||||

@ -1,9 +1,9 @@

|

||||

如何在 Ubuntu 16.04 上使用 Gogs 安装 Go 语言编写的 Git 服务器

|

||||

如何在 Ubuntu 安装 Go 语言编写的 Git 服务器 Gogs

|

||||

======

|

||||

|

||||

Gogs 是由 Go 语言编写,提供开源且免费的 Git 服务。Gogs 是一款无痛式自托管的 Git 服务器,能在尽可能小的硬件资源开销上搭建并运行您的私有 Git 服务器。Gogs 的网页界面和 GitHub 十分相近,且提供 MySQL、PostgreSQL 和 SQLite 数据库支持。

|

||||

Gogs 是由 Go 语言编写的,自由开源的 Git 服务。Gogs 是一款无痛式自托管的 Git 服务器,能在尽可能小的硬件资源开销上搭建并运行您的私有 Git 服务器。Gogs 的网页界面和 GitHub 十分相近,且提供 MySQL、PostgreSQL 和 SQLite 数据库支持。

|

||||

|

||||

在本教程中,我们将使用 Gogs 在 Ununtu 16.04 上按步骤,指导您安装和配置您的私有 Git 服务器。这篇教程中涵盖了如何在 Ubuntu 上安装 Go 语言、PostgreSQL 和安装并且配置 Nginx 网页服务器作为 Go 应用的反向代理的细节内容。

|

||||

在本教程中,我们将使用 Gogs 在 Ununtu 16.04 上按步骤指导您安装和配置您的私有 Git 服务器。这篇教程中涵盖了如何在 Ubuntu 上安装 Go 语言、PostgreSQL 和安装并且配置 Nginx 网页服务器作为 Go 应用的反向代理的细节内容。

|

||||

|

||||

### 搭建环境

|

||||

|

||||

@ -22,9 +22,11 @@ Gogs 是由 Go 语言编写,提供开源且免费的 Git 服务。Gogs 是一

|

||||

8. 测试

|

||||

|

||||

### 步骤 1 - 更新和升级系统

|

||||

|

||||

继续之前,更新 Ubuntu 所有的库,升级所有包。

|

||||

|

||||

运行下面的 apt 命令

|

||||

运行下面的 `apt` 命令:

|

||||

|

||||

```

|

||||

sudo apt update

|

||||

sudo apt upgrade

|

||||

@ -36,12 +38,14 @@ Gogs 提供 MySQL、PostgreSQL、SQLite 和 TiDB 数据库系统支持。

|

||||

|

||||

此步骤中,我们将使用 PostgreSQL 作为 Gogs 程序的数据库。

|

||||

|

||||

使用下面的 apt 命令安装 PostgreSQL。

|

||||

使用下面的 `apt` 命令安装 PostgreSQL。

|

||||

|

||||

```

|

||||

sudo apt install -y postgresql postgresql-client libpq-dev

|

||||

```

|

||||

|

||||

安装完成之后,启动 PostgreSQL 服务并设置为开机启动。

|

||||

|

||||

```

|

||||

systemctl start postgresql

|

||||

systemctl enable postgresql

|

||||

@ -51,62 +55,71 @@ systemctl enable postgresql

|

||||

|

||||

之后,我们需要为 Gogs 创建数据库和用户。

|

||||

|

||||

使用 'postgres' 用户登陆并运行 ‘psql’ 命令获取 PostgreSQL 操作界面.

|

||||

使用 `postgres` 用户登录并运行 `psql` 命令以访问 PostgreSQL 操作界面。

|

||||

|

||||

```

|

||||

su - postgres

|

||||

psql

|

||||

```

|

||||

|

||||

创建一个名为 ‘git’ 的新用户,给予此用户 ‘CREATEDB’ 权限。

|

||||

创建一个名为 `git` 的新用户,给予此用户 `CREATEDB` 权限。

|

||||

|

||||

```

|

||||

CREATE USER git CREATEDB;

|

||||

\password git

|

||||

```

|

||||

|

||||

创建名为 ‘gogs_production’ 的数据库,设置 ‘git’ 用户作为其所有者。

|

||||

创建名为 `gogs_production` 的数据库,设置 `git` 用户作为其所有者。

|

||||

|

||||

```

|

||||

CREATE DATABASE gogs_production OWNER git;

|

||||

```

|

||||

|

||||

[![创建 Gogs 数据库][1]][2]

|

||||

|

||||

作为 Gogs 安装时的 ‘gogs_production’ PostgreSQL 数据库和 ‘git’ 用户已经创建完毕。

|

||||

用于 Gogs 的 `gogs_production` PostgreSQL 数据库和 `git` 用户已经创建完毕。

|

||||

|

||||

### 步骤 3 - 安装 Go 和 Git

|

||||

|

||||

使用下面的 apt 命令从库中安装 Git。

|

||||

使用下面的 `apt` 命令从库中安装 Git。

|

||||

|

||||

```

|

||||

sudo apt install git

|

||||

```

|

||||

|

||||

此时,为系统创建名为 ‘git’ 的新用户。

|

||||

此时,为系统创建名为 `git` 的新用户。

|

||||

|

||||

```

|

||||

sudo adduser --disabled-login --gecos 'Gogs' git

|

||||

```

|

||||

|

||||

登陆 ‘git’ 账户并且创建名为 ‘local’ 的目录。

|

||||

登录 `git` 账户并且创建名为 `local` 的目录。

|

||||

|

||||

```

|

||||

su - git

|

||||

mkdir -p /home/git/local

|

||||

```

|

||||

|

||||

切换到 ‘local’ 目录,依照下方所展示的内容,使用 wget 命令下载 ‘Go’(最新版)。

|

||||

切换到 `local` 目录,依照下方所展示的内容,使用 `wget` 命令下载 Go(最新版)。

|

||||

|

||||

```

|

||||

cd ~/local

|

||||

wget <https://dl.google.com/go/go1.9.2.linux-amd64.tar.gz>

|

||||

wget https://dl.google.com/go/go1.9.2.linux-amd64.tar.gz

|

||||

```

|

||||

|

||||

[![安装 Go 和 Git][3]][4]

|

||||

|

||||

解压并且删除 go 的压缩文件。

|

||||

|

||||

```

|

||||

tar -xf go1.9.2.linux-amd64.tar.gz

|

||||

rm -f go1.9.2.linux-amd64.tar.gz

|

||||

```

|

||||

|

||||

‘Go’ 二进制文件已经被下载到 ‘~/local/go’ 目录。此时我们需要设置环境变量 - 设置 ‘GOROOT’ 和 ‘GOPATH’ 目录到系统环境,这样,我们就可以在 ‘git’ 用户下执行 ‘go’ 命令。

|

||||

Go 二进制文件已经被下载到 `~/local/go` 目录。此时我们需要设置环境变量 - 设置 `GOROOT` 和 `GOPATH` 目录到系统环境,这样,我们就可以在 `git` 用户下执行 `go` 命令。

|

||||

|

||||

执行下方的命令。

|

||||

|

||||

```

|

||||

cd ~/

|

||||

echo 'export GOROOT=$HOME/local/go' >> $HOME/.bashrc

|

||||

@ -114,7 +127,8 @@ echo 'export GOPATH=$HOME/go' >> $HOME/.bashrc

|

||||

echo 'export PATH=$PATH:$GOROOT/bin:$GOPATH/bin' >> $HOME/.bashrc

|

||||

```

|

||||

|

||||

之后通过运行 'source ~/.bashrc' 重载 Bash,如下:

|

||||

之后通过运行 `source ~/.bashrc` 重载 Bash,如下:

|

||||

|

||||

```

|

||||

source ~/.bashrc

|

||||

```

|

||||

@ -123,7 +137,8 @@ source ~/.bashrc

|

||||

|

||||

[![安装 Go 编程语言][5]][6]

|

||||

|

||||

现在运行 'go' 的版本查看命令。

|

||||

现在运行 `go` 的版本查看命令。

|

||||

|

||||

```

|

||||

go version

|

||||

```

|

||||

@ -132,27 +147,30 @@ go version

|

||||

|

||||

[![检查 go 版本][7]][8]

|

||||

|

||||

现在,Go 已经安装在系统的 ‘git’ 用户下了。

|

||||

现在,Go 已经安装在系统的 `git` 用户下了。

|

||||

|

||||

### 步骤 4 - 使用 Gogs 安装 Git 服务

|

||||

|

||||

使用 ‘git’ 用户登陆并且使用 ‘go’ 命令从 GitHub 下载 ‘Gogs’。

|

||||

使用 `git` 用户登录并且使用 `go` 命令从 GitHub 下载 Gogs。

|

||||

|

||||

```

|

||||

su - git

|

||||

go get -u github.com/gogits/gogs

|

||||

```

|

||||

|

||||

此命令将在 ‘GOPATH/src’ 目录下载 Gogs 的所有源代码。

|

||||

此命令将在 `GOPATH/src` 目录下载 Gogs 的所有源代码。

|

||||

|

||||

切换至 `$GOPATH/src/github.com/gogits/gogs` 目录,并且使用下列命令搭建 Gogs。

|

||||

|

||||

切换至 '$GOPATH/src/github.com/gogits/gogs' 目录,并且使用下列命令搭建 gogs。

|

||||

```

|

||||

cd $GOPATH/src/github.com/gogits/gogs

|

||||

go build

|

||||

```

|

||||

|

||||

确保您没有捕获到错误。

|

||||

确保您没有遇到错误。

|

||||

|

||||

现在使用下面的命令运行 Gogs Go Git 服务器。

|

||||

|

||||

```

|

||||

./gogs web

|

||||

```

|

||||

@ -161,31 +179,34 @@ go build

|

||||

|

||||

[![安装 Gogs Go Git 服务][9]][10]

|

||||

|

||||

打开网页浏览器,键入您的 IP 地址和端口号,我的是<http://192.168.33.10:3000/>

|

||||

打开网页浏览器,键入您的 IP 地址和端口号,我的是 http://192.168.33.10:3000/ 。

|

||||

|

||||

您应该会得到于下方一致的反馈。

|

||||

您应该会得到与下方一致的反馈。

|

||||

|

||||

[![Gogs 网页服务器][11]][12]

|

||||

|

||||

Gogs 已经在您的 Ubuntu 系统上安装完毕。现在返回到您的终端,并且键入 'Ctrl + c' 中止服务。

|

||||

Gogs 已经在您的 Ubuntu 系统上安装完毕。现在返回到您的终端,并且键入 `Ctrl + C` 中止服务。

|

||||

|

||||

### 步骤 5 - 配置 Gogs Go Git 服务器

|

||||

|

||||

本步骤中,我们将为 Gogs 创建惯例配置。

|

||||

|

||||

进入 Gogs 安装目录并新建 ‘custom/conf’ 目录。

|

||||

进入 Gogs 安装目录并新建 `custom/conf` 目录。

|

||||

|

||||

```

|

||||

cd $GOPATH/src/github.com/gogits/gogs

|

||||

mkdir -p custom/conf/

|

||||

```

|

||||

|

||||

复制默认的配置文件到 custom 目录,并使用 [vim][13] 修改。

|

||||

复制默认的配置文件到 `custom` 目录,并使用 [vim][13] 修改。

|

||||

|

||||

```

|

||||

cp conf/app.ini custom/conf/app.ini

|

||||

vim custom/conf/app.ini

|

||||

```

|

||||

|

||||

在 ‘ **[server]** ’ 选项中,修改 ‘HOST_ADDR’ 为 ‘127.0.0.1’.

|

||||

在 `[server]` 小节中,修改 `HOST_ADDR` 为 `127.0.0.1`。

|

||||

|

||||

```

|

||||

[server]

|

||||

PROTOCOL = http

|

||||

@ -193,23 +214,23 @@ vim custom/conf/app.ini

|

||||

ROOT_URL = %(PROTOCOL)s://%(DOMAIN)s:%(HTTP_PORT)s/

|

||||

HTTP_ADDR = 127.0.0.1

|

||||

HTTP_PORT = 3000

|

||||

|

||||

```

|

||||

|

||||

在 ‘ **[database]** ’ 选项中,按照您的数据库信息修改。

|

||||

在 `[database]` 选项中,按照您的数据库信息修改。

|

||||

|

||||

```

|

||||

[database]

|

||||

DB_TYPE = postgres

|

||||

HOST = 127.0.0.1:5432

|

||||

NAME = gogs_production

|

||||

USER = git

|

||||

PASSWD = [email protected]#

|

||||

|

||||

PASSWD = aqwe123@#

|

||||

```

|

||||

|

||||

保存并退出。

|

||||

|

||||

运行下面的命令验证配置项。

|

||||

|

||||

```

|

||||

./gogs web

|

||||

```

|

||||

@ -218,54 +239,57 @@ vim custom/conf/app.ini

|

||||

|

||||

[![配置服务器][14]][15]

|

||||

|

||||

Gogs 现在已经按照自定义配置下运行在 ‘localhost’ 的 3000 端口上了。

|

||||

Gogs 现在已经按照自定义配置下运行在 `localhost` 的 3000 端口上了。

|

||||

|

||||

### 步骤 6 - 运行 Gogs 服务器

|

||||

|

||||

这一步,我们将在 Ubuntu 系统上配置 Gogs 服务器。我们会在 ‘/etc/systemd/system’ 目录下创建一个新的服务器配置文件 ‘gogs.service’。

|

||||

这一步,我们将在 Ubuntu 系统上配置 Gogs 服务器。我们会在 `/etc/systemd/system` 目录下创建一个新的服务器配置文件 `gogs.service`。

|

||||

|

||||

切换到 `/etc/systemd/system` 目录,使用 [vim][13] 创建服务器配置文件 `gogs.service`。

|

||||

|

||||

切换到 ‘/etc/systemd/system’ 目录,使用 [vim][13] 创建服务器配置文件 ‘gogs.service’。

|

||||

```

|

||||

cd /etc/systemd/system

|

||||

vim gogs.service

|

||||

```

|

||||

|

||||

粘贴下面的代码到 gogs 服务器配置文件中。

|

||||

粘贴下面的代码到 Gogs 服务器配置文件中。

|

||||

|

||||

```

|

||||

[Unit]

|

||||

Description=Gogs

|

||||

After=syslog.target

|

||||

After=network.target

|

||||

After=mariadb.service mysqld.service postgresql.service memcached.service redis.service

|

||||

Description=Gogs

|

||||

After=syslog.target

|

||||

After=network.target

|

||||

After=mariadb.service mysqld.service postgresql.service memcached.service redis.service

|

||||

|

||||

[Service]

|

||||

# Modify these two values and uncomment them if you have

|

||||

# repos with lots of files and get an HTTP error 500 because

|

||||

# of that

|

||||

###

|

||||

#LimitMEMLOCK=infinity

|

||||

#LimitNOFILE=65535

|

||||

Type=simple

|

||||

User=git

|

||||

Group=git

|

||||

WorkingDirectory=/home/git/go/src/github.com/gogits/gogs

|

||||

ExecStart=/home/git/go/src/github.com/gogits/gogs/gogs web

|

||||

Restart=always

|

||||

Environment=USER=git HOME=/home/git

|

||||

|

||||

[Install]

|

||||

WantedBy=multi-user.target

|

||||

[Service]

|

||||

# Modify these two values and uncomment them if you have

|

||||

# repos with lots of files and get an HTTP error 500 because

|

||||

# of that

|

||||

###

|

||||

#LimitMEMLOCK=infinity

|

||||

#LimitNOFILE=65535

|

||||

Type=simple

|

||||

User=git

|

||||

Group=git

|

||||

WorkingDirectory=/home/git/go/src/github.com/gogits/gogs

|

||||

ExecStart=/home/git/go/src/github.com/gogits/gogs/gogs web

|

||||

Restart=always

|

||||

Environment=USER=git HOME=/home/git

|

||||

|

||||

[Install]

|

||||

WantedBy=multi-user.target

|

||||

```

|

||||

|

||||

之后保存并且退出。

|

||||

|

||||

现在可以重载系统服务器。

|

||||

|

||||

```

|

||||

systemctl daemon-reload

|

||||

```

|

||||

|

||||

使用下面的命令开启 gogs 服务器并设置为开机启动。

|

||||

使用下面的命令开启 Gogs 服务器并设置为开机启动。

|

||||

|

||||

```

|

||||

systemctl start gogs

|

||||

systemctl enable gogs

|

||||

@ -276,6 +300,7 @@ systemctl enable gogs

|

||||

Gogs 服务器现在已经运行在 Ubuntu 系统上了。

|

||||

|

||||

使用下面的命令检测:

|

||||

|

||||

```

|

||||

netstat -plntu

|

||||

systemctl status gogs

|

||||

@ -290,23 +315,27 @@ systemctl status gogs

|

||||

在本步中,我们将为 Gogs 安装和配置 Nginx 反向代理。我们会在自己的库中调用 Nginx 包。

|

||||

|

||||

使用下面的命令添加 Nginx 库。

|

||||

|

||||

```

|

||||

sudo add-apt-repository -y ppa:nginx/stable

|

||||

```

|

||||

|

||||

此时更新所有的库并且使用下面的命令安装 Nginx。

|

||||

|

||||

```

|

||||

sudo apt update

|

||||

sudo apt install nginx -y

|

||||

```

|

||||

|

||||

之后,进入 ‘/etc/nginx/sites-available’ 目录并且创建虚拟主机文件 ‘gogs’。

|

||||

之后,进入 `/etc/nginx/sites-available` 目录并且创建虚拟主机文件 `gogs`。

|

||||

|

||||

```

|

||||

cd /etc/nginx/sites-available

|

||||

vim gogs

|

||||

```

|

||||

|

||||

粘贴下面的代码到配置项。

|

||||

粘贴下面的代码到配置文件。

|

||||

|

||||

```

|

||||

server {

|

||||

listen 80;

|

||||

@ -316,21 +345,21 @@ server {

|

||||

proxy_pass http://localhost:3000;

|

||||

}

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

保存退出。

|

||||

|

||||

**注意:**

|

||||

使用您的域名修改 ‘server_name’ 项。

|

||||

**注意:** 请使用您的域名修改 `server_name` 项。

|

||||

|

||||

现在激活虚拟主机并且测试 nginx 配置。

|

||||

|

||||

```

|

||||

ln -s /etc/nginx/sites-available/gogs /etc/nginx/sites-enabled/

|

||||

nginx -t

|

||||

```

|

||||

|

||||

确保没有抛错,重启 Nginx 服务器。

|

||||

确保没有遇到错误,重启 Nginx 服务器。

|

||||

|

||||

```

|

||||

systemctl restart nginx

|

||||

```

|

||||

@ -339,25 +368,25 @@ systemctl restart nginx

|

||||

|

||||

### 步骤 8 - 测试

|

||||

|

||||

打开您的网页浏览器并且输入您的 gogs URL,我的是 <http://git.hakase-labs.co>

|

||||

打开您的网页浏览器并且输入您的 Gogs URL,我的是 http://git.hakase-labs.co

|

||||

|

||||

现在您将进入安装界面。在页面的顶部,输入您所有的 PostgreSQL 数据库信息。

|

||||

|

||||

[![Gogs 安装][22]][23]

|

||||

|

||||

之后,滚动到底部,点击 ‘Admin account settings’ 下拉选项。

|

||||

之后,滚动到底部,点击 “Admin account settings” 下拉选项。

|

||||

|

||||

输入您的管理者用户名和邮箱。

|

||||

|

||||

[![键入 gogs 安装设置][24]][25]

|

||||

|

||||

之后点击 ‘Install Gogs’ 按钮。

|

||||

之后点击 “Install Gogs” 按钮。

|

||||

|

||||

然后您将会被重定向到下图显示的 Gogs 用户面板。

|

||||

|

||||

[![Gogs 面板][26]][27]

|

||||

|

||||

下面是 Gogs ‘Admin Dashboard(管理员面板)’。

|

||||

下面是 Gogs 的 “Admin Dashboard(管理员面板)”。

|

||||

|

||||

[![浏览 Gogs 面板][28]][29]

|

||||

|

||||

@ -369,7 +398,7 @@ via: https://www.howtoforge.com/tutorial/how-to-install-gogs-go-git-service-on-u

|

||||

|

||||

作者:[Muhammad Arul][a]

|

||||

译者:[CYLeft](https://github.com/CYLeft)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

translating---geekpi

|

||||

|

||||

Record and Share Terminal Session with Showterm

|

||||

======

|

||||

|

||||

|

||||

@ -1,99 +0,0 @@

|

||||

translating---geekpi

|

||||

|

||||

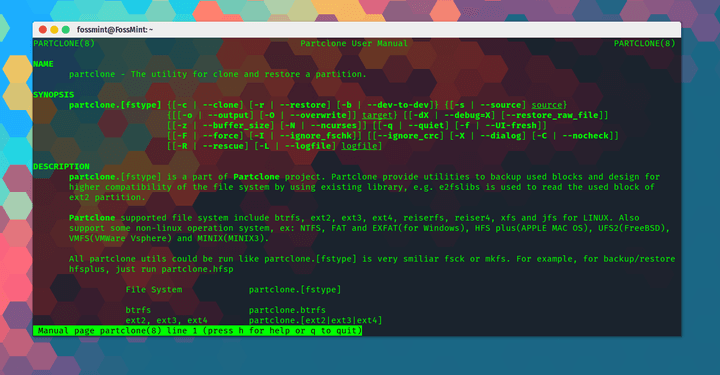

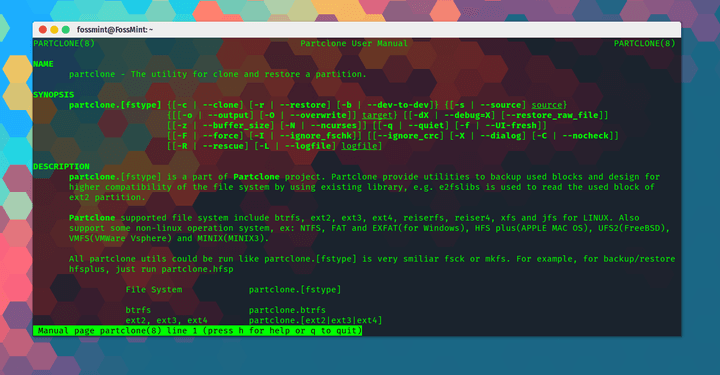

Partclone – A Versatile Free Software for Partition Imaging and Cloning

|

||||

======

|

||||

|

||||

|

||||

|

||||

**[Partclone][1]** is a free and open-source tool for creating and cloning partition images brought to you by the developers of **Clonezilla**. In fact, **Partclone** is one of the tools that **Clonezilla** is based on.

|

||||

|

||||

It provides users with the tools required to backup and restores used partition blocks along with high compatibility with several file systems thanks to its ability to use existing libraries like **e2fslibs** to read and write partitions e.g. **ext2**.

|

||||

|

||||

Its best stronghold is the variety of formats it supports including ext2, ext3, ext4, hfs+, reiserfs, reiser4, btrfs, vmfs3, vmfs5, xfs, jfs, ufs, ntfs, fat(12/16/32), exfat, f2fs, and nilfs.

|

||||

|

||||

It also has a plethora of available programs including **partclone.ext2** (ext3 & ext4), partclone.ntfs, partclone.exfat, partclone.hfsp, and partclone.vmfs (v3 and v5), among others.

|

||||

|

||||

### Features in Partclone

|

||||

|

||||

* **Freeware:** **Partclone** is free for everyone to download and use.

|

||||

* **Open Source:** **Partclone** is released under the GNU GPL license and is open to contribution on [GitHub][2].

|

||||

* **Cross-Platform** : Available on Linux, Windows, MAC, ESX file system backup/restore, and FreeBSD.

|

||||

* An online [Documentation page][3] from where you can view help docs and track its GitHub issues.

|

||||

* An online [user manual][4] for beginners and pros alike.

|

||||

* Rescue support.

|

||||

* Clone partitions to image files.

|

||||

* Restore image files to partitions.

|

||||

* Duplicate partitions quickly.

|

||||

* Support for raw clone.

|

||||

* Displays transfer rate and elapsed time.

|

||||

* Supports piping.

|

||||

* Support for crc32.

|

||||

* Supports vmfs for ESX vmware server and ufs for FreeBSD file system.

|

||||

|

||||

|

||||

|

||||

There are a lot more features bundled in **Partclone** and you can see the rest of them [here][5].

|

||||

|

||||

[__Download Partclone for Linux][6]

|

||||

|

||||

### How to Install and Use Partclone

|

||||

|

||||

To install Partclone on Linux.

|

||||

```

|

||||

$ sudo apt install partclone [On Debian/Ubuntu]

|

||||

$ sudo yum install partclone [On CentOS/RHEL/Fedora]

|

||||

|

||||

```

|

||||

|

||||

Clone partition to image.

|

||||

```

|

||||

# partclone.ext4 -d -c -s /dev/sda1 -o sda1.img

|

||||

|

||||

```

|

||||

|

||||

Restore image to partition.

|

||||

```

|

||||

# partclone.ext4 -d -r -s sda1.img -o /dev/sda1

|

||||

|

||||

```

|

||||

|

||||

Partition to partition clone.

|

||||

```

|

||||

# partclone.ext4 -d -b -s /dev/sda1 -o /dev/sdb1

|

||||

|

||||

```

|

||||

|

||||

Display image information.

|

||||

```

|

||||

# partclone.info -s sda1.img

|

||||

|

||||

```

|

||||

|

||||

Check image.

|

||||

```

|

||||

# partclone.chkimg -s sda1.img

|

||||

|

||||

```

|

||||

|

||||

Are you a **Partclone** user? I wrote on [**Deepin Clone**][7] just recently and apparently, there are certain tasks Partclone is better at handling. What has been your experience with other backup and restore utility tools?

|

||||

|

||||

Do share your thoughts and suggestions with us in the comments section below.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.fossmint.com/partclone-linux-backup-clone-tool/

|

||||

|

||||

作者:[Martins D. Okoi;View All Posts;Peter Beck;Martins Divine Okoi][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:

|

||||

[1]:https://partclone.org/

|

||||

[2]:https://github.com/Thomas-Tsai/partclone

|

||||

[3]:https://partclone.org/help/

|

||||

[4]:https://partclone.org/usage/

|

||||

[5]:https://partclone.org/features/

|

||||

[6]:https://partclone.org/download/

|

||||

[7]:https://www.fossmint.com/deepin-clone-system-backup-restore-for-deepin-users/

|

||||

@ -1,265 +0,0 @@

|

||||

Translating by qhwdw

|

||||

Monitor your Kubernetes Cluster

|

||||

======

|

||||

This article originally appeared on [Kevin Monroe's blog][1]

|

||||

|

||||

Keeping an eye on logs and metrics is a necessary evil for cluster admins. The benefits are clear: metrics help you set reasonable performance goals, while log analysis can uncover issues that impact your workloads. The hard part, however, is getting a slew of applications to work together in a useful monitoring solution.

|

||||

|

||||

In this post, I'll cover monitoring a Kubernetes cluster with [Graylog][2] (for logging) and [Prometheus][3] (for metrics). Of course that's not just wiring 3 things together. In fact, it'll end up looking like this:

|

||||

|

||||

![][4]

|

||||

|

||||

As you know, Kubernetes isn't just one thing -- it's a system of masters, workers, networking bits, etc(d). Similarly, Graylog comes with a supporting cast (apache2, mongodb, etc), as does Prometheus (telegraf, grafana, etc). Connecting the dots in a deployment like this may seem daunting, but the right tools can make all the difference.

|

||||

|

||||

I'll walk through this using [conjure-up][5] and the [Canonical Distribution of Kubernetes][6] (CDK). I find the conjure-up interface really helpful for deploying big software, but I know some of you hate GUIs and TUIs and probably other UIs too. For those folks, I'll do the same deployment again from the command line.

|

||||

|

||||

Before we jump in, note that Graylog and Prometheus will be deployed alongside Kubernetes and not in the cluster itself. Things like the Kubernetes Dashboard and Heapster are excellent sources of information from within a running cluster, but my objective is to provide a mechanism for log/metric analysis whether the cluster is running or not.

|

||||

|

||||

### The Walk Through

|

||||

|

||||

First things first, install conjure-up if you don't already have it. On Linux, that's simply:

|

||||

```

|

||||

sudo snap install conjure-up --classic

|

||||

```

|

||||

|

||||

There's also a brew package for macOS users:

|

||||

```

|

||||

brew install conjure-up

|

||||

```

|

||||

|

||||

You'll need at least version 2.5.2 to take advantage of the recent CDK spell additions, so be sure to `sudo snap refresh conjure-up` or `brew update && brew upgrade conjure-up` if you have an older version installed.

|

||||

|

||||

Once installed, run it:

|

||||

```

|

||||

conjure-up

|

||||

```

|

||||

|

||||

![][7]

|

||||

|

||||

You'll be presented with a list of various spells. Select CDK and press `Enter`.

|

||||

|

||||

![][8]

|

||||

|

||||

At this point, you'll see additional components that are available for the CDK spell. We're interested in Graylog and Prometheus, so check both of those and hit `Continue`.

|

||||

|

||||

You'll be guided through various cloud choices to determine where you want your cluster to live. After that, you'll see options for post-deployment steps, followed by a review screen that lets you see what is about to be deployed:

|

||||

|

||||

![][9]

|

||||

|

||||

In addition to the typical K8s-related applications (etcd, flannel, load-balancer, master, and workers), you'll see additional applications related to our logging and metric selections.

|

||||

|

||||

The Graylog stack includes the following:

|

||||

|

||||

* apache2: reverse proxy for the graylog web interface

|

||||

* elasticsearch: document database for the logs

|

||||

* filebeat: forwards logs from K8s master/workers to graylog

|

||||

* graylog: provides an api for log collection and an interface for analysis

|

||||

* mongodb: database for graylog metadata

|

||||

|

||||

|

||||

|

||||

The Prometheus stack includes the following:

|

||||

|

||||

* grafana: web interface for metric-related dashboards

|

||||

* prometheus: metric collector and time series database

|

||||

* telegraf: sends host metrics to prometheus

|

||||

|

||||

|

||||

|

||||

You can fine tune the deployment from this review screen, but the defaults will suite our needs. Click `Deploy all Remaining Applications` to get things going.

|

||||

|

||||

The deployment will take a few minutes to settle as machines are brought online and applications are configured in your cloud. Once complete, conjure-up will show a summary screen that includes links to various interesting endpoints for you to browse:

|

||||

|

||||

![][10]

|

||||

|

||||

#### Exploring Logs

|

||||

|

||||

Now that Graylog has been deployed and configured, let's take a look at some of the data we're gathering. By default, the filebeat application will send both syslog and container log events to graylog (that's `/var/log/*.log` and `/var/log/containers/*.log` from the kubernetes master and workers).

|

||||

|

||||

Grab the apache2 address and graylog admin password as follows:

|

||||

```

|

||||

juju status --format yaml apache2/0 | grep public-address

|

||||

public-address: <your-apache2-ip>

|

||||

juju run-action --wait graylog/0 show-admin-password

|

||||

admin-password: <your-graylog-password>

|

||||

```

|

||||

|

||||

Browse to `http://<your-apache2-ip>` and login with admin as the username and <your-graylog-password> as the password. **Note:** if the interface is not immediately available, please wait as the reverse proxy configuration may take up to 5 minutes to complete.

|

||||

|

||||

Once logged in, head to the `Sources` tab to get an overview of the logs collected from our K8s master and workers:

|

||||

|

||||

![][11]

|

||||

|

||||

Drill into those logs by clicking the `System / Inputs` tab and selecting `Show received messages` for the filebeat input:

|

||||

|

||||

![][12]

|

||||

|

||||

From here, you may want to play around with various filters or setup Graylog dashboards to help identify the events that are most important to you. Check out the [Graylog Dashboard][13] docs for details on customizing your view.

|

||||

|

||||

#### Exploring Metrics

|

||||

|

||||

Our deployment exposes two types of metrics through our grafana dashboards: system metrics include things like cpu/memory/disk utilization for the K8s master and worker machines, and cluster metrics include container-level data scraped from the K8s cAdvisor endpoints.

|

||||

|

||||

Grab the grafana address and admin password as follows:

|

||||

```

|

||||

juju status --format yaml grafana/0 | grep public-address

|

||||

public-address: <your-grafana-ip>

|

||||

juju run-action --wait grafana/0 get-admin-password

|

||||

password: <your-grafana-password>

|

||||

```

|

||||

|

||||

Browse to `http://<your-grafana-ip>:3000` and login with admin as the username and <your-grafana-password> as the password. Once logged in, check out the cluster metric dashboard by clicking the `Home` drop-down box and selecting `Kubernetes Metrics (via Prometheus)`:

|

||||

|

||||

![][14]

|

||||

|

||||

We can also check out the system metrics of our K8s host machines by switching the drop-down box to `Node Metrics (via Telegraf) `

|

||||

|

||||

![][15]

|

||||

|

||||

|

||||

### The Other Way

|

||||

|

||||

As alluded to in the intro, I prefer the wizard-y feel of conjure-up to guide me through complex software deployments like Kubernetes. Now that we've seen the conjure-up way, some of you may want to see a command line approach to achieve the same results. Still others may have deployed CDK previously and want to extend it with the Graylog/Prometheus components described above. Regardless of why you've read this far, I've got you covered.

|

||||

|

||||

The tool that underpins conjure-up is [Juju][16]. Everything that the CDK spell did behind the scenes can be done on the command line with Juju. Let's step through how that works.

|

||||

|

||||

**Starting From Scratch**

|

||||

|

||||

If you're on Linux, install Juju like this:

|

||||

```

|

||||

sudo snap install juju --classic

|

||||

```

|

||||

|

||||

For macOS, Juju is available from brew:

|

||||

```

|

||||

brew install juju

|

||||

```

|

||||

|

||||

Now setup a controller for your preferred cloud. You may be prompted for any required cloud credentials:

|

||||

```

|

||||

juju bootstrap

|

||||

```

|

||||

|

||||

We then need to deploy the base CDK bundle:

|

||||

```

|

||||

juju deploy canonical-kubernetes

|

||||

```

|

||||

|

||||

**Starting From CDK**

|

||||

|

||||

With our Kubernetes cluster deployed, we need to add all the applications required for Graylog and Prometheus:

|

||||

```

|

||||

## deploy graylog-related applications

|

||||

juju deploy xenial/apache2

|

||||

juju deploy xenial/elasticsearch

|

||||

juju deploy xenial/filebeat

|

||||

juju deploy xenial/graylog

|

||||

juju deploy xenial/mongodb

|

||||

```

|

||||

```

|

||||

## deploy prometheus-related applications

|

||||

juju deploy xenial/grafana

|

||||

juju deploy xenial/prometheus

|

||||

juju deploy xenial/telegraf

|

||||

```

|

||||

|

||||

Now that the software is deployed, connect them together so they can communicate:

|

||||

```

|

||||

## relate graylog applications

|

||||

juju relate apache2:reverseproxy graylog:website

|

||||

juju relate graylog:elasticsearch elasticsearch:client

|

||||

juju relate graylog:mongodb mongodb:database

|

||||

juju relate filebeat:beats-host kubernetes-master:juju-info

|

||||

juju relate filebeat:beats-host kubernetes-worker:jujuu-info

|

||||

```

|

||||

```

|

||||

## relate prometheus applications

|

||||

juju relate prometheus:grafana-source grafana:grafana-source

|

||||

juju relate telegraf:prometheus-client prometheus:target

|

||||

juju relate kubernetes-master:juju-info telegraf:juju-info

|

||||

juju relate kubernetes-worker:juju-info telegraf:juju-info

|

||||

```

|

||||

|

||||

At this point, all the applications can communicate with each other, but we have a bit more configuration to do (e.g., setting up the apache2 reverse proxy, telling prometheus how to scrape k8s, importing our grafana dashboards, etc):

|

||||

```

|

||||

## configure graylog applications

|

||||

juju config apache2 enable_modules="headers proxy_html proxy_http"

|

||||

juju config apache2 vhost_http_template="$(base64 <vhost-tmpl>)"

|

||||

juju config elasticsearch firewall_enabled="false"

|

||||

juju config filebeat \

|

||||

logpath="/var/log/*.log /var/log/containers/*.log"

|

||||

juju config filebeat logstash_hosts="<graylog-ip>:5044"

|

||||

juju config graylog elasticsearch_cluster_name="<es-cluster>"

|

||||

```

|

||||

```

|

||||

## configure prometheus applications

|

||||

juju config prometheus scrape-jobs="<scraper-yaml>"

|

||||

juju run-action --wait grafana/0 import-dashboard \

|

||||

dashboard="$(base64 <dashboard-json>)"

|

||||

```

|

||||

|

||||

Some of the above steps need values specific to your deployment. You can get these in the same way that conjure-up does:

|

||||

|

||||

* <vhost-tmpl>: fetch our sample [template][17] from github

|

||||

* <graylog-ip>: `juju run --unit graylog/0 'unit-get private-address'`

|

||||

* <es-cluster>: `juju config elasticsearch cluster-name`

|

||||

* <scraper-yaml>: fetch our sample [scraper][18] from github; [substitute][19]appropriate values for `[K8S_PASSWORD][20]` and `[K8S_API_ENDPOINT][21]`

|

||||

* <dashboard-json>: fetch our [host][22] and [k8s][23] dashboards from github

|

||||

|

||||

|

||||

|

||||

Finally, you'll want to expose the apache2 and grafana applications to make their web interfaces accessible:

|

||||

```

|

||||

## expose relevant endpoints

|

||||

juju expose apache2

|

||||

juju expose grafana

|

||||

```

|

||||

|

||||

Now that we have everything deployed, related, configured, and exposed, you can login and poke around using the same steps from the **Exploring Logs** and **Exploring Metrics** sections above.

|

||||

|

||||

### The Wrap Up

|

||||

|

||||

My goal here was to show you how to deploy a Kubernetes cluster with rich monitoring capabilities for logs and metrics. Whether you prefer a guided approach or command line steps, I hope it's clear that monitoring complex deployments doesn't have to be a pipe dream. The trick is to figure out how all the moving parts work, make them work together repeatably, and then break/fix/repeat for a while until everyone can use it.

|

||||

|

||||

This is where tools like conjure-up and Juju really shine. Leveraging the expertise of contributors to this ecosystem makes it easy to manage big software. Start with a solid set of apps, customize as needed, and get back to work!

|

||||

|

||||

Give these bits a try and let me know how it goes. You can find enthusiasts like me on Freenode IRC in **#conjure-up** and **#juju**. Thanks for reading!

|

||||

|

||||

### About the author

|

||||

|

||||

Kevin joined Canonical in 2014 with his focus set on modeling complex software. He found his niche on the Juju Big Software team where his mission is to capture operational knowledge of Big Data and Machine Learning applications into repeatable (and reliable!) solutions.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://insights.ubuntu.com/2018/01/16/monitor-your-kubernetes-cluster/

|

||||

|

||||

作者:[Kevin Monroe][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://insights.ubuntu.com/author/kwmonroe/

|

||||

[1]:https://medium.com/@kwmonroe/monitor-your-kubernetes-cluster-a856d2603ec3

|

||||

[2]:https://www.graylog.org/

|

||||

[3]:https://prometheus.io/

|

||||

[4]:https://insights.ubuntu.com/wp-content/uploads/706b/1_TAA57DGVDpe9KHIzOirrBA.png

|

||||

[5]:https://conjure-up.io/

|

||||

[6]:https://jujucharms.com/canonical-kubernetes

|

||||

[7]:https://insights.ubuntu.com/wp-content/uploads/98fd/1_o0UmYzYkFiHIs2sBgj7G9A.png

|

||||

[8]:https://insights.ubuntu.com/wp-content/uploads/0351/1_pgVaO_ZlalrjvYd5pOMJMA.png

|

||||

[9]:https://insights.ubuntu.com/wp-content/uploads/9977/1_WXKxMlml2DWA5Kj6wW9oXQ.png

|

||||

[10]:https://insights.ubuntu.com/wp-content/uploads/8588/1_NWq7u6g6UAzyFxtbM-ipqg.png

|

||||

[11]:https://insights.ubuntu.com/wp-content/uploads/a1c3/1_hHK5mSrRJQi6A6u0yPSGOA.png

|

||||

[12]:https://insights.ubuntu.com/wp-content/uploads/937f/1_cP36lpmSwlsPXJyDUpFluQ.png

|

||||

[13]:http://docs.graylog.org/en/2.3/pages/dashboards.html

|

||||

[14]:https://insights.ubuntu.com/wp-content/uploads/9256/1_kskust3AOImIh18QxQPgRw.png

|

||||

[15]:https://insights.ubuntu.com/wp-content/uploads/2037/1_qJpjPOTGMQbjFY5-cZsYrQ.png

|

||||

[16]:https://jujucharms.com/

|

||||

[17]:https://raw.githubusercontent.com/conjure-up/spells/master/canonical-kubernetes/addons/graylog/steps/01_install-graylog/graylog-vhost.tmpl

|

||||

[18]:https://raw.githubusercontent.com/conjure-up/spells/master/canonical-kubernetes/addons/prometheus/steps/01_install-prometheus/prometheus-scrape-k8s.yaml

|

||||

[19]:https://github.com/conjure-up/spells/blob/master/canonical-kubernetes/addons/prometheus/steps/01_install-prometheus/after-deploy#L25

|

||||

[20]:https://github.com/conjure-up/spells/blob/master/canonical-kubernetes/addons/prometheus/steps/01_install-prometheus/after-deploy#L10

|

||||

[21]:https://github.com/conjure-up/spells/blob/master/canonical-kubernetes/addons/prometheus/steps/01_install-prometheus/after-deploy#L11

|

||||

[22]:https://raw.githubusercontent.com/conjure-up/spells/master/canonical-kubernetes/addons/prometheus/steps/01_install-prometheus/grafana-telegraf.json

|

||||

[23]:https://raw.githubusercontent.com/conjure-up/spells/master/canonical-kubernetes/addons/prometheus/steps/01_install-prometheus/grafana-k8s.json

|

||||

@ -1,171 +0,0 @@

|

||||

Translating by qhwdw

|

||||

Never miss a Magazine's article, build your own RSS notification system

|

||||

======

|

||||

|

||||

|

||||

|

||||

Python is a great programming language to quickly build applications that make our life easier. In this article we will learn how to use Python to build a RSS notification system, the goal being to have fun learning Python using Fedora. If you are looking for a complete RSS notifier application, there are a few already packaged in Fedora.

|

||||

|

||||

### Fedora and Python - getting started

|

||||

|

||||

Python 3.6 is available by default in Fedora, that includes Python's extensive standard library. The standard library provides a collection of modules which make some tasks simpler for us. For example, in our case we will use the [**sqlite3**][1] module to create, add and read data from a database. In the case where a particular problem we are trying to solve is not covered by the standard library, the chance is that someone has already developed a module for everyone to use. The best place to search for such modules is the Python Package Index known as [PyPI][2]. In our example we are going to use the [**feedparser**][3] to parse an RSS feed.

|

||||

|

||||

Since **feedparser** is not in the standard library, we have to install it in our system. Luckily for us there is an rpm package in Fedora, so the installation of **feedparser** is as simple as:

|

||||

```

|

||||

$ sudo dnf install python3-feedparser

|

||||

```

|

||||

|

||||

We now have everything we need to start coding our application.

|

||||

|

||||

### Storing the feed data

|

||||

|

||||

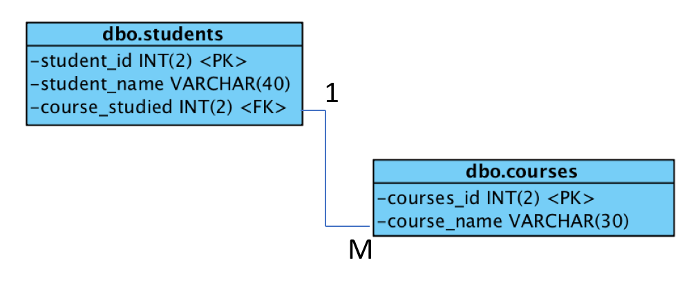

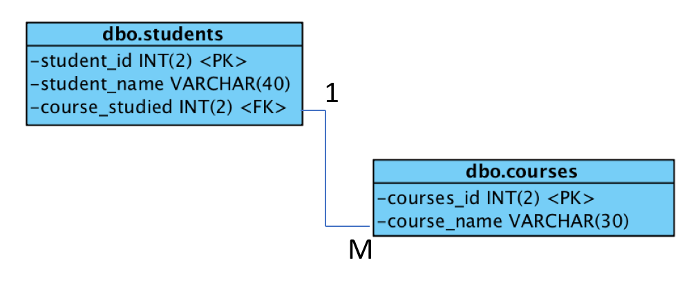

We need to store data from the articles that have already been published so that we send a notification only for new articles. The data we want to store will give us a unique way to identify an article. Therefore we will store the **title** and the **publication date** of the article.

|

||||

|

||||

So let's create our database using python **sqlite3** module and a simple SQL query. We are also adding the modules we are going to use later ( **feedparser** , **smtplib** and **email** ).

|

||||

|

||||

#### Creating the Database

|

||||

```

|

||||

#!/usr/bin/python3

|

||||

import sqlite3

|

||||

import smtplib

|

||||

from email.mime.text import MIMEText

|

||||

|

||||

import feedparser

|

||||

|

||||

db_connection = sqlite3.connect('/var/tmp/magazine_rss.sqlite')

|

||||

db = db_connection.cursor()

|

||||

db.execute(' CREATE TABLE IF NOT EXISTS magazine (title TEXT, date TEXT)')

|

||||

|

||||

```

|

||||

|

||||

These few lines of code create a new sqlite database stored in a file called 'magazine_rss.sqlite', and then create a new table within the database called 'magazine'. This table has two columns - 'title' and 'date' - that can store data of the type TEXT, which means that the value of each column will be a text string.

|

||||

|

||||

#### Checking the Database for old articles

|

||||

|

||||

Since we only want to add new articles to our database we need a function that will check if the article we get from the RSS feed is already in our database or not. We will use it to decide if we should send an email notification (new article) or not (old article). Ok let's code this function.

|

||||

```

|

||||

def article_is_not_db(article_title, article_date):

|

||||

""" Check if a given pair of article title and date

|

||||

is in the database.

|

||||

Args:

|

||||

article_title (str): The title of an article

|

||||

article_date (str): The publication date of an article

|

||||

Return:

|

||||

True if the article is not in the database

|

||||

False if the article is already present in the database

|

||||

"""

|

||||

db.execute("SELECT * from magazine WHERE title=? AND date=?", (article_title, article_date))

|

||||

if not db.fetchall():

|

||||

return True

|

||||

else:

|

||||

return False

|

||||

```

|

||||

|

||||

The main part of this function is the SQL query we execute to search through the database. We are using a SELECT instruction to define which column of our magazine table we will run the query on. We are using the 0_sync_master.sh 1_add_new_article_manual.sh 1_add_new_article_newspaper.sh 2_start_translating.sh 3_continue_the_work.sh 4_finish.sh 5_pause.sh base.sh env format.test lctt.cfg parse_url_by_manual.sh parse_url_by_newspaper.py parse_url_by_newspaper.sh README.org reformat.sh symbol to select all columns ( title and date). Then we ask to select only the rows of the table WHERE the article_title and article_date string are equal to the value of the title and date column.

|

||||

|

||||

To finish, we have a simple logic that will return True if the query did not return any results and False if the query found an article in database matching our title, date pair.

|

||||

|

||||

#### Adding a new article to the Database

|

||||

|

||||

Now we can code the function to add a new article to the database.

|

||||

```

|

||||

def add_article_to_db(article_title, article_date):

|

||||

""" Add a new article title and date to the database

|

||||

Args:

|

||||

article_title (str): The title of an article

|

||||

article_date (str): The publication date of an article

|

||||

"""

|

||||

db.execute("INSERT INTO magazine VALUES (?,?)", (article_title, article_date))

|

||||

db_connection.commit()

|

||||

```

|

||||

|

||||

This function is straight forward, we are using a SQL query to INSERT a new row INTO the magazine table with the VALUES of the article_title and article_date. Then we commit the change to make it persistent.

|

||||

|

||||

That's all we need from the database's point of view, let's look at the notification system and how we can use python to send emails.

|

||||

|

||||

### Sending an email notification

|