mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-04-14 03:30:29 +08:00

commit

68a71483e0

published/RHCE

Part 10 - Setting Up 'NTP (Network Time Protocol) Server' in RHEL or CentOS 7.mdPart 9 - How to Setup Postfix Mail Server (SMTP) using null-client Configuration.md

sources

talk

20150820 Why did you start using Linux.md20151105 Linus Torvalds Lambasts Open Source Programmers over Insecure Code.md

tech

translated

@ -1,12 +1,13 @@

|

||||

第 10 部分:在 RHEL/CentOS 7 中设置 “NTP(网络时间协议) 服务器”

|

||||

RHCE 系列(十):在 RHEL/CentOS 7 中设置 NTP(网络时间协议)服务器

|

||||

================================================================================

|

||||

网络时间协议 - NTP - 是运行在传输层 123 号端口允许计算机通过网络同步准确时间的协议。随着时间的流逝,计算机内部时间会出现漂移,这会导致时间不一致问题,尤其是对于服务器和客户端日志文件,或者你想要备份服务器资源或数据库。

|

||||

|

||||

网络时间协议 - NTP - 是运行在传输层 123 号端口的 UDP 协议,它允许计算机通过网络同步准确时间。随着时间的流逝,计算机内部时间会出现漂移,这会导致时间不一致问题,尤其是对于服务器和客户端日志文件,或者你想要复制服务器的资源或数据库。

|

||||

|

||||

|

||||

|

||||

在 CentOS 和 RHEL 7 上安装 NTP 服务器

|

||||

*在 CentOS 和 RHEL 7 上安装 NTP 服务器*

|

||||

|

||||

#### 要求: ####

|

||||

#### 前置要求: ####

|

||||

|

||||

- [CentOS 7 安装过程][1]

|

||||

- [RHEL 安装过程][2]

|

||||

@ -17,62 +18,62 @@

|

||||

- [在 CentOS/RHCE 7 上配置静态 IP][4]

|

||||

- [在 CentOS/RHEL 7 上停用并移除不需要的服务][5]

|

||||

|

||||

这篇指南会告诉你如何在 CentOS/RHCE 7 上安装和配置 NTP 服务器,并使用 NTP 公共时间服务器池列表中和你服务器地理位置最近的可用节点中同步时间。

|

||||

这篇指南会告诉你如何在 CentOS/RHCE 7 上安装和配置 NTP 服务器,并使用 NTP 公共时间服务器池(NTP Public Pool Time Servers)列表中和你服务器地理位置最近的可用节点中同步时间。

|

||||

|

||||

#### 步骤一:安装和配置 NTP 守护进程 ####

|

||||

|

||||

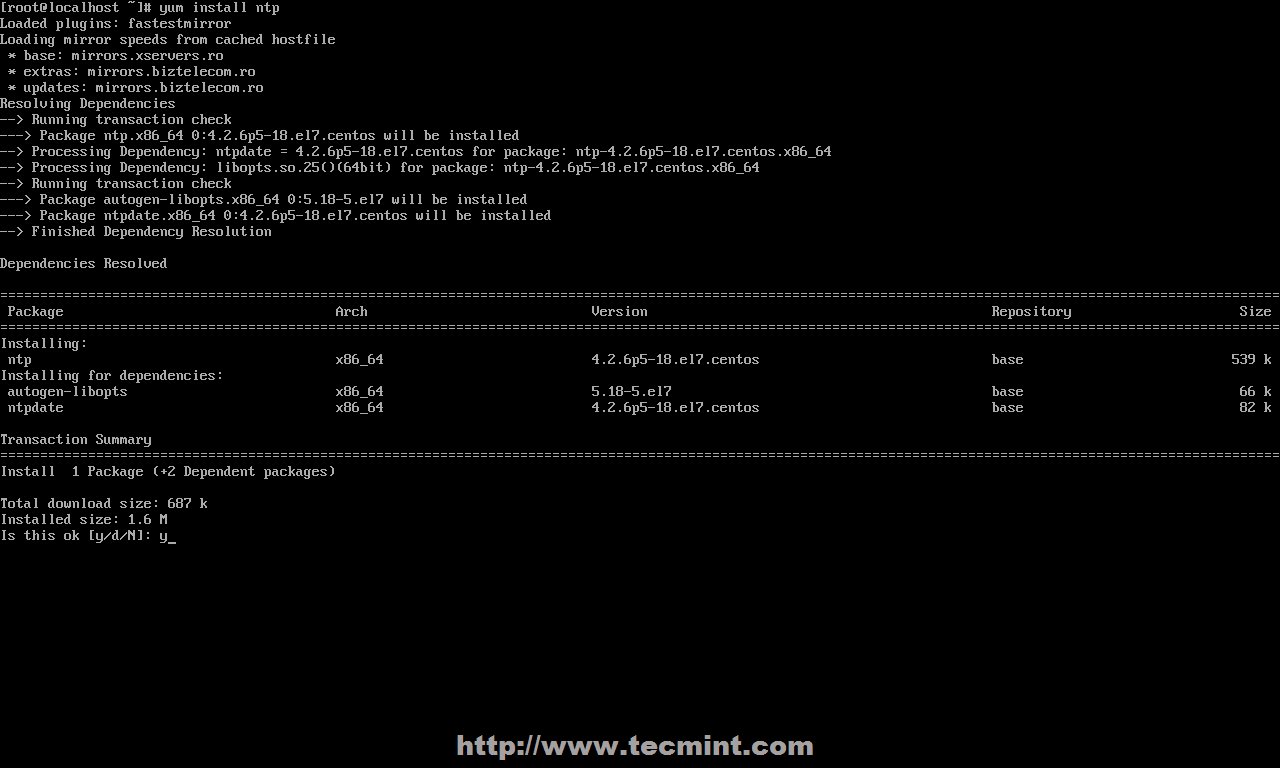

1. 官方 CentOS /RHEL 7 库默认提供 NTP 服务器安装包,可以通过使用下面的命令安装。

|

||||

1、 官方 CentOS /RHEL 7 库默认提供 NTP 服务器安装包,可以通过使用下面的命令安装。

|

||||

|

||||

# yum install ntp

|

||||

|

||||

|

||||

|

||||

安装 NTP 服务器

|

||||

*安装 NTP 服务器*

|

||||

|

||||

2. 安装完服务器之后,首先到官方 [NTP 公共时间服务器池][6],选择你服务器物理位置所在的洲,然后搜索你的国家位置,然后会出现 NTP 服务器列表。

|

||||

2、 安装完服务器之后,首先到官方 [NTP 公共时间服务器池(NTP Public Pool Time Servers)][6],选择你服务器物理位置所在的洲,然后搜索你的国家位置,然后会出现 NTP 服务器列表。

|

||||

|

||||

|

||||

|

||||

NTP 服务器池

|

||||

*NTP 服务器池*

|

||||

|

||||

3. 然后打开编辑 NTP 守护进程主要配置文件,从 pool.ntp.org 中注释掉默认的公共服务器列表并用类似下面截图提供给你国家的列表替换。

|

||||

3、 然后打开编辑 NTP 守护进程的主配置文件,注释掉来自 pool.ntp.org 项目的公共服务器默认列表,并用类似下面截图中提供给你所在国家的列表替换。(LCTT 译注:中国使用 0.cn.pool.ntp.org 等)

|

||||

|

||||

|

||||

|

||||

配置 NTP 服务器

|

||||

*配置 NTP 服务器*

|

||||

|

||||

4. 下一步,你需要允许客户端从你的网络中和这台服务器同步时间。为了做到这点,添加下面一行到 NTP 配置文件,其中限制语句控制允许哪些网络查询和同步时间 - 根据需要替换网络 IP。

|

||||

4、 下一步,你需要允许来自你的网络的客户端和这台服务器同步时间。为了做到这点,添加下面一行到 NTP 配置文件,其中 **restrict** 语句控制允许哪些网络查询和同步时间 - 请根据需要替换网络 IP。

|

||||

|

||||

restrict 192.168.1.0 netmask 255.255.255.0 nomodify notrap

|

||||

|

||||

nomodify notrap 语句意味着不允许你的客户端配置服务器或者作为同步时间的节点。

|

||||

|

||||

5. 如果你需要额外的信息用于错误处理,以防你的 NTP 守护进程出现问题,添加一个 logfile 语句,用于记录所有 NTP 服务器问题到一个指定的日志文件。

|

||||

5、 如果你需要用于错误处理的额外信息,以防你的 NTP 守护进程出现问题,添加一个 logfile 语句,用于记录所有 NTP 服务器问题到一个指定的日志文件。

|

||||

|

||||

logfile /var/log/ntp.log

|

||||

|

||||

|

||||

|

||||

启用 NTP 日志

|

||||

*启用 NTP 日志*

|

||||

|

||||

6. 你编辑完所有上面解释的配置并保存关闭 ntp.conf 文件后,你最终的配置看起来像下面的截图。

|

||||

6、 在你编辑完所有上面解释的配置并保存关闭 ntp.conf 文件后,你最终的配置看起来像下面的截图。

|

||||

|

||||

|

||||

|

||||

NTP 服务器配置

|

||||

*NTP 服务器配置*

|

||||

|

||||

### 步骤二:添加防火墙规则并启动 NTP 守护进程 ###

|

||||

|

||||

7. NTP 服务在传输层(第四层)使用 123 号 UDP 端口。它是针对限制可变延迟的影响特别设计的。要在 RHEL/CentOS 7 中开放这个端口,可以对 Firewalld 服务使用下面的命令。

|

||||

7、 NTP 服务使用 OSI 传输层(第四层)的 123 号 UDP 端口。它是为了避免可变延迟的影响所特别设计的。要在 RHEL/CentOS 7 中开放这个端口,可以对 Firewalld 服务使用下面的命令。

|

||||

|

||||

# firewall-cmd --add-service=ntp --permanent

|

||||

# firewall-cmd --reload

|

||||

|

||||

|

||||

|

||||

在 Firewall 中开放 NTP 端口

|

||||

*在 Firewall 中开放 NTP 端口*

|

||||

|

||||

8. 你在防火墙中开放了 123 号端口之后,启动 NTP 服务器并确保系统范围内可用。用下面的命令管理服务。

|

||||

8、 你在防火墙中开放了 123 号端口之后,启动 NTP 服务器并确保系统范围内可用。用下面的命令管理服务。

|

||||

|

||||

# systemctl start ntpd

|

||||

# systemctl enable ntpd

|

||||

@ -80,34 +81,34 @@ NTP 服务器配置

|

||||

|

||||

|

||||

|

||||

启动 NTP 服务

|

||||

*启动 NTP 服务*

|

||||

|

||||

### 步骤三:验证服务器时间同步 ###

|

||||

|

||||

9. 启动了 NTP 守护进程后,用几分钟等服务器和它的服务器池列表同步时间,然后运行下面的命令验证 NTP 节点同步状态和你的系统时间。

|

||||

9、 启动了 NTP 守护进程后,用几分钟等服务器和它的服务器池列表同步时间,然后运行下面的命令验证 NTP 节点同步状态和你的系统时间。

|

||||

|

||||

# ntpq -p

|

||||

# date -R

|

||||

|

||||

|

||||

|

||||

验证 NTP 时间同步

|

||||

*验证 NTP 时间同步*

|

||||

|

||||

10. 如果你想查询或者和你选择的服务器池同步,你可以使用 ntpdate 命令,后面跟服务器名或服务器地址,类似下面建议的命令行事例。

|

||||

10、 如果你想查询或者和你选择的服务器池同步,你可以使用 ntpdate 命令,后面跟服务器名或服务器地址,类似下面建议的命令行示例。

|

||||

|

||||

# ntpdate -q 0.ro.pool.ntp.org 1.ro.pool.ntp.org

|

||||

|

||||

|

||||

|

||||

同步 NTP 时间

|

||||

*同步 NTP 时间*

|

||||

|

||||

### 步骤四:设置 Windows NTP 客户端 ###

|

||||

|

||||

11. 如果你的 windows 机器不是域名控制器的一部分,你可以配置 Windows 和你的 NTP服务器同步时间。在任务栏右边 -> 时间 -> 更改日期和时间设置 -> 网络时间标签 -> 更改设置 -> 和一个网络时间服务器检查同步 -> 在 Server 空格输入服务器 IP 或 FQDN -> 马上更新 -> OK。

|

||||

11、 如果你的 windows 机器不是域名控制器的一部分,你可以配置 Windows 和你的 NTP服务器同步时间。在任务栏右边 -> 时间 -> 更改日期和时间设置 -> 网络时间标签 -> 更改设置 -> 和一个网络时间服务器检查同步 -> 在 Server 空格输入服务器 IP 或 FQDN -> 马上更新 -> OK。

|

||||

|

||||

|

||||

|

||||

和 NTP 同步 Windows 时间

|

||||

*和 NTP 同步 Windows 时间*

|

||||

|

||||

就是这些。在你的网络中配置一个本地 NTP 服务器能确保你所有的服务器和客户端有相同的时间设置,以防出现网络连接失败,并且它们彼此都相互同步。

|

||||

|

||||

@ -117,7 +118,7 @@ via: http://www.tecmint.com/install-ntp-server-in-centos/

|

||||

|

||||

作者:[Matei Cezar][a]

|

||||

译者:[ictlyh](http://motouxiaogui.cn/blog)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,25 +1,25 @@

|

||||

第九部分 - 如果使用零客户端配置 Postfix 邮件服务器(SMTP)

|

||||

RHCE 系列(九):如何使用无客户端配置 Postfix 邮件服务器(SMTP)

|

||||

================================================================================

|

||||

尽管现在有很多在线联系方式,邮件仍然是一个人传递信息给远在世界尽头或办公室里坐在我们旁边的另一个人的有效方式。

|

||||

尽管现在有很多在线联系方式,电子邮件仍然是一个人传递信息给远在世界尽头或办公室里坐在我们旁边的另一个人的有效方式。

|

||||

|

||||

下面的图描述了邮件从发送者发出直到信息到达接收者收件箱的传递过程。

|

||||

下面的图描述了电子邮件从发送者发出直到信息到达接收者收件箱的传递过程。

|

||||

|

||||

|

||||

|

||||

|

||||

邮件如何工作

|

||||

*电子邮件如何工作*

|

||||

|

||||

要使这成为可能,背后发生了好多事情。为了使邮件信息从一个客户端应用程序(例如 [Thunderbird][1]、Outlook,或者网络邮件服务,例如 Gmail 或 Yahoo 邮件)到一个邮件服务器,并从其到目标服务器并最终到目标接收人,每个服务器上都必须有 SMTP(简单邮件传输协议)服务。

|

||||

要实现这一切,背后发生了好多事情。为了使电子邮件信息从一个客户端应用程序(例如 [Thunderbird][1]、Outlook,或者 web 邮件服务,例如 Gmail 或 Yahoo 邮件)投递到一个邮件服务器,并从其投递到目标服务器并最终到目标接收人,每个服务器上都必须有 SMTP(简单邮件传输协议)服务。

|

||||

|

||||

这就是为什么我们要在这篇博文中介绍如何在 RHEL 7 中设置 SMTP 服务器,从中本地用户发送的邮件(甚至发送到本地用户)被转发到一个中央邮件服务器以便于访问。

|

||||

这就是为什么我们要在这篇博文中介绍如何在 RHEL 7 中设置 SMTP 服务器,从本地用户发送的邮件(甚至发送到另外一个本地用户)被转发(forward)到一个中央邮件服务器以便于访问。

|

||||

|

||||

在实际需求中这称为零客户端安装。

|

||||

在这个考试的要求中这称为无客户端(null-client)安装。

|

||||

|

||||

在我们的测试环境中将包括一个原始邮件服务器和一个中央服务器或中继主机。

|

||||

在我们的测试环境中将包括一个起源(originating)邮件服务器和一个中央服务器或中继主机(relayhost)。

|

||||

|

||||

原始邮件服务器: (主机名: box1.mydomain.com / IP: 192.168.0.18)

|

||||

中央邮件服务器: (主机名: mail.mydomain.com / IP: 192.168.0.20)

|

||||

- 起源邮件服务器: (主机名: box1.mydomain.com / IP: 192.168.0.18)

|

||||

- 中央邮件服务器: (主机名: mail.mydomain.com / IP: 192.168.0.20)

|

||||

|

||||

为了域名解析我们在两台机器中都会使用有名的 /etc/hosts 文件:

|

||||

我们在两台机器中都会使用你熟知的 `/etc/hosts` 文件做名字解析:

|

||||

|

||||

192.168.0.18 box1.mydomain.com box1

|

||||

192.168.0.20 mail.mydomain.com mail

|

||||

@ -28,34 +28,29 @@

|

||||

|

||||

首先,我们需要(在两台机器上):

|

||||

|

||||

**1. 安装 Postfix:**

|

||||

**1、 安装 Postfix:**

|

||||

|

||||

# yum update && yum install postfix

|

||||

|

||||

**2. 启动服务并启用开机自动启动:**

|

||||

**2、 启动服务并启用开机自动启动:**

|

||||

|

||||

# systemctl start postfix

|

||||

# systemctl enable postfix

|

||||

|

||||

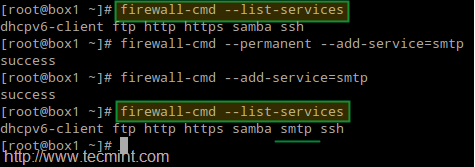

**3. 允许邮件流量通过防火墙:**

|

||||

**3、 允许邮件流量通过防火墙:**

|

||||

|

||||

# firewall-cmd --permanent --add-service=smtp

|

||||

# firewall-cmd --add-service=smtp

|

||||

|

||||

|

||||

|

||||

|

||||

在防火墙中开通邮件服务器端口

|

||||

*在防火墙中开通邮件服务器端口*

|

||||

|

||||

**4. 在 box1.mydomain.com 配置 Postfix**

|

||||

**4、 在 box1.mydomain.com 配置 Postfix**

|

||||

|

||||

Postfix 的主要配置文件是 /etc/postfix/main.cf。这个文件本身是一个很大的文本,因为其中包含的注释解析了程序设置的目的。

|

||||

Postfix 的主要配置文件是 `/etc/postfix/main.cf`。这个文件本身是一个很大的文本文件,因为其中包含了解释程序设置的用途的注释。

|

||||

|

||||

为了简洁,我们只显示了需要编辑的行(是的,在原始服务器中你需要保留 mydestination 为空;否则邮件会被保存到本地而不是我们实际想要的中央邮件服务器):

|

||||

|

||||

**在 box1.mydomain.com 配置 Postfix**

|

||||

|

||||

----------

|

||||

为了简洁,我们只显示了需要编辑的行(没错,在起源服务器中你需要保留 `mydestination` 为空;否则邮件会被存储到本地,而不是我们实际想要发往的中央邮件服务器):

|

||||

|

||||

myhostname = box1.mydomain.com

|

||||

mydomain = mydomain.com

|

||||

@ -64,11 +59,7 @@ Postfix 的主要配置文件是 /etc/postfix/main.cf。这个文件本身是一

|

||||

mydestination =

|

||||

relayhost = 192.168.0.20

|

||||

|

||||

**5. 在 mail.mydomain.com 配置 Postfix**

|

||||

|

||||

** 在 mail.mydomain.com 配置 Postfix **

|

||||

|

||||

----------

|

||||

**5、 在 mail.mydomain.com 配置 Postfix**

|

||||

|

||||

myhostname = mail.mydomain.com

|

||||

mydomain = mydomain.com

|

||||

@ -83,23 +74,23 @@ Postfix 的主要配置文件是 /etc/postfix/main.cf。这个文件本身是一

|

||||

|

||||

|

||||

|

||||

设置 Postfix SELinux 权限

|

||||

*设置 Postfix SELinux 权限*

|

||||

|

||||

上面的 SELinux 布尔值会允许 Postfix 在中央服务器写入邮件池。

|

||||

上面的 SELinux 布尔值会允许中央服务器上的 Postfix 可以写入邮件池(mail spool)。

|

||||

|

||||

**6. 在两台机子上重启服务以使更改生效:**

|

||||

**6、 在两台机子上重启服务以使更改生效:**

|

||||

|

||||

# systemctl restart postfix

|

||||

|

||||

如果 Postfix 没有正确启动,你可以使用下面的命令进行错误处理。

|

||||

|

||||

# systemctl –l status postfix

|

||||

# journalctl –xn

|

||||

# postconf –n

|

||||

# systemctl -l status postfix

|

||||

# journalctl -xn

|

||||

# postconf -n

|

||||

|

||||

### 测试 Postfix 邮件服务 ###

|

||||

|

||||

为了测试邮件服务器,你可以使用任何邮件用户代理(最常见的简称为 MUA)例如 [mail 或 mutt][2]。

|

||||

要测试邮件服务器,你可以使用任何邮件用户代理(Mail User Agent,常简称为 MUA),例如 [mail 或 mutt][2]。

|

||||

|

||||

由于我个人喜欢 mutt,我会在 box1 中使用它发送邮件给用户 tecmint,并把现有文件(mailbody.txt)作为信息内容:

|

||||

|

||||

@ -107,7 +98,7 @@ Postfix 的主要配置文件是 /etc/postfix/main.cf。这个文件本身是一

|

||||

|

||||

|

||||

|

||||

测试 Postfix 邮件服务器

|

||||

*测试 Postfix 邮件服务器*

|

||||

|

||||

现在到中央邮件服务器(mail.mydomain.com)以 tecmint 用户登录,并检查是否收到了邮件:

|

||||

|

||||

@ -116,15 +107,15 @@ Postfix 的主要配置文件是 /etc/postfix/main.cf。这个文件本身是一

|

||||

|

||||

|

||||

|

||||

检查 Postfix 邮件服务器发送

|

||||

*检查 Postfix 邮件服务器发送*

|

||||

|

||||

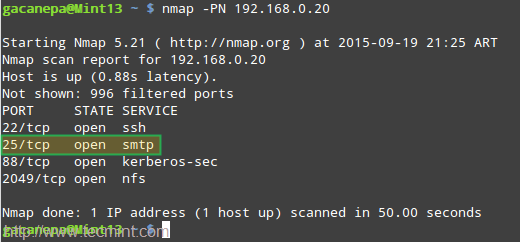

如果没有收到邮件,检查 root 用户的邮件池查看警告或者错误提示。你也需要使用 [nmap 命令][3]确保两台服务器运行了 SMTP 服务,并在中央邮件服务器中 打开了 25 号端口:

|

||||

如果没有收到邮件,检查 root 用户的邮件池看看是否有警告或者错误提示。你也许需要使用 [nmap 命令][3]确保两台服务器运行了 SMTP 服务,并在中央邮件服务器中打开了 25 号端口:

|

||||

|

||||

# nmap -PN 192.168.0.20

|

||||

|

||||

|

||||

|

||||

Postfix 邮件服务器错误处理

|

||||

*Postfix 邮件服务器错误处理*

|

||||

|

||||

### 总结 ###

|

||||

|

||||

@ -134,7 +125,7 @@ Postfix 邮件服务器错误处理

|

||||

|

||||

- [在 CentOS/RHEL 07 上配置仅缓存的 DNS 服务器][4]

|

||||

|

||||

最后,我强烈建议你熟悉 Postfix 的配置文件(main.cf)和这个程序的帮助手册。如果有任何疑问,别犹豫,使用下面的评论框或者我们的论坛 Linuxsay.com 告诉我们吧,你会从世界各地的 Linux 高手中获得几乎及时的帮助。

|

||||

最后,我强烈建议你熟悉 Postfix 的配置文件(main.cf)和这个程序的帮助手册。如果有任何疑问,别犹豫,使用下面的评论框或者我们的论坛 Linuxsay.com 告诉我们吧,你会从世界各地的 Linux 高手中获得几乎是及时的帮助。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -142,7 +133,7 @@ via: http://www.tecmint.com/setup-postfix-mail-server-smtp-using-null-client-on-

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[ictlyh](https//www.mutouxiaogui.cn/blog/)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,148 +0,0 @@

|

||||

[bazz2222]

|

||||

Why did you start using Linux?

|

||||

================================================================================

|

||||

> In today's open source roundup: What got you started with Linux? Plus: IBM's Linux only Mainframe. And why you should skip Windows 10 and go with Linux

|

||||

|

||||

### Why did you start using Linux? ###

|

||||

|

||||

Linux has become quite popular over the years, with many users defecting to it from OS X or Windows. But have you ever wondered what got people started with Linux? A redditor asked that question and got some very interesting answers.

|

||||

|

||||

SilverKnight asked his question on the Linux subreddit:

|

||||

|

||||

> I know this has been asked before, but I wanted to hear more from the younger generation why it is that they started using linux and what keeps them here.

|

||||

>

|

||||

> I dont want to discourage others from giving their linux origin stories, because those are usually pretty good, but I was mostly curious about our younger population since there isn't much out there from them yet.

|

||||

>

|

||||

> I myself am 27 and am a linux dabbler. I have installed quite a few different distros over the years but I haven't made the plunge to full time linux. I guess I am looking for some more reasons/inspiration to jump on the bandwagon.

|

||||

>

|

||||

> [More at Reddit][1]

|

||||

|

||||

Fellow redditors in the Linux subreddit responded with their thoughts:

|

||||

|

||||

> **DoublePlusGood**: "I started using Backtrack Linux (now Kali) at 12 because I wanted to be a "1337 haxor". I've stayed with Linux (Archlinux currently) because it lets me have the endless freedom to make my computer do what I want."

|

||||

>

|

||||

> **Zack**: "I'm a Linux user since, I think, the age of 12 or 13, I'm 15 now.

|

||||

>

|

||||

> It started when I got tired with Windows XP at 11 and the waiting, dammit am I impatient sometimes, but waiting for a basic task such as shutting down just made me tired of Windows all together.

|

||||

>

|

||||

> A few months previously I had started participating in discussions in a channel on the freenode IRC network which was about a game, and as freenode usually goes, it was open source and most of the users used Linux.

|

||||

>

|

||||

> I kept on hearing about this Linux but wasn't that interested in it at the time. However, because the channel (and most of freenode) involved quite a bit of programming I started learning Python.

|

||||

>

|

||||

> A year passed and I was attempting to install GNU/Linux (specifically Ubuntu) on my new (technically old, but I had just got it for my birthday) PC, unfortunately it continually froze, for reasons unknown (probably a bad hard drive, or a lot of dust or something else...).

|

||||

>

|

||||

> Back then I was the type to give up on things, so I just continually nagged my dad to try and install Ubuntu, he couldn't do it for the same reasons.

|

||||

>

|

||||

> After wanting Linux for a while I became determined to get Linux and ditch windows for good. So instead of Ubuntu I tried Linux Mint, being a derivative of Ubuntu(?) I didn't have high hopes, but it worked!

|

||||

>

|

||||

> I continued using it for another 6 months.

|

||||

>

|

||||

> During that time a friend on IRC gave me a virtual machine (which ran Ubuntu) on their server, I kept it for a year a bit until my dad got me my own server.

|

||||

>

|

||||

> After the 6 months I got a new PC (which I still use!) I wanted to try something different.

|

||||

>

|

||||

> I decided to install openSUSE.

|

||||

>

|

||||

> I liked it a lot, and on the same Christmas I obtained a Raspberry Pi, and stuck with Debian on it for a while due to the lack of support other distros had for it."

|

||||

>

|

||||

> **Cqz**: "Was about 9 when the Windows 98 machine handed down to me stopped working for reasons unknown. We had no Windows install disk, but Dad had one of those magazines that comes with demo programs and stuff on CDs. This one happened to have install media for Mandrake Linux, and so suddenly I was a Linux user. Had no idea what I was doing but had a lot of fun doing it, and although in following years I often dual booted with various Windows versions, the FLOSS world always felt like home. Currently only have one Windows installation, which is a virtual machine for games."

|

||||

>

|

||||

> **Tosmarcel**: "I was 15 and was really curious about this new concept called 'programming' and then I stumbled upon this Harvard course, CS50. They told users to install a Linux vm to use the command line. But then I asked myself: "Why doesn't windows have this command line?!". I googled 'linux' and Ubuntu was the top result -Ended up installing Ubuntu and deleted the windows partition accidentally... It was really hard to adapt because I knew nothing about linux. Now I'm 16 and running arch linux, never looked back and I love it!"

|

||||

>

|

||||

> **Micioonthet**: "First heard about Linux in the 5th grade when I went over to a friend's house and his laptop was running MEPIS (an old fork of Debian) instead of Windows XP.

|

||||

>

|

||||

> Turns out his dad was a socialist (in America) and their family didn't trust Microsoft. This was completely foreign to me, and I was confused as to why he would bother using an operating system that didn't support the majority of software that I knew.

|

||||

>

|

||||

> Fast forward to when I was 13 and without a laptop. Another friend of mine was complaining about how slow his laptop was, so I offered to buy it off of him so I could fix it up and use it for myself. I paid $20 and got a virus filled, unusable HP Pavilion with Windows Vista. Instead of trying to clean up the disgusting Windows install, I remembered that Linux was a thing and that it was free. I burned an Ubuntu 12.04 disc and installed it right away, and was absolutely astonished by the performance.

|

||||

>

|

||||

> Minecraft (one of the few early Linux games because it ran on Java), which could barely run at 5 FPS on Vista, ran at an entirely playable 25 FPS on a clean install of Ubuntu.

|

||||

>

|

||||

> I actually still have that old laptop and use it occasionally, because why not? Linux doesn't care how old your hardware is.

|

||||

>

|

||||

> I since converted my dad to Linux and we buy old computers at lawn sales and thrift stores for pennies and throw Linux Mint or some other lightweight distros on them."

|

||||

>

|

||||

> **Webtm**: "My dad had every computer in the house with some distribution on it, I think a couple with OpenSUSE and Debian, and his personal computer had Slackware on it. So I remember being little and playing around with Debian and not really getting into it much. So I had a Windows laptop for a few years and my dad asked me if I wanted to try out Debian. It was a fun experience and ever since then I've been using Debian and trying out distributions. I currently moved away from Linux and have been using FreeBSD for around 5 months now, and I am absolutely happy with it.

|

||||

>

|

||||

> The control over your system is fantastic. There are a lot of cool open source projects. I guess a lot of the fun was figuring out how to do the things I want by myself and tweaking those things in ways to make them do something else. Stability and performance is also a HUGE plus. Not to mention the level of privacy when switching."

|

||||

>

|

||||

> **Wyronaut**: "I'm currently 18, but I first started using Linux when I was 13. Back then my first distro was Ubuntu. The reason why I wanted to check out Linux, was because I was hosting little Minecraft game servers for myself and a couple of friends, back then Minecraft was pretty new-ish. I read that the defacto operating system for hosting servers was Linux.

|

||||

>

|

||||

> I was a big newbie when it came to command line work, so Linux scared me a little, because I had to take care of a lot of things myself. But thanks to google and a few wiki pages I managed to get up a couple of simple servers running on a few older PC's I had lying around. Great use for all that older hardware no one in the house ever uses.

|

||||

>

|

||||

> After running a few game servers I started running a few web servers as well. Experimenting with HTML, CSS and PHP. I worked with those for a year or two. Afterwards, took a look at Java. I made the terrible mistake of watching TheNewBoston video's.

|

||||

>

|

||||

> So after like a week I gave up on Java and went to pick up a book on Python instead. That book was Learn Python The Hard Way by Zed A. Shaw. After I finished that at the fast pace of two weeks, I picked up the book C++ Primer, because at the time I wanted to become a game developer. Went trough about half of the book (~500 pages) and burned out on learning. At that point I was spending a sickening amount of time behind my computer.

|

||||

>

|

||||

> After taking a bit of a break, I decided to pick up JavaScript. Read like 2 books, made like 4 different platformers and called it a day.

|

||||

>

|

||||

> Now we're arriving at the present. I had to go through the horrendous process of finding a school and deciding what job I wanted to strive for when I graduated. I ruled out anything in the gaming sector as I didn't want anything to do with graphics programming anymore, I also got completely sick of drawing and modelling. And I found this bachelor that had something to do with netsec and I instantly fell in love. I picked up a couple books on C to shred this vacation period and brushed up on some maths and I'm now waiting for the new school year to commence.

|

||||

>

|

||||

> Right now, I am having loads of fun with Arch Linux, made couple of different arrangements on different PC's and it's going great!

|

||||

>

|

||||

> In a sense Linux is what also got me into programming and ultimately into what I'm going to study in college starting this september. I probably have my future life to thank for it."

|

||||

>

|

||||

> **Linuxllc**: "You also can learn from old farts like me.

|

||||

>

|

||||

> The crutch, The crutch, The crutch. Getting rid of the crutch will inspired you and have good reason to stick with Linux.

|

||||

>

|

||||

> I got rid of my crutch(Windows XP) back in 2003. Took me only 5 days to get all my computer task back and running at a 100% workflow. Including all my peripheral devices. Minus any Windows games. I just play native Linux games."

|

||||

>

|

||||

> **Highclass**: "Hey I'm 28 not sure if this is the age group you are looking for.

|

||||

>

|

||||

> To be honest, I was always interested in computers and the thought of a free operating system was intriguing even though at the time I didn't fully grasp the free software philosophy, to me it was free as in no cost. I also did not find the CLI too intimidating as from an early age I had exposure to DOS.

|

||||

>

|

||||

> I believe my first distro was Mandrake, I was 11 or 12, I messed up the family computer on several occasions.... I ended up sticking with it always trying to push myself to the next level. Now I work in the industry with Linux everyday.

|

||||

>

|

||||

> /shrug"

|

||||

>

|

||||

> Matto: "My computer couldn't run fast enough for XP (got it at a garage sale), so I started looking for alternatives. Ubuntu came up in Google. I was maybe 15 or 16 at the time. Now I'm 23 and have a job working on a product that uses Linux internally."

|

||||

>

|

||||

> [More at Reddit][2]

|

||||

|

||||

### IBM's Linux only Mainframe ###

|

||||

|

||||

IBM has a long history with Linux, and now the company has created a Mainframe that features Ubuntu Linux. The new machine is named LinuxOne.

|

||||

|

||||

Ron Miller reports for TechCrunch:

|

||||

|

||||

> The new mainframes come in two flavors, named for penguins (Linux — penguins — get it?). The first is called Emperor and runs on the IBM z13, which we wrote about in January. The other is a smaller mainframe called the Rockhopper designed for a more “entry level” mainframe buyer.

|

||||

>

|

||||

> You may have thought that mainframes went the way of the dinosaur, but they are still alive and well and running in large institutions throughout the world. IBM as part of its broader strategy to promote the cloud, analytics and security is hoping to expand the potential market for mainframes by running Ubuntu Linux and supporting a range of popular open source enterprise software such as Apache Spark, Node.js, MongoDB, MariaDB, PostgreSQL and Chef.

|

||||

>

|

||||

> The metered mainframe will still sit inside the customer’s on-premises data center, but billing will be based on how much the customer uses the system, much like a cloud model, Mauri explained.

|

||||

>

|

||||

> ...IBM is looking for ways to increase those sales. Partnering with Canonical and encouraging use of open source tools on a mainframe gives the company a new way to attract customers to a small, but lucrative market.

|

||||

>

|

||||

> [More at TechCrunch][3]

|

||||

|

||||

### Why you should skip Windows 10 and opt for Linux ###

|

||||

|

||||

Since Windows 10 has been released there has been quite a bit of media coverage about its potential to spy on users. ZDNet has listed some reasons why you should skip Windows 10 and opt for Linux instead on your computer.

|

||||

|

||||

SJVN reports for ZDNet:

|

||||

|

||||

> You can try to turn Windows 10's data-sharing ways off, but, bad news: Windows 10 will keep sharing some of your data with Microsoft anyway. There is an alternative: Desktop Linux.

|

||||

>

|

||||

> You can do a lot to keep Windows 10 from blabbing, but you can't always stop it from talking. Cortana, Windows 10's voice activated assistant, for example, will share some data with Microsoft, even when it's disabled. That data includes a persistent computer ID to identify your PC to Microsoft.

|

||||

>

|

||||

> So, if that gives you a privacy panic attack, you can either stick with your old operating system, which is likely Windows 7, or move to Linux. Eventually, when Windows 7 is no longer supported, if you want privacy you'll have no other viable choice but Linux.

|

||||

>

|

||||

> There are other, more obscure desktop operating systems that are also desktop-based and private. These include the BSD Unix family such as FreeBSD, PCBSD, and NetBSD and eComStation, OS/2 for the 21st century. Your best choice, though, is a desktop-based Linux with a low learning curve.

|

||||

>

|

||||

> [More at ZDNet][4]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.itworld.com/article/2972587/linux/why-did-you-start-using-linux.html

|

||||

|

||||

作者:[Jim Lynch][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.itworld.com/author/Jim-Lynch/

|

||||

[1]:https://www.reddit.com/r/linux/comments/3hb2sr/question_for_younger_users_why_did_you_start/

|

||||

[2]:https://www.reddit.com/r/linux/comments/3hb2sr/question_for_younger_users_why_did_you_start/

|

||||

[3]:http://techcrunch.com/2015/08/16/ibm-teams-with-canonical-on-linux-mainframe/

|

||||

[4]:http://www.zdnet.com/article/sick-of-windows-spying-on-you-go-linux/

|

||||

@ -1,35 +0,0 @@

|

||||

Linus Torvalds Lambasts Open Source Programmers over Insecure Code

|

||||

================================================================================

|

||||

|

||||

|

||||

Linus Torvalds's latest rant underscores the high expectations the Linux developer places on open source programmers—as well the importance of security for Linux kernel code.

|

||||

|

||||

Torvalds is the unofficial "benevolent dictator" of the Linux kernel project. That means he gets to decide which code contributions go into the kernel, and which ones land in the reject pile.

|

||||

|

||||

On Oct. 28, open source coders whose work did not meet Torvalds's expectations faced an [angry rant][1]. "Christ people," Torvalds wrote about the code. "This is just sh*t."

|

||||

|

||||

He went on to call the coders "just incompetent and out to lunch."

|

||||

|

||||

What made Torvalds so angry? He believed the code could have been written more efficiently. It could have been easier for other programmers to understand and would run better through a compiler, the program that translates human-readable code into the binaries that computers understand.

|

||||

|

||||

Torvalds posted his own substitution for the code in question and suggested that the programmers should have written it his way.

|

||||

|

||||

Torvalds has a history of lashing out against people with whom he disagrees. It stretches back to 1991, when he famously [flamed Andrew Tanenbaum][2]—whose Minix operating system he later described as a series of "brain-damages." No doubt this latest criticism of fellow open source coders will go down as another example of Torvalds's confrontational personality.

|

||||

|

||||

But Torvalds may also have been acting strategically during this latest rant. "I want to make it clear to *everybody* that code like this is completely unacceptable," he wrote, suggesting that his goal was to send a message to all Linux programmers, not just vent his anger at particular ones.

|

||||

|

||||

Torvalds also used the incident as an opportunity to highlight the security concerns that arise from poorly written code. Those are issues dear to open source programmers' hearts in an age when enterprises are finally taking software security seriously, and demanding top-notch performance from their code in this regard. Lambasting open source programmers who write insecure code thus helps Linux's image.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://thevarguy.com/open-source-application-software-companies/110415/linus-torvalds-lambasts-open-source-programmers-over-inse

|

||||

|

||||

作者:[Christopher Tozzi][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://thevarguy.com/author/christopher-tozzi

|

||||

[1]:http://lkml.iu.edu/hypermail/linux/kernel/1510.3/02866.html

|

||||

[2]:https://en.wikipedia.org/wiki/Tanenbaum%E2%80%93Torvalds_debate

|

||||

@ -1,330 +0,0 @@

|

||||

translating by ezio

|

||||

|

||||

Going Beyond Hello World Containers is Hard Stuff

|

||||

================================================================================

|

||||

In [my previous post][1], I provided the basic concepts behind Linux container technology. I wrote as much for you as I did for me. Containers are new to me. And I figured having the opportunity to blog about the subject would provide the motivation to really learn the stuff.

|

||||

|

||||

I intend to learn by doing. First get the concepts down, then get hands-on and write about it as I go. I assumed there must be a lot of Hello World type stuff out there to give me up to speed with the basics. Then, I could take things a bit further and build a microservice container or something.

|

||||

|

||||

I mean, it can’t be that hard, right?

|

||||

|

||||

Wrong.

|

||||

|

||||

Maybe it’s easy for someone who spends significant amount of their life immersed in operations work. But for me, getting started with this stuff turned out to be hard to the point of posting my frustrations to Facebook...

|

||||

|

||||

But, there is good news: I got it to work! And it’s always nice being able to make lemonade from lemons. So I am going to share the story of how I made my first microservice container with you. Maybe my pain will save you some time.

|

||||

|

||||

If you've ever found yourself in a situation like this, fear not: folks like me are here to deal with the problems so you don't have to!

|

||||

|

||||

Let’s begin.

|

||||

|

||||

### A Thumbnail Micro Service ###

|

||||

|

||||

The microservice I designed was simple in concept. Post a digital image in JPG or PNG format to an HTTP endpoint and get back a a 100px wide thumbnail.

|

||||

|

||||

Here’s what that looks like:

|

||||

|

||||

|

||||

|

||||

I decide to use a NodeJS for my code and version of [ImageMagick][2] to do the thumbnail transformation.

|

||||

|

||||

I did my first version of the service, using the logic shown here:

|

||||

|

||||

|

||||

|

||||

I download the [Docker Toolbox][3] which installs an the Docker Quickstart Terminal. Docker Quickstart Terminal makes creating containers easier. The terminal fires up a Linux virtual machine that has Docker installed, allowing you to run Docker commands from within a terminal.

|

||||

|

||||

In my case, I am running on OS X. But there’s a Windows version too.

|

||||

|

||||

I am going to use Docker Quickstart Terminal to build a container image for my microservice and run a container from that image.

|

||||

|

||||

The Docker Quickstart Terminal runs in your regular terminal, like so:

|

||||

|

||||

|

||||

|

||||

### The First Little Problem and the First Big Problem ###

|

||||

|

||||

So I fiddled around with NodeJS and ImageMagick and I got the service to work on my local machine.

|

||||

|

||||

Then, I created the Dockerfile, which is the configuration script Docker uses to build your container. (I’ll go more into builds and Dockerfile more later on.)

|

||||

|

||||

Here’s the build command I ran on the Docker Quickstart Terminal:

|

||||

|

||||

$ docker build -t thumbnailer:0.1

|

||||

|

||||

I got this response:

|

||||

|

||||

docker: "build" requires 1 argument.

|

||||

|

||||

Huh.

|

||||

|

||||

After 15 minutes I realized: I forgot to put a period . as the last argument!

|

||||

|

||||

It needs to be:

|

||||

|

||||

$ docker build -t thumbnailer:0.1 .

|

||||

|

||||

But this wasn’t the end of my problems.

|

||||

|

||||

I got the image to build and then I typed [the the `run` command][4] on the Docker Quickstart Terminal to fire up a container based on the image, called `thumbnailer:0.1`:

|

||||

|

||||

$ docker run -d -p 3001:3000 thumbnailer:0.1

|

||||

|

||||

The `-p 3001:3000` argument makes it so the NodeJS microservice running on port 3000 within the container binds to port 3001 on the host virtual machine.

|

||||

|

||||

Looks so good so far, right?

|

||||

|

||||

Wrong. Things are about to get pretty bad.

|

||||

|

||||

I determined the IP address of the virtual machine created by Docker Quickstart Terminal by running the `docker-machine` command:

|

||||

|

||||

$ docker-machine ip default

|

||||

|

||||

This returns the IP address of the default virtual machine, the one that is run under the Docker Quickstart Terminal. For me, this IP address was 192.168.99.100.

|

||||

|

||||

I browsed to http://192.168.99.100:3001/ and got the file upload page I built:

|

||||

|

||||

|

||||

|

||||

I selected a file and clicked the Upload Image button.

|

||||

|

||||

But it didn’t work.

|

||||

|

||||

The terminal is telling me it can’t find the `/upload` directory my microservice requires.

|

||||

|

||||

Now, keep in mind, I had been at this for about a day—between the fiddling and research. I’m feeling a little frustrated by this point.

|

||||

|

||||

Then, a brain spark flew. Somewhere along the line remembered reading a microservice should not do any data persistence on its own! Saving data should be the job of another service.

|

||||

|

||||

So what if the container can’t find the `/upload` directory? The real issue is: my microservice has a fundamentally flawed design.

|

||||

|

||||

Let’s take another look:

|

||||

|

||||

|

||||

|

||||

Why am I saving a file to disk? Microservices are supposed to be fast. Why not do all my work in memory? Using memory buffers will make the "I can’t find no stickin’ directory" error go away and will increase the performance of my app dramatically.

|

||||

|

||||

So that’s what I did. And here’s what the plan was:

|

||||

|

||||

|

||||

|

||||

Here’s the NodeJS I wrote to do all the in-memory work for creating a thumbnail:

|

||||

|

||||

// Bind to the packages

|

||||

var express = require('express');

|

||||

var router = express.Router();

|

||||

var path = require('path'); // used for file path

|

||||

var im = require("imagemagick");

|

||||

|

||||

// Simple get that allows you test that you can access the thumbnail process

|

||||

router.get('/', function (req, res, next) {

|

||||

res.status(200).send('Thumbnailer processor is up and running');

|

||||

});

|

||||

|

||||

// This is the POST handler. It will take the uploaded file and make a thumbnail from the

|

||||

// submitted byte array. I know, it's not rocket science, but it serves a purpose

|

||||

router.post('/', function (req, res, next) {

|

||||

req.pipe(req.busboy);

|

||||

req.busboy.on('file', function (fieldname, file, filename) {

|

||||

var ext = path.extname(filename)

|

||||

|

||||

// Make sure that only png and jpg is allowed

|

||||

if(ext.toLowerCase() != '.jpg' && ext.toLowerCase() != '.png'){

|

||||

res.status(406).send("Service accepts only jpg or png files");

|

||||

}

|

||||

|

||||

var bytes = [];

|

||||

|

||||

// put the bytes from the request into a byte array

|

||||

file.on('data', function(data) {

|

||||

for (var i = 0; i < data.length; ++i) {

|

||||

bytes.push(data[i]);

|

||||

}

|

||||

console.log('File [' + fieldname + '] got bytes ' + bytes.length + ' bytes');

|

||||

});

|

||||

|

||||

// Once the request is finished pushing the file bytes into the array, put the bytes in

|

||||

// a buffer and process that buffer with the imagemagick resize function

|

||||

file.on('end', function() {

|

||||

var buffer = new Buffer(bytes,'binary');

|

||||

console.log('Bytes got ' + bytes.length + ' bytes');

|

||||

|

||||

//resize

|

||||

im.resize({

|

||||

srcData: buffer,

|

||||

height: 100

|

||||

}, function(err, stdout, stderr){

|

||||

if (err){

|

||||

throw err;

|

||||

}

|

||||

// get the extension without the period

|

||||

var typ = path.extname(filename).replace('.','');

|

||||

res.setHeader("content-type", "image/" + typ);

|

||||

res.status(200);

|

||||

// send the image back as a response

|

||||

res.send(new Buffer(stdout,'binary'));

|

||||

});

|

||||

});

|

||||

});

|

||||

});

|

||||

|

||||

module.exports = router;

|

||||

|

||||

Okay, so we’re back on track and everything is hunky dory on my local machine. I go to sleep.

|

||||

|

||||

But, before I do I test the microservice code running as standard Node app on localhost...

|

||||

|

||||

|

||||

|

||||

It works fine. Now all I needed to do was get it working in a container.

|

||||

|

||||

The next day I woke up, grabbed some coffee, and built an image—not forgetting to put in the period!

|

||||

|

||||

$ docker build -t thumbnailer:01 .

|

||||

|

||||

I am building from the root directory of my thumbnailer project. The build command uses the Dockerfile that is in the root directory. That’s how it goes: put the Dockerfile in the same place you want to run build and the Dockerfile will be used by default.

|

||||

|

||||

Here is the text of the Dockerfile I was using:

|

||||

|

||||

FROM ubuntu:latest

|

||||

MAINTAINER bob@CogArtTech.com

|

||||

|

||||

RUN apt-get update

|

||||

RUN apt-get install -y nodejs nodejs-legacy npm

|

||||

RUN apt-get install imagemagick libmagickcore-dev libmagickwand-dev

|

||||

RUN apt-get clean

|

||||

|

||||

COPY ./package.json src/

|

||||

|

||||

RUN cd src && npm install

|

||||

|

||||

COPY . /src

|

||||

|

||||

WORKDIR src/

|

||||

|

||||

CMD npm start

|

||||

|

||||

What could go wrong?

|

||||

|

||||

### The Second Big Problem ###

|

||||

|

||||

I ran the `build` command and I got this error:

|

||||

|

||||

Do you want to continue? [Y/n] Abort.

|

||||

|

||||

The command '/bin/sh -c apt-get install imagemagick libmagickcore-dev libmagickwand-dev' returned a non-zero code: 1

|

||||

|

||||

I figured something was wrong with the microservice. I went back to my machine, fired up the service on localhost, and uploaded a file.

|

||||

|

||||

Then I got this error from NodeJS:

|

||||

|

||||

Error: spawn convert ENOENT

|

||||

|

||||

What’s going on? This worked the other night!

|

||||

|

||||

I searched and searched, for every permutation of the error I could think of. After about four hours of replacing different node modules here and there, I figured: why not restart the machine?

|

||||

|

||||

I did. And guess what? The error went away!

|

||||

|

||||

Go figure.

|

||||

|

||||

### Putting the Genie Back in the Bottle ###

|

||||

|

||||

So, back to the original quest: I needed to get this build working.

|

||||

|

||||

I removed all of the containers running on the VM, using [the `rm` command][5]:

|

||||

|

||||

$ docker rm -f $(docker ps -a -q)

|

||||

|

||||

The `-f` flag here force removes running images.

|

||||

|

||||

Then I removed all of my Docker images, using [the `rmi` command][6]:

|

||||

|

||||

$ docker rmi if $(docker images | tail -n +2 | awk '{print $3}')

|

||||

|

||||

I go through the whole process of rebuilding the image, installing the container and try to get the microservice running. Then after about an hour of self-doubt and accompanying frustration, I thought to myself: maybe this isn’t a problem with the microservice.

|

||||

|

||||

So, I looked that the the error again:

|

||||

|

||||

Do you want to continue? [Y/n] Abort.

|

||||

|

||||

The command '/bin/sh -c apt-get install imagemagick libmagickcore-dev libmagickwand-dev' returned a non-zero code: 1

|

||||

|

||||

Then it hit me: the build is looking for a Y input from the keyboard! But, this is a non-interactive Dockerfile script. There is no keyboard.

|

||||

|

||||

I went back to the Dockerfile, and there it was:

|

||||

|

||||

RUN apt-get update

|

||||

RUN apt-get install -y nodejs nodejs-legacy npm

|

||||

RUN apt-get install imagemagick libmagickcore-dev libmagickwand-dev

|

||||

RUN apt-get clean

|

||||

|

||||

The second `apt-get` command is missing the `-y` flag which causes "yes" to be given automatically where usually it would be prompted for.

|

||||

|

||||

I added the missing `-y` to the command:

|

||||

|

||||

RUN apt-get update

|

||||

RUN apt-get install -y nodejs nodejs-legacy npm

|

||||

RUN apt-get install -y imagemagick libmagickcore-dev libmagickwand-dev

|

||||

RUN apt-get clean

|

||||

|

||||

And guess what: after two days of trial and tribulation, it worked! Two whole days!

|

||||

|

||||

So, I did my build:

|

||||

|

||||

$ docker build -t thumbnailer:0.1 .

|

||||

|

||||

I fired up the container:

|

||||

|

||||

$ docker run -d -p 3001:3000 thumbnailer:0.1

|

||||

|

||||

Got the IP address of the Virtual Machine:

|

||||

|

||||

$ docker-machine ip default

|

||||

|

||||

Went to my browser and entered http://192.168.99.100:3001/ into the address bar.

|

||||

|

||||

The upload page loaded.

|

||||

|

||||

I selected an image, and this is what I got:

|

||||

|

||||

|

||||

|

||||

It worked!

|

||||

|

||||

Inside a container, for the first time!

|

||||

|

||||

### So What Does It All Mean? ###

|

||||

|

||||

A long time ago, I accepted the fact when it comes to tech, sometimes even the easy stuff is hard. Along with that, I abandoned the desire to be the smartest guy in the room. Still, the last few days trying get basic competency with containers has been, at times, a journey of self doubt.

|

||||

|

||||

But, you wanna know something? It’s 2 AM on an early morning as I write this, and every nerve wracking hour has been worth it. Why? Because you gotta put in the time. This stuff is hard and it does not come easy for anyone. And don’t forget: you’re learning tech and tech runs the world!

|

||||

|

||||

P.S. Check out this two part video of Hello World containers, check out [Raziel Tabib’s][7] excellent work in this video...

|

||||

|

||||

注:youtube视频

|

||||

<iframe width="560" height="315" src="https://www.youtube.com/embed/PJ95WY2DqXo" frameborder="0" allowfullscreen></iframe>

|

||||

|

||||

And don't miss part two...

|

||||

|

||||

注:youtube视频

|

||||

<iframe width="560" height="315" src="https://www.youtube.com/embed/lss2rZ3Ppuk" frameborder="0" allowfullscreen></iframe>

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://deis.com/blog/2015/beyond-hello-world-containers-hard-stuff

|

||||

|

||||

作者:[Bob Reselman][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://deis.com/blog

|

||||

[1]:http://deis.com/blog/2015/developer-journey-linux-containers

|

||||

[2]:https://github.com/rsms/node-imagemagick

|

||||

[3]:https://www.docker.com/toolbox

|

||||

[4]:https://docs.docker.com/reference/commandline/run/

|

||||

[5]:https://docs.docker.com/reference/commandline/rm/

|

||||

[6]:https://docs.docker.com/reference/commandline/rmi/

|

||||

[7]:http://twitter.com/RazielTabib

|

||||

@ -0,0 +1,54 @@

|

||||

NetworkManager and privacy in the IPv6 internet

|

||||

======================

|

||||

|

||||

IPv6 is gaining momentum. With growing use of the protocol concerns about privacy that were not initially anticipated arise. The Internet community actively publishes solutions to them. What’s the current state and how does NetworkManager catch up? Let’s figure out!

|

||||

|

||||

|

||||

|

||||

## The identity of a IPv6-connected host

|

||||

|

||||

The IPv6 enabled nodes don’t need a central authority similar to IPv4 [DHCP](https://tools.ietf.org/html/rfc2132) servers to configure their addresses. They discover the networks they are in and [complete the addresses themselves](https://tools.ietf.org/html/rfc4862) by generating the host part. This makes the network configuration simpler and scales better to larger networks. However, there’s some drawbacks to this approach. Firstly, the node needs to ensure that its address doesn’t collide with an address of any other node on the network. Secondly, if the node uses the same host part of the address in every network it enters then its movement can be tracked and the privacy is at risk.

|

||||

|

||||

Internet Engineering Task Force (IETF), the organization behind the Internet standards, [acknowledged this problem](https://tools.ietf.org/html/draft-iesg-serno-privacy-00) and recommends against use of hardware serial numbers to identify the node in the network.

|

||||

|

||||

But what does the actual implementation look like?

|

||||

|

||||

The problem of address uniqueness is addressed with [Duplicate Address Detection](https://tools.ietf.org/html/rfc4862#section-5.4) (DAD) mechanism. When a node creates an address for itself it first checks whether another node uses the same address using the [Neighbor Discovery Protocol](https://tools.ietf.org/html/rfc4861) (a mechanism not unlike IPv4 [ARP](https://tools.ietf.org/html/rfc826) protocol). When it discovers the address is already used, it must discard it.

|

||||

|

||||

The other problem (privacy) is a bit harder to solve. An IP address (be it IPv4 or IPv6) address consists of a network part and the host part. The host discovers the relevant network parts and is supposed generate the host part. Traditionally it just uses an Interface Identifier derived from the network hardware’s (MAC) address. The MAC address is set at manufacturing time and can uniquely identify the machine. This guarantees the address is stable and unique. That’s a good thing for address collision avoidance but a bad thing for privacy. The host part remaining constant in different network means that the machine can be uniquely identified as it enters different networks. This seemed like non-issue at the time the protocol was designed, but the privacy concerns arose as the IPv6 gained popularity. Fortunately, there’s a solution to this problem.

|

||||

|

||||

## Enter privacy extensions

|

||||

|

||||

It’s no secret that the biggest problem with IPv4 is that the addresses are scarce. This is no longer true with IPv6 and in fact an IPv6-enabled host can use addresses quite liberally. There’s absolutely nothing wrong with having multiple IPv6 addresses attached to the same interface. On the contrary, it’s a pretty standard situation. At the very minimum each node has an address that is used for contacting nodes on the same hardware link called a link-local address. When the network contains a router that connects it to other networks in the internet, a node has an address for every network it’s directly connected to. If a host has more addresses in the same network the node accepts incoming traffic for all of them. For the outgoing connections which, of course, reveal the address to the remote host, the kernel picks the fittest one. But which one is it?

|

||||

|

||||

With privacy extensions enabled, as defined by [RFC4941](https://tools.ietf.org/html/rfc4941), a new address with a random host part is generated every now and then. The newest one is used for new outgoing connections while the older ones are deprecated when they’re unused. This is a nifty trick — the host does not reveal the stable address as it’s not used for outgoing connections, but still accepts connections to it from the hosts that are aware of it.

|

||||

|

||||

There’s a downside to this. Certain applications tie the address to the user identity. Consider a web application that issues a HTTP Cookie for the user during the authentication but only accepts it for the connections that come from the address that conducted the authentications. As the kernel generates a new temporary address, the server would reject the requests that use it, effectively logging the user out. It could be argued that the address is not an appropriate mechanism for establishing user’s identity but that’s what some real-world applications do.

|

||||

|

||||

## Privacy stable addressing to the rescue

|

||||

|

||||

Another approach would be needed to cope with this. There’s a need for an address that is unique (of course), stable for a particular network but still changes when user enters another network so that tracking is not possible. The RFC7217 introduces a mechanism that provides exactly this.

|

||||

|

||||

Creation of a privacy stable address relies on a pseudo-random key that’s only known the host itself and never revealed to other hosts in the network. This key is then hashed using a cryptographically secure algorithm along with values specific for a particular network connection. It includes an identifier of the network interface, the network prefix and possibly other values specific to the network such as the wireless SSID. The use of the secret key makes it impossible to predict the resulting address for the other hosts while the network-specific data causes it to be different when entering a different network.

|

||||

|

||||

This also solves the duplicate address problem nicely. The random key makes collisions unlikely. If, in spite of this, a collision occurs then the hash can be salted with a DAD failure counter and a different address can be generated instead of failing the network connectivity. Now that’s clever.

|

||||

|

||||

Using privacy stable address doesn’t interfere with the privacy extensions at all. You can use the [RFC7217](https://tools.ietf.org/html/rfc7217) stable address while still employing the RFC4941 temporary addresses at the same time.

|

||||

|

||||

## Where does NetworkManager stand?

|

||||

|

||||

We’ve already enabled the privacy extensions with the release NetworkManager 1.0.4. They’re turned on by default; you can control them with ipv6.ip6-privacy property.

|

||||

|

||||

With the release of NetworkManager 1.2, we’re adding the stable privacy addressing. It’s supposed to address the situations where the privacy extensions don’t make the cut. The use of the feature is controlled with the ipv6.addr-gen-mode property. If it’s set to stable-privacy then stable privacy addressing is used. Setting it to “eui64” or not setting it at all preserves the traditional default behavior.

|

||||

|

||||

Stay tuned for NetworkManager 1.2 release in early 2016! If you want to try the bleeding-edge snapshot, give Fedora Rawhide a try. It will eventually become Fedora 24.

|

||||

|

||||

*I’d like to thank Hannes Frederic Sowa for a valuable feedback. The article would make less sense without his corrections. Hannes also created the in-kernel implementation of the RFC7217 mechanism which can be used when the networking is not managed by NetworkManager.*

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://blogs.gnome.org/lkundrak/2015/12/03/networkmanager-and-privacy-in-the-ipv6-internet/

|

||||

作者:[Lubomir Rintel]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

46

sources/tech/20151206 Supporting secure DNS in glibc.md

Normal file

46

sources/tech/20151206 Supporting secure DNS in glibc.md

Normal file

@ -0,0 +1,46 @@

|

||||

Supporting secure DNS in glibc

|

||||

========================

|

||||

|

||||

Credit: Jonathan Corbet

|

||||

|

||||

One of the many weak links in Internet security is the domain name system (DNS); it is subject to attacks that, among other things, can mislead applications regarding the IP address of a system they wish to connect to. That, in turn, can cause connections to go to the wrong place, facilitating man-in-the-middle attacks and more. The DNSSEC protocol extensions are meant to address this threat by setting up a cryptographically secure chain of trust for DNS information. When DNSSEC is set up properly, applications should be able to trust the results of domain lookups. As the discussion over an attempt to better integrate DNSSEC into the GNU C Library shows, though, ensuring that DNS lookups are safe is still not a straightforward problem.

|

||||

|

||||

In a sense, the problem was solved years ago; one can configure a local nameserver to perform full DNSSEC verification and use that server via glibc calls in applications. DNSSEC can even be used to increase security in other areas; it can, for example, carry SSH or TLS key fingerprints, allowing applications to verify that they are talking to the right server. Things get tricky, though, when one wants to be sure that DNS results claiming to have DNSSEC verification are actually what they claim to be — when one wants the security that DNSSEC is meant to provide, in other words.

|

||||

|

||||

The /etc/resolv.conf problem

|

||||

|

||||

Part of the problem, from the glibc perspective, is that glibc itself does not do DNSSEC verification. Instead, it consults /etc/resolv.conf and asks the servers found therein to do the lookup and verification; the results are then returned to the application. If the application is using the low-level res_query() interface, those results may include the "authenticated data" (AD) flag (if the nameserver has set it) indicating that DNSSEC verification has been successfully performed. But glibc knows nothing about the trustworthiness of the nameserver that has provided those results, so it cannot tell the application anything about whether they should really be trusted.

|

||||

|

||||

One of the first steps suggested by glibc maintainer Carlos O'Donell is to add an option (dns-strip-dnssec-ad-bit) to the resolv.conf file telling glibc to unconditionally remove the AD bit. This option could be set by distributions to indicate that the DNS lookup results cannot be trusted at a DNSSEC level. Once things have been set up so that the results can be trusted, that option can be removed. In the meantime, though, applications would have a way to judge the DNS lookup results they get from glibc, something that does not exist now.

|

||||

|

||||

What would a trustworthy setup look like? The standard picture looks something like this: there is a local nameserver, accessed via the loopback interface, as the only entry in /etc/resolv.conf. That nameserver would be configured to do verification and, in the case that verification fails, simply return no results at all. There would, in almost all cases, be no need to worry about whether applications see the AD bit or not; if the results are not trustworthy, applications will simply not see them at all. A number of distributions are moving toward this model, but the situation is still not as simple as some might think.

|

||||

|

||||

One problem is that this scheme makes /etc/resolv.conf into a central point of trust for the system. But, in a typical Linux system, there are no end of DHCP clients, networking scripts, and more that will make changes to that file. As Paul Wouters pointed out, locking down this file in the short term is not really an option. Sometimes those changes are necessary: when a diskless system is booting, it may need name-resolution service before it is at a point where it can start up its own nameserver. A system's entire DNS environment may change depending on which network it is attached to. Systems in containers may be best configured to talk to a nameserver on the host. And so on.

|

||||

|

||||

So there seems to be a general belief that /etc/resolv.conf cannot really be trusted on current systems. Ideas to add secondary configuration files (/etc/secure-resolv.conf or whatever) have been floated, but they don't much change the basic nature of the situation. Beyond that, some participants felt that even a local nameserver running on the loopback interface is not really trustworthy; Zack Weinberg suggested that administrators might intentionally short out DNSSEC validation, for example.

|

||||

|

||||

Since the configuration cannot be trusted on current systems, the reasoning goes, glibc needs to have a way to indicate to applications when the situation has improved and things can be trusted. That could include the AD-stripping option described above (or, conversely, an explicit "this nameserver is trusted" option); that, of course, would require that the system be locked down to a level where surprising changes to /etc/resolv.conf no longer happen. A variant, as suggested by Petr Spacek, is to have a way for an application to ask glibc whether it is talking to a local nameserver or not.

|

||||

|

||||

Do it in glibc?

|

||||

|

||||

An alternative would be to dispense with the nameserver and have glibc do DNSSEC validation itself. There is, however, resistance to putting a big pile of cryptographic code into glibc itself. That would increase the size of the library and, it is felt, increase the attack surface of any application using it. A variant of this idea, suggested by Zack, would be to put the validation code into the name-service caching daemon (nscd) instead. Since nscd is part of glibc, it is under the control of the glibc developers and there could be a certain amount of confidence that DNSSEC validation is being performed properly. The location of the nscd socket is well known, so the /etc/resolv.confissues don't come into play. Carlos worried, though, that this approach might deter adoption by users who do not want the caching features of nscd; in his mind, that seems to rule out the nscd option.

|

||||

|

||||

So, in the short term, at least, it seems unlikely that glibc will take on the full task of performing validated DNSSEC lookups. That means that, if security-conscious applications are going to use glibc for their name lookups, the library will have to provide an indication of how trustworthy the results received from a separate nameserver are. And that will almost certainly require explicit action on the part of the distributor and/or system administrator. As Simo Sorce put it:

|

||||

|

||||

A situation in which glibc does not use an explicit configuration option to signal applications that it is using a trusted resolver is not useful ... no scratch that, it is actively harmful, because applications developers will quickly realize they cannot trust any information coming from glibc and will simply not use it for DNSSEC related information.

|

||||

|

||||

Configuring a system to properly use DNSSEC involves change to many of the components of that system — it is a distribution-wide problem that will take time to solve fully. The role that glibc plays in this transition is likely to be relatively small, but it is an important one: glibc is probably the only place where applications can receive some assurance that their DNS results are trustworthy without implementing their own resolver code. Running multiple DNSSEC implementations on a system seems like an unlikely path to greater security, so it would be good to get this right.

|

||||

|

||||

The glibc project has not yet chosen a path by which it intends to get things right, though some sort of annotation in /etc/resolv.conf looks like a likely outcome. Any such change would then have to get into a release; given the conservative nature of glibc development, it may already be late for the 2.23 release, which is likely to happen in February. So higher DNSSEC awareness in glibc may not happen right away, but there is at least some movement in that direction.

|

||||

|

||||

---------------------------

|

||||

|

||||

via: https://lwn.net/Articles/663474/

|

||||

|

||||

作者:Jonathan Corbet

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

144

translated/talk/20150820 Why did you start using Linux.md

Normal file

144

translated/talk/20150820 Why did you start using Linux.md

Normal file

@ -0,0 +1,144 @@

|

||||

年轻人,你为啥使用 linux

|

||||

================================================================================

|

||||

> 今天的开源综述:是什么带你进入 linux 的世界?号外:IBM 基于 Linux 的大型机。以及,你应该抛弃 win10 选择 Linux 的原因。

|

||||

|

||||

### 当初你为何使用 Linux? ###

|

||||

|

||||

Linux 越来越流行,很多 OS X 或 Windows 用户都转移到 Linux 阵营了。但是你知道是什么让他们开始使用 Linux 的吗?一个 Reddit 用户在网站上问了这个问题,并且得到了很多有趣的回答。

|

||||

|

||||

一个名为 SilverKnight 的用户在 Reddit 的 Linux 板块上问了如下问题:

|

||||

|

||||

> 我知道这个问题肯定被问过了,但我还是想听听年轻一代使用 Linux 的原因,以及是什么让他们坚定地成为 Linux 用户。

|

||||

>

|

||||

> 我无意阻止大家讲出你们那些精彩的 Linux 故事,但是我还是对那些没有经历过什么精彩故事的新人的想法比较感兴趣。

|

||||

>

|

||||

> 我27岁,半吊子 Linux 用户,这些年装过不少发行版,但没有投入全部精力去玩 Linux。我正在找更多的、能让我全身心投入到 Linux 潮流的理由,或者说激励。

|

||||

>

|

||||

> [详见 Reddit][1]

|

||||

|

||||

以下是网站上的回复:

|

||||

|

||||

> **DoublePlusGood**:我12岁开始使用 Backtrack(现在改名为 Kali),因为我想成为一名黑客(LCTT 译注:原文1337 haxor,1337 是 leet 的火星文写法,意为'火星文',haxor 为 hackor 的火星文写法,意为'黑客',另一种写法是 1377 h4x0r,满满的火星文文化)。我现在一直使用 ArchLinux,因为它给我无限自由,让我对我的电脑可以为所欲为。

|

||||

>

|

||||

> **Zack**:我记得是12、3岁的时候使用 Linux,现在15岁了。

|

||||

>

|

||||

> 我11岁的时候就对 Windows XP 感到不耐烦,一个简单的功能,比如关机,TMD 都要让我耐心等着它慢慢完成。

|

||||

>

|

||||

> 在那之前几个月,我在 freenode IRC 聊天室参与讨论了一个游戏,它是一个开源项目,大多数用户使用 Linux。

|

||||

>

|

||||

> 我不断听到 Linux 但当时对它还没有兴趣。然而由于这些聊天频道(大部分在 freenode 上)谈论了很多编程话题,我就开始学习 python 了。

|

||||

>

|

||||

> 一年后我尝试着安装 GNU/Linux (主要是 ubuntu)到我的新电脑(其实不新,但它是作为我的生日礼物被我得到的)。不幸的是它总是不能正常工作,原因未知,也许硬盘坏了,也许灰尘太多了。

|

||||

>

|

||||

> 那时我放弃自己解决这个问题,然后缠着老爸给我的电脑装上 Ubuntu,他也无能为力,原因同上。

|

||||

>

|

||||

> 在追求 Linux 一段时间后,我打算抛弃 Windows,使用 Linux Mint 代替 Ubuntu,本来没抱什么希望,但 Linux Mint 竟然能跑起来!

|

||||

>

|

||||

> 于是这个系统我用了6个月。

|

||||

>

|

||||

> 那段时间我的一个朋友给了我一台虚拟机,跑 Ubuntu 的,我用了一年,直到我爸给了我一台服务器。

|

||||

>

|

||||

> 6个月后我得到一台新 PC(现在还在用)。于是起想折腾点不一样的东西。

|

||||

>

|

||||

> 我打算装 openSUSE。

|

||||

>

|

||||

> 我很喜欢这个系统。然后在圣诞节的时候我得到树莓派,上面只能跑 Debian,还不能支持其它发行版。

|

||||

>

|

||||

> **Cqz**:我9岁的时候有一次玩 Windows 98,结果这货当机了,原因未知。我没有 Windows 安装盘,但我爸的一本介绍编程的杂志上有一张随书附赠的光盘,这张光盘上刚好有 Mandrake Linux 的安装软件,于是我瞬间就成为了 Linux 用户。我当时还不知道自己在玩什么,但是玩得很嗨皮。这些年我虽然在电脑上装了多种 Windows 版本,但是 FLOSS 世界才是我的家。现在我只把 Windows 装在虚拟机上,用来玩游戏。

|

||||

>

|

||||

> **Tosmarcel**:15岁那年对'编程'这个概念很好奇,然后我开始了哈佛课程'CS50',这个课程要我们安装 Linux 虚拟机用来执行一些命令。当时我问自己为什么 Windows 没有这些命令?于是我 Google 了 Linux,搜索结果出现了 Ubuntu,在安装 Ubuntu。的时候不小心把 Windows 分区给删了。。。当时对 Linux 毫无所知,适应这个系统非常困难。我现在16岁,用 ArchLinux,不想用回 Windows,我爱 ArchLinux。

|