mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-13 22:30:37 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

6860fa36ee

@ -0,0 +1,109 @@

|

||||

并发服务器(五):Redis 案例研究

|

||||

======

|

||||

|

||||

这是我写的并发网络服务器系列文章的第五部分。在前四部分中我们讨论了并发服务器的结构,这篇文章我们将去研究一个在生产系统中大量使用的服务器的案例—— [Redis][10]。

|

||||

|

||||

|

||||

|

||||

Redis 是一个非常有魅力的项目,我关注它很久了。它最让我着迷的一点就是它的 C 源代码非常清晰。它也是一个高性能、大并发的内存数据库服务器的非常好的例子,它是研究网络并发服务器的一个非常好的案例,因此,我们不能错过这个好机会。

|

||||

|

||||

我们来看看前四部分讨论的概念在真实世界中的应用程序。

|

||||

|

||||

本系列的所有文章有:

|

||||

|

||||

* [第一节 - 简介][3]

|

||||

* [第二节 - 线程][4]

|

||||

* [第三节 - 事件驱动][5]

|

||||

* [第四节 - libuv][6]

|

||||

* [第五节 - Redis 案例研究][7]

|

||||

|

||||

### 事件处理库

|

||||

|

||||

Redis 最初发布于 2009 年,它最牛逼的一件事情大概就是它的速度 —— 它能够处理大量的并发客户端连接。需要特别指出的是,它是用*一个单线程*来完成的,而且还不对保存在内存中的数据使用任何复杂的锁或者同步机制。

|

||||

|

||||

Redis 之所以如此牛逼是因为,它在给定的系统上使用了其可用的最快的事件循环,并将它们封装成由它实现的事件循环库(在 Linux 上是 epoll,在 BSD 上是 kqueue,等等)。这个库的名字叫做 [ae][11]。ae 使得编写一个快速服务器变得很容易,只要在它内部没有阻塞即可,而 Redis 则保证 ^注1 了这一点。

|

||||

|

||||

在这里,我们的兴趣点主要是它对*文件事件*的支持 —— 当文件描述符(如网络套接字)有一些有趣的未决事情时将调用注册的回调函数。与 libuv 类似,ae 支持多路事件循环(参阅本系列的[第三节][5]和[第四节][6])和不应该感到意外的 `aeCreateFileEvent` 信号:

|

||||

|

||||

```

|

||||

int aeCreateFileEvent(aeEventLoop *eventLoop, int fd, int mask,

|

||||

aeFileProc *proc, void *clientData);

|

||||

```

|

||||

|

||||

它在 `fd` 上使用一个给定的事件循环,为新的文件事件注册一个回调(`proc`)函数。当使用的是 epoll 时,它将调用 `epoll_ctl` 在文件描述符上添加一个事件(可能是 `EPOLLIN`、`EPOLLOUT`、也或许两者都有,取决于 `mask` 参数)。ae 的 `aeProcessEvents` 功能是 “运行事件循环和发送回调函数”,它在底层调用了 `epoll_wait`。

|

||||

|

||||

### 处理客户端请求

|

||||

|

||||

我们通过跟踪 Redis 服务器代码来看一下,ae 如何为客户端事件注册回调函数的。`initServer` 启动时,通过注册一个回调函数来读取正在监听的套接字上的事件,通过使用回调函数 `acceptTcpHandler` 来调用 `aeCreateFileEvent`。当新的连接可用时,这个回调函数被调用。它调用 `accept` ^注2 ,接下来是 `acceptCommonHandler`,它转而去调用 `createClient` 以初始化新客户端连接所需要的数据结构。

|

||||

|

||||

`createClient` 的工作是去监听来自客户端的入站数据。它将套接字设置为非阻塞模式(一个异步事件循环中的关键因素)并使用 `aeCreateFileEvent` 去注册另外一个文件事件回调函数以读取事件 —— `readQueryFromClient`。每当客户端发送数据,这个函数将被事件循环调用。

|

||||

|

||||

`readQueryFromClient` 就让我们期望的那样 —— 解析客户端命令和动作,并通过查询和/或操作数据来回复。因为客户端套接字是非阻塞的,所以这个函数必须能够处理 `EAGAIN`,以及部分数据;从客户端中读取的数据是累积在客户端专用的缓冲区中,而完整的查询可能被分割在回调函数的多个调用当中。

|

||||

|

||||

### 将数据发送回客户端

|

||||

|

||||

在前面的内容中,我说到了 `readQueryFromClient` 结束了发送给客户端的回复。这在逻辑上是正确的,因为 `readQueryFromClient` *准备*要发送回复,但它不真正去做实质的发送 —— 因为这里并不能保证客户端套接字已经准备好写入/发送数据。我们必须为此使用事件循环机制。

|

||||

|

||||

Redis 是这样做的,它注册一个 `beforeSleep` 函数,每次事件循环即将进入休眠时,调用它去等待套接字变得可以读取/写入。`beforeSleep` 做的其中一件事情就是调用 `handleClientsWithPendingWrites`。它的作用是通过调用 `writeToClient` 去尝试立即发送所有可用的回复;如果一些套接字不可用时,那么*当*套接字可用时,它将注册一个事件循环去调用 `sendReplyToClient`。这可以被看作为一种优化 —— 如果套接字可用于立即发送数据(一般是 TCP 套接字),这时并不需要注册事件 ——直接发送数据。因为套接字是非阻塞的,它从不会去阻塞循环。

|

||||

|

||||

### 为什么 Redis 要实现它自己的事件库?

|

||||

|

||||

在 [第四节][14] 中我们讨论了使用 libuv 来构建一个异步并发服务器。需要注意的是,Redis 并没有使用 libuv,或者任何类似的事件库,而是它去实现自己的事件库 —— ae,用 ae 来封装 epoll、kqueue 和 select。事实上,Antirez(Redis 的创建者)恰好在 [2011 年的一篇文章][15] 中回答了这个问题。他的回答的要点是:ae 只有大约 770 行他理解的非常透彻的代码;而 libuv 代码量非常巨大,也没有提供 Redis 所需的额外功能。

|

||||

|

||||

现在,ae 的代码大约增长到 1300 多行,比起 libuv 的 26000 行(这是在没有 Windows、测试、示例、文档的情况下的数据)来说那是小巫见大巫了。libuv 是一个非常综合的库,这使它更复杂,并且很难去适应其它项目的特殊需求;另一方面,ae 是专门为 Redis 设计的,与 Redis 共同演进,只包含 Redis 所需要的东西。

|

||||

|

||||

这是我 [前些年在一篇文章中][16] 提到的软件项目依赖关系的另一个很好的示例:

|

||||

|

||||

> 依赖的优势与在软件项目上花费的工作量成反比。

|

||||

|

||||

在某种程度上,Antirez 在他的文章中也提到了这一点。他提到,提供大量附加价值(在我的文章中的“基础” 依赖)的依赖比像 libuv 这样的依赖更有意义(它的例子是 jemalloc 和 Lua),对于 Redis 特定需求,其功能的实现相当容易。

|

||||

|

||||

### Redis 中的多线程

|

||||

|

||||

[在 Redis 的绝大多数历史中][17],它都是一个不折不扣的单线程的东西。一些人觉得这太不可思议了,有这种想法完全可以理解。Redis 本质上是受网络束缚的 —— 只要数据库大小合理,对于任何给定的客户端请求,其大部分延时都是浪费在网络等待上,而不是在 Redis 的数据结构上。

|

||||

|

||||

然而,现在事情已经不再那么简单了。Redis 现在有几个新功能都用到了线程:

|

||||

|

||||

1. “惰性” [内存释放][8]。

|

||||

2. 在后台线程中使用 fsync 调用写一个 [持久化日志][9]。

|

||||

3. 运行需要执行一个长周期运行的操作的用户定义模块。

|

||||

|

||||

对于前两个特性,Redis 使用它自己的一个简单的 bio(它是 “Background I/O" 的首字母缩写)库。这个库是根据 Redis 的需要进行了硬编码,它不能用到其它的地方 —— 它运行预设数量的线程,每个 Redis 后台作业类型需要一个线程。

|

||||

|

||||

而对于第三个特性,[Redis 模块][18] 可以定义新的 Redis 命令,并且遵循与普通 Redis 命令相同的标准,包括不阻塞主线程。如果在模块中自定义的一个 Redis 命令,希望去执行一个长周期运行的操作,这将创建一个线程在后台去运行它。在 Redis 源码树中的 `src/modules/helloblock.c` 提供了这样的一个示例。

|

||||

|

||||

有了这些特性,Redis 使用线程将一个事件循环结合起来,在一般的案例中,Redis 具有了更快的速度和弹性,这有点类似于在本系统文章中 [第四节][19] 讨论的工作队列。

|

||||

|

||||

- 注1: Redis 的一个核心部分是:它是一个 _内存中_ 数据库;因此,查询从不会运行太长的时间。当然了,这将会带来各种各样的其它问题。在使用分区的情况下,服务器可能最终路由一个请求到另一个实例上;在这种情况下,将使用异步 I/O 来避免阻塞其它客户端。

|

||||

- 注2: 使用 `anetAccept`;`anet` 是 Redis 对 TCP 套接字代码的封装。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://eli.thegreenplace.net/2017/concurrent-servers-part-5-redis-case-study/

|

||||

|

||||

作者:[Eli Bendersky][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://eli.thegreenplace.net/pages/about

|

||||

[1]:https://eli.thegreenplace.net/2017/concurrent-servers-part-5-redis-case-study/#id1

|

||||

[2]:https://eli.thegreenplace.net/2017/concurrent-servers-part-5-redis-case-study/#id2

|

||||

[3]:https://linux.cn/article-8993-1.html

|

||||

[4]:https://linux.cn/article-9002-1.html

|

||||

[5]:https://linux.cn/article-9117-1.html

|

||||

[6]:https://linux.cn/article-9397-1.html

|

||||

[7]:http://eli.thegreenplace.net/2017/concurrent-servers-part-5-redis-case-study/

|

||||

[8]:http://antirez.com/news/93

|

||||

[9]:https://redis.io/topics/persistence

|

||||

[10]:https://redis.io/

|

||||

[11]:https://redis.io/topics/internals-rediseventlib

|

||||

[12]:https://eli.thegreenplace.net/2017/concurrent-servers-part-5-redis-case-study/#id4

|

||||

[13]:https://eli.thegreenplace.net/2017/concurrent-servers-part-5-redis-case-study/#id5

|

||||

[14]:https://linux.cn/article-9397-1.html

|

||||

[15]:http://oldblog.antirez.com/post/redis-win32-msft-patch.html

|

||||

[16]:http://eli.thegreenplace.net/2017/benefits-of-dependencies-in-software-projects-as-a-function-of-effort/

|

||||

[17]:http://antirez.com/news/93

|

||||

[18]:https://redis.io/topics/modules-intro

|

||||

[19]:https://linux.cn/article-9397-1.html

|

||||

@ -1,35 +1,38 @@

|

||||

如何在 Linux 上使用 Vundle 管理 Vim 插件

|

||||

======

|

||||

|

||||

|

||||

|

||||

毋庸置疑,**Vim** 是一款强大的文本文件处理的通用工具,能够管理系统配置文件,编写代码。通过插件,vim 可以被拓展出不同层次的功能。通常,所有的插件和附属的配置文件都会存放在 **~/.vim** 目录中。由于所有的插件文件都被存储在同一个目录下,所以当你安装更多插件时,不同的插件文件之间相互混淆。因而,跟踪和管理它们将是一个恐怖的任务。然而,这正是 Vundle 所能处理的。Vundle,分别是 **V** im 和 B **undle** 的缩写,它是一款能够管理 Vim 插件的极其实用的工具。

|

||||

毋庸置疑,Vim 是一款强大的文本文件处理的通用工具,能够管理系统配置文件和编写代码。通过插件,Vim 可以被拓展出不同层次的功能。通常,所有的插件和附属的配置文件都会存放在 `~/.vim` 目录中。由于所有的插件文件都被存储在同一个目录下,所以当你安装更多插件时,不同的插件文件之间相互混淆。因而,跟踪和管理它们将是一个恐怖的任务。然而,这正是 Vundle 所能处理的。Vundle,分别是 **V** im 和 B **undle** 的缩写,它是一款能够管理 Vim 插件的极其实用的工具。

|

||||

|

||||

Vundle 为每一个你安装和存储的拓展配置文件创建各自独立的目录树。因此,相互之间没有混淆的文件。简言之,Vundle 允许你安装新的插件、配置已存在的插件、更新插件配置、搜索安装插件和清理不使用的插件。所有的操作都可以在单一按键的交互模式下完成。在这个简易的教程中,让我告诉你如何安装 Vundle,如何在 GNU/Linux 中使用它来管理 Vim 插件。

|

||||

Vundle 为每一个你安装的插件创建一个独立的目录树,并在相应的插件目录中存储附加的配置文件。因此,相互之间没有混淆的文件。简言之,Vundle 允许你安装新的插件、配置已有的插件、更新插件配置、搜索安装的插件和清理不使用的插件。所有的操作都可以在一键交互模式下完成。在这个简易的教程中,让我告诉你如何安装 Vundle,如何在 GNU/Linux 中使用它来管理 Vim 插件。

|

||||

|

||||

### Vundle 安装

|

||||

|

||||

如果你需要 Vundle,那我就当作你的系统中,已将安装好了 **vim**。如果没有,安装 vim,尽情 **git**(下载 vundle)去吧。在大部分 GNU/Linux 发行版中的官方仓库中都可以获取到这两个包。比如,在 Debian 系列系统中,你可以使用下面的命令安装这两个包。

|

||||

如果你需要 Vundle,那我就当作你的系统中,已将安装好了 Vim。如果没有,请安装 Vim 和 git(以下载 Vundle)。在大部分 GNU/Linux 发行版中的官方仓库中都可以获取到这两个包。比如,在 Debian 系列系统中,你可以使用下面的命令安装这两个包。

|

||||

|

||||

```

|

||||

sudo apt-get install vim git

|

||||

```

|

||||

|

||||

**下载 Vundle**

|

||||

#### 下载 Vundle

|

||||

|

||||

复制 Vundle 的 GitHub 仓库地址:

|

||||

|

||||

```

|

||||

git clone https://github.com/VundleVim/Vundle.vim.git ~/.vim/bundle/Vundle.vim

|

||||

```

|

||||

|

||||

**配置 Vundle**

|

||||

#### 配置 Vundle

|

||||

|

||||

创建 **~/.vimrc** 文件,通知 vim 使用新的插件管理器。这个文件获得有安装、更新、配置和移除插件的权限。

|

||||

创建 `~/.vimrc` 文件,以通知 Vim 使用新的插件管理器。安装、更新、配置和移除插件需要这个文件。

|

||||

|

||||

```

|

||||

vim ~/.vimrc

|

||||

```

|

||||

|

||||

在此文件顶部,加入如下若干行内容:

|

||||

|

||||

```

|

||||

set nocompatible " be iMproved, required

|

||||

filetype off " required

|

||||

@ -76,35 +79,39 @@ filetype plugin indent on " required

|

||||

" Put your non-Plugin stuff after this line

|

||||

```

|

||||

|

||||

被标记的行中,是 Vundle 的请求项。其余行仅是一些例子。如果你不想安装那些特定的插件,可以移除它们。一旦你安装过,键入 **:wq** 保存退出。

|

||||

被标记为 “required” 的行是 Vundle 的所需配置。其余行仅是一些例子。如果你不想安装那些特定的插件,可以移除它们。完成后,键入 `:wq` 保存退出。

|

||||

|

||||

最后,打开 Vim:

|

||||

|

||||

最后,打开 vim

|

||||

```

|

||||

vim

|

||||

```

|

||||

|

||||

然后键入下列命令安装插件。

|

||||

然后键入下列命令安装插件:

|

||||

|

||||

```

|

||||

:PluginInstall

|

||||

```

|

||||

|

||||

[![][1]][2]

|

||||

![][2]

|

||||

|

||||

将会弹出一个新的分窗口,.vimrc 中陈列的项目都会自动安装。

|

||||

将会弹出一个新的分窗口,我们加在 `.vimrc` 文件中的所有插件都会自动安装。

|

||||

|

||||

[![][1]][3]

|

||||

![][3]

|

||||

|

||||

安装完毕之后,键入下列命令,可以删除高速缓存区缓存并关闭窗口:

|

||||

|

||||

安装完毕之后,键入下列命令,可以删除高速缓存区缓存并关闭窗口。

|

||||

```

|

||||

:bdelete

|

||||

```

|

||||

|

||||

在终端上使用下面命令,规避使用 vim 安装插件

|

||||

你也可以在终端上使用下面命令安装插件,而不用打开 Vim:

|

||||

|

||||

```

|

||||

vim +PluginInstall +qall

|

||||

```

|

||||

|

||||

使用 [**fish shell**][4] 的朋友,添加下面这行到你的 **.vimrc** 文件中。

|

||||

使用 [fish shell][4] 的朋友,添加下面这行到你的 `.vimrc` 文件中。

|

||||

|

||||

```

|

||||

set shell=/bin/bash

|

||||

@ -112,123 +119,138 @@ set shell=/bin/bash

|

||||

|

||||

### 使用 Vundle 管理 Vim 插件

|

||||

|

||||

**添加新的插件**

|

||||

#### 添加新的插件

|

||||

|

||||

首先,使用下面的命令搜索可以使用的插件:

|

||||

|

||||

首先,使用下面的命令搜索可以使用的插件。

|

||||

```

|

||||

:PluginSearch

|

||||

```

|

||||

|

||||

命令之后添加 **"! "**,刷新 vimscripts 网站内容到本地。

|

||||

要从 vimscripts 网站刷新本地的列表,请在命令之后添加 `!`。

|

||||

|

||||

```

|

||||

:PluginSearch!

|

||||

```

|

||||

|

||||

一个陈列可用插件列表的新分窗口将会被弹出。

|

||||

会弹出一个列出可用插件列表的新分窗口:

|

||||

|

||||

[![][1]][5]

|

||||

![][5]

|

||||

|

||||

你还可以通过直接指定插件名的方式,缩小搜索范围。

|

||||

|

||||

```

|

||||

:PluginSearch vim

|

||||

```

|

||||

|

||||

这样将会列出包含关键词“vim”的插件。

|

||||

这样将会列出包含关键词 “vim” 的插件。

|

||||

|

||||

当然你也可以指定确切的插件名,比如:

|

||||

|

||||

```

|

||||

:PluginSearch vim-dasm

|

||||

```

|

||||

|

||||

移动焦点到正确的一行上,点击 **" i"** 来安装插件。现在,被选择的插件将会被安装。

|

||||

移动焦点到正确的一行上,按下 `i` 键来安装插件。现在,被选择的插件将会被安装。

|

||||

|

||||

[![][1]][6]

|

||||

![][6]

|

||||

|

||||

类似的,在你的系统中安装所有想要的插件。一旦安装成功,使用下列命令删除 Vundle 缓存:

|

||||

|

||||

在你的系统中,所有想要的的插件都以类似的方式安装。一旦安装成功,使用下列命令删除 Vundle 缓存:

|

||||

```

|

||||

:bdelete

|

||||

```

|

||||

|

||||

现在,插件已经安装完成。在 .vimrc 文件中添加安装好的插件名,让插件正确加载。

|

||||

现在,插件已经安装完成。为了让插件正确的自动加载,我们需要在 `.vimrc` 文件中添加安装好的插件名。

|

||||

|

||||

这样做:

|

||||

|

||||

```

|

||||

:e ~/.vimrc

|

||||

```

|

||||

|

||||

添加这一行:

|

||||

|

||||

```

|

||||

[...]

|

||||

Plugin 'vim-dasm'

|

||||

[...]

|

||||

```

|

||||

|

||||

用自己的插件名替换 vim-dasm。然后,敲击 ESC,键入 **:wq** 保存退出。

|

||||

用自己的插件名替换 vim-dasm。然后,敲击 `ESC`,键入 `:wq` 保存退出。

|

||||

|

||||

请注意,所有插件都必须在 `.vimrc` 文件中追加如下内容。

|

||||

|

||||

请注意,所有插件都必须在 .vimrc 文件中追加如下内容。

|

||||

```

|

||||

[...]

|

||||

filetype plugin indent on

|

||||

```

|

||||

|

||||

**列出已安装的插件**

|

||||

#### 列出已安装的插件

|

||||

|

||||

键入下面命令列出所有已安装的插件:

|

||||

|

||||

```

|

||||

:PluginList

|

||||

```

|

||||

|

||||

[![][1]][7]

|

||||

![][7]

|

||||

|

||||

**更新插件**

|

||||

#### 更新插件

|

||||

|

||||

键入下列命令更新插件:

|

||||

|

||||

```

|

||||

:PluginUpdate

|

||||

```

|

||||

|

||||

键入下列命令重新安装所有插件

|

||||

键入下列命令重新安装所有插件:

|

||||

|

||||

```

|

||||

:PluginInstall!

|

||||

```

|

||||

|

||||

**卸载插件**

|

||||

#### 卸载插件

|

||||

|

||||

首先,列出所有已安装的插件:

|

||||

|

||||

```

|

||||

:PluginList

|

||||

```

|

||||

|

||||

之后将焦点置于正确的一行上,敲 **" SHITF+d"** 组合键。

|

||||

之后将焦点置于正确的一行上,按下 `SHITF+d` 组合键。

|

||||

|

||||

[![][1]][8]

|

||||

![][8]

|

||||

|

||||

然后编辑你的 `.vimrc` 文件:

|

||||

|

||||

然后编辑你的 .vimrc 文件:

|

||||

```

|

||||

:e ~/.vimrc

|

||||

```

|

||||

|

||||

再然后删除插件入口。最后,键入 **:wq** 保存退出。

|

||||

删除插件入口。最后,键入 `:wq` 保存退出。

|

||||

|

||||

或者,你可以通过移除插件所在 `.vimrc` 文件行,并且执行下列命令,卸载插件:

|

||||

|

||||

或者,你可以通过移除插件所在 .vimrc 文件行,并且执行下列命令,卸载插件:

|

||||

```

|

||||

:PluginClean

|

||||

```

|

||||

|

||||

这个命令将会移除所有不在你的 .vimrc 文件中但是存在于 bundle 目录中的插件。

|

||||

这个命令将会移除所有不在你的 `.vimrc` 文件中但是存在于 bundle 目录中的插件。

|

||||

|

||||

你应该已经掌握了 Vundle 管理插件的基本方法了。在 Vim 中使用下列命令,查询帮助文档,获取更多细节。

|

||||

|

||||

你应该已经掌握了 Vundle 管理插件的基本方法了。在 vim 中使用下列命令,查询帮助文档,获取更多细节。

|

||||

```

|

||||

:h vundle

|

||||

```

|

||||

|

||||

**捎带看看:**

|

||||

|

||||

现在我已经把所有内容都告诉你了。很快,我就会出下一篇教程。保持关注 OSTechNix!

|

||||

现在我已经把所有内容都告诉你了。很快,我就会出下一篇教程。保持关注!

|

||||

|

||||

干杯!

|

||||

|

||||

**来源:**

|

||||

### 资源

|

||||

|

||||

[Vundle GitHub 仓库][9]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -236,16 +258,17 @@ via: https://www.ostechnix.com/manage-vim-plugins-using-vundle-linux/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[CYLeft](https://github.com/CYLeft)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[2]:http://www.ostechnix.com/wp-content/uploads/2018/01/Vundle-1.png ()

|

||||

[3]:http://www.ostechnix.com/wp-content/uploads/2018/01/Vundle-2.png ()

|

||||

[2]:http://www.ostechnix.com/wp-content/uploads/2018/01/Vundle-1.png

|

||||

[3]:http://www.ostechnix.com/wp-content/uploads/2018/01/Vundle-2.png

|

||||

[4]:https://www.ostechnix.com/install-fish-friendly-interactive-shell-linux/

|

||||

[5]:http://www.ostechnix.com/wp-content/uploads/2018/01/Vundle-3.png ()

|

||||

[6]:http://www.ostechnix.com/wp-content/uploads/2018/01/Vundle-4-2.png ()

|

||||

[7]:http://www.ostechnix.com/wp-content/uploads/2018/01/Vundle-5-1.png ()

|

||||

[8]:http://www.ostechnix.com/wp-content/uploads/2018/01/Vundle-6.png ()

|

||||

[5]:http://www.ostechnix.com/wp-content/uploads/2018/01/Vundle-3.png

|

||||

[6]:http://www.ostechnix.com/wp-content/uploads/2018/01/Vundle-4-2.png

|

||||

[7]:http://www.ostechnix.com/wp-content/uploads/2018/01/Vundle-5-1.png

|

||||

[8]:http://www.ostechnix.com/wp-content/uploads/2018/01/Vundle-6.png

|

||||

[9]:https://github.com/VundleVim/Vundle.vim

|

||||

98

sources/talk/20180214 11 awesome vi tips and tricks.md

Normal file

98

sources/talk/20180214 11 awesome vi tips and tricks.md

Normal file

@ -0,0 +1,98 @@

|

||||

11 awesome vi tips and tricks

|

||||

======

|

||||

|

||||

|

||||

|

||||

The [vi editor][1] is one of the most popular text editors on Unix and Unix-like systems, such as Linux. Whether you're new to vi or just looking for a refresher, these 11 tips will enhance how you use it.

|

||||

|

||||

### Editing

|

||||

|

||||

Editing a long script can be tedious, especially when you need to edit a line so far down that it would take hours to scroll to it. Here's a faster way.

|

||||

|

||||

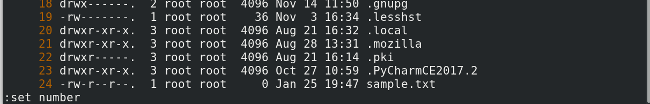

1. The command `:set number` numbers each line down the left side.

|

||||

|

||||

|

||||

|

||||

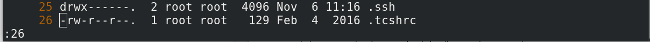

You can directly reach line number 26 by opening the file and entering this command on the CLI: `vi +26 sample.txt`. To edit line 26 (for example), the command `:26` will take you directly to it.

|

||||

|

||||

|

||||

|

||||

### Fast navigation

|

||||

|

||||

2. `i` changes your mode from "command" to "insert" and starts inserting text at the current cursor position.

|

||||

3. `a` does the same, except it starts just after the current cursor position.

|

||||

4. `o` starts the cursor position from the line below the current cursor position.

|

||||

|

||||

|

||||

|

||||

### Delete

|

||||

|

||||

If you notice an error or typo, being able to make a quick fix is important. Good thing vi has it all figured out.

|

||||

|

||||

Understanding vi's delete function so you don't accidentally press a key and permanently remove a line, paragraph, or more, is critical.

|

||||

|

||||

5. `x` deletes the character under the cursor.

|

||||

6. `dd` deletes the current line. (Yes, the whole line!)

|

||||

|

||||

|

||||

|

||||

Here's the scary part: `30dd` would delete 30 lines starting with the current line! Proceed with caution when using this command.

|

||||

|

||||

### Search

|

||||

|

||||

You can search for keywords from the "command" mode rather than manually navigating and looking for a specific word in a plethora of text.

|

||||

|

||||

7. `:/<keyword>` searches for the word mentioned in the `< >` space and takes your cursor to the first match.

|

||||

8. To navigate to the next instance of that word, type `n`, and keep pressing it until you get to the match you're looking for.

|

||||

|

||||

|

||||

|

||||

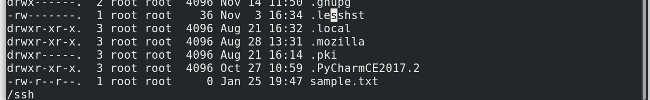

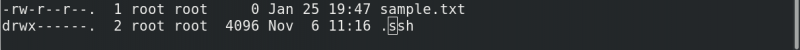

For example, in the image below I searched for `ssh`, and vi highlighted the beginning of the first result.

|

||||

|

||||

|

||||

|

||||

After I pressed `n`, vi highlighted the next instance.

|

||||

|

||||

|

||||

|

||||

### Save and exit

|

||||

|

||||

Developers (and others) will probably find this next command useful.

|

||||

|

||||

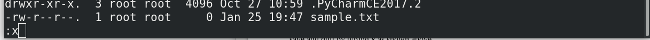

9. `:x` saves your work and exits vi.

|

||||

|

||||

|

||||

|

||||

10. If you think every nanosecond is worth saving, here's a faster way to shift to terminal mode in vi. Instead of pressing `Shift+:` on the keyboard, you can press `Shift+q` (or Q, in caps) to access [Ex mode][2], but this doesn't really make any difference if you just want to save and quit by typing `x` (as shown above).

|

||||

|

||||

|

||||

|

||||

### Substitution

|

||||

|

||||

Here is a neat trick if you want to substitute every occurrence of one word with another. For example, if you want to substitute "desktop" with "laptop" in a large file, it would be monotonous and waste time to search for each occurrence of "desktop," delete it, and type "laptop."

|

||||

|

||||

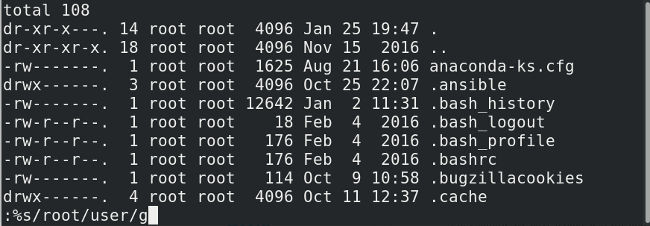

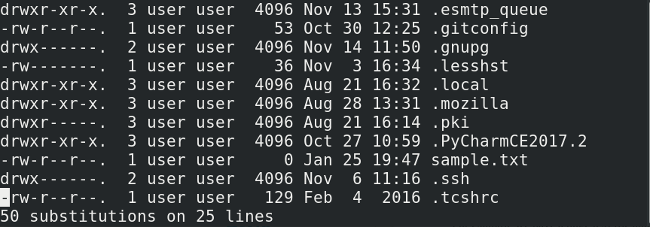

11. The command `:%s/desktop/laptop/g` would replace each occurrence of "desktop" with "laptop" throughout the file; it works just like the Linux `sed` command.

|

||||

|

||||

|

||||

|

||||

In this example, I replaced "root" with "user":

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

These tricks should help anyone get started using vi. Are there other neat tips I missed? Share them in the comments.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/1/top-11-vi-tips-and-tricks

|

||||

|

||||

作者:[Archit Modi][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/architmodi

|

||||

[1]:http://ex-vi.sourceforge.net/

|

||||

[2]:https://en.wikibooks.org/wiki/Learning_the_vi_Editor/Vim/Modes#Ex-mode

|

||||

@ -0,0 +1,53 @@

|

||||

Beyond metrics: How to operate as team on today's open source project

|

||||

======

|

||||

|

||||

|

||||

|

||||

How do we traditionally think about community health and vibrancy?

|

||||

|

||||

We might quickly zero in on metrics related primarily to code contributions: How many companies are contributing? How many individuals? How many lines of code? Collectively, these speak to both the level of development activity and the breadth of the contributor base. The former speaks to whether the project continues to be enhanced and expanded; the latter to whether it has attracted a diverse group of developers or is controlled primarily by a single organization.

|

||||

|

||||

The [Linux Kernel Development Report][1] tracks these kinds of statistics and, unsurprisingly, it appears extremely healthy on all counts.

|

||||

|

||||

However, while development cadence and code contributions are still clearly important, other aspects of the open source communities are also coming to the forefront. This is in part because, increasingly, open source is about more than a development model. It’s also about making it easier for users and other interested parties to interact in ways that go beyond being passive recipients of code. Of course, there have long been user groups. But open source streamlines the involvement of users, just as it does software development.

|

||||

|

||||

This was the topic of my discussion with Diane Mueller, the director of community development for OpenShift.

|

||||

|

||||

When OpenShift became a container platform based in part on Kubernetes in version 3, Mueller saw a need to broaden the community beyond the core code contributors. In part, this was because OpenShift was increasingly touching a broad range of open source projects and organizations such those associated with the [Open Container Initiative (OCI)][2] and the [Cloud Native Computing Foundation (CNCF)][3]. In addition to users, cloud service providers who were offering managed services also wanted ways to get involved in the project.

|

||||

|

||||

“What we tried to do was open up our minds about what the community constituted,” Mueller explained, adding, “We called it the [Commons][4] because Red Hat's near Boston, and I'm from that area. Boston Common is a shared resource, the grass where you bring your cows to graze, and you have your farmer's hipster market or whatever it is today that they do on Boston Common.”

|

||||

|

||||

This new model, she said, was really “a new ecosystem that incorporated all of those different parties and different perspectives. We used a lot of virtual tools, a lot of new tools like Slack. We stepped up beyond the mailing list. We do weekly briefings. We went very virtual because, one, I don't scale. The Evangelist and Dev Advocate team didn't scale. We need to be able to get all that word out there, all this new information out there, so we went very virtual. We worked with a lot of people to create online learning stuff, a lot of really good tooling, and we had a lot of community help and support in doing that.”

|

||||

|

||||

![diane mueller open shift][6]

|

||||

|

||||

Diane Mueller, director of community development at Open Shift, discusses the role of strong user communities in open source software development. (Credit: Gordon Haff, CC BY-SA 4.0)

|

||||

|

||||

However, one interesting aspect of the Commons model is that it isn’t just virtual. We see the same pattern elsewhere in many successful open source communities, such as the Linux kernel. Lots of day-to-day activities happen on mailings lists, IRC, and other collaboration tools. But this doesn’t eliminate the benefits of face-to-face time that allows for both richer and informal discussions and exchanges.

|

||||

|

||||

This interview with Mueller took place in London the day after the [OpenShift Commons Gathering][7]. Gatherings are full-day events, held a number of times a year, which are typically attended by a few hundred people. Much of the focus is on users and user stories. In fact, Mueller notes, “Here in London, one of the Commons members, Secnix, was really the major reason we actually hosted the gathering here. Justin Cook did an amazing job organizing the venue and helping us pull this whole thing together in less than 50 days. A lot of the community gatherings and things are driven by the Commons members.”

|

||||

|

||||

Mueller wants to focus on users more and more. “The OpenShift Commons gathering at [Red Hat] Summit will be almost entirely case studies,” she noted. “Users talking about what's in their stack. What lessons did they learn? What are the best practices? Sharing those ideas that they've done just like we did here in London.”

|

||||

|

||||

Although the Commons model grew out of some specific OpenShift needs at the time it was created, Mueller believes it’s an approach that can be applied more broadly. “I think if you abstract what we've done, you can apply it to any existing open source community,” she said. “The foundations still, in some ways, play a nice role in giving you some structure around governance, and helping incubate stuff, and helping create standards. I really love what OCI is doing to create standards around containers. There's still a role for that in some ways. I think the lesson that we can learn from the experience and we can apply to other projects is to open up the community so that it includes feedback mechanisms and gives the podium away.”

|

||||

|

||||

The evolution of the community model though approaches like the OpenShift Commons mirror the healthy evolution of open source more broadly. Certainly, some users have been involved in the development of open source software for a long time. What’s striking today is how widespread and pervasive direct user participation has become. Sure, open source remains central to much of modern software development. But it’s also becoming increasingly central to how users learn from each other and work together with their partners and developers.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/3/how-communities-are-evolving

|

||||

|

||||

作者:[Gordon Haff][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/ghaff

|

||||

[1]:https://www.linuxfoundation.org/2017-linux-kernel-report-landing-page/

|

||||

[2]:https://www.opencontainers.org/

|

||||

[3]:https://www.cncf.io/

|

||||

[4]:https://commons.openshift.org/

|

||||

[5]:/file/388586

|

||||

[6]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/images/life-uploads/39369010275_7df2c3c260_z.jpg?itok=gIhnBl6F (diane mueller open shift)

|

||||

[7]:https://www.meetup.com/London-OpenShift-User-Group/events/246498196/

|

||||

75

sources/talk/20180303 4 meetup ideas- Make your data open.md

Normal file

75

sources/talk/20180303 4 meetup ideas- Make your data open.md

Normal file

@ -0,0 +1,75 @@

|

||||

4 meetup ideas: Make your data open

|

||||

======

|

||||

|

||||

|

||||

|

||||

[Open Data Day][1] (ODD) is an annual, worldwide celebration of open data and an opportunity to show the importance of open data in improving our communities.

|

||||

|

||||

Not many individuals and organizations know about the meaningfulness of open data or why they might want to liberate their data from the restrictions of copyright, patents, and more. They also don't know how to make their data open—that is, publicly available for anyone to use, share, or republish with modifications.

|

||||

|

||||

This year ODD falls on Saturday, March 3, and there are [events planned][2] in every continent except Antarctica. While it might be too late to organize an event for this year, it's never too early to plan for next year. Also, since open data is important every day of the year, there's no reason to wait until ODD 2019 to host an event in your community.

|

||||

|

||||

There are many ways to build local awareness of open data. Here are four ideas to help plan an excellent open data event any time of year.

|

||||

|

||||

### 1. Organize an entry-level event

|

||||

|

||||

You can host an educational event at a local library, college, or another public venue about how open data can be used and why it matters for all of us. If possible, invite a [local speaker][3] or have someone present remotely. You could also have a roundtable discussion with several knowledgeable people in your community.

|

||||

|

||||

Consider offering resources such as the [Open Data Handbook][4], which not only provides a guide to the philosophy and rationale behind adopting open data, but also offers case studies, use cases, how-to guides, and other material to support making data open.

|

||||

|

||||

### 2. Organize an advanced-level event

|

||||

|

||||

For a deeper experience, organize a hands-on training event for open data newbies. Ideas for good topics include [training teachers on open science][5], [creating audiovisual expressions from open data][6], and using [open government data][7] in meaningful ways.

|

||||

|

||||

The options are endless. To choose a topic, think about what is locally relevant, identify issues that open data might be able to address, and find people who can do the training.

|

||||

|

||||

### 3. Organize a hackathon

|

||||

|

||||

Open data hackathons can be a great way to bring open data advocates, developers, and enthusiasts together under one roof. Hackathons are more than just training sessions, though; the idea is to build prototypes or solve real-life challenges that are tied to open data. In a hackathon, people in various groups can contribute to the entire assembly line in multiple ways, such as identifying issues by working collaboratively through [Etherpad][8] or creating focus groups.

|

||||

|

||||

Once the hackathon is over, make sure to upload all the useful data that is produced to the internet with an open license.

|

||||

|

||||

### 4. Release or relicense data as open

|

||||

|

||||

Open data is about making meaningful data publicly available under open licenses while protecting any data that might put people's private information at risk. (Learn [how to protect private data][9].) Try to find existing, interesting, and useful data that is privately owned by individuals or organizations and negotiate with them to relicense or release the data online under any of the [recommended open data licenses][10]. The widely popular [Creative Commons licenses][11] (particularly the CC0 license and the 4.0 licenses) are quite compatible with relicensing public data. (See this FAQ from Creative Commons for more information on [openly licensing data][12].)

|

||||

|

||||

Open data can be published on multiple platforms—your website, [GitHub][13], [GitLab][14], [DataHub.io][15], or anywhere else that supports open standards.

|

||||

|

||||

### Tips for event success

|

||||

|

||||

No matter what type of event you decide to do, here are some general planning tips to improve your chances of success.

|

||||

|

||||

* Find a venue that's accessible to the people you want to reach, such as a library, a school, or a community center.

|

||||

* Create a curriculum that will engage the participants.

|

||||

* Invite your target audience—make sure to distribute information through social media, community events calendars, Meetup, and the like.

|

||||

|

||||

|

||||

|

||||

Have you attended or hosted a successful open data event? If so, please share your ideas in the comments.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/3/celebrate-open-data-day

|

||||

|

||||

作者:[Subhashish Panigraphi][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/psubhashish

|

||||

[1]:http://www.opendataday.org/

|

||||

[2]:http://opendataday.org/#map

|

||||

[3]:https://openspeakers.org/

|

||||

[4]:http://opendatahandbook.org/

|

||||

[5]:https://docs.google.com/forms/d/1BRsyzlbn8KEMP8OkvjyttGgIKuTSgETZW9NHRtCbT1s/viewform?edit_requested=true

|

||||

[6]:http://dattack.lv/en/

|

||||

[7]:https://www.eventbrite.co.nz/e/open-data-open-potential-event-friday-2-march-2018-tickets-42733708673

|

||||

[8]:http://etherpad.org/

|

||||

[9]:https://ssd.eff.org/en/module/keeping-your-data-safe

|

||||

[10]:https://opendatacommons.org/licenses/

|

||||

[11]:https://creativecommons.org/share-your-work/licensing-types-examples/

|

||||

[12]:https://wiki.creativecommons.org/wiki/Data#Frequently_asked_questions_about_data_and_CC_licenses

|

||||

[13]:https://github.com/MartinBriza/MediaWriter

|

||||

[14]:https://about.gitlab.com/

|

||||

[15]:https://datahub.io/

|

||||

@ -1,215 +0,0 @@

|

||||

translating by imquanquan

|

||||

|

||||

9 Lightweight Linux Applications to Speed Up Your System

|

||||

======

|

||||

**Brief:** One of the many ways to [speed up Ubuntu][1] system is to use lightweight alternatives of the popular applications. We have already seen [must have Linux application][2] earlier. we'll see the lightweight alternative applications for Ubuntu and other Linux distributions.

|

||||

|

||||

![Use these Lightweight alternative applications in Ubuntu Linux][4]

|

||||

|

||||

## 9 Lightweight alternatives of popular Linux applications

|

||||

|

||||

Is your Linux system slow? Are the applications taking a long time to open? The best option you have is to use a [light Linux distro][5]. But it's not always possible to reinstall an operating system, is it?

|

||||

|

||||

So if you want to stick to your present Linux distribution, but want improved performance, you should use lightweight alternatives of the applications you are using. Here, I'm going to put together a small list of lightweight alternatives to various Linux applications.

|

||||

|

||||

Since I am using Ubuntu, I have provided installation instructions for Ubuntu-based Linux distributions. But these applications will work on almost all other Linux distribution. You just have to find a way to install these lightweight Linux software in your distro.

|

||||

|

||||

### 1. Midori: Web Browser

|

||||

|

||||

Midori is one of the most lightweight web browsers that have reasonable compatibility with the modern web. It is open source and uses the same rendering engine that Google Chrome was initially built on -- WebKit. It is super fast and minimal yet highly customizable.

|

||||

|

||||

![Midori Browser][6]

|

||||

|

||||

It has plenty of extensions and options to tinker with. So if you are a power user, it's a great choice for you too. If you face any problems browsing round the web, check the [Frequently Asked Question][7] section of their website -- it contains the common problems you might face along with their solution.

|

||||

|

||||

[Midori][8]

|

||||

|

||||

#### Installing Midori on Ubuntu based distributions

|

||||

|

||||

Midori is available on Ubuntu via the official repository. Just run the following commands for installing it:

|

||||

```

|

||||

sudo apt install midori

|

||||

```

|

||||

|

||||

### 2. Trojita: email client

|

||||

|

||||

Trojita is an open source robust IMAP e-mail client. It is fast and resource efficient. I can certainly call it one of the [best email clients for Linux][9]. If you can live with only IMAP support on your e-mail client, you might not want to look any further.

|

||||

|

||||

![Trojitá][10]

|

||||

|

||||

Trojita uses various techniques -- on-demand e-mail loading, offline caching, bandwidth-saving mode etc. -- for achieving its impressive performance.

|

||||

|

||||

[Trojita][11]

|

||||

|

||||

#### Installing Trojita on Ubuntu based distributions

|

||||

|

||||

Trojita currently doesn't have an official PPA for Ubuntu. But that shouldn't be a problem. You can install it quite easily using the following commands:

|

||||

```

|

||||

sudo sh -c "echo 'deb http://download.opensuse.org/repositories/home:/jkt-gentoo:/trojita/xUbuntu_16.04/ /' > /etc/apt/sources.list.d/trojita.list"

|

||||

wget http://download.opensuse.org/repositories/home:jkt-gentoo:trojita/xUbuntu_16.04/Release.key

|

||||

sudo apt-key add - < Release.key

|

||||

sudo apt update

|

||||

sudo apt install trojita

|

||||

```

|

||||

|

||||

### 3. GDebi: Package Installer

|

||||

|

||||

Sometimes you need to quickly install DEB packages. Ubuntu Software Center is a resource-heavy application and using it just for installing .deb files is not wise.

|

||||

|

||||

Gdebi is certainly a nifty tool for the same purpose, just with a minimal graphical interface.

|

||||

|

||||

![GDebi][12]

|

||||

|

||||

GDebi is totally lightweight and does its job flawlessly. You should even [make Gdebi the default installer for DEB files][13].

|

||||

|

||||

#### Installing GDebi on Ubuntu based distributions

|

||||

|

||||

You can install GDebi on Ubuntu with this simple one-liner:

|

||||

```

|

||||

sudo apt install gdebi

|

||||

```

|

||||

|

||||

### 4. App Grid: Software Center

|

||||

|

||||

If you use software center frequently for searching, installing and managing applications on Ubuntu, App Grid is a must have application. It is the most visually appealing and yet fast alternative to the default Ubuntu Software Center.

|

||||

|

||||

![App Grid][14]

|

||||

|

||||

App Grid supports ratings, reviews and screenshots for applications.

|

||||

|

||||

[App Grid][15]

|

||||

|

||||

#### Installing App Grid on Ubuntu based distributions

|

||||

|

||||

App Grid has its official PPA for Ubuntu. Use the following commands for installing App Grid:

|

||||

```

|

||||

sudo add-apt-repository ppa:appgrid/stable

|

||||

sudo apt update

|

||||

sudo apt install appgrid

|

||||

```

|

||||

|

||||

### 5. Yarock: Music Player

|

||||

|

||||

Yarock is an elegant music player with a modern and minimal user interface. It is lightweight in design and yet it has a comprehensive list of advanced features.

|

||||

|

||||

![Yarock][16]

|

||||

|

||||

The main features of Yarock include multiple music collections, rating, smart playlist, multiple back-end option, desktop notification, scrobbling, context fetching etc.

|

||||

|

||||

[Yarock][17]

|

||||

|

||||

#### Installing Yarock on Ubuntu based distributions

|

||||

|

||||

You will have to install Yarock on Ubuntu via PPA using the following commands:

|

||||

```

|

||||

sudo add-apt-repository ppa:nilarimogard/webupd8

|

||||

sudo apt update

|

||||

sudo apt install yarock

|

||||

```

|

||||

|

||||

### 6. VLC: Video Player

|

||||

|

||||

Who doesn't need a video player? And who has never heard about VLC? It doesn't really need any introduction.

|

||||

|

||||

![VLC][18]

|

||||

|

||||

VLC is all you need to play various media files on Ubuntu and it is quite lightweight too. It works flawlessly on even on very old PCs.

|

||||

|

||||

[VLC][19]

|

||||

|

||||

#### Installing VLC on Ubuntu based distributions

|

||||

|

||||

VLC has official PPA for Ubuntu. Enter the following commands for installing it:

|

||||

```

|

||||

sudo apt install vlc

|

||||

```

|

||||

|

||||

### 7. PCManFM: File Manager

|

||||

|

||||

PCManFM is the standard file manager from LXDE. As with the other applications from LXDE, this one too is lightweight. If you are looking for a lighter alternative for your file manager, try this one.

|

||||

|

||||

![PCManFM][20]

|

||||

|

||||

Although coming from LXDE, PCManFM works with other desktop environments just as well.

|

||||

|

||||

#### Installing PCManFM on Ubuntu based distributions

|

||||

|

||||

Installing PCManFM on Ubuntu will just take one simple command:

|

||||

```

|

||||

sudo apt install pcmanfm

|

||||

```

|

||||

|

||||

### 8. Mousepad: Text Editor

|

||||

|

||||

Nothing can beat command-line text editors like - nano, vim etc. in terms of being lightweight. But if you want a graphical interface, here you go -- Mousepad is a minimal text editor. It's extremely lightweight and blazing fast. It comes with a simple customizable user interface with multiple themes.

|

||||

|

||||

![Mousepad][21]

|

||||

|

||||

Mousepad supports syntax highlighting. So, you can also use it as a basic code editor.

|

||||

|

||||

#### Installing Mousepad on Ubuntu based distributions

|

||||

|

||||

For installing Mousepad use the following command:

|

||||

```

|

||||

sudo apt install mousepad

|

||||

```

|

||||

|

||||

### 9. GNOME Office: Office Suite

|

||||

|

||||

Many of us need to use office applications quite often. Generally, most of the office applications are bulky in size and resource hungry. Gnome Office is quite lightweight in that respect. Gnome Office is technically not a complete office suite. It's composed of different standalone applications and among them, **AbiWord** & **Gnumeric** stands out.

|

||||

|

||||

**AbiWord** is the word processor. It is lightweight and a lot faster than other alternatives. But that came to be at a cost -- you might miss some features like macros, grammar checking etc. It's not perfect but it works.

|

||||

|

||||

![AbiWord][22]

|

||||

|

||||

**Gnumeric** is the spreadsheet editor. Just like AbiWord, Gnumeric is also very fast and it provides accurate calculations. If you are looking for a simple and lightweight spreadsheet editor, Gnumeric has got you covered.

|

||||

|

||||

![Gnumeric][23]

|

||||

|

||||

There are some other applications listed under Gnome Office. You can find them in the official page.

|

||||

|

||||

[Gnome Office][24]

|

||||

|

||||

#### Installing AbiWord & Gnumeric on Ubuntu based distributions

|

||||

|

||||

For installing AbiWord & Gnumeric, simply enter the following command in your terminal:

|

||||

```

|

||||

sudo apt install abiword gnumeric

|

||||

```

|

||||

|

||||

That's all for today. Would you like to add some other **lightweight Linux applications** to this list? Do let us know!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/lightweight-alternative-applications-ubuntu/

|

||||

|

||||

作者:[Munif Tanjim][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://itsfoss.com/author/munif/

|

||||

[1]:https://itsfoss.com/speed-up-ubuntu-1310/

|

||||

[2]:https://itsfoss.com/essential-linux-applications/

|

||||

[4]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/03/Lightweight-alternative-applications-for-Linux-800x450.jpg

|

||||

[5]:https://itsfoss.com/lightweight-linux-beginners/

|

||||

[6]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/03/Midori-800x497.png

|

||||

[7]:http://midori-browser.org/faqs/

|

||||

[8]:http://midori-browser.org/

|

||||

[9]:https://itsfoss.com/best-email-clients-linux/

|

||||

[10]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/03/Trojit%C3%A1-800x608.png

|

||||

[11]:http://trojita.flaska.net/

|

||||

[12]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/03/GDebi.png

|

||||

[13]:https://itsfoss.com/gdebi-default-ubuntu-software-center/

|

||||

[14]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/03/AppGrid-800x553.png

|

||||

[15]:http://www.appgrid.org/

|

||||

[16]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/03/Yarock-800x529.png

|

||||

[17]:https://seb-apps.github.io/yarock/

|

||||

[18]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/03/VLC-800x526.png

|

||||

[19]:http://www.videolan.org/index.html

|

||||

[20]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/03/PCManFM.png

|

||||

[21]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/03/Mousepad.png

|

||||

[22]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/03/AbiWord-800x626.png

|

||||

[23]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/03/Gnumeric-800x470.png

|

||||

[24]:https://gnome.org/gnome-office/

|

||||

@ -0,0 +1,256 @@

|

||||

Build a bikesharing app with Redis and Python

|

||||

======

|

||||

|

||||

|

||||

|

||||

I travel a lot on business. I'm not much of a car guy, so when I have some free time, I prefer to walk or bike around a city. Many of the cities I've visited on business have bikeshare systems, which let you rent a bike for a few hours. Most of these systems have an app to help users locate and rent their bikes, but it would be more helpful for users like me to have a single place to get information on all the bikes in a city that are available to rent.

|

||||

|

||||

To solve this problem and demonstrate the power of open source to add location-aware features to a web application, I combined publicly available bikeshare data, the [Python][1] programming language, and the open source [Redis][2] in-memory data structure server to index and query geospatial data.

|

||||

|

||||

The resulting bikeshare application incorporates data from many different sharing systems, including the [Citi Bike][3] bikeshare in New York City. It takes advantage of the General Bikeshare Feed provided by the Citi Bike system and uses its data to demonstrate some of the features that can be built using Redis to index geospatial data. The Citi Bike data is provided under the [Citi Bike data license agreement][4].

|

||||

|

||||

### General Bikeshare Feed Specification

|

||||

|

||||

The General Bikeshare Feed Specification (GBFS) is an [open data specification][5] developed by the [North American Bikeshare Association][6] to make it easier for map and transportation applications to add bikeshare systems into their platforms. The specification is currently in use by over 60 different sharing systems in the world.

|

||||

|

||||

The feed consists of several simple [JSON][7] data files containing information about the state of the system. The feed starts with a top-level JSON file referencing the URLs of the sub-feed data:

|

||||

```

|

||||

{

|

||||

|

||||

"data": {

|

||||

|

||||

"en": {

|

||||

|

||||

"feeds": [

|

||||

|

||||

{

|

||||

|

||||

"name": "system_information",

|

||||

|

||||

"url": "https://gbfs.citibikenyc.com/gbfs/en/system_information.json"

|

||||

|

||||

},

|

||||

|

||||

{

|

||||

|

||||

"name": "station_information",

|

||||

|

||||

"url": "https://gbfs.citibikenyc.com/gbfs/en/station_information.json"

|

||||

|

||||

},

|

||||

|

||||

. . .

|

||||

|

||||

]

|

||||

|

||||

}

|

||||

|

||||

},

|

||||

|

||||

"last_updated": 1506370010,

|

||||

|

||||

"ttl": 10

|

||||

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

The first step is loading information about the bikesharing stations into Redis using data from the `system_information` and `station_information` feeds.

|

||||

|

||||

The `system_information` feed provides the system ID, which is a short code that can be used to create namespaces for Redis keys. The GBFS spec doesn't specify the format of the system ID, but does guarantee it is globally unique. Many of the bikeshare feeds use short names like coast_bike_share, boise_greenbike, or topeka_metro_bikes for system IDs. Others use familiar geographic abbreviations such as NYC or BA, and one uses a universally unique identifier (UUID). The bikesharing application uses the identifier as a prefix to construct unique keys for the given system.

|

||||

|

||||

The `station_information` feed provides static information about the sharing stations that comprise the system. Stations are represented by JSON objects with several fields. There are several mandatory fields in the station object that provide the ID, name, and location of the physical bike stations. There are also several optional fields that provide helpful information such as the nearest cross street or accepted payment methods. This is the primary source of information for this part of the bikesharing application.

|

||||

|

||||

### Building the database

|

||||

|

||||

I've written a sample application, [load_station_data.py][8], that mimics what would happen in a backend process for loading data from external sources.

|

||||

|

||||

### Finding the bikeshare stations

|

||||

|

||||

Loading the bikeshare data starts with the [systems.csv][9] file from the [GBFS repository on GitHub][5].

|

||||

|

||||

The repository's [systems.csv][9] file provides the discovery URL for registered bikeshare systems with an available GBFS feed. The discovery URL is the starting point for processing bikeshare information.

|

||||

|

||||

The `load_station_data` application takes each discovery URL found in the systems file and uses it to find the URL for two sub-feeds: system information and station information. The system information feed provides a key piece of information: the unique ID of the system. (Note: the system ID is also provided in the systems.csv file, but some of the identifiers in that file do not match the identifiers in the feeds, so I always fetch the identifier from the feed.) Details on the system, like bikeshare URLs, phone numbers, and emails, could be added in future versions of the application, so the data is stored in a Redis hash using the key `${system_id}:system_info`.

|

||||

|

||||

### Loading the station data

|

||||

|

||||

The station information provides data about every station in the system, including the system's location. The `load_station_data` application iterates over every station in the station feed and stores the data about each into a Redis hash using a key of the form `${system_id}:station:${station_id}`. The location of each station is added to a geospatial index for the bikeshare using the `GEOADD` command.

|

||||

|

||||

### Updating data

|

||||

|

||||

On subsequent runs, I don't want the code to remove all the feed data from Redis and reload it into an empty Redis database, so I carefully considered how to handle in-place updates of the data.

|

||||

|

||||

The code starts by loading the dataset with information on all the bikesharing stations for the system being processed into memory. When information is loaded for a station, the station (by key) is removed from the in-memory set of stations. Once all station data is loaded, we're left with a set containing all the station data that must be removed for that system.

|

||||

|

||||

The application iterates over this set of stations and creates a transaction to delete the station information, remove the station key from the geospatial indexes, and remove the station from the list of stations for the system.

|

||||

|

||||

### Notes on the code

|

||||

|

||||

There are a few interesting things to note in [the sample code][8]. First, items are added to the geospatial indexes using the `GEOADD` command but removed with the `ZREM` command. As the underlying implementation of the geospatial type uses sorted sets, items are removed using `ZREM`. A word of caution: For simplicity, the sample code demonstrates working with a single Redis node; the transaction blocks would need to be restructured to run in a cluster environment.

|

||||

|

||||

If you are using Redis 4.0 (or later), you have some alternatives to the `DELETE` and `HMSET` commands in the code. Redis 4.0 provides the [`UNLINK`][10] command as an asynchronous alternative to the `DELETE` command. `UNLINK` will remove the key from the keyspace, but it reclaims the memory in a separate thread. The [`HMSET`][11] command is [deprecated in Redis 4.0 and the `HSET` command is now variadic][12] (that is, it accepts an indefinite number of arguments).

|

||||

|

||||

### Notifying clients

|

||||

|

||||

At the end of the process, a notification is sent to the clients relying on our data. Using the Redis pub/sub mechanism, the notification goes out over the `geobike:station_changed` channel with the ID of the system.

|

||||

|

||||

### Data model

|

||||

|

||||

When structuring data in Redis, the most important thing to think about is how you will query the information. The two main queries the bikeshare application needs to support are:

|

||||

|

||||

* Find stations near us

|

||||

* Display information about stations

|

||||

|

||||

|

||||

|

||||

Redis provides two main data types that will be useful for storing our data: hashes and sorted sets. The [hash type][13] maps well to the JSON objects that represent stations; since Redis hashes don't enforce a schema, they can be used to store the variable station information.

|

||||

|

||||

Of course, finding stations geographically requires a geospatial index to search for stations relative to some coordinates. Redis provides [several commands][14] to build up a geospatial index using the [sorted set][15] data structure.

|

||||

|

||||

We construct keys using the format `${system_id}:station:${station_id}` for the hashes containing information about the stations and keys using the format `${system_id}:stations:location` for the geospatial index used to find stations.

|

||||

|

||||

### Getting the user's location

|

||||

|

||||

The next step in building out the application is to determine the user's current location. Most applications accomplish this through built-in services provided by the operating system. The OS can provide applications with a location based on GPS hardware built into the device or approximated from the device's available WiFi networks.

|

||||

|

||||

|

||||

|

||||

### Finding stations

|

||||

|

||||

After the user's location is found, the next step is locating nearby bikesharing stations. Redis' geospatial functions can return information on stations within a given distance of the user's current coordinates. Here's an example of this using the Redis command-line interface.

|

||||

|

||||

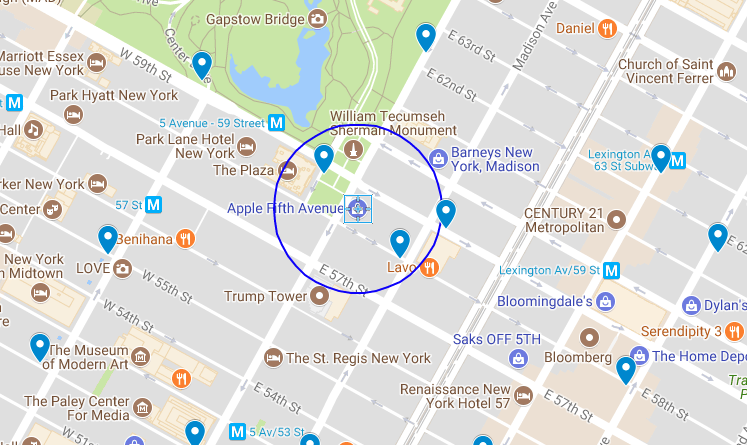

Imagine I'm at the Apple Store on Fifth Avenue in New York City, and I want to head downtown to Mood on West 37th to catch up with my buddy [Swatch][16]. I could take a taxi or the subway, but I'd rather bike. Are there any nearby sharing stations where I could get a bike for my trip?

|

||||

|

||||

The Apple store is located at 40.76384, -73.97297. According to the map, two bikeshare stations—Grand Army Plaza & Central Park South and East 58th St. & Madison—fall within a 500-foot radius (in blue on the map above) of the store.

|

||||

|

||||

I can use Redis' `GEORADIUS` command to query the NYC system index for stations within a 500-foot radius:

|

||||

```

|

||||

127.0.0.1:6379> GEORADIUS NYC:stations:location -73.97297 40.76384 500 ft

|

||||

|

||||

1) "NYC:station:3457"

|

||||

|

||||

2) "NYC:station:281"

|

||||

|

||||

```

|

||||

|

||||

Redis returns the two bikeshare locations found within that radius, using the elements in our geospatial index as the keys for the metadata about a particular station. The next step is looking up the names for the two stations:

|

||||

```

|

||||

127.0.0.1:6379> hget NYC:station:281 name

|

||||

|

||||

"Grand Army Plaza & Central Park S"

|

||||

|

||||

|

||||

|

||||

127.0.0.1:6379> hget NYC:station:3457 name

|

||||

|

||||

"E 58 St & Madison Ave"

|

||||

|

||||

```

|

||||

|

||||

Those keys correspond to the stations identified on the map above. If I want, I can add more flags to the `GEORADIUS` command to get a list of elements, their coordinates, and their distance from our current point:

|

||||

```

|

||||

127.0.0.1:6379> GEORADIUS NYC:stations:location -73.97297 40.76384 500 ft WITHDIST WITHCOORD ASC

|

||||

|

||||

1) 1) "NYC:station:281"

|

||||

|

||||

2) "289.1995"

|

||||

|

||||

3) 1) "-73.97371262311935425"

|

||||

|

||||

2) "40.76439830559216659"

|

||||

|

||||

2) 1) "NYC:station:3457"

|

||||

|

||||

2) "383.1782"

|

||||

|

||||

3) 1) "-73.97209256887435913"

|

||||

|

||||

2) "40.76302702144496237"

|

||||

|

||||

```

|

||||

|

||||

Looking up the names associated with those keys generates an ordered list of stations I can choose from. Redis doesn't provide directions or routing capability, so I use the routing features of my device's OS to plot a course from my current location to the selected bike station.

|

||||

|

||||

The `GEORADIUS` function can be easily implemented inside an API in your favorite development framework to add location functionality to an app.

|

||||

|

||||

### Other query commands

|

||||

|

||||

In addition to the `GEORADIUS` command, Redis provides three other commands for querying data from the index: `GEOPOS`, `GEODIST`, and `GEORADIUSBYMEMBER`.

|

||||

|

||||

The `GEOPOS` command can provide the coordinates for a given element from the geohash. For example, if I know there is a bikesharing station at West 38th and 8th and its ID is 523, then the element name for that station is NYC🚉523. Using Redis, I can find the station's longitude and latitude:

|

||||

```

|

||||

127.0.0.1:6379> geopos NYC:stations:location NYC:station:523

|

||||

|

||||

1) 1) "-73.99138301610946655"

|

||||

|

||||

2) "40.75466497634030105"

|

||||

|

||||

```

|

||||

|

||||

The `GEODIST` command provides the distance between two elements of the index. If I wanted to find the distance between the station at Grand Army Plaza & Central Park South and the station at East 58th St. & Madison, I would issue the following command:

|

||||

```

|

||||

127.0.0.1:6379> GEODIST NYC:stations:location NYC:station:281 NYC:station:3457 ft

|

||||

|

||||

"671.4900"

|

||||

|

||||

```

|

||||

|

||||

Finally, the `GEORADIUSBYMEMBER` command is similar to the `GEORADIUS` command, but instead of taking a set of coordinates, the command takes the name of another member of the index and returns all the members within a given radius centered on that member. To find all the stations within 1,000 feet of the Grand Army Plaza & Central Park South, enter the following:

|

||||

```

|

||||

127.0.0.1:6379> GEORADIUSBYMEMBER NYC:stations:location NYC:station:281 1000 ft WITHDIST

|

||||

|

||||

1) 1) "NYC:station:281"

|

||||

|

||||

2) "0.0000"

|

||||

|

||||

2) 1) "NYC:station:3132"

|

||||

|

||||

2) "793.4223"

|

||||

|

||||

3) 1) "NYC:station:2006"

|

||||

|

||||

2) "911.9752"

|

||||

|

||||

4) 1) "NYC:station:3136"

|

||||

|

||||

2) "940.3399"

|

||||

|

||||

5) 1) "NYC:station:3457"

|

||||

|

||||

2) "671.4900"

|

||||

|

||||

```

|

||||

|

||||

While this example focused on using Python and Redis to parse data and build an index of bikesharing system locations, it can easily be generalized to locate restaurants, public transit, or any other type of place developers want to help users find.

|

||||

|

||||

This article is based on [my presentation][17] at Open Source 101 in Raleigh this year.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/2/building-bikesharing-application-open-source-tools

|

||||

|

||||

作者:[Tague Griffith][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/tague

|

||||

[1]:https://www.python.org/

|

||||

[2]:https://redis.io/

|

||||

[3]:https://www.citibikenyc.com/

|

||||

[4]:https://www.citibikenyc.com/data-sharing-policy

|

||||

[5]:https://github.com/NABSA/gbfs

|

||||

[6]:http://nabsa.net/

|

||||

[7]:https://www.json.org/

|

||||

[8]:https://gist.github.com/tague/5a82d96bcb09ce2a79943ad4c87f6e15

|

||||

[9]:https://github.com/NABSA/gbfs/blob/master/systems.csv

|

||||

[10]:https://redis.io/commands/unlink

|

||||

[11]:https://redis.io/commands/hmset

|

||||

[12]:https://raw.githubusercontent.com/antirez/redis/4.0/00-RELEASENOTES

|

||||

[13]:https://redis.io/topics/data-types#Hashes

|

||||

[14]:https://redis.io/commands#geo

|

||||

[15]:https://redis.io/topics/data-types-intro#redis-sorted-sets

|

||||

[16]:https://twitter.com/swatchthedog

|

||||

[17]:http://opensource101.com/raleigh/talks/building-location-aware-apps-open-source-tools/

|

||||

@ -0,0 +1,264 @@

|

||||

Check Linux Distribution Name and Version

|

||||

======

|

||||

You have joined new company and want to install some software’s which is requested by DevApp team, also want to restart few of the service after installation. What to do?

|

||||

|

||||

In this situation at least you should know what Distribution & Version is running on it. It will help you perform the activity without any issue.

|

||||

|

||||

Administrator should gather some of the information about the system before doing any activity, which is first task for him.

|

||||

|

||||

There are many ways to find the Linux distribution name and version. You might ask, why i want to know this basic things?

|

||||

|

||||

We have four major distributions such as RHEL, Debian, openSUSE & Arch Linux. Each distribution comes with their own package manager which help us to install packages on the system.

|

||||

|

||||

If you don’t know the distribution name then you wont be able to perform the package installation.

|

||||

|

||||

Also you won’t able to run the proper command for service bounces because most of the distributions implemented systemd command instead of SysVinit script.

|

||||

|

||||

It’s good to have the basic commands which will helps you in many ways.

|

||||

|

||||

Use the following Methods to Check Your Linux Distribution Name and Version.

|

||||

|

||||

### List of methods

|

||||

|

||||

* lsb_release command

|

||||

* /etc/*-release file

|

||||

* uname command

|

||||

* /proc/version file

|

||||

* dmesg Command

|

||||

* YUM or DNF Command

|

||||

* RPM command

|

||||

* APT-GET command

|

||||

|

||||

|

||||

|

||||

### Method-1: lsb_release Command

|

||||

|

||||

LSB stands for Linux Standard Base that prints distribution-specific information such as Distribution name, Release version and codename.

|

||||

```

|

||||

# lsb_release -a

|

||||

No LSB modules are available.

|

||||