mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

commit

667df81784

@ -6,84 +6,83 @@

|

||||

|

||||

这是初学者经常会问的一个问题,在这里,我会告诉你们10个我最喜欢的博客,这些博客可以帮助我们解决问题,能让我们及时了解所有 Ubuntu 版本的更新消息。不,我谈论的不是通常的 Linux 和 shell 脚本一类的东东。我是在说一个流畅的 Linux 桌面系统和一个普通的用户所要的关于 Ubuntu 的经验。

|

||||

|

||||

这些网站帮助你解决你正遇到的问题,提醒你关注各种应用和提供给你来自 Ubuntu 世界的最新消息。这个网站可以让你对 Ubuntu 更了解,所以,下面列出的10个我最喜欢的网站覆盖 Ubuntu 的方方面面。

|

||||

这些网站帮助你解决你正遇到的问题,提醒你关注各种应用和提供给你来自 Ubuntu 世界的最新消息。这个网站可以让你对 Ubuntu 更了解,所以,下面列出的是10个我最喜欢的博客,它们包括了 Ubuntu 的方方面面。

|

||||

|

||||

###10个Ubutun用户一定要知道的博客###

|

||||

|

||||

从我开始在 itsfoss 网站上写作开始,我特意把他排除在外,没有列入名单。我也并没有把[Planet Ubuntu][1]列入名单,因为他不适合初学的同学。废话不多说,让我们一起来看下**最好的乌邦图(ubuntu)博客**(排名不分先后):

|

||||

从我开始在 itsfoss 网站上写作开始,我特意把它排除在外,没有列入名单。我也并没有把[Planet Ubuntu][1]列入名单,因为它不适合初学者。废话不多说,让我们一起来看下**最好的乌邦图(ubuntu)博客**(排名不分先后):

|

||||

|

||||

### [OMG! Ubuntu!][2] ###

|

||||

|

||||

这是一个只针对 ubuntu 爱好者的网站。任何和乌邦图有关系的想法,不管成不成熟,OMG!Ubuntu上都会有收集!他主要包括新闻和应用。你也可以再这里找到一些关于 Ubuntu 的教程,但是不是很多。

|

||||

这是一个只针对 ubuntu 爱好者的网站。无论多小,只要是和乌邦图有关系的,OMG!Ubuntu 都会收入站内!博客主要包括新闻和应用。你也可以再这里找到一些关于 Ubuntu 的教程,但不是很多。

|

||||

|

||||

这个博客会让你知道 Ubuntu 的世界是怎么样的。

|

||||

这个博客会让你知道 Ubuntu 世界发生的各种事情。

|

||||

|

||||

### [Web Upd8][3] ###

|

||||

|

||||

Web Upd8 是我最喜欢的博客。除了涵盖新闻,他有很多容易理解的教程。Web Upd8 还维护了几个PPAs。博主[Andrei][4]有时会在评论里回答你的问题,这对你来说也会是很有帮助的。

|

||||

Web Upd8 是我最喜欢的博客。除了涵盖新闻,它有很多容易理解的教程。Web Upd8 还维护了几个PPAs。博主[Andrei][4]有时会在评论里回答你的问题,这对你来说也会是很有帮助的。

|

||||

|

||||

一个你可以追新闻资讯和教程的网站。

|

||||

这是一个你可以了解新闻资讯,学习教程的网站。

|

||||

|

||||

### [Noobs Lab][5] ###

|

||||

|

||||

和Web Upd8一样,Noobs Lab上也有很多教程,新闻,并且它可能是PPA里最大的主题和图标集。

|

||||

和Web Upd8一样,Noobs Lab上也有很多教程,新闻,并且它可能是PPA里最大的主题和图标集。

|

||||

|

||||

如果你是个小白,跟着Noobs Lab。

|

||||

如果你是个新手,去Noobs Lab看看吧。

|

||||

|

||||

### [Linux Scoop][6] ###

|

||||

|

||||

这里,大多数的博客都是“文字博客”。你通过看说明和截图来学习教程。而 Linux Scoop 上有很多录像来帮助初学者来学习,是一个实实在在的录像博客。

|

||||

大多数的博客都是“文字博客”。你通过看说明和截图来学习教程。而 Linux Scoop 上有很多录像来帮助初学者来学习,完全是一个视频博客。

|

||||

|

||||

如果你更喜欢看,而不是阅读的话,Linux Scoop应该是最适合你的。

|

||||

比起阅读来,如果你更喜欢视频,Linux Scoop应该是最适合你的。

|

||||

|

||||

### [Ubuntu Geek][7] ###

|

||||

|

||||

这是一个相对比较老的博客。覆盖面很广,并且有很多快速安装的教程和说明。虽然,有时我发现其中的一些教程文章缺乏深度,当然这也许只是我个人的观点。

|

||||

|

||||

想要快速的小贴士,去Ubuntu Geek。

|

||||

想要快速小贴士,去Ubuntu Geek。

|

||||

|

||||

### [Tech Drive-in][8] ###

|

||||

|

||||

这个网站的更新好像没有以前那么勤快了,可能是 Manuel 在忙于他的工作,但是仍然给我们提供了很多的东西。新闻,教程,应用评论是这个博客的重点。

|

||||

这个网站的更新频率好像没有以前那么快了,可能是 Manuel 在忙于他的工作,但是仍然给我们提供了很多的东西。新闻,教程,应用评论是这个博客的亮点。

|

||||

|

||||

博客经常被收入到[Ubuntu的新闻邮件请求][9],Tech Drive-in肯定是一个很值得你去追的网站。

|

||||

博客经常被收入到[Ubuntu的新闻邀请邮件中][9],Tech Drive-in肯定是一个很值得你去学习的网站。

|

||||

|

||||

### [UbuntuHandbook][10] ###

|

||||

|

||||

快速小贴士,新闻和教程是UbuntuHandbook的USP。[Ji m][11]最近也在参与维护一些PPAS。我必须很认真的说,这个站界面其实可以做得更好看点,纯属个人观点。

|

||||

快速小贴士,新闻和教程是UbuntuHandbook的USP。[Ji m][11]最近也在参与维护一些PPAS。我必须很认真的说,这个博客的页面其实可以做得更好看点,纯属个人观点。

|

||||

|

||||

UbuntuHandbook 真的很方便。

|

||||

|

||||

### [Unixmen][12] ###

|

||||

|

||||

这个网站是由很多人一起维护的,而且并不仅仅局限于Ubuntu,它也覆盖了很多的其他的Linux发行版。他用他自己的方式来帮助用户。

|

||||

这个网站是由很多人一起维护的,而且并不仅仅局限于Ubuntu,它也覆盖了很多的其他的Linux发行版。它有自己的论坛来帮助用户。

|

||||

|

||||

紧跟着 Unixmen 的步伐。。

|

||||

|

||||

### [The Mukt][13] ###

|

||||

|

||||

The Mukt是Muktware新的代表。Muktware是一个逐渐消亡的Linux组织,并以Mukt重生。Muktware是一个很严谨的Linux开源的博客,The Mukt涉及很多广泛的主题,包括,科技新闻,古怪的新闻,有时还有娱乐新闻(听起来是否有一种混搭风的感觉?)The Mukt也包括很多Ubuntu的新闻,有些可能是你感兴趣的。

|

||||

The Mukt是Muktware新的代表。Muktware是一个逐渐消亡的Linux组织,并以Mukt重生。Muktware是一个很严谨的Linux开源的博客,The Mukt涉及很多广泛的主题,包括,科技新闻,极客新闻,有时还有娱乐新闻(听起来是否有一种混搭风的感觉?)The Mukt也包括很多你感兴趣的Ubuntu新闻。

|

||||

|

||||

The Mukt 不仅仅是一个博客,它是一种文化潮流。

|

||||

|

||||

### [LinuxG][14] ###

|

||||

|

||||

LinuxG是一个你可以找到所有关于“怎样安装”文章的站点。几乎所有的文章都开始于一句话“你好,Linux geeksters,正如你所知道的。。。”,博客可以在不同的主题上做得更好。我经常发现有些是文章缺乏深度,并且是急急忙忙写出来的,但是它仍然是一个关注应用更新的好地方。

|

||||

LinuxG是一个你可以找到所有关于“怎样安装”类型文章的站点。几乎所有的文章都开始于一句话“你好,Linux geeksters,正如你所知道的……”,博客可以在不同的主题上做得更好。我经常发现有些是文章缺乏深度,并且是急急忙忙写出来的,但是它仍然是一个关注应用最新版本的好地方。

|

||||

|

||||

它很好的平衡了新的应用和他们最新的版本。

|

||||

这是个快速浏览新的应用和它们最新的版本好地方。

|

||||

|

||||

### 你还有什么好的站点吗? ###

|

||||

|

||||

This was my list of best Ubuntu blogs which I regularly follow. I know there are plenty more out there, perhaps better than some of those listed here. So why don’t you mention your favorite Ubuntu blog in the comment section below?

|

||||

这些就是我平时经常浏览的 Ubuntu 博客。我知道还有很多我不知道的站点,可能会比我列出来的这些更好。所以,欢迎把你最喜爱的 Ubuntu 博客写在下面评论区。

|

||||

|

||||

这些就是我平时经常浏览的 Ubuntu 博客。我知道还有很多我不知道的站点,可能会比我列出来的这些更好。所以,欢迎把你最喜爱的 Ubuntu 博客在下面评论的位置写出来。

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://itsfoss.com/ten-blogs-every-ubuntu-user-must-follow/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[barney-ro](https://github.com/barney-ro)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,42 @@

|

||||

Debian 8 "Jessie" 将把GNOME作为默认桌面环境

|

||||

================================================================================

|

||||

> Debian的GNOME团队已经取得了实质进展

|

||||

|

||||

<center></center>

|

||||

|

||||

<center>*GNOME 3.14桌面*</center>

|

||||

|

||||

**Debian项目开发者花了很长一段时间来决定将Xfce,GNOME或一些其他桌面环境中的哪个作为默认环境,不过目前看起来像是GNOME赢了。**

|

||||

|

||||

[我们两天前提到了][1],GNOME 3.14的软件包被上传到 Debian Testing(Debian 8 “Jessie”)的软件仓库中,这是一个令人惊喜的事情。通常情况下,GNOME的维护者对任何类型的软件包都不会这么快地决定添加,更别说桌面环境。

|

||||

|

||||

事实证明,关于即将到来的Debian 8的发行版中所用的默认桌面的争论已经尘埃落定,尽管这个词可能有点过于武断。无论什么情况下,总是有些开发者想要Xfce,另外一些则是喜欢 GNOME,看起来 MATE 也是不少人的备选。

|

||||

|

||||

### 最有可能的是,GNOME将Debian 8“Jessie” 的默认桌面环境###

|

||||

|

||||

我们之所以说“最有可能”是因为协议尚未达成一致,但它看起来GNOME已经遥遥领先了。Debian的维护者和开发者乔伊·赫斯解释了为什么会这样。

|

||||

|

||||

“根据从 https://wiki.debian.org/DebianDesktop/Requalification/Jessie 初步结果看,一些所需数据尚不可用,但在这一点上,我百分之八十地确定GNOME已经领先了。特别是,由于“辅助功能”和某些“systemd”整合的进度。在辅助功能方面:Gnome和Mate都领先了一大截。其他一些桌面的辅助功能改善了在Debian上的支持,部分原因是这一过程推动的,但仍需要上游大力支持。“

|

||||

|

||||

“Systemd /etc 整合方面:Xfce,Mate等尽力追赶在这一领域正在发生的变化,当技术团队停止了修改之后,希望有时间能在冻结期间解决这些问题。所以这并不是完全否决这些桌面,但要从目前的状态看,GNOME是未来的选择,“乔伊·赫斯[补充说][2]。

|

||||

|

||||

开发者在邮件中表示,在Debian的GNOME团队对他们所维护的项目[充满了激情][3],而Debian的Xfce的团队是决定默认桌面的实际阻碍。

|

||||

|

||||

无论如何,Debian 8“Jessie”没有一个具体发布时间,并没有迹象显示何时可能会被发布。在另一方面,GNOME 3.14已经发布了(也许你已经看到新闻了),它将很快应对好进行Debian的测试。

|

||||

|

||||

我们也应该感谢Jordi Mallach,在Debian中的GNOME包的维护者之一,他为我们指引了正确的讯息。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Debian-8-quot-Jessie-quot-to-Have-GNOME-as-the-Default-Desktop-459665.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[fbigun](https://github.com/fbigun)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://news.softpedia.com/news/Debian-8-quot-Jessie-quot-to-Get-GNOME-3-14-459470.shtml

|

||||

[2]:http://anonscm.debian.org/cgit/tasksel/tasksel.git/commit/?id=dce99f5f8d84e4c885e6beb4cc1bb5bb1d9ee6d7

|

||||

[3]:http://news.softpedia.com/news/Debian-Maintainer-Says-that-Xfce-on-Debian-Will-Not-Meet-Quality-Standards-GNOME-Is-Needed-454962.shtml

|

||||

@ -0,0 +1,29 @@

|

||||

Red Hat Enterprise Linux 5产品线终结

|

||||

================================================================================

|

||||

2007年3月,红帽公司首次宣布它的[Red Hat Enterprise Linux 5][1](RHEL)平台。虽然如今看来很普通,RHEL 5特别显著的一点是它是红帽公司第一个强调虚拟化的主要发行版本,而这点是如今现代发行版所广泛接受的特性。

|

||||

|

||||

最初的计划是为RHEL 5提供七年的寿命,但在2012年该计划改变了,红帽为RHEL 5[扩展][2]至10年的标准支持。

|

||||

|

||||

刚刚过去的这个星期,Red Hat发布的RHEL 5.11是RHEL 5.X系列的最后的、次要里程碑版本。红帽现在进入了将持续三年的名为“production 3”的支持周期。在这阶段将没有新的功能被添加到平台中,并且红帽公司将只提供有重大影响的安全修复程序和紧急优先级的bug修复。

|

||||

|

||||

平台事业部副总裁兼总经理Jim Totton在红帽公司在一份声明中说:“红帽公司致力于建立一个长期,稳定的产品生命周期,这将给那些依赖Red Hat Enterprise Linux为他们的关键应用服务的企业客户提供关键的益处。虽然RHEL 5.11是RHEL 5平台的最终次要版本,但它提供了安全性和可靠性方面的增强功能,以保持该平台接下来几年的活力。”

|

||||

|

||||

新的增强功能包括安全性和稳定性更新,包括改进了红帽帮助用户调试系统的方式。

|

||||

|

||||

还有一些新的存储的驱动程序,以支持新的存储适配器和改进在VMware ESXi上运行RHEL的支持。

|

||||

|

||||

在安全方面的巨大改进是OpenSCAP更新到版本1.0.8。红帽在2011年五月的[RHEL5.7的里程碑更新][3]中第一次支持了OpenSCAP。 OpenSCAP是安全内容自动化协议(SCAP)框架的开源实现,用于创建一个标准化方法来维护安全系统。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxplanet.com/news/end-of-the-line-for-red-hat-enterprise-linux-5.html

|

||||

|

||||

作者:Sean Michael Kerner

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.internetnews.com/ent-news/article.php/3665641

|

||||

[2]:http://www.serverwatch.com/server-news/red-hat-extends-linux-support.html

|

||||

[3]:http://www.internetnews.com/skerner/2011/05/red-hat-enterprise-linux-57-ad.html

|

||||

@ -0,0 +1,39 @@

|

||||

KDE Plasma 5的第二个bug修复版本发布,带来了很多的改变

|

||||

================================================================================

|

||||

> 新的Plasma 5发布了,带来了新的外观

|

||||

|

||||

<center></center>

|

||||

|

||||

<center>*KDE Plasma 5*</center>

|

||||

|

||||

### Plasma 5的第二个bug修复版本发布,已可下载###

|

||||

|

||||

KDE Plasma 5的bug修复版本不断来到,它新的桌面体验将会是KDE的生态系统的一个组成部分。

|

||||

|

||||

[公告][1]称:“plasma-5.0.2这个版本,新增了一个月以来来自KDE的贡献者新的翻译和修订。Bug修复通常是很小但是很重要,如修正未翻译的文字,使用正确的图标和修正KDELibs 4软件的文件重复现象。它还增加了一个月以来辛勤的翻译成果,使其支持其他更多的语言”

|

||||

|

||||

这个桌面还没有在任何Linux发行版中默认安装,这将持续一段时间,直到我们测试完成。

|

||||

|

||||

开发者还解释说,更新的软件包可以在Kubuntu Plasma 5的开发版本中进行审查。

|

||||

|

||||

如果你个人需要它们,你也可以下载源码包。

|

||||

|

||||

- [KDE Plasma Packages][2]

|

||||

- [KDE Plasma Sources][3]

|

||||

|

||||

如果你决定去编译它,你必须需要知道 KDE Plasma 5.0.2是一组复杂的软件,可能你需要解决不少问题。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Second-Bugfix-Release-for-KDE-Plasma-5-Arrives-with-Lots-of-Changes-459688.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://kde.org/announcements/plasma-5.0.2.php

|

||||

[2]:https://community.kde.org/Plasma/Packages

|

||||

[3]:http://kde.org/info/plasma-5.0.2.php

|

||||

@ -1,38 +0,0 @@

|

||||

Translating by ZTinoZ

|

||||

Red Hat Acquires FeedHenry for $82 Million to Advance Mobile Development

|

||||

================================================================================

|

||||

> Red Hat jumps into the mobile development sector with a key acquisition.

|

||||

|

||||

Red Hat's JBoss developer tools division has always focused on enterprise development, but hasn't always been focused on mobile. Today that will start to change as Red Hat announced its intention to acquire mobile development vendor [FeedHenry][1] for $82 million in cash. The deal is set to close in the third quarter of Red Hat's fiscal 2015. Red Hat is set to disclose its second quarter fiscal 2015 earning at 4 ET today.

|

||||

|

||||

Mike Piech, general manager of Middleware at Red Hat, told Datamation that upon the deal's closing FeedHenry's employees will become Red Hat employees

|

||||

|

||||

FeedHenry's development platform enables application developers to rapidly build mobile application for Android, IOS, Windows Phone and BlackBerry. The FeedHenry platform leverages Node.js programming architecture, which is not an area where JBoss has had much exposure in the past.

|

||||

|

||||

"The acquisition of FeedHenry significantly expands Red Hat's support for and engagement in Node.js," Piech said.

|

||||

|

||||

Piech Red Hat's OpenShift Platform-as-a-Service (PaaS) technology already has a Node.js cartridge. Additionally Red Hat Enterprise Linux ships a tech preview of node.js as part of the Red Hat Software Collections.

|

||||

|

||||

While node.js itself is open source, not all of FeedHenry's technology is currently available under an open source license. As has been Red Hat's policy throughout its entire history, it is now committing to making FeedHenry open source as well.

|

||||

|

||||

"As we've done with other acquisitions, open sourcing the technology we acquire is a priority for Red Hat, and we have no reason to expect that approach will change with FeedHenry," Piech said.

|

||||

|

||||

Red Hat's last major acquisition of a company with non open source technology was with [ManageIQ][2] for $104 million back in 2012. In May of this year, Red Hat launched the ManageIQ open-source project, opening up development and code of the formerly closed-source cloud management technology.

|

||||

|

||||

From an integration standpoint, Red Hat is not yet providing full details of precisely where FeedHenry will fit it.

|

||||

|

||||

"We've already identified a number of areas where FeedHenry and Red Hat's existing technology and products can be better aligned and integrated," Piech said. "We'll share more details as we develop the roadmap over the next 90 days."

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.datamation.com/mobile-wireless/red-hat-acquires-feedhenry-for-82-million-to-advance-mobile-development.html

|

||||

|

||||

作者:[Sean Michael Kerner][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.datamation.com/author/Sean-Michael-Kerner-4807810.html

|

||||

[1]:http://www.feedhenry.com/

|

||||

[2]:http://www.datamation.com/cloud-computing/red-hat-makes-104-million-cloud-management-bid-with-manageiq-acquisition.html

|

||||

@ -1,36 +0,0 @@

|

||||

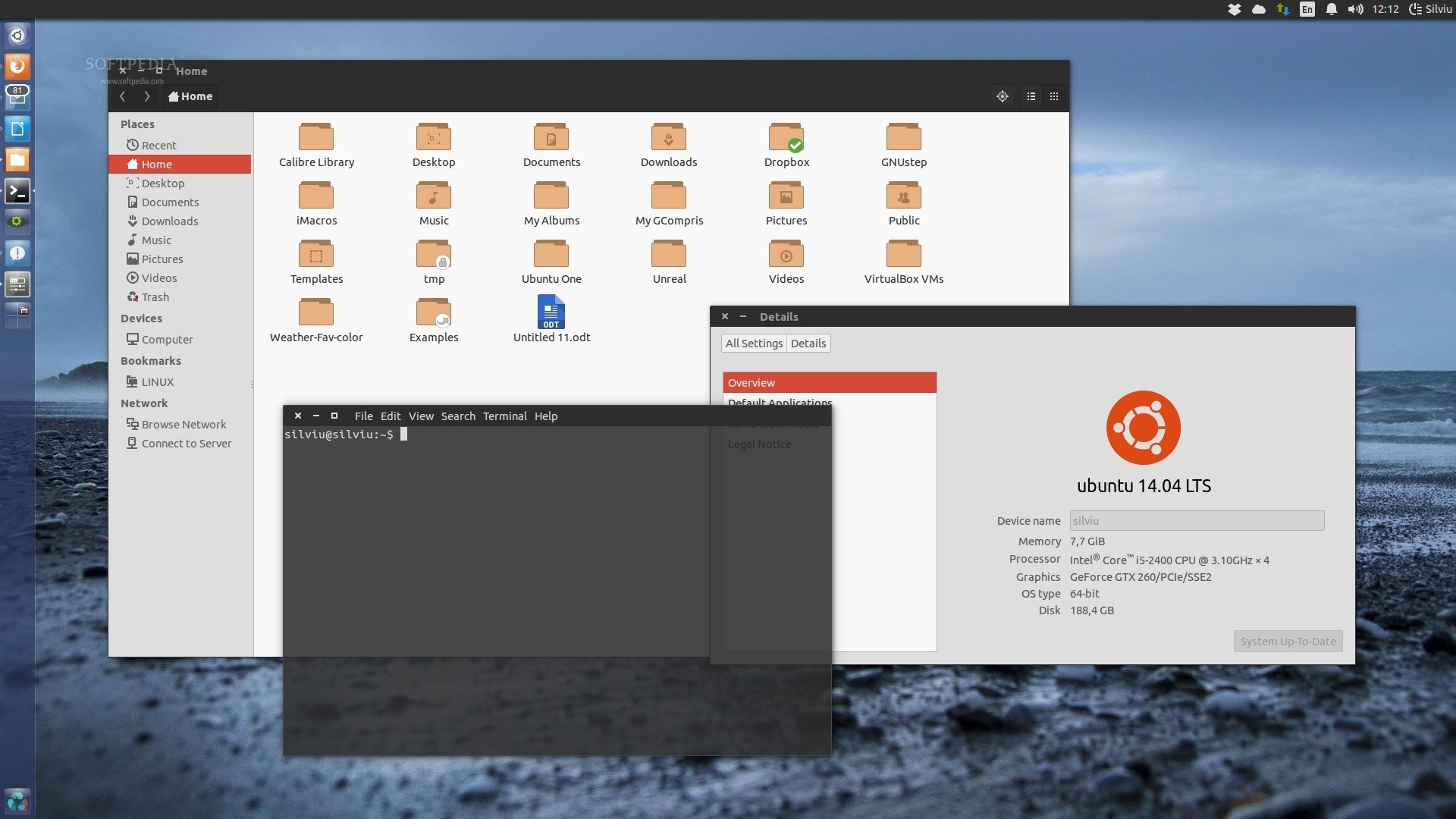

Canonical Closes nginx Exploit in Ubuntu 14.04 LTS

|

||||

================================================================================

|

||||

> Users have to upgrade their systems to fix the issue

|

||||

|

||||

|

||||

|

||||

Ubuntu 14.04 LTS

|

||||

|

||||

**Canonical has published details in a security notice about an nginx vulnerability that affected Ubuntu 14.04 LTS (Trusty Tahr). The problem has been identified and fixed.**

|

||||

|

||||

The Ubuntu developers have fixed a small nginx exploit. They explain that nginx could have been made to expose sensitive information over the network.

|

||||

|

||||

According to the security notice, “Antoine Delignat-Lavaud and Karthikeyan Bhargavan discovered that nginx incorrectly reused cached SSL sessions. An attacker could possibly use this issue in certain configurations to obtain access to information from a different virtual host.”

|

||||

|

||||

For a more detailed description of the problems, you can see Canonical's security [notification][1]. Users should upgrade their Linux distribution in order to correct this issue.

|

||||

|

||||

The problem can be repaired by upgrading the system to the latest nginx package (and dependencies). To apply the patch, you can simply run the Update Manager application.

|

||||

|

||||

If you don't want to use the Software Updater, you can open a terminal and enter the following commands (you will need to be root):

|

||||

|

||||

sudo apt-get update

|

||||

sudo apt-get dist-upgrade

|

||||

|

||||

In general, a standard system update will make all the necessary changes. You don't have to restart the PC in order to implement this fix.

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Canonical-Closes-Nginx-Exploit-in-Ubuntu-14-04-LTS-459677.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://www.ubuntu.com/usn/usn-2351-1/

|

||||

@ -1,29 +0,0 @@

|

||||

End of the Line for Red Hat Enterprise Linux 5

|

||||

================================================================================

|

||||

In March of 2007, Red Hat first announced its [Red Hat Enterprise Linux 5][1]( RHEL) platform. Though it might seem quant today, RHEL 5 was particularly notable in that it was the first major release for Red Hat to emphasize virtualization, which is a feature all modern distros now take for granted.

|

||||

|

||||

Originally the plan was for RHEL 5 to have seven years of life, but that plan changed in 2012 when when Red Hat [extended][2] its standard support for RHEL 5 to 10 years.

|

||||

|

||||

This past week, Red Hat released RHEL 5.11 which is the final minor milestone release for RHEL 5.X. RHEL now enters what Red Hat calls it production 3 support which will last for another three years. During the production three phase no new functionality is added to the platform and Red Hat will only provide critical impact security fixes and urgent priority bug fixes.

|

||||

|

||||

"Red Hat’s commitment to a long, stable product lifecycle is a key benefit for enterprise customers who rely on Red Hat Enterprise Linux for their critical applications," Jim Totton, vice president and general manager, Platform Business Unit, Red Hat said in a statement. " While Red Hat Enterprise Linux 5.11 is the final minor release of the Red Hat Enterprise Linux 5 platform, the enhancements it offers in terms of security and reliability are designed to maintain the platform’s viability for years to come."

|

||||

|

||||

The new enhancements include security and stability updates including improvements to the way that Red Hat can help users to debug a system.

|

||||

|

||||

There are also new storage drivers to support newer storage adapters and improved support for RHEL running on VMware ESXi.

|

||||

|

||||

On the security front the big improvement is an update to OpenSCAP version 1.0.8. Red Hat first provided support for OpenSCAP in May of 2011 with the [RHEL 5.7 milestone update][3]. OpenSCAP is an open source implementation of the Security Content Automation Protocol (SCAP) framework for creating a standardized approach for maintaining secure systems.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxplanet.com/news/end-of-the-line-for-red-hat-enterprise-linux-5.html

|

||||

|

||||

作者:Sean Michael Kerner

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.internetnews.com/ent-news/article.php/3665641

|

||||

[2]:http://www.serverwatch.com/server-news/red-hat-extends-linux-support.html

|

||||

[3]:http://www.internetnews.com/skerner/2011/05/red-hat-enterprise-linux-57-ad.html

|

||||

@ -1,38 +0,0 @@

|

||||

Second Bugfix Release for KDE Plasma 5 Arrives with Lots of Changes

|

||||

================================================================================

|

||||

> The new Plasma 5 desktop is out with a new version

|

||||

|

||||

|

||||

|

||||

KDE Plasma 5

|

||||

|

||||

### The KDE Community has announced that the second bugfix release for Plasma 5 is now out and available for download. ###

|

||||

|

||||

Bugfix releases for the KDE Plasma 5, the new desktop experience that will be an integral part of the KDE ecosystem, have started to arrive very often.

|

||||

|

||||

"This release, versioned plasma-5.0.2, adds a month's worth of new translations and fixes from KDE's contributors. The bugfixes are typically small but important such as fixing text which couldn't be translated, using the correct icons and fixing overlapping files with KDELibs 4 software. It also adds a month's hard work of translations to make support in other languages even more complete," reads the [announcement][1].

|

||||

|

||||

This particular desktop is not yet implemented by default in any Linux distro and it will be a while until we are able to test it properly.

|

||||

|

||||

The developers also explain that the updated packages can be reviewed in the development versions of Kubuntu Plasma 5.

|

||||

|

||||

You can also download the source packages, if you need them individually.

|

||||

|

||||

- [KDE Plasma Packages][2]

|

||||

- [KDE Plasma Sources][3]

|

||||

|

||||

You also have to keep in mind that KDE Plasma 5.0.2 is a sophisticated piece of software and you really need to know what you are doing if you decide to compile it.

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Second-Bugfix-Release-for-KDE-Plasma-5-Arrives-with-Lots-of-Changes-459688.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://kde.org/announcements/plasma-5.0.2.php

|

||||

[2]:https://community.kde.org/Plasma/Packages

|

||||

[3]:http://kde.org/info/plasma-5.0.2.php

|

||||

@ -1,92 +0,0 @@

|

||||

Making MySQL Better at GitHub

|

||||

================================================================================

|

||||

> At GitHub we say, "it's not fully shipped until it's fast." We've talked before about some of the ways we keep our [frontend experience speedy][1], but that's only part of the story. Our MySQL database infrastructure dramatically affects the performance of GitHub.com. Here's a look at how our infrastructure team seamlessly conducted a major MySQL improvement last August and made GitHub even faster.

|

||||

|

||||

### The mission ###

|

||||

|

||||

Last year we moved the bulk of GitHub.com's infrastructure into a new datacenter with world-class hardware and networking. Since MySQL forms the foundation of our backend systems, we expected database performance to benefit tremendously from an improved setup. But creating a brand-new cluster with brand-new hardware in a new datacenter is no small task, so we had to plan and test carefully to ensure a smooth transition.

|

||||

|

||||

### Preparation ###

|

||||

|

||||

A major infrastructure change like this requires measurement and metrics gathering every step of the way. After installing base operating systems on our new machines, it was time to test out our new setup with various configurations. To get a realistic test workload, we used tcpdump to extract SELECT queries from the old cluster that was serving production and replayed them onto the new cluster.

|

||||

|

||||

MySQL tuning is very workload specific, and well-known configuration settings like innodb_buffer_pool_size often make the most difference in MySQL's performance. But on a major change like this, we wanted to make sure we covered everything, so we took a look at settings like innodb_thread_concurrency, innodb_io_capacity, and innodb_buffer_pool_instances, among others.

|

||||

|

||||

We were careful to only make one test configuration change at a time, and to run tests for at least 12 hours. We looked for query response time changes, stalls in queries per second, and signs of reduced concurrency. We observed the output of SHOW ENGINE INNODB STATUS, particularly the SEMAPHORES section, which provides information on work load contention.

|

||||

|

||||

Once we were relatively comfortable with configuration settings, we started migrating one of our largest tables onto an isolated cluster. This served as an early test of the process, gave us more space in the buffer pools of our core cluster and provided greater flexibility for failover and storage. This initial migration introduced an interesting application challenge, as we had to make sure we could maintain multiple connections and direct queries to the correct cluster.

|

||||

|

||||

In addition to all our raw hardware improvements, we also made process and topology improvements: we added delayed replicas, faster and more frequent backups, and more read replica capacity. These were all built out and ready for go-live day.

|

||||

|

||||

### Making a list; checking it twice ###

|

||||

|

||||

With millions of people using GitHub.com on a daily basis, we did not want to take any chances with the actual switchover. We came up with a thorough [checklist][2] before the transition:

|

||||

|

||||

|

||||

|

||||

We also planned a maintenance window and [announced it on our blog][3] to give our users plenty of notice.

|

||||

|

||||

### Migration day ###

|

||||

|

||||

At 5am Pacific Time on a Saturday, the migration team assembled online in chat and the process began:

|

||||

|

||||

|

||||

|

||||

We put the site in maintenance mode, made an announcement on Twitter, and set out to work through the list above:

|

||||

|

||||

|

||||

|

||||

**13 minutes** later, we were able to confirm operations of the new cluster:

|

||||

|

||||

|

||||

|

||||

Then we flipped GitHub.com out of maintenance mode, and let the world know that we were in the clear.

|

||||

|

||||

|

||||

|

||||

Lots of up front testing and preparation meant that we kept the work we needed on go-live day to a minimum.

|

||||

|

||||

### Measuring the final results ###

|

||||

|

||||

In the weeks following the migration, we closely monitored performance and response times on GitHub.com. We found that our cluster migration cut the average GitHub.com page load time by half and the 99th percentile by *two-thirds*:

|

||||

|

||||

|

||||

|

||||

### What we learned ###

|

||||

|

||||

#### Functional partitioning ####

|

||||

|

||||

During this process we decided that moving larger tables that mostly store historic data to separate cluster was a good way to free up disk and buffer pool space. This allowed us to leave more resources for our "hot" data, splitting some connection logic to enable the application to query multiple clusters. This proved to be a big win for us and we are working to reuse this pattern.

|

||||

|

||||

#### Always be testing ####

|

||||

|

||||

You can never do too much acceptance and regression testing for your application. Replicating data from the old cluster to the new cluster while running acceptance tests and replaying queries were invaluable for tracing out issues and preventing surprises during the migration.

|

||||

|

||||

#### The power of collaboration ####

|

||||

|

||||

Large changes to infrastructure like this mean a lot of people need to be involved, so pull requests functioned as our primary point of coordination as a team. We had people all over the world jumping in to help.

|

||||

|

||||

Deploy day team map:

|

||||

|

||||

<iframe width="620" height="420" frameborder="0" src="https://render.githubusercontent.com/view/geojson?url=https://gist.githubusercontent.com/anonymous/5fa29a7ccbd0101630da/raw/map.geojson"></iframe>

|

||||

|

||||

This created a workflow where we could open a pull request to try out changes, get real-time feedback, and see commits that fixed regressions or errors -- all without phone calls or face-to-face meetings. When everything has a URL that can provide context, it's easy to involve a diverse range of people and make it simple for them give feedback.

|

||||

|

||||

### One year later.. ###

|

||||

|

||||

A full year later, we are happy to call this migration a success — MySQL performance and reliability continue to meet our expectations. And as an added bonus, the new cluster enabled us to make further improvements towards greater availability and query response times. I'll be writing more about those improvements here soon.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://github.com/blog/1880-making-mysql-better-at-github

|

||||

|

||||

作者:[samlambert][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://github.com/samlambert

|

||||

[1]:https://github.com/blog/1756-optimizing-large-selector-sets

|

||||

[2]:https://help.github.com/articles/writing-on-github#task-lists

|

||||

[3]:https://github.com/blog/1603-site-maintenance-august-31st-2013

|

||||

@ -1,89 +0,0 @@

|

||||

Drab Desktop? Try These 4 Beautiful Linux Icon Themes

|

||||

================================================================================

|

||||

**Ubuntu’s default icon theme [hasn’t changed much][1] in almost 5 years, save for the [odd new icon here and there][2]. If you’re tired of how it looks we’re going to show you a handful of gorgeous alternatives that will easily freshen things up.**

|

||||

|

||||

Do feel free to share links to your own favourite choices in the comments below.

|

||||

|

||||

### Captiva ###

|

||||

|

||||

|

||||

|

||||

Captiva icons, elementary folders and Moka GTK

|

||||

|

||||

Captiva is a relatively new icon theme that even the least bling-prone user can appreicate.

|

||||

|

||||

Made by DeviantArt user ~[bokehlicia][3], Captiva shuns the 2D flat look of many current icon themes for a softer, rounded look. The icons themselves have an almost material or textured look, with subtle drop shadows and a rich colour palette adding to the charm.

|

||||

|

||||

It doesn’t yet include a set of its own folder icons, and will fallback to using elementary (if available) or stock Ubuntu icons.

|

||||

|

||||

To install Captiva icons in Ubuntu 14.04 you can add the official PPA by opening a new Terminal window and enter the following commands:

|

||||

|

||||

sudo add-apt-repository ppa:captiva/ppa

|

||||

|

||||

sudo apt-get update && sudo apt-get install captiva-icon-theme

|

||||

|

||||

Or, if you’re not into software source cruft, by downloading the icon pack direct from the DeviantArt page. To install, extract the archive and move the resulting folder to the ‘.icons‘ directory in Home.

|

||||

|

||||

However you choose to install it, you’ll need to apply this (and every other theme on this list) using a utility like [Unity Tweak Tool][4].

|

||||

|

||||

- [Captiva Icon Theme on DeviantArt][5]

|

||||

|

||||

### Square Beam ###

|

||||

|

||||

|

||||

|

||||

Square Beam icon set with Orchis GTK

|

||||

|

||||

After something a bit angular? Check out Square Beam. It offers a more imposing visual statement than other sets on this list, with electric colours, harsh gradients and stark iconography. It claims to have more than 30,000 different icons (!) included (you’ll forgive me for not counting) so you should find very few gaps in its coverage.

|

||||

|

||||

- [Square Beam Icon Theme on GNOME-Look.org][6]

|

||||

|

||||

### Moka & Faba ###

|

||||

|

||||

|

||||

|

||||

Moka/Faba Mono Icons with Orchis GTK

|

||||

|

||||

The Moka icon suite needs little introduction. In fact, I’d wager a good number of you are already using it

|

||||

|

||||

With pastel colours, soft edges and simple icon artwork, Moka is a truly standout and comprehensive set of application icons. It’s best used with its sibling, Faba, which Moka will inherit so as to fill in all the system icons, folders, panel icons, etc. The combined result is…well, you’ve got eyes!

|

||||

|

||||

For full details on how to install on Ubuntu head over to the official project website, link below.

|

||||

|

||||

- [Download Moka and Faba Icon Themes][7]

|

||||

|

||||

### Compass ###

|

||||

|

||||

|

||||

|

||||

Compass Icon Theme with Numix Blue GTK

|

||||

|

||||

Last on our list, but by no means least, is Compass. This is a true adherent to the ’2D, two-tone’ UI design right now. It may not be as visually diverse as others on this list, but that’s the point. It’s consistent and uniform and all the better for it — just check out those folder icons!

|

||||

|

||||

It’s available to download and install manually through GNOME-Look (link below) or through the Nitrux Artwork PPA:

|

||||

|

||||

sudo add-apt-repository ppa:nitrux/nitrux-artwork

|

||||

|

||||

sudo apt-get update && sudo apt-get install compass-icon-theme

|

||||

|

||||

- [Compass Icon Theme on GNOME-Look.org][8]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2014/09/4-gorgeous-linux-icon-themes-download

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/117485690627814051450/?rel=author

|

||||

[1]:http://www.omgubuntu.co.uk/2010/02/lucid-gets-new-icons-for-rhythmbox-ubuntuone-memenu-more

|

||||

[2]:http://www.omgubuntu.co.uk/2012/08/new-icon-theme-lands-in-lubuntu-12-10

|

||||

[3]:http://bokehlicia.deviantart.com/

|

||||

[4]:http://www.omgubuntu.co.uk/2014/06/unity-tweak-tool-0-7-development-download

|

||||

[5]:http://bokehlicia.deviantart.com/art/Captiva-Icon-Theme-479302805

|

||||

[6]:http://gnome-look.org/content/show.php/Square-Beam?content=165094

|

||||

[7]:http://mokaproject.com/moka-icon-theme/download/ubuntu/

|

||||

[8]:http://gnome-look.org/content/show.php/Compass?content=160629

|

||||

@ -1,65 +0,0 @@

|

||||

7 killer open source monitoring tools

|

||||

================================================================================

|

||||

Looking for greater visibility into your network? Look no further than these excellent free tools

|

||||

|

||||

Network and system monitoring is a broad category. There are solutions that monitor for the proper operation of servers, network gear, and applications, and there are solutions that track the performance of those systems and devices, providing trending and analysis. Some tools will sound alarms and notifications when problems are detected, while others will even trigger actions to run when alarms sound. Here is a collection of open source solutions that aim to provide some or all of these capabilities.

|

||||

|

||||

### Cacti ###

|

||||

|

||||

|

||||

|

||||

Cacti is a very extensive performance graphing and trending tool that can be used to track just about any monitored metric that can be plotted on a graph. From disk utilization to fan speeds in a power supply, if it can be monitored, Cacti can track it -- and make that data quickly available.

|

||||

|

||||

### Nagios ###

|

||||

|

||||

|

||||

|

||||

Nagios is the old guard of system and network monitoring. It is fast, reliable, and extremely customizable. Nagios can be a challenge for newcomers, but the rather complex configuration is also its strength, as it can be adapted to just about any monitoring task. What it may lack in looks it makes up for in power and reliability.

|

||||

|

||||

### Icinga ###

|

||||

|

||||

|

||||

|

||||

Icinga is an offshoot of Nagios that is currently being rebuilt anew. It offers a thorough monitoring and alerting framework that\u2019s designed to be as open and extensible as Nagios is, but with several different Web UI options. Icinga 1 is closely related to Nagios, while Icinga 2 is the rewrite. Both versions are currently supported, and Nagios users can migrate to Icinga 1 very easily.

|

||||

|

||||

### NeDi ###

|

||||

|

||||

|

||||

|

||||

NeDi may not be as well known as some of the others, but it\u2019s a great solution for tracking devices across a network. It continuously walks through a network infrastructure and catalogs devices, keeping track of everything it discovers. It can provide the current location of any device, as well as a history.

|

||||

|

||||

NeDi can be used to locate stolen or lost devices by alerting you if they reappear on the network. It can even display all known and discovered connections on a map, showing how every network interconnect is laid out, down to the physical port level.

|

||||

|

||||

### Observium ###

|

||||

|

||||

|

||||

|

||||

Observium combines system and network monitoring with performance trending. It uses both static and auto discovery to identify servers and network devices, leverages a variety of monitoring methods, and can be configured to track just about any available metric. The Web UI is very clean, well thought out, and easy to navigate.

|

||||

|

||||

As shown, Observium can also display the physical location of monitored devices on a geographical map. Note too the heads-up panels showing active alarms and device counts.

|

||||

|

||||

### Zabbix ###

|

||||

|

||||

|

||||

|

||||

Zabbix monitors servers and networks with an extensive array of tools. There are Zabbix agents for most operating systems, or you can use passive or external checks, including SNMP to monitor hosts and network devices. You'll also find extensive alerting and notification facilities, and a highly customizable Web UI that can be adapted to a variety of heads-up displays. In addition, Zabbix has specific tools that monitor Web application stacks and virtualization hypervisors.

|

||||

|

||||

Zabbix can also produce logical interconnection diagrams detailing how certain monitored objects are interconnected. These maps are customizable, and maps can be created for groups of monitored devices and hosts.

|

||||

|

||||

### Ntop ###

|

||||

|

||||

|

||||

|

||||

Ntop is a packet sniffing tool with a slick Web UI that displays live data on network traffic passing by a monitoring interface. Instant data on network flows is available through an advanced live graphing function. Host data flows and host communication pair information is also available in real-time.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.networkworld.com/article/2686794/asset-management/164219-7-killer-open-source-monitoring-tools.html

|

||||

|

||||

作者:[Paul Venezia][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.networkworld.com/author/Paul-Venezia/

|

||||

@ -0,0 +1,108 @@

|

||||

barney-ro translating

|

||||

|

||||

ChromeOS vs Linux: The Good, the Bad and the Ugly

|

||||

ChromeOS 对战 Linux : 孰优孰劣 仁者见仁 智者见智

|

||||

================================================================================

|

||||

> In the battle between ChromeOS and Linux, both desktop environments have strengths and weaknesses.

|

||||

|

||||

> 在 ChromeOS 和 Linux 的斗争过程中,不管是哪一家的操作系统都是有优有劣。

|

||||

|

||||

Anyone who believes Google isn't "making a play" for desktop users isn't paying attention. In recent years, I've seen [ChromeOS][1] making quite a splash on the [Google Chromebook][2]. Exploding with popularity on sites such as Amazon.com, it looks as if ChromeOS could be unstoppable.

|

||||

|

||||

任何不关注Google的人都不会相信Google在桌面用户当中扮演这一个很重要的角色。在近几年,我们见到的[ChromeOS][1]制造的[Google Chromebook][2]相当的轰动。和同期的人气火爆的Amazon一样,似乎ChromeOS势不可挡。

|

||||

|

||||

In this article, I'm going to look at ChromeOS as a concept to market, how it's affecting Linux adoption and whether or not it's a good/bad thing for the Linux community as a whole. Plus, I'll talk about the biggest issue of all and how no one is doing anything about it.

|

||||

|

||||

在本文中,我们要了解的是ChromeOS概念的市场,ChromeOS怎么影响着Linux的使用,和整个 ChromeOS 对于一个社区来说,是好事还是坏事。另外,我将会谈到一些重大的事情,和为什么没人去为他做点什么事情。

|

||||

|

||||

### ChromeOS isn't really Linux ###

|

||||

|

||||

### ChromeOS 并不是真正的Linux ###

|

||||

|

||||

When folks ask me if ChromeOS is a Linux distribution, I usually reply that ChromeOS is to Linux what OS X is to BSD. In other words, I consider ChromeOS to be a forked operating system that uses the Linux kernel under the hood. Much of the operating system is made up of Google's own proprietary blend of code and software.

|

||||

|

||||

每当有朋友问我说是否ChromeOS 是否是Linux 的一个分支时,我都会这样回答:ChromeOS 对于Linux 就好像是 OS X 对于BSD 。换句话说,我认为,ChromeOS 是一个派生的操作系统,运行于Linux 内核的引擎之下。很多操作系统就组成了Google 的专利代码和软件。

|

||||

|

||||

So while the ChromeOS is using the Linux kernel under its hood, it's still very different from what we might find with today's modern Linux distributions.

|

||||

|

||||

尽管ChromeOS 是利用了Linux 内核引擎,但是它仍然有很大的不同和现在流行的Linux分支版本。

|

||||

|

||||

Where ChromeOS's difference becomes most apparent, however, is in the apps it offers the end user: Web applications. With everything being launched from a browser window, Linux users might find using ChromeOS to be a bit vanilla. But for non-Linux users, the experience is not all that different than what they may have used on their old PCs.

|

||||

|

||||

ChromeOS和它们最大的不同就在于它给终端用户提供的app,包括Web 应用。因为ChromeOS 每一个操作都是开始于浏览器窗口,对于Linux 用户来说,可能会有很多不一样的感受,但是,对于没有Linux 经验的用户来说,这与他们使用的旧电脑并没有什么不同。

|

||||

|

||||

For example: Anyone who is living a Google-centric lifestyle on Windows will feel right at home on ChromeOS. Odds are this individual is already relying on the Chrome browser, Google Drive and Gmail. By extension, moving over to ChromeOS feels fairly natural for these folks, as they're simply using the browser they're already used to.

|

||||

|

||||

就是说,每一个以Google-centric为生活方式的人来说,当他们回到家时在ChromeOS上的感觉将会非常良好。这样的优势就是这个人已经接受了Chrome 浏览器,Google 驱动器和Gmail 。久而久之,他们的亲朋好友也都对ChromeOs有了好感,就好像是他们很容易接受Chrome 流浪器,因为他们早已经用过。

|

||||

|

||||

Linux enthusiasts, however, tend to feel constrained almost immediately. Software choices feel limited and boxed in, plus games and VoIP are totally out of the question. Sorry, but [GooglePlus Hangouts][3] isn't a replacement for [VoIP][4] software. Not even by a long shot.

|

||||

|

||||

然而,对于Linux 爱好者来说,这样就立即带来了不适应。软件的选择是受限制的,盒装的,在加上游戏和VoIP 是完全不可能的。对不起,因为[GooglePlus Hangouts][3]是代替不了VoIP 软件的。甚至在很长的一段时间里。

|

||||

|

||||

### ChromeOS or Linux on the desktop ###

|

||||

|

||||

### ChromeOS 和Linux 的桌面化 ###

|

||||

Anyone making the claim that ChromeOS hurts Linux adoption on the desktop needs to come up for air and meet non-technical users sometime.

|

||||

|

||||

有人断言,ChromeOS 要是想在桌面系统中对Linux 产生影响,只有在Linux 停下来浮出水面换气的时候或者是满足某个非技术用户的时候。

|

||||

|

||||

Yes, desktop Linux is absolutely fine for most casual computer users. However it helps to have someone to install the OS and offer "maintenance" services like we see in the Windows and OS X camps. Sadly Linux lacks this here in the States, which is where I see ChromeOS coming into play.

|

||||

|

||||

是的,桌面Linux 对于大多数休闲型的用户来说绝对是一个好东西。它有助于有专人安装操作系统,并且提供“维修”服务,从windows 和 OS X 的阵营来看。但是,令人失望的是,在美国Linux 正好在这个方面很缺乏。所以,我们看到,ChromeOS 慢慢的走入我们的视线。

|

||||

|

||||

I've found the Linux desktop is best suited for environments where on-site tech support can manage things on the down-low. Examples include: Homes where advanced users can drop by and handle updates, governments and schools with IT departments. These are environments where Linux on the desktop is set up to be used by users of any skill level or background.

|

||||

|

||||

By contrast, ChromeOS is built to be completely maintenance free, thus not requiring any third part assistance short of turning it on and allowing updates to do the magic behind the scenes. This is partly made possible due to the ChromeOS being designed for specific hardware builds, in a similar spirit to how Apple develops their own computers. Because Google has a pulse on the hardware ChromeOS is bundled with, it allows for a generally error free experience. And for some individuals, this is fantastic!

|

||||

|

||||

Comically, the folks who exclaim that there's a problem here are not even remotely the target market for ChromeOS. In short, these are passionate Linux enthusiasts looking for something to gripe about. My advice? Stop inventing problems where none exist.

|

||||

|

||||

The point is: the market share for ChromeOS and Linux on the desktop are not even remotely the same. This could change in the future, but at this time, these two groups are largely separate.

|

||||

|

||||

### ChromeOS use is growing ###

|

||||

|

||||

No matter what your view of ChromeOS happens to be, the fact remains that its adoption is growing. New computers built for ChromeOS are being released all the time. One of the most recent ChromeOS computer releases is from Dell. Appropriately named the [Dell Chromebox][5], this desktop ChromeOS appliance is yet another shot at traditional computing. It has zero software DVDs, no anti-malware software, and offfers completely seamless updates behind the scenes. For casual users, Chromeboxes and Chromebooks are becoming a viable option for those who do most of their work from within a web browser.

|

||||

|

||||

Despite this growth, ChromeOS appliances face one huge downside – storage. Bound by limited hard drive size and a heavy reliance on cloud storage, ChromeOS isn't going to cut it for anyone who uses their computers outside of basic web browser functionality.

|

||||

|

||||

### ChromeOS and Linux crossing streams ###

|

||||

|

||||

Previously, I mentioned that ChromeOS and Linux on the desktop are in two completely separate markets. The reason why this is the case stems from the fact that the Linux community has done a horrid job at promoting Linux on the desktop offline.

|

||||

|

||||

Yes, there are occasional events where casual folks might discover this "Linux thing" for the first time. But there isn't a single entity to then follow up with these folks, making sure they’re getting their questions answered and that they're getting the most out of Linux.

|

||||

|

||||

In reality, the likely offline discovery breakdown goes something like this:

|

||||

|

||||

- Casual user finds out Linux from their local Linux event.

|

||||

- They bring the DVD/USB device home and attempt to install the OS.

|

||||

- While some folks very well may have success with the install process, I've been contacted by a number of folks with the opposite experience.

|

||||

- Frustrated, these folks are then expected to "search" online forums for help. Difficult to do on a primary computer experiencing network or video issues.

|

||||

- Completely fed up, some of the above frustrated bring their computers back into a Windows shop for "repair." In addition to Windows being re-installed, they also receive an earful about how "Linux isn't for them" and should be avoided.

|

||||

|

||||

Some of you might charge that the above example is exaggerated. I would respond with this: It's happened to people I know personally and it happens often. Wake up Linux community, our adoption model is broken and tired.

|

||||

|

||||

### Great platforms, horrible marketing and closing thoughts ###

|

||||

|

||||

If there is one thing that I feel ChromeOS and Linux on the desktop have in common...besides the Linux kernel, it's that they both happen to be great products with rotten marketing. The advantage however, goes to Google with this one, due to their ability to spend big money online and reserve shelf space at big box stores.

|

||||

|

||||

Google believes that because they have the "online advantage" that offline efforts aren't really that important. This is incredibly short-sighted and reflects one of Google's biggest missteps. The belief that if you're not exposed to their online efforts, you're not worth bothering with, is only countered by local shelf-space at select big box stores.

|

||||

|

||||

My suggestion is this – offer Linux on the desktop to the ChromeOS market through offline efforts. This means Linux User Groups need to start raising funds to be present at county fairs, mall kiosks during the holiday season and teaching free classes at community centers. This will immediately put Linux on the desktop in front of the same audience that might otherwise end up with a ChromeOS powered appliance.

|

||||

|

||||

If local offline efforts like this don't happen, not to worry. Linux on the desktop will continue to grow as will the ChromeOS market. Sadly though, it will absolutely keep the two markets separate as they are now.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.datamation.com/open-source/chromeos-vs-linux-the-good-the-bad-and-the-ugly-1.html

|

||||

|

||||

作者:[Matt Hartley][a]

|

||||

译者:[barney-ro](https://github.com/barney-ro)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.datamation.com/author/Matt-Hartley-3080.html

|

||||

[1]:http://en.wikipedia.org/wiki/Chrome_OS

|

||||

[2]:http://www.google.com/chrome/devices/features/

|

||||

[3]:https://plus.google.com/hangouts

|

||||

[4]:http://en.wikipedia.org/wiki/Voice_over_IP

|

||||

[5]:http://www.pcworld.com/article/2602845/dell-brings-googles-chrome-os-to-desktops.html

|

||||

@ -1,111 +0,0 @@

|

||||

alim0x translating

|

||||

|

||||

The history of Android

|

||||

================================================================================

|

||||

|

||||

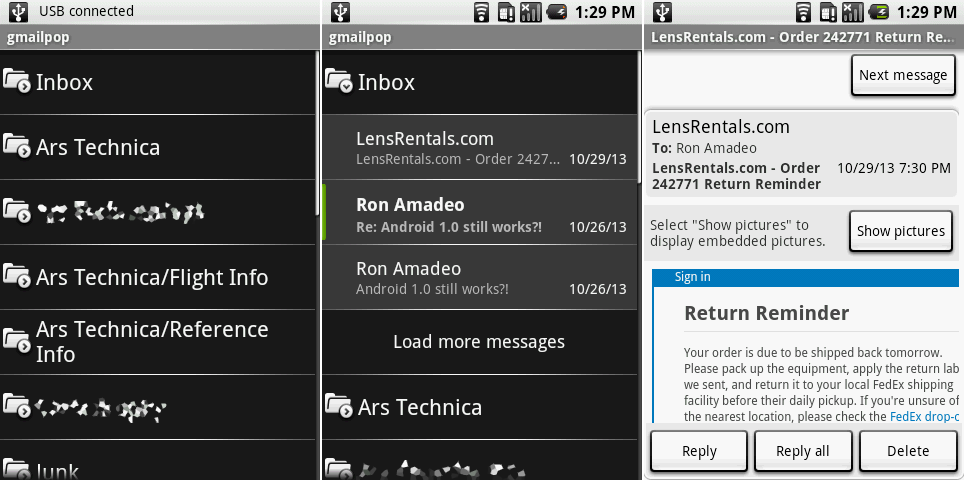

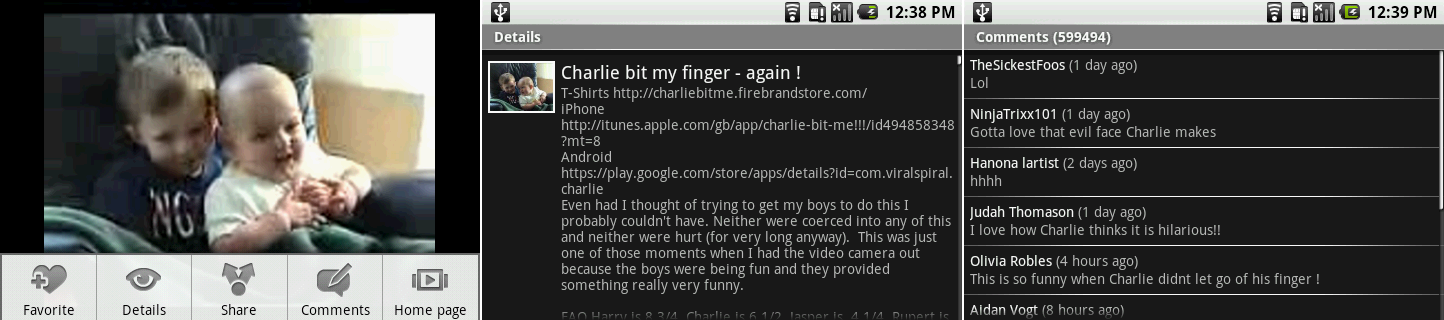

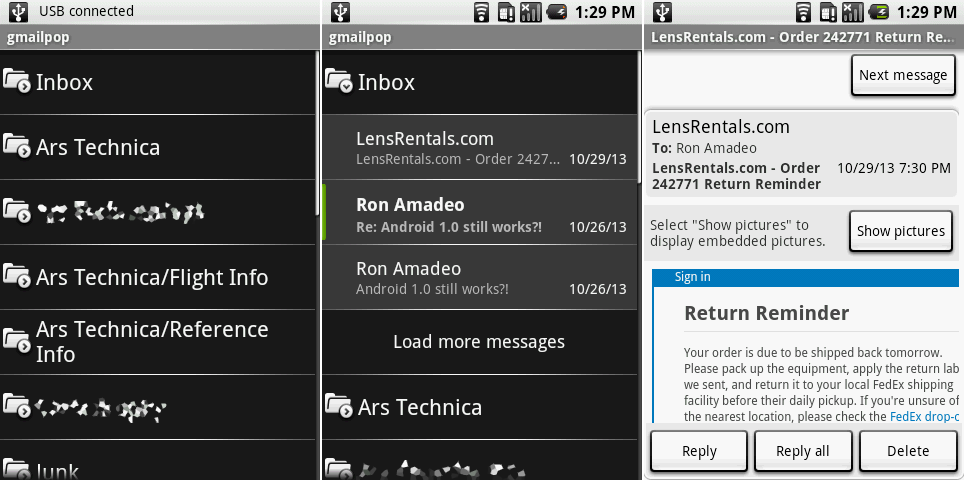

Both screens of the Email app. The first two screenshots show the combined label/inbox view, and the last shows a message.

|

||||

Photo by Ron Amadeo

|

||||

|

||||

The message view was—surprise!—white. Android's e-mail app has historically been a watered-down version of the Gmail app, and you can see that close connection here. The message and compose views were taken directly from Gmail with almost no modifications.

|

||||

|

||||

|

||||

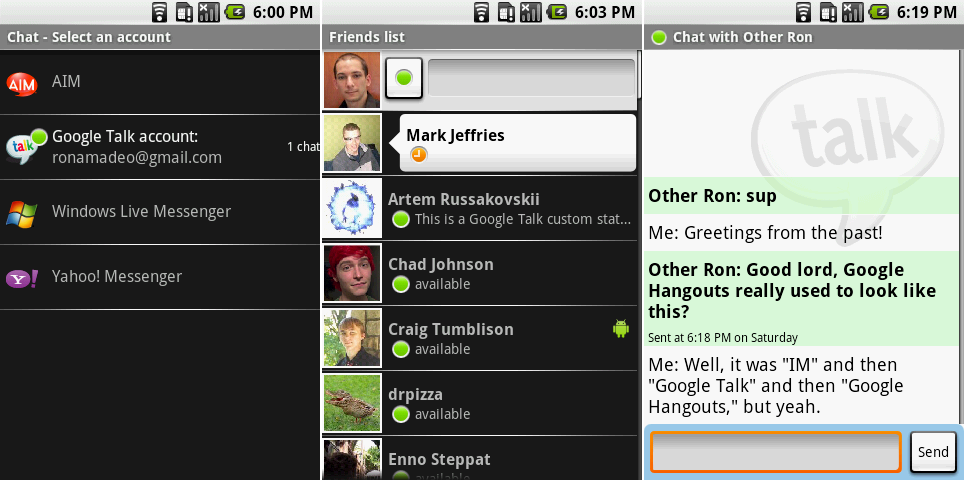

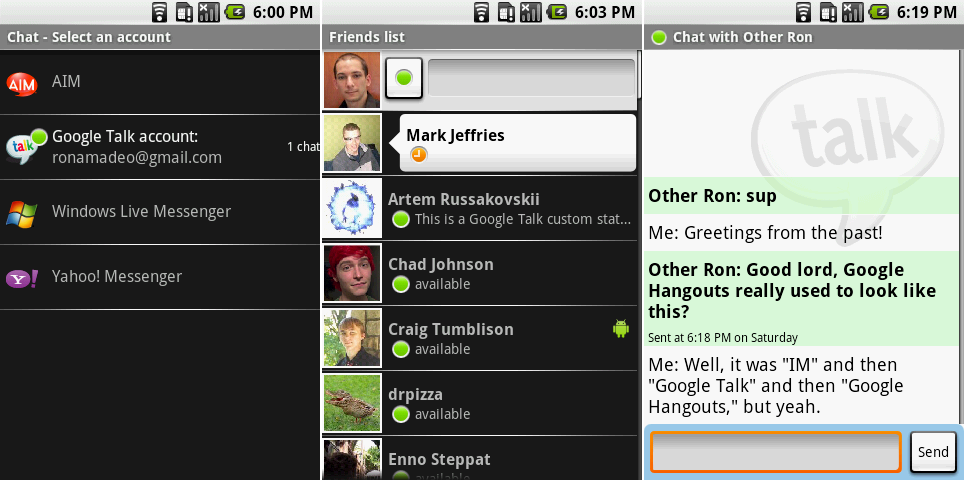

The “IM" applications. Screenshots show the short-lived provider selection screen, the friends list, and a chat.

|

||||

Photo by Ron Amadeo

|

||||

|

||||

Before Google Hangouts and even before Google Talk, there was "IM"—the only instant messaging client that shipped on Android 1.0. Surprisingly, multiple IM services were supported: users could pick from AIM, Google Talk, Windows Live Messenger, and Yahoo. Remember when OS creators cared about interoperability?

|

||||

|

||||

The friends list was a black background with white speech bubbles for open chats. Presence was indicated with colored circles, and a little Android on the right hand side would indicate that a person was mobile. It's amazing how much more communicative the IM app was than Google Hangouts. Green means the person is using a device they are signed into, yellow means they are signed in but idle, red means they have manually set busy and don't want to be bothered, and gray is offline. Today, Hangouts only shows when a user has the app open or closed.

|

||||

|

||||

The chats interface was clearly based on the Messaging program, and the chat backgrounds were changed from white and blue to white and green. No one changed the color of the blue text entry box, though, so along with the orange highlight effect, this screen used white, green, blue, and orange.

|

||||

|

||||

|

||||

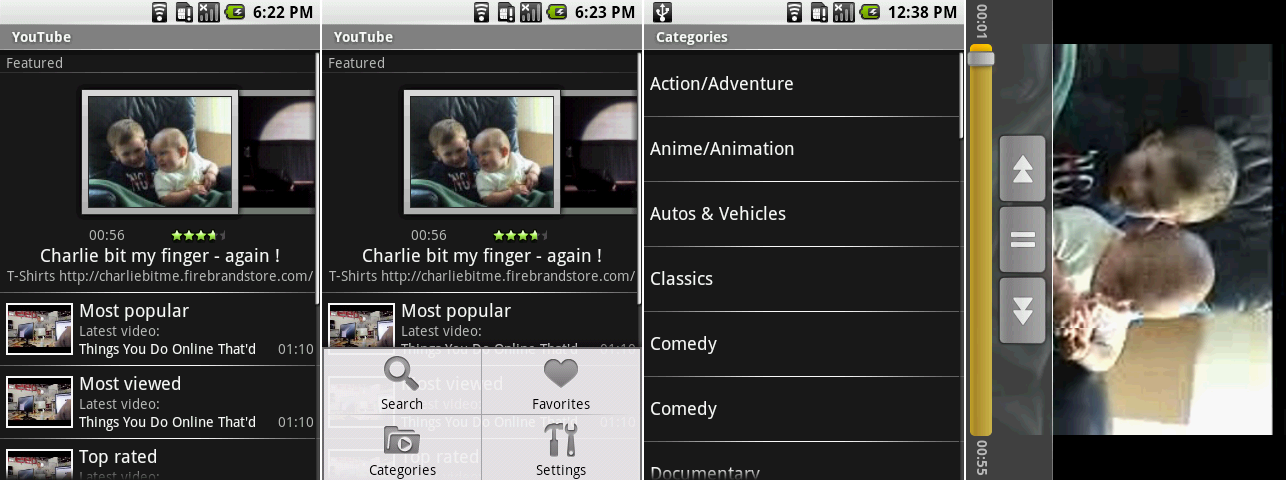

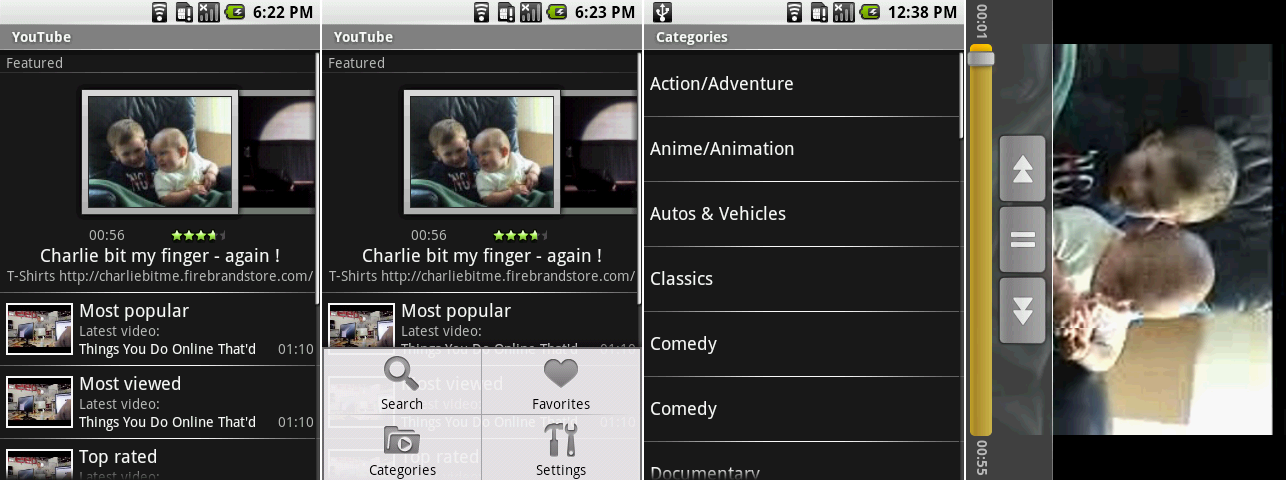

YouTube on Android 1.0. The screens show the main page, the main page with the menu open, the categories screen, and the videos screen.

|

||||

Photo by Ron Amadeo

|

||||

|

||||

YouTube might not have been the mobile sensation it is today with the 320p screen and 3G data speeds of the G1, but Google's video service was present and accounted for on Android 1.0. The main screen looked like a tweaked version of the Android Market, with a horizontally scrolling featured section along the top and vertically scrolling categories along the bottom. Some of Google's category choices were pretty strange: what would the difference be between "Most popular" and "Most viewed?"

|

||||

|

||||

In a sign that Google had no idea how big YouTube would eventually become, one of the video categories was "Most recent." Today, with [100 hours of video][1] uploaded to the site every minute, if this section actually worked it would be an unreadable blur of rapidly scrolling videos.

|

||||

|

||||

The menu housed search, favorites, categories, and settings. Settings (not pictured) was the lamest screen ever, housing one option to clear the search history. Categories was equally barren, showing only a black list of text.

|

||||

|

||||

The last screen shows a video, which only supported horizontal mode. The auto-hiding video controls weirdly had rewind and fast forward buttons, even though there was a seek bar.

|

||||

|

||||

|

||||

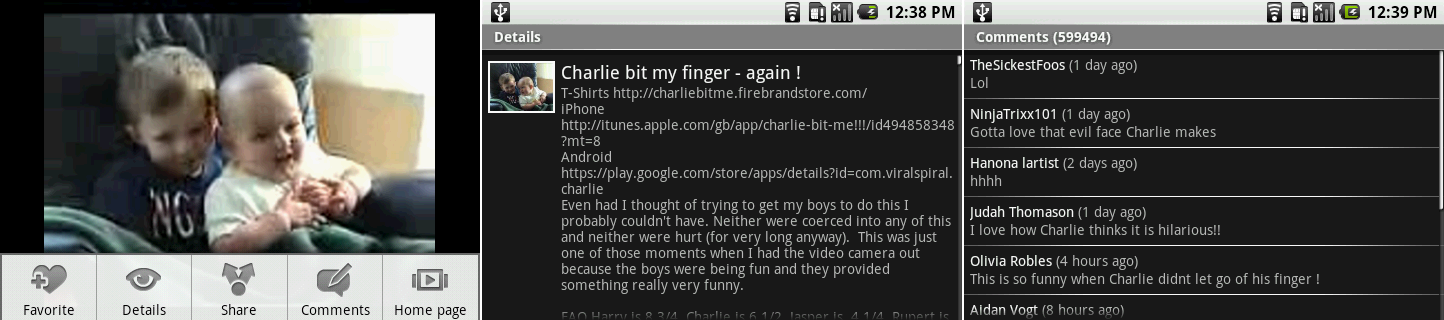

YouTube’s video menu, description page, and comments.

|

||||

Photo by Ron Amadeo

|

||||

|

||||

Additional sections for each video could be brought up by hitting the menu button. Here you could favorite the video, access details, and read comments. All of these screens, like the videos, were locked to horizontal mode.

|

||||

|

||||

"Share" didn't bring up a share dialog yet; it just kicked the link out to a Gmail message. Texting or IMing someone a link wasn't possible. Comments could be read, but you couldn't rate them or post your own. You couldn't rate or like a video either.

|

||||

|

||||

|

||||

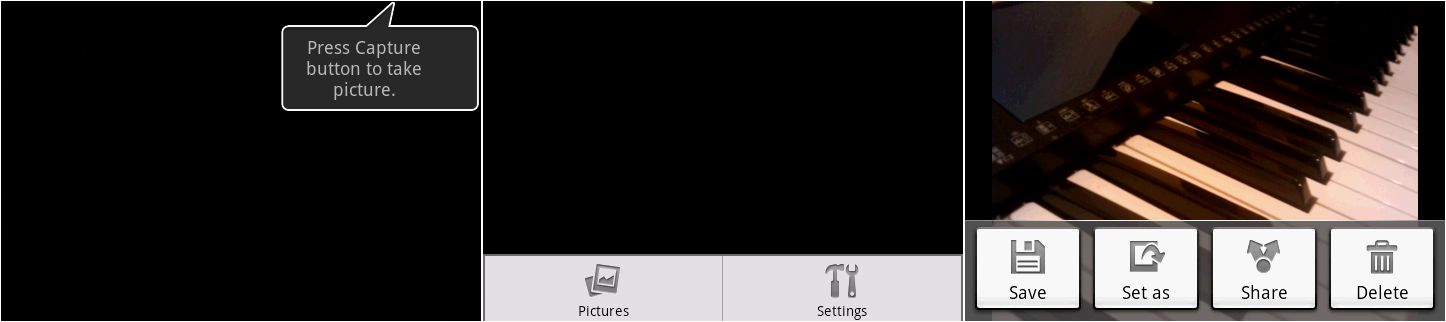

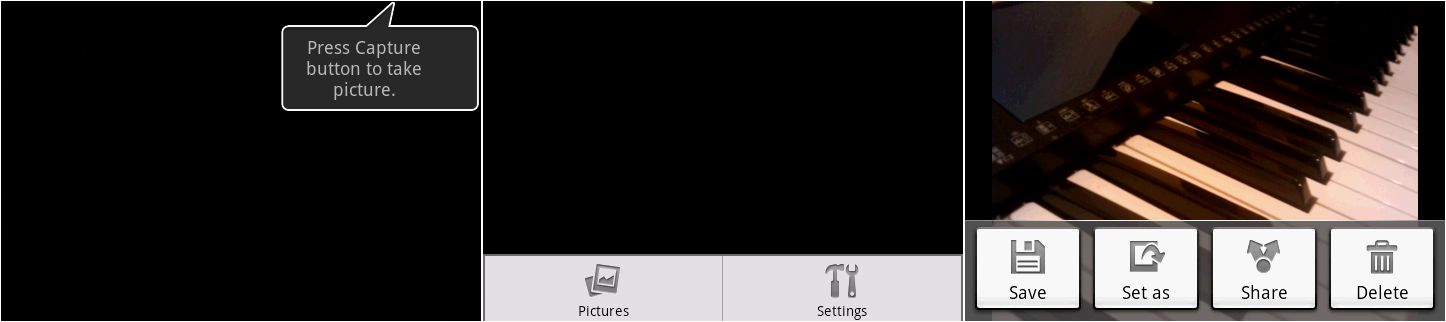

The camera app’s picture taking interface, menu, and photo review mode.

|

||||

Photo by Ron Amadeo

|

||||

|

||||

Real Android on real hardware meant a functional camera app, even if there wasn't much to look at. That black square on the left was the camera interface, which should be showing a viewfinder image, but the SDK screenshot utility can't capture it. The G1 had a hardware camera button (remember those?), so there wasn't a need for an on-screen shutter button. There were no settings for exposure, white balance, or HDR—you could take a picture and that was about it.

|

||||

|

||||

The menu button revealed a meager two options: a way to jump to the Pictures app and Settings screen with two options. The first settings option was whether or not to enable geotagging for pictures, and the second was for a dialog prompt after every capture, which you can see on the right. Also, you could only take pictures—there was no video support yet.

|

||||

|

||||

|

||||

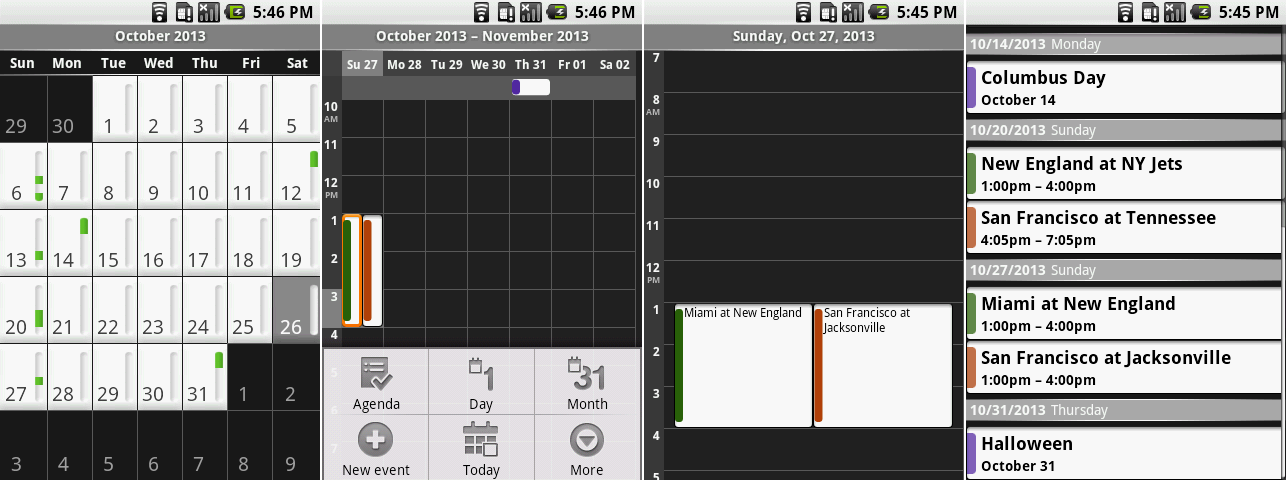

The Calendar’s month view, week view with the menu open, day view, and agenda.

|

||||

Photo by Ron Amadeo

|

||||

|

||||

Like most apps of this era, the primary command interface for the calendar was the menu. It was used to switch views, add a new event, navigate to the current day, pick visible calendars, and go to the settings. The menu functioned as a catch-all for every single button.

|

||||

|

||||

The month view couldn't show appointment text. Every date had a bar next to it, and appointments were displayed as green sections in the bar denoting what time of day an appointment was. Week view couldn't show text either—the 320×480 display of the G1 just wasn't dense enough—so you got a white block with a strip of color indicating which calendar it was from. The only views that provided text were the agenda and day views. You could move through dates by swiping—week and day used left and right, and month and agenda used up and down.

|

||||

|

||||

|

||||

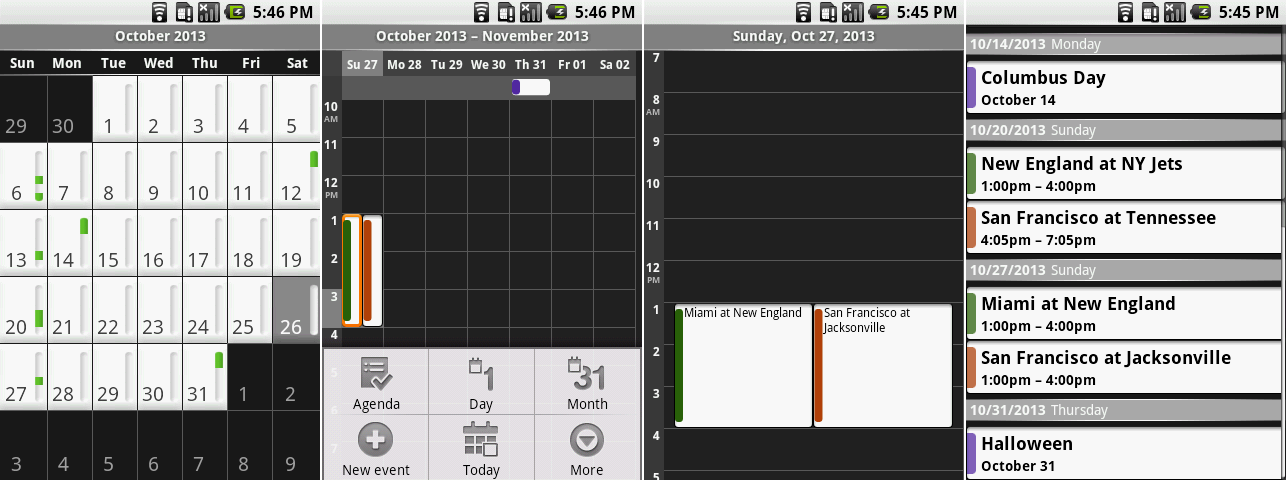

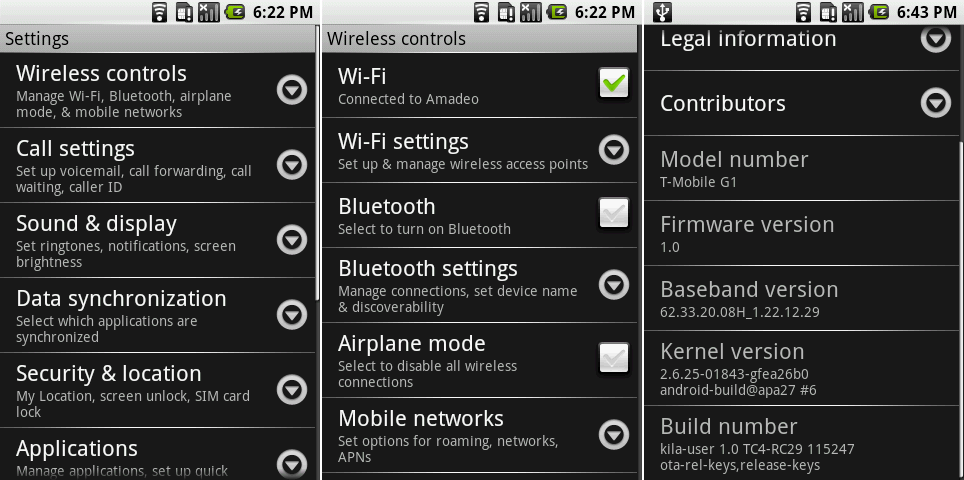

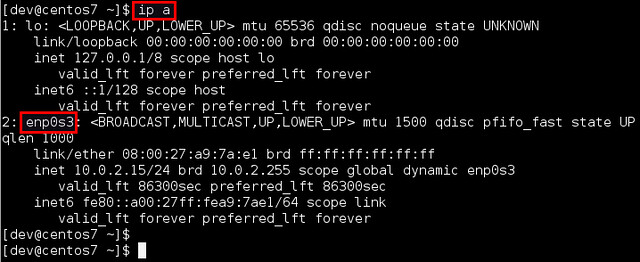

The main settings page, the Wireless section, and the bottom of the about page.

|

||||

Photo by Ron Amadeo

|

||||

|

||||

Android 1.0 finally brought a settings screen to the party. It was a black and white wall of text that was roughly broken down into sections. Down arrows next to each list item confusingly look like they would expand line-in to show more of something, but touching anywhere on the list item would just load the next screen. All the screens were pretty boring and samey looking, but hey, it's a settings screen.

|

||||

|

||||

Any option with an on/off state used a cartoony-looking checkbox. The original checkboxes in Android 1.0 were pretty strange—even when they were "unchecked," they still had a gray check mark in them. Android treated the check mark like a light bulb that would light up when on and be dim when off, but that's not how checkboxes work. We did finally get an "About" page, though. Android 1.0 ran Linux kernel 2.6.25.

|

||||

|

||||

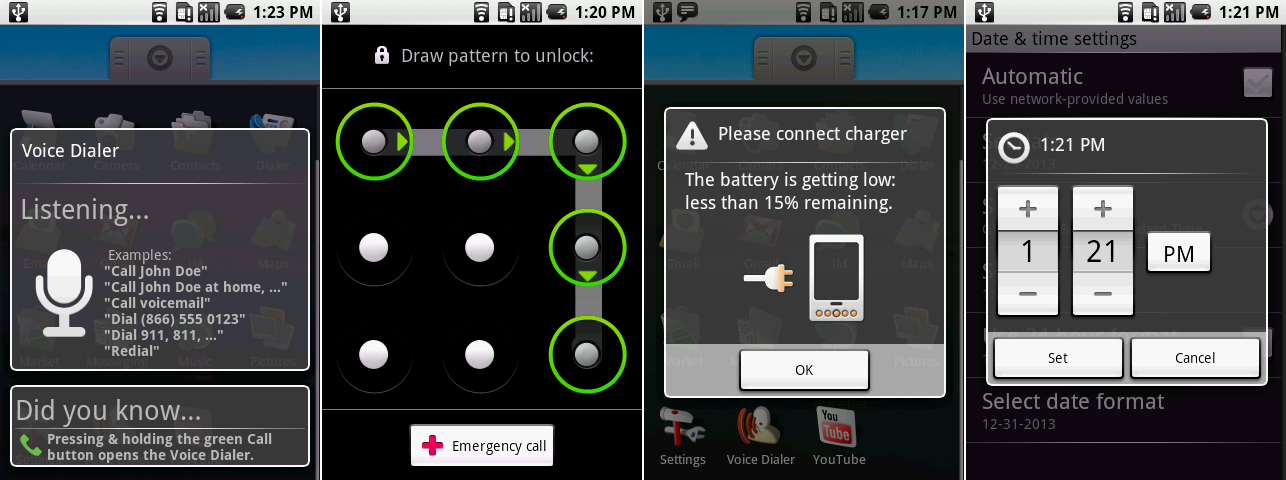

A settings screen means we can finally open the security settings and change lock screens. Android 1.0 only had two styles, the gray square lock screen pictured in the Android 0.9 section, and pattern unlock, which required you to draw a pattern over a grid of 9 dots. A swipe pattern like this was easier to remember and input than a PIN even if it did not add any more security.

|

||||

|

||||

|

||||

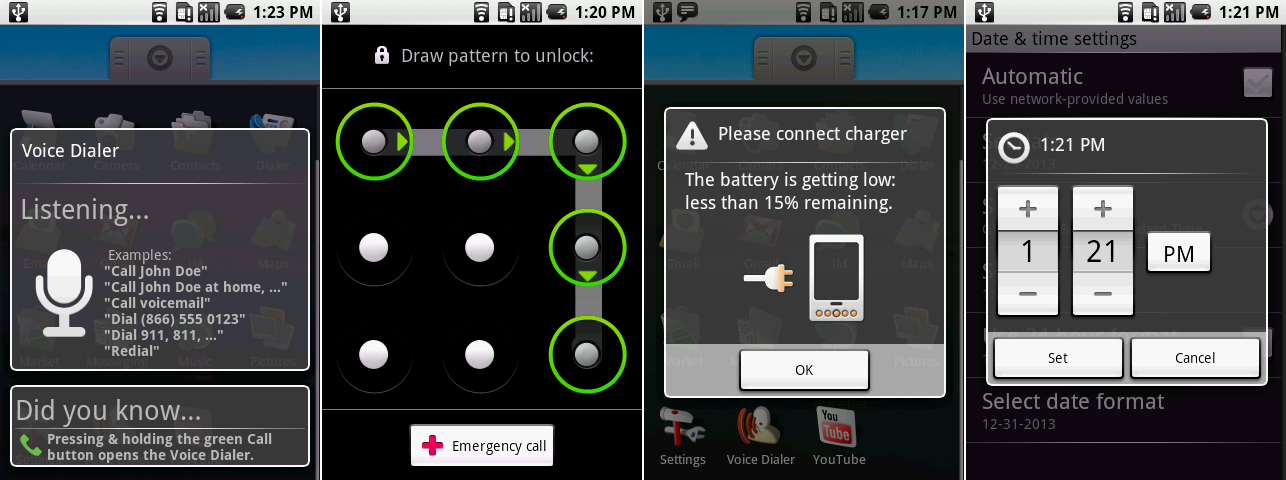

The Voice Dialer, pattern lock screen, low battery warning, and time picker.

|

||||

Photo by Ron Amadeo

|

||||

|

||||

oice functions arrived in 1.0 with Voice Dialer. This feature hung around in various capacities in AOSP for a while, as it was a simple voice command app for calling numbers and contacts. Voice Dialer was completely unrelated to Google's future voice products, however, and it worked the same way a voice dialer on a dumbphone would work.

|

||||

|

||||

As for a final note, low battery popup would occur when the battery dropped below 15 percent. It was a funny graphic, depicting plugging the wrong end of the power cord into the phone. That wasn't (and still isn't) how phones work, Google.

|

||||

|

||||

Android 1.0 was a great first start, but there were still so many gaps in functionality. Physical keyboards and tons of hardware buttons were mandatory, as Android devices were still not allowed to be sold without a d-pad or trackball. Base smartphone functionality like auto-rotate wasn't here yet, either. Updates for built-in apps weren't possible through the Android Market the way they were today. All the Google Apps were interwoven with the operating system. If Google wanted to update a single app, an update for the entire operating system needed to be pushed out through the carriers. There was still a lot of work to do.

|

||||

|

||||

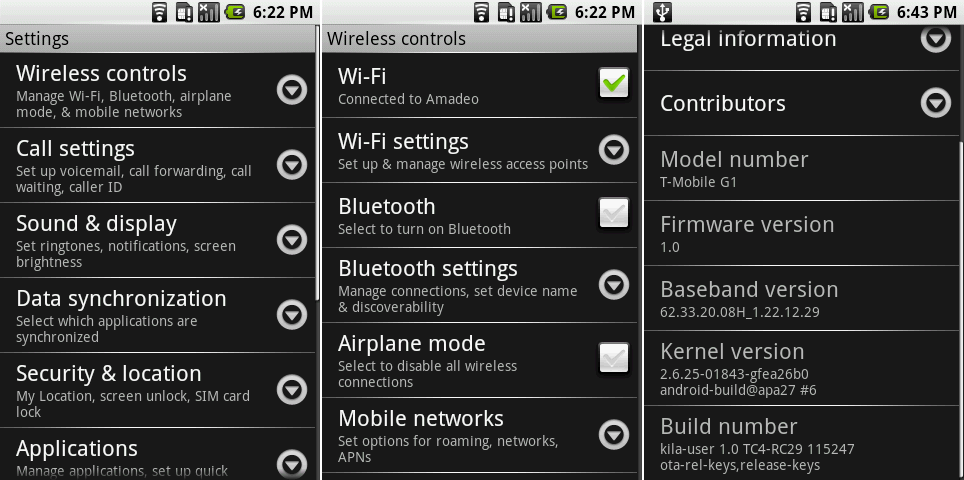

### Android 1.1—the first truly incremental update ###

|

||||

|

||||

|

||||

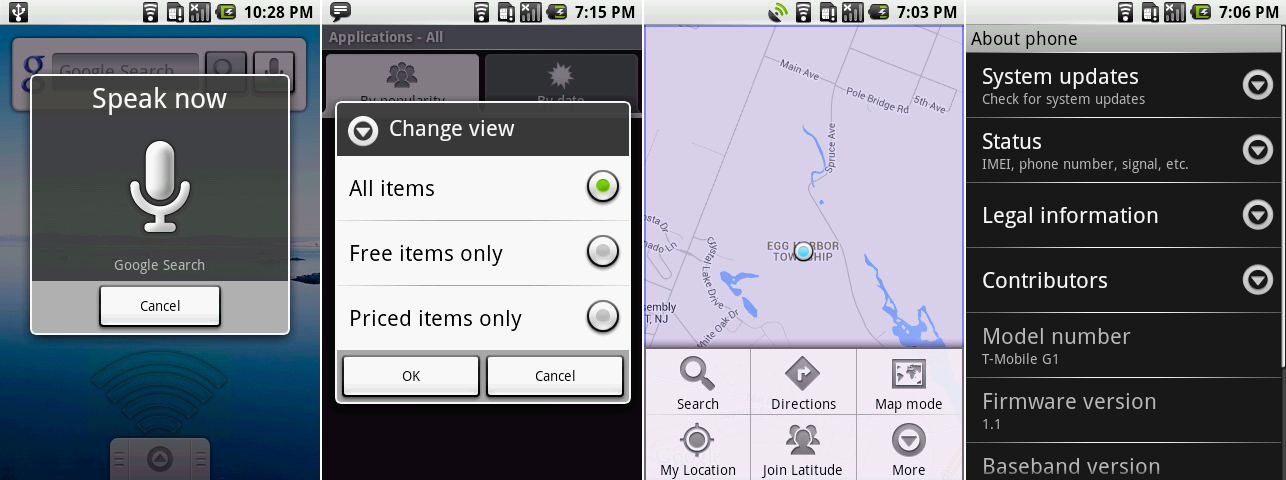

All of Android 1.1’s new features: Search by voice, the Android Market showing paid app support, Google Latitude, and the new “system updates" option in the settings.

|

||||

Photo by Ron Amadeo

|

||||

|

||||

Four and a half months after Android 1.0, in February 2009, Android got its first public update in Android 1.1. Not much changed in the OS, and just about every new thing Google added with 1.1 has been shut down by now. Google Voice Search was Android's first foray into cloud-powered voice search, and it had its own icon in the app drawer. While the app can't communicate with Google's servers anymore, you can check out how it used to work [on the iPhone][2]. It wasn't yet Voice Actions, but you could speak and the results would go to a simple Google Search.

|

||||

|

||||

Support for paid apps was added to the Android Market, but just like the beta client, this version of the Android Market could no longer connect to the Google Play servers. The most that we could get to work was this sorting screen, which lets you pick between displaying free apps, paid apps, or a mix of both.

|

||||

|

||||

Maps added [Google Latitude][3], a way to share your location with friends. Latitude was shut down in favor of Google+ a few months ago and no longer works. There was an option for it in the Maps menu, but tapping on it just brings up a loading spinner forever.

|

||||

|

||||

Given that system updates come quickly in the Android world—or at least, that was the plan before carriers and OEMs got in the way—Google also added a button to the "About Phone" screen to check for system updates.

|

||||

|

||||

----------

|

||||

|

||||

|

||||

|

||||

[Ron Amadeo][a] / Ron is the Reviews Editor at Ars Technica, where he specializes in Android OS and Google products. He is always on the hunt for a new gadget and loves to rip things apart to see how they work.

|

||||

|

||||

[@RonAmadeo][t]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://arstechnica.com/gadgets/2014/06/building-android-a-40000-word-history-of-googles-mobile-os/7/

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.youtube.com/yt/press/statistics.html

|

||||

[2]:http://www.youtube.com/watch?v=y3z7Tw1K17A

|

||||

[3]:http://arstechnica.com/information-technology/2009/02/google-tries-location-based-social-networking-with-latitude/

|

||||

[a]:http://arstechnica.com/author/ronamadeo

|

||||

[t]:https://twitter.com/RonAmadeo

|

||||

@ -1,3 +1,4 @@

|

||||

Translating by SPccman

|

||||

How to configure SNMPv3 on ubuntu 14.04 server

|

||||

================================================================================

|

||||

Simple Network Management Protocol (SNMP) is an "Internet-standard protocol for managing devices on IP networks". Devices that typically support SNMP include routers, switches, servers, workstations, printers, modem racks and more.It is used mostly in network management systems to monitor network-attached devices for conditions that warrant administrative attention. SNMP is a component of the Internet Protocol Suite as defined by the Internet Engineering Task Force (IETF). It consists of a set of standards for network management, including an application layer protocol, a database schema, and a set of data objects.[2]

|

||||

@ -96,4 +97,4 @@ via: http://www.ubuntugeek.com/how-to-configure-snmpv3-on-ubuntu-14-04-server.ht

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

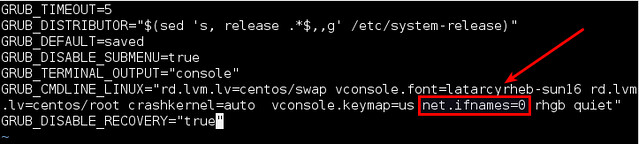

@ -1,466 +0,0 @@

|

||||

Linux Tutorial: Install Ansible Configuration Management And IT Automation Tool

|

||||

================================================================================

|

||||

|

||||

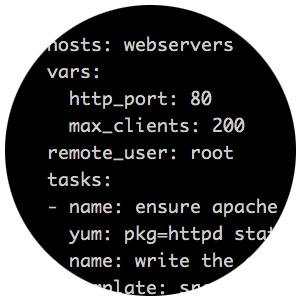

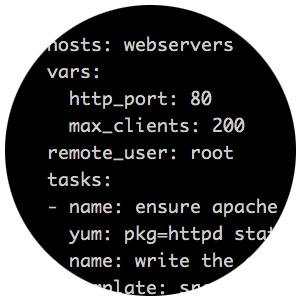

|

||||

Today I will be talking about ansible, a powerful configuration management solution written in python. There are many configuration management solutions available, all with pros and cons, ansible stands apart from many of them for its simplicity. What makes ansible different than many of the most popular configuration management systems is that its agent-less, no need to setup agents on every node you want to control. Plus, this has the benefit of being able to control you entire infrastructure from more than one place, if needed. That last point's validity, of being a benefit, may be debatable but I find it as a positive in most cases. Enough talk, lets get started with Ansible installation and configuration on a RHEL/CentOS, and Debian/Ubuntu based systems.

|

||||

|

||||

### Prerequisites ###

|

||||

|

||||

1. Distro: RHEL/CentOS/Debian/Ubuntu Linux

|

||||

1. Jinja2: A modern and designer friendly templating language for Python.

|

||||

1. PyYAML: A YAML parser and emitter for the Python programming language.

|

||||

1. parmiko: Native Python SSHv2 protocol library.

|

||||

1. httplib2: A comprehensive HTTP client library.

|

||||

1. Most of the actions listed in this post are written with the assumption that they will be executed by the root user running the bash or any other modern shell.

|

||||

|

||||

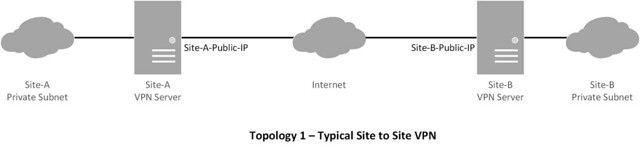

How Ansible works

|

||||

|

||||

Ansible tool uses no agents. It requires no additional custom security infrastructure, so it’s easy to deploy. All you need is ssh client and server:

|

||||

|

||||

+----------------------+ +---------------+

|

||||

|Linux/Unix workstation| SSH | file_server1 |

|

||||

|with Ansible |<------------------>| db_server2 | Unix/Linux servers

|

||||

+----------------------+ Modules | proxy_server3 | in local/remote

|

||||

192.168.1.100 +---------------+ data centers

|

||||

|

||||

Where,

|

||||

|

||||

1. 192.168.1.100 - Install Ansible on your local workstation/server.

|

||||

1. file_server1..proxy_server3 - Use 192.168.1.100 and Ansible to automates configuration management of all servers.

|

||||

1. SSH - Setup ssh keys between 192.168.1.100 and local/remote servers.

|

||||

|

||||

### Ansible Installation Tutorial ###

|

||||

|

||||

Installation of ansible is a breeze, many distributions have a package available in their 3rd party repos which can easily be installed, a quick alternative is to just pip install it or grab the latest copy from github. To install using your package manager, on [RHEL/CentOS Linux based systems you will most likely need the EPEL repo][1] then:

|

||||

|

||||

#### Install ansible on a RHEL/CentOS Linux based system ####

|

||||

|

||||

Type the following [yum command][2]:

|

||||

|

||||

$ sudo yum install ansible

|

||||

|

||||

#### Install ansible on a Debian/Ubuntu Linux based system ####

|

||||

|

||||

Type the following [apt-get command][3]:

|

||||

|

||||

$ sudo apt-get install software-properties-common

|

||||

$ sudo apt-add-repository ppa:ansible/ansible

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get install ansible

|

||||

|

||||

#### Install ansible using pip ####

|

||||

|

||||

The [pip command is a tool for installing and managing Python packages][4], such as those found in the Python Package Index. The following method works on Linux and Unix-like systems:

|

||||

|

||||

$ sudo pip install ansible

|

||||

|

||||