mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-22 00:40:10 +08:00

commit

65f6a50b5a

@ -17,11 +17,11 @@

|

||||

|

||||

如果 **Jeb (Jeb!?) Bush** 使用 Linux,它一定是 [Debian][2]。Debian 属于一个相当无趣的分支,它是为真正意义上的、成熟的黑客设计的,这些人将清理那些由经验不甚丰富的开源爱好者所造成的混乱视为一大使命。当然,这也使得 Debian 显得很枯燥,所以它已有的用户基数一直在缩减。

|

||||

|

||||

**Scott Walker** ,对于他来说,应该是一个 [Damn Small Linux][3] (DSL) 用户。这个系统仅仅需要 50MB 的硬盘空间和 16MB 的 RAM 便可运行。DSL 可以使一台 20 年前的 486 计算机焕发新春,而这恰好符合了 **Scott Walker** 所主张的消减成本计划。当然,你在 DSL 上的用户体验也十分原始,这个系统平台只能够运行一个浏览器。但是至少你你不用浪费钱财购买新的电脑硬件,你那台 1993 年购买的机器仍然可以为你好好的工作。

|

||||

**Scott Walker** ,对于他来说,应该是一个 [Damn Small Linux][3] (DSL) 用户。这个系统仅仅需要 50MB 的硬盘空间和 16MB 的 RAM 便可运行。DSL 可以使一台 20 年前的 486 计算机焕发新春,而这恰好符合了 **Scott Walker** 所主张的消减成本计划。当然,你在 DSL 上的用户体验也十分原始,这个系统平台只能够运行一个浏览器。但是至少你不用浪费钱财购买新的电脑硬件,你那台 1993 年购买的机器仍然可以为你好好的工作。

|

||||

|

||||

**Chris Christie** 会使用哪种系统呢?他肯定会使用 [Relax-and-Recover Linux][4],它号称“一次搞定(Setup-and-forget)的裸机 Linux 灾难恢复方案” 。从那次不幸的华盛顿大桥事故后,“一次搞定(Setup-and-forget)”基本上便成了 Christie 的政治主张。不管灾难恢复是否能够让 Christie 最终挽回一切,但是当他的电脑死机的时候,至少可以找到一两封意外丢失的机密邮件。

|

||||

|

||||

至于 **Carly Fiorina**,她无疑将要使用 [惠普][6] (HPQ)为“[The Machine][5]”开发的操作系统,她在 1999 年到 2005 年这 6 年期间管理的这个公司。事实上,The Machine 可以运行几种不同的操作系统,也许是基于 Linux 的,也许不是,我们并不太清楚,它的开发始于 **Carly Fiorina** 在惠普公司的任期结束后。不管怎么说,作为 IT 圈里一个成功的管理者,这是她履历里面重要的组成部分,同时这也意味着她很难与惠普彻底断绝关系。

|

||||

至于 **Carly Fiorina**,她无疑将要使用 [惠普][6] (HPQ)为“[The Machine][5]”开发的操作系统,她在 1999 年到 2005 年这 6 年期间管理该公司。事实上,The Machine 可以运行几种不同的操作系统,也许是基于 Linux 的,也许不是,我们并不太清楚,它的开发始于 **Carly Fiorina** 在惠普公司的任期结束后。不管怎么说,作为 IT 圈里一个成功的管理者,这是她履历里面重要的组成部分,同时这也意味着她很难与惠普彻底断绝关系。

|

||||

|

||||

最后,但并不是不重要,你也猜到了——**Donald Trump**。他显然会动用数百万美元去雇佣一个精英黑客团队去定制属于自己的操作系统——尽管他原本是想要免费获得一个完美的、现成的操作系统——然后还能向别人炫耀自己的财力。他可能会吹嘘自己的操作系统是目前最好的系统,虽然它可能没有兼容 POSIX 或者一些其它的标准,因为那样的话就需要花掉更多的钱。同时这个系统也将根本不会提供任何文档,因为如果 **Donald Trump** 向人们解释他的系统的实际运行方式,他会冒着所有机密被泄露至伊斯兰国家的风险,绝对是这样的。

|

||||

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

Linux内核中的数据结构 —— 基数树

|

||||

Linux 内核里的数据结构 —— 基数树

|

||||

================================================================================

|

||||

|

||||

基数树 Radix tree

|

||||

@ -11,35 +11,35 @@ Linux内核中的数据结构 —— 基数树

|

||||

让我们先说说什么是 `基数树` 吧。基数树是一种 `压缩的字典树 (compressed trie)` ,而[字典树](http://en.wikipedia.org/wiki/Trie)是实现了关联数组接口并允许以 `键值对` 方式存储值的一种数据结构。这里的键通常是字符串,但可以使用任意数据类型。字典树因为它的节点而与 `n叉树` 不同。字典树的节点不存储键,而是存储单个字符的标签。与一个给定节点关联的键可以通过从根遍历到该节点获得。举个例子:

|

||||

|

||||

```

|

||||

+-----------+

|

||||

| |

|

||||

| " " |

|

||||

+-----------+

|

||||

| |

|

||||

+------+-----------+------+

|

||||

| |

|

||||

| |

|

||||

+----v------+ +-----v-----+

|

||||

| | | |

|

||||

| g | | c |

|

||||

| | | |

|

||||

+-----------+ +-----------+

|

||||

| |

|

||||

| |

|

||||

+----v------+ +-----v-----+

|

||||

| | | |

|

||||

| o | | a |

|

||||

| | | |

|

||||

+-----------+ +-----------+

|

||||

|

|

||||

|

|

||||

+-----v-----+

|

||||

| |

|

||||

| t |

|

||||

| " " |

|

||||

| |

|

||||

+-----------+

|

||||

+------+-----------+------+

|

||||

| |

|

||||

| |

|

||||

+----v------+ +-----v-----+

|

||||

| | | |

|

||||

| g | | c |

|

||||

| | | |

|

||||

+-----------+ +-----------+

|

||||

| |

|

||||

| |

|

||||

+----v------+ +-----v-----+

|

||||

| | | |

|

||||

| o | | a |

|

||||

| | | |

|

||||

+-----------+ +-----------+

|

||||

|

|

||||

|

|

||||

+-----v-----+

|

||||

| |

|

||||

| t |

|

||||

| |

|

||||

+-----------+

|

||||

```

|

||||

|

||||

因此在这个例子中,我们可以看到一个有着两个键 `go` 和 `cat` 的 `字典树` 。压缩的字典树或者说 `基数树` ,它和 `字典树` 的不同之处在于,所有只有一个孩子的中间节点都被删除。

|

||||

因此在这个例子中,我们可以看到一个有着两个键 `go` 和 `cat` 的 `字典树` 。压缩的字典树也叫做 `基数树` ,它和 `字典树` 的不同之处在于,所有只有一个子节点的中间节点都被删除。

|

||||

|

||||

Linux 内核中的基数树是把值映射到整形键的一种数据结构。[include/linux/radix-tree.h](https://github.com/torvalds/linux/blob/master/include/linux/radix-tree.h)文件中的以下结构体描述了基数树:

|

||||

|

||||

@ -86,10 +86,10 @@ struct radix_tree_node {

|

||||

unsigned long tags[RADIX_TREE_MAX_TAGS][RADIX_TREE_TAG_LONGS];

|

||||

};

|

||||

```

|

||||

这个结构体包含的信息有父节点中的偏移以及到底端(叶节点)的高度、孩子节点的个数以及用于访问和释放节点的字段成员。这些字段成员描述如下:

|

||||

这个结构体包含的信息有父节点中的偏移以及到底端(叶节点)的高度、子节点的个数以及用于访问和释放节点的字段成员。这些字段成员描述如下:

|

||||

|

||||

* `path` - 父节点中的偏移和到底端(叶节点)的高度

|

||||

* `count` - 孩子节点的个数;

|

||||

* `count` - 子节点的个数;

|

||||

* `parent` - 父节点指针;

|

||||

* `private_data` - 由树的用户使用;

|

||||

* `rcu_head` - 用于释放节点;

|

||||

@ -188,9 +188,9 @@ unsigned int radix_tree_gang_lookup(struct radix_tree_root *root,

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://github.com/0xAX/linux-insides/edit/master/DataStructures/radix-tree.md

|

||||

via: https://github.com/0xAX/linux-insides/blob/master/DataStructures/radix-tree.md

|

||||

|

||||

作者:[0xAX]

|

||||

作者:0xAX

|

||||

译者:[cposture](https://github.com/cposture)

|

||||

校对:[Mr小眼儿](https://github.com/tinyeyeser)

|

||||

|

||||

@ -1,50 +1,51 @@

|

||||

点评:Linux编程中五款内存调试器

|

||||

点评五款用于 Linux 编程的内存调试器

|

||||

================================================================================

|

||||

|

||||

Credit: [Moini][1]

|

||||

>Credit: [Moini][1]

|

||||

|

||||

作为一个程序员,我知道我总在犯错误——事实是,怎么可能会不犯错的!程序员也是人啊。有的错误能在编码过程中及时发现,而有些却得等到软件测试才显露出来。然而,还有一类错误并不能在这两个时期被排除,从而导致软件不能正常运行,甚至是提前中止。

|

||||

作为一个程序员,我知道我肯定会犯错误——怎么可能不犯错!程序员也是人啊。有的错误能在编码过程中及时发现,而有些却得等到软件测试了才能显露出来。然而,还有一类错误并不能在这两个阶段被解决,这就导致软件不能正常运行,甚至是提前终止。

|

||||

|

||||

想到了吗?我说的就是内存相关的错误。手动调试这些错误不仅耗时,而且很难发现并纠正。值得一提的是,这种错误非常常见,特别是在用 C/C++ 这类允许[手动管理内存][2]的语言编写的软件里。

|

||||

如果你还没猜出是那种错误,我说的就是和内存相关的错误。手动调试这些错误不仅耗时,而且很难发现并纠正。值得一提的是,这种错误很常见,特别是在用 C/C++ 这类允许[手动管理内存][2]的语言编写的软件里。

|

||||

|

||||

幸运的是,现行有一些编程工具能够帮你找到软件程序中这些内存相关的错误。在这些工具集中,我评定了五款Linux可用的,流行、免费并且开源的内存调试器: Dmalloc 、 Electric Fence 、 Memcheck 、 Memwatch 以及 Mtrace 。在日常编码中,我已经把这五个调试器用了个遍,所以这些点评是建立在我的实际体验之上的。

|

||||

幸运的是,现行有一些编程工具能够帮你在软件程序中找到这些和内存相关的错误。在这些工具集中,我评估了五款支持 Linux 的、流行的、自由开源的内存调试器: Dmalloc 、 Electric Fence 、 Memcheck 、 Memwatch 以及 Mtrace 。在日常编码中,我已经用过这五个调试器了,所以这些评估是建立在我的实际体验之上的。

|

||||

|

||||

### [Dmalloc][3] ###

|

||||

|

||||

**开发者**:Gray Watson

|

||||

|

||||

**点评版本**:5.5.2

|

||||

**评估版本**:5.5.2

|

||||

|

||||

**支持的 Linux**:所有种类

|

||||

**支持的 Linux 版本**:所有种类

|

||||

|

||||

**许可**:知识共享署名-相同方式共享许可证 3.0

|

||||

**许可**: CC 3.0

|

||||

|

||||

Dmalloc 是 Gray Watson 开发的一款内存调试工具。它实现成库,封装了标准内存管理函数如 *malloc() , calloc() , free()* 等,使程序员得以检测出有问题的代码。

|

||||

Dmalloc 是 Gray Watson 开发的一款内存调试工具。它是作为库来实现的,封装了标准内存管理函数如`malloc() , calloc() , free()`等,使程序员得以检测出有问题的代码。

|

||||

|

||||

|

||||

Dmalloc

|

||||

|

||||

如同工具的网页所列,这个调试器提供的特性包括内存泄漏跟踪、[重复释放(double free)][4]错误跟踪、以及[越界写入(fence-post write)][5]检测。其它特性包括文件/行号报告、普通统计记录。

|

||||

*Dmalloc*

|

||||

|

||||

如同工具的网页所示,这个调试器提供的特性包括内存泄漏跟踪、[重复释放内存(double free)][4]错误跟踪、以及[越界写入(fence-post write)][5]检测。其它特性包括报告错误的文件/行号、通用的数据统计记录。

|

||||

|

||||

#### 更新内容 ####

|

||||

|

||||

5.5.2 版本是一个 [bug 修复发行版][6],同时修复了构建和安装的问题。

|

||||

5.5.2 版本是一个 [bug 修正发行版][6],修复了几个有关构建和安装的问题。

|

||||

|

||||

#### 有何优点 ####

|

||||

|

||||

Dmalloc 最大的优点就是高度可配置性。比如说,你可以配置以支持 C++ 程序和多线程应用。 Dmalloc 还提供一个有用的功能:运行时可配置,这表示在 Dmalloc 执行时,可以轻易地使能或者禁能它提供的特性。

|

||||

Dmalloc 最大的优点就是高度可配置性。比如说,你可以配置它以支持 C++ 程序和多线程应用。 Dmalloc 还提供一个有用的功能:运行时可配置,这表示在 Dmalloc 执行时,可以轻易地启用或者禁用它提供的一些特性。

|

||||

|

||||

你还可以配合 [GNU Project Debugger (GDB)][7]来使用 Dmalloc ,只需要将 *dmalloc.gdb* 文件(位于 Dmalloc 源码包中的 contrib 子目录里)的内容添加到你的主目录中的 *.gdbinit* 文件里即可。

|

||||

你还可以配合 [GNU Project Debugger (GDB)][7]来使用 Dmalloc ,只需要将`dmalloc.gdb`文件(位于 Dmalloc 源码包中的 contrib 子目录里)的内容添加到你的主目录中的`.gdbinit`文件里即可。

|

||||

|

||||

另外一个让我对 Dmalloc 爱不释手的优点是它有大量的资料文献。前往官网的 [Documentation 标签][8],可以获取所有关于如何下载、安装、运行,怎样使用库,和 Dmalloc 所提供特性的细节描述,及其输入文件的解释。其中还有一个章节介绍了一般问题的解决方法。

|

||||

另外一个让我对 Dmalloc 爱不释手的优点是它有大量的资料文献。前往官网的 [Documentation 栏目][8],可以获取所有关于如何下载、安装、运行、怎样使用库,和 Dmalloc 所提供特性的细节描述,及其生成的输出文件的解释。其中还有一个章节介绍了一般问题的解决方法。

|

||||

|

||||

#### 注意事项 ####

|

||||

|

||||

跟 Mtrace 一样, Dmalloc 需要程序员改动他们的源代码。比如说你可以(必须的)添加头文件 *dmalloc.h* ,工具就能汇报产生问题的调用的文件或行号。这个功能非常有用,因为它节省了调试的时间。

|

||||

跟 Mtrace 一样, Dmalloc 需要程序员改动他们的源代码。比如说你可以(也是必须的)添加头文件`dmalloc.h`,工具就能汇报产生问题的调用的文件或行号。这个功能非常有用,因为它节省了调试的时间。

|

||||

|

||||

除此之外,还需要在编译你的程序时,把 Dmalloc 库(编译源码包时产生的)链接进去。

|

||||

除此之外,还需要在编译你的程序时,把 Dmalloc 库(编译 Dmalloc 源码包时产生的)链接进去。

|

||||

|

||||

然而,还有点更麻烦的事,需要设置一个环境变量,命名为 *DMALLOC_OPTION* ,以供工具在运行时配置内存调试特性,以及输出文件的路径。可以手动为该环境变量分配一个值,不过初学者可能会觉得这个过程有点困难,因为该值的一部分用来表示要启用的 Dmalloc 特性——表示为各自的十六进制值的累加。[这里][9]有详细介绍。

|

||||

然而,还有点更麻烦的事,需要设置一个环境变量,命名为`DMALLOC_OPTION`,以供工具在运行时配置内存调试特性,比如定义输出文件的路径。可以手动为该环境变量分配一个值,不过初学者可能会觉得这个过程有点困难,因为该值的一部分用来表示要启用的 Dmalloc 特性——以十六进制值的累加值表示。[这里][9]有详细介绍。

|

||||

|

||||

一个比较简单方法设置这个环境变量是使用 [Dmalloc 实用指令][10],这是专为这个目的设计的方法。

|

||||

|

||||

@ -56,19 +57,19 @@ Dmalloc 真正的优势在于它的可配置选项。而且高度可移植,曾

|

||||

|

||||

**开发者**:Bruce Perens

|

||||

|

||||

**点评版本**:2.2.3

|

||||

**评估版本**:2.2.3

|

||||

|

||||

**支持的 Linux**:所有种类

|

||||

**支持的 Linux 版本**:所有种类

|

||||

|

||||

**许可**:GNU 通用公共许可证 (第二版)

|

||||

**许可**:GPL v2

|

||||

|

||||

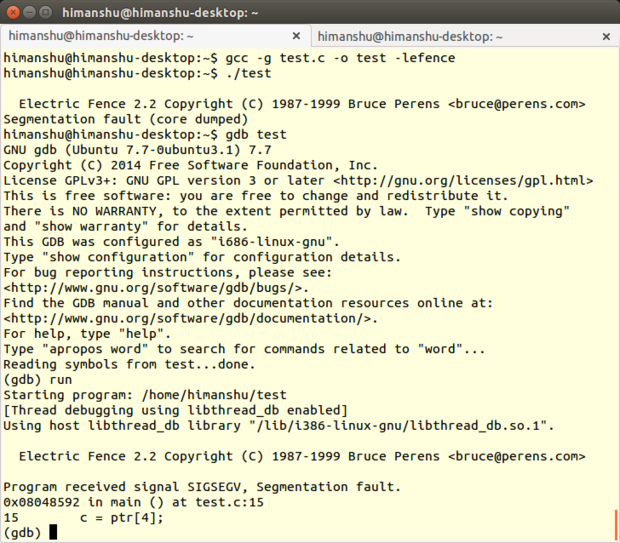

Electric Fence 是 Bruce Perens 开发的一款内存调试工具,它以库的形式实现,你的程序需要链接它。Electric Fence 能检测出[栈][11]内存溢出和访问已经释放的内存。

|

||||

Electric Fence 是 Bruce Perens 开发的一款内存调试工具,它以库的形式实现,你的程序需要链接它。Electric Fence 能检测出[堆][11]内存溢出和访问已经释放的内存。

|

||||

|

||||

|

||||

Electric Fence

|

||||

|

||||

顾名思义, Electric Fence 在每个申请的缓存边界建立了 virtual fence(虚拟围栏),任何非法内存访问都会导致[段错误][12]。这个调试工具同时支持 C 和 C++ 程序。

|

||||

*Electric Fence*

|

||||

|

||||

顾名思义, Electric Fence 在每个所申请的缓存边界建立了虚拟围栏,这样一来任何非法的内存访问都会导致[段错误][12]。这个调试工具同时支持 C 和 C++ 程序。

|

||||

|

||||

#### 更新内容 ####

|

||||

|

||||

@ -76,19 +77,19 @@ Electric Fence

|

||||

|

||||

#### 有何优点 ####

|

||||

|

||||

我喜欢 Electric Fence 首要的一点是它(不同于 Memwatch 、 Dmalloc 和 Mtrace ,)不需要你的源码做任何的改动,你只需要在编译的时候把它的库链接进你的程序即可。

|

||||

我喜欢 Electric Fence 的首要一点是它不同于 Memwatch 、 Dmalloc 和 Mtrace ,不需要对你的源码做任何的改动,你只需要在编译的时候把它的库链接进你的程序即可。

|

||||

|

||||

其次, Electric Fence 的实现保证了导致越界访问( a bounds violation )的第一个指令就是引起段错误的原因。这比在后面再发现问题要好多了。

|

||||

其次, Electric Fence 的实现保证了产生越界访问的第一个指令就会引起段错误。这比在后面再发现问题要好多了。

|

||||

|

||||

不管是否有检测出错误, Electric Fence 都会在输出产生版权信息。这一点非常有用,由此可以确定你所运行的程序已经启用了 Electric Fence 。

|

||||

|

||||

#### 注意事项 ####

|

||||

|

||||

另一方面,我对 Electric Fence 真正念念不忘的是它检测内存泄漏的能力。内存泄漏是 C/C++ 软件最常见也是最难隐秘的问题之一。不过, Electric Fence 不能检测出堆内存溢出,而且也不是线程安全的。

|

||||

另一方面,我对 Electric Fence 真正念念不忘的是它检测内存泄漏的能力。内存泄漏是 C/C++ 软件最常见也是最不容易发现的问题之一。不过, Electric Fence 不能检测出栈溢出,而且也不是线程安全的。

|

||||

|

||||

由于 Electric Fence 会在用户分配内存区的前后分配禁止访问的虚拟内存页,如果你过多的进行动态内存分配,将会导致你的程序消耗大量的额外内存。

|

||||

|

||||

Electric Fence 还有一个局限是不能明确指出错误代码所在的行号。它所能做只是在监测到内存相关错误时产生段错误。想要定位行号,需要借助 [The Gnu Project Debugger ( GDB )][14]这样的调试工具来调试启用了 Electric Fence 的程序。

|

||||

Electric Fence 还有一个局限是不能明确指出错误代码所在的行号。它所能做只是在检测到内存相关错误时产生段错误。想要定位错误的行号,需要借助 [GDB][14]这样的调试工具来调试启用了 Electric Fence 的程序。

|

||||

|

||||

最后一点,尽管 Electric Fence 能检测出大部分的缓冲区溢出,有一个例外是,如果所申请的缓冲区大小不是系统字长的倍数,这时候溢出(即使只有几个字节)就不能被检测出来。

|

||||

|

||||

@ -96,94 +97,95 @@ Electric Fence 还有一个局限是不能明确指出错误代码所在的行

|

||||

|

||||

尽管局限性较大, Electric Fence 的易用性仍然是加分项。只要链接一次程序, Electric Fence 就可以在监测出内存相关问题的时候报警。不过,如同前面所说, Electric Fence 需要配合像 GDB 这样的源码调试器使用。

|

||||

|

||||

|

||||

### [Memcheck][16] ###

|

||||

|

||||

**开发者**:[Valgrind 开发团队][17]

|

||||

|

||||

**点评版本**:3.10.1

|

||||

**评估版本**:3.10.1

|

||||

|

||||

**支持的 Linux**:所有种类

|

||||

**支持的 Linux 发行版**:所有种类

|

||||

|

||||

**许可**:通用公共许可证

|

||||

**许可**:GPL

|

||||

|

||||

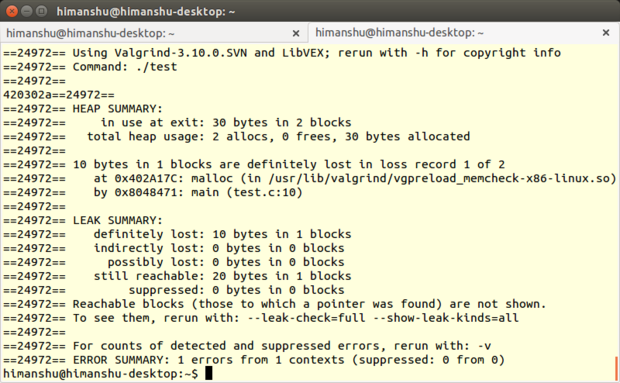

[Valgrind][18] 是一个提供好几款调试和 Linux 程序性能分析工具的套件。虽然 Valgrind 能和编写语言各不相同(有 Java 、 Perl 、 Python 、 Assembly code 、 ortran 、 Ada等等)的程序配合工作,但是它所提供的工具主要针对用 C/C++ 所编写的程序。

|

||||

[Valgrind][18] 是一个提供好几款调试和分析 Linux 程序性能的工具的套件。虽然 Valgrind 能和不同语言——Java 、 Perl 、 Python 、 Assembly code 、 ortran 、 Ada 等——编写的程序一起工作,但是它主要还是针对使用 C/C++ 所编写的程序。

|

||||

|

||||

Memcheck ,一款内存错误检测器,是其中最受欢迎的工具。它能够检测出如内存泄漏、无效的内存访问、未定义变量的使用以及栈内存分配和释放相关的问题等诸多问题。

|

||||

Memcheck ,一款内存错误检测器,是其中最受欢迎的工具。它能够检测出如内存泄漏、无效的内存访问、未定义变量的使用以及堆内存分配和释放相关的问题等诸多问题。

|

||||

|

||||

#### 更新内容 ####

|

||||

|

||||

工具套件( 3.10.1 )的[发行版][19]是一个副版本,主要修复了 3.10.0 版本发现的 bug 。除此之外,从主版本向后移植(标音: backport )一些包,修复了缺失的 AArch64 ARMv8 指令和系统调用。

|

||||

[工具套件( 3.10.1 )][19]主要修复了 3.10.0 版本发现的 bug 。除此之外,“从主干开发版本向后移植的一些补丁,修复了缺失的 AArch64 ARMv8 指令和系统调用”。

|

||||

|

||||

#### 有何优点 ####

|

||||

|

||||

同其它所有 Valgrind 工具一样, Memcheck 也是命令行实用程序。它的操作非常简单:通常我们会使用诸如 prog arg1 arg2 格式的命令来运行程序,而 Memcheck 只要求你多加几个值即可,如 valgrind --leak-check=full prog arg1 arg2 。

|

||||

同其它所有 Valgrind 工具一样, Memcheck 也是命令行程序。它的操作非常简单:通常我们会使用诸如 `prog arg1 arg2` 格式的命令来运行程序,而 Memcheck 只要求你多加几个值即可,如 `valgrind --leak-check=full prog arg1 arg2` 。

|

||||

|

||||

|

||||

Memcheck

|

||||

|

||||

(注意:因为 Memcheck 是 Valgrind 的默认工具所以无需提及 Memcheck。但是,需要在编译程序之初带上 -g 参数选项,这一步会添加调试信息,使得 Memcheck 的错误信息会包含正确的行号。)

|

||||

*Memcheck*

|

||||

|

||||

我真正倾心于 Memcheck 的是它提供了很多命令行选项(如上所述的 *--leak-check* 选项),如此不仅能控制工具运转还可以控制它的输出。

|

||||

(注意:因为 Memcheck 是 Valgrind 的默认工具,所以在命令行执行命令时无需提及 Memcheck。但是,需要在编译程序之初带上 `-g` 参数选项,这一步会添加调试信息,使得 Memcheck 的错误信息会包含正确的行号。)

|

||||

|

||||

举个例子,可以开启 *--track-origins* 选项,以查看程序源码中未初始化的数据;可以开启 *--show-mismatched-frees* 选项让 Memcheck 匹配内存的分配和释放技术。对于 C 语言所写的代码, Memcheck 会确保只能使用 *free()* 函数来释放内存, *malloc()* 函数来申请内存。而对 C++ 所写的源码, Memcheck 会检查是否使用了 *delete* 或 *delete[]* 操作符来释放内存,以及 *new* 或者*new[]* 来申请内存。

|

||||

我真正倾心于 Memcheck 的是它提供了很多命令行选项(如上所述的`--leak-check`选项),如此不仅能控制工具运转还可以控制它的输出。

|

||||

|

||||

Memcheck 最好的特点,尤其是对于初学者来说,是它会给用户建议使用哪个命令行选项能让输出更加有意义。比如说,如果你不使用基本的 *--leak-check* 选项, Memcheck 会在输出时建议“使用 --leak-check=full 重新运行以查看更多泄漏内存细节”。如果程序有未初始化的变量, Memcheck 会产生信息“使用 --track-origins=yes 以查看未初始化变量的定位”。

|

||||

举个例子,可以开启`--track-origins`选项,以查看程序源码中未初始化的数据;可以开启`--show-mismatched-frees`选项让 Memcheck 匹配内存的分配和释放技术。对于 C 语言所写的代码, Memcheck 会确保只能使用`free()`函数来释放内存,`malloc()`函数来申请内存。而对 C++ 所写的源码, Memcheck 会检查是否使用了`delete`或`delete[]`操作符来释放内存,以及`new`或者`new[]`来申请内存。

|

||||

|

||||

Memcheck 另外一个有用的特性是它可以[创建抑制文件( suppression files )][20],由此可以忽略特定不能修正的错误,这样 Memcheck 运行时就不会每次都报警了。值得一提的是, Memcheck 会去读取默认抑制文件来忽略系统库(比如 C 库)中的报错,这些错误在系统创建之前就已经存在了。可以选择创建一个新的抑制文件,或是编辑现有的文件(通常是*/usr/lib/valgrind/default.supp*)。

|

||||

Memcheck 最好的特点,尤其是对于初学者来说,是它会给用户建议使用哪个命令行选项能让输出更加有意义。比如说,如果你不使用基本的`--leak-check`选项, Memcheck 会在输出时给出建议:“使用 --leak-check=full 重新运行以查看更多泄漏内存细节”。如果程序有未初始化的变量, Memcheck 会产生信息:“使用 --track-origins=yes 以查看未初始化变量的定位”。

|

||||

|

||||

Memcheck 另外一个有用的特性是它可以[创建抑制文件( suppression files )][20],由此可以略过特定的不能修正的错误,这样 Memcheck 运行时就不会每次都报警了。值得一提的是, Memcheck 会去读取默认抑制文件来忽略系统库(比如 C 库)中的报错,这些错误在系统创建之前就已经存在了。可以选择创建一个新的抑制文件,或是编辑现有的文件(通常是`/usr/lib/valgrind/default.supp`)。

|

||||

|

||||

Memcheck 还有高级功能,比如可以使用[定制内存分配器][22]来[检测内存错误][21]。除此之外, Memcheck 提供[监控命令][23],当用到 Valgrind 内置的 gdbserver ,以及[客户端请求][24]机制(不仅能把程序的行为告知 Memcheck ,还可以进行查询)时可以使用。

|

||||

|

||||

#### 注意事项 ####

|

||||

|

||||

毫无疑问, Memcheck 可以节省很多调试时间以及省去很多麻烦。但是它使用了很多内存,导致程序执行变慢([由文档可知][25],大概花上 20 至 30 倍时间)。

|

||||

毫无疑问, Memcheck 可以节省很多调试时间以及省去很多麻烦。但是它使用了很多内存,导致程序执行变慢([由文档可知][25],大概会花费 20 至 30 倍时间)。

|

||||

|

||||

除此之外, Memcheck 还有其它局限。根据用户评论, Memcheck 明显不是[线程安全][26]的;它不能检测出 [静态缓冲区溢出][27];还有就是,一些 Linux 程序如 [GNU Emacs][28] 目前还不能使用 Memcheck 。

|

||||

除此之外, Memcheck 还有其它局限。根据用户评论, Memcheck 很明显不是[线程安全][26]的;它不能检测出 [静态缓冲区溢出][27];还有就是,一些 Linux 程序如 [GNU Emacs][28] 目前还不能配合 Memcheck 工作。

|

||||

|

||||

如果有兴趣,可以在[这里][29]查看 Valgrind 详尽的局限性说明。

|

||||

如果有兴趣,可以在[这里][29]查看 Valgrind 局限性的详细说明。

|

||||

|

||||

#### 总结 ####

|

||||

|

||||

无论是对于初学者还是那些需要高级特性的人来说, Memcheck 都是一款便捷的内存调试工具。如果你仅需要基本调试和错误核查, Memcheck 会非常容易上手。而当你想要使用像抑制文件或者监控指令这样的特性,就需要花一些功夫学习了。

|

||||

无论是对于初学者还是那些需要高级特性的人来说, Memcheck 都是一款便捷的内存调试工具。如果你仅需要基本调试和错误检查, Memcheck 会非常容易上手。而当你想要使用像抑制文件或者监控指令这样的特性,就需要花一些功夫学习了。

|

||||

|

||||

虽然罗列了大量的局限性,但是 Valgrind(包括 Memcheck )在它的网站上声称全球有[成千上万程序员][30]使用了此工具。开发团队称收到来自超过 30 个国家的用户反馈,而这些用户的工程代码有的高达 2.5 千万行。

|

||||

虽然罗列了大量的局限性,但是 Valgrind(包括 Memcheck )在它的网站上声称全球有[成千上万程序员][30]使用了此工具。开发团队称收到来自超过 30 个国家的用户反馈,而这些用户的工程代码有的高达两千五百万行。

|

||||

|

||||

### [Memwatch][31] ###

|

||||

|

||||

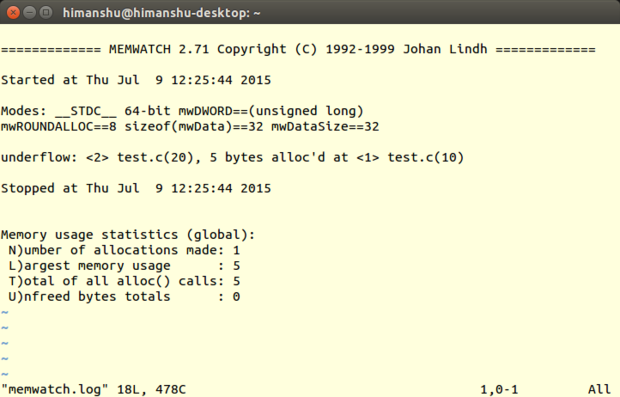

**开发者**:Johan Lindh

|

||||

|

||||

**点评版本**:2.71

|

||||

**评估版本**:2.71

|

||||

|

||||

**支持的 Linux**:所有种类

|

||||

**支持的 Linux 发行版**:所有种类

|

||||

|

||||

**许可**:GNU通用公共许可证

|

||||

**许可**:GNU GPL

|

||||

|

||||

Memwatch 是由 Johan Lindh 开发的内存调试工具,虽然它主要扮演内存泄漏检测器的角色,但是(根据网页介绍)它也具有检测其它如[重复释放跟踪和内存错误释放][32]、缓冲区溢出和下溢、[野指针][33]写入等等内存相关问题的能力。

|

||||

Memwatch 是由 Johan Lindh 开发的内存调试工具,虽然它扮演的主要角色是内存泄漏检测器,但是(根据网页介绍)它也具有检测其它如[内存重复释放和错误释放][32]、缓冲区溢出和下溢、[野指针][33]写入等等内存相关问题的能力。

|

||||

|

||||

Memwatch 支持用 C 语言所编写的程序。也可以在 C++ 程序中使用它,但是这种做法并不提倡(由 Memwatch 源码包随附的 Q&A 文件中可知)。

|

||||

|

||||

#### 更新内容 ####

|

||||

|

||||

这个版本添加了 *ULONG_LONG_MAX* 以区分 32 位和 64 位程序。

|

||||

这个版本添加了`ULONG_LONG_MAX`以区分 32 位和 64 位程序。

|

||||

|

||||

#### 有何优点 ####

|

||||

|

||||

跟 Dmalloc 一样, Memwatch 也有优秀的文档资料。参考 USING 文件,可以学习如何使用 Memwatch ,可以了解 Memwatch 是如何初始化、如何清理以及如何进行 I/O 操作,等等。还有一个 FAQ 文件,旨在帮助用户解决使用过程遇到的一般问题。最后还有一个 *test.c* 文件提供工作案例参考。

|

||||

跟 Dmalloc 一样, Memwatch 也有优秀的文档资料。参考 USING 文件,可以学习如何使用 Memwatch ,可以了解 Memwatch 是如何初始化、如何清理以及如何进行 I/O 操作,等等。还有一个 FAQ 文件,旨在帮助用户解决使用过程遇到的一般问题。最后还有一个`test.c`文件提供工作案例参考。

|

||||

|

||||

|

||||

Memwatch

|

||||

|

||||

不同于 Mtrace , Memwatch 的输出产生的日志文件(通常是 *memwatch.log* )是人类可阅读格式。而且, Memwatch 每次运行时总会拼接内存调试输出到文件末尾。如此便可在需要之时,轻松查看之前的输出信息。

|

||||

*Memwatch*

|

||||

|

||||

不同于 Mtrace , Memwatch 产生的日志文件(通常是`memwatch.log`)是人类可阅读的格式。而且, Memwatch 每次运行时总会把内存调试结果拼接到输出该文件的末尾。如此便可在需要之时轻松查看之前的输出信息。

|

||||

|

||||

同样值得一提的是当你执行了启用 Memwatch 的程序, Memwatch 会在[标准输出][34]中产生一个单行输出,告知发现了错误,然后你可以在日志文件中查看输出细节。如果没有产生错误信息,就可以确保日志文件不会写入任何错误,多次运行的话确实能节省时间。

|

||||

|

||||

另一个我喜欢的优点是 Memwatch 还提供了在源码中获取其输出信息的方式,你可以获取信息,然后任由你进行处理(参考 Memwatch 源码中的 *mwSetOutFunc()* 函数获取更多有关的信息)。

|

||||

另一个我喜欢的优点是 Memwatch 还提供了在源码中获取其输出信息的方式,你可以获取信息,然后任由你进行处理(参考 Memwatch 源码中的`mwSetOutFunc()`函数获取更多有关的信息)。

|

||||

|

||||

#### 注意事项 ####

|

||||

|

||||

跟 Mtrace 和 Dmalloc 一样, Memwatch 也需要你往你的源文件里增加代码:你需要把 *memwatch.h* 这个头文件包含进你的代码。而且,编译程序的时候,你需要连同 *memwatch.c* 一块编译;或者你可以把已经编译好的目标模块包含起来,然后在命令行定义 *MEMWATCH* 和 *MW_STDIO* 变量。不用说,想要在输出中定位行号, -g 编译器选项也少不了。

|

||||

跟 Mtrace 和 Dmalloc 一样, Memwatch 也需要你往你的源文件里增加代码:你需要把`memwatch.h`这个头文件包含进你的代码。而且,编译程序的时候,你需要连同`memwatch.c`一块编译;或者你可以把已经编译好的目标模块包含起来,然后在命令行定义`MEMWATCH`和`MW_STDIO`变量。不用说,想要在输出中定位行号, -g 编译器选项也少不了。

|

||||

|

||||

此外, Memwatch 缺少一些特性。比如 Memwatch 不能检测出往一块已经被释放的内存写入操作,或是在分配的内存块之外的读取操作。而且, Memwatch 也不是线程安全的。还有一点,正如我在开始时指出,在 C++ 程序上运行 Memwatch 的结果是不能预料的。

|

||||

此外, Memwatch 缺少一些特性。比如 Memwatch 不能检测出对一块已经被释放的内存进行写入操作,或是在分配的内存块之外的进行读取操作。而且, Memwatch 也不是线程安全的。还有一点,正如我在开始时指出,在 C++ 程序上运行 Memwatch 的结果是不能预料的。

|

||||

|

||||

#### 总结 ####

|

||||

|

||||

@ -191,20 +193,21 @@ Memcheck 可以检测很多内存相关的问题,在处理 C 程序时是非

|

||||

|

||||

### [Mtrace][35] ###

|

||||

|

||||

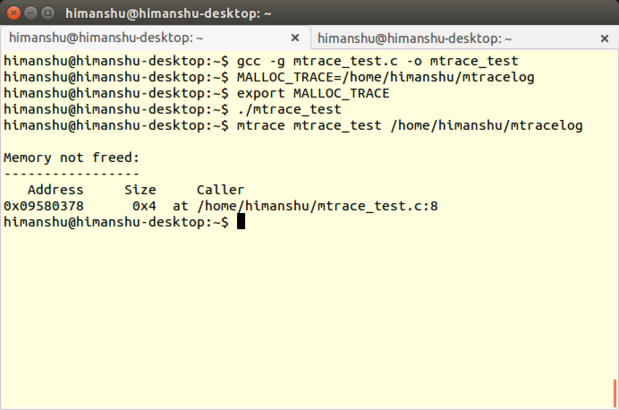

**开发者**: Roland McGrath and Ulrich Drepper

|

||||

**开发者**: Roland McGrath 和 Ulrich Drepper

|

||||

|

||||

**点评版本**: 2.21

|

||||

**评估版本**: 2.21

|

||||

|

||||

**支持的 Linux**:所有种类

|

||||

**支持的 Linux 发行版**:所有种类

|

||||

|

||||

**许可**:GNU 通用公共许可证

|

||||

**许可**:GNU GPL

|

||||

|

||||

Mtrace 是 [GNU C 库][36]中的一款内存调试工具,同时支持 Linux 上的 C 和 C++ 程序,检测由 *malloc()* 和 *free()* 函数的不对等调用所引起的内存泄漏问题。

|

||||

Mtrace 是 [GNU C 库][36]中的一款内存调试工具,同时支持 Linux 上的 C 和 C++ 程序,可以检测由函数`malloc()`和`free()`不匹配的调用所引起的内存泄漏问题。

|

||||

|

||||

|

||||

Mtrace

|

||||

|

||||

Mtrace 实现为对 *mtrace()* 函数的调用,跟踪程序中所有 malloc/free 调用,并在用户指定的文件中记录相关信息。文件以一种机器可读的格式记录数据,所以有一个 Perl 脚本(同样命名为 mtrace )用来把文件转换并展示为人类可读格式。

|

||||

*Mtrace*

|

||||

|

||||

Mtrace 实际上是实现了一个名为`mtrace()`的函数,它可以跟踪程序中所有 malloc/free 调用,并在用户指定的文件中记录相关信息。文件以一种机器可读的格式记录数据,所以有一个 Perl 脚本——同样命名为 mtrace ——用来把文件转换并为人类可读格式。

|

||||

|

||||

#### 更新内容 ####

|

||||

|

||||

@ -212,21 +215,21 @@ Mtrace 实现为对 *mtrace()* 函数的调用,跟踪程序中所有 malloc/fr

|

||||

|

||||

#### 有何优点 ####

|

||||

|

||||

Mtrace 最优秀的特点是非常简单易学。你只需要了解在你的源码中如何以及何处添加mtrace()及其对立的muntrace()函数,还有如何使用Mtrace的Perl脚本。后者非常简单,只需要运行指令 *mtrace <program-executable> <log-file-generated-upon-program-execution>*(例子见开头截图最后一条指令)。

|

||||

Mtrace 最好的地方是它非常简单易学。你只需要了解在你的源码中如何以及何处添加 mtrace() 及对应的 muntrace() 函数,还有如何使用 Mtrace 的 Perl 脚本。后者非常简单,只需要运行指令`mtrace <program-executable> <log-file-generated-upon-program-execution>`(例子见开头截图最后一条指令)。

|

||||

|

||||

Mtrace 另外一个优点是它的可收缩性,体现在,不仅可以使用它来调试完整的程序,还可以使用它来检测程序中独立模块的内存泄漏。只需在每个模块里调用 *mtrace()* 和 *muntrace()* 即可。

|

||||

Mtrace 另外一个优点是它的可伸缩性,这体现在不仅可以使用它来调试完整的程序,还可以使用它来检测程序中独立模块的内存泄漏。只需在每个模块里调用`mtrace()`和`muntrace()`即可。

|

||||

|

||||

最后一点,因为 Mtrace 会在 *mtace()*(在源码中添加的函数)执行时被触发,因此可以很灵活地[使用信号][39]动态地(在程序执行周期内)使能 Mtrace 。

|

||||

最后一点,因为 Mtrace 会在`mtrace()`——在源码中添加的函数——执行时被触发,因此可以很灵活地[使用信号][39]动态地(在程序执行时)使能 Mtrace 。

|

||||

|

||||

#### 注意事项 ####

|

||||

|

||||

因为 *mtrace()* 和 *mauntrace()* 函数(声明在 *mcheck.h* 文件中,所以必须在源码中包含此头文件)的调用是 Mtrace 运行( *mauntrace()* 函数并非[总是必要][40])的根本,因此 Mtrace 要求程序员至少改动源码一次。

|

||||

因为`mtrace()`和`mauntrace()`函数 —— 声明在`mcheck.h`文件中,所以必须在源码中包含此头文件 —— 的调用是 Mtrace 工作的基础(`mauntrace()`函数并非[总是必要][40]),因此 Mtrace 要求程序员至少改动源码一次。

|

||||

|

||||

需要注意的是,在编译程序的时候带上 -g 选项( [GCC][41] 和 [G++][42] 编译器均有提供),才能使调试工具在输出展示正确的行号。除此之外,有些程序(取决于源码体积有多大)可能会花很长时间进行编译。最后,带 -g 选项编译会增加了可执行文件的内存(因为提供了额外的调试信息),因此记得程序需要在测试结束后,不带 -g 选项重新进行编译。

|

||||

需要注意的是,在编译程序的时候带上 -g 选项( [GCC][41] 和 [G++][42] 编译器均有提供),才能使调试工具在输出结果时展示正确的行号。除此之外,有些程序(取决于源码体积有多大)可能会花很长时间进行编译。最后,带 -g 选项编译会增加了可执行文件的大小(因为提供了额外的调试信息),因此记得程序需要在测试结束后,不带 -g 选项重新进行编译。

|

||||

|

||||

使用 Mtrace ,你需要掌握 Linux 环境变量的基本知识,因为在程序执行之前,需要把用户指定的文件( *mtrace()* 函数将会记录全部信息到其中)路径设置为环境变量 *MALLOC_TRACE* 的值。

|

||||

使用 Mtrace ,你需要掌握 Linux 环境变量的基本知识,因为在程序执行之前,需要把用户把环境变量`MALLOC_TRACE`的值设为指定的文件(`mtrace()`函数将会记录全部信息到其中)路径。

|

||||

|

||||

Mtrace 在检测内存泄漏和尝试释放未经过分配的内存方面存在局限。它不能检测其它内存相关问题如非法内存访问、使用未初始化内存。而且,[有人抱怨][43] Mtrace 不是[线程安全][44]的。

|

||||

Mtrace 在检测内存泄漏和试图释放未经过分配的内存方面存在局限。它不能检测其它内存相关问题如非法内存访问、使用未初始化内存。而且,[有人抱怨][43] Mtrace 不是[线程安全][44]的。

|

||||

|

||||

### 总结 ###

|

||||

|

||||

@ -238,7 +241,7 @@ Mtrace 在检测内存泄漏和尝试释放未经过分配的内存方面存在

|

||||

|

||||

虽然 Memwatch 的资料比 Dmalloc 的更加丰富,而且还能检测更多的错误种类,但是你只能在 C 语言写就的程序中使用它。一个让 Memwatch 脱颖而出的特性是它允许在你的程序源码中处理它的输出,这对于想要定制输出格式来说是非常有用的。

|

||||

|

||||

如果改动程序源码非你所愿,那么使用 Electric Fence 吧。不过,请记住, Electric Fence 只能检测两种错误类型,而此二者均非内存泄漏。还有就是,需要了解 GDB 基础以最大化发挥这款内存调试工具的作用。

|

||||

如果改动程序源码非你所愿,那么使用 Electric Fence 吧。不过,请记住, Electric Fence 只能检测两种错误类型,而此二者均非内存泄漏。还有就是,需要基本了解 GDB 以最大化发挥这款内存调试工具的作用。

|

||||

|

||||

Memcheck 可能是其中综合性最好的了。相比这里提及的其它工具,它能检测更多的错误类型,提供更多的特性,而且不需要你的源码做任何改动。但请注意,基本功能并不难上手,但是想要使用它的高级特性,就必须学习相关的专业知识了。

|

||||

|

||||

@ -248,7 +251,7 @@ via: http://www.computerworld.com/article/3003957/linux/review-5-memory-debugger

|

||||

|

||||

作者:[Himanshu Arora][a]

|

||||

译者:[soooogreen](https://github.com/soooogreen)

|

||||

校对:[PurlingNayuki](https://github.com/PurlingNayuki)

|

||||

校对:[PurlingNayuki](https://github.com/PurlingNayuki),[ezio](https://github.com/oska874)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,44 @@

|

||||

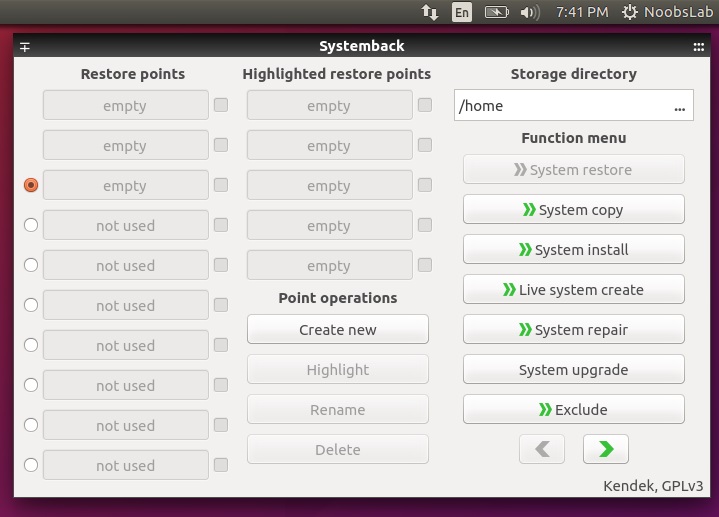

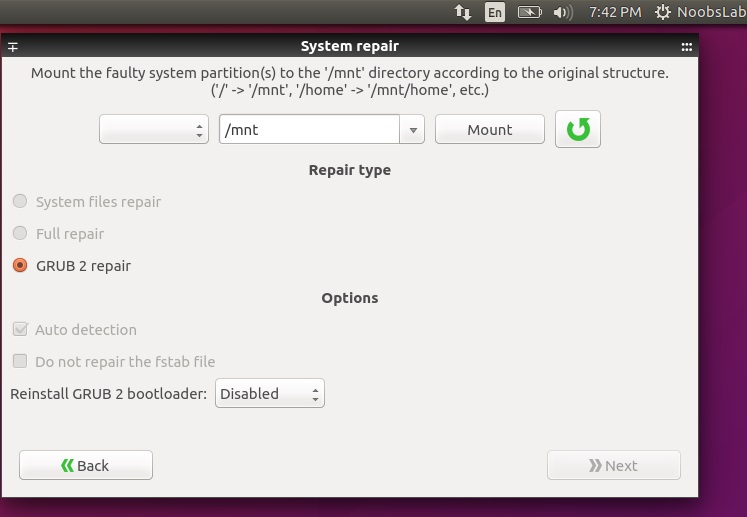

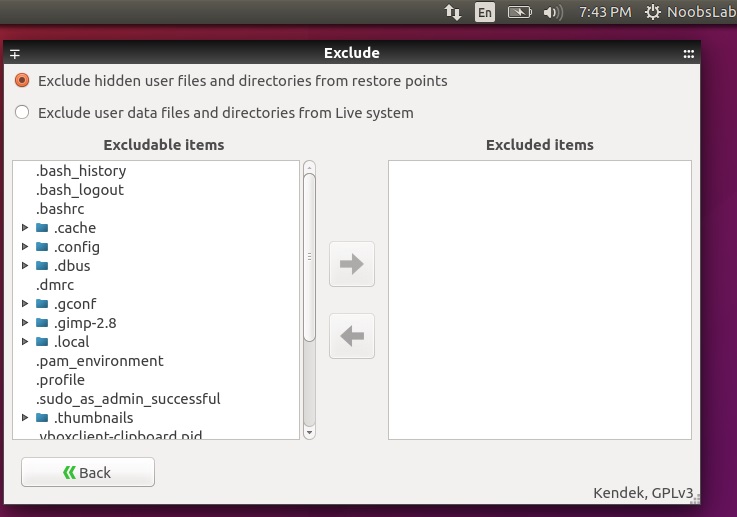

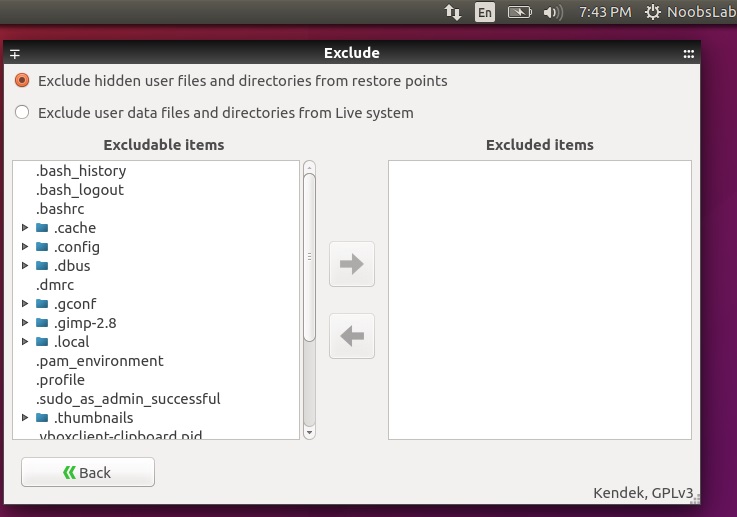

使用 SystemBack 备份/还原你的 Ubuntu/Linux Mint

|

||||

=================================================

|

||||

|

||||

对于任何一款允许用户还原电脑到之前状态(包括文件系统,安装的应用,以及系统设置)的操作系统来说,系统还原功能都是必备功能,它可以恢复系统故障以及其他的问题。

|

||||

|

||||

有的时候安装一个程序或者驱动可能让你的系统黑屏。系统还原则可以让你电脑里面的系统文件(LCTT 译注:是系统文件,并非普通文件,详情请看**注意**部分)和程序恢复到之前工作正常时候的状态,进而让你远离那让人头痛的排障过程了,而且它也不会影响你的文件,照片或者其他数据。

|

||||

|

||||

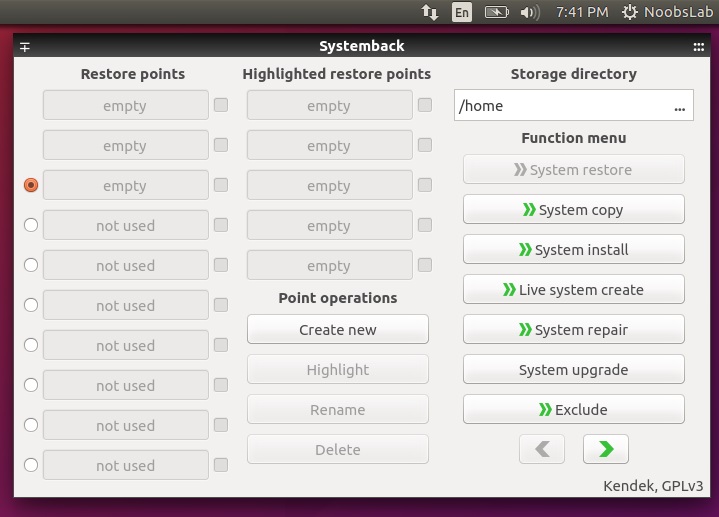

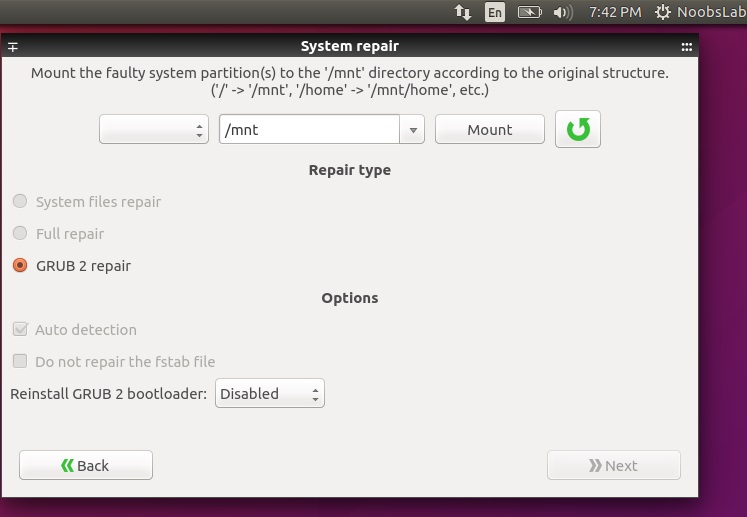

简单的系统备份还原工具 [Systemback](https://launchpad.net/systemback) 可以让你很容易地创建系统备份以及用户配置文件。一旦遇到问题,你可以简单地恢复到系统先前的状态。它还有一些额外的特征包括系统复制,系统安装以及Live系统创建。

|

||||

|

||||

**截图**

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

**注意**:使用系统还原不会还原你自己的文件、音乐、电子邮件或者其他任何类型的私人文件。对不同用户来讲,这既是优点又是缺点。坏消息是它不会还原你意外删除的文件,不过你可以通过一个文件恢复程序来解决这个问题。如果你的计算机没有创建还原点,那么系统恢复就无法奏效,所以这个工具就无法帮助你(还原系统),如果你尝试恢复一个主要问题,你将需要移步到另外的步骤来进行故障排除。

|

||||

|

||||

> 适用于 Ubuntu 15.10 Wily/16.04/15.04 Vivid/14.04 Trusty/Linux Mint 14.x/其他Ubuntu衍生版,打开终端,将下面这些命令复制过去:

|

||||

|

||||

终端命令:

|

||||

|

||||

```

|

||||

sudo add-apt-repository ppa:nemh/systemback

|

||||

sudo apt-get update

|

||||

sudo apt-get install systemback

|

||||

|

||||

```

|

||||

|

||||

大功告成。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.noobslab.com/2015/11/backup-system-restore-point-your.html

|

||||

|

||||

译者:[DongShuaike](https://github.com/DongShuaike)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:https://launchpad.net/systemback

|

||||

@ -6,18 +6,20 @@

|

||||

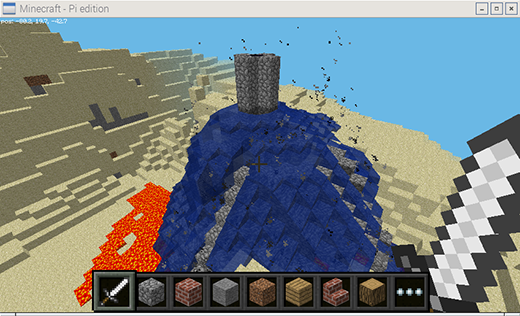

### 1. 我的世界: Pi

|

||||

|

||||

|

||||

>源于 Raspberry Pi 基金会. [CC BY-SA 4.0][1].

|

||||

|

||||

我的世界是世界上几乎每一个青少年都特别喜欢的一款游戏,而且它成功抓住了年轻人眼球,成为目前最能激发年轻人创造力的游戏之一。这个树莓派版本自带的我的世界不仅仅是一个具有创造性的建筑游戏,还是一个具有编程接口,可以通过 Python 与之交互的版本。

|

||||

*源于 Raspberry Pi 基金会. [CC BY-SA 4.0][1]*

|

||||

|

||||

“我的世界”是世界上几乎每一个青少年都特别喜欢的一款游戏,而且它成功抓住了年轻人眼球,成为目前最能激发年轻人创造力的游戏之一。这个树莓派版本自带的我的世界不仅仅是一个具有创造性的建筑游戏,还是一个具有编程接口,可以通过 Python 与之交互的版本。

|

||||

|

||||

我的世界:Pi 版对于老师来说是一个教授学生解决问题和编写代码完成任务的好方式。你可以使用 Python API 创建一个房子,并且一直跟随这你的脚步移动,在所到之处建造一座桥,让天空落下熔岩雨滴,在空中显示温度,以及其它你可以想象到的一切东西。

|

||||

|

||||

详情请见 "[Getting Started with Minecraft Pi][2]."

|

||||

详情请见 "[我的世界: Pi 入门][2]"

|

||||

|

||||

### 2. 反应游戏和交通灯

|

||||

|

||||

|

||||

>源于 [Low Voltage Labs][3]. [CC BY-SA 4.0][1].

|

||||

|

||||

*源于 [Low Voltage Labs][3]. [CC BY-SA 4.0][1]*

|

||||

|

||||

使用树莓派可以很轻松地进行物理计算,只需要连接几个 LED 和按钮到开发板上的 GPIO 接口,再用几行代码你就可以按下按钮来开灯。一旦你了解了如何使用代码来完成这些基本的操作,接下来就可以根据你的想象来做其它事情了。

|

||||

|

||||

@ -26,7 +28,8 @@

|

||||

代码并不是全部——这只是一个演练,让你理解现实世界里系统是如何完成设计的。计算思维是一个让你终身受用的技能。

|

||||

|

||||

|

||||

>源于 Raspberry Pi 基金会. [CC BY-SA 4.0][1].

|

||||

|

||||

*源于 Raspberry Pi 基金会. [CC BY-SA 4.0][1]*

|

||||

|

||||

接下来试着接通两个按钮和 LED 灯的电源,实现一个双玩家的反应游戏 —— 让 LED 灯随机时间点亮,然后看是谁抢先按下按钮。

|

||||

|

||||

@ -38,25 +41,25 @@ Astro Pi —— 一个增强版的树莓派 —— 将在 12 月问世,但是

|

||||

|

||||

[video](https://youtu.be/gfRDFvEVz-w)

|

||||

|

||||

>详见 "[探索 Sense HAT][9]."

|

||||

> 详见 "[探索 Sense HAT][9]."

|

||||

|

||||

### 4. 红外鸟笼

|

||||

|

||||

|

||||

>源于 Raspberry Pi 基金会. [CC BY-SA 4.0][1].

|

||||

|

||||

*源于 Raspberry Pi 基金会. [CC BY-SA 4.0][1]*

|

||||

|

||||

让整个班级都可以参与进来的好主意是在鸟笼里放置一个树莓派和夜视镜头,以及一些红外线灯,这样子你就可以在黑暗中看见鸟笼里的情况了,然后使用树莓派通过网络串流视频。然后就可以等待小鸟归笼了,你可以在不打扰的情况下近距离观察小窝里的它们了。

|

||||

|

||||

要了解更多有关红外线和光谱的知识,以及如何校准摄像头焦点和使用软件控制摄像头,可以访问 [Make an infrared bird box][10]。

|

||||

|

||||

|

||||

要了解更多有关红外线和光谱的知识,以及如何校准摄像头焦点和使用软件控制摄像头,可以访问 [打造一个红外鸟笼][10]。

|

||||

|

||||

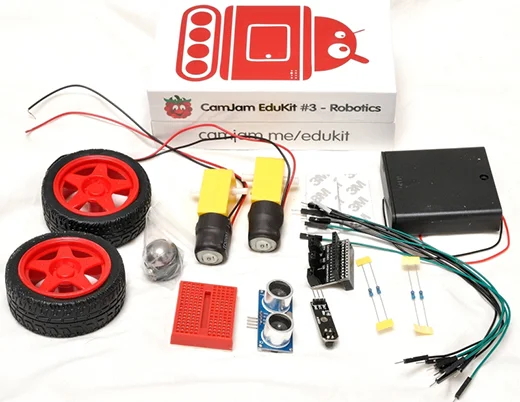

### 5. 机器人

|

||||

|

||||

|

||||

>源于 Raspberry Pi 基金会. [CC BY-SA 4.0][1].

|

||||

|

||||

只需要一个树莓派、很少的几个电机和电机控制器,你就可以自己动手制作一个机器人。可以制作的机器人有很多种,从简单的由几个轮子和自制底盘拼凑的简单小车,到由游戏控制器驱动、具有自我意识、配备了传感器,安装了摄像头的金属种马。

|

||||

*源于 Raspberry Pi 基金会. [CC BY-SA 4.0][1]*

|

||||

|

||||

只需要一个树莓派、很少的几个电机和电机控制器,你就可以自己动手制作一个机器人。可以制作的机器人有很多种,从简单的由几个轮子和自制底盘拼凑的简单小车,到由游戏控制器驱动、具有自我意识、配备了传感器,安装了摄像头的金属小马。

|

||||

|

||||

要学习如何控制不同的电机,可以使用 RTK 电机驱动开发板入门或者使用配置了电机、轮子和传感器的 CamJam 机器人开发套件——具有很大的价值和大量的学习潜力。

|

||||

|

||||

200

published/20160225 The Tao of project management.md

Normal file

200

published/20160225 The Tao of project management.md

Normal file

@ -0,0 +1,200 @@

|

||||

《道德经》之项目管理

|

||||

=================================

|

||||

|

||||

|

||||

|

||||

|

||||

[道德经][1],[被认为][2]是由圣人[老子][3]于公元前六世纪时所编写,是现存最为广泛翻译的经文之一。从[宗教][4]到[关于约会的有趣电影][5]等方方面面,它都深深地影响着它们,作者们借用它来做隐喻,以解释各种各样的事情(甚至是[编程][6])。

|

||||

|

||||

在思考有关开放性组织的项目管理时,我的脑海中便立马浮现出上面的这段文字。

|

||||

|

||||

这听起来可能会有点奇怪。若要理解我的这种想法从何而来,你应该读读 *《开放性组织:点燃激情提升执行力》* 这本书,它是红帽公司总裁、首席执行官 Jim Whitehurst 所写的一本有关企业文化和新领导力范式的宣言。在这本书中,Jim(还有来自其他红帽人的一点帮助)解释了传统组织机构(一种 “自上而下” 的方式,来自高层的决策被传达到员工,而员工通过晋升和薪酬来激励)和开放性组织机构(一种 自下而上 的方式,领导专注于激励和鼓励,员工被充分授权以各尽其能)之间的差异。

|

||||

|

||||

在开放性组织中的员工都是被激情、目标和参与感所激励,这个观点正是我认为项目管理者所应该关注的。

|

||||

|

||||

要解释这一切,我将从*道德经*上寻找依据。

|

||||

|

||||

### 不要让工作职衔框住自身

|

||||

|

||||

> 道,可道也,(The tao that can be told)

|

||||

|

||||

> 非恒道也。(is not the eternal Tao)

|

||||

|

||||

> 名,可名也,(The name that can be named)

|

||||

|

||||

> 非恒名也。(is not the eternal Name.)

|

||||

|

||||

> “无”,名天地之始;(The unnameable is the eternally real.)

|

||||

|

||||

> “有”,名万物之母。(Naming is the origin of all particular things.)

|

||||

[[1]][7]

|

||||

|

||||

项目管理到底是什么?作为一个项目管理者应该做些什么呢?

|

||||

|

||||

如您所想,项目管理者的一部分工作就是管理项目:收集需求、与项目相关人员沟通、设置项目优先级、安排任务、帮助团队解决困扰。许多机构都可以教你如何做好项目管理,并且这些技能你值得拥有。

|

||||

|

||||

然而,在开放性组织中,字面上的项目管理技能仅仅只是项目管理者需要做到的一小部分,这些组织需要更多其他的东西:即勇气。如果你擅长于管理项目(或者是真的擅长于任何工作),那么你就进入了舒适区。这时候就是需要鼓起勇气开始尝试冒险之时。

|

||||

|

||||

您有勇气跨出舒适区吗?向权威人士提出挑战性的问题,可能会引发对方的不快,但也可能会开启一个更好的方法,您有勇气这样做吗?有确定需要做的下一件事,然后真正去完成它的勇气吗?有主动去解决因为交流的鸿沟而遗留下来的问题的勇气吗?有去尝试各种事情的勇气吗?有失败的勇气吗?

|

||||

|

||||

道德经的开篇(上面引用的)就表明词语(words)、标签(labels)、名字(names)这些是有限制的,当然也包括工作职衔。在开放性组织中,项目经理不仅仅是执行管理项目所需的机械任务,而且要帮助团队完成组织的使命,尽管这已经被限定了。

|

||||

|

||||

### 联系起合适的人

|

||||

|

||||

> 三十辐共一轂,(We join spokes together in a wheel,)

|

||||

|

||||

> 当其无,(but it is the center hole)

|

||||

|

||||

> 有车之用。(that makes the wagon move.)

|

||||

[[11]][8]

|

||||

|

||||

当我过渡到项目管理的工作时,我必须学会的最为困难的一课是:并不是所有解决方案都是可完全地接受,甚至有的连预期都达不到。这对我来说是全新的一页。我*喜欢*全部都能解决。但作为项目管理者,我的角色更多的是与人沟通--使得那些确实有解决方案的人可以更高效地合作。

|

||||

|

||||

这并不是逃避责任或者不负责。这意味着可以很舒适的说,“我不知道,但我会给你找出答案”,然后就可迅速地结束这个循环。

|

||||

|

||||

想像一下马车的车轮,如果没有毂中的孔洞所提供的稳定性和方向,辐条便会失去支持,车轮也会散架。在一个开放性的组织中,项目管理者可以通过把合适的人凝聚在一起,培养正确的讨论话题来帮助团队保持持续向前的动力。

|

||||

|

||||

### 信任你的团队

|

||||

|

||||

>太上,不知有之;(When the Master governs, the people

|

||||

are hardly aware that he exists.)

|

||||

|

||||

> 其次,亲而誉之;(Next best is a leader who is loved.)

|

||||

|

||||

> 其次,畏之;(Next, one who is feared.)

|

||||

|

||||

> 其次,侮之。(The worst is one who is despised.)

|

||||

|

||||

>信不足焉,(If you don't trust the people,)

|

||||

|

||||

>有不信焉。(you make them untrustworthy.)

|

||||

|

||||

>悠兮,其贵言。(The Master doesn't talk, he acts.)

|

||||

|

||||

> 功成事遂,(When his work is done,)

|

||||

|

||||

> 百姓皆谓:“我自然”。(the people say, "Amazing:

|

||||

we did it, all by ourselves!")

|

||||

[[17]][9]

|

||||

|

||||

[Rebecca Fernandez][10] 曾经告诉我开放性组织的领导与其它组织的领导者最大的不同点在于,我们不是去取得别人的信任,而是信任别人。

|

||||

|

||||

开放性组织会雇佣那些非常聪明的,且对公司正在做的事情充满激情的人来做工作。为了能使他们能更好的工作,我们会提供其所需,并尊重他们的工作方式。

|

||||

|

||||

至于原因,我认为从道德经中摘出的上面一段就说的很清楚。

|

||||

|

||||

### 顺其自然

|

||||

|

||||

>上德无为而无以为;(The Master does nothing

|

||||

yet he leaves nothing undone.)

|

||||

|

||||

>下德为之而有以为。(The ordinary man is always doing things,

|

||||

yet many more are left to be done.)

|

||||

[[38]][11]

|

||||

|

||||

你认识那类总是极其忙碌的人吗?认识那些因为有太多事情要做而看起来疲倦和压抑的人吗?

|

||||

|

||||

不要成为那样的人。

|

||||

|

||||

我知道说比做容易。帮助我没有成为那类人的最重要的东西是:我时刻记着*大家都很忙*这件事。我没有一个那样无聊的同事。

|

||||

|

||||

但总需要有人成为在狂风暴雨中仍保持镇定的人。总需要有人能够宽慰团队告诉他们一切都会好起来,我们将在现实和一天中工作时间有限的情况下,找到方法使得任务能够完成(因为事实就是这样的,而且我们必须这样)。

|

||||

|

||||

成为那样的人吧。

|

||||

|

||||

对于上面这段道德经所说的,我的理解是那些总是谈论他或她正在做什么的人实际上并*没有时间*去做他们谈论的事。如果相比于你周围的人,你能把你的工作做的毫不费劲,那就说明你的工作做对了。

|

||||

|

||||

### 做一名文化传教士

|

||||

|

||||

>上士闻道,(When a superior man hears of the Tao,)

|

||||

|

||||

> 勤而行之;(he immediately begins to embody it.)

|

||||

|

||||

> 中士闻道,(When an average man hears of the Tao,)

|

||||

|

||||

>若存若亡;(he half believes it, half doubts it.)

|

||||

|

||||

> 下士闻道,(When a foolish man hears of the Tao,)

|

||||

|

||||

> 大笑之。(he laughs out loud.)

|

||||

|

||||

> 不笑不足以為道。(If he didn't laugh,it wouldn't be the Tao.)

|

||||

[[41]][12]

|

||||

|

||||

去年秋天,我和一群联邦雇员参加了一堂 MBA 的商业准则课程。当我开始介绍我们公司的文化、价值和伦理框架时,我得到的直接印象是:我的同学和教授都认为我就像一个天真可爱的小姑娘,做着许多关于公司应该如何运作的[甜美白日梦][13]。他们告诉我事情不可能是他们看起来的那样,他们还告诉我应该进一步考察。

|

||||

|

||||

所以我照做了。

|

||||

|

||||

然而我发现的是:事情*恰好*是他们看起来的那样。

|

||||

|

||||

在开放性组织,关于企业文化,人们应该随着企业的成长而时时维护那些文化,以使它随时精神焕发,充满斗志。我(和其它开源组织的成员)并不想过着如我同学们所描述的那样,“为生活而工作”。我需要有激情、有目标,需要明白自己的日常工作是如何对那些我所坚信的东西做贡献的。

|

||||

|

||||

作为一个项目管理者,你可能会认为在你的团队中,你的工作对培养你们公司的企业文化没有多少帮助。然而你的工作正是孕育文化本身。

|

||||

|

||||

### Kaizen (持续改善)

|

||||

|

||||

>为学日益,(In pursuit of knowledge,every day something is added.)

|

||||

|

||||

> 为道日损。(In the practice of the Tao,every day something is dropped.)

|

||||

|

||||

> 损之又损,(Less and less do you need to force things,)

|

||||

|

||||

> 以至于无为。(until finally you arrive at non-action. )

|

||||

|

||||

> 无为而无不为。(When nothing is done,nothing is left undone.)

|

||||

[[48]][14]

|

||||

|

||||

项目管理的常规领域都太过于专注最新、最强大的的工具,但对于应该使用哪种工具,这个问题的答案总是一致的:“最简单的”。

|

||||

|

||||

例如,我将任务列表放在桌面的一个文本文件中,因为它很单纯,不会受到不必要的干扰。您想介绍给团队的,无论是何种工具、流程和程序都应该是能提高效率,排除障碍的,而不是引入额外的复杂性。所以与其专注于工具,还不如专注于要使用这些工具来解决的*问题*。

|

||||

|

||||

作为一个项目经理,我最喜爱的部分是在敏捷世界中,我有自由抛弃那些没有成效的东西的权利。这与 [kaizen][16] 的概念相关,或叫 “持续改进”。不要害怕尝试和失败。失败是我们在探索什么能够起作用,什么不能起作用的过程中所用的标签,这是提高的唯一方式。

|

||||

|

||||

最好的过程都不是一蹴而就的。作为项目管理者,你应该通过支持他们,而不是强迫他们去做某些事来帮助你的团队。

|

||||

|

||||

### 实践

|

||||

|

||||

>天下皆谓我"道"大,(Some say that my teaching is nonsense.)

|

||||

|

||||

> 似不肖。(Others call it lofty but impractical.)

|

||||

|

||||

> 夫唯大,(But to those who have looked inside themselves,)

|

||||

|

||||

> 故似不肖。(this nonsense makes perfect sense.)

|

||||

|

||||

>若肖,(And to those who put it into practice,)

|

||||

|

||||

> 久矣其细也夫!(this loftiness has roots that go deep.)

|

||||

[[67]][15]

|

||||

|

||||

我相信开放性组织正在做的事。开放性组织在管理领域的工作几乎与他们提供的产品和服务一样重要。我们有机会以身作则,激发他人的激情和目的,创造激励和充分授权的工作环境。

|

||||

|

||||

我鼓励你们找到办法把这些想法融入到自己的项目和团队中,看看会发生什么。了解你们组织的使命,知晓你的项目是如何为这个使命做贡献的。鼓起勇气,尝试某些看起来没有多少成效的事,同时不要忘记和我们的社区分享你所学到的经验,这样我们就可以继续改进。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/open-organization/16/2/tao-project-management

|

||||

|

||||

作者:[Allison Matlack][a]

|

||||

译者:[runningwater](https://github.com/runningwater)

|

||||

校对:[FSSlc](https://github.com/FSSlc)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/amatlack

|

||||

[1]: http://acc6.its.brooklyn.cuny.edu/~phalsall/texts/taote-v3.html

|

||||

[2]: https://en.wikipedia.org/wiki/Tao_Te_Ching

|

||||

[3]: http://plato.stanford.edu/entries/laozi/

|

||||

[4]: https://en.wikipedia.org/wiki/Taoism

|

||||

[5]: http://www.imdb.com/title/tt0234853/

|

||||

[6]: http://www.mit.edu/~xela/tao.html

|

||||

[7]: http://acc6.its.brooklyn.cuny.edu/~phalsall/texts/taote-v3.html#1

|

||||

[8]: http://acc6.its.brooklyn.cuny.edu/~phalsall/texts/taote-v3.html#11

|

||||

[9]: http://acc6.its.brooklyn.cuny.edu/~phalsall/texts/taote-v3.html#17

|

||||

[10]: https://opensource.com/users/rebecca

|

||||

[11]: http://acc6.its.brooklyn.cuny.edu/~phalsall/texts/taote-v3.html#38

|

||||

[12]: http://acc6.its.brooklyn.cuny.edu/~phalsall/texts/taote-v3.html#41

|

||||

[13]: https://opensource.com/open-organization/15/9/reflections-open-organization-starry-eyed-dreamer

|

||||

[14]: http://acc6.its.brooklyn.cuny.edu/~phalsall/texts/taote-v3.html#48

|

||||

[15]: http://acc6.its.brooklyn.cuny.edu/~phalsall/texts/taote-v3.html#67

|

||||

[16]: https://www.kaizen.com/about-us/definition-of-kaizen.html

|

||||

@ -0,0 +1,48 @@

|

||||

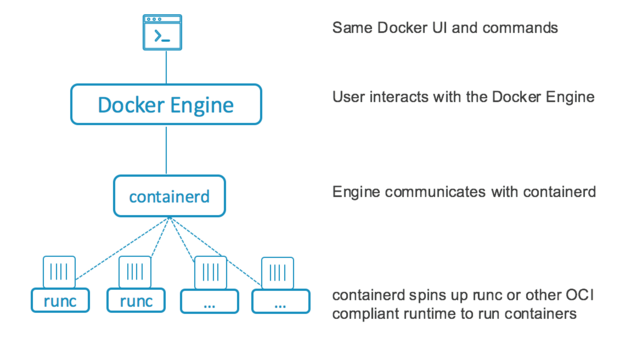

Docker 1.11 采纳了开源容器项目(OCP)组件

|

||||

=======================================================

|

||||

|

||||

|

||||

|

||||

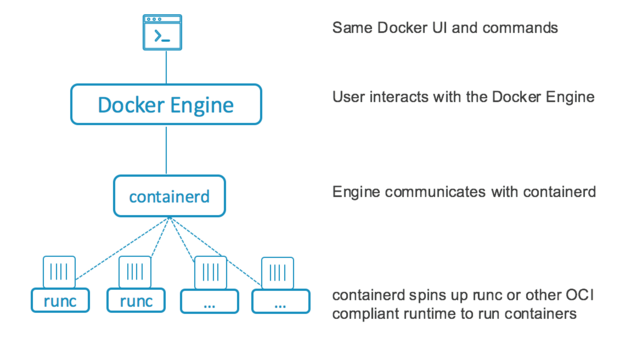

> Docker 在开放容器项目(Open Container Project,OCP)中的参与度达成圆满,最新构建的Docker采用了Docker 贡献给 OCP 的组件。

|

||||

|

||||

新发布的 [Docker 1.11][1] 的最大新闻并不是它的功能,而是它使用了在 OCP 支持下的标准化的组件版本。

|

||||

|

||||

去年,Docker 贡献了它的 [runC][2] 核心给 OCP 作为构建构建容器工具的基础。同样还有 [containerd][3],作为守护进程或者服务端用于控制 runC 的实例。Docker 1.11 现在使用的就是这个捐赠和公开的版本。

|

||||

|

||||

|

||||

Docker 此举挑战了它的容器生态仍[主要由 Docker 自身决定][6]这个说法。它并不是为了作秀才将容器规范和运行时细节贡献给 OCP。它希望项目将来的开发越开放和广泛越好。

|

||||

|

||||

|

||||

|

||||

> Docker 1.11 已经用贡献给 OCP 的 runC 和 containerd 进行了重构。runC 如果需要的话可以换成另外一个。

|

||||

|

||||

runC 的[两位主要提交者][7]来自 Docker,但是来自 Virtuozzo(Parallels fame)、OpenShift、Project Atomic、华为、GE Healthcare、Suse Linux 也都是提交人员里面的常客。

|

||||

|

||||

Docker 1.11 中一个更明显的变化是先前 Docker runtime 在 Docker 中是唯一可用的,并且评论家认为这个会限制用户的选择。runC runtime 现在是可替换的;虽然 Docker 在发布时将 runC 作为默认引擎,但是任何兼容的引擎都可以用来替换它。(Docker 同样希望它可以不用杀死并重启现在运行的容器,但是这个作为今后的改进规划。)

|

||||

|

||||

Docker 正在将基于 OCP 的开发流程作为内部创建其产品的更好方式。在它发布 1.11 的[官方博客中称][8]:“将 Docker 切分成独立的工具意味着更专注的维护者,最终会有更好的软件质量。”

|

||||

|

||||

除了修复长期以来存在的问题和确保 Docker 的 runC/containerd 跟上步伐,Docker 还在 Docker 1.11 中加入了一些改进。Docker Engine 现在支持 VLAN 和 IPv6 服务发现,并且会自动在多个相同别名容器间执行 DNS 轮询负载均衡。

|

||||

|

||||

|

||||

------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.infoworld.com/article/3055966/open-source-tools/docker-111-adopts-open-container-project-components.html

|

||||

|

||||

作者:[Serdar Yegulalp][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://www.infoworld.com/author/Serdar-Yegulalp/

|

||||

[1]: https://blog.docker.com/2016/04/docker-engine-1-11-runc/

|

||||

[2]: http://runc.io/

|

||||

[3]: https://containerd.tools/

|

||||

[4]: http://www.infoworld.com/resources/16373/application-virtualization/the-beginners-guide-to-docker#tk.ifw-infsb

|

||||

[5]: http://www.infoworld.com/newsletters/signup.html#tk.ifw-infsb

|

||||

[6]: http://www.infoworld.com/article/2876801/application-virtualization/docker-reorganization-grows-up.html

|

||||

[7]: https://github.com/opencontainers/runc/graphs/contributors

|

||||

[8]: https://blog.docker.com/2016/04/docker-engine-1-11-runc/

|

||||

|

||||

|

||||

@ -1,35 +1,35 @@

|

||||

LFCS 系列第七讲: 通过 SysVinit、Systemd 和 Upstart 管理系统自启动进程和服务

|

||||

LFCS 系列第七讲:通过 SysVinit、Systemd 和 Upstart 管理系统自启动进程和服务

|

||||

================================================================================

|

||||

几个月前, Linux 基金会宣布 LFCS(Linux 基金会认证系统管理员) 认证诞生了,这个令人兴奋的新计划定位于让来自全球各地的初级到中级的 Linux 系统管理员得到认证。这其中包括维护已经在运行的系统和服务能力、第一手的问题查找和分析能力、以及决定何时向开发团队提交问题的能力。

|

||||

几个月前, Linux 基金会宣布 LFCS (Linux 基金会认证系统管理员) 认证诞生了,这个令人兴奋的新计划定位于让来自全球各地的初级到中级的 Linux 系统管理员得到认证。这其中包括维护已经在运行的系统和服务的能力、第一手的问题查找和分析能力、以及决定何时向开发团队提交问题的能力。

|

||||

|

||||

|

||||

|

||||

第七讲: Linux 基金会认证系统管理员

|

||||

*第七讲: Linux 基金会认证系统管理员*

|

||||

|

||||

下面的视频简要介绍了 Linux 基金会认证计划。

|

||||

|

||||

注:youtube 视频

|

||||

<iframe width="720" height="405" frameborder="0" allowfullscreen="allowfullscreen" src="//www.youtube.com/embed/Y29qZ71Kicg"></iframe>

|

||||

|

||||

这篇博文是 10 指南系列中的第七篇,在这篇文章中,我们会介绍如何管理 Linux 系统自启动进程和服务,这是 LFCS 认证考试要求的一部分。

|

||||

本讲是系列教程中的第七讲,在这篇文章中,我们会介绍如何管理 Linux 系统自启动进程和服务,这是 LFCS 认证考试要求的一部分。

|

||||

|

||||

### 管理 Linux 自启动进程 ###

|

||||

|

||||

Linux 系统的启动程序包括多个阶段,每个阶段由一个不同的组件表示。下面的图示简要总结了启动过程以及所有包括的主要组件。

|

||||

Linux 系统的启动程序包括多个阶段,每个阶段由一个不同的图示块表示。下面的图示简要总结了启动过程以及所有包括的主要组件。

|

||||

|

||||

|

||||

|

||||

Linux 启动过程

|

||||

*Linux 启动过程*

|

||||

|

||||

当你按下你机器上的电源键时, 存储在主板 EEPROM 芯片中的固件初始化 POST(通电自检) 检查系统硬件资源的状态。POST 结束后,固件会搜索并加载位于第一块可用磁盘上的 MBR 或 EFI 分区的第一阶段引导程序,并把控制权交给引导程序。

|

||||

当你按下你机器上的电源键时,存储在主板 EEPROM 芯片中的固件初始化 POST(通电自检) 检查系统硬件资源的状态。POST 结束后,固件会搜索并加载位于第一块可用磁盘上的 MBR 或 EFI 分区的第一阶段引导程序,并把控制权交给引导程序。

|

||||

|

||||

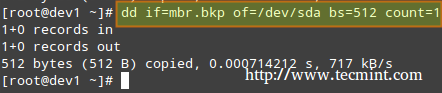

#### MBR 方式 ####

|

||||

|

||||

MBR 是位于 BISO 设置中标记为可启动磁盘上的第一个扇区,大小是 512 个字节。

|

||||

MBR 是位于 BIOS 设置中标记为可启动磁盘上的第一个扇区,大小是 512 个字节。

|

||||

|

||||

- 前面 446 个字节:包括可执行代码和错误信息文本的引导程序

|

||||

- 接下来的 64 个字节:四个分区(主分区或扩展分区)中每个分区一条记录的分区表。其中,每条记录标示了每个一个分区的状态(是否活跃)、大小以及开始和结束扇区。

|

||||

- 最后 2 个字节: MBR 有效性检查的魔数。

|

||||

- 最后 2 个字节: MBR 有效性检查的魔法数。

|

||||

|

||||

下面的命令对 MBR 进行备份(在本例中,/dev/sda 是第一块硬盘)。结果文件 mbr.bkp 在分区表被破坏、例如系统不可引导时能排上用场。

|

||||

|

||||

@ -41,7 +41,7 @@ MBR 是位于 BISO 设置中标记为可启动磁盘上的第一个扇区,大

|

||||

|

||||

|

||||

|

||||

在 Linux 中备份 MBR

|

||||

*在 Linux 中备份 MBR*

|

||||

|

||||

**恢复 MBR**

|

||||

|

||||

@ -49,7 +49,7 @@ MBR 是位于 BISO 设置中标记为可启动磁盘上的第一个扇区,大

|

||||

|

||||

|

||||

|

||||

在 Linux 中恢复 MBR

|

||||

*在 Linux 中恢复 MBR*

|

||||

|

||||

#### EFI/UEFI 方式 ####

|

||||

|

||||

@ -74,11 +74,11 @@ init 和 systemd 都是管理其它守护进程的守护进程(后台进程)

|

||||

|

||||

|

||||

|

||||

Systemd 和 Init

|

||||

*Systemd 和 Init*

|

||||

|

||||

### 自启动服务(SysVinit) ###

|

||||

|

||||

Linux 中运行等级的概念表示通过控制运行哪些服务来以不同方式使用系统。换句话说,运行等级控制着当前执行状态下可以完成什么任务(以及什么不能完成)。

|

||||

Linux 中运行等级通过控制运行哪些服务来以不同方式使用系统。换句话说,运行等级控制着当前执行状态下可以完成什么任务(以及什么不能完成)。

|

||||

|

||||

传统上,这个启动过程是基于起源于 System V Unix 的形式,通过执行脚本启动或者停止服务从而使机器进入指定的运行等级(换句话说,是一个不同的系统运行模式)。

|

||||

|

||||

@ -88,47 +88,17 @@ Linux 中运行等级的概念表示通过控制运行哪些服务来以不同

|

||||

|

||||

除了启动系统进程,init 还会查看 /etc/inittab 来决定进入哪个运行等级。

|

||||

|

||||

注:表格

|

||||

<table cellspacing="0" border="0">

|

||||

<colgroup width="85">

|

||||

</colgroup>

|

||||

<colgroup width="1514">

|

||||

</colgroup>

|

||||

<tbody>

|

||||

<tr>

|

||||

<td align="CENTER" height="18" style="border: 1px solid #000001;"><b>Runlevel</b></td>

|

||||

<td align="LEFT" style="border: 1px solid #000001;"><b> Description</b></td>

|

||||

</tr>

|

||||

<tr class="alt">

|

||||

<td align="CENTER" height="18" style="border: 1px solid #000001;">0</td>

|

||||

<td align="LEFT" style="border: 1px solid #000001;"> 停止系统。运行等级 0 是一个用于快速关闭系统的特殊过渡状态。</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td align="CENTER" height="20" style="border: 1px solid #000001;">1</td>

|

||||

<td align="LEFT" style="border: 1px solid #000001;"> 别名为 s 或 S,这个运行等级有时候也称为维护模式。在这个运行等级启动的服务由于发行版不同而不同。通常用于正常系统操作损坏时低级别的系统维护。</td>

|

||||

</tr>

|

||||

<tr class="alt">

|

||||

<td align="CENTER" height="18" style="border: 1px solid #000001;">2</td>

|

||||

<td align="LEFT" style="border: 1px solid #000001;"> 多用户。在 Debian 系统及其衍生版中,这是默认的运行等级,还包括了一个图形化登录(如果有的话)。在基于红帽的系统中,这是没有网络的多用户模式。</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td align="CENTER" height="18" style="border: 1px solid #000001;">3</td>

|

||||

<td align="LEFT" style="border: 1px solid #000001;"> 在基于红帽的系统中,这是默认的多用户模式,运行除了图形化环境以外的所有东西。基于 Debian 的系统中通常不会使用这个运行等级以及等级 4 和 5。</td>

|

||||

</tr>

|

||||

<tr class="alt">

|

||||

<td align="CENTER" height="18" style="border: 1px solid #000001;">4</td>

|

||||

<td align="LEFT" style="border: 1px solid #000001;"> 通常默认情况下不使用,可用于自定制。</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td align="CENTER" height="18" style="border: 1px solid #000001;">5</td>

|

||||

<td align="LEFT" style="border: 1px solid #000001;"> 基于红帽的系统中,支持 GUI 登录的完全多用户模式。这个运行等级和等级 3 类似,但是有可用的 GUI 登录。</td>

|

||||

</tr>

|

||||

<tr class="alt">

|

||||

<td align="CENTER" height="18" style="border: 1px solid #000001;">6</td>

|

||||

<td align="LEFT" style="border: 1px solid #000001;"> 重启系统。</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

</table>

|

||||

|

||||

|Runlevel| Description|

|

||||

|--------|------------|

|

||||

|0|停止系统。运行等级 0 是一个用于快速关闭系统的特殊过渡状态。|

|

||||

|1|别名为 s 或 S,这个运行等级有时候也称为维护模式。在这个运行等级启动的服务由于发行版不同而不同。通常用于正常系统操作损坏时低级别的系统维护。|

|

||||

|2|多用户。在 Debian 系统及其衍生版中,这是默认的运行等级,还包括了一个图形化登录(如果有的话)。在基于红帽的系统中,这是没有网络的多用户模式。|

|

||||

|3|在基于红帽的系统中,这是默认的多用户模式,运行除了图形化环境以外的所有东西。基于 Debian 的系统中通常不会使用这个运行等级以及等级 4 和 5。|

|

||||

|4|通常默认情况下不使用,可用于自定制。|

|

||||

|5|基于红帽的系统中,支持 GUI 登录的完全多用户模式。这个运行等级和等级 3 类似,但是有可用的 GUI 登录。|

|

||||

|6|重启系统。|

|

||||

|

||||

|

||||

要在运行等级之间切换,我们只需要使用 init 命令更改运行等级:init N(其中 N 是上面列出的一个运行等级)。

|

||||

请注意这并不是运行中的系统切换运行等级的推荐方式,因为它不会给已经登录的用户发送警告(因而导致他们丢失工作以及进程异常终结)。

|

||||

@ -139,7 +109,7 @@ Linux 中运行等级的概念表示通过控制运行哪些服务来以不同

|

||||

|

||||

id:2:initdefault:

|

||||

|

||||

并用你喜欢的文本编辑器,例如 vim(本系列的[第二讲 : 如何在 Linux 中使用 vi/vim 编辑器][2]),更改数字 2 为想要的运行等级。

|

||||

并用你喜欢的文本编辑器,例如 vim(本系列的 [LFCS 系列第二讲:如何安装和使用纯文本编辑器 vi/vim][2]),更改数字 2 为想要的运行等级。

|

||||

|

||||

然后,以 root 用户执行

|

||||

|

||||

@ -149,7 +119,7 @@ Linux 中运行等级的概念表示通过控制运行哪些服务来以不同

|

||||

|

||||

|

||||

|

||||

在 Linux 中更改运行等级

|

||||

*在 Linux 中更改运行等级*

|

||||

|

||||

#### 使用 chkconfig 管理服务 ####

|

||||

|

||||

@ -165,7 +135,7 @@ Linux 中运行等级的概念表示通过控制运行哪些服务来以不同

|

||||

|

||||

|

||||

|

||||

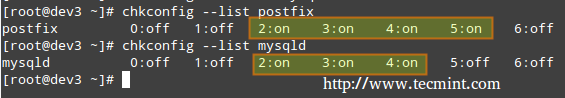

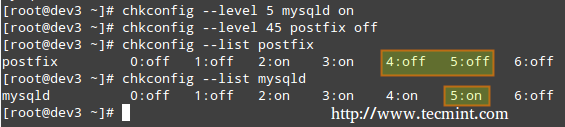

列出运行等级配置

|

||||

*列出运行等级配置*

|

||||

|

||||

从上图中我们可以看出,当系统进入运行等级 2 到 5 的时候就会启动 postfix,而默认情况下运行等级 2 到 4 时会运行 mysqld。现在假设我们并不希望如此。

|

||||

|

||||

@ -183,7 +153,7 @@ Linux 中运行等级的概念表示通过控制运行哪些服务来以不同

|

||||

|

||||

|

||||

|

||||

启用/停用服务

|

||||

*启用/停用服务*

|

||||

|

||||

我们在基于 Debian 的系统中使用 sysv-rc-conf 完成类似任务。

|

||||

|

||||

@ -196,29 +166,29 @@ Linux 中运行等级的概念表示通过控制运行哪些服务来以不同

|

||||

# ls -l /etc/rc[0-6].d | grep -E 'rc[0-6]|mdadm'

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

查看运行中服务的运行等级

|

||||

*查看运行中服务的运行等级*

|

||||

|

||||

2. 我们使用 sysv-rc-conf 设置防止 mdadm 在运行等级2 之外的其它等级启动。只需根据需要(你可以使用上下左右按键)选中或取消选中(通过空格键)。

|

||||

|

||||

# sysv-rc-conf

|

||||

|

||||

|

||||

|

||||

|

||||

Sysv 运行等级配置

|

||||

*Sysv 运行等级配置*

|

||||

|

||||

然后输入 q 退出。

|

||||

然后输入 q 退出。

|

||||

|

||||

3. 重启系统并从步骤 1 开始再操作一遍。

|

||||

|

||||

# ls -l /etc/rc[0-6].d | grep -E 'rc[0-6]|mdadm'

|

||||

|

||||

|

||||

|

||||

|

||||

验证服务运行等级

|

||||

*验证服务运行等级*

|

||||

|

||||

从上图中我们可以看出 mdadm 配置为只在运行等级 2 上启动。

|

||||

从上图中我们可以看出 mdadm 配置为只在运行等级 2 上启动。

|

||||

|

||||

### 那关于 systemd 呢? ###

|

||||

|

||||

@ -232,11 +202,11 @@ systemd 是另外一个被多种主流 Linux 发行版采用的服务和系统

|

||||

|

||||

|

||||

|

||||

查看运行中的进程

|

||||

*查看运行中的进程*

|

||||

|

||||

LOAD 一列显示了单元(UNIT 列,显示服务或者由 systemd 维护的其它进程)是否正确加载,ACTIVE 和 SUB 列则显示了该单元当前的状态。

|

||||

|

||||

显示服务当前状态的信息

|

||||

**显示服务当前状态的信息**

|

||||

|

||||

当 ACTIVE 列显示某个单元状态并非活跃时,我们可以使用以下命令查看具体原因。

|

||||

|

||||

@ -248,7 +218,7 @@ LOAD 一列显示了单元(UNIT 列,显示服务或者由 systemd 维护的

|

||||

|

||||

|

||||

|

||||

查看服务状态

|

||||

*查看服务状态*

|

||||

|

||||

我们可以看到 media-samba.mount 失败的原因是 host dev1 上的挂载进程无法找到 //192.168.0.10/gacanepa 上的共享网络。

|

||||

|

||||

@ -262,9 +232,9 @@ LOAD 一列显示了单元(UNIT 列,显示服务或者由 systemd 维护的

|

||||

# systemctl restart media-samba.mount

|

||||

# systemctl status media-samba.mount

|

||||

|

||||

|

||||

|

||||

|

||||

启动停止服务

|

||||

*启动停止服务*

|

||||

|

||||

**启用或停用某服务随系统启动**

|

||||

|

||||

@ -278,7 +248,7 @@ LOAD 一列显示了单元(UNIT 列,显示服务或者由 systemd 维护的

|

||||

|

||||

|

||||

|

||||

启用或停用服务

|

||||

*启用或停用服务*

|

||||

|

||||

你也可以用下面的命令查看某个服务的当前状态(启用或者停用)。

|

||||

|

||||

@ -358,13 +328,13 @@ via: http://www.tecmint.com/linux-boot-process-and-manage-services/

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[ictlyh](http://mutouxiaogui.cn/blog/)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/gacanepa/

|

||||

[1]:http://www.tecmint.com/systemd-replaces-init-in-linux/

|

||||

[2]:http://www.tecmint.com/vi-editor-usage/

|

||||

[2]:https://linux.cn/article-7165-1.html

|

||||

[3]:http://www.tecmint.com/chkconfig-command-examples/

|

||||

[4]:http://www.tecmint.com/remove-unwanted-services-from-linux/

|

||||

[5]:http://www.tecmint.com/chkconfig-command-examples/

|

||||

@ -0,0 +1,38 @@

|

||||

Linux will be the major operating system of 21st century cars

|

||||

===============================================================

|

||||

|

||||

>Cars are more than engines and good looking bodies. They're also complex computing devices so, of course, Linux runs inside them.

|

||||

|

||||

Linux doesn't just run your servers and, via Android, your phones. It also runs your cars. Of course, no one has ever bought a car for its operating system. But Linux is already powering the infotainment, heads-up display and connected car 4G and Wi-Fi systems for such major car manufacturers as Toyota, Nissan, and Jaguar Land Rover and [Linux is on its way to Ford][1], Mazda, Mitsubishi, and Subaru cars.

|

||||

|

||||

|

||||

>All the Linux and open-source car software efforts have now been unified under the Automotive Grade Linux project.

|

||||

|

||||

Software companies are also getting into this Internet of mobile things act. Movimento, Oracle, Qualcomm, Texas Instruments, UIEvolution and VeriSilicon have all [joined the Automotive Grade Linux (AGL)][2] project. The [AGL][3] is a collaborative open-source project devoted to creating a common, Linux-based software stack for the connected car.

|

||||

|

||||

AGL has seen tremendous growth over the past year as demand for connected car technology and infotainment are rapidly increasing," said Dan Cauchy, the Linux Foundation's General Manager of Automotive, in a statement.

|

||||

|

||||

Cauchy continued, "Our membership base is not only growing rapidly, but it is also diversifying across various business interests, from semiconductors and in-vehicle software to IoT and connected cloud services. This is a clear indication that the connected car revolution has broad implications across many industry verticals."

|

||||

|

||||

These companies have joined after AGL's recent announcement of a new AGL Unified Code Base (UCB). This new Linux distribution is based on AGL and two other car open-source projects: [Tizen][4] and the [GENIVI Alliance][5]. UCB is a second-generation car Linux. It was built from the ground up to address automotive specific applications. It handles navigation, communications, safety, security and infotainment functionality,

|

||||

|

||||

"The automotive industry needs a standard open operating system and framework to enable automakers and suppliers to quickly bring smartphone-like capabilities to the car," said Cauchy. "This new distribution integrates the best components from AGL, Tizen, GENIVI and related open-source code into a single AGL Unified Code Base, allowing car-makers to leverage a common platform for rapid innovation. The AGL UCB distribution will play a huge role in the adoption of Linux-based systems for all functions in the vehicle."

|

||||

|

||||

He's right. Since its release in January 2016, four car companies and ten new software businesses have joined AGL. Esso, now Exxon, made the advertising slogan, "Put a tiger in your tank!" famous. I doubt that "Put a penguin under your hood" will ever become well-known, but that's exactly what's happening. Linux is well on its way to becoming the major operating system of 21st century cars.

|

||||

|

||||

------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.zdnet.com/article/the-linux-in-your-car-movement-gains-momentum/

|

||||

|

||||

作者:[Steven J. Vaughan-Nichols][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.zdnet.com/meet-the-team/us/steven-j-vaughan-nichols/

|

||||

[1]: https://www.automotivelinux.org/news/announcement/2016/01/ford-mazda-mitsubishi-motors-and-subaru-join-linux-foundation-and

|

||||

[2]: https://www.automotivelinux.org/news/announcement/2016/05/oracle-qualcomm-innovation-center-texas-instruments-and-others-support

|

||||

[3]: https://www.automotivelinux.org/

|

||||

[4]: https://www.tizen.org/

|

||||

[5]: http://www.genivi.org/

|

||||

@ -0,0 +1,52 @@

|

||||

eriwoon 翻译中 -- 2106-May-19

|

||||

Open source from a recruiter's perspective

|

||||

============================================

|

||||

|

||||

|

||||

|

||||

I fell in love with technology when I went to my first open source convention in 2012.

|

||||

|

||||

After spending years in recruiting, I decided to take a job specializing in big data at [Greythorn][1]. I had been trying to learn the ropes for a few months leading up to [OSCON][2], but going to the conference sped that process up like crazy. There were so many brilliant people all in one place, and everyone was willing to share what they knew. It wasn't because they were trying to sell me anything, but because they were all so passionate about what they were working on.

|

||||

|

||||

I soon realized that, in many ways, the open source and big data industry was less an industry and more of a community. That's why I now try to pay it forward and share what I've learned about open source with those who are just getting started in their careers.

|

||||

|

||||

### Why employers want open source contributors

|

||||

|

||||

Many clients tell me that although they want a candidate who has an exceptional technical mind, the ideal person should also really like this stuff. When you are passionate about something, you find yourself working on it even when you aren't getting paid.

|

||||

|

||||

My clients often ask, "Do they code in their spare time?" "Can I find their work anywhere?" "What do they really enjoy?" Open source contributors are often at an advantage because they check these boxes, and not only are their projects out in the open—so is the evidence of their coding proficiency.

|

||||

|

||||

#### Why recruiters search for open source contributors

|

||||

|

||||

Solid tech recruiters understand the technologies and roles they're recruiting for, and they're going to assess your skills accordingly. But I'll admit that many of us have found that the best candidates we've come across have a tendency to be involved in open source, so we often just start our search there. Recruiters provide value to clients when they find candidates who are motivated to work on a team to create something awesome, because that's basically the description of a top-performing employee.

|

||||

|

||||

It makes sense to me: When you take really smart people and give them the chance to be collaborative—for the sake of making something that works really well or may change the landscape of our everyday lives—it creates an energy that can be addictive.

|

||||

|

||||

### What open source contributors can do to build a happy career

|

||||

|

||||

There are obvious things you can do to leverage your open source work to build your career: Put your code on GitHub, participate in projects, go to conferences and join panels and workshops, etc. These are worthwhile, but more than anything you need to know what will make you happy in your work.

|

||||

|

||||

Ask yourself questions like...

|

||||

|

||||

* **Is it important to work for a company that gives back to the open source and software community?** I find that some of my best candidates insist on this, and it makes a huge difference in their job satisfaction.

|

||||

* **Do you want to work for a company that is based on open source?** The culture is often different in these environments, and it helps to know if that's where you think you'll fit best.

|

||||

* **Are there people you'd specifically like to work with?** Although you can always try to join the same projects, the odds of collaborating with and learning from someone you admire are better if your day jobs align at the same company.

|

||||

|

||||

Once you know your own career priorities, it's easier to filter out the jobs that won't move you closer to your goals—and if you're working with a recruiter, it helps them match you with the right employer and team.

|

||||

|

||||

Although I don't contribute code, I'll always share what I've learned with those who are working on their career in open source. This community is made up of supportive and smart people, and I love that I've been able to be a small part of it.

|

||||

|

||||

|

||||

------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/business/16/5/open-source-recruiters-perspective

|

||||

|

||||

作者:[Lindsey Thorne][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/lindsey-thorne

|

||||

[1]: http://www.greythorn.com/

|

||||

[2]: http://conferences.oreilly.com/oscon

|

||||

104

sources/talk/20160523 Driving cars into the future with Linux.md

Normal file

104

sources/talk/20160523 Driving cars into the future with Linux.md

Normal file

@ -0,0 +1,104 @@

|

||||

Driving cars into the future with Linux

|

||||

===========================================

|

||||

|

||||

|

||||

|

||||

I don't think much about it while I'm driving, but I sure do love that my car is equipped with a system that lets me use a few buttons and my voice to call my wife, mom, and children. That same system allows me to choose whether I listen to music streaming from the cloud, satellite radio, or the more traditional AM/FM radio. I also get weather updates and can direct my in-vehicle GPS to find the fastest route to my next destination. [In-vehicle infotainment][1], or IVI as it's known in the industry, has become ubiquitous in today's newest automobiles.

|

||||

|

||||

A while ago, I had to travel hundreds of miles by plane and then rent a car. Happily, I discovered that my rental vehicle was equipped with IVI technology similar to my own car. In no time, I was connected via Bluetooth, had uploaded my contacts into the system, and was calling home to let my family know I arrived safely and my hosts to let them know I was en route to their home.

|

||||

|

||||

In a recent [news roundup][2], Scott Nesbitt cited an article that said Ford Motor Company is getting substantial backing from a rival automaker for its open source [Smart Device Link][3] (SDL) middleware framework, which supports mobile phones. SDL is a project of the [GENIVI Alliance][4], a nonprofit committed to building middleware to support open source in-vehicle infotainment systems. According to [Steven Crumb][5], executive director of GENIVI, their [membership][6] is broad and includes Daimler Group, Hyundai, Volvo, Nissan, Honda, and 170 others.

|

||||

|

||||

In order to remain competitive in the industry, automotive companies need a middleware system that can support the various human machine interface technologies available to consumers today. Whether you own an Android, iOS, or other device, automotive OEMs want their units to be able to support these systems. Furthermore, these IVI systems must be adaptable enough to support the ever decreasing half-life of mobile technology. OEMs want to provide value and add services in their IVI stacks that will support a variety of options for their customers. Enter Linux and open source software.

|

||||

|

||||

In addition to GENIVI's efforts, the [Linux Foundation][7] sponsors the [Automotive Grade Linux][8] (AGL) workgroup, a software foundation dedicated to finding open source solutions for automotive applications. Although AGL will initially focus on IVI systems, they envision branching out to include [telematics][9], heads up displays, and other control systems. AGL has over 50 members at this time, including Jaguar, Toyota, and Nissan, and in a [recent press release][10] announced that Ford, Mazda, Mitsubishi, and Subaru have joined.

|

||||

|

||||

To find out more, we interviewed two leaders in this emerging field. Specifically, we wanted to know how Linux and open source software are being used and if they are in fact changing the face of the automotive industry. First, we talk to [Alison Chaiken][11], a software engineer at Peloton Technology and an expert on automotive Linux, cybersecurity, and transparency. She previously worked for Mentor Graphics, Nokia, and the Stanford Linear Accelerator. Then, we chat with [Steven Crumb][12], executive director of GENIVI, who got started in open source in high-performance computing environments (supercomputers and early cloud computing). He says that though he's not a coder anymore, he loves to help organizations solve real business problems with open source software.

|

||||

|

||||

### Interview with Alison Chaiken (by [Deb Nicholson][13])

|

||||

|

||||

#### How did you get interested in the automotive software space?

|

||||

|

||||

I was working on [MeeGo][14] in phones at Nokia in 2009 when the project was cancelled. I thought, what's next? A colleague was working on [MeeGo-IVI][15], an early automotive Linux distribution. "Linux is going to be big in cars," I thought, so I headed in that direction.

|

||||

|

||||

#### Can you tell us what aspects you're working on these days?

|

||||

|

||||

I'm currently working for a startup on an advanced cruise control system that uses real-time Linux to increase the safety and fuel economy of big-rig trucks. I love working in this area, as no one would disagree that trucking can be improved.

|

||||

|

||||

#### There have been a few stories about hacked cars in recent years. Can open source solutions help address this issue?

|

||||

|

||||

I presented a talk on precisely this topic, on how Linux can (and cannot) contribute to security solutions in automotive at Southern California Linux Expo 2016 ([Slides][16]). Notably, GENIVI and Automotive Grade Linux have published their code and both projects take patches via Git. Please send your fixes upstream! Many eyes make all bugs shallow.

|

||||

|

||||

#### Law enforcement agencies and insurance companies could find plenty of uses for data about drivers. How easy will it be for them to obtain this information?

|

||||

|

||||

Good question. The Dedicated Short Range Communication Standard (IEEE-1609) takes great pains to keep drivers participating in Wi-Fi safety messaging anonymous. Still, if you're posting to Twitter from your car, someone will be able to track you.

|

||||

|

||||