mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-13 22:30:37 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

62ab23bef3

@ -1,17 +1,17 @@

|

||||

在命令行使用 Pandoc 进行文件转换

|

||||

======

|

||||

|

||||

这篇指南介绍如何使用 Pandoc 将文档转换为多种不同的格式。

|

||||

> 这篇指南介绍如何使用 Pandoc 将文档转换为多种不同的格式。

|

||||

|

||||

|

||||

|

||||

Pandoc 是一个命令行工具,用于将文件从一种标记语言转换为另一种标记语言。标记语言使用标签来标记文档的各个部分。常用的标记语言包括 Markdown,ReStructuredText,HTML,LaTex,ePub 和 Microsoft Word DOCX。

|

||||

Pandoc 是一个命令行工具,用于将文件从一种标记语言转换为另一种标记语言。标记语言使用标签来标记文档的各个部分。常用的标记语言包括 Markdown、ReStructuredText、HTML、LaTex、ePub 和 Microsoft Word DOCX。

|

||||

|

||||

简单来说,[Pandoc][1] 允许你将一些文件从一种标记语言转换为另一种标记语言。典型的例子包括将 Markdown 文件转换为演示文稿,LaTeX,PDF 甚至是 ePub。

|

||||

简单来说,[Pandoc][1] 允许你将一些文件从一种标记语言转换为另一种标记语言。典型的例子包括将 Markdown 文件转换为演示文稿、LaTeX,PDF 甚至是 ePub。

|

||||

|

||||

本文将解释如何使用 Pandoc 从单一标记语言(在本文中为 Markdown)生成多种格式的文档,引导你完成从 Pandoc 安装,到展示如何创建多种类型的文档,再到提供有关如何编写易于移植到其他格式的文档的提示。

|

||||

|

||||

文中还将解释使用元信息文件对文档内容和元信息(例如,作者姓名,使用的模板,书目样式等)进行分离的意义。

|

||||

文中还将解释使用元信息文件对文档内容和元信息(例如,作者姓名、使用的模板、书目样式等)进行分离的意义。

|

||||

|

||||

### Pandoc 安装和要求

|

||||

|

||||

@ -25,7 +25,7 @@ sudo apt-get install pandoc pandoc-citeproc texlive

|

||||

|

||||

您可以在 Pandoc 的网站上找到其他平台的 [安装说明][2]。

|

||||

|

||||

我强烈建议安装 [pandoc][3][- crossref][3],这是一个“用于对图表,方程式,表格和交叉引用进行编号的过滤器”。最简单的安装方式是下载 [预构建的可执行文件][4],但也可以通过以下命令从 Haskell 的软件包管理器 cabal 安装它:

|

||||

我强烈建议安装 [pandoc-crossref][3],这是一个“用于对图表,方程式,表格和交叉引用进行编号的过滤器”。最简单的安装方式是下载 [预构建的可执行文件][4],但也可以通过以下命令从 Haskell 的软件包管理器 cabal 安装它:

|

||||

|

||||

```

|

||||

cabal update

|

||||

@ -38,15 +38,15 @@ cabal install pandoc-crossref

|

||||

|

||||

我将通过解释如何生成三种类型的文档来演示 Pandoc 的工作原理:

|

||||

|

||||

- 由包含数学公式的 LaTeX 文件创建的网站

|

||||

- 由包含数学公式的 LaTeX 文件创建的网页

|

||||

- 由 Markdown 文件生成的 Reveal.js 幻灯片

|

||||

- 混合 Markdown 和 LaTeX 的合同文件

|

||||

|

||||

#### 创建一个包含数学公式的网站

|

||||

|

||||

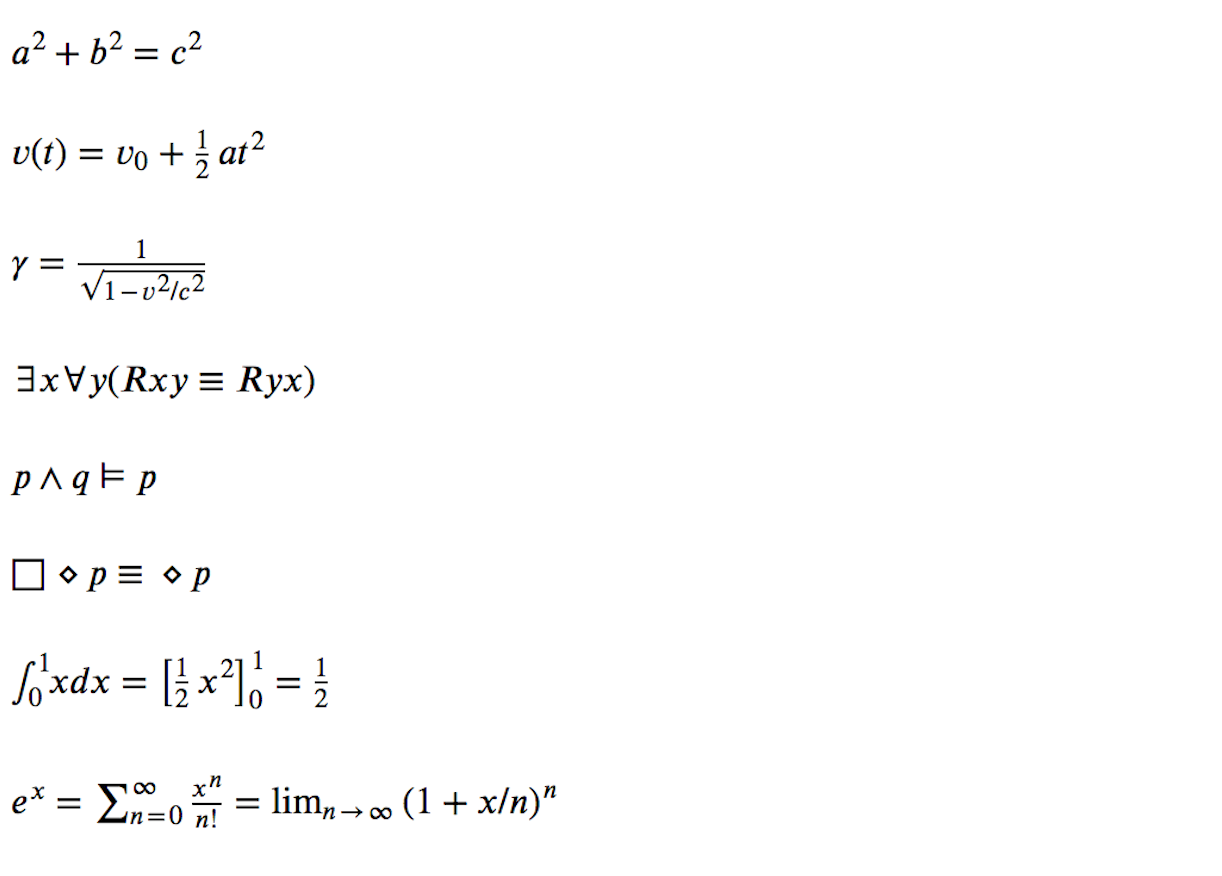

Pandoc 的优势之一是以不同的输出文件格式显示数学公式。例如,我们可以从包含一些数学符号(用 LaTeX 编写)的 LaTeX 文档(名为 math.tex)生成一个网站。

|

||||

Pandoc 的优势之一是以不同的输出文件格式显示数学公式。例如,我们可以从包含一些数学符号(用 LaTeX 编写)的 LaTeX 文档(名为 `math.tex`)生成一个网页。

|

||||

|

||||

math.tex 文档如下所示:

|

||||

`math.tex` 文档如下所示:

|

||||

|

||||

```

|

||||

% Pandoc math demos

|

||||

@ -68,23 +68,23 @@ $\int_{0}^{1} x dx = \left[ \frac{1}{2}x^2 \right]_{0}^{1} = \frac{1}{2}$

|

||||

$e^x = \sum_{n=0}^\infty \frac{x^n}{n!} = \lim_{n\rightarrow\infty} (1+x/n)^n$

|

||||

```

|

||||

|

||||

通过输入以下命令将 LaTeX 文档转换为名为 mathMathML.html 的网站:

|

||||

通过输入以下命令将 LaTeX 文档转换为名为 `mathMathML.html` 的网站:

|

||||

|

||||

```

|

||||

pandoc math.tex -s --mathml -o mathMathML.html

|

||||

```

|

||||

|

||||

参数 - s 告诉 Pandoc 生成一个独立的网站(而不是网站片段,因此它将包括 HTML 中的 head 和 body 标签),- mathml 参数强制 Pandoc 将 LaTeX 中的数学公式转换成 MathML,从而可以由现代浏览器进行渲染。

|

||||

参数 `-s` 告诉 Pandoc 生成一个独立的网页(而不是网页片段,因此它将包括 HTML 中的 head 和 body 标签),`-mathml` 参数强制 Pandoc 将 LaTeX 中的数学公式转换成 MathML,从而可以由现代浏览器进行渲染。

|

||||

|

||||

|

||||

|

||||

看一下 [网站效果][6] 和 [代码][7],代码仓库中的 Makefile 使得运行更加简单。

|

||||

看一下 [网页效果][6] 和 [代码][7],代码仓库中的 Makefile 使得运行更加简单。

|

||||

|

||||

#### 制作一个 Reveal.js 幻灯片

|

||||

|

||||

使用 Pandoc 从 Markdown 文件生成简单的演示文稿很容易。幻灯片包含顶级幻灯片和下面的嵌套幻灯片。可以通过键盘控制演示文稿,从一个顶级幻灯片跳转到下一个顶级幻灯片,或者显示顶级幻灯片下面的嵌套幻灯片。 这种结构在基于 HTML 的演示文稿框架中很常见。

|

||||

|

||||

创建一个名为 SLIDES 的幻灯片文档(参见 [代码仓库][8])。首先,在 % 后面添加幻灯片的元信息(例如,标题,作者和日期):

|

||||

创建一个名为 `SLIDES` 的幻灯片文档(参见 [代码仓库][8])。首先,在 `%` 后面添加幻灯片的元信息(例如,标题、作者和日期):

|

||||

|

||||

```

|

||||

% Case Study

|

||||

@ -94,7 +94,7 @@ pandoc math.tex -s --mathml -o mathMathML.html

|

||||

|

||||

这些元信息同时也创建了第一张幻灯片。要添加更多幻灯片,使用 Markdown 的一级标题(在下面例子中的第5行,参考 [Markdown 的一级标题][9] )生成顶级幻灯片。

|

||||

|

||||

例如,可以通过以下命令创建一个标题为 Case Study、顶级幻灯片名为 Wine Management System 的演示文稿:

|

||||

例如,可以通过以下命令创建一个标题为 “Case Study”、顶级幻灯片名为 “Wine Management System” 的演示文稿:

|

||||

|

||||

```

|

||||

% Case Study

|

||||

@ -106,8 +106,8 @@ pandoc math.tex -s --mathml -o mathMathML.html

|

||||

|

||||

使用 Markdown 的二级标题将内容(比如包含一个新管理系统的说明和实现的幻灯片)放入刚刚创建的顶级幻灯片。下面添加另外两张幻灯片(在下面例子中的第 7 行和 14 行 ,参考 [Markdown 的二级标题][9] )。

|

||||

|

||||

- 第一个二级幻灯片的标题为 Idea,并显示瑞士国旗的图像

|

||||

- 第二个二级幻灯片的标题为 Implementation

|

||||

- 第一个二级幻灯片的标题为 “Idea”,并显示瑞士国旗的图像

|

||||

- 第二个二级幻灯片的标题为 “Implementation”

|

||||

|

||||

```

|

||||

% Case Study

|

||||

@ -121,9 +121,9 @@ pandoc math.tex -s --mathml -o mathMathML.html

|

||||

## Implementation

|

||||

```

|

||||

|

||||

我们现在有一个顶级幻灯片(#Wine Management System),其中包含两张幻灯片(## Idea 和 ## Implementation)。

|

||||

我们现在有一个顶级幻灯片(`#Wine Management System`),其中包含两张幻灯片(`## Idea` 和 `## Implementation`)。

|

||||

|

||||

通过创建一个由符号 > 开头的 Markdown 列表,在这两张幻灯片中添加一些内容。在上面代码的基础上,在第一张幻灯片中添加两个项目(第 9-10 行),第二张幻灯片中添加五个项目(第 16-20 行):

|

||||

通过创建一个由符号 `>` 开头的 Markdown 列表,在这两张幻灯片中添加一些内容。在上面代码的基础上,在第一张幻灯片中添加两个项目(第 9-10 行),第二张幻灯片中添加五个项目(第 16-20 行):

|

||||

|

||||

```

|

||||

% Case Study

|

||||

@ -150,7 +150,7 @@ pandoc math.tex -s --mathml -o mathMathML.html

|

||||

|

||||

上面的代码添加了马特洪峰的图像,也可以使用纯 Markdown 语法或添加 HTML 标签来改进幻灯片。

|

||||

|

||||

要生成幻灯片,Pandoc 需要引用 Reveal.js 库,因此它必须与 SLIDES 文件位于同一文件夹中。生成幻灯片的命令如下所示:

|

||||

要生成幻灯片,Pandoc 需要引用 Reveal.js 库,因此它必须与 `SLIDES` 文件位于同一文件夹中。生成幻灯片的命令如下所示:

|

||||

|

||||

```

|

||||

pandoc -t revealjs -s --self-contained SLIDES \

|

||||

@ -161,13 +161,13 @@ pandoc -t revealjs -s --self-contained SLIDES \

|

||||

|

||||

上面的 Pandoc 命令使用了以下参数:

|

||||

|

||||

- -t revealjs 表示将输出一个 revealjs 演示文稿

|

||||

- -s 告诉 Pandoc 生成一个独立的文档

|

||||

- \--self-contained 生成没有外部依赖关系的 HTML 文件

|

||||

- -V 设置以下变量:

|

||||

- theme = white 将幻灯片的主题设为白色

|

||||

- slideNumber = true 显示幻灯片编号

|

||||

- -o index.html 在名为 index.html 的文件中生成幻灯片

|

||||

- `-t revealjs` 表示将输出一个 revealjs 演示文稿

|

||||

- `-s` 告诉 Pandoc 生成一个独立的文档

|

||||

- `--self-contained` 生成没有外部依赖关系的 HTML 文件

|

||||

- `-V` 设置以下变量:

|

||||

- `theme=white` 将幻灯片的主题设为白色

|

||||

- `slideNumber=true` 显示幻灯片编号

|

||||

- `-o index.html` 在名为 `index.html` 的文件中生成幻灯片

|

||||

|

||||

为了简化操作并避免键入如此长的命令,创建以下 Makefile:

|

||||

|

||||

@ -188,9 +188,9 @@ clean: index.html

|

||||

|

||||

#### 制作一份多种格式的合同

|

||||

|

||||

假设你正在准备一份文件,并且(这样的情况现在很常见)有些人想用 Microsoft Word 格式,其他人使用免费软件,想要 ODT 格式,而另外一些人则需要 PDF。你不必使用 OpenOffice 或 LibreOffice 来生成 DOCX 或 PDF 格式的文件,可以用 Markdown 创建一份文档(如果需要高级格式,可以使用一些 LaTeX 语法),并生成任何这些文件类型。

|

||||

假设你正在准备一份文件,并且(这样的情况现在很常见)有些人想用 Microsoft Word 格式,其他人使用自由软件,想要 ODT 格式,而另外一些人则需要 PDF。你不必使用 OpenOffice 或 LibreOffice 来生成 DOCX 或 PDF 格式的文件,可以用 Markdown 创建一份文档(如果需要高级格式,可以使用一些 LaTeX 语法),并生成任何这些文件类型。

|

||||

|

||||

和以前一样,首先声明文档的元信息(标题,作者和日期):

|

||||

和以前一样,首先声明文档的元信息(标题、作者和日期):

|

||||

|

||||

```

|

||||

% Contract Agreement for Software X

|

||||

@ -198,7 +198,7 @@ clean: index.html

|

||||

% August 28th, 2018

|

||||

```

|

||||

|

||||

然后在 Markdown 中编写文档(如果需要高级格式,则添加 LaTeX)。例如,创建一个固定间隔的表格(在 LaTeX 中用 \hspace{3cm} 声明)以及客户端和承包商应填写的行(在 LaTeX 中用 \hrulefill 声明)。之后,添加一个用 Markdown 编写的表格。

|

||||

然后在 Markdown 中编写文档(如果需要高级格式,则添加 LaTeX)。例如,创建一个固定间隔的表格(在 LaTeX 中用 `\hspace{3cm}` 声明)以及客户端和承包商应填写的行(在 LaTeX 中用 `\hrulefill` 声明)。之后,添加一个用 Markdown 编写的表格。

|

||||

|

||||

创建的文档如下所示:

|

||||

|

||||

@ -267,7 +267,7 @@ clean:

|

||||

|

||||

4 到 7 行是生成三种不同输出格式的具体命令:

|

||||

|

||||

如果有多个 Markdown 文件并想将它们合并到一个文档中,需要按照希望它们出现的顺序编写命令。例如,在撰写本文时,我创建了三个文档:一个介绍文档,三个示例和一些高级用法。以下命令告诉 Pandoc 按指定的顺序将这些文件合并在一起,并生成一个名为 document.pdf 的 PDF 文件。

|

||||

如果有多个 Markdown 文件并想将它们合并到一个文档中,需要按照希望它们出现的顺序编写命令。例如,在撰写本文时,我创建了三个文档:一个介绍文档、三个示例和一些高级用法。以下命令告诉 Pandoc 按指定的顺序将这些文件合并在一起,并生成一个名为 document.pdf 的 PDF 文件。

|

||||

|

||||

```

|

||||

pandoc -s introduction.md examples.md advanced-uses.md -o document.pdf

|

||||

@ -275,9 +275,9 @@ pandoc -s introduction.md examples.md advanced-uses.md -o document.pdf

|

||||

|

||||

### 模板和元信息

|

||||

|

||||

编写复杂的文档并非易事,你需要遵循一系列独立于内容的规则,例如使用特定的模板,编写摘要,嵌入特定字体,甚至可能要声明关键字。所有这些都与内容无关:简单地说,它就是元信息。

|

||||

编写复杂的文档并非易事,你需要遵循一系列独立于内容的规则,例如使用特定的模板、编写摘要、嵌入特定字体,甚至可能要声明关键字。所有这些都与内容无关:简单地说,它就是元信息。

|

||||

|

||||

Pandoc 使用模板生成不同的输出格式。例如,有一个 LaTeX 的模板,还有一个 ePub 的模板,等等。这些模板 的元信息中有未赋值的变量。使用以下命令找出 Pandoc 模板中可用的元信息:

|

||||

Pandoc 使用模板生成不同的输出格式。例如,有一个 LaTeX 的模板,还有一个 ePub 的模板,等等。这些模板的元信息中有未赋值的变量。使用以下命令找出 Pandoc 模板中可用的元信息:

|

||||

|

||||

```

|

||||

pandoc -D FORMAT

|

||||

@ -319,7 +319,7 @@ $endif$

|

||||

\begin{document}

|

||||

```

|

||||

|

||||

如你所见,输出的内容中有标题,致谢,作者,副标题和机构模板变量(还有许多其他可用的变量)。可以使用 YAML 元区块轻松设置这些内容。 在下面例子的第 1-5 行中,我们声明了一个 YAML 元区块并设置了一些变量(使用上面合同协议的例子):

|

||||

如你所见,输出的内容中有标题、致谢、作者、副标题和机构模板变量(还有许多其他可用的变量)。可以使用 YAML 元区块轻松设置这些内容。 在下面例子的第 1-5 行中,我们声明了一个 YAML 元区块并设置了一些变量(使用上面合同协议的例子):

|

||||

|

||||

```

|

||||

---

|

||||

@ -343,7 +343,7 @@ date: August 28th, 2018

|

||||

|

||||

考虑一下这些情况:

|

||||

|

||||

- 如果你只是尝试嵌入 YAML 变量 css:style-epub.css,那么将从 HTML 版本中移除该变量。这不起作用。

|

||||

- 如果你只是尝试嵌入 YAML 变量 `css:style-epub.css`,那么将从 HTML 版本中移除该变量。这不起作用。

|

||||

- 复制文档显然也不是一个好的解决方案,因为一个版本的更改不会与另一个版本同步。

|

||||

- 你也可以像下面这样将变量添加到 Pandoc 命令中:

|

||||

|

||||

@ -352,7 +352,7 @@ pandoc -s -V css=style-epub.css document.md document.epub

|

||||

pandoc -s -V css=style-html.css document.md document.html

|

||||

```

|

||||

|

||||

我的观点是,这样做很容易从命令行忽略这些变量,特别是当你需要设置数十个变量时(这可能出现在编写复杂文档的情况中)。现在,如果将它们放在同一文件中(meta.yaml 文件),则只需更新或创建新的元信息文件即可生成所需的输出格式。然后你会编写这样的命令:

|

||||

我的观点是,这样做很容易从命令行忽略这些变量,特别是当你需要设置数十个变量时(这可能出现在编写复杂文档的情况中)。现在,如果将它们放在同一文件中(`meta.yaml` 文件),则只需更新或创建新的元信息文件即可生成所需的输出格式。然后你会编写这样的命令:

|

||||

|

||||

```

|

||||

pandoc -s meta-pub.yaml document.md document.epub

|

||||

@ -372,7 +372,7 @@ via: https://opensource.com/article/18/9/intro-pandoc

|

||||

作者:[Kiko Fernandez-Reyes][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[jlztan](https://github.com/jlztan)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

Translating by lixinyuxx

|

||||

|

||||

Mixing software development roles produces great results

|

||||

======

|

||||

|

||||

|

||||

@ -0,0 +1,184 @@

|

||||

5 signs you are doing continuous testing wrong | Opensource.com

|

||||

======

|

||||

Avoid these common test automation mistakes in the era of DevOps and Agile.

|

||||

|

||||

|

||||

In the last few years, many companies have made large investments to automate every step of deploying features in production. Test automation has been recognized as a key enabler:

|

||||

|

||||

> “We found that Test Automation is the biggest contributor to continuous delivery.” – [2017 State of DevOps report][1]

|

||||

|

||||

Suppose you started adopting agile and DevOps practices to speed up your time to market and put new features in the hands of customers as soon as possible. You implemented continuous testing practices, but you’re facing the challenge of scalability: Implementing test automation at all system levels for code bases that contain tens of millions of lines of code involves many teams of developers and testers. And to add even more complexity, you need to support numerous browsers, mobile devices, and operating systems.

|

||||

|

||||

Despite your commitment and resources expenditure, the result is likely an automated test suite with high maintenance costs and long execution times. Worse, your teams don't trust it.

|

||||

|

||||

Here are five common test automation mistakes, and how to mitigate them using (in some cases) open source tools.

|

||||

|

||||

### 1\. Siloed automation teams

|

||||

|

||||

In medium and large IT projects with hundreds or even thousands of engineers, the most common cause of unmaintainable and expensive automated tests is keeping test teams separate from the development teams that deliver features.

|

||||

|

||||

This also happens in organizations that follow agile practices where analysts, developers, and testers work together on feature acceptance criteria and test cases. In these agile organizations, automated tests are often partially or fully managed by engineers outside the scrum teams. Inefficient communication can quickly become a bottleneck, especially when teams are geographically distributed, if you want to evolve the automated test suite over time.

|

||||

|

||||

Furthermore, when automated acceptance tests are written without developer involvement, they tend to be tightly coupled to the UI and thus brittle and badly factored, because the most testers don’t have insight into the UI’s underlying design and lack the skills to create abstraction layers or run acceptance tests against a public API.

|

||||

|

||||

A simple suggestion is to split your siloed automation teams and include test engineers directly in scrum teams where feature discussion and implementation happen, and the impacts on test scripts can be immediately discovered and fixed. This is certainly a good idea, but it is not the real point. Better yet is to make the entire scrum team responsible for automated tests. Product owners, developers, and testers must then work together to refine feature acceptance criteria, create test cases, and prioritize them for automation.

|

||||

|

||||

When different actors, inside or outside the development team, are involved in running automated test suites, one practice that levels up the overall collaborative process is [BDD][2], or behavior-driven development. It helps create business requirements that can be understood by the whole team and contributes to having a single source of truth for automated tests. Open source tools like [Cucumber][3], [JBehave][4], and [Gauge][5] can help you implement BDD and keep test case specifications and test scripts automatically synchronized. Such tools let you create concrete examples that illustrate business rules and acceptance criteria through the use of a simple text file containing Given-When-Then scenarios. They are used as executable software specifications to automatically verify that the software behaves as intended.

|

||||

|

||||

### 2\. Most of your automated suite is made by user interface tests

|

||||

|

||||

You should already know that user interface automated tests are brittle and even small changes will immediately break all the tests referring to a particular changed GUI element. This is one of the main reasons technical/business stakeholders perceive automated tests as expensive to maintain. Record-and-playback tools such as [SeleniumRecorder][6], used to generate GUI automatic tests, are tightly coupled to the GUI and therefore brittle. These tools can be used in the first stage of creating an automatic test, but a second optimization stage is required to provide a layer of abstraction that reduces the coupling between the acceptance tests and the GUI of the system under test. Design patterns such as [PageObject][7] can be used for this purpose.

|

||||

|

||||

However, if your automated test strategy is focused only on user interfaces, it will quickly become a bottleneck as it is resource-intensive, takes a long time to execute, and it is generally hard to fix. Indeed, resolving UI test failure may require you to go through all system levels to discover the root cause.

|

||||

|

||||

A better approach is to prioritize development of automated tests at the right level to balance the costs of maintaining them while trying to discover bugs in the early stages of the software [deployment pipeline][8] (a key pattern introduced in continuous delivery).

|

||||

|

||||

|

||||

|

||||

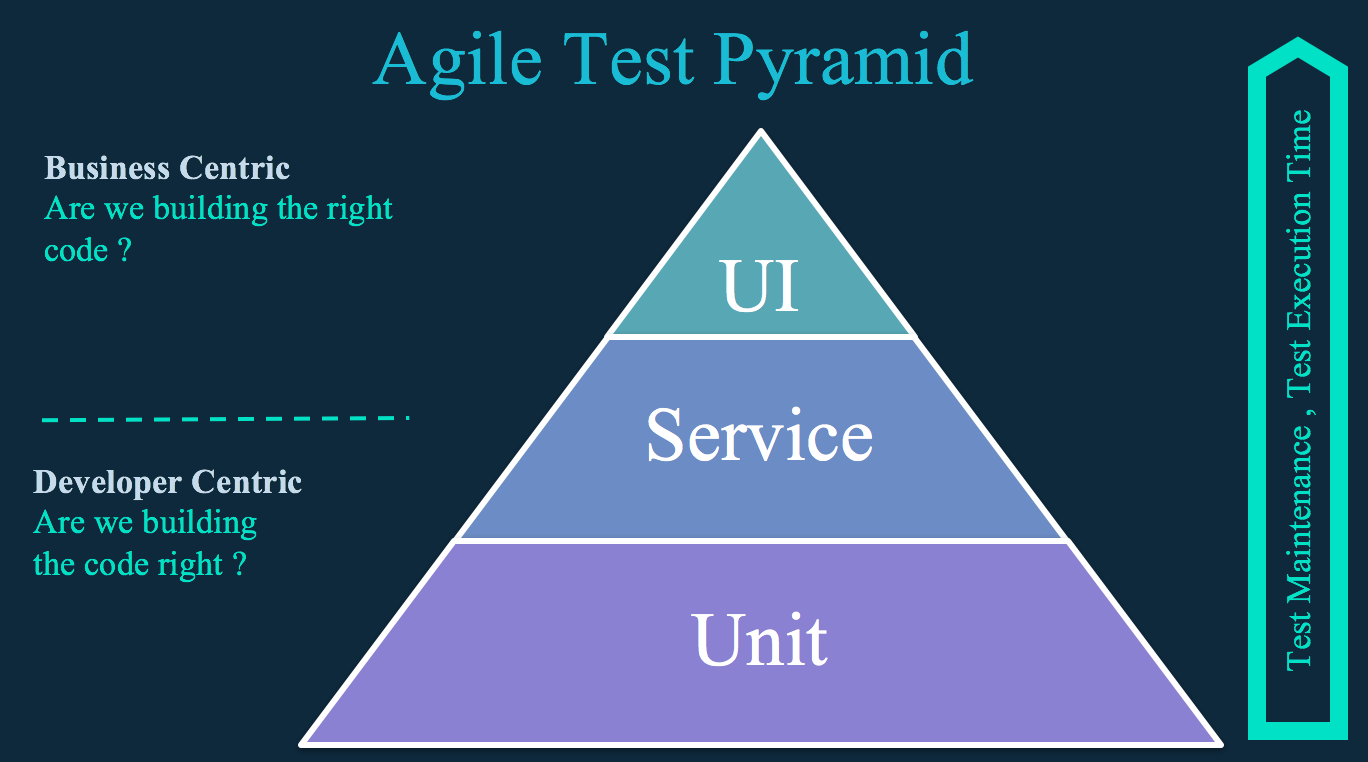

As suggested by the [agile test pyramid][9] shown above, the vast majority of automated tests should be comprised of unit tests (both back- and front-end level). The most important property of unit tests is that they should be very fast to execute (e.g., 5 to 10 minutes).

|

||||

|

||||

The service layer (or component tests) allows for testing business logic at the API or service level, where you're not encumbered by the user interface (UI). The higher the level, the slower and more brittle testing becomes.

|

||||

|

||||

Typically unit tests are run at every developer commit, and the build process is stopped in the case of a test failure or if the test coverage is under a predefined threshold (e.g., when less than 80% of code lines are covered by unit tests). Once the build passes, it is deployed in a stage environment, and acceptance tests are executed. Any build that passes acceptance tests is then typically made available for manual and integration testing.

|

||||

|

||||

Unit tests are an essential part of any automated test strategy, but they usually do not provide a high enough level of confidence that the application can be released. The objective of acceptance tests at service and UI level is to prove that your application does what the customer wants it to, not that it works the way its programmers think it should. Unit tests can sometimes share this focus, but not always.

|

||||

|

||||

To ensure that the application provides value to end users while balancing test suite costs and value, you must automate both the service/component and UI acceptance tests with the agile test pyramid in mind.

|

||||

|

||||

Read more about test types, levels, and tools in this comprehensive [article][10] from ThoughtWorks.

|

||||

|

||||

### 3\. External systems are integrated too early in your deployment pipeline

|

||||

|

||||

Integration with external systems is a common source of problems, and it can be difficult to get right. This implies that it is important to test such integration points carefully and effectively. The problem is that if you include the external systems themselves within the scope of your automated acceptance testing, you have less control over the system. It is difficult to set an external system starting state, and this, in turn, will end up in an unpredictable test run that fails most of the time. The rest of your time will be probably spent discussing how to fix testing failures with external providers. However, our objective with continuous testing is to find problems as early as possible, and to achieve this, we aim to integrate our system continuously. Clearly, there is a tension here and a “one-size-fits-all” answer doesn’t exist.

|

||||

|

||||

Having suites of tests around each integration point, intended to run in an environment that has real connections to external systems, is valuable, but the tests should be very small, focus on business risks, and cover core customer journeys. Instead, consider creating [test doubles][11] that represent the connection to all external systems and use them in development and/or early-stage environments so that your test suites are faster and test results are deterministic. If you are new to the concept of test doubles but have heard about mocks and stubs, you can learn about the differences in this [Martin Fowler blog post][11].

|

||||

|

||||

In their book, [Continuous Delivery: Reliable Software Releases through Build, Test, and Deployment Automation][12], Jez Humble and David Farley advise: “Test doubles must almost always be used to stub out part of an external system when:

|

||||

|

||||

* The external system is under development but the interface has been defined ahead of time (in these situations, be prepared for the interface to change).

|

||||

|

||||

* The external system is developed already but you don’t have a test instance of that system available for your testing, or the test system is too slow or buggy to act as a service for regular automated test runs.

|

||||

|

||||

* The test system exists, but responses are not deterministic and so make validation of tests results impossible for automated tests (for example, a stock market feed).

|

||||

|

||||

* The external system takes the form of another application that is difficult to install or requires manual intervention via a UI.

|

||||

|

||||

* The load that your automated continuous integration system imposes, and the service level that it requires, overwhelms the lightweight test environment that is set up to cope with only a few manual exploratory interactions.”

|

||||

|

||||

|

||||

|

||||

|

||||

Suppose you need to integrate one or more external systems that are under active development. In turn, there will likely be changes in the schemas, contracts, and so on. Such a scenario needs careful and regular testing to identify points at which different teams diverge. This is the case of microservice-based architectures, which involve several independent systems deployed to test a single functionality. In this context, review the overall automated testing strategies in favor of a more scalable and maintainable approach like the one used on [consumer-driven contracts][13].

|

||||

|

||||

If you are not in such a situation, I found the following open source tools useful to implement test doubles starting from an API contract specification:

|

||||

|

||||

* [SoapUI mocking services][14]: Despite its name, it can mock both SOAP and rest services.

|

||||

|

||||

* [WireMock][15]: It can mock rest services only.

|

||||

|

||||

* For rest services, look at [OpenAPI tools][16] for “mock servers,” which are able to generate test stubs starting from [OpenAPI][17] contract specification.

|

||||

|

||||

|

||||

|

||||

|

||||

### 4\. Test and development tools mismatch

|

||||

|

||||

One of the consequences of offloading test automation work to teams other than the development team is that it creates a divergence between development and test tools. This makes collaboration and communication harder between dev and test engineers, increases the overall cost for test automation, and fosters bad practices such as having the version of test scripts and feature code not aligned or not versioned at all.

|

||||

|

||||

I’ve seen a lot of teams struggle with expensive UI/API automated test tools that had poor integration with standard versioning systems like Git. Other tools, especially GUI-based commercial ones with visual workflow capabilities, create a false expectation—primarily between test managers—that you can easily expect testers to develop maintainable and reusable automated tests. Even if this is possible, they can’t scale your automated test suite over time; the tests must be curated as much as feature code, which requires developer-level programming skills and best practices.

|

||||

|

||||

There are several open source tools that help you write automated acceptance tests and reuse your development teams' skills. If your primary development language is Java or JavaScript, you may find the following options useful:

|

||||

|

||||

* Java

|

||||

|

||||

* [Cucumber-jvm][18] for implementing executable specifications in Java for both UI and API automated testing

|

||||

|

||||

* [REST Assured][19] for API testing

|

||||

|

||||

* [SeleniumHQ][20] for web testing

|

||||

|

||||

* [ngWebDriver][21] locators for Selenium WebDriver. It is optimized for web applications built with Angular.js 1.x or Angular 2+

|

||||

|

||||

* [Appium Java][22] for mobile testing using Selenium WebDriver

|

||||

|

||||

* JavaScript

|

||||

|

||||

* [Cucumber.js][23] same as Cucumber.jvm but runs on Node.js platform

|

||||

|

||||

* [Chakram][24] for API testing

|

||||

|

||||

* [Protractor][25] for web testing optimized for web applications built with AngularJS 1.x or Angular 2+

|

||||

|

||||

* [Appium][26] for mobile testing on the Node.js platform

|

||||

|

||||

|

||||

|

||||

|

||||

### 5\. Your test data management is not fully automated

|

||||

|

||||

To build maintainable test suites, it’s essential to have an effective strategy for creating and maintaining test data. It requires both automatic migration of data schema and test data initialization.

|

||||

|

||||

It's tempting to use large database dumps for automated tests, but this makes it difficult to version and automate them and will increase the overall time of test execution. A better approach is to capture all data changes in DDL and DML scripts, which can be easily versioned and executed by the data management system. These scripts should first create the structure of the database and then populate the tables with any reference data required for the application to start. Furthermore, you need to design your scripts incrementally so that you can migrate your database without creating it from scratch each time and, most importantly, without losing any valuable data.

|

||||

|

||||

Open source tools like [Flyway][27] can help you orchestrate your DDL and DML scripts' execution based on a table in your database that contains its current version number. At deployment time, Flyway checks the version of the database currently deployed and the version of the database required by the version of the application that is being deployed. It then works out which scripts to run to migrate the database from its current version to the required version, and runs them on the database in order.

|

||||

|

||||

One important characteristic of your automated acceptance test suite, which makes it scalable over time, is the level of isolation of the test data: Test data should be visible only to that test. In other words, a test should not depend on the outcome of the other tests to establish its state, and other tests should not affect its success or failure in any way. Isolating tests from one another makes them capable of being run in parallel to optimize test suite performance, and more maintainable as you don’t have to run tests in any specific order.

|

||||

|

||||

When considering how to set up the state of the application for an acceptance test, Jez Humble and David Farley note [in their book][12] that it is helpful to distinguish between three kinds of data:

|

||||

|

||||

* **Test reference data:** This is the data that is relevant for a test but that has little bearing upon the behavior under test. Such data is typically read by test scripts and remains unaffected by the operation of the tests. It can be managed by using pre-populated seed data that is reused in a variety of tests to establish the general environment in which the tests run.

|

||||

|

||||

* **Test-specific data:** This is the data that drives the behavior under test. It also includes transactional data that is created and/or updated during test execution. It should be unique and use test isolation strategies to ensure that the test starts in a well-defined environment that is unaffected by other tests. Examples of test isolation practices are deleting test-specific data and transactional data at the end of the test execution, or using a functional partitioning strategy.

|

||||

|

||||

* **Application reference data:** This data is irrelevant to the test but is required by the application for startup.

|

||||

|

||||

|

||||

|

||||

|

||||

Application reference data and test reference data can be kept in the form of database scripts, which are versioned and migrated as part of the application's initial setup. For test-specific data, you should use application APIs so the system is always put in a consistent state as a consequence of executing business logic (which otherwise would be bypassed if you directly load test data into the database using scripts).

|

||||

|

||||

### Conclusion

|

||||

|

||||

Agile and DevOps teams continue to fall short on continuous testing—a crucial element of the CI/CD pipeline. Even as a single process, continuous testing is made up of various components that must work in unison. Team structure, testing prioritization, test data, and tools all play a critical role in the success of continuous testing. Agile and DevOps teams must get every piece right to see the benefits.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/11/continuous-testing-wrong

|

||||

|

||||

作者:[Davide Antelmo][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/dantelmo

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://puppet.com/blog/2017-state-devops-report-here

|

||||

[2]: https://www.agilealliance.org/glossary/bdd/

|

||||

[3]: https://docs.cucumber.io/

|

||||

[4]: https://jbehave.org/

|

||||

[5]: https://www.gauge.org/

|

||||

[6]: https://www.seleniumhq.org/projects/ide/

|

||||

[7]: https://martinfowler.com/bliki/PageObject.html

|

||||

[8]: https://continuousdelivery.com/implementing/patterns/

|

||||

[9]: https://martinfowler.com/bliki/TestPyramid.html

|

||||

[10]: https://martinfowler.com/articles/practical-test-pyramid.html

|

||||

[11]: https://martinfowler.com/bliki/TestDouble.html

|

||||

[12]: https://martinfowler.com/books/continuousDelivery.html

|

||||

[13]: https://martinfowler.com/articles/consumerDrivenContracts.html

|

||||

[14]: https://www.soapui.org/soap-mocking/service-mocking-overview.html

|

||||

[15]: http://wiremock.org/

|

||||

[16]: https://openapi.tools/

|

||||

[17]: https://www.openapis.org/

|

||||

[18]: https://github.com/cucumber/cucumber-jvm

|

||||

[19]: http://rest-assured.io/

|

||||

[20]: https://www.seleniumhq.org/

|

||||

[21]: https://github.com/paul-hammant/ngWebDriver

|

||||

[22]: https://github.com/appium/java-client

|

||||

[23]: https://github.com/cucumber/cucumber-js

|

||||

[24]: http://dareid.github.io/chakram/

|

||||

[25]: https://www.protractortest.org/#/

|

||||

[26]: https://github.com/appium/appium

|

||||

[27]: https://flywaydb.org/

|

||||

@ -0,0 +1,65 @@

|

||||

How open source in education creates new developers

|

||||

======

|

||||

Self-taught developer and new Gibbon maintainer explains why open source is integral to creating the next generation of coders.

|

||||

|

||||

|

||||

Like many programmers, I got my start solving problems with code. When I was a young programmer, I was content to code anything I could imagine—mostly games—and do it all myself. I didn't need help; I just needed less sleep. It's a common pitfall, and one that I'm happy to have climbed out of with the help of two important realizations:

|

||||

|

||||

First, the software that impacts our daily lives the most isn't made by an amazingly talented solo developer. On a large scale, it's made by global teams of hundreds or thousands of developers. On smaller scales, it's still made by a team of dedicated professionals, often working remotely. Far beyond the value of churning out code is the value of communicating ideas, collaborating, sharing feedback, and making collective decisions.

|

||||

|

||||

Second, sustainable code isn't programmed in a vacuum. It's not just a matter of time or scale; it's a diversity of thinking. Designing software is about understanding an issue and the people it affects and setting out to find a solution. No one person can see an issue from every point of view. As a developer, learning to connect with other developers, empathize with users, and think of a project as a community rather than a codebase are invaluable.

|

||||

|

||||

### Open source and education: natural partners

|

||||

|

||||

Education is not a zero-sum game. Worldwide, members of the education community work together to share ideas, build professional learning networks, and create new learning models.

|

||||

|

||||

This collaboration is where there's an amazing synergy between open source software and education. It's already evident in the many open source projects used in schools worldwide; in classrooms, running blogs, sharing resources, hosting servers, and empowering collaboration.

|

||||

|

||||

Working in a school has sparked my passion to advocate for open source in education. My position as web developer and digital media specialist at [The International School of Macao][1] has become what I call a developer-in-residence. Working alongside educators has given me the incredible opportunity to learn their needs and workflows, then go back and write code to help solve those problems. There's a lot of power in this model: not just programming for hypothetical "users" but getting to know the people who use a piece of software on a day-to-day basis, watching them use it, learning their pain points, and aiming to build [something that meets their needs][2].

|

||||

|

||||

This is a model that I believe we can build on and share. Educators and developers working together have the ability to create the quality, open, affordable software they need, built on the values that matter most to them. These tools can be made available to those who cannot afford commercial systems but do want to educate the next generation.

|

||||

|

||||

Not every school may have the capacity to contribute code or hire developers, but with a larger community of people working together, extraordinary things are happening.

|

||||

|

||||

### What schools need from software

|

||||

|

||||

There are a lot of amazing educators out there re-thinking the learning models used in schools. They're looking for ways to provide students with agency, spark their curiosity, connect their learning to the real world, and foster mindsets that will help them navigate our rapidly changing world.

|

||||

|

||||

The software used in schools needs to be able to adapt and change at the same pace. No one knows for certain what education will look like in the future, but there are some great ideas for what directions it's going in. To keep moving forward, educators need to be able to experiment at the same level that learning is happening; to try, to fail, and to iterate on different approaches right in their classrooms.

|

||||

|

||||

This is where I believe open source tools for learning can be quite powerful. There are a lot of challenging projects that can arise in a school. My position started as a web design job but soon grew into developing staff portals, digital signage, school blogs, and automated newsletters. For each new project, open source was a natural jumping-off point: it was affordable, got me up to speed faster, and I was able to adapt each system to my school's ever-evolving needs.

|

||||

|

||||

One such project was transitioning our school's student information system, along with 10 years of data, to an open source platform called [Gibbon][3]. The system did a lot of [things that my school needed][4], which was awesome. Still, there were some things we needed to adapt and other things we needed to add, including tools to import large amounts of data. Since it's an open source school platform, I was able to dive in and make these changes, and then share them back with the community.

|

||||

|

||||

This is the point where open source started to change from something I used to something I contributed to. I've done a lot of solo development work in the past, so the opportunity to collaborate on new features and contribute bug fixes really hooked me.

|

||||

|

||||

As my work on Gibbon evolved from small fixes to whole features, I also started collaborating on ideas to refactor and modernize the codebase. This was an open source lightbulb for me, and over the past couple of years, I've become more and more involved in our growing community, recently stepping into the role of maintainer on the project.

|

||||

|

||||

### Creating a new generation of developers

|

||||

|

||||

As a software developer, I'm entirely self-taught, and much of what I know wouldn't have been possible if these tools were locked down and inaccessible. Learning in the information age is about having access to the ideas that inspire and motivate us.

|

||||

|

||||

The ability to explore, break, fix and tinker with the source code I've used is largely the driving force of my motivation to learn. Like many coders, early on I'd peek into a codebase and change a few variables here and there to see what happened. Then I started stringing spaghetti code together to see what I could build with it. Bit by bit, I'd wonder "what is it doing?" and "why does this work, but that doesn't?" Eventually, my haphazard jungles of code became carefully architected codebases; all of this learned through playing with source code written by other developers and seeking to understand the bigger concepts of what the software was accomplishing.

|

||||

|

||||

Beyond the possibilities open source offers to schools as a whole, it also can also offer individual students a profound opportunity to explore the technology that's part of our everyday lives. Schools embracing an open source mindset would do so not just to cut costs or create new tools for learning, but also to give their students the same freedoms to be a part of this evolving landscape of education and technology.

|

||||

|

||||

With this level of access, open source in the hands of a student transforms from a piece of software to a source of potential learning experiences, and possibly even a launching point for students who wish to dive deeper into computer science concepts. This is a powerful way that students can discover their intrinsic motivation: when they can see their learning as a path to unravel and understand the complexities of the world around them.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/11/next-gen-coders-education

|

||||

|

||||

作者:[Sandra Kuipers][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/skuipers

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.tis.edu.mo

|

||||

[2]: https://skuipers.com/portfolio/

|

||||

[3]: https://gibbonedu.org/

|

||||

[4]: https://opensource.com/education/14/2/gibbon-project-story

|

||||

@ -1,222 +0,0 @@

|

||||

translating by StdioA

|

||||

|

||||

Systemd Timers: Three Use Cases

|

||||

======

|

||||

|

||||

|

||||

|

||||

In this systemd tutorial series, we have[ already talked about systemd timer units to some degree][1], but, before moving on to the sockets, let's look at three examples that illustrate how you can best leverage these units.

|

||||

|

||||

### Simple _cron_ -like behavior

|

||||

|

||||

This is something I have to do: collect [popcon data from Debian][2] every week, preferably at the same time so I can see how the downloads for certain applications evolve. This is the typical thing you can have a _cron_ job do, but a systemd timer can do it too:

|

||||

```

|

||||

# cron-like popcon.timer

|

||||

|

||||

[Unit]

|

||||

Description= Says when to download and process popcons

|

||||

|

||||

[Timer]

|

||||

OnCalendar= Thu *-*-* 05:32:07

|

||||

Unit= popcon.service

|

||||

|

||||

[Install]

|

||||

WantedBy= basic.target

|

||||

|

||||

```

|

||||

|

||||

The actual _popcon.service_ runs a regular _wget_ job, so nothing special. What is new in here is the `OnCalendar=` directive. This is what lets you set a service to run on a certain date at a certain time. In this case, `Thu` means " _run on Thursdays_ " and the `*-*-*` means " _the exact date, month and year don't matter_ ", which translates to " _run on Thursday, regardless of the date, month or year_ ".

|

||||

|

||||

Then you have the time you want to run the service. I chose at about 5:30 am CEST, which is when the server is not very busy.

|

||||

|

||||

If the server is down and misses the weekly deadline, you can also work an _anacron_ -like functionality into the same timer:

|

||||

```

|

||||

# popcon.timer with anacron-like functionality

|

||||

|

||||

[Unit]

|

||||

Description=Says when to download and process popcons

|

||||

|

||||

[Timer]

|

||||

Unit=popcon.service

|

||||

OnCalendar=Thu *-*-* 05:32:07

|

||||

Persistent=true

|

||||

|

||||

[Install]

|

||||

WantedBy=basic.target

|

||||

|

||||

```

|

||||

|

||||

When you set the `Persistent=` directive to true, it tells systemd to run the service immediately after booting if the server was down when it was supposed to run. This means that if the machine was down, say for maintenance, in the early hours of Thursday, as soon as it is booted again, _popcon.service_ will be run immediately and then it will go back to the routine of running the service every Thursday at 5:32 am.

|

||||

|

||||

So far, so straightforward.

|

||||

|

||||

### Delayed execution

|

||||

|

||||

But let's kick thing up a notch and "improve" the [systemd-based surveillance system][3]. Remember that the system started taking pictures the moment you plugged in a camera. Suppose you don't want pictures of your face while you install the camera. You will want to delay the start up of the picture-taking service by a minute or two so you can plug in the camera and move out of frame.

|

||||

|

||||

To do this; first change the Udev rule so it points to a timer:

|

||||

```

|

||||

ACTION=="add", SUBSYSTEM=="video4linux", ATTRS{idVendor}=="03f0",

|

||||

ATTRS{idProduct}=="e207", TAG+="systemd", ENV{SYSTEMD_WANTS}="picchanged.timer",

|

||||

SYMLINK+="mywebcam", MODE="0666"

|

||||

|

||||

```

|

||||

|

||||

The timer looks like this:

|

||||

```

|

||||

# picchanged.timer

|

||||

|

||||

[Unit]

|

||||

Description= Runs picchanged 1 minute after the camera is plugged in

|

||||

|

||||

[Timer]

|

||||

OnActiveSec= 1 m

|

||||

Unit= picchanged.path

|

||||

|

||||

[Install]

|

||||

WantedBy= basic.target

|

||||

|

||||

```

|

||||

|

||||

The Udev rule gets triggered when you plug the camera in and it calls the timer. The timer waits for one minute after it starts (`OnActiveSec= 1 m`) and then runs _picchanged.path_ , which [monitors to see if the master image changes][4]. The _picchanged.path_ is also in charge of pulling in the _webcam.service_ , the service that actually takes the picture.

|

||||

|

||||

### Start and stop Minetest server at a certain time every day

|

||||

|

||||

In the final example, let's say you have decided to delegate parenting to systemd. I mean, systemd seems to be already taking over most of your life anyway. Why not embrace the inevitable?

|

||||

|

||||

So you have your Minetest service set up for your kids. You also want to give some semblance of caring about their education and upbringing and have them do homework and chores. What you want to do is make sure Minetest is only available for a limited time (say from 5 pm to 7 pm) every evening.

|

||||

|

||||

This is different from " _starting a service at certain time_ " in that, writing a timer to start the service at 5 pm is easy...:

|

||||

```

|

||||

# minetest.timer

|

||||

|

||||

[Unit]

|

||||

Description= Runs the minetest.service at 5pm everyday

|

||||

|

||||

[Timer]

|

||||

OnCalendar= *-*-* 17:00:00

|

||||

Unit= minetest.service

|

||||

|

||||

[Install]

|

||||

WantedBy= basic.target

|

||||

|

||||

```

|

||||

|

||||

... But writing a counterpart timer that shuts down a service at a certain time needs a bigger dose of lateral thinking.

|

||||

|

||||

Let's start with the obvious -- the timer:

|

||||

```

|

||||

# stopminetest.timer

|

||||

|

||||

[Unit]

|

||||

Description= Stops the minetest.service at 7 pm everyday

|

||||

|

||||

[Timer]

|

||||

OnCalendar= *-*-* 19:05:00

|

||||

Unit= stopminetest.service

|

||||

|

||||

[Install]

|

||||

WantedBy= basic.target

|

||||

|

||||

```

|

||||

|

||||

The tricky part is how to tell _stopminetest.service_ to actually, you know, stop the Minetest. There is no way to pass the PID of the Minetest server from _minetest.service_. and there are no obvious commands in systemd's unit vocabulary to stop or disable a running service.

|

||||

|

||||

The trick is to use systemd's `Conflicts=` directive. The `Conflicts=` directive is similar to systemd's `Wants=` directive, in that it does _exactly the opposite_. If you have `Wants=a.service` in a unit called _b.service_ , when it starts, _b.service_ will run _a.service_ if it is not running already. Likewise, if you have a line that reads `Conflicts= a.service` in your _b.service_ unit, as soon as _b.service_ starts, systemd will stop _a.service_.

|

||||

|

||||

This was created for when two services could clash when trying to take control of the same resource simultaneously, say when two services needed to access your printer at the same time. By putting a `Conflicts=` in your preferred service, you could make sure it would override the least important one.

|

||||

|

||||

You are going to use `Conflicts=` a bit differently, however. You will use `Conflicts=` to close down cleanly the _minetest.service_ :

|

||||

```

|

||||

# stopminetest.service

|

||||

|

||||

[Unit]

|

||||

Description= Closes down the Minetest service

|

||||

Conflicts= minetest.service

|

||||

|

||||

[Service]

|

||||

Type= oneshot

|

||||

ExecStart= /bin/echo "Closing down minetest.service"

|

||||

|

||||

```

|

||||

|

||||

The _stopminetest.service_ doesn't do much at all. Indeed, it could do nothing at all, but just because it contins that `Conflicts=` line in there, when it is started, systemd will close down _minetest.service_.

|

||||

|

||||

There is one last wrinkle in your perfect Minetest set up: What happens if you are late home from work, it is past the time when the server should be up but playtime is not over? The `Persistent=` directive (see above) that runs a service if it has missed its start time is no good here, because if you switch the server on, say at 11 am, it would start Minetest and that is not what you want. What you really want is a way to make sure that systemd will only start Minetest between the hours of 5 and 7 in the evening:

|

||||

```

|

||||

# minetest.timer

|

||||

|

||||

[Unit]

|

||||

Description= Runs the minetest.service every minute between the hours of 5pm and 7pm

|

||||

|

||||

[Timer]

|

||||

OnCalendar= *-*-* 17..19:*:00

|

||||

Unit= minetest.service

|

||||

|

||||

[Install]

|

||||

WantedBy= basic.target

|

||||

|

||||

```

|

||||

|

||||

The line `OnCalendar= *-*-* 17..19:*:00` is interesting for two reasons: (1) `17..19` is not a point in time, but a period of time, in this case the period of time between the times of 17 and 19; and (2) the `*` in the minute field indicates that the service must be run every minute. Hence, you would read this as " _run the minetest.service every minute between 5 and 7 pm_ ".

|

||||

|

||||

There is still one catch, though: once the _minetest.service_ is up and running, you want _minetest.timer_ to stop trying to run it again and again. You can do that by including a `Conflicts=` directive into _minetest.service_ :

|

||||

```

|

||||

# minetest.service

|

||||

|

||||

[Unit]

|

||||

Description= Runs Minetest server

|

||||

Conflicts= minetest.timer

|

||||

|

||||

[Service]

|

||||

Type= simple

|

||||

User= <your user name>

|

||||

|

||||

ExecStart= /usr/bin/minetest --server

|

||||

ExecStop= /bin/kill -2 $MAINPID

|

||||

|

||||

[Install]

|

||||

WantedBy= multi-user.targe

|

||||

|

||||

```

|

||||

|

||||

The `Conflicts=` directive shown above makes sure _minetest.timer_ is stopped as soon as the _minetest.service_ is successfully started.

|

||||

|

||||

Now enable and start _minetest.timer_ :

|

||||

```

|

||||

systemctl enable minetest.timer

|

||||

systemctl start minetest.timer

|

||||

|

||||

```

|

||||

|

||||

And, if you boot the server at, say, 6 o'clock, _minetest.timer_ will start up and, as the time falls between 5 and 7, _minetest.timer_ will try and start _minetest.service_ every minute. But, as soon as _minetest.service_ is running, systemd will stop _minetest.timer_ because it "conflicts" with _minetest.service_ , thus avoiding the timer from trying to start the service over and over when it is already running.

|

||||

|

||||

It is a bit counterintuitive that you use the service to kill the timer that started it up in the first place, but it works.

|

||||

|

||||

### Conclusion

|

||||

|

||||

You probably think that there are better ways of doing all of the above. I have heard the term "overengineered" in regard to these articles, especially when using systemd timers instead of cron.

|

||||

|

||||

But, the purpose of this series of articles is not to provide the best solution to any particular problem. The aim is to show solutions that use systemd units as much as possible, even to a ridiculous length. The aim is to showcase plenty of examples of how the different types of units and the directives they contain can be leveraged. It is up to you, the reader, to find the real practical applications for all of this.

|

||||

|

||||

Be that as it may, there is still one more thing to go: next time, we'll be looking at _sockets_ and _targets_ , and then we'll be done with systemd units.

|

||||

|

||||

Learn more about Linux through the free ["Introduction to Linux" ][5]course from The Linux Foundation and edX.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/intro-to-linux/2018/8/systemd-timers-two-use-cases-0

|

||||

|

||||

作者:[Paul Brown][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/bro66

|

||||

[1]:https://www.linux.com/blog/learn/intro-to-linux/2018/7/setting-timer-systemd-linux

|

||||

[2]:https://popcon.debian.org/

|

||||

[3]:https://www.linux.com/blog/intro-to-linux/2018/6/systemd-services-reacting-change

|

||||

[4]:https://www.linux.com/blog/learn/intro-to-linux/2018/6/systemd-services-monitoring-files-and-directories

|

||||

[5]:https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

@ -1,140 +0,0 @@

|

||||

HankChow translating

|

||||

|

||||

An Overview of Android Pie

|

||||

======

|

||||

|

||||

|

||||

|

||||

Let’s talk about Android for a moment. Yes, I know it’s only Linux by way of a modified kernel, but what isn’t these days? And seeing as how the developers of Android have released what many (including yours truly) believe to be the most significant evolution of the platform to date, there’s plenty to talk about. Of course, before we get into that, it does need to be mentioned (and most of you will already know this) that the whole of Android isn’t open source. Although much of it is, when you get into the bits that connect to Google services, things start to close up. One major service is the Google Play Store, a functionality that is very much proprietary. But this isn’t about how much of Android is open or closed, this is about Pie.

|

||||

Delicious, nutritious … efficient and battery-saving Pie.

|

||||

|

||||

I’ve been working with Android Pie on my Essential PH-1 daily driver (a phone that I really love, but understand how shaky the ground is under the company). After using Android Pie for a while now, I can safely say you want it. It’s that good. But what about the ninth release of Android makes it so special? Let’s dig in and find out. Our focus will be on the aspects that affect users, not developers, so I won’t dive deep into the underlying works.

|

||||

|

||||

### Gesture-Based Navigation

|

||||

|

||||

Much has been made about Android’s new gesture-based navigation—much of it not good. To be honest, this was a feature that aroused all of my curiosity. When it was first announced, no one really had much of an idea what it would be like. Would users be working with multi touch gestures to navigate around the Android interface? Or would this be something completely different.

|

||||

|

||||

|

||||

![Android Pie][2]

|

||||

|

||||

Figure 1: The Android Pie recent apps overview.

|

||||

|

||||

[Used with permission][3]

|

||||

|

||||

The reality is, gesture-based navigation is much more subtle and simple than what most assumed. And it all boils down to the Home button. With gesture-based navigation enabled, the Home button and the Recents button have been combined into a single feature. This means, in order to gain access to your recent apps, you can’t simply tap that square Recents button. Instead, the Recent apps overview (Figure 1) is opened with a short swipe up from the home button.

|

||||

|

||||

Another change is how the App Drawer is accessed. In similar fashion to opening the Recents overview, the App Drawer is opened via a long swipe up from the Home button.

|

||||

|

||||

As for the back button? It’s not been removed. Instead, what you’ll find is it appears (in the left side of the home screen dock) when an app calls for it. Sometimes that back button will appear, even if an app includes its own back button.

|

||||

|

||||

Thing is, however, if you don’t like gesture-based navigation, you can disable it. To do so, follow these steps:

|

||||

|

||||

1. Open Settings

|

||||

|

||||

2. Scroll down and tap System > Gestures

|

||||

|

||||

3. Tap Swipe up on Home button

|

||||

|

||||

4. Tap the On/Off slider (Figure 2) until it’s in the Off position

|

||||

|

||||

|

||||

|

||||

|

||||

### Battery Life

|

||||

|

||||

AI has become a crucial factor in Android. In fact, it is AI that has helped to greatly improve battery life in Android. This new feature is called Adaptive Battery and works by prioritizing battery power for the apps and services you use most. By using AI, Android learns how you use your Apps and, after a short period, can then shut down unused apps, so they aren’t draining your battery while waiting in memory.

|

||||

|

||||

The only caveat to Adaptive Battery is, should the AI pick up “bad habits” and your battery start to prematurely drain, the only way to reset the function is by way of a factory reset. Even with that small oversight, the improvement in battery life from Android Oreo to Pie is significant.

|

||||

|

||||

### Changes to Split Screen

|

||||

|

||||

Split Screen has been available to Android for some time. However, with Android Pie, how it’s used has slightly changed. This change only affects those who have gesture-based navigation enabled (otherwise, it remains the same). In order to work with Split Screen on Android 9.0, follow these steps:

|

||||

|

||||

![Adding an app][5]

|

||||

|

||||

Figure 3: Adding an app to split screen mode in Android Pie.

|

||||

|

||||

[Used with permission][3]

|

||||

|

||||

1. Swipe upward from the Home button to open the Recent apps overview.

|

||||

|

||||

2. Locate the app you want to place in the top portion of the screen.

|

||||

|

||||

3. Long press the app’s circle icon (located at the top of the app card) to reveal a new popup menu (Figure 3)

|

||||

|

||||

4. Tap Split Screen and the app will open in the top half of the screen.

|

||||

|

||||

5. Locate the second app you want to open and, tap it to add it to the bottom half of the screen.

|

||||

|

||||

|

||||

|

||||

|

||||

Using Split Screen and closing apps with the feature remains the same as it was.

|

||||

|

||||

###

|

||||

|

||||

![Actions][7]

|

||||

|

||||

Figure 4: Android App Actions in action.

|

||||

|

||||

[Used with permission][3]

|

||||

|

||||

### App Actions

|

||||

|

||||

This is another feature that was introduced some time ago, but was given some serious attention for the release of Android Pie. App Actions make it such that you can do certain things with an app, directly from the apps launcher.

|

||||

|

||||

For instance, if you long-press the GMail launcher, you can select to reply to a recent email, or compose a new email. Back in Android Oreo, that feature came in the form of a popup list of actions. With Android Pie, the feature now better fits with the Material Design scheme of things (Figure 4).

|

||||

|

||||

![Sound control][9]

|

||||

|

||||

Figure 5: Sound control in Android Pie.

|

||||

|

||||

[Used with permission][3]

|

||||

|

||||

### Sound Controls

|

||||

|

||||

Ah, the ever-changing world of sound controls on Android. Android Oreo had an outstanding method of controlling your sound, by way of minor tweaks to the Do Not Disturb feature. With Android Pie, that feature finds itself in a continued state of evolution.

|

||||

|

||||

What Android Pie nailed is the quick access buttons to controlling sound on a device. Now, if you press either the volume up or down button, you’ll see a new popup menu that allows you to control if your device is silenced and/or vibrations are muted. By tapping the top icon in that popup menu (Figure 5), you can cycle through silence, mute, or full sound.

|

||||

|

||||

### Screenshots

|

||||

|

||||

Because I write about Android, I tend to take a lot of screenshots. With Android Pie came one of my favorite improvements: sharing screenshots. Instead of having to open Google Photos, locate the screenshot to be shared, open the image, and share the image, Pie gives you a pop-up menu (after you take a screenshot) that allows you to share, edit, or delete the image in question.

|

||||

|

||||

![Sharing ][11]

|

||||

|

||||

Figure 6: Sharing screenshots just got a whole lot easier.

|

||||

|

||||

[Used with permission][3]

|

||||

|

||||

If you want to share the screenshot, take it, wait for the menu to pop up, tap Share (Figure 6), and then share it from the standard Android sharing menu.

|

||||

|

||||

### A More Satisfying Android Experience

|

||||

|

||||

The ninth iteration of Android has brought about a far more satisfying user experience. What I’ve illustrated only scratches the surface of what Android Pie brings to the table. For more information, check out Google’s official [Android Pie website][12]. And if your device has yet to receive the upgrade, have a bit of patience. Pie is well worth the wait.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/learn/2018/10/overview-android-pie

|

||||

|

||||

作者:[Jack Wallen][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/users/jlwallen

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: /files/images/pie1png

|

||||

[2]: https://www.linux.com/sites/lcom/files/styles/floated_images/public/pie_1.png?itok=BsSe8kqS (Android Pie)

|

||||

[3]: /licenses/category/used-permission

|

||||

[4]: /files/images/pie3png

|

||||

[5]: https://www.linux.com/sites/lcom/files/styles/floated_images/public/pie_3.png?itok=F-NB1dqI (Adding an app)

|

||||

[6]: /files/images/pie4png

|

||||

[7]: https://www.linux.com/sites/lcom/files/styles/floated_images/public/pie_4.png?itok=Ex-NzYSo (Actions)

|

||||

[8]: /files/images/pie5png

|

||||

[9]: https://www.linux.com/sites/lcom/files/styles/floated_images/public/pie_5.png?itok=NMW2vIlL (Sound control)

|

||||

[10]: /files/images/pie6png

|

||||

[11]: https://www.linux.com/sites/lcom/files/styles/floated_images/public/pie_6.png?itok=7Ik8_4jC (Sharing )

|

||||

[12]: https://www.android.com/versions/pie-9-0/

|

||||

237

sources/tech/20181105 How to manage storage on Linux with LVM.md

Normal file

237

sources/tech/20181105 How to manage storage on Linux with LVM.md

Normal file

@ -0,0 +1,237 @@

|

||||

How to manage storage on Linux with LVM

|

||||

======

|

||||

Create, expand, and encrypt storage pools as needed with the Linux LVM utilities.

|

||||

|

||||

|

||||

Logical Volume Manager ([LVM][1]) is a software-based RAID-like system that lets you create "pools" of storage and add hard drive space to those pools as needed. There are lots of reasons to use it, especially in a data center or any place where storage requirements change over time. Many Linux distributions use it by default for desktop installations, though, because users find the flexibility convenient and there are some built-in encryption features that the LVM structure simplifies.

|

||||

|

||||

However, if you aren't used to seeing an LVM volume when booting off of a Live CD for data rescue or migration purposes, LVM can be confusing because the **mount** command can't mount LVM volumes. For that, you need LVM tools installed. The chances are great that your distribution has LVM utils available—if they aren't already installed.

|

||||

|

||||

This tutorial explains how to create and deal with LVM volumes.

|

||||

|

||||

### Create an LVM pool

|

||||

|

||||

This article assumes you have a working knowledge of how to interact with hard drives on Linux. If you need more information on the basics before continuing, read my [introduction to hard drives on Linux][2]

|

||||

|

||||

Usually, you don't have to set up LVM at all. When you install Linux, it often defaults to creating a virtual "pool" of storage and adding your machine's hard drive(s) to that pool. However, manually creating an LVM storage pool is a great way to learn what happens behind the scenes.

|

||||

|

||||

You can practice with two spare thumb drives of any size, or two hard drives, or a virtual machine with two imaginary drives defined.

|

||||

|

||||

First, format the imaginary drive **/dev/sdx** so that you have a fresh drive ready to use for this demo.

|

||||

|

||||

```

|

||||

# echo "warning, this ERASES everything on this drive."

|

||||

warning, this ERASES everything on this drive.

|

||||

# dd if=/dev/zero of=/dev/sdx count=8196

|

||||

# parted /dev/sdx print | grep Disk

|

||||

Disk /dev/sdx: 100GB

|

||||

# parted /dev/sdx mklabel gpt

|

||||

# parted /dev/sdx mkpart primary 1s 100%

|

||||

```

|

||||

|

||||

This LVM command creates a storage pool. A pool can consist of one or more drives, and right now it consists of one. This example storage pool is named **billiards** , but you can call it anything.

|

||||

|

||||

```

|

||||

# vgcreate billiards /dev/sdx1

|

||||

```

|

||||

|

||||

Now you have a big, nebulous pool of storage space. Time to hand it out. To create two logical volumes (you can think of them as virtual drives), one called **vol0** and the other called **vol1** , enter the following:

|

||||

|

||||

```

|

||||

# lvcreate billiards 49G --name vol0

|

||||

# lvcreate billiards 49G --name vol1

|

||||

```

|

||||

|

||||

Now you have two volumes carved out of one storage pool, but neither of them has a filesystem yet. To create a filesystem on each volume, you must bring the **billiards** volume group online.

|

||||

|

||||

```

|

||||

# vgchange --activate y billiards

|

||||

```

|

||||

|

||||

Now make the file systems. The **-L** option provides a label for the drive, which is displayed when the drive is mounted on your desktop. The path to the volume is a little different than the usual device paths you're used to because these are virtual devices in an LVM storage pool.

|

||||

|

||||

```

|

||||

# mkfs.ext4 -L finance /dev/billiards/vol0

|

||||

# mkfs.ext4 -L production /dev/billiards/vol1

|

||||

```

|

||||

|

||||

You can mount these new volumes on your desktop or from a terminal.

|

||||

|

||||

```

|

||||

# mkdir -p /mnt/vol0 /mnt/vol1

|

||||

# mount /dev/billiards/vol0 /mnt/vol0

|

||||

# mount /dev/billiards/vol1 /mnt/vol1

|

||||

```

|

||||

|

||||

### Add space to your pool

|

||||

|

||||

So far, LVM has provided nothing more than partitioning a drive normally provides: two distinct sections of drive space on a single physical drive (in this example, 49GB and 49GB on a 100GB drive). Imagine now that the finance department needs more space. Traditionally, you'd have to restructure. Maybe you'd move the finance department data to a new, dedicated physical drive, or maybe you'd add a drive and then use an ugly symlink hack to provide users easy access to their additional storage space. With LVM, however, all you have to do is expand the storage pool.

|

||||

|

||||

You can add space to your pool by formatting another drive and using it to create more additional space.

|

||||

|

||||

First, create a partition on the new drive you're adding to the pool.

|

||||

|

||||

```

|

||||

# part /dev/sdy mkpart primary 1s 100%

|

||||

```

|

||||

|

||||

Then use the **vgextend** command to mark the new drive as part of the pool.

|

||||

|

||||

```

|

||||

# vgextend billiards /dev/sdy1

|

||||

```

|

||||

|

||||

Finally, dedicate some portion of the newly available storage pool to the appropriate logical volume.

|

||||

|

||||

```

|

||||

# lvextend -L +49G /dev/billiards/vol0

|

||||

```

|

||||

|

||||