mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-27 02:30:10 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

6273b84f10

@ -1,43 +1,43 @@

|

||||

使用 PGP 保护代码完整性 - 第 3 部分:生成 PGP 子密钥

|

||||

使用 PGP 保护代码完整性(三):生成 PGP 子密钥

|

||||

======

|

||||

|

||||

> 在第三篇文章中,我们将解释如何生成用于日常工作的 PGP 子密钥。

|

||||

|

||||

|

||||

|

||||

在本系列教程中,我们提供了使用 PGP 的实用指南。在此之前,我们介绍了[基本工具和概念][1],并介绍了如何[生成并保护您的主 PGP 密钥][2]。在第三篇文章中,我们将解释如何生成 PGP 子密钥,以及它们在日常工作中使用。

|

||||

在本系列教程中,我们提供了使用 PGP 的实用指南。在此之前,我们介绍了[基本工具和概念][1],并介绍了如何[生成并保护您的主 PGP 密钥][2]。在第三篇文章中,我们将解释如何生成用于日常工作的 PGP 子密钥。

|

||||

|

||||

### 清单

|

||||

|

||||

1. 生成 2048 位加密子密钥(必要)

|

||||

|

||||

2. 生成 2048 位签名子密钥(必要)

|

||||

|

||||

3. 生成一个 2048 位验证子密钥(可选)

|

||||

|

||||

3. 生成一个 2048 位验证子密钥(推荐)

|

||||

4. 将你的公钥上传到 PGP 密钥服务器(必要)

|

||||

|

||||

5. 设置一个刷新的定时任务(必要)

|

||||

|

||||

### 注意事项

|

||||

|

||||

现在我们已经创建了主密钥,让我们创建用于日常工作的密钥。我们创建 2048 位的密钥是因为很多专用硬件(我们稍后会讨论这个)不能处理更长的密钥,但同样也是出于实用的原因。如果我们发现自己处于一个 2048 位 RSA 密钥也不够好的世界,那将是由于计算或数学有了基本突破,因此更长的 4096 位密钥不会产生太大的差别。

|

||||

|

||||

#### 注意事项

|

||||

|

||||

现在我们已经创建了主密钥,让我们创建用于日常工作的密钥。我们创建了 2048 位密钥,因为很多专用硬件(我们稍后会讨论这个)不能处理更长的密钥,但同样也是出于实用的原因。如果我们发现自己处于一个 2048 位 RSA 密钥也不够好的世界,那将是由于计算或数学的基本突破,因此更长的 4096 位密钥不会产生太大的差别。

|

||||

|

||||

##### 创建子密钥

|

||||

### 创建子密钥

|

||||

|

||||

要创建子密钥,请运行:

|

||||

|

||||

```

|

||||

$ gpg --quick-add-key [fpr] rsa2048 encr

|

||||

$ gpg --quick-add-key [fpr] rsa2048 sign

|

||||

|

||||

```

|

||||

|

||||

你也可以创建验证密钥,这能让你使用你的 PGP 密钥来使用 ssh:

|

||||

用你密钥的完整指纹替换 `[fpr]`。

|

||||

|

||||

你也可以创建验证密钥,这能让你将你的 PGP 密钥用于 ssh:

|

||||

|

||||

```

|

||||

$ gpg --quick-add-key [fpr] rsa2048 auth

|

||||

|

||||

```

|

||||

|

||||

你可以使用 gpg --list-key [fpr] 来查看你的密钥信息:

|

||||

你可以使用 `gpg --list-key [fpr]` 来查看你的密钥信息:

|

||||

|

||||

```

|

||||

pub rsa4096 2017-12-06 [C] [expires: 2019-12-06]

|

||||

111122223333444455556666AAAABBBBCCCCDDDD

|

||||

@ -45,55 +45,57 @@ uid [ultimate] Alice Engineer <alice@example.org>

|

||||

uid [ultimate] Alice Engineer <allie@example.net>

|

||||

sub rsa2048 2017-12-06 [E]

|

||||

sub rsa2048 2017-12-06 [S]

|

||||

|

||||

```

|

||||

|

||||

##### 上传你的公钥到密钥服务器

|

||||

### 上传你的公钥到密钥服务器

|

||||

|

||||

你的密钥创建已完成,因此现在需要你将其上传到一个公共密钥服务器,使其他人能更容易找到密钥。 (如果你不打算实际使用你创建的密钥,请跳过这一步,因为这只会在密钥服务器上留下垃圾数据。)

|

||||

|

||||

```

|

||||

$ gpg --send-key [fpr]

|

||||

|

||||

```

|

||||

|

||||

如果此命令不成功,你可以尝试指定一台密钥服务器以及端口,这很有可能成功:

|

||||

|

||||

```

|

||||

$ gpg --keyserver hkp://pgp.mit.edu:80 --send-key [fpr]

|

||||

|

||||

```

|

||||

|

||||

大多数密钥服务器彼此进行通信,因此你的密钥信息最终将与所有其他密钥信息同步。

|

||||

|

||||

**关于隐私的注意事项:**密钥服务器是完全公开的,因此在设计上会泄露有关你的潜在敏感信息,例如你的全名、昵称以及个人或工作邮箱地址。如果你签名了其他人的钥匙或某人签名你的钥匙,那么密钥服务器还会成为你的社交网络的泄密者。一旦这些个人信息发送给密钥服务器,就不可能编辑或删除。即使你撤销签名或身份,它也不会将你的密钥记录删除,它只会将其标记为已撤消 - 这甚至会显得更突出。

|

||||

**关于隐私的注意事项:**密钥服务器是完全公开的,因此在设计上会泄露有关你的潜在敏感信息,例如你的全名、昵称以及个人或工作邮箱地址。如果你签名了其他人的钥匙或某人签名了你的钥匙,那么密钥服务器还会成为你的社交网络的泄密者。一旦这些个人信息发送给密钥服务器,就不可能被编辑或删除。即使你撤销签名或身份,它也不会将你的密钥记录删除,它只会将其标记为已撤消 —— 这甚至会显得更显眼。

|

||||

|

||||

也就是说,如果你参与公共项目的软件开发,以上所有信息都是公开记录,因此通过密钥服务器另外让这些信息可见,不会导致隐私的净损失。

|

||||

|

||||

###### 上传你的公钥到 GitHub

|

||||

### 上传你的公钥到 GitHub

|

||||

|

||||

如果你在开发中使用 GitHub(谁不是呢?),则应按照他们提供的说明上传密钥:

|

||||

|

||||

- [添加 PGP 密钥到你的 GitHub 账户](https://help.github.com/articles/adding-a-new-gpg-key-to-your-github-account/)

|

||||

|

||||

要生成适合粘贴的公钥输出,只需运行:

|

||||

|

||||

```

|

||||

$ gpg --export --armor [fpr]

|

||||

|

||||

```

|

||||

|

||||

##### 设置一个刷新定时任务

|

||||

### 设置一个刷新定时任务

|

||||

|

||||

你需要定期刷新你的钥匙环,以获取其他人公钥的最新更改。你可以设置一个定时任务来做到这一点:

|

||||

|

||||

你需要定期刷新你的 keyring,以获取其他人公钥的最新更改。你可以设置一个定时任务来做到这一点:

|

||||

```

|

||||

$ crontab -e

|

||||

|

||||

```

|

||||

|

||||

在新行中添加以下内容:

|

||||

|

||||

```

|

||||

@daily /usr/bin/gpg2 --refresh >/dev/null 2>&1

|

||||

|

||||

```

|

||||

|

||||

**注意:**检查你的 gpg 或 gpg2 命令的完整路径,如果你的 gpg 是旧式的 GnuPG v.1,请使用 gpg2。

|

||||

**注意:**检查你的 `gpg` 或 `gpg2` 命令的完整路径,如果你的 `gpg` 是旧式的 GnuPG v.1,请使用 gpg2。

|

||||

|

||||

通过 Linux 基金会和 edX 的免费“[Introduction to Linux](https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux)” 课程了解关于 Linux 的更多信息。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -101,10 +103,10 @@ via: https://www.linux.com/blog/learn/pgp/2018/2/protecting-code-integrity-pgp-p

|

||||

|

||||

作者:[Konstantin Ryabitsev][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/mricon

|

||||

[1]:https://www.linux.com/blog/learn/2018/2/protecting-code-integrity-pgp-part-1-basic-pgp-concepts-and-tools

|

||||

[2]:https://www.linux.com/blog/learn/pgp/2018/2/protecting-code-integrity-pgp-part-2-generating-and-protecting-your-master-pgp-key

|

||||

[1]:https://linux.cn/article-9524-1.html

|

||||

[2]:https://linux.cn/article-9529-1.html

|

||||

@ -1,44 +1,41 @@

|

||||

Vrms 助你在 Debian 中查找非自由软件

|

||||

vrms 助你在 Debian 中查找非自由软件

|

||||

======

|

||||

|

||||

|

||||

有一天,我在阅读一篇有趣的指南,它解释了[**在数字海洋中的自由和开源软件之间的区别**][1]。在此之前,我认为两者都差不多。但是,我错了。它们之间有一些显著差异。在阅读那篇文章时,我想知道如何在 Linux 中找到非自由软件,因此有了这篇文章。

|

||||

|

||||

有一天,我在 Digital ocean 上读到一篇有趣的指南,它解释了[自由和开源软件之间的区别][1]。在此之前,我认为两者都差不多。但是,我错了。它们之间有一些显著差异。在阅读那篇文章时,我想知道如何在 Linux 中找到非自由软件,因此有了这篇文章。

|

||||

|

||||

### 向 “Virtual Richard M. Stallman” 问好,这是一个在 Debian 中查找非自由软件的 Perl 脚本

|

||||

|

||||

**Virtual Richard M. Stallman** ,简称 **vrms**,是一个用 Perl 编写的程序,它在你基于 Debian 的系统上分析已安装软件的列表,并报告所有来自非自由和 contrib 树的已安装软件包。对于那些疑惑的人,免费软件应该符合以下[**四项基本自由**][2]。

|

||||

**Virtual Richard M. Stallman** ,简称 **vrms**,是一个用 Perl 编写的程序,它在你基于 Debian 的系统上分析已安装软件的列表,并报告所有来自非自由和 contrib 树的已安装软件包。对于那些不太清楚区别的人,自由软件应该符合以下[**四项基本自由**][2]。

|

||||

|

||||

* **自由 0** – 不管任何目的,随意运行程序的自由。

|

||||

* **自由 1** – 自由研究程序如何工作,并根据你的需求进行调整。访问源代码是一个先决条件。

|

||||

* **自由 2** – 自由重新分发拷贝,这样你可以帮助别人。

|

||||

* **自由 3** – 自由改进程序,并向公众发布改进,以便整个社区获益。访问源代码是一个先决条件。

|

||||

* **自由 1** – 研究程序如何工作的自由,并根据你的需求进行调整。访问源代码是一个先决条件。

|

||||

* **自由 2** – 重新分发副本的自由,这样你可以帮助别人。

|

||||

* **自由 3** – 改进程序,并向公众发布改进的自由,以便整个社区获益。访问源代码是一个先决条件。

|

||||

|

||||

|

||||

|

||||

任何不满足上述四个条件的软件都不被视为自由软件。简而言之,**自由软件意味着用户可以自由运行、拷贝、分发、研究、修改和改进软件。**

|

||||

任何不满足上述四个条件的软件都不被视为自由软件。简而言之,**自由软件意味着用户有运行、复制、分发、研究、修改和改进软件的自由。**

|

||||

|

||||

现在让我们来看看安装的软件是自由的还是非自由的,好么?

|

||||

|

||||

Vrms 包存在于 Debian 及其衍生版(如 Ubuntu)的默认仓库中。因此,你可以使用 apt 包管理器安装它,使用下面的命令。

|

||||

vrms 包存在于 Debian 及其衍生版(如 Ubuntu)的默认仓库中。因此,你可以使用 `apt` 包管理器安装它,使用下面的命令。

|

||||

|

||||

```

|

||||

$ sudo apt-get install vrms

|

||||

|

||||

```

|

||||

|

||||

安装完成后,运行以下命令,在基于 debian 的系统中查找非自由软件。

|

||||

|

||||

```

|

||||

$ vrms

|

||||

|

||||

```

|

||||

|

||||

在我的 Ubuntu 16.04 LTS 桌面版上输出的示例。

|

||||

|

||||

```

|

||||

Non-free packages installed on ostechnix

|

||||

|

||||

Non-free packages installed on ostechnix

|

||||

unrar Unarchiver for .rar files (non-free version)

|

||||

|

||||

1 non-free packages, 0.0% of 2103 installed packages.

|

||||

|

||||

```

|

||||

|

||||

![][4]

|

||||

@ -46,33 +43,30 @@ unrar Unarchiver for .rar files (non-free version)

|

||||

如你在上面的截图中看到的那样,我的 Ubuntu 中安装了一个非自由软件包。

|

||||

|

||||

如果你的系统中没有任何非自由软件包,则应该看到以下输出。

|

||||

|

||||

```

|

||||

No non-free or contrib packages installed on ostechnix! rms would be proud.

|

||||

|

||||

```

|

||||

|

||||

Vrms 不仅可以在 Debian 上找到非自由软件包,还可以在 Ubuntu、Linux Mint 和其他基于 deb 的系统中找到非自由软件包。

|

||||

vrms 不仅可以在 Debian 上找到非自由软件包,还可以在 Ubuntu、Linux Mint 和其他基于 deb 的系统中找到非自由软件包。

|

||||

|

||||

**限制**

|

||||

|

||||

Vrms 虽然有一些限制。就像我已经提到的那样,它列出了安装的非自由和 contrib 部分的软件包。但是,某些发行版并未遵循确保专有软件仅在 vrm 识别为“非自由”的仓库中存在,并且它们不努力维护分离。在这种情况下,Vrms 将不会识别非自由软件,并且始终会报告你的系统上安装了非自由软件。如果你使用的是像 Debian 和 Ubuntu 这样的发行版,遵循将专有软件保留在非自由仓库的策略,Vrms 一定会帮助你找到非自由软件包。

|

||||

vrms 虽然有一些限制。就像我已经提到的那样,它列出了安装的非自由和 contrib 部分的软件包。但是,某些发行版并未遵循确保专有软件仅在 vrms 识别为“非自由”的仓库中存在,并且它们不努力维护这种分离。在这种情况下,vrms 将不能识别非自由软件,并且始终会报告你的系统上安装了非自由软件。如果你使用的是像 Debian 和 Ubuntu 这样的发行版,遵循将专有软件保留在非自由仓库的策略,vrms 一定会帮助你找到非自由软件包。

|

||||

|

||||

就是这些。希望它是有用的。还有更好的东西。敬请关注!

|

||||

|

||||

祝世上所有的泰米尔人在泰米尔新年快乐!

|

||||

|

||||

干杯!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/the-vrms-program-helps-you-to-find-non-free-software-in-debian/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,143 @@

|

||||

Cloud Commander – A Web File Manager With Console And Editor

|

||||

======

|

||||

|

||||

|

||||

|

||||

**Cloud commander** is a web-based file manager application that allows you to view, access, and manage the files and folders of your system from any computer, mobile, and tablet Pc via a web browser. It has two simple and classic panels, and automatically converts it’s size as per your device’s display size. It also has two built-in editors namely **Dword** and **Edward** with support of Syntax-highlighting and one **Console** with support of your system’s command line. So you can edit your files on the go. Cloud Commander server is a cross-platform application that runs on Linux, Windows and Mac OS X operating systems, and the client will run on any web browser. It is written using **JavaScript/Node.Js** , and is licensed under **MIT**.

|

||||

|

||||

In this brief tutorial, let us see how to install Cloud Commander in Ubuntu 18.04 LTS server.

|

||||

|

||||

### Prerequisites

|

||||

|

||||

As I mentioned earlier, Cloud Commander is written using Node.Js. So, in order to install Cloud Commander we need to install Node.Js first. To do so, refer the following guide.

|

||||

|

||||

### Install Cloud Commander

|

||||

|

||||

After installing Node.Js, run the following command to install Cloud Commander:

|

||||

```

|

||||

$ npm i cloudcmd -g

|

||||

|

||||

```

|

||||

|

||||

Congratulations! Cloud Commander has been installed. Let us go ahead and see the basic usage of Cloud Commander.

|

||||

|

||||

### Getting started with Cloud Commander

|

||||

|

||||

Run the following command to start Cloud Commander:

|

||||

```

|

||||

$ cloudcmd

|

||||

|

||||

```

|

||||

|

||||

**Sample output:**

|

||||

```

|

||||

url: http://localhost:8000

|

||||

|

||||

```

|

||||

|

||||

Now, open your web browser and navigate to the URL: **<http://localhost:8000** or> **<http://IP-address:8000>**.

|

||||

|

||||

From now on, you can create, delete, view, manage files or folders right in the web browser from the local system or remote system, or mobile, tablet etc.

|

||||

|

||||

![][2]

|

||||

|

||||

As you can see in the above screenshot, Cloud Commander has two panels, ten hotkeys (F1 to F10), and Console.

|

||||

|

||||

Each hotkey does a unique job.

|

||||

|

||||

* F1 – Help

|

||||

* F2 – Rename file/folder

|

||||

* F3 – View files and folders

|

||||

* F4 – Edit files

|

||||

* F5 – Copy files/folders

|

||||

* F6 – Move files/folders

|

||||

* F7 – Create new directory

|

||||

* F8 – Delete file/folder

|

||||

* F9 – Open Menu

|

||||

* F10 – Open config

|

||||

|

||||

|

||||

|

||||

#### Cloud Commander console

|

||||

|

||||

Click on the Console icon. This will open your default system’s shell.

|

||||

|

||||

![][3]

|

||||

|

||||

From this console you can do all sort of administration tasks such as installing packages, removing packages, update your system etc. You can even shutdown or reboot system. Therefore, Cloud Commander is not just a file manager, but also has the functionality of a remote administration tool.

|

||||

|

||||

#### Creating files/folders

|

||||

|

||||

To create a new file or folder Right click on any empty place and go to **New - >File or Directory**.

|

||||

|

||||

![][4]

|

||||

|

||||

#### View files

|

||||

|

||||

You can view pictures, watch audio and video files.

|

||||

|

||||

![][5]

|

||||

|

||||

#### Upload files

|

||||

|

||||

The other cool feature is we can easily upload a file to Cloud Commander system from any system or device.

|

||||

|

||||

To upload a file, right click on any empty space in the Cloud Commander panel, and click on the **Upload** option.

|

||||

|

||||

![][6]

|

||||

|

||||

Select the files you want to upload.

|

||||

|

||||

Also, you can upload files from the Cloud services like Google drive, Dropbox, Amazon cloud drive, Facebook, Twitter, Gmail, GtiHub, Picasa, Instagram and many.

|

||||

|

||||

To upload files from Cloud, right click on any empty space in the panel and select **Upload from Cloud**.

|

||||

|

||||

![][7]

|

||||

|

||||

Select any web service of your choice, for example Google drive. Click **Connect to Google drive** button.

|

||||

|

||||

![][8]

|

||||

|

||||

In the next step, authenticate your google drive with Cloud Commander. Finally, select the files from your Google drive and click **Upload**.

|

||||

|

||||

![][9]

|

||||

|

||||

#### Update Cloud Commander

|

||||

|

||||

To update Cloud Commander to the latest available version, run the following command:

|

||||

```

|

||||

$ npm update cloudcmd -g

|

||||

|

||||

```

|

||||

|

||||

#### Conclusion

|

||||

|

||||

As far as I tested Cloud Commander, It worked like charm. I didn’t face a single issue during the testing in my Ubuntu server. Also, Cloud Commander is not just a web-based file manager, but also acts as a remote administration tool that performs most Linux administration tasks. You can create a files/folders, rename, delete, edit, and view them. Also, You can install, update, upgrade, and remove any package as the way you do in the local system from the Terminal. And, of course, you can even shutdown or restart the system from the Cloud Commander console itself. What do you need more? Give it a try, you will find it useful.

|

||||

|

||||

That’s all for now. I will be here soon with another interesting article. Until then, stay tuned with OSTechNix.

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/cloud-commander-a-web-file-manager-with-console-and-editor/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[2]:http://www.ostechnix.com/wp-content/uploads/2016/05/Cloud-Commander-Google-Chrome_006-4.jpg

|

||||

[3]:http://www.ostechnix.com/wp-content/uploads/2016/05/Cloud-Commander-Google-Chrome_007-2.jpg

|

||||

[4]:http://www.ostechnix.com/wp-content/uploads/2016/05/Cloud-commander-file-folder-1.png

|

||||

[5]:http://www.ostechnix.com/wp-content/uploads/2016/05/Cloud-Commander-home-sk-Google-Chrome_008-1.jpg

|

||||

[6]:http://www.ostechnix.com/wp-content/uploads/2016/05/cloud-commander-upload-2.png

|

||||

[7]:http://www.ostechnix.com/wp-content/uploads/2016/05/upload-from-cloud-1.png

|

||||

[8]:http://www.ostechnix.com/wp-content/uploads/2016/05/Cloud-Commander-home-sk-Google-Chrome_009-2.jpg

|

||||

[9]:http://www.ostechnix.com/wp-content/uploads/2016/05/Cloud-Commander-home-sk-Google-Chrome_010-1.jpg

|

||||

@ -1,113 +0,0 @@

|

||||

Advanced image viewing tricks with ImageMagick

|

||||

======

|

||||

|

||||

|

||||

|

||||

In my [introduction to ImageMagick][1], I showed how to use the application's menus to edit and add effects to your images. In this follow-up, I'll show additional ways to use this open source image editor to view your images.

|

||||

|

||||

### Another effect

|

||||

|

||||

Before diving into advanced image viewing with ImageMagick, I want to share another interesting, yet simple, effect using the **convert** command, which I discussed in detail in my previous article. This involves the

|

||||

**-edge** option, then **negate** :

|

||||

```

|

||||

convert DSC_0027.JPG -edge 3 -negate edge3+negate.jpg

|

||||

```

|

||||

|

||||

![Using the edge and negate options on an image.][3]

|

||||

|

||||

|

||||

Before and after example of using the edge and negate options on an image.

|

||||

|

||||

There are a number of things I like about the edited image--the appearance of the sea, the background and foreground vegetation, but especially the sun and its reflection, and also the sky.

|

||||

|

||||

### Using display to view a series of images

|

||||

|

||||

If you're a command-line user like I am, you know that the shell provides a lot of flexibility and shortcuts for complex tasks. Here I'll show one example: the way ImageMagick's **display** command can overcome a problem I've had reviewing images I import with the [Shotwell][4] image manager for the GNOME desktop.

|

||||

|

||||

Shotwell creates a nice directory structure that uses each image's [Exif][5] data to store imported images based on the date they were taken or created. You end up with a top directory for the year, subdirectories for each month (01, 02, 03, and so on), followed by another level of subdirectories for each day of the month. I like this structure, because finding an image or set of images based on when they were taken is easy.

|

||||

|

||||

This structure is not so great, however, when I want to review all my images for the last several months or even the whole year. With a typical image viewer, this involves a lot of jumping up and down the directory structure, but ImageMagick's **display** command makes it simple. For example, imagine that I want to look at all my pictures for this year. If I enter **display** on the command line like this:

|

||||

```

|

||||

display -resize 35 % 2017 /*/*/*.JPG

|

||||

```

|

||||

|

||||

I can march through the year, month by month, day by day.

|

||||

|

||||

Now imagine I'm looking for an image, but I can't remember whether I took it in the first half of 2016 or the first half of 2017. This command:

|

||||

```

|

||||

display -resize 35% 201[6-7]/0[1-6]/*/*.JPG

|

||||

```

|

||||

|

||||

restricts the images shown to January through June of 2016 and 2017.

|

||||

|

||||

### Using montage to view thumbnails of images

|

||||

|

||||

Now say I'm looking for an image that I want to edit. One problem is that **display** shows each image's filename, but not its place in the directory structure, so it's not obvious where I can find that image. Also, when I (sporadically) download images from my camera, I clear them from the camera's storage, so the filenames restart at **DSC_0001.jpg** at unpredictable times. Finally, it can take a lot of time to go through 12 months of images when I use **display** to show an entire year.

|

||||

|

||||

This is where the **montage** command, which puts thumbnail versions of a series of images into a single image, can be very useful. For example:

|

||||

```

|

||||

montage -label %d/%f -title 2017 -tile 5x -resize 10% -geometry +4+4 2017/0[1-4]/*/*.JPG 2017JanApr.jpg

|

||||

```

|

||||

|

||||

From left to right, this command starts by specifying a label for each image that consists of the filename ( **%f** ) and its directory ( **%d** ) structure, separated with **/**. Next, the command specifies the main directory as the title, then instructs the montage to tile the images in five columns, with each image resized to 10% (which fits my monitor's screen easily). The geometry setting puts whitespace around each image. Finally, it specifies which images to include in the montage, and an appropriate filename to save the montage ( **2017JanApr.jpg** ). So now the image **2017JanApr.jpg** becomes a reference I can use over and over when I want to view all my images from this time period.

|

||||

|

||||

### Managing memory

|

||||

|

||||

You might wonder why I specified just a four-month period (January to April) for this montage. Here is where you need to be a bit careful, because **montage** can consume a lot of memory. My camera creates image files that are about 2.5MB each, and I have found that my system's memory can pretty easily handle 60 images or so. When I get to around 80, my computer freezes when other programs, such as Firefox and Thunderbird, are running the background. This seems to relate to memory usage, which goes up to 80% or more of available RAM for **montage**. (You can check this by running **top** while you do this procedure.) If I shut down all other programs, I can manage 80 images before my system freezes.

|

||||

|

||||

Here's how you can get some sense of how many files you're dealing with before running the **montage** command:

|

||||

```

|

||||

ls 2017/0[1-4/*/*.JPG > filelist; wc -l filelist

|

||||

```

|

||||

|

||||

The command **ls** generates a list of the files in our search and saves it to the arbitrarily named filelist. Then, the **wc** command with the **-l** option reports how many lines are in the file, in other words, how many files **ls** found. Here's my output:

|

||||

```

|

||||

163 filelist

|

||||

```

|

||||

|

||||

Oops! There are 163 images taken from January through April, and creating a montage of all of them would almost certainly freeze up my system. I need to trim down the list a bit, maybe just to March or even earlier. But what if I took a lot of pictures from April 20 to 30, and I think that's a big part of my problem. Here's how the shell can help us figure this out:

|

||||

```

|

||||

ls 2017/0[1-3]/*/*.JPG > filelist; ls 2017/04/0[1-9]/*.JPG >> filelist; ls 2017/04/1[0-9]/*.JPG >> filelist; wc -l filelist

|

||||

```

|

||||

|

||||

This is a series of four commands all on one line, separated by semicolons. The first command specifies the number of images taken from January to March; the second adds April 1 through 9 using the **> >** append operator; the third appends April 10 through 19. The fourth command, **wc -l** , reports:

|

||||

```

|

||||

81 filelist

|

||||

```

|

||||

|

||||

I know 81 files should be doable if I shut down my other applications.

|

||||

|

||||

Managing this with the **montage** command is easy, since we're just transposing what we did above:

|

||||

```

|

||||

montage -label %d/%f -title 2017 -tile 5x -resize 10% -geometry +4+4 2017/0[1-3]/*/*.JPG 2017/04/0[1-9]/*.JPG 2017/04/1[0-9]/*.JPG 2017Jan01Apr19.jpg

|

||||

```

|

||||

|

||||

The last filename in the **montage** command will be the output; everything before that is input and is read from left to right. This took just under three minutes to run and resulted in an image about 2.5MB in size, but my system was sluggish for a bit afterward.

|

||||

|

||||

### Displaying the montage

|

||||

|

||||

When you first view a large montage using the **display** command, you may see that the montage's width is OK, but the image is squished vertically to fit the screen. Don't worry; just left-click the image and select **View > Original Size**. Click again to hide the menu.

|

||||

|

||||

I hope this has been helpful in showing you new ways to view your images. In my next article, I'll discuss more complex image manipulation.

|

||||

|

||||

|

||||

### About The Author

|

||||

Greg Pittman;Greg Is A Retired Neurologist In Louisville;Kentucky;With A Long-Standing Interest In Computers;Programming;Beginning With Fortran Iv In The When Linux;Open Source Software Came Along;It Kindled A Commitment To Learning More;Eventually Contributing. He Is A Member Of The Scribus Team.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/9/imagemagick-viewing-images

|

||||

|

||||

作者:[Greg Pittman][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/greg-p

|

||||

[1]:https://opensource.com/article/17/8/imagemagick

|

||||

[2]:/file/370946

|

||||

[3]:https://opensource.com/sites/default/files/u128651/edge3negate.jpg (Using the edge and negate options on an image.)

|

||||

[4]:https://wiki.gnome.org/Apps/Shotwell

|

||||

[5]:https://en.wikipedia.org/wiki/Exif

|

||||

@ -1,3 +1,6 @@

|

||||

Translating by MjSeven

|

||||

|

||||

|

||||

Useful Resources for Those Who Want to Know More About Linux

|

||||

======

|

||||

|

||||

|

||||

@ -0,0 +1,429 @@

|

||||

Some Common Concurrent Programming Mistakes

|

||||

============================================================

|

||||

|

||||

Go is a language supporting built-in concurrent programming. By using the `go` keyword to create goroutines (light weight threads) and by [using][8] [channels][9] and [other concurrency][10] [synchronization techniques][11] provided in Go, concurrent programming becomes easy, flexible and enjoyable.

|

||||

|

||||

One the other hand, Go doesn't prevent Go programmers from making some concurrent programming mistakes which are caused by either carelessnesses or lacking of experiences. The remaining of the current article will show some common mistakes in Go concurrent programming, to help Go programmers avoid making such mistakes.

|

||||

|

||||

### No Synchronizations When Synchronizations Are Needed

|

||||

|

||||

Code lines may be [not executed by the appearance orders][2].

|

||||

|

||||

There are two mistakes in the following program.

|

||||

|

||||

* First, the read of `b` in the main goroutine and the write of `b` in the new goroutine might cause data races.

|

||||

|

||||

* Second, the condition `b == true` can't ensure that `a != nil` in the main goroutine. Compilers and CPUs may make optimizations by [reordering instructions][1] in the new goroutine, so the assignment of `b`may happen before the assignment of `a` at run time, which makes that slice `a` is still `nil` when the elements of `a` are modified in the main goroutine.

|

||||

|

||||

```

|

||||

package main

|

||||

|

||||

import (

|

||||

"time"

|

||||

"runtime"

|

||||

)

|

||||

|

||||

func main() {

|

||||

var a []int // nil

|

||||

var b bool // false

|

||||

|

||||

// a new goroutine

|

||||

go func () {

|

||||

a = make([]int, 3)

|

||||

b = true // write b

|

||||

}()

|

||||

|

||||

for !b { // read b

|

||||

time.Sleep(time.Second)

|

||||

runtime.Gosched()

|

||||

}

|

||||

a[0], a[1], a[2] = 0, 1, 2 // might panic

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

The above program may run well on one computer, but may panic on another one. Or it may run well for _N_ times, but may panic at the _(N+1)_ th time.

|

||||

|

||||

We should use channels or the synchronization techniques provided in the `sync` standard package to ensure the memory orders. For example,

|

||||

|

||||

```

|

||||

package main

|

||||

|

||||

func main() {

|

||||

var a []int = nil

|

||||

c := make(chan struct{})

|

||||

|

||||

// a new goroutine

|

||||

go func () {

|

||||

a = make([]int, 3)

|

||||

c <- struct{}{}

|

||||

}()

|

||||

|

||||

<-c

|

||||

a[0], a[1], a[2] = 0, 1, 2

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

### Use `time.Sleep` Calls To Do Synchronizations

|

||||

|

||||

Let's view a simple example.

|

||||

|

||||

```

|

||||

ppackage main

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

"time"

|

||||

)

|

||||

|

||||

func main() {

|

||||

var x = 123

|

||||

|

||||

go func() {

|

||||

x = 789 // write x

|

||||

}()

|

||||

|

||||

time.Sleep(time.Second)

|

||||

fmt.Println(x) // read x

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

We expect the program to print `789`. If we run it, it really prints `789`, almost always. But is it a program with good syncrhonization? No! The reason is Go runtime doesn't guarantee the write of `x` happens before the read of `x` for sure. Under certain conditions, such as most CPU resources are cunsumed by other programs running on same OS, the write of `x` might happen after the read of `x`. This is why we should never use `time.Sleep` calls to do syncrhonizations in formal projects.

|

||||

|

||||

Let's view another example.

|

||||

|

||||

```

|

||||

package main

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

"time"

|

||||

)

|

||||

|

||||

var x = 0

|

||||

|

||||

func main() {

|

||||

var num = 123

|

||||

var p = &num

|

||||

|

||||

c := make(chan int)

|

||||

|

||||

go func() {

|

||||

c <- *p + x

|

||||

}()

|

||||

|

||||

time.Sleep(time.Second)

|

||||

num = 789

|

||||

fmt.Println(<-c)

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

What do you expect the program will output? `123`, or `789`? In fact, the output is compiler dependent. For the standard Go compiler 1.10, it is very possible the program will output `123`. But in theory, it might output `789`, or another random number.

|

||||

|

||||

Now, let's change `c <- *p + x` to `c <- *p` and run the program again. You will find the output becomes to `789` (for the he standard Go compiler 1.10). Again, the output is compiler dependent.

|

||||

|

||||

Yes, there are data races in the above program. The expression `*p` might be evaluated before, after, or when the assignment `num = 789` is processed. The `time.Sleep` call can't guarantee the evaluation of `*p`happens before the assignment is processed.

|

||||

|

||||

For this specified example, we should store the value to be sent in a temporary value before creating the new goroutine and send the temporary value instead in the new goroutine to remove the data races.

|

||||

|

||||

```

|

||||

...

|

||||

tmp := *p + x

|

||||

go func() {

|

||||

c <- tmp

|

||||

}()

|

||||

...

|

||||

|

||||

```

|

||||

|

||||

### Leave Goroutines Hanging

|

||||

|

||||

Hanging goroutines are the goroutines staying in blocking state for ever. There are many reasons leading goroutines into hanging. For example,

|

||||

|

||||

* a goroutine tries to receive a value from a nil channel or from a channel which no more other goroutines will send values to.

|

||||

|

||||

* a goroutine tries to send a value to nil channel or to a channel which no more other goroutines will receive values from.

|

||||

|

||||

* a goroutine is dead locked by itself.

|

||||

|

||||

* a group of goroutines are dead locked by each other.

|

||||

|

||||

* a goroutine is blocked when executing a `select` code block without `default` branch, and all the channel operations following the `case` keywords in the `select` code block keep blocking for ever.

|

||||

|

||||

Except sometimes we deliberately let the main goroutine in a program hanging to avoid the program exiting, most other hanging goroutine cases are unexpected. It is hard for Go runtime to judge whether or not a goroutine in blocking state is hanging or stays in blocking state temporarily. So Go runtime will never release the resources consumed by a hanging goroutine.

|

||||

|

||||

In the [first-response-wins][12] channel use case, if the capacity of the channel which is used a future is not large enough, some slower response goroutines will hang when trying to send a result to the future channel. For example, if the following function is called, there will be 4 goroutines stay in blocking state for ever.

|

||||

|

||||

```

|

||||

func request() int {

|

||||

c := make(chan int)

|

||||

for i := 0; i < 5; i++ {

|

||||

i := i

|

||||

go func() {

|

||||

c <- i // 4 goroutines will hang here.

|

||||

}()

|

||||

}

|

||||

return <-c

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

To avoid the four goroutines hanging, the capacity of channel `c` must be at least `4`.

|

||||

|

||||

In [the second way to implement the first-response-wins][13] channel use case, if the channel which is used as a future is an unbufferd channel, it is possible that the channel reveiver will never get a response and hang. For example, if the following function is called in a goroutine, the goroutine might hang. The reason is, if the five try-send operations all happen before the receive operation `<-c` is ready, then all the five try-send operations will fail to send values so that the caller goroutine will never receive a value.

|

||||

|

||||

```

|

||||

func request() int {

|

||||

c := make(chan int)

|

||||

for i := 0; i < 5; i++ {

|

||||

i := i

|

||||

go func() {

|

||||

select {

|

||||

case c <- i:

|

||||

default:

|

||||

}

|

||||

}()

|

||||

}

|

||||

return <-c

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

Changing the channel `c` as a buffered channel will guarantee at least one of the five try-send operations succeed so that the caller goroutine will never hang in the above function.

|

||||

|

||||

### Copy Values Of The Types In The `sync` Standard Package

|

||||

|

||||

In practice, values of the types in the `sync` standard package shouldn't be copied. We should only copy pointers of such values.

|

||||

|

||||

The following is bad concurrent programming example. In this example, when the `Counter.Value` method is called, a `Counter` receiver value will be copied. As a field of the receiver value, the respective `Mutex` field of the `Counter` receiver value will also be copied. The copy is not synchronized, so the copied `Mutex` value might be corrupt. Even if it is not corrupt, what it protects is the accessment of the copied `Counter` receiver value, which is meaningless generally.

|

||||

|

||||

```

|

||||

import "sync"

|

||||

|

||||

type Counter struct {

|

||||

sync.Mutex

|

||||

n int64

|

||||

}

|

||||

|

||||

// This method is okay.

|

||||

func (c *Counter) Increase(d int64) (r int64) {

|

||||

c.Lock()

|

||||

c.n += d

|

||||

r = c.n

|

||||

c.Unlock()

|

||||

return

|

||||

}

|

||||

|

||||

// The method is bad. When it is called, a Counter

|

||||

// receiver value will be copied.

|

||||

func (c Counter) Value() (r int64) {

|

||||

c.Lock()

|

||||

r = c.n

|

||||

c.Unlock()

|

||||

return

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

We should change the reveiver type of the `Value` method to the poiner type `*Counter` to avoid copying `Mutex` values.

|

||||

|

||||

The `go vet` command provided in the official Go SDK will report potential bad value copies.

|

||||

|

||||

### Call Methods Of `sync.WaitGroup` At Wrong Places

|

||||

|

||||

Each `sync.WaitGroup` value maintains a counter internally, The initial value of the counter is zero. If the counter of a `WaitGroup` value is zero, a call to the `Wait` method of the `WaitGroup` value will not block, otherwise, the call blocks until the counter value becomes zero.

|

||||

|

||||

To make the uses of `WaitGroup` value meaningful, when the counter of a `WaitGroup` value is zero, a call to the `Add` method of the `WaitGroup` value must happen before the corresponding call to the `Wait` method of the `WaitGroup` value.

|

||||

|

||||

For example, in the following program, the `Add` method is called at an improper place, which makes that the final printed number is not always `100`. In fact, the final printed number of the program may be an arbitrary number in the range `[0, 100)`. The reason is none of the `Add` method calls are guaranteed to happen before the `Wait` method call.

|

||||

|

||||

```

|

||||

package main

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

"sync"

|

||||

"sync/atomic"

|

||||

)

|

||||

|

||||

func main() {

|

||||

var wg sync.WaitGroup

|

||||

var x int32 = 0

|

||||

for i := 0; i < 100; i++ {

|

||||

go func() {

|

||||

wg.Add(1)

|

||||

atomic.AddInt32(&x, 1)

|

||||

wg.Done()

|

||||

}()

|

||||

}

|

||||

|

||||

fmt.Println("To wait ...")

|

||||

wg.Wait()

|

||||

fmt.Println(atomic.LoadInt32(&x))

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

To make the program behave as expected, we should move the `Add` method calls out of the new goroutines created in the `for` loop, as the following code shown.

|

||||

|

||||

```

|

||||

...

|

||||

for i := 0; i < 100; i++ {

|

||||

wg.Add(1)

|

||||

go func() {

|

||||

atomic.AddInt32(&x, 1)

|

||||

wg.Done()

|

||||

}()

|

||||

}

|

||||

...

|

||||

|

||||

```

|

||||

|

||||

### Use Channels As Futures Improperly

|

||||

|

||||

From the article [channel use cases][14], we know that some functions will return [channels as futures][15]. Assume `fa` and `fb` are two such functions, then the following call uses future arguments improperly.

|

||||

|

||||

```

|

||||

doSomethingWithFutureArguments(<-fa(), <-fb())

|

||||

|

||||

```

|

||||

|

||||

In the above code line, the two channel receive operations are processed in sequentially, instead of concurrently. We should modify it as the following to process them concurrently.

|

||||

|

||||

```

|

||||

ca, cb := fa(), fb()

|

||||

doSomethingWithFutureArguments(<-c1, <-c2)

|

||||

|

||||

```

|

||||

|

||||

### Close Channels Not From The Last Active Sender Goroutine

|

||||

|

||||

A common mistake made by Go programmers is closing a channel when there are still some other goroutines will potentially send values to the channel later. When such a potential send (to the closed channel) really happens, a panic will occur.

|

||||

|

||||

This mistake was ever made in some famous Go projects, such as [this bug][3] and [this bug][4] in the kubernetes project.

|

||||

|

||||

Please read [this article][5] for explanations on how to safely and gracefully close channels.

|

||||

|

||||

### Do 64-bit Atomic Operations On Values Which Are Not Guaranteed To Be 64-bit Aligned

|

||||

|

||||

Up to now (Go 1.10), for the standard Go compiler, the address of the value involved in a 64-bit atomic operation is required to be 64-bit aligned. Failure to do so may make the current goroutine panic. For the standard Go compiler, such failure can only happen on 32-bit architectures. Please read [memory layouts][6] to get how to guarantee the addresses of 64-bit word 64-bit aligned on 32-bit OSes.

|

||||

|

||||

### Not Pay Attention To Too Many Resources Are Consumed By Calls To The `time.After` Function

|

||||

|

||||

The `After` function in the `time` standard package returns [a channel for delay notification][7]. The function is convenient, however each of its calls will create a new value of the `time.Timer` type. The new created `Timer` value will keep alive in the duration specified by the passed argument to the `After` function. If the function is called many times in the duration, there will be many `Timer` values alive and consuming much memory and computation.

|

||||

|

||||

For example, if the following `longRunning` function is called and there are millions of messages coming in one minute, then there will be millions of `Timer` values alive in a certain period, even if most of these `Timer`values have already become useless.

|

||||

|

||||

```

|

||||

import (

|

||||

"fmt"

|

||||

"time"

|

||||

)

|

||||

|

||||

// The function will return if a message arrival interval

|

||||

// is larger than one minute.

|

||||

func longRunning(messages <-chan string) {

|

||||

for {

|

||||

select {

|

||||

case <-time.After(time.Minute):

|

||||

return

|

||||

case msg := <-messages:

|

||||

fmt.Println(msg)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

To avoid too many `Timer` values being created in the above code, we should use a single `Timer` value to do the same job.

|

||||

|

||||

```

|

||||

func longRunning(messages <-chan string) {

|

||||

timer := time.NewTimer(time.Minute)

|

||||

defer timer.Stop()

|

||||

|

||||

for {

|

||||

select {

|

||||

case <-timer.C:

|

||||

return

|

||||

case msg := <-messages:

|

||||

fmt.Println(msg)

|

||||

if !timer.Stop() {

|

||||

<-timer.C

|

||||

}

|

||||

}

|

||||

|

||||

// The above "if" block can also be put here.

|

||||

|

||||

timer.Reset(time.Minute)

|

||||

}

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

### Use `time.Timer` Values Incorrectly

|

||||

|

||||

An idiomatic use example of a `time.Timer` value has been shown in the last section. One detail which should be noted is that the `Reset` method should always be invoked on stopped or expired `time.Timer`values.

|

||||

|

||||

At the end of the first `case` branch of the `select` block, the `time.Timer` value has expired, so we don't need to stop it. But we must stop the timer in the second branch. If the `if` code block in the second branch is missing, it is possible that a send (by the Go runtime) to the channel `timer.C` races with the `Reset`method call, and it is possible that the `longRunning` function returns earlier than expected, for the `Reset`method will only reset the internal timer to zero, it will not clear (drain) the value which has been sent to the `timer.C` channel.

|

||||

|

||||

For example, the following program is very possible to exit in about one second, instead of ten seconds. and more importantly, the program is not data race free.

|

||||

|

||||

```

|

||||

package main

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

"time"

|

||||

)

|

||||

|

||||

func main() {

|

||||

start := time.Now()

|

||||

timer := time.NewTimer(time.Second/2)

|

||||

select {

|

||||

case <-timer.C:

|

||||

default:

|

||||

time.Sleep(time.Second) // go here

|

||||

}

|

||||

timer.Reset(time.Second * 10)

|

||||

<-timer.C

|

||||

fmt.Println(time.Since(start)) // 1.000188181s

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

A `time.Timer` value can be leaved in non-stopping status when it is not used any more, but it is recommended to stop it in the end.

|

||||

|

||||

It is bug prone and not recommended to use a `time.Timer` value concurrently in multiple goroutines.

|

||||

|

||||

We should not rely on the return value of a `Reset` method call. The return result of the `Reset` method exists just for compatibility purpose.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://go101.org/article/concurrent-common-mistakes.html

|

||||

|

||||

作者:[go101.org ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:go101.org

|

||||

[1]:https://go101.org/article/memory-model.html

|

||||

[2]:https://go101.org/article/memory-model.html

|

||||

[3]:https://github.com/kubernetes/kubernetes/pull/45291/files?diff=split

|

||||

[4]:https://github.com/kubernetes/kubernetes/pull/39479/files?diff=split

|

||||

[5]:https://go101.org/article/channel-closing.html

|

||||

[6]:https://go101.org/article/memory-layout.html

|

||||

[7]:https://go101.org/article/channel-use-cases.html#timer

|

||||

[8]:https://go101.org/article/channel-use-cases.html

|

||||

[9]:https://go101.org/article/channel.html

|

||||

[10]:https://go101.org/article/concurrent-atomic-operation.html

|

||||

[11]:https://go101.org/article/concurrent-synchronization-more.html

|

||||

[12]:https://go101.org/article/channel-use-cases.html#first-response-wins

|

||||

[13]:https://go101.org/article/channel-use-cases.html#first-response-wins-2

|

||||

[14]:https://go101.org/article/channel-use-cases.html

|

||||

[15]:https://go101.org/article/channel-use-cases.html#future-promise

|

||||

@ -1,124 +0,0 @@

|

||||

translating----geekpi

|

||||

|

||||

How to start developing on Java in Fedora

|

||||

======

|

||||

|

||||

|

||||

Java is one of the most popular programming languages in the world. It is widely-used to develop IOT appliances, Android apps, web, and enterprise applications. This article will provide a quick guide to install and configure your workstation using [OpenJDK][1].

|

||||

|

||||

### Installing the compiler and tools

|

||||

|

||||

Installing the compiler, or Java Development Kit (JDK), is easy to do in Fedora. At the time of this article, versions 8 and 9 are available. Simply open a terminal and enter:

|

||||

```

|

||||

sudo dnf install java-1.8.0-openjdk-devel

|

||||

|

||||

```

|

||||

|

||||

This will install the JDK for version 8. For version 9, enter:

|

||||

```

|

||||

sudo dnf install java-9-openjdk-devel

|

||||

|

||||

```

|

||||

|

||||

For the developer who requires additional tools and libraries such as Ant and Maven, the **Java Development** group is available. To install the suite, enter:

|

||||

```

|

||||

sudo dnf group install "Java Development"

|

||||

|

||||

```

|

||||

|

||||

To verify the compiler is installed, run:

|

||||

```

|

||||

javac -version

|

||||

|

||||

```

|

||||

|

||||

The output shows the compiler version and looks like this:

|

||||

```

|

||||

javac 1.8.0_162

|

||||

|

||||

```

|

||||

|

||||

### Compiling applications

|

||||

|

||||

You can use any basic text editor such as nano, vim, or gedit to write applications. This example provides a simple “Hello Fedora” program.

|

||||

|

||||

Open your favorite text editor and enter the following:

|

||||

```

|

||||

public class HelloFedora {

|

||||

|

||||

|

||||

public static void main (String[] args) {

|

||||

System.out.println("Hello Fedora!");

|

||||

}

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

Save the file as HelloFedora.java. In the terminal change to the directory containing the file and do:

|

||||

```

|

||||

javac HelloFedora.java

|

||||

|

||||

```

|

||||

|

||||

The compiler will complain if it runs into any syntax errors. Otherwise it will simply display the shell prompt beneath.

|

||||

|

||||

You should now have a file called HelloFedora, which is the compiled program. Run it with the following command:

|

||||

```

|

||||

java HelloFedora

|

||||

|

||||

```

|

||||

|

||||

And the output will display:

|

||||

```

|

||||

Hello Fedora!

|

||||

|

||||

```

|

||||

|

||||

### Installing an Integrated Development Environment (IDE)

|

||||

|

||||

Some programs may be more complex and an IDE can make things flow smoothly. There are quite a few IDEs available for Java programmers including:

|

||||

|

||||

+ Geany, a basic IDE that loads quickly, and provides built-in templates

|

||||

+ Anjuta

|

||||

+ GNOME Builder, which has been covered in the article Builder – a new IDE specifically for GNOME app developers

|

||||

|

||||

However, one of the most popular open-source IDE’s, mainly written in Java, is [Eclipse][2]. Eclipse is available in the official repositories. To install it, run this command:

|

||||

```

|

||||

sudo dnf install eclipse-jdt

|

||||

|

||||

```

|

||||

|

||||

When the installation is complete, a shortcut for Eclipse appears in the desktop menu.

|

||||

|

||||

For more information on how to use Eclipse, consult the [User Guide][3] available on their website.

|

||||

|

||||

### Browser plugin

|

||||

|

||||

If you’re developing web applets and need a plugin for your browser, [IcedTea-Web][4] is available. Like OpenJDK, it is open source and easy to install in Fedora. Run this command:

|

||||

```

|

||||

sudo dnf install icedtea-web

|

||||

|

||||

```

|

||||

|

||||

As of Firefox 52, the web plugin no longer works. For details visit the Mozilla support site at [https://support.mozilla.org/en-US/kb/npapi-plugins?as=u&utm_source=inproduct][5].

|

||||

|

||||

Congratulations, your Java development environment is ready to use.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://fedoramagazine.org/start-developing-java-fedora/

|

||||

|

||||

作者:[Shaun Assam][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://fedoramagazine.org/author/sassam/

|

||||

[1]:http://openjdk.java.net/

|

||||

[2]:https://www.eclipse.org/

|

||||

[3]:http://help.eclipse.org/oxygen/nav/0

|

||||

[4]:https://icedtea.classpath.org/wiki/IcedTea-Web

|

||||

[5]:https://support.mozilla.org/en-US/kb/npapi-plugins?as=u&utm_source=inproduct

|

||||

106

sources/tech/20180426 Continuous Profiling of Go programs.md

Normal file

106

sources/tech/20180426 Continuous Profiling of Go programs.md

Normal file

@ -0,0 +1,106 @@

|

||||

Continuous Profiling of Go programs

|

||||

============================================================

|

||||

|

||||

One of the most interesting parts of Google is our fleet-wide continuous profiling service. We can see who is accountable for CPU and memory usage, we can continuously monitor our production services for contention and blocking profiles, and we can generate analysis and reports and easily can tell what are some of the highly impactful optimization projects we can work on.

|

||||

|

||||

I briefly worked on [Stackdriver Profiler][2], our new product that is filling the gap of cloud-wide profiling service for Cloud users. Note that you DON’T need to run your code on Google Cloud Platform in order to use it. Actually, I use it at development time on a daily basis now. It also supports Java and Node.js.

|

||||

|

||||

#### Profiling in production

|

||||

|

||||

pprof is safe to use in production. We target an additional 5% overhead for CPU and heap allocation profiling. The collection is happening for 10 seconds for every minute from a single instance. If you have multiple replicas of a Kubernetes pod, we make sure we do amortized collection. For example, if you have 10 replicas of a pod, the overhead will be 0.5%. This makes it possible for users to keep the profiling always on.

|

||||

|

||||

We currently support CPU, heap, mutex and thread profiles for Go programs.

|

||||

|

||||

#### Why?

|

||||

|

||||

Before explaining how you can use the profiler in production, it would be helpful to explain why you would ever want to profile in production. Some very common cases are:

|

||||

|

||||

* Debug performance problems only visible in production.

|

||||

|

||||

* Understand the CPU usage to reduce billing.

|

||||

|

||||

* Understand where the contention cumulates and optimize.

|

||||

|

||||

* Understand the impact of new releases, e.g. seeing the difference between canary and production.

|

||||

|

||||

* Enrich your distributed traces by [correlating][1] them with profiling samples to understand the root cause of latency.

|

||||

|

||||

#### Enabling

|

||||

|

||||

Stackdriver Profiler doesn’t work with the _net/http/pprof_ handlers and require you to install and configure a one-line agent in your program.

|

||||

|

||||

```

|

||||

go get cloud.google.com/go/profiler

|

||||

```

|

||||

|

||||

And in your main function, start the profiler:

|

||||

|

||||

```

|

||||

if err := profiler.Start(profiler.Config{

|

||||

Service: "indexing-service",

|

||||

ServiceVersion: "1.0",

|

||||

ProjectID: "bamboo-project-606", // optional on GCP

|

||||

}); err != nil {

|

||||

log.Fatalf("Cannot start the profiler: %v", err)

|

||||

}

|

||||

```

|

||||

|

||||

Once you start running your program, the profiler package will report the profilers for 10 seconds for every minute.

|

||||

|

||||

#### Visualization

|

||||

|

||||

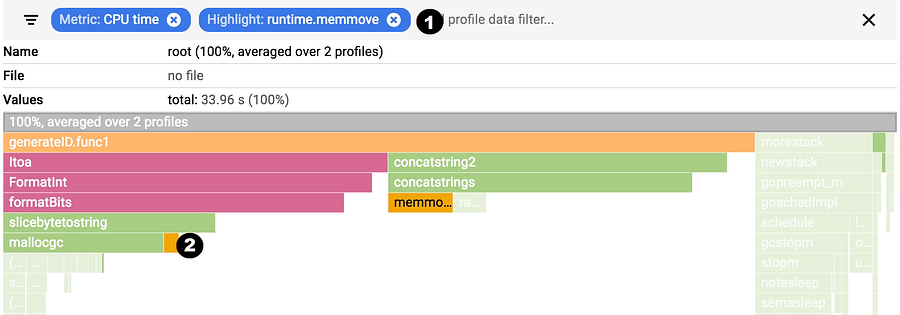

As soon as profiles are reported to the backend, you will start seeing a flamegraph at [https://console.cloud.google.com/profiler][4]. You can filter by tags and change the time span, as well as break down by service name and version. The data will be around up to 30 days.

|

||||

|

||||

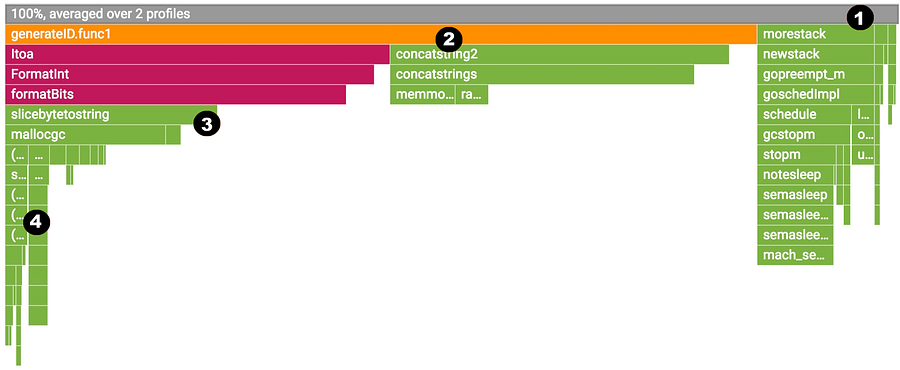

|

||||

|

||||

|

||||

You can choose one of the available profiles; break down by service, zone and version. You can move in the flame and filter by tags.

|

||||

|

||||

#### Reading the flame

|

||||

|

||||

Flame graph visualization is explained by [Brendan Gregg][5] very comprehensively. Stackdriver Profiler adds a little bit of its own flavor.

|

||||

|

||||

|

||||

|

||||

|

||||

We will examine a CPU profile but all also applies to the other profiles.

|

||||

|

||||

1. The top-most x-axis represents the entire program. Each box on the flame represents a frame on the call path. The width of the box is proportional to the CPU time spent to execute that function.

|

||||

|

||||

2. Boxes are sorted from left to right, left being the most expensive call path.

|

||||

|

||||

3. Frames from the same package have the same color. All runtime functions are represented with green in this case.

|

||||

|

||||

4. You can click on any box to expand the execution tree further.

|

||||

|

||||

|

||||

|

||||

|

||||

You can hover on any box to see detailed information for any frame.

|

||||

|

||||

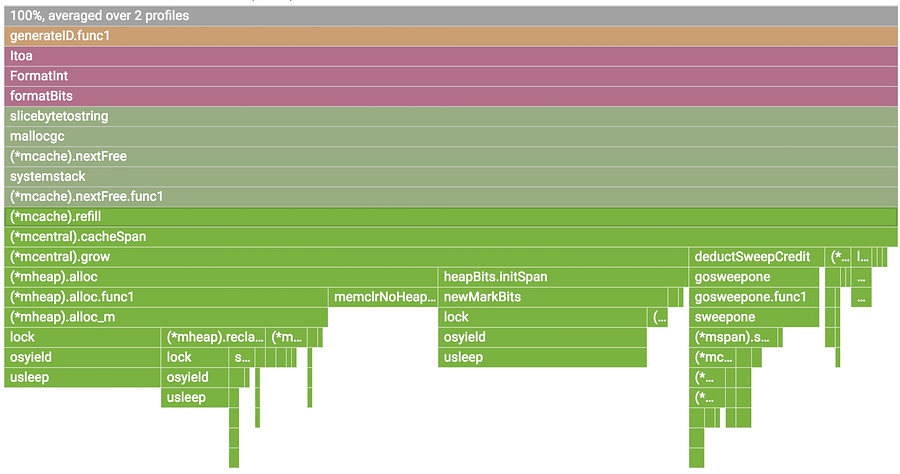

#### Filtering

|

||||

|

||||

You can show, hide and and highlight by symbol name. These are extremely useful if you specifically want to understand the cost of a particular call or package.

|

||||

|

||||

|

||||

|

||||

1. Choose your filter. You can combine multiple filters. In this case, we are highlighting runtime.memmove.

|

||||

|

||||

2. The flame is going to filter the frames with the filter and visualize the filtered boxes. In this case, it is highlighting all runtime.memmove boxes.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://medium.com/google-cloud/continuous-profiling-of-go-programs-96d4416af77b

|

||||

|

||||

作者:[JBD ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://medium.com/@rakyll?source=post_header_lockup

|

||||

[1]:https://rakyll.org/profiler-labels/

|

||||

[2]:https://cloud.google.com/profiler/

|

||||

[3]:http://cloud.google.com/go/profiler

|

||||

[4]:https://console.cloud.google.com/profiler

|

||||

[5]:http://www.brendangregg.com/flamegraphs.html

|

||||

@ -0,0 +1,130 @@

|

||||

// Copyright 2018 The Go Authors. All rights reserved.

|

||||

// Use of this source code is governed by a BSD-style

|

||||

// license that can be found in the LICENSE file.

|

||||

|

||||

## Introduction to the Go compiler

|

||||

|

||||

`cmd/compile` contains the main packages that form the Go compiler. The compiler

|

||||

may be logically split in four phases, which we will briefly describe alongside

|

||||

the list of packages that contain their code.

|

||||

|

||||

You may sometimes hear the terms "front-end" and "back-end" when referring to

|

||||

the compiler. Roughly speaking, these translate to the first two and last two

|

||||

phases we are going to list here. A third term, "middle-end", often refers to

|

||||

much of the work that happens in the second phase.

|

||||

|

||||

Note that the `go/*` family of packages, such as `go/parser` and `go/types`,

|

||||

have no relation to the compiler. Since the compiler was initially written in C,

|

||||

the `go/*` packages were developed to enable writing tools working with Go code,

|

||||

such as `gofmt` and `vet`.

|

||||

|

||||

It should be clarified that the name "gc" stands for "Go compiler", and has

|

||||

little to do with uppercase GC, which stands for garbage collection.

|

||||

|

||||

### 1. Parsing

|

||||

|

||||

* `cmd/compile/internal/syntax` (lexer, parser, syntax tree)

|

||||

|

||||

In the first phase of compilation, source code is tokenized (lexical analysis),

|

||||

parsed (syntactic analyses), and a syntax tree is constructed for each source

|

||||

file.

|

||||

|

||||

Each syntax tree is an exact representation of the respective source file, with

|

||||

nodes corresponding to the various elements of the source such as expressions,

|

||||

declarations, and statements. The syntax tree also includes position information

|

||||

which is used for error reporting and the creation of debugging information.

|

||||

|

||||

### 2. Type-checking and AST transformations

|

||||

|

||||

* `cmd/compile/internal/gc` (create compiler AST, type checking, AST transformations)

|

||||

|

||||

The gc package includes an AST definition carried over from when it was written

|

||||

in C. All of its code is written in terms of it, so the first thing that the gc

|

||||

package must do is convert the syntax package's syntax tree to the compiler's

|

||||

AST representation. This extra step may be refactored away in the future.

|

||||

|

||||

The AST is then type-checked. The first steps are name resolution and type

|

||||

inference, which determine which object belongs to which identifier, and what

|

||||

type each expression has. Type-checking includes certain extra checks, such as

|

||||

"declared and not used" as well as determining whether or not a function

|

||||

terminates.

|

||||

|

||||

Certain transformations are also done on the AST. Some nodes are refined based

|

||||

on type information, such as string additions being split from the arithmetic

|

||||

addition node type. Some other examples are dead code elimination, function call

|

||||

inlining, and escape analysis.

|

||||

|

||||

### 3. Generic SSA

|

||||

|

||||

* `cmd/compile/internal/gc` (converting to SSA)

|

||||

* `cmd/compile/internal/ssa` (SSA passes and rules)

|

||||

|

||||

|

||||

In this phase, the AST is converted into Static Single Assignment (SSA) form, a

|

||||

lower-level intermediate representation with specific properties that make it

|

||||

easier to implement optimizations and to eventually generate machine code from

|

||||

it.

|

||||

|

||||

During this conversion, function intrinsics are applied. These are special

|

||||

functions that the compiler has been taught to replace with heavily optimized

|

||||

code on a case-by-case basis.

|

||||

|

||||

Certain nodes are also lowered into simpler components during the AST to SSA

|

||||

conversion, so that the rest of the compiler can work with them. For instance,

|

||||

the copy builtin is replaced by memory moves, and range loops are rewritten into

|

||||

for loops. Some of these currently happen before the conversion to SSA due to

|

||||

historical reasons, but the long-term plan is to move all of them here.

|

||||

|

||||

Then, a series of machine-independent passes and rules are applied. These do not

|

||||

concern any single computer architecture, and thus run on all `GOARCH` variants.

|

||||

|

||||

Some examples of these generic passes include dead code elimination, removal of

|

||||

unneeded nil checks, and removal of unused branches. The generic rewrite rules

|

||||

mainly concern expressions, such as replacing some expressions with constant

|

||||

values, and optimizing multiplications and float operations.

|

||||

|

||||

### 4. Generating machine code

|

||||

|

||||