mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

623d4e147f

245

published/20190725 Introduction to GNU Autotools.md

Normal file

245

published/20190725 Introduction to GNU Autotools.md

Normal file

@ -0,0 +1,245 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11218-1.html)

|

||||

[#]: subject: (Introduction to GNU Autotools)

|

||||

[#]: via: (https://opensource.com/article/19/7/introduction-gnu-autotools)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||

|

||||

GNU Autotools 介绍

|

||||

======

|

||||

|

||||

> 如果你仍未使用过 Autotools,那么这篇文章将改变你递交代码的方式。

|

||||

|

||||

|

||||

|

||||

你有没有下载过流行的软件项目的源代码,要求你输入几乎是仪式般的 `./configure; make && make install` 命令序列来构建和安装它?如果是这样,你已经使用过 [GNU Autotools][2] 了。如果你曾经研究过这样的项目所附带的一些文件,你可能会对这种构建系统的显而易见的复杂性感到害怕。

|

||||

|

||||

好的消息是,GNU Autotools 的设置要比你想象的要简单得多,GNU Autotools 本身可以为你生成这些上千行的配置文件。是的,你可以编写 20 或 30 行安装代码,并免费获得其他 4,000 行。

|

||||

|

||||

### Autotools 工作方式

|

||||

|

||||

如果你是初次使用 Linux 的用户,正在寻找有关如何安装应用程序的信息,那么你不必阅读本文!如果你想研究如何构建软件,欢迎阅读它;但如果你只是要安装一个新应用程序,请阅读我在[在 Linux 上安装应用程序][3]的文章。

|

||||

|

||||

对于开发人员来说,Autotools 是一种管理和打包源代码的快捷方式,以便用户可以编译和安装软件。 Autotools 也得到了主要打包格式(如 DEB 和 RPM)的良好支持,因此软件存储库的维护者可以轻松管理使用 Autotools 构建的项目。

|

||||

|

||||

Autotools 工作步骤:

|

||||

|

||||

1. 首先,在 `./configure` 步骤中,Autotools 扫描宿主机系统(即当前正在运行的计算机)以发现默认设置。默认设置包括支持库所在的位置,以及新软件应放在系统上的位置。

|

||||

2. 接下来,在 `make` 步骤中,Autotools 通常通过将人类可读的源代码转换为机器语言来构建应用程序。

|

||||

3. 最后,在 `make install` 步骤中,Autotools 将其构建好的文件复制到计算机上(在配置阶段检测到)的相应位置。

|

||||

|

||||

这个过程看起来很简单,和你使用 Autotools 的步骤一样。

|

||||

|

||||

### Autotools 的优势

|

||||

|

||||

GNU Autotools 是我们大多数人认为理所当然的重要软件。与 [GCC(GNU 编译器集合)][4]一起,Autotools 是支持将自由软件构建和安装到正在运行的系统的脚手架。如果你正在运行 [POSIX][5] 系统,可以毫不保守地说,你的计算机上的操作系统里大多数可运行软件都是这些这样构建的。

|

||||

|

||||

即使是你的项目是个玩具项目不是操作系统,你可能会认为 Autotools 对你的需求来说太过分了。但是,尽管它的名气很大,Autotools 有许多可能对你有益的小功能,即使你的项目只是一个相对简单的应用程序或一系列脚本。

|

||||

|

||||

#### 可移植性

|

||||

|

||||

首先,Autotools 考虑到了可移植性。虽然它无法使你的项目在所有 POSIX 平台上工作(这取决于你,编码的人),但 Autotools 可以确保你标记为要安装的文件安装到已知平台上最合理的位置。而且由于 Autotools,高级用户可以轻松地根据他们自己的系统情况定制和覆盖任何非最佳设定。

|

||||

|

||||

使用 Autotools,你只要知道需要将文件安装到哪个常规位置就行了。它会处理其他一切。不需要可能破坏未经测试的操作系统的定制安装脚本。

|

||||

|

||||

#### 打包

|

||||

|

||||

Autotools 也得到了很好的支持。将一个带有 Autotools 的项目交给一个发行版打包者,无论他们是打包成 RPM、DEB、TGZ 还是其他任何东西,都很简单。打包工具知道 Autotools,因此可能不需要修补、魔改或调整。在许多情况下,将 Autotools 项目结合到流程中甚至可以实现自动化。

|

||||

|

||||

### 如何使用 Autotools

|

||||

|

||||

要使用 Autotools,必须先安装它。你的发行版可能提供一个单个的软件包来帮助开发人员构建项目,或者它可能为每个组件提供了单独的软件包,因此你可能需要在你的平台上进行一些研究以发现需要安装的软件包。

|

||||

|

||||

Autotools 的组件是:

|

||||

|

||||

* `automake`

|

||||

* `autoconf`

|

||||

* `automake`

|

||||

* `make`

|

||||

|

||||

虽然你可能需要安装项目所需的编译器(例如 GCC),但 Autotools 可以很好地处理不需要编译的脚本或二进制文件。实际上,Autotools 对于此类项目非常有用,因为它提供了一个 `make uninstall` 脚本,以便于删除。

|

||||

|

||||

安装了所有组件之后,现在让我们了解一下你的项目文件的组成结构。

|

||||

|

||||

#### Autotools 项目结构

|

||||

|

||||

GNU Autotools 有非常具体的预期规范,如果你经常下载和构建源代码,可能大多数都很熟悉。首先,源代码本身应该位于一个名为 `src` 的子目录中。

|

||||

|

||||

你的项目不必遵循所有这些预期规范,但如果你将文件放在非标准位置(从 Autotools 的角度来看),那么你将不得不稍后在 `Makefile` 中对其进行调整。

|

||||

|

||||

此外,这些文件是必需的:

|

||||

|

||||

* `NEWS`

|

||||

* `README`

|

||||

* `AUTHORS`

|

||||

* `ChangeLog`

|

||||

|

||||

你不必主动使用这些文件,它们可以是包含所有信息的单个汇总文档(如 `README.md`)的符号链接,但它们必须存在。

|

||||

|

||||

#### Autotools 配置

|

||||

|

||||

在你的项目根目录下创建一个名为 `configure.ac` 的文件。`autoconf` 使用此文件来创建用户在构建之前运行的 `configure` shell 脚本。该文件必须至少包含 `AC_INIT` 和 `AC_OUTPUT` [M4 宏][6]。你不需要了解有关 M4 语言的任何信息就可以使用这些宏;它们已经为你编写好了,并且所有与 Autotools 相关的内容都在该文档中定义好了。

|

||||

|

||||

在你喜欢的文本编辑器中打开该文件。`AC_INIT` 宏可以包括包名称、版本、报告错误的电子邮件地址、项目 URL 以及可选的源 TAR 文件名称等参数。

|

||||

|

||||

[AC_OUTPUT][7] 宏更简单,不用任何参数。

|

||||

|

||||

```

|

||||

AC_INIT([penguin], [2019.3.6], [[seth@example.com][8]])

|

||||

AC_OUTPUT

|

||||

```

|

||||

|

||||

如果你此刻运行 `autoconf`,会依据你的 `configure.ac` 文件生成一个 `configure` 脚本,它是可以运行的。但是,也就是能运行而已,因为到目前为止你所做的就是定义项目的元数据,并要求创建一个配置脚本。

|

||||

|

||||

你必须在 `configure.ac` 文件中调用的下一个宏是创建 [Makefile][9] 的函数。 `Makefile` 会告诉 `make` 命令做什么(通常是如何编译和链接程序)。

|

||||

|

||||

创建 `Makefile` 的宏是 `AM_INIT_AUTOMAKE`,它不接受任何参数,而 `AC_CONFIG_FILES` 接受的参数是你要输出的文件的名称。

|

||||

|

||||

最后,你必须添加一个宏来考虑你的项目所需的编译器。你使用的宏显然取决于你的项目。如果你的项目是用 C++ 编写的,那么适当的宏是 `AC_PROG_CXX`,而用 C 编写的项目需要 `AC_PROG_CC`,依此类推,详见 Autoconf 文档中的 [Building Programs and Libraries][10] 部分。

|

||||

|

||||

例如,我可能会为我的 C++ 程序添加以下内容:

|

||||

|

||||

```

|

||||

AC_INIT([penguin], [2019.3.6], [[seth@example.com][8]])

|

||||

AC_OUTPUT

|

||||

AM_INIT_AUTOMAKE

|

||||

AC_CONFIG_FILES([Makefile])

|

||||

AC_PROG_CXX

|

||||

```

|

||||

|

||||

保存该文件。现在让我们将目光转到 `Makefile`。

|

||||

|

||||

#### 生成 Autotools Makefile

|

||||

|

||||

`Makefile` 并不难手写,但 Autotools 可以为你编写一个,而它生成的那个将使用在 `./configure` 步骤中检测到的配置选项,并且它将包含比你考虑要包括或想要自己写的还要多得多的选项。然而,Autotools 并不能检测你的项目构建所需的所有内容,因此你必须在文件 `Makefile.am` 中添加一些细节,然后在构造 `Makefile` 时由 `automake` 使用。

|

||||

|

||||

`Makefile.am` 使用与 `Makefile` 相同的语法,所以如果你曾经从头开始编写过 `Makefile`,那么这个过程将是熟悉和简单的。通常,`Makefile.am` 文件只需要几个变量定义来指示要构建的文件以及它们的安装位置即可。

|

||||

|

||||

以 `_PROGRAMS` 结尾的变量标识了要构建的代码(这通常被认为是<ruby>原语<rt>primary</rt></ruby>目标;这是 `Makefile` 存在的主要意义)。Automake 也会识别其他原语,如 `_SCRIPTS`、`_ DATA`、`_LIBRARIES`,以及构成软件项目的其他常见部分。

|

||||

|

||||

如果你的应用程序在构建过程中需要实际编译,那么你可以用 `bin_PROGRAMS` 变量将其标记为二进制程序,然后使用该程序名称作为变量前缀引用构建它所需的源代码的任何部分(这些部分可能是将被编译和链接在一起的一个或多个文件):

|

||||

|

||||

```

|

||||

bin_PROGRAMS = penguin

|

||||

penguin_SOURCES = penguin.cpp

|

||||

```

|

||||

|

||||

`bin_PROGRAMS` 的目标被安装在 `bindir` 中,它在编译期间可由用户配置。

|

||||

|

||||

如果你的应用程序不需要实际编译,那么你的项目根本不需要 `bin_PROGRAMS` 变量。例如,如果你的项目是用 Bash、Perl 或类似的解释语言编写的脚本,那么定义一个 `_SCRIPTS` 变量来替代:

|

||||

|

||||

```

|

||||

bin_SCRIPTS = bin/penguin

|

||||

```

|

||||

|

||||

Automake 期望源代码位于名为 `src` 的目录中,因此如果你的项目使用替代目录结构进行布局,则必须告知 Automake 接受来自外部源的代码:

|

||||

|

||||

```

|

||||

AUTOMAKE_OPTIONS = foreign subdir-objects

|

||||

```

|

||||

|

||||

最后,你可以在 `Makefile.am` 中创建任何自定义的 `Makefile` 规则,它们将逐字复制到生成的 `Makefile` 中。例如,如果你知道一些源代码中的临时值需要在安装前替换,则可以为该过程创建自定义规则:

|

||||

|

||||

```

|

||||

all-am: penguin

|

||||

touch bin/penguin.sh

|

||||

|

||||

penguin: bin/penguin.sh

|

||||

@sed "s|__datadir__|@datadir@|" $< >bin/$@

|

||||

```

|

||||

|

||||

一个特别有用的技巧是扩展现有的 `clean` 目标,至少在开发期间是这样的。`make clean` 命令通常会删除除了 Automake 基础结构之外的所有生成的构建文件。它是这样设计的,因为大多数用户很少想要 `make clean` 来删除那些便于构建代码的文件。

|

||||

|

||||

但是,在开发期间,你可能需要一种方法可靠地将项目返回到相对不受 Autotools 影响的状态。在这种情况下,你可能想要添加:

|

||||

|

||||

```

|

||||

clean-local:

|

||||

@rm config.status configure config.log

|

||||

@rm Makefile

|

||||

@rm -r autom4te.cache/

|

||||

@rm aclocal.m4

|

||||

@rm compile install-sh missing Makefile.in

|

||||

```

|

||||

|

||||

这里有很多灵活性,如果你还不熟悉 `Makefile`,那么很难知道你的 `Makefile.am` 需要什么。最基本需要的是原语目标,无论是二进制程序还是脚本,以及源代码所在位置的指示(无论是通过 `_SOURCES` 变量还是使用 `AUTOMAKE_OPTIONS` 告诉 Automake 在哪里查找源代码)。

|

||||

|

||||

一旦定义了这些变量和设置,如下一节所示,你就可以尝试生成构建脚本,并调整缺少的任何内容。

|

||||

|

||||

#### 生成 Autotools 构建脚本

|

||||

|

||||

你已经构建了基础结构,现在是时候让 Autotools 做它最擅长的事情:自动化你的项目工具。对于开发人员(你),Autotools 的接口与构建代码的用户的不同。

|

||||

|

||||

构建者通常使用这个众所周知的顺序:

|

||||

|

||||

```

|

||||

$ ./configure

|

||||

$ make

|

||||

$ sudo make install

|

||||

```

|

||||

|

||||

但是,要使这种咒语起作用,你作为开发人员必须引导构建这些基础结构。首先,运行 `autoreconf` 以生成用户在运行 `make` 之前调用的 `configure` 脚本。使用 `-install` 选项将辅助文件(例如符号链接)引入到 `depcomp`(这是在编译过程中生成依赖项的脚本),以及 `compile` 脚本的副本(一个编译器的包装器,用于说明语法,等等)。

|

||||

|

||||

```

|

||||

$ autoreconf --install

|

||||

configure.ac:3: installing './compile'

|

||||

configure.ac:2: installing './install-sh'

|

||||

configure.ac:2: installing './missing'

|

||||

```

|

||||

|

||||

使用此开发构建环境,你可以创建源代码分发包:

|

||||

|

||||

```

|

||||

$ make dist

|

||||

```

|

||||

|

||||

`dist` 目标是从 Autotools “免费”获得的规则。这是一个内置于 `Makefile` 中的功能,它是通过简单的 `Makefile.am` 配置生成的。该目标可以生成一个 `tar.gz` 存档,其中包含了所有源代码和所有必要的 Autotools 基础设施,以便下载程序包的人员可以构建项目。

|

||||

|

||||

此时,你应该仔细查看存档文件的内容,以确保它包含你要发送给用户的所有内容。当然,你也应该尝试自己构建:

|

||||

|

||||

```

|

||||

$ tar --extract --file penguin-0.0.1.tar.gz

|

||||

$ cd penguin-0.0.1

|

||||

$ ./configure

|

||||

$ make

|

||||

$ DESTDIR=/tmp/penguin-test-build make install

|

||||

```

|

||||

|

||||

如果你的构建成功,你将找到由 `DESTDIR` 指定的已编译应用程序的本地副本(在此示例的情况下为 `/tmp/penguin-test-build`)。

|

||||

|

||||

```

|

||||

$ /tmp/example-test-build/usr/local/bin/example

|

||||

hello world from GNU Autotools

|

||||

```

|

||||

|

||||

### 去使用 Autotools

|

||||

|

||||

Autotools 是一个很好的脚本集合,可用于可预测的自动发布过程。如果你习惯使用 Python 或 Bash 构建器,这个工具集对你来说可能是新的,但它为你的项目提供的结构和适应性可能值得学习。

|

||||

|

||||

而 Autotools 也不只是用于代码。Autotools 可用于构建 [Docbook][11] 项目,保持媒体有序(我使用 Autotools 进行音乐发布),文档项目以及其他任何可以从可自定义安装目标中受益的内容。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/7/introduction-gnu-autotools

|

||||

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/seth

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/linux_kernel_clang_vscode.jpg?itok=fozZ4zrr (Linux kernel source code (C) in Visual Studio Code)

|

||||

[2]: https://www.gnu.org/software/automake/faq/autotools-faq.html

|

||||

[3]: https://linux.cn/article-9486-1.html

|

||||

[4]: https://en.wikipedia.org/wiki/GNU_Compiler_Collection

|

||||

[5]: https://en.wikipedia.org/wiki/POSIX

|

||||

[6]: https://www.gnu.org/software/autoconf/manual/autoconf-2.67/html_node/Initializing-configure.html

|

||||

[7]: https://www.gnu.org/software/autoconf/manual/autoconf-2.67/html_node/Output.html#Output

|

||||

[8]: mailto:seth@example.com

|

||||

[9]: https://www.gnu.org/software/make/manual/html_node/Introduction.html

|

||||

[10]: https://www.gnu.org/software/automake/manual/html_node/Programs.html#Programs

|

||||

[11]: https://opensource.com/article/17/9/docbook

|

||||

@ -0,0 +1,99 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (How SD-Branch addresses today’s network security concerns)

|

||||

[#]: via: (https://www.networkworld.com/article/3431166/how-sd-branch-addresses-todays-network-security-concerns.html)

|

||||

[#]: author: (Zeus Kerravala https://www.networkworld.com/author/Zeus-Kerravala/)

|

||||

|

||||

How SD-Branch addresses today’s network security concerns

|

||||

======

|

||||

New digital technologies such as IoT at remote locations increase the need to identify devices and monitor network activity. That’s where SD-Branch can help, says Fortinet’s John Maddison.

|

||||

![KontekBrothers / Getty Images][1]

|

||||

|

||||

Secure software-defined WAN (SD-WAN) has become one of the hottest new technologies, with some reports claiming that 85% of companies are actively considering [SD-WAN][2] to improve cloud-based application performance, replace expensive and inflexible fixed WAN connections, and increase security.

|

||||

|

||||

But now the industry is shifting to software-defined branch ([SD-Branch][3]), which is broader than SD-WAN but introduced several new things for organizations to consider, including better security for new digital technologies. To understand what's required in this new solution set, I recently sat down with John Maddison, Fortinet’s executive vice president of products and solutions.

|

||||

|

||||

**[ Learn more: [SD-Branch: What it is, and why you'll need it][3] | Get regularly scheduled insights: [Sign up for Network World newsletters][4] ]**

|

||||

|

||||

### Zeus Kerravala: To get started, what exactly is SD-Branch?

|

||||

|

||||

**John Maddison:** To answer that question, let’s step back and look at the need for a secure SD-WAN solution. Organizations need to expand their digital transformation efforts out to their remote locations, such as branch offices, remote school campuses, and retail locations. The challenge is that today’s networks and applications are highly elastic and constantly changing, which means that the traditional fixed and static WAN connections to their remote offices, such as [MPLS][5], can’t support this new digital business model.

|

||||

|

||||

That’s where SD-WAN comes in. It replaces those legacy, and sometimes quite expensive, connections with flexible and intelligent connectivity designed to optimize bandwidth, maximize application performance, secure direct internet connections, and ensure that traffic, applications, workflows, and data are secure.

|

||||

|

||||

However, most branch offices and retail stores have a local LAN behind that connection that is undergoing rapid transformation. Internet of things (IoT) devices, for example, are being adopted at remote locations at an unprecedented rate. Retail shops now include a wide array of connected devices, from cash registers and scanners to refrigeration units and thermostats, to security cameras and inventory control devices. Hotels monitor room access, security and safety devices, elevators, HVAC systems, and even minibar purchases. The same sort of transformation is happening at schools, branch and field offices, and remote production facilities.

|

||||

|

||||

![John Maddison, executive vice president, Fortinet][6]

|

||||

|

||||

The challenge is that many of these environments, especially these new IoT and mobile end-user devices, lack adequate safeguards. SD-Branch extends the benefits of the secure SD-WAN’s security and control functions into the local network by securing wired and wireless access points, monitoring and inspecting internal traffic and applications, and leveraging network access control (NAC) to identify the devices being deployed at the branch and then dynamically assigning them to network segments where they can be more easily controlled.

|

||||

|

||||

### What unique challenges do remote locations, such as branch offices, schools, and retail locations, face?

|

||||

|

||||

Many of the devices being deployed at these remote locations need access to the internal network, to cloud services, or to internet resources to operate. The challenge is that IoT devices, in particular, are notoriously insecure and vulnerable to a host of threats and exploits. In addition, end users are connecting a growing number of unauthorized devices to the office. While these are usually some sort of personal smart device, they can also include anything from a connected coffee maker to a wireless access point.

|

||||

|

||||

**[ [Prepare to become a Certified Information Security Systems Professional with this comprehensive online course from PluralSight. Now offering a 10-day free trial!][7] ]**

|

||||

|

||||

Any of these, if connected to the network and then exploited, not only represent a threat to that remote location, but they can also be used as a door into the larger core network. There are numerous examples of vulnerable point-of-sale devices or HVAC systems being used to tunnel back into the organization’s data center to steal account and financial information.

|

||||

|

||||

Of course, these issues might be solved by adding a number of additional networking and security technologies to the branch, but most IT teams can’t afford to put IT resources onsite to deploy and manage these solutions, even temporarily. What’s needed is a security solution that combines traffic scanning and security enforcement, access control for both wired and wireless connections, device recognition, dynamic segmentation, and integrated management in a single low-touch/no-touch device. That’s where SD-Branch comes in.

|

||||

|

||||

### Why aren't traditional branch solutions, such as integrated routers, solving these challenges?

|

||||

|

||||

Most of the solutions designed for branch and retail locations predate SD-WAN and digital transformation. As a result, most do not provide support for the sort of flexible SD-WAN functionality that today’s remote locations require. In addition, while they may claim to provide low-touch deployment and management, the experience of most organizations tells a different story. Complicating things further, these solutions provide little more than a superficial integration between their various services.

|

||||

|

||||

For example, few if any of these integrated devices can manage or secure the wired and wireless access points deployed as part of the larger branch LAN, provide device recognition and network access control, scan network traffic, or deliver the sort of robust security that today’s networks require. Instead, many of these solutions are little more than a collection of separate limited networking, connectivity, and security elements wrapped in a piece of sheet metal that all require separate management systems, providing little to no control for those extended LAN environments with their own access points and switches – which adds to IT overhead rather than reducing it.

|

||||

|

||||

### What role does security play in an SD-Branch?

|

||||

|

||||

Security is a critical element of any branch or retail location, especially as the ongoing deployment of IoT and end-user devices continues to expand the potential attack surface. As I explained before, IoT devices are a particular concern, as they are generally quite insecure, and as a result, they need to be automatically identified, segmented, and continuously monitored for malware and unusual behaviors.

|

||||

|

||||

But that is just part of the equation. Security tools need to be integrated into the switch and wireless infrastructure so that networking protocols, security policies, and network access controls can work together as a single system. This allows the SD-Branch solution to identify devices and dynamically match them to security policies, inspect applications and workflows, and dynamically assign devices and traffic to their appropriate network segment based on their function and role.

|

||||

|

||||

The challenge is that there is often no IT staff on site to set up, manage, and fine-tune a system like this. SD-Branch provides these advanced security, access control, and network management services in a zero-touch model so they can be deployed across multiple locations and then be remotely managed through a common interface.

|

||||

|

||||

### Security teams often face challenges with a lack of visibility and control at their branch offices. How does SD-Branch address this?

|

||||

|

||||

An SD-Branch solution seamlessly extends an organization's core security into the local branch network. For organizations with multiple branch or retail locations, this enables the creation of an integrated security fabric operating through a single pane of glass management system that can see all devices and orchestrate all security policies and configurations. This approach allows all remote locations to be dynamically coordinated and updated, supports the collection and correlation of threat intelligence from every corner of the network – from the core to the branch to the cloud – and enables a coordinated response to cyber events that can automatically raise defenses everywhere while identifying and eliminating all threads of an attack.

|

||||

|

||||

Combining security with switches, access points, and network access control systems means that every connected device can not only be identified and monitored, but every application and workflow can also be seen and tracked, even if they travel across or between the different branch and cloud environments.

|

||||

|

||||

### How is SD-Branch related to secure SD-WAN?

|

||||

|

||||

SD-Branch is a natural extension of secure SD-WAN. We are finding that once an organization deploys a secure SD-WAN solution, they quickly discover that the infrastructure behind that connection is often not ready to support their digital transformation efforts. Every new threat vector adds additional risk to their organization.

|

||||

|

||||

While secure SD-WAN can see and secure applications running to or between remote locations, the applications and workflows running inside those branch offices, schools, or retail stores are not being recognized or properly inspected. Shadow IT instances are not being identified. Wired and wireless access points are not secured. End-user devices have open access to network resources. And IoT devices are expanding the potential attack surface without corresponding protections in place. That requires an SD-Branch solution.

|

||||

|

||||

Of course, this is about much more than the emergence of the next-gen branch. These new remote network environments are just another example of the new edge model that is extending and replacing the traditional network perimeter. Cloud and multi-cloud, mobile workers, 5G networks, and the next-gen branch – including offices, retail locations, and extended school campuses – are all emerging simultaneously. That means they all need to be addressed by IT and security teams at the same time. However, the traditional model of building a separate security strategy for each edge environment is a recipe for an overwhelmed IT staff. Instead, every edge needs to be seen as part of a larger, integrated security strategy where every component contributes to the overall health of the entire distributed network.

|

||||

|

||||

With that in mind, adding SD-Branch solutions to SD-WAN deployments not only extends security deep into branch office and other remote locations, but they are also a critical component of a broader strategy that ensures consistent security across all edge environments, while providing a mechanism for controlling operational expenses across the entire distributed network through central management, visibility, and control.

|

||||

|

||||

**[ For more on IoT security, see [our corporate guide to addressing IoT security concerns][8]. ]**

|

||||

|

||||

Join the Network World communities on [Facebook][9] and [LinkedIn][10] to comment on topics that are top of mind.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3431166/how-sd-branch-addresses-todays-network-security-concerns.html

|

||||

|

||||

作者:[Zeus Kerravala][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Zeus-Kerravala/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://images.idgesg.net/images/article/2019/07/cio_cw_distributed_decentralized_global_network_africa_by_kontekbrothers_gettyimages-1004007018_2400x1600-100802403-large.jpg

|

||||

[2]: https://www.networkworld.com/article/3031279/sd-wan-what-it-is-and-why-you-ll-use-it-one-day.html

|

||||

[3]: https://www.networkworld.com/article/3250664/sd-branch-what-it-is-and-why-youll-need-it.html

|

||||

[4]: https://www.networkworld.com/newsletters/signup.html

|

||||

[5]: https://www.networkworld.com/article/2297171/network-security-mpls-explained.html

|

||||

[6]: https://images.idgesg.net/images/article/2019/08/john-maddison-_fortinet-square-100808017-small.jpg

|

||||

[7]: https://pluralsight.pxf.io/c/321564/424552/7490?u=https%3A%2F%2Fwww.pluralsight.com%2Fpaths%2Fcertified-information-systems-security-professional-cisspr

|

||||

[8]: https://www.networkworld.com/article/3269165/internet-of-things/a-corporate-guide-to-addressing-iot-security-concerns.html

|

||||

[9]: https://www.facebook.com/NetworkWorld/

|

||||

[10]: https://www.linkedin.com/company/network-world

|

||||

@ -0,0 +1,69 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Xilinx launches new FPGA cards that can match GPU performance)

|

||||

[#]: via: (https://www.networkworld.com/article/3430763/xilinx-launches-new-fpga-cards-that-can-match-gpu-performance.html)

|

||||

[#]: author: (Andy Patrizio https://www.networkworld.com/author/Andy-Patrizio/)

|

||||

|

||||

Xilinx launches new FPGA cards that can match GPU performance

|

||||

======

|

||||

Xilinx says its new FPGA card, the Alveo U50, can match the performance of a GPU in areas of artificial intelligence (AI) and machine learning.

|

||||

![Thinkstock][1]

|

||||

|

||||

Xilinx has launched a new FPGA card, the Alveo U50, that it claims can match the performance of a GPU in areas of artificial intelligence (AI) and machine learning.

|

||||

|

||||

The company claims the card is the industry’s first low-profile adaptable accelerator with PCIe Gen 4 support, which offers double the throughput over PCIe Gen3. It was finalized in 2017, but cards and motherboards to support it have been slow to come to market.

|

||||

|

||||

The Alveo U50 provides customers with a programmable low-profile and low-power accelerator platform built for scale-out architectures and domain-specific acceleration of any server deployment, on premises, in the cloud, and at the edge.

|

||||

|

||||

**[ Also read: [What is quantum computing (and why enterprises should care)][2] ]**

|

||||

|

||||

Xilinx claims the Alveo U50 delivers 10 to 20 times improvements in throughput and latency as compared to a CPU. One thing's for sure, it beats the competition on power draw. It has a 75 watt power envelope, which is comparable to a desktop CPU and vastly better than a Xeon or GPU.

|

||||

|

||||

For accelerated networking and storage workloads, the U50 card helps developers identify and eliminate latency and data movement bottlenecks by moving compute closer to the data.

|

||||

|

||||

![Xilinx Alveo U50][3]

|

||||

|

||||

The Alveo U50 card is the first in the Alveo portfolio to be packaged in a half-height, half-length form factor. It runs the Xilinx UltraScale+ FPGA architecture, features high-bandwidth memory (HBM2), 100 gigabits per second (100 Gbps) networking connectivity, and support for the PCIe Gen 4 and CCIX interconnects. Thanks to the 8GB of HBM2 memory, data transfer speeds can reach 400Gbps. It also supports NVMe-over-Fabric for high-speed SSD transfers.

|

||||

|

||||

That’s a lot of performance packed into a small card.

|

||||

|

||||

**[ [Get certified as an Apple Technical Coordinator with this seven-part online course from PluralSight.][4] ]**

|

||||

|

||||

### What the Xilinx Alveo U50 can do

|

||||

|

||||

Xilinx is making some big boasts about Alveo U50's capabilities:

|

||||

|

||||

* Deep learning inference acceleration (speech translation): delivers up to 25x lower latency, 10x higher throughput, and significantly improved power efficiency per node compared to GPU-only for speech translation performance.

|

||||

* Data analytics acceleration (database query): running the TPC-H Query benchmark, Alveo U50 delivers 4x higher throughput per hour and reduced operational costs by 3x compared to in-memory CPU.

|

||||

* Computational storage acceleration (compression): delivers 20x more compression/decompression throughput, faster Hadoop and big data analytics, and over 30% lower cost per node compared to CPU-only nodes.

|

||||

* Network acceleration (electronic trading): delivers 20x lower latency and sub-500ns trading time compared to CPU-only latency of 10us.

|

||||

* Financial modeling (grid computing): running the Monte Carlo simulation, Alveo U50 delivers 7x greater power efficiency compared to GPU-only performance for a faster time to insight, deterministic latency and reduced operational costs.

|

||||

|

||||

|

||||

|

||||

The Alveo U50 is sampling now with OEM system qualifications in process. General availability is slated for fall 2019.

|

||||

|

||||

Join the Network World communities on [Facebook][5] and [LinkedIn][6] to comment on topics that are top of mind.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3430763/xilinx-launches-new-fpga-cards-that-can-match-gpu-performance.html

|

||||

|

||||

作者:[Andy Patrizio][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Andy-Patrizio/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://images.techhive.com/images/article/2014/04/bolts-of-light-speeding-through-the-acceleration-tunnel-95535268-100264665-large.jpg

|

||||

[2]: https://www.networkworld.com/article/3275367/what-s-quantum-computing-and-why-enterprises-need-to-care.html

|

||||

[3]: https://images.idgesg.net/images/article/2019/08/xilinx-alveo-u50-100808003-medium.jpg

|

||||

[4]: https://pluralsight.pxf.io/c/321564/424552/7490?u=https%3A%2F%2Fwww.pluralsight.com%2Fpaths%2Fapple-certified-technical-trainer-10-11

|

||||

[5]: https://www.facebook.com/NetworkWorld/

|

||||

[6]: https://www.linkedin.com/company/network-world

|

||||

@ -1,162 +0,0 @@

|

||||

DF-SHOW – A Terminal File Manager Based On An Old DOS Application

|

||||

======

|

||||

|

||||

|

||||

If you have worked on good-old MS-DOS, you might have used or heard about **DF-EDIT**. The DF-EDIT, stands for **D** irectory **F** ile **Edit** or, is an obscure DOS file manager, originally written by **Larry Kroeker** for MS-DOS and PC-DOS systems. It is used to display the contents of a given directory or file in MS-DOS and PC-DOS systems. Today, I stumbled upon a similar utility named **DF-SHOW** ( **D** irectory **F** ile **S** how), a terminal file manager for Unix-like operating systems. It is an Unix rewrite of obscure DF-EDIT file manager and is based on DF-EDIT 2.3d release from 1986. DF-SHOW is completely free, open source and released under GPLv3.

|

||||

|

||||

DF-SHOW can be able to,

|

||||

|

||||

* List contents of a directory,

|

||||

* View files,

|

||||

* Edit files using your default file editor,

|

||||

* Copy files to/from different locations,

|

||||

* Rename files,

|

||||

* Delete files,

|

||||

* Create new directories from within the DF-SHOW interface,

|

||||

* Update file permissions, owners and groups,

|

||||

* Search files matching a search term,

|

||||

* Launch executable files.

|

||||

|

||||

|

||||

|

||||

### DF-SHOW Usage

|

||||

|

||||

DF-SHOW consists of two programs, namely **“show”** and **“sf”**.

|

||||

|

||||

**Show command**

|

||||

|

||||

The “show” program (similar to the `ls` command) is used to display the contents of a directory, create new directories, rename, delete files/folders, update permissions, search files and so on.

|

||||

|

||||

To view the list of contents in a directory, use the following command:

|

||||

|

||||

```

|

||||

$ show <directory path>

|

||||

```

|

||||

|

||||

Example:

|

||||

|

||||

```

|

||||

$ show dfshow

|

||||

```

|

||||

|

||||

Here, dfshow is a directory. If you invoke the “show” command without specifying a directory path, it will display the contents of current directory.

|

||||

|

||||

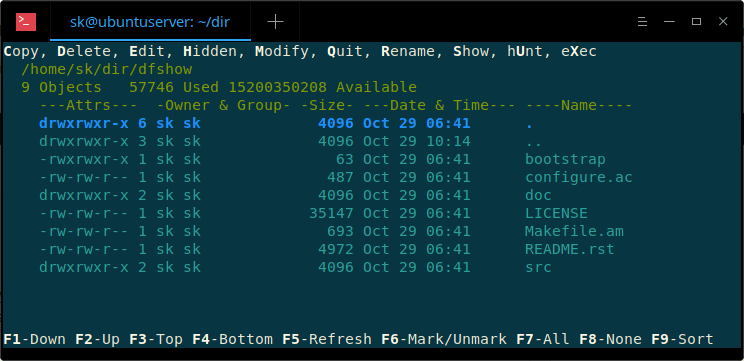

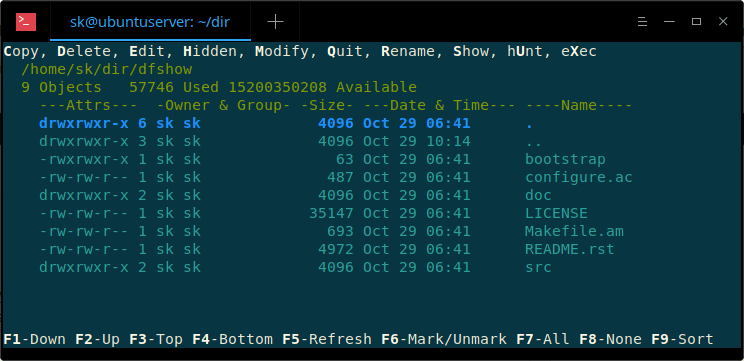

Here is how DF-SHOW default interface looks like.

|

||||

|

||||

|

||||

|

||||

As you can see, DF-SHOW interface is self-explanatory.

|

||||

|

||||

On the top bar, you see the list of available options such as Copy, Delete, Edit, Modify etc.

|

||||

|

||||

Complete list of available options are given below:

|

||||

|

||||

* **C** opy,

|

||||

* **D** elete,

|

||||

* **E** dit,

|

||||

* **H** idden,

|

||||

* **M** odify,

|

||||

* **Q** uit,

|

||||

* **R** ename,

|

||||

* **S** how,

|

||||

* h **U** nt,

|

||||

* e **X** ec,

|

||||

* **R** un command,

|

||||

* **E** dit file,

|

||||

* **H** elp,

|

||||

* **M** ake dir,

|

||||

* **Q** uit,

|

||||

* **S** how dir

|

||||

|

||||

|

||||

|

||||

In each option, one letter has been capitalized and marked as bold. Just press the capitalized letter to perform the respective operation. For example, to rename a file, just press **R** and type the new name and hit ENTER to rename the selected item.

|

||||

|

||||

|

||||

|

||||

To display all options or cancel an operation, just press **ESC** key.

|

||||

|

||||

Also, you will see a bunch of function keys at the bottom of DF-SHOW interface to navigate through the contents of a directory.

|

||||

|

||||

* **UP/DOWN** arrows or **F1/F2** – Move up and down (one line at time),

|

||||

* **PgUp/Pg/Dn** – Move one page at a time,

|

||||

* **F3/F4** – Instantly go to Top and bottom of the list,

|

||||

* **F5** – Refresh,

|

||||

* **F6** – Mark/Unmark files (Files marked will be indicated with an ***** in front of them),

|

||||

* **F7/F8** – Mark/Unmark all files at once,

|

||||

* **F9** – Sort the list by – Date & time, Name, Size.,

|

||||

|

||||

|

||||

|

||||

Press **h** to learn more details about **show** command and its options.

|

||||

|

||||

To exit DF-SHOW, simply press **q**.

|

||||

|

||||

**SF Command**

|

||||

|

||||

The “sf” (show files) is used to display the contents of a file.

|

||||

|

||||

```

|

||||

$ sf <file>

|

||||

```

|

||||

|

||||

|

||||

|

||||

Press **h** to learn more “sf” command and its options. To quit, press **q**.

|

||||

|

||||

Want to give it a try? Great! Go ahead and install DF-SHOW on your Linux system as described below.

|

||||

|

||||

### Installing DF-SHOW

|

||||

|

||||

DF-SHOW is available in [**AUR**][1], so you can install it on any Arch-based system using AUR programs such as [**Yay**][2].

|

||||

|

||||

```

|

||||

$ yay -S dfshow

|

||||

```

|

||||

|

||||

On Ubuntu and its derivatives:

|

||||

|

||||

```

|

||||

$ sudo add-apt-repository ppa:ian-hawdon/dfshow

|

||||

|

||||

$ sudo apt-get update

|

||||

|

||||

$ sudo apt-get install dfshow

|

||||

```

|

||||

|

||||

On other Linux distributions, you can compile and build it from the source as shown below.

|

||||

|

||||

```

|

||||

$ git clone https://github.com/roberthawdon/dfshow

|

||||

$ cd dfshow

|

||||

$ ./bootstrap

|

||||

$ ./configure

|

||||

$ make

|

||||

$ sudo make install

|

||||

```

|

||||

|

||||

The author of DF-SHOW project has only rewritten some of the applications of DF-EDIT utility. Since the source code is freely available on GitHub, you can add more features, improve the code and submit or fix the bugs (if there are any). It is still in alpha stage, but fully functional.

|

||||

|

||||

Have you tried it already? If so, how’d go? Tell us your experience in the comments section below.

|

||||

|

||||

And, that’s all for now. Hope this was useful.More good stuffs to come.

|

||||

|

||||

Stay tuned!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/df-show-a-terminal-file-manager-based-on-an-old-dos-application/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.ostechnix.com/author/sk/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://aur.archlinux.org/packages/dfshow/

|

||||

[2]: https://www.ostechnix.com/yay-found-yet-another-reliable-aur-helper/

|

||||

@ -1,56 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (qfzy1233 )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (What is a Linux user?)

|

||||

[#]: via: (https://opensource.com/article/19/6/what-linux-user)

|

||||

[#]: author: (Anderson Silva https://opensource.com/users/ansilva/users/petercheer/users/ansilva/users/greg-p/users/ansilva/users/ansilva/users/bcotton/users/ansilva/users/seth/users/ansilva/users/don-watkins/users/ansilva/users/seth)

|

||||

|

||||

What is a Linux user?

|

||||

======

|

||||

The definition of who is a "Linux user" has grown to be a bigger tent,

|

||||

and it's a great change.

|

||||

![][1]

|

||||

|

||||

> _Editor's note: this article was updated on Jun 11, 2019, at 1:15:19 PM to more accurately reflect the author's perspective on an open and inclusive community of practice in the Linux community._

|

||||

|

||||

In only two years, the Linux kernel will be 30 years old. Think about that! Where were you in 1991? Were you even born? I was 13! Between 1991 and 1993 a few Linux distributions were created, and at least three of them—Slackware, Debian, and Red Hat–provided the [backbone][2] the Linux movement was built on.

|

||||

|

||||

Getting a copy of a Linux distribution and installing and configuring it on a desktop or server was very different back then than today. It was hard! It was frustrating! It was an accomplishment if you got it running! We had to fight with incompatible hardware, configuration jumpers on devices, BIOS issues, and many other things. Even if the hardware was compatible, many times, you still had to compile the kernel, modules, and drivers to get them to work on your system.

|

||||

|

||||

If you were around during those days, you are probably nodding your head. Some readers might even call them the "good old days," because choosing to use Linux meant you had to learn about operating systems, computer architecture, system administration, networking, and even programming, just to keep the OS functioning. I am not one of them though: Linux being a regular part of everyone's technology experience is one of the most amazing changes in our industry!

|

||||

|

||||

Almost 30 years later, Linux has gone far beyond the desktop and server. You will find Linux in automobiles, airplanes, appliances, smartphones… virtually everywhere! You can even purchase laptops, desktops, and servers with Linux preinstalled. If you consider cloud computing, where corporations and even individuals can deploy Linux virtual machines with the click of a button, it's clear how widespread the availability of Linux has become.

|

||||

|

||||

With all that in mind, my question for you is: **How do you define a "Linux user" today?**

|

||||

|

||||

If you buy your parent or grandparent a Linux laptop from System76 or Dell, log them into their social media and email, and tell them to click "update system" every so often, they are now a Linux user. If you did the same with a Windows or MacOS machine, they would be Windows or MacOS users. It's incredible to me that, unlike the '90s, Linux is now a place for anyone and everyone to compute.

|

||||

|

||||

In many ways, this is due to the web browser becoming the "killer app" on the desktop computer. Now, many users don't care what operating system they are using as long as they can get to their app or service.

|

||||

|

||||

How many people do you know who use their phone, desktop, or laptop regularly but can't manage files, directories, and drivers on their systems? How many can't install a binary that isn't attached to an "app store" of some sort? How about compiling an application from scratch?! For me, it's almost no one. That's the beauty of open source software maturing along with an ecosystem that cares about accessibility.

|

||||

|

||||

Today's Linux user is not required to know, study, or even look up information as the Linux user of the '90s or early 2000s did, and that's not a bad thing. The old imagery of Linux being exclusively for bearded men is long gone, and I say good riddance.

|

||||

|

||||

There will always be room for a Linux user who is interested, curious, _fascinated_ about computers, operating systems, and the idea of creating, using, and collaborating on free software. There is just as much room for creative open source contributors on Windows and MacOS these days as well. Today, being a Linux user is being anyone with a Linux system. And that's a wonderful thing.

|

||||

|

||||

### The change to what it means to be a Linux user

|

||||

|

||||

When I started with Linux, being a user meant knowing how to the operating system functioned in every way, shape, and form. Linux has matured in a way that allows the definition of "Linux users" to encompass a much broader world of possibility and the people who inhabit it. It may be obvious to say, but it is important to say clearly: anyone who uses Linux is an equal Linux user.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/6/what-linux-user

|

||||

|

||||

作者:[Anderson Silva][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/ansilva/users/petercheer/users/ansilva/users/greg-p/users/ansilva/users/ansilva/users/bcotton/users/ansilva/users/seth/users/ansilva/users/don-watkins/users/ansilva/users/seth

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/linux_penguin_green.png?itok=ENdVzW22

|

||||

[2]: https://en.wikipedia.org/wiki/Linux_distribution#/media/File:Linux_Distribution_Timeline.svg

|

||||

@ -1,185 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (How to install Elasticsearch and Kibana on Linux)

|

||||

[#]: via: (https://opensource.com/article/19/7/install-elasticsearch-and-kibana-linux)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||

|

||||

How to install Elasticsearch and Kibana on Linux

|

||||

======

|

||||

Get our simplified instructions for installing both.

|

||||

![5 pengiuns floating on iceburg][1]

|

||||

|

||||

If you're keen to learn Elasticsearch, the famous open source search engine based on the open source Lucene library, then there's no better way than to install it locally. The process is outlined in detail on the [Elasticsearch website][2], but the official instructions have a lot more detail than necessary if you're a beginner. This article takes a simplified approach.

|

||||

|

||||

### Add the Elasticsearch repository

|

||||

|

||||

First, add the Elasticsearch software repository to your system, so you can install it and receive updates as needed. How you do so depends on your distribution. On an RPM-based system, such as [Fedora][3], [CentOS][4], [Red Hat Enterprise Linux (RHEL)][5], or [openSUSE][6], (anywhere in this article that references Fedora or RHEL applies to CentOS and openSUSE as well) create a repository description file in **/etc/yum.repos.d/** called **elasticsearch.repo**:

|

||||

|

||||

|

||||

```

|

||||

$ cat << EOF | sudo tee /etc/yum.repos.d/elasticsearch.repo

|

||||

[elasticsearch-7.x]

|

||||

name=Elasticsearch repository for 7.x packages

|

||||

baseurl=<https://artifacts.elastic.co/packages/oss-7.x/yum>

|

||||

gpgcheck=1

|

||||

gpgkey=<https://artifacts.elastic.co/GPG-KEY-elasticsearch>

|

||||

enabled=1

|

||||

autorefresh=1

|

||||

type=rpm-md

|

||||

EOF

|

||||

```

|

||||

|

||||

On Ubuntu or Debian, do not use the **add-apt-repository** utility. It causes errors due to a mismatch in its defaults and what Elasticsearch’s repository provides. Instead, set up this one:

|

||||

|

||||

|

||||

```

|

||||

$ echo "deb <https://artifacts.elastic.co/packages/oss-7.x/apt> stable main" | sudo tee -a /etc/apt/sources.list.d/elastic-7.x.list

|

||||

```

|

||||

|

||||

This repository contains only Elasticsearch’s open source features, under an [Apache License][7], with none of the extra features provided by a subscription. If you need subscription-only features (these features are _not_ open source), the **baseurl** must be set to:

|

||||

|

||||

|

||||

```

|

||||

`baseurl=https://artifacts.elastic.co/packages/7.x/yum`

|

||||

```

|

||||

|

||||

|

||||

|

||||

### Install Elasticsearch

|

||||

|

||||

The name of the package you need to install depends on whether you use the open source version or the subscription version. This article uses the open source version, which appends **-oss** to the end of the package name. Without **-oss** appended to the package name, you are requesting the subscription-only version.

|

||||

|

||||

If you create a repository pointing to the subscription version but try to install the open source version, you will get a fairly non-specific error in return. If you create a repository for the open source version and fail to append **-oss** to the package name, you will also get an error.

|

||||

|

||||

Install Elasticsearch with your package manager. For instance, on Fedora, CentOS, or RHEL, run the following:

|

||||

|

||||

|

||||

```

|

||||

$ sudo dnf install elasticsearch-oss

|

||||

```

|

||||

|

||||

On Ubuntu or Debian, run:

|

||||

|

||||

|

||||

```

|

||||

$ sudo apt install elasticsearch-oss

|

||||

```

|

||||

|

||||

If you get errors while installing Elasticsearch, then you may be attempting to install the wrong package. If your intention is to use the open source package, as this article does, then make sure you are using the correct **apt** repository or baseurl in your Yum configuration.

|

||||

|

||||

### Start and enable Elasticsearch

|

||||

|

||||

Once Elasticsearch has been installed, you must start and enable it:

|

||||

|

||||

|

||||

```

|

||||

$ sudo systemctl daemon-reload

|

||||

$ sudo systemctl enable --now elasticsearch.service

|

||||

```

|

||||

|

||||

Then, to confirm that Elasticsearch is running on its default port of 9200, point a web browser to **localhost:9200**. You can use a GUI browser or you can do it in the terminal:

|

||||

|

||||

|

||||

```

|

||||

$ curl localhost:9200

|

||||

{

|

||||

|

||||

"name" : "fedora30",

|

||||

"cluster_name" : "elasticsearch",

|

||||

"cluster_uuid" : "OqSbb16NQB2M0ysynnX1hA",

|

||||

"version" : {

|

||||

"number" : "7.2.0",

|

||||

"build_flavor" : "oss",

|

||||

"build_type" : "rpm",

|

||||

"build_hash" : "508c38a",

|

||||

"build_date" : "2019-06-20T15:54:18.811730Z",

|

||||

"build_snapshot" : false,

|

||||

"lucene_version" : "8.0.0",

|

||||

"minimum_wire_compatibility_version" : "6.8.0",

|

||||

"minimum_index_compatibility_version" : "6.0.0-beta1"

|

||||

},

|

||||

"tagline" : "You Know, for Search"

|

||||

}

|

||||

```

|

||||

|

||||

### Install Kibana

|

||||

|

||||

Kibana is a graphical interface for Elasticsearch data visualization. It’s included in the Elasticsearch repository, so you can install it with your package manager. Just as with Elasticsearch itself, you must append **-oss** to the end of the package name if you are using the open source version of Elasticsearch, and not the subscription version (the two installations need to match):

|

||||

|

||||

|

||||

```

|

||||

$ sudo dnf install kibana-oss

|

||||

```

|

||||

|

||||

On Ubuntu or Debian:

|

||||

|

||||

|

||||

```

|

||||

$ sudo apt install kibana-oss

|

||||

```

|

||||

|

||||

Kibana runs on port 5601, so launch a graphical web browser and navigate to **localhost:5601** to start using the Kibana interface, which is shown below:

|

||||

|

||||

![Kibana running in Firefox.][8]

|

||||

|

||||

### Troubleshoot

|

||||

|

||||

If you get errors while installing Elasticsearch, try installing a Java environment manually. On Fedora, CentOS, and RHEL:

|

||||

|

||||

|

||||

```

|

||||

$ sudo dnf install java-openjdk-devel java-openjdk

|

||||

```

|

||||

|

||||

On Ubuntu:

|

||||

|

||||

|

||||

```

|

||||

`$ sudo apt install default-jdk`

|

||||

```

|

||||

|

||||

If all else fails, try installing the Elasticsearch RPM directly from the Elasticsearch servers:

|

||||

|

||||

|

||||

```

|

||||

$ wget <https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-oss-7.2.0-x86\_64.rpm{,.sha512}>

|

||||

$ shasum -a 512 -c elasticsearch-oss-7.2.0-x86_64.rpm.sha512 && sudo rpm --install elasticsearch-oss-7.2.0-x86_64.rpm

|

||||

```

|

||||

|

||||

On Ubuntu or Debian, use the DEB package instead.

|

||||

|

||||

If you cannot access either Elasticsearch or Kibana with a web browser, then your firewall may be blocking those ports. You can allow traffic on those ports by adjusting your firewall settings. For instance, if you are running **firewalld** (the default on Fedora and RHEL, and installable on Debian and Ubuntu), then you can use **firewall-cmd**:

|

||||

|

||||

|

||||

```

|

||||

$ sudo firewall-cmd --add-port=9200/tcp --permanent

|

||||

$ sudo firewall-cmd --add-port=5601/tcp --permanent

|

||||

$ sudo firewall-cmd --reload

|

||||

```

|

||||

|

||||

You’re now set up and can follow along with our upcoming installation articles for Elasticsearch and Kibana.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/7/install-elasticsearch-and-kibana-linux

|

||||

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/seth

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/rh_003499_01_linux31x_cc.png?itok=Pvim4U-B (5 pengiuns floating on iceburg)

|

||||

[2]: https://www.elastic.co/guide/en/elasticsearch/reference/current/rpm.html

|

||||

[3]: https://getfedora.org

|

||||

[4]: https://www.centos.org

|

||||

[5]: https://www.redhat.com/en/technologies/linux-platforms/enterprise-linux

|

||||

[6]: https://www.opensuse.org

|

||||

[7]: http://www.apache.org/licenses/

|

||||

[8]: https://opensource.com/sites/default/files/uploads/kibana.jpg (Kibana running in Firefox.)

|

||||

@ -1,263 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Introduction to GNU Autotools)

|

||||

[#]: via: (https://opensource.com/article/19/7/introduction-gnu-autotools)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||

|

||||

Introduction to GNU Autotools

|

||||

======

|

||||

If you're not using Autotools yet, this tutorial will change the way you

|

||||

deliver your code.

|

||||

![Linux kernel source code \(C\) in Visual Studio Code][1]

|

||||

|

||||

Have you ever downloaded the source code for a popular software project that required you to type the almost ritualistic **./configure; make && make install** command sequence to build and install it? If so, you’ve used [GNU Autotools][2]. If you’ve ever looked into some of the files accompanying such a project, you’ve likely also been terrified at the apparent complexity of such a build system.

|

||||

|

||||

Good news! GNU Autotools is a lot simpler to set up than you think, and it’s GNU Autotools itself that generates those 1,000-line configuration files for you. Yes, you can write 20 or 30 lines of installation code and get the other 4,000 for free.

|

||||

|

||||

### Autotools at work

|

||||

|

||||

If you’re a user new to Linux looking for information on how to install applications, you do not have to read this article! You’re welcome to read it if you want to research how software is built, but if you’re just installing a new application, go read my article about [installing apps on Linux][3].

|

||||

|

||||

For developers, Autotools is a quick and easy way to manage and package source code so users can compile and install software. Autotools is also well-supported by major packaging formats, like DEB and RPM, so maintainers of software repositories can easily prepare a project built with Autotools.

|

||||

|

||||

Autotools works in stages:

|

||||

|

||||

1. First, during the **./configure** step, Autotools scans the host system (the computer it’s being run on) to discover the default settings. Default settings include where support libraries are located, and where new software should be placed on the system.

|

||||

2. Next, during the **make** step, Autotools builds the application, usually by converting human-readable source code into machine language.

|

||||

3. Finally, during the **make install** step, Autotools copies the files it built to the appropriate locations (as detected during the configure stage) on your computer.

|

||||

|

||||

|

||||

|

||||

This process seems simple, and it is, as long as you use Autotools.

|

||||

|

||||

### The Autotools advantage

|

||||

|

||||

GNU Autotools is a big and important piece of software that most of us take for granted. Along with [GCC (the GNU Compiler Collection)][4], Autotools is the scaffolding that allows Free Software to be constructed and installed to a running system. If you’re running a [POSIX][5] system, it’s not an understatement to say that most of your operating system exists as runnable software on your computer because of these projects.

|

||||

|

||||

In the likely event that your pet project isn’t an operating system, you might assume that Autotools is overkill for your needs. But, despite its reputation, Autotools has lots of little features that may benefit you, even if your project is a relatively simple application or series of scripts.

|

||||

|

||||

#### Portability

|

||||

|

||||

First of all, Autotools comes with portability in mind. While it can’t make your project work across all POSIX platforms (that’s up to you, as the coder), Autotools can ensure that the files you’ve marked for installation get installed to the most sensible locations on a known platform. And because of Autotools, it’s trivial for a power user to customize and override any non-optimal value, according to their own system.

|

||||

|

||||

With Autotools, all you need to know is what files need to be installed to what general location. It takes care of everything else. No more custom install scripts that break on any untested OS.

|

||||

|

||||

#### Packaging

|

||||

|

||||

Autotools is also well-supported. Hand a project with Autotools over to a distro packager, whether they’re packaging an RPM, DEB, TGZ, or anything else, and their job is simple. Packaging tools know Autotools, so there’s likely to be no patching, hacking, or adjustments necessary. In many cases, incorporating an Autotools project into a pipeline can even be automated.

|

||||

|

||||

### How to use Autotools

|

||||

|

||||

To use Autotools, you must first have Autotools installed. Your distribution may provide one package meant to help developers build projects, or it may provide separate packages for each component, so you may have to do some research on your platform to discover what packages you need to install.

|

||||

|

||||

The components of Autotools are:

|

||||

|

||||

* **automake**

|

||||

* **autoconf**

|

||||

* **automake**

|

||||

* **make**

|

||||

|

||||

|

||||

|

||||

While you likely need to install the compiler (GCC, for instance) required by your project, Autotools works just fine with scripts or binary assets that don’t need to be compiled. In fact, Autotools can be useful for such projects because it provides a **make uninstall** script for easy removal.

|

||||

|

||||

Once you have all of the components installed, it’s time to look at the structure of your project’s files.

|

||||

|

||||

#### Autotools project structure

|

||||

|

||||

GNU Autotools has very specific expectations, and most of them are probably familiar if you download and build source code often. First, the source code itself is expected to be in a subdirectory called **src**.

|

||||

|

||||

Your project doesn’t have to follow all of these expectations, but if you put files in non-standard locations (from the perspective of Autotools), then you’ll have to make adjustments for that in your Makefile later.

|

||||

|

||||

Additionally, these files are required:

|

||||

|

||||

* **NEWS**

|

||||

* **README**

|

||||

* **AUTHORS**

|

||||

* **ChangeLog**

|

||||

|

||||

|

||||

|

||||

You don’t have to actively use the files, and they can be symlinks to a monolithic document (like **README.md**) that encompasses all of that information, but they must be present.

|

||||

|

||||

#### Autotools configuration

|

||||

|

||||

Create a file called **configure.ac** at your project’s root directory. This file is used by **autoconf** to create the **configure** shell script that users run before building. The file must contain, at the very least, the **AC_INIT** and **AC_OUTPUT** [M4 macros][6]. You don’t need to know anything about the M4 language to use these macros; they’re already written for you, and all of the ones relevant to Autotools are defined in the documentation.

|

||||

|

||||

Open the file in your favorite text editor. The **AC_INIT** macro may consist of the package name, version, an email address for bug reports, the project URL, and optionally the name of the source TAR file.

|

||||

|

||||

The **[AC_OUTPUT][7]** macro is much simpler and accepts no arguments.

|

||||

|

||||

|

||||

```

|

||||

AC_INIT([penguin], [2019.3.6], [[seth@example.com][8]])

|

||||

AC_OUTPUT

|

||||

```

|

||||

|

||||

If you were to run **autoconf** at this point, a **configure** script would be generated from your **configure.ac** file, and it would run successfully. That’s all it would do, though, because all you have done so far is define your project’s metadata and called for a configuration script to be created.

|

||||

|

||||

The next macros you must invoke in your **configure.ac** file are functions to create a [Makefile][9]. A Makefile tells the **make** command what to do (usually, how to compile and link a program).

|

||||

|

||||

The macros to create a Makefile are **AM_INIT_AUTOMAKE**, which accepts no arguments, and **AC_CONFIG_FILES**, which accepts the name you want to call your output file.

|

||||

|

||||

Finally, you must add a macro to account for the compiler your project needs. The macro you use obviously depends on your project. If your project is written in C++, the appropriate macro is **AC_PROG_CXX**, while a project written in C requires **AC_PROG_CC**, and so on, as detailed in the [Building Programs and Libraries][10] section in the Autoconf documentation.

|

||||

|

||||

For example, I might add the following for my C++ program:

|

||||

|

||||

|

||||

```

|

||||

AC_INIT([penguin], [2019.3.6], [[seth@example.com][8]])

|

||||

AC_OUTPUT

|

||||

AM_INIT_AUTOMAKE

|

||||

AC_CONFIG_FILES([Makefile])

|

||||

AC_PROG_CXX

|

||||

```

|

||||

|

||||

Save the file. It’s time to move on to the Makefile.

|

||||

|

||||

#### Autotools Makefile generation

|

||||

|

||||

Makefiles aren’t difficult to write manually, but Autotools can write one for you, and the one it generates will use the configuration options detected during the `./configure` step, and it will contain far more options than you would think to include or want to write yourself. However, Autotools can’t detect everything your project requires to build, so you have to add some details in the file **Makefile.am**, which in turn is used by **automake** when constructing a Makefile.

|

||||

|

||||

**Makefile.am** uses the same syntax as a Makefile, so if you’ve ever written a Makefile from scratch, then this process will be familiar and simple. Often, a **Makefile.am** file needs only a few variable definitions to indicate what files are to be built, and where they are to be installed.

|

||||

|

||||

Variables ending in **_PROGRAMS** identify code that is to be built (this is usually considered the _primary_ target; it’s the main reason the Makefile exists). Automake recognizes other primaries, like **_SCRIPTS**, **_DATA**, **_LIBRARIES**, and other common parts that make up a software project.

|

||||

|

||||

If your application is literally compiled during the build process, then you identify it as a binary program with the **bin_PROGRAMS** variable, and then reference any part of the source code required to build it (these parts may be one or more files to be compiled and linked together) using the program name as the variable prefix:

|

||||

|

||||

|

||||

```

|

||||

bin_PROGRAMS = penguin

|

||||

penguin_SOURCES = penguin.cpp

|

||||

```

|

||||

|

||||

The target of **bin_PROGRAMS** is installed into the **bindir**, which is user-configurable during compilation.

|

||||

|

||||

If your application isn’t actually compiled, then your project doesn’t need a **bin_PROGRAMS** variable at all. For instance, if your project is a script written in Bash, Perl, or a similar interpreted language, then define a **_SCRIPTS** variable instead:

|

||||

|

||||

|

||||

```

|

||||

bin_SCRIPTS = bin/penguin

|

||||

```

|

||||

|

||||

Automake expects sources to be located in a directory called **src**, so if your project uses an alternative directory structure for its layout, you must tell Automake to accept code from outside sources:

|

||||

|

||||

|

||||

```

|

||||

AUTOMAKE_OPTIONS = foreign subdir-objects

|

||||

```

|

||||

|

||||

Finally, you can create any custom Makefile rules in **Makefile.am** and they’ll be copied verbatim into the generated Makefile. For instance, if you know that a temporary value needs to be replaced in your source code before the installation proceeds, you could make a custom rule for that process:

|

||||

|

||||

|

||||

```

|

||||

all-am: penguin

|

||||

touch bin/penguin.sh

|

||||

|

||||

penguin: bin/penguin.sh

|

||||

@sed "s|__datadir__|@datadir@|" $< >bin/$@

|

||||

```

|

||||

|

||||

A particularly useful trick is to extend the existing **clean** target, at least during development. The **make clean** command generally removes all generated build files with the exception of the Automake infrastructure. It’s designed this way because most users rarely want **make clean** to obliterate the files that make it easy to build their code.

|

||||

|

||||

However, during development, you might want a method to reliably return your project to a state relatively unaffected by Autotools. In that case, you may want to add this:

|

||||

|

||||

|

||||

```

|

||||

clean-local:

|

||||

@rm config.status configure config.log

|

||||

@rm Makefile

|

||||

@rm -r autom4te.cache/

|

||||

@rm aclocal.m4

|

||||

@rm compile install-sh missing Makefile.in

|

||||

```

|

||||

|

||||

There’s a lot of flexibility here, and if you’re not already familiar with Makefiles, it can be difficult to know what your **Makefile.am** needs. The barest necessity is a primary target, whether that’s a binary program or a script, and an indication of where the source code is located (whether that’s through a **_SOURCES** variable or by using **AUTOMAKE_OPTIONS** to tell Automake where to look for source code).

|

||||

|

||||