mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-25 23:11:02 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

60b8a43faa

@ -1,8 +1,7 @@

|

||||

云计算的成本

|

||||

============================================================

|

||||

|

||||

### 两个开发团队的一天

|

||||

|

||||

> 两个开发团队的一天

|

||||

|

||||

|

||||

|

||||

@ -12,103 +11,108 @@

|

||||

|

||||

这两个团队被要求为一家全球化企业开发一个新的服务,该企业目前为全球数百万消费者提供服务。要开发的这项新服务需要满足以下基本需求:

|

||||

|

||||

1. 能够随时扩展以满足弹性需求

|

||||

|

||||

2. 具备应对数据中心故障的弹性

|

||||

|

||||

3. 确保数据安全以及数据受到保护

|

||||

|

||||

4. 为排错提供深入的调试功能

|

||||

|

||||

5. 项目必须能迅速分发

|

||||

|

||||

6. 服务构建和维护的性价比要高

|

||||

1. 能够随时**扩展**以满足弹性需求

|

||||

2. 具备应对数据中心故障的**弹性**

|

||||

3. 确保数据**安全**以及数据受到保护

|

||||

4. 为排错提供深入的**调试**功能

|

||||

5. 项目必须能**迅速分发**

|

||||

6. 服务构建和维护的**性价比**要高

|

||||

|

||||

就新服务来说,这看起来是非常标准的需求 — 从本质上看传统专用基础设备上没有什么东西可以超越公共云了。

|

||||

|

||||

|

||||

|

||||

* * *

|

||||

|

||||

#### 1 — 扩展以满足客户需求

|

||||

|

||||

当说到可扩展性时,这个新服务需要去满足客户变化无常的需求。我们构建的服务不可以拒绝任何请求,以防让公司遭受损失或者声誉受到影响。

|

||||

|

||||

传统的团队使用的是专用基础设施,架构体系的计算能力需要与峰值数据需求相匹配。对于负载变化无常的服务来说,大量昂贵的计算能力在低利用率的时间被浪费掉。

|

||||

**传统团队**

|

||||

|

||||

使用的是专用基础设施,架构体系的计算能力需要与峰值数据需求相匹配。对于负载变化无常的服务来说,大量昂贵的计算能力在低利用率时被浪费掉。

|

||||

|

||||

这是一种很浪费的方法 — 并且大量的资本支出会侵蚀掉你的利润。另外,这些未充分利用的庞大的服务器资源的维护也是一项很大的运营成本。这是一项你无法忽略的成本 — 我不得不再强调一下,为支持一个单一服务去维护一机柜的服务器是多么的浪费时间和金钱。

|

||||

|

||||

云团队使用的是基于云的自动伸缩解决方案,应用会按需要进行自动扩展和收缩。也就是说你只需要支付你所消费的计算资源的费用。

|

||||

**云团队**

|

||||

|

||||

使用的是基于云的自动伸缩解决方案,应用会按需要进行自动扩展和收缩。也就是说你只需要支付你所消费的计算资源的费用。

|

||||

|

||||

一个架构良好的基于云的应用可以实现无缝地伸缩 — 并且还是自动进行的。开发团队只需要定义好自动伸缩的资源组即可,即当你的应用 CPU 利用率达到某个高位、或者每秒有多大请求数时启动多少实例,并且你可以根据你的意愿去定制这些规则。

|

||||

|

||||

* * *

|

||||

|

||||

#### 2 — 应对故障的弹性

|

||||

|

||||

当说到弹性时,将托管服务的基础设施放在同一个房间里并不是一个好的选择。如果你的应用托管在一个单一的数据中心 — (不是如果)发生某些失败时(译者注:指坍塌、地震、洪灾等),你的所有的东西都被埋了。

|

||||

当说到弹性时,将托管服务的基础设施放在同一个房间里并不是一个好的选择。如果你的应用托管在一个单一的数据中心 — (不是如果)发生某些失败时(LCTT 译注:指坍塌、地震、洪灾等),你的所有的东西都被埋了。

|

||||

|

||||

传统的团队去满足这种基本需求的标准解决方案是,为实现局部弹性建立至少两个服务器 — 在地理上冗余的数据中心之间实施秒级复制。

|

||||

**传统团队**

|

||||

|

||||

开发团队需要一个负载均衡解决方案,以便于在发生饱合或者故障等事件时将流量转向到另一个节点 — 并且还要确保镜像节点之间,整个栈是持续完全同步的。

|

||||

满足这种基本需求的标准解决方案是,为实现局部弹性建立至少两个服务器 — 在地理上冗余的数据中心之间实施秒级复制。

|

||||

|

||||

在全球 50 个区域中的每一个云团队,都由 AWS 提供多个_有效区域_。每个区域由多个容错数据中心组成 — 通过自动故障切换功能,AWS 可以在区域内将服务无缝地转移到其它的区中。

|

||||

开发团队需要一个负载均衡解决方案,以便于在发生饱和或者故障等事件时将流量转向到另一个节点 — 并且还要确保镜像节点之间,整个栈是持续完全同步的。

|

||||

|

||||

**云团队**

|

||||

|

||||

在 AWS 全球 50 个地区中,他们都提供多个_可用区_。每个区域由多个容错数据中心组成 — 通过自动故障切换功能,AWS 可以将服务无缝地转移到该地区的其它区中。

|

||||

|

||||

在一个 `CloudFormation` 模板中定义你的_基础设施即代码_,确保你的基础设施在自动伸缩事件中跨区保持一致 — 而对于流量的流向管理,AWS 负载均衡服务仅需要做很少的配置即可。

|

||||

|

||||

* * *

|

||||

|

||||

#### 3 — 安全和数据保护

|

||||

|

||||

安全是一个组织中任何一个系统的基本要求。我想你肯定不想成为那些不幸遭遇安全问题的公司之一的。

|

||||

|

||||

传统团队为保证运行他们服务的基础服务器安全,他们不得不持续投入成本。这意味着将不得不向监视、识别、以及为来自不同数据源的跨多个供应商解决方案的安全威胁打补丁的团队上投资。

|

||||

**传统团队**

|

||||

|

||||

使用公共云的团队并不能免除来自安全方面的责任。云团队仍然需要提高警惕,但是并不需要去担心为底层基础设施打补丁的问题。AWS 将积极地对付各种 0 日漏洞 — 最近的一次是 Spectre 和 Meltdown。

|

||||

为保证运行他们服务的基础服务器安全,他们不得不持续投入成本。这意味着将需要投资一个团队,以监视和识别安全威胁,并用来自不同数据源的跨多个供应商解决方案打上补丁。

|

||||

|

||||

利用来自 AWS 的识别管理和加密安全服务,可以让云团队专注于他们的应用 — 而不是无差别的安全管理。使用 `CloudTrail` 对 API 到 AWS 服务的调用做全面审计,可以实现透明地监视。

|

||||

**云团队**

|

||||

|

||||

* * *

|

||||

使用公共云并不能免除来自安全方面的责任。云团队仍然需要提高警惕,但是并不需要去担心为底层基础设施打补丁的问题。AWS 将积极地对付各种零日漏洞 — 最近的一次是 Spectre 和 Meltdown。

|

||||

|

||||

利用来自 AWS 的身份管理和加密安全服务,可以让云团队专注于他们的应用 — 而不是无差别的安全管理。使用 CloudTrail 对 API 到 AWS 服务的调用做全面审计,可以实现透明地监视。

|

||||

|

||||

#### 4 — 监视和日志

|

||||

|

||||

任何基础设施和部署为服务的应用都需要严密监视实时数据。团队应该有一个可以访问的仪表板,当超过指标阈值时仪表板会显示警报,并能够在排错时提供与事件相关的日志。

|

||||

|

||||

使用传统基础设施的传统团队,将不得不在跨不同供应商和“雪花状”的解决方案上配置监视和报告解决方案。配置这些“见鬼的”解决方案将花费你大量的时间和精力 — 并且能够正确地实现你的目的是相当困难的。

|

||||

**传统团队**

|

||||

|

||||

对于传统基础设施,将不得不在跨不同供应商和“雪花状”的解决方案上配置监视和报告解决方案。配置这些“见鬼的”解决方案将花费你大量的时间和精力 — 并且能够正确地实现你的目的是相当困难的。

|

||||

|

||||

对于大多数部署在专用基础设施上的应用来说,为了搞清楚你的应用为什么崩溃,你可以通过搜索保存在你的服务器文件系统上的日志文件来找到答案。为此你的团队需要通过 SSH 进入服务器,导航到日志文件所在的目录,然后浪费大量的时间,通过 `grep` 在成百上千的日志文件中寻找。如果你在一个横跨 60 台服务器上部署的应用中这么做 — 我能负责任地告诉你,这是一个极差的解决方案。

|

||||

|

||||

云团队利用原生的 AWS 服务,如 CloudWatch 和 CloudTrail,来做云应用程序的监视是非常容易。不需要很多的配置,开发团队就可以监视部署的服务上的各种指标 — 问题的排除过程也不再是个恶梦了。

|

||||

**云团队**

|

||||

|

||||

利用原生的 AWS 服务,如 CloudWatch 和 CloudTrail,来做云应用程序的监视是非常容易。不需要很多的配置,开发团队就可以监视部署的服务上的各种指标 — 问题的排除过程也不再是个恶梦了。

|

||||

|

||||

对于传统的基础设施,团队需要构建自己的解决方案,配置他们的 REST API 或者服务去推送日志到一个聚合器。而得到这个“开箱即用”的解决方案将对生产力有极大的提升。

|

||||

|

||||

* * *

|

||||

|

||||

#### 5 — 加速开发进程

|

||||

|

||||

现在的商业环境中,快速上市的能力越来越重要。由于实施延误所失去的机会成本,可能成为影响最终利润的一个主要因素。

|

||||

现在的商业环境中,快速上市的能力越来越重要。由于实施的延误所失去的机会成本,可能成为影响最终利润的一个主要因素。

|

||||

|

||||

大多数组织的这种传统团队,他们需要在新项目所需要的硬件采购、配置和部署上花费很长的时间 — 并且由于预测能力差,提前获得的额外的性能将造成大量的浪费。

|

||||

**传统团队**

|

||||

|

||||

对于大多数组织,他们需要在新项目所需要的硬件采购、配置和部署上花费很长的时间 — 并且由于预测能力差,提前获得的额外的性能将造成大量的浪费。

|

||||

|

||||

而且还有可能的是,传统的开发团队在无数的“筒仓”中穿梭以及在移交创建的服务上花费数月的时间。项目的每一步都会在数据库、系统、安全、以及网络管理方面需要一个独立工作。

|

||||

|

||||

**云团队**

|

||||

|

||||

而云团队开发新特性时,拥有大量的随时可投入生产系统的服务套件供你使用。这是开发者的天堂。每个 AWS 服务一般都有非常好的文档并且可以通过你选择的语言以编程的方式去访问。

|

||||

|

||||

使用新的云架构,例如无服务器,开发团队可以在最小化冲突的前提下构建和部署一个可扩展的解决方案。比如,只需要几天时间就可以建立一个 [Imgur 的无服务器克隆][4],它具有图像识别的特性,内置一个产品级的监视/日志解决方案,并且它的弹性极好。

|

||||

|

||||

|

||||

|

||||

如果必须要我亲自去设计弹性和可伸缩性,我可以向你保证,我仍然在开发这个项目 — 而且最终的产品将远不如目前的这个好。

|

||||

*如何建立一个 Imgur 的无服务器克隆*

|

||||

|

||||

如果必须要我亲自去设计弹性和可伸缩性,我可以向你保证,我会陷在这个项目的开发里 — 而且最终的产品将远不如目前的这个好。

|

||||

|

||||

从我实践的情况来看,使用无服务器架构的交付时间远小于在大多数公司中提供硬件所花费的时间。我只是简单地将一系列 AWS 服务与 Lambda 功能 — 以及 ta-da 耦合到一起而已!我只专注于开发解决方案,而无差别的可伸缩性和弹性是由 AWS 为我处理的。

|

||||

|

||||

* * *

|

||||

|

||||

#### 关于云计算成本的结论

|

||||

|

||||

就弹性而言,云计算团队的按需扩展是当之无愧的赢家 — 因为他们仅为需要的计算能力埋单。而不需要为维护和底层的物理基础设施打补丁付出相应的资源。

|

||||

|

||||

云计算也为开发团队提供一个可使用多个有效区的弹性架构、为每个服务构建的安全特性、持续的日志和监视工具、随用随付的服务、以及低成本的加速分发实践。

|

||||

云计算也为开发团队提供一个可使用多个可用区的弹性架构、为每个服务构建的安全特性、持续的日志和监视工具、随用随付的服务、以及低成本的加速分发实践。

|

||||

|

||||

大多数情况下,云计算的成本要远低于为你的应用运行所需要的购买、支持、维护和设计的按需基础架构的成本 — 并且云计算的麻烦事更少。

|

||||

|

||||

@ -116,17 +120,17 @@

|

||||

|

||||

也有一些云计算比传统基础设施更昂贵的例子,一些情况是在周末忘记关闭运行的一些极其昂贵的测试机器。

|

||||

|

||||

[Dropbox 在决定推出自己的基础设施并减少对 AWS 服务的依赖之后,在两年的时间内节省近 7500 万美元的费用,Dropbox…www.geekwire.com][5][][6]

|

||||

[Dropbox 在决定推出自己的基础设施并减少对 AWS 服务的依赖之后,在两年的时间内节省近 7500 万美元的费用,Dropbox…——www.geekwire.com][5][][6]

|

||||

|

||||

即便如此,这样的案例仍然是非常少见的。更不用说当初 Dropbox 也是从 AWS 上开始它的业务的 — 并且当它的业务达到一个临界点时,才决定离开这个平台。即便到现在,他们也已经进入到云计算的领域了,并且还在 AWS 和 GCP 上保留了 40% 的基础设施。

|

||||

|

||||

将云服务与基于单一“成本”指标(译者注:此处的“成本”仅指物理基础设施的购置成本)的传统基础设施比较的想法是极其幼稚的 — 公然无视云为开发团队和你的业务带来的一些主要的优势。

|

||||

将云服务与基于单一“成本”指标(LCTT 译注:此处的“成本”仅指物理基础设施的购置成本)的传统基础设施比较的想法是极其幼稚的 — 公然无视云为开发团队和你的业务带来的一些主要的优势。

|

||||

|

||||

在极少数的情况下,云服务比传统基础设施产生更多的绝对成本 — 它在开发团队的生产力、速度和创新方面仍然贡献着更好的价值。

|

||||

在极少数的情况下,云服务比传统基础设施产生更多的绝对成本 — 但它在开发团队的生产力、速度和创新方面仍然贡献着更好的价值。

|

||||

|

||||

|

||||

|

||||

客户才不在乎你的数据中心呢

|

||||

*客户才不在乎你的数据中心呢*

|

||||

|

||||

_我非常乐意倾听你在云中开发的真实成本相关的经验和反馈!请在下面的评论区、Twitter _ [_@_ _Elliot_F_][7] 上、或者直接在 _ [_LinkedIn_][8] 上联系我。

|

||||

|

||||

@ -136,7 +140,7 @@ via: https://read.acloud.guru/the-true-cost-of-cloud-a-comparison-of-two-develop

|

||||

|

||||

作者:[Elliot Forbes][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,9 +1,11 @@

|

||||

Intel 和 AMD 透露新的处理器设计

|

||||

======

|

||||

|

||||

> Whiskey Lake U 系列和 Amber Lake Y 系列的酷睿芯片将会在今年秋季开始出现在超过 70 款笔记本以及 2 合 1 机型中。

|

||||

|

||||

|

||||

|

||||

根据本周的台北国际电脑展 (Computex 2018) 以及最近其它的消息,处理器成为科技新闻圈中最前沿的话题。Intel 发布了一些公告涉及从新的酷睿处理器到延长电池续航的尖端技术。与此同时,AMD 亮相了第二代 32 核心的高端游戏处理器线程撕裂者(Threadripper)以及一些嵌入式友好的新型号锐龙 Ryzen 处理器。

|

||||

根据最近的台北国际电脑展 (Computex 2018) 以及最近其它的消息,处理器成为科技新闻圈中最前沿的话题。Intel 发布了一些公告涉及从新的酷睿处理器到延长电池续航的尖端技术。与此同时,AMD 亮相了第二代 32 核心的高端游戏处理器线程撕裂者(Threadripper)以及一些适合嵌入式的新型号锐龙 Ryzen 处理器。

|

||||

|

||||

以上是对 Intel 和 AMD 主要公布产品的快速浏览,针对那些对嵌入式 Linux 开发者最感兴趣的处理器。

|

||||

|

||||

@ -11,7 +13,7 @@ Intel 和 AMD 透露新的处理器设计

|

||||

|

||||

在四月份,Intel 已经宣布量产 10nm 制程的 Cannon Lake 系列酷睿处理器将会延期到 2019 年,这件事引起了人们对摩尔定律最终走上正轨的议论。然而,在 Intel 的 [Computex 展区][1] 中有着众多让人欣慰的消息。Intel 展示了两款节能的第八代 14nm 酷睿家族产品,同时也是 Intel 首款 5GHz 的设计。

|

||||

|

||||

Whiskey Lake U 系列和 Amber Lake Y 系列的酷睿芯片将会在今年秋季开始出现在超过70款笔记本以及 2 合 1 机型中。Intel 表示,这些芯片相较于第七代的 Kaby Lake 酷睿系列处理器会带来两倍的性能提升。新的产品家族将会相比于目前出现的搭载 [Coffee Lake][2] 芯片的产品更加节能 。

|

||||

Whiskey Lake U 系列和 Amber Lake Y 系列的酷睿芯片将会在今年秋季开始出现在超过 70 款笔记本以及 2 合 1 机型中。Intel 表示,这些芯片相较于第七代的 Kaby Lake 酷睿系列处理器会带来两倍的性能提升。新的产品家族将会相比于目前出现的搭载 [Coffee Lake][2] 芯片的产品更加节能 。

|

||||

|

||||

Whiskey Lake 和 Amber Lake 两者将会配备 Intel 高性能千兆 WiFi (Intel 9560 AC),该网卡同样出现在 [Gemini Lake][3] 架构的奔腾银牌和赛扬处理器,随之出现在 Apollo Lake 一代。千兆 WiFi 本质上就是 Intel 将 2×2 MU-MIMO 和 160MHz 信道技术与 802.11ac 结合。

|

||||

|

||||

@ -19,7 +21,7 @@ Intel 的 Whiskey Lake 将作为第七代和第八代 Skylake U 系列处理器

|

||||

|

||||

[PC World][6] 报导称,Amber Lake Y 系列芯片主要目标定位是 2 合 1 机型。就像双核的 [Kaby Lake Y 系列][5] 芯片,Amber Lake 将会支持 4.5W TDP。

|

||||

|

||||

为了庆祝 Intel 即将到来的 50 周年庆典, 同样也是作为世界上第一款 8086 处理器的 40 周年庆典,Intel 将启动一个限量版,带有一个时钟频率 4GHz 的第八代 [酷睿 i7-8086K][7] CPU。 这款 64 位限量版产品将会是第一块拥有 5GHz, 单核睿频加速,并且是首款带有集成显卡的 6 核,12 线程处理器。Intel 将会于 6 月 7 日开始 [赠送][8] 8,086 块超频酷睿 i7-8086K 芯片。

|

||||

为了庆祝 Intel 即将到来的 50 周年庆典, 同样也是作为世界上第一款 8086 处理器的 40 周年庆典,Intel 将启动一个限量版,带有一个时钟频率 4GHz 的第八代 [酷睿 i7-8086K][7] CPU。 这款 64 位限量版产品将会是第一块拥有 5GHz, 单核睿频加速,并且是首款带有集成显卡的 6 核,12 线程处理器。Intel 将会于 6 月 7 日开始 [赠送][8] 8086 块超频酷睿 i7-8086K 芯片。

|

||||

|

||||

Intel 也展示了计划于今年年底启动新的高端 Core X 系列拥有高核心和线程数。[AnandTech 预测][9] 可能会使用类似于 Xeon 的 Cascade Lake 架构。今年晚些时候,Intel 将会公布新的酷睿 S系列型号,AnandTech 预测它可能会是八核心的 Coffee Lake 芯片。

|

||||

|

||||

@ -32,7 +34,8 @@ Intel 也表示第一款疾速傲腾 SSD —— 一个 M.2 接口产品被称作

|

||||

### AMD 继续翻身

|

||||

|

||||

在展会中,AMD 亮相了第二代拥有 32 核 64 线程的线程撕裂者(Threadripper) CPU。为了走在 Intel 尚未命名的 28 核怪兽之前,这款高端游戏处理器将会在第三季度推出。根据 [Engadget][11] 的消息,新的线程撕裂者同样采用了被用在锐龙 Ryzen 芯片的 12nm Zen+ 架构。

|

||||

[WCCFTech][12] 报导,AMD 也表示它选自为拥有 32GB 昂贵的 HBM2 显存而不是 GDDR5X 或 GDDR6 的显卡而设计的 7nm Vega Instinct GPU 。这款 Vega Instinct 将提供相比现今 14nm Vega GPU 高出 35% 的性能和两倍的功效效率。新的渲染能力将会帮助它同 Nvidia 启用 CUDA 技术的 GPU 在光线追踪中竞争。

|

||||

|

||||

[WCCFTech][12] 报导,AMD 也表示它选自 7nm Vega Instinct GPU(为拥有 32GB 昂贵的 HBM2 显存而不是 GDDR5X 或 GDDR6 的显卡而设计)。这款 Vega Instinct 将提供相比现今 14nm Vega GPU 高出 35% 的性能和两倍的功效效率。新的渲染能力将会帮助它同 Nvidia 启用 CUDA 技术的 GPU 在光线追踪中竞争。

|

||||

|

||||

一些新的 Ryzen 2000 系列处理器近期出现在一个 ASRock CPU 聊天室,它将拥有比主流的 Ryzen 芯片更低的功耗。[AnandTech][13] 详细介绍了,2.8GHz,8 核心,16 线程的 Ryzen 7 2700E 和 3.4GHz/3.9GHz,六核,12 线程 Ryzen 5 2600E 都将拥有 45W TDP。这比 12-54W TDP 的 [Ryzen Embedded V1000][2] 处理器更高,但低于 65W 甚至更高的主流 Ryzen 芯片。新的 Ryzen-E 型号是针对 SFF (外形小巧,small form factor) 和无风扇系统。

|

||||

|

||||

@ -45,7 +48,7 @@ via: https://www.linux.com/blog/2018/6/intel-amd-and-arm-reveal-new-processor-de

|

||||

作者:[Eric Brown][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[softpaopao](https://github.com/softpaopao)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,9 +1,9 @@

|

||||

停止手动合并你的 pull 请求

|

||||

不要再手动合并你的拉取请求(PR)

|

||||

======

|

||||

|

||||

|

||||

|

||||

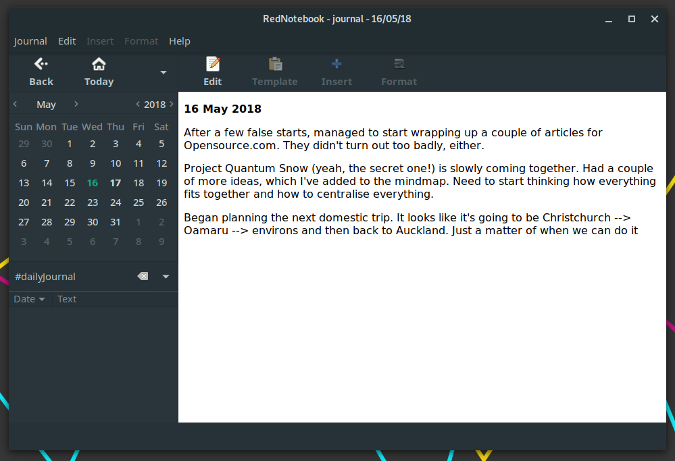

如果有什么我讨厌的东西,那就是当我知道我可以自动化它们时,但我手动进行了操作。只有我有这种情况么?我很怀疑。

|

||||

如果有什么我讨厌的东西,那就是当我知道我可以自动化它们时,但我手动进行了操作。只有我有这种情况么?我觉得不是。

|

||||

|

||||

尽管如此,他们每天都有数千名使用 [GitHub][1] 的开发人员一遍又一遍地做同样的事情:他们点击这个按钮:

|

||||

|

||||

@ -11,20 +11,18 @@

|

||||

|

||||

这没有任何意义。

|

||||

|

||||

不要误解我的意思。合并 pull 请求是有意义的。只是每次点击这个该死的按钮是没有意义的。

|

||||

不要误解我的意思。合并拉取请求是有意义的。只是每次点击这个该死的按钮是没有意义的。

|

||||

|

||||

这样做没有意义因为世界上的每个开发团队在合并 pull 请求之前都有一个已知的先决条件列表。这些要求几乎总是相同的,而且这些要求也是如此:

|

||||

这样做没有意义因为世界上的每个开发团队在合并拉取请求之前都有一个已知的先决条件列表。这些要求几乎总是相同的,而且这些要求也是如此:

|

||||

|

||||

* 是否通过测试?

|

||||

* 文档是否更新了?

|

||||

* 这是否遵循我们的代码风格指南?

|

||||

* 是否有 N 位开发人员对此进行审查?

|

||||

* 是否有若干位开发人员对此进行审查?

|

||||

|

||||

随着此列表变长,合并过程变得更容易出错。 “糟糕,在没有足够的开发人员审查补丁时 John 就点了合并按钮。” 要发出警报么?

|

||||

|

||||

|

||||

随着此列表变长,合并过程变得更容易出错。 “糟糕,John 点了合并按钮,但没有足够的开发人员审查补丁。” 要发出警报么?

|

||||

|

||||

在我的团队中,我们就像外面的每一支队伍。我们知道我们将一些代码合并到我们仓库的标准是什么。这就是为什么我们建立一个持续集成系统,每次有人创建一个 pull 请求时运行我们的测试。我们还要求代码在获得批准之前由团队的 2 名成员进行审查。

|

||||

在我的团队中,我们就像外面的每一支队伍。我们知道我们将一些代码合并到我们仓库的标准是什么。这就是为什么我们建立一个持续集成系统,每次有人创建一个拉取请求时运行我们的测试。我们还要求代码在获得批准之前由团队的 2 名成员进行审查。

|

||||

|

||||

当这些条件全部设定好时,我希望代码被合并。

|

||||

|

||||

@ -34,7 +32,7 @@

|

||||

|

||||

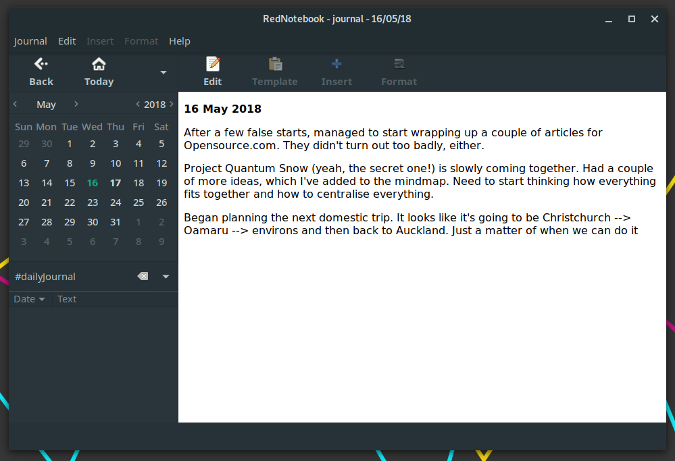

![github-branching-1][4]

|

||||

|

||||

[Mergify][3] 是一个为你按下合并按钮的服务。你可以在仓库的 .mergify.yml 中定义规则,当规则满足时,Mergify 将合并该请求。

|

||||

[Mergify][3] 是一个为你按下合并按钮的服务。你可以在仓库的 `.mergify.yml` 中定义规则,当规则满足时,Mergify 将合并该请求。

|

||||

|

||||

无需按任何按钮。

|

||||

|

||||

@ -42,26 +40,26 @@

|

||||

|

||||

![Screen-Shot-2018-06-20-at-17.12.11][5]

|

||||

|

||||

这来自一个小型项目,没有很多持续集成服务,只有 Travis。在这个 pull 请求中,一切都是绿色的:其中一个所有者审查了代码,并且测试通过。因此,该代码应该被合并:但是它还在那里挂起这,等待某人有一天按下合并按钮。

|

||||

这来自一个小型项目,没有很多持续集成服务,只有 Travis。在这个拉取请求中,一切都是绿色的:其中一个所有者审查了代码,并且测试通过。因此,该代码应该被合并:但是它还在那里挂起这,等待某人有一天按下合并按钮。

|

||||

|

||||

使用 [Mergify][3] 后,你只需将 `.mergify.yml` 放在仓库的根目录即可:

|

||||

|

||||

```

|

||||

rules:

|

||||

default:

|

||||

protection:

|

||||

required_status_checks:

|

||||

contexts:

|

||||

- continuous-integration/travis-ci

|

||||

required_pull_request_reviews:

|

||||

required_approving_review_count: 1

|

||||

|

||||

default:

|

||||

protection:

|

||||

required_status_checks:

|

||||

contexts:

|

||||

- continuous-integration/travis-ci

|

||||

required_pull_request_reviews:

|

||||

required_approving_review_count: 1

|

||||

```

|

||||

|

||||

通过这样的配置,[Mergify][3] 可以实现所需的限制,即 Travis 通过,并且至少有一个项目成员审阅了代码。只要这些条件是肯定的,pull 请求就会自动合并。

|

||||

通过这样的配置,[Mergify][3] 可以实现所需的限制,即 Travis 通过,并且至少有一个项目成员审阅了代码。只要这些条件是肯定的,拉取请求就会自动合并。

|

||||

|

||||

我们为将 [Mergify][3] **在开源项目中作为一个免费服务**。[提供服务的引擎][6]也是开源的。

|

||||

我们将 [Mergify][3] 构建为 **一个对开源项目免费的服务**。[提供服务的引擎][6]也是开源的。

|

||||

|

||||

现在去[尝试它][3],并停止让这些 pull 请求挂起一秒钟。合并它们!

|

||||

现在去[尝试它][3],不要让这些拉取请求再挂起哪怕一秒钟。合并它们!

|

||||

|

||||

如果你有任何问题,请随时在下面向我们提问或写下评论!并且敬请期待 - 因为 Mergify 还提供了其他一些我迫不及待想要介绍的功能!

|

||||

|

||||

@ -72,7 +70,7 @@ via: https://julien.danjou.info/stop-merging-your-pull-request-manually/

|

||||

作者:[Julien Danjou][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,90 +0,0 @@

|

||||

翻译中 by ZenMoore

|

||||

5 open source puzzle games for Linux

|

||||

======

|

||||

|

||||

|

||||

|

||||

Gaming has traditionally been one of Linux's weak points. That has changed somewhat in recent years thanks to Steam, GOG, and other efforts to bring commercial games to multiple operating systems, but those games are often not open source. Sure, the games can be played on an open source operating system, but that is not good enough for an open source purist.

|

||||

|

||||

So, can someone who only uses free and open source software find games that are polished enough to present a solid gaming experience without compromising their open source ideals? Absolutely. While open source games are unlikely ever to rival some of the AAA commercial games developed with massive budgets, there are plenty of open source games, in many genres, that are fun to play and can be installed from the repositories of most major Linux distributions. Even if a particular game is not packaged for a particular distribution, it is usually easy to download the game from the project's website in order to install and play it.

|

||||

|

||||

This article looks at puzzle games. I have already written about [arcade-style games][1] and [board and card games][2]. In future articles, I plan to cover racing, role-playing, and strategy & simulation games.

|

||||

|

||||

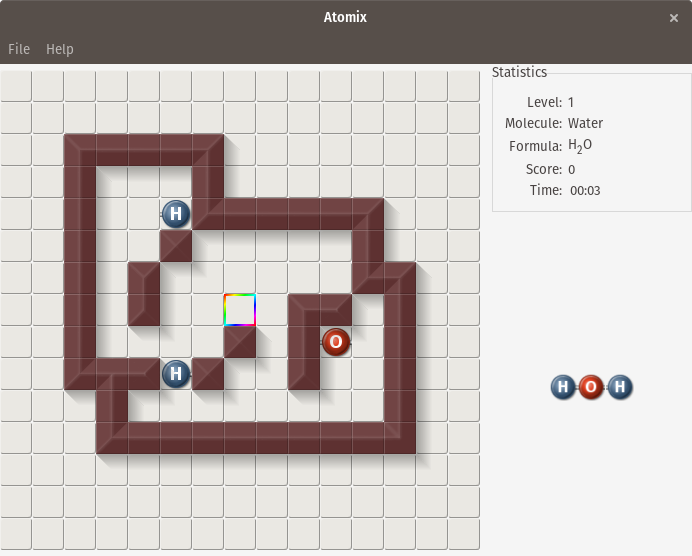

### Atomix

|

||||

|

||||

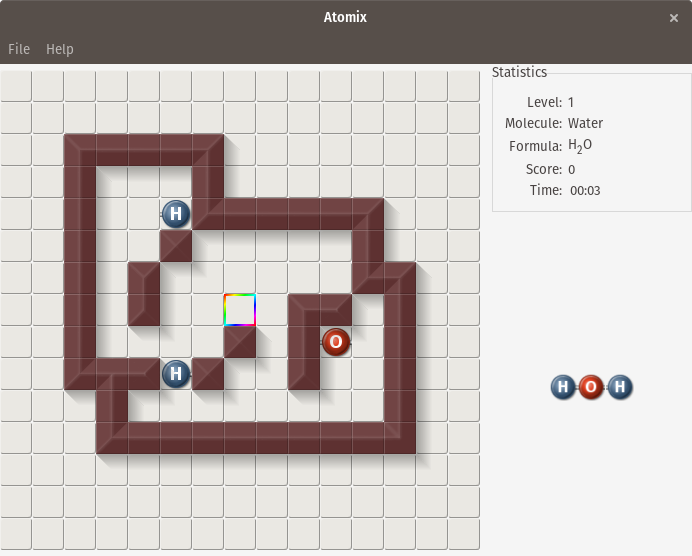

[Atomix][3] is an open source clone of the [Atomix][4] puzzle game released in 1990 for Amiga, Commodore 64, MS-DOS, and other platforms. The goal of Atomix is to construct atomic molecules by connecting atoms. Individual atoms can be moved up, down, left, or right and will keep moving in that direction until the atom hits an obstacle—either the level's walls or another atom. This means that planning is needed to figure out where in the level to construct the molecule and in what order to move the individual pieces. The first level features a simple water molecule, which is made up of two hydrogen atoms and one oxygen atom, but later levels feature more complex molecules.

|

||||

|

||||

To install Atomix, run the following command:

|

||||

|

||||

* On Fedora: `dnf`` install ``atomix`

|

||||

* On Debian/Ubuntu: `apt install`

|

||||

|

||||

|

||||

|

||||

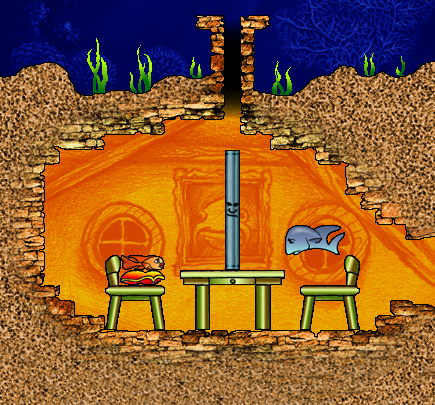

### Fish Fillets - Next Generation

|

||||

|

||||

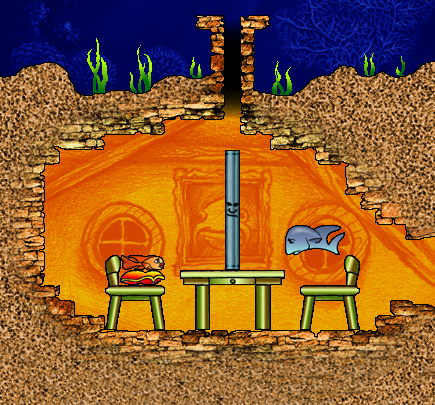

[Fish Fillets - Next Generation][5] is a Linux port of the game Fish Fillets, which was released in 1998 for Windows, and the source code was released under the GPL in 2004. The game involves two fish trying to escape various levels by moving objects out of their way. The two fish have different attributes, so the player needs to pick the right fish for each task. The larger fish can move heavier objects but it is bigger, which means it cannot fit in smaller gaps. The smaller fish can fit in those smaller gaps, but it cannot move the heavier objects. Both fish will be crushed if an object is dropped on them from above, so the player needs to be careful when moving pieces.

|

||||

|

||||

To install Fish Fillets, run the following command:

|

||||

|

||||

* On Fedora: `dnf`` install fillets-ng`

|

||||

* On Debian/Ubuntu: `apt install fillets-ng`

|

||||

|

||||

|

||||

|

||||

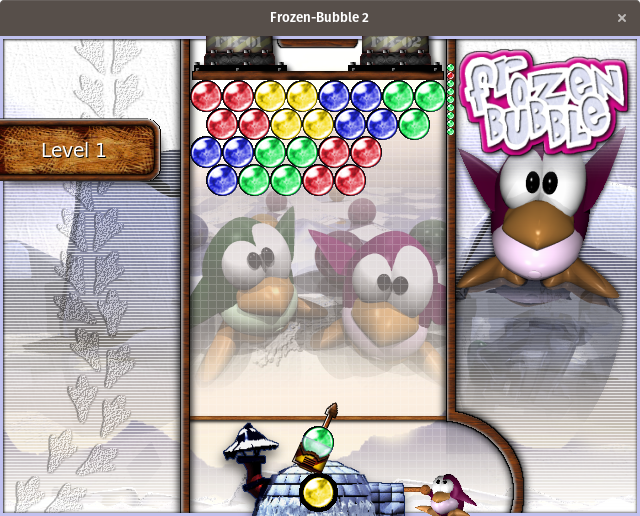

### Frozen Bubble

|

||||

|

||||

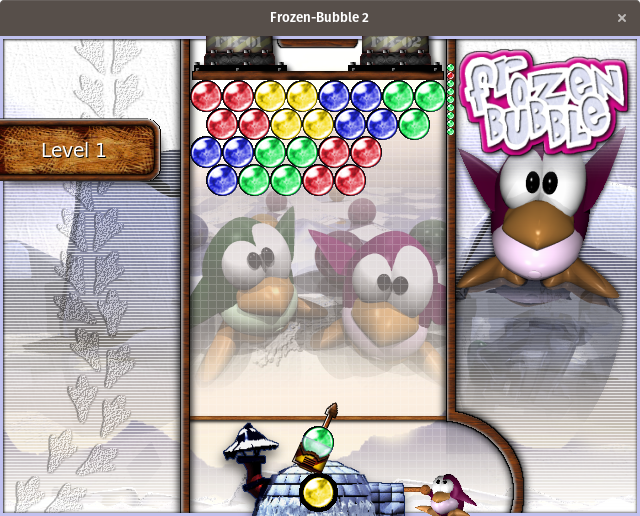

[Frozen Bubble][6] is an arcade-style puzzle game that involves shooting bubbles from the bottom of the screen toward a collection of bubbles at the top of the screen. If three bubbles of the same color connect, they are removed from the screen. Any other bubbles that were connected below the removed bubbles but that were not connected to anything else are also removed. In puzzle mode, the design of the levels is fixed, and the player simply needs to remove the bubbles from the play area before the bubbles drop below a line near the bottom of the screen. The games arcade mode and multiplayer modes follow the same basic rules but provide some differences, which adds to the variety. Frozen Bubble is one of the iconic open source games, so if you have not played it before, check it out.

|

||||

|

||||

To install Frozen Bubble, run the following command:

|

||||

|

||||

* On Fedora: `dnf`` install frozen-bubble`

|

||||

* On Debian/Ubuntu: `apt install frozen-bubble`

|

||||

|

||||

|

||||

|

||||

### Hex-a-hop

|

||||

|

||||

[Hex-a-hop][7] is a hexagonal tile-based puzzle game in which the player needs to remove all the green tiles from the level. Tiles are removed by moving over them. Since tiles disappear after they are moved over, it is imperative to plan the optimal path through the level to remove all the tiles without getting stuck. However, there is an undo feature if the player uses a sub-optimal path. Later levels add extra complexity by including tiles that need to be crossed over multiple times and bouncing tiles that cause the player to jump over a certain number of hexes.

|

||||

|

||||

To install Hex-a-hop, run the following command:

|

||||

|

||||

* On Fedora: `dnf`` install hex-a-hop`

|

||||

* On Debian/Ubuntu: `apt install hex-a-hop`

|

||||

|

||||

|

||||

|

||||

### Pingus

|

||||

|

||||

[Pingus][8] is an open source clone of [Lemmings][9]. It is not an exact clone, but the game-play is very similar. Small creatures (lemmings in Lemmings, penguins in Pingus) enter the level through the level's entrance and start walking in a straight line. The player needs to use special abilities to make it so that the creatures can reach the level's exit without getting trapped or falling off a cliff. These abilities include things like digging or building a bridge. If a sufficient number of creatures make it to the exit, the level is successfully solved and the player can advance to the next level. Pingus adds a few extra features to the standard Lemmings features, including a world map and a few abilities not found in the original game, but fans of the classic Lemmings game should feel right at home in this open source variant.

|

||||

|

||||

To install Pingus, run the following command:

|

||||

|

||||

* On Fedora: `dnf`` install ``pingus`

|

||||

* On Debian/Ubuntu: `apt install ``pingus`

|

||||

|

||||

|

||||

|

||||

Did I miss one of your favorite open source puzzle games? Share it in the comments below.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/6/puzzle-games-linux

|

||||

|

||||

作者:[Joshua Allen Holm][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/holmja

|

||||

[1]:https://opensource.com/article/18/1/arcade-games-linux

|

||||

[2]:https://opensource.com/article/18/3/card-board-games-linux

|

||||

[3]:https://wiki.gnome.org/action/raw/Apps/Atomix

|

||||

[4]:https://en.wikipedia.org/w/index.php?title=Atomix_(video_game)

|

||||

[5]:http://fillets.sourceforge.net/index.php

|

||||

[6]:http://www.frozen-bubble.org/home/

|

||||

[7]:http://hexahop.sourceforge.net/index.html

|

||||

[8]:https://pingus.seul.org/index.html

|

||||

[9]:http://en.wikipedia.org/wiki/Lemmings

|

||||

@ -1,74 +0,0 @@

|

||||

翻译中 by ZenMoore

|

||||

World Cup football on the command line

|

||||

======

|

||||

|

||||

|

||||

|

||||

Football is around us constantly. Even when domestic leagues have finished, there’s always a football score I want to know. Currently, it’s the biggest football tournament in the world, the Fifa World Cup 2018, hosted in Russia. Every World Cup there are some great football nations that don’t manage to qualify for the tournament. This time around the Italians and the Dutch missed out. But even in non-participating countries, it’s a rite of passage to keep track of the latest scores. I also like to keep abreast of the latest scores from the major leagues around the world without having to search different websites.

|

||||

|

||||

![Command-Line Interface][2]If you’re a big fan of the command-line, what better way to keep track of the latest World Cup scores and standings with a small command-line utility. Let’s take a look at one of the hottest trending football utilities available. It’s goes by the name football-cli.

|

||||

|

||||

If you’re a big fan of the command-line, what better way to keep track of the latest World Cup scores and standings with a small command-line utility. Let’s take a look at one of the hottest trending football utilities available. It’s goes by the name football-cli.

|

||||

|

||||

football-cli is not a groundbreaking app. Over the years, there’s been a raft of command line tools that let you keep you up-to-date with the latest football scores and league standings. For example, I am a heavy user of soccer-cli, a Python based tool, and App-Football, written in Perl. But I’m always looking on the look out for trending apps. And football-cli stands out from the crowd in a few ways.

|

||||

|

||||

football-cli is developed in JavaScript and written by Manraj Singh. It’s open source software, published under the MIT license. Installation is trivial with npm (the package manager for JavaScript), so let’s get straight into the action.

|

||||

|

||||

The utility offers commands that give scores of past and live fixtures, see upcoming and past fixtures of a league and team. It also displays standings of a particular league. There’s a command that lists the various supported competitions. Let’s start with the last command.

|

||||

|

||||

At a shell prompt.

|

||||

|

||||

`luke@ganges:~$ football lists`

|

||||

|

||||

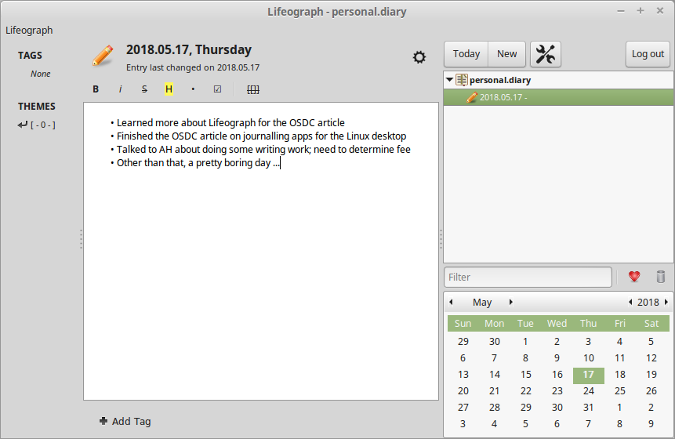

![football-lists][3]

|

||||

|

||||

The World Cup is listed at the bottom. I missed yesterday’s games, so to catch up on the scores, I type at a shell prompt:

|

||||

|

||||

`luke@ganges:~$ football scores`

|

||||

|

||||

![football-wc-22][4]

|

||||

|

||||

Now I want to see the current World Cup group standings. That’s easy.

|

||||

|

||||

`luke@ganges:~$ football standings -l WC`

|

||||

|

||||

Here’s an excerpt of the output:

|

||||

|

||||

![football-wc-table][5]

|

||||

|

||||

The eagle-eyed among you may notice a bug here. Belgium is showing as the leader of Group G. But this is not correct. Belgium and England are (at the time of writing) both tied on points, goal difference, and goals scored. In this situation, the team with the better disciplinary record is ranked higher. England and Belgium have received 2 and 3 yellow cards respectively, so England top the group.

|

||||

|

||||

Suppose I want to find out Liverpool’s results in the Premiership going back 90 days from today.

|

||||

|

||||

`luke@ganges:~$ football fixtures -l PL -d 90 -t "Liverpool"`

|

||||

|

||||

![football-Liverpool][6]

|

||||

|

||||

I’m finding the utility really handy, displaying the scores and standings in a clear, uncluttered, and attractive way. When the European domestic games start up again, it’ll get heavy usage. (Actually, the 2018-19 Champions League is already underway)!

|

||||

|

||||

These few examples give a taster of the functionality available with football-cli. Read more about the utility from the developer’s **[GitHub page][7].** Football + command-line = football-cli

|

||||

|

||||

Like similar tools, the software retrieves its football data from football-data.org. This service provide football data for all major European leagues in a machine-readable way. This includes fixtures, teams, players, results and more. All this information is provided via an easy-to-use RESTful API in JSON representation.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linuxlinks.com/football-cli-world-cup-football-on-the-command-line/

|

||||

|

||||

作者:[Luke Baker][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linuxlinks.com/author/luke-baker/

|

||||

[1]:https://www.linuxlinks.com/wp-content/plugins/jetpack/modules/lazy-images/images/1x1.trans.gif

|

||||

[2]:https://i0.wp.com/www.linuxlinks.com/wp-content/uploads/2017/12/CLI.png?resize=195%2C171&ssl=1

|

||||

[3]:https://i2.wp.com/www.linuxlinks.com/wp-content/uploads/2018/06/football-lists.png?resize=595%2C696&ssl=1

|

||||

[4]:https://i2.wp.com/www.linuxlinks.com/wp-content/uploads/2018/06/football-wc-22.png?resize=634%2C75&ssl=1

|

||||

[5]:https://i0.wp.com/www.linuxlinks.com/wp-content/uploads/2018/06/football-wc-table.png?resize=750%2C581&ssl=1

|

||||

[6]:https://i1.wp.com/www.linuxlinks.com/wp-content/uploads/2018/06/football-Liverpool.png?resize=749%2C131&ssl=1

|

||||

[7]:https://github.com/ManrajGrover/football-cli

|

||||

[8]:https://www.linuxlinks.com/links/Software/

|

||||

[9]:https://discord.gg/uN8Rqex

|

||||

42

sources/talk/20180702 My first sysadmin mistake.md

Normal file

42

sources/talk/20180702 My first sysadmin mistake.md

Normal file

@ -0,0 +1,42 @@

|

||||

My first sysadmin mistake

|

||||

======

|

||||

|

||||

|

||||

|

||||

If you work in IT, you know that things never go completely as you think they will. At some point, you'll hit an error or something will go wrong, and you'll end up having to fix things. That's the job of a systems administrator.

|

||||

|

||||

As humans, we all make mistakes. Sometimes, we are the error in the process, or we are what went wrong. As a result, we end up having to fix our own mistakes. That happens. We all make mistakes, typos, or errors.

|

||||

|

||||

As a young systems administrator, I learned this lesson the hard way. I made a huge blunder. But thanks to some coaching from my supervisor, I learned not to dwell on my errors, but to create a "mistake strategy" to set things right. Learn from your mistakes. Get over it, and move on.

|

||||

|

||||

My first job was a Unix systems administrator for a small company. Really, I was a junior sysadmin, but I worked alone most of the time. We were a small IT team, just the three of us. I was the only sysadmin for 20 or 30 Unix workstations and servers. The other two supported the Windows servers and desktops.

|

||||

|

||||

Any systems administrators reading this probably won't be surprised to know that, as an unseasoned, junior sysadmin, I eventually ran the `rm` command in the wrong directory. As root. I thought I was deleting some stale cache files for one of our programs. Instead, I wiped out all files in the `/etc` directory by mistake. Ouch.

|

||||

|

||||

My clue that I'd done something wrong was an error message that `rm` couldn't delete certain subdirectories. But the cache directory should contain only files! I immediately stopped the `rm` command and looked at what I'd done. And then I panicked. All at once, a million thoughts ran through my head. Did I just destroy an important server? What was going to happen to the system? Would I get fired?

|

||||

|

||||

Fortunately, I'd run `rm *` and not `rm -rf *` so I'd deleted only files. The subdirectories were still there. But that didn't make me feel any better.

|

||||

|

||||

Immediately, I went to my supervisor and told her what I'd done. She saw that I felt really dumb about my mistake, but I owned it. Despite the urgency, she took a few minutes to do some coaching with me. "You're not the first person to do this," she said. "What would someone else do in your situation?" That helped me calm down and focus. I started to think less about the stupid thing I had just done, and more about what I was going to do next.

|

||||

|

||||

I put together a simple strategy: Don't reboot the server. Use an identical system as a template, and re-create the `/etc` directory.

|

||||

|

||||

Once I had my plan of action, the rest was easy. It was just a matter of running the right commands to copy the `/etc` files from another server and edit the configuration so it matched the system. Thanks to my practice of documenting everything, I used my existing documentation to make any final adjustments. I avoided having to completely restore the server, which would have meant a huge disruption.

|

||||

|

||||

To be sure, I learned from that mistake. For the rest of my years as a systems administrator, I always confirmed what directory I was in before running any command.

|

||||

|

||||

I also learned the value of building a "mistake strategy." When things go wrong, it's natural to panic and think about all the bad things that might happen next. That's human nature. But creating a "mistake strategy" helps me stop worrying about what just went wrong and focus on making things better. I may still think about it, but knowing my next steps allows me to "get over it."

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/7/my-first-sysadmin-mistake

|

||||

|

||||

作者:[Jim Hall][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/jim-hall

|

||||

@ -1,3 +1,4 @@

|

||||

translating by wenwensnow

|

||||

An Advanced System Configuration Utility For Ubuntu Power Users

|

||||

======

|

||||

|

||||

|

||||

110

sources/tech/20180424 A gentle introduction to FreeDOS.md

Normal file

110

sources/tech/20180424 A gentle introduction to FreeDOS.md

Normal file

@ -0,0 +1,110 @@

|

||||

A gentle introduction to FreeDOS

|

||||

======

|

||||

|

||||

|

||||

|

||||

FreeDOS is an old operating system, but it is new to many people. In 1994, several developers and I came together to [create FreeDOS][1]—a complete, free, DOS-compatible operating system you can use to play classic DOS games, run legacy business software, or develop embedded systems. Any program that works on MS-DOS should also run on FreeDOS.

|

||||

|

||||

In 1994, FreeDOS was immediately familiar to anyone who had used Microsoft's proprietary MS-DOS. And that was by design; FreeDOS intended to mimic MS-DOS as much as possible. As a result, DOS users in the 1990s were able to jump right into FreeDOS. But times have changed. Today, open source developers are more familiar with the Linux command line or they may prefer a graphical desktop like [GNOME][2], making the FreeDOS command line seem alien at first.

|

||||

|

||||

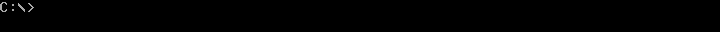

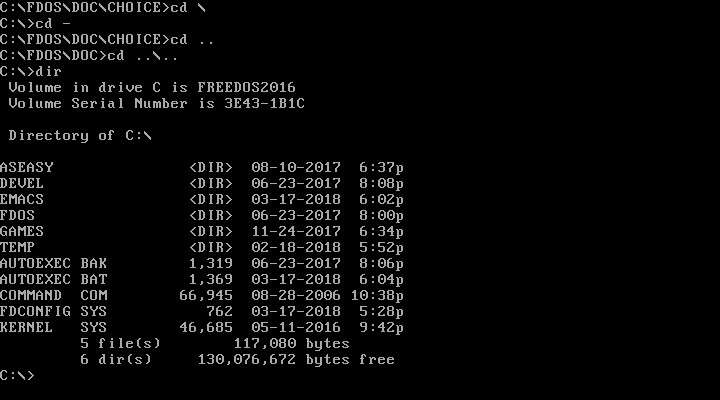

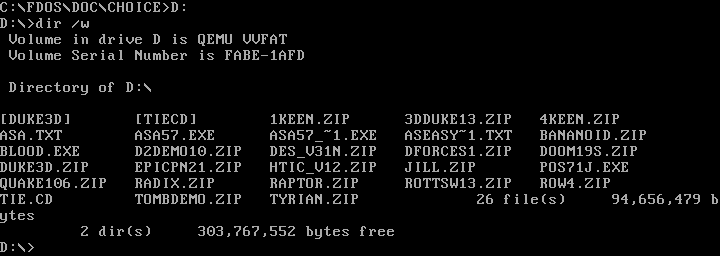

New users often ask, "I [installed FreeDOS][3], but how do I use it?" If you haven't used DOS before, the blinking `C:\>` DOS prompt can seem a little unfriendly. And maybe scary. This gentle introduction to FreeDOS should get you started. It offers just the basics: how to get around and how to look at files. If you want to learn more than what's offered here, visit the [FreeDOS wiki][4].

|

||||

|

||||

### The DOS prompt

|

||||

|

||||

First, let's look at the empty prompt and what it means.

|

||||

|

||||

|

||||

|

||||

DOS is a "disk operating system" created when personal computers ran from floppy disks. Even when computers supported hard drives, it was common in the 1980s and 1990s to switch frequently between the different drives. For example, you might make a backup copy of your most important files to a floppy disk.

|

||||

|

||||

DOS referenced each drive by a letter. Early PCs could have only two floppy drives, which were assigned as the `A:` and `B:` drives. The first partition on the first hard drive was the `C:` drive, and so on for other drives. The `C:` in the prompt means you are using the first partition on the first hard drive.

|

||||

|

||||

Starting with PC-DOS 2.0 in 1983, DOS also supported directories and subdirectories, much like the directories and subdirectories on Linux filesystems. But unlike Linux, DOS directory names are delimited by `\` instead of `/`. Putting that together with the drive letter, the `C:\` in the prompt means you are in the top, or "root," directory of the `C:` drive.

|

||||

|

||||

The `>` is the literal prompt where you type your DOS commands, like the `$` prompt on many Linux shells. The part before the `>` tells you the current working directory, and you type commands at the `>` prompt.

|

||||

|

||||

### Finding your way around in DOS

|

||||

|

||||

The basics of navigating through directories in DOS are very similar to the steps you'd use on the Linux command line. You need to remember only a few commands.

|

||||

|

||||

#### Displaying a directory

|

||||

|

||||

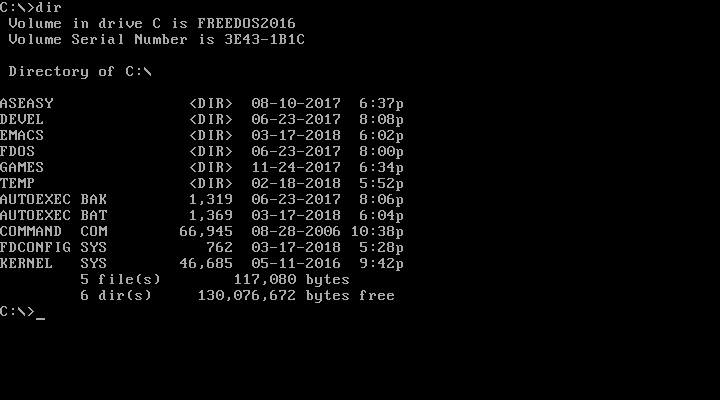

When you want to see the contents of the current directory, use the `DIR` command. Since DOS commands are not case-sensitive, you could also type `dir`. By default, DOS displays the details of every file and subdirectory, including the name, extension, size, and last modified date and time.

|

||||

|

||||

|

||||

|

||||

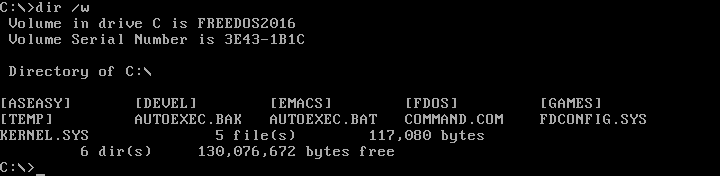

If you don't want the extra details about individual file sizes, you can display a "wide" directory by using the `/w` option with the `DIR` command. Note that Linux uses the hyphen (`-`) or double-hyphen (`--`) to start command-line options, but DOS uses the slash character (`/`).

|

||||

|

||||

|

||||

|

||||

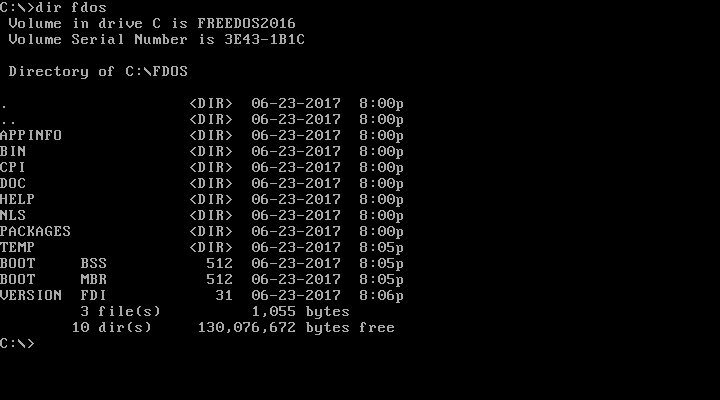

You can look inside a specific subdirectory by passing the pathname as a parameter to `DIR`. Again, another difference from Linux is that Linux files and directories are case-sensitive, but DOS names are case-insensitive. DOS will usually display files and directories in all uppercase, but you can equally reference them in lowercase.

|

||||

|

||||

|

||||

|

||||

|

||||

#### Changing the working directory

|

||||

|

||||

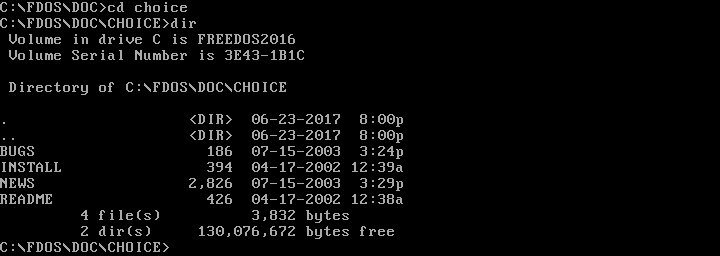

Once you can see the contents of a directory, you can "move into" any other directory. On DOS, you change your working directory with the `CHDIR` command, also abbreviated as `CD`. You can change into a subdirectory with a command like `CD CHOICE` or into a new path with `CD \FDOS\DOC\CHOICE`.

|

||||

|

||||

|

||||

|

||||

Just like on the Linux command line, DOS uses `.` to represent the current directory, and `..` for the parent directory (one level "up" from the current directory). You can combine these. For example, `CD ..` changes to the parent directory, and `CD ..\..` moves you two levels "up" from the current directory.

|

||||

|

||||

|

||||

|

||||

FreeDOS also borrows a feature from Linux: You can use `CD -` to jump back to your previous working directory. That is handy after you change into a new path to do one thing and want to go back to your previous work.

|

||||

|

||||

#### Changing the working drive

|

||||

|

||||

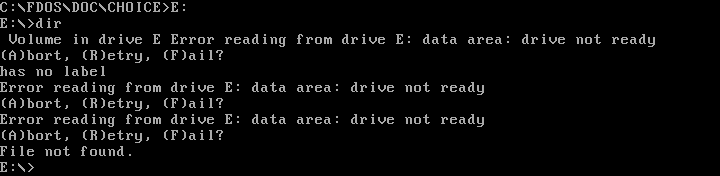

Under Linux, the concept of a "drive" is hidden. In Linux and other Unix systems, you "mount" a drive to a directory path, such as `/backup`, or the system does it for you automatically, such as `/var/run/media/user/flashdrive`. But DOS is a much simpler system. With DOS, you must change the working drive by yourself.

|

||||

|

||||

Remember that DOS assigns the first partition on the first hard drive as the `C:` drive, and so on for other drive letters. On modern systems, people rarely divide a hard drive with multiple DOS partitions; they simply use the whole disk—or as much of it as they can assign to DOS. Today, `C:` is usually the first hard drive, and `D:` is usually another hard drive or the CD-ROM drive. Other network drives can be mapped to other letters, such as `E:` or `Z:` or however you want to organize them.

|

||||

|

||||

Changing drives is easy under DOS. Just type the drive letter followed by a colon (`:`) on the command line, and DOS will change to that working drive. For example, on my [QEMU][5] system, I set my `D:` drive to a shared directory in my Linux home directory, where I keep installers for various DOS applications and games I want to test.

|

||||

|

||||

|

||||

|

||||

Be careful that you don't try to change to a drive that doesn't exist. DOS may set the working drive, but if you try to do anything there you'll get the somewhat infamous "Abort, Retry, Fail" DOS error message.

|

||||

|

||||

|

||||

|

||||

### Other things to try

|

||||

|

||||

With the `CD` and `DIR` commands, you have the basics of DOS navigation. These commands allow you to find your way around DOS directories and see what other subdirectories and files exist. Once you are comfortable with basic navigation, you might also try these other basic DOS commands:

|

||||

|

||||

* `MKDIR` or `MD` to create new directories

|

||||

* `RMDIR` or `RD` to remove directories

|

||||

* `TREE` to view a list of directories and subdirectories in a tree-like format

|

||||

* `TYPE` and `MORE` to display file contents

|

||||

* `RENAME` or `REN` to rename files

|

||||

* `DEL` or `ERASE` to delete files

|

||||

* `EDIT` to edit files

|

||||

* `CLS` to clear the screen

|

||||

|

||||

|

||||

|

||||

If those aren't enough, you can find a list of [all DOS commands][6] on the FreeDOS wiki.

|

||||

|

||||

In FreeDOS, you can use the `/?` parameter to get brief instructions to use each command. For example, `EDIT /?` will show you the usage and options for the editor. Or you can type `HELP` to use an interactive help system.

|

||||

|

||||

Like any DOS, FreeDOS is meant to be a simple operating system. The DOS filesystem is pretty simple to navigate with only a few basic commands. So fire up a QEMU session, install FreeDOS, and experiment with the DOS command line. Maybe now it won't seem so scary.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/4/gentle-introduction-freedos

|

||||

|

||||

作者:[Jim Hall][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/jim-hall

|

||||

[1]:https://opensource.com/article/17/10/freedos

|

||||

[2]:https://opensource.com/article/17/8/gnome-20-anniversary

|

||||

[3]:http://www.freedos.org/

|

||||

[4]:http://wiki.freedos.org/

|

||||

[5]:https://www.qemu.org/

|

||||

[6]:http://wiki.freedos.org/wiki/index.php/Dos_commands

|

||||

211

sources/tech/20180425 JavaScript Router.md

Normal file

211

sources/tech/20180425 JavaScript Router.md

Normal file

@ -0,0 +1,211 @@

|

||||

JavaScript Router

|

||||

======

|

||||

There are a lot of frameworks/libraries to build single page applications, but I wanted something more minimal. I’ve come with a solution and I just wanted to share it 🙂

|

||||

```

|

||||

class Router {

|

||||

constructor() {

|

||||

this.routes = []

|

||||

}

|

||||

|

||||

handle(pattern, handler) {

|

||||

this.routes.push({ pattern, handler })

|

||||

}

|

||||

|

||||

exec(pathname) {

|

||||

for (const route of this.routes) {

|

||||

if (typeof route.pattern === 'string') {

|

||||

if (route.pattern === pathname) {

|

||||

return route.handler()

|

||||

}

|

||||

} else if (route.pattern instanceof RegExp) {

|

||||

const result = pathname.match(route.pattern)

|

||||

if (result !== null) {

|

||||

const params = result.slice(1).map(decodeURIComponent)

|

||||

return route.handler(...params)

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

const router = new Router()

|

||||

|

||||

router.handle('/', homePage)

|

||||

router.handle(/^\/users\/([^\/]+)$/, userPage)

|

||||

router.handle(/^\//, notFoundPage)

|

||||

|

||||

function homePage() {

|

||||

return 'home page'

|

||||

}

|

||||

|

||||

function userPage(username) {

|

||||

return `${username}'s page`

|

||||

}

|

||||

|

||||

function notFoundPage() {

|

||||

return 'not found page'

|

||||

}

|

||||

|

||||

console.log(router.exec('/')) // home page

|

||||

console.log(router.exec('/users/john')) // john's page

|

||||

console.log(router.exec('/foo')) // not found page

|

||||

|

||||

```

|

||||

|

||||

To use it you add handlers for a URL pattern. This pattern can be a simple string or a regular expression. Using a string will match exactly that, but a regular expression allows you to do fancy things like capture parts from the URL as seen with the user page or match any URL as seen with the not found page.

|

||||

|

||||

I’ll explain what does that `exec` method… As I said, the URL pattern can be a string or a regular expression, so it first checks for a string. In case the pattern is equal to the given pathname, it returns the execution of the handler. If it is a regular expression, we do a match with the given pathname. In case it matches, it returns the execution of the handler passing to it the captured parameters.

|

||||

|

||||

### Working Example

|

||||

|

||||

That example just logs to the console. Let’s try to integrate it to a page and see something.

|

||||

```

|

||||

<!DOCTYPE html>

|

||||

<html lang="en">

|

||||

<head>

|

||||

<meta charset="utf-8">

|

||||

<meta name="viewport" content="width=device-width, initial-scale=1.0">

|

||||

<title>Router Demo</title>

|

||||

<link rel="shortcut icon" href="data:,">

|

||||

<script src="/main.js" type="module"></script>

|

||||

</head>

|

||||

<body>

|

||||

<header>

|

||||

<a href="/">Home</a>

|

||||

<a href="/users/john_doe">Profile</a>

|

||||

</header>

|

||||

<main></main>

|

||||

</body>

|

||||

</html>

|

||||

|

||||

```

|

||||

|

||||

This is the `index.html`. For single page applications, you must do special work on the server side because all unknown paths should return this `index.html`. For development, I’m using an npm tool called [serve][1]. This tool is to serve static content. With the flag `-s`/`--single` you can serve single page applications.

|

||||

|

||||

With [Node.js][2] and npm (comes with Node) installed, run:

|

||||

```

|

||||

npm i -g serve

|

||||

serve -s

|

||||

|

||||

```

|

||||

|

||||

That HTML file loads the script `main.js` as a module. It has a simple `<header>` and a `<main>` element in which we’ll render the corresponding page.

|

||||

|

||||

Inside the `main.js` file:

|

||||

```

|

||||

const main = document.querySelector('main')

|

||||

const result = router.exec(location.pathname)

|

||||

main.innerHTML = result

|

||||

|

||||

```

|

||||

|

||||

We call `router.exec()` passing the current pathname and setting the result as HTML in the main element.

|

||||

|

||||

If you go to localhost and play with it you’ll see that it works, but not as you expect from a SPA. Single page applications shouldn’t refresh when you click on links.

|

||||

|

||||

We’ll have to attach event listeners to each anchor link click, prevent the default behavior and do the correct rendering. Because a single page application is something dynamic, you expect creating anchor links on the fly so to add the event listeners I’ll use a technique called [event delegation][3].

|

||||

|

||||

I’ll attach a click event listener to the whole document and check if that click was on an anchor link (or inside one).

|

||||

|

||||

In the `Router` class I’ll have a method that will register a callback that will run for every time we click on a link or a “popstate” event occurs. The popstate event is dispatched every time you use the browser back or forward buttons.

|

||||

|

||||

To the callback we’ll pass that same `router.exec(location.pathname)` for convenience.

|

||||

```class Router {

|

||||

// ...

|

||||

install(callback) {

|

||||

const execCallback = () => {

|

||||

callback(this.exec(location.pathname))

|

||||

}

|

||||

|

||||

document.addEventListener('click', ev => {

|

||||

if (ev.defaultPrevented

|

||||

|| ev.button !== 0

|

||||

|| ev.ctrlKey

|

||||

|| ev.shiftKey

|

||||

|| ev.altKey

|

||||

|| ev.metaKey) {

|

||||

return

|

||||

}

|

||||

|

||||

const a = ev.target.closest('a')

|

||||

|

||||

if (a === null

|

||||

|| (a.target !== '' && a.target !== '_self')

|

||||

|| a.hostname !== location.hostname) {

|

||||

return

|

||||

}

|

||||

|

||||

ev.preventDefault()

|

||||

|

||||

if (a.href !== location.href) {

|

||||

history.pushState(history.state, document.title, a.href)

|

||||

execCallback()

|

||||

}

|

||||

})

|

||||

|

||||

addEventListener('popstate', execCallback)

|

||||

execCallback()

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

For link clicks, besides calling the callback, we update the URL with `history.pushState()`.

|

||||

|

||||

We’ll move that previous render we did in the main element into the install callback.

|

||||

```

|

||||

router.install(result => {

|

||||

main.innerHTML = result

|

||||

})

|

||||

|

||||

```

|

||||

|

||||

#### DOM

|

||||

|

||||

Those handlers you pass to the router doesn’t need to return a `string`. If you need more power you can return actual DOM. Ex:

|

||||

```

|

||||

const homeTmpl = document.createElement('template')

|

||||

homeTmpl.innerHTML = `

|

||||

<div class="container">

|

||||

<h1>Home Page</h1>

|

||||

</div>

|

||||

`

|

||||

|

||||

function homePage() {

|

||||

const page = homeTmpl.content.cloneNode(true)

|

||||

// You can do `page.querySelector()` here...

|

||||

return page

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

And now in the install callback you can check if the result is a `string` or a `Node`.

|

||||

```

|

||||

router.install(result => {

|

||||

if (typeof result === 'string') {

|

||||

main.innerHTML = result

|

||||

} else if (result instanceof Node) {

|

||||

main.innerHTML = ''

|

||||

main.appendChild(result)

|

||||

}

|

||||

})

|

||||

```

|

||||

|

||||

That will cover the basic features. I wanted to share this because I’ll use this router in next blog posts.

|

||||

|

||||

I’ve published it as an [npm package][4].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://nicolasparada.netlify.com/posts/js-router/

|

||||

|

||||

作者:[Nicolás Parada][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://nicolasparada.netlify.com/

|

||||

[1]:https://npm.im/serve

|

||||

[2]:https://nodejs.org/

|

||||

[3]:https://developer.mozilla.org/en-US/docs/Learn/JavaScript/Building_blocks/Events#Event_delegation

|

||||

[4]:https://www.npmjs.com/package/@nicolasparada/router

|

||||

@ -0,0 +1,355 @@

|

||||

How the Go runtime implements maps efficiently (without generics)

|

||||

============================================================

|

||||

|

||||

This post discusses how maps are implemented in Go. It is based on a presentation I gave at the [GoCon Spring 2018][7] conference in Tokyo, Japan.

|

||||

|

||||

# What is a map function?

|

||||

|

||||

To understand how a map works, let’s first talk about the idea of the _map function_ . A map function maps one value to another. Given one value, called a _key_ , it will return a second, the _value_ .

|

||||

|

||||

```

|

||||

map(key) → value

|

||||

```

|

||||

|

||||

Now, a map isn’t going to be very useful unless we can put some data in the map. We’ll need a function that adds data to the map

|

||||

|

||||

```

|

||||

insert(map, key, value)

|

||||

```

|

||||

|

||||

and a function that removes data from the map

|

||||

|

||||

```

|

||||

delete(map, key)

|

||||

```

|

||||

|

||||

There are other interesting properties of map implementations like querying if a key is present in the map, but they’re outside the scope of what we’re going to discuss today. Instead we’re just going to focus on these properties of a map; insertion, deletion and mapping keys to values.

|

||||

|

||||

# Go’s map is a hashmap

|

||||

|

||||

The specific map implementation I’m going to talk about is the _hashmap_ , because this is the implementation that the Go runtime uses. A hashmap is a classic data structure offering O(1) lookups on average and O(n) in the worst case. That is, when things are working well, the time to execute the map function is a near constant.

|

||||

|

||||

The size of this constant is part of the hashmap design and the point at which the map moves from O(1) to O(n) access time is determined by its _hash function_ .

|

||||

|

||||

### The hash function

|

||||

|

||||

What is a hash function? A hash function takes a key of an unknown length and returns a value with a fixed length.

|

||||

|

||||

```

|

||||

hash(key) → integer

|

||||

```

|

||||

|

||||

this _hash value _ is almost always an integer for reasons that we’ll see in a moment.

|

||||

|

||||

Hash and map functions are similar. They both take a key and return a value. However in the case of the former, it returns a value _derived _ from the key, not the value _associated_ with the key.

|

||||

|

||||

### Important properties of a hash function

|

||||

|

||||

It’s important to talk about the properties of a good hash function as the quality of the hash function determines how likely the map function is to run near O(1).

|

||||

|

||||

When used with a hashmap, hash functions have two important properties. The first is _stability_ . The hash function must be stable. Given the same key, your hash function must return the same answer. If it doesn’t you will not be able to find things you put into the map.

|

||||

|

||||

The second property is _good distribution_ . Given two near identical keys, the result should be wildly different. This is important for two reasons. Firstly, as we’ll see, values in a hashmap should be distributed evenly across buckets, otherwise the access time is not O(1). Secondly as the user can control some of the aspects of the input to the hash function, they may be able to control the output of the hash function, leading to poor distribution which has been a DDoS vector for some languages. This property is also known as _collision resistance_ .

|

||||

|

||||

### The hashmap data structure

|

||||

|

||||

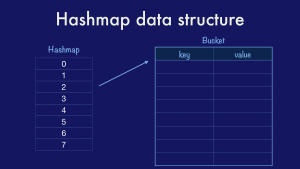

The second part of a hashmap is the way data is stored.

|

||||

|

||||

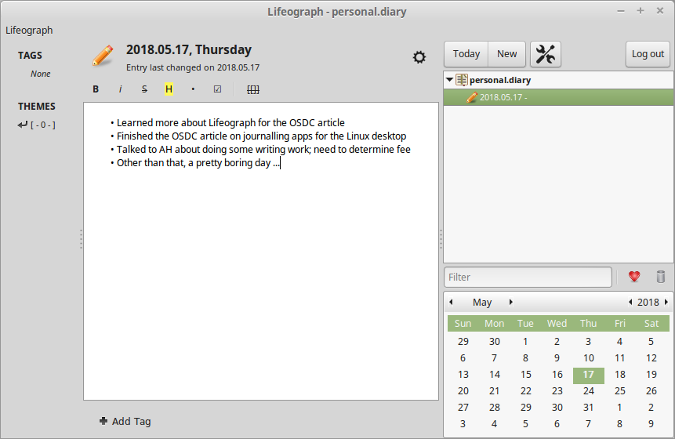

|

||||

The classical hashmap is an array of _buckets_ each of which contains a pointer to an array of key/value entries. In this case our hashmap has eight buckets (as this is the value that the Go implementation uses) and each bucket can hold up to eight entries each (again drawn from the Go implementation). Using powers of two allows the use of cheap bit masks and shifts rather than expensive division.

|

||||

|

||||

As entries are added to a map, assuming a good hash function distribution, then the buckets will fill at roughly the same rate. Once the number of entries across each bucket passes some percentage of their total size, known as the _load factor,_ then the map will grow by doubling the number of buckets and redistributing the entries across them.

|

||||

|

||||

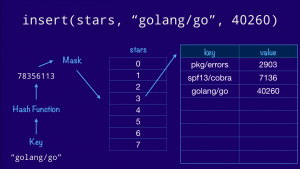

With this data structure in mind, if we had a map of project names to GitHub stars, how would we go about inserting a value into the map?

|

||||

|

||||

|

||||

|

||||

We start with the key, feed it through our hash function, then mask off the bottom few bits to get the correct offset into our bucket array. This is the bucket that will hold all the entries whose hash ends in three (011 in binary). Finally we walk down the list of entries in the bucket until we find a free slot and we insert our key and value there. If the key was already present, we’d just overwrite the value.

|

||||

|

||||

|

||||

|

||||

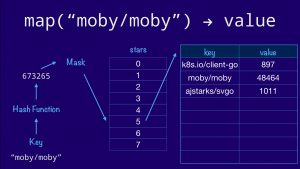

Now, lets use the same diagram to look up a value in our map. The process is similar. We hash the key as before, then masking off the lower 3 bits, as our bucket array contains 8 entries, to navigate to the fifth bucket (101 in binary). If our hash function is correct then the string `"moby/moby"` will always hash to the same value, so we know that the key will not be in any other bucket. Now it’s a case of a linear search through the bucket comparing the key provided with the one stored in the entry.

|

||||

|

||||

### Four properties of a hash map

|

||||

|

||||

That was a very high level explanation of the classical hashmap. We’ve seen there are four properties you need to implement a hashmap;

|

||||

|

||||

* 1. You need a hash function for the key.

|

||||

|

||||

2. You need an equality function to compare keys.

|

||||

|

||||

3. You need to know the size of the key and,

|

||||

|

||||

4. You need to know the size of the value because these affect the size of the bucket structure, which the compiler needs to know, as you walk or insert into that structure, how far to advance in memory.

|

||||

|

||||

# Hashmaps in other languages

|

||||

|

||||

Before we talk about the way Go implements a hashmap, I wanted to give a brief overview of how two popular languages implement hashmaps. I’ve chosen these languages as both offer a single map type that works across a variety of key and values.

|

||||

|

||||

### C++

|

||||

|

||||

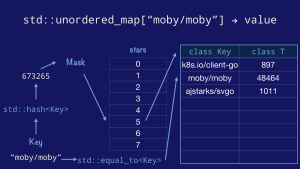

The first language we’ll discuss is C++. The C++ Standard Template Library (STL) provides `std::unordered_map` which is usually implemented as a hashmap.

|

||||

|

||||

This is the declaration for `std::unordered_map`. It’s a template, so the actual values of the parameters depend on how the template is instantiated.

|

||||

|

||||

```

|

||||

template<

|

||||

class Key, // the type of the key

|

||||

class T, // the type of the value

|

||||

class Hash = std::hash<Key>,

// the hash function

|

||||

class KeyEqual = std::equal_to<Key>,

// the key equality function

|

||||

class Allocator = std::allocator< std::pair<const Key, T> >

|

||||

> class unordered_map;

|

||||

```

|

||||

|

||||

There is a lot here, but the important things to take away are;

|

||||

|

||||

* The template takes the type of the key and value as parameters, so it knows their size.

|

||||

|

||||

* The template takes a `std::hash` function specialised on the key type, so it knows how to hash a key passed to it.

|

||||

|

||||

* And the template takes an `std::equal_to` function, also specialised on key type, so it knows how to compare two keys.

|

||||

|

||||

Now we know how the four properties of a hashmap are communicated to the compiler in C++’s `std::unordered_map`, let’s look at how they work in practice.

|

||||

|

||||

|

||||

|

||||

First we take the key, pass it to the `std::hash` function to obtain the hash value of the key. We mask and index into the bucket array, then walk the entries in that bucket comparing the keys using the `std::equal_to` function.

|

||||

|

||||

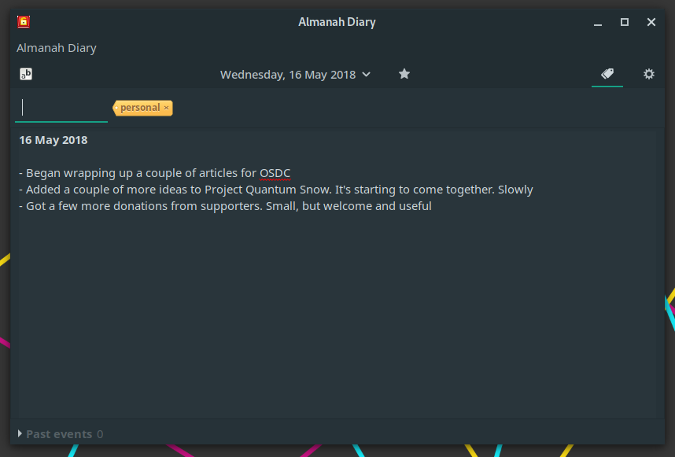

### Java

|

||||

|

||||

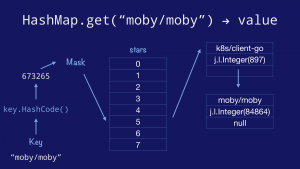

The second language we’ll discuss is Java. In java the hashmap type is called, unsurprisingly, `java.util.Hashmap`.

|

||||

|

||||

In java, the `java.util.Hashmap` type can only operate on objects, which is fine because in Java almost everything is a subclass of `java.lang.Object`. As every object in Java descends from `java.lang.Object` they inherit, or override, a `hashCode` and an `equals` method.

|

||||

|

||||

However, you cannot directly store the eight primitive types; `boolean`, `int`, ``short``, ``long``, ``byte``, ``char``, ``float``, and ``double``, because they are not subclasss of `java.lang.Object`. You cannot use them as a key, you cannot store them as a value. To work around this limitation, those types are silently converted into objects representing their primitive values. This is known as _boxing._

|

||||

|

||||

Putting this limitation to one side for the moment, let’s look at how a lookup in Java’s hashmap would operate.

|

||||

|

||||

|

||||

|

||||

First we take the key and call its `hashCode` method to obtain the hash value of the key. We mask and index into the bucket array, which in Java is a pointer to an `Entry`, which holds a key and value, and a pointer to the next `Entry` in the bucket forming a linked list of entries.

|

||||

|

||||

# Tradeoffs

|

||||

|

||||

Now that we’ve seen how C++ and Java implement a Hashmap, let’s compare their relative advantages and disadvantages.

|

||||

|

||||

### C++ templated `std::unordered_map`

|

||||

|

||||

### Advantages

|

||||

|

||||

* Size of the key and value types known at compile time.

|

||||

|

||||

* Data structure are always exactly the right size, no need for boxing or indiretion.

|

||||

|

||||

* As code is specialised at compile time, other compile time optimisations like inlining, constant folding, and dead code elimination, can come into play.

|

||||

|

||||

In a word, maps in C++ _can be_ as fast as hand writing a custom map for each key/value combination, because that is what is happening.

|

||||

|

||||

### Disadvantages

|

||||

|

||||

* Code bloat. Each different map are different types. For N map types in your source, you will have N copies of the map code in your binary.

|

||||

|

||||

* Compile time bloat. Due to the way header files and template work, each file that mentions a `std::unordered_map` the source code for that implementation has to be generated, compiled, and optimised.

|

||||

|

||||

### Java util Hashmap

|

||||

|

||||

### Advantages

|

||||

|

||||

* One implementation of a map that works for any subclass of java.util.Object. Only one copy of java.util.HashMap is compiled, and its referenced from every single class.

|

||||

|

||||

### Disadvantages

|

||||

|

||||

* Everything must be an object, even things which are not objects, this means maps of primitive values must be converted to objects via boxing. This adds gc pressure for wrapper objects, and cache pressure because of additional pointer indirections (each object is effective another pointer lookup)

|

||||

|

||||

* Buckets are stored as linked lists, not sequential arrays. This leads to lots of pointer chasing while comparing objects.

|

||||

|

||||

* Hash and equality functions are left as an exercise to the author of the class. Incorrect hash and equals functions can slow down maps using those types, or worse, fail to implement the map behaviour.

|

||||

|

||||

# Go’s hashmap implementation

|

||||

|

||||

Now, let’s talk about how the hashmap implementation in Go allows us to retain many of the benfits of the best map implementations we’ve seen, without paying for the disadvantages.

|

||||

|

||||

Just like C++ and just like Java, Go’s hashmap written _in Go._ But–Go does not provide generic types, so how can we write a hashmap that works for (almost) any type, in Go?

|

||||

|

||||

### Does the Go runtime use interface{}

?

|

||||

|

||||

No, the Go runtime does not use `interface{}` to implement its hashmap. While we have the `container/{list,heap}` packages which do use the empty interface, the runtime’s map implementation does not use `interface{}`.

|

||||

|

||||

### Does the compiler use code generation?

|

||||

|

||||

No, there is only one copy of the map implementation in a Go binary. There is only one map implementation, and unlike Java, it doesn’t use `interface{}` boxing. So, how does it work?

|

||||

|

||||

There are two parts to the answer, and they both involve co-operation between the compiler and the runtime.

|

||||

|

||||

### Compile time rewriting

|

||||

|

||||

The first part of the answer is to understand that map lookups, insertion, and removal, are implemented in the runtime package. During compilation map operations are rewritten to calls to the runtime. eg.

|

||||

|

||||

```

|

||||

v := m["key"] → runtime.mapaccess1(m, ”key", &v)

|

||||

v, ok := m["key"] → runtime.mapaccess2(m, ”key”, &v, &ok)

|

||||

m["key"] = 9001 → runtime.mapinsert(m, ”key", 9001)

|

||||

delete(m, "key") → runtime.mapdelete(m, “key”)

|

||||

```

|

||||

|

||||

It’s also useful to note that the same thing happens with channels, but not with slices.

|

||||

|

||||