diff --git a/published/20160826 Forget Technical Debt —Here'sHowtoBuild Technical Wealth.MD b/published/20160826 Forget Technical Debt —Here'sHowtoBuild Technical Wealth.MD

new file mode 100644

index 0000000000..026e10c717

--- /dev/null

+++ b/published/20160826 Forget Technical Debt —Here'sHowtoBuild Technical Wealth.MD

@@ -0,0 +1,276 @@

+忘记技术债务 —— 教你如何创造技术财富

+===============

+

+电视里正播放着《老屋》节目,[Andrea Goulet][58] 和她的商业合作伙伴正悠闲地坐在客厅里,商讨着他们的战略计划。那正是大家思想的火花碰撞出创新事物的时刻。他们正在寻求一种能够实现自身价值的方式 —— 为其它公司清理遗留代码及科技债务。他们此刻的情景,像极了电视里的场景。(LCTT 译注:《老屋》电视节目提供专业的家装、家庭改建、重新装饰、创意等等信息,与软件的改造有异曲同工之处)。

+

+“我们意识到我们现在做的工作不仅仅是清理遗留代码,实际上我们是在用重建老屋的方式来重构软件,让系统运行更持久、更稳定、更高效,”Goulet 说。“这让我开始思考公司如何花钱来改善他们的代码,以便让他们的系统运行更高效。就好比为了让屋子变得更有价值,你不得不使用一个全新的屋顶。这并不吸引人,但却是至关重要的,然而很多人都搞错了。“

+

+如今,她是 [Corgibytes][57] 公司的 CEO —— 这是一家提高软件现代化和进行系统重构方面的咨询公司。她曾经见过各种各样糟糕的系统、遗留代码,以及严重的科技债务事件。Goulet 认为**创业公司需要转变思维模式,不是偿还债务,而是创造科技财富,不是要铲除旧代码,而是要逐步修复代码**。她解释了这种新的方法,以及如何完成这些看似不可能完成的事情 —— 实际上是聘用优秀的工程师来完成这些工作。

+

+### 反思遗留代码

+

+关于遗留代码最常见的定义是由 Michael Feathers 在他的著作[《高效利用遗留代码》][56]一书中提出:遗留代码就是没有被测试所覆盖的代码。这个定义比大多数人所认为的 —— 遗留代码仅指那些古老、陈旧的系统这个说法要妥当得多。但是 Goulet 认为这两种定义都不够明确。“遗留代码与软件的年头儿毫无关系。一个两年的应用程序,其代码可能已经进入遗留状态了,”她说。“**关键要看软件质量提高的难易程度。**”

+

+这意味着写得不够清楚、缺少解释说明的代码,是没有包含任何关于代码构思和决策制定的流程的成果。单元测试就是这样的一种成果,但它并没有包括了写那部分代码的原因以及逻辑推理相关的所有文档。如果想要提升代码,但没办法搞清楚原开发者的意图 —— 那些代码就属于遗留代码了。

+

+> **遗留代码不是技术问题,而是沟通上的问题。**

+

+

+

+如果你像 Goulet 所说的那样迷失在遗留代码里,你会发现每一次的沟通交流过程都会变得像那条[康威定律][54]所描述的一样。

+

+Goulet 说:“这个定律认为你的代码能反映出整个公司的组织沟通结构,如果想修复公司的遗留代码,而没有一个好的组织沟通方式是不可能完成的。那是很多人都没注意到的一个重要环节。”

+

+Goulet 和她的团队成员更像是考古学家一样来研究遗留系统项目。他们根据前开发者写的代码构件相关的线索来推断出他们的思想意图。然后再根据这些构件之间的关系来做出新的决策。

+

+代码构件最重要的什么呢?**良好的代码结构、清晰的思想意图、整洁的代码**。例如,如果使用通用的名称如 “foo” 或 “bar” 来命名一个变量,半年后再返回来看这段代码时,根本就看不出这个变量的用途是什么。

+

+如果代码读起来很困难,可以使用源代码控制系统,这是一个非常有用的工具,因为它可以提供代码的历史修改信息,并允许软件开发者写明他们作出本次修改的原因。

+

+Goulet 说:“我一个朋友认为提交代码时附带的信息,每一个概要部分的内容应该有半条推文那么长(几十个字),如需要的话,代码的描述信息应该有一篇博客那么长。你得用这个方式来为你修改的代码写一个合理的说明。这不会浪费太多额外的时间,并且能给后期的项目开发者提供非常多的有用信息,但是让人惊讶的是很少有人会这么做。我们经常能看到一些开发人员在被一段代码激怒之后,要用 `git blame` 扒代码库找出这些垃圾是谁干的,结果最后发现是他们自己干的。”

+

+使用自动化测试对于理解程序的流程非常有用。Goulet 解释道:“很多人都比较认可 Michael Feathers 提出的关于遗留代码的定义。测试套件对于理解开发者的意图来说是非常有用的工具,尤其当用来与[行为驱动开发模式][53]相结合时,比如编写测试场景。”

+

+理由很简单,如果你想将遗留代码限制在一定程度下,注意到这些细节将使代码更易于理解,便于在以后也能工作。编写并运行一个代码单元,接受、认可,并且集成测试。写清楚注释的内容,方便以后你自己或是别人来理解你写的代码。

+

+尽管如此,由于很多已知的和不可意料的原因,遗留代码仍然会出现。

+

+在创业公司刚成立初期,公司经常会急于推出很多新的功能。开发人员在巨大的交付压力下,测试常常半途而废。Corgibytes 团队就遇到过好多公司很多年都懒得对系统做详细的测试了。

+

+确实如此,当你急于开发出系统原型的时候,强制性地去做太多的测试也许意义不大。但是,一旦产品开发完成并投入使用后,你就需要投入时间精力来维护及完善系统了。Goulet 说:“很多人说,‘别在维护上费心思,重要的是功能!’ **如果真这样,当系统规模到一定程序的时候,就很难再扩展了。同时也就失去市场竞争力了。**”

+

+最后才明白过来,原来热力学第二定律对代码也同样适用:**你所面临的一切将向熵增的方向发展。**你需要与混乱无序的技术债务进行一场无休无止的战斗。随着时间的推移,遗留代码也逐渐变成一种债务。

+

+她说:“我们再次拿家来做比喻。你必须坚持每天收拾餐具、打扫卫生、倒垃圾。如果你不这么做,情况将来越来越糟糕,直到有一天你不得不向 HazMat 团队求助。”(LCTT 译注:HazMat 团队,危害物质专队)

+

+就跟这种情况一样,Corgibytes 团队接到很多公司 CEO 的求助电话,比如 Features 公司的 CEO 在电话里抱怨道:“现在我们公司的开发团队工作效率太低了,三年前只需要两个星期就完成的工作,现在却要花费12个星期。”

+

+> **技术债务往往反映出公司运作上的问题。**

+

+很多公司的 CTO 明知会发生技术债务的问题,但是他们很难说服其它同事相信花钱来修复那些已经存在的问题是值得的。这看起来像是在走回头路,很乏味,也不是新的产品。有些公司直到系统已经严重影响了日常工作效率时,才着手去处理这些技术债务方面的问题,那时付出的代价就太高了。

+

+### 忘记债务,创造技术财富

+

+如果你想把[重构技术债务][52] — [敏捷开发讲师 Declan Whelan 最近造出的一个术语][51] — 作为一个积累技术财富的机会,你很可能要先说服你们公司的 CEO、投资者和其它的股东接受并为之共同努力。

+

+“我们没必要把技术债务想像得很可怕。当产品处于开发设计初期,技术债务反而变得非常有用,”Goulet 说。“当你解决一些系统遗留的技术问题时,你会充满成就感。例如,当你在自己家里安装新窗户时,你确实会花费一笔不少的钱,但是之后你每个月就可以节省 100 美元的电费。程序代码亦是如此。虽然暂时没有提高工作效率,但随时时间推移将提高生产力。”

+

+一旦你意识到项目团队工作不再富有成效时,就需要确认下是哪些技术债务在拖后腿了。

+

+“我跟很多不惜一切代价招募英才的初创公司交流过,他们高薪聘请一些工程师来只为了完成更多的工作。”她说。“与此相反,他们应该找出如何让原有的每个工程师能更高效率工作的方法。你需要去解决什么样的技术债务以增加额外的生产率?”

+

+如果你改变自己的观点并且专注于创造技术财富,你将会看到产能过剩的现象,然后重新把多余的产能投入到修复更多的技术债务和遗留代码的良性循环中。你们的产品将会走得更远,发展得更好。

+

+> **别把你们公司的软件当作一个项目来看。从现在起,把它想象成一栋自己要长久居住的房子。**

+

+“这是一个极其重要的思想观念的转变,”Goulet 说。“这将带你走出短浅的思维模式,并让你比之前更加关注产品的维护工作。”

+

+这就像对一栋房子,要实现其现代化及维护的方式有两种:小动作,表面上的更改(“我买了一块新的小地毯!”)和大改造,需要很多年才能偿还所有债务(“我想我们应替换掉所有的管道...”)。你必须考虑好两者,才能让你们已有的产品和整个团队顺利地运作起来。

+

+这还需要提前预算好 —— 否则那些较大的花销将会是硬伤。定期维护是最基本的预期费用。让人震惊的是,很多公司都没把维护当成商务成本预算进来。

+

+这就是 Goulet 提出“**软件重构**”这个术语的原因。当你房子里的一些东西损坏的时候,你并不是铲除整个房子,从头开始重建。同样的,当你们公司出现老的、损坏的代码时,重写代码通常不是最明智的选择。

+

+下面是 Corgibytes 公司在重构客户代码用到的一些方法:

+

+* 把大型的应用系统分解成轻量级的更易于维护的微服务。

+* 让功能模块彼此解耦以便于扩展。

+* 更新形象和提升用户前端界面体验。

+* 集合自动化测试来检查代码可用性。

+* 代码库可以让重构或者修改更易于操作。

+

+系统重构也进入到 DevOps 领域。比如,Corgibytes 公司经常推荐新客户使用 [Docker][50],以便简单快速的部署新的开发环境。当你们团队有 30 个工程师的时候,把初始化配置时间从 10 小时减少到 10 分钟对完成更多的工作很有帮助。系统重构不仅仅是应用于软件开发本身,也包括如何进行系统重构。

+

+如果你知道做些什么能让你们的代码管理起来更容易更高效,就应该把这它们写入到每年或季度的项目规划中。别指望它们会自动呈现出来。但是也别给自己太大的压力来马上实施它们。Goulets 看到很多公司从一开始就致力于 100% 测试覆盖率而陷入困境。

+

+**具体来说,每个公司都应该把以下三种类型的重构工作规划到项目建设中来:**

+

+* 自动测试

+* 持续交付

+* 文化提升

+

+咱们来深入的了解下每一项内容。

+

+#### 自动测试

+

+ “有一位客户即将进行第二轮融资,但是他们没办法在短期内招聘到足够的人才。我们帮助他们引进了一种自动化测试框架,这让他们的团队在 3 个月的时间内工作效率翻了一倍,”Goulets 说。“这样他们就可以在他们的投资人面前自豪的说,‘我们一个精英团队完成的任务比两个普通的团队要多。’”

+

+自动化测试从根本上来讲就是单个测试的组合,就是可以再次检查某一行代码的单元测试。可以使用集成测试来确保系统的不同部分都正常运行。还可以使用验收性测试来检验系统的功能特性是否跟你想像的一样。当你把这些测试写成测试脚本后,你只需要简单地用鼠标点一下按钮就可以让系统自行检验了,而不用手工的去梳理并检查每一项功能。

+

+在产品市场尚未打开之前就来制定自动化测试机制有些言之过早。但是一旦你有一款感到满意,并且客户也很依赖的产品,就应该把这件事付诸实施了。

+

+#### 持续交付

+

+这是与自动化交付相关的工作,过去是需要人工完成。目的是当系统部分修改完成时可以迅速进行部署,并且短期内得到反馈。这使公司在其它竞争对手面前有很大的优势,尤其是在客户服务行业。

+

+“比如说你每次部署系统时环境都很复杂。熵值无法有效控制,”Goulets 说。“我们曾经见过花 12 个小时甚至更多的时间来部署一个很大的集群环境。在这种情况下,你不会愿意频繁部署了。因为太折腾人了,你还会推迟系统功能上线的时间。这样,你将落后于其它公司并失去竞争力。”

+

+**在持续性改进的过程中常见的其它自动化任务包括:**

+

+* 在提交完成之后检查构建中断部分。

+* 在出现故障时进行回滚操作。

+* 自动化审查代码的质量。

+* 根据需求增加或减少服务器硬件资源。

+* 让开发、测试及生产环境配置简单易懂。

+

+举一个简单的例子,比如说一个客户提交了一个系统 Bug 报告。开发团队越高效解决并修复那个 Bug 越好。对于开发人员来说,修复 Bug 的挑战根本不是个事儿,这本来也是他们的强项,主要是系统设置上不够完善导致他们浪费太多的时间去处理 bug 以外的其它问题。

+

+使用持续改进的方式时,你要严肃地决定决定哪些工作应该让机器去做,哪些交给研发去完成更好。如果机器更擅长,那就使其自动化完成。这样也能让研发愉快地去解决其它有挑战性的问题。同时客户也会很高兴地看到他们报怨的问题被快速处理了。你的待修复的未完成任务数减少了,之后你就可以把更多的时间投入到运用新的方法来提高产品的质量上了。**这是创造科技财富的一种转变。**因为开发人员可以修复 bug 后立即发布新代码,这样他们就有时间和精力做更多事。

+

+“你必须时刻问自己,‘我应该如何为我们的客户改善产品功能?如何做得更好?如何让产品运行更高效?’不过还要不止于此。”Goulets 说。“一旦你回答完这些问题后,你就得询问下自己,如何自动去完成那些需要改善的功能。”

+

+#### 文化提升

+

+Corgibytes 公司每天都会看到同样的问题:一家创业公司建立了一个对开发团队毫无推动的文化环境。公司 CEO 抱着双臂思考着为什么这样的环境对员工没多少改变。然而事实却是公司的企业文化对工作并不利。为了激励工程师,你必须全面地了解他们的工作环境。

+

+为了证明这一点,Goulet 引用了作者 Robert Henry 说过的一段话:

+

+> **目的不是创造艺术,而是在最美妙的状态下让艺术应运而生。**

+

+“你们也要开始这样思考一下你们的软件,”她说。“你们的企业文件就类似那个状态。你们的目标就是创造一个让艺术品应运而生的环境,这件艺术品就是你们公司的代码、一流的售后服务、充满幸福感的开发者、良好的市场预期、盈利能力等等。这些都息息相关。”

+

+优先考虑解决公司的技术债务和遗留代码也是一种文化。那是真正为开发团队清除障碍,以制造影响的方法。同时,这也会让你将来有更多的时间精力去完成更重要的工作。如果你不从根本上改变固有的企业文化环境,你就不可能重构公司产品。改变对产品维护及现代化的投资的态度是开始实施变革的第一步,最理想情况是从公司的 CEO 开始自顶向下转变。

+

+以下是 Goulet 关于建立那种流态文化方面提出的建议:

+

+* 反对公司嘉奖那些加班到深夜的“英雄”。提倡高效率的工作方式。

+* 了解协同开发技术,比如 Woody Zuill 提出的[合作编程][44]模式。

+* 遵从 4 个[现代敏捷开发][42]原则:用户至上、实践及快速学习、把安全放在首位、持续交付价值。

+* 每周为研发人员提供项目外的职业发展时间。

+* 把[日工作记录][43]作为一种驱动开发团队主动解决问题的方式。

+* 把同情心放在第一位。Corgibytes 公司让员工参加 [Brene Brown 勇气工厂][40]的培训是非常有用的。

+

+“如果公司高管和投资者不支持这种升级方式,你得从客户服务的角度去说服他们,”Goulet 说,“告诉他们通过这次调整后,最终产品将如何给公司的大多数客户提高更好的体验。这是你能做的一个很有力的论点。”

+

+### 寻找最具天才的代码重构者

+

+整个行业都认为顶尖的工程师不愿意干修复遗留代码的工作。他们只想着去开发新的东西。大家都说把他们留在维护部门真是太浪费人才了。

+

+**其实这些都是误解。如果你知道去哪里和如何找工程师,并为他们提供一个愉快的工作环境,你就可以找到技术非常精湛的工程师,来帮你解决那些最棘手的技术债务问题。**

+

+“每次在会议上,我们都会问现场的同事‘谁喜欢去在遗留代码上工作?’每次只有不到 10% 的与会者会举手。”Goulet 说。“但是我跟这些人交流后,我发现这些工程师恰好是喜欢最具挑战性工作的人才。”

+

+有一位客户来寻求她的帮助,他们使用国产的数据库,没有任何相关文档,也没有一种有效的方法来弄清楚他们公司的产品架构。她称修理这种情况的一类工程师为“修正者”。在 Corgibytes 公司,她有一支这样的修正者团队由她支配,热衷于通过研究二进制代码来解决技术问题。

+

+

+

+那么,如何才能找到这些技术人才呢? Goulet 尝试过各种各样的方法,其中有一些方法还是富有成效的。

+

+她创办了一个社区网站 [legacycode.rocks][49] 并且在招聘启示上写道:“长期招聘那些喜欢重构遗留代码的另类开发人员...如果你以从事处理遗留代码的工作为自豪,欢迎加入!”

+

+“我开始收到很多人发来邮件说,‘噢,天呐,我也属于这样的开发人员!’”她说。“只需要发布这条信息,并且告诉他们这份工作是非常有意义的,就吸引了合适的人才。”

+

+在招聘的过程中,她也会使用持续性交付的经验来回答那些另类开发者想知道的信息:包括详细的工作内容以及明确的要求。“我这么做的原因是因为我讨厌重复性工作。如果我收到多封邮件来咨询同一个问题,我会把答案发布在网上,我感觉自己更像是在写说明文档一样。”

+

+但是随着时间的推移,她发现可以重新定义招聘流程来帮助她识别出更出色的候选人。比如说,她在应聘要求中写道,“公司 CEO 将会重新审查你的简历,因此请确保求职信中致意时不用写明性别。所有以‘尊敬的先生’或‘先生’开头的信件将会被当垃圾处理掉”。这些只是她的招聘初期策略。

+

+“我开始这么做是因为很多申请人把我当成男性,因为我是一家软件公司的男性 CEO,我必须是男性!?”Goulet 说。“所以,有一天我想我应该它当作应聘要求放到网上,看有多少人注意到这个问题。令我惊讶的是,这让我过滤掉一些不太严谨的申请人。还突显出了很多擅于从事遗留代码方面工作的人。”

+

+Goulet 想起一个应聘者发邮件给我说,“我查看了你们网站的代码(我喜欢这个网站,这也是我的工作)。你们的网站架构很奇特,好像是用 PHP 写的,但是你们却运行在用 Ruby 语言写的 Jekyll 下。我真的很好奇那是什么呢。”

+

+Goulet 从她的设计师那里得知,原来,在 HTML、CSS 和 JavaScript 文件中有一个未使用的 PHP 类名,她一直想解决这个问题,但是一直没机会。Goulet 的回复是:“你正在找工作吗?”

+

+另外一名候选人注意到她曾经在一篇说明文档中使用 CTO 这个词,但是她的团队里并没有这个头衔(她的合作伙伴是 Chief Code Whisperer)。这些注重细节、充满求知欲、积极主动的候选者更能引起她的注意。

+

+> **代码修正者不仅需要注重细节,而且这也是他们必备的品质。**

+

+让人吃惊的是,Goulet 从来没有为招募最优秀的代码修正者而感到厌烦过。“大多数人都是通过我们的网站直接投递简历,但是当我们想扩大招聘范围的时候,我们会通过 [PowerToFly][48] 和 [WeWorkRemotely][47] 网站进行招聘。我现在确实不需要招募新人马了。他们需要经历一段很艰难的时期才能理解代码修正者的意义是什么。”

+

+如果他们通过首轮面试,Goulet 将会让候选者阅读一篇 Arlo Belshee 写的文章“[命名是一个过程][46]”。它讲的是非常详细的处理遗留代码的的过程。她最经典的指导方法是:“阅读完这段代码并且告诉我,你是怎么理解的。”

+

+她将找出对问题的理解很深刻并且也愿意接受文章里提出的观点的候选者。这对于区分有深刻理解的候选者和仅仅想获得工作的候选者来说,是极其有用的办法。她强烈要求候选者找出一段与他操作相关的代码,来证明他是充满激情的、有主见的及善于分析问题的人。

+

+最后,她会让候选者跟公司里当前的团队成员一起使用 [Exercism.io][45] 工具进行编程。这是一个开源项目,它允许开发者学习如何在不同的编程语言环境下使用一系列的测试驱动开发的练习进行编程。结对编程课程的第一部分允许候选者选择其中一种语言来使用。下一个练习中,面试官可以选择一种语言进行编程。他们总能看到那些人处理异常的方法、随机应便的能力以及是否愿意承认某些自己不了解的技术。

+

+“当一个人真正的从执业者转变为大师的时候,他会毫不犹豫的承认自己不知道的东西,”Goulet说。

+

+让他们使用自己不熟悉的编程语言来写代码,也能衡量其坚韧不拔的毅力。“我们想听到某个人说,‘我会深入研究这个问题直到彻底解决它。’也许第二天他们仍然会跑过来跟我们说,‘我会一直留着这个问题直到我找到答案为止。’那是作为一个成功的修正者表现出来的一种气质。”

+

+> **产品开发人员在我们这个行业很受追捧,因此很多公司也想让他们来做维护工作。这是一个误解。最优秀的维护修正者并不是最好的产品开发工程师。**

+

+如果一个有天赋的修正者在眼前,Goulet 懂得如何让他走向成功。下面是如何让这种类型的开发者感到幸福及高效工作的一些方式:

+

+* 给他们高度的自主权。把问题解释清楚,然后安排他们去完成,但是永不命令他们应该如何去解决问题。

+* 如果他们要求升级他们的电脑配置和相关工具,尽管去满足他们。他们明白什么样的需求才能最大限度地提高工作效率。

+* 帮助他们[避免分心][39]。他们喜欢全身心投入到某一个任务直至完成。

+

+总之,这些方法已经帮助 Corgibytes 公司培养出二十几位对遗留代码充满激情的专业开发者。

+

+### 稳定期没什么不好

+

+大多数创业公司都都不想跳过他们的成长期。一些公司甚至认为成长期应该是永无止境的。而且,他们觉得也没这个必要跳过成长期,即便他们已经进入到了下一个阶段:稳定期。**完全进入到稳定期意味着你拥有人力资源及管理方法来创造技术财富,同时根据优先权适当支出。**

+

+“在成长期和稳定期之间有个转折点,就是维护人员必须要足够壮大,并且相对于专注新功能的产品开发人员,你开始更公平的对待维护人员,”Goulet 说。“你们公司的产品开发完成了。现在你得让他们更加稳定地运行。”

+

+这就意味着要把公司更多的预算分配到产品维护及现代化方面。“你不应该把产品维护当作是一个不值得关注的项目,”她说。“这必须成为你们公司固有的一种企业文化 —— 这将帮助你们公司将来取得更大的成功。“

+

+最终,你通过这些努力创建的技术财富,将会为你的团队带来一大批全新的开发者:他们就像侦查兵一样,有充足的时间和资源去探索新的领域,挖掘新客户资源并且给公司创造更多的机遇。当你们在新的市场领域做得更广泛并且不断取得进展 —— 那么你们公司已经真正地进入到繁荣发展的状态了。

+

+--------------------------------------------------------------------------------

+

+via: http://firstround.com/review/forget-technical-debt-heres-how-to-build-technical-wealth/

+

+作者:[http://firstround.com/][a]

+译者:[rusking](https://github.com/rusking)

+校对:[jasminepeng](https://github.com/jasminepeng)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: http://firstround.com/

+[1]:http://corgibytes.com/blog/2016/04/15/inception-layers/

+[2]:http://www.courageworks.com/

+[3]:http://corgibytes.com/blog/2016/08/02/how-we-use-daily-journals/

+[4]:https://www.industriallogic.com/blog/modern-agile/

+[5]:http://mobprogramming.org/

+[6]:http://exercism.io/

+[7]:http://arlobelshee.com/good-naming-is-a-process-not-a-single-step/

+[8]:https://weworkremotely.com/

+[9]:https://www.powertofly.com/

+[10]:http://legacycode.rocks/

+[11]:https://www.docker.com/

+[12]:http://legacycoderocks.libsyn.com/technical-wealth-with-declan-wheelan

+[13]:https://www.agilealliance.org/resources/initiatives/technical-debt/

+[14]:https://en.wikipedia.org/wiki/Behavior-driven_development

+[15]:https://en.wikipedia.org/wiki/Conway%27s_law

+[16]:https://www.amazon.com/Working-Effectively-Legacy-Michael-Feathers/dp/0131177052

+[17]:http://corgibytes.com/

+[18]:https://www.linkedin.com/in/andreamgoulet

+[19]:http://corgibytes.com/blog/2016/04/15/inception-layers/

+[20]:http://www.courageworks.com/

+[21]:http://corgibytes.com/blog/2016/08/02/how-we-use-daily-journals/

+[22]:https://www.industriallogic.com/blog/modern-agile/

+[23]:http://mobprogramming.org/

+[24]:http://mobprogramming.org/

+[25]:http://exercism.io/

+[26]:http://arlobelshee.com/good-naming-is-a-process-not-a-single-step/

+[27]:https://weworkremotely.com/

+[28]:https://www.powertofly.com/

+[29]:http://legacycode.rocks/

+[30]:https://www.docker.com/

+[31]:http://legacycoderocks.libsyn.com/technical-wealth-with-declan-wheelan

+[32]:https://www.agilealliance.org/resources/initiatives/technical-debt/

+[33]:https://en.wikipedia.org/wiki/Behavior-driven_development

+[34]:https://en.wikipedia.org/wiki/Conway%27s_law

+[35]:https://www.amazon.com/Working-Effectively-Legacy-Michael-Feathers/dp/0131177052

+[36]:https://www.amazon.com/Working-Effectively-Legacy-Michael-Feathers/dp/0131177052

+[37]:http://corgibytes.com/

+[38]:https://www.linkedin.com/in/andreamgoulet

+[39]:http://corgibytes.com/blog/2016/04/15/inception-layers/

+[40]:http://www.courageworks.com/

+[41]:http://corgibytes.com/blog/2016/08/02/how-we-use-daily-journals/

+[42]:https://www.industriallogic.com/blog/modern-agile/

+[43]:http://corgibytes.com/blog/2016/08/02/how-we-use-daily-journals/

+[44]:http://mobprogramming.org/

+[45]:http://exercism.io/

+[46]:http://arlobelshee.com/good-naming-is-a-process-not-a-single-step/

+[47]:https://weworkremotely.com/

+[48]:https://www.powertofly.com/

+[49]:http://legacycode.rocks/

+[50]:https://www.docker.com/

+[51]:http://legacycoderocks.libsyn.com/technical-wealth-with-declan-wheelan

+[52]:https://www.agilealliance.org/resources/initiatives/technical-debt/

+[53]:https://en.wikipedia.org/wiki/Behavior-driven_development

+[54]:https://en.wikipedia.org/wiki/Conway%27s_law

+[56]:https://www.amazon.com/Working-Effectively-Legacy-Michael-Feathers/dp/0131177052

+[57]:http://corgibytes.com/

+[58]:https://www.linkedin.com/in/andreamgoulet

diff --git a/published/20161028 Inkscape: Adding some colour.md b/published/20161028 Inkscape: Adding some colour.md

new file mode 100644

index 0000000000..8e3b6969ab

--- /dev/null

+++ b/published/20161028 Inkscape: Adding some colour.md

@@ -0,0 +1,50 @@

+使用 Inkscape:添加颜色

+=========

+

+

+

+在我们先前的 Inkscape 文章中,[我们介绍了 Inkscape 的基础][2] - 安装,以及如何创建基本形状及操作它们。我们还介绍了使用 Palette 更改 inkscape 对象的颜色。 虽然 Palette 对于从预定义列表快速更改对象颜色非常有用,但大多数情况下,你需要更好地控制对象的颜色。这时我们使用 Inkscape 中最重要的对话框之一 - 填充和轮廓 对话框。

+

+**关于文章中的动画的说明:**动画中的一些颜色看起来有条纹。这只是动画创建导致的。当你在 Inkscape 尝试时,你会看到很好的平滑渐变的颜色。

+

+### 使用 Fill/Stroke 对话框

+

+要在 Inkscape 中打开 “Fill and Stroke” 对话框,请从主菜单中选择 `Object`>`Fill and Stroke`。打开后,此对话框中的三个选项卡允许你检查和更改当前选定对象的填充颜色、描边颜色和描边样式。

+

+

+

+在 Inkscape 中,Fill 用来给予对象主体颜色。对象的轮廓是你的对象的可选择外框,可在轮廓样式选项卡中进行配置,它允许您更改轮廓的粗细,创建虚线轮廓或为轮廓添加圆角。 在下面的动画中,我会改变星形的填充颜色,然后改变轮廓颜色,并调整轮廓的粗细:

+

+

+

+### 添加并编辑渐变效果

+

+对象的填充(或者轮廓)也可以是渐变的。要从 “Fill and Stroke” 对话框快速设置渐变填充,请先选择 “Fill” 选项卡,然后选择线性渐变 选项:

+

+

+

+要进一步编辑我们的渐变,我们需要使用专门的渐变工具。 从工具栏中选择“Gradient Tool”,会有一些渐变编辑锚点出现在你选择的形状上。 **移动锚点**将改变渐变的位置。 如果你**单击一个锚点**,您还可以在“Fill and Stroke”对话框中更改该锚点的颜色。 要**在渐变中添加新的锚点**,请双击连接锚点的线,然后会出现一个新的锚点。

+

+

+

+* * *

+

+这篇文章介绍了在 Inkscape 图纸中添加一些颜色和渐变的基础知识。 **“Fill and Stroke”** 对话框还有许多其他选项可供探索,如图案填充、不同的渐变样式和许多不同的轮廓样式。另外,查看**工具控制栏** 的 **Gradient Tool** 中的其他选项,看看如何以不同的方式调整渐变。

+

+-----------------------

+

+作者简介:Ryan 是一名 Fedora 设计师。他使用 Fedora Workstation 作为他的主要桌面,还有来自 Libre Graphics 世界的最好的工具,尤其是矢量图形编辑器 Inkscape。

+

+--------------------------------------------------------------------------------

+

+via: https://fedoramagazine.org/inkscape-adding-colour/

+

+作者:[Ryan Lerch][a]

+译者:[geekpi](https://github.com/geekpi)

+校对:[jasminepeng](https://github.com/jasminepeng)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: http://ryanlerch.id.fedoraproject.org/

+[1]:https://fedoramagazine.org/inkscape-adding-colour/

+[2]:https://linux.cn/article-8079-1.html

diff --git a/translated/tech/20161104 Create a simple wallpaper with Fedora and Inkscape.md b/published/20161104 Create a simple wallpaper with Fedora and Inkscape.md

similarity index 81%

rename from translated/tech/20161104 Create a simple wallpaper with Fedora and Inkscape.md

rename to published/20161104 Create a simple wallpaper with Fedora and Inkscape.md

index 5a0fbce7b4..a9317d1dfd 100644

--- a/translated/tech/20161104 Create a simple wallpaper with Fedora and Inkscape.md

+++ b/published/20161104 Create a simple wallpaper with Fedora and Inkscape.md

@@ -1,6 +1,7 @@

-### 使用 Fedora 和 Inkscape 制作一张简单的壁纸

+使用 Fedora 和 Inkscape 制作一张简单的壁纸

+================

-

+

在先前的两篇 Inkscape 的文章中,我们已经[介绍了 Inkscape 的基本使用、创建对象][18]以及[一些基本操作和如何修改颜色。][17]

@@ -14,7 +15,7 @@

][16]

-对于这张壁纸而言,我们会将尺寸改为**1024px x 768px**。要改变文档的尺寸,进入`File` > `Document Properties…`。在文档属性对话框中自定义文档大小区域中输入宽度为 1024px,高度为 768px:

+对于这张壁纸而言,我们会将尺寸改为**1024px x 768px**。要改变文档的尺寸,进入`File` > `Document Properties...`。在文档属性对话框中自定义文档大小区域中输入宽度为 `1024`,高度为 `768` ,单位是 `px`:

[

@@ -34,13 +35,13 @@

][13]

-接着在矩形中添加一个渐变填充。[如果你需要复习添加渐变,请阅读先前添加色彩的文章。][12]

+接着在矩形中添加一个渐变填充。如果你需要复习添加渐变,请阅读先前添加色彩的[那篇文章][12]。

[

][11]

-你的矩形可能也设置了轮廓颜色。 使用填充和轮廓对话框将轮廓设置为 **none**。

+你的矩形也可以设置轮廓颜色。 使用填充和轮廓对话框将轮廓设置为 **none**。

[

@@ -48,19 +49,19 @@

### 绘制图样

-接下来我们画一个三角形,使用 3个 顶点的星型/多边形工具。你可以**按住 CTRL** 键给三角形一个角度并使之对称。

+接下来我们画一个三角形,使用 3 个顶点的星型/多边形工具。你可以按住 `CTRL` 键给三角形一个角度并使之对称。

[

][9]

-选中三角形并按下 **CTRL+D** 来复制它(复制的图形会覆盖在原来图形的上面),**因此在复制后确保将它移动到别处。**

+选中三角形并按下 `CTRL+D` 来复制它(复制的图形会覆盖在原来图形的上面),**因此在复制后确保将它移动到别处。**

[

][8]

-如图选中一个三角形,进入**OBJECT > FLIP-HORIZONTAL(水平翻转)**。

+如图选中一个三角形,进入`Object` > `FLIP-HORIZONTAL`(水平翻转)。

[

@@ -82,7 +83,7 @@

### 导出背景

-最后,我们需要将我们的文档导出为 PNG 文件。点击 **FILE > EXPORT PNG**,打开导出对话框,选择文件位置和名字,确保选中的是 Drawing 标签,并点击 **EXPORT**。

+最后,我们需要将我们的文档导出为 PNG 文件。点击 `File` > `EXPORT PNG`,打开导出对话框,选择文件位置和名字,确保选中的是 `Drawing` 标签,并点击 `EXPORT`。

[

@@ -100,9 +101,7 @@

via: https://fedoramagazine.org/inkscape-design-imagination/

作者:[a2batic][a]

-

译者:[geekpi](https://github.com/geekpi)

-

校对:[jasminepeng](https://github.com/jasminepeng)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 组织编译,[Linux中国](https://linux.cn/) 荣誉推出

@@ -119,11 +118,11 @@ via: https://fedoramagazine.org/inkscape-design-imagination/

[9]:https://1504253206.rsc.cdn77.org/wp-content/uploads/2016/10/Screenshot-from-2016-09-07-09-52-38.png

[10]:https://1504253206.rsc.cdn77.org/wp-content/uploads/2016/10/Screenshot-from-2016-09-07-09-44-15.png

[11]:https://1504253206.rsc.cdn77.org/wp-content/uploads/2016/10/Screenshot-from-2016-09-07-09-41-13.png

-[12]:https://fedoramagazine.org/inkscape-adding-colour/

+[12]:https://linux.cn/article-8084-1.html

[13]:https://1504253206.rsc.cdn77.org/wp-content/uploads/2016/10/rect.png

[14]:https://1504253206.rsc.cdn77.org/wp-content/uploads/2016/10/Screenshot-from-2016-09-07-09-01-03.png

[15]:https://1504253206.rsc.cdn77.org/wp-content/uploads/2016/10/Screenshot-from-2016-09-07-09-00-00.png

[16]:https://1504253206.rsc.cdn77.org/wp-content/uploads/2016/10/Screenshot-from-2016-09-07-08-37-01.png

-[17]:https://fedoramagazine.org/inkscape-adding-colour/

-[18]:https://fedoramagazine.org/getting-started-inkscape-fedora/

+[17]:https://linux.cn/article-8084-1.html

+[18]:https://linux.cn/article-8079-1.html

[19]:https://fedoramagazine.org/inkscape-design-imagination/

diff --git a/published/201612/20160505 A daughter of Silicon Valley shares her 'nerd' story.md b/published/201612/20160505 A daughter of Silicon Valley shares her 'nerd' story.md

new file mode 100644

index 0000000000..20fb963c6b

--- /dev/null

+++ b/published/201612/20160505 A daughter of Silicon Valley shares her 'nerd' story.md

@@ -0,0 +1,82 @@

+“硅谷的女儿”的成才之路

+=======================================================

+

+

+

+在 2014 年,为了对网上一些关于在科技行业女性稀缺的评论作出回应,我的同事 [Crystal Beasley][1] 倡议在科技/信息安全方面工作的女性在网络上分享自己的“成才之路”。这篇文章就是我的故事。我把我的故事与你们分享是因为我相信榜样的力量,也相信一个人有多种途径,选择一个让自己满意的有挑战性的工作以及可以实现目标的人生。

+

+### 和电脑相伴的童年

+

+我可以说是硅谷的女儿。我的故事不是一个从科技业余爱好转向专业的故事,也不是从小就专注于这份事业的故事。这个故事更多的是关于环境如何塑造你 — 通过它的那种已然存在的文化来改变你,如果你想要被改变的话。这不是从小就开始努力并为一个明确的目标而奋斗的故事,我意识到,这其实是享受了一些特权的成长故事。

+

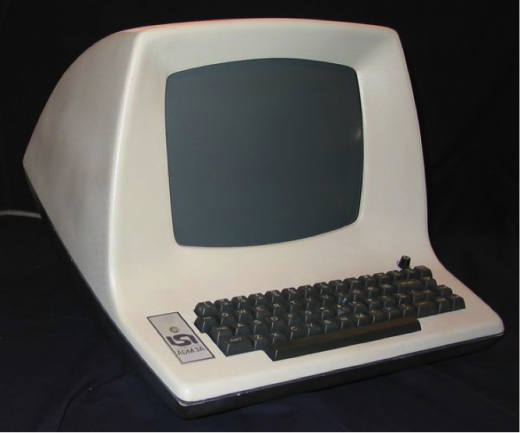

+我出生在曼哈顿,但是我在新泽西州长大,因为我的爸爸退伍后,在那里的罗格斯大学攻读计算机科学的博士学位。当我四岁时,学校里有人问我爸爸干什么谋生时,我说,“他就是看电视和捕捉小虫子,但是我从没有见过那些小虫子”(LCTT 译注:小虫子,bug)。他在家里有一台哑终端(LCTT 译注:就是那台“电视”),这大概与他在 Bolt Beranek Newman 公司的工作有关,做关于早期互联网人工智能方面的工作。我就在旁边看着。

+

+我没能玩上父亲的会抓小虫子的电视,但是我很早就接触到了技术领域,我很珍惜这个礼物。提早的熏陶对于一个未来的高手是十分必要的 — 所以,请花时间和你的小孩谈谈你在做的事情!

+

+

+

+*我父亲的终端和这个很类似 —— 如果不是这个的话 CC BY-SA 4.0*

+

+当我六岁时,我们搬到了加州。父亲在施乐的帕克研究中心(Xerox PARC)找到了一个工作。我记得那时我认为这个城市一定有很多熊,因为在它的旗帜上有一个熊。在1979年,帕洛阿图市还是一个大学城,还有果园和开阔地带。

+

+在 Palo Alto 的公立学校待了一年之后,我的姐姐和我被送到了“半岛学校”,这个“民主典范”学校对我造成了深刻的影响。在那里,好奇心和创新意识是被高度推崇的,教育也是由学生自己分组讨论决定的。在学校,我们很少能看到叫做电脑的东西,但是在家就不同了。

+

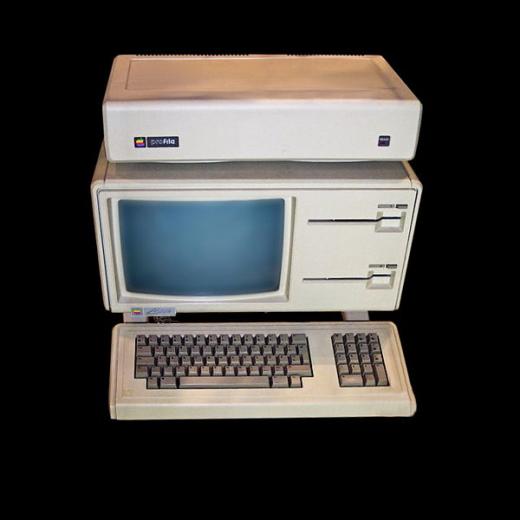

+在父亲从施乐辞职之后,他就去了苹果公司,在那里他工作使用并带回家让我玩的第一批电脑就是:Apple II 和 LISA。我的父亲在最初的 LISA 的研发团队。我直到现在还深刻的记得他让我们一次又一次的“玩”鼠标训练的场景,因为他想让我的 3 岁大的妹妹也能对这个东西觉得好用 —— 她也确实那样。

+

+

+

+*我们的 LISA 看起来就像这样。谁看到鼠标哪儿去了?CC BY-SA 4.0*

+

+在学校,我的数学的概念学得不错,但是基本计算却惨不忍睹。我的第一个学校的老师告诉我的家长和我,说我的数学很差,还说我很“笨”。虽然我在“常规的”数学项目中表现出色,能理解一个超出 7 岁孩子理解能力的逻辑谜题,但是我不能完成我们每天早上都要做的“练习”。她说我傻,这事我不会忘记。在那之后的十年我都没能相信自己的逻辑能力和算法的水平。**不要低估你对孩子说的话的影响**。

+

+在我玩了几年爸爸的电脑之后,他从 Apple 公司跳槽到了 EA,又跳到了 SGI,我又体验了他带回来的新玩意。这让我们认为我们家的房子是镇里最酷的,因为我们在车库里有一个能玩 Doom 的 SGI 的机器。我不会太多的编程,但是现在看来,从那些年里我学到对尝试新的科技毫不恐惧。同时,我的学文学和教育的母亲,成为了一个科技行业的作家,她向我证实了一个人的职业可以改变,而且一个做母亲的人可能同时驾驭一个科技职位。我不是说这对她来说很简单,但是她让我认为这件事看起来很简单。你可能会想这些早期的熏陶能把我带到科技行业,但是它没有。

+

+### 本科时光

+

+我想我要成为一个小学教师,我就读米尔斯学院就是想要做这个。但是后来我开始研究女性学,后来又研究神学,我这样做仅仅是由于我自己的一个渴求:我希望能理解人类的意志以及为更好的世界而努力。

+

+同时,我也感受到了互联网的巨大力量。在 1991 年,拥有你自己的 UNIX 的账户,能够和全世界的人谈话,是很令人兴奋的事。我仅仅从在互联网中“玩”就学到了不少,从那些愿意回答我提出的问题的人那里学到的就更多了。这些学习对我的职业生涯的影响不亚于我在正规学校教育之中学到的知识。所有的信息都是有用的。我在一个女子学院度过了学习的关键时期,那时是一个杰出的女性在掌管计算机院。在那个宽松氛围的学院,我们不仅被允许,还被鼓励去尝试很多的道路(我们能接触到很多很多的科技,还有聪明人愿意帮助我们),我也确实那样做了。我十分感激当年的教育。在那个学院,我也了解了什么是极客文化。

+

+之后我去了研究生院去学习女性主义神学,但是技术的气息已经渗入我的灵魂。当我意识到我不想成为一个教授或者一个学术伦理家时,我离开了学术圈,带着学校债务和一些想法回到了家。

+

+### 新的开端

+

+在 1995 年,我被互联网连接人们以及分享想法和信息的能力所震惊(直到现在仍是如此)。我想要进入这个行业。看起来我好像要“女承父业”,但是我不知道如何开始。我开始在硅谷做临时工,从 Sun 微系统公司得到我的第一个“真正”技术职位前尝试做了一些事情(为半导体数据公司写最基础的数据库,技术手册印发前的事务,备份工资单的存跟)。这些事很让人激动。(毕竟,我们是“.com”中的那个”点“)。

+

+在 Sun 公司,我努力学习,尽可能多的尝试新事物。我的第一个工作是网页化(啥?这居然是一个词!)白皮书,以及为 Beta 程序修改一些基础的服务工具(大多数是 Perl 写的)。后来我成为 Solaris beta 项目组中的项目经理,并在 Open Solaris 的 Beta 版运行中感受到了开源的力量。

+

+在那里我做的最重要的事情就是学习。我发现在同样重视工程和教育的地方有一种气氛,在那里我的问题不再显得“傻”。我很庆幸我选对了导师和朋友。在决定休第二个孩子的产假之前,我上每一堂我能上的课程,读每一本我能读的书,尝试自学我在学校没有学习过的技术,商业以及项目管理方面的技能。

+

+### 重回工作

+

+当我准备重新工作时,Sun 公司已经不再是合适的地方了。所以,我整理了我的联系信息(网络帮到了我),利用我的沟通技能,最终获得了一个管理互联网门户的长期合同(2005 年时,一切皆门户),并且开始了解 CRM、发布产品的方式、本地化、网络等知识。我讲这么多背景,主要是我的尝试以及失败的经历,和我成功的经历同等重要,从中学到很多。我也认为我们需要这个方面的榜样。

+

+从很多方面来看,我的职业生涯的第一部分是我的技术教育。时变势移 —— 我在帮助组织中的女性和其他弱势群体,但是并没有看出为一个技术行业的女性有多难。当时无疑我没有看到这个行业的缺陷,但是现在这个行业更加的厌恶女性,一点没有减少。

+

+在这些事情之后,我还没有把自己当作一个标杆,或者一个高级技术人员。当我在父母圈子里认识的一位极客朋友鼓励我申请一个看起来定位十分模糊且技术性很强的开源的非盈利基础设施机构(互联网系统协会 ISC,它是广泛部署的开源 DNS 名称服务器 BIND 的缔造者,也是 13 台根域名服务器之一的运营商)的产品经理时,我很震惊。有很长一段时间,我都不知道他们为什么要雇佣我!我对 DNS、基础设备,以及协议的开发知之甚少,但是我再次遇到了老师,并再度开始飞速发展。我花时间出差,在关键流程攻关,搞清楚如何与高度国际化的团队合作,解决麻烦的问题,最重要的是,拥抱支持我们的开源和充满活力的社区。我几乎重新学了一切,通过试错的方式。我学习如何构思一个产品。如何通过建设开源社区,领导那些有这特定才能,技能和耐心的人,是他们给了产品价值。

+

+### 成为别人的导师

+

+当我在 ISC 工作时,我通过 [TechWomen 项目][2] (一个让来自中东和北非的技术行业的女性到硅谷来接受教育的计划),我开始喜欢教学生以及支持那些技术女性,特别是在开源行业中奋斗的。也正是从这时起我开始相信自己的能力。我还需要学很多。

+

+当我第一次读 TechWomen 关于导师的广告时,我根本不认为他们会约我面试!我有冒名顶替综合症。当他们邀请我成为第一批导师(以及以后六年每年的导师)时,我很震惊,但是现在我学会了相信这些都是我努力得到的待遇。冒名顶替综合症是真实的,但是随着时间过去我就慢慢名副其实了。

+

+### 现在

+

+最后,我不得不离开我在 ISC 的工作。幸运的是,我的工作以及我的价值让我进入了 Mozilla ,在这里我的努力和我的幸运让我在这里承担着重要的角色。现在,我是一名支持多样性与包容的高级项目经理。我致力于构建一个更多样化,更有包容性的 Mozilla ,站在之前的做同样事情的巨人的肩膀上,与最聪明友善的人们一起工作。我用我的激情来让人们找到贡献一个世界需要的互联网的有意义的方式:这让我兴奋了很久。当我爬上山峰,我能极目四望!

+

+通过对组织和个人行为的干预来获取一种改变文化的新方式,这和我的人生轨迹有着不可思议的联系 —— 从我的早期的学术生涯,到职业生涯再到现在。每天都是一个新的挑战,我想这是我喜欢在科技行业工作,尤其是在开放互联网工作的理由。互联网天然的多元性是它最开始吸引我的原因,也是我还在寻求的 —— 所有人都有机会和获取资源的可能性,无论背景如何。榜样、导师、资源,以及最重要的,尊重,是不断发展技术和开源文化的必要组成部分,实现我相信它能实现的所有事 —— 包括给所有人平等的接触机会。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/life/16/5/my-open-source-story-larissa-shapiro

+

+作者:[Larissa Shapiro][a]

+译者:[name1e5s](https://github.com/name1e5s)

+校对:[jasminepeng](https://github.com/jasminepeng)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/larissa-shapiro

+[1]: http://skinnywhitegirl.com/blog/my-nerd-story/1101/

+[2]: https://www.techwomen.org/mentorship/why-i-keep-coming-back-to-mentor-with-techwomen

diff --git a/published/201612/20160516 Securing Your Server.md b/published/201612/20160516 Securing Your Server.md

new file mode 100644

index 0000000000..13a4e6568a

--- /dev/null

+++ b/published/201612/20160516 Securing Your Server.md

@@ -0,0 +1,365 @@

+Linux 服务器安全简明指南

+============================================================

+

+现在让我们强化你的服务器以防止未授权访问。

+

+### 经常升级系统

+

+保持最新的软件是你可以在任何操作系统上采取的最大的安全预防措施。软件更新的范围从关键漏洞补丁到小 bug 的修复,许多软件漏洞实际上是在它们被公开的时候得到修补的。

+

+### 自动安全更新

+

+有一些用于服务器上自动更新的参数。[Fedora 的 Wiki][15] 上有一篇很棒的剖析自动更新的利弊的文章,但是如果你把它限制到安全更新上,自动更新的风险将是最小的。

+

+自动更新的可行性必须你自己判断,因为它归结为**你**在你的服务器上做什么。请记住,自动更新仅适用于来自仓库的包,而不是自行编译的程序。你可能会发现一个复制了生产服务器的测试环境是很有必要的。可以在部署到生产环境之前,在测试环境里面更新来检查问题。

+

+* CentOS 使用 [yum-cron][2] 进行自动更新。

+* Debian 和 Ubuntu 使用 [无人值守升级][3]。

+* Fedora 使用 [dnf-automatic][4]。

+

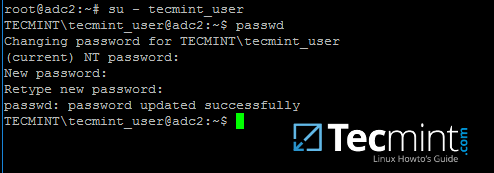

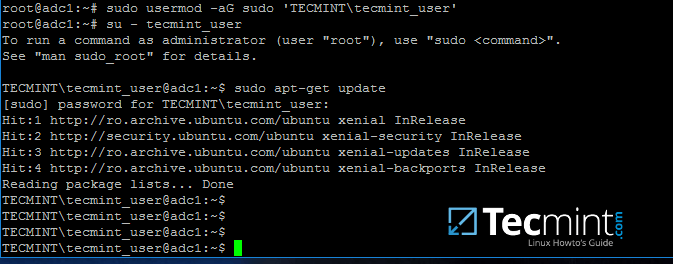

+### 添加一个受限用户账户

+

+到目前为止,你已经作为 `root` 用户访问了你的服务器,它有无限制的权限,可以执行**任何**命令 - 甚至可能意外中断你的服务器。 我们建议创建一个受限用户帐户,并始终使用它。 管理任务应该使用 `sudo` 来完成,它可以临时提升受限用户的权限,以便管理你的服务器。

+

+> 不是所有的 Linux 发行版都在系统上默认包含 `sudo`,但大多数都在其软件包仓库中有 `sudo`。 如果得到这样的输出 `sudo:command not found`,请在继续之前安装 `sudo`。

+

+要添加新用户,首先通过 SSH [登录到你的服务器][16]。

+

+#### CentOS / Fedora

+

+1、 创建用户,用你想要的名字替换 `example_user`,并分配一个密码:

+

+```

+useradd example_user && passwd example_user

+```

+

+2、 将用户添加到具有 sudo 权限的 `wheel` 组:

+

+```

+usermod -aG wheel example_user

+```

+

+#### Ubuntu

+

+1、 创建用户,用你想要的名字替换 `example_user`。你将被要求输入用户密码:

+

+```

+adduser example_user

+```

+

+2、 添加用户到 `sudo` 组,这样你就有管理员权限了:

+

+```

+adduser example_user sudo

+```

+

+#### Debian

+

+1、 Debian 默认的包中没有 `sudo`, 使用 `apt-get` 来安装:

+

+```

+apt-get install sudo

+```

+

+2、 创建用户,用你想要的名字替换 `example_user`。你将被要求输入用户密码:

+

+```

+adduser example_user

+```

+

+3、 添加用户到 `sudo` 组,这样你就有管理员权限了:

+

+```

+adduser example_user sudo

+```

+

+创建完有限权限的用户后,断开你的服务器连接:

+

+```

+exit

+```

+

+重新用你的新用户登录。用你的用户名代替 `example_user`,用你的服务器 IP 地址代替例子中的 IP 地址:

+

+```

+ssh example_user@203.0.113.10

+```

+

+现在你可以用你的新用户帐户管理你的服务器,而不是 `root`。 几乎所有超级用户命令都可以用 `sudo`(例如:`sudo iptables -L -nv`)来执行,这些命令将被记录到 `/var/log/auth.log` 中。

+

+### 加固 SSH 访问

+

+默认情况下,密码认证用于通过 SSH 连接到您的服务器。加密密钥对更加安全,因为它用私钥代替了密码,这通常更难以暴力破解。在本节中,我们将创建一个密钥对,并将服务器配置为不接受 SSH 密码登录。

+

+#### 创建验证密钥对

+

+1、这是在你本机上完成的,**不是**在你的服务器上,这里将创建一个 4096 位的 RSA 密钥对。在创建过程中,您可以选择使用密码加密私钥。这意味着它不能在没有输入密码的情况下使用,除非将密码保存到本机桌面的密钥管理器中。我们建议您使用带有密码的密钥对,但如果你不想使用密码,则可以将此字段留空。

+

+**Linux / OS X**

+

+> 如果你已经创建了 RSA 密钥对,则这个命令将会覆盖它,这可能会导致你不能访问其它的操作系统。如果你已创建过密钥对,请跳过此步骤。要检查现有的密钥,请运行 `ls〜/ .ssh / id_rsa *`。

+

+```

+ssh-keygen -b 4096

+```

+

+在输入密码之前,按下 **回车**使用 `/home/your_username/.ssh` 中的默认名称 `id_rsa` 和 `id_rsa.pub`。

+

+**Windows**

+

+这可以使用 PuTTY 完成,在我们指南中已有描述:[使用 SSH 公钥验证][6]。

+

+2、将公钥上传到您的服务器上。 将 `example_user` 替换为你用来管理服务器的用户名称,将 `203.0.113.10` 替换为你的服务器的 IP 地址。

+

+**Linux**

+

+在本机上:

+

+```

+ssh-copy-id example_user@203.0.113.10

+```

+

+**OS X**

+

+在你的服务器上(用你的权限受限用户登录):

+

+```

+mkdir -p ~/.ssh && sudo chmod -R 700 ~/.ssh/

+```

+

+在本机上:

+

+```

+scp ~/.ssh/id_rsa.pub example_user@203.0.113.10:~/.ssh/authorized_keys

+```

+

+> 如果相对于 `scp` 你更喜欢 `ssh-copy-id` 的话,那么它也可以在 [Homebrew][5] 中找到。使用 `brew install ssh-copy-id` 安装。

+

+**Windows**

+

+* **选择 1**:使用 [WinSCP][1] 来完成。 在登录窗口中,输入你的服务器的 IP 地址作为主机名,以及非 root 的用户名和密码。单击“登录”连接。

+

+ 一旦 WinSCP 连接后,你会看到两个主要部分。 左边显示本机上的文件,右边显示服务区上的文件。 使用左侧的文件浏览器,导航到你已保存公钥的文件,选择公钥文件,然后点击上面工具栏中的“上传”。

+

+ 系统会提示你输入要将文件放在服务器上的路径。 将文件上传到 `/home/example_user/.ssh /authorized_keys`,用你的用户名替换 `example_user`。

+

+* **选择 2**:将公钥直接从 PuTTY 键生成器复制到连接到你的服务器中(作为非 root 用户):

+

+ ```

+ mkdir ~/.ssh; nano ~/.ssh/authorized_keys

+ ```

+

+ 上面命令将在文本编辑器中打开一个名为 `authorized_keys` 的空文件。 将公钥复制到文本文件中,确保复制为一行,与 PuTTY 所生成的完全一样。 按下 `CTRL + X`,然后按下 `Y`,然后回车保存文件。

+

+最后,你需要为公钥目录和密钥文件本身设置权限:

+

+```

+sudo chmod 700 -R ~/.ssh && chmod 600 ~/.ssh/authorized_keys

+```

+

+这些命令通过阻止其他用户访问公钥目录以及文件本身来提供额外的安全性。有关它如何工作的更多信息,请参阅我们的指南[如何修改文件权限][7]。

+

+3、 现在退出并重新登录你的服务器。如果你为私钥指定了密码,则需要输入密码。

+

+#### SSH 守护进程选项

+

+1、 **不允许 root 用户通过 SSH 登录。** 这要求所有的 SSH 连接都是通过非 root 用户进行。当以受限用户帐户连接后,可以通过使用 `sudo` 或使用 `su -` 切换为 root shell 来使用管理员权限。

+

+```

+# Authentication:

+...

+PermitRootLogin no

+```

+

+2、 **禁用 SSH 密码认证。** 这要求所有通过 SSH 连接的用户使用密钥认证。根据 Linux 发行版的不同,它可能需要添加 `PasswordAuthentication` 这行,或者删除前面的 `#` 来取消注释。

+

+```

+# Change to no to disable tunnelled clear text passwords

+PasswordAuthentication no

+```

+

+> 如果你从许多不同的计算机连接到服务器,你可能想要继续启用密码验证。这将允许你使用密码进行身份验证,而不是为每个设备生成和上传密钥对。

+

+3、 **只监听一个互联网协议。** 在默认情况下,SSH 守护进程同时监听 IPv4 和 IPv6 上的传入连接。除非你需要使用这两种协议进入你的服务器,否则就禁用你不需要的。 _这不会禁用系统范围的协议,它只用于 SSH 守护进程。_

+

+使用选项:

+

+* `AddressFamily inet` 只监听 IPv4。

+* `AddressFamily inet6` 只监听 IPv6。

+

+默认情况下,`AddressFamily` 选项通常不在 `sshd_config` 文件中。将它添加到文件的末尾:

+

+```

+echo 'AddressFamily inet' | sudo tee -a /etc/ssh/sshd_config

+```

+

+4、 重新启动 SSH 服务以加载新配置。

+

+如果你使用的 Linux 发行版使用 systemd(CentOS 7、Debian 8、Fedora、Ubuntu 15.10+)

+

+```

+sudo systemctl restart sshd

+```

+

+如果您的 init 系统是 SystemV 或 Upstart(CentOS 6、Debian 7、Ubuntu 14.04):

+

+```

+sudo service ssh restart

+```

+

+#### 使用 Fail2Ban 保护 SSH 登录

+

+[Fail2Ban][17] 是一个应用程序,它会在太多的失败登录尝试后禁止 IP 地址登录到你的服务器。由于合法登录通常不会超过三次尝试(如果使用 SSH 密钥,那不会超过一个),因此如果服务器充满了登录失败的请求那就表示有恶意访问。

+

+Fail2Ban 可以监视各种协议,包括 SSH、HTTP 和 SMTP。默认情况下,Fail2Ban 仅监视 SSH,并且因为 SSH 守护程序通常配置为持续运行并监听来自任何远程 IP 地址的连接,所以对于任何服务器都是一种安全威慑。

+

+有关安装和配置 Fail2Ban 的完整说明,请参阅我们的指南:[使用 Fail2ban 保护服务器][18]。

+

+### 删除未使用的面向网络的服务

+

+大多数 Linux 发行版都安装并运行了网络服务,监听来自互联网、回环接口或两者兼有的传入连接。 将不需要的面向网络的服务从系统中删除,以减少对运行进程和对已安装软件包攻击的概率。

+

+#### 查明运行的服务

+

+要查看服务器中运行的服务:

+

+```

+sudo netstat -tulpn

+```

+

+> 如果默认情况下 `netstat` 没有包含在你的 Linux 发行版中,请安装软件包 `net-tools` 或使用 `ss -tulpn` 命令。

+

+以下是 `netstat` 的输出示例。 请注意,因为默认情况下不同发行版会运行不同的服务,你的输出将有所不同:

+

+

+```

+Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

+tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 7315/rpcbind

+tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 3277/sshd

+tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 3179/exim4

+tcp 0 0 0.0.0.0:42526 0.0.0.0:* LISTEN 2845/rpc.statd

+tcp6 0 0 :::48745 :::* LISTEN 2845/rpc.statd

+tcp6 0 0 :::111 :::* LISTEN 7315/rpcbind

+tcp6 0 0 :::22 :::* LISTEN 3277/sshd

+tcp6 0 0 ::1:25 :::* LISTEN 3179/exim4

+udp 0 0 127.0.0.1:901 0.0.0.0:* 2845/rpc.statd

+udp 0 0 0.0.0.0:47663 0.0.0.0:* 2845/rpc.statd

+udp 0 0 0.0.0.0:111 0.0.0.0:* 7315/rpcbind

+udp 0 0 192.0.2.1:123 0.0.0.0:* 3327/ntpd

+udp 0 0 127.0.0.1:123 0.0.0.0:* 3327/ntpd

+udp 0 0 0.0.0.0:123 0.0.0.0:* 3327/ntpd

+udp 0 0 0.0.0.0:705 0.0.0.0:* 7315/rpcbind

+udp6 0 0 :::111 :::* 7315/rpcbind

+udp6 0 0 fe80::f03c:91ff:fec:123 :::* 3327/ntpd

+udp6 0 0 2001:DB8::123 :::* 3327/ntpd

+udp6 0 0 ::1:123 :::* 3327/ntpd

+udp6 0 0 :::123 :::* 3327/ntpd

+udp6 0 0 :::705 :::* 7315/rpcbind

+udp6 0 0 :::60671 :::* 2845/rpc.statd

+```

+

+`netstat` 告诉我们服务正在运行 [RPC][19](`rpc.statd` 和 `rpcbind`)、SSH(`sshd`)、[NTPdate][20](`ntpd`)和[Exim][21](`exim4`)。

+

+##### TCP

+

+请参阅 `netstat` 输出的 `Local Address` 那一列。进程 `rpcbind` 正在侦听 `0.0.0.0:111` 和 `:::111`,外部地址是 `0.0.0.0:*` 或者 `:::*` 。这意味着它从任何端口和任何网络接口接受来自任何外部地址(IPv4 和 IPv6)上的其它 RPC 客户端的传入 TCP 连接。 我们看到类似的 SSH,Exim 正在侦听来自回环接口的流量,如所示的 `127.0.0.1` 地址。

+

+##### UDP

+

+UDP 套接字是[无状态][14]的,这意味着它们只有打开或关闭,并且每个进程的连接是独立于前后发生的连接。这与 TCP 的连接状态(例如 `LISTEN`、`ESTABLISHED`和 `CLOSE_WAIT`)形成对比。

+

+我们的 `netstat`输出说明 NTPdate :1)接受服务器的公网 IP 地址的传入连接;2)通过本地主机进行通信;3)接受来自外部的连接。这些连接是通过端口 123 进行的,同时支持 IPv4 和 IPv6。我们还看到了 RPC 打开的更多的套接字。

+

+#### 查明该移除哪个服务

+

+如果你在没有启用防火墙的情况下对服务器进行基本的 TCP 和 UDP 的 [nmap][22] 扫描,那么在打开端口的结果中将出现 SSH、RPC 和 NTPdate 。通过[配置防火墙][23],你可以过滤掉这些端口,但 SSH 除外,因为它必须允许你的传入连接。但是,理想情况下,应该禁用未使用的服务。

+

+* 你可能主要通过 SSH 连接管理你的服务器,所以让这个服务需要保留。如上所述,[RSA 密钥][8]和 [Fail2Ban][9] 可以帮助你保护 SSH。

+* NTP 是服务器计时所必需的,但有个替代 NTPdate 的方法。如果你喜欢不开放网络端口的时间同步方法,并且你不需要纳秒精度,那么你可能有兴趣用 [OpenNTPD][10] 来代替 NTPdate。

+* 然而,Exim 和 RPC 是不必要的,除非你有特定的用途,否则应该删除它们。

+

+> 本节针对 Debian 8。默认情况下,不同的 Linux 发行版具有不同的服务。如果你不确定某项服务的功能,请尝试搜索互联网以了解该功能是什么,然后再尝试删除或禁用它。

+

+#### 卸载监听的服务

+

+如何移除包取决于发行版的包管理器:

+

+**Arch**

+

+```

+sudo pacman -Rs package_name

+```

+

+**CentOS**

+

+```

+sudo yum remove package_name

+```

+

+**Debian / Ubuntu**

+

+```

+sudo apt-get purge package_name

+```

+

+**Fedora**

+

+```

+sudo dnf remove package_name

+```

+

+再次运行 `sudo netstat -tulpn`,你看到监听的服务就只会有 SSH(`sshd`)和 NTP(`ntpdate`,网络时间协议)。

+

+### 配置防火墙

+

+使用防火墙阻止不需要的入站流量能为你的服务器提供一个高效的安全层。 通过指定入站流量,你可以阻止入侵和网络测绘。 最佳做法是只允许你需要的流量,并拒绝一切其他流量。请参阅我们的一些关于最常见的防火墙程序的文档:

+

+* [iptables][11] 是 netfilter 的控制器,它是 Linux 内核的包过滤框架。 默认情况下,iptables 包含在大多数 Linux 发行版中。

+* [firewallD][12] 是可用于 CentOS/Fedora 系列发行版的 iptables 控制器。

+* [UFW][13] 为 Debian 和 Ubuntu 提供了一个 iptables 前端。

+

+### 接下来

+

+这些是加固 Linux 服务器的最基本步骤,但是进一步的安全层将取决于其预期用途。 其他技术可以包括应用程序配置,使用[入侵检测][24]或者安装某个形式的[访问控制][25]。

+

+现在你可以按你的需求开始设置你的服务器了。 我们有一个文档库来以帮助你从[从共享主机迁移][26]到[启用两步验证][27]到[托管网站] [28]等各种主题。

+

+--------------------------------------------------------------------------------

+

+via: https://www.linode.com/docs/security/securing-your-server/

+

+作者:[Phil Zona][a]

+译者:[geekpi](https://github.com/geekpi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]:https://www.linode.com/docs/security/securing-your-server/

+[1]:http://winscp.net/

+[2]:https://fedoraproject.org/wiki/AutoUpdates#Fedora_21_or_earlier_versions

+[3]:https://help.ubuntu.com/lts/serverguide/automatic-updates.html

+[4]:https://dnf.readthedocs.org/en/latest/automatic.html

+[5]:http://brew.sh/

+[6]:https://www.linode.com/docs/security/use-public-key-authentication-with-ssh#windows-operating-system

+[7]:https://www.linode.com/docs/tools-reference/modify-file-permissions-with-chmod

+[8]:https://www.linode.com/docs/security/securing-your-server/#create-an-authentication-key-pair

+[9]:https://www.linode.com/docs/security/securing-your-server/#use-fail2ban-for-ssh-login-protection

+[10]:https://en.wikipedia.org/wiki/OpenNTPD

+[11]:https://www.linode.com/docs/security/firewalls/control-network-traffic-with-iptables

+[12]:https://www.linode.com/docs/security/firewalls/introduction-to-firewalld-on-centos

+[13]:https://www.linode.com/docs/security/firewalls/configure-firewall-with-ufw

+[14]:https://en.wikipedia.org/wiki/Stateless_protocol

+[15]:https://fedoraproject.org/wiki/AutoUpdates#Why_use_Automatic_updates.3F

+[16]:https://www.linode.com/docs/getting-started#logging-in-for-the-first-time

+[17]:http://www.fail2ban.org/wiki/index.php/Main_Page

+[18]:https://www.linode.com/docs/security/using-fail2ban-for-security

+[19]:https://en.wikipedia.org/wiki/Open_Network_Computing_Remote_Procedure_Call

+[20]:http://support.ntp.org/bin/view/Main/SoftwareDownloads

+[21]:http://www.exim.org/

+[22]:https://nmap.org/

+[23]:https://www.linode.com/docs/security/securing-your-server/#configure-a-firewall

+[24]:https://linode.com/docs/security/ossec-ids-debian-7

+[25]:https://en.wikipedia.org/wiki/Access_control#Access_Control

+[26]:https://www.linode.com/docs/migrate-to-linode/migrate-from-shared-hosting

+[27]:https://www.linode.com/docs/security/linode-manager-security-controls

+[28]:https://www.linode.com/docs/websites/hosting-a-website

diff --git a/published/20160525 What containers and unikernels can learn from Arduino and Raspberry Pi.md b/published/201612/20160525 What containers and unikernels can learn from Arduino and Raspberry Pi.md

similarity index 100%

rename from published/20160525 What containers and unikernels can learn from Arduino and Raspberry Pi.md

rename to published/201612/20160525 What containers and unikernels can learn from Arduino and Raspberry Pi.md

diff --git a/published/20160615 Excel Filter and Edit - Demonstrated in Pandas.md b/published/201612/20160615 Excel Filter and Edit - Demonstrated in Pandas.md

similarity index 100%

rename from published/20160615 Excel Filter and Edit - Demonstrated in Pandas.md

rename to published/201612/20160615 Excel Filter and Edit - Demonstrated in Pandas.md

diff --git a/published/20160627 Linux Practicality vs Activism.md b/published/201612/20160627 Linux Practicality vs Activism.md

similarity index 100%

rename from published/20160627 Linux Practicality vs Activism.md

rename to published/201612/20160627 Linux Practicality vs Activism.md

diff --git a/published/20160817 Building a Real-Time Recommendation Engine with Data Science.md b/published/201612/20160817 Building a Real-Time Recommendation Engine with Data Science.md

similarity index 100%

rename from published/20160817 Building a Real-Time Recommendation Engine with Data Science.md

rename to published/201612/20160817 Building a Real-Time Recommendation Engine with Data Science.md

diff --git a/translated/tech/20160817 Dependency Injection for the Android platform 101 - Part 1.md b/published/201612/20160817 Dependency Injection for the Android platform 101 - Part 1.md

similarity index 66%

rename from translated/tech/20160817 Dependency Injection for the Android platform 101 - Part 1.md

rename to published/201612/20160817 Dependency Injection for the Android platform 101 - Part 1.md

index 6ae6ddccfb..b8c4427b83 100644

--- a/translated/tech/20160817 Dependency Injection for the Android platform 101 - Part 1.md

+++ b/published/201612/20160817 Dependency Injection for the Android platform 101 - Part 1.md

@@ -1,19 +1,19 @@

-安卓平台上的依赖注入 - 第一部分

+安卓平台上的依赖注入(一)

===========================

刚开始学习软件工程的时候,我们经常会碰到像这样的事情:

->软件应该符合 SOLID 原则。

+> 软件应该符合 SOLID 原则。

但这句话实际是什么意思?让我们看看 SOLID 中每个字母在架构里所代表的重要含义,例如:

-- [S 单职责原则][1]

-- [O 开闭原则][2]

-- [L Liskov 替换原则][3]

-- [I 接口分离原则][4]

-- [D 依赖反转原则][5] 这也是依赖注入的核心概念。

+- [S - 单职责原则][1]

+- [O - 开闭原则][2]

+- [L - Liskov 替换原则][3]

+- [I - 接口分离原则][4]

+- [D - 依赖反转原则][5] 这也是依赖注入(dependency injection)的核心概念。

简单来说,我们需要提供一个类,这个类有它所需要的所有对象,以便实现其功能。

@@ -39,7 +39,7 @@ class DependencyInjection {

}

```

-正如我们所见,第一种情况是我们在构造器里创建了依赖对象,但在第二种情况下,它作为参数被传递给构造器,这就是我们所说的依赖注入。这样做是为了让我们所写的类不依靠特定依赖关系的实现,却能直接使用它。

+正如我们所见,第一种情况是我们在构造器里创建了依赖对象,但在第二种情况下,它作为参数被传递给构造器,这就是我们所说的依赖注入(dependency injection)。这样做是为了让我们所写的类不依靠特定依赖关系的实现,却能直接使用它。

参数传递的目标是构造器,我们就称之为构造器依赖注入;或者是某个方法,就称之为方法依赖注入:

@@ -58,13 +58,13 @@ class Example {

```

-要是你想总体深入地了解依赖注入,可以看看由 [Dan Lew][t2] 发表的[精彩的演讲][t1],事实上是这个演讲启迪了这个概述。

+要是你想总体深入地了解依赖注入,可以看看由 [Dan Lew][t2] 发表的[精彩的演讲][t1],事实上是这个演讲启迪了这篇概述。

-在 Android 平台,当需要框架来处理依赖注入这个特殊的问题时,我们有不同的选择,其中最有名的框架就是 [Dagger 2][t3]。它最开始是由 Square 公司(译者注:Square 是美国一家移动支付公司)里一些很棒的开发者开发出来的,然后慢慢发展成由 Google 自己开发。特别地,Dagger 1 先被开发出来,然后 Big G 接手这个项目,做了很多改动,比如以注释为基础,在编译的时候就完成 Dagger 的任务,也就是第二个版本。

+在 Android 平台,当需要框架来处理依赖注入这个特殊的问题时,我们有不同的选择,其中最有名的框架就是 [Dagger 2][t3]。它最开始是由 Square 公司(LCTT 译注:Square 是美国一家移动支付公司)的一些很棒的开发者开发出来的,然后慢慢发展成由 Google 自己开发。首先开发出来的是 Dagger 1,然后 Big G 接手这个项目发布了第二个版本,做了很多改动,比如以注解(annotation)为基础,在编译的时候完成其任务。

### 导入框架

-安装 Dagger 并不难,但需要导入 `android-apt` 插件,通过向项目的根目录下的 build.gradle 文件中添加它的依赖关系:

+安装 Dagger 并不难,但需要导入 `android-apt` 插件,通过向项目的根目录下的 `build.gradle` 文件中添加它的依赖关系:

```

buildscript{

@@ -76,13 +76,13 @@ buildscript{

}

```

-然后,我们需要将 `android-apt` 插件应用到项目 build.gradle 文件,放在文件顶部 Android 应用那一句的下一行:

+然后,我们需要将 `android-apt` 插件应用到项目 `build.gradle` 文件,放在文件顶部 Android application 那一句的下一行:

```

apply plugin: ‘com.neenbedankt.android-apt’

```

-这个时候,我们只用添加依赖关系,然后就能使用库和注释了:

+这个时候,我们只用添加依赖关系,然后就能使用库及其注解(annotation)了:

```

dependencies{

@@ -93,11 +93,11 @@ dependencies{

}

```

->需要加上最后一个依赖关系是因为 @Generated 注解在 Android 里还不可用,但它是[原生的 Java 注解][t4]。

+> 需要加上最后一个依赖关系是因为 @Generated 注解在 Android 里还不可用,但它是[原生的 Java 注解][t4]。

### Dagger 模块

-要注入依赖,首先需要告诉框架我们能提供什么(比如说上下文)以及特定的对象应该怎样创建。为了完成注入,我们用 `@Module` 注释对一个特殊的类进行了注解(这样 Dagger 就能识别它了),寻找 `@Provide` 标记的方法,生成图表,能够返回我们所请求的对象。

+要注入依赖,首先需要告诉框架我们能提供什么(比如说上下文)以及特定的对象应该怎样创建。为了完成注入,我们用 `@Module` 注释对一个特殊的类进行了注解(这样 Dagger 就能识别它了),寻找 `@Provide` 注解的方法,生成图表,能够返回我们所请求的对象。

看下面的例子,这里我们创建了一个模块,它会返回给我们 `ConnectivityManager`,所以我们要把 `Context` 对象传给这个模块的构造器。

@@ -122,11 +122,11 @@ public class ApplicationModule {

}

```

->Dagger 中十分有意思的一点是只用在一个方法前面添加一个 Singleton 注解,就能处理所有从 Java 中继承过来的问题。

+> Dagger 中十分有意思的一点是简单地注解一个方法来提供一个单例(Singleton),就能处理所有从 Java 中继承过来的问题。

-### 容器

+### 组件

-当我们有一个模块的时候,我们需要告诉 Dagger 想把依赖注入到哪里:我们在一个容器里,一个特殊的注解过的接口里完成依赖注入。我们在这个接口里创造不同的方法,而接口的参数是我们想注入依赖关系的类。

+当我们有一个模块的时候,我们需要告诉 Dagger 想把依赖注入到哪里:我们在一个组件(Component)里完成依赖注入,这是一个我们特别创建的特殊注解接口。我们在这个接口里创造不同的方法,而接口的参数是我们想注入依赖关系的类。

下面给出一个例子并告诉 Dagger 我们想要 `MainActivity` 类能够接受 `ConnectivityManager`(或者在图表里的其它依赖对象)。我们只要做类似以下的事:

@@ -139,15 +139,15 @@ public interface ApplicationComponent {

}

```

->正如我们所见,@Component 注解有几个参数,一个是所支持的模块的数组,意味着它能提供的依赖。这里既可以是 Context 也可以是 ConnectivityManager,因为他们在 ApplicationModule 类中有声明。

+> 正如我们所见,@Component 注解有几个参数,一个是所支持的模块的数组,代表它能提供的依赖。这里既可以是 `Context` 也可以是 `ConnectivityManager`,因为它们在 `ApplicationModule` 类中有声明。

-### 使用

+### 用法

-这时,我们要做的是尽快创建容器(比如在应用的 onCreate 方法里面)并且返回这个容器,那么类就能用它来注入依赖了:

+这时,我们要做的是尽快创建组件(比如在应用的 `onCreate` 阶段)并返回它,那么类就能用它来注入依赖了:

->为了让框架自动生成 DaggerApplicationComponent,我们需要构建项目以便 Dagger 能够扫描我们的代码库,并且生成我们需要的部分。

+> 为了让框架自动生成 `DaggerApplicationComponent`,我们需要构建项目以便 Dagger 能够扫描我们的代码,并生成我们需要的部分。

-在 `MainActivity` 里,我们要做的两件事是用 `@Inject` 注解符对想要注入的属性进行注释,调用我们在 `ApplicationComponent` 接口中声明的方法(请注意后面一部分会因我们使用的注入类型的不同而变化,但这里简单起见我们不去管它),然后依赖就被注入了,我们就能自由使用他们:

+在 `MainActivity` 里,我们要做的两件事是用 `@Inject` 注解符对想要注入的属性进行注解,调用我们在 `ApplicationComponent` 接口中声明的方法(请注意后面一部分会因我们使用的注入类型的不同而变化,但这里简单起见我们不去管它),然后依赖就被注入了,我们就能自由使用他们:

```

public class MainActivity extends AppCompatActivity {

@@ -164,7 +164,7 @@ public class MainActivity extends AppCompatActivity {

### 总结

-当然了,我们可以手动注入依赖,管理所有不同的对象,但 Dagger 打消了很多有关模板的“噪声”,Dagger 给我们有用的附加品(比如 `Singleton`),而仅用 Java 处理将会很糟糕。

+当然了,我们可以手动注入依赖,管理所有不同的对象,但 Dagger 消除了很多比如模板这样的“噪声”,给我们提供有用的附加品(比如 `Singleton`),而仅用 Java 处理将会很糟糕。

--------------------------------------------------------------------------------

@@ -172,7 +172,7 @@ via: https://medium.com/di-101/di-101-part-1-81896c2858a0#.3hg0jj14o

作者:[Roberto Orgiu][a]

译者:[GitFuture](https://github.com/GitFuture)

-校对:[校对者ID](https://github.com/校对者ID)

+校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

diff --git a/published/20160908 Using webpack with the Amazon Cognito Identity SDK for JavaScript.md b/published/201612/20160908 Using webpack with the Amazon Cognito Identity SDK for JavaScript.md

similarity index 100%

rename from published/20160908 Using webpack with the Amazon Cognito Identity SDK for JavaScript.md

rename to published/201612/20160908 Using webpack with the Amazon Cognito Identity SDK for JavaScript.md

diff --git a/published/20160913 The Five Principles of Monitoring Microservices.md b/published/201612/20160913 The Five Principles of Monitoring Microservices.md

similarity index 100%

rename from published/20160913 The Five Principles of Monitoring Microservices.md

rename to published/201612/20160913 The Five Principles of Monitoring Microservices.md

diff --git a/published/20160915 Should Smartphones Do Away with the Headphone Jack Here Are Our Though.md b/published/201612/20160915 Should Smartphones Do Away with the Headphone Jack Here Are Our Though.md

similarity index 100%

rename from published/20160915 Should Smartphones Do Away with the Headphone Jack Here Are Our Though.md

rename to published/201612/20160915 Should Smartphones Do Away with the Headphone Jack Here Are Our Though.md

diff --git a/published/201612/20160921 How To Install The PyCharm Python In Linux.md b/published/201612/20160921 How To Install The PyCharm Python In Linux.md

new file mode 100644

index 0000000000..5894aaad37

--- /dev/null

+++ b/published/201612/20160921 How To Install The PyCharm Python In Linux.md

@@ -0,0 +1,109 @@

+如何在 Linux 下安装 PyCharm

+============================================

+

+/about/pycharmstart-57e2cb405f9b586c351a4cf7.png)

+

+### 简介

+

+Linux 经常被看成是一个远离外部世界,只有极客才会使用的操作系统,但是这是不准确的,如果你想开发软件,那么 Linux 能够为你提供一个非常棒的开发环境。

+

+刚开始学习编程的新手们经常会问这样一个问题:应该使用哪种语言?当涉及到 Linux 系统的时候,通常的选择是 C、C++、Python、Java、PHP、Perl 和 Ruby On Rails。

+

+Linux 系统的许多核心程序都是用 C 语言写的,但是如果离开 Linux 系统的世界, C 语言就不如其它语言比如 Java 和 Python 那么常用。

+

+对于学习编程的人来说, Python 和 Java 都是不错的选择,因为它们是跨平台的,因此,你在 Linux 系统上写的程序在 Windows 系统和 Mac 系统上也能够很好的工作。

+

+虽然你可以使用任何编辑器来开发 Python 程序,但是如果你使用一个同时包含编辑器和调试器的优秀的集成开发环境(IDE)来进行开发,那么你的编程生涯将会变得更加轻松。

+

+PyCharm 是由 Jetbrains 公司开发的一个跨平台编辑器。如果你之前是在 Windows 环境下进行开发,那么你会立刻认出 Jetbrains 公司,它就是那个开发了 Resharper 的公司。 Resharper 是一个用于重构代码的优秀产品,它能够指出代码可能存在的问题,自动添加声明,比如当你在使用一个类的时候它会自动为你导入。

+

+这篇文章将讨论如何在 Linux 系统上获取、安装和运行 PyCharm 。

+

+### 如何获取 PyCharm

+

+你可以通过访问[https://www.jetbrains.com/pycharm/][1]获取 PyCharm 。

+

+屏幕中央有一个很大的 'Download' 按钮。

+

+你可以选择下载专业版或者社区版。如果你刚刚接触 Python 编程那么推荐下载社区版。然而,如果你打算发展到专业化的编程,那么专业版的一些优秀特性是不容忽视的。

+

+### 如何安装 PyCharm

+

+下载好的文件的名称可能类似这种样子 ‘pycharm-professional-2016.2.3.tar.gz’。

+

+以 “tar.gz” 结尾的文件是被 [gzip][2] 工具压缩过的,并且把文件夹用 [tar][3] 工具归档到了一起。你可以阅读关于[提取 tar.gz 文件][4]指南的更多信息。

+

+加快速度,为了解压文件,你需要做的是首先打开终端,然后通过下面的命令进入下载文件所在的文件夹:

+

+```

+cd ~/Downloads

+```

+

+现在,通过运行下面的命令找到你下载的文件的名字:

+

+```

+ls pycharm*

+```

+

+然后运行下面的命令解压文件:

+

+```

+tar -xvzf pycharm-professional-2016.2.3.tar.gz -C ~

+```

+

+记得把上面命令中的文件名替换成通过 `ls` 命令获知的 pycharm 文件名。(也就是你下载的文件的名字)。上面的命令将会把 PyCharm 软件安装在 `home` 目录中。

+

+### 如何运行 PyCharm

+

+要运行 PyCharm, 首先需要进入 `home` 目录:

+

+```

+cd ~

+```

+

+运行 `ls` 命令查找文件夹名:

+

+```

+ls

+```

+

+查找到文件名以后,运行下面的命令进入 PyCharm 目录:

+

+```

+cd pycharm-2016.2.3/bin

+```

+

+最后,通过运行下面的命令来运行 PyCharm:

+

+```

+sh pycharm.sh &

+```

+

+如果你是在一个桌面环境比如 GNOME 、 KDE 、 Unity 、 Cinnamon 或者其他现代桌面环境上运行,你也可以通过桌面环境的菜单或者快捷方式来找到 PyCharm 。

+

+### 总结

+

+现在, PyCharm 已经安装好了,你可以开始使用它来开发一个桌面应用、 web 应用和各种工具。

+

+如果你想学习如何使用 Python 编程,那么这里有很好的[学习资源][5]值得一看。里面的文章更多的是关于 Linux 学习,但也有一些资源比如 Pluralsight 和 Udemy 提供了关于 Python 学习的一些很好的教程。

+

+如果想了解 PyCharm 的更多特性,请点击[这儿][6]来查看。它覆盖了从创建项目到描述用户界面、调试以及代码重构的全部内容。

+

+-----------------------------------------------------------------------------------------------------------

+

+via: https://www.lifewire.com/how-to-install-the-pycharm-python-ide-in-linux-4091033

+

+作者:[Gary Newell][a]

+译者:[ucasFL](https://github.com/ucasFL)

+校对:[oska874](https://github.com/oska874)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 组织编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]:https://www.lifewire.com/gary-newell-2180098

+[1]:https://www.jetbrains.com/pycharm/

+[2]:https://www.lifewire.com/example-uses-of-the-linux-gzip-command-4078675

+[3]:https://www.lifewire.com/uses-of-linux-command-tar-2201086

+[4]:https://www.lifewire.com/extract-tar-gz-files-2202057

+[5]:https://www.lifewire.com/learn-linux-in-structured-manner-4061368

+[6]:https://www.lifewire.com/pycharm-the-best-linux-python-ide-4091045

+[7]:https://fthmb.tqn.com/ju1u-Ju56vYnXabPbsVRyopd72Q=/768x0/filters:no_upscale()/about/pycharmstart-57e2cb405f9b586c351a4cf7.png

diff --git a/published/201612/20160923 PyCharm - The Best Linux Python IDE.md b/published/201612/20160923 PyCharm - The Best Linux Python IDE.md

new file mode 100644

index 0000000000..a21321caa2

--- /dev/null

+++ b/published/201612/20160923 PyCharm - The Best Linux Python IDE.md

@@ -0,0 +1,146 @@

+PyCharm - Linux 下最好的 Python IDE

+=========

+/about/pycharm2-57e2d5ee5f9b586c352c7493.png)

+

+### 介绍

+

+在这篇指南中,我将向你介绍一个集成开发环境 - PyCharm, 你可以在它上面使用 Python 编程语言开发专业应用。

+

+Python 是一门优秀的编程语言,因为它真正实现了跨平台,用它开发的应用程序在 Windows、Linux 以及 Mac 系统上均可运行,无需重新编译任何代码。

+

+PyCharm 是由 [Jetbrains][3] 开发的一个编辑器和调试器,[Jetbrains][3] 就是那个开发了 Resharper 的人。不得不说,Resharper 是一个很优秀的工具,它被 Windows 开发者们用来重构代码,同时,它也使得 Windows 开发者们写 .NET 代码更加轻松。[Resharper][2] 的许多原则也被加入到了 [PyCharm][3] 专业版中。

+

+### 如何安装 PyCharm

+

+我已经[写了一篇][4]关于如何获取 PyCharm 的指南,下载、解压文件,然后运行。

+

+### 欢迎界面

+

+当你第一次运行 PyCharm 或者关闭一个项目的时候,会出现一个屏幕,上面显示一系列近期项目。

+

+你也会看到下面这些菜单选项:

+

+* 创建新项目

+* 打开项目

+* 从版本控制仓库检出

+

+还有一个配置设置选项,你可以通过它设置默认 Python 版本或者一些其他设置。

+

+### 创建一个新项目

+

+当你选择‘创建一个新项目’以后,它会提供下面这一系列可能的项目类型供你选择:

+

+* Pure Python

+* Django

+* Flask

+* Google App Engine

+* Pyramid

+* Web2Py

+* Angular CLI

+* AngularJS

+* Foundation

+* HTML5 Bolierplate

+* React Starter Kit

+* Twitter Bootstrap

+* Web Starter Kit

+

+这不是一个编程教程,所以我没必要说明这些项目类型是什么。如果你想创建一个可以运行在 Windows、Linux 和 Mac 上的简单桌面运行程序,那么你可以选择 Pure Python 项目,然后使用 Qt 库来开发图形应用程序,这样的图形应用程序无论在何种操作系统上运行,看起来都像是原生的,就像是在该系统上开发的一样。

+

+选择了项目类型以后,你需要输入一个项目名字并且选择一个 Python 版本来进行开发。

+

+### 打开一个项目

+

+你可以通过单击‘最近打开的项目’列表中的项目名称来打开一个项目,或者,你也可以单击‘打开’,然后浏览到你想打开的项目所在的文件夹,找到该项目,然后选择‘确定’。

+

+### 从源码控制进行查看

+

+PyCharm 提供了从各种在线资源查看项目源码的选项,在线资源包括 [GitHub][5]、[CVS][6]、Git、[Mercurial][7] 以及 [Subversion][8]。

+

+### PyCharm IDE(集成开发环境)

+

+PyCharm IDE 中可以打开顶部的菜单,在这个菜单下方你可以看到每个打开的项目的标签。

+

+屏幕右方是调试选项区,可以单步运行代码。

+

+左侧面板有项目文件和外部库的列表。

+

+如果想在项目中新建一个文件,你可以鼠标右击项目的名字,然后选择‘新建’。然后你可以在下面这些文件类型中选择一种添加到项目中:

+

+* 文件

+* 目录

+* Python 包

+* Python 包

+* Jupyter 笔记

+* HTML 文件

+* Stylesheet

+* JavaScript

+* TypeScript

+* CoffeeScript

+* Gherkin

+* 数据源

+

+当添加了一个文件,比如 Python 文件以后,你可以在右边面板的编辑器中进行编辑。

+

+文本是全彩色编码的,并且有黑体文本。垂直线显示缩进,从而能够确保缩进正确。

+

+编辑器具有智能补全功能,这意味着当你输入库名字或可识别命令的时候,你可以按 'Tab' 键补全命令。

+

+### 调试程序

+

+你可以利用屏幕右上角的’调试选项’调试程序的任何一个地方。

+

+如果你是在开发一个图形应用程序,你可以点击‘绿色按钮’来运行程序,你也可以通过 'shift+F10' 快捷键来运行程序。

+

+为了调试应用程序,你可以点击紧挨着‘绿色按钮’的‘绿色箭头’或者按 ‘shift+F9’ 快捷键。你可以点击一行代码的灰色边缘,从而设置断点,这样当程序运行到这行代码的时候就会停下来。

+

+你可以按 'F8' 单步向前运行代码,这意味着你只是运行代码但无法进入函数内部,如果要进入函数内部,你可以按 'F7'。如果你想从一个函数中返回到调用函数,你可以按 'shift+F8'。

+

+调试过程中,你会在屏幕底部看到许多窗口,比如进程和线程列表,以及你正在监视的变量。

+

+当你运行到一行代码的时候,你可以对这行代码中出现的变量进行监视,这样当变量值改变的时候你能够看到。

+

+另一个不错的选择是使用覆盖检查器运行代码。在过去这些年里,编程界发生了很大的变化,现在,对于开发人员来说,进行测试驱动开发是很常见的,这样他们可以检查对程序所做的每一个改变,确保不会破坏系统的另一部分。

+

+覆盖检查器能够很好的帮助你运行程序,执行一些测试,运行结束以后,它会以百分比的形式告诉你测试运行所覆盖的代码有多少。

+

+还有一个工具可以显示‘类函数’或‘类’的名字,以及一个项目被调用的次数和在一个特定代码片段运行所花费的时间。

+

+### 代码重构

+

+PyCharm 一个很强大的特性是代码重构选项。

+

+当你开始写代码的时候,会在右边缘出现一个小标记。如果你写的代码可能出错或者写的不太好, PyCharm 会标记上一个彩色标记。

+

+点击彩色标记将会告诉你出现的问题并提供一个解决方法。

+

+比如,你通过一个导入语句导入了一个库,但没有使用该库中的任何东西,那么不仅这行代码会变成灰色,彩色标记还会告诉你‘该库未使用’。

+

+对于正确的代码,也可能会出现错误提示,比如在导入语句和函数起始之间只有一个空行。当你创建了一个名称非小写的函数时它也会提示你。

+

+你不必遵循 PyCharm 的所有规则。这些规则大部分只是好的编码准则,与你的代码是否能够正确运行无关。

+

+代码菜单还有其它的重构选项。比如,你可以进行代码清理以及检查文件或项目问题。

+

+### 总结

+

+PyCharm 是 Linux 系统上开发 Python 代码的一个优秀编辑器,并且有两个可用版本。社区版可供临时开发者使用,专业版则提供了开发者开发专业软件可能需要的所有工具。

+

+--------------------------------------------------------------------------------

+

+via: https://www.lifewire.com/pycharm-the-best-linux-python-ide-4091045

+

+作者:[Gary Newell][a]

+译者:[ucasFL](https://github.com/ucasFL)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 组织编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]:https://www.lifewire.com/gary-newell-2180098

+[1]:https://www.jetbrains.com/

+[2]:https://www.jetbrains.com/resharper/

+[3]:https://www.jetbrains.com/pycharm/specials/pycharm/pycharm.html?&gclid=CjwKEAjw34i_BRDH9fbylbDJw1gSJAAvIFqU238G56Bd2sKU9EljVHs1bKKJ8f3nV--Q9knXaifD8xoCRyjw_wcB&gclsrc=aw.ds.ds&dclid=CNOy3qGQoc8CFUJ62wodEywCDg

+[4]:https://www.lifewire.com/how-to-install-the-pycharm-python-ide-in-linux-4091033

+[5]:https://github.com/

+[6]:http://www.linuxhowtos.org/System/cvs_tutorial.htm

+[7]:https://www.mercurial-scm.org/

+[8]:https://subversion.apache.org/

diff --git a/published/20161014 IS OPEN SOURCE DESIGN A THING.md b/published/201612/20161014 IS OPEN SOURCE DESIGN A THING.md

similarity index 100%

rename from published/20161014 IS OPEN SOURCE DESIGN A THING.md

rename to published/201612/20161014 IS OPEN SOURCE DESIGN A THING.md

diff --git a/published/20161014 WattOS - A Rock-Solid Lightning-Fast Lightweight Linux Distro For All.md b/published/201612/20161014 WattOS - A Rock-Solid Lightning-Fast Lightweight Linux Distro For All.md

similarity index 100%

rename from published/20161014 WattOS - A Rock-Solid Lightning-Fast Lightweight Linux Distro For All.md

rename to published/201612/20161014 WattOS - A Rock-Solid Lightning-Fast Lightweight Linux Distro For All.md

diff --git a/published/20161017 How To Manually Backup Your SMS MMS Messages On Android.md b/published/201612/20161017 How To Manually Backup Your SMS MMS Messages On Android.md

similarity index 100%

rename from published/20161017 How To Manually Backup Your SMS MMS Messages On Android.md

rename to published/201612/20161017 How To Manually Backup Your SMS MMS Messages On Android.md

diff --git a/published/20161018 An Everyday Linux User Review Of Xubuntu 16.10 - A Good Place To Start.md b/published/201612/20161018 An Everyday Linux User Review Of Xubuntu 16.10 - A Good Place To Start.md

similarity index 100%

rename from published/20161018 An Everyday Linux User Review Of Xubuntu 16.10 - A Good Place To Start.md

rename to published/201612/20161018 An Everyday Linux User Review Of Xubuntu 16.10 - A Good Place To Start.md

diff --git a/published/201612/20161021 Getting started with Inkscape on Fedora.md b/published/201612/20161021 Getting started with Inkscape on Fedora.md

new file mode 100644

index 0000000000..99a1d40413

--- /dev/null

+++ b/published/201612/20161021 Getting started with Inkscape on Fedora.md

@@ -0,0 +1,113 @@

+Fedora 中使用 Inkscape 起步

+=============

+

+

+

+Inkscape 是一个流行的、功能齐全、自由而开源的矢量[图形编辑器][3],它已经在 Fedora 官方仓库中。它特别适合创作 [SVG 格式][4]的矢量图形。Inkscape 非常适于创建和操作图片和插图,以及创建图表和用户界面设计。

+

+[

+

+][5]

+

+*使用 inkscape 创建的[风车景色][1]的插图*

+

+[其官方网站的截图页][6]上有一些很好的例子,说明 Inkscape 可以做些什么。Fedora 杂志上的大多数精选图片也是使用 Inkscape 创建的,包括最近的精选图片:

+

+[

+

+][7]

+

+*Fedora 杂志最近使用 Inkscape 创建的精选图片*

+

+### 在 Fedora 上安装 Inkscape

+

+**Inkscape 已经[在 Fedora 官方仓库中了][8],因此可以非常简单地在 Fedora Workstation 上使用 Software 这个应用来安装它:**

+

+[

+

+][9]

+

+另外,如果你习惯用命令行,你可以使用 `dnf` 命令来安装:

+

+```

+sudo dnf install inkscape

+```

+

+### (开始)深入 Inkscape

+

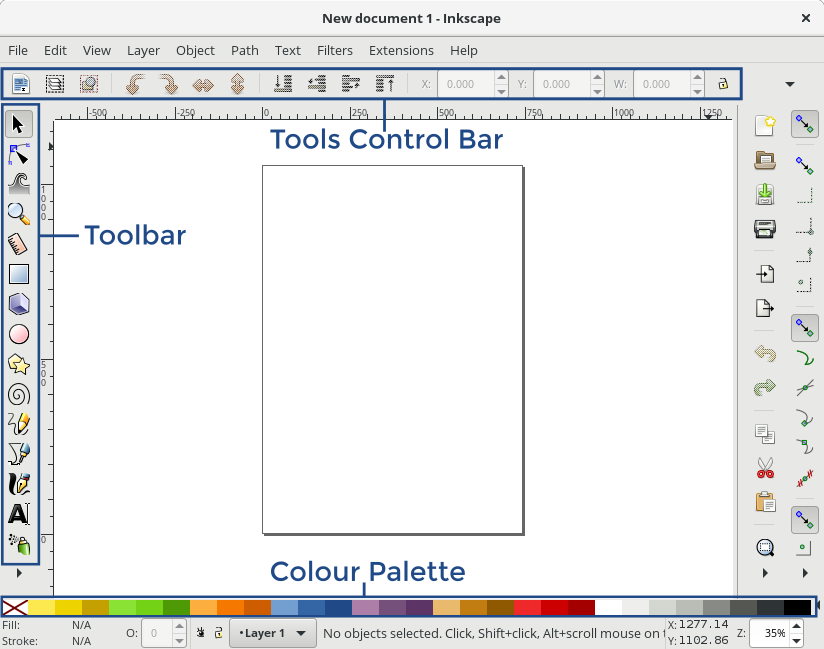

+当第一次打开程序时,你会看到一个空白页面,并且有一组不同的工具栏。对于初学者,最重要的三个工具栏是:Toolbar、Tools Control Bar、 Colour Palette(调色板):

+

+[

+

+][10]

+

+**Toolbar**提供了创建绘图的所有基本工具,包括以下工具:

+

+* 矩形工具:用于绘制矩形和正方形

+* 星形/多边形(形状)工具

+* 圆形工具:用于绘制椭圆和圆

+* 文本工具:用于添加标签和其他文本

+* 路径工具:用于创建或编辑更复杂或自定义的形状

+* 选择工具:用于选择图形中的对象

+

+**Colour Palette** 提供了一种设置当前选定对象的颜色的快速方式。 **Tools Control Bar** 提供了工具栏中当前选定工具的所有设置。每次选择新工具时,Tools Control Bar 会变成该工具的相应设置:

+

+[

+

+][11]

+

+### 绘图

+

+接下来,让我们使用 Inkscape 绘制一个星星。 首先,从 **Toolbar** 中选择星形工具,**然后在主绘图区域上单击并拖动。**

+

+你可能会注意到你画的星星看起来很像一个三角形。要更改它,请使用 **Tools Control Bar** 中的 **Corners** 选项,再添加几个点。 最后,当你完成后,在星星仍被选中的状态下,从 **Palette**(调色板)中选择一种颜色来改变星星的颜色:

+

+[

+

+][12]

+

+接下来,可以在 Toolbar 中实验一些其他形状工具,如矩形工具,螺旋工具和圆形工具。通过不同的设置,每个工具都可以创建一些独特的图形。

+

+### 在绘图中选择并移动对象

+

+现在你有一堆图形了,你可以使用 Select 工具来移动它们。要使用 Select 工具,首先从工具栏中选择它,然后单击要操作的形状,接着将图形拖动到您想要的位置。

+

+选择形状后,你还可以使用尺寸句柄调整图形大小。此外,如果你单击所选的图形,尺寸句柄将转变为旋转模式,并允许你旋转图形:

+

+[

+

+][13]

+

+* * *

+

+Inkscape是一个很棒的软件,它还包含了更多的工具和功能。在本系列的下一篇文章中,我们将介绍更多可用来创建插图和文档的功能和选项。

+

+-----------------------

+

+作者简介:Ryan 是一名 Fedora 设计师。他使用 Fedora Workstation 作为他的主要桌面,还有来自 Libre Graphics 世界的最好的工具,尤其是矢量图形编辑器 Inkscape。

+

+

+--------------------------------------------------------------------------------

+

+via: https://fedoramagazine.org/getting-started-inkscape-fedora/

+

+作者:[Ryan Lerch][a]

+译者:[geekpi](https://github.com/geekpi)

+校对:[jasminepeng](https://github.com/jasminepeng)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]:http://ryanlerch.id.fedoraproject.org/

+[1]:https://openclipart.org/detail/185885/windmill-in-landscape

+[2]:https://fedoramagazine.org/getting-started-inkscape-fedora/

+[3]:https://inkscape.org/

+[4]:https://en.wikipedia.org/wiki/Scalable_Vector_Graphics

+[5]:https://cdn.fedoramagazine.org/wp-content/uploads/2016/10/cyberscoty-landscape-800px.png

+[6]:https://inkscape.org/en/about/screenshots/

+[7]:https://cdn.fedoramagazine.org/wp-content/uploads/2016/09/communty.png

+[8]:https://apps.fedoraproject.org/packages/inkscape

+[9]:https://cdn.fedoramagazine.org/wp-content/uploads/2016/10/inkscape-gnome-software.png

+[10]:https://cdn.fedoramagazine.org/wp-content/uploads/2016/10/inkscape_window.png

+[11]:https://cdn.fedoramagazine.org/wp-content/uploads/2016/10/inkscape-toolscontrolbar.gif

+[12]:https://cdn.fedoramagazine.org/wp-content/uploads/2016/10/inkscape-drawastar.gif

+[13]:https://cdn.fedoramagazine.org/wp-content/uploads/2016/10/inkscape-movingshapes.gif

diff --git a/published/20161021 Livepatch – Apply Critical Security Patches to Ubuntu Linux Kernel Without Rebooting.md b/published/201612/20161021 Livepatch – Apply Critical Security Patches to Ubuntu Linux Kernel Without Rebooting.md

similarity index 100%

rename from published/20161021 Livepatch – Apply Critical Security Patches to Ubuntu Linux Kernel Without Rebooting.md

rename to published/201612/20161021 Livepatch – Apply Critical Security Patches to Ubuntu Linux Kernel Without Rebooting.md

diff --git a/published/20161023 HOW TO SHARE STEAM GAME FILES BETWEEN LINUX AND WINDOWS.md b/published/201612/20161023 HOW TO SHARE STEAM GAME FILES BETWEEN LINUX AND WINDOWS.md

similarity index 100%

rename from published/20161023 HOW TO SHARE STEAM GAME FILES BETWEEN LINUX AND WINDOWS.md

rename to published/201612/20161023 HOW TO SHARE STEAM GAME FILES BETWEEN LINUX AND WINDOWS.md

diff --git a/published/20161024 Getting Started with Webpack 2.md b/published/201612/20161024 Getting Started with Webpack 2.md

similarity index 100%

rename from published/20161024 Getting Started with Webpack 2.md

rename to published/201612/20161024 Getting Started with Webpack 2.md

diff --git a/published/20161026 24 MUST HAVE ESSENTIAL LINUX APPLICATIONS IN 2016.md b/published/201612/20161026 24 MUST HAVE ESSENTIAL LINUX APPLICATIONS IN 2016.md

similarity index 100%

rename from published/20161026 24 MUST HAVE ESSENTIAL LINUX APPLICATIONS IN 2016.md

rename to published/201612/20161026 24 MUST HAVE ESSENTIAL LINUX APPLICATIONS IN 2016.md

diff --git a/published/20161026 Fedora-powered computer lab at our university.md b/published/201612/20161026 Fedora-powered computer lab at our university.md

similarity index 100%

rename from published/20161026 Fedora-powered computer lab at our university.md

rename to published/201612/20161026 Fedora-powered computer lab at our university.md

diff --git a/published/20161027 DTrace for Linux 2016.md b/published/201612/20161027 DTrace for Linux 2016.md

similarity index 100%

rename from published/20161027 DTrace for Linux 2016.md

rename to published/201612/20161027 DTrace for Linux 2016.md

diff --git a/published/20161027 Would You Consider Riding in a Driverless Car.md b/published/201612/20161027 Would You Consider Riding in a Driverless Car.md

similarity index 100%

rename from published/20161027 Would You Consider Riding in a Driverless Car.md

rename to published/201612/20161027 Would You Consider Riding in a Driverless Car.md

diff --git a/published/20161030 I dont understand Pythons Asyncio.md b/published/201612/20161030 I dont understand Pythons Asyncio.md

similarity index 100%

rename from published/20161030 I dont understand Pythons Asyncio.md

rename to published/201612/20161030 I dont understand Pythons Asyncio.md

diff --git a/published/20161102 5 Best FPS Games For Linux.md b/published/201612/20161102 5 Best FPS Games For Linux.md

similarity index 100%

rename from published/20161102 5 Best FPS Games For Linux.md

rename to published/201612/20161102 5 Best FPS Games For Linux.md

diff --git a/published/20161104 4 Easy Ways To Generate A Strong Password In Linux.md b/published/201612/20161104 4 Easy Ways To Generate A Strong Password In Linux.md

similarity index 100%

rename from published/20161104 4 Easy Ways To Generate A Strong Password In Linux.md

rename to published/201612/20161104 4 Easy Ways To Generate A Strong Password In Linux.md

diff --git a/published/20161105 How to Install Security Updates Automatically on Debian and Ubuntu.md b/published/201612/20161105 How to Install Security Updates Automatically on Debian and Ubuntu.md

similarity index 100%

rename from published/20161105 How to Install Security Updates Automatically on Debian and Ubuntu.md

rename to published/201612/20161105 How to Install Security Updates Automatically on Debian and Ubuntu.md

diff --git a/published/20161110 4 Ways to Batch Convert Your PNG to JPG and Vice-Versa.md b/published/201612/20161110 4 Ways to Batch Convert Your PNG to JPG and Vice-Versa.md

similarity index 100%

rename from published/20161110 4 Ways to Batch Convert Your PNG to JPG and Vice-Versa.md

rename to published/201612/20161110 4 Ways to Batch Convert Your PNG to JPG and Vice-Versa.md

diff --git a/published/20161110 How To Update Wifi Network Password From Terminal In Arch Linux.md b/published/201612/20161110 How To Update Wifi Network Password From Terminal In Arch Linux.md

similarity index 100%

rename from published/20161110 How To Update Wifi Network Password From Terminal In Arch Linux.md

rename to published/201612/20161110 How To Update Wifi Network Password From Terminal In Arch Linux.md

diff --git a/published/201612/20161110 How to check if port is in use on Linux or Unix.md b/published/201612/20161110 How to check if port is in use on Linux or Unix.md

new file mode 100644

index 0000000000..951cf2a490

--- /dev/null

+++ b/published/201612/20161110 How to check if port is in use on Linux or Unix.md

@@ -0,0 +1,119 @@

+如何在 Linux/Unix 系统中验证端口是否被占用

+==========

+

+[][1]

+

+在 Linux 或者类 Unix 中,我该如何检查某个端口是否被占用?我又该如何验证 Linux 服务器中有哪些端口处于监听状态?

+

+验证哪些端口在服务器的网络接口上处于监听状态是非常重要的。你需要注意那些开放端口来检测网络入侵。除了网络入侵,为了排除故障,确认服务器上的某个端口是否被其他应用程序占用也是必要的。比方说,你可能会在同一个系统中安装了 Apache 和 Nginx 服务器,所以了解是 Apache 还是 Nginx 占用了 # 80/443 TCP 端口真的很重要。这篇快速教程会介绍使用 `netstat` 、 `nmap` 和 `lsof` 命令来检查端口使用信息并找出哪些程序正在使用这些端口。

+

+### 如何检查 Linux 中的程序和监听的端口

+

+1、 打开一个终端,如 shell 命令窗口。

+2、 运行以下任意一行命令:

+

+```

+sudo lsof -i -P -n | grep LISTEN

+sudo netstat -tulpn | grep LISTEN

+sudo nmap -sTU -O IP地址

+```

+

+下面我们看看这些命令和它们的详细输出内容:

+

+### 方式 1:lsof 命令

+

+语法如下:

+

+```

+$ sudo lsof -i -P -n

+$ sudo lsof -i -P -n | grep LISTEN

+$ doas lsof -i -P -n | grep LISTEN ### OpenBSD

+```

+

+输出如下:

+

+[][2]

+

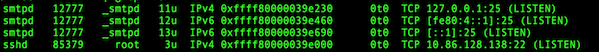

+*图 1:使用 lsof 命令检查监听端口和程序*

+

+仔细看上面输出的最后一行:

+

+```

+sshd 85379 root 3u IPv4 0xffff80000039e000 0t0 TCP 10.86.128.138:22 (LISTEN)

+```

+

+- `sshd` 是程序的名称

+- `10.86.128.138` 是 `sshd` 程序绑定 (LISTEN) 的 IP 地址

+- `22` 是被使用 (LISTEN) 的 TCP 端口

+- `85379` 是 `sshd` 任务的进程 ID (PID)

+

+### 方式 2:netstat 命令

+

+你可以如下面所示使用 `netstat` 来检查监听的端口和程序。

+

+**Linux 中 netstat 语法**

+

+```

+$ netstat -tulpn | grep LISTEN

+```

+

+**FreeBSD/MacOS X 中 netstat 语法**

+

+```

+$ netstat -anp tcp | grep LISTEN

+$ netstat -anp udp | grep LISTEN

+```

+

+**OpenBSD 中 netstat 语法**

+

+```

+$ netstat -na -f inet | grep LISTEN

+$ netstat -nat | grep LISTEN

+```

+

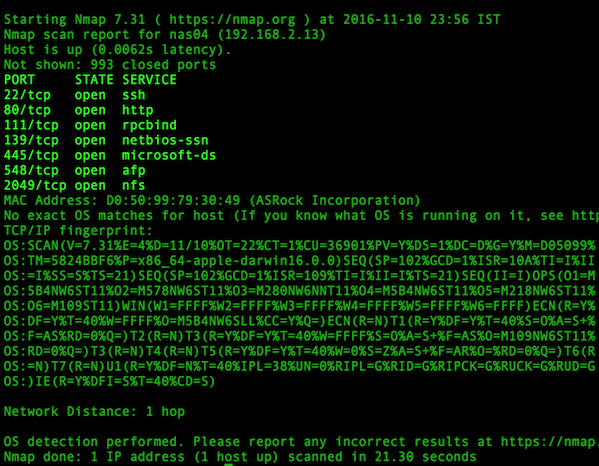

+### 方式 3:nmap 命令

+

+语法如下:

+

+```

+$ sudo nmap -sT -O localhost

+$ sudo nmap -sU -O 192.168.2.13 ### 列出打开的 UDP 端口

+$ sudo nmap -sT -O 192.168.2.13 ### 列出打开的 TCP 端口

+```

+

+示例输出如下:

+

+[][3]

+

+*图 2:使用 nmap 探测哪些端口监听 TCP 连接*

+

+你可以用一句命令合并 TCP/UDP 扫描:

+

+```

+$ sudo nmap -sTU -O 192.168.2.13

+```

+

+### 赠品:对于 Windows 用户

+

+在 windows 系统下可以使用下面的命令检查端口使用情况:

+

+```

+netstat -bano | more

+netstat -bano | grep LISTENING

+netstat -bano | findstr /R /C:"[LISTING]"

+```

+

+----------------------------------------------------

+

+via: https://www.cyberciti.biz/faq/unix-linux-check-if-port-is-in-use-command/

+

+作者:[VIVEK GITE][a]

+译者:[GHLandy](https://github.com/GHLandy)

+校对:[oska874](https://github.com/oska874)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]:https://www.cyberciti.biz/faq/unix-linux-check-if-port-is-in-use-command/

+[1]:https://www.cyberciti.biz/faq/category/linux/

+[2]:http://www.cyberciti.biz/faq/unix-linux-check-if-port-is-in-use-command/lsof-outputs/

+[3]:http://www.cyberciti.biz/faq/unix-linux-check-if-port-is-in-use-command/nmap-outputs/

diff --git a/published/20161112 Neofetch – Shows Linux System Information with Distribution Logo.md b/published/201612/20161112 Neofetch – Shows Linux System Information with Distribution Logo.md

similarity index 100%

rename from published/20161112 Neofetch – Shows Linux System Information with Distribution Logo.md

rename to published/201612/20161112 Neofetch – Shows Linux System Information with Distribution Logo.md

diff --git a/published/20161114 Introduction to Eclipse Che a next-generation web-based IDE.md b/published/201612/20161114 Introduction to Eclipse Che a next-generation web-based IDE.md

similarity index 100%

rename from published/20161114 Introduction to Eclipse Che a next-generation web-based IDE.md

rename to published/201612/20161114 Introduction to Eclipse Che a next-generation web-based IDE.md

diff --git a/published/20161116 Fix Unable to lock the administration directory in Ubuntu.md b/published/201612/20161116 Fix Unable to lock the administration directory in Ubuntu.md

similarity index 100%

rename from published/20161116 Fix Unable to lock the administration directory in Ubuntu.md

rename to published/201612/20161116 Fix Unable to lock the administration directory in Ubuntu.md

diff --git a/published/201612/20161121 Create an Active Directory Infrastructure with Samba4 on Ubuntu – Part 1.md b/published/201612/20161121 Create an Active Directory Infrastructure with Samba4 on Ubuntu – Part 1.md

new file mode 100644

index 0000000000..e221170a57

--- /dev/null

+++ b/published/201612/20161121 Create an Active Directory Infrastructure with Samba4 on Ubuntu – Part 1.md

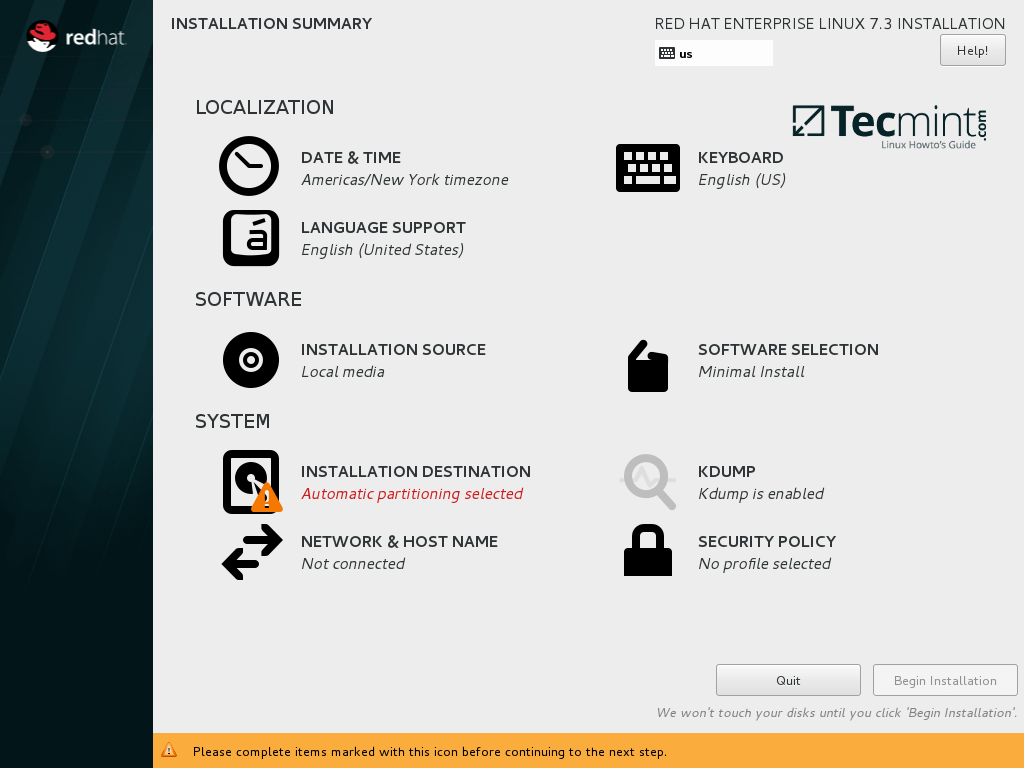

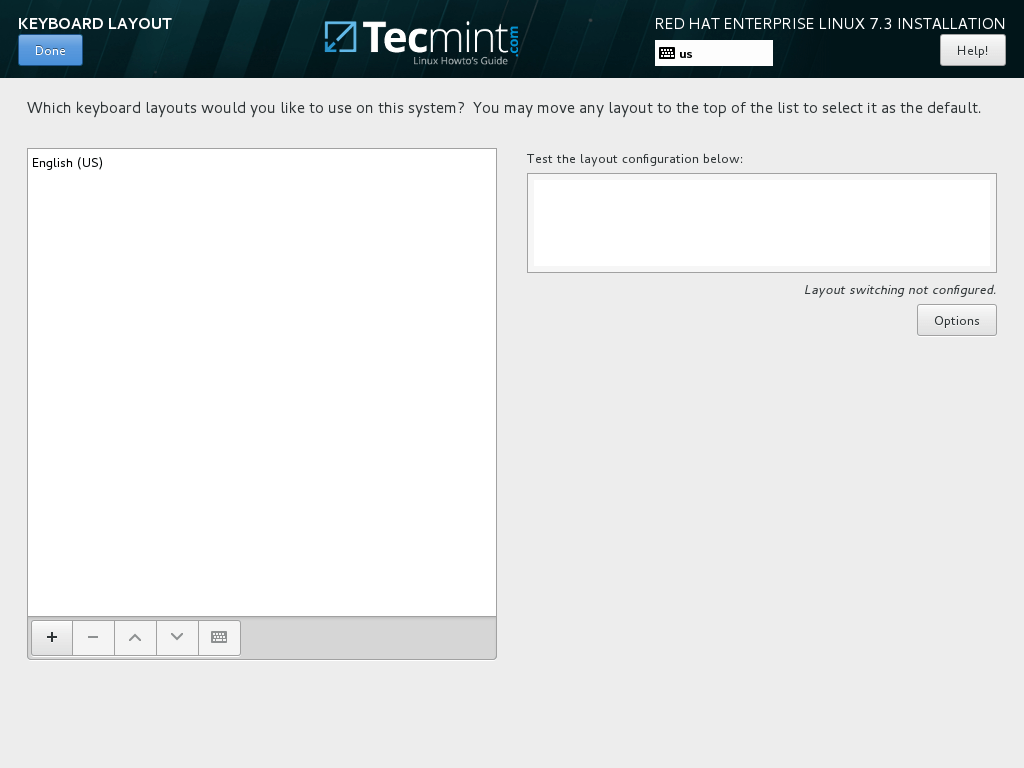

@@ -0,0 +1,257 @@

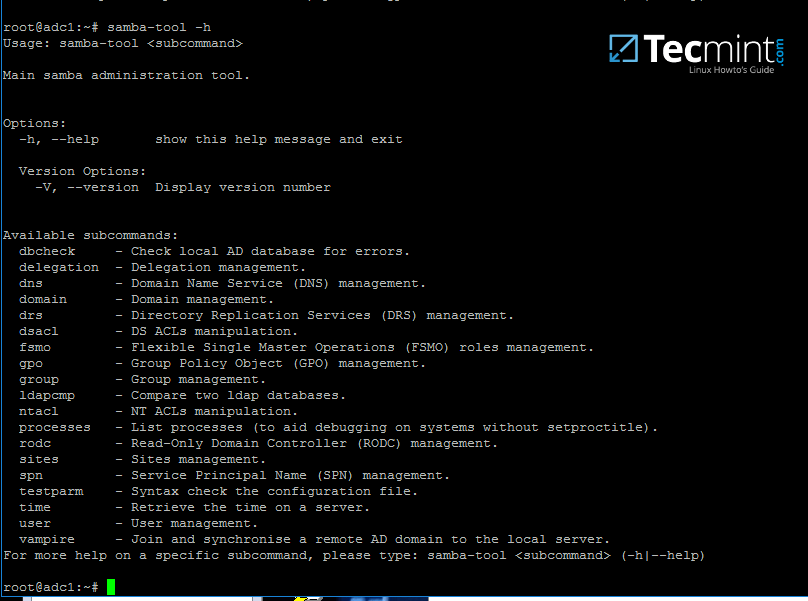

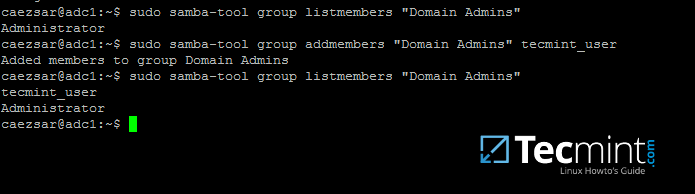

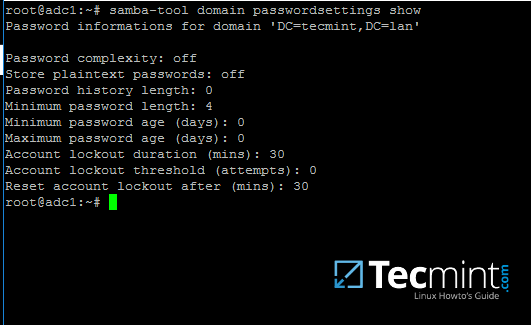

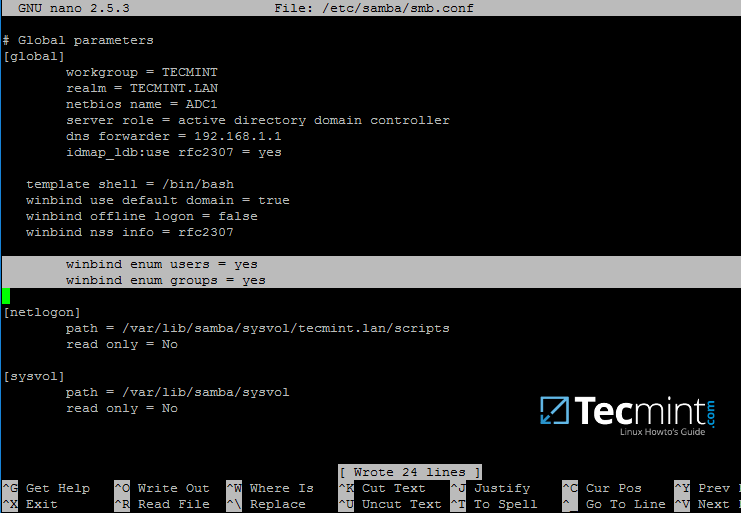

+在 Ubuntu 系统上使用 Samba4 来创建活动目录架构(一)

+============================================================

+

+Samba 是一个自由的开源软件套件,用于实现 Windows 操作系统与 Linux/Unix 系统之间的无缝连接及共享资源。

+

+Samba 不仅可以通过 SMB/CIFS 协议组件来为 Windows 与 Linux 系统之间提供独立的文件及打印机共享服务,它还能实现活动目录(Active Directory)域控制器(Domain Controller)的功能,或者让 Linux 主机加入到域环境中作为域成员服务器。当前的 Samba4 版本实现的 AD DC 域及森林级别可以取代 Windows 2008 R2 系统的域相关功能。

+

+本系列的文章的主要内容是使用 Samba4 软件来配置活动目录域控制器,涉及到 Ubuntu、CentOS 和 Windows 系统相关的以下主题:

+

+- 第 1 节:在 Ubuntu 系统上使用 Samba4 来创建活动目录架构

+- 第 2 节:在 Linux 命令行下管理 Samba4 AD 架构

+- 第 3 节:在 Windows 10 操作系统上安装 RSAT 工具来管理 Samba4 AD

+- 第 4 节:从 Windows 中管理 Samba4 AD 域控制器 DNS 和组策略

+- 第 5 节:使用 Sysvol Replication 复制功能把 Samba 4 DC 加入到已有的 AD

+- 第 6 节:从 Linux DC 服务器通过 GOP 来添加一个共享磁盘并映射到 AD

+- 第 7 节:把 Ubuntu 16.04 系统主机作为域成员服务器添加到 AD

+- 第 8 节:把 CenterOS 7 系统主机作为域成员服务器添加到 AD

+- 第 9 节:在 AD Intranet 区域创建使用 kerberos 认证的 Apache Website

+

+这篇指南将阐明在 Ubuntu 16.04 和 Ubuntu 14.04 操作系统上安装配置 Samba4 作为域控服务器组件的过程中,你需要注意的每一个步骤。

+

+以下安装配置文档将会说明在 Windows 和 Linux 的混合系统环境中,关于用户、机器、共享卷、权限及其它资源信息的主要配置点。

+

+#### 环境要求:

+

+1. [Ubuntu 16.04 服务器安装][1]

+2. [Ubuntu 14.04 服务器安装][2]

+3. 为你的 AD DC 服务器[设置静态IP地址][3]

+

+### 第一步:初始化 Samba4 安装环境

+

+1、 在开始安装 Samba4 AD DC 之前,让我们先做一些准备工作。首先运行以下命令来确保系统已更新了最新的安全特性,内核及其它补丁:

+

+```

+$ sudo apt-get update

+$ sudo apt-get upgrade

+$ sudo apt-get dist-upgrade

+```

+

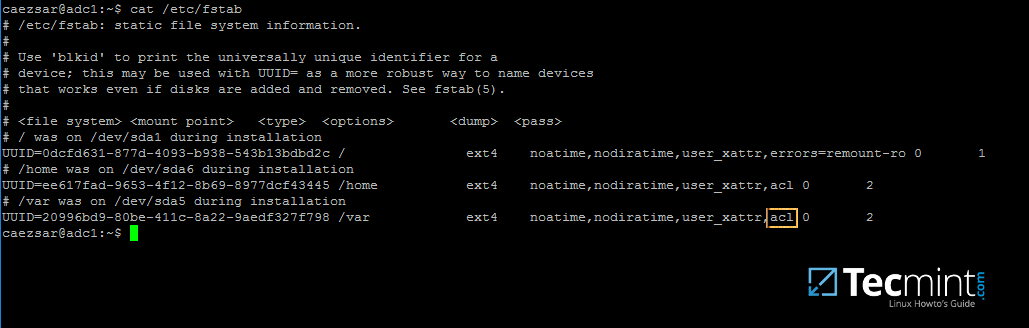

+2、 其次,打开服务器上的 `/etc/fstab` 文件,确保文件系统分区的 ACL 已经启用 ,如下图所示。

+

+通常情况下,当前常见的 Linux 文件系统,比如 ext3、ext4、xfs 或 btrfs 都默认支持并已经启用了 ACL 。如果未设置,则打开并编辑 `/etc/fstab` 文件,在第三列添加 `acl`,然后重启系统以使用修改的配置生效。

+

+[

+

+][5]

+

+*启动 Linux 文件系统的 ACL 功能*

+

+3、 最后使用一个具有描述性的名称来[设置主机名][6] ,比如这往篇文章所使用的 `adc1`。通过编辑 `/etc/hostname` 文件或使用使用下图所示的命令来设置主机名。

+

+```

+$ sudo hostnamectl set-hostname adc1

+```

+

+为了使修改的主机名生效必须重启服务器。

+

+### 第二步: 为 Samba4 AD DC 服务器安装必需的软件包

+

+4、 为了让你的服务器转变为域控制器,你需要在服务器上使用具有 root 权限的账号执行以下命令来安装 Samba 套件及所有必需的软件包。

+

+```

+$ sudo apt-get install samba krb5-user krb5-config winbind libpam-winbind libnss-winbind

+```

+[

+

+][7]

+

+*在 Ubuntu 系统上安装 Samba 套件*

+

+5、 安装包在执行的过程中将会询问你一系列的问题以便完成域控制器的配置。

+

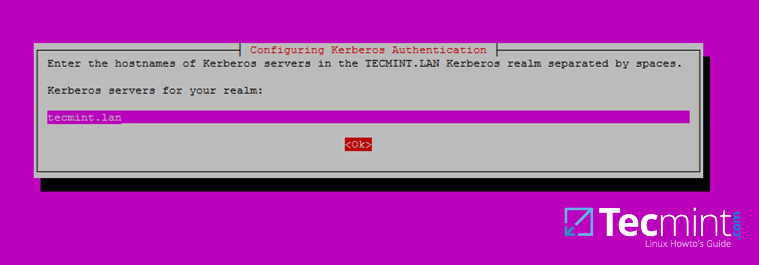

+在第一屏中你需要以大写为 Kerberos 默认 REALM 输入一个名字。以**大写**为你的域环境输入名字,然后单击回车继续。

+

+[

+

+][8]

+

+*配置 Kerosene 认证服务*

+

+6、 下一步,输入你的域中 Kerberos 服务器的主机名。使用和上面相同的名字,这一次使用**小写**,然后单击回车继续。

+

+[

+

+][9]

+

+*设置 Kerberos 服务器的主机名*

+

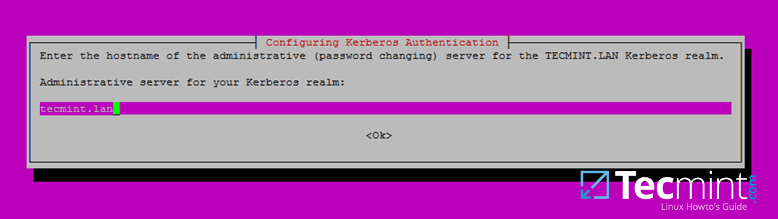

+7、 最后,指定 Kerberos realm 管理服务器的主机名。使用更上面相同的名字,单击回车安装完成。

+

+[

+

+][10]

+

+*设置管理服务器的主机名*

+

+### 第三步:为你的域环境开启 Samba AD DC 服务

+

+8、 在为域服务器配置 Samba 服务之前,先运行如下命令来停止并禁用所有 Samba 进程。

+

+```

+$ sudo systemctl stop samba-ad-dc.service smbd.service nmbd.service winbind.service

+$ sudo systemctl disable samba-ad-dc.service smbd.service nmbd.service winbind.service

+```

+

+9、 下一步,重命名或删除 Samba 原始配置文件。在开启 Samba 服务之前,必须执行这一步操作,因为在开启服务的过程中 Samba 将会创建一个新的配置文件,如果检测到原有的 `smb.conf` 配置文件则会报错。

+

+```

+$ sudo mv /etc/samba/smb.conf /etc/samba/smb.conf.initial

+```

+

+10、 现在,使用 root 权限的账号并接受 Samba 提示的默认选项,以交互方式启动域供给(domain provision)。

+

+还有,输入正确的 DNS 服务器地址并且为 Administrator 账号设置强密码。如果使用的是弱密码,则域供给过程会失败。

+

+```

+$ sudo samba-tool domain provision --use-rfc2307 –interactive

+```

+[

+

+][11]

+

+*Samba 域供给*

+

+11、 最后,使用以下命令重命名或删除 Kerberos 认证在 `/etc` 目录下的主配置文件,并且把 Samba 新生成的 Kerberos 配置文件创建一个软链接指向 `/etc` 目录。

+

+```

+$ sudo mv /etc/krb6.conf /etc/krb5.conf.initial

+$ sudo ln –s /var/lib/samba/private/krb5.conf /etc/

+```

+[

+

+][12]

+

+*创建 Kerberos 配置文件*

+

+12、 启动并开启 Samba 活动目录域控制器后台进程

+

+```

+$ sudo systemctl start samba-ad-dc.service

+$ sudo systemctl status samba-ad-dc.service

+$ sudo systemctl enable samba-ad-dc.service

+```

+[

+

+][13]

+

+*开启 Samba 活动目录域控制器服务*

+

+13、 下一步,[使用 netstat 命令][14] 来验证活动目录启动的服务是否正常。

+

+```

+$ sudo netstat –tulpn| egrep ‘smbd|samba’

+```

+[

+

+][15]

+

+*验证 Samba 活动目录*

+

+### 第四步: Samba 最后的配置

+

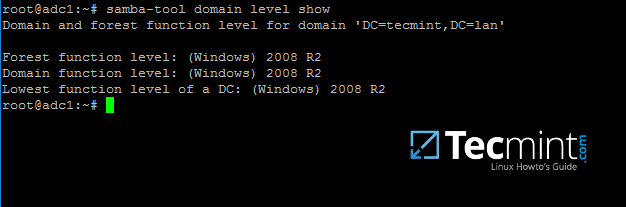

+14、 此刻,Samba 应该跟你想像的一样,完全运行正常。Samba 现在实现的域功能级别可以完全跟 Windows AD DC 2008 R2 相媲美。

+

+可以使用 `samba-tool` 工具来验证 Samba 服务是否正常:

+

+```

+$ sudo samba-tool domain level show

+```

+[

+

+][16]

+

+*验证 Samba 域服务级别*

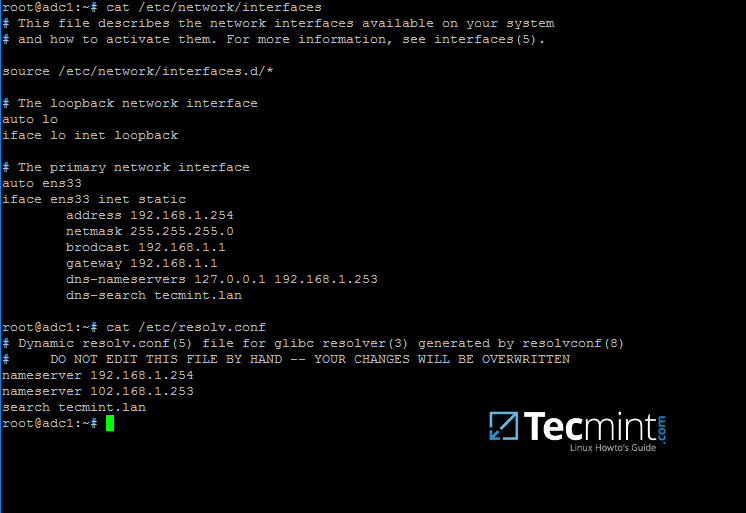

+

+15、 为了满足 DNS 本地解析的需求,你可以编辑网卡配置文件,修改 `dns-nameservers` 参数的值为域控制器地址(使用 127.0.0.1 作为本地 DNS 解析地址),并且设置 `dns-search` 参数为你的 realm 值。

+

+```

+$ sudo cat /etc/network/interfaces

+$ sudo cat /etc/resolv.conf

+```

+[

+

+][17]

+

+*为 Samba 配置 DNS 服务器地址*

+

+设置完成后,重启服务器并检查解析文件是否指向正确的 DNS 服务器地址。

+

+16、 最后,通过 `ping` 命令查询结果来检查某些重要的 AD DC 记录是否正常,使用类似下面的命令,替换对应的域名。

+

+```

+$ ping –c3 tecmint.lan # 域名

+$ ping –c3 adc1.tecmint.lan # FQDN

+$ ping –c3 adc1 # 主机

+```

+[

+

+][18]

+

+*检查 Samba AD DNS 记录*

+

+执行下面的一些查询命令来检查 Samba 活动目录域控制器是否正常。

+

+```

+$ host –t A tecmint.lan

+$ host –t A adc1.tecmint.lan

+$ host –t SRV _kerberos._udp.tecmint.lan # UDP Kerberos SRV record

+$ host -t SRV _ldap._tcp.tecmint.lan # TCP LDAP SRV record

+```

+

+17、 并且,通过请求一个域管理员账号的身份来列出缓存的票据信息以验证 Kerberos 认证是否正常。注意域名部分使用大写。

+

+```

+$ kinit administrator@TECMINT.LAN

+$ klist

+```