mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-06 01:20:12 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject

This commit is contained in:

commit

6063cc24cc

@ -0,0 +1,113 @@

|

||||

10 个应当了解的 Unikernel 开源项目

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

> unikernel 实质上是一个缩减的操作系统,它可以与应用程序结合成为一个 unikernel 程序,它通常在虚拟机中运行。下载《开放云指南》了解更多。

|

||||

|

||||

当涉及到操作系统、容器技术和 unikernel,趋势是朝着微型化发展。什么是 unikernel?unikernel 实质上是一个缩减的操作系统(特指 “unikernel”),它可以与应用程序结合成为一个 unikernel 程序, 它通常在虚拟机中运行。它们有时被称为库操作系统,因为它包含了使应用程序能够将硬件和网络协议与一组访问控制和网络层隔离的策略相结合使用的库。

|

||||

|

||||

在讨论云计算和 Linux 时容器常常会被提及,而 unikernel 也在做一些变革。容器和 unikernel 都不是新事物。在 20 世纪 90 年代就有类似 unikernel 的系统,如 Exokernel,而如今流行的 unikernel 系统则有 MirageOS 和 OSv。 Unikernel 程序可以独立使用并在异构环境中部署。它们可以促进专业化和隔离化服务,并被广泛用于在微服务架构中开发应用程序。

|

||||

|

||||

作为 unikernel 如何引起关注的一个例子,你可以看看 Docker 收购了[基于 Cambridge 的 Unikernel 系统][3],并且已在许多情况下在使用 unikernel。

|

||||

|

||||

unikernel,就像容器技术一样, 它剥离了非必需的的部分,因此它们对应用程序的稳定性、可用性以及安全性有非常积极的影响。在开源领域,它们也吸引了许多顶级,最具创造力的开发人员。

|

||||

|

||||

Linux 基金会最近[宣布][4]发布了其 2016 年度报告[开放云指南:当前趋势和开源项目指南][5]。这份第三年度的报告全面介绍了开放云计算的状况,并包含了一节关于 unikernel 的内容。你现在可以[下载该报告][6]。它汇总并分析研究、描述了容器、unikernel 的发展趋势,已经它们如何重塑云计算的。该报告提供了对当今开放云环境中心的各类项目的描述和链接。

|

||||

|

||||

在本系列文章中,我们将按类别分析指南中提到的项目,为整体类别的演变提供了额外的见解。下面, 你将看到几个重要 unikernel 项目的列表及其影响,以及它们的 GitHub 仓库的链接, 这些都是从开放云指南中收集到的:

|

||||

|

||||

### [ClickOS][7]

|

||||

|

||||

ClickOS 是 NEC 的高性能虚拟化软件中间件平台,用于构建于 MiniOS/MirageOS 之上网络功能虚拟化(NFV)

|

||||

|

||||

- [ClickOS 的 GitHub][8]

|

||||

|

||||

### [Clive][9]

|

||||

|

||||

Clive 是用 Go 编写的一个操作系统,旨在工作于分布式和云计算环境中。

|

||||

|

||||

### [HaLVM][10]

|

||||

|

||||

Haskell 轻量级虚拟机(HaLVM)是 Glasgow Haskell 编译器工具包的移植,它使开发人员能够编写可以直接在 Xen 虚拟机管理程序上运行的高级轻量级虚拟机。

|

||||

|

||||

- [HaLVM 的 GitHub][11]

|

||||

|

||||

### [IncludeOS][12]

|

||||

|

||||

IncludeOS 是在云中运行 C++ 服务的 unikernel 操作系统。它提供了一个引导加载程序、标准库以及运行服务的构建和部署系统。在 VirtualBox 或 QEMU 中进行测试,并在 OpenStack 上部署服务。

|

||||

|

||||

- [IncludeOS 的 GitHub][13]

|

||||

|

||||

### [Ling][14]

|

||||

|

||||

Ling 是一个用于构建超级可扩展云的 Erlang 平台,可直接运行在 Xen 虚拟机管理程序之上。它只运行三个外部库 (没有 OpenSSL),并且文件系统是只读的,以避免大多数攻击。

|

||||

|

||||

- [Ling 的 GitHub][15]

|

||||

|

||||

### [MirageOS][16]

|

||||

|

||||

MirageOS 是在 Linux 基金会的 Xen 项目下孵化的库操作系统。它使用 OCaml 语言构建的 unikernel 可以用于各种云计算和移动平台上安全的高性能网络应用。代码可以在诸如 Linux 或 MacOS X 等普通的操作系统上开发,然后编译成在 Xen 虚拟机管理程序下运行的完全独立的专用 Unikernel。

|

||||

|

||||

- [MirageOS 的 GitHub][17]

|

||||

|

||||

### [OSv][18]

|

||||

|

||||

OSv 是 Cloudius Systems 为云设计的开源操作系统。它支持用 Java、Ruby(通过 JRuby)、JavaScript(通过 Rhino 和 Nashorn)、Scala 等编写程序。它运行在 VMware、VirtualBox、KVM 和 Xen 虚拟机管理程序上。

|

||||

|

||||

- [OSV 的 GitHub][19]

|

||||

|

||||

### [Rumprun][20]

|

||||

|

||||

Rumprun 是一个可用于生产环境的 unikernel,它使用 rump 内核提供的驱动程序,添加了 libc 和应用程序环境,并提供了一个工具链,用于将现有的 POSIX-y 程序构建为 Rumprun unikernel。它适用于 KVM 和 Xen 虚拟机管理程序和裸机,并支持用 C、C ++、Erlang、Go、Java、JavaScript(Node.js)、Python、Ruby、Rust 等编写的程序。

|

||||

|

||||

- [Rumprun 的 GitHub][21]

|

||||

|

||||

### [Runtime.js][22]

|

||||

|

||||

Runtime.js 是用于在云上运行 JavaScript 的开源库操作系统(unikernel),它可以与应用程序捆绑在一起,并部署为轻量级和不可变的 VM 镜像。它基于 V8 JavaScript 引擎,并使用受 Node.js 启发的事件驱动和非阻塞 I/O 模型。KVM 是唯一支持的虚拟机管理程序。

|

||||

|

||||

- [Runtime.js 的 GitHub] [23]

|

||||

|

||||

### [UNIK][24]

|

||||

|

||||

Unik 是 EMC 推出的工具,可以将应用程序源编译为 unikernel(轻量级可引导磁盘镜像)而不是二进制文件。它允许应用程序在各种云提供商、嵌入式设备(IoT) 以及开发人员的笔记本或工作站上安全地部署,资源占用很少。它支持多种 unikernel 类型、处理器架构、管理程序和编排工具,包括 Cloud Foundry、Docker 和 Kubernetes。[Unik 的 GitHub] [25]

|

||||

|

||||

(题图:Pixabay)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/news/open-cloud-report/2016/guide-open-cloud-age-unikernel

|

||||

|

||||

作者:[SAM DEAN][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/sam-dean

|

||||

[1]:https://www.linux.com/licenses/category/creative-commons-zero

|

||||

[2]:https://www.linux.com/files/images/unikernelsjpg-0

|

||||

[3]:http://www.infoworld.com/article/3024410/application-virtualization/docker-kicks-off-unikernel-revolution.html

|

||||

[4]:https://www.linux.com/blog/linux-foundation-issues-2016-guide-open-source-cloud-projects

|

||||

[5]:http://ctt.marketwire.com/?release=11G120876-001&id=10172077&type=0&url=http%3A%2F%2Fgo.linuxfoundation.org%2Frd-open-cloud-report-2016-pr

|

||||

[6]:http://go.linuxfoundation.org/l/6342/2016-10-31/3krbjr

|

||||

[7]:http://cnp.neclab.eu/clickos/

|

||||

[8]:https://github.com/cnplab/clickos

|

||||

[9]:http://lsub.org/ls/clive.html

|

||||

[10]:https://galois.com/project/halvm/

|

||||

[11]:https://github.com/GaloisInc/HaLVM

|

||||

[12]:http://www.includeos.org/

|

||||

[13]:https://github.com/hioa-cs/IncludeOS

|

||||

[14]:http://erlangonxen.org/

|

||||

[15]:https://github.com/cloudozer/ling

|

||||

[16]:https://mirage.io/

|

||||

[17]:https://github.com/mirage/mirage

|

||||

[18]:http://osv.io/

|

||||

[19]:https://github.com/cloudius-systems/osv

|

||||

[20]:http://rumpkernel.org/

|

||||

[21]:https://github.com/rumpkernel/rumprun

|

||||

[22]:http://runtimejs.org/

|

||||

[23]:https://github.com/runtimejs/runtime

|

||||

[24]:http://dojoblog.emc.com/unikernels/unik-build-run-unikernels-easy/

|

||||

[25]:https://github.com/emc-advanced-dev/unik

|

||||

@ -0,0 +1,91 @@

|

||||

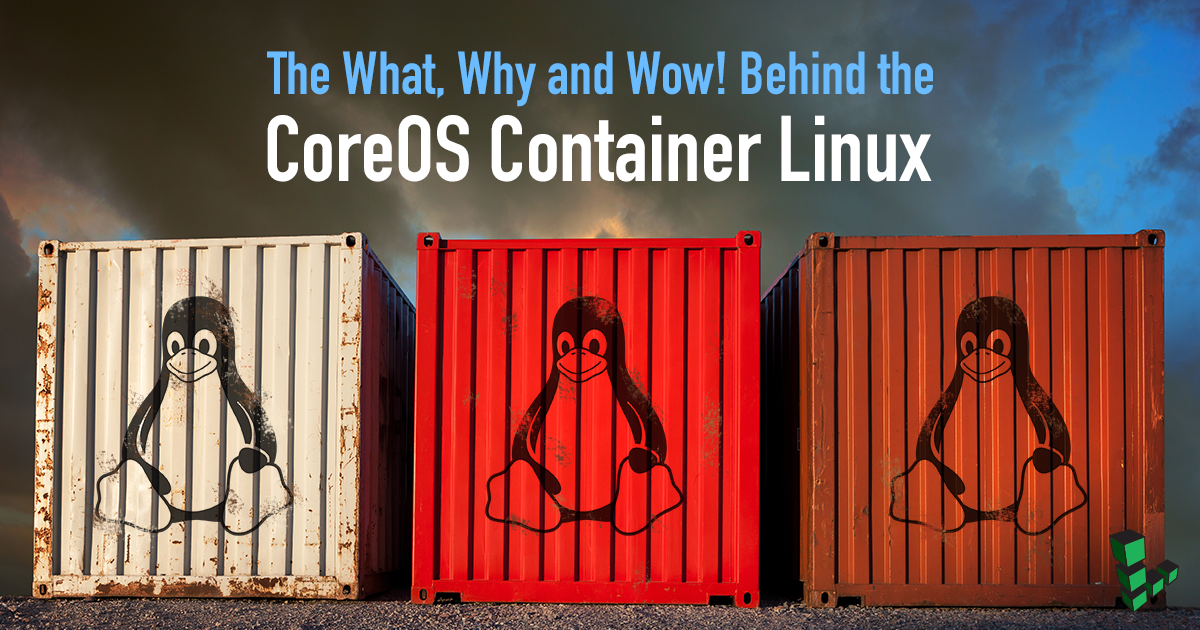

CoreOS,一款 Linux 容器发行版

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

> CoreOS,一款最新的 Linux 发行版本,支持自动升级内核软件,提供各集群间配置的完全控制。

|

||||

|

||||

关于使用哪个版本的 Linux 服务器系统的争论,常常是以这样的话题开始的:

|

||||

|

||||

> 你是喜欢基于 [Red Hat Enterprise Linux (RHEL)][1] 的 [CentOS][2] 或者 [Fedora][3],还是基于 [Debian][4] 的 [Ubuntu][5],抑或 [SUSE][6] 呢?

|

||||

|

||||

但是现在,一款名叫 [CoreOS 容器 Linux][7] 的 Linux 发行版加入了这场“圣战”。[这个最近在 Linode 服务器上提供的 CoreOS][8],和它的老前辈比起来,它使用了完全不同的实现方法。

|

||||

|

||||

你可能会感到不解,这里有这么多成熟的 Linux 发行版本,为什么要选择用 CoreOS ?借用 Linux 主干分支的维护者,也是 CoreOS 顾问的 Greg Kroah-Hartman 先生的一句话:

|

||||

|

||||

> CoreOS 可以控制发行版的升级(基于 ChromeOS 代码),并结合了 Docker 和潜在的核对/修复功能,这意味着不用停止或者重启你的相关进程,就可以[在线升级][9]。测试版本已经支持此功能,这是史无前例的。

|

||||

|

||||

当 Greg Kroah-Hartman 做出这段评价时,CoreOS 还处于 α 测试阶段,当时也许就是在硅谷的一个车库当中,[开发团队正在紧锣密鼓地开发此产品][10],但 CoreOS 不像最开始的苹果或者惠普,其在过去的四年当中一直稳步发展。

|

||||

|

||||

当我参加在旧金山举办的 [2017 CoreOS 大会][11]时,CoreOS 已经支持谷歌云、IBM、AWS 和微软的相关服务。现在有超过 1000 位开发人员参与到这个项目中,并为能够成为这个伟大产品的一员而感到高兴。

|

||||

|

||||

究其原因,CoreOS 从开始就是为容器而设计的轻量级 Linux 发行版,其起初是作为一个 [Docker][12] 平台,随着时间的推移, CoreOS 在容器方面走出了自己的道路,除了 Docker 之外,它也支持它自己的容器 [rkt][13] (读作 rocket )。

|

||||

|

||||

不像大多数其他的 Linux 发行版,CoreOS 没有包管理器,取而代之的是通过 Google ChromeOS 的页面自动进行软件升级,这样能提高在集群上运行的机器/容器的安全性和可靠性。不用通过系统管理员的干涉,操作系统升级组件和安全补丁可以定期推送到 CoreOS 容器。

|

||||

|

||||

你可以通过 [CoreUpdate 和它的 Web 界面][14]上来修改推送周期,这样你就可以控制你的机器何时更新,以及更新以多快的速度滚动分发到你的集群上。

|

||||

|

||||

CoreOS 通过一种叫做 [etcd][15] 的分布式配置服务来进行升级,etcd 是一种基于 [YAML][16] 的开源的分布式哈希存储系统,它可以为 Linux 集群容器提供配置共享和服务发现等功能。

|

||||

|

||||

此服务运行在集群上的每一台服务器上,当其中一台服务器需要下线升级时,它会发起领袖选举,以便服务器更新时整个Linux 系统和容器化的应用可以继续运行。

|

||||

|

||||

对于集群管理,CoreOS 之前采用的是 [fleet][17] 方法,这将 etcd 和 [systemd][18] 结合到分布式初始化系统中。虽然 fleet 仍然在使用,但 CoreOS 已经将 etcd 加入到 [Kubernetes][19] 容器编排系统构成了一个更加强有力的管理工具。

|

||||

|

||||

CoreOS 也可以让你定制其它的操作系统相关规范,比如用 [cloud-config][20] 的方式管理网络配置、用户账号和 systemd 单元等。

|

||||

|

||||

综上所述,CoreOS 可以不断地自行升级到最新版本,能让你获得从单独系统到集群等各种场景的完全控制。如 CoreOS 宣称的,你再也不用为了改变一个单独的配置而在每一台机器上运行 [Chef][21] 了。

|

||||

|

||||

假如说你想进一步的扩展你的 DevOps 控制,[CoreOS 能够轻松地帮助你部署 Kubernetes][22]。

|

||||

|

||||

CoreOS 从一开始就是构建来易于部署、管理和运行容器的。当然,其它的 Linux 发行版,比如 RedHat 家族的[原子项目][23]也可以达到类似的效果,但是对于那些发行版而言是以附加组件的方式出现的,而 CoreOS 从它诞生的第一天就是为容器而设计的。

|

||||

|

||||

当前[容器和 Docker 已经逐渐成为商业系统的主流][24],如果在可预见的未来中你要在工作中使用容器,你应该考虑下 CoreOS,不管你的系统是在裸机硬件上、虚拟机还是云上。

|

||||

|

||||

如果有任何关于 CoreOS 的观点或者问题,还请在评论栏中留言。如果你觉得这篇博客还算有用的话,还请分享一下~

|

||||

|

||||

---

|

||||

|

||||

关于博主:Steven J. Vaughan-Nichols 是一位经验丰富的 IT 记者,许多网站中都刊登有他的文章,包括 [ZDNet.com][25]、[PC Magazine][26]、[InfoWorld][27]、[ComputerWorld][28]、[Linux Today][29] 和 [eWEEK][30] 等。他拥有丰富的 IT 知识 - 而且他曾参加过智力竞赛节目 Jeopardy !他的相关观点都是自身思考的结果,并不代表 Linode 公司,我们对他做出的贡献致以最真诚的感谢。如果想知道他更多的信息,可以关注他的 Twitter [_@sjvn_][31]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://medium.com/linode-cube/the-what-why-and-wow-behind-the-coreos-container-linux-fa7ceae5593c

|

||||

|

||||

作者:[Steven J. Vaughan-Nichols][a]

|

||||

译者:[吴霄/toyijiu](https://github.com/toyijiu)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://medium.com/linode-cube/the-what-why-and-wow-behind-the-coreos-container-linux-fa7ceae5593c

|

||||

[1]:https://www.redhat.com/en/technologies/linux-platforms/enterprise-linux

|

||||

[2]:https://www.centos.org/

|

||||

[3]:https://getfedora.org/

|

||||

[4]:https://www.debian.org/

|

||||

[5]:https://www.ubuntu.com/

|

||||

[6]:https://www.suse.com/

|

||||

[7]:https://coreos.com/os/docs/latest

|

||||

[8]:https://www.linode.com/docs/platform/use-coreos-container-linux-on-linode

|

||||

[9]:https://plus.google.com/+gregkroahhartman/posts/YvWFmPa9kVf

|

||||

[10]:https://www.wired.com/2013/08/coreos-the-new-linux/

|

||||

[11]:https://coreos.com/fest/

|

||||

[12]:https://www.docker.com/

|

||||

[13]:https://coreos.com/rkt

|

||||

[14]:https://coreos.com/products/coreupdate/

|

||||

[15]:https://github.com/coreos/etcd

|

||||

[16]:http://yaml.org/

|

||||

[17]:https://github.com/coreos/fleet

|

||||

[18]:https://www.freedesktop.org/wiki/Software/systemd/

|

||||

[19]:https://kubernetes.io/

|

||||

[20]:https://coreos.com/os/docs/latest/cloud-config.html

|

||||

[21]:https://insights.hpe.com/articles/what-is-chef-a-primer-for-devops-newbies-1704.html

|

||||

[22]:https://blogs.dxc.technology/2017/06/08/coreos-moves-in-on-cloud-devops-with-kubernetes/

|

||||

[23]:http://www.projectatomic.io/

|

||||

[24]:http://www.zdnet.com/article/what-is-docker-and-why-is-it-so-darn-popular/

|

||||

[25]:http://www.zdnet.com/meet-the-team/us/steven-j-vaughan-nichols/

|

||||

[26]:http://www.pcmag.com/author-bio/steven-j.-vaughan-nichols

|

||||

[27]:http://www.infoworld.com/author/Steven-J.-Vaughan_Nichols/

|

||||

[28]:http://www.computerworld.com/author/Steven-J.-Vaughan_Nichols/

|

||||

[29]:http://www.linuxtoday.com/author/Steven+J.+Vaughan-Nichols/

|

||||

[30]:http://www.eweek.com/cp/bio/Steven-J.-Vaughan-Nichols/

|

||||

[31]:http://www.twitter.com/sjvn

|

||||

@ -1,12 +1,11 @@

|

||||

监控服务器:在 Ubuntu 16.04 Server 上安装 Zabbix

|

||||

在 Ubuntu 16.04 Server 上安装 Zabbix

|

||||

============================================================

|

||||

|

||||

[][3]

|

||||

|

||||

### 监控服务器 - 什么是 Zabbix

|

||||

|

||||

[Zabbix][2] 是企业级开源分布式监控服务器解决方案。该软件能监控网络的不同参数以及服务器的完整性,还允许为任何事件配置基于电子邮件的警报。Zabbix 根据存储在数据库(例如 MySQL)中的数据提供报告和数据可视化功能。软件收集的每个测量可以通过基于 Web 的界面访问。

|

||||

|

||||

[Zabbix][2] 是企业级开源分布式监控服务器解决方案。该软件能监控网络的不同参数以及服务器的完整性,还允许为任何事件配置基于电子邮件的警报。Zabbix 根据存储在数据库(例如 MySQL)中的数据提供报告和数据可视化功能。软件收集的每个测量指标都可以通过基于 Web 的界面访问。

|

||||

|

||||

Zabbix 根据 GNU 通用公共许可证版本 2(GPLv2)的条款发布,完全免费。

|

||||

|

||||

@ -19,6 +18,7 @@ Zabbix 根据 GNU 通用公共许可证版本 2(GPLv2)的条款发布,完

|

||||

```

|

||||

# apt-get install php7.0-bcmath php7.0-xml php7.0-mbstring

|

||||

```

|

||||

|

||||

Ubuntu 仓库中提供的 Zabbix 软件包已经过时了。使用官方 Zabbix 仓库安装最新的稳定版本。

|

||||

|

||||

通过执行以下命令来安装仓库软件包:

|

||||

@ -28,11 +28,12 @@ $ wget http://repo.zabbix.com/zabbix/3.2/ubuntu/pool/main/z/zabbix-release/zabbi

|

||||

# dpkg -i zabbix-release_3.2-1+xenial_all.deb

|

||||

```

|

||||

|

||||

然后更新 `apt` 包源码:

|

||||

然后更新 `apt` 包源:

|

||||

|

||||

```

|

||||

# apt-get update

|

||||

```

|

||||

|

||||

现在可以安装带有 MySQL 支持和 PHP 前端的 Zabbix 服务器。执行命令:

|

||||

|

||||

```

|

||||

@ -166,18 +167,19 @@ DBPassword=usr_strong_pwd

|

||||

|

||||

|

||||

|

||||

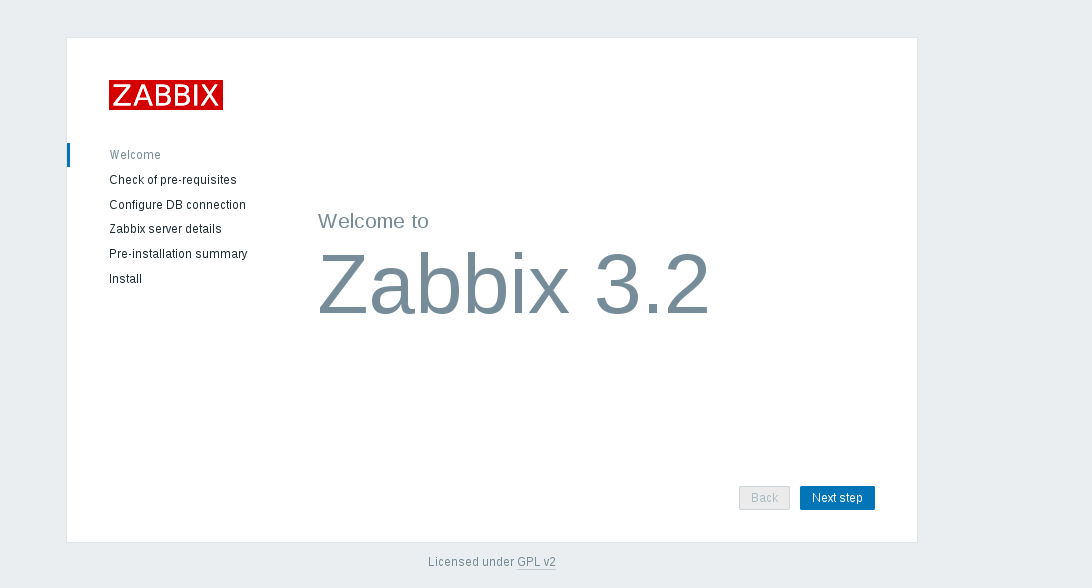

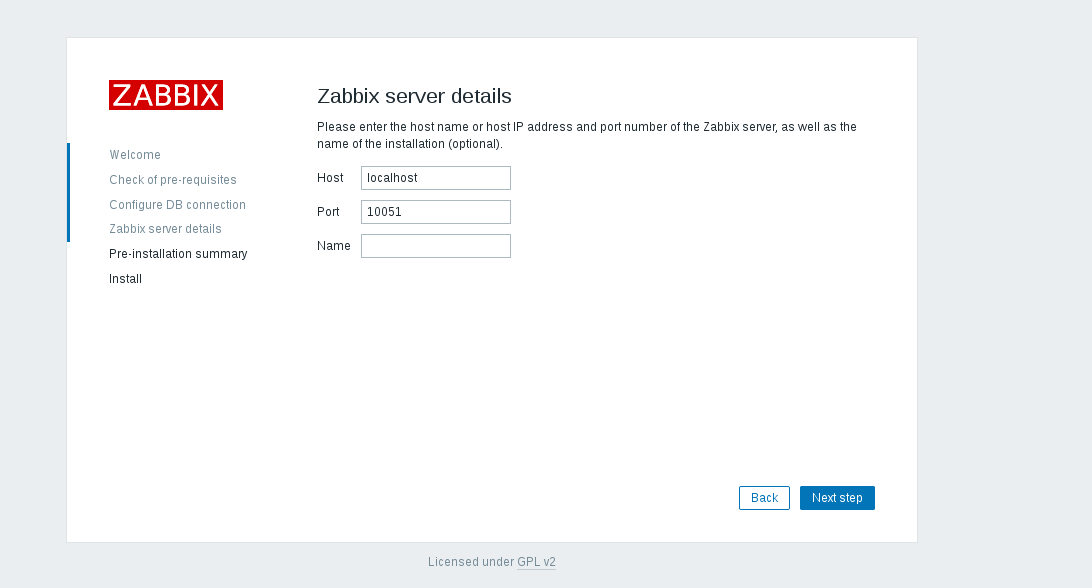

点击 _**Next step**_

|

||||

点击 **Next step**

|

||||

|

||||

|

||||

|

||||

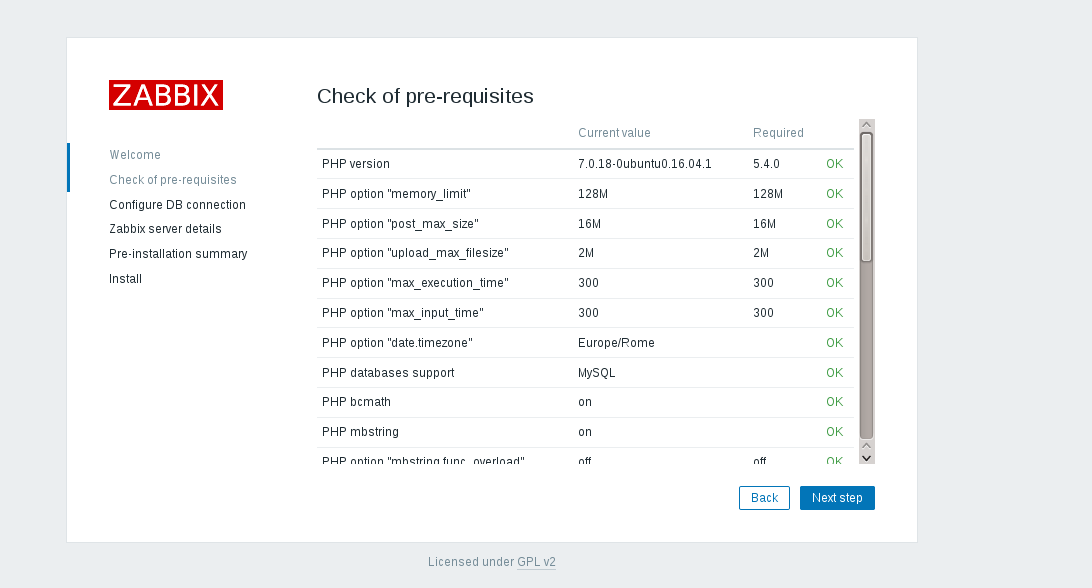

确保所有的值都是 **Ok**,然后再次单击 _**Next step** _ 。

|

||||

确保所有的值都是 **Ok**,然后再次单击 **Next step** 。

|

||||

|

||||

|

||||

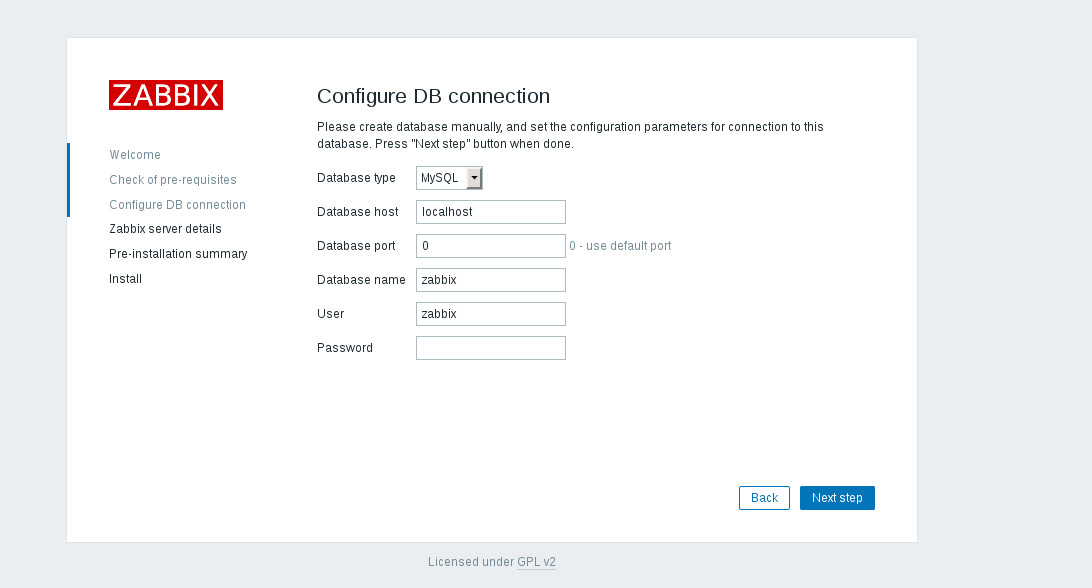

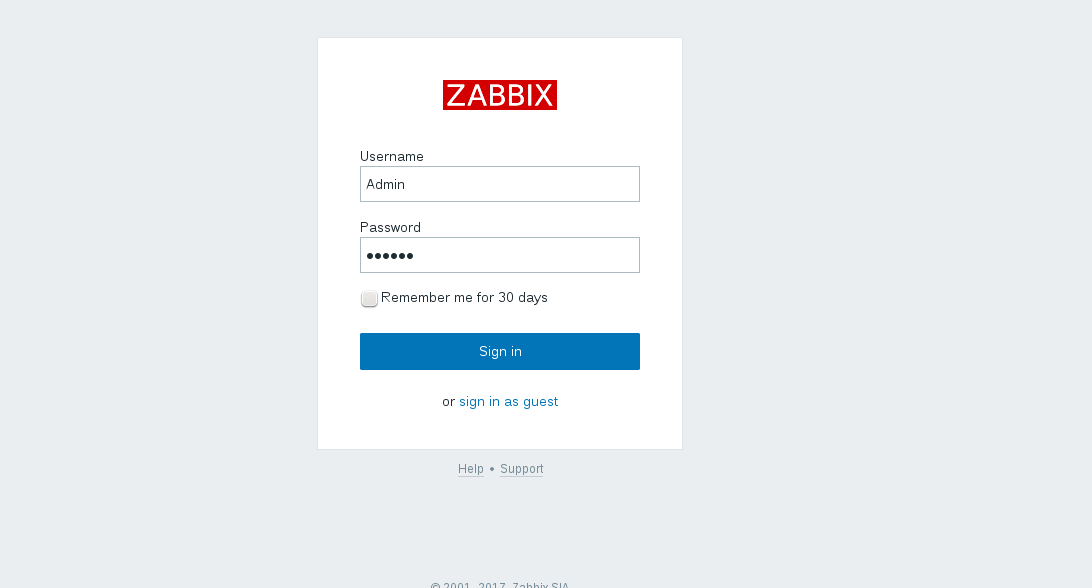

输入 MySQL **zabbix** 的用户密码,然后点击 _ **Next step** _。

|

||||

|

||||

输入 MySQL **zabbix** 的用户密码,然后点击 **Next step**。

|

||||

|

||||

|

||||

|

||||

单击 _**Next step**_ ,安装程序将显示具有所有配置参数的页面。再次检查以确保一切正确。

|

||||

单击 **Next step** ,安装程序将显示具有所有配置参数的页面。再次检查以确保一切正确。

|

||||

|

||||

|

||||

|

||||

@ -192,18 +194,24 @@ DBPassword=usr_strong_pwd

|

||||

|

||||

|

||||

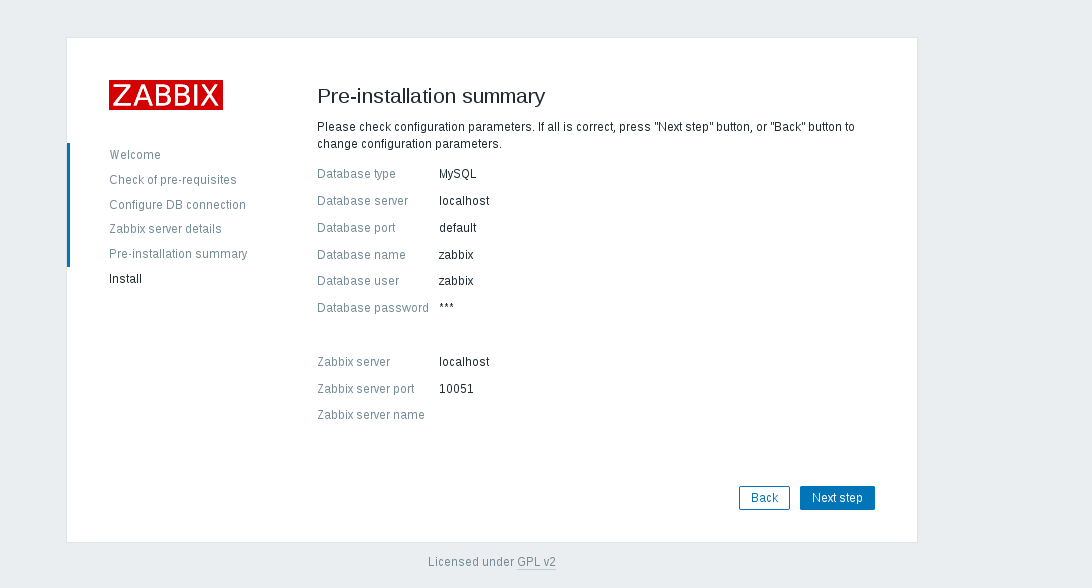

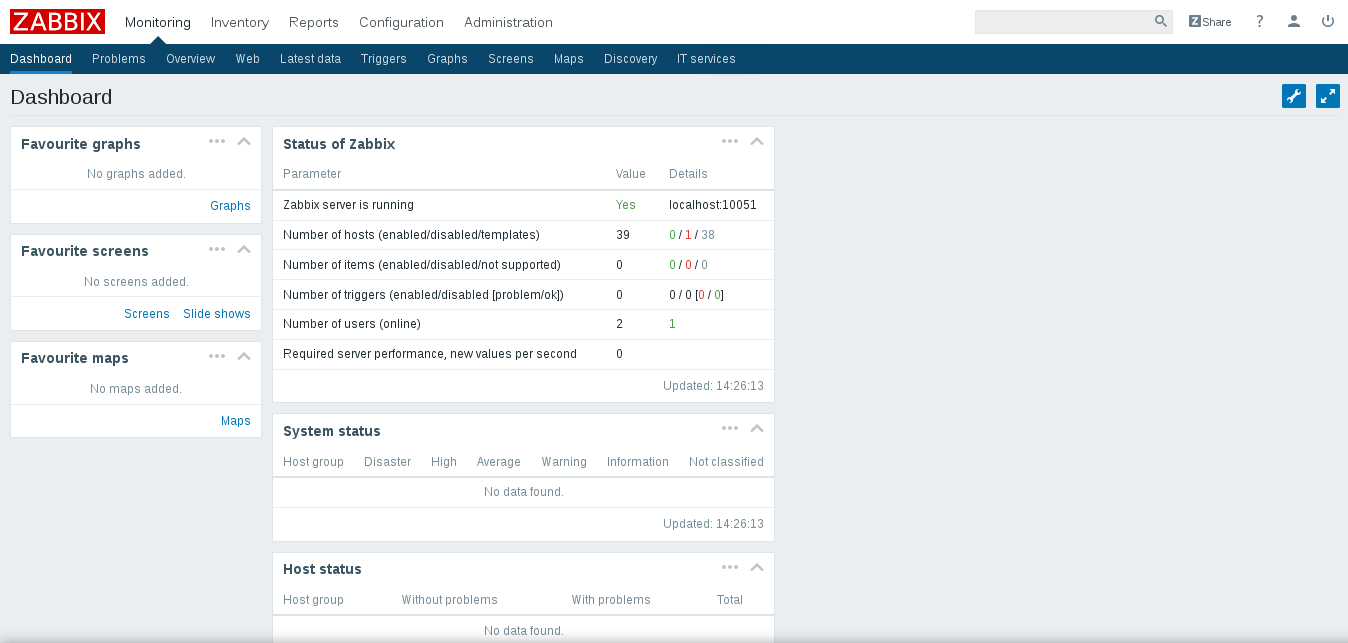

使用上述凭证登录后,我们将看到 Zabbix 面板:

|

||||

|

||||

|

||||

|

||||

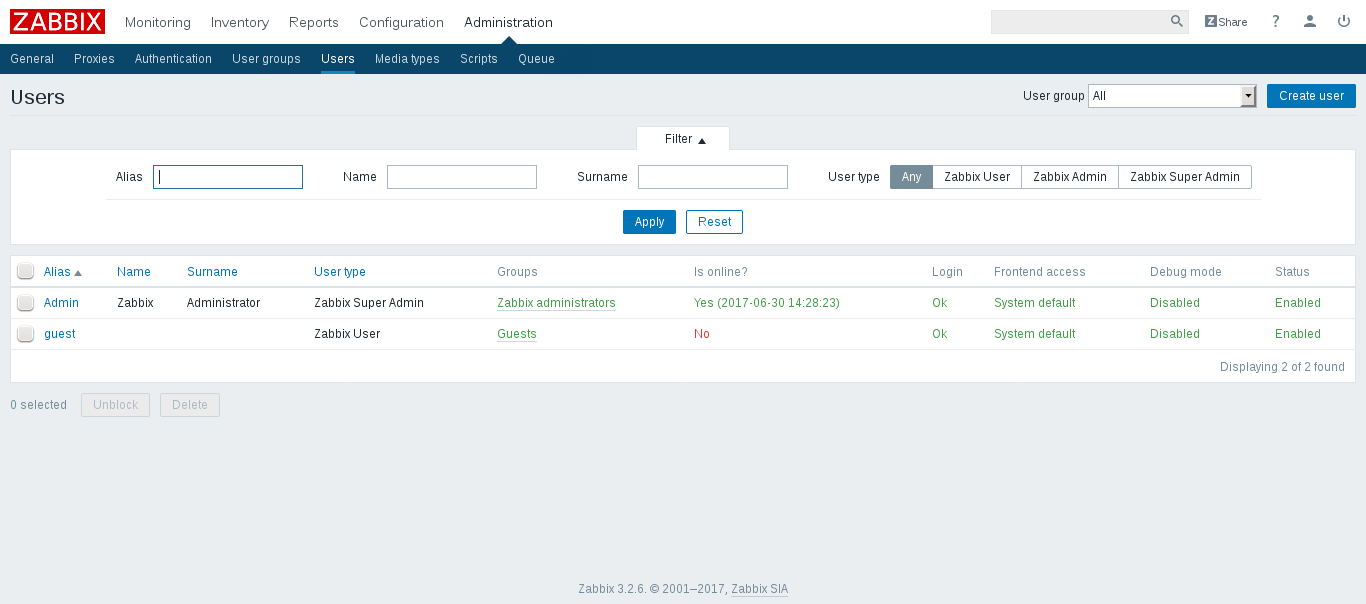

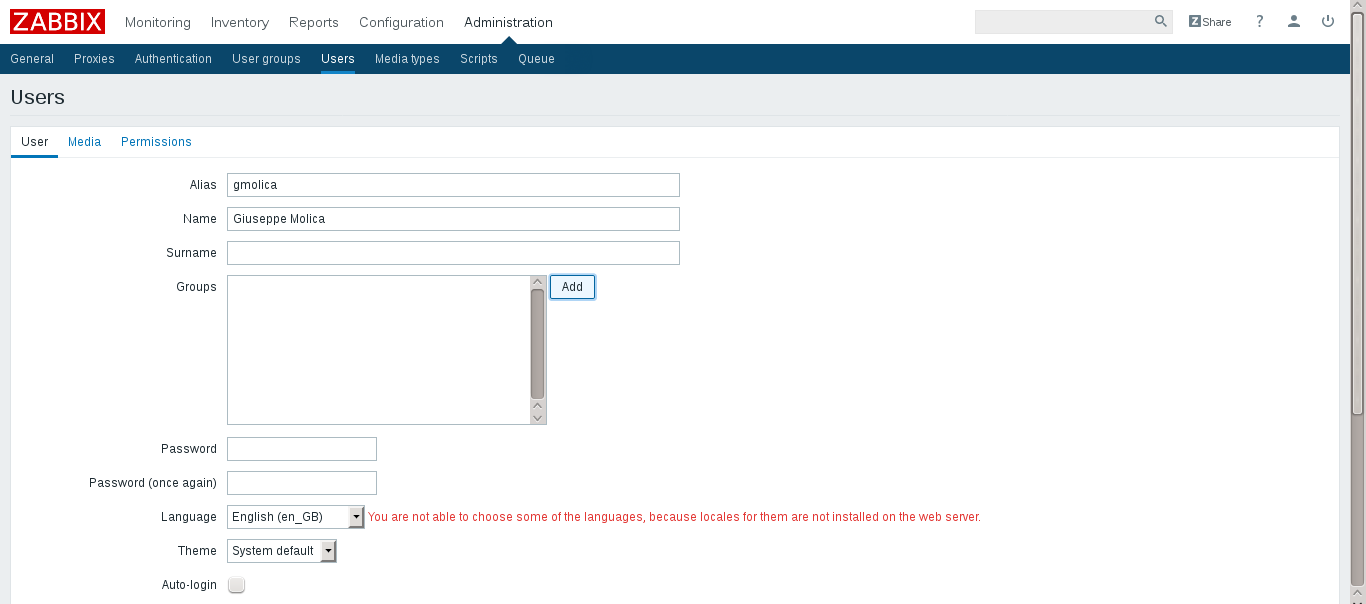

前往 _Administration -> Users_,了解已启用帐户的概况

|

||||

前往 **Administration -> Users**,了解已启用帐户的概况:

|

||||

|

||||

|

||||

|

||||

通过点击 _**Create user**_ 创建一个新帐户。

|

||||

通过点击 **Create user** 创建一个新帐户。

|

||||

|

||||

|

||||

|

||||

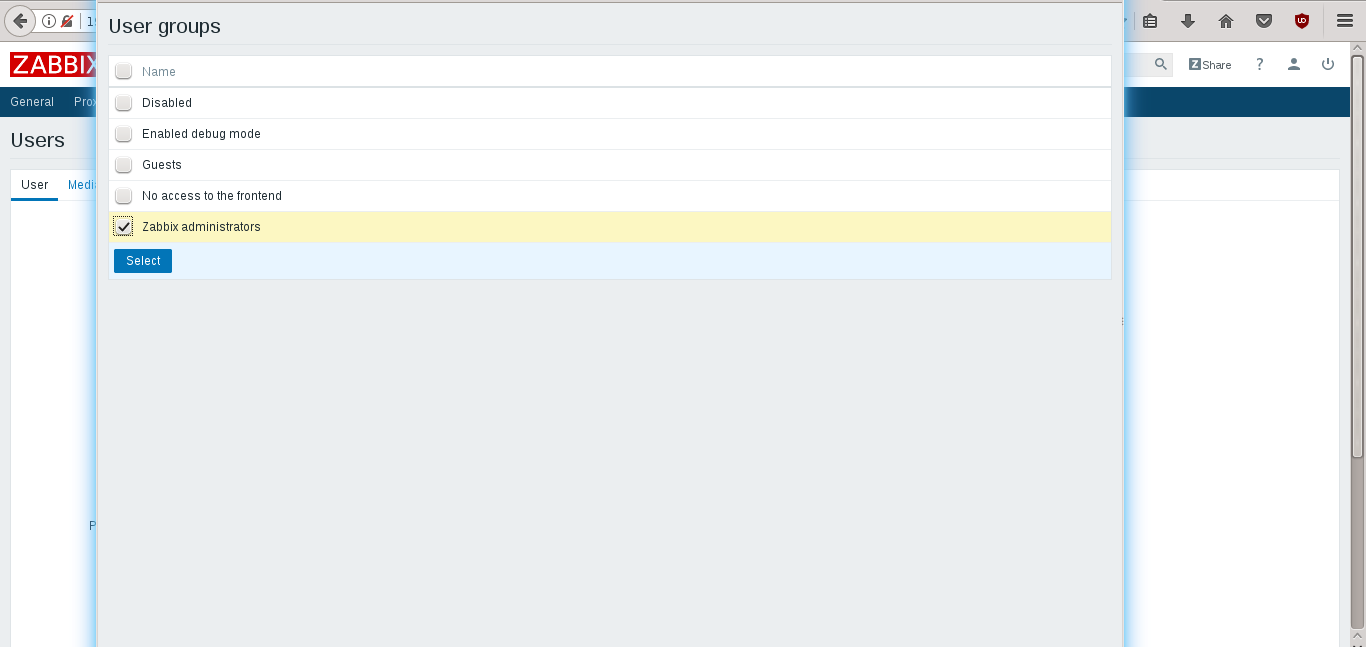

点击 **Groups** 中的 **Add**,然后选择一个组

|

||||

点击 **Groups** 中的 **Add**,然后选择一个组:

|

||||

|

||||

|

||||

|

||||

保存新用户凭证,它将显示在 _Administration -> Users_ 面板中。**请注意,在 Zabbix 中,主机的访问权限分配给用户组,而不是单个用户。**

|

||||

保存新用户凭证,它将显示在 **Administration -> Users** 面板中。

|

||||

|

||||

**请注意,在 Zabbix 中,主机的访问权限分配给用户组,而不是单个用户。**

|

||||

|

||||

### 总结

|

||||

|

||||

@ -213,9 +221,9 @@ DBPassword=usr_strong_pwd

|

||||

|

||||

via: https://www.unixmen.com/monitoring-server-install-zabbix-ubuntu-16-04/

|

||||

|

||||

作者:[Giuseppe Molica ][a]

|

||||

作者:[Giuseppe Molica][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

187

published/20170705 Two great uses for the cp command.md

Normal file

187

published/20170705 Two great uses for the cp command.md

Normal file

@ -0,0 +1,187 @@

|

||||

cp 命令两个高效的用法

|

||||

============================================================

|

||||

|

||||

> Linux 中高效的备份拷贝命令

|

||||

|

||||

|

||||

|

||||

在 Linux 上能使用鼠标点来点去的图形化界面是一件很美妙的事……但是如果你喜欢的开发交互环境和编译器是终端窗口、Bash 和 Vim,那你应该像我一样*经常*和终端打交道。

|

||||

|

||||

即使是不经常使用终端的人,如果对终端环境深入了解也能获益良多。举个例子—— `cp` 命令,据 [维基百科][12] 的解释,`cp` (意即 copy)命令是第一个版本的 [Unix][13] 系统的一部分。连同一组其它的命令 `ls`、`mv`、`cd`、`pwd`、`mkdir`、`vi`、`sh`、`sed` 和 `awk` ,还有提到的 `cp` 都是我在 1984 年接触 System V Unix 系统时所学习的命令之一。`cp` 命令最常见的用法是制作文件副本。像这样:

|

||||

|

||||

```

|

||||

cp sourcefile destfile

|

||||

```

|

||||

|

||||

在终端中执行此命令,上述命令将名为 `sourcefile` 的文件复制到名为 `destfile` 的文件中。如果在执行命令之前 `destfile` 文件不存在,那将会创建此文件,如果已经存在,那就会覆盖此文件。

|

||||

|

||||

这个命令我不知道自己用了多少次了(我也不想知道),但是我知道在我编写测试代码的时候,我经常用,为了保留当前正常的版本,而且又能继续修改,我会输入这个命令:

|

||||

|

||||

```

|

||||

cp test1.py test1.bak

|

||||

```

|

||||

|

||||

在过去的30多年里,我使用了无数次这个命令。另外,当我决定编写我的第二个版本的测试程序时,我会输入这个命令:

|

||||

|

||||

```

|

||||

cp test1.py test2.py

|

||||

```

|

||||

|

||||

这样就完成了修改程序的第一步。

|

||||

|

||||

我通常很少查看 `cp` 命令的参考文档,但是当我在备份我的图片文件夹的时候(在 GUI 环境下使用 “file” 应用),我开始思考“在 `cp` 命令中是否有个参数支持只复制新文件或者是修改过的文件。”果然,真的有!

|

||||

|

||||

### 高效用法 1:更新你的文件夹

|

||||

|

||||

比如说在我的电脑上有一个存放各种文件的文件夹,另外我要不时的往里面添加一些新文件,而且我会不时地修改一些文件,例如我手机里下载的照片或者是音乐。

|

||||

|

||||

假设我收集的这些文件对我而言都很有价值,我有时候会想做个拷贝,就像是“快照”一样将文件保存在其它媒体。当然目前有很多程序都支持备份,但是我想更为精确的将目录结构复制到可移动设备中,方便于我经常使用这些离线设备或者连接到其它电脑上。

|

||||

|

||||

`cp` 命令提供了一个易如反掌的方法。例子如下:

|

||||

|

||||

在我的 `Pictures` 文件夹下,我有这样一个文件夹名字为 `Misc`。为了方便说明,我把文件拷贝到 USB 存储设备上。让我们开始吧!

|

||||

|

||||

```

|

||||

me@desktop:~/Pictures$ cp -r Misc /media/clh/4388-D5FE

|

||||

me@desktop:~/Pictures$

|

||||

```

|

||||

|

||||

上面的命令是我从按照终端窗口中完整复制下来的。对于有些人来说不是很适应这种环境,在我们输入命令或者执行命令之前,需要注意的是 `me@mydesktop:~/Pictures` 这个前缀,`me` 这个是当前用户,`mydesktop` 这是电脑名称,`~/Pictures` 这个是当前工作目录,是 `/home/me/Pictures` 完整路径的缩写。

|

||||

|

||||

我输入这个命令 `cp -r Misc /media/clh/4388-D5FE` 并执行后 ,拷贝 `Misc` 目录下所有文件(这个 `-r` 参数,全称 “recursive”,递归处理,意思为本目录下所有文件及子目录一起处理)到我的 USB 设备的挂载目录 `/media/clh/4388-D5FE`。

|

||||

|

||||

执行命令后回到之前的提示,大多数命令继承了 Unix 的特性,在命令执行后,如果没有任何异常什么都不显示,在任务结束之前不会显示像 “execution succeeded” 这样的提示消息。如果想获取更多的反馈,就使用 `-v` 参数让执行结果更详细。

|

||||

|

||||

下图中是我的 USB 设备中刚刚拷贝过来的文件夹 `Misc` ,里面总共有 9 张图片。

|

||||

|

||||

|

||||

|

||||

假设我要在原始拷贝路径下 `~/Pictures/Misc` 下添加一些新文件,就像这样:

|

||||

|

||||

|

||||

|

||||

现在我想只拷贝新的文件到我的存储设备上,我就使用 `cp` 的“更新”和“详细”选项。

|

||||

|

||||

```

|

||||

me@desktop:~/Pictures$ cp -r -u -v Misc /media/clh/4388-D5FE

|

||||

'Misc/asunder.png' -> '/media/clh/4388-D5FE/Misc/asunder.png'

|

||||

'Misc/editing tags guayadeque.png' -> '/media/clh/4388-D5FE/Misc/editing tags guayadeque.png'

|

||||

'Misc/misc on usb.png' -> '/media/clh/4388-D5FE/Misc/misc on usb.png'

|

||||

me@desktop:~/Pictures$

|

||||

```

|

||||

上面的第一行中是 `cp` 命令和具体的参数(`-r` 是“递归”, `-u` 是“更新”,`-v` 是“详细”)。接下来的三行显示被复制文件的信息,最后一行显示命令行提示符。

|

||||

|

||||

通常来说,参数 `-r` 也可用更详细的风格 `--recursive`。但是以简短的方式,也可以这么连用 `-ruv`。

|

||||

|

||||

### 高效用法 2:版本备份

|

||||

|

||||

回到一开始的例子中,我在开发的时候定期给我的代码版本进行备份。然后我找到了另一种更好用的 `cp` 参数。

|

||||

|

||||

假设我正在编写一个非常有用的 Python 程序,作为一个喜欢不断修改代码的开发者,我会在一开始编写一个程序简单版本,然后不停的往里面添加各种功能直到它能成功的运行起来。比方说我的第一个版本就是用 Python 程序打印出 “hello world”。这只有一行代码的程序就像这样:

|

||||

|

||||

```

|

||||

print 'hello world'

|

||||

```

|

||||

|

||||

然后我将这个代码保存成文件命名为 `test1.py`。我可以这么运行它:

|

||||

|

||||

```

|

||||

me@desktop:~/Test$ python test1.py

|

||||

hello world

|

||||

me@desktop:~/Test$

|

||||

```

|

||||

|

||||

现在程序可以运行了,我想在添加新的内容之前进行备份。我决定使用带编号的备份选项,如下:

|

||||

|

||||

```

|

||||

clh@vancouver:~/Test$ cp --force --backup=numbered test1.py test1.py

|

||||

clh@vancouver:~/Test$ ls

|

||||

test1.py test1.py.~1~

|

||||

clh@vancouver:~/Test$

|

||||

```

|

||||

|

||||

所以,上面的做法是什么意思呢?

|

||||

|

||||

第一,这个 `--backup=numbered` 参数意思为“我要做个备份,而且是带编号的连续备份”。所以一个备份就是 1 号,第二个就是 2 号,等等。

|

||||

|

||||

第二,如果源文件和目标文件名字是一样的。通常我们使用 `cp` 命令去拷贝成自己,会得到这样的报错信息:

|

||||

|

||||

```

|

||||

cp: 'test1.py' and 'test1.py' are the same file

|

||||

```

|

||||

|

||||

在特殊情况下,如果我们想备份的源文件和目标文件名字相同,我们使用 `--force` 参数。

|

||||

|

||||

第三,我使用 `ls` (意即 “list”)命令来显示现在目录下的文件,名字为 `test1.py` 的是原始文件,名字为 `test1.py.~1~` 的是备份文件

|

||||

|

||||

假如现在我要加上第二个功能,在程序里加上另一行代码,可以打印 “Kilroy was here.”。现在程序文件 `test1.py` 的内容如下:

|

||||

|

||||

```

|

||||

print 'hello world'

|

||||

print 'Kilroy was here'

|

||||

```

|

||||

|

||||

看到 Python 编程多么简单了吗?不管怎样,如果我再次执行备份的步骤,结果如下:

|

||||

|

||||

|

||||

```

|

||||

clh@vancouver:~/Test$ cp --force --backup=numbered test1.py test1.py

|

||||

clh@vancouver:~/Test$ ls

|

||||

test1.py test1.py.~1~ test1.py.~2~

|

||||

clh@vancouver:~/Test$

|

||||

```

|

||||

现在我有有两个备份文件: `test1.py.~1~` 包含了一行代码的程序,和 `test1.py.~2~` 包含两行代码的程序。

|

||||

|

||||

这个很好用的功能,我考虑做个 shell 函数让它变得更简单。

|

||||

|

||||

### 最后总结

|

||||

|

||||

第一,Linux 手册页,它在大多数桌面和服务器发行版都默认安装了,它提供了更为详细的使用方法和例子,对于 `cp` 命令,在终端中输入如下命令:

|

||||

|

||||

```

|

||||

man cp

|

||||

```

|

||||

|

||||

对于那些想学习如何使用这些命令,但不清楚如何使用的用户应该首先看一下这些说明,然后我建议创建一个测试目录和文件来尝试使用命令和选项。

|

||||

|

||||

第二,兴趣是最好的老师。在你最喜欢的搜索引擎中搜索 “linux shell tutorial”,你会获得很多有趣和有用的资源。

|

||||

|

||||

第三,你是不是在想,“为什么我要用这么麻烦的方法,图形化界面中有相同的功能,只用点击几下岂不是更简单?”,关于这个问题我有两个理由。首先,在我们工作中需要中断其他工作流程以及大量使用点击动作时,点击动作可就不简单了。其次,如果我们要完成流水线般的重复性工作,通过使用 shell 脚本和 shell 函数以及 shell 重命名等功能就能很轻松的实现。

|

||||

|

||||

你还知道关于 `cp` 命令其他更棒的使用方式吗?请在留言中积极回复哦~

|

||||

|

||||

(题图:Opensource.com)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Chris Hermansen - 1978 年毕业于英国哥伦比亚大学后一直从事计算机相关职业,我从 2005 年开始一直使用 Linux、Solaris、SunOS,在那之前我就是 Unix 系统管理员了,在技术方面,我的大量的职业生涯都是在做数据分析,尤其是空间数据分析,我有大量的编程经验与数据分析经验,熟练使用 awk、Python、PostgreSQL、PostGIS 和 Groovy。

|

||||

|

||||

---

|

||||

|

||||

via: https://opensource.com/article/17/7/two-great-uses-cp-command

|

||||

|

||||

作者:[Chris Hermansen][a]

|

||||

译者:[bigdimple](https://github.com/bigdimple)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/clhermansen

|

||||

[1]:https://opensource.com/resources/what-is-linux?intcmp=70160000000h1jYAAQ&utm_source=intcallout&utm_campaign=linuxcontent

|

||||

[2]:https://opensource.com/resources/what-are-linux-containers?intcmp=70160000000h1jYAAQ&utm_source=intcallout&utm_campaign=linuxcontent

|

||||

[3]:https://developers.redhat.com/promotions/linux-cheatsheet/?intcmp=70160000000h1jYAAQ&utm_source=intcallout&utm_campaign=linuxcontent

|

||||

[4]:https://developers.redhat.com/cheat-sheet/advanced-linux-commands-cheatsheet?intcmp=70160000000h1jYAAQ&utm_source=intcallout&utm_campaign=linuxcontent

|

||||

[5]:https://opensource.com/tags/linux?intcmp=70160000000h1jYAAQ&utm_source=intcallout&utm_campaign=linuxcontent

|

||||

[6]:https://opensource.com/file/360601

|

||||

[7]:https://opensource.com/file/360606

|

||||

[8]:https://opensource.com/article/17/7/two-great-uses-cp-command?rate=87TiE9faHZRes_f4Gj3yQZXhZ-x7XovYhnhjrk3SdiM

|

||||

[9]:https://opensource.com/user/37806/feed

|

||||

[10]:https://www.flickr.com/photos/internetarchivebookimages/14746482994/in/photolist-ot6zCN-odgbDq-orm48o-otifuv-otdyWa-ouDjnZ-otGT2L-odYVqY-otmff7-otGamG-otnmSg-rxnhoq-orTmKf-otUn6k-otBg1e-Gm6FEf-x4Fh64-otUcGR-wcXsxg-tLTN9R-otrWYV-otnyUE-iaaBKz-ovcPPi-ovokCg-ov4pwM-x8Tdf1-hT5mYr-otb75b-8Zk6XR-vtefQ7-vtehjQ-xhhN9r-vdXhWm-xFBgtQ-vdXdJU-vvTH6R-uyG5rH-vuZChC-xhhGii-vvU5Uv-vvTNpB-vvxqsV-xyN2Ai-vdXcFw-vdXuNC-wBMhes-xxYmxu-vdXxwS-vvU8Zt

|

||||

[11]:https://www.flickr.com/photos/internetarchivebookimages/14774719031/in/photolist-ovAie2-otPK99-xtDX7p-tmxqWf-ow3i43-odd68o-xUPaxW-yHCtWi-wZVsrD-DExW5g-BrzB7b-CmMpC9-oy4hyF-x3UDWA-ow1m4A-x1ij7w-tBdz9a-tQMoRm-wn3tdw-oegTJz-owgrs2-rtpeX1-vNN6g9-owemNT-x3o3pX-wiJyEs-CGCC4W-owg22q-oeT71w-w6PRMn-Ds8gyR-x2Aodm-owoJQm-owtGp9-qVxppC-xM3Gw7-owgV5J-ou9WEs-wihHtF-CRmosE-uk9vB3-wiKdW6-oeGKq3-oeFS4f-x5AZtd-w6PNuv-xgkofr-wZx1gJ-EaYPED-oxCbFP

|

||||

[12]:https://en.wikipedia.org/wiki/Cp_(Unix)

|

||||

[13]:https://en.wikipedia.org/wiki/Unix

|

||||

[14]:https://opensource.com/users/clhermansen

|

||||

[15]:https://opensource.com/users/clhermansen

|

||||

[16]:https://opensource.com/article/17/7/two-great-uses-cp-command#comments

|

||||

@ -0,0 +1,159 @@

|

||||

Ubuntu Core:制作包含私有 snap 的工厂镜像

|

||||

========

|

||||

|

||||

这篇帖子是有关 [在 Ubuntu Core 开发 ROS 原型到成品][1] 系列的补充,用来回答我收到的一个问题: “我想做一个工厂镜像,但我不想使我的 snap 公开” 当然,这个问题和回答都不只是针对于机器人技术。在这篇帖子中,我将会通过两种方法来回答这个问题。

|

||||

|

||||

开始之前,你需要了解一些制作 Ubuntu Core 镜像的背景知识,如果你已经看过 [在 Ubuntu Core 开发 ROS 原型到成品[3] 系列文章(具体是第 5 部分),你就已经有了需要的背景知识,如果没有看过的话,可以查看有关 [制作你的 Ubuntu Core 镜像][5] 的教程。

|

||||

|

||||

如果你已经了解了最新的情况,并且当我说 “模型定义” 或者 “模型断言” 时知道我在谈论什么,那就让我们开始通过不同的方法使用私有 sanps 来制作 Ubuntu Core 镜像吧。

|

||||

|

||||

### 方法 1: 不要上传你的 snap 到商店

|

||||

|

||||

这是最简单的方法了。首先看一下这个有关模型定义的例子——`amd64-model.json`:

|

||||

|

||||

```

|

||||

{

|

||||

"type": "model",

|

||||

"series": "16",

|

||||

"model": "custom-amd64",

|

||||

"architecture": "amd64",

|

||||

"gadget": "pc",

|

||||

"kernel": "pc-kernel",

|

||||

"authority-id": "4tSgWHfAL1vm9l8mSiutBDKnnSQBv0c8",

|

||||

"brand-id": "4tSgWHfAL1vm9l8mSiutBDKnnSQBv0c8",

|

||||

"timestamp": "2017-06-23T21:03:24+00:00",

|

||||

"required-snaps": ["kyrofa-test-snap"]

|

||||

}

|

||||

```

|

||||

|

||||

让我们将它转换成模型断言:

|

||||

|

||||

```

|

||||

$ cat amd64-model.json | snap sign -k my-key-name > amd64.model

|

||||

You need a passphrase to unlock the secret key for

|

||||

user: "my-key-name"

|

||||

4096-bit RSA key, ID 0B79B865, created 2016-01-01

|

||||

...

|

||||

```

|

||||

|

||||

获得模型断言:`amd64.model` 后,如果你现在就把它交给 `ubuntu-image` 使用,你将会碰钉子:

|

||||

|

||||

```

|

||||

$ sudo ubuntu-image -c stable amd64.model

|

||||

Fetching core

|

||||

Fetching pc-kernel

|

||||

Fetching pc

|

||||

Fetching kyrofa-test-snap

|

||||

error: cannot find snap "kyrofa-test-snap": snap not found

|

||||

COMMAND FAILED: snap prepare-image --channel=stable amd64.model /tmp/tmp6p453gk9/unpack

|

||||

```

|

||||

|

||||

实际上商店中并没有名为 `kyrofa-test-snap` 的 snap。这里需要重点说明的是:模型定义(以及转换后的断言)只包含了一系列的 snap 的名字。如果你在本地有个那个名字的 snap,即使它没有存在于商店中,你也可以通过 `--extra-snaps` 选项告诉 `ubuntu-image` 在断言中匹配这个名字来使用它:

|

||||

|

||||

```

|

||||

$ sudo ubuntu-image -c stable \

|

||||

--extra-snaps /path/to/kyrofa-test-snap_0.1_amd64.snap \

|

||||

amd64.model

|

||||

Fetching core

|

||||

Fetching pc-kernel

|

||||

Fetching pc

|

||||

Copying "/path/to/kyrofa-test-snap_0.1_amd64.snap" (kyrofa-test-snap)

|

||||

kyrofa-test-snap already prepared, skipping

|

||||

WARNING: "kyrofa-test-snap" were installed from local snaps

|

||||

disconnected from a store and cannot be refreshed subsequently!

|

||||

Partition size/offset need to be a multiple of sector size (512).

|

||||

The size/offset will be rounded up to the nearest sector.

|

||||

```

|

||||

|

||||

现在,在 snap 并没有上传到商店的情况下,你已经获得一个预装了私有 snap 的 Ubuntu Core 镜像(名为 `pc.img`)。但是这样做有一个很大的问题,ubuntu-image 会提示一个警告:不通过连接商店预装 snap 意味着你没有办法在烧录了这些镜像的设备上更新它。你只能通过制作新的镜像并重新烧录到设备的方式来更新它。

|

||||

|

||||

### 方法 2: 使用品牌商店

|

||||

|

||||

当你注册了一个商店账号并访问 [dashboard.snapcraft.io][6] 时,你其实是在标准的 Ubuntu 商店中查看你的 snap。如果你是在系统中新安装的 snapd,默认会从这个商店下载。虽然你可以在 Ubuntu 商店中发布私有的 snap,但是你[不能将它们预装到镜像中][7],因为只有你(以及你添加的合作者)才有权限去使用它。在这种情况下制作镜像的唯一方式就是公开发布你的 snap,然而这并不符合这篇帖子的目的。

|

||||

|

||||

对于这种用例,我们有所谓的 [品牌商店][8]。品牌商店仍然托管在 Ubuntu 商店里,但是它们是针对于某一特定公司或设备的一个定制的、专门的版本。品牌商店可以继承或者不继承标准的 Ubuntu 商店,品牌商店也可以选择开放给所有的开发者或者将其限制在一个特定的组内(保持私有正是我们想要的)。

|

||||

|

||||

请注意,这是一个付费功能。你需要 [申请一个品牌商店][9]。请求通过后,你将可以通过访问用户名下的 “stores you can access” 看到你的新商店。

|

||||

|

||||

|

||||

|

||||

在那里你可以看到多个有权使用的商店。最少的情况下也会有两个:标准的 Ubuntu 商店以及你的新的品牌商店。选择品牌商店(红框),进去后记录下你的商店 ID(蓝框):等下你将会用到它。

|

||||

|

||||

|

||||

|

||||

在品牌商店里注册名字或者上传 snap 和标准的商店使用的方法是一样的,只是它们现在是上传到你的品牌商店而不是标准的那个。如果你将品牌商店放在 unlisted 里面,那么这些 snap 对外部用户是不可见。但是这里需要注意的是第一次上传 snap 的时候需要通过 web 界面来操作。在那之后,你可以继续像往常一样使用 Snapcraft 来操作。

|

||||

|

||||

那么这些是如何改变的呢?我的 “kyrofal-store” 从 Ubuntu 商店继承了 snap,并且还包含一个发布在稳定通道中的 “kyrofa-bran-test-snap” 。这个 snap 在 Ubuntu 商店里是使用不了的,如果你去搜索它,你是找不到的:

|

||||

|

||||

```

|

||||

$ snap find kyrofa-branded

|

||||

The search "kyrofa-branded" returned 0 snaps

|

||||

```

|

||||

|

||||

但是使用我们前面记录的商店 ID,我们可以创建一个从品牌商店而不是 Ubuntu 商店下载 snap 的模型断言。我们只需要将 “store” 键添加到 JSON 文件中,就像这样:

|

||||

|

||||

```

|

||||

{

|

||||

"type": "model",

|

||||

"series": "16",

|

||||

"model": "custom-amd64",

|

||||

"architecture": "amd64",

|

||||

"gadget": "pc",

|

||||

"kernel": "pc-kernel",

|

||||

"authority-id": "4tSgWHfAL1vm9l8mSiutBDKnnSQBv0c8",

|

||||

"brand-id": "4tSgWHfAL1vm9l8mSiutBDKnnSQBv0c8",

|

||||

"timestamp": "2017-06-23T21:03:24+00:00",

|

||||

"required-snaps": ["kyrofa-branded-test-snap"],

|

||||

"store": "ky<secret>ek"

|

||||

}

|

||||

```

|

||||

|

||||

使用方法 1 中的方式对它签名,然后我们就可以像这样很简单的制作一个预装有我们品牌商店私有 snap 的 Ubuntu Core 镜像:

|

||||

|

||||

```

|

||||

$ sudo ubuntu-image -c stable amd64.model

|

||||

Fetching core

|

||||

Fetching pc-kernel

|

||||

Fetching pc

|

||||

Fetching kyrofa-branded-test-snap

|

||||

Partition size/offset need to be a multiple of sector size (512).

|

||||

The size/offset will be rounded up to the nearest sector.

|

||||

```

|

||||

|

||||

现在,和方法 1 的最后一样,你获得了一个为工厂准备的 `pc.img`。并且使用这种方法制作的镜像中的所有 snap 都从商店下载的,这意味着它们将能像平常一样自动更新。

|

||||

|

||||

### 结论

|

||||

|

||||

到目前为止,做这个只有两种方法。当我开始写这篇帖子的时候,我想过可能还有第三种(将 snap 设置为私有然后使用它制作镜像),[但最后证明是不行的][12]。

|

||||

|

||||

另外,我们也收到很多内部部署或者企业商店的请求,虽然这样的产品还没有公布,但是商店团队正在从事这项工作。一旦可用,我将会写一篇有关它的文章。

|

||||

|

||||

希望能帮助到您!

|

||||

|

||||

---

|

||||

|

||||

关于作者

|

||||

|

||||

Kyle 是 Snapcraft 团队的一员,也是 Canonical 公司的常驻机器人专家,他专注于 snaps 和 snap 开发实践,以及 snaps 和 Ubuntu Core 的机器人技术实现。

|

||||

|

||||

---

|

||||

|

||||

via: https://insights.ubuntu.com/2017/07/11/ubuntu-core-making-a-factory-image-with-private-snaps/

|

||||

|

||||

作者:[Kyle Fazzari][a]

|

||||

译者:[Snaplee](https://github.com/Snaplee)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[1]: https://insights.ubuntu.com/2017/04/06/from-ros-prototype-to-production-on-ubuntu-core/

|

||||

[2]: https://insights.ubuntu.com/2017/04/06/from-ros-prototype-to-production-on-ubuntu-core/

|

||||

[3]: https://insights.ubuntu.com/2017/04/06/from-ros-prototype-to-production-on-ubuntu-core/

|

||||

[4]: https://insights.ubuntu.com/2017/04/06/from-ros-prototype-to-production-on-ubuntu-core/

|

||||

[5]: https://tutorials.ubuntu.com/tutorial/create-your-own-core-image

|

||||

[6]: https://dashboard.snapcraft.io/dev/snaps/

|

||||

[7]: https://forum.snapcraft.io/t/unable-to-create-an-image-that-uses-private-snaps

|

||||

[8]: https://docs.ubuntu.com/core/en/build-store/index?_ga=2.103787520.1269328701.1501772209-778441655.1499262639

|

||||

[9]: https://docs.ubuntu.com/core/en/build-store/create

|

||||

[12]: https://forum.snapcraft.io/t/unable-to-create-an-image-that-uses-private-snaps/1115

|

||||

[14]: https://insights.ubuntu.com/2017/04/06/from-ros-prototype-to-production-on-ubuntu-core/

|

||||

@ -1,73 +1,67 @@

|

||||

解密开放容器计划(OCI)规范

|

||||

============================================================

|

||||

|

||||

|

||||

开放容器计划(OCI)宣布本周完成容器运行时和镜像的第一版规范。OCI 在是 Linux 基金会(Linux Foundation)支持下的容器解决方案标准化的成果。两年来,为了[建立这些规范][12]已经付出了大量的努力。 由此,让我们一起来回顾过去两年中出现的一些误区。

|

||||

<ruby>开放容器计划<rt>Open Container Initiative</rt></ruby>(OCI)宣布本周完成了容器运行时和镜像的第一版规范。OCI 在是 <ruby>Linux 基金会<rt>Linux Foundation</rt></ruby>支持下的容器解决方案标准化的成果。两年来,为了[建立这些规范][12]已经付出了大量的努力。 由此,让我们一起来回顾过去两年中出现的一些误区。

|

||||

|

||||

|

||||

|

||||

**误区:OCI 是 Docker 的替代品**

|

||||

### 误区:OCI 是 Docker 的替代品

|

||||

|

||||

诚然标准非常重要,但它们远非一个完整的生产平台。 以万维网为例,它25年来一路演进,建立在诸如 TCP/IP ,HTTP 和 HTML 等核心可靠的标准之上。再以 TCP/IP 为例,当企业将 TCP/IP 合并为一种通用协议时,它推动了路由器行业,尤其是思科的发展。 然而,思科通过专注于在其路由平台上提供差异化的功能,而成为市场的领导者。我们认为 OCI 规范和 Docker 也是类似这样并行存在的。

|

||||

诚然标准非常重要,但它们远非一个完整的生产平台。 以万维网为例,它 25 年来一路演进,建立在诸如 TCP/IP 、HTTP 和 HTML 等可靠的核心标准之上。再以 TCP/IP 为例,当企业将 TCP/IP 合并为一种通用协议时,它推动了路由器行业,尤其是思科的发展。 然而,思科通过专注于在其路由平台上提供差异化的功能,而成为市场的领导者。我们认为 OCI 规范和 Docker 也是类似这样并行存在的。

|

||||

|

||||

[Docker 是一个完整的生产平台][13],提供了基于容器的开发、分发、安全、编排的一体化解决方案。Docker 使用了 OCI 规范,但它大约只占总代码的 5%,而且 Docker 平台只有一小部分涉及容器的运行时行为和容器镜像的布局。

|

||||

|

||||

**误区:产品和项目已经通过了 OCI 规范认证**

|

||||

### 误区:产品和项目已经通过了 OCI 规范认证

|

||||

|

||||

运行时和镜像规范本周刚发布1.0的版本。 而且 OCI 认证计划仍在开发阶段,所以企业在认证正式推出之前(今年晚些时候),没法要求容器产品的合规性,一致性或兼容性。

|

||||

运行时和镜像规范本周刚发布 1.0 的版本。 而且 OCI 认证计划仍在开发阶段,所以企业在该认证正式推出之前(今年晚些时候),没法要求容器产品的合规性、一致性或兼容性。

|

||||

|

||||

OCI [认证工作组][14] 目前正在制定标准,使容器产品和开源项目能够符合规范的要求。标准和规范对于实施解决方案的工程师很重要,但正式认证是向客户保证其正在使用的技术真正符合标准的唯一方式。

|

||||

OCI [认证工作组][14]目前正在制定标准,使容器产品和开源项目能够符合规范的要求。标准和规范对于实施解决方案的工程师很重要,但正式认证是向客户保证其正在使用的技术真正符合标准的唯一方式。

|

||||

|

||||

**误区:Docker不支持 OCI 规范的工作**

|

||||

### 误区:Docker 不支持 OCI 规范的工作

|

||||

|

||||

Docker 很早就开始为 OCI 做贡献。 我们向 OCI 贡献了大部分的代码,作为 OCI 项目的维护者,为 OCI 运行时和镜像规范定义提供了积极有益的帮助。Docker 运行时和镜像格式在 2013 年开源发布之后,便迅速成为事实上的标准,我们认为将代码捐赠给中立的管理机构,对于避免容器行业的碎片化和鼓励行业创新将是有益的。我们的目标是提供一个可靠和标准化的规范,因此 Docker 提供了一个简单的容器运行时 runc 作为运行时规范工作的基础,后来又贡献了 Docker V2 镜像规范作为 OCI 镜像规范工作的基础。

|

||||

|

||||

Docker 的开发人员如 Michael Crosby 和 Stephen Day 从一开始就是这项工作的关键贡献者,确保能将 Docker 的托管和运行数十亿个容器镜像的经验带给 OCI。等认证工作组完成(制定认证规范的)工作后,Docker 将通过 OCI 认证将其产品展示出来,以证明 OCI 的一致性。

|

||||

|

||||

**误区:OCI 仅用于 Linux 容器技术**

|

||||

### 误区:OCI 仅用于 Linux 容器技术

|

||||

|

||||

因为 OCI 是由 Linux 基金会 (Linux Foundation) 负责制定的,所以很容易让人误解为 OCI 仅适用于 Linux 容器技术。 而实际上并非如此,尽管 Docker 技术源于 Linux 世界,但 Docker 也一直在与微软合作,将我们的容器技术、平台和工具带到 Windows Server 的世界。 此外,Docker 向 OCI 贡献的基础技术广泛适用于包括 Linux ,Windows 和 Solaris 在内的多种操作系统环境,涵盖了 x86,ARM 和 IBM zSeries 等多种架构环境。

|

||||

因为 OCI 是由 <ruby>Linux 基金会<rt>Linux Foundation</rt></ruby> 负责制定的,所以很容易让人误解为 OCI 仅适用于 Linux 容器技术。 而实际上并非如此,尽管 Docker 技术源于 Linux 世界,但 Docker 也一直在与微软合作,将我们的容器技术、平台和工具带到 Windows Server 的世界。 此外,Docker 向 OCI 贡献的基础技术广泛适用于包括 Linux 、Windows 和 Solaris 在内的多种操作系统环境,涵盖了 x86、ARM 和 IBM zSeries 等多种架构环境。

|

||||

|

||||

**误区:Docker 仅仅是OCI的众多贡献者之一**

|

||||

### 误区:Docker 仅仅是 OCI 的众多贡献者之一

|

||||

|

||||

OCI 作为一个支持成员众多的开放组织,代表了容器行业的广度。 也就是说,它是一个小而专业的个人技术专家组,为制作初始规范的工作贡献了大量的时间和技术。 Docker 是 OCI 的创始成员,贡献了初始代码库,构成了运行时规范的基础,后来引入了参考实现。 同样地,Docker 也将 Docker V2 镜像规范贡献给 OCI 作为镜像规范的基础。

|

||||

OCI 作为一个支持成员众多的开放组织,代表了容器行业的广度。 也就是说,它是一个小而专业的个人技术专家组,为制作初始规范的工作贡献了大量的时间和技术。 Docker 是 OCI 的创始成员,贡献了初始代码库,构成了运行时规范的基础和后来的参考实现。 同样地,Docker 也将 Docker V2 镜像规范贡献给 OCI 作为镜像规范的基础。

|

||||

|

||||

**误区:CRI-O 是 OCI 项目**

|

||||

### 误区:CRI-O 是 OCI 项目

|

||||

|

||||

CRI-O 是云计算基金会(CNCF)Kubernetes 孵化器的开源项目 -- 它不是 OCI 项目。 它基于早期版本的 Docker 体系结构,而 containerd 是一个直接的 CNCF 项目,它是一个包括 runc 参考实现的更大的容器运行时。 containerd 负责镜像传输和存储,容器运行和监控,以及支持存储和网络附件等底层功能。 Docker 在五个最大的云提供商(阿里云,AWS,Google Cloud Platform (GCP),IBM Softlayer 和 Microsoft Azure)的支持下,将 containerd 捐赠给了云计算基金会(CNCF),作为多个容器平台和编排系统的核心容器运行时。

|

||||

CRI-O 是<ruby>云计算基金会<rt>Cloud Native Computing Foundation</rt></ruby>(CNCF)的 Kubernetes 孵化器的开源项目 -- 它不是 OCI 项目。 它基于早期版本的 Docker 体系结构,而 containerd 是一个直接的 CNCF 项目,它是一个包括 runc 参考实现的更大的容器运行时。 containerd 负责镜像传输和存储、容器运行和监控,以及支持存储和网络附件等底层功能。 Docker 在五个最大的云提供商(阿里云、AWS、Google Cloud Platform(GCP)、IBM Softlayer 和 Microsoft Azure)的支持下,将 containerd 捐赠给了云计算基金会(CNCF),作为多个容器平台和编排系统的核心容器运行时。

|

||||

|

||||

**误区:OCI 规范现在已经完成了**

|

||||

### 误区:OCI 规范现在已经完成了

|

||||

|

||||

虽然首版容器运行时和镜像格式规范的发布是一个重要的里程碑,但还有许多工作有待完成。 OCI 的一开始着眼于定义一个狭窄的规范:开发人员可以依赖于容器的运行时行为,防止容器行业碎片化,并且仍然允许在不断变化的容器域中进行创新。之后才将含容器镜像规范囊括其中。

|

||||

虽然首版容器运行时和镜像格式规范的发布是一个重要的里程碑,但还有许多工作有待完成。 OCI 一开始着眼于定义一个狭窄的规范:开发人员可以依赖于容器的运行时行为,防止容器行业碎片化,并且仍然允许在不断变化的容器域中进行创新。之后才将含容器镜像规范囊括其中。

|

||||

|

||||

随着工作组完成运行时行为和镜像格式的第一个稳定规范,新的工作考量也已经同步展开。未来的新特性将包括分发和签名等。 然而,OCI 的下一个最重要的工作是提供一个由测试套件支持的认证过程,因为第一个规范已经稳定了。

|

||||

|

||||

**在 Docker 了解更多关于 OCI 和开源的信息:**

|

||||

|

||||

* 阅读关于[OCI v1.0 版本的运行时和镜像格式规范]的博文[1]

|

||||

|

||||

* 阅读关于 [OCI v1.0 版本的运行时和镜像格式规范]的博文[1]

|

||||

* 访问 [OCI 的网站][2]

|

||||

|

||||

* 访问 [Moby 项目网站][3]

|

||||

|

||||

* 参加 [DockerCon Europe 2017][4]

|

||||

|

||||

* 参加 [Moby Summit LA][5]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Stephen 是 Docker 开源项目总监。 他曾在 Hewlett-Packard Enterprise (惠普企业)担任董事和杰出技术专家。他的关于开源软件和商业的博客 “再次违约”(http://stephesblog.blogs.com) 和网站 opensource.com。

|

||||

|

||||

Stephen 是 Docker 开源项目总监。 他曾在 Hewlett-Packard Enterprise (惠普企业)担任董事和杰出技术专家。他的关于开源软件和商业的博客发布在 “再次违约”(http://stephesblog.blogs.com) 和网站 opensource.com 上。

|

||||

|

||||

-----------------

|

||||

|

||||

via: https://blog.docker.com/2017/07/demystifying-open-container-initiative-oci-specifications/

|

||||

|

||||

作者:[Stephen ][a]

|

||||

作者:[Stephen][a]

|

||||

译者:[rieonke](https://github.com/rieonke)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,38 +1,38 @@

|

||||

Deploy Kubernetes cluster for Linux containers

|

||||

部署Kubernetes 容器集群

|

||||

在 Azure 中部署 Kubernetes 容器集群

|

||||

============================================================

|

||||

|

||||

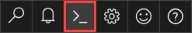

在这个快速入门教程中,我们使用 Azure CLI 创建 Kubernetes 集群。 然后在集群上部署并运行由 Web 前端和 Redis 实例组成的多容器应用程序。 一旦部署完成,应用程序可以通过互联网访问。

|

||||

在这个快速入门教程中,我们使用 Azure CLI 创建一个 Kubernetes 集群,然后在集群上部署运行由 Web 前端和 Redis 实例组成的多容器应用程序。一旦部署完成,应用程序可以通过互联网访问。

|

||||

|

||||

|

||||

|

||||

这个快速入门教程假设你已经基本了解了Kubernetes 的概念,有关 Kubernetes 的详细信息,请参阅[ Kubernetes 文档][3]。

|

||||

这个快速入门教程假设你已经基本了解了 Kubernetes 的概念,有关 Kubernetes 的详细信息,请参阅 [Kubernetes 文档][3]。

|

||||

|

||||

如果您没有 Azure 账号订阅,请在开始之前创建一个[免费帐户][4]。

|

||||

如果您没有 Azure 账号,请在开始之前创建一个[免费帐户][4]。

|

||||

|

||||

### 登陆Azure 云控制台

|

||||

### 登录 Azure 云控制台

|

||||

|

||||

Azure 云控制台是一个免费的 Bash shell ,你可以直接在 Azure 网站上运行。 它已经在你的账户中预先配置好了, 单击[ Azure 门户][5]右上角菜单上的 “Cloud Shell” 按钮;

|

||||

Azure 云控制台是一个免费的 Bash shell,你可以直接在 Azure 网站上运行。它已经在你的账户中预先配置好了, 单击 [Azure 门户][5]右上角菜单上的 “Cloud Shell” 按钮;

|

||||

|

||||

[][6]

|

||||

[][6]

|

||||

|

||||

该按钮启动一个交互式 shell,您可以使用它来运行本教程中的所有操作步骤。

|

||||

该按钮会启动一个交互式 shell,您可以使用它来运行本教程中的所有操作步骤。

|

||||

|

||||

[][7]

|

||||

[][7]

|

||||

|

||||

此快速入门教程所用的 Azure CLI 的版本最低要求为 2.0.4 。如果您选择在本地安装和使用 CLI 工具,请运行 `az --version` 来检查已安装的版本。 如果您需要安装或升级请参阅[安装 Azure CLI 2.0 ][8]。

|

||||

此快速入门教程所用的 Azure CLI 的版本最低要求为 2.0.4。如果您选择在本地安装和使用 CLI 工具,请运行 `az --version` 来检查已安装的版本。 如果您需要安装或升级请参阅[安装 Azure CLI 2.0 ][8]。

|

||||

|

||||

### 创建一个资源组

|

||||

|

||||

使用 [az group create][9] 命令创建一个资源组,一个 Azure 资源组是 Azure 资源部署和管理的逻辑组。

|

||||

使用 [az group create][9] 命令创建一个资源组,一个 Azure 资源组是指 Azure 资源部署和管理的逻辑组。

|

||||

|

||||

以下示例在 _eastus_ 位置中创建名为 _myResourceGroup_ 的资源组。

|

||||

以下示例在 _eastus_ 区域中创建名为 _myResourceGroup_ 的资源组。

|

||||

|

||||

```

|

||||

az group create --name myResourceGroup --location eastus

|

||||

|

||||

```

|

||||

|

||||

输出:

|

||||

输出:

|

||||

|

||||

|

||||

```

|

||||

@ -53,23 +53,21 @@ az group create --name myResourceGroup --location eastus

|

||||

|

||||

使用 [az acs create][10] 命令在 Azure 容器服务中创建 Kubernetes 集群。 以下示例使用一个 Linux 主节点和三个 Linux 代理节点创建一个名为 _myK8sCluster_ 的集群。

|

||||

|

||||

Azure CLICopyTry It

|

||||

|

||||

```

|

||||

az acs create --orchestrator-type=kubernetes --resource-group myResourceGroup --name=myK8sCluster --generate-ssh-keys

|

||||

|

||||

```

|

||||

几分钟后,命令将完成并返回有关该集群的json格式的信息。

|

||||

几分钟后,命令将完成并返回有关该集群的 json 格式的信息。

|

||||

|

||||

### 连接到 Kubernetes 集群

|

||||

|

||||

要管理 Kubernetes 群集,可以使用 Kubernetes 命令行工具 [kubectl][11]。

|

||||

|

||||

如果您使用 Azure CloudShell ,则已经安装了 kubectl 。 如果要在本地安装,可以使用 [az acs kubernetes install-cli][12] 命令。

|

||||

如果您使用 Azure CloudShell ,则已经安装了 kubectl 。如果要在本地安装,可以使用 [az acs kubernetes install-cli][12] 命令。

|

||||

|

||||

要配置 kubectl 连接到您的 Kubernetes 群集,请运行 [az acs kubernetes get-credentials][13] 命令下载凭据并配置 Kubernetes CLI 以使用它们。

|

||||

|

||||

|

||||

```

|

||||

az acs kubernetes get-credentials --resource-group=myResourceGroup --name=myK8sCluster

|

||||

|

||||

@ -83,7 +81,7 @@ kubectl get nodes

|

||||

|

||||

```

|

||||

|

||||

输出:

|

||||

输出:

|

||||

|

||||

|

||||

```

|

||||

@ -171,7 +169,7 @@ kubectl create -f azure-vote.yaml

|

||||

|

||||

```

|

||||

|

||||

输出:

|

||||

输出:

|

||||

|

||||

|

||||

```

|

||||

@ -188,15 +186,13 @@ service "azure-vote-front" created

|

||||

|

||||

要监控这个进程,使用 [kubectl get service][17] 命令时加上 `--watch` 参数。

|

||||

|

||||

Azure CLICopyTry It

|

||||

|

||||

```

|

||||

kubectl get service azure-vote-front --watch

|

||||

|

||||

```

|

||||

|

||||

Initially the EXTERNAL-IP for the _azure-vote-front_ service appears as _pending_ . Once the EXTERNAL-IP address has changed from _pending_ to an _IP address_ , use `CTRL-C` to stop the kubectl watch process.

|

||||

最初,_azure-vote-front_ 服务的 EXTERNAL-IP 显示为 _pending_ 。 一旦 EXTERNAL-IP 地址从 _pending_ 变成一个具体的IP地址,请使用 “CTRL-C” 来停止 kubectl 监视进程。

|

||||

最初,_azure-vote-front_ 服务的 EXTERNAL-IP 显示为 _pending_ 。 一旦 EXTERNAL-IP 地址从 _pending_ 变成一个具体的 IP 地址,请使用 “CTRL-C” 来停止 kubectl 监视进程。

|

||||

|

||||

```

|

||||

azure-vote-front 10.0.34.242 <pending> 80:30676/TCP 7s

|

||||

@ -204,7 +200,6 @@ azure-vote-front 10.0.34.242 52.179.23.131 80:30676/TCP 2m

|

||||

|

||||

```

|

||||

|

||||

You can now browse to the external IP address to see the Azure Vote App.

|

||||

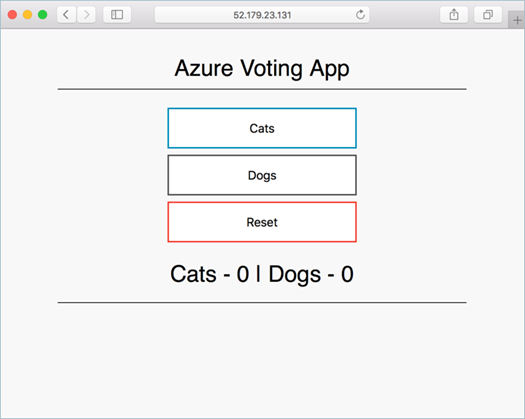

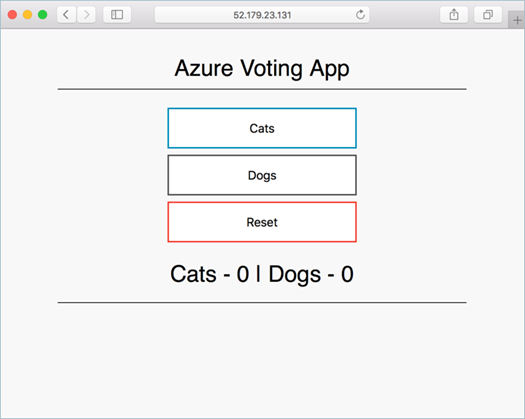

现在你可以通过这个外网 IP 地址访问到 Azure Vote 这个应用了。

|

||||

|

||||

|

||||

@ -234,9 +229,9 @@ az group delete --name myResourceGroup --yes --no-wait

|

||||

|

||||

via: https://docs.microsoft.com/en-us/azure/container-service/kubernetes/container-service-kubernetes-walkthrough

|

||||

|

||||

作者:[neilpeterson ][a],[mmacy][b]

|

||||

作者:[neilpeterson][a],[mmacy][b]

|

||||

译者:[rieonke](https://github.com/rieonke)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,5 +1,3 @@

|

||||

翻译中 by WuXiao(toyijiu)

|

||||

|

||||

Education of a Programmer

|

||||

============================================================

|

||||

|

||||

|

||||

@ -1,408 +0,0 @@

|

||||

[HaitaoBio](https://github.com/HaitaoBio)

|

||||

|

||||

TypeScript: the missing introduction

|

||||

============================================================

|

||||

|

||||

|

||||

**The following is a guest post by James Henry ([@MrJamesHenry][8]). I am a member of the ESLint Core Team, and a TypeScript evangelist. I am working with Todd on [UltimateAngular][9] to bring you more award-winning Angular and TypeScript courses.**

|

||||

|

||||

> The purpose of this article is to offer an introduction to how we can think about TypeScript, and its role in supercharging our **JavaScript** development.

|

||||

>

|

||||

> We will also try and come up with our own reasonable definitions for a lot of the buzzwords surrounding types and compilation.

|

||||

|

||||

There is huge amount of great stuff in the TypeScript project that we won’t be able to cover within the scope of this blog post. Please read the [official documentation][15] to learn more, and check out the [TypeScript courses over on UltimateAngular][16] to go from total beginner to TypeScript Pro!

|

||||

|

||||

### [Table of contents][17]

|

||||

|

||||

* [Background][10]

|

||||

* [Getting to grips with the buzzwords][11]

|

||||

* [JavaScript - interpreted or compiled?][1]

|

||||

* [Run Time vs Compile Time][2]

|

||||

* [The TypeScript Compiler][3]

|

||||

* [Dynamic vs Static Typing][4]

|

||||

* [TypeScript’s role in our JavaScript workflow][12]

|

||||

* [Our source file is our document, TypeScript is our Spell Check][5]

|

||||

* [TypeScript is a tool which enables other tools][13]

|

||||

* [What is an Abstract Syntax Tree (AST)?][6]

|

||||

* [Example: Renaming symbols in VS Code][7]

|

||||

* [Summary][14]

|

||||

|

||||

### [Background][18]

|

||||

|

||||

TypeScript is an amazingly powerful tool, and really quite easy to get started with.

|

||||

|

||||

It can, however, come across as more complex than it is, because it may simultaneously be introducing us to a whole host of technical concepts related to our JavaScript programs that we may not have considered before.

|

||||

|

||||

Whenever we stray into the area of talking about types, compilers, etc. things can get really confusing, really fast.

|

||||

|

||||

This article is designed as a “what you need to know” guide for a lot of these potentially confusing concepts, so that by the time you dive into the “Getting Started” style tutorials, you are feeling confident with the various themes and terminology that surround the topic.

|

||||

|

||||

### [Getting to grips with the buzzwords][19]

|

||||

|

||||

There is something about running our code in a web browser that makes us _feel_ differently about how it works. “It’s not compiled, right?”, “Well, I definitely know there aren’t any types…”

|

||||

|

||||

Things get even more interesting when we consider that both of those statements are both correct and incorrect at the same time - depending on the context and how you define some of these concepts.

|

||||

|

||||

As a first step, we are going to do exactly that!

|

||||

|

||||

#### [JavaScript - interpreted or compiled?][20]

|

||||

|

||||

Traditionally, developers will often think about a language being a “compiled language” when they are the ones responsible for compiling their own programs.

|

||||

|

||||

> In basic terms, when we compile a program we are converting it from the form we wrote it in, to the form it actually gets run in.

|

||||

|

||||

In a language like Golang, for example, you have a command line tool called `go build`which allows you to compile your `.go` file into a lower-level representation of the code, which can then be executed and run:

|

||||

|

||||

```

|

||||

# We manually compile our .go file into something we can run

|

||||

# using the command line tool "go build"

|

||||

go build ultimate-angular.go

|

||||

# ...then we execute it!

|

||||

./ultimate-angular

|

||||

```

|

||||

|

||||

As authors of JavaScript (ignoring our love of new-fangled build tools and module loaders for a moment), we don’t have such a fundamental compilation step in our workflow.

|

||||

|

||||

We write some code, and load it up in a browser using a `<script>` tag (or a server-side environment such as node.js), and it just runs.

|

||||

|

||||

**Ok, so JavaScript isn’t compiled - it must be an interpreted language, right?**

|

||||

|

||||

Well, actually, all we have determined so far is that JavaScript is not something that we compile _ourselves_, but we’ll come back to this after we briefly look an example of an “interpreted language”.

|

||||

|

||||

> An interpreted computer program is one that is executed like a human reads a book, starting at the top and working down line-by-line.

|

||||

|

||||

The classic example of interpreted programs that we are already familiar with are bash scripts. The bash interpreter in our terminal reads our commands in line-by-line and executes them.

|

||||

|

||||

Now, if we return to thinking about JavaScript and whether or not it is interpreted or compiled, intuitively there are some things about it that just don’t add up when we think about reading and executing a program line-by-line (our simple definition of “interpreted”).

|

||||

|

||||

Take this code as an example:

|

||||

|

||||

```

|

||||

hello();

|

||||

function hello() {

|

||||

console.log('Hello!');

|

||||

}

|

||||

```

|

||||

|

||||

This is perfectly valid JavaScript which will print the word “Hello!”, but we have used the `hello()` function before we have even defined it! A simple line-by-line execution of this program would just not be possible, because `hello()` on line 1 does not have any meaning until we reach its declaration on line 2.

|

||||

|

||||

The reason that this, and many other concepts like it, is possible in JavaScript is because our code is actually compiled by the so called “JavaScript engine”, or environment, before it is executed. The exact nature of this compilation process will depend on the specific implementation (e.g. V8, which powers node.js and Google Chrome, will behave slightly differently to SpiderMonkey, which is used by FireFox).

|

||||

|

||||

We will not dig any further into the subtleties of defining “compiled vs interpreted” here (there are a LOT).

|

||||

|

||||

> It’s useful to always keep in mind that the JavaScript code we write is already not the actual code that will be executed by our users, even when we simply have a `<script>` tag in an HTML document.

|

||||

|

||||

#### [Run Time vs Compile Time][21]

|

||||

|

||||

Now that we have properly introduced the idea that compiling a program and running a program are two distinct phases, the terms “Run Time” and “Compile Time” become a little easier to reason about.

|

||||

|

||||

When something happens at **Compile Time**, it is happening during the conversion of our code from what we wrote in our editor/IDE to some other form.

|

||||

|

||||

When something happens at **Run Time**, it is happening during the actual execution of our program. For example, our `hello()` function above is executed at “run time”.

|

||||

|

||||

#### [The TypeScript Compiler][22]

|

||||

|

||||

Now that we understand these key phases in the lifecycle of a program, we can introduce the **TypeScript compiler**.

|

||||

|

||||

The TypeScript compiler is at the core of how TypeScript is able to help us when we write our code. Instead of just including our JavaScript in a `<script>` tag, for example, we will first pass it through the TypeScript compiler so that it can give us helpful hints on how we can improve our program before it runs.

|

||||

|

||||

> We can think about this new step as our own personal “compile time”, which will help us ensure that our program is written in the way we intended, before it even reaches the main JavaScript engine.

|

||||

|

||||

It is a similar process to the one shown in the Golang example above, except that the TypeScript compiler just provides hints based on how we have written our program, and doesn’t turn it into a lower-level executable - it produces pure JavaScript.

|

||||

|

||||

```

|

||||

# One option for passing our source .ts file through the TypeScript

|

||||

# compiler is to use the command line tool "tsc"

|

||||

tsc ultimate-angular.ts

|

||||

|

||||

# ...this will produce a .js file of the same name

|

||||

# i.e. ultimate-angular.js

|

||||

```

|

||||

|

||||

There are many great posts about the different options for integrating the TypeScript compiler into your existing workflow, including the [official documentation][23]. It is beyond the scope of this article to go into those options here.

|

||||

|

||||

#### [Dynamic vs Static Typing][24]

|

||||

|

||||

Just like with “compiled vs interpreted” programs, the existing material on “dynamic vs static typing” can be incredibly confusing.

|

||||

|

||||

Let’s start by taking a step back and refreshing our memory on how much we _already_understand about types from our existing JavaScript code.

|

||||

|

||||

We have the following program:

|

||||

|

||||

```

|

||||

var name = 'James';

|

||||

var sum = 1 + 2;

|

||||

```

|

||||

|

||||

How would we describe this code to somebody?

|

||||

|

||||

“We have declared a variable called `name`, which is assigned the **string** of ‘James’, and we have declared the variable `sum`, which is assigned the value we get when we add the **number** `1` to the **number** `2`.”

|

||||

|

||||

Even in such a simple program, we have already highlighted two of JavaScript’s fundamental types: String and Number.

|

||||

|

||||

As with our introduction to compilation above, we are not going to get bogged down in the academic subtleties of types in programming languages - the key thing is understanding what it means for our JavaScript so that we can then extend it to properly understanding TypeScript.

|

||||

|

||||

We know from our traditional nightly ritual of reading the [latest ECMAScript specification][25]**(LOL, JK - “wat’s an ECMA?”)**, that it makes numerous references to types and their usage in JavaScript.

|

||||

|

||||

Taken directly from the official spec:

|

||||

|

||||

> An ECMAScript language type corresponds to values that are directly manipulated by an ECMAScript programmer using the ECMAScript language.

|

||||

>

|

||||

> The ECMAScript language types are Undefined, Null, Boolean, String, Symbol, Number, and Object.

|

||||

|

||||

We can see that the JavaScript language officially has 7 types, of which we have likely used 6 in just about every real-world program we have ever written (Symbol was first introduced in ES2015, a.k.a. ES6).

|

||||

|

||||

Now, let’s think a bit more deeply about our “name and sum” JavaScript program above.

|

||||

|

||||

We could take our `name` variable which is currently assigned the **string** ‘James’, and reassign it to the current value of our second variable `sum`, which is the **number** `3`.

|

||||

|

||||

```

|

||||

var name = 'James';

|

||||

var sum = 1 + 2;

|

||||

|

||||

name = sum;

|

||||

```

|

||||

|

||||

The `name` variable started out “holding” a string, but now it holds a number. This highlights a fundamental quality of variables and types in JavaScript:

|

||||

|

||||

The _value_ ‘James’ is always one type - a string - but the `name` variable can be assigned any value, and therefore any type. The exact same is true in the case of the `sum`assignment: the _value_ `1` is always a number type, but the `sum` variable could be assigned any possible value.

|

||||

|

||||

> In JavaScript, it is _values_, not variables, which have types. Variables can hold any value, and therefore any _type_, at any time.

|

||||

|

||||

For our purposes, this also just so happens to be the very definition of a **“dynamically typed language”**!

|

||||

|

||||

By contrast, we can think of a **“statically typed language”** as being one in which we can (and very likely have to) associate type information with a particular variable:

|

||||

|

||||

```

|

||||

var name: string = 'James';

|

||||

```

|

||||

|

||||

In this code, we are better able to explicitly declare our _intentions_ for the `name` variable - we want it to always be used as a string.

|

||||

|

||||

And guess what? We have just seen our first bit of TypeScript in action!

|

||||

|

||||

When we reflect on our own code (no programming pun intended), we can likely conclude that even when we are working with dynamic languages like JavaScript, in almost all cases we should have pretty clear intentions for the usage of our variables and function parameters when we first define them. If those variables and parameters are reassigned to hold values of _different_ types to ones we first assigned them to, it is possible that something is not working out as we planned.

|

||||

|

||||

> One great power that the static type annotations from TypeScript give us, as JavaScript authors, is the ability to clearly express our intentions for our variables.

|

||||

>

|

||||

> This improved clarity benefits not only the TypeScript compiler, but also our colleagues and future selves when they come to read and understand our code. Code is _read_ far more than it is written.

|

||||

|

||||

### [TypeScript’s role in our JavaScript workflow][26]

|

||||

|

||||

We have started to see why it is often said that TypeScript is just JavaScript + Static Types. Our so-called “type annotation” `: string` for our `name` variable is used by TypeScript at _compile time_ (in other words, when we pass our code through the TypeScript compiler) to make sure that the rest of the code is true to our original intention.

|

||||

|

||||

Let’s take a look at our program again, and add another explicit annotation, this time for our `sum` variable:

|

||||

|

||||

```

|

||||

var name: string = 'James';

|

||||

var sum: number = 1 + 2;

|

||||

|

||||

name = sum;

|

||||

```

|

||||

|

||||

If we let TypeScript take a look at this code for us, we will now get an error `Type 'number' is not assignable to type 'string'` for our `name = sum` assignment, and we are appropriately warned against shipping _potentially_ problematic code to be executed by our users.

|

||||

|

||||

> Importantly, we can choose to ignore errors from the TypeScript compiler if we want to, because it is just a tool which gives us feedback on our JavaScript code before we ship it to our users.

|

||||

|

||||

The final JavaScript code that the TypeScript compiler will output for us will look exactly the same as our original source above:

|

||||

|

||||

```

|

||||

var name = 'James';

|

||||

var sum = 1 + 2;

|

||||

|

||||

name = sum;

|

||||

```

|

||||

|

||||

The type annotations are all removed for us automatically, and we can now run our code.

|

||||

|

||||

> NOTE: In this example, the TypeScript Compiler would have been able to offer us the exact same error even if we hadn’t provided the explicit type annotations `: string` and `: number`.

|

||||

>

|

||||

> TypeScript is very often able to just _infer_ the type of a variable from the way we have used it!

|

||||

|

||||

#### [Our source file is our document, TypeScript is our Spell Check][27]

|

||||

|

||||

A great analogy for TypeScript’s relationship with our source code, is that of Spell Check’s relationship to a document we are writing in Microsoft Word, for example.

|

||||

|

||||

There are three key commonalities between the two examples:

|

||||

|

||||

1. **It can tell us when stuff we have written is objectively, flat-out wrong:**

|

||||

* _Spell Check_: “we have written a word that does not exist in the dictionary”

|

||||

* _TypeScript_: “we have referenced a symbol (e.g. a variable), which is not declared in our program”

|

||||

|

||||

2. **It can suggest that what we have written _might be_ wrong:**

|

||||

* _Spell Check_: “the tool is not able to fully infer the meaning of a particular clause and suggests rewriting it”

|

||||

* _TypeScript_: “the tool is not able to fully infer the type of a particular variable and warns against using it as is”

|

||||

|

||||

3. **Our source can be used for its original purpose, regardless of if there are errors from the tool or not:**

|

||||

* _Spell Check_: “even if your document has lots of Spell Check errors, you can still print it out and “use” it as document”

|

||||

* _TypeScript_: “even if your source code has TypeScript errors, it will still produce JavaScript code which you can execute”

|

||||

|

||||

### [TypeScript is a tool which enables other tools][28]

|

||||

|

||||

The TypeScript compiler is made up of a couple of different parts or phases. We are going to finish off this article by looking at how one of those parts - **the Parser** - offers us the chance to build _additional developer tools_ on top of what TypeScript already does for us.

|

||||

|

||||

The result of the “parser step” of the compilation process is what is called an **Abstract Syntax Tree**, or **AST** for short.

|

||||

|

||||

#### [What is an Abstract Syntax Tree (AST)?][29]

|

||||

|

||||

We write our programs in a free text form, as this is a great way for us humans to interact with our computers to get them to do the stuff we want them to. We are not so great at manually composing complex data structures!

|

||||

|

||||

However, free text is actually a pretty tricky thing to work with within a compiler in any kind of reasonable way. It may contain things which are unnecessary for the program to function, such as whitespace, or there may be parts which are ambiguous.

|

||||

|

||||

For this reason, we ideally want to convert our programs into a data structure which maps out all of the so-called “tokens” we have used, and where they slot into our program.

|

||||

|

||||

This data structure is exactly what an AST is!

|

||||

|

||||

An AST could be represented in a number of different ways, but let’s take a look at a quick example using our old buddy JSON.

|

||||

|

||||

If we have this incredibly basic source code:

|

||||

|

||||

```

|

||||

var a = 1;

|

||||

```

|

||||

|

||||

The (simplified) output of the TypeScript Compiler’s **Parser** phase will be the following AST:

|

||||

|

||||

```

|

||||

{

|

||||

"pos": 0,

|

||||

"end": 10,

|

||||

"kind": 256,

|

||||

"text": "var a = 1;",

|

||||

"statements": [

|

||||

{

|

||||

"pos": 0,

|

||||

"end": 10,

|

||||

"kind": 200,

|

||||

"declarationList": {

|

||||

"pos": 0,

|

||||

"end": 9,

|

||||

"kind": 219,

|

||||

"declarations": [

|

||||

{

|

||||

"pos": 3,

|

||||

"end": 9,

|

||||

"kind": 218,

|

||||

"name": {

|

||||

"pos": 3,

|

||||

"end": 5,

|

||||

"text": "a"

|

||||

},

|

||||

"initializer": {

|

||||

"pos": 7,

|

||||

"end": 9,

|

||||

"kind": 8,

|

||||

"text": "1"

|

||||

}

|

||||

}

|

||||

]

|

||||

}

|

||||

}

|

||||

]

|

||||

}

|

||||

```

|

||||

|

||||

The objects in our in our AST are called _nodes_.

|

||||

|

||||

#### [Example: Renaming symbols in VS Code][30]

|

||||

|

||||

Internally, the TypeScript Compiler will use the AST it has produced to power a couple of really important things such as the actual **Type Checking** that occurs when we compile our programs.

|

||||

|

||||

But it does not stop there!

|

||||

|

||||

> We can use the AST to develop our own tooling on top of TypeScript, such as linters, formatters, and analysis tools.

|

||||

|

||||

One great example of a tool built on top of this AST generation is the **Language Server**.

|

||||

|

||||

It is beyond the scope of this article to dive into how the Language Server works, but one absolutely killer feature that it enables for us when we write our programs is that of “renaming symbols”.

|

||||

|

||||

Let’s say that we have the following source code:

|

||||

|

||||

```

|

||||

// The name of the author is James

|

||||

var first_name = 'James';

|

||||

console.log(first_name);

|

||||

```

|

||||

|

||||