mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

commit

5dec79dac0

85

published/20160506 Setup honeypot in Kali Linux.md

Normal file

85

published/20160506 Setup honeypot in Kali Linux.md

Normal file

@ -0,0 +1,85 @@

|

||||

在 Kali Linux 环境下设置蜜罐

|

||||

=========================

|

||||

|

||||

Pentbox 是一个包含了许多可以使渗透测试工作变得简单流程化的工具的安全套件。它是用 Ruby 编写并且面向 GNU / Linux,同时也支持 Windows、MacOS 和其它任何安装有 Ruby 的系统。在这篇短文中我们将讲解如何在 Kali Linux 环境下设置蜜罐。如果你还不知道什么是蜜罐(honeypot),“蜜罐是一种计算机安全机制,其设置用来发现、转移、或者以某种方式,抵消对信息系统的非授权尝试。"

|

||||

|

||||

### 下载 Pentbox:

|

||||

|

||||

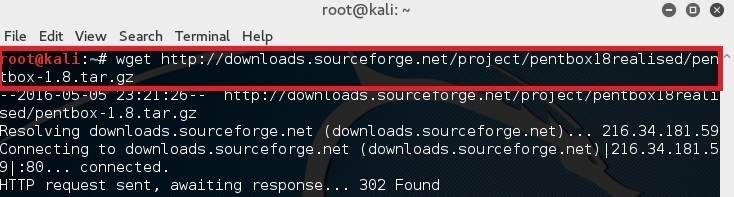

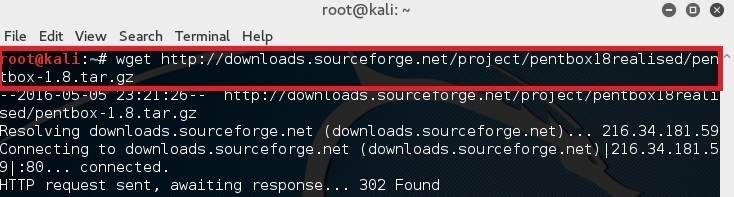

在你的终端中简单的键入下面的命令来下载 pentbox-1.8。

|

||||

|

||||

```

|

||||

root@kali:~# wget http://downloads.sourceforge.net/project/pentbox18realised/pentbox-1.8.tar.gz

|

||||

```

|

||||

|

||||

|

||||

|

||||

### 解压 pentbox 文件

|

||||

|

||||

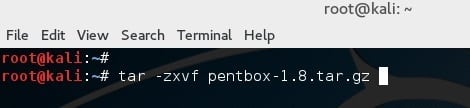

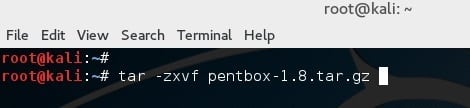

使用如下命令解压文件:

|

||||

|

||||

```

|

||||

root@kali:~# tar -zxvf pentbox-1.8.tar.gz

|

||||

```

|

||||

|

||||

|

||||

|

||||

### 运行 pentbox 的 ruby 脚本

|

||||

|

||||

改变目录到 pentbox 文件夹:

|

||||

|

||||

```

|

||||

root@kali:~# cd pentbox-1.8/

|

||||

```

|

||||

|

||||

|

||||

|

||||

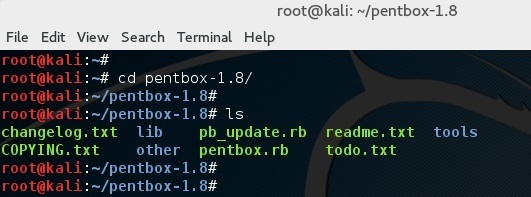

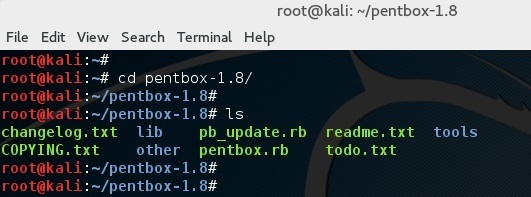

使用下面的命令来运行 pentbox:

|

||||

|

||||

```

|

||||

root@kali:~# ./pentbox.rb

|

||||

```

|

||||

|

||||

|

||||

|

||||

### 设置一个蜜罐

|

||||

|

||||

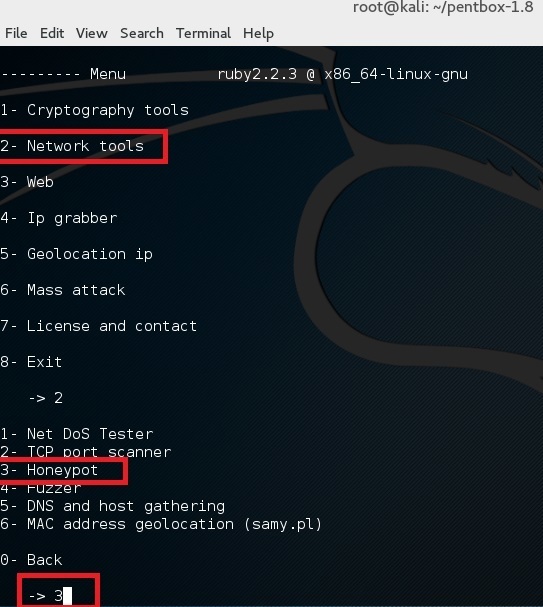

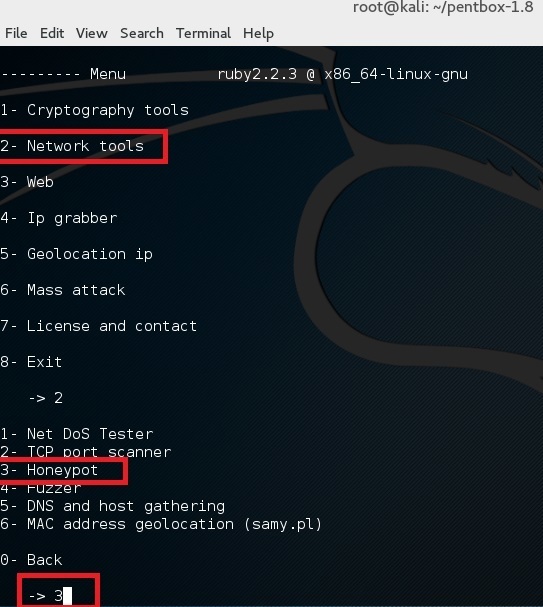

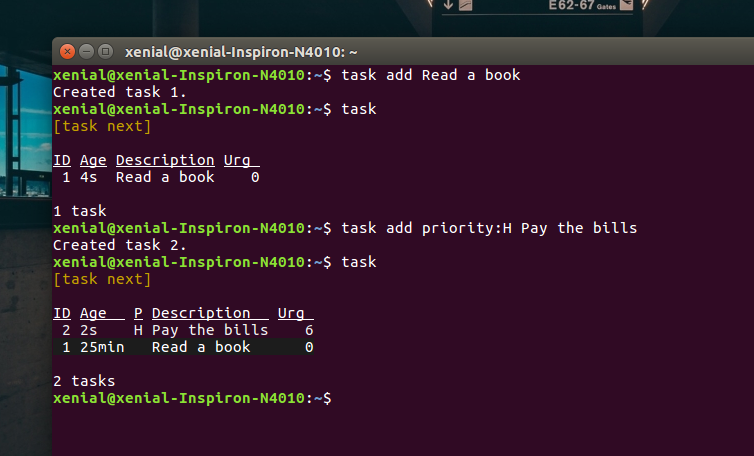

使用选项 2 (Network Tools) 然后是其中的选项 3 (Honeypot)。

|

||||

|

||||

|

||||

|

||||

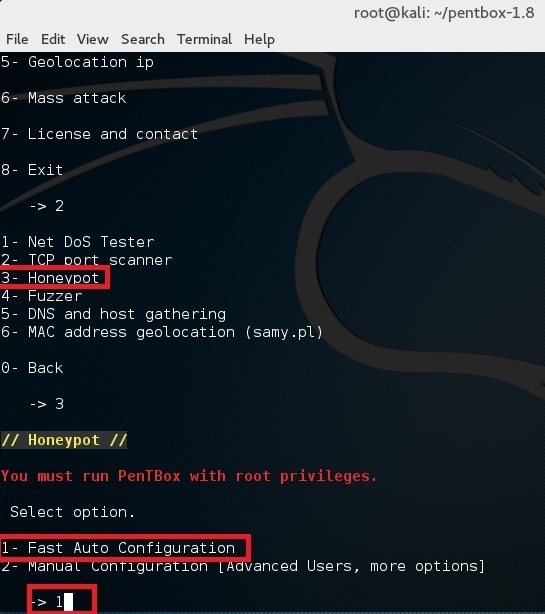

完成让我们执行首次测试,选择其中的选项 1 (Fast Auto Configuration)

|

||||

|

||||

|

||||

|

||||

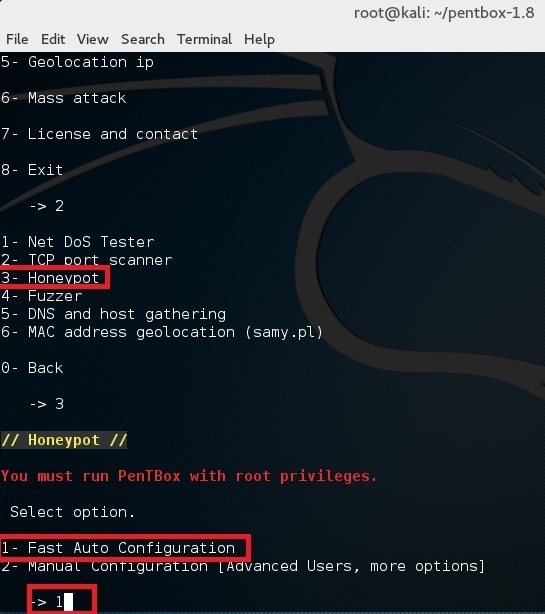

这样就在 80 端口上开启了一个蜜罐。打开浏览器并且打开链接 http://192.168.160.128 (这里的 192.168.160.128 是你自己的 IP 地址。)你应该会看到一个 Access denied 的报错。

|

||||

|

||||

|

||||

|

||||

|

||||

并且在你的终端应该会看到 “HONEYPOT ACTIVATED ON PORT 80” 和跟着的 “INTRUSION ATTEMPT DETECTED”。

|

||||

|

||||

|

||||

|

||||

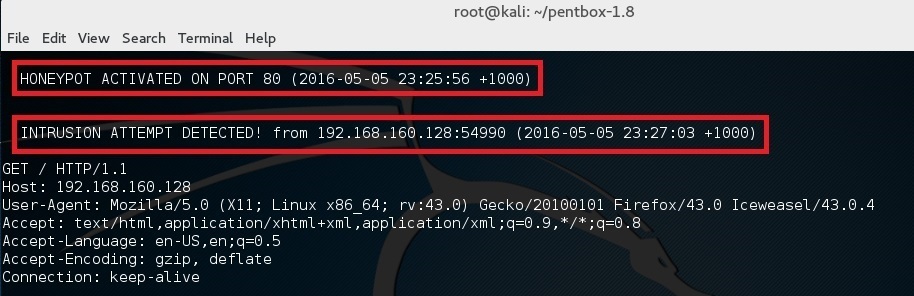

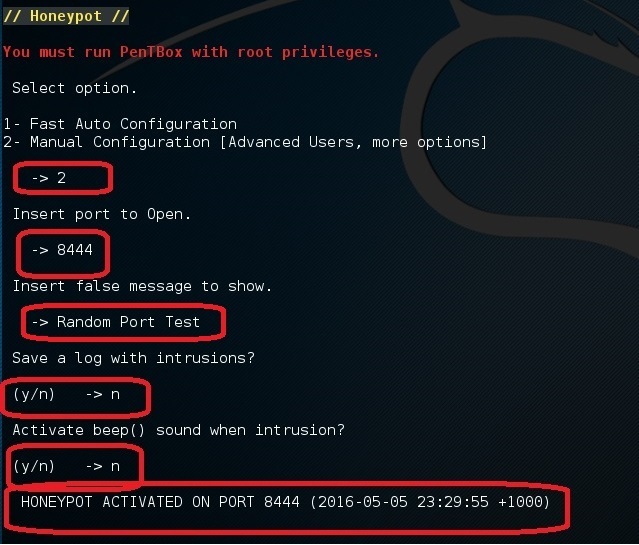

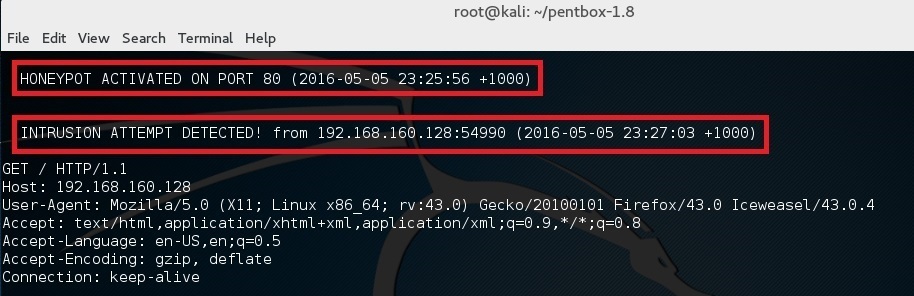

现在,如果你在同一步选择了选项 2 (Manual Configuration), 你应该看见更多的其它选项:

|

||||

|

||||

|

||||

|

||||

执行相同的步骤但是这次选择 22 端口 (SSH 端口)。接着在你家里的路由器上做一个端口转发,将外部的 22 端口转发到这台机器的 22 端口上。或者,把这个蜜罐设置在你的云端服务器的一个 VPS 上。

|

||||

|

||||

你将会被有如此多的机器在持续不断地扫描着 SSH 端口而震惊。 你知道你接着应该干什么么? 你应该黑回它们去!桀桀桀!

|

||||

|

||||

如果视频是你的菜的话,这里有一个设置蜜罐的视频:

|

||||

|

||||

<https://youtu.be/NufOMiktplA>

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.blackmoreops.com/2016/05/06/setup-honeypot-in-kali-linux/

|

||||

|

||||

作者:[blackmoreops.com][a]

|

||||

译者:[wcnnbdk1](https://github.com/wcnnbdk1)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: blackmoreops.com

|

||||

@ -0,0 +1,236 @@

|

||||

使用 Python 和 Asyncio 编写在线多用人游戏(二)

|

||||

==================================================================

|

||||

|

||||

|

||||

|

||||

> 你在 Python 中用过异步编程吗?本文中我会告诉你怎样做,而且用一个[能工作的例子][1]来展示它:这是一个流行的贪吃蛇游戏,而且是为多人游戏而设计的。

|

||||

|

||||

介绍和理论部分参见“[第一部分 异步化][2]”。

|

||||

|

||||

- [游戏入口在此,点此体验][1]。

|

||||

|

||||

### 3、编写游戏循环主体

|

||||

|

||||

游戏循环是每一个游戏的核心。它持续地运行以读取玩家的输入、更新游戏的状态,并且在屏幕上渲染游戏结果。在在线游戏中,游戏循环分为客户端和服务端两部分,所以一般有两个循环通过网络通信。通常客户端的角色是获取玩家输入,比如按键或者鼠标移动,将数据传输给服务端,然后接收需要渲染的数据。服务端处理来自玩家的所有数据,更新游戏的状态,执行渲染下一帧的必要计算,然后将结果传回客户端,例如游戏中对象的新位置。如果没有可靠的理由,不混淆客户端和服务端的角色是一件很重要的事。如果你在客户端执行游戏逻辑的计算,很容易就会和其它客户端失去同步,其实你的游戏也可以通过简单地传递客户端的数据来创建。

|

||||

|

||||

> 游戏循环的一次迭代称为一个嘀嗒(tick)。嘀嗒是一个事件,表示当前游戏循环的迭代已经结束,下一帧(或者多帧)的数据已经就绪。

|

||||

|

||||

在后面的例子中,我们使用相同的客户端,它使用 WebSocket 从一个网页上连接到服务端。它执行一个简单的循环,将按键码发送给服务端,并显示来自服务端的所有信息。[客户端代码戳这里][4]。

|

||||

|

||||

### 例子 3.1:基本游戏循环

|

||||

|

||||

> [例子 3.1 源码][5]。

|

||||

|

||||

我们使用 [aiohttp][6] 库来创建游戏服务器。它可以通过 asyncio 创建网页服务器和客户端。这个库的一个优势是它同时支持普通 http 请求和 websocket。所以我们不用其他网页服务器来渲染游戏的 html 页面。

|

||||

|

||||

下面是启动服务器的方法:

|

||||

|

||||

```

|

||||

app = web.Application()

|

||||

app["sockets"] = []

|

||||

|

||||

asyncio.ensure_future(game_loop(app))

|

||||

|

||||

app.router.add_route('GET', '/connect', wshandler)

|

||||

app.router.add_route('GET', '/', handle)

|

||||

|

||||

web.run_app(app)

|

||||

```

|

||||

|

||||

`web.run_app` 是创建服务主任务的快捷方法,通过它的 `run_forever()` 方法来执行 `asyncio` 事件循环。建议你查看这个方法的源码,弄清楚服务器到底是如何创建和结束的。

|

||||

|

||||

`app` 变量就是一个类似于字典的对象,它用于在所连接的客户端之间共享数据。我们使用它来存储连接的套接字的列表。随后会用这个列表来给所有连接的客户端发送消息。`asyncio.ensure_future()` 调用会启动主游戏循环的任务,每隔2 秒向客户端发送嘀嗒消息。这个任务会在同样的 asyncio 事件循环中和网页服务器并行执行。

|

||||

|

||||

有两个网页请求处理器:`handle` 是提供 html 页面的处理器;`wshandler` 是主要的 websocket 服务器任务,处理和客户端之间的交互。在事件循环中,每一个连接的客户端都会创建一个新的 `wshandler` 任务。这个任务会添加客户端的套接字到列表中,以便 `game_loop` 任务可以给所有的客户端发送消息。然后它将随同消息回显客户端的每个击键。

|

||||

|

||||

在启动的任务中,我们在 `asyncio` 的主事件循环中启动 worker 循环。任务之间的切换发生在它们之间任何一个使用 `await`语句来等待某个协程结束时。例如 `asyncio.sleep` 仅仅是将程序执行权交给调度器一段指定的时间;`ws.receive` 等待 websocket 的消息,此时调度器可能切换到其它任务。

|

||||

|

||||

在浏览器中打开主页,连接上服务器后,试试随便按下键。它们的键值会从服务端返回,每隔 2 秒这个数字会被游戏循环中发给所有客户端的嘀嗒消息所覆盖。

|

||||

|

||||

我们刚刚创建了一个处理客户端按键的服务器,主游戏循环在后台做一些处理,周期性地同时更新所有的客户端。

|

||||

|

||||

### 例子 3.2: 根据请求启动游戏

|

||||

|

||||

> [例子 3.2 的源码][7]

|

||||

|

||||

在前一个例子中,在服务器的生命周期内,游戏循环一直运行着。但是现实中,如果没有一个人连接服务器,空运行游戏循环通常是不合理的。而且,同一个服务器上可能有不同的“游戏房间”。在这种假设下,每一个玩家“创建”一个游戏会话(比如说,多人游戏中的一个比赛或者大型多人游戏中的副本),这样其他用户可以加入其中。当游戏会话开始时,游戏循环才开始执行。

|

||||

|

||||

在这个例子中,我们使用一个全局标记来检测游戏循环是否在执行。当第一个用户发起连接时,启动它。最开始,游戏循环没有执行,标记设置为 `False`。游戏循环是通过客户端的处理方法启动的。

|

||||

|

||||

```

|

||||

if app["game_is_running"] == False:

|

||||

asyncio.ensure_future(game_loop(app))

|

||||

```

|

||||

|

||||

当 `game_loop()` 运行时,这个标记设置为 `True`;当所有客户端都断开连接时,其又被设置为 `False`。

|

||||

|

||||

### 例子 3.3:管理任务

|

||||

|

||||

> [例子3.3源码][8]

|

||||

|

||||

这个例子用来解释如何和任务对象协同工作。我们把游戏循环的任务直接存储在游戏循环的全局字典中,代替标记的使用。在像这样的一个简单例子中并不一定是最优的,但是有时候你可能需要控制所有已经启动的任务。

|

||||

|

||||

```

|

||||

if app["game_loop"] is None or \

|

||||

app["game_loop"].cancelled():

|

||||

app["game_loop"] = asyncio.ensure_future(game_loop(app))

|

||||

```

|

||||

|

||||

这里 `ensure_future()` 返回我们存放在全局字典中的任务对象,当所有用户都断开连接时,我们使用下面方式取消任务:

|

||||

|

||||

```

|

||||

app["game_loop"].cancel()

|

||||

```

|

||||

|

||||

这个 `cancel()` 调用将通知调度器不要向这个协程传递执行权,而且将它的状态设置为已取消:`cancelled`,之后可以通过 `cancelled()` 方法来检查是否已取消。这里有一个值得一提的小注意点:当你持有一个任务对象的外部引用时,而这个任务执行中发生了异常,这个异常不会抛出。取而代之的是为这个任务设置一个异常状态,可以通过 `exception()` 方法来检查是否出现了异常。这种悄无声息地失败在调试时不是很有用。所以,你可能想用抛出所有异常来取代这种做法。你可以对所有未完成的任务显式地调用 `result()` 来实现。可以通过如下的回调来实现:

|

||||

|

||||

```

|

||||

app["game_loop"].add_done_callback(lambda t: t.result())

|

||||

```

|

||||

|

||||

如果我们打算在我们代码中取消这个任务,但是又不想产生 `CancelError` 异常,有一个检查 `cancelled` 状态的点:

|

||||

|

||||

```

|

||||

app["game_loop"].add_done_callback(lambda t: t.result()

|

||||

if not t.cancelled() else None)

|

||||

```

|

||||

|

||||

注意仅当你持有任务对象的引用时才需要这么做。在前一个例子,所有的异常都是没有额外的回调,直接抛出所有异常。

|

||||

|

||||

### 例子 3.4:等待多个事件

|

||||

|

||||

> [例子 3.4 源码][9]

|

||||

|

||||

在许多场景下,在客户端的处理方法中你需要等待多个事件的发生。除了来自客户端的消息,你可能需要等待不同类型事件的发生。比如,如果你的游戏时间有限制,那么你可能需要等一个来自定时器的信号。或者你需要使用管道来等待来自其它进程的消息。亦或者是使用分布式消息系统的网络中其它服务器的信息。

|

||||

|

||||

为了简单起见,这个例子是基于例子 3.1。但是这个例子中我们使用 `Condition` 对象来与已连接的客户端保持游戏循环的同步。我们不保存套接字的全局列表,因为只在该处理方法中使用套接字。当游戏循环停止迭代时,我们使用 `Condition.notify_all()` 方法来通知所有的客户端。这个方法允许在 `asyncio` 的事件循环中使用发布/订阅的模式。

|

||||

|

||||

为了等待这两个事件,首先我们使用 `ensure_future()` 来封装任务中这个可等待对象。

|

||||

|

||||

```

|

||||

if not recv_task:

|

||||

recv_task = asyncio.ensure_future(ws.receive())

|

||||

if not tick_task:

|

||||

await tick.acquire()

|

||||

tick_task = asyncio.ensure_future(tick.wait())

|

||||

```

|

||||

|

||||

在我们调用 `Condition.wait()` 之前,我们需要在它后面获取一把锁。这就是我们为什么先调用 `tick.acquire()` 的原因。在调用 `tick.wait()` 之后,锁会被释放,这样其他的协程也可以使用它。但是当我们收到通知时,会重新获取锁,所以在收到通知后需要调用 `tick.release()` 来释放它。

|

||||

|

||||

我们使用 `asyncio.wait()` 协程来等待两个任务。

|

||||

|

||||

```

|

||||

done, pending = await asyncio.wait(

|

||||

[recv_task,

|

||||

tick_task],

|

||||

return_when=asyncio.FIRST_COMPLETED)

|

||||

```

|

||||

|

||||

程序会阻塞,直到列表中的任意一个任务完成。然后它返回两个列表:执行完成的任务列表和仍然在执行的任务列表。如果任务执行完成了,其对应变量赋值为 `None`,所以在下一个迭代时,它可能会被再次创建。

|

||||

|

||||

### 例子 3.5: 结合多个线程

|

||||

|

||||

> [例子 3.5 源码][10]

|

||||

|

||||

在这个例子中,我们结合 `asyncio` 循环和线程,在一个单独的线程中执行主游戏循环。我之前提到过,由于 `GIL` 的存在,Python 代码的真正并行执行是不可能的。所以使用其它线程来执行复杂计算并不是一个好主意。然而,在使用 `asyncio` 时结合线程有原因的:当我们使用的其它库不支持 `asyncio` 时就需要。在主线程中调用这些库会阻塞循环的执行,所以异步使用他们的唯一方法是在不同的线程中使用他们。

|

||||

|

||||

我们使用 `asyncio` 循环的`run_in_executor()` 方法和 `ThreadPoolExecutor` 来执行游戏循环。注意 `game_loop()` 已经不再是一个协程了。它是一个由其它线程执行的函数。然而我们需要和主线程交互,在游戏事件到来时通知客户端。`asyncio` 本身不是线程安全的,它提供了可以在其它线程中执行你的代码的方法。普通函数有 `call_soon_threadsafe()`,协程有 `run_coroutine_threadsafe()`。我们在 `notify()` 协程中增加了通知客户端游戏的嘀嗒的代码,然后通过另外一个线程执行主事件循环。

|

||||

|

||||

```

|

||||

def game_loop(asyncio_loop):

|

||||

print("Game loop thread id {}".format(threading.get_ident()))

|

||||

async def notify():

|

||||

print("Notify thread id {}".format(threading.get_ident()))

|

||||

await tick.acquire()

|

||||

tick.notify_all()

|

||||

tick.release()

|

||||

|

||||

while 1:

|

||||

task = asyncio.run_coroutine_threadsafe(notify(), asyncio_loop)

|

||||

# blocking the thread

|

||||

sleep(1)

|

||||

# make sure the task has finished

|

||||

task.result()

|

||||

```

|

||||

|

||||

当你执行这个例子时,你会看到 “Notify thread id” 和 “Main thread id” 相等,因为 `notify()` 协程在主线程中执行。与此同时 `sleep(1)` 在另外一个线程中执行,因此它不会阻塞主事件循环。

|

||||

|

||||

### 例子 3.6:多进程和扩展

|

||||

|

||||

> [例子 3.6 源码][11]

|

||||

|

||||

单线程的服务器可能运行得很好,但是它只能使用一个 CPU 核。为了将服务扩展到多核,我们需要执行多个进程,每个进程执行各自的事件循环。这样我们需要在进程间交互信息或者共享游戏的数据。而且在一个游戏中经常需要进行复杂的计算,例如路径查找之类。这些任务有时候在一个游戏嘀嗒中没法快速完成。在协程中不推荐进行费时的计算,因为它会阻塞事件的处理。在这种情况下,将这个复杂任务交给其它并行执行的进程可能更合理。

|

||||

|

||||

最简单的使用多个核的方法是启动多个使用单核的服务器,就像之前的例子中一样,每个服务器占用不同的端口。你可以使用 `supervisord` 或者其它进程控制的系统。这个时候你需要一个像 `HAProxy` 这样的负载均衡器,使得连接的客户端分布在多个进程间。已经有一些可以连接 asyncio 和一些流行的消息及存储系统的适配系统。例如:

|

||||

|

||||

- [aiomcache][12] 用于 memcached 客户端

|

||||

- [aiozmq][13] 用于 zeroMQ

|

||||

- [aioredis][14] 用于 Redis 存储,支持发布/订阅

|

||||

|

||||

你可以在 github 或者 pypi 上找到其它的软件包,大部分以 `aio` 开头。

|

||||

|

||||

使用网络服务在存储持久状态和交换某些信息时可能比较有效。但是如果你需要进行进程间通信的实时处理,它的性能可能不足。此时,使用标准的 unix 管道可能更合适。`asyncio` 支持管道,在`aiohttp`仓库有个 [使用管道的服务器的非常底层的例子][15]。

|

||||

|

||||

在当前的例子中,我们使用 Python 的高层类库 [multiprocessing][16] 来在不同的核上启动复杂的计算,使用 `multiprocessing.Queue` 来进行进程间的消息交互。不幸的是,当前的 `multiprocessing` 实现与 `asyncio` 不兼容。所以每一个阻塞方法的调用都会阻塞事件循环。但是此时线程正好可以起到帮助作用,因为如果在不同线程里面执行 `multiprocessing` 的代码,它就不会阻塞主线程。所有我们需要做的就是把所有进程间的通信放到另外一个线程中去。这个例子会解释如何使用这个方法。和上面的多线程例子非常类似,但是我们从线程中创建的是一个新的进程。

|

||||

|

||||

```

|

||||

def game_loop(asyncio_loop):

|

||||

# coroutine to run in main thread

|

||||

async def notify():

|

||||

await tick.acquire()

|

||||

tick.notify_all()

|

||||

tick.release()

|

||||

|

||||

queue = Queue()

|

||||

|

||||

# function to run in a different process

|

||||

def worker():

|

||||

while 1:

|

||||

print("doing heavy calculation in process {}".format(os.getpid()))

|

||||

sleep(1)

|

||||

queue.put("calculation result")

|

||||

|

||||

Process(target=worker).start()

|

||||

|

||||

while 1:

|

||||

# blocks this thread but not main thread with event loop

|

||||

result = queue.get()

|

||||

print("getting {} in process {}".format(result, os.getpid()))

|

||||

task = asyncio.run_coroutine_threadsafe(notify(), asyncio_loop)

|

||||

task.result()

|

||||

```

|

||||

|

||||

这里我们在另外一个进程中运行 `worker()` 函数。它包括一个执行复杂计算并把计算结果放到 `queue` 中的循环,这个 `queue` 是 `multiprocessing.Queue` 的实例。然后我们就可以在另外一个线程的主事件循环中获取结果并通知客户端,就和例子 3.5 一样。这个例子已经非常简化了,它没有合理的结束进程。而且在真实的游戏中,我们可能需要另外一个队列来将数据传递给 `worker`。

|

||||

|

||||

有一个项目叫 [aioprocessing][17],它封装了 `multiprocessing`,使得它可以和 `asyncio` 兼容。但是实际上它只是和上面例子使用了完全一样的方法:从线程中创建进程。它并没有给你带来任何方便,除了它使用了简单的接口隐藏了后面的这些技巧。希望在 Python 的下一个版本中,我们能有一个基于协程且支持 `asyncio` 的 `multiprocessing` 库。

|

||||

|

||||

> 注意!如果你从主线程或者主进程中创建了一个不同的线程或者子进程来运行另外一个 `asyncio` 事件循环,你需要显式地使用 `asyncio.new_event_loop()` 来创建循环,不然的话可能程序不会正常工作。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-and-asyncio-writing-game-loop/

|

||||

|

||||

作者:[Kyrylo Subbotin][a]

|

||||

译者:[chunyang-wen](https://github.com/chunyang-wen)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-and-asyncio-writing-game-loop/

|

||||

[1]: http://snakepit-game.com/

|

||||

[2]: https://linux.cn/article-7767-1.html

|

||||

[3]: http://snakepit-game.com/

|

||||

[4]: https://github.com/7WebPages/snakepit-game/blob/master/simple/index.html

|

||||

[5]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_basic.py

|

||||

[6]: http://aiohttp.readthedocs.org/

|

||||

[7]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_handler.py

|

||||

[8]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_global.py

|

||||

[9]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_wait.py

|

||||

[10]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_thread.py

|

||||

[11]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_process.py

|

||||

[12]: https://github.com/aio-libs/aiomcache

|

||||

[13]: https://github.com/aio-libs/aiozmq

|

||||

[14]: https://github.com/aio-libs/aioredis

|

||||

[15]: https://github.com/KeepSafe/aiohttp/blob/master/examples/mpsrv.py

|

||||

[16]: https://docs.python.org/3.5/library/multiprocessing.html

|

||||

[17]: https://github.com/dano/aioprocessing

|

||||

@ -0,0 +1,46 @@

|

||||

DAISY : 一种 Linux 上可用的服务于视力缺陷者的文本格式

|

||||

=================================================================

|

||||

|

||||

|

||||

|

||||

|

||||

*图片: 由Kate Ter Haar提供图片。 opensource.com 后期修饰。 CC BY-SA 2.0 *

|

||||

|

||||

如果你是盲人或像我一样有视力障碍,你可能经常需要各种软硬件才能做到视觉正常的人们视之为理所当然的事情。这其中之一就是阅读的印刷图书的专用格式:布莱叶盲文(Braille)(假设你知道怎样阅读它)或特殊的文本格式例如DAISY。

|

||||

|

||||

### DAISY 是什么?

|

||||

|

||||

DAISY 是数字化无障碍信息系统(Digital Accessible Information System)的缩写。 它是一种开放的标准,专用于帮助盲人阅读课本、杂志、报纸、小说,以及你想到的各种东西。 它由[ DAISY 联盟][1]创立于上世纪 90 年代中期,该联盟包括的组织们致力于制定出一套标准,可以让以这种方式标记的文本易于阅读、可以跳转、进行注释以及其它的文本操作,就像视觉正常的人能做的一样。

|

||||

|

||||

当前的 DAISY 3.0 版本发布于 2005 年中期,是一个完全重写了的标准。它创建的目的是更容易撰写遵守该规范的书籍。值得注意的是,DAISY 能够仅支持纯文本、或仅是录音(PCM wave 文件格式或者 MP3 格式)、或既有文本也有录音。特殊的软件能阅读这类书,并支持用户设置书签和目录导航,就像正常人阅读印刷书籍一样。

|

||||

|

||||

### DAISY 是怎样工作的呢?

|

||||

|

||||

DAISY,除开特殊的版本,它工作时有点像这样:你拥有自己的主向导文件(在 DAISY 2.02 中是 ncc.html),它包含书籍的元数据,比如作者姓名、版权信息、书籍页数等等。而在 DAISY 3.0 中这个文件是一个有效的 XML 文件,以及一个被强烈建议包含在每一本书中的 DTD(文档类型定义)文件。

|

||||

|

||||

在导航控制文件中,标记精确描述了各个位置——无论是文本导航中当前光标位置还是录音中的毫秒级定位,这让该软件可以跳到确切的位置,就像视力健康的人翻到某个章节一样。值得注意的是这种导航控制文件仅包含书中主要的、最大的书籍组成部分的位置。

|

||||

|

||||

更小的内容组成部分由 SMIL(同步多媒体集成语言(synchronized multimedia integration language))文件处理。导航的层次很大程度上取决于书籍的标记的怎么样。这样设想一下,如果印刷书籍没有章节标题,你就需要花很多的时间来确定自己阅读的位置。如果一本 DAISY 格式的书籍被标记的很差,你可能只能转到书本的开头或者目录。如果书籍被标记的太差了(或者完全没有标记),你的 DAISY 阅读软件很可能会直接忽略它。

|

||||

|

||||

### 为什么需要专门的软件?

|

||||

|

||||

你可能会问,如果 DAISY 仅仅是 HTML、XML、录音文件,为什么还需要使用专门的软件进行阅读和操作。单纯从技术上而言,你并不需要。专业化的软件大多数情况下是为了方便。这就像在 Linux 操作系统中,一个简单的 Web 浏览器可以被用来打开并阅读书籍。如果你在一本 DAISY 3 的书中点击 XML 文件,软件通常做的就是读取那些你赋予访问权限的书籍的名称,并建立一个列表让你点击选择要打开的书。如果书籍被标记的很差,它不会显示在这份清单中。

|

||||

|

||||

创建 DAISY 则完全是另一件事了,通常需要专门的软件,或需要拥有足够的专业知识来修改一个通用的软件以达到这样的目的。

|

||||

|

||||

### 结语

|

||||

|

||||

幸运的是,DAISY 是一个已确立的标准。虽然它在阅读方面表现的很棒,但是需要特殊软件来生产它使得视力缺陷者孤立于正常人眼中的世界,在那里人们可以以各种格式去阅读他们电子化书籍。这就是 DAISY 联盟在 EPUB 格式取得了 DAISY 成功的原因,它的第 3 版支持一种叫做“媒体覆盖”的规范,基本上来说是在 EPUB 电子书中可选增加声频或视频。由于 EPUB 和 DAISY 共享了很多 XML 标记,一些能够阅读 DAISY 的软件能够看到 EPUB 电子书但不能阅读它们。这也就意味着只要网站为我们换到这种开放格式的书籍,我们将会有更多可选的软件来阅读我们的书籍。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/life/16/5/daisy-linux-compatible-text-format-visually-impaired

|

||||

|

||||

作者:[Kendell Clark][a]

|

||||

译者:[theArcticOcean](https://github.com/theArcticOcean)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/kendell-clark

|

||||

[1]: http://www.daisy.org

|

||||

@ -0,0 +1,404 @@

|

||||

旅行时通过树莓派和 iPad Pro 备份图片

|

||||

===================================================================

|

||||

|

||||

|

||||

|

||||

*旅行中备份图片 - 组件*

|

||||

|

||||

### 介绍

|

||||

|

||||

我在很长的时间内一直在寻找一个旅行中备份图片的理想方法,把 SD 卡放进你的相机包会让你暴露在太多的风险之中:SD 卡可能丢失或者被盗,数据可能损坏或者在传输过程中失败。比较好的一个选择是复制到另外一个介质中,即使它也是个 SD 卡,并且将它放到一个比较安全的地方去,备份到远端也是一个可行的办法,但是如果去了一个没有网络的地方就不太可行了。

|

||||

|

||||

我理想的备份步骤需要下面的工具:

|

||||

|

||||

1. 用一台 iPad pro 而不是一台笔记本。我喜欢轻装旅行,我的大部分旅程都是商务相关的(而不是拍摄休闲的),我痛恨带着个人笔记本的时候还得带着商务本。而我的 iPad 却一直带着,这就是我为什么选择它的原因。

|

||||

2. 用尽可能少的硬件设备。

|

||||

3. 设备之间的连接需要很安全。我需要在旅馆和机场使用这套设备,所以设备之间的连接需要是封闭而加密的。

|

||||

4. 整个过程应该是可靠稳定的,我还用过其他的路由器/组合设备,但是[效果不太理想][1]。

|

||||

|

||||

### 设备

|

||||

|

||||

我配置了一套满足上面条件并且在未来可以扩充的设备,它包含下面这些部件的使用:

|

||||

|

||||

1. [9.7 英寸的 iPad Pro][2],这是本文写作时最强大、轻薄的 iOS 设备,苹果笔不是必需的,但是作为零件之一,当我在路上可以做一些编辑工作,所有的重活由树莓派做 ,其他设备只能通过 SSH 连接就行。

|

||||

2. 安装了 Raspbian 操作系统[树莓派 3][3](LCTT 译注:Raspbian 是基于 Debian 的树莓派操作系统)。

|

||||

3. 树莓派的 [Mini SD卡][4] 和 [盒子/外壳][5]。

|

||||

5. [128G 的优盘][6],对于我是够用了,你可以买个更大的。你也可以买个像[这样][7]的移动硬盘,但是树莓派没法通过 USB 给移动硬盘提供足够的电量,这意味你需要额外准备一个[供电的 USB hub][8] 以及电缆,这就破坏了我们让设备轻薄的初衷。

|

||||

6. [SD 读卡器][9]

|

||||

7. [另外的 SD 卡][10],我会使用几个 SD 卡,在用满之前就会立即换一个,这样就会让我在一次旅途当中的照片散布在不同的 SD 卡上。

|

||||

|

||||

下图展示了这些设备之间如何相互连接。

|

||||

|

||||

|

||||

|

||||

*旅行时照片的备份-流程图*

|

||||

|

||||

树莓派会作为一个安全的热点。它会创建一个自己的 WPA2 加密的 WIFI 网络,iPad Pro 会连入其中。虽然有很多在线教程教你如何创建 Ad Hoc 网络(计算机到计算机的单对单网络),还更简单一些,但是它的连接是不加密的,而且附件的设备很容易就能连接进去。因此我选择创建 WIFI 网络。

|

||||

|

||||

相机的 SD 卡通过 SD 读卡器插到树莓派 USB 端口之一,128G 的大容量优盘一直插在树莓派的另外一个 USB 端口上,我选择了一款[闪迪的][11],因为体积比较小。主要的思路就是通过 Python 脚本把 SD 卡的照片备份到优盘上,备份过程是增量备份,每次脚本运行时都只有变化的(比如新拍摄的照片)部分会添加到备份文件夹中,所以这个过程特别快。如果你有很多的照片或者拍摄了很多 RAW 格式的照片,在就是个巨大的优势。iPad 将用来运行 Python 脚本,而且用来浏览 SD 卡和优盘的文件。

|

||||

|

||||

作为额外的好处,如果给树莓派连上一根能上网的网线(比如通过以太网口),那么它就可以共享互联网连接给那些通过 WIFI 连入的设备。

|

||||

|

||||

### 1. 树莓派的设置

|

||||

|

||||

这部分需要你卷起袖子亲自动手了,我们要用到 Raspbian 的命令行模式,我会尽可能详细的介绍,方便大家进行下去。

|

||||

|

||||

#### 安装和配置 Raspbian

|

||||

|

||||

给树莓派连接鼠标、键盘和 LCD 显示器,将 SD 卡插到树莓派上,按照[树莓派官网][12]的步骤安装 Raspbian。

|

||||

|

||||

安装完后,打开 Raspbian 的终端,执行下面的命令:

|

||||

|

||||

```

|

||||

sudo apt-get update

|

||||

sudo apt-get upgrade

|

||||

```

|

||||

|

||||

这将升级机器上所有的软件到最新,我将树莓派连接到本地网络,而且为了安全更改了默认的密码。

|

||||

|

||||

Raspbian 默认开启了 SSH,这样所有的设置可以在一个远程的设备上完成。我也设置了 RSA 验证,但这是可选的功能,可以在[这里][13]查看更多信息。

|

||||

|

||||

这是一个在 Mac 上在 [iTerm][14] 里建立 SSH 连接到树莓派上的截图[14]。(LCTT 译注:原文图丢失。)

|

||||

|

||||

#### 建立 WPA2 加密的 WIFI AP

|

||||

|

||||

安装过程基于[这篇文章][15],根据我的情况进行了调整。

|

||||

|

||||

**1. 安装软件包**

|

||||

|

||||

我们需要安装下面的软件包:

|

||||

|

||||

```

|

||||

sudo apt-get install hostapd

|

||||

sudo apt-get install dnsmasq

|

||||

```

|

||||

|

||||

hostapd 用来使用内置的 WiFi 来创建 AP,dnsmasp 是一个组合的 DHCP 和 DNS 服务其,很容易设置。

|

||||

|

||||

**2. 编辑 dhcpcd.conf**

|

||||

|

||||

通过以太网连接树莓派,树莓派上的网络接口配置由 `dhcpd` 控制,因此我们首先忽略这一点,将 `wlan0` 设置为一个静态的 IP。

|

||||

|

||||

用 `sudo nano /etc/dhcpcd.conf` 命令打开 dhcpcd 的配置文件,在最后一行添加上如下内容:

|

||||

|

||||

```

|

||||

denyinterfaces wlan0

|

||||

```

|

||||

|

||||

注意:它必须放在如果已经有的其它接口行**之上**。

|

||||

|

||||

**3. 编辑接口**

|

||||

|

||||

现在设置静态 IP,使用 `sudo nano /etc/network/interfaces` 打开接口配置文件,按照如下信息编辑`wlan0`部分:

|

||||

|

||||

```

|

||||

allow-hotplug wlan0

|

||||

iface wlan0 inet static

|

||||

address 192.168.1.1

|

||||

netmask 255.255.255.0

|

||||

network 192.168.1.0

|

||||

broadcast 192.168.1.255

|

||||

# wpa-conf /etc/wpa_supplicant/wpa_supplicant.conf

|

||||

```

|

||||

|

||||

同样,然后 `wlan1` 编辑如下:

|

||||

|

||||

```

|

||||

#allow-hotplug wlan1

|

||||

#iface wlan1 inet manual

|

||||

# wpa-conf /etc/wpa_supplicant/wpa_supplicant.conf

|

||||

```

|

||||

|

||||

重要: 使用 `sudo service dhcpcd restart` 命令重启 `dhcpd`服务,然后用 `sudo ifdown eth0; sudo ifup wlan0` 命令来重载`wlan0`的配置。

|

||||

|

||||

**4. 配置 Hostapd**

|

||||

|

||||

接下来,我们需要配置 hostapd,使用 `sudo nano /etc/hostapd/hostapd.conf` 命令创建一个新的配置文件,内容如下:

|

||||

|

||||

```

|

||||

interface=wlan0

|

||||

|

||||

# Use the nl80211 driver with the brcmfmac driver

|

||||

driver=nl80211

|

||||

|

||||

# This is the name of the network

|

||||

ssid=YOUR_NETWORK_NAME_HERE

|

||||

|

||||

# Use the 2.4GHz band

|

||||

hw_mode=g

|

||||

|

||||

# Use channel 6

|

||||

channel=6

|

||||

|

||||

# Enable 802.11n

|

||||

ieee80211n=1

|

||||

|

||||

# Enable QoS Support

|

||||

wmm_enabled=1

|

||||

|

||||

# Enable 40MHz channels with 20ns guard interval

|

||||

ht_capab=[HT40][SHORT-GI-20][DSSS_CCK-40]

|

||||

|

||||

# Accept all MAC addresses

|

||||

macaddr_acl=0

|

||||

|

||||

# Use WPA authentication

|

||||

auth_algs=1

|

||||

|

||||

# Require clients to know the network name

|

||||

ignore_broadcast_ssid=0

|

||||

|

||||

# Use WPA2

|

||||

wpa=2

|

||||

|

||||

# Use a pre-shared key

|

||||

wpa_key_mgmt=WPA-PSK

|

||||

|

||||

# The network passphrase

|

||||

wpa_passphrase=YOUR_NEW_WIFI_PASSWORD_HERE

|

||||

|

||||

# Use AES, instead of TKIP

|

||||

rsn_pairwise=CCMP

|

||||

```

|

||||

|

||||

配置完成后,我们需要告诉`dhcpcd` 在系统启动运行时到哪里寻找配置文件。 使用 `sudo nano /etc/default/hostapd` 命令打开默认配置文件,然后找到`#DAEMON_CONF=""` 替换成`DAEMON_CONF="/etc/hostapd/hostapd.conf"`。

|

||||

|

||||

**5. 配置 Dnsmasq**

|

||||

|

||||

自带的 dnsmasp 配置文件包含很多信息方便你使用它,但是我们不需要那么多选项,我建议把它移动到别的地方(而不要删除它),然后自己创建一个新文件:

|

||||

|

||||

```

|

||||

sudo mv /etc/dnsmasq.conf /etc/dnsmasq.conf.orig

|

||||

sudo nano /etc/dnsmasq.conf

|

||||

```

|

||||

|

||||

粘贴下面的信息到新文件中:

|

||||

|

||||

```

|

||||

interface=wlan0 # Use interface wlan0

|

||||

listen-address=192.168.1.1 # Explicitly specify the address to listen on

|

||||

bind-interfaces # Bind to the interface to make sure we aren't sending things elsewhere

|

||||

server=8.8.8.8 # Forward DNS requests to Google DNS

|

||||

domain-needed # Don't forward short names

|

||||

bogus-priv # Never forward addresses in the non-routed address spaces.

|

||||

dhcp-range=192.168.1.50,192.168.1.100,12h # Assign IP addresses in that range with a 12 hour lease time

|

||||

```

|

||||

|

||||

**6. 设置 IPv4 转发**

|

||||

|

||||

最后我们需要做的事就是配置包转发,用 `sudo nano /etc/sysctl.conf` 命令打开 `sysctl.conf` 文件,将包含 `net.ipv4.ip_forward=1`的那一行之前的#号删除,它将在下次重启时生效。

|

||||

|

||||

我们还需要给连接到树莓派的设备通过 WIFI 分享互联网连接,做一个 `wlan0`和 `eth0` 之间的 NAT。我们可以参照下面的脚本来实现。

|

||||

|

||||

```

|

||||

sudo iptables -t nat -A POSTROUTING -o eth0 -j MASQUERADE

|

||||

sudo iptables -A FORWARD -i eth0 -o wlan0 -m state --state RELATED,ESTABLISHED -j ACCEPT

|

||||

sudo iptables -A FORWARD -i wlan0 -o eth0 -j ACCEPT

|

||||

```

|

||||

|

||||

我命名这个脚本名为 `hotspot-boot.sh`,然后让它可以执行:

|

||||

|

||||

```

|

||||

sudo chmod 755 hotspot-boot.sh

|

||||

```

|

||||

|

||||

该脚本应该在树莓派启动的时候运行。有很多方法实现,下面是我实现的方式:

|

||||

|

||||

1. 把文件放到`/home/pi/scripts`目录下。

|

||||

2. 输入`sudo nano /etc/rc.local`命令编辑 `rc.local` 文件,将运行该脚本的命令放到 `exit 0`之前。(更多信息参照[这里][16])。

|

||||

|

||||

编辑后`rc.local`看起来像这样:

|

||||

|

||||

```

|

||||

#!/bin/sh -e

|

||||

#

|

||||

# rc.local

|

||||

#

|

||||

# This script is executed at the end of each multiuser runlevel.

|

||||

# Make sure that the script will "exit 0" on success or any other

|

||||

# value on error.

|

||||

#

|

||||

# In order to enable or disable this script just change the execution

|

||||

# bits.

|

||||

#

|

||||

# By default this script does nothing.

|

||||

|

||||

# Print the IP address

|

||||

_IP=$(hostname -I) || true

|

||||

if [ "$_IP" ]; then

|

||||

printf "My IP address is %s\n" "$_IP"

|

||||

fi

|

||||

|

||||

sudo /home/pi/scripts/hotspot-boot.sh &

|

||||

|

||||

exit 0

|

||||

|

||||

```

|

||||

|

||||

#### 安装 Samba 服务和 NTFS 兼容驱动

|

||||

|

||||

我们要安装下面几个软件来启用 samba 协议,使[文件浏览器][20]能够访问树莓派分享的文件夹,`ntfs-3g` 可以使我们能够访问移动硬盘中 ntfs 文件系统的文件。

|

||||

|

||||

```

|

||||

sudo apt-get install ntfs-3g

|

||||

sudo apt-get install samba samba-common-bin

|

||||

```

|

||||

|

||||

你可以参照[这些文档][17]来配置 Samba。

|

||||

|

||||

重要提示:参考的文档介绍的是挂载外置硬盘到树莓派上,我们不这样做,是因为在这篇文章写作的时候,树莓派在启动时的 auto-mounts 功能同时将 SD 卡和优盘挂载到`/media/pi/`上,该文章有一些多余的功能我们也不会采用。

|

||||

|

||||

### 2. Python 脚本

|

||||

|

||||

树莓派配置好后,我们需要开发脚本来实际拷贝和备份照片。注意,这个脚本只是提供了特定的自动化备份进程,如果你有基本的 Linux/树莓派命令行操作的技能,你可以 ssh 进树莓派,然后创建需要的文件夹,使用`cp`或`rsync`命令拷贝你自己的照片从一个设备到另外一个设备上。在脚本里我们用`rsync`命令,这个命令比较可靠而且支持增量备份。

|

||||

|

||||

这个过程依赖两个文件,脚本文件自身和`backup_photos.conf`这个配置文件,后者只有几行包含被挂载的目的驱动器(优盘)和应该挂载到哪个目录,它看起来是这样的:

|

||||

|

||||

```

|

||||

mount folder=/media/pi/

|

||||

destination folder=PDRIVE128GB

|

||||

```

|

||||

|

||||

重要提示:在这个符号`=`前后不要添加多余的空格,否则脚本会失效。

|

||||

|

||||

下面是这个 Python 脚本,我把它命名为`backup_photos.py`,把它放到了`/home/pi/scripts/`目录下,我在每行都做了注释可以方便的查看各行的功能。

|

||||

|

||||

```

|

||||

#!/usr/bin/python3

|

||||

|

||||

import os

|

||||

import sys

|

||||

from sh import rsync

|

||||

|

||||

'''

|

||||

脚本将挂载到 /media/pi 的 SD 卡上的内容复制到目的磁盘的同名目录下,目的磁盘的名字在 .conf文件里定义好了。

|

||||

|

||||

|

||||

Argument: label/name of the mounted SD Card.

|

||||

'''

|

||||

|

||||

CONFIG_FILE = '/home/pi/scripts/backup_photos.conf'

|

||||

ORIGIN_DEV = sys.argv[1]

|

||||

|

||||

def create_folder(path):

|

||||

|

||||

print ('attempting to create destination folder: ',path)

|

||||

if not os.path.exists(path):

|

||||

try:

|

||||

os.mkdir(path)

|

||||

print ('Folder created.')

|

||||

except:

|

||||

print ('Folder could not be created. Stopping.')

|

||||

return

|

||||

else:

|

||||

print ('Folder already in path. Using that instead.')

|

||||

|

||||

|

||||

|

||||

confFile = open(CONFIG_FILE,'rU')

|

||||

#重要:: rU 选项将以统一换行模式打开文件,

|

||||

#所以 \n 和/或 \r 都被识别为一个新行。

|

||||

|

||||

confList = confFile.readlines()

|

||||

confFile.close()

|

||||

|

||||

|

||||

for line in confList:

|

||||

line = line.strip('\n')

|

||||

|

||||

try:

|

||||

name , value = line.split('=')

|

||||

|

||||

if name == 'mount folder':

|

||||

mountFolder = value

|

||||

elif name == 'destination folder':

|

||||

destDevice = value

|

||||

|

||||

|

||||

except ValueError:

|

||||

print ('Incorrect line format. Passing.')

|

||||

pass

|

||||

|

||||

|

||||

destFolder = mountFolder+destDevice+'/'+ORIGIN_DEV

|

||||

create_folder(destFolder)

|

||||

|

||||

print ('Copying files...')

|

||||

|

||||

# 取消这行备注将删除不在源处的文件

|

||||

# rsync("-av", "--delete", mountFolder+ORIGIN_DEV, destFolder)

|

||||

rsync("-av", mountFolder+ORIGIN_DEV+'/', destFolder)

|

||||

|

||||

print ('Done.')

|

||||

```

|

||||

|

||||

### 3. iPad Pro 的配置

|

||||

|

||||

因为重活都由树莓派干了,文件不通过 iPad Pro 传输,这比我[之前尝试的一种方案][18]有巨大的优势。我们在 iPad 上只需要安装上 [Prompt2][19] 来通过 SSH 连接树莓派就行了,这样你既可以运行 Python 脚本也可以手动复制文件了。

|

||||

|

||||

|

||||

|

||||

*iPad 用 Prompt2 通过 SSH 连接树莓派*

|

||||

|

||||

因为我们安装了 Samba,我们可以以更图形化的方式访问连接到树莓派的 USB 设备,你可以看视频,在不同的设备之间复制和移动文件,[文件浏览器][20]对于这种用途非常完美。

|

||||

|

||||

### 4. 将它们结合在一起

|

||||

|

||||

我们假设`SD32GB-03`是连接到树莓派 USB 端口之一的 SD 卡的卷标,`PDRIVE128GB`是那个优盘的卷标,也连接到设备上,并在上面指出的配置文件中定义好。如果我们想要备份 SD 卡上的图片,我们需要这么做:

|

||||

|

||||

1. 给树莓派加电打开,将驱动器自动挂载好。

|

||||

2. 连接树莓派配置好的 WIFI 网络。

|

||||

3. 用 [Prompt2][21] 这个 app 通过 SSH 连接到树莓派。

|

||||

4. 连接好后输入下面的命令:`python3 backup_photos.py SD32GB-03`

|

||||

|

||||

首次备份需要一些时间,这依赖于你的 SD 卡使用了多少容量。这意味着你需要一直保持树莓派和 iPad 设备连接不断,你可以在脚本运行之前通过 `nohup` 命令解决:

|

||||

|

||||

```

|

||||

nohup python3 backup_photos.py SD32GB-03 &

|

||||

```

|

||||

|

||||

|

||||

|

||||

*运行完成的脚本如图所示*

|

||||

|

||||

### 未来的定制

|

||||

|

||||

我在树莓派上安装了 vnc 服务,这样我可以通过其它计算机或在 iPad 上用 [Remoter App][23]连接树莓派的图形界面,我安装了 [BitTorrent Sync][24] 用来远端备份我的图片,当然需要先设置好。当我有了可以运行的解决方案之后,我会补充我的文章。

|

||||

|

||||

你可以在下面发表你的评论和问题,我会在此页下面回复。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.movingelectrons.net/blog/2016/06/26/backup-photos-while-traveling-with-a-raspberry-pi.html

|

||||

|

||||

作者:[Lenin][a]

|

||||

译者:[jiajia9linuxer](https://github.com/jiajia9linuxer)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://www.movingelectrons.net/blog/2016/06/26/backup-photos-while-traveling-with-a-raspberry-pi.html

|

||||

[1]: http://bit.ly/1MVVtZi

|

||||

[2]: http://www.amazon.com/dp/B01D3NZIMA/?tag=movinelect0e-20

|

||||

[3]: http://www.amazon.com/dp/B01CD5VC92/?tag=movinelect0e-20

|

||||

[4]: http://www.amazon.com/dp/B010Q57T02/?tag=movinelect0e-20

|

||||

[5]: http://www.amazon.com/dp/B01F1PSFY6/?tag=movinelect0e-20

|

||||

[6]: http://amzn.to/293kPqX

|

||||

[7]: http://amzn.to/290syFY

|

||||

[8]: http://amzn.to/290syFY

|

||||

[9]: http://amzn.to/290syFY

|

||||

[10]: http://amzn.to/290syFY

|

||||

[11]: http://amzn.to/293kPqX

|

||||

[12]: https://www.raspberrypi.org/downloads/noobs/

|

||||

[13]: https://www.raspberrypi.org/documentation/remote-access/ssh/passwordless.md

|

||||

[14]: https://www.iterm2.com/

|

||||

[15]: https://frillip.com/using-your-raspberry-pi-3-as-a-wifi-access-point-with-hostapd/

|

||||

[16]: https://www.raspberrypi.org/documentation/linux/usage/rc-local.md

|

||||

[17]: http://www.howtogeek.com/139433/how-to-turn-a-raspberry-pi-into-a-low-power-network-storage-device/

|

||||

[18]: http://bit.ly/1MVVtZi

|

||||

[19]: https://itunes.apple.com/us/app/prompt-2/id917437289?mt=8&uo=4&at=11lqkH

|

||||

[20]: https://itunes.apple.com/us/app/filebrowser-access-files-on/id364738545?mt=8&uo=4&at=11lqkH

|

||||

[21]: https://itunes.apple.com/us/app/prompt-2/id917437289?mt=8&uo=4&at=11lqkH

|

||||

[22]: https://en.m.wikipedia.org/wiki/Nohup

|

||||

[23]: https://itunes.apple.com/us/app/remoter-pro-vnc-ssh-rdp/id519768191?mt=8&uo=4&at=11lqkH

|

||||

[24]: https://getsync.com/

|

||||

76

published/20160828 4 Best Linux Boot Loaders.md

Normal file

76

published/20160828 4 Best Linux Boot Loaders.md

Normal file

@ -0,0 +1,76 @@

|

||||

4 个最好的 Linux 引导程序

|

||||

==================

|

||||

|

||||

当你打开你的机器,开机自检(POST)成功完成后,BIOS(基本输入输出系统)立即定位所配置的引导介质,并从 MBR(主引导记录)或 GUID(全局唯一标识符)分区表读取一些命令,这是引导介质的最前面 512 个字节内容。主引导记录(MBR)中包含两个重要的信息集合,第一个是引导程序,第二个是分区表。

|

||||

|

||||

### 什么是引导程序?

|

||||

|

||||

引导程序(Boot Loader)是存储在 MBR(主引导记录)或 GUID(全局唯一标识符)分区表中的一个小程序,用于帮助把操作系统装载到内存中。如果没有引导程序,那么你的操作系统将不能够装载到内存中。

|

||||

|

||||

有一些我们可以随同 Linux 安装到系统上的引导程序,在这篇文章里,我将简要地谈论几个最好的可以与 Linux 一同工作的 Linux 引导程序。

|

||||

|

||||

### 1. GNU GRUB

|

||||

|

||||

GNU GRUB 是一个非常受欢迎,也可能是用的最多的具有多重引导能力的 Linux 引导程序,它以原始的 Eirch Stefan Broleyn 发明的 GRUB(GRand Unified Bootlader)为基础。GNU GRUB 增强了原来的 GRUB,带来了一些改进、新的特性和漏洞修复。

|

||||

|

||||

重要的是,GRUB 2 现在已经取代了 GRUB。值得注意的是,GRUB 这个名字被重新命名为 GRUB Legacy,但没有活跃开发,不过,它可以用来引导老的系统,因为漏洞修复依然继续。

|

||||

|

||||

GRUB 具有下面一些显著的特性:

|

||||

|

||||

- 支持多重引导

|

||||

- 支持多种硬件结构和操作系统,比如 Linux 和 Windows

|

||||

- 提供一个类似 Bash 的交互式命令行界面,从而用户可以运行 GRUB 命令来和配置文件进行交互

|

||||

- 允许访问 GRUB 编辑器

|

||||

- 支持设置加密口令以确保安全

|

||||

- 支持从网络进行引导,以及一些次要的特性

|

||||

|

||||

访问主页: <https://www.gnu.org/software/grub/>

|

||||

|

||||

### 2. LILO(Linux 引导程序(LInux LOader))

|

||||

|

||||

LILO 是一个简单但强大且非常稳定的 Linux 引导程序。由于 GRUB 有很大改善和增加了许多强大的特性,越来越受欢迎,因此 LILO 在 Linux 用户中已经不是很流行了。

|

||||

|

||||

当 LILO 引导的时候,单词“LILO”会出现在屏幕上,并且每一个字母会在一个特定的事件发生前后出现。然而,从 2015 年 12 月开始,LILO 的开发停止了,它有许多特性比如下面列举的:

|

||||

|

||||

- 不提供交互式命令行界面

|

||||

- 支持一些错误代码

|

||||

- 不支持网络引导(LCTT 译注:其变体 ELILO 支持 TFTP/DHCP 引导)

|

||||

- 所有的文件存储在驱动的最开始 1024 个柱面上

|

||||

- 面临 BTFS、GTP、RAID 等的限制

|

||||

|

||||

访问主页: <http://lilo.alioth.debian.org/>

|

||||

|

||||

### 3. BURG - 新的引导程序

|

||||

|

||||

基于 GRUB,BURG 是一个相对来说比较新的引导程序(LCTT 译注:已于 2011 年停止了开发)。由于 BURG 起源于 GRUB, 所以它带有一些 GRUB 主要特性。尽管如此, BURG 也提供了一些出色的特性,比如一种新的对象格式可以支持包括 Linux、Windows、Mac OS、 FreeBSD 等多种平台。

|

||||

|

||||

另外,BURG 支持可高度配置的文本和图标模式的引导菜单,计划增加的“流”支持未来可以不同的输入/输出设备一同工作。

|

||||

|

||||

访问主页: <https://launchpad.net/burg>

|

||||

|

||||

### 4. Syslinux

|

||||

|

||||

Syslinux 是一种能从光盘驱动器、网络等进行引导的轻型引导程序。Syslinux 支持诸如 MS-DOS 上的 FAT、 Linux 上的 ext2、ext3、ext4 等文件系统。Syslinux 也支持未压缩的单一设备上的 Btrfs。

|

||||

|

||||

注意由于 Syslinux 仅能访问自己分区上的文件,因此不具备多重文件系统引导能力。

|

||||

|

||||

访问主页: <http://www.syslinux.org/wiki/index.php?title=The_Syslinux_Project>

|

||||

|

||||

### 结论

|

||||

|

||||

一个引导程序允许你在你的机器上管理多个操作系统,并在某个的时间选择其中一个使用。没有引导程序,你的机器就不能够装载内核以及操作系统的剩余部分。

|

||||

|

||||

我们是否遗漏了任何一流的 Linux 引导程序?如果有请让我们知道,请在下面的评论表中填入值得推荐的 Linux 系统引导程序。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/best-linux-boot-loaders/

|

||||

|

||||

作者:[Aaron Kili][a]

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://www.tecmint.com/best-linux-boot-loaders/

|

||||

@ -0,0 +1,140 @@

|

||||

Googler:现在可以 Linux 终端下进行 Google 搜索了!

|

||||

============================================

|

||||

|

||||

|

||||

|

||||

一个小问题:你每天做什么事?当然了,好多事情,但是我可以指出一件事,你几乎每天(如果不是每天)都会用 Google 搜索,我说的对吗?(LCTT 译注:Google 是啥?/cry )

|

||||

|

||||

现在,如果你是一位 Linux 用户(我猜你也是),这里有另外一个问题:如果你甚至不用离开终端就可以进行 Google 搜索那岂不是相当棒?甚至不用打开一个浏览器窗口?

|

||||

|

||||

如果你是一位类 [*nix][7] 系统的狂热者而且也是喜欢终端界面的人,我知道你的答案是肯定的,而且我认为,接下来你也将喜欢上我今天将要介绍的这个漂亮的小工具。它被称做 Googler。

|

||||

|

||||

### Googler:在你 linux 终端下的 google

|

||||

|

||||

Googler 是一个简单的命令行工具,它用于直接在命令行窗口中进行 google 搜索,Googler 主要支持三种类型的 Google 搜索:

|

||||

|

||||

- Google 搜索:简单的 Google 搜索,和在 Google 主页搜索是等效的。

|

||||

- Google 新闻搜索:Google 新闻搜索,和在 Google News 中的搜索一样。

|

||||

- Google 站点搜索:Google 从一个特定的网站搜索结果。

|

||||

|

||||

Googler 用标题、链接和网页摘要来显示搜索结果。搜索出来的结果可以仅通过两个按键就可以在浏览器里面直接打开。

|

||||

|

||||

|

||||

|

||||

### 在 Ubuntu 下安装 Googler

|

||||

|

||||

先让我们进行软件的安装。

|

||||

|

||||

首先确保你的 python 版本大于等于 3.3,可以用以下命令查看。

|

||||

|

||||

```

|

||||

python3 --version

|

||||

```

|

||||

|

||||

如果不是的话,就更新一下。Googler 要求 python 版本 3.3 及以上运行。

|

||||

|

||||

虽然 Googler 现在还不能在 Ununtu 的软件库中找到,我们可以很容易地从 GitHub 仓库中安装它。我们需要做的就是运行以下命令:

|

||||

|

||||

```

|

||||

cd /tmp

|

||||

git clone https://github.com/jarun/googler.git

|

||||

cd googler

|

||||

sudo make install

|

||||

cd auto-completion/bash/

|

||||

sudo cp googler-completion.bash /etc/bash_completion.d/

|

||||

```

|

||||

|

||||

这样 Googler 就带着命令自动完成特性安装完毕了。

|

||||

|

||||

### 特点 & 基本用法

|

||||

|

||||

如果我们快速浏览它所有的特点,我们会发现 Googler 实际上是一个十分强大的工具,它的一些主要特点就是:

|

||||

|

||||

#### 交互界面

|

||||

|

||||

在终端下运行以下命令:

|

||||

|

||||

```

|

||||

googler

|

||||

```

|

||||

|

||||

交互界面就会被打开,Googler 的开发者 [Arun Prakash Jana][1] 称之为全向提示符(omniprompt),你可以输入 `?` 去寻找可用的命令参数:

|

||||

|

||||

|

||||

|

||||

在提示符处,输入任何搜索词汇关键字去开始搜索,然后你可以输入`n`或者`p`导航到搜索结果的后一页和前一页。

|

||||

|

||||

要在浏览器窗口中打开搜索结果,直接输入搜索结果的编号,或者你可以输入 `o` 命令来打开这个搜索网页。

|

||||

|

||||

#### 新闻搜索

|

||||

|

||||

如果你想去搜索新闻,直接以`N`参数启动 Googler:

|

||||

|

||||

```

|

||||

googler -N

|

||||

```

|

||||

|

||||

随后的搜索将会从 Google News 抓取结果。

|

||||

|

||||

#### 站点搜索

|

||||

|

||||

如果你想从某个特定的站点进行搜索,以`w 域名`参数启动 Googler:

|

||||

|

||||

```

|

||||

googler -w itsfoss.com

|

||||

```

|

||||

|

||||

随后的搜索会只从这个博客中抓取结果!

|

||||

|

||||

#### 手册页

|

||||

|

||||

运行以下命令去查看 Googler 的带着各种用例的手册页:

|

||||

|

||||

```

|

||||

man googler

|

||||

```

|

||||

|

||||

#### 指定国家/地区的 Google 搜索引擎

|

||||

|

||||

```

|

||||

googler -c in "hello world"

|

||||

```

|

||||

|

||||

上面的示例命令将会开始从 Google 的印度域名搜索结果(in 代表印度)

|

||||

|

||||

还支持:

|

||||

|

||||

- 通过时间和语言偏好来过滤搜索结果

|

||||

- 支持 Google 查询关键字,例如:`site:example.com` 或者 `filetype:pdf` 等等

|

||||

- 支持 HTTPS 代理

|

||||

- Shell 命令自动补全

|

||||

- 禁用自动拼写纠正

|

||||

|

||||

这里还有更多特性。你可以用 Googler 去满足你的需要。

|

||||

|

||||

Googler 也可以和一些基于文本的浏览器整合在一起(例如:[elinks][2]、[links][3]、[lynx][4]、w3m 等),所以你甚至都不用离开终端去浏览网页。在 [Googler 的 GitHub 项目页][5]可以找到指导。

|

||||

|

||||

如果你想看一下 Googler 不同的特性的视频演示,方便的话你可以查看 GitHub 项目页附带的终端记录演示页: [jarun/googler v2.7 quick demo][6]。

|

||||

|

||||

### 对于 Googler 的看法?

|

||||

|

||||

尽管 googler 可能并不是对每个人都是必要和渴望的,对于一些不想打开浏览器进行 google 搜索或者就是想泡在终端窗口里面的人来说,这是一个很棒的工具。你认为呢?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/review-googler-linux/

|

||||

|

||||

作者:[Munif Tanjim][a]

|

||||

译者:[LinuxBars](https://github.com/LinuxBars)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/munif/

|

||||

[1]: https://github.com/jarun

|

||||

[2]: http://elinks.or.cz/

|

||||

[3]: http://links.twibright.com/

|

||||

[4]: http://lynx.browser.org/

|

||||

[5]: https://github.com/jarun/googler#faq

|

||||

[6]: https://asciinema.org/a/85019

|

||||

[7]: https://en.wikipedia.org/wiki/Unix-like

|

||||

@ -0,0 +1,87 @@

|

||||

Torvalds 2.0: Linus 之女谈计算机、大学、女权主义和提升技术界的多元化

|

||||

====================

|

||||

|

||||

|

||||

|

||||

*图片来源:照片来自 Becky Svartström, Opensource.com 修改*

|

||||

|

||||

Patricia Torvalds 暂时还不像她的父亲 Linus 一样闻名于 Linux 和开源领域。

|

||||

|

||||

|

||||

|

||||

在她 18 岁的时候,Patricia 已经是一个有多项技术成就、拥有开源行业经验的女权主义者,而且她已经把目标放在进入杜克大学普拉特工程学院的新学期上了。当时她以实习生的身份在位于美国奥勒冈州伯特兰市的 [Puppet 实验室][2]工作。但不久后,她就将前往北卡罗纳州的达拉莫,开始秋季学期的大学学习。

|

||||

|

||||

在这次独家采访中,Patricia 谈到了使她对计算机科学与工程学感兴趣的(剧透警告:不是因为她的父亲)原因,她所在高中学校在技术教学上所采取的“正确”方法,女权主义在她的生活中扮演的重要角色,以及对技术缺乏多元化的思考。

|

||||

|

||||

|

||||

|

||||

###是什么使你对学习计算机科学与工程学发生兴趣?###

|

||||

|

||||

我在技术方面的兴趣主要来自于高中时代。我曾一度想投身于生物学,这种想法一直维持到大约大学二年级的时候。大二结束以后,我在波特兰 [VA][11] 做网页设计实习生。与此同时,我参加了一个叫做“探索冒险家(Exploratory Ventures,XV)”的工程学课程,在我大二学年的后期,我们把一个水下机器人送入了太平洋。但是,转折点大概是在我大三学年的中期被授予“[NCWIT 的计算机之理想][6]”奖的地区冠军和全国亚军的时候出现的(LCTT 译注:NCWIT - National Center for Women & IT,女性与 IT 国家中心)。

|

||||

|

||||

这个奖项的获得让我感觉到确立了自己的兴趣。当然,我认为最重要的部分是我加入到一个由所有获奖者组成的 Facebook 群。女孩们获奖很难想象,因此我们彼此非常支持。由于在 XV 和 [VA][11] 的工作,我在获奖前就已经确实对计算机科学发生了兴趣,但是和这些女孩们的交谈更加坚定了这份兴趣,使之更加强烈。再后来,后期大三、大四年级的时候执教 XV 也使我体会到工程学和计算机科学的乐趣。

|

||||

|

||||

###你打算学习什么?你已经知道自己毕业后想干什么了吗?###

|

||||

|

||||

我希望要么主修机械,要么是电子和计算机工程学,以及计算机科学,并且辅修女性学。毕业以后,我希望在一个支持社会公益或者为其创造技术的公司工作,或者自己开公司。

|

||||

|

||||

###我的女儿在高中有一门 Visual Basic 的编程课。她是整个班上唯一的一个女生,并且以困扰和痛苦的经历结束了这门课程。你的经历是什么样的呢?###

|

||||

|

||||

我的高中在高年级的时候开设了计算机科学的课程,我也学习了 Visual Basic!这门课不是很糟糕,但我的确是 20 多个人的班级里仅有的三四个女生之一。其他的计算机课程似乎也有相似的性别比例差异。然而,我所在的高中极其小,并且老师对技术包容性非常支持,所以我并没有感到困扰。希望在未来的一些年里这些课程会变得更加多样化。

|

||||

|

||||

###你的学校做了哪些促进技术的举措?它们如何能够变得更好?###

|

||||

|

||||

我的高中学校给了我们长时间接触计算机的机会,老师们会突然在不相关的课程上安排技术相关的任务,有几次我们还为社会实践课程建了一个网站,我认为这很棒,因为它使我们每一个人都能接触到技术。机器人俱乐部也很活跃并且资金充足,但是非常小,不过我不是其中的成员。学校的技术/工程学项目中一个非常重要的组成部分是一门叫做”[探索冒险家(Exploratory Ventures)][8]“的由学生自己教的工程学课程,这是一门需要亲自动手的课程,并且每年换一个工程学或者计算机科学方面的难题。我和我的一个同学在这儿教了两年,在课程结束以后,有学生上来告诉我他们对从事工程学或者计算机科学发生了兴趣。

|

||||

|

||||

然而,我的高中没有特别的关注于让年轻女性加入到这些课程中来,并且在人种上也没有呈现多样化。计算机的课程和俱乐部大量的主要成员都是男性白人学生。这的确应该需要有所改善。

|

||||

|

||||

###在成长过程中,你如何在家运用技术?###

|

||||

|

||||

老实说,小的时候,我使用我的上机时间([我的父亲 Linus][9] 设置了一个跟踪装置,当我们上网一个小时就会断线)来玩[尼奥宠物][10]和或者相似的游戏。我想我本可以搞乱跟踪装置或者在不连接网络的情况下玩游戏,但我没有这样做。我有时候也会和我的父亲做一些小的科学项目,我还记得有一次我和他在电脑终端上打印出几千个“Hello world”。但是大多数时候,我都是和我的妹妹一起玩网络游戏,直到高中的时候才开始学习计算机。

|

||||

|

||||

###你在高中学校的女权俱乐部很活跃,从这份经历中你学到了什么?现在对你来说什么女权问题是最重要的?###

|

||||

|

||||

在高中二年级的后期,我和我的朋友一起建立了女权俱乐部。刚开始,我们受到了很多人对俱乐部的排斥,并且这从来就没有完全消失过。到我们毕业的时候,女权主义思想已经彻底成为了学校文化的一部分。我们在学校做的女权主义工作通常是在一些比较直接的方面,并集中于像着装要求这样一些问题。

|

||||

|

||||

就我个人来说,我更关注于新女性主义( intersectional feminism),这是一种致力于(消除)其它方面压迫(比如,种族歧视和阶级压迫等)的女权主义。Facebook 上的 [Gurrilla Feminism][4] 专页是新女性主义一个非常好的例子,并且我从中学到了很多。我目前管理着波特兰分会。

|

||||

|

||||

在技术多样性方面女权主义对我也非常重要,尽管作为一名和技术世界有很强联系的高年级白人女性,女权主义问题对我产生的影响相比其他人来说少得多,我所参与的新女性主义也是同样的。[《Model View Culture》][5]的出版非常鼓舞我,谢谢 Shanley Kane 所做的这一切。

|

||||

|

||||

###你会给想教他们的孩子学习编程的父母什么样的建议?###

|

||||

|

||||

老实说,从没有人推着我学习计算机科学或者工程学。正如我前面说的,在很长一段时间里,我想成为一名遗传学家。大二结束的那个夏天,我在 [VA][11] 做了一个夏天的网页设计实习生,这彻底改变了我之前的想法。所以我不知道我是否能够充分回答这个问题。

|

||||

|

||||

我的确认为真正的兴趣很重要。如果在我 12 岁的时候,我的父亲让我坐在一台电脑前,教我配置一台网站服务器,我认为我不会对计算机科学感兴趣。相反,我的父母给了我很多可以支配的自由时间让我去做自己想做的事情,绝大多数时候是我在为我的尼奥宠物游戏编写糟糕的 HTML 网站。比我小的妹妹们没有一个对工程学或计算机科学感兴趣,我的父母也不在乎。我感到很幸运的是我的父母给了我和我的妹妹们鼓励和资源去探索自己的兴趣。

|

||||

|

||||

仍然要讲的是,在我成长过程中我也常说未来职业生涯要“像我爹一样”,尽管那时我还不知道我父亲是干什么的,只知道他有一个很酷的工作。另外,中学的时候有一次我告诉我的父亲这件事,然后他没有发表什么看法只是告诉我高中的时候不要想这事。所以我猜想这从一定程度上鼓励了我。

|

||||

|

||||

###对于开源社区的领导者们,你有什么建议给他们来吸引和维持更加多元化的贡献者?###

|

||||

|

||||

我实际上在开源社区并不是特别积极和活跃,我更喜欢和其它女性讨论计算机。我是“[NCWIT 的计算机之理想][6]”成员之一,这是我对技术持久感到兴趣的一个重要方面,同样也包括 Facebook 的”[Ladies Storm Hackathons][7]” 群。

|

||||

|

||||

我认为对于吸引和留住那些天才而形形色色的贡献者,安全的空间很重要。我曾经看到过在一些开源社区有人发表关于女性歧视和种族主义的评论,当人们指出这一问题随后该人就被解职了。我认为要维持一个专业的社区必须就骚扰事件和不正当行为有一个高标准。当然,人们已经有而且还会有,关于在开源社区或其他任何社区能够表达什么意见的更多的观点。然而,如果社区领导人真的想吸引和留住形形色色的天才们,他们必须创造一个安全的空间并且以高标准要求社区成员们。

|

||||

|

||||

我也觉得一些社区领导者不明白多元化的价值。很容易觉得在技术上是唯才是举的,并且这个原因有一些是技术上不处于中心位置的人是他们不在意的,问题来自于发展的早期。他们争论如果一个人在自己的工作上做得很好,那么他的性别或者民族还有性取向这些情况都变得不重要了。这很容易反驳,但我不想看到为这些错误找的理由。我认为多元化的缺失是一个错误,我们应该为之负责并尽力去改善这件事。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://opensource.com/life/15/8/patricia-torvalds-interview

|

||||

|

||||

作者:[Rikki Endsley][a]

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[LinuxBars](https://github.com/LinuxBars), [wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://opensource.com/users/rikki-endsley

|

||||

[1]:https://creativecommons.org/licenses/by-sa/4.0/

|

||||

[2]:https://puppetlabs.com/

|

||||

[3]:https://www.aspirations.org/

|

||||

[4]:https://www.facebook.com/guerrillafeminism

|

||||

[5]:https://modelviewculture.com/

|

||||

[6]:https://www.aspirations.org/

|

||||

[7]:https://www.facebook.com/groups/LadiesStormHackathons/

|

||||

[8]: http://exploratoryventures.com/

|

||||

[9]: https://plus.google.com/+LinusTorvalds/about

|

||||

[10]: http://www.neopets.com/

|

||||

[11]: http://www.va.gov/

|

||||

@ -1,3 +1,5 @@

|

||||

translating by Chao-zhi

|

||||

|

||||

Adobe's new CIO shares leadership advice for starting a new role

|

||||

====

|

||||

|

||||

@ -42,7 +44,7 @@ Through this whole process, I’ve been very open with people that this is not g

|

||||

via: https://enterprisersproject.com/article/2016/9/adobes-new-cio-shares-leadership-advice-starting-new-role

|

||||

|

||||

作者:[Cynthia Stoddard][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

译者:[Chao-zhi](https://github.com/Chao-zhi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,62 +0,0 @@

|

||||

Linus Torvalds reveals his favorite programming laptop

|

||||

====

|

||||

|

||||

>It's the Dell XPS 13 Developer Edition. Here's why.

|

||||

|

||||

I recently talked with some Linux developers about what the best laptop is for serious programmers. As a result I checked out several laptops from a programmer's viewpoint. The winner in my book? The 2016 Dell XPS 13 Developer Edition. I'm in good company. Linus Torvalds, Linux's creator, agrees. The Dell XPS 13 Developer Edition, for him, is the best laptop around.

|

||||

|

||||

|

||||

|

||||

Torvald's requirements may not be yours though.

|

||||

|

||||

On Google+, Torvalds explained, "First off: [I don't use my laptop as a desktop replacement][1], and I only travel for a small handful of events each year. So for me, the laptop is a fairly specialized thing that doesn't get daily (or even weekly) use, so the main criteria are not some kind of "average daily use", but very much "travel use".

|

||||

|

||||

Therefore, for Torvalds, "I end up caring a lot about it being fairly small and light, because I may end up carrying it around all day at a conference. I also want it to have a good screen, because by now I'm just used to it at my main desktop, and I want my text to be legible but small."

|

||||

|

||||

The Dell's display is powered by Intel's Iris 540 GPU. In my experience it works really well.

|

||||

|

||||

The Iris powers a 13.3 inch display with a 3,200×1,800 touchscreen. That's 280 pixels per inch, 40 more than my beloved [2015 Chromebook Pixel][2] and 60 more than a [MacBook Pro with Retina][3].

|

||||

|

||||

However, getting that hardware to work and play well with the [Gnome][4] desktop isn't easy. As Torvalds explained in another post, it "has the [same resolution as my desktop][5], but apparently because the laptop screen is smaller, Gnome seems to decide on its own that I need an automatic scaling factor of 2, which blows up all the stupid things (window decorations, icons etc) to a ridiculous degree".

|

||||

|

||||

The solution? You can forget about looking to the user interface. You need to go to the shell and run: gsettings set org.gnome.desktop.interface scaling-factor 1.

|

||||

|

||||

Torvalds may use Gnome, but he's [never liked the Gnome 3.x family much][6]. I can't argue with him. That's why I use [Cinnamon][7] instead.

|

||||

|

||||

He also wants "a reasonably powerful CPU, because when I'm traveling I still build the kernel a lot. I don't do my normal full 'make allmodconfig' build between each pull request like I do at home, but I'd like to do it more often than I did with my previous laptop, which is actually (along with the screen) the main reason I wanted to upgrade."

|

||||

|

||||

Linus doesn't describe the features of his XPS 13, but my review unit was a high-end model. It came with dual-core, 2.2GHz 6th Generation Intel Core i7-6560U Skylake processor and 16GBs of DDR3 RAM with a half a terabyte, PCIe solid state drive (SSD). I'm sure Torvalds' system is at least that well-equipped.

|

||||

|

||||

Some features you may care about aren't on Torvalds' list.

|

||||

|

||||

>"What I don't tend to care about is touch-screens, because my fingers are big and clumsy compared to the text I'm looking at (I also can't handle the smudges: maybe I just have particularly oily fingers, but I really don't want to touch that screen).

|

||||

|

||||

I also don't care deeply about some 'all day battery life', because quite frankly, I can't recall the last time I didn't have access to power. I might not want to bother to plug it in for some quick check, but it's just not a big overwhelming issue. By the time battery life is in 'more than a couple of hours', I just don't care very much any more."

|

||||

Dell claims the XPS 13, with its 56wHR, 4-Cell Battery, has about a 12-hour battery life. It has well over 10 in my experience. I haven't tried to run it down to the dregs.

|

||||

|

||||

Torvalds also didn't have any trouble with the Intel Wi-Fi set. The non Developer Edition uses a Broadcom chip set and that has proven troublesome for both Windows and Linux users. Dell technical support was extremely helpful to me in getting this problem under control.

|

||||

|

||||

Some people have trouble with the XPS 13 touchpad. Neither I nor Torvalds have any worries. Torvalds wrote, the "XPS13 touchpad works very well for me. That may be a personal preference thing, but it seems to be both smooth and responsive."

|

||||

|

||||

Still, while Torvalds likes the XPS 13, he's also fond of the latest Lenovo X1 Carbon, HP Spectre 13 x360, and last year's Lenovo Yoga 900. Me? I like the XPS 13 Developer Editor. The price tag, which for the model I reviewed was $1949.99, may keep you from reaching for your credit card.

|

||||

|

||||

Still, if you want to develop like one of the world's top programmers, the Dell XPS 13 Developer Edition is worth the money.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.zdnet.com/article/linus-torvalds-reveals-his-favorite-programming-laptop/

|

||||

|

||||

作者:[Steven J. Vaughan-Nichols ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://www.zdnet.com/meet-the-team/us/steven-j-vaughan-nichols/

|

||||

[1]: https://plus.google.com/+LinusTorvalds/posts/VZj8vxXdtfe

|

||||

[2]: http://www.zdnet.com/article/the-best-chromebook-ever-the-chromebook-pixel-2015/

|

||||

[3]: http://www.zdnet.com/product/apple-15-inch-macbook-pro-with-retina-display-mid-2015/

|

||||

[4]: https://www.gnome.org/

|

||||

[5]: https://plus.google.com/+LinusTorvalds/posts/d7nfnWSXjfD

|

||||

[6]: http://www.zdnet.com/article/linus-torvalds-finds-gnome-3-4-to-be-a-total-user-experience-design-failure/

|

||||

[7]: http://www.zdnet.com/article/how-to-customise-your-linux-desktop-cinnamon/

|

||||

@ -1,84 +0,0 @@

|

||||

Setup honeypot in Kali Linux

|

||||

====

|

||||

|

||||

The Pentbox is a safety kit containing various tools for streamlining PenTest conducting a job easily. It is programmed in Ruby and oriented to GNU / Linux, with support for Windows, MacOS and every systems where Ruby is installed. In this small article we will explain how to set up a honeypot in Kali Linux. If you don’t know what is a honeypot, “a honeypot is a computer security mechanism set to detect, deflect, or, in some manner, counteract attempts at unauthorized use of information systems.”

|

||||

|

||||

### Download Pentbox:

|

||||

|

||||

Simply type in the following command in your terminal to download pentbox-1.8.

|

||||

|

||||

```

|

||||

root@kali:~# wget http://downloads.sourceforge.net/project/pentbox18realised/pentbox-1.8.tar.gz

|

||||

```

|

||||

|

||||

|

||||

|

||||

### Uncompress pentbox files

|

||||

|

||||

Decompressing the file with the following command:

|

||||

|

||||

```

|

||||

root@kali:~# tar -zxvf pentbox-1.8.tar.gz

|

||||

```

|

||||

|

||||

|

||||

|

||||

### Run pentbox ruby script

|

||||

|

||||

Change directory into pentbox folder

|

||||

|

||||

```

|

||||

root@kali:~# cd pentbox-1.8/

|

||||

```

|

||||

|

||||

|

||||

|

||||

Run pentbox using the following command

|

||||

|

||||

```

|

||||

root@kali:~# ./pentbox.rb

|

||||

```

|

||||

|

||||

|

||||

|

||||

### Setup a honeypot

|

||||

|

||||

Use option 2 (Network Tools) and then option 3 (Honeypot).

|

||||

|

||||

|

||||

|

||||

Finally for first test, choose option 1 (Fast Auto Configuration)

|

||||

|

||||

|

||||

|

||||

This opens up a honeypot in port 80. Simply open browser and browse to http://192.168.160.128 (where 192.168.160.128 is your IP Address. You should see an Access denied error.

|

||||

|

||||

|

||||

|

||||

and in the terminal you should see “HONEYPOT ACTIVATED ON PORT 80” followed by “INTRUSION ATTEMPT DETECTED”.

|

||||

|

||||

|

||||

|

||||

Now, if you do the same steps but this time select Option 2 (Manual Configuration), you should see more extra options

|

||||

|

||||

|

||||

|

||||

Do the same steps but select port 22 this time (SSH Port). Then do a port forwarding in your home router to forward port external port 22 to this machines’ port 22. Alternatively, set it up in a VPS in your cloud server.

|

||||

|

||||

You’d be amazed how many bots out there scanning port SSH continuously. You know what you do then? You try to hack them back for the lulz!

|

||||

|

||||

Here’s a video of setting up honeypot if video is your thing:

|

||||

|

||||

<https://youtu.be/NufOMiktplA>

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.blackmoreops.com/2016/05/06/setup-honeypot-in-kali-linux/

|

||||

|

||||

作者:[blackmoreops.com][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: blackmoreops.com

|

||||

@ -0,0 +1,234 @@

|

||||

# Scientific Audio Processing, Part II - How to make basic Mathematical Signal Processing in Audio files using Ubuntu with Octave 4.0

|

||||

|

||||

|

||||

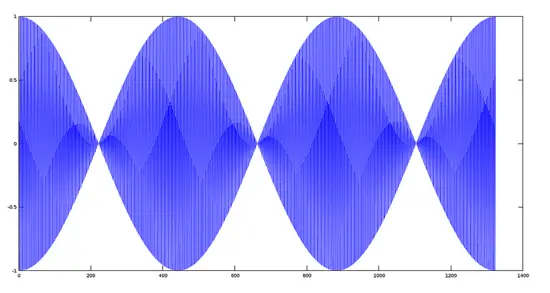

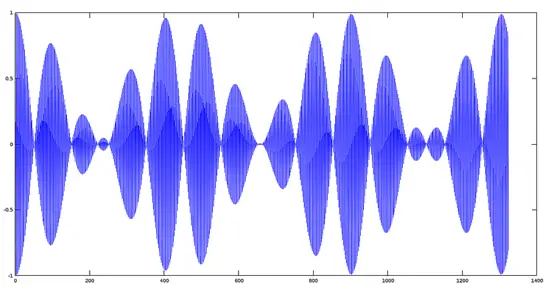

In the [previous tutorial](https://www.howtoforge.com/tutorial/how-to-read-and-write-audio-files-with-octave-4-in-ubuntu/), we saw the simple steps to read, write and playback audio files. We even saw how we can synthesize an audio file from a periodic function such as the cosine function. In this tutorial, we will see how we can do additions to signals, multiplying signals (modulation), and applying some basic mathematical functions to see their effect on the original signal.

|

||||

|

||||

### Adding Signals

|

||||

|

||||

The sum of two signals S1(t) and S2(t) results in a signal R(t) whose value at any instant of time is the sum of the added signal values at that moment. Just like this:

|

||||

|

||||

```

|

||||

R(t) = S1(t) + S2(t)

|

||||

```

|

||||

|

||||

We will recreate the sum of two signals in Octave and see the effect graphically. First, we will generate two signals of different frequencies to see the signal resulting from the sum.

|

||||

|

||||

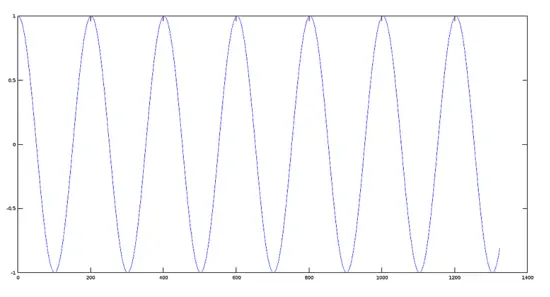

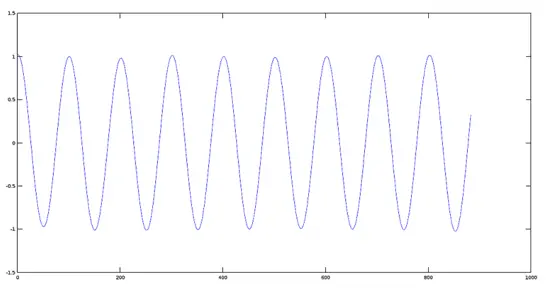

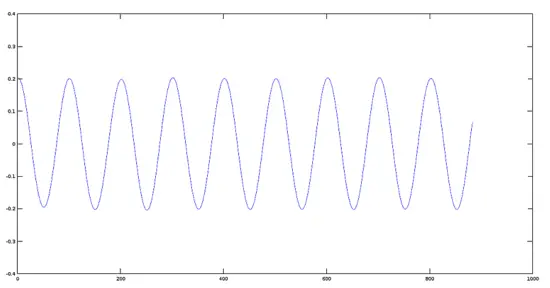

#### Step 1: Creating two signals of different frequencies (ogg files)

|

||||

|

||||

```

|

||||

>> sig1='cos440.ogg'; %creating the audio file @440 Hz

|

||||

>> sig2='cos880.ogg'; %creating the audio file @880 Hz

|

||||

>> fs=44100; %generating the parameters values (Period, sampling frequency and angular frequency)

|

||||

>> t=0:1/fs:0.02;

|

||||

>> w1=2*pi*440*t;

|

||||

>> w2=2*pi*880*t;

|

||||

>> audiowrite(sig1,cos(w1),fs); %writing the function cos(w) on the files created

|

||||

>> audiowrite(sig2,cos(w2),fs);

|

||||

```

|

||||

|

||||

Here we will plot both signals.

|

||||

|

||||

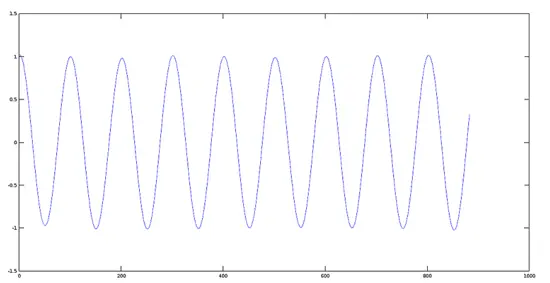

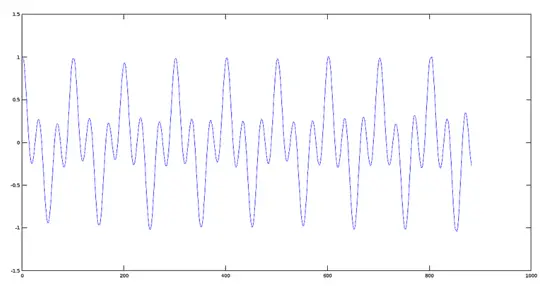

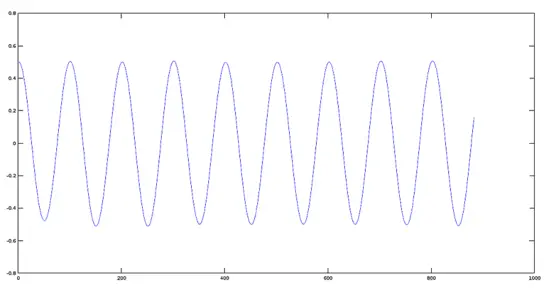

Plot of Signal 1 (440 Hz)

|

||||

|

||||

```

|

||||

>> [y1, fs] = audioread(sig1);

|

||||

>> plot(y1)

|

||||

```

|

||||

|

||||

[](https://www.howtoforge.com/images/octave-audio-signal-processing-ubuntu/big/plotsignal1.png)

|

||||

|

||||

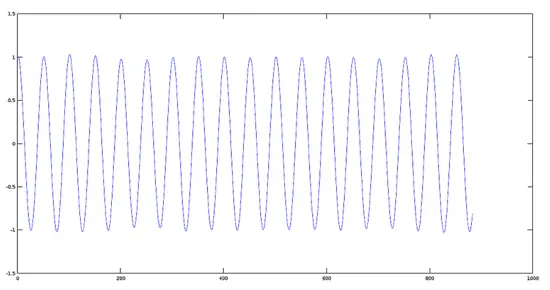

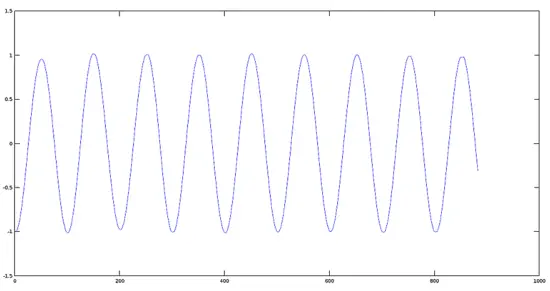

Plot of Signal 2 (880 Hz)

|

||||

|

||||

```

|

||||

>> [y2, fs] = audioread(sig2);

|

||||

>> plot(y2)

|

||||

```

|

||||

|

||||

[](https://www.howtoforge.com/images/octave-audio-signal-processing-ubuntu/big/plotsignal2.png)

|

||||

|

||||

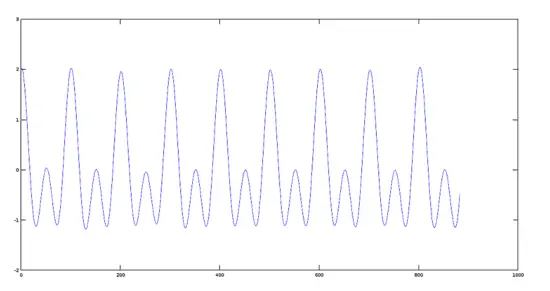

#### Step 2: Adding two signals

|

||||

|

||||

Now we perform the sum of the two signals created in the previous step.

|

||||

|

||||

```

|

||||

>> sumres=y1+y2;

|

||||

>> plot(sumres)

|

||||

```

|

||||

|

||||

Plot of Resulting Signal

|

||||

|

||||

[](https://www.howtoforge.com/images/octave-audio-signal-processing-ubuntu/big/plotsum.png)

|

||||

|

||||

The Octaver Effect

|

||||

|

||||

In the Octaver, the sound provided by this effect is characteristic because it emulates the note being played by the musician, either in a lower or higher octave (according as it has been programmed), coupled with sound the original note, ie two notes appear identically sounding.

|

||||

|

||||

#### Step 3: Adding two real signals (example with two musical tracks)

|

||||

|

||||

For this purpose, we will use two tracks of Gregorian Chants (voice sampling).

|

||||

|

||||

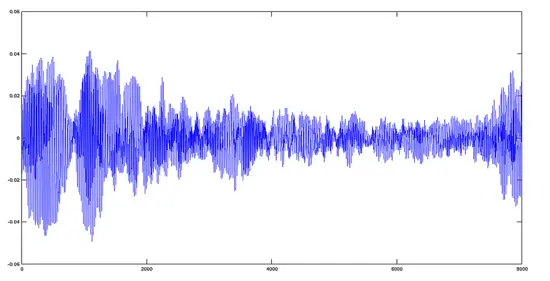

Avemaria Track

|

||||

|

||||

First, will read and plot an Avemaria track:

|

||||

|

||||

```

|

||||

>> [y1,fs]=audioread('avemaria_.ogg');

|

||||

>> plot(y1)

|

||||

```

|

||||

|

||||

[](https://www.howtoforge.com/images/octave-audio-signal-processing-ubuntu/big/avemaria.png)

|

||||

|

||||

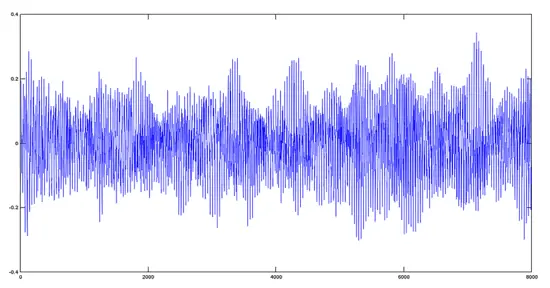

Hymnus Track

|

||||

|

||||

Now, will read and plot an hymnus track

|

||||

|

||||

```

|

||||

>> [y2,fs]=audioread('hymnus.ogg');

|

||||

>> plot(y2)

|

||||

```

|

||||

|

||||

[](https://www.howtoforge.com/images/octave-audio-signal-processing-ubuntu/big/hymnus.png)

|

||||

|

||||

Avemaria + Hymnus Track

|

||||

|

||||

```

|

||||

>> y='avehymnus.ogg';

|

||||

>> audiowrite(y, y1+y2, fs);

|

||||

>> [y, fs]=audioread('avehymnus.ogg');

|

||||

>> plot(y)

|

||||

```

|

||||

|

||||

[](https://www.howtoforge.com/images/octave-audio-signal-processing-ubuntu/big/avehymnus.png)The result, from the point of view of audio, is that both tracks will sound mixed.

|

||||

|

||||

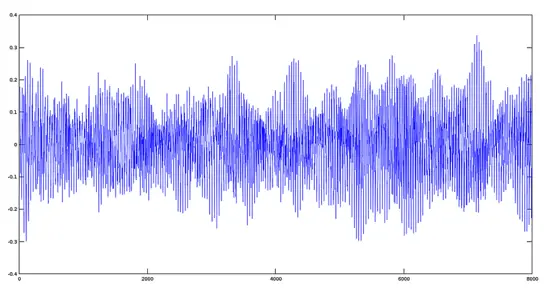

### Product of two Signals

|

||||

|

||||

To multiply two signals, we have to use an analogous way to the sum. Let´s use the same files created previously.

|

||||

|

||||

```

|

||||

R(t) = S1(t) * S2(t)

|

||||

```

|

||||

|

||||

```

|

||||

>> sig1='cos440.ogg'; %creating the audio file @440 Hz

|

||||

>> sig2='cos880.ogg'; %creating the audio file @880 Hz

|

||||

>> product='prod.ogg'; %creating the audio file for product

|

||||

>> fs=44100; %generating the parameters values (Period, sampling frequency and angular frequency)

|

||||

>> t=0:1/fs:0.02;

|

||||

>> w1=2*pi*440*t;

|

||||

>> w2=2*pi*880*t;

|

||||

>> audiowrite(sig1, cos(w1), fs); %writing the function cos(w) on the files created

|

||||

>> audiowrite(sig2, cos(w2), fs);>> [y1,fs]=audioread(sig1);>> [y2,fs]=audioread(sig2);

|

||||

>> audiowrite(product, y1.*y2, fs); %performing the product

|

||||

>> [yprod,fs]=audioread(product);

|

||||

>> plot(yprod); %plotting the product

|

||||

```

|

||||

|

||||

Note: we have to use the operand '.*' because this product is made, value to value, on the argument files. For more information, please refer to the manual of product operations with matrices of Octave.

|

||||

|

||||

#### Plot of Resulting Product Signal

|

||||

|

||||

[](https://www.howtoforge.com/images/octave-audio-signal-processing-ubuntu/big/plotprod.png)

|

||||

|

||||

#### Graphical effect of multiplying two signals with a big fundamental frequency difference (Principles of Modulation)

|

||||

|

||||

##### Step 1:

|

||||

|

||||