mirror of

https://github.com/LCTT/TranslateProject.git

synced 2024-12-26 21:30:55 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject

This commit is contained in:

commit

5bad28ed52

@ -1,21 +1,21 @@

|

||||

Linux 上 PowerShell 6.0 使用入门 [新手指南]

|

||||

微软爱上 Linux:当 PowerShell 来到 Linux 时

|

||||

============================================================

|

||||

|

||||

在微软爱上 Linux 之后(众所周知 **Microsoft Loves Linux**),**PowerShell** 原本只是 Windows 才能使用的组件,于 2016 年 8 月 18 日开源并且跨平台,已经可以在 Linux 和 macOS 中使用。

|

||||

在微软爱上 Linux 之后,**PowerShell** 这个原本只是 Windows 才能使用的组件,于 2016 年 8 月 18 日开源并且成为跨平台软件,登陆了 Linux 和 macOS。

|

||||

|

||||

**PowerShell** 是一个微软开发的自动化任务和配置管理系统。它基于 .NET 框架,由命令行语言解释器(shell)和脚本语言组成。

|

||||

|

||||

PowerShell 提供对 **COM** (**Component Object Model**) 和 **WMI** (**Windows Management Instrumentation**) 的完全访问,从而允许系统管理员在本地或远程 Windows 系统中 [执行管理任务][1],以及对 WS-Management 和 CIM(**Common Information Model**)的访问,实现对远程 Linux 系统和网络设备的管理。

|

||||

PowerShell 提供对 **COM** (<ruby>组件对象模型<rt>Component Object Model</rt></ruby>) 和 **WMI** (<ruby>Windows 管理规范<rt>Windows Management Instrumentation</rt></ruby>) 的完全访问,从而允许系统管理员在本地或远程 Windows 系统中 [执行管理任务][1],以及对 WS-Management 和 CIM(<ruby>公共信息模型<rt>Common Information Model</rt></ruby>)的访问,实现对远程 Linux 系统和网络设备的管理。

|

||||

|

||||

通过这个框架,管理任务基本上由称为 **cmdlets**(发音 command-lets)的 **.NET** 类执行。就像 Linux 的 shell 脚本一样,用户可以通过按照一定的规则将 **cmdlets** 写入文件来制作脚本或可执行文件。这些脚本可以用作独立的[命令行程序或工具][2]。

|

||||

通过这个框架,管理任务基本上由称为 **cmdlets**(发音 command-lets)的 **.NET** 类执行。就像 Linux 的 shell 脚本一样,用户可以通过按照一定的规则将一组 **cmdlets** 写入文件来制作脚本或可执行文件。这些脚本可以用作独立的[命令行程序或工具][2]。

|

||||

|

||||

### 在 Linux 系统中安装 PowerShell Core 6.0

|

||||

|

||||

要在 Linux 中安装 **PowerShell Core 6.0**,我们将会用到微软 Ubuntu 官方仓库,它允许我们通过最流行的 Linux 包管理器工具,如 [apt-get][3]、[yum][4] 等来安装。

|

||||

要在 Linux 中安装 **PowerShell Core 6.0**,我们将会用到微软软件仓库,它允许我们通过最流行的 Linux 包管理器工具,如 [apt-get][3]、[yum][4] 等来安装。

|

||||

|

||||

#### 在 Ubuntu 16.04 中安装

|

||||

|

||||

首先,导入公共仓库 **GPG** 密钥,然后将 **Microsoft Ubuntu** 仓库注册到 **APT** 的源中来安装 **PowerShell**:

|

||||

首先,导入该公共仓库的 **GPG** 密钥,然后将 **Microsoft Ubuntu** 仓库注册到 **APT** 的源中来安装 **PowerShell**:

|

||||

|

||||

```

|

||||

$ curl https://packages.microsoft.com/keys/microsoft.asc | sudo apt-key add -

|

||||

@ -51,6 +51,7 @@ $ sudo yum install -y powershell

|

||||

```

|

||||

$ powershell

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][5]

|

||||

@ -62,6 +63,7 @@ $ powershell

|

||||

```

|

||||

$PSVersionTable

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][6]

|

||||

@ -78,7 +80,7 @@ get-location [# 显示当前工作目录]

|

||||

|

||||

#### 在 PowerShell 中操作文件和目录

|

||||

|

||||

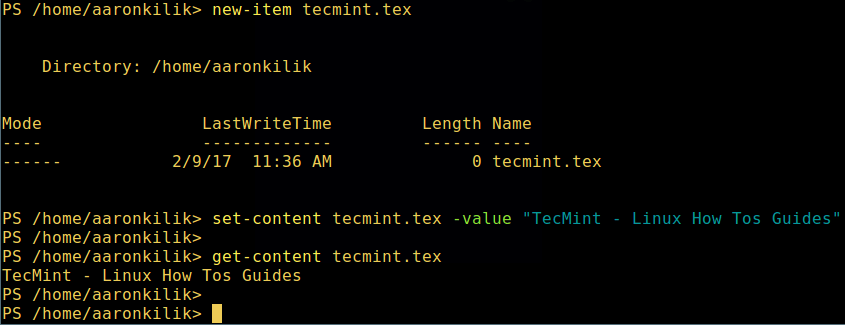

1. 可以通过两种方法创建空文件:

|

||||

1、 可以通过两种方法创建空文件:

|

||||

|

||||

```

|

||||

new-item tecmint.tex

|

||||

@ -92,25 +94,27 @@ new-item tecmint.tex

|

||||

set-content tecmint.tex -value "TecMint Linux How Tos Guides"

|

||||

get-content tecmint.tex

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][7]

|

||||

|

||||

*在 PowerShell 中创建新文件*

|

||||

|

||||

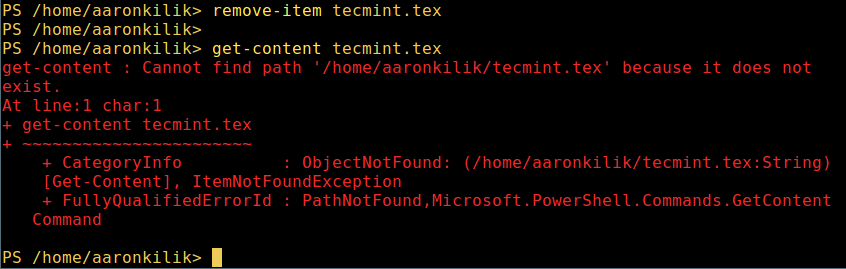

2. 在 PowerShell 中删除一个文件

|

||||

2、 在 PowerShell 中删除一个文件

|

||||

|

||||

```

|

||||

remove-item tecmint.tex

|

||||

get-content tecmint.tex

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][8]

|

||||

|

||||

*在 PowerShell 中删除一个文件*

|

||||

|

||||

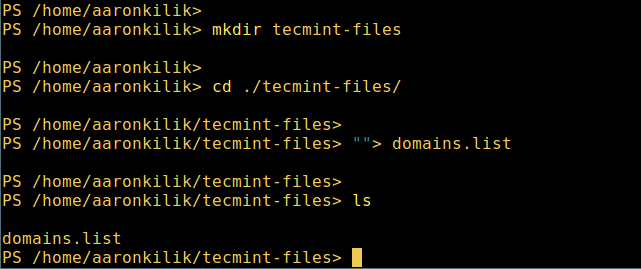

3. 创建目录

|

||||

3、 创建目录

|

||||

|

||||

```

|

||||

mkdir tecmint-files

|

||||

@ -118,13 +122,14 @@ cd tecmint-files

|

||||

“”>domains.list

|

||||

ls

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][9]

|

||||

|

||||

*在 PowerShell 中创建目录*

|

||||

|

||||

4. 执行长列表,列出文件/目录详细情况,包括模式(文件类型)、最后修改时间等,使用以下命令:

|

||||

4、 执行长格式的列表操作,列出文件/目录详细情况,包括模式(文件类型)、最后修改时间等,使用以下命令:

|

||||

|

||||

```

|

||||

dir

|

||||

@ -135,22 +140,24 @@ dir

|

||||

|

||||

*Powershell 中列出目录长列表*

|

||||

|

||||

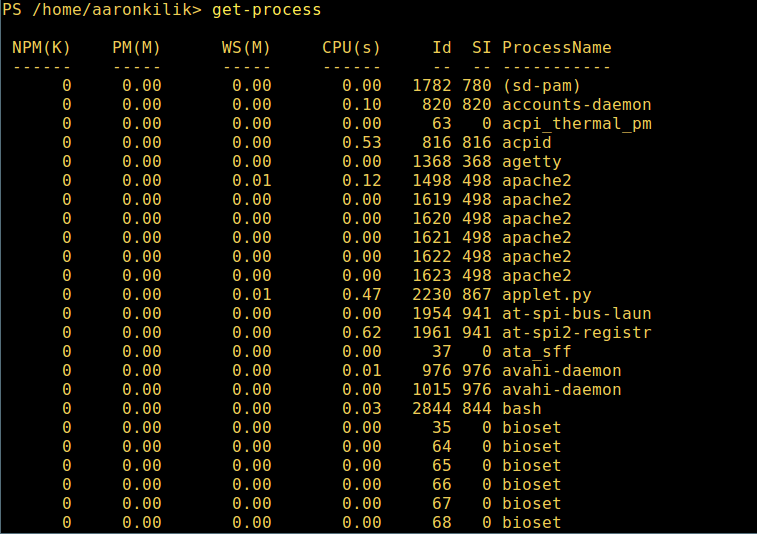

5. 显示系统中所有的进程:

|

||||

5、 显示系统中所有的进程:

|

||||

|

||||

```

|

||||

get-process

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][11]

|

||||

|

||||

*在 PowerShell 中显示运行中的进程*

|

||||

|

||||

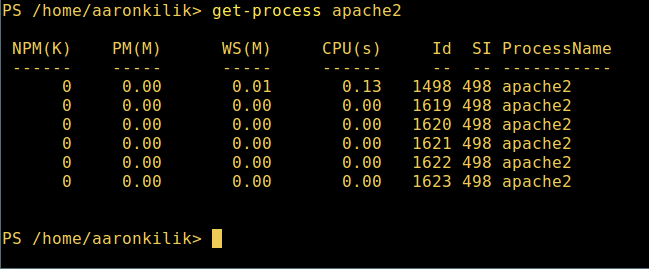

6. 通过给定的名称查看正在运行的进程/进程组细节,将进程名作为参数传给上面的命令,如下:

|

||||

6、 通过给定的名称查看正在运行的进程/进程组细节,将进程名作为参数传给上面的命令,如下:

|

||||

|

||||

```

|

||||

get-process apache2

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][12]

|

||||

@ -159,58 +166,62 @@ get-process apache2

|

||||

|

||||

输出中各部分的含义:

|

||||

|

||||

* NPM(K) – 进程总共使用的非分页内存,单位:Kb。

|

||||

* PM(K) – 进程总共使用的可分页内存,单位:Kb。

|

||||

* WS(K) – 进程的工作集大小,单位:Kb,包括进程引用到的内存页。

|

||||

* CPU(s) – 进程所用的处理器时间,单位:秒。

|

||||

* NPM(K) – 进程使用的非分页内存,单位:Kb。

|

||||

* PM(K) – 进程使用的可分页内存,单位:Kb。

|

||||

* WS(K) – 进程的工作集大小,单位:Kb,工作集由进程所引用到的内存页组成。

|

||||

* CPU(s) – 进程在所有处理器上所占用的处理器时间,单位:秒。

|

||||

* ID – 进程 ID (PID).

|

||||

* ProcessName – 进程名称。

|

||||

|

||||

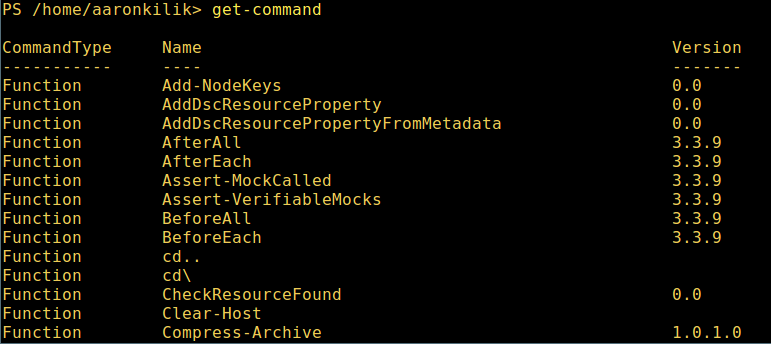

7. 想要了解更多,获取 PowerShell 命令列表:

|

||||

7、 想要了解更多,获取 PowerShell 命令列表:

|

||||

|

||||

```

|

||||

get-command

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][13]

|

||||

|

||||

*列出 PowerShell 的命令*

|

||||

|

||||

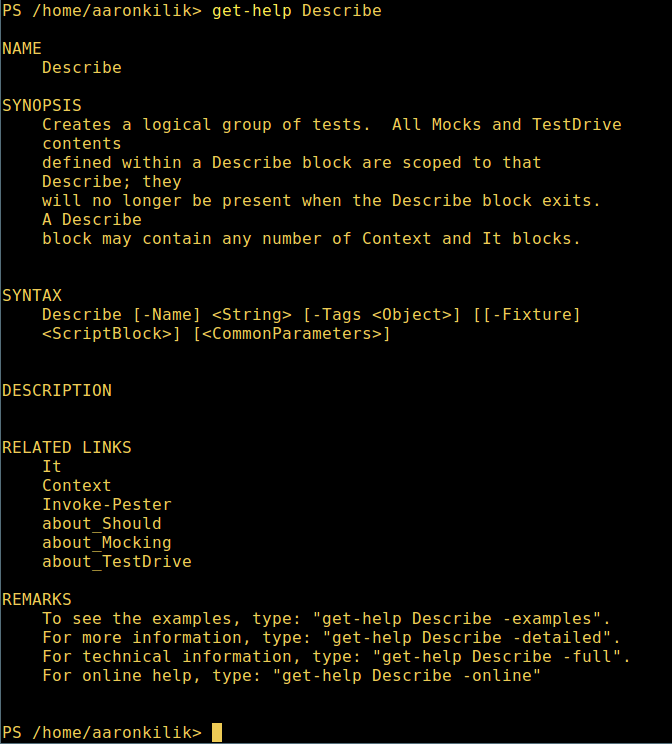

8. 想知道如何使用一个命令,查看它的帮助(类似于 Unix/Linux 中的 man);举个例子,你可以这样获取命令 **Describe** 的帮助:

|

||||

8、 想知道如何使用一个命令,查看它的帮助(类似于 Unix/Linux 中的 man);举个例子,你可以这样获取命令 **Describe** 的帮助:

|

||||

|

||||

```

|

||||

get-help Describe

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][14]

|

||||

|

||||

*PowerShell 帮助手册*

|

||||

|

||||

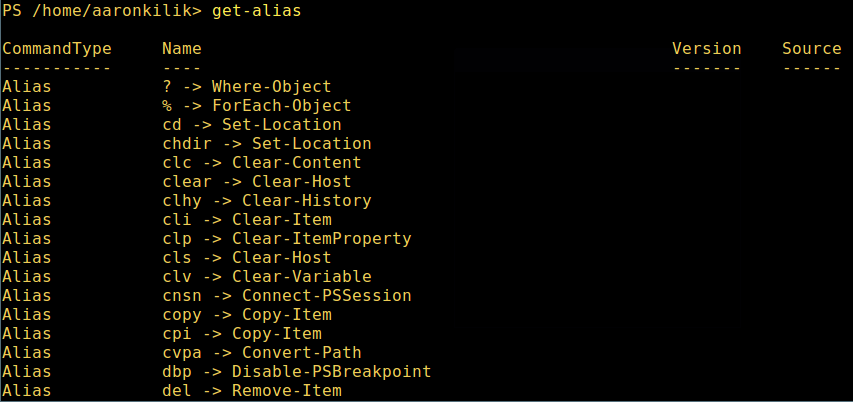

9. 显示所有命令的别名,輸入:

|

||||

9、 显示所有命令的别名,輸入:

|

||||

|

||||

```

|

||||

get-alias

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][15]

|

||||

|

||||

*列出 PowerShell 命令别名*

|

||||

|

||||

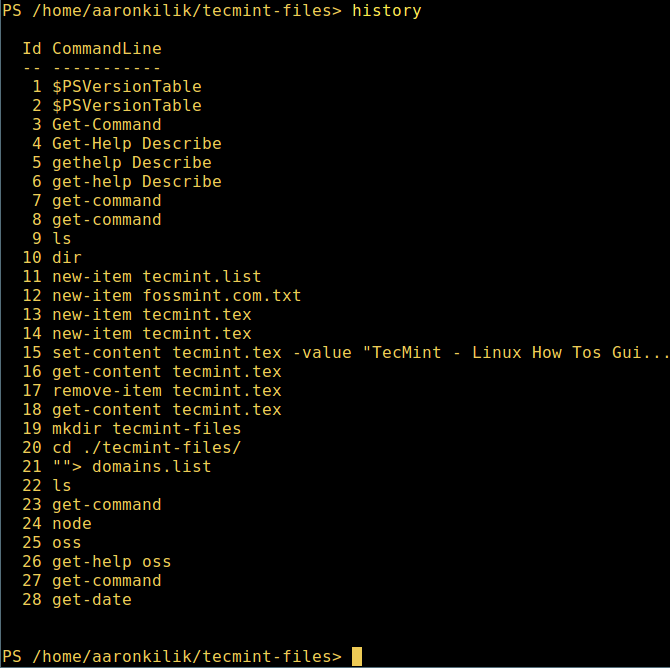

10. 最后,不过也很重要,显示命令历史记录(曾运行过的命令的列表):

|

||||

10、 最后,不过也很重要,显示命令历史记录(曾运行过的命令的列表):

|

||||

|

||||

```

|

||||

history

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][16]

|

||||

|

||||

*显示 PowerShell 命令历史记录*

|

||||

|

||||

就是这些了!在这篇文章里,我们展示了如何在 Linux 中安装**微软的 PowerShell Core 6.0**。在我看来,与传统 Unix/Linux 的 shell 相比,PowerShell 还有很长的路要走。到目前为止,前者为从命令行操作机器,更重要的是,编程(写脚本),提供了更好、更多令人激动和富有成效的特性。

|

||||

就是这些了!在这篇文章里,我们展示了如何在 Linux 中安装**微软的 PowerShell Core 6.0**。在我看来,与传统 Unix/Linux 的 shell 相比,PowerShell 还有很长的路要走。目前看来,PowerShell 还需要在命令行操作机器,更重要的是,编程(写脚本)等方面,提供更好、更多令人激动和富有成效的特性。

|

||||

|

||||

查看 PowerShell 的 GitHub 仓库:[https://github.com/PowerShell/PowerShell][17]。

|

||||

|

||||

290

sources/talk/20170303 A Programmes Introduction to Unicode.md

Normal file

290

sources/talk/20170303 A Programmes Introduction to Unicode.md

Normal file

@ -0,0 +1,290 @@

|

||||

[A Programmer’s Introduction to Unicode][18]

|

||||

============================================================

|

||||

|

||||

|

||||

Unicode! 🅤🅝🅘🅒🅞🅓🅔‽ 🇺🇳🇮🇨🇴🇩🇪! 😄 The very name strikes fear and awe into the hearts of programmers worldwide. We all know we ought to “support Unicode” in our software (whatever that means—like using `wchar_t` for all the strings, right?). But Unicode can be abstruse, and diving into the thousand-page [Unicode Standard][27] plus its dozens of supplementary [annexes, reports][28], and [notes][29]can be more than a little intimidating. I don’t blame programmers for still finding the whole thing mysterious, even 30 years after Unicode’s inception.

|

||||

|

||||

A few months ago, I got interested in Unicode and decided to spend some time learning more about it in detail. In this article, I’ll give an introduction to it from a programmer’s point of view.

|

||||

|

||||

I’m going to focus on the character set and what’s involved in working with strings and files of Unicode text. However, in this article I’m not going to talk about fonts, text layout/shaping/rendering, or localization in detail—those are separate issues, beyond my scope (and knowledge) here.

|

||||

|

||||

* [Diversity and Inherent Complexity][10]

|

||||

* [The Unicode Codespace][11]

|

||||

* [Codespace Allocation][2]

|

||||

* [Scripts][3]

|

||||

* [Usage Frequency][4]

|

||||

* [Encodings][12]

|

||||

* [UTF-8][5]

|

||||

* [UTF-16][6]

|

||||

* [Combining Marks][13]

|

||||

* [Canonical Equivalence][7]

|

||||

* [Normalization Forms][8]

|

||||

* [Grapheme Clusters][9]

|

||||

* [And More…][14]

|

||||

|

||||

### [][30]Diversity and Inherent Complexity

|

||||

|

||||

As soon as you start to study Unicode, it becomes clear that it represents a large jump in complexity over character sets like ASCII that you may be more familiar with. It’s not just that Unicode contains a much larger number of characters, although that’s part of it. Unicode also has a great deal of internal structure, features, and special cases, making it much more than what one might expect a mere “character set” to be. We’ll see some of that later in this article.

|

||||

|

||||

When confronting all this complexity, especially as an engineer, it’s hard not to find oneself asking, “Why do we need all this? Is this really necessary? Couldn’t it be simplified?”

|

||||

|

||||

However, Unicode aims to faithfully represent the _entire world’s_ writing systems. The Unicode Consortium’s stated goal is “enabling people around the world to use computers in any language”. And as you might imagine, the diversity of written languages is immense! To date, Unicode supports 135 different scripts, covering some 1100 languages, and there’s still a long tail of [over 100 unsupported scripts][31], both modern and historical, which people are still working to add.

|

||||

|

||||

Given this enormous diversity, it’s inevitable that representing it is a complicated project. Unicode embraces that diversity, and accepts the complexity inherent in its mission to include all human writing systems. It doesn’t make a lot of trade-offs in the name of simplification, and it makes exceptions to its own rules where necessary to further its mission.

|

||||

|

||||

Moreover, Unicode is committed not just to supporting texts in any _single_ language, but also to letting multiple languages coexist within one text—which introduces even more complexity.

|

||||

|

||||

Most programming languages have libaries available to handle the gory low-level details of text manipulation, but as a programmer, you’ll still need to know about certain Unicode features in order to know when and how to apply them. It may take some time to wrap your head around it all, but don’t be discouraged—think about the billions of people for whom your software will be more accessible through supporting text in their language. Embrace the complexity!

|

||||

|

||||

### [][32]The Unicode Codespace

|

||||

|

||||

Let’s start with some general orientation. The basic elements of Unicode—its “characters”, although that term isn’t quite right—are called _code points_ . Code points are identified by number, customarily written in hexadecimal with the prefix “U+”, such as [U+0041 “A” latin capital letter a][33] or [U+03B8 “θ” greek small letter theta][34]. Each code point also has a short name, and quite a few other properties, specified in the [Unicode Character Database][35].

|

||||

|

||||

The set of all possible code points is called the _codespace_ . The Unicode codespace consists of 1,114,112 code points. However, only 128,237 of them—about 12% of the codespace—are actually assigned, to date. There’s plenty of room for growth! Unicode also reserves an additional 137,468 code points as “private use” areas, which have no standardized meaning and are available for individual applications to define for their own purposes.

|

||||

|

||||

### [][36]Codespace Allocation

|

||||

|

||||

To get a feel for how the codespace is laid out, it’s helpful to visualize it. Below is a map of the entire codespace, with one pixel per code point. It’s arranged in tiles for visual coherence; each small square is 16×16 = 256 code points, and each large square is a “plane” of 65,536 code points. There are 17 planes altogether.

|

||||

|

||||

[

|

||||

")

|

||||

][37]

|

||||

|

||||

White represents unassigned space. Blue is assigned code points, green is private-use areas, and the small red area is surrogates (more about those later). As you can see, the assigned code points are distributed somewhat sparsely, but concentrated in the first three planes.

|

||||

|

||||

Plane 0 is also known as the “Basic Multilingual Plane”, or BMP. The BMP contains essentially all the characters needed for modern text in any script, including Latin, Cyrillic, Greek, Han (Chinese), Japanese, Korean, Arabic, Hebrew, Devanagari (Indian), and many more.

|

||||

|

||||

(In the past, the codespace was just the BMP and no more—Unicode was originally conceived as a straightforward 16-bit encoding, with only 65,536 code points. It was expanded to its current size in 1996\. However, the vast majority of code points in modern text belong to the BMP.)

|

||||

|

||||

Plane 1 contains historical scripts, such as Sumerian cuneiform and Egyptian hieroglyphs, as well as emoji and various other symbols. Plane 2 contains a large block of less-common and historical Han characters. The remaining planes are empty, except for a small number of rarely-used formatting characters in Plane 14; planes 15–16 are reserved entirely for private use.

|

||||

|

||||

### [][38]Scripts

|

||||

|

||||

Let’s zoom in on the first three planes, since that’s where the action is:

|

||||

|

||||

[

|

||||

")

|

||||

][39]

|

||||

|

||||

This map color-codes the 135 different scripts in Unicode. You can see how Han <nobr>()</nobr> and Korean <nobr>()</nobr>take up most of the range of the BMP (the left large square). By contrast, all of the European, Middle Eastern, and South Asian scripts fit into the first row of the BMP in this diagram.

|

||||

|

||||

Many areas of the codespace are adapted or copied from earlier encodings. For example, the first 128 code points of Unicode are just a copy of ASCII. This has clear benefits for compatibility—it’s easy to losslessly convert texts from smaller encodings into Unicode (and the other direction too, as long as no characters outside the smaller encoding are used).

|

||||

|

||||

### [][40]Usage Frequency

|

||||

|

||||

One more interesting way to visualize the codespace is to look at the distribution of usage—in other words, how often each code point is actually used in real-world texts. Below is a heat map of planes 0–2 based on a large sample of text from Wikipedia and Twitter (all languages). Frequency increases from black (never seen) through red and yellow to white.

|

||||

|

||||

[

|

||||

")

|

||||

][41]

|

||||

|

||||

You can see that the vast majority of this text sample lies in the BMP, with only scattered usage of code points from planes 1–2\. The biggest exception is emoji, which show up here as the several bright squares in the bottom row of plane 1.

|

||||

|

||||

### [][42]Encodings

|

||||

|

||||

We’ve seen that Unicode code points are abstractly identified by their index in the codespace, ranging from U+0000 to U+10FFFF. But how do code points get represented as bytes, in memory or in a file?

|

||||

|

||||

The most convenient, computer-friendliest (and programmer-friendliest) thing to do would be to just store the code point index as a 32-bit integer. This works, but it consumes 4 bytes per code point, which is sort of a lot. Using 32-bit ints for Unicode will cost you a bunch of extra storage, memory, and performance in bandwidth-bound scenarios, if you work with a lot of text.

|

||||

|

||||

Consequently, there are several more-compact encodings for Unicode. The 32-bit integer encoding is officially called UTF-32 (UTF = “Unicode Transformation Format”), but it’s rarely used for storage. At most, it comes up sometimes as a temporary internal representation, for examining or operating on the code points in a string.

|

||||

|

||||

Much more commonly, you’ll see Unicode text encoded as either UTF-8 or UTF-16\. These are both _variable-length_ encodings, made up of 8-bit or 16-bit units, respectively. In these schemes, code points with smaller index values take up fewer bytes, which saves a lot of memory for typical texts. The trade-off is that processing UTF-8/16 texts is more programmatically involved, and likely slower.

|

||||

|

||||

### [][43]UTF-8

|

||||

|

||||

In UTF-8, each code point is stored using 1 to 4 bytes, based on its index value.

|

||||

|

||||

UTF-8 uses a system of binary prefixes, in which the high bits of each byte mark whether it’s a single byte, the beginning of a multi-byte sequence, or a continuation byte; the remaining bits, concatenated, give the code point index. This table shows how it works:

|

||||

|

||||

| UTF-8 (binary) | Code point (binary) | Range |

|

||||

| --- | --- | --- |

|

||||

| 0xxxxxxx | xxxxxxx | U+0000–U+007F |

|

||||

| 110xxxxx 10yyyyyy | xxxxxyyyyyy | U+0080–U+07FF |

|

||||

| 1110xxxx 10yyyyyy 10zzzzzz | xxxxyyyyyyzzzzzz | U+0800–U+FFFF |

|

||||

| 11110xxx 10yyyyyy 10zzzzzz 10wwwwww | xxxyyyyyyzzzzzzwwwwww | U+10000–U+10FFFF |

|

||||

|

||||

A handy property of UTF-8 is that code points below 128 (ASCII characters) are encoded as single bytes, and all non-ASCII code points are encoded using sequences of bytes 128–255\. This has a couple of nice consequences. First, any strings or files out there that are already in ASCII can also be interpreted as UTF-8 without any conversion. Second, lots of widely-used string programming idioms—such as null termination, or delimiters (newlines, tabs, commas, slashes, etc.)—will just work on UTF-8 strings. ASCII bytes never occur inside the encoding of non-ASCII code points, so searching byte-wise for a null terminator or a delimiter will do the right thing.

|

||||

|

||||

Thanks to this convenience, it’s relatively simple to extend legacy ASCII programs and APIs to handle UTF-8 strings. UTF-8 is very widely used in the Unix/Linux and Web worlds, and many programmers argue [UTF-8 should be the default encoding everywhere][44].

|

||||

|

||||

However, UTF-8 isn’t a drop-in replacement for ASCII strings in all respects. For instance, code that iterates over the “characters” in a string will need to decode UTF-8 and iterate over code points (or maybe grapheme clusters—more about those later), not bytes. When you measure the “length” of a string, you’ll need to think about whether you want the length in bytes, the length in code points, the width of the text when rendered, or something else.

|

||||

|

||||

### [][45]UTF-16

|

||||

|

||||

The other encoding that you’re likely to encounter is UTF-16\. It uses 16-bit words, with each code point stored as either 1 or 2 words.

|

||||

|

||||

Like UTF-8, we can express the UTF-16 encoding rules in the form of binary prefixes:

|

||||

|

||||

| UTF-16 (binary) | Code point (binary) | Range |

|

||||

| --- | --- | --- |

|

||||

| xxxxxxxxxxxxxxxx | xxxxxxxxxxxxxxxx | U+0000–U+FFFF |

|

||||

| 110110xxxxxxxxxx 110111yyyyyyyyyy | xxxxxxxxxxyyyyyyyyyy + 0x10000 | U+10000–U+10FFFF |

|

||||

|

||||

A more common way that people talk about UTF-16 encoding, though, is in terms of code points called “surrogates”. All the code points in the range U+D800–U+DFFF—or in other words, the code points that match the binary prefixes `110110` and `110111` in the table above—are reserved specifically for UTF-16 encoding, and don’t represent any valid characters on their own. They’re only meant to occur in the 2-word encoding pattern above, which is called a “surrogate pair”. Surrogate code points are illegal in any other context! They’re not allowed in UTF-8 or UTF-32 at all.

|

||||

|

||||

Historically, UTF-16 is a descendant of the original, pre-1996 versions of Unicode, in which there were only 65,536 code points. The original intention was that there would be no different “encodings”; Unicode was supposed to be a straightforward 16-bit character set. Later, the codespace was expanded to make room for a long tail of less-common (but still important) Han characters, which the Unicode designers didn’t originally plan for. Surrogates were then introduced, as—to put it bluntly—a kludge, allowing 16-bit encodings to access the new code points.

|

||||

|

||||

Today, Javascript uses UTF-16 as its standard string representation: if you ask for the length of a string, or iterate over it, etc., the result will be in UTF-16 words, with any code points outside the BMP expressed as surrogate pairs. UTF-16 is also used by the Microsoft Win32 APIs; though Win32 supports either 8-bit or 16-bit strings, the 8-bit version unaccountably still doesn’t support UTF-8—only legacy code-page encodings, like ANSI. This leaves UTF-16 as the only way to get proper Unicode support in Windows.

|

||||

|

||||

By the way, UTF-16’s words can be stored either little-endian or big-endian. Unicode has no opinion on that issue, though it does encourage the convention of putting [U+FEFF zero width no-break space][46] at the top of a UTF-16 file as a [byte-order mark][47], to disambiguate the endianness. (If the file doesn’t match the system’s endianness, the BOM will be decoded as U+FFFE, which isn’t a valid code point.)

|

||||

|

||||

### [][48]Combining Marks

|

||||

|

||||

In the story so far, we’ve been focusing on code points. But in Unicode, a “character” can be more complicated than just an individual code point!

|

||||

|

||||

Unicode includes a system for _dynamically composing_ characters, by combining multiple code points together. This is used in various ways to gain flexibility without causing a huge combinatorial explosion in the number of code points.

|

||||

|

||||

In European languages, for example, this shows up in the application of diacritics to letters. Unicode supports a wide range of diacritics, including acute and grave accents, umlauts, cedillas, and many more. All these diacritics can be applied to any letter of any alphabet—and in fact, _multiple_ diacritics can be used on a single letter.

|

||||

|

||||

If Unicode tried to assign a distinct code point to every possible combination of letter and diacritics, things would rapidly get out of hand. Instead, the dynamic composition system enables you to construct the character you want, by starting with a base code point (the letter) and appending additional code points, called “combining marks”, to specify the diacritics. When a text renderer sees a sequence like this in a string, it automatically stacks the diacritics over or under the base letter to create a composed character.

|

||||

|

||||

For example, the accented character “Á” can be expressed as a string of two code points: [U+0041 “A” latin capital letter a][49] plus [U+0301 “◌́” combining acute accent][50]. This string automatically gets rendered as a single character: “Á”.

|

||||

|

||||

Now, Unicode does also include many “precomposed” code points, each representing a letter with some combination of diacritics already applied, such as [U+00C1 “Á” latin capital letter a with acute][51] or [U+1EC7 “ệ” latin small letter e with circumflex and dot below][52]. I suspect these are mostly inherited from older encodings that were assimilated into Unicode, and kept around for compatibility. In practice, there are precomposed code points for most of the common letter-with-diacritic combinations in European-script languages, so they don’t use dynamic composition that much in typical text.

|

||||

|

||||

Still, the system of combining marks does allow for an _arbitrary number_ of diacritics to be stacked on any base character. The reductio-ad-absurdum of this is [Zalgo text][53], which works by ͖͟ͅr͞aṋ̫̠̖͈̗d͖̻̹óm̪͙͕̗̝ļ͇̰͓̳̫ý͓̥̟͍ ̕s̫t̫̱͕̗̰̼̘͜a̼̩͖͇̠͈̣͝c̙͍k̖̱̹͍͘i̢n̨̺̝͇͇̟͙ģ̫̮͎̻̟ͅ ̕n̼̺͈͞u̮͙m̺̭̟̗͞e̞͓̰̤͓̫r̵o̖ṷs҉̪͍̭̬̝̤ ̮͉̝̞̗̟͠d̴̟̜̱͕͚i͇̫̼̯̭̜͡ḁ͙̻̼c̲̲̹r̨̠̹̣̰̦i̱t̤̻̤͍͙̘̕i̵̜̭̤̱͎c̵s ͘o̱̲͈̙͖͇̲͢n͘ ̜͈e̬̲̠̩ac͕̺̠͉h̷̪ ̺̣͖̱ḻ̫̬̝̹ḙ̙̺͙̭͓̲t̞̞͇̲͉͍t̷͔̪͉̲̻̠͙e̦̻͈͉͇r͇̭̭̬͖,̖́ ̜͙͓̣̭s̘̘͈o̱̰̤̲ͅ ̛̬̜̙t̼̦͕̱̹͕̥h̳̲͈͝ͅa̦t̻̲ ̻̟̭̦̖t̛̰̩h̠͕̳̝̫͕e͈̤̘͖̞͘y҉̝͙ ̷͉͔̰̠o̞̰v͈͈̳̘͜er̶f̰͈͔ḻ͕̘̫̺̲o̲̭͙͠ͅw̱̳̺ ͜t̸h͇̭͕̳͍e̖̯̟̠ ͍̞̜͔̩̪͜ļ͎̪̲͚i̝̲̹̙̩̹n̨̦̩̖ḙ̼̲̼͢ͅ ̬͝s̼͚̘̞͝p͙̘̻a̙c҉͉̜̤͈̯̖i̥͡n̦̠̱͟g̸̗̻̦̭̮̟ͅ ̳̪̠͖̳̯̕a̫͜n͝d͡ ̣̦̙ͅc̪̗r̴͙̮̦̹̳e͇͚̞͔̹̫͟a̙̺̙ț͔͎̘̹ͅe̥̩͍ a͖̪̜̮͙̹n̢͉̝ ͇͉͓̦̼́a̳͖̪̤̱p̖͔͔̟͇͎͠p̱͍̺ę̲͎͈̰̲̤̫a̯͜r̨̮̫̣̘a̩̯͖n̹̦̰͎̣̞̞c̨̦̱͔͎͍͖e̬͓͘ ̤̰̩͙̤̬͙o̵̼̻̬̻͇̮̪f̴ ̡̙̭͓͖̪̤“̸͙̠̼c̳̗͜o͏̼͙͔̮r̞̫̺̞̥̬ru̺̻̯͉̭̻̯p̰̥͓̣̫̙̤͢t̳͍̳̖ͅi̶͈̝͙̼̙̹o̡͔n̙̺̹̖̩͝ͅ”̨̗͖͚̩.̯͓

|

||||

|

||||

A few other places where dynamic character composition shows up in Unicode:

|

||||

|

||||

* [Vowel-pointing notation][15] in Arabic and Hebrew. In these languages, words are normally spelled with some of their vowels left out. They then have diacritic notation to indicate the vowels (used in dictionaries, language-teaching materials, children’s books, and such). These diacritics are expressed with combining marks.

|

||||

|

||||

| A Hebrew example, with [niqqud][1]: | אֶת דַלְתִּי הֵזִיז הֵנִיעַ, קֶטֶב לִשְׁכַּתִּי יָשׁוֹד |

|

||||

| Normal writing (no niqqud): | את דלתי הזיז הניע, קטב לשכתי ישוד |

|

||||

|

||||

* [Devanagari][16], the script used to write Hindi, Sanskrit, and many other South Asian languages, expresses certain vowels as combining marks attached to consonant letters. For example, “ह” + “ि” = “हि” (“h” + “i” = “hi”).

|

||||

|

||||

* Korean characters stand for syllables, but they are composed of letters called [jamo][17] that stand for the vowels and consonants in the syllable. While there are code points for precomposed Korean syllables, it’s also possible to dynamically compose them by concatenating their jamo. For example, “ᄒ” + “ᅡ” + “ᆫ” = “한” (“h” + “a” + “n” = “han”).

|

||||

|

||||

### [][54]Canonical Equivalence

|

||||

|

||||

In Unicode, precomposed characters exist alongside the dynamic composition system. A consequence of this is that there are multiple ways to express “the same” string—different sequences of code points that result in the same user-perceived characters. For example, as we saw earlier, we can express the character “Á” either as the single code point U+00C1, _or_ as the string of two code points U+0041 U+0301.

|

||||

|

||||

Another source of ambiguity is the ordering of multiple diacritics in a single character. Diacritic order matters visually when two diacritics apply to the same side of the base character, e.g. both above: “ǡ” (dot, then macron) is different from “ā̇” (macron, then dot). However, when diacritics apply to different sides of the character, e.g. one above and one below, then the order doesn’t affect rendering. Moreover, a character with multiple diacritics might have one of the diacritics precomposed and others expressed as combining marks.

|

||||

|

||||

For example, the Vietnamese letter “ệ” can be expressed in _five_ different ways:

|

||||

|

||||

* Fully precomposed: U+1EC7 “ệ”

|

||||

* Partially precomposed: U+1EB9 “ẹ” + U+0302 “◌̂”

|

||||

* Partially precomposed: U+00EA “ê” + U+0323 “◌̣”

|

||||

* Fully decomposed: U+0065 “e” + U+0323 “◌̣” + U+0302 “◌̂”

|

||||

* Fully decomposed: U+0065 “e” + U+0302 “◌̂” + U+0323 “◌̣”

|

||||

|

||||

Unicode refers to set of strings like this as “canonically equivalent”. Canonically equivalent strings are supposed to be treated as identical for purposes of searching, sorting, rendering, text selection, and so on. This has implications for how you implement operations on text. For example, if an app has a “find in file” operation and the user searches for “ệ”, it should, by default, find occurrences of _any_ of the five versions of “ệ” above!

|

||||

|

||||

### [][55]Normalization Forms

|

||||

|

||||

To address the problem of “how to handle canonically equivalent strings”, Unicode defines several _normalization forms_ : ways of converting strings into a canonical form so that they can be compared code-point-by-code-point (or byte-by-byte).

|

||||

|

||||

The “NFD” normalization form fully _decomposes_ every character down to its component base and combining marks, taking apart any precomposed code points in the string. It also sorts the combining marks in each character according to their rendered position, so e.g. diacritics that go below the character come before the ones that go above the character. (It doesn’t reorder diacritics in the same rendered position, since their order matters visually, as previously mentioned.)

|

||||

|

||||

The “NFC” form, conversely, puts things back together into precomposed code points as much as possible. If an unusual combination of diacritics is called for, there may not be any precomposed code point for it, in which case NFC still precomposes what it can and leaves any remaining combining marks in place (again ordered by rendered position, as in NFD).

|

||||

|

||||

There are also forms called NFKD and NFKC. The “K” here refers to _compatibility_ decompositions, which cover characters that are “similar” in some sense but not visually identical. However, I’m not going to cover that here.

|

||||

|

||||

### [][56]Grapheme Clusters

|

||||

|

||||

As we’ve seen, Unicode contains various cases where a thing that a user thinks of as a single “character” might actually be made up of multiple code points under the hood. Unicode formalizes this using the notion of a _grapheme cluster_ : a string of one or more code points that constitute a single “user-perceived character”.

|

||||

|

||||

[UAX #29][57] defines the rules for what, precisely, qualifies as a grapheme cluster. It’s approximately “a base code point followed by any number of combining marks”, but the actual definition is a bit more complicated; it accounts for things like Korean jamo, and [emoji ZWJ sequences][58].

|

||||

|

||||

The main thing grapheme clusters are used for is text _editing_ : they’re often the most sensible unit for cursor placement and text selection boundaries. Using grapheme clusters for these purposes ensures that you can’t accidentally chop off some diacritics when you copy-and-paste text, that left/right arrow keys always move the cursor by one visible character, and so on.

|

||||

|

||||

Another place where grapheme clusters are useful is in enforcing a string length limit—say, on a database field. While the true, underlying limit might be something like the byte length of the string in UTF-8, you wouldn’t want to enforce that by just truncating bytes. At a minimum, you’d want to “round down” to the nearest code point boundary; but even better, round down to the nearest _grapheme cluster boundary_ . Otherwise, you might be corrupting the last character by cutting off a diacritic, or interrupting a jamo sequence or ZWJ sequence.

|

||||

|

||||

### [][59]And More…

|

||||

|

||||

There’s much more that could be said about Unicode from a programmer’s perspective! I haven’t gotten into such fun topics as case mapping, collation, compatibility decompositions and confusables, Unicode-aware regexes, or bidirectional text. Nor have I said anything yet about implementation issues—how to efficiently store and look-up data about the sparsely-assigned code points, or how to optimize UTF-8 decoding, string comparison, or NFC normalization. Perhaps I’ll return to some of those things in future posts.

|

||||

|

||||

Unicode is a fascinating and complex system. It has a many-to-one mapping between bytes and code points, and on top of that a many-to-one (or, under some circumstances, many-to-many) mapping between code points and “characters”. It has oddball special cases in every corner. But no one ever claimed that representing _all written languages_ was going to be _easy_ , and it’s clear that we’re never going back to the bad old days of a patchwork of incompatible encodings.

|

||||

|

||||

Further reading:

|

||||

|

||||

* [The Unicode Standard][21]

|

||||

* [UTF-8 Everywhere Manifesto][22]

|

||||

* [Dark corners of Unicode][23] by Eevee

|

||||

* [ICU (International Components for Unicode)][24]—C/C++/Java libraries implementing many Unicode algorithms and related things

|

||||

* [Python 3 Unicode Howto][25]

|

||||

* [Google Noto Fonts][26]—set of fonts intended to cover all assigned code points

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

I’m a graphics programmer, currently freelancing in Seattle. Previously I worked at NVIDIA on the DevTech software team, and at Sucker Punch Productions developing rendering technology for the Infamous series of games for PS3 and PS4.

|

||||

|

||||

I’ve been interested in graphics since about 2002 and have worked on a variety of assignments, including fog, atmospheric haze, volumetric lighting, water, visual effects, particle systems, skin and hair shading, postprocessing, specular models, linear-space rendering, and GPU performance measurement and optimization.

|

||||

|

||||

You can read about what I’m up to on my blog. In addition to graphics, I’m interested in theoretical physics, and in programming language design.

|

||||

|

||||

You can contact me at nathaniel dot reed at gmail dot com, or follow me on Twitter (@Reedbeta) or Google+. I can also often be found answering questions at Computer Graphics StackExchange.

|

||||

|

||||

-------------------

|

||||

|

||||

via: http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311

|

||||

|

||||

作者:[ Nathan][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://reedbeta.com/about/

|

||||

[1]:https://en.wikipedia.org/wiki/Niqqud

|

||||

[2]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#codespace-allocation

|

||||

[3]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#scripts

|

||||

[4]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#usage-frequency

|

||||

[5]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#utf-8

|

||||

[6]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#utf-16

|

||||

[7]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#canonical-equivalence

|

||||

[8]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#normalization-forms

|

||||

[9]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#grapheme-clusters

|

||||

[10]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#diversity-and-inherent-complexity

|

||||

[11]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#the-unicode-codespace

|

||||

[12]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#encodings

|

||||

[13]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#combining-marks

|

||||

[14]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#and-more

|

||||

[15]:https://en.wikipedia.org/wiki/Vowel_pointing

|

||||

[16]:https://en.wikipedia.org/wiki/Devanagari

|

||||

[17]:https://en.wikipedia.org/wiki/Hangul#Letters

|

||||

[18]:http://reedbeta.com/blog/programmers-intro-to-unicode/

|

||||

[19]:http://reedbeta.com/blog/category/coding/

|

||||

[20]:http://reedbeta.com/blog/programmers-intro-to-unicode/#comments

|

||||

[21]:http://www.unicode.org/versions/latest/

|

||||

[22]:http://utf8everywhere.org/

|

||||

[23]:https://eev.ee/blog/2015/09/12/dark-corners-of-unicode/

|

||||

[24]:http://site.icu-project.org/

|

||||

[25]:https://docs.python.org/3/howto/unicode.html

|

||||

[26]:https://www.google.com/get/noto/

|

||||

[27]:http://www.unicode.org/versions/latest/

|

||||

[28]:http://www.unicode.org/reports/

|

||||

[29]:http://www.unicode.org/notes/

|

||||

[30]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#diversity-and-inherent-complexity

|

||||

[31]:http://linguistics.berkeley.edu/sei/

|

||||

[32]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#the-unicode-codespace

|

||||

[33]:http://unicode.org/cldr/utility/character.jsp?a=A

|

||||

[34]:http://unicode.org/cldr/utility/character.jsp?a=%CE%B8

|

||||

[35]:http://www.unicode.org/reports/tr44/

|

||||

[36]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#codespace-allocation

|

||||

[37]:http://reedbeta.com/blog/programmers-intro-to-unicode/codespace-map.png

|

||||

[38]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#scripts

|

||||

[39]:http://reedbeta.com/blog/programmers-intro-to-unicode/script-map.png

|

||||

[40]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#usage-frequency

|

||||

[41]:http://reedbeta.com/blog/programmers-intro-to-unicode/heatmap-wiki+tweets.png

|

||||

[42]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#encodings

|

||||

[43]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#utf-8

|

||||

[44]:http://utf8everywhere.org/

|

||||

[45]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#utf-16

|

||||

[46]:http://unicode.org/cldr/utility/character.jsp?a=FEFF

|

||||

[47]:https://en.wikipedia.org/wiki/Byte_order_mark

|

||||

[48]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#combining-marks

|

||||

[49]:http://unicode.org/cldr/utility/character.jsp?a=A

|

||||

[50]:http://unicode.org/cldr/utility/character.jsp?a=0301

|

||||

[51]:http://unicode.org/cldr/utility/character.jsp?a=%C3%81

|

||||

[52]:http://unicode.org/cldr/utility/character.jsp?a=%E1%BB%87

|

||||

[53]:https://eeemo.net/

|

||||

[54]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#canonical-equivalence

|

||||

[55]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#normalization-forms

|

||||

[56]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#grapheme-clusters

|

||||

[57]:http://www.unicode.org/reports/tr29/

|

||||

[58]:http://blog.emojipedia.org/emoji-zwj-sequences-three-letters-many-possibilities/

|

||||

[59]:http://reedbeta.com/blog/programmers-intro-to-unicode/?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311#and-more

|

||||

@ -0,0 +1,95 @@

|

||||

How to use pull requests to improve your code reviews

|

||||

============================================================

|

||||

|

||||

Spend more time building and less time fixing with GitHub Pull Requests for proper code review.

|

||||

|

||||

|

||||

|

||||

|

||||

>Take a look Brent and Peter’s book, [ _Introducing GitHub_ ][5], for more on creating projects, starting pull requests, and getting an overview of your team’s software development process.

|

||||

|

||||

|

||||

If you don’t write code every day, you may not know some of the problems that software developers face on a daily basis:

|

||||

|

||||

* Security vulnerabilities in the code

|

||||

* Code that causes your application to crash

|

||||

* Code that can be referred to as “technical debt” and needs to be re-written later

|

||||

* Code that has already been written somewhere that you didn’t know about

|

||||

|

||||

|

||||

Code review helps improve the software we write by allowing other people and/or tools to look it over for us. This review can happen with automated code analysis or test coverage tools — two important pieces of the software development process that can save hours of manual work — or peer review. Peer review is a process where developers review each other's work. When it comes to developing software, speed and urgency are two components that often result in some of the previously mentioned problems. If you don’t release soon enough, your competitor may come out with a new feature first. If you don’t release often enough, your users may doubt whether or not you still care about improvements to your application.

|

||||

|

||||

### Weighing the time trade-off: code review vs. bug fixing

|

||||

|

||||

If someone is able to bring together multiple types of code review in a way that has minimal friction, then the quality of that software written over time will be improved. It would be naive to think that the introduction of new tools or processes would not at first introduce some amount of delay in time. But what is more expensive: time to fix bugs in production, or improving the software before it makes it into production? Even if new tools introduce some lag time in which a new feature can be released and appreciated by customers, that lag time will shorten as the software developers improve their own skills and the software release cycles will increase back to previous levels while bugs should decrease.

|

||||

|

||||

One of the keys for achieving this goal of proactively improving code quality with code review is using a platform that is flexible enough to allow software developers to quickly write code, plug in the tools they are familiar with, and do peer review of each others’ code. [GitHub][9] is a great example of such a platform. However, putting your code on GitHub doesn’t just magically make code review happen; you have to open a pull request to start down this journey.

|

||||

|

||||

### Pull requests: a living discussion about code

|

||||

|

||||

[Pull requests][10] are a tool on GitHub that allows software developers to discuss and propose changes to the main codebase of a project that later can be deployed for all users to see. They were created back in February of 2008 for the purpose of suggesting a change on to someone’s work before it would be accepted (merged) and later deployed to production for end-users to see that change.

|

||||

|

||||

Pull requests started out as a loose way to offer your change to someone’s project, but they have evolved into:

|

||||

|

||||

* A living discussion about the code you want merged

|

||||

* Added functionality of increasing the visibility of what changed

|

||||

* Integration of your favorite tools

|

||||

* Explicit pull request reviews that can be required as part of a protected branch workflow

|

||||

|

||||

### Considering code: URLs are forever

|

||||

|

||||

Looking at the first two bullet points above, pull requests foster an ongoing code discussion that makes code changes very visible, as well as making it easy to pick up where you left off on your review. For both new and experienced developers, being able to refer back to these previous discussions about why a feature was developed the way it was or being linked to another conversation about a related feature should be priceless. Context can be so important when coordinating features across multiple projects and keeping everyone in the loop as close as possible to the code is great too. If those features are still being developed, it’s important to be able to just see what’s changed since you last reviewed. After all, it’s far easier to [review a small change than a large one][11], but that’s not always possible with large features. So, it’s important to be able to pick up where you last reviewed and only view the changes since then.

|

||||

|

||||

### Integrating tools: software developers are opinionated

|

||||

|

||||

Considering the third point above, GitHub’s pull requests have a lot of functionality but developers will always have a preference on additional tools. Code quality is a whole realm of code review that involves the other component to code reviews that aren’t necessarily human. Detecting code that’s “inefficient” or slow, a potential security vulnerability, or just not up to company standards is a task best left to automated tools. Tools like [SonarQube][12] and [Code Climate][13]can analyse your code, while tools like [Codecov][14] and [Coveralls][15] can tell you if the new code you just wrote is not well tested. The wonder of these tools is that they can plug into GitHub and report their findings right back into the pull request! This means the conversation not only has people reviewing the code, but the tools are reporting there too. Everyone can stay in the loop of exactly how a feature is developing.

|

||||

|

||||

Lastly, depending on the preference of your team, you can make the tools and the peer review required by leveraging the required status feature of the [protected branch workflow][16].

|

||||

|

||||

Though you may just be getting started on your software development journey, a business stakeholder who wants to know how a project is doing, or a project manager who wants to ensure the timeliness and quality of a project, getting involved in the pull request by setting up an approval workflow and thinking about integration with additional tools to ensure quality is important at any level of software development.

|

||||

|

||||

Whether it’s for your personal website, your company’s online store, or the latest combine to harvest this year’s corn with maximum yield, writing good software involves having good code review. Having good code review involves the right tools and platform. To learn more about GitHub and the software development process, take a look at the O’Reilly book, [ _Introducing GitHub_ ][17], where you can understand creating projects, starting pull requests, and getting an overview of your team's’ software development process.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

**Brent Beer**

|

||||

|

||||

Brent Beer has used Git and GitHub for over 5 years through university classes, contributions to open source projects, and professionally as a web developer. While working as a trainer for GitHub, he also became a published author of “Introducing GitHub” for O’Reilly. He now works as a solutions engineer for GitHub in Amsterdam to help bring Git and GitHub to developers across the world.

|

||||

|

||||

**Peter Bell**

|

||||

|

||||

Peter Bell is the founder and CTO of Ronin Labs. Training is broken - we're fixing it through technology enhanced training! He is an experienced entrepreneur, technologist, agile coach and CTO specializing in EdTech projects. He wrote "Introducing GitHub" for O'Reilly, created the "Mastering GitHub" course for code school and "Git and GitHub LiveLessons" for Pearson. He has presented regularly at national and international conferences on ruby, nodejs, NoSQL (especially MongoDB and neo4j), cloud computing, software craftsmanship, java, groovy, j...

|

||||

|

||||

|

||||

-------------

|

||||

|

||||

|

||||

via: https://www.oreilly.com/ideas/how-to-use-pull-requests-to-improve-your-code-reviews?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311

|

||||

|

||||

作者:[Brent Beer][a],[Peter Bell][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.oreilly.com/people/acf937de-cdf4-4b0e-85bd-b559404c580e

|

||||

[b]:https://www.oreilly.com/people/2256f119-7ea0-440e-99e8-65281919e952

|

||||

[1]:https://pixabay.com/en/measure-measures-rule-metro-106354/

|

||||

[2]:http://conferences.oreilly.com/oscon/oscon-tx?intcmp=il-prog-confreg-update-ostx17_new_site_oscon_17_austin_right_rail_cta

|

||||

[3]:https://www.oreilly.com/people/acf937de-cdf4-4b0e-85bd-b559404c580e

|

||||

[4]:https://www.oreilly.com/people/2256f119-7ea0-440e-99e8-65281919e952

|

||||

[5]:https://www.safaribooksonline.com/library/view/introducing-github/9781491949801/?utm_source=newsite&utm_medium=content&utm_campaign=lgen&utm_content=how-to-use-pull-requests-to-improve-your-code-reviews

|

||||

[6]:http://conferences.oreilly.com/oscon/oscon-tx?intcmp=il-prog-confreg-update-ostx17_new_site_oscon_17_austin_right_rail_cta

|

||||

[7]:http://conferences.oreilly.com/oscon/oscon-tx?intcmp=il-prog-confreg-update-ostx17_new_site_oscon_17_austin_right_rail_cta

|

||||

[8]:https://www.oreilly.com/ideas/how-to-use-pull-requests-to-improve-your-code-reviews?imm_mid=0ee8ca&cmp=em-prog-na-na-newsltr_20170311

|

||||

[9]:https://github.com/about

|

||||

[10]:https://help.github.com/articles/about-pull-requests/

|

||||

[11]:https://blog.skyliner.io/ship-small-diffs-741308bec0d1

|

||||

[12]:https://github.com/integrations/sonarqube

|

||||

[13]:https://github.com/integrations/code-climate

|

||||

[14]:https://github.com/integrations/codecov

|

||||

[15]:https://github.com/integrations/coveralls

|

||||

[16]:https://help.github.com/articles/about-protected-branches/

|

||||

[17]:https://www.safaribooksonline.com/library/view/introducing-github/9781491949801/?utm_source=newsite&utm_medium=content&utm_campaign=lgen&utm_content=how-to-use-pull-requests-to-improve-your-code-reviews-lower

|

||||

@ -0,0 +1,67 @@

|

||||

# Why DevOps is the end of security as we know it

|

||||

|

||||

|

||||

|

||||

Security can be a hard sell. It’s difficult to convince development teams to spend their limited cycles patching security holes with line-of-business managers pressuring them to release applications as quickly as possible. But given that 84 percent of all cyberattacks happen on the application layer, organizations can’t afford for their dev teams not to include security.

|

||||

|

||||

The rise of DevOps presents a dilemma for many security leads. “It’s a threat to security,” [Josh Corman, former CTO at Sonatype][2], “and it’s an opportunity for security to get better.” Corman is a staunch advocate of [integrating security and DevOps practices to create “Rugged DevOps.”][3] _Business Insights_ talked with Corman about the values security and DevOps share, and how those shared values help make organizations less vulnerable to outages and exploits.

|

||||

|

||||

What Is the True State of Security in DevOps?[Get Report][1]

|

||||

|

||||

### How are security and DevOps practices mutually beneficial?

|

||||

|

||||

**Josh Corman: **A primary example is the tendency for DevOps teams to instrument everything that can be measured. Security is always looking for more intelligence and telemetry. You can take a lot of what DevOps teams are measuring and enter that info into your log management or your SIEM [security information and event management system].

|

||||

|

||||

An OODA loop [observe, orient, decide, act] is predicated on having enough pervasive eyes and ears to notice whispers and echoes. DevOps gives you pervasive instrumentation.

|

||||

|

||||

### Are there other cultural attitudes that they share?

|

||||

|

||||

**JC:** “Be mean to your code” is a shared value. For example, the software tool Chaos Monkey written by Netflix was a watershed moment for DevOps teams. Created to test the resiliency and recoverability of Amazon Web Services, Chaos Monkey made the Netflix teams stronger and more prepared for outages.

|

||||

|

||||

So there’s now this notion that our systems need to be tested and, as such, James Wickett and I and others decided to make an evil, weaponized Chaos Monkey, which is where the GAUNTLT project came from. It’s basically a barrage of security tests that can be used within DevOps cycle times and by DevOps tool chains. It’s also very DevOps-friendly with APIs.

|

||||

|

||||

### Where else do enterprise security and DevOps values intersect?

|

||||

|

||||

**JC:** Both teams believe complexity is the enemy of all things. For example, [security people and Rugged DevOps folks][4] can actually say, “Look, we’re using 11 logging frameworks in our project—maybe we don’t need that many, and maybe that attack surface and complexity could hurt us, or hurt the quality or availability of the product.”

|

||||

|

||||

Complexity tends to be the enemy of lots of things. Typically you don’t have a hard time convincing DevOps teams to use better building materials in architectural levels: use the most recent, least vulnerable versions, and use fewer of them.

|

||||

|

||||

### What do you mean by “better building materials”?

|

||||

|

||||

**JC:** I’m the custodian of the largest open-source repository in the world, so I see who’s using which versions, which vulnerabilities are in them, when they don’t take a fix for a vulnerability, and for how long. Certain logging frameworks, for example, fix none of their bugs, ever. Some of them fix most of their security bugs within 90 days. People are getting breached over and over because they’re using a framework that has zero security hygiene.

|

||||

|

||||

Beyond that, even if you don’t know the quality of your logging frameworks, having 11 different frameworks makes for a very clunky, buggy deliverable, with lots of extra work and complexity. Your exposure to vulnerabilities is much greater. How much development time do you want to be spending fixing lots of little defects, as opposed to creating the next big disruptive thing?

|

||||

|

||||

One of the keys to [Rugged DevOps is software supply chain management][5], which incorporates three principles: Use fewer and better suppliers; use the highest-quality parts from those suppliers; and track which parts went where, so that you can have a prompt and agile response when something goes wrong.

|

||||

|

||||

### So change management is also important.

|

||||

|

||||

**JC:** Yes, that’s another shared value. What I’ve found is that when a company wants to perform security tests such as anomaly detection or net-flow analysis, they need to know what “normal” looks like. A lot of the basic things that trip people up have to do with inventory and patch management.

|

||||

|

||||

I saw in the _Verizon Data Breach Investigations Report_ that 97 percent of last year’s successfully exploited vulnerabilities tracked to just ten CVEs [common vulnerabilities and exposures], and of those 10, eight have been fixed for over a decade. So, shame on us for talking about advanced espionage. We’re not doing basic patching. Now, I’m not saying that if you fix those ten CVEs, you’ll have no successful exploits, but they account for the lion’s share of how people are actually failing.

|

||||

|

||||

The nice thing about [DevOps automation tools ][6]is that they’ve become an accidental change management database. It’s a single version of the truth of who pushed which change where, and when. That’s a huge win, because often the factors that have the greatest impact on security are out of your control. You inherit the downstream consequences of the choices made by the CIO and the CTO. As IT becomes more rigorous and repeatable through automation, you lessen the chance for human error and allow more traceability on which change happened where.

|

||||

|

||||

### What would you say is the most important shared value?

|

||||

|

||||

**JC:** DevOps involves processes and toolchains, but I think the defining attribute is culture, specifically empathy. DevOps works because dev and ops teams understand each other better and can make more informed decisions. Rather than solving problems in silos, they’re solving for the stream of activity and the goal. If you show DevOps teams how security can make them better, then as a reciprocation they tend to ask, “Well, are there any choices we make that would make your life easier?” Because often they don’t know that the choice they’ve made to do X, Y, or Z made it impossible to include security.

|

||||

|

||||

For security teams, one of the ways to drive value is to be helpful before we ask for help, and provide qualitative and quantitative value before we tell DevOps teams what to do. You’ve got to earn the trust of DevOps teams and earn the right to play, and then it will be reciprocated. It often happens a lot faster than you think.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://techbeacon.com/why-devops-end-security-we-know-it

|

||||

|

||||

作者:[Mike Barton][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://twitter.com/intent/follow?original_referer=https%3A%2F%2Ftechbeacon.com%2Fwhy-devops-end-security-we-know-it%3Fimm_mid%3D0ee8c5%26cmp%3Dem-webops-na-na-newsltr_20170310&ref_src=twsrc%5Etfw®ion=follow_link&screen_name=mikebarton&tw_p=followbutton

|

||||

[1]:https://techbeacon.com/resources/application-security-devops-true-state?utm_source=tb&utm_medium=article&utm_campaign=inline-cta

|

||||

[2]:https://twitter.com/joshcorman

|

||||

[3]:https://techbeacon.com/want-rugged-devops-team-your-release-security-engineers

|

||||

[4]:https://techbeacon.com/rugged-devops-rsa-6-takeaways-security-ops-pros

|

||||

[5]:https://techbeacon.com/josh-corman-security-devops-how-shared-team-values-can-reduce-threats

|

||||

[6]:https://techbeacon.com/devops-automation-best-practices-how-much-too-much

|

||||

119

sources/tech/20150413 Why most High Level Languages are Slow.md

Normal file

119

sources/tech/20150413 Why most High Level Languages are Slow.md

Normal file

@ -0,0 +1,119 @@

|

||||

|

||||

|

||||

[Why (most) High Level Languages are Slow][7]

|

||||

============================================================

|

||||

|

||||

Contents

|

||||

|

||||

|

||||

* * [Cache costs review][1]

|

||||

* [Why C# introduces cache misses][2]

|

||||

* [Garbage Collection][3]

|

||||

* [Closing remarks][5]

|

||||

|

||||

|

||||

In the last month or two I’ve had basically the same conversation half a dozen times, both online and in real life, so I figured I’d just write up a blog post that I can refer to in the future.

|

||||

|

||||

The reason most high level languages are slow is usually because of two reasons:

|

||||

|

||||

1. They don’t play well with the cache.

|

||||

2. They have to do expensive garbage collections

|

||||

|

||||

But really, both of these boil down to a single reason: the language heavily encourages too many allocations.

|

||||

|

||||

First, I’ll just state up front that for all of this I’m talking mostly about client-side applications. If you’re spending 99.9% of your time waiting on the network then it probably doesn’t matter how slow your language is – optimizing network is your main concern. I’m talking about applications where local execution speed is important.

|

||||

|

||||

I’m going to pick on C# as the specific example here for two reasons: the first is that it’s the high level language I use most often these days, and because if I used Java I’d get a bunch of C# fans telling me how it has value types and therefore doesn’t have these issues (this is wrong).

|

||||

|

||||

In the following I will be talking about what happens when you write idiomatic code. When you work “with the grain” of the language. When you write code in the style of the standard libraries and tutorials. I’m not very interested in ugly workarounds as “proof” that there’s no problem. Yes, you can sometimes fight the language to avoid a particular issue, but that doesn’t make the language unproblematic.

|

||||

|

||||

### Cache costs review

|

||||

|

||||

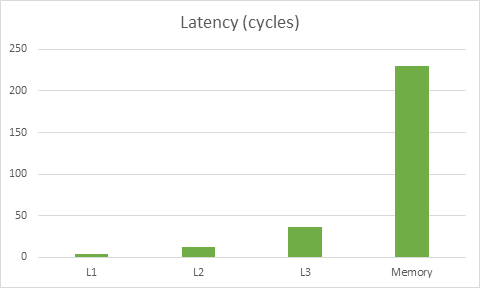

First, let’s review the importance of playing well with the cache. Here’s a graph based on [this data][10] on memory latencies for Haswell:

|

||||

|

||||

|

||||

|

||||

The latency for this particular CPU to get to memory is about 230 cycles, meanwhile the cost of reading data from L1 is 4 cycles. The key takeaway here is that doing the wrong thing for the cache can make code ~50x slower. In fact, it may be even worse than that – modern CPUs can often do multiple things at once so you could be loading stuff from L1 while operating on stuff that’s already in registers, thus hiding the L1 load cost partially or completely.

|

||||

|

||||

Without exaggerating we can say that aside from making reasonable algorithm choices, cache misses are the main thing you need to worry about for performance. Once you’re accessing data efficiently you can worry about fine tuning the actual operations you do. In comparison to cache misses, minor inefficiencies just don’t matter much.

|

||||

|

||||

This is actually good news for language designers! You don’t _have_ to build the most efficient compiler on the planet, and you totally can get away with some extra overhead here and there for your abstractions (e.g. array bounds checking), all you need to do is make sure that your design makes it easy to write code that accesses data efficiently and programs in your language won’t have any problems running at speeds that are competitive with C.

|

||||

|

||||

### Why C# introduces cache misses

|

||||

|

||||

To put it bluntly, C# is a language that simply isn’t designed to run efficiently with modern cache realities in mind. Again, I’m now talking about the limitations of the design and the “pressure” it puts on the programmer to do things in inefficient ways. Many of these things have theoretical workarounds that you could do at great inconvenience. I’m talking about idiomatic code, what the language “wants” you to do.

|

||||

|

||||

The basic problem with C# is that it has very poor support for value-based programming. Yes, it has structs which are values that are stored “embedded” where they are declared (e.g. on the stack, or inside another object). But there are a several big issues with structs that make them more of a band-aid than a solution.

|

||||

|

||||

* You have to declare your data types as struct up front – which means that if you _ever_ need this type to exist as a heap allocation then _all_ of them need to be heap allocations. You could make some kind of class-wrapper for your struct and forward all the members but it’s pretty painful. It would be better if classes and structs were declared the same way and could be used in both ways on a case-by-case basis. So when something can live on the stack you declare it as a value, and when it needs to be on the heap you declare it as an object. This is how C++ works, for example. You’re not encouraged to make everything into an object-type just because there’s a few things here and there that need them on the heap.

|

||||

|

||||

* _Referencing_ values is extremely limited. You can pass values by reference to functions, but that’s about it. You can’t just grab a reference to an element in a List<int>, you have to store both a reference to the list and an index. You can’t grab a pointer to a stack-allocated value, or a value stored inside an object (or value). You can only copy them, unless you’re passing them to a function (by ref). This is all understandable, by the way. If type safety is a priority, it’s pretty difficult (though not imposible) to support flexible referencing of values while also guaranteeing type safety. The rationale behind these restrictions don’t change the fact that the restrictions are there, though.</int>

|

||||

|

||||

* [Fixed sized buffers][6] don’t support custom types and also requires you to use an unsafe keyword.

|

||||

|

||||

* Limited “array slice” functionality. There’s an ArraySegment class, but it’s not really used by anyone, which means that in order to pass a range of elements from an array you have to create an IEnumerable, which means allocation (boxing). Even if the APIs accepted ArraySegment parameters it’s still not good enough – you can only use it for normal arrays, not for List<t>, not for [stack-allocated array][4]s, etc.</t>

|

||||

|

||||

The bottom line is that for all but very simple cases, the language pushes you very strongly towards heap allocations. If all your data is on the heap, it means that accessing it is likely to cause a cache misses (since you can’t decide how objects are organized in the heap). So while a C++ program poses few challenges to ensuring that data is organized in cache-efficient ways, C# typically encourages you to allocate each part of that data in a separate heap allocation. This means the programmers loses control over data layout, which means unnecessary cache misses are introduced and performance drops precipitously. It doesn’t matter that [you can now compile C# programs natively][11] ahead of time – improvement to code quality is a drop in the bucket compared to poor memory locality.

|

||||

|

||||

Plus, there’s storage overhead. Each reference is 8 bytes on a 64-bit machine, and each allocation has its own overhead in the form of various metadata. A heap full of tiny objects with lots of references everywhere has a lot of space overhead compared to a heap with very few large allocations where most data is just stored embedded within their owners at fixed offsets. Even if you don’t care about memory requirements, the fact that the heap is bloated with header words and references means that cache lines have more waste in them, this in turn means even more cache misses and reduced performance.

|

||||

|

||||

There are sometimes workarounds you can do, for example you can use structs and allocate them in a pool using a big List<t>. This allows you to e.g. traverse the pool and update all of the objects in-bulk, getting good locality. This does get pretty messy though, because now anything else wanting to refer to one of these objects have to have a reference to the pool as well as an index, and then keep doing array-indexing all over the place. For this reason, and the reasons above, it is significantly more painful to do this sort of stuff in C# than it is to do it in C++, because it’s just not something the language was designed to do. Furthermore, accessing a single element in the pool is now more expensive than just having an allocation per object - you now get _two_ cache misses because you have to first dereference the pool itself (since it’s a class). Ok, so you can duplicate the functionality of List<t> in struct-form and avoid this extra cache miss and make things even uglier. I’ve written plenty of code just like this and it’s just extremely low level and error prone.</t></t>

|

||||

|

||||

Finally, I want to point out that this isn’t just an issue for “hot spot” code. Idiomatically written C# code tends to have classes and references basically _everywhere_ . This means that all over your code at relatively uniform frequency there are random multi-hundred cycle stalls, dwarfing the cost of surrounding operations. Yes there could be hotspots too, but after you’ve optimized them you’re left with a program that’s just [uniformly slow.][12] So unless you want to write all your code with memory pools and indices, effectively operating at a lower level of abstraction than even C++ does (and at that point, why bother with C#?), there’s not a ton you can do to avoid this issue.

|

||||

|

||||

### Garbage Collection

|

||||

|

||||

I’m just going to assume in the following that you already understand why garbage collection is a performance problem in a lot of cases. That pausing randomly for many milliseconds just is usually unacceptable for anything with animation. I won’t linger on it and move on to explaining why the language design itself exacerbates this issue.

|

||||

|

||||

Because of the limitations when it comes to dealing with values, the language very strongly discourages you from using big chunky allocations consisting mostly of values embedded within other values (perhaps stored on the stack), pressuring you instead to use lots of small classes which have to be allocated on the heap. Roughly speaking, more allocations means more time spent collecting garbage.

|

||||

|

||||

There are benchmarks that show how C# or Java beat C++ in some particular case, because an allocator based on a GC can have decent throughput (cheap allocations, and you batch all the deallocations up). However, this isn’t a common real world scenario. It takes a huge amount of effort to write a C# program with the same low allocation rate that even a very naïve C++ program has, so those kinds of comparisons are really comparing a highly tuned managed program with a naïve native one. Once you spend the same amount of effort on the C++ program, you’d be miles ahead of C# again.

|

||||

|

||||