mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-03 23:40:14 +08:00

Merge pull request #10473 from pygmalion666/master

Update 20180927 How To Find And Delete Duplicate Files In Linux.md

This commit is contained in:

commit

55a5b98c5e

@ -1,442 +0,0 @@

|

||||

Translating by pygmalion666

|

||||

How To Find And Delete Duplicate Files In Linux

|

||||

======

|

||||

|

||||

|

||||

|

||||

I always backup the configuration files or any old files to somewhere in my hard disk before edit or modify them, so I can restore them from the backup if I accidentally did something wrong. But the problem is I forgot to clean up those files and my hard disk is filled with a lot of duplicate files after a certain period of time. I feel either too lazy to clean the old files or afraid that I may delete an important files. If you’re anything like me and overwhelming with multiple copies of same files in different backup directories, you can find and delete duplicate files using the tools given below in Unix-like operating systems.

|

||||

|

||||

**A word of caution:**

|

||||

|

||||

Please be careful while deleting duplicate files. If you’re not careful, it will lead you to [**accidental data loss**][1]. I advice you to pay extra attention while using these tools.

|

||||

|

||||

### Find And Delete Duplicate Files In Linux

|

||||

|

||||

For the purpose of this guide, I am going to discuss about three utilities namely,

|

||||

|

||||

1. Rdfind,

|

||||

2. Fdupes,

|

||||

3. FSlint.

|

||||

|

||||

|

||||

|

||||

These three utilities are free, open source and works on most Unix-like operating systems.

|

||||

|

||||

##### 1. Rdfind

|

||||

|

||||

**Rdfind** , stands for **r** edundant **d** ata **find** , is a free and open source utility to find duplicate files across and/or within directories and sub-directories. It compares files based on their content, not on their file names. Rdfind uses **ranking** algorithm to classify original and duplicate files. If you have two or more equal files, Rdfind is smart enough to find which is original file, and consider the rest of the files as duplicates. Once it found the duplicates, it will report them to you. You can decide to either delete them or replace them with [**hard links** or **symbolic (soft) links**][2].

|

||||

|

||||

**Installing Rdfind**

|

||||

|

||||

Rdfind is available in [**AUR**][3]. So, you can install it in Arch-based systems using any AUR helper program like [**Yay**][4] as shown below.

|

||||

|

||||

```

|

||||

$ yay -S rdfind

|

||||

|

||||

```

|

||||

|

||||

On Debian, Ubuntu, Linux Mint:

|

||||

|

||||

```

|

||||

$ sudo apt-get install rdfind

|

||||

|

||||

```

|

||||

|

||||

On Fedora:

|

||||

|

||||

```

|

||||

$ sudo dnf install rdfind

|

||||

|

||||

```

|

||||

|

||||

On RHEL, CentOS:

|

||||

|

||||

```

|

||||

$ sudo yum install epel-release

|

||||

|

||||

$ sudo yum install rdfind

|

||||

|

||||

```

|

||||

|

||||

**Usage**

|

||||

|

||||

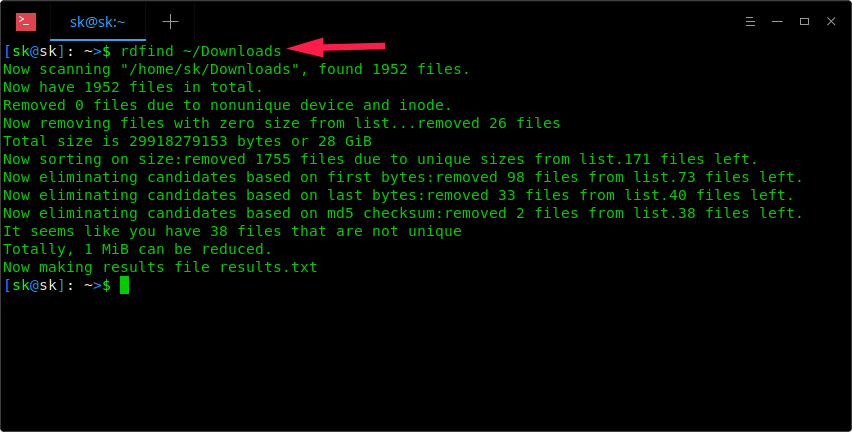

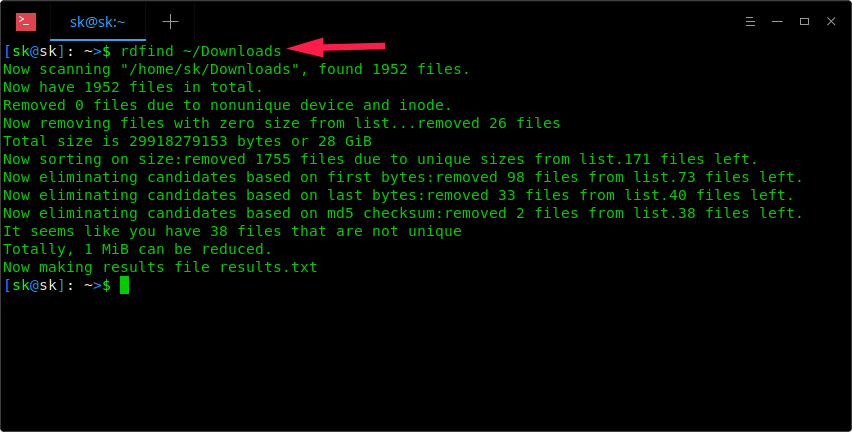

Once installed, simply run Rdfind command along with the directory path to scan for the duplicate files.

|

||||

|

||||

```

|

||||

$ rdfind ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

As you see in the above screenshot, Rdfind command will scan ~/Downloads directory and save the results in a file named **results.txt** in the current working directory. You can view the name of the possible duplicate files in results.txt file.

|

||||

|

||||

```

|

||||

$ cat results.txt

|

||||

# Automatically generated

|

||||

# duptype id depth size device inode priority name

|

||||

DUPTYPE_FIRST_OCCURRENCE 1469 8 9 2050 15864884 1 /home/sk/Downloads/tor-browser_en-US/Browser/TorBrowser/Tor/PluggableTransports/fte/tests/dfas/test5.regex

|

||||

DUPTYPE_WITHIN_SAME_TREE -1469 8 9 2050 15864886 1 /home/sk/Downloads/tor-browser_en-US/Browser/TorBrowser/Tor/PluggableTransports/fte/tests/dfas/test6.regex

|

||||

[...]

|

||||

DUPTYPE_FIRST_OCCURRENCE 13 0 403635 2050 15740257 1 /home/sk/Downloads/Hyperledger(1).pdf

|

||||

DUPTYPE_WITHIN_SAME_TREE -13 0 403635 2050 15741071 1 /home/sk/Downloads/Hyperledger.pdf

|

||||

# end of file

|

||||

|

||||

```

|

||||

|

||||

By reviewing the results.txt file, you can easily find the duplicates. You can remove the duplicates manually if you want to.

|

||||

|

||||

Also, you can **-dryrun** option to find all duplicates in a given directory without changing anything and output the summary in your Terminal:

|

||||

|

||||

```

|

||||

$ rdfind -dryrun true ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

Once you found the duplicates, you can replace them with either hardlinks or symlinks.

|

||||

|

||||

To replace all duplicates with hardlinks, run:

|

||||

|

||||

```

|

||||

$ rdfind -makehardlinks true ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

To replace all duplicates with symlinks/soft links, run:

|

||||

|

||||

```

|

||||

$ rdfind -makesymlinks true ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

You may have some empty files in a directory and want to ignore them. If so, use **-ignoreempty** option like below.

|

||||

|

||||

```

|

||||

$ rdfind -ignoreempty true ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

If you don’t want the old files anymore, just delete duplicate files instead of replacing them with hard or soft links.

|

||||

|

||||

To delete all duplicates, simply run:

|

||||

|

||||

```

|

||||

$ rdfind -deleteduplicates true ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

If you do not want to ignore empty files and delete them along with all duplicates, run:

|

||||

|

||||

```

|

||||

$ rdfind -deleteduplicates true -ignoreempty false ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

For more details, refer the help section:

|

||||

|

||||

```

|

||||

$ rdfind --help

|

||||

|

||||

```

|

||||

|

||||

And, the manual pages:

|

||||

|

||||

```

|

||||

$ man rdfind

|

||||

|

||||

```

|

||||

|

||||

##### 2. Fdupes

|

||||

|

||||

**Fdupes** is yet another command line utility to identify and remove the duplicate files within specified directories and the sub-directories. It is free, open source utility written in **C** programming language. Fdupes identifies the duplicates by comparing file sizes, partial MD5 signatures, full MD5 signatures, and finally performing a byte-by-byte comparison for verification.

|

||||

|

||||

Similar to Rdfind utility, Fdupes comes with quite handful of options to perform operations, such as:

|

||||

|

||||

* Recursively search duplicate files in directories and sub-directories

|

||||

* Exclude empty files and hidden files from consideration

|

||||

* Show the size of the duplicates

|

||||

* Delete duplicates immediately as they encountered

|

||||

* Exclude files with different owner/group or permission bits as duplicates

|

||||

* And a lot more.

|

||||

|

||||

|

||||

|

||||

**Installing Fdupes**

|

||||

|

||||

Fdupes is available in the default repositories of most Linux distributions.

|

||||

|

||||

On Arch Linux and its variants like Antergos, Manjaro Linux, install it using Pacman like below.

|

||||

|

||||

```

|

||||

$ sudo pacman -S fdupes

|

||||

|

||||

```

|

||||

|

||||

On Debian, Ubuntu, Linux Mint:

|

||||

|

||||

```

|

||||

$ sudo apt-get install fdupes

|

||||

|

||||

```

|

||||

|

||||

On Fedora:

|

||||

|

||||

```

|

||||

$ sudo dnf install fdupes

|

||||

|

||||

```

|

||||

|

||||

On RHEL, CentOS:

|

||||

|

||||

```

|

||||

$ sudo yum install epel-release

|

||||

|

||||

$ sudo yum install fdupes

|

||||

|

||||

```

|

||||

|

||||

**Usage**

|

||||

|

||||

Fdupes usage is pretty simple. Just run the following command to find out the duplicate files in a directory, for example **~/Downloads**.

|

||||

|

||||

```

|

||||

$ fdupes ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

Sample output from my system:

|

||||

|

||||

```

|

||||

/home/sk/Downloads/Hyperledger.pdf

|

||||

/home/sk/Downloads/Hyperledger(1).pdf

|

||||

|

||||

```

|

||||

|

||||

As you can see, I have a duplicate file in **/home/sk/Downloads/** directory. It shows the duplicates from the parent directory only. How to view the duplicates from sub-directories? Just use **-r** option like below.

|

||||

|

||||

```

|

||||

$ fdupes -r ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

Now you will see the duplicates from **/home/sk/Downloads/** directory and its sub-directories as well.

|

||||

|

||||

Fdupes can also be able to find duplicates from multiple directories at once.

|

||||

|

||||

```

|

||||

$ fdupes ~/Downloads ~/Documents/ostechnix

|

||||

|

||||

```

|

||||

|

||||

You can even search multiple directories, one recursively like below:

|

||||

|

||||

```

|

||||

$ fdupes ~/Downloads -r ~/Documents/ostechnix

|

||||

|

||||

```

|

||||

|

||||

The above commands searches for duplicates in “~/Downloads” directory and “~/Documents/ostechnix” directory and its sub-directories.

|

||||

|

||||

Sometimes, you might want to know the size of the duplicates in a directory. If so, use **-S** option like below.

|

||||

|

||||

```

|

||||

$ fdupes -S ~/Downloads

|

||||

403635 bytes each:

|

||||

/home/sk/Downloads/Hyperledger.pdf

|

||||

/home/sk/Downloads/Hyperledger(1).pdf

|

||||

|

||||

```

|

||||

|

||||

Similarly, to view the size of the duplicates in parent and child directories, use **-Sr** option.

|

||||

|

||||

We can exclude empty and hidden files from consideration using **-n** and **-A** respectively.

|

||||

|

||||

```

|

||||

$ fdupes -n ~/Downloads

|

||||

|

||||

$ fdupes -A ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

The first command will exclude zero-length files from consideration and the latter will exclude hidden files from consideration while searching for duplicates in the specified directory.

|

||||

|

||||

To summarize duplicate files information, use **-m** option.

|

||||

|

||||

```

|

||||

$ fdupes -m ~/Downloads

|

||||

1 duplicate files (in 1 sets), occupying 403.6 kilobytes

|

||||

|

||||

```

|

||||

|

||||

To delete all duplicates, use **-d** option.

|

||||

|

||||

```

|

||||

$ fdupes -d ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

Sample output:

|

||||

|

||||

```

|

||||

[1] /home/sk/Downloads/Hyperledger Fabric Installation.pdf

|

||||

[2] /home/sk/Downloads/Hyperledger Fabric Installation(1).pdf

|

||||

|

||||

Set 1 of 1, preserve files [1 - 2, all]:

|

||||

|

||||

```

|

||||

|

||||

This command will prompt you for files to preserve and delete all other duplicates. Just enter any number to preserve the corresponding file and delete the remaining files. Pay more attention while using this option. You might delete original files if you’re not be careful.

|

||||

|

||||

If you want to preserve the first file in each set of duplicates and delete the others without prompting each time, use **-dN** option (not recommended).

|

||||

|

||||

```

|

||||

$ fdupes -dN ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

To delete duplicates as they are encountered, use **-I** flag.

|

||||

|

||||

```

|

||||

$ fdupes -I ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

For more details about Fdupes, view the help section and man pages.

|

||||

|

||||

```

|

||||

$ fdupes --help

|

||||

|

||||

$ man fdupes

|

||||

|

||||

```

|

||||

|

||||

##### 3. FSlint

|

||||

|

||||

**FSlint** is yet another duplicate file finder utility that I use from time to time to get rid of the unnecessary duplicate files and free up the disk space in my Linux system. Unlike the other two utilities, FSlint has both GUI and CLI modes. So, it is more user-friendly tool for newbies. FSlint not just finds the duplicates, but also bad symlinks, bad names, temp files, bad IDS, empty directories, and non stripped binaries etc.

|

||||

|

||||

**Installing FSlint**

|

||||

|

||||

FSlint is available in [**AUR**][5], so you can install it using any AUR helpers.

|

||||

|

||||

```

|

||||

$ yay -S fslint

|

||||

|

||||

```

|

||||

|

||||

On Debian, Ubuntu, Linux Mint:

|

||||

|

||||

```

|

||||

$ sudo apt-get install fslint

|

||||

|

||||

```

|

||||

|

||||

On Fedora:

|

||||

|

||||

```

|

||||

$ sudo dnf install fslint

|

||||

|

||||

```

|

||||

|

||||

On RHEL, CentOS:

|

||||

|

||||

```

|

||||

$ sudo yum install epel-release

|

||||

|

||||

```

|

||||

|

||||

$ sudo yum install fslint

|

||||

|

||||

Once it is installed, launch it from menu or application launcher.

|

||||

|

||||

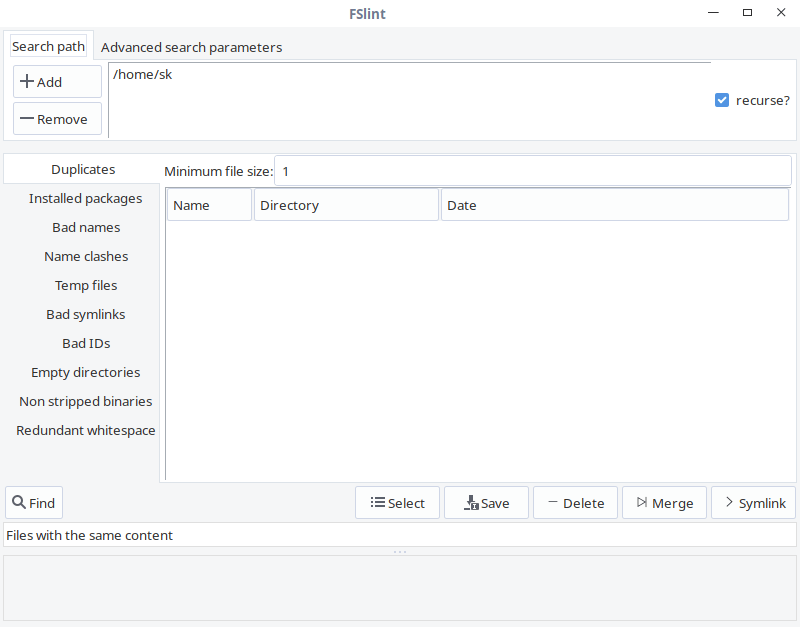

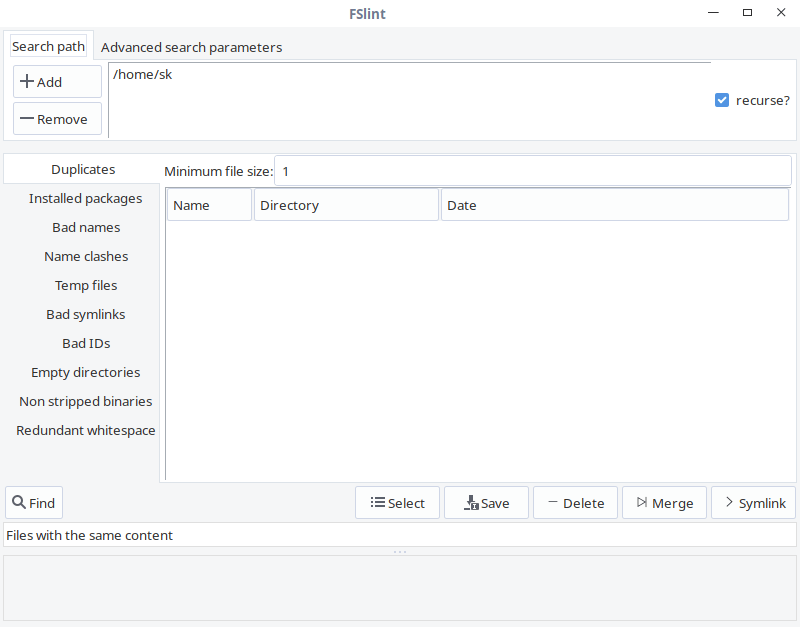

This is how FSlint GUI looks like.

|

||||

|

||||

|

||||

|

||||

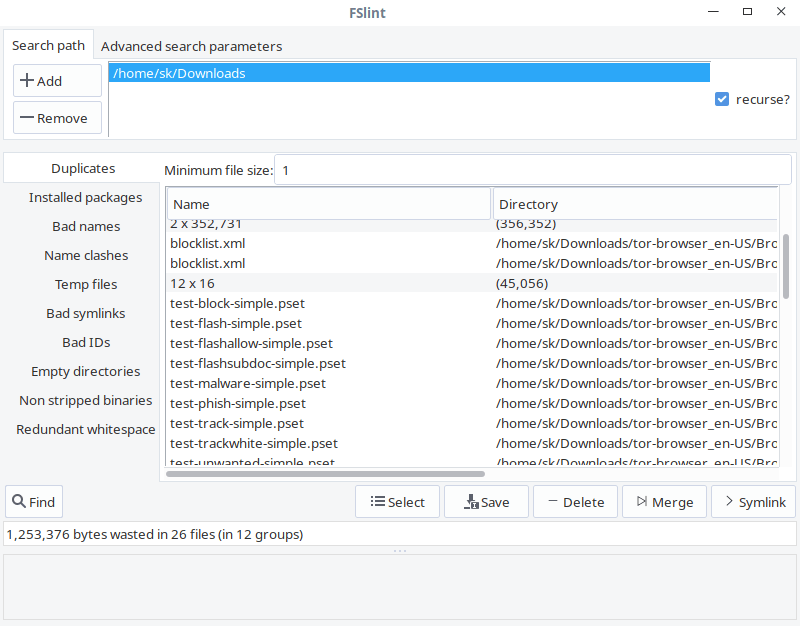

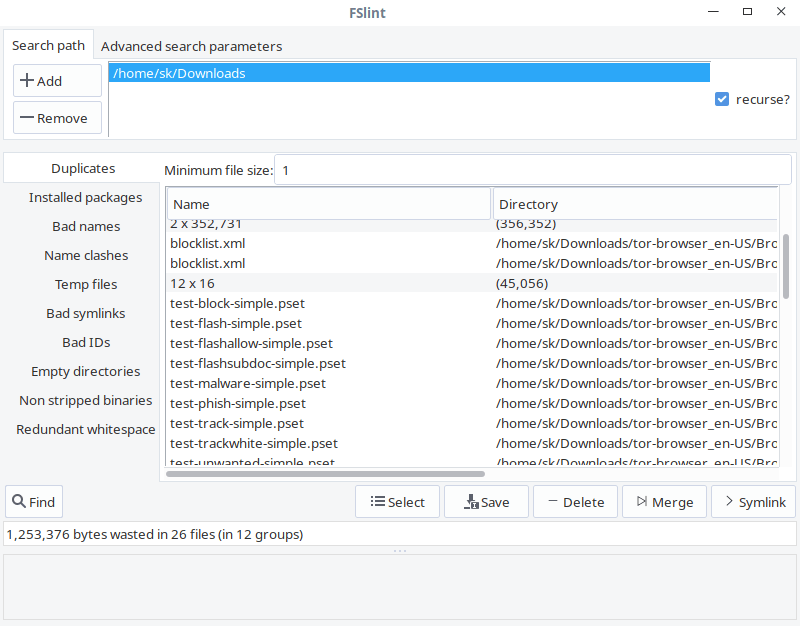

As you can see, the interface of FSlint is user-friendly and self-explanatory. In the **Search path** tab, add the path of the directory you want to scan and click **Find** button on the lower left corner to find the duplicates. Check the recurse option to recursively search for duplicates in directories and sub-directories. The FSlint will quickly scan the given directory and list out them.

|

||||

|

||||

|

||||

|

||||

From the list, choose the duplicates you want to clean and select any one of them given actions like Save, Delete, Merge and Symlink.

|

||||

|

||||

In the **Advanced search parameters** tab, you can specify the paths to exclude while searching for duplicates.

|

||||

|

||||

|

||||

|

||||

**FSlint command line options**

|

||||

|

||||

FSlint provides a collection of the following CLI utilities to find duplicates in your filesystem:

|

||||

|

||||

* **findup** — find DUPlicate files

|

||||

* **findnl** — find Name Lint (problems with filenames)

|

||||

* **findu8** — find filenames with invalid utf8 encoding

|

||||

* **findbl** — find Bad Links (various problems with symlinks)

|

||||

* **findsn** — find Same Name (problems with clashing names)

|

||||

* **finded** — find Empty Directories

|

||||

* **findid** — find files with dead user IDs

|

||||

* **findns** — find Non Stripped executables

|

||||

* **findrs** — find Redundant Whitespace in files

|

||||

* **findtf** — find Temporary Files

|

||||

* **findul** — find possibly Unused Libraries

|

||||

* **zipdir** — Reclaim wasted space in ext2 directory entries

|

||||

|

||||

|

||||

|

||||

All of these utilities are available under **/usr/share/fslint/fslint/fslint** location.

|

||||

|

||||

For example, to find duplicates in a given directory, do:

|

||||

|

||||

```

|

||||

$ /usr/share/fslint/fslint/findup ~/Downloads/

|

||||

|

||||

```

|

||||

|

||||

Similarly, to find empty directories, the command would be:

|

||||

|

||||

```

|

||||

$ /usr/share/fslint/fslint/finded ~/Downloads/

|

||||

|

||||

```

|

||||

|

||||

To get more details on each utility, for example **findup** , run:

|

||||

|

||||

```

|

||||

$ /usr/share/fslint/fslint/findup --help

|

||||

|

||||

```

|

||||

|

||||

For more details about FSlint, refer the help section and man pages.

|

||||

|

||||

```

|

||||

$ /usr/share/fslint/fslint/fslint --help

|

||||

|

||||

$ man fslint

|

||||

|

||||

```

|

||||

|

||||

##### Conclusion

|

||||

|

||||

You know now about three tools to find and delete unwanted duplicate files in Linux. Among these three tools, I often use Rdfind. It doesn’t mean that the other two utilities are not efficient, but I am just happy with Rdfind so far. Well, it’s your turn. Which is your favorite tool and why? Let us know them in the comment section below.

|

||||

|

||||

And, that’s all for now. Hope this was useful. More good stuffs to come. Stay tuned!

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/how-to-find-and-delete-duplicate-files-in-linux/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.ostechnix.com/author/sk/

|

||||

[1]: https://www.ostechnix.com/prevent-files-folders-accidental-deletion-modification-linux/

|

||||

[2]: https://www.ostechnix.com/explaining-soft-link-and-hard-link-in-linux-with-examples/

|

||||

[3]: https://aur.archlinux.org/packages/rdfind/

|

||||

[4]: https://www.ostechnix.com/yay-found-yet-another-reliable-aur-helper/

|

||||

[5]: https://aur.archlinux.org/packages/fslint/

|

||||

@ -0,0 +1,439 @@

|

||||

如何在 Linux 中找到并删除重复文件

|

||||

======

|

||||

|

||||

|

||||

|

||||

在编辑或修改配置文件或旧文件前,我经常会把它们备份到硬盘的某个地方,因此我如果意外地改错了这些文件,我可以从备份中恢复它们。但问题是如果我忘记清理备份文件,一段时间之后,我的磁盘会被这些大量重复文件填满。我觉得要么是懒得清理这些旧文件,要么是担心可能会删掉重要文件。如果你们像我一样,在类 Unix 操作系统中,大量多版本的相同文件放在不同的备份目录,你可以使用下面的工具找到并删除重复文件。

|

||||

|

||||

**提醒一句:**

|

||||

|

||||

在删除重复文件的时请尽量小心。如果你不小心,也许会导致[**意外丢失数据**][1]。我建议你在使用这些工具的时候要特别注意。

|

||||

|

||||

### 在 Linux 中找到并删除重复文件

|

||||

|

||||

|

||||

出于本指南的目的,我将讨论下面的三个工具:

|

||||

|

||||

1. Rdfind

|

||||

2. Fdupes

|

||||

3. FSlint

|

||||

|

||||

|

||||

|

||||

这三个工具是免费的、开源的,且运行在大多数类 Unix 系统中。

|

||||

|

||||

##### 1. Rdfind

|

||||

|

||||

**Rdfind** 代表找到找到冗余数据,是一个通过访问目录和子目录来找出重复文件的免费、开源的工具。它是基于文件内容而不是文件名来比较。Rdfind 使用**排序**算法来区分原始文件和重复文件。如果你有两个或者更多的相同文件,Rdfind 会很智能的找到原始文件并认定剩下的文件为重复文件。一旦找到副本文件,它会向你报告。你可以决定是删除还是使用[**硬链接**或者**符号(软)链接**][2]代替它们。

|

||||

|

||||

**安装 Rdfind**

|

||||

|

||||

Rdfind 存在于 [**AUR**][3] 中。因此,在基于 Arch 的系统中,你可以像下面一样使用任一如 [**Yay**][4] AUR 程序助手安装它。

|

||||

|

||||

```

|

||||

$ yay -S rdfind

|

||||

|

||||

```

|

||||

|

||||

在 Debian、Ubuntu、Linux Mint 上:

|

||||

|

||||

```

|

||||

$ sudo apt-get install rdfind

|

||||

|

||||

```

|

||||

|

||||

在 Fedora 上:

|

||||

|

||||

```

|

||||

$ sudo dnf install rdfind

|

||||

|

||||

```

|

||||

|

||||

在 RHEL、CentOS 上:

|

||||

|

||||

```

|

||||

$ sudo yum install epel-release

|

||||

|

||||

$ sudo yum install rdfind

|

||||

|

||||

```

|

||||

|

||||

**用法**

|

||||

|

||||

一旦安装完成,仅带上目录路径运行 Rdfind 命令就可以扫描重复文件。

|

||||

|

||||

```

|

||||

$ rdfind ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

正如你看到上面的截屏,Rdfind 命令将扫描 ~/Downloads 目录,并将结果存储到当前工作目录下一个名为 **results.txt** 的文件中。你可以在 results.txt 文件中看到可能是重复文件的名字。

|

||||

|

||||

```

|

||||

$ cat results.txt

|

||||

# Automatically generated

|

||||

# duptype id depth size device inode priority name

|

||||

DUPTYPE_FIRST_OCCURRENCE 1469 8 9 2050 15864884 1 /home/sk/Downloads/tor-browser_en-US/Browser/TorBrowser/Tor/PluggableTransports/fte/tests/dfas/test5.regex

|

||||

DUPTYPE_WITHIN_SAME_TREE -1469 8 9 2050 15864886 1 /home/sk/Downloads/tor-browser_en-US/Browser/TorBrowser/Tor/PluggableTransports/fte/tests/dfas/test6.regex

|

||||

[...]

|

||||

DUPTYPE_FIRST_OCCURRENCE 13 0 403635 2050 15740257 1 /home/sk/Downloads/Hyperledger(1).pdf

|

||||

DUPTYPE_WITHIN_SAME_TREE -13 0 403635 2050 15741071 1 /home/sk/Downloads/Hyperledger.pdf

|

||||

# end of file

|

||||

|

||||

```

|

||||

|

||||

通过检查 results.txt 文件,你可以很容易的找到那些重复文件。如果愿意你可以手动的删除它们。

|

||||

|

||||

此外,你可在不修改其他事情情况下使用 **-dryrun** 选项找出所有重复文件,并在终端上输出汇总信息。

|

||||

|

||||

```

|

||||

$ rdfind -dryrun true ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

一旦找到重复文件,你可以使用硬链接或符号链接代替他们。

|

||||

|

||||

使用硬链接代替所有重复文件,运行:

|

||||

|

||||

```

|

||||

$ rdfind -makehardlinks true ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

使用符号链接/软链接代替所有重复文件,运行:

|

||||

|

||||

```

|

||||

$ rdfind -makesymlinks true ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

目录中有一些空文件,也许你想忽略他们,你可以像下面一样使用 **-ignoreempty** 选项:

|

||||

|

||||

```

|

||||

$ rdfind -ignoreempty true ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

如果你不再想要这些旧文件,删除重复文件,而不是使用硬链接或软链接代替它们。

|

||||

|

||||

删除重复文件,就运行:

|

||||

|

||||

```

|

||||

$ rdfind -deleteduplicates true ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

如果你不想忽略空文件,并且和所哟重复文件一起删除。运行:

|

||||

|

||||

```

|

||||

$ rdfind -deleteduplicates true -ignoreempty false ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

更多细节,参照帮助部分:

|

||||

|

||||

```

|

||||

$ rdfind --help

|

||||

|

||||

```

|

||||

|

||||

手册页:

|

||||

|

||||

```

|

||||

$ man rdfind

|

||||

|

||||

```

|

||||

|

||||

##### 2. Fdupes

|

||||

|

||||

**Fdupes** 是另一个在指定目录以及子目录中识别和移除重复文件的命令行工具。这是一个使用 **C** 语言编写的免费、开源工具。Fdupes 通过对比文件大小、部分 MD5 签名、全部 MD5 签名,最后执行逐个字节对比校验来识别重复文件。

|

||||

|

||||

与 Rdfind 工具类似,Fdupes 附带非常少的选项来执行操作,如:

|

||||

|

||||

* 在目录和子目录中递归的搜索重复文件

|

||||

* 从计算中排除空文件和隐藏文件

|

||||

* 显示重复文件大小

|

||||

* 出现重复文件时立即删除

|

||||

* 使用不同的拥有者/组或权限位来排除重复文件

|

||||

* 更多

|

||||

|

||||

|

||||

|

||||

**安装 Fdupes**

|

||||

|

||||

Fdupes 存在于大多数 Linux 发行版的默认仓库中。

|

||||

|

||||

在 Arch Linux 和它的变种如 Antergos、Manjaro Linux 上,如下使用 Pacman 安装它。

|

||||

|

||||

```

|

||||

$ sudo pacman -S fdupes

|

||||

|

||||

```

|

||||

|

||||

在 Debian、Ubuntu、Linux Mint 上:

|

||||

|

||||

```

|

||||

$ sudo apt-get install fdupes

|

||||

|

||||

```

|

||||

|

||||

在 Fedora 上:

|

||||

|

||||

```

|

||||

$ sudo dnf install fdupes

|

||||

|

||||

```

|

||||

|

||||

在 RHEL、CentOS 上:

|

||||

|

||||

```

|

||||

$ sudo yum install epel-release

|

||||

|

||||

$ sudo yum install fdupes

|

||||

|

||||

```

|

||||

|

||||

**用法**

|

||||

|

||||

Fdupes 用法非常简单。仅运行下面的命令就可以在目录中找到重复文件,如:**~/Downloads**.

|

||||

|

||||

```

|

||||

$ fdupes ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

我系统中的样例输出:

|

||||

|

||||

```

|

||||

/home/sk/Downloads/Hyperledger.pdf

|

||||

/home/sk/Downloads/Hyperledger(1).pdf

|

||||

|

||||

```

|

||||

你可以看到,在 **/home/sk/Downloads/** 目录下有一个重复文件。它仅显示了父级目录中的重复文件。如何显示子目录中的重复文件?像下面一样,使用 **-r** 选项。

|

||||

|

||||

```

|

||||

$ fdupes -r ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

现在你将看到 **/home/sk/Downloads/** 目录以及子目录中的重复文件。

|

||||

|

||||

Fdupes 也可用来从多个目录中迅速查找重复文件。

|

||||

|

||||

```

|

||||

$ fdupes ~/Downloads ~/Documents/ostechnix

|

||||

|

||||

```

|

||||

|

||||

你甚至可以搜索多个目录,递归搜索其中一个目录,如下:

|

||||

|

||||

```

|

||||

$ fdupes ~/Downloads -r ~/Documents/ostechnix

|

||||

|

||||

```

|

||||

|

||||

上面的命令将搜索 “~/Downloads” 目录,“~/Documents/ostechnix” 目录和它的子目录中的重复文件。

|

||||

|

||||

有时,你可能想要知道一个目录中重复文件的大小。你可以使用 **-S** 选项,如下:

|

||||

|

||||

```

|

||||

$ fdupes -S ~/Downloads

|

||||

403635 bytes each:

|

||||

/home/sk/Downloads/Hyperledger.pdf

|

||||

/home/sk/Downloads/Hyperledger(1).pdf

|

||||

|

||||

```

|

||||

|

||||

类似的,为了显示父目录和子目录中重复文件的大小,使用 **-Sr** 选项。

|

||||

|

||||

我们可以在计算时分别使用 **-n** 和 **-A** 选项排除空白文件以及排除隐藏文件。

|

||||

|

||||

```

|

||||

$ fdupes -n ~/Downloads

|

||||

|

||||

$ fdupes -A ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

在搜索指定目录的重复文件时,第一个命令将排除零长度文件,后面的命令将排除隐藏文件。

|

||||

|

||||

汇总重复文件信息,使用 **-m** 选项。

|

||||

|

||||

```

|

||||

$ fdupes -m ~/Downloads

|

||||

1 duplicate files (in 1 sets), occupying 403.6 kilobytes

|

||||

|

||||

```

|

||||

|

||||

删除所有重复文件,使用 **-d** 选项。

|

||||

|

||||

```

|

||||

$ fdupes -d ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

样例输出:

|

||||

|

||||

```

|

||||

[1] /home/sk/Downloads/Hyperledger Fabric Installation.pdf

|

||||

[2] /home/sk/Downloads/Hyperledger Fabric Installation(1).pdf

|

||||

|

||||

Set 1 of 1, preserve files [1 - 2, all]:

|

||||

|

||||

```

|

||||

|

||||

这个命令将提示你保留还是删除所有其他重复文件。输入任一号码保留相应的文件,并删除剩下的文件。当使用这个选项的时候需要更加注意。如果不小心,你可能会删除原文件。

|

||||

|

||||

如果你想要每次保留每个重复文件集合的第一个文件,且无提示的删除其他文件,使用 **-dN** 选项(不推荐)。

|

||||

|

||||

```

|

||||

$ fdupes -dN ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

当遇到重复文件时删除它们,使用 **-I** 标志。

|

||||

|

||||

```

|

||||

$ fdupes -I ~/Downloads

|

||||

|

||||

```

|

||||

|

||||

关于 Fdupes 的更多细节,查看帮助部分和 man 页面。

|

||||

|

||||

```

|

||||

$ fdupes --help

|

||||

|

||||

$ man fdupes

|

||||

|

||||

```

|

||||

|

||||

##### 3. FSlint

|

||||

|

||||

**FSlint** 是另外一个查找重复文件的工具,有时我用它去掉 Linux 系统中不需要的重复文件并释放磁盘空间。不像另外两个工具,FSlint 有 GUI 和 CLI 两种模式。因此对于新手来说它更友好。FSlint 不仅仅找出重复文件,也找出坏符号链接、坏名字文件、临时文件、坏 IDS、空目录和非剥离二进制文件等等。

|

||||

|

||||

**安装 FSlint**

|

||||

|

||||

FSlint 存在于 [**AUR**][5],因此你可以使用任一 AUR 助手安装它。

|

||||

|

||||

```

|

||||

$ yay -S fslint

|

||||

|

||||

```

|

||||

|

||||

在 Debian、Ubuntu、Linux Mint 上:

|

||||

|

||||

```

|

||||

$ sudo apt-get install fslint

|

||||

|

||||

```

|

||||

|

||||

在 Fedora 上:

|

||||

|

||||

```

|

||||

$ sudo dnf install fslint

|

||||

|

||||

```

|

||||

|

||||

在 RHEL,CentOS 上:

|

||||

|

||||

```

|

||||

$ sudo yum install epel-release

|

||||

$ sudo yum install fslint

|

||||

|

||||

```

|

||||

|

||||

一旦安装完成,从菜单或者应用程序启动器启动它。

|

||||

|

||||

FSlint GUI 展示如下:

|

||||

|

||||

|

||||

|

||||

如你所见,FSlint 接口友好、一目了然。在 **Search path** 栏,添加你要扫描的目录路径,点击左下角 **Find** 按钮查找重复文件。验证递归选项可以在目录和子目录中递归的搜索重复文件。FSlint 将快速的扫描给定的目录并列出重复文件。

|

||||

|

||||

|

||||

|

||||

从列表中选择那些要清理的重复文件,也可以选择 Save、Delete、Merge 和 Symlink 操作他们。

|

||||

|

||||

在 **Advanced search parameters** 栏,你可以在搜索重复文件的时候指定排除的路径。

|

||||

|

||||

|

||||

|

||||

**FSlint 命令行选项**

|

||||

|

||||

FSlint 提供下面的 CLI 工具集在你的文件系统中查找重复文件。

|

||||

|

||||

* **findup** — 查找重复文件

|

||||

* **findnl** — 查找 Lint 名称文件(有问题的文件名)

|

||||

* **findu8** — 查找非法的 utf8 编码文件

|

||||

* **findbl** — 查找坏链接(有问题的符号链接)

|

||||

* **findsn** — 查找同名文件(可能有冲突的文件名)

|

||||

* **finded** — 查找空目录

|

||||

* **findid** — 查找死用户的文件

|

||||

* **findns** — 查找非剥离的可执行文件

|

||||

* **findrs** — 查找文件中多于的空白

|

||||

* **findtf** — 查找临时文件

|

||||

* **findul** — 查找可能未使用的库

|

||||

* **zipdir** — 回收 ext2 目录实体下浪费的空间

|

||||

|

||||

|

||||

|

||||

所有这些工具位于 **/usr/share/fslint/fslint/fslint** 下面。

|

||||

|

||||

|

||||

例如,在给定的目录中查找重复文件,运行:

|

||||

|

||||

```

|

||||

$ /usr/share/fslint/fslint/findup ~/Downloads/

|

||||

|

||||

```

|

||||

|

||||

类似的,找出空目录命令是:

|

||||

|

||||

```

|

||||

$ /usr/share/fslint/fslint/finded ~/Downloads/

|

||||

|

||||

```

|

||||

|

||||

获取每个工具更多细节,例如:**findup**,运行:

|

||||

|

||||

```

|

||||

$ /usr/share/fslint/fslint/findup --help

|

||||

|

||||

```

|

||||

|

||||

关于 FSlint 的更多细节,参照帮助部分和 man 页。

|

||||

|

||||

```

|

||||

$ /usr/share/fslint/fslint/fslint --help

|

||||

|

||||

$ man fslint

|

||||

|

||||

```

|

||||

|

||||

##### 总结

|

||||

|

||||

现在你知道在 Linux 中,使用三个工具来查找和删除不需要的重复文件。这三个工具中,我经常使用 Rdfind。这并不意味着其他的两个工具效率低下,因为到目前为止我更喜欢 Rdfind。好了,到你了。你的最喜欢哪一个工具呢?为什么?在下面的评论区留言让我们知道吧。

|

||||

|

||||

就到这里吧。希望这篇文章对你有帮助。更多的好东西就要来了,敬请期待。

|

||||

|

||||

谢谢!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/how-to-find-and-delete-duplicate-files-in-linux/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[pygmalion666](https://github.com/pygmalion666)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.ostechnix.com/author/sk/

|

||||

[1]: https://www.ostechnix.com/prevent-files-folders-accidental-deletion-modification-linux/

|

||||

[2]: https://www.ostechnix.com/explaining-soft-link-and-hard-link-in-linux-with-examples/

|

||||

[3]: https://aur.archlinux.org/packages/rdfind/

|

||||

[4]: https://www.ostechnix.com/yay-found-yet-another-reliable-aur-helper/

|

||||

[5]: https://aur.archlinux.org/packages/fslint/

|

||||

Loading…

Reference in New Issue

Block a user