mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

commit

52717946a7

38

README.md

38

README.md

@ -30,6 +30,26 @@ LCTT的组成

|

||||

|

||||

请阅读[WIKI](https://github.com/LCTT/TranslateProject/wiki)。

|

||||

|

||||

历史

|

||||

-------------------------------

|

||||

|

||||

* 2013/09/10 倡议并得到了大家的积极响应,成立翻译组。

|

||||

* 2013/09/11 采用github进行翻译协作,并开始进行选题翻译。

|

||||

* 2013/09/16 公开发布了翻译组成立消息后,又有新的成员申请加入了。并从此建立见习成员制度。

|

||||

* 2013/09/24 鉴于大家使用Github的水平不一,容易导致主仓库的一些错误,因此换成了常规的fork+PR的模式来进行翻译流程。

|

||||

* 2013/10/11 根据对LCTT的贡献,划分了Core Translators组,最先的加入成员是vito-L和tinyeyeser。

|

||||

* 2013/10/12 取消对LINUX.CN注册用户的依赖,在QQ群内、文章内都采用github的注册ID。

|

||||

* 2013/10/18 正式启动man翻译计划。

|

||||

* 2013/11/10 举行第一次北京线下聚会。

|

||||

* 2014/01/02 增加了Core Translators 成员: geekpi。

|

||||

* 2014/05/04 更换了新的QQ群:198889102

|

||||

* 2014/05/16 增加了Core Translators 成员: will.qian、vizv。

|

||||

* 2014/06/18 由于GOLinux令人惊叹的翻译速度和不错的翻译质量,升级为Core Translators成员。

|

||||

* 2014/09/09 LCTT 一周年,做一年[总结](http://linux.cn/article-3784-1.html)。并将曾任 CORE 的成员分组为 Senior,以表彰他们的贡献。

|

||||

* 2014/10/08 提升bazz2为Core Translators成员。

|

||||

* 2014/11/04 提升zpl1025为Core Translators成员。

|

||||

* 2014/12/25 提升runningwater为Core Translators成员。

|

||||

|

||||

活跃成员

|

||||

-------------------------------

|

||||

|

||||

@ -119,21 +139,3 @@ LCTT的组成

|

||||

|

||||

谢谢大家的支持!

|

||||

|

||||

历史

|

||||

-------------------------------

|

||||

|

||||

* 2013/09/10 倡议并得到了大家的积极响应,成立翻译组。

|

||||

* 2013/09/11 采用github进行翻译协作,并开始进行选题翻译。

|

||||

* 2013/09/16 公开发布了翻译组成立消息后,又有新的成员申请加入了。并从此建立见习成员制度。

|

||||

* 2013/09/24 鉴于大家使用Github的水平不一,容易导致主仓库的一些错误,因此换成了常规的fork+PR的模式来进行翻译流程。

|

||||

* 2013/10/11 根据对LCTT的贡献,划分了Core Translators组,最先的加入成员是vito-L和tinyeyeser。

|

||||

* 2013/10/12 取消对LINUX.CN注册用户的依赖,在QQ群内、文章内都采用github的注册ID。

|

||||

* 2013/10/18 正式启动man翻译计划。

|

||||

* 2013/11/10 举行第一次北京线下聚会。

|

||||

* 2014/01/02 增加了Core Translators 成员: geekpi。

|

||||

* 2014/05/04 更换了新的QQ群:198889102

|

||||

* 2014/05/16 增加了Core Translators 成员: will.qian、vizv。

|

||||

* 2014/06/18 由于GOLinux令人惊叹的翻译速度和不错的翻译质量,升级为Core Translators成员。

|

||||

* 2014/09/09 LCTT 一周年,做一年[总结](http://linux.cn/article-3784-1.html)。并将曾任 CORE 的成员分组为 Senior,以表彰他们的贡献。

|

||||

* 2014/10/08 提升bazz2为Core Translators成员。

|

||||

* 2014/11/04 提升zpl1025为Core Translators成员。

|

||||

@ -1,20 +1,19 @@

|

||||

为 Linux 用户准备的 10 个开源克隆软件

|

||||

给 Linux 用户的 10 个开源克隆软件

|

||||

================================================================================

|

||||

> 这些克隆软件会读取整个磁盘的数据,将它们转换成一个 .img 文件,之后你可以将它复制到其他硬盘上。

|

||||

|

||||

|

||||

|

||||

磁盘克隆意味着从一个硬盘复制数据到另一个硬盘上,而且你可以通过简单的复制粘贴来做到。但是你却不能复制隐藏文件和文件夹,以及正在使用中的文件。这便是一个克隆软件可以通过保存一份文件和文件夹的镜像来帮助你的地方。克隆软件会读取整个磁盘的数据,将它们转换成一个 .img 文件,之后你可以将它复制到其他硬盘上。现在我们将要向你介绍最优秀的 10 个开源的克隆软件:

|

||||

磁盘克隆的意思是说从一个硬盘复制数据到另一个硬盘上。虽然你可以通过简单的复制粘贴来做到这一点,但是你却不能复制隐藏文件和文件夹,以及正在使用中的文件。这便是一个克隆软件可以通过保存一份文件和文件夹的镜像来做到的。克隆软件会读取整个磁盘的数据,将它们转换成一个 .img 文件,之后你可以将它复制到其他硬盘上。现在我们将要向你介绍最优秀的 10 个开源的克隆软件:

|

||||

|

||||

### 1. [Clonezilla][1]:###

|

||||

|

||||

Clonezilla 是一个基于 Ubuntu 和 Debian 的 Live CD。它可以像 Windows 里的诺顿 Ghost 一样克隆你的磁盘数据和做备份,不过它更有效率。Clonezilla 支持包括 ext2、ext3、ext4、btrfs 和 xfs 在内的很多文件系统。它还支持 BIOS、UEFI、MBR 和 GPT 分区。

|

||||

Clonezilla 是一个基于 Ubuntu 和 Debian 的 Live CD。它可以像 Windows 里的 Ghost 一样克隆你的磁盘数据和做备份,不过它更有效率。Clonezilla 支持包括 ext2、ext3、ext4、btrfs 和 xfs 在内的很多文件系统。它还支持 BIOS、UEFI、MBR 和 GPT 分区。

|

||||

|

||||

|

||||

|

||||

### 2. [Redo Backup][2]:###

|

||||

### 2. [Redo Backup][2]:###

|

||||

|

||||

Redo Backup 是另一个用来方便地克隆磁盘的 Live CD。它是自由和开源的软件,使用 GPL 3 许可协议授权。它的主要功能和特点包括从 CD 引导的简单易用的 GUI、无需安装,可以恢复 Linux 和 Windows 等系统、无需登陆访问文件,以及已删除的文件等。

|

||||

Redo Backup 是另一个用来方便地克隆磁盘的 Live CD。它是自由和开源的软件,使用 GPL 3 许可协议授权。它的主要功能和特点包括从 CD 引导的简单易用的 GUI、无需安装,可以恢复 Linux 和 Windows 等系统,无需登陆访问文件,以及已删除的文件等。

|

||||

|

||||

|

||||

|

||||

@ -26,7 +25,7 @@ Mondo 和其他的软件不大一样,它并不将你的磁盘数据转换为

|

||||

|

||||

### 4. [Partimage][4]:###

|

||||

|

||||

这是一个开源的备份软件,默认情况下在 Linux 系统里工作。在大多数发行版中,你都可以从发行版自带的软件包管理工具中安装。如果你没有 Linux 系统,你也可以使用“SystemRescueCd”。它是一个默认包括 Partimage 的 Live CD,可以为你完成备份工作。Partimage 在克隆硬盘方面的性能非常出色。

|

||||

这是一个开源的备份软件,默认工作在 Linux 系统下。在大多数发行版中,你都可以从发行版自带的软件包管理工具中安装。如果你没有 Linux 系统,你也可以使用“SystemRescueCd”。它是一个默认包含了 Partimage 的 Live CD,可以为你完成备份工作。Partimage 在克隆硬盘方面的性能非常出色。

|

||||

|

||||

|

||||

|

||||

@ -71,7 +70,7 @@ via: http://www.efytimes.com/e1/fullnews.asp?edid=148039

|

||||

|

||||

作者:Sanchari Banerjee

|

||||

译者:[felixonmars](https://github.com/felixonmars)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,72 +1,79 @@

|

||||

什么是有用的bash别名和函数

|

||||

一大波有用的 bash 别名和函数

|

||||

================================================================================

|

||||

作为一个命令行探索者,你或许发现你自己一遍又一遍. 如果你总是用ssh进入到同一台电脑, 同时你总是管道关联相同的命令,或者如果你时常用一些参数运行一个程序,你应该想要拯救你人生中的这个珍贵的助手。你一遍又一遍花费着重复相同的动作.

|

||||

解决方案是使用一个别名.正如你可能知道的,别名用一种方式告诉你的shell记住详细的命令并且给它一个新的名字:别名,的方式。不管怎么样,别名是即时有效的,同样地它只是shell命令的快捷方式,没有能力传递或者控制参数.所以补充时,bash也允许你创建你自己的函数,那样可能更漫长和复杂,并且也允许任意数量的参数.

|

||||

当然,当你有一个好的食谱-像汤,你要分享它.因此这里有一个列表,用一些最有用bash别名和函数的.注意"最有用的"是随意的定义,当然别名的有益依赖在于你每天shell的使用性

|

||||

在你用别名开始试验之前, 这里有一个便于使用的小技巧:如果你给予别名相同的名字作为常规命令,你可以选择开始原始的命令并且用技巧忽略别名

|

||||

|

||||

作为一个命令行探索者,你或许发现你自己一遍又一遍重复同样的命令。如果你总是用ssh进入到同一台电脑,如果你总是将一连串命令连接起来,如果你总是用同样的参数运行一个程序,你也许希望在这种不断的重复中为你的生命节约下几秒钟。

|

||||

|

||||

解决方案是使用一个别名(alias)。正如你可能知道的,别名用一种让你的shell记住一个特定的命令并且给它一个新的名字的方式。不管怎么样,别名有一些限制,它只是shell命令的快捷方式,不能传递或者控制其中的参数。所以作为补充,bash 也允许你创建你自己的函数,这可能更长一些和复杂一点,它允许任意数量的参数。

|

||||

|

||||

当然,当你有美食时,比如某种汤,你要分享它给大家。我这里有一个列表,列出了一些最有用bash别名和函数的。注意“最有用的”只是个说法,别名的是否有用要看你是否每天都需要在 shell 里面用它。

|

||||

|

||||

在你开始你的别名体验之旅前,这里有一个便于使用的小技巧:如果你的别名和原本的命令名字相同,你可以用如下技巧来访问原本的命令(LCTT 译注:你也可以直接原本命令的完整路径来访问它。)

|

||||

|

||||

\command

|

||||

例如,第一个别名在下面替换ls命令。如果你想使用常规的ls命令而不是别名,通过调用它:

|

||||

|

||||

例如,如果有一个替换了ls命令的别名 ls。如果你想使用原本的ls命令而不是别名,通过调用它:

|

||||

|

||||

\ls

|

||||

|

||||

### Productivity ###

|

||||

### 提升生产力 ###

|

||||

|

||||

这些别名真的很简单并且真的很短,但他们大多数主要是以主题为依据,那样无论何时倘若你第二次保存一小部分,它允许在多年以后再结束.也许不会.

|

||||

这些别名真的很简单并且真的很短,但他们大多数是为了给你的生命节省几秒钟,最终也许为你这一辈子节省出来几年,也许呢。

|

||||

|

||||

alias ls="ls --color=auto"

|

||||

|

||||

简单但非常重要.使ls命令带着彩色输出

|

||||

简单但非常重要。使ls命令带着彩色输出。

|

||||

|

||||

alias ll = "ls --color -al"

|

||||

alias ll="ls --color -al"

|

||||

|

||||

从一个目录采用列表格式快速显示全部文件.

|

||||

以彩色的列表方式列出目录里面的全部文件。

|

||||

|

||||

alias grep='grep --color=auto'

|

||||

|

||||

相同地,把一些颜色在grep里输出

|

||||

类似,只是在grep里输出带上颜色。

|

||||

|

||||

mcd() { mkdir -p "$1"; cd "$1";}

|

||||

|

||||

我的最爱之一. 制造一个目录采用一个命令mcd[名字]和cd命令进入到目录里面

|

||||

我的最爱之一。创建一个目录并进入该目录里: mcd [目录名]。

|

||||

|

||||

cls() { cd "$1"; ls;}

|

||||

|

||||

类似于前面的功能,cd命令进入一个目录别且列出它的的内容:cls[名字]

|

||||

类似上一个函数,进入一个目录并列出它的的内容:cls[目录名]。

|

||||

|

||||

backup() { cp "$1"{,.bak};}

|

||||

|

||||

简单的方法,使文件有一个备份: backup [文件]将会在相同的目录创建[文件].bak.

|

||||

简单的给文件创建一个备份: backup [文件] 将会在同一个目录下创建 [文件].bak。

|

||||

|

||||

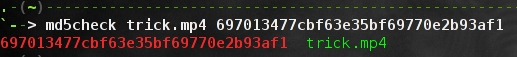

md5check() { md5sum "$1" | grep "$2";}

|

||||

|

||||

因为我讨厌通过手工比较文件的md5算法,这个函数计算它并且计算它使用grep:md5check[文件][钥匙]

|

||||

因为我讨厌通过手工比较文件的md5校验值,这个函数会计算它并进行比较:md5check[文件][校验值]。

|

||||

|

||||

|

||||

|

||||

alias makescript="fc -rnl | head -1 >"

|

||||

|

||||

很容易地制造上个命令的脚本输出,你运行makescript[脚本名字.sh]

|

||||

很容易用你上一个运行的命令创建一个脚本:makescript [脚本名字.sh]

|

||||

|

||||

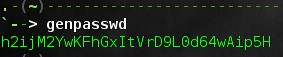

alias genpasswd="strings /dev/urandom | grep -o '[[:alnum:]]' | head -n 30 | tr -d '\n'; echo"

|

||||

|

||||

只是瞬间产生一个强壮的密码

|

||||

只是瞬间产生一个强壮的密码。

|

||||

|

||||

|

||||

|

||||

alias c="clear"

|

||||

|

||||

不能较为简单的清除你终端的屏幕

|

||||

清除你终端屏幕不能更简单了吧?

|

||||

|

||||

alias histg="history | grep"

|

||||

|

||||

通过你的命令历史:histg[关键字]快速地搜索

|

||||

快速搜索你的命令输入历史:histg [关键字]

|

||||

|

||||

alias ..='cd ..'

|

||||

|

||||

不需要写cd命令到上层目录

|

||||

回到上层目录还需要输入 cd 吗?

|

||||

|

||||

alias ...='cd ../..'

|

||||

|

||||

类似地,去到上两个目录

|

||||

自然,去到上两层目录。

|

||||

|

||||

extract() {

|

||||

if [ -f $1 ] ; then

|

||||

@ -89,98 +96,93 @@

|

||||

fi

|

||||

}

|

||||

|

||||

很长,但是也是最有用的。解压任何的文档类型:extract:[文档文件]

|

||||

|

||||

很长,但是也是最有用的。解压任何的文档类型:extract: [压缩文件]

|

||||

|

||||

### 系统信息 ###

|

||||

|

||||

想尽快地知道一切关于你的系统?

|

||||

想尽快地知道关于你的系统一切信息?

|

||||

|

||||

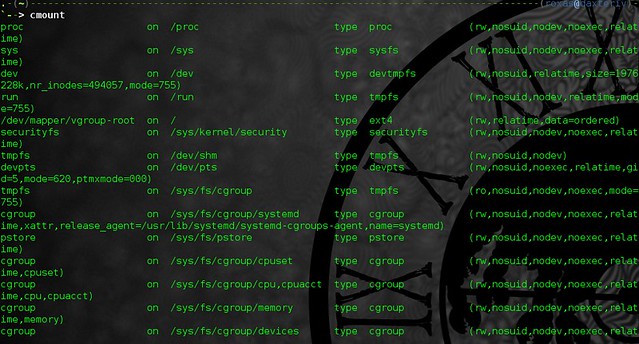

alias cmount="mount | column -t"

|

||||

|

||||

mount到列队中的格式输出

|

||||

按列格式化输出mount信息。

|

||||

|

||||

|

||||

|

||||

alias tree="ls -R | grep ":$" | sed -e 's/:$//' -e 's/[^-][^\/]*\//--/g' -e 's/^/ /' -e 's/-/|/'"

|

||||

|

||||

递归树格式显示目录结构.

|

||||

以树形结构递归地显示目录结构。

|

||||

|

||||

sbs() { du -b --max-depth 1 | sort -nr | perl -pe 's{([0-9]+)}{sprintf "%.1f%s", $1>=2**30? ($1/2**30, "G"): $1>=2**20? ($1/2**20, "M"): $1>=2**10? ($1/2**10, "K"): ($1, "")}e';}

|

||||

|

||||

在当前目录里“按大小排序”显示列表的文件,排序按它们在磁盘上的大小

|

||||

安装文件在磁盘存储的大小排序,显示当前目录的文件列表。

|

||||

|

||||

alias intercept="sudo strace -ff -e trace=write -e write=1,2 -p"

|

||||

|

||||

intercept[一些PID]阻止进程的标准输入输出文件和标准错误文件。注意你需要看着安装完成

|

||||

接管某个进程的标准输出和标准错误。注意你需要安装了 strace。

|

||||

|

||||

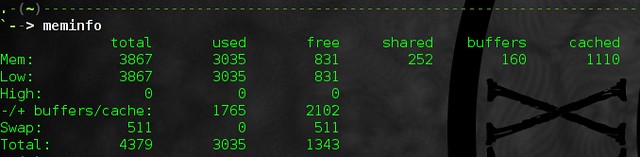

alias meminfo='free -m -l -t'

|

||||

|

||||

查看你还有剩下多少内存

|

||||

查看你还有剩下多少内存。

|

||||

|

||||

|

||||

|

||||

alias ps? = "ps aux | grep"

|

||||

|

||||

ps?[名字]很容易地发现,这个任何进程的

|

||||

可以很容易地找到某个进程的PID:ps? [名字]。

|

||||

|

||||

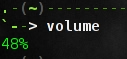

alias volume="amixer get Master | sed '1,4 d' | cut -d [ -f 2 | cut -d ] -f 1"

|

||||

|

||||

显示现在声音的音量.

|

||||

显示当前音量设置。

|

||||

|

||||

|

||||

|

||||

### 网络 ###

|

||||

|

||||

对于所有涉及互联网和你本地网络的命令,也有奇特的别名给它们

|

||||

|

||||

对于所有用在互联网和本地网络的命令,也有一些神奇的别名给它们。

|

||||

|

||||

alias websiteget="wget --random-wait -r -p -e robots=off -U mozilla"

|

||||

|

||||

websiteget[指定的位置]下载完整的网站地址

|

||||

下载整个网站:websiteget [URL]。

|

||||

|

||||

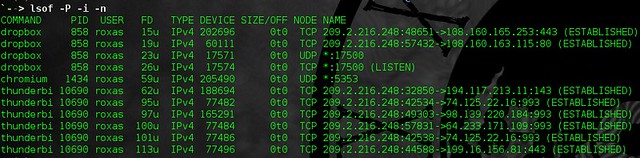

alias listen="lsof -P -i -n"

|

||||

|

||||

显示出哪个应用程序连接到网络

|

||||

显示出哪个应用程序连接到网络。

|

||||

|

||||

|

||||

|

||||

alias port='netstat -tulanp'

|

||||

|

||||

显示出活动的端口

|

||||

显示出活动的端口。

|

||||

|

||||

gmail() { curl -u "$1" --silent "https://mail.google.com/mail/feed/atom" | sed -e 's/<\/fullcount.*/\n/' | sed -e 's/.*fullcount>//'}

|

||||

|

||||

gmail:[用户名]大概的显示你的谷歌邮件里未读邮件的数量

|

||||

|

||||

大概的显示你的谷歌邮件里未读邮件的数量:gmail [用户名]

|

||||

|

||||

alias ipinfo="curl ifconfig.me && curl ifconfig.me/host"

|

||||

|

||||

获得你的公共IP地址和主机

|

||||

获得你的公网IP地址和主机名。

|

||||

|

||||

getlocation() { lynx -dump http://www.ip-adress.com/ip_tracer/?QRY=$1|grep address|egrep 'city|state|country'|awk '{print $3,$4,$5,$6,$7,$8}'|sed 's\ip address flag \\'|sed 's\My\\';}

|

||||

|

||||

以你的IP地址为基础返回你现在的位置

|

||||

返回你的当前IP地址的地理位置。

|

||||

|

||||

### 没用的 ###

|

||||

|

||||

所以呢,如果一些别名是不是全部具有使用价值?它们可能仍然有趣

|

||||

### 也许无用 ###

|

||||

|

||||

所以呢,如果一些别名并不是全都具有使用价值?它们可能仍然有趣。

|

||||

|

||||

kernelgraph() { lsmod | perl -e 'print "digraph \"lsmod\" {";<>;while(<>){@_=split/\s+/; print "\"$_[0]\" -> \"$_\"\n" for split/,/,$_[3]}print "}"' | dot -Tpng | display -;}

|

||||

|

||||

要绘制内核模块依赖曲线图。需要镜像阅读器

|

||||

绘制内核模块依赖曲线图。需要可以查看图片。

|

||||

|

||||

alias busy="cat /dev/urandom | hexdump -C | grep "ca fe""

|

||||

alias busy="cat /dev/urandom | hexdump -C | grep 'ca fe'"

|

||||

|

||||

在非技术人员的眼里你看起来都在忙和构思

|

||||

在那些非技术人员的眼里你看起来是总是那么忙和神秘。

|

||||

|

||||

|

||||

|

||||

最后,这些别名和函数的很大一部分来自于我个人的.bashrc.这些令人敬畏的网站 [alias.sh][1]和[commandlinefu.com][2]我早已经展示在我的[best online tools for Linux][3].当然去检测它们的输出,让你拥有特有的秘诀。如果你真的同意,在注释里分享你的智慧,

|

||||

最后,这些别名和函数的很大一部分来自于我个人的.bashrc。而那些令人点赞的网站 [alias.sh][1]和[commandlinefu.com][2]我早已在我的帖子[best online tools for Linux][3] 里面介绍过。你可以去看看,如果你愿意,也可以分享下你的。也欢迎你在这里评论,分享一下你的智慧。

|

||||

|

||||

|

||||

做为奖励,这里有我提到的全部别名和函数的纯文本版本,随时可以复制粘贴到你的.bashrc.

|

||||

做为奖励,这里有我提到的全部别名和函数的纯文本版本,随时可以复制粘贴到你的.bashrc。(如果你已经一行一行的复制到这里了,哈哈,你发现你又浪费了生命的几秒钟~)

|

||||

|

||||

#Productivity

|

||||

alias ls="ls --color=auto"

|

||||

@ -243,8 +245,8 @@ gmail:[用户名]大概的显示你的谷歌邮件里未读邮件的数量

|

||||

via: http://xmodulo.com/useful-bash-aliases-functions.html

|

||||

|

||||

作者:[Adrien Brochard][a]

|

||||

译者:[译者luoyutiantang](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[luoyutiantang](https://github.com/luoyutiantang)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

Linux上使用备份管理器进行系统备份

|

||||

Linux 上使用 backup-manager 进行系统备份

|

||||

================================================================================

|

||||

无论简单与否,我们都有机会去了解这么一件事,那就是备份的重要性从来都不可以被低估。考虑到备份的方法真的多如牛毛,你可能想要知道怎样来有效地为你的系统选择正确的工具和和合适的策略。

|

||||

|

||||

在本文中,我将为你介绍[备份管理器][1],一个简单易用的命令行备份工具,在大多数的Linux发行版的标准软件库中都能见到它的身影。

|

||||

在本文中,我将为你介绍[backup-manager][1],一个简单易用的命令行备份工具,在大多数的Linux发行版的标准软件库中都能见到它的身影。

|

||||

|

||||

是什么让备份管理器在众多的备份工具或备份策略中脱颖而出呢?让我来简单介绍一些它的与众不同的特性吧:

|

||||

|

||||

@ -28,7 +28,7 @@ Linux上使用备份管理器进行系统备份

|

||||

|

||||

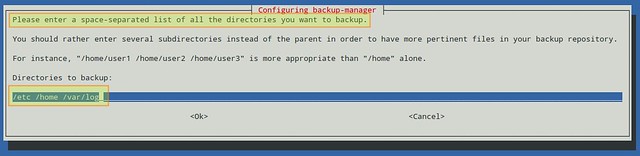

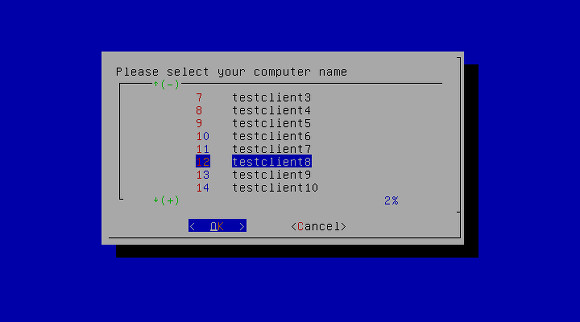

在下一步中,会询问你要备份的所有目录(用空格分隔)。建议,但不是严格要求,列出同一父目录中的几个子目录,而不要仅仅输入父目录。

|

||||

|

||||

你可以跳过该步骤并在以后对配置文件中BM_TARBALL_DIRECTORIESb变量进行设置。否则的话,就请尽可能多地添加你想要的目录,然后选择OK:

|

||||

你可以跳过该步骤并在以后对配置文件中BM\_TARBALL\_DIRECTORIESb变量进行设置。否则的话,就请尽可能多地添加你想要的目录,然后选择OK:

|

||||

|

||||

|

||||

|

||||

@ -115,11 +115,11 @@ Linux上使用备份管理器进行系统备份

|

||||

|

||||

# backup-manager

|

||||

|

||||

BM_TARBALL_DIRECTORIES列出的目录将作为tarball备份到BM_REPOSITORY_ROOT目录,然后通过SSH传输到BM_UPLOAD_SSH_DESTINATION指定的主机dev1和dev3。

|

||||

BM\_TARBALL\_DIRECTORIES列出的目录将作为tarball备份到BM\_REPOSITORY\_ROOT目录,然后通过SSH传输到BM\_UPLOAD\_SSH_DESTINATION指定的主机dev1和dev3。

|

||||

|

||||

|

||||

|

||||

正如你在上面图片中看到的那样,备份管理器在运行的时候创建了一个名为/root/.back-manager_my.cnf的文件,MySQL密码通过BM_MYSQL_ ADMINPASS指定。那样,mysqldump可以验证到MySQL服务器,而不必在命令行以明文格式接受密码,那样会有安全风险。

|

||||

正如你在上面图片中看到的那样,备份管理器在运行的时候创建了一个名为/root/.back-manager\_my.cnf的文件,MySQL密码通过BM\_MYSQL\_ADMINPASS指定。那样,mysqldump可以验证到MySQL服务器,而不必在命令行以明文格式接受密码,那样会有安全风险。

|

||||

|

||||

### 通过cron运行备份管理器 ###

|

||||

|

||||

@ -145,7 +145,7 @@ via: http://xmodulo.com/linux-backup-manager.html

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -8,23 +8,23 @@ Linux能够提供消费者想要的东西吗?

|

||||

|

||||

Linux需要深深凝视自己的水晶球,仔细体会那场浏览器大战留下的尘埃,然后留意一下这点建议:

|

||||

|

||||

如果你不能提供他们想要的,他们就会离开。

|

||||

> 如果你不能提供他们想要的,他们就会离开。

|

||||

|

||||

而这种事与愿违的另一个例子是Windows 8。消费者不喜欢那套界面。而微软却坚持使用,因为这是把所有东西搬到Surface平板上所必须的。相同的情况也可能发生在Canonical和Ubuntu Unity身上 -- 尽管它们的目标并不是单一独特地针对平板电脑来设计(所以,整套界面在桌面系统上仍然很实用而且直观)。

|

||||

|

||||

一直以来,Linux开发者和设计者们看上去都按照他们自己的想法来做事情。他们过分在意“吃你自家的狗粮”这句话了。以至于他们忘记了一件非常重要的事情:

|

||||

|

||||

没有新用户,他们的“根基”也仅仅只属于他们自己。

|

||||

> 没有新用户,他们的“根基”也仅仅只属于他们自己。

|

||||

|

||||

换句话说,唱诗班不仅仅是被传道,他们也同时在宣传。让我给你看三个案例来完全掌握这一点。

|

||||

|

||||

- 多年以来,有在Linux系统中替代活动目录(Active Directory)的需求。我很想把这个名称换成LDAP,但是你真的用过LDAP吗?那就是个噩梦。开发者们也努力了想让LDAP能易用一点,但是没一个做到了。而让我很震惊的是这样一个从多用户环境下发展起来的平台居然没有一个能和AD正面较量的功能。这需要一组开发人员,从头开始建立一个AD的开源替代。这对那些寻求从微软产品迁移的中型企业来说是非常大的福利。但是在这个产品做好之前,他们还不能开始迁移。

|

||||

- 多年以来,一直有在Linux系统中替代活动目录(Active Directory)的需求。我很想把这个名称换成LDAP,但是你真的用过LDAP吗?那就是个噩梦。开发者们也努力了想让LDAP能易用一点,但是没一个做到了。而让我很震惊的是这样一个从多用户环境下发展起来的平台居然没有一个能和AD正面较量的功能。这需要一组开发人员,从头开始建立一个AD的开源替代。这对那些寻求从微软产品迁移的中型企业来说是非常大的福利。但是在这个产品做好之前,他们还不能开始迁移。

|

||||

- 另一个从微软激发的需求是Exchange/Outlook。是,我也知道许多人都开始用云。但是,事实上中等和大型规模生意仍然依赖于Exchange/Outlook组合,直到能有更好的产品出现。而这将非常有希望发生在开源社区。整个拼图的一小块已经摆好了(虽然还需要一些工作)- 群件客户端,Evolution。如果有人能够从Zimbra拉出一个分支,然后重新设计成可以配合Evolution(甚至Thunderbird)来提供服务实现Exchange的简单替代,那这个游戏就不是这么玩了,而消费者获得的利益将是巨大的。

|

||||

- 便宜,便宜,还是便宜。这是大多数人都得咽下去的苦药片 - 但是消费者(和生意)就是希望便宜。看看去年一年Chromebook的销量吧。现在,搜索一下Linux笔记本看能不能找到700美元以下的。而只用三分之一的价格,就可以买到一个让你够用的Chromebook(一个使用了Linux内核的平台)。但是因为Linux仍然是一个细分市场,很难降低成本。像红帽那种公司也许可以改变现状。他们也已经推出了服务器硬件。为什么不推出一些和Chromebook有类似定位但是却运行完整Linux环境的低价中档笔记本呢?(请看“[Cloudbook是Linux的未来吗?][1]”)其中的关键是这种设备要低成本并且符合普通消费者的要求。不要站在游戏玩家/开发者的角度去思考了,记住普通消费者真正的需求 - 一个网页浏览器,不会有更多了。这是Chromebook为什么可以这么轻松地成功。Google精确地知道消费者想要什么,然后推出相应的产品。而面对Linux,一些公司仍然认为他们吸引买家的唯一途径是高端昂贵的硬件。而有一点讽刺的是,口水战中最经常听到的却是Linux只能在更慢更旧的硬件上运行。

|

||||

|

||||

最后,Linux需要看一看乔布斯传(Book Of Jobs),搞清楚如何说服消费者们他们真正要的就是Linux。在生意上和在家里 -- 每个人都可以享受到Linux带来的好处。说真的,开源社区怎么可能做不到这点呢?Linux本身就已经带有很多漂亮的时髦术语标签:稳定性,可靠性,安全性,云,免费 -- 再加上Linux实际已经进入到绝大多数人手中了(只是他们自己还不清楚罢了)。现在是时候让他们知道这一点了。如果你是用Android或者Chromebooks,那么你就在用(某种形式上的)Linux。

|

||||

最后,Linux需要看一看乔布斯传(Book Of Jobs),搞清楚如何说服消费者们他们真正要的就是Linux。在公司里和在家里 -- 每个人都可以享受到Linux带来的好处。说真的,开源社区怎么可能做不到这点呢?Linux本身就已经带有很多漂亮的时髦术语标签:稳定性、可靠性、安全性、云、免费 -- 再加上Linux实际已经进入到绝大多数人手中了(只是他们自己还不清楚罢了)。现在是时候让他们知道这一点了。如果你是用Android或者Chromebooks,那么你就在用(某种形式上的)Linux。

|

||||

|

||||

搞清楚消费者需求一直以来都是Linux社区的绊脚石。而且我知道 -- 太多的Linux开发都基于某个开发者有个特殊的想法。这意味着这些开发都针对的“微型市场”。是时候,无论如何,让Linux开发社区能够进行全球性思考了。“一般用户有什么需求,我们怎么满足他们?”让我提几个最基本的点。

|

||||

搞清楚消费者需求一直以来都是Linux社区的绊脚石。而且我知道 -- 太多的Linux开发都基于某个开发者有个特殊的想法。这意味着这些开发都针对的“微型市场”。是时候了,无论如何,让Linux开发社区能够进行全球性思考了。“一般用户有什么需求,我们怎么满足他们?”让我提几个最基本的点。

|

||||

|

||||

一般用户想要:

|

||||

|

||||

@ -43,7 +43,7 @@ via: http://www.techrepublic.com/article/will-linux-ever-be-able-to-give-consume

|

||||

|

||||

作者:[Jack Wallen][a]

|

||||

译者:[zpl1025](https://github.com/zpl1025)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,16 +1,16 @@

|

||||

为什么一些古老的编程语言不会消亡?

|

||||

================================================================================

|

||||

> 我们中意于我们所知道的。

|

||||

> 我们钟爱我们已知的。

|

||||

|

||||

|

||||

|

||||

当今许多知名的编程语言已经都非常古老了。PHP 语言20年、Python 语言23年、HTML 语言21年、Ruby 语言和 JavaScript 语言已经19年,C 语言更是高达42年之久。

|

||||

|

||||

这是没人能预料得到的,即使是计算机科学家 [Brian Kernighan][1] 也一样。他是写著第一本关于 C 语言的作者之一,只到今天这本书还在印刷着。(C 语言本身的发明者 [Dennis Ritchie][2] 是 Kernighan 的合著者,他于 2011 年已辞世。)

|

||||

这是没人能预料得到的,即使是计算机科学家 [Brian Kernighan][1] 也一样。他是写著第一本关于 C 语言的作者之一,直到今天这本书还在印刷着。(C 语言本身的发明者 [Dennis Ritchie][2] 是 Kernighan 的合著者,他于 2011 年已辞世。)

|

||||

|

||||

“我依稀记得早期跟编辑们的谈话,告诉他们我们已经卖出了5000册左右的量,”最近采访 Kernighan 时他告诉我说。“我们设法做的更好。我没有想到的是在2014年的教科书里学生仍然在使用第一个版本的书。”

|

||||

|

||||

关于 C 语言的持久性特别显著的就是 Google 开发出了新的语言 Go,解决同一问题比用 C 语言更有效率。

|

||||

关于 C 语言的持久性特别显著的就是 Google 开发出了新的语言 Go,解决同一问题比用 C 语言更有效率。不过,我仍然很难想象 Go 能彻底杀死 C,无论它有多么好。

|

||||

|

||||

“大多数语言并不会消失或者至少很大一部分用户承认它们不会消失,”他说。“C 语言仍然在一定的领域独领风骚,所以它很接地气。”

|

||||

|

||||

@ -20,13 +20,13 @@

|

||||

|

||||

分别来自普林斯顿大学和加州大学伯克利分校的研究者 Ari Rabkin 和 Leo Meyerovich 花费了两年时间来研究解决上面的问题。他们的研究报告,[《编程语言使用情况实例分析》][3],记录了对超过 200,000 个 Sourceforge 项目和超过 13,000 个程序员投票结果的分析。

|

||||

|

||||

他们主要的发现呢?大多数时候程序员选择的编程语言都是他们所熟悉的。

|

||||

他们主要的发现是什么呢?大多数时候程序员选择的编程语言都是他们所熟悉的。

|

||||

|

||||

“存在着我们使用的语言是因为我们经常使用他们,” Rabkin 告诉我。“例如:天文学家就经常使用 IDL [交互式数据语言]来开发他们的计算机程序,并不是因为它具有什么特殊的星级功能或其它特点,而是因为用它形成习惯了。他们已经用些语言构建出很优秀的程序了,并且想保持原状。”

|

||||

“这些我们使用的语言还继续存在是因为我们经常使用他们,” Rabkin 告诉我。“例如:天文学家就经常使用 IDL [交互式数据语言]来开发他们的计算机程序,并不是因为它具有什么特殊的亮点功能或其它特点,而是因为用它形成习惯了。他们已经用些语言构建出很优秀的程序了,并且想保持原状。”

|

||||

|

||||

换句话说,它部分要归功于创建其的语言的的知名度仍保留较大劲头。当然,这并不意味着流行的语言不会变化。Rabkin 指出我们今天在使用的 C 语言就跟 Kernighan 第一次创建时的一点都不同,那时的 C 编译器跟现代的也不是完全兼容。

|

||||

换句话说,它部分要归功于这些语言所创立的知名度仍保持较高。当然,这并不意味着流行的语言不会变化。Rabkin 指出我们今天在使用的 C 语言就跟 Kernighan 第一次创建时的一点都不同,那时的 C 编译器跟现代的也不是完全兼容。

|

||||

|

||||

“有一个古老的,关于工程师的笑话。工程师被问到哪一种编程语言人们会使用30年,他说,‘我不知道,但它总会被叫做 Fortran’,” Rabkin 说到。“长期存活的语言跟他们在70年代和80年代刚设计出来的时候不一样了。人们通常都是在上面增加功能,而不会删除功能,因为要保持向后兼容,但有些功能会被修正。”

|

||||

“有一个古老的,关于工程师的笑话。工程师被问到哪一种编程语言人们会使用30年,他说,‘我不知道,但它总会被叫做 Fortran’,” Rabkin 说到。“长期存活的语言跟他们在70年代和80年代刚设计出来的时候不太一样了。人们通常都是在上面增加功能,而不会删除功能,因为要保持向后兼容,但有些功能会被修正。”

|

||||

|

||||

向后兼容意思就是当语言升级后,程序员不仅可以使用升级语言的新特性,也不用回去重写已经实现的老代码块。老的“遗留代码”的语法规则已经不用了,但舍弃是要花成本的。只要它们存在,我们就有理由相信相关的语言也会存在。

|

||||

|

||||

@ -34,17 +34,17 @@

|

||||

|

||||

遗留代码指的是用过时的源代码编写的程序或部分程序。想想看,一个企业或工程项目的关键程序功能部分是用没人维护的编程语言写出来的。因为它们仍起着作用,用现代的源代码重写非常困难或着代价太高,所以它们不得不保留下来,即使其它部分的代码都变动了,程序员也必须不断折腾以保证它们能正常工作。

|

||||

|

||||

任何的编程语言,存在了超过几十年时间都具有某种形式的遗留代码问题, PHP 也不加例外。PHP 是一个很有趣的例子,因为它的遗留代码跟现在的代码明显不同,支持者或评论家都承认这是一个巨大的进步。

|

||||

任何编程语言,存在了超过几十年时间都具有某种形式的遗留代码问题, PHP 也不例外。PHP 是一个很有趣的例子,因为它的遗留代码跟现在的代码明显不同,支持者或评论家都承认这是一个巨大的进步。

|

||||

|

||||

Andi Gutmans 是 已经成为 PHP4 的标准编译器的 Zend Engine 的发明者之一。Gutmans 说他和搭档本来是想改进完善 PHP3 的,他们的工作如此成功,以至于 PHP 的原发明者 Rasmus Lerdorf 也加入他们的项目。结果就成为了 PHP4 和他的后续者 PHP5 的编译器。

|

||||

Andi Gutmans 是已经成为 PHP4 的标准编译器的 Zend Engine 的发明者之一。Gutmans 说他和搭档本来是想改进完善 PHP3 的,他们的工作如此成功,以至于 PHP 的原发明者 Rasmus Lerdorf 也加入他们的项目。结果就成为了 PHP4 和他的后续者 PHP5 的编译器。

|

||||

|

||||

因此,当今的 PHP 与它的祖先即最开始的 PHP 是完全不同的。然而,在 Gutmans 看来,在用古老的 PHP 语言版本写的遗留代码的地方一直存在着偏见以至于上升到整个语言的高度。比如 PHP 充满着安全漏洞或没有“集群”功能来支持大规模的计算任务等概念。

|

||||

因此,当今的 PHP 与它的祖先——即最开始的 PHP 是完全不同的。然而,在 Gutmans 看来,在用古老的 PHP 语言版本写的遗留代码的地方一直存在着偏见以至于上升到整个语言的高度。比如 PHP 充满着安全漏洞或没有“集群”功能来支持大规模的计算任务等概念。

|

||||

|

||||

“批评 PHP 的人们通常批评的是在 1998 年时候的 PHP 版本,”他说。“这些人都没有与时俱进。当今的 PHP 已经有了很成熟的生态系统了。”

|

||||

|

||||

如今,Gutmans 说,他作为一个管理者最重要的事情就是鼓励人们升级到最新版本。“PHP有个很大的社区,足以支持您的遗留代码的问题,”他说。“但总的来说,我们的社区大部分都在 PHP5.3 及以上的。”

|

||||

|

||||

问题是,任何语言用户都不会全部升级到最新版本。这就是为什么 Python 用户仍在使用 2000 年发布的 Python 2,而不是使用 2008 年发布的 Python 3 的原因。甚至是已经六年了喜欢 Google 的大多数用户仍没有升级。这种情况是多种原因造成的,但它使得很多开发者在承担风险。

|

||||

问题是,任何语言用户都不会全部升级到最新版本。这就是为什么 Python 用户仍在使用 2000 年发布的 Python 2,而不是使用 2008 年发布的 Python 3 的原因。甚至在六年后,大多数像 Google 这样的用户仍没有升级。这种情况是多种原因造成的,但它使得很多开发者在承担风险。

|

||||

|

||||

“任何东西都不会消亡的,”Rabkin 说。“任何语言的遗留代码都会一直存在。重写的代价是非常高昂的,如果它们不出问题就不要去改动。”

|

||||

|

||||

@ -54,15 +54,15 @@ Andi Gutmans 是 已经成为 PHP4 的标准编译器的 Zend Engine 的发明

|

||||

|

||||

> 有一件事使我们被深深震撼到了。这事最重要的就是我们给人们按年龄分组,然后询问他们知道多少编程语言。我们主观的认为随着年龄的增长知道的会越来越多,但实际上却不是,25岁年龄组和45岁年龄组知道的语言数目是一样的。几个反复询问的问题这里持续不变的。您知道一种语言的几率并不与您的年龄挂钩。

|

||||

|

||||

换句话说,不仅仅里年长的开发者坚持传统,年轻的程序员会承认并采用古老的编程语言作为他们的第一们语言。这可能是因为这些语言具有很有趣的开发库及功能特点,也可能是因为在社区里开发者都是一个组的都喜爱这种开发语言。

|

||||

换句话说,不仅仅年长的开发者坚持传统,年轻的程序员也会认可并采用古老的编程语言作为他们的第一们语言。这可能是因为这些语言具有很有趣的开发库及功能特点,也可能是因为在社区里开发者都是喜爱这种开发语言的一伙人。

|

||||

|

||||

“在全球程序员关注的语言的数量是有定数的,” Rabkin 说。“如果一们语言表现出足够独特的价值,人们将会学习和使用它。如果是和您交流代码和知识的的某个人分享一门编程语言,您将会学习它。因此,例如,只要那些开发库是 Python 库和社区特长是 Python 语言的经验,那么 Python 将会大行其道。”

|

||||

“在全球程序员关注的语言的数量是有定数的,” Rabkin 说。“如果一们语言表现出足够独特的价值,人们将会学习和使用它。如果是和您交流代码和知识的的某个人分享一门编程语言,您将会学习它。因此,例如,只要那些 Python 库存在、 社区也对 Python 语言很有经验的话,那么 Python 仍将会大行其道。”

|

||||

|

||||

研究人员发现关于语言实现的功能,社区是一个巨大的因素。虽然像 Python 和 Ruby 这样的高级语言并没有太大的差别,但,例如程序员就更容易觉得一种比另一种优越。

|

||||

研究人员发现关于语言实现的功能,社区是一个巨大的因素。虽然像 Python 和 Ruby 这样的高级语言并没有太大的差别,但,程序员总是容易觉得一种比另一种优越。

|

||||

|

||||

“Rails 不一定要用 Ruby 语言编写,但它用了,这就是社会因素在起作用,” Rabkin 说。“例如,复活 Objective-C 语言这件事就是苹果的工程师团队说‘让我们使用它吧,’ 他们就没得选择了。”

|

||||

“Rails 不一定要用 Ruby 语言编写,但它用了,这就是社区因素在起作用,” Rabkin 说。“例如,复活 Objective-C 语言这件事就是苹果的工程师团队说‘让我们使用它吧,’ 他们就没得选择了。”

|

||||

|

||||

通观社会的影响及老旧代码这些问题,我们发现最古老的和最新的计算机语言都有巨大的惰性。Go 语言怎么样能超越 C 语言呢?如果有合适的人或公司说它超越它就超越。

|

||||

通观社会的影响及老旧代码这些问题,我们发现最古老的和最新的计算机语言都有巨大的惰性。Go 语言怎么样才能超越 C 语言呢?如果有合适的人或公司说它超越它就超越。

|

||||

|

||||

“它归结为谁传播的更好谁就好,” Rabkin 说。

|

||||

|

||||

@ -74,7 +74,7 @@ via: http://readwrite.com/2014/09/02/programming-language-coding-lifetime

|

||||

|

||||

作者:[Lauren Orsini][a]

|

||||

译者:[runningwater](https://github.com/runningwater)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,27 +1,26 @@

|

||||

让下载更方便

|

||||

================================================================================

|

||||

下载管理器是一个电脑程序,专门处理下载文件,优化带宽占用,以及让下载更有条理等任务。有些网页浏览器,例如Firefox,也集成了一个下载管理器作为功能,但是它们的方式还是没有专门的下载管理器(或者浏览器插件)那么专业,没有最佳地使用带宽,也没有好用的文件管理功能。

|

||||

下载管理器是一个电脑程序,专门处理下载文件,优化带宽占用,以及让下载更有条理等任务。有些网页浏览器,例如Firefox,也集成了一个下载管理器作为功能,但是它们的使用方式还是没有专门的下载管理器(或者浏览器插件)那么专业,没有最佳地使用带宽,也没有好用的文件管理功能。

|

||||

|

||||

对于那些经常下载的人,使用一个好的下载管理器会更有帮助。它能够最大化下载速度(加速下载),断点续传以及制定下载计划,让下载更安全也更有价值。下载管理器已经没有之前流行了,但是最好的下载管理器还是很实用,包括和浏览器的紧密结合,支持类似YouTube的主流网站,以及更多。

|

||||

|

||||

有好几个能在Linux下工作都非常优秀的开源下载管理器,以至于让人无从选择。我整理了一个摘要,是我喜欢的下载管理器,以及Firefox里的一个非常好用的下载插件。这里列出的每一个程序都是开源许可发布的。

|

||||

|

||||

----------

|

||||

|

||||

|

||||

###uGet

|

||||

|

||||

|

||||

|

||||

uGet是一个轻量级,容易使用,功能完备的开源下载管理器。uGet允许用户从不同的源并行下载来加快速度,添加文件到下载序列,暂停或继续下载,提供高级分类管理,和浏览器集成,监控剪贴板,批量下载,支持26种语言,以及其他许多功能。

|

||||

|

||||

uGet是一个成熟的软件;保持开发超过11年。在这个时间里,它发展成一个非常多功能的下载管理器,拥有一套很高价值的功能集,还保持了易用性。

|

||||

uGet是一个成熟的软件;持续开发超过了11年。在这段时间里,它发展成一个非常多功能的下载管理器,拥有一套很高价值的功能集,还保持了易用性。

|

||||

|

||||

uGet是用C语言开发的,使用了cURL作为底层支持,以及应用库libcurl。uGet有非常好的平台兼容性。它一开始是Linux系统下的项目,但是被移植到在Mac OS X,FreeBSD,Android和Windows平台运行。

|

||||

|

||||

#### 功能点: ####

|

||||

|

||||

- 容易使用

|

||||

- 下载队列可以让下载任务按任意多或少或你希望的数量同时进行。

|

||||

- 下载队列可以让下载任务按任意数量或你希望的数量同时进行。

|

||||

- 断点续传

|

||||

- 默认分类

|

||||

- 完美实现的剪贴板监控功能

|

||||

@ -43,19 +42,19 @@ uGet是用C语言开发的,使用了cURL作为底层支持,以及应用库li

|

||||

- 支持GnuTLS

|

||||

- 支持26种语言,包括:阿拉伯语,白俄罗斯语,简体中文,繁体中文,捷克语,丹麦语,英语(默认),法语,格鲁吉亚语,德语,匈牙利语,印尼语,意大利语,波兰语,葡萄牙语(巴西),俄语,西班牙语,土耳其语,乌克兰语,以及越南语。

|

||||

|

||||

---

|

||||

|

||||

- 网站:[ugetdm.com][1]

|

||||

- 开发人员:C.H. Huang and contributors

|

||||

- 许可:GNU LGPL 2.1

|

||||

- 版本:1.10.5

|

||||

|

||||

----------

|

||||

|

||||

|

||||

###DownThemAll!

|

||||

|

||||

|

||||

|

||||

DownThemAll!是一个小巧的,可靠的以及易用的,开源下载管理器/加速器,是Firefox的一个组件。它可以让用户下载一个页面上所有链接和图片以及更多功能。它可以让用户完全控制下载任务,随时分配下载速度以及同时下载的任务数量。通过使用Metalinks或者手动添加镜像的方式,可以同时从不同的服务器下载同一个文件。

|

||||

DownThemAll!是一个小巧可靠的、易用的开源下载管理器/加速器,是Firefox的一个组件。它可以让用户下载一个页面上所有链接和图片,还有更多功能。它可以让用户完全控制下载任务,随时分配下载速度以及同时下载的任务数量。通过使用Metalinks或者手动添加镜像的方式,可以同时从不同的服务器下载同一个文件。

|

||||

|

||||

DownThemAll会根据你要下载的文件大小,切割成不同的部分,然后并行下载。

|

||||

|

||||

@ -69,6 +68,7 @@ DownThemAll会根据你要下载的文件大小,切割成不同的部分,然

|

||||

- 高级重命名选项

|

||||

- 暂停和继续下载任务

|

||||

|

||||

---

|

||||

|

||||

- 网站:[addons.mozilla.org/en-US/firefox/addon/downthemall][2]

|

||||

- 开发人员:Federico Parodi, Stefano Verna, Nils Maier

|

||||

@ -77,13 +77,13 @@ DownThemAll会根据你要下载的文件大小,切割成不同的部分,然

|

||||

|

||||

----------

|

||||

|

||||

|

||||

###JDownloader

|

||||

|

||||

|

||||

|

||||

JDownloader是一个免费,开源的下载管理工具,拥有一个大型社区的开发者支持,让下载更简单和快捷。用户可以开始,停止或暂停下载,设置带宽限制,自动解压缩包,以及更多功能。它提供了一个容易扩展的框架。

|

||||

|

||||

JDownloader简化了从一键下载网站下载文件。它还支持从不同并行资源下载,手势识别,自动文件解压缩以及更多功能。另外,还支持许多“加密链接”网站-所以你只需要复制粘贴“加密的”链接,然后JDownloader会处理剩下的事情。JDownloader还能导入CCF,RSDF和DLC文件。

|

||||

JDownloader简化了从一键下载网站下载文件。它还支持从不同并行资源下载、手势识别、自动文件解压缩以及更多功能。另外,还支持许多“加密链接”网站-所以你只需要复制粘贴“加密的”链接,然后JDownloader会处理剩下的事情。JDownloader还能导入CCF,RSDF和DLC文件。

|

||||

|

||||

#### 功能点: ####

|

||||

|

||||

@ -98,6 +98,7 @@ JDownloader简化了从一键下载网站下载文件。它还支持从不同并

|

||||

- 网页更新

|

||||

- 集成包管理器支持额外模块(例如,Webinterface,Shutdown)

|

||||

|

||||

---

|

||||

|

||||

- 网站:[jdownloader.org][3]

|

||||

- 开发人员:AppWork UG

|

||||

@ -106,11 +107,11 @@ JDownloader简化了从一键下载网站下载文件。它还支持从不同并

|

||||

|

||||

----------

|

||||

|

||||

|

||||

###FreeRapid Downloader

|

||||

|

||||

|

||||

|

||||

FreeRapid Downloader是一个易用的开源下载程序,支持从Rapidshare,Youtube,Facebook,Picasa和其他文件分享网站下载。他的下载引擎基于一些插件,所以可以从特殊站点下载。

|

||||

FreeRapid Downloader是一个易用的开源下载程序,支持从Rapidshare,Youtube,Facebook,Picasa和其他文件分享网站下载。他的下载引擎基于一些插件,所以可以从那些特别的站点下载。

|

||||

|

||||

对于需要针对特定文件分享网站的下载管理器用户来说,FreeRapid Downloader是理想的选择。

|

||||

|

||||

@ -133,6 +134,7 @@ FreeRapid Downloader使用Java语言编写。需要至少Sun Java 7.0版本才

|

||||

- 支持多国语言:英语,保加利亚语,捷克语,芬兰语,葡萄牙语,斯洛伐克语,匈牙利语,简体中文,以及其他

|

||||

- 支持超过700个站点

|

||||

|

||||

---

|

||||

|

||||

- 网站:[wordrider.net/freerapid/][4]

|

||||

- 开发人员:Vity and contributors

|

||||

@ -141,7 +143,7 @@ FreeRapid Downloader使用Java语言编写。需要至少Sun Java 7.0版本才

|

||||

|

||||

----------

|

||||

|

||||

|

||||

###FlashGot

|

||||

|

||||

|

||||

|

||||

@ -151,7 +153,7 @@ FlashGot把所支持的所有下载管理器统一成Firefox中的一个下载

|

||||

|

||||

#### 功能点: ####

|

||||

|

||||

- Linux下支持:Aria, Axel Download Accelerator, cURL, Downloader 4 X, FatRat, GNOME Gwget, FatRat, JDownloader, KDE KGet, pyLoad, SteadyFlow, uGet, wxDFast, 和wxDownload Fast

|

||||

- Linux下支持:Aria, Axel Download Accelerator, cURL, Downloader 4 X, FatRat, GNOME Gwget, FatRat, JDownloader, KDE KGet, pyLoad, SteadyFlow, uGet, wxDFast 和 wxDownload Fast

|

||||

- 支持图库功能,可以帮助把原来分散在不同页面的系列资源,整合到一个所有媒体库页面中,然后可以轻松迅速地“下载所有”

|

||||

- FlashGot Link会使用默认下载管理器下载当前鼠标选中的链接

|

||||

- FlashGot Selection

|

||||

@ -160,12 +162,13 @@ FlashGot把所支持的所有下载管理器统一成Firefox中的一个下载

|

||||

- FlashGot Media

|

||||

- 抓取页面里所有链接

|

||||

- 抓取所有标签栏的所有链接

|

||||

- 链接过滤(例如,只下载指定类型文件)

|

||||

- 链接过滤(例如只下载指定类型文件)

|

||||

- 在网页上抓取点击所产生的所有链接

|

||||

- 支持从大多数链接保护和文件托管服务器直接和批量下载

|

||||

- 隐私选项

|

||||

- 支持国际化

|

||||

|

||||

---

|

||||

|

||||

- 网站:[flashgot.net][5]

|

||||

- 开发人员:Giorgio Maone

|

||||

@ -178,7 +181,7 @@ via: http://www.linuxlinks.com/article/20140913062041384/DownloadManagers.html

|

||||

|

||||

作者:Frazer Kline

|

||||

译者:[zpl1025](https://github.com/zpl1025)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,79 @@

|

||||

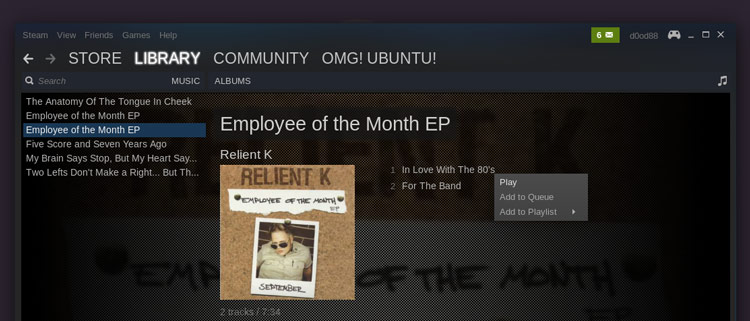

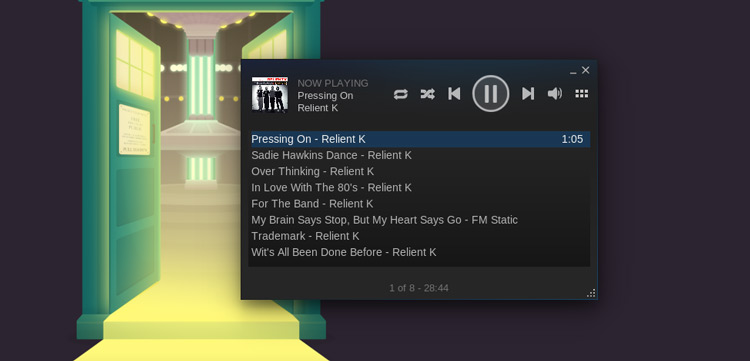

如何在Ubuntu桌面上使用Steam Music音乐播放器

|

||||

================================================================================

|

||||

|

||||

|

||||

**‘音乐让人们走到一起’ 麦当娜曾这样唱道。但是Steam的新音乐播放器特性能否很好的混搭小资与叛逆?**

|

||||

|

||||

如果你曾与世隔绝,充耳不闻,你就会错过与Steam Music的相识。它的特性并不是全新的。从今年的早些时候开始,它就已经以这样或那样的形式进行了测试。

|

||||

|

||||

但Steam客户端最近一次在Windows、Mac和Linux上的定期更新中,所有的客户端都能使用它了。你会问为什么一个游戏客户端会添加一个音乐播放器呢?当然是为了让你能一边玩游戏一边一边听你最喜欢的音乐了。

|

||||

|

||||

别担心:在游戏的音乐声中再加上你自己的音乐,听起来并不会像你想象的那么糟(哈哈)。Steam会帮你减少或消除游戏的背景音乐,但在混音器中保持效果音的高音量,以便于你能和平时一样听到那些叮,嘭和各种爆炸声。

|

||||

|

||||

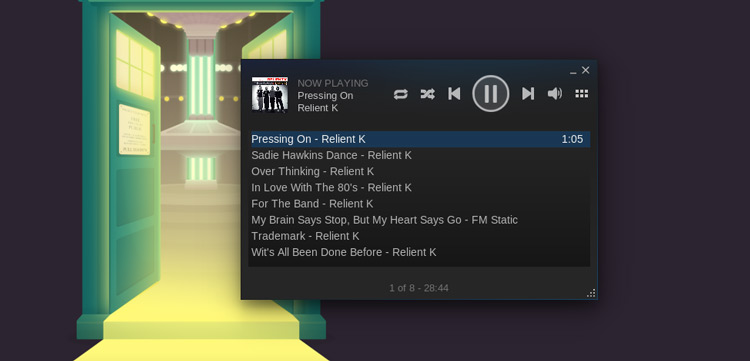

### 使用Steam Music音乐播放器 ###

|

||||

|

||||

|

||||

|

||||

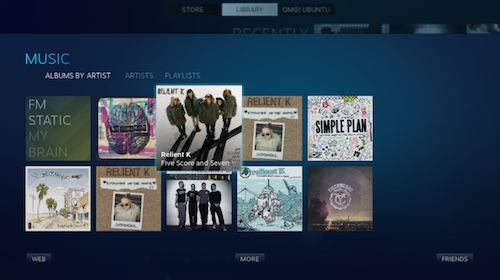

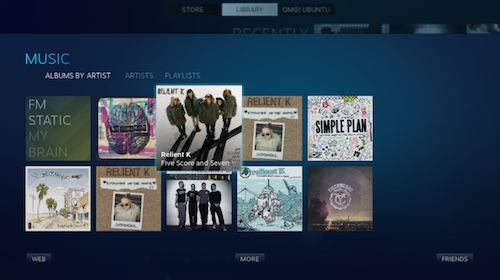

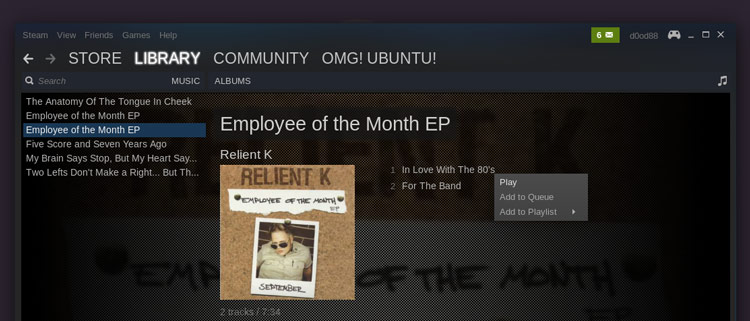

*大图模式*

|

||||

|

||||

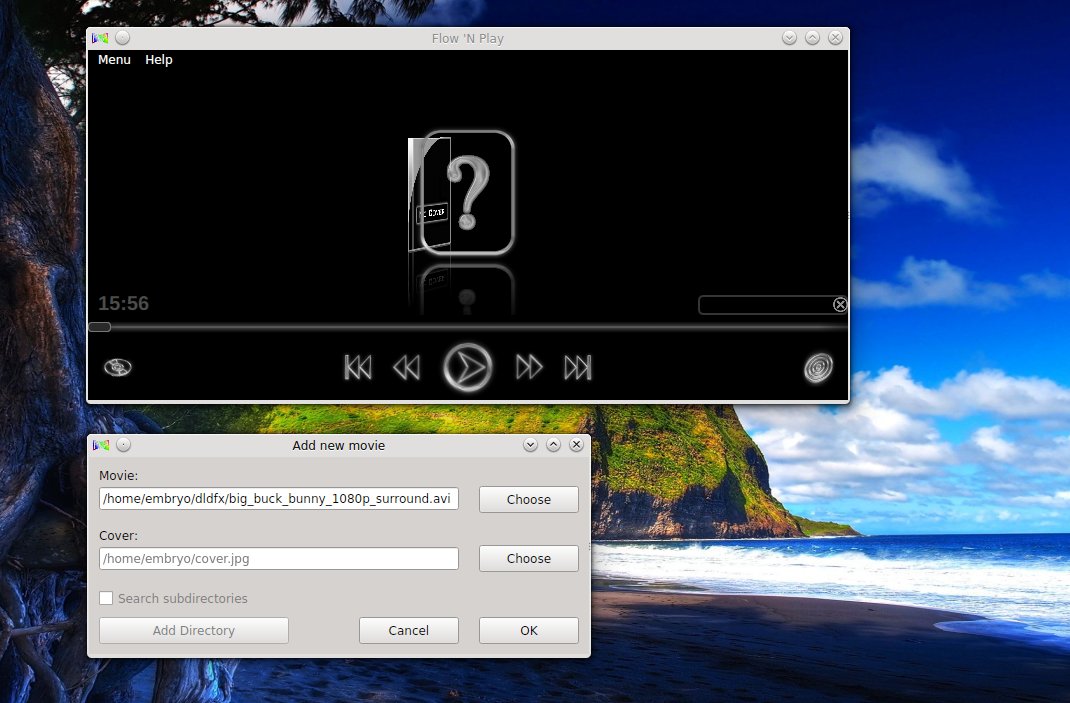

任何使用最新版客户端的人都能使用Steam Music音乐播放器。它是个相当简单的附加程序:它让你能从你的电脑中添加、浏览并播放音乐。

|

||||

|

||||

播放器可以以两种方式进入:桌面和(超棒的)Steam大图模式。在两种方式下,控制播放都超级简单。

|

||||

|

||||

作为一个Rhythmbox的对手或是Spotify的继承者,把**为玩游戏时放音乐而设计**作为特点一点也不吸引人。事实上,他没有任何可购买音乐的商店,也没有整合Rdio,Grooveshark这类在线服务或是桌面服务。没错,你的多媒体键在Linux的播放器上完全不能用。

|

||||

|

||||

Valve说他们“*……计划增加更多的功能以便用户能以新的方式体验Steam Music。我们才刚刚开始。*”

|

||||

|

||||

#### Steam Music的重要特性:####

|

||||

|

||||

- 只能播放MP3文件

|

||||

- 与游戏中的音乐相融

|

||||

- 在游戏中可以控制音乐

|

||||

- 播放器可以在桌面上或在大图模式下运行

|

||||

- 基于播放列表的播放方式

|

||||

|

||||

**它没有整合到Ubuntu的声音菜单里,而且目前也不支持键盘上的多媒体键。**

|

||||

|

||||

### 在Ubuntu上使用Steam Music播放器 ###

|

||||

|

||||

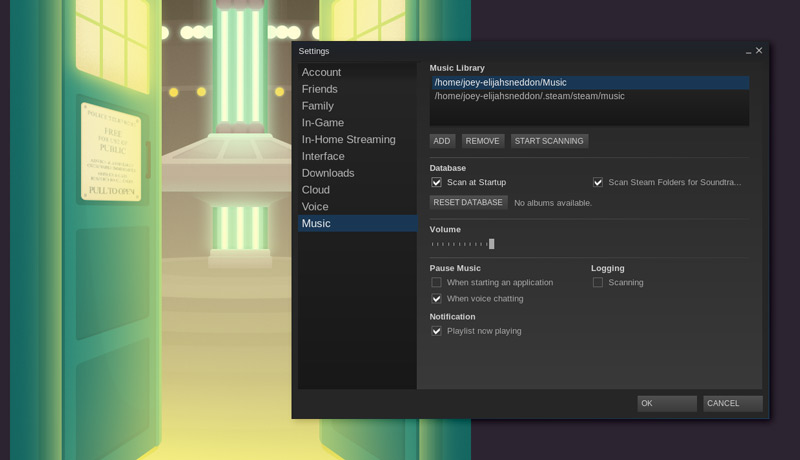

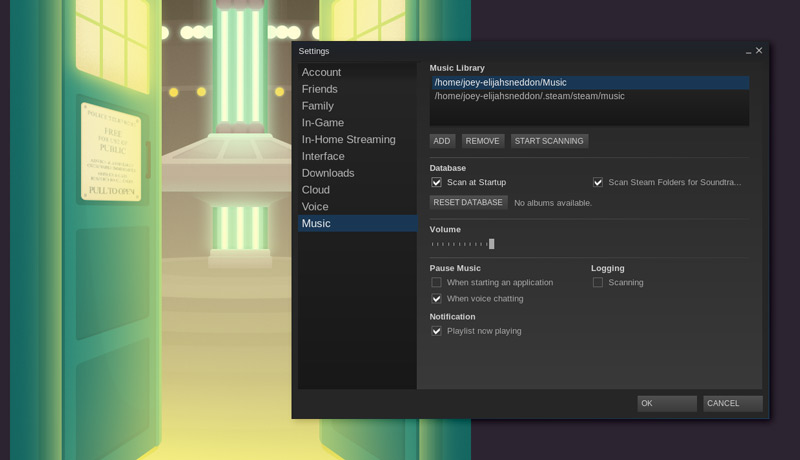

显然,添加音乐是你播放音乐前的第一件事。在Ubuntu上,默认设置下,Steam会自动添加两个文件夹:Home下的标准Music目录和它自带的Steam Music文件夹。任何可下载的音轨都保存在其中。

|

||||

|

||||

注意:目前**Steam Music只能播放MP3文件**。如果你的大部分音乐都是其他文件格式(比如.acc、.m4a等等),这些文件不会被添加也不能被播放。

|

||||

|

||||

若想添加其他的文件夹或重新扫描:

|

||||

|

||||

- 到**View > Settings > Music**。

|

||||

- 点击‘**Add**‘将其他位置的文件夹添加到已列出两个文件夹的列表下。

|

||||

- 点击‘**Start Scanning**’

|

||||

|

||||

|

||||

|

||||

你还可以在这个对话框中调整其他设置,包括‘scan at start’。如果你经常添加新音乐而且很容易忘记手动启动扫描,请标记此项。你还可以选择当路径变化时是否显示提示,设置默认的音量,还能调整当你打开一个应用软件或语音聊天时的播放状态的改变。

|

||||

|

||||

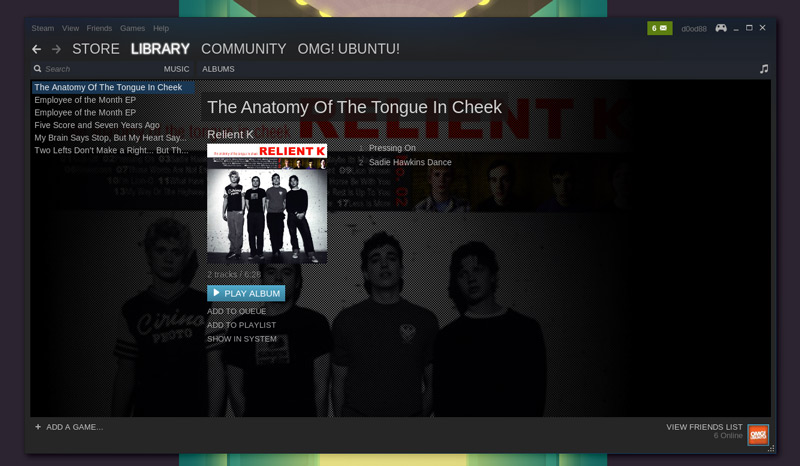

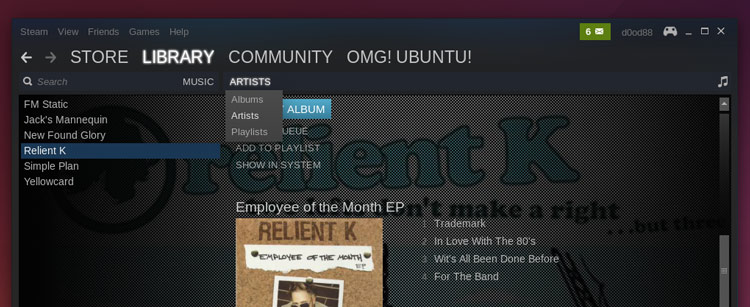

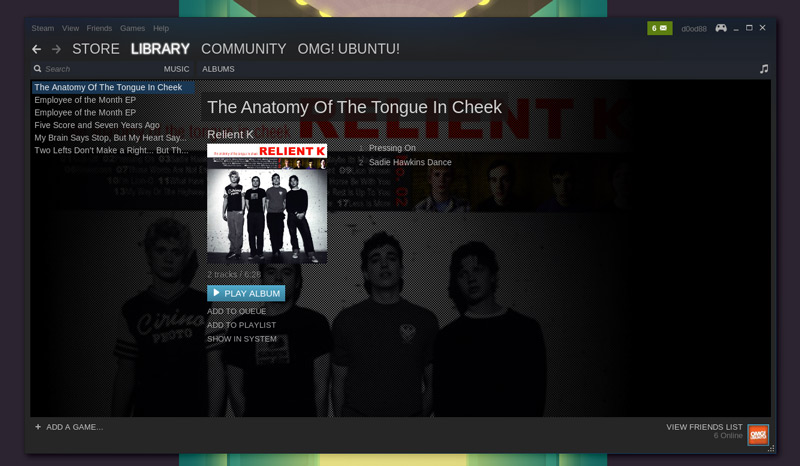

一旦你的音乐源成功的被添加并扫描后,你就可以通过主客户端的**Library > Music**区域浏览你的音乐了。

|

||||

|

||||

|

||||

|

||||

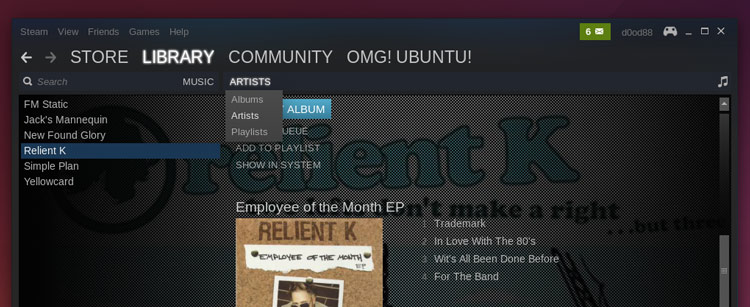

Steam Music会默认的将音乐按照专辑进行分组。若想按照乐队名进行浏览,你需要点击‘Albums’然后从下拉菜单中选择‘Artists’。

|

||||

|

||||

|

||||

|

||||

Steam Music是一个以‘队列’方式工作的系统。你可以通过双击浏览器里的音乐或右键单击并选择‘Add to Queue’来把音乐添加到播放队列里。

|

||||

|

||||

|

||||

|

||||

若想**启动桌面播放器**请点击右上角的音符图标或通过**View > Music Player**菜单。

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2014/10/use-steam-music-player-linux

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[H-mudcup](https://github.com/H-mudcup)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/117485690627814051450/?rel=author

|

||||

@ -1,10 +1,10 @@

|

||||

Linux中使用rsync——文件和目录排除列表

|

||||

================================================================================

|

||||

**rsync**是一个十分有用,而且十分流行的linux工具。它用于备份和恢复文件,也用于对比和同步文件。我们已经在前面的文章讲述了[Linux中rsync命令的使用实例][1],而今天我们将增加一些更为有用的rsync使用技巧。

|

||||

**rsync**是一个十分有用,而且十分流行的linux工具。它用于备份和恢复文件,也用于对比和同步文件。我们已经在前面的文章讲述了[如何在Linux下使用rsync][1],而今天我们将增加一些更为有用的rsync使用技巧。

|

||||

|

||||

### 排除文件和目录列表 ###

|

||||

|

||||

有时候,当我们做大量同步的时候,我们可能想要从同步的文件和目录中排除一个文件和目录的列表。一般来说,像不能被同步的设备文件和某些系统文件,或者像临时文件或者缓存文件这类占据不必要磁盘空间的文件,这类文件时我们需要排除的。

|

||||

有时候,当我们做大量同步的时候,我们可能想要从同步的文件和目录中排除一个文件和目录的列表。一般来说,像设备文件和某些系统文件,或者像临时文件或者缓存文件这类占据不必要磁盘空间的文件是不合适同步的,这类文件是我们需要排除的。

|

||||

|

||||

首先,让我们创建一个名为“excluded”的文件(当然,你想取什么名都可以),然后将我们想要排除的文件夹或文件写入该文件,一行一个。在我们的例子中,如果你想要对根分区进行完整的备份,你应该排除一些在启动时创建的设备目录和放置临时文件的目录,列表看起来像下面这样:

|

||||

|

||||

@ -19,7 +19,8 @@ Linux中使用rsync——文件和目录排除列表

|

||||

### 从命令行排除文件 ###

|

||||

|

||||

你也可以从命令行直接排除文件,该方法在你要排除的文件数量较少,并且在你想要将它写成脚本或加到crontab中又不想脚本或cron依赖于另外一个文件运行时十分有用。

|

||||

For example if you wish to sync /var to a backup directory but you don't wish to include cache and tmp folder that usualy don't hold important content between restarts you can use the following command:

|

||||

|

||||

|

||||

例如,如果你想要同步/var到一个备份目录,但是你不想要包含cache和tmp这些通常不会有重要内容的文件夹,你可以使用以下命令:

|

||||

|

||||

$ sudo rsync -aAXhv --exclude={"/var/cache","/var/tmp"} /var /home/adrian/var

|

||||

@ -34,9 +35,9 @@ via: http://linoxide.com/linux-command/exclude-files-rsync-examples/

|

||||

|

||||

作者:[Adrian Dinu][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/adriand/

|

||||

[1]:http://linoxide.com/how-tos/rsync-copy/

|

||||

[1]:http://linux.cn/article-4503-1.html

|

||||

@ -1,25 +1,25 @@

|

||||

Pitivi 发布 0.94 版本,使用 GTK HeaderBar,修复无数 Bugs

|

||||

Pitivi 0.94 切换到 GTK HeaderBar,修复无数 Bugs

|

||||

=====================================

|

||||

|

||||

** 我是 [Pitivi 视频编辑器][1] 的狂热爱好者。Pitivi 可能不是(至少现在不是)Linux 上可用的最拉风的,功能完善的非线性视频编辑器,但是它绝对是最可靠的一个。 **

|

||||

** 我是 [Pitivi 视频编辑器][1] 的狂热爱好者。Pitivi 可能不是(至少现在不是)Linux 上可用的、最拉风的、功能完善的、非线性视频编辑器,但是它绝对是最可靠的一个。 **

|

||||

|

||||

|

||||

|

||||

自然而然地,我一直在期待这个开源视频编辑器在 [这周末][2] 发布的新的 beta 测试版。

|

||||

自然而然地,我一直在期待这个开源视频编辑器[这次][2]发布的新的 beta 测试版。

|

||||

|

||||

Pitivi 0.94 是基于新的 “GStreamer Editing Service”(GES)的第四个发行版本。

|

||||

|

||||

开发组成员 Jean-François Fortin Tam,称号 “Nekohayo” 将本次升级描述为 “** ...主要作为一个维护版本发布,但是除了对 Bug 的修复之外,还是增加了几个有意思的改进和功能。 **”

|

||||

开发组成员 Jean-François Fortin Tam(“Nekohayo”)将本次升级描述为 “**...主要作为一个维护版本发布,但是除了对 Bug 的修复之外,还是增加了几个有意思的改进和功能。**”

|

||||

|

||||

## 有什么新改进? ##

|

||||

### 有什么新改进? ###

|

||||

|

||||

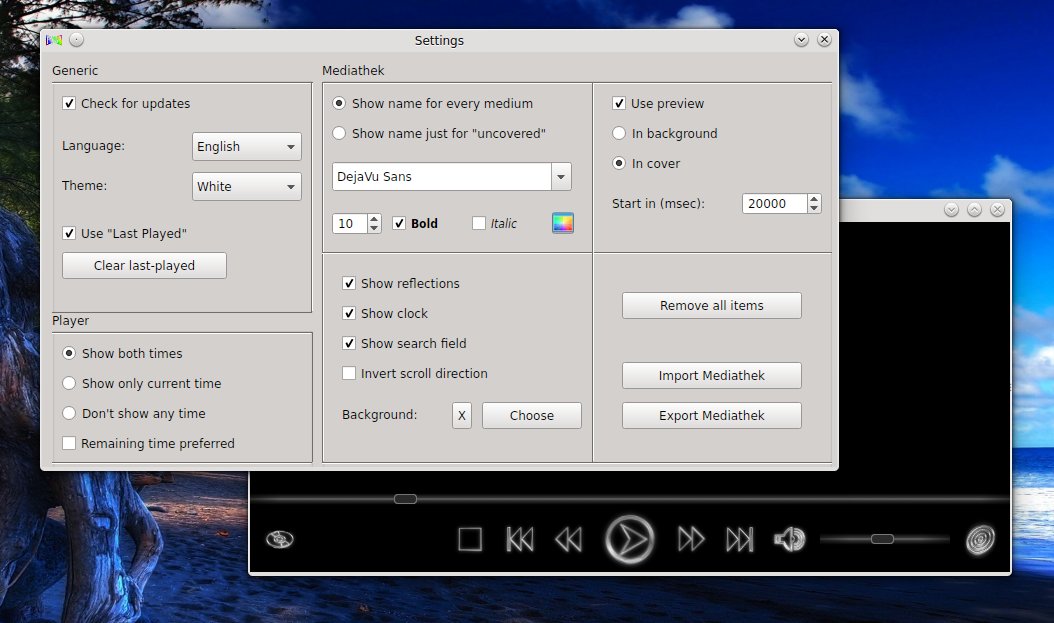

有不少有意思的改进!作为 Pitivi 0.94 版本中最明显的变化,Pitivi 添加了如同 GNOME 客户端一般的 GTK HeaderBar 装饰。HeaderBar 整合了桌面窗口栏,标题栏以及工具栏,节省了大块浪费的垂直以及水平的占用空间。

|

||||

|

||||

“*当你用过一次后,你就在也回不来了,*” Fortin Tam 介绍说。欣赏一下下面这张截图,你肯定会同意的。

|

||||

“*当你用过一次后,你就再也不会走了*” Fortin Tam 介绍说。欣赏一下下面这张截图,你肯定会同意的。

|

||||

|

||||

|

||||

|

||||

Pitivi 现在使用了 GTK HeaderBar 以及菜单键。(image: Nekohayo)

|

||||

*Pitivi 现在使用了 GTK HeaderBar 以及菜单键。(image: Nekohayo)*

|

||||

|

||||

那么应用菜单又怎么样呢?别担心,应用菜单遵循了 GNOME 交互界面的标准,看一下自己机器上的应用菜单确认一下吧。

|

||||

|

||||

@ -49,13 +49,11 @@ Pitivi 现在使用了 GTK HeaderBar 以及菜单键。(image: Nekohayo)

|

||||

|

||||

上面这些信息听起来都很不错吧?下一次更新会更好!这不只是一个通常的来自开发者的夸张,如同 Jean François 解释的一般:

|

||||

|

||||

> “下一次更新(0.95)会运行在难以置信的强大的后端上。感谢 Mathieu [Duponchelle] 和 Thibault [Saunier] 在用 NLE(新的为了 GES 的非线性引擎)替代 GNonLin 并修复问题等工作中做出的努力。”

|

||||

> “下一次更新(0.95)会运行在令人难以置信的强大的后端上。感谢 Mathieu [Duponchelle] 和 Thibault [Saunier] 在用 NLE(新的为了 GES 的非线性引擎)替代 GNonLin 并修复问题等工作中做出的努力。”

|

||||

|

||||

Ubuntu 14.10 带有老的(更容易崩溃)的软件中心,进入 Pitivi 官网¹下载 [安装包][5] 来体验最新杰作。

|

||||

Ubuntu 14.10 带有老的(更容易崩溃)的软件中心,进入 Pitivi 官网下载 [安装包][5] 来体验最新杰作。

|

||||

|

||||

** Pitivi 基金会酬了将近 €20,000,使我们能够向着约定的 1.0 版本迈出一大步。如果你也想早点看到 1.0 版本的到来的话,省下你在星巴克买的格郎德香草奶油咖啡,捐赠我们! **

|

||||

|

||||

*¹目前 0.94 安装包还没发布,你可以下载 nightly tar*

|

||||

**Pitivi 基金会筹了将近 €20,000,使我们能够向着约定的 1.0 版本迈出一大步。如果你也想早点看到 1.0 版本的到来的话,省下你在星巴克买的格郎德香草奶油咖啡,捐赠我们!**

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -64,7 +62,7 @@ via: http://www.omgubuntu.co.uk/2014/11/pitivi-0-94-header-bar-more-features

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[ThomazL](https://github.com/ThomazL)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

运行级别与服务管理命令systemd简介

|

||||

systemd的运行级别与服务管理命令简介

|

||||

================================================================================

|

||||

|

||||

|

||||

@ -6,20 +6,21 @@

|

||||

|

||||

在开始介绍systemd命令前,让我们先简单的回顾一下历史。在Linux世界里,有一个很奇怪的现象,一方面Linux和自由软件(FOSS)在不断的向前推进,另一方面人们对这些变化却不断的抱怨。这就是为什么我要在此稍稍提及那些反对systemd所引起的争论的原因,因为我依然记得历史上有不少类似的争论:

|

||||

|

||||

- 软件包(Pacakge)是邪恶的,因为正真的Linux用户会从源码构建他所想要的的一切,并严格的管理系统中安装的软件。

|

||||

- 解析依赖关系的包管理器是邪恶的,正真的Linux用户会手动解决这些该死的依赖关系。

|

||||

- 软件包(Pacakge)是邪恶的,因为真正的Linux用户会从源码构建他所想要的的一切,并严格的管理系统中安装的软件。

|

||||

- 解析依赖关系的包管理器是邪恶的,真正的Linux用户会手动解决这些该死的依赖关系。

|

||||

- apt-get总能把事情干好,所以只有Yum是邪恶的。

|

||||

- Red Hat简直就是Linux中的微软。

|

||||

- 好样的,Ubuntu!

|

||||

- 滚蛋吧,Ubuntu!

|

||||

|

||||

诸如此类...就像我之前常常说的一样,变化总是让人沮丧。这些该死的变化搅乱了我的工作流程,这可不是一件小事情,任何业务流程的中断,都会直接影响到生产力。但是,我们现在还处于计算机发展的婴儿期,在未来的很长的一段时间内将会持续有快速的变化和发展。想必大家应该都认识一些因循守旧的人,在他们的心里,商品一旦买回家以后就是恒久不变的,就像是买了一把扳手、一套家具或是一个粉红色的火烈鸟草坪装饰品。就是这些人,仍然在坚持使用Windows Vista,甚至还有人在使用运行Windows95的老破烂机器和CRT显示器。他们不能理解为什么要去换一台新机器。老的还能用啊,不是么?

|

||||

诸如此类...就像我之前常常说的一样,变化总是让人沮丧。这些该死的变化搅乱了我的工作流程,这可不是一件小事情,任何业务流程的中断,都会直接影响到生产力。但是,我们现在还处于计算机发展的婴儿期,在未来的很长的一段时间内将会持续有快速的变化和发展。想必大家应该都认识一些因循守旧的人,在他们的心里,商品一旦买回家以后就是恒久不变的,就像是买了一把扳手、一套家具或是一个粉红色的火烈鸟草坪装饰品。就是这些人,仍然在坚持使用Windows Vista,甚至还有人在使用运行Windows 95的老破烂机器和CRT显示器。他们不能理解为什么要去换一台新机器。老的还能用啊,不是么?

|

||||

|

||||

这让我回忆起了我在维护老电脑上的一项伟大的成就,那台破电脑真的早就该淘汰掉。从前我有个朋友有一台286的老机器,安装了一个极其老的MS-DOS版本。她使用这台电脑来处理一些简单的任务,比如说约会、日记、记账等,我还用BASIC给她写了一个简单的记账软件。她不用关注任何安全更新,是这样么?因为它压根都没有联网。所以我会时不时给她维修一下电脑,更换电阻、电容、电源或者是CMOS电池什么的。它竟然还一直能用。它那袖珍的琥珀CRT显示器变得越来越暗,在使用了20多年后,终于退出了历史舞台。现在我的这位朋友,换了一台运行Linux的老Thinkpad,来干同样的活。

|

||||

|

||||

前面的话题有点偏题了,下面抓紧时间开始介绍systemd。

|

||||

|

||||

###运行级别 vs. 状态###

|

||||

|

||||

SysVInit使用静态的运行级别来构建不同的启动状态,大部分发布版本中提供了以下5个运行级别:

|

||||

|

||||

- 单用户模式(Single-user mode)

|

||||

@ -28,7 +29,7 @@ SysVInit使用静态的运行级别来构建不同的启动状态,大部分发

|

||||

- 系统关机(System shutdown)

|

||||

- 系统重启(System reboot)

|

||||

|

||||

对于我来说,使用多个运行级别并没有太大的好处,但它们却一直在系统中存在着。 不同于运行级别,systemd可以创建不同的状态,状态提供了灵活的机制来设置启动时的配置项。这些状态是由多个unit文件组成的,状态又叫做启动目标(target)。启动目标有一个漂亮的描述性命名,而不是像运行级别那样使用数字。unit文件可以控制服务、设备、套接字和挂载点。参考/usr/lib/systemd/system/graphical.target,这是CentOS 7默认的启动目标:

|

||||

对于我来说,使用多个运行级别并没有太大的好处,但它们却一直在系统中存在着。 不同于运行级别,systemd可以创建不同的状态,状态提供了灵活的机制来设置启动时的配置项。这些状态是由多个unit文件组成的,状态又叫做启动目标(target)。启动目标有一个清晰的描述性命名,而不是像运行级别那样使用数字。unit文件可以控制服务、设备、套接字和挂载点。参考下/usr/lib/systemd/system/graphical.target,这是CentOS 7默认的启动目标:

|

||||

|

||||

[Unit]

|

||||

Description=Graphical Interface

|

||||

@ -71,15 +72,16 @@ SysVInit使用静态的运行级别来构建不同的启动状态,大部分发

|

||||

DIR_SUFFIX="${APACHE_CONFDIR##/etc/apache2-}"

|

||||

else

|

||||

DIR_SUFFIX=

|

||||

|

||||

整个文件一共有410行。

|

||||

|

||||

你可以检查unit件的依赖关系,我常常被这些复杂的依赖关系给吓到:

|

||||

你可以检查unit文件的依赖关系,我常常被这些复杂的依赖关系给吓到:

|

||||

|

||||

$ systemctl list-dependencies httpd.service

|

||||

|

||||

### cgroups ###

|

||||

|

||||

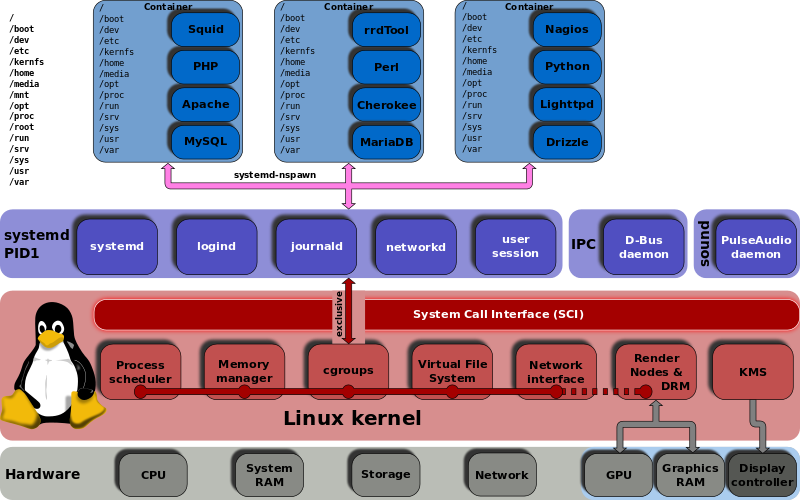

cgroups,或者叫控制组,在Linux内核里已经出现好几年了,但直到systemd的出现才被真正使用起来。[The kernel documentation][1]中是这样描述cgroups的:“控制组提供层次化的机制来管理任务组,使用它可以聚合和拆分任务组,并管理任务组后续产生的子任务。”换句话说,它提供了多种有效的方式来控制、限制和分配资源。systemd使用了cgroups,你可以便捷得查看它,使用下面的命令可以展示你系统中的整个cgroup树:

|

||||

cgroups,或者叫控制组,在Linux内核里已经出现好几年了,但直到systemd的出现才被真正使用起来。[The kernel documentation][1]中是这样描述cgroups的:“控制组提供层次化的机制来管理任务组,使用它可以聚合和拆分任务组,并管理任务组后续产生的子任务。”换句话说,它提供了多种有效的方式来控制、限制和分配资源。systemd使用了cgroups,你可以便捷的查看它,使用下面的命令可以展示你系统中的整个cgroup树:

|

||||

|

||||

$ systemd-cgls

|

||||

|

||||

@ -115,7 +117,7 @@ via: http://www.linux.com/learn/tutorials/794615-systemd-runlevels-and-service-m

|

||||

|

||||

作者:[Carla Schroder][a]

|

||||

译者:[coloka](https://github.com/coloka)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

Linux问答时间--如何在CentOS上安装phpMyAdmin

|

||||

Linux有问必答:如何在CentOS上安装phpMyAdmin

|

||||

================================================================================

|

||||

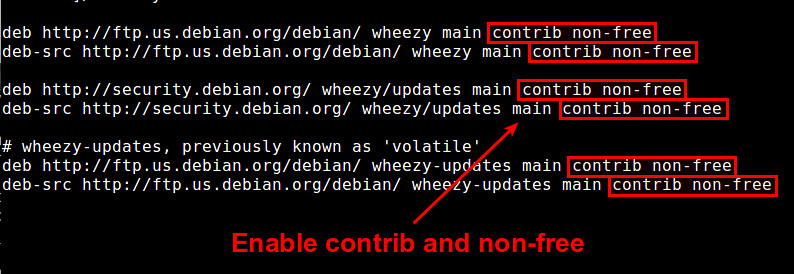

> **问题**:我正在CentOS上运行一个MySQL/MariaDB服务,并且我想要通过网络接口来用phpMyAdmin来管理数据库。在CentOS上安装phpMyAdmin的最佳方法是什么?

|

||||

|

||||

@ -108,7 +108,7 @@ phpMyAdmin是一款以PHP为基础,基于Web的MySQL/MariaDB数据库管理工

|

||||

|

||||

### 测试phpMyAdmin ###

|

||||

|

||||

测试phpMyAdmin是否设置成功,访问这个页面:http://<web-server-ip-addresss>/phpmyadmin

|

||||

测试phpMyAdmin是否设置成功,访问这个页面:http://\<web-server-ip-addresss>/phpmyadmin

|

||||

|

||||

|

||||

|

||||

@ -153,14 +153,14 @@ phpMyAdmin是一款以PHP为基础,基于Web的MySQL/MariaDB数据库管理工

|

||||

via: http://ask.xmodulo.com/install-phpmyadmin-centos.html

|

||||

|

||||

译者:[ZTinoZ](https://github.com/ZTinoZ)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://xmodulo.com/set-web-based-database-management-system-adminer.html

|

||||

[2]:http://xmodulo.com/install-lamp-stack-centos.html

|

||||

[3]:http://xmodulo.com/install-lemp-stack-centos.html

|

||||

[4]:http://xmodulo.com/how-to-set-up-epel-repository-on-centos.html

|

||||

[2]:http://linux.cn/article-1567-1.html

|

||||

[3]:http://linux.cn/article-4314-1.html

|

||||

[4]:http://linux.cn/article-2324-1.html

|

||||

[5]:

|

||||

[6]:

|

||||

[7]:

|

||||

@ -1,6 +1,6 @@

|

||||

Linux的十条SCP传输命令

|

||||

十个 SCP 传输命令例子

|

||||

================================================================================

|

||||

Linux系统管理员应该很熟悉**CLI**环境,因为在Linux服务器中是不安装**GUI**的。**SSH**可能是Linux系统管理员通过远程方式安全管理服务器的最流行协议。在**SSH**命令中内置了一种叫**SCP**的命令,用来在服务器之间安全传输文件。

|

||||

Linux系统管理员应该很熟悉**CLI**环境,因为通常在Linux服务器中是不安装**GUI**的。**SSH**可能是Linux系统管理员通过远程方式安全管理服务器的最流行协议。在**SSH**命令中内置了一种叫**SCP**的命令,用来在服务器之间安全传输文件。

|

||||

|

||||

|

||||

|

||||

@ -10,7 +10,7 @@ Linux系统管理员应该很熟悉**CLI**环境,因为在Linux服务器中是

|

||||

|

||||

scp source_file_name username@destination_host:destination_folder

|

||||

|

||||

**SCP**命令有很多参数供你使用,这里指的是每次都会用到的参数。

|

||||

**SCP**命令有很多可以使用的参数,这里指的是每次都会用到的参数。

|

||||

|

||||

### 用-v参数来提供SCP进程的详细信息 ###

|

||||

|

||||

@ -53,7 +53,7 @@ Linux系统管理员应该很熟悉**CLI**环境,因为在Linux服务器中是

|

||||

|

||||

### 用-C参数来让文件传输更快 ###

|

||||

|

||||

有一个参数能让传输文件更快,就是“**-C**”参数,它的作用是不停压缩所传输的文件。它特别之处在于压缩是在网络中进行,当文件传到目标服务器时,它会变回压缩之前的原始大小。

|

||||

有一个参数能让传输文件更快,就是“**-C**”参数,它的作用是不停压缩所传输的文件。它特别之处在于压缩是在网络传输中进行,当文件传到目标服务器时,它会变回压缩之前的原始大小。

|

||||

|

||||

来看看这些命令,我们使用一个**93 Mb**的单一文件来做例子。

|

||||

|

||||

@ -121,18 +121,18 @@ Linux系统管理员应该很熟悉**CLI**环境,因为在Linux服务器中是

|

||||

|

||||

看到了吧,压缩了文件之后,传输过程在**162.5**秒内就完成了,速度是不用“**-C**”参数的10倍。如果你要通过网络拷贝很多份文件,那么“**-C**”参数能帮你节省掉很多时间。

|

||||

|

||||

有一点我们需要注意,这个压缩的方法不是适用于所有文件。当源文件已经被压缩过了,那就没办法再压缩了。诸如那些像**.zip**,**.rar**,**pictures**和**.iso**的文件,用“**-C**”参数就无效。

|

||||

有一点我们需要注意,这个压缩的方法不是适用于所有文件。当源文件已经被压缩过了,那就没办法再压缩很多了。诸如那些像**.zip**,**.rar**,**pictures**和**.iso**的文件,用“**-C**”参数就没什么意义。

|

||||

|

||||

### 选择其它加密算法来加密文件 ###

|

||||

|

||||

**SCP**默认是用“**AES-128**”加密算法来加密文件的。如果你想要改用其它加密算法来加密文件,你可以用“**-c**”参数。我们来瞧瞧。

|

||||

**SCP**默认是用“**AES-128**”加密算法来加密传输的。如果你想要改用其它加密算法来加密传输,你可以用“**-c**”参数。我们来瞧瞧。

|

||||

|

||||

pungki@mint ~/Documents $ scp -c 3des Label.pdf mrarianto@202.x.x.x:.

|

||||

|

||||

mrarianto@202.x.x.x's password:

|

||||

Label.pdf 100% 3672KB 282.5KB/s 00:13

|

||||

|

||||

上述命令是告诉**SCP**用**3des algorithm**来加密文件。要注意这个参数是“**-c**”而不是“**-C**“。

|

||||

上述命令是告诉**SCP**用**3des algorithm**来加密文件。要注意这个参数是“**-c**”(小写)而不是“**-C**“(大写)。

|

||||

|

||||

### 限制带宽使用 ###

|

||||

|

||||

@ -143,24 +143,24 @@ Linux系统管理员应该很熟悉**CLI**环境,因为在Linux服务器中是

|

||||

mrarianto@202.x.x.x's password:

|

||||

Label.pdf 100% 3672KB 50.3KB/s 01:13

|

||||

|

||||

在“**-l**”参数后面的这个**400**值意思是我们给**SCP**进程限制了带宽为**50 KB/秒**。有一点要记住,带宽是以**千比特/秒** (**kbps**)表示的,**8 比特**等于**1 字节**。

|

||||

在“**-l**”参数后面的这个**400**值意思是我们给**SCP**进程限制了带宽为**50 KB/秒**。有一点要记住,带宽是以**千比特/秒** (**kbps**)表示的,而**8 比特**等于**1 字节**。

|

||||

|

||||

因为**SCP**是用**千字节/秒** (**KB/s**)计算的,所以如果你想要限制**SCP**的最大带宽只有**50 KB/s**,你就需要设置成**50 x 8 = 400**。

|

||||

|

||||

### 指定端口 ###

|

||||

|

||||

通常**SCP**是把**22**作为默认端口。但是为了安全起见,你可以改成其它端口。比如说,我们想用**2249**端口,命令如下所示。

|

||||

通常**SCP**是把**22**作为默认端口。但是为了安全起见SSH 监听端口改成其它端口。比如说,我们想用**2249**端口,这种情况下就要指定端口。命令如下所示。

|

||||

|

||||

pungki@mint ~/Documents $ scp -P 2249 Label.pdf mrarianto@202.x.x.x:.

|

||||

|

||||

mrarianto@202.x.x.x's password:

|

||||

Label.pdf 100% 3672KB 262.3KB/s 00:14

|

||||

|

||||

确认一下写的是大写字母“**P**”而不是“**p**“,因为“**p**”已经被用来保留源文件的修改时间和模式。

|

||||

确认一下写的是大写字母“**P**”而不是“**p**“,因为“**p**”已经被用来保留源文件的修改时间和模式(LCTT 译注:和 ssh 命令不同了)。

|

||||

|

||||

### 递归拷贝文件和文件夹 ###

|

||||

|

||||

有时我们需要拷贝文件夹及其内部的所有**文件** / **文件夹**,我们如果能用一条命令解决问题那就更好了。**SCP**用“**-r**”参数就能做到。

|

||||

有时我们需要拷贝文件夹及其内部的所有**文件**/**子文件夹**,我们如果能用一条命令解决问题那就更好了。**SCP**用“**-r**”参数就能做到。

|

||||

|

||||

pungki@mint ~/Documents $ scp -r documents mrarianto@202.x.x.x:.

|

||||

|

||||

@ -172,7 +172,7 @@ Linux系统管理员应该很熟悉**CLI**环境,因为在Linux服务器中是

|

||||

|

||||

### 禁用进度条和警告/诊断信息 ###

|

||||

|

||||

如果你不想从SCP中看到进度条和警告/诊断信息,你可以用“**-q**”参数来禁用它们,举例如下。

|

||||

如果你不想从SCP中看到进度条和警告/诊断信息,你可以用“**-q**”参数来静默它们,举例如下。

|

||||

|

||||

pungki@mint ~/Documents $ scp -q Label.pdf mrarianto@202.x.x.x:.

|

||||

|

||||

@ -207,7 +207,7 @@ Linux系统管理员应该很熟悉**CLI**环境,因为在Linux服务器中是

|

||||

|

||||

### 选择不同的ssh_config文件 ###

|

||||

|

||||

对于经常在公司网络和公共网络之间切换的移动用户来说,一直改变SCP的设置显然是很痛苦的。如果我们能放一个不同的**ssh_config**文件来匹配我们的需求那就很好了。

|

||||

对于经常在公司网络和公共网络之间切换的移动用户来说,一直改变SCP的设置显然是很痛苦的。如果我们能放一个保存不同配置的**ssh_config**文件来匹配我们的需求那就很好了。

|

||||

|

||||

#### 以下是一个简单的场景 ####

|

||||

|

||||

@ -231,7 +231,7 @@ via: http://www.tecmint.com/scp-commands-examples/

|

||||

|

||||

作者:[Pungki Arianto][a]

|

||||

译者:[ZTinoZ](https://github.com/ZTinoZ)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,10 +1,10 @@

|

||||

5大最佳开源的浏览器安全应用

|

||||

5个最佳开源的浏览器安全应用

|

||||

================================================================================

|

||||

浏览器是现在各种在线服务的入口。电脑安全问题迄今仍未得到解决,技术进步为恶意软件提供了新的途径,感染我们的设备,入侵商业网络。例如,智能手机与平板为恶意软件--及其同伙“[恶意广告][1]”--带来一片全新天地,它们在其中腾挪作乱。

|

||||

浏览器是现在各种在线服务的入口。电脑安全问题迄今仍未得到解决,技术进步为恶意软件提供了新的途径,感染我们的设备、入侵商业网络。例如,智能手机与平板为恶意软件--及其同伙“[恶意广告][1]”--带来一片全新天地,它们在其中腾挪作乱。

|

||||

|

||||

恶意广告在合法广告与合法网络中注入恶意软件。当然你可能会认为“合法”广告与网络与非法广告与网络之间仅有一线之隔。但是请不要偏题哦。隐私与安全天生就是一对兄弟,保护隐私也就是保护你的安全。

|

||||

|

||||

Firefox, Chrome, 以及 Opera当仁不让属最棒的浏览器:性能最佳、兼容性最好、以及安全性最优。以下五个开源安全应用安装于浏览器后会助你抵御种种威胁。

|

||||

Firefox, Chrome, 以及 Opera 当仁不让属最棒的浏览器:性能最佳、兼容性最好、以及安全性最优。以下五个开源安全应用安装于浏览器后会助你抵御种种威胁。

|

||||

|

||||

### 保护隐私: 开源浏览器安全应用 ###

|

||||

|

||||

@ -12,11 +12,11 @@ Firefox, Chrome, 以及 Opera当仁不让属最棒的浏览器:性能最佳、

|

||||

|

||||

广告网络为恶意软件提供了肥沃的土壤。一个广告网络可以覆盖数千站点,因此攻陷一个广告网络就相当于攻陷数千台机器。AdBlock及其衍生品—[AdBlock Plus][2], [AdBlock Pro][3], 与 [AdBlock Edge][4]--都是屏蔽广告的优秀工具,可以让那些充斥烦人广告的网站重新还你一片清静。

|

||||

|

||||

当然,凡事都有两面性:上述做法损害了依靠广告收入的站点的利益。这些工具一键式白名单功能,对于那些你希望支持的网站,你可以通过白名单功能关闭这些网站的广告屏蔽。(真的,我亲爱的站长们,如果你不希望网站访问者屏蔽你的广告,那么就适可而止,不要让人反感。)

|

||||

当然,凡事都有两面性:上述做法损害了依靠广告收入的站点的利益。这些工具一键式白名单功能,对于那些你希望支持的网站,你可以通过白名单功能关闭这些网站的广告屏蔽。(真的,我亲爱的站长们,如果你不希望网站访问者屏蔽你的广告,那么就适可而止,不要让人反感。当然,作为粉丝,也请您支持您喜爱的站点,将它们放到白名单吧。)

|

||||

|

||||

|

||||

|

||||

图1:在Ad Blocker中添加其它过滤规则。

|

||||

*图1:在Ad Blocker中添加其它过滤规则。*

|

||||

|

||||

Ad Blocker们不仅能屏蔽广告;它们还能屏蔽网站跟踪爬虫与恶意域名。要打开额外过滤规则,点击ad blocker图标 > 点击**首选项**,转至**过滤规则订阅**标签。点击按纽**添加订阅过滤规则**,然后加入**Easy Privacy + EasyList**规则。加入恶意域名过滤也是个不错的选择;它会屏蔽那些供恶意软件与间谍软件寄生的域名。Adblock可在Firefox, Chrome, Opera, Safari, IE, 以及Android平台下工作。

|

||||

|

||||

@ -24,7 +24,7 @@ Ad Blocker们不仅能屏蔽广告;它们还能屏蔽网站跟踪爬虫与恶

|

||||

|

||||

浏览器扩展HTTPS Everywhere可确保在网站HTTPS可用的时候,总是以HTTPS方式连接到站点。HTTPS意味着你的连接是以SSL(安全套接层)方式加密的,SSL协议通常用于加密网站与电子邮件连接。HTTPS Everywhere可在Firefox, Chrome, 及Opera下使用。

|

||||

|

||||

安装了HTTPS Everywhere之后,它会询问你是否希望启用SSL检测程序。点击是,因为SSL检测程序会提供额外保护,防止中间人攻击与虚假SSL证书攻击。HTTPS Everywhere可在Firefox, Chrome, Opera, Safari, IE, 以及Android平台下工作。

|

||||

安装了HTTPS Everywhere之后,它会询问你是否希望启用SSL检测程序。点击“是”,因为SSL检测程序会提供额外保护,防止中间人攻击与虚假SSL证书攻击。HTTPS Everywhere可在Firefox, Chrome, Opera, Safari, IE, 以及Android平台下工作。

|

||||

|

||||

#### 3. [Social Fixer][6] ####

|

||||

|

||||

@ -37,7 +37,9 @@ Social Fixer本身不是安全工具,但它具有两个重要的安全特性

|

||||

|

||||

|

||||

|

||||

图2: 使用Social Fixer匿名化Facebook网面。

|

||||

*图2: 使用Social Fixer匿名化Facebook网面。*

|

||||

|

||||

(LCTT 译注:好吧,这个应用和我等无关~~)

|

||||

|

||||

#### 4. [Privacy Badger][7] ####

|

||||

|

||||

@ -47,7 +49,7 @@ AdBlock也能拦截这些乌七八糟的东西,不过Privacy Badger在此方

|

||||

|

||||

|

||||

|

||||

图3: Privacy Badger拦截跟踪站点。

|

||||

*图3: Privacy Badger拦截跟踪站点。*

|

||||

|

||||

Privacy Badger装好后就能使用了。点击图标,看看它对你浏览的网页都拦截了哪些东西。你可以试试访问Huffingtonpost.com,这是一家不在每一个页面塞满第三方组件誓不罢休的网站(图3)。

|

||||

|

||||

@ -63,7 +65,7 @@ Disconnect还有安全搜索功能,可以阻止搜索引擎爱挖数据的癖

|

||||

|

||||

想象一下,网页上所有东西都腾空而出,奔你而去。当然这一切都是抽象的且在幕后悄然发生,不象有人正在猛击窗户试图进入你家那么明显罢了。但是,威胁倒是实实在在的,而且数不胜数,所以你必须采取预防措施,来保护自己。

|

||||

|

||||

Carla Schroder著有The Book of Audacity, Linux Cookbook, Linux Networking Cookbook等书,并撰写了上百篇Linux指南文章。她曾担任Linux Planet与Linux Today网站总编。

|

||||

本文作者 Carla Schroder 著有The Book of Audacity, Linux Cookbook, Linux Networking Cookbook等书,并撰写了上百篇Linux指南文章。她曾担任Linux Planet与Linux Today网站总编。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -71,7 +73,7 @@ via: http://www.smallbusinesscomputing.com/biztools/5-best-open-source-web-brows

|

||||

|

||||

作者:[Carla Schroder][a]

|

||||

译者:[yupmoon](https://github.com/yupmoon)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

Postfix提示和故障排除命令

|

||||

Postfix 技巧和故障排除命令

|

||||

================================================================================

|

||||

这里是一些我每天用的命令,当然,其他的email管理员也会使用,因此我写下来,以防我忘记。

|

||||

|

||||

@ -16,7 +16,7 @@ Postfix提示和故障排除命令

|

||||

|

||||

# postqueue -f

|

||||

|

||||

立即交付所有某domain.com域名的所有邮件

|

||||

立即投递某domain.com域名的所有邮件

|

||||

|

||||

# postqueue -s domain.com

|

||||

|

||||

@ -39,7 +39,7 @@ Postfix提示和故障排除命令

|

||||

|

||||

你也可以查看下面的连接,这个连接有很多例子和不错的可用的解释文档,可以用来配置postfix.

|

||||

|

||||

[Postfix Configuration - ][1]

|

||||

[Postfix Configuration][1]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -47,7 +47,7 @@ via: http://techarena51.com/index.php/postfix-configuration-and-explanation-of-p

|

||||

|

||||

作者:[Leo G][a]

|

||||

译者:[Vic020](http://www.vicyu.net)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,47 @@

|

||||

2014年会是 "Linux桌面年"吗?

|

||||

================================================================================

|

||||

> Linux桌面现在终于发出最强音!

|

||||

|

||||

|

||||

|

||||

**看来Linux在2014年有很多改变,许多用户都表示今年Linux的确有进步,但是仅凭这个就能断定2014年就是"Linux桌面年"吗?**

|

||||

|

||||

"Linux桌面年"这句话,在过去几年就被传诵得像句颂歌一样,可以说是在试图用一种比较有意义的方式来标记它的发展进程。此类事情目前还没有发生过,在我们的见证下也从无先例,所以这就不难理解为什么Linux用户会用这个角度去看待这句话。

|

||||

|

||||

大多数软件和硬件领域不太会有这种快速的进步,都以较慢的速度发展,但是对于那些在工业领域有更好眼光的人来说,事情就会变得疯狂。即使有可能,针对某一时刻或某一事件还是比较困难的,但是Linux在几年的过程中还是以指数方式迅速发展成长。

|

||||

|

||||

|

||||

|

||||

|

||||

### Linux桌面年这句话不可轻言 ###

|

||||

|

||||

没有一个比较权威的人和机构能判定Linux桌面年已经到来或者已经过去,所以我们只能尝试根据迄今为止我们所看到的和用户所反映的去推断。有一些人比较保守,改变对他们影响不大,还有一些人则比较激进,永远不知满足。这真的要取决于你的见解了。

|

||||

|

||||

点燃这一切的火花似乎就是Linux上的Steam平台,尽管在这变成现实之前我们已经看到了一些Linux游戏已经开始有重要的动作了。在任何情况下,Valve都可能是我们今天所看到的一系列复苏事件的催化剂。

|

||||

|

||||

|

||||

|

||||

在过去的十年里,Linux桌面以一种缓慢的速度在发展,并没有什么真正的改变。创新肯定是有的,但是市场份额几乎还是保持不变。无论桌面变得多么酷或Linux相比之前的任何一版多出了多少特点,很大程度上还是在原地踏步,包括那些开发商业软件的公司,他们的参与度一直很小,基本上就忽略掉了Linux。

|

||||

|

||||

|

||||

|

||||

|

||||

现在,相比过去的十年里,更多的公司表现出了对Linux平台的浓厚兴趣。或许这是一种自然地演变,Valve并没有做什么,但是Linux最终还是达到了一个能被普通用户接受并理解的水平,并不只是因为令人着迷的开源技术。

|

||||

|

||||

驱动程序能力强了,游戏工作室就会定期移植游戏,在Linux中我们前所未见的应用和中间件就会开始出现。Linux内核发展达到了难以置信的速度,大多数发行版的安装过程通常都不怎么难,所有这一切都只是冰山一角。

|

||||

|

||||

|

||||

|

||||

所以,当有人问你2014年是不是Linux桌面年时,你可以说“是的!”,因为Linux桌面完全统治了2014年。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Was-2014-The-Year-of-Linux-Desktop-467036.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[ZTinoZ](https://github.com/ZTinoZ)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

@ -0,0 +1,92 @@

|

||||

Attic——删除重复数据的备份程序

|

||||

================================================================================

|

||||

Attic是一个Python写的删除重复数据的备份程序,其主要目标是提供一种高效安全的数据备份方式。重复数据消除技术的使用使得Attic适用于日常备份,因为它可以只存储那些修改过的数据。

|

||||

|

||||

### Attic特性 ###

|

||||

|

||||

#### 空间高效存储 ####

|

||||

|

||||

可变块大小重复数据消除技术用于减少检测到的冗余数据存储字节数量。每个文件被分割成若干可变长度组块,只有那些从没见过的组合块会被压缩并添加到仓库中。

|

||||

|

||||

#### 可选数据加密 ####

|

||||

|

||||

所有数据可以使用256位AES加密进行保护,并使用HMAC-SHA256验证数据完整性和真实性。

|

||||

|

||||

#### 离场备份 ####

|

||||

|

||||

Attic可以通过SSH将数据存储到安装有Attic的远程主机上。

|

||||

|

||||

#### 备份可作为文件系统挂载 ####

|

||||

|

||||

备份归档可作为用户空间文件系统挂载,用于便捷地验证和恢复备份。

|

||||

|

||||

#### 安装attic到ubuntu 14.10 ####

|

||||

|

||||

打开终端并运行以下命令

|

||||

|

||||

sudo apt-get install attic

|

||||

|

||||

### 使用Attic ###

|

||||

|

||||

#### 手把手实例教学 ####

|

||||

|

||||

在进行备份之前,首先要对仓库进行初始化:

|

||||

|

||||

$ attic init /somewhere/my-repository.attic

|

||||

|

||||

将~/src和~/Documents目录备份到名为Monday的归档:

|

||||

|

||||

$ attic create /somwhere/my-repository.attic::Monday ~/src ~/Documents

|

||||

|

||||

第二天创建一个新的名为Tuesday的归档:

|

||||

|

||||

$ attic create --stats /somwhere/my-repository.attic::Tuesday ~/src ~/Documents

|

||||

|

||||

该备份将更快些,也更小些,因为只有之前从没见过的新数据会被存储。--stats选项会让Attic输出关于新创建的归档的统计数据,比如唯一数据(不和其它归档共享)的数量:

|

||||

|

||||

归档名:Tuesday

|

||||

归档指纹:387a5e3f9b0e792e91ce87134b0f4bfe17677d9248cb5337f3fbf3a8e157942a

|

||||

开始时间: Tue Mar 25 12:00:10 2014

|

||||

结束时间: Tue Mar 25 12:00:10 2014

|

||||

持续时间: 0.08 seconds

|

||||

文件数量: 358

|

||||

最初大小 压缩后大小 重复数据删除后大小

|

||||

本归档: 57.16 MB 46.78 MB 151.67 kB

|

||||

所有归档:114.02 MB 93.46 MB 44.81 MB

|

||||

|

||||

列出仓库中所有归档:

|

||||

|

||||

$ attic list /somewhere/my-repository.attic

|

||||

|

||||

Monday Mon Mar 24 11:59:35 2014

|

||||

Tuesday Tue Mar 25 12:00:10 2014

|

||||

|

||||

列出Monday归档的内容:

|

||||

|

||||

$ attic list /somewhere/my-repository.attic::Monday

|

||||

|

||||

drwxr-xr-x user group 0 Jan 06 15:22 home/user/Documents

|

||||

-rw-r--r-- user group 7961 Nov 17 2012 home/user/Documents/Important.doc

|

||||

|

||||

恢复Monday归档:

|

||||

|

||||

$ attic extract /somwhere/my-repository.attic::Monday

|

||||

|

||||

通过手动删除Monday归档恢复磁盘空间:

|

||||

|

||||

$ attic delete /somwhere/my-backup.attic::Monday

|

||||

|

||||

详情请查阅[Attic文档][1]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.ubuntugeek.com/attic-deduplicating-backup-program.html

|

||||

|

||||

作者:[ruchi][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.ubuntugeek.com/author/ubuntufix

|

||||

[1]:https://attic-backup.org/index.html

|

||||

@ -0,0 +1,68 @@

|

||||

红帽反驳:“grinch(鬼精灵)”算不上Linux漏洞

|

||||

================================================================================

|

||||

|

||||

|

||||

图片来源:[Natalia Wilson,受Creative Commons许可][1]

|

||||

|

||||

> 安全专家表示,Linux处理权限的方式仍有可能导致潜在的误操作。

|

||||

|

||||

但红帽对此不以为然,称 Alert Logic 于本周二(译者注:12月16日)公布的 grinch (“鬼精灵”) Linux漏洞根本算不上是安全漏洞。

|

||||

|

||||

[红帽于周三发表简报][2] 回应Alert Logic 说法,表示:“(Alert Logic的)这份报告错误地将正常预期动作归为安全问题。”

|

||||

|

||||

安全公司Alert Logic于本周二声称“鬼精灵”漏洞其严重性堪比 Heartbleed 臭虫,并称其是 [Linux 系统处理用户权限时的重大设计缺陷][3],恶意攻击者可借此获取机器的root权限。

|

||||

|

||||

Alert Logic 称攻击者可以使用第三方Linux 软件框架Policy Kit (Polkit)达到利用“鬼精灵”漏洞的目的。Polkit旨在帮助用户安装与运行软件包,此开源程序由红帽维护。Alert Logic 声称,允许用户安装软件程序的过程中往往需要超级用户权限,如此一来,Polkit也在不经意间或通过其它形式为恶意程序的运行洞开方便之门。

|

||||

|

||||

红帽对此不以为意,表示系统就是这么设计的,换句话说,**“鬼精灵”不是臭虫而是一项特性。**

|

||||

|

||||

安全监控公司Threat Stack联合创造人 Jen Andre [就此在一篇博客][4]中写道:“如果你任由用户通过使用那些利用了Policykit的软件,无需密码就可以在系统上安装任何软件,实际上也就绕过了Linux内在授权与访问控制。”

|

||||

|

||||

Alert Logic 高级安全研究员 James Staten 在发给国际数据集团新闻社(IDG News Service)的电子邮件中写道,虽然这种行为是设计使然,有意为之,但“鬼精灵”仍然可能被加以利用或修改来攻陷系统。

|

||||

|

||||

“现在的问题是表面存在一个薄弱环节,可以被用来攻击系统,如果安装软件包象其它操作一样,比如删除软件包或添加软件源,没有密码不行,那么就不会存在被恶意利用的可能性了。”

|

||||

|

||||

不过 Andre 在一次采访中也表示,对那些跃跃欲试的攻击者来说,想利用Polkit还是有一些苛刻限制的。

|

||||

|

||||

攻击者需要能够物理访问机器,并且还须通过外设键鼠与机器互动。如果攻击者能够物理访问机器,可以象重启机器进入恢复模式访问数据与程序一样地轻而易举的得手。

|

||||

|

||||

Andre表示,不是所有Linux机器都默认安装Polkit -- 事实上,其主要用于拥有桌面图形界面的工作站,在当今运行的Linux机器中占有很小的份额。

|

||||

|

||||

换句话说,“鬼精灵”并不具有象[Shellshock][5]那样广泛的攻击面, 后者存在于Bash shell中,几乎所有发行版无一幸免。

|

||||

|

||||

其他安全专家对“鬼精灵”漏洞也不以为然。

|

||||

|

||||

系统网络安全协会(SANS Institute)互联网风暴中心(Internet Storm Center)咨询网站的 Johanners Ullrich 在[一篇博文][6]中写道:“某种程度上,与很多Linux系统过分随意的设置相比,这个并算不上多大的漏洞。”

|

||||

|

||||

Ullrich 同时还指出,“鬼精灵”漏洞也并非完全“良性”,“可以很容易地加以利用,获得超出Polkit设置预期的权限。”

|

||||

|

||||

Andre指出,负责管理运行Polkit桌面Linux机器的管理员要做到心中有数,了解潜在的危险,检查那些程序是靠Polkit来管理的,确保系统无虞。

|

||||

|

||||

他还表示,应用开发者与Linux 发行者也应确保正确使用Polkit框架。

|

||||

|

||||

原始报告的另一位作者Even Tyler似乎也承认“鬼精灵”并非十分严重。

|

||||

|

||||

[在开源安全邮件列表的一封邮件中][7],Bourland 提到攻击者需要借助其它漏洞,连同“鬼精灵”才能发起攻击时,他写道,“鬼精灵”就象个“开启界面的熟练工,但是本身并不能翻多高的浪。”

|

||||

|

||||

(Lucian Constantin 对本文也有贡献。)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via:http://www.computerworld.com/article/2861392/security0/the-grinch-isnt-a-linux-vulnerability-red-hat-says.html

|

||||

|

||||

作者:[Joab Jackson][a]

|

||||

译者:[yupmoon](https://github.com/yupmoon)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出