mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-25 23:11:02 +08:00

清除太久远的文章

This commit is contained in:

parent

e55ebfbbeb

commit

523ed70853

@ -1,108 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Fedora CoreOS out of preview)

|

||||

[#]: via: (https://fedoramagazine.org/fedora-coreos-out-of-preview/)

|

||||

[#]: author: (bgilbert https://fedoramagazine.org/author/bgilbert/)

|

||||

|

||||

Fedora CoreOS out of preview

|

||||

======

|

||||

|

||||

![The Fedora CoreOS logo on a gray background.][1]

|

||||

|

||||

The Fedora CoreOS team is pleased to announce that Fedora CoreOS is now [available for general use][2].

|

||||

|

||||

Fedora CoreOS is a new Fedora Edition built specifically for running containerized workloads securely and at scale. It’s the successor to both [Fedora Atomic Host][3] and [CoreOS Container Linux][4] and is part of our effort to explore new ways of assembling and updating an OS. Fedora CoreOS combines the provisioning tools and automatic update model of Container Linux with the packaging technology, OCI support, and SELinux security of Atomic Host. For more on the Fedora CoreOS philosophy, goals, and design, see the [announcement of the preview release][5].

|

||||

|

||||

Some highlights of the current Fedora CoreOS release:

|

||||

|

||||

* [Automatic updates][6], with staged deployments and phased rollouts

|

||||

* Built from Fedora 31, featuring:

|

||||

* Linux 5.4

|

||||

* systemd 243

|

||||

* Ignition 2.1

|

||||

* OCI and Docker Container support via Podman 1.7 and Moby 18.09

|

||||

* cgroups v1 enabled by default for broader compatibility; cgroups v2 available via configuration

|

||||

|

||||

|

||||

|

||||

Fedora CoreOS is available on a variety of platforms:

|

||||

|

||||

* Bare metal, QEMU, OpenStack, and VMware

|

||||

* Images available in all public AWS regions

|

||||

* Downloadable cloud images for Alibaba, AWS, Azure, and GCP

|

||||

* Can run live from RAM via ISO and PXE (netboot) images

|

||||

|

||||

|

||||

|

||||

Fedora CoreOS is under active development. Planned future enhancements include:

|

||||

|

||||

* Addition of the _next_ release stream for extended testing of upcoming Fedora releases.

|

||||

* Support for additional cloud and virtualization platforms, and processor architectures other than _x86_64_.

|

||||

* Closer integration with Kubernetes distributions, including [OKD][7].

|

||||

* [Aggregate statistics collection][8].

|

||||

* Additional [documentation][9].

|

||||

|

||||

|

||||

|

||||

### Where do I get it?

|

||||

|

||||

To try out the new release, head over to the [download page][10] to get OS images or cloud image IDs. Then use the [quick start guide][11] to get a machine running quickly.

|

||||

|

||||

### How do I get involved?

|

||||

|

||||

It’s easy! You can report bugs and missing features to the [issue tracker][12]. You can also discuss Fedora CoreOS in [Fedora Discourse][13], the [development mailing list][14], in _#fedora-coreos_ on Freenode, or at our [weekly IRC meetings][15].

|

||||

|

||||

### Are there stability guarantees?

|

||||

|

||||

In general, the Fedora Project does not make any guarantees around stability. While Fedora CoreOS strives for a high level of stability, this can be challenging to achieve in the rapidly evolving Linux and container ecosystems. We’ve found that the incremental, exploratory, forward-looking development required for Fedora CoreOS — which is also a cornerstone of the Fedora Project as a whole — is difficult to reconcile with the iron-clad stability guarantee that ideally exists when automatically updating systems.

|

||||

|

||||

We’ll continue to do our best not to break existing systems over time, and to give users the tools to manage the impact of any regressions. Nevertheless, automatic updates may produce regressions or breaking changes for some use cases. You should make your own decisions about where and how to run Fedora CoreOS based on your risk tolerance, operational needs, and experience with the OS. We will continue to announce any major planned or unplanned breakage to the [coreos-status mailing list][16], along with recommended mitigations.

|

||||

|

||||

### How do I migrate from CoreOS Container Linux?

|

||||

|

||||

Container Linux machines cannot be migrated in place to Fedora CoreOS. We recommend [writing a new Fedora CoreOS Config][11] to provision Fedora CoreOS machines. Fedora CoreOS Configs are similar to Container Linux Configs, and must be passed through the Fedora CoreOS Config Transpiler to produce an Ignition config for provisioning a Fedora CoreOS machine.

|

||||

|

||||

Whether you’re currently provisioning your Container Linux machines using a Container Linux Config, handwritten Ignition config, or cloud-config, you’ll need to adjust your configs for differences between Container Linux and Fedora CoreOS. For example, on Fedora CoreOS network configuration is performed with [NetworkManager key files][17] instead of _systemd-networkd_, and time synchronization is performed by _chrony_ rather than _systemd-timesyncd_. Initial migration documentation will be [available soon][9] and a skeleton list of differences between the two OSes is available in [this issue][18].

|

||||

|

||||

CoreOS Container Linux will be maintained for a few more months, and then will be declared end-of-life. We’ll announce the exact end-of-life date later this month.

|

||||

|

||||

### How do I migrate from Fedora Atomic Host?

|

||||

|

||||

Fedora Atomic Host has already reached end-of-life, and you should migrate to Fedora CoreOS as soon as possible. We do not recommend in-place migration of Atomic Host machines to Fedora CoreOS. Instead, we recommend [writing a Fedora CoreOS Config][11] and using it to provision new Fedora CoreOS machines. As with CoreOS Container Linux, you’ll need to adjust your existing cloud-configs for differences between Fedora Atomic Host and Fedora CoreOS.

|

||||

|

||||

Welcome to Fedora CoreOS. Deploy it, launch your apps, and let us know what you think!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://fedoramagazine.org/fedora-coreos-out-of-preview/

|

||||

|

||||

作者:[bgilbert][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://fedoramagazine.org/author/bgilbert/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://fedoramagazine.org/wp-content/uploads/2019/07/introducing-fedora-coreos-816x345.png

|

||||

[2]: https://getfedora.org/coreos/

|

||||

[3]: https://www.projectatomic.io/

|

||||

[4]: https://coreos.com/os/docs/latest/

|

||||

[5]: https://fedoramagazine.org/introducing-fedora-coreos/

|

||||

[6]: https://docs.fedoraproject.org/en-US/fedora-coreos/auto-updates/

|

||||

[7]: https://www.okd.io/

|

||||

[8]: https://github.com/coreos/fedora-coreos-pinger/

|

||||

[9]: https://docs.fedoraproject.org/en-US/fedora-coreos/

|

||||

[10]: https://getfedora.org/coreos/download/

|

||||

[11]: https://docs.fedoraproject.org/en-US/fedora-coreos/getting-started/

|

||||

[12]: https://github.com/coreos/fedora-coreos-tracker/issues

|

||||

[13]: https://discussion.fedoraproject.org/c/server/coreos

|

||||

[14]: https://lists.fedoraproject.org/archives/list/coreos@lists.fedoraproject.org/

|

||||

[15]: https://github.com/coreos/fedora-coreos-tracker#meetings

|

||||

[16]: https://lists.fedoraproject.org/archives/list/coreos-status@lists.fedoraproject.org/

|

||||

[17]: https://developer.gnome.org/NetworkManager/stable/nm-settings-keyfile.html

|

||||

[18]: https://github.com/coreos/fedora-coreos-tracker/issues/159

|

||||

@ -1,80 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Open source fights cancer, Tesla adopts Coreboot, Uber and Lyft release open source machine learning)

|

||||

[#]: via: (https://opensource.com/article/20/1/news-january-19)

|

||||

[#]: author: (Scott Nesbitt https://opensource.com/users/scottnesbitt)

|

||||

|

||||

Open source fights cancer, Tesla adopts Coreboot, Uber and Lyft release open source machine learning

|

||||

======

|

||||

Catch up on the biggest open source headlines from the past two weeks.

|

||||

![Weekly news roundup with TV][1]

|

||||

|

||||

In this edition of our open source news roundup, we take a look machine learning tools from Uber and Lyft, open source software to fight cancer, saving students money with open textbooks, and more!

|

||||

|

||||

### Uber and Lyft release machine learning tools

|

||||

|

||||

It's hard to a growing company these days that doesn't take advantage of machine learning to streamline its business and make sense of the data it amasses. Ridesharing companies, which gather massive amounts of data, have enthusiastically embraced the promise of machine learning. Two of the biggest players in the ridesharing sector have made some of their machine learning code open source.

|

||||

|

||||

Uber recently [released the source code][2] for its Manifold tool for debugging machine learning models. According to Uber software engineer Lezhi Li, Manifold will "benefit the machine learning (ML) community by providing interpretability and debuggability for ML workflows." If you're interested, you can browse Manifold's source code [on GitHub][3].

|

||||

|

||||

Lyft has also upped its open source stakes by releasing Flyte. Flyte, whose source code is [available on GitHub][4], manages machine learning pipelines and "is an essential backbone to (Lyft's) operations." Lyft has been using it to train AI models and process data "across pricing, logistics, mapping, and autonomous projects."

|

||||

|

||||

### Software to detect cancer cells

|

||||

|

||||

In a study recently published in _Nature Biotechnology_, a team of medical researchers from around the world announced [new open source software][5] that "could make it easier to create personalised cancer treatment plans."

|

||||

|

||||

The software assesses "the proportion of cancerous cells in a tumour sample" and can help clinicians "judge the accuracy of computer predictions and establish benchmarks" across tumor samples. Maxime Tarabichi, one of the lead authors of [the study][6], said that the software "provides a foundation which will hopefully become a much-needed, unbiased, gold-standard benchmarking tool for assessing models that aim to characterise a tumour’s genetic diversity."

|

||||

|

||||

### University of Regina saves students over $1 million with open textbooks

|

||||

|

||||

If rising tuition costs weren't enough to send university student spiralling into debt, the high prices of textbooks can deepen the crater in their bank accounts. To help ease that financial pain, many universities turn to open textbooks. One of those schools is the University of Regina. By offering open text books, the university [expects to save a huge amount for students][7] over the next five years.

|

||||

|

||||

The expected savings are in the region of $1.5 million (CAD), or around $1.1 million USD (at the time of writing). The textbooks, according to a report by radio station CKOM, are "provided free for (students) and they can be printed off or used as e-books." Students aren't getting inferior-quality textbooks, though. Nilgun Onder of the University of Regina said that the "textbooks and other open education resources the university published are all peer-reviewed resources. In other words, they are reliable and credible."

|

||||

|

||||

### Tesla adopts Coreboot

|

||||

|

||||

Much of the software driving (no pun intended) the electric vehicles made by Tesla Motors is open source. So it's not surprising to learn that the company has [adopted Coreboot][8] "as part of their electric vehicle computer systems."

|

||||

|

||||

Coreboot was developed as a replacement for proprietary BIOS and is used to boot hardware and the Linux kernel. The code, which is in [Tesla's GitHub repository][9], "is from Tesla Motors and Samsung," according to Phoronix. Samsung, in case you're wondering, makes the chip on which Tesla's self-driving software runs.

|

||||

|

||||

#### In other news

|

||||

|

||||

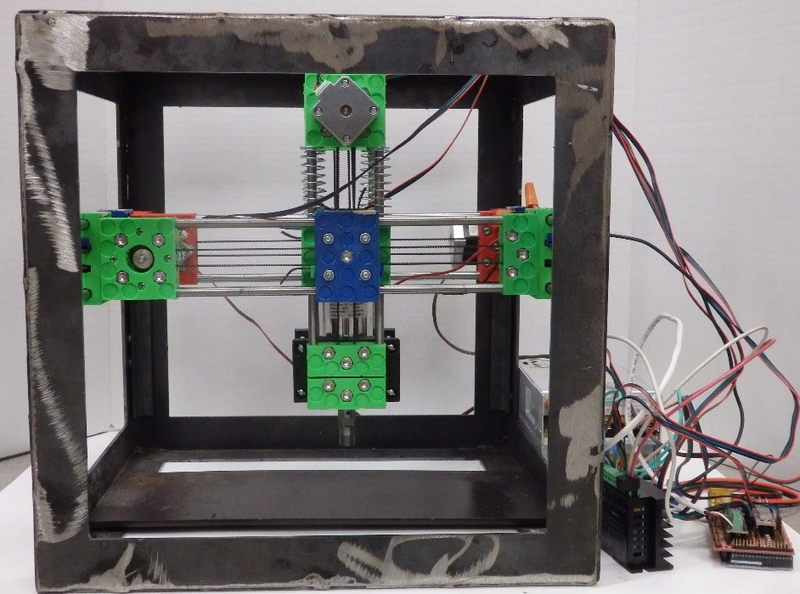

* [Arduino launches new modular platform for IoT development][10]

|

||||

* [SUSE and Karunya Institute of Technology and Sciences collaborate to enhance cloud and open source learning][11]

|

||||

* [How open-source code could help us survive natural disasters][12]

|

||||

* [The hottest thing in robotics is an open source project you've never heard of][13]

|

||||

|

||||

|

||||

|

||||

_Thanks, as always, to Opensource.com staff members and moderators for their help this week. Make sure to check out [our event calendar][14], to see what's happening next week in open source._

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/20/1/news-january-19

|

||||

|

||||

作者:[Scott Nesbitt][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/scottnesbitt

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/weekly_news_roundup_tv.png?itok=B6PM4S1i (Weekly news roundup with TV)

|

||||

[2]: https://venturebeat.com/2020/01/07/uber-open-sources-manifold-a-visual-tool-for-debugging-ai-models/

|

||||

[3]: https://github.com/uber/manifold

|

||||

[4]: https://github.com/lyft/flyte

|

||||

[5]: https://www.cbronline.com/industry/healthcare/open-source-cancer-cells/

|

||||

[6]: https://www.nature.com/articles/s41587-019-0364-z

|

||||

[7]: https://www.ckom.com/2020/01/07/open-source-program-to-save-u-of-r-students-1-5m/

|

||||

[8]: https://www.phoronix.com/scan.php?page=news_item&px=Tesla-Uses-Coreboot

|

||||

[9]: https://github.com/teslamotors/coreboot

|

||||

[10]: https://techcrunch.com/2020/01/07/arduino-launches-a-new-modular-platform-for-iot-development/

|

||||

[11]: https://www.crn.in/news/suse-and-karunya-institute-of-technology-and-sciences-collaborate-to-enhance-cloud-and-open-source-learning/

|

||||

[12]: https://qz.com/1784867/open-source-data-could-help-save-lives-during-natural-disasters/

|

||||

[13]: https://www.techrepublic.com/article/the-hottest-thing-in-robotics-is-an-open-source-project-youve-never-heard-of/

|

||||

[14]: https://opensource.com/resources/conferences-and-events-monthly

|

||||

@ -1,61 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (What 2020 brings for the developer, and more industry trends)

|

||||

[#]: via: (https://opensource.com/article/20/1/hybrid-developer-future-industry-trends)

|

||||

[#]: author: (Tim Hildred https://opensource.com/users/thildred)

|

||||

|

||||

What 2020 brings for the developer, and more industry trends

|

||||

======

|

||||

A weekly look at open source community and industry trends.

|

||||

![Person standing in front of a giant computer screen with numbers, data][1]

|

||||

|

||||

As part of my role as a senior product marketing manager at an enterprise software company with an open source development model, I publish a regular update about open source community, market, and industry trends for product marketers, managers, and other influencers. Here are five of my and their favorite articles from that update.

|

||||

|

||||

## [How developers will work in 2020][2]

|

||||

|

||||

> Developers have been spending an enormous amount of time on everything *except* making software that solves problems. ‘DevOps’ has transmogrified from ‘developers releasing software’ into ‘developers building ever more complex infrastructure atop Kubernetes’ and ‘developers reinventing their software as distributed stateless functions.’ In 2020, ‘serverless’ will mature. Handle state. Handle data storage without requiring devs to learn yet-another-proprietary-database-service. Learning new stuff is fun-but shipping is even better, and we’ll finally see systems and services that support that.

|

||||

|

||||

**The impact:** A lot of forces are converging to give developers superpowers. There are ever more open source building blocks in place; thousands of geniuses are collaborating to make developer workflows more fun and efficient, and artificial intelligences are being brought to bear solving the types of problems a developer might face. On the one hand, there is clear leverage to giving developer superpowers: if they can make magic with software they'll be able to make even bigger magic with all this help. On the other hand, imagine if teachers had the same level of investment and support. Makes ya wonder don't it?

|

||||

|

||||

## [2020 forecast: Cloud-y with a chance of hybrid][3]

|

||||

|

||||

> Behind this growth is an array of new themes and strategies that are pushing cloud further up business agendas the world over. With ‘emerging’ technologies, such as AI and machine learning, containers and functions, and even more flexibility available with hybrid cloud solutions being provided by the major providers, it’s no wonder cloud is set to take centre stage.

|

||||

|

||||

**The impact:** Hybrid cloud finally has the same level of flesh that public cloud and on-premises have. Over the course of 2019 especially the competing visions offered for what it meant to be hybrid formed a composite that drove home why someone would want it. At the same time more and more of the technology pieces that make hybrid viable are in place and maturing. 2019 was the year that people truly "got" hybrid. 2020 will be the year that people start to take advantage of it.

|

||||

|

||||

## [The no-code delusion][4]

|

||||

|

||||

> Increasingly popular in the last couple of years, I think 2020 is going to be the year of “no code”: the movement that says you can write business logic and even entire applications without having the training of a software developer. I empathise with people doing this, and I think some of the “no code” tools are great. But I also thing it’s wrong at heart.

|

||||

|

||||

**The impact:** I've heard many devs say it over many years: "software development is hard." It would be a mistake to interpret that as "all software development is equally hard." What I've always found hard about learning to code is trying to think in a way that a computer will understand. With or without code, making computers do complex things will always require a different kind of thinking.

|

||||

|

||||

## [All things Java][5]

|

||||

|

||||

> The open, multi-vendor model has been a major strength—it’s very hard for any single vendor to pioneer a market for a sustained period of time—and taking different perspectives from diverse industries has been a key strength of the [evolution of Java][6]. Choosing to open source Java in 2006 was also a decision that only worked to strengthen the Java ecosystem, as it allowed Sun Microsystems and later Oracle to share the responsibility of maintaining and evolving Java with many other organizations and individuals.

|

||||

|

||||

**The impact:** The things that move quickly in technology are the things that can be thrown away. When you know you're going to keep something for a long time, you're likely to make different choices about what to prioritize when building it. Disposable and long-lived both have their places, and the Java community made enough good decisions over the years that the language itself can have a foot in both camps.

|

||||

|

||||

_I hope you enjoyed this list of what stood out to me from last week and come back next Monday for more open source community, market, and industry trends._

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/20/1/hybrid-developer-future-industry-trends

|

||||

|

||||

作者:[Tim Hildred][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/thildred

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/data_metrics_analytics_desktop_laptop.png?itok=9QXd7AUr (Person standing in front of a giant computer screen with numbers, data)

|

||||

[2]: https://thenextweb.com/readme/2020/01/15/how-developers-will-work-in-2020/

|

||||

[3]: https://www.itproportal.com/features/2020-forecast-cloud-y-with-a-chance-of-hybrid/

|

||||

[4]: https://www.alexhudson.com/2020/01/13/the-no-code-delusion/

|

||||

[5]: https://appdevelopermagazine.com/all-things-java/

|

||||

[6]: https://appdevelopermagazine.com/top-10-developer-technologies-in-2019/

|

||||

@ -1,221 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (The Ultimate Guide to JavaScript Fatigue: Realities of our industry)

|

||||

[#]: via: (https://lucasfcosta.com/2017/07/17/The-Ultimate-Guide-to-JavaScript-Fatigue.html)

|

||||

[#]: author: (Lucas Fernandes Da Costa https://lucasfcosta.com)

|

||||

|

||||

The Ultimate Guide to JavaScript Fatigue: Realities of our industry

|

||||

======

|

||||

|

||||

**Complaining about JS Fatigue is just like complaining about the fact that humanity has created too many tools to solve the problems we have** , from email to airplanes and spaceships.

|

||||

|

||||

Last week I’ve done a talk about this very same subject at the NebraskaJS 2017 Conference and I got so many positive feedbacks that I just thought this talk should also become a blog post in order to reach more people and help them deal with JS Fatigue and understand the realities of our industry. **My goal with this post is to change the way you think about software engineering in general and help you in any areas you might work on**.

|

||||

|

||||

One of the things that has inspired me to write this blog post and that totally changed my life is [this great post by Patrick McKenzie, called “Don’t Call Yourself a Programmer and other Career Advice”][1]. **I highly recommend you read that**. Most of this blog post is advice based on what Patrick has written in that post applied to the JavaScript ecosystem and with a few more thoughts I’ve developed during these last years working in the tech industry.

|

||||

|

||||

This first section is gonna be a bit philosophical, but I swear it will be worth reading.

|

||||

|

||||

### Realities of Our Industry 101

|

||||

|

||||

Just like Patrick has done in [his post][1], let’s start with the most basic and essential truth about our industry:

|

||||

|

||||

Software solves business problems

|

||||

|

||||

This is it. **Software does not exist to please us as programmers** and let us write beautiful code. Neither it exists to create jobs for people in the tech industry. **Actually, it exists to kill as many jobs as possible, including ours** , and this is why basic income will become much more important in the next few years, but that’s a whole other subject.

|

||||

|

||||

I’m sorry to say that, but the reason things are that way is that there are only two things that matter in the software engineering (and any other industries):

|

||||

|

||||

**Cost versus Revenue**

|

||||

|

||||

**The more you decrease cost and increase revenue, the more valuable you are** , and one of the most common ways of decreasing cost and increasing revenue is replacing human beings by machines, which are more effective and usually cost less in the long run.

|

||||

|

||||

You are not paid to write code

|

||||

|

||||

**Technology is not a goal.** Nobody cares about which programming language you are using, nobody cares about which frameworks your team has chosen, nobody cares about how elegant your data structures are and nobody cares about how good is your code. **The only thing that somebody cares about is how much does your software cost and how much revenue it generates**.

|

||||

|

||||

Writing beautiful code does not matter to your clients. We write beautiful code because it makes us more productive in the long run and this decreases cost and increases revenue.

|

||||

|

||||

The whole reason why we try not to write bugs is not that we value correctness, but that **our clients** value correctness. If you have ever seen a bug becoming a feature you know what I’m talking about. That bug exists but it should not be fixed. That happens because our goal is not to fix bugs, our goal is to generate revenue. If our bugs make clients happy then they increase revenue and therefore we are accomplishing our goals.

|

||||

|

||||

Reusable space rockets, self-driving cars, robots, artificial intelligence: these things do not exist just because someone thought it would be cool to create them. They exist because there are business interests behind them. And I’m not saying the people behind them just want money, I’m sure they think that stuff is also cool, but the truth is that if they were not economically viable or had any potential to become so, they would not exist.

|

||||

|

||||

Probably I should not even call this section “Realities of Our Industry 101”, maybe I should just call it “Realities of Capitalism 101”.

|

||||

|

||||

And given that our only goal is to increase revenue and decrease cost, I think we as programmers should be paying more attention to requirements and design and start thinking with our minds and participating more actively in business decisions, which is why it is extremely important to know the problem domain we are working on. How many times before have you found yourself trying to think about what should happen in certain edge cases that have not been thought before by your managers or business people?

|

||||

|

||||

In 1975, Boehm has done a research in which he found out that about 64% of all errors in the software he was studying were caused by design, while only 36% of all errors were coding errors. Another study called [“Higher Order Software—A Methodology for Defining Software”][2] also states that **in the NASA Apollo project, about 73% of all errors were design errors**.

|

||||

|

||||

The whole reason why Design and Requirements exist is that they define what problems we’re going to solve and solving problems is what generates revenue.

|

||||

|

||||

> Without requirements or design, programming is the art of adding bugs to an empty text file.

|

||||

>

|

||||

> * Louis Srygley

|

||||

>

|

||||

|

||||

|

||||

This same principle also applies to the tools we’ve got available in the JavaScript ecosystem. Babel, webpack, react, Redux, Mocha, Chai, Typescript, all of them exist to solve a problem and we gotta understand which problem they are trying to solve, we need to think carefully about when most of them are needed, otherwise, we will end up having JS Fatigue because:

|

||||

|

||||

JS Fatigue happens when people use tools they don't need to solve problems they don't have.

|

||||

|

||||

As Donald Knuth once said: “Premature optimization is the root of all evil”. Remember that software only exists to solve business problems and most software out there is just boring, it does not have any high scalability or high-performance constraints. Focus on solving business problems, focus on decreasing cost and generating revenue because this is all that matters. Optimize when you need, otherwise you will probably be adding unnecessary complexity to your software, which increases cost, and not generating enough revenue to justify that.

|

||||

|

||||

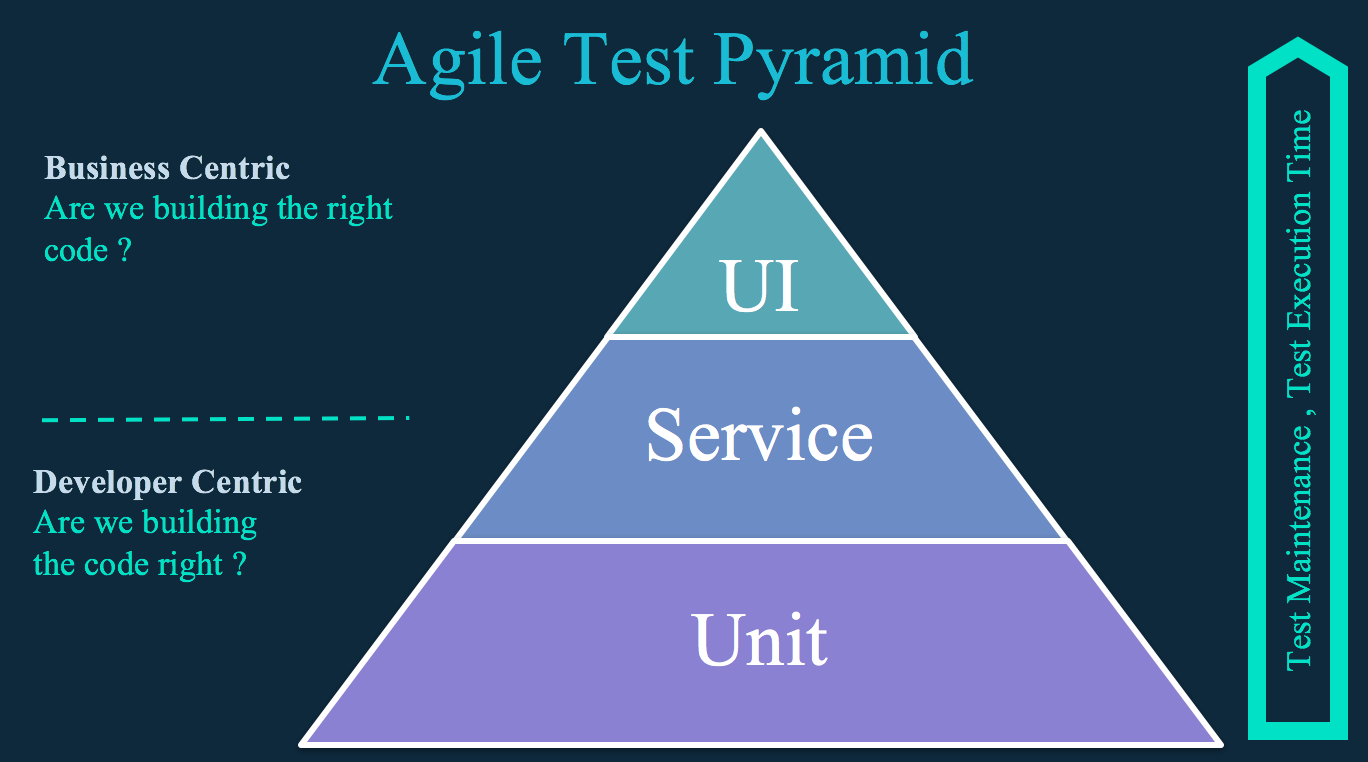

This is why I think we should apply [Test Driven Development][3] principles to everything we do in our job. And by saying this I’m not just talking about testing. **I’m talking about waiting for problems to appear before solving them. This is what TDD is all about**. As Kent Beck himself says: “TDD reduces fear” because it guides your steps and allows you take small steps towards solving your problems. One problem at a time. By doing the same thing when it comes to deciding when to adopt new technologies then we will also reduce fear.

|

||||

|

||||

Solving one problem at a time also decreases [Analysis Paralysis][4], which is basically what happens when you open Netflix and spend three hours concerned about making the optimal choice instead of actually watching something. By solving one problem at a time we reduce the scope of our decisions and by reducing the scope of our decisions we have fewer choices to make and by having fewer choices to make we decrease Analysis Paralysis.

|

||||

|

||||

Have you ever thought about how easier it was to decide what you were going to watch when there were only a few TV channels available? Or how easier it was to decide which game you were going to play when you had only a few cartridges at home?

|

||||

|

||||

### But what about JavaScript?

|

||||

|

||||

By the time I’m writing this post NPM has 489,989 packages and tomorrow approximately 515 new ones are going to be published.

|

||||

|

||||

And the packages we use and complain about have a history behind them we must comprehend in order to understand why we need them. **They are all trying to solve problems.**

|

||||

|

||||

Babel, Dart, CoffeeScript and other transpilers come from our necessity of writing code other than JavaScript but making it runnable in our browsers. Babel even lets us write new generation JavaScript and make sure it will work even on older browsers, which has always been a great problem given the inconsistencies and different amount of compliance to the ECMA Specification between browsers. Even though the ECMA spec is becoming more and more solid these days, we still need Babel. And if you want to read more about Babel’s history I highly recommend that you read [this excellent post by Henry Zhu][5].

|

||||

|

||||

Module bundlers such as Webpack and Browserify also have their reason to exist. If you remember well, not so long ago we used to suffer a lot with lots of `script` tags and making them work together. They used to pollute the global namespace and it was reasonably hard to make them work together when one depended on the other. In order to solve this [`Require.js`][6] was created, but it still had its problems, it was not that straightforward and its syntax also made it prone to other problems, as you can see [in this blog post][7]. Then Node.js came with `CommonJS` imports, which were synchronous, simple and clean, but we still needed a way to make that work on our browsers and this is why we needed Webpack and Browserify.

|

||||

|

||||

And Webpack itself actually solves more problems than that by allowing us to deal with CSS, images and many other resources as if they were JavaScript dependencies.

|

||||

|

||||

Front-end frameworks are a bit more complicated, but the reason why they exist is to reduce the cognitive load when we write code so that we don’t need to worry about manipulating the DOM ourselves or even dealing with messy browser APIs (another problem JQuery came to solve), which is not only error prone but also not productive.

|

||||

|

||||

This is what we have been doing this whole time in computer science. We use low-level abstractions and build even more abstractions on top of it. The more we worry about describing how our software should work instead of making it work, the more productive we are.

|

||||

|

||||

But all those tools have something in common: **they exist because the web platform moves too fast**. Nowadays we’re using web technology everywhere: in web browsers, in desktop applications, in phone applications or even in watch applications.

|

||||

|

||||

This evolution also creates problems we need to solve. PWAs, for example, do not exist only because they’re cool and we programmers have fun writing them. Remember the first section of this post: **PWAs exist because they create business value**.

|

||||

|

||||

And usually standards are not fast enough to be created and therefore we need to create our own solutions to these things, which is why it is great to have such a vibrant and creative community with us. We’re solving problems all the time and **we are allowing natural selection to do its job**.

|

||||

|

||||

The tools that suit us better thrive, get more contributors and develop themselves more quickly and sometimes other tools end up incorporating the good ideas from the ones that thrive and becoming even more popular than them. This is how we evolve.

|

||||

|

||||

By having more tools we also have more choices. If you remember the UNIX philosophy well, it states that we should aim at creating programs that do one thing and do it well.

|

||||

|

||||

We can clearly see this happening in the JS testing environment, for example, where we have Mocha for running tests and Chai for doing assertions, while in Java JUnit tries to do all these things. This means that if we have a problem with one of them or if we find another one that suits us better, we can simply replace that small part and still have the advantages of the other ones.

|

||||

|

||||

The UNIX philosophy also states that we should write programs that work together. And this is exactly what we are doing! Take a look at Babel, Webpack and React, for example. They work very well together but we still do not need one to use the other. In the testing environment, for example, if we’re using Mocha and Chai all of a sudden we can just install Karma and run those same tests in multiple environments.

|

||||

|

||||

### How to Deal With It

|

||||

|

||||

My first advice for anyone suffering from JS Fatigue would definitely be to stay aware that **you don’t need to know everything**. Trying to learn it all at once, even when we don’t have to do so, only increases the feeling of fatigue. Go deep in areas that you love and for which you feel an inner motivation to study and adopt a lazy approach when it comes to the other ones. I’m not saying that you should be lazy, I’m just saying that you can learn those only when needed. Whenever you face a problem that requires you to use a certain technology to solve it, go learn.

|

||||

|

||||

Another important thing to say is that **you should start from the beginning**. Make sure you have learned enough about JavaScript itself before using any JavaScript frameworks. This is the only way you will be able to understand them and bend them to your will, otherwise, whenever you face an error you have never seen before you won’t know which steps to take in order to solve it. Learning core web technologies such as CSS, HTML5, JavaScript and also computer science fundamentals or even how the HTTP protocol works will help you master any other technologies a lot more quickly.

|

||||

|

||||

But please, don’t get too attached to that. Sometimes you gotta risk yourself and start doing things on your own. As Sacha Greif has written in [this blog post][8], spending too much time learning the fundamentals is just like trying to learn how to swim by studying fluid dynamics. Sometimes you just gotta jump into the pool and try to swim by yourself.

|

||||

|

||||

And please, don’t get too attached to a single technology. All of the things we have available nowadays have already been invented in the past. Of course, they have different features and a brand new name, but, in their essence, they are all the same.

|

||||

|

||||

If you look at NPM, it is nothing new, we already had Maven Central and Ruby Gems quite a long time ago.

|

||||

|

||||

In order to transpile your code, Babel applies the very same principles and theory as some of the oldest and most well-known compilers, such as the GCC.

|

||||

|

||||

Even JSX is not a new idea. It E4X (ECMAScript for XML) already existed more than 10 years ago.

|

||||

|

||||

Now you might ask: “what about Gulp, Grunt and NPM Scripts?” Well, I’m sorry but we can solve all those problems with GNU Make in 1976. And actually, there are a reasonable number of JavaScript projects that still use it, such as Chai.js, for example. But we do not do that because we are hipsters that like vintage stuff. We use `make` because it solves our problems, and this is what you should aim at doing, as we’ve talked before.

|

||||

|

||||

If you really want to understand a certain technology and be able to solve any problems you might face, please, dig deep. One of the most decisive factors to success is curiosity, so **dig deep into the technologies you like**. Try to understand them from bottom-up and whenever you think something is just “magic”, debunk that myth by exploring the codebase by yourself.

|

||||

|

||||

In my opinion, there is no better quote than this one by Richard Feinman, when it comes to really learning something:

|

||||

|

||||

> What I cannot create, I do not understand

|

||||

|

||||

And just below this phrase, [in the same blackboard, Richard also wrote][9]:

|

||||

|

||||

> Know how to solve every problem that has been solved

|

||||

|

||||

Isn’t this just amazing?

|

||||

|

||||

When Richard said that, he was talking about being able to take any theoretical result and re-derive it, but I think the exact same principle can be applied to software engineering. The tools that solve our problems have already been invented, they already exist, so we should be able to get to them all by ourselves.

|

||||

|

||||

This is the very reason I love [some of the videos available in Egghead.io][10] in which Dan Abramov explains how to implement certain features that exist in Redux from scratch or [blog posts that teach you how to build your own JSX renderer][11].

|

||||

|

||||

So why not trying to implement these things by yourself or going to GitHub and reading their codebase in order to understand how they work? I’m sure you will find a lot of useful knowledge out there. Comments and tutorials might lie and be incorrect sometimes, the code cannot.

|

||||

|

||||

Another thing that we have been talking a lot in this post is that **you should not get ahead of yourself**. Follow a TDD approach and solve one problem at a time. You are paid to increase revenue and decrease cost and you do this by solving problems, this is the reason why software exists.

|

||||

|

||||

And since we love comparing our role to the ones related to civil engineering, let’s do a quick comparison between software development and civil engineering, just as [Sam Newman does in his brilliant book called “Building Microservices”][12].

|

||||

|

||||

We love calling ourselves “engineers” or “architects”, but is that term really correct? We have been developing software for what we know as computers less than a hundred years ago, while the Colosseum, for example, exists for about two thousand years.

|

||||

|

||||

When was the last time you’ve seen a bridge falling and when was the last time your telephone or your browser crashed?

|

||||

|

||||

In order to explain this, I’ll use an example I love.

|

||||

|

||||

This is the beautiful and awesome city of Barcelona:

|

||||

|

||||

![The City of Barcelona][13]

|

||||

|

||||

When we look at it this way and from this distance, it just looks like any other city in the world, but when we look at it from above, this is how Barcelona looks:

|

||||

|

||||

![Barcelona from above][14]

|

||||

|

||||

As you can see, every block has the same size and all of them are very organized. If you’ve ever been to Barcelona you will also know how good it is to move through the city and how well it works.

|

||||

|

||||

But the people that planned Barcelona could not predict what it was going to look like in the next two or three hundred years. In cities, people come in and people move through it all the time so what they had to do was make it grow organically and adapt as the time goes by. They had to be prepared for changes.

|

||||

|

||||

This very same thing happens to our software. It evolves quickly, refactors are often needed and requirements change more frequently than we would like them to.

|

||||

|

||||

So, instead of acting like a Software Engineer, act as a Town Planner. Let your software grow organically and adapt as needed. Solve problems as they come by but make sure everything still has its place.

|

||||

|

||||

Doing this when it comes to software is even easier than doing this in cities due to the fact that **software is flexible, civil engineering is not**. **In the software world, our build time is compile time**. In Barcelona we cannot simply destroy buildings to give space to new ones, in Software we can do that a lot easier. We can break things all the time, we can make experiments because we can build as many times as we want and it usually takes seconds and we spend a lot more time thinking than building. Our job is purely intellectual.

|

||||

|

||||

So **act like a town planner, let your software grow and adapt as needed**.

|

||||

|

||||

By doing this you will also have better abstractions and know when it’s the right time to adopt them.

|

||||

|

||||

As Sam Koblenski says:

|

||||

|

||||

> Abstractions only work well in the right context, and the right context develops as the system develops.

|

||||

|

||||

Nowadays something I see very often is people looking for boilerplates when they’re trying to learn a new technology, but, in my opinion, **you should avoid boilerplates when you’re starting out**. Of course boilerplates and generators are useful if you are already experienced, but they take a lot of control out of your hands and therefore you won’t learn how to set up a project and you won’t understand exactly where each piece of the software you are using fits.

|

||||

|

||||

When you feel like you are struggling more than necessary to get something simple done, it might be the right time for you to look for an easier way to do this. In our role **you should strive to be lazy** , you should work to not work. By doing that you have more free time to do other things and this decreases cost and increases revenue, so that’s another way of accomplishing your goal. You should not only work harder, you should work smarter.

|

||||

|

||||

Probably someone has already had the same problem as you’re having right now, but if nobody did it might be your time to shine and build your own solution and help other people.

|

||||

|

||||

But sometimes you will not be able to realize you could be more effective in your tasks until you see someone doing them better. This is why it is so important to **talk to people**.

|

||||

|

||||

By talking to people you share experiences that help each other’s careers and we discover new tools to improve our workflow and, even more important than that, learn how they solve their problems. This is why I like reading blog posts in which companies explain how they solve their problems.

|

||||

|

||||

Especially in our area we like to think that Google and StackOverflow can answer all our questions, but we still need to know which questions to ask. I’m sure you have already had a problem you could not find a solution for because you didn’t know exactly what was happening and therefore didn’t know what was the right question to ask.

|

||||

|

||||

But if I needed to sum this whole post in a single advice, it would be:

|

||||

|

||||

Solve problems.

|

||||

|

||||

Software is not a magic box, software is not poetry (unfortunately). It exists to solve problems and improves peoples’ lives. Software exists to push the world forward.

|

||||

|

||||

**Now it’s your time to go out there and solve problems**.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://lucasfcosta.com/2017/07/17/The-Ultimate-Guide-to-JavaScript-Fatigue.html

|

||||

|

||||

作者:[Lucas Fernandes Da Costa][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://lucasfcosta.com

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: http://www.kalzumeus.com/2011/10/28/dont-call-yourself-a-programmer/

|

||||

[2]: http://ieeexplore.ieee.org/document/1702333/

|

||||

[3]: https://en.wikipedia.org/wiki/Test_Driven_Development

|

||||

[4]: https://en.wikipedia.org/wiki/Analysis_paralysis

|

||||

[5]: https://babeljs.io/blog/2016/12/07/the-state-of-babel

|

||||

[6]: http://requirejs.org

|

||||

[7]: https://benmccormick.org/2015/05/28/moving-past-requirejs/

|

||||

[8]: https://medium.freecodecamp.org/a-study-plan-to-cure-javascript-fatigue-8ad3a54f2eb1

|

||||

[9]: https://www.quora.com/What-did-Richard-Feynman-mean-when-he-said-What-I-cannot-create-I-do-not-understand

|

||||

[10]: https://egghead.io/lessons/javascript-redux-implementing-store-from-scratch

|

||||

[11]: https://jasonformat.com/wtf-is-jsx/

|

||||

[12]: https://www.barnesandnoble.com/p/building-microservices-sam-newman/1119741399/2677517060476?st=PLA&sid=BNB_DRS_Marketplace+Shopping+Books_00000000&2sid=Google_&sourceId=PLGoP4760&k_clickid=3x4760

|

||||

[13]: /assets/barcelona-city.jpeg

|

||||

[14]: /assets/barcelona-above.jpeg

|

||||

[15]: https://twitter.com/thewizardlucas

|

||||

@ -1,69 +0,0 @@

|

||||

Why I love technical debt

|

||||

======

|

||||

|

||||

This is not necessarily the title you'd expect for an article, I guess,* but I'm a fan of [technical debt][1]. There are two reasons for this: a Bad Reason and a Good Reason. I'll be upfront about the Bad Reason first, then explain why even that isn't really a reason to love it. I'll then tackle the Good Reason, and you'll nod along in agreement.

|

||||

|

||||

### The Bad Reason I love technical debt

|

||||

|

||||

We'll get this out of the way, then, shall we? The Bad Reason is that, well, there's just lots of it, it's interesting, it keeps me in a job, and it always provides a reason, as a security architect, for me to get involved in** projects that might give me something new to look at. I suppose those aren't all bad things. It can also be a bit depressing, because there's always so much of it, it's not always interesting, and sometimes I need to get involved even when I might have better things to do.

|

||||

|

||||

And what's worse is that it almost always seems to be security-related, and it's always there. That's the bad part.

|

||||

|

||||

Security, we all know, is the piece that so often gets left out, or tacked on at the end, or done in half the time it deserves, or done by people who have half an idea, but don't quite fully grasp it. I should be clear at this point: I'm not saying that this last reason is those people's fault. That people know they need security is fantastic. If we (the security folks) or we (the organization) haven't done a good enough job in making sufficient security resources--whether people, training, or visibility--available to those people who need it, the fact that they're trying is great and something we can work on. Let's call that a positive. Or at least a reason for hope.***

|

||||

|

||||

### The Good Reason I love technical debt

|

||||

|

||||

Let's get on to the other reason: the legitimate reason. I love technical debt when it's named.

|

||||

|

||||

What does that mean?

|

||||

|

||||

We all get that technical debt is a bad thing. It's what happens when you make decisions for pragmatic reasons that are likely to come back and bite you later in a project's lifecycle. Here are a few classic examples that relate to security:

|

||||

|

||||

* Not getting around to applying authentication or authorization controls on APIs that might, at some point, be public.

|

||||

* Lumping capabilities together so it's difficult to separate out appropriate roles later on.

|

||||

* Hard-coding roles in ways that don't allow for customisation by people who may use your application in different ways from those you initially considered.

|

||||

* Hard-coding cipher suites for cryptographic protocols, rather than putting them in a config file where they can be changed or selected later.

|

||||

|

||||

|

||||

|

||||

There are lots more, of course, but those are just a few that jump out at me and that I've seen over the years. Technical debt means making decisions that will mean more work later on to fix them. And that can't be good, can it?

|

||||

|

||||

There are two words in the preceding paragraphs that should make us happy: they are "decisions" and "pragmatic." Because, in order for something to be named technical debt, I'd argue, it has to have been subject to conscious decision-making, and trade-offs must have been made--hopefully for rational reasons. Those reasons may be many and various--lack of qualified resources; project deadlines; lack of sufficient requirement definition--but if they've been made consciously, then the technical debt can be named, and if technical debt can be named, it can be documented.

|

||||

|

||||

And if it's documented, we're halfway there. As a security guy, I know that I can't force everything that goes out of the door to meet all the requirements I'd like--but the same goes for the high availability gal, the UX team, the performance folks, etc.

|

||||

|

||||

What we need--what we all need--is for documentation to exist about why decisions were made, because when we return to the problem we'll know it was thought about. And, what's more, the recording of that information might even make it into product documentation. "This API is designed to be used in a protected environment and should not be exposed on the public Internet" is a great piece of documentation. It may not be what a customer is looking for, but at least they know how to deploy the product, and, crucially, it's an opportunity for them to come back to the product manager and say, "We'd really like to deploy that particular API in this way. Could you please add this as a feature request?" Product managers like that. Very much.****

|

||||

|

||||

The best thing, though, is not just that named technical debt is visible technical debt, but that if you encourage your developers to document the decisions in code,***** then there's a decent chance that they'll record some ideas about how this should be done in the future. If you're really lucky, they might even add some hooks in the code to make it easier (an "auth" parameter on the API, which is unused in the current version, but will make API compatibility so much simpler in new releases; or cipher entry in the config file that currently only accepts one option, but is at least checked by the code).

|

||||

|

||||

I've been a bit disingenuous, I know, by defining technical debt as named technical debt. But honestly, if it's not named, then you can't know what it is, and until you know what it is, you can't fix it.******* My advice is this: when you're doing a release close-down (or in your weekly standup--EVERY weekly standup), have an agenda item to record technical debt. Name it, document it, be proud, sleep at night.

|

||||

|

||||

* Well, apart from the obvious clickbait reason--for which I'm (a little) sorry.

|

||||

|

||||

** I nearly wrote "poke my nose into."

|

||||

|

||||

*** Work with me here.

|

||||

|

||||

**** If you're software engineer/coder/hacker, here's a piece of advice: Learn to talk to product managers like real people, and treat them nicely. They (the better ones, at least) are invaluable allies when you need to prioritize features or have tricky trade-offs to make.

|

||||

|

||||

***** Do this. Just do it. Documentation that isn't at least mirrored in code isn't real documentation.******

|

||||

|

||||

****** Don't believe me? Talk to developers. "Who reads product documentation?" "Oh, the spec? I skimmed it. A few releases back. I think." "I looked in the header file; couldn't see it there."

|

||||

|

||||

******* Or decide not to fix it, which may also be an entirely appropriate decision.

|

||||

|

||||

This article originally appeared on [Alice, Eve, and Bob - a security blog][2] and is republished with permission.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/10/why-i-love-technical-debt

|

||||

|

||||

作者:[Mike Bursell][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/mikecamel

|

||||

[1]:https://en.wikipedia.org/wiki/Technical_debt

|

||||

[2]:https://aliceevebob.wordpress.com/2017/08/29/why-i-love-technical-debt/

|

||||

@ -1,86 +0,0 @@

|

||||

How to Monetize an Open Source Project

|

||||

======

|

||||

|

||||

|

||||

The problem for any small group of developers putting the finishing touches on a commercial open source application is figuring out how to monetize the software in order to keep the bills paid and food on the table. Often these small pre-startups will start by deciding which of the recognized open source business models they're going to adapt, whether that be following Red Hat's lead and offering professional services, going the SaaS route, releasing as open core or something else.

|

||||

|

||||

Steven Grandchamp, general manager for MariaDB's North America operations and CEO for Denver-based startup [Drud Tech][1], thinks that might be putting the cart before the horse. With an open source project, the best first move is to get people downloading and using your product for free.

|

||||

|

||||

**Related:** [Demand for Open Source Skills Continues to Grow][2]

|

||||

|

||||

"The number one tangent to monetization in any open source product is adoption, because the key to monetizing an open source product is you flip what I would call the sales funnel upside down," he told ITPro at the recent All Things Open conference in Raleigh, North Carolina.

|

||||

|

||||

In many ways, he said, selling open source solutions is the opposite of marketing traditional proprietary products, where adoption doesn't happen until after a contract is signed.

|

||||

|

||||

**Related:** [Is Raleigh the East Coast's Silicon Valley?][3]

|

||||

|

||||

"In a proprietary software company, you advertise, you market, you make claims about what the product can do, and then you have sales people talk to customers. Maybe you have a free trial or whatever. Maybe you have a small version. Maybe it's time bombed or something like that, but you don't really get to realize the benefit of the product until there's a contract and money changes hands."

|

||||

|

||||

Selling open source solutions is different because of the challenge of selling software that's freely available as a GitHub download.

|

||||

|

||||

"The whole idea is to put the product out there, let people use it, experiment with it, and jump on the chat channels," he said, pointing out that his company Drud has a public chat channel that's open to anybody using their product. "A subset of that group is going to raise their hand and go, 'Hey, we need more help. We'd like a tighter relationship with the company. We'd like to know where your road map's going. We'd like to know about customization. We'd like to know if maybe this thing might be on your road map.'"

|

||||

|

||||

Grandchamp knows more than a little about making software pay, from both the proprietary and open source sides of the fence. In the 1980s he served as VP of research and development at Formation Technologies, and became SVP of R&D at John H. Harland after it acquired Formation in the mid-90s. He joined MariaDB in 2016, after serving eight years as CEO at OpenLogic, which was providing commercial support for more than 600 open-source projects at the time it was acquired by Rogue Wave Software. Along the way, there was a two year stint at Microsoft's Redmond campus.

|

||||

|

||||

OpenLogic was where he discovered open source, and his experiences there are key to his approach for monetizing open source projects.

|

||||

|

||||

"When I got to OpenLogic, I was told that we had 300 customers that were each paying $99 a year for access to our tool," he explained. "But the problem was that nobody was renewing the tool. So I called every single customer that I could find and said 'did you like the tool?'"

|

||||

|

||||

It turned out that nearly everyone he talked to was extremely happy with the company's software, which ironically was the reason they weren't renewing. The company's tool solved their problem so well there was no need to renew.

|

||||

|

||||

"What could we have offered that would have made you renew the tool?" he asked. "They said, 'If you had supported all of the open source products that your tool assembled for me, then I would have that ongoing relationship with you.'"

|

||||

|

||||

Grandchamp immediately grasped the situation, and when the CTO said such support would be impossible, Grandchamp didn't mince words: "Then we don't have a company."

|

||||

|

||||

"We figured out a way to support it," he said. "We created something called the Open Logic Expert Community. We developed relationships with committers and contributors to a couple of hundred open source packages, and we acted as sort of the hub of the SLA for our customers. We had some people on staff, too, who knew the big projects."

|

||||

|

||||

After that successful launch, Grandchamp and his team began hearing from customers that they were confused over exactly what open source code they were using in their projects. That lead to the development of what he says was the first software-as-a-service compliance portal of open source, which could scan an application's code and produce a list of all of the open source code included in the project. When customers then expressed confusion over compliance issues, the SaaS service was expanded to flag potential licensing conflicts.

|

||||

|

||||

Although the product lines were completely different, the same approach was used to monetize MariaDB, then called SkySQL, after MySQL co-founders Michael "Monty" Widenius, David Axmark, and Allan Larsson created the project by forking MySQL, which Oracle had acquired from Sun Microsystems in 2010.

|

||||

|

||||

Again, users were approached and asked what things they would be willing to purchase.

|

||||

|

||||

"They wanted different functionality in the database, and you didn't really understand this if you didn't talk to your customers," Grandchamp explained. "Monty and his team, while they were being acquired at Sun and Oracle, were working on all kinds of new functionality, around cloud deployments, around different ways to do clustering, they were working on lots of different things. That work, Oracle and MySQL didn't really pick up."

|

||||

|

||||

Rolling in the new features customers wanted needed to be handled gingerly, because it was important to the folks at MariaDB to not break compatibility with MySQL. This necessitated a strategy around when the code bases would come together and when they would separate. "That road map, knowledge, influence and technical information was worth paying for."

|

||||

|

||||

As with OpenLogic, MariaDB customers expressed a willingness to spend money on a variety of fronts. For example, a big driver in the early days was a project called Remote DBA, which helped customers make up for a shortage of qualified database administrators. The project could help with design issues, as well as monitor existing systems to take the workload off of a customer's DBA team. The service also offered access to MariaDB's own DBAs, many of whom had a history with the database going back to the early days of MySQL.

|

||||

|

||||

"That was a subscription offering that people were definitely willing to pay for," he said.

|

||||

|

||||

The company also learned, again by asking and listening to customers, that there were various types of support subscriptions that customers were willing to purchase, including subscriptions around capability and functionality, and a managed service component of Remote DBA.

|

||||

|

||||

These days Grandchamp is putting much of his focus on his latest project, Drud, a startup that offers a suite of integrated, automated, open source development tools for developing and managing multiple websites, which can be running on any combination of content management systems and deployment platforms. It is monetized partially through modules that add features like a centralized dashboard and an "intelligence engine."

|

||||

|

||||

As you might imagine, he got it off the ground by talking to customers and giving them what they indicated they'd be willing to purchase.

|

||||

|

||||

"Our number one customer target is the agency market," he said. "The enterprise market is a big target, but I believe it's our second target, not our first. And the reason it's number two is they don't make decisions very fast. There are technology refresh cycles that have to come up, there are lots of politics involved and lots of different vendors. It's lucrative once you're in, but in a startup you've got to figure out how to pay your bills. I want to pay my bills today. I don't want to pay them in three years."

|

||||

|

||||

Drud's focus on the agency market illustrates another consideration: the importance of understanding something about your customers' business. When talking with agencies, many said they were tired of being offered generic software that really didn't match their needs from proprietary vendors that didn't understand their business. In Drud's case, that understanding is built into the company DNA. The software was developed by an agency to fill its own needs.

|

||||

|

||||

"We are a platform designed by an agency for an agency," Grandchamp said. "Right there is a relationship that they're willing to pay for. We know their business."

|

||||

|

||||

Grandchamp noted that startups also need to be able to distinguish users from customers. Most of the people downloading and using commercial open source software aren't the people who have authorization to make purchasing decisions. These users, however, can point to the people who control the purse strings.

|

||||

|

||||

"It's our job to build a way to communicate with those users, provide them value so that they'll give us value," he explained. "It has to be an equal exchange. I give you value of a tool that works, some advice, really good documentation, access to experts who can sort of guide you along. Along the way I'm asking you for pieces of information. Who do you work for? How are the technology decisions happening in your company? Are there other people in your company that we should refer the product to? We have to create the dialog."

|

||||

|

||||

In the end, Grandchamp said, in the open source world the people who go out to find business probably shouldn't see themselves as salespeople, but rather, as problem solvers.

|

||||

|

||||

"I believe that you're not really going to need salespeople in this model. I think you're going to need customer success people. I think you're going to need people who can enable your customers to be successful in a business relationship that's more highly transactional."

|

||||

|

||||

"People don't like to be sold," he added, "especially in open source. The last person they want to see is the sales person, but they like to ply and try and consume and give you input and give you feedback. They love that."

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.itprotoday.com/software-development/how-monetize-open-source-project

|

||||

|

||||

作者:[Christine Hall][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.itprotoday.com/author/christine-hall

|

||||

[1]:https://www.drud.com/

|

||||

[2]:http://www.itprotoday.com/open-source/demand-open-source-skills-continues-grow

|

||||

[3]:http://www.itprotoday.com/software-development/raleigh-east-coasts-silicon-valley

|

||||

@ -1,87 +0,0 @@

|

||||

Why pair writing helps improve documentation

|

||||

======

|

||||

|

||||

|

||||

Professional writers, at least in the Red Hat documentation team, nearly always work on docs alone. But have you tried writing as part of a pair? In this article, I'll explain a few benefits of pair writing.

|

||||

### What is pair writing?

|

||||

|

||||

Pair writing is when two writers work in real time, on the same piece of text, in the same room. This approach improves document quality, speeds up writing, and allows writers to learn from each other. The idea of pair writing is borrowed from [pair programming][1].

|

||||

|

||||

When pair writing, you and your colleague work on the text together, making suggestions and asking questions as needed. Meanwhile, you're observing each other's work. For example, while one is writing, the other writer observes details such as structure or context. Often discussion around the document turns into sharing experiences and opinions, and brainstorming about writing in general.

|

||||

|

||||

At all times, the writing is done by only one person. Thus, you need only one computer, unless you want one writer to do online research while the other person does the writing. The text workflow is the same as if you are working alone: a text editor, the documentation source files, git, and so on.

|

||||

|

||||

### Pair writing in practice

|

||||

|

||||

My colleague Aneta Steflova and I have done more than 50 hours of pair writing working on the Red Hat Enterprise Linux System Administration docs and on the Red Hat Identity Management docs. I've found that, compared to writing alone, pair writing:

|

||||

|

||||

* is as productive or more productive;

|

||||

* improves document quality;

|

||||

* helps writers share technical expertise; and

|

||||

* is more fun.

|

||||

|

||||

|

||||

|

||||

### Speed

|

||||

|

||||

Two writers writing one text? Sounds half as productive, right? Wrong. (Usually.)

|

||||

|

||||

Pair writing can help you work faster because two people have solutions to a bigger set of problems, which means getting blocked less often during the process. For example, one time we wrote urgent API docs for identity management. I know at least the basics of web APIs, the REST protocol, and so on, which helped us speed through those parts of the documentation. Working alone, Aneta would have needed to interrupt the writing process frequently to study these topics.

|

||||

|

||||

### Quality

|

||||

|

||||

Poor wording or sentence structure, inconsistencies in material, and so on have a harder time surviving under the scrutiny of four eyes. For example, one of our pair writing documents was reviewed by an extremely critical developer, who was known for catching technical inaccuracies and bad structure. After this particular review, he said, "Perfect. Thanks a lot."

|

||||

|

||||

### Sharing expertise

|

||||

|

||||

Each of us lives in our own writing bubble, and we normally don't know how others approach writing. Pair writing can help you improve your own writing process. For example, Aneta showed me how to better handle assignments in which the developer has provided starting text (as opposed to the writer writing from scratch using their own knowledge of the subject), which I didn't have experience with. Also, she structures the docs thoroughly, which I began doing as well.

|

||||

|

||||

As another example, I'm good enough at Vim that XML editing (e.g., tags manipulation) is enjoyable instead of torturous. Aneta saw how I was using Vim, asked about it, suffered through the learning curve, and now takes advantage of the Vim features that help me.

|

||||

|

||||

Pair writing is especially good for helping and mentoring new writers, and it's a great way to get to know professionally (and have fun with) colleagues.

|

||||

|

||||

### When pair writing shines

|

||||

|

||||

In addition to benefits I've already listed, pair writing is especially good for:

|

||||

|

||||

* **Working with[Bugzilla][2]** : Bugzillas can be cumbersome and cause problems, especially for administration-clumsy people (like me).

|

||||