mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-31 23:30:11 +08:00

commit

4f225d6faa

@ -15,7 +15,7 @@

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

当计算机还是快乐、安全的时代时,在机器中的几乎每个进程上,那些段的起始虚拟地址都是**完全相同**的。这将使远程挖掘安全漏洞变得容易。漏洞利用经常需要去引用绝对内存位置:比如在栈中的一个地址,一个库函数的地址,等等。远程攻击闭着眼睛也会选择这个地址,因为地址空间都是相同的。当攻击者们这样做的时候,人们就会受到伤害。因此,地址空间随机化开始流行起来。Linux 会通过在其起始地址上增加偏移量来随机化[栈][3]、[内存映射段][4]、以及[堆][5]。不幸的是,32 位的地址空间是非常拥挤的,为地址空间随机化留下的空间不多,因此 [妨碍了地址空间随机化的效果][6]。

|

当计算机还是快乐、安全的时代时,在机器中的几乎每个进程上,那些段的起始虚拟地址都是**完全相同**的。这将使远程挖掘安全漏洞变得容易。漏洞利用经常需要去引用绝对内存位置:比如在栈中的一个地址,一个库函数的地址,等等。远程攻击可以闭着眼睛选择这个地址,因为地址空间都是相同的。当攻击者们这样做的时候,人们就会受到伤害。因此,地址空间随机化开始流行起来。Linux 会通过在其起始地址上增加偏移量来随机化[栈][3]、[内存映射段][4]、以及[堆][5]。不幸的是,32 位的地址空间是非常拥挤的,为地址空间随机化留下的空间不多,因此 [妨碍了地址空间随机化的效果][6]。

|

||||||

|

|

||||||

在进程地址空间中最高的段是栈,在大多数编程语言中它存储本地变量和函数参数。调用一个方法或者函数将推送一个新的<ruby>栈帧<rt>stack frame</rt></ruby>到这个栈。当函数返回时这个栈帧被删除。这个简单的设计,可能是因为数据严格遵循 [后进先出(LIFO)][7] 的次序,这意味着跟踪栈内容时不需要复杂的数据结构 —— 一个指向栈顶的简单指针就可以做到。推入和弹出也因此而非常快且准确。也可能是,持续的栈区重用往往会在 [CPU 缓存][8] 中保持活跃的栈内存,这样可以加快访问速度。进程中的每个线程都有它自己的栈。

|

在进程地址空间中最高的段是栈,在大多数编程语言中它存储本地变量和函数参数。调用一个方法或者函数将推送一个新的<ruby>栈帧<rt>stack frame</rt></ruby>到这个栈。当函数返回时这个栈帧被删除。这个简单的设计,可能是因为数据严格遵循 [后进先出(LIFO)][7] 的次序,这意味着跟踪栈内容时不需要复杂的数据结构 —— 一个指向栈顶的简单指针就可以做到。推入和弹出也因此而非常快且准确。也可能是,持续的栈区重用往往会在 [CPU 缓存][8] 中保持活跃的栈内存,这样可以加快访问速度。进程中的每个线程都有它自己的栈。

|

||||||

|

|

||||||

@ -25,7 +25,7 @@

|

|||||||

|

|

||||||

在栈的下面,有内存映射段。在这里,内核将文件内容直接映射到内存。任何应用程序都可以通过 Linux 的 [`mmap()`][12] 系统调用( [代码实现][13])或者 Windows 的 [`CreateFileMapping()`][14] / [`MapViewOfFile()`][15] 来请求一个映射。内存映射是实现文件 I/O 的方便高效的方式。因此,它经常被用于加载动态库。有时候,也被用于去创建一个并不匹配任何文件的匿名内存映射,这种映射经常被用做程序数据的替代。在 Linux 中,如果你通过 [`malloc()`][16] 去请求一个大的内存块,C 库将会创建这样一个匿名映射而不是使用堆内存。这里所谓的“大”表示是超过了`MMAP_THRESHOLD` 设置的字节数,它的缺省值是 128 kB,可以通过 [`mallopt()`][17] 去调整这个设置值。

|

在栈的下面,有内存映射段。在这里,内核将文件内容直接映射到内存。任何应用程序都可以通过 Linux 的 [`mmap()`][12] 系统调用( [代码实现][13])或者 Windows 的 [`CreateFileMapping()`][14] / [`MapViewOfFile()`][15] 来请求一个映射。内存映射是实现文件 I/O 的方便高效的方式。因此,它经常被用于加载动态库。有时候,也被用于去创建一个并不匹配任何文件的匿名内存映射,这种映射经常被用做程序数据的替代。在 Linux 中,如果你通过 [`malloc()`][16] 去请求一个大的内存块,C 库将会创建这样一个匿名映射而不是使用堆内存。这里所谓的“大”表示是超过了`MMAP_THRESHOLD` 设置的字节数,它的缺省值是 128 kB,可以通过 [`mallopt()`][17] 去调整这个设置值。

|

||||||

|

|

||||||

接下来讲的是“堆”,就在我们接下来的地址空间中,堆提供运行时内存分配,像栈一样,但又不同于栈的是,它分配的数据生存期要长于分配它的函数。大多数编程语言都为程序提供了堆管理支持。因此,满足内存需要是编程语言运行时和内核共同来做的事情。在 C 中,堆分配的接口是 [`malloc()`][18] 一族,然而在垃圾回收式编程语言中,像 C#,这个接口使用 `new` 关键字。

|

接下来讲的是“堆”,就在我们接下来的地址空间中,堆提供运行时内存分配,像栈一样,但又不同于栈的是,它分配的数据生存期要长于分配它的函数。大多数编程语言都为程序提供了堆管理支持。因此,满足内存需要是编程语言运行时和内核共同来做的事情。在 C 中,堆分配的接口是 [`malloc()`][18] 一族,然而在支持垃圾回收的编程语言中,像 C#,这个接口使用 `new` 关键字。

|

||||||

|

|

||||||

如果在堆中有足够的空间可以满足内存请求,它可以由编程语言运行时来处理内存分配请求,而无需内核参与。否则将通过 [`brk()`][19] 系统调用([代码实现][20])来扩大堆以满足内存请求所需的大小。堆管理是比较 [复杂的][21],在面对我们程序的混乱分配模式时,它通过复杂的算法,努力在速度和内存使用效率之间取得一种平衡。服务一个堆请求所需要的时间可能是非常可观的。实时系统有一个 [特定用途的分配器][22] 去处理这个问题。堆也会出现 _碎片化_ ,如下图所示:

|

如果在堆中有足够的空间可以满足内存请求,它可以由编程语言运行时来处理内存分配请求,而无需内核参与。否则将通过 [`brk()`][19] 系统调用([代码实现][20])来扩大堆以满足内存请求所需的大小。堆管理是比较 [复杂的][21],在面对我们程序的混乱分配模式时,它通过复杂的算法,努力在速度和内存使用效率之间取得一种平衡。服务一个堆请求所需要的时间可能是非常可观的。实时系统有一个 [特定用途的分配器][22] 去处理这个问题。堆也会出现 _碎片化_ ,如下图所示:

|

||||||

|

|

||||||

@ -51,7 +51,7 @@

|

|||||||

|

|

||||||

via: http://duartes.org/gustavo/blog/post/anatomy-of-a-program-in-memory/

|

via: http://duartes.org/gustavo/blog/post/anatomy-of-a-program-in-memory/

|

||||||

|

|

||||||

作者:[gustavo][a]

|

作者:[Gustavo Duarte][a]

|

||||||

译者:[qhwdw](https://github.com/qhwdw)

|

译者:[qhwdw](https://github.com/qhwdw)

|

||||||

校对:[wxy](https://github.com/wxy)

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

@ -1,52 +1,47 @@

|

|||||||

当你在 Linux 上启动一个进程时会发生什么?

|

当你在 Linux 上启动一个进程时会发生什么?

|

||||||

===========================================================

|

===========================================================

|

||||||

|

|

||||||

|

|

||||||

本文是关于 fork 和 exec 是如何在 Unix 上工作的。你或许已经知道,也有人还不知道。几年前当我了解到这些时,我惊叹不已。

|

本文是关于 fork 和 exec 是如何在 Unix 上工作的。你或许已经知道,也有人还不知道。几年前当我了解到这些时,我惊叹不已。

|

||||||

|

|

||||||

我们要做的是启动一个进程。我们已经在博客上讨论了很多关于**系统调用**的问题,每当你启动一个进程或者打开一个文件,这都是一个系统调用。所以你可能会认为有这样的系统调用:

|

我们要做的是启动一个进程。我们已经在博客上讨论了很多关于**系统调用**的问题,每当你启动一个进程或者打开一个文件,这都是一个系统调用。所以你可能会认为有这样的系统调用:

|

||||||

|

|

||||||

```

|

```

|

||||||

start_process(["ls", "-l", "my_cool_directory"])

|

start_process(["ls", "-l", "my_cool_directory"])

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

这是一个合理的想法,显然这是它在 DOS 或 Windows 中的工作原理。我想说的是,这并不是 Linux 上的工作原理。但是,我查阅了文档,确实有一个 [posix_spawn][2] 的系统调用基本上是这样做的,不过这不在本文的讨论范围内。

|

这是一个合理的想法,显然这是它在 DOS 或 Windows 中的工作原理。我想说的是,这并不是 Linux 上的工作原理。但是,我查阅了文档,确实有一个 [posix_spawn][2] 的系统调用基本上是这样做的,不过这不在本文的讨论范围内。

|

||||||

|

|

||||||

### fork 和 exec

|

### fork 和 exec

|

||||||

|

|

||||||

Linux 上的 `posix_spawn` 是通过两个系统调用实现的,分别是 `fork` 和 `exec`(实际上是 execve),这些都是人们常常使用的。尽管在 OS X 上,人们使用 `posix_spawn`,而 fork 和 exec 是不提倡的,但我们将讨论的是 Linux。

|

Linux 上的 `posix_spawn` 是通过两个系统调用实现的,分别是 `fork` 和 `exec`(实际上是 `execve`),这些都是人们常常使用的。尽管在 OS X 上,人们使用 `posix_spawn`,而 `fork` 和 `exec` 是不提倡的,但我们将讨论的是 Linux。

|

||||||

|

|

||||||

Linux 中的每个进程都存在于“进程树”中。你可以通过运行 `pstree` 命令查看进程树。树的根是 `init`,进程号是 1。每个进程(init 除外)都有一个父进程,一个进程都可以有很多子进程。

|

Linux 中的每个进程都存在于“进程树”中。你可以通过运行 `pstree` 命令查看进程树。树的根是 `init`,进程号是 1。每个进程(`init` 除外)都有一个父进程,一个进程都可以有很多子进程。

|

||||||

|

|

||||||

所以,假设我要启动一个名为 `ls` 的进程来列出一个目录。我是不是只要发起一个进程 `ls` 就好了呢?不是的。

|

所以,假设我要启动一个名为 `ls` 的进程来列出一个目录。我是不是只要发起一个进程 `ls` 就好了呢?不是的。

|

||||||

|

|

||||||

我要做的是,创建一个子进程,这个子进程是我本身的一个克隆,然后这个子进程的“大脑”被替代,变成 `ls`。

|

我要做的是,创建一个子进程,这个子进程是我(`me`)本身的一个克隆,然后这个子进程的“脑子”被吃掉了,变成 `ls`。

|

||||||

|

|

||||||

开始是这样的:

|

开始是这样的:

|

||||||

|

|

||||||

```

|

```

|

||||||

my parent

|

my parent

|

||||||

|- me

|

|- me

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

然后运行 `fork()`,生成一个子进程,是我自己的一份克隆:

|

然后运行 `fork()`,生成一个子进程,是我(`me`)自己的一份克隆:

|

||||||

|

|

||||||

```

|

```

|

||||||

my parent

|

my parent

|

||||||

|- me

|

|- me

|

||||||

|-- clone of me

|

|-- clone of me

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

然后我让子进程运行 `exec("ls")`,变成这样:

|

然后我让该子进程运行 `exec("ls")`,变成这样:

|

||||||

|

|

||||||

```

|

```

|

||||||

my parent

|

my parent

|

||||||

|- me

|

|- me

|

||||||

|-- ls

|

|-- ls

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

当 ls 命令结束后,我几乎又变回了我自己:

|

当 ls 命令结束后,我几乎又变回了我自己:

|

||||||

@ -55,24 +50,22 @@ my parent

|

|||||||

my parent

|

my parent

|

||||||

|- me

|

|- me

|

||||||

|-- ls (zombie)

|

|-- ls (zombie)

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

在这时 ls 其实是一个僵尸进程。这意味着它已经死了,但它还在等我,以防我需要检查它的返回值(使用 `wait` 系统调用)。一旦我获得了它的返回值,我将再次恢复独自一人的状态。

|

在这时 `ls` 其实是一个僵尸进程。这意味着它已经死了,但它还在等我,以防我需要检查它的返回值(使用 `wait` 系统调用)。一旦我获得了它的返回值,我将再次恢复独自一人的状态。

|

||||||

|

|

||||||

```

|

```

|

||||||

my parent

|

my parent

|

||||||

|- me

|

|- me

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

### fork 和 exec 的代码实现

|

### fork 和 exec 的代码实现

|

||||||

|

|

||||||

如果你要编写一个 shell,这是你必须做的一个练习(这是一个非常有趣和有启发性的项目。Kamal 在 Github 上有一个很棒的研讨会:[https://github.com/kamalmarhubi/shell-workshop][3])

|

如果你要编写一个 shell,这是你必须做的一个练习(这是一个非常有趣和有启发性的项目。Kamal 在 Github 上有一个很棒的研讨会:[https://github.com/kamalmarhubi/shell-workshop][3])。

|

||||||

|

|

||||||

事实证明,有了 C 或 Python 的技能,你可以在几个小时内编写一个非常简单的 shell,例如 bash。(至少如果你旁边能有个人多少懂一点,如果没有的话用时会久一点。)我已经完成啦,真的很棒。

|

事实证明,有了 C 或 Python 的技能,你可以在几个小时内编写一个非常简单的 shell,像 bash 一样。(至少如果你旁边能有个人多少懂一点,如果没有的话用时会久一点。)我已经完成啦,真的很棒。

|

||||||

|

|

||||||

这就是 fork 和 exec 在程序中的实现。我写了一段 C 的伪代码。请记住,[fork 也可能会失败哦。][4]

|

这就是 `fork` 和 `exec` 在程序中的实现。我写了一段 C 的伪代码。请记住,[fork 也可能会失败哦。][4]

|

||||||

|

|

||||||

```

|

```

|

||||||

int pid = fork();

|

int pid = fork();

|

||||||

@ -80,7 +73,7 @@ int pid = fork();

|

|||||||

// “我”是谁呢?可能是子进程也可能是父进程

|

// “我”是谁呢?可能是子进程也可能是父进程

|

||||||

if (pid == 0) {

|

if (pid == 0) {

|

||||||

// 我现在是子进程

|

// 我现在是子进程

|

||||||

// 我的大脑将被替代,然后变成一个完全不一样的进程“ls”

|

// “ls” 吃掉了我脑子,然后变成一个完全不一样的进程

|

||||||

exec(["ls"])

|

exec(["ls"])

|

||||||

} else if (pid == -1) {

|

} else if (pid == -1) {

|

||||||

// 天啊,fork 失败了,简直是灾难!

|

// 天啊,fork 失败了,简直是灾难!

|

||||||

@ -89,59 +82,48 @@ if (pid == 0) {

|

|||||||

// 继续做一个酷酷的美男子吧

|

// 继续做一个酷酷的美男子吧

|

||||||

// 需要的话,我可以等待子进程结束

|

// 需要的话,我可以等待子进程结束

|

||||||

}

|

}

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

### 上文提到的“大脑被替代“是什么意思呢?

|

### 上文提到的“脑子被吃掉”是什么意思呢?

|

||||||

|

|

||||||

进程有很多属性:

|

进程有很多属性:

|

||||||

|

|

||||||

* 打开的文件(包括打开的网络连接)

|

* 打开的文件(包括打开的网络连接)

|

||||||

|

|

||||||

* 环境变量

|

* 环境变量

|

||||||

|

|

||||||

* 信号处理程序(在程序上运行 Ctrl + C 时会发生什么?)

|

* 信号处理程序(在程序上运行 Ctrl + C 时会发生什么?)

|

||||||

|

|

||||||

* 内存(你的“地址空间”)

|

* 内存(你的“地址空间”)

|

||||||

|

|

||||||

* 寄存器

|

* 寄存器

|

||||||

|

* 可执行文件(`/proc/$pid/exe`)

|

||||||

* 可执行文件(/proc/$pid/exe)

|

|

||||||

|

|

||||||

* cgroups 和命名空间(与 Linux 容器相关)

|

* cgroups 和命名空间(与 Linux 容器相关)

|

||||||

|

|

||||||

* 当前的工作目录

|

* 当前的工作目录

|

||||||

|

|

||||||

* 运行程序的用户

|

* 运行程序的用户

|

||||||

|

|

||||||

* 其他我还没想到的

|

* 其他我还没想到的

|

||||||

|

|

||||||

当你运行 `execve` 并让另一个程序替代你的时候,实际上几乎所有东西都是相同的! 你们有相同的环境变量、信号处理程序和打开的文件等等。

|

当你运行 `execve` 并让另一个程序吃掉你的脑子的时候,实际上几乎所有东西都是相同的! 你们有相同的环境变量、信号处理程序和打开的文件等等。

|

||||||

|

|

||||||

唯一改变的是,内存、寄存器以及正在运行的程序,这可是件大事。

|

唯一改变的是,内存、寄存器以及正在运行的程序,这可是件大事。

|

||||||

|

|

||||||

### 为何 fork 并非那么耗费资源(写入时复制)

|

### 为何 fork 并非那么耗费资源(写入时复制)

|

||||||

|

|

||||||

你可能会问:“如果我有一个使用了 2 GB 内存的进程,这是否意味着每次我启动一个子进程,所有 2 GB 的内存都要被复制一次?这听起来要耗费很多资源!“

|

你可能会问:“如果我有一个使用了 2GB 内存的进程,这是否意味着每次我启动一个子进程,所有 2 GB 的内存都要被复制一次?这听起来要耗费很多资源!”

|

||||||

|

|

||||||

事实上,Linux 为 fork() 调用实现了写入时复制(copy on write),对于新进程的 2 GB 内存来说,就像是“看看旧的进程就好了,是一样的!”。然后,当如果任一进程试图写入内存,此时系统才真正地复制一个内存的副本给该进程。如果两个进程的内存是相同的,就不需要复制了。

|

事实上,Linux 为 `fork()` 调用实现了<ruby>写时复制<rt>copy on write</rt></ruby>,对于新进程的 2GB 内存来说,就像是“看看旧的进程就好了,是一样的!”。然后,当如果任一进程试图写入内存,此时系统才真正地复制一个内存的副本给该进程。如果两个进程的内存是相同的,就不需要复制了。

|

||||||

|

|

||||||

### 为什么你需要知道这么多

|

### 为什么你需要知道这么多

|

||||||

|

|

||||||

你可能会说,好吧,这些琐事听起来很厉害,但为什么这么重要?关于信号处理程序或环境变量的细节会被继承吗?这对我的日常编程有什么实际影响呢?

|

你可能会说,好吧,这些细节听起来很厉害,但为什么这么重要?关于信号处理程序或环境变量的细节会被继承吗?这对我的日常编程有什么实际影响呢?

|

||||||

|

|

||||||

有可能哦!比如说,在 Kamal 的博客上有一个很有意思的 [bug][5]。它讨论了 Python 如何使信号处理程序忽略了 SIGPIPE。也就是说,如果你从 Python 里运行一个程序,默认情况下它会忽略 SIGPIPE!这意味着,程序从 Python 脚本和从 shell 启动的表现会**有所不同**。在这种情况下,它会造成一个奇怪的问题。

|

有可能哦!比如说,在 Kamal 的博客上有一个很有意思的 [bug][5]。它讨论了 Python 如何使信号处理程序忽略了 `SIGPIPE`。也就是说,如果你从 Python 里运行一个程序,默认情况下它会忽略 `SIGPIPE`!这意味着,程序从 Python 脚本和从 shell 启动的表现会**有所不同**。在这种情况下,它会造成一个奇怪的问题。

|

||||||

|

|

||||||

所以,你的程序的环境(环境变量、信号处理程序等)可能很重要,都是从父进程继承来的。知道这些,在调试时是很有用的。

|

所以,你的程序的环境(环境变量、信号处理程序等)可能很重要,都是从父进程继承来的。知道这些,在调试时是很有用的。

|

||||||

|

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

via: https://jvns.ca/blog/2016/10/04/exec-will-eat-your-brain/

|

via: https://jvns.ca/blog/2016/10/04/exec-will-eat-your-brain/

|

||||||

|

|

||||||

作者:[ Julia Evans][a]

|

作者:[Julia Evans][a]

|

||||||

译者:[jessie-pang](https://github.com/jessie-pang)

|

译者:[jessie-pang](https://github.com/jessie-pang)

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

@ -1,8 +1,9 @@

|

|||||||

如何方便地寻找 GitHub 上超棒的项目和资源

|

如何轻松地寻找 GitHub 上超棒的项目和资源

|

||||||

======

|

======

|

||||||

|

|

||||||

|

|

||||||

在 **GitHub** 网站上每天都会新增上百个项目。由于 GitHub 上有成千上万的项目,要在上面搜索好的项目简直要累死人。好在,有那么一伙人已经创建了一些这样的列表。其中包含的类别五花八门,如编程,数据库,编辑器,游戏,娱乐等。这使得我们寻找在 GitHub 上托管的项目,软件,资源,裤,书籍等其他东西变得容易了很多。有一个 GitHub 用户更进了一步,创建了一个名叫 `Awesome-finder` 的命令行工具,用来在 awesome 系列的仓库中寻找超棒的项目和资源。该工具帮助我们不需要离开终端(当然也就不需要使用浏览器了)的情况下浏览 awesome 列表。

|

|

||||||

|

|

||||||

|

在 GitHub 网站上每天都会新增上百个项目。由于 GitHub 上有成千上万的项目,要在上面搜索好的项目简直要累死人。好在,有那么一伙人已经创建了一些这样的列表。其中包含的类别五花八门,如编程、数据库、编辑器、游戏、娱乐等。这使得我们寻找在 GitHub 上托管的项目、软件、资源、库、书籍等其他东西变得容易了很多。有一个 GitHub 用户更进了一步,创建了一个名叫 `Awesome-finder` 的命令行工具,用来在 awesome 系列的仓库中寻找超棒的项目和资源。该工具可以让我们不需要离开终端(当然也就不需要使用浏览器了)的情况下浏览 awesome 列表。

|

||||||

|

|

||||||

在这篇简单的说明中,我会向你演示如何方便地在类 Unix 系统中浏览 awesome 列表。

|

在这篇简单的说明中,我会向你演示如何方便地在类 Unix 系统中浏览 awesome 列表。

|

||||||

|

|

||||||

@ -12,12 +13,14 @@

|

|||||||

|

|

||||||

使用 `pip` 可以很方便地安装该工具,`pip` 是一个用来安装使用 Python 编程语言开发的程序的包管理器。

|

使用 `pip` 可以很方便地安装该工具,`pip` 是一个用来安装使用 Python 编程语言开发的程序的包管理器。

|

||||||

|

|

||||||

在 **Arch Linux** 一起衍生发行版中(比如 **Antergos**,**Manjaro Linux**),你可以使用下面命令安装 `pip`:

|

在 Arch Linux 及其衍生发行版中(比如 Antergos,Manjaro Linux),你可以使用下面命令安装 `pip`:

|

||||||

|

|

||||||

```

|

```

|

||||||

sudo pacman -S python-pip

|

sudo pacman -S python-pip

|

||||||

```

|

```

|

||||||

|

|

||||||

在 **RHEL**,**CentOS** 中:

|

在 RHEL,CentOS 中:

|

||||||

|

|

||||||

```

|

```

|

||||||

sudo yum install epel-release

|

sudo yum install epel-release

|

||||||

```

|

```

|

||||||

@ -25,32 +28,33 @@ sudo yum install epel-release

|

|||||||

sudo yum install python-pip

|

sudo yum install python-pip

|

||||||

```

|

```

|

||||||

|

|

||||||

在 **Fedora** 上:

|

在 Fedora 上:

|

||||||

|

|

||||||

```

|

```

|

||||||

sudo dnf install epel-release

|

sudo dnf install epel-release

|

||||||

```

|

|

||||||

```

|

|

||||||

sudo dnf install python-pip

|

sudo dnf install python-pip

|

||||||

```

|

```

|

||||||

|

|

||||||

在 **Debian**,**Ubuntu**,**Linux Mint** 上:

|

在 Debian,Ubuntu,Linux Mint 上:

|

||||||

|

|

||||||

```

|

```

|

||||||

sudo apt-get install python-pip

|

sudo apt-get install python-pip

|

||||||

```

|

```

|

||||||

|

|

||||||

在 **SUSE**,**openSUSE** 上:

|

在 SUSE,openSUSE 上:

|

||||||

```

|

```

|

||||||

sudo zypper install python-pip

|

sudo zypper install python-pip

|

||||||

```

|

```

|

||||||

|

|

||||||

PIP 安装好后,用下面命令来安装 'Awesome-finder'。

|

`pip` 安装好后,用下面命令来安装 'Awesome-finder'。

|

||||||

|

|

||||||

```

|

```

|

||||||

sudo pip install awesome-finder

|

sudo pip install awesome-finder

|

||||||

```

|

```

|

||||||

|

|

||||||

#### 用法

|

#### 用法

|

||||||

|

|

||||||

Awesome-finder 会列出 GitHub 网站中如下这些主题(其实就是仓库)的内容:

|

Awesome-finder 会列出 GitHub 网站中如下这些主题(其实就是仓库)的内容:

|

||||||

|

|

||||||

* awesome

|

* awesome

|

||||||

* awesome-android

|

* awesome-android

|

||||||

@ -66,83 +70,84 @@ Awesome-finder 会列出 GitHub 网站中如下这些主题(其实就是仓库)

|

|||||||

* awesome-scala

|

* awesome-scala

|

||||||

* awesome-swift

|

* awesome-swift

|

||||||

|

|

||||||

|

|

||||||

该列表会定期更新。

|

该列表会定期更新。

|

||||||

|

|

||||||

比如,要查看 `awesome-go` 仓库中的列表,只需要输入:

|

比如,要查看 `awesome-go` 仓库中的列表,只需要输入:

|

||||||

|

|

||||||

```

|

```

|

||||||

awesome go

|

awesome go

|

||||||

```

|

```

|

||||||

|

|

||||||

你就能看到用 “Go” 写的所有流行的东西了,而且这些东西按字母顺序进行了排列。

|

你就能看到用 “Go” 写的所有流行的东西了,而且这些东西按字母顺序进行了排列。

|

||||||

|

|

||||||

[![][1]][2]

|

![][2]

|

||||||

|

|

||||||

你可以通过 **上/下** 箭头在列表中导航。一旦找到所需要的东西,只需要选中它,然后按下 **回车** 键就会用你默认的 web 浏览器打开相应的链接了。

|

你可以通过 上/下 箭头在列表中导航。一旦找到所需要的东西,只需要选中它,然后按下回车键就会用你默认的 web 浏览器打开相应的链接了。

|

||||||

|

|

||||||

类似的,

|

类似的,

|

||||||

|

|

||||||

* "awesome android" 命令会搜索 **awesome-android** 仓库。

|

* `awesome android` 命令会搜索 awesome-android 仓库。

|

||||||

* "awesome awesome" 命令会搜索 **awesome** 仓库。

|

* `awesome awesome` 命令会搜索 awesome 仓库。

|

||||||

* "awesome elixir" 命令会搜索 **awesome-elixir**。

|

* `awesome elixir` 命令会搜索 awesome-elixir。

|

||||||

* "awesome go" 命令会搜索 **awesome-go**。

|

* `awesome go` 命令会搜索 awesome-go。

|

||||||

* "awesome ios" 命令会搜索 **awesome-ios**。

|

* `awesome ios` 命令会搜索 awesome-ios。

|

||||||

* "awesome java" 命令会搜索 **awesome-java**。

|

* `awesome java` 命令会搜索 awesome-java。

|

||||||

* "awesome javascript" 命令会搜索 **awesome-javascript**。

|

* `awesome javascript` 命令会搜索 awesome-javascript。

|

||||||

* "awesome php" 命令会搜索 **awesome-php**。

|

* `awesome php` 命令会搜索 awesome-php。

|

||||||

* "awesome python" 命令会搜索 **awesome-python**。

|

* `awesome python` 命令会搜索 awesome-python。

|

||||||

* "awesome ruby" 命令会搜索 **awesome-ruby**。

|

* `awesome ruby` 命令会搜索 awesome-ruby。

|

||||||

* "awesome rust" 命令会搜索 **awesome-rust**。

|

* `awesome rust` 命令会搜索 awesome-rust。

|

||||||

* "awesome scala" 命令会搜索 **awesome-scala**。

|

* `awesome scala` 命令会搜索 awesome-scala。

|

||||||

* "awesome swift" 命令会搜索 **awesome-swift**。

|

* `awesome swift` 命令会搜索 awesome-swift。

|

||||||

|

|

||||||

而且,它还会随着你在提示符中输入的内容而自动进行筛选。比如,当我输入 "dj" 后,他会显示与 Django 相关的内容。

|

而且,它还会随着你在提示符中输入的内容而自动进行筛选。比如,当我输入 `dj` 后,他会显示与 Django 相关的内容。

|

||||||

|

|

||||||

[![][1]][3]

|

![][3]

|

||||||

|

|

||||||

若你想从最新的 `awesome-<topic>`( 而不是用缓存中的数据) 中搜索,使用 `-f` 或 `-force` 标志:

|

若你想从最新的 `awesome-<topic>`( 而不是用缓存中的数据) 中搜索,使用 `-f` 或 `-force` 标志:

|

||||||

|

|

||||||

```

|

```

|

||||||

awesome <topic> -f (--force)

|

awesome <topic> -f (--force)

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

**像这样:**

|

像这样:

|

||||||

|

|

||||||

```

|

```

|

||||||

awesome python -f

|

awesome python -f

|

||||||

```

|

```

|

||||||

|

|

||||||

或,

|

或,

|

||||||

|

|

||||||

```

|

```

|

||||||

awesome python --force

|

awesome python --force

|

||||||

```

|

```

|

||||||

|

|

||||||

上面命令会显示 **awesome-python** GitHub 仓库中的列表。

|

上面命令会显示 awesome-python GitHub 仓库中的列表。

|

||||||

|

|

||||||

很棒,对吧?

|

很棒,对吧?

|

||||||

|

|

||||||

要退出这个工具的话,按下 **ESC** 键。要显示帮助信息,输入:

|

要退出这个工具的话,按下 ESC 键。要显示帮助信息,输入:

|

||||||

|

|

||||||

```

|

```

|

||||||

awesome -h

|

awesome -h

|

||||||

```

|

```

|

||||||

|

|

||||||

本文至此就结束了。希望本文能对你产生帮助。如果你觉得我们的文章对你有帮助,请将他们分享到你的社交网络中去,造福大众。我们马上还有其他好东西要来了。敬请期待!

|

本文至此就结束了。希望本文能对你产生帮助。如果你觉得我们的文章对你有帮助,请将他们分享到你的社交网络中去,造福大众。我们马上还有其他好东西要来了。敬请期待!

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

via: https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/

|

via: https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/

|

||||||

|

|

||||||

作者:[SK][a]

|

作者:[SK][a]

|

||||||

译者:[lujun9972](https://github.com/lujun9972)

|

译者:[lujun9972](https://github.com/lujun9972)

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

[a]:https://www.ostechnix.com/author/sk/

|

[a]:https://www.ostechnix.com/author/sk/

|

||||||

[1]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

[1]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||||

[2]:http://www.ostechnix.com/wp-content/uploads/2017/09/sk@sk_008-1.png ()

|

[2]:http://www.ostechnix.com/wp-content/uploads/2017/09/sk@sk_008-1.png

|

||||||

[3]:http://www.ostechnix.com/wp-content/uploads/2017/09/sk@sk_009.png ()

|

[3]:http://www.ostechnix.com/wp-content/uploads/2017/09/sk@sk_009.png

|

||||||

[4]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/?share=reddit (Click to share on Reddit)

|

[4]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/?share=reddit (Click to share on Reddit)

|

||||||

[5]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/?share=twitter (Click to share on Twitter)

|

[5]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/?share=twitter (Click to share on Twitter)

|

||||||

[6]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/?share=facebook (Click to share on Facebook)

|

[6]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/?share=facebook (Click to share on Facebook)

|

||||||

@ -1,119 +0,0 @@

|

|||||||

translating---geekpi

|

|

||||||

|

|

||||||

|

|

||||||

Working with Vi/Vim Editor : Advanced concepts

|

|

||||||

======

|

|

||||||

Earlier we have discussed some basics about VI/VIM editor but VI & VIM are both very powerful editors and there are many other functionalities that can be used with these editors. In this tutorial, we are going to learn some advanced uses of VI/VIM editor.

|

|

||||||

|

|

||||||

( **Recommended Read** : [Working with VI editor : The Basics ][1])

|

|

||||||

|

|

||||||

## Opening multiple files with VI/VIM editor

|

|

||||||

|

|

||||||

To open multiple files, command would be same as is for a single file; we just add the file name for second file as well.

|

|

||||||

|

|

||||||

```

|

|

||||||

$ vi file1 file2 file 3

|

|

||||||

```

|

|

||||||

|

|

||||||

Now to browse to next file, we can use

|

|

||||||

|

|

||||||

```

|

|

||||||

$ :n

|

|

||||||

```

|

|

||||||

|

|

||||||

or we can also use

|

|

||||||

|

|

||||||

```

|

|

||||||

$ :e filename

|

|

||||||

```

|

|

||||||

|

|

||||||

## Run external commands inside the editor

|

|

||||||

|

|

||||||

We can run external Linux/Unix commands from inside the vi editor, i.e. without exiting the editor. To issue a command from editor, go back to Command Mode if in Insert mode & we use the BANG i.e. '!' followed by the command that needs to be used. Syntax for running a command is,

|

|

||||||

|

|

||||||

```

|

|

||||||

$ :! command

|

|

||||||

```

|

|

||||||

|

|

||||||

An example for this would be

|

|

||||||

|

|

||||||

```

|

|

||||||

$ :! df -H

|

|

||||||

```

|

|

||||||

|

|

||||||

## Searching for a pattern

|

|

||||||

|

|

||||||

To search for a word or pattern in the text file, we use following two commands in command mode,

|

|

||||||

|

|

||||||

* command '/' searches the pattern in forward direction

|

|

||||||

|

|

||||||

* command '?' searched the pattern in backward direction

|

|

||||||

|

|

||||||

|

|

||||||

Both of these commands are used for same purpose, only difference being the direction they search in. An example would be,

|

|

||||||

|

|

||||||

`$ :/ search pattern` (If at beginning of the file)

|

|

||||||

|

|

||||||

`$ :/ search pattern` (If at the end of the file)

|

|

||||||

|

|

||||||

## Searching & replacing a pattern

|

|

||||||

|

|

||||||

We might be required to search & replace a word or a pattern from our text files. So rather than finding the occurrence of word from whole text file & replace it, we can issue a command from the command mode to replace the word automatically. Syntax for using search & replacement is,

|

|

||||||

|

|

||||||

```

|

|

||||||

$ :s/pattern_to_be_found/New_pattern/g

|

|

||||||

```

|

|

||||||

|

|

||||||

Suppose we want to find word "alpha" & replace it with word "beta", the command would be

|

|

||||||

|

|

||||||

```

|

|

||||||

$ :s/alpha/beta/g

|

|

||||||

```

|

|

||||||

|

|

||||||

If we want to only replace the first occurrence of word "alpha", then the command would be

|

|

||||||

|

|

||||||

```

|

|

||||||

$ :s/alpha/beta/

|

|

||||||

```

|

|

||||||

|

|

||||||

## Using Set commands

|

|

||||||

|

|

||||||

We can also customize the behaviour, the and feel of the vi/vim editor by using the set command. Here is a list of some options that can be use set command to modify the behaviour of vi/vim editor,

|

|

||||||

|

|

||||||

`$ :set ic ` ignores cases while searching

|

|

||||||

|

|

||||||

`$ :set smartcase ` enforce case sensitive search

|

|

||||||

|

|

||||||

`$ :set nu` display line number at the begining of the line

|

|

||||||

|

|

||||||

`$ :set hlsearch ` highlights the matching words

|

|

||||||

|

|

||||||

`$ : set ro ` change the file type to read only

|

|

||||||

|

|

||||||

`$ : set term ` prints the terminal type

|

|

||||||

|

|

||||||

`$ : set ai ` sets auto-indent

|

|

||||||

|

|

||||||

`$ :set noai ` unsets the auto-indent

|

|

||||||

|

|

||||||

Some other commands to modify vi editors are,

|

|

||||||

|

|

||||||

`$ :colorscheme ` its used to change the color scheme for the editor. (for VIM editor only)

|

|

||||||

|

|

||||||

`$ :syntax on ` will turn on the color syntax for .xml, .html files etc. (for VIM editor only)

|

|

||||||

|

|

||||||

This complete our tutorial, do mention your queries/questions or suggestions in the comment box below.

|

|

||||||

|

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

|

||||||

|

|

||||||

via: http://linuxtechlab.com/working-vivim-editor-advanced-concepts/

|

|

||||||

|

|

||||||

作者:[Shusain][a]

|

|

||||||

译者:[译者ID](https://github.com/译者ID)

|

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

|

||||||

|

|

||||||

[a]:http://linuxtechlab.com/author/shsuain/

|

|

||||||

[1]:http://linuxtechlab.com/working-vi-editor-basics/

|

|

||||||

@ -1,3 +1,5 @@

|

|||||||

|

translating---geekpi

|

||||||

|

|

||||||

Easy guide to secure VNC server with TLS encryption

|

Easy guide to secure VNC server with TLS encryption

|

||||||

======

|

======

|

||||||

In this tutorial, we will learn to install VNC server & secure VNC server sessions with TLS encryption.

|

In this tutorial, we will learn to install VNC server & secure VNC server sessions with TLS encryption.

|

||||||

|

|||||||

99

sources/tech/20180115 How debuggers really work.md

Normal file

99

sources/tech/20180115 How debuggers really work.md

Normal file

@ -0,0 +1,99 @@

|

|||||||

|

How debuggers really work

|

||||||

|

======

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

Image by : opensource.com

|

||||||

|

|

||||||

|

A debugger is one of those pieces of software that most, if not every, developer uses at least once during their software engineering career, but how many of you know how they actually work? During my talk at [linux.conf.au 2018][1] in Sydney, I will be talking about writing a debugger from scratch... in [Rust][2]!

|

||||||

|

|

||||||

|

In this article, the terms debugger/tracer are interchangeably. "Tracee" refers to the process being traced by the tracer.

|

||||||

|

|

||||||

|

### The ptrace system call

|

||||||

|

|

||||||

|

Most debuggers heavily rely on a system call known as `ptrace(2)`, which has the prototype:

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

long ptrace(enum __ptrace_request request, pid_t pid, void *addr, void *data);

|

||||||

|

```

|

||||||

|

|

||||||

|

This is a system call that can manipulate almost all aspects of a process; however, before the debugger can attach to a process, the "tracee" has to call `ptrace` with the request `PTRACE_TRACEME`. This tells Linux that it is legitimate for the parent to attach via `ptrace` to this process. But... how do we coerce a process into calling `ptrace`? Easy-peasy! `fork/execve` provides an easy way of calling `ptrace` after `fork` but before the tracee really starts using `execve`. Conveniently, `fork` will also return the `pid` of the tracee, which is required for using `ptrace` later.

|

||||||

|

|

||||||

|

Now that the tracee can be traced by the debugger, important changes take place:

|

||||||

|

|

||||||

|

* Every time a signal is delivered to the tracee, it stops and a wait-event is delivered to the tracer that can be captured by the `wait` family of system calls.

|

||||||

|

* Each `execve` system call will cause a `SIGTRAP` to be delivered to the tracee. (Combined with the previous item, this means the tracee is stopped before an `execve` can fully take place.)

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

This means that, once we issue the `PTRACE_TRACEME` request and call the `execve` system call to actually start the program in the tracee, the tracee will immediately stop, since `execve` delivers a `SIGTRAP`, and that is caught by a wait-event in the tracer. How do we continue? As one would expect, `ptrace` has a number of requests that can be used for telling the tracee it's fine to continue:

|

||||||

|

|

||||||

|

* `PTRACE_CONT`: This is the simplest. The tracee runs until it receives a signal, at which point a wait-event is delivered to the tracer. This is most commonly used to implement "continue-until-breakpoint" and "continue-forever" options of a real-world debugger. Breakpoints will be covered below.

|

||||||

|

* `PTRACE_SYSCALL`: Very similar to `PTRACE_CONT`, but stops before a system call is entered and also before a system call returns to userspace. It can be used in combination with other requests (which we will cover later in this article) to monitor and modify a system call's arguments or return value. `strace`, the system call tracer, uses this request heavily to figure out what system calls are made by a process.

|

||||||

|

* `PTRACE_SINGLESTEP`: This one is pretty self-explanatory. If you used a debugger before, this request executes the next instruction, but stops immediately after.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

We can stop the process with a variety of requests, but how do we get the state of the tracee? The state of a process is mostly captured by its registers, so of course `ptrace` has a request to get (or modify!) the registers:

|

||||||

|

|

||||||

|

* `PTRACE_GETREGS`: This request will give the registers' state as it was when a tracee was stopped.

|

||||||

|

* `PTRACE_SETREGS`: If the tracer has the values of registers from a previous call to `PTRACE_GETREGS`, it can modify the values in that structure and set the registers to the new values via this request.

|

||||||

|

* `PTRACE_PEEKUSER` and `PTRACE_POKEUSER`: These allow reading from the tracee's `USER` area, which holds the registers and other useful information. This can be used to modify a single register, without the more heavyweight `PTRACE_{GET,SET}REGS`.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

Modifying the registers isn't always sufficient in a debugger. A debugger will sometimes need to read some parts of the memory or even modify it. The GNU Project Debugger (GDB) can use `print` to get the value of a memory location or a variable. `ptrace` has the functionality to implement this:

|

||||||

|

|

||||||

|

* `PTRACE_PEEKTEXT` and `PTRACE_POKETEXT`: These allow reading and writing a word in the address space of the tracee. Of course, the tracee has to be stopped for this to work.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

Real-world debuggers also have features like breakpoints and watchpoints. In the next section, I'll dive into the architectural details of debugging support. For the purposes of clarity and conciseness, this article will consider x86 only.

|

||||||

|

|

||||||

|

### Architectural support

|

||||||

|

|

||||||

|

`ptrace` is all cool, but how does it work? In the previous section, we've seen that `ptrace` has quite a bit to do with signals: `SIGTRAP` can be delivered during single-stepping, before `execve` and before or after system calls. Signals can be generated a number of ways, but we will look at two specific examples that can be used by debuggers to stop a program (effectively creating a breakpoint!) at a given location:

|

||||||

|

|

||||||

|

* **Undefined instructions:** When a process tries to execute an undefined instruction, an exception is raised by the CPU. This exception is handled via a CPU interrupt, and a handler corresponding to the interrupt in the kernel is called. This will result in a `SIGILL` being sent to the process. This, in turn, causes the process to stop, and the tracer is notified via a wait-event. It can then decide what to do. On x86, an instruction `ud2` is guaranteed to be always undefined.

|

||||||

|

|

||||||

|

* **Debugging interrupt:** The problem with the previous approach is that the `ud2` instruction takes two bytes of machine code. A special instruction exists that takes one byte and raises an interrupt. It's `int $3` and the machine code is `0xCC`. When this interrupt is raised, the kernel sends a `SIGTRAP` to the process and, just as before, the tracer is notified.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

This is fine, but how do we coerce the tracee to execute these instructions? Easy: `ptrace` has `PTRACE_POKETEXT`, which can override a word at a memory location. A debugger would read the original word at the location using `PTRACE_PEEKTEXT` and replace it with `0xCC`, remembering the original byte and the fact that it is a breakpoint in its internal state. The next time the tracee executes at the location, it is automatically stopped by the virtue of a `SIGTRAP`. The debugger's end user can then decide how to continue (for instance, inspect the registers).

|

||||||

|

|

||||||

|

Okay, we've covered breakpoints, but what about watchpoints? How does a debugger stop a program when a certain memory location is read or written? Surely you wouldn't just overwrite every instruction with `int $3` that could read or write some memory location. Meet debug registers, a set of registers designed to fulfill this goal more efficiently:

|

||||||

|

|

||||||

|

* `DR0` to `DR3`: Each of these registers contains an address (a memory location), where the debugger wants the tracee to stop for some reason. The reason is specified as a bitmask in `DR7`.

|

||||||

|

* `DR4` and `DR5`: These obsolete aliases to `DR6` and `DR7`, respectively.

|

||||||

|

* `DR6`: Debug status. Contains information about which `DR0` to `DR3` caused the debugging exception to be raised. This is used by Linux to figure out the information passed along with the `SIGTRAP` to the tracee.

|

||||||

|

* `DR7`: Debug control. Using the bits in these registers, the debugger can control how the addresses specified in `DR0` to `DR3` are interpreted. A bitmask controls the size of the watchpoint (whether 1, 2, 4, or 8 bytes are monitored) and whether to raise an exception on execution, reading, writing, or either of reading and writing.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

Because the debug registers form part of the `USER` area of a process, the debugger can use `PTRACE_POKEUSER` to write values into the debug registers. The debug registers are only relevant to a specific process and are thus restored to the value at preemption before the process regains control of the CPU.

|

||||||

|

|

||||||

|

### Tip of the iceberg

|

||||||

|

|

||||||

|

We've glanced at the iceberg a debugger is: we've covered `ptrace`, went over some of its functionality, then we had a look at how `ptrace` is implemented. Some parts of `ptrace` can be implemented in software, but other parts have to be implemented in hardware, otherwise they'd be very expensive or even impossible.

|

||||||

|

|

||||||

|

There's plenty that we didn't cover, of course. Questions, like "how does a debugger know where a variable is in memory?" remain open due to space and time constraints, but I hope you've learned something from this article; if it piqued your interest, there are plenty of resources available online to learn more.

|

||||||

|

|

||||||

|

For more, attend Levente Kurusa's talk, [Let's Write a Debugger!][3], at [linux.conf.au][1], which will be held January 22-26 in Sydney.

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://opensource.com/article/18/1/how-debuggers-really-work

|

||||||

|

|

||||||

|

作者:[Levente Kurusa][a]

|

||||||

|

译者:[译者ID](https://github.com/译者ID)

|

||||||

|

校对:[校对者ID](https://github.com/校对者ID)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:https://opensource.com/users/lkurusa

|

||||||

|

[1]:https://linux.conf.au/index.html

|

||||||

|

[2]:https://www.rust-lang.org

|

||||||

|

[3]:https://rego.linux.conf.au/schedule/presentation/91/

|

||||||

@ -0,0 +1,97 @@

|

|||||||

|

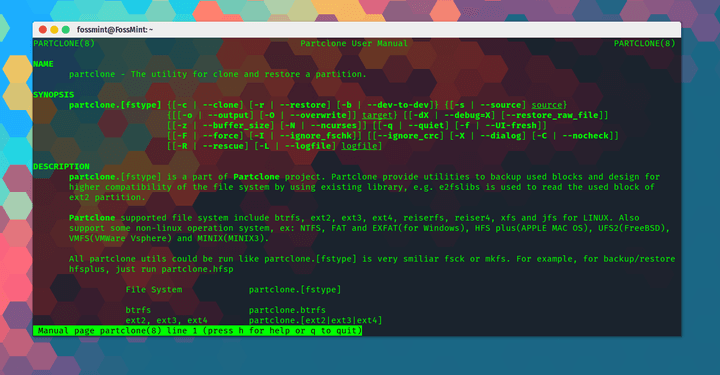

Partclone – A Versatile Free Software for Partition Imaging and Cloning

|

||||||

|

======

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

**[Partclone][1]** is a free and open-source tool for creating and cloning partition images brought to you by the developers of **Clonezilla**. In fact, **Partclone** is one of the tools that **Clonezilla** is based on.

|

||||||

|

|

||||||

|

It provides users with the tools required to backup and restores used partition blocks along with high compatibility with several file systems thanks to its ability to use existing libraries like **e2fslibs** to read and write partitions e.g. **ext2**.

|

||||||

|

|

||||||

|

Its best stronghold is the variety of formats it supports including ext2, ext3, ext4, hfs+, reiserfs, reiser4, btrfs, vmfs3, vmfs5, xfs, jfs, ufs, ntfs, fat(12/16/32), exfat, f2fs, and nilfs.

|

||||||

|

|

||||||

|

It also has a plethora of available programs including **partclone.ext2** (ext3 & ext4), partclone.ntfs, partclone.exfat, partclone.hfsp, and partclone.vmfs (v3 and v5), among others.

|

||||||

|

|

||||||

|

### Features in Partclone

|

||||||

|

|

||||||

|

* **Freeware:** **Partclone** is free for everyone to download and use.

|

||||||

|

* **Open Source:** **Partclone** is released under the GNU GPL license and is open to contribution on [GitHub][2].

|

||||||

|

* **Cross-Platform** : Available on Linux, Windows, MAC, ESX file system backup/restore, and FreeBSD.

|

||||||

|

* An online [Documentation page][3] from where you can view help docs and track its GitHub issues.

|

||||||

|

* An online [user manual][4] for beginners and pros alike.

|

||||||

|

* Rescue support.

|

||||||

|

* Clone partitions to image files.

|

||||||

|

* Restore image files to partitions.

|

||||||

|

* Duplicate partitions quickly.

|

||||||

|

* Support for raw clone.

|

||||||

|

* Displays transfer rate and elapsed time.

|

||||||

|

* Supports piping.

|

||||||

|

* Support for crc32.

|

||||||

|

* Supports vmfs for ESX vmware server and ufs for FreeBSD file system.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

There are a lot more features bundled in **Partclone** and you can see the rest of them [here][5].

|

||||||

|

|

||||||

|

[__Download Partclone for Linux][6]

|

||||||

|

|

||||||

|

### How to Install and Use Partclone

|

||||||

|

|

||||||

|

To install Partclone on Linux.

|

||||||

|

```

|

||||||

|

$ sudo apt install partclone [On Debian/Ubuntu]

|

||||||

|

$ sudo yum install partclone [On CentOS/RHEL/Fedora]

|

||||||

|

|

||||||

|

```

|

||||||

|

|

||||||

|

Clone partition to image.

|

||||||

|

```

|

||||||

|

# partclone.ext4 -d -c -s /dev/sda1 -o sda1.img

|

||||||

|

|

||||||

|

```

|

||||||

|

|

||||||

|

Restore image to partition.

|

||||||

|

```

|

||||||

|

# partclone.ext4 -d -r -s sda1.img -o /dev/sda1

|

||||||

|

|

||||||

|

```

|

||||||

|

|

||||||

|

Partition to partition clone.

|

||||||

|

```

|

||||||

|

# partclone.ext4 -d -b -s /dev/sda1 -o /dev/sdb1

|

||||||

|

|

||||||

|

```

|

||||||

|

|

||||||

|

Display image information.

|

||||||

|

```

|

||||||

|

# partclone.info -s sda1.img

|

||||||

|

|

||||||

|

```

|

||||||

|

|

||||||

|

Check image.

|

||||||

|

```

|

||||||

|

# partclone.chkimg -s sda1.img

|

||||||

|

|

||||||

|

```

|

||||||

|

|

||||||

|

Are you a **Partclone** user? I wrote on [**Deepin Clone**][7] just recently and apparently, there are certain tasks Partclone is better at handling. What has been your experience with other backup and restore utility tools?

|

||||||

|

|

||||||

|

Do share your thoughts and suggestions with us in the comments section below.

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://www.fossmint.com/partclone-linux-backup-clone-tool/

|

||||||

|

|

||||||

|

作者:[Martins D. Okoi;View All Posts;Peter Beck;Martins Divine Okoi][a]

|

||||||

|

译者:[译者ID](https://github.com/译者ID)

|

||||||

|

校对:[校对者ID](https://github.com/校对者ID)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:

|

||||||

|

[1]:https://partclone.org/

|

||||||

|

[2]:https://github.com/Thomas-Tsai/partclone

|

||||||

|

[3]:https://partclone.org/help/

|

||||||

|

[4]:https://partclone.org/usage/

|

||||||

|

[5]:https://partclone.org/features/

|

||||||

|

[6]:https://partclone.org/download/

|

||||||

|

[7]:https://www.fossmint.com/deepin-clone-system-backup-restore-for-deepin-users/

|

||||||

251

sources/tech/20180116 Analyzing the Linux boot process.md

Normal file

251

sources/tech/20180116 Analyzing the Linux boot process.md

Normal file

@ -0,0 +1,251 @@

|

|||||||

|

Analyzing the Linux boot process

|

||||||

|

======

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

Image by : Penguin, Boot. Modified by Opensource.com. CC BY-SA 4.0.

|

||||||

|

|

||||||

|

The oldest joke in open source software is the statement that "the code is self-documenting." Experience shows that reading the source is akin to listening to the weather forecast: sensible people still go outside and check the sky. What follows are some tips on how to inspect and observe Linux systems at boot by leveraging knowledge of familiar debugging tools. Analyzing the boot processes of systems that are functioning well prepares users and developers to deal with the inevitable failures.

|

||||||

|

|

||||||

|

In some ways, the boot process is surprisingly simple. The kernel starts up single-threaded and synchronous on a single core and seems almost comprehensible to the pitiful human mind. But how does the kernel itself get started? What functions do [initial ramdisk][1] ) and bootloaders perform? And wait, why is the LED on the Ethernet port always on?

|

||||||

|

|

||||||

|

Read on for answers to these and other questions; the [code for the described demos and exercises][2] is also available on GitHub.

|

||||||

|

|

||||||

|

### The beginning of boot: the OFF state

|

||||||

|

|

||||||

|

#### Wake-on-LAN

|

||||||

|

|

||||||

|

The OFF state means that the system has no power, right? The apparent simplicity is deceptive. For example, the Ethernet LED is illuminated because wake-on-LAN (WOL) is enabled on your system. Check whether this is the case by typing:

|

||||||

|

```

|

||||||

|

$# sudo ethtool <interface name>

|

||||||

|

```

|

||||||

|

|

||||||

|

where `<interface name>` might be, for example, `eth0`. (`ethtool` is found in Linux packages of the same name.) If "Wake-on" in the output shows `g`, remote hosts can boot the system by sending a [MagicPacket][3]. If you have no intention of waking up your system remotely and do not wish others to do so, turn WOL off either in the system BIOS menu, or via:

|

||||||

|

```

|

||||||

|

$# sudo ethtool -s <interface name> wol d

|

||||||

|

```

|

||||||

|

|

||||||

|

The processor that responds to the MagicPacket may be part of the network interface or it may be the [Baseboard Management Controller][4] (BMC).

|

||||||

|

|

||||||

|

#### Intel Management Engine, Platform Controller Hub, and Minix

|

||||||

|

|

||||||

|

The BMC is not the only microcontroller (MCU) that may be listening when the system is nominally off. x86_64 systems also include the Intel Management Engine (IME) software suite for remote management of systems. A wide variety of devices, from servers to laptops, includes this technology, [which enables functionality][5] such as KVM Remote Control and Intel Capability Licensing Service. The [IME has unpatched vulnerabilities][6], according to [Intel's own detection tool][7]. The bad news is, it's difficult to disable the IME. Trammell Hudson has created an [me_cleaner project][8] that wipes some of the more egregious IME components, like the embedded web server, but could also brick the system on which it is run.

|

||||||

|

|

||||||

|

The IME firmware and the System Management Mode (SMM) software that follows it at boot are [based on the Minix operating system][9] and run on the separate Platform Controller Hub processor, not the main system CPU. The SMM then launches the Universal Extensible Firmware Interface (UEFI) software, about which much has [already been written][10], on the main processor. The Coreboot group at Google has started a breathtakingly ambitious [Non-Extensible Reduced Firmware][11] (NERF) project that aims to replace not only UEFI but early Linux userspace components such as systemd. While we await the outcome of these new efforts, Linux users may now purchase laptops from Purism, System76, or Dell [with IME disabled][12], plus we can hope for laptops [with ARM 64-bit processors][13].

|

||||||

|

|

||||||

|

#### Bootloaders

|

||||||

|

|

||||||

|

Besides starting buggy spyware, what function does early boot firmware serve? The job of a bootloader is to make available to a newly powered processor the resources it needs to run a general-purpose operating system like Linux. At power-on, there not only is no virtual memory, but no DRAM until its controller is brought up. A bootloader then turns on power supplies and scans buses and interfaces in order to locate the kernel image and the root filesystem. Popular bootloaders like U-Boot and GRUB have support for familiar interfaces like USB, PCI, and NFS, as well as more embedded-specific devices like NOR- and NAND-flash. Bootloaders also interact with hardware security devices like [Trusted Platform Modules][14] (TPMs) to establish a chain of trust from earliest boot.

|

||||||

|

|

||||||

|

![Running the U-boot bootloader][16]

|

||||||

|

|

||||||

|

Running the U-boot bootloader in the sandbox on the build host.

|

||||||

|

|

||||||

|

The open source, widely used [U-Boot ][17]bootloader is supported on systems ranging from Raspberry Pi to Nintendo devices to automotive boards to Chromebooks. There is no syslog, and when things go sideways, often not even any console output. To facilitate debugging, the U-Boot team offers a sandbox in which patches can be tested on the build-host, or even in a nightly Continuous Integration system. Playing with U-Boot's sandbox is relatively simple on a system where common development tools like Git and the GNU Compiler Collection (GCC) are installed:

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

$# git clone git://git.denx.de/u-boot; cd u-boot

|

||||||

|

|

||||||

|

$# make ARCH=sandbox defconfig

|

||||||

|

|

||||||

|

$# make; ./u-boot

|

||||||

|

|

||||||

|

=> printenv

|

||||||

|

|

||||||

|

=> help

|

||||||

|

```

|

||||||

|

|

||||||

|

That's it: you're running U-Boot on x86_64 and can test tricky features like [mock storage device][2] repartitioning, TPM-based secret-key manipulation, and hotplug of USB devices. The U-Boot sandbox can even be single-stepped under the GDB debugger. Development using the sandbox is 10x faster than testing by reflashing the bootloader onto a board, and a "bricked" sandbox can be recovered with Ctrl+C.

|

||||||

|

|

||||||

|

### Starting up the kernel

|

||||||

|

|

||||||

|

#### Provisioning a booting kernel

|

||||||

|

|

||||||

|

Upon completion of its tasks, the bootloader will execute a jump to kernel code that it has loaded into main memory and begin execution, passing along any command-line options that the user has specified. What kind of program is the kernel? `file /boot/vmlinuz` indicates that it is a bzImage, meaning a big compressed one. The Linux source tree contains an [extract-vmlinux tool][18] that can be used to uncompress the file:

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

$# scripts/extract-vmlinux /boot/vmlinuz-$(uname -r) > vmlinux

|

||||||

|

|

||||||

|

$# file vmlinux

|

||||||

|

|

||||||

|

vmlinux: ELF 64-bit LSB executable, x86-64, version 1 (SYSV), statically

|

||||||

|

|

||||||

|

linked, stripped

|

||||||

|

```

|

||||||

|

|

||||||

|

The kernel is an [Executable and Linking Format][19] (ELF) binary, like Linux userspace programs. That means we can use commands from the `binutils` package like `readelf` to inspect it. Compare the output of, for example:

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

$# readelf -S /bin/date

|

||||||

|

|

||||||

|

$# readelf -S vmlinux

|

||||||

|

```

|

||||||

|

|

||||||

|

The list of sections in the binaries is largely the same.

|

||||||

|

|

||||||

|

So the kernel must start up something like other Linux ELF binaries ... but how do userspace programs actually start? In the `main()` function, right? Not precisely.

|

||||||

|

|

||||||

|

Before the `main()` function can run, programs need an execution context that includes heap and stack memory plus file descriptors for `stdio`, `stdout`, and `stderr`. Userspace programs obtain these resources from the standard library, which is `glibc` on most Linux systems. Consider the following:

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

$# file /bin/date

|

||||||

|

|

||||||

|

/bin/date: ELF 64-bit LSB shared object, x86-64, version 1 (SYSV), dynamically

|

||||||

|

|

||||||

|

linked, interpreter /lib64/ld-linux-x86-64.so.2, for GNU/Linux 2.6.32,

|

||||||

|

|

||||||

|

BuildID[sha1]=14e8563676febeb06d701dbee35d225c5a8e565a,

|

||||||

|

|

||||||

|

stripped

|

||||||

|

```

|

||||||

|

|

||||||

|

ELF binaries have an interpreter, just as Bash and Python scripts do, but the interpreter need not be specified with `#!` as in scripts, as ELF is Linux's native format. The ELF interpreter [provisions a binary][20] with the needed resources by calling `_start()`, a function available from the `glibc` source package that can be [inspected via GDB][21]. The kernel obviously has no interpreter and must provision itself, but how?

|

||||||

|

|

||||||

|

Inspecting the kernel's startup with GDB gives the answer. First install the debug package for the kernel that contains an unstripped version of `vmlinux`, for example `apt-get install linux-image-amd64-dbg`, or compile and install your own kernel from source, for example, by following instructions in the excellent [Debian Kernel Handbook][22]. `gdb vmlinux` followed by `info files` shows the ELF section `init.text`. List the start of program execution in `init.text` with `l *(address)`, where `address` is the hexadecimal start of `init.text`. GDB will indicate that the x86_64 kernel starts up in the kernel's file [arch/x86/kernel/head_64.S][23], where we find the assembly function `start_cpu0()` and code that explicitly creates a stack and decompresses the zImage before calling the `x86_64 start_kernel()` function. ARM 32-bit kernels have the similar [arch/arm/kernel/head.S][24]. `start_kernel()` is not architecture-specific, so the function lives in the kernel's [init/main.c][25]. `start_kernel()` is arguably Linux's true `main()` function.

|

||||||

|

|

||||||

|

### From start_kernel() to PID 1

|

||||||

|

|

||||||

|

#### The kernel's hardware manifest: the device-tree and ACPI tables

|

||||||

|

|

||||||

|

At boot, the kernel needs information about the hardware beyond the processor type for which it has been compiled. The instructions in the code are augmented by configuration data that is stored separately. There are two main methods of storing this data: [device-trees][26] and [ACPI tables][27]. The kernel learns what hardware it must run at each boot by reading these files.

|

||||||

|

|

||||||

|

For embedded devices, the device-tree is a manifest of installed hardware. The device-tree is simply a file that is compiled at the same time as kernel source and is typically located in `/boot` alongside `vmlinux`. To see what's in the binary device-tree on an ARM device, just use the `strings` command from the `binutils` package on a file whose name matches `/boot/*.dtb`, as `dtb` refers to a device-tree binary. Clearly the device-tree can be modified simply by editing the JSON-like files that compose it and rerunning the special `dtc` compiler that is provided with the kernel source. While the device-tree is a static file whose file path is typically passed to the kernel by the bootloader on the command line, a [device-tree overlay][28] facility has been added in recent years, where the kernel can dynamically load additional fragments in response to hotplug events after boot.

|

||||||

|

|

||||||

|

x86-family and many enterprise-grade ARM64 devices make use of the alternative Advanced Configuration and Power Interface ([ACPI][27]) mechanism. In contrast to the device-tree, the ACPI information is stored in the `/sys/firmware/acpi/tables` virtual filesystem that is created by the kernel at boot by accessing onboard ROM. The easy way to read the ACPI tables is with the `acpidump` command from the `acpica-tools` package. Here's an example:

|

||||||

|

|

||||||

|

![ACPI tables on Lenovo laptops][30]

|

||||||

|

|

||||||

|

|

||||||

|

ACPI tables on Lenovo laptops are all set for Windows 2001.

|

||||||

|

|

||||||

|

Yes, your Linux system is ready for Windows 2001, should you care to install it. ACPI has both methods and data, unlike the device-tree, which is more of a hardware-description language. ACPI methods continue to be active post-boot. For example, starting the command `acpi_listen` (from package `apcid`) and opening and closing the laptop lid will show that ACPI functionality is running all the time. While temporarily and dynamically [overwriting the ACPI tables][31] is possible, permanently changing them involves interacting with the BIOS menu at boot or reflashing the ROM. If you're going to that much trouble, perhaps you should just [install coreboot][32], the open source firmware replacement.

|

||||||

|

|

||||||

|

#### From start_kernel() to userspace

|

||||||

|

|

||||||

|

The code in [init/main.c][25] is surprisingly readable and, amusingly, still carries Linus Torvalds' original copyright from 1991-1992. The lines found in `dmesg | head` on a newly booted system originate mostly from this source file. The first CPU is registered with the system, global data structures are initialized, and the scheduler, interrupt handlers (IRQs), timers, and console are brought one-by-one, in strict order, online. Until the function `timekeeping_init()` runs, all timestamps are zero. This part of the kernel initialization is synchronous, meaning that execution occurs in precisely one thread, and no function is executed until the last one completes and returns. As a result, the `dmesg` output will be completely reproducible, even between two systems, as long as they have the same device-tree or ACPI tables. Linux is behaving like one of the RTOS (real-time operating systems) that runs on MCUs, for example QNX or VxWorks. The situation persists into the function `rest_init()`, which is called by `start_kernel()` at its termination.

|

||||||

|

|

||||||

|

![Summary of early kernel boot process.][34]

|

||||||

|

|

||||||

|

Summary of early kernel boot process.

|

||||||

|

|

||||||

|

The rather humbly named `rest_init()` spawns a new thread that runs `kernel_init()`, which invokes `do_initcalls()`. Users can spy on `initcalls` in action by appending `initcall_debug` to the kernel command line, resulting in `dmesg` entries every time an `initcall` function runs. `initcalls` pass through seven sequential levels: early, core, postcore, arch, subsys, fs, device, and late. The most user-visible part of the `initcalls` is the probing and setup of all the processors' peripherals: buses, network, storage, displays, etc., accompanied by the loading of their kernel modules. `rest_init()` also spawns a second thread on the boot processor that begins by running `cpu_idle()` while it waits for the scheduler to assign it work.

|

||||||

|

|

||||||

|

`kernel_init()` also [sets up symmetric multiprocessing][35] (SMP). With more recent kernels, find this point in `dmesg` output by looking for "Bringing up secondary CPUs..." SMP proceeds by "hotplugging" CPUs, meaning that it manages their lifecycle with a state machine that is notionally similar to that of devices like hotplugged USB sticks. The kernel's power-management system frequently takes individual cores offline, then wakes them as needed, so that the same CPU hotplug code is called over and over on a machine that is not busy. Observe the power-management system's invocation of CPU hotplug with the [BCC tool][36] called `offcputime.py`.

|

||||||

|

|

||||||

|

Note that the code in `init/main.c` is nearly finished executing when `smp_init()` runs: The boot processor has completed most of the one-time initialization that the other cores need not repeat. Nonetheless, the per-CPU threads must be spawned for each core to manage interrupts (IRQs), workqueues, timers, and power events on each. For example, see the per-CPU threads that service softirqs and workqueues in action via the `ps -o psr` command.

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

$\# ps -o pid,psr,comm $(pgrep ksoftirqd)

|

||||||

|

|

||||||

|

PID PSR COMMAND

|

||||||

|

|

||||||

|

7 0 ksoftirqd/0

|

||||||

|

|

||||||

|

16 1 ksoftirqd/1

|

||||||

|

|

||||||

|

22 2 ksoftirqd/2

|

||||||

|

|

||||||

|

28 3 ksoftirqd/3

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

$\# ps -o pid,psr,comm $(pgrep kworker)

|

||||||

|

|

||||||

|

PID PSR COMMAND

|

||||||

|

|

||||||

|

4 0 kworker/0:0H

|

||||||

|

|

||||||

|

18 1 kworker/1:0H

|

||||||

|

|

||||||

|

24 2 kworker/2:0H

|

||||||

|

|

||||||

|

30 3 kworker/3:0H

|

||||||

|

|

||||||

|

[ . . . ]

|

||||||

|

```

|

||||||

|

|

||||||

|

where the PSR field stands for "processor." Each core must also host its own timers and `cpuhp` hotplug handlers.

|

||||||

|

|

||||||

|

How is it, finally, that userspace starts? Near its end, `kernel_init()` looks for an `initrd` that can execute the `init` process on its behalf. If it finds none, the kernel directly executes `init` itself. Why then might one want an `initrd`?

|

||||||

|

|

||||||

|

#### Early userspace: who ordered the initrd?

|

||||||

|

|

||||||

|

Besides the device-tree, another file path that is optionally provided to the kernel at boot is that of the `initrd`. The `initrd` often lives in `/boot` alongside the bzImage file vmlinuz on x86, or alongside the similar uImage and device-tree for ARM. List the contents of the `initrd` with the `lsinitramfs` tool that is part of the `initramfs-tools-core` package. Distro `initrd` schemes contain minimal `/bin`, `/sbin`, and `/etc` directories along with kernel modules, plus some files in `/scripts`. All of these should look pretty familiar, as the `initrd` for the most part is simply a minimal Linux root filesystem. The apparent similarity is a bit deceptive, as nearly all the executables in `/bin` and `/sbin` inside the ramdisk are symlinks to the [BusyBox binary][37], resulting in `/bin` and `/sbin` directories that are 10x smaller than glibc's.

|

||||||

|

|

||||||

|

Why bother to create an `initrd` if all it does is load some modules and then start `init` on the regular root filesystem? Consider an encrypted root filesystem. The decryption may rely on loading a kernel module that is stored in `/lib/modules` on the root filesystem ... and, unsurprisingly, in the `initrd` as well. The crypto module could be statically compiled into the kernel instead of loaded from a file, but there are various reasons for not wanting to do so. For example, statically compiling the kernel with modules could make it too large to fit on the available storage, or static compilation may violate the terms of a software license. Unsurprisingly, storage, network, and human input device (HID) drivers may also be present in the `initrd`--basically any code that is not part of the kernel proper that is needed to mount the root filesystem. The `initrd` is also a place where users can stash their own [custom ACPI][38] table code.

|

||||||

|

|

||||||

|

![Rescue shell and a custom <code>initrd</code>.][40]

|

||||||

|

|

||||||

|

Having some fun with the rescue shell and a custom `initrd`.

|

||||||

|

|

||||||

|

`initrd`'s are also great for testing filesystems and data-storage devices themselves. Stash these test tools in the `initrd` and run your tests from memory rather than from the object under test.

|

||||||

|

|

||||||

|